Direct from Development: Tech Notes

Empowering Server Power Efficiency Profiles: Unleashing Power Savings in Bills & Usage

Tue, 16 Apr 2024 15:48:44 -0000

|Read Time: 0 minutes

Introduction

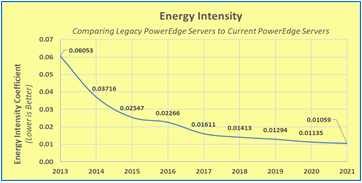

Over the last few years, the cost of power has continued to increase alongside the amount of power used in most data centers. Given these trends, customers are searching for strategies to reduce both the economic and environmental footprint of powering their server estates.

Simple strategies include virtualization and consolidation to reduce the number of physical servers, identifying zombie servers to be retired, and replacing older, less efficient servers with newer servers offering improved performance per watt.

BIOS System Profile Settings

Beyond the aforementioned strategies, Dell PowerEdge server customers can increase their power savings by selecting CPU power management and energy efficient policy settings in the system BIOS. These settings configure a collection of the rules that relate to server chip set behavior, including CPU C-state and CPU turbo mode, to increase power usage efficiency.

Selecting the most relevant setting can reduce CPU power demands while continuing to meet performance requirements to produce significant long-term cost savings. For example, in Intel®-based PowerEdge servers, customers can enable Dynamic Application Power Management (DAPC), which allows the BIOS to manage processor power states in order to achieve maximized performance per watt at all utilization levels. The full details of BIOS System Profile Settings can be found in the white paper, Set-up BIOS on the 16th Generation of PowerEdge Servers.

Testing and results

To demonstrate the effectiveness of the various profiles on power efficiency and server performance settings, SPEC Power® 2008 version 1.11.0 benchmarking was run for each setting. The SPEC Power® benchmark exercises the server at ten workload levels and combines power and performance into a single metric that measures power efficiency in operations per watt.

Table 1. SPEC Power® benchmark results

Max Perf Performance | DAPC Performance | DAPC Balanced Perf | DAPC Balanced Energy | DAPC Energy Efficient | |

SPEC Power® Score | 8621 | 10311 | 10378 | 11105 | 11564 |

SPEC Power® 100% OP/s | 8,383,505 | 8,380,816 | 8,399,796 | 8,402,421 | 8,451,740 |

SPEC Power® 100% Watts | 602 | 602 | 602 | 602 | 602 |

SPEC Power® 100% Score PPR | 13924 | 13921 | 13943 | 13956 | 14036 |

SPEC Power® 60% OP/s | 5,052,076 | 5,047,622 | 5,068,899 | 5,051,143 | 5,066,320 |

SPEC Power® 60% Watts | 549 | 488 | 477 | 392 | 360 |

SPEC Power® 60% Score PPR | 9198 | 10343 | 10624 | 12890 | 14084 |

SPEC Power® Idle Watts | 269 | 125 | 125 | 121 | 122 |

We selected a Dell PowerEdge server with dual Intel® 6448Y 2.1GHz 32 cores with 256GB ram for the test. The SPEC Power® benchmark was run by the Dell Technologies Server Performance Analysis (SPA) team in the Dell Technologies Austin Server Performance lab. The summary of the results in Table 1 shows that using DAPC/Energy Efficient policy delivered the best overall SPEC Power® score with comparable performance. Looking at the individual results more closely, a server at 100% utilization has the same power usage irrespective of the BIOS profile. However, given that most customers are not running their servers at 100%, the 60% results have been highlighted, demonstrating the power savings available for a representative customer.

Substantial energy efficiency delivered

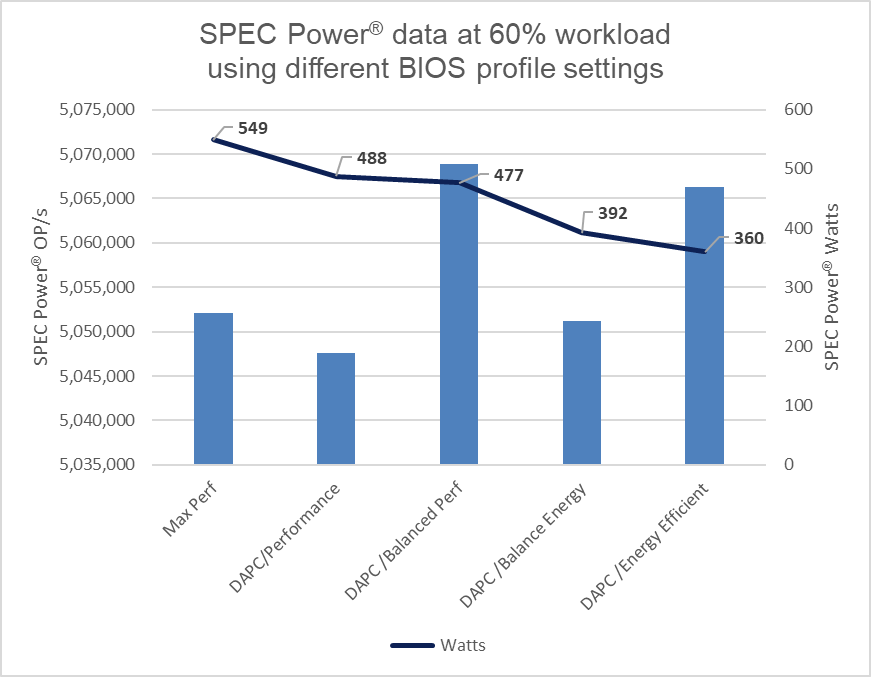

Figure 1. SPEC Power® results at 60%

The DAPC/Energy Efficient policy delivered 35% more savings in power usage as compared to the Max Performance profile.

Considering the average EU energy costs of $0.21[1] for an estate of 100 servers running at 60% load, there is a potential savings of $380,797 in energy costs over four years when comparing the Max Performance profile to the Energy Efficient policy. For a 1000-server estate, these potential savings increase to $1,523,188, all while maintaining server performance.

Those who have purchased an electric car in the last few years know that the range advertised by the manufacturer can differ to the mileage delivered in the real world. Treat these Dell Technologies results as guidance. It is recommended that customers run their own testing using their workloads.

These results are from Dell Technologies in-house testing as of January 2024. The cost of power was sourced from Consumer Energy Prices in Europe (qery.no). The full spec2008 results are posted on spec.org.

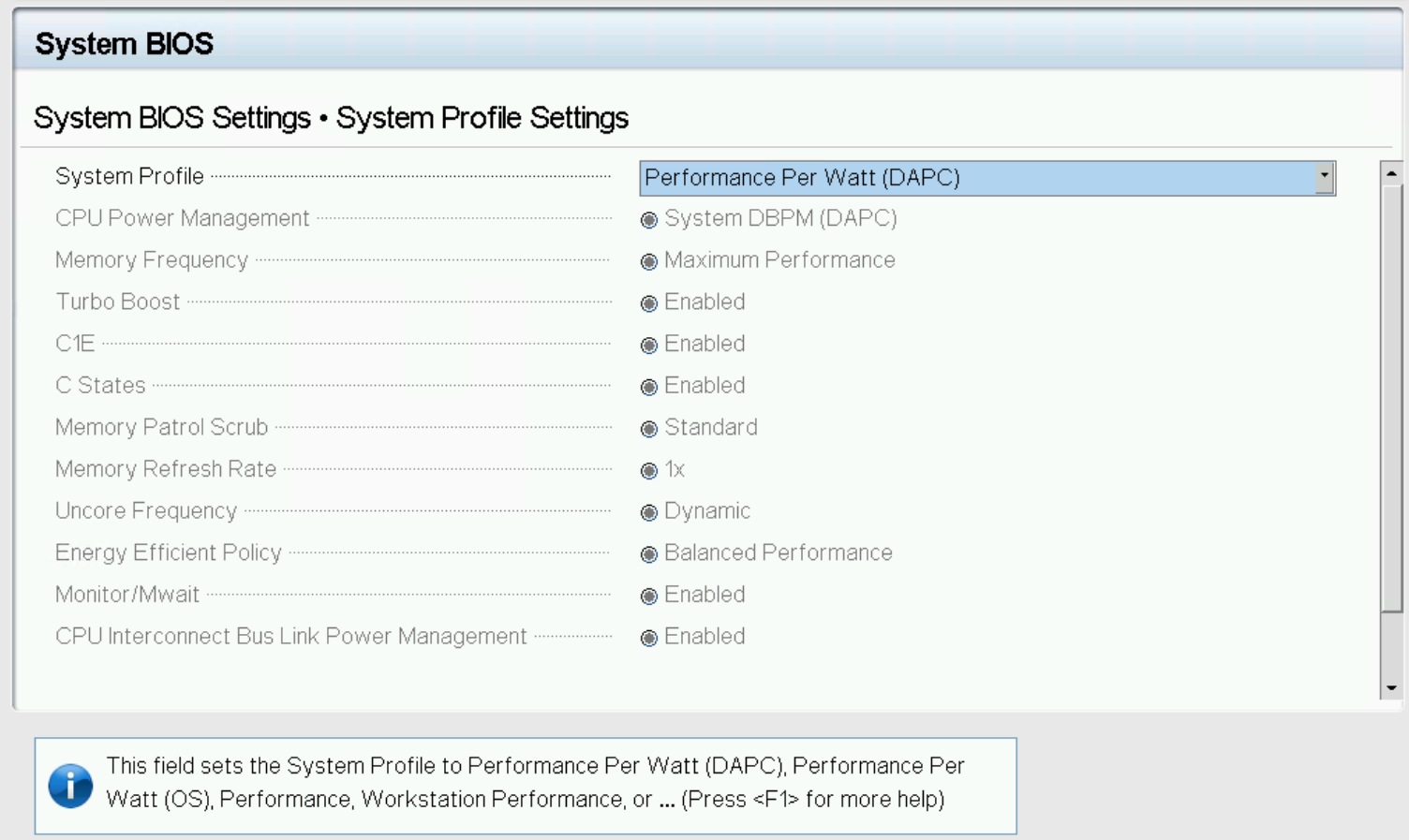

Changing BIOS profiles

BIOS profiles can be set several ways, the simplest being from the server BIOS access at boot using the <F2> key. That said, when faced with more than a few servers, this method becomes very time-consuming. There are a number of methods to automate this process, including running a script at the iDRAC API level or using a server configuration profile. A server configuration profile (SCP) is sometimes referred to as a template and can be used to bundle the system profile setting into the server firmware configuration. Using a tool such as OpenManage Enterprise (OME), a server template can then be deployed to each server’s iDRAC—or Dell remote access controller—to streamline and automate the application of these BIOS settings.

Figure 2. System profile in BIOS setup

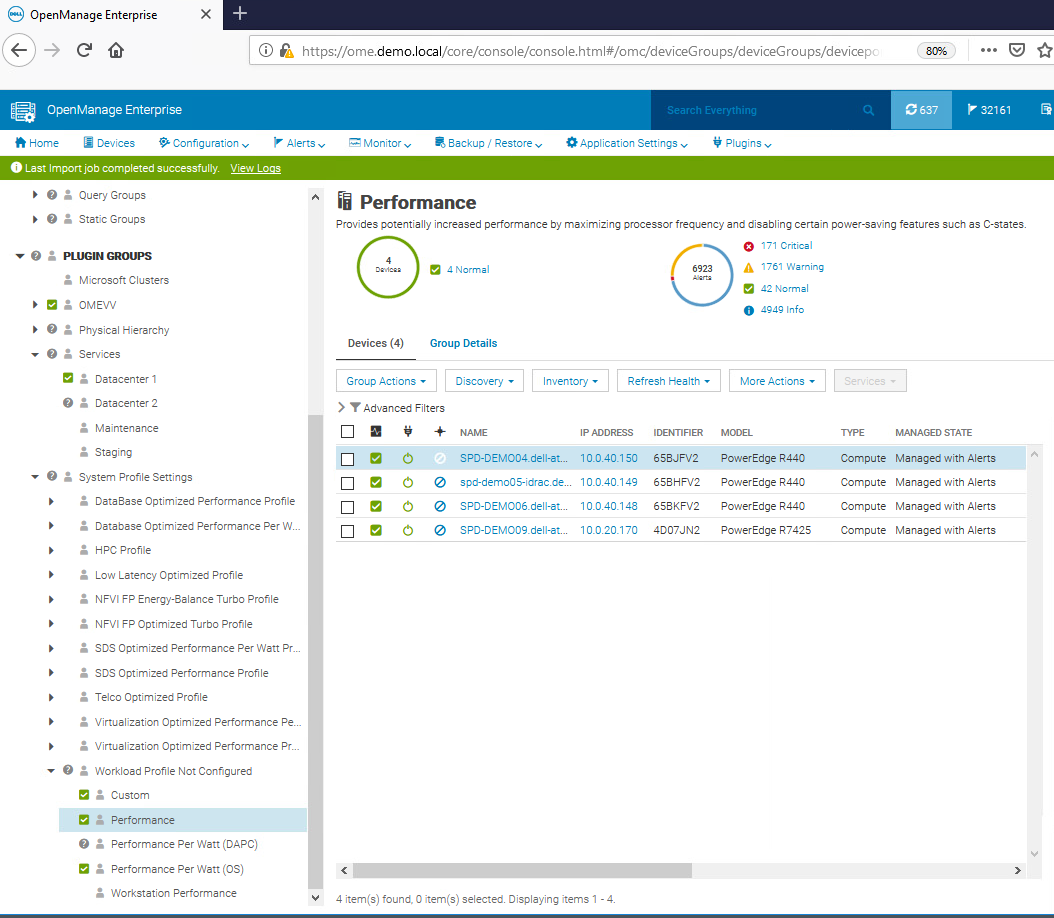

For customers who want to track and report these settings on Dell PowerEdge servers, the Dell OME Power Manager plugin for OpenManage Enterprise enables the automatic grouping of servers by profile, displaying this information on the GUI as shown in Figure 3. The Power Manager plugin also offers a ready-to-run report template that breaks down the entire server estate, grouped by server profile. This report can be scheduled or run ad hoc.

Figure 3. OpenManage Enterprise displaying BIOS profiles

System profiles and BIOS settings in detail

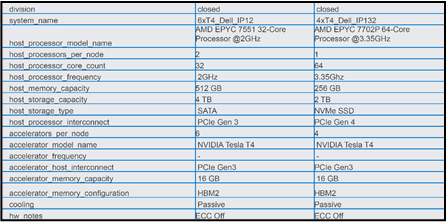

The following tables provide detailed background information about each system profile and the BIOS settings they alter for Intel®- and AMD-based PowerEdge servers.

Table 2. Intel® Platform System Profile

System Profile Settings | Performance Per Watt Optimized (DAPC) | Performance Per Watt Optimized (OS) | Performance | Workstation Performance |

CPU Power Management | System DBPM (DAPC) | OS DBPM | Maximum Performance | Maximum Performance |

Memory Frequency | Maximum Performance | Maximum Performance | Maximum Performance | Maximum Performance |

Turbo Boost | Enabled | Enabled | Enabled | Enabled |

Energy Efficient Turbo | Enabled | Enabled | Disabled | Disabled |

C1E | Enabled | Enabled | Disabled | Disabled |

C-States | Enabled | Enabled | Disabled | Enabled |

Memory Patrol Scrub | Standard | Standard | Standard | Standard |

Memory Refresh Rate | 1x | 1x | 1x | 1x |

Uncore Frequency | Dynamic | Dynamic | Maximum | Maximum |

Energy Efficient Policy | Balanced Performance | Balanced Performance | Performance | Performance |

Monitor/Mwait | Enabled | Enabled | Enabled | Enabled |

CPU Interconnect Bus Link Power Management | Enabled | Enabled | Disabled | Disabled |

PCI ASPM L1 Link Power Management | Enabled | Enabled | Disabled | Disabled |

Workload Configuration | Balance | Balance | Balance | Balance |

Table 3. AMD Platform System Profile

System Profile Settings | Performance Per Watt Optimized (OS) | Performance |

CPU Power Management | OS DBPM | Maximum Performance |

Memory Frequency | Maximum Performance | Maximum Performance |

Turbo Boost | Enabled | Enabled |

C-States | Enabled | Disabled |

Memory Patrol Scrub | Standard | Standard |

Memory Refresh Rate | 1x | 1x |

PCI ASPM L1 Link Power Management | Enabled | Disabled |

Determinism Slider | Power Determinism | Power Determinism |

Power Profile Select | High Performance Mode | High Performance Mode |

PCIE Speed PMM Control | Auto | Auto |

EQ Bypass To Highest Rate | Disabled | Disabled |

DF PState Frequency Optimizer | Enabled | Enabled |

DF PState Latency Optimizer | Enabled | Enabled |

Host System Management Port (HSMP) Support | Enabled | Enabled |

Boost FMax | 0 - Auto | 0 - Auto |

Algorithm Performance Boost Disable (ApbDis) | Disabled | Disabled |

Dynamic Link Width Management (DLWM) | Unforced | Unforced |

Conclusion

When implementing strategies for increasing server energy efficiency, selecting a BIOS system profile can result in significant power savings with minimal or no server performance degradation. The power cost savings for a 1000-server estate could potentially be $1,390,737 over four years. Additionally, as a result of low processor power consumption, the load on the cooling system in the data center is reduced, increasing savings on energy costs and power. Customers running an estate of Dell PowerEdge servers should review their use of these BIOS settings for their server workloads to better understand how these profiles can help to reduce power usage and lower energy bills.

References

- SPEC Power® benchmark details

- Dell Server BIOS attributes

- Infographic: Save energy and save money with Dell OpenManage Enterprise Power Manager

[1] For non-household consumers such as industrial, commercial, and other users not included in the households sector, average electricity prices in the EU stood at €0.21 per kWh (excluding VAT and other recoverable taxes and levies) for the first half of 2023 according to the latest Eurostat data, Consumer Energy Prices in Europe (qery.no)

Authors: Mark Maclean, PowerEdge Technical Marketing Engineering; Kevin Locklear, ISG Sustainability; Donald Russell, Senior Performance Engineer, Solution Performance Analysis

Dell 277V AC & HVDC Power Supplies

Wed, 25 Oct 2023 21:40:08 -0000

|Read Time: 0 minutes

Introduction

This Direct from Development (DfD) tech note describes how mixed-mode 277V AC & HVDC (260-400V DC) power supplies are important, and how they can benefit our customers.

Data center power and high voltages

Although 208V AC is the traditional voltage used in US data centers, there has been a shift towards higher voltages in recent years. Some modern data centers are adopting 415V AC or even 480V AC 3-phase, to further enhance energy efficiency.

480V AC 3-phase is becoming increasingly popular due to its many advantages over traditional 415V AC 3-phase or 240V AC single-phase:

- Less current is needed to deliver the same amount of power as 415V or 240V. Less resistance means less energy loss in cables and electrical components.

- Reduced wiring costs: 480V allows the use of higher gauge, thinner, cheaper wires. This also means reduced installation costs.

- Increased power density: By using 480V, data centers can increase their power density, because more power can be delivered using the same amount of space.

Data centers that are getting 480V 3-phase power from the utility typically convert it to 208V/120V single-phase. To do that, they use transformers. But even with the most efficient transformer, there are power losses during the conversion (around 3%). This is where PSUs that can support voltages higher than 240V AC become relevant.

About 277V AC

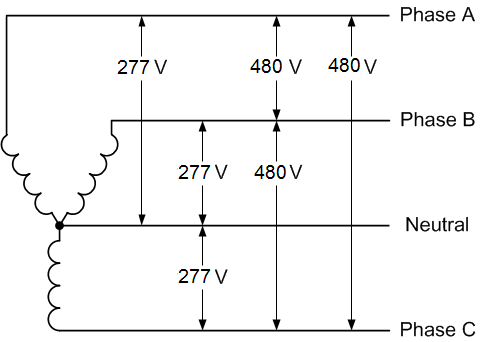

To avoid transformer losses and bring single-phase high-voltage directly to the server PSU, a convenient option exists: splitting 480V AC 3-phase into 277V AC single-phase lines.

To do that, data centers typically use Line-to-Neutral Power Distribution Units (PDUs), which divide the 480V AC 3-phase power into three separate 277V AC single-phase lines, by using “Wye” (Y) wiring:

In simple words, 277V AC is derived from 480V AC. If the PSU can directly support 277V AC, there is no need for conversion and no need for a transformer.

That is the first benefit of high-voltage PSUs: they help data centers reduce their energy consumption at the power distribution level.

The advantages of 277V AC

To understand how higher voltage can bring better efficiency, we must look at the basic principle of Power transmission.

Power, measured in watts, is the rate at which energy is transferred. It is proportional to Voltage multiplied by Current:

- Power (Watts) = Electric Tension (Volts) x Current (Amps)

Therefore, if the voltage is increased, the current required to transmit a given amount of power can be reduced. For example, to transmit 10kW of power:

- at 230V AC, the current required is 10kW / 230V = 43.5A

- at 277V AC, the current required is only 10kW / 277V = 36.1A

When transmitting power at 277V AC, the energy loss due to resistance is lower than at 230V AC. Less current (Amps) is needed, resulting in higher efficiency, and potentially significant energy savings.

Less current also means we can use higher gauge (thinner) wires, which use less copper. This can help with space optimization, by either reducing the overall volume of wires or increasing the power density.

So, although 200V-240V AC is the most common voltage range in data centers nowadays, using 277V AC brings considerable advantages:

- Reduced energy losses, resulting in Opex savings

- Reduced equipment expenses and reduced copper

- Reduced space requirements and fewer cable runs in the plenum

- Fewer breakers at the Remote Power Panel (RPP)

- Increased capacity

277V AC business opportunities

Here are the most common business opportunities for 480V AC at the rack and 277V AC at the PSU:

- Large RFPs from the US Federal Government

- HPC customers (optimized power delivery to the cluster and lower energy cost)

- AI/ML customers with high-end GPU-dense platforms

- Any customer looking for more efficiency, energy savings, and carbon footprint reduction

HVDC (260V to 400V DC)

Our high-voltage mixed-mode power supplies support these two input voltages:

- 277V AC

- HVDC (High-Voltage 260-400V DC), this voltage is mostly used by Telecommunications customers.

The advantages of HVDC

High Voltage DC has many advantages over AC and -48V DC in the Telco space:

1. Higher Efficiency:

- vs AC: DC power distribution is usually more efficient than AC power distribution, and higher voltages can improve this efficiency further. Also, fewer conversion stages are needed with HVDC (typically two instead of four).

- vs -48V DC: by increasing the voltage from -48V DC to 400V DC, power losses due to electrical resistance in cables and other components can be reduced, resulting in higher overall efficiency.

2. Lower Cost:

- vs AC: Less Capex with simplified installation and gradual investments compared to traditional AC UPS. Less Opex because easier maintenance leads to lower maintenance costs. HVDC also requires less cooling than AC due to fewer conversions.

- vs -48V DC: Less Capex because 400V DC requires fewer cables (less copper) and smaller/fewer components. Less current is needed compared to -48V DC for the same amount of power, and fewer joules are lost in the distribution.

3. Smaller Footprint:

- Because 400V DC requires fewer cables and electrical components, it can be easier to install. It can also use less physical space within the data center. This can be especially advantageous in high-density environments where space is at a premium.

HVDC business opportunities

Here are the most common business opportunities for HVDC 260-400V DC power:

- Telecom customers who are modernizing their infrastructure, either at the data center level, or at the mobile network level (antennas) where -48V DC is being replaced by 400V DC.

- The adoption of 400V DC power in telecommunications is also driven by standardization efforts. Organizations such as the Telecommunications Industry Association (TIA) and the International Electrotechnical Commission (IEC) have developed standards for higher-voltage DC power distribution in telecommunication applications.

- Renewable energy systems: solar photovoltaic installations, for example, can use 400V DC power.

- Electric Vehicle Charging Stations: EV charging stations can employ 400V DC power for fast charging capabilities. DC charging allows for direct power transfer to the vehicle's battery, reducing charging time and minimizing energy losses.

Conclusion

Modern workloads such as Generative AI or HPC require more and more power, but energy costs are rising at the same time. Therefore, companies all over the world must solve new technical and economic challenges, to power and cool down their infrastructure.

Different solutions are available today to reduce power consumption, such as using more efficient PSUs. For example, 80Plus Titanium PSUs can reach up to 96% efficiency, with only 4% of the electricity lost into heat. This type of PSU can significantly reduce power consumption and cooling costs.

Better efficiency is also achievable by right-sizing the PSU. Overloading and underloading will have a negative impact. A 50% load is considered optimal to get the best efficiency.

For example, when tested at 240V AC, a Dell 1100W Titanium PSU has the following efficiencies:

Dell 1100W Titanium PSU | ||||

PSU load in % | 10% | 20% | 50% | 100% |

PSU load in Watts | 110W | 220W | 550W | 1100W |

PSU efficiency at 240V AC | 90% | 94% | 96% | 91.5% |

But as we look at other ways to maximize power efficiency, we must also consider input voltages and the power distribution itself.

Data centers can achieve massive savings if:

- They can avoid using transformers to convert the high-voltage they get from the power utility, down to the traditional 208-240V AC

- The servers can directly take 277V AC or HVDC with appropriate PSUs

So, as we focus on sustainability, using higher voltages is an impactful way to achieve better power efficiency, reduce energy costs, and reduce the overall carbon footprint.

As data centers continue to grow, in size and number, the impact on the environment increases as well. Therefore, it is important for companies to consider not only the economic implications of their energy usage but also the environmental implications, and work towards more sustainable practices.

Dell is convinced that 277V AC & HVDC voltages can help customers solve some of the complex problems related to power distribution and achieve better sustainability overall.

We are expecting a growing market and infrastructure renewals to support high voltages in the upcoming years. For this reason, our plan is to expand our portfolio of 277V AC & HVDC PSUs and progressively increase the number of compatible platforms.

Choosing the Most Appropriate Server SDD Interfaces: E3.S, NVMe, SAS, or SATA

Sun, 10 Sep 2023 15:32:11 -0000

|Read Time: 0 minutes

Summary

This document is a straightforward guide to help PowerEdge customers choose the most appropriate SSD type, based on their business needs and goals.

As new generations of CPUs and servers are released, they frequently bring new technologies such as increased PCIe bus speeds and new storage formats, such as the EDSFF E3.S form factor for NVMe PCIe 5 Solid State Drives (SSDs), as released in early 2023. PowerEdge customers can optimize their local storage configurations based on their applications and business needs. Multiple factors must be taken into consideration to make an informed decision, such as workload demands, budget, scale, and even roadmap. Still, when all of these factors are understood, it can be difficult to determine the best choice of SSD interface among NVMe, SAS, Value SAS, and SATA.

This DfD (Direct from Development) tech note is provided to simplify and guide customers in their choice of SSD. We hope customers will find it to be a valuable resource when it becomes unclear which storage medium is the optimal choice. First, let’s summarize the history and architecture of the NVMe, SAS, Value SAS, and SATA SSD interfaces:

NVMe (Non-Volatile Memory Express)

Since it came to market in 2011, the NVMe interface remains the class of flash storage with the highest performance. The driving architectural differentiator of NVMe is that it uses the PCIe interface bus to connect directly to the CPU and streamline the data travel path. This design contrasts with SAS and SATA, which require data to first traverse to an HBA disk controller before reaching the CPU. By removing a layer from the stack, the travel path is optimized and produces reduced latency and improved performance. Scalability is also significantly improved, because NVMe drives can go beyond the traditional four lanes by using lanes from the same “pool” of lanes connected to the CPU. EDSFF including EDSFF E3.S are the next generation of NVMe SSDs. These form factors enable higher server storage density. Furthermore, NVMe performance continually improves as each new generation of the PCIe standard becomes available.

Figure 1. Latest Dell PowerEdge R7625 with 32 x E3.S drives

SAS (Serial Attached SCSI)

The SAS interface was released a few years after SATA and introduced new features that are beneficial for modern workloads. Instead of building upon the ATA (Advanced Technology Attachment) standard used in SATA, SAS serialized the existing parallel SCSI (Small Computer System Interface) standard. SAS cable architecture has four wires within two cables, creating more channels available for moving data and more connectors available for use by other devices. Furthermore, the channels are full duplex, allowing for reads and writes to traverse concurrently. Improved reliability, error reporting, and longer cable lengths were also introduced with SAS. Value SAS is often alongside SAS using the same interface but using lower performance devices, giving customers the technical benefit of SAS at a lower a price point. SAS improvements are made to this day, with SAS4 (24G) now available in certain supported PERC 12 (PowerEdge Raid Controller) configurations. For this reason, SAS still remains valuable and relevant within the market.

SATA (Serial Advanced Technology Attachment)

The SATA interface was released in 2000 and is still commonly adopted within modern servers because it is the most affordable of the SSD interface options. It replaced parallel ATA with serial ATA, which resolved various performance and physical limitations at that time. The SATA cable architecture has four wires within one cable—two for sending data and two for receiving data. These four channels are half-duplex, so data can only move in one direction at a time. At 6Gb/s, SATA write speeds are sufficient for storing information, but its read speeds are slow compared to more modern interfaces, which limits its application use for modern workloads. The last major SATA revision was in 2008, and SATA will not see further advancement in the future.

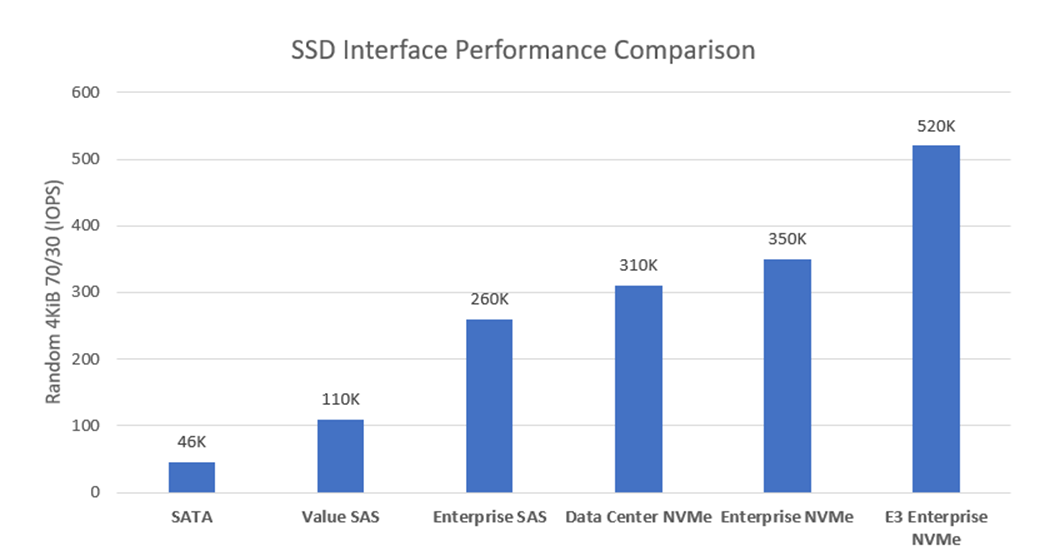

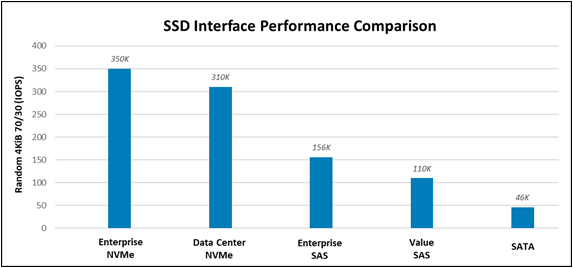

Figure 2. Random 4KiB 70% read / 30% write IOPS variances for each storage interface

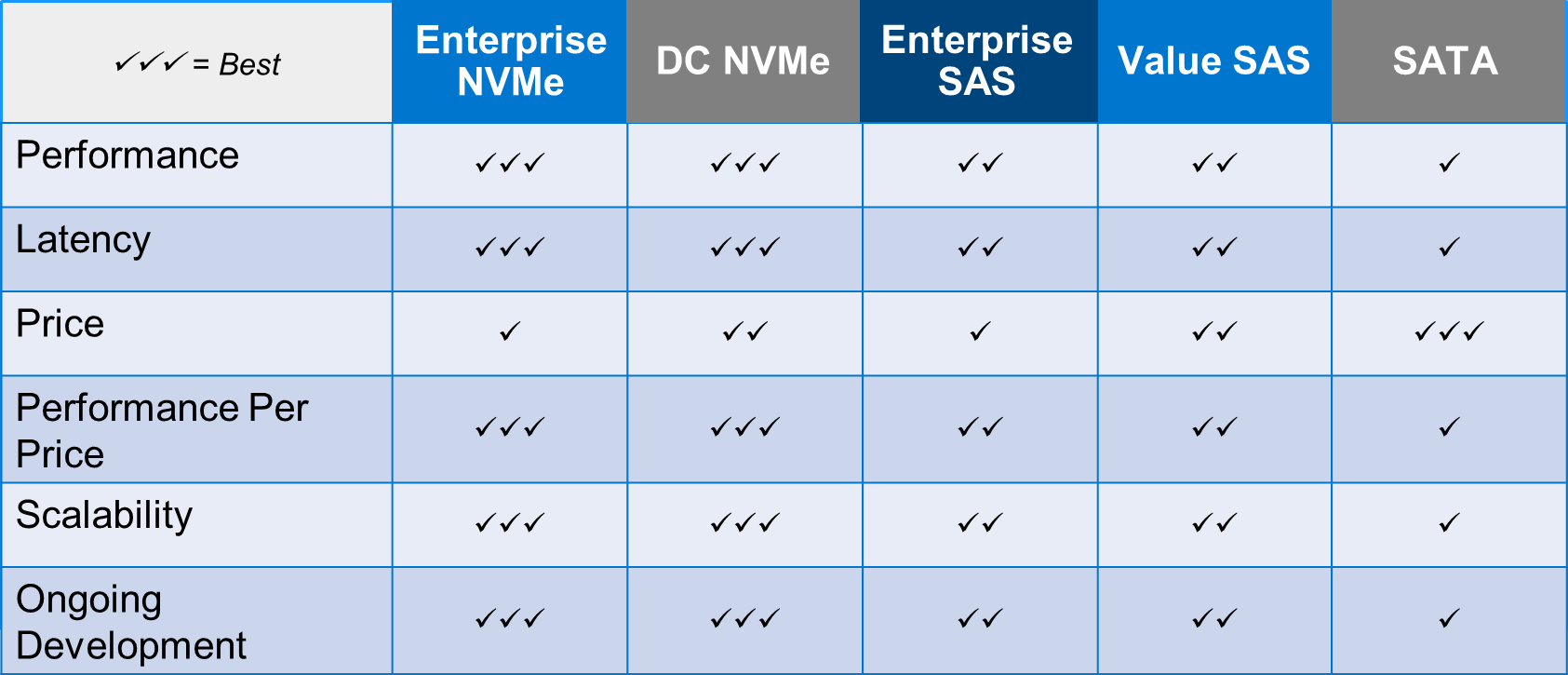

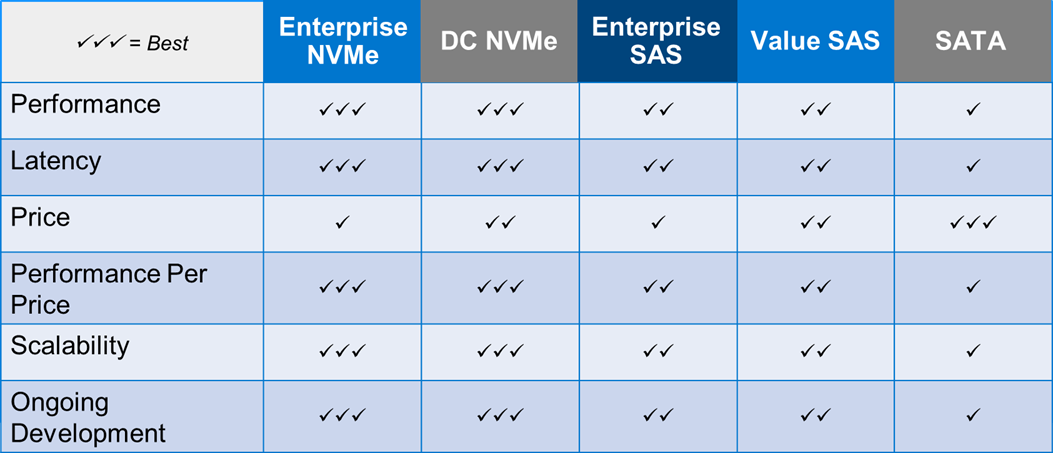

Table 1 lists key metrics for five storage-drive types most commonly attached to PowerEdge servers: Enterprise NVMe, Data Center (DC) NVMe, Enterprise SAS, Value SAS, and SATA. This comparison helps clarify which storage interface type is most applicable to specific business needs and goals.

Table 1. Ranking performance metrics of Enterprise NVMe, DC NVMe, Enterprise SAS, Value SAS, and SATA drives

Performance: Performance can be measured in various ways. For this example, Random 4 KiB 70/30 (70% reads, 30% writes) data was compared and published here by Dell, with higher IOPS being better. Figure 2 illustrates the following IOPS performance variances:

- E3.s NVMe Enterprise class drives produce 1.48x more IOPS than Enterprise NVMe SSDs.

- Enterprise NVMe SSDs produce 1.13x more IOPS than DC NVMe SSDs.

- DC NVMe SSDs produce 1.99x more IOPS than Enterprise SAS SSDs.

- Enterprise SAS SSDs produce 1.42x more IOPS than Value SAS SSDs.

Lastly, Value SAS SSDs produce 2.39x more IOPS than SATA. Random 4KiB 70% read / 30% write IOPS variances for each storage interface

Latency: The NVMe protocol reduces the number of touchpoints that data must travel (bypassing the HBA) to reach the CPU. It also has less overhead, giving it significantly lower latency than SAS and SATA. The SAS protocol is full-duplex (as opposed to half-duplex) and offers two channels (as opposed to one) for data to use, giving it over 50% lower latency than SATA.

Price: According to Dell pricing in Q1 2022, SATA SSDs are the least expensive storage interface, at ~0.9x the price of Value SAS SSDs. Value SAS SSDs are ~0.85x the price of DC NVMe SSDs. DC NVMe SSDs are ~0.85x the price of Enterprise SAS SSDs. Enterprise SAS SSDs are ~0.97x the price of Enterprise NVMe SSDs. Pricing is volatile and these number variances are subject to change at any time.

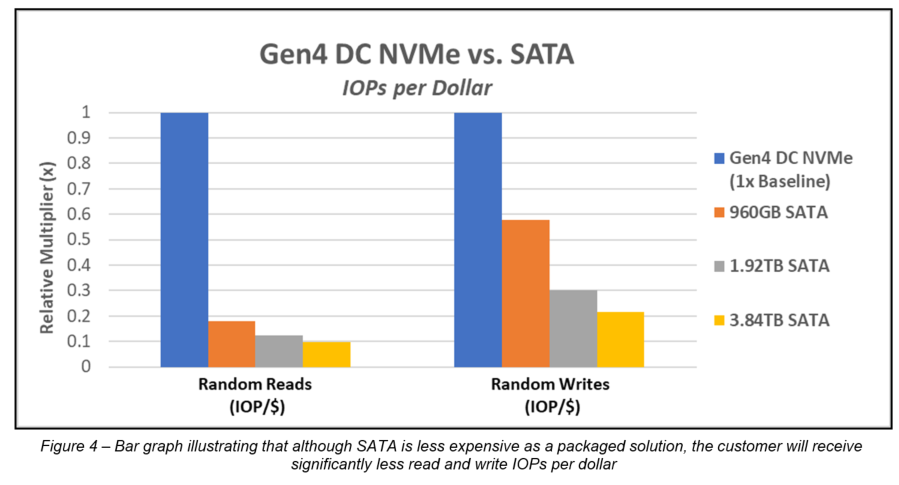

Performance per price: PowerEdge customers who have not identified which metric is most important for their business goals should strongly consider performance (IOPS) per price (dollar) to be at the top of the list. Because NVMe has such a significant performance lead over SAS and SATA, it is easily the golden standard for performance per price. DC NVMe SSDs have the best performance per price, followed closely by Enterprise DC NVMe SSDs, followed by Value SAS SSDs, followed closely by SAS SSDs, followed by SATA SSDs. This tech note gives more performance/price detail.

Scalability: Currently, NVMe shows the greatest promise for wider-scale implementation due to the abundance of lanes that can be available with low overhead. However, it can be a costly investment if existing data center infrastructures must be upgraded to support the NVMe I/O protocol. SAS is more flexible, because SAS expanders are cost-effective, and most data center infrastructures already have the required hardware to support it. However, SAS does not have the potential to scale out as aggressively as NVMe. SATA does not scale well with SSDs.

Ongoing development: The NVMe interface has consistent and substantial advancements year-over-year, including updates such as NVMe 2.0b (released in Oct. 2022) and PCIe 5.0 (released on Intel CPUs in Jan. 2023). The SAS interface also has regularly cadenced updates, but the impact is mostly marginal, except for the recent SAS4 (24G) update. There are no plans to extend the capabilities of the SATA interface beyond the current limitations.

Assigning these ranks for each storage interface and metric, and explaining why the rank was given, will make it easier to understand which drive type will be the most valuable in relation to business needs and goals.

Guidance in accordance with business goals

Each business is unique and will have different requirements for their storage drives. Factors such as intended workload, business size, plan to scale, budget, and so on, should be considered to make a confident investment decision. Although this decision is ultimately up to each business, we provide the following guidelines to help businesses that are still undecided to make an educated choice:

Enterprise NVMe SSD: Businesses that desire maximum performance and have a flexible budget should consider purchasing Enterprise NVMe SSDs. Storage I/O heavy workloads such as HPC or AI will immediately benefit from the additional cache gained from the non-volatile nature of this storage interface. The fast-paced performance growth seen in Enterprise NVMe SSDs will also allow smaller workloads like databases or collaboration to easily keep up with the ever-increasing size of data. Ultimately, because Enterprise NVMe undergoes consistent valuable changes every year, such as performance increases and cost reduction/optimization, we recommend futureproofing your data center with it.

DC NVMe SSD: Businesses that desire a budget conscious NVMe solution, in addition to the greatest value, should consider purchasing DC NVMe SSDs. These drives have the same value proposition as for Enterprise NVMe SSDs, but with a sizeable price reduction (0.83x) and performance hit (0.86x). Businesses that want to get the best value will be pleased to know that DC NVMe drives have the best performance-per-price.

Enterprise SAS: Businesses that desire to continue using their existing SCSI-based data center environment and have maximum SAS performance should consider purchasing Enterprise SAS SSDs. Although the Enterprise SAS interface does not currently have any ranking leadership for performance or pricing, it is established in the industry as highly reliable, cost-effective to scale, and shows promise for the future, with 24G available. Enterprise SAS SSDs will adequately handle medium-duty workloads, such as databases or virtualization, but will operate best when mixed with NVMe SSDs if any heavy-duty workloads are included.

Value SAS: Businesses that desire a budget-conscious SAS solution should consider purchasing Value SAS SSDs. These drives have the same value proposition as for Enterprise SAS SSDs, but with both a sizeable price reduction (0.73x) and performance hit (0.71x). For this reason, it has a slightly lower performance-per-price than Enterprise SAS, and is therefore more of a “value” play when compared to SATA. This storage interface has a purpose for existing though, because small-to-medium businesses with a smaller budget can leverage this lower-cost solution while still receiving the many benefits of the SAS interface.

SATA: Businesses that desire the lowest price storage interface should consider purchasing SATA SSDs. However, caution should be applied with this statement, because there is currently no other value proposition for SATA SSDs, and the price gap for these flash storage interfaces has been shrinking over time, which may eventually remove any valid reason for the existence of SATA. With that said, SATA is currently still a solid choice for light workloads that are not read-heavy.

Figure 3. Latest Dell PowerEdge MX760c with 8 x E3.S drives per sedge

Conclusion

The story of competing NVMe, SAS, and SATA storage interfaces is still being written. Five or more years ago, analysts made the argument that although NVMe has superior performance, its high cost warranted SAS the title of ‘best value for years to come’. What we see today is a rapidly shrinking price gap for all of these interfaces. We observe that SATA performance has fallen far behind SAS, and very far behind NVMe, with no plan to improve its current state. We also see NVMe optimizing its performance and price-point to yield more market share every year. Most importantly, we expect rapid growth in the industry adoption of heavier workloads and ever-increasing data requirements. Both storage drive and industry trends lead us to believe that the best option for any business desiring to build a future-proofed data center would be to begin making the investment in NVMe storage. However, the remaining types of storage still hold value for varying use cases. It is the customer’s choice about which storage type is best for their business goals. We hope this guide has helped to clarify the available options.

Firmware Device Order for PERC H750, H755, H350, and H355 Storage Controllers (Linux Only)

Thu, 20 Jul 2023 20:10:45 -0000

|Read Time: 0 minutes

Summary

Dell Technologies provides a feature to the PERC 11 family of controllers that gives users the limited ability to influence the ordering of devices within Linux operating systems.

This DfD tech note is intended to educate customers about this feature and its caveats. It also provides the necessary background about device enumeration.

Introduction

PERC 11-series controllers provide a feature called Firmware Device Order that provides limited operator control of the order of host-visible SCSI devices in compatible Linux distributions[1]. A This feature is called Firmware Device Order (FDO). When enabled, this feature influences the Linux kernel’s SCSI device enumeration (that is, the /dev/sdXX ordering).

This feature is particularly targeted to customers transitioning from PERC 9/10 controllers to PERC 11 on Dell’s 14G PowerEdge servers, while looking to maintain a consistent device order enumeration.

This document describes the design, control, and limitations of this feature.

Background

Linux device enumeration

The PERC device driver presents to the Linux kernel a pseudo-SCSI (Small Computing System Interface) adapter where the configured Virtual Drives (VDs) and Non-RAID drives are individual SCSI targets.

The PERC device driver does not directly control the SCSI disk drive enumeration. It is the kernel’s prerogative, for example, to use /dev/sda to refer to the first discovered drive. The feature in this DfD will enforce an ordering in the revealing of SCSI disk drives to the kernel.

PERC 11

PERC 11-series controllers support the concurrent existence of Non- RAID and Virtual Drives (VDs).

Under Linux, without Firmware Device Order enabled, the PERC driver enumerates any configured Non-RAID drives first, followed by VDs. This results in the Non-RAID drives having lower /dev/sdXX device assignments than VDs when listed alphabetically.

The ordering logic within the two groups – Non-RAID and Virtual Drives – differs between PERC H75x and PERC H35x. For details, see the following table:

Table 1. PERC 11-series default Linux enumeration

Group | Property | PERC H75x | PERC H35x |

1st | Type | Non-RAID | Non-RAID |

Ordering | Enclosure/Slot position order | Discovery order, | |

2nd | Type | Virtual Drives | Virtual Drives |

Ordering | Reverse creation order | Order of creation |

Although creating VDs while the OS is running is a supported PERC operation, note that newly created devices may not adhere to the ordering rules in Table 1. After a restart, those rules apply.

Creating a new VD after deleting Virtual Disks out-of-order might alter the presentation order (that is, deleting a VD other than the last VD, then creating a new VD).

The following table represents an example configuration where a PERC H75x controller has two VDs created and two Non-RAID drives. This ordering is what will appear in a Linux-based operating system enumeration after booting the system.

Table 2. PERC H75x default Linux enumeration example

Type | Description | Block Device |

Non-RAID

| Non-RAID in backplane slot 6 | /dev/sda |

Non-RAID in backplane slot 7 | /dev/sdb | |

Virtual Drives | Second VD created | /dev/sdc |

First VD created | /dev/sdd |

Note that for demonstration purposes, the block device enumeration is assumed to start as /dev/sda. That may not be the case in your system if the Linux kernel discovered other SCSI attached devices prior to enumeration of the drives attached to PERC.

Introducing the Firmware Device Order feature

Functionality

Firmware Device Order (FDO) alters the order of device presentation to the Linux kernel. It adds a third type - the designated boot volume. When enabled, the following order is used:

- Designated boot device

- Virtual Drives (VDs)

- Non-RAID drives

Table 3. PERC 11-series FDO Linux enumeration

Order | FDO enabled |

1st | Boot device |

2nd | Virtual Drives |

3rd | Non-RAID |

Firmware Device Order requires supported PERC 11-series controller firmware and a FDO aware Linux device driver. See the section Minimum required component versions.

Boot device

The boot device specified in the PERC controller will be presented first to the Linux kernel. The boot device may be chosen by the operator, or if none is chosen, the PERC controller automatically determines its designated boot device. Either a Virtual Drive or a Non-RAID drive can be a boot device. The PERC controller and driver use this information regardless of the system’s current boot mode and independent of whether the boot device was used to boot the current running operating system.

See the PERC 11 User’s Guide for further instructions about how to designate a boot device.

Virtual drives

After the optional boot device, the configured Virtual Drives will be presented to the Linux kernel in the order of creation (that is, the 1st VD created is presented 1st, the 2nd VD created is presented second, and so on).

Non-RAID drives

Non-RAID drives are presented after the VDs. Non-RAID drives are presented in the order of PERC’s discovery of the drives during system boot. This may not be the same as the ordering of enclosure/slot position of the drives.

Summary

The following table summarizes the Firmware Device Order behavior for PERC H75x and PERC H35x.

Table 4. PERC 11-series Firmware Device Order Linux enumeration

Group | Property | PERC H75x | PERC H35x |

1st | Type | Boot device | Boot device |

2nd | Type | Virtual Drives | Virtual Drives |

Ordering | Creation order | Creation order | |

3rd | Type | Non-RAID | Non-RAID |

Ordering | Discovery order, Not based on slot | Discovery order, Not based on slot |

How to enable Firmware Device Order

Overview

Firmware Device Order (FDO) is disabled by default. To enable FDO you can use the PERC System Setup Utility or the perccli utility. Note that FDO requires:

- Using or installing a compatible Linux-based operating system

- Using a compatible PERC Linux device driver

- Selecting a preferred boot device (see the Boot device section)

System setup

The PERC 11-series firmware includes a new Human Interactive Interface (HII) setting to enable the Firmware Device Order feature. This setting is on the Advanced Controller Properties page.

- Open the Dell PERC 11 Configuration Utility.

- Select Main Menu > Controller Management > Advanced Controller Properties.

- Select Firmware Device Order, then select the option desired.

- Confirm the change by selecting Apply Change.

Note that a system restart is necessary for an FDO enable or disable operation to take effect. See the section Manage PERC 11 Controllers Using HII Configuration Utility of the User's Guide for steps to enter and navigate in HII.

The perccli utility

You can use the perccli utility to query the current Firmware Device Order setting, and to enable/disable the feature (see the Minimum required component versions section).

To query the current setting:

# perccli /cx show deviceorderbyfirmware

To enable Firmware Device Order:

# perccli /cx set deviceorderbyfirmware=on

To disable Firmware Device Order:

# perccli /cx set deviceorderbyfirmware=off

where x is the controller instance for the PERC 11-series controller being targeted.

Note: A system restart is necessary for an FDO enable or disable operation to take effect.

Operating system support

Overview

The Firmware Device Order feature is only supported on Linux distributions. Enabling the feature on systems that run other operating systems, such as Microsoft Windows or VMware ESXi, will result in no VDs nor Non-RAID drives being visible in these operating systems. If this is attempted, disable the feature, and reboot your system. The contents on the underlying storage/devices are not affected by the setting.

Linux

A Firmware Device Order compatible device driver must be used on Linux-based distributions. Using an incompatible driver causes both VDs and Non-RAID drives to be hidden from the host.

The following table lists the minimum versions of the major Linux distributions that support the Firmware Device Order feature.

Table 5. FDO enabled distributions

Distribution | Inbox driver version |

RHEL 8.2 | 07.710.50.00-rh1 |

RHEL 7.8 | 07.710.50.00-rh1 |

SLES 15 SP2 | 07.713.01.00-rc1 |

Ubuntu 20.04 LTS | 07.710.06.00-rc1 |

Notes:

Not all operating system distribution release versions listed in Table 5 may be supported by your specific system and controlled combination. See the Linux OS Support Matrix on Dell.com to confirm the supported Linux distributions for your system and PERC controller.

Linux 5.x kernels and above probe for block devices asynchronously. Device ordering can be inconsistent because of this, even with FDO enabled. See the OS documentation for custom persistent device alternatives.

Unsupported operating systems

Attempting to boot into an operating system running a device driver that does not support Firmware Device Order will result in no storage being presented to the operating system. If PERC is your boot controller, the OS will fail to start correctly. After the system reboots, the PERC 11- series will display a warning indicating that an incompatible operating system driver was detected.

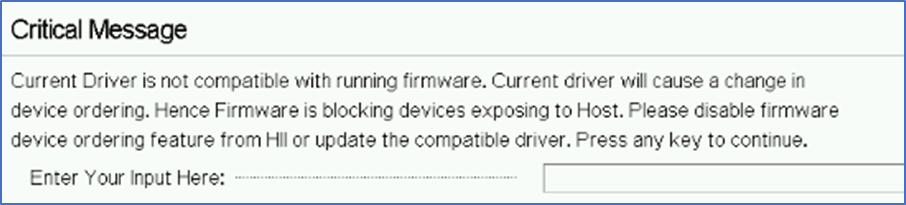

Figure 1. Critical message displayed with incompatible operating system

If this message appears on your system, it means that you are running an incompatible operating system with Firmware Device Order enabled. (To disable Firmware Device Order, see the System setup section).

Windows

Microsoft Windows is not supported with Firmware Device Order.

VMware ESXi

VMware ESXi is not supported with Firmware Device Order.

Minimum required component versions

This section lists the minimum PERC 11-series component versions required to use the Firmware Device Order (FDO) feature.

Table 6. FDO minimum component versions

Component | PERC H75x | PERC H35x |

Controller Firmware | 52.16.1-4074 | 52.19.1-4171 |

Linux Device Driver | 07.707.51.00-rc1 | 07.707.51.00-rc1 |

perccli Utility | 7.1604.00 | 7.1604.00 |

Note: Not all firmware, driver, and utility version combinations may be supported by your system and controller combination. Visit support.dell.com for the latest component releases for your system and PERC controller.

Summary

The new PERC series-11 Firmware Device Order (FDO) feature enables an alternate presentation order of Virtual Drives and Non-RAID drives. This feature is particularly targeted to those customers on Dell’s 14G PowerEdge who want to transition to PERC 11 from PERC 9/PERC 10. The FDO feature requires a supporting PERC 11-series firmware, an aware device driver, and that the system be running a Linux-based operating system. If you prefer, the feature can be turned off at any time to resume traditional enumeration, or to transition from a Linux environment to another operating system

[1] Includes PERC H750, PERC H755, PERC H350, and PERC H355 storage controllers. See the Minimum required component versions section.

Memory Bandwidth for Next-Gen PowerEdge Servers Significantly Improved with Sapphire Rapids Architecture

Mon, 17 Apr 2023 19:07:00 -0000

|Read Time: 0 minutes

Summary

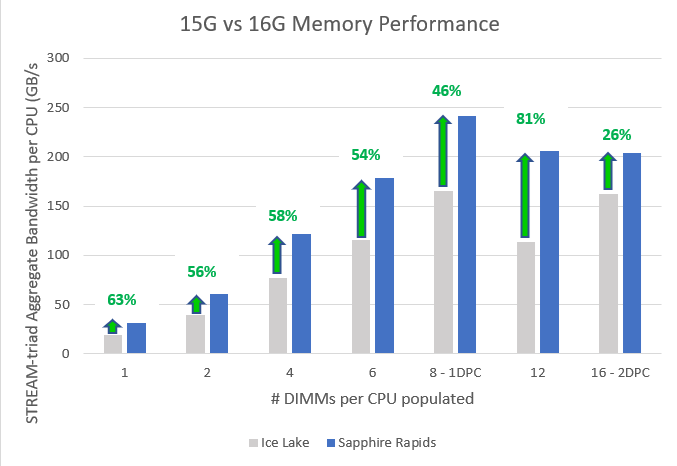

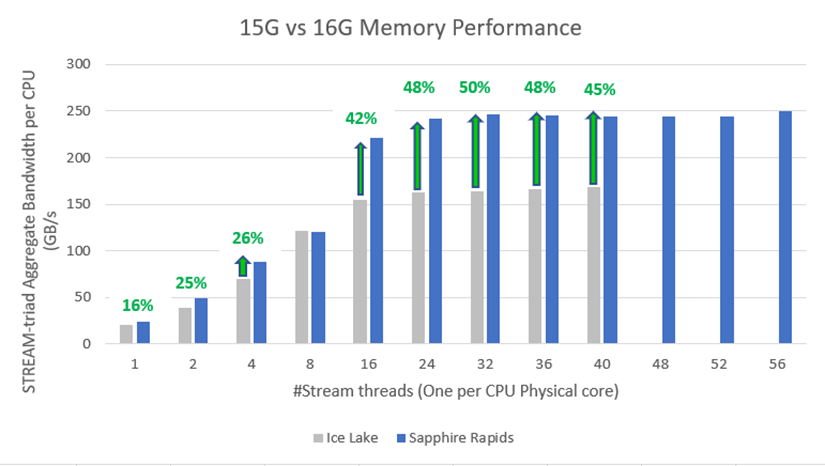

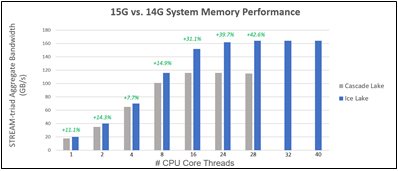

New PowerEdge servers fueled by 4th Generation Intel® Xeon® Scalable Processors can support eight DIMMs per CPU and up to 4800 MT/memory speeds. This document compares memory bandwidth readings observed on new PowerEdge servers with Sapphire Rapids CPU architecture against prior-gen PowerEdge servers with Ice-Lake CPU architecture.

Sapphire Rapids CPU architecture

4th Generation Intel® Xeon® Scalable Processors, known as Sapphire Rapids processors, are the designated CPU for new Dell PowerEdge servers.

Compared to prior-gen 3rd Generation Intel® Xeon® Scalable Processors, Sapphire Rapids Architecture supports up to 50% higher memory bandwidth (4800MTS (1DPC)/4400MTS(2DPC) on 4th Gen Intel® Xeon® Scalable Processors vs 3200MT/s on Ice Lake Processors).

Performance Data

To quantify the impact of this increase in memory support, we performed two studies. The first study[1] (see Figure 1) measured memory bandwidth determined by the number of DIMMs per CPU populated. To measure the memory bandwidth, we used the STREAM Triad benchmark. STREAM Triad is a synthetic benchmark that is designed to measure sustainable memory bandwidth (in MB/s) and a corresponding computation rate for four simple vector kernels. Of all the vector kernels, Triad is the most complex scenario. It was run on previous generation Dell PowerEdge R750 powered by Intel® Ice Lake CPU populated with eight DDR4 3200MT/s DIMMs per channel and the latest generation Dell PowerEdge R760 powered by Intel’s latest Sapphire Rapids populated with eight DDR5 4800MT/s DIMMs per channel. As a result, we saw a performance increase of 53% for 6 DIMMs per channel and 46% for 8 DIMMS per channel.

Figure 1. Sapphire Rapids and Ice Lake bandwidth comparison by # of DIMMs per CPU

The second study[1][2] (see Figure 2) measured memory bandwidth determined by the number of CPU thread cores. Both STREAM bandwidth benchmarks have Sapphire Rapids populated with eight DDR5 4800MT/s DIMMs per channel, and Ice Lake populated with eight DDR4 3200 MT/s DIMMs per channel. This resulted in up to 50% more aggregate bandwidth available for 32+ core threads, which is ideal for memory applications such as SAP HANA, MSFT SQL, and VDI.

Figure 2. Sapphire Rapids and Ice Lake bandwidth comparison by # of CPU core threads

Conclusion

With improved bandwidth, and continuous improvements for providing a quality customer experience on memory, all provided in a dense form factor of DDR5, Dell Technologies continues to provide best-in-class features and specifications for its constantly evolving better and faster PowerEdge server portfolio.

[1] These tests have been performed in the Solutions and Performance Analysis Lab at Dell Technologies on December 19, 2022.

[2] Performance numbers are based on per CPU, not aggregated or two-socket system.

Dell PowerEdge RAID Controller 12

Wed, 10 May 2023 17:18:18 -0000

|Read Time: 0 minutes

Summary

Dell Technologies’ newest RAID controller iteration, PERC 12, which is using the new Broadcom SAS4116W series chip, has increased support capabilities, including 24 Gbps SAS drives, increased cache memory speed to 3200 Mhz, 16-lane host bus type, and, most notably, only one front controller that supports both NVMe and SAS.

PERC 12 card management applications include Comprehensive Embedded Management (CEM), Dell OpenManage Storage Management, The Human Interface Infrastructure (HII) configuration utility, and the PERC command line interface (CLI). These applications enable you to manage and configure the RAID system, create and manage multiple disk groups, control and monitor multiple RAID systems, and provide online maintenance.

Introduction

As storage demands expand and processing  loads grow, RAID (Redundant Array of Independent Disks) data protection has become a necessary staple for proper enterprise storage management. Dell PowerEdge RAID Controller (PERC) provides a RAID solution that is powerful and easy-to-manage for enterprise storage data protection needs.

loads grow, RAID (Redundant Array of Independent Disks) data protection has become a necessary staple for proper enterprise storage management. Dell PowerEdge RAID Controller (PERC) provides a RAID solution that is powerful and easy-to-manage for enterprise storage data protection needs.

Dell Technologies’ newest RAID controller iteration, PERC 12, has increased support capabilities: 24 Gbps SAS drives, an increased cache memory speed of 3200 Mhz, 16-lane host bus type, and a single front controller that supports both NVMe and SAS.

PERC12 PowerEdge Support

H965i Adapter controller

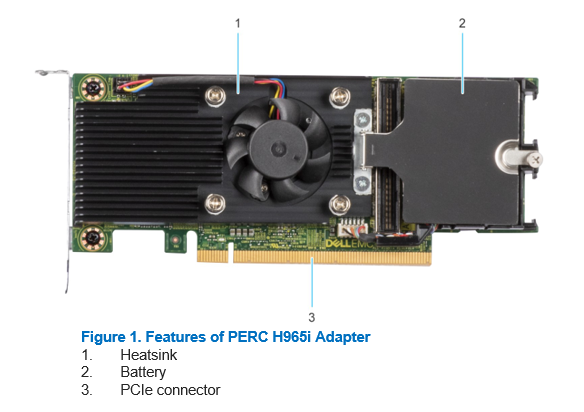

PERC12 Adapter Card adds an Active Heat Sink (Fan) on the controller, providing additional cooling capabilities, to ensure that the controller is always running at optimum temperature and does not compromise on performance because of overheating. The controller connects directly on the motherboard using a PCIe slot and uses a SlimLine connector (or a NearStack connector) for the SAS/NVMe interfaces.

H965i Front controller

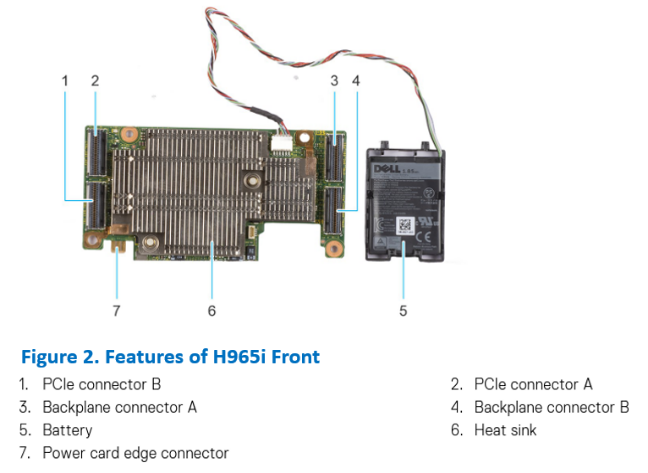

PERC12 Front Card upgrades the hardware design when compared to the previous generation controller. It combines SAS and NVMe support with a single card, eliminating the need to use different controllers for SAS and NVMe supported servers. The controller has a SlimLine connector (or a NearStack connector) for both PCIe and SAS/NVMe interfaces.

PERC12 Front Card upgrades the hardware design when compared to the previous generation controller. It combines SAS and NVMe support with a single card, eliminating the need to use different controllers for SAS and NVMe supported servers. The controller has a SlimLine connector (or a NearStack connector) for both PCIe and SAS/NVMe interfaces.

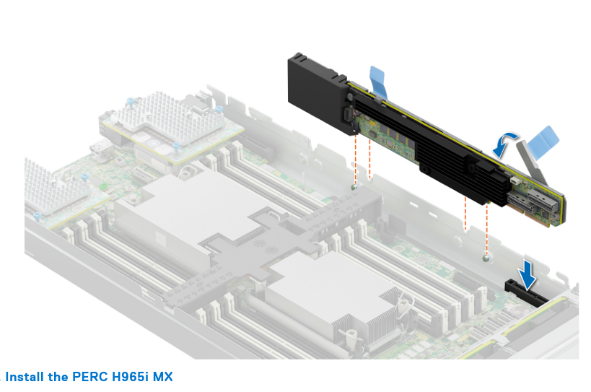

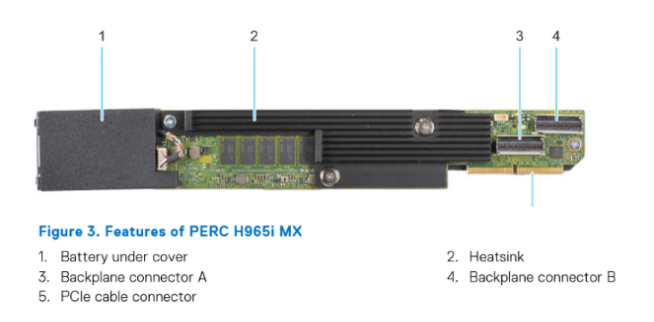

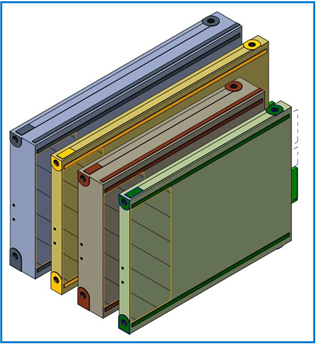

H965i MX controller

PERC12 MX Card is designed specifically for MX chassis servers and includes an energy pack similar to other form factors for power backup in case of power loss. This helps ensure proper customer cache offload to avoid any data loss. The controller connects directly on the motherboard using a PCIe slot and uses a SlimLine connector (or a NearStack connector) for the SAS/NVMe interfaces.

PERC12 MX Card is designed specifically for MX chassis servers and includes an energy pack similar to other form factors for power backup in case of power loss. This helps ensure proper customer cache offload to avoid any data loss. The controller connects directly on the motherboard using a PCIe slot and uses a SlimLine connector (or a NearStack connector) for the SAS/NVMe interfaces.

PERC 12 Supported Operating Systems

Windows Server

- Windows Server 2019

- Windows Server 2022

Linux

- RHEL 8.6

- RHEL 9.0

- SLES 15 SP4

- Ubuntu 22.04

VMware

- ESXi 7.0 U3

- ESXi 8.0

See Dell Technologies Enterprise operating systems support for a list of supported operating systems by specific server for the PERC 12 cards.

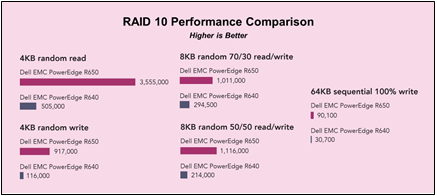

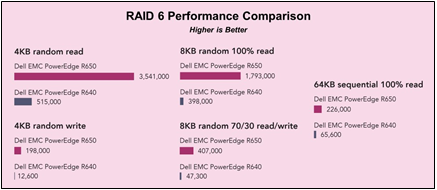

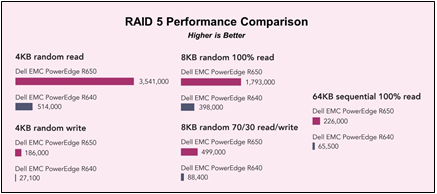

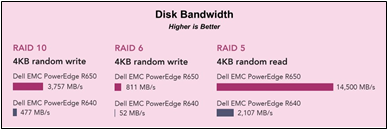

Hardware RAID Performance

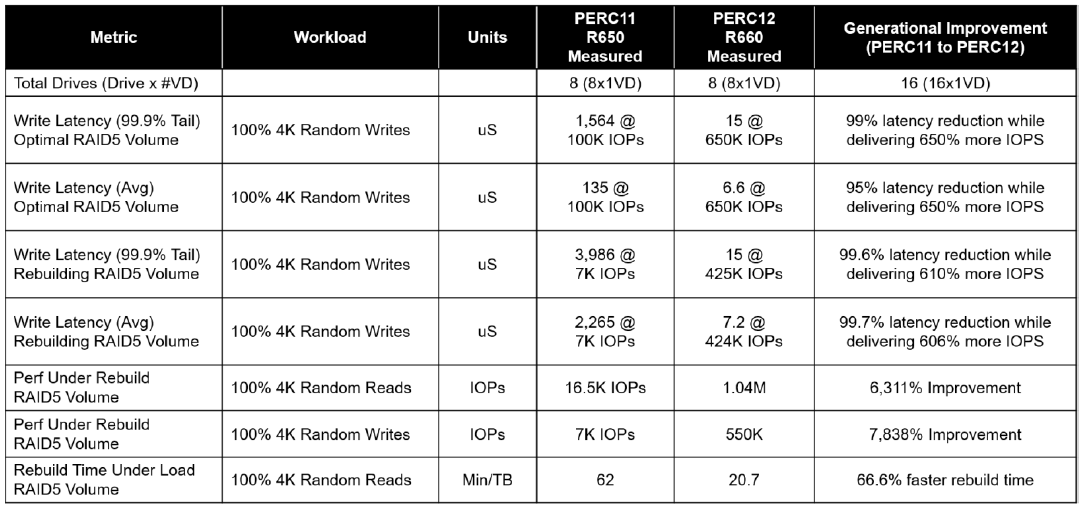

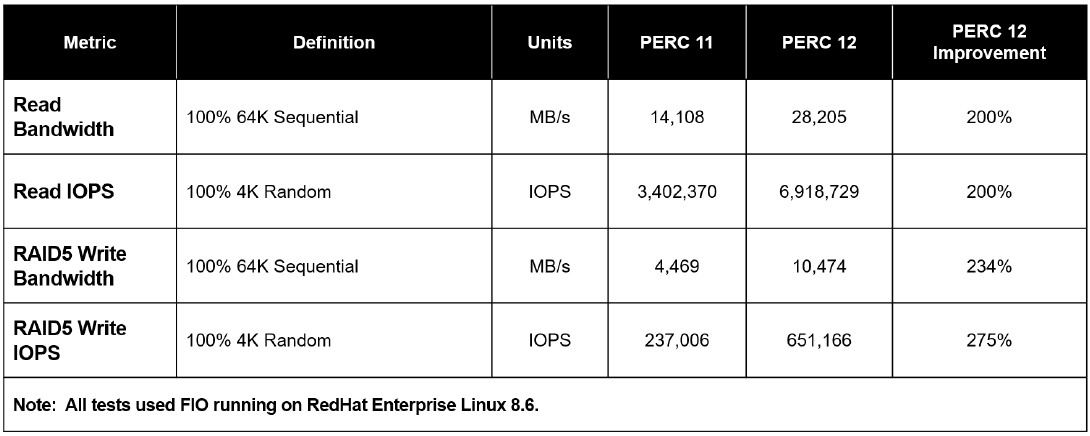

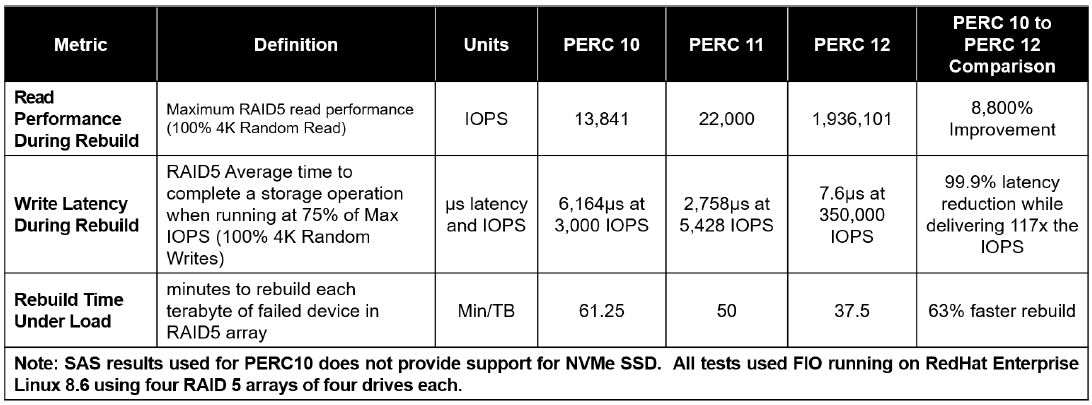

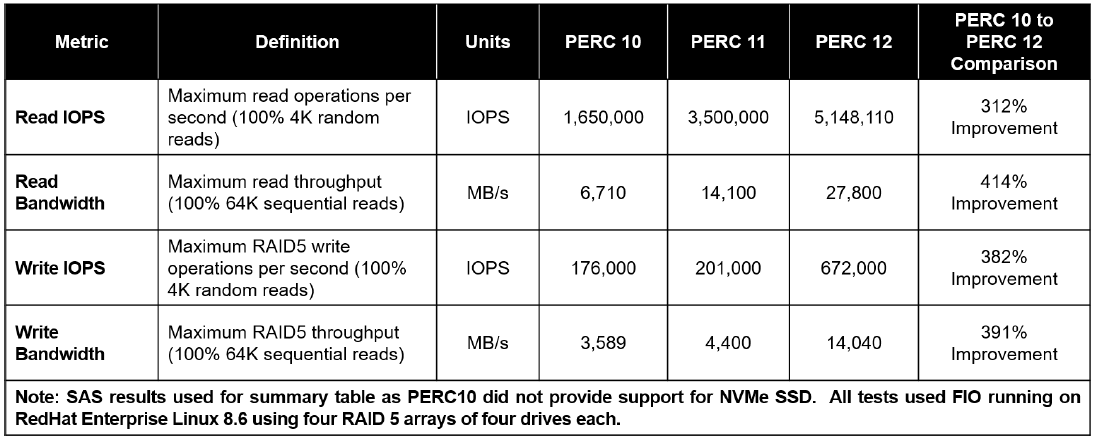

NVMe Key RAID Metrics (PERC11 / PERC12)

Table 1. Latency / Rebuild

Key NVMe RAID 5 Metrics (PERC11 / PERC12)

Table 2. IOPS / Bandwidth

Key SAS RAID Metrics (PERC10 / PERC11 / PERC12)

Table 3. IOPS / Latency Reduction During Rebuild

Key SAS RAID Metrics (PERC10 / PERC11 / PERC12)

Table 4. IOPS / Bandwidth

Conclusion

Dell PowerEdge RAID Controller 12 or PERC 12 continues to innovate by supporting hardware RAID for NVMe drives. The PERC 12 series consists of PERC H965i Adapter, PERC H965i Front, and PERC H965i MX.

Dell Next Generation PowerEdge Servers: Designed with DDR5 to Deliver Future-Ready Bandwidth

Fri, 03 Mar 2023 17:38:38 -0000

|Read Time: 0 minutes

Summary

This Direct from Development (DfD) tech note describes the DDR5 Memory technology for Dell’s latest generation PowerEdge Server portfolio. This document provides a high-level overview for DDR5, including information about generational performance improvement.

Overview

DDR5 Memory technology is the next big advancement in the world of DRAM Memory and is launching on the latest generation PowerEdge Servers.

DDR SDRAM (Dual Data Rate Synchronous Dynamic Random Access Memory) is a DRAM package on a DIMM. DDR means that the data is transferred at both the rising and falling edge of the clock signal. SDRAM is different from Asynchronous RAM because it is synchronized to the clock of the processor and hence the bus. Today, virtually all SDRAM is manufactured in compliance with standards established by JEDEC, an electronics industry association that adopts open standards to facilitate the interoperability of electronic components. This makes DDR5 an important spec for any standard server.

DDR5 is the fifth major iteration of this standard. Compared to its predecessors, DDR5 provides higher bandwidth and increased bandwidth efficiency.

The core counts are growing with every new generation of CPU. DDR4 has reached its limit in terms of memory bandwidth and density. It can only support up to 16GB Density and 3200MT/s speed. This is where DDR5 technology offers solutions to meet customer needs for greater memory capacity per core, and bandwidth per core.

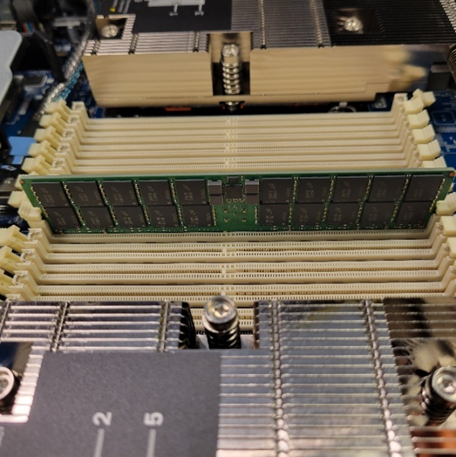

DDR5 offers a 50% increase in the bandwidth with 4800MT/s as compared to DDR4 with 3200MT/s[1]. It also supports a maximum of up to 32Gb density (a density that is not available in the latest PowerEdhe generation launch), as compared to 16Gb in the previous generation. DDR5 also offers 2x the burst length, 2x bank groups, 2x banks, Decision Feedback Equalization, two independent 40-bit channels per DIMM, and optimized power management on DIMM.

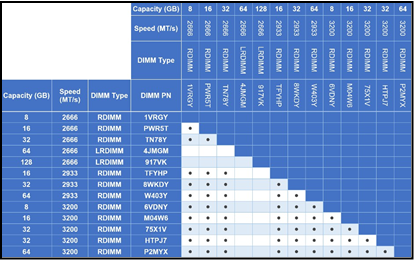

The following table provides information about the latest Dell PowerEdge portfolio for DDR5, including capacity, bandwidth, DIMM type, and Dell part numbers. Note that Dell does not support DIMM capacity mixing on the latest generation. These represent maximum bandwidth at ideal configurations. CPU vendors may reduce bandwidth capability based on their respective DIMM population rules. Total system bandwidth is expected to vary between platforms based on population capability, such as on 8 x 1 DPC Intel® CPU- based platforms.

Table 1. Details about the latest Dell PowerEdge portfolio for DDR5

DIMM Capacity (GB) | DIMM Speed (MT/s) | DIMM Type | Dell PN* | Ranks per DIMM | Data Width | Density | Technology |

16 | 4800 | RDIMM | 1V1N1 | 1 | x8 | 16Gb | SDP |

32 | 4800 | RDIMM | W08W9 | 2 | x8 | 16Gb | SDP |

64 | 4800 | RDIMM | J52K5 | 2 | x4 | 16Gb | SDP |

128 | 4800 | RDIMM | MMWR9 | 4 | x4 | 16Gb | 3DS |

256 | 4800 | RDIMM | PCFCR | 8 | x4 | 16Gb | 3DS |

* Part numbers are subject to change. Additional part numbers may be required.

Dell Customer Experience improvement for PowerEdge Servers

Beginning in March 2022 on previous PowerEdge generation platforms, Dell Technologies began a journey to improve the customer experience related to memory errors. The following key improvements were made at that time, which are also included in the latest generation of PowerEdge servers.

- Single-Bit Correctable Error Messaging – This style of the message has been removed. Working with vendor partners across the industry and studying our own field performance, we could find no relationship between correctable error reporting and subsequent uncorrectable errors on the same DIMM in the same system. To avoid concerning alert messaging and potential unnecessary downtime, we have eliminated this messaging.

- Uncorrectable Error Messaging – Previously we would recommend after an uncorrectable error to perform memory self-healing. That is still a recommended action that will occur automatically on the next reset after the error is detected. We have now determined that having an uncorrectable error causes a loss of confidence in the long-term health of the memory hardware. Customer data on this hardware is always critical and for that reason, we recommend scheduling a replacement as soon as an uncorrectable error is detected, to avoid any doubt of future system health.

- Self-Health Messaging – Previous messaging gave a notification recommending scheduling a reset to perform self-healing. However, customers notified us that we did not give an adequate indication of the urgency of the reset, and it is very costly to take down the server for this action. Upon further consideration, we schedule the self-healing to occur in the background opportunistically on the next reset because the action is typically not urgent and can wait until scheduled downtime. We will no longer send self-heal messaging in logs requesting a customer action for this reason.

- Revise Diagnostic Messaging – Certain benign system events in the prior design would trigger a MEM5100 “OEM Diagnostic Event” message with encoded details. When this occurs frequently in customer logs it can cause concern. What do these messages mean? Should I replace the DIMM? These events do not indicate a degradation of DIMM health or an early indication of DIMM failure, but the messaging was left too ambiguous for customers.

We have updated the language to clearly state the action and intent. For example, such a message might be “An event has been completed successfully in the memory device at <location>. The server and device are operating normally; no action is required.” An extended ID code is then provided for internal terms to reference when required.

The latest generation of PowerEdge improvements

Quality and a premier customer experience with Dell PowerEdge servers continues to be a focus in our latest generation design. Our specific goals to achieve this are to reduce log chattiness and give clear crisp messaging on the health of the memory hardware. With that in mind, we have continued to refine our messaging strategy so that we can act swiftly to identify and diagnose issues without filling customer logs with verbose diagnostic memory error messages. Here are a few additional changes exclusive to the latest PowerEdge server design:

- Debug logging to TSR/Support Assist – We have enabled a new pipeline of diagnostic data that only displays in the Support Assist log. This data is collected in real-time as it occurs by our iDRAC BMC but is only harvested and logged when Support Assist is requested. This eliminates the need to log continuous “bread crumb” type information into the SEL and LC logs and while maintaining the diagnosability when we need it most.

- Enhanced SPD Error Logging – The SPD for DDR5 is significantly bigger than for DDR4. Dell Technologies has a proprietary logging format that resides on each Dell DIMM device. Expanding beyond what was possible in DDR4, we have enhanced logging to include new events such as detail about the health of the on-DIMM PMIC and more robust logging of CPU data when memory errors occur. When problems arise, we understand it can be chaotic and memory could be swapped between systems or labeled in the wrong box by mistake when returning to Dell for diagnosis. This enhanced logging will help us see the history of the DIMM itself. We can identify trends and previous problems to get to a solution quickly.

- Out-of-Band Access Improvement – The information provided by iDRAC has always been available out-of-band, but beginning in this latest generation of PowerEdge server it is also available even when the system is off with the power cord plugged in. This is strategically useful for diagnosing memory health because memory is often one of the most critical components for a successful power-up sequence. What if the system hangs for some reason in the OS? What if you need to keep the system offline due to rack power constraints but you need detail about the health and history of the memory? In the latest PowerEdge servers you can still remote into the iDRAC BMC of the system and collect health and status information while the system is offline.

Figure 1. DDR5 inserted in the Dell PowerEdge Chassis

Conclusion

With improved bandwidth and continuous improvements for providing a quality customer experience on memory — all provided in a dense form factor of DDR5, Dell Technologies continues to provide best-in-class features and specifications for its constantly evolving better and faster PowerEdge Server portfolio.

References

[1] These tests were performed in the Solutions and Performance Analysis Lab at Dell Technologies in December 2022.

Dell PowerEdge Boot Optimized Storage Solution – BOSS-N1

Fri, 27 Jan 2023 21:58:02 -0000

|Read Time: 0 minutes

Summary

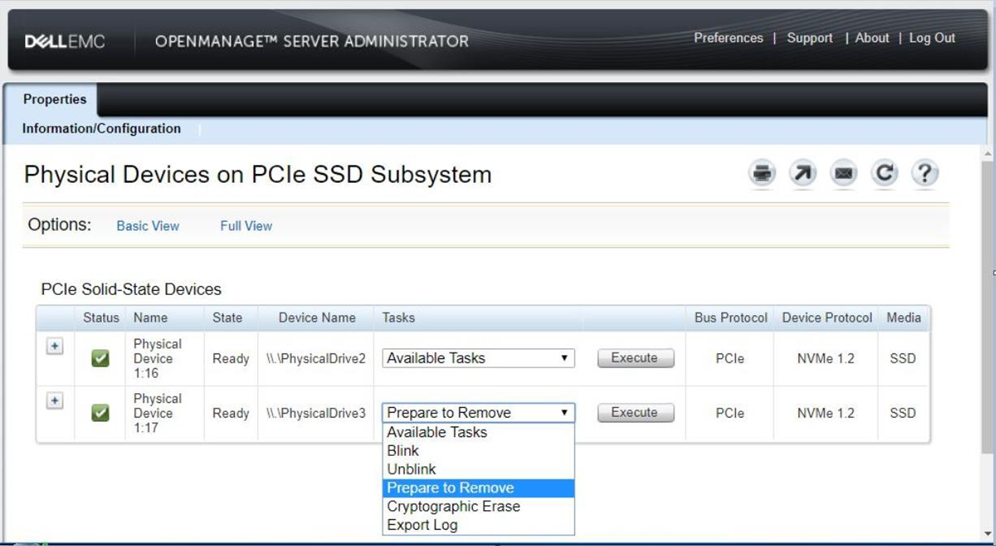

Our latest generation HW RAID BOSS solution (BOSS-N1) incorporates NVMe Enterprise class M.2 NVMe SSDs. It includes important RAS features such as rear or front facing drives on our new rack servers and full hot-plug support, so a server does not need to be taken offline in case of an SSD failure. When operating a RAID 1 mirror, a surprise removal and addition of a new SSD automatically kicks off a rebuild on the new RAID 1 member SSD that was added, so there is no need to halt server operations.

Available on the newest generation of PowerEdge systems, BOSS-N1 provides a robust, redundant, low-cost solution for boot optimization.

Introduction

The Boot Optimized Storage Solution (BOSS-N1) provides key, generational feature improvements to the highly popular BOSS subsystem and its existing value proposition. It incorporates an NVMe interface to the M.2 SSDs to ensure high performance and the latest technology. BOSS was originally designed to provide a highly reliable, cost-effective solution for separating operating system boot drives from data drives on server-internal storage. Many customers, particularly those in the Hyperconverged Infrastructure (HCI) arena and those implementing Software Defined Storage (SDS), require separating their OS drives from data drives. They also require hardware RAID mirroring (RAID 1) for their OS drives. The main motivation for this is to create a server configuration optimized for application data. Providing a separate, redundant disk solution for the OS enables a more robust and optimized compute platform.

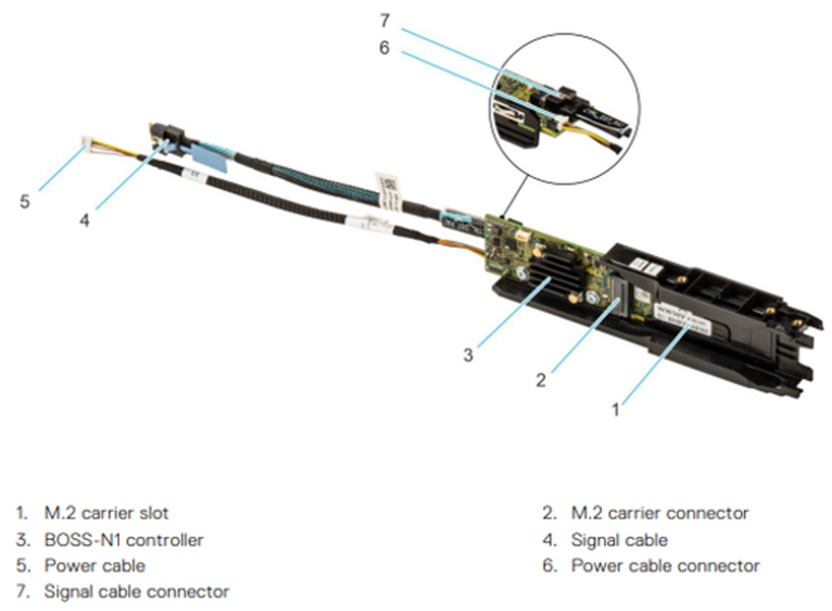

Figure 1. Installing the BOSS-N1 monolithic controller module

The Boot Optimized Storage Solution (BOSS-N1) is a simple, highly reliable and cost-effective solution to meet the requirements of our customers. The NVMe M.2 devices offer similar performance as 2.5” SSDs and support rear or front facing drive accessibility with full hot-plug support on monolithic platforms and includes surprise removal. Our design frees up and maximizes available drive slots for data requirements.

BOSS-N1 provides a secure way of updating the controller firmware

- Each of the firmware components is authenticated before being stored to firmware slot

- Authentication requires the use of public and private asymmetric key pair. This protected key pair is uniquely generated for Dell through a hardware security module (HSM) server.

- BOSS-N1 firmware updates can be updated using DUP from both In-band (Operating System) and Out-of-band (iDRAC) interfaces

You can manage BOSS-N1 with standard well-known management tools such as iDRAC, OpenManage Systems Administrator (OMSA), and the BOSS-N1 Command Line Interface (CLI).

BOSS-N1 hardware components

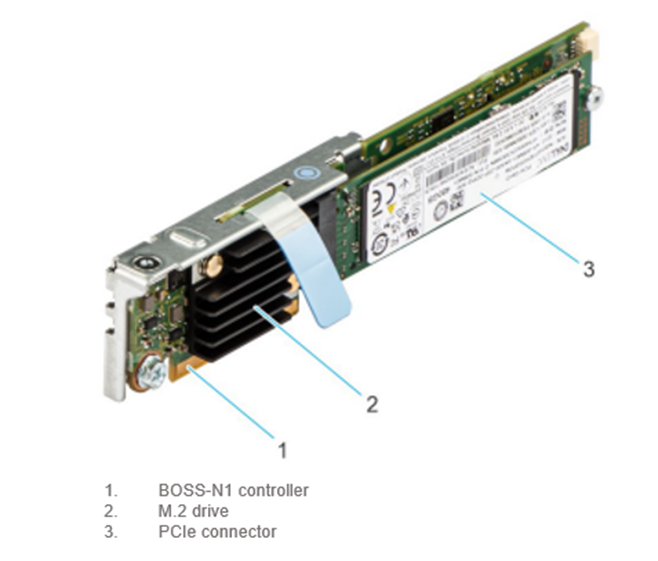

Figure 2. BOSS-N1 monolithic card

Figure 3. BOSS-N1 modular

Key features of BOSS-N1:

- Supports one (1) or two (2) 80 mm M.2 Enterprise Class NVMe SSDs

- M.2 devices are read-intensive (1 DWPD) with 480GB or 960GB capacity

- Fixed function hardware RAID 1 (mirroring) or single drive RAID 0

- Rear or front facing module for quick and easy accessibility to the M.2 SSDs on monolithic platforms

- Full hot-plug support on monolithic platforms

- M.2 drive LED functionality on monolithic platforms

- Managing BOSS-N1 is accomplished with standard, well-known management tools such as iDRAC, OpenManage Systems Administrator (OMSA), and the BOSS-N1 Command Line Interface (CLI)

BOSS-N1 supported operating systems

Windows Servers

- Windows Server 2019

- Windows Server 2022

Linux

- RHEL 8.6

- SLES 15 SP4

- Ubuntu 20.04.4

VMware

- ESXi 7.0 U3

- ESXi 8.0

References

- For more information about BOSS-N1, see the BOSS-N1 User’s Guide.

- For more information about iDRAC, such as the iDRAC User’s Guide and the iDRAC Release Notes, see the Dell Support site.

- For more information about OpenManage Server Administrator, see the OMSA 9.5 User’s Guide.

Improved PowerEdge Server Thermal Capability with Smart Flow

Fri, 03 Mar 2023 20:12:37 -0000

|Read Time: 0 minutes

Introduction

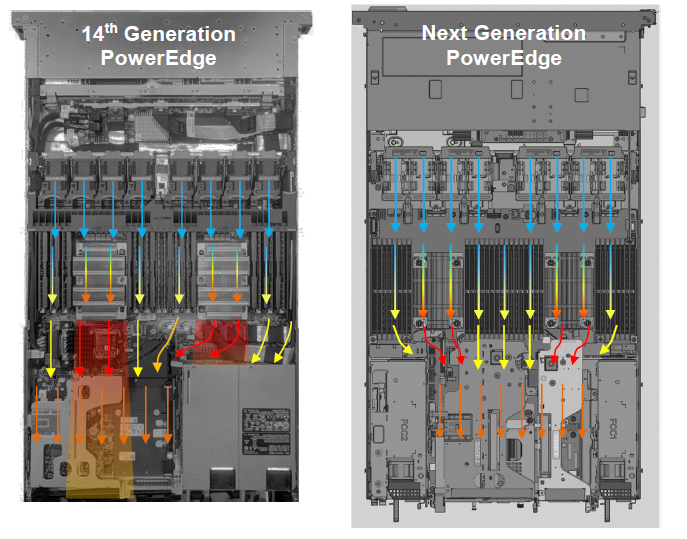

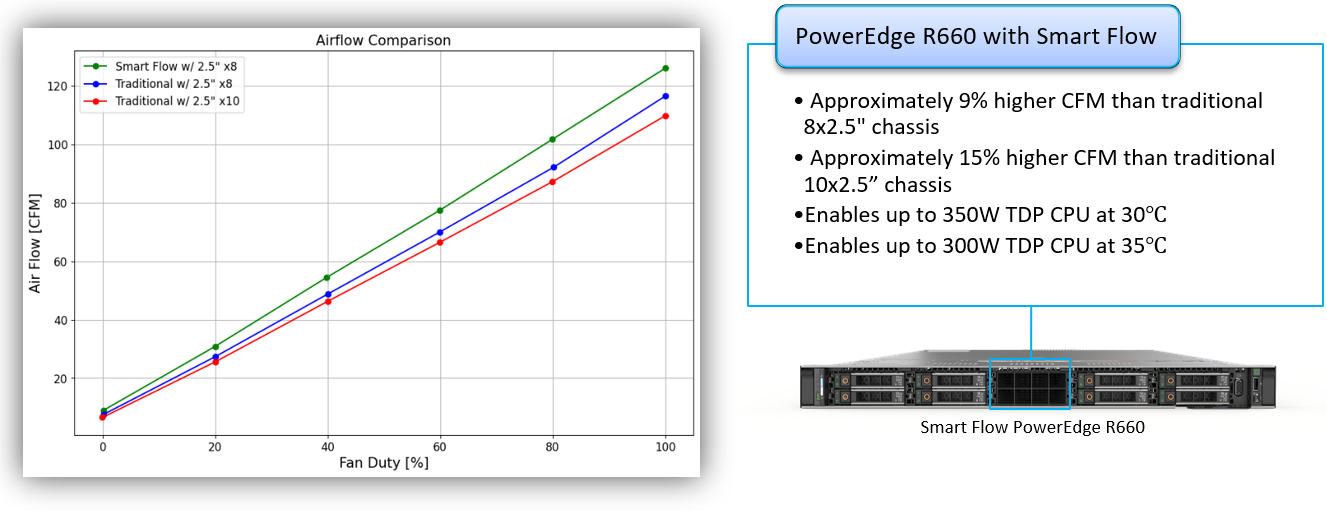

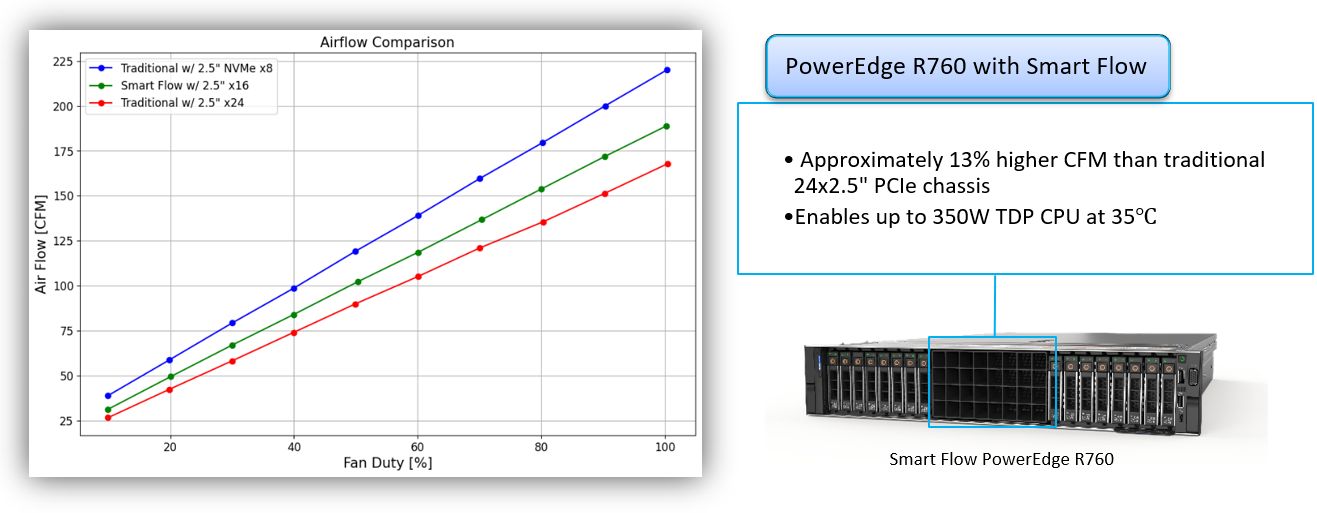

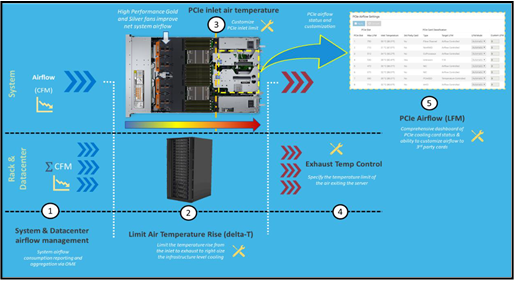

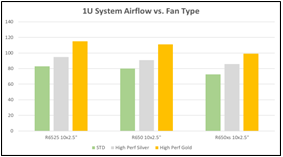

New PowerEdge Smart Flow chassis options increase airflow to support the highest core count CPUs and DDR5 in an air-cooled environment within current IT infrastructure.

What is Smart Flow?

One way to increase the thermal capacity of an air-cooled server is to increase airflow that exhausts heat generated by components. Dell PowerEdge addresses this in several ways: high-performance fans, air baffles to direct airflow within the chassis, and intelligent thermal controls that monitor temperature sensors and dynamically adjust fan speeds.

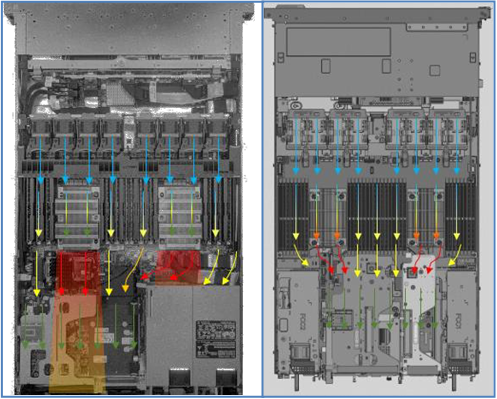

With Smart Flow, our thermal engineers have increased server thermal capacity by reducing impedance to fresh air intake on select server configurations. Servers with Smart Flow replace middle storage slots with centralized airflow inlets to maintain balanced airflow distribution within the server. This is made possible by new backplane configurations that allow larger air intake capacity. Smart Flow enables expanded CPU and memory configurations for lower storage needs in our next generation 1U and 2U air-cooled PowerEdge servers. Gains in thermal efficiency are also realized with Smart Flow implementations and will be explored in a subsequent paper. Examples for four different servers are shown here:

PowerEdge R660

Figure 1. PowerEdge R660 airflow increase with Smart Flow

PowerEdge R760

Figure 2. PowerEdge R760 airflow increase with Smart Flow

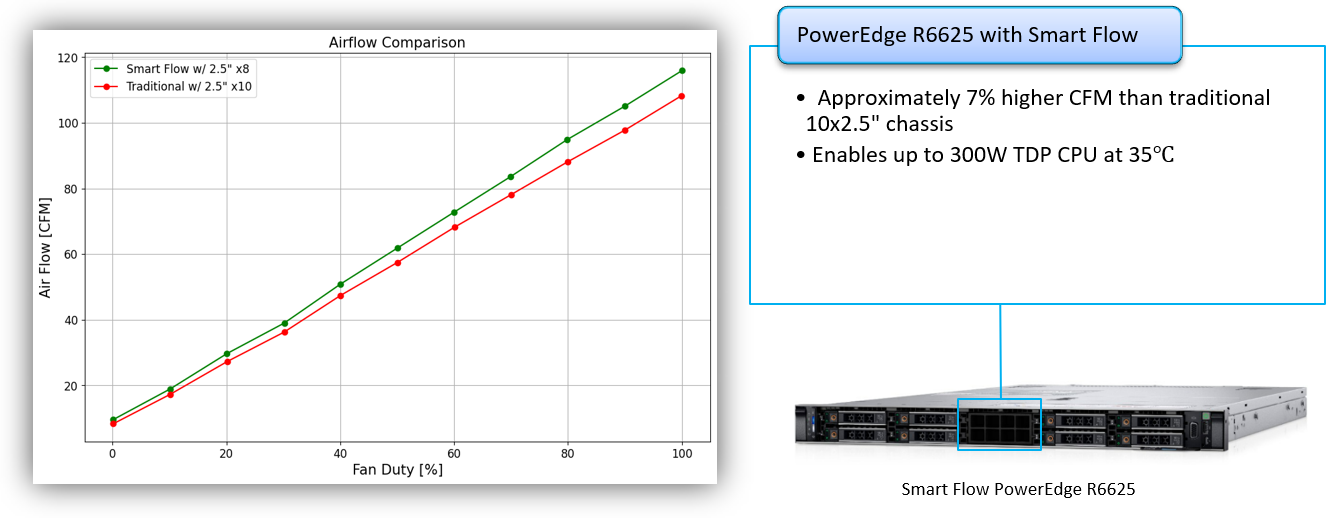

PowerEdge R6625

Figure 3. PowerEdge R6625 airflow increase with Smart Flow

PowerEdge R7625

Figure 4. PowerEdge R7625 airflow increase with Smart Flow

Conclusion

Dell PowerEdge Smart Flow increases select servers' thermal capacity, enabling high-power CPUs and GPUs, at increased ambient temperatures, for the most demanding workloads in air-cooled data centers.

Next-Generation Dell PowerEdge Servers: Designed with PCIe Gen 5 to Deliver Future-Ready Bandwidth

Fri, 03 Mar 2023 17:38:40 -0000

|Read Time: 0 minutes

Summary

This Direct from Development tech note describes PCIe Gen 5 for next-generation Dell PowerEdge servers. This document provides a high-level overview of PCIe Gen 5 and information about its performance improvement over Gen 4.

PCIe Gen 4 and Gen 5

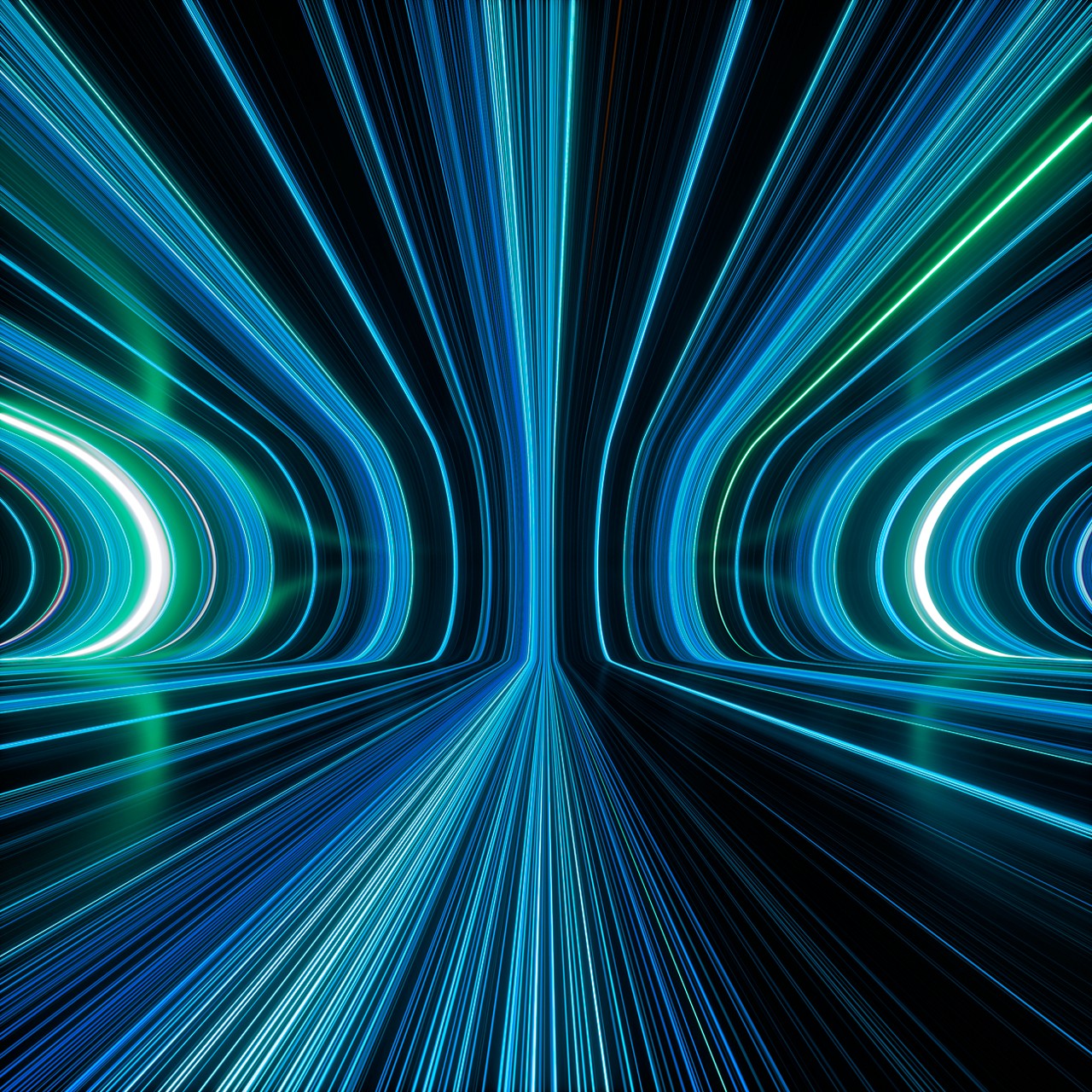

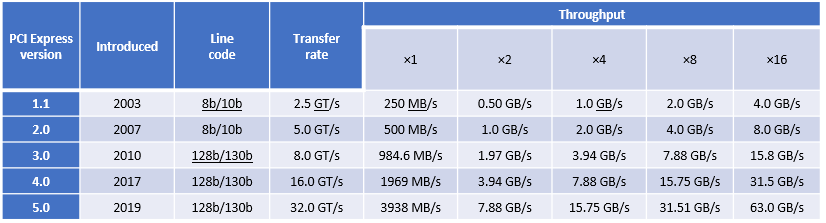

PCIe (Peripheral Component Interconnect Express) is a high-speed bus standard interface for connecting various peripherals to the CPU. This standard is maintained and developed by the PCI Special Interest Group (PCI-SIG), a group of more than 900 companies. In today’s world of servers, PCIe is the primary interface for connecting peripherals. It has numerous advantages over the earlier standards, being faster, more robust, and very flexible. These advantages have cemented the importance of PCIe.

PCIe Gen 4, which was the fourth major iteration of this standard, can carry data at the speed of 16 gigatransfers per second (GT/s). GT/s is the rate of bits (0’s and 1’s) transferred per second from the host to the end device or endpoint. After considering the overhead of the encoding scheme, Gen 4’s 16 GT/s works out to an effective delivery of 2 GB/s per lane in each direction. A PCIe Gen 4 slot with x16 lanes can have a total bandwidth of 64 GB/s.

The fifth major iteration of the PCIe standard, PCIe Gen 5, doubles the data transfer rate to 32 GT/s. This works out to an effective throughput of 4 GB/s per lane in each direction and 128 GB/s for an x16 PCIe Gen5 slot.

PCIe generations feature forward and backward compatibility. That means that you can connect a PCIe 4.0 SSD or a PCIe 5.0 SSD to a PCIe 5.0 slot, although speed is limited to the lowest generation. There are no pinout changes to from PCIe 4.0 for x16, x8, x4 packages.

Figure 1. PCIe bandwidth over time

Advantages of increased bandwidth

With the increased bandwidth of PCIe 5.0, devices might be able to achieve the same throughput while using fewer lanes, which means freeing up more lanes. For example, a graphics card that requires x16 bandwidth to run at full speed might now run at the same speed with x8, making an additional eight lanes available. Using fewer lanes is important because CPUs only provide a limited number of lanes, which need to be distributed among devices.

PCIe bandwidth improvements bring opportunities for high-bandwidth accelerators (FPGA, for example). The number of storage-attached and server-attached SSDs using PCIe continues to grow. PCIe 5.0 provides foundational bandwidth, electricals, and CEM slots for Compute Express Link (CXL) devices such as SmartNICs and accelerators. The new standard will be much more useful for machine learning and artificial intelligence, data centers, and other high performance computing environments, thanks to the increase in speeds and bandwidth. In addition, a single 200 Gb network is expected to saturate a PCIe 4.0 link in certain conditions, creating opportunities for PCIe 5.0 connectivity adapters. This unlocks opportunities for 400 Gb networking. The Intel PCIe 5.0 test chip is heavily utilized for interoperability testing.

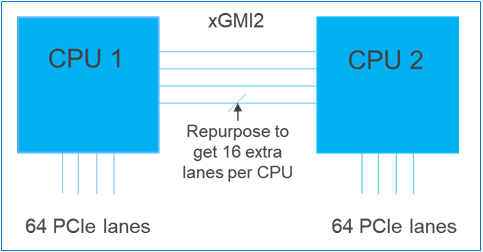

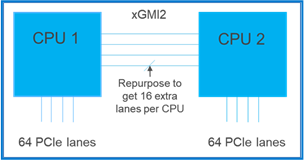

Next-generation PowerEdge servers and PCIe Gen 5

Next-generation Dell PowerEdge servers with 4th Gen Intel® Scalable processors are designed for PCIe Gen 5. The 4th Gen Intel® Xeon® series processors support the PCIe Gen 5 standard, allowing for the maximum utilization of this available bandwidth with the resulting advantages.

Single-socket 4th Gen Intel® Scalable processors have 80 PCIe Gen 5 lanes available for use, which allows for great flexibility in design. Eighty lanes also give plenty of bandwidth for many peripherals to take advantage of the high-core-count CPUs.

Conclusion

PowerEdge servers continue to deliver the latest technology. Support for PCIe Gen 5 provides increased bandwidth and improvements to make new applications possible.

NVMe, SAS, and SATA

Mon, 16 Jan 2023 13:44:19 -0000

|Read Time: 0 minutes

Summary

PowerEdge customers optimize their server configurations based on their applications and business needs. Multiple factors must be taken into consideration to make an informed decision, such as workload, budget, scale, and even roadmap. Still, when all of the factors are understood, it can be difficult to discern whether the optimized Solid State Drive (SSD) is NVMe, SAS, or SATA. This DfD (Direct from Development) tech note was written to simplify and guide customers in their choice of SSD. We hope customers will find this document to be a valuable reference guide when it becomes unclear which storage medium is the optimized decision. This paper can be used as a reference guide to help PowerEdge customers make an informed decision on which SSD interface will presumably bring the greatest value in relation to their intended business needs and goals. First, let’s summarize the history and architecture around the NVMe, SAS, and SATA SSD interfaces:

NVMe (Non-Volatile Memory Express)

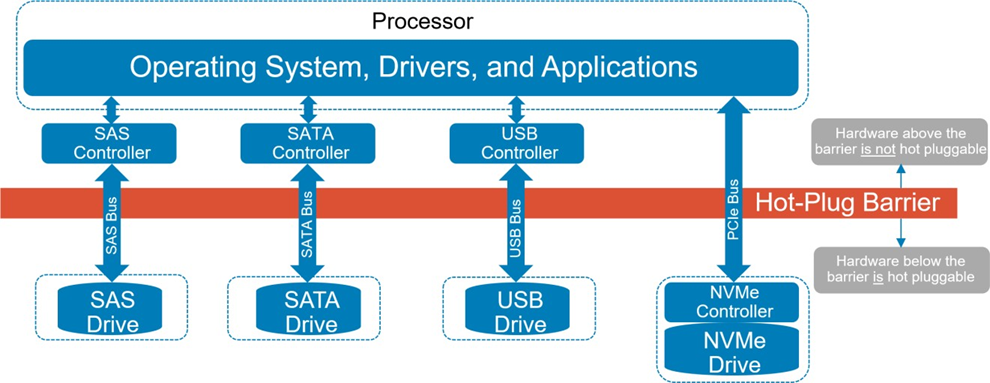

The NVMe interface is the newest type of flash storage with the highest performance. The driving architectural differentiator of NVMe is that it uses the PCIe interface bus to connect directly to the CPU and streamline the travel path. This design contrasts with SAS and SATA, which require data to first traverse to an HBA before reaching the CPU. By removing a layer from the stack, the travel path is optimized and produces reduced latency and improved performance. Scalability is also significantly improved, because NVMe drives can go beyond the traditional four lanes by using lanes from the same “pool” of lanes connected to the CPU. Furthermore, NVMe performance will continually improve as each new generation of the PCIe standard becomes available.

SAS (Serial Attached SCSI)

The SAS interface was released a few years after SATA and introduced new features that are beneficial for modern workloads. Instead of building upon the ATA (Advanced Technology Attachment) standard used in SATA, it serialized the existing parallel SCSI (Small Computer System Interface) standard. SAS cable architecture has four wires within two cables, creating more channels available for moving data and more connectors available for use by other devices. Furthermore, the channels are full duplex, allowing for reads and writes to traverse concurrently. Improved reliability, error reporting, and longer cable lengths were also introduced with SAS. SAS improvements are made to this day, with 24GB/s available soon, so it still remains valuable and relevant within the market.

SATA (Serial Advanced Technology Attachment)

The SATA interface was released in 2000 and is still commonly adopted within modern servers since it is the most-affordable of the three. It replaced parallel ATA with serial ATA, which resolved various performance and physical limitations at that time. The SATA cable architecture has four wires within one cable—two for sending data and two for receiving data. These four channels are half-duplex, so data can only move in one direction at a time. SATA write speeds are sufficient for storing information, but its read speeds are slow compared to more modern interfaces, which limits its application use for modern workloads. The last major SATA revision occurred in 2008, and will not see further advancement in the future.

Table 1. Ranking performance metrics of Enterprise NVMe, DC NVMe, Enterprise SAS, Value SAS, and SATA drives

Table 1 lists key metrics for five storage-drive types most commonly attached to PowerEdge servers: Enterprise NVMe, Data Center (DC) NVMe, Enterprise SAS, Value SAS, and SATA. This comparison helps clarify which storage interface type is most applicable in relation to business needs and goals.

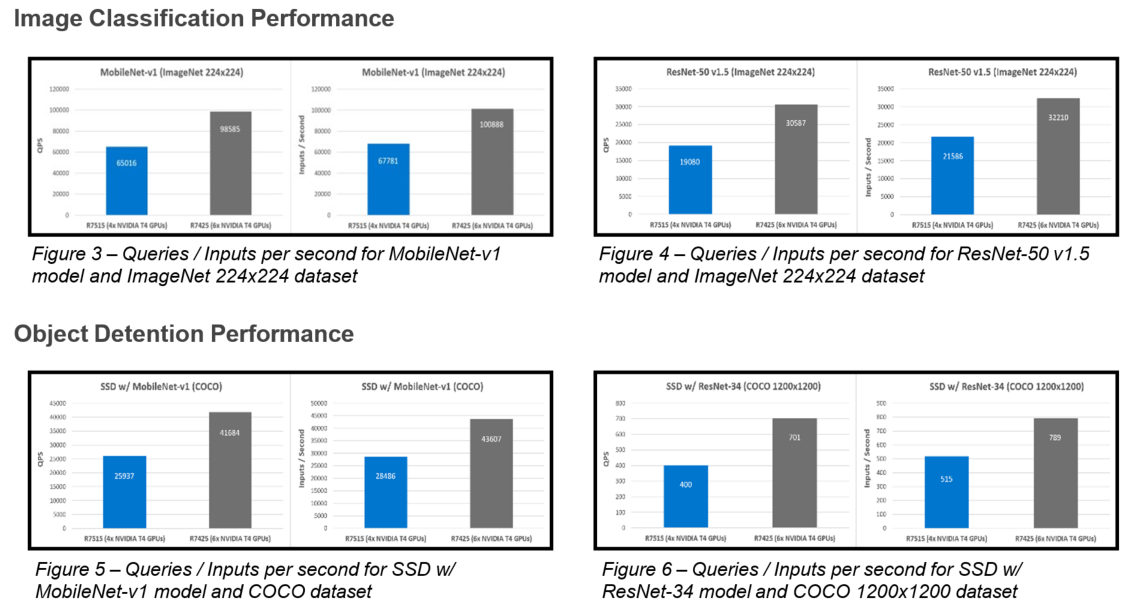

Performance: Performance can be measured in various ways. For this example, Random 4 KiB 70/30 (70% reads, 30% writes) data was compared and published by Dell, with higher IOPS being better. Enterprise NVMe SSDs produce 1.13x more IOPS than DC NVMe SSDs. DC NVMe SSDs produce 1.99x more IOPS than Enterprise SAS SSDs. Enterprise SAS SSDs produce 1.42x more IOPS than Value SAS SSDs. Lastly, Value SAS SSDs produce 2.39x more IOPS than SATA. Figure 1 below illustrates the IOPS performance variances on a bar graph for a visual representation:

Figure 1. Random 4KiB 70/30 IOPS variances for each storage interface

Latency: The NVMe protocol reduces the number of touchpoints data must travel to (bypassing the HBA) before reaching the CPU. It also has less overhead, giving it significantly lower latency than SAS and SATA. The SAS protocol is full- duplex (as opposed to half-duplex) and offers two channels (as opposed to one) for data to use, giving it over 50% lower latency than SATA.

Price: According to Dell pricing in Q1 2022, SATA SSDs are the least expensive storage interface, at ~0.9x the price of Value SAS SSDs. Value SAS SSDs are ~0.85x the price of DC NVMe SSDs. DC NVMe SSDs are ~0.85x the price of Enterprise SAS SSDs. And Enterprise SAS SSDs are ~0.97x the price of Enterprise NVMe SSDs. Pricing is volatile and these number variances are subject to change at any time.

Performance per price: PowerEdge customers that have not identified which metric is most important for their business goals should strongly consider performance (IOPS) per price (dollar) to be at the top of the list. Because NVMe has such a significant performance lead over SAS and SATA, it is easily the golden standard for performance per price. DC NVMe SSDs have the best performance per price, followed closely by Enterprise DC NVMe SSDs, followed by Value SAS SSDs, followed closely by SAS SSDs, followed by SATA SSDs. This tech note gives more performance/price detail.

Scalability: Currently, NVMe shows the greatest promise for wider-scale implementation due to the abundance of lanes that can be available with low-overhead. However, it can be a costly investment if existing data center infrastructures must be upgraded to support the NVMe I/O protocol. SAS is more flexible, since SAS expanders are cost-effective and most data center infrastructures already have the required hardware to support it. However, SAS does not have the potential to scale out as aggressively as NVMe. SATA does not scale well with SSDs.

Ongoing development: The NVMe interface has consistent and substantial advancements year-over-year, including updates like NVMe 2.0b (released in Jan. 2022) and PCIe Gen5 (released on Intel CPUs in Nov. 2021). The SAS interface also has regularly cadenced updates, but the impact is marginal, with the exception of upcoming updates like 24Gb/s and 48Gb/s. The SATA interface has no plan to extend capabilities beyond its current limitations.

Assigning these ranks for each storage interface and metric, and explaining why the rank was given, will make it easier to understand which drive type will be the most valuable in relation to business needs and goals.

Guidance in accordance with business goals

Every business is unique and will have different requirements for their storage drives. Factors such as intended workload, business size, plan to scale, budget, and so on, should be considered to confidently make an investment decision. Although this decision is ultimately up to each business, we have provided some guidelines below to help businesses that are still on the fence to make an educated choice:

Enterprise NVMe: Businesses that desire maximum performance and have a flexible budget should consider purchasing Enterprise NVMe SSDs. Heavy workloads like HPC or AI will immediately benefit from the additional cache gained from the non-volatile nature of this storage interface. The fast-paced performance growth seen in Enterprise NVMe SSDs will also allow smaller workloads like databases or collaboration to easily keep up with the ever-increasing size of data. Ultimately, because Enterprise NVMe undergoes consistent valuable changes with every passing year, such as performance increases and cost reduction/optimization, we recommend futureproofing your data center with it.

DC NVMe: Businesses that desire a budget-conscious NVMe solution, in addition to the greatest value, should consider purchasing DC NVMe SSDs. These drives have the exact same value proposition as stated above for Enterprise NVMe SSDs, but with a sizeable price reduction (0.83x) and performance hit (0.86x). Businesses that want to get the best value will be pleased to know that DC NVMe drives have the best performance-per-price.

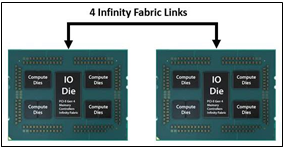

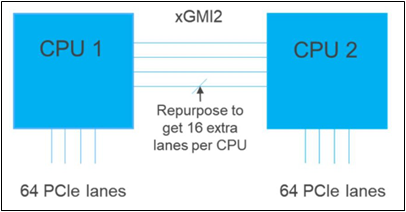

Enterprise SAS: Businesses that desire to continue using their existing SCSI-based data center environment and have maximum SAS performance should consider purchasing Enterprise SAS SSDs. Although the Enterprise SAS interface does not currently have any ranking leadership for performance or pricing, it is established in the industry as highly reliable, cost- effective to scale, and it shows promise for the future, with 24Gb/s available soon and 48Gb/s on the horizon. Enterprise SAS SSDs will adequately handle medium-duty workloads, like databases or virtualization, but will operate best when mixed with NVMe SSDs if any heavy-duty workloads are at play.