The Latest GPUs of 2022

Download PDFMon, 16 Jan 2023 13:44:30 -0000

|Read Time: 0 minutes

And How We Recommend Applying Them to Enable Breakthrough Performance

Summary

Dell Technologies offers a wide range of GPUs to address different workloads and use cases. Deciding on which GPU model and PowerEdge server to purchase, based on intended workloads, can become quite complex for customers looking to use GPU capabilities. It is important that our customers understand why specific GPUs and PowerEdge servers will work best to accelerate their intended workloads. This DfD informs customers of the latest and greatest GPU offerings in 2022, as well as which PowerEdge servers and workloads we recommend to enable breakthrough performance.

PowerEdge servers support various GPU brands and models. Each model is designed to accelerate specific demanding applications by acting as a powerful assistant to the CPU. For this reason, it is vital to understand which GPUs on PowerEdge servers will best enable breakthrough performance for varying workloads. This paper describes the latest GPUs as of Q1 2022, shown below in Figure 1, to help educate PowerEdge customers on which GPU is best suited for their specific needs.

GPU Model | Number of Cores | Peak Double Precision (FP64) | Peak Single Precision (FP32) | Peak Half Precision (FP16) | Memory Size / Bus | Memory Bandwidth | Power Consumption |

A2 | 2560 | N/A | 4.5 TFLOPS | 18 TFLOPS | 16GB GDDR6 | 200 GB/s | 40-60W |

A16 | 1280 x4 | N/A | 4.5 TFLOPS x4 | 17.9 TFLOPS x4 | 16GB GDDR6 x4 | 200 GB/s x4 | 250W |

A30 | 3804 | 5.2 TFLOPS | 10.3 TFLOPS | 165 TFLOPS | 24GB HBM2 | 933 GB/s | 165W |

A40 | 10752 | N/A | 37.4 TFLOPS | 149.7 TFLOPS | 48GB GDDR6 | 696 GB/s | 300W |

MI100 | 7680 | 11.5 TFLOPS | 23.1 TFLOPS | 184.6 TFLOPS | 32GB HBM2 | 1.2 TB/s | 300W |

A100 PCIe | 6912 | 9.7 TFLOPS | 19.5 TFLOPS | 312 TFLOPS | 80GB HBM2e | 1.93 TB/s | 300W |

A100 SXM2 | 6912 | 9.7 TFLOPS | 19.5 TFLOPS | 312 TFLOPS | 40GB HBM2 | 1.55 TB/s | 400W |

A100 SXM2 | 6912 | 9.7 TFLOPS | 19.5 TFLOPS | 312 TFLOPS | 80GB HBM2e | 2.04 TB/s | 500W |

T4 | 2560 | N/A | 8.1 TFLOPS | 65 TFLOPS | 16GB GDDR6 | 300 GB/s | 70W |

Figure 1 – Table comparing 2022 GPU specifications

NVIDIA A2

The NVIDIA A2 is an entry-level GPU intended to boost performance for AI-enabled applications. What makes this product unique is its extremely low power limit (40W-60W), compact size, and affordable price. These attributes position the A2 as the perfect “starter” GPU for users seeking performance improvements on their servers. To benefit from the performance inferencing and entry-level specifications of the A2, we suggest attaching it to mainstream PowerEdge servers, such as the R750 and R7515, which can host up to 4x and 3x A2 GPUs respectively. Edge and space/power constrained environments, such as the XR11, are also recommended, which can host up to 2x A2 GPUs. Customers can expect more PowerEdge support by H2 2022, including the PowerEdge R650, T550, R750xa, and XR12.

Supported Workloads: AI Inference, Edge, VDI, General Purpose Recommended Workloads: AI Inference, Edge, VDI Recommended PowerEdge Servers: R750, R7515, XR11

NVIDIA A16

The NVIDIA A16 is a full height, full length (FHFL) GPU card that has four GPUs connected together on a single board through a Mellanox PCIe switch. The A16 is targeted at customers requiring high-user density for VDI environments, because it shares incoming requests across four GPUs instead of just one. This will both increase the total user count and reduce queue times per request. All four GPUs have a high memory capacity (16GB DDR6 for each GPU) and memory bandwidth (200GB/s for each GPU) to support a large volume of users and varying workload types. Lastly, the NVIDIA A16 has a large number of video encoders and decoders for the best user experience in a VDI environment.

To take full advantage of the A16s capabilities, we suggest attaching it to newer PowerEdge servers that support PCIe Gen4. For Intel-based PowerEdge servers, we recommend the R750 and R750xa, which support 2x and 4x A16 GPUs, respectively. For AMD-based PowerEdge servers, we recommend the R7515 and R7525, which support 1x and 3x A16 GPUs, respectively.

Supported Workloads: VDI, Video Encoding, Video Analytics Recommended Workloads: VDI Recommended PowerEdge Servers: R750, R750xa, R7515, R7525

NVIDIA A30

The NVIDIA A30 is a mainstream GPU offering targeted at enterprise customers who seek increased performance, scalability, and flexibility in the data center. This powerhouse accelerator is a versatile GPU solution because it has excellent performance specifications for a broad spectrum of math precisions, including INT4, INT8, FP16, FP32, and FP64 models. Having the ability to run third- generation tensor core and the Multi-Instance GPU (MIG) features in unison further secures quality performance gains for big and small workloads. Lastly, it has an unconventionally low power budget of only 165W, making it a viable GPU for virtually any PowerEdge server.

Given that the A30 GPU was built to be a versatile solution for most workloads and servers, it balances both the performance and pricing to bring optimized value to our PowerEdge servers. The PowerEdge R750, R750xa, R7525, and R7515 are all great mainstream servers for enterprise customers looking to scale. For those requiring a GPU-dense server, the PowerEdge DSS8440 can hold up to 10x A30s and will be supported in Q1 2022. Lastly, the PowerEdge XR12 can support up to 2x A30s for Edge environments.

Supported Workloads: AI Inference, AI Training, HPC, Video Analytics, General Purpose Recommended Workloads: AI Inference, AI Training Recommended PowerEdge Servers: R750, R750xa, R7525, R7515, DSS8440, XR12

NVIDIA A40

The NVIDIA A40 is a FHFL GPU offering that combines advanced professional graphics with HPC and AI acceleration to boost the performance of graphics and visualization workloads, such as batch rendering, multi-display, and 3D display. By providing support for ray tracing, advanced shading, and other powerful simulation features, this GPU is a unique solution targeted at customers that require powerful virtual and physical displays. Furthermore, with 48GB of GDDR6 memory, 10,752 CUDA cores, and PCIe Gen4 support, the A40 will ensure that massive datasets and graphics workload requests are moving quickly.

To accommodate the A40s hefty power budget of 300W, we suggest customers attach it to a PowerEdge server with ample power to spare, such as the DSS8440. However, if the DSS8440 is not possible, the PowerEdge R750xa, R750, R7525, and XR12 are also compatible with the A40 GPU and will function adequately so long as they are using PSUs with adequate power output. Lastly, populating A40 GPUs within the PowerEdge T550 is also a great play for customers who want to address visually demanding workloads outside the traditional data center.

Supported Workloads: Graphics, Batch Rendering, Multi-Display, 3D Display, VR, Virtual Workstations, AI Training, AI Inference Recommended Workloads: Graphics, Bach Rendering, Multi-Display Recommended PowerEdge Servers: DSS8440, R750xa, R750, R7525, XR12, T550

NVIDIA A100

The NVIDIA A100 focuses on accelerating HPC and AI workloads. It introduces double-precision tensor cores that significantly reduce HPC simulation run times. Furthermore, the A100 includes Multi-Instance GPU (MIG) virtualization and GPU partitioning capabilities, which benefit cloud users looking to use their GPUs for AI inference and data analytics. The newly supported sparsity feature can also double the throughput of tensor core operations by exploiting the fine- grained structure in DL networks. Lastly, A100 GPUs can be inter-connected either by NVLink bridge on platforms like the R750xa and DSS8440, or by SXM4 on platforms like the PowerEdge XE8545, which increases the GPU-to- GPU bandwidth when compared to the PCIe host interface.

The PowerEdge DSS8440 is a great server for the A100, as it provides ample power and can hold the most GPUs. If not the DSS8440, we would suggest using the PowerEdge XE8545, R750xa, or R7525. Please note that only the 80GB model is supported for PCIe connections, and be sure to provide plenty of power to accommodate the A100s 300W/400W power requirements.

Supported Workloads: HPC, AI Training, AI Inference, Data Analytics, General Purpose Recommended Workloads: HPC, AI Training, AI Inference, Data Analytics Recommended PowerEdge Servers: DSS8440, XE8545, R750xa, R7525

AMD MI100

The AMD MI100 value proposition is similar to the A100 in that it will best accelerate HPC and AI workloads. At 11.5 TFLOPS, its FP64 performance is industry-leading for the acceleration of HPC workloads. Similarly, at 23.1 TFLOPs, the FP32 specifications are more than sufficient for any AI workload. Furthermore, the MI100 supports 32GB of high-bandwidth memory (HBM2) to enable a whopping 1.2TB/s of memory bandwidth. In a nutshell, this GPU is designed to tackle complex, data-intensive HPC and AI workloads for enterprise customers.

The AMD MI100 is qualified on both the Intel-based PowerEdge R750xa, which supports up to 4x MI100 GPUs, and the AMD- based PowerEdge R7525, which supports up to 3x MI100 GPUs. We highly recommend adopting a powerful PSU for either server, as the MI100 also has a massive power consumption of 300W.

Supported Workloads: HPC, AI Training, AI Inference, ML/DL Recommended Workloads: HPC, AI Training, AI Inference Recommended PowerEdge Servers: R750xa, R7525

Conclusion

The GPUs we are recommending in this list offer a wide variety of features that are designed to accelerate a diverse range of server workloads. A PowerEdge server configured with the most appropriate GPU will enable intended customer workloads to use these features in concert with other system components to yield the best performance. We hope this discussion of the latest 2022 GPUs, as well as our recommendations for Dell PowerEdge servers and workloads, will help customers choose the most appropriate GPU for their data center needs and business goals.

Learn More

Dell PowerEdge Accelerated Servers and Accelerators Dell eBook

Demystifying Deep Learning Infrastructure Choices using MLPerf Benchmark Suite HPC at Dell

Related Documents

Dell PowerEdge Servers Offer Comprehensive GPU Acceleration Options

Fri, 03 Mar 2023 19:57:27 -0000

|Read Time: 0 minutes

Summary

The next generation of PowerEdge servers is engineered to accelerate insights by enabling the latest technologies. These technologies include next-gen CPUs bringing support for DDR5 and PCIe Gen 5 and PowerEdge servers that support a wide range of enterprise-class GPUs. Over 75% of next generation Dell PowerEdge servers offer support for GPU acceleration.

Accelerate insights

For the digital enterprise, success hinges on leveraging big, fast data. But as data sets grow, traditional data centers are starting to hit performance and scale limitations — especially when ingesting and querying real-time data sources. While some have long taken advantage of accelerators for speeding visualization, modeling, and simulation, today, more mainstream applications than ever before can leverage accelerators to boost insight and innovation. Accelerators such as graphics processing units (GPUs) complement and accelerate CPUs, using parallel processing to crunch large volumes of data faster. Accelerated data centers can also deliver better economics, providing breakthrough performance with fewer servers, resulting in faster insights and lower costs. Organizations in multiple industries are adopting server accelerators to outpace the competition — honing product and service offerings with data-gleaned insights, enhancing productivity with better application performance, optimizing operations with fast and powerful analytics, and shortening time to market by doing it all faster than ever before. Dell Technologies offers a choice of server accelerators in Dell PowerEdge servers so you can turbo-charge your applications.

For the digital enterprise, success hinges on leveraging big, fast data. But as data sets grow, traditional data centers are starting to hit performance and scale limitations — especially when ingesting and querying real-time data sources. While some have long taken advantage of accelerators for speeding visualization, modeling, and simulation, today, more mainstream applications than ever before can leverage accelerators to boost insight and innovation. Accelerators such as graphics processing units (GPUs) complement and accelerate CPUs, using parallel processing to crunch large volumes of data faster. Accelerated data centers can also deliver better economics, providing breakthrough performance with fewer servers, resulting in faster insights and lower costs. Organizations in multiple industries are adopting server accelerators to outpace the competition — honing product and service offerings with data-gleaned insights, enhancing productivity with better application performance, optimizing operations with fast and powerful analytics, and shortening time to market by doing it all faster than ever before. Dell Technologies offers a choice of server accelerators in Dell PowerEdge servers so you can turbo-charge your applications.

Accelerated server architecture

Our world-class engineering team designs PowerEdge servers with the latest technologies for ultimate performance. Here’s how.

Industry enabled technologies

- Next Generation Intel and AMD Processors

- DDR5 Memory

- PCIe Gen5

- GPU Form Factor Options

Next generation air and Direct Liquid Cooling (DLC) technology

PowerEdge ensures no-compromise system performance through innovative cooling solutions while offering customers options that fit their facility or usage model.

PowerEdge ensures no-compromise system performance through innovative cooling solutions while offering customers options that fit their facility or usage model.

- Innovations that extend the range of air-cooled configurations

- Advanced designs - airflow pathways are streamlined within the server, directing the right amount of air to where it is needed

- Latest generation fan and heat sinks – to manage the latest high-TDP CPUs and other key components

- Intelligent thermal controls – automatically adjust airflow during workload or environmental changes, seamless support for channel add-in cards, plus enhanced customer control options for temp/power/acoustics

- For high-performance CPU and GPU options in dense configurations, Dell DLC effectively manages heat while improving overall system efficiency

Our GPU partners

AMD

Dell Technologies and AMD have established a solid partnership to help organizations accelerate their AI initiatives. Together our technologies provide the foundation for successful AI solutions that drive the development of advanced DL software frameworks. These technologies also deliver massively parallel computing in the form of AMD Graphic Processing Units (GPUs) for parallel model training and scale-out file systems to support the concurrency, performance and capacity requirements of unstructured image and video data sets. With AMD ROCm open software platform built for flexibility and performance, the HPC and AI communities can gain access to open compute languages, compilers, libraries, and tools designed to accelerate code development and solve the toughest challenges in the world today.

Intel

Dell Technologies and Intel are giving customers new choices in enterprise-class GPUs. The Intel Data Center GPUs are available with our next generation of PowerEdge servers. These GPUs are designed to accelerate AI inferencing, VDI, and model training workloads. And with toolsets like Intel® oneAPI and OpenVINOTM, developers have the tools they need to develop new AI applications and migrate existing applications to run optimally on Intel GPUs.

NVIDIA

Dell Technologies solutions designed with NVIDIA hardware and software enable customers to deploy high-performance deep learning and AI-capable enterprise-class servers from the edge to the data center. This relationship allows Dell to offer Ready Solutions for AI and built-to-order PowerEdge servers with your choice of NVIDIA GPUs. With Dell Ready Solutions for AI, organizations can rely on a Dell-designed and validated set of best-of-breed technologies for software – including AI frameworks and libraries – with compute, networking, and storage. With NVIDIA CUDA, developers can accelerate computing applications by harnessing the power of the GPUs. Applications and operations (such as matrix multiplication) that are typically run serially in CPUs can run on thousands of GPU cores in parallel.

GPU options for next-generation PowerEdge servers

Turbo-charge your applications with performance accelerators available in select Dell PowerEdge tower and rack servers. The number and type of accelerators that fit in PowerEdge servers are based on the physical dimensions of the PCIe adapter cards and the GPU form factor.

Brand | GPU Model | GPU Memory | Max Power Consumption | Form Factor | 2-way Bridge | Recommended Workloads | |

PCIe Adapter Form Factor | |||||||

NVIDIA | A2 | 16 GB GDDR6 | 60W | SW, HHHL or FHHL | n/a | AI Inferencing, Edge, VDI | |

NVIDIA | A16 | 64 GB GDDR6 | 250W | DW, FHFL | n/a | VDI | |

NVIDIA | A40, L40 | 48 GB GDDR6 | 300W | DW, FHFL | Y, N | Performance graphics, Multi-workload | |

NVIDIA | A30 | 24 GB HBM2 | 165W | DW, FHFL | Y | AI Inferencing, AI Training | |

NVIDIA | A100 | 80 GB HBM2e | 300W | DW, FHFL | Y, Y | AI Training, HPC, AI Inferencing | |

NVIDIA | H100 | 80GB HBM2e | 300 - 350W | DW, FHFL | Y | AI Training, HPC, AI Inferencing | |

AMD | MI210 | 64 GB HBM2e | 300W | DW, FHFL | Y | HPC, AI Training | |

Intel | Max 1100* | 48GB HBM2e | 300W | DW, FHFL | Y | HPC, AI Training | |

Intel | Flex 140* | 12GB GDDR6 | 75W | SW, HHHL or FHHL | n/a | AI Inferencing | |

SXM / OAM Form Factor | |||||||

NVIDIA | HGX A100* | 80GB HBM2 | 500W | SXM w/ NVLink | n/a | AI Training, HPC | |

NVIDIA | HGX H100* | 80GB HBM3 | 700W | SXM w/ NVLink | n/a | AI Training, HPC | |

Intel | Max 1550 * | 128GB HBM2e | 600W | OAM w/ XeLink | n/a | AI Training, HPC | |

* Development or under evaluation | |||||||

References

Introducing the PowerEdge T360 & R360: Gain up to Double the Performance with Intel® Xeon® E-Series Processors

Thu, 04 Jan 2024 22:08:42 -0000

|Read Time: 0 minutes

Summary

The launch of the PowerEdge T360 and R360 is a prominent addition to the Dell Technologies PowerEdge portfolio. These cost-effective 1-socket servers deliver powerful performance with the latest Intel® Xeon® E-series processors, added GPU support, DDR5 memory, and PCIe Gen 5 I/O slots. They are designed to meet evolving compute demands in Small and Medium Businesses (SMB), Remote Office/Branch Office (ROBO) and Near-Edge deployments.

Both the T360 and R360 boost compute performance up to 108% compared to the prior generation servers. Consequently, customers gain up to 1.8x the performance per every dollar spent on the new E-series CPUs [1]. The rest of this document covers key product features and differentiators, as well as the details behind the performance testing conducted in our labs.

Feature Additions and Upgrades

We break down the new features that are common across both the rack and tower form factors as shown in the table below. Perhaps the most salient upgrades over the prior generation servers – the PowerEdge T350 and R350 – are the significantly more performant CPUs, added entry GPU support, and up to nearly 1.4x faster memory.

- T360 and R360 key feature additions

| Prior-Gen PowerEdge T350, R350 | New PowerEdge T360, R360 |

CPU | 1x Intel Xeon E-2300 Processor, up to 8 cores | 1x Intel Xeon E-2400 Processor, up to 8 cores |

Memory | 4x UDDR4, up to 3200 MT/s DIMM speed | 4x UDDR5, up to 4400 MT/s DIMM speed |

Storage | Hot Plug SATA BOSS S-2 | Hot Plug NVMe BOSS N-1 |

GPU | Not supported | 1 x NVIDIA A2 entry GPU |

- From left to right, PowerEdge R360 and T360

Entry GPU Support

We have seen a growing demand for video and audio computing particularly in retail, manufacturing, and logistics industries.To meet this demand, the PowerEdge T360 and R360 now supports 1 NVIDIA A2 entry datacenter GPU that accelerates these media intensive workloads, as well as emerging AI inferencing workloads. The A2 is a single-width GPU stacked with 16GB of GPU memory and 40-60W configurable thermal design power (TDP). Read more about the A2 GPU’s up to 20x inference speedup and features here: A2 Tensor Core GPU | NVIDIA.

This upgrade could not come at a more apropos time for businesses looking to scale up and explore entry AI use cases. In fact, IDC projects $154 billion in global AI spending this year, with retail and banking topping the industries with the greatest AI investment. For example, a retailer could leverage the power of the A2 GPU and latest CPUs to stream video of store aisles for inventory management and customer behavior analytics.

Product Differentiation – Rack vs Tower Form Factor

The biggest differentiator between T360 and R360 is their form factors. The T360 is a tower server that can fit under a desk or even in a storage closet, while maintaining office-friendly acoustics. The R360 is a traditional 1U rack server. The table below further details the differences in the product specifications. Namely, the PowerEdge T360 has greater drive capacity for customers with data-intensive workloads or those who anticipate growing storage demand.

2. T360 and R360 differentiators

| PowerEdge R360 | PowerEdge T360 |

Storage | Up to 4 x 3.5'' or 8 x 2.5'' SATA/SAS, max 64GB | Up to 8 x 3.5'' or 8 x 2.5'' SATA/SAS, max 128G |

PCIe Slots | 2 x PCIe Gen 5 (QNS) or 2 x PCIe Gen4 | 3x PCIe Gen 4 + 1x PCIe Gen 5 |

Dimensions & Form Factor | H x W x D: 1U x 17.08 in x 22.18 in 1U Rack Server | H x W x D: 14.54 in x 6.88 in x 22.06 in 4.5U Tower Server |

Processor Performance Testing

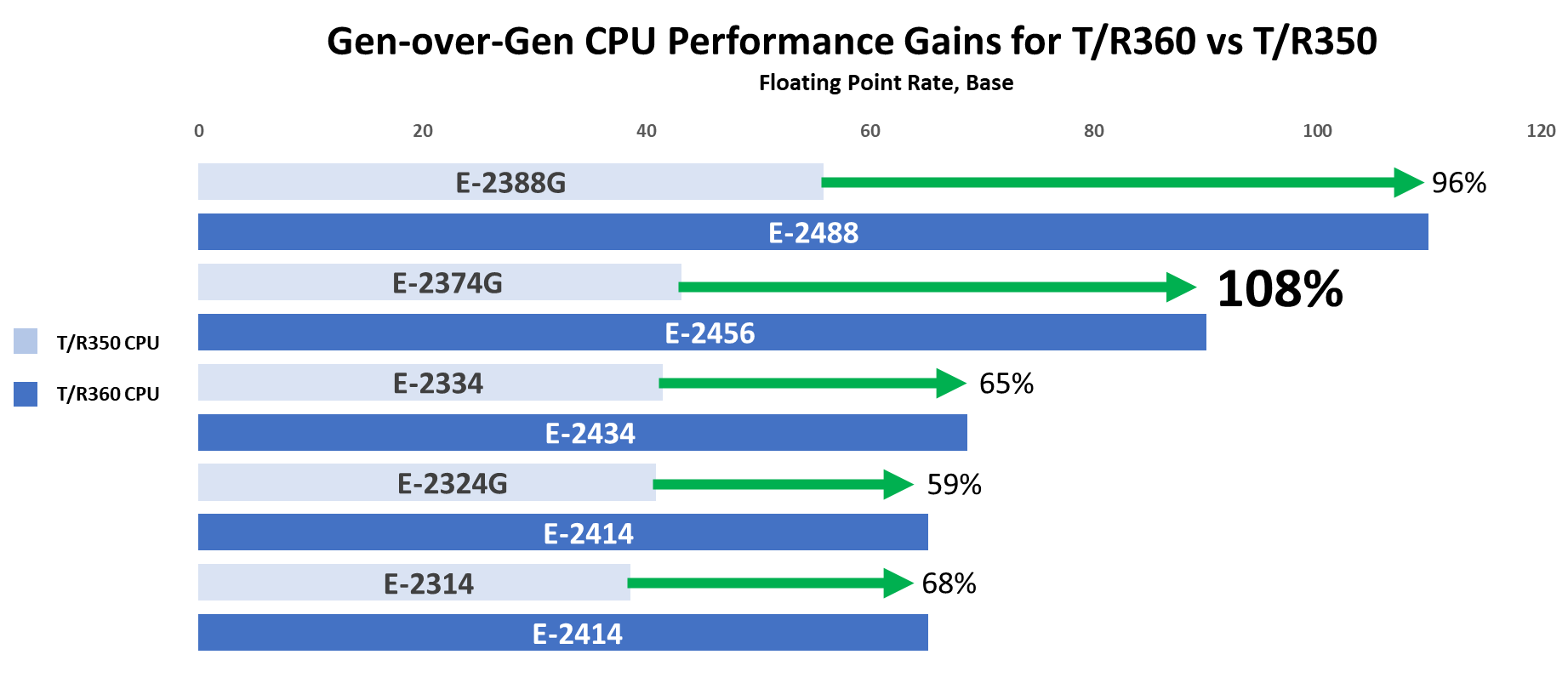

The Dell Solutions Performance Analysis Lab (SPA) ran the SPEC CPU® 2017 benchmark on both the PowerEdge T360 and R360 servers with the latest Intel Xeon E-2400 series processors. SPEC CPU is an industry-standard benchmark that measures compute performance for both floating point (FP) and integer operations. We compare these new results with the prior-generation PowerEdge T350 and R350 servers that have Intel Xeon E-2300 series processors.

The following gen-over-gen comparisons represent common Intel CPU configurations for R350/T350 and R360/T360 customers, respectively:

3. Selected CPUs for T/R350 vs T/R360 comparison

Comparison # | PowerEdge R350/T350 | PowerEdge R360/T360 |

1 | E-2388G, 8 cores, 3.2 GHz base frequency | E-2488, 8 cores, 3.2 GHz base frequency |

2 | E-2374G, 4 cores, 3.7 GHz base frequency | E-2456, 6 cores, 3.3 GHz base frequency |

3 | E-2334, 4 cores, 3.4 GHz base frequency | E-2434, 4 cores, 3.4 GHz base frequency |

4 | E-2324G, 4 cores, 3.1 GHz base frequency | E-2414, 4 cores, 2.6 GHz base frequency

|

5 | E-2314, 4 cores, 2.8 GHz base frequency |

Results

We report SPEC CPU’s FP rate metric and integer rate metric which measures throughput in terms of work per unit of time (so higher results are better).[1] Across all CPU comparisons and for both FP and Int rates, there was a 20% or greater uplift in performance gen-over-gen. Overall, customers can expect up to 108% better CPU performance when upgrading from the PowerEdge T/R350 to the T/R360.[2] Below Figure 1 displays the results for the FP base metric, and Table 4 details results for integer rates and FP peak metric.

Figure 1. SPEC CPU results gen-over-gen

4. Results for each CPU comparison

Comparison # | Processor | Int Rate (Base) | Int Rate (Peak) | FP Rate (Base) | FP Rate (Peak) |

1 | E-2388G | 68.1 | 71.2 | 55.9 | 60.3 |

E-2488 | 95.1 | 99.2 | 110 | 110 | |

% Increase | 39.65% | 39.33% | 96.78% | 82.42% | |

2 | E-2374G | 42.3 | 43.8 | 43.2 | 45.3 |

E-2456 | 68.3 | 71.1 | 90.1 | 90.3 | |

% Increase | 61.47% | 62.33% | 108.56% | 99.34% | |

3 | E-2334 | 39.8 | 41.2 | 41.5 | 43.4 |

E-2434 | 50.8 | 52.6 | 68.7 | 68.9 | |

% Increase | 27.64% | 27.67% | 65.54% | 58.76% | |

4 | E-2324G | 33 | 34 | 40.9 | 41.4 |

E-2414 | 39.7 | 41.1 | 65.2 | 65.7 | |

% Increase | 20.30% | 20.88% | 59.41% | 58.70% | |

5 | E-2314 | 29.4 | 30.2 | 38.6 | 39 |

E-2414 | 39.7 | 41.1 | 65.2 | 65.7 | |

% Increase | 35.03% | 36.09% | 68.91% | 68.46% |

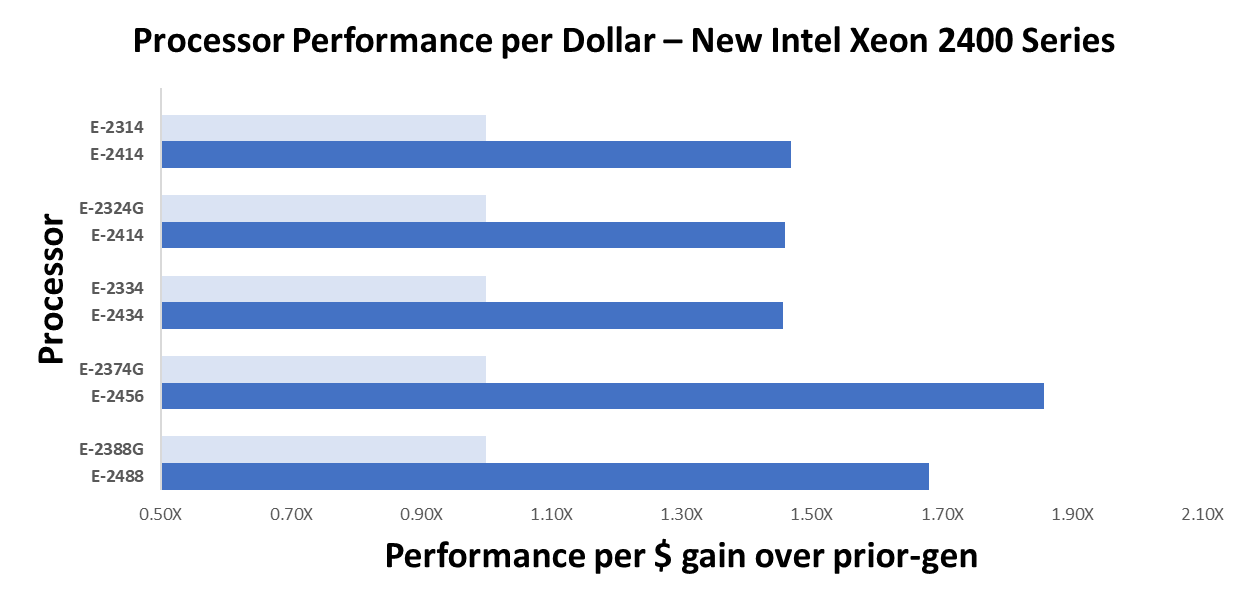

In addition to better performance, Figure 2 below illustrates the high return on investment associated with these new Intel Xeon E-2400 series processors. Specifically, customers gain up to 1.8x the performance per every dollar spent on CPUs [1]. We calculated performance by dollar by dividing the FP base results reported in Table 4 by the US list price for the corresponding CPU. Please note that pricing varies by region and is subject to change.

Figure 2. Performance per Dollar gen-over-gen

Conclusion

The PowerEdge T360 and R360 are impressive upgrades from the prior-generation servers, especially considering the performance gains with the latest Intel Xeon E-series CPUs and added GPU support. These highly cost-effective servers empower businesses to accelerate their traditional use cases while exploring the realm of emerging AI workloads.

References

- A2 Tensor Core GPU | NVIDIA

- Worldwide Spending on AI-Centric Systems Forecast to Reach $154 Billion in 2023, According to IDC

- Overview - CPU 2017 (spec.org)

Legal Disclosures

[1] Based on SPEC CPU® 2017 benchmarking of the E-2456 and E-2374G Intel Xeon E-series processors in the PowerEdge R360 and R350, respectively. Testing was conducted by Dell Performance Analysis Labs in October 2023, available on spec.org/cpu2017/. Actual results will vary. Pricing is based on Dell US list prices for Intel Xeon E-series processors and varies by region. Please contact your local sales representative for more information.