NUMA Configuration settings on AMD EPYC 2nd Generation

Download PDFMon, 16 Jan 2023 13:44:26 -0000

|Read Time: 0 minutes

Summary

In multi-chip processors like the AMD-EPYC series, differing distances between a CPU core and the memory can cause Non- Uniform Memory Access (NUMA) issues. AMD offers a variety of settings to help limit the impact of NUMA. One of the key options is called Nodes per Socket (NPS). This paper talks about some of the recommended NPS settings for different workloads.

Introduction

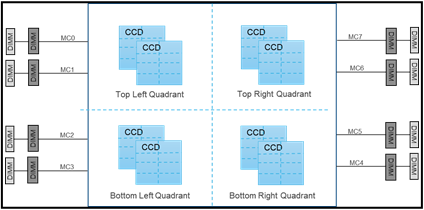

AMD Epyc is a Multi-Chip Module processor. With the 2nd generation AMD EPYC 7002 series, the silicon package was modified to make it a little simpler. This package is now divided into 4 quadrants, with up to 2 Core Complex Dies (CCDs) per quadrant. Each CCD consists of two Core CompleXes (CCX). Each CCX has 4 cores that share an L3 cache. All 4 CCDs communicate via 1 central die for IO called I/O Die (IOD).

There are 8 memory controllers per socket that support eight memory channels running DDR4 at 3200 MT/s, supporting up to 2 DIMMs per channel. See Figure 1 below:

Figure 1 - Illustration of the ROME Core and memory architecture

With this architecture, all cores on a single CCD are closest to 2 memory channels. The rest of the memory channels are across the IO die, at differing distances from these cores. Memory interleaving allows a CPU to efficiently spread memory accesses across multiple DIMMs. This allows more memory accesses to execute without waiting for one to complete, maximizing performance.

NUMA and NPS

Rome processors achieve memory interleaving by using Non-Uniform Memory Access (NUMA) in Nodes Per Socket (NPS). The below NPS options can be used for different workload types:

- NPS0 – This is only available on a 2-socket system. This means one NUMA node per system. Memory is interleaved across all 16 memory channels in the system.

- NPS1 – In this, the whole CPU is a single NUMA domain, with all the cores in the socket, and all the associated memory in this one NUMA domain. Memory is interleaved across the eight memory channels. All PCIe devices on the socket belong to this single NUMA domain.

- NPS2 – This setting partitions the CPU into 2 NUMA domains, with half the cores and memory in each domain. Memory is interleaved across 4 memory channels in each NUMA domain.

- NPS4 – This setting partitions the CPU into four NUMA domains. Each quadrant is a NUMA domain, and memory is interleaved across the 2 memory channels in each quadrant. PCIe devices will be local to one of the 4 NUMA domains on the socket, depending on the quadrant of the IOD that has the PCIe root for the device.

Note: Not all CPUs support all NPS settings

Recommended NPS Settings

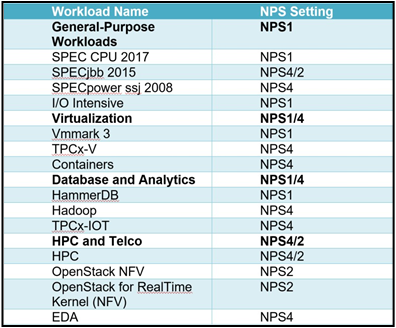

Depending on the workload type, different NPS settings might give better performance. In general, NPS1 is the default recommendation for most use cases. Highly parallel workloads like many HPC use cases might benefit from NPS4. Here is a list of recommended NPS settings for some key workloads. In some cases, benchmarks are listed to indicate the kind of workloads.

Figure 2 - Table of recommended NPS Settings depending on workload

For additional tuning details, please refer to the Tuning Guides shared by AMD here.

For detailed discussions around the AMD memory architecture, and memory configurations, please refer to the Balanced Memory Whitepaper

In Conclusion

The Dell AMD EPYC based servers offer multiple configuration options to optimize memory performance. Based on workload, choosing the appropriate NPS setting can help maximize performance.

Related Documents

Benchmark Performance of AMD EPYC™ Processors

Mon, 16 Jan 2023 23:40:30 -0000

|Read Time: 0 minutes

Summary

The Dell PowerEdge portfolio of servers with AMD EPYC processors have achieved several world record benchmark scores including VMMark, TPCx-HS and SAP-SD. These scores demonstrate the advantage of these servers for key business workloads.

AMD 3rd Generation AMD EPYC™ Processors

The 3rd Generation of AMD EPYC™ Processors builds on the AMD Infinity Architecture to provide full features and functionality for both one-socket and two-socket x86 server options. The processor retains the chiplet design from the 2nd generation, with a 12nm based IO die surrounded by 7nm based compute dies and is a drop-in replacement option. With a range of options, from 8 cores all the way to 64 cores, and TDPs of up to 280W, these processors can target a wide variety of workloads. Configurations support up to 160 lanes of PCIe Gen4 allowing for options like 24x direct attach NVMe drives and dual port 100Gbps NICs that can run at line rate.

Key New Features

The AMD 3rd Generation of processors builds on the previous generation but adds a few key optimizations that deliver significant performance improvements. The L3 cache in each CCD is now shared across all 8 cores instead of just 4. Thus, each core has up to 32MB of L3 cache allowing for flexibility, lower inter-core latency, and improved cache performance. The DDR memory latency has been further reduced along with a new 6-channel memory interleaving option. The IO memory management unit has been optimized to better handle 200Gbps line rate. There is improved support for Hot Plug surprise remove following PCIe-SIGs new implementation guideline. New features like SEV-SNP (Secure Nested Paging) provide enhanced virtualization security. There are a few other enhancements and optimizations targeting workloads around HPC, etc.

What does this mean?

Technical specifications can only explain part of the story. Key workload-based benchmarks can explain some of the real-world applicability and real-world performance. Dell has worked to publish multiple key benchmarks to help customers gauge the real-world performance of the various Dell PowerEdge servers with AMD EPYC Processors

Key Benchmarks

Some of the key benchmarks that are relevant to typical use cases are listed below:

VMMark:

VMMark is a benchmark from VMWare that highlights virtualization. The VMMark benchmark runs multiple tiles on the system under test. Each tile consists of 19 different virtual machines, with each running a typical workload. This benchmark is great at identifying the capabilities for a typical IT server where the workloads are virtualized, and multiple workloads are running on a single server.

For AMD, EPYC, the large number of cores, the high speed memory, the high speed PCIe Gen4 for networking, and, when used, storage, all contribute towards a very positive result.

As of 3/15/2021, Dell has top scores on 4-node vSAN configurations with the R7515, the R6525 and the C6525. The R7515 and R6525 are 1 and 2-Socket servers with scores of 15.18@16 tiles and 24.08@28 tiles respectively. The C6525 is a modular server with 4-nodes in a single 2-U system with a score of 13.74@16 tiles and highlights the sheer density of compute possible in 2U of rack space.

Dell also has a leading score for a matched pair 2-socket configuration connected to a Dell EMC PowerMax. This configuration managed a score of 19.4@22 tiles from just 2 servers, achieving the maximum VM density for such a configuration. This score highlights the advantages of leveraging an excellent external storage array like the Dell EMC PowerMax to maximize reliability and performance.

Reference: https://www.vmware.com/products/vmmark/results3x.html

TPCx-HS

The TPCx-HS benchmark is built to showcase the performance of a Hadoop cluster doing data analytics. In today’s world, where data is critical, the ability to analyze and manage this data becomes very important. The benchmark can do batch processing with MapReduce or data analytics on Spark

As of 3/15/2021, Dell PowerEdge servers with AMD EPYC 3rd generation of processors have multiple world record scores for TPCx-HS at both the 1TB and 3TB database sizes. These include performance improvements of as much as 60% over the previous world records with as much as 40% lower $/HSph.

Reference: http://tpc.org/tpcx-hs/results/tpcxhs_perf_results5.asp?version=2

SAP-SD

SAP-Sales and Distribution is a core functional module in SAP ERP Central Component that allows organizations to store and manage customer and product related data. The ability to access and manage this data at high speed, and with minimal latency is a very critical requirement of the business architecture.

For this benchmark, Dell PowerEdge servers have world record scores on Windows and Linux for both 1-S and 2-S platforms. The 2-S Linux configuration score of 75000 benchmark users is higher than even the best 4-S score for this benchmark, highlighting the significant advantage of this architecture for database use cases.

Reference: https://www.sap.com/dmc/exp/2018-benchmark-directory/#/sd

Conclusion

Dell PowerEdge servers with AMD EPYC processors have industry leading performance numbers. Benchmarks like VMMark, TPCx-HS and SAP-SD show that these platforms are excellent for the most common workloads and provide excellent business value.

13% Better Performance in Financial Trading with PowerEdge R7615 and AMD EPYC 9374F

Wed, 16 Aug 2023 15:41:36 -0000

|Read Time: 0 minutes

Summary

Dell PowerEdge R7615 with 4th Generation AMD EPYC 9374F provides up to a 13 percent performance gain over Dell PowerEdge R7615 with 4th Generation AMD EPYC 9354P for financial trading benchmarks.[1] This Direct to Development (DfD) document looks at CPU benchmarks for three R7615 32-core based CPU configurations and highlights key features that enable businesses enterprises to host different workloads.

Dell PowerEdge R7615

Dell PowerEdge R7615 is a 2U, single-socket rack server. It is designed to be the best investment per dollar for your data center. This server provides performance, and flexible low-latency storage options in an air or Direct Liquid Cooling (DLC) configuration by using an AMD EPYC 4th generation processor to deliver up to 50% more core count per single socket platform in an innovative air-cooled chassis. It delivers breakthrough innovation for traditional and emerging workloads, including software-defined storage, data analytics, and virtualization, using the latest performance and density.

Figure 1. Side angle of the extremely scalable R7615

4th Generation AMD EPYC processors

PowerEdge R7615 is the latest single socket AMD server supporting 4th Generation AMD EPYC 9004 Series processors, the latest generation of the AMD64 System-on-Chip (SoC) processor family. It is based on the Zen 4 microarchitecture introduced in 2022, supporting up to 128 cores (256 threads) and 12 memory channels per socket, a 50% increase over the previous generation. This series includes three different CPU(s) with 32 cores:

Processor | CPU Cores | Threads | Max. Boost Clock | All core boost speed | Base clock | L3 Cache | Default TDP |

AMD EPYC 9374F | 32 | 64 | Up to 4.3GHz | 4.1GHz | 3.85GHz | 256MB | 320W |

AMD EPYC 9354P | 32 | 64 | Up to 3.8GHz | 3.75GHz | 3.25GHz | 256MB | 280W |

AMD EPYC 9334 | 32 | 64 | Up to 3.9GHz | 3.85GHz | 2.7GHz | 128MB | 210W |

The Base Clock, also known as Base Frequency, refers to the minimum operational clock speed of an AMD processor's cores when running under normal conditions. It serves as the foundational clock speed for the processor's overall performance. During tasks that do not require intense processing power, the processor operates at or around this speed, conserving energy and minimizing heat generation.

The Max Boost Clock, often called Max Turbo Frequency or Max Turbo Boost, signifies the upper limit of a processor's clock speed. This clock speed is achieved when specific cores of the AMD processor dynamically increase their frequency to deliver peak performance. The Max Boost Clock is typically applied to a subset of cores and is triggered when the workload demands require a burst of processing power, such as for gaming, video editing, financial trading, and other intensive applications.

The All-Core Boost Speed refers to the clock speed that all cores of an AMD processor can achieve simultaneously when under load. Unlike the Max Boost Clock, which is applicable to only a select number of cores, the All-Core Boost Speed ensures that all cores are operating at an elevated clock speed for optimized multi-threaded performance. This feature is particularly advantageous for tasks that rely heavily on parallel processing, such as rendering, simulations, and content creation.

AMD EPYC 9374F is the frequency/core optimized offering which provides up to a 13 percent increase in all core boost speed over AMD EPYC 9354P, the basic 32 core 1-socket offering. The series also includes AMD EPYC 9334 which has half the L3 Cache but offers over 52 percent drop in Default TDP over AMD EPYC 9374F, making it the most energy efficient of the three CPUs.

Performance data

We captured four benchmarks:

- Sockperf is a network benchmarking tool designed to measure network latency and throughput performance using the Socket Direct Protocol (SDP) for high-performance computing clusters and data centers.

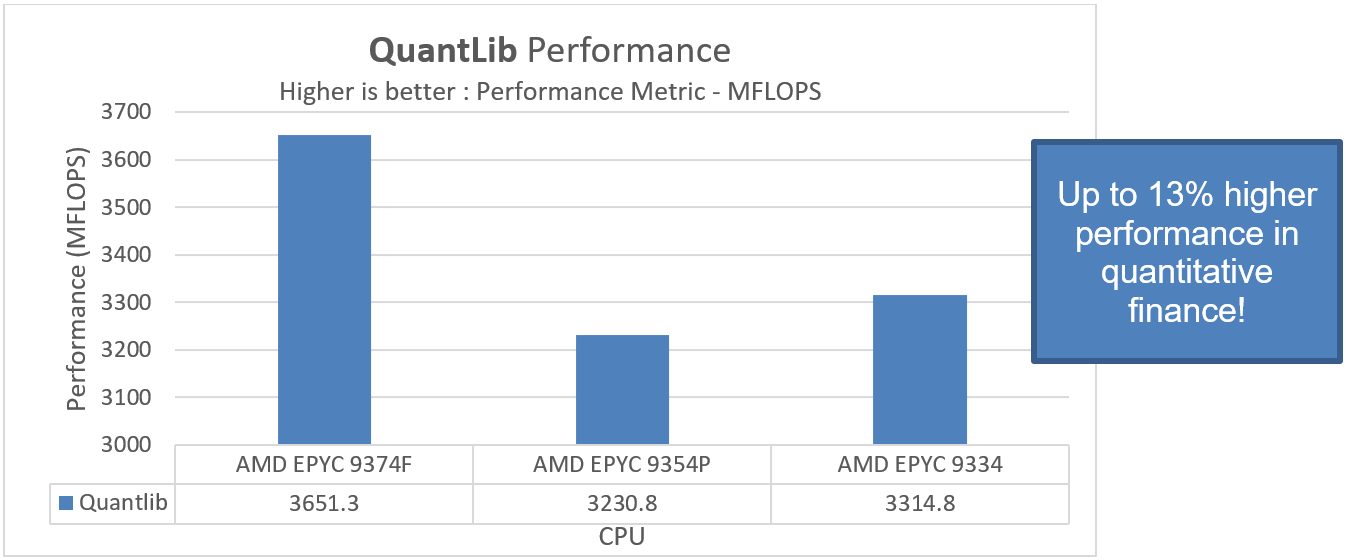

- The QuantLib benchmark is a software library used in quantitative finance and derivatives pricing for modeling and analyzing financial instruments, providing tools for pricing, risk management, and quantitative research. It is widely used by financial professionals and institutions for accurate and efficient financial calculations.

- High Performance Conjugate Gradient measures the computational efficiency of solving a sparse linear system using conjugate gradient methods, providing insights into HPC system performance and optimization. It complements the traditional HPL benchmark, reflecting real-world application characteristics.

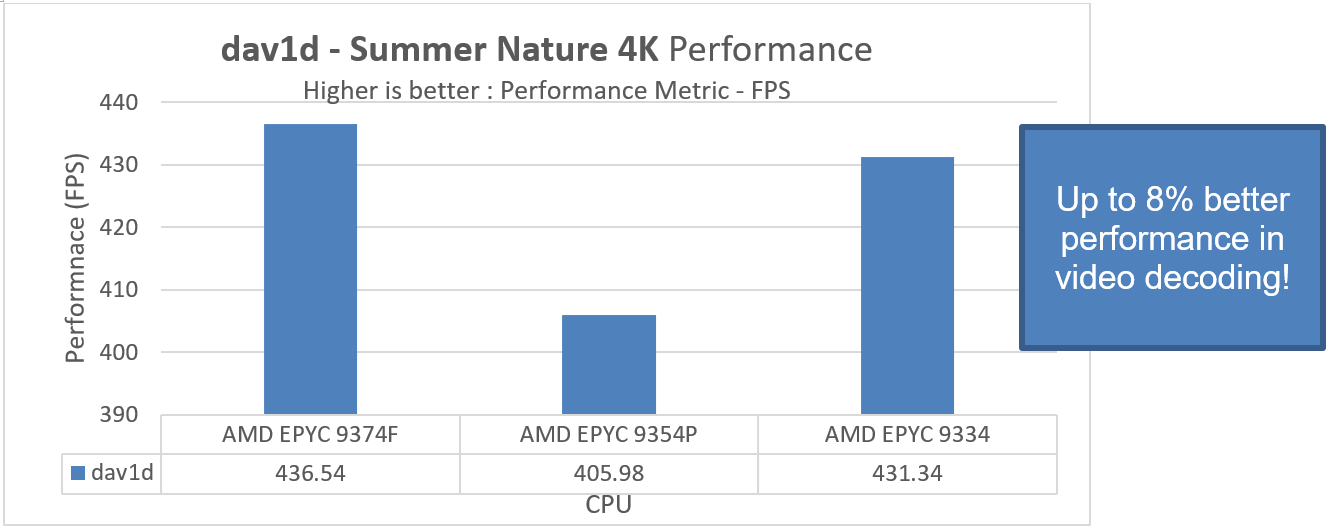

- The dav1d benchmark is a performance testing tool used to assess the decoding speed and efficiency of the AV1 video codec, helping to evaluate its real-time playback capabilities and effectiveness in video streaming applications. It aids in optimizing AV1 codec implementations for improved video compression and playback performance.

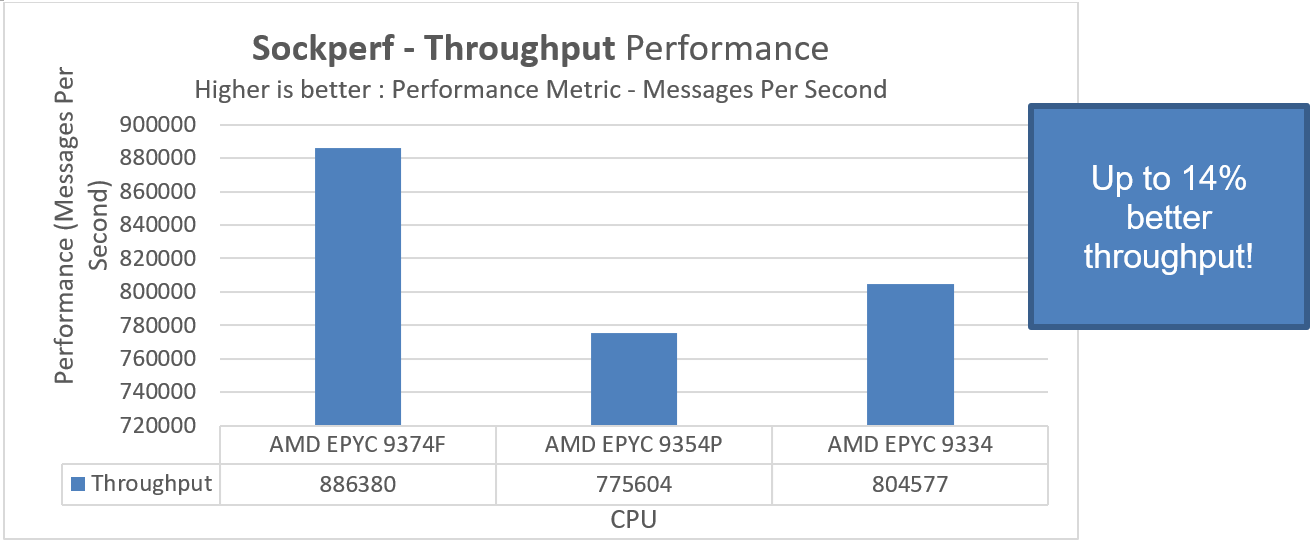

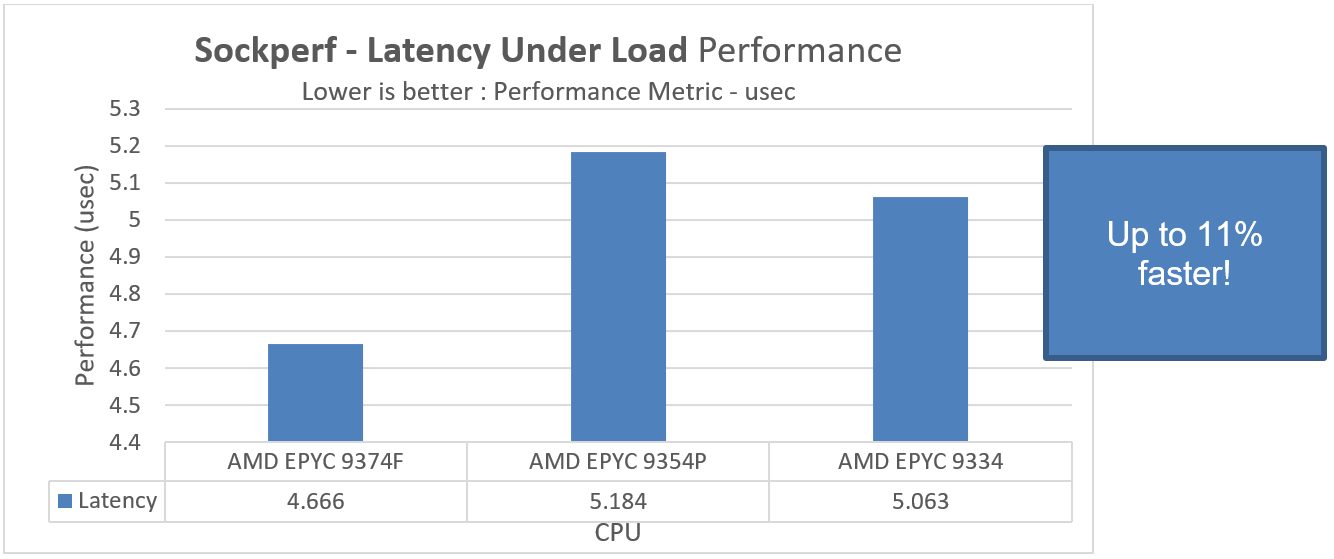

To compare performance across three R7615 4th Generation AMD EPYC processors, let us first consider the Sockperf benchmark. This benchmark reports throughput in terms of messages per second, the speed at which queries are processed and data is retrieved or stored. It also reports latency overload in usec, measuring the system's response time (latency) under different load conditions.

Figure 1. Three CPU comparison demonstrating Throughput performance using the Sockperf benchmark

Figure 2. CPU comparison showing Latency under Load performance using the Sockperf benchmark

In PowerEdge R7615 with AMD EPYC 9374F, we see up to 14 percent better throughput performance and an 11 percent drop-in time taken for the Latency Under Load subtest to complete using the Sockperf benchmark over AMD EPYC 9354P.

We also report dav1d results in Frames per second. This test measures the time taken to decode AV1 video content and QuantLib results in MFLOPS, a benchmark for quantitative finance for modeling, trading, and risk management scenarios.

Figure 3. A three CPU comparison demonstrating dav1d performance

Figure 4. A three CPU comparison demonstrating performance using the QuantLib benchmark

Performance in PowerEdge R7615 with AMD EPYC 9374F is better for the dav1d and QuantLib benchmarks than for the other tested configurations. We find an up to 8 percent performance uptake for video decoding and an additional 13 percent better performance for financial modelling and trading workloads in Dell Technologies PowerEdge R7615 with the frequency optimized AMD EPYC 9374F.

Conclusion

Some workloads benefit from more cores and some benefit from higher frequency. Here we have shown examples of workloads that take advantage of the higher boost frequencies.

Like most industries, the financial trading industry continues to evolve. Firms are pushing workloads harder and with larger datasets, all while expecting immediate or real-time results. These organizations must be confident that they are investing in the right platforms to support computational requirements. With PowerEdge R7615 with AMD EPYC 9374F, Dell Technologies delivers the systems to address the current and expanding needs for high-performance quantitative trading modelling and risk management scenarios.

References

- Dell PowerEdge R7615 Spec Sheet

- AMD EPYC™ 9374F Processors | AMD

- DDR5 Memory Bandwidth for Next-Generation PowerEdge Servers Featuring 4th Gen AMD EPYC Processors | Dell Technologies Info Hub

[1] Tests were performed in August 2023 at the Solutions and Performance Analysis Lab at Dell Technologies.