Direct from Development - Tech Notes

Reliability in Dell Technologies PowerEdge Servers

Thu, 25 Apr 2024 18:31:15 -0000

|Read Time: 0 minutes

Introduction

Reliability is defined as the characteristic of a product or system that assures the performance of its intended function over time and assures operation in a defined environment without failure. Reliability is designed into PowerEdge servers, and it is constantly evaluated and improved throughout the product lifecycle. Full in-house test and analysis capabilities allow Dell Technologies to develop and implement robust product qualification and release procedures.

Dell Technologies Design Guidelines

Dell Technologies server design-to-criteria includes:

- Servers to operate continuously at 40C degrees/80% relative humidity, and allow for short term excursions to 45 degrees C and 90% relative humidity

note: 40C/85%RH capability is configuration specific, but the vast majority of PowerEdge server configurations allow for these conditions

- Additional design life margin, and accommodation for the potential of lifetime limited warranty

- Potential deployment in uncontrolled environments – locations with polluted air and dust

- Customer special requests – for example, higher shock and vibration tolerance

Dell Technologies Design for Reliability Process

The Dell Technologies Reliability Engineering team is part of the Server Product Development team and has developed a full suite of procedures. Many are based on industry standards which define DfR: Subsystem Qualification, Ongoing Reliability Testing, Validation, Shock and Vibration, and associated Failure Analysis requirements. This suite must be met and fulfilled before any product is released. Dell Technologies environmental test chambersDell Technologies uses internally developed web-based design for reliability (DfR) tools for systems development. In addition to using these tools at Dell Technologies, we require that our supply base use these tools in their product development processes to ensure our suppliers also design in reliability.

Dell Technologies environmental test chambersDell Technologies uses internally developed web-based design for reliability (DfR) tools for systems development. In addition to using these tools at Dell Technologies, we require that our supply base use these tools in their product development processes to ensure our suppliers also design in reliability.

Design for Reliability Starts at the Component Level

Dell Technologies reliability begins with choosing and approving component suppliers. Dell Technologies specifies JEDEC qualified components from all suppliers (JEDEC is a global industry group that creates standards for broad range of technologies). To ensure enterprise-class reliability, Dell Technologies may require qualification testing beyond the standard JEDEC suite depending on the nature of the component – new, unique, different, and difficult or NUDD. Dell Technologies has specific qualification requirements for NUDDs.

Subsystem Level Comes Next

Dell Technologies defines qualification protocol for all subsystems (HDD, SSD, PSU, fans, memory, PCIe cards, PERC, and daughter cards) and ensures that the supply base executes to Dell Technologies requirements. Dell Technologies does this by:

- Defining test requirements, sample sizes, ramp rates, durations, and accept/reject criteria

- Working closely with Suppliers during their product development process

- Reviewing and approving results, and addressing qualification fails, if any

- Auditing product by conducting our own in house testing as appropriate

- Auditing supplier Quality and Assembly/Test processes

- Requiring ongoing reliability testing (ORT) on all subsystems throughout their shipping life

The System is the Third Level of Reliability

Dell Technologies does extensive testing and analysis of all systems during development and prior to release:

- Dell Technologies has developed and refined a suite of multiple environment over-stress validation tests that it executes on every system during its development and prior to release

- Dell Technologies has a separate suite of shock and vibration tests, many of which are industry-standards-based, that we execute on every system prior to release

- Dell Technologies has full internal capability to analyze test fails in our own in-house Failure Analysis Labs

Dell Technologies Reliability is designed in and closes the loop: from the component level to subsystem level to system level. Our product qualification and release systems ensure that design criteria, including deployment life, additional deployment life margin, and accommodation for potential lifetime limited warranty, are met before product is launched. This qualification and release system is based on industry standards and on our own rigorous methods which have been developed and refined over multiple generations of PowerEdge products. This includes Ongoing Reliability Testing (ORT) on components and subsystems which is required to be implemented throughout the shipping life of PowerEdge servers.

Dell Technologies’ focus is on Design for Reliability - using a full suite of internally developed web-based tools, HW Validation Tests, and Shock and Vibration tests. Full in-house capabilities allow Dell Technologies to conduct all phases of product qualification and release in house, including multiple environment overstress tests, shock and vibration tests, and failure analysis.

Dell Technologies also conducts research on long term reliability of our products in expanded operating environments. This research, and associated multimillion-dollar investments in applied research facilities, allow Dell Technologies to continue to improve reliability on PowerEdge products.

Accelerate Genomics Insights and Discovery with High-Performing, Scalable Architecture from Dell and Intel

Thu, 01 Feb 2024 18:47:58 -0000

|Read Time: 0 minutes

Summary

The field of Genomics requires the storage and processing of vast amounts of data. In this brief, Intel and Dell technologists discuss key considerations to successfully deploy BeeGFS based storage for Genomics applications on the latest generation PowerEdge Server portfolio offerings.

Market positioning

The life sciences industry faces intense pressure to speed results and bring in new treatments to market all while lowering costs, especially in genomics. However, life-changing discoveries often depend on processing, storing, and analyzing enormous volumes of genomic sequencing data — more than 20 TB of new data per day by one organization, alone1, with each modern genome sequencer producing up to 10TB of new data per day. Researchers need high-performing solutions built to handle this volume of data and analytics and artificial intelligence (AI) workloadsthat are easy to deploy and scale.

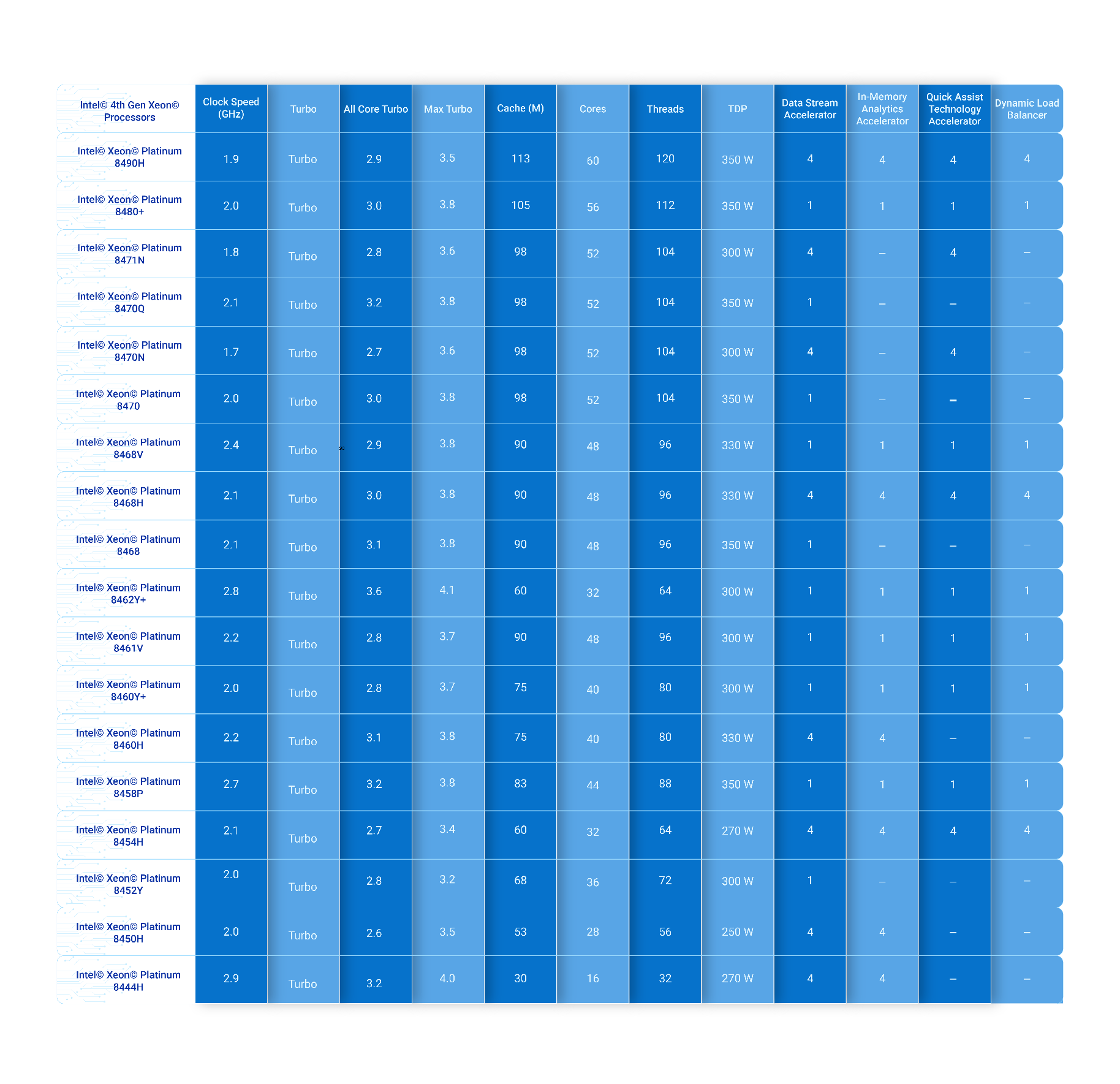

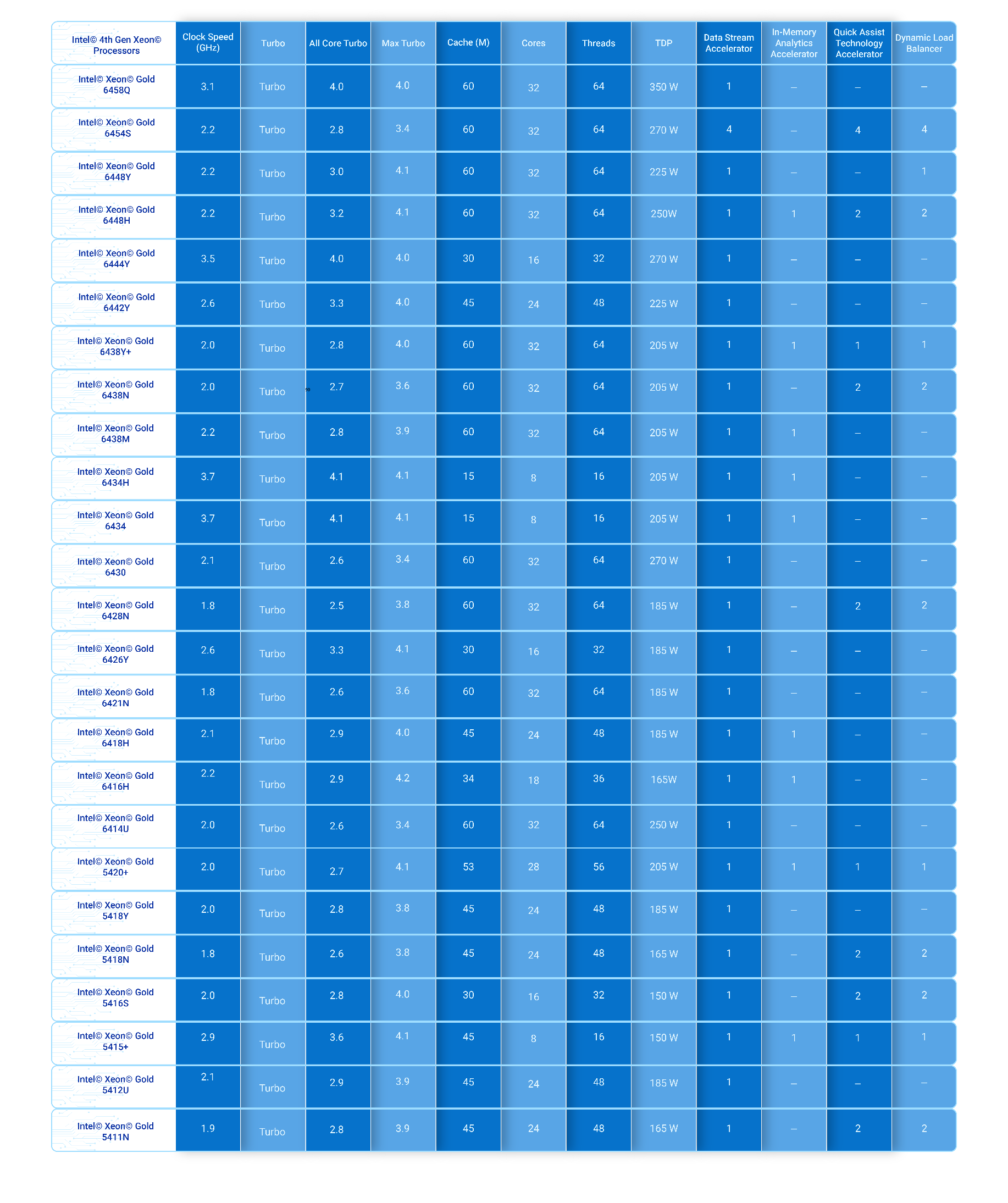

Dell and Intel have collaborated on a bill of materials (BoM) that provides life science organizations with a scalable solution for genomics. This solution features high-performance compute and storage building blocks for one of the leading parallel cluster file systems, BeeGFS. The BoM features four Dell PowerEdge rack server nodes powered by 4th Generation Intel® Xeon® Scalable processors, which deliver the performance needed for faster results and time to production.

The BoM can be tailored for each organization’s architectural needs. For dense configurations, customers can use the Dell PowerEdge C6600 enclosure with PowerEdge C6620 server nodes instead of standard PowerEdge R660 servers (each PowerEdge C6600 chassis can hold up to four PowerEdge C6620 server nodes). If they already have a storage solution in place using InfiniBand fabric, the nodes can be equipped with an additional Mellanox ConnectX-6 HDR100 InfiniBand adapter.

Key Considerations

Key considerations for deploying genomics solutions on Dell PowerEdge servers include:

- Core count: Life sciences organizations often process a whole genome on a cluster, which scales linearly with core count. The Dell PowerEdge solution offers up to 56 cores per CPU to meet performance requirements.

- Memory requirements: This BoM provides 512 GB of DRAM to support specific tasks in workloads that have higher memory requirements, such as running Burrows-Wheeler Aligner algorithms.

- Local and distributed storage: Input/output (I/O) is a big consideration for genomics workloads because datasets can reach hundreds of gigabytes in size. Dell and Intel recommend 3.2 TB of local storage specifically for commonly used genomics tools that read and write many temporary files.

Available Configurations

Feature | Configuration |

Platform | 4 x Dell R660 supporting 8 x 2.5” NVMe drives - direct connection |

CPU (per server) | 2x Intel® Xeon® Platinum 8480+ (56c @ 2.0GHz) |

DRAM | 512GB (16 x 32GB DDR5-4800MT/s) |

Boot device | Dell BOSS-N1 with 2x 480GB M.2 NVMe SSD (RAID1) |

Storage | 1x 3.2TB Solidigm D7-P5620 NVMe SSD (PCIe Gen4, Mixed-use) |

Capacity storage | Dell Ready Solutions for HPC BeeGFS Storage: 500 GB capacity per 30x coverage whole genome sequence (WGS) to be processed; 800 MB/s total (200 MB/s per node). |

NIC | Intel® E810-XXV Dual Port 10/25GbE SFP28, OCP NIC 3.0 |

Software Versions | |

Workload | GATK Best Practices for Germline Variant Calling WholeGenomeGermlineSingleSample_v3.1.6 |

Applications | • WARP 3.1.6 • GATK 4.3.0.0 • Picard 3.0.0 • Samtools 1.17 • Burroughs-Wheeler Aligner (BWA) 0.7.17 • VerifyBamID 2.0.1 • MariaDB 10.3.35 • Cromwell 84 |

Learn more

Contact your Dell or Intel account team for a customized quote at 1-877-289-3355.

Read about Intel Select Solutions for Genomics Analysis: https://www.intel.com/content/dam/www/public/us/en/documents/solution-briefs/select-genomics-analytics.pdf

Read about Dell HPC Ready Architecture for Genomics: https://infohub.delltechnologies.com/static/media/6cb85249-c458-4c06-bcec-ef35c1a363ca.pdf?dgc=SM&cid=1117&lid=spr4502976221&linkId=112053582

Learn more about Dell Ready Solutions for HPC BeeGFS Storage: https://www.dell.com/support/kbdoc/en-us/000130963/dell-emc-ready-solutions-for-hpc-beegfs-high-performance-storage

Learn more about Dell Ready Solutions for HPC BeeGFS High Capacity Storage: www.dell.com/support/kbdoc/en-ie/000132681/dell-emc-ready-solutions-for-hpc-beegfs-high-capacitystorage

Powering TigerGraph with Intel® Xeon® Processors on PowerEdge Servers

Tue, 30 Jan 2024 23:56:48 -0000

|Read Time: 0 minutes

TigerGraph Overview

At the top of this webpage are 3 PDF files outlining test results and reference configurations for Dell PowerEdge servers using both the 3rd Generation Intel Xeon processors and 4th Generation Intel Xeon processors. All testing was conducted in Dell Labs by Intel and Dell Engineers in May and June of 2023.

- TigerGraph DfD ICX – highlights the recommended configurations for Dell PowerEdge servers using 3rd Generation Intel Xeon processors.

- TigerGraph DfD SPR – highlights the recommended configurations for Dell PowerEdge servers using 4th Generation Intel Xeon processors.

- DfD – PowerEdge TigerGraph Test Report – Highlights the results of performance testing on both configurations with comparisons that demonstrate the performance difference between the two platforms.

Solution Overview

TigerGraph was founded in 2012 by programmer Dr. Yu Xu under the name GraphSQL

According to Gartner, by 2025, graph technologies will be used in 80% of data and analytics innovations, up from 10% in 2021. This projection aligns with the explosive growth of TigerGraph’s global customer base, which has increased by more than 100% in the past twelve months as more organizations use graphs to drive better business outcomes.

A graph database is designed to facilitate analysis of relationships in data. A graph database stores data as entities and the relationships between those entities. It is composed of two things: vertices and edges. Vertices represent entities such as a person, product, location, payment, order and so on; edges represent the relationship between these entities, for example, this person initiated this payment to purchase this product with this order. Graph analytics explores these connections in data and reveals insights about the connected data. These capabilities enable applications such as customer 360, cyber threat mitigation, digital twins, entity resolution, fraud detection, supply chain optimization, and much more.

TigerGraph is the only scalable graph database for the enterprise. TigerGraph’s innovative architecture allows siloed data sets to be connected for deeper and wider analysis at scale. Additionally, TigerGraph supports real-time in-place updates for operational analytics use cases.

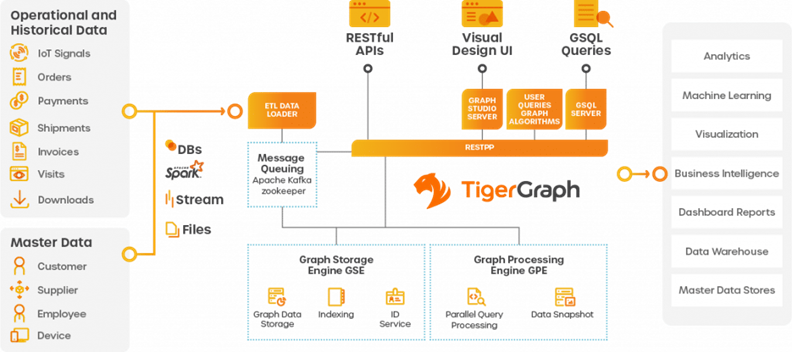

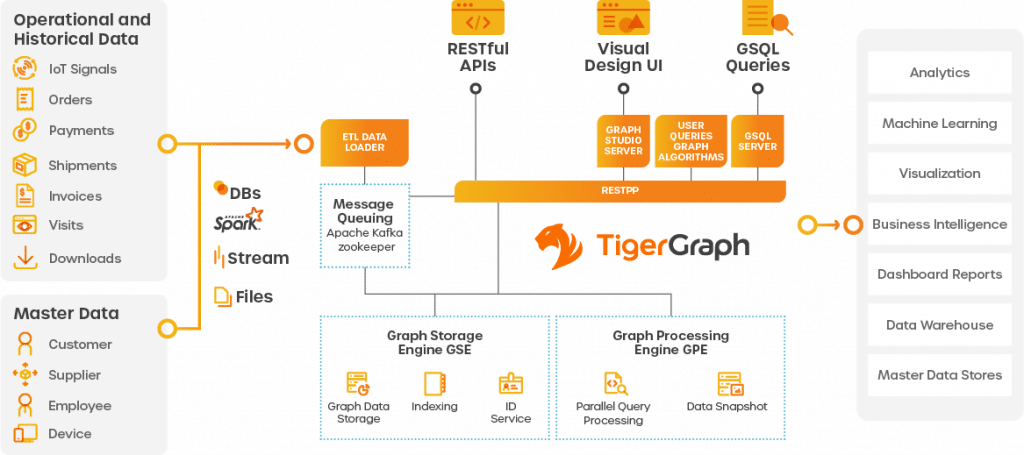

Below is an outline of the TigerGraph architecture.

As you should note, a TigerGraph instance is designed to process massive pools of data and utilizes a large number of processes to do so. Choosing the correct hardware is critical to a successful deployment.

Reference Deployments

- Four top-tier banks use TigerGraph to improve fraud detection rates by 20% or more.

- Over 300 million consumers receive personalized offers with recommendation engines powered by TigerGraph.

- More than 50 million patients receive care path recommendations to assist them on their wellness journey.

- One billion people depend on the energy infrastructure optimized by TigerGraph to reduce power outages.TigerGraph is a native parallel graph database purpose-built for analyzing massive amounts of data (terabytes).

TigerGraph helps make graph technology more accessible. TigerGraph DB is democratizing the adoption of advanced analytics with Intel’s 4th Generation Intel Xeon Scalable Processors by enabling non-technical users to accomplish as much with graphs as the experts do.

TigerGraph with Dell PowerEdge and Intel processor benefits

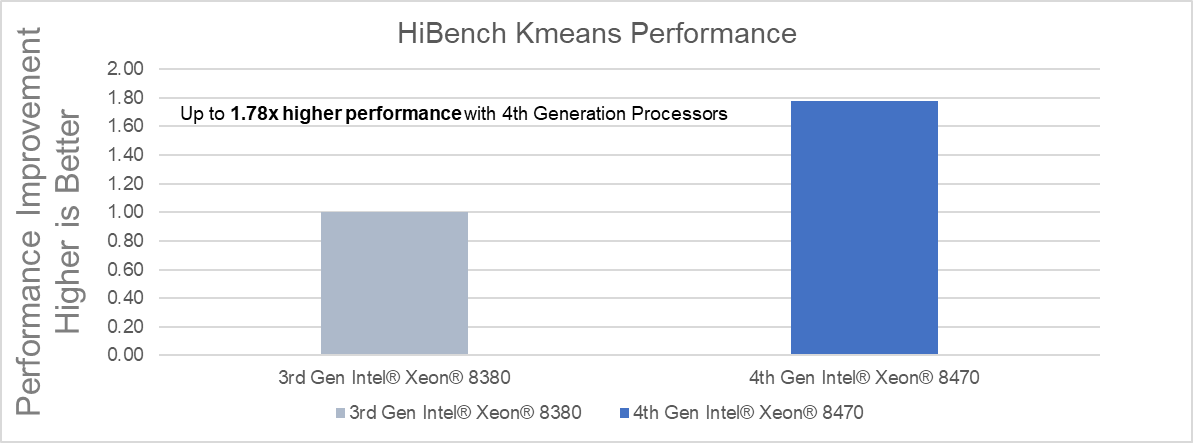

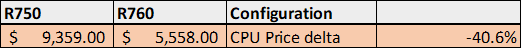

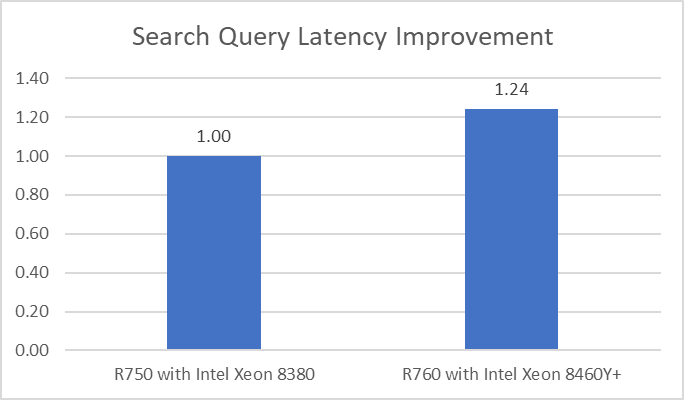

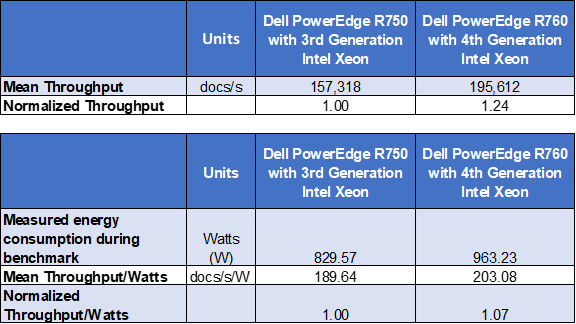

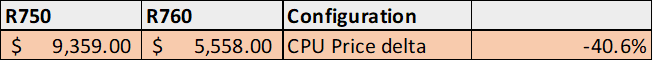

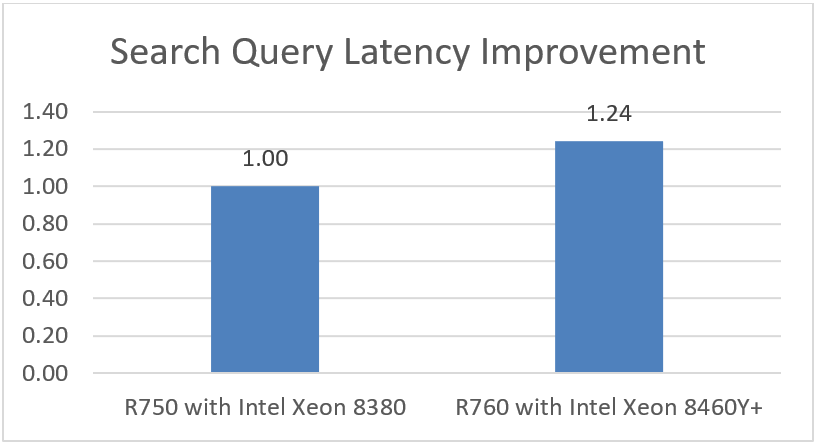

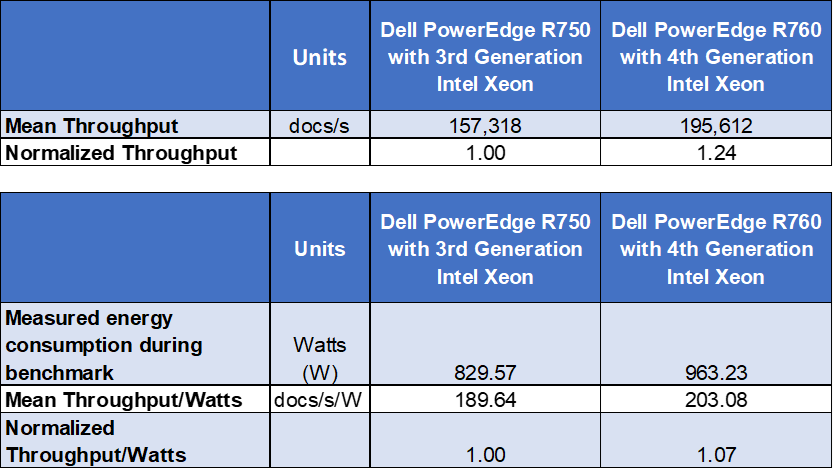

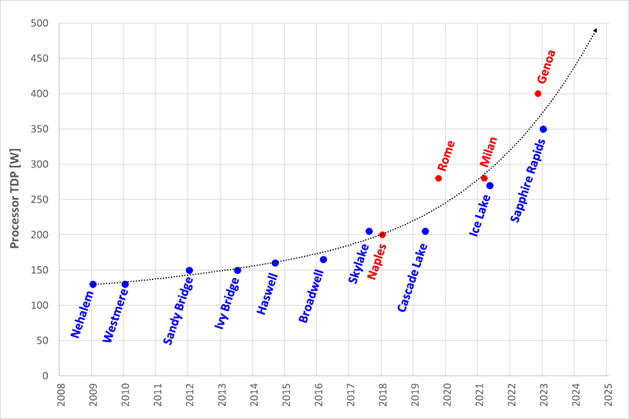

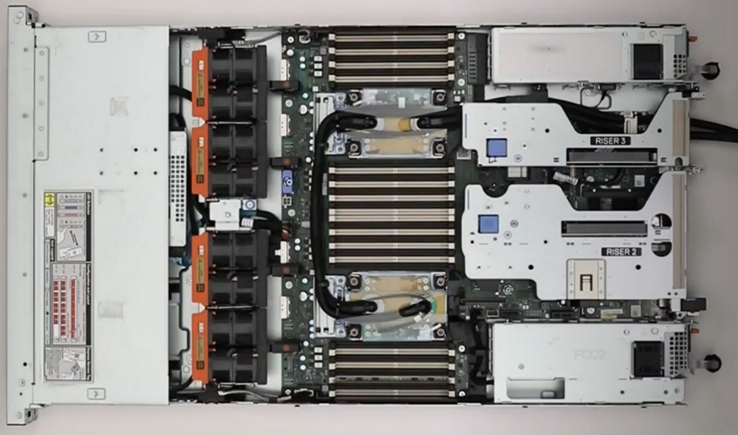

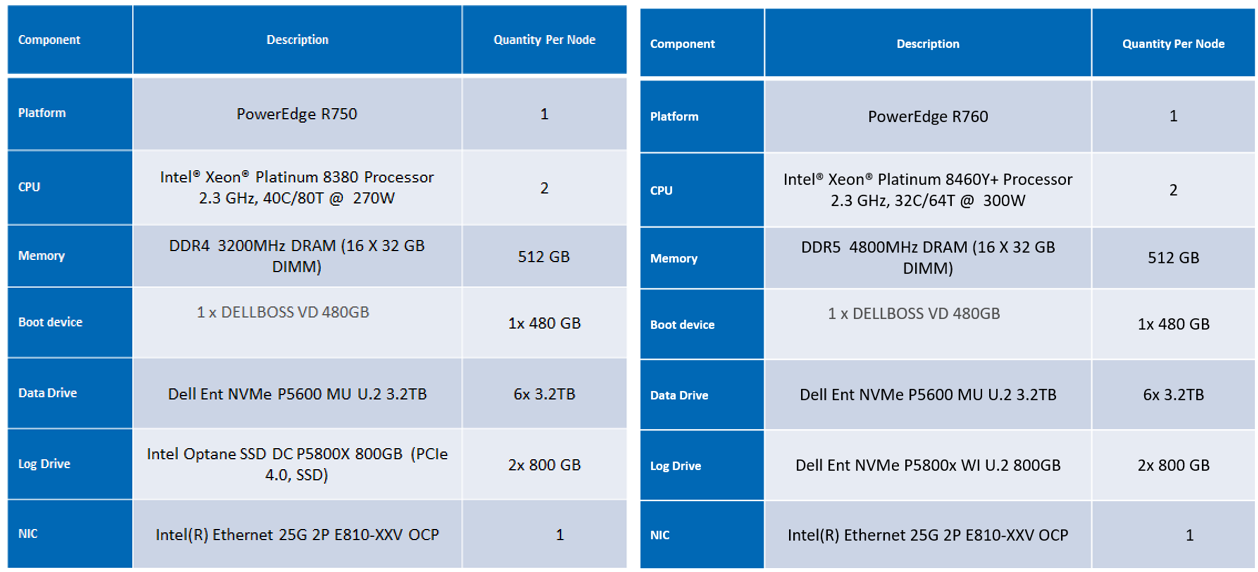

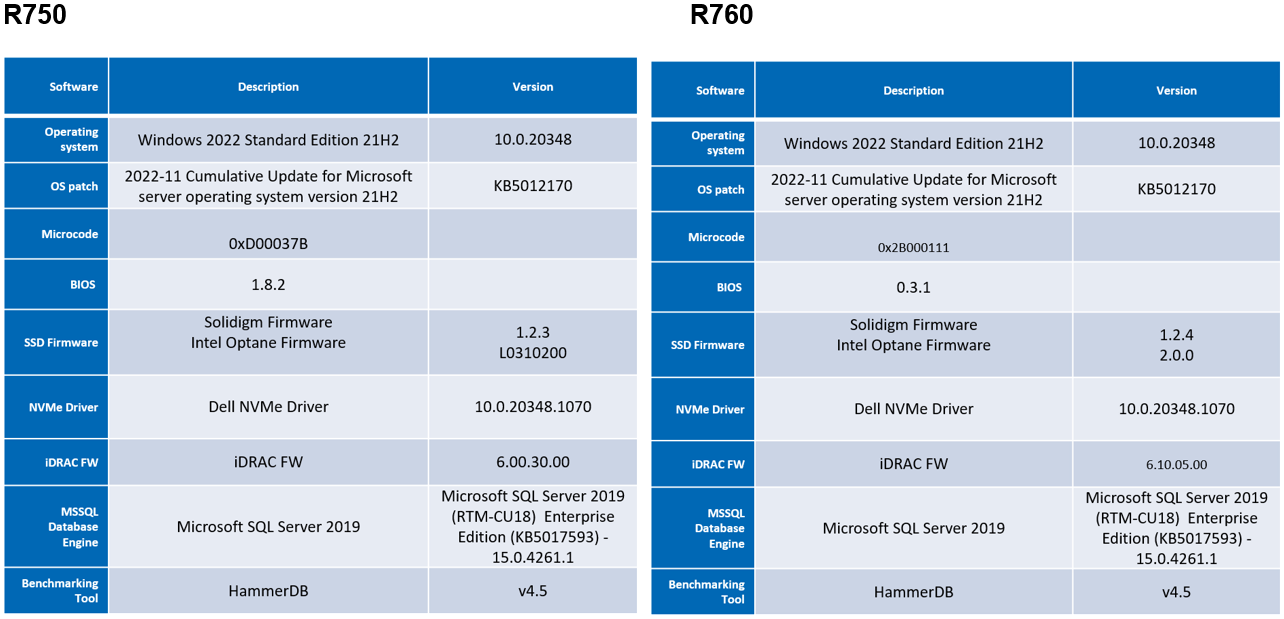

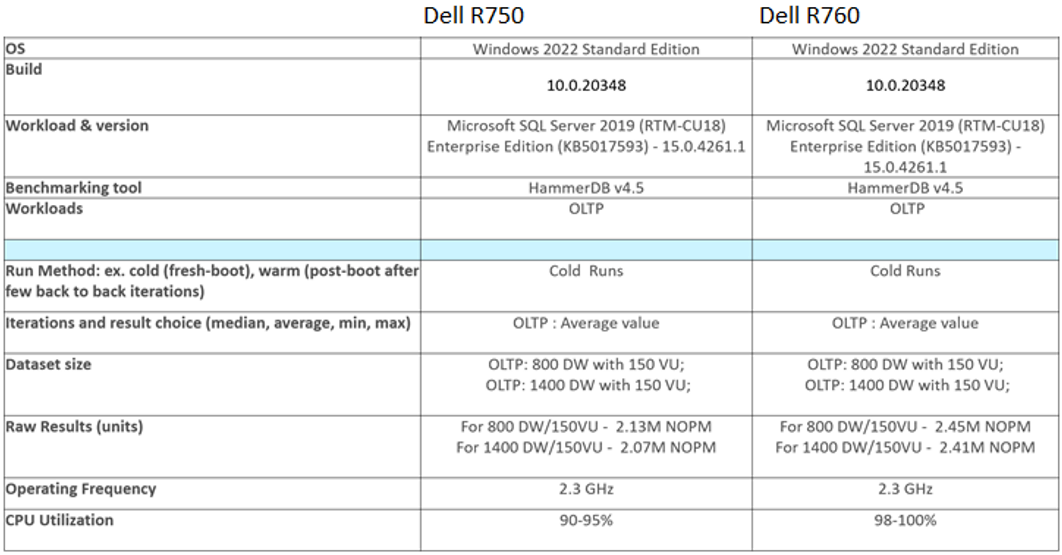

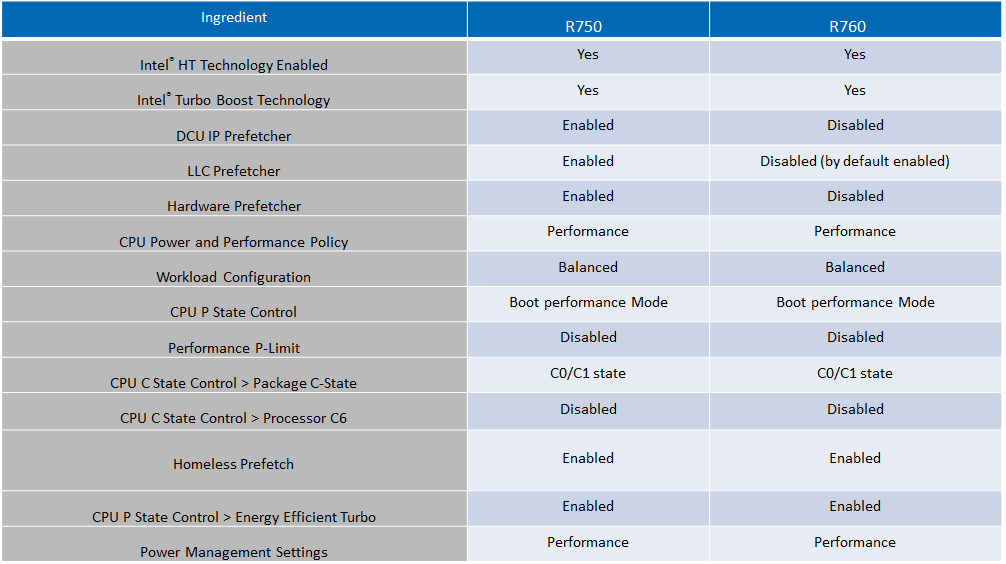

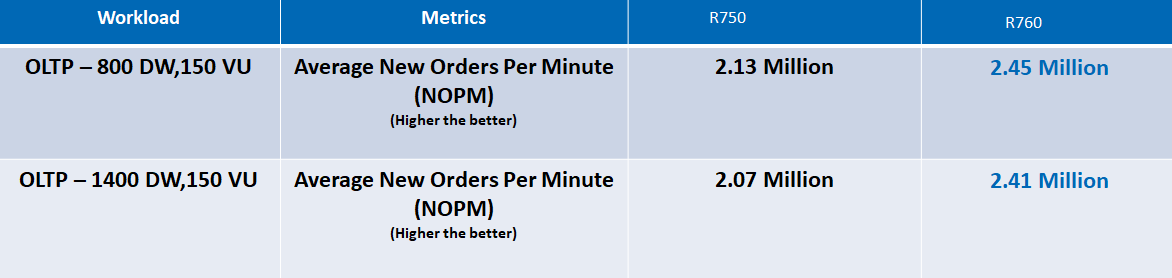

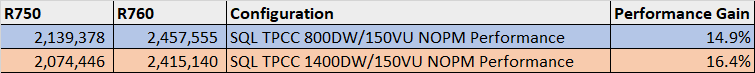

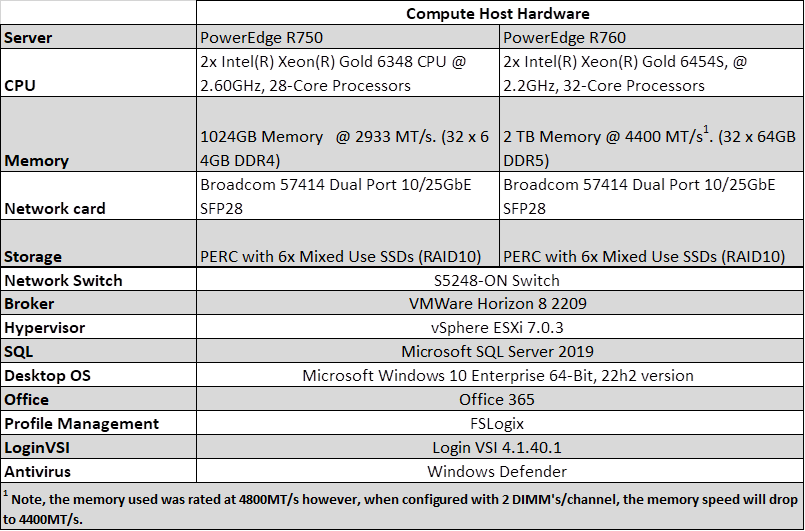

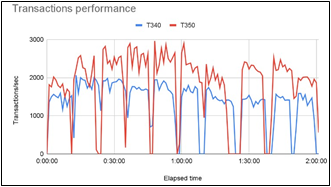

The introduction of new server technologies allows customers to deploy solutions using the newly introduced functionality, but it can also provide an opportunity for them to review their current infrastructure and determine if the new technology might increase performance and efficiency. Dell and Intel recently conducted TigerGraph performance testing on the new Dell PowerEdge R760 with 4th Generation Intel Xeon Scalable processors and compared the results to the same solution running on the previous generation R750 with 3rd generation Intel Xeon Scalable processors to determine if customers could benefit from a transition.

Dell PowerEdge R660 and R760 servers with 4th generation Intel Xeon Scalable processors deliver a fast, scalable, portable and cost-effective solution to implement and operationalize deep analysis of large pools of data.

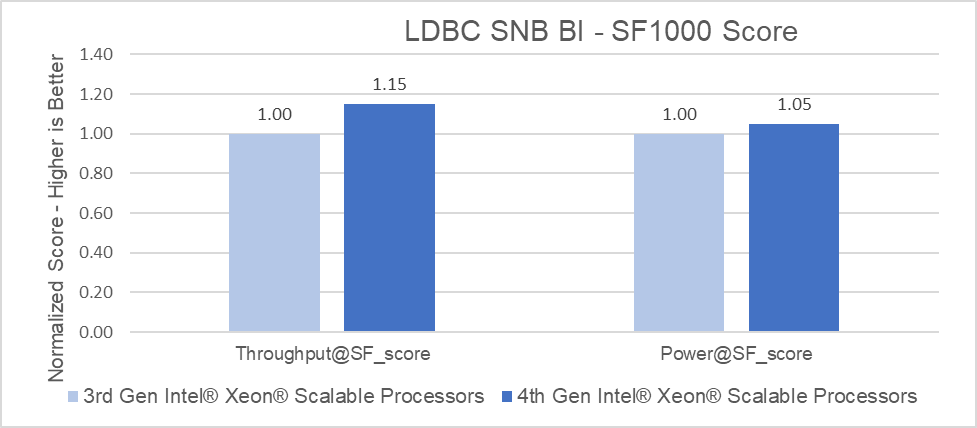

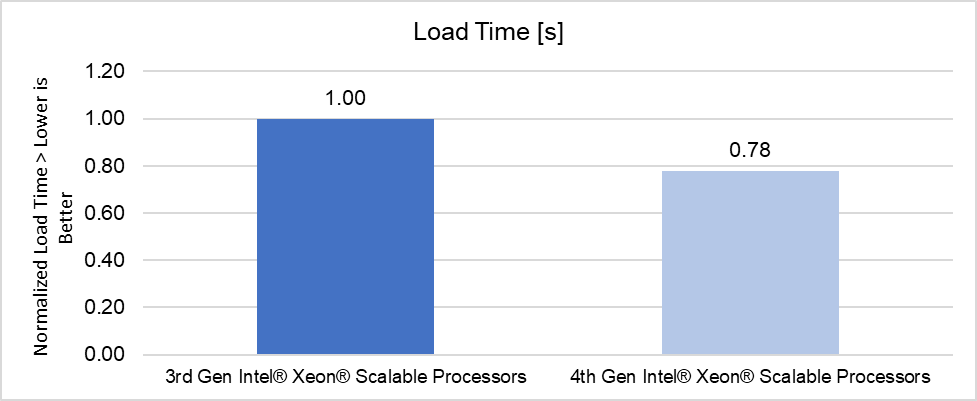

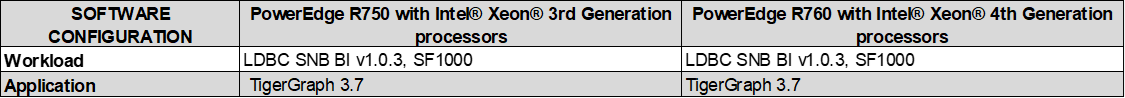

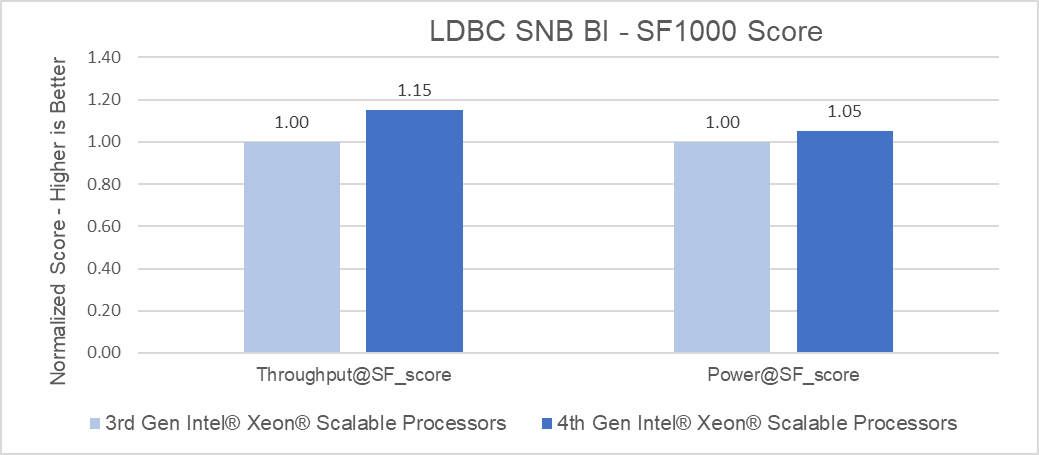

Raw performance: As noted in the report, PowerEdge servers with 4th Generation Intel Xeon Platinum processors delivered up to 1.15x better throughput than 3rd Generation Intel Xeon Platinum processors and were able to load the data set up to 1.27x faster (for TigerGraph in the LDBC SNB BI benchmark).

Benchmark score

Load time

Conclusion

Choosing the right combination of Server and Processor can increase performance and reduce latency. As this testing demonstrated, the Dell PowerEdge R760 with 4th Generation Intel Xeon Platinum 8468 CPUs delivered up to a 15% performance improvement for business intelligence queries than the Dell PowerEdge R750 with 3rd Generation Intel Xeon Platinum 8380 CPUs, and were able to load the data set up to 27% faster.

PowerEdge R760 with 4th Generation Intel® Xeon® Processors TigerGraph Test Report

Tue, 30 Jan 2024 23:55:41 -0000

|Read Time: 0 minutes

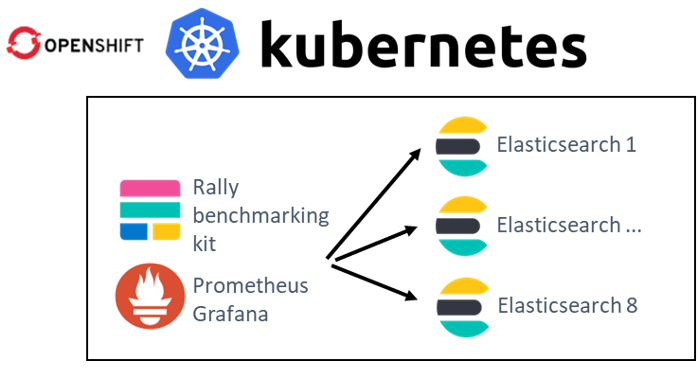

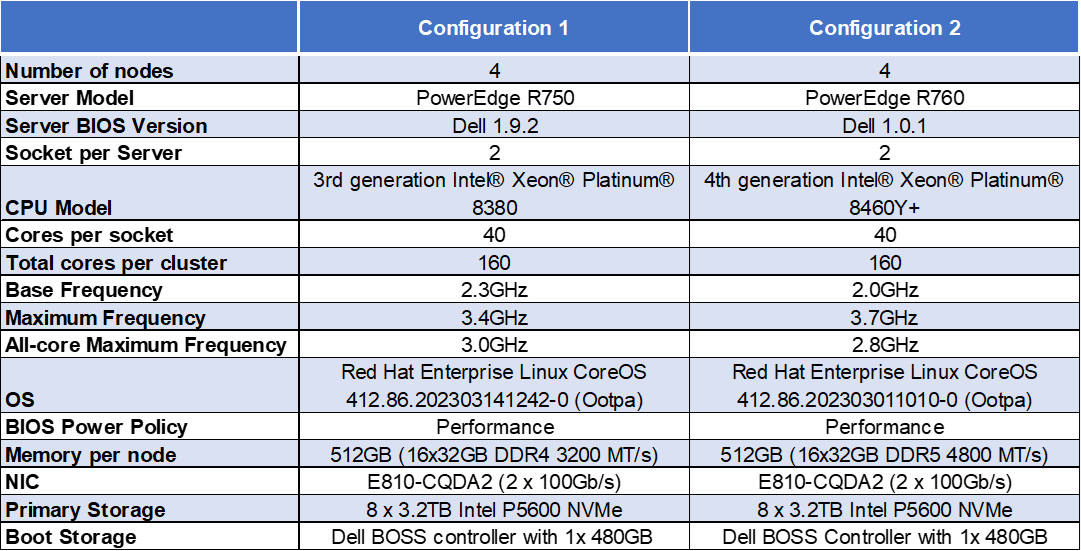

Summary

Introducing new server technologies allows customers to deploy solutions that use the newly introduced functionality. It can also provide an opportunity for them to review their current infrastructure and determine whether the new technology can increase performance and efficiency. With this in mind, Dell Technologies and Intel recently conducted testing with TigerGraph on the new Dell PowerEdge R760 with 4th Generation Intel Xeon Scalable processors. We compared the results to the same solution running on the previous generation R750 with 3rd Generation Intel Xeon Scalable processors to determine whether customers could benefit from a transition.

All testing was conducted in Dell Labs by Intel and Dell engineers in April 2023.

Solution overview

TigerGraph was founded in 2012, by programmer Dr. Yu Xu, under the name GraphSQL[i]

According to Gartner, by 2025, graph technologies will be used in 80% of data and analytics innovations, up from 10% in 2021. This projection aligns with the explosive growth of TigerGraph’s global customer base, which has increased by more than 100% in the past twelve months as more organizations use graphs to drive better business outcomes.[ii]

A graph database is designed to facilitate analysis of relationships in data. A graph database stores data as entities and the relationships between those entities. It is composed of two things: vertices and edges. Vertices represent entities such as a person, product, location, payment, order, and so on; edges represent the relationship between these entities, for example, this person initiated this payment to purchase this product with this order. Graph analytics explores these connections in data and reveals insights about the connected data. These capabilities enable applications such as customer 360, cyber threat mitigation, digital twins, entity resolution, fraud detection, supply chain optimization, and much more.

TigerGraph is the only scalable graph database for the enterprise. TigerGraph’s innovative architecture allows siloed data sets to be connected for deeper and wider analysis at scale. Additionally, TigerGraph supports real-time in-place updates for operational analytics use cases.[iii]

- Four top-tier banks use TigerGraph to improve fraud detection rates by 20% or more.

- Over 300 million consumers receive personalized offers with recommendation engines powered by TigerGraph.

- More than 50 million patients receive care path recommendations to assist them on their wellness journey.

- One billion people depend on the energy infrastructure optimized by TigerGraph to reduce power outages.TigerGraph is a native parallel graph database purpose-built for analyzing massive amounts of data (terabytes).[iv]

TigerGraph helps make graph technology more accessible. TigerGraph DB is democratizing the adoption of advanced analytics with Intel’s 4th Generation Intel Xeon Scalable Processors by enabling non-technical users to accomplish as much with graphs as the experts do.[v]

Here is an outline of the TigerGraph architecture:

Because a TigerGraph instance is designed to process massive pools of data and uses a large number of processes to do so, choosing the correct hardware is critical to a successful deployment.

Dell PowerEdge R660 and R760 servers with 4th generation Intel Xeon Scalable processors deliver a fast, scalable, portable, and cost-effective solution to implement and operationalize deep analysis of large pools of data.

Workload description

To test the performance of TigerGraph, we chose the Linked Data Benchmark Council SNB BI benchmark.

The Linked Data Benchmark Council (LDBC) is a non-profit organization that helps to define standard graph benchmarks to foster a community around graph processing technologies. LDBC consists of members from both industry and academia, including organizations (such as Intel) and individuals.

The Social Network Benchmark (SNB) suite defines graph workloads that target database management systems. One of these is the Business Intelligence (BI) workload, which focuses on aggregation- and join-heavy complex queries that touch a large portion of the graph with microbatches of insert/delete operations. The SNB BI specification standardizes the dataset schema, data generation technique, size, and graph queries to be performed.

The SNB BI dataset represents a social network database (with Forums, Posts, Comments, and so on). In addition to analytics queries, it defines daily batches of updates to simulate changes in the social network over time (adding/removing posts, comments, users, and so on).

The reference implementation of the benchmark is responsible for loading the data into the database, scheduling the queries, collecting the metrics, and producing scoring results.

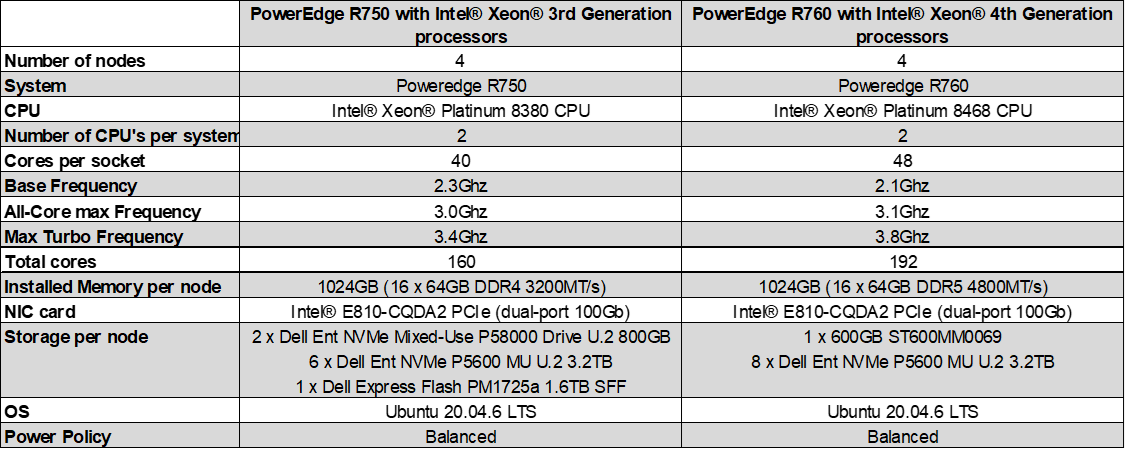

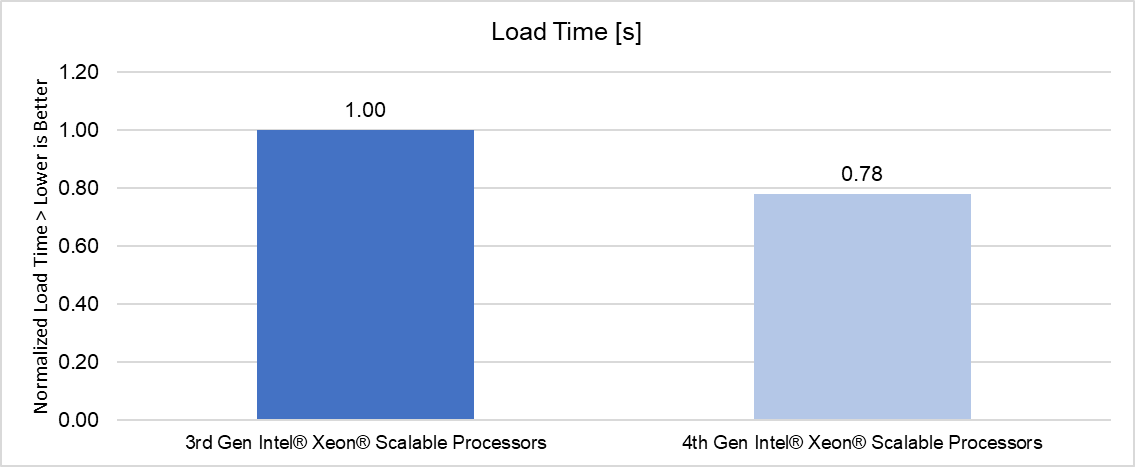

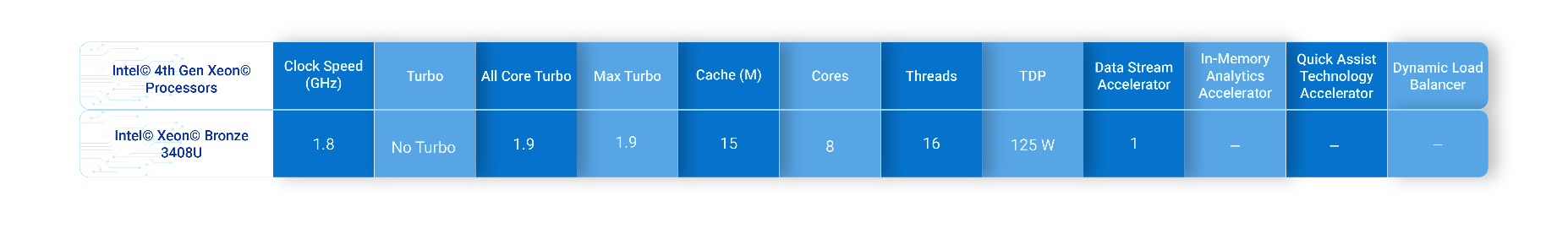

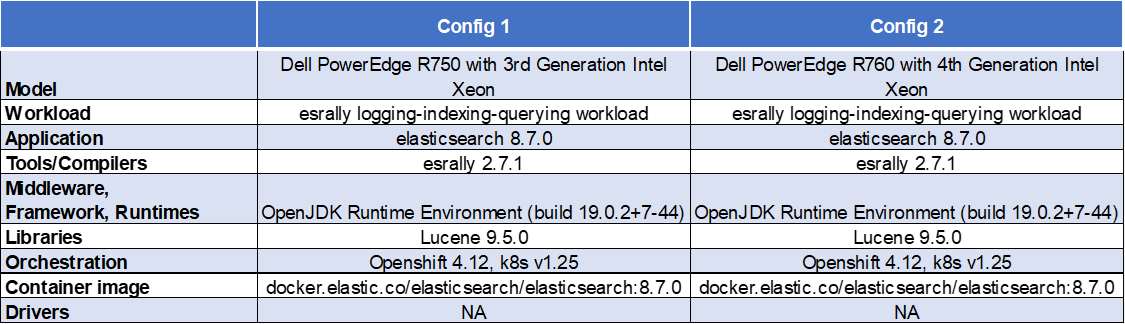

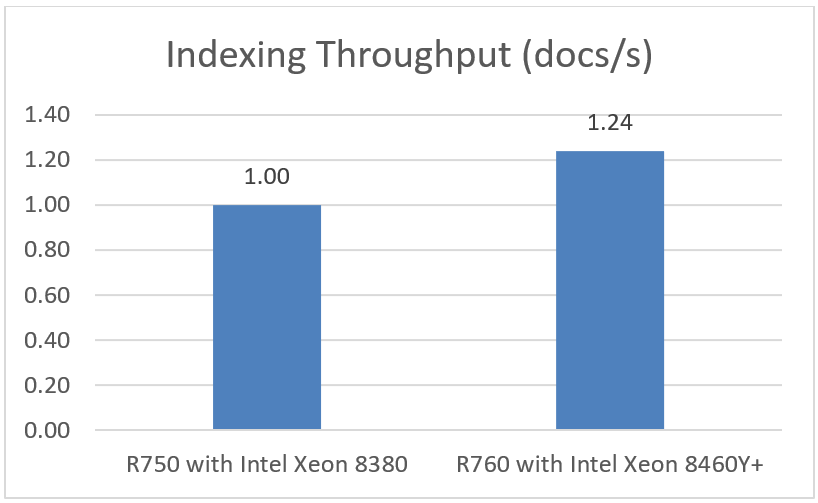

Configurations tested

Results

The following graphs highlight the relative performance differences between the two architectures.

Benchmark Score

Load Time

*Performance varies by use, configuration, and other factors. For the configuration details of this test, see the following section.

Test configuration details

- 3rd Gen Intel Xeon Scalable Processors (baseline): Test by Intel as of 04/28/23. 1-node, 2x Intel Xeon Platinum 8380 CPU @ 2.30GHz, 40 cores, HT On, Turbo On, Total Memory 1024GB (16x64GB DDR4 3200 MT/s [3200 MT/s]), BIOS 1.9.2, microcode 0xd000389, 2x NetXtreme BCM5720 2-port Gigabit Ethernet PCIe, 2x Ethernet Controller E810-C for QSFP, 2x 745.2G Dell Ent NVMe P5800x WI U.2 800GB, 6x 2.9T Dell Ent NVMe P5600 MU U.2 3.2TB, 1x 1.5T Dell Express Flash PM1725a 1.6TB SFF, Ubuntu 20.04.6 LTS, 5.15.0-71-generic, LDBC SNB BI v., TigerGraph 3.7

- 4th Gen Intel Xeon Scalable Processors: Test by Intel as of 04/28/23. 1-node, 2x Intel Xeon Platinum 8468, 48 cores, HT On, Turbo On, Total Memory 1024GB (16x64GB DDR5 4800 MT/s [4800 MT/s]), BIOS 1.0.1, microcode 0x2b000181, 2x NetXtreme BCM5720 2-port Gigabit Ethernet PCIe, 2x Ethernet Controller E810-C for QSFP, 1x 558.9G ST600MM0069, 8x 2.9T Dell Ent NVMe P5600 MU U.2 3.2TB, Ubuntu 20.04.6 LTS, 5.15.0-71-generic, LDBC SNB BI v., TigerGraph 3.7

Key takeaways

PowerEdge servers with 4th Generation Intel Xeon Platinum processors delivered up to 1.15x better throughput than 3rd Generation Intel Xeon Platinum processors and were able to load the data set up to 1.27x faster (for TigerGraph in the LDBC SNB BI benchmark).

Conclusion

Choosing the right combination of server and processor can increase performance and reduce latency. As this testing demonstrated, the Dell PowerEdge R760 with 4th Generation Intel Xeon Platinum 8468 CPUs delivered up to a 15% performance improvement for business intelligence queries than the Dell PowerEdge R750 with 3rd Generation Intel Xeon Platinum 8380 CPUs, and were able to load the data set up to 27% faster simply by upgrading the platform to Intel 4th Gen Xeon Gold Scalable processors.

[ii] https://www.tigergraph.com/press-article/tigergraph-recognized-for-the-first-time-in-the-2022-gartner-magic-quadrant-for-cloud-database-management-systems-2/

Driving Advanced Graph Analytics with TigerGraph on Next Gen PE Servers and 4th Gen Intel® Xeon® Processors

Tue, 30 Jan 2024 22:49:38 -0000

|Read Time: 0 minutes

Summary

This joint paper describes the key hardware considerations when configuring a successful Tigergraph database deployment and recommends configurations based on the next generation Dell PowerEdge Server portfolio offerings.

TigerGraph helps make graph technology more accessible. TigerGraph DB is democratizing the adoption of advanced analytics with Intel’s 4th Generation Intel Xeon Scalable Processors by enabling non-technical users to accomplish as much with graphs as the experts do. TigerGraph is a native parallel graph database purpose-built for analyzing massive amounts of data (terabytes).

Dell PowerEdge R660 and R760 servers with 4th Generation Intel Xeon Scalable processors deliver a fast, scalable, portable, and cost-effective solution to implement and operationalize deep analysis of large pools of data.

Key considerations and industry use cases

- Manufacturing/Supply Chain. Delays in orders or shipments that cannot reach their final destination translate to poor customer experience, increased customer attrition, financial penalties for delivery delays and the loss of potential customer revenues.

With the mounting strains on global supply chains, companies are now investing heavily into technologies and processes to enhance adaptability and resiliency in their supply chains.

Real-time analysis of changes in supply and demand requires expensive database joins across the board, with the data for suppliers, orders, products, locations, and the inventory for parts and sub-assemblies. Global supply chains have multiple manufacturing partners, requiring integrating the external data from partners with the internal data. TigerGraph, Intel, and Dell Technologies provide a powerful Graph engine to find product relations and shipping alternatives for your business needs.

- Financial Services. Fraudsters are getting more sophisticated over time, creating a network of synthetic identities that combine legitimate information, such as social security or national identification number, name, phone number, and physical address. TigerGraph’s solutions on 4th Generation Intel Xeon Scalable Processors help you isolate and identify issues to keep your business safe.

- Recommendation Engines. Every business faces the challenge of maximizing the revenue opportunity from every customer interaction. Companies offering a wide range of products or services face the additional challenge of matching the right product or service based on immediate browsing and search activity along with the historical data for the customer. TigerGraph’s Recommendation Engine on 4th Generation Intel Xeon Scalable Processors powers purchases with increased click-through results leading to higher average order value and increased per-visit spend for your shoppers.

- The Dell PERC H755N NVMe RAID controller and the new PERC 965i RAID controller with Self-Encrypting Drives (SED) provide additional security for stored data. Whether drives are lost, stolen, or failed, unauthorized access is prevented by rendering the drive unreadable without the encryption key. It also offers additional benefits, including regulatory compliance and secure decommissioning. Both controllers support Local Key Management (LKM) and external key management systems using Secure Enterprise Key Manager (SEKM).

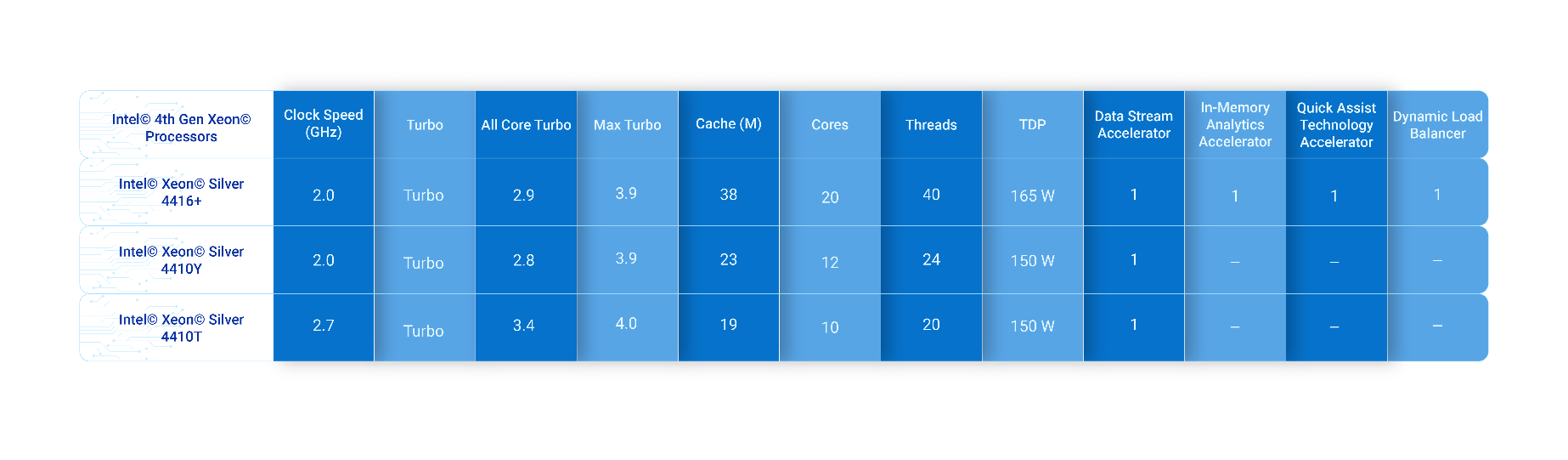

Recommended configurations

Cost-optimized configuration | |

Platform | PowerEdge R660 supporting up to 8 NVMe drives in RAID config or the PowerEdge R760 with support for up to 24 NVMe drives |

CPU* | 2x Intel® Xeon® Gold 5420+ processor* (28 cores, 2.0GHz base/2.7GHz all core turbo frequency) |

DRAM | 256 GB (16x 16 GB DDR5-4800)* |

Boot device | Dell BOSS-S2 with 2x 240GB or 2x 480GB M.2 SATA SSD (RAID1) |

Storage adapter | Dell PERC H755 or H965i Front NVMe RAID Controller |

Storage | 2x (up to 8x) 1.6TB Enterprise NVMe Mixed Use P5620 Drive, U2 Gen4 |

NIC | Intel® E810-XXVDA2 for OCP3 (dual-port 25Gb) |

* Memory attached to the Gold 5420+ operates at DDR5-4400 memory speeds.

Balanced configuration | |

Platform | PowerEdge R660 supporting up to 8 NVMe drives in RAID config or the PowerEdge R760 with support for up to 24 NVMe drives |

CPU | 2x Intel® Xeon® Gold 6448Y processor (32 cores, 2.2GHz base/3.0GHz all core turbo frequency) |

DRAM | 512 GB (16x 32 GB DDR5-4800) |

Boot device | Dell BOSS-S2 with 2x 240GB or 2x 480GB M.2 SATA SSD (RAID1) |

Storage adapter | Dell PERC H755 or H965i Front NVMe RAID Controller |

Storage | 2x (up to 8x) 1.6TB Enterprise NVMe Mixed Use P5620 Drive, U2 Gen4 |

NIC | Intel® E810-XXVDA2 for OCP3 (dual-port 25Gb) |

High-performance configuration | |

Platform | PowerEdge R660 supporting up to 8 NVMe drives in RAID config or the PowerEdge R760 with support for up to 24 NVMe drives |

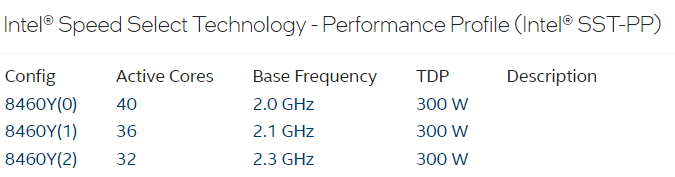

CPU | 2x Intel® Xeon® Platinum 8468 processor (48 cores, 2.1GHz base/3.1GHz all core turbo frequency) with Intel Speed Select technology |

DRAM | 1 TB (32x 32 GB DDR5-4800) |

Boot device | Dell BOSS-S2 with 2x 240GB or 2x 480GB M.2 SATA SSD (RAID1) |

Storage adapter | Dell PERC H755 or H965i Front NVMe RAID Controller |

Storage | 2x (up to 8x) 1.6TB Enterprise NVMe Mixed Use P5620 Drive, U2 Gen4 |

NIC | Intel® E810-XXVDA2 for OCP3 (dual-port 25Gb), or Intel® E810-CQDA2 PCIe (dual-port 100Gb) |

Learn more

Visit the Dell support page or contact Dell for a customized quote 1-877-289-3355 You can also visit the Intel-Dell website for more information.

Read:

Driving Advanced Graph Analytics with TigerGraph on 15G PowerEdge Servers and 3rd Gen Intel® Xeon® Processors

Tue, 30 Jan 2024 22:23:26 -0000

|Read Time: 0 minutes

Summary

This joint paper describes the key hardware considerations when configuring a successful Tigergraph database deployment, and recommends configurations based on the 15th Generation Dell PowerEdge Server portfolio offerings.

TigerGraph helps make graph technology more accessible. TigerGraph 3.x is democratizing the adoption of advanced analytics with Intel’s 3rd Generation Intel Xeon Scalable Processors by enabling non-technical users to accomplish as much with graphs as the experts do. TigerGraph is a native parallel graph database purpose-built for analyzing massive amounts of data (terabytes).

Dell PowerEdge R650 and R750 servers with 3rd Generation Intel Xeon Scalable processors deliver a fast, scalable, portable, and cost-effective solution to implement and operationalize deep analysis of large pools of data.

Key considerations and industry use cases

- Manufacturing/Supply Chain. Delays in orders or shipments that cannot reach their final destination translate to poor customer experience, increased customer attrition, financial penalties for delivery delays, and the loss of potential customer revenues.

With the mounting strains on global supply chains, companies are now investing heavily in technologies and processes to enhance adaptability and resiliency in their supply chains.

Real-time analysis of changes in supply and demand requires expensive database joins across the board, with the data for suppliers, orders, products, locations, and inventory for parts and sub-assemblies. Global supply chains have multiple manufacturing partners, requiring integrating the external data from partners with the internal data. TigerGraph, Intel, and Dell Technologies provide a powerful Graph engine to find product relations and shipping alternatives for your business needs.

- Financial Services. Fraudsters are getting more sophisticated over time, creating a network of synthetic identities that combine legitimate information, such as social security or national identification number, name, phone number, and physical address. TigerGraph’s solutions on 3rd Generation Intel Xeon Scalable Processors help you isolate and identify issues to keep your business safe.

- Recommendation Engines. Every business faces the challenge of maximizing the revenue opportunity from every customer interaction. Companies offering a wide range of products or services face the additional challenge of matching the right product or service based on immediate browsing and search activity along with the historical data for the customer. TigerGraph’s Recommendation Engine on 3rd Generation Intel Xeon Scalable Processors powers purchases with increased click-through results, leading to higher average order value and increased per-visit spend for your shoppers.

- The Dell PERC H755N NVMe RAID controller with Self-Encrypting Drives (SED) provides additional security for stored data. Whether drives are lost, stolen, or failed, unauthorized access is prevented by rendering the drive unreadable without the encryption key. It also offers additional benefits, including regulatory compliance and secure decommissioning. The PERC H755N controller supports Local Key Management (LKM) and external key management systems using Secure Enterprise Key Manager (SEKM).

Recommended configurations

Cost-optimized configuration | |

Platform | PowerEdge R650 supporting up to 8 NVMe drives in RAID config or the PowerEdge R750 with support for up to 24 NVMe drives |

CPU* | 2x Intel® Xeon® Gold 5320 processor* (26 cores, 2.2GHz base/2.8GHz all core turbo frequency) |

DRAM | 256 GB (16x 16GB DDR4-3200) |

Boot device | Dell BOSS-S2 with 2x 240GB or 2x 480GB M.2 SATA SSD (RAID1) |

Storage adapter | Dell PERC H755N Front NVMe RAID Controller |

Storage | 2x (up to 8x) 1.6TB Enterprise NVMe Mixed Use P5620 Drive, U2 Gen4 |

NIC | Intel® E810-XXVDA2 for OCP3 (dual-port 25Gb) |

* Memory attached to the Gold 5320 operates at DDR4-2933 memory speeds.

Balanced configuration | |

Platform | PowerEdge R650 supporting up to 8 NVMe drives in RAID config or the PowerEdge R750 with support for up to 24 NVMe drives |

CPU | 2x Intel® Xeon® Gold 6348 processor (28 cores, 2.6GHz base/3.4GHz all core turbo frequency) |

DRAM | 512 GB (16x 32GB DDR4-3200) |

Boot device | Dell BOSS-S2 with 2x 240GB or 2x 480GB M.2 SATA SSD (RAID1) |

Storage adapter | Dell PERC H755N Front NVMe RAID Controller |

Storage | 2x (up to 8x) 1.6TB Enterprise NVMe Mixed Use P5620 Drive, U2 Gen4 |

NIC | Intel® E810-XXVDA2 for OCP3 (dual-port 25Gb) |

High-performance configuration | |

Platform | PowerEdge R650 supporting up to 8 NVMe drives in RAID config or the PowerEdge R750 with support for up to 24 NVMe drives |

CPU | 2x Intel® Xeon® Platinum 8380 processor (40 cores, 2.3GHz base/3.0GHz all core turbo frequency) with Intel Speed Select technology |

DRAM | 1 TB (32x 32GB DDR4-3200) |

Boot device | Dell BOSS-S2 with 2x 240GB or 2x 480GB M.2 SATA SSD (RAID1) |

Storage adapter | Dell PERC H755N Front NVMe RAID Controller |

Storage | 2x (up to 8x) 1.6TB Enterprise NVMe Mixed Use P5620 Drive, U2 Gen4 |

NIC | Intel® E810-XXVDA2 for OCP3 (dual-port 25Gb), or Intel® E810-CQDA2 PCIe (dual-port 100Gb) |

Learn more

Visit the Dell support page or contact Dell for a customized quote 1-877-289-3355 You can also visit the Intel-Dell website for more information.

Read:

Achieving Significant Virtualization Performance Gains with New 16G Dell® PowerEdge™ R760 Servers

Thu, 25 Jan 2024 17:43:01 -0000

|Read Time: 0 minutes

Summary

With the latest Dell PowerEdge R760 16G servers utilizing the PCIe® 5.0 interface to connect networking and storage to the CPU, there are great performance increases in data movement over previous PCIe generations. These improvements can be utilized by hyperconverged infrastructures running on these servers.

This Direct from Development (DfD) tech note presents a generational server performance comparison in a virtualized environment comparing new 16G Dell PowerEdge R760 servers deployed with new KIOXIA CM7 Series SSDs with prior generation 14G Dell PowerEdge R740xd servers deployed with prior generation KIOXIA CM6 Series SSDs.

As presented by the test results, the latest Dell generation PowerEdge servers perform the same amount of work in less time and deliver faster performance in a virtualized environment when compared with prior PCIe server generations.

Market positioning

Data center infrastructures typically fall into three categories: traditional, converged and hyperconverged. Hyperconverged infrastructures enable users to add compute, memory and storage requirements as needed, delivering the flexibility of horizontal and vertical scaling. However, many virtual machine (VM) configurations run in converged infrastructures, and their ability to scale is often difficult when VM clusters require more storage.

VMware®, Inc. enables hyperconverged infrastructures through VMware ESXi™ and VMware vSAN™ platforms. The VMware ESXi platform is a popular enterprise-grade virtualization platform that scales compute and memory as needed and provides simple management of large VM clusters. The VMware vSAN platform enables the infrastructure to transition from converged to hyperconverged, delivering incredibly fast performance since storage is local to the servers themselves. The platforms support a new VMware vSAN Express Storage Architecture™ (ESA) that has gone through a series of optimizations to utilize NVMe™ SSDs more efficiently than in the past.

Product features

Dell PowerEdge 760 Rack Server (Figure 1)

Specifications: https://www.delltechnologies.com/asset/en-us/products/servers/technical-support/poweredge-r760-spec-sheet.pdf.

Figure 1: Side angle of Dell PowerEdge 760 Rack Server1

KIOXIA CM7 Series Enterprise NVMe SSD (Figure 2) Specifications:https://americas.kioxia.com/en-us/business/ssd/enterprise-ssd.html.

Figure 2: Front view of KIOXIA CM7 Series SSD2

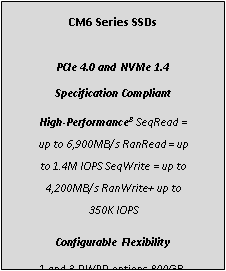

PCIe 5.0 and NVMe 2.0 specification compliant; Two configurations: CM7-R Series (read intensive), 1 Drive Write Per Day3 (DWPD), up to 30,720 gigabyte4 (GB) capacities and CM7-V Series (higher endurance mixed use), 3 DWPD, up to 12,800 GB capacities.

Performance specifications: SeqRead = up to 14,000 MB/s; SeqWrite = up to 7,000 MB/s; RanRead = up to 2.7M IOPS; RanWrite = up to 600K IOPS.

Hardware/Software test configuration

The hardware and software equipment used in this virtualization comparison (Figure 3):

Server Information | ||

Server Model | Dell PowerEdge R7605 | Dell PowerEdge R740xd6 |

No. of Servers | 3 | 3 |

BIOS Version | 1.3.2 | 2.18.1 |

CPU Information | ||

CPU Model | Intel® Xeon® Gold 6430 | Intel Xeon Silver 4214 |

No. of Sockets | 2 | 2 |

No. of Cores | 64 | 24 |

Frequency (in gigahertz) | 2.1 GHz | 2.2 GHz |

Memory Information | ||

Memory Type | DDR5 | DDR4 |

Memory Speed (in megatransfers per second) | 4,400 MT/s | 2,400 MT/s |

Memory Size (in gigabytes) | 16 GB | 32 GB |

No. of DIMMs | 16 | 12 |

Total Memory (in gigabytes) | 256 GB | 384 GB |

SSD Information | ||

SSD Model | KIOXIA CM7-R Series | KIOXIA CM6-R Series |

Form Factor | 2.5-inch7 | 2.5-inch |

Interface | PCIe 5.0 x4 | PCIe 4.0 x4 |

No. of SSDs | 12 | 12 |

SSD Capacity (in terabytes4) | 3.84 TB | 3.84 TB |

Drive Write(s) Per Day (DWPD) | 1 | 1 |

Active Power | 25 watts | 19 watts |

Operating System Information | ||

Operating System (OS) | VMware ESXi | VMware ESXi |

OS Version | 8.0.1, 21813344 | 8.0.1, 21495797 |

VMware vCenter® Version | 8.0.1.00200 | 8.0.1.00200 |

Storage Type | vSAN ESA | vSAN ESA |

Load Generator Information (Test Software) | ||

Load Generator | HyperConverged Infrastructure Benchmark (HCIBench) | HCIBench |

Load Generator Version | 2.8.2 | 2.8.2 |

Figure 3: Hardware/Software configuration used in the comparison

Set-up and test procedures

Set-up:

The latest VMware ESXi 8.0 operating system was installed on all hosts.

Two clusters were created in VMware’s vCenter management interface with ‘High Availability’ and ‘Distributed Resource Scheduler’ disabled for testing.

Each Dell PowerEdge R760 host was added into a cluster - then each Dell PowerEdge R740xd host was added into a separate cluster.

VMkernel adapters were set up to have VMware vMotion™ migration, provisioning, management and the VMware vSAN platform enabled for both test configurations.

In the VMware vSAN configurations, twelve KIOXIA CM7 Series drives were added for the Dell PowerEdge R760 cluster (four drives per server), and twelve KIOXIA CM6 Series drives were added for the Dell PowerEdge R740xd cluster (four drives per server). The default storage policy was set to ‘vSAN ESA Default Policy – RAID 5’ for both configurations.

The HCIBench load generator (virtual appliance) was then imported and configured on the network.

Test procedures:

The latest VMware ESXi 8.0 operating system was installed on all hosts.

Six tests were run on each cluster – four performance tests and two power consumption tests as follows:

Performance tests:

IOPS: This metric measured the number of Input/Output operations per second that the system completed. Throughput: This metric measured the amount of data transferred per second to and from the storage devices.

Read Latency: This metric measured the time it took to perform a read operation. It included the average time it took for the load generator to not only issue the read operation, but also the time it took to complete the operation and receive a ‘successfully completed’ acknowledgement.

Write Latency: This metric measured the time it took to perform a write operation. It included the average time it took for the load generator to not only issue the write operation, but also the time it took to complete the operation and receive a ‘successfully completed’ acknowledgement.

Power consumption tests:

IOPS per Watt: This metric measured the amount of IOPS performed in conjunction with the power consumed by the cluster.

Throughput per Watt: This metric measured the amount of throughput performed in conjunction with the power consumed by the cluster.

For the four performance tests, the following five workloads were run with the test results recorded. For the two power consumption tests, the latter four workloads were run with the test results recorded.

100% Sequential Write (256K block size, 1 thread): This workload is representative of a data logging use case. 100% Random Read (4K block size, 4 threads): This workload is representative of a read cache system.

Random 70% Read / 30% Write (4K block size, 4 threads): This workload is representative of a common mixed read/write ratio used in commercial database systems.

Random 50% Read /50% Write (4K block size, 4 threads): This workload is representative of other common IT use cases such as email.

Blender (block sizes/threads vary): This workload is representative of a mix of many types of sequential and random workloads at various block sizes and thread counts as VMs request storage against the vSAN storage pool.

Test results8

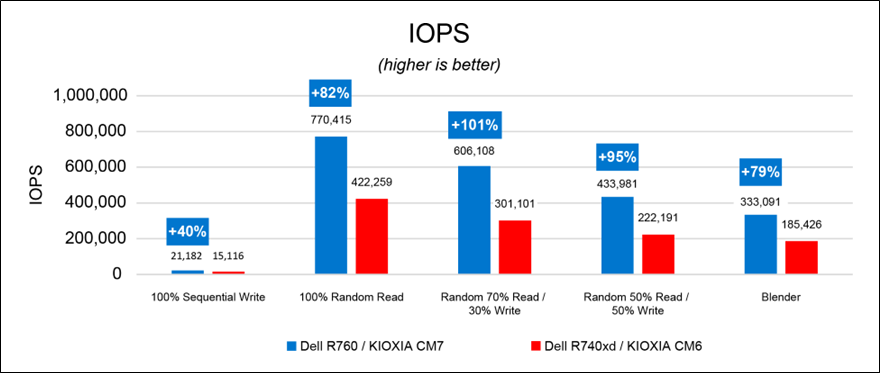

IOPS (Figure 4): The results are in IOPS - the higher result for each is better.

Figure 4: IOPS results

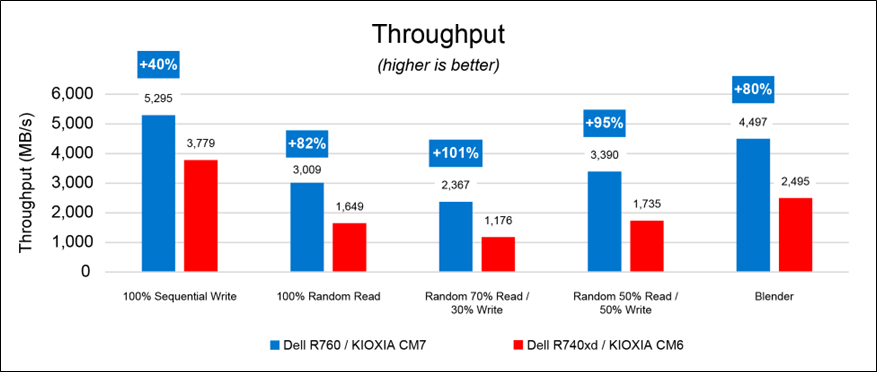

Throughput (Figure 5): The results are in megabytes per second (MB/s) - the higher result for each is better.

Figure 5: throughput results

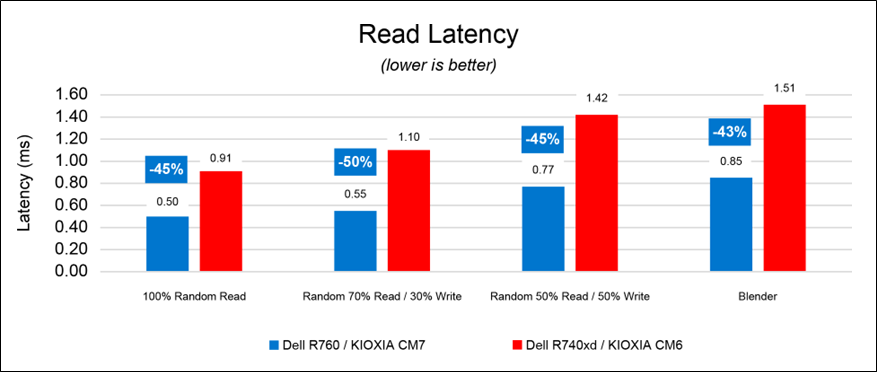

Read Latency (Figure 6): The results are in milliseconds (ms) - the lower result for each is better. The 100% sequential write workloads for both configurations were not included for this test as the workload does not include read operations.

Figure 6: read latency results

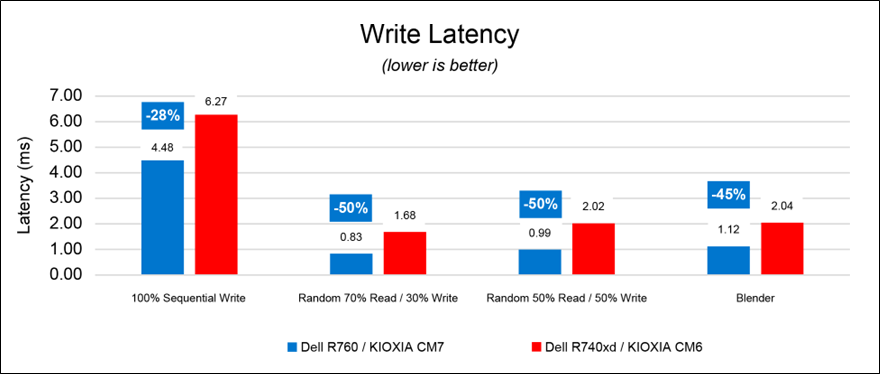

Write Latency (Figure 7): The results are in milliseconds - the lower result for each is better. The 100% random read workloads for both PCIe configurations were not included for this test as the workload does not include write operations.

Figure 7: write latency results

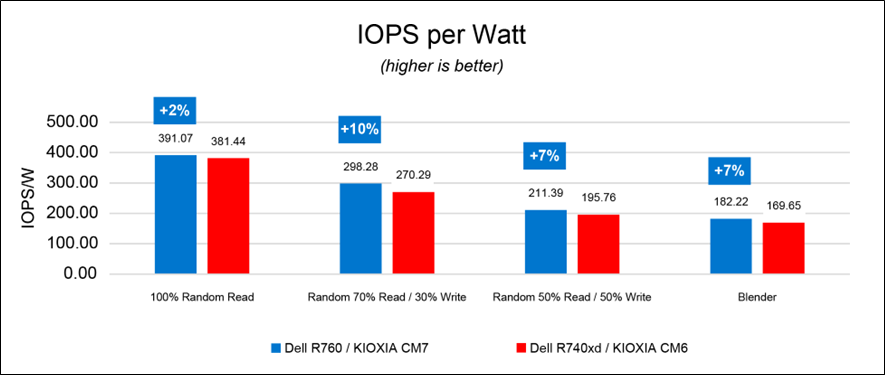

IOPS per Watt (Figure 8): The results show the amount of IOPS performed per power consumed by the cluster and are in IOPS per watt (IOPS/W). The higher result for each is better.

Figure 8: IOPS per watt results

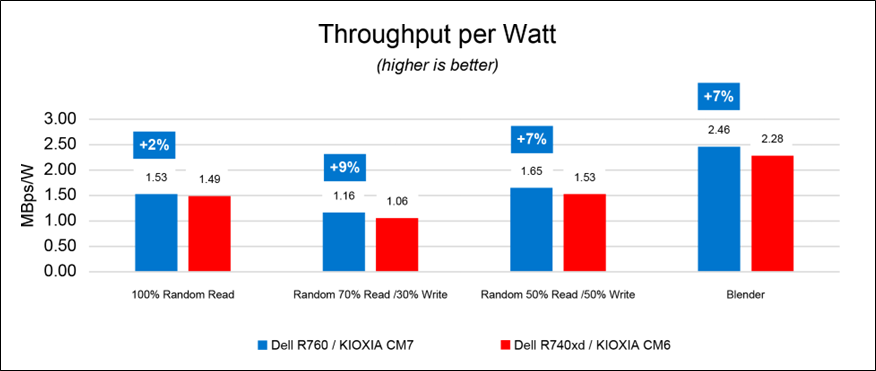

Throughput per Watt (Figure 9): The results show the amount of throughput performed per power consumed by the cluster and are in MB/s per watt (MBps/W). The higher result for each is better.

Figure 9: throughput per watt results

Final analysis

The Dell PowerEdge R760 servers equipped with new KIOXIA CM7 Series enterprise NVMe SSDs outperformed the Dell PowerEdge 740xd servers and SSDs in IOPS, throughput and latency. They also delivered higher performance per watt. With the newer generation of Dell PowerEdge servers, there are notable performance increases associated with hyperconverged infrastructures that directly affect server, CPU, memory and storage performance when compared with prior generations.

References

Footnotes

1. The product image shown is a representation of the design model and not an accurate product depiction.

2. The product image shown was provided with permission from KIOXIA America, Inc. and is a representation of the design model and not an accurate product depiction.

3. Drive Write Per Day (DWPD) means the drive can be written and re-written to full capacity once a day, every day for five years, the stated product warranty period. Actual results may vary due to system configuration, usage and other factors. Read and write speed may vary depending on the host device, read and write conditions and file size.

4. Definition of capacity - KIOXIA Corporation defines a megabyte (MB) as 1,000,000 bytes, a gigabyte (GB) as 1,000,000,000 bytes and a terabyte (TB) as 1,000,000,000,000 bytes. A computer operating system, however, reports storage capacity using powers of 2 for the definition of 1Gbit = 230 bits = 1,073,741,824 bits, 1GB = 230 bytes = 1,073,741,824 bytes and 1TB = 240 bytes = 1,099,511,627,776 bytes and therefore shows less storage capacity. Available storage capacity (including examples of various media files) will vary based on file size, formatting, settings, software and operating system, and/or pre-installed software applications, or media content. Actual formatted capacity may vary.

5. The Dell PowerEdge R760 server features a PCIe 4.0 backplane.

6. The Dell PowerEdge R740xd server features a PCIe 3.0 backplane.

7. 2.5-inch indicates the form factor of the SSD and not its physical size.

8. Read and write speed may vary depending on the host device, read and write conditions and file size.

Trademarks

Dell and PowerEdge are registered trademarks or trademarks of Dell Inc.

Intel and Xeon are registered trademarks of Intel Corporation or its subsidiaries in the U.S. and/or other countries NVMe is a registered or unregistered trademark of NVM Express, Inc. in the United States and other countries. PCIe is a registered trademark of PCI-SIG.

VMware, VMware ESXi, VMware vMotion, VMware vSAN, VMware vSAN Express Storage Architecture and VMware vCenter are registered trademarks or trademarks of VMware Inc. in the United States and/or various jurisdictions.

All other company names, product names and service names may be trademarks or registered trademarks of their respective companies.

Disclaimers

© 2023 Dell, Inc. All rights reserved. Information in this tech note, including product specifications, tested content, and assessments are current and believed to be accurate as of the date that the document was published and subject to change without prior notice. Technical and application information contained here is subject to the most recent applicable product specifications.

Launch Flexible Machine Learning Models Quickly with cnvrg.io® on Red Hat OpenShift

Wed, 17 Jan 2024 14:11:31 -0000

|Read Time: 0 minutes

Summary

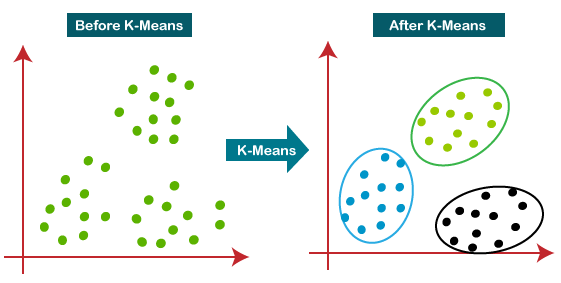

Data scientists hold a high degree of responsibility to support the decision-making process of companies and their strategies. To this end, data scientists extract insights from a large amount of heterogeneous data through a set of iterative tasks that include various aspects: cleaning and formatting the data available to them, building training and testing datasets, mining data for patterns, deciding on the type of data analysis to apply and the ML methods to use, evaluating and interpreting the results, refining ML algorithms, and possibly even managing infrastructure. To ensure that data scientists can deliver the most impactful insights for their companies efficiently and effectively, convrg.io provides a unified platform to operationalize the full machine learning (ML) lifecycle from research to production.

As the leading data-science platform for ML model operationalization (MLOps) and management, cnvrg.io is a pioneer in building cutting-edge ML development solutions that provide data scientists with all the tools they need in one place to streamline their processes. In addition, by deploying MLOps on Red Hat OpenShift, data scientists can launch flexible, container-based jobs and pipelines that can easily scale to deliver better efficiency in terms of compute resource utilization and cost. Infrastructure teams can also manage and monitor ML workloads in a single managed and cloud-native environment. For infrastructure architects who are deploying cnvrg.io on Dell PowerEdge servers and Intel® components, this document provides recommended hardware bill of materials (BoM) configurations to help get them started.

Key considerations

Key considerations for using the recommended hardware BoMs for deploying cnvrg.io on Red Hat OpenShift include:

- Provision external storage. When deploying cnvrg.io on Red Hat OpenShift, local storage is used only for container images and ephemeral volumes. External persistent storage volumes should be provisioned on a storage array or on another solution that you already have in place. If you do not already have a persistent storage solution, contact your Dell Technologies representative for guidance.

- Use high-performance object storage. The hardware BoMs below assume that you use an in-cluster solution based on MinIO for object storage. The number of drives and the capacity for MinIO object storage depends on the dataset size and performance requirements. An alternative object store would be an external S3-compatible object store such as Elastic Cloud Storage (ECS) or Dell PowerScale (Isilon), powered by high-capacity Solidigm SSDs.

- Scale object storage independently. Object storage capacity can be scaled independently of worker nodes by deploying additional storage nodes. Both high-performance, high capacity (with NVM Express [NVMe] Solidigm solid-state drives [SSDs]), and high-capacity (with rotational hard-disk drives [HDDs]) configurations can be used. All nodes using NVMe drives should be configured with 100 Gbps network interface controllers (NICs) to take full advantage of the drives’ I/O throughput.

Recommended configurations

Controller nodes (3 nodes required) and worker nodes

Table 1. PowerEdge R660-based, up to 10 NVMe drives, 1RU

Feature | Control-Plane (Master) Nodes | ML/Artificial Intelligence (AI) CPU Cluster (Worker) Nodes | |

Platform | Dell R660 supporting 10 x 2.5” drives with NVMe backplane - direct connection | ||

CPU |

| Base configuration | Plus configuration |

2x Xeon® Gold 6426Y (16c @ 2.5GHz) | 2x Xeon® Gold 6448Y (32c @ 2.1GHz) | 2x Xeon® Platinum 8468 (48c @ 2.1GHz) | |

DRAM | 128GB (8x 16GB DDR5-4800) | 256GB (16x 16GB DDR5-4800) | 512GB (16x 32GB DDR5-4800) |

Boot device | Dell BOSS-N1 with 2x 480GB M.2 NVMe SSD (RAID1) | ||

Storage[1] | 1x 1.6TB Solidigm[2] D7-P5620 SSD (PCIe Gen4, Mixed-use) | 2x 1.6TB Solidigm2 D7-P5620 SSD (PCIe Gen4, Mixed-use) | |

Object storage[3] | N/A | 4x (up to 10x) 1.92TB, 3.84TB or 7.68TB Solidigm D7-P5520 SSD (PCIe Gen4, Read-Intensive) | |

Shared storage[4] | N/A | External | |

NIC[5] | Intel® X710-T4L for OCP3 (Quad-port 10Gb) | Intel® X710-T4L for OCP3 (Quad-port 10Gb), or Intel® E810-CQDA2 PCIe add-on card (dual-port 100Gb) | |

Additional NIC for external storage[6] | N/A | Intel® X710-T4L for OCP3 (Quad-port 10Gb), or Intel® E810-CQDA2 PCIe add-on card (dual-port 100Gb) | |

Optional – Dedicated storage nodes

Figure 2. PowerEdge R660-based, up to 10 NVMe drives or 12 SAS drives, 1RU

Feature | Description | |

Node type | High performance | High capacity |

Platform | Dell R660 supporting 10x 2.5” drives with NVMe backplane | Dell R760 supporting 12x 3.5” drives with SAS/SATA backplane |

CPU | 2x Xeon® Gold 6442Y (24c @ 2.6GHz) | 2x Xeon® Gold 6426Y (16c @ 2.5GHz) |

DRAM | 128GB (8x 16GB DDR5-4800) | |

Storage controller | None | HBA355e adapter |

Boot device | Dell BOSS-N1 with 2x 480GB M.2 NVMe SSD (RAID1) | |

Object storage3 | up to 10x 1.92TB / 3.84TB / 7.68TB Solidigm D7-P5520 SSD (PCIe Gen4, Read-Intensive) | up to 12x 8TB/16TB/22TB 3.5in 12Gbps SAS HDD 7.2k RPM |

NIC4 | Intel® E810-CQDA2 PCIe add-on card (dual-port 100Gb) | Intel® E810-XXV for OCP3 (dual-port 25Gb) |

Learn more

Contact your Dell or Intel account team for a customized quote at 1-877-289-3355

[1] Local storage used only for container images and ephemeral volumes; persistent volumes should be provisioned on an external storage system.

[2] Formerly Intel

[3] The number of drives and capacity for MinIO object storage depends on the dataset size and performance requirements.

[4] External shared storage required for Kubernetes persistent volumes.

[5] 100 Gb NICs are recommended for higher throughput.

[6] Optional, required only if a dedicated storage network for external storage system is necessary.

GPU Support for the PowerEdge R360 & T360 Servers Raises the Bar for Emerging Use Cases

Fri, 12 Jan 2024 17:31:43 -0000

|Read Time: 0 minutes

Summary

As we enter the New Year, the market for AI solutions across numerous industries continues to grow. Specifically, UBS predicts a jump from $2.2 billion in 2022 to $255 billion in 2027 [1]. This growth is not limited to large enterprises; GPU support on the new PowerEdge T360 and R360 servers gives businesses of any size the freedom to explore entry AI inferencing use cases, in addition to graphic-heavy workloads.

We tested both a 3D rendering and AI inferencing workload on a PowerEdge R360 with one NVIDIA A2 GPU[1] to fully showcase the added performance possibilities.

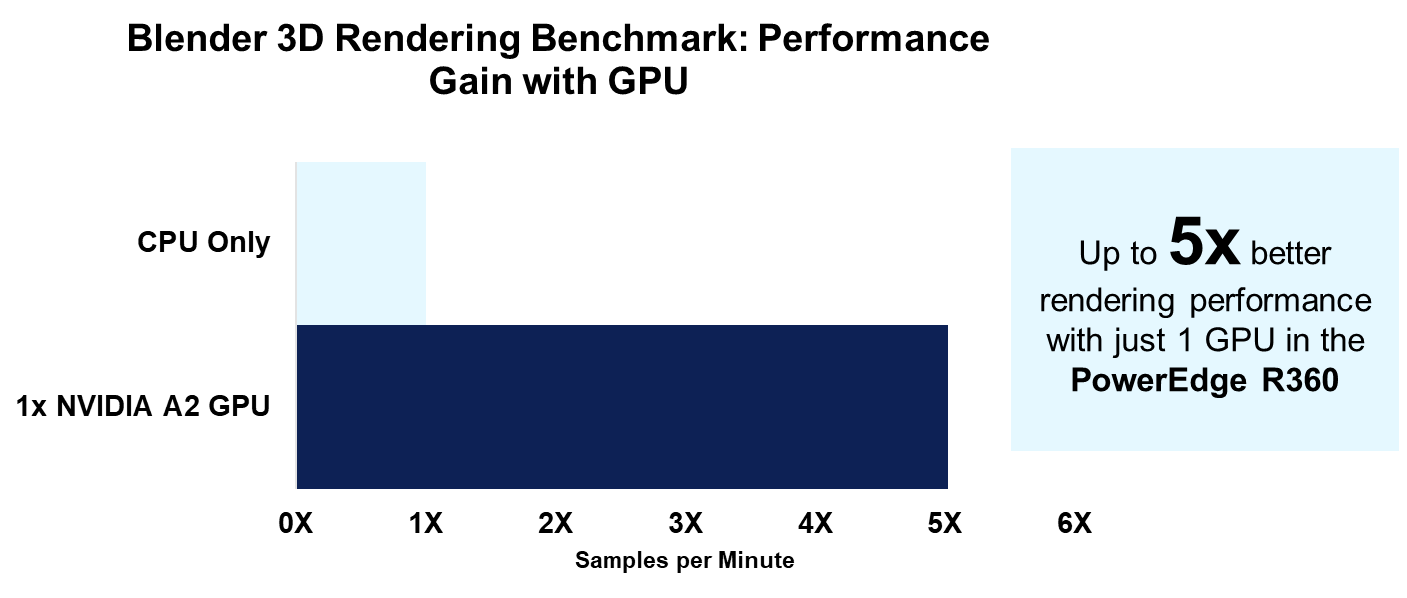

Achieve 5x rendering performance with the NVIDIA A2 GPU

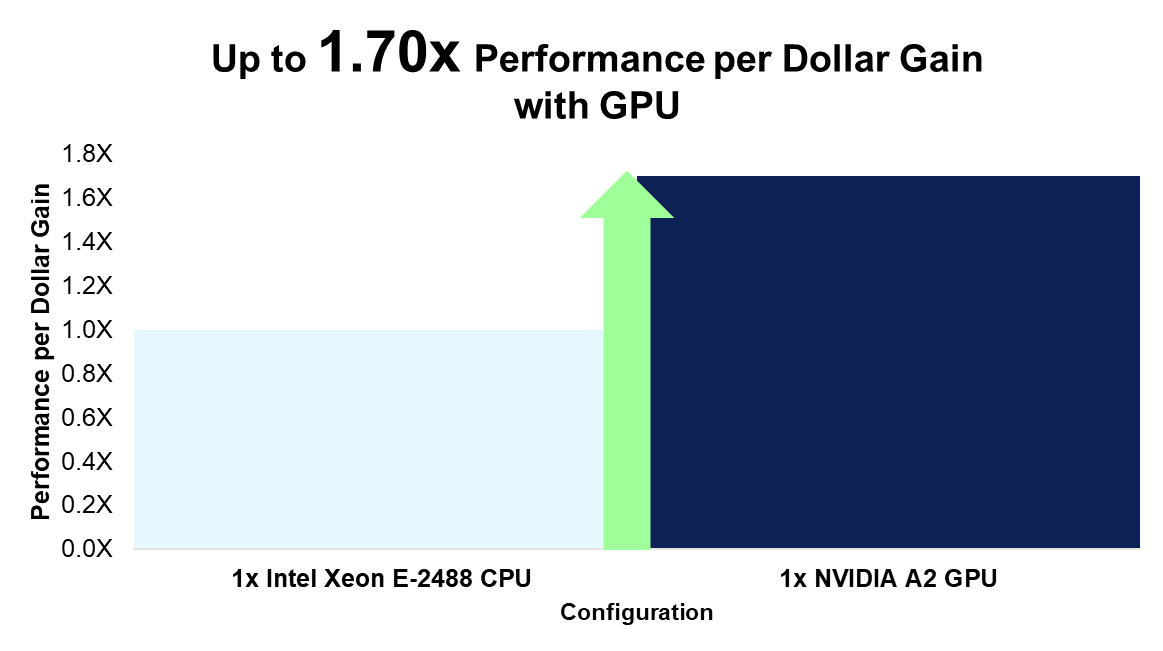

For our first test, we used Blender’s OpenData benchmark. This open-source benchmark measures rendering performance of various 3D scenes on either CPU or GPU. We achieved up to 5x better rendering performance on GPU, compared to the same workload run only on CPU [1]. As a result, customers gain up to 1.70x the performance per every dollar invested on an A2 GPU vs CPU [2].

[1] Similar results can be expected on a PowerEdge T360 with the same configuration.

Reach max inferencing performance with limited CPU consumption

Part of the motivation behind adding GPU support is the growing demand among SMBs for on-premise, real-time, video and audio processing. Thus, to evaluate AI inferencing performance, we installed NVIDIA’s open-source DeepStream toolkit (version 6.3). DeepStream is primarily used to develop AI vision applications that leverage sensor data and various camera and video streams as input. These applications can be used across various industrial sectors (for example, real-time traffic monitoring systems or retail store aisle footage analysis). With the same PowerEdge R360, we conducted inferencing on 48 streams while utilizing just over 50% of the GPU, and a limited amount of the CPU [3]. Our CPU utilization during testing averaged about 8%.

The rest of this document provides more details about the testing conducted for these two distinct use cases of a PowerEdge T360 or R360 with GPU support.

Product Overview

The PowerEdge T360 and R360 are the latest servers to join the PowerEdge family. Both are cost-effective 1-socket servers designed for small to medium businesses with growing compute demands. They can be deployed in the office, the near-edge, or in a typical data analytic environment.

The biggest differentiator between the T360 and R360 is the form factor. The T360 is a tower server that can fit under a desk or even in a storage closet, while maintaining office-friendly acoustics. The R360, on the other hand, is a traditional 1U rack server. Both servers support the newly launched Intel® Xeon® E-series CPUs, 1 NVIDIA A2 GPU, as well as DDR5 memory, NVMe BOSS, PCIe Gen5 I/O ports, and the latest remote management capabilities.

Figure 1. From left to right, PowerEdge T360 and R360

NVIDIA A2 GPU Information

Unlike the analogous prior-generation servers, the recently launched PowerEdge T360 and R360 now support 1 NVIDIA A2 entry GPU. The A2 accelerates media intensive workloads, as well as emerging AI inferencing workloads. It is a single-width GPU stacked with 16GB of GPU memory and 40-60W configurable thermal design power (TDP). Read more about the A2 GPU’s up to 20x inference speedup and features here: A2 Tensor Core GPU | NVIDIA.

Testing Configuration

We conducted benchmarking on one PowerEdge R360 with the configuration in the table below. Similar results can be expected for the PowerEdge T360 with this same configuration. We tested in a Linux Ubuntu Desktop environment, version 20.04.6.

Table 1. PowerEdge R360 System Configuration

Component | Configuration |

CPU | 1x Intel® Xeon® E-2488, 8 cores |

GPU | 1x NVIDIA A2 |

Memory | 4x 32 GB DIMMs, DDR5 |

Drives | 1x 2 TB SATA HDD |

OS | Ubuntu 20.04.6 |

NIC | 2x Broadcom NetXtreme Gigabit Ethernet |

Accelerate 3D Rendering Workloads

Entry GPUs are often used in the media and entertainment industry for 3D modeling and rending. The NVIDIA A2 GPU is a powerful accelerator for these workloads. To highlight the magnitude of the acceleration, we ran the same Blender OpenData benchmark on CPU, and then only on GPU. Blender is a popular open-source 3D modeling software.

The benchmark evaluates the system’s rendering performance for three different 3D scenes, either on CPU or GPU only. Results, or scores, are reported in sample per minute. We ran the benchmark on CPU (Intel Xeon-E2488) three times, and then on GPU (NVIDIA A2) three times. The results in Table 2 below represent the average score of each of the three trials.

Results

Compared to the benchmark run only on CPU, we attained up to 5x better rendering performance with the same workload run on the A2 GPU [1]. Although we achieved over 4x better performance for all three 3D scenes, the classroom scene corresponds to the best result and is illustrated in the figure below.

Figure 2. Rendering performance on CPU only and GPU only

Given this 5x better rendering performance, we calculated the performance per dollar for the cost of CPU compared to the cost of the GPU. For CPU performance, we divided the rendering score by the Dell US list price for the E-2488 CPU. For GPU performance, we divided the rendering score by the Dell US list price for the A2 GPU[2]. When comparing these results, we found customers can gain up to 1.70x the performance per every dollar spent on the GPU compared to the CPU [2].

Figure 3. Rendering performance per dollar increase

Taking the analysis a step further, we also calculated the performance per dollar spent on a CPU compared to cost of both a CPU and GPU. This comparison is relevant for customers who are investing in both an Intel Xeon E-2488 CPU and NVIDIA A2 GPU for their PowerEdge R360/T360. While we calculated the CPU performance score the same way as above, we now divided the GPU rendering score by the Dell US list price for the A2 GPU + E-2488 CPU. When comparing these results, we found customers can gain up to 1.27x the performance per every dollar spent on both GPU and CPU compared to just CPU [2].

In other words, investing in an R360 with a E-2488 CPU and A2 GPU yields a higher return on investment for rendering performance compared to an R360 without an A2 GPU. It is also worth mentioning that the E-2488 CPU is the highest-end, and most expensive, CPU offered for both the T360 and R360. It is reasonable to expect an even higher return on investment for the A2 GPU when compared to the same system with a lower-end CPU.

The full results and scores are listed in the table below.

Table 2. Blender benchmark results

Scene | CPU Only, Samples per Min | NVIDIA A2 GPU, Samples per Min | Increase from CPU to GPU |

Monster | 98.664848 | 422.8827567 | 4.29x |

Junkshop | 62.561726 | 268.386526 | 4.29x |

Classroom | 47.35613467 | 237.8551867 | 5.02x |

Video Analytics Performance with NVIDIA DeepStream

While 3D rendering may be a more common workload for SMBs investing in entry-GPUs, the same GPU is also a powerful accelerator for entry AI inferencing and video analytic workloads. We used NVIDIA’s DeepStream version 6.3[3] to showcase the PowerEdge R360’s performance when running a sample video analytic application. DeepStream has a variety of sample applications and input streams available for testing. The given configuration files allow you to vary the number of streams for a run of the app which we explain in greater detail below. Input streams can range from photos, video files (with either h.264 or h.265 coding), or even RTSP IP cameras.

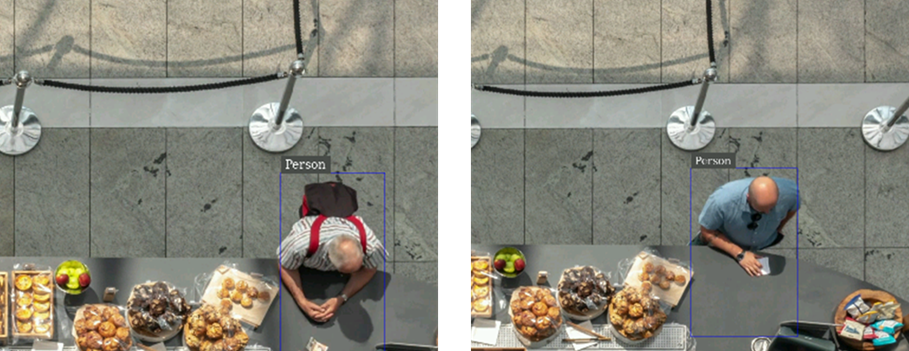

To better illustrate DeepStream’s functionality, consider the images below that were generated from our run of a DeepStream sample app. Instead of using a provided sample video, we used our own stock video of customers entering and leaving a bakery. The AI model in this scenario can identify people, cars, and bicycles. The images below, which are cropped outputs to zoom in on the person at the cash register, show how this vision application correctly identified these two customers with a bounding box and “person” label.

Figure 4. Cropped output of DeepStream sample app with modified source video

Instead of pre-recorded videos, an RTSP IP camera would theoretically allow a user to stream and analyze live footage of customers in a retail store. Check out this blog from the Dell AI Solutions team for a guide on how to get DeepStream up and running with a 1080p webcam for streaming RTSP output.

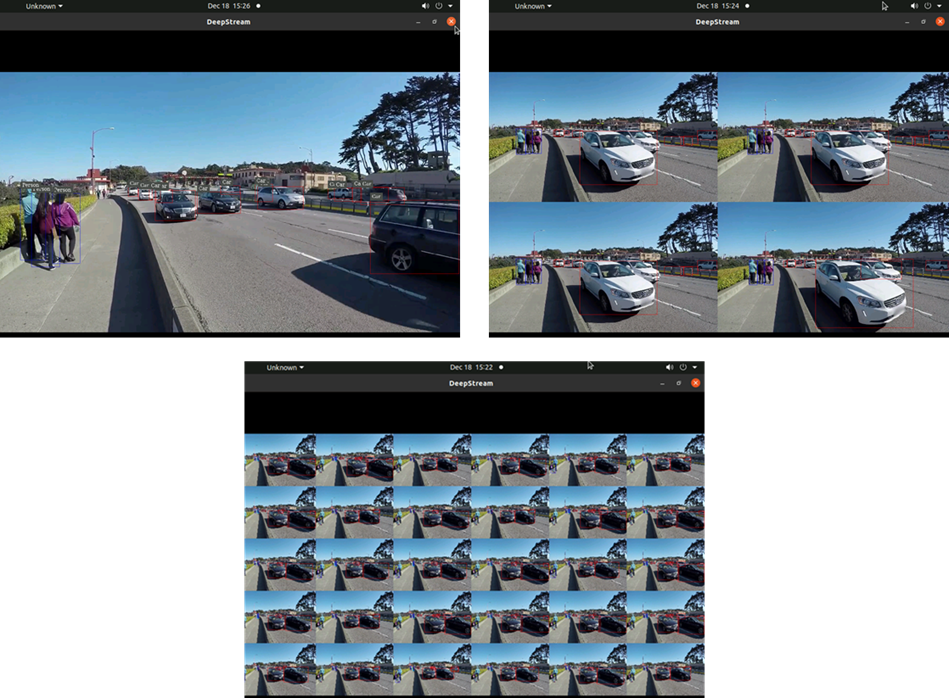

We also tested the DeepStream sample application with one of NVIDIA’s provided videos that shows cars, bicycles, and pedestrians on a busy road. The images below are screenshots of the sample app run with 1, 4, and 30 streams, respectively. In each tile, or stream, the given model places bounding boxes around the identified objects.

Figure 5. Deepstream sample video output with 1, 4, and 30 streams, respectively

Performance Testing Procedure

During a run of a sample application, NVIDIA measures performance as the number of frames per second (FPS) processed. An FPS score is displayed for each stream in 5 second intervals. For our testing, we followed the steps in the DeepStream 6.3 performance guide, which lists the appropriate modifications to the configuration file in order to maximize performance. All modifications were made to the source4_1080p_dec_infer-resnet_tracker_sgie_tiled_display_int8.txt configuration file, which is specifically described in the “Data center GPU – A2 section” of the tutorial. Tiled displays like in Figures 4 and 5 above impact performance, so NVIDIA recommends disabling on-screen display/output when evaluating performance. We did the same.

With the same sample video as shown in Figure 5, NVIDIA reports that using an H.264 source, it is possible to host 48 inferencing streams at 30 FPS each. To test this with our PowerEdge R360 and A2 GPU, we followed the benchmarking procedure below:

- Modify the sample application configuration file to take in 48 input streams by changing the parameter num-sources to 48, and the batch-size parameter under the streammux section to 48.[4] This is in addition to the other recommended configuration changes described in the guide above.

- Let the application run for 10 minutes[5]

- Record the average FPS for each of the 48 streams at the end of the run

- Repeat steps 1-3 with 40, 30, 20, 10, 5, and 1 streams. The only modification to the configuration file should be updating the num-sources and batch-size to match the number of streams currently under test.

Our results are illustrated in the section below. We used iDRAC tools and the nvidia-smi command to capture system telemetry data every 7 seconds during testing trials as well (i.e. CPU utilization, total power utilization, GPU power draw, and GPU utilization). Each reported utilization statistic (such a GPU utilization) is the average of 100 datapoints collected over the app run period.

Results

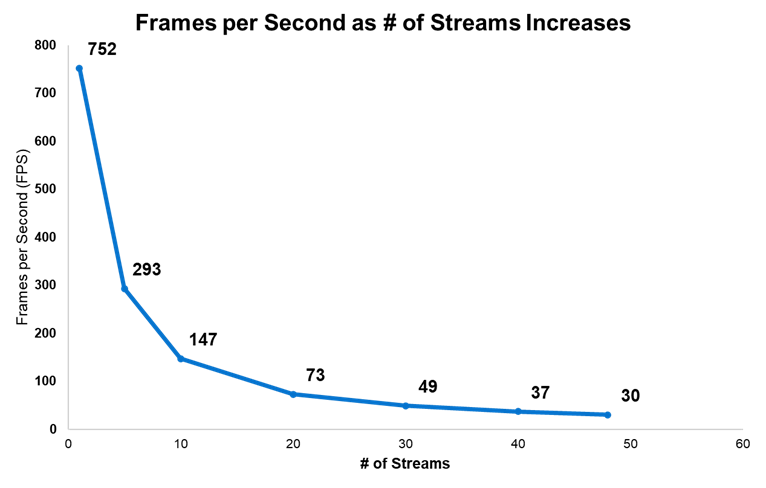

The figure below displays the average FPS (to the nearest whole number) achieved for varying number of streams. As the number of streams tested increases, the FPS per stream decreases.

Most notably, we achieved NVIDIA’s expected max performance with our PowerEdge R360; We ran 48 streams with an average of 30 FPS each at the end of the 10-minute run period [3]. In general, 30 FPS is an industry-accepted rate for standard video feeds such as live TV.

Figure 6. DeepStream FPS for varying number of streams

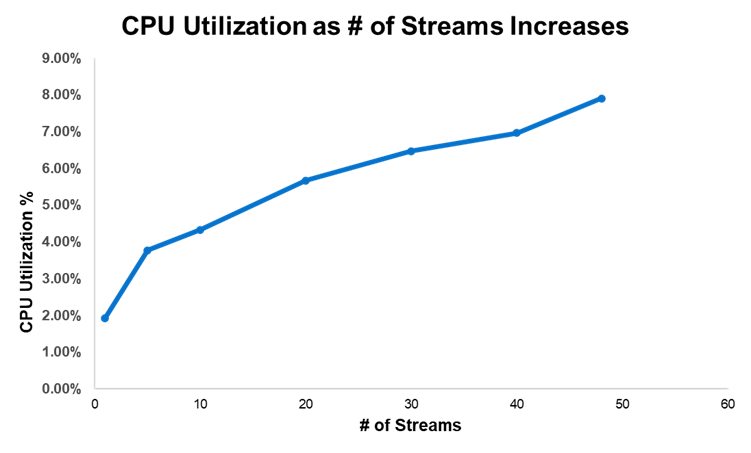

We also captured CPU utilization during our testing. Unsurprisingly, CPU utilization was highest with 48 streams. However, for all number of streams tested, CPU utilization only ranged between about 2-8%. This means most of the system’s CPU was still available for other work while we tested DeepStream.

Figure 7. CPU utilization for varying number of streams

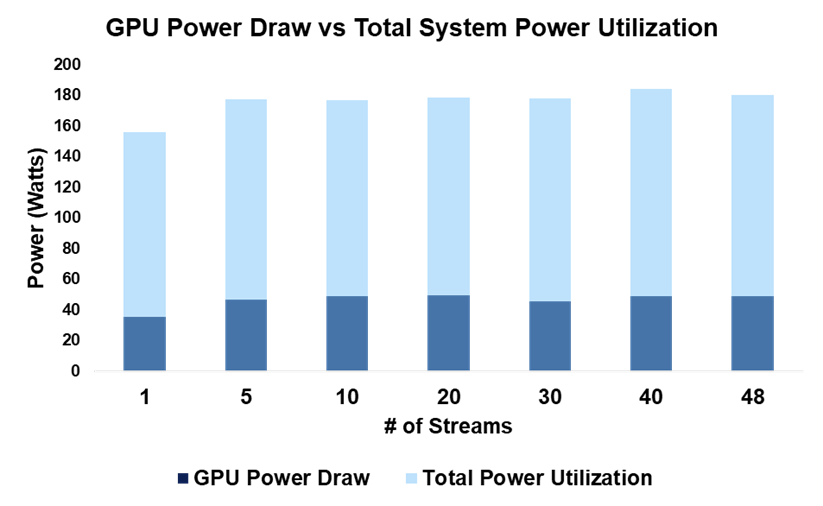

In terms of power consumption, the figure below shows GPU power draw overlayed on top of total system power utilization. Irrespective to the number of streams, GPU power draw represents only about 25-27% of the total system power utilization.

Figure 8. System power consumption for varying number of streams

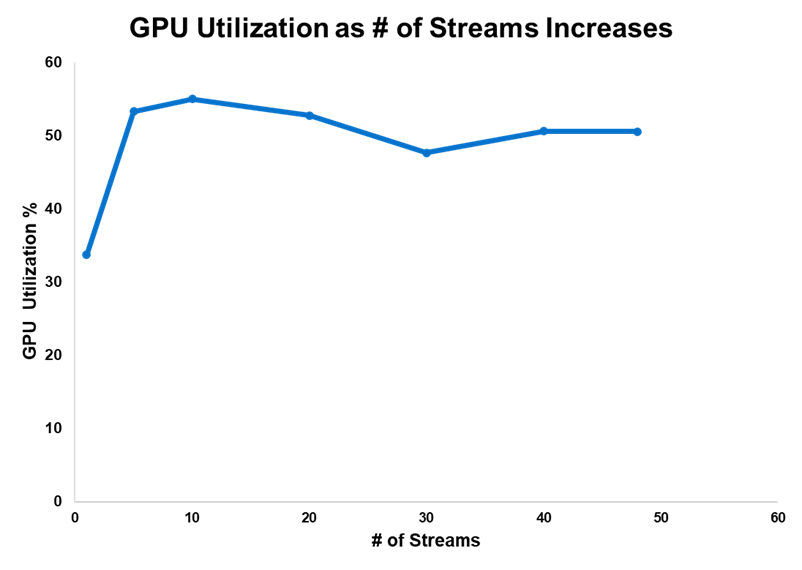

Finally, we captured GPU utilization as number of streams increased. While it varied more so than the other telemetry data, at the max number of streams tested, GPU utilization was about 50%. We achieved these impressive results without driving the GPU to max utilization.

Figure 9. GPU utilization for varying number of streams

Conclusion

We have just scratched the surface on the performance capabilities of the PowerEdge T360 and R360. Between 3D rendering and entry AI-inferencing workloads; the added A2 GPU allows SMBs to explore compute-intensive use cases from the office to the near-edge. In other words, the R360 and T360 are equipped to scale with businesses as computing demand inevitably, and rapidly, evolves.

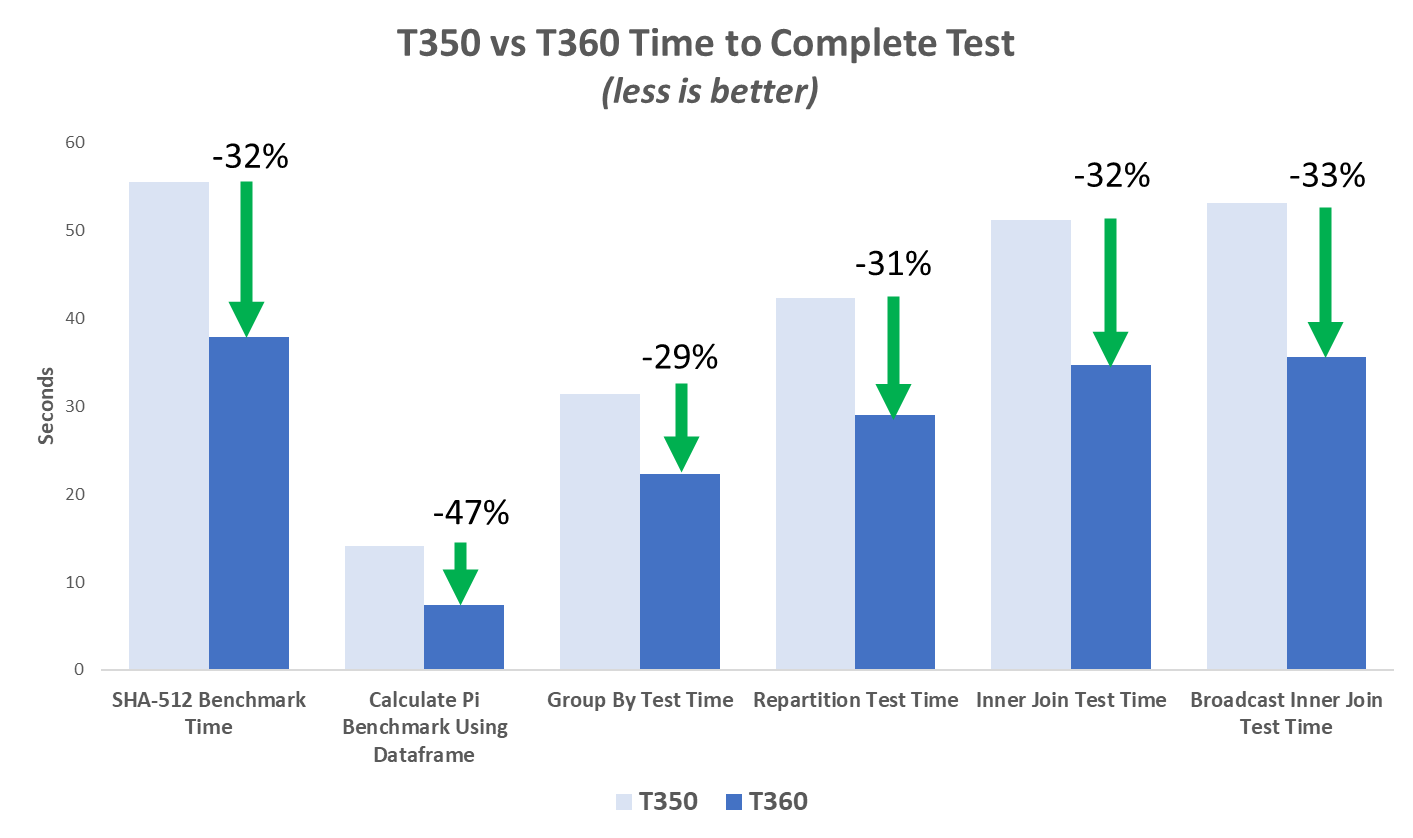

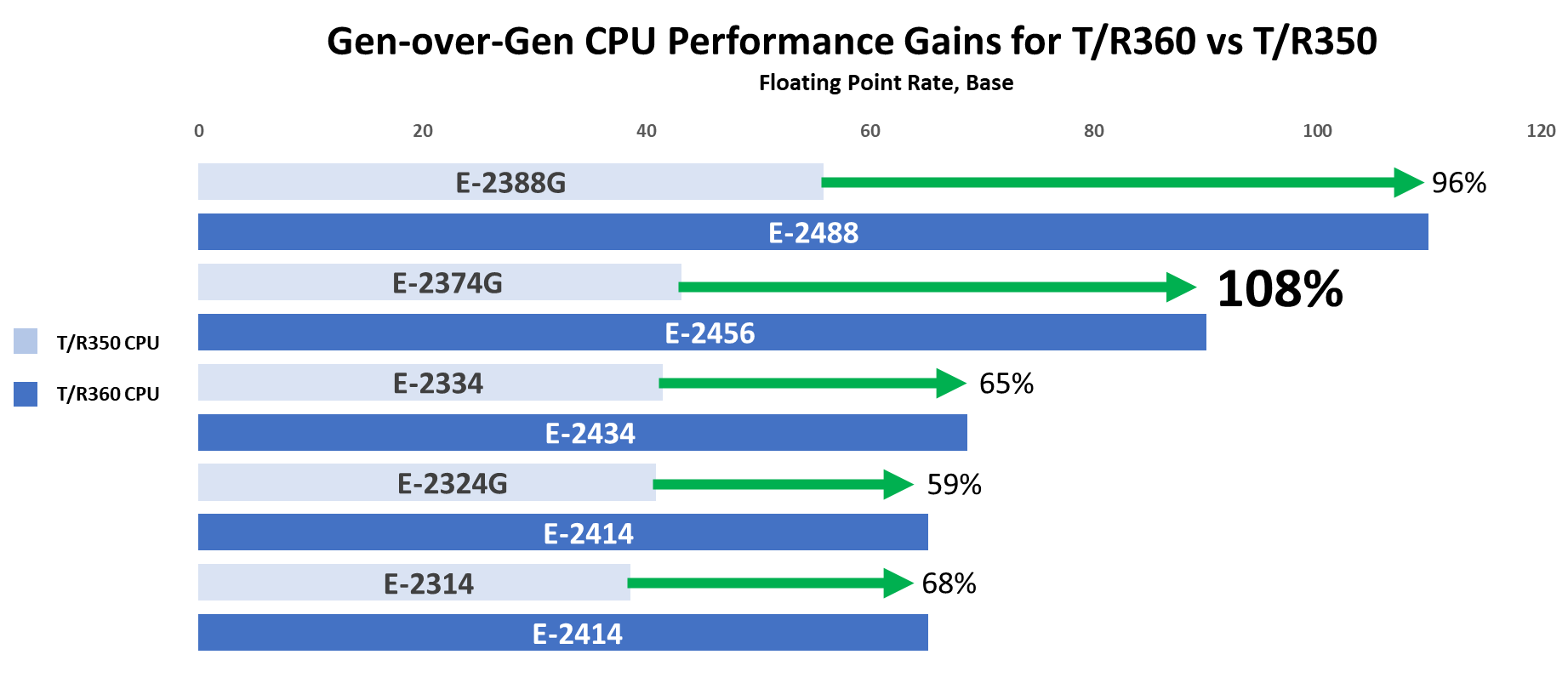

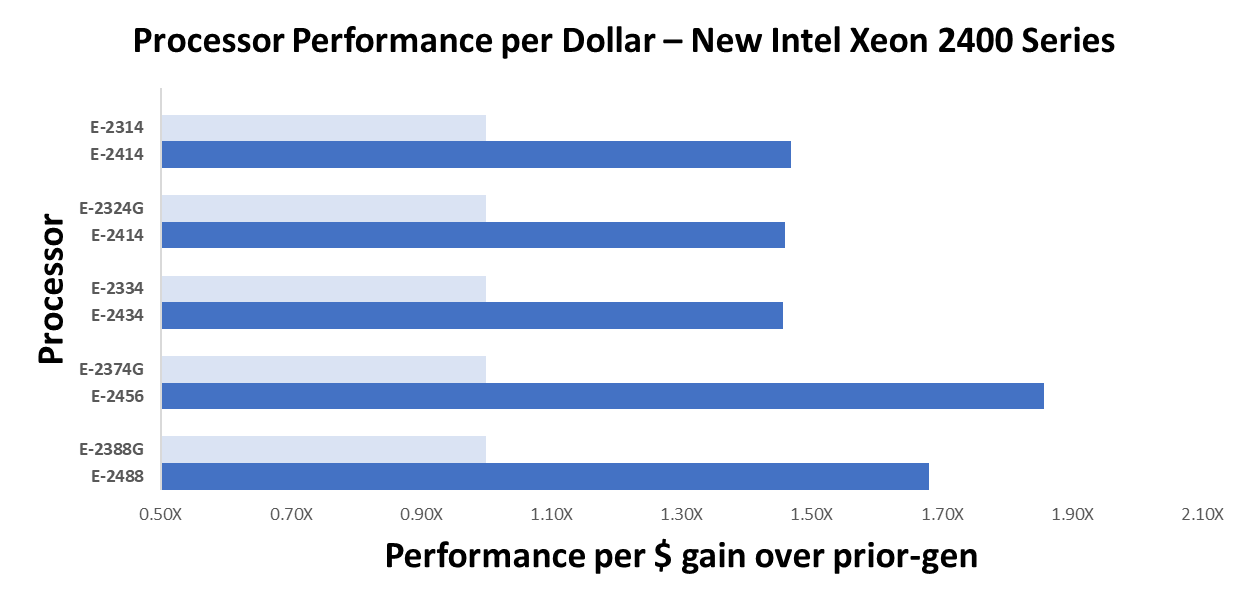

While GPU support is a defining feature of the PowerEdge T360 and R360, they also leverage the newly launched Intel® Xeon® E-series CPUs, 1.4x faster DDR5 memory, NVMe BOSS, and PCIe Gen5 I/O ports. For more information on these cost-effective, entry-level servers, you can read about their excellent performance across a variety of industry-relevant benchmarks and up to 108% better CPU performance.

References

- Daily: US tech gains set to continue on stronger AI growth | UBS Global

- Blender - Open Data

- DeepStream SDK | NVIDIA Developer | NVIDIA Developer

- A2 Tensor Core GPU | NVIDIA

- Ubuntu 20.04.6 LTS (Focal Fossa)

- Performance — DeepStream 6.3 Release documentation (nvidia.com)

- Understanding FPS Values: The Advanced Guide to Video Frame Rates (dacast.com)

- Battle of the Servers: PowerEdge T360 & R360 outperform prior-gen models across a range of benchmarks | Dell Technologies Info Hub

- Introducing the PowerEdge T360 & R360: Gain up to Double the Performance with Intel® Xeon® E-Series Processors | Dell Technologies Info Hub

Legal Disclosures

[1] Based on November 2023 Dell labs testing subjecting the PowerEdge R360 to Blender OpenData benchmark with 1x NVIDIA A2 GPU and 1x Intel Xeon E-2488 CPU. Actual results will vary. Similar results can be expected on a PowerEdge T360 with the same system configuration.

[2] Based on November 2023 Dell labs testing subjecting the PowerEdge R360 to Blender OpenData benchmark with 1x NVIDIA A2 GPU and 1x Intel Xeon E-2488 CPU. Actual results will vary. Similar results can be expected on a PowerEdge T360 with the same system configuration. Pricing analysis is based on Dell US R360 list prices for both the NVIDIA A2 GPU and Intel Xeon E-2488 processor. Pricing varies by region and is subject to change without notice. Please contact your local sales representative for more information.

[3] Based on November 2023 Dell labs testing subjecting the PowerEdge R360 with 1x A2 GPU to performance testing of NVIDIA’s DeepStream SDK, version 6.3. We tested the sample application with configuration file named:source4_1080p_dec_infer-resnet_tracker_sgie_tiled_display_int8.txt. The full testing procedure is described in this report. Similar results can be expected with a PowerEdge T360 with the same configuration. Actual results will vary.

Appendix

Dell provides an open-source Reference Toolset for iDRAC9 Telemetry Streaming. With streaming data, you can easily create a Grafana dashboard to visualize and monitor your system’s telemetry in real-time. Tutorials are available with this video and whitepaper.

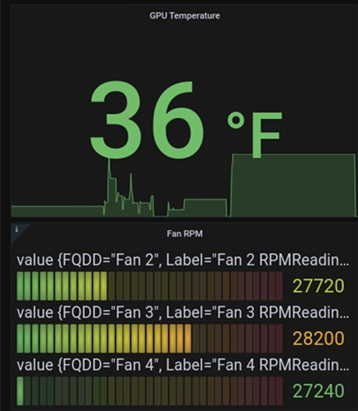

The screenshot below is from a Grafana dashboard we created for capturing PowerEdge R360 telemetry. It displays GPU temperature and rotations per minute (RPM) for three fans (we ran the Blender benchmark to demonstrate a spike in GPU temperature). You can also track GPU power consumption and utilization, among many other system metrics.

Figure 10. Grafana dashboard example

Powering Kafka with Kubernetes and Dell PowerEdge Servers with Intel® Processors

Mon, 29 Jan 2024 23:33:38 -0000

|Read Time: 0 minutes

Kafka with Kubernetes

At the top of this webpage are 3 PDF files outlining test results and reference configurations for Dell PowerEdge servers using both the 3rd Generation Intel® Xeon® processors and 4th Generation Intel Xeon processors. All testing was conducted in Dell Labs by Intel and Dell Engineers in October and November of 2023.

- “Dell DfD Kafka ICX” – highlights the recommended configurations for Dell PowerEdge servers using 3rd generation Intel® Xeon® processors.

- “Dell DfD Kafka SPR” – highlights the recommended configurations for Dell PowerEdge servers using 4th generation Intel® Xeon® processors.

- “Dell DfD Kafka Kubernetes Test Report” – Highlights the results of performance testing on both configurations with comparisons that demonstrate the performance differences between them.

Solution Overview

The Apache® Software Foundation developed Kafka as an Open Source solution to provide distributed event store and stream processing capabilities. Apache Kafka uses a publish-subscribe model to enable efficient data sharing across multiple applications. Applications can publish messages to a pool of message brokers, which subsequently distribute the data to multiple subscriber applications in real time.

Kafka is often deployed for mission-critical applications and streaming analytics along with other use cases. These types of workloads require leading-edge performance which places significant demand on hardware.

There are five major APIs in Kafka[i]:

- Producer API – Permits an application to publish streams of records.

- Consumer API – Permits an application to subscribe to topics and process streams of records.

- Connect API – performs the reusable producer and consumer APIs that can link the topics to the existing applications.

- Streams API – This API converts the input streams to output and produces the result.

- Admin API – Used to manage Kafka topics, brokers, and other Kafka objects.

Kafka with Dell PowerEdge and Intel processor benefits

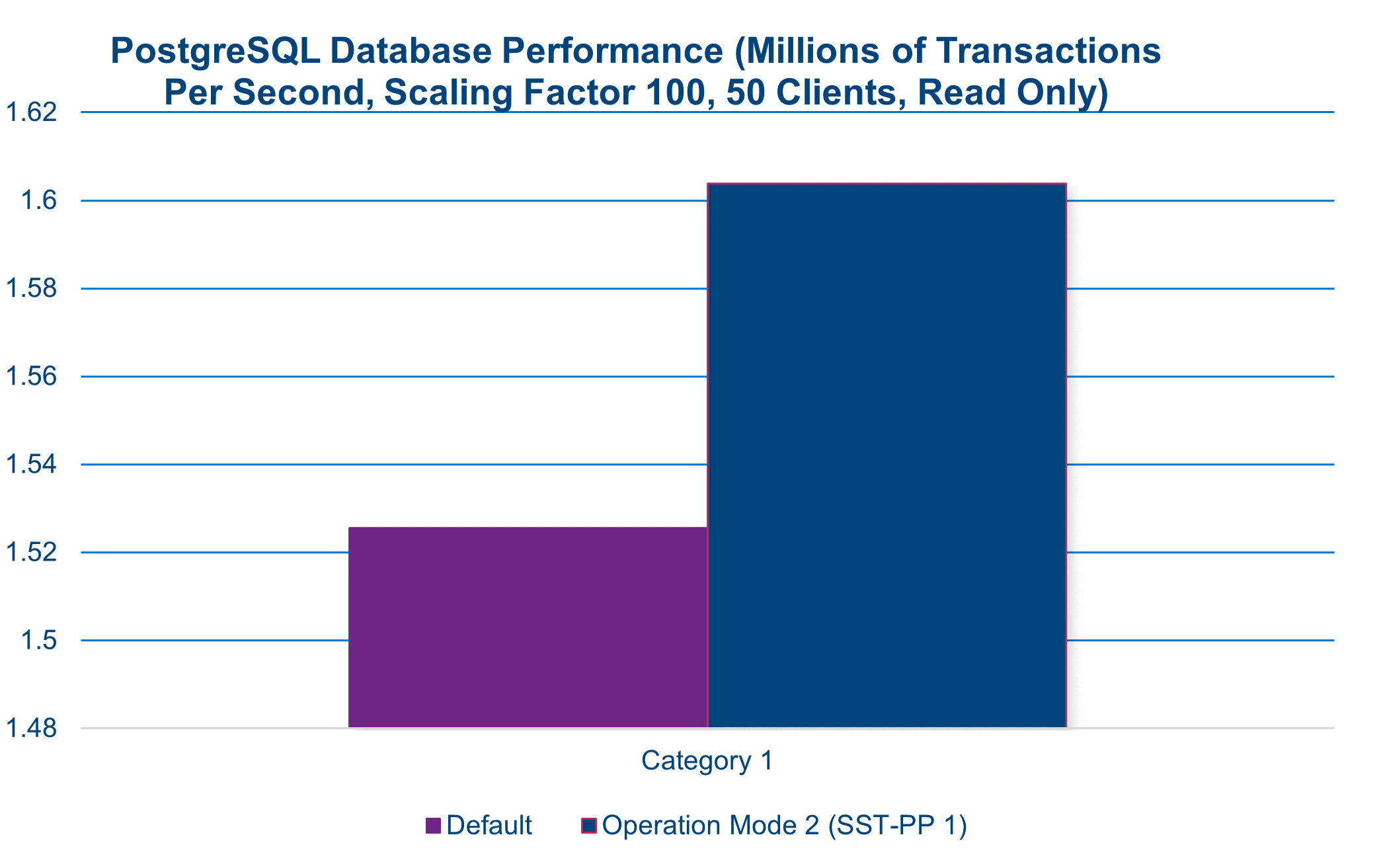

The introduction of new server technologies allows customers to deploy solutions using the newly introduced functionality, but it can also provide an opportunity for them to review their current infrastructure and determine if the new technology might increase performance and efficiency. Dell and Intel recently conducted testing of Kafka performance in a Kubernetes environment and measured the performance of two different compression engines on the new Dell PowerEdge R760 with 4th generation Intel® Xeon® Scalable processors and compared the results to the same solution running on the previous generation R750 with 3rd generation Intel® Xeon® Scalable processors to determine if customers could benefit from a transition.

Some of the key changes incorporated into 4th generation Intel® Xeon® Scalable processors include:

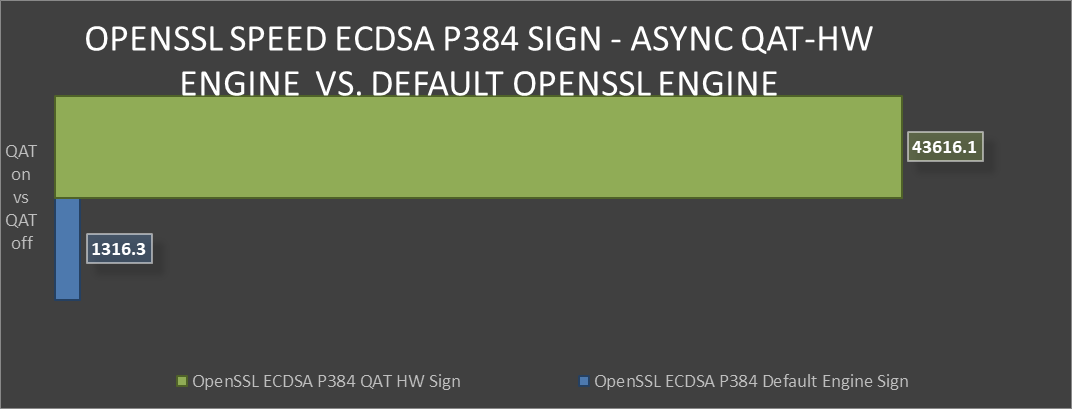

- Quick Assist Technology (QAT) to accelerate data compression and encryption.

- Support for 4800 MT/s DDR5 memory

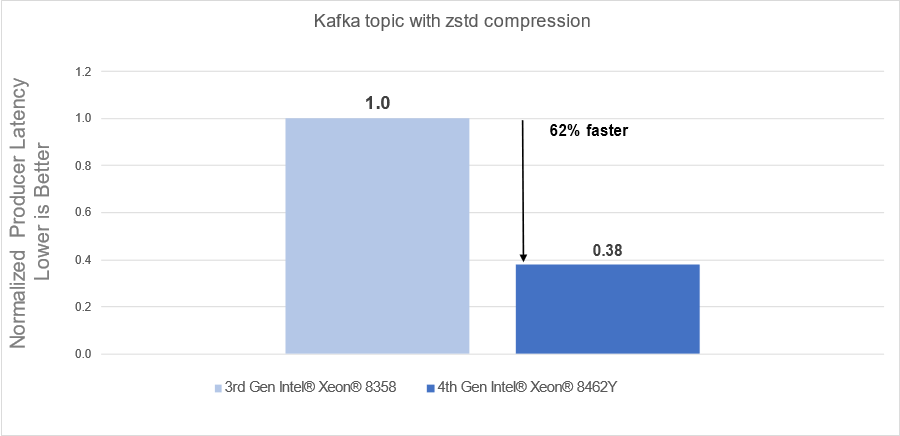

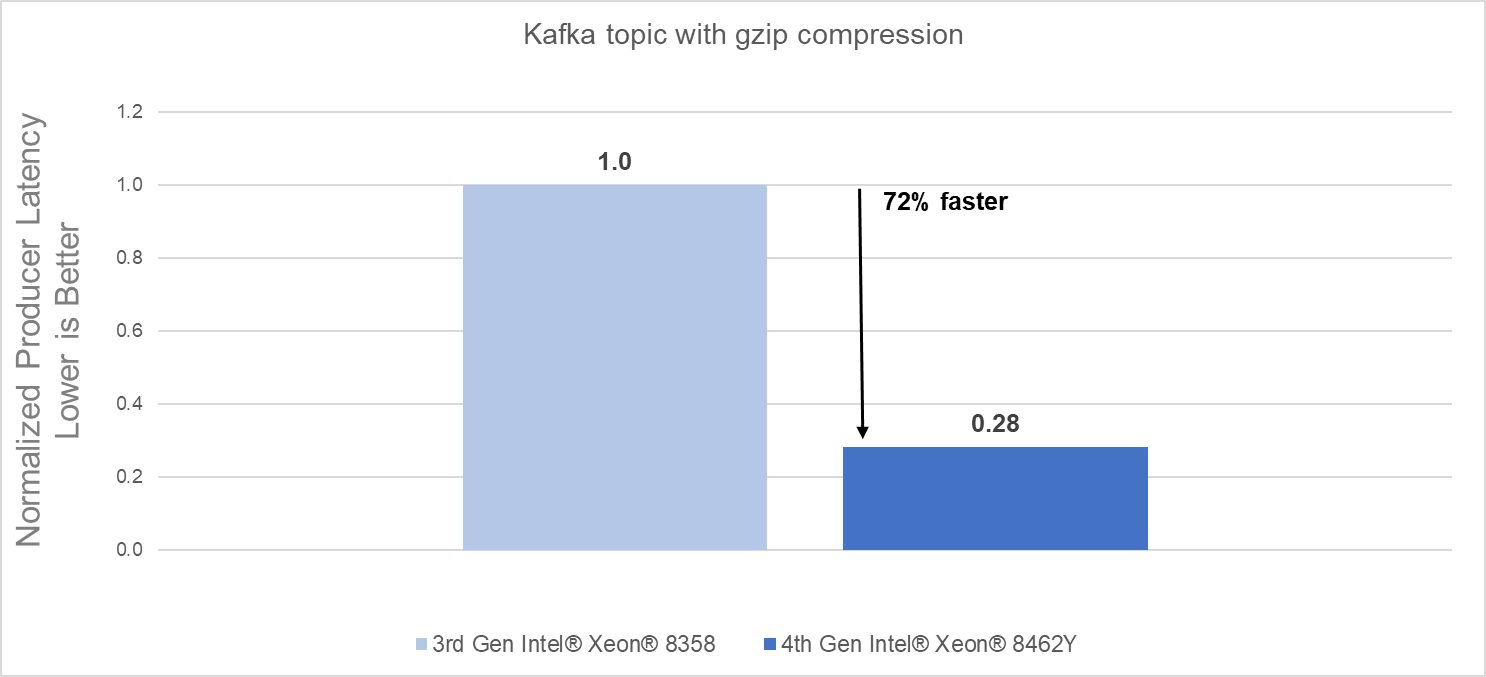

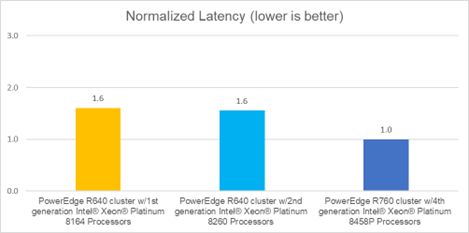

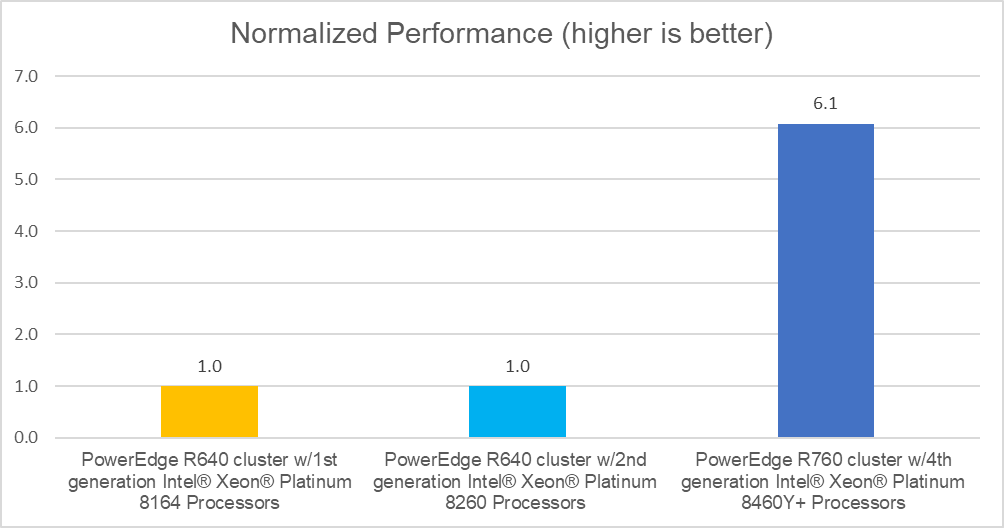

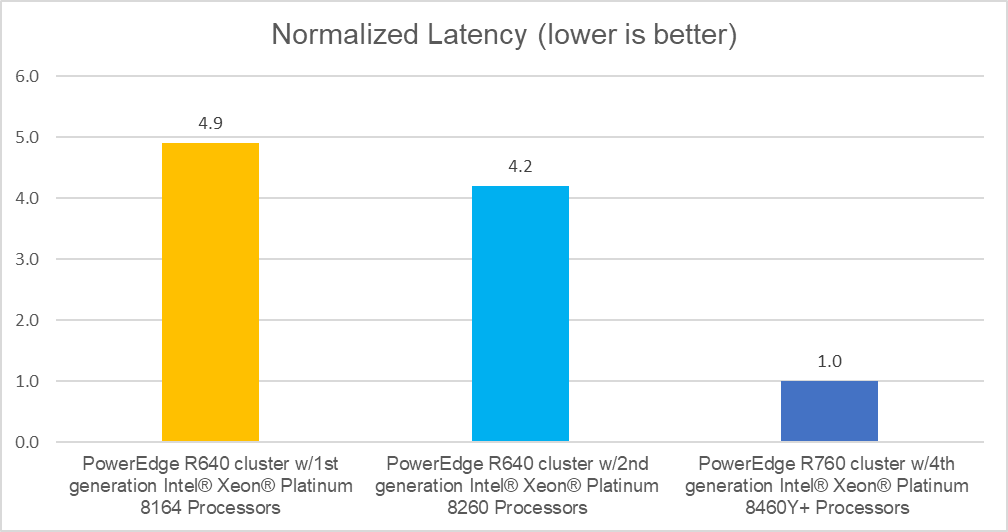

Raw performance: As noted in the report, our tests showed a 72% producers’ latency decrease with gzip compression and a 62% producers’ latency decrease with zstd compression.

Conclusion

Choosing the right combination of Server and Processor can increase performance and reduce time, allowing customers to react faster and process more data. As this testing demonstrated, the Dell PowerEdge R760 with 4th Generation Intel® Xeon® CPUs significantly outperformed the previous generation.

- The Dell PowerEdge R760 with 4th Generation Intel® Xeon® Scalable processors delivered:

- 62% faster processing using zstd compression

- 72% faster procession using gzip compression

- 4th Generation Intel® Xeon® Scalable processors benefits are the results of:

- Innovative CPU microarchitecture providing a performance boost

- Introduction of DDR5 memory support

[i] https://en.wikipedia.org/wiki/Apache_Kafka

Yellowbrick- An efficient Cloud Data Warehouse powered by Dell Technologies

Mon, 29 Jan 2024 23:20:57 -0000

|Read Time: 0 minutes

In the current economic climate, CIOs are rethinking their cloud strategy. They face challenges on several fronts - the need to continue innovating and driving growth while reducing the cost of cloud data programs and bringing tangible value. As cloud economics practices mature, private cloud and hybrid cloud are regaining strategic impetus. Organizations need the flexibility to manage data in private cloud, public cloud, co-lo, and at the edge. Yellowbrick delivers on this “Your Data Anywhere” vision.

Alongside new data management approaches such as data lakes, SQL based Data Warehouse technologies continue to prove their value as the primary business interface, with data lake vendors rushing to emulate their capabilities.

With Dell Technologies’ this solution is designed and optimized to provide an elastic data management platform for SQL analytics at any scale.

Business Challenges and Benefits

Yellowbrick data warehouse meets these challenges with a unique architecture designed to maximize efficiency with hardened security and simplified management. Yellowbrick delivers everything you would expect from a modern high-performance SQL cloud data warehouse.

It comes with cloud SaaS simplicity and elasticity with performance perfected through years of delivering value to customers in weeks and months and bills natively to exploit the power agility of the cloud.

Yellowbrick uniquely combines its MPP database software, and highly engineered systems design, with an agile elastic modern Kubernetes-based architecture that delivers high efficiency and maximizes performance in every deployment scenario.

Yellowbrick is engineered for maximum efficiency and price performance, supporting thousands of concurrent users on 1/5 of the cloud resources compare with competitors, maximizing data value with the simplicity and familiarity of SQL but with a unique pricing model that alleviates concerns over unpredictable cost overruns.

Who is Yellowbrick?

The Yellowbrick Data Warehouse is an elastic massively parallel processing (MPP) SQL database that runs on-premises, in the cloud, and at the network edge, it was designed for the most demanding batch real time and ad hoc and mixed workloads and can run complex queries at up to petabyte scale with guaranteed sub second response times. Yellowbrick is proven, providing business critical services at many large global enterprises with thousands of concurrent users. It is available on AWS, Azure, and Google Cloud as well as on-premises.

| SQL Analytics for The Masses Cost-effectively supporting thousands of concurrent users running hundreds of concurrent ad-hoc queries, Yellowbrick leapfrogs competitors while still providing full elasticity with separate storage and compute. |

| Meet Mission-Critical Service Levels Intelligent workload management dynamically optimizes resources to ensure SLAs are consistently met without the need to scale out and spend more. |

| Ultimate Control of Data Security Yellowbrick’s data warehouse runs in your own cloud VPC or on-premises behind your firewall, allowing you to meet data sovereignty and governance requirements and pay for your own infrastructure. |

| Engineered for Extreme Efficiency and Performance Get answers faster with our Direct Data Path architecture. Yellowbrick runs mixed ad-hoc ETL, OLAP, and real-time streaming workloads delivering the maximum benefit from any underlying infrastructure platform. |

| Easy to Do Business With Optimize your costs with flexible on-demand or fixed subscription – Yellowbrick is invested in your success, not in emptying your wallet. Our NPS of 82 is a testament to our customer partnership model and support excellence. |

Figure 1 The Yellowbrick Advantage

Yellowbrick Overview

Designed to run complex mixed workloads and support ad-hoc SQL while computing correct answers on any schema, Yellowbrick offers massive scalability and supports vast numbers of concurrent users. This means our clients gain deeper, more meaningful insights into their customers more quickly than ever before possible, setting us apart from other cloud data warehouses (CDWs).

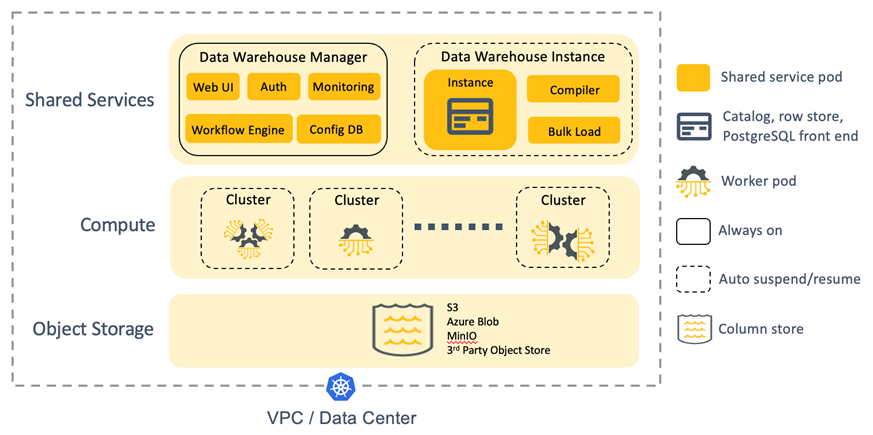

Figure 2 Yellowbrick Architecture

In an industry-first, full SQL-driven elasticity with separate storage and compute is available within your own cloud account as well as on-premises. Compute resources – elastic, virtual compute clusters (VCCs) – are created, resized, and dropped on-demand through SQL, and cache data persisted on shared cloud object storage. For example, ad-hoc users can be routed to one cluster, business-critical users to a second cluster, and more clusters created and dropped on demand for ETL processing.

Each data warehouse instance runs independently of one another. There is no single point of failure or metadata shared across instances. Global outages – when deployed with replication across multiple public clouds and/or on-premises – are impossible.