IT Modernization with next-generation Dell PowerEdge Servers and 4th generation Intel® Xeon® Processors

PowerEdge R760 vSAN 8.0 testing with the Original Storage Architecture (OSA) PowerEdge R760 vSAN 8.0 testing with the Express Storage Architecture (ESA)Thu, 03 Aug 2023 22:50:03 -0000

|Read Time: 0 minutes

When transitioning to a new Server Technology, customers must weigh the cost of the solution against the benefits it can provide. A “solution” requires a combination of Hardware, Operating Environment, and Software. To gain maximum benefit from new technologies, it is important to consider all of them when making a decision. One of the biggest challenges this creates is that all three elements rarely emerge simultaneously, and customers can find themselves hindered by past choices.

A real-world example would be a Dell, Intel, and VMware customer planning to upgrade their existing infrastructure.

As the article below notes, vSAN 8.0 with Express Storage Architecture (ESA) represents “A revolutionary release that will deliver performance and efficiency enhancements to meet customers’ business needs of today and tomorrow!” “vSAN ESA will unlock the capabilities of modern hardware by adding optimization for high-performance, NVMe-based TLC flash devices with vSAN, building off vSAN’s Original Storage Architecture (vSAN OSA). vSAN was initially designed to deliver highly performant storage with SATA or SAS devices, the most common storage media at the time. vSAN 8 will give our customers the freedom of choice to decide which of the two existing architectures (vSAN OSA or vSAN ESA) to leverage to best suit their needs.”

The introduction of the next-generation PowerEdge Servers, such as the PowerEdge R760, brings exciting opportunities for customers to enhance their current and future workloads by utilizing the latest vSAN storage architecture. To fully leverage the performance benefits of this new storage architecture, customers can take advantage of the VMware certified hardware configurations for vSAN ESA on Dell vSAN Ready Nodes.

It's important to note that VMware vSAN ESA requires a different set of drives compared to the OSA hardware. With the release of vSAN 8.0, customers are faced with a decision. They likely have an existing infrastructure based on the vSAN OSA architecture running on vSAN 7.0U3. Now, they need to consider the advantages and disadvantages of sticking with the OSA architecture or upgrading to new hardware to unleash the performance of new ESA architecture. The ESA architecture serves as an optional and alternative storage architecture for vSAN software and hardware, offering customers a familiar yet upgraded solution. This choice allows customers to tailor their storage architecture to meet their specific needs and preferences.

There are links at the top of this page detailing recent testing by Intel and Dell on the PowerEdge R760 with vSAN. All tests were conducted using VMware’s HCIBench tool, which VMware describes as “an automation wrapper around the popular and proven open-source benchmark tools: Vdbench and FIO that make it easier to automate testing across an HCI cluster.”

All 4th generation Intel® Xeon® testing was conducted in Dell Labs by Engineers from Intel supported by Engineers from Dell. All testing on 1st generation Intel® Xeon® and 2nd generation Intel® Xeon® was conducted in Intel Labs by Engineers from Intel. The two tests were conducted between November 2022 and March 2023. Solidigm provided all NVMe drives used in these tests.

R760 vSAN 8.0 OSA vs. R640 vSAN 7.0U3 OSA

In the first paper, we configured HCIBench for Vdbench. We compared the performance of a 4 node cluster of PowerEdge R760’s with 4th generation Intel® Xeon® Platinum Processors using vSAN 8.0 (OSA) to a 4 node cluster of PowerEdge R640’s with 1st generation Intel® Xeon® Platinum Processors and a 4 node cluster of PowerEdge R640’s with 2nd generation Intel® Xeon® Platinum Processors with both configurations using vSAN7.0U3. All configurations used an “all flash” storage configuration using components certified and available for that server. The 14th Generation Dell servers were also configured with 2x10Gb/s Networking cards, which were common then. The R760 systems are the first generation of Dell Servers with the PCIe bandwidth necessary to support the OCP 3.0 2x100Gb/s Ethernet Networking cards used in the test. The Intel network cards that were chosen for the R760 also support ROCE v.2 (RDMA Over Converged Ethernet), which was enabled for this test. ROCE v.2 was not available in the NICs used in the prior generation servers. The R640 delivers comparable performance to the R740 and was chosen only for hardware availability reasons.

R760 vSAN 8.0 ESA vs. R640 vSAN 7.0U3 OSA

In the second paper, we configured HCIBench for FIO. We compared the performance of a 4 node cluster of PowerEdge R760’s with 4th generation Intel® Xeon® Platinum Processors using vSAN 8.0 (ESA) to a 4 node cluster of PowerEdge R640’s with 1st generation Intel® Xeon® Platinum Processors and a 4 node cluster of PowerEdge R640’s with 2nd generation Intel® Xeon® Platinum Processors both configurations using vSAN7.0U3. The R640 delivers comparable performance to the R740 and was chosen only for hardware availability reasons.

Vdbench and FIO test throughput (reported in IOPS) and storage latency (reported in milliseconds), but the results are not directly comparable. What is comparable are the ratios of performance gain. After conducting the initial testing with Vdbench to create a baseline, the team moved to FIO for the greater control it provides over tuning parameters. While this would affect performance, it would not be expected to affect the ratios because all systems in each test used a consistent approach for that test.

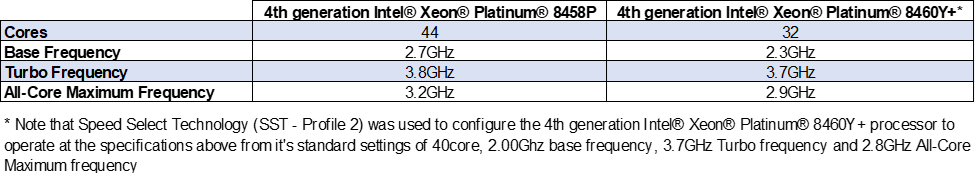

The 4th generation Intel® Xeon® processors used in these two tests were different. In the first set of tests, the 3rd generation Intel® Xeon® Platinum 8458 PP was used, while in the second test, the 4th generation Intel® Xeon® 8460Y+ was used. This was due to hardware constraints at the time of the test but is not expected to affect performance dramatically. This observation is offered based on the following key differences:

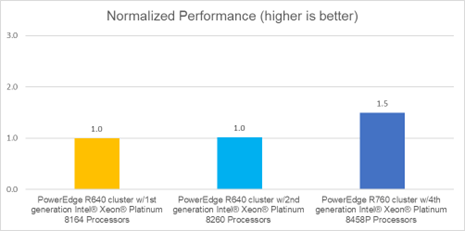

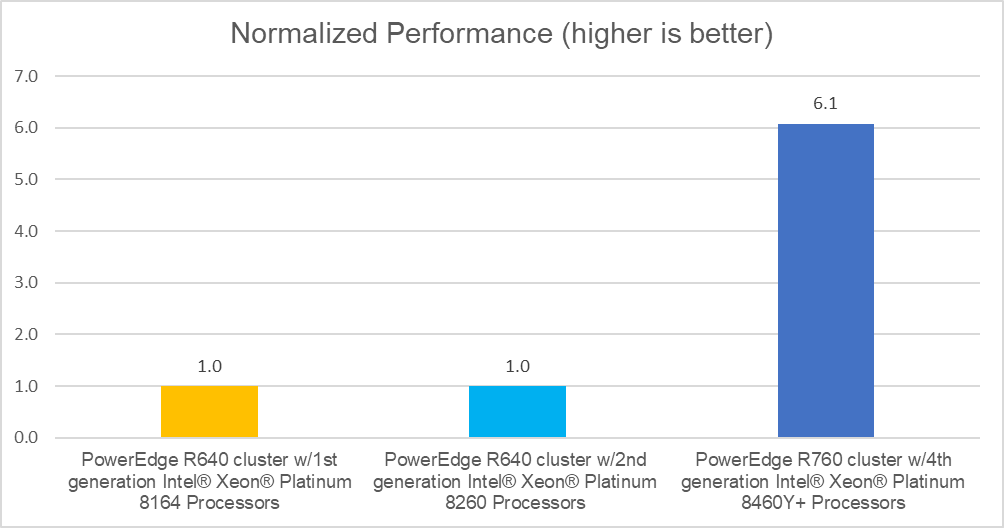

Test 1 Results

Vdbench Test Parameters: 8 K block size, 70% reads, 100% random.

Measured in IO per second (IOPS)

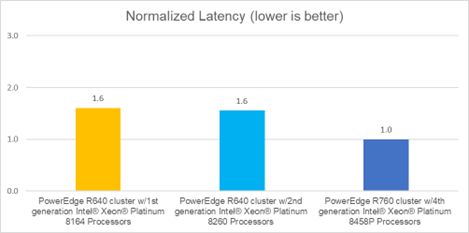

Measured in milliseconds

Measured in milliseconds

As these graphs show, vSAN performance in an OSA environment using the new R760 with 4th generation Intel® Xeon® Platinum Processors is up to 1.5x* faster than the two previous generations with up to 1.6x lower latency*. These performance increases were likely driven by the increase in network performance (100 Gb/s Ethernet vs. 10 Gb/s Ethernet). And the generational performance improvements of processors and the underlying NVMe drives benefit from the higher PCIe throughput available in the R760.

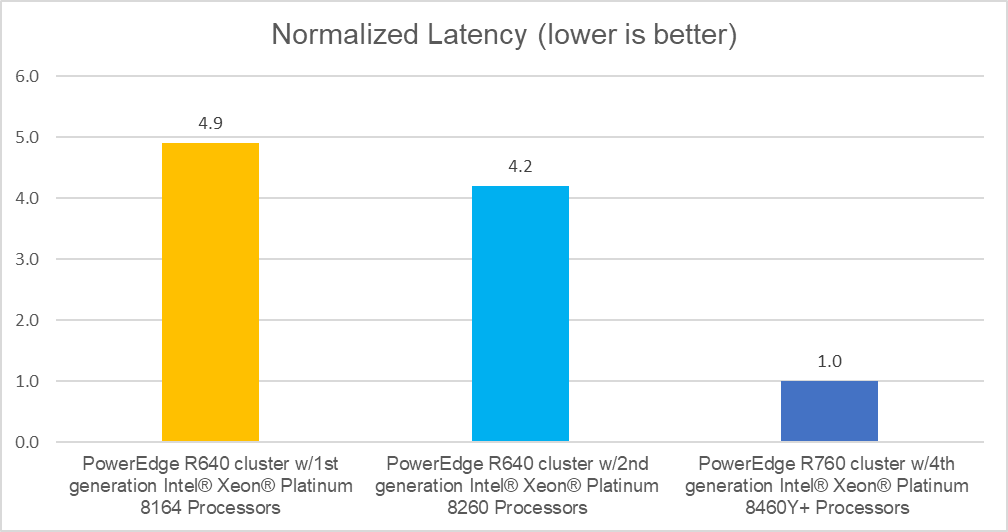

Test 2 Results

FIO Test Parameters: 8 K block size, 70% reads, 100% random.

Measured in IO per second (IOPS)

Measured in milliseconds

These graphs show that vSAN performance in an ESA environment using the new R760 with 4th generation Intel® Xeon® Platinum Processors is over 6x faster* than the two previous generations and delivers up to 4.9x lower latency*. With similar underlying hardware as the previous test, this performance increase is primarily a function of the new ESA architecture running on the latest generation Servers.

How to move from OSA to ESA

With higher performance and lower latency, the clear choice would be for customers to move to the vSAN 8.0 ESA architecture using the latest Dell PowerEdge Servers with 4th generation Intel® Xeon® Processors. Still, the question is, “How?”.

According to VMware[i], customers have three options:

- Deploy a new cluster and migrate workloads using vMotion and Storage vMotion.

- Convert existing OSA clusters to ESA by evacuating the cluster, upgrading the hardware, and redeploying it as an ESA solution.

- Perform a rolling cluster migration from OSA to a new cluster.

While the steps necessary for each of these options are different, they all use the same key process: “migrate workloads using vMotion and Storage vMotion.”

Option 1 – Pros and Cons

The choice of option 1 involves deploying new servers into a new cluster and, as it grows, migrate existing virtual machines and storage images to the new cluster.

Pro’s

- Requires the fewest steps

- It does not place any existing data at risk since it can be maintained in the existing cluster until it is ready to move.

- Performance and availability of the environment are affected only during the vMotion/Storage Motion activities.

- This option also provides the additional performance benefits of the new 4th generation Intel® Xeon® Processors and Dell PowerEdge Servers.

- The “Enhanced vMotion Compatibility” (EVC)[ii] feature of ESXi is designed to enable workloads to be live migrated between different generations of processors to ensure uptime for the workload

Con’s

- It requires the purchase of new hardware; however, this effect can be minimized by implementing this change as part of existing growth plans.

Option 2 – Pros and Cons

The choice of option 2 involves evacuating the existing cluster, upgrading the hardware (storage and network), and redeploying the existing servers into a new cluster. Once the hardware transition is complete, the final step would be to migrate the previously moved virtual machines and storage images to this new cluster.

Pro’s

- Some budget savings may be obtained due to reduced hardware replacement

- This approach may be suitable if existing hardware is certified for ESA[iii]. Details on ESA hardware requirements can be found at the link in this document’s end notes.

Con’s

- This approach requires that all nodes be reconfigured with NVMe drives. If the current environment uses a spinning disk with SSD as the cache layer, it can be expensive to purchase new drives, reprovision the hardware, and require many hours of work to effect the transition. Note, even for existing clusters that use all NVMe configurations, they would be using older technology drives that cannot deliver the same performance levels as the latest generation of NVMe. Depending on the choices made when the original hardware was purchased, this option may not exist. For example, this option is not available if the existing systems do not have the space and connections necessary to host the required number of NVMe drives.

- This option also adds additional time to the process as it involves first using vMotion/Storage Motion to vacate the cluster and then requires their reuse to repopulate the cluster.

- This option requires that sufficient capacity is available in other clusters to accommodate 100% of the capacity of the cluster being redeployed.

- This approach may require distributing virtual machines and storage images to multiple clusters to obtain the capacity needed. In this case, it adds additional complexity to the migration as the human resources who manage the environment will need to determine how to rebalance all these environments.

Option 3 – Pros and Cons

The choice of option 3 involves selectively removing servers from the existing cluster, allowing time for the vSAN environment to rebuild, downing the selected servers, upgrading the hardware (storage and network), and redeploying the existing servers into a new cluster. As this new cluster grows, the final stage would be migrating existing virtual machines and storage images to this new cluster.

Pro’s

- Some budget savings may be obtained due to reduced hardware replacement

- This approach may be suitable if existing hardware is certified for ESAv. Details on ESA hardware requirements can be found at the link in this document’s end notes.

Con’s

- The same as above, this approach requires that all nodes be reconfigured with NVMe drives. If the current environment uses a spinning disk with SSD as the cache layer, it can be expensive to purchase new drives, reprovision the hardware, and require many hours of work to effect the transition. Note, even for existing clusters that use all NVMe configurations, they would be using older technology drives that cannot deliver the same performance levels as the latest generation of NVMe. Depending on the choices made when the original hardware was purchased, this option may not exist. For example, this option is unavailable if the existing systems do not have the space and connections necessary to host the required NVMe drives.

- This option requires less time in each step to effect the transition but may require more time. This approach also requires appropriate planning to allow the old vSAN time to redistribute the data.

- This approach also introduces additional risk due to the high level of coordination required between resources to ensure that the correct server is removed from the cluster.

Conclusion

IT professionals’ primary responsibilities are reducing downtime, increasing performance and scalability, and optimizing infrastructure. As technology continues to evolve, engineers at Dell, Intel, and VMware are focused on optimizing new solutions to deliver greater value to customers. Deploying new technologies into old environments reduces or sometimes eliminates this value. Combining Dell PowerEdge Servers with 4th generation Intel® Xeon® Processors and the latest VMware hypervisor/vSAN software can dramatically improve performance, reduce latency, and significantly increase the business benefit. With storage devices forming a large portion of the cost of a server, reconfiguring existing hardware to optimize the capabilities of vSAN8.0 ESA requires a significant capital investment. Yet it will still not deliver maximum performance due to the reduced performance of legacy NVMe and Servers. In addition, this approach significantly increases the workload on existing IT staff. Based on this, Dell and Intel recommend that customers implement Option 1 to Modernize their IT infrastructure, reduce risk, and maximize business benefits.

*All performance claims noted in this document were based on measurements conducted in accordance with published standards for HCIBench. Performance varies by use, configuration, and other factors. Performance results are based on testing conducted between November 2022 and March 2023.

- https://www.intel.com/content/www/us/en/products/sku/231742/intel-xeon-platinum-8458p-processor-82-5m-cache-2-70-ghz/ordering.html?wapkw=8458

- https://ark.intel.com/content/www/us/en/ark/products/231736/intel-xeon-platinum-8460y-processor-105m-cache-2-00-ghz.html

- https://networkbuilders.intel.com/solutionslibrary/power-management-technology-overview-technology-guide

- https://blogs.VMware.com/virtualblocks/2022/08/30/announcing-vsan-8-with-vsan-express-storage-architecture/

- https://flings.VMware.com/hcibench

- https://core.VMware.com/resource/migrating-express-storage-architecture-vsan-8#sec23176-sub2

- https://core.VMware.com/resource/enhanced-vmotion-compatibility-evc-explained

- https://www.VMware.com/resources/compatibility/vsanesa_profile.php