The Future of Server Cooling - Part 2: New IT hardware Features and Power Trends

Download PDFFri, 03 Mar 2023 17:21:25 -0000

|Read Time: 0 minutes

Summary

Part 1 of this three-part series, titled The Future of Server Cooling, covered the history of server and data center cooling technologies.

Part 2 of this series covers new IT hardware features and power trends with an overview of the cooling solutions that Dell Technologies provides to keep IT infrastructure cool.

Overview

The Future of Server Cooling was written because future generations of PowerEdge servers may require liquid cooling to enable certain CPU or GPU configurations. Our intent is to educate customers about why the transition to liquid cooling may be required, and to prepare them ahead of time for these changes. Integrating liquid cooling solutions on future PowerEdge servers will allow for significant performance gains from new technologies, such as next-generation Intel® Xeon® and AMD EPYC CPUs, and NVIDIA, Intel, and AMD GPUs, as well as the emerging segment of DPUs.

Part 1 of this three-part series reviewed some major historical cooling milestones and evolution of cooling technologies over time both in the server and the data center.

Part 2 of this series describes the power and cooling trends in the server industry and Dell Technologies’ response to the challenges through intelligent hardware design and technology innovation.

Part 3 of this series will focus on technical details aimed to enable customers to prepare for the introduction, optimization, and evolution of these technologies within their current and future datacenters.

Increasing power requirements and heat generation trends within servers

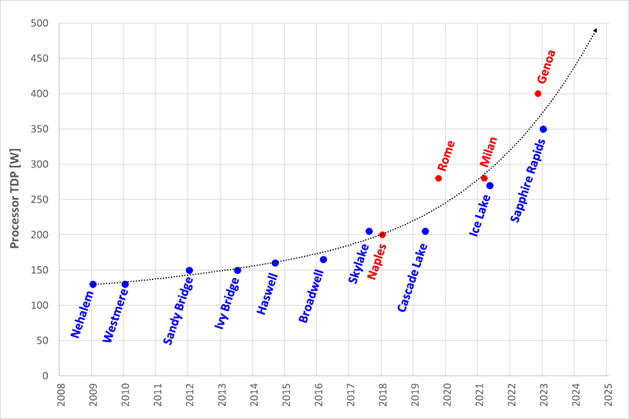

CPU TDP trends over time – Over the past ten years, significant innovations in CPU design have included increased core counts, advancements in frequency management, and performance optimizations. As a result, CPU Thermal Design Power (TDP) has nearly doubled over just a few processor generations and is expected to continue increasing.

Figure 1. TDP trends over time

Emergence of GPUs – Workloads such as Artificial Intelligence (AI) and Machine Learning (ML) capitalize the parallel processing capabilities of Graphic Processing Units (GPUs). These subsystems require significant power and generate significant amounts of heat. As it has for CPUs, the power consumption of GPUs has rapidly increased. For example, while the power of an NVIDIA A100 GPU in 2021 was 300W, NVIDIA H100 GPUs are releasing soon at up to 700W. GPUs up to 1000W are expected in the next three years.

Memory – As CPU capabilities have increased, memory subsystems have also evolved to provide increased performance and density. A 128GB LRDIMM installed in an Intel-based Dell 14G server would operate at 2666MT/s and could require up to 11.5W per DIMM. The addition of 256GB LRDIMMs for subsequent Dell AMD platforms pushed the performance to 3200MT/s but required up to 14.5W per DIMM. The latest Intel and AMD based platforms from Dell operate at 4800MT/s and with 256GB RDIMMs consuming 19.2W each. Intel based systems can support up to 32 DIMMs, which could require over 600W of power for the memory subsystem alone.

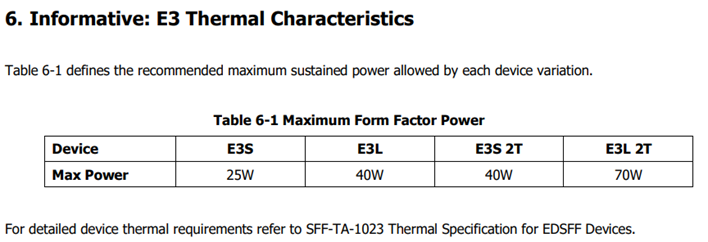

Storage – Data storage is a key driver of power and cooling. Fewer than ten years ago, a 2U server could only support up to 16 2.5” hard drives. Today a 2U server can support up to 24 2.5” drives. In addition to the increased power and cooling that this trend has driven, these higher drive counts have resulted in significant air flow impedance both on the inlet side and exhaust side of the system. With the latest generation of PowerEdge servers, a new form factor called E3 (also known as EDSFF or “Enterprise & Data Center SSD Form Factor) brings the drive count to 16 in some models but reduces the width and height of the storage device, which gives more space for airflow. The “E3” family of devices includes “Short” (E3.S), “Short – Double Thickness”: (E3.S 2T), “Long” (E3.L), and “Long – Double Thickness” (E3L.2T). While traditional 2.5” SAS drives can require up to 25W, these new EDSFF designs can require up to 70W as shown in the following table.

(Source: https://members.snia.org/document/dl/26716, page 25.)

Innovative Dell Technologies design elements and cooling techniques to help manage these trends

“Smart Flow” configurations

Dell ISG engineering teams have architected new system storage configurations to allow increased system airflow for high power configurations. These high flow configurations are referred to as “Smart Flow”. The high airflow aspect of Smart Flow is achieved using new low impedance airflow paths, new storage backplane ingredients, and optimized mechanical structures all tuned to provide up to a 15% higher airflow compared to traditional designs. Smart Flow configurations allow Dell’s latest generation of 1U and 2U servers to support new high-power CPUs, DDR5 DIMMs, and GPUs with minimal tradeoffs.

Figure 2. R660 “Smart Flow” chassis

Figure 3. R760 “Smart Flow” chassis

FGPU configurations

The R750xa and R760xa continue the legacy of the Dell C4140, with GPUs located in the “first-class” seats at the front of the system. Dell thermal and system architecture teams designed these next generation GPU optimized systems with GPUs in the front to provide fresh (non-preheated) air to the GPUs in the front of the system. These systems also incorporate larger 60x76mm fans to provide the high airflow rates required by the GPUs and CPUs in the system. Look for additional fresh air GPU architectures in future Dell systems.

Figure 4. R760xa chassis showing “first class seats” for GPU at the front of the system

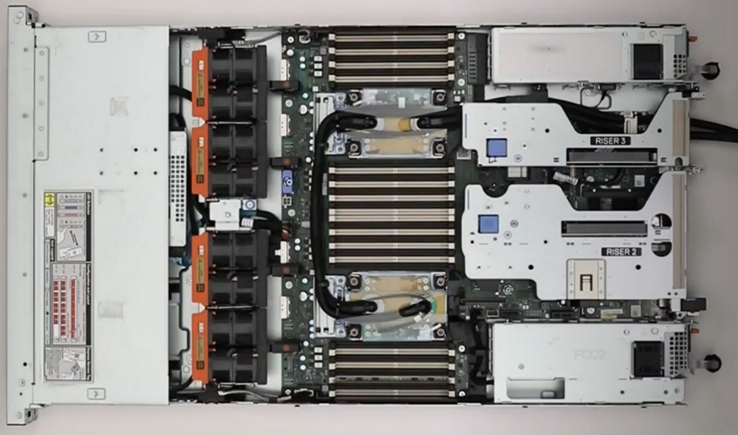

4th Generation DLC with leak detection

Dell’s latest generation of servers continue to expand on an already extensive support for direct liquid cooling (DLC). In fact, a total of 12 Dell platforms have a DLC option including an all-new offering of DLC in the MX760c. Dell’s 4th generation liquid cooling solution has been designed for robust operation under the most extreme conditions. If an excursion occurs, Dell has you covered. All platforms supporting DLC utilize Dell’s proprietary Leak Sensor solution. This solution is capable of detecting and differentiating small and large leaks which can be associated with configurable actions including email notification, event logging, and system shutdown.

Figure 5. 2U chassis with Direct Liquid Cooling heatsink and tubing

Application optimized designs

Dell closely monitors not only the hardware configurations that customers choose but also the application environments they run on them. This information is used to determine when design changes might help customers to achieve a more efficient design for power and cooling with various workloads.

An example of this is in the Smart Flow designs discussed previously, in which engineers reduced the maximum storage potential of the designs to deliver more efficient air flow in configurations that do not require maximum storage expansion.

Another example is in the design of the “xs” (R650xs, R660xs, R750xs, and R760xs) platforms. These platforms are designed to be optimized specifically for virtualized environments. Using the R750xs as an example, it supports a maximum of 16 hard drives. This reduces the density of power supplies that must be supported and allows for the use of lower cost fans. This design supports a maximum of 16 DIMMs which means that the system can be optimized for a lower maximum power threshold, yet still deliver enough capacity to support large numbers of virtual machines. Dell also recognized that the licensing structure of VMware supports a maximum of 32 cores per license. This created an opportunity to reduce the power and cooling loads even further by supporting CPUs with a maximum of 32 cores which have a lower TDP than the higher core count CPUs.

Software design

As power and cooling requirements increase, Dell is also investing in software controls to help customers manage these new environments. iDRAC and Open Manage Enterprise (OME) with the Power Manager plug-in both provide power capping. OME Power Manager will automatically manipulate power based on policies set by the customer. In addition, iDRAC, OME Power Manager, and CloudIQ all report power usage to allow the customer the flexibility to monitor and adapt power usage based on their unique requirements.

Conclusion

As Server technology evolves, power and cooling challenges will continue. Fan power in air-cooled servers is one of largest contributors to wasted power. Minimizing fan power for typical operating conditions is the key to a thermally efficient server and has a large impact on customer sustainability footprint.

As the industry adopts liquid cooling solutions, Dell is ensuring that air cooling potentials are maximized to protect customer infrastructure investments in air cooling based data centers around the globe. The latest generation of Dell servers required advanced engineering simulations and analysis to improve system design to increase system airflow per unit watt of fan power, as compared to the previous generation of platforms, not only to maximize air cooling potential but to keep it efficient as well. Additional air-cooling opportunities are enabled with Smart Flow configurations – allowing higher CPU bins to be air cooled, as compared to the requirement for liquid cooling. A large number of thermal and power sensors have been implemented to manage both power and thermal transients using Dell proprietary adaptive closed loop algorithms that maximize cooling at the lowest fan power state and that protect systems at excursion conditions by closed loop power management.