Blogs

The latest news about VxRail releases and updates

Learn About the Latest VMware Cloud Foundation 5.1.1 on Dell VxRail 8.0.210 Release

Tue, 26 Mar 2024 18:47:52 -0000

|Read Time: 0 minutes

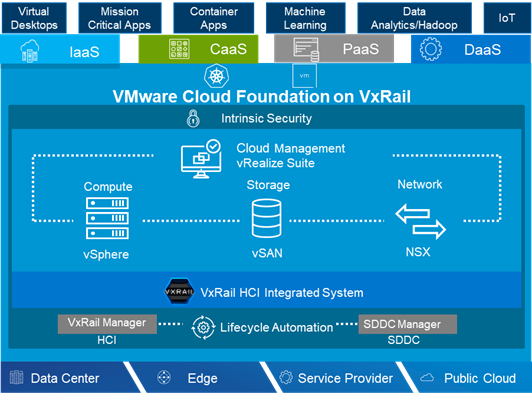

The latest VCF on VxRail release delivers GenAI-ready infrastructure, runs more demanding workloads, and is an excellent choice for supporting hardware tech refreshes and achieving higher consolidation ratios.

VMware Cloud Foundation 5.1.1 on VxRail 8.0.210 is a minor release from the perspective of versioning and new functionality but is significant in terms of support for the latest VxRail hardware platforms. This new release is based on the latest software bill of materials (BOM) featuring vSphere 8.0 U2b, vSAN 8.0 U2b, and NSX 4.1.2.3. Read on for more details…

VxRail hardware platform updates

16th generation VxRail VE-660 and VP-760 hardware platform support

Cloud Foundation on VxRail customers can now benefit from the latest, more scalable, and robust 16th generation hardware platforms. This includes a full spectrum of hybrid, all-flash, and all NVMe options that have been qualified to run VxRail 8.0.210 software. This is fantastic news as these new hardware options bring many technical innovations, which my colleagues discussed in detail in previous blogs.

These new hardware platforms are based on Intel® 4th Generation Xeon® Scalable processors, which increase VxRail core density per socket to 56 (112 max per node). They also come with built-in Intel® AMX accelerators (Advanced Matrix Extensions) that support AI and HPC workloads without the need for additional drivers or hardware.

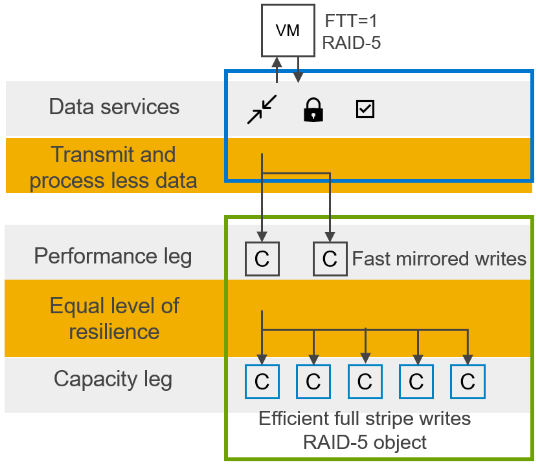

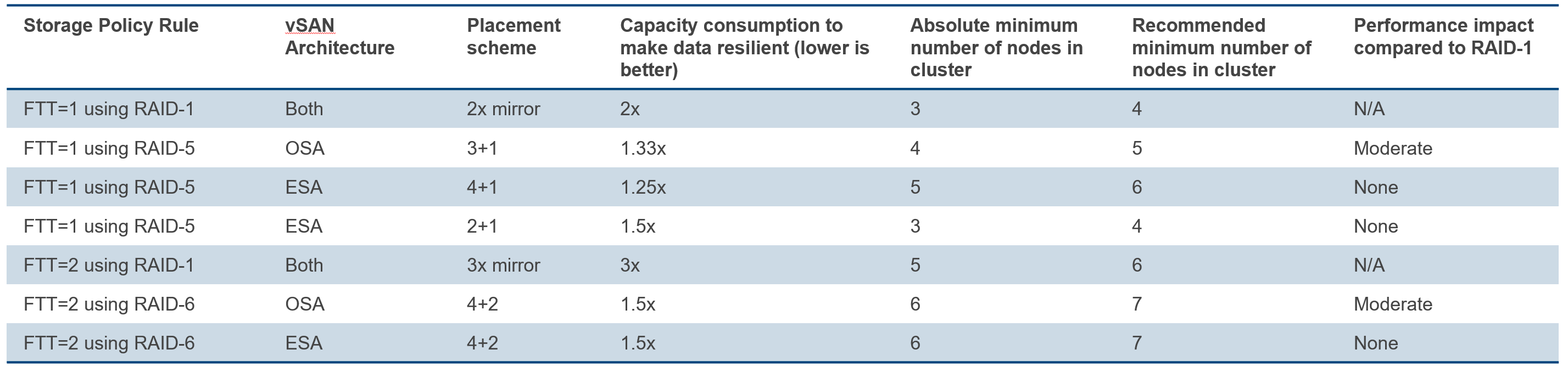

VxRail on the 16th generation hardware supports deployments with either vSAN Original Storage Architecture (OSA) or vSAN Express Storage Architecture (ESA). The VP-760 and VE-660 can take advantage of vSAN ESA’s single-tier storage architecture, which enables RAID-5 resiliency and capacity with RAID-1 performance.

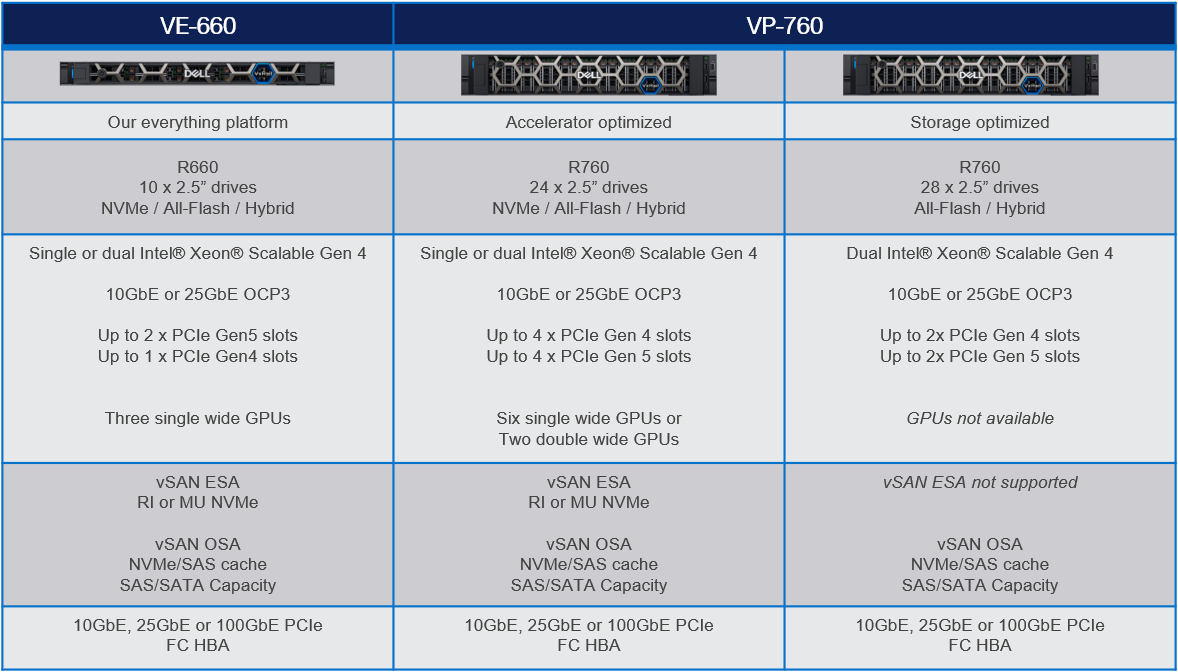

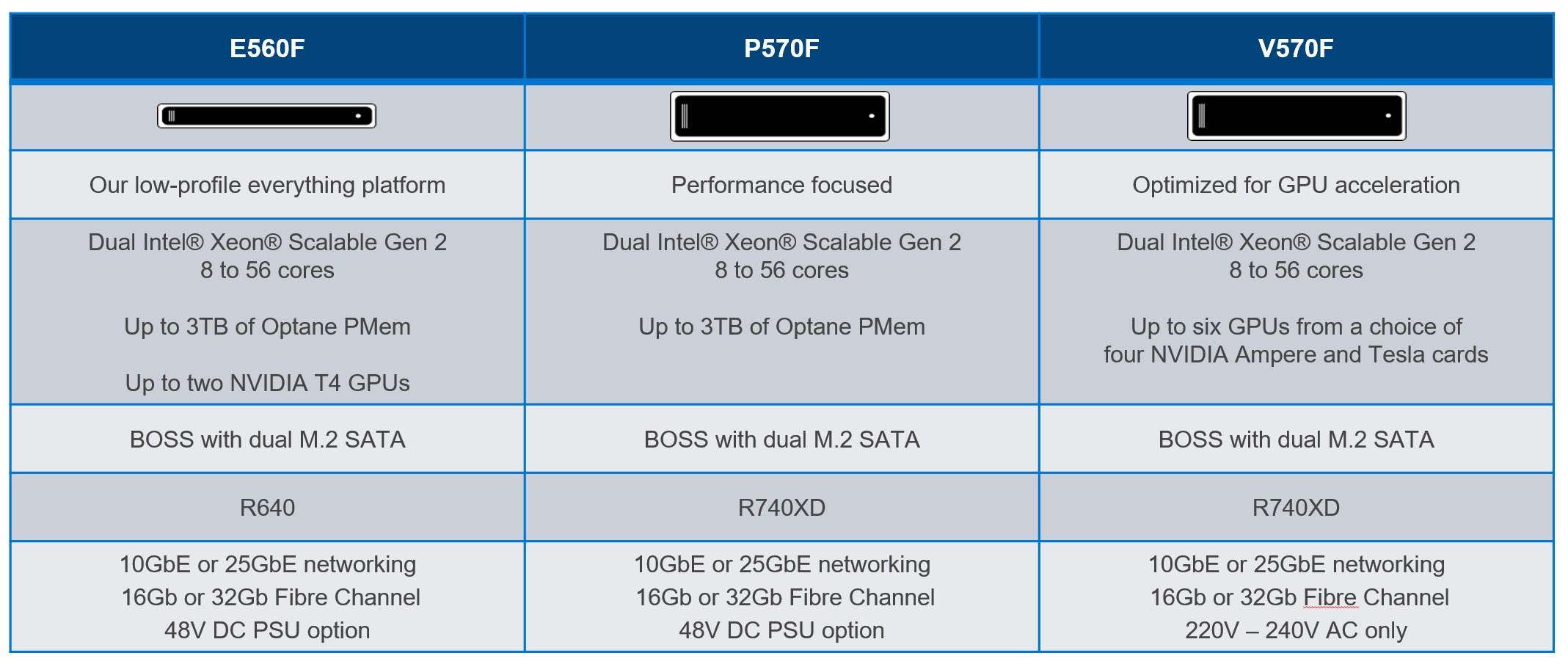

This table summarizes the configurations of the newly added platforms:

To learn more about the VE-660 and VP-760 platforms, please check Mike Athanasiou’s VxRail’s Latest Hardware Evolution blog. To learn more about Intel® AMX capability set, make sure to check out the VxRail and Intel® AMX, Bringing AI Everywhere blog, authored by Una O’Herlihy.

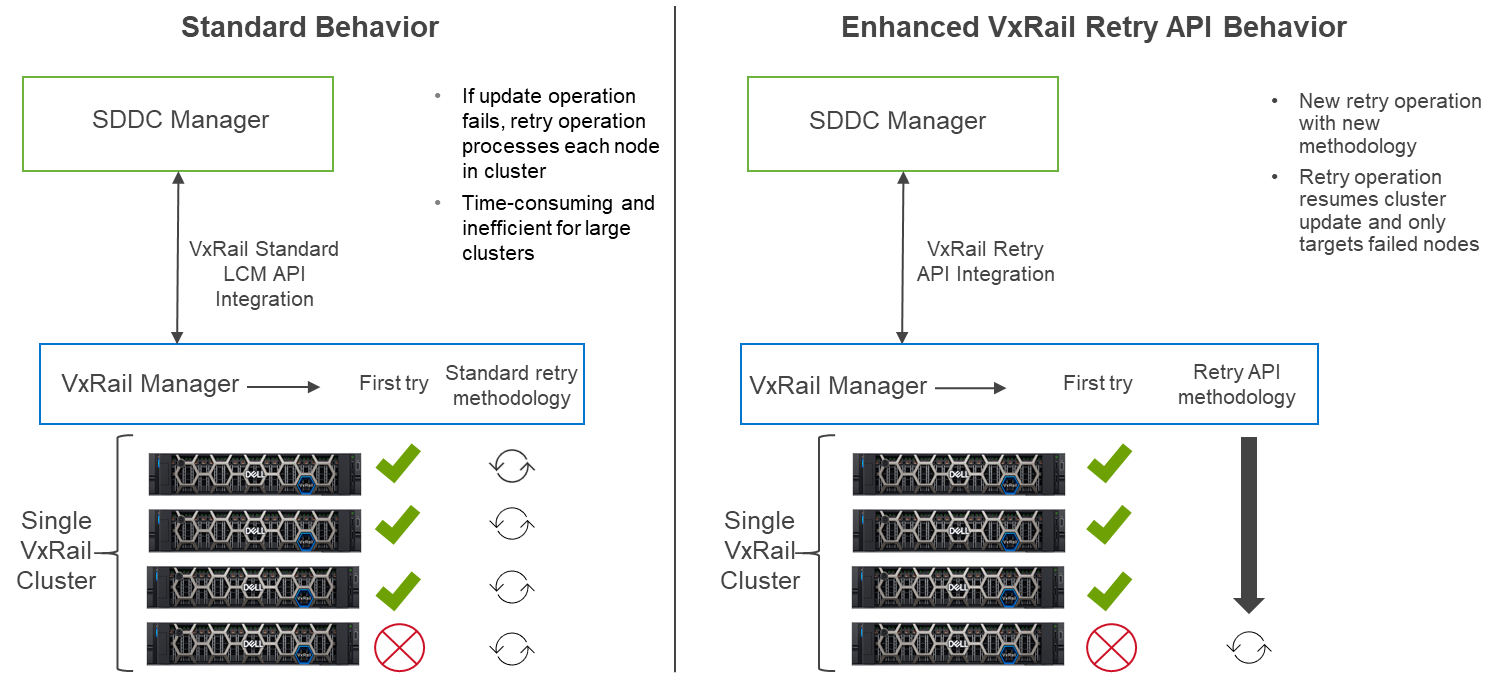

VCF on VxRail LCM updates

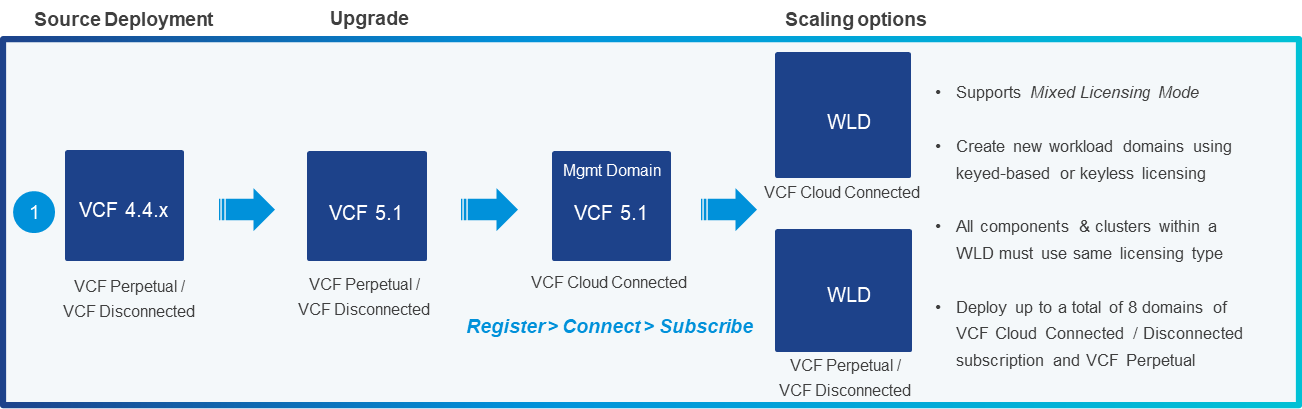

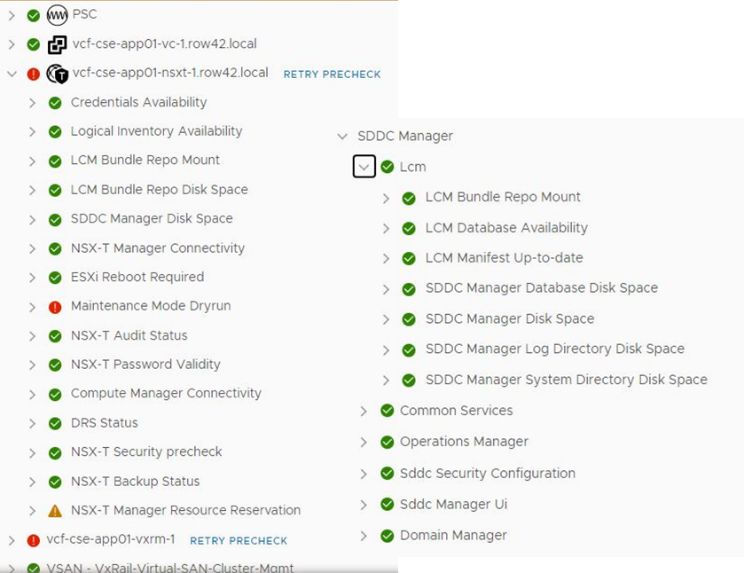

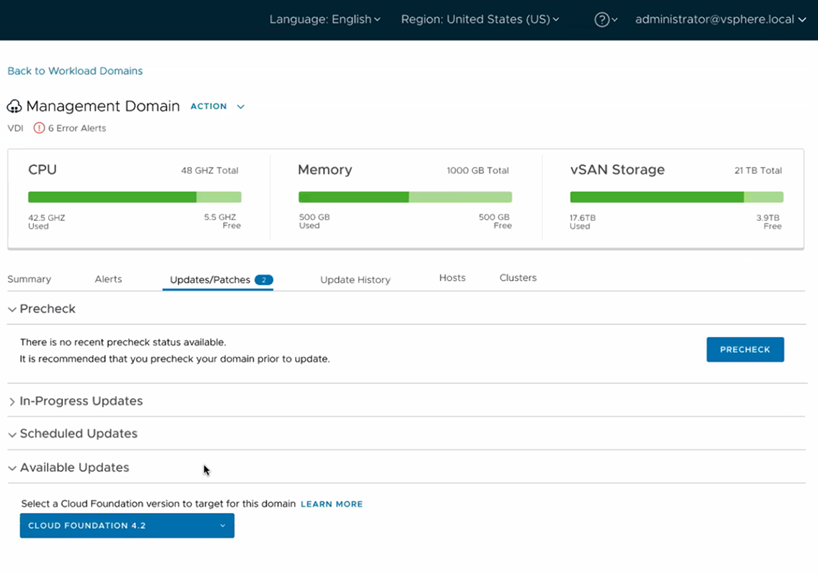

Support upgrades to VCF 5.1.1 from existing VCF 4.4.x and higher environments (N-3 upgrade support)

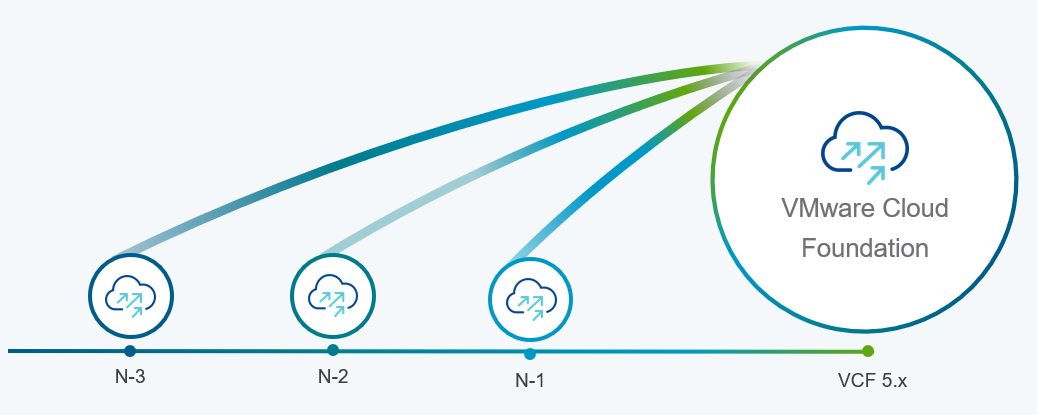

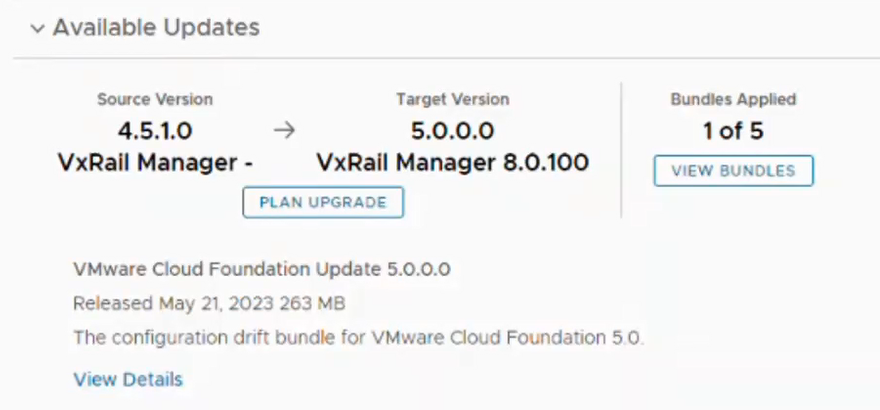

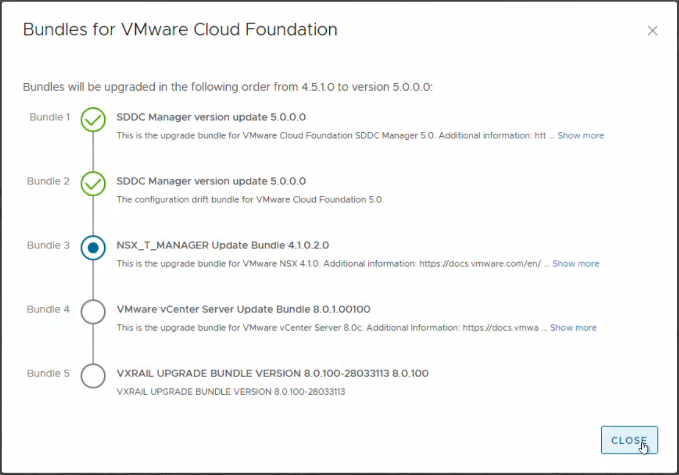

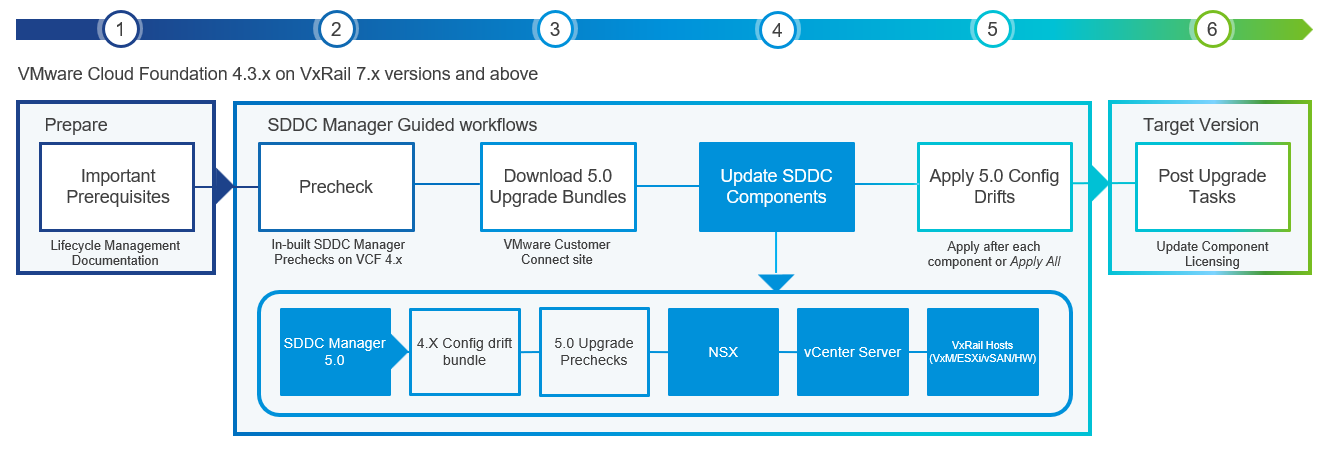

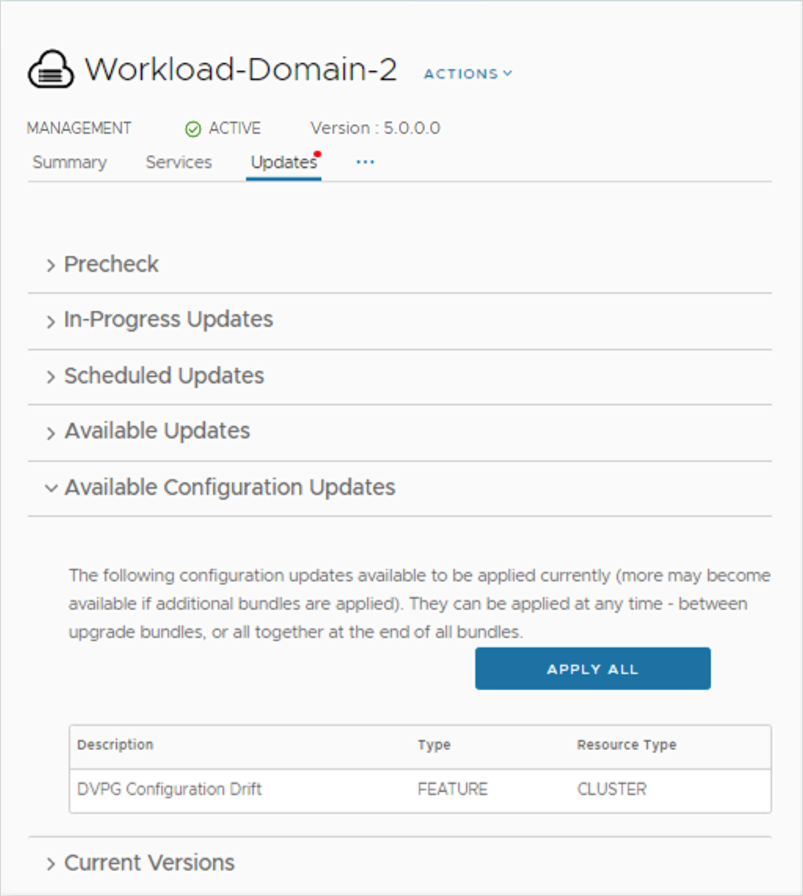

Customers who already upgraded to VCF 5.x are already familiar with the concept of the skip-level upgrade, which allows them to upgrade directly to the latest 5.x release without the need to perform upgrades to the interim versions. It significantly reduces the time required to perform the upgrade and enhances the overall upgrade experience. VCF 5.1.1 introduces so-called “N-3” upgrade support (as illustrated on the following diagram), which supports the skip-level upgrade for VCF 4.4.x. This means they can now perform a direct LCM upgrade operation from VCF 4.4.x, 4.5.x, 5.0.x, and 5.1.0 to VCF 5.1.1.

VCF licensing changes

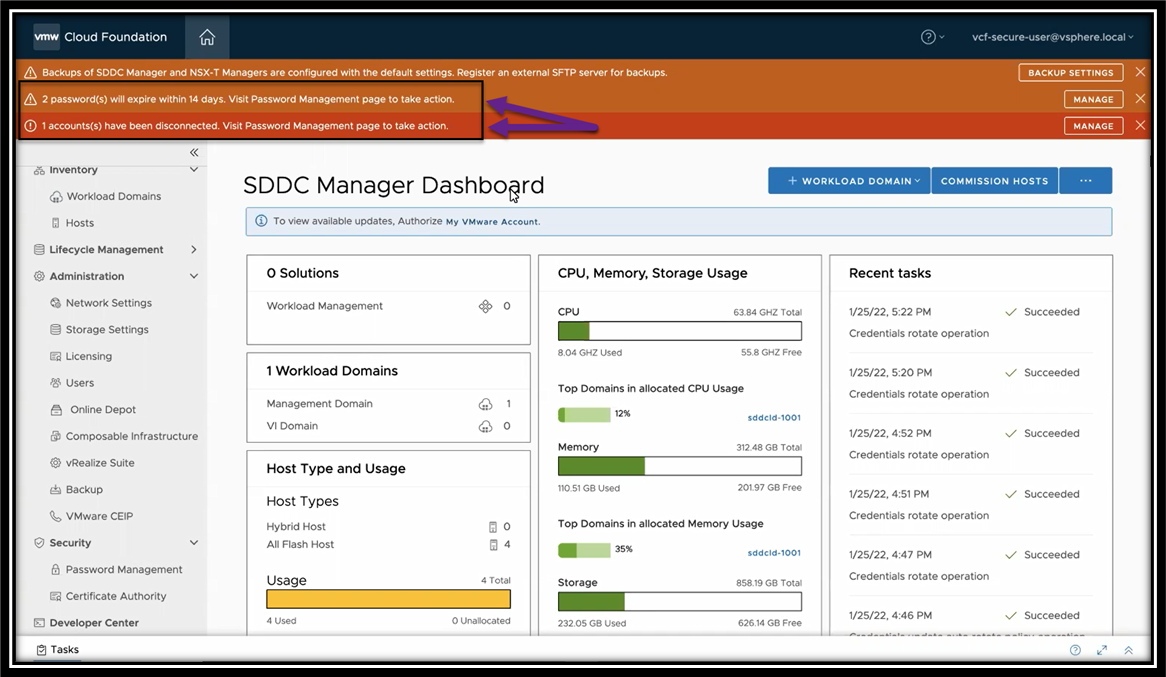

Simplified licensing using a single solution license key

Starting with VCF 5.1.1, vCenter Server, ESXi, and TKG component licenses are now entered using a single “VCF Solution License” key. This helps to simplify the licensing by minimizing the number of individual component keys that require separate management. VMware NSX Networking, HCX, and VMware Aria Suite components are automatically entitled from the vCenter Server post-deployment. The single licensing key and existing keyed licenses will continue to work in parallel.

Removal of VCF+ cloud-connected subscriptions as a supported VCF licensing type

The other significant licensing change is the deprecation of VCF+ licensing, which the new subscription model has replaced.

Support for deploying or expanding VCF instances using Evaluation Mode

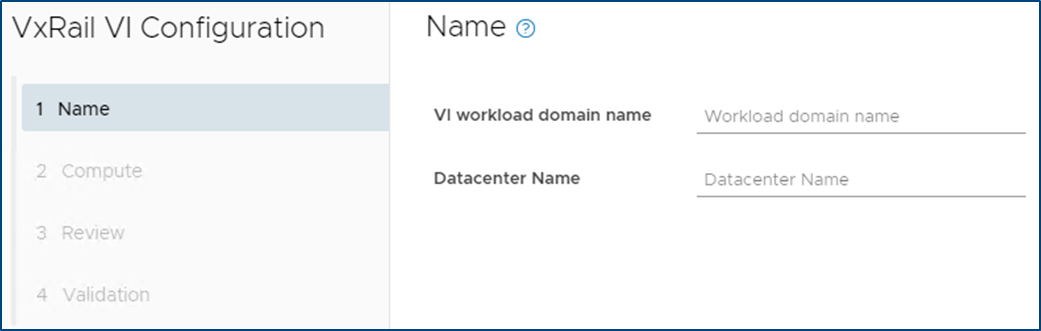

VMware Cloud Foundation 5.1.1 allows deploying a new VCF instance in evaluation mode without needing to enter license keys. An administrator has 60 days to enter licensing for the deployment, and SDDC Manager is fully functional at this time. The workflows for expanding a cluster, adding a new cluster, or creating a VI workload domain also provide an option to license later within a 60 day timeframe.

For more comprehensive information about changes in VCF licensing, please consult the VMware website.

Core VxRail enhancements

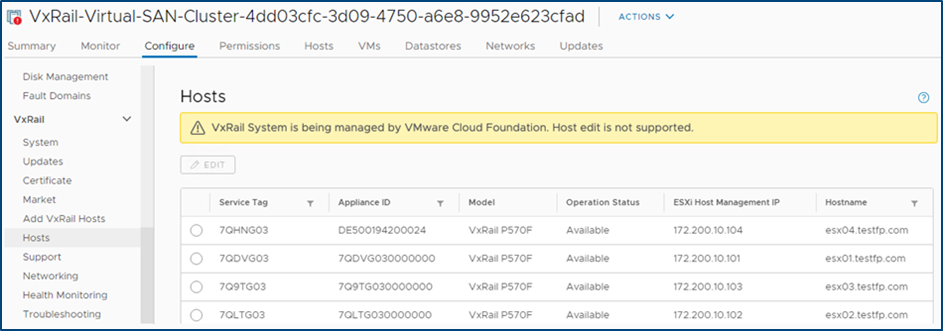

Support for remote vCenter plug-in

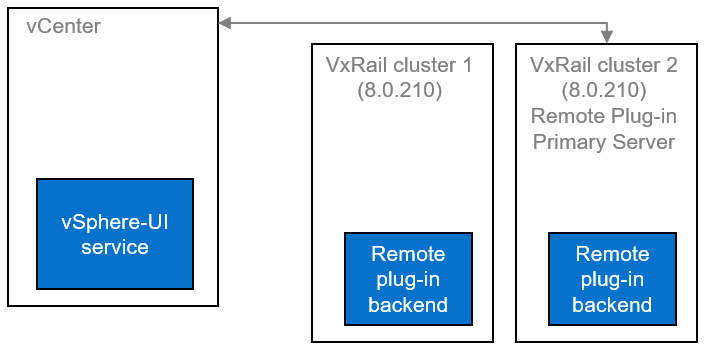

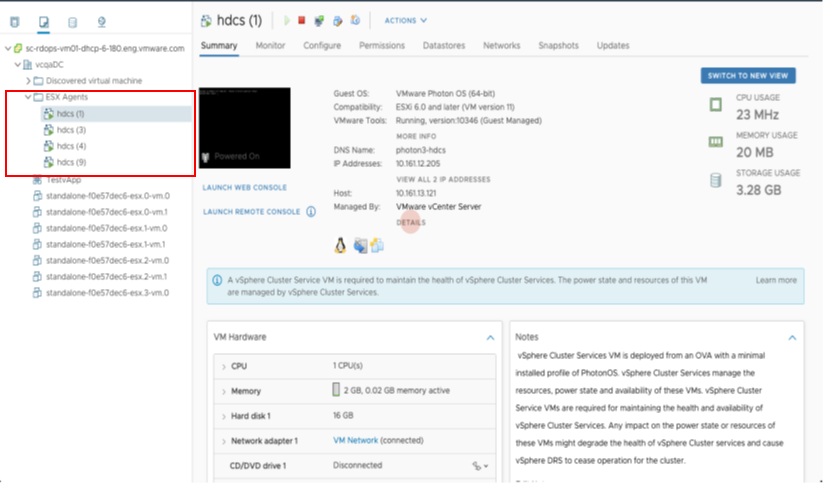

One of the notable enhancements in VxRail 8.0.210 is adopting the vSphere Client remote plugin architecture. It showcases adopting the latest vSphere architecture guidelines, as the local plug-ins are deprecated in vSphere 8.0 and won’t be supported in vSphere 9.0. The vSphere Client remote plug-in architecture allows plug-in functionality integration without running inside a vCenter Server. It’s a more robust architecture that separates vCenter Server from plug-ins and provides more security, flexibility, and scalability when choosing the programming frameworks and introducing new features. Starting with 8.0.210, a new VxRail Manager remote plug-in is deployed in the VxRail Manager Appliance.

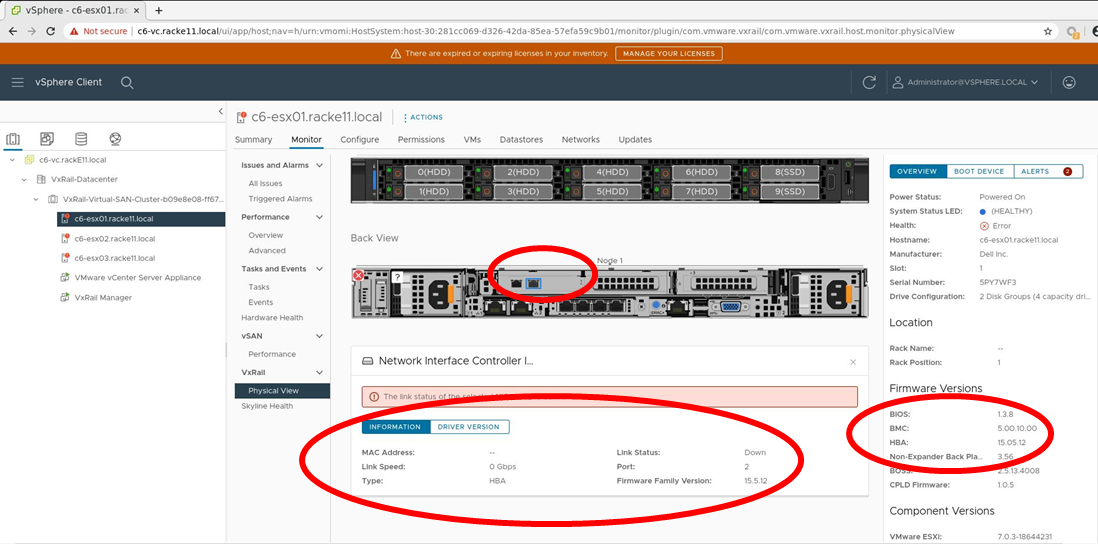

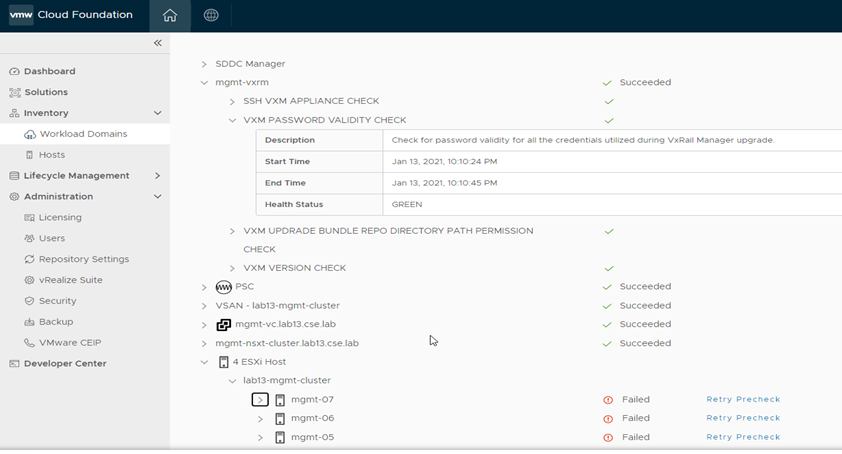

LCM enhancements, including improved VxRail pre-checks and self-remediation of iDRAC issues.

VxRail 8.0.210 also comes with several small features based on Customer feedback that combine to improve the LCM experience's reliability. These include:

- VxRail Manager root disk space precheck prevents the upgrade errors related to lack of disk space (for rpm-based upgrades).

- Self-remediation of iDRAC issues during LCM upgrades provides a more reliable firmware upgrade experience. By clearing the iDRAC job queue and resetting the iDRAC, the process may recover from a firmware update failure.

Serviceability enhancements, including improved expansion pre-checks, external storage reporting, and improved troubleshooting capabilities.

Another group of features contributes to overall improved serviceability and visibility into the system:

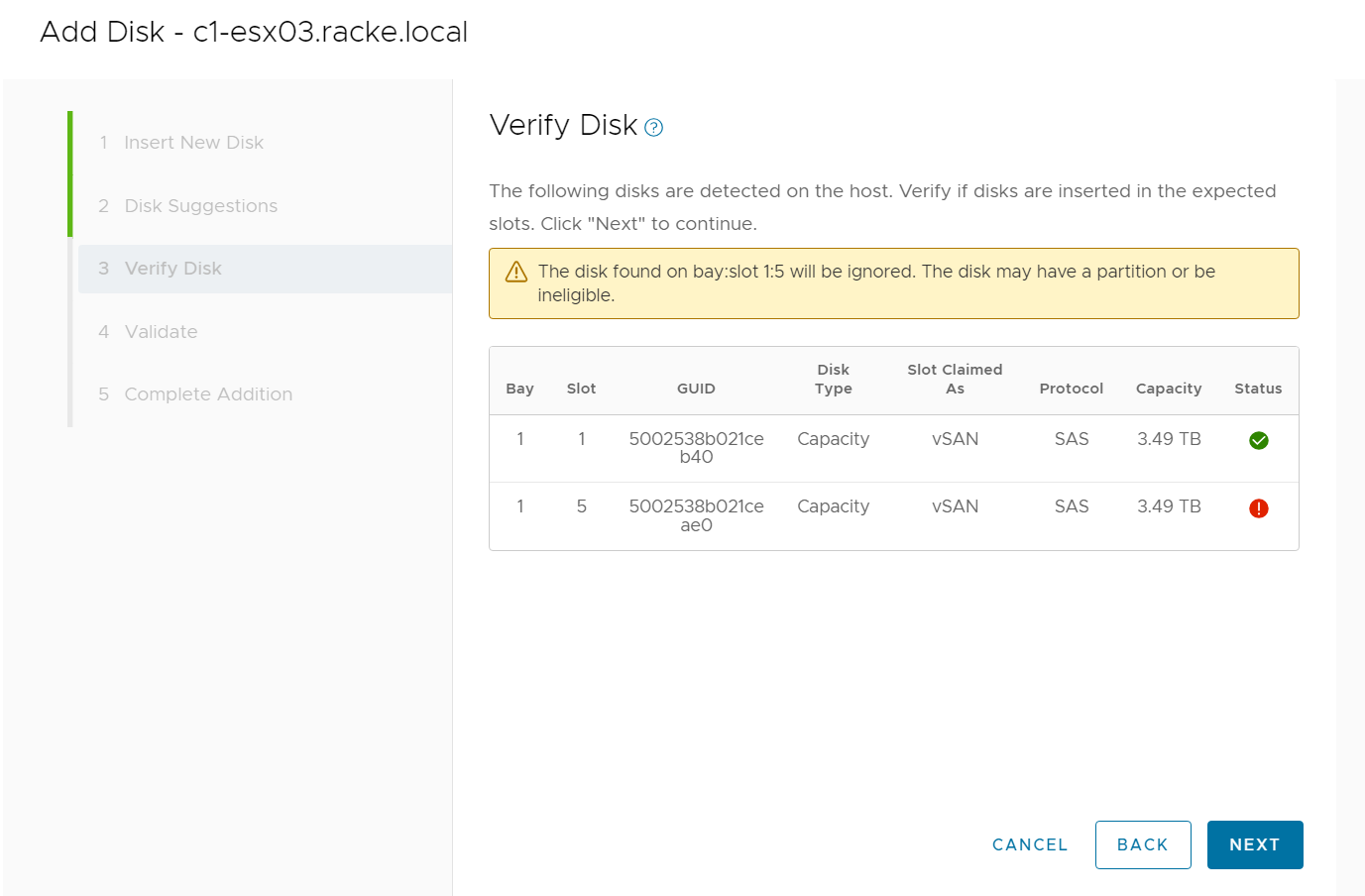

- The UI now implements new errors and warnings for incompatible disks when the user tries to add an incompatible disk during the disk addition process (see the following figure)

- The improved hardware views report on storage capacity and utilization for dynamic nodes, improving the overall visibility for the external storage attached to dynamic nodes directly from the vSphere Client.

- VxRail cluster troubleshooting efficiency has improved thanks to better standardization of log format and event grooming for disk exhaustion.

- The improved node-add health checks reduce the risk of successfully adding a faulty or mismatched node to a VxRail cluster.

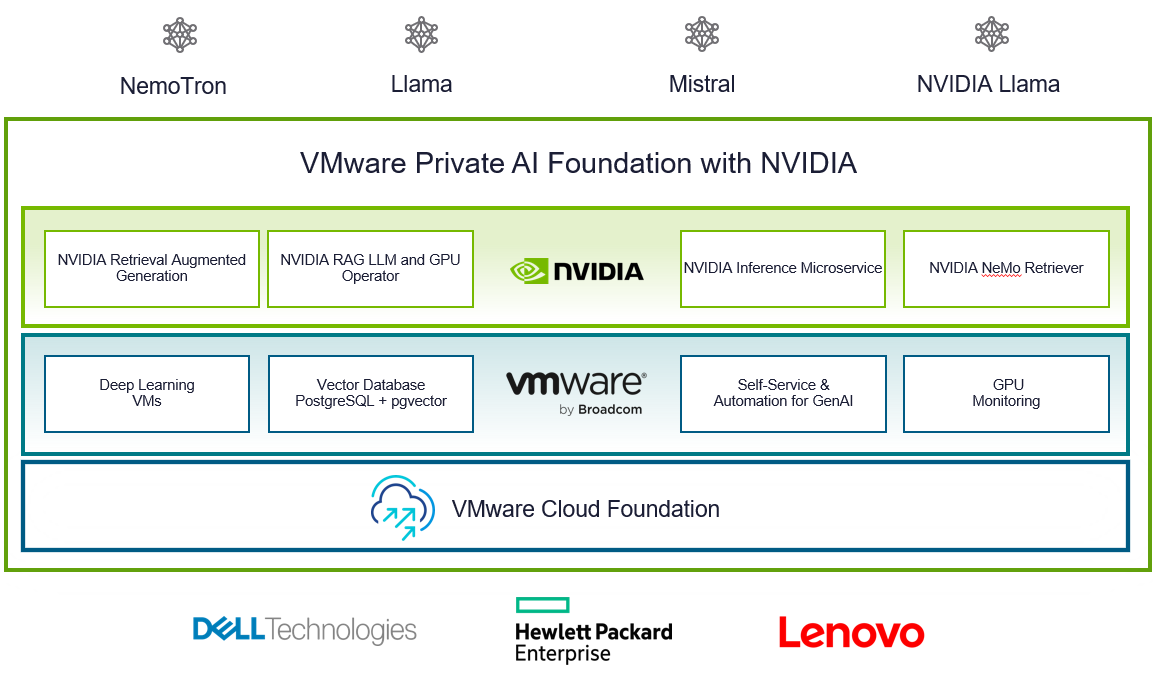

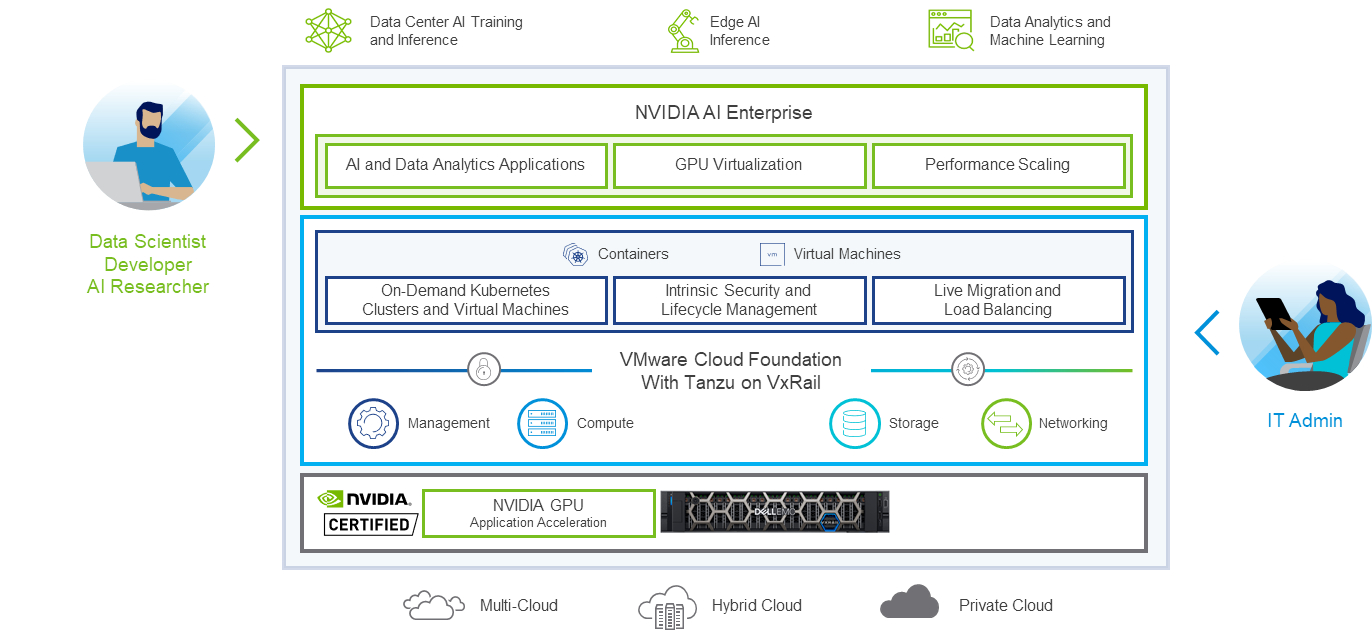

VMware Private AI Foundation with NVIDIA

With VCF 5.1.1, VMware introduces VMware Private AI Foundation with NVIDIA as Initial Access. Dell Technologies Engineering intends to validate this feature when it is generally available.

This solution aims to enable enterprise customers to adopt Generative AI capabilities more easily and securely by providing enterprises with a cost-effective, high-performance, and secure environment for delivering business value from Large Language Models (LLMs) using their private data.

Summary

The new VCF 5.1.1 on VxRail 8.0.210 release is an excellent option for customers looking for a hardware refresh, Gen AI-ready infrastructure to run more demanding workloads, or to achieve higher consolidation ratios. Additional enhancements introduced in the core VxRail functionality improve the overall LCM experience, serviceability, and visibility into the system.

Thank you for your time, and please check the additional resources if you like to learn more.

Resources

- VxRail’s Latest Hardware Evolution blog

- VxRail and Intel® AMX, Bringing AI Everywhere

- VxRail product page

- VxRail Infohub page

- VxRail Videos

- VMware Cloud Foundation on Dell VxRail Release Notes

- VCF on VxRail Interactive Demo

- VMware Product Lifecycle Matrix

Author: Karol Boguniewicz

Twitter: @cl0udguide

Learn About the Latest Major VxRail Software Release: VxRail 8.0.210

Wed, 24 Apr 2024 12:09:24 -0000

|Read Time: 0 minutes

It’s springtime, VxRail customers! VxRail 8.0.210 is our latest software release to bloom. Come see for yourself what makes this software release shine.

VxRail 8.0.210 provides support for VMware vSphere 8.0 Update 2b. All existing platforms that support VxRail 8.0 can upgrade to VxRail 8.0.210. This is also the first VxRail 8.0 software to support the hybrid and all-flash models of the VE-660 and VP-760 nodes based on Dell PowerEdge 16th Generation platforms that were released last summer and the edge-optimized VD-4000 platform that was released early last year.

Read on for a deep dive into the release content. For a more comprehensive rundown of the feature and enhancements in VxRail 8.0.210, see the release notes.

Support for VD-4000

The support for VD-4000 includes vSAN Original Storage Architecture (OSA) and vSAN Express Storage Architecture (ESA). VD-4000 was launched last year with VxRail 7.0 support with vSAN OSA. Support in VxRail 8.0.210 carries over all previously supported configurations for VD-4000 with vSAN OSA. What may intrigue you even more is that VxRail 8.0.210 is introducing first-time support for VD-4000 with vSAN ESA.

In the second half of last year, VMware reduced the hardware requirements to run vSAN ESA to extend its adoption of vSAN ESA into edge environments. This change enabled customers to consider running the latest vSAN technology in areas where constraints from price points and infrastructure resources were barriers to adoption. VxRail added support for the reduced hardware requirements shortly after for existing platforms that already supported vSAN ESA, including E660N, P670N, VE-660 (all-NVMe), and VP-760 (all-NVMe). With VD-4000, the VxRail portfolio now has an edge-optimized platform that can run vSAN ESA for environments that also may have space, energy consumption, and environmental constraints. To top that off, it’s the first VxRail platform to support a single-processor node to run vSAN ESA, further reducing the price point.

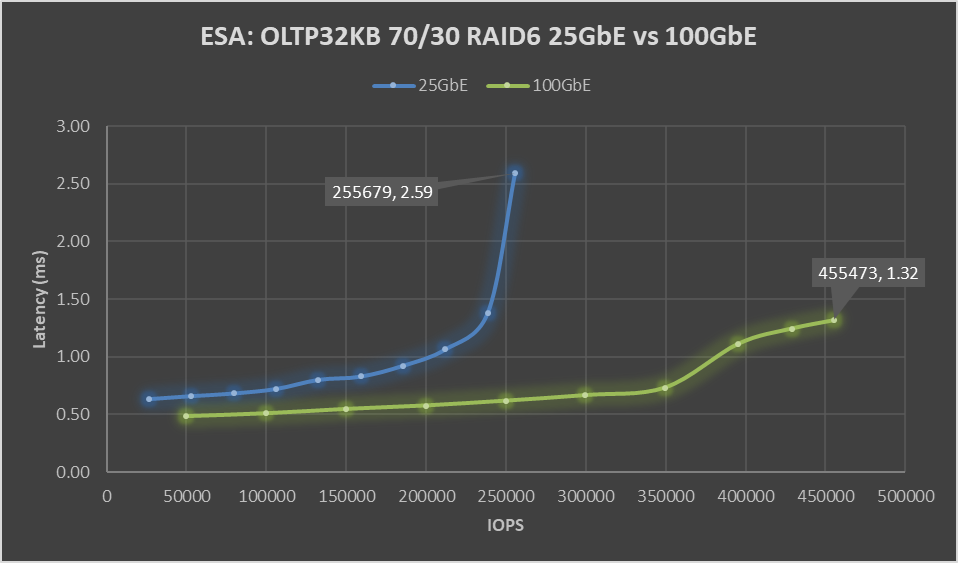

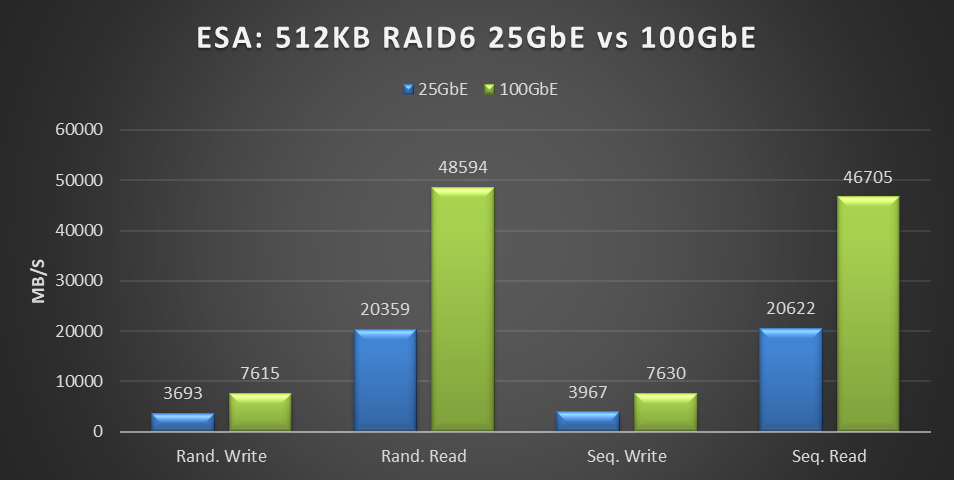

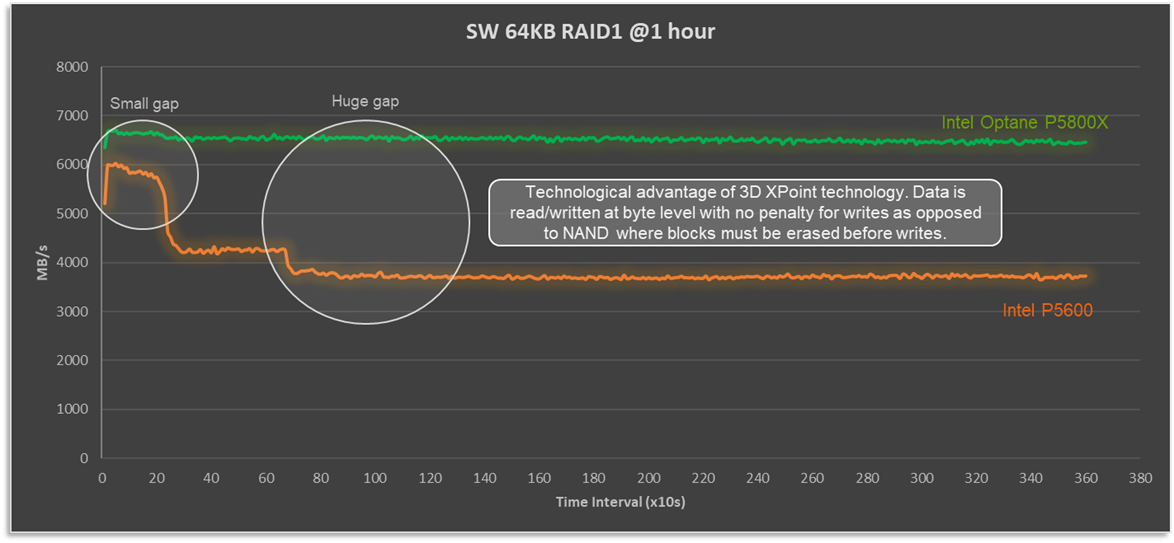

It is important to set performance expectations when running workload applications on the VD-4000 platform. While our performance testing on vSAN ESA showed stellar gains to the point where we made the argument to invest in 100GbE to maximize performance (check it out here), it is essential to understand that the VD-4000 platform is running with an Intel Xeon-D processor with reduced memory and bandwidth resources. In short, while a VD-4000 running vSAN ESA won’t be setting any performance records, it can be a great solution for your edge sites if you are looking to standardize on the latest vSAN technology and take advantage of vSAN ESA’s data services and erasure coding efficiencies.

Lifecycle management enhancements

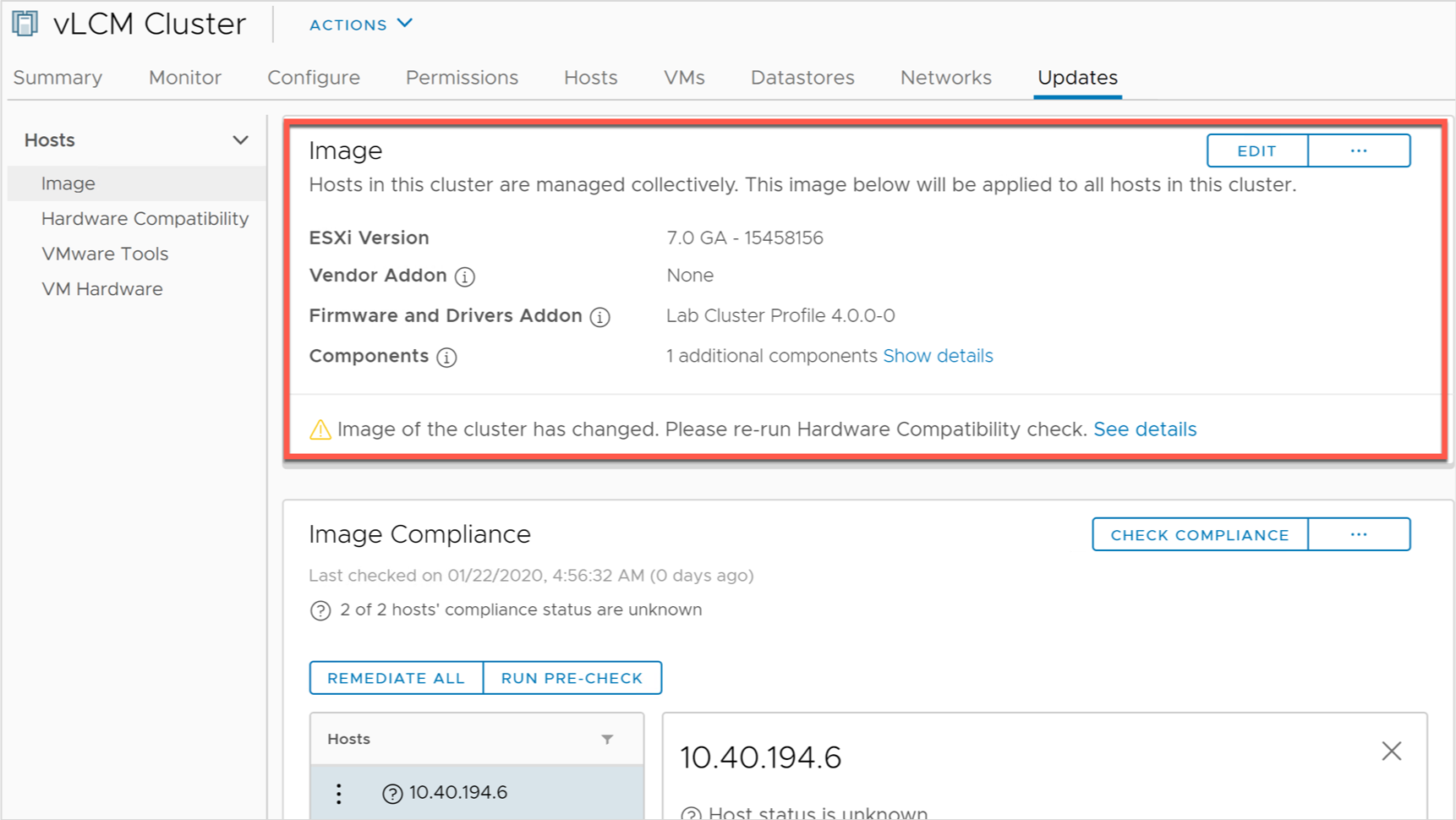

VxRail 8.0.210 offers support for a few vLCM feature enhancements that came with vSphere 8.0 Update 2. In addition, the VxRail implementation of these enhancements further simplifies the user experience.

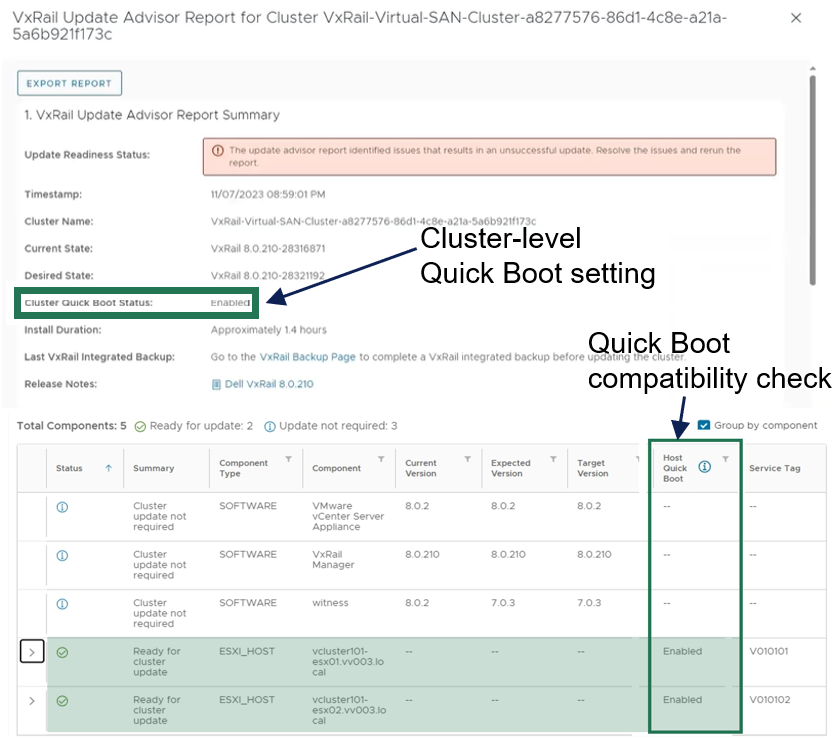

For vLCM-enabled VxRail clusters, we’ve made it easier to benefit from VMware ESXi Quick Boot. The VxRail Manager UI has been enhanced so that users can enable Quick Boot one time, and VxRail will maintain the setting whenever there is a Quick Boot-compatible cluster update. As a refresher for some folks not familiar with Quick Boot, it is an operating system-level reboot of the node that skips the hardware initialization. It can reduce the node reboot time by up to three minutes, providing significant time savings when updating large clusters. That said, any cluster update that involves firmware updates is not Quick Boot-compatible.

Using Quick Boot had been cumbersome in the past because it required several manual steps. To use Quick Boot for a cluster update, you would need to go to the vSphere Update Manager to enable the Quick Boot setting. Because the setting resets to Disabled after the reboot, this step had to be repeated for any Quick Boot-compatible cluster update. Now, the setting can be persisted to avoid manual intervention.

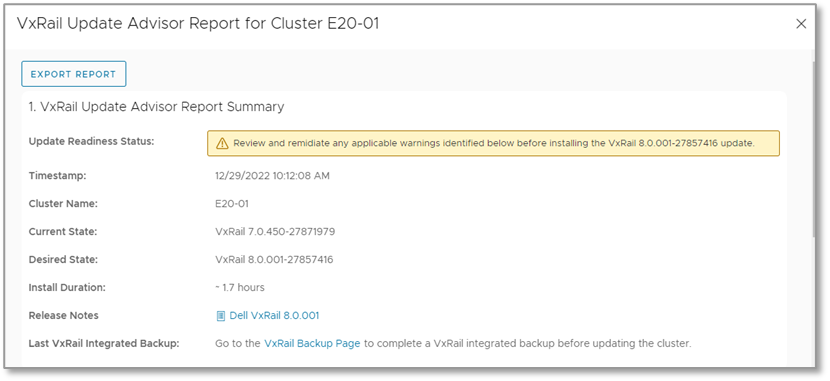

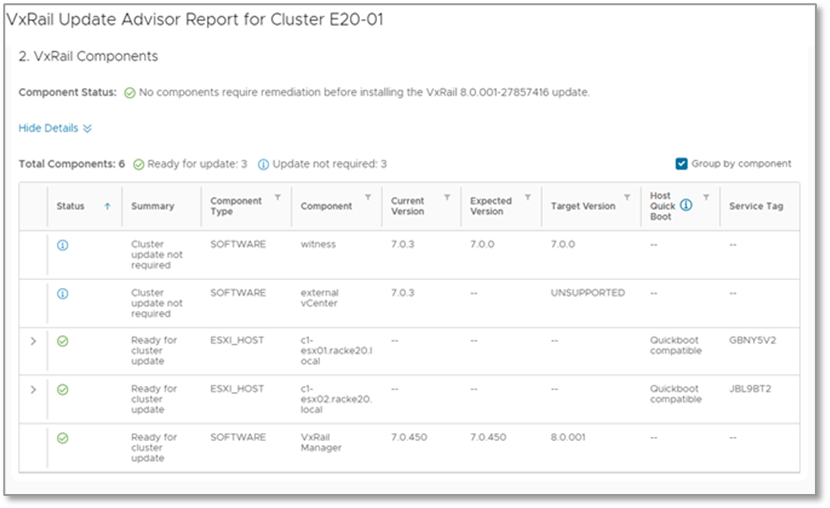

As shown in the following figure, the update advisor report now informs you whether a cluster update is Quick Boot-compatible so that the information is part of your update planning procedure. VxRail leverages the ESXi Quick Boot compatibility utility for this status check.

Figure 1. VxRail update advisor report highlighting Quick Boot information

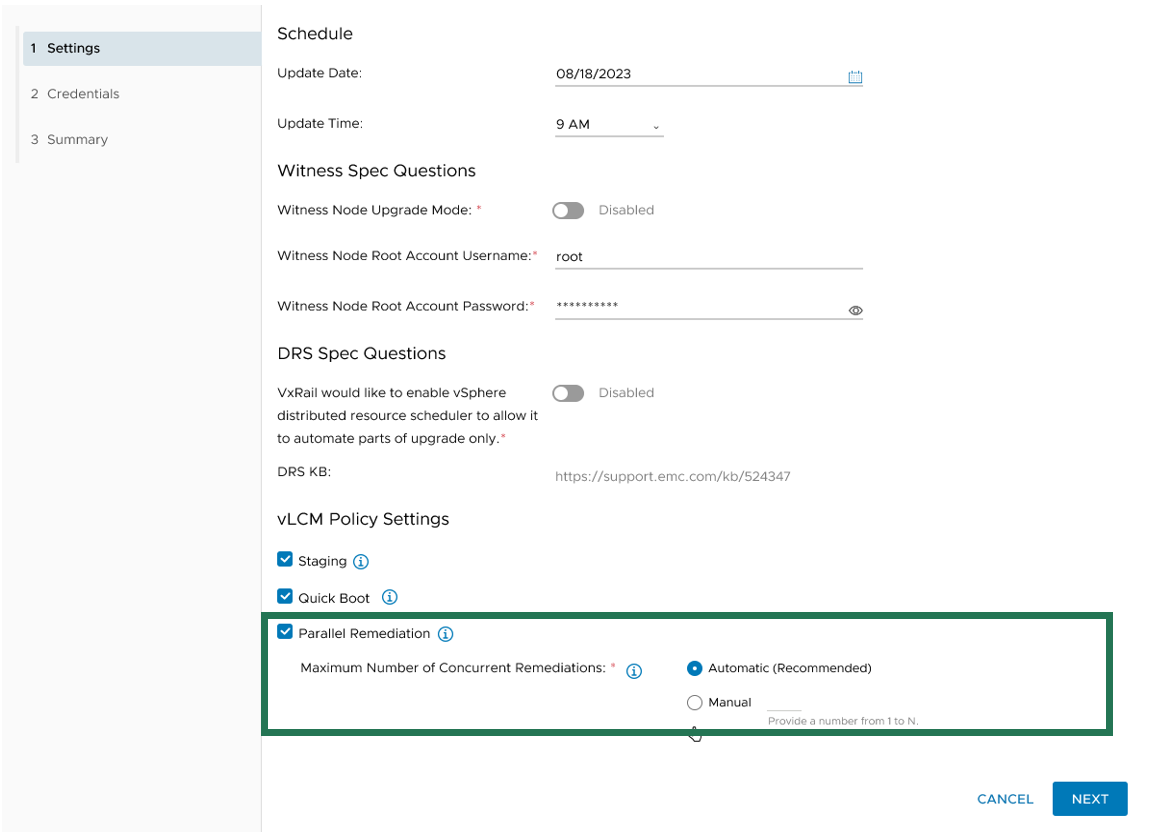

Another new vLCM feature enhancement that VxRail supports is parallel remediation. This enhancement allows you to update multiple nodes at the same time, which can significantly cut down on the overall cluster update time. However, this feature enhancement only applies to VxRail dynamic nodes because vSAN clusters still need to be updated one at a time to adhere to storage policy settings.

This feature offers substantial benefits in reducing the maintenance window, and VxRail’s implementation of the feature offers additional protections over how it can be used on vSAN Ready Nodes. For example, enabling parallel remediation with vSAN Ready Nodes means that you would be responsible for managing when nodes go into and out of maintenance mode as well as ensuring application availability because vCenter will not check whether the nodes that you select will disrupt application uptime. The VxRail implementation adds safety checks that help mitigate potential pitfalls, ensuring a smoother parallel remediation process.

VxRail Manager manages when nodes enter and exit maintenance modes and provides the same level of error checking that it already performs on cluster updates. You have the option of letting VxRail Manager automatically set the maximum number of nodes that it will update concurrently, or you can input your own number. The number for the manual setting is capped at the total node count minus two to ensure that the VxRail Manager VM and vCenter Server VM can continue to run on separate nodes during the cluster update.

Figure 2. Options for setting the maximum number of concurrent node remediations

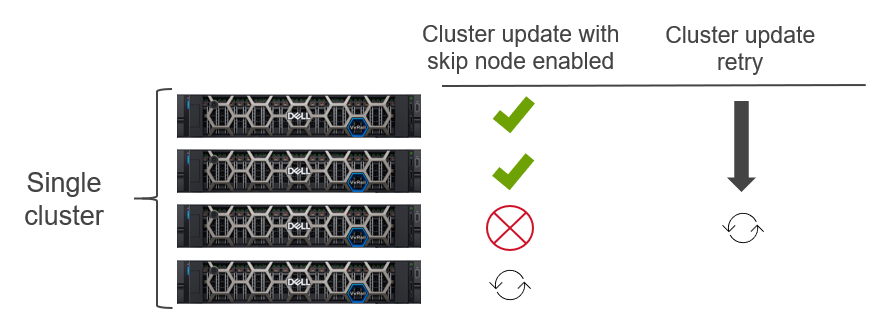

During the cluster update, VxRail Manager intelligently reduces the node count of concurrent updates if a node cannot enter maintenance mode or if the application workload cannot be migrated to another node to ensure availability. VxRail Manager will automatically defer that node to the next batch of node updates in the cluster update operation.

The last vLCM feature enhancement in VxRail 8.0.210 that I want to discuss is installation file pre-staging. The idea is to upload as many installation files for the node update as possible onto the node before it actually begins the update operation. Transfer times can be lengthy, so any reduction in the maintenance window would have a positive impact to the production environment.

To reap the maximum benefits of this feature, consider using the scheduling feature when setting up your cluster update. Initiating a cluster update with a future start time allows VxRail Manager the time to pre-stage the files onto the nodes before the update begins.

As you can see, the three vLCM feature enhancements can have varying levels of impact on your VxRail clusters. Automated Quick Boot enablement only benefits cluster updates that are Quick Boot-compatible, meaning there is not a firmware update included in the package. Parallel remediation only applies to VxRail dynamic node clusters. To maximize installation files pre-staging, you need to schedule cluster updates in advance.

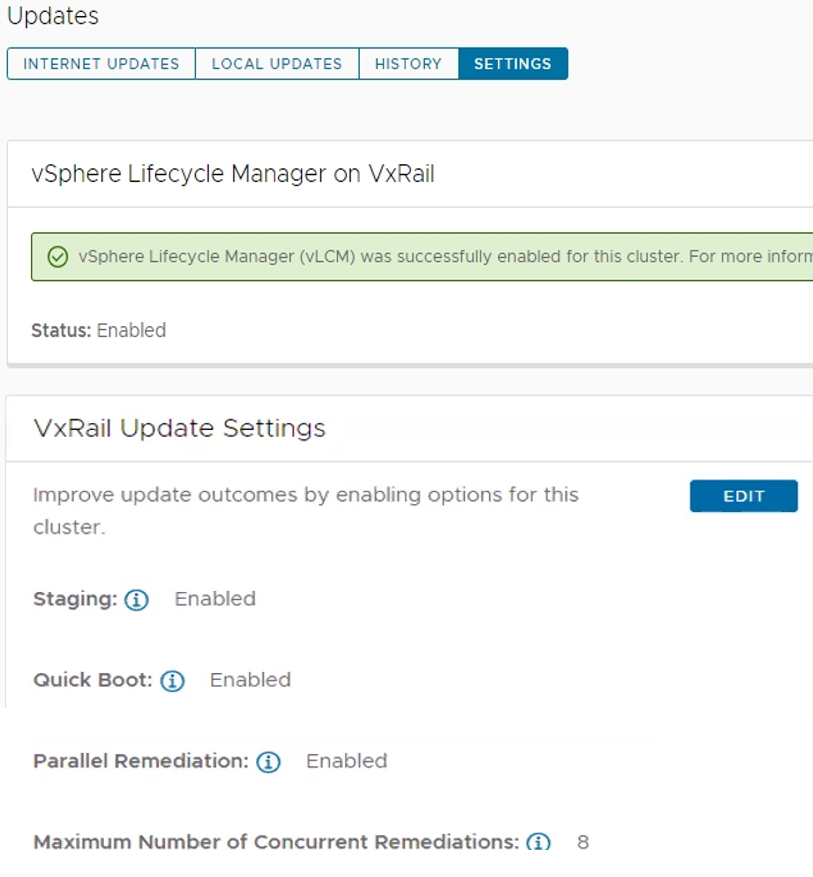

That said, two commonalities across all three vLCM feature enhancements is that you must have your VxRail clusters running vLCM mode and that the VxRail implementation for these three feature enhancements makes them more secure and easy to use. As shown in the following figure, the Updates page on the VxRail Manager UI has been enhanced so that you can easily manage these vLCM features at the cluster level.

Figure 3. VxRail Update Settings for vLCM features

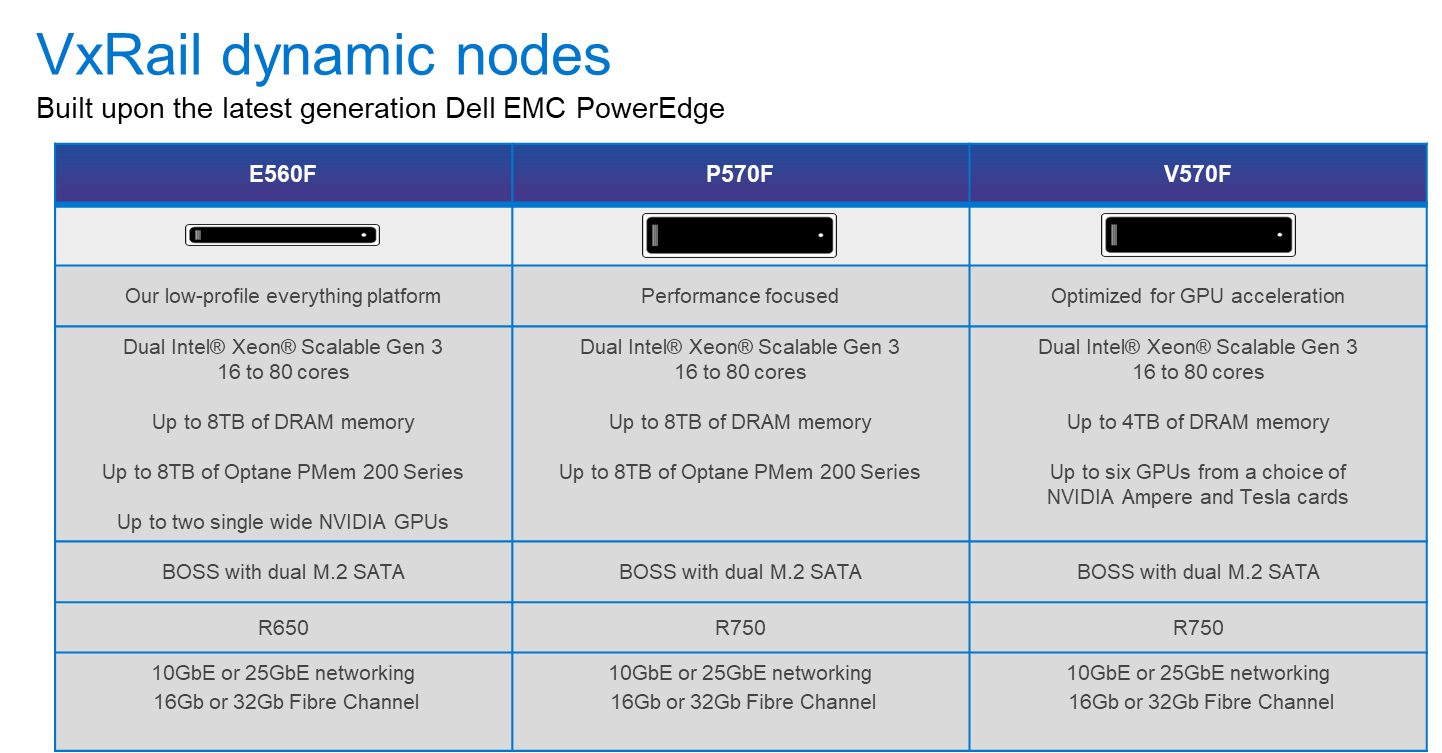

VxRail dynamic nodes

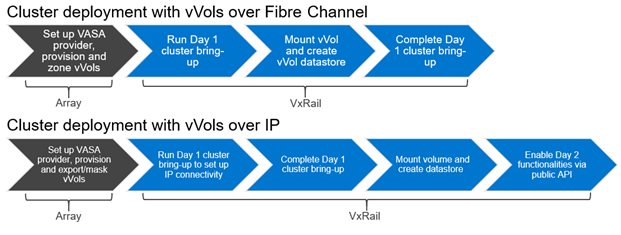

VxRail 8.0.210 also introduces an enhancement for dynamic node clusters with a VxRail-managed vCenter Server. In a recent VxRail software release, VxRail added an option for you to deploy a VxRail-managed vCenter Server with your dynamic node cluster as a Day 1 operation. The initial support was for Fiber-Channel attached storage. The parallel enhancement in this release adds support for dynamic node clusters using IP-attached storage for its primary datastore. That means iSCSI, NFS, and NVMe over TCP attached storage from PowerMax, VMAX, PowerStore, UnityXT, PowerFlex, and VMware vSAN cross-cluster capacity sharing is now supported. Just like before, you are still responsible for acquiring and applying your own vCenter Server license before the 60-day evaluation period expires.

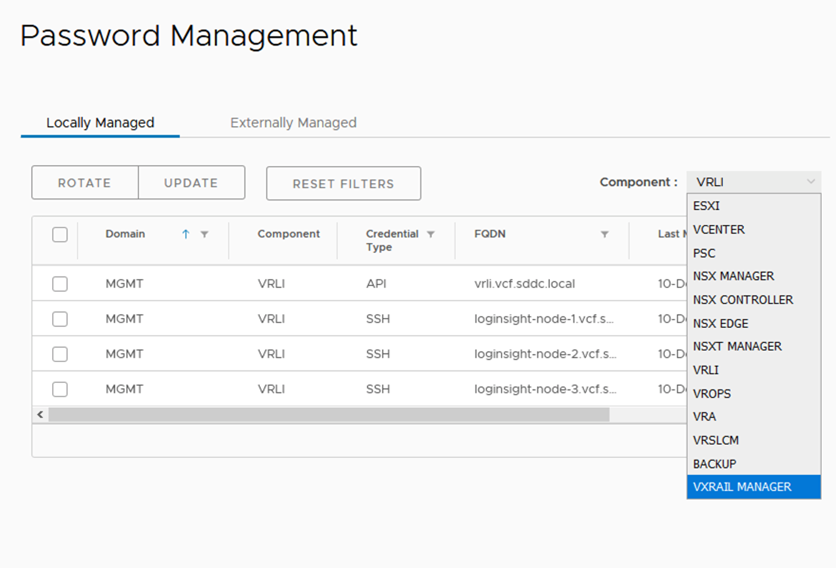

Password management

Password management is one of the key areas of focus in this software release. To reduce the manual steps to modify the vCenter Server management and iDRAC root account passwords, the VxRail Manager UI has been enhanced to allow you to make the changes via a wizard-driven workflow instead of having to change the password on the vCenter Server or iDRAC themselves and then go onto VxRail Manager UI to provide the updated password. The enhancement simplifies the experience and reduces potential user errors.

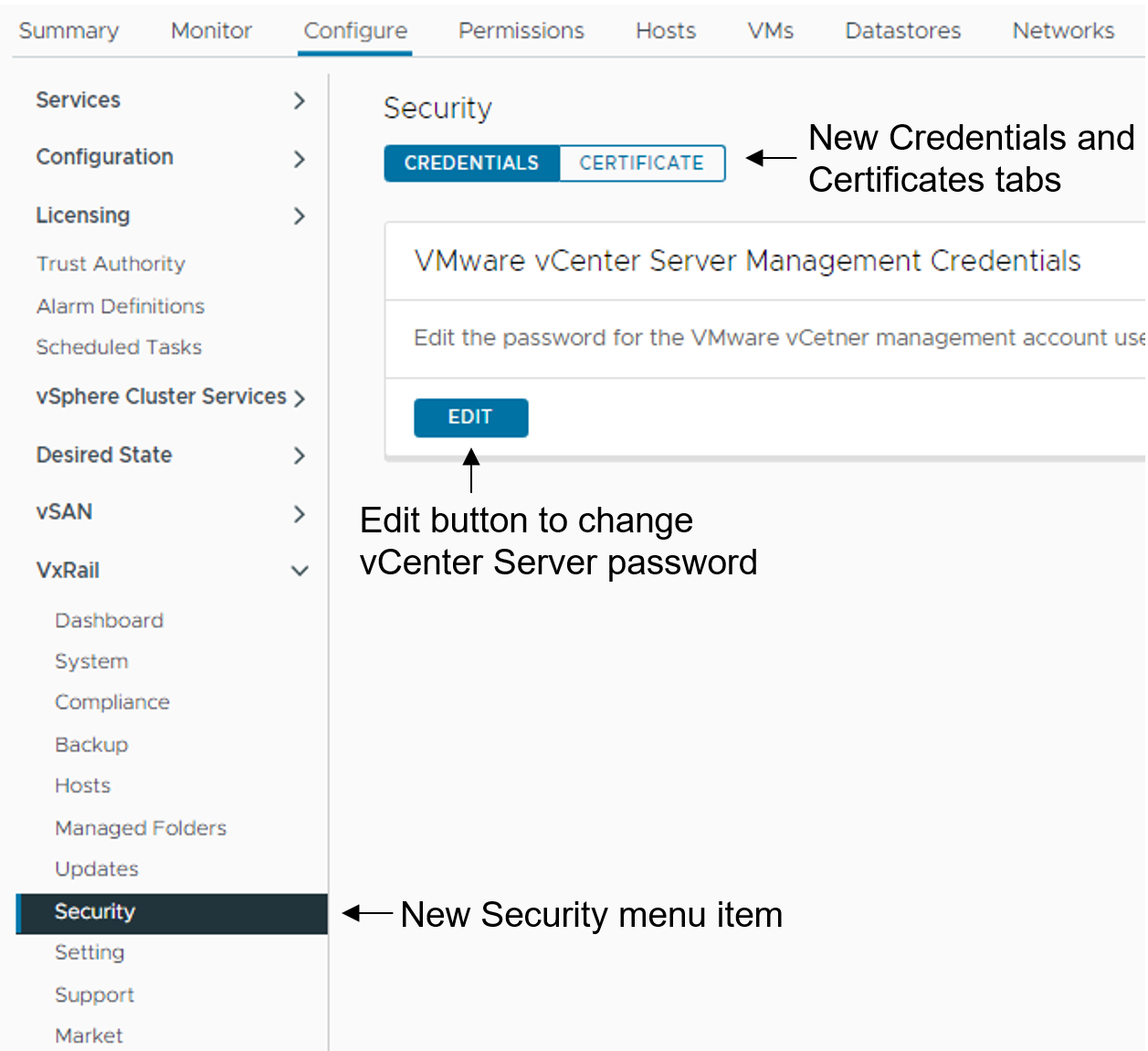

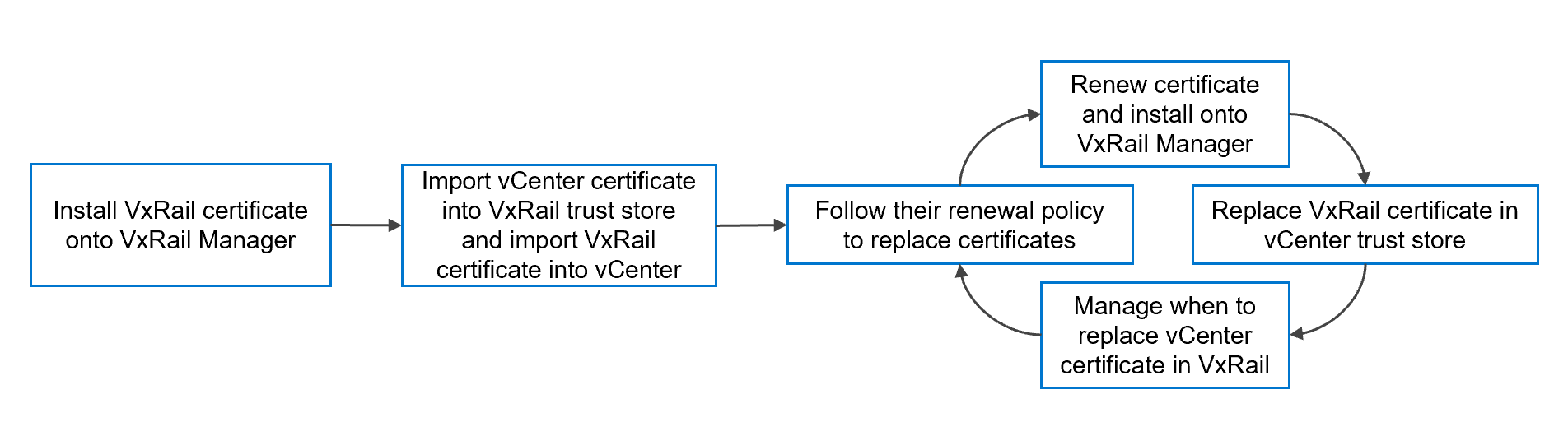

To update the vCenter Server management credentials, there is a new Security page that replaces the Certificates page. As illustrated in the following figure, a Certificate tab for the certificates management and a Credentials tab to change the vCenter Server management password are now present.

Figure 4. How to update the vCenter Server management credentials

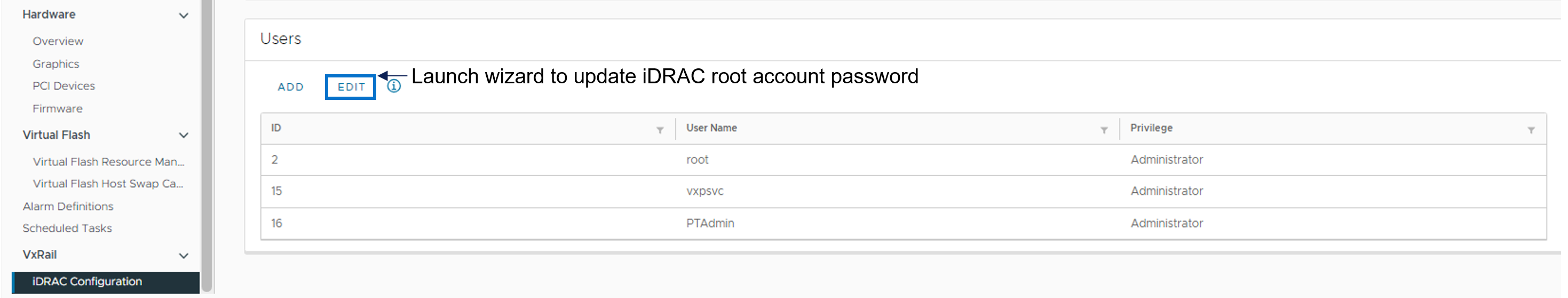

To update the iDRAC root account password, there is a new iDRAC Configuration page where you can click the Edit button to launch a wizard to the change password.

Figure 5. How to update the iDRAC root password

Deployment Flexibility

Lastly, I want to touch on two features in deployment flexibility.

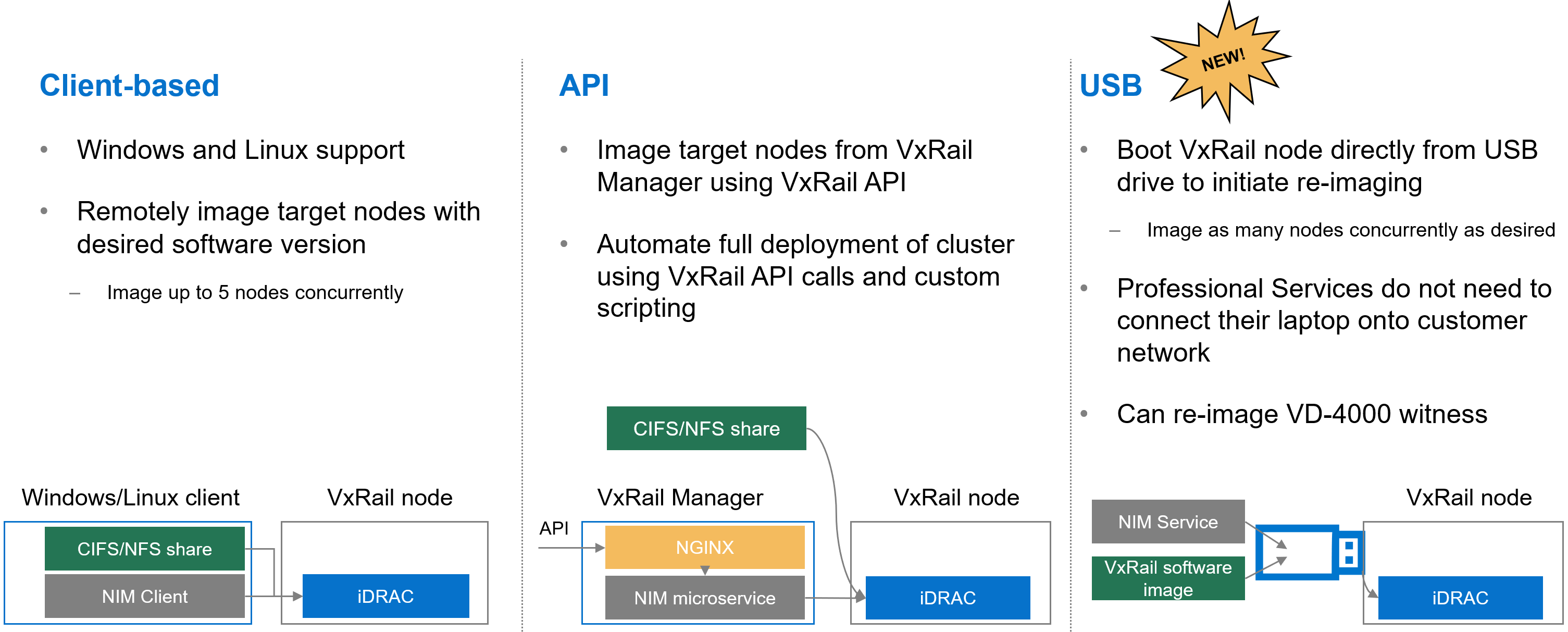

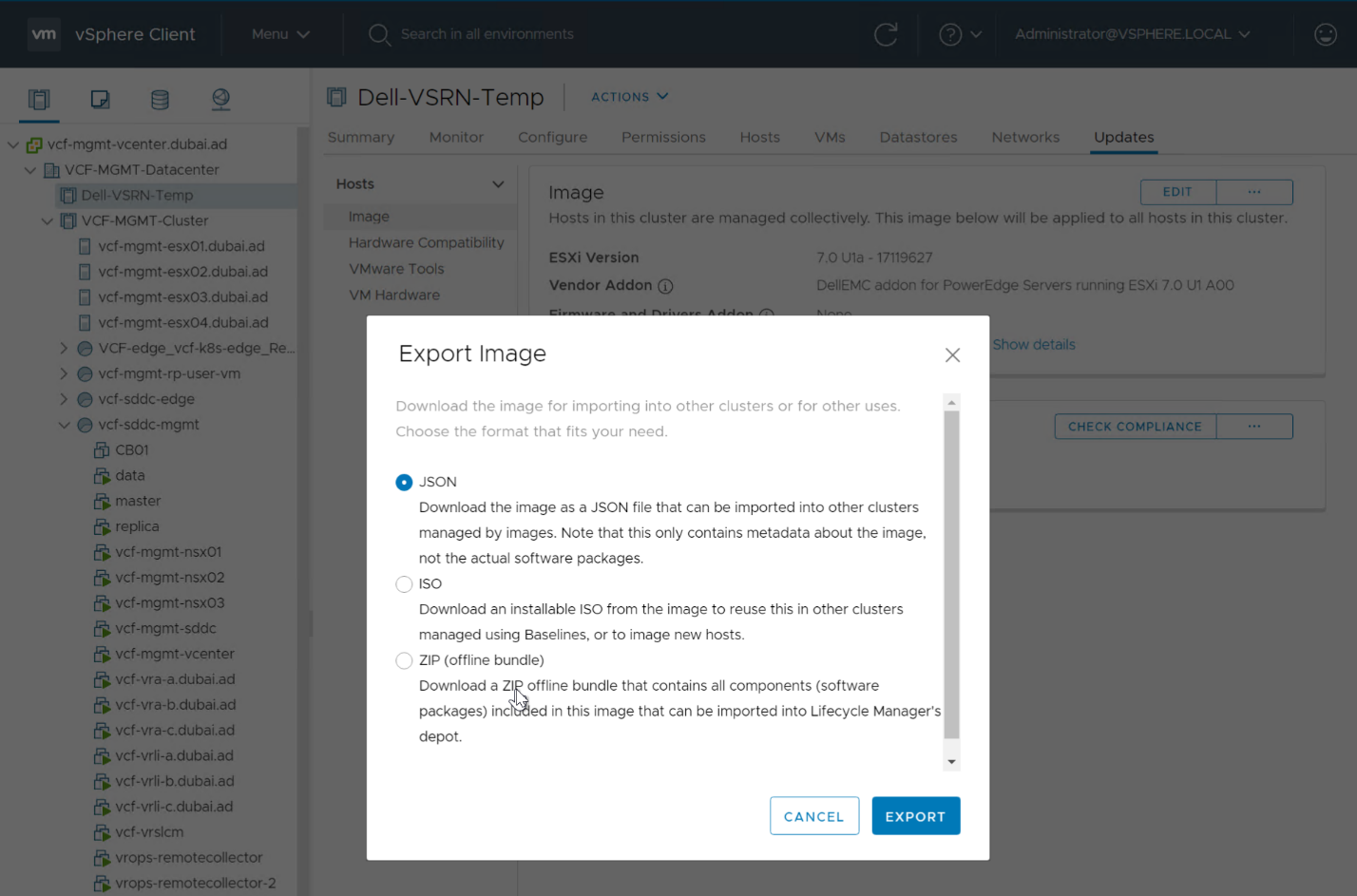

Over the past few years, the VxRail team has invested heavily in empowering you with the tools to recompose and rebuild the clusters on your own. One example is making our VxRail nodes customer-deployable with the VxRail Configuration Portal. Another is the node imaging tool.

Figure 6. Different options to use the node image management tool

Initially, the node imaging tool was Windows client-based where the workstation has the VxRail software ISO image stored locally or on a share. By connecting the workstation onto the local network where the target nodes reside, the imaging tool can be used to connect to the iDRAC of the target node. Users can reimage up to 5 nodes on the local network concurrently. In a more recent VxRail release, we added Linux client support for the tool.

We’ve also refactored the tool into a microservice within the VxRail HCI System Software so that it can be used via VxRail API. This method added more flexibility so that you can automate the full deployment of your cluster by using VxRail API calls and custom scripting.

In VxRail 8.0.210, we are introducing the USB version of the tool. Here, the tool can be self-contained on a USB drive so that users can plug the USB drive into a node, boot from it, and initiate reimaging. This provides benefits in scenarios where the 5-node maximum for concurrent reimage jobs is an issue. With this option, users can scale reimage jobs by setting up more USB drives. The USB version of the tool now allows an option to reimage the embedded witness on the VD-4000.

The final feature for deployment flexibility is support for IPv6. Whether your environment is exhausting the IPv4 address pool or there are requirements in your organization to future-proof your networking with IPv6, you will be pleasantly surprised by the level of support that VxRail offers.

You can deploy IPv6 in a dual or single network stack. In a dual network stack, you can have IPv4 and IPv6 addresses for your management network. In a single network stack, the management network is only on the IPv6 network. Initial support is for VxRail clusters running vSAN OSA with 3 or more nodes. Other than that, the feature set is on par with what you see with IPv4. Select the network stack at cluster deployment.

Conclusion

VxRail 8.0.210 offers a plethora of new features and platform support such that there is something for everyone. As you digest the information about this release, know that updating your cluster to the latest VxRail software provides you with the best return on your investment from a security and capability standpoint. Backed by VxRail Continuously Validated States, you can update your cluster to the latest software with confidence. For more information about VxRail 8.0.210, please refer to the release notes. For more information about VxRail in general, visit the Dell Technologies website.

Author: Daniel Chiu, VxRail Technical Marketing

https://www.linkedin.com/in/daniel-chiu-8422287/

VxRail API—Updated List of Useful Public Resources

Fri, 09 Feb 2024 16:07:26 -0000

|Read Time: 0 minutes

Well-managed companies are always looking for new ways to increase efficiency and reduce costs while maintaining excellence in the quality of their products and services. Hence, IT departments and service providers look at the cloud and Application Programming Interfaces (APIs) as the enablers for automation, driving efficiency, consistency, and cost-savings.

This blog helps you get started with VxRail API by grouping the most useful VxRail API resources available from various public sources in one place. This list of resources is updated every few months. Consider bookmarking this blog as it is a useful reference.

Before jumping into the list, it is essential to answer some of the most obvious questions:

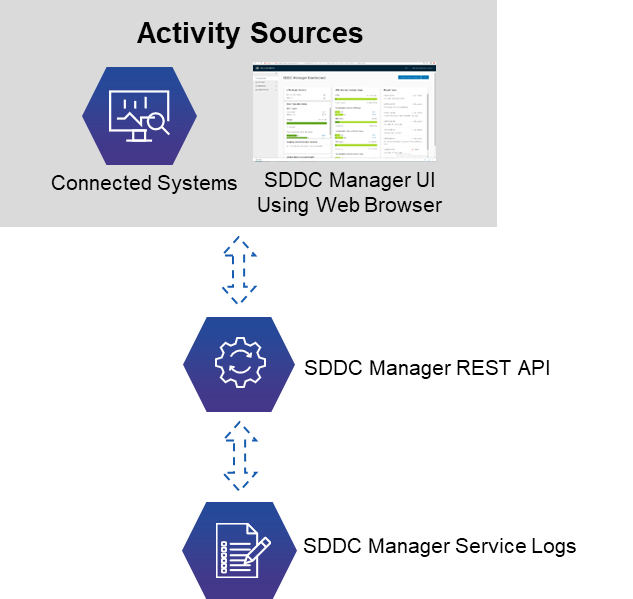

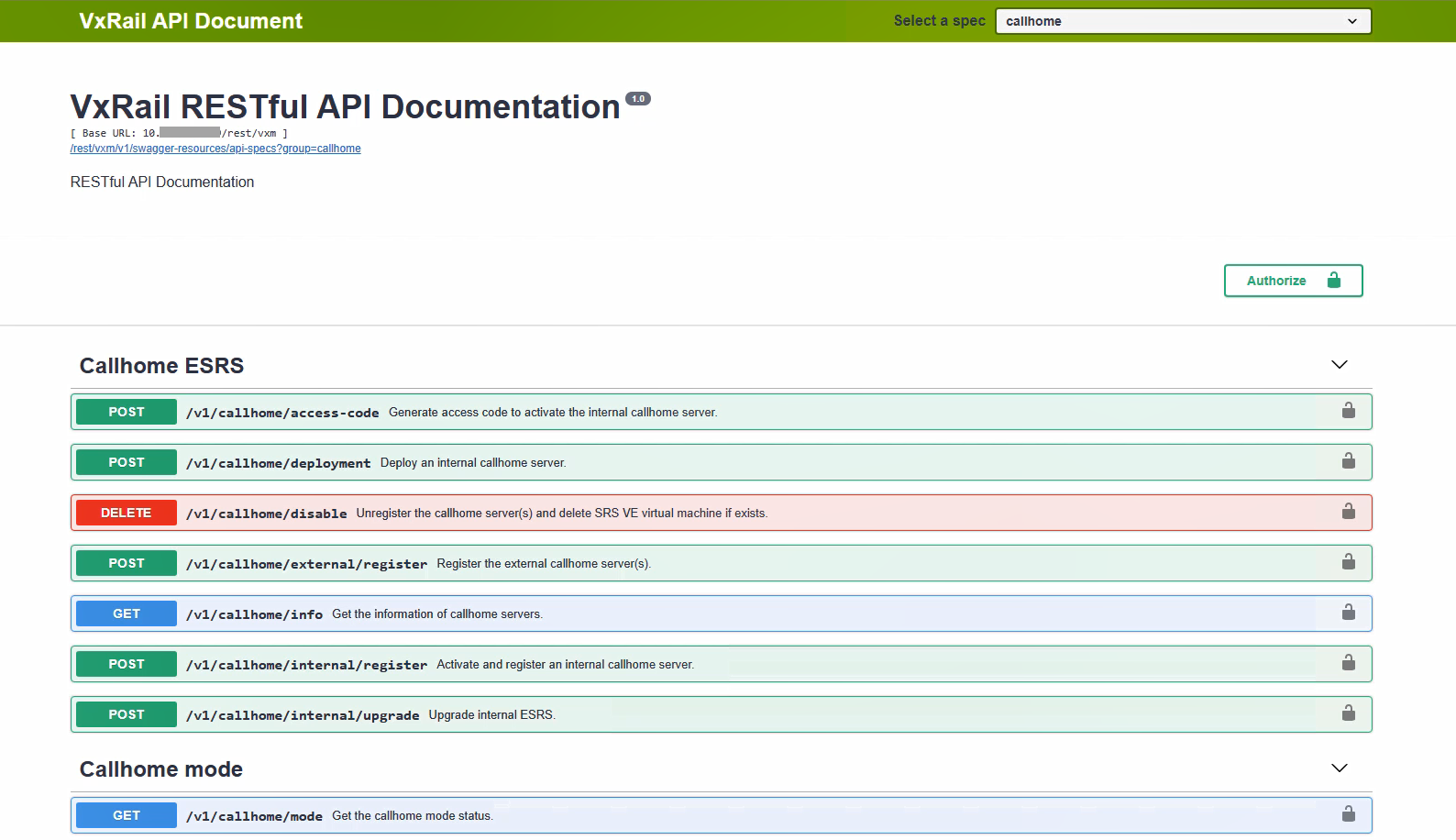

What is VxRail API?

VxRail API is a feature of the VxRail HCI System Software that exposes management functions with a RESTful application programming interface. It is designed for ease of use by VxRail customers and ecosystem partners who want to better integrate third-party products with VxRail systems. VxRail API is:

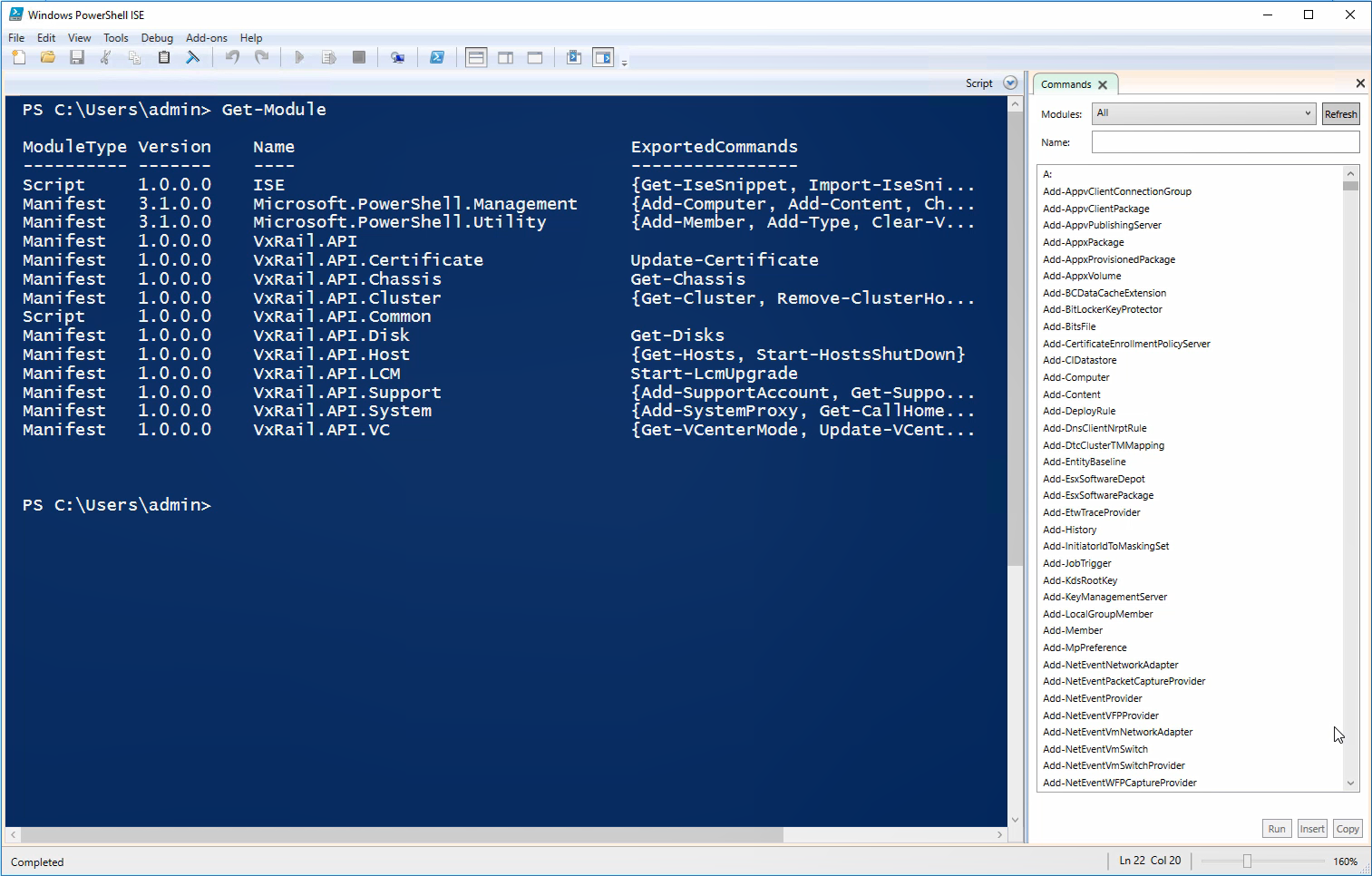

- Simple to use— Thanks to embedded, interactive, web-based documentation, and PowerShell and Ansible modules, you can easily consume the API using a supported web browser, using a familiar command line interface for Windows and VMware vSphere admins, or using Ansible playbooks.

- Powerful—VxRail offers dozens of API calls for essential operations such as automated life cycle management (LCM), and its capabilities are growing with every new release.

- Extensible—This API is designed to complement REST APIs from VMware (such as vSphere Automation API, PowerCLI, and VMware Cloud Foundation on Dell EMC VxRail API), offering a familiar look and feel and vast capabilities.

Why is VxRail API relevant?

VxRail API enables you to use the full power of automation and orchestration services across your data center. This extensibility enables you to build and operate infrastructure with cloud-like scale and agility. It also streamlines the integration of the infrastructure into your IT environment and processes. Instead of manually managing your environment through the user interface, the software can programmatically trigger and run repeatable operations.

More customers are embracing DevOps and Infrastructure as Code (IaC) models because they need reliable and repeatable processes to configure the underlying infrastructure resources that are required for applications. IaC uses APIs to store configurations in code, making operations repeatable and greatly reducing errors.

How can I start? Where can I find more information?

To help you navigate through all available resources, I grouped them by level of technical difficulty, starting with 101 (the simplest, explaining the basics, use cases, and value proposition), through 201, up to 301 (the most in-depth technical level).

101 Level

- Solution Brief—Dell VxRail API – Solution Brief is a concise brochure that describes the VxRail API at a high-level, typical use cases, and where you can find additional resources for a quick start. I highly recommend starting your exploration from this resource.

- Learning Tool—VxRail Interactive Journey is the "go-to resource" to learn about VxRail and HCI System Software. It includes a dedicated module for the VxRail API, with essential resources to maximize your learning experience.

- On-demand Session—Automation with VxRail API is a one-hour interactive learning session delivered as part of the Tech Exchange Live VxRail Series, available on-demand. This session is an excellent introduction for anyone new to VxRail API, discussing the value, typical use cases, and how to get started.

- On-demand Session—Infrastructure as Code (IaC) with VxRail is another one-hour interactive learning session delivered as part of the Tech Exchange Live VxRail Series, available on-demand. This one is an introduction to adopting Infrastructure as Code on VxRail, with automation tools like Ansible and Terraform.

- Instructor Session—Automation with VxRail is a live, interactive training session offered by Dell Technologies Education Services. Hear directly from the VxRail team about new capabilities and what’s on the roadmap for VxRail new releases and the latest advancements.

During the session you will:

• Learn about the VxRail ecosystem and leverage its automation capabilities

• Elevate performance of automated VxRail operations using the latest tools

• Experience live demonstrations of customer use cases and apply these examples to your environment

• Increase your knowledge of VxRail API tools such as PowerShell and Ansible modules

• Receive bonus material to support you in your automation journey - Infographic—Dell VxRail HCI System Software RESTful API is an infographic that provides quick facts about VxRail HCI System Software differentiation. This infographic explains the value of VxRail API.

- Whiteboard Video—Level up your HCI automation with VxRail API – This technical whiteboard video introduces you to automation with VxRail API. We discuss different ways you can access the API, and provide example use cases.

- Blog Post—Take VxRail automation to the next level by leveraging APIs is my first blog that focuses on VxRail API. It addresses some of the challenges related to managing a farm of VxRail clusters and how VxRail API can be a solution. It also covers the enhancements introduced in VxRail HCI System Software 4.7.300, such as Swagger and PowerShell integration.

- Blog Post—VxRail – API PowerShell Module Examples is a blog from my colleague David, explaining how to install and get started with the VxRail API PowerShell Modules Package.

- Blog Post—Infrastructure as Code with VxRail Made Easier with Ansible Modules for Dell VxRail – my blog with an introduction to VxRail Ansible Modules, including a demo.

- (New!) Blog Post—VxRail Edge Automation Unleashed - Simplifying Satellite Node Management with Ansible – my blog explaining the use of Ansible Modules for Dell VxRail for satellite node management, including a demo.

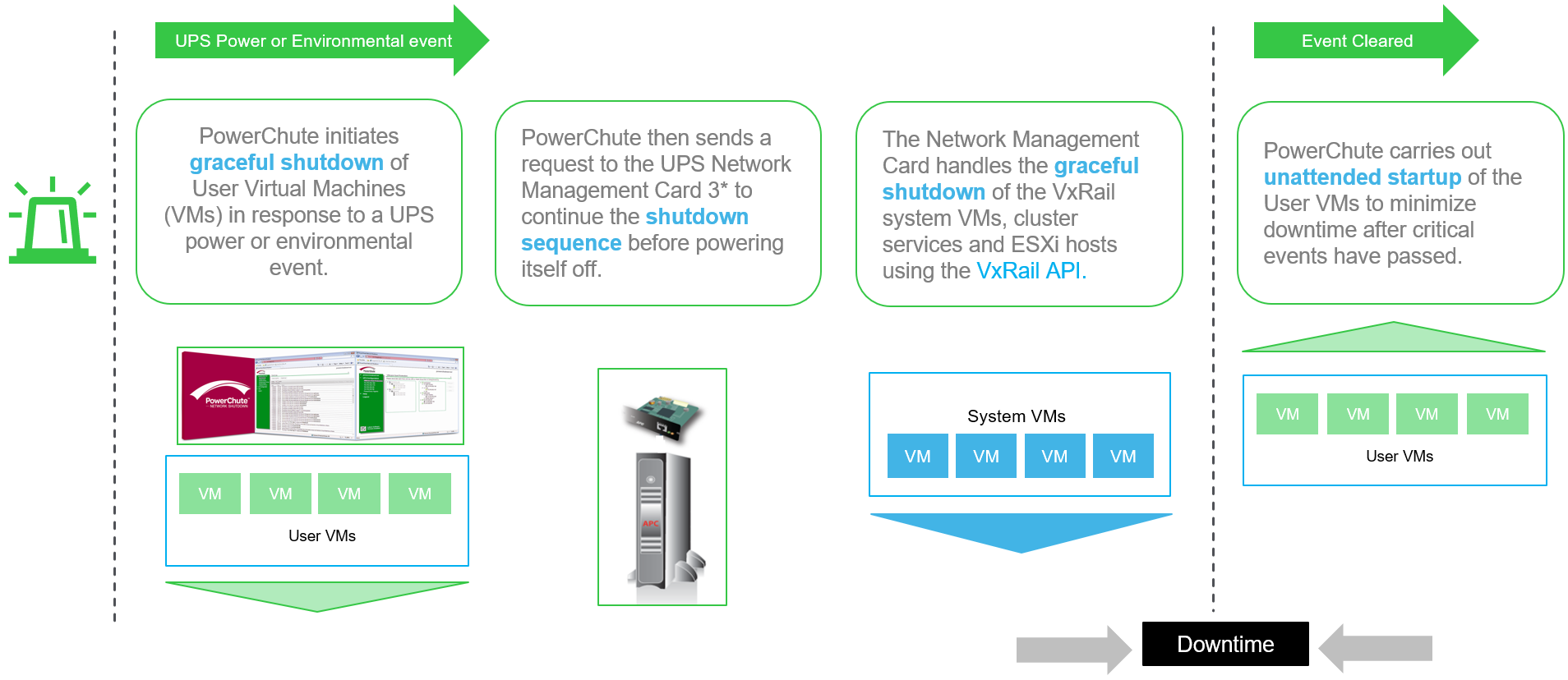

- Blog Post—Protecting VxRail from Power Disturbances is my second API-related blog, in which I explain an exciting use case by Eaton, our ecosystem partner, and the first UPS vendor who integrated their power management solution with VxRail using the VxRail API.

- Blog Post—Protecting VxRail From Unplanned Power Outages: More Choices Available describes another UPS solution integrated with the VxRail API, from our ecosystem partner APC (Schneider Electric).

- Demo—VxRail API – Overview is our first VxRail API demo published on the official Dell YouTube channel. It was recorded using VxRail HCI System Software 4.7.300, which explains VxRail API basics, API enhancements introduced in this version, and how you can explore the API using the Swagger UI.

- Demo—VxRail API – PowerShell Package is a continuation of the API overview demo referenced above, focusing on PowerShell integration. It was recorded using VxRail HCI System Software 4.7.300.

- Demo—Ansible Modules for Dell VxRail provides a quick overview of VxRail Ansible Modules. It was recorded using VxRail HCI System Software 7.0.x.

- (New!) Demo—Ansible Modules for Dell VxRail – Automating Satellite Node Management continues the subject of VxRail Ansible Modules, showcasing the satellite node management use case for the Edge. It was recorded using VxRail HCI System Software 8.0.x.

201 Level

- (Updated!) HoL—Hands On Lab: HOL-0310-01 - Scalable Virtualization, Compute, and Storage with the VxRail REST API- allows you to experience the VxRail API in a virtualized demo environment using various tools. This has been premiered at Dell Technologies World 2022 and is a very valuable self-learning tool for VxRail API. It includes four modules:

- Module 1: Getting Started (~10 min / Basic) - The aim of this module is to get the lab up and running and dip your toe in the VxRail API waters using our web-based interactive documentation.

• Access interactive API documentation

• Explore available VxRail API functions

• Test a VxRail API function

• Explore Dell Technologies' Developer Portal - Module 2: Monitoring and Maintenance (~15 min / Intermediate) - In this module you will navigate our VxRail PowerShell Modules and the VxRail Manager, to become more familiar with the options available to monitor the health indicators of a VxRail cluster. There are also some maintenance tasks that show how these functions can simplify the management of your environment.

Monitoring the health of a VxRail cluster:

• Check the cluster's overall health

• Check the health of the nodes

• Check the individual components of a node

Maintenance of a VxRail cluster:

• View iDRAC IP configuration

• Collect a log bundle of the VxRail cluster

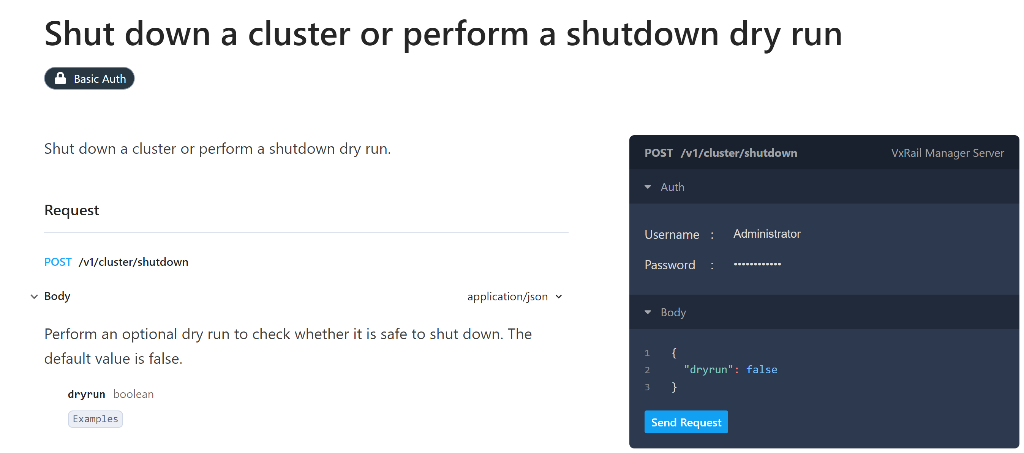

• Cluster shutdown (Dry run) - Module 3: Add & Update VxRail Satellite Nodes (~30 min / Intermediate) - In this module, you will experiment with adding and updating VxRail satellite nodes using VxRail API and VxRail API PowerShell Modules.

• Add a VxRail satellite node

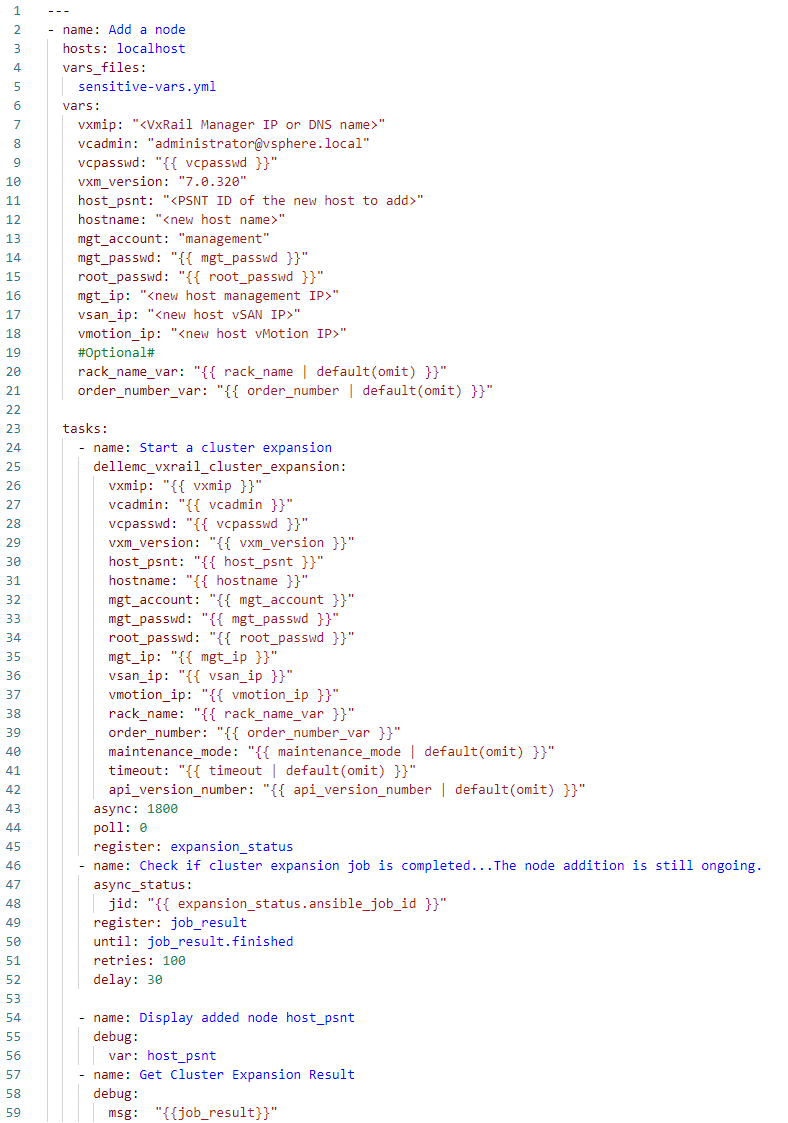

• Update a VxRail satellite node - Module 4: Cluster Expansion or Scaling Out (~25 min / Advanced) - In this module, you will experience our official VxRail Ansible Modules and how easy it is to expand the cluster with an additional node.

• Connect to Ansible server

• View VxRail Ansible Modules documentation

• Add a node to the existing VxRail cluster

• Verify cluster state after expansion - Module 5: Lifecycle Management or LCM (~25 min / Advanced) - In this module, you will experience our VxRail APIs using POSTMAN. You will see how easy LCM operations are using our VxRail API and software.

• Explore POSTMAN

• Generate a compliance report

• Explore LCM pre-check and LCM upgrade API functions available to bring it to the next VxRail version.

- Module 1: Getting Started (~10 min / Basic) - The aim of this module is to get the lab up and running and dip your toe in the VxRail API waters using our web-based interactive documentation.

Note: If you’re a customer, you will need to ask your Dell or partner account team to create a session for you and a hyperlink to get the access to this lab.

- vBrownBag session—vSphere and VxRail REST API: Get Started in an Easy Way is a vBrownBag community session that took place at the VMworld 2020 TechTalks Live event. There are no slides and no “marketing fluff,” but an extensive demo showing:

- How you can begin your API journey by using interactive, web-based API documentation

- How you can use these APIs from different frameworks (such as scripting with PowerShell in Windows environments) and configuration management tools (such as Ansible on Linux)

- How you can consume these APIs virtually from any application in any programming language.

- vBrownBag session—Automating large scale HCI deployments programmatically using REST APIs is a vBrownBag community session that took place at the VMworld 2021 TechTalks Live event. This approx. 10 minute session discusses the sample use cases and tools at your disposal, allowing you to jumpstart your API journey in various frameworks quickly. It includes a demo of VxRail cluster expansion using PowerShell.

301 Level

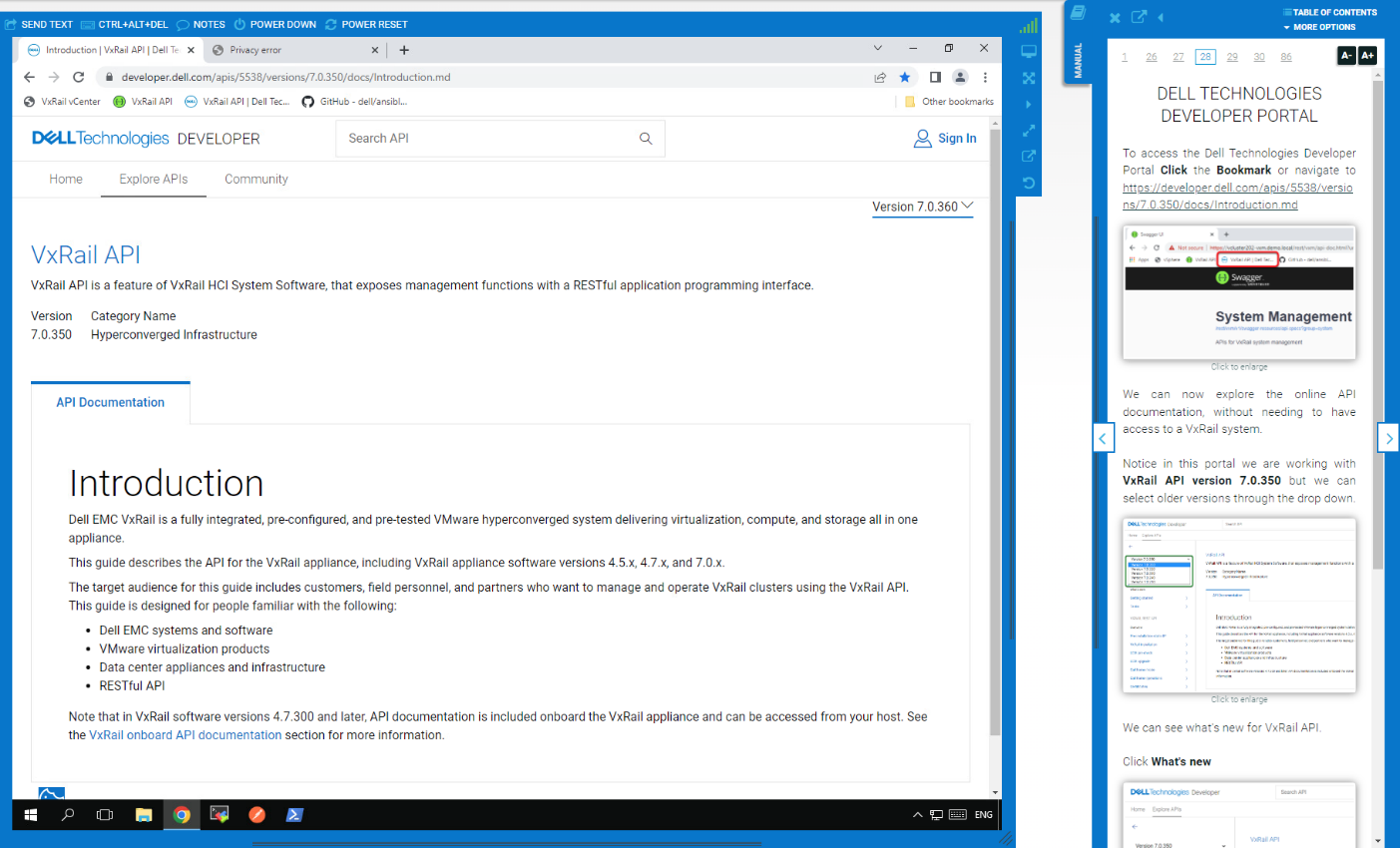

- (Updated!) Manual—VxRail API User Guide at Dell Technologies Developer Portal is an official web-based version of the reference manual for VxRail API. It provides a detailed description of each available API function.

Make sure to check the “Tutorials” section of this web-based manual, which contains code examples for various use cases and will replace the API Cookbook over time. - Manual—VxRail API User Guide is an official reference manual for VxRail API in PDF format. It provides a detailed description of each available API function, support information for specific VxRail HCI System Software versions, request parameters and possible response codes, successful call response data models, and example values returned. Dell Technologies Support portal access is required.

- (Updated!) Ansible Modules—The Ansible Modules for Dell VxRail available on GitHub and Ansible Galaxy allow data center and IT administrators to use Red Hat Ansible to automate and orchestrate the configuration and management of Dell VxRail.

The Ansible Modules for Dell VxRail are used for gathering system information and performing cluster level operations. These tasks can be executed by running simple playbooks written in yaml syntax. The modules are written so that all the operations are idempotent, therefore making multiple identical requests has the same effect as making a single request. - PowerShell Package—VxRail API PowerShell Modules is a package with VxRail.API PowerShell Modules that allows simplified access to the VxRail API, using dedicated PowerShell commands and integrated help. This version supports VxRail HCI System Software 7.0.010 or later.

Note: You must sign into the Dell Technologies Support portal to access this link successfully. - API Reference—vSphere Automation API is an official vSphere REST API reference that provides API documentation, request/response samples, and usage descriptions of the vSphere services.

- API Reference—VMware Cloud Foundation on Dell VxRail API Reference Guide is an official VMware Cloud Foundation (VCF) on VxRail REST API reference that provides API documentation, request/response samples, and usage descriptions of the VCF on VxRail services.

- Blog Post—Deployment of Workload Domains on VMware Cloud Foundation 4.0 on Dell VxRail using Public API is a VMware blog explaining how you can deploy a workload domain on VCF on VxRail using the API with the CURL shell command.

I hope you find this list useful. If so, make sure that you bookmark this blog for your reference. I will update it over time to include the latest collateral.

Enjoy your Infrastructure as Code journey with the VxRail API!

Author: Karol Boguniewicz, Senior Principal Engineering Technologist, VxRail Technical Marketing

Twitter: @cl0udguide

VxRail’s Latest Hardware Evolution

Thu, 04 Jan 2024 17:22:21 -0000

|Read Time: 0 minutes

December is a time of celebration and anticipation, a month in which we may reflect on the events of the year and look ahead to what is yet to come. Charles Dickens’ “A Christmas Carol” – and its many stage and movie remakes – is one of those literary classics that helps showcase this season’s magic at its finest. It is even said that there is a special kind of magic—one full of excitement, innovation, and productivity—that finds a way to (hyper)converge the past, present, and future for data center administrators all around the world who have been good all year!

No, your wondering eyes do not deceive you. Appearing today are VxRail’s next generation platforms—the VE-660 and VP-760—in all-new, all-NVMe configurations! While Santa’s elves have spent the year building their backlog of toys and planning supply-chain delivery logistics that rival SLA standards of the world’s largest e-tailers, the VxRail team has been hard at work innovating our VxRail family portfolio to ensure that your workloads can run faster than ever before. So, let’s grab a glass of eggnog and invite the holiday spirits along for a tour of VxRail past, present, and future to better understand our latest portfolio addition.

Figure 1. VxRail VE-660

Figure 1. VxRail VE-660 Figure 2. VxRail VP-760

Figure 2. VxRail VP-760

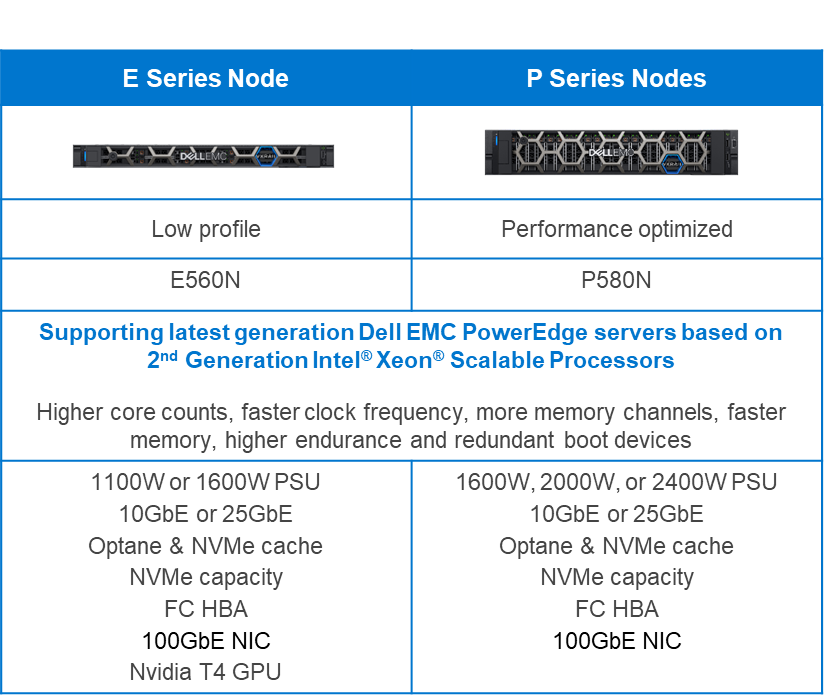

Spirit of VxRail Past

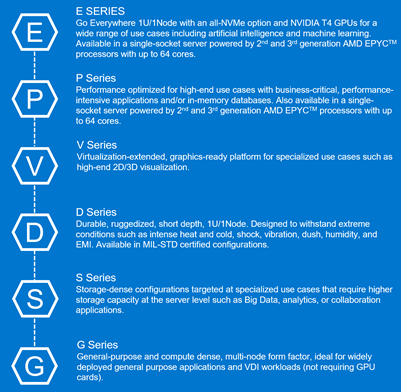

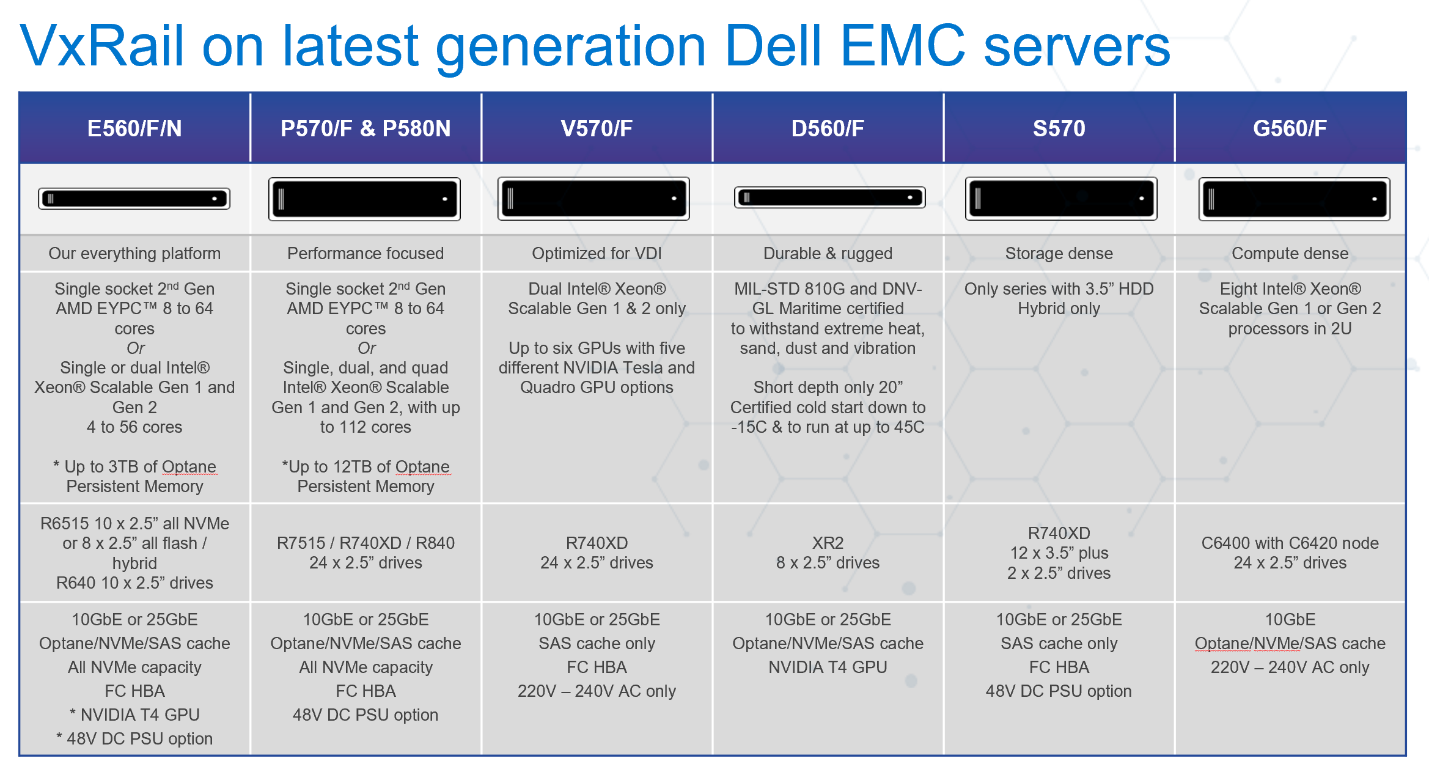

This was especially true earlier this summer when we launched the VE-660 and VP-760 VxRail platforms based on 16th Generation Dell PowerEdge servers. These next-gen successors to the VxRail E-Series and P-Series platforms not only contained the latest hardware innovations, but also represented a systemic change in the overall VxRail offering.

First, the mainline E- and P-series platforms were respectively re-christened as the VE-660 and VP-760. This was done primarily to invite easier comparison points to the underlying PowerEdge servers on which they’re based – the R660 and R760. Second, we tracked how the use of accelerators in the data center had evolved over the years and made the strategic decision to fold the capabilities of the V-Series platform into the P-Series by way of specific riser configurations. Now, customers have the ability to glean all the benefits of a high-performant 2U system with the choice of either storage-optimized (up to 28 total drive bays) or accelerator-optimized (up to 2x double wide or 6x single wide GPUs) chassis configurations—whichever best aligns to the specifics of their workload needs. And third, VxRail platforms dropped the storage type suffix from the model name. Hybrid and all-flash (and as of today, all-NVME–more on this later) storage variants are now offered as part of the riser configuration selection options of these baseline platforms, where applicable.

These changes are representative of how the breadth and depth of customer needs have grown tremendously over the years. By taking these steps to streamline the VxRail portfolio, we charted an evolutionary path forward that continues our commitment to offer greater customer choice and flexibility.

Spirit of VxRail Present

These themes of greater choice and flexibility are amplified by the architectural improvements underpinning these new VxRail platforms. Primary among them is the introduction of Intel® 4th Generation Xeon® Scalable processors. Intel’s latest generation of processors do more than bump VxRail core density per socket to 56 (112 max per node). They also come with built-in AMX accelerators (Advanced Matrix Extensions) that support AI and HPC workloads without the need for any additional drivers or hardware. For a deeper dive into the Intel® AMX capability set, the Spirit of VxRail Present invites you to read this blog: VxRail and Intel® AMX, Bringing AI Everywhere, authored by Una O’Herlihy.

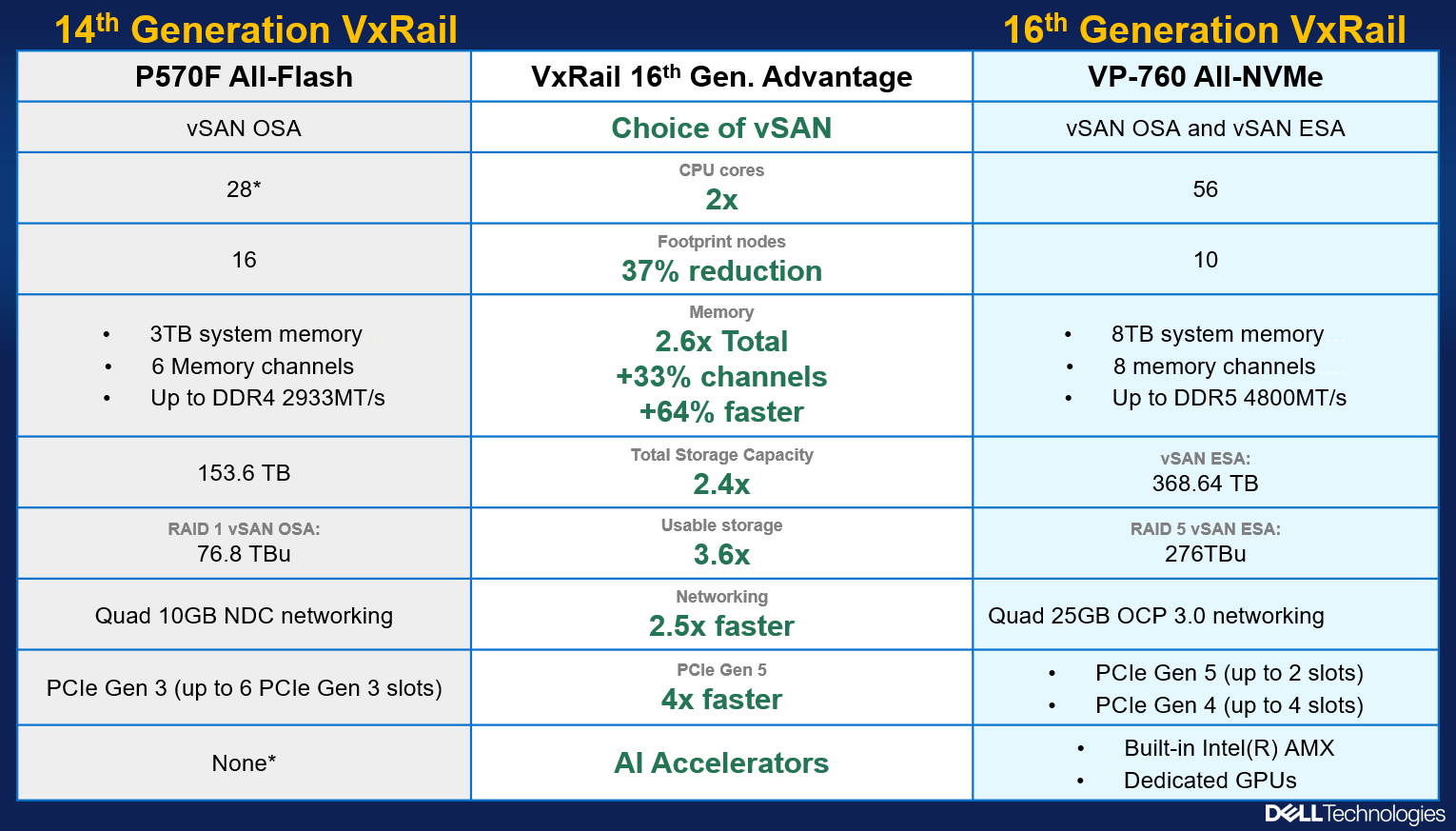

Intel’s latest processors also usher in support for DDR5 memory and PCIe Gen 5, two other architectural pillars that underpin significant jumps in performance. The following table offers a high-level overview and comparison of these pillars and a useful at-a-glance primer for those considering a technology refresh from earlier generation VxRail:

Table 1. VxRail 14th Generation to 16th Generation comparison

VxRail VE-660 & VP-760 | VxRail E560, P570 & V570 | |

Intel Chipset | 4th Generation Xeon | 2nd Generation Xeon |

Cores | 8 - 56 | 4 - 28 |

TDP | 125W – 350W | 85W – 205W |

Max DRAM Memory | 4TB per socket | 1.5TB per socket |

Memory Channels | 8 (DDR5) | 6 (DDR4) |

Memory Bandwidth | Up to 4800 MT/s | Up to 2933 MT/s |

PCIe Generation | PCIe Gen 5 | PCIe Gen 3 |

PCIe Lanes | 80 | 48 |

PCIe Throughput | 32 GT/s | 8 GT/s |

As the operational needs of a business change day-by-day, finding the right balance between workload density and load balance can often feel like an infinite war for resources. The adoption of DDR5 memory across the latest generation of VxRail platforms offers additional flexibility in the way system resources can be divvied up by virtue of two key benefits: greater memory density and faster bandwidth. The VE-660 and VP-760 wield eight memory channels per processor, with the ability to slot up to two 4800MT/s DIMMs per channel for a maximum memory capacity of 8TB per node. Compared to a VxRail P570, the density and speed improvements are staggering: 33% more memory channels per processor, 2.6x increase in per system total memory, and up to a 64% increase in memory speed! With faster and greater density compute and memory available for workloads, each node in a VxRail cluster can handle more VMs, and if there is ever a case of task bottlenecking, there are plenty of resources still available for optimal load balancing.

When we consider the presence of PCIe Gen 5, we see an even greater increase in the overall performance envelope. PowerEdge’s Next-Generation Tech Note does a great job of contextualizing the capabilities of PCIe Gen 5. The main takeaway for VxRail, however, is that it increases the maximum bandwidth achievable from various peripheral components by roughly 25% when compared to PCIe Gen 4 and roughly 66% when compared to PCIe Gen 3. In particular, the jump in available PCIe lanes (48 lanes to a luxurious 80 lanes) and associated throughput (8 GT/s to 32 GT/s per lane) from Gen 3 to Gen 5 significantly reduces performance bottlenecks, resulting in faster storage transfer rates and more bandwidth for accelerators to process AI and ML workloads.

PCIe Gen 5 is also backwards compatible with previous generation peripherals, enabling a certain degree of flexibility with respect to VxRail’s component extensibility and longevity in the data center. Yesterday’s technologies can still be used, but the VE-660 and VP-760 can adapt to growing workload demands by taking full advantage of the latest peripherals as they are released. They are even equipped with an additional PCIe slot over their E- & P-Series predecessors, providing extra dimensions of configuration. These boons in flexibility ensure any investment into this generation of VxRail enjoys longer relevance as your infrastructure backbone.

Spirit of VxRail Future

Even with all these architectural improvements defining the VP-760 and VE-660, we knew we could find ways of improving the capability set. So, we made our list of desired features (and checked it twice!) and determined that the best way to augment these next-generation hardware enhancements would be with the introduction of all-NVMe storage options.

The Spirit of VxRail Past wishes to remind us that VxRail with all-NVMe storage is not new—NVMe first made its way to the VxRail lineup with the P580N and E560N almost four years ago and has been a mainstay facet of the VxRail with vSAN architecture ever since. However, what is most compelling about all-NVMe versions of the VE-660 and VP-760—what the Spirit of VxRail Future wishes to strongly communicate—is that NVMe opens the door to two very compelling benefits: additional flexibility of choice with respect to vSAN architecture and an associated increase in overall storage capacity with the addition of read intensive NVMe drives in sizes of up to 15.36TB.

The following figure outlines all of the generational advantages customers can benefit from when transitioning from existing 14th Generation VxRail environments to VP-760 all-NVMe platforms.

Figure 4. The VxRail 16th Generation all-NVMe advantage

Figure 4. The VxRail 16th Generation all-NVMe advantage

In addition, VxRail on 16th Generation hardware can now support deployments with either vSAN Original Storage Architecture (OSA) or vSAN Express Storage Architecture (ESA). David Glynn provided a great summary of the core value vSAN ESA brings to the table for VxRail in his blog written nearly a year ago. With today’s launch, the VP-760 and VE-660 can now take advantage of vSAN ESA’s single-tier storage architecture that enables RAID-5 resiliency and capacity with RAID-1 performance. Customers who choose to deploy with vSAN OSA can also see the benefit of these new read intensive NVMe drives, with a total storage per node of up to 122.88TB in the VE-660 and 322.56TB in the VP-760. For those who deploy with vSAN ESA, maximum achievable storage is 153.6TB on the VE-660 and up to 368.64TB on the VP-760.

The Spirit of VxRail Future has seen the value of all-NVMe and is content knowing that VxRail will continue to underpin VMware mission-critical workloads for years to come.

Resources

Author: Mike Athanasiou, Sr. Engineering Technologist

VxRail and Intel® AMX, Bringing AI Everywhere

Wed, 13 Dec 2023 22:54:31 -0000

|Read Time: 0 minutes

We have seen exponential growth and adoption of Artificial Intelligence (AI) across nearly every sector in recent years, with many implementing their AI strategies as soon as possible to tap into the benefits and efficiencies AI has to offer.

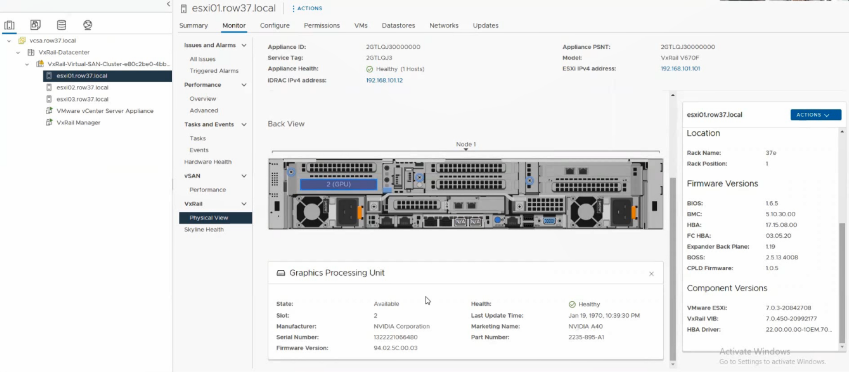

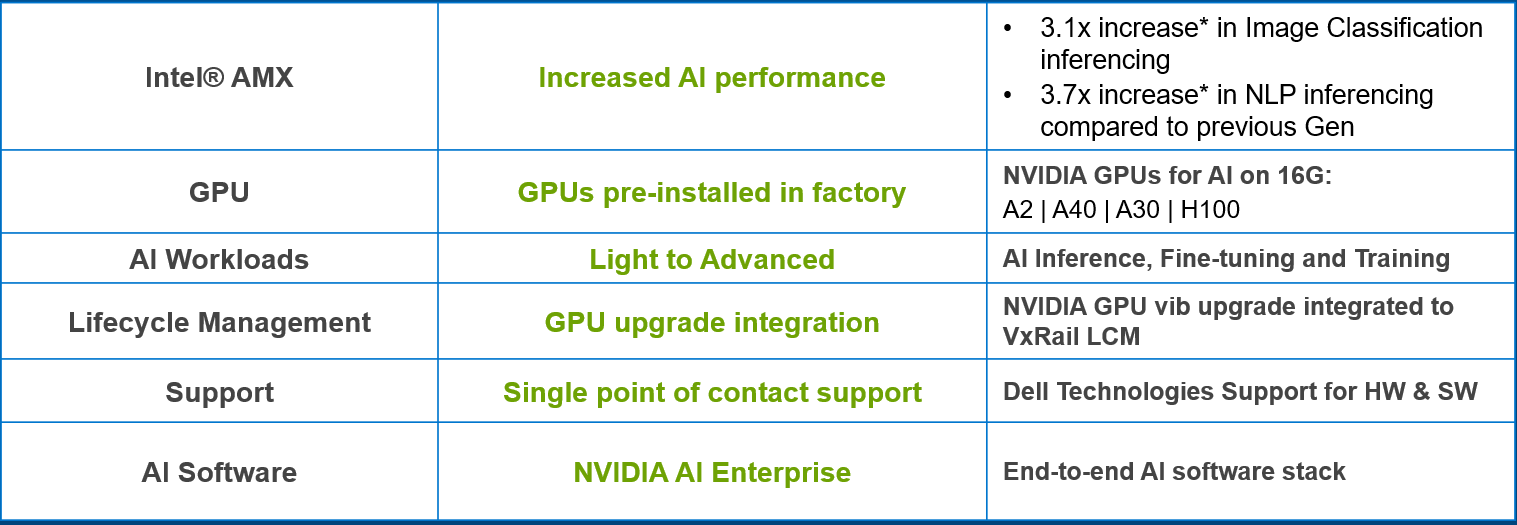

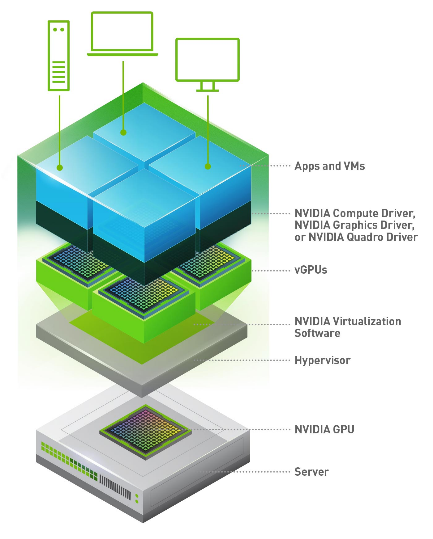

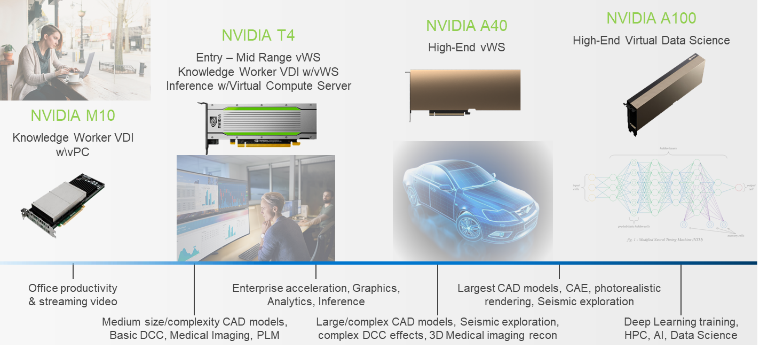

With our VxRail platforms, we have been supporting fully integrated and pre-installed GPUs for many years, with an array of NVIDIA GPUs available that already caters for high performance compute, graphics, and, of course, AI workloads.

These GPUs are stood up as part of the first run deployment of your VxRail system (though licensing for NVIDIA will be separate) and will be displayed and managed in vCenter. On top of this integration with vSphere and VxRail Manager, you will also see the GPUs’ lifecycle management taken care of through vLCM, where the GPU vib will be added to VxRail’s LCM bundle and then upgraded as part of that upgrade process.

When considering the type of accelerator you need for your VxRail system, there is now an additional option outside of discrete GPUs, which may have just enough acceleration capabilities to cater to your AI workloads.

Figure 1. Embrace AI with VxRail

Figure 1. Embrace AI with VxRail

Our VxRail 16th Generation platforms, launched this past summer, come with a choice of Intel® 4th generation Xeon® Scalable processors, all of which come with built-in accelerators called Intel® Advanced Matrix Extensions (AMX) that are deeply embedded in every core of the processor. The Intel® AMX accelerator, which benefits both AI and HPC workloads, is supported out-of-the-box and comes as standard without any requirement for drivers, special hardware, or additional licensing.

In this blog, we will cover the performance testing carried out by our VxRail Performance team in conjunction with Intel, as well as the gains we can expect to see when running AI inferencing workloads on our VxRail 16th Generation platforms leveraging Intel® AMX.

But first – what is Intel® AMX and how does it work?

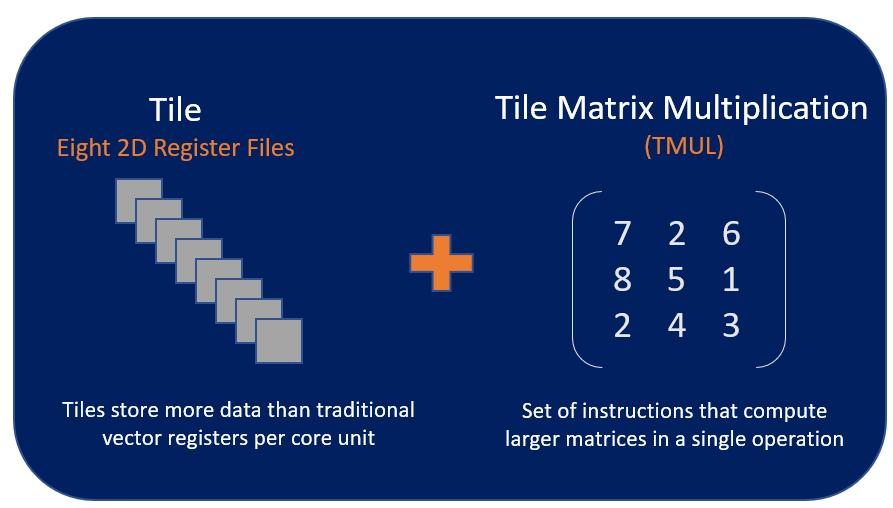

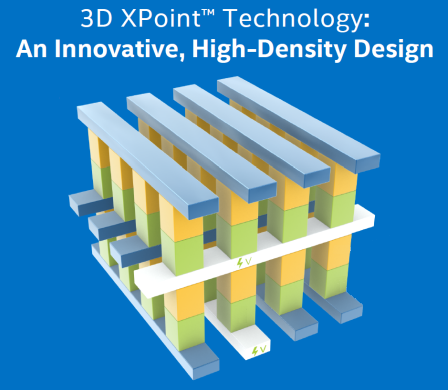

Intel® AMX’s architecture consists of two components:

- Tiles – consisting of eight two-dimensional registers that store large chunks of data, each 1kilobyte in size

- Tile Matrix Multiplication (TMUL) – an accelerator engine attached to the tiles that performs matrix-multiply computations for AI

The accelerator works by combining larger 2D register files called tiles and a set of matrix multiplication instructions, enabling Intel® AMX to deliver the type of matrix compute functionality that you commonly find in dedicated AI accelerators (i.e. GPUs) directly into our CPU cores. This allows AI workloads to run on the CPU instead of offloading them to dedicated GPUs.

Figure 2. Intel AMX Architecture Tile and TMUL

Figure 2. Intel AMX Architecture Tile and TMUL

With this functionality, the Intel® AMX accelerator works best with AI workloads that rely on matrix math, like natural language processing, recommendation systems, and image recognition. The Intel® AMX accelerator delivers acceleration for both inferencing and deep learning on these workloads, providing a significant performance boost which we will cover shortly.

There are two data types – INT8 and BF16 – supported for Intel® AMX, both of which allow for the matrix multiplication I mentioned earlier.

Some Intel® AMX workload use cases include:

- Image recognition

- Natural language processing

- Recommendation systems

- Media processing

- Machine translation

Did you say performance testing?

Yes, I did.

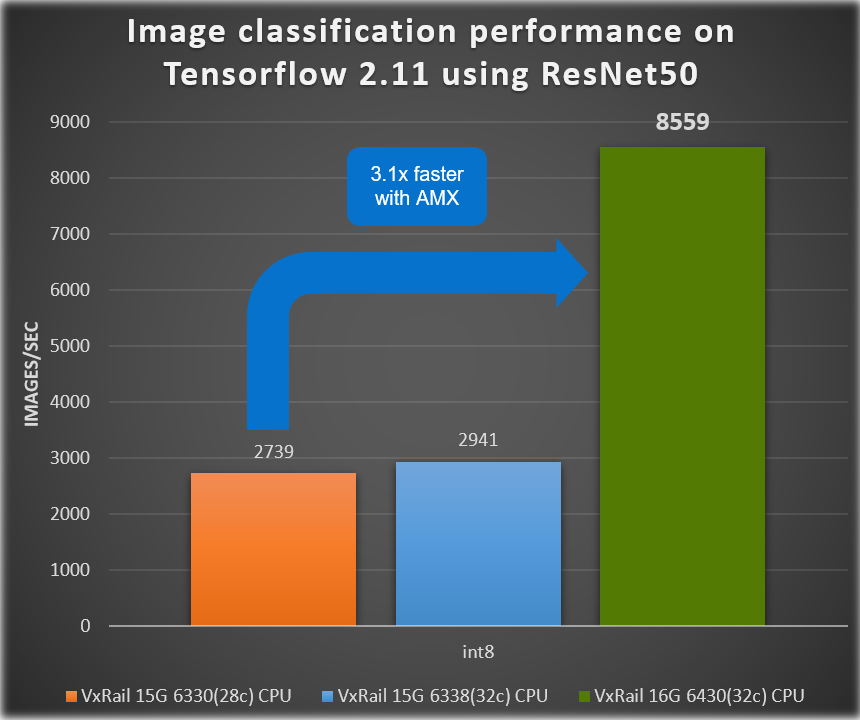

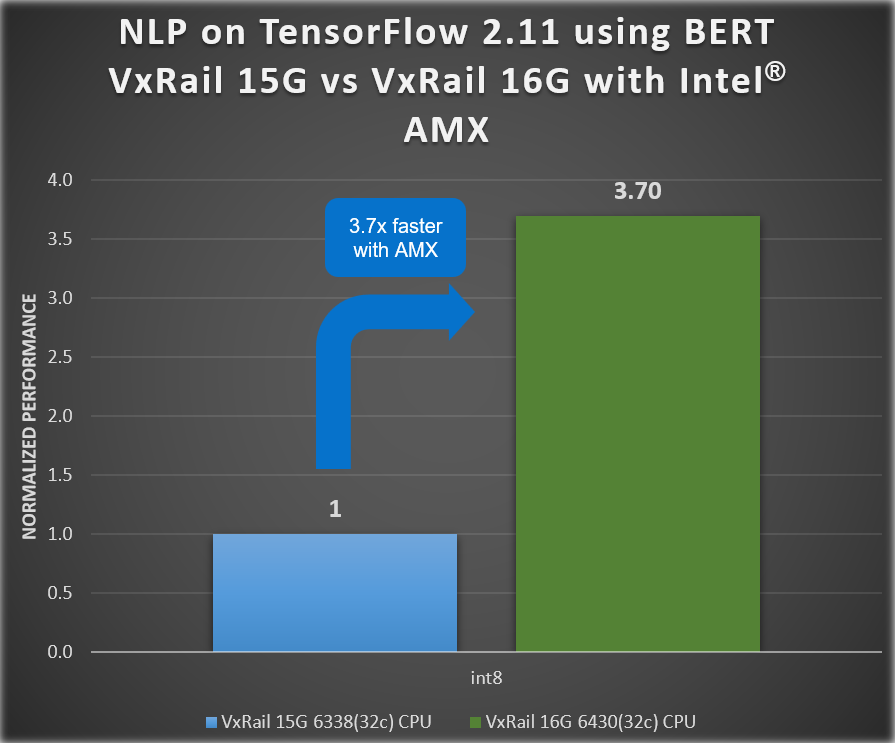

With this testing, we saw increased AI performance for two sets of benchmark results that demonstrated the generation-to-generation inference performance gains delivered by our 16th generation VxRail VE-660 platform (with Intel® AMX!) compared to previous 15th generation VxRail platforms.

The testing was focused on the inferencing of two different AI tasks, one for image classification with the ResNet50 model and the other for natural language processing with the BERT-large Model. The following covers the details of the testing:

Benchmark Testing:

- ResNet50 for Image Classification

- BERT benchmark for Natural Language Processing

Framework: TensorFlow 2.11

Table 1. Tested VxRail hardware overview

Generation | 16th Generation | 15th Generation | 15th Generation (different processor) |

System Name | VxRail VE-660 | VxRail E660N | VxRail E660N |

Number of Nodes | 4 | 4 | 4 |

| Components per VE-660 node | Components per E660N node | Components per E660N node |

Processor Model | Intel® Xeon ® 6430 (32c) | Intel® Xeon ® 6338 (32c) | Intel® Xeon ® 6330 (28c) |

Intel® AMX? | Yes | No | No |

Processors per node | 2 | 2 | 2 |

Core count per node | 64 | 64 | 56 |

Processor Frequency | 2.1 GHz, 3.4 GHz Turbo boost | 2.0 GHz, 3.0 GHz Turbo boost | 2.0 GHz, 3.10 GHz Turbo boost |

Memory per node | 512GB RAM | 512GB RAM | 512GB RAM |

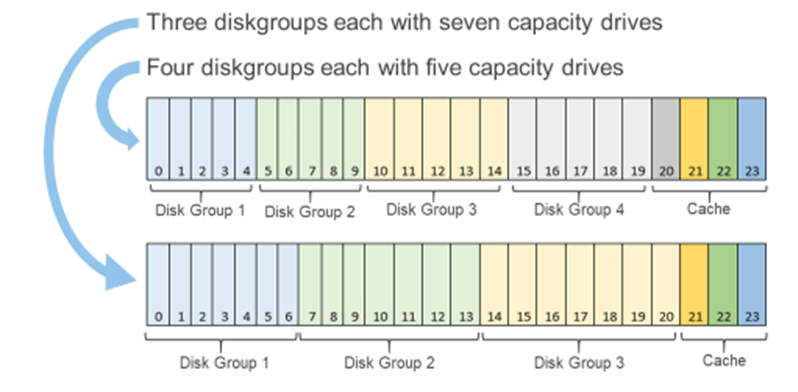

Storage | 2x diskgroups (1 x cache, 3 x capacity) | 2x diskgroups (1 x cache, 4 x capacity) | 2x diskgroups (1 x cache, 4 x capacity) |

vSAN OSA | vSAN OSA 8.0 U2 | vSAN OSA 8.0 | vSAN OSA 8.0 |

VxRail version | Engineer pre-release VxRail code | 8.0.010 | 8.0.010 |

We can see in the following figures that the ResNet50 image classification throughput increased by 3.1x, and we see a 3.7x increase in AI performance for the BERT benchmark results for natural language processing (NLP).

Figure 3. VxRail Generation-to-Generation – ResNet 50 inference results

Figure 3. VxRail Generation-to-Generation – ResNet 50 inference results

Figure 4. VxRail Generation-to-Generation - BERT inference results

Figure 4. VxRail Generation-to-Generation - BERT inference results

This exceptional increase in performance illustrates the type of AI performance gains you can achieve with Intel® AMX on VxRail without needing to invest in dedicated GPUs, enabling you to start your AI journey whenever you want.

Before we go, let’s review some highlights…

Intel® AMX and VxRail are…

- Already included in any Intel processor on VxRail 16th Generation VE-660 and VP-760 platforms

- Highly optimized for matrix operations common to AI workloads

- Cost-effective, allowing you to run AI workloads without the need of a dedicated GPU

- Integral to increased AI performance on VxRail 16thGeneration platform

- 3.1 x for Image Classification*

- 3.7 x for Natural Language Processing (NLP)*

Intel® AMX and VxRail support…

- Most popular AI frameworks, including TensorFlow, Pytorch, OpenVINO, and more

- int8 and bf16 data types

- Deep Learning AI Inference and Training Workloads for:

- Image recognition

- Natural language processing

- Recommendation systems

- Media processing

- Machine translation

(*Results based on engineering pre-release VxRail code)

Conclusion

Our VE-660 and VP-760 VxRail platforms come with built-in Intel® AMX accelerators which improve AI performance by 3.1x for image classification and 3.7x for NLP. The combination of these 16th Generation VxRail platforms and 4th generation Intel® Xeon® processors provides a cost-effective solution for customers that rely on Intel® AMX to meet their SLA for AI workload acceleration.

Author: Una O’Herlihy

A Closer Look at New Features Brought with VxRail 7.0.480

Sat, 17 Feb 2024 23:57:31 -0000

|Read Time: 0 minutes

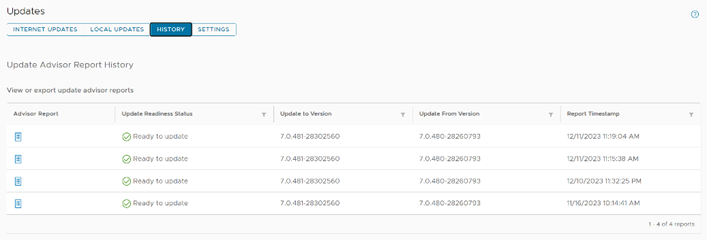

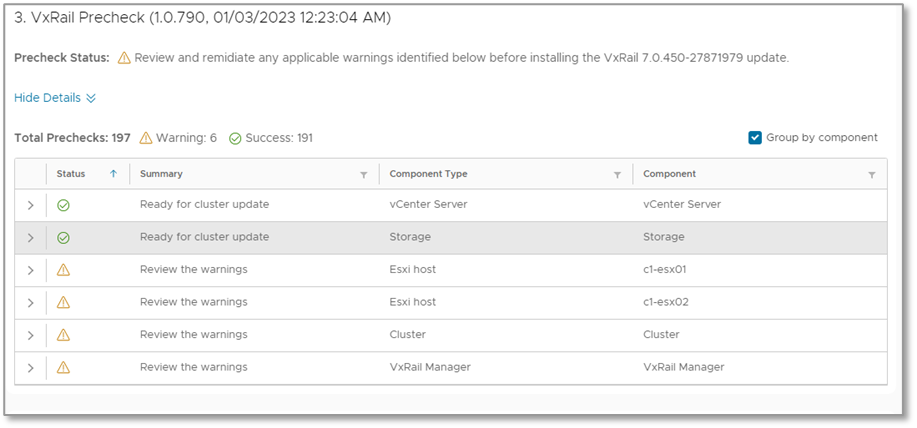

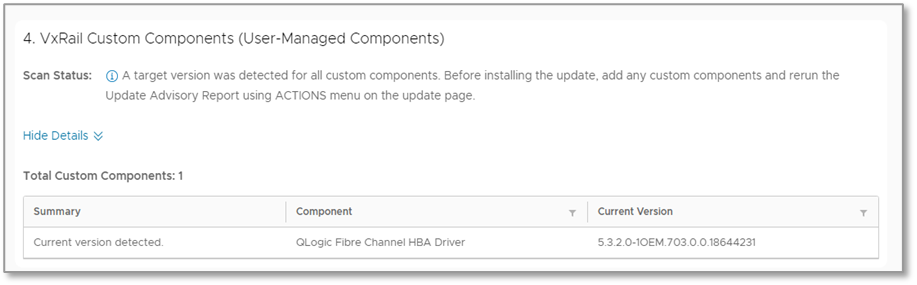

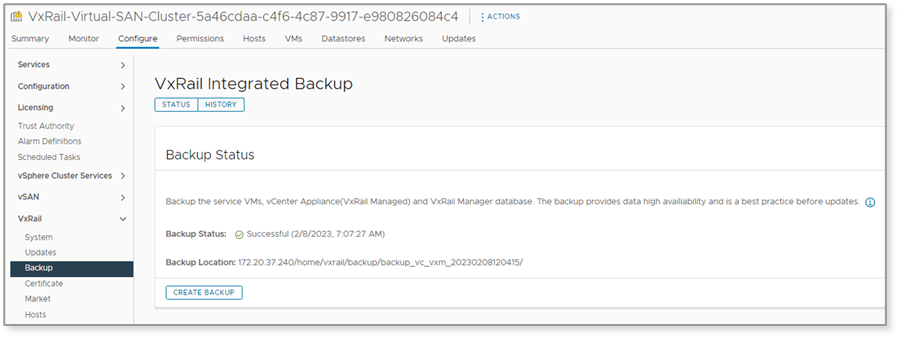

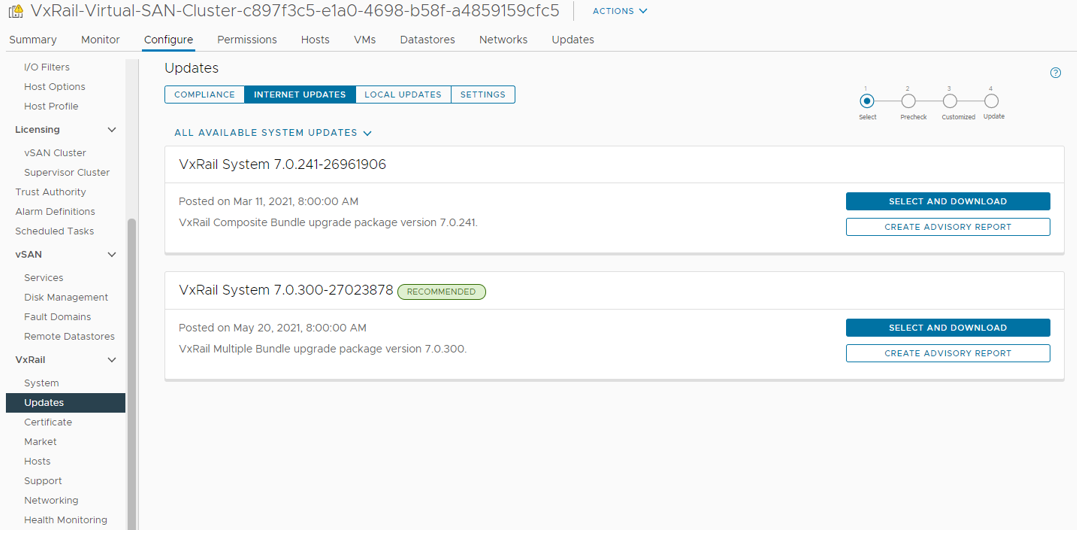

The landscape of VxRail software is ever-evolving. As software releases become available, so too do new features and functions. These new features and functions create a more robust ecosystem, focusing on simplifying regular tasks that appear mundane but are critical to maintaining a secure, up-to-date, and healthy IT environment. VxRail 7.0.480 brought several new and enhanced capabilities to administrators, continuing to build on the streamlined infrastructure management experience that VxRail offers. Many of these improvements are part of the LCM experience. Let’s take a moment to discuss some of these new software improvements and what they can do for infrastructure staff. These include expanded storage of update advisor reports from one report to thirty reports, the ability to export compliance reports to preservable files, automated node reboots for clusters, and extended ADC bundle and installer metadata upload functionality for improved prechecking and update advisor reporting.

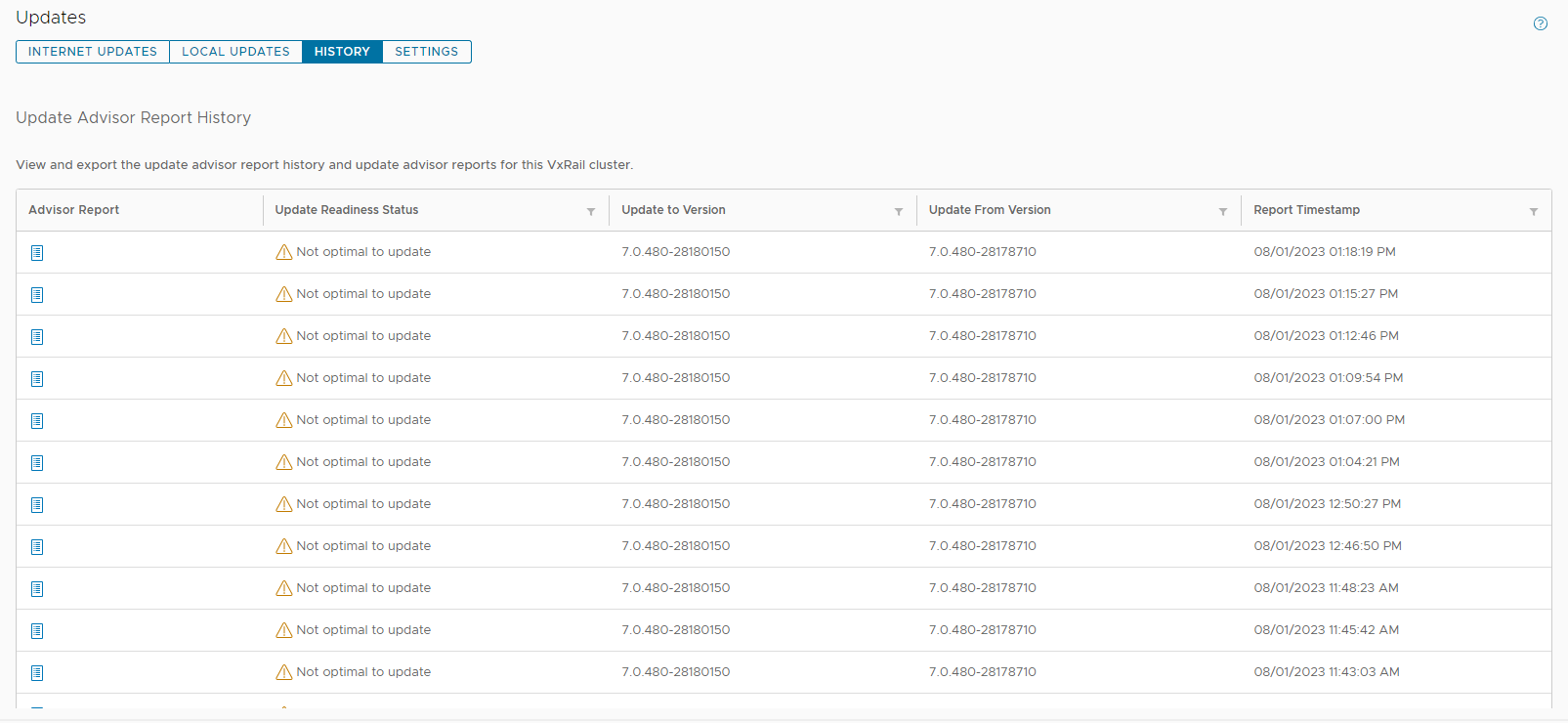

Extended update advisor report availability

Administrative teams have likely seen various update advisor reports. These reports have been part of the VxRail LCM experience for the past few releases and present a look at the Figure 1. A view of the pane showing multiple update advisor reports available for review cluster as it is at the moment. That said, storing multiple reports helps provide a documented history of the cluster. VxRail 7.0.480 has taken these singular reports and extended their storage to hold up to thirty reports, granting administrators the information and reporting to review up to the last thirty updates.

Figure 1. A view of the pane showing multiple update advisor reports available for review cluster as it is at the moment. That said, storing multiple reports helps provide a documented history of the cluster. VxRail 7.0.480 has taken these singular reports and extended their storage to hold up to thirty reports, granting administrators the information and reporting to review up to the last thirty updates.

Imagine that you have a large cluster. Different nodes could need different remediating actions. The ability to maintain multiple reports would enable administrators to address issues raised in a report while also creating a documentation trail for when corrective actions take multiple administrative cycles spanning extended lengths of time, possibly exceeding a day.

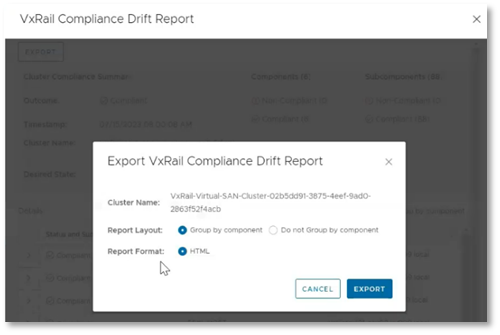

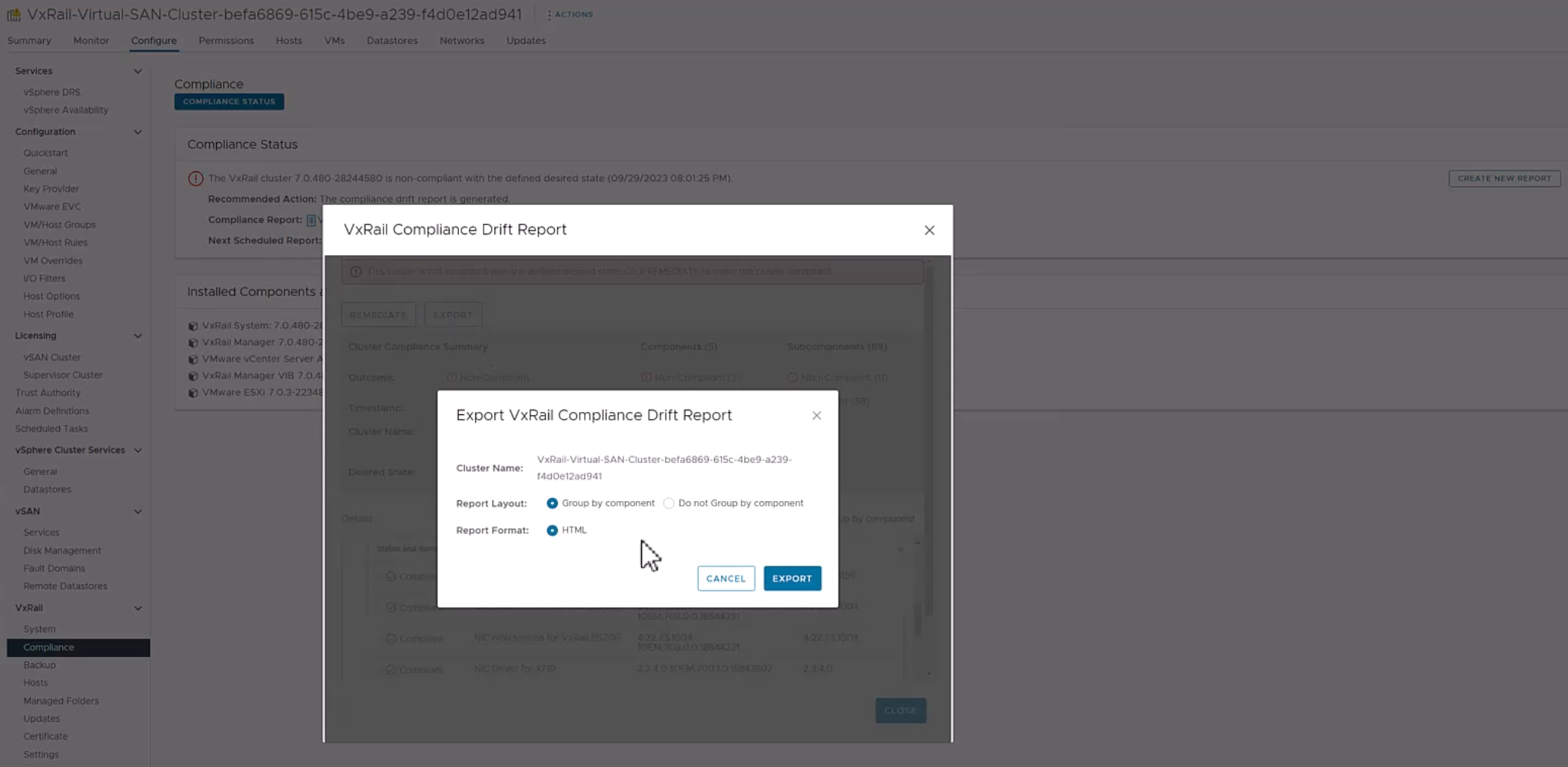

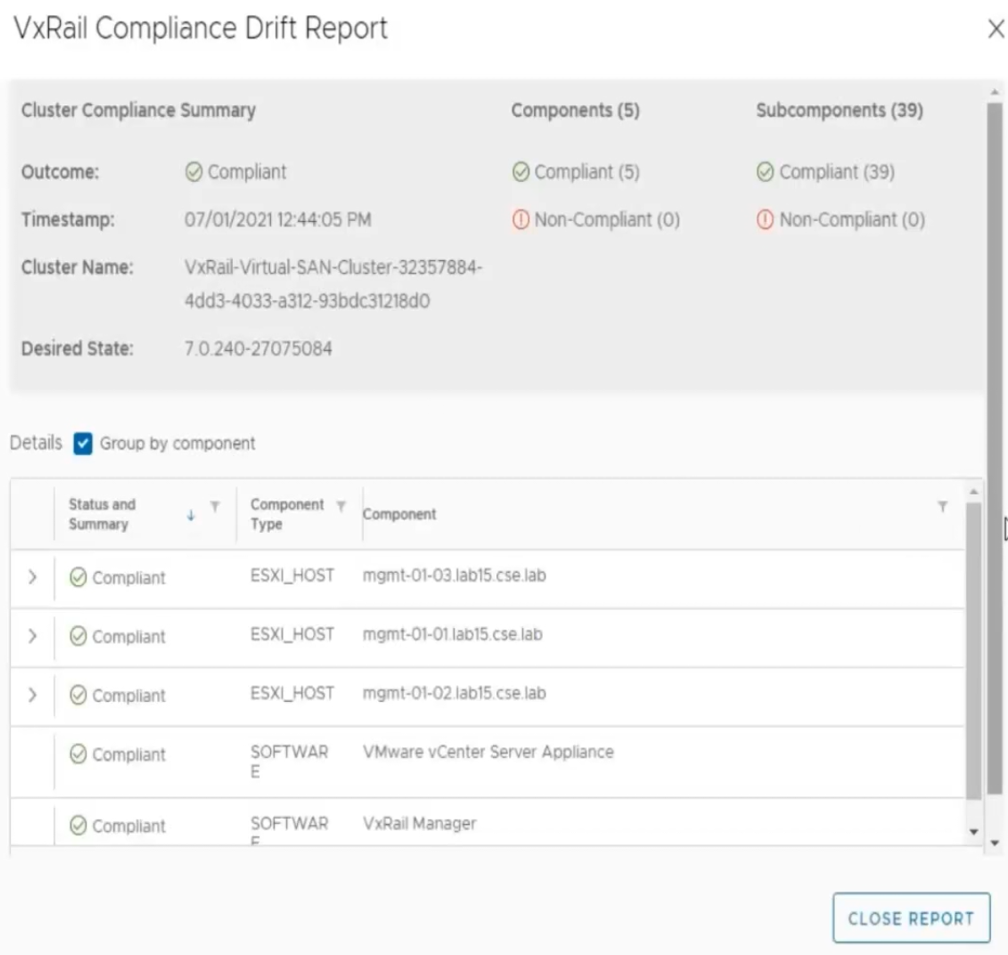

Export of compliance drift reports

Compliance drift reports are another reporting element of the LCM process, helping administrators to ensure that clusters conform with a Continuously Validated State (CVS) on a daily basis. This frees up administrators to attend to business-specific tasks, while ensuring that the more mundane work of gathering software versions for review is automated. This is a critical task that helps prevent time-intensive infrastructure issues that IT teams need to dedicate resources to correcting. Additionally, these reports ensure that LCM updates are successful by identifying any components that may have drifted from what is defined by the current Continuously  Figure 2. The option to export a drift report to a local HTML fileValidated State.

Figure 2. The option to export a drift report to a local HTML fileValidated State.

These compliance drift reports, demonstrated to the right, can now be exported, aiding administrators in creating and maintaining a documented history of their clusters' adherence to Continuously Validated States. Each report can be grouped by components and is saved to an HTML file, preserving the original view that VxRail administrators have come to know.

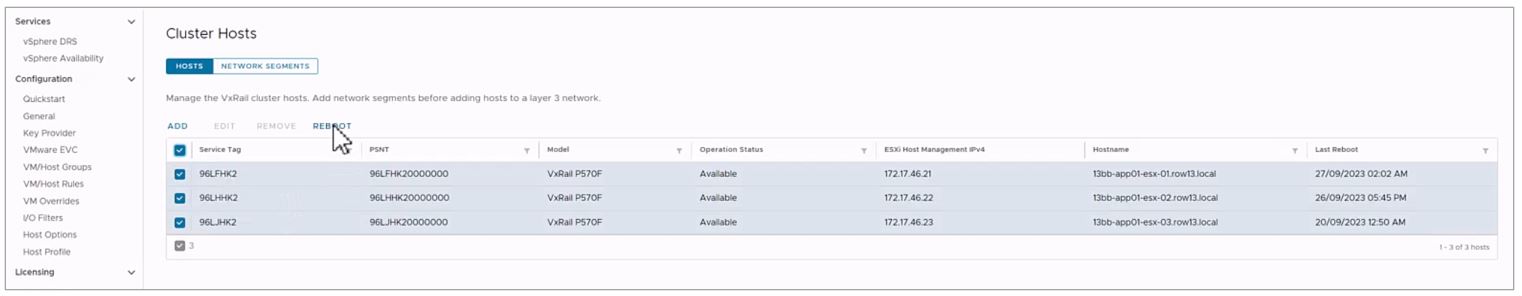

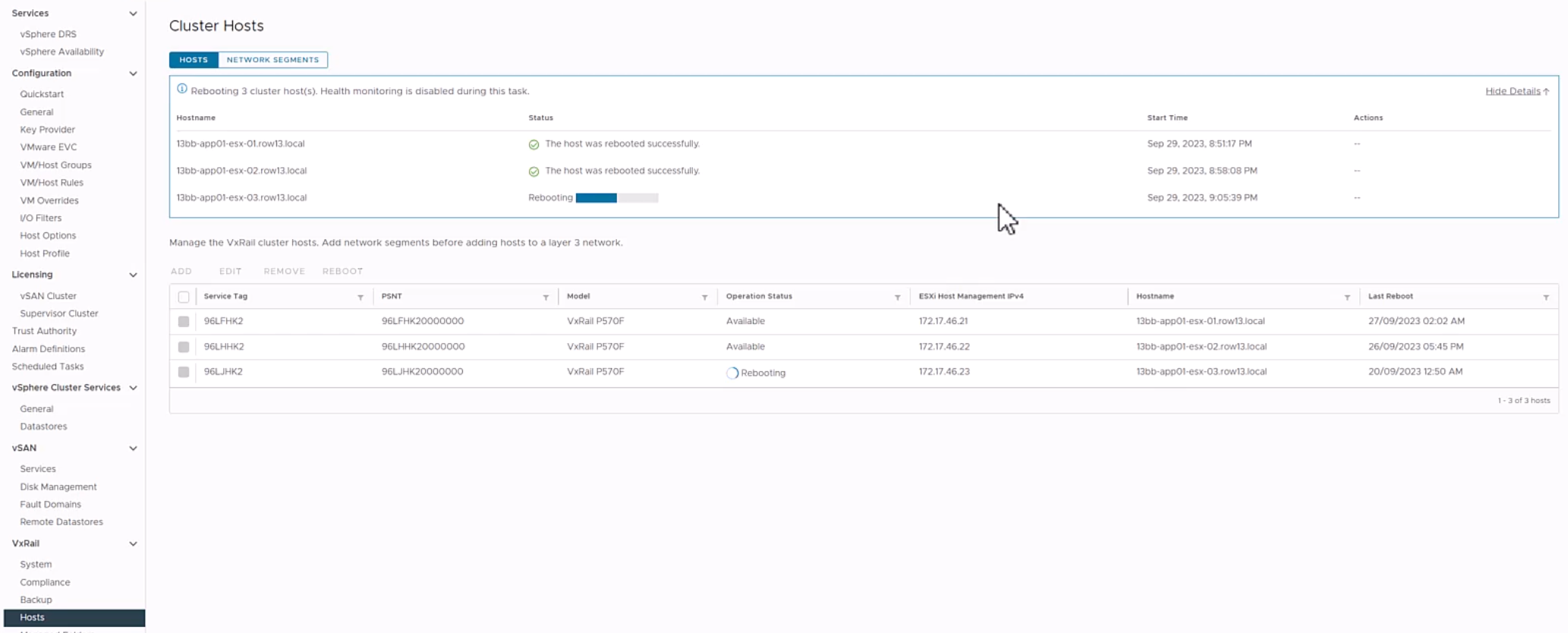

Sequential node reboot

Our next new feature automates the sequential reboot of nodes within a cluster, a task that many customers engage in manually. The automatic node reboot function is found within the Hosts submenu in the Configure tab. As shown in the following demonstration, administrators simply select the nodes they want to reboot, click the reboot button, and then complete the wizard. The wizard offers the options to begin rebooting immediately or schedule them for a later time. Once this selection is made, the wizard will run a precheck, and the reboot cycles can begin. While this feature most benefits larger clusters, clusters of any size are advantaged by automating infrastructure tasks. Node reboots can help further improve update cycle success rates by clearing issues like memory utilization or restarting any potentially hung processes.

As an example, let’s consider memory utilization again. If there were an issue with the balloon driver making memory available, the update precheck would detect it, however rebooting the node would restart the service and force the memory to be made available once again. We’ve also observed cases where larger clusters are updated less often compared to smaller clusters due to longer maintenance windows. This can lead to longer times between reboots for larger clusters. The sequential reboot of nodes within a cluster eases the difficulty in restarting larger clusters through automation and orchestration, leading to restarts with minimal administrator activity. This can clear a variety of issues that could halt an upgrade.

That said, manually rebooting each node within a cluster can require a significant time investment. Imagine for a moment that we have a 20-node cluster. If it took just 10 minutes per node to migrate workloads away from a node, restart the host, bring it back online physically and relaunch software services, and finally bring workload back, cycling through all 20 nodes would still take over three hours of an administrator's undivided attention and time. In reality, this reboot cycle would likely take longer. Automating these actions allows clusters to benefit from these actions while freeing IT staff up to focus on other critical business tasks.

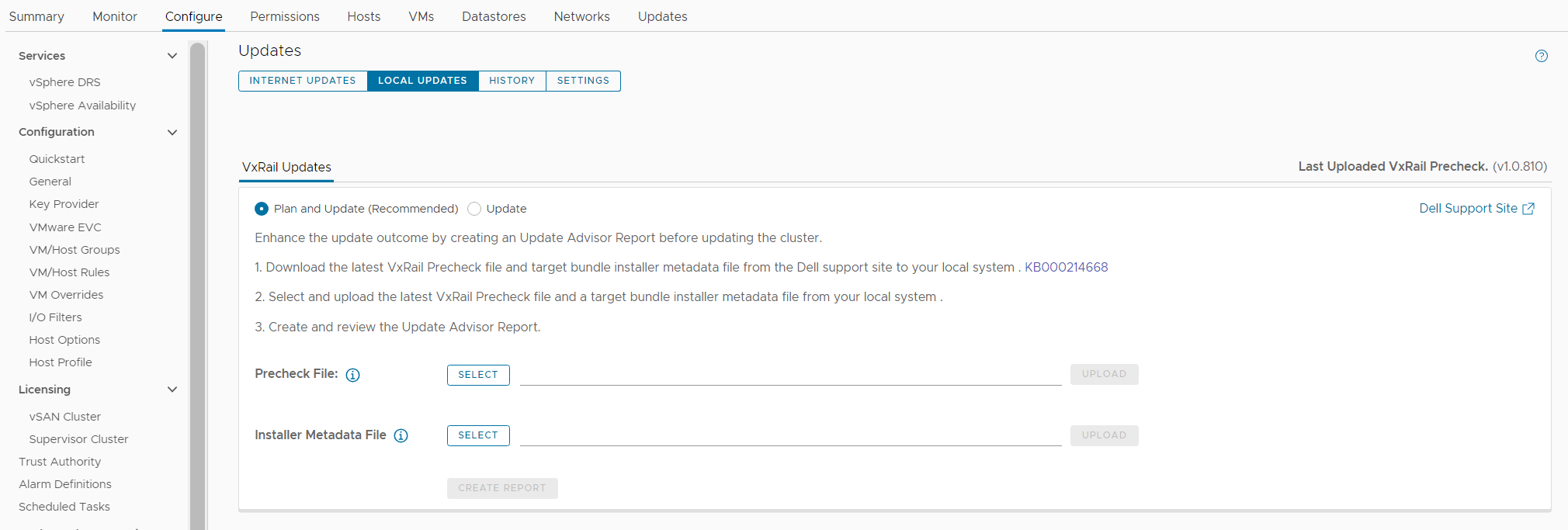

ADC bundle and installer metadata upload

VxRail 7.0.480 brings the ability to use the adaptive data collector (ADC) bundle and installer metadata, shown being uploaded in the following demonstration, to update the LCM precheck and update advisor functions VxRail Manager provides. This is helpful because the precheck routinely welcomes new developments, leading to a more robust precheck and more successful LCM update cycles. For example, one of the more recent precheck developments involves an additional check on memory utilization. The LCM precheck examines CPU and memory utilization of the vCenter Server appliance. If either CPU or memory utilization exceeds an 80% threshold, a warning will appear in the precheck report. If the check occurs as part of an upgrade cycle, then the warning appears in the update progress dashboard. The update advisor metadata file includes all the version information related to the target VxRail release version. This allows the update advisor to create reports showing the current, expected, and target software versions for each LCM cycle. These packages are pulled by VxRail Manager automatically over the network for clusters using a Secure Connect Gateway connection and are also available to offline dark sites using the Local Updates tab.

Conclusion

The VxRail engineering team routinely delivers new features and functions to our customers. In this blog, we reviewed the enhancements for expanded update advisor report storage, the ability to export drift reports to local HTML files, automated cluster node reboot cycles, and the enhanced LCM precheck and update advisor with the ADC bundle and installer metadata file uploads. As we move forward, we continue to enhance LCM operations and minimize the time required to manage VxRail. As such, VxRail is a fantastic choice to run your virtualized workloads and will continue to become a more robust and administration-friendly platform.

Author: Dylan Jackson, Engineering Technologist

VxRail Edge Automation Unleashed - Simplifying Satellite Node Management with Ansible

Thu, 30 Nov 2023 17:43:03 -0000

|Read Time: 0 minutes

VxRail Edge Automation Unleashed

Simplifying Satellite Node Management with Ansible

In the previous blog, Infrastructure as Code with VxRail made easier with Ansible Modules for Dell VxRail, I introduced the modules which enable the automation of VxRail operations through code-driven processes using Ansible and VxRail API. This approach not only streamlines IT infrastructure management but also aligns with Infrastructure as Code (IaC) principles, benefiting both technical experts and business leaders.

The corresponding demo is available on YouTube:

The previous blog laid the foundation for the continued journey where we explore more advanced Ansible automation techniques, with a focus on satellite node management in the VxRail ecosystem. I highly recommend checking out that blog before diving deeper into the topics discussed here - as the concepts discussed in this demo will be much easier to absorb

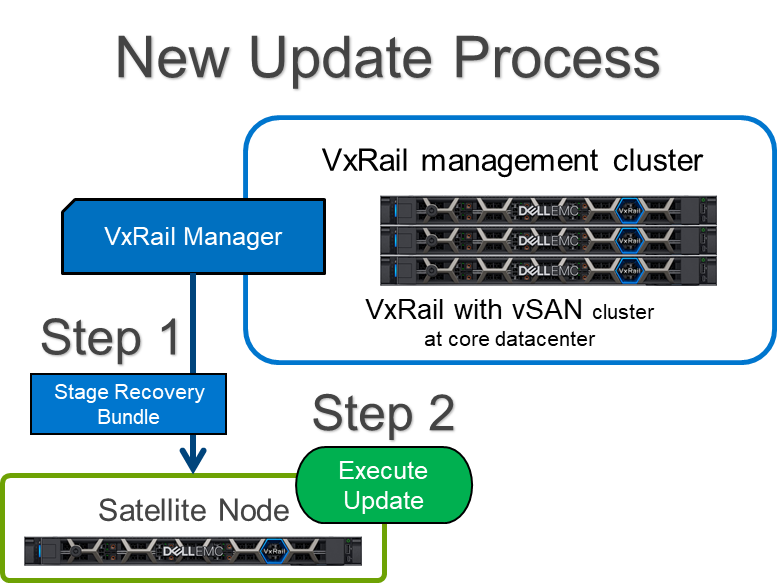

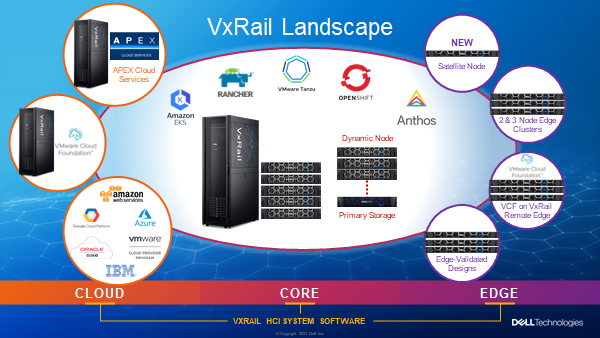

What are the VxRail satellite nodes?

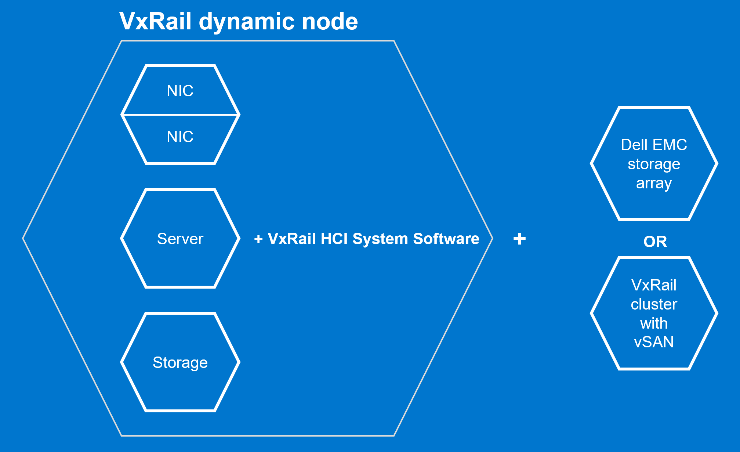

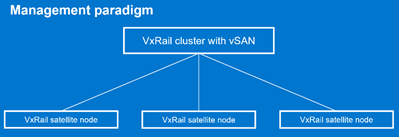

VxRail satellite nodes are individual nodes designed specifically for deployment in edge environments and are managed through a centralized primary VxRail cluster. Satellite nodes do not leverage vSAN to provide storage resources and are an ideal solution for those workloads where the SLA and compute demands do not justify even the smallest of VxRail 2-node vSAN clusters.

Satellite nodes enable customers to achieve uniform and centralized operations within the data center and at the edge, ensuring VxRail management throughout. This includes comprehensive, automated lifecycle management for VxRail satellite nodes, while encompassing hardware and software and significantly reducing the need for manual intervention.

To learn more about satellite nodes, please check the following blogs from my colleagues:

- David’s introduction: Satellite nodes: Because sometimes even a 2-node cluster is too much

- Stephen’s update on enhancements: Enhancing Satellite Node Management at Scale

Automating VxRail satellite node operations using Ansible

You can leverage the Ansible Modules for Dell VxRail to automate various VxRail operations, including more advanced use cases, like satellite node management. It’s possible today by using the provided samples available in the official repository on GitHub.

Have a look at the following demo, which leverages the latest available version of these modules at the time of recording – 2.2.0. In the demo, I discuss and demonstrate how you can perform the following operations from Ansible:

- Collecting information about the number of satellite nodes added to the primary VxRail cluster

- Adding a new satellite node to the primary VxRail cluster

- Performing lifecycle management operations – staging the upgrade bundle and executing the upgrade on managed satellite nodes

- Removing a satellite node from the primary cluster

The examples used in the demo are slightly modified versions of the following samples from the modules' documentation on GitHub. If you’d like to replicate these in your environment, here are the links to the corresponding samples for your reference, which need slight modification:

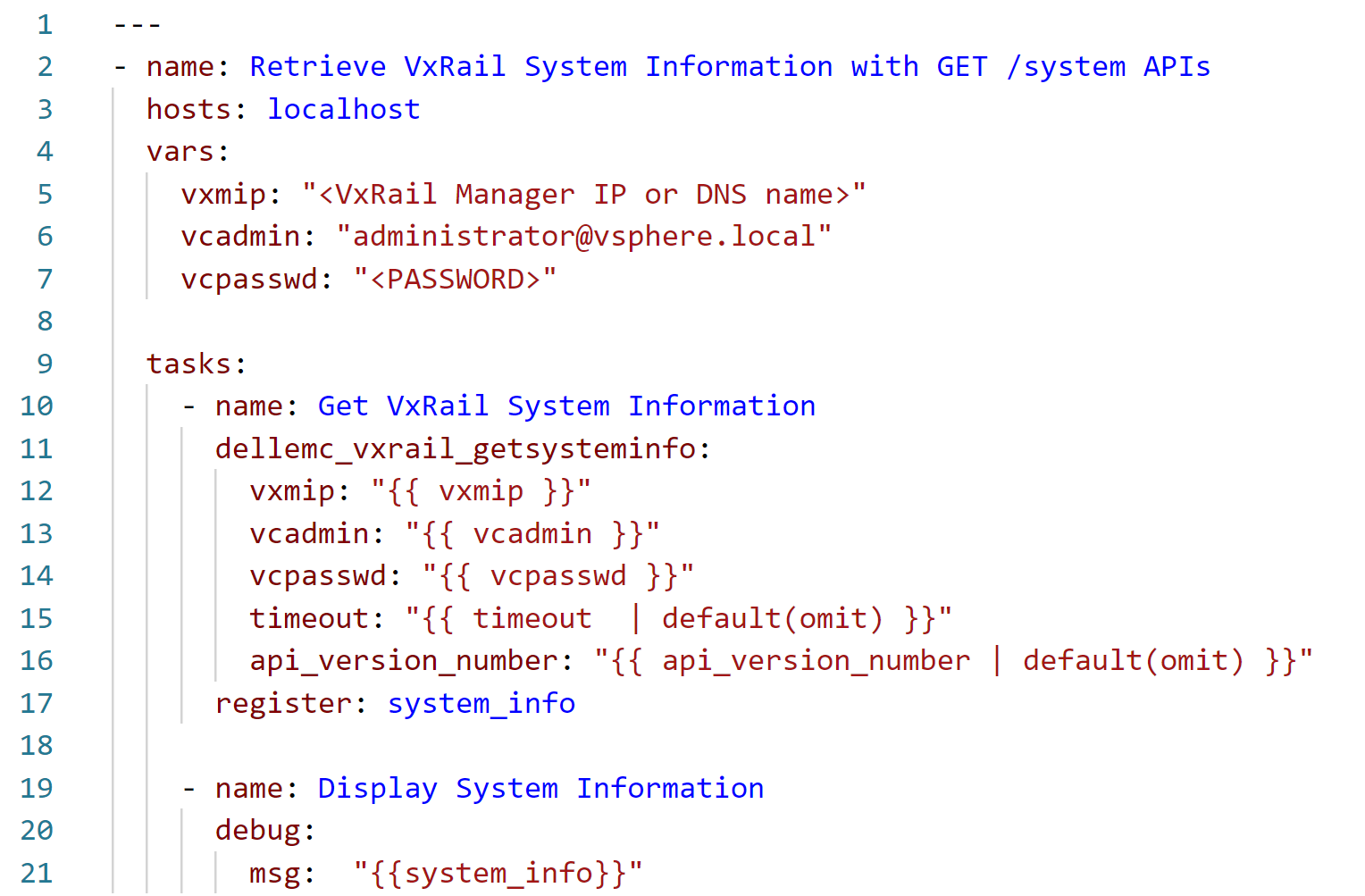

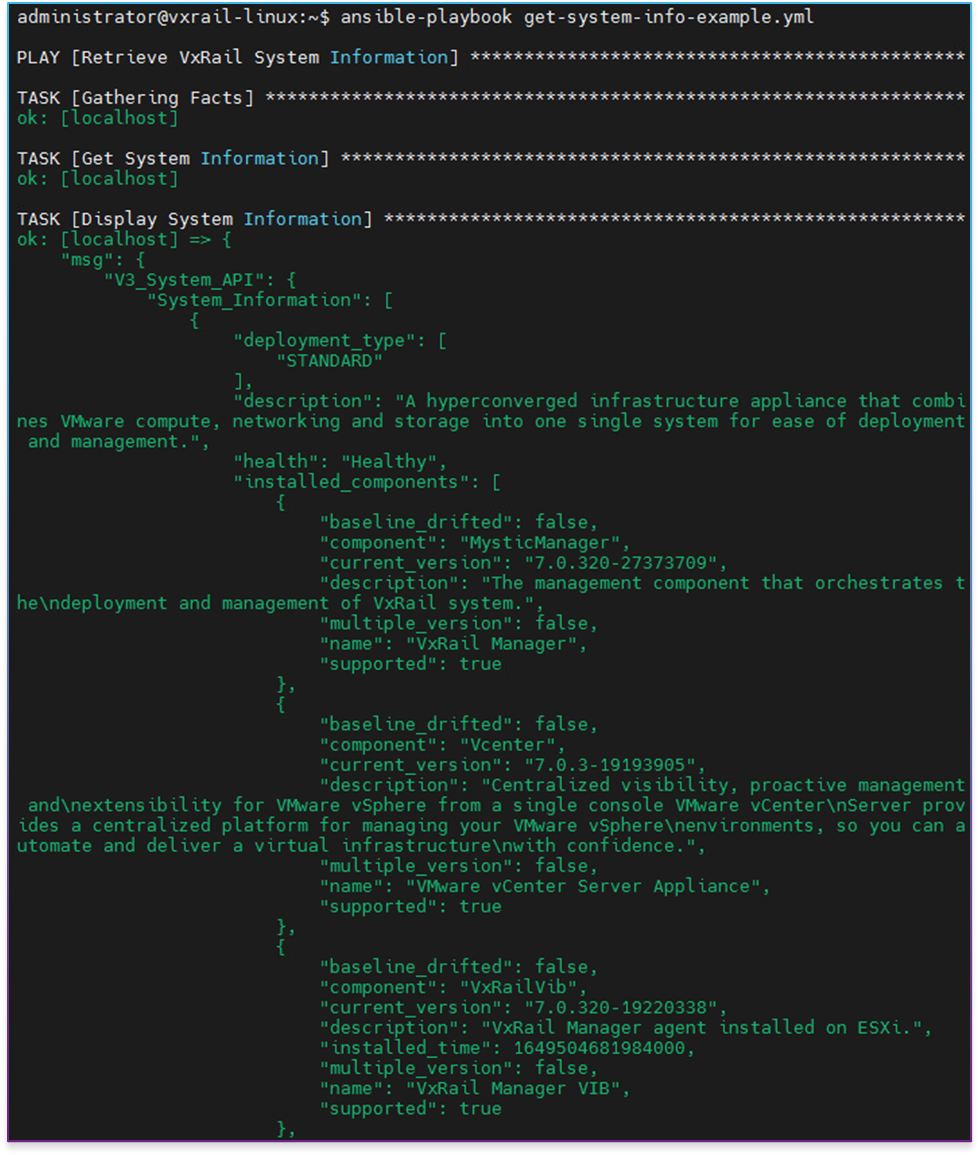

- Retrieving system information: systeminfo.yml

- Adding a new satellite node: add_satellite_node.yml

- Performing LCM operations: upgrade_host_folder.yml (both staging and upgrading as explained in the demo)

- Removing a satellite node: remove_satellite_node.yml.

In the demo, you can also observe one of the interesting features of the Ansible Modules for Dell VxRail that is shown in action but not explained explicitly. You might be aware that some of the VxRail API functions are available in multiple versions – typically, a new version is made available when some new features are available in the VxRail HCI System Software, while the previous versions are stored to provide backward compatibility. The example is “GET /vX/system”, which is used to retrieve the number of the satellite nodes – this property was introduced in version 4. If you avoid specifying the version, the modules will automatically select the latest supported version, simplifying the end-user experience.

How can you get more hands-on experience with automating VxRail operations programmatically?

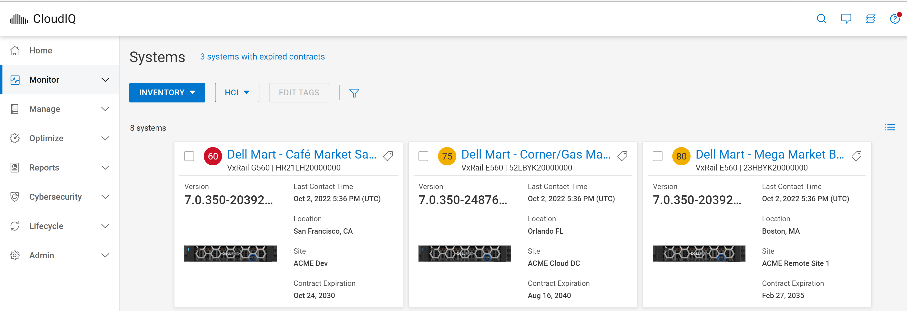

The above demo, discussing the satellite nodes management using Ansible, was configured in the VxRail API hands-on lab which is available in the Dell Technologies Demo Center. With the help of the Demo Center team, we built this lab as the self-education tool for learning VxRail API and how it can be used for automating VxRail operations using various methods – through exploring the built-in, interactive, web-based documentation, VxRail API PowerShell Modules, Ansible Modules for Dell VxRail and Postman.

The hands-on lab provides a safe VxRail API sandbox, where you can easily start experimenting by following the exercises from the lab guide or trying some other use cases on your own without any concerns about making configuration changes to the VxRail system.

The lab was refreshed for the Dell Technologies World 2023 conference to leverage VxRail HCI System Software 8.0.x and the latest version of the Ansible Modules. If you’re a Dell partner, you should have access directly, and if you’re a customer who’d like to get access – please contact your Account SE from Dell or Dell Partner. The lab is available in the catalog as: “HOL-0310-01 - Scalable Virtualization, Compute, and Storage with the VxRail REST API”.

Conclusion

In the fast-evolving landscape of IT infrastructure, the ability to automate operations efficiently is not just a convenience but a necessity. With the power of Ansible Modules for Dell VxRail, we've explored how this necessity can be met, looking at the examples of satellite nodes use case. We encourage you to embrace the full potential of VxRail automation using VxRail API and Ansible or other tools. If it is something new, you can get the experience by experimenting with the hands-on lab available in the Demo Center catalog.

Resources

- Previous blog: Infrastructure as Code with VxRail Made Easier with Ansible Modules for Dell VxRail

- The “master” blog containing a curated list of publicly-available educational resources about the VxRail API: VxRail API - Updated List of Useful Public Resources

- Ansible Modules for Dell VxRail on GitHub, which is the central code repository for the modules. It also contains complete product documentation and examples.

- Dell Technologies Demo Center, which includes VxRail API hands-on lab.

Author: Karol Boguniewicz, Senior Principal Engineering Technologist, VxRail Technical Marketing

Twitter/X: @cl0udguide

LinkedIn: https://www.linkedin.com/in/boguniewicz/

Learn About the Latest VMware Cloud Foundation 5.1 on Dell VxRail 8.0.200 Release

Tue, 05 Dec 2023 17:06:36 -0000

|Read Time: 0 minutes

Pairing more configuration flexibility with more integrated automation delivers even more simplified outcomes to meet more business needs!

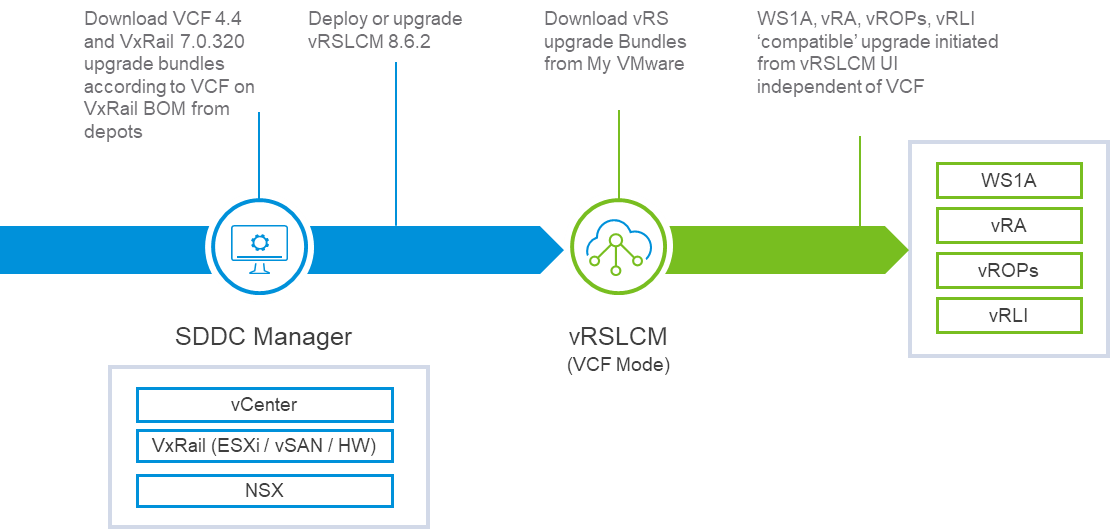

More is what sums up this latest Cloud Foundation on VxRail release! This new release is based on the latest software bill of materials (BOM) featuring vSphere 8.0 U2, vSAN 8.0 U2, and NSX 4.1.2. Read on for more details.…

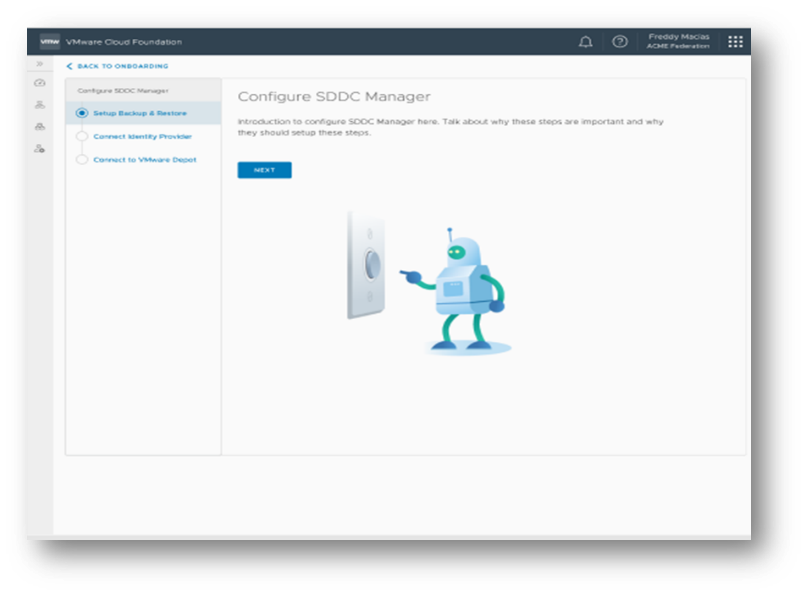

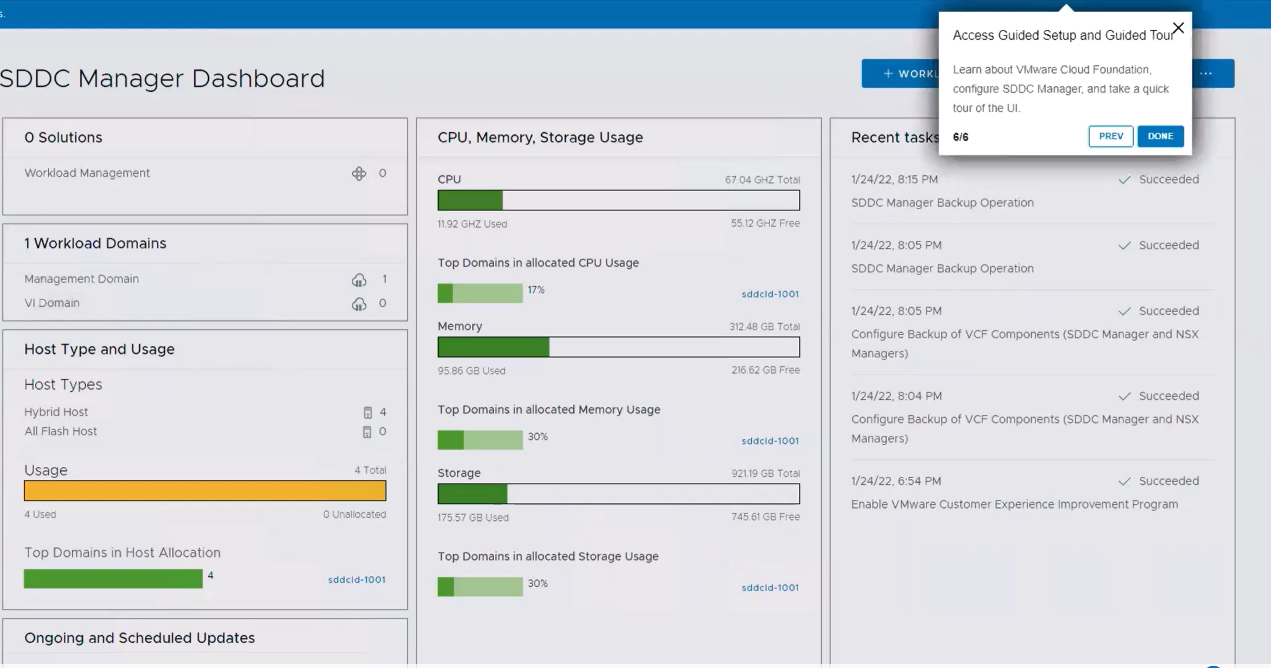

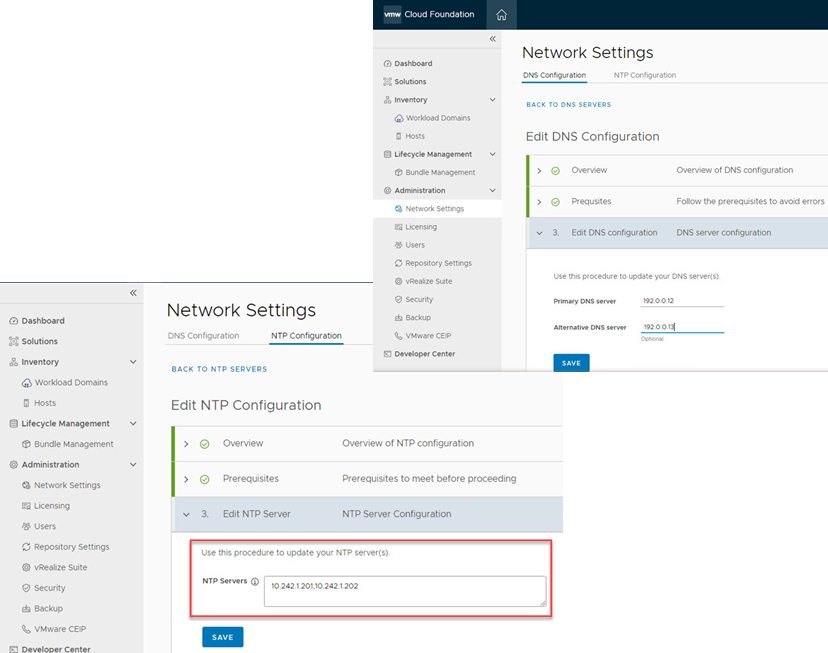

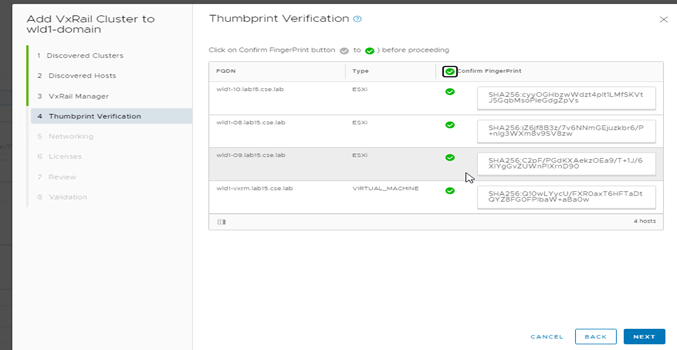

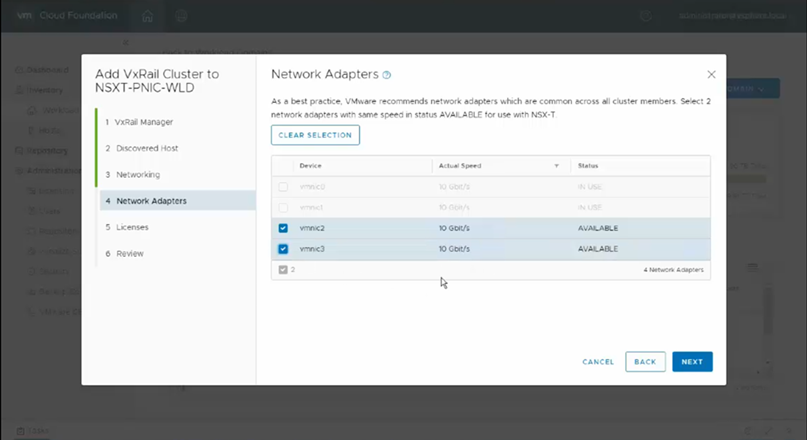

Operations and serviceability user experience updates

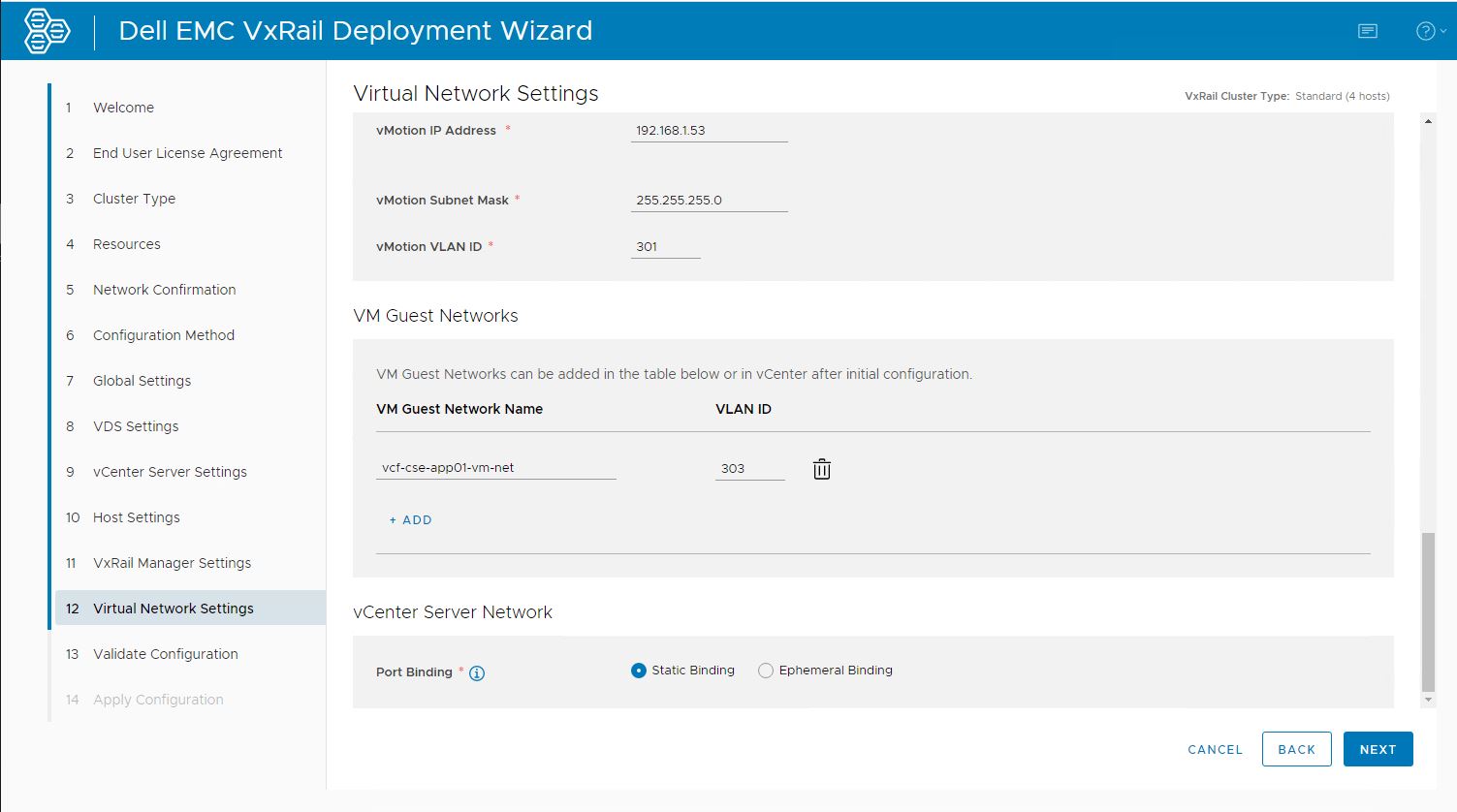

SDDC Manager WFO UI custom host networking configuration enhancements

With this enhancement, the administrator can configure networking of a new workload domain or VxRail cluster using either “Default” VxRail Network Profiles or a “Custom” Network Profile configuration. Cloud Foundation on VxRail already supports the ability for administrators to deploy custom host networking configurations using the SDDC Manager WFO API deployment method, however this new feature now brings this support to the SDDC Manager WFO UI deployment method, making it even easier to operationalize.

The following demo walks through using the SDDC Manager WFO UI to create a new workload domain with a VxRail cluster that is configured with vSAN ESA and VxRail vLCM mode enabled and a custom network profile.

New VCF Infrastructure as Code (IaC) tooling with new Terraform VCF Provider and PowerCLI VCF Module

Infrastructure teams can now utilize the Terraform Provider for VCF and the VCF module that is now integrated into VMware’s official PowerCLI tool to perform Infrastructure-as-code (IaC), allowing them to deploy, manage, and operate VMware Cloud Foundation on VxRail deployments.

By using prebuilt IaC best practices code that is designed to take advantage of interfacing with a single VCF API, IaC teams are able to perform infrastructure provisioning tasks that can accelerate IaC usage and lessen the burden to develop and maintain code for individual infrastructure components intended to deliver similar outcomes.

Important Note: Not all operations using these tools may be supported in Cloud Foundation on VxRail. Please refer to tool documentation links at the bottom of this post for details.

LCM updates

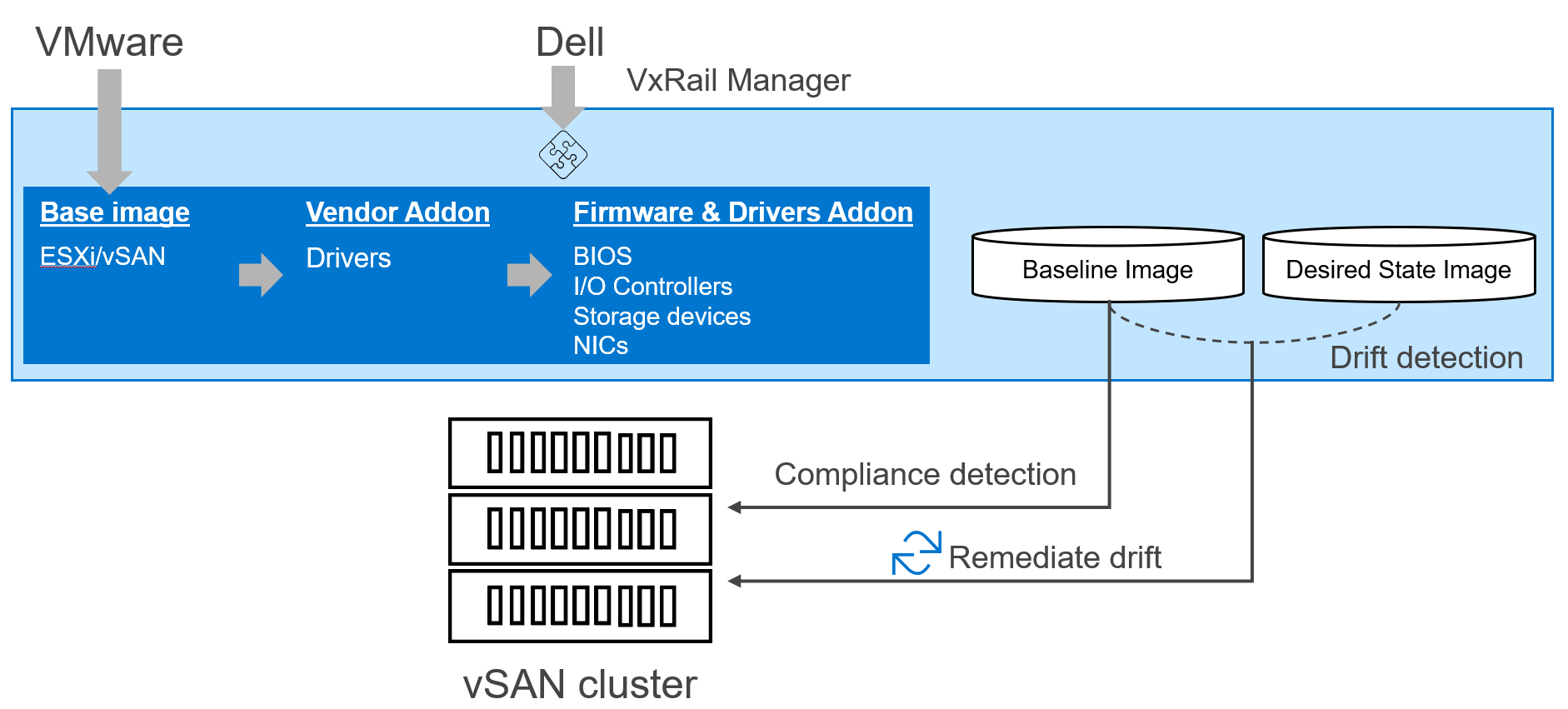

Day 1 VxRail vLCM mode compatibility for management and workload domains

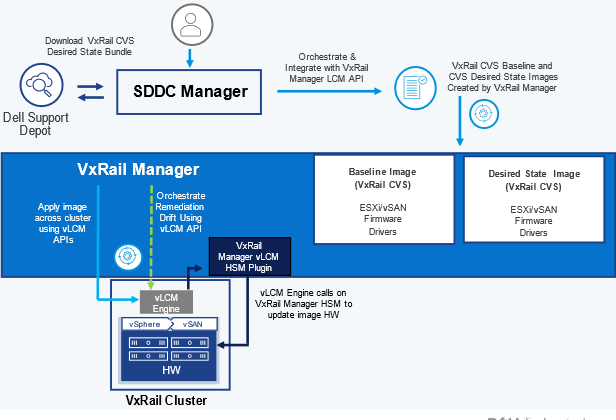

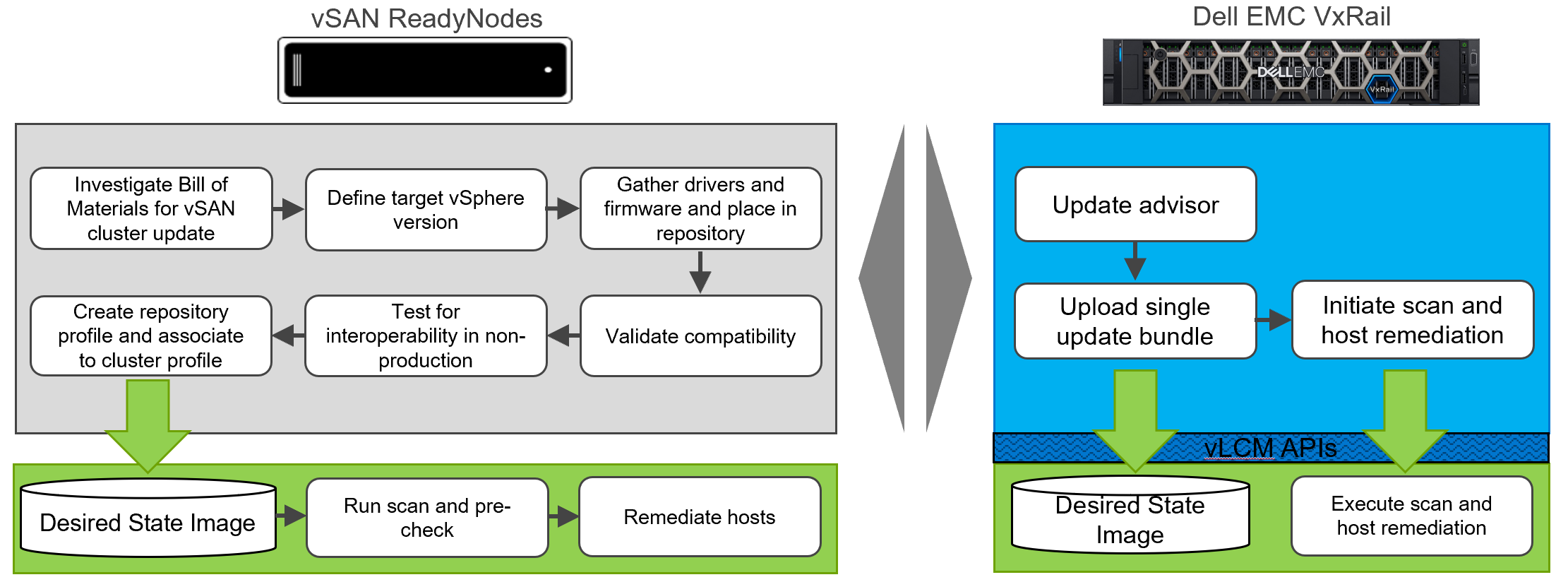

VMware Cloud Foundation 5.1 on VxRail 8.0.200 now supports the configuration and deployment of new domains using vSphere Lifecycle Manager Images (vLCM) enabled VxRail clusters, depicted in figure 1. VxRail vLCM enabled clusters can leverage VxRail Manager to unify not only your ESXi Image but also your BIOS/firmware/drivers through a single update process, all controlled/orchestrated by VxRail Manager using the integrated SDDC Manager’s native LCM operations experience via VxRail APIs. VxRail clusters will have their VxRail Continuously Validated State image managed at the cluster level by VxRail Manager just like in VxRail standard LCM mode enabled clusters.

Figure 1. High-level VxRail vLCM mode architecture

Mixed-mode support for workload domains as a steady state

Existing VMware Cloud Foundation 5.x on VxRail 8.x deployments now allow administrators to run workload domains of different VCF 5.x versions as a “steady state”. Administrators can now update the management domain and any other workload domain of a VCF 5.0 deployment to the latest VCF 5.x version without the need to upgrade all workload domains. Mixed-mode support also allows administrators to leverage the benefits of new SDDC Manager features in the management domain without having to upgrade a full VCF 5.x on VxRail 8.x instance.

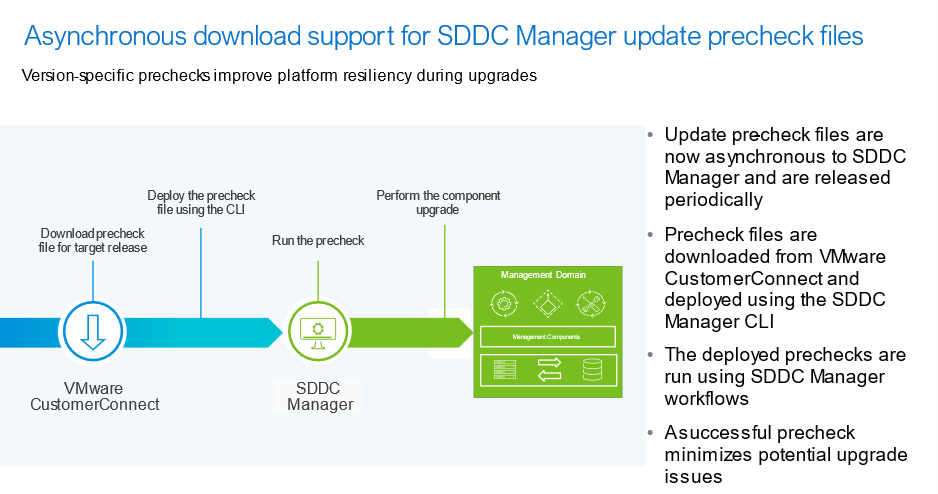

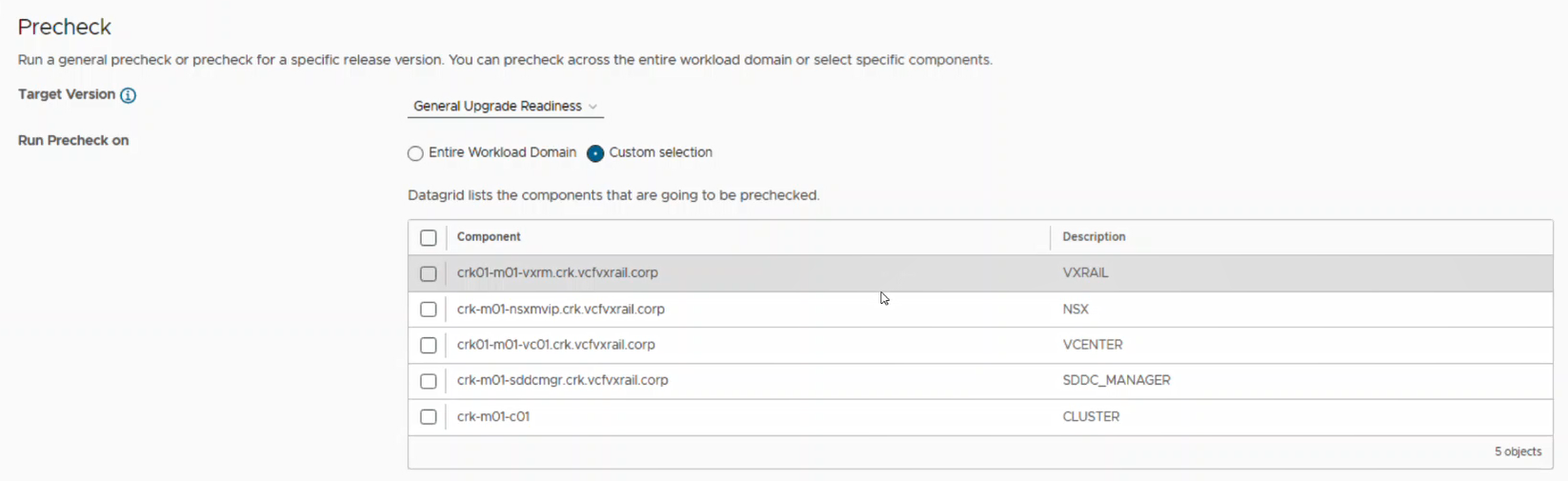

Asynchronous download support for SDDC Manager update precheck files

SDDC Manager update precheck files can now be downloaded and updated asynchronously from full release updates, an addition to similar async VxRail specific precheck file updates that already exist within VxRail Manager. This feature allows administrators to download, deploy, and run SDDC Manager update prechecks tailored to a specific VMware Cloud Foundation on VxRail releases. SDDC Manager precheck files are created by VMware engineering and contain detailed checks for SDDC Manager to run prior to upgrading to a newer VCF on VxRail target release, as shown in the following figure.

Figure 2. High-level process of asynchronous download support for SDDC Manager update precheck files

Networking updates

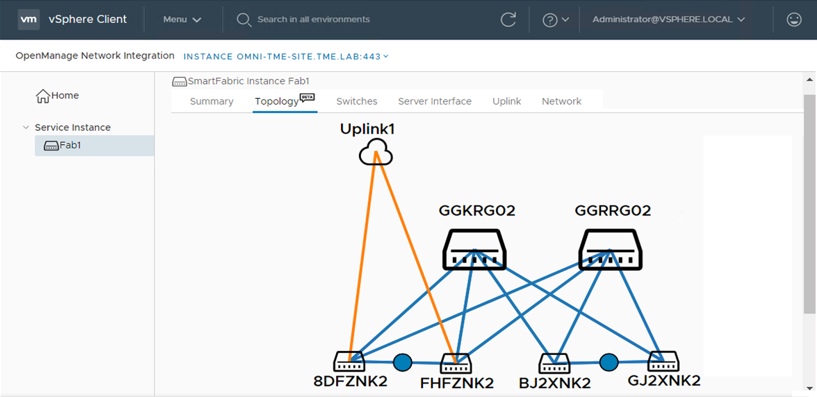

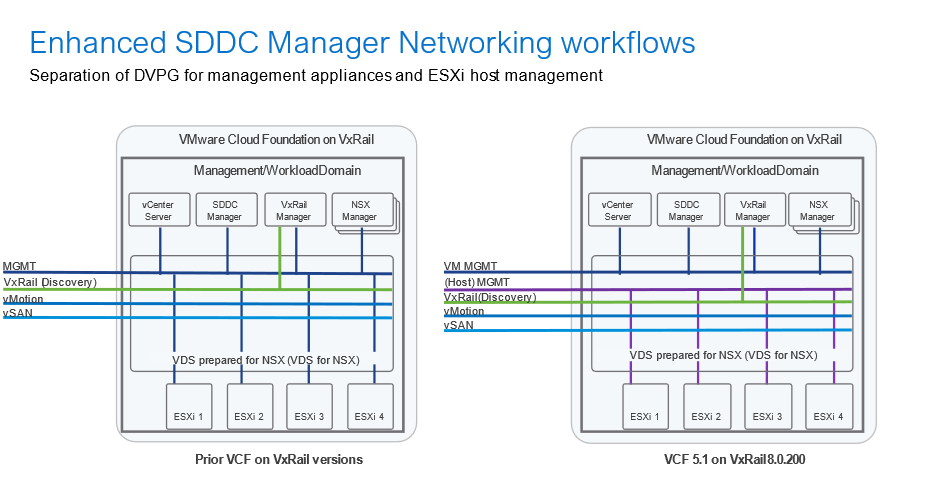

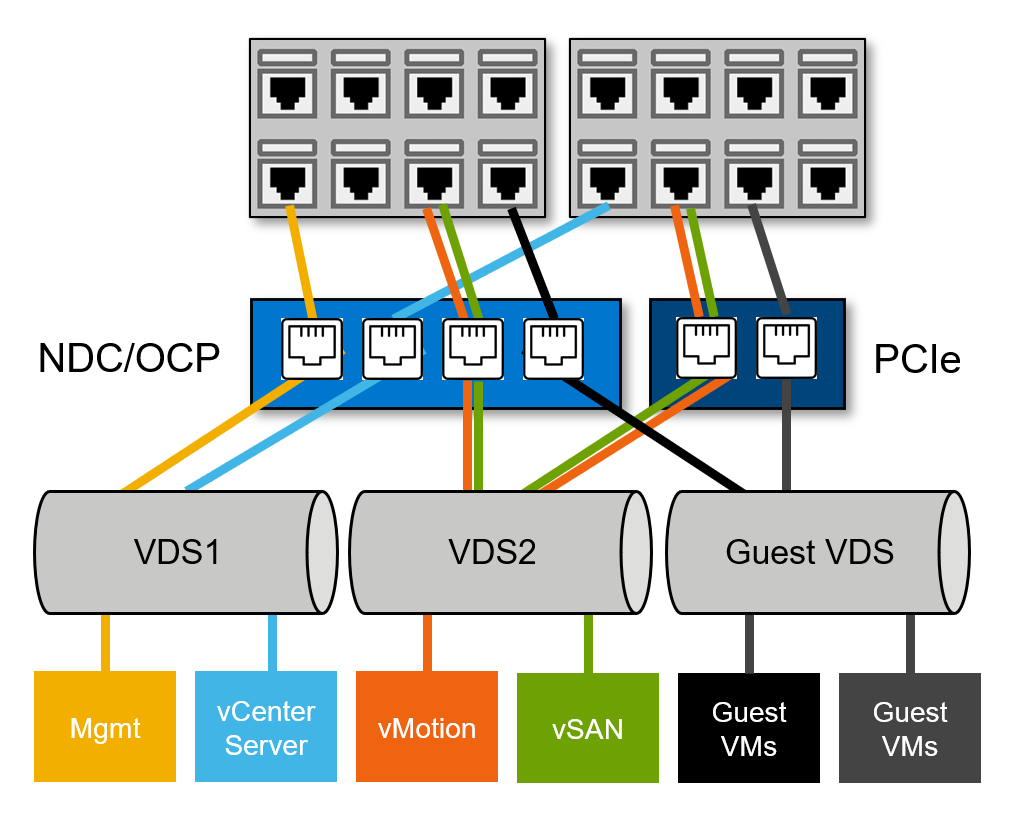

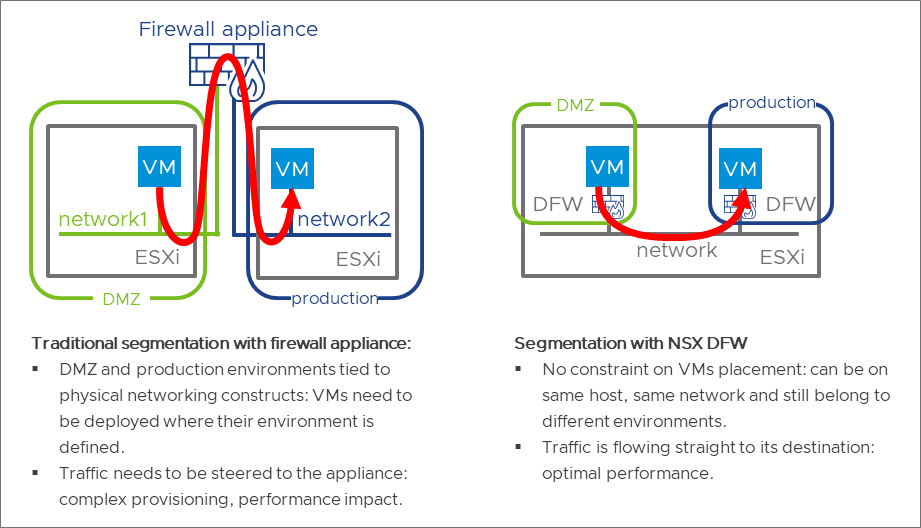

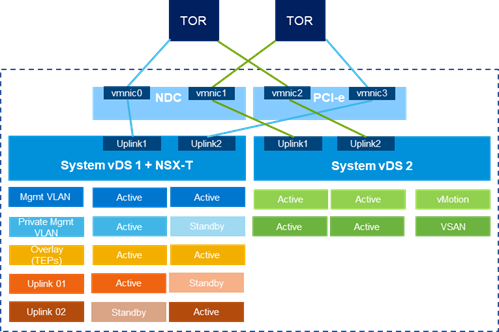

Support for the separation of DvPG for management appliances and ESXi host (VMKernel) management

Prior to this release, the default networking topology deployed by VMware Cloud Foundation on VxRail consisted of ESXi host management interfaces (vmkernel interface) and management components (vCenter server, SDDC Manager, NSX components, VxRail Manager, etc.) being applied to the same Distributed Virtual Port Group (DvPG). This new DvPG separation feature enables traffic isolation between management component VMs and ESXi Host Management vmkernel Interfaces, helping align to an organization’s desired security posture. Figure 3 illustrates this new configuration architecture.

Figure 3. New DvPG architecture

Configure custom NSX Edge cluster without 2-tier routing (via API)

VMware Cloud Foundation 5.1 on VxRail 8.0.200 now provides the option to deploy a custom NSX Edge cluster without the need to configure both a Tier-0 and Tier-1 gateway. These types of NSX Edge cluster deployments can be configured using the SDDC Manager (API only).

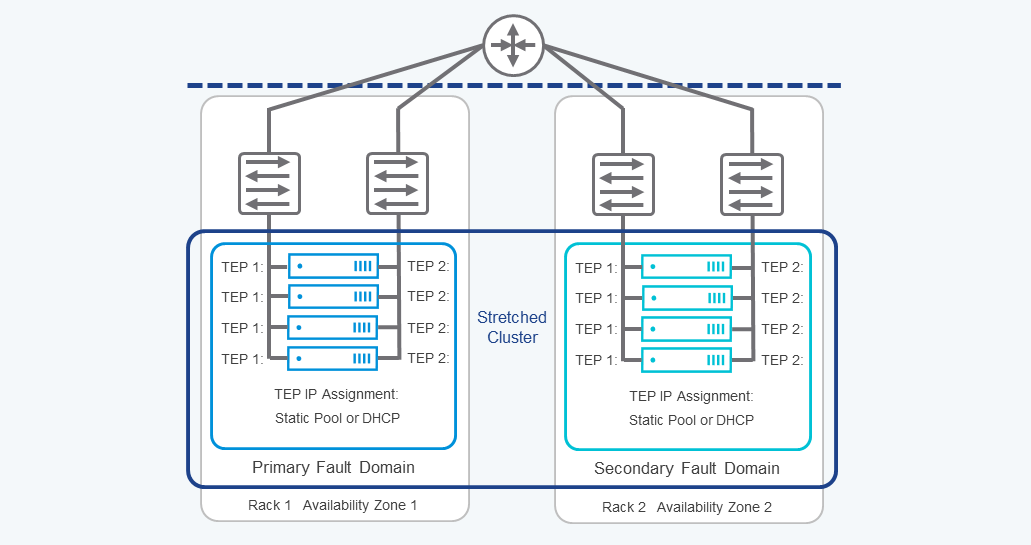

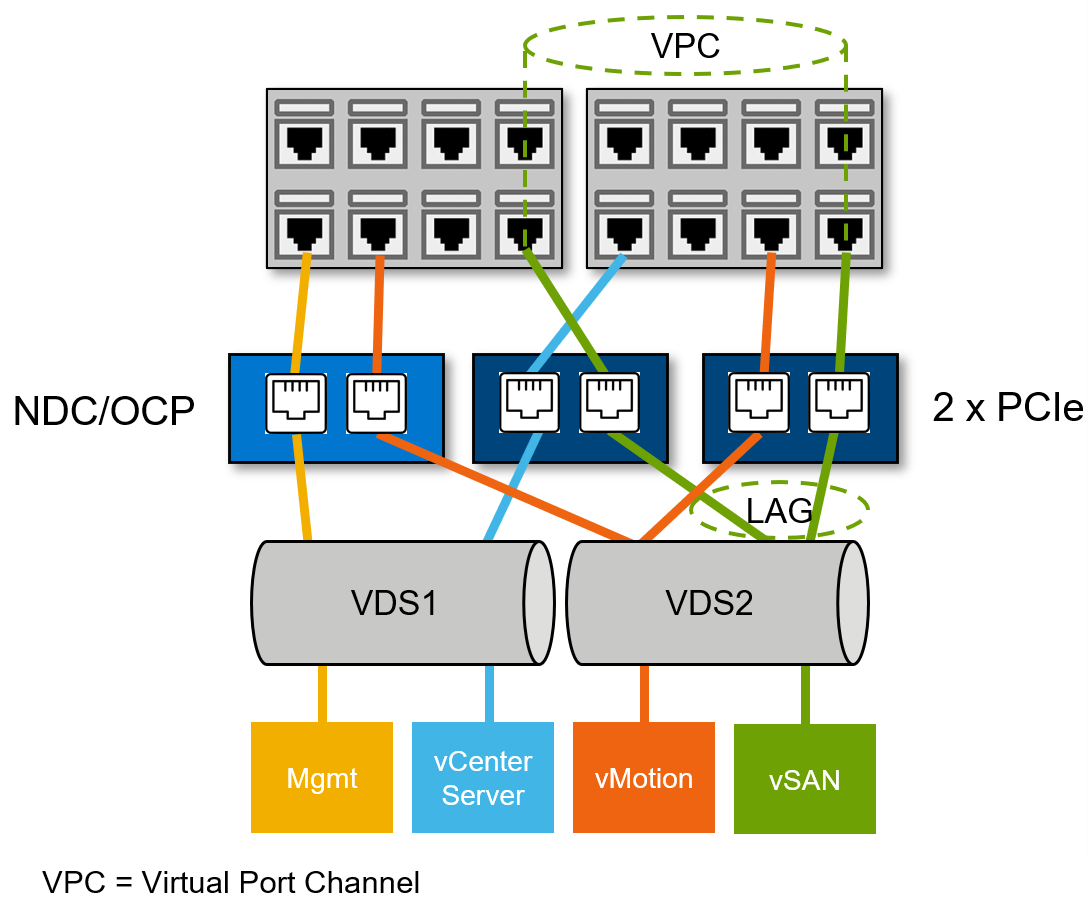

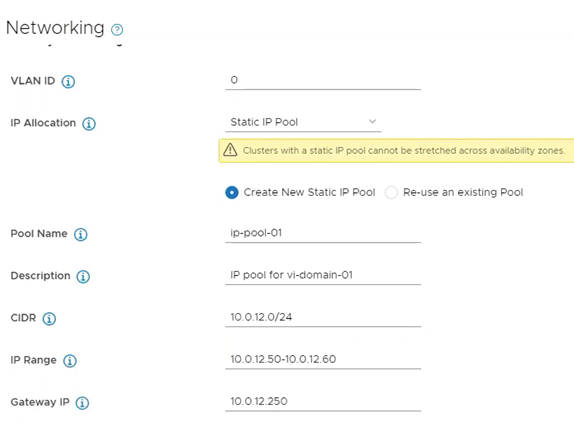

Static IP-based NSX Tunnel End Point and Sub Transport Node Profile assignment support for L3 aware clusters and L2/L3 vSAN stretched clusters

VxRail stretched clusters that are deployed using vSAN OSA can now be configured with vLCM mode enabled. In addition, administrators can now configure NSX Host TEPs to utilize a NSX static IP pool and no longer need to manually maintain an external DHCP server to support Layer 3 vSAN OSA stretched clusters, as illustrated in the following figure.

Figure 4. TEP Configuration Flexibility Example for vSAN Stretched Clusters

Building off these capabilities, deployments of VxRail stretched clusters with vSAN OSA which are configured using static IP Pools can now also leverage Sub-Transport Node Profiles (Sub-TNP), a feature introduced with NSX-T 3.2.2 and NSX 4.1.

Sub-TNPs can be used to prepare clusters of hosts without L2 adjacency to the Host TEP VLAN. This is useful for customers with rack-based IP schemas and allows Host TEP IPs to be configured on their own separate networks. Configuring vSAN stretched clusters using NSX Sub-TNP provides increased security, allowing administrators to enable and configure Distributed Malware Prevention and Detection. An example of this is depicted in the following figure.

Figure 5. Sub-TNP vSAN L3 Stretched Cluster Configuration Example

Note: Stretched VxRail with vSAN ESA clusters are not yet supported.

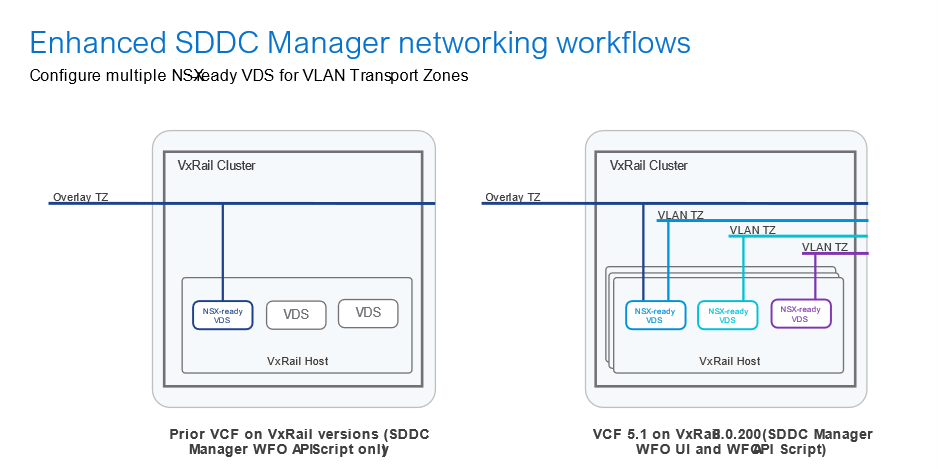

Support for multiple VDS for NSX host networking configurations

This release now provides the option to configure multiple VDS for NSX through the SDDC Manager WFO UI and WFO API.

Administrators can now configure additional VxRail host VDS prepared for NSX (VDS for NSX) to configure using VLAN Transport Zones (VLAN TZs), as shown in the following figure. This provides administrators the added benefit of configuring NSX Distributed Firewall (DFW) for workloads in VLAN transport zones, allowing security to be more granular. These capabilities further simplify the configuration of advanced networking and security for Cloud Foundation on VxRail.

Figure 6 is VxRail host

Figure 6 is VxRail host

Figure 6. Configuring additional VxRail host VDS for NSX to configure using VLAN TZs

Security and access updates

OKTA SSO identity federation support

VMware Cloud Foundation 5.1 on VxRail 8.0.200 now supports the option to configure the VMware Identity Broker for federation using Okta (3rd party IDP). Once configured, federated users can seamlessly move between vCenter Server and NSX Manager consoles without being prompted to re-authenticate.

Storage updates

vSAN OSA/ESA support for management and workload domain VxRail clusters

VMware Cloud Foundation 5.1 on VxRail 8.0.200 adds support for both vSAN OSA-based and vSAN ESA-based VxRail clusters when deploying a new management domain (greenfield VCF on VxRail instance) and new workload domains/clusters in VCF on VxRail instances that have been upgraded to this latest release. VCF requires that vSAN ESA-based cluster deployments have vLCM mode enabled. Also, as of this release, only 15th generation VxRail vSAN ESA compatible hardware platforms are supported. 16th generation VxRail platform support is planned for a future release.

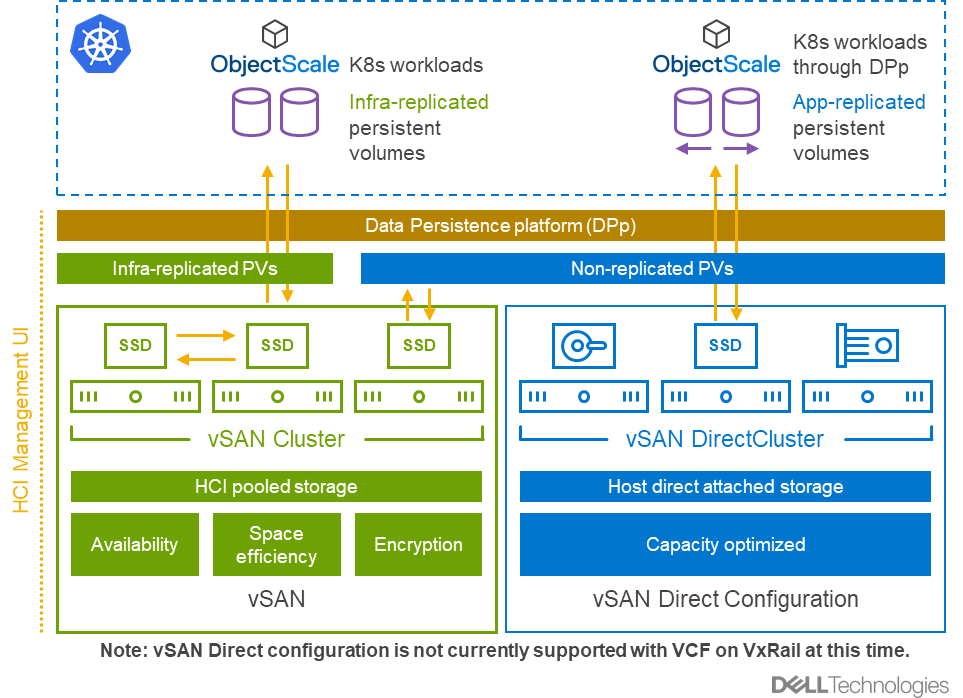

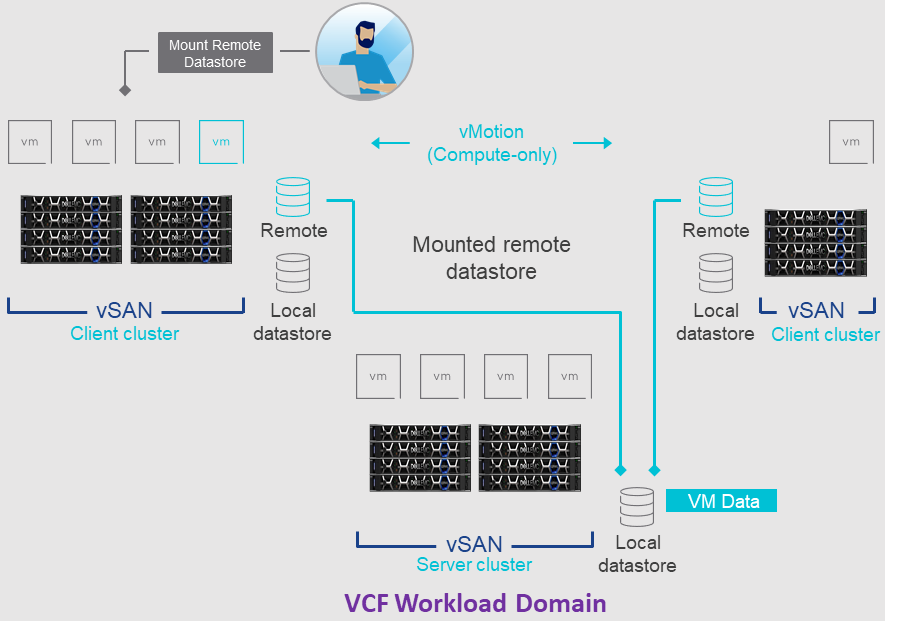

Support for vSAN OSA/ESA remote datastores as principal storage when used with VxRail dynamic node workload domain clusters

This release adds support of VxRail dynamic node compute-only clusters in cross cluster capacity sharing use cases. This means that vSAN OSA or ESA remote datastores sourced from a standard VxRail HCI cluster with vSAN within the same workload domain can now be used as principal storage for VxRail dynamic node- compute only workload domain clusters. This capability is available via the SDDC Manager WFO script deployment method only.

Platform and scale updates

Increased VCF remote cluster maximum support for up to 16 nodes and up to 150ms latency

There are new validated updates to the maximum supported latency requirements for use of VCF remote clusters. These links now require 10 Mbps of bandwidth available and a latency less than 150ms.

There have also been updates regarding VCF remote cluster size scalability ranges. A VCF remote cluster now requires a minimum of 3 hosts when using local vSAN as cluster principal storage or 2 hosts when using supported Dell external storage principal storage with VxRail dynamic nodes. On the max scale limit side, VCF remote clusters cannot exceed the new maximum of 16 VxRail hosts in either case.

Note: Support for this feature is expected to be available after GA.

Support for 2-node workload domain VxRail dynamic node clusters when using VMFS on FC Dell external storage as principal storage

Cloud Foundation on VxRail now supports the ability to deploy 2-node dynamic node-based workload domain clusters when using VMFS on FC Dell external storage as cluster Principal storage.

Increased GPU scale for Private AI

Nvidia GPUs can be configured for AI / ML to support a variety of different use cases. In VMware Cloud Foundation 5.1 on VxRail 8.0.200, where GPUs have been configured for vGPUs, a VM can now be configured with up to 16 vGPU profiles that represent all of a GPU or parts of a GPU. These enhancements allow customers to support larger Generative AI and large-language model (LLM) workloads while delivering maximum performance.

VxRail hardware platform updates

15th generation VxRail E660N and P670N all-NVMe vSAN ESA hardware platform support

Cloud Foundation on VxRail administrators can now use VxRail hardware platforms that have been qualified to run vSAN ESA and VxRail 8.0.200 software. The all-NVMe VxRail platforms such as the 15th generation VxRail E660N and P670N can now be ordered and deployed in Cloud Foundation 5.1 on VxRail 8.0.200 environments.

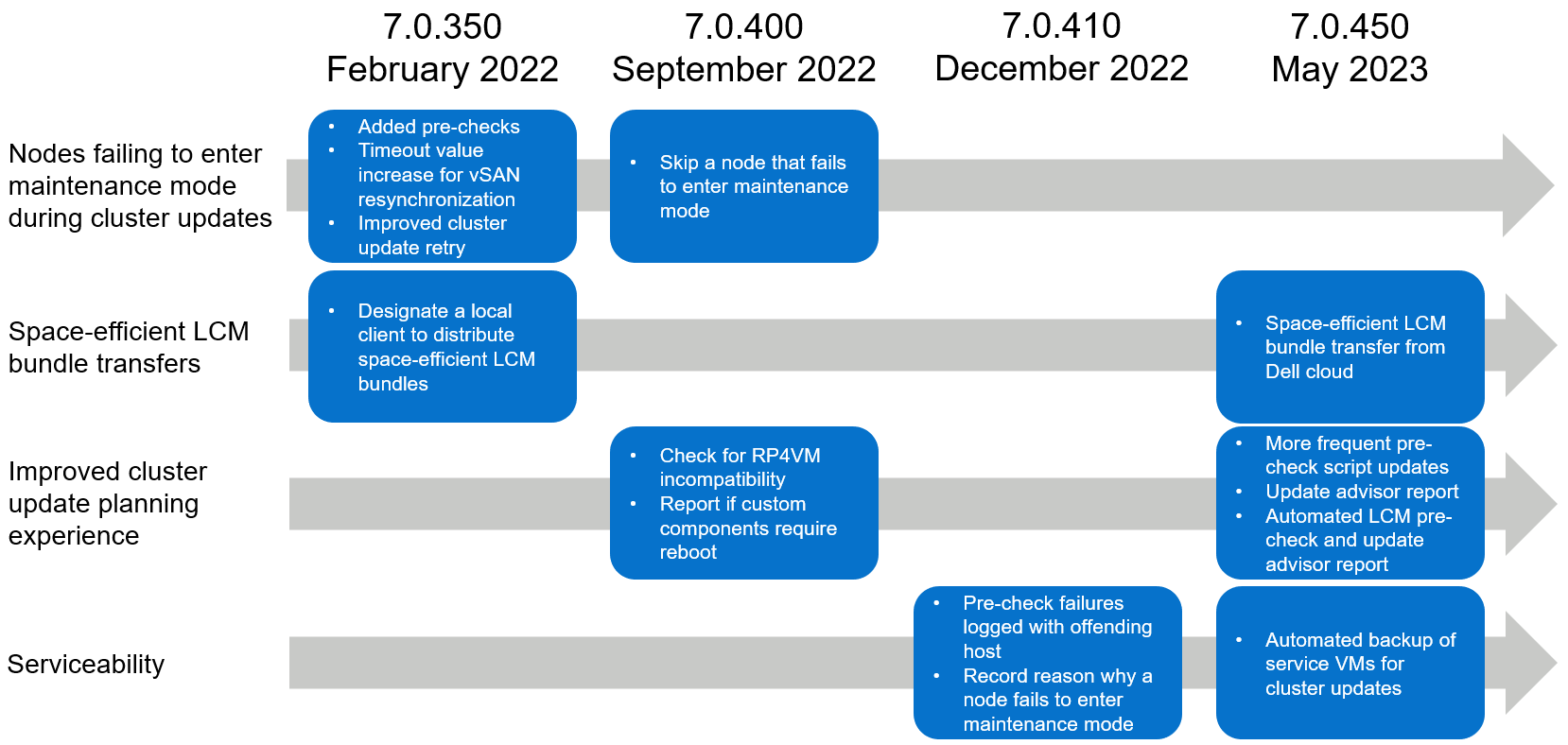

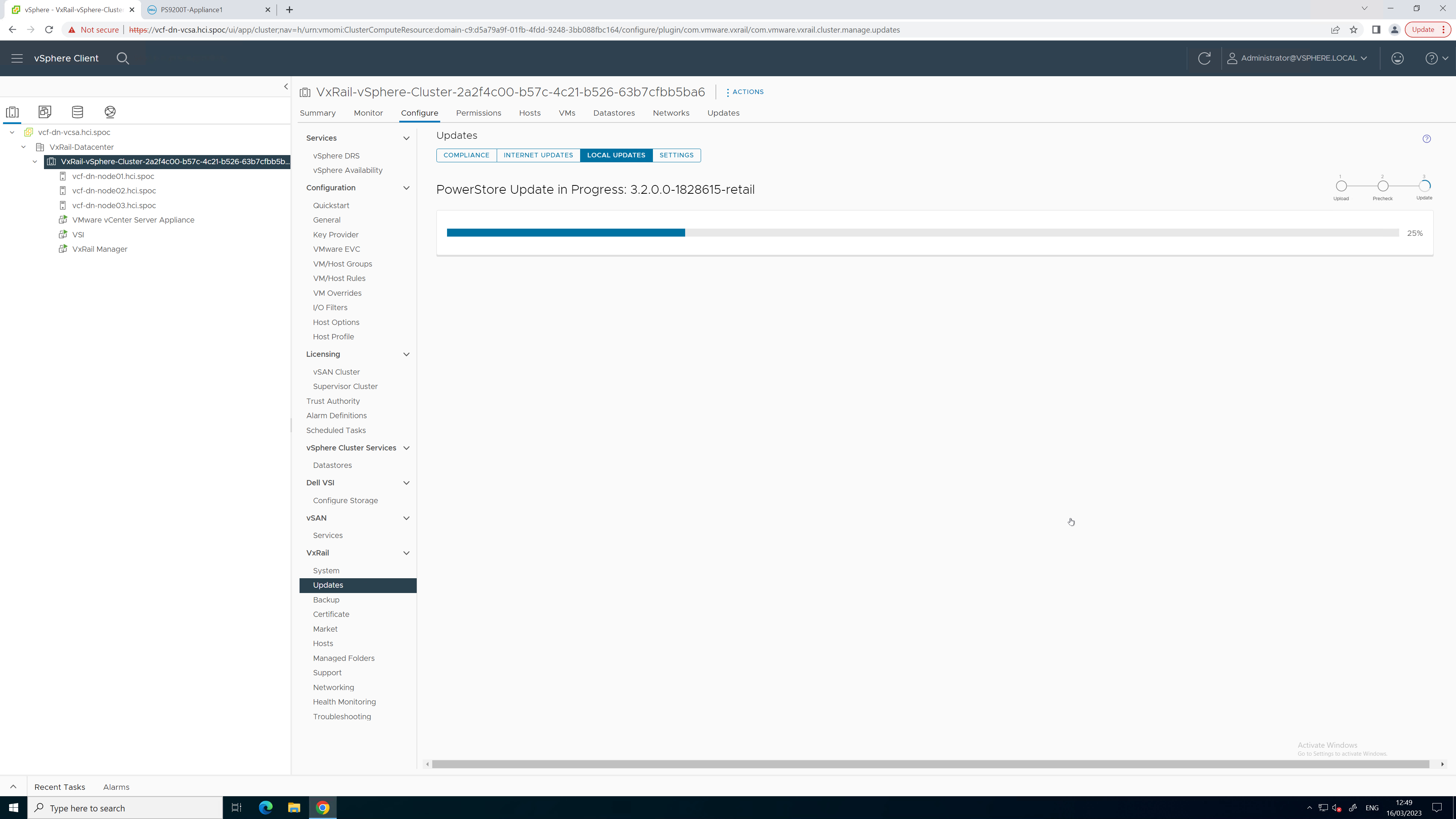

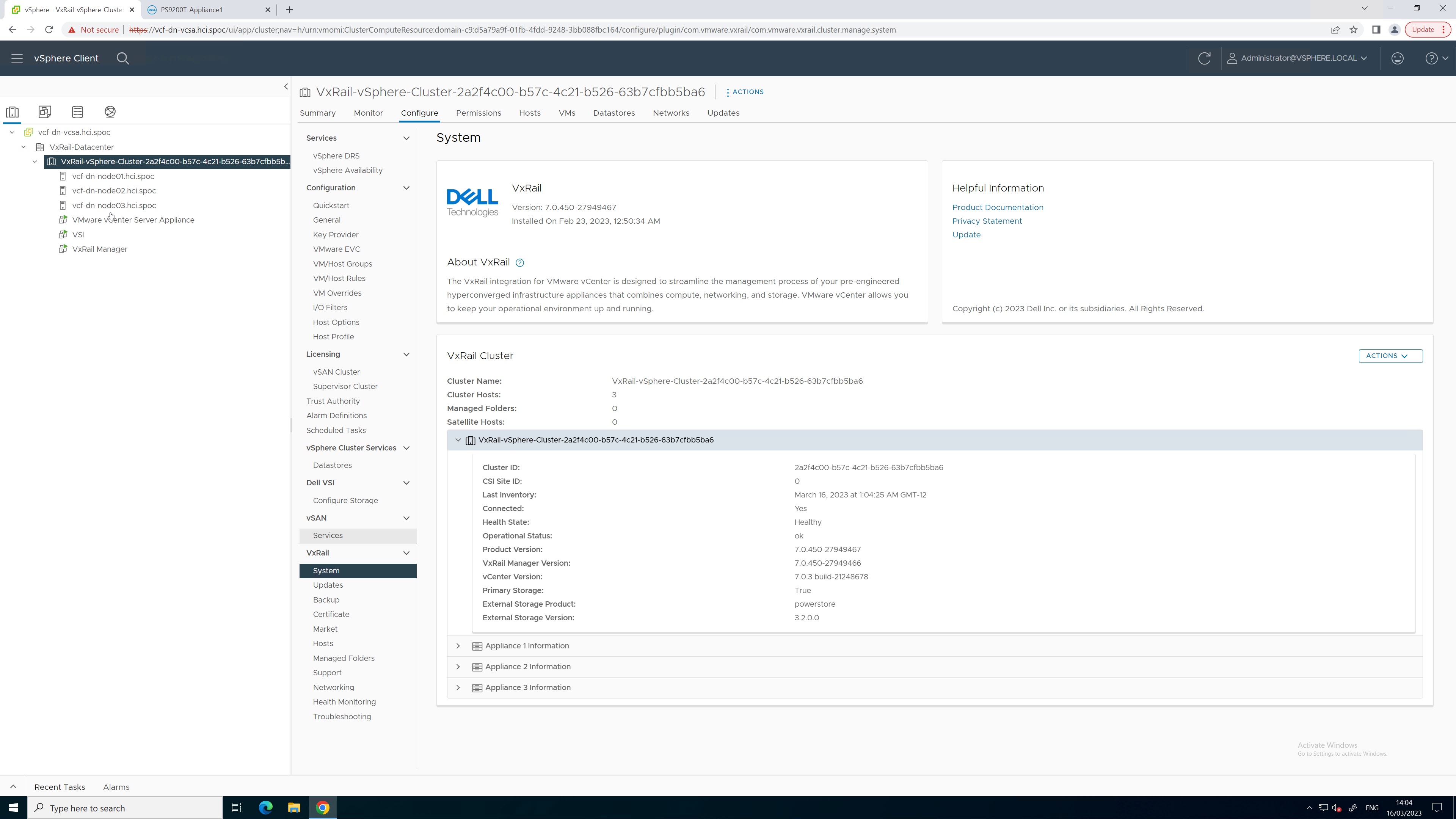

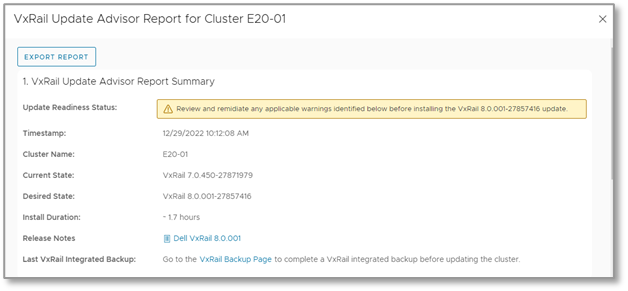

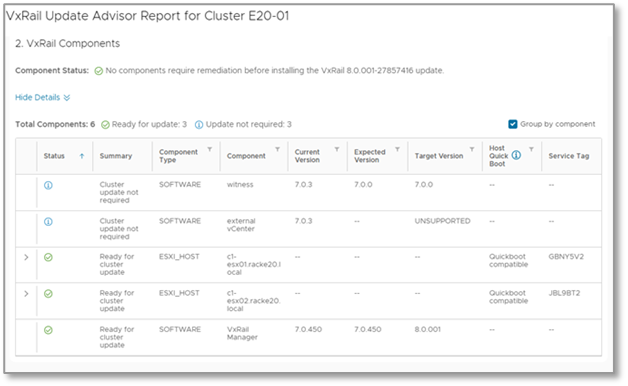

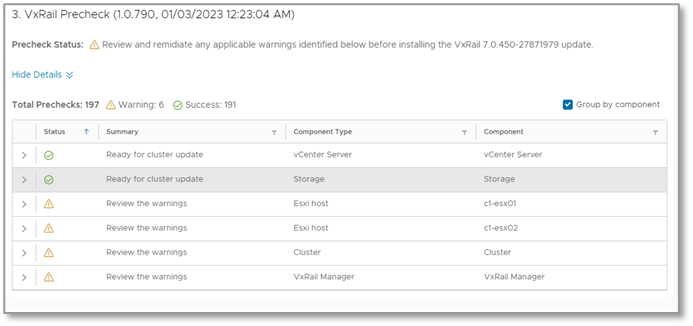

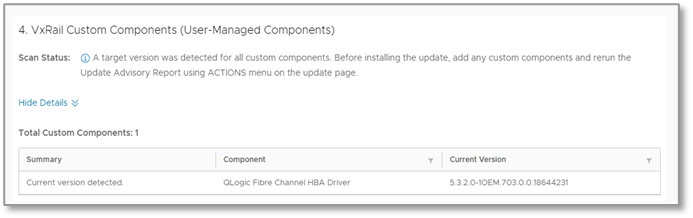

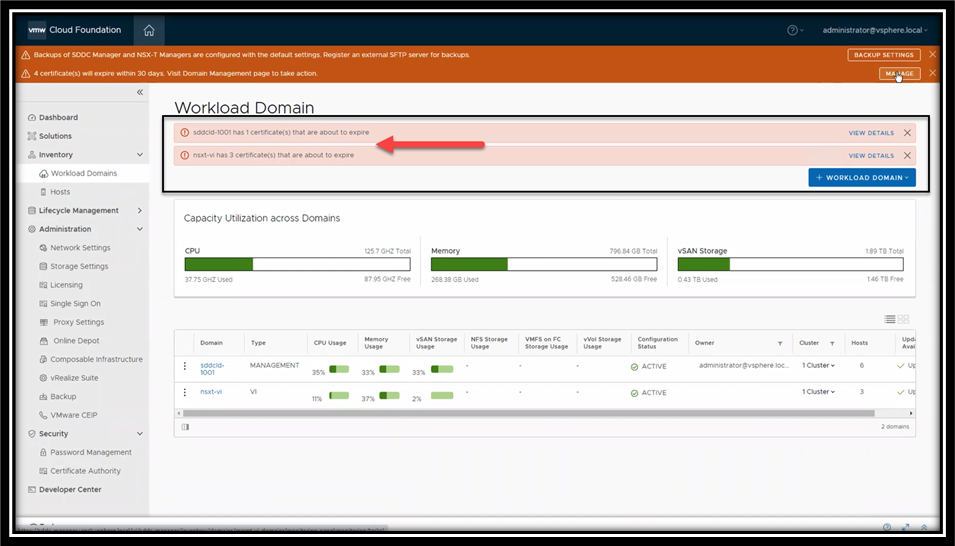

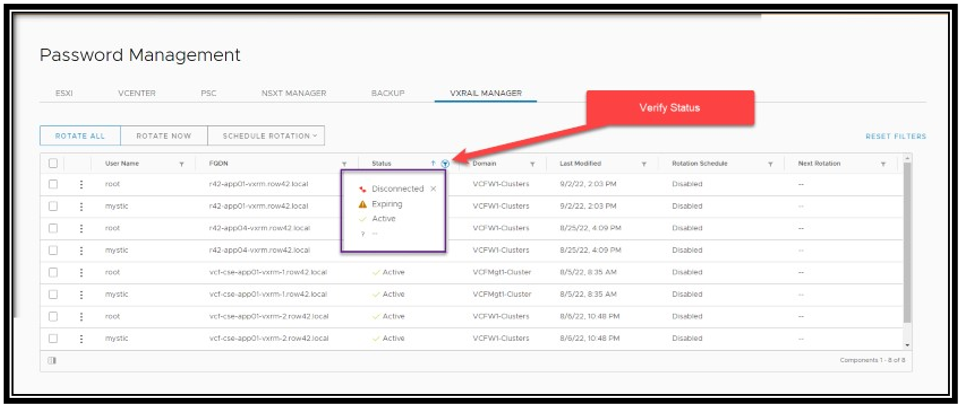

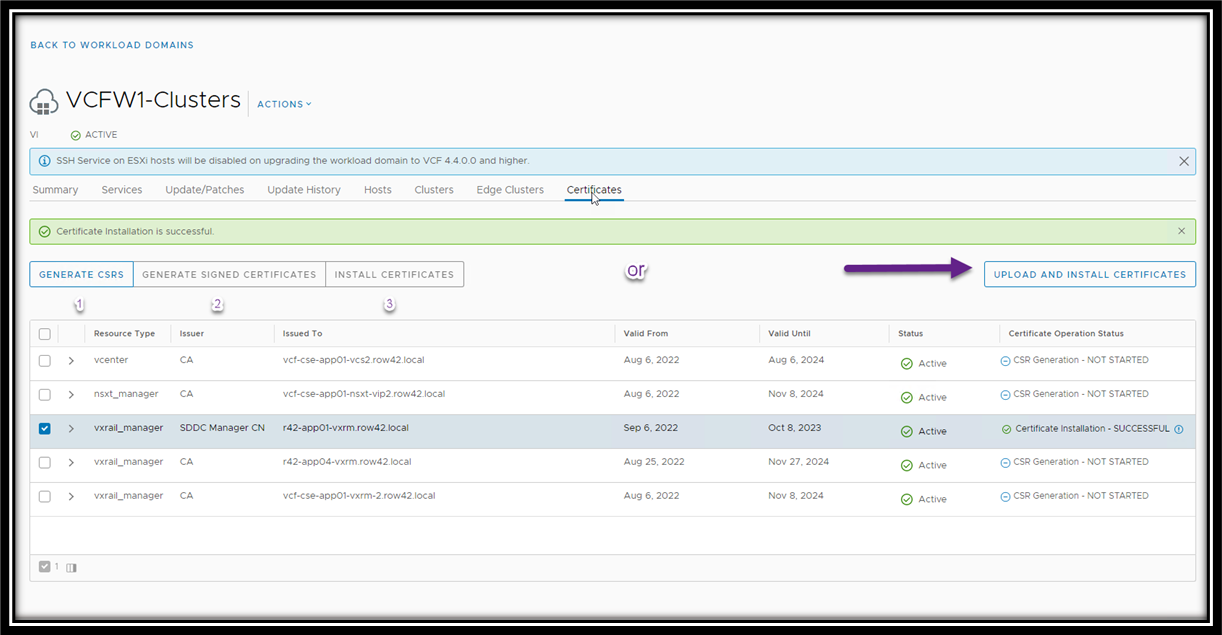

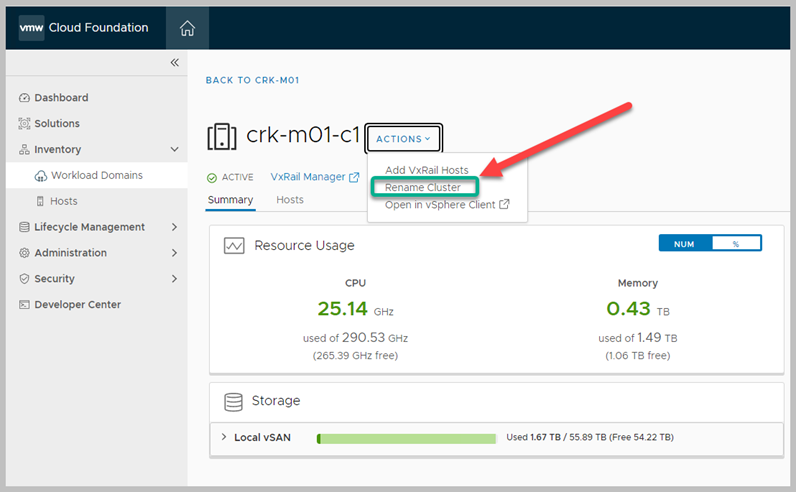

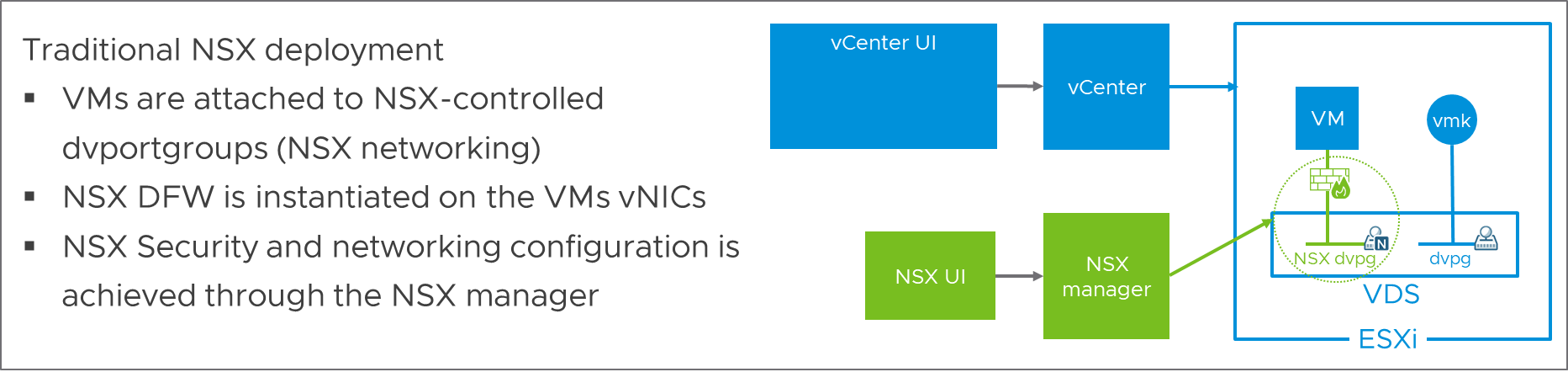

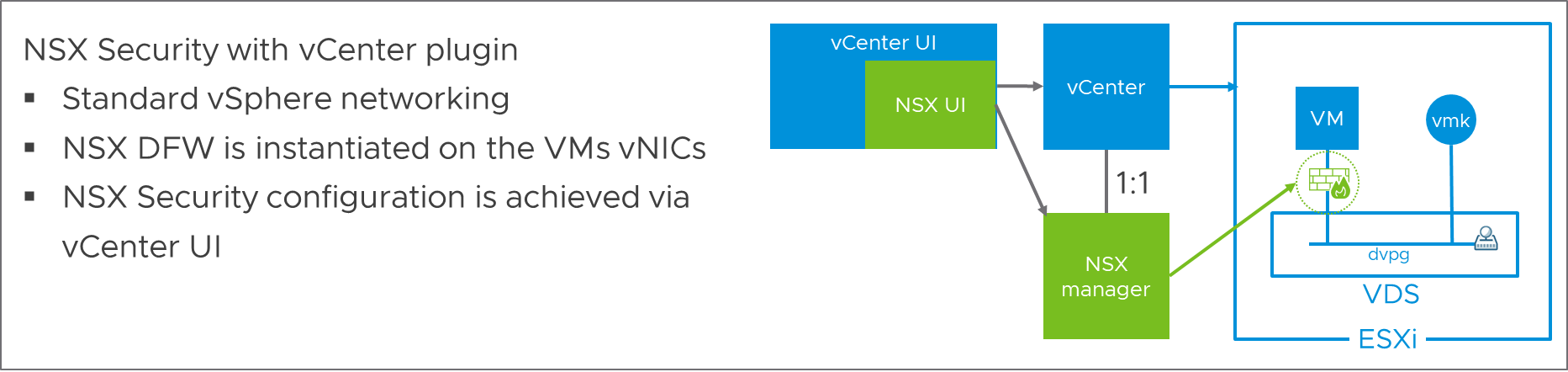

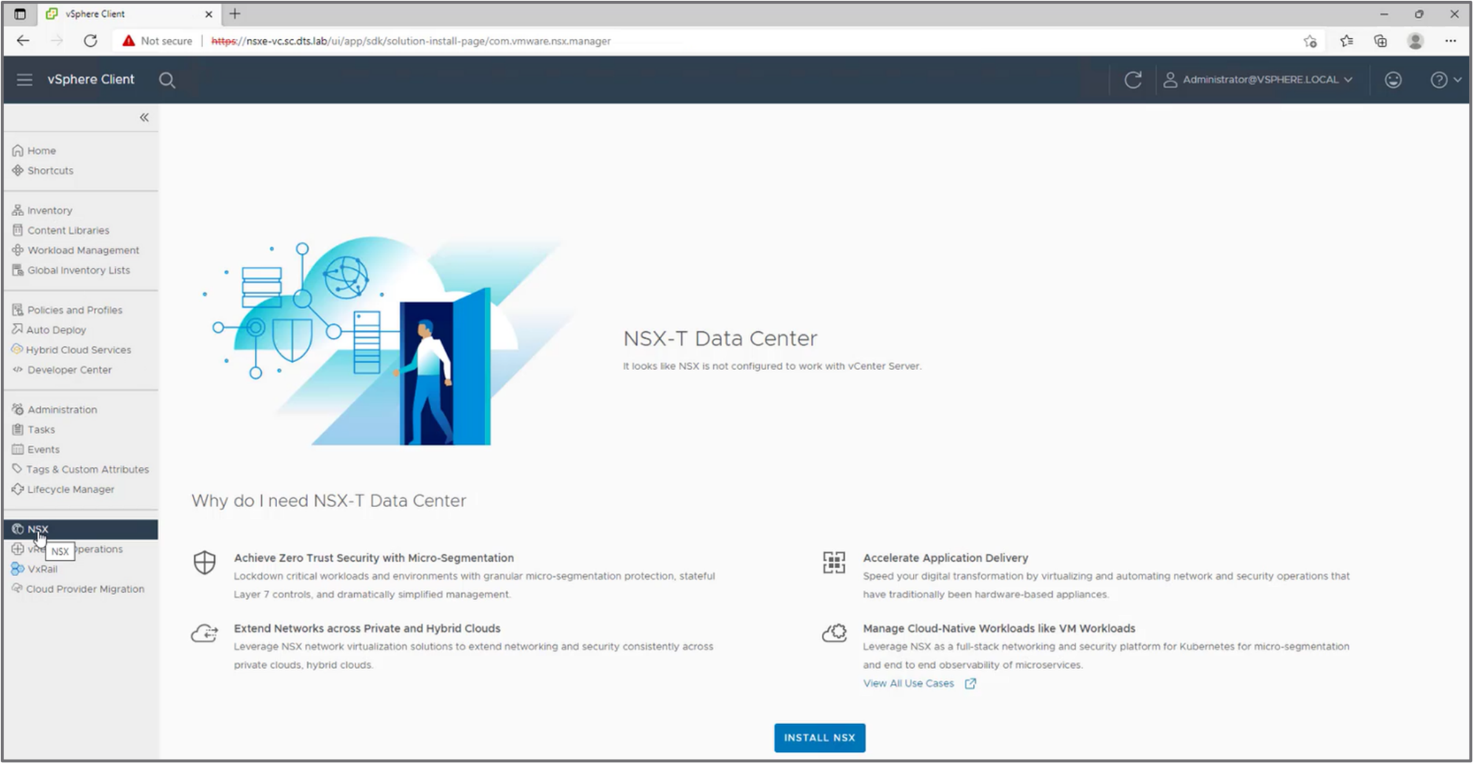

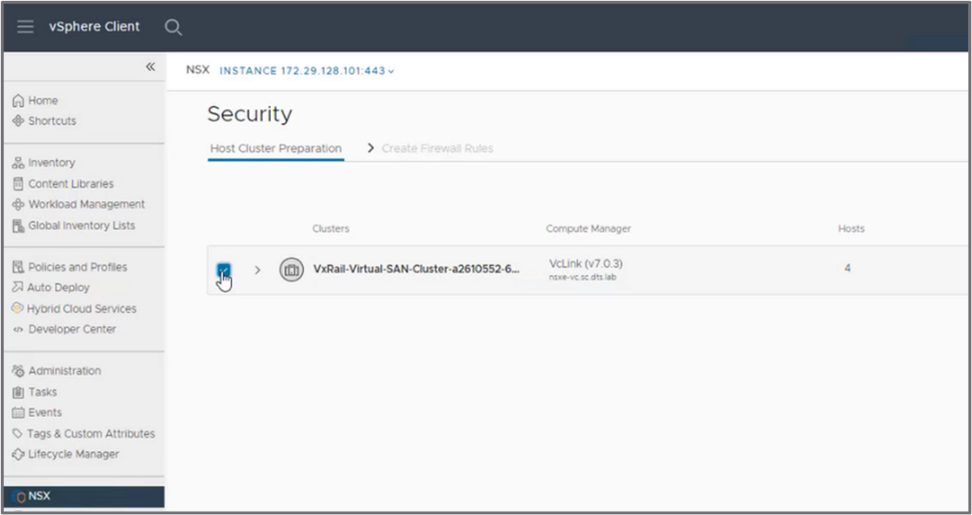

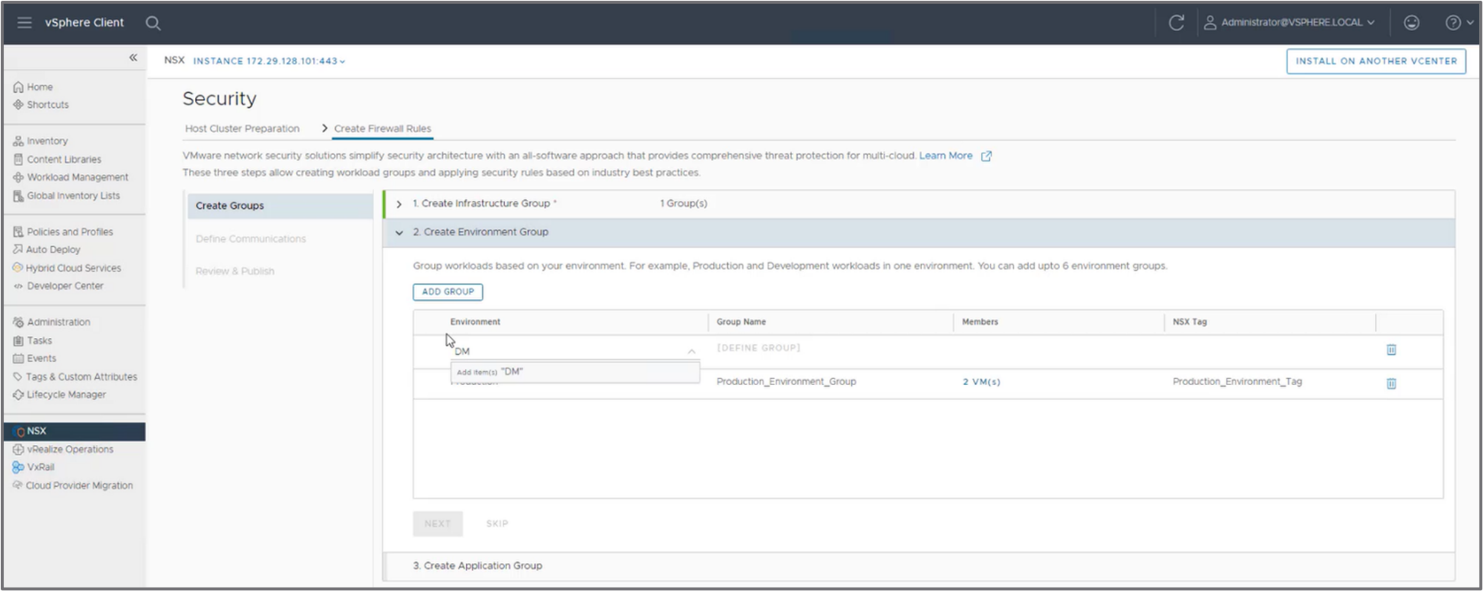

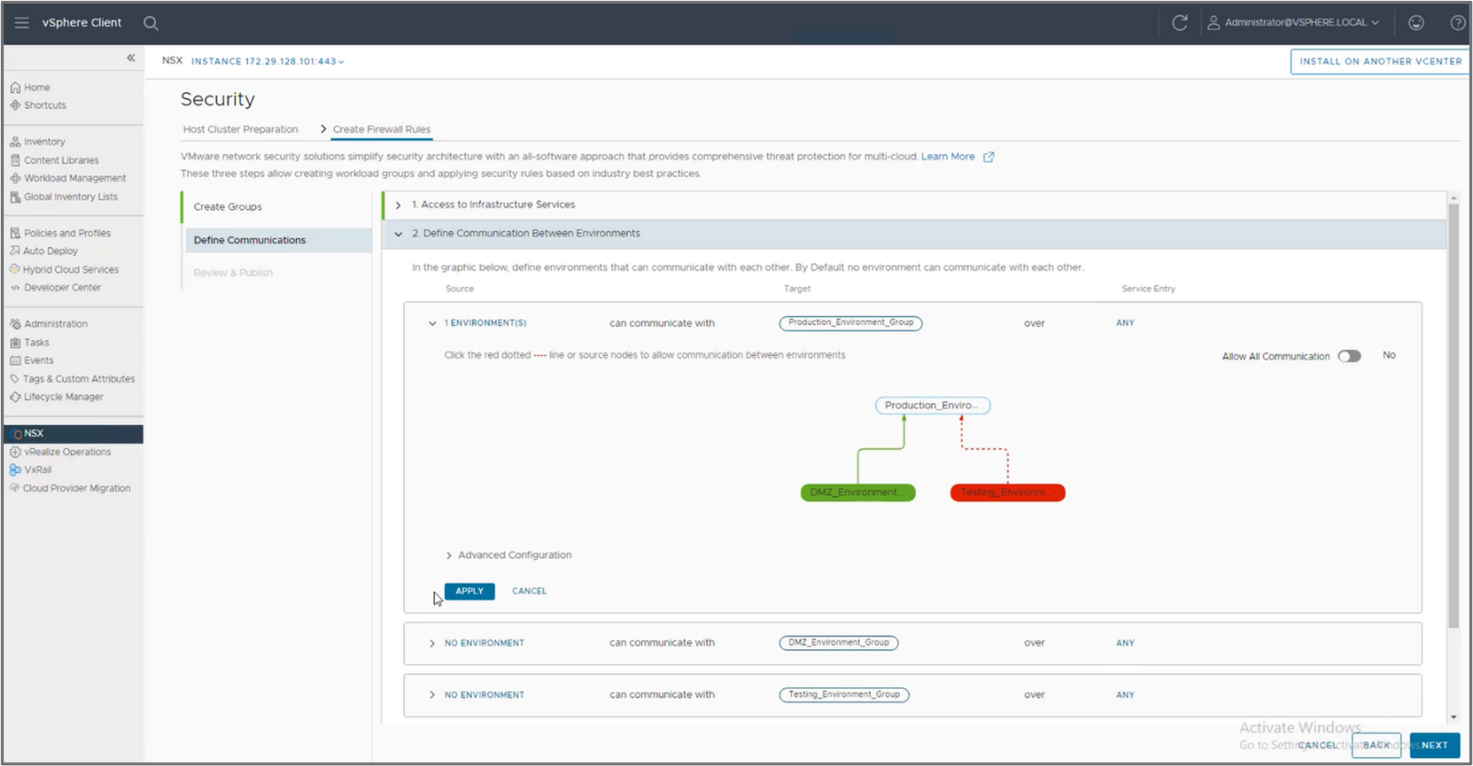

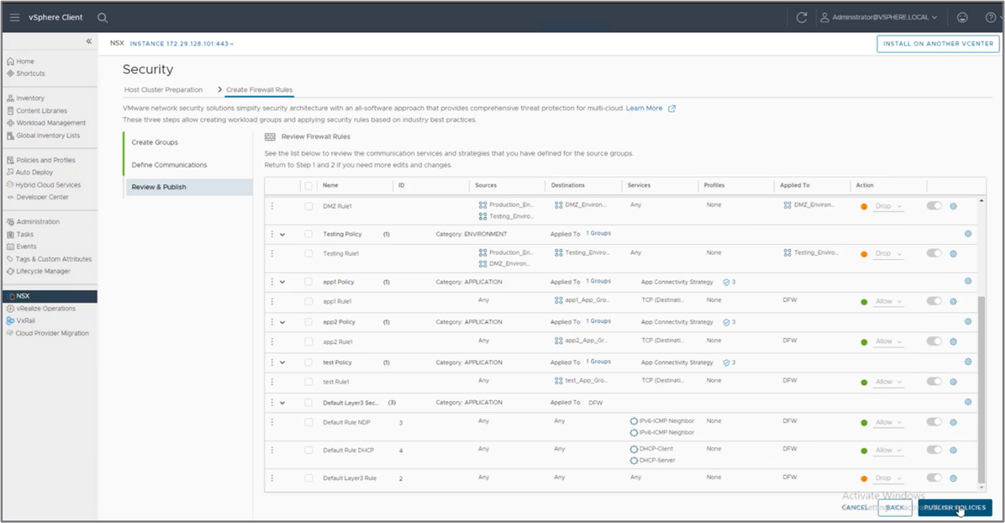

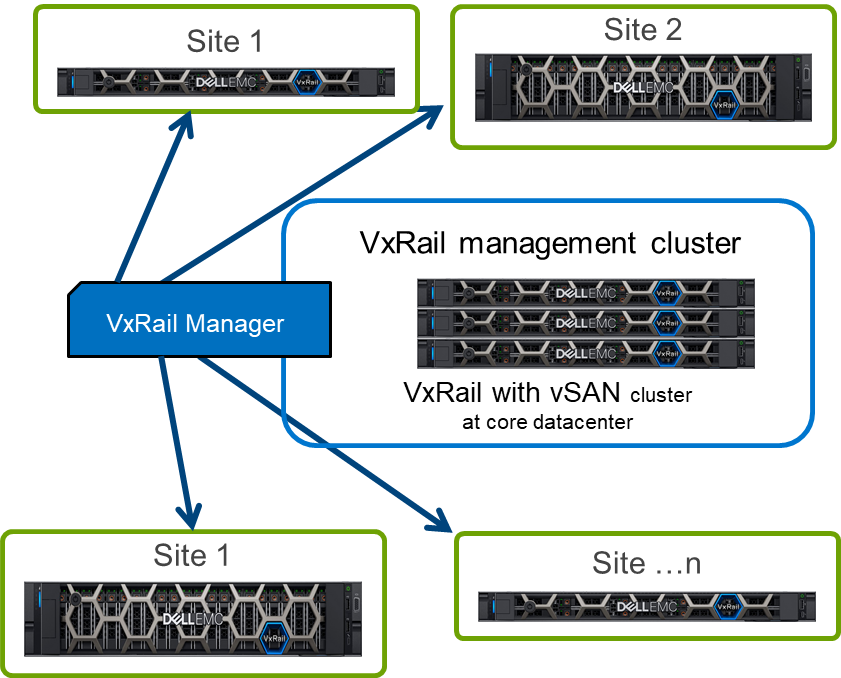

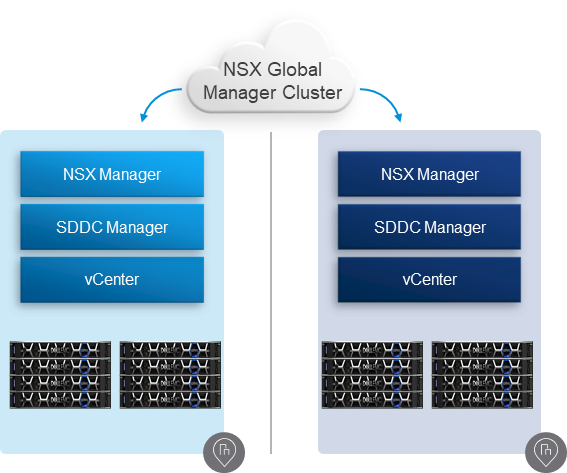

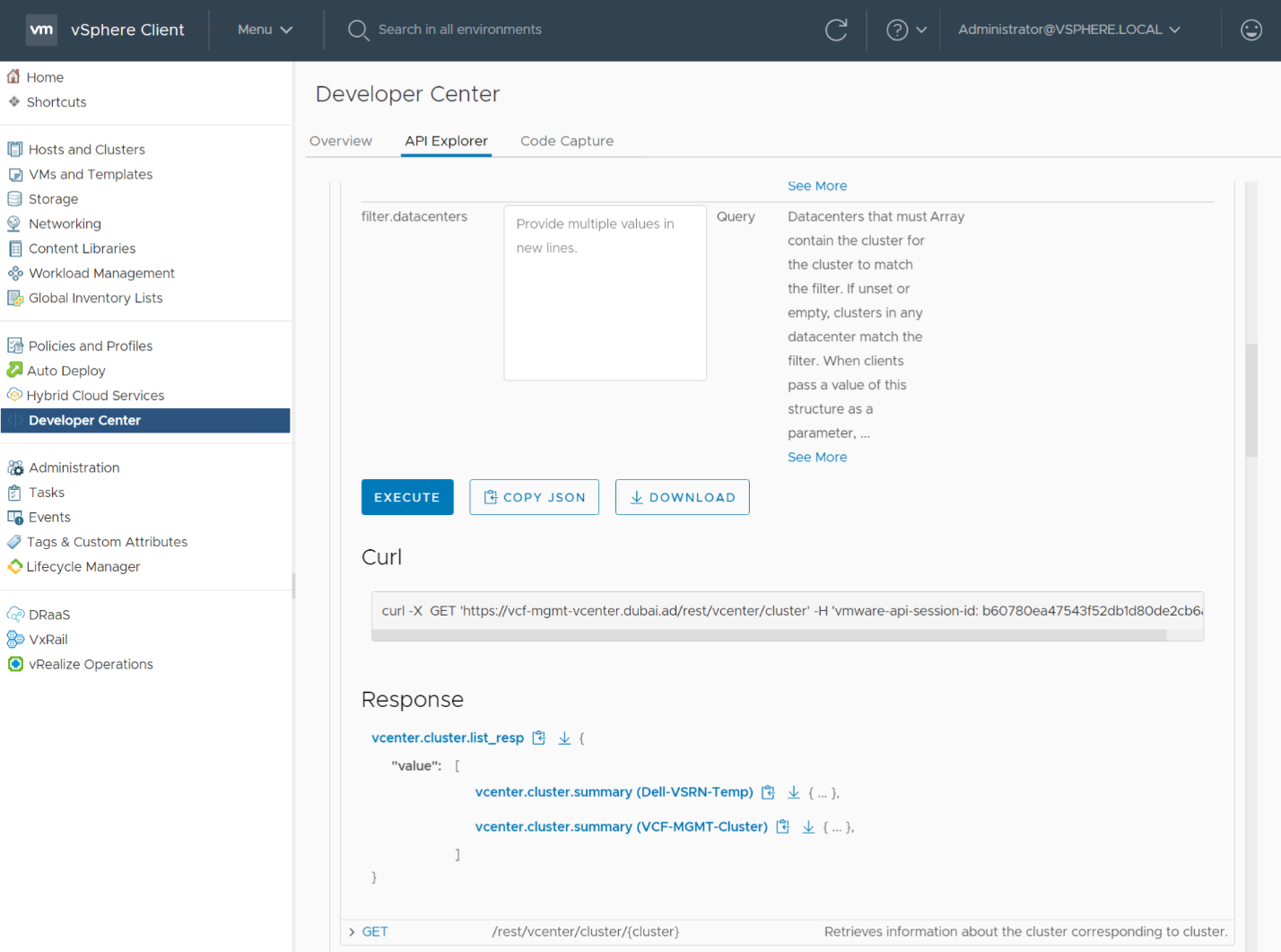

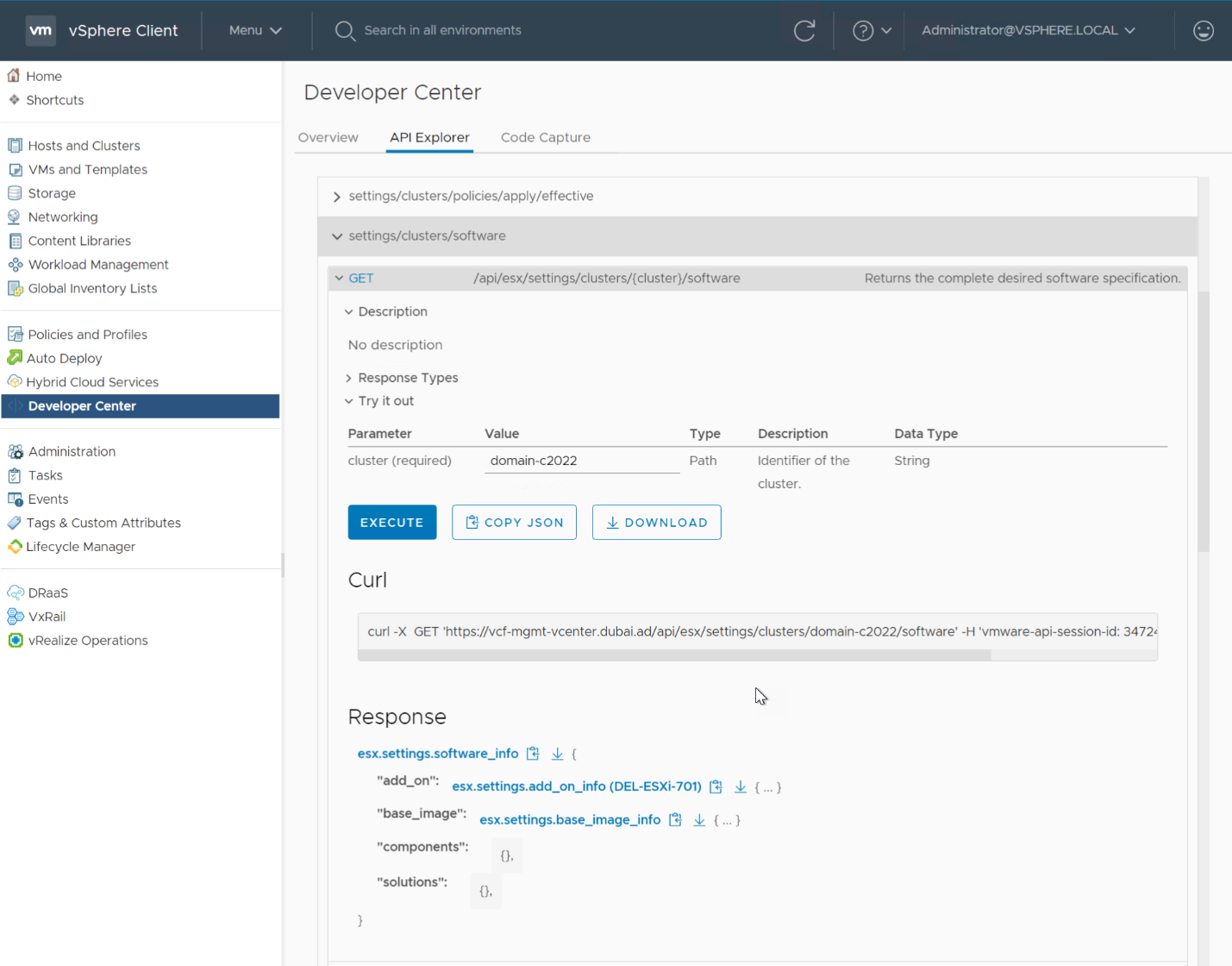

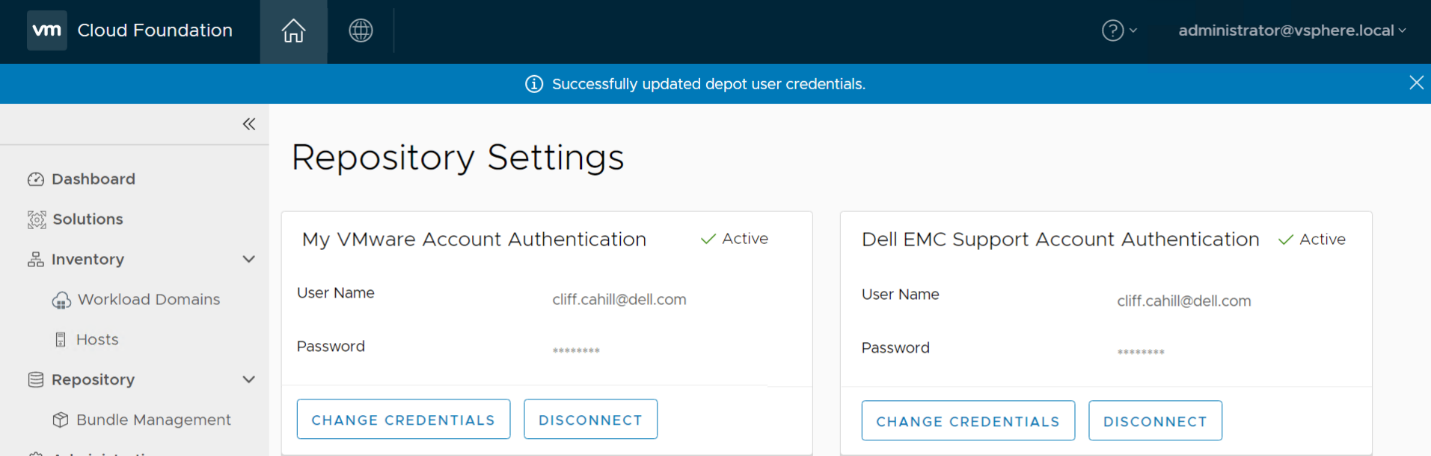

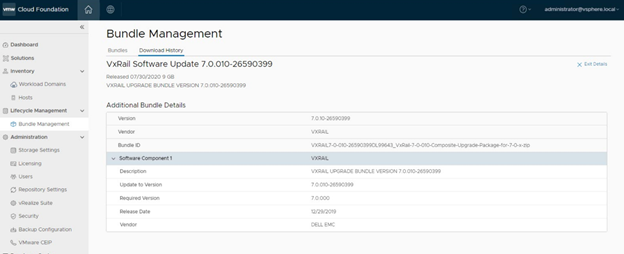

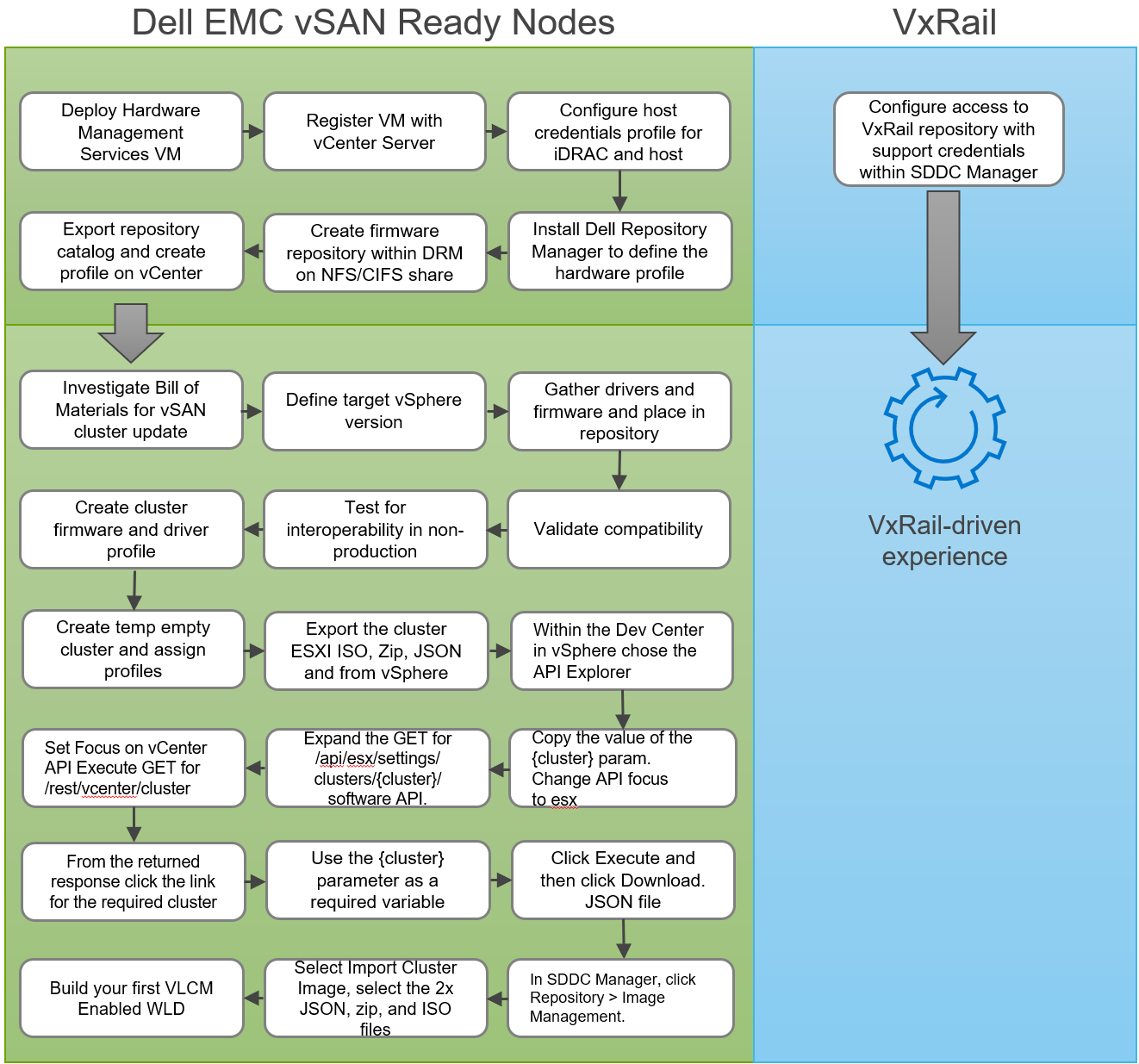

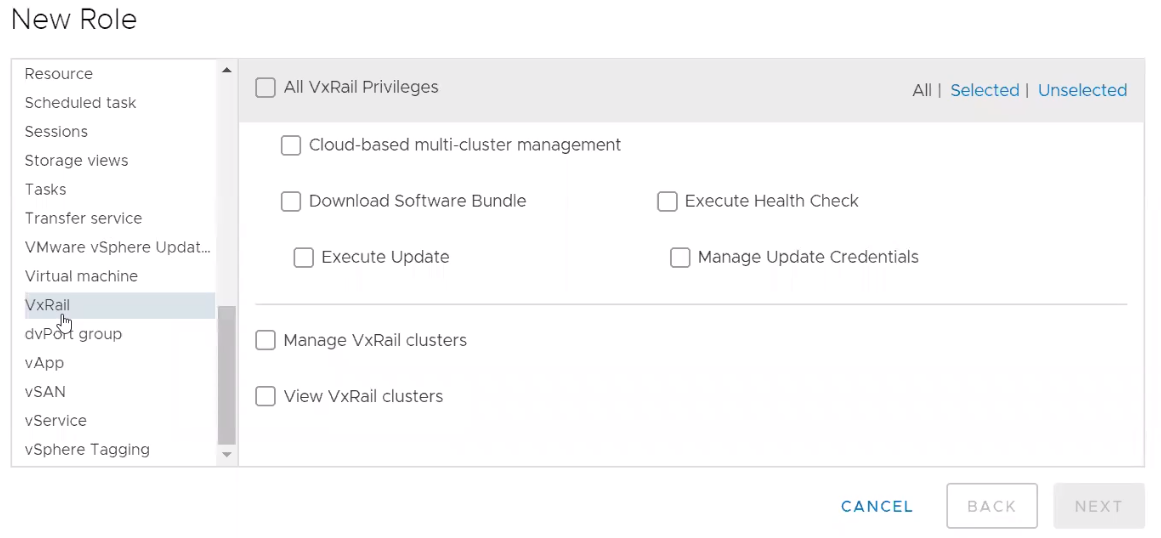

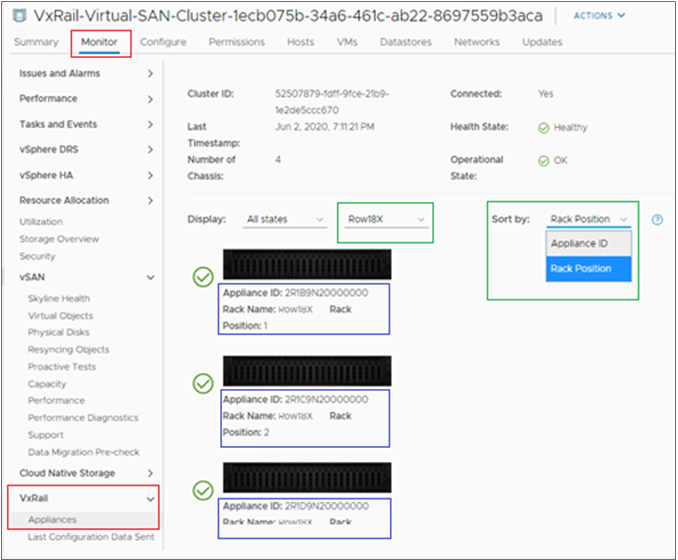

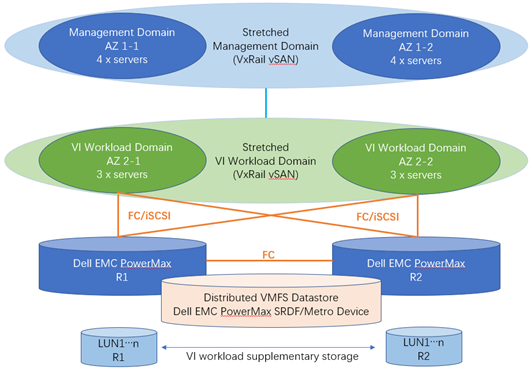

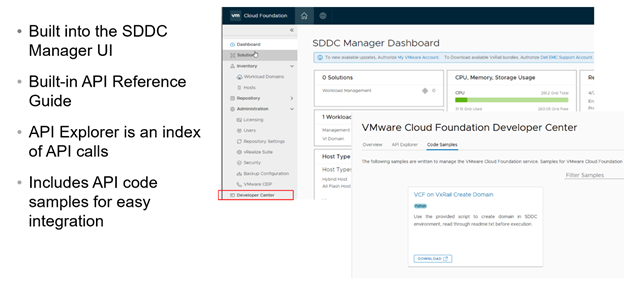

Hybrid cloud management updates