The Dell Technologies Cloud Platform – Smaller in Size, Big on Features

Wed, 03 Aug 2022 21:32:15 -0000

|Read Time: 0 minutes

The latest VMware Cloud Foundation 4.0 on VxRail 7.0 release introduces a more accessible entry cloud option with support for new four node configurations. It also delivers a simple and direct path to vSphere with Kubernetes at cloud scale.

The Dell Technologies team is very excited to announce that May 12, 2020 marked the general availability of our latest Dell Technologies Cloud Platform release, VMware Cloud Foundation 4.0 on VxRail 7.0. There is so much to unpack in this release across all layers of the platform, from the latest features of VCF 4.0 to newly supported deployment configurations new to VCF on VxRail. To help you navigate through all of the goodness, I have broken out this post into two sections: VCF 4.0 updates and new features introduced specifically to VCF on VxRail deployments. Let’s jump right to it!

VMware Cloud Foundation 4.0 Updates

A lot great information on VCF 4.0 features was already published by VMware as a part of their Modern Apps Launch earlier this year. If you haven’t caught yourself up, check out links to some VMware blogs at the end of this post. Some of my favorite new features include new support for vSphere for Kubernetes (GAMECHANGER!), support for NSX-T in the Management Domain, and the NSX-T compatible Virtual Distributed Switch.

Now let’s dive into the items that are new to VCF on VxRail deployments, specifically ones that customers can take advantage of on top of the latest VCF 4.0 goodness.

New to VCF 4.0 on VxRail 7.0 Deployments

VCF Consolidated Architecture Four Node Deployment Support for Entry Level Cloud (available beginning May 26, 2020)

New to VCF on VxRail is support for the VCF Consolidated Architecture deployment option. Until now, VCF on VxRail required that all deployments use the VCF Standard Architecture. This was due to several factors: a major one was that NSX-T was not supported in the VCF Management Domain until this latest release. Having this capability was a prerequisite before we could support the consolidated architecture with VCF on VxRail.

Before we jump into the details of a VCF Consolidated Architecture deployment, let's review what the current VCF Standard deployment is all about.

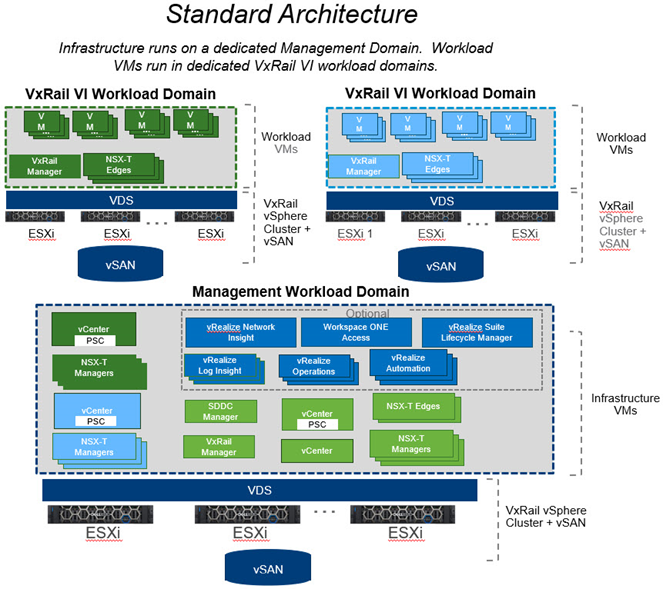

VCF Standard Architecture Details

This deployment would consist of:

- A minimum of seven VxRail nodes (however eight is recommended)

- A four node Management Domain dedicated to run the VCF management software and at least one dedicated workload domain that consists of a three node cluster (however four is recommended) to run user workloads

- The Management Domain runs its own dedicated vCenter and NSX-T instance

- The workload domains are deployed with their own dedicated vCenter instances and choice of dedicated or shared NSX-T instances that are separate from the Management Domain NSX-T instance.

A summary of features includes:

- Requires a minimum of 7 nodes (8 recommended)

- A Management Domain dedicated to run management software components

- Dedicated VxRail VI domain(s) for user workloads

- Each workload domain can consist of multiple clusters

- Up to 15 domains are supported per VCF instance including the Management Domain

- vCenter instances run in linked-mode

- Supports vSAN storage only as principal storage

- Supports using external storage as supplemental storage

This deployment architecture design is preferred because it provides the most flexibility, scalability, and workload isolation for customers scaling their clouds in production. However, this does require a larger initial infrastructure footprint, and thus cost, to get started.

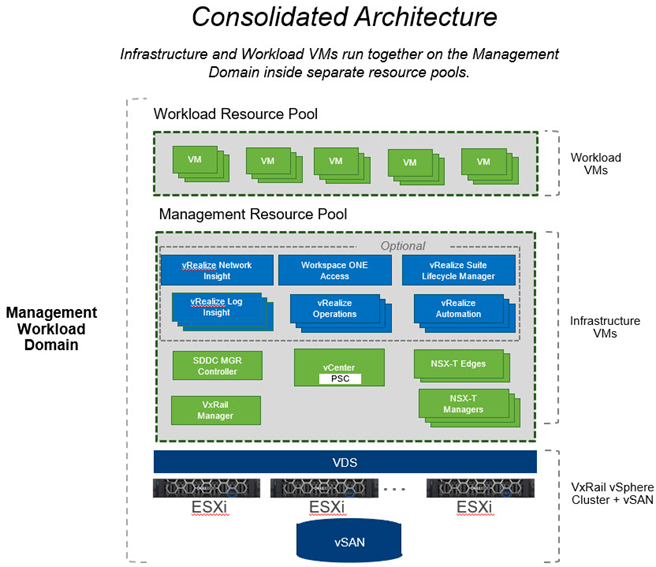

For something that allows customers to start smaller, VMware developed a validated VCF Consolidated Architecture option. This allows for the Management domain cluster to run both the VCF management components and a customer’s general purpose server VM workloads. Since you are just using the Management Domain infrastructure to run both your management components and user workloads, your minimum infrastructure starting point consists of the four nodes required to create your Management Domain. In this model, vSphere Resource Pools are used to logically isolate cluster resources to the respective workloads running on the cluster. A single vCenter and NSX-T instance is used for all workloads running on the Management Domain cluster.

VCF Consolidated Architecture Details

A summary of features of a Consolidated Architecture deployment:

- Minimum of 4 VxRail nodes

- Infrastructure and compute VMs run together on shared management domain

- Resource Pools used to separate and isolate workload types

- Supports multi-cluster and scale to documented vSphere maximums

- Does not support running Horizon Virtual Desktop or vSphere with Kubernetes workloads

- Supports vSAN storage only as principal storage

- Supports using external storage as supplemental storage for workload clusters

For customers to get started with an entry level cloud for general purpose VM server workloads, this option provides a smaller entry point, both in terms of required infrastructure footprint as well as cost.

With the Dell Technologies Cloud Platform, we now have you covered across your scalability spectrum, from entry level to cloud scale!

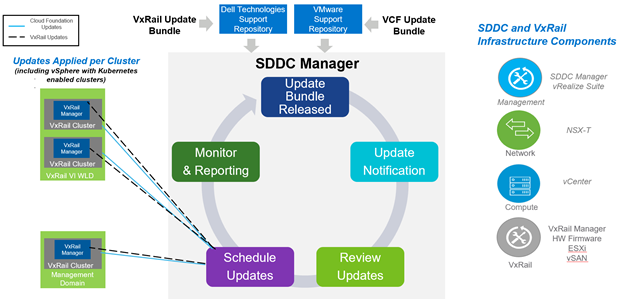

Automated and Validated Lifecycle Management Support for vSphere with Kubernetes Enabled Workload Domain Clusters

How is it that we can support this? How does this work? What benefits does this provide you, as a VCF on VxRail administrator, as a part of this latest release? You may be asking yourself these questions. Well, the answer is through the unique integration that Dell Technologies and VMware have co-engineered between SDDC Manager and VxRail Manager. With these integrations, we have developed a unique set of LCM capabilities that can benefit our customers tremendously. You can read more about the details in one of my previous blog posts here.

VCF 4.0 on VxRail 7.0 customers who benefit from the automated full stack LCM integration that is built into the platform can now include in this integration vSphere with Kubernetes components that are a part of the ESXi hypervisor! Customers are future proofed to be able to automatically LCM vSphere with Kubernetes enabled clusters when the need arises with fully automated and validated VxRail LCM workflows natively integrated into the SDDC Manager management experience. Cool right?! This means that you can now bring the same streamlined operations capabilities to your modern apps infrastructure just like you already do for your traditional apps! The figure below illustrates the LCM process for VCF on VxRail.

VCF on VxRail LCM Integrated Workflow

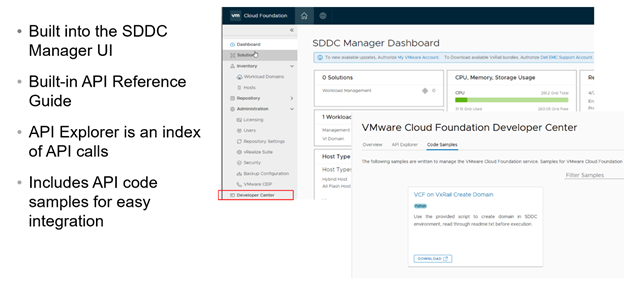

Introduction of initial support of VCF (SDDC Manager) Public APIs

VMware Cloud Foundation first introduced the concept of SDDC Manager Public APIs back in version 3.8. These APIs have expanded in subsequent releases and have been geared toward VCF deployments on Ready Nodes.

Well, we are happy to say that in this latest release, the VCF on VxRail team is offering initial support for VCF Public APIs. These will include a subset of the various APIs that are applicable to a VCF on VxRail deployment. For a full listing of the available APIs, please refer to the VMware Cloud Foundation on Dell EMC VxRail API Reference Guide.

Another new API related feature in this release is the availability of the VMware Cloud Foundation Developer Center. This provides some very handy API references and code samples built right into the SDDC Manager UI. These references are readily accessible and help our customers to better integrate their own systems and other third party systems directly into VMware Cloud Foundation on VxRail. The figure below provides a summary and a sneak peek at what this looks like.

VMware Cloud Foundation Developer Center SDDC Manager UI View

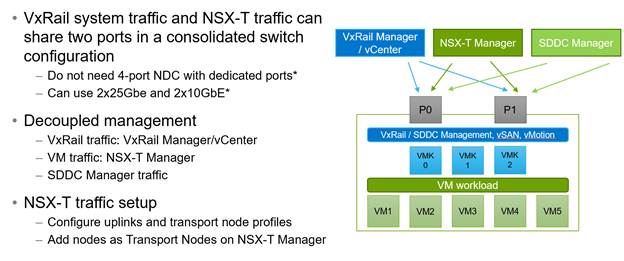

Reduced VxRail Networking Hardware Configuration Requirements

Finally, we end out journey of new features on the hardware front. In this release, we have officially reduced the minimum VxRail node networking hardware configurations required for VCF use cases. With the introduction of vSphere 7.0 in VCF 4.0, admins can now use the vSphere Distributed Switch (VDS) for NSX-T. The need for a separate N-VDS switch has been deprecated. So why is this important and how does this lead to VxRail node network hardware configuration improvements?

Well, up until now, VxRail and SDDC management networks have been configured to use the VDS. And this VDS would be configured to use at least two physical NIC ports as uplinks for high availability. When introducing the use of NSX-T on VxRail, an administrator would need to create a separate N-VDS switch for the NSX-T traffic to use. This switch would require its own pair of dedicated uplinks for high availability. Thus, in VCF on VxRail environments in which NSX-T would be used, each VxRail node would require a minimum of four physical NIC ports to support the two different pairs of uplinks for each of the switches. This resulted in a higher infrastructure footprint for both the VxRail nodes and for a customer’s Top of Rack Switch infrastructure because they would need to turn on more ports on the switch to support all of these host connections. This, in turn, would come with a higher cost.

Fast forward to this release -- now we can run NSX-T traffic on the same VDS as the VxRail and SDDC Manager management traffic. And when you can share the same VDS, you can get away with reducing the number of physical uplink ports to provide high availability down to two and reduce the upfront hardware footprint and cost across the board! Win win! The following figure highlights this new feature.

NSX-T Dual pNIC Features

Well, that about sums it all up. Thanks for coming on this journey and learning about the boat load of new features in VCF 4.0 on VxRail 7.0. As always, feel free to check out the additional resources for more information. Until next time, stay safe and stay healthy out there!

Jason Marques

Twitter -@vwhippersnapper

Additional Resources

What’s New in Cloud Foundation 4 VMware Blog Post

Delivering Kubernetes At Scale With VMware Cloud Foundation (Part 1) VMware Blog Post

Consistency Makes the Tanzu Difference VMware Blog Post

VxRail page on DellTechnologies.com

VMware Cloud Foundation 4.0 on VxRail 7.0 Documentation and Release Notes

Related Blog Posts

Take VMware Tanzu to the Cloud Edge with Dell Technologies Cloud Platform

Wed, 12 Jul 2023 16:23:35 -0000

|Read Time: 0 minutes

Dell Technologies and VMware are happy to announce the availability of VMware Cloud Foundation 4.1.0 on VxRail 7.0.100.

This release brings support for the latest versions of VMware Cloud Foundation and Dell EMC VxRail to the Dell Technologies Cloud Platform and provides a simple and consistent operational experience for developer ready infrastructure across core, edge, and cloud. Let’s review these new features.

Updated VMware Cloud Foundation and VxRail BOM

Cloud Foundation 4.1 on VxRail 7.0.100 introduces support for the latest versions of the SDDC listed below:

- vSphere 7.0 U1

- vSAN 7.0 U1

- NSX-T 3.0 P02

- vRealize Suite Lifecycle Manager 8.1 P01

- vRealize Automation 8.1 P02

- vRealize Log Insight 8.1.1

- vRealize Operations Manager 8.1.1

- VxRail 7.0.100

For the complete list of component versions in the release, please refer to the VCF on VxRail release notes. A link is available at the end of this post.

VMware Cloud Foundation Software Feature Updates

VCF on VxRail Management Enhancements

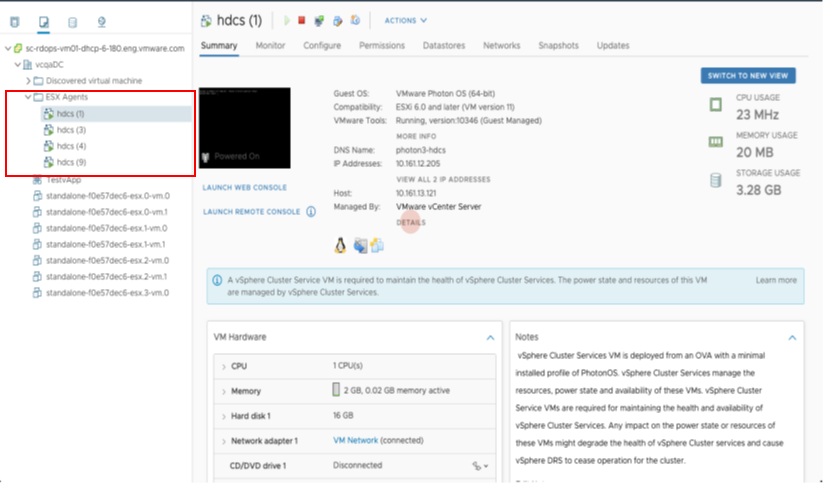

vSphere Cluster Level Services (vCLS)

vSphere Cluster Services is a new capability introduced in the vSphere 7 Update 1 release that is included as a part of VCF 4.1. It runs as a set of virtual machines deployed on top of every vSphere cluster. Its initial functionality provides foundational capabilities that are needed to create a decoupled and distributed control plane for clustering services in vSphere. vCLS ensures cluster services like vSphere DRS and vSphere HA are all available to maintain the resources and health of the workloads running in the clusters independent of the availability of vCenter Server. The figure below shows the components that make up vCLS from the vSphere Web Client.

Figure 1

Not only is vSphere 7 providing modernized data services like embedded vSphere Native Pods with vSphere with Tanzu but features like vCLS are now beginning the evolution of modernizing to distributed control planes too!

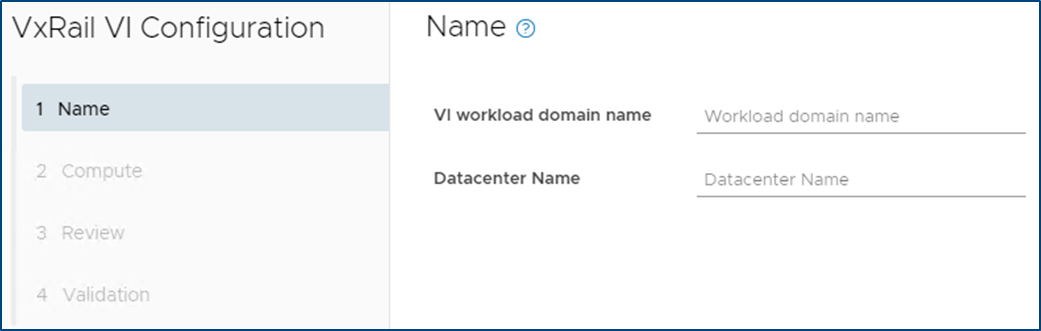

VCF Managed Resources and VxRail Cluster Object Renaming Support

VCF can now rename resource objects post creation, including the ability to rename domains, datacenters, and VxRail clusters.

The domain is managed by the SDDC Manager. As a result, you will find that there are additional options within the SDDC Manager UI that will allow you to rename these objects.

VxRail Cluster objects are managed by a given vCenter server instance. In order to change cluster names, you will need to change the name within vCenter Server. Once you do, you can go back to the SDDC Manager and after a refresh of the UI, the new cluster name will be retrieved by the SDDC Manager and shown.

In addition to the domain and VxRail cluster object rename, SDDC Manager now supports the use of a customized Datacenter object name. The enhanced VxRail VI WLD creation wizard process has been updated to include inputs for Datacenter Name and is automatically imported into the SDDC Manager inventory during the VxRail VI WLD Creation SDDC Manager workflow. Note: Make sure the Datacenter name matches the one used during the VxRail Cluster First Run. The figure below shows the Datacenter Input step in the enhanced VxRail VI WLD creation wizard from within SDDC Manager.

Figure 2

Being able to customize resource object names makes VCF on VxRail more flexible in aligning with an IT organization’s naming policies.

VxRail Integrated SDDC Manager WLD Cluster Node Removal Workflow Optimization

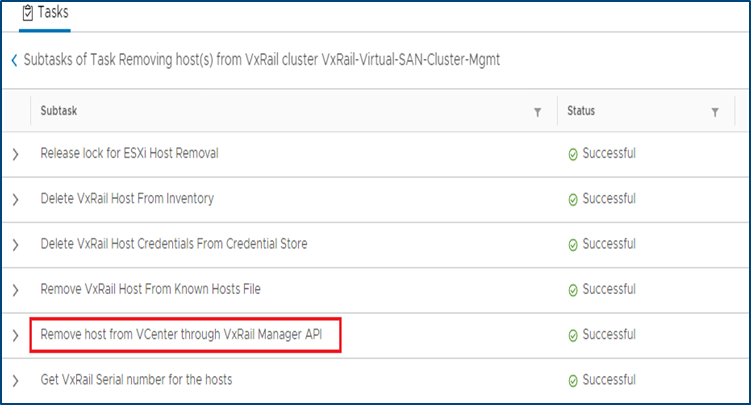

Furthering the Dell Technologies and VMware co-engineering integration efforts for VCF on VxRail, new workflow optimizations have been introduced in VCF 4.1 that take advantage of VxRail Manager APIs for VxRail cluster host removal operations.

When the time comes for VCF on VxRail cloud administrators to remove hosts from WLD clusters and repurpose them for other domains, admins will use the SDDC Manager “Remove Host from WLD Cluster” workflow to perform this task. This remove host operation has now been fully integrated with native VxRail Manager APIs to automate removing physical VxRail hosts from a VxRail cluster as a single end-to-end automated workflow that is kicked off from the SDDC Manager UI or VCF API. This integration further simplifies and streamlines VxRail infrastructure management operations all from within common VMware SDDC management tools. The figure below illustrates the SDDC Manager sub tasks that include new VxRail API calls used by SDDC Manager as a part of the workflow.

Figure 3

Note: Removed VxRail nodes require reimaging prior to repurposing them into other domains. This reimaging currently requires Dell EMC support to perform.

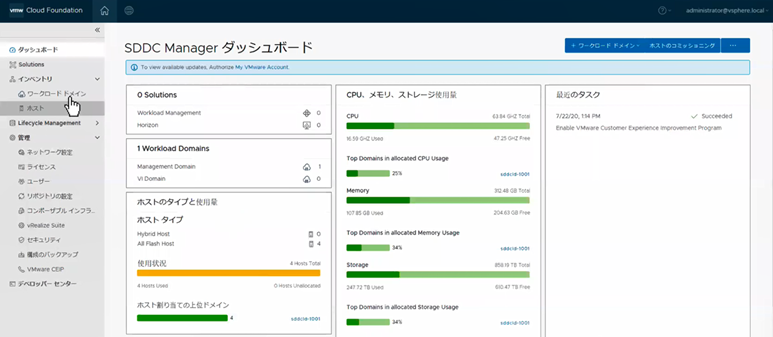

I18N Internationalization and Localization (SDDC Manager)

SDDC Manager now has international language support that meets the I18N Internationalization and Localization standard. Options to select the desired language are available in the Cloud Builder UI, which installs SDDC Manager using the selected language settings. SDDC Manager will have localization support for the following languages – German, Japanese, Chinese, French, and Spanish. The figure below illustrates an example of what this would look like in the SDDC Manager UI.

Figure 4

vRealize Suite Enhancements

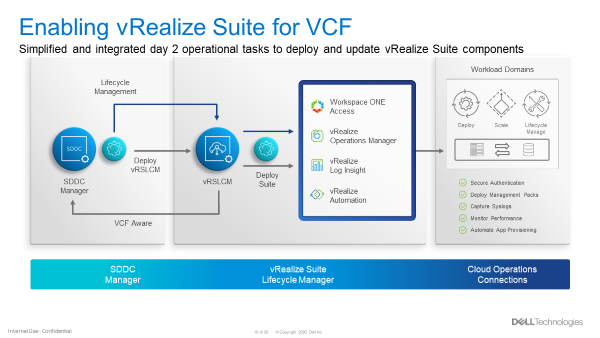

VCF Aware vRSLCM

New in VCF 4.1, the vRealize Suite is fully integrated into VCF. The SDDC Manager deploys the vRSLCM and creates a two way communication channel between the two components. When deployed, vRSLCM is now VCF aware and reports back to the SDDC Manager what vRealize products are installed. The installation of vRealize Suite components utilizes built standardized VVD best practices deployment designs leveraging Application Virtual Networks (AVNs).

Software Bundles for the vRealize Suite are all downloaded and managed through the SDDC Manager. When patches or updates become available for the vRealize Suite, lifecycle management of the vRealize Suite components is controlled from the SDDC Manager, calling on vRSLCM to execute the updates as part of SDDC Manager LCM workflows. The figure below showcases the process for enabling vRealize Suite for VCF.

Figure 5

VCF Multi-Site Architecture Enhancements

VCF Remote Cluster Support

VCF Remote Cluster Support enables customers to extend their VCF on VxRail operational capabilities to ROBO and Cloud Edge sites, enabling consistent operations from core to edge. Pair this with an awesome selection of VxRail hardware platform options and Dell Technologies has your Edge use cases covered. More on hardware platforms later…For a great detailed explanation on this exciting new feature check out the link to a detailed VMware blog post on the topic at the end of this post.

VCF LCM Enhancements

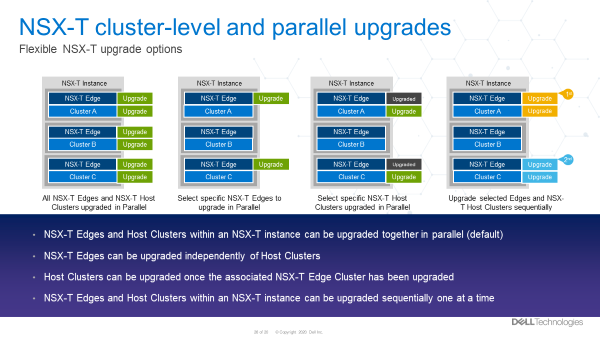

NSX-T Edge and Host Cluster-Level and Parallel Upgrades

With previous VCF on VxRail releases, NSX-T upgrades were all encompassing, meaning that a single update required updates to all the transport hosts as well as the NSX Edge and Manager components in one evolution.

With VCF 4.1, support has been added to perform staggered NSX updates to help minimize maintenance windows. Now, an NSX upgrade can consist of three distinct parts:

- Updating of edges

- Can be one job or multiple jobs. Rerun the wizard.

- Must be done before moving to the hosts

- Updating the transport hosts

- Once the hosts within the clusters have been updated, the NSX Managers can be updated.

Multiple NSX edge and/or host transport clusters within the NSX-T instance can be upgraded in parallel. The Administrator has the option to choose some clusters without having to choose all of them. Clusters within a NSX-T fabric can also be chosen to be upgraded sequentially, one at a time. Below are some examples of how NSX-T components can be updated.

NSX-T Components can be updated in several ways. These include updating:

- NSX-T Edges and Host Clusters within an NSX-T instance can be upgraded together in parallel (default)

- NSX-T Edges can be upgraded independently of NSX-T Host Clusters

- NSX-T Host Clusters can be upgraded independently of NSX-T Edges only after the Edges are upgraded first

- NSX-T Edges and Host Clusters within an NSX-T instance can be upgraded sequentially one after another.

The figure below visually depicts these options.

Figure 6

These options provide Cloud admins with a ton of flexibility so they can properly plan and execute NSX-T LCM updates within their respective maintenance windows. More flexible and simpler operations. Nice!

VCF Security Enhancements

Read-Only Access Role, Local and Service Accounts

A new ‘view-only’ role has been added to VCF 4.1. For some context, let’s talk a bit now about what happens when logging into the SDDC Manager.

First, you will provide a username and password. This information gets sent to the SDDC Manager, who then sends it to the SSO domain for verification. Once verified, the SDDC Manager can see what role the account has privilege for.

In previous versions of Cloud Foundation, the role would either be for an Administrator or it would be for an Operator.

Now, there is a third role available called a ‘Viewer’. Like its name suggests, this is a view only role which has no ability to create, delete, or modify objects. Users who are assigned this role may not see certain items in the SDDC Manger UI, such as the User screen. They may also see a message saying they are unauthorized to perform certain actions.

Also new, VCF now has a local account that can be used during an SSO failure. To help understand why this is needed let’s consider this: What happens when the SSO domain is unavailable for some reason? In this case, the user would not be able to login. To address this, administrators now can configure a VCF local account called admin@local. This account will allow the performing of certain actions until the SSO domain is functional again. This VCF local account is defined in the deployment worksheet and used in the VCF bring up process. If bring up has already been completed and the local account was not configured, then a warning banner will be displayed on the SDDC Manager UI until the local account is configured.

Lastly, SDDC Manager now uses new service accounts to streamline communications between SDDC manager and the products within Cloud Foundation. These new service accounts follow VVD guidelines for pre-defined usernames and are administered through the admin user account to improve inter-VCF communications within SDDC Manager.

VCF Data Protection Enhancements

As described in this blog, with VCF 4.1, SDDC Manager backup-recovery workflows and APIs have been improved to add capabilities such as backup management, backup scheduling, retention policy, on-demand backup & auto retries on failure. The improvement also includes Public APIs for 3rd party ecosystem and certified backup solutions from Dell PowerProtect.

VxRail Software Feature Updates

VxRail Networking Enhancements

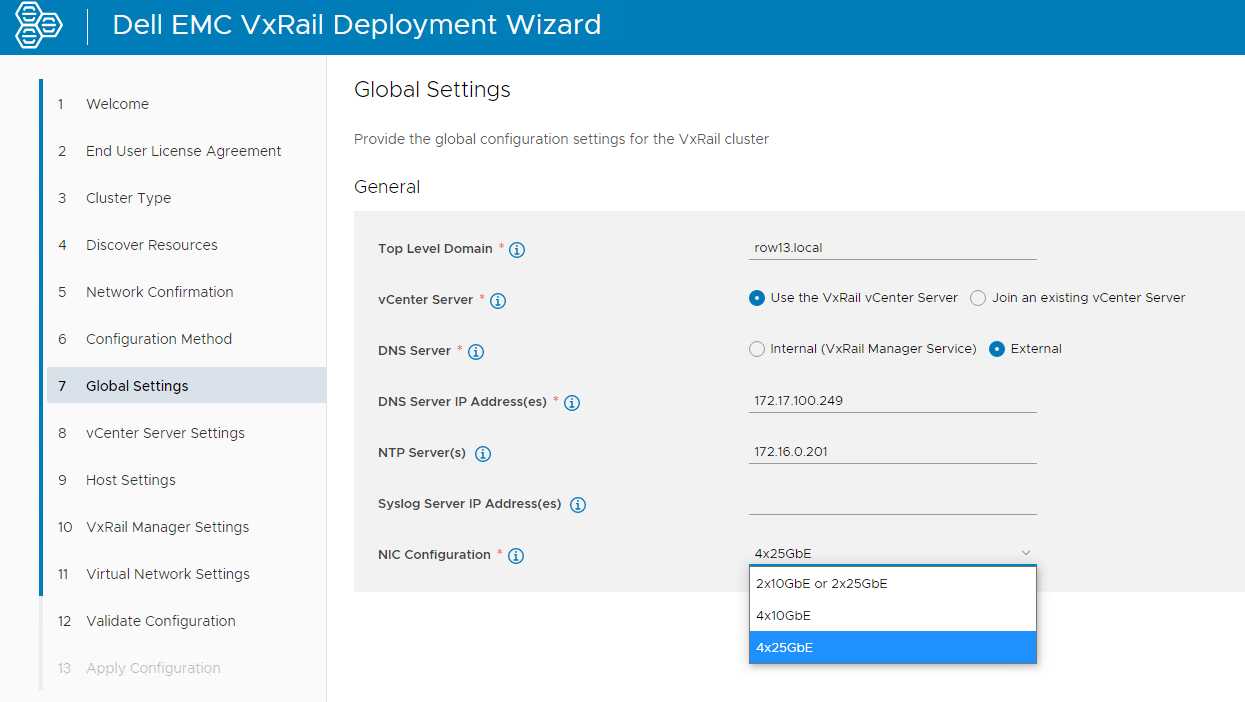

VxRail 4 x 25Gbps pNIC redundancy

VxRail engineering continues innovate in areas that drive more value to customers. The latest VCF on VxRail release follows through on delivering just that for our customers. New in this release, customers can use the automated VxRail First Run Process to deploy VCF on VxRail nodes using 4 x 25Gbps physical port configurations to run the VxRail System vDS for system traffic like Management, vSAN, and vMotion, etc. The physical port configuration of the VxRail nodes would include 2 x 25Gbps NDC ports and additional 2 x 25Gbps PCIe NIC ports.

In this 4 x 25Gbps set up, NSX-T traffic would run on the same System vDS. But what is great here (and where the flexibility comes in) is that customers can also choose to separate NSX-T traffic on its own NSX-T vDS that uplinks to separate physical PCIe NIC ports by using SDDC Manager APIs. This ability was first introduced in the last release and can also be leveraged here to expand the flexibility of VxRail host network configurations.

The figure below illustrates the option to select the base 4 x 25Gbps port configuration during VxRail First Run.

Figure 7

By allowing customers to run the VxRail System VDS across the NDC NIC ports and PCIe NIC ports, customers gain an extra layer of physical NIC redundancy and high availability. This has already been supported with 10Gbps based VxRail nodes. This release now brings the same high availability option to 25Gbps based VxRail nodes. Extra network high availability AND 25Gbps performance!? Sign me up!

VxRail Hardware Platform Updates

Recently introduced support for ruggedized D-Series VxRail hardware platforms (D560/D560F) continue expanding the available VxRail hardware platforms supported in the Dell Technologies Cloud Platform.

These ruggedized and durable platforms are designed to meet the demand for more compute, performance, storage, and more importantly, operational simplicity that deliver the full power of VxRail for workloads at the edge, in challenging environments, or for space-constrained areas.

These D-Series systems are a perfect match when paired with the latest VCF Remote Cluster features introduced in Cloud Foundation 4.1.0 to enable Cloud Foundation with Tanzu on VxRail to reach these space-constrained and challenging ROBO/Edge sites to run cloud native and traditional workloads, extending existing VCF on VxRail operations to these locations! Cool right?!

To read more about the technical details of VxRail D-Series, check out the VxRail D-Series Spec Sheet.

Well that about covers it all for this release. The innovation train continues. Until next time, feel free to check out the links below to learn more about DTCP (VCF on VxRail).

Jason Marques

Twitter - @vwhippersnapper

Additional Resources

VMware Blog Post on VCF Remote Clusters

Cloud Foundation on VxRail Release Notes

VxRail page on DellTechnologies.com

VCF on VxRail Interactive Demos

Announcing VMware Cloud Foundation 4.0.1 on Dell EMC VxRail 7.0

Wed, 03 Aug 2022 15:21:13 -0000

|Read Time: 0 minutes

The latest Dell Technologies Cloud Platform release introduces new support for vSphere with Kubernetes for entry cloud deployments and more

Dell Technologies and VMware are happy to announce the general availability VCF 4.0.1 on VxRail 7.0.

This release offers several enhancements including vSphere with Kubernetes support for entry cloud deployments, enhanced bring up features for more extensibility and accelerated deployments, increased network configuration options, and more efficient LCM capabilities for NSX-T components. Below is the full listing of features that can be found in this release:

- Kubernetes in the management domain: vSphere with Kubernetes is now supported in the management domain. With VMware Cloud Foundation Workload Management, you can deploy vSphere with Kubernetes on the management domain default cluster starting with only four VxRail nodes. This means that DTCP entry cloud deployments can take advantage of running Kubernetes containerized workloads alongside general purpose VM workloads on a common infrastructure!

- Multi-pNIC/multi-vDS during VCF bring-up: The Cloud Builder deployment parameter workbook now provides five vSphere Distributed Switch (vDS) profiles that allow you to perform bring-up of hosts with two, four, or six physical NICs (pNICs) and to create up to two vSphere Distributed Switches for isolating system (Management, vMotion, vSAN) traffic from overlay (Host, Edge, and Uplinks) traffic.

- Multi-pNIC/multi-vDS API support: The VCF API now supports configuring a second vSphere Distributed Switch (vDS) using up to four physical NICs (pNICs), providing more flexibility to support high performance use cases and physical traffic separation.

- NSX-T cluster-level upgrade support: Users can upgrade specific host clusters within a workload domain so that the upgrade can fit into their maintenance windows bringing about more efficient upgrades.

- Cloud Builder API support for bring-up operations – VCF on VxRail deployment workflows have been enhanced to support using a new Cloud Builder API for bring-up operations. VCF software installation on VxRail during VCF bring-up can now be done using either an API or GUI providing even more platform extensibility capabilities.

- Automated externalization of the vCenter Server for the management domain: Externalizing the vCenter Server that gets created during the VxRail first run (the one used for the management domain) is now automated as part of the bring-up process. This enhanced integration between the VCF Cloud Builder bring-up automation workflow and VxRail API helps to further accelerate installation times for VCF on VxRail deployments.

- BOM Updates: Updated VCF software Bill of Materials with new product versions.

Jason Marques

Twitter - @vwhippersnapper

Additional Resources