Assets

VCF on VxRail – More business-critical workloads welcome!

Wed, 07 Feb 2024 22:30:10 -0000

|Read Time: 0 minutes

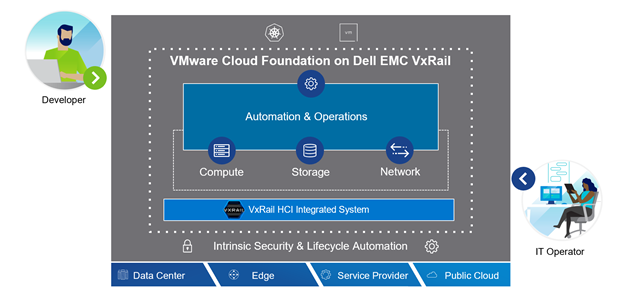

New platform enhancements for stronger mobility and flexibility

February 4, 2020

Today, Dell EMC has made the newest VCF 3.9.1 on VxRail 4.7.410 release available for download for existing VCF on VxRail customers with plans for availability for new customers coming on February 19, 2020. Let’s dive into what’s new in this latest version.

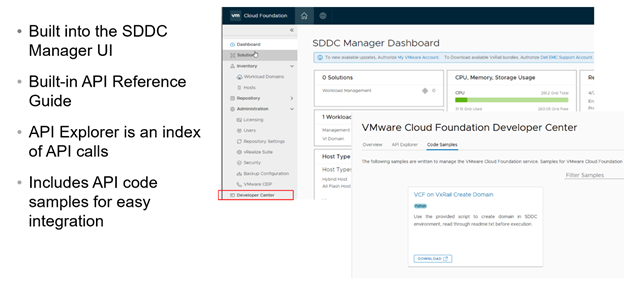

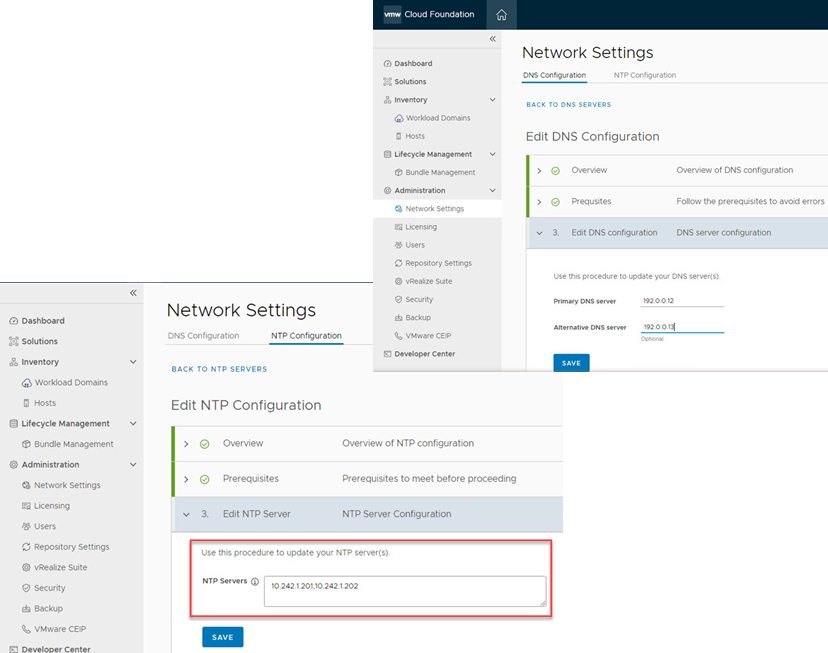

Expand your turnkey cloud experience with additional unique VCF on VxRail integrations

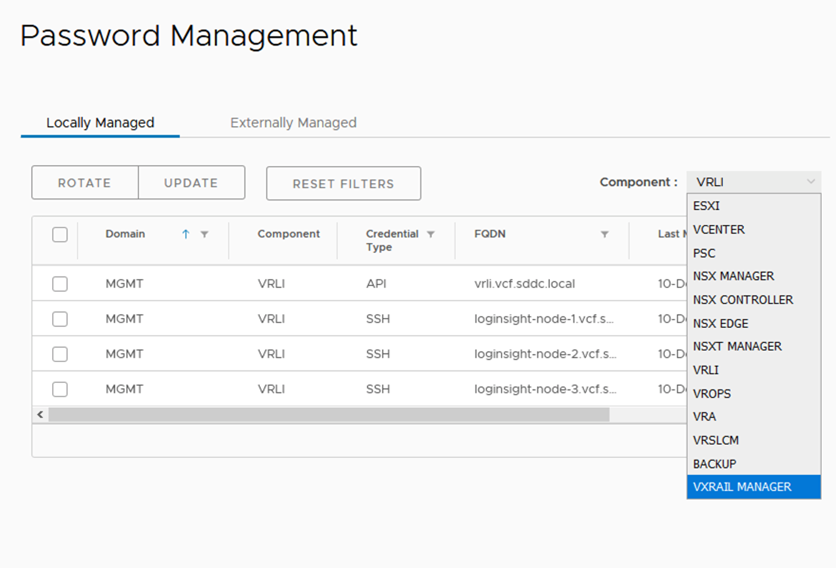

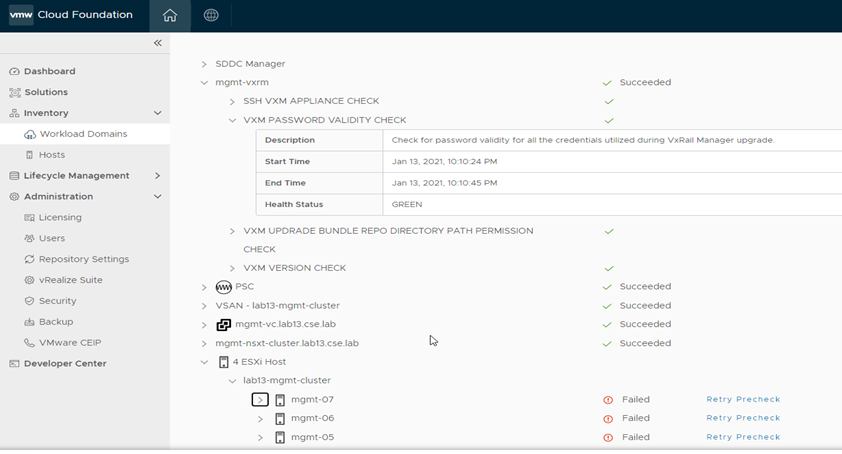

This release continues the co-engineering innovation efforts of Dell EMC and VMware to provide our joint customers with better outcomes. We tackle the area of security in this case. VxRail password management for VxRail Manager accounts such as root and mystic as well as ESXi have been integrated into the SDDC Manager UI Password Management framework. Now the components of the full SDDC and HCI infrastructure stack can be centrally managed as one complete turnkey platform using your native VCF management tool, SDDC Manager. Figure 1 illustrates what this looks like.

Figure 1

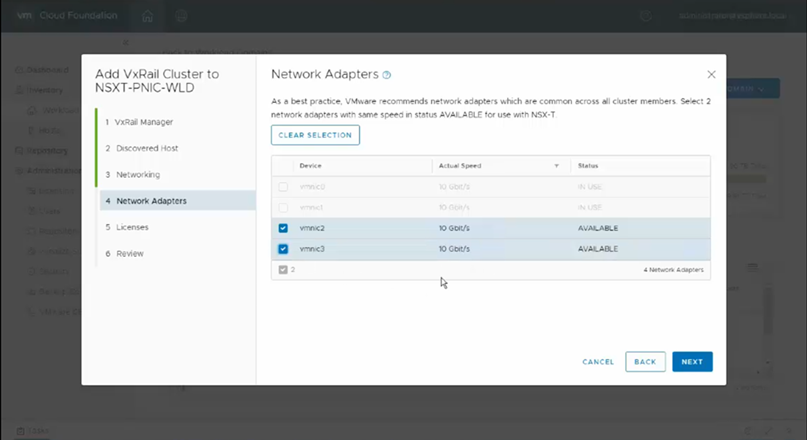

Support for Layer 3 VxRail Stretched Cluster Configuration Automation

Building off the support for Layer 3 stretched clusters introduced in VCF 3.9 on VxRail 4.7.300 using manual guidance, VCF 3.9.1 on VxRail 4.7.410 now supports the ability to automate the configuration of Layer 3 VxRail stretched clusters for both NSX-V and NSX-T backed VxRail VI Workload Domains. This is accomplished using CLI in the VCF SOS Utility.

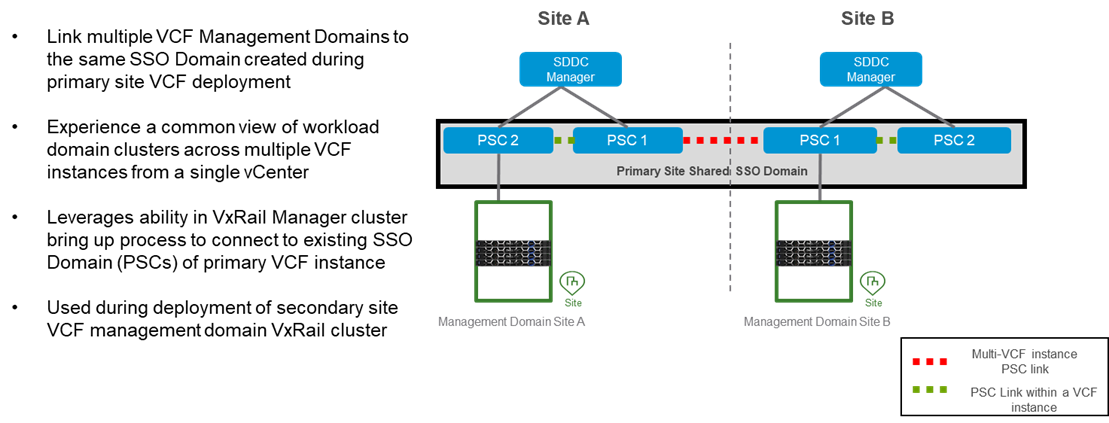

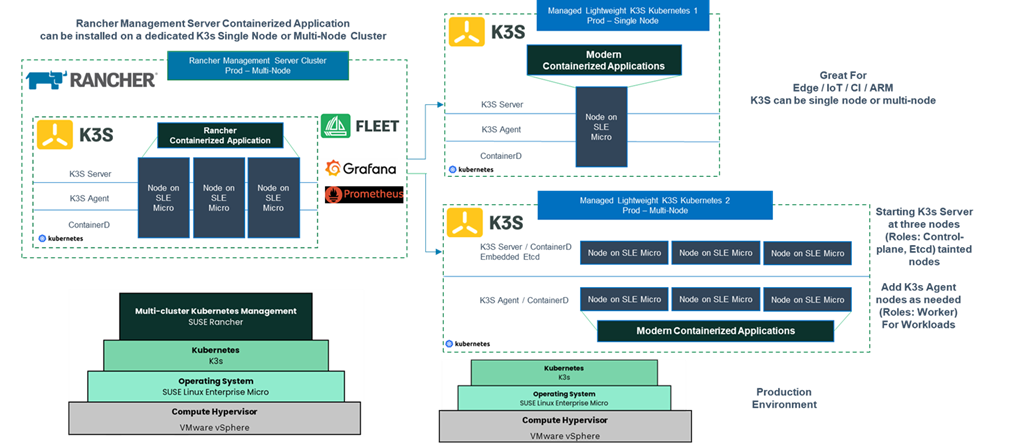

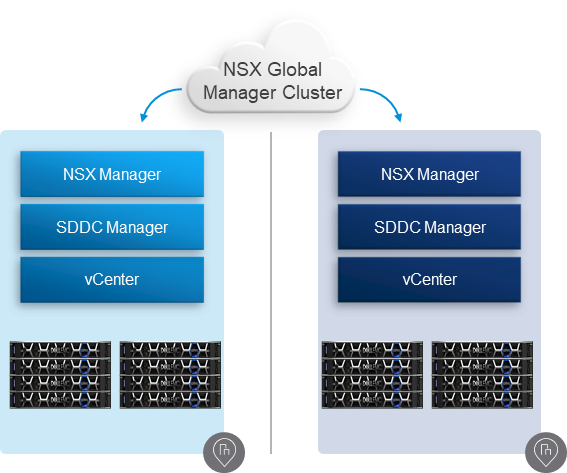

Greater management visibility and control across multiple VCF instances

For new installations, this release now provides the ability to extend a common management and security model across two VCF on VxRail instance deployments by sharing a common Single Sign On (SSO) Domain between the PSCs of multiple VMware Cloud Foundation instances so that the management and the VxRail VI Workload Domains are visible in each of the instances. This is known as a Federated SSO Domain.

What does this mean exactly? Referring to Figure 2, this translates into the ability for Site B to join the SSO instance of Site A. This allows VCF to further align to the VMware Validated Design (VVD) to share SSO domains where it makes sense based upon Enhanced Linked Mode 150ms RTT limitation.

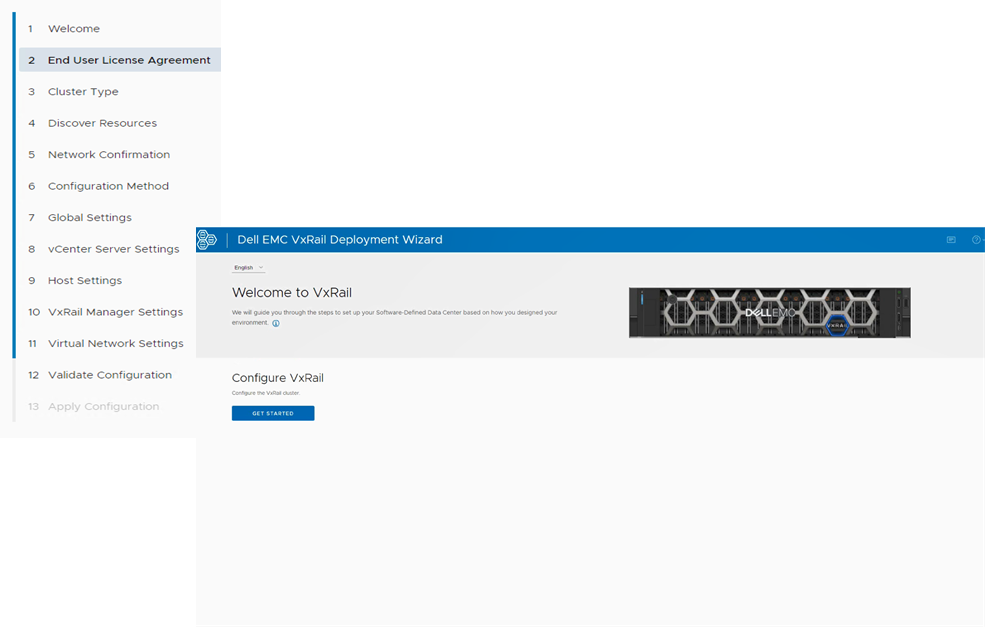

This would leverage a recent option made available in the VxRail first run to connect the VxRail cluster to an existing SSO Domain (PSCs). So, when you stand up the VxRail cluster for the second MGMT Domain that is affiliated with the second VCF instance deployed in Site B, you would connect it to the SSO (PSCs) that was created by the first MGMT domain of the VCF instance in Site A.

Figure 2

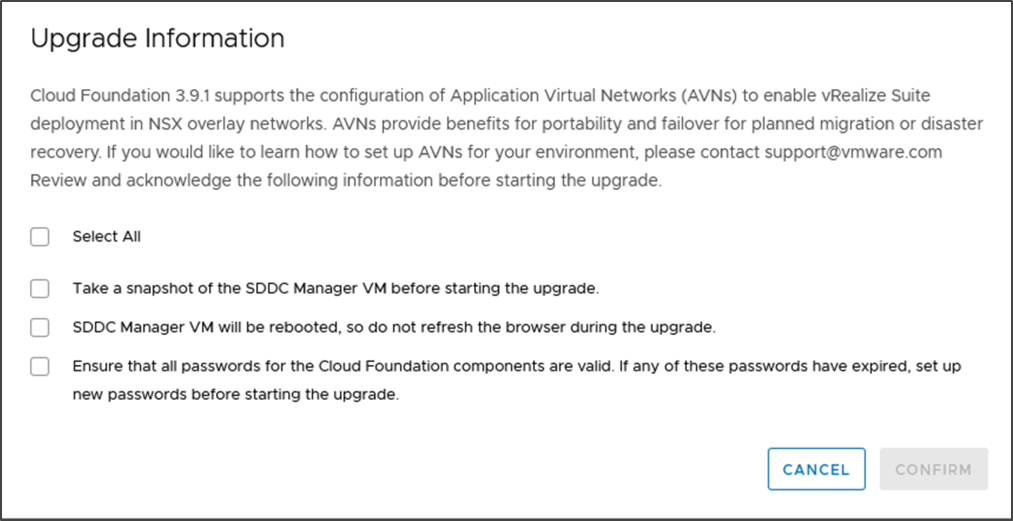

Application Virtual Networks – Enabling Stronger Mobility and Flexibility with VMware Cloud Foundation

One of the new features in the 3.9.1 release of VMware Cloud Foundation (VCF) is use of Application Virtual Networks (AVNs) to completely abstract the hardware and realize the true value from a software-defined cloud computing model. Read more about it on VMware’s blog post here. Key note on this feature: It is automatically set up for new VCF 3.9.1 installations. Customers who are upgrading from a previous version of VCF would need to engage with the VMware Professional Services Organization (PSO) to configure AVN at this time. Figure 3 shows the message existing customers will see when attempting the upgrade.

Figure 3

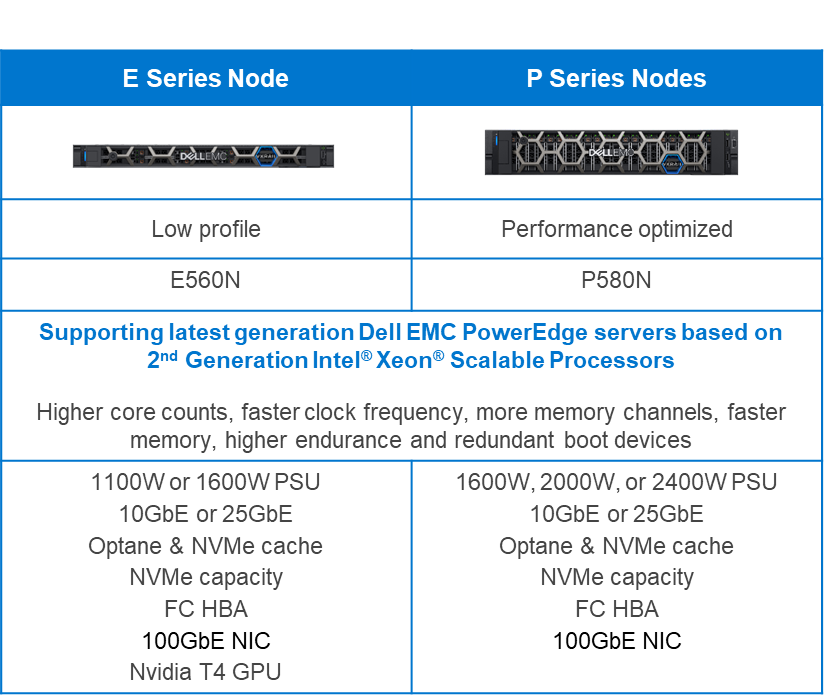

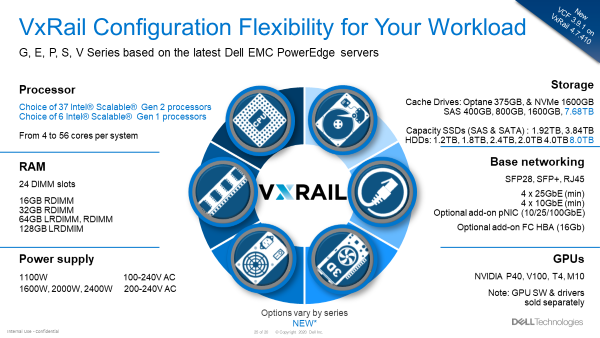

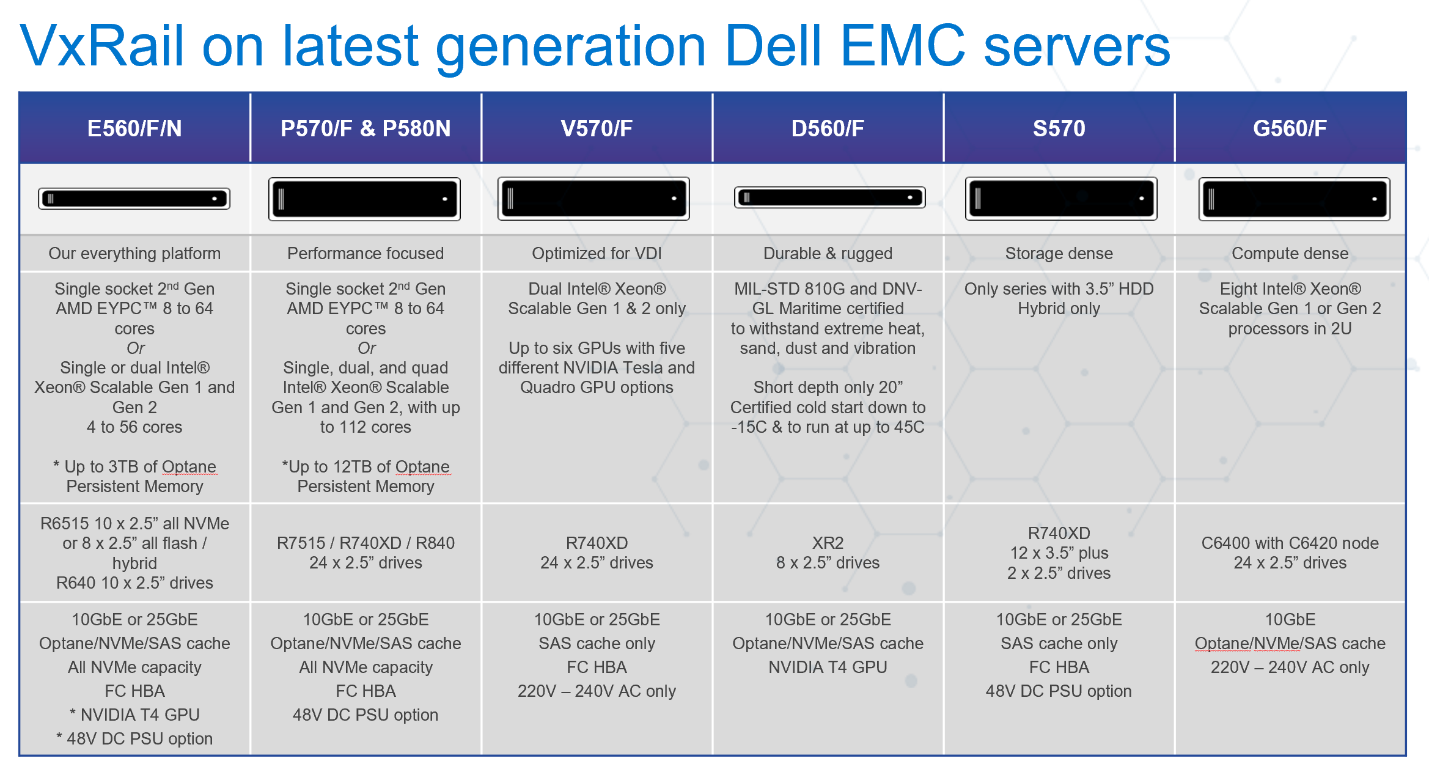

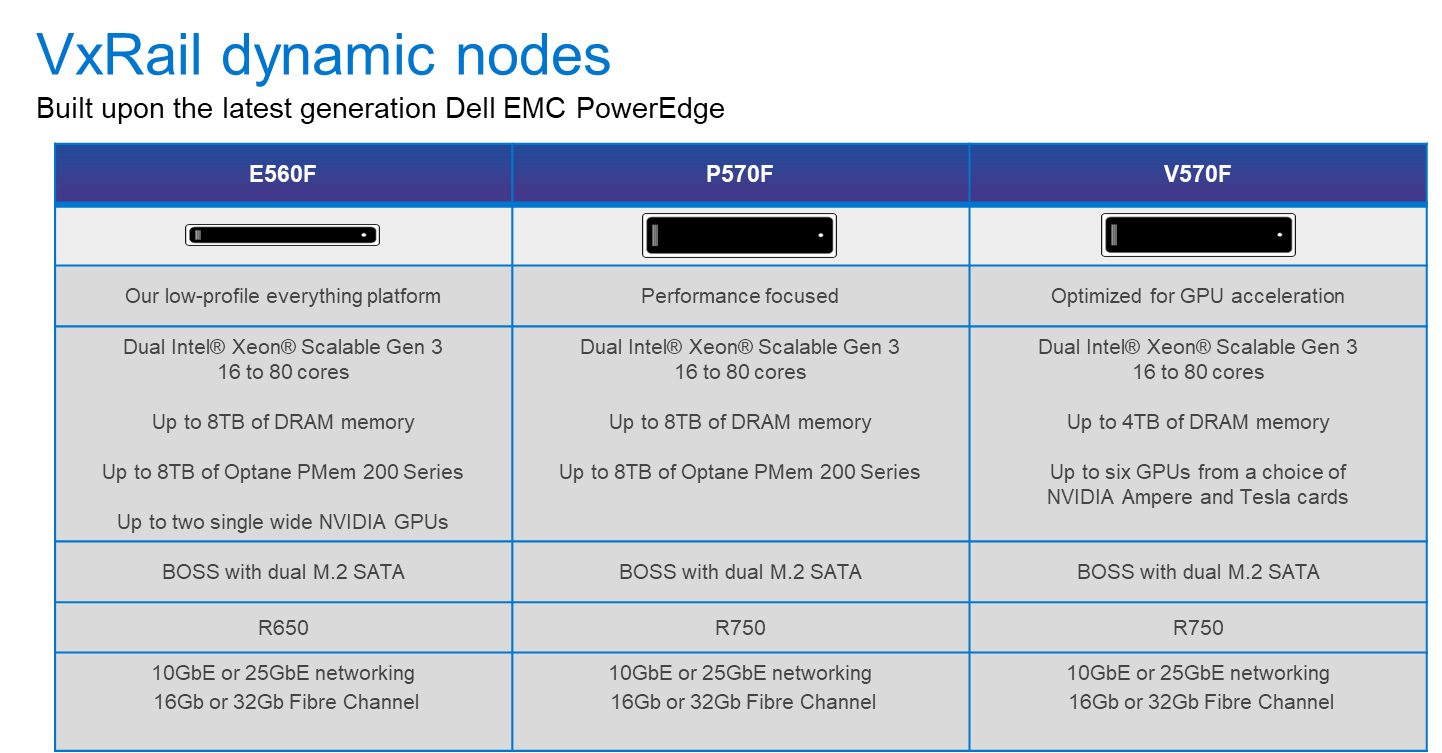

VxRail 4.7.410 platform enhancements

VxRail 4.7.410 brings a slew of new hardware platforms and hardware configuration enhancements that expand your ability to support even more business-critical applications.

- New VxRail P580N model, features four-socket PowerEdge server platform with 2nd Generation Intel® Xeon® Scalable Processors delivering 2x the memory per system* making it the optimal VxRail platform for SAP HANA and other in memory databases. The P580N provides 2x the CPU compared to the P570/F and offers 25% more processing potential over virtual storage architecture (VSA) 4S platforms that require a dedicated socket to run VSA. See Figure 4.

- New cost-effective E560N, an all NVMe platform for read intensive applications. See Figure 4.

- New configuration choices, including Mellanox 100GBe NIC cards for media broadcast use cases, 8TB high density disk drives for video surveillance. See Figure 5.

- GPUs now available in the E Series. For the first time GPU cards are supported outside the V Series. NVIDIA T4 GPUs in 1U E series platforms for entry level AI/ML, data inferencing and VDI workloads enable customers to expand the breadth of critical business applications across VMware Cloud Foundation on VxRail cloud infrastructures. See Figure 5 for the available options in VCF on VxRail configurations.

Figure 4

Figure 5

There you have it! We hope you find these latest features beneficial. Until next time…

Jason Marques

Twitter - @vwhippersnapper

Additional Resources

VxRail page on DellTechnologies.com

VCF 3.9.1 on VxRail 4.7.410 Release Notes

VCF on VxRail Interactive Demos

Learn About the Latest VMware Cloud Foundation 5.1 on Dell VxRail 8.0.200 Release

Tue, 05 Dec 2023 17:06:36 -0000

|Read Time: 0 minutes

Pairing more configuration flexibility with more integrated automation delivers even more simplified outcomes to meet more business needs!

More is what sums up this latest Cloud Foundation on VxRail release! This new release is based on the latest software bill of materials (BOM) featuring vSphere 8.0 U2, vSAN 8.0 U2, and NSX 4.1.2. Read on for more details.…

Operations and serviceability user experience updates

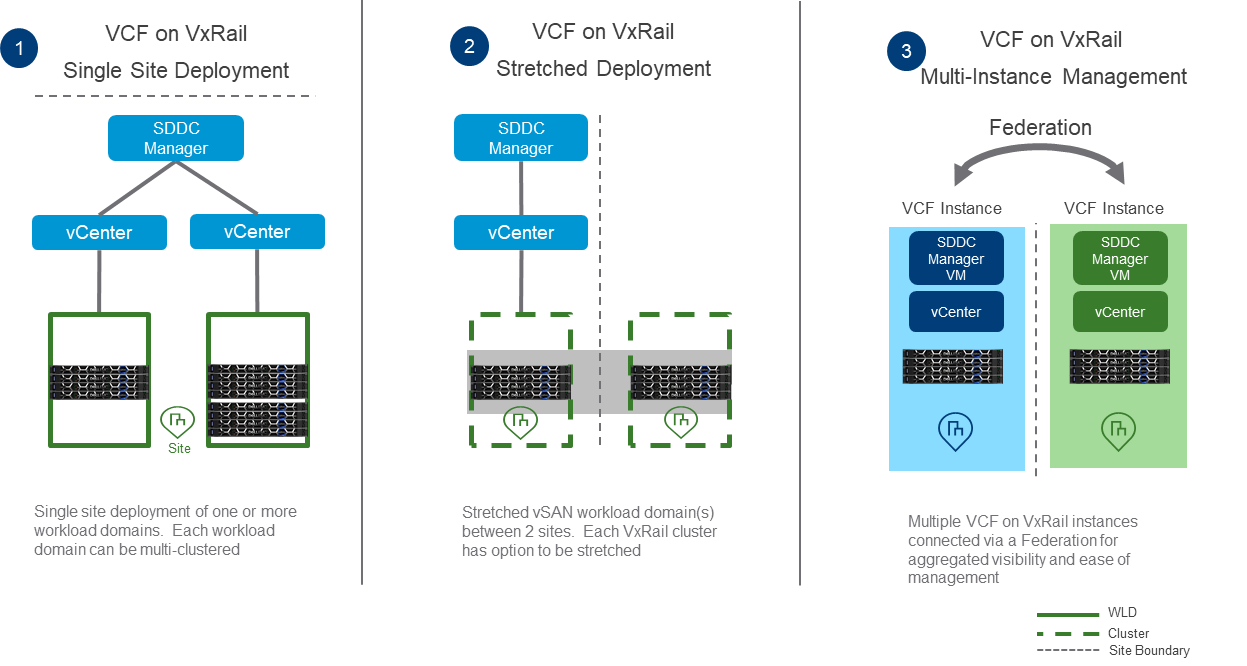

SDDC Manager WFO UI custom host networking configuration enhancements

With this enhancement, the administrator can configure networking of a new workload domain or VxRail cluster using either “Default” VxRail Network Profiles or a “Custom” Network Profile configuration. Cloud Foundation on VxRail already supports the ability for administrators to deploy custom host networking configurations using the SDDC Manager WFO API deployment method, however this new feature now brings this support to the SDDC Manager WFO UI deployment method, making it even easier to operationalize.

The following demo walks through using the SDDC Manager WFO UI to create a new workload domain with a VxRail cluster that is configured with vSAN ESA and VxRail vLCM mode enabled and a custom network profile.

New VCF Infrastructure as Code (IaC) tooling with new Terraform VCF Provider and PowerCLI VCF Module

Infrastructure teams can now utilize the Terraform Provider for VCF and the VCF module that is now integrated into VMware’s official PowerCLI tool to perform Infrastructure-as-code (IaC), allowing them to deploy, manage, and operate VMware Cloud Foundation on VxRail deployments.

By using prebuilt IaC best practices code that is designed to take advantage of interfacing with a single VCF API, IaC teams are able to perform infrastructure provisioning tasks that can accelerate IaC usage and lessen the burden to develop and maintain code for individual infrastructure components intended to deliver similar outcomes.

Important Note: Not all operations using these tools may be supported in Cloud Foundation on VxRail. Please refer to tool documentation links at the bottom of this post for details.

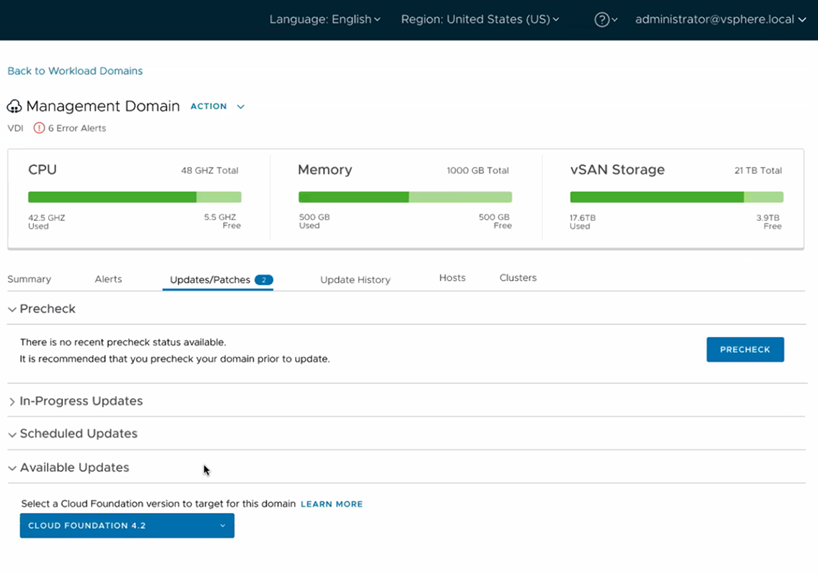

LCM updates

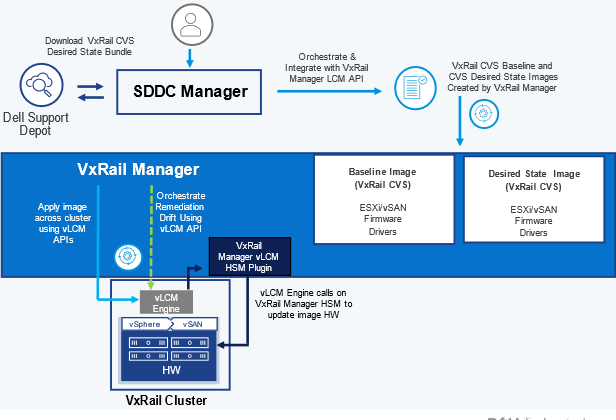

Day 1 VxRail vLCM mode compatibility for management and workload domains

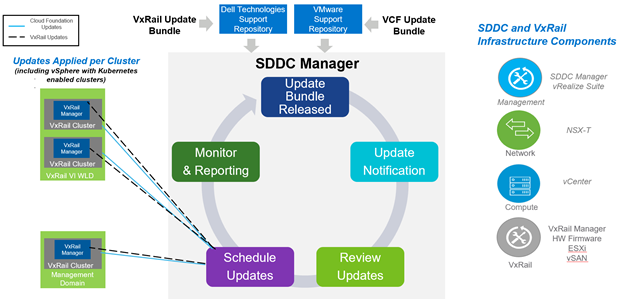

VMware Cloud Foundation 5.1 on VxRail 8.0.200 now supports the configuration and deployment of new domains using vSphere Lifecycle Manager Images (vLCM) enabled VxRail clusters, depicted in figure 1. VxRail vLCM enabled clusters can leverage VxRail Manager to unify not only your ESXi Image but also your BIOS/firmware/drivers through a single update process, all controlled/orchestrated by VxRail Manager using the integrated SDDC Manager’s native LCM operations experience via VxRail APIs. VxRail clusters will have their VxRail Continuously Validated State image managed at the cluster level by VxRail Manager just like in VxRail standard LCM mode enabled clusters.

Figure 1. High-level VxRail vLCM mode architecture

Mixed-mode support for workload domains as a steady state

Existing VMware Cloud Foundation 5.x on VxRail 8.x deployments now allow administrators to run workload domains of different VCF 5.x versions as a “steady state”. Administrators can now update the management domain and any other workload domain of a VCF 5.0 deployment to the latest VCF 5.x version without the need to upgrade all workload domains. Mixed-mode support also allows administrators to leverage the benefits of new SDDC Manager features in the management domain without having to upgrade a full VCF 5.x on VxRail 8.x instance.

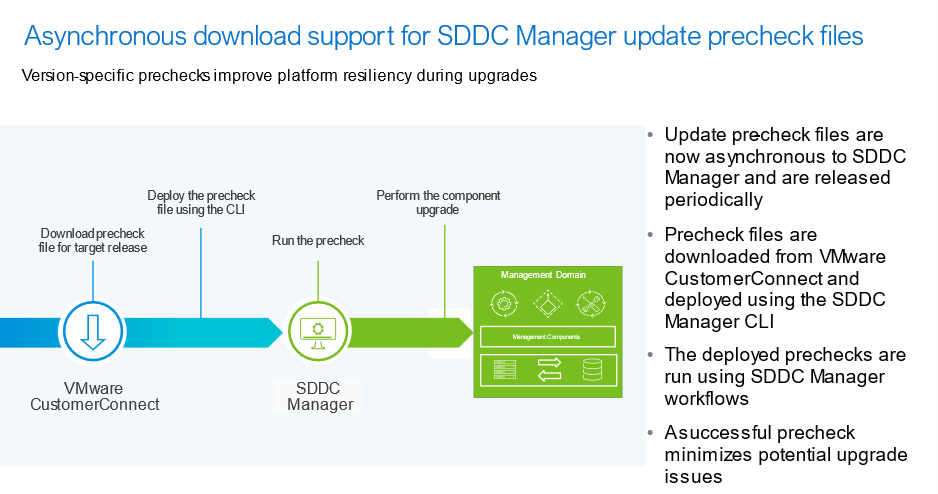

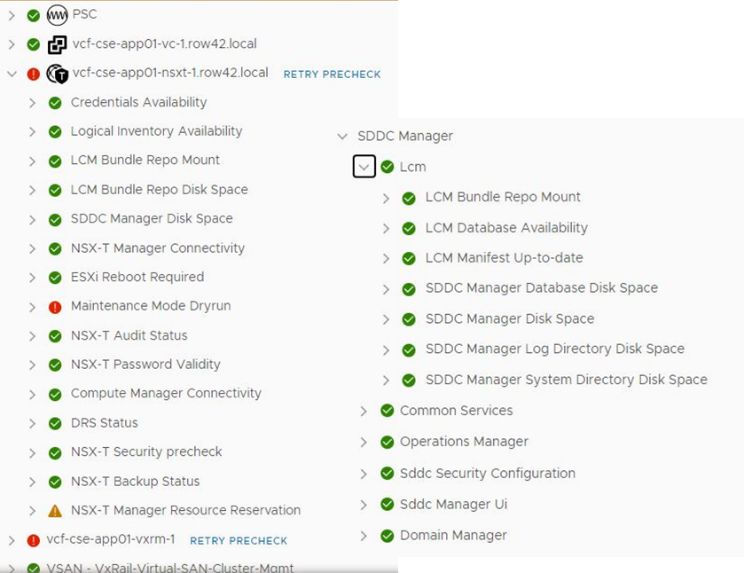

Asynchronous download support for SDDC Manager update precheck files

SDDC Manager update precheck files can now be downloaded and updated asynchronously from full release updates, an addition to similar async VxRail specific precheck file updates that already exist within VxRail Manager. This feature allows administrators to download, deploy, and run SDDC Manager update prechecks tailored to a specific VMware Cloud Foundation on VxRail releases. SDDC Manager precheck files are created by VMware engineering and contain detailed checks for SDDC Manager to run prior to upgrading to a newer VCF on VxRail target release, as shown in the following figure.

Figure 2. High-level process of asynchronous download support for SDDC Manager update precheck files

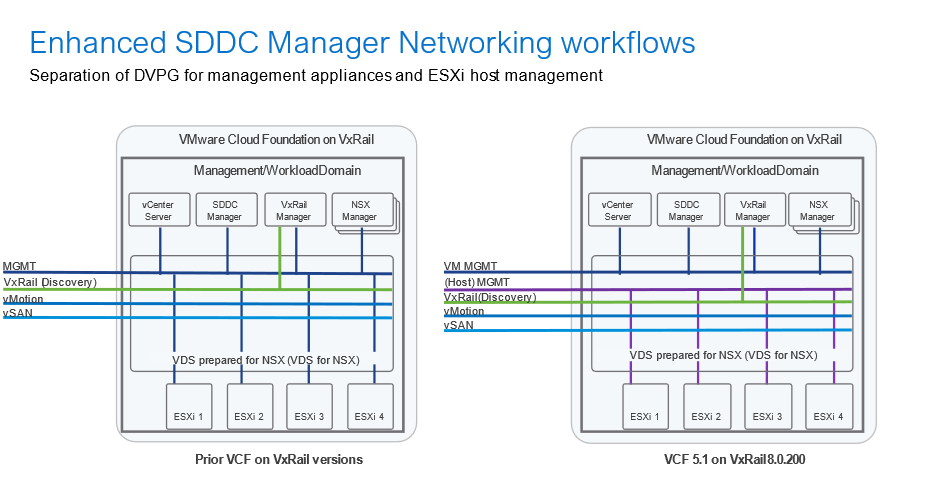

Networking updates

Support for the separation of DvPG for management appliances and ESXi host (VMKernel) management

Prior to this release, the default networking topology deployed by VMware Cloud Foundation on VxRail consisted of ESXi host management interfaces (vmkernel interface) and management components (vCenter server, SDDC Manager, NSX components, VxRail Manager, etc.) being applied to the same Distributed Virtual Port Group (DvPG). This new DvPG separation feature enables traffic isolation between management component VMs and ESXi Host Management vmkernel Interfaces, helping align to an organization’s desired security posture. Figure 3 illustrates this new configuration architecture.

Figure 3. New DvPG architecture

Configure custom NSX Edge cluster without 2-tier routing (via API)

VMware Cloud Foundation 5.1 on VxRail 8.0.200 now provides the option to deploy a custom NSX Edge cluster without the need to configure both a Tier-0 and Tier-1 gateway. These types of NSX Edge cluster deployments can be configured using the SDDC Manager (API only).

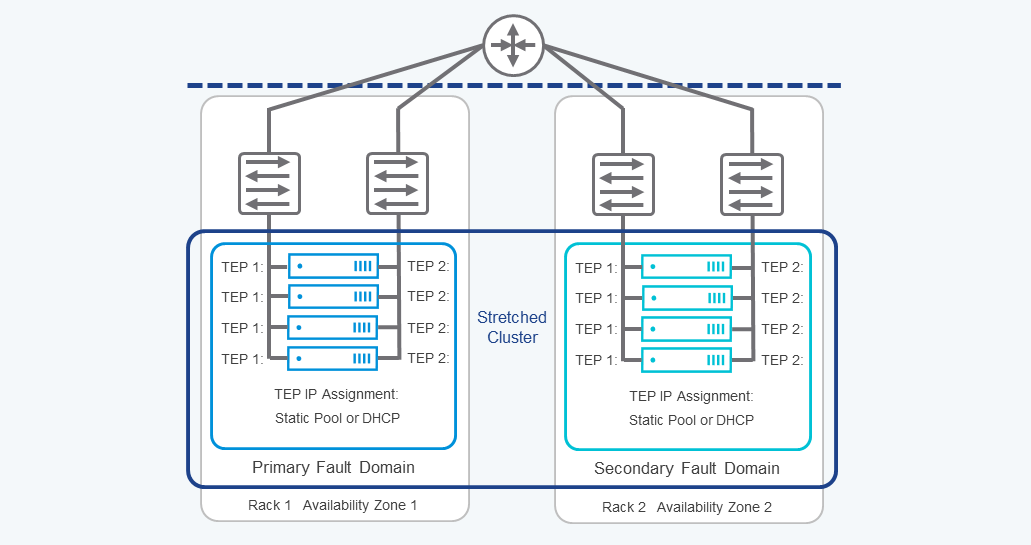

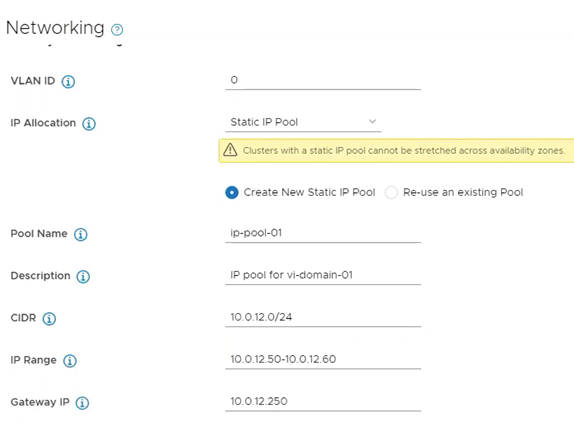

Static IP-based NSX Tunnel End Point and Sub Transport Node Profile assignment support for L3 aware clusters and L2/L3 vSAN stretched clusters

VxRail stretched clusters that are deployed using vSAN OSA can now be configured with vLCM mode enabled. In addition, administrators can now configure NSX Host TEPs to utilize a NSX static IP pool and no longer need to manually maintain an external DHCP server to support Layer 3 vSAN OSA stretched clusters, as illustrated in the following figure.

Figure 4. TEP Configuration Flexibility Example for vSAN Stretched Clusters

Building off these capabilities, deployments of VxRail stretched clusters with vSAN OSA which are configured using static IP Pools can now also leverage Sub-Transport Node Profiles (Sub-TNP), a feature introduced with NSX-T 3.2.2 and NSX 4.1.

Sub-TNPs can be used to prepare clusters of hosts without L2 adjacency to the Host TEP VLAN. This is useful for customers with rack-based IP schemas and allows Host TEP IPs to be configured on their own separate networks. Configuring vSAN stretched clusters using NSX Sub-TNP provides increased security, allowing administrators to enable and configure Distributed Malware Prevention and Detection. An example of this is depicted in the following figure.

Figure 5. Sub-TNP vSAN L3 Stretched Cluster Configuration Example

Note: Stretched VxRail with vSAN ESA clusters are not yet supported.

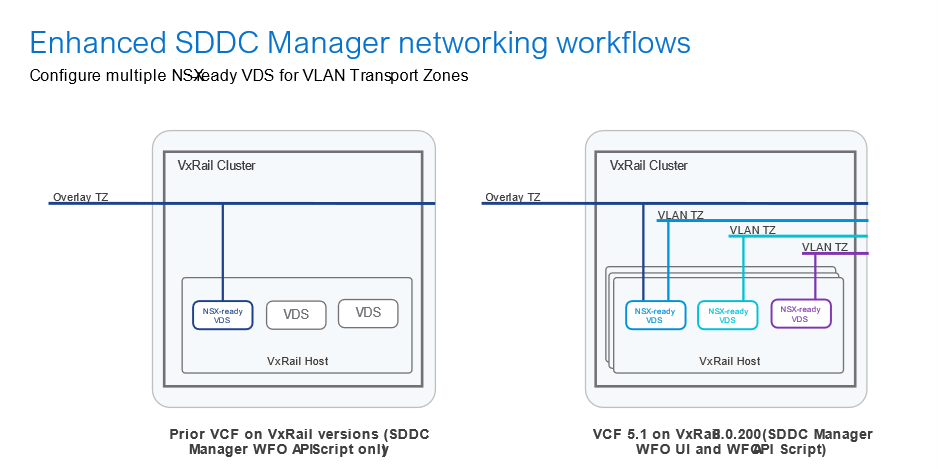

Support for multiple VDS for NSX host networking configurations

This release now provides the option to configure multiple VDS for NSX through the SDDC Manager WFO UI and WFO API.

Administrators can now configure additional VxRail host VDS prepared for NSX (VDS for NSX) to configure using VLAN Transport Zones (VLAN TZs), as shown in the following figure. This provides administrators the added benefit of configuring NSX Distributed Firewall (DFW) for workloads in VLAN transport zones, allowing security to be more granular. These capabilities further simplify the configuration of advanced networking and security for Cloud Foundation on VxRail.

Figure 6 is VxRail host

Figure 6 is VxRail host

Figure 6. Configuring additional VxRail host VDS for NSX to configure using VLAN TZs

Security and access updates

OKTA SSO identity federation support

VMware Cloud Foundation 5.1 on VxRail 8.0.200 now supports the option to configure the VMware Identity Broker for federation using Okta (3rd party IDP). Once configured, federated users can seamlessly move between vCenter Server and NSX Manager consoles without being prompted to re-authenticate.

Storage updates

vSAN OSA/ESA support for management and workload domain VxRail clusters

VMware Cloud Foundation 5.1 on VxRail 8.0.200 adds support for both vSAN OSA-based and vSAN ESA-based VxRail clusters when deploying a new management domain (greenfield VCF on VxRail instance) and new workload domains/clusters in VCF on VxRail instances that have been upgraded to this latest release. VCF requires that vSAN ESA-based cluster deployments have vLCM mode enabled. Also, as of this release, only 15th generation VxRail vSAN ESA compatible hardware platforms are supported. 16th generation VxRail platform support is planned for a future release.

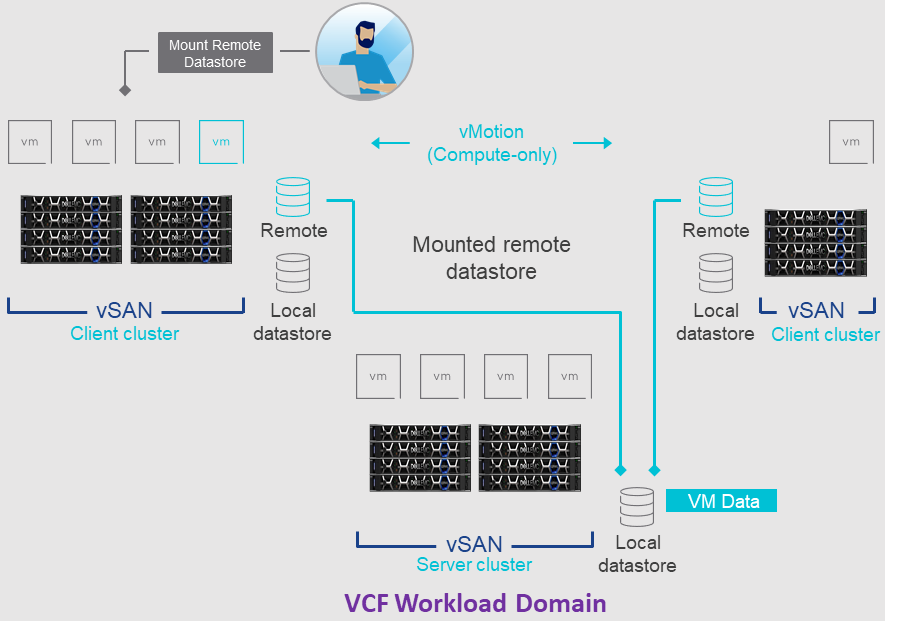

Support for vSAN OSA/ESA remote datastores as principal storage when used with VxRail dynamic node workload domain clusters

This release adds support of VxRail dynamic node compute-only clusters in cross cluster capacity sharing use cases. This means that vSAN OSA or ESA remote datastores sourced from a standard VxRail HCI cluster with vSAN within the same workload domain can now be used as principal storage for VxRail dynamic node- compute only workload domain clusters. This capability is available via the SDDC Manager WFO script deployment method only.

Platform and scale updates

Increased VCF remote cluster maximum support for up to 16 nodes and up to 150ms latency

There are new validated updates to the maximum supported latency requirements for use of VCF remote clusters. These links now require 10 Mbps of bandwidth available and a latency less than 150ms.

There have also been updates regarding VCF remote cluster size scalability ranges. A VCF remote cluster now requires a minimum of 3 hosts when using local vSAN as cluster principal storage or 2 hosts when using supported Dell external storage principal storage with VxRail dynamic nodes. On the max scale limit side, VCF remote clusters cannot exceed the new maximum of 16 VxRail hosts in either case.

Note: Support for this feature is expected to be available after GA.

Support for 2-node workload domain VxRail dynamic node clusters when using VMFS on FC Dell external storage as principal storage

Cloud Foundation on VxRail now supports the ability to deploy 2-node dynamic node-based workload domain clusters when using VMFS on FC Dell external storage as cluster Principal storage.

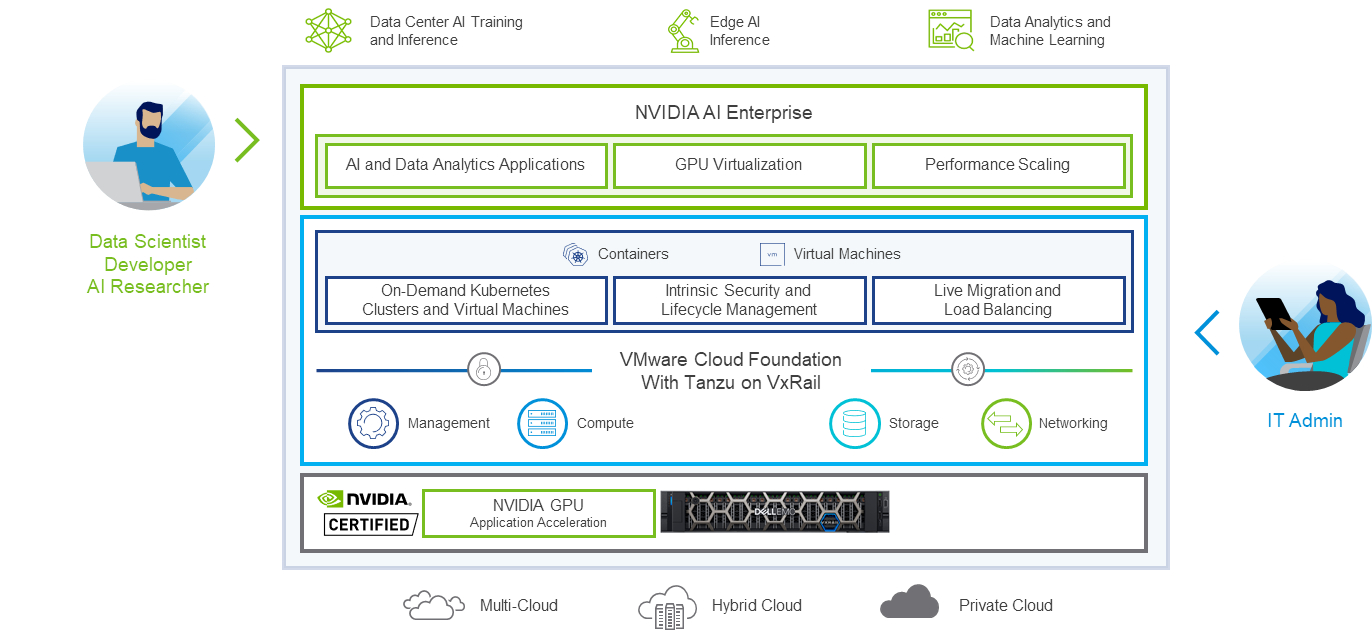

Increased GPU scale for Private AI

Nvidia GPUs can be configured for AI / ML to support a variety of different use cases. In VMware Cloud Foundation 5.1 on VxRail 8.0.200, where GPUs have been configured for vGPUs, a VM can now be configured with up to 16 vGPU profiles that represent all of a GPU or parts of a GPU. These enhancements allow customers to support larger Generative AI and large-language model (LLM) workloads while delivering maximum performance.

VxRail hardware platform updates

15th generation VxRail E660N and P670N all-NVMe vSAN ESA hardware platform support

Cloud Foundation on VxRail administrators can now use VxRail hardware platforms that have been qualified to run vSAN ESA and VxRail 8.0.200 software. The all-NVMe VxRail platforms such as the 15th generation VxRail E660N and P670N can now be ordered and deployed in Cloud Foundation 5.1 on VxRail 8.0.200 environments.

Hybrid cloud management updates

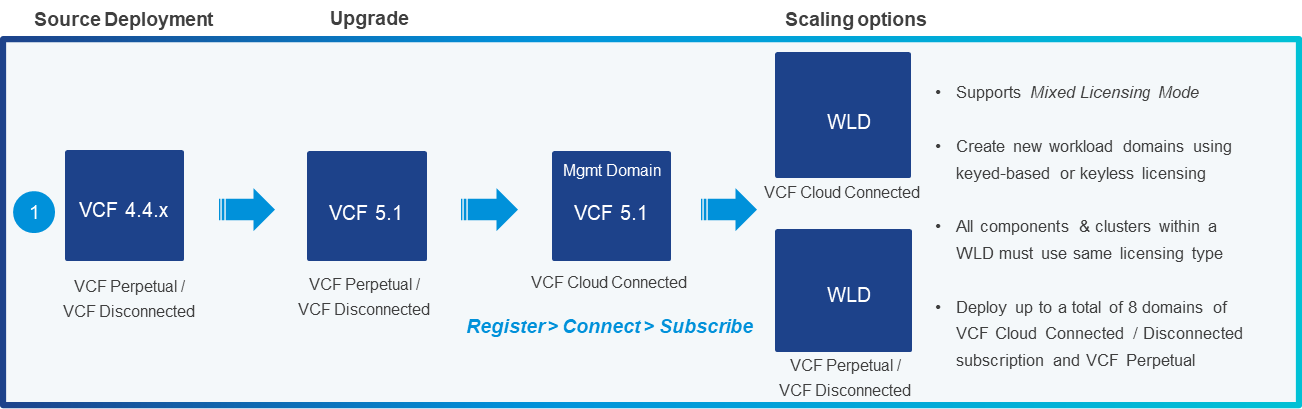

VCF mixed licensing mode support

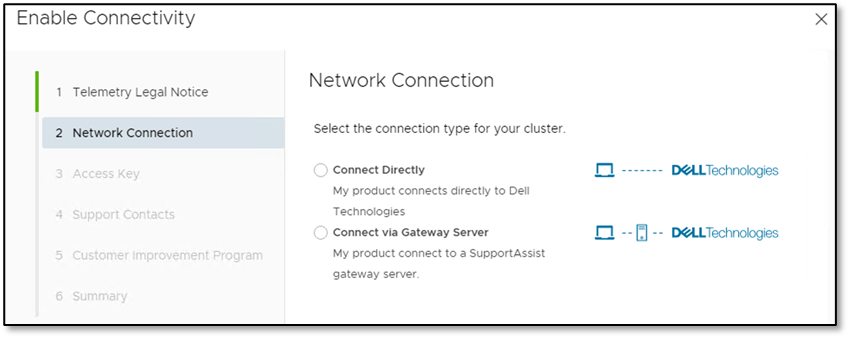

VMware Cloud Foundation 5.1 on VxRail 8.0.200 introduces support for both Key-based and Keyless licensing for existing deployments, as illustrated in the following figure.

To enable the deployment, the management domain must first be cloud connected and subscribed. Once complete, enhanced SDDC Manager workflows allow administrators the option to license a new workload domain using Keyless licenses (cloud connected subscription) or Key-based licenses (perpetual or cloud disconnected subscription). This deployment scenario is referred to as Mixed Licensing Mode. All licensing used within a domain must be homogenous, meaning all components within a domain must use either a Key-based or Keyless license and not a combination thereof.

Figure 7. Understanding Key-based and Keyless licensing for existing deployments

VMware Cloud Disaster Recovery service for VCF cloud connected subscription deployments

VMware Cloud Foundation on VxRail cloud connected subscriptions now support VMware Cloud Disaster Recovery (VCDR) as an add-on service through the VMware Cloud Portal.

Other asynchronous release-independent related updates

VMware redefines Cloud Foundation product lifecycle policies

The product lifecycle policies for new and existing VMware Cloud Foundation releases have been redefined by VMware. VCF on VxRail product lifecycle policies align with VMware’s VCF product lifecycle policy.

End of General Support for VCF 5.x is now four (4) years from the original VCF 5.0 launch date. This change allows IT teams to run their VMware Cloud Foundation on VxRail deployments for longer before planning an upgrade, providing more control for IT organizations to adopt a cloud operating model that evolves at the pace of their business.

Summary

Well, there you have it! Another release in the books. If you want even more information beyond what was discussed here, feel free to check out the resources linked below. See you next time!

Resources

- VxRail product page

- VxRail Info Hub page

- VxRail Videos

- VMware Cloud Foundation on Dell VxRail Release Notes

- VCF on VxRail Interactive Demo

- VMware Product Lifecycle Matrix

- Terraform Provider for VCF

- PowerCLI VCF Module

Author: Jason Marques

Twitter: @vWhipperSnapper

Take VMware Tanzu to the Cloud Edge with Dell Technologies Cloud Platform

Wed, 12 Jul 2023 16:23:35 -0000

|Read Time: 0 minutes

Dell Technologies and VMware are happy to announce the availability of VMware Cloud Foundation 4.1.0 on VxRail 7.0.100.

This release brings support for the latest versions of VMware Cloud Foundation and Dell EMC VxRail to the Dell Technologies Cloud Platform and provides a simple and consistent operational experience for developer ready infrastructure across core, edge, and cloud. Let’s review these new features.

Updated VMware Cloud Foundation and VxRail BOM

Cloud Foundation 4.1 on VxRail 7.0.100 introduces support for the latest versions of the SDDC listed below:

- vSphere 7.0 U1

- vSAN 7.0 U1

- NSX-T 3.0 P02

- vRealize Suite Lifecycle Manager 8.1 P01

- vRealize Automation 8.1 P02

- vRealize Log Insight 8.1.1

- vRealize Operations Manager 8.1.1

- VxRail 7.0.100

For the complete list of component versions in the release, please refer to the VCF on VxRail release notes. A link is available at the end of this post.

VMware Cloud Foundation Software Feature Updates

VCF on VxRail Management Enhancements

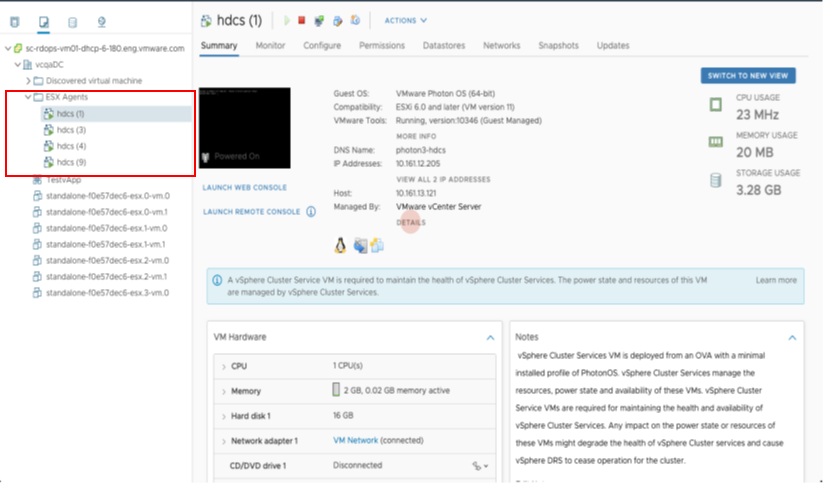

vSphere Cluster Level Services (vCLS)

vSphere Cluster Services is a new capability introduced in the vSphere 7 Update 1 release that is included as a part of VCF 4.1. It runs as a set of virtual machines deployed on top of every vSphere cluster. Its initial functionality provides foundational capabilities that are needed to create a decoupled and distributed control plane for clustering services in vSphere. vCLS ensures cluster services like vSphere DRS and vSphere HA are all available to maintain the resources and health of the workloads running in the clusters independent of the availability of vCenter Server. The figure below shows the components that make up vCLS from the vSphere Web Client.

Figure 1

Not only is vSphere 7 providing modernized data services like embedded vSphere Native Pods with vSphere with Tanzu but features like vCLS are now beginning the evolution of modernizing to distributed control planes too!

VCF Managed Resources and VxRail Cluster Object Renaming Support

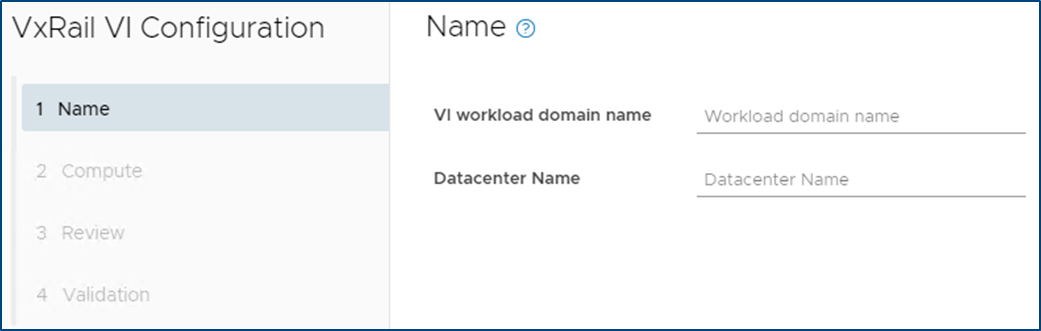

VCF can now rename resource objects post creation, including the ability to rename domains, datacenters, and VxRail clusters.

The domain is managed by the SDDC Manager. As a result, you will find that there are additional options within the SDDC Manager UI that will allow you to rename these objects.

VxRail Cluster objects are managed by a given vCenter server instance. In order to change cluster names, you will need to change the name within vCenter Server. Once you do, you can go back to the SDDC Manager and after a refresh of the UI, the new cluster name will be retrieved by the SDDC Manager and shown.

In addition to the domain and VxRail cluster object rename, SDDC Manager now supports the use of a customized Datacenter object name. The enhanced VxRail VI WLD creation wizard process has been updated to include inputs for Datacenter Name and is automatically imported into the SDDC Manager inventory during the VxRail VI WLD Creation SDDC Manager workflow. Note: Make sure the Datacenter name matches the one used during the VxRail Cluster First Run. The figure below shows the Datacenter Input step in the enhanced VxRail VI WLD creation wizard from within SDDC Manager.

Figure 2

Being able to customize resource object names makes VCF on VxRail more flexible in aligning with an IT organization’s naming policies.

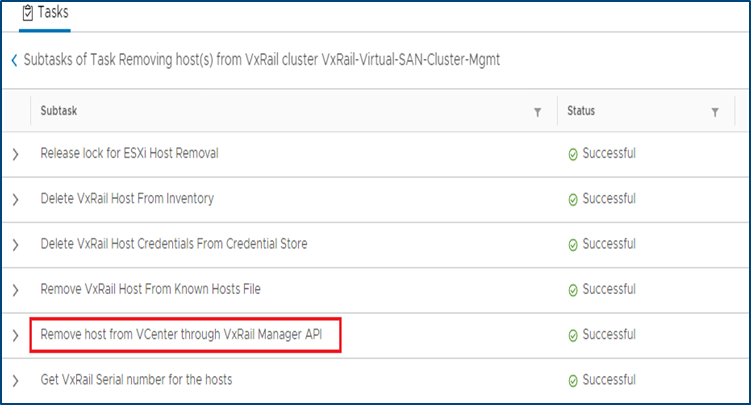

VxRail Integrated SDDC Manager WLD Cluster Node Removal Workflow Optimization

Furthering the Dell Technologies and VMware co-engineering integration efforts for VCF on VxRail, new workflow optimizations have been introduced in VCF 4.1 that take advantage of VxRail Manager APIs for VxRail cluster host removal operations.

When the time comes for VCF on VxRail cloud administrators to remove hosts from WLD clusters and repurpose them for other domains, admins will use the SDDC Manager “Remove Host from WLD Cluster” workflow to perform this task. This remove host operation has now been fully integrated with native VxRail Manager APIs to automate removing physical VxRail hosts from a VxRail cluster as a single end-to-end automated workflow that is kicked off from the SDDC Manager UI or VCF API. This integration further simplifies and streamlines VxRail infrastructure management operations all from within common VMware SDDC management tools. The figure below illustrates the SDDC Manager sub tasks that include new VxRail API calls used by SDDC Manager as a part of the workflow.

Figure 3

Note: Removed VxRail nodes require reimaging prior to repurposing them into other domains. This reimaging currently requires Dell EMC support to perform.

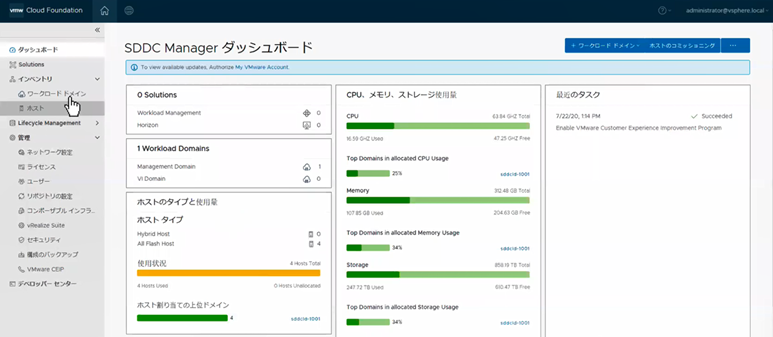

I18N Internationalization and Localization (SDDC Manager)

SDDC Manager now has international language support that meets the I18N Internationalization and Localization standard. Options to select the desired language are available in the Cloud Builder UI, which installs SDDC Manager using the selected language settings. SDDC Manager will have localization support for the following languages – German, Japanese, Chinese, French, and Spanish. The figure below illustrates an example of what this would look like in the SDDC Manager UI.

Figure 4

vRealize Suite Enhancements

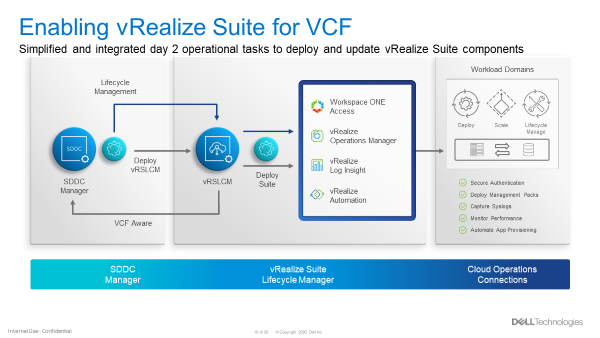

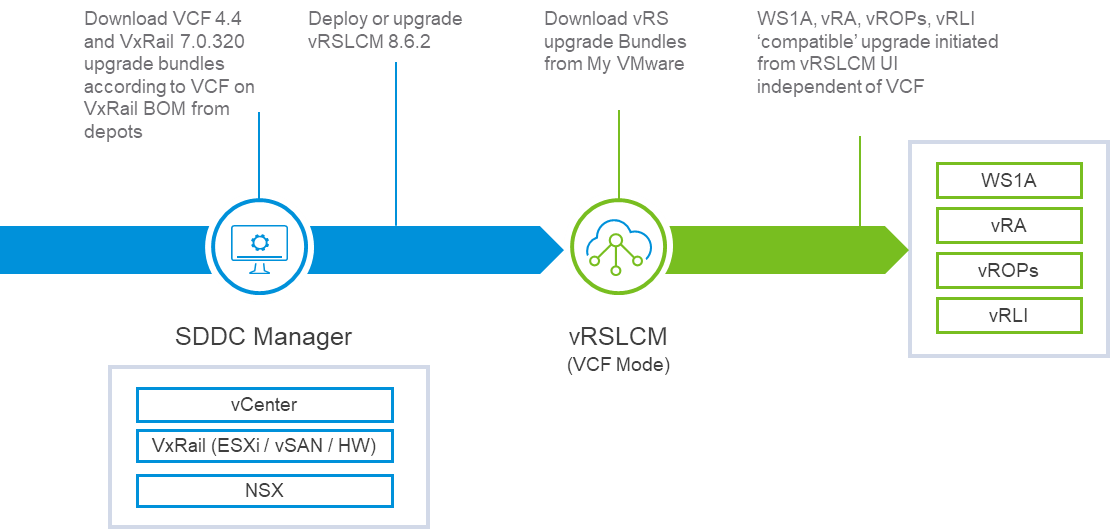

VCF Aware vRSLCM

New in VCF 4.1, the vRealize Suite is fully integrated into VCF. The SDDC Manager deploys the vRSLCM and creates a two way communication channel between the two components. When deployed, vRSLCM is now VCF aware and reports back to the SDDC Manager what vRealize products are installed. The installation of vRealize Suite components utilizes built standardized VVD best practices deployment designs leveraging Application Virtual Networks (AVNs).

Software Bundles for the vRealize Suite are all downloaded and managed through the SDDC Manager. When patches or updates become available for the vRealize Suite, lifecycle management of the vRealize Suite components is controlled from the SDDC Manager, calling on vRSLCM to execute the updates as part of SDDC Manager LCM workflows. The figure below showcases the process for enabling vRealize Suite for VCF.

Figure 5

VCF Multi-Site Architecture Enhancements

VCF Remote Cluster Support

VCF Remote Cluster Support enables customers to extend their VCF on VxRail operational capabilities to ROBO and Cloud Edge sites, enabling consistent operations from core to edge. Pair this with an awesome selection of VxRail hardware platform options and Dell Technologies has your Edge use cases covered. More on hardware platforms later…For a great detailed explanation on this exciting new feature check out the link to a detailed VMware blog post on the topic at the end of this post.

VCF LCM Enhancements

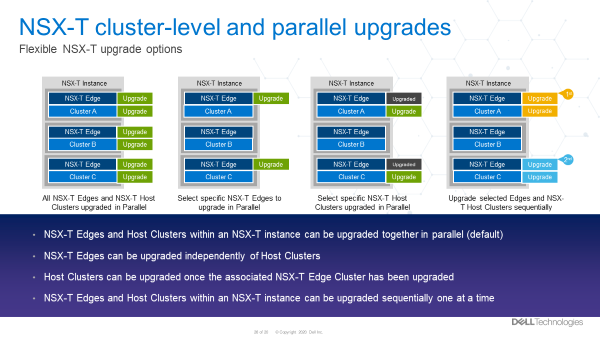

NSX-T Edge and Host Cluster-Level and Parallel Upgrades

With previous VCF on VxRail releases, NSX-T upgrades were all encompassing, meaning that a single update required updates to all the transport hosts as well as the NSX Edge and Manager components in one evolution.

With VCF 4.1, support has been added to perform staggered NSX updates to help minimize maintenance windows. Now, an NSX upgrade can consist of three distinct parts:

- Updating of edges

- Can be one job or multiple jobs. Rerun the wizard.

- Must be done before moving to the hosts

- Updating the transport hosts

- Once the hosts within the clusters have been updated, the NSX Managers can be updated.

Multiple NSX edge and/or host transport clusters within the NSX-T instance can be upgraded in parallel. The Administrator has the option to choose some clusters without having to choose all of them. Clusters within a NSX-T fabric can also be chosen to be upgraded sequentially, one at a time. Below are some examples of how NSX-T components can be updated.

NSX-T Components can be updated in several ways. These include updating:

- NSX-T Edges and Host Clusters within an NSX-T instance can be upgraded together in parallel (default)

- NSX-T Edges can be upgraded independently of NSX-T Host Clusters

- NSX-T Host Clusters can be upgraded independently of NSX-T Edges only after the Edges are upgraded first

- NSX-T Edges and Host Clusters within an NSX-T instance can be upgraded sequentially one after another.

The figure below visually depicts these options.

Figure 6

These options provide Cloud admins with a ton of flexibility so they can properly plan and execute NSX-T LCM updates within their respective maintenance windows. More flexible and simpler operations. Nice!

VCF Security Enhancements

Read-Only Access Role, Local and Service Accounts

A new ‘view-only’ role has been added to VCF 4.1. For some context, let’s talk a bit now about what happens when logging into the SDDC Manager.

First, you will provide a username and password. This information gets sent to the SDDC Manager, who then sends it to the SSO domain for verification. Once verified, the SDDC Manager can see what role the account has privilege for.

In previous versions of Cloud Foundation, the role would either be for an Administrator or it would be for an Operator.

Now, there is a third role available called a ‘Viewer’. Like its name suggests, this is a view only role which has no ability to create, delete, or modify objects. Users who are assigned this role may not see certain items in the SDDC Manger UI, such as the User screen. They may also see a message saying they are unauthorized to perform certain actions.

Also new, VCF now has a local account that can be used during an SSO failure. To help understand why this is needed let’s consider this: What happens when the SSO domain is unavailable for some reason? In this case, the user would not be able to login. To address this, administrators now can configure a VCF local account called admin@local. This account will allow the performing of certain actions until the SSO domain is functional again. This VCF local account is defined in the deployment worksheet and used in the VCF bring up process. If bring up has already been completed and the local account was not configured, then a warning banner will be displayed on the SDDC Manager UI until the local account is configured.

Lastly, SDDC Manager now uses new service accounts to streamline communications between SDDC manager and the products within Cloud Foundation. These new service accounts follow VVD guidelines for pre-defined usernames and are administered through the admin user account to improve inter-VCF communications within SDDC Manager.

VCF Data Protection Enhancements

As described in this blog, with VCF 4.1, SDDC Manager backup-recovery workflows and APIs have been improved to add capabilities such as backup management, backup scheduling, retention policy, on-demand backup & auto retries on failure. The improvement also includes Public APIs for 3rd party ecosystem and certified backup solutions from Dell PowerProtect.

VxRail Software Feature Updates

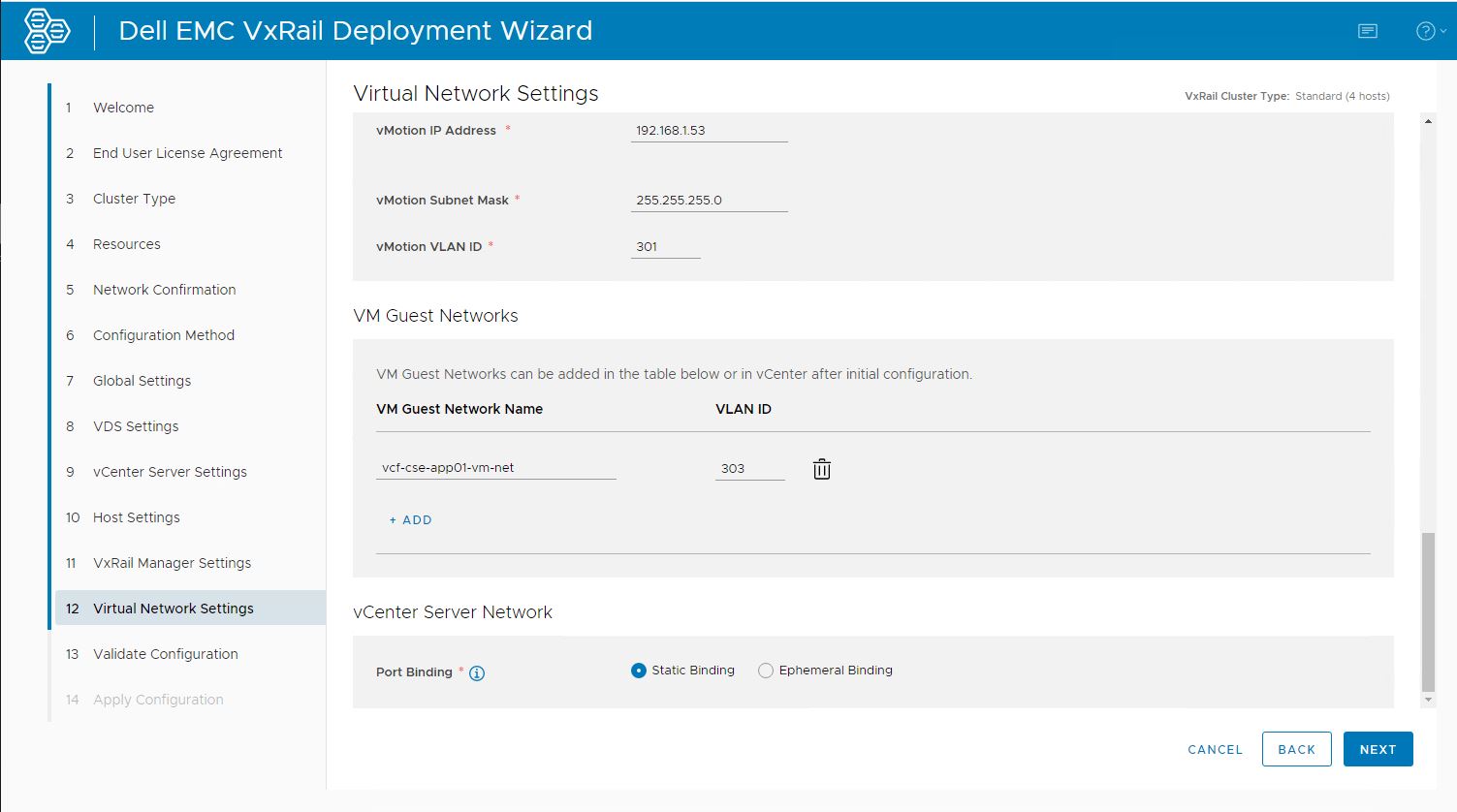

VxRail Networking Enhancements

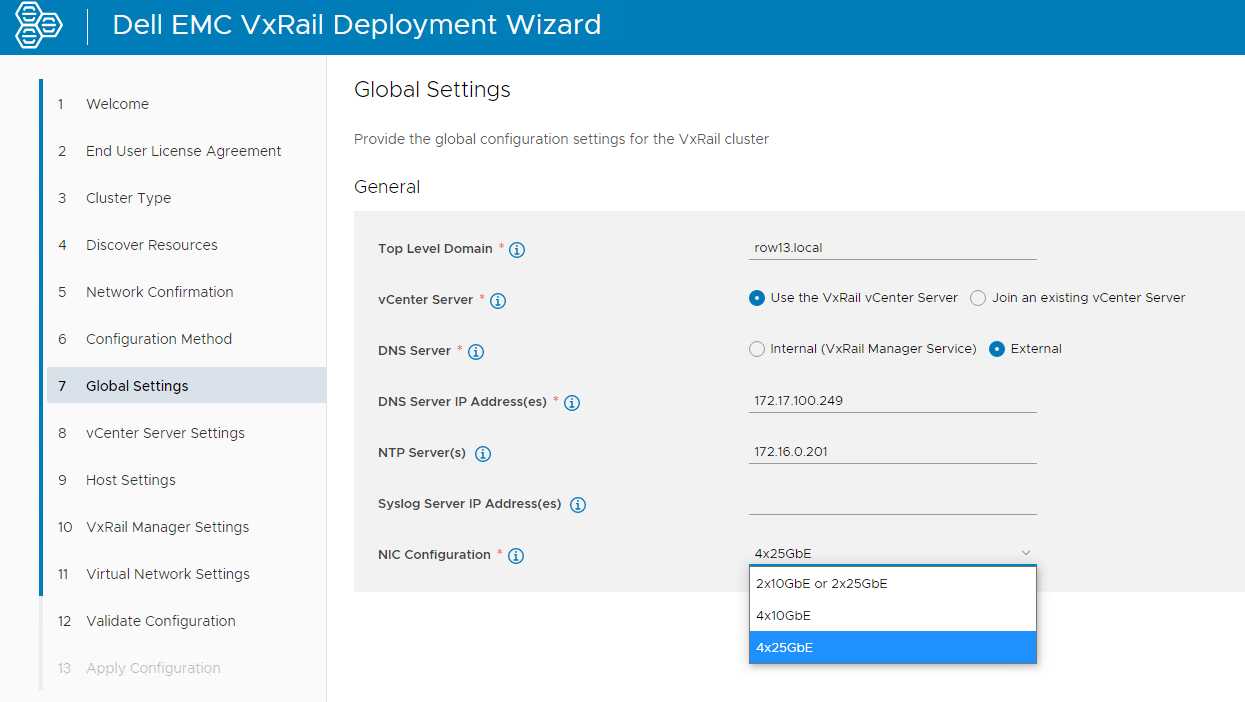

VxRail 4 x 25Gbps pNIC redundancy

VxRail engineering continues innovate in areas that drive more value to customers. The latest VCF on VxRail release follows through on delivering just that for our customers. New in this release, customers can use the automated VxRail First Run Process to deploy VCF on VxRail nodes using 4 x 25Gbps physical port configurations to run the VxRail System vDS for system traffic like Management, vSAN, and vMotion, etc. The physical port configuration of the VxRail nodes would include 2 x 25Gbps NDC ports and additional 2 x 25Gbps PCIe NIC ports.

In this 4 x 25Gbps set up, NSX-T traffic would run on the same System vDS. But what is great here (and where the flexibility comes in) is that customers can also choose to separate NSX-T traffic on its own NSX-T vDS that uplinks to separate physical PCIe NIC ports by using SDDC Manager APIs. This ability was first introduced in the last release and can also be leveraged here to expand the flexibility of VxRail host network configurations.

The figure below illustrates the option to select the base 4 x 25Gbps port configuration during VxRail First Run.

Figure 7

By allowing customers to run the VxRail System VDS across the NDC NIC ports and PCIe NIC ports, customers gain an extra layer of physical NIC redundancy and high availability. This has already been supported with 10Gbps based VxRail nodes. This release now brings the same high availability option to 25Gbps based VxRail nodes. Extra network high availability AND 25Gbps performance!? Sign me up!

VxRail Hardware Platform Updates

Recently introduced support for ruggedized D-Series VxRail hardware platforms (D560/D560F) continue expanding the available VxRail hardware platforms supported in the Dell Technologies Cloud Platform.

These ruggedized and durable platforms are designed to meet the demand for more compute, performance, storage, and more importantly, operational simplicity that deliver the full power of VxRail for workloads at the edge, in challenging environments, or for space-constrained areas.

These D-Series systems are a perfect match when paired with the latest VCF Remote Cluster features introduced in Cloud Foundation 4.1.0 to enable Cloud Foundation with Tanzu on VxRail to reach these space-constrained and challenging ROBO/Edge sites to run cloud native and traditional workloads, extending existing VCF on VxRail operations to these locations! Cool right?!

To read more about the technical details of VxRail D-Series, check out the VxRail D-Series Spec Sheet.

Well that about covers it all for this release. The innovation train continues. Until next time, feel free to check out the links below to learn more about DTCP (VCF on VxRail).

Jason Marques

Twitter - @vwhippersnapper

Additional Resources

VMware Blog Post on VCF Remote Clusters

Cloud Foundation on VxRail Release Notes

VxRail page on DellTechnologies.com

VCF on VxRail Interactive Demos

Deploying VMware Tanzu for Kubernetes Operations on Dell VxRail: Now for the Multicloud

Wed, 17 May 2023 15:56:43 -0000

|Read Time: 0 minutes

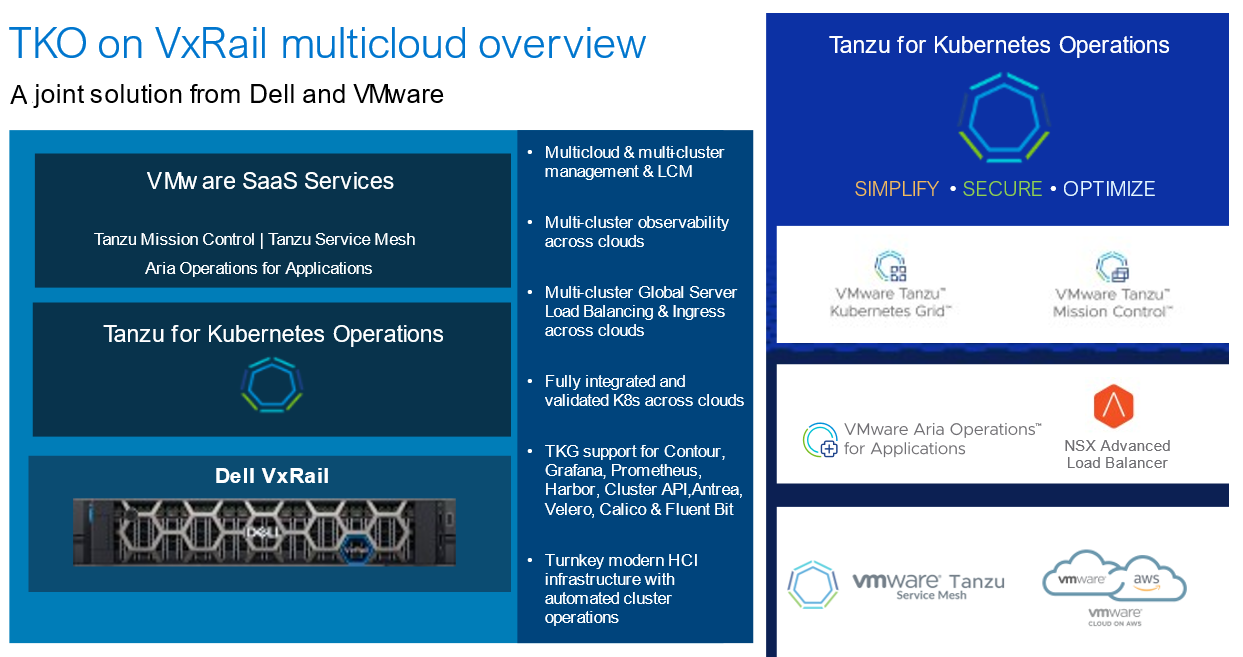

VMware Tanzu for Kubernetes Operations (TKO) on Dell VxRail is a jointly validated Dell and VMware reference architecture solution designed to streamline Kubernetes use for the enterprise. The latest version has been extended to showcase multicloud application deployment and operations use cases. Read on for more details.

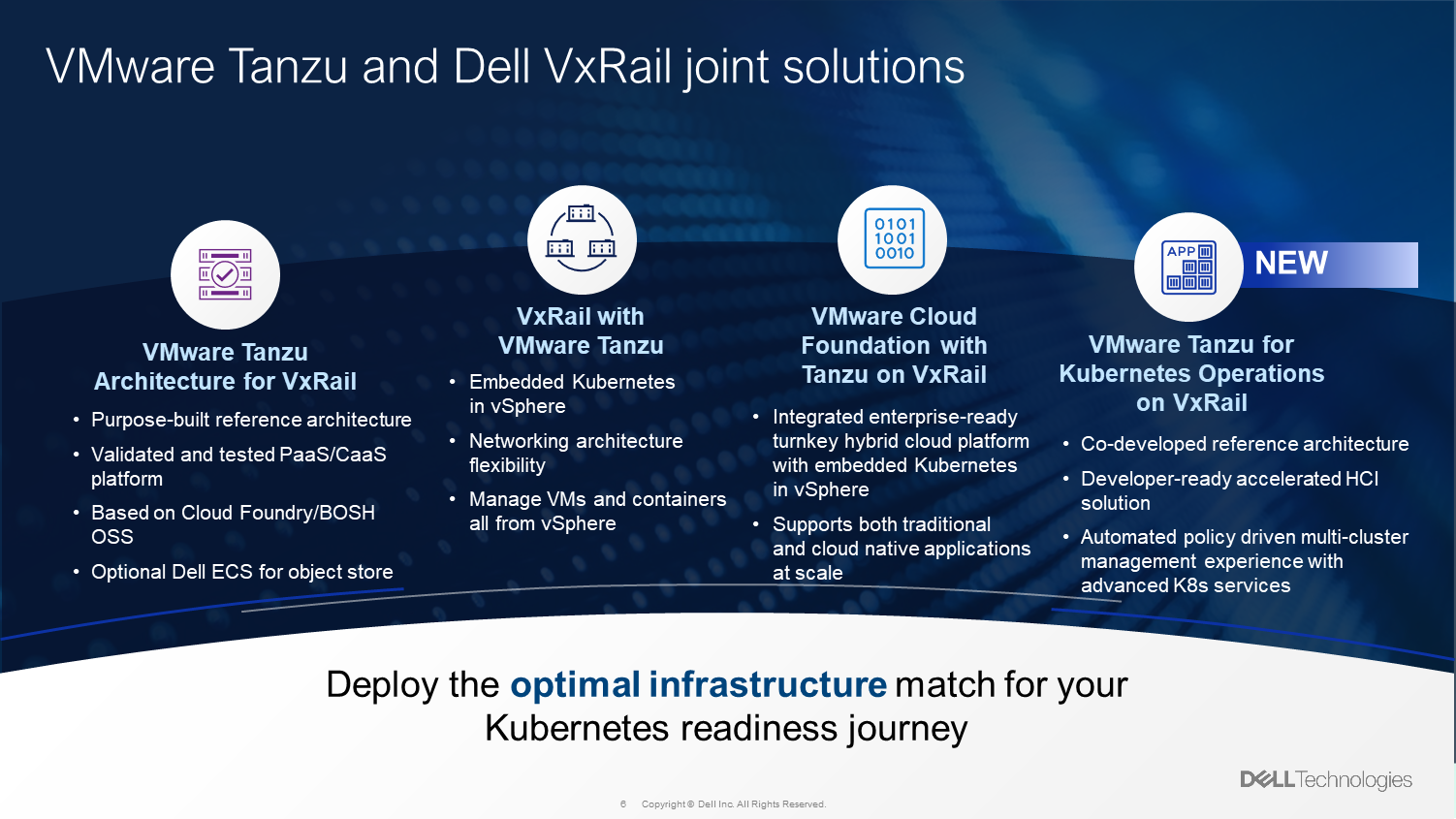

VMware Tanzu and Dell VxRail joint solutions

VMware TKO on Dell VxRail is yet another example of the strong partnership and joint development efforts that Dell and VMware continue to deliver on behalf of our joint customers so they can find success in their infrastructure modernization and digital transformation efforts. It is an addition to an existing portfolio of jointly developed and/or engineered products and reference architecture solutions that are built upon VxRail as the foundation to help customers accelerate and simplify their Kubernetes adoption.

Figure 1 highlights the joint VMware Tanzu and Dell VxRail offerings available today. Each is specifically designed to meet customers where they are in their journey to Kubernetes adoption.

Figure 1. Joint VMware Tanzu and Dell VxRail solutions

VMware TKO on VxRail

VMware Tanzu For Kubernetes Operations on Dell VxRail reference architecture updates

This latest release of the jointly developed reference architecture builds off the first release. To learn more about what TKO on VxRail is and our objective for jointly developing it, take a look at this blog post introducing its first iteration.

Okay… Now that you are all caught up, let’s dive into what is new in this latest version of the reference architecture.

Additional TKO multicloud components

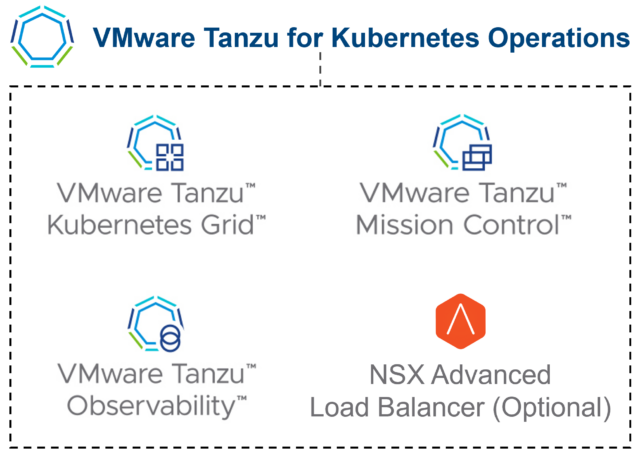

Let’s dive a bit deeper and highlight what we see as the essential building blocks for your cloud infrastructure transformation that are included in the TKO edition of Tanzu.

First, you’re going to need a consistent Kubernetes runtime like Tanzu Kubernetes Grid (TKG) so you can manage and upgrade clusters consistently as you move to a multicloud Kubernetes environment.

Next, you’re going to need some way to manage your platform and having a management plane like Tanzu Mission Control (TMC) that provides centralized visibility and control over your platform will be critical to helping you roll this out to distributed teams.

Also, having platform-wide observability like Aria Operations for Applications (formerly known as Tanzu/Aria Observability) ensures that you can effectively monitor and troubleshoot issues faster. Having data protection capabilities allows you to protect your data both at rest and in transit, which is critical if your teams will be deploying applications that run across clusters and clouds. And with NSX Advanced Load Balancer, TKO can also help you implement global load balancing and advanced traffic routing that allows for automated service discovery and north-south traffic management.

TKO on VxRail, VMware and Dell’s joint solution for core IT and cloud platform teams, can help you get started with your IT modernization project and enable you to build a standardized platform that will support you as you grow and expand to more clouds.

In the initial release of the reference architecture with VxRail, Tanzu Mission Control (TMC) and Aria Operations for Applications were used, and a solid on-premises foundation was established for building our multicloud architecture onward. The following figure shows the TKO items included in the first iteration.

Figure 2. Base TKO components used in initial version of reference architecture

In this second phase, we extended the on-premises architecture to a true multicloud environment fit for a new generation of applications.

Added to the latest version of the reference architecture are VMware Cloud on AWS, an Amazon EKS service, Tanzu Service Mesh, and Global Server Load Balancing (GSLB) functionality provided by NSX Advanced Load Balancer to build a global namespace for modern applications.

New TMC functionalities were also added that were not part of the first reference architecture, such as EKS LCM and continuous delivery capabilities. Besides the fact that AWS is still the most widely used public cloud provider, the reason AWS was used for this reference architecture is because the VMware SaaS products have the most features available for AWS cloud services. Other hyperscaler public cloud provider services are still in the VMware development pipeline. For example, today you can perform life cycle management of Amazon EKS clusters through Tanzu Mission Control. This life cycle management capability isn’t available yet with other cloud providers. The following figure highlights the high-level set of components used in this latest reference architecture update.

Figure 3. Additional components used in latest version of TKO on VxRail RA

New multicloud testing environment

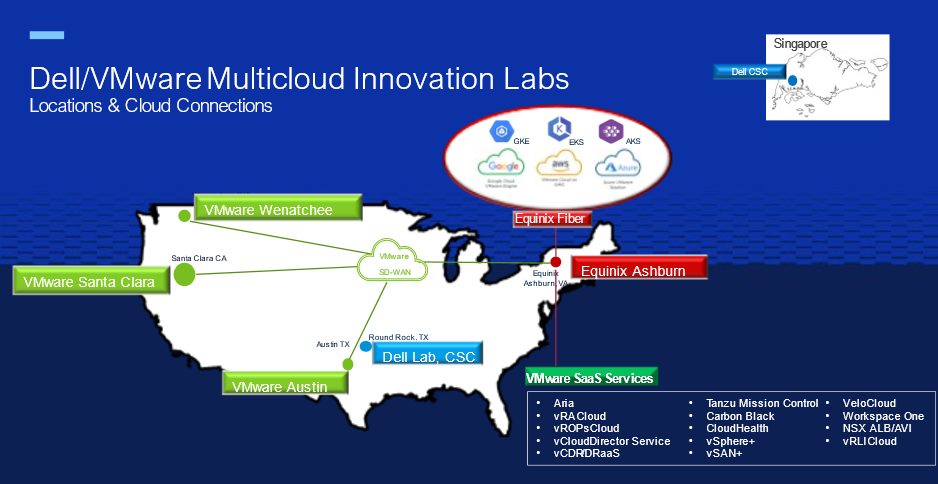

To test this multicloud architecture, the Dell and VMware engineering teams needed a true multicloud environment. Figure 4 illustrates a snapshot of the multisite/multicloud lab infrastructure that our VMware and Dell engineering teams built to provide a “real-world” environment to test and showcase our solutions. We use this environment to work on projects with internal teams and external partners.

Figure 4. Dell/VMware Multicloud Innovation Lab Environments

The environment is made up of five data centers and private clouds across the US, all connected by VMware SD-WAN, delivering a private multicloud environment. An Equinix data center provides the fiber backbone to connect with most public cloud providers as well as VMware Cloud Services.

Extended TKO on VxRail multicloud architecture

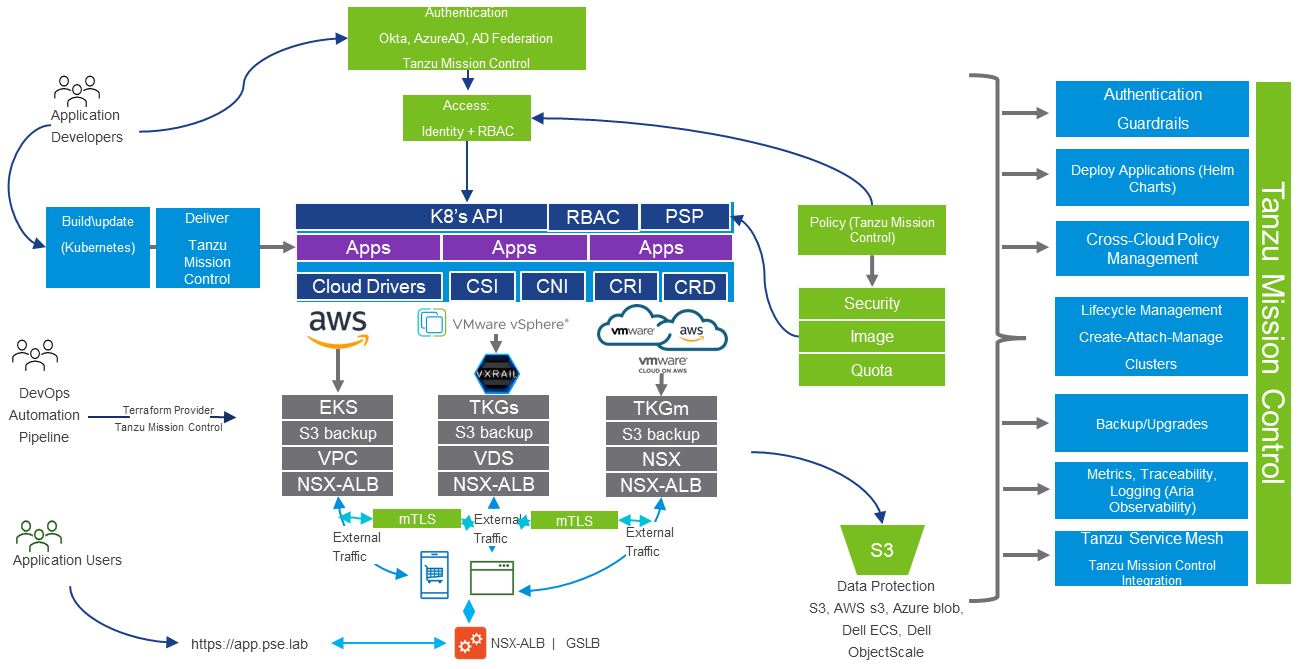

Figure 5 shows the multicloud implementation of Tanzu for Kubernetes Operations on VxRail. Here you have K8s clusters on-premises and running on multiple cloud providers.

Figure 5. TKO on VxRail Reference Architecture Multicloud Architecture

Tanzu Mission Control (TMC), which is part of Tanzu for Kubernetes Operations, provides you with a management plane through which platform operators or DevOps team members can manage the entire K8s environment across clouds. Developers can have self-service access, authenticated by either cloud identity providers like Okta or Microsoft Active Directory or through corporate Active Directory federation. With TMC, you can assign consistent policies across your cross-cloud K8s clusters. DevOps teams can use the TMC Terraform provider to manage the clusters as infrastructure-as-code.

Through TMC support for K8s open-source project technologies such as Velero, teams can back up clusters either to Azure blob, Amazon S3, or on-prem S3 storage solutions such as Dell ECS, Dell ObjectScale, or another object storage of their choice.

When you enable data protection for a cluster, Tanzu Mission Control installs Velero with Restic (an open-source backup tool), configured to use the opt-out approach. With this approach, Velero backs up all pod volumes using Restic.

TMC integration with Aria Operations for Applications (formerly Tanzu/Aria Observability) delivers fine-grained insights and analytics about the microservices applications running across the multicloud environments.

TMC also has integration with Tanzu Service Mesh (TSM), so you can add your clusters to TSM. When the TKO on VxRail multicloud reference architecture is implemented, users would connect to their multicloud microservices applications through a single URL provided by NSX Advanced Load Balancer (formerly AVI Load Balancer) in conjunction with TSM. TSM provides advanced, end-to-end connectivity, security, and insights for modern applications—across application end users, microservices, APIs, and data—enabling compliance with service level objectives (SLOs) and data protection and privacy regulations.

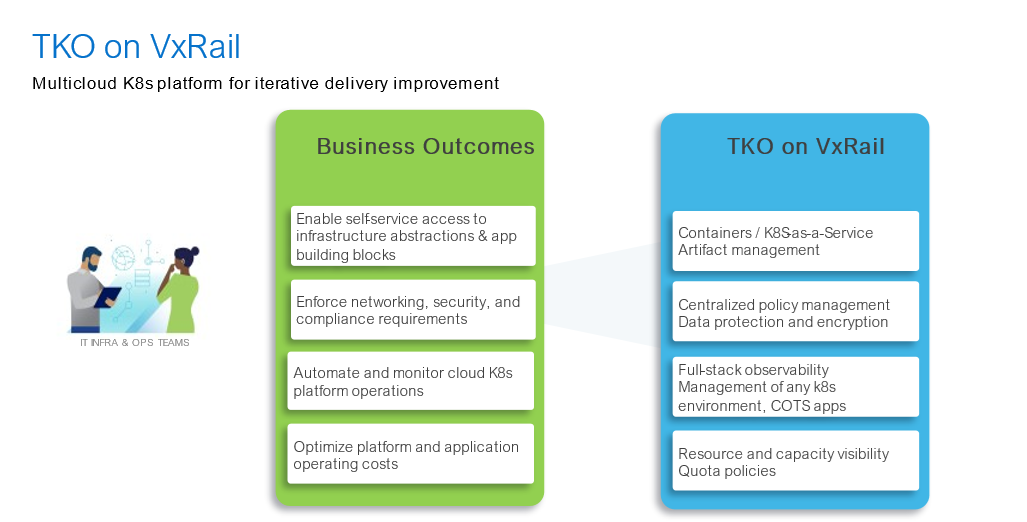

TKO on VxRail business outcomes

Dell and VMware know what business outcomes matter to enterprises, and together we help customers map those outcomes to transformations.

Figure 6 highlights the business outcomes that customers are asking for and that we are delivering through the Tanzu portfolio on VxRail today. They also set the stage to inform our joint development teams about future capabilities we look forward to delivering.

Figure 6. TKO on VxRail and business outcomes alignment

Learn more at Dell Technologies World 2023

Want to dive deeper into VMware Tanzu for Kubernetes Operations on Dell VxRail? Visit our interactive Dell Technologies and VMware booths at Dell Technologies World to talk with any of our experts. You can also attend our session Simplify & Streamline via VMware Tanzu for Kubernetes Operations on VxRail.

Also, feel free to check out the VMware Blog on this topic, written by Ather Jamil from VMware. It includes some cool demos showing TKO on VxRail in action!

Author: Jason Marques (Dell Technologies)

Twitter: @vWhipperSnapper

Contributor: Ather Jamil (VMware)

Resources

- VxRail page on DellTechnologies.com

- VxRail InfoHub

- VxRail videos

- Tanzu for Kubernetes Operations VMware page

- TKO on VxRail Reference Architecture

What’s New: VMware Cloud Foundation 4.5.1 on Dell VxRail 7.0.450 Release and More!

Thu, 11 May 2023 15:55:52 -0000

|Read Time: 0 minutes

This latest Cloud Foundation (VCF) on VxRail release includes updated versions of software BOM components, a bunch of new VxRail platform enhancements, and some good ol’ under-the-hood improvements that lay the groundwork for future features designed to deliver an even better customer experience. Read on for the highlights…

VCF on VxRail operations and serviceability enhancements

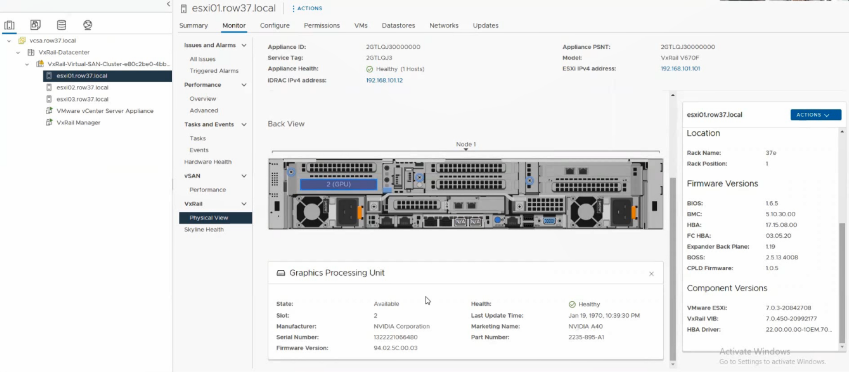

View Nvidia GPU hardware details in VxRail Manager vCenter plugin ‘Physical View’ and VxRail API

Leveraging the power of GPU acceleration with VCF on VxRail delivers a lot of value to organizations looking to harness the power of their data. VCF on VxRail makes operationalizing infrastructure with Nvidia GPUs easier with native GPU visualization and details using the VxRail Manager vCenter Plugin ‘Physical View’ and VxRail API. Administrators can quickly gain deeper-level hardware insights into the health and details of the Nvidia GPUs running on their VxRail nodes, to easily map the hardware layer to the virtual layer, and to help improve infrastructure management and serviceability operations.

Figure 1 shows what this looks like.

Figure 1. Nvidia GPU visualization and details – VxRail vCenter Plugin ‘Physical View’ UI

Support for the capturing, displaying, and proactive Dell dial home alerting for new VxRail iDRAC system events and alarms

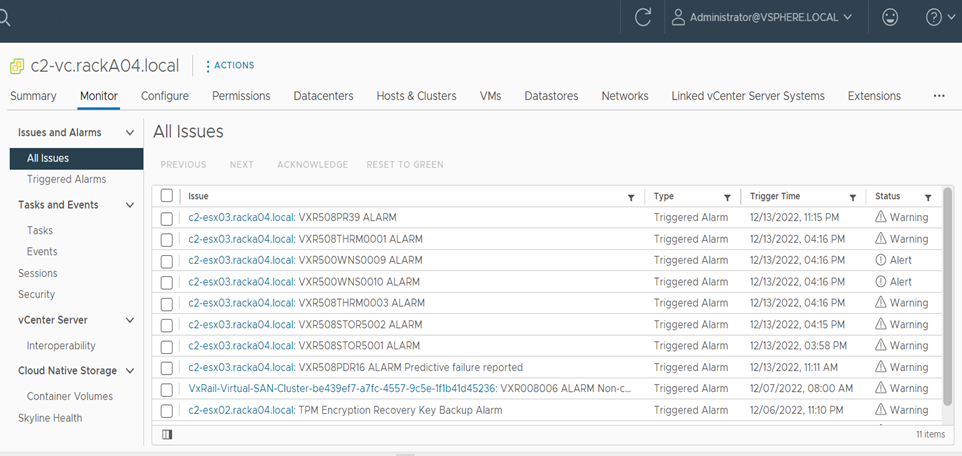

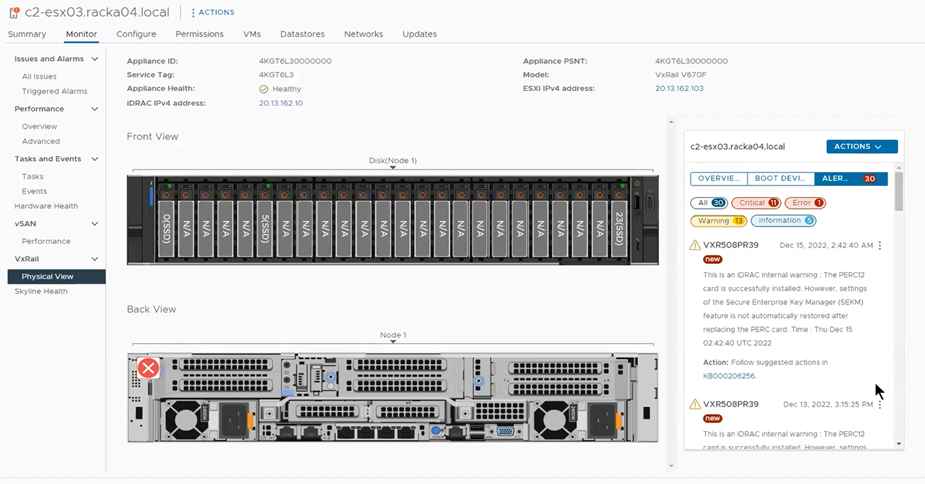

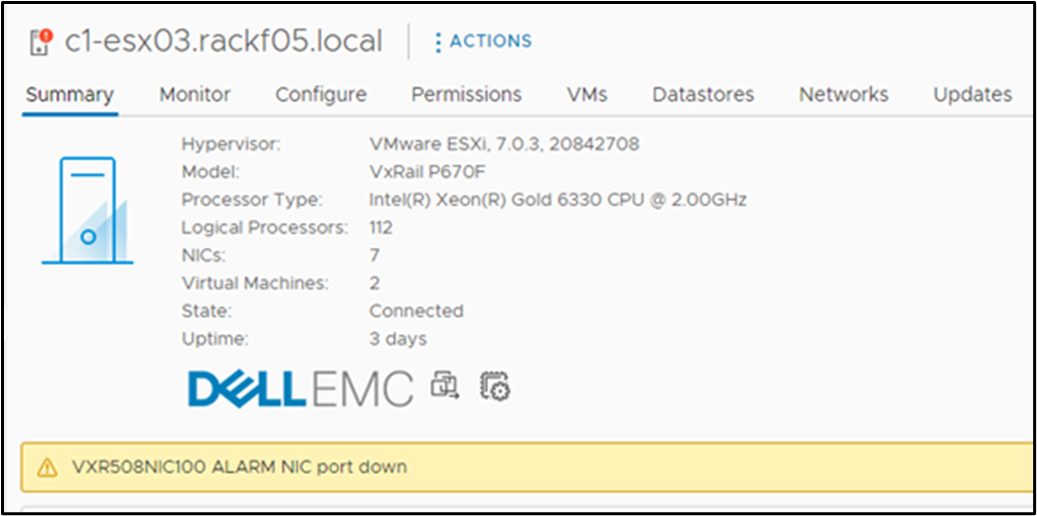

Introduced in VxRail 7.0.450 and available in VCF 4.5.1 on VxRail 7.0.450 are enhancements to VxRail Manager intelligent system health monitoring of iDRAC critical and warning system events. With this new feature, new iDRAC warning and critical system events are captured, and through VxRail Manager integration with both iDRAC and vCenter, alarms are triggered and posted in vCenter.

Customers can view these events and alarms in the native vCenter UI and the VxRail Manager vCenter Plugin Physical View which contains KB article links in the event description to provide added details and guidance on remediation. These new events also trigger call home actions to inform Dell support about the incident.

These improvements are designed to improve the serviceability and support experience for customers of VCF on VxRail. Figures 2 and 3 show these events as they appear in the vCenter UI ‘All Issues’ view and the VxRail Manager vCenter Plugin Physical View UI, respectively.

Figure 2. New iDRAC events displayed in the vCenter UI ‘All Issues’ view

Figure 3. New iDRAC events displayed in the VxRail Manager vCenter Plugin UI ‘Physical View’

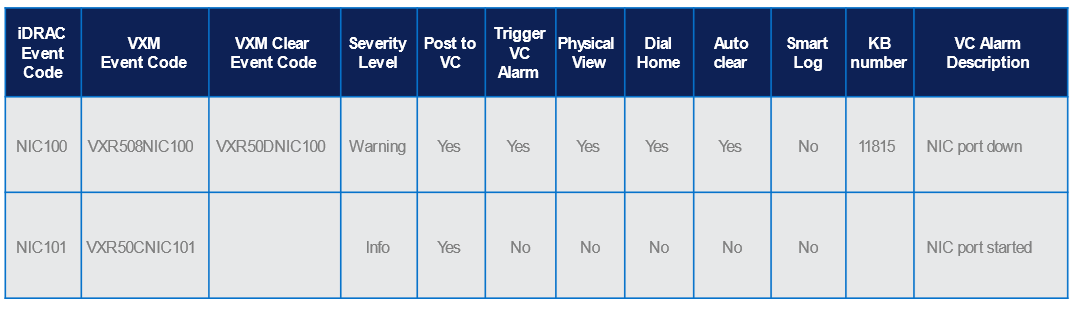

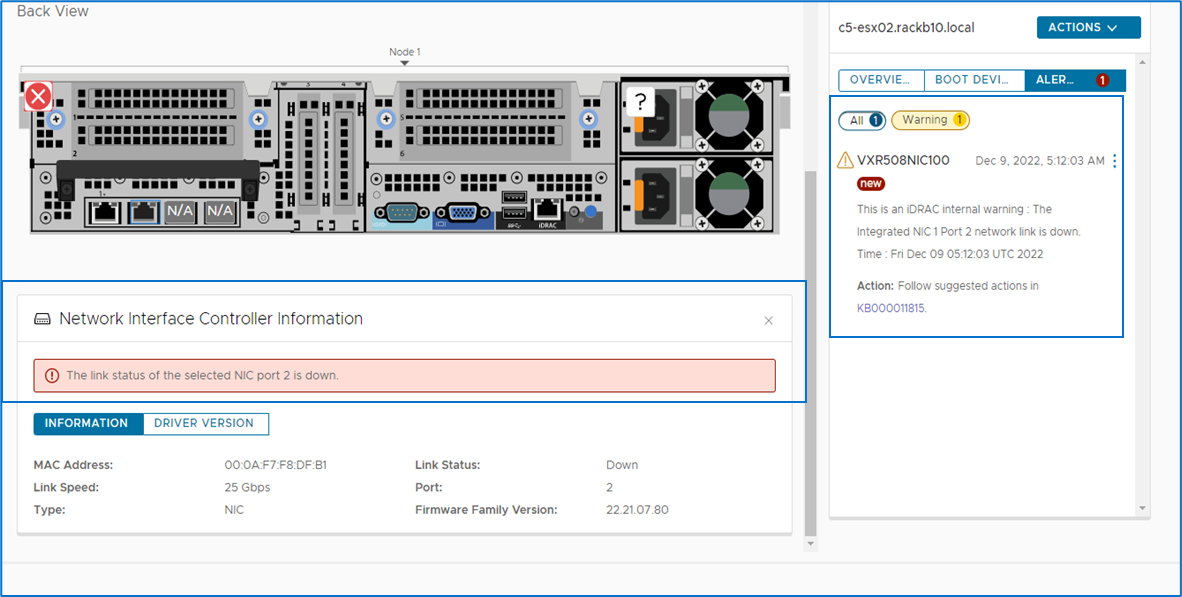

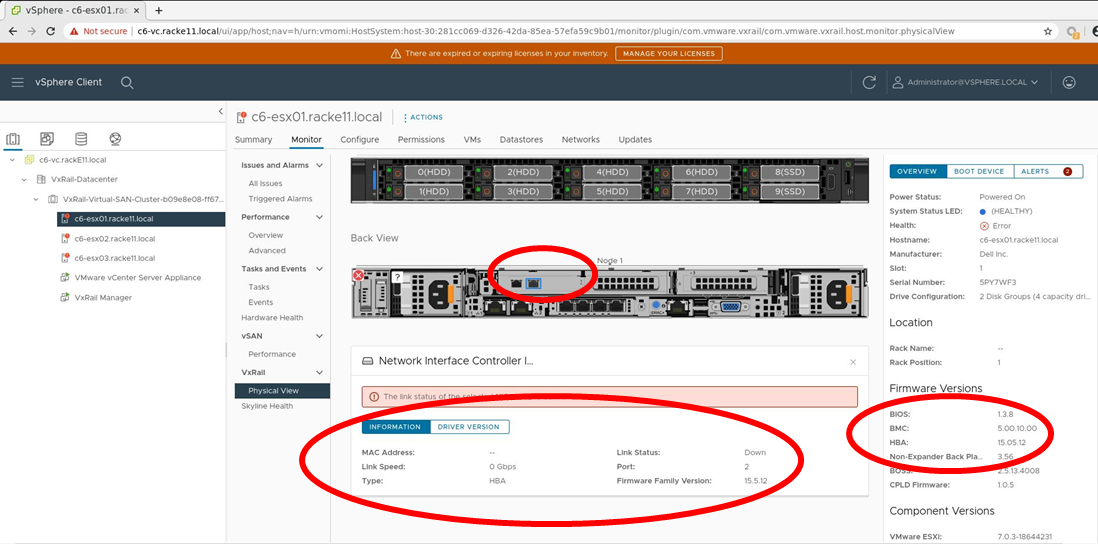

Support for the capturing, displaying, and proactive dial home alerting for new iDRAC NIC port down events and alarms

To further improve system serviceability and simplify operations, VxRail 7.0.450 introduces the capturing of new iDRAC system events related to host NIC port link status. These include NIC port down warning events, each of which is indicated by a NIC100 event code and a ‘NIC port is started/up’ info event.

A NIC100 event indicates either that a network cable is not connected, or that the network device is not working.

A NIC101 event indicates that the transition from a network link ‘down’ state to a network link ‘started’ or ‘up’ state has been detected on the corresponding NIC port.

VxRail Manager now creates new VxM events that track these NIC port states.

As a result, users can be alerted through an alarm in vCenter when a NIC port is down. VxRail Manager will also generate a dial-home event when a NIC port is down. When the condition is no longer present, VxRail Manager will automatically clear the alarm by generating a clear-alarm event.

Finally, to reduce the number of false positive events and prevent unnecessary alarm and dial home events, VxRail Manager implements an intelligent throttling mechanism to handle situations in which false positive alarms related to network maintenance activities could occur. This makes the alarms/events that are triggered more credible for an admin to act against.

Table 1 contains a summary of the details of these two events and the VxRail Manager serviceability behavior.

Table 1. iDRAC NIC port down and started event and behavior details

Let’s double click on this serviceability behavior in a bit more detail.

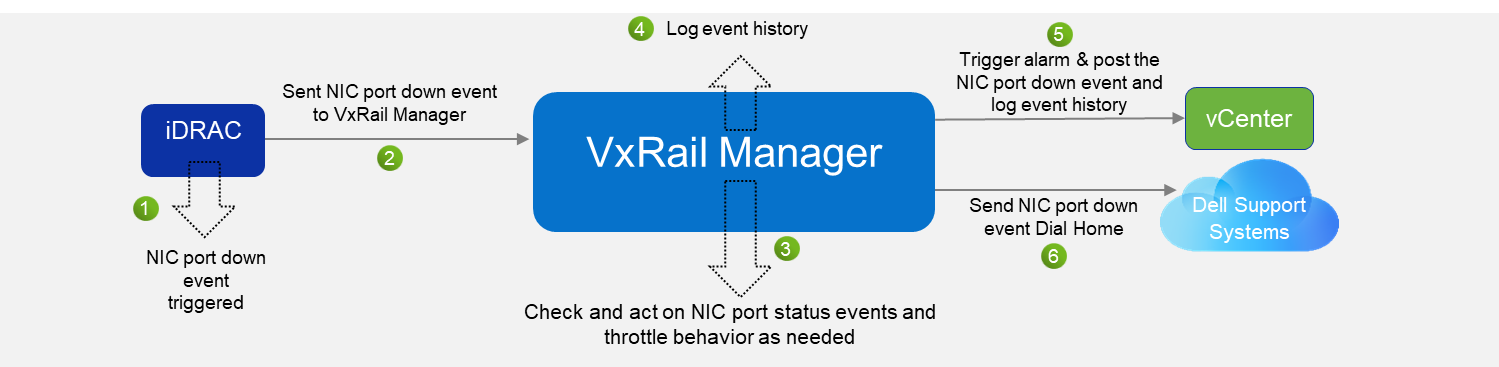

Figure 4 depicts the behavior process flow VxRail Manager takes when iDRAC discovers and triggers a NIC port down system event. Let’s walk through the details now:

1. The first thing that occurs is that iDRAC discovers that the NIC port state has gone down and triggers a NIC port down event.

2. Next, iDRAC will send that event to VxRail Manager.

3. At this stage VxRail Manager will validate how long the NIC port down event has been active and check whether a NIC port started (or up) event has been triggered within a 30-minute time frame since the original NIC port down event occurred. With this check, if there has not been a NIC port started event triggered, VxRail Manager will begin throttling NIC port down event communication in order to prevent duplicate alerts about the same event.

If during the 30-minute window, a NIC port started event has been detected, VxRail Manager will cease throttling and clear the event.

4. When the VxRail Manager event throttling state is active, VxRail Manager will log it in its event history.

5. VxRail Manager will then trigger a vCenter alarm and post the event to vCenter.

6. Finally, VxRail Manager will trigger a NIC port down dial home event communication to backend Dell Support Systems, if connected.

Figure 4. Processing VxRail NIC port down events, and VxRail Manager throttling logic

Figure 5 shows what this looks like in the vCenter UI.

Figure 5. VxRail NIC port down trigger alarm in vCenter UI

Figure 6 shows what this looks like in the VxRail Manager vCenter Plugin ‘Physical View’ UI.

Figure 6. VxRail Manager vCenter Plugin ‘Physical View’ UI view of a VxRail NIC port down event

VCF on VxRail storage updates

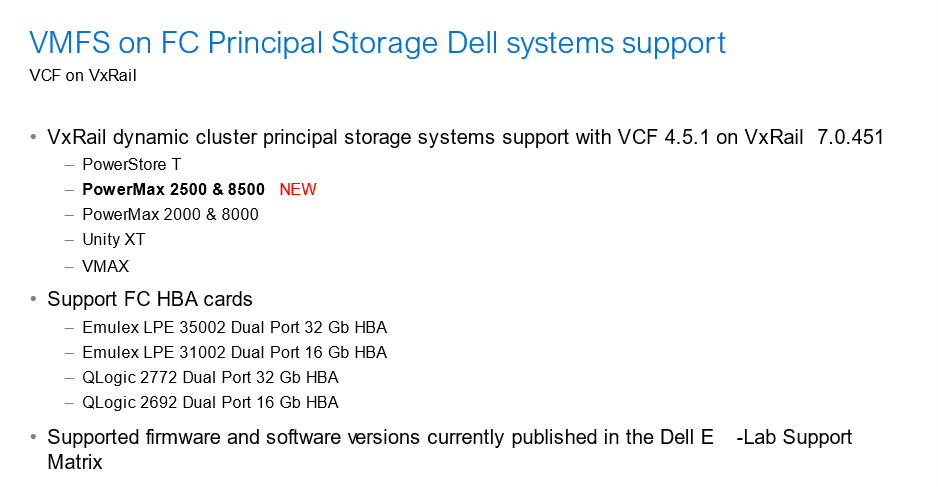

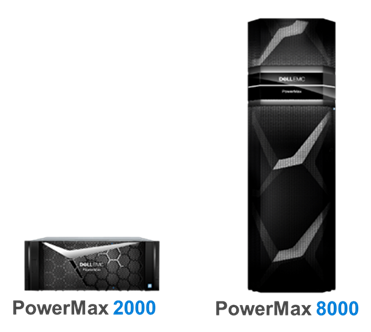

Support for new PowerMax 2500 and 8500 storage arrays with VxRail 14G and 15G dynamic nodes using VMFS on FC principal storage

Starting in VCF 4.5.1 on VxRail 7.0.450, support has been added for the latest next gen Dell PowerMax 2500 and 8500 storage systems as VMFS on FC principal storage when deployed with 14G and 15G VxRail dynamic node clusters in VI workload domains.

Figure 7 lists the Dell storage arrays that support VxRail dynamic node clusters using VMFS on FC principal storage for VCF on VxRail, along with the corresponding supported FC HBA makes and models.

Note: Compatible supported array firmware and software versions are published in the Dell E-Lab Support Matrix for reference.

Figure 7. Supported Dell storage arrays used as VMFS on FC principal storage

VCF on VxRail lifecycle management enhancements

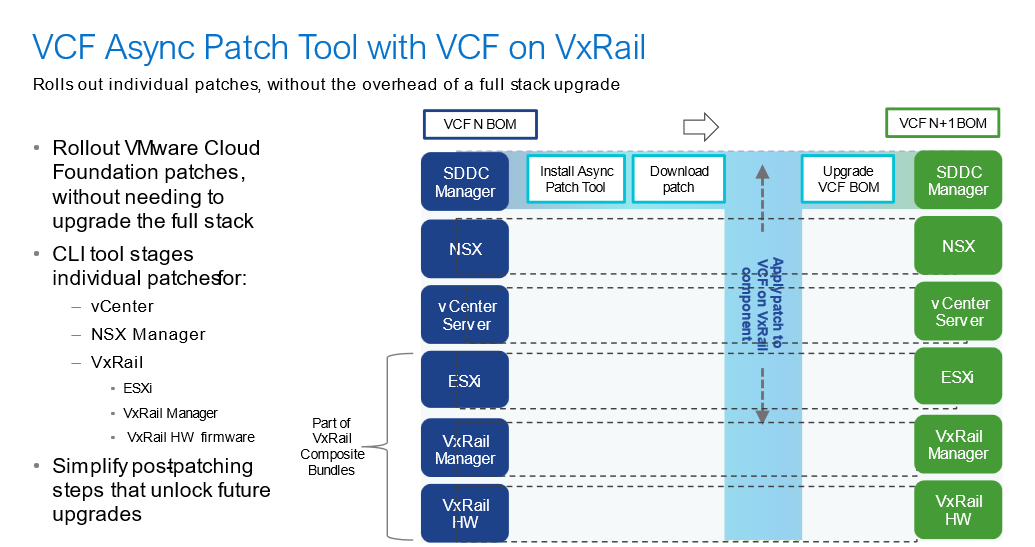

VCF Async Patch Tool 1.0.1.1 update

This tool addresses both LCM and security areas. Although it is not officially a feature of any specific VCF on VxRail release, it does get released asynchronously (pun intended) and is designed for use in VCF and VCF on VxRail environments. Thus, it deserves a call out.

For some background, the VCF Async Patch Tool is a new CLI based tool that allows cloud admins to apply individual component out-of-band security patches to their VCF on VxRail environment, separately from an official VCF LCM update release. This enables organizations to address security vulnerabilities faster without having to wait for a full VCF release update. It also allows admins to install these patches themselves without needing to engage support resources to get them applied manually.

With this latest AP Tool 1.0.1.1 release, the AP Tool now supports the ability to use patch VxRail (which includes all of the components in a VxRail update bundle including VxRail Manager and ESXi software components, and VxRail HW firmware/drivers) within VCF on VxRail environments. This is a great addition to the tool’s initial support for patching vCenter and NSX Manager in its first release. VCF on VxRail customers now have a centralized and standardized process for applying security patches for core VCF and VxRail software and core VxRail HCI stack hardware components (such as server BIOS or pNIC firmware/driver for example), all in a simple and integrated manner that VCF on VxRail customers have come to expect from a jointly engineered integrated turnkey hybrid cloud platform.

Note: Hardware patching is made possible due to how VxRail implements HW updates with the core VxRail update bundle. All VxRail patches for VxRail Manager, ESXi, and HW components are delivered in a the VxRail update bundle and leveraged by the AP Tool to apply.

From an operational standpoint, when patches for the respective software and hardware components have been applied, and a new VCF on VxRail BOM update is available that includes the security fixes, admins can use the tool to download the latest VCF on VxRail LCM release bundles and upgrade their environment back to an official in-band VCF on VxRail release BOM. After that, admins can continue to use the native SDDC Manager LCM workflow process for applying additional VCF on VxRail upgrades. Figure 8 highlights this process at a high level.

Figure 8. Async Patch Tool overview

You can access VCF Async Patch Tool instructions and documentation from VMware’s website.

Summary

In this latest release, the new features and platform improvements help set the stage for even more innovation in the future. For more details about bug fixes in this release, see VMware Cloud Foundation on Dell VxRail Release Notes. For this and other Cloud Foundation on VxRail information, see the following additional resources.

Author: Jason Marques

Twitter: @vWhipperSnapper

Additional Resources

- VMware Cloud Foundation on Dell VxRail Release Notes

- VxRail page on DellTechnologies.com

- VxRail Info Hub

- VCF on VxRail Interactive Demo

- Videos

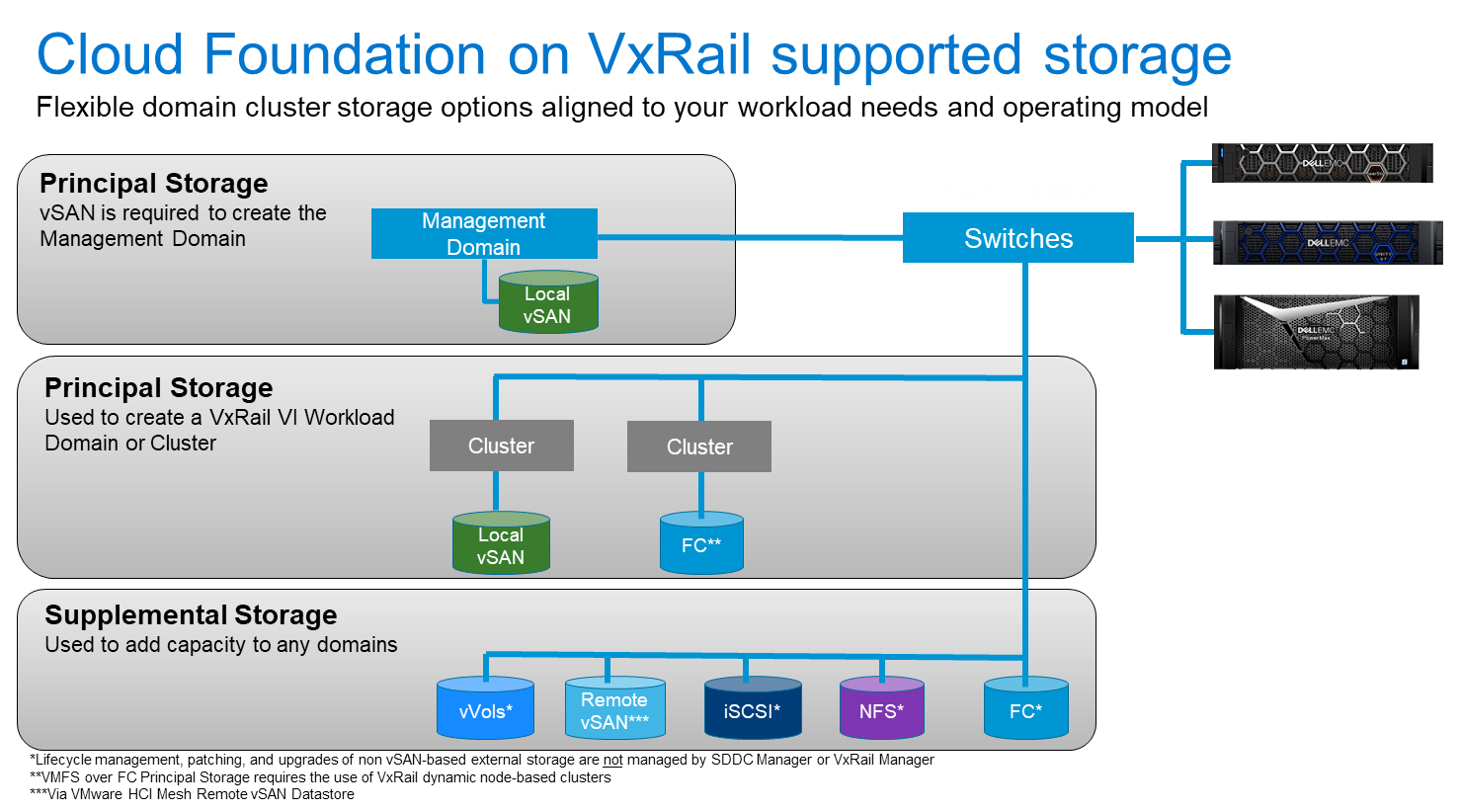

Getting To Know VMware Cloud Foundation on Dell VxRail Flexible Storage Options

Thu, 09 Feb 2023 20:45:22 -0000

|Read Time: 0 minutes

Have you been tasked with executing your company’s cloud transformation strategy and you’re worried about creating yet another infrastructure silo just for a subset of workloads to run in this cloud and, as a result, need a solution that delivers storage flexibility? Then you have come to the right place.

Dell Technologies and VMware have you covered with VMware Cloud Foundation on Dell VxRail. VCF on VxRail delivers the cloud infrastructure that can deliver storage flexibility that meets you where you are in your cloud adoption journey.

This new whiteboard video walks through these flexible storage options that you can take advantage of, that might align to your business, workload, and operational needs. Check it out below.

And for more information about VCF on VxRail, visit the VxRail Info Hub page.

Author: Jason Marques

Twitter: @vWhipperSnapper

Additional Resources

Improved management insights and integrated control in VMware Cloud Foundation 4.5 on Dell VxRail 7.0.400

Tue, 11 Oct 2022 12:59:13 -0000

|Read Time: 0 minutes

The latest release of the co-engineered hybrid cloud platform delivers new capabilities to help you manage your cloud with the precision and ease of a fighter jet pilot in the cockpit! The new VMware Cloud Foundation (VCF) on VxRail release includes support for the latest Cloud Foundation and VxRail software components based on vSphere 7, the latest VxRail P670N single socket All-NVMe 15th Generation HW platform, and VxRail API integrations with SDDC Manager. These components streamline and automate VxRail cluster creation and LCM operations, provide greater insights into platform health and activity status, and more! There is a ton of airspace to cover, ready to take off? Then buckle up and let’s hit Mach 10, Maverick!

VCF on VxRail operations and serviceability enhancements

Support for VxRail cluster creation automation using SDDC Manager UI

The best pilots are those that can access the most fully integrated tools to get the job done all from one place: the cockpit interface that they use every day. Cloud Foundation on VxRail administrators should also be able to access the best tools, minus the cockpit of course.

The newest VCF on VxRail release introduces support for VxRail cluster creation as a fully integrated end-to-end SDDC Manager workflow, driven from within the SDDC Manager UI. This integrated API-driven workload domain and VxRail cluster SDDC Manager feature extends the deep integration capabilities between SDDC Manager and VxRail Manager. This integration enables users to VxRail clusters when creating new VI workload domains or expanding existing workload domains (by adding new VxRail clusters into them) all from an SDDC Manager UI-driven end-to-end workflow experience.

In the initial SDDC Manager UI deployment workflow integration, only unused VxRail nodes discovered by VxRail Manager are supported. It also only supports clusters that are using one of the VxRail predefined network profile cluster configuration options. This method supports deploying VxRail clusters using both vSAN and VMFS on FC as principal storage options.

Another enhancement allows administrators to provide custom user-defined cluster names and custom user-defined VDS and port group names as configuration parameters as part of this workflow.

You can watch this new feature in action in this demo.

Now that’s some great co-piloting!

Support for SDDC Manager WFO Script VxRail cluster deployment configuration enhancements

Th SDDC Manager WFO Script deployment method was first introduced in VCF 4.3 on VxRail 7.0.202 to support advanced VxRail cluster configuration deployments within VCF on VxRail environments. This deployment method is also integrated with the VxRail API and can be used with or without VxRail JSON cluster configuration files as inputs, depending on what type of advanced VxRail cluster configurations are desired.

Note:

- The legacy method for deploying VxRail clusters using the VxRail Manager Deployment Wizard has been deprecated with this release.

- VxRail cluster deployments using the SDDC Manager WFO Script method currently require the use of professional services.

Proactive notifications about expired passwords and certificates in SDDC Manager UI and from VCF public API

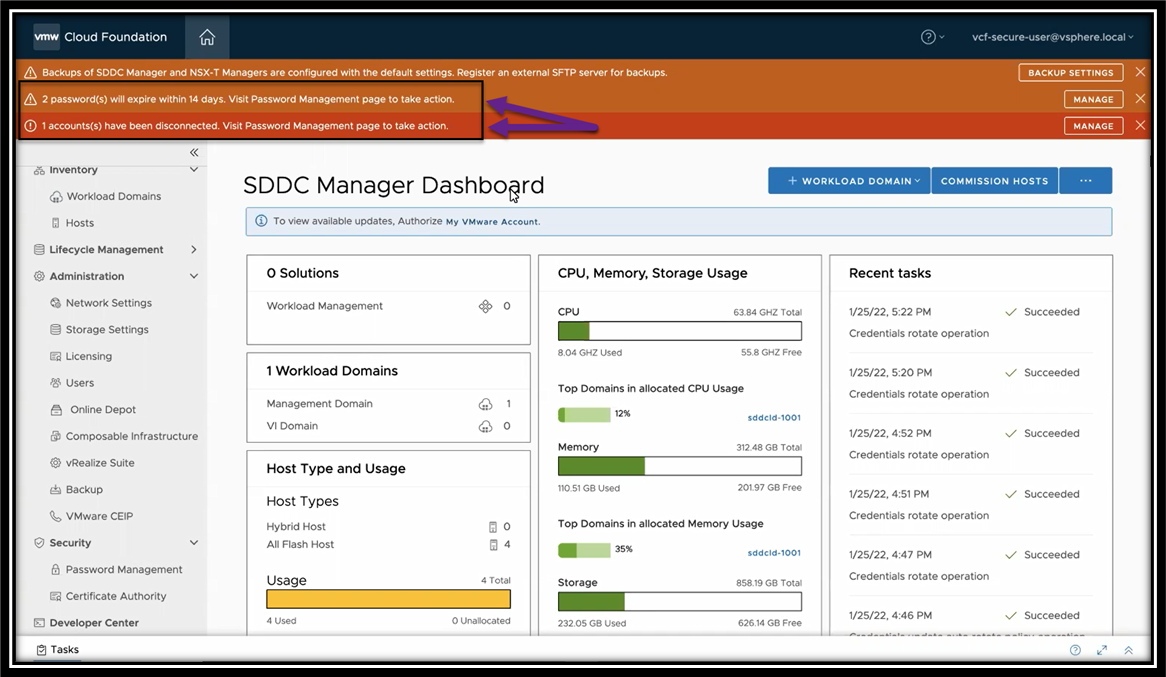

To deliver improved management insights into the cloud infrastructure system and its health status, this release introduces new proactive SDDC Manager UI notifications for impending VCF and VxRail component expired passwords and certificates. Now, within 30 days of expiration, a notification banner is automatically displayed in the SDDC Manager UI to give cloud administrators enough time to plan a course of action before these components expire. Figure 1 illustrates these notifications in the SDDC Manager UI.

Figure 1. Proactive password and certificate expiration notifications in SDDC Manager UI

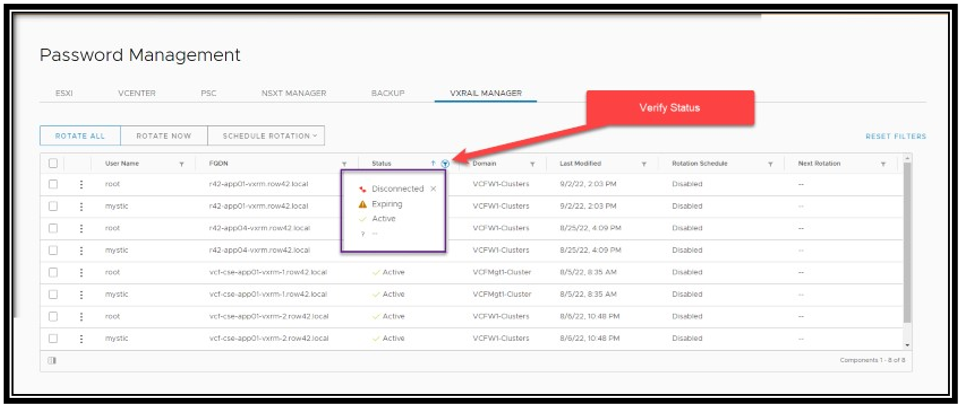

VCF also displays different types of password status categories to help better identify a given account’s password state. These status categories include:

- Active – Password is in a healthy state and not within a pending expiry window. No action is necessary.

- Expiring – Password is in a healthy state but is reaching a pending expiry date. Action should be taken to use SDDC Manager Password Management to update the password.

- Disconnected – Password of component is unknown or not in sync with the SDDC Manager managed passwords database inventory. Action should be taken to update the password at the component and remediate with SDDC Manager to resync.

The password status is displayed on the SDDC Manager UI Password Management dashboard so that users can easily reference it.

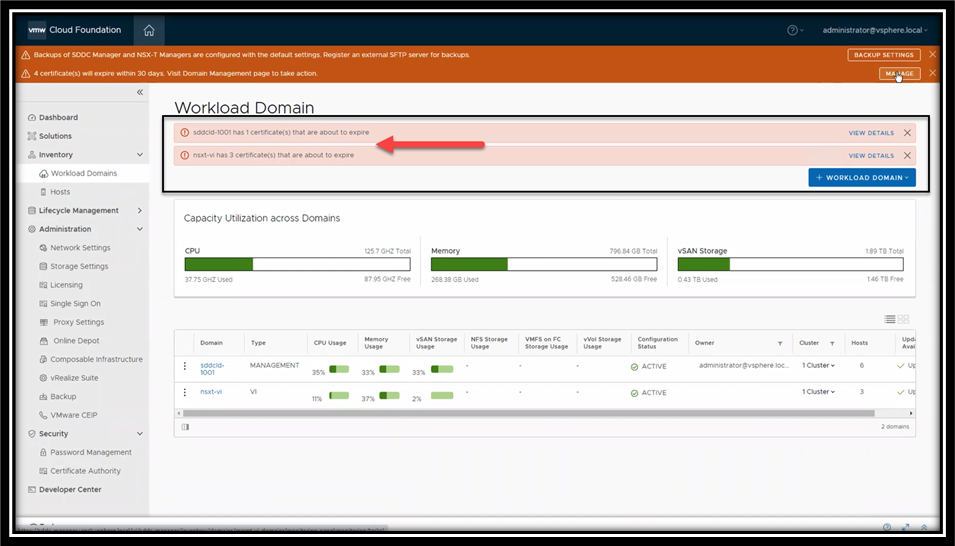

Figure 2. Password status display in SDDC Manager UI

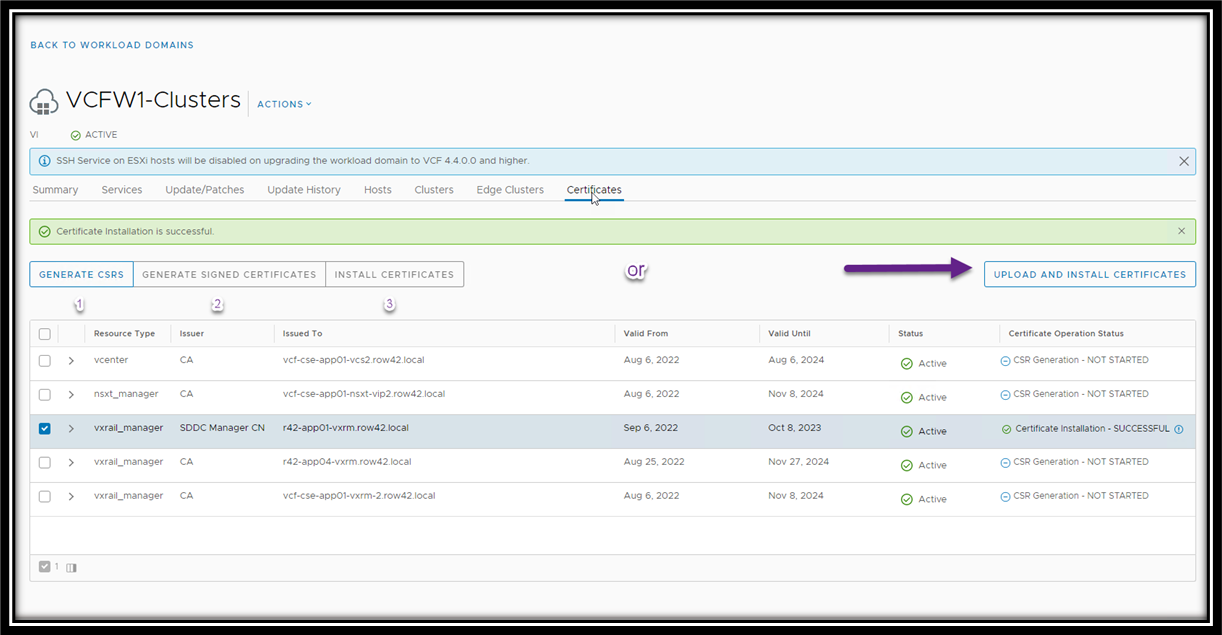

Similarly, certificate status state is also monitored. Depending on the certificate state, administrators can remediate expired certificates using the automated SDDC Manager certificate management capabilities, as shown in Figure 3.

Figure 3. Certificate status and management in SDDC Manager UI

Finally, administrators looking to capture this information programmatically can now use the VCF public API to query the system for any expired passwords and certificates.

Add and delete hosts from WLD clusters within a workload domain in parallel using SDDC Manager UI or VCF public API

Agility and efficiency are what cloud administrators strive for. The last thing anyone wants is to have to wait for the system to complete a task before being able to perform the next one. To address this, VCF on VxRail now allows admins to add and delete hosts in clusters within a workload domain in parallel using the SDDC Manager UI or VCF Public API. This helps to perform infrastructure management operations faster: some may even say at Mach 9!

Note:

- Prerequisite: Currently, VxRail nodes must be added to existing clusters using VxRail Manager first prior to executing SDDC Manager add host workflow operations in VCF.

- Currently a maximum of 10 operations of each type can be performed simultaneously. Always check the VMware Configuration Maximums Guide for VCF documentation for the latest supported configuration maximums.

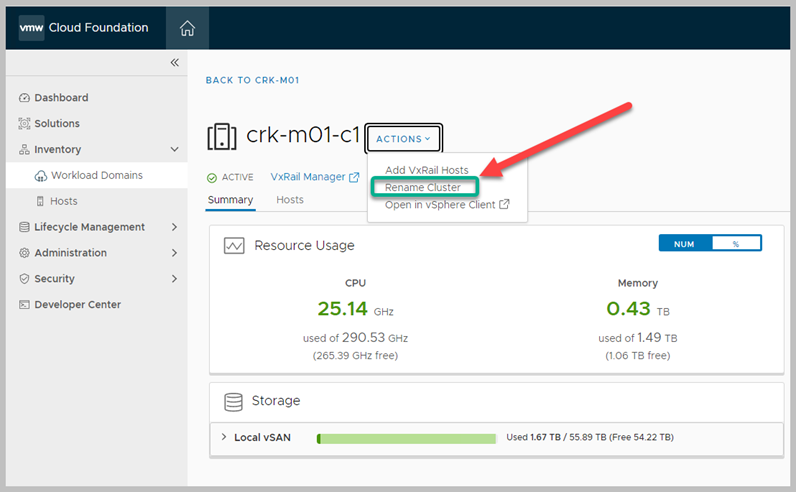

SDDC Manager UI: Support for Day 2 renaming of VCF cluster objects

To continue making the VCF on VxRail platform more accommodating to each organization’s governance policies and naming conventions, this release enables administrators to rename VCF cluster objects from within the SDDC Manager UI as a Day 2 operation.

New menu actions to rename the cluster are visible in-context when operating on cluster objects from within the SDDC Manager UI. This is just the first step in a larger initiative to make VCF on VxRail even more adaptable with naming conventions across many other VCF objects in the future. Figure 4 describes new in-context rename cluster menu option looks like.

Figure 4. Day 2 Rename Cluster Menu Option in SDDC Manager UI

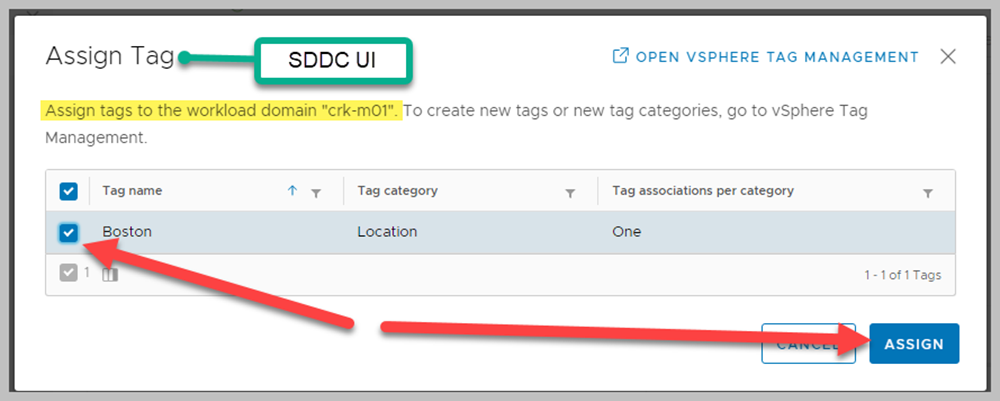

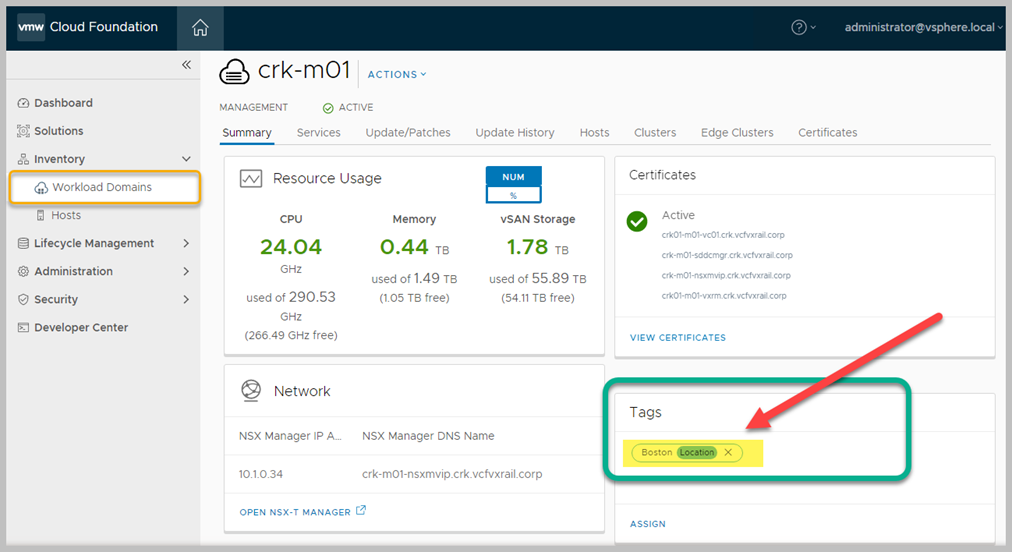

Support for assigning user defined tags to WLD, cluster, and host VCF objects in SDDC Manager

VCF on VxRail now incorporates SDDC Manager support for assigning and displaying user defined tags for workload domain, cluster, and host VCF objects.

Administrators now see a new Tags pane in the SDDC Manager UI that displays tags that have been created and assigned to WLD, cluster, and host VCF objects. If no tags exist, are not assigned, or if changes to existing tags are needed, there is an assign link that allows an administrator to assign the tag or link and launch into that object in vCenter where tag management (create, delete, modify) can be performed. When tags are instantiated, VCF syncs them and allow administrators to assign and display them in the tags pane in the SDDC Manager UI, as shown in Figure 5.

Figure 5. User-defined tags visibility and assignment, using SDDC Manager

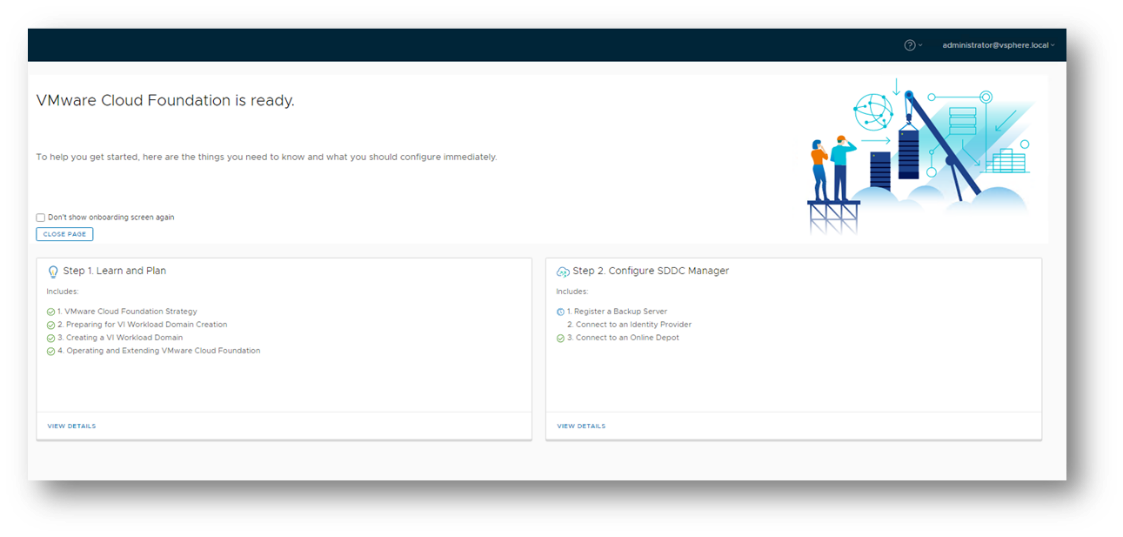

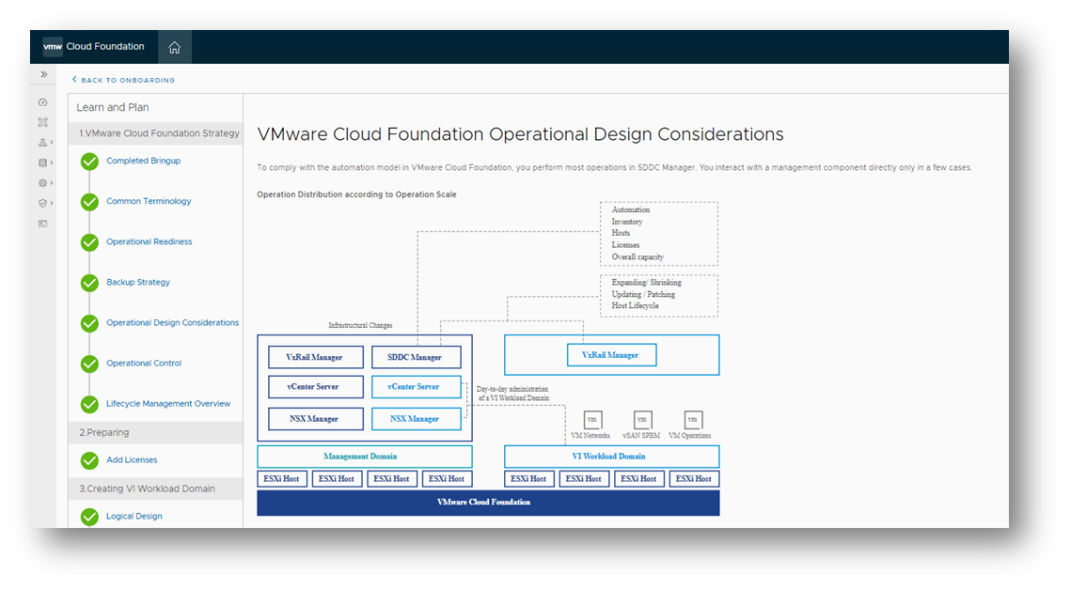

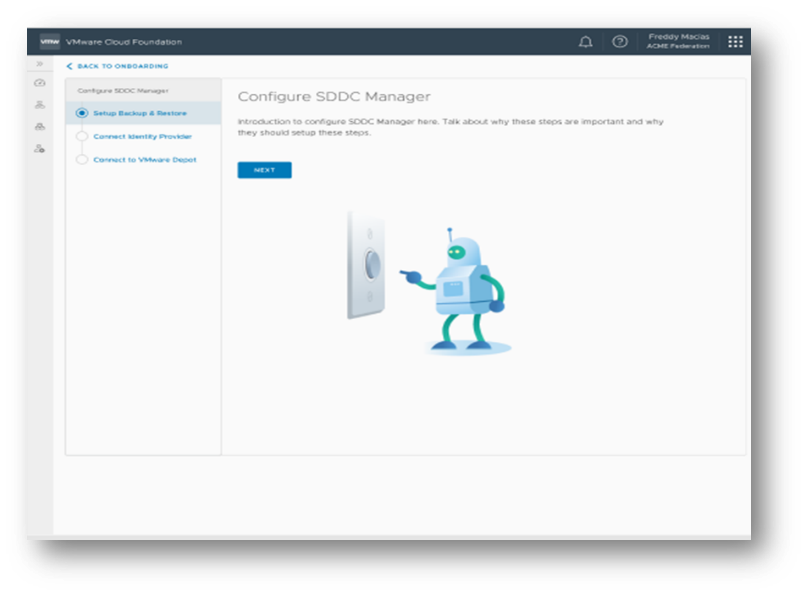

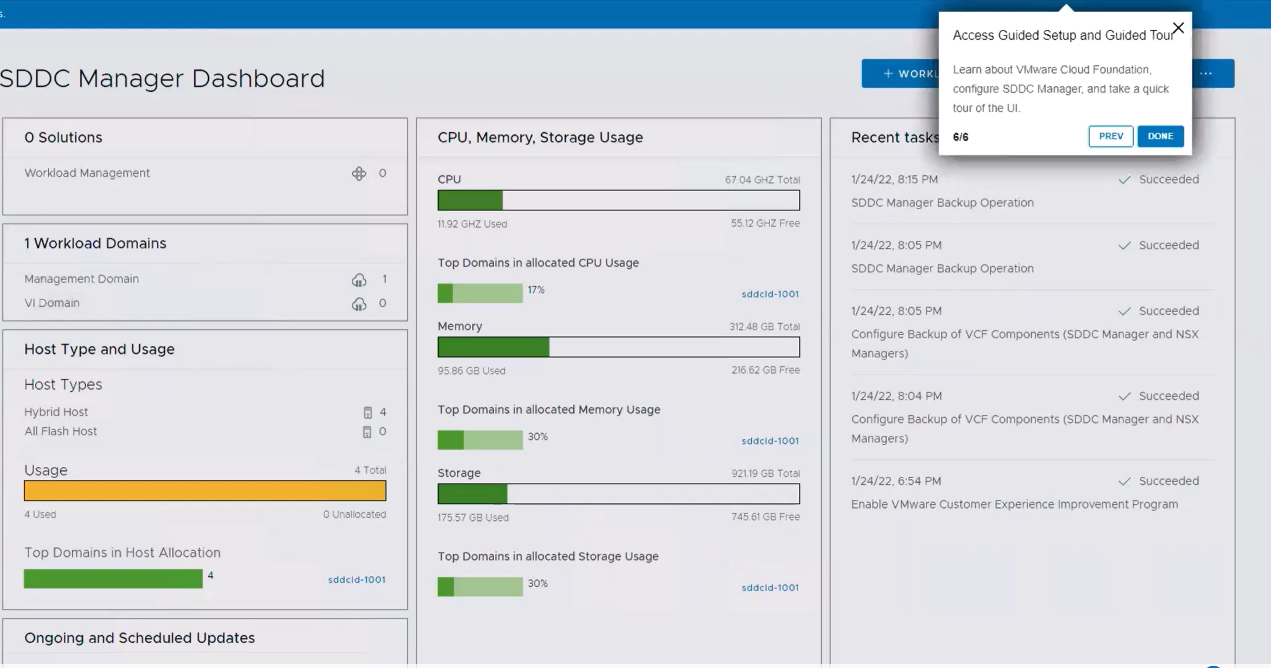

Support for SDDC Manager onboarding within SDDC Manager UI

VCF on VxRail is a powerful and flexible hybrid cloud platform that enables administrators to manage and configure the platform to meet their business requirements. To help organizations make the most of their strategic investments and start operationalizing them quicker, this release introduces support for a new SDDC Manager UI onboarding experience.

The new onboarding experience:

- Focuses on Learn and plan and Configure SDDC Manager phases with drill down to configure each phase

- Includes in-product context that enables administrators to learn, plan, and configure their workload domains, with added details including documentation articles and technical illustrations

- Introduces a step-by-step UI walkthrough wizard for initial SDDC Manager configuration setup

- Provides an intuitive UI guided walkthrough tour of SDDC Manager UI in stages of configuration that reduces the learning curve for customers

- Provides opt-out and revisit options for added flexibility

Figure 6 illustrates the new onboarding capabilities.

Figure 6. SDDC Manager Onboarding and UI Tour Experience

VCF on VxRail lifecycle management enhancements

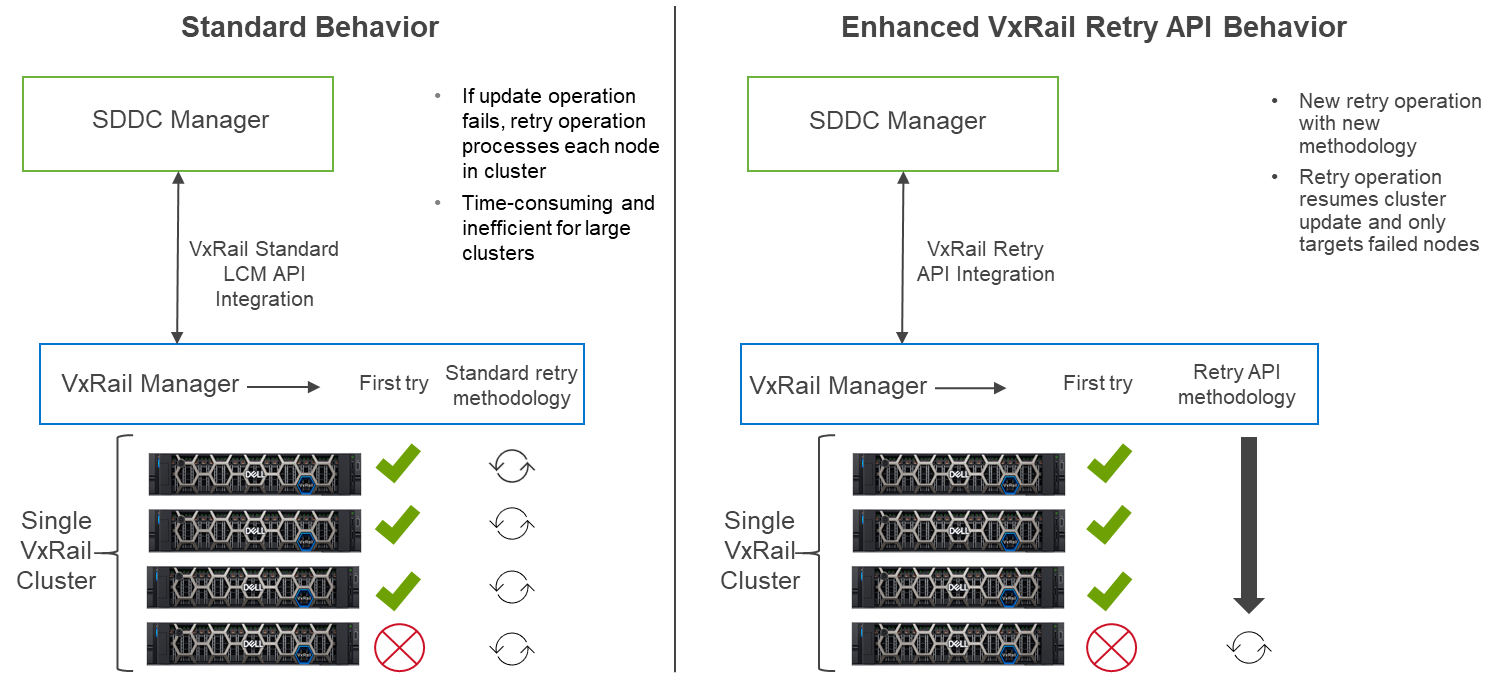

VCF integration with VxRail Retry API

The new VCF on VxRail release delivers new integrations with SDDC Manager and the VxRail Retry API to help reduce overall LCM performance time. If a cloud administrator has attempted to perform LCM operations on a VxRail cluster within their VCF on VxRail workload domain and only a subset of those nodes within the cluster can be upgraded successfully, another LCM attempt would be required to fully upgrade the rest of the nodes in the cluster.

Before VxRail Retry API, the VxRail Manager LCM would start the LCM from the first node in the cluster and scan each one to determine if it required an upgrade or not, even if the node was already successfully upgraded. This rescan behavior added unnecessary time to the LCM execution window for customers with large VxRail clusters.

The VxRail Retry API has made LCM even smarter. During an LCM update where a cluster has a mix of updated and non-updated nodes, VxRail Manager automatically skips right to the non-updated nodes only and runs through the LCM process from there until all remaining non-updated nodes are upgraded. This can provide cloud administrators with significant time savings. Figure 7 shows the behavior difference between standard and enhanced VxRail Retry API Behavior.

Figure 7. Comparison between standard and enhanced VxRail Retry API LCM Behavior

The VxRail Retry API behavior for VCF 4.5 on VxRail 7.0.400 has been natively integrated into the SDDC Manager LCM workflow. Administrators can continue to manage their VxRail upgrades within the SDDC Manager UI per usual. They can also take advantage of these improved operational workflows without any additional manual configuration changes.

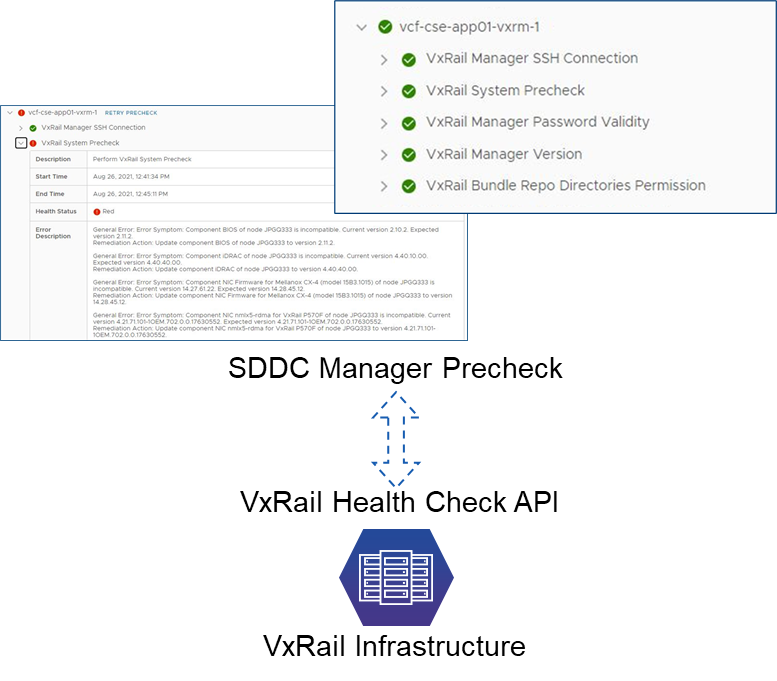

Improved SDDC Manager prechecks

More prechecks have been integrated into the platform that help fortify platform stability and simplify operations. These are:

- Verification of valid licenses for software components

- Checks for expired NSX Edge cluster passwords

- Verification of system inconsistent state caused by any prior failed workflows

- Additional host maintenance mode prechecks

- Determine if a host is in maintenance mode

- Determine whether CPU reservation for NSX-T is beyond VCF recommendation

- Determine whether DRS policy has changed from the VCF recommended (Fully Automated)

- Additional filesystem capacity and permissions checks

While VCF on VxRail has many core prechecks that monitor many common system health issues, VCF on VxRail will continue to integrate even more into the platform with each new release.

Support for vSAN health check silencing

The new VCF on VxRail release also includes vSAN health check interoperability improvements. These improvements allow VCF to:

- Address common upgrade blockers due to vSAN HCL precheck false positives

- Allow vSAN pre-checks to be more granular, which enables the administrator to only perform those that are applicable to their environment

- Display failed vSAN health checks during LCM operations of domain-level pre-checks and upgrades

- Enable the administrators to silence the health checks

Display VCF configurations drift bundle progress details in SDDC Manager UI during LCM operations

In a VCF on VxRail context, configuration-drift is a set of configuration changes that are required to bring upgraded BOM components (such as vCenter, NSX, and so on) with a new VCF on VxRail installation. These configuration changes are delivered by VCF configuration-drift LCM update bundles.

VCF configuration drift update improvements deliver greater visibility into what specifically is being changed, improved error details for better troubleshooting, and more efficient behavior for retry operations.

VCF Async Patch Tool support

VCF Async Patch Tool support offers both LCM and security enhancements.

Note: This feature is not officially included in this new release, but it is newly available.

The VCF Async Patch Tool is a new CLI based tool that allows cloud administrators to apply individual component out-of-band security patches to their VCF on VxRail environment, separate from an official VCF LCM update release. This enables organizations to address security vulnerabilities faster without having to wait for a full VCF release update. It also gives administrators control to install these patches without requiring the engagement of support resources.

Today, VCF on VxRail supports the ability to use the VCF Async Patch Tool for NSX-T and vCenter security patch updates only. Once patches have been applied and a new VCF BOM update is available that includes the security fixes, administrators can use the tool to download the latest VCF LCM release bundles and upgrade their environment back to an official in-band VCF release BOM. After that, administrators can continue to use the native SDDC Manager LCM workflow process to apply additional VCF on VxRail upgrades.

Note: Using VCF Async Patch Tool for VxRail and ESXi patch updates is not yet supported for VCF on VxRail deployments. There is currently separate manual guidance available for customers needing to apply patches for those components.

Instructions on downloading and using the VCF Async Patch Tool can be found here.

VCF on VxRail hardware platform enhancements

Support for 24-drive All-NVMe 15th Generation P670N VxRail platform

The VxRail 7.0.400 release delivers support for the latest VxRail 15th Generation P670N VxRail hardware platform. This 2U1N single CPU socket model delivers an All-NVMe storage configuration of up to 24 drives for improved workload performance. Now that would be powerful single engine aircraft!

Time to come in for a landing…

I don’t know about you, but I am flying high with excitement about all the innovation delivered with this release. Now it’s time to take ourselves down for a landing. For more information, see the following additional resources so you can become your organization’s Cloud Ace.

Author: Jason Marques

Twitter: @vWhipperSnapper

Additional resources

VMware Cloud Foundation on Dell VxRail Release Notes

VxRail page on DellTechnologies.com

Find Your Edge: Running SUSE Rancher and K3s with SLE Micro on Dell VxRail

Wed, 28 Sep 2022 10:26:37 -0000

|Read Time: 0 minutes

Find Your Edge: Running SUSE Rancher and K3s with SLE Micro on Dell VxRail

Existing examples of this collaboration have already begun to bear fruit with work done to validate SUSE Rancher and RKE2 on Dell VxRail. You can find more information on that in a Solution Brief here and blog post here. This initial example was to highlight deploying and operating Kubernetes clusters in a core datacenter use case.

But what about providing examples of jointly validated solutions for near edge use cases? More and more organizations are looking to deploy solutions at the edge since that is an increasing area where data is being generated and analyzed. As a result, this is where the focus of our ongoing technology validation efforts recently moved.

Our latest validation exercise featured deploying SUSE Rancher and K3s with the SUSE Linux Enterprise Micro operating system (SLE Micro) and running it on Dell VxRail hyperconverged infrastructure. These technologies were installed in a non-production lab environment by a team of SUSE and Dell VxRail engineers. All the installation steps that were used followed the SUSE documentation without any unique VxRail customization. This illustrates the seamless compatibility of using these technologies together and allowing for standardized deployment practices with the out-of-the-box system capabilities of both VxRail and the SUSE products.

Solution Components Overview

Before jumping into the details of the solution validation itself, let’s do a quick review of the major components that we used.

- SUSE Rancher is a complete software stack for teams that are adopting containers. It addresses the operational and security challenges of managing multiple Kubernetes (K8s) clusters, including lightweight K3s clusters, across any infrastructure, while providing DevOps teams with integrated tools for running containerized workloads.

- K3s is a CNCF sandbox project that delivers a lightweight yet powerful certified Kubernetes distribution.

- SUSE Linux Enterprise Micro (SLE Micro) is an ultra-reliable, lightweight operating system purpose built for containerized and virtualized workloads.

- Dell VxRail is the only fully integrated, pre-configured, and tested HCI system optimized with VMware vSphere, making it ideal for customers who want to leverage SUSE Rancher, K3s, and SLE Micro through vSphere to create and operate lightweight Kubernetes clusters on-premises or at the edge.

Validation Deployment Details

Now, let’s dive into the details of the deployment for this solution validation.

First, we deployed a single VxRail cluster with these specifications:

- 4 x VxRail E660F nodes running VxRail 7.0.370 version software

- 2 x Intel® Xeon® Gold 6330 CPUs

- 512 GB RAM

- Broadcom Adv. Dual 25 Gb Ethernet NIC

- 2 x vSAN Disk Groups:

- 1 x 800 GB Cache Disk

- 3 x 4 TB Capacity Disks

- vSphere K8s CSI/CNS

After we built the VxRail cluster, we deployed a set of three virtual machines running SLE Micro 5.1. We installed a multi-node K3s cluster running version 1.23.6 with Server and Agent services, Etcd, and a ContainerD container runtime on these VMs. We then installed SUSE Rancher 2.6.3 on the K3s cluster. Also included in the K3s cluster for Rancher installation were Fleet GitOps services, Prometheus monitoring and metrics capture services, and Grafana metrics visualization services. All of this formed our Rancher Management Server.

We then used Rancher to deploy managed K3s workload clusters. In this validation, we deployed the two managed K3 workload clusters on the Rancher Management Server cluster. These managed workload clusters were single node and six-node K3s clusters all running on vSphere VMs with the SLE Micro operating system installed.

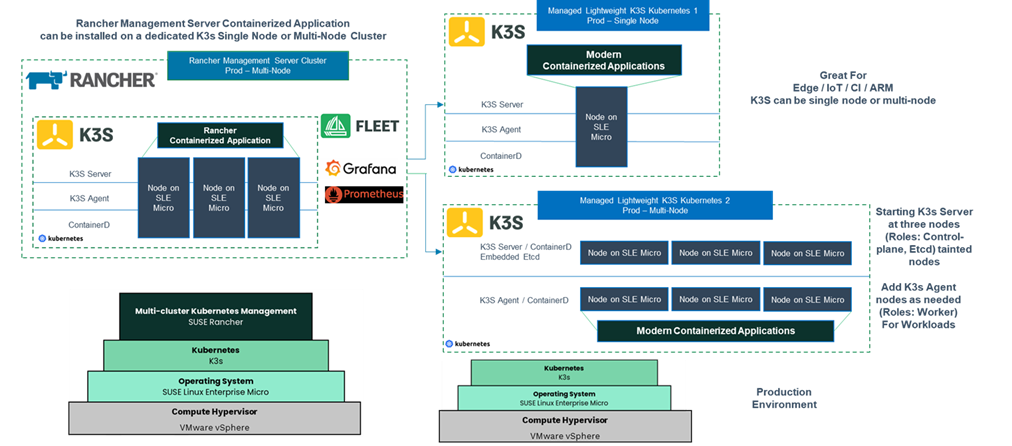

You can easily modify this validation to be more highly available and production ready. The following diagram shows how to incorporate more resilience.

Figure 1: SUSE Rancher and K3s with SLE Micro on Dell VxRail - Production Architecture

The Rancher Management Server stays the same, because it was already deployed with a highly available four-node VxRail cluster and three SLE Micro VMs running a multi-node K3 cluster. As a production best practice, managed K3s workload clusters should run on separate highly available infrastructure from the Rancher Management Server to maintain separation of management and workloads. In this case, you can deploy a second four-node VxRail cluster. For the managed K3s workload clusters, you should use a minimum of three-node clusters to provide high availability for both the Etcd services and the workloads running on it. However, three nodes are not enough to provide node separation and high availability for the Etcd services and workloads. To remedy this, you can deploy a minimum six-node K3s cluster (as shown in the diagram with the K3s Kubernetes 2 Prod cluster).

Summary

Although this validation features Dell VxRail, you can also deploy similar architectures using other Dell hardware platforms, such as Dell PowerEdge and Dell vSAN Ready Nodes running VMware vSphere!

For more information and to see other jointly validated reference architectures using Dell infrastructure with SUSE Rancher, K3s, and more, check out the following resource pages and documentation. We hope to see you back here again soon.

Author: Jason Marques

Dell Resources

- Dell VxRail Hyperconverged Infrastructure

- Dell VxRail System TechBook

- Solution Brief – Running SUSE Rancher and K3s with SLE Micro on Dell VxRail

- Reference Architecture - SUSE Rancher, K3s, and SUSE Linux Enterprise Micro for Edge Computing: Based on Dell PowerEdge XR11 and XR12 Servers

SUSE Resources

Find Your Edge: Running SUSE Rancher and K3s with SLE Micro on Dell VxRail

Tue, 16 Aug 2022 13:51:15 -0000

|Read Time: 0 minutes

The goal of our ongoing partnership between Dell Technologies and SUSE is to bring validated modern products and solutions to market that enable our joint customers to operate CNCF-Certified Kubernetes clusters in the core, in the cloud, and at the edge, to support their digital businesses and harness the power of their data.

Existing examples of this collaboration have already begun to bear fruit with work done to validate SUSE Rancher and RKE2 on Dell VxRail. You can find more information on that in a Solution Brief here and blog post here. This initial example was to highlight deploying and operating Kubernetes clusters in a core datacenter use case.

But what about providing examples of jointly validated solutions for near edge use cases? More and more organizations are looking to deploy solutions at the edge since that is an increasing area where data is being generated and analyzed. As a result, this is where the focus of our ongoing technology validation efforts recently moved.

Our latest validation exercise featured deploying SUSE Rancher and K3s with the SUSE Linux Enterprise Micro operating system (SLE Micro) and running it on Dell VxRail hyperconverged infrastructure. These technologies were installed in a non-production lab environment by a team of SUSE and Dell VxRail engineers. All the installation steps that were used followed the SUSE documentation without any unique VxRail customization. This illustrates the seamless compatibility of using these technologies together and allowing for standardized deployment practices with the out-of-the-box system capabilities of both VxRail and the SUSE products.

Solution Components Overview

Before jumping into the details of the solution validation itself, let’s do a quick review of the major components that we used.

SUSE Rancher is a complete software stack for teams that are adopting containers. It addresses the operational and security challenges of managing multiple Kubernetes (K8s) clusters, including lightweight K3s clusters, across any infrastructure, while providing DevOps teams with integrated tools for running containerized workloads.

K3s is a CNCF sandbox project that delivers a lightweight yet powerful certified Kubernetes distribution.

SUSE Linux Enterprise Micro (SLE Micro) is an ultra-reliable, lightweight operating system purpose built for containerized and virtualized workloads.

Dell VxRail is the only fully integrated, pre-configured, and tested HCI system optimized with VMware vSphere, making it ideal for customers who want to leverage SUSE Rancher, K3s, and SLE Micro through vSphere to create and operate lightweight Kubernetes clusters on-premises or at the edge.

Validation Deployment Details

Now, let’s dive into the details of the deployment for this solution validation.

First, we deployed a single VxRail cluster with these specifications:

- 4 x VxRail E660F nodes running VxRail 7.0.370 version software

- 2 x Intel® Xeon® Gold 6330 CPUs

- 512 GB RAM

- Broadcom Adv. Dual 25 Gb Ethernet NIC

- 2 x vSAN Disk Groups:

- 1 x 800 GB Cache Disk

- 3 x 4 TB Capacity Disks

- vSphere K8s CSI/CNS