Find Your Edge: Running SUSE Rancher and K3s with SLE Micro on Dell VxRail

Tue, 16 Aug 2022 13:51:15 -0000

|Read Time: 0 minutes

The goal of our ongoing partnership between Dell Technologies and SUSE is to bring validated modern products and solutions to market that enable our joint customers to operate CNCF-Certified Kubernetes clusters in the core, in the cloud, and at the edge, to support their digital businesses and harness the power of their data.

Existing examples of this collaboration have already begun to bear fruit with work done to validate SUSE Rancher and RKE2 on Dell VxRail. You can find more information on that in a Solution Brief here and blog post here. This initial example was to highlight deploying and operating Kubernetes clusters in a core datacenter use case.

But what about providing examples of jointly validated solutions for near edge use cases? More and more organizations are looking to deploy solutions at the edge since that is an increasing area where data is being generated and analyzed. As a result, this is where the focus of our ongoing technology validation efforts recently moved.

Our latest validation exercise featured deploying SUSE Rancher and K3s with the SUSE Linux Enterprise Micro operating system (SLE Micro) and running it on Dell VxRail hyperconverged infrastructure. These technologies were installed in a non-production lab environment by a team of SUSE and Dell VxRail engineers. All the installation steps that were used followed the SUSE documentation without any unique VxRail customization. This illustrates the seamless compatibility of using these technologies together and allowing for standardized deployment practices with the out-of-the-box system capabilities of both VxRail and the SUSE products.

Solution Components Overview

Before jumping into the details of the solution validation itself, let’s do a quick review of the major components that we used.

SUSE Rancher is a complete software stack for teams that are adopting containers. It addresses the operational and security challenges of managing multiple Kubernetes (K8s) clusters, including lightweight K3s clusters, across any infrastructure, while providing DevOps teams with integrated tools for running containerized workloads.

K3s is a CNCF sandbox project that delivers a lightweight yet powerful certified Kubernetes distribution.

SUSE Linux Enterprise Micro (SLE Micro) is an ultra-reliable, lightweight operating system purpose built for containerized and virtualized workloads.

Dell VxRail is the only fully integrated, pre-configured, and tested HCI system optimized with VMware vSphere, making it ideal for customers who want to leverage SUSE Rancher, K3s, and SLE Micro through vSphere to create and operate lightweight Kubernetes clusters on-premises or at the edge.

Validation Deployment Details

Now, let’s dive into the details of the deployment for this solution validation.

First, we deployed a single VxRail cluster with these specifications:

- 4 x VxRail E660F nodes running VxRail 7.0.370 version software

- 2 x Intel® Xeon® Gold 6330 CPUs

- 512 GB RAM

- Broadcom Adv. Dual 25 Gb Ethernet NIC

- 2 x vSAN Disk Groups:

- 1 x 800 GB Cache Disk

- 3 x 4 TB Capacity Disks

- vSphere K8s CSI/CNS

After we built the VxRail cluster, we deployed a set of three virtual machines running SLE Micro 5.1. We installed a multi-node K3s cluster running version 1.23.6 with Server and Agent services, Etcd, and a ContainerD container runtime on these VMs. We then installed SUSE Rancher 2.6.3 on the K3s cluster. Also included in the K3s cluster for Rancher installation were Fleet GitOps services, Prometheus monitoring and metrics capture services, and Grafana metrics visualization services. All of this formed our Rancher Management Server.

We then used Rancher to deploy managed K3s workload clusters. In this validation, we deployed the two managed K3 workload clusters on the Rancher Management Server cluster. These managed workload clusters were single node and six-node K3s clusters all running on vSphere VMs with the SLE Micro operating system installed.

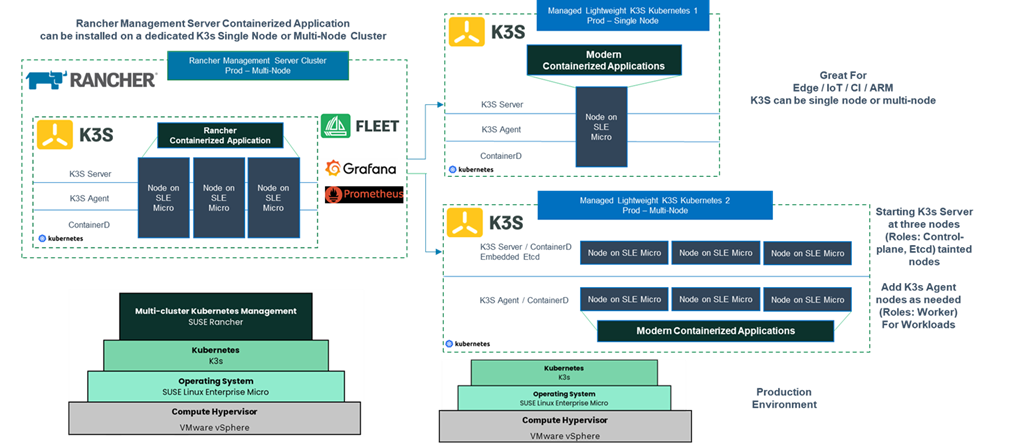

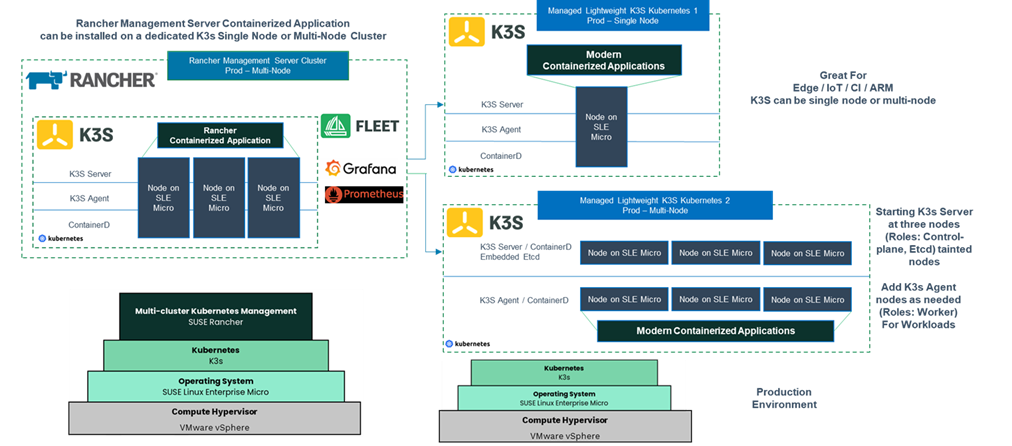

You can easily modify this validation to be more highly available and production ready. The following diagram shows how to incorporate more resilience.

Figure 1: SUSE Rancher and K3s with SLE Micro on Dell VxRail - Production Architecture

The Rancher Management Server stays the same, because it was already deployed with a highly available four-node VxRail cluster and three SLE Micro VMs running a multi-node K3 cluster. As a production best practice, managed K3s workload clusters should run on separate highly available infrastructure from the Rancher Management Server to maintain separation of management and workloads. In this case, you can deploy a second four-node VxRail cluster. For the managed K3s workload clusters, you should use a minimum of three-node clusters to provide high availability for both the Etcd services and the workloads running on it. However, three nodes are not enough to provide node separation and high availability for the Etcd services and workloads. To remedy this, you can deploy a minimum six-node K3s cluster (as shown in the diagram with the K3s Kubernetes 2 Prod cluster).

Summary

Although this validation features Dell VxRail, you can also deploy similar architectures using other Dell hardware platforms, such as Dell PowerEdge and Dell vSAN Ready Nodes running VMware vSphere!

For more information and to see other jointly validated reference architectures using Dell infrastructure with SUSE Rancher, K3s, and more, check out the following resource pages and documentation. We hope to see you back here again soon.

Author: Jason Marques

Dell Resources

- Dell VxRail Hyperconverged Infrastructure

- Dell VxRail System TechBook

- Solution Brief – Running SUSE Rancher and K3s with SLE Micro on Dell VxRail

- Reference Architecture - SUSE Rancher, K3s, and SUSE Linux Enterprise Micro for Edge Computing: Based on Dell PowerEdge XR11 and XR12 Servers

SUSE Resources

Related Blog Posts

Find Your Edge: Running SUSE Rancher and K3s with SLE Micro on Dell VxRail

Wed, 28 Sep 2022 10:26:37 -0000

|Read Time: 0 minutes

Find Your Edge: Running SUSE Rancher and K3s with SLE Micro on Dell VxRail

Existing examples of this collaboration have already begun to bear fruit with work done to validate SUSE Rancher and RKE2 on Dell VxRail. You can find more information on that in a Solution Brief here and blog post here. This initial example was to highlight deploying and operating Kubernetes clusters in a core datacenter use case.

But what about providing examples of jointly validated solutions for near edge use cases? More and more organizations are looking to deploy solutions at the edge since that is an increasing area where data is being generated and analyzed. As a result, this is where the focus of our ongoing technology validation efforts recently moved.

Our latest validation exercise featured deploying SUSE Rancher and K3s with the SUSE Linux Enterprise Micro operating system (SLE Micro) and running it on Dell VxRail hyperconverged infrastructure. These technologies were installed in a non-production lab environment by a team of SUSE and Dell VxRail engineers. All the installation steps that were used followed the SUSE documentation without any unique VxRail customization. This illustrates the seamless compatibility of using these technologies together and allowing for standardized deployment practices with the out-of-the-box system capabilities of both VxRail and the SUSE products.

Solution Components Overview

Before jumping into the details of the solution validation itself, let’s do a quick review of the major components that we used.

- SUSE Rancher is a complete software stack for teams that are adopting containers. It addresses the operational and security challenges of managing multiple Kubernetes (K8s) clusters, including lightweight K3s clusters, across any infrastructure, while providing DevOps teams with integrated tools for running containerized workloads.

- K3s is a CNCF sandbox project that delivers a lightweight yet powerful certified Kubernetes distribution.

- SUSE Linux Enterprise Micro (SLE Micro) is an ultra-reliable, lightweight operating system purpose built for containerized and virtualized workloads.

- Dell VxRail is the only fully integrated, pre-configured, and tested HCI system optimized with VMware vSphere, making it ideal for customers who want to leverage SUSE Rancher, K3s, and SLE Micro through vSphere to create and operate lightweight Kubernetes clusters on-premises or at the edge.

Validation Deployment Details

Now, let’s dive into the details of the deployment for this solution validation.

First, we deployed a single VxRail cluster with these specifications:

- 4 x VxRail E660F nodes running VxRail 7.0.370 version software

- 2 x Intel® Xeon® Gold 6330 CPUs

- 512 GB RAM

- Broadcom Adv. Dual 25 Gb Ethernet NIC

- 2 x vSAN Disk Groups:

- 1 x 800 GB Cache Disk

- 3 x 4 TB Capacity Disks

- vSphere K8s CSI/CNS

After we built the VxRail cluster, we deployed a set of three virtual machines running SLE Micro 5.1. We installed a multi-node K3s cluster running version 1.23.6 with Server and Agent services, Etcd, and a ContainerD container runtime on these VMs. We then installed SUSE Rancher 2.6.3 on the K3s cluster. Also included in the K3s cluster for Rancher installation were Fleet GitOps services, Prometheus monitoring and metrics capture services, and Grafana metrics visualization services. All of this formed our Rancher Management Server.

We then used Rancher to deploy managed K3s workload clusters. In this validation, we deployed the two managed K3 workload clusters on the Rancher Management Server cluster. These managed workload clusters were single node and six-node K3s clusters all running on vSphere VMs with the SLE Micro operating system installed.

You can easily modify this validation to be more highly available and production ready. The following diagram shows how to incorporate more resilience.

Figure 1: SUSE Rancher and K3s with SLE Micro on Dell VxRail - Production Architecture

The Rancher Management Server stays the same, because it was already deployed with a highly available four-node VxRail cluster and three SLE Micro VMs running a multi-node K3 cluster. As a production best practice, managed K3s workload clusters should run on separate highly available infrastructure from the Rancher Management Server to maintain separation of management and workloads. In this case, you can deploy a second four-node VxRail cluster. For the managed K3s workload clusters, you should use a minimum of three-node clusters to provide high availability for both the Etcd services and the workloads running on it. However, three nodes are not enough to provide node separation and high availability for the Etcd services and workloads. To remedy this, you can deploy a minimum six-node K3s cluster (as shown in the diagram with the K3s Kubernetes 2 Prod cluster).

Summary

Although this validation features Dell VxRail, you can also deploy similar architectures using other Dell hardware platforms, such as Dell PowerEdge and Dell vSAN Ready Nodes running VMware vSphere!

For more information and to see other jointly validated reference architectures using Dell infrastructure with SUSE Rancher, K3s, and more, check out the following resource pages and documentation. We hope to see you back here again soon.

Author: Jason Marques

Dell Resources

- Dell VxRail Hyperconverged Infrastructure

- Dell VxRail System TechBook

- Solution Brief – Running SUSE Rancher and K3s with SLE Micro on Dell VxRail

- Reference Architecture - SUSE Rancher, K3s, and SUSE Linux Enterprise Micro for Edge Computing: Based on Dell PowerEdge XR11 and XR12 Servers

SUSE Resources

Deploying VMware Tanzu for Kubernetes Operations on Dell VxRail: Now for the Multicloud

Wed, 17 May 2023 15:56:43 -0000

|Read Time: 0 minutes

VMware Tanzu for Kubernetes Operations (TKO) on Dell VxRail is a jointly validated Dell and VMware reference architecture solution designed to streamline Kubernetes use for the enterprise. The latest version has been extended to showcase multicloud application deployment and operations use cases. Read on for more details.

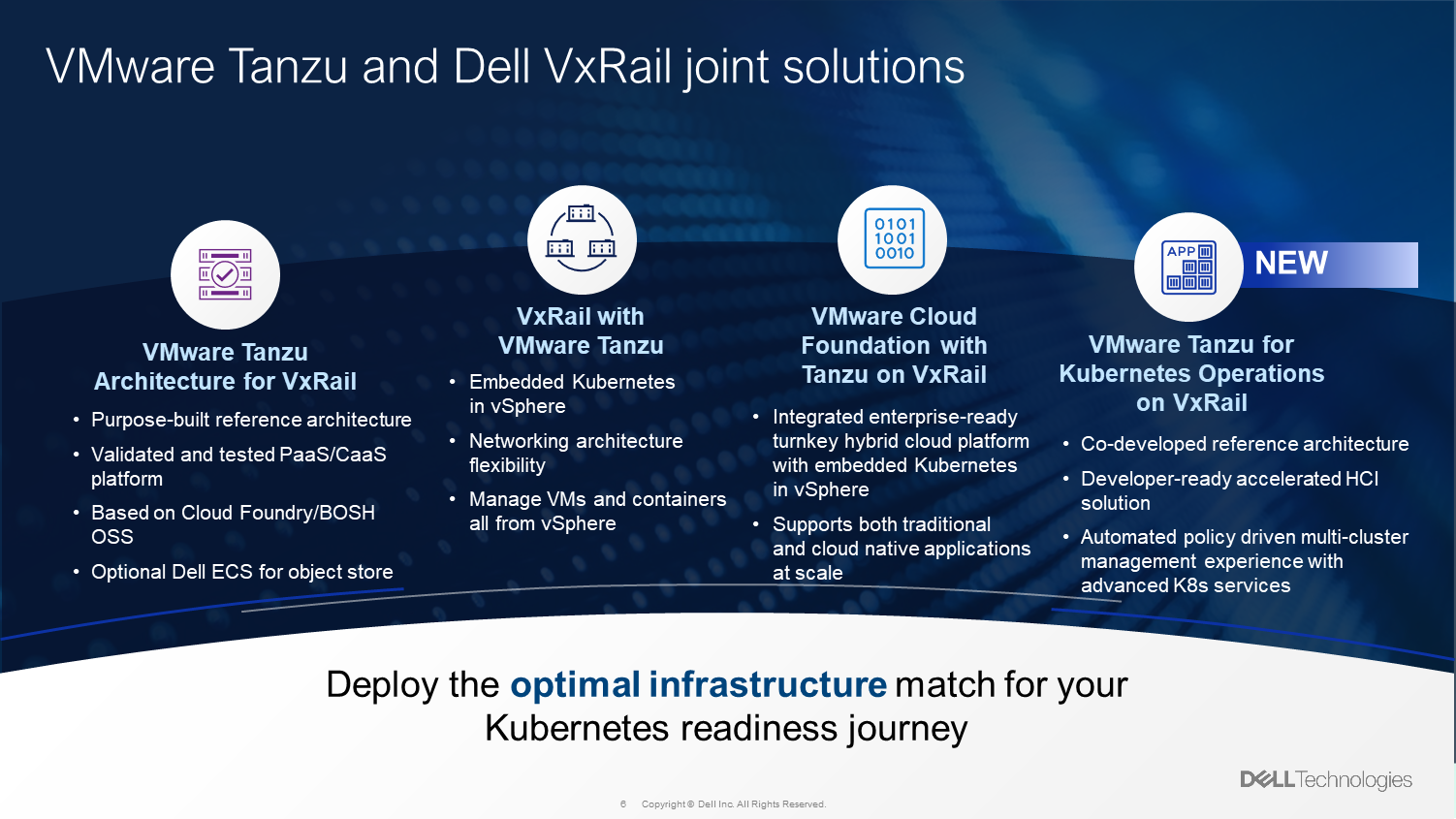

VMware Tanzu and Dell VxRail joint solutions

VMware TKO on Dell VxRail is yet another example of the strong partnership and joint development efforts that Dell and VMware continue to deliver on behalf of our joint customers so they can find success in their infrastructure modernization and digital transformation efforts. It is an addition to an existing portfolio of jointly developed and/or engineered products and reference architecture solutions that are built upon VxRail as the foundation to help customers accelerate and simplify their Kubernetes adoption.

Figure 1 highlights the joint VMware Tanzu and Dell VxRail offerings available today. Each is specifically designed to meet customers where they are in their journey to Kubernetes adoption.

Figure 1. Joint VMware Tanzu and Dell VxRail solutions

VMware TKO on VxRail

VMware Tanzu For Kubernetes Operations on Dell VxRail reference architecture updates

This latest release of the jointly developed reference architecture builds off the first release. To learn more about what TKO on VxRail is and our objective for jointly developing it, take a look at this blog post introducing its first iteration.

Okay… Now that you are all caught up, let’s dive into what is new in this latest version of the reference architecture.

Additional TKO multicloud components

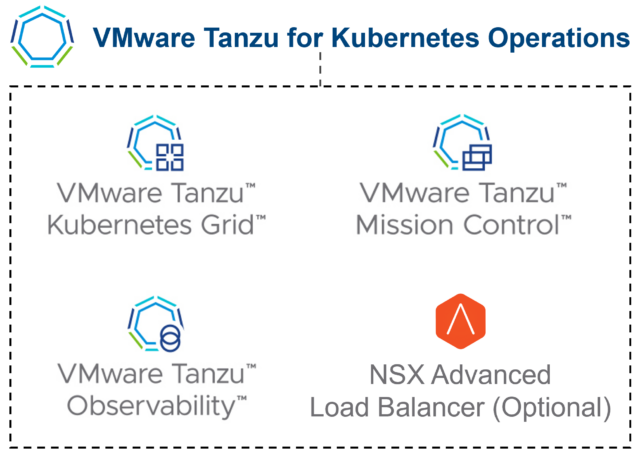

Let’s dive a bit deeper and highlight what we see as the essential building blocks for your cloud infrastructure transformation that are included in the TKO edition of Tanzu.

First, you’re going to need a consistent Kubernetes runtime like Tanzu Kubernetes Grid (TKG) so you can manage and upgrade clusters consistently as you move to a multicloud Kubernetes environment.

Next, you’re going to need some way to manage your platform and having a management plane like Tanzu Mission Control (TMC) that provides centralized visibility and control over your platform will be critical to helping you roll this out to distributed teams.

Also, having platform-wide observability like Aria Operations for Applications (formerly known as Tanzu/Aria Observability) ensures that you can effectively monitor and troubleshoot issues faster. Having data protection capabilities allows you to protect your data both at rest and in transit, which is critical if your teams will be deploying applications that run across clusters and clouds. And with NSX Advanced Load Balancer, TKO can also help you implement global load balancing and advanced traffic routing that allows for automated service discovery and north-south traffic management.

TKO on VxRail, VMware and Dell’s joint solution for core IT and cloud platform teams, can help you get started with your IT modernization project and enable you to build a standardized platform that will support you as you grow and expand to more clouds.

In the initial release of the reference architecture with VxRail, Tanzu Mission Control (TMC) and Aria Operations for Applications were used, and a solid on-premises foundation was established for building our multicloud architecture onward. The following figure shows the TKO items included in the first iteration.

Figure 2. Base TKO components used in initial version of reference architecture

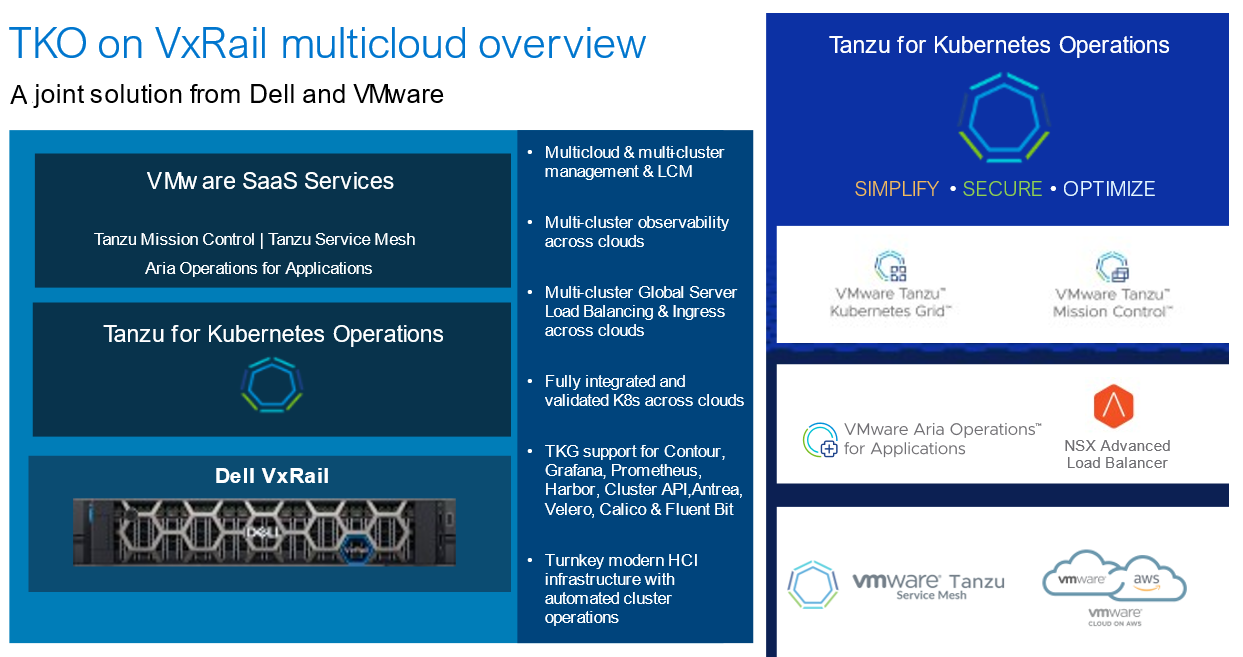

In this second phase, we extended the on-premises architecture to a true multicloud environment fit for a new generation of applications.

Added to the latest version of the reference architecture are VMware Cloud on AWS, an Amazon EKS service, Tanzu Service Mesh, and Global Server Load Balancing (GSLB) functionality provided by NSX Advanced Load Balancer to build a global namespace for modern applications.

New TMC functionalities were also added that were not part of the first reference architecture, such as EKS LCM and continuous delivery capabilities. Besides the fact that AWS is still the most widely used public cloud provider, the reason AWS was used for this reference architecture is because the VMware SaaS products have the most features available for AWS cloud services. Other hyperscaler public cloud provider services are still in the VMware development pipeline. For example, today you can perform life cycle management of Amazon EKS clusters through Tanzu Mission Control. This life cycle management capability isn’t available yet with other cloud providers. The following figure highlights the high-level set of components used in this latest reference architecture update.

Figure 3. Additional components used in latest version of TKO on VxRail RA

New multicloud testing environment

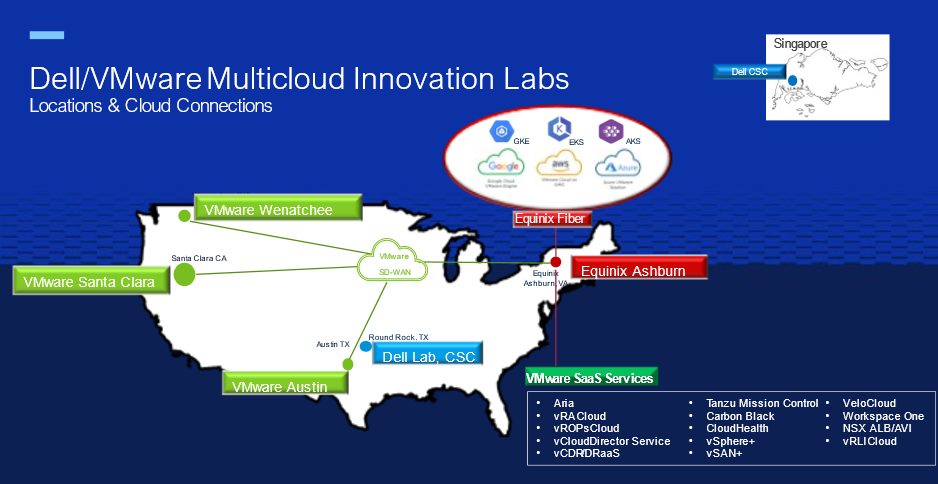

To test this multicloud architecture, the Dell and VMware engineering teams needed a true multicloud environment. Figure 4 illustrates a snapshot of the multisite/multicloud lab infrastructure that our VMware and Dell engineering teams built to provide a “real-world” environment to test and showcase our solutions. We use this environment to work on projects with internal teams and external partners.

Figure 4. Dell/VMware Multicloud Innovation Lab Environments

The environment is made up of five data centers and private clouds across the US, all connected by VMware SD-WAN, delivering a private multicloud environment. An Equinix data center provides the fiber backbone to connect with most public cloud providers as well as VMware Cloud Services.

Extended TKO on VxRail multicloud architecture

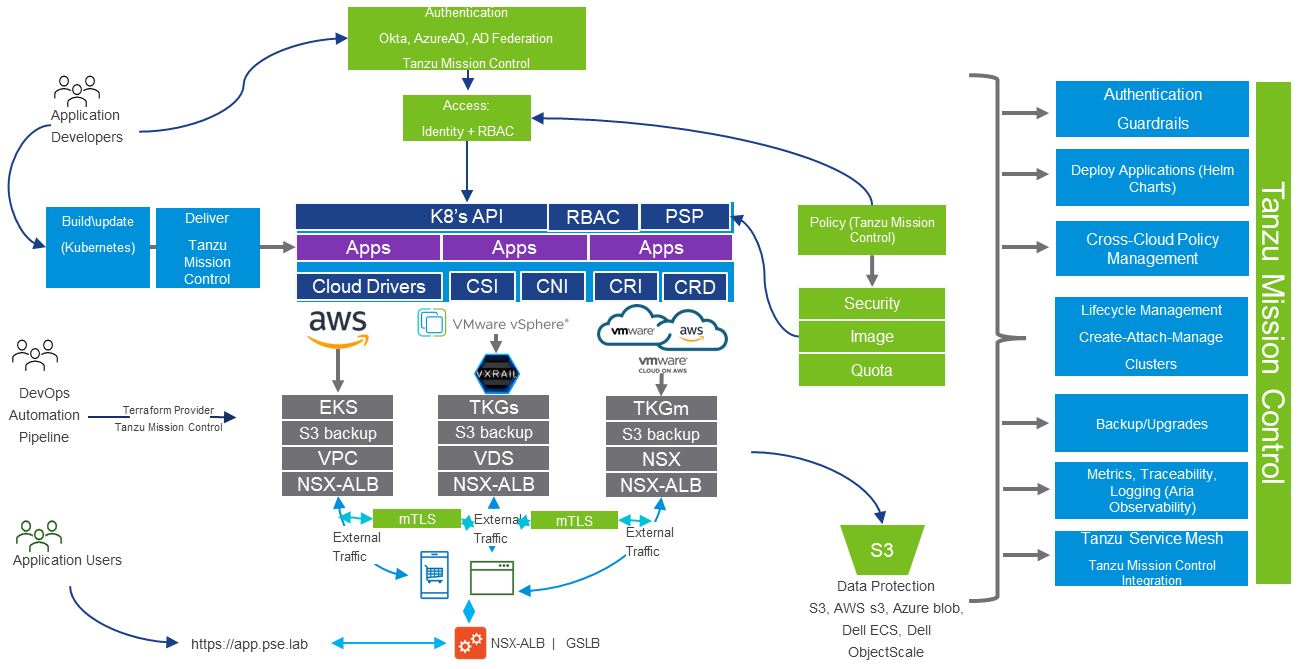

Figure 5 shows the multicloud implementation of Tanzu for Kubernetes Operations on VxRail. Here you have K8s clusters on-premises and running on multiple cloud providers.

Figure 5. TKO on VxRail Reference Architecture Multicloud Architecture

Tanzu Mission Control (TMC), which is part of Tanzu for Kubernetes Operations, provides you with a management plane through which platform operators or DevOps team members can manage the entire K8s environment across clouds. Developers can have self-service access, authenticated by either cloud identity providers like Okta or Microsoft Active Directory or through corporate Active Directory federation. With TMC, you can assign consistent policies across your cross-cloud K8s clusters. DevOps teams can use the TMC Terraform provider to manage the clusters as infrastructure-as-code.

Through TMC support for K8s open-source project technologies such as Velero, teams can back up clusters either to Azure blob, Amazon S3, or on-prem S3 storage solutions such as Dell ECS, Dell ObjectScale, or another object storage of their choice.

When you enable data protection for a cluster, Tanzu Mission Control installs Velero with Restic (an open-source backup tool), configured to use the opt-out approach. With this approach, Velero backs up all pod volumes using Restic.

TMC integration with Aria Operations for Applications (formerly Tanzu/Aria Observability) delivers fine-grained insights and analytics about the microservices applications running across the multicloud environments.

TMC also has integration with Tanzu Service Mesh (TSM), so you can add your clusters to TSM. When the TKO on VxRail multicloud reference architecture is implemented, users would connect to their multicloud microservices applications through a single URL provided by NSX Advanced Load Balancer (formerly AVI Load Balancer) in conjunction with TSM. TSM provides advanced, end-to-end connectivity, security, and insights for modern applications—across application end users, microservices, APIs, and data—enabling compliance with service level objectives (SLOs) and data protection and privacy regulations.

TKO on VxRail business outcomes

Dell and VMware know what business outcomes matter to enterprises, and together we help customers map those outcomes to transformations.

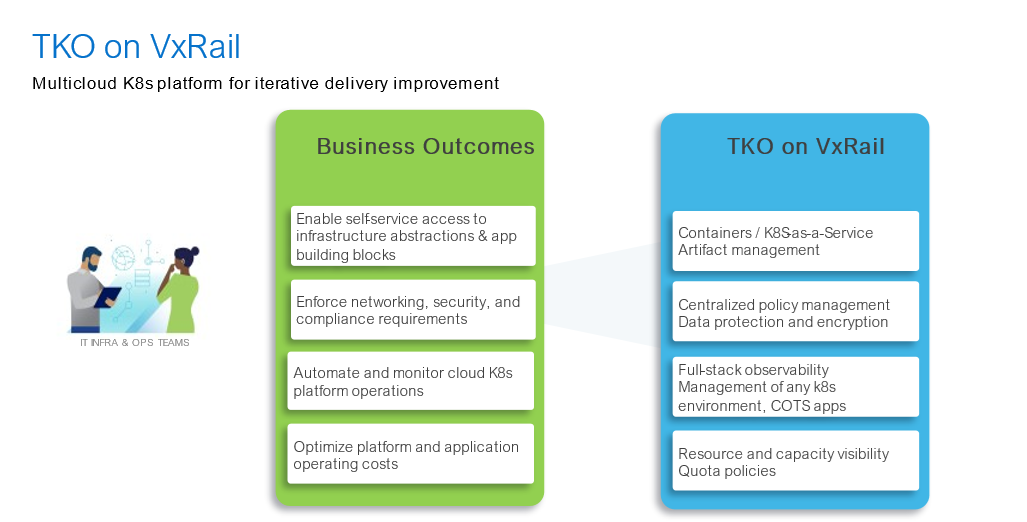

Figure 6 highlights the business outcomes that customers are asking for and that we are delivering through the Tanzu portfolio on VxRail today. They also set the stage to inform our joint development teams about future capabilities we look forward to delivering.

Figure 6. TKO on VxRail and business outcomes alignment

Learn more at Dell Technologies World 2023

Want to dive deeper into VMware Tanzu for Kubernetes Operations on Dell VxRail? Visit our interactive Dell Technologies and VMware booths at Dell Technologies World to talk with any of our experts. You can also attend our session Simplify & Streamline via VMware Tanzu for Kubernetes Operations on VxRail.

Also, feel free to check out the VMware Blog on this topic, written by Ather Jamil from VMware. It includes some cool demos showing TKO on VxRail in action!

Author: Jason Marques (Dell Technologies)

Twitter: @vWhipperSnapper

Contributor: Ather Jamil (VMware)

Resources

- VxRail page on DellTechnologies.com

- VxRail InfoHub

- VxRail videos

- Tanzu for Kubernetes Operations VMware page

- TKO on VxRail Reference Architecture