2nd Gen AMD EPYC now available to power your favorite hyperconverged platform ;) VxRail

Mon, 17 Aug 2020 18:31:32 -0000

|Read Time: 0 minutes

Expanding the range of VxRail choices to include 64-cores of 2nd Gen AMD EPYC compute

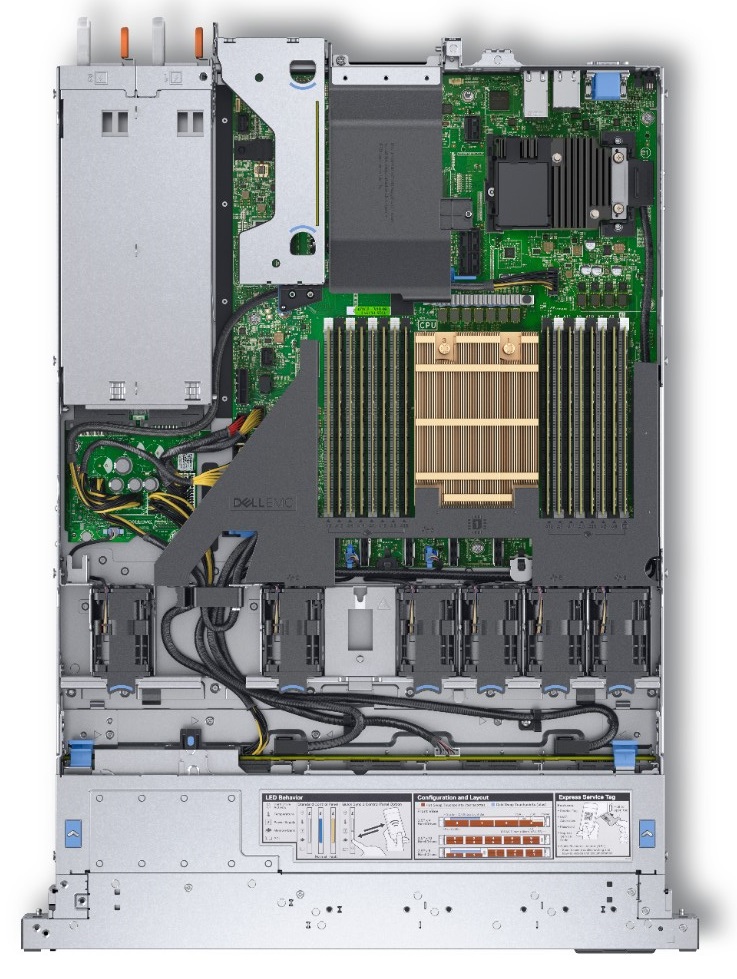

Last month, Dell EMC expanded our very popular E Series (the E for Everything Series) with the introduction of the E665/F/N, our very first VxRail with an AMD processor, and what a processor it is! The 2nd Gen AMD EPYC processor came to market with a lot of industry-leading capabilities:

- Up to 64-cores in a single processor with 8, 12, 16, 24, 32 or 48 core offerings also available

- Eight memory channels, but not only more channels, they are also faster at 3200MT/s. The 2nd Gen EPYC can also address much more memory per processor

- 7nm transistors. Smaller transistors mean more powerful and more energy efficient processors

- Up to 128 lanes of PCIe Gen 4.0, with 2X the bandwidth of PCIe Gen 3.0.

These industry leading capabilities enable the VxRail E665 series to deliver dual socket performance in a single socket model - and can provide up to 90% greater general-purpose CPU capacity than other VxRail models when configured with single socket processors.

So, what is the sweet spot or ideal use case for the E665? As always, it depends on many things. Unlike the D Series (our D for Durable Series) that we also launched last month, which has clear rugged use cases, the E665 and the rest of the E Series very much live up to their “Everything” name, and perform admirably in a variety of use cases.

While the 2nd Gen EPYC 64-core processors grab the headlines, there are multiple AMD processor options, including the 16 core AMD 7F52 at 3.50GHz with a max boost of 3.9GHz for applications that benefit from raw clock speed, or where application licensing is core based. On the topic of licensing, I would be remiss if I didn’t mention VMware’s update to its per-CPU pricing earlier this year. This results in processors with more then 32-cores requiring a second VMware per-CPU license. This may make a 32-core processor an attractive option from an overall capacity & performance verses hardware & licensing cost perspective.

Speaking of overall costs, the E665 has dual 10Gb RJ45/SFP+ or dual 25Gb SFP28 base networking options, which can be further expanded with PCIe NICs including a dual 100Gb SFP28 option. From a cost perspective, the price delta between 10Gb and 25Gb networking is minimal. This is worth considering particularly for greenfield sites and even for brownfield sites where the networking maybe upgraded in the near future. Last year, we began offering Fibre Channel cards on VxRail, which are also available on the E665. While FC connectivity may sound strange for a hyperconverged infrastructure platform, it does make sense for many of our customers who have existing SAN infrastructure, or some applications (PowerMax for extremely large database requiring SRDF) or storage needs (Isilon for large file repository for medical files) that are more suited to SAN. While we’d prefer these SAN to be a Dell EMC product, as long as it is on the VMware SAN HCL, it can be connected. Providing this option enables customers to get the best both worlds have to offer.

The options don’t stop there. While the majority of VxRail nodes are sold with all-flash configurations, there are customers whose needs are met with hybrid configs, or who are looking towards all-NVMe options. The E665 can be configured with as little as 960GB to maximums of 14TB hybrid, 46TB all-flash, or 32TB all-NVMe of raw storage capacity. Memory options consist of 4, 8, or 16 RDIMMs of 16GB, 32GB or 64GB in size. Maximum memory performance, 3200 MT/s, is achieved with one DIMM per memory channel, adding a second matching DIMM reduces bandwidth slightly to 2933 MT/s.

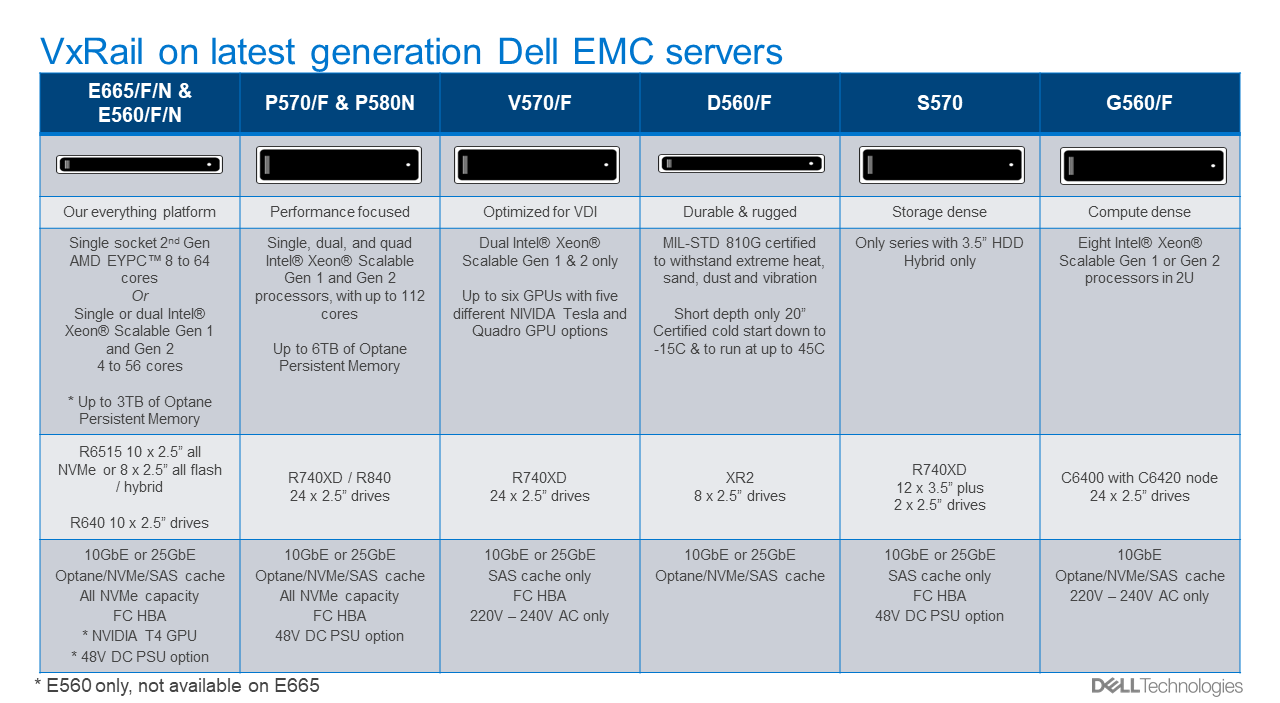

VxRail and Dell Technologies, very much recognize that the needs of our customers vary greatly. A product with a single set of options cannot meet all our various customers’ different needs. Today, VxRail offers six different series, each with a different focus:

- Everything E Series a power packed 1U of choice

- Performance-focused P Series with dual or quad socket options

- VDI-focused V Series with a choice of five different NIVIDA GPUs

- Durable D Series are MIL-STD 810G certified for extreme heat, sand, dust, and vibration

- Storage-dense S Series with 96TB of hybrid storage capacity

- General purpose and compute dense G Series with 228 cores in a 2U form factor

With the highly flexible configuration choices, there is a VxRail for almost every use case, and if there isn’t, there is more than likely something in the broad Dell Technologies portfolio that is.

Author: David Glynn, Sr. Principal Engineer, VxRail Tech Marketing

Resources:

VxRail Spec Sheet

E665 Product Brief

E665 One Pager

D560 3D product landing page

D Series video

D Series spec sheet

D Series Product Brief

Related Blog Posts

More GPUs, CPUs and performance - oh my!

Mon, 14 Jun 2021 14:30:46 -0000

|Read Time: 0 minutes

Continuous hardware and software changes deployed with VxRail’s Continuously Validated State

A wonderful aspect of software-defined-anything, particularly when built on world class PowerEdge servers, is speed of innovation. With a software-defined platform like VxRail, new technologies and improvements are continuously added to provide benefits and gains today, and not a year or so in the future. With the release of VxRail 7.0.200, we are at it again! This release brings support for VMware vSphere and vSAN 7.0 Update 2, and for new hardware: 3rd Gen AMD EPYC processors (Milan), and more powerful hardware from NVIDIA with their A100 and A40 GPUs.

VMware, as always, does a great job of detailing the many enhanced or new features in a release. From high level What’s New corporate or personal blog posts, to in-depth videos by Duncan Epping. However, there are a few changes that I want to highlight:

Get thee to 25GbE: A trilogy of reasons - Storage, load-balancing, and pricing.

vSAN is a distributed storage system. To that end, anything that improves the network or networking efficiency improves storage performance and application performance -- but there is more to networking than big, low-latency pipes. RDMA has been a part of vSphere since the 6.5 release; it is only with 7.0 Update 2 that it is leveraged by vSAN. John Nicholson explains the nuts and bolts of vSAN RDMA in this blog post, but only touches on the performance gains. From our performance testing on VxRail, I can share with you the gains we have seen with VxRail: up to 5% reduction in CPU utilization, up to 25% lower latency, and up to 18% higher IOPS, along with increases in read and write throughput. It should be noted that even with medium block IO, vSAN is more than capable of saturating a 10GbE port, RDMA is pushing performance beyond that, and we’ve yet to see what Intel 3rd Generation Xeon processors will bring. The only fly in the ointment for vSAN RDMA is the current small list of approved network cards – no doubt more will be added soon.

vSAN is not the only feature that enjoys large low-latency pipes. Niels Hagoort describes the changes in vSphere 7.0 Update 2 that have made vMotion faster, thus making Balancing Workloads Invisible and the lives of virtualization administrators everywhere a lot better. Aside: Can I say how awesome it is to see VMware continuing to enhance a foundational feature that they first introduced in 2003, a feature that for many was that lightbulb Aha! moment that started their virtualization journey.

One last nudge: pricing. The cost delta between 10GbE and 25GbE network hardware is minimal, so for greenfield deployments the choice is easy; you may not need it today, but workloads and demands continue to grow. For brownfield, where the existing network is not due for replacements, the choice is still easy. 25GbE NICs and switch ports can negotiate to 10GbE making a phased migration, VxRail nodes now and switches in the future, possible. The inverse is also possible: upgrade the network to 25GbE switches while still connecting your existing VxRail 10GbE SFP+ NIC ports.

Is 25GbE in your infrastructure upgrade plans yet? If not, maybe it should be.

A duo of AMD goodness

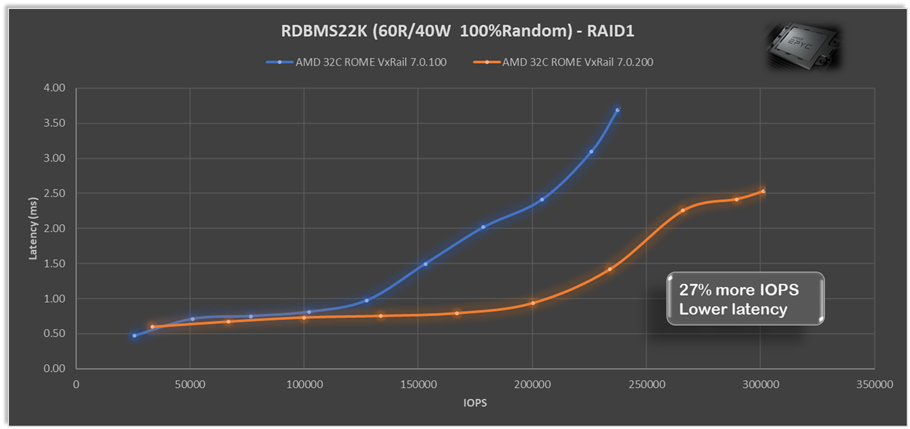

Last year we released two AMD-based VxRail platforms, the E665/F and the P675F/N, so I’m delighted to see CPU scheduler optimizations for AMD EPYC processors, as described in Aditya Sahu blog post. What is even better is the 29 page performance study Aditya links to, the depth of detail provided on how the ESXi CPU scheduling works, and didn’t work, with AMD EYPC processors is truly educational. The extensive performance testing VMware continuously runs and the results they share (spoiler: they achieved very significant gains) are also a worthwhile read. In our testing we’ve seen that with just these scheduler optimizations AMD alone VxRail 7.0.200 can provide up to 27% more IOPS and up to 27% lower latency for both RAID1 and RAID5 with relational database (RDBMS22K 60R/40W 100%Random) workloads.

The extensive performance testing VMware continuously runs and the results they share (spoiler: they achieved very significant gains) are also a worthwhile read. In our testing we’ve seen that with just these scheduler optimizations AMD alone VxRail 7.0.200 can provide up to 27% more IOPS and up to 27% lower latency for both RAID1 and RAID5 with relational database (RDBMS22K 60R/40W 100%Random) workloads.

VxRail begins shipping the 3rd generation AMD EYPC processors – also known as

Milan – in VxRail E665 and P675 nodes later this month. These are not a replacement

for the current 2nd Gen EPYC processors we offer, rather the addition of higher

performing 24-core, 32-core, and 64-core choices to the VxRail line up delivering up to 33% more IOPS and 16% lower latency across a range of workloads and block sizes. Check out this VMware blog post for the performance gains they showcase with the VMmark benchmarking tool.

HCI Mesh – only recently introduced, yet already getting better

When VMware released HCI Mesh just last October, it enabled stranded storage on one VxRail cluster to be consumed by another VxRail cluster. With the release of VxRail 7.0.200 this has been expanded to making it more applicable to more customers by enabling any vSphere clusters to also be consumers of that excess storage capacity – these remote clusters do not require a vSAN license and consume the storage in the same manner they would any other datastore. This opens up some interesting multi-cluster use cases, for example:

In solutions where a software application licensing requires each core/socket in the vSphere cluster to be licensed, this licensing cost can easily dwarf other costs. Now this application can be deployed on a small compute-only cluster, while consuming storage from the larger VxRail cluster. Or where the density of storage per socket didn’t make VxRail viable, it can now be achieved with a smaller VxRail cluster, plus a separate compute-only cluster. If only the all the goodness that is VxRail was available in a compute-only cluster – now that would be something dynamic…

A GPU for every workload

GPUs, once the domain of PC gamers, are now a data center staple with their parallel processing capabilities accelerating a variety of workloads. The versatile VxRail V Series has multiple NVIDIA GPUs to choose from and we’ve added two more with the addition of the NVIDIA A40 and A100. The A40 is for sophisticated visual computing workloads – think large complex CAD models, while the A100 is optimized for deep learning inference workloads for high-end data science.

Evolution of hardware in a software-defined world

PowerEdge took a big step forward with their recent release built on 3rd Gen Intel Xeon Scalable processors. Software-defined principles enable VxRail to not only quickly leverage this big step forward, but also to quickly leverage all the small steps in hardware changes throughout a generation. Building on the latest PowerEdge servers we are Reimagine HCI with VxRail with the next generation VxRail E660/F, P670F or V670F. Plus, what’s great about VxRail is that you can seamlessly integrate this latest technology into your existing infrastructure environment. This is an exciting release, but equally exciting are all the incremental changes that VxRail software-defined infrastructure will get along the way with PowerEdge and VMware.

VxRail, flexibility is at its core.

Availability

- VxRail systems with Intel 3rd Generation Xeon processors will be globally available in July 2021.

- VxRail systems with AMD 3rd Generation EPYC processors will be globally available in June 2021.

- VxRail HCI System Software updates will be globally available in July 2021.

- VxRail dynamic nodes will be globally available in August 2021.

- VxRail self-deployment options will begin availability in North America through an early access program in August 2021.

Additional resources

- Blog: Reimagine HCI with VxRail

- Attend our launch webinar to learn more.

- Press release: Dell Technologies Reimagines Dell EMC VxRail to Offer Greater Performance and Storage Flexibility

100 GbE Networking – Harness the Performance of vSAN Express Storage Architecture

Wed, 05 Apr 2023 12:48:50 -0000

|Read Time: 0 minutes

For a few years, 25GbE networking has been the mainstay of rack networking, with 100 GbE reserved for uplinks to spine or aggregation switches. 25 GbE provides a significant leap in bandwidth over 10 GbE, and today carries no outstanding price premium over 10 GbE, making it a clear winner for new buildouts. But should we still be continuing with this winning 25 GbE strategy? Is it time to look to a future of 100 GbE networking within the rack? Or is that future now?

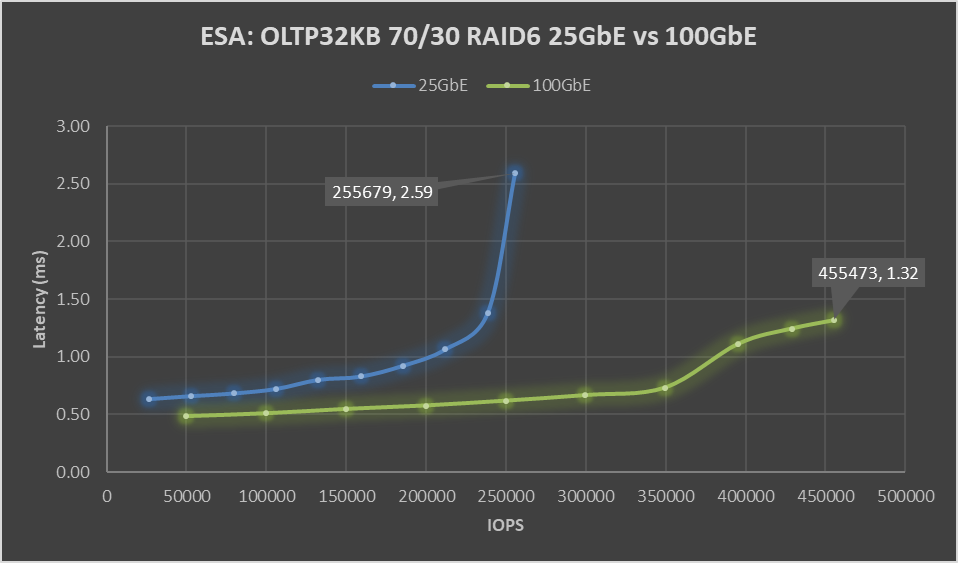

This question stems from my last blog post: VxRail with vSAN Express Storage Architecture (ESA) where I called out VMware’s 100 GbE recommended for maximum performance. But just how much more performance can vSAN ESA deliver with 100GbE networking? VxRail is fortunate to have its performance team, who stood up two identical six-node VxRail with vSAN ESA clusters, except for the networking. One was configured with Broadcom 57514 25 GbE networking, and the other with Broadcom 57508 100 GbE networking. For more VxRail white papers, guides, and blog posts visit VxRail Info Hub.

When it comes to benchmark tests, there is a large variety to choose from. Some benchmark tests are ideal for generating headline hero numbers for marketing purposes – think quarter-mile drag racing. Others are good for helping with diagnosing issues. Finally, there are benchmark tests that are reflective of real-world workloads. OLTP32K is a popular one, reflective of online transaction processing with a 70/30 read-write split and a 32k block size, and according to the aggregated results from thousands of Live Optics workload observations across millions of servers.

One more thing before we get to the results of the VxRail Performance Team's testing. The environment configuration. We used a storage policy of erasure coding with a failure tolerance of two and compression enabled.

When VMware announced vSAN with Express Storage Architecture they published a series of blogs all of which I encourage you to read. But as part of our 25 GbE vs 100 GbE testing, we also wanted to verify the astounding claims of RAID-5/6 with the Performance of RAID-1 using the vSAN Express Storage Architecture and vSAN 8 Compression - Express Storage Architecture. In short, forget the normal rules of storage performance, VMware threw that book out of the window. We didn’t throw our copy out of the window, well not at first, but once our results validated their claims… it went out.

Let’s look at the data: Boom!

Figure 1. ESA: OLTP32KB 70/30 RAID6 25 GbE vs 100 GbE performance graph

Boom! A 78% increase in peak IOPS with a substantial 49% drop in latency. This is a HUGE increase in performance, and the sole difference is the use of the Broadcom 57508 100 GbE networking. Also, check out that latency ramp-up on the 25 GbE line, it’s just like hitting a wall. While it is almost flat on the 100 GbE line.

But nobody runs constantly at 100%, at least they shouldn’t be. 60 to 70% of absolute max is typically a normal day-to-day comfortable peak workload, leaving some headroom for spikes or node maintenance. At that range, there is an 88% increase in IOPS with a 19 to 21% drop in latency, with a smaller drop in latency attributable to the 25 GbE configuration not hitting a wall. As much as applications like high performance, it is needed to deliver performance with consistent and predictable latency, and if it is low all the better. If we focus on just latency, the 100 GbE networking enabled 350K IOPS to be delivered at 0.73 ms, while the 25 GbE networking can squeak out 106K IOPS at 0.72 ms. That may not be the fairest of comparisons, but it does highlight how much 100GbE networking can benefit latency-sensitive workloads.

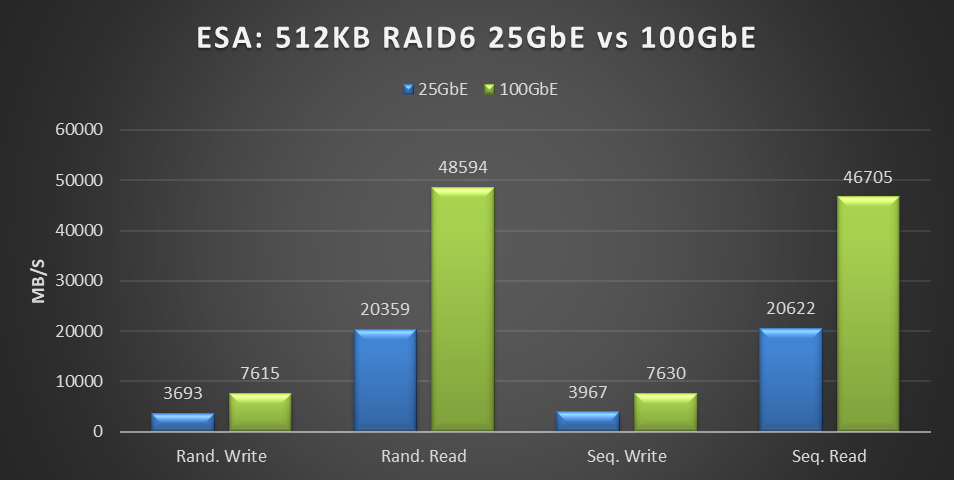

Boom, again! This benchmark is not reflective of real-world workloads but is a diagnostic test that stresses the network with its 100% read-and-write workloads. Can this find the bottleneck that 25 GbE hit in the previous benchmark?

Figure 2. ESA: 512KB RAID6 25 GbE vs 100 GbE performance graph

This testing was performed on a six-node cluster, with each node contributing one-sixth of the throughput shown in this graph. 20359MB/s of random read throughput for the 25 GbE cluster or 3393 MB/s per node. Which is slightly above the theoretical max throughput of 3125 MB/s that 25 GbE can deliver. This is the absolute maximum that 25 GbE can deliver! In the world of HCI, the virtual machine workload is co-resident with the storage. As a result, some of the IO is local to the workload, resulting in higher than theoretical throughput. For comparison, the 100 GbE cluster achieved 48,594 MB/s of random read throughput, or 8,099 MB/s per node out of a theoretical maximum of 12,500 MB/s.

But this is just the first release of the Express Storage Architecture. In the past, VMware has added significant gains to vSAN, as seen in the lab-based performance analysis of Harnessing the Performance of Dell EMC VxRail 7.0.100. We can only speculate on what else they have in store to improve upon this initial release.

What about costs, you ask? Street pricing can vary greatly depending on the region, so it's best to reach out to your Dell account team for local pricing information. Using US list pricing as of March 2023, I got the following:

Component | Dell PN | List price | Per port | 25GbE | 100GbE |

Broadcom 57414 dual 25 Gb | 540-BBUJ | $769 | $385 | $385 |

|

S5248F-ON 48 port 25 GbE | 210-APEX | $59,216 | $1,234 | $1,234 |

|

25 GbE Passive Copper DAC | 470-BBCX | $125 | $125 | $125 |

|

Broadcom 57508 dual 100Gb | 540-BDEF | $2,484 | $1,242 |

| $1,242 |

S5232F-ON 32 port 100 GbE | 210-APHK | $62,475 | $1,952 |

| $1,952 |

100 GbE Passive Copper DAC | 470-ABOX | $360 | $360 |

| $360 |

Total per port |

|

|

| $1,743 | $3,554 |

Overall, the per-port cost of the 100 GbE equipment was 2.04 times that of the 25 GbE equipment. However, this doubling of network cost provides four times the bandwidth, a 78% increase in storage performance, and a 49% reduction in latency.

If your workload is IOPS-bound or latency-sensitive and you had planned to address this issue by adding more VxRail nodes, consider this a wakeup call. Adding dual 100Gb came at a total list cost of $42,648 for the twelve ports used. This cost is significantly less than the list price of a single VxRail node and a fraction of the list cost of adding enough VxRail nodes to achieve the same level of performance increase.

Reach out to your networking team; they would be delighted to help deploy the 100 Gb switches your savings funded. If decision-makers need further encouragement, send them this link to the white paper on this same topic Dell VxRail Performance Analysis (similar content, just more formal), and this link to VMware's vSAN 8 Total Cost of Ownership white paper.

While 25 GbE has its place in the datacenter, when it comes to deploying vSAN Express Storage Architecture, it's clear that we're moving beyond it and onto 100 GbE. The future is now 100 GbE, and we thank Broadcom for joining us on this journey.