VxRail brings key features with the release of 4.7.510

Mon, 17 Aug 2020 18:31:30 -0000

|Read Time: 0 minutes

VxRail recently released a new version of our software, 4.7.510, which brings key feature functionality and product offerings

At a high level, this release further solidifies VxRail’s synchronous release commitment with vSphere of 30 days or less. VxRail and the 4.7.510 release integrates and aligns with VMware by including the vSphere 6.7U3 patch release. More importantly, vSphere 6.7U3 provides the underlying support for Intel Optane persistent memory (or PMem), also offered in this release.

Intel Optane persistent memory is non-volatile storage medium with RAM-like performance characteristics. Intel Optane PMem in a hyperconverged VxRail environment accelerates IT transformation with faster analytics (think in-memory DBMS), and cloud services.

Intel Optane PMem (in App Direct mode) provides added memory options for the E560/F/N and P570/F and is supported on version 4.7.410. Additionally, PMem will be supported on the P580N beginning with version 4.7.510 on July 14.

This technology is ideal for many use cases including in-memory databases and block storage devices, and it’s flexible and scalable allowing you to start small with a single PMem module (card) and scale as needed. Other use cases include real-time analytics and transaction processing, journaling, massive parallel query functions, checkpoint acceleration, recovery time reduction, paging reduction and overall application performance improvements.

New functionality enables customers to schedule and run "on demand” health checks in advance, and in lieu of the LCM upgrade. Not only does this give customers the flexibility to pro-actively troubleshoot issues, but it ensures that clusters are in a ready state for the next upgrade or patch. This is extremely valuable for customers that have stringent upgrade schedules, as they can rest assured that clusters will seamlessly upgrade within a specified window. Of course, running health checks on a regular basis provides sanity in knowing that your clusters are always ready for unscheduled patches and security updates.

Finally, the VxRail 4.7.510 release introduces optimized security functionality with two-factor authentication (or 2FA) with SecurID for VxRail. 2FA allows users to login to VxRail via the vCenter plugin when the vCenter is configured for RSA 2FA. Prior to this version, the user would be required to enter username and password. The RSA authentication manager automatically verifies multiple prerequisites and system components to identify and authenticate users. This new functionality saves time by alleviating the username/password entry process for VxRail access. Two-factor authentication methods are often required by government agencies or large enterprises. VxRail has already incorporated enhanced security offerings including security hardening, VxRail ACLs and RBAC, KMIP compliant key management, secure logging, and DARE, and now with the release of 4.7.510, the inclusion of 2FA further distinguishes VxRail as a market leader.

Please check out these resources for more VxRail 4.7.510 information:

By: KJ Bedard - VxRail Technical Marketing Engineer

LinkedIn: https://www.linkedin.com/in/kj-bedard-50b25315/

Twitter: @KJbedard

Related Blog Posts

VxRail & Intel Optane for Extreme Performance

Tue, 02 Mar 2021 17:47:20 -0000

|Read Time: 0 minutes

Enabling high performance for HCI workloads is exactly what happens when VxRail is configured with Intel Optane Persistent Memory (PMem). Optane PMem provides compute and storage performance to better serve applications and business-critical workloads. So, what is Intel Optane Persistent Memory? Persistent memory is memory that can be used as storage, providing RAM-like performance, very low latency and high bandwidth. It’s great for applications that require or consume large amounts of memory like SAP HANA, and has many other use cases as shown in Figure 1 and VxRail is certified for SAP HANA as well as Intel Optane PMem.

Moreover, PMem can be used as block storage where data can be written persistently, a great example is for DBMS log files. A key advantage to using this technology is that you can start small with a single PMem card (or module), then scale and grow as needed with the ability to add up to 12 cards. Customers can take advantage of PMem immediately because there’s no need to make major hardware or configuration changes, nor budget for a large capital expenditure.

There are a wide variety of use cases today including those you see here:

Figure 1: Intel Optane PMem Use Cases

PMem offers two very different operating modes, that being Memory and App Direct, and in turn App Direct can be used in two very different ways.

First, Intel Optane PMem in Memory mode is not yet supported by VxRail. This mode acts as volatile system memory and provides significantly lower cost per GB then traditional DRAM DIMMs. A follow-on update to this blog will describe this mode and test results in much more detail once it is supported.

As for App Direct mode (supported today), PMem is consumed by virtual machines as either a block storage device, known as vPMemDisk, or as byte addressable memory, known as Virtual NVDIMM. Both provide great benefit to the applications running in a virtual machine, just in very different ways. vPMemDisk can be used by any virtual machine hardware, and by any Guest OS. Since it’s presented as a block device it will be treated like any other virtual disk. Applications and/or data can then be placed on this virtual disk. The second consumption method, NVDIMM has the advantage of being addressed in the same way as regular RAM, however, it can retain its data through reboots or power failures. This is a considerable plus for large in-memory databases like SAP HANA where cache warm-up or the time to load tables in memory can be significant!

However, it’s important to note that, like any other memory module, the PMem module does not provide data redundancy. This may not be an issue for some data files on commonly used applications that can be re-created in case of a host failure. But a key principle when using PMem, either as block storage or byte addressable memory is that the applications are responsible for handling data replication to provide durability.

New data redundancy options are expected on applications that are using PMem and should be well understood before deployment.

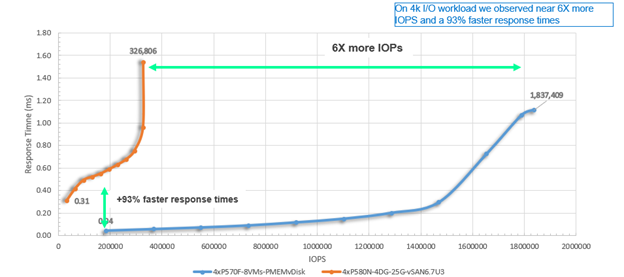

First, we’ll look at test results using PMem as virtual disk (or vPMemDisk). Our Engineering team tested VxRail with PMem in App Direct mode and ran comparison tests against a VxRail all-flash (P570F series platform). The testing simulated a typical 4K OLTP workload with 70/30 RW ratio. Our results achieve more than 1.8M IOPs or 6X more than the all-flash VxRail system. That equates to 93% faster response times (or lower latency) and 6X greater throughput as shown here:

Figure 2: VxRail PMem App Direct versus VxRail all-flash

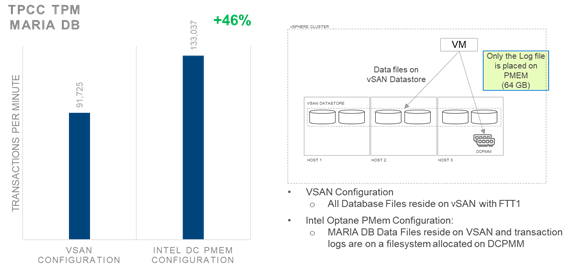

This latency difference indicates the potential to improve the performance of legacy applications by placing specific data files on a PMem module, for example, placing log files on PMem. To verify the benefit of this log acceleration use case we ran a TPC-C benchmark comparing VxRail configured with a log file on a vPMEMDIsk to a VxRail all-flash vSAN, and we saw a 46% improvement on the number of transactions per minute.

Figure 3: Log file acceleration use case

Figure 3: Log file acceleration use case

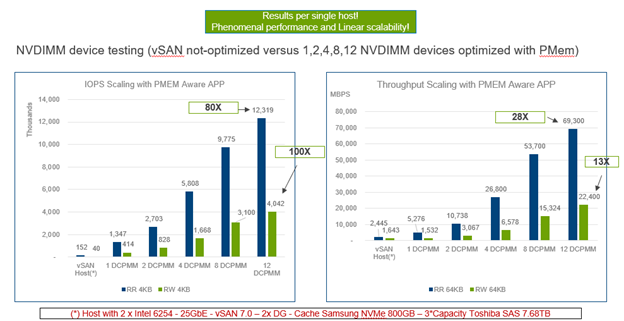

For the second consumption method, we tested PMem in App direct mode using the NVDIMM consumption method. We performed tests using 1,2,4,8 and then 12 PMEM modules. All testing has been evaluated and validated by ESG (Enterprise Strategy Group). The certified white paper has been published as highlighted in the resources section.

Figure 4: NVDIMM device testing (vSAN not-optimized versus optimized PMem NVDIMM)

The results prove linear scalability as we increase the number of modules from 1 to 12. And with 12 PMem modules, VxRail achieves 80 times more IOPs than when running against vSAN not optimized (meaning VxRail all-flash vSAN with no PMem involved), and 100X for the 4K RW workload. The right half of the graphic depicts throughput results for very large IO, 64KB. When PMem is optimized on 12 modules we saw 28X higher throughput for a 64KB random read (RR) workload, and PMem is 13 times faster for the 64K RW.

What you see here is amazing performance on a single VxRail host and almost linear scalability when adding PMem!! Yes, that warrants a double bang. If you were to max out a 64-node cluster, the potential scalability is phenomenal and game changing!

So, what does all this mean? Key takeaways are:

- The local performance of VxRail with Intel Optane PMem can scale to 12M read IOPS, and more than 4M write IOPs or 70GB/s read throughput / 22GB/s write throughput on a single host.

- The use of PMEM modules doesn’t affect the regular activity on vSAN Datastores and extends the value of your VxRail platform in many ways;

- It can be used to accelerate legacy applications, such as RDBMS Log acceleration

- It enables the deployment of in memory databases and applications that can benefit from the higher IO throughput provided by PMEM while still taking the benefit of vSAN characteristics in the VxRail platform

- The local performance of a single host with 12 x 128GB PMem modules achieves more than 12M read IOPS, and more than 4M write IOPs

- It not only increases performance of traditional HCI workloads such as VDI, but also support performance-intensive transactional and analytics workloads

- It offers orders-of-magnitude faster performance than traditional storage

- It provides more memory for less cost as PMem is much less costly than DRAM

The references and validation testing have been completed by ESG (Enterprise Strategy Group). White papers and other resources on VxRail for Extreme Performance are available via the links listed below.

Additional Resources

ESG Validation: Dell EMC VxRail and Intel Optane Persistent Memory

VxRail and Intel Optane for Extreme Performance – Engineering presentation

Dell EMC & Intel Optane Persistent Memory - video

ESG Validation: Dell EMC VxRail with Intel Xeon Scalable Processors and Intel Optane SSDs

By: KJ Bedard – VxRail Technical Marketing Engineer

LinkedIn: https://www.linkedin.com/in/kj-bedard-50b25315/

Twitter: @KJbedard

I feel the need – the need for speed (and endurance): Intel Optane edition

Wed, 13 Oct 2021 17:37:52 -0000

|Read Time: 0 minutes

It has only been three short months since we launched VxRail on 15th Generation PowerEdge, but we're already expanding the selection of configuration offerings. So far we've added 18 additional processors to power your workloads, including some high frequency and low core count options. This is delightful news for those with applications that are licensed per core, an additional NVIDIA GPU - the A30, a slew of additional drives, and doubled the RAM capacity to 8TB. I've probably missed something, as it can be hard to keep up with the all the innovations taking place within this race car that is VxRail!

In my last blog, I hinted at one of those drive additions, faster cache drives. Today I'm excited to announce that you can now order, and turbo charge your VxRail with the 400GB or 800GB Intel P5800X – Intel’s second generation Optane NVMe drive. Before we delve into some of the performance numbers, let’s discuss what it is about the Optane drives that makes them so special. More specifically, what is it about them that enables them to deliver so much more performance, in addition to significantly higher endurance rates.

To grossly over-simplify it, and my apologies in advance to the Intel engineers who poured their lives into this, when writing to NAND flash an erase cycle needs to be performed before a write can be made. These erase cycles are time-consuming operations and the main reason why random write IO capabilities on NAND flash is often a fraction of the read capability. Additionally, a garbage collection is running continuously in the background to ensure that there is space available to incoming writes. Optane, on the other hand, does bit-level write in place operations, therefore it doesn’t require an erase cycle, garbage collection, or performance penalty writes. Hence, random write IO capability almost matches the random read IO capability. So just how much better is endurance with this new Optane drive? Endurance can be measured in Drive Writes Per Day (DWPD), which measures how many times the drive's entire size could be overwritten each day of its warranty life. For the 1.6TB NVMe P5600 this is 3 DWPD, or 55 MB per second, every second for five years – just shy of 9PB of writes, not bad. However, the 800GB Optane P5800X will endure 146PB over its five-year warranty life, or almost 1 GB per second (926 MB/s) every second for its five year 100 DWPD warranty life. Not quite indestructible, but that is a lot of writes, so much so you don’t need extra capacity for wear leveling and a smaller capacity drive will suffice.

To grossly over-simplify it, and my apologies in advance to the Intel engineers who poured their lives into this, when writing to NAND flash an erase cycle needs to be performed before a write can be made. These erase cycles are time-consuming operations and the main reason why random write IO capabilities on NAND flash is often a fraction of the read capability. Additionally, a garbage collection is running continuously in the background to ensure that there is space available to incoming writes. Optane, on the other hand, does bit-level write in place operations, therefore it doesn’t require an erase cycle, garbage collection, or performance penalty writes. Hence, random write IO capability almost matches the random read IO capability. So just how much better is endurance with this new Optane drive? Endurance can be measured in Drive Writes Per Day (DWPD), which measures how many times the drive's entire size could be overwritten each day of its warranty life. For the 1.6TB NVMe P5600 this is 3 DWPD, or 55 MB per second, every second for five years – just shy of 9PB of writes, not bad. However, the 800GB Optane P5800X will endure 146PB over its five-year warranty life, or almost 1 GB per second (926 MB/s) every second for its five year 100 DWPD warranty life. Not quite indestructible, but that is a lot of writes, so much so you don’t need extra capacity for wear leveling and a smaller capacity drive will suffice.

You might wonder why you should care about endurance, as Dell EMC will replace the drive under warranty anyway – there are three reasons. When a cache drive fails, its diskgroup is taken offline, so not only have you lost performance and capacity, your environment is taking on the additional burden of a rebuild operation to re-protect your data. Secondly, more and more systems are being deployed outside of the core data center. Replacing a drive in your data center is straightforward, and you might even have spares onsite, but what about outside of your core datacenter? What is your plan for replacing a drive at a remote office, or a thousand miles away? What if that remote location is not an office but an oilrig one hundred miles offshore, or a cruise ship halfway around the world where the cost of getting a replacement drive there is not trivial? In these remote locations, onsite spares are commonplace, but the exceptions are what lead me to the third reason, Murphy's Law. IT and IT staffing might be an afterthought at these remote locations. Getting a failed drive swapped out at a remote location which lacks true IT staffing may not get the priority it deserves, and then there is that ever present risk of user error... “Oh, you meant the other drive?!? Sorry...”

Cache in its many forms plays an important role in the datacenter. Cache enables switches and storage to deliver higher levels of performance. On VxRail, our cache drives fall into two categories, SAS and NVMe, with NVMe delivering up to 35% higher IOPS and 14% lower latency. Among our NVMe cache drive we have two from Intel, the 1.6TB P5600 and the Optane P5800X, in 400GB and 800GB capacities. The links for each will bring you to the drive specification including performance details. But how does the performance at a drive level impact performance at the solution level? Because, at the end of the day that is what your application consumes at the solution level, after cache mirroring, network hops, and the vSAN stack. Intel is a great partner to work with, when we checked with them about publishing solution level performance data comparing the two drives side-by-side, they were all for it.

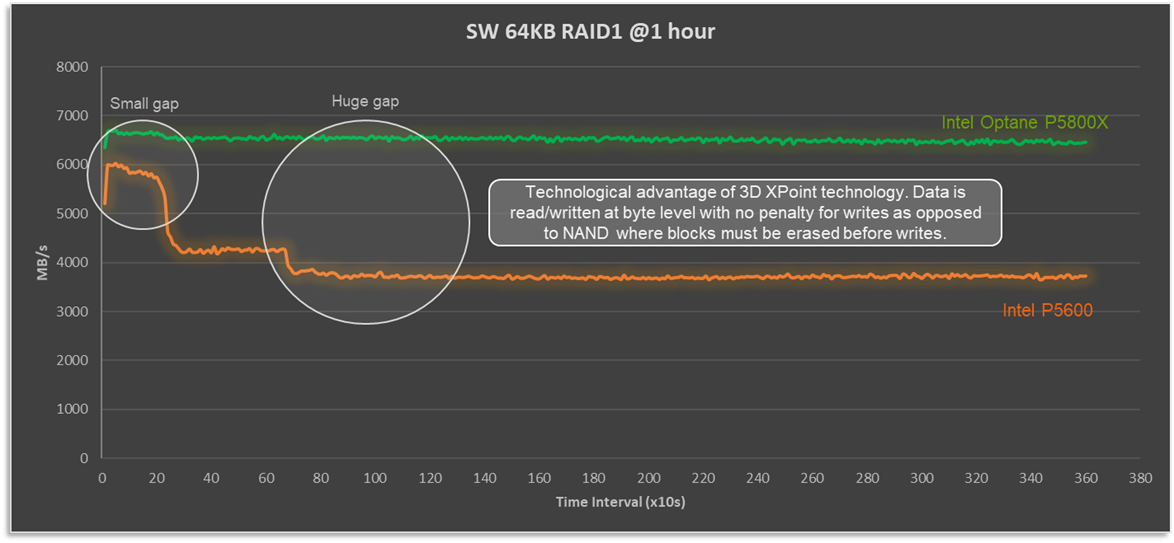

In my over-simplified explanation above, I described how the write cycle for Optane drives is significantly different as an erase operation and does not need to be done first. So how does that play out in a full solution stack? Figure 1 compares a four node VxRail P670F cluster, running a 100% sequential write 64KB workload. Not a test that reflects any real-world workload, but one that really stresses the vSAN cache layer, highlights the consistent write performance that 3D XPoint technology delivers, and shows how Optane is able to de-stage cache when it fills up without compromising performance.

Figure 1: Optane cache drives deliver consistent and predictable write performance

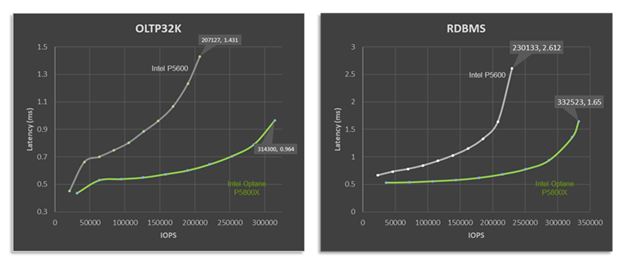

When we look at performance, there are two numbers to keep in mind: IOPS and latency. The target is to have high IOPS with low and predictable latency, at a real-world IO size and read:write ratio. To that end, let’s look at how VxRail performance differs with the P5600 and P5800X under OLTP32K (70R30W) and RDBMS (60R40W) benchmark workload, as shown in Figure 2.

Figure 2: Optane cache drives deliver higher performance and lower latency across a variety of workload types.

It doesn't take an expert to see that with the P5800X this four node VxRail P670F cluster's peak performance is significantly higher than when it is equipped with the P5600 as a cache drive. For RDBMS workloads up to 44% higher IOPS with a 37% reduction in latency. But peak performance isn't everything. Many workloads, particularly databases, place a higher importance on latency requirements. What if our workload, database or otherwise, requires 1ms response times? Maybe this is the Service Level Agreement (SLA) that the infrastructure team has with the application team. In such a situation, based on the data shown, and for a OLTP 70:30 workload with a 32K block size, the VxRail cluster would deliver over twice the performance at the same latency SLA, going from 147,746 to 314,300 IOPS.

In the datacenter, as in life, we are often faced with "Good, fast, or cheap. Choose two." When you compare the price tag of the P5600 and P5800X side by side, the Optane drive has a significant premium for its good and fast. However, keep in mind that you are not buying an individual drive, you are buying a full stack solution of several pieces of hardware and software, where the cost of the premium pales in comparison to the increased endurance and performance. Whether you are looking to turbo charge your VxRail like a racecar, or make it as robust as a tank, Intel Optane SSD drives will get you both.

Author Information

David Glynn, Technical Marketing Engineer, VxRail at Dell Technologies

Twitter: @d_glynn

LinkedIn: David Glynn

Additional Resources

Intel SSD D7P5600 Series 1.6TB 2.5in PCIe 4.0 x4 3D3 TLC Product Specifications

Intel Optane SSD DC P5800X Series 800GB 2.5in PCIe x4 3D XPoint Product Specifications