I feel the need – the need for speed (and endurance): Intel Optane edition

Wed, 13 Oct 2021 17:37:52 -0000

|Read Time: 0 minutes

It has only been three short months since we launched VxRail on 15th Generation PowerEdge, but we're already expanding the selection of configuration offerings. So far we've added 18 additional processors to power your workloads, including some high frequency and low core count options. This is delightful news for those with applications that are licensed per core, an additional NVIDIA GPU - the A30, a slew of additional drives, and doubled the RAM capacity to 8TB. I've probably missed something, as it can be hard to keep up with the all the innovations taking place within this race car that is VxRail!

In my last blog, I hinted at one of those drive additions, faster cache drives. Today I'm excited to announce that you can now order, and turbo charge your VxRail with the 400GB or 800GB Intel P5800X – Intel’s second generation Optane NVMe drive. Before we delve into some of the performance numbers, let’s discuss what it is about the Optane drives that makes them so special. More specifically, what is it about them that enables them to deliver so much more performance, in addition to significantly higher endurance rates.

To grossly over-simplify it, and my apologies in advance to the Intel engineers who poured their lives into this, when writing to NAND flash an erase cycle needs to be performed before a write can be made. These erase cycles are time-consuming operations and the main reason why random write IO capabilities on NAND flash is often a fraction of the read capability. Additionally, a garbage collection is running continuously in the background to ensure that there is space available to incoming writes. Optane, on the other hand, does bit-level write in place operations, therefore it doesn’t require an erase cycle, garbage collection, or performance penalty writes. Hence, random write IO capability almost matches the random read IO capability. So just how much better is endurance with this new Optane drive? Endurance can be measured in Drive Writes Per Day (DWPD), which measures how many times the drive's entire size could be overwritten each day of its warranty life. For the 1.6TB NVMe P5600 this is 3 DWPD, or 55 MB per second, every second for five years – just shy of 9PB of writes, not bad. However, the 800GB Optane P5800X will endure 146PB over its five-year warranty life, or almost 1 GB per second (926 MB/s) every second for its five year 100 DWPD warranty life. Not quite indestructible, but that is a lot of writes, so much so you don’t need extra capacity for wear leveling and a smaller capacity drive will suffice.

To grossly over-simplify it, and my apologies in advance to the Intel engineers who poured their lives into this, when writing to NAND flash an erase cycle needs to be performed before a write can be made. These erase cycles are time-consuming operations and the main reason why random write IO capabilities on NAND flash is often a fraction of the read capability. Additionally, a garbage collection is running continuously in the background to ensure that there is space available to incoming writes. Optane, on the other hand, does bit-level write in place operations, therefore it doesn’t require an erase cycle, garbage collection, or performance penalty writes. Hence, random write IO capability almost matches the random read IO capability. So just how much better is endurance with this new Optane drive? Endurance can be measured in Drive Writes Per Day (DWPD), which measures how many times the drive's entire size could be overwritten each day of its warranty life. For the 1.6TB NVMe P5600 this is 3 DWPD, or 55 MB per second, every second for five years – just shy of 9PB of writes, not bad. However, the 800GB Optane P5800X will endure 146PB over its five-year warranty life, or almost 1 GB per second (926 MB/s) every second for its five year 100 DWPD warranty life. Not quite indestructible, but that is a lot of writes, so much so you don’t need extra capacity for wear leveling and a smaller capacity drive will suffice.

You might wonder why you should care about endurance, as Dell EMC will replace the drive under warranty anyway – there are three reasons. When a cache drive fails, its diskgroup is taken offline, so not only have you lost performance and capacity, your environment is taking on the additional burden of a rebuild operation to re-protect your data. Secondly, more and more systems are being deployed outside of the core data center. Replacing a drive in your data center is straightforward, and you might even have spares onsite, but what about outside of your core datacenter? What is your plan for replacing a drive at a remote office, or a thousand miles away? What if that remote location is not an office but an oilrig one hundred miles offshore, or a cruise ship halfway around the world where the cost of getting a replacement drive there is not trivial? In these remote locations, onsite spares are commonplace, but the exceptions are what lead me to the third reason, Murphy's Law. IT and IT staffing might be an afterthought at these remote locations. Getting a failed drive swapped out at a remote location which lacks true IT staffing may not get the priority it deserves, and then there is that ever present risk of user error... “Oh, you meant the other drive?!? Sorry...”

Cache in its many forms plays an important role in the datacenter. Cache enables switches and storage to deliver higher levels of performance. On VxRail, our cache drives fall into two categories, SAS and NVMe, with NVMe delivering up to 35% higher IOPS and 14% lower latency. Among our NVMe cache drive we have two from Intel, the 1.6TB P5600 and the Optane P5800X, in 400GB and 800GB capacities. The links for each will bring you to the drive specification including performance details. But how does the performance at a drive level impact performance at the solution level? Because, at the end of the day that is what your application consumes at the solution level, after cache mirroring, network hops, and the vSAN stack. Intel is a great partner to work with, when we checked with them about publishing solution level performance data comparing the two drives side-by-side, they were all for it.

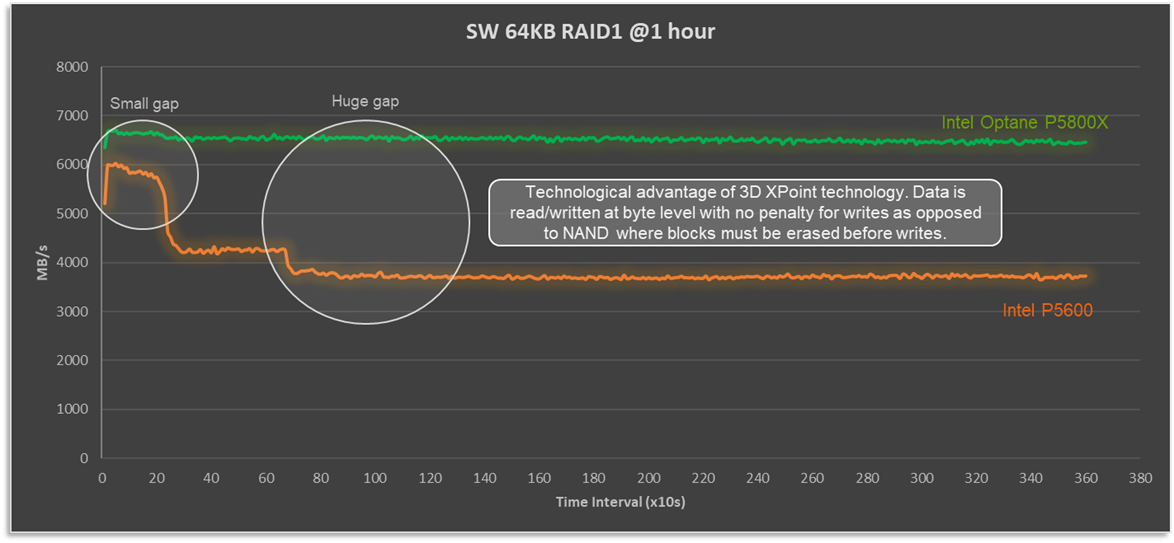

In my over-simplified explanation above, I described how the write cycle for Optane drives is significantly different as an erase operation and does not need to be done first. So how does that play out in a full solution stack? Figure 1 compares a four node VxRail P670F cluster, running a 100% sequential write 64KB workload. Not a test that reflects any real-world workload, but one that really stresses the vSAN cache layer, highlights the consistent write performance that 3D XPoint technology delivers, and shows how Optane is able to de-stage cache when it fills up without compromising performance.

Figure 1: Optane cache drives deliver consistent and predictable write performance

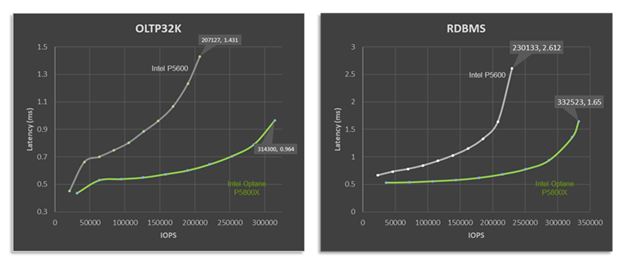

When we look at performance, there are two numbers to keep in mind: IOPS and latency. The target is to have high IOPS with low and predictable latency, at a real-world IO size and read:write ratio. To that end, let’s look at how VxRail performance differs with the P5600 and P5800X under OLTP32K (70R30W) and RDBMS (60R40W) benchmark workload, as shown in Figure 2.

Figure 2: Optane cache drives deliver higher performance and lower latency across a variety of workload types.

It doesn't take an expert to see that with the P5800X this four node VxRail P670F cluster's peak performance is significantly higher than when it is equipped with the P5600 as a cache drive. For RDBMS workloads up to 44% higher IOPS with a 37% reduction in latency. But peak performance isn't everything. Many workloads, particularly databases, place a higher importance on latency requirements. What if our workload, database or otherwise, requires 1ms response times? Maybe this is the Service Level Agreement (SLA) that the infrastructure team has with the application team. In such a situation, based on the data shown, and for a OLTP 70:30 workload with a 32K block size, the VxRail cluster would deliver over twice the performance at the same latency SLA, going from 147,746 to 314,300 IOPS.

In the datacenter, as in life, we are often faced with "Good, fast, or cheap. Choose two." When you compare the price tag of the P5600 and P5800X side by side, the Optane drive has a significant premium for its good and fast. However, keep in mind that you are not buying an individual drive, you are buying a full stack solution of several pieces of hardware and software, where the cost of the premium pales in comparison to the increased endurance and performance. Whether you are looking to turbo charge your VxRail like a racecar, or make it as robust as a tank, Intel Optane SSD drives will get you both.

Author Information

David Glynn, Technical Marketing Engineer, VxRail at Dell Technologies

Twitter: @d_glynn

LinkedIn: David Glynn

Additional Resources

Intel SSD D7P5600 Series 1.6TB 2.5in PCIe 4.0 x4 3D3 TLC Product Specifications

Intel Optane SSD DC P5800X Series 800GB 2.5in PCIe x4 3D XPoint Product Specifications

Related Blog Posts

Top benefits to using Intel Optane NVMe for cache drives in VxRail

Mon, 17 Aug 2020 18:31:31 -0000

|Read Time: 0 minutes

Performance, endurance, and all without a price jump!

There is a saying that “A picture paints a thousand words” but let me add that a “graph can make for an awesome picture”.

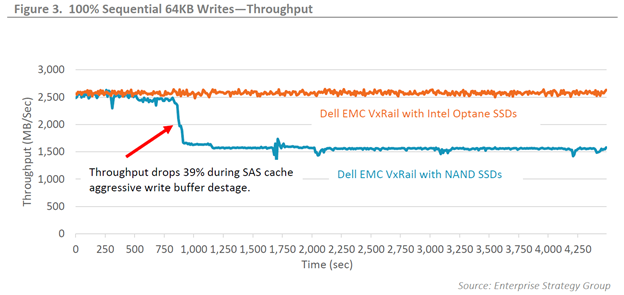

Last August we at VxRail worked with ESG on a technical validation paper that included, among other things, the recent addition of Intel Optane NVMe drives for the vSAN caching layer. Figure 3 in this paper is a graph showing the results of a throughput benchmark workload (more on benchmarks later). When I do customer briefings and the question of vSAN caching performance comes up, this is my go-to whiteboard sketch because on its own it paints a very clear picture about the benefit of using Optane drives – and also because it is easy to draw.

In the public and private cloud, predictability of performance is important, doubly so for any form of latency. This is where caching comes into play, rather than having to wait on a busy system, we just leave it in the write cache inbox and get an acknowledgment. The inverse is also true. Like many parents I read almost the same bedtime stories to my young kids every night, you can be sure those books remain close to hand on my bedside “read cache” table. This write and read caching greatly helps in providing performance and consistent latency. With vSAN all-flash there no longer any read cache as the flash drives at the capacity layer provide enough random read access performance… just as my collection of bedtime story books has been replaced with a Kindle full of eBooks. Back to the write cache inbox where we’ve been dropping things off – at some point, this write cache needs to be empty, and this is where the Intel Optane NVMe drives shine. Drawing the comparison back to my kids, I no longer drive to a library to drop off books. With a flick of my finger I can return, or in cache terms de-stage, books from my Kindle back to the town library - the capacity drives if you will. This is a lot less disruptive to my day-to-day life, I don’t need to schedule it, I don’t need to stop what I’m doing, and with a bit of practice I’ve been able to do this mid story Let’s look at this in actual IT terms and business benefits.

To really show off how well the Optane drives shine, we want to stress the write cache as much as possible. This is where benchmarking tools and the right knowledge of how to apply them come into play. We had ESG design and run these benchmarking workloads for us. Now let’s be clear, this test is not reflective of a real-world workload but was designed purely to stress the write cache, in particular the de-staging from cache to capacity. The workload that created my go-to whiteboard sketch was the 100% sequential 64KB workload with a 1.2TB working set per node for 75 minutes.

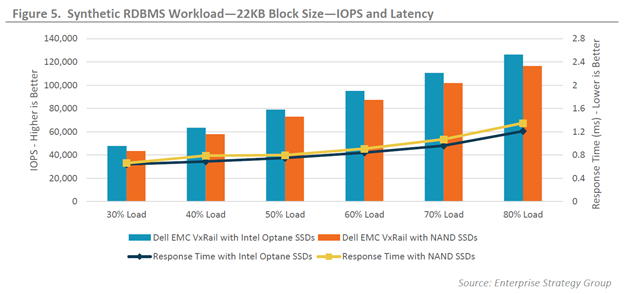

The graph clearly shows the benefit of the Optane drives, they keep on chugging at 2,500MB/sec of throughput the entire time without dropping a beat. What’s not to like about that! This is usually when the techie customer in the room will try to burst my bubble by pointing out the unrealistic workload that is in no way reflective of their environment, or most environments… which is true. A more real-world workload would be a simulated relational database workload with a 22KB block size, mixing random 8K and sequential 128K I/O, with 60% reads and 40% writes, and a 600GB per node working set, which is quite a mouthful and is shown in figure 5. The results there show a steady 8.4-8.8% increase in IOPS across the board and a slower rise in latency resulting in a 10.5% lower response time under 80% load.

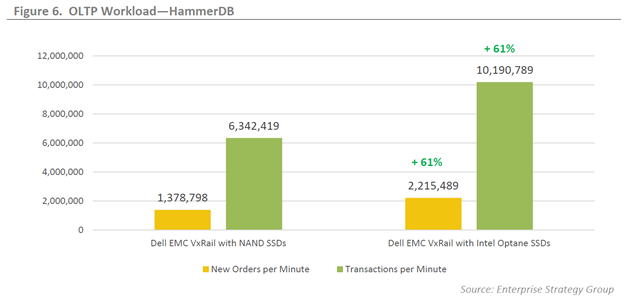

Those of you running OLTP workloads will appreciate the graph shown in figure 6 where HammerDB was used to emulate the database activity of a typical online brokerage firm. The Optane cache drives under that workload sustained a remarkable 61% more transactions per minute (TPM) and new orders per minute (NOPM). That can result in significant business improvement for an online brokerage firm who adopts Optane drives versus one who is using NAND SSDs.

When it comes to write cache, performance is not everything, write endurance is also extremely important. The vSAN spec requires that cache drives be SSD Endurance Class C (3,650 TBW) or above, and Intel Optane beats this hands down with an over tenfold margin at 41 PBW (41,984 TBW). The Intel Optane 3D XPoint architecture allows memory cells to be individually addressed in a dense, transistor-less, stackable design. This extremely high write endurance capability has let us spec a smaller sized cache drive, which in turn lets us maintain a similar VxRail node price point, enabling you the customer to get more performance for your dollar.

What’s not to like? Typically, you get to pick any two; faster/better/cheaper. With Intel Optane drives in your VxRail you get all three; more performance and better endurance, at roughly the same cost. Wins all around!

Author: David Glynn, Sr Principal Engineer, VxRail Tech Marketing

Resources: Dell EMC VxRail with Intel Xeon Scalable Processors and Intel Optane SSDs

New VxRail Node Lets You Start Small with Greater Flexibility in Scaling and Additional Resiliency

Mon, 29 Aug 2022 19:00:25 -0000

|Read Time: 0 minutes

When deploying infrastructure, it is important to know two things: current resource needs and that those resource needs will grow. What we don’t always know is in what way the demands for resources will grow. Resource growth is rarely equal across all resources. Storage demands will grow more rapidly than compute, or vice-versa. At the end of the day, we can only make an educated guess, and time will tell if we guessed right. We can, however, make intelligent choices that increase the flexibility of our growth options and give us the ability to scale resources independently. Enter the single processor Dell VxRail P670F.

The availability of the P670F with only a single processor provides more growth flexibility for our customers who have smaller clusters. By choosing a less compute dense single processor node, the same compute workload will require more nodes. There are two benefits to this:

- More efficient storage: More nodes in the cluster opens the door to using the more capacity efficient erasure coding vSAN storage option. Erasure coding, also known as parity RAID, (such as RAID 5 and RAID 6) has a capacity overhead of 33% compared to the 100% overhead that mirroring requires. Erasure coding can deliver 50% more usable storage capacity while using the same amount of raw capacity. While this increase in storage does come with a write performance penalty, VxRail with vSAN has shown that the gap between erasure coding and mirroring has narrowed significantly, and provides significant storage performance capabilities.

- Reduced cluster overhead: Clusters are designed around N+1, where ‘N’ represents sufficient resources to run the preferred workload, and ‘+1’ are spare and unused resources held in reserve should a failure occur in the nodes that make up the N. As the number of nodes in N increases, the percentage of overall resources that are kept in reserve to provide the +1 for planned and unplanned downtime drops.

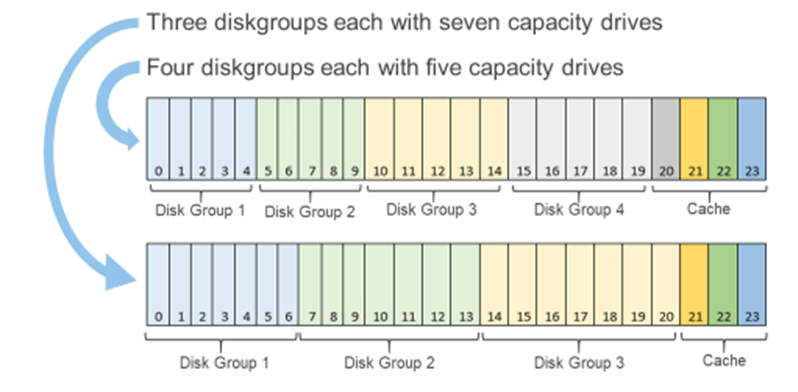

Figure 1: Single processor P670F disk group options

You may be wondering, “How does all of this deliver flexibility in the options for scaling?”

You can scale out the cluster by adding a node. Adding a node is the standard option and can be the right choice if you want to increase both compute and storage resources. However, if you want to grow storage, adding capacity drives will deliver that additional storage capacity. The single processor P670F has disk slots for up to 21 capacity drives with three cache drives, which can be populated one at a time, providing over 160TB of raw storage. (This is also a good time to review virtual machine storage policies: does that application really need mirrored storage?) The single processor P670F does not have a single socket motherboard. Instead, it has the same dual socket motherboard as the existing P670F—very much a platform designed for expanding CPU and memory in the future.

If you are starting small, even really small, as in a 2-node cluster (don’t worry, you can still scale out to 64 nodes), the single processor P670F has even more additional features that may be of interest to you. Our customers frequently deploy 2-node clusters outside of their core data center at the edge or at remote locations that can be difficult to access. In these situations, the additional data resiliency that provided by Nested Fault Domains in vSAN is attractive. To provide this additional resiliency on 2-node clusters requires at least three disk groups in each node, for which the single processor P670F is perfectly suited. For more information, see VMware’s Teodora Hristov blog post about Nested fault domain for 2 Node cluster deployments. She also posts related information and blog posts on Twitter.

It is impressive how a single change in configuration options can add so much more configuration flexibility, enabling you to optimize your VxRail nodes specifically to your use cases and needs. These configuration options impact your systems today and as you scale into the future.

Author Information

Author: David Glynn, Sr. Principal Engineer, VxRail Technical Marketing

Twitter: @d_glynn