Assets

A Simple Poster at NVIDIA GTC – Running NVIDIA Riva on Red Hat OpenShift with Dell PowerFlex

Fri, 15 Mar 2024 21:45:09 -0000

|Read Time: 0 minutes

A few months back, Dell and NVIDIA released a validated design for running NVIDIA Riva on Red Hat OpenShift with Dell PowerFlex. A simple poster—nothing more, nothing less—yet it can unlock much more for your organization. This design shows the power of NVIDIA Riva and Dell PowerFlex to handle audio processing workloads.

What’s more, it will be showcased as part of the poster gallery at NVIDIA GTC this week in San Jose California. If you are at GTC, we strongly encourage you to join us during the Poster Reception from 4:00 to 6:00 PM. If you are unable to join us, you can view the poster online from the GTC website.

For those familiar with ASR, TTS, and NMT applications, you might be curious as to how we can synthesize these concepts into a simple poster. Read on to learn more.

NVIDIA Riva

For those not familiar with NVIDIA Riva, let’s start there.

NVIDIA Riva is an AI software development kit (SDK) for building conversational AI pipelines, enabling organizations to program AI into their speech and audio systems. It can be used as a smart assistant or even a note taker at your next meeting. Super cool, right?

Taking that up a notch, NVIDIA Riva lets you build fully customizable, real-time conversational AI pipelines, which is a fancy way of saying it allows you to process speech in a bunch of different ways including automatic speech recognition (ASR), text-to-speech (TTS), and neural machine translation (NMT) applications:

- Automatic speech recognition (ASR) – this is essentially dictation. Provide AI with a recording and get a transcript—a near perfect note keeper for your next meeting.

- Text-to-speech (TTS) – a computer reads what you type. In the past, this was often in a monotone voice. It’s been around for more than a couple of decades and has evolved rapidly with more fluid voices and emotion.

- Neural machine translation (NMT) – this is the translation of spoken language in near real-time to a different language. It is a fantastic tool for improving communication, which can go a long way in helping organizations extend business.

Each application is powerful in its own right, so think about what’s possible when we bring ASR, TTS, and NMT together, especially with an AI-backed system. Imagine having a technical support system that could triage support calls, sounded like you were talking to an actual support engineer, and could provide that support in multiple languages. In a word: ground-breaking.

NVIDIA Riva allows organizations to become more efficient in handling speech-based communications. When organizations become more efficient in one area, they can improve in other areas. This is why NVIDIA Riva is part of the NVIDIA AI Enterprise software platform, focusing on streamlining the development and deployment of production AI.

I make it all sound simple, however those creating large language models (LLMs) around multilingual speech and translation software know it’s not so. That’s why NVIDIA developed the Riva SDK.

The operating platform also plays a massive role in what can be done with workloads. Red Hat OpenShift enables AI speech recognition and inference with its robust container orchestration, microservices architecture, and strong security features. This allows workloads to scale to meet the needs of an organization. As the success of a project grows, so too must the project.

Why is Storage Important

You might be wondering how storage fits into all of this. That’s a great question. You’ll need high performance storage for NVIDIA Riva. After all, it’s designed to process and/or generate audio files and being able to do that in near real-time requires a highly performant, enterprise-grade storage system like Dell PowerFlex.

Additionally, AI workloads are becoming mainstream applications in the data center and should be able to run side by side with other mission critical workloads utilizing the same storage. I wrote about this in my Dell PowerFlex – For Business-Critical Workloads and AI blog.

At this point you might be curious how well NVIDIA Riva runs on Dell PowerFlex. That is what a majority of the poster is about.

ASR and TTS Performance

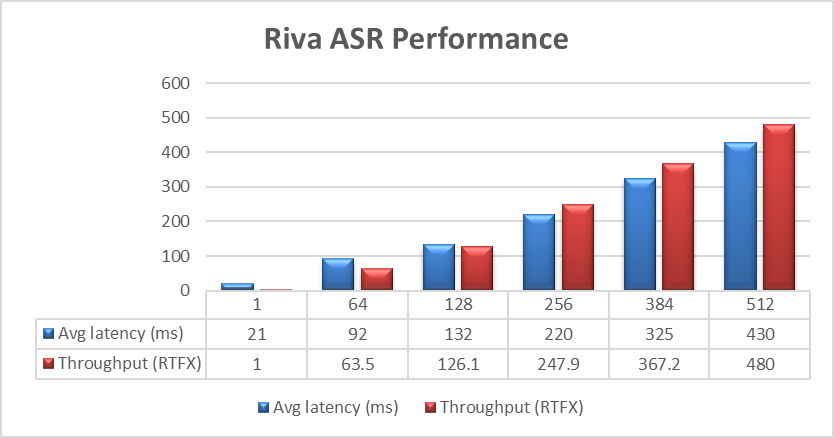

The Dell PowerFlex Solutions Engineering team did extensive testing using the LibriSpeech dev-clean dataset available from Open SLR. With this data set, they performed automatic speech recognition (ASR) testing using NVIDIA Riva. For each test, the stream was increased from 1 to 64, 128, 256, 384, and finally 512, as shown in the following graph.

Figure 1. NVIDIA Riva ASR Performance

Figure 1. NVIDIA Riva ASR Performance

The objective of these tests is to have the lowest latency with the highest throughput. Throughput is measured in RTFX, or the duration of audio transcribed divided by computation time. During these tests, the GPU utilization was approximately 48% without any PowerFlex storage bottlenecks. These results are comparable to NVIDIA’s own findings in in the NVIDIA Riva User Guide.

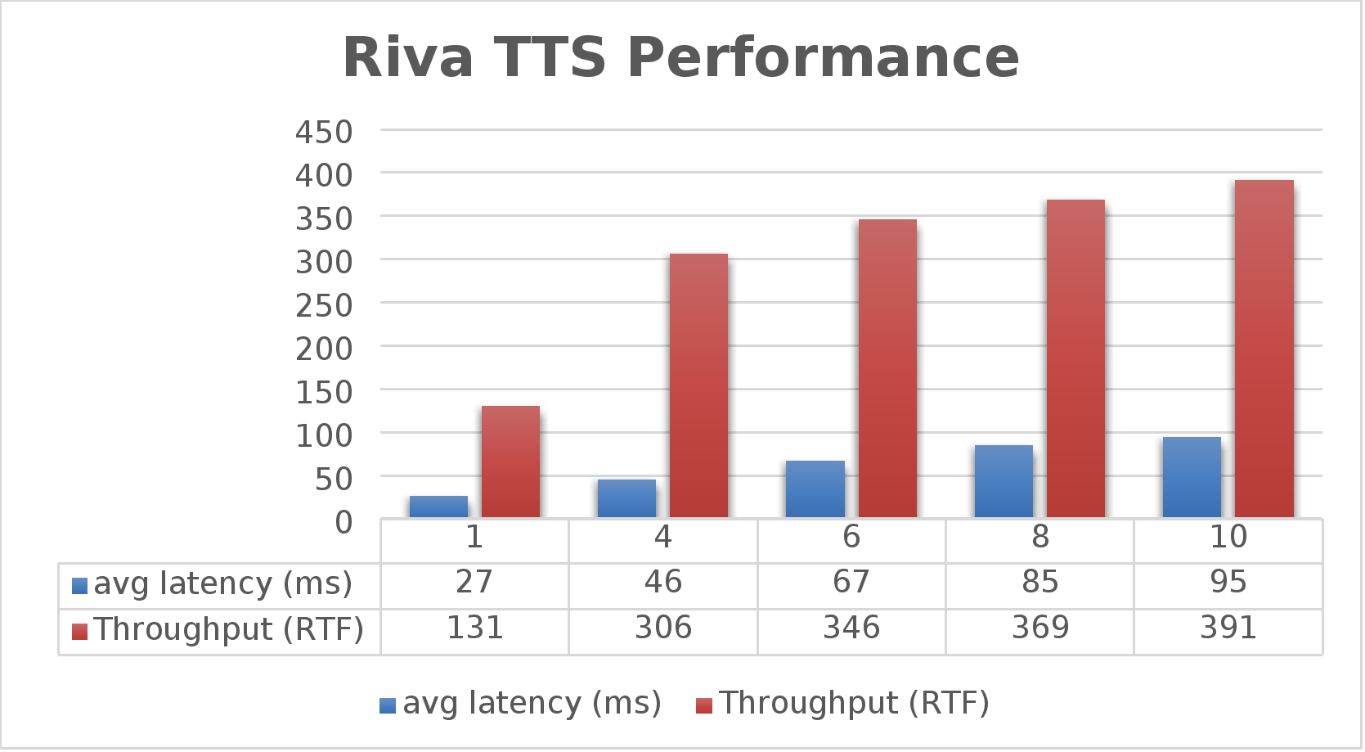

The Dell PowerFlex Solutions Engineering team went beyond just looking at how fast NVIDIA Riva could transcribe text, also exploring the speed at which it could convert text to speech (TTS). They validated this as well. Starting with a single stream, for each run the stream is changed to 4, 6, 8, and 10, as shown in the following graph.

Figure 2. NVIDIA Riva TTS Performance

Figure 2. NVIDIA Riva TTS Performance

Again, the goal is to have a low average latency with a high throughput. The throughput (RTFX) in this case is the duration of audio generated divided by computation time. As we can see, this results in a RTFX throughput of 391 with a latency of 91ms with ten streams. It is also worth noting that during testing, GPU utilization was approximately 82% with no storage bottlenecks.

This is a lot of data to pack into one poster. Luckily, the Dell PowerFlex Solutions Engineering team created a validated architecture that details how all of these results were achieved and how an organization could replicate them if needed.

Now, to put all this into perspective, with PowerFlex you can achieve great results on both spoken language coming into your organization and converting text to speech. Pair this capability with some other generative AI (genAI) tools, like NVIDIA NeMo, and you can create some ingenious systems for your organization.

For example, if an ASR model is paired with a large language model (LLM) for a help desk, users could ask it questions verbally, and—once it found the answers—it could use TTS to provide them with support. Think of what that could mean for organizations.

It's amazing how a simple poster can hold so much information and so many possibilities. If you’re interested in learning more about the research Dell PowerFlex has done with NVIDIA Riva, visit the Poster Reception at NVIDIA GTC on Monday, March 18th from 4:00 to 6:00 PM. If you are unable to join us at the poster reception, the poster will be on display throughout NVIDIA GTC. If you are unable to attend GTC, check out the white paper, and reach out to your Dell representative for more information.

Authors: Tony Foster | Twitter: @wonder_nerd | LinkedIn

Praphul Krottapalli

Kailas Goliwadekar

Reaching the Summit, The Next Chapter of VxBlock History

Wed, 06 Mar 2024 15:34:48 -0000

|Read Time: 0 minutes

You stand atop a great mountain looking over all you have done to reach the summit. The air is thin and only a few have ever attempted to join you at these hallowed heights of success. This is the reality of VxBlock and indeed the converged infrastructure market.

As it has ascended to these heights over the last 13 years, the enterprise IT space has changed and morphed continually reinventing itself. We have seen the rise of hyperconverged infrastructure (HCI), cloud computing, containers, and software defined anything and everything. All these technologies have sprung to life during the decades long journey of VxBlock.

And like many of you, I have been there for this journey to the mountain top, this extraordinary adventure to do something new and unheard of in the marketplace. Today, the journey changes, today we start the next chapter of VxBlock history.

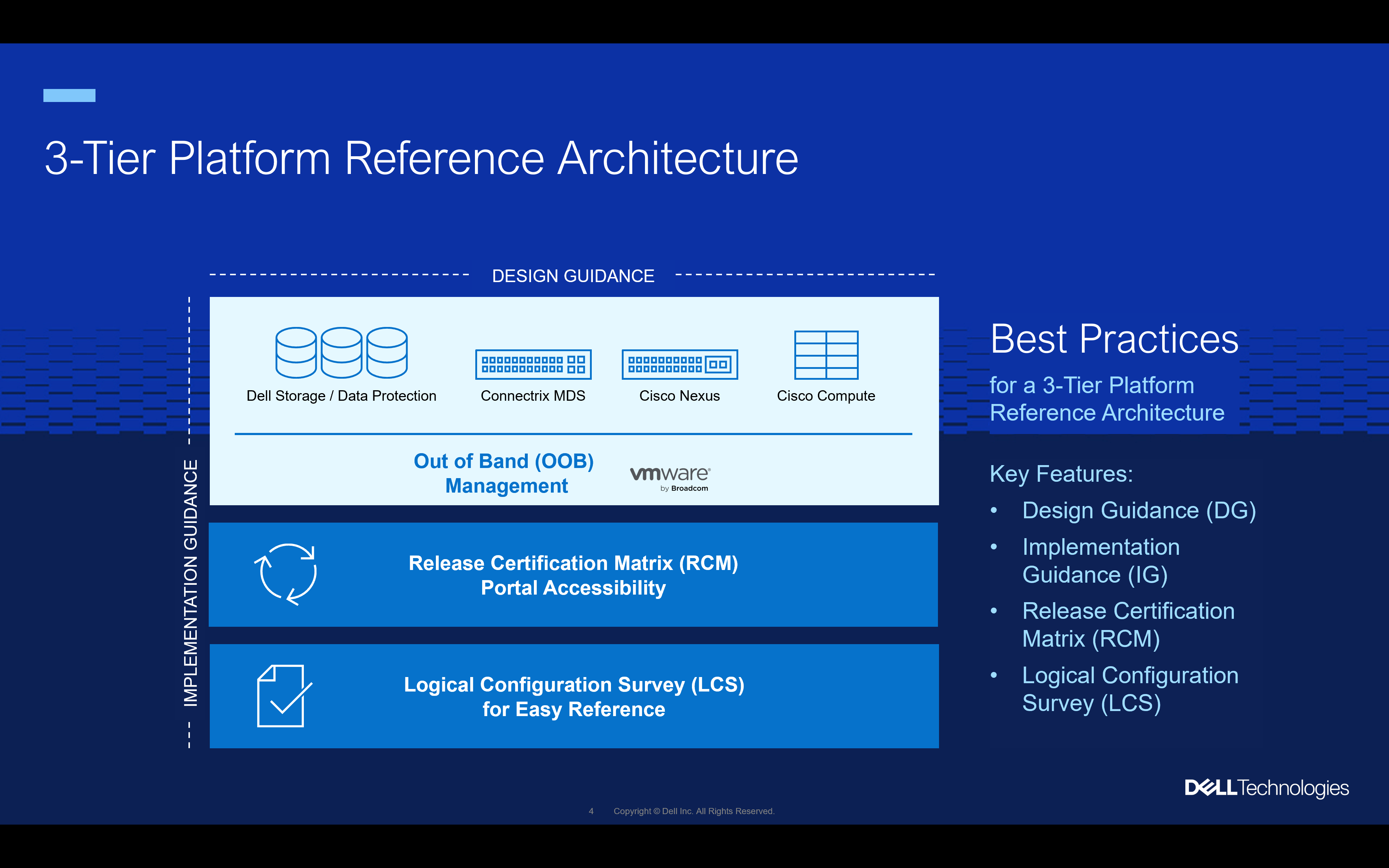

We are turning the page on VxBlock, we are creating a 3-Tier reference architecture to allow anyone to build a 3-Tier architecture. You can scale the mountain and embrace 3-Tier in your data center, but you don’t have to do it alone.

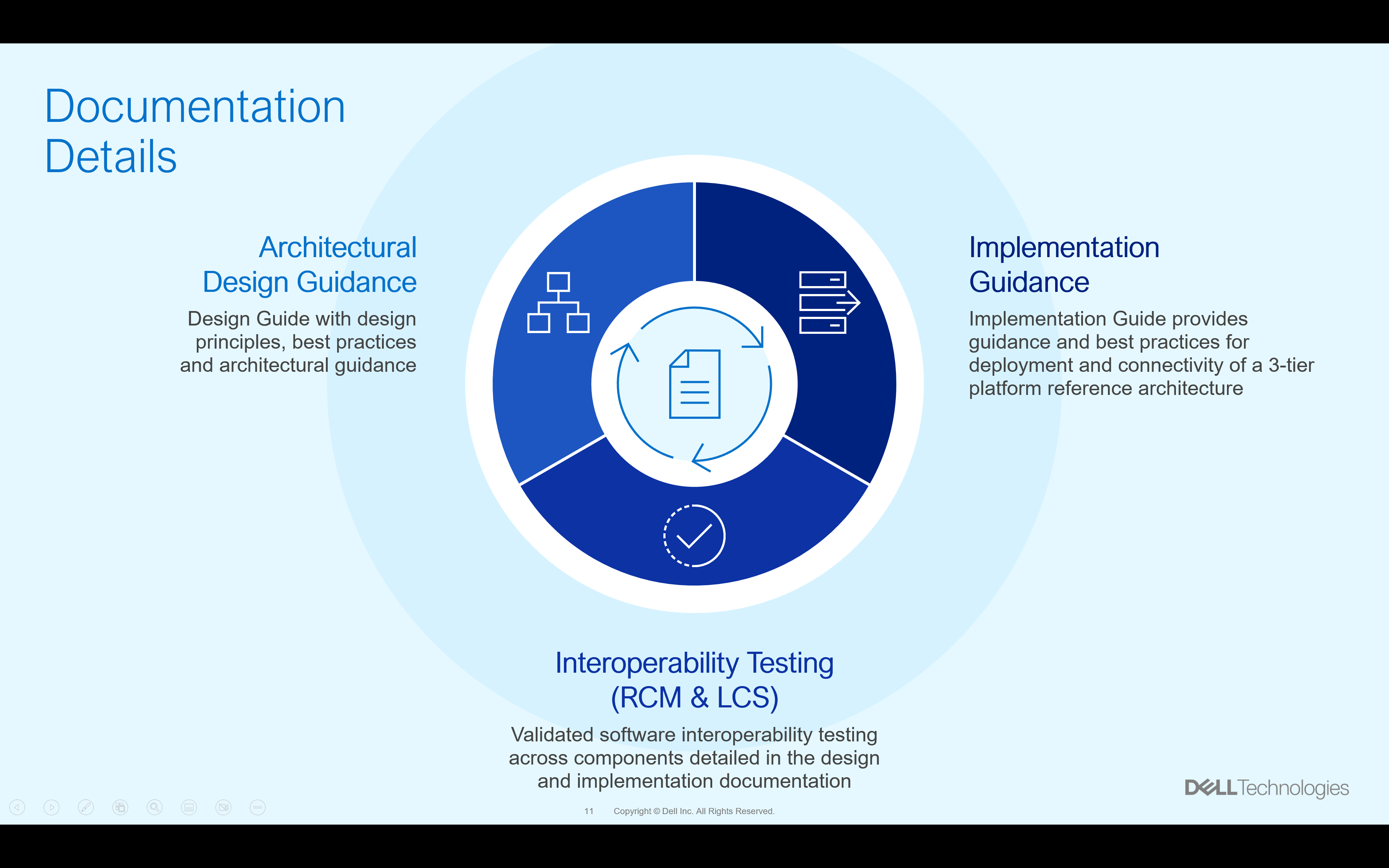

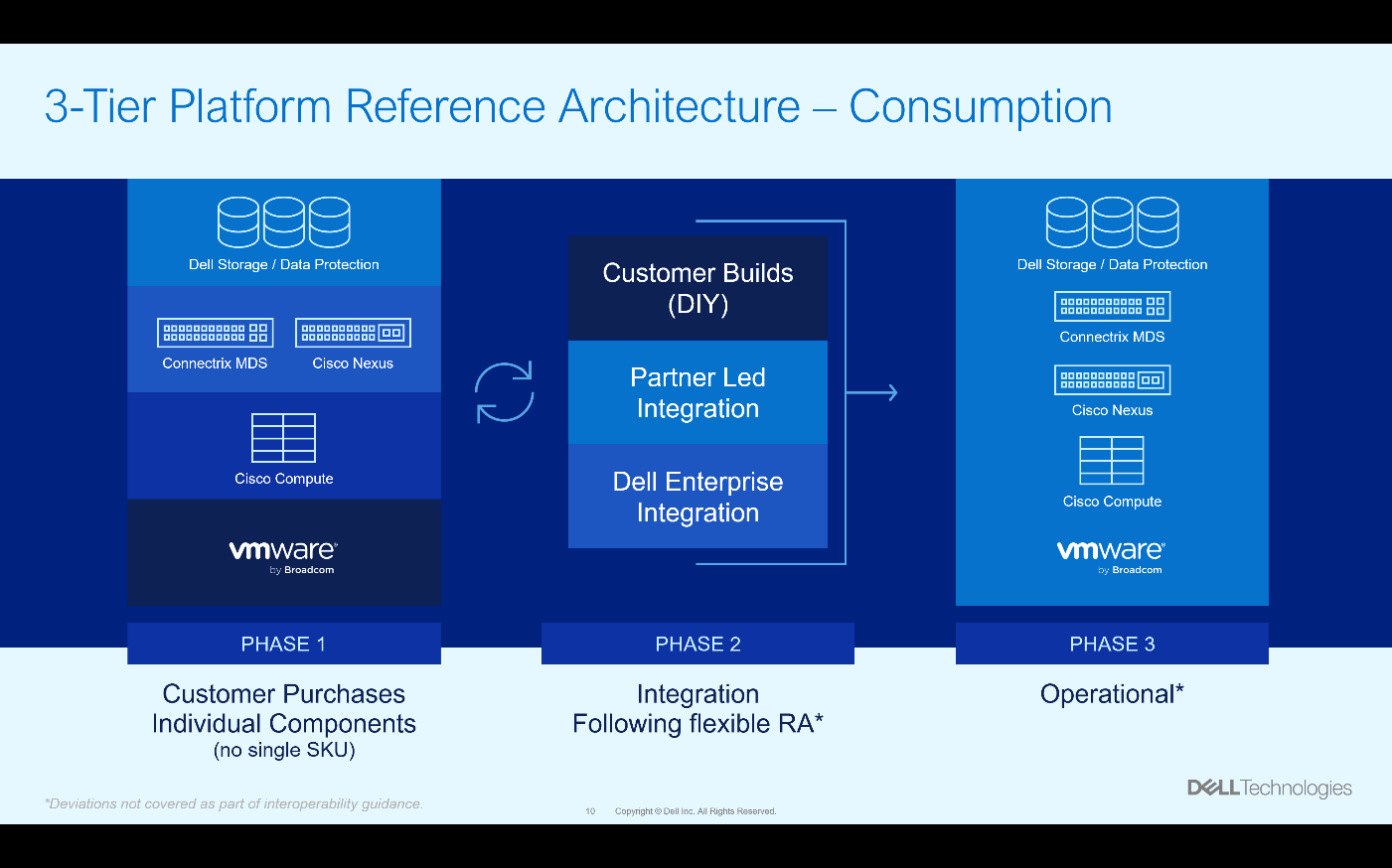

A traditional reference architecture is merely a map to follow. We all know to scale the high peaks of enterprise IT you need more than a map, more than a single document as your guide. We realize this and provide four essential documents to guide you on you 3-Tier journey. There is a design guide that helps you plan your journey. There is also an implementation guide to help assemble the right parts for your converged architecture journey. And there are the Release Certification Matrix (RCM) and Logical Configuration Survey (LCS) materials to help you avoid both common and uncommon pitfalls that you may come across. This is shown in the overview below.

These last two pieces of knowledge, the RCM and LCS, allow you to take advantage of some of the same revolutionary items that are used with VxBlock systems. VxBlock users tend to be familiar with both, but for those new to the 3-Tier space, let us explain them in a little more detail.

The LCS or logical configuration survey, is a document that has been refined over a decade to capture all the points of integration for a new 3-Tier deployment. There is no need to guess what information you might need to have a successful build, as those details are captured in the LCS before you even begin to deploy a 3-Tier architecture. This includes the obvious things such as domain credentials as well as the not so obvious things like rack power requirements. This survey makes it much easier to attain a successful deployment.

The RCM or release certification matrix has been a staple of 3-Tier architectures for a long time and has made its way into many other architectures. What the RCM provides is a list of interoperable systems. This may not sound like much, after all, most RAs tell you the components that were tested in the design. The RCM is different, in that it’s not just about a single set of components. For example, a single server model. The RCM is comprised of several different components that can be incorporated into a 3-Tier architecture. Plus, it is even more detailed than that. It looks at code level interoperability. Can the firmware of a switch, a server, and an OS function together? The RCM can help answer that question. If you will, would you rather climb a mountain in a one-size-fits-all climbing shoe, or a pair of tailor made shoes that fit to you?

This has been a core feature of VxBlock since its inception and can now be utilized as a for interoperability as part of the 3-Tier reference architecture. The RCM provides a reference point as organizations continue the normal life of their VxBlocks. Then as VxBlock systems reach the end of their operational life, the RCM also provides a pathway to migrate to a 3-Tier reference architecture. If you will, a path for any organization to reach the summit of their IT aspirations.

You are probably wondering, how these documents result in a complete 3-teir architecture in your data center. Let’s look at how all the parts come together with all the documents that make up the 3-Teir Reference Architecture. You can see how they logically come together in the following graphic.

Start by preparing a plan for your environment. The 3-Tier Design Guide can help you with this along with a trusted advisor, such as Dell or another partner or VAR. Once you have a plan in place, you will need to order the infrastructure for your design. That includes the standard items you see in the diagram above like storage, switching, and compute. It also includes things you may not have thought of like racks and cables. No one climbs a mountain without first having a plan.

Something worth noting at this point is, you’ll need to purchase maintenance on each piece of equipment purchased. Similar to how one wouldn’t expect climbing gear and tents to be covered by the same warranty.

Now comes the exciting decision, who’s going to assemble your powerful 3-Tier reference architecture? There are three pathways to choose from. You can have all the gear land on your dock, and you can assemble it yourself by following the Implementation Guide. Of course, that is spending a lot of time just keeping the data center humming. There are other options that might yield a better return on investment for your organization.

You could have someone who is skilled at assembly assemble it for you, following the Implementation Guide, RCM, and LCS. There are many partners who can help you design and build a tailored 3-Tier architecture using the four documents discussed above. Additionally, Dell offers Enterprise Integration Services where a Dell team will work with you to integrate components into a 3-Tier architecture using a build methodology based on the documents above.

Whichever way you choose to assemble the design, the result is a 3-Tier platform for your datacenter. The process could look something like the diagram below. Where the individual components are purchased, then assembled according to the documentation, and finally consumed as a single operational system.

Having a structured architecture like this makes the journey to an operational state much easier. Much like climbing a mountain, a well-tested path is a quicker way to the summit.

Dell has made this journey an open process for anyone looking to deploy a 3-Tier architecture in their environment. The team has created more than a typical reference architecture, they have provided access to design guidance, implementation guidance, a release certification matrix (RCM), and a logical configuration survey (LCS).

Having all of these resources, is not only like having a map to the summit, but a trained guide with a full understanding of the mountain and a support system every step of the way until you reach the summit. If you’re interested in finding out more about using 3-Tier architectures in your environment, reach out to your Dell representative.

Dell PowerFlex – For Business-Critical Workloads and AI

Wed, 21 Feb 2024 00:10:52 -0000

|Read Time: 0 minutes

AI—the buzzword that dances on the tongues of tech enthusiasts, executives, and coffee-break conversationalists alike. It's the shiny promise of automation, insights, and futuristic marvels. But let's step back from the AI dazzle for a moment. Beneath the glitz lies a fundamental truth: business-critical applications are the unsung heroes of organizational success. Enter Dell PowerFlex, the sturdy workhorse that ensures these applications run seamlessly.

The AI hype revisited

Imagine a room abuzz with anticipation. Faces lean forward, eager for the next AI revelation. If you've followed my previous blog, Can I Do That AI Thing on Dell PowerFlex, you know the answer. Yes, you can do that AI thing on PowerFlex. Being able to do AI shouldn’t be the end all be all for organizations. In fact, for most, it’s probably only a small portion of their IT operations. To that end, Dell PowerFlex isn't just built for AI. In fact, PowerFlex’s real strength isn’t AI at all.

Crushing the AI illusion

Let's peel back the layers. Dell PowerFlex isn't a mystical crystal ball, predicting stock market trends or composing poetry. Instead, it's the backbone supporting everyday business operations. Think databases, application servers, file servers—the workhorses that keep your organization humming. These workloads are the lifeblood of any enterprise, and their smooth functioning is non-negotiable. For many organizations, AI operations are a distant second. Why not optimize for the workhorses as well as prepare to support that new AI model?

The workload warriors

- Databases: Customer data, financial records, and inventory details all reside in databases. Dell PowerFlex ensures their availability, scalability, and performance.

- Application Servers: The engines behind web applications, APIs, and services. PowerFlex flexes its muscles here, providing the horsepower needed for user requests, transactions, and data processing.

- File Servers: Shared drives, document repositories, and collaboration spaces rely on file servers. PowerFlex ensures your files flow smoothly, whether you're sharing a presentation or collaborating on a project.

- And So Many Others: ERP systems, CRM platforms, virtual desktops—the list goes on. Each workload has its quirks, demands, and deadlines. Dell PowerFlex steps up, offering a unified platform that simplifies management and boosts performance.

Business-critical, Dell PowerFlex vital

These business-critical workloads are the heartbeat of organizations. They power customer interactions, financial transactions, and strategic decision-making. When these workloads hiccup, the entire operation feels it. That's where Dell PowerFlex shines. Its architecture leverages a robust and resilient software-defined storage (SDS) platform. Translation? It's agile, scalable, and resilient.

So, what's the secret sauce? PowerFlex leverages distributed storage resources, creating a pool of compute and storage nodes. These nodes collaborate harmoniously, distributing data and handling failures gracefully. Whether you're running a database query, serving up a web page, or analyzing mountains of data, PowerFlex ensures the show goes on.

The PowerFlex promise

Dell PowerFlex isn't just a hardware box—it's a promise. A promise to keep your workloads humming, your data secure, and your business thriving. So, the next time AI dazzles you with its potential, remember that PowerFlex is the sturdy engine of reliability in the background, ensuring the lights stay on, the servers stay responsive, and the wheels of progress keep turning.

In the grand scheme of IT, Dell PowerFlex takes center stage—an unassuming force that holds everything together. And as we navigate the AI landscape, let's tip our hats to the real heroes who keep the gears turning, one workload—AI included—at a time.

In the interest of full disclosure, this blog was created with the assistance of AI.

Author: Tony Foster

Twitter: @wonder_nerd

LinkedIn

PowerFlex and CloudStack, an Amazing IaaS match!

Sat, 18 Nov 2023 14:13:00 -0000

|Read Time: 0 minutes

Have you heard about Apache CloudStack? Did you know it runs amazingly on Dell PowerFlex? And what does it all have to do with infrastructure as a service (IaaS)? Interested in learning more? If so, then you should probably keep reading!

The PowerFlex team and ShapeBlue have been collaborating to bring ease and simplicity to CloudStack on PowerFlex. They have been doing this for quite a while. As new versions are released, the teams work together to ensure it continues to be amazing for customers. The deep integration with PowerFlex makes it an ideal choice for organizations building CloudStack environments.

Both Dell and ShapeBlue are gearing up for the CloudStack Collaboration Conference (CCC) in Paris on November 23 and 24th. The CloudStack Collaboration Conference is the biggest get-together for the Apache CloudStack Community, bringing vendors, users, and developers to one place to discuss the future of open-source technologies, the benefits of CloudStack, new integrations, and capabilities.

CloudStack is open-source software designed to deploy and manage large networks of virtual machines as a highly available, highly scalable Infrastructure as a Service (IaaS) cloud computing platform. CloudStack is used by hundreds of service providers around the world to offer public cloud services and by many companies to provide an on-premises (private) cloud offering or as part of a hybrid cloud solution.

Users can manage their cloud with an easy to use Web interface, command line tools, and/or a full-featured RESTful API. In addition, CloudStack provides an API that is compatible with AWS EC2 and S3 for organizations that want to deploy hybrid clouds.

CloudStack can leverage the extensive PowerFlex REST APIs to enhance functionality. This facilitates streamlined provisioning, effective data management, robust snapshot management, comprehensive data protection, and seamless scalability, making the combination of PowerFlex storage and CloudStack a robust choice for modern IaaS environments.

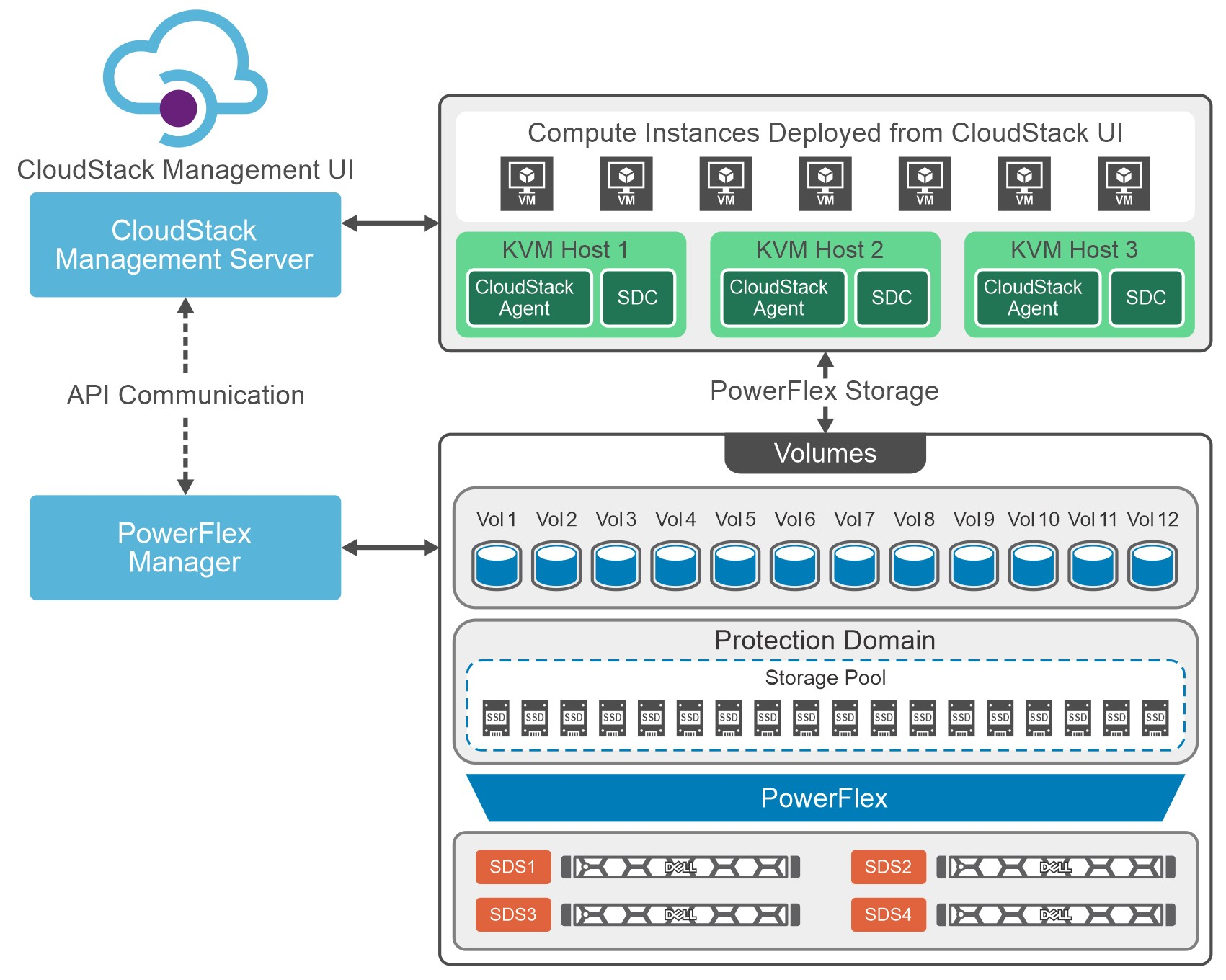

You can see this in the following diagram. CloudStack and PowerFlex communicate with each other using APIs to coordinate operations for VMs. This makes it easier to administer larger environments, enabling organizations to have a true IaaS environment.

Figure 1. Cloud Stack on PowerFlex Architecture

Let's talk about IaaS for a moment. It is a fantastic concept that can be compared with ordering off a menu at a restaurant. The restaurant has unrelated dishes on the menu until you start looking at their components. For example, you can get three different base sauces (red, pink, and white) with just a red sauce and a white sauce. With a small variety of pasta and proteins, the options are excellent. This is the same for IaaS. Have a few base options, sprinkle on some API know-how, and you get a fantastic menu to satisfy workload needs without having a detailed knowledge of the infrastructure.

That makes it easier for the IT organization to become more efficient and shift the focus toward aspirational initiatives. This is especially true when CloudStack and PowerFlex work together. The hungry IT consumers can get what they want with less IT interaction.

Other significant benefits that come from integrating CloudStack with PowerFlex include the following:

- Seamless Data Management: Efficient provision, backup, and data management across infrastructure, ensuring data integrity and accessibility.

- Enhanced Performance: Provides low-latency access to data, optimizing I/O, and reducing bottlenecks. This, in turn, leads to improved application and workload performance.

- Reliability and Data Availability: Benefit from advanced redundancy and failover mechanisms and data replication, reducing the risk of data loss and ensuring continuous service availability.

- Scalability: Scalable storage solutions allow organizations to expand their storage resources in tandem with their growing needs. This flexibility ensures that they can adapt to changing workloads and resource requirements.

- Simplified Management: Ability to use a single interface to handle provisioning, monitoring, troubleshooting, and streamlining administrative tasks.

- Enhanced Data Protection: Data protection features, such as snapshots, backups, and disaster recovery solutions. This ensures that an organization's data remains secure and can be quickly restored in case of unexpected incidents.

These are tremendous benefits for organizations, especially the data protection aspects. It is often said that it is no longer a question of if an organization will be impacted by an incident. It is a question of when they will be impacted. The IaaS capabilities of CloudStack and PowerFlex play a crucial role in protecting an organization's data. That protection can be automated as part of the IaaS design. That way, when a VM or VMs are requested, they can be assigned to a data protection policy as part of the creation process.

Simply put, that means that VM can be protected from the moment of creation. No more having to remember to add a VM to a backup, and no more "oh no" when someone realizes they forgot. That is amazing!

If you are at the CloudStack Collaboration Conference and are interested in discovering more, talk with Shashi and Florian. They will also present how CloudStack and PowerFlex create an outstanding IaaS solution.

Register for the CloudStack Collaboration Conference here to join virtually if you are unable to attend in person.

If you want to learn more about how PowerFlex and CloudStack can benefit your organization, reach out to your Dell representative for more details on this amazing solution.

Resources

Authors

Tony Foster

Twitter: @wonder_nerd

LinkedIn

Punitha HS

LinkedIn

KubeCon NA23, Google Cloud Anthos on Dell PowerFlex and More

Sun, 05 Nov 2023 23:26:43 -0000

|Read Time: 0 minutes

KubeCon will be here before you know it. There are so many exciting things to see and do. While you are making your plans, be sure to add a few things that will make things easier for you at the conference and afterwards.

Before we get into those things, did you know that the Google Cloud team and the Dell PowerFlex team have been collaborating? Recently Dell and Google Cloud published a reference architecture: Google Cloud Anthos and GDC Virtual on Dell PowerFlex. This illustrates how both teams are working together to enable consistency between cloud and on premises environments like PowerFlex. You will see this collaboration at KubeCon this year.

On Tuesday at KubeCon, after breakfast and the keynote, you should make your way to the Solutions Showcase in Hall F on Level 3 of the West building. Once there, make your way over to the Google Cloud booth and visit with the team! They want your questions about PowerFlex and are eager to share with you how Google Distributed Cloud (GDC) Virtual with PowerFlex provides a powerful on-premises container solution.

Also, be sure to catch the lightning sessions in the Google Cloud booth. You’ll get to hear from Dell PowerFlex engineer, Praphul Krottapalli. He will be digging into leveraging GDC Virtual on PowerFlex. That’s not the big thing though, he’ll also be looking at running a Postgres database distributed across on-premises PowerFlex nodes using GDC Virtual. Beyond that, they will look at how to protect these containerized database workloads. They’ll show you how to use Dell PowerProtect Data Manager to create application consistent backups of a containerized Postgres database instance.

We all know backups are only good if you can restore them. So, Praphul will show you how to recover the Postgres database and have it running again in no time.

Application consistency is an important thing to keep in mind with backups. Would you rather have a database backup where someone had just pulled the plug on the database (crash consistent) or would you like the backup to be as though someone had gracefully shut down the system (application consistent)? For all kinds of reasons (time, cost, sanity), the latter is highly preferable!

We talk about this more in a blog that covers the demo environment we used for KubeCon.

This highlights Dell and Google’s joint commitment to modern apps by ensuring that they can be run everywhere and that organizations can easily develop and deploy modern workloads.

If you are at KubeCon and would like to learn more about how containers work on Dell solutions, be sure to stop by both the Dell and Google Cloud booths. If it’s after KubeCon, be sure to reach out to your Dell representative for more details.

Author: Tony Foster

Using Dell PowerFlex and Google Distributed Cloud Virtual for Postgres Databases and How to Protect Them

Fri, 03 Nov 2023 23:27:04 -0000

|Read Time: 0 minutes

Did you know you can get the Google Cloud experience in your data center? Well now, you can! Using Google Distributed Cloud (GDC) Virtual and Dell PowerFlex enables the use of cloud and container workloads – such as Postgres databases – in your data center.

Looking beyond day one operations, the whole lifecycle must be considered, which includes assessing how to protect these cloud native workloads. That’s where Dell PowerProtect Data Manager comes in, allowing you to protect your workloads both in the data center and the cloud. PowerProtect Data Manager enhances data protection by discovering, managing, and sending data directly to the Dell PowerProtect DD series virtual appliance, resulting in unmatched efficiency, deduplication, performance, and scalability. Together with PowerProtect Data Manager, the PowerProtect DD is the ultimate cyber resilient data protection appliance.

In the following blog, we will unpack all this and more, giving you the opportunity to see how Dell PowerFlex and GDC Virtual can transform how you cloud.

What is Google Distributed Cloud Virtual?

We will start by looking at GDC Virtual and how it allows you to consume the cloud on your terms.

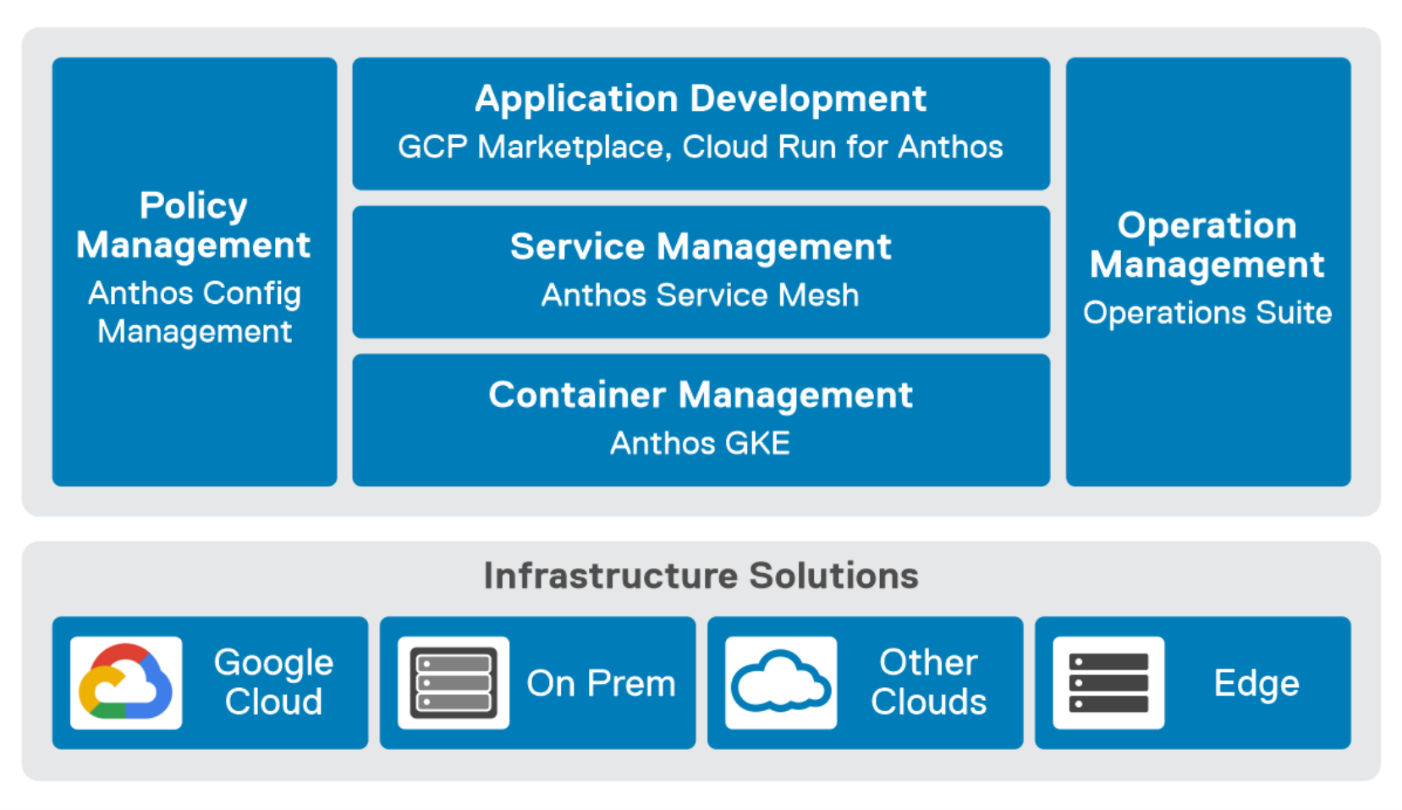

GDC Virtual provides you with a consistent platform for building and managing containerized applications across hybrid infrastructures and helps your developers become more productive across all environments. GDC Virtual provides all the mechanisms required to bring your code into production reliably, securely, and consistently while minimizing risk. GDC Virtual is built on open-source technologies pioneered by Google Cloud including Kubernetes and Istio, enabling consistency between cloud and on premises environments like PowerFlex. Anthos GKE (on GCP and on-prem), Anthos Service Mesh, and Anthos Config Management are the core building blocks of Anthos, which has integrations with platform-level services such as Stackdriver, Cloud Build, and Binary Authorization. GDC Virtual users purchase services and resources from the GCP Marketplace.

Figure 1. GDC Virtual components.

GDC Virtual puts all your IT resources into a consistent development, management, and control framework, automating low-value tasks across your PowerFlex and GCP infrastructure.

Within the context of GCP, the term ‘hybrid cloud’ describes a setup in which common or interconnected services are deployed across multiple computing environments, which include public cloud and on-premises. A hybrid cloud strategy allows you to extend the capacity and capabilities of your IT without the upfront capital expense investments of the public cloud while preserving your existing investments by adding one or more cloud deployments to your existing infrastructure. For more information, see Hybrid and Multi-Cloud Architecture Patterns.

PowerFlex delivers software defined storage to both virtual environments and bare metal hosts providing flexible consumption or resources. This enables both two-tier and three-tier architectures to match the needs of most any environment.

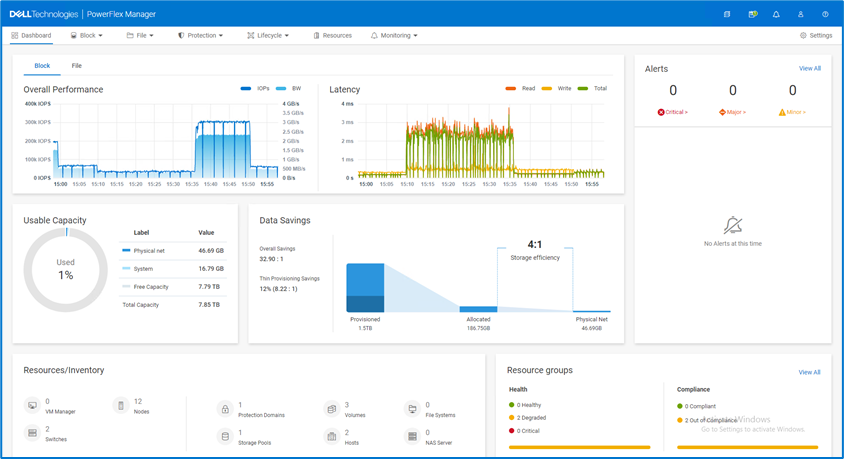

PowerFlex container storage

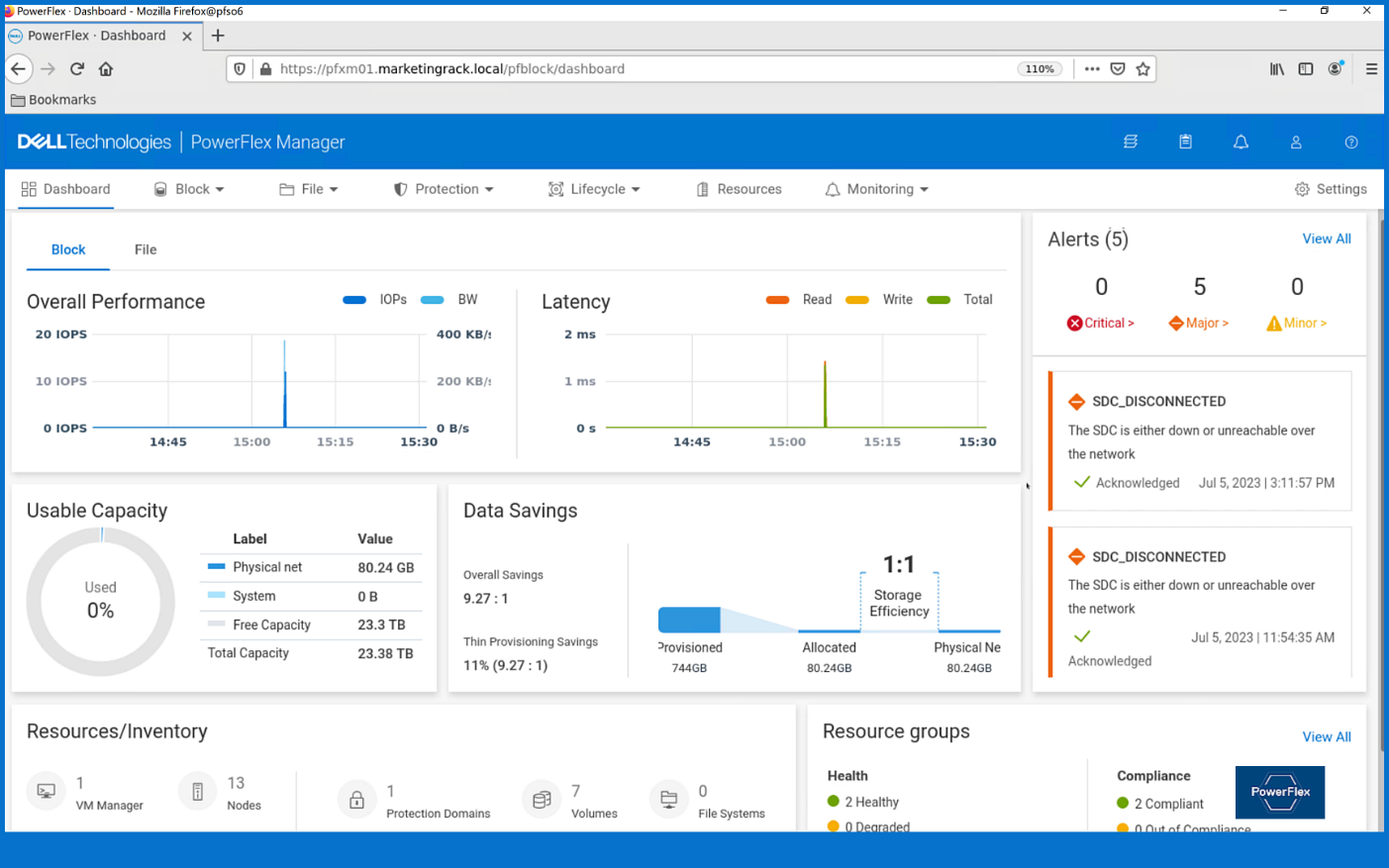

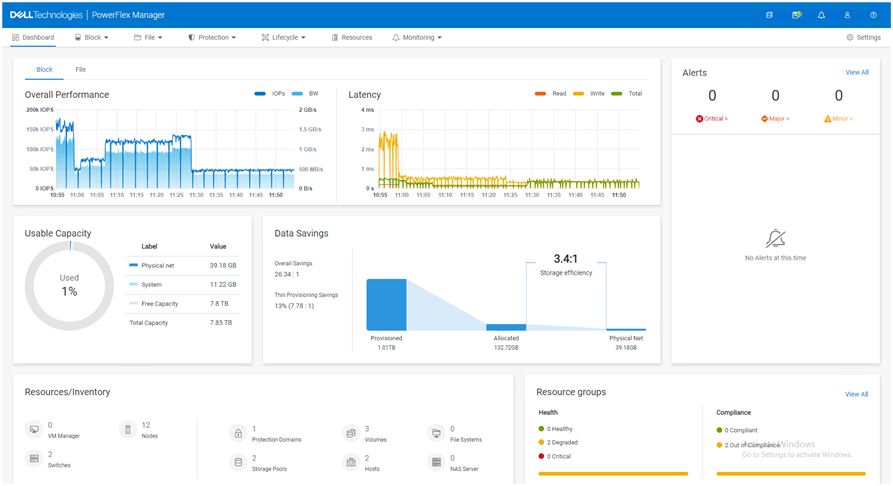

From the PowerFlex UI – shown in the following figure – you can easily monitor the performance and usage of your PowerFlex environment. Additionally, PowerFlex offers a container storage interface (CSI) and container storage modules (CSM) for integration with your container environment. The CSI/CSM allows containers to have persistent storage, which is important when working with workloads like databases that require it.

Figure 2. PowerFlex dashboard provides easy access to information.

To gain a deeper understanding of implementing GDC Virtual on Dell Powerflex, we invite you to explore our recently published reference architecture.

Dell engineers have recently prepared a PostgreSQL container environment deployed from the Google Cloud to a PowerFlex environment with GDC Virtual in anticipation of Kubecon. For those who have deployed Postgres from Google Cloud, you know it doesn’t take long to deploy. It took our team maybe 10 minutes, which makes it effortless to consume and integrate into workloads.

Once we had Postgres deployed, we proceeded to put it under load as we added records to it. To do this, we used pgbench, which is a built-in benchmarking tool in Postgres. This made it easy to fill a database with 10 million entries. We then used pgbench to simulate the load of 40 clients running 40 threads against the freshly loaded database.

Our goal wasn’t to capture performance numbers though. We just wanted to get a “warm” database created for some data protection work. That being said, what we saw on our modest cluster was impressive, with sub-millisecond latency and plenty of IO.

Data protection

With our containerized database warmed up, it was time to protect it. As you probably know, there are many ways to do this, some better than others. We’ll spend just a moment talking about two functional methods of data protection – crash consistent and application consistent backups. PowerProtect Data Manager supports both crash-consistent and application consistent database backups.

A “crash consistent” backup is exactly as the name implies. The backup application captures the volume in its running state and copies out the data regardless of what’s currently happening. It’s as if someone had just pulled the power cord on the workload. Needless to say, that’s not the most desirable backup state, but it’s still better than no backup at all.

That’s where an “application consistent” backup can be more desirable. An application consistent backup talks with the application and makes sure the data is all “flushed” and in a “clean” state prior to it being backed up. At least, that’s the simple version.

The longer version is that the backup application talks to the OS and application, asks them to flush their buffers – known as quiescing – and then triggers a snapshot of the volumes to be backed up. Once complete, the system then initiates a snapshot on the underlying storage – in this case PowerFlex – of the volumes used. Once the snapshots are completed, the application-level snapshots are released, the applications begin writing normally to it again, and the backup application begins to copy the storage snapshot to the protected location. All of this happens in a matter of seconds, many times even faster.

This is why application consistent backups are preferred. The backup can take about the same amount of time to run, but the data is in a known good state, which makes the chances of recovery much greater than crash consistent backups.

In our lab environment, we did this with PowerProtect Data Manager and PowerProtect DD Virtual Edition (DDVE). PowerProtect Data Manager provides a standardized way to quiesce a supported database, backup the data from that database, and then return the database to operation. This works great for protecting Kubernetes workloads running on PowerFlex. It’s able to create application consistent backups of the Postgres containers quickly and efficiently. This also works in concert with GDC Virtual, allowing for the containers to be registered and restored into the cloud environment.

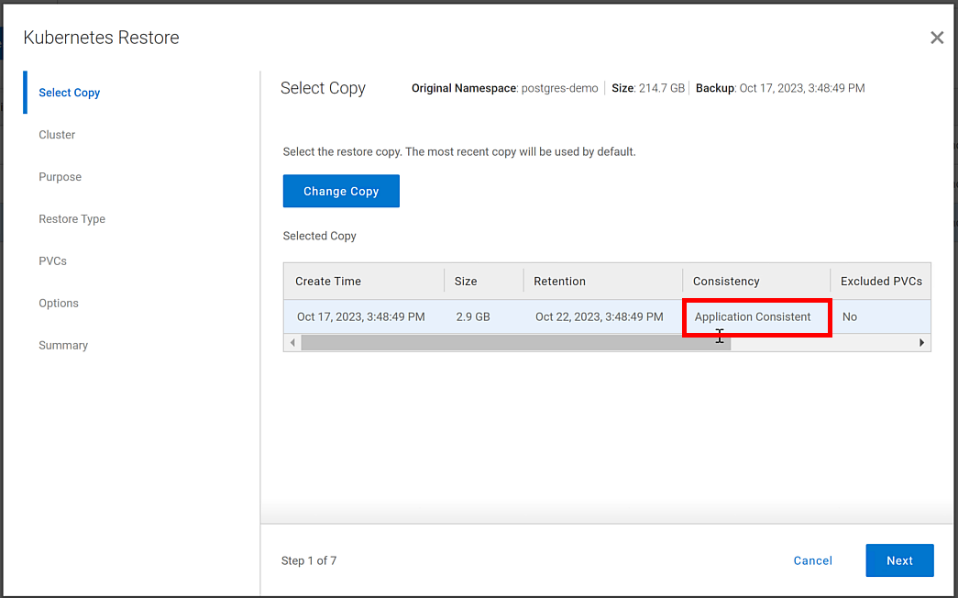

Figure 3. An application consistent backup and its timing in the PowerProtect Data Manager UI

It’s great having application consistent backups of your cloud workloads, “checking” many of those boxes that people require from their backup environments. That said, just as important and not to be forgotten is the recovery of the backups.

Data recovery

As has been said many times, “never trust a backup that hasn’t been tested.” It’s important to test any and all backups to make sure they can be recovered. Testing the recovery of a Postgres database running in GDC Virtual on PowerFlex is as straightforward as can be.

The high-level steps are:

- From the PowerProtect Data Manager UI, select Restore > Assets, and select the Kubernetes tab. Select the checkbox next to the protected namespace and click Restore.

- On the Select Copy page, select the copy you wish to restore from.

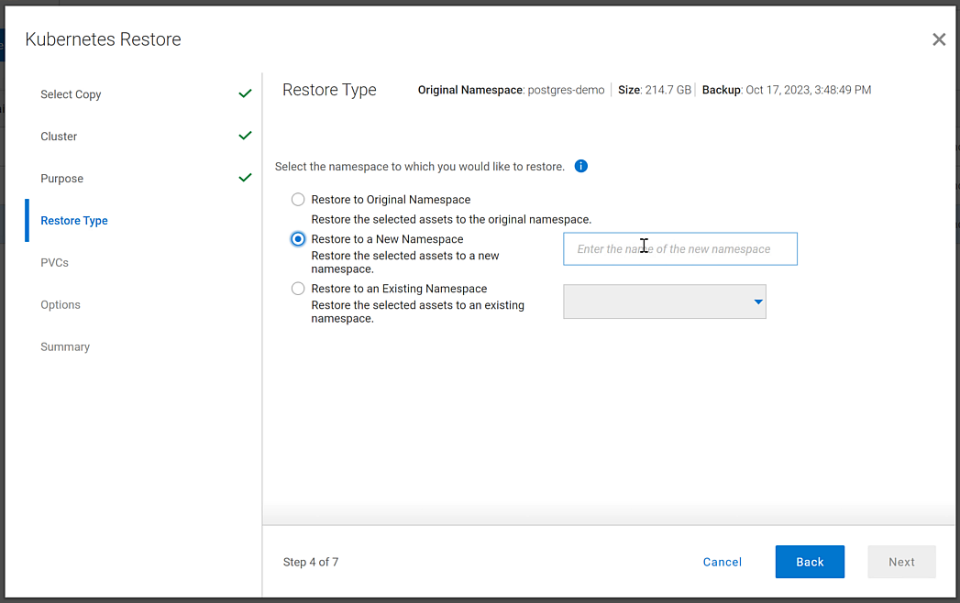

- On the Restore Type page, select where it should be restored to.

- Determine how the Persistent Volume Claims (PVCs) and namespace should be restored.

- When finished, test the restore.

You might have noticed in step 4, I mentioned PVCs, which are the container’s connections to the data and, as the name implies, allow that data to persist across the nodes. This is made possible by the CSI/CSM mentioned earlier. Because of the integration across the environment, restoring PVCs is a simple task.

The following shows some of the recovery options in PowerProtect Data Manager for PVCs.

Figure 4. PowerProtect Data Manager UI – Namespace restore options

The recovery, like most things in data protection, is relatively anticlimactic. Everything is functional, and queries work as expected against the Postgres database instance.

Dell and Google Cloud collaborated extensively to create solutions that leverage both PowerFlex and GDC Virtual. The power of this collaboration really shows through when recovery operations just work. That consistency and ease enables customers to take advantage of a robust environment backed by leaders in the space and helps to remove one nightmare that keeps developers and IT admins awake at night, allowing them to rest easy and be prepared to change the world.

If any of this sounds interesting to you and you’ll be at Kubecon in Chicago, Illinois on November 6-9, stop by the Google Cloud booth. We’ll be happy to show you demos of this exciting collaboration in action. Otherwise, feel free contact your Dell representative for more details.

Resources

Authors:

Authors: | Tony Foster, | Vinod Kumar Kumaresan, | Harsha Yadappanavar, |

LinkedIn: | |||

X (formerly Twitter): |

| @harshauy | |

Personal Blog: |

|

|

Dell PowerFlex at VMware Explore in Barcelona – Nothing Controversial

Thu, 19 Oct 2023 22:38:22 -0000

|Read Time: 0 minutes

For those who aren’t aware, there are some big changes happening at VMware. If you watched the VMware Explore Las Vegas keynote, it was a whirlwind of changes and important information. CEOs of several major companies took the stage and spoke about the direction VMware is going, attendees hanging on their every word and wondering what the changes meant as well as how it would impact their operations.

For many, the impact is still unclear. This could radically change data centers and how organizations do work, leaving many in IT and business asking questions about what’s next and where things are headed.

We can all expect to find out more at VMware Explore Barcelona coming up 6 to 9 November, which will bring more clarity in direction and illuminate what it will mean for organizations large and small.

I can’t wait to see what’s in store for the Generative AI (GenAI) workloads we’ve all been waiting for (And you thought I was talking about something else?).

At VMware Explore in Las Vegas this year, the message was clear. VMware is embracing AI workloads. NVIDIA CEO Jensen Huang and VMware CEO Raghu Raghuram spoke to this during the general session keynote. Jensen stated, “we’re reinventing enterprise computing after a quarter of a century in order to transition to the future.”

The entire IT industry is moving in the direction of AI. Dell PowerFlex is already there. We’ve been on this journey for quite some time. If you were lucky enough to have stopped at the Kioxia stand during the Las Vegas show, you saw how we are working with both NVIDIA and Kioxia to deliver powerful AI systems for customers to make that transition to the future.

If you couldn’t make it to Las Vegas for VMware Explore but plan to attend VMware Explore in Barcelona, you’re in luck. PowerFlex will be showcasing the amazing performance of Kioxia storage and NVIDIA GPUs again. You can see a live demo at the Kioxia stand, #225 in the Solutions Exchange.

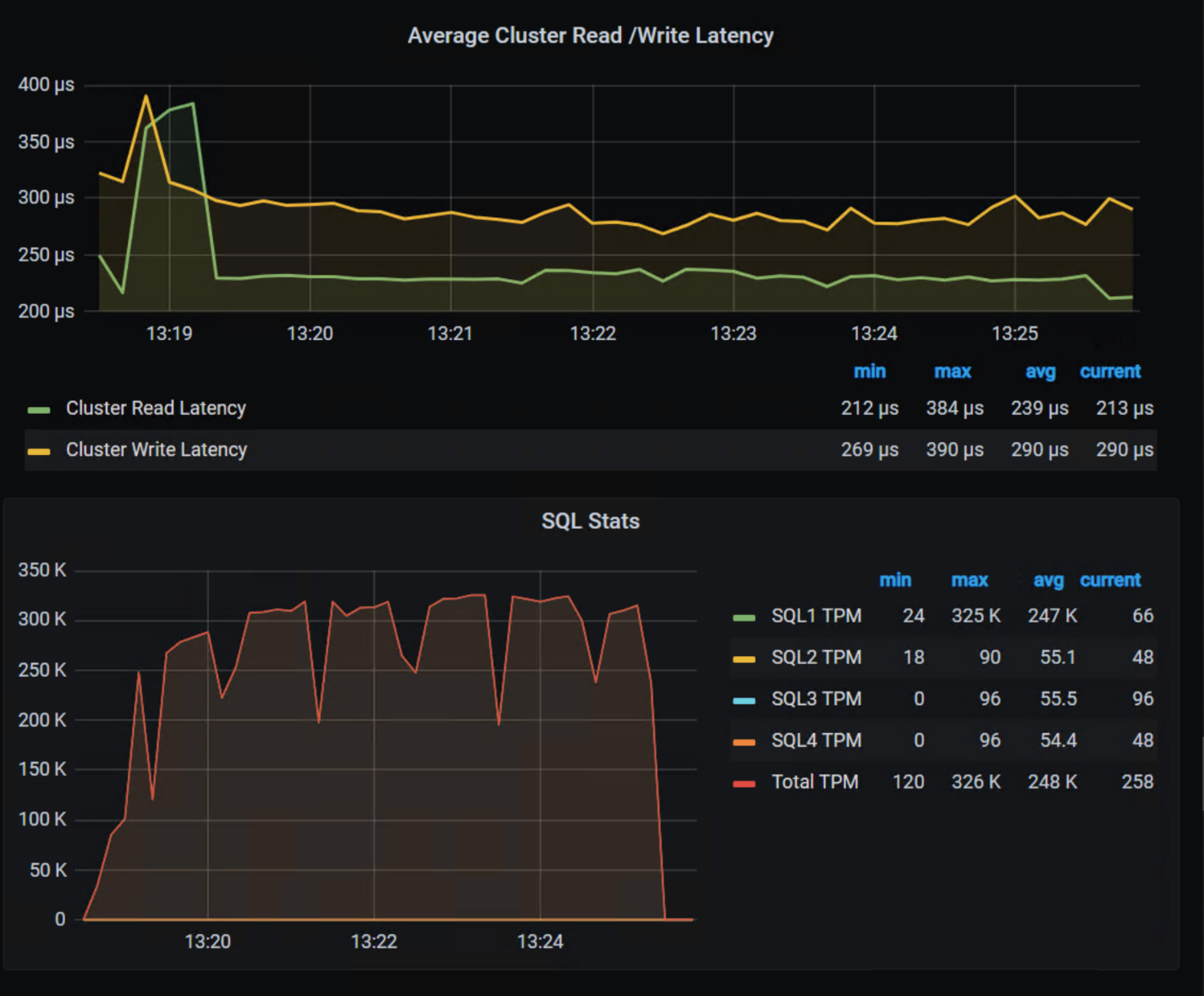

When you visit the Kioxia stand, you will be able to experience the power of running ResNet 50 image classification and Online Transactional Processing (OLTP) workloads simultaneously, live from the show floor. And if that’s not enough, there are experts and lots of them! If you get a chance, talk with Shashi about all the things PowerFlex unlocks for your organization.

PowerFlex supports NVIDIA GPUs with MIG technology, which is part of NVIDIA AI Enterprise. NVIDIA MIG allows you to tailor GPU resources for the workloads that need them (Yes, there is nothing that says you can’t run different workloads on the same hosts). Plus, PowerFlex uses Kioxia PM7 series SSDs, so there are plenty of IOPS to go around while ensuring sub-millisecond latency for both workloads. This allows the data to be closer to the processing, even on the same host.

In our lab tests, we were able to push one million transactions per minute (TPM) with OLTP workloads while also processing 6620 images per second using a RESNET50 model built on NVIDIA NGC containers. These are important if you want to keep users happy, especially as more and more organizations want to add AI/ML capabilities to their online apps (and more and more data is generated from all those new apps).

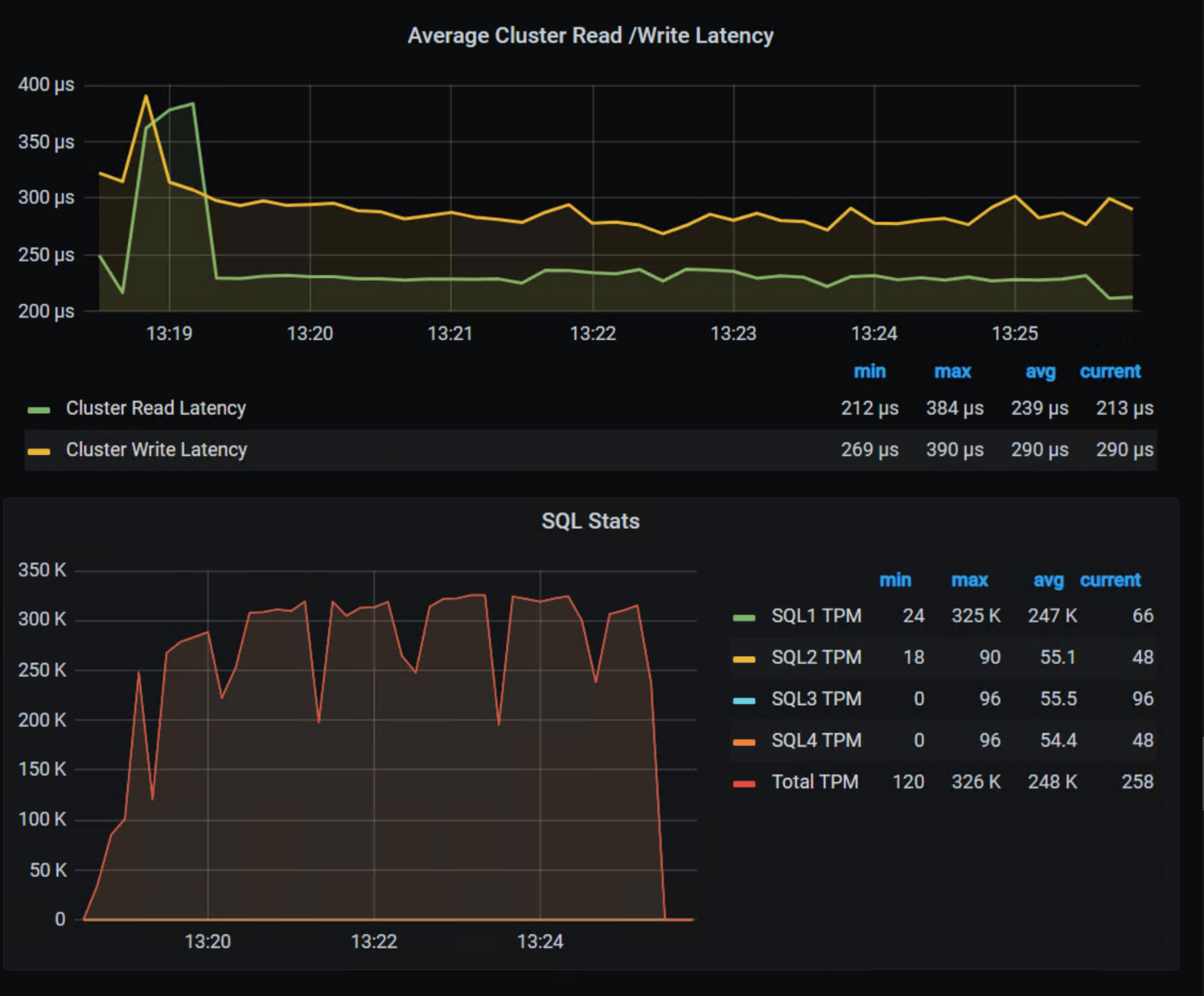

The following shows the TPM results from the demo environment that is running our four SQL VMs. The TPMs in this test are maxing out around 320k, and the latency is always sub-millisecond.

The future is here and waiting for you to visit.

If you are unable to visit the stand and would like to get an overview of PowerFlex’s abilities when it comes to GenAI, check out this video.

As you can see, PowerFlex has true flexibility when it comes to GenAI, making it the ideal platform to reinvent your enterprise IT environment as you transition to the future.

If you find yourself at VMware Explore in Barcelona, be sure to stop by the Kioxia stand (#225) and talk with the team about how Dell PowerFlex, Kioxia drives, and NVIDIA GPUs can accelerate your transition to the future.

See, nothing controversial here!

Resources

- Dell Generative AI Solutions

- VMware and NVIDIA Unlock Generative AI for Enterprises

- VMware’s Approach to Private AI

Author: Tony Foster, Sr. Principal Technical Marketing Engineer

Twitter: | |

LinkedIn: | |

Personal Blog: | |

Location: | The Land of Oz [-6 GMT] |

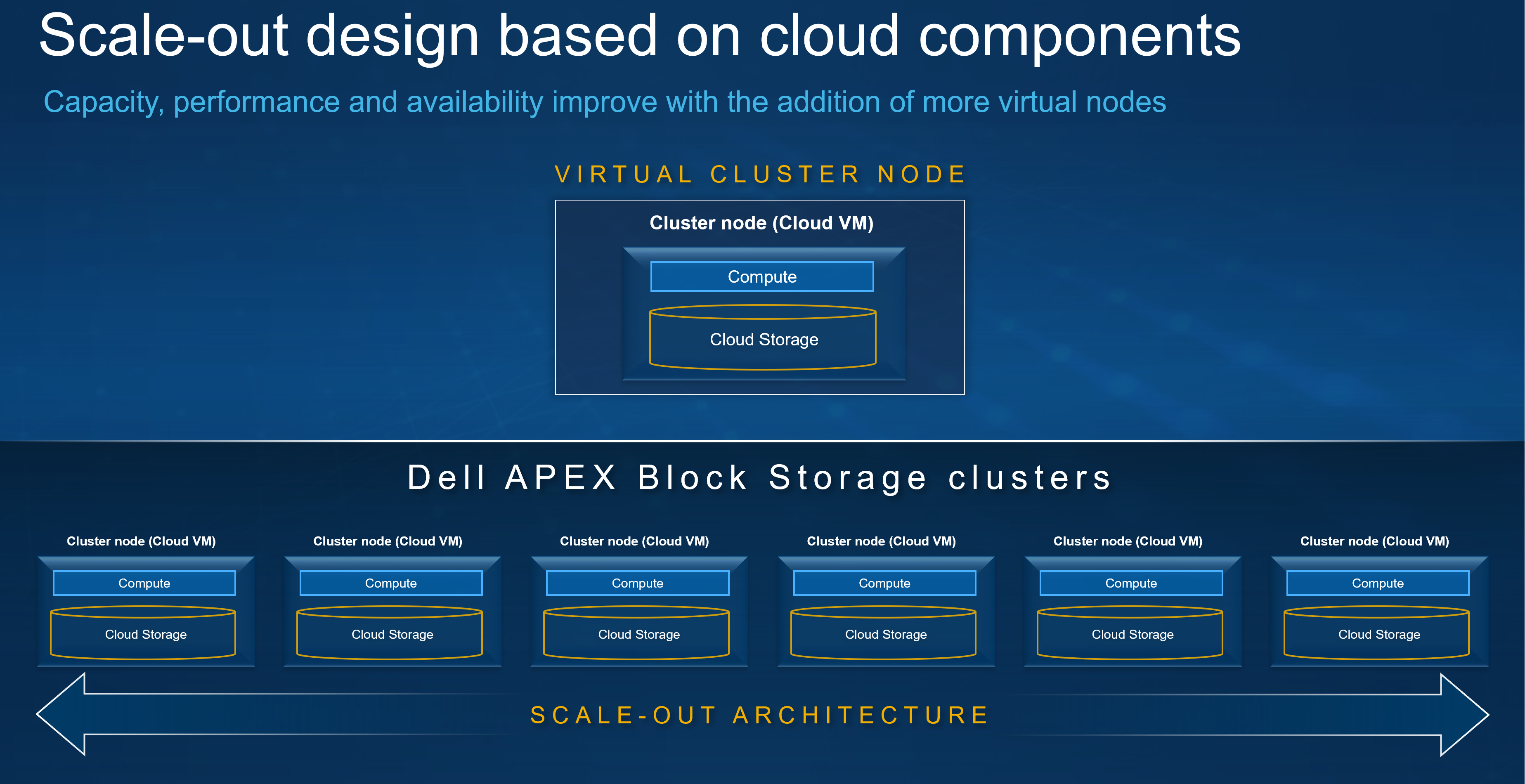

Dell APEX Block Storage: It’s Not Where You Do Cloud, It’s How You Do Cloud

Fri, 13 Oct 2023 22:36:19 -0000

|Read Time: 0 minutes

The cloud isn’t just about where you are operating but how you are operating, a paradigm shift for many organizations. That’s where Dell APEX Block Storage comes in.

I’m not going to bore everyone with the history of Dell’s multicloud offerings announced at Dell Tech World and all the different APEX offerings being released. You can read all about those on the Dell APEX InfoHub. Instead, we’ll spend this bit of space talking about how Dell APEX cloud offerings systematically change how you do cloud rather than where you do cloud.

It’s safe to say that most readers here are familiar with the saying “the cloud is just someone else’s computer[s].” I even have a sticker someone gave me at a trade show a few years back with that quote, pictured here. The quote is commonly understood to say that the difference between operating in the cloud and in your own data center is where and whose computer you are using.

Figure 1. Sticker – There is no cloud, it’s just someone else’s computer

This is only a partially accurate statement though. By a show of hands, how many folks reading this would run their workloads the same way on someone else’s computer? You’re probably laughing and--if you work in IT security--potentially yelling at the screen right now. Why? Because it’s someone else’s computer! No one in IT would willingly move their workloads straight from the data center to a random computer and keep things the way they are. Rather, those systems would need to be reworked and reimagined to effectively “do cloud.”

We must approach the cloud as a mindset, not a location, asking “how can this workload be run on any computer — my computer, your computer, some “public” computer — and get the same results regardless?”. After all, it’s just someone else’s computer.

Diving into Dell APEX Block Storage

The Dell APEX offerings aren’t just another cloud, enabling organizations to readily adapt and adopt a cloud mindset for all workloads. For the sake of brevity, we’ll focus on the block storage varieties, Dell APEX Block Storage for AWS and Dell APEX Block Storage for Azure.

Dell APEX Block Storage allows you to consume cloud storage in either Azure or AWS. Both are powerful cloud platforms for enterprise workloads and PaaS offerings that aggregate low-cost cloud storage (just someone else’s storage) from the cloud provider to form cloud environments with enterprise-equivalent storage.

You might be asking, “what is ‘enterprise-equivalent storage?’” The answer may surprise you. Remember earlier when I described the cloud mindset of being able to run workloads anywhere? Adopting that, our workloads should function the same in the enterprise as well as in the cloud, encompassing not only performance (IOs and capacity) but also data resiliency, availability, and consistency across workloads.

But wait, only containerized workloads should run in “the cloud,” and those are already resilient. If a node goes down, just spin up another one. Why are containerized workloads special? Shouldn’t any workload be able to run anywhere, be it a traditional x86 application or a modern containerized app?

That’s why Dell APEX Block Storage is such an integral part of your organization’s cloud journey, enabling you to run any workload in any location and have the same experience. Who doesn’t want choice?

Beyond a consistent approach for consuming storage, the performance of that storage should be uniform across environments as well. Meaning, if you need a given number of IOPS or amount of storage capacity for a workload, they are imperative regardless of if it runs in your data center or on someone else’s computer (AKA the cloud).

The cloud provides many ways to satisfy resource requirements, some easy and some not so easy. If you want that consistent method for managing your data and workloads both on premises and in the cloud, Dell APEX Block storage makes it easy. It can aggregate cloud storage into a consistent, scale-out, software defined block storage service. Dell APEX Block Storage enables you to consume block storage from the cloud the same way you consume it in your own data center.

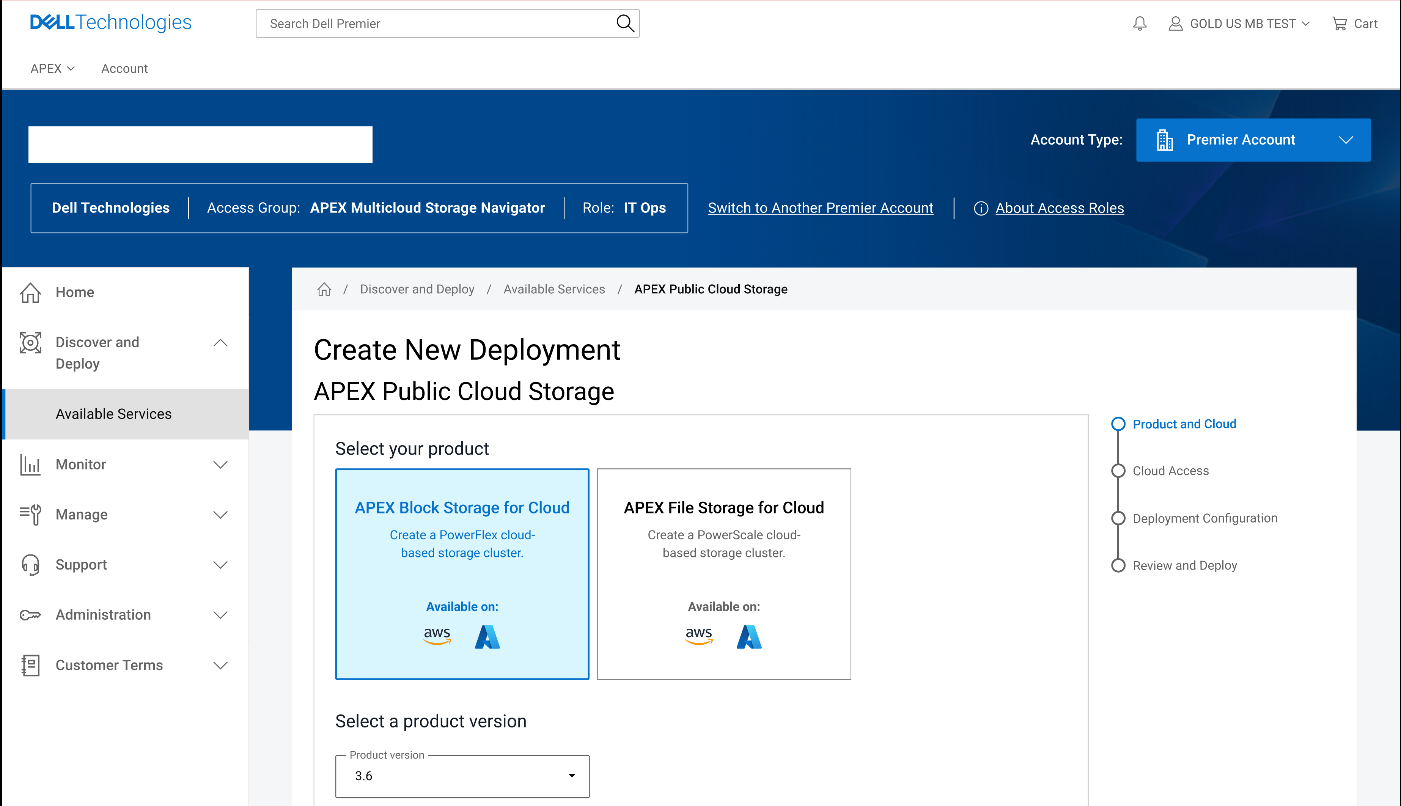

Deploying APEX Block Storage

Let’s walk through this concept. First, log in to your organization's Dell Premier Account. From the right-hand menu, select the Discover and Deploy option, then click on Available Services. From there, you will see the option, if entitled, to create a new APEX Block Storage instance. That can either be deployed on Amazon AWS or Microsoft Azure, as depicted in figure 2.

Figure 2. Dell Premier Account with APEX Block Storage capabilities enabled

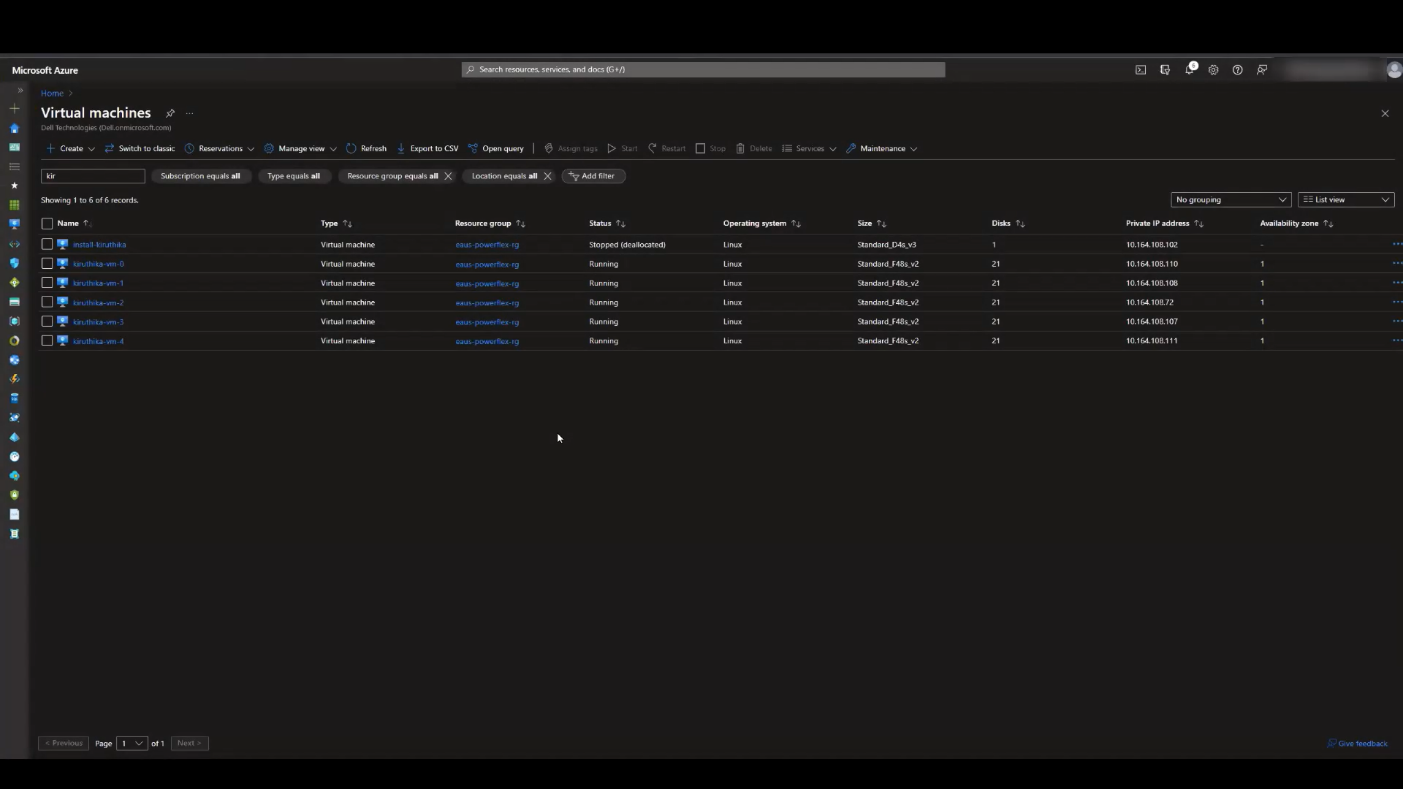

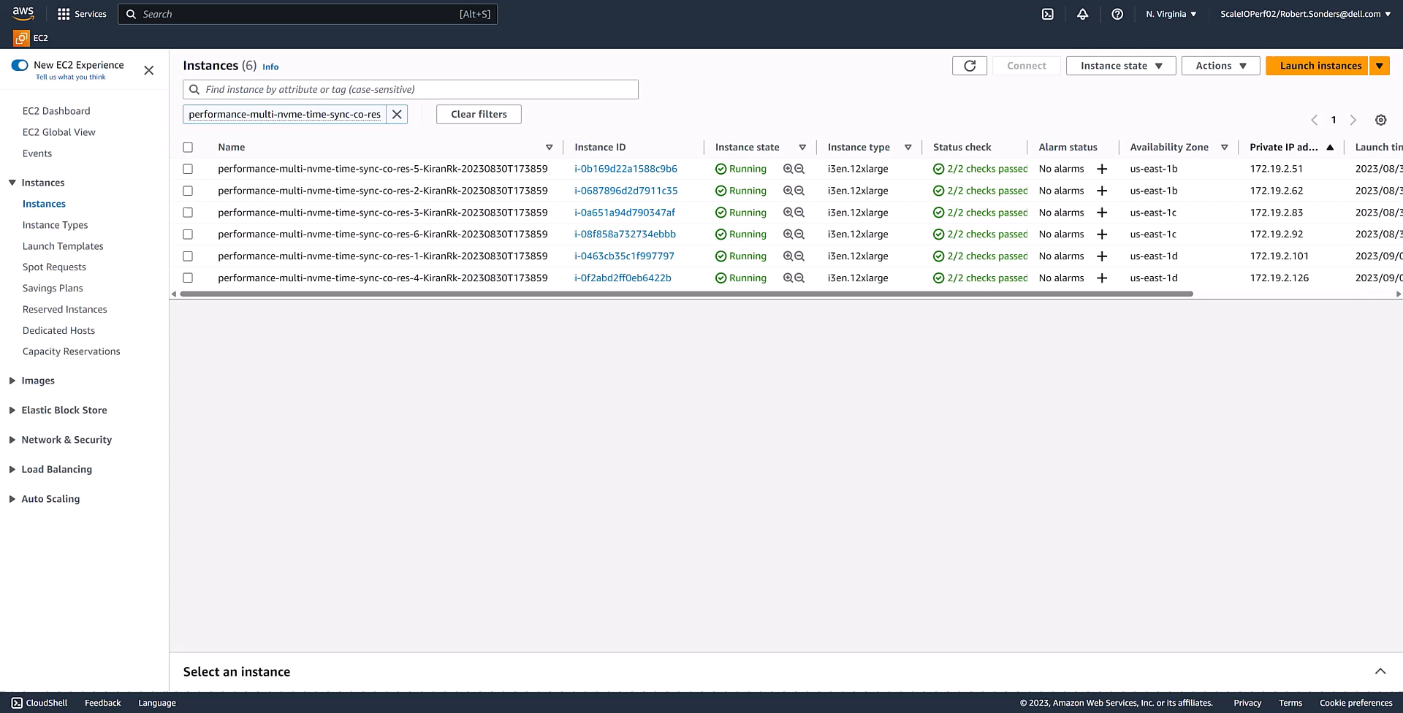

Once you have completed the wizard, you will see additional instances or VMs in your cloud console of choice. The storage resources of these VMs (or instances) are aggregated to deliver APEX Block Storage that can be easily consumed. This is illustrated in the following figures for both APEX Block Storage for Azure and APEX Block Storage for AWS.

Figure 3. APEX Block Storage for Azure VMs

Figure 4. APEX Block Storage for AWS instances

What can APEX Block Storage do for you

The following diagram illustrates this aggregation and shows the relative ease with which storage can be expanded by simply adding an additional VM or instance in the cloud. You might be thinking, “that’s not a big deal, I’ve been doing that in my data center for several years”, and you’d be right. It shouldn’t be a big deal. Expanding storage should be the same, regardless of location.

Figure 5. Aggregating storage with Dell APEX Block Storage

Something else that has been happening in the data center forever is thin provisioning. I remember back when I first started in IT how cool it was to have a SAN in the office that could thin provision. That meant I had a SAN with 16 – 500GB drives or about 7TB of usable space, allowing me to allocate terabytes of space to my systems even though I didn’t have the capacity to deliver it. If I ever needed too, I could scale out my capacity to meet the greater demand by adding another shelf to the array. It was awesome and powerful and — for a young IT admin — it sure made life easier. Since then, I’ve always considered thin provisioning when building storage systems, and it’s nice that I can be consistent in my planning wherever my workloads run.

Additionally, Dell APEX Block Storage enables space efficient snapshots in the cloud, helping reduce your space consumption. The reason space efficient snapshots are a big deal is because, instead of making a copy of everything, only the changed bits are recorded. That’s monumental when you’re paying for every IO and byte of space.

Not only do you get space efficient snapshots, you can also have a lot of them. We’re talking 126 snapshots per volume or roughly 32k snapshots per storage pool, unlocking an abundance of capabilities, especially in the cloud. Remember the whole cloud is a mindset thing? Having data center options at your disposal can come in handy regardless of where the operations are taking place.

Snapshots are nice, but they don’t mean a thing if you can’t do something with them — preferably at the same time you are using the rest of the volume. With APEX Block Storage, you can do just that. You can mount the snapshots and read from or write to them like they are another volume in the environment, similar to what you have been doing with storage in the data center for years.

Earlier, I mentioned scaling. In that regard, Dell APEX Block Storage has you covered. Starting with 10TiB of useable capacity and scaling up to 4PiB in the cluster, I can create volumes that are 8GB all the way up to 1PiB, producing massive flexibility for building in the cloud and empowering you to meet the storage needs of your most demanding applications.

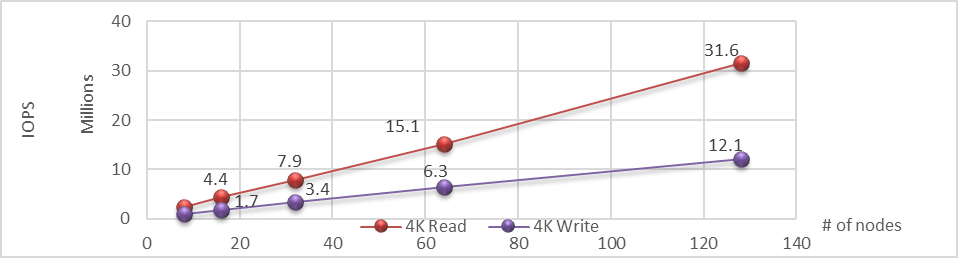

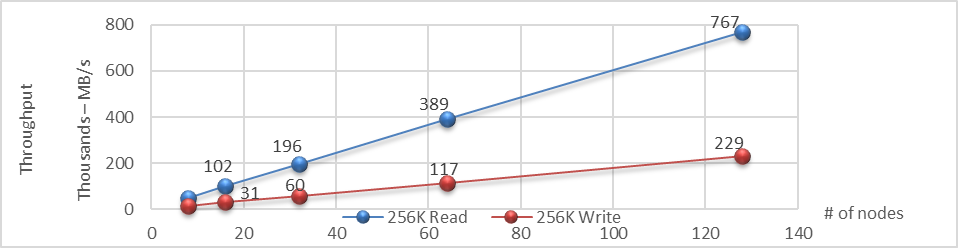

As you increase the capacity, rest assured the performance with Dell APEX Block Storage remains linear. As you can see from the following charts for IOPS and throughput, both scale linearly on both reads and writes, meaning you get reliable and consistent performance from the cloud1. This provides the opportunity to apply a cloud mindset at scale, allowing you to focus on optimizing cloud workloads as the cloud infrastructure is ready for them.

Figure 6. Linear IOPS as the number of nodes is increased in the APEX Block Storage environment

Figure 7. Linear throughput as the number of nodes is increased in the APEX Block Storage environment

Conclusion

Dell APEX Block Storage provides a host of capabilities, including aggregating underlying cloud storage into scalable and unified storage that linearly scales IOPS and throughput as new nodes are provided. We also looked at the availability of Dell APEX Block Storage in both Microsoft Azure and Amazon AWS clouds. Finally, we covered how cloud computing is a frame of mind to incorporate into our designs so our workloads can run in any location we choose.

Of course, the areas we went over just scratch the surface of applying a cloud mindset. After all, as we’ve said before, the cloud is just somebody else’s computer.

If you would like to find out more about how Dell APEX Block Storage can enhance your cloud journey, reach out to your Dell representative.

Resources

Author: Tony Foster, Sr. Principal Technical Marketing Engineer

Twitter: | |

LinkedIn: | |

Personal Blog: | |

Location: | The Land of Oz [-6 GMT] |

1Based on internal testing, March 15th-16th 2021 – Extreme SLAs – Even in the Cloud

VMware Explore, PowerFlex, and Silos of Glitter: this blog has it all!

Fri, 18 Aug 2023 19:30:20 -0000

|Read Time: 0 minutes

Those who know me are aware that I’ve been a big proponent of one platform that must be able to support multiple workloads—and Dell PowerFlex can. If you are at VMware Explore you can see a live demo of both traditional database workloads and AI workloads running on the same four PowerFlex nodes.

When virtualization took the enterprise by storm, a war was started against silos. First was servers, and the idea that we can consolidate them on a few large hosts with virtualization. This then rapidly moved to storage and continued to blast through every part of the data center. Yet today we still have silos. Mainly in the form of workloads, these hide in plain sight - disguised with other names like “departmental,” “project,” or “application group.”

Some of these workload silos are becoming even more stealthy and operate under the guise of needing “different” hardware or performance, so IT administrators allow them to operate in a separate silo.

That is wasteful! It wastes company resources, it wastes the opportunity to do more, and it wastes your time managing multiple environments. It has become even more of an issue with the rise of Machine Learning (ML) and AI workloads.

If you are at VMware Explore this year you can see how to break down these silos with Dell PowerFlex at the Kioxia booth (Booth 309). Experience the power of running ResNet 50 image classification and OLTP (Online Transactional Processing) workloads simultaneously, live from the show floor. And if that’s not enough, there are experts, and lots of them! You might even get the chance to visit with the WonderNerd.

This might not seem like a big deal, right? You just need a few specialty systems, some storage, and a bit of IT glitter… some of the systems run the databases, some run the ML workloads. Sprinkle some of that IT glitter and poof you’ve got your workloads running together. Well sort of. They’re in the same rack at least.

Remember: silos are bad. Instead, let’s put some PowerFlex in there! And put that glitter back in your pocket, this is a data center, not a five-year old’s birthday party.

PowerFlex supports NVIDIA GPUs with MIG technology which is part of NVIDIA AI Enterprise, so we can customize our GPU resources for the workloads that need them. (Yes, there is nothing that says you can’t run different workloads on the same hosts.) Plus, PowerFlex uses Kioxia PM7 series SSDs, so there is plenty of IOPS to go around while ensuring sub-millisecond latency for both workloads. This allows the data to be closer to the processing, maybe even on the same host.

In our lab tests, we could push one million transactions per minute (TPM) with OLTP workloads while also processing 6620 images per second using a RESNET50 model built on NVIDIA NGC containers. These are important if you want to keep customers happy, especially as more and more organizations add AI/ML capabilities to their online apps, and more and more data is generated from all those new apps.

Here are the TPM results from the demo environment that is running our four SQL VMs. The TPMs in this test are maxing out around 320k and the latency is always sub-millisecond. This is the stuff you want to show off, not that pocket full of glitter.

Yeah, you can silo your environments and hide them with terms like “project” and “application group,” but everyone will still know they are silos.

We all started battling silos at the dawn of virtualization. PowerFlex with Kioxia drives and NVIDIA GPUs gives administrators a fighting chance to win the silo war.

You can visit the NVIDIA team at Lounge L3 on the show floor during VMware Explore. And of course, you have to stop by the Kioxia booth (309) to see what PowerFlex can do for your IT battles. We’ll see you there!

Author: Tony Foster

Twitter: | |

LinkedIn: | |

Personal Blog: | |

Location: | The Land of Oz [-6 GMT] |

Contributors: Kailas Goliwadekar, Anup Bharti

Managing Dell PowerFlex Licensing and Being Way Less Sad

Mon, 24 Jul 2023 21:20:14 -0000

|Read Time: 0 minutes

Imagine there was an easy way to view and manage your Dell PowerFlex licenses. Wouldn’t that be nice? I know I’d be way less sad. Well guess what, I’m way less sad, and there’s a way to easily manage your PowerFlex licenses.

I was on a call today with one of our product managers. He was showing something really cool, and I just had to share it with everyone. You can go into CloudIQ and view all your PowerFlex licenses.

You might think, “big deal, licenses.” You’re right! It is a big deal. Okay, a moderate sized deal, it makes me less sad. And here’s why. Have you ever had to track licenses for your environment in a spreadsheet? How about sharing that spreadsheet with everyone else on your team and hoping that no one accidently removes too many rows or types in the wrong cell. Or maybe you have to correlate a license to how much capacity you’re using. I’m sure 90% of users love this method. What’s that I hear you yelling at your monitor, I’m wrong???

You’re correct, hardly anyone wants to track licenses that way. Why? Because its error prone and difficult to manage, plus it’s not automated. Oh, and it’s licensing. Well, CloudIQ can help you address a lot of this, at least for your PowerFlex environment.

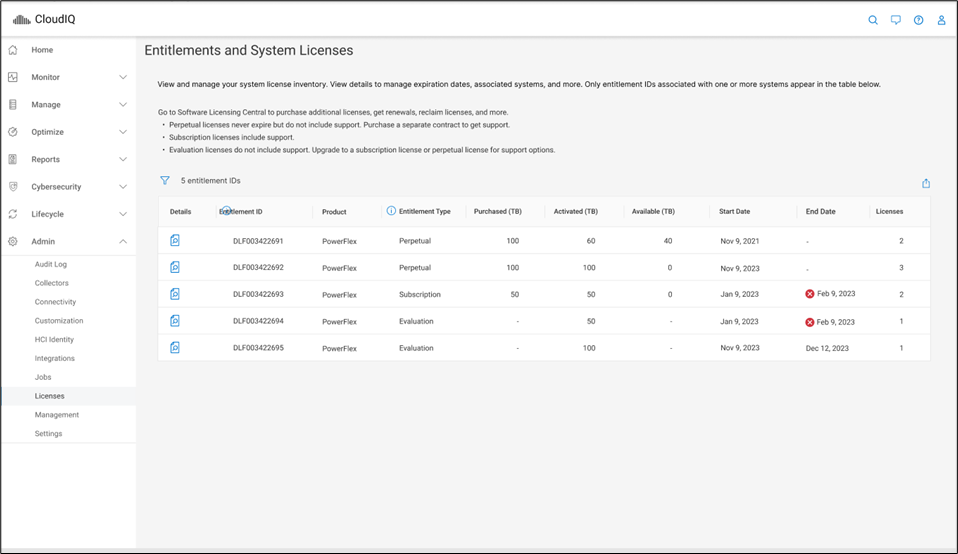

That’s right. You log in, click on the Entitlements and System Licenses option in the menu, and you can see all your entitlements for PowerFlex. With that you can see how many terabytes of capacity each license has as well as the start and end dates. It’s all there, no spreadsheets, no manual entry, it’s easy to manage. Maybe 90% of users would prefer this method over a spreadsheet. You can see this functionality in the screenshot below.

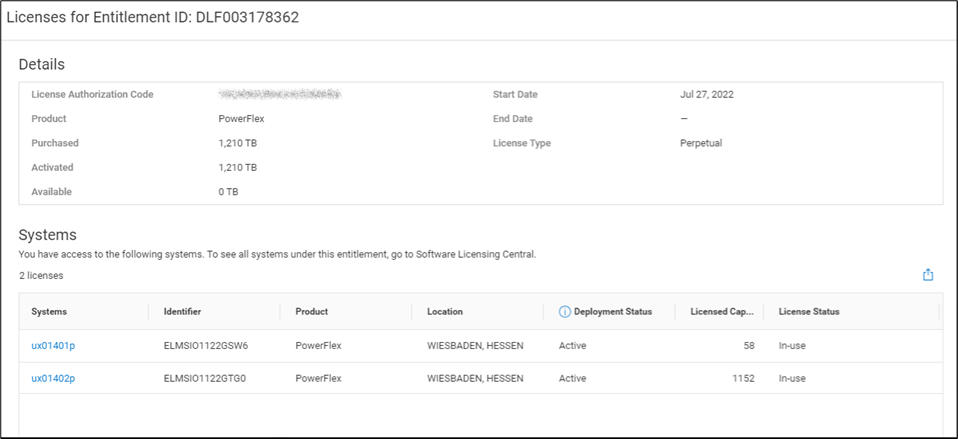

It gets better though…. Maybe you want to dig into the details of your environment and see how different licenses are being used. Maybe you are licensed for a petabyte of storage but you’re missing 50ish terabytes and want to see where they went. If you click on the details of an entitlement, you can see which systems are consuming capacity from the license. This makes it a lot easier than a spreadsheet to track down. You can see this in the following screenshot.

I’m sure it’s hard to get excited over licensing, but hopefully this makes you way less sad knowing you don’t have to try and track all this in a spreadsheet. Instead, you just log in to CloudIQ, then click on Entitlements and System Licenses. Poof, there it all is, in an easy-to-consume format. And for those who still want to manage their licenses in a spreadsheet, there’s an export option at the top of the table just for you. You can create pivot tables to your heart’s content. For everyone else, you’ve just unlocked a PowerFlex secret. Hopefully, like me, this makes you way less sad about licensing.

If you’re interested in finding out more about what you can do with licensing in CloudIQ, reach out to your Dell representative, who can guide you on all CloudIQ has to offer.

Author: Tony Foster

Sr. Principal Technical Marketing Engineer

Twitter: | |

LinkedIn: | |

Personal Blog: | |

Location: | The Land of Oz [-6 GMT] |

Can I do that AI thing on Dell PowerFlex?

Thu, 20 Jul 2023 21:08:09 -0000

|Read Time: 0 minutes

The simple answer is Yes, you can do that AI thing with Dell PowerFlex. For those who might have been busy with other things, AI stands for Artificial Intelligence and is based on trained models that allow a computer to “think” in ways machines haven’t been able to do in the past. These trained models (neural networks) are essentially a long set of IF statements (layers) stacked on one another, and each IF has a ‘weight’. Once something has worked through a neural network, the weights provide a probability about the object. So, the AI system can be 95% sure that it’s looking at a bowl of soup or a major sporting event. That, at least, is my overly simplified description of how AI works. The term carries a lot of baggage as it’s been around for more than 70 years, and the definition has changed from time to time. (See The History of Artificial Intelligence.)

Most recently, AI has been made famous by large language models (LLMs) for conversational AI applications like ChatGPT. Though these applications have stoked fears that AI will take over the world and destroy humanity, that has yet to be seen. Computers still can do only what we humans tell them to do, even LLMs, and that means if something goes wrong, we their creators are ultimately to blame. (See ‘Godfather of AI’ leaves Google, warns of tech’s dangers.)

The reality is that most organizations aren’t building world destroying LLMs, they are building systems to ensure that every pizza made in their factory has exactly 12 slices of pepperoni evenly distributed on top of the pizza. Or maybe they are looking at loss prevention, or better traffic light timing, or they just want a better technical support phone menu. All of these are uses for AI and each one is constructed differently (they use different types of neural networks).

We won’t delve into these use cases in this blog because we need to start with the underlying infrastructure that makes all those ideas “AI possibilities.” We are going to start with the infrastructure and what many now consider a basic (by today’s standards) image classifier known as ResNet-50 v1.5. (See ResNet-50: The Basics and a Quick Tutorial.)

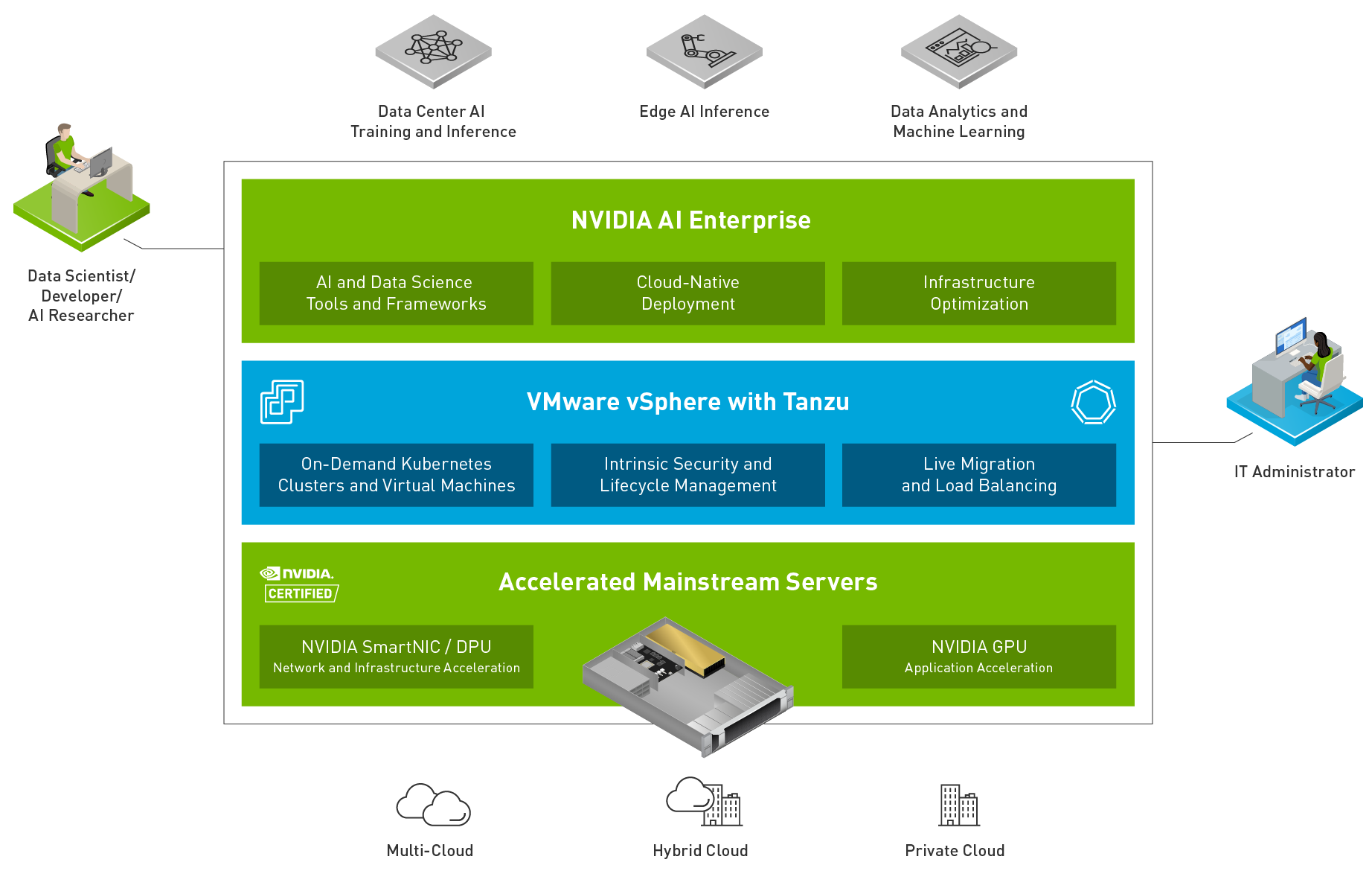

That’s also what the PowerFlex Solution Engineering team did in the validated design they recently published. This design details the use of ResNet-50 v1.5 in a VMware vSphere environment using NVIDIA AI Enterprise as part of PowerFlex environment. They started out with the basics of how a virtualized NVIDIA GPU works well in a PowerFlex environment. That’s what we’ll explore in this blog – getting started with AI workloads, and not how you build the next AI supercomputer (though you could do that with PowerFlex as well).

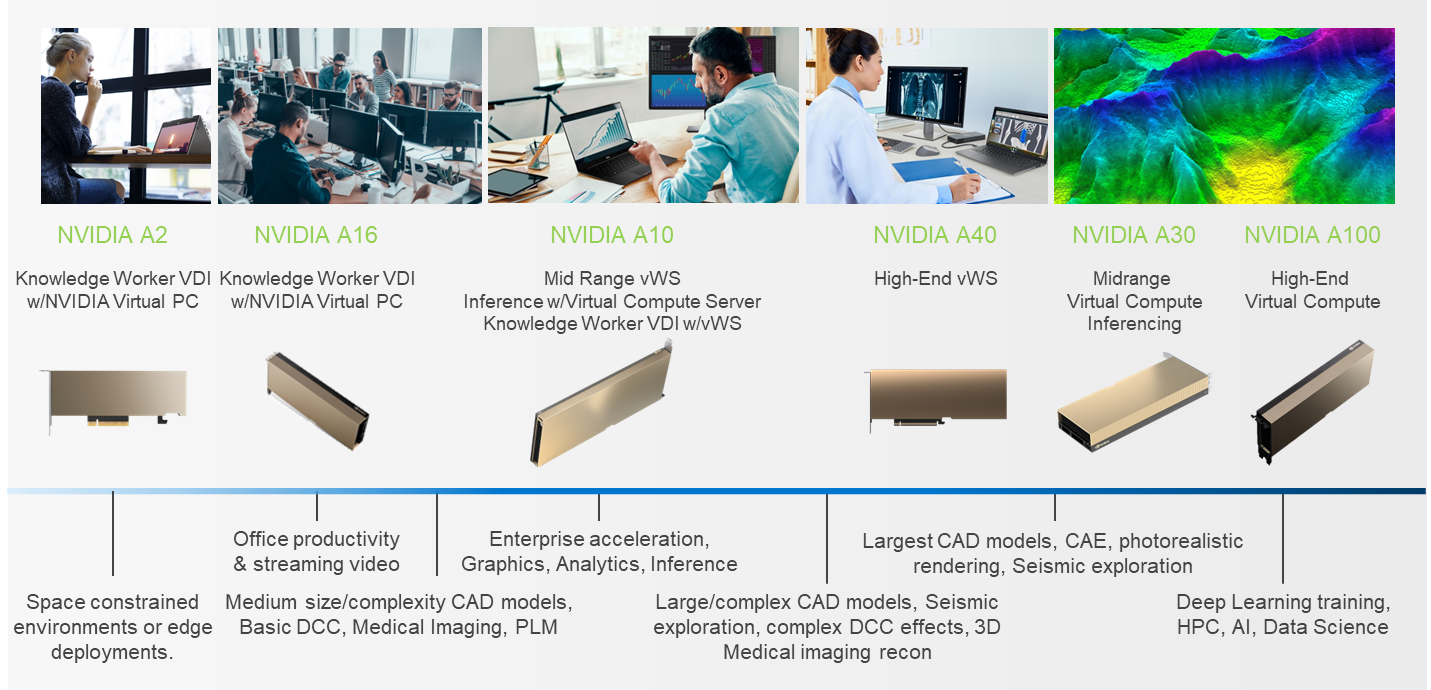

In this validated design, they use the NVIDIA A100 (PCIe) GPU and virtualized it in VMware vSphere as a virtual GPU or vGPU. With the infrastructure in place, they built Linux VMs that will contain the ResNet-50 v1.5 workload and vGPUs. Beyond just working with traditional vGPUs that many may be familiar with, they also worked with NVIDIA’s Multi-Instance GPU (MIG) technology.

NVIDIA’s MIG technology allows administrators to partition a GPU into a maximum of seven GPU instances. Being able to do this provides greater control of GPU resources, ensuring that large and small workloads get the appropriate amount of GPU resources they need without wasting any.

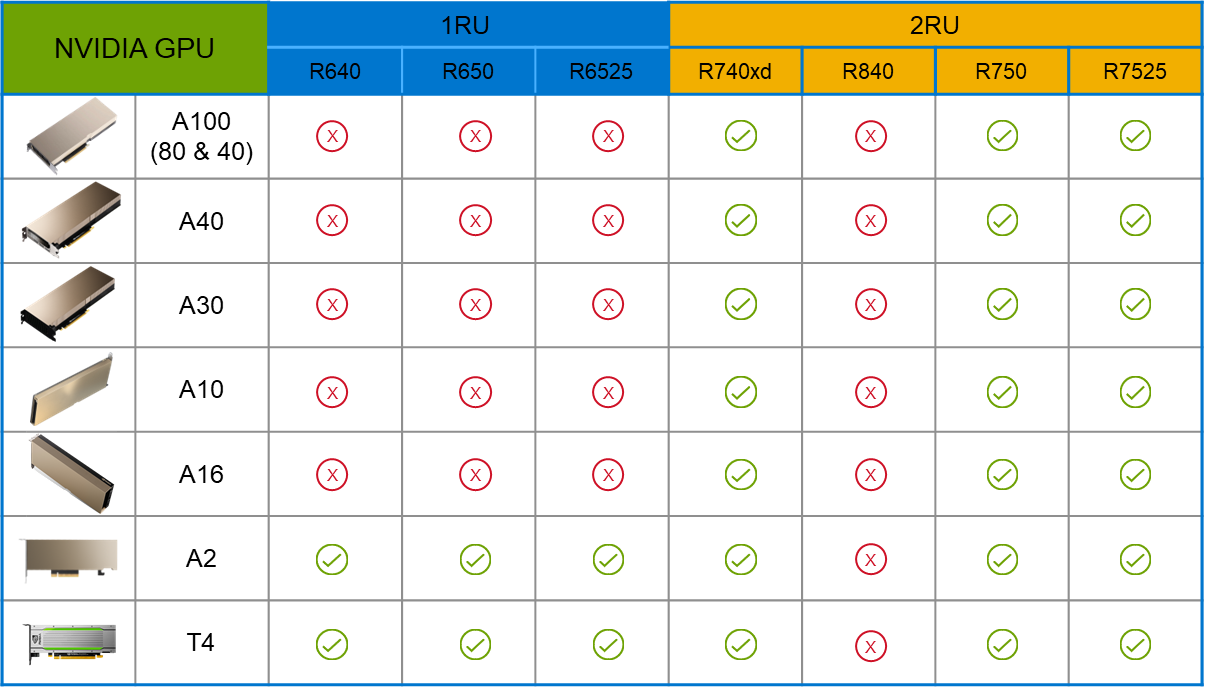

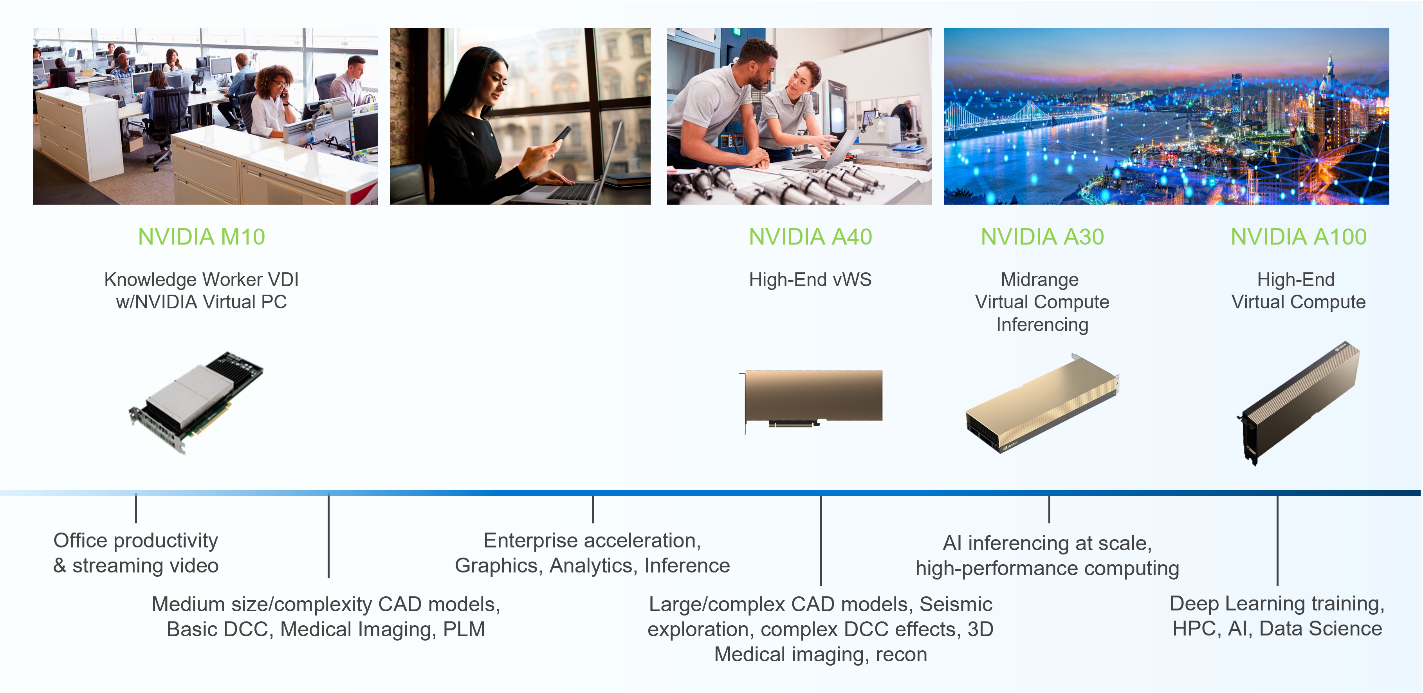

PowerFlex supports a large range of NVIDIA GPUs for workloads, from VDI (Virtual Desktops) to high end virtual compute workloads like AI. You can see this in the following diagram where there are solutions for “space constrained” and “edge” environments, all the way to GPUs used for large inferencing models. In the table below the diagram, you can see which GPUs are supported in each type of PowerFlex node. This provides a tremendous amount of flexibility depending on your workloads.

The validated design describes the steps to configure the architecture and provides detailed links to the NVIDIAand VMware documentation for configuring the vGPUs, and the licensing process for NVIDIA AI Enterprise.

These are key steps when building an AI environment. I know from my experience working with various organizations, and from teaching, that many are not used to working with vGPUs in Linux. This is slowly changing in the industry. If you haven’t spent a lot of time working with vGPUs in Linux, be sure to pay attention to the details provided in the guide. It is important and can make a big difference in your performance.

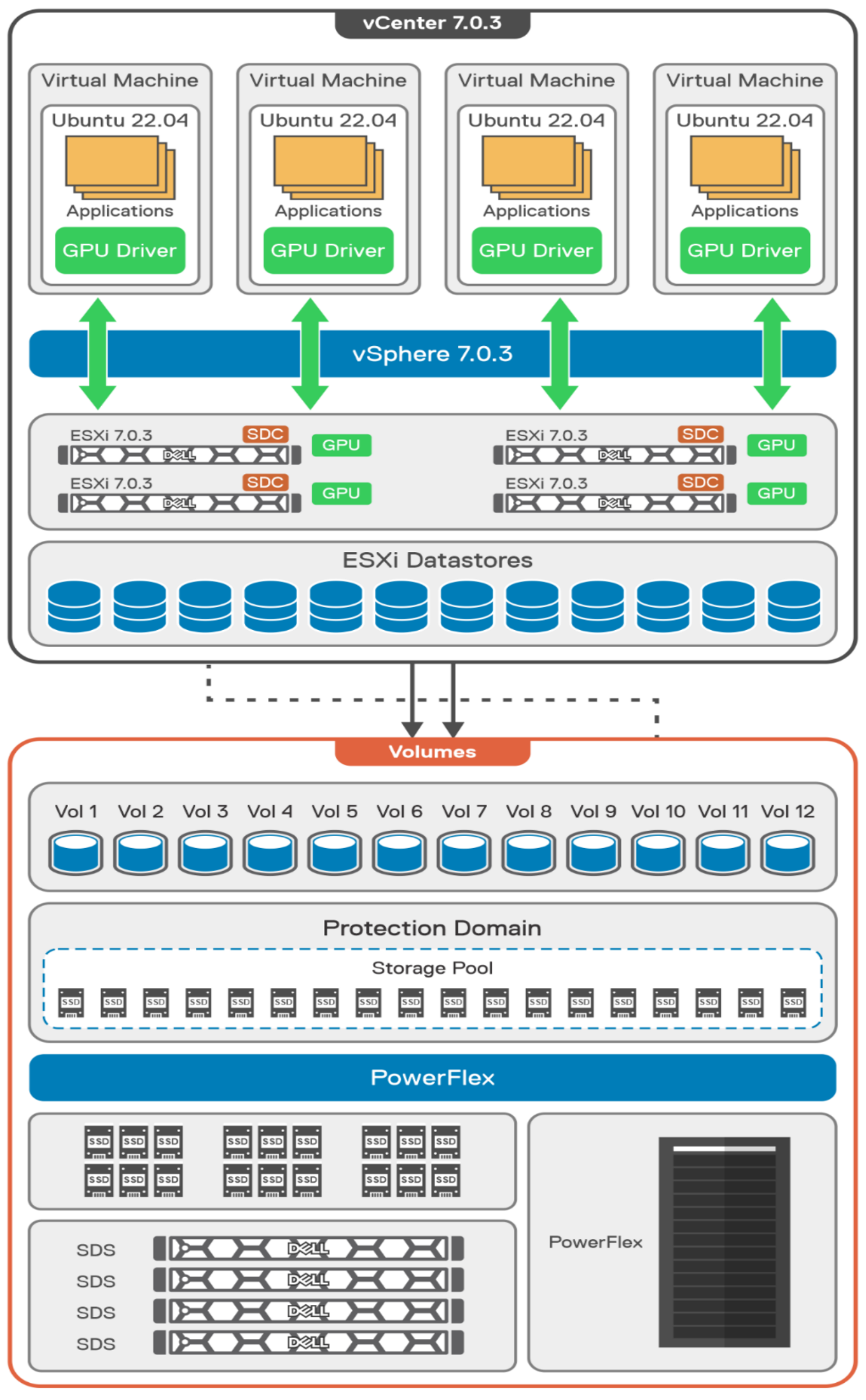

The following diagram shows the validated design’s logical architecture. At the top of the diagram, you can see four Ubuntu 22.04 Linux VMs with the NVIDIA vGPU driver loaded in them. They are running on PowerFlex hosts with VMware ESXi deployed. Each VM contains one NVIDIA A100 GPU configured for MIG operations. This configuration leverages a two-tier architecture where storage is provided by separate PowerFlex software defined storage (SDS) nodes.

A design like this allows for independent scalability for your workloads. What I mean by this is during the training phase of a model, significant storage may be required for the training data, but once the model clears validation and goes into production, storage requirements may be drastically different. With PowerFlex you have the flexibility to deliver the storage capacity and performance you need at each stage.

This brings us to testing the environment. Again, for this paper, the engineering team validated it using ResNet-50 v1.5 using the ImageNet 1K data set. For this validation they enabled several ResNet-50 v1.5 TensorFlow features. These include Multi-GPU training with Horovod, NVIDIA DALI, and Automatic Mixed Precision (AMP). These help to enable various capabilities in the ResNet-50 v1.5 model that are present in the environment. The paper then describes how to set up and configure ResNet-50 v1.5, the features mentioned above, and details about downloading the ImageNet data.

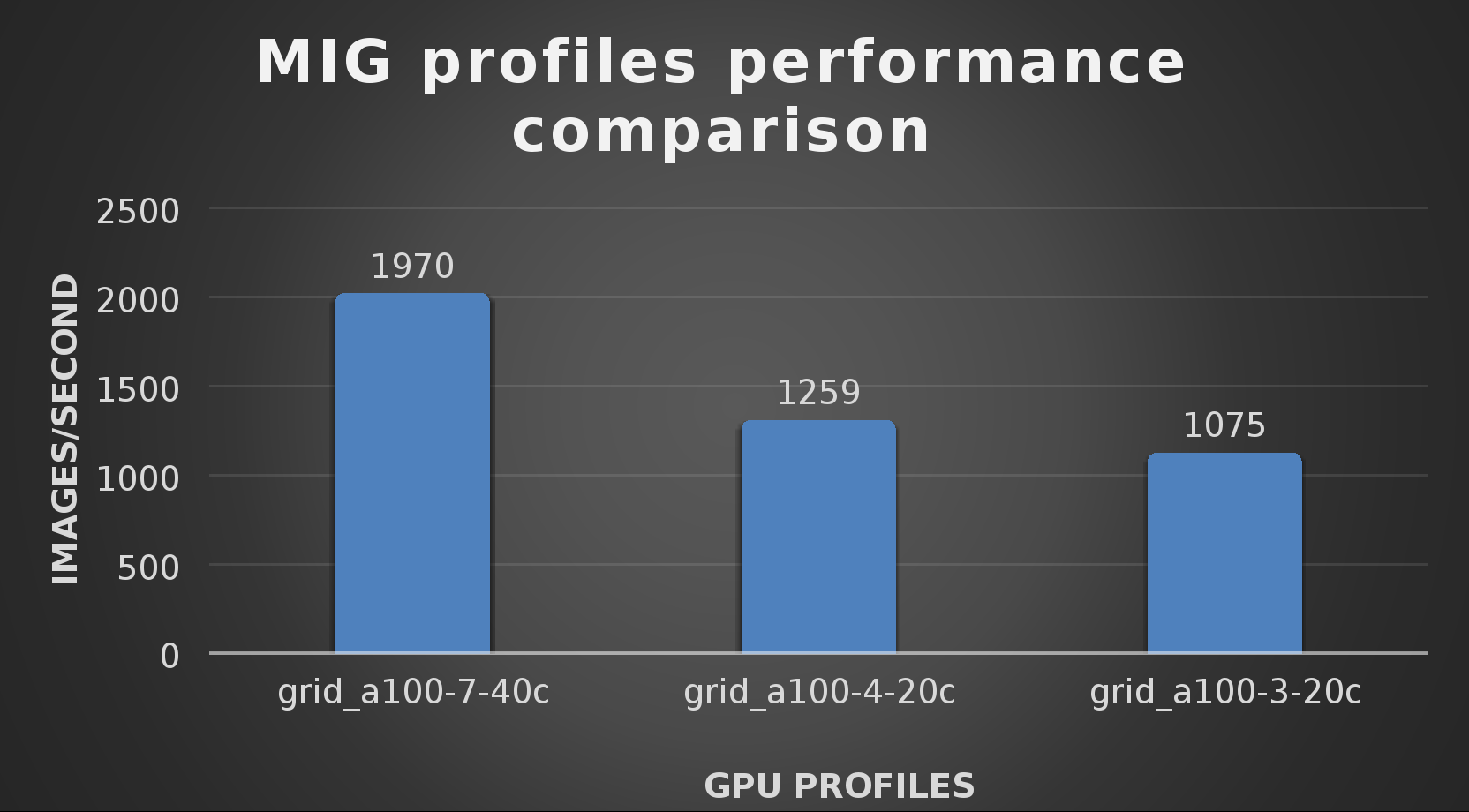

At this stage they were able to train the ResNet-50 v1.5 deployment. The first iteration of training used the NVIDIA A100-7-40C vGPU profile. They then repeated testing with the A100-4-20C vGPU profile and the A100-3-20C vGPU profile. You might be wondering about the A100-2-10C vGPU profile and the A100-1-5C profile. Although those vGPU profiles are available, they are more suited for inferencing, so they were not tested.

The results from validating the training workloads for each vGPU profile is shown in the following graph. The vGPUs were running near 98% capacity according to nvitop during each test. The CPU utilization was 14% and there was no bottle neck with the storage during the tests.

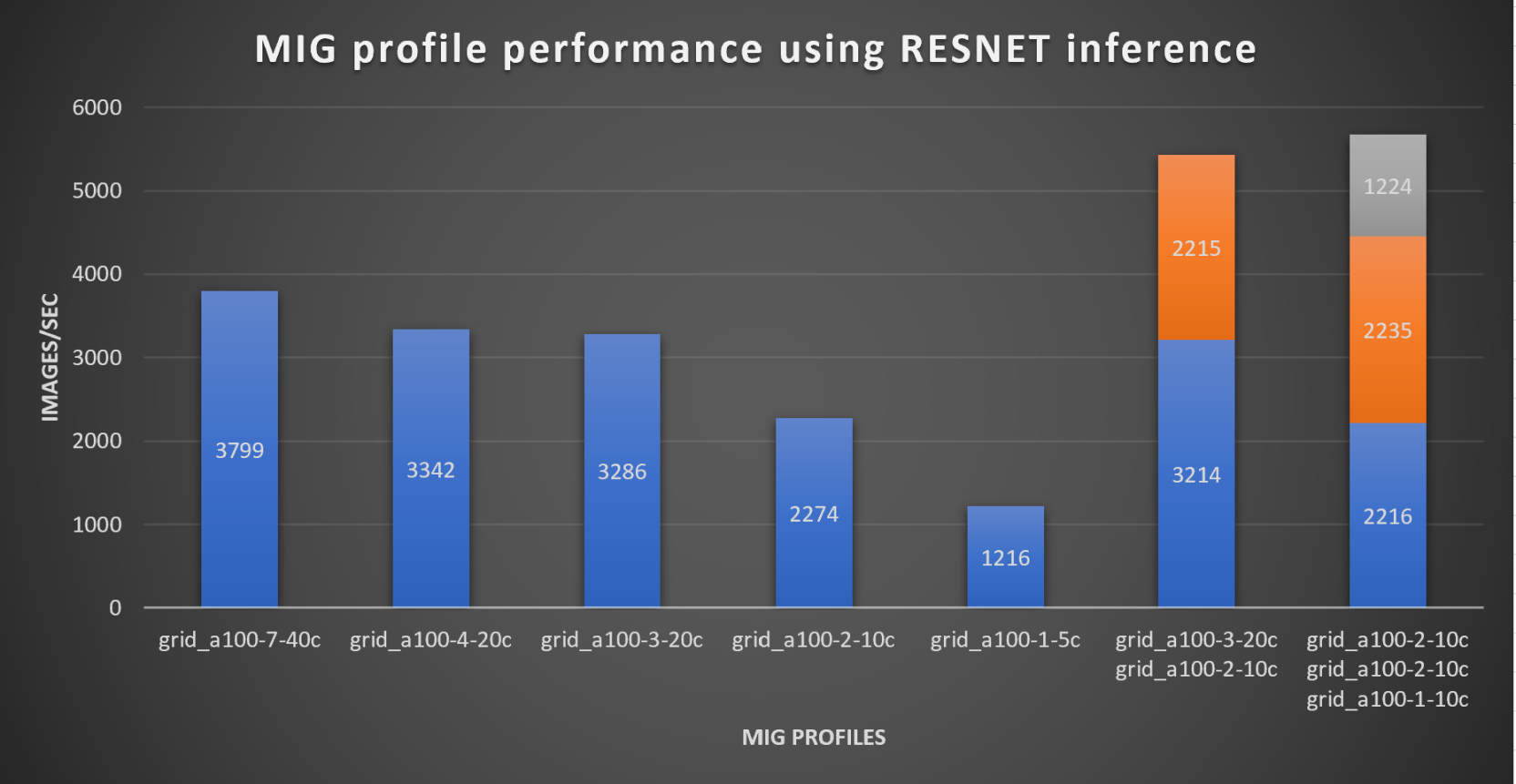

With the models trained, the guide then looks at how well inference runs on the MIG profiles. The following graph shows inferencing images per second of the various MIG profiles with ResNet-50 v1.5.

It’s worth noting that the last two columns show the inferencing running across multiple VMs, on the same ESXi host, that are leveraging MIG profiles. This also shows that GPU resources are partitioned with MIG and that resources can be precisely controlled, allowing multiple types of jobs to run on the same GPU without impacting other running jobs.

This opens the opportunity for organizations to align consumption of vGPU resources in virtual environments. Said a different way, it allows IT to provide “show back” of infrastructure usage in the organization. So if a department only needs an inferencing vGPU profile, that’s what they get, no more, no less.

It’s also worth noting that the results from the vGPU utilization were at 88% and CPU utilization was 11% during the inference testing.

These validations show that a Dell PowerFlex environment can support the foundational components of modern-day AI. It also shows the value of NVIDIA’s MIG technology to organizations of all sizes: allowing them to gain operational efficiencies in the data center and enable access to AI.

Which again answers the question of this blog, can I do that AI thing on Dell PowerFlex… Yes you can run that AI thing! If you would like to find out more about how to run your AI thing on PowerFlex, be sure to reach out to your Dell representative.

Resources

- The History of Artificial Intelligence

- ‘Godfather of AI’ leaves Google, warns of tech’s dangers

- ResNet-50: The Basics and a Quick Tutorial

- Dell Validated Design for Virtual GPU with VMware and NVIDIA on PowerFlex

- NVIDIA NGC Catalog ResNet v1.5 for PyTorch

- NVIDIA AI Enterprise

- NVIDIA A100 (PCIe) GPU

- NVIDIA Virtual GPU Software Documentation

- NVIDIA A100-7-40C vGPU profile

- NVIDIA Multi-Instance GPU (MIG)

- NVIDIA Multi-Instance GPU User Guide

- Horovod

- ImageNet

- DALI

- Automatic Mixed Precision (AMP)

- nvitop

Author: Tony Foster

Sr. Principal Technical Marketing Engineer

Twitter: | |

LinkedIn: | |

Personal Blog: | |

Location: | The Land of Oz [-6 GMT] |

What to do with all that data? Answer: SingleStore on PowerFlex

Wed, 10 May 2023 22:55:28 -0000

|Read Time: 0 minutes

Every organization has data, every organization has databases, every organization must figure out what to do with all that data from those databases. According to research by the University of Tennessee, Knoxville’s Haslam College of Business there were 44 zettabytes of data in 2020, and by 2025 it is estimated that 463 exabytes of data will be created daily. That’s a lot of data, and even if your organization only contributes a fraction of a precent to those 463 exabytes of data a day, that’s still a lot of data to manage. A great approach to this modern ocean of data is using SingleStore on Dell PowerFlex.

Recently Dell and SingleStore released a joint validation white paper on a virtualized SingleStore environment running on PowerFlex. The paper provides an overview of the technologies used and then looks at an architecture that can be used to run SingleStore on PowerFlex. After that, the paper looks at how the environment was validated.

SingleStore

Before I get into the details of the paper, I suspect there might be a few readers who have yet to hear about SingleStore or know about some of its great features, so let’s start there. Built for developers and architects, SingleStoreDB is based on a distributed SQL architecture, delivering 10–100 millisecond performance on complex queries—all while ensuring that your organization can effortlessly scale. Now let’s go a bit deeper….

The SingleStoreDB :

- Scales horizontally providing high throughput across a wide range of platforms.

- Maintains a broad compatibility with common technologies in the modern data processing ecosystem (for example, orchestration platforms, developer IDEs, and BI tools), so you can easily integrate it in your existing environment.

- Features an in-memory rowstore and an on-disk columnstore to handle both highly concurrent operational and analytical workloads.

- Features the SingleStore Pipelines data ingestion technology that streams large amounts of data at high throughput into the database with exactly once semantics.

This means that you can continue to run your traditional SQL queries against your every growing data, which all resides on a distributed system, and you can do it fast. This is a big win for organizations who have active data growth in their environment.

What makes this even better is the ability of PowerFlex to scale from a few nodes to thousands. This provides a few different options to match your growing needs. You can start with just your SingleStore system deployed on PowerFlex and migrate other workloads on to the PowerFlex environment as time permits. This allows you to focus on just your database environment to start and then, as infrastructure comes up for renewal, you migrate those workloads and scale up your environment with more compute and storage capacity.

Or maybe you are making a bigger contribution to that 463 exabytes of data per day I mentioned earlier, and you need to scale out your environment to handle your data’s growth. You can do that too!

That’s the great thing about PowerFlex, you can consume resources independently of each other. You can add more storage or compute as you need them.

Additionally, with PowerFlex, you can deliver bare-metal and virtualized environments without having to choose only one. That’s right—you can run bare-metal servers right next to virtualized workloads.

Architecture

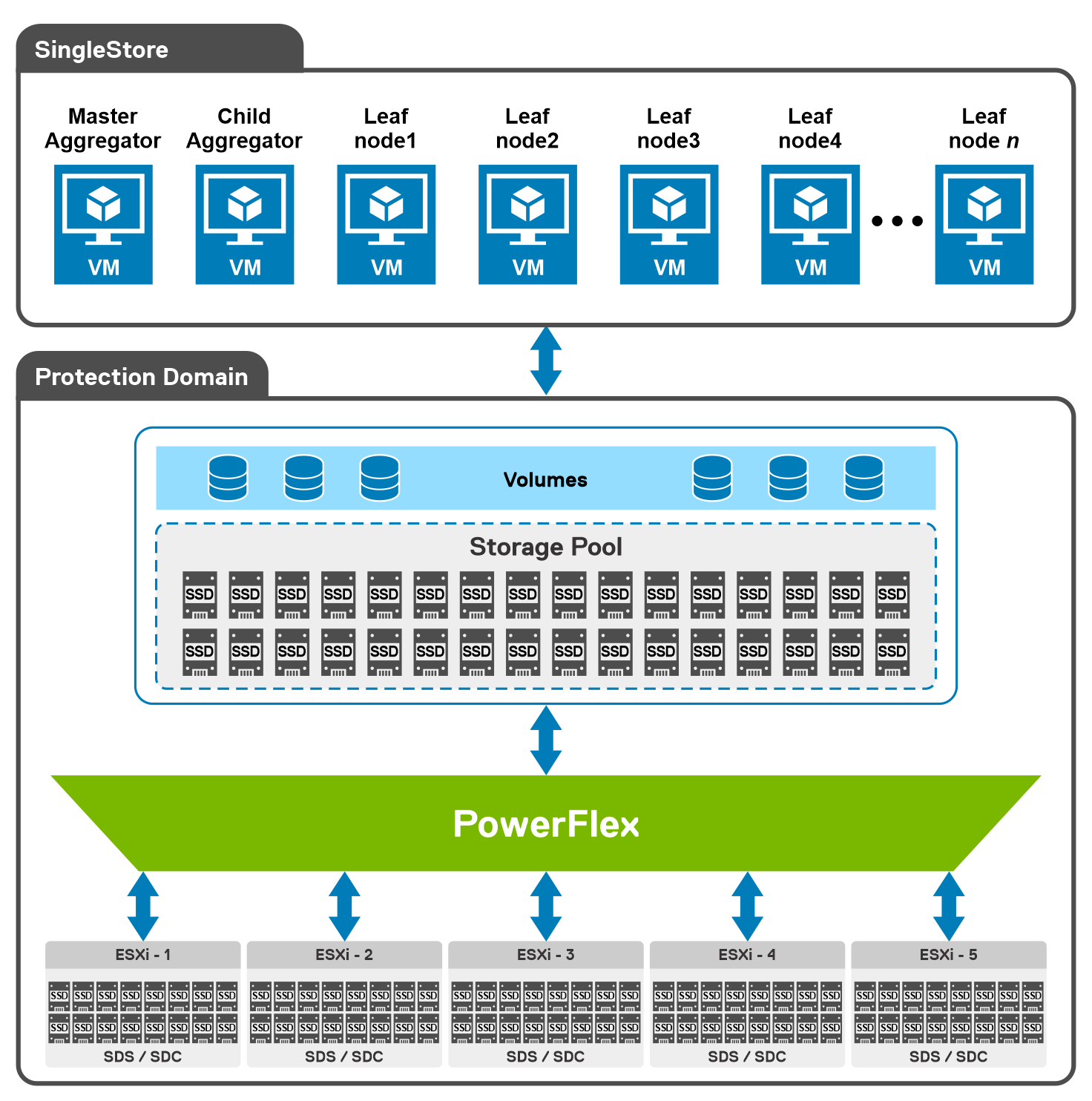

The way the engineers built this environment was using PowerFlex deployed in a hyper-converged infrastructure (HCI) configuration where the compute nodes are also storage nodes. (PowerFlex supports both two-tier architectures and HCI.)

As shown in the following diagram, our engineering team used five Dell PowerEdge R640 servers with dual CPUs, 384 GB of RAM, and eight SSDs per node. These five nodes were configured as HCI nodes and connected with a 25 Gbps network. The storage from across the nodes is aggregated to create a large software-defined pool of storage as a single protection domain that provides volumes to the SingleStore VMs. This is ideal for even the most demanding databases due to its high I/O capability.

For this validation, the SingleStore Cluster VMs consist of two aggregator VMs and multiple leaf VMs. The white paper details the configuration of these VMs.

Additionally, the white paper provides an overview of the steps used to deploy SingleStore on VMware vSphere in a PowerFlex environment. For this validation, they followed the online user interface method to deploy SingleStore.

Testing

With the environment configured, the white paper then discusses how to validate the environment using TPC-DS. This tool provides 99 different queries that can be used to test a database. For this validation, only 95 of the 99 were used. The paper then describes both how the sample data set was created and how the tests were run.

The validation tests were run on 4, 6, and 8 leaf node configurations. This was done to understand the variation in performance as the environment scales. The testing showed that having more SingleStore leaf nodes results in better performance outcomes.

The testing also showed that there were no storage bottlenecks for the TPC-DS like workload and that using more powerful CPUs could further enhance the environment.

The white paper shows how SingleStore and PowerFlex can be used to create a dynamic and robust environment for your growing data needs as you do your part to contribute to the 463 exabytes of data that is expected to be created daily by 2025. To find out more about this design, contact your Dell representative.

Resources

Author: Tony Foster

Twitter: @wonder_nerd

LinkedIn

How PowerFlex Transforms Big Data with VMware Greenplum

Tue, 01 Nov 2022 21:18:15 -0000

|Read Time: 0 minutes

Quick! The word has just come down. There is a new initiative that requires a massively parallel processing (MPP) database, and you are in charge of implementing it. What are you going to do? Luckily, you know the answer. You also just discovered that the Dell PowerFlex Solutions team has you covered with a solutions guide for VMware Greenplum.

What is in the solutions guide and how will it help with an MPP database? This blog provides the answer. We look at what Greenplum is and how to leverage Dell PowerFlex for both the storage and compute resources in Greenplum.

Infrastructure flexibility: PowerFlex

If you have read my other blogs or are familiar with PowerFlex, you know it has powerful transmorphic properties. For example, PowerFlex nodes sometimes function as both storage and compute, like hyperconverged infrastructure (HCI). At other times, PowerFlex functions as a storage-only (SO) node or a compute-only (CO) node. Even more interesting, these node types can be mixed and matched in the same environment to meet the needs of the organization and the workloads that they run.

This transmorphic property of PowerFlex is helpful in a Greenplum deployment, especially with the configuration described in the solutions guide. Because the deployment is built on open-source PostgreSQL, it is optimized for the needs of an MPP database, like Greenplum. PowerFlex can deliver the compute performance necessary to support massive data IO with its CO nodes. The PowerFlex infrastructure can also support workloads running on CO nodes or nodes that combine compute and storage (hybrid nodes). By leveraging the malleable nature of PowerFlex, no additional silos are needed in the data center, and it may even help remove existing ones.

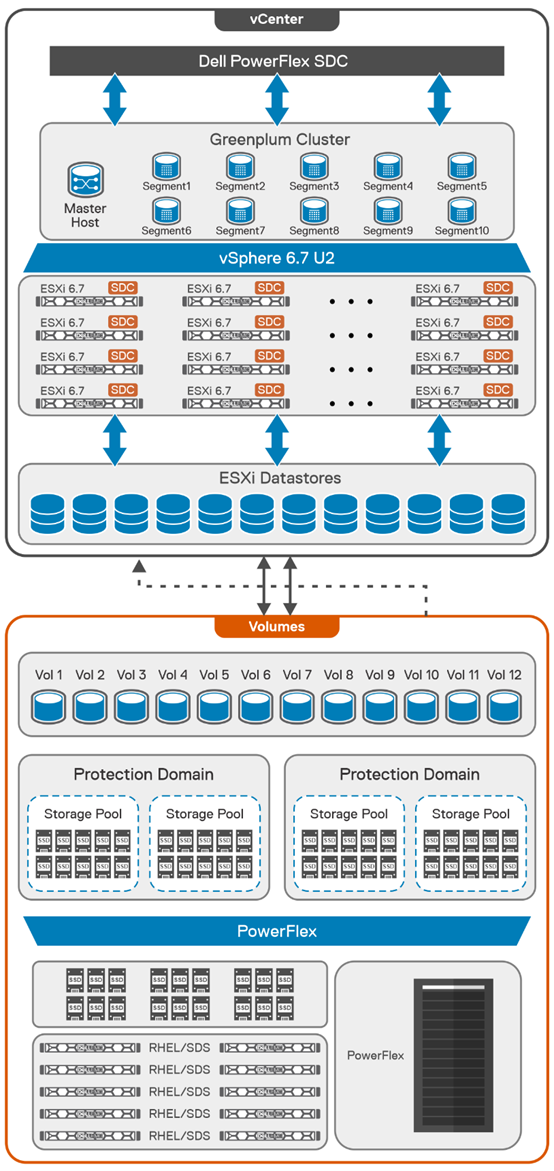

The architecture used in the solutions guide consists of 12 CO nodes and 10 SO nodes. The CO nodes have VMware ESXi installed on them, with Greenplum instances deployed on top. There are 10 segments and one director deployed for the Greenplum environment. The 12th CO node is used for redundancy.

The storage tier uses the 10 SO nodes to deliver 12 volumes backed by SSDs. This configuration creates a high speed, highly redundant storage system that is needed for Greenplum. Also, two protection domains are used to provide both primary and mirror storage for the Greenplum instances. Greenplum mirrors the volumes between those protection domains, adding an additional level of protection to the environment, as shown in the following figure:

By using this fluid and composable architecture, the components can be scaled independently of one another, allowing for storage to be increased either independently or together with compute. Administrators can use this configuration to optimize usage and deliver appropriate resources as needed without creating silos in the environment.

Testing and validation with Greenplum: we have you covered

The solutions guide not only describes how to build a Greenplum environment, it also addresses testing, which many administrators want to perform before they finish a build. The guide covers performing basic validations with FIO and gpcheckperf. In the simplest terms, these tools ensure that IO, memory, and network performance are acceptable. The FIO tests that were run for the guide showed that the HBA was fully saturated, maximizing both read and write operations. The gpcheckperf testing showed a performance of 14,283.62 MB/sec for write workloads.

Wouldn’t you feel better if a Greenplum environment was tested with a real-world dataset? That is, taking it beyond just the minimum, maximum, and average numbers? The great news is that the architecture was tested that way! Our Dell Digital team has developed an internal test suite running static benchmarked data. This test suite is used at Dell Technologies across new Greenplum environments as the gold standard for new deployments.

In this test design, all the datasets and queries are static. This scenario allows for a consistent measurement of the environment from one run to the next. It also provides a baseline of an environment that can be used over time to see how its performance has changed -- for example, if the environment sped up or slowed down following a software update.

Massive performance with real data