Expanding VxRail Dynamic Node Storage Options with PowerFlex

Wed, 09 Feb 2022 19:53:55 -0000

|Read Time: 0 minutes

It was recently announced that Dell VxRail dynamic nodes now supports Dell PowerFlex. This announcement expands the storage possibilities for VxRail dynamic nodes, providing a powerful and complimentary option for hyperconverged data centers. A white paper published by the Dell Technologies Solutions Engineering team details this configuration with VxRail dynamic nodes and PowerFlex.

In this blog we will explore how to use VxRail dynamic nodes with PowerFlex and explain why the two in combination are beneficial for organizations. We will begin by providing an overview for the dynamic nodes and PowerFlex, then describe why this duo is beneficial, and finally we will look at some of the exciting aspects of the white paper.

VxRail dynamic nodes and PowerFlex

VxRail

VxRail dynamic nodes are compute-only nodes, meaning these nodes don’t provide vSAN storage. They are available in the E, P, and V Series and accommodate a large variety of use cases. VxRail dynamic nodes rely on an external storage resource as their primary storage, which in this case is PowerFlex.

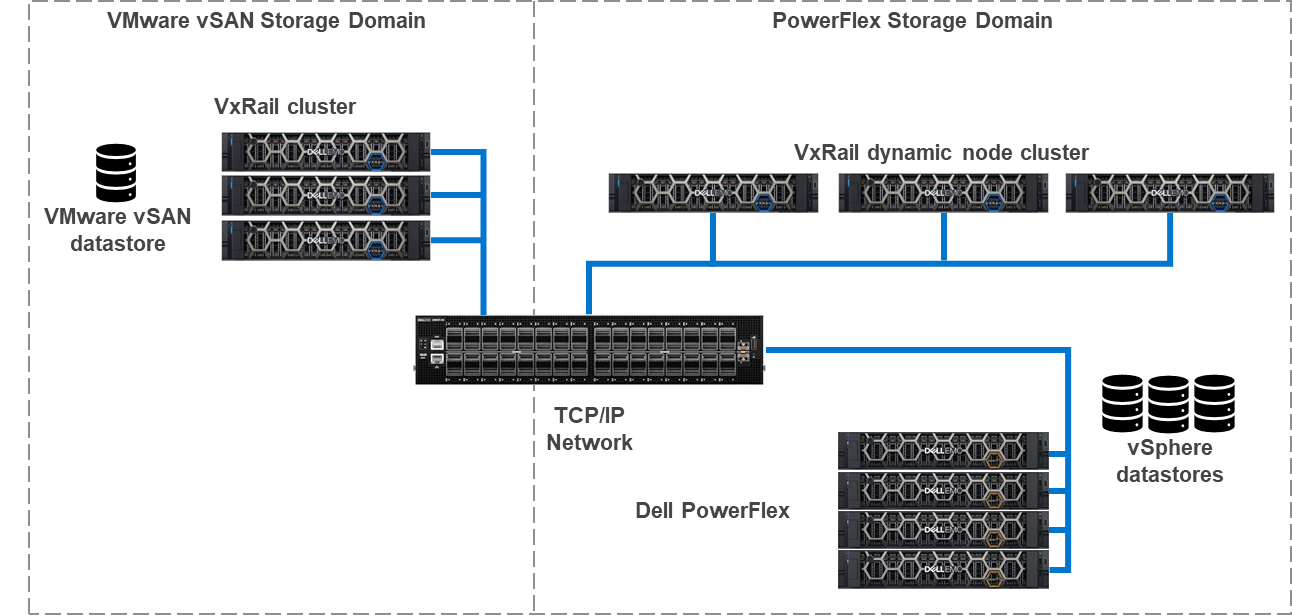

The following diagram shows a traditional VxRail environment is on the left. This environment uses VMware vSAN datastore for storage. The right side of the diagram is a VxRail dynamic node cluster. The VxRail dynamic nodes are compute only nodes, and, in this case rely on PowerFlex for storage. In this diagram the VxRail cluster, the VxRail dynamic node cluster, and the PowerFlex storage can all be scaled independently of one another for certain workloads. For example, some may want to adjust resources for Oracle environments to reduce license costs.

To learn more about VxRail dynamic nodes, see my colleague Daniel Chiu’s blog on the VxRail 7.0.240 release.

PowerFlex

PowerFlex is a software defined infrastructure that delivers linear scaling of performance and resources. PowerFlex is built on top of PowerEdge servers and aggregates the storage of four or more PowerFlex nodes to create a high-performance software defined storage system. PowerFlex uses a traditional TCP/IP network to connect nodes and deliver storage to environments. This is the only storage platform for VxRail dynamic nodes that uses an IP network. Both of these attributes are analogous to how VxRail delivers storage.

PowerFlex-VxRail benefits

If it seems confusing because VxRail and PowerFlex seem to share many of the same characteristics, it is they do share many of the same characteristics. However, this is why it also makes sense to bring them together. This section of this blog describes how the two can be combined to deliver a powerful architecture for certain applications.

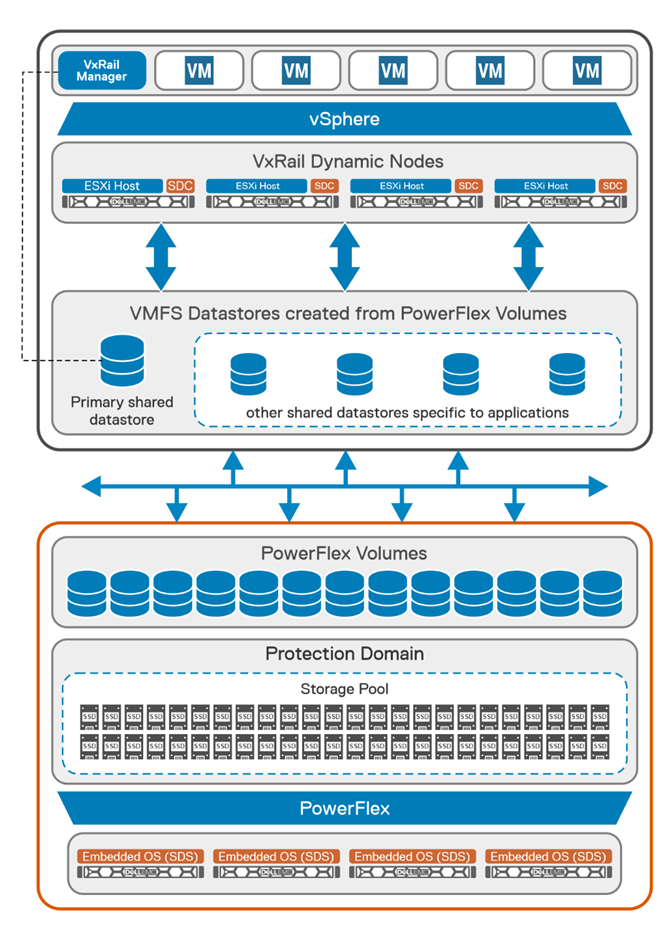

The following diagram shows the logical configuration of PowerFlex and VxRail combined. Starting at the top of the diagram, you will see the VxRail cluster, consisting of four dynamic nodes. These dynamic nodes are running the PowerFlex Storage Data Client (SDC), a software-based storage adapter, which runs in the ESXi kernel. The SDC enables the VxRail dynamic nodes to consume volumes provisioned from the storage on the PowerFlex nodes.

In the lower half of the diagram, we see the PowerFlex nodes and the storage they present. The cluster contains four PowerFlex storage-only nodes. In these nodes, the internal drives are aggregated into a storage pool that spans across all four nodes. The storage pool capacity can then be provisioned as PowerFlex volumes to the VxRail dynamic nodes.

AI workloads offer a great example of where it makes perfect sense to bring these two technologies together. There has been a lot of buzz around virtualizing AI, ML, and HPC workloads. Dell, NVIDIA, and VMware have done amazing things in this area, including NVIDIA AI Enterprise on VxRail. Now you may think this does not matter to your organization, as there are no uses for AI, ML, or HPC in your organizations, but uses for AI are constantly evolving. For example, AI is even being used extensively in agriculture.

These new AI technologies are data driven and require massive amounts of data to train and validate models. This data needs to be stored somewhere, and the systems processing benefit from quick access to it and VxRail is awesome for that. There are exceptions, what if your data set is too large for VxRail, or what if you have multiple AI models that need to be shared amongst multiple clusters?

The typical response in this scenario is to get a storage array for the environment. That would work, except you’ve just added complexity to the environment. Many users move to HCI to drive complexity out of their environment. Fibre channel is a great example of this complexity.

To reduce complexity, there’s another option, just use PowerFlex. PowerFlex can support hundreds of nodes, enabling highly-performant storage needed for modern, data hungry applications. Additionally, it operates on standard TCP/IP networks, eliminating the need for a dedicated storage switch fabric. This makes it an ideal choice for virtualized AI workloads.

The idea of a standard network may be important to some organizations, due to the complexity aspects or they may not have the in-house talent to administer a Fibre channel network. This is particularly true in areas where administrators are hard to find. Leveraging the skills and resources already available within an organization, now more than ever, is extremely important.

Another area where PowerFlex backed VxRail dynamic nodes can be beneficial is with data services like data at rest encryption (D@RE). Both vSAN and PowerFlex support D@RE technology. When encryption is run on a host, the encryption/decryption process consumes resources. This impact can vary depending on the workload. If the workload has a lot of I/O, the resource utilization (CPU and RAM) could be more than a workload with lower I/O. When D@RE is offloaded, those resources needed for D@RE can be used for other tasks, such as workloads.

Beyond D@RE, PowerFlex has many other built in data resiliency and protection mechanisms. These include a distributed mesh mirroring system and native asynchronous replication. These functions help deliver fast data access and a consistent data protection strategy.

The impact of storage processing, like encryption, can impact the number of hosts that need to be licensed. Good examples of this are large databases with millions of transactions per minute (TPM). For each data write there is an encryption process. This process can be small and appear inconsequential, that is until you have millions of those processes happening in the same time span. This can cause a performance degradation if there aren’t enough resources to handle both the encryption processing and the CPU/RAM demands of the database environment and can lead to needing additional hosts to support the database environment.

In such a scenario, it can be advantageous to use VxRail dynamic nodes with PowerFlex. This offloads the encryption to PowerFlex allowing all the compute performance to be delivered to the VMs.

Dell PowerFlex with VxRail Dynamic Nodes – White Paper

The Solutions Engineering team has included many graphics detailing both the logical and physical design of how VxRail dynamic nodes can be configured with PowerFlex.

It highlights several important prerequisites, including that you will need to be using VxRail system software version 7.0.300 or above. This is important as this release is when support for PowerFlex was added to VxRail dynamic nodes. If the VxRail environment is not at the correct version, it could cause delays while the environment is upgraded to a compatible version.

Beyond just building an environment, the white paper also details administrating the environment. While administration is a relatively straight forward for seasoned administrators, it’s always good to have instructions in case an administrator is sick or other members of the team are gaining experience.

All of this and so much more are outlined in the white paper. If you are interested in all the details, be sure to read through it. This applies if your team is currently using VxRail and looking to add dynamic nodes or if you have both PowerFlex and VxRail in your environment and you want to expand the capabilities of each.

Summary

This blog provided an overview of VxRail dynamic nodes and how they can take advantage of PowerFlex software defined storage when needed. This includes reducing licensing costs and keeping complexity, like fiber channel, to a minimum in your environment. To find out more, read the white paper or talk with your Dell representative.

Author Information

Author: Tony Foster

Twitter: @wonder_nerd

Related Blog Posts

PowerFlex and CloudStack, an Amazing IaaS match!

Sat, 18 Nov 2023 14:13:00 -0000

|Read Time: 0 minutes

Have you heard about Apache CloudStack? Did you know it runs amazingly on Dell PowerFlex? And what does it all have to do with infrastructure as a service (IaaS)? Interested in learning more? If so, then you should probably keep reading!

The PowerFlex team and ShapeBlue have been collaborating to bring ease and simplicity to CloudStack on PowerFlex. They have been doing this for quite a while. As new versions are released, the teams work together to ensure it continues to be amazing for customers. The deep integration with PowerFlex makes it an ideal choice for organizations building CloudStack environments.

Both Dell and ShapeBlue are gearing up for the CloudStack Collaboration Conference (CCC) in Paris on November 23 and 24th. The CloudStack Collaboration Conference is the biggest get-together for the Apache CloudStack Community, bringing vendors, users, and developers to one place to discuss the future of open-source technologies, the benefits of CloudStack, new integrations, and capabilities.

CloudStack is open-source software designed to deploy and manage large networks of virtual machines as a highly available, highly scalable Infrastructure as a Service (IaaS) cloud computing platform. CloudStack is used by hundreds of service providers around the world to offer public cloud services and by many companies to provide an on-premises (private) cloud offering or as part of a hybrid cloud solution.

Users can manage their cloud with an easy to use Web interface, command line tools, and/or a full-featured RESTful API. In addition, CloudStack provides an API that is compatible with AWS EC2 and S3 for organizations that want to deploy hybrid clouds.

CloudStack can leverage the extensive PowerFlex REST APIs to enhance functionality. This facilitates streamlined provisioning, effective data management, robust snapshot management, comprehensive data protection, and seamless scalability, making the combination of PowerFlex storage and CloudStack a robust choice for modern IaaS environments.

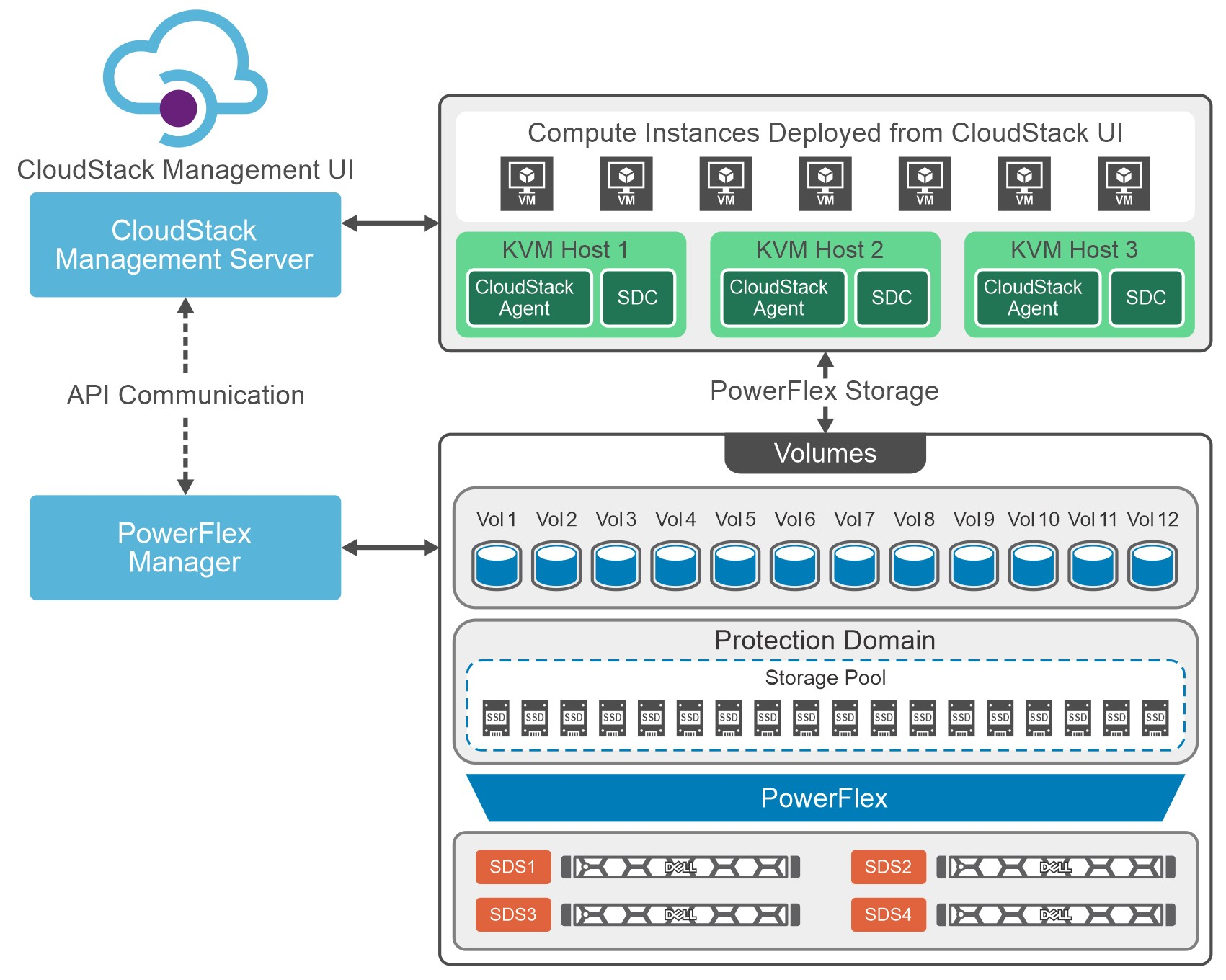

You can see this in the following diagram. CloudStack and PowerFlex communicate with each other using APIs to coordinate operations for VMs. This makes it easier to administer larger environments, enabling organizations to have a true IaaS environment.

Figure 1. Cloud Stack on PowerFlex Architecture

Let's talk about IaaS for a moment. It is a fantastic concept that can be compared with ordering off a menu at a restaurant. The restaurant has unrelated dishes on the menu until you start looking at their components. For example, you can get three different base sauces (red, pink, and white) with just a red sauce and a white sauce. With a small variety of pasta and proteins, the options are excellent. This is the same for IaaS. Have a few base options, sprinkle on some API know-how, and you get a fantastic menu to satisfy workload needs without having a detailed knowledge of the infrastructure.

That makes it easier for the IT organization to become more efficient and shift the focus toward aspirational initiatives. This is especially true when CloudStack and PowerFlex work together. The hungry IT consumers can get what they want with less IT interaction.

Other significant benefits that come from integrating CloudStack with PowerFlex include the following:

- Seamless Data Management: Efficient provision, backup, and data management across infrastructure, ensuring data integrity and accessibility.

- Enhanced Performance: Provides low-latency access to data, optimizing I/O, and reducing bottlenecks. This, in turn, leads to improved application and workload performance.

- Reliability and Data Availability: Benefit from advanced redundancy and failover mechanisms and data replication, reducing the risk of data loss and ensuring continuous service availability.

- Scalability: Scalable storage solutions allow organizations to expand their storage resources in tandem with their growing needs. This flexibility ensures that they can adapt to changing workloads and resource requirements.

- Simplified Management: Ability to use a single interface to handle provisioning, monitoring, troubleshooting, and streamlining administrative tasks.

- Enhanced Data Protection: Data protection features, such as snapshots, backups, and disaster recovery solutions. This ensures that an organization's data remains secure and can be quickly restored in case of unexpected incidents.

These are tremendous benefits for organizations, especially the data protection aspects. It is often said that it is no longer a question of if an organization will be impacted by an incident. It is a question of when they will be impacted. The IaaS capabilities of CloudStack and PowerFlex play a crucial role in protecting an organization's data. That protection can be automated as part of the IaaS design. That way, when a VM or VMs are requested, they can be assigned to a data protection policy as part of the creation process.

Simply put, that means that VM can be protected from the moment of creation. No more having to remember to add a VM to a backup, and no more "oh no" when someone realizes they forgot. That is amazing!

If you are at the CloudStack Collaboration Conference and are interested in discovering more, talk with Shashi and Florian. They will also present how CloudStack and PowerFlex create an outstanding IaaS solution.

Register for the CloudStack Collaboration Conference here to join virtually if you are unable to attend in person.

If you want to learn more about how PowerFlex and CloudStack can benefit your organization, reach out to your Dell representative for more details on this amazing solution.

Resources

Authors

Tony Foster

Twitter: @wonder_nerd

LinkedIn

Punitha HS

LinkedIn

KubeCon NA23, Google Cloud Anthos on Dell PowerFlex and More

Sun, 05 Nov 2023 23:26:43 -0000

|Read Time: 0 minutes

KubeCon will be here before you know it. There are so many exciting things to see and do. While you are making your plans, be sure to add a few things that will make things easier for you at the conference and afterwards.

Before we get into those things, did you know that the Google Cloud team and the Dell PowerFlex team have been collaborating? Recently Dell and Google Cloud published a reference architecture: Google Cloud Anthos and GDC Virtual on Dell PowerFlex. This illustrates how both teams are working together to enable consistency between cloud and on premises environments like PowerFlex. You will see this collaboration at KubeCon this year.

On Tuesday at KubeCon, after breakfast and the keynote, you should make your way to the Solutions Showcase in Hall F on Level 3 of the West building. Once there, make your way over to the Google Cloud booth and visit with the team! They want your questions about PowerFlex and are eager to share with you how Google Distributed Cloud (GDC) Virtual with PowerFlex provides a powerful on-premises container solution.

Also, be sure to catch the lightning sessions in the Google Cloud booth. You’ll get to hear from Dell PowerFlex engineer, Praphul Krottapalli. He will be digging into leveraging GDC Virtual on PowerFlex. That’s not the big thing though, he’ll also be looking at running a Postgres database distributed across on-premises PowerFlex nodes using GDC Virtual. Beyond that, they will look at how to protect these containerized database workloads. They’ll show you how to use Dell PowerProtect Data Manager to create application consistent backups of a containerized Postgres database instance.

We all know backups are only good if you can restore them. So, Praphul will show you how to recover the Postgres database and have it running again in no time.

Application consistency is an important thing to keep in mind with backups. Would you rather have a database backup where someone had just pulled the plug on the database (crash consistent) or would you like the backup to be as though someone had gracefully shut down the system (application consistent)? For all kinds of reasons (time, cost, sanity), the latter is highly preferable!

We talk about this more in a blog that covers the demo environment we used for KubeCon.

This highlights Dell and Google’s joint commitment to modern apps by ensuring that they can be run everywhere and that organizations can easily develop and deploy modern workloads.

If you are at KubeCon and would like to learn more about how containers work on Dell solutions, be sure to stop by both the Dell and Google Cloud booths. If it’s after KubeCon, be sure to reach out to your Dell representative for more details.

Author: Tony Foster