VMware Explore, PowerFlex, and Silos of Glitter: this blog has it all!

Fri, 18 Aug 2023 19:30:20 -0000

|Read Time: 0 minutes

Those who know me are aware that I’ve been a big proponent of one platform that must be able to support multiple workloads—and Dell PowerFlex can. If you are at VMware Explore you can see a live demo of both traditional database workloads and AI workloads running on the same four PowerFlex nodes.

When virtualization took the enterprise by storm, a war was started against silos. First was servers, and the idea that we can consolidate them on a few large hosts with virtualization. This then rapidly moved to storage and continued to blast through every part of the data center. Yet today we still have silos. Mainly in the form of workloads, these hide in plain sight - disguised with other names like “departmental,” “project,” or “application group.”

Some of these workload silos are becoming even more stealthy and operate under the guise of needing “different” hardware or performance, so IT administrators allow them to operate in a separate silo.

That is wasteful! It wastes company resources, it wastes the opportunity to do more, and it wastes your time managing multiple environments. It has become even more of an issue with the rise of Machine Learning (ML) and AI workloads.

If you are at VMware Explore this year you can see how to break down these silos with Dell PowerFlex at the Kioxia booth (Booth 309). Experience the power of running ResNet 50 image classification and OLTP (Online Transactional Processing) workloads simultaneously, live from the show floor. And if that’s not enough, there are experts, and lots of them! You might even get the chance to visit with the WonderNerd.

This might not seem like a big deal, right? You just need a few specialty systems, some storage, and a bit of IT glitter… some of the systems run the databases, some run the ML workloads. Sprinkle some of that IT glitter and poof you’ve got your workloads running together. Well sort of. They’re in the same rack at least.

Remember: silos are bad. Instead, let’s put some PowerFlex in there! And put that glitter back in your pocket, this is a data center, not a five-year old’s birthday party.

PowerFlex supports NVIDIA GPUs with MIG technology which is part of NVIDIA AI Enterprise, so we can customize our GPU resources for the workloads that need them. (Yes, there is nothing that says you can’t run different workloads on the same hosts.) Plus, PowerFlex uses Kioxia PM7 series SSDs, so there is plenty of IOPS to go around while ensuring sub-millisecond latency for both workloads. This allows the data to be closer to the processing, maybe even on the same host.

In our lab tests, we could push one million transactions per minute (TPM) with OLTP workloads while also processing 6620 images per second using a RESNET50 model built on NVIDIA NGC containers. These are important if you want to keep customers happy, especially as more and more organizations add AI/ML capabilities to their online apps, and more and more data is generated from all those new apps.

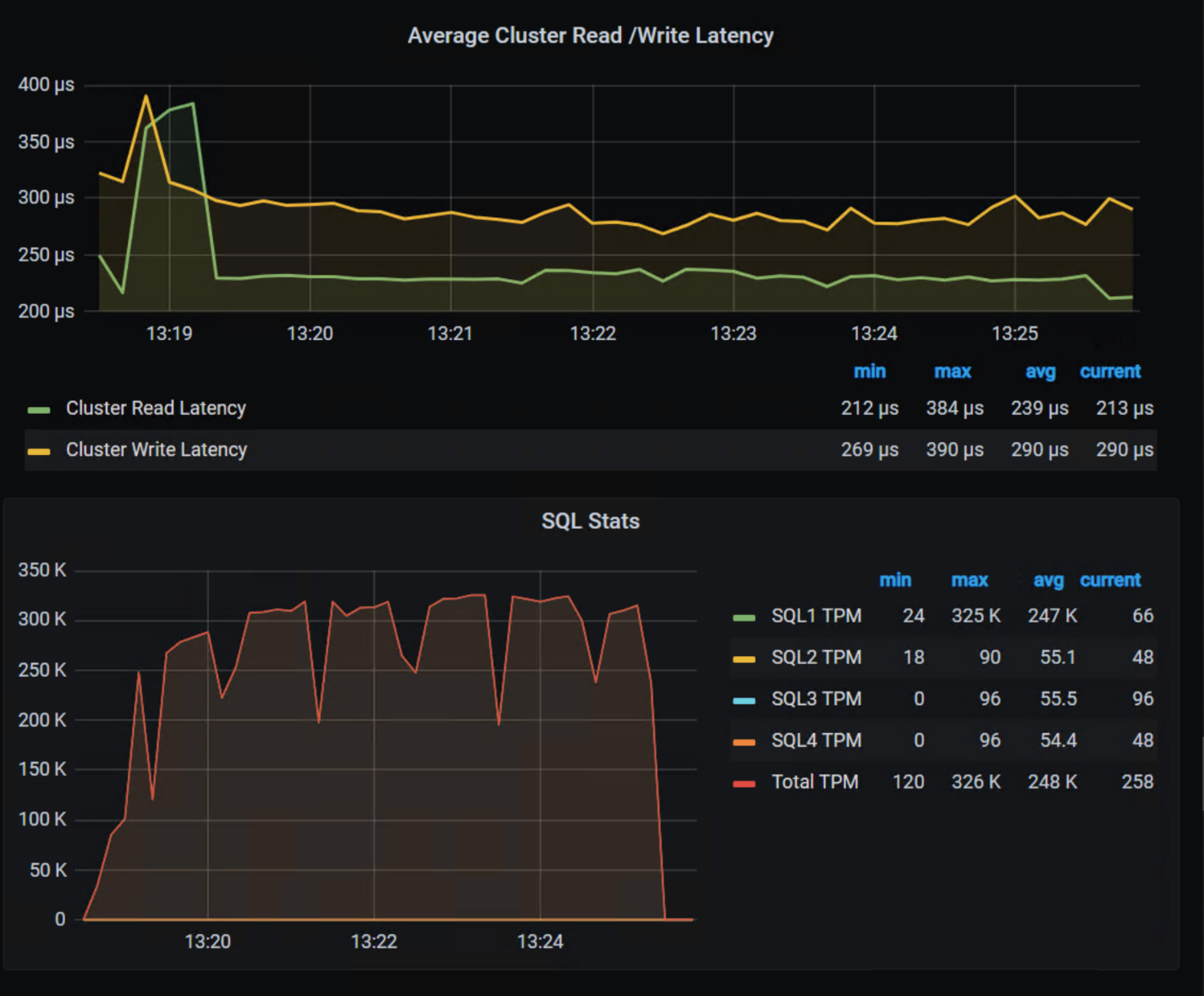

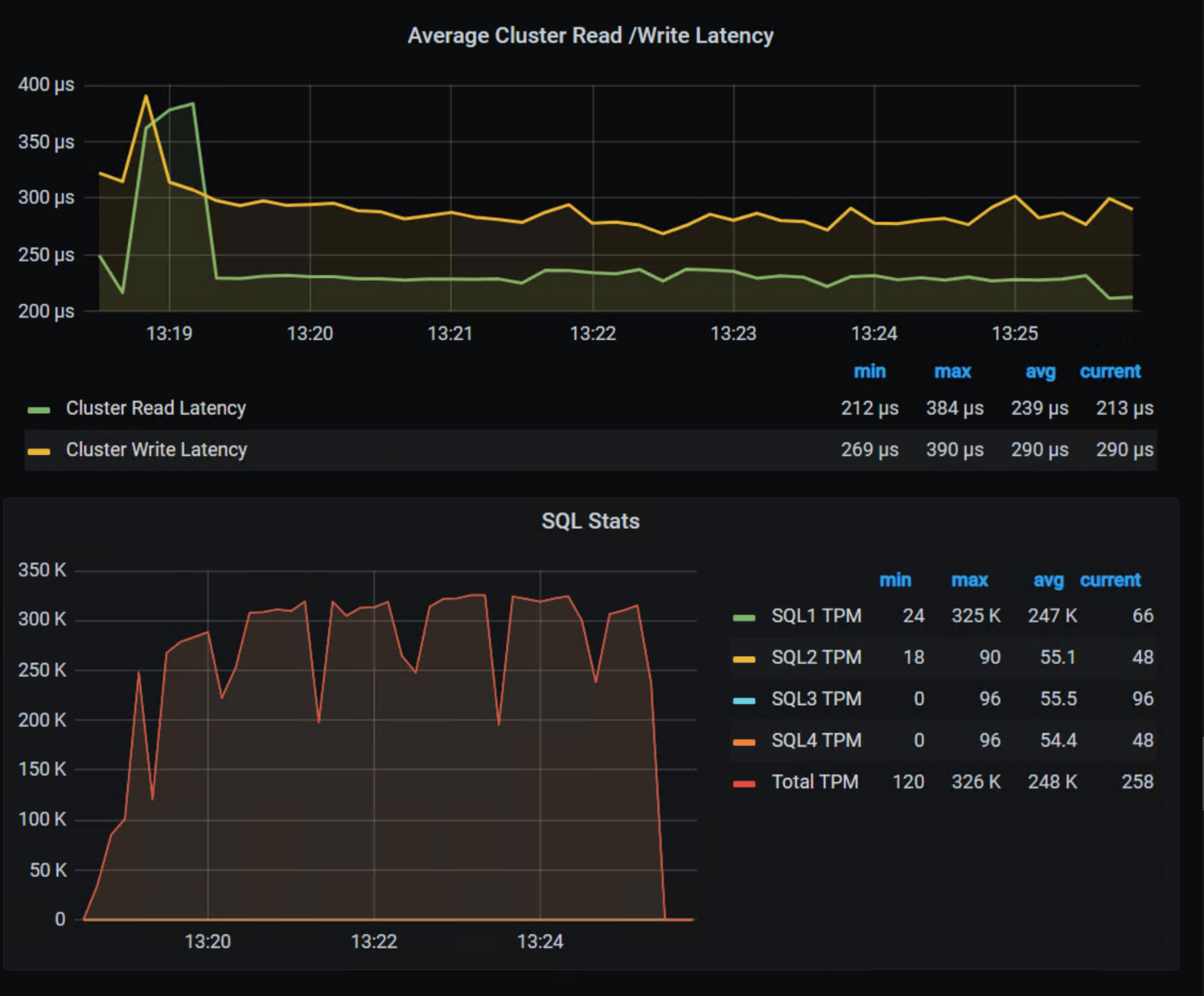

Here are the TPM results from the demo environment that is running our four SQL VMs. The TPMs in this test are maxing out around 320k and the latency is always sub-millisecond. This is the stuff you want to show off, not that pocket full of glitter.

Yeah, you can silo your environments and hide them with terms like “project” and “application group,” but everyone will still know they are silos.

We all started battling silos at the dawn of virtualization. PowerFlex with Kioxia drives and NVIDIA GPUs gives administrators a fighting chance to win the silo war.

You can visit the NVIDIA team at Lounge L3 on the show floor during VMware Explore. And of course, you have to stop by the Kioxia booth (309) to see what PowerFlex can do for your IT battles. We’ll see you there!

Author: Tony Foster

Twitter: | |

LinkedIn: | |

Personal Blog: | |

Location: | The Land of Oz [-6 GMT] |

Contributors: Kailas Goliwadekar, Anup Bharti

Related Blog Posts

Dell PowerFlex at VMware Explore in Barcelona – Nothing Controversial

Thu, 19 Oct 2023 22:38:22 -0000

|Read Time: 0 minutes

For those who aren’t aware, there are some big changes happening at VMware. If you watched the VMware Explore Las Vegas keynote, it was a whirlwind of changes and important information. CEOs of several major companies took the stage and spoke about the direction VMware is going, attendees hanging on their every word and wondering what the changes meant as well as how it would impact their operations.

For many, the impact is still unclear. This could radically change data centers and how organizations do work, leaving many in IT and business asking questions about what’s next and where things are headed.

We can all expect to find out more at VMware Explore Barcelona coming up 6 to 9 November, which will bring more clarity in direction and illuminate what it will mean for organizations large and small.

I can’t wait to see what’s in store for the Generative AI (GenAI) workloads we’ve all been waiting for (And you thought I was talking about something else?).

At VMware Explore in Las Vegas this year, the message was clear. VMware is embracing AI workloads. NVIDIA CEO Jensen Huang and VMware CEO Raghu Raghuram spoke to this during the general session keynote. Jensen stated, “we’re reinventing enterprise computing after a quarter of a century in order to transition to the future.”

The entire IT industry is moving in the direction of AI. Dell PowerFlex is already there. We’ve been on this journey for quite some time. If you were lucky enough to have stopped at the Kioxia stand during the Las Vegas show, you saw how we are working with both NVIDIA and Kioxia to deliver powerful AI systems for customers to make that transition to the future.

If you couldn’t make it to Las Vegas for VMware Explore but plan to attend VMware Explore in Barcelona, you’re in luck. PowerFlex will be showcasing the amazing performance of Kioxia storage and NVIDIA GPUs again. You can see a live demo at the Kioxia stand, #225 in the Solutions Exchange.

When you visit the Kioxia stand, you will be able to experience the power of running ResNet 50 image classification and Online Transactional Processing (OLTP) workloads simultaneously, live from the show floor. And if that’s not enough, there are experts and lots of them! If you get a chance, talk with Shashi about all the things PowerFlex unlocks for your organization.

PowerFlex supports NVIDIA GPUs with MIG technology, which is part of NVIDIA AI Enterprise. NVIDIA MIG allows you to tailor GPU resources for the workloads that need them (Yes, there is nothing that says you can’t run different workloads on the same hosts). Plus, PowerFlex uses Kioxia PM7 series SSDs, so there are plenty of IOPS to go around while ensuring sub-millisecond latency for both workloads. This allows the data to be closer to the processing, even on the same host.

In our lab tests, we were able to push one million transactions per minute (TPM) with OLTP workloads while also processing 6620 images per second using a RESNET50 model built on NVIDIA NGC containers. These are important if you want to keep users happy, especially as more and more organizations want to add AI/ML capabilities to their online apps (and more and more data is generated from all those new apps).

The following shows the TPM results from the demo environment that is running our four SQL VMs. The TPMs in this test are maxing out around 320k, and the latency is always sub-millisecond.

The future is here and waiting for you to visit.

If you are unable to visit the stand and would like to get an overview of PowerFlex’s abilities when it comes to GenAI, check out this video.

As you can see, PowerFlex has true flexibility when it comes to GenAI, making it the ideal platform to reinvent your enterprise IT environment as you transition to the future.

If you find yourself at VMware Explore in Barcelona, be sure to stop by the Kioxia stand (#225) and talk with the team about how Dell PowerFlex, Kioxia drives, and NVIDIA GPUs can accelerate your transition to the future.

See, nothing controversial here!

Resources

- Dell Generative AI Solutions

- VMware and NVIDIA Unlock Generative AI for Enterprises

- VMware’s Approach to Private AI

Author: Tony Foster, Sr. Principal Technical Marketing Engineer

Twitter: | |

LinkedIn: | |

Personal Blog: | |

Location: | The Land of Oz [-6 GMT] |

Can I do that AI thing on Dell PowerFlex?

Thu, 20 Jul 2023 21:08:09 -0000

|Read Time: 0 minutes

The simple answer is Yes, you can do that AI thing with Dell PowerFlex. For those who might have been busy with other things, AI stands for Artificial Intelligence and is based on trained models that allow a computer to “think” in ways machines haven’t been able to do in the past. These trained models (neural networks) are essentially a long set of IF statements (layers) stacked on one another, and each IF has a ‘weight’. Once something has worked through a neural network, the weights provide a probability about the object. So, the AI system can be 95% sure that it’s looking at a bowl of soup or a major sporting event. That, at least, is my overly simplified description of how AI works. The term carries a lot of baggage as it’s been around for more than 70 years, and the definition has changed from time to time. (See The History of Artificial Intelligence.)

Most recently, AI has been made famous by large language models (LLMs) for conversational AI applications like ChatGPT. Though these applications have stoked fears that AI will take over the world and destroy humanity, that has yet to be seen. Computers still can do only what we humans tell them to do, even LLMs, and that means if something goes wrong, we their creators are ultimately to blame. (See ‘Godfather of AI’ leaves Google, warns of tech’s dangers.)

The reality is that most organizations aren’t building world destroying LLMs, they are building systems to ensure that every pizza made in their factory has exactly 12 slices of pepperoni evenly distributed on top of the pizza. Or maybe they are looking at loss prevention, or better traffic light timing, or they just want a better technical support phone menu. All of these are uses for AI and each one is constructed differently (they use different types of neural networks).

We won’t delve into these use cases in this blog because we need to start with the underlying infrastructure that makes all those ideas “AI possibilities.” We are going to start with the infrastructure and what many now consider a basic (by today’s standards) image classifier known as ResNet-50 v1.5. (See ResNet-50: The Basics and a Quick Tutorial.)

That’s also what the PowerFlex Solution Engineering team did in the validated design they recently published. This design details the use of ResNet-50 v1.5 in a VMware vSphere environment using NVIDIA AI Enterprise as part of PowerFlex environment. They started out with the basics of how a virtualized NVIDIA GPU works well in a PowerFlex environment. That’s what we’ll explore in this blog – getting started with AI workloads, and not how you build the next AI supercomputer (though you could do that with PowerFlex as well).

In this validated design, they use the NVIDIA A100 (PCIe) GPU and virtualized it in VMware vSphere as a virtual GPU or vGPU. With the infrastructure in place, they built Linux VMs that will contain the ResNet-50 v1.5 workload and vGPUs. Beyond just working with traditional vGPUs that many may be familiar with, they also worked with NVIDIA’s Multi-Instance GPU (MIG) technology.

NVIDIA’s MIG technology allows administrators to partition a GPU into a maximum of seven GPU instances. Being able to do this provides greater control of GPU resources, ensuring that large and small workloads get the appropriate amount of GPU resources they need without wasting any.

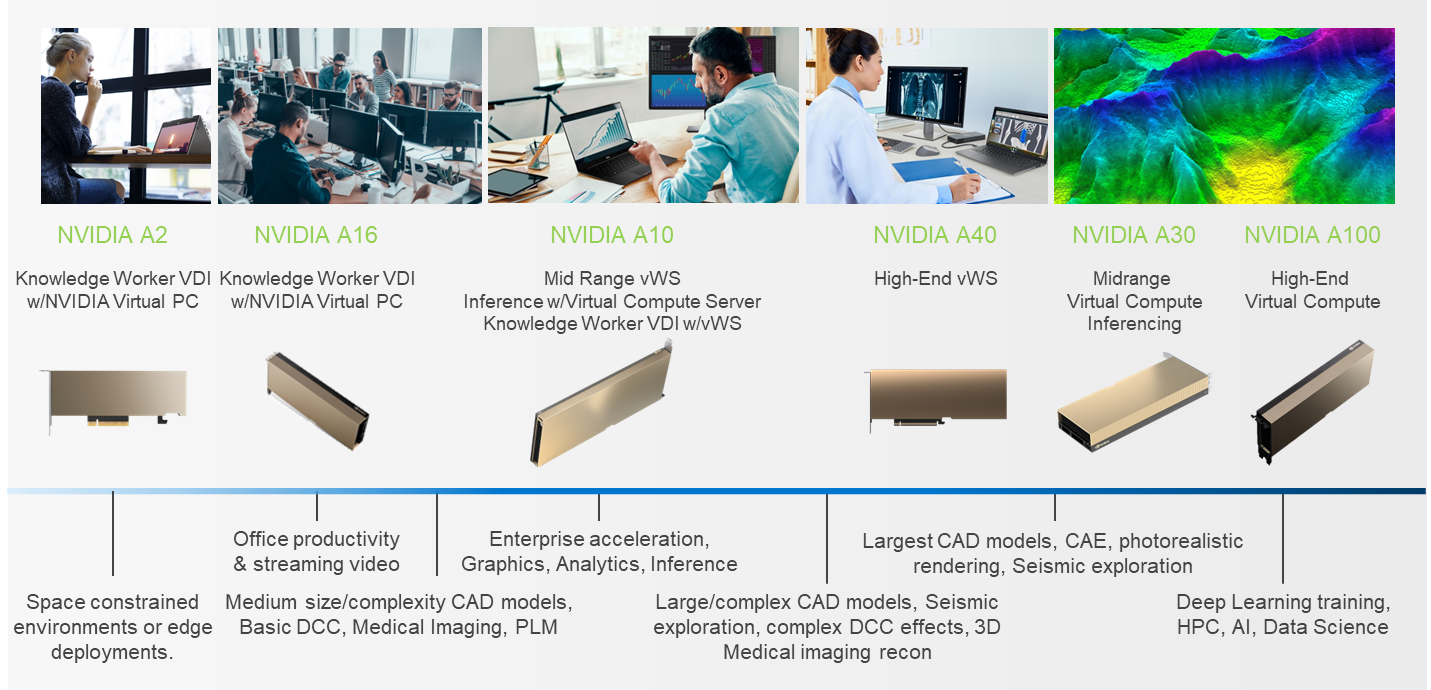

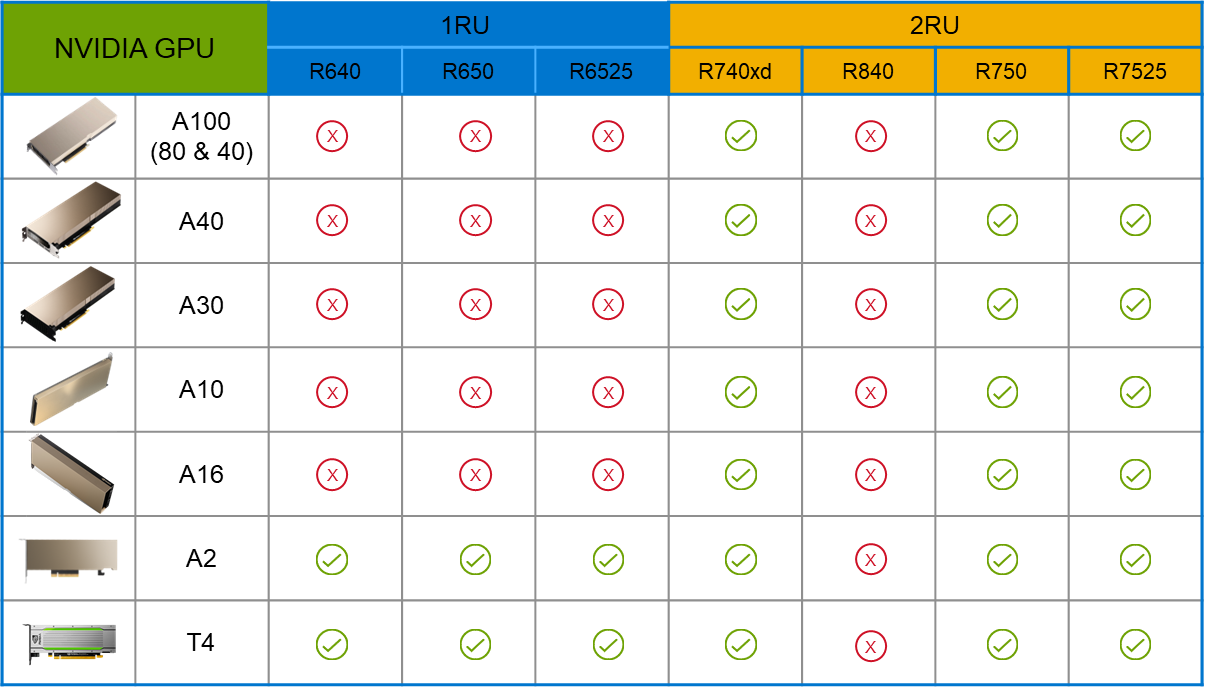

PowerFlex supports a large range of NVIDIA GPUs for workloads, from VDI (Virtual Desktops) to high end virtual compute workloads like AI. You can see this in the following diagram where there are solutions for “space constrained” and “edge” environments, all the way to GPUs used for large inferencing models. In the table below the diagram, you can see which GPUs are supported in each type of PowerFlex node. This provides a tremendous amount of flexibility depending on your workloads.

The validated design describes the steps to configure the architecture and provides detailed links to the NVIDIAand VMware documentation for configuring the vGPUs, and the licensing process for NVIDIA AI Enterprise.

These are key steps when building an AI environment. I know from my experience working with various organizations, and from teaching, that many are not used to working with vGPUs in Linux. This is slowly changing in the industry. If you haven’t spent a lot of time working with vGPUs in Linux, be sure to pay attention to the details provided in the guide. It is important and can make a big difference in your performance.

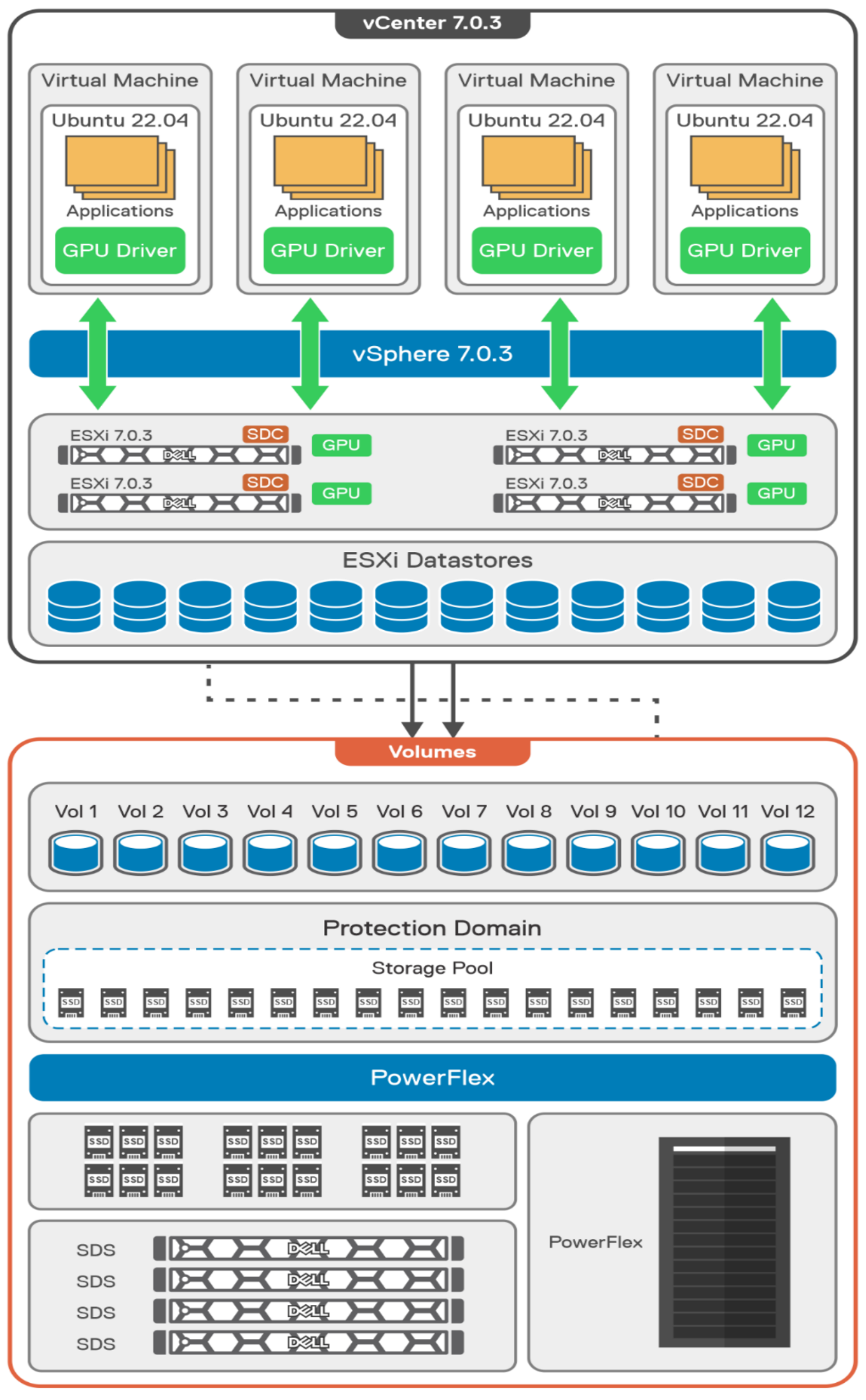

The following diagram shows the validated design’s logical architecture. At the top of the diagram, you can see four Ubuntu 22.04 Linux VMs with the NVIDIA vGPU driver loaded in them. They are running on PowerFlex hosts with VMware ESXi deployed. Each VM contains one NVIDIA A100 GPU configured for MIG operations. This configuration leverages a two-tier architecture where storage is provided by separate PowerFlex software defined storage (SDS) nodes.

A design like this allows for independent scalability for your workloads. What I mean by this is during the training phase of a model, significant storage may be required for the training data, but once the model clears validation and goes into production, storage requirements may be drastically different. With PowerFlex you have the flexibility to deliver the storage capacity and performance you need at each stage.

This brings us to testing the environment. Again, for this paper, the engineering team validated it using ResNet-50 v1.5 using the ImageNet 1K data set. For this validation they enabled several ResNet-50 v1.5 TensorFlow features. These include Multi-GPU training with Horovod, NVIDIA DALI, and Automatic Mixed Precision (AMP). These help to enable various capabilities in the ResNet-50 v1.5 model that are present in the environment. The paper then describes how to set up and configure ResNet-50 v1.5, the features mentioned above, and details about downloading the ImageNet data.

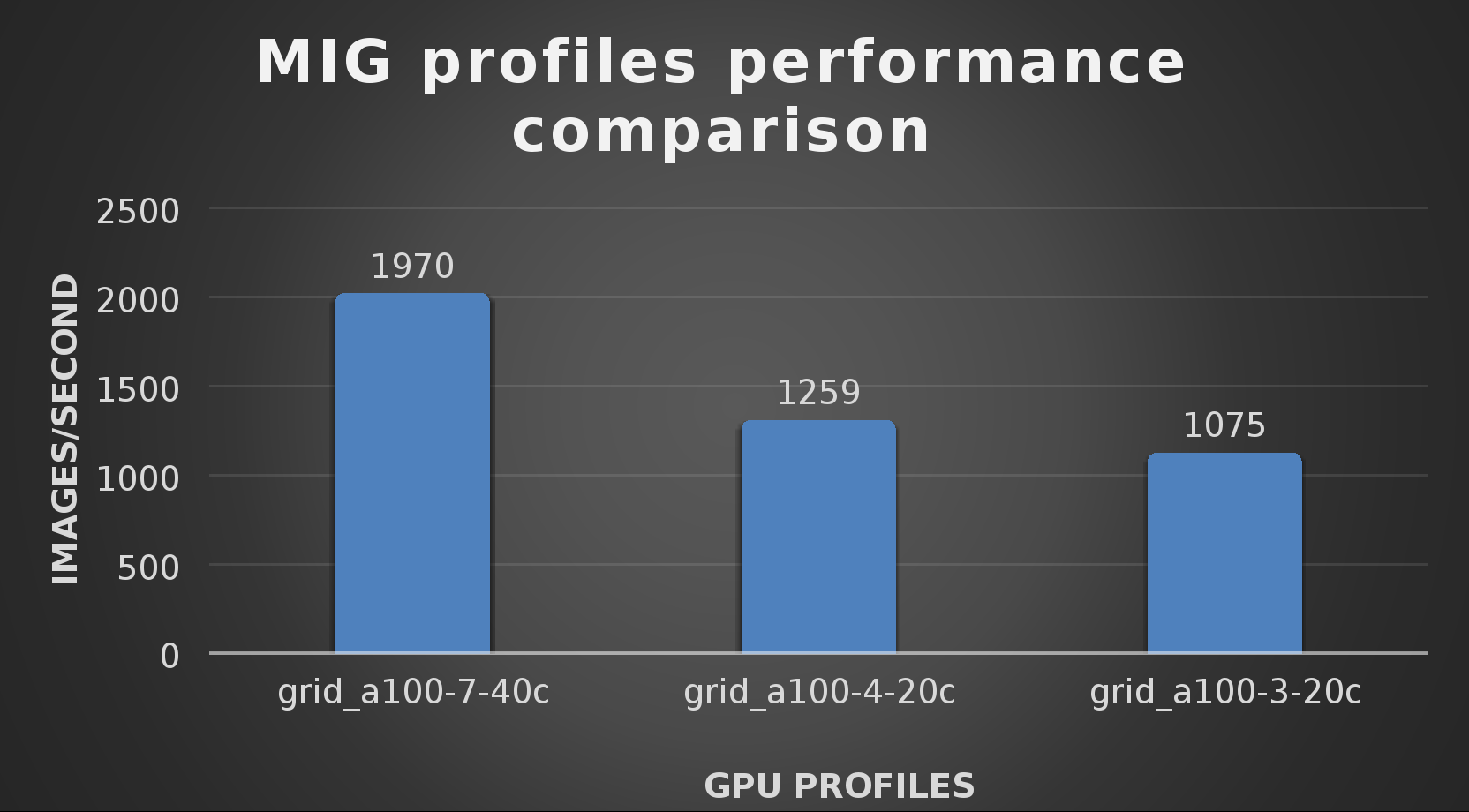

At this stage they were able to train the ResNet-50 v1.5 deployment. The first iteration of training used the NVIDIA A100-7-40C vGPU profile. They then repeated testing with the A100-4-20C vGPU profile and the A100-3-20C vGPU profile. You might be wondering about the A100-2-10C vGPU profile and the A100-1-5C profile. Although those vGPU profiles are available, they are more suited for inferencing, so they were not tested.

The results from validating the training workloads for each vGPU profile is shown in the following graph. The vGPUs were running near 98% capacity according to nvitop during each test. The CPU utilization was 14% and there was no bottle neck with the storage during the tests.

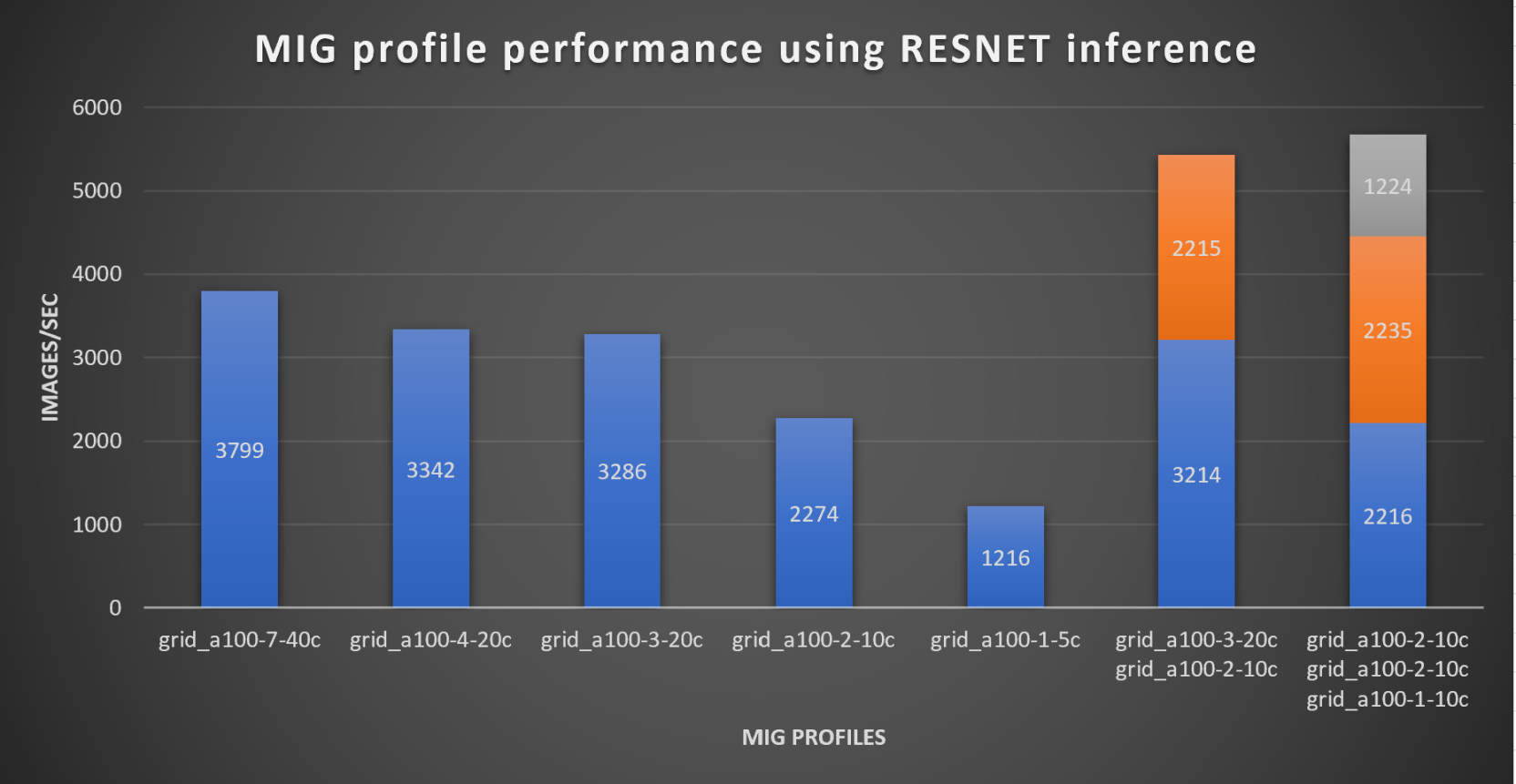

With the models trained, the guide then looks at how well inference runs on the MIG profiles. The following graph shows inferencing images per second of the various MIG profiles with ResNet-50 v1.5.

It’s worth noting that the last two columns show the inferencing running across multiple VMs, on the same ESXi host, that are leveraging MIG profiles. This also shows that GPU resources are partitioned with MIG and that resources can be precisely controlled, allowing multiple types of jobs to run on the same GPU without impacting other running jobs.

This opens the opportunity for organizations to align consumption of vGPU resources in virtual environments. Said a different way, it allows IT to provide “show back” of infrastructure usage in the organization. So if a department only needs an inferencing vGPU profile, that’s what they get, no more, no less.

It’s also worth noting that the results from the vGPU utilization were at 88% and CPU utilization was 11% during the inference testing.

These validations show that a Dell PowerFlex environment can support the foundational components of modern-day AI. It also shows the value of NVIDIA’s MIG technology to organizations of all sizes: allowing them to gain operational efficiencies in the data center and enable access to AI.

Which again answers the question of this blog, can I do that AI thing on Dell PowerFlex… Yes you can run that AI thing! If you would like to find out more about how to run your AI thing on PowerFlex, be sure to reach out to your Dell representative.

Resources

- The History of Artificial Intelligence

- ‘Godfather of AI’ leaves Google, warns of tech’s dangers

- ResNet-50: The Basics and a Quick Tutorial

- Dell Validated Design for Virtual GPU with VMware and NVIDIA on PowerFlex

- NVIDIA NGC Catalog ResNet v1.5 for PyTorch

- NVIDIA AI Enterprise

- NVIDIA A100 (PCIe) GPU

- NVIDIA Virtual GPU Software Documentation

- NVIDIA A100-7-40C vGPU profile

- NVIDIA Multi-Instance GPU (MIG)

- NVIDIA Multi-Instance GPU User Guide

- Horovod

- ImageNet

- DALI

- Automatic Mixed Precision (AMP)

- nvitop

Author: Tony Foster

Sr. Principal Technical Marketing Engineer

Twitter: | |

LinkedIn: | |

Personal Blog: | |

Location: | The Land of Oz [-6 GMT] |