Blogs

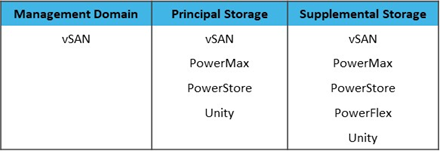

The latest news about PowerFlex releases and updates

A Simple Poster at NVIDIA GTC – Running NVIDIA Riva on Red Hat OpenShift with Dell PowerFlex

Fri, 15 Mar 2024 21:45:09 -0000

|Read Time: 0 minutes

A few months back, Dell and NVIDIA released a validated design for running NVIDIA Riva on Red Hat OpenShift with Dell PowerFlex. A simple poster—nothing more, nothing less—yet it can unlock much more for your organization. This design shows the power of NVIDIA Riva and Dell PowerFlex to handle audio processing workloads.

What’s more, it will be showcased as part of the poster gallery at NVIDIA GTC this week in San Jose California. If you are at GTC, we strongly encourage you to join us during the Poster Reception from 4:00 to 6:00 PM. If you are unable to join us, you can view the poster online from the GTC website.

For those familiar with ASR, TTS, and NMT applications, you might be curious as to how we can synthesize these concepts into a simple poster. Read on to learn more.

NVIDIA Riva

For those not familiar with NVIDIA Riva, let’s start there.

NVIDIA Riva is an AI software development kit (SDK) for building conversational AI pipelines, enabling organizations to program AI into their speech and audio systems. It can be used as a smart assistant or even a note taker at your next meeting. Super cool, right?

Taking that up a notch, NVIDIA Riva lets you build fully customizable, real-time conversational AI pipelines, which is a fancy way of saying it allows you to process speech in a bunch of different ways including automatic speech recognition (ASR), text-to-speech (TTS), and neural machine translation (NMT) applications:

- Automatic speech recognition (ASR) – this is essentially dictation. Provide AI with a recording and get a transcript—a near perfect note keeper for your next meeting.

- Text-to-speech (TTS) – a computer reads what you type. In the past, this was often in a monotone voice. It’s been around for more than a couple of decades and has evolved rapidly with more fluid voices and emotion.

- Neural machine translation (NMT) – this is the translation of spoken language in near real-time to a different language. It is a fantastic tool for improving communication, which can go a long way in helping organizations extend business.

Each application is powerful in its own right, so think about what’s possible when we bring ASR, TTS, and NMT together, especially with an AI-backed system. Imagine having a technical support system that could triage support calls, sounded like you were talking to an actual support engineer, and could provide that support in multiple languages. In a word: ground-breaking.

NVIDIA Riva allows organizations to become more efficient in handling speech-based communications. When organizations become more efficient in one area, they can improve in other areas. This is why NVIDIA Riva is part of the NVIDIA AI Enterprise software platform, focusing on streamlining the development and deployment of production AI.

I make it all sound simple, however those creating large language models (LLMs) around multilingual speech and translation software know it’s not so. That’s why NVIDIA developed the Riva SDK.

The operating platform also plays a massive role in what can be done with workloads. Red Hat OpenShift enables AI speech recognition and inference with its robust container orchestration, microservices architecture, and strong security features. This allows workloads to scale to meet the needs of an organization. As the success of a project grows, so too must the project.

Why is Storage Important

You might be wondering how storage fits into all of this. That’s a great question. You’ll need high performance storage for NVIDIA Riva. After all, it’s designed to process and/or generate audio files and being able to do that in near real-time requires a highly performant, enterprise-grade storage system like Dell PowerFlex.

Additionally, AI workloads are becoming mainstream applications in the data center and should be able to run side by side with other mission critical workloads utilizing the same storage. I wrote about this in my Dell PowerFlex – For Business-Critical Workloads and AI blog.

At this point you might be curious how well NVIDIA Riva runs on Dell PowerFlex. That is what a majority of the poster is about.

ASR and TTS Performance

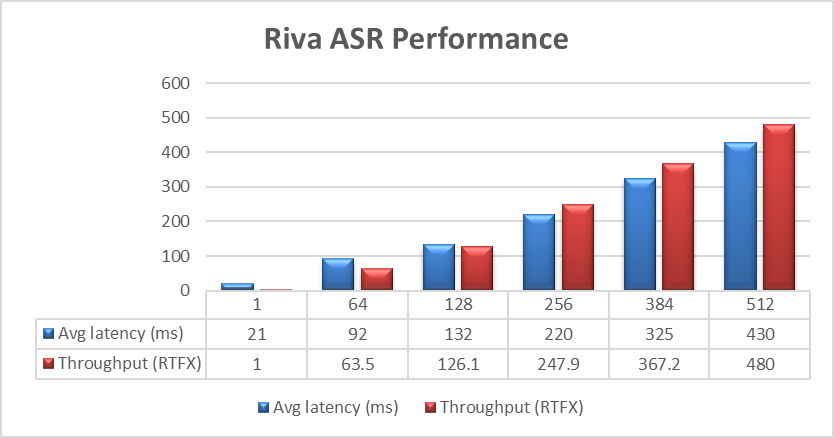

The Dell PowerFlex Solutions Engineering team did extensive testing using the LibriSpeech dev-clean dataset available from Open SLR. With this data set, they performed automatic speech recognition (ASR) testing using NVIDIA Riva. For each test, the stream was increased from 1 to 64, 128, 256, 384, and finally 512, as shown in the following graph.

Figure 1. NVIDIA Riva ASR Performance

Figure 1. NVIDIA Riva ASR Performance

The objective of these tests is to have the lowest latency with the highest throughput. Throughput is measured in RTFX, or the duration of audio transcribed divided by computation time. During these tests, the GPU utilization was approximately 48% without any PowerFlex storage bottlenecks. These results are comparable to NVIDIA’s own findings in in the NVIDIA Riva User Guide.

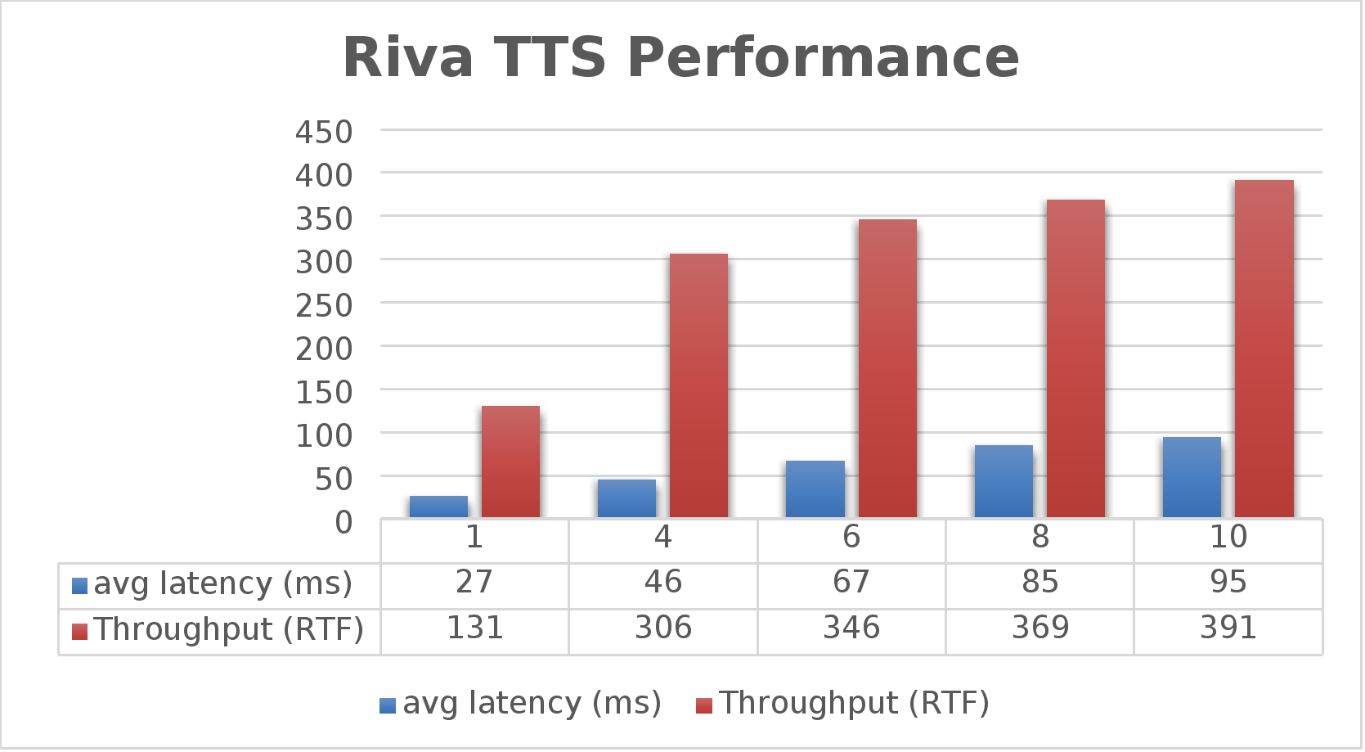

The Dell PowerFlex Solutions Engineering team went beyond just looking at how fast NVIDIA Riva could transcribe text, also exploring the speed at which it could convert text to speech (TTS). They validated this as well. Starting with a single stream, for each run the stream is changed to 4, 6, 8, and 10, as shown in the following graph.

Figure 2. NVIDIA Riva TTS Performance

Figure 2. NVIDIA Riva TTS Performance

Again, the goal is to have a low average latency with a high throughput. The throughput (RTFX) in this case is the duration of audio generated divided by computation time. As we can see, this results in a RTFX throughput of 391 with a latency of 91ms with ten streams. It is also worth noting that during testing, GPU utilization was approximately 82% with no storage bottlenecks.

This is a lot of data to pack into one poster. Luckily, the Dell PowerFlex Solutions Engineering team created a validated architecture that details how all of these results were achieved and how an organization could replicate them if needed.

Now, to put all this into perspective, with PowerFlex you can achieve great results on both spoken language coming into your organization and converting text to speech. Pair this capability with some other generative AI (genAI) tools, like NVIDIA NeMo, and you can create some ingenious systems for your organization.

For example, if an ASR model is paired with a large language model (LLM) for a help desk, users could ask it questions verbally, and—once it found the answers—it could use TTS to provide them with support. Think of what that could mean for organizations.

It's amazing how a simple poster can hold so much information and so many possibilities. If you’re interested in learning more about the research Dell PowerFlex has done with NVIDIA Riva, visit the Poster Reception at NVIDIA GTC on Monday, March 18th from 4:00 to 6:00 PM. If you are unable to join us at the poster reception, the poster will be on display throughout NVIDIA GTC. If you are unable to attend GTC, check out the white paper, and reach out to your Dell representative for more information.

Authors: Tony Foster | Twitter: @wonder_nerd | LinkedIn

Praphul Krottapalli

Kailas Goliwadekar

Dell PowerFlex – For Business-Critical Workloads and AI

Wed, 21 Feb 2024 00:10:52 -0000

|Read Time: 0 minutes

AI—the buzzword that dances on the tongues of tech enthusiasts, executives, and coffee-break conversationalists alike. It's the shiny promise of automation, insights, and futuristic marvels. But let's step back from the AI dazzle for a moment. Beneath the glitz lies a fundamental truth: business-critical applications are the unsung heroes of organizational success. Enter Dell PowerFlex, the sturdy workhorse that ensures these applications run seamlessly.

The AI hype revisited

Imagine a room abuzz with anticipation. Faces lean forward, eager for the next AI revelation. If you've followed my previous blog, Can I Do That AI Thing on Dell PowerFlex, you know the answer. Yes, you can do that AI thing on PowerFlex. Being able to do AI shouldn’t be the end all be all for organizations. In fact, for most, it’s probably only a small portion of their IT operations. To that end, Dell PowerFlex isn't just built for AI. In fact, PowerFlex’s real strength isn’t AI at all.

Crushing the AI illusion

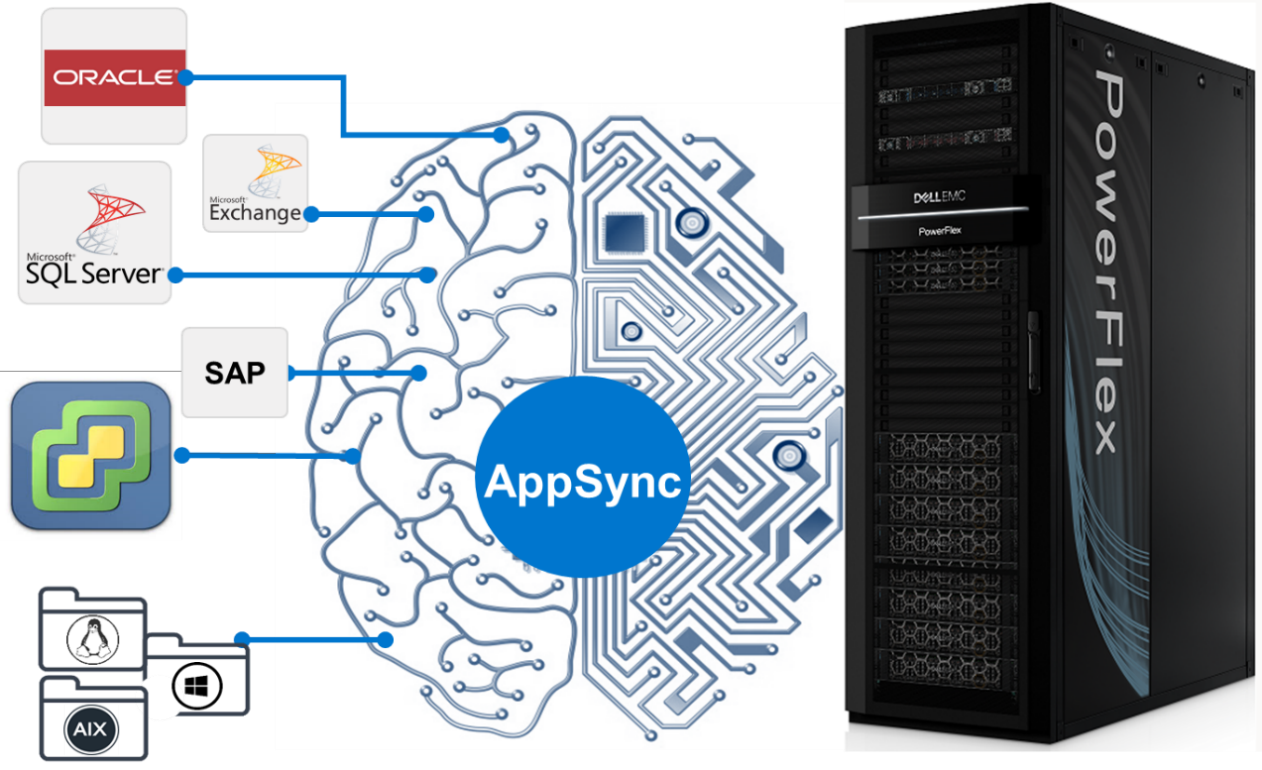

Let's peel back the layers. Dell PowerFlex isn't a mystical crystal ball, predicting stock market trends or composing poetry. Instead, it's the backbone supporting everyday business operations. Think databases, application servers, file servers—the workhorses that keep your organization humming. These workloads are the lifeblood of any enterprise, and their smooth functioning is non-negotiable. For many organizations, AI operations are a distant second. Why not optimize for the workhorses as well as prepare to support that new AI model?

The workload warriors

- Databases: Customer data, financial records, and inventory details all reside in databases. Dell PowerFlex ensures their availability, scalability, and performance.

- Application Servers: The engines behind web applications, APIs, and services. PowerFlex flexes its muscles here, providing the horsepower needed for user requests, transactions, and data processing.

- File Servers: Shared drives, document repositories, and collaboration spaces rely on file servers. PowerFlex ensures your files flow smoothly, whether you're sharing a presentation or collaborating on a project.

- And So Many Others: ERP systems, CRM platforms, virtual desktops—the list goes on. Each workload has its quirks, demands, and deadlines. Dell PowerFlex steps up, offering a unified platform that simplifies management and boosts performance.

Business-critical, Dell PowerFlex vital

These business-critical workloads are the heartbeat of organizations. They power customer interactions, financial transactions, and strategic decision-making. When these workloads hiccup, the entire operation feels it. That's where Dell PowerFlex shines. Its architecture leverages a robust and resilient software-defined storage (SDS) platform. Translation? It's agile, scalable, and resilient.

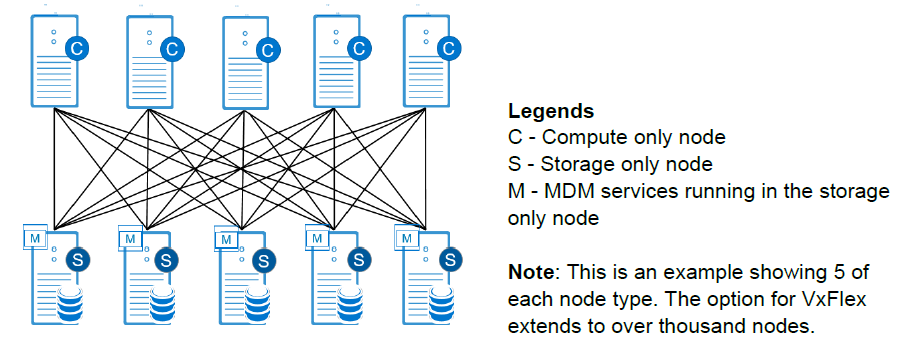

So, what's the secret sauce? PowerFlex leverages distributed storage resources, creating a pool of compute and storage nodes. These nodes collaborate harmoniously, distributing data and handling failures gracefully. Whether you're running a database query, serving up a web page, or analyzing mountains of data, PowerFlex ensures the show goes on.

The PowerFlex promise

Dell PowerFlex isn't just a hardware box—it's a promise. A promise to keep your workloads humming, your data secure, and your business thriving. So, the next time AI dazzles you with its potential, remember that PowerFlex is the sturdy engine of reliability in the background, ensuring the lights stay on, the servers stay responsive, and the wheels of progress keep turning.

In the grand scheme of IT, Dell PowerFlex takes center stage—an unassuming force that holds everything together. And as we navigate the AI landscape, let's tip our hats to the real heroes who keep the gears turning, one workload—AI included—at a time.

In the interest of full disclosure, this blog was created with the assistance of AI.

Author: Tony Foster

Twitter: @wonder_nerd

LinkedIn

PowerFlex and CloudStack, an Amazing IaaS match!

Sat, 18 Nov 2023 14:13:00 -0000

|Read Time: 0 minutes

Have you heard about Apache CloudStack? Did you know it runs amazingly on Dell PowerFlex? And what does it all have to do with infrastructure as a service (IaaS)? Interested in learning more? If so, then you should probably keep reading!

The PowerFlex team and ShapeBlue have been collaborating to bring ease and simplicity to CloudStack on PowerFlex. They have been doing this for quite a while. As new versions are released, the teams work together to ensure it continues to be amazing for customers. The deep integration with PowerFlex makes it an ideal choice for organizations building CloudStack environments.

Both Dell and ShapeBlue are gearing up for the CloudStack Collaboration Conference (CCC) in Paris on November 23 and 24th. The CloudStack Collaboration Conference is the biggest get-together for the Apache CloudStack Community, bringing vendors, users, and developers to one place to discuss the future of open-source technologies, the benefits of CloudStack, new integrations, and capabilities.

CloudStack is open-source software designed to deploy and manage large networks of virtual machines as a highly available, highly scalable Infrastructure as a Service (IaaS) cloud computing platform. CloudStack is used by hundreds of service providers around the world to offer public cloud services and by many companies to provide an on-premises (private) cloud offering or as part of a hybrid cloud solution.

Users can manage their cloud with an easy to use Web interface, command line tools, and/or a full-featured RESTful API. In addition, CloudStack provides an API that is compatible with AWS EC2 and S3 for organizations that want to deploy hybrid clouds.

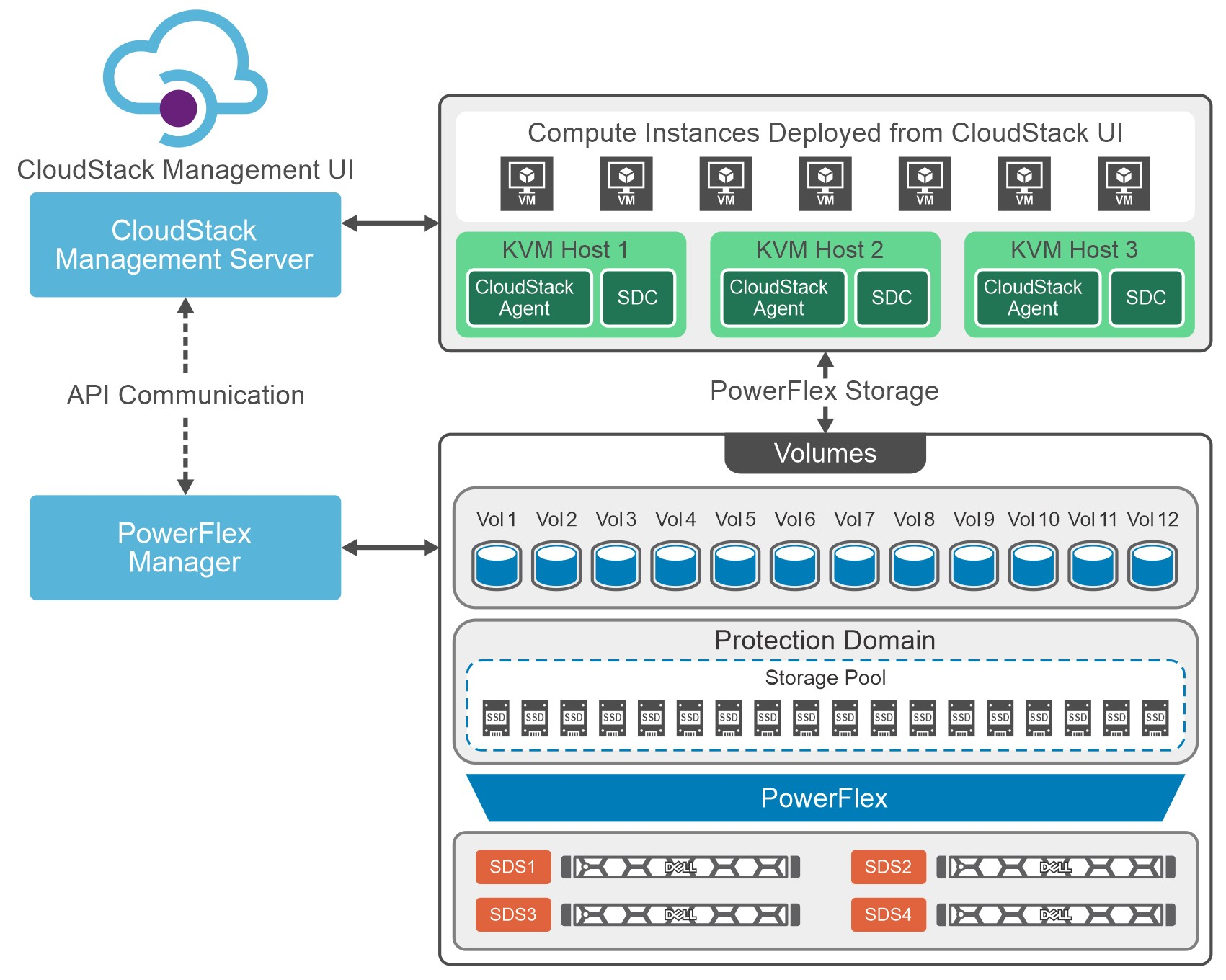

CloudStack can leverage the extensive PowerFlex REST APIs to enhance functionality. This facilitates streamlined provisioning, effective data management, robust snapshot management, comprehensive data protection, and seamless scalability, making the combination of PowerFlex storage and CloudStack a robust choice for modern IaaS environments.

You can see this in the following diagram. CloudStack and PowerFlex communicate with each other using APIs to coordinate operations for VMs. This makes it easier to administer larger environments, enabling organizations to have a true IaaS environment.

Figure 1. Cloud Stack on PowerFlex Architecture

Let's talk about IaaS for a moment. It is a fantastic concept that can be compared with ordering off a menu at a restaurant. The restaurant has unrelated dishes on the menu until you start looking at their components. For example, you can get three different base sauces (red, pink, and white) with just a red sauce and a white sauce. With a small variety of pasta and proteins, the options are excellent. This is the same for IaaS. Have a few base options, sprinkle on some API know-how, and you get a fantastic menu to satisfy workload needs without having a detailed knowledge of the infrastructure.

That makes it easier for the IT organization to become more efficient and shift the focus toward aspirational initiatives. This is especially true when CloudStack and PowerFlex work together. The hungry IT consumers can get what they want with less IT interaction.

Other significant benefits that come from integrating CloudStack with PowerFlex include the following:

- Seamless Data Management: Efficient provision, backup, and data management across infrastructure, ensuring data integrity and accessibility.

- Enhanced Performance: Provides low-latency access to data, optimizing I/O, and reducing bottlenecks. This, in turn, leads to improved application and workload performance.

- Reliability and Data Availability: Benefit from advanced redundancy and failover mechanisms and data replication, reducing the risk of data loss and ensuring continuous service availability.

- Scalability: Scalable storage solutions allow organizations to expand their storage resources in tandem with their growing needs. This flexibility ensures that they can adapt to changing workloads and resource requirements.

- Simplified Management: Ability to use a single interface to handle provisioning, monitoring, troubleshooting, and streamlining administrative tasks.

- Enhanced Data Protection: Data protection features, such as snapshots, backups, and disaster recovery solutions. This ensures that an organization's data remains secure and can be quickly restored in case of unexpected incidents.

These are tremendous benefits for organizations, especially the data protection aspects. It is often said that it is no longer a question of if an organization will be impacted by an incident. It is a question of when they will be impacted. The IaaS capabilities of CloudStack and PowerFlex play a crucial role in protecting an organization's data. That protection can be automated as part of the IaaS design. That way, when a VM or VMs are requested, they can be assigned to a data protection policy as part of the creation process.

Simply put, that means that VM can be protected from the moment of creation. No more having to remember to add a VM to a backup, and no more "oh no" when someone realizes they forgot. That is amazing!

If you are at the CloudStack Collaboration Conference and are interested in discovering more, talk with Shashi and Florian. They will also present how CloudStack and PowerFlex create an outstanding IaaS solution.

Register for the CloudStack Collaboration Conference here to join virtually if you are unable to attend in person.

If you want to learn more about how PowerFlex and CloudStack can benefit your organization, reach out to your Dell representative for more details on this amazing solution.

Resources

Authors

Tony Foster

Twitter: @wonder_nerd

LinkedIn

Punitha HS

LinkedIn

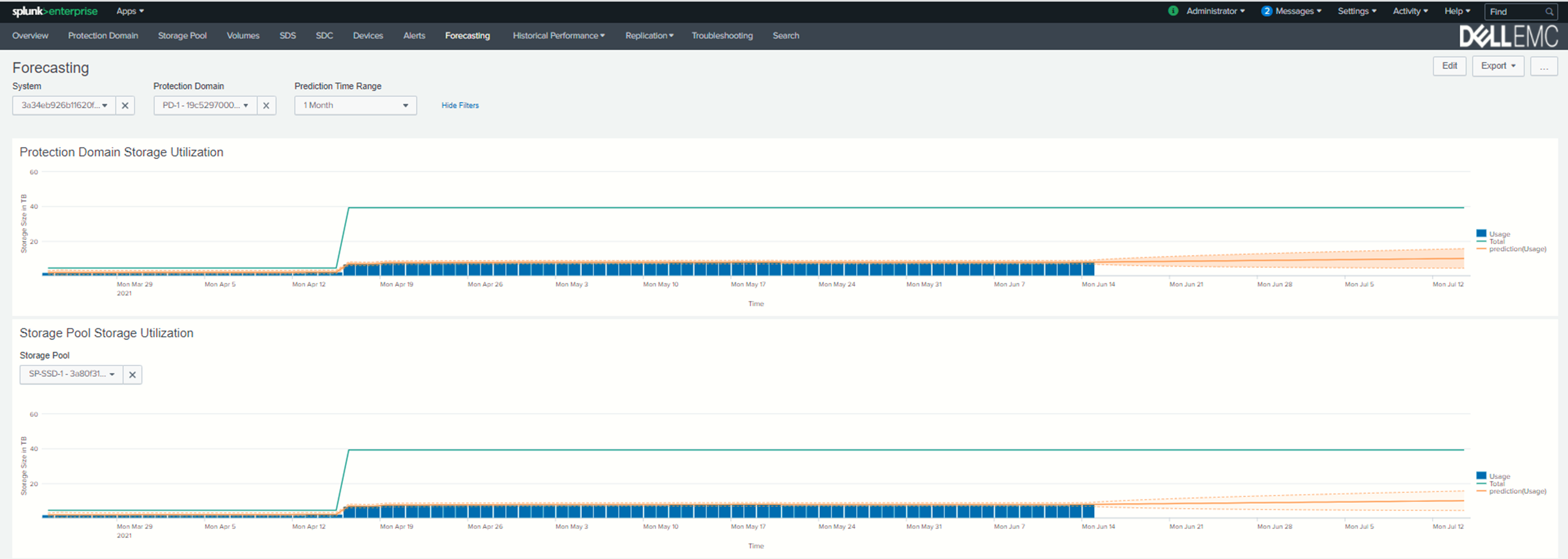

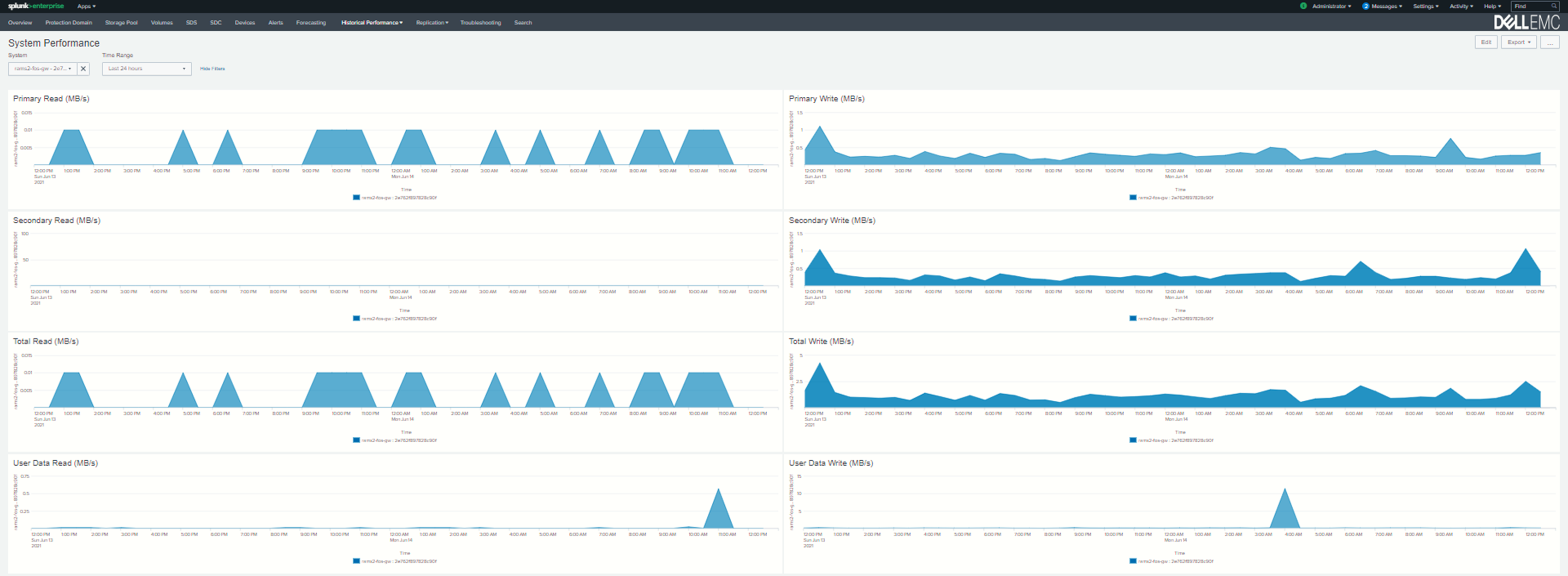

PowerFlex: CloudIQ Enhancements

Thu, 16 Nov 2023 22:07:06 -0000

|Read Time: 0 minutes

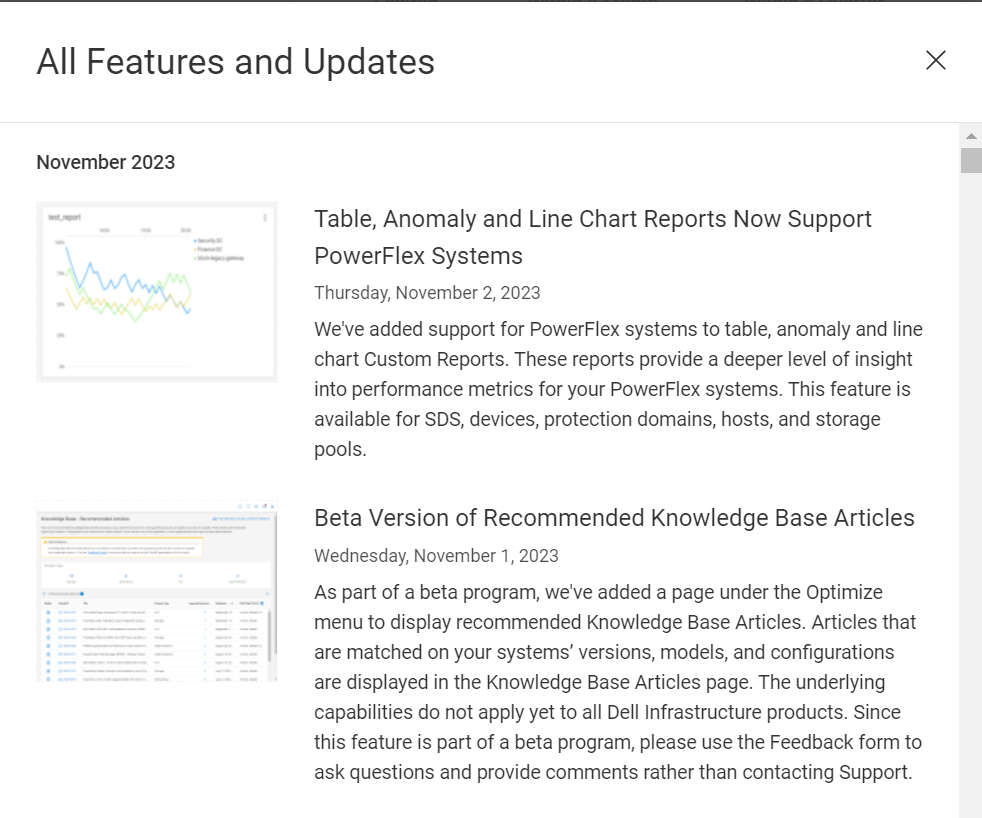

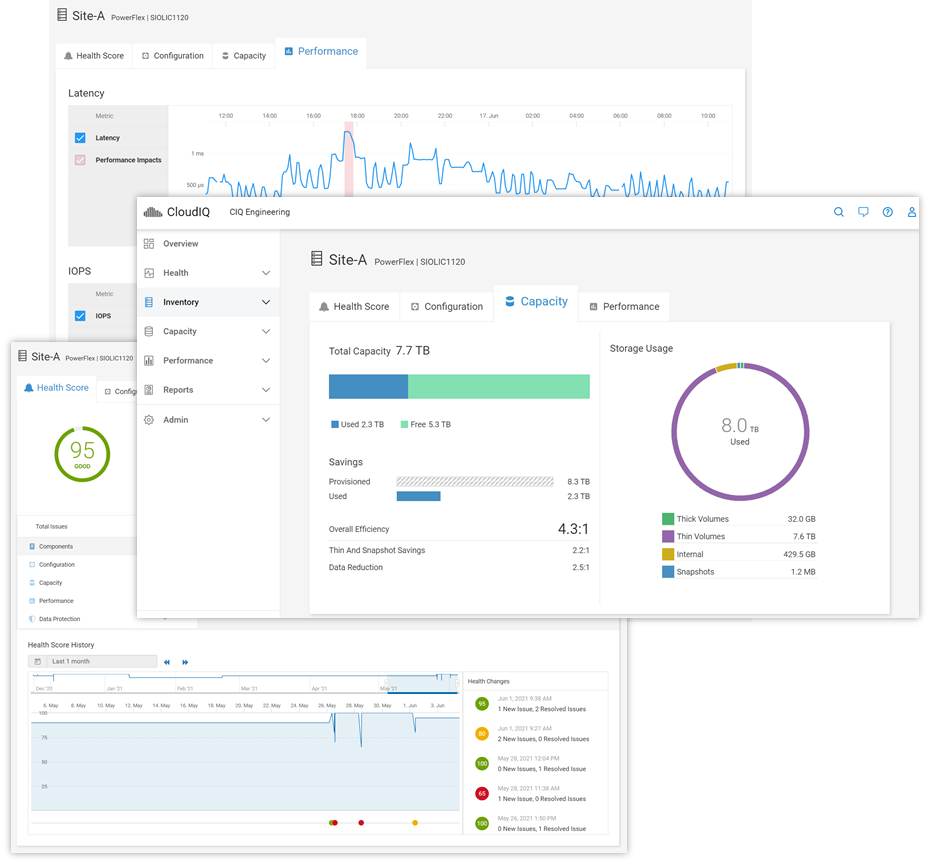

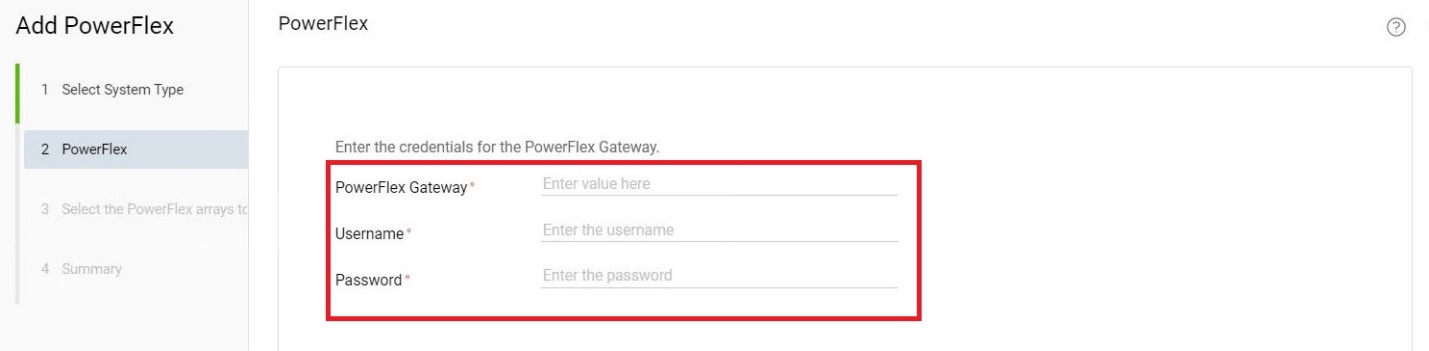

Have you checked out the All Features and Updates dialog in CloudIQ recently? If not, then let’s take a look together!

Figure 1. All Features and Updates dialog in CloudIQ

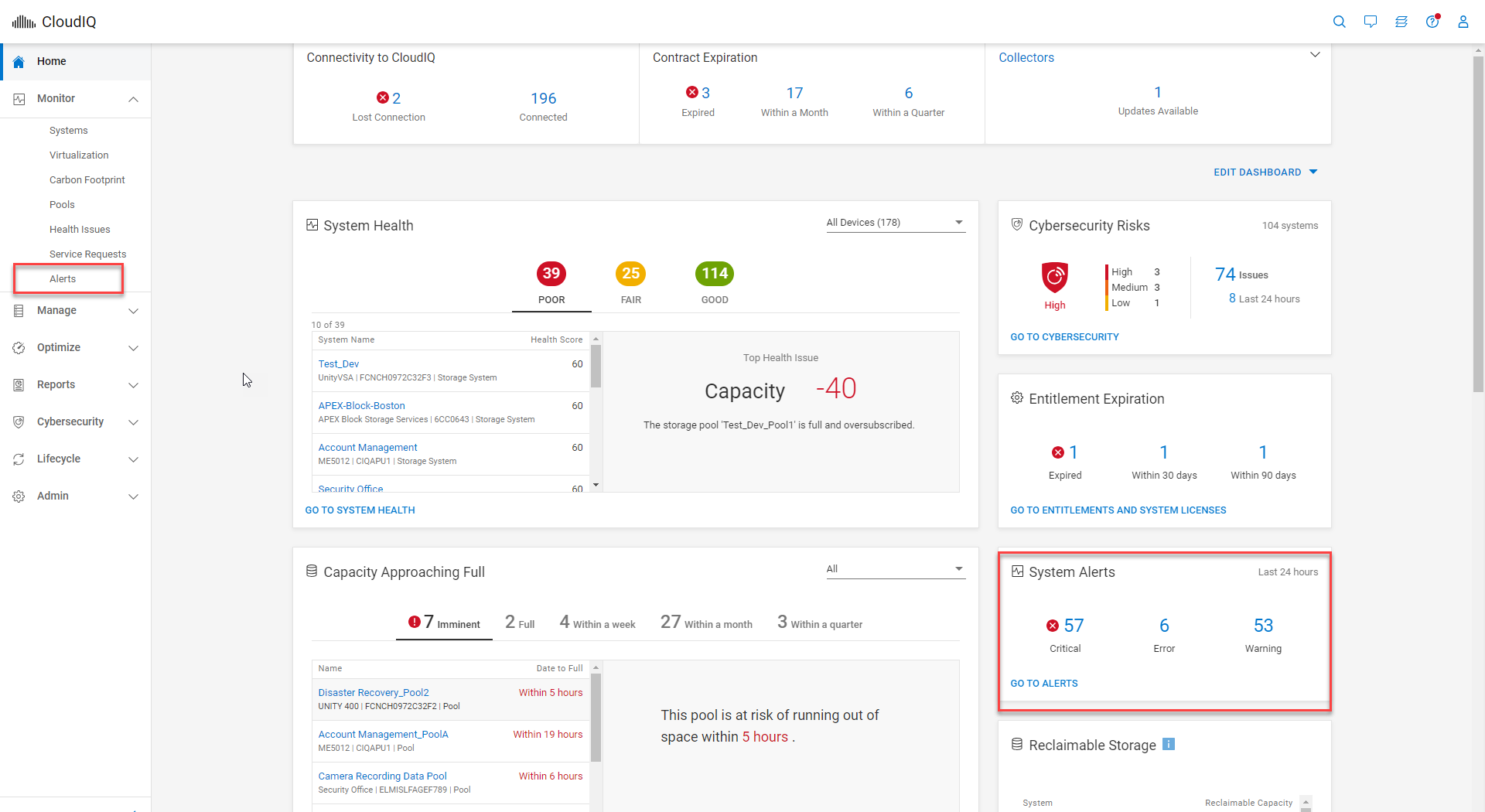

The first enhancement happened early this year with the addition of PowerFlex alerts. The alerts can be viewed through the System Alerts tile on the Home page, shown in figure 2, or by selecting Alerts under the Monitor menu. The System Alerts tile provides an alert count by severity level for all systems monitored by CloudIQ. Selecting the severity icon in the System Alerts tile redirects you to the Alerts page with a filter applied based on the selected severity.

Figure 2. System Alerts tile within CloudIQ Home page

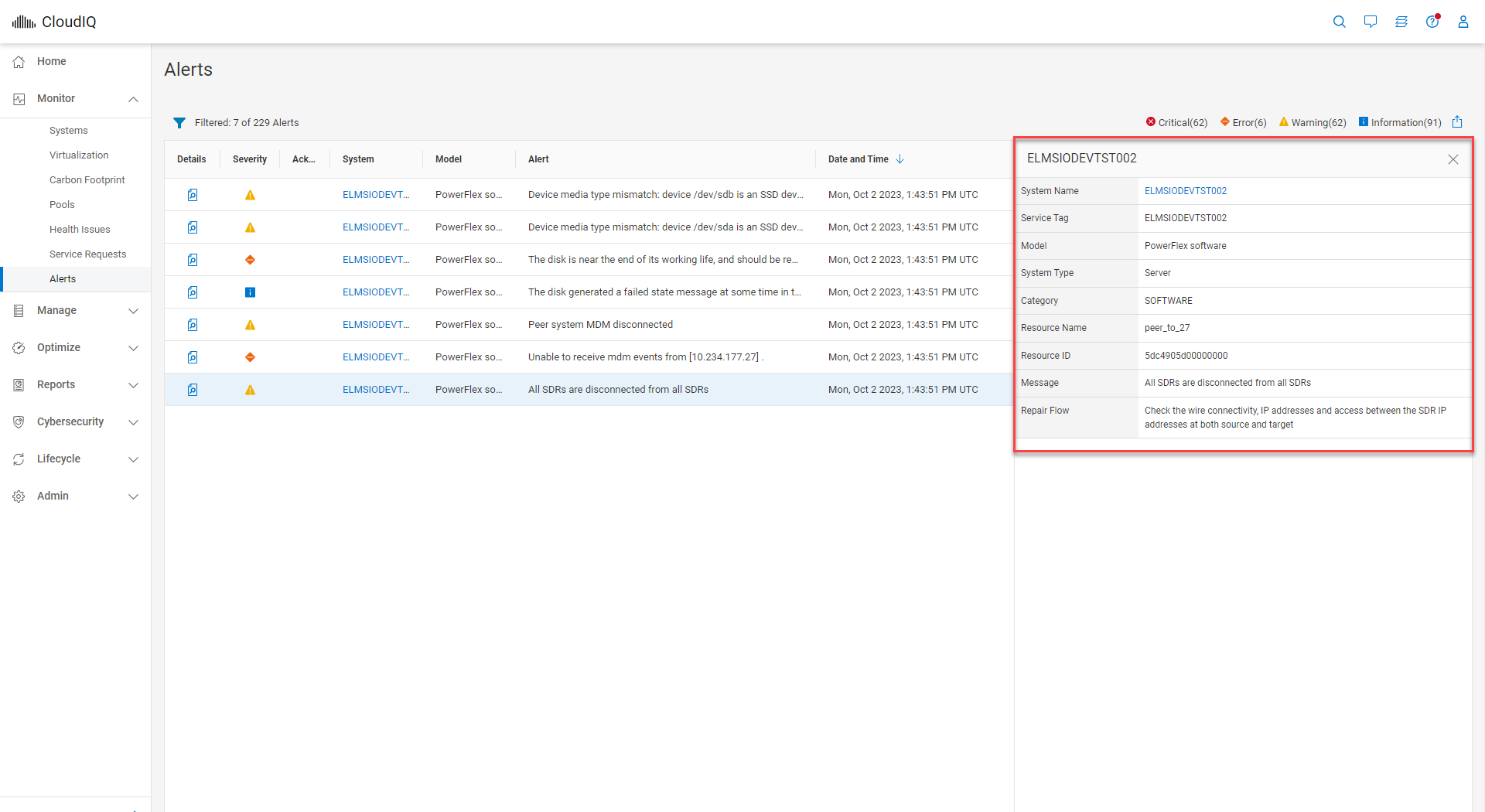

Once on the Alerts page, you can apply additional filters. The Alerts page has a simple table layout and displays information such as severity, system name, and model alert description, as well as the date and time when the alert occurred. Details of an alert can be viewed by selecting the details icon, as highlighted in figure 3.

Figure 3. Details expanded for CloudIQ alert

In August, the PowerFlex/CloudIQ engineering team was busy releasing a bunch of exciting updates.

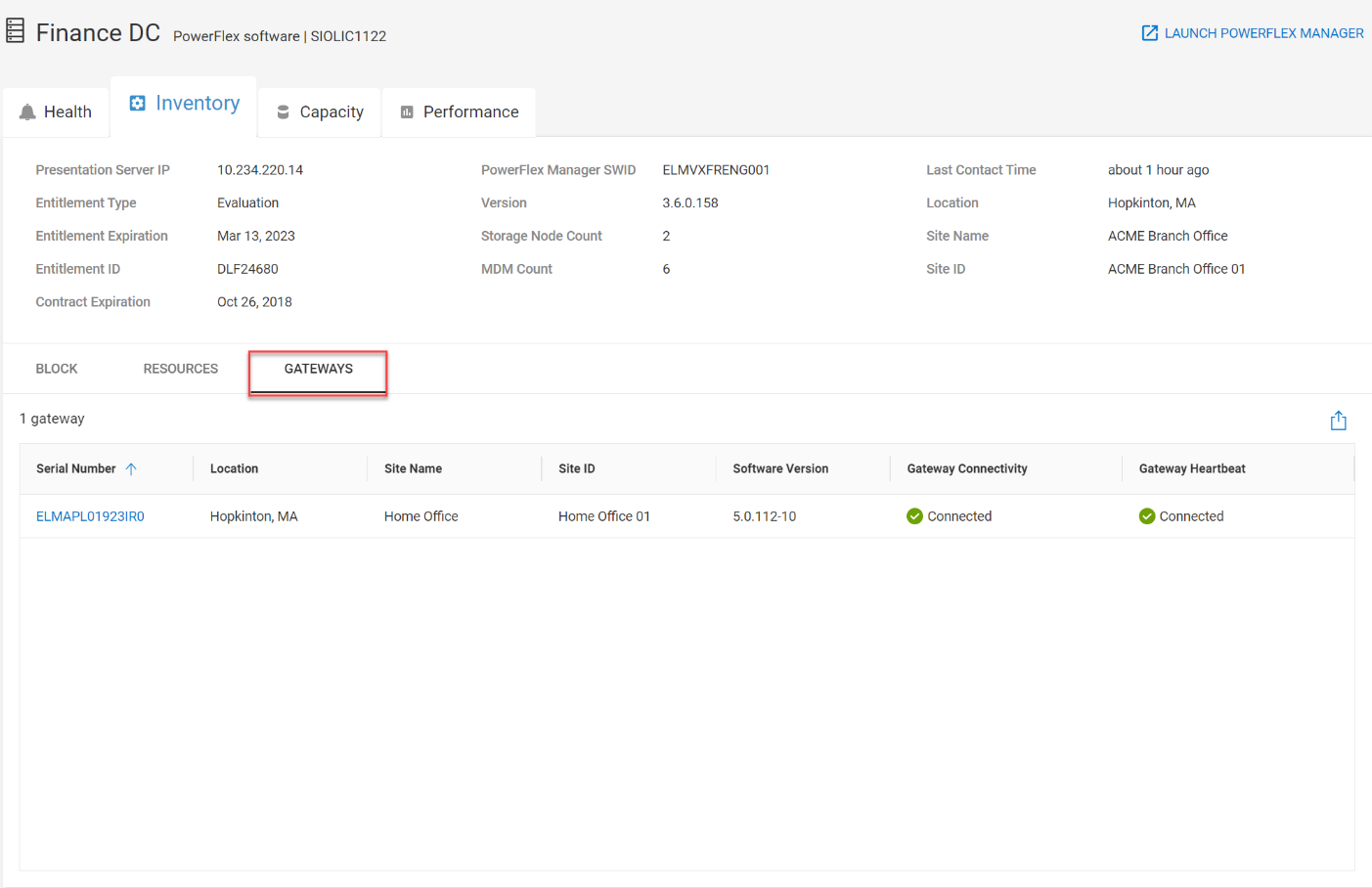

CloudIQ now supports Secure Connect Gateways (SCG) for PowerFlex systems. You can view the SCG information at a system level by visiting the PowerFlex system details page and clicking the GATEWAYS tab, highlighted in figure 3. The GATEWAYS tab consists of the gateway serial number, site information, location, gateway version, connectivity status, and heartbeat status.

Figure 4. GATEWAYS tab for Finance DC PowerFlex system

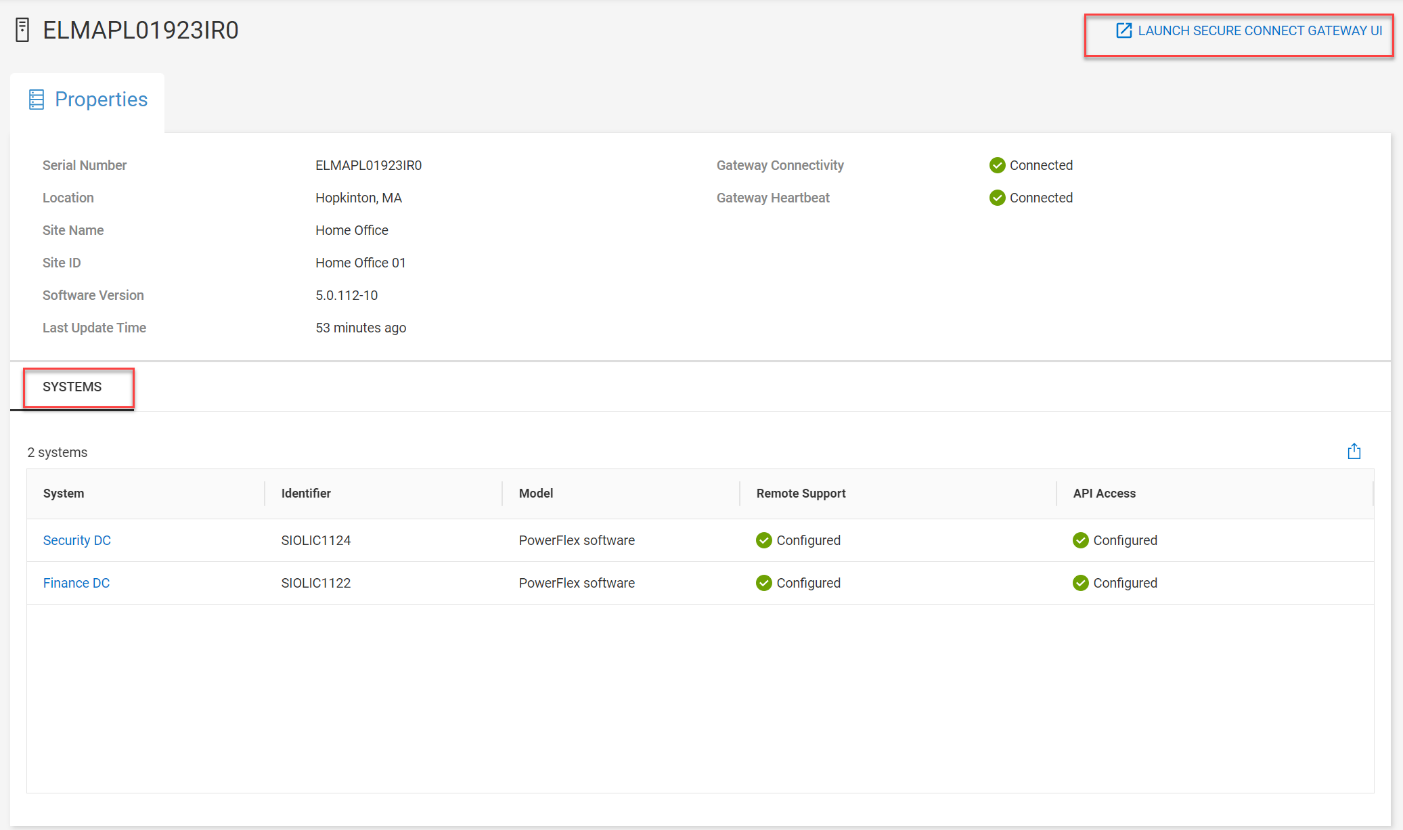

The SCG serial number links to the SCG details page shown in figure 5. All systems connected to the specific SCG are listed on the SCG details page. From here, you can launch the SCG UI by clicking the link at the upper right corner of the page, providing a seamless workflow when working with the gateway from CloudIQ.

Figure 5. SCG details page highlighting connected systems and the SCG UI launch

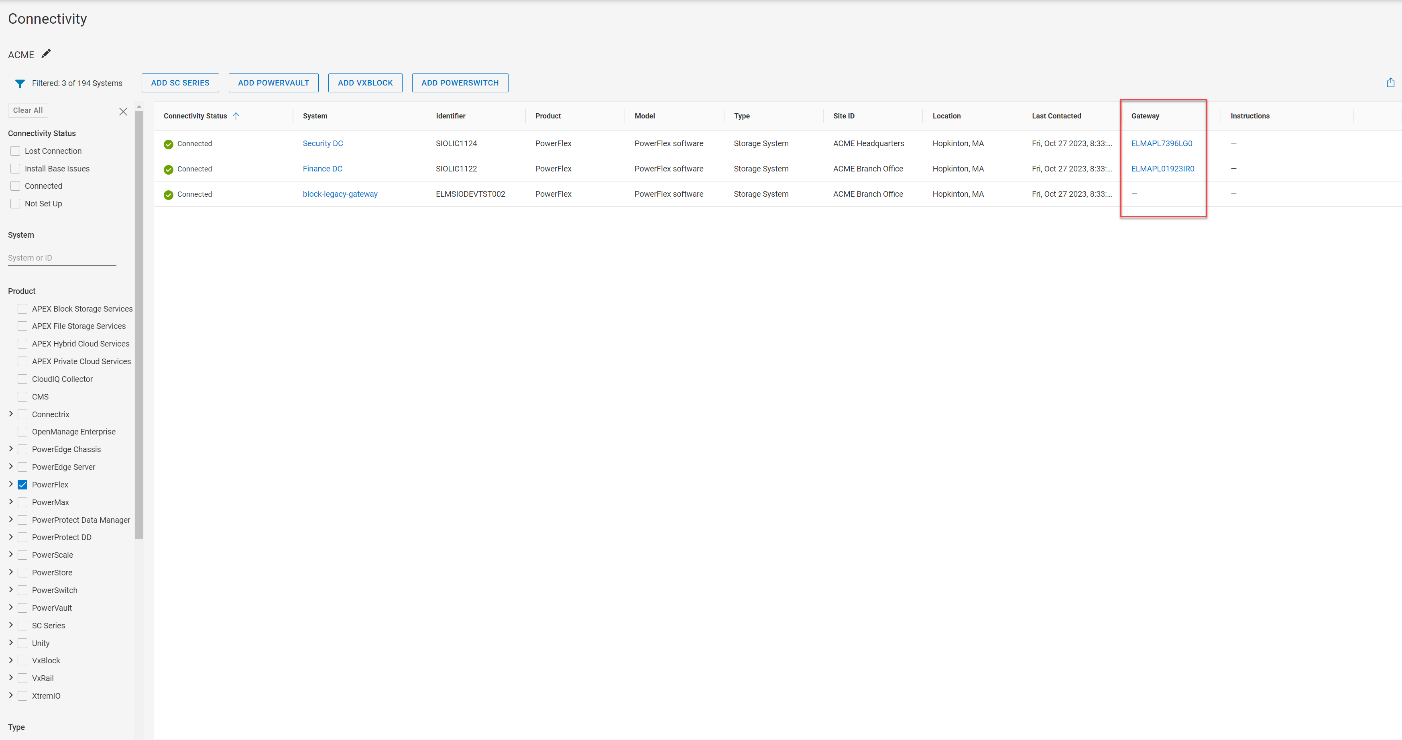

A Gateway column was added to the Admin>Connectivity page. The most recently active gateway for the PowerFlex system is listed in this column and contains a link to the gateway details page.

Figure 6. Gateway column on the Connectivity page

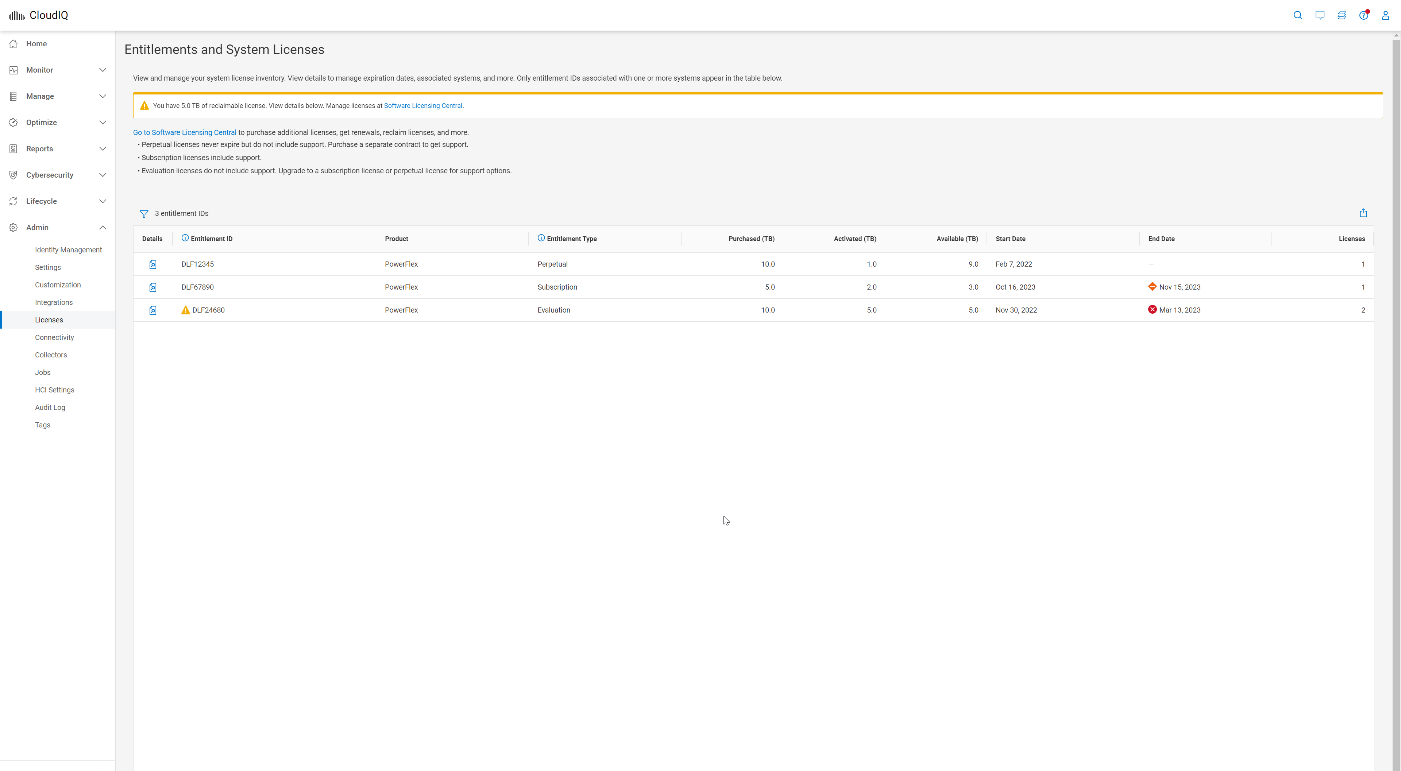

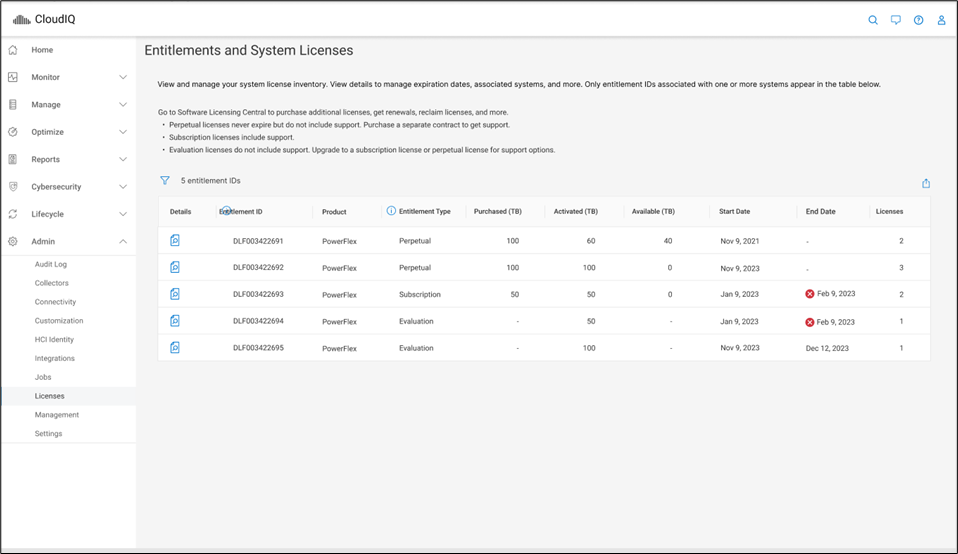

The next update is the Entitlements and System Licenses page, which you can find in the Admin section under Licenses. Here, you can check on the entitlements and licenses for all your PowerFlex systems. For more on this update, refer to the blog, Managing Dell PowerFlex Licensing and Being Way Less Sad.

Figure 7. Entitlements and System Licenses page

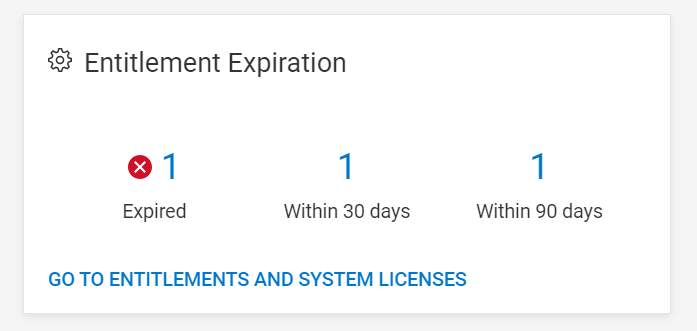

A new Entitlements tile is now available on the CloudIQ Home page, providing a summary of entitlement status. The Entitlements tile lets you quickly view the number of PowerFlex systems with entitlements and licenses that are expired, expiring withing 30 days, and expiring within 90 days.

Figure 8. Entitlement Expiration tile on CloudIQ Home page

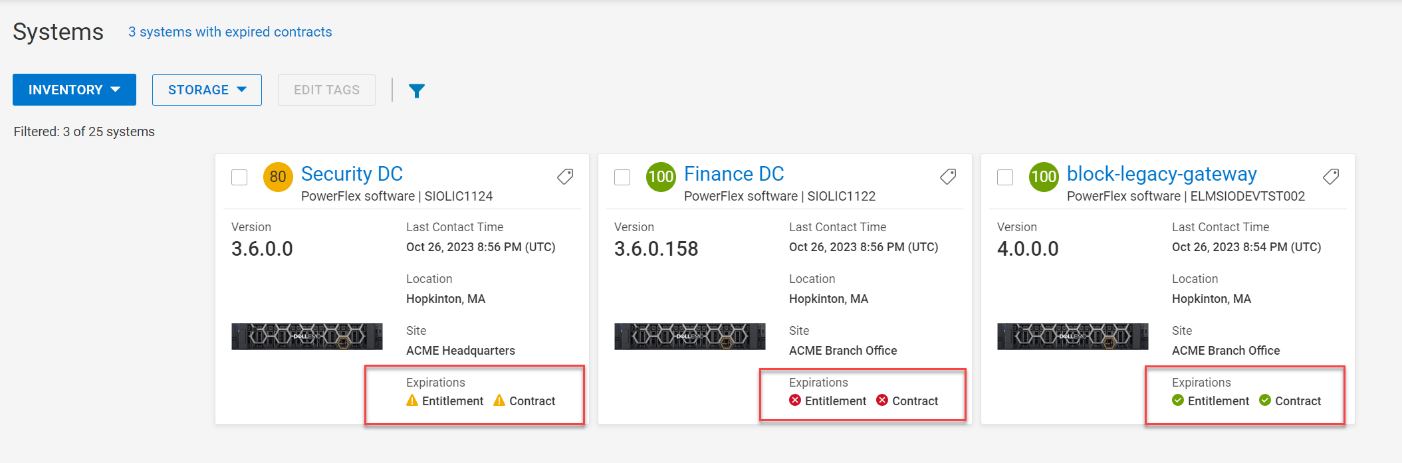

Another location for entitlement and contract status is on the PowerFlex Systems tiles. An entitlement that is in good standing is marked with a green checkmark, soon to expire with a yellow icon, and expired with a red “x.”

Figure 9. Three entitlement and contract statuses in PowerFlex Systems tile

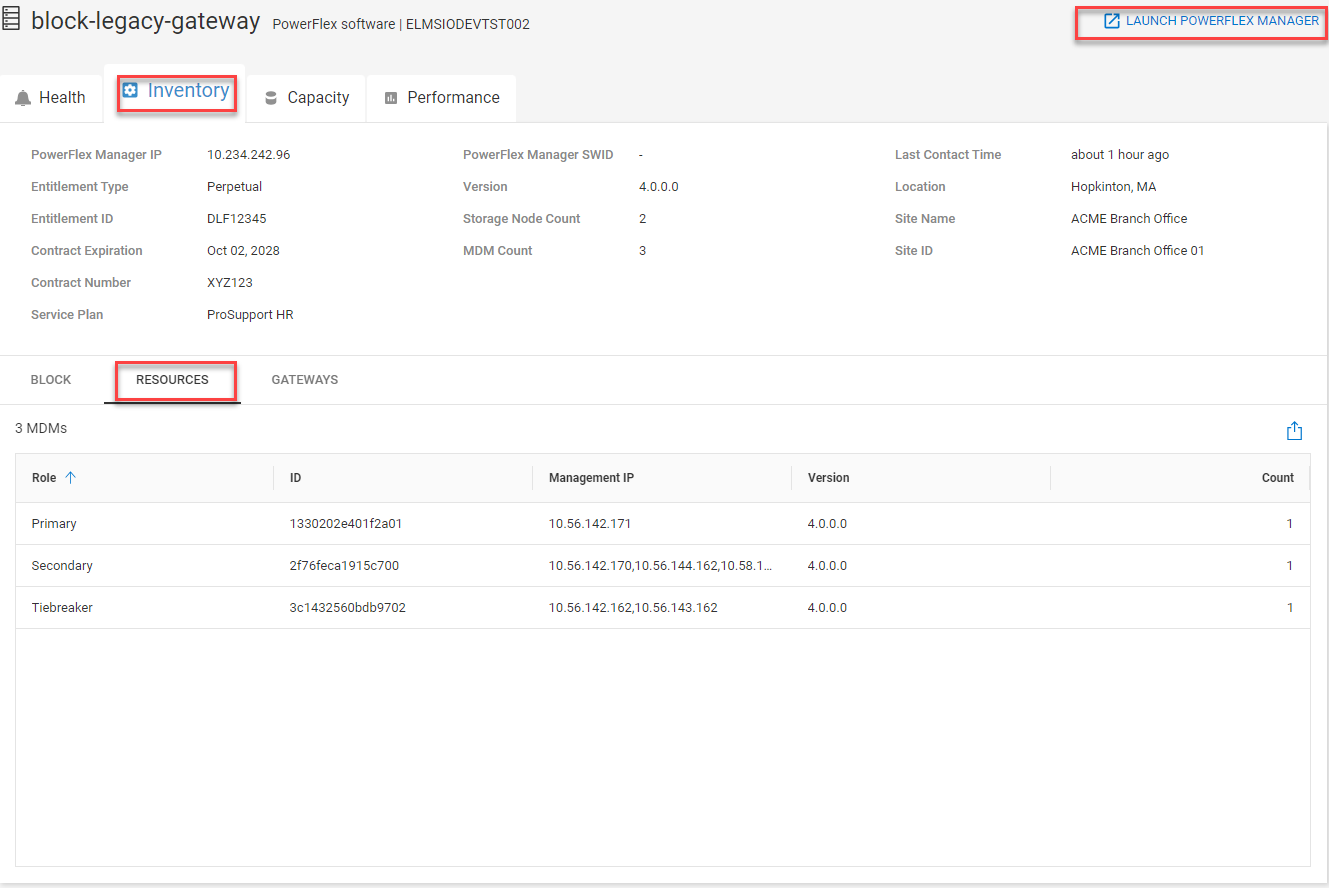

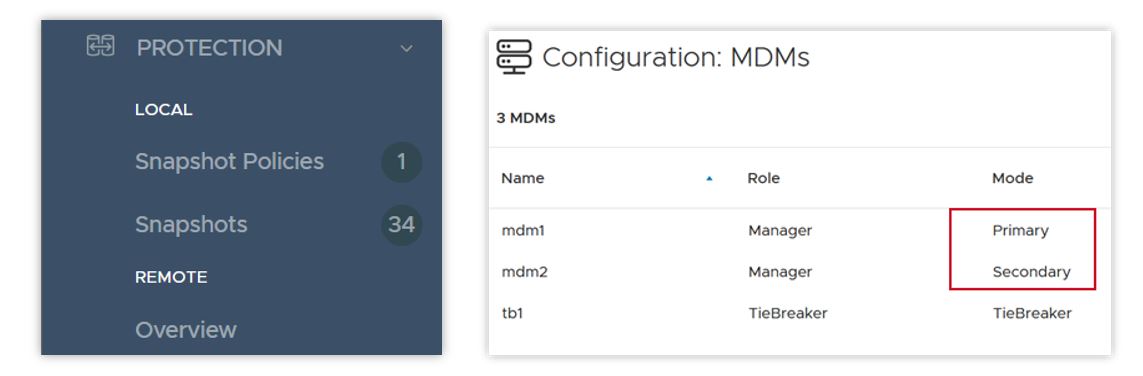

MDM cluster information was added to CloudIQ in July of this year. To view the MDM information, go to the system details page for the PowerFlex system and select the RESOURCES tab, shown in figure 10. You can view a list of the MDMs, MDM role, ID, management IP addresses, and software version.

Figure 10. PowerFlex system details page highlighting the RESOURCES tab under Inventory and the PowerFlex Manager launch

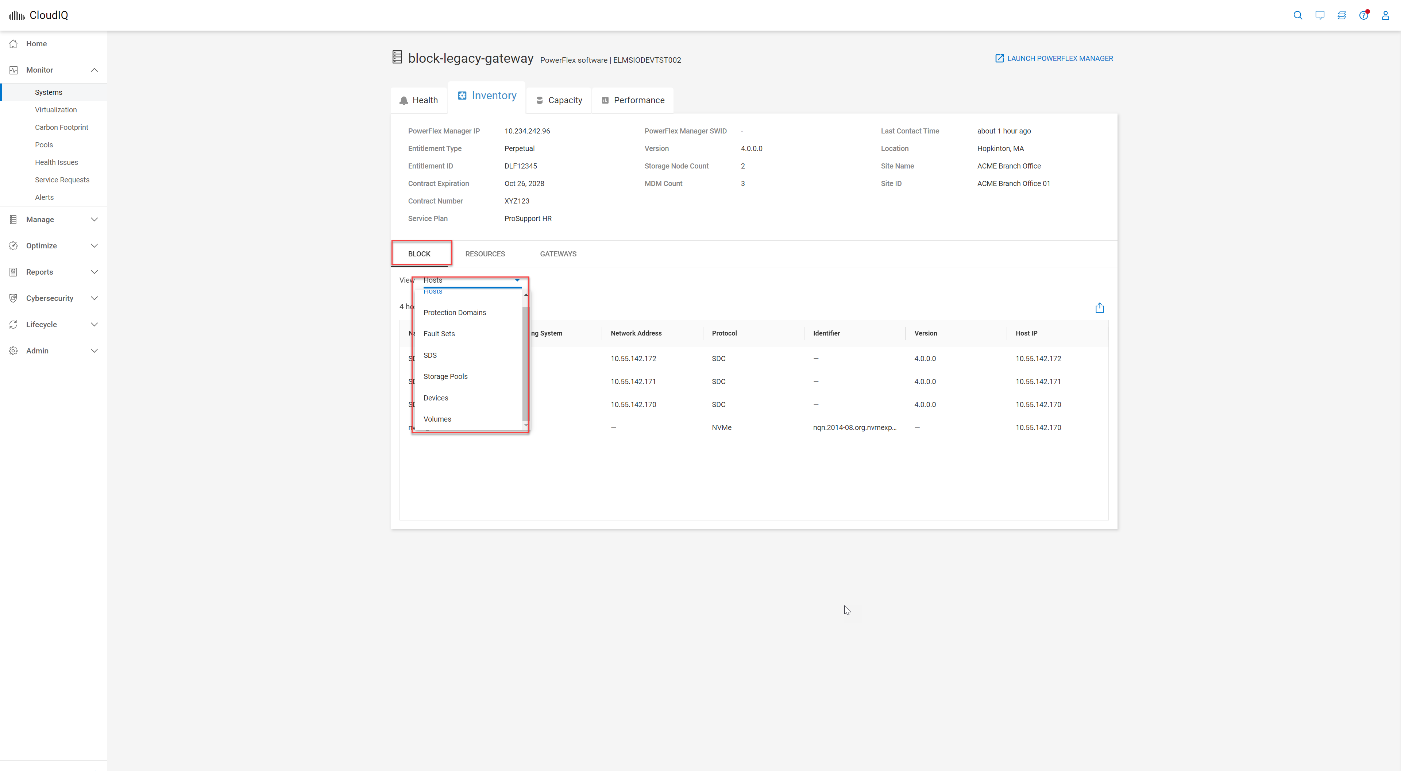

Another addition to the system details page is a link to PowerFlex Manager, making it convenient to launch the PowerFlex Manager UI for the specified system. A BLOCK tab provides details on PowerFlex components, including protection domains, fault sets, SDSs, devices, storage pools, volumes, and hosts. The views may feel familiar to you because they are based on the Block menu in PowerFlex Manager.

Figure 11. BLOCK tab within PowerFlex system details page

Planning on adopting APEX Block Storage for Public Cloud as part of your Multicloud strategy? CloudIQ has you covered on-prem and in the cloud.

Figure 12. CloudIQ Monitoring overview

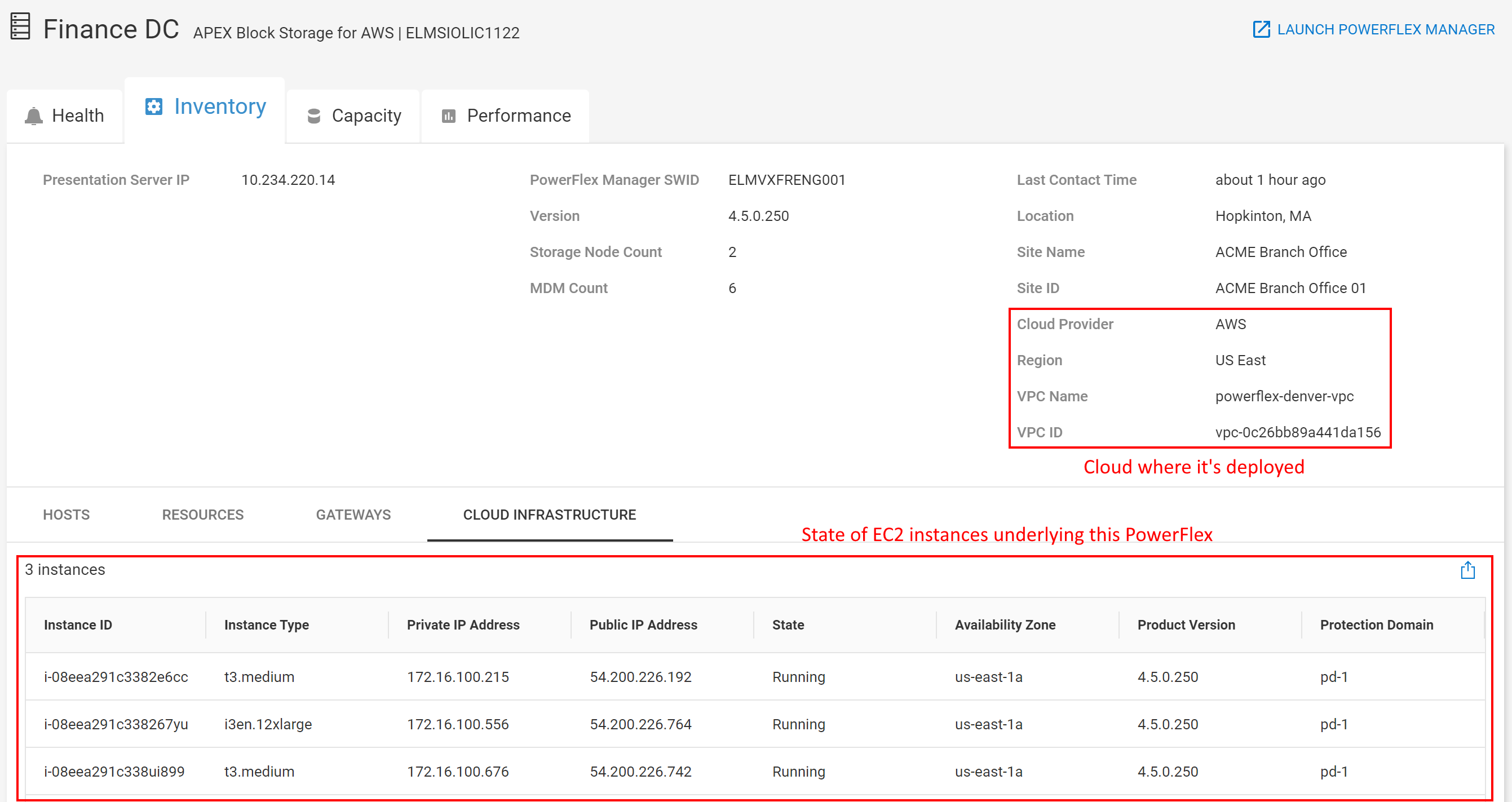

Onboarding your APEX Block Storage for Public Cloud into CloudIQ brings visibility into the cloud infrastructure.

Figure 13. Inventory page for APEX Block Storage for AWS

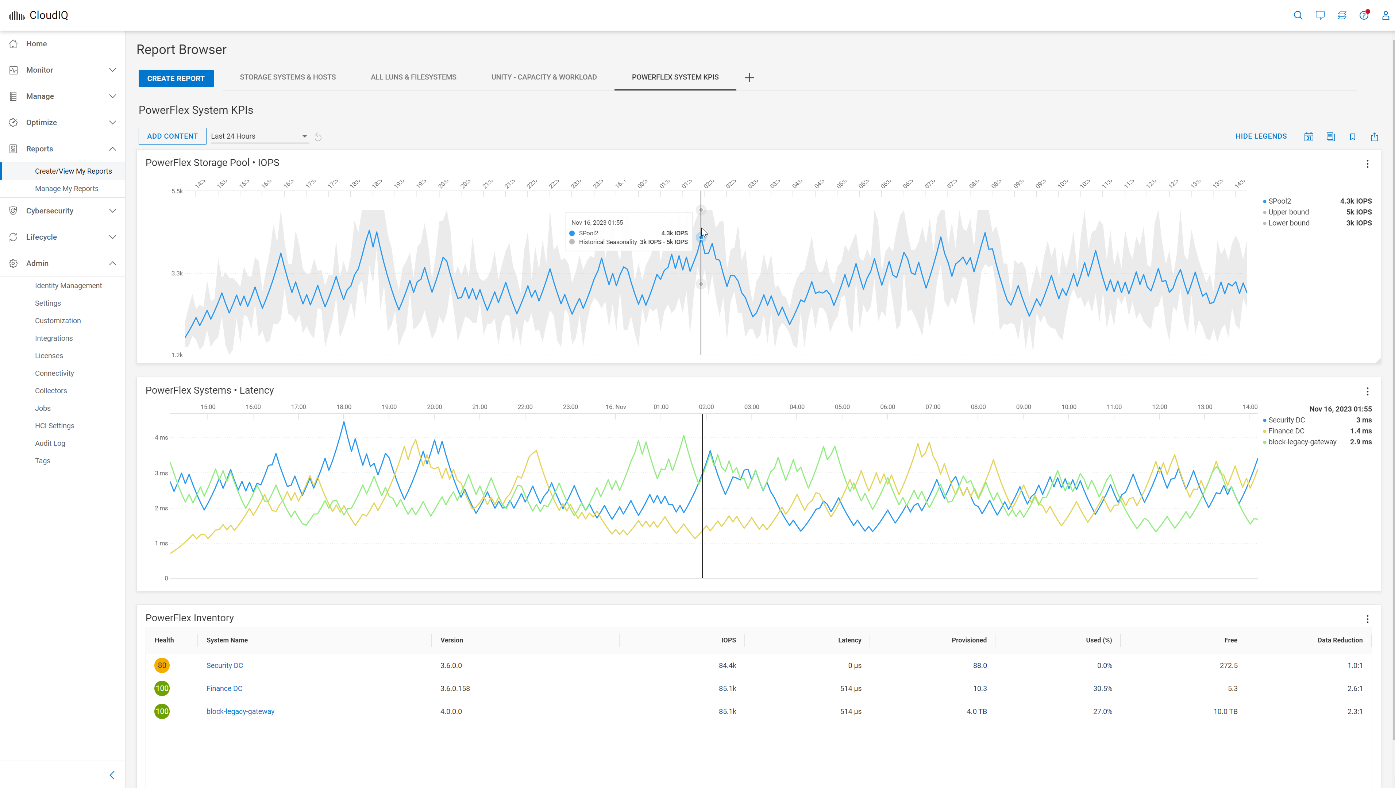

The last enhancement that I will highlight is the addition of PowerFlex in custom reports. This update provides three report types, including anomaly charts, line charts, and table reports.

Figure 14. Three report types in a custom report for PowerFlex

The anomaly chart consists of a single performance metric overlaid on the gray historic seasonality value boundaries. The upper and lower bounds establish the normal behavior for the specific metric. The bounds are based on an analysis of the prior three-week history. Historical values that exceed the upper or lower bounds are shaded in light blue to highlight that the value of the metric during this time was outside the historical range. Anomaly charts, at most, display the last 24 hours of data. The line chart allows you to display more than one metric from one or more systems. The example in figure 14 shows system latency from three PowerFlex systems. The table displays metrics and properties, such as system name and code version.

The next time you are working with CloudIQ, keep an eye out for the latest enhancements. If you have not onboarded your PowerFlex systems into CloudIQ, check out the PowerFlex CloudIQ onboarding article to get started.

Resources

Procedure to Onboard PowerFlex Systems to CloudIQ

Author: Roy Laverty, Principal Technical Marketing Engineer

Twitter: @RoyLaverty

LinkedIn: https://linkedin.com/in/roy-laverty

Using Dell PowerFlex and Google Distributed Cloud Virtual for Postgres Databases and How to Protect Them

Fri, 03 Nov 2023 23:27:04 -0000

|Read Time: 0 minutes

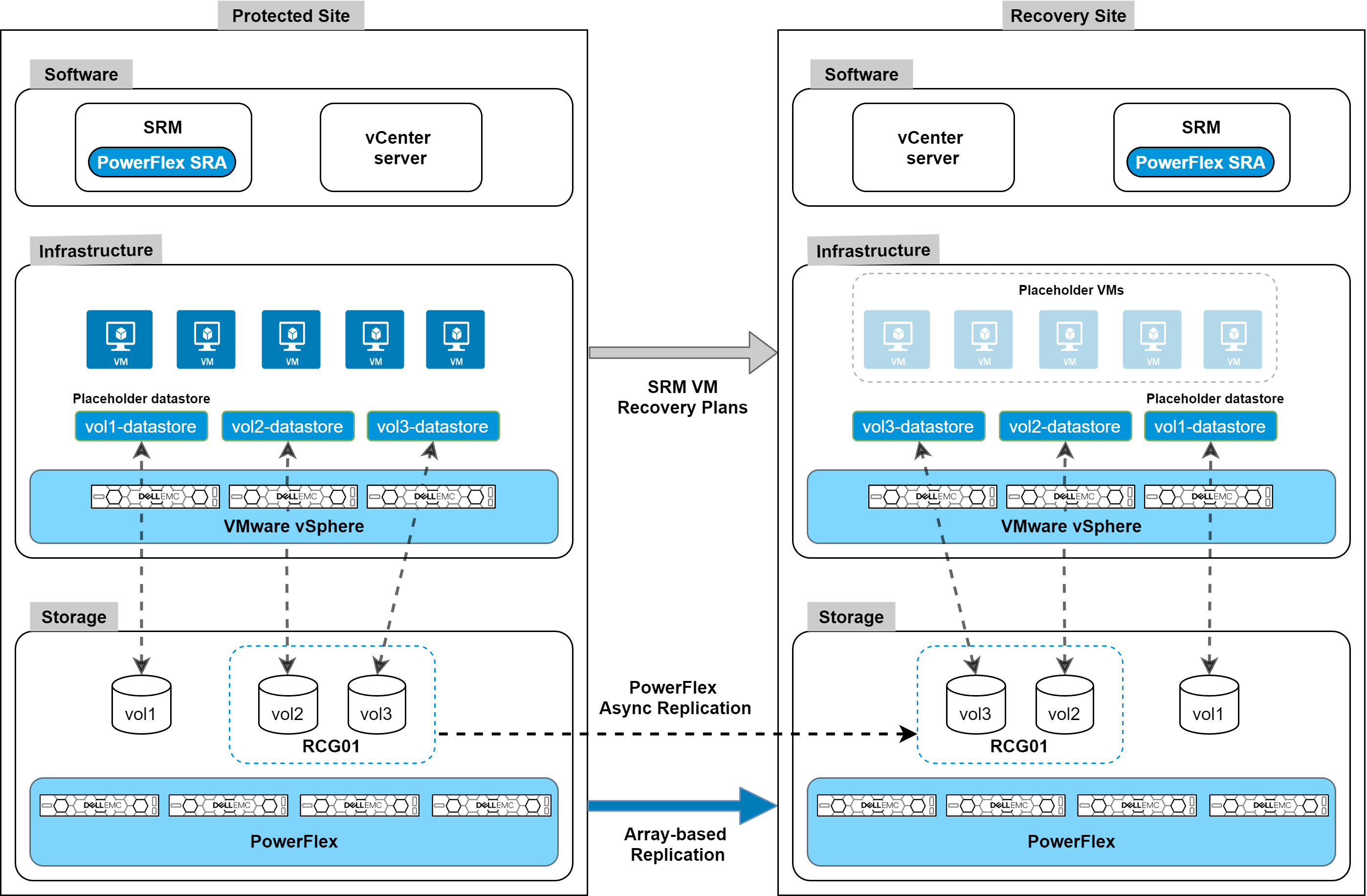

Did you know you can get the Google Cloud experience in your data center? Well now, you can! Using Google Distributed Cloud (GDC) Virtual and Dell PowerFlex enables the use of cloud and container workloads – such as Postgres databases – in your data center.

Looking beyond day one operations, the whole lifecycle must be considered, which includes assessing how to protect these cloud native workloads. That’s where Dell PowerProtect Data Manager comes in, allowing you to protect your workloads both in the data center and the cloud. PowerProtect Data Manager enhances data protection by discovering, managing, and sending data directly to the Dell PowerProtect DD series virtual appliance, resulting in unmatched efficiency, deduplication, performance, and scalability. Together with PowerProtect Data Manager, the PowerProtect DD is the ultimate cyber resilient data protection appliance.

In the following blog, we will unpack all this and more, giving you the opportunity to see how Dell PowerFlex and GDC Virtual can transform how you cloud.

What is Google Distributed Cloud Virtual?

We will start by looking at GDC Virtual and how it allows you to consume the cloud on your terms.

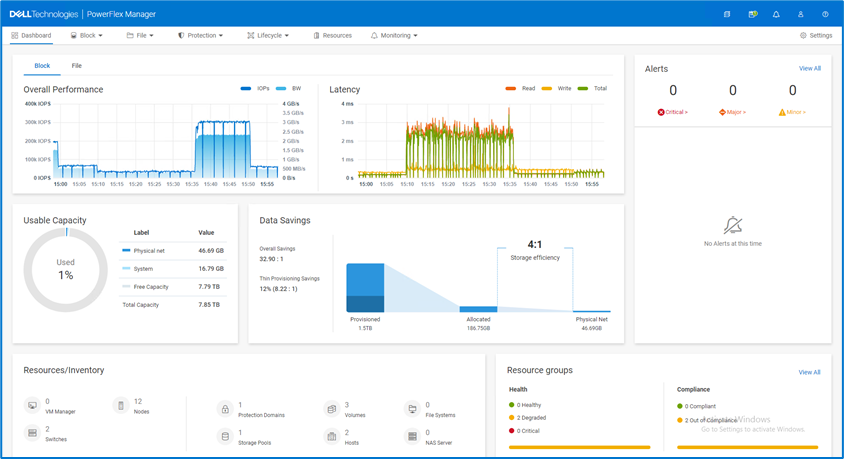

GDC Virtual provides you with a consistent platform for building and managing containerized applications across hybrid infrastructures and helps your developers become more productive across all environments. GDC Virtual provides all the mechanisms required to bring your code into production reliably, securely, and consistently while minimizing risk. GDC Virtual is built on open-source technologies pioneered by Google Cloud including Kubernetes and Istio, enabling consistency between cloud and on premises environments like PowerFlex. Anthos GKE (on GCP and on-prem), Anthos Service Mesh, and Anthos Config Management are the core building blocks of Anthos, which has integrations with platform-level services such as Stackdriver, Cloud Build, and Binary Authorization. GDC Virtual users purchase services and resources from the GCP Marketplace.

Figure 1. GDC Virtual components.

GDC Virtual puts all your IT resources into a consistent development, management, and control framework, automating low-value tasks across your PowerFlex and GCP infrastructure.

Within the context of GCP, the term ‘hybrid cloud’ describes a setup in which common or interconnected services are deployed across multiple computing environments, which include public cloud and on-premises. A hybrid cloud strategy allows you to extend the capacity and capabilities of your IT without the upfront capital expense investments of the public cloud while preserving your existing investments by adding one or more cloud deployments to your existing infrastructure. For more information, see Hybrid and Multi-Cloud Architecture Patterns.

PowerFlex delivers software defined storage to both virtual environments and bare metal hosts providing flexible consumption or resources. This enables both two-tier and three-tier architectures to match the needs of most any environment.

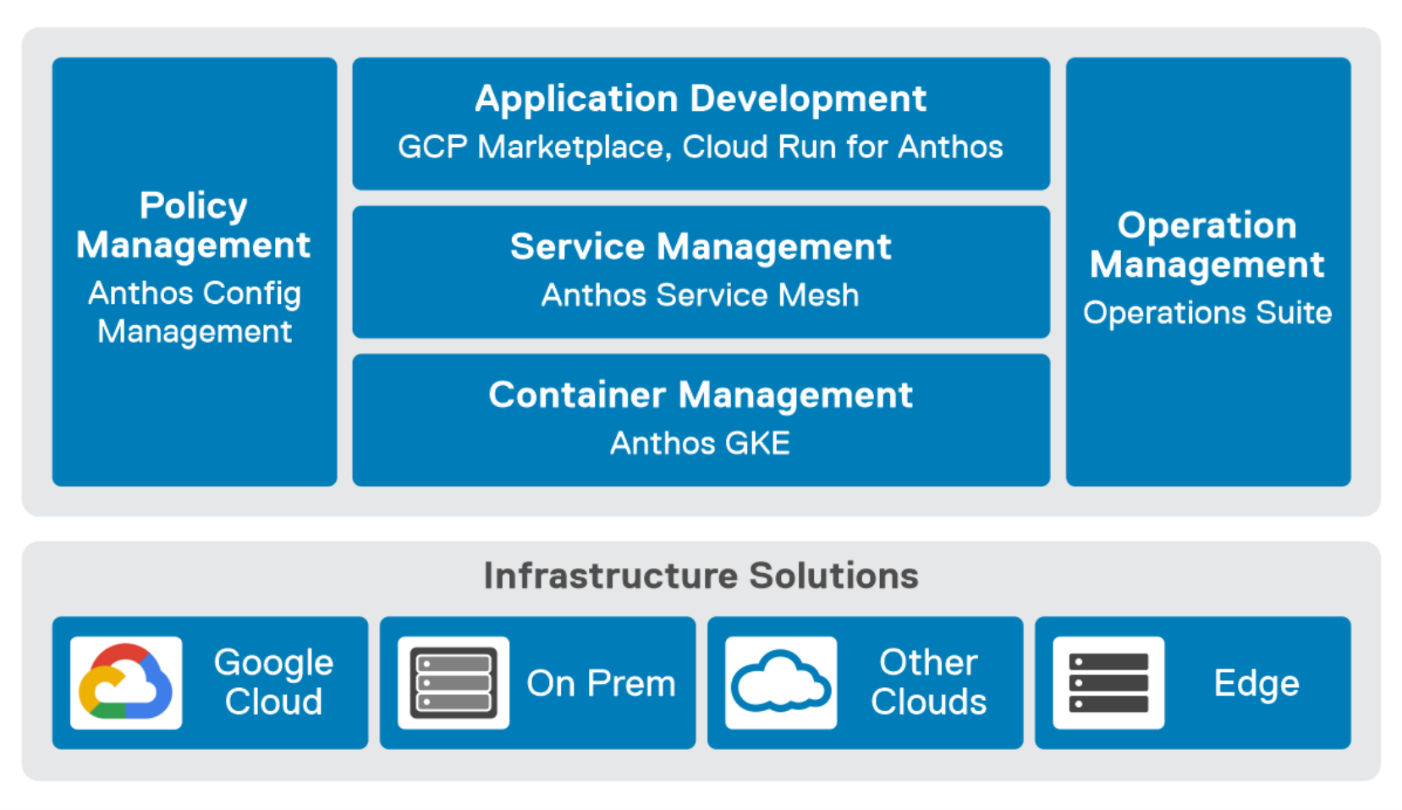

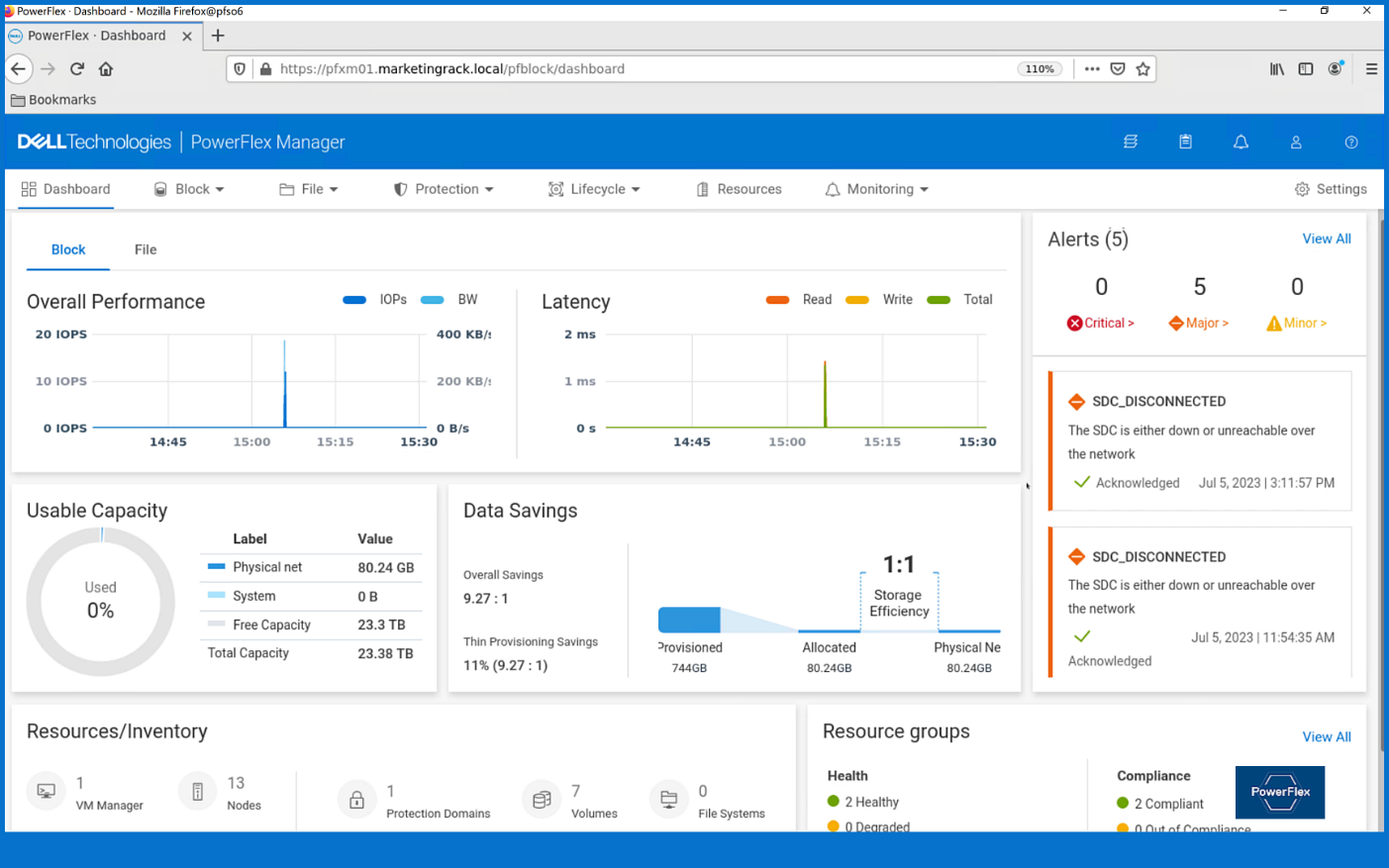

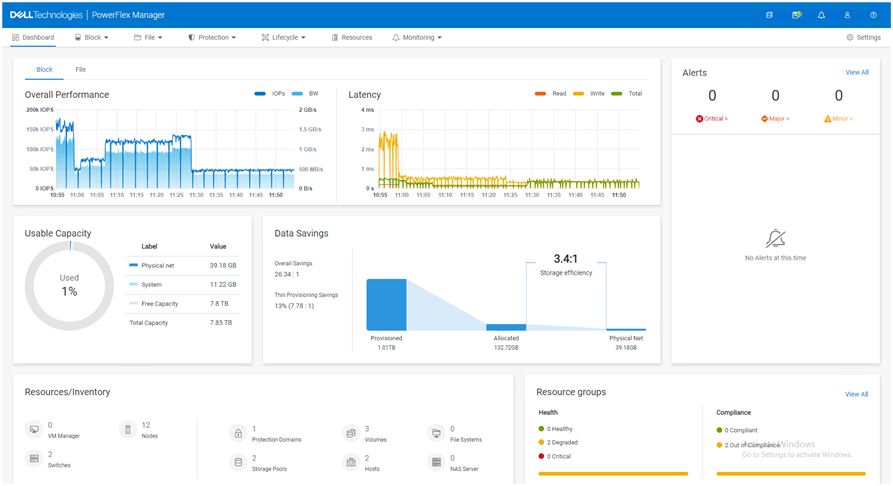

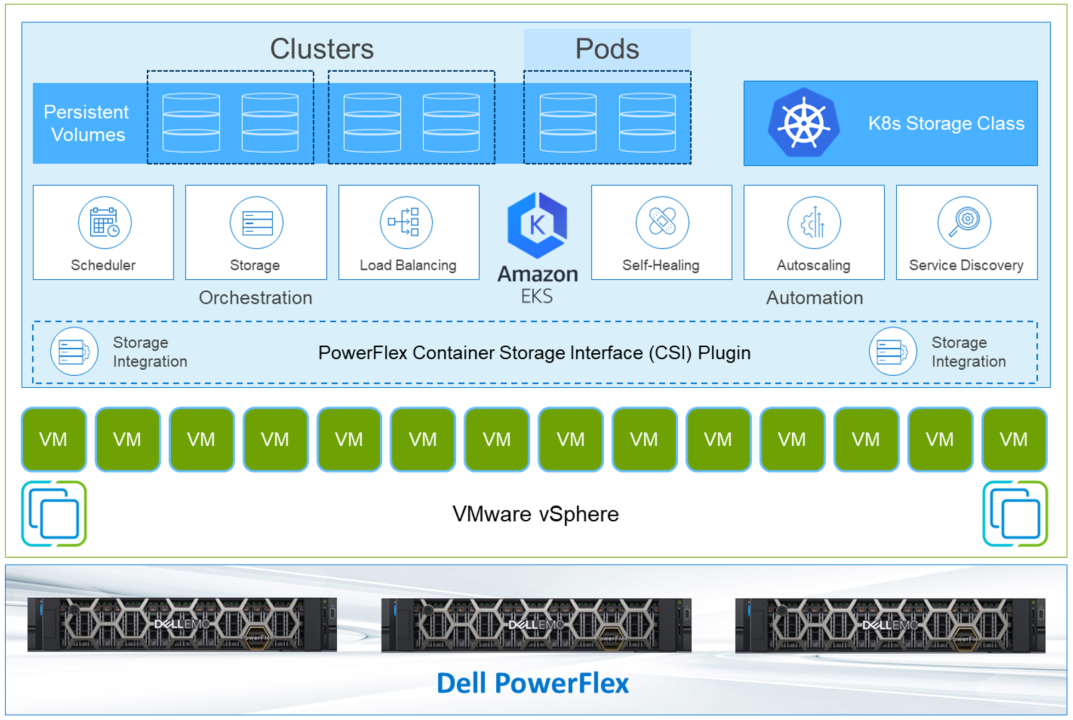

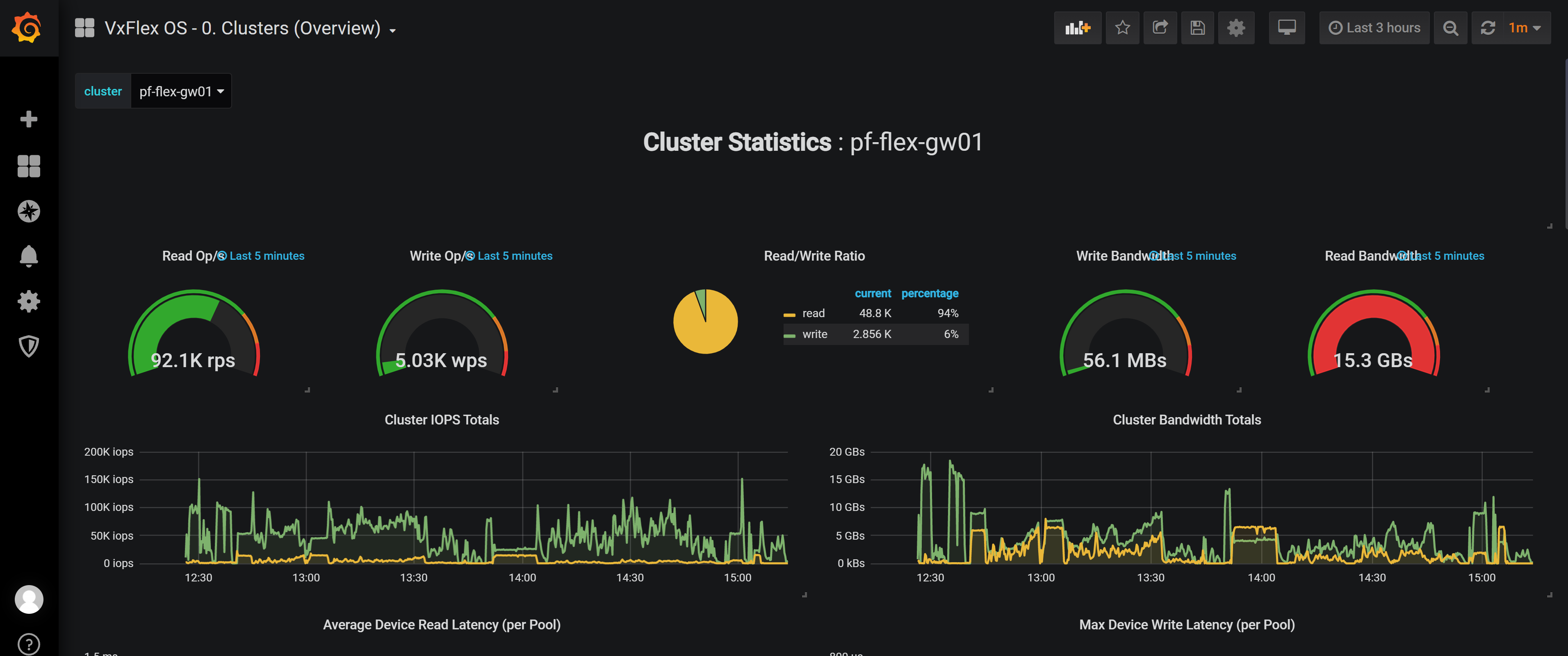

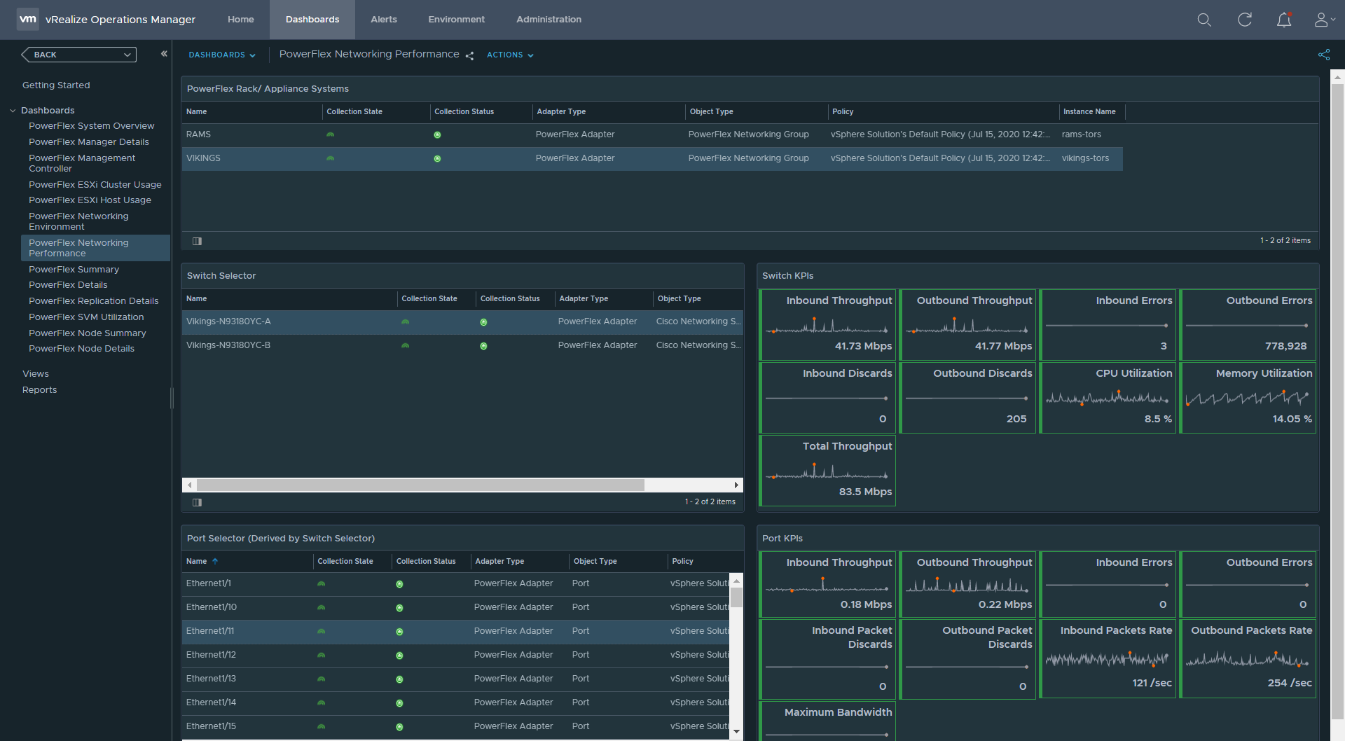

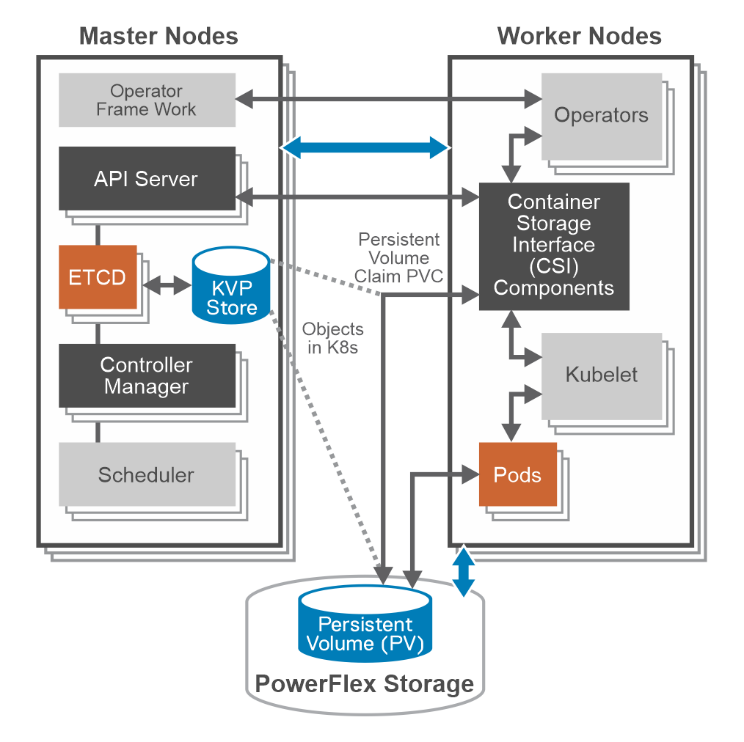

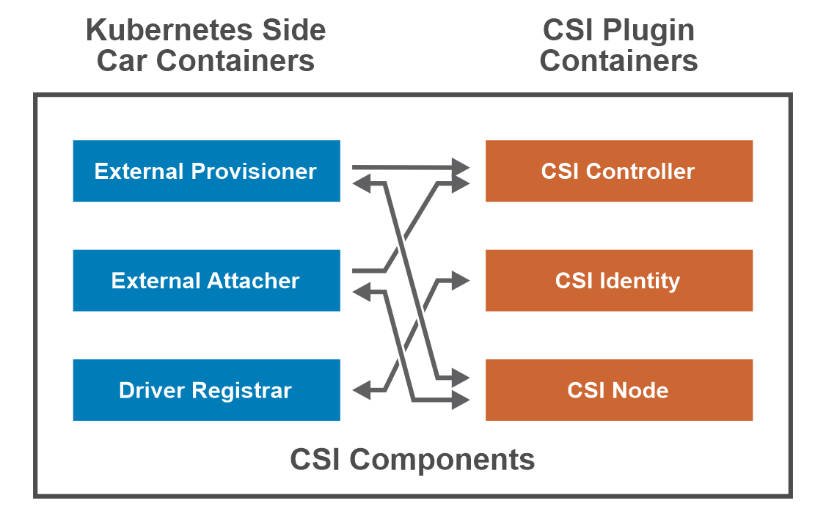

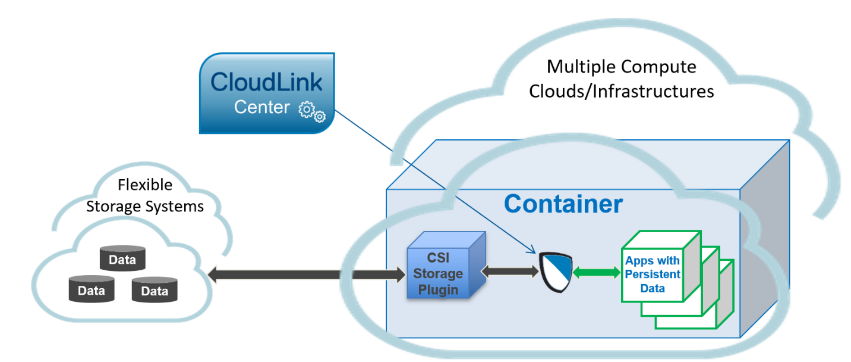

PowerFlex container storage

From the PowerFlex UI – shown in the following figure – you can easily monitor the performance and usage of your PowerFlex environment. Additionally, PowerFlex offers a container storage interface (CSI) and container storage modules (CSM) for integration with your container environment. The CSI/CSM allows containers to have persistent storage, which is important when working with workloads like databases that require it.

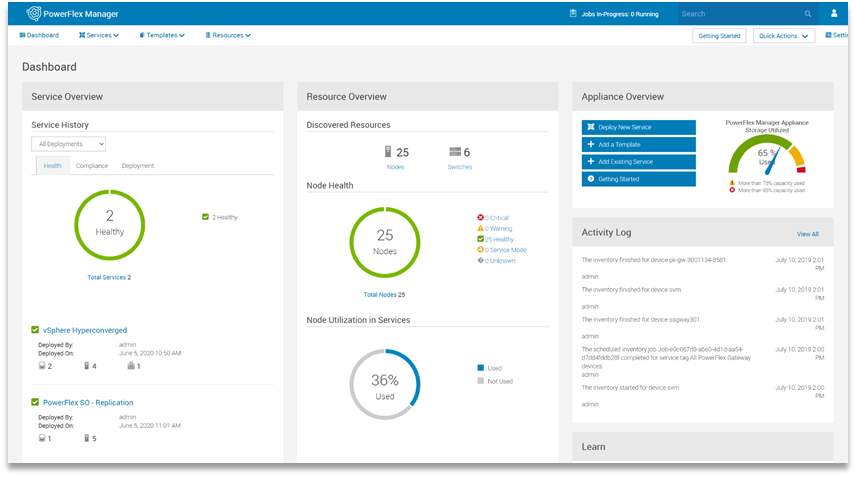

Figure 2. PowerFlex dashboard provides easy access to information.

To gain a deeper understanding of implementing GDC Virtual on Dell Powerflex, we invite you to explore our recently published reference architecture.

Dell engineers have recently prepared a PostgreSQL container environment deployed from the Google Cloud to a PowerFlex environment with GDC Virtual in anticipation of Kubecon. For those who have deployed Postgres from Google Cloud, you know it doesn’t take long to deploy. It took our team maybe 10 minutes, which makes it effortless to consume and integrate into workloads.

Once we had Postgres deployed, we proceeded to put it under load as we added records to it. To do this, we used pgbench, which is a built-in benchmarking tool in Postgres. This made it easy to fill a database with 10 million entries. We then used pgbench to simulate the load of 40 clients running 40 threads against the freshly loaded database.

Our goal wasn’t to capture performance numbers though. We just wanted to get a “warm” database created for some data protection work. That being said, what we saw on our modest cluster was impressive, with sub-millisecond latency and plenty of IO.

Data protection

With our containerized database warmed up, it was time to protect it. As you probably know, there are many ways to do this, some better than others. We’ll spend just a moment talking about two functional methods of data protection – crash consistent and application consistent backups. PowerProtect Data Manager supports both crash-consistent and application consistent database backups.

A “crash consistent” backup is exactly as the name implies. The backup application captures the volume in its running state and copies out the data regardless of what’s currently happening. It’s as if someone had just pulled the power cord on the workload. Needless to say, that’s not the most desirable backup state, but it’s still better than no backup at all.

That’s where an “application consistent” backup can be more desirable. An application consistent backup talks with the application and makes sure the data is all “flushed” and in a “clean” state prior to it being backed up. At least, that’s the simple version.

The longer version is that the backup application talks to the OS and application, asks them to flush their buffers – known as quiescing – and then triggers a snapshot of the volumes to be backed up. Once complete, the system then initiates a snapshot on the underlying storage – in this case PowerFlex – of the volumes used. Once the snapshots are completed, the application-level snapshots are released, the applications begin writing normally to it again, and the backup application begins to copy the storage snapshot to the protected location. All of this happens in a matter of seconds, many times even faster.

This is why application consistent backups are preferred. The backup can take about the same amount of time to run, but the data is in a known good state, which makes the chances of recovery much greater than crash consistent backups.

In our lab environment, we did this with PowerProtect Data Manager and PowerProtect DD Virtual Edition (DDVE). PowerProtect Data Manager provides a standardized way to quiesce a supported database, backup the data from that database, and then return the database to operation. This works great for protecting Kubernetes workloads running on PowerFlex. It’s able to create application consistent backups of the Postgres containers quickly and efficiently. This also works in concert with GDC Virtual, allowing for the containers to be registered and restored into the cloud environment.

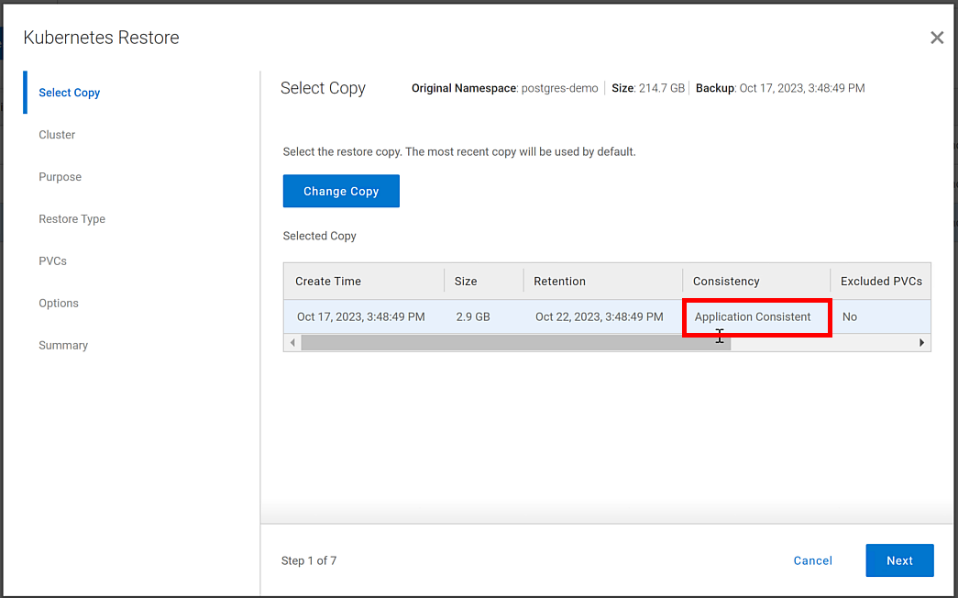

Figure 3. An application consistent backup and its timing in the PowerProtect Data Manager UI

It’s great having application consistent backups of your cloud workloads, “checking” many of those boxes that people require from their backup environments. That said, just as important and not to be forgotten is the recovery of the backups.

Data recovery

As has been said many times, “never trust a backup that hasn’t been tested.” It’s important to test any and all backups to make sure they can be recovered. Testing the recovery of a Postgres database running in GDC Virtual on PowerFlex is as straightforward as can be.

The high-level steps are:

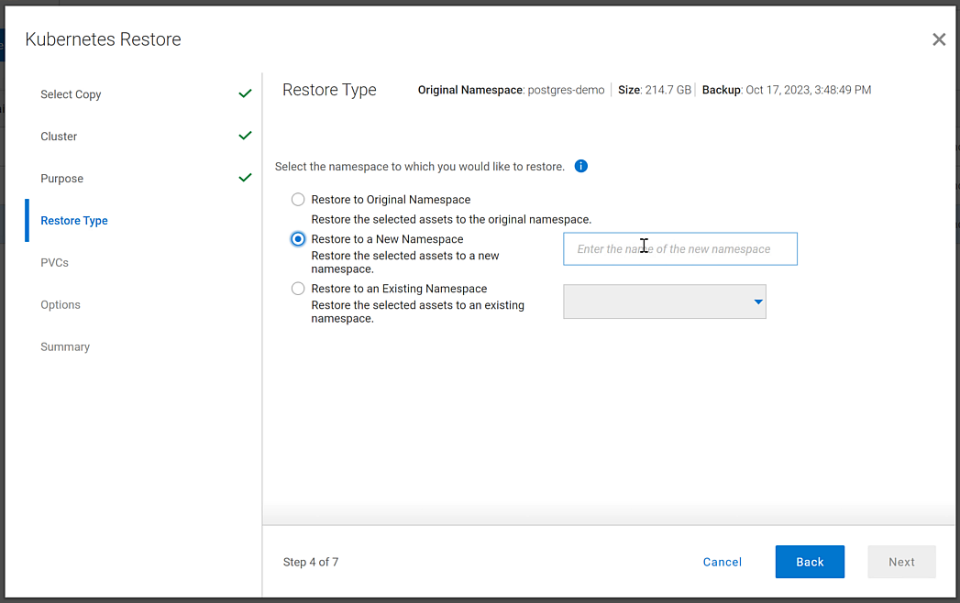

- From the PowerProtect Data Manager UI, select Restore > Assets, and select the Kubernetes tab. Select the checkbox next to the protected namespace and click Restore.

- On the Select Copy page, select the copy you wish to restore from.

- On the Restore Type page, select where it should be restored to.

- Determine how the Persistent Volume Claims (PVCs) and namespace should be restored.

- When finished, test the restore.

You might have noticed in step 4, I mentioned PVCs, which are the container’s connections to the data and, as the name implies, allow that data to persist across the nodes. This is made possible by the CSI/CSM mentioned earlier. Because of the integration across the environment, restoring PVCs is a simple task.

The following shows some of the recovery options in PowerProtect Data Manager for PVCs.

Figure 4. PowerProtect Data Manager UI – Namespace restore options

The recovery, like most things in data protection, is relatively anticlimactic. Everything is functional, and queries work as expected against the Postgres database instance.

Dell and Google Cloud collaborated extensively to create solutions that leverage both PowerFlex and GDC Virtual. The power of this collaboration really shows through when recovery operations just work. That consistency and ease enables customers to take advantage of a robust environment backed by leaders in the space and helps to remove one nightmare that keeps developers and IT admins awake at night, allowing them to rest easy and be prepared to change the world.

If any of this sounds interesting to you and you’ll be at Kubecon in Chicago, Illinois on November 6-9, stop by the Google Cloud booth. We’ll be happy to show you demos of this exciting collaboration in action. Otherwise, feel free contact your Dell representative for more details.

Resources

Authors:

Authors: | Tony Foster, | Vinod Kumar Kumaresan, | Harsha Yadappanavar, |

LinkedIn: | |||

X (formerly Twitter): |

| @harshauy | |

Personal Blog: |

|

|

KubeCon NA23, Google Cloud Anthos on Dell PowerFlex and More

Sun, 05 Nov 2023 23:26:43 -0000

|Read Time: 0 minutes

KubeCon will be here before you know it. There are so many exciting things to see and do. While you are making your plans, be sure to add a few things that will make things easier for you at the conference and afterwards.

Before we get into those things, did you know that the Google Cloud team and the Dell PowerFlex team have been collaborating? Recently Dell and Google Cloud published a reference architecture: Google Cloud Anthos and GDC Virtual on Dell PowerFlex. This illustrates how both teams are working together to enable consistency between cloud and on premises environments like PowerFlex. You will see this collaboration at KubeCon this year.

On Tuesday at KubeCon, after breakfast and the keynote, you should make your way to the Solutions Showcase in Hall F on Level 3 of the West building. Once there, make your way over to the Google Cloud booth and visit with the team! They want your questions about PowerFlex and are eager to share with you how Google Distributed Cloud (GDC) Virtual with PowerFlex provides a powerful on-premises container solution.

Also, be sure to catch the lightning sessions in the Google Cloud booth. You’ll get to hear from Dell PowerFlex engineer, Praphul Krottapalli. He will be digging into leveraging GDC Virtual on PowerFlex. That’s not the big thing though, he’ll also be looking at running a Postgres database distributed across on-premises PowerFlex nodes using GDC Virtual. Beyond that, they will look at how to protect these containerized database workloads. They’ll show you how to use Dell PowerProtect Data Manager to create application consistent backups of a containerized Postgres database instance.

We all know backups are only good if you can restore them. So, Praphul will show you how to recover the Postgres database and have it running again in no time.

Application consistency is an important thing to keep in mind with backups. Would you rather have a database backup where someone had just pulled the plug on the database (crash consistent) or would you like the backup to be as though someone had gracefully shut down the system (application consistent)? For all kinds of reasons (time, cost, sanity), the latter is highly preferable!

We talk about this more in a blog that covers the demo environment we used for KubeCon.

This highlights Dell and Google’s joint commitment to modern apps by ensuring that they can be run everywhere and that organizations can easily develop and deploy modern workloads.

If you are at KubeCon and would like to learn more about how containers work on Dell solutions, be sure to stop by both the Dell and Google Cloud booths. If it’s after KubeCon, be sure to reach out to your Dell representative for more details.

Author: Tony Foster

Dell PowerFlex at VMware Explore in Barcelona – Nothing Controversial

Thu, 19 Oct 2023 22:38:22 -0000

|Read Time: 0 minutes

For those who aren’t aware, there are some big changes happening at VMware. If you watched the VMware Explore Las Vegas keynote, it was a whirlwind of changes and important information. CEOs of several major companies took the stage and spoke about the direction VMware is going, attendees hanging on their every word and wondering what the changes meant as well as how it would impact their operations.

For many, the impact is still unclear. This could radically change data centers and how organizations do work, leaving many in IT and business asking questions about what’s next and where things are headed.

We can all expect to find out more at VMware Explore Barcelona coming up 6 to 9 November, which will bring more clarity in direction and illuminate what it will mean for organizations large and small.

I can’t wait to see what’s in store for the Generative AI (GenAI) workloads we’ve all been waiting for (And you thought I was talking about something else?).

At VMware Explore in Las Vegas this year, the message was clear. VMware is embracing AI workloads. NVIDIA CEO Jensen Huang and VMware CEO Raghu Raghuram spoke to this during the general session keynote. Jensen stated, “we’re reinventing enterprise computing after a quarter of a century in order to transition to the future.”

The entire IT industry is moving in the direction of AI. Dell PowerFlex is already there. We’ve been on this journey for quite some time. If you were lucky enough to have stopped at the Kioxia stand during the Las Vegas show, you saw how we are working with both NVIDIA and Kioxia to deliver powerful AI systems for customers to make that transition to the future.

If you couldn’t make it to Las Vegas for VMware Explore but plan to attend VMware Explore in Barcelona, you’re in luck. PowerFlex will be showcasing the amazing performance of Kioxia storage and NVIDIA GPUs again. You can see a live demo at the Kioxia stand, #225 in the Solutions Exchange.

When you visit the Kioxia stand, you will be able to experience the power of running ResNet 50 image classification and Online Transactional Processing (OLTP) workloads simultaneously, live from the show floor. And if that’s not enough, there are experts and lots of them! If you get a chance, talk with Shashi about all the things PowerFlex unlocks for your organization.

PowerFlex supports NVIDIA GPUs with MIG technology, which is part of NVIDIA AI Enterprise. NVIDIA MIG allows you to tailor GPU resources for the workloads that need them (Yes, there is nothing that says you can’t run different workloads on the same hosts). Plus, PowerFlex uses Kioxia PM7 series SSDs, so there are plenty of IOPS to go around while ensuring sub-millisecond latency for both workloads. This allows the data to be closer to the processing, even on the same host.

In our lab tests, we were able to push one million transactions per minute (TPM) with OLTP workloads while also processing 6620 images per second using a RESNET50 model built on NVIDIA NGC containers. These are important if you want to keep users happy, especially as more and more organizations want to add AI/ML capabilities to their online apps (and more and more data is generated from all those new apps).

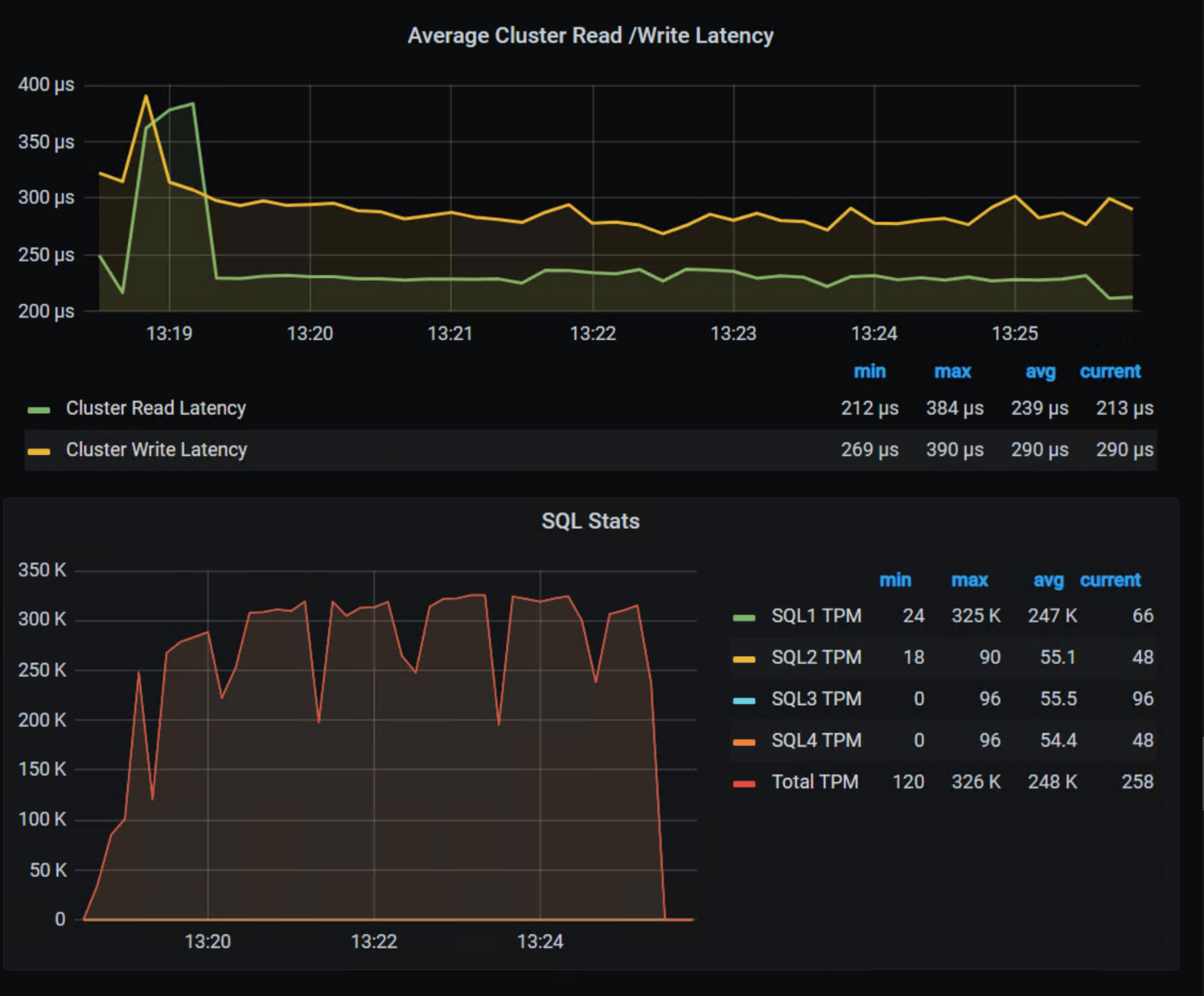

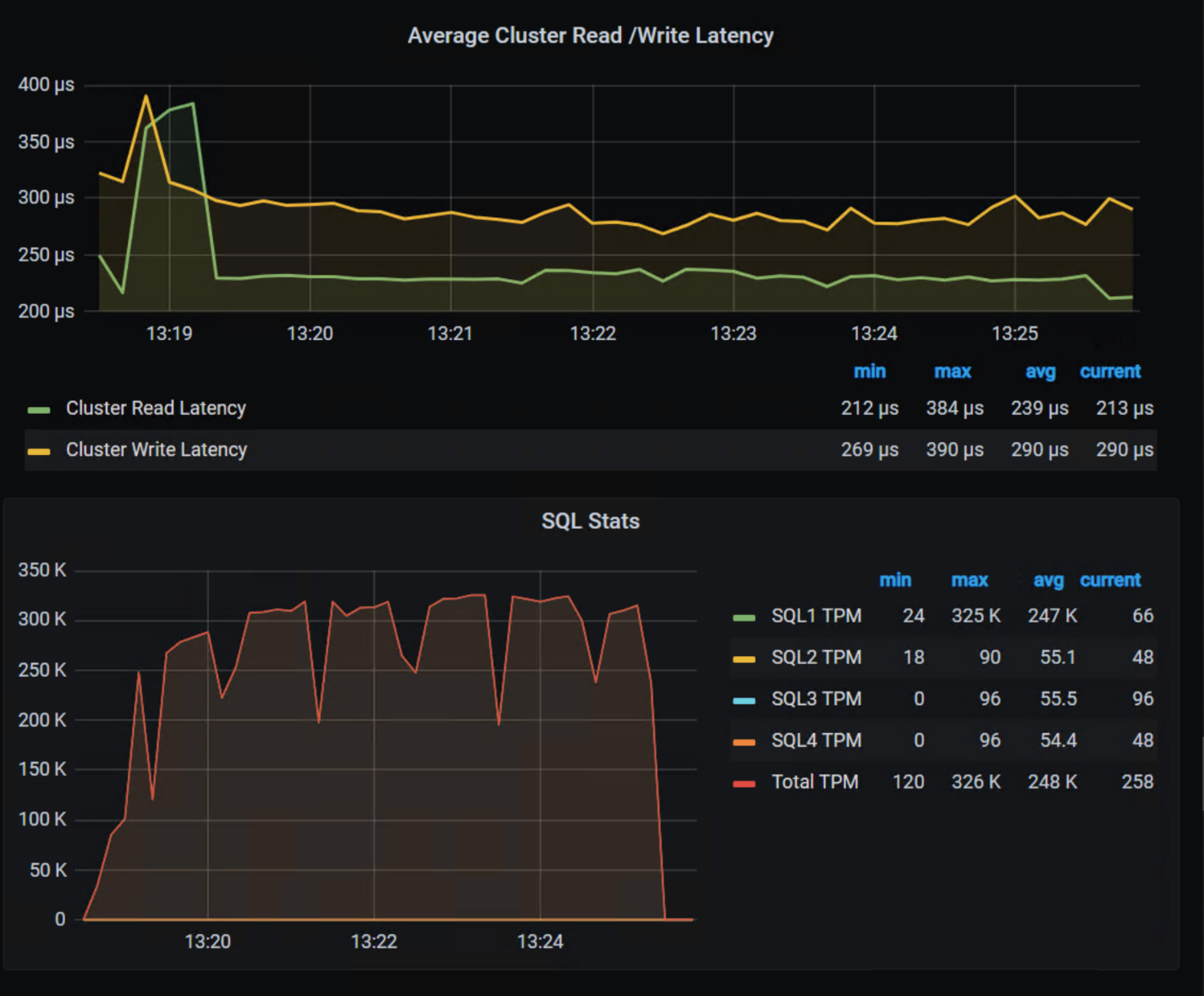

The following shows the TPM results from the demo environment that is running our four SQL VMs. The TPMs in this test are maxing out around 320k, and the latency is always sub-millisecond.

The future is here and waiting for you to visit.

If you are unable to visit the stand and would like to get an overview of PowerFlex’s abilities when it comes to GenAI, check out this video.

As you can see, PowerFlex has true flexibility when it comes to GenAI, making it the ideal platform to reinvent your enterprise IT environment as you transition to the future.

If you find yourself at VMware Explore in Barcelona, be sure to stop by the Kioxia stand (#225) and talk with the team about how Dell PowerFlex, Kioxia drives, and NVIDIA GPUs can accelerate your transition to the future.

See, nothing controversial here!

Resources

- Dell Generative AI Solutions

- VMware and NVIDIA Unlock Generative AI for Enterprises

- VMware’s Approach to Private AI

Author: Tony Foster, Sr. Principal Technical Marketing Engineer

Twitter: | |

LinkedIn: | |

Personal Blog: | |

Location: | The Land of Oz [-6 GMT] |

PowerFlex: The DNA of the Ultimate Software-Defined Infrastructure

Mon, 25 Sep 2023 13:24:55 -0000

|Read Time: 0 minutes

Introduction

This blog is the first in a series discussing PowerFlex software-defined infrastructure.

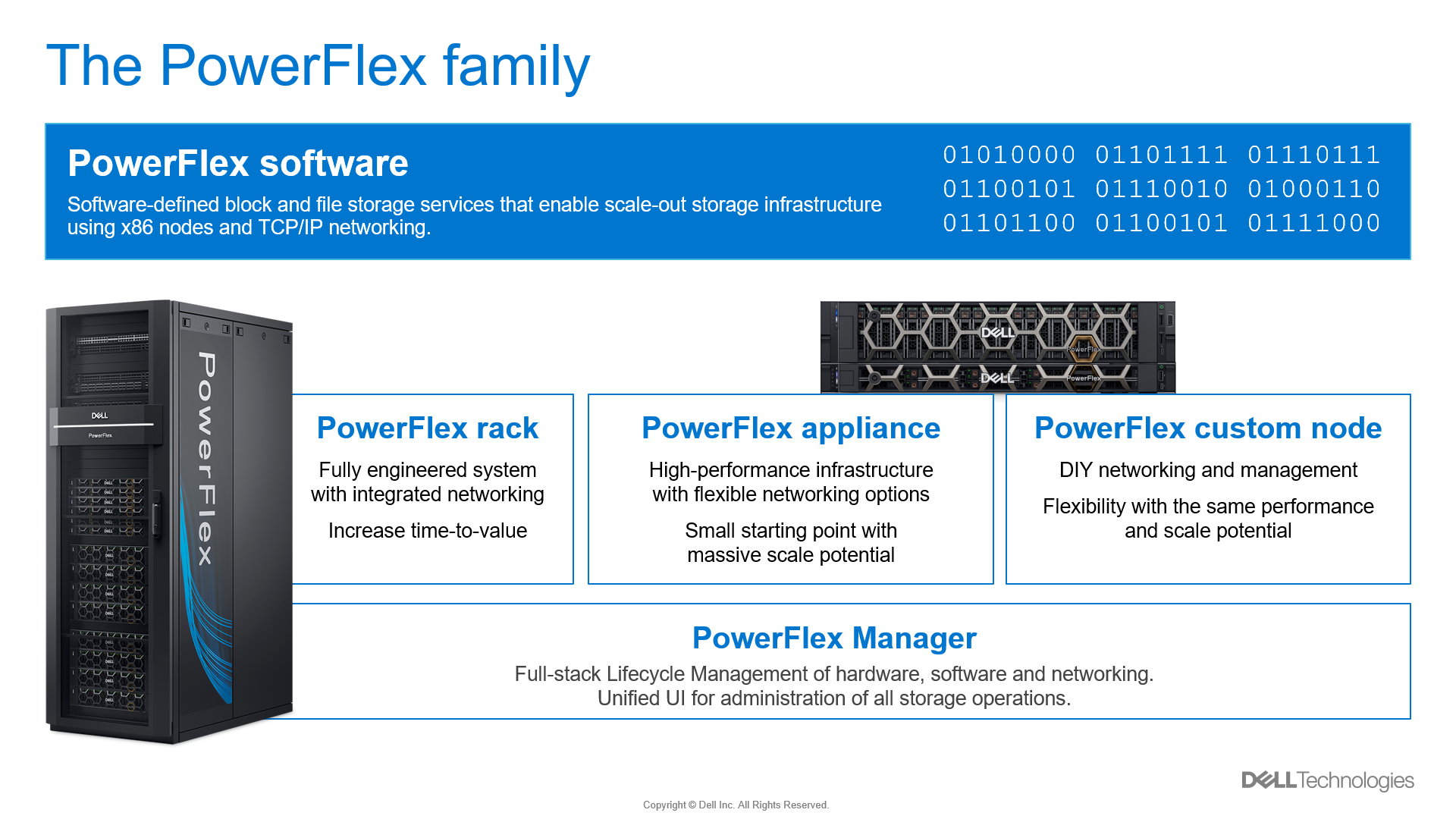

PowerFlex is Dell Technologies’ flexible, resilient, and highly scalable software-defined infrastructure, providing both block and file storage services. Its software-first DNA can be traced back to influential Dell software-defined products such as ScaleIO and VxFlex.

PowerFlex software runs on the ubiquitous x86 node with TCP/IP networking, leveraging the market-leading PowerEdge server in configurations that have been tested and qualified to run PowerFlex.

Flexible consumption options

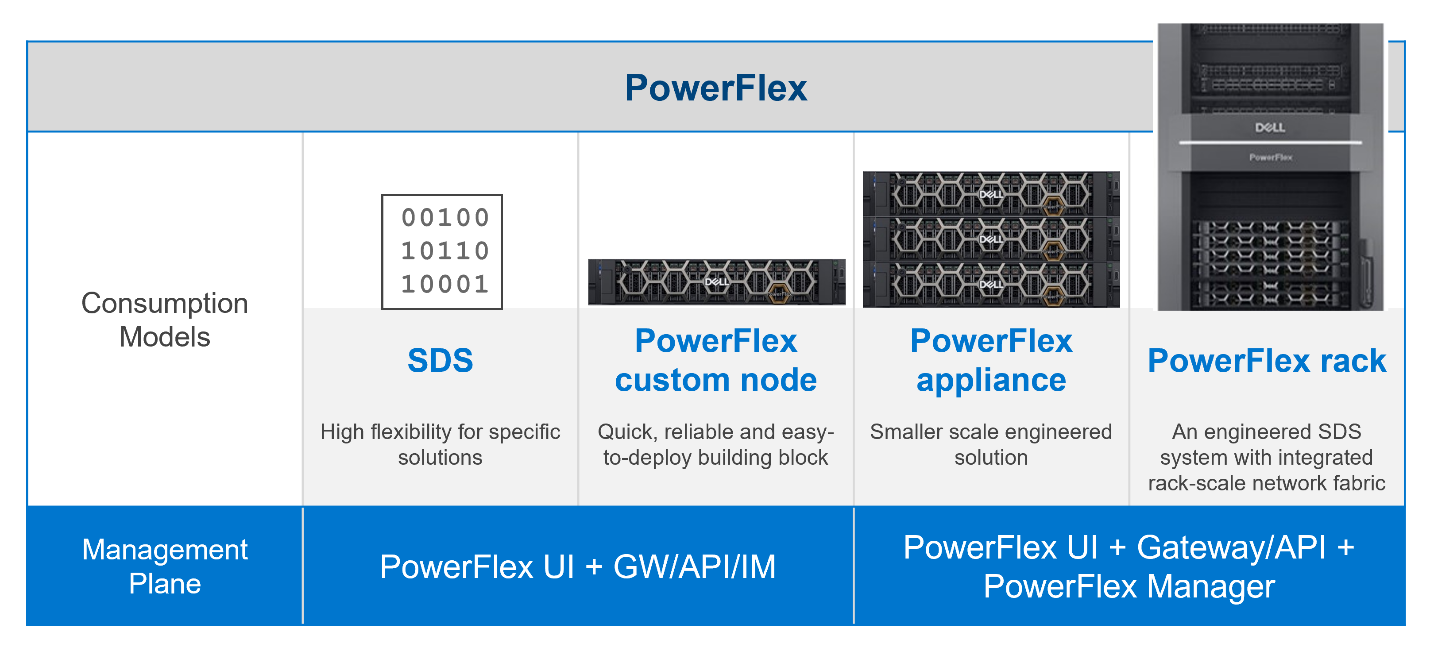

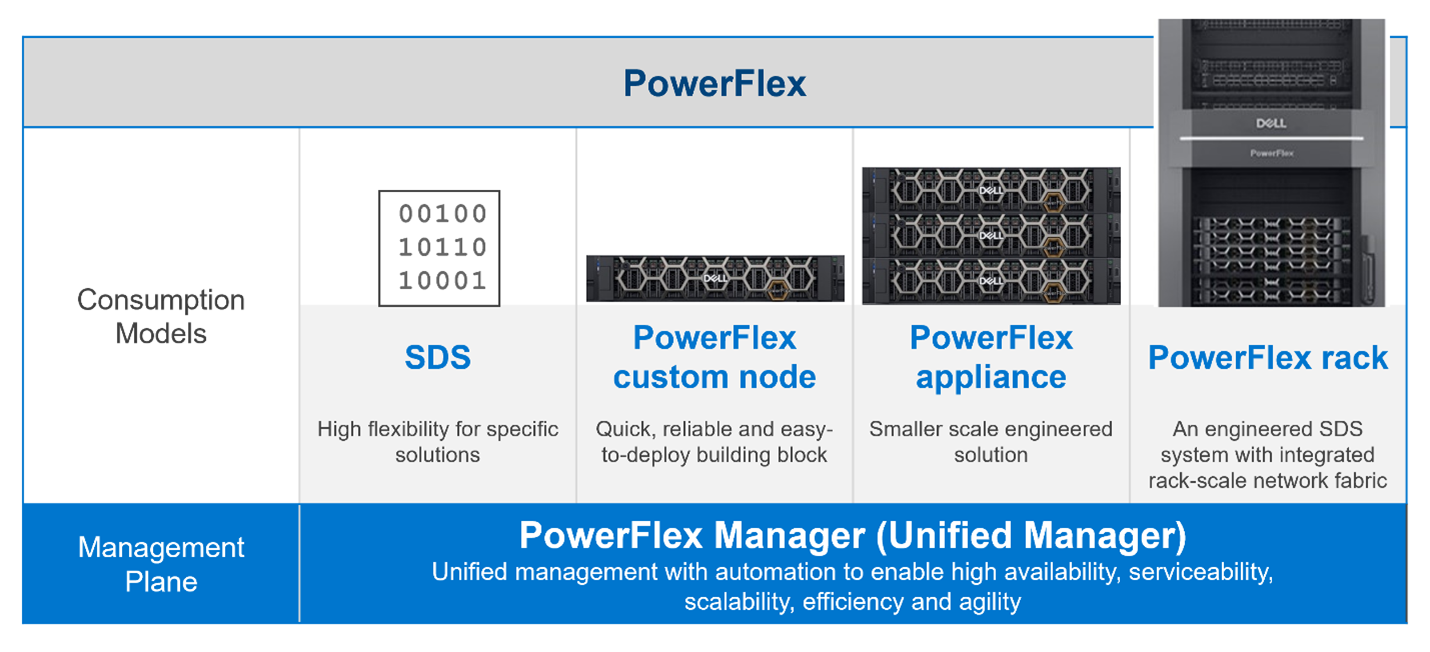

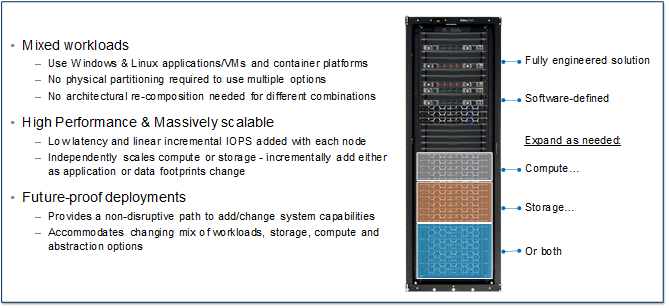

PowerFlex comes in four consumption options: PowerFlex rack, PowerFlex appliance, PowerFlex custom node, and APEX Block Storage for Public Cloud.

- PowerFlex rack is a fully engineered rack-scale system with integrated networking, management nodes, and intelligent cabinet. A turn-key solution with increased time-to-value, the value of PowerFlex rack is hard to beat.

- PowerFlex appliance provides the same level of performance as PowerFlex rack but at a smaller starting point and with greater networking options to fit a wide variety of requirements.

- PowerFlex custom node is a DIY experience compared to PowerFlex rack or PowerFlex appliance, yielding the greatest configuration flexibility of all. Custom node deployments—as the name implies—are a node level offering and do not include integrated networking.

- APEX Block Storage for Public Cloud is a deployment of the Dell software-defined block storage in the public cloud. It provides higher performance and resiliency beyond what is available with native public cloud providers.

Figure 1. PowerFlex consumption options

Flexible architecture

There are three building blocks that give PowerFlex its power.

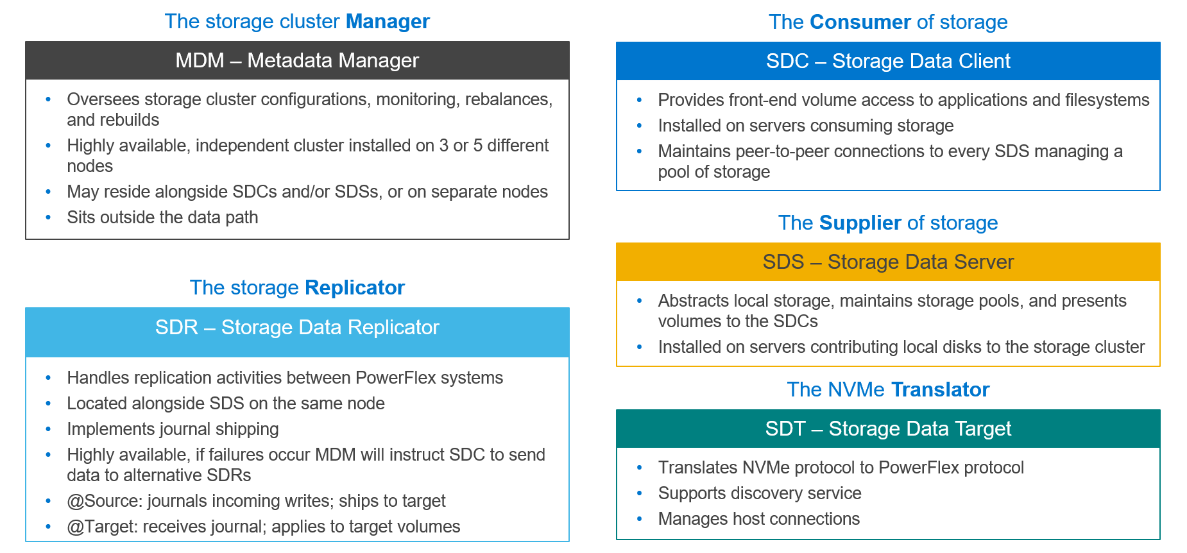

The first is the storage cluster manager called the Meta Data Manager (MDM), which sits outside of the data path. The MDM is a highly available, tightly coupled software cluster of three or five nodes, which has a supervisory role monitoring system health, managing the configuration, and coordinating the rebuilding and protection of data.

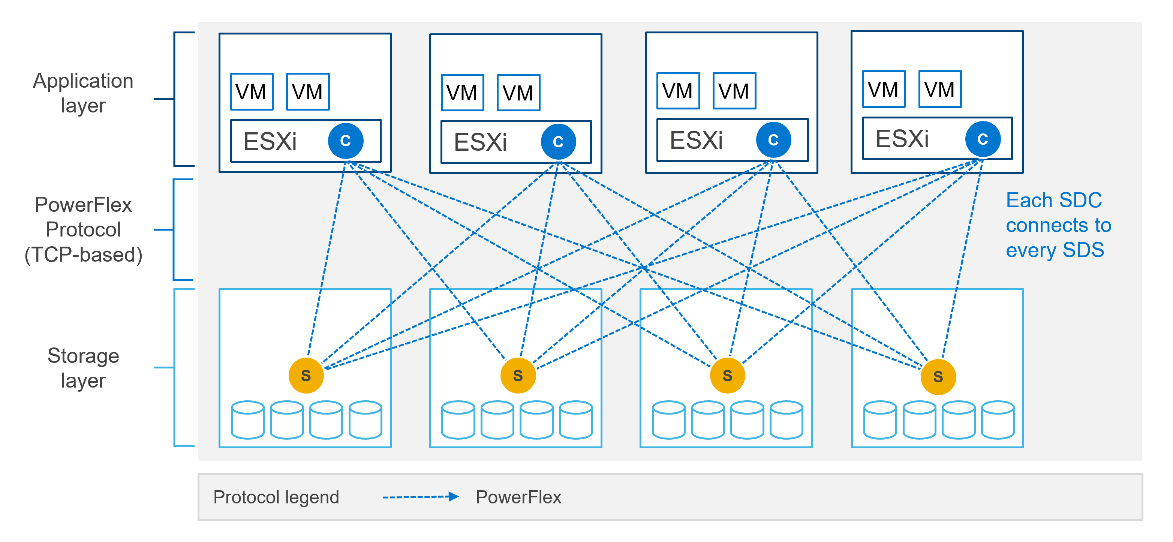

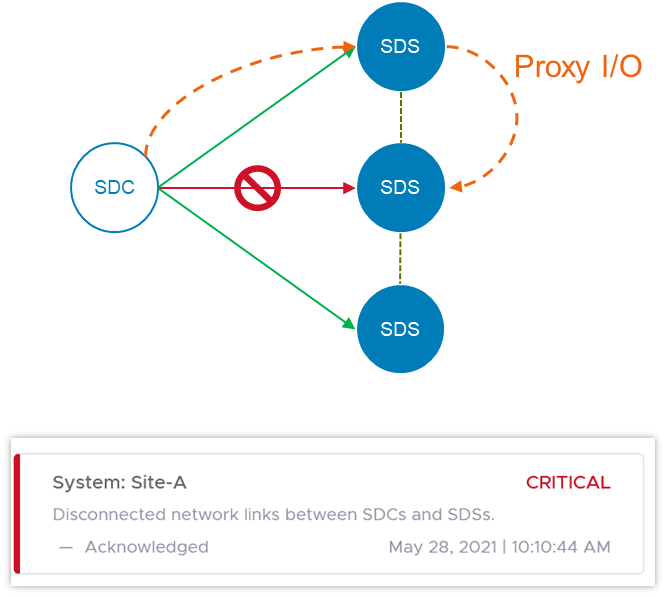

The second software component is the storage creator also known as the Storage Data Server (SDS). The SDS abstracts the local storage in each node into one or more storage pools and presents the volumes that have been provisioned from its local storage to the storage consumer.

The third component is the storage consumer called the Storage Data Client (SDC). The SDC is installed on the application node and presents the PowerFlex volume as a block device to the operating system.

Figure 2. PowerFlex building blocks

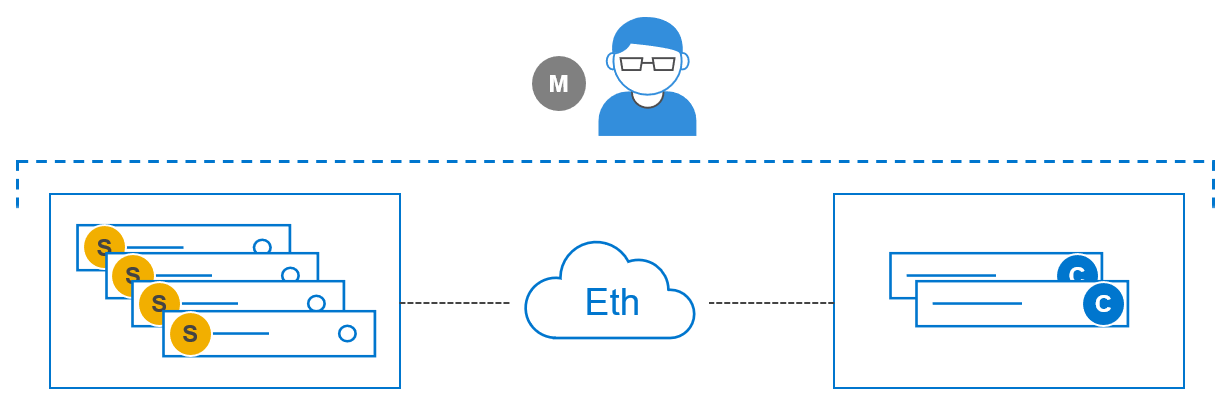

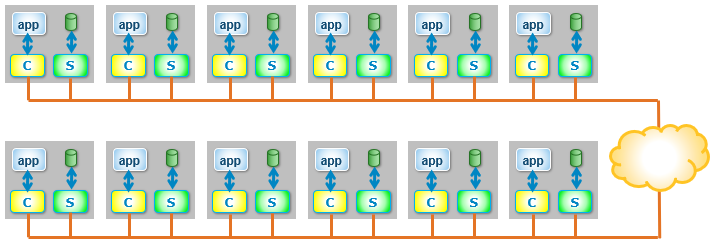

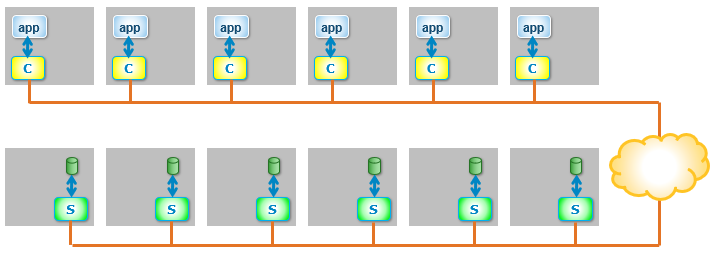

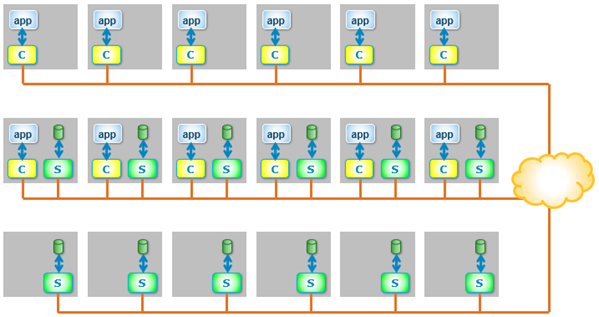

These pieces of software can be installed on the nodes in almost any combination. How they are installed defines the role of the node in a PowerFlex system as well as the type of deployment. The following figure shows a two-layer system with a set of four storage nodes (SDS) and two compute nodes (SDC).

Figure 3. Two-Layer configuration

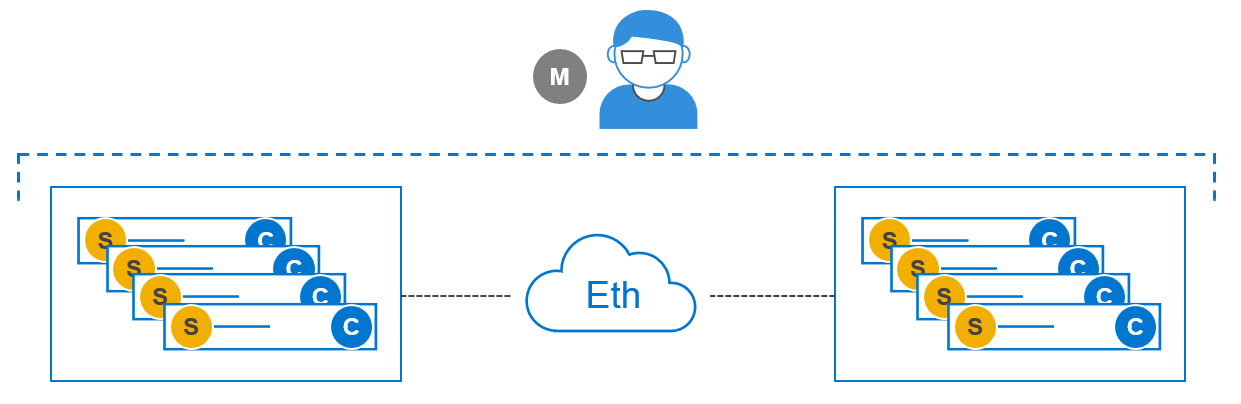

An advantage of the two-layer deployment is that we can scale the storage and compute independently. Additionally, it reduces license costs on application and compute environments that license by CPU core count. The SDC and SDS can also be installed on the same node to create an HCI deployment as shown in Figure 4, reducing complexity and resulting in increased operational efficiencies.

Figure 4. HCI configuration

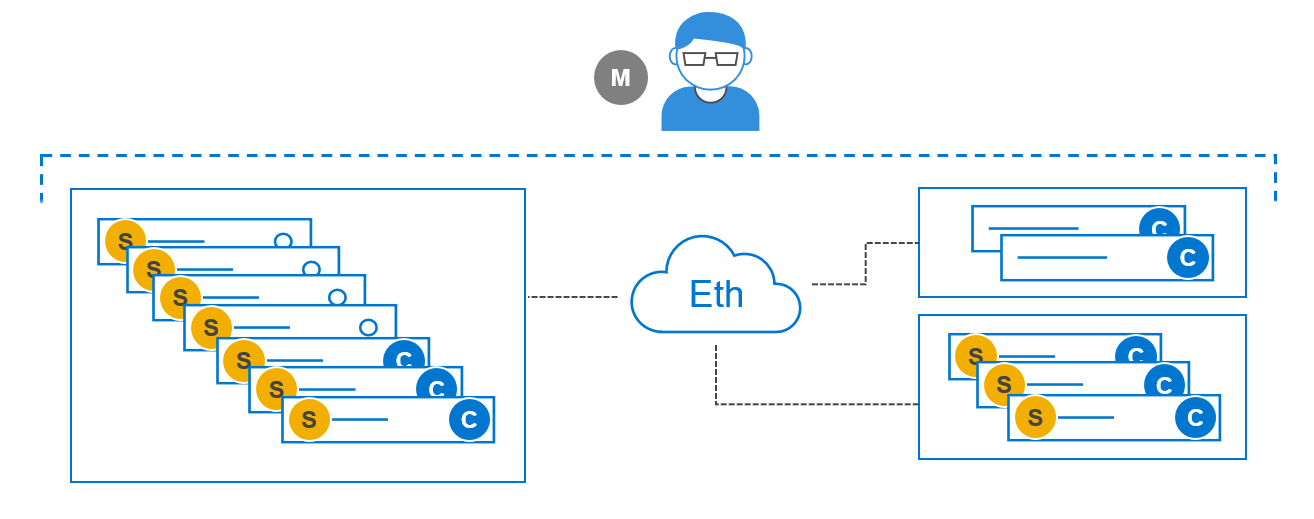

We can also mix two-layer and HCI, all in a single PowerFlex system, as shown in Figure 5.

Figure 5. Mixed – Two-Layer and HCI

As you can see from the available consumption and deployment options, PowerFlex provides extreme flexibility, but it does not end there. PowerFlex boasts broad support for compute operating environments and applications, including Windows and many Linux distributions, and multiple hypervisor environments. PowerFlex is also a fantastic platform for containerized cloud native applications.

Flexibility evolved

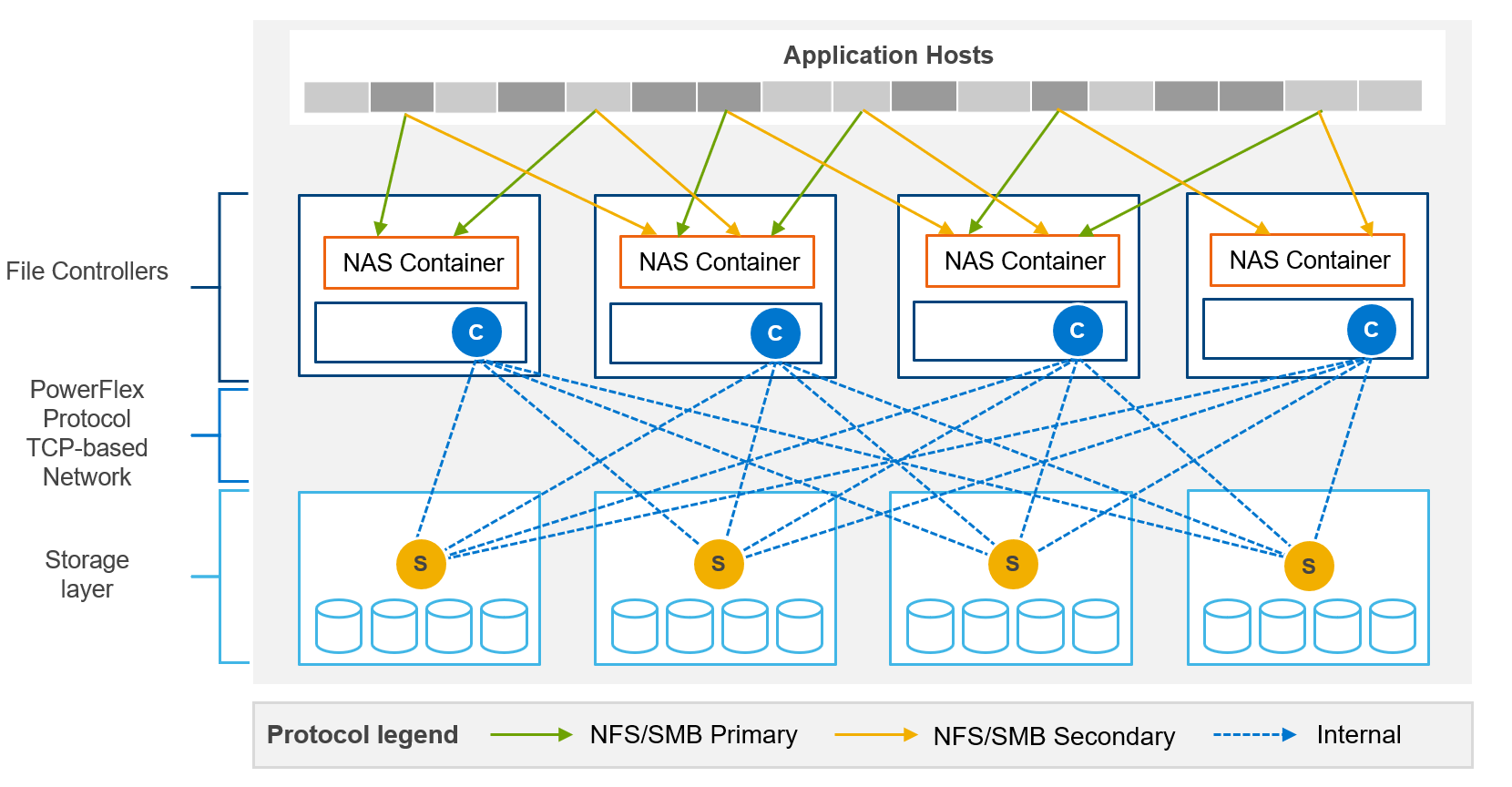

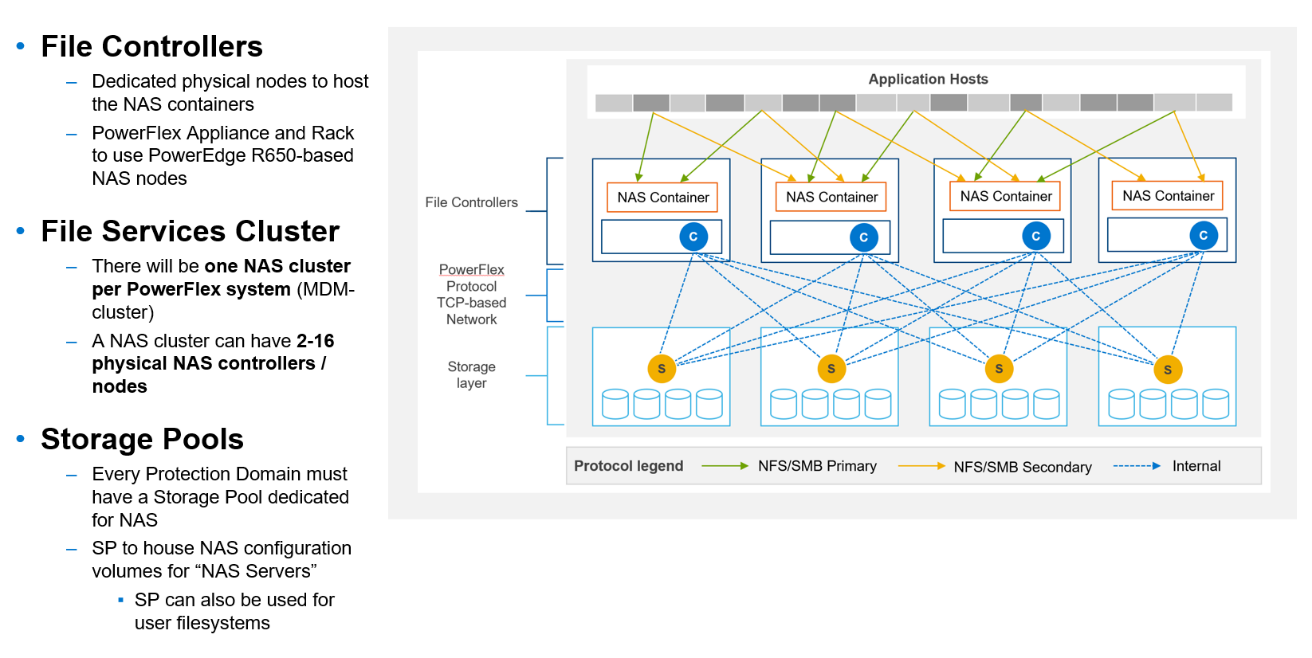

PowerFlex is a continually evolving solution. The most recent steps in the evolution are file services, as illustrated in Figure 6, and NVMe/TCP support for front-end (application) connectivity.

PowerFlex file services use physical nodes for NAS controllers and are similar to compute nodes. When the file service is deployed, an NAS container and an SDC are installed on each dedicated file node. A single NAS cluster is supported per PowerFlex system (MDM cluster). The NAS cluster supports anything from two to sixteen physical NAS controller nodes.

The backend block storage supporting the NAS file system is PowerFlex block storage provisioned from a storage pool. Volumes are created within the selected storage pool for NAS meta data and for user file systems. PowerFlex file storage supports NFSv3/v4, SMBv2/v3, and FTP and SFTP.

Figure 6. File services

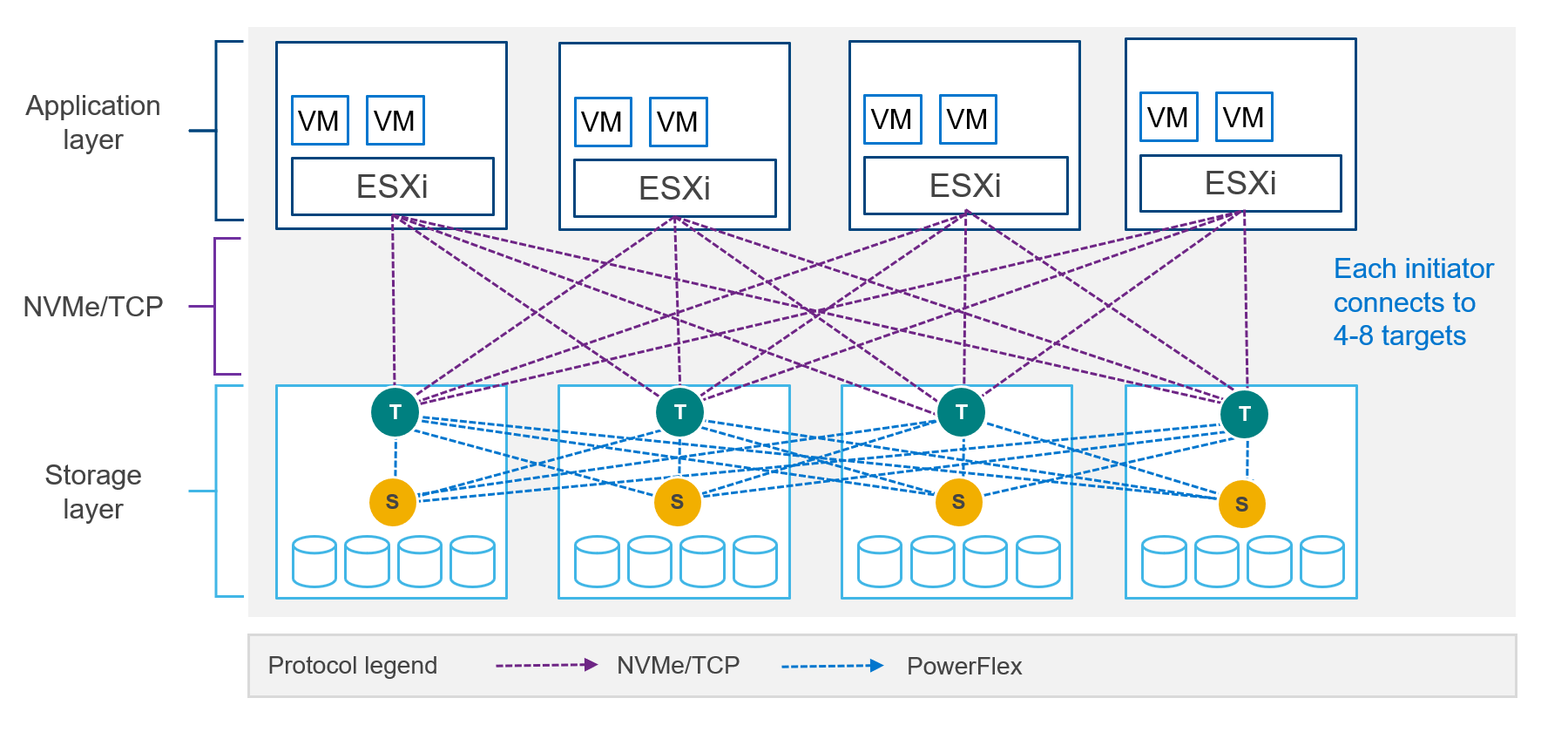

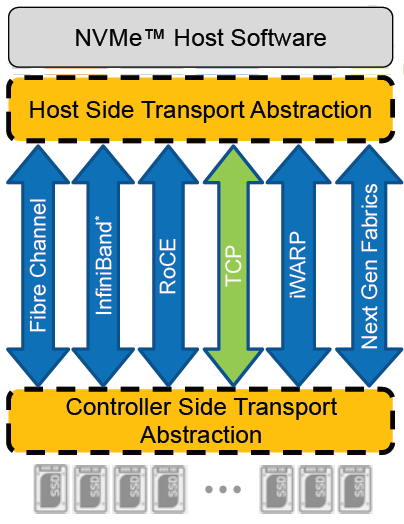

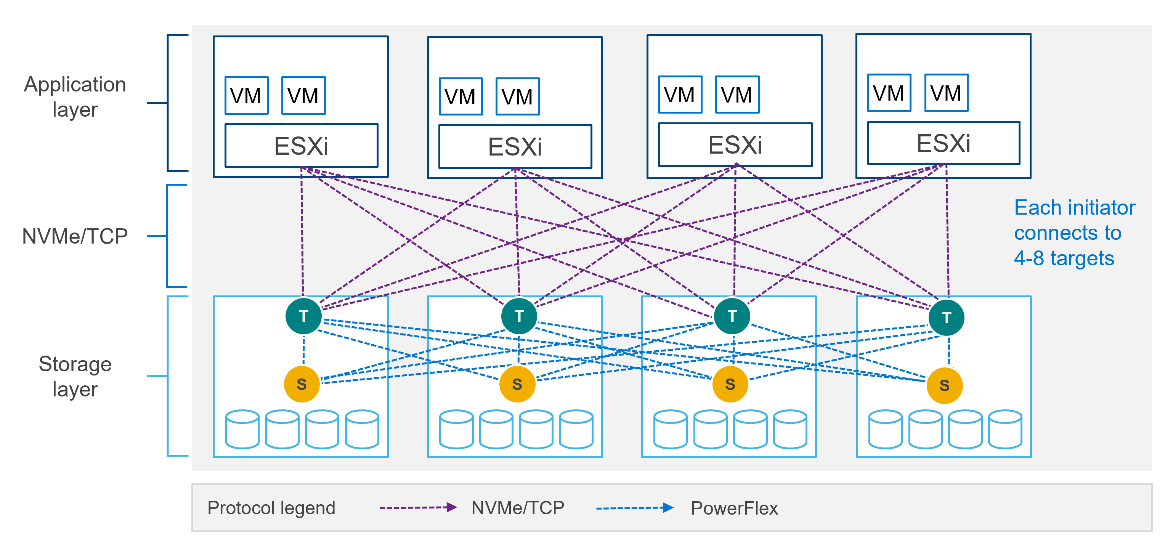

The NVMe specification was established in 2013 to address the issue of using fast, direct, attached nonvolatile storage media with slower interfaces such as SAS. In 2016, the standard was extended to include NVMe devices used over fabrics (NVMe-oF). The SDC is not compatible with the NVMe/TCP protocol, however most operating system vendors have started to adopt NVMe/TCP natively. As such, a change in host connectivity was required to support NVMe/TCP connectivity, as illustrated in Figure 7.

I mentioned earlier that the SDC holds a map of the volume layout on the storage nodes. The map of the volume layout must be known so that reads and writes go to the appropriate SDS and device. Without an SDC, the mapping logic had to be moved from the compute node to the PowerFlex storage system. Likewise, the translation of the NVMe protocol used by the host to the proprietary PowerFlex protocol on the backend is another technical gap that needed to be filled.

Enter a new PowerFlex software module called the Storage Data Target (SDT). The SDT is installed on the storage nodes alongside the SDS and is responsible for translating the compute IO using NVMe protocol to the PowerFlex protocol. The map of the volume layout held by the SDC has been moved to the SDT.

Figure 7. NVMe/TCP host connectivity

NOTE: Dell PowerFlex and most Linux distributions support NVMe/TCP in tech preview only. Consult with your operating system vendor documentation and the latest PowerFlex documentation for updated information regarding NVMe/TCP support. NVMe/TCP connectivity between VMware ESXi and Dell PowerFlex is supported.

Unmatched scalability

PowerFlex has high growth potential and can scale to thousands of nodes. You can start as small as a four-node system and add nodes as business needs dictate. Furthermore, adding nodes is a nondisruptive operation. More detailed specifications can be found in the PowerFlex Specification sheet.

Software-defined infrastructure

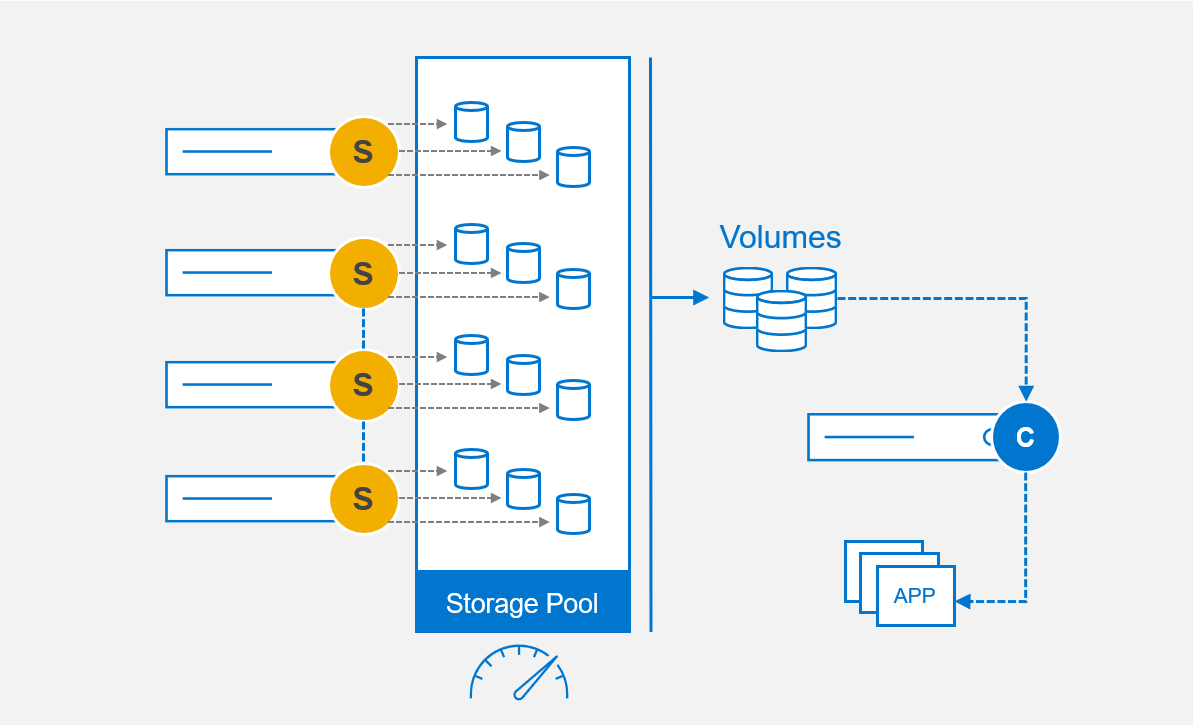

The SDS on each storage node abstracts the local disks and federates all of them into storage pools. In addition to aggregating the storage capacity, PowerFlex software also aggregates the performance capability of each node. For example, if one node has 20 TB of storage and can perform 100k IOPs, then two nodes provide 40 TB of storage and 200k IOPs.

Figure 8. Software-defined infrastructure

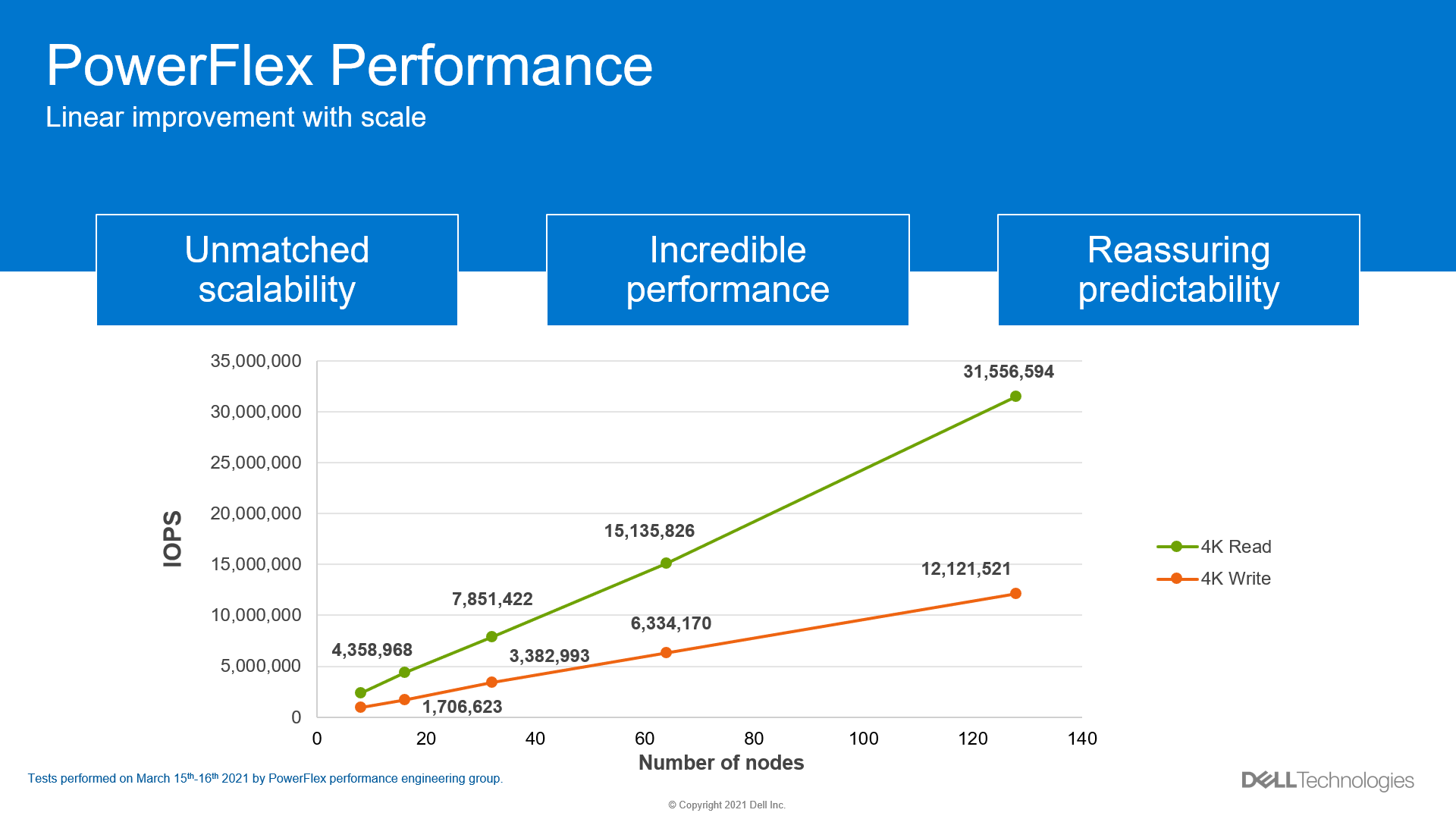

Internal testing at Dell has shown a near linear improvement in performance when adding nodes, as displayed in Figure 9, providing predictable gains when adding nodes to a PowerFlex system.

Figure 9. Linear improvement with IOPs

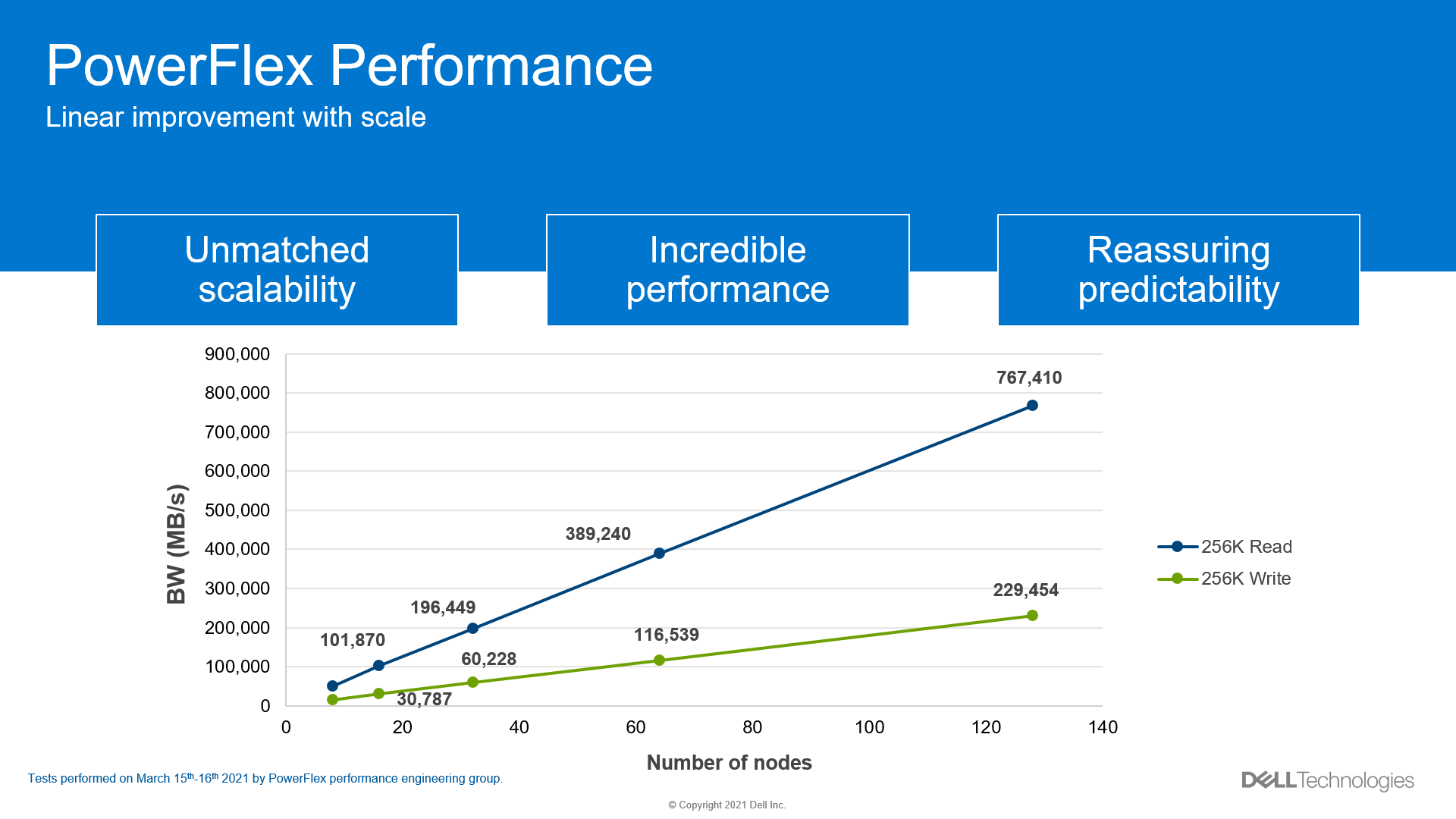

The same linear improvement observed with IOPs in PowerFlex is seen with throughput in Figure 10, all while maintaining submillisecond response times.

Figure 10. Linear improvement with throughput

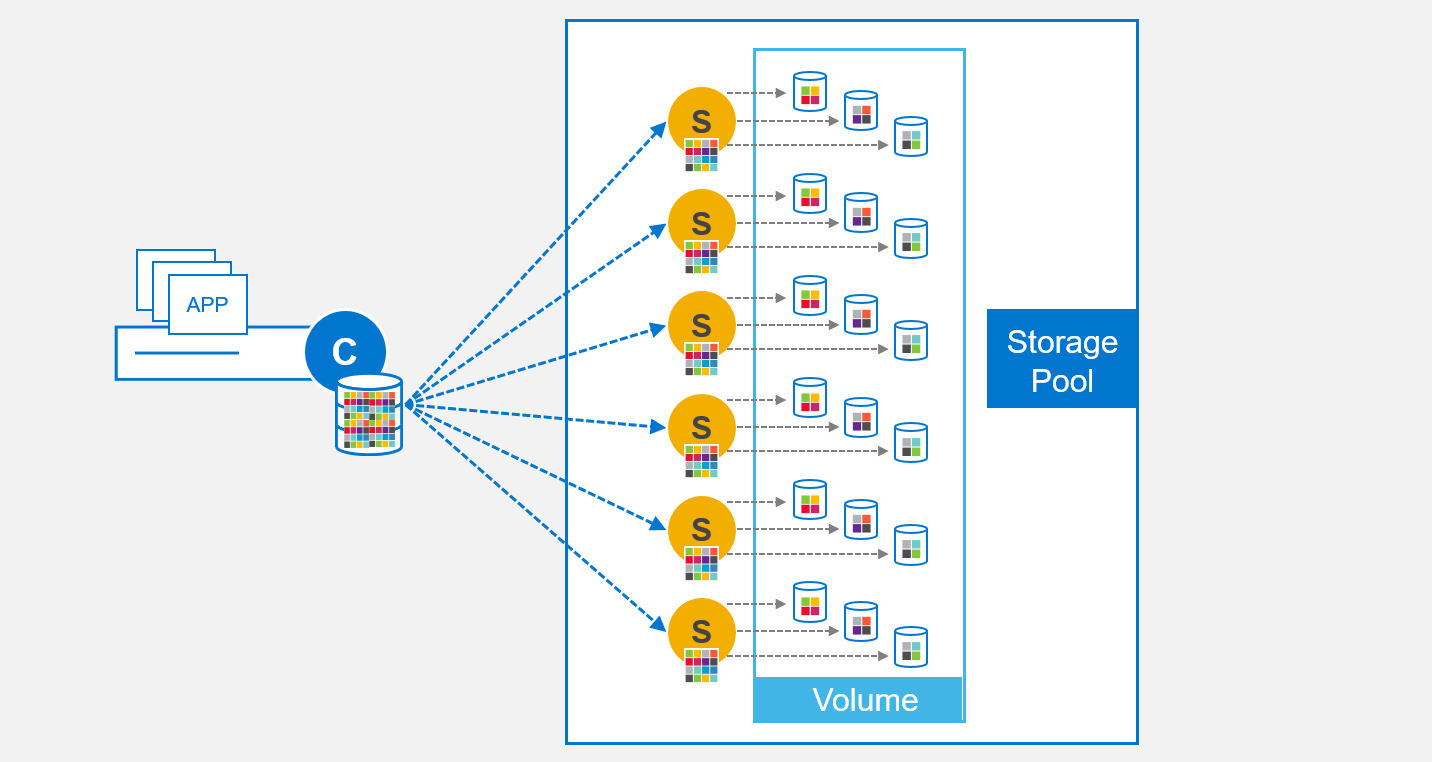

Perfect balance

The MDM determines how to lay out the volume address space in the storage pool when it is created, as illustrated in Figure 11. The MDM sends the data map to the SDSs that are contributing storage and to the SDC that is consuming the volume. Notably, the MDM distributes the volume address space evenly across every SDS and every hard drive that is contributing storage to the storage pool.

Figure 11. Volume addressing

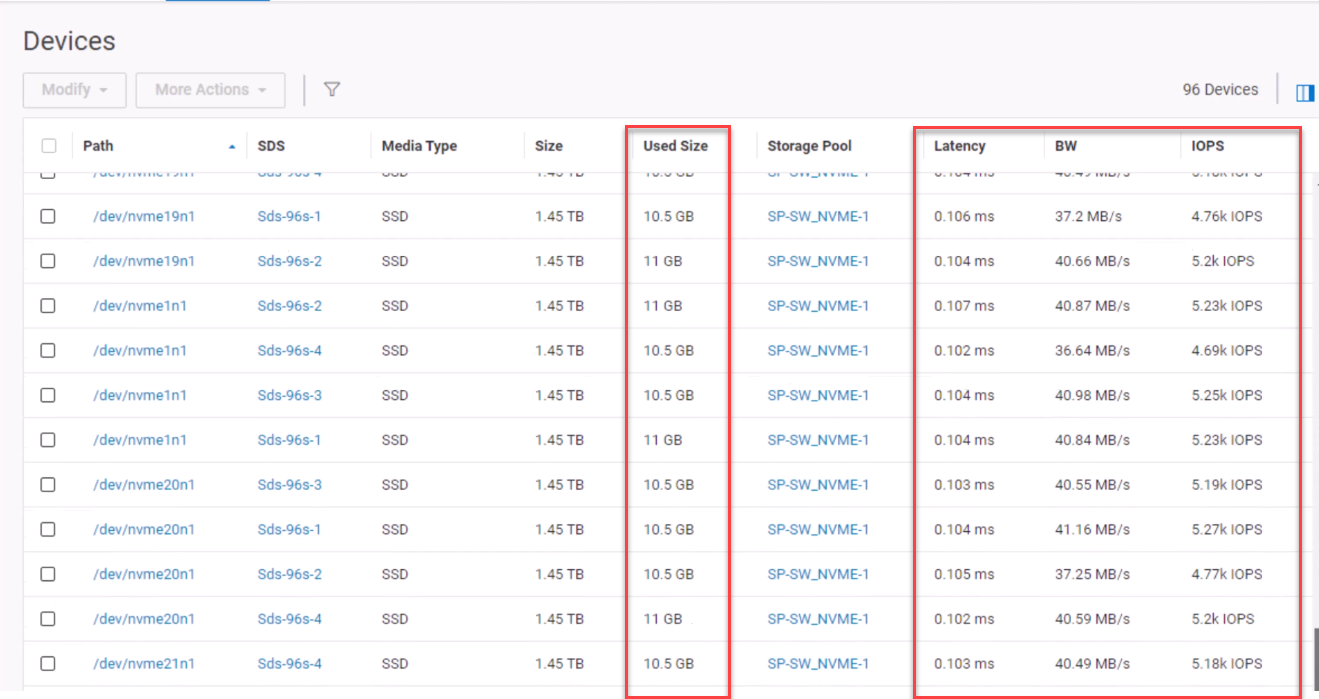

The MDM continually monitors resources and ensures that there are no hot spots in the system. The SDSs communicate with each other over the backend mesh when an imbalance is detected and begin the process of rebalancing. This balancing act ensures capacity is evenly distributed across the backend devices and performance is distributed across the backend mesh, the result of which is displayed in Figure 12. Note that the rebalance is a background process and does not impact production IO.

Figure 12. Balanced devices

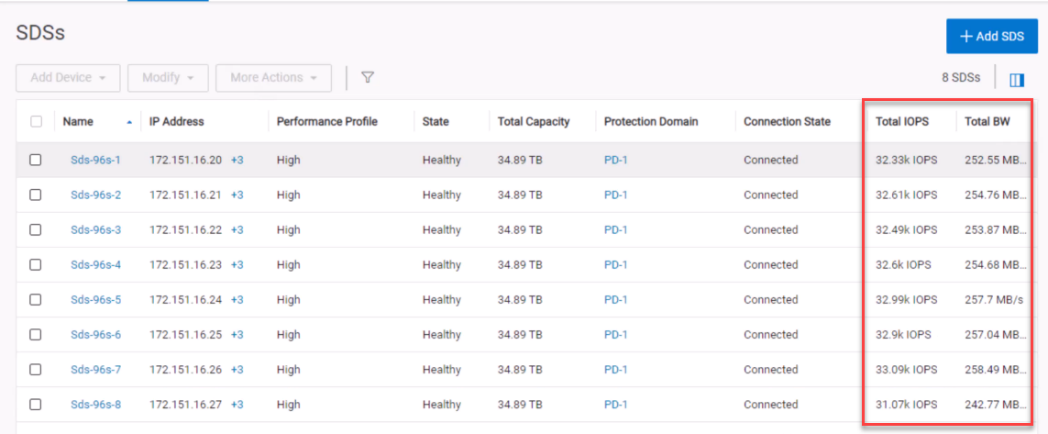

PowerFlex also ensures that reads and writes to the volume are balanced across the SDSs in the storage pool.

Figure 13. Balanced SDSs

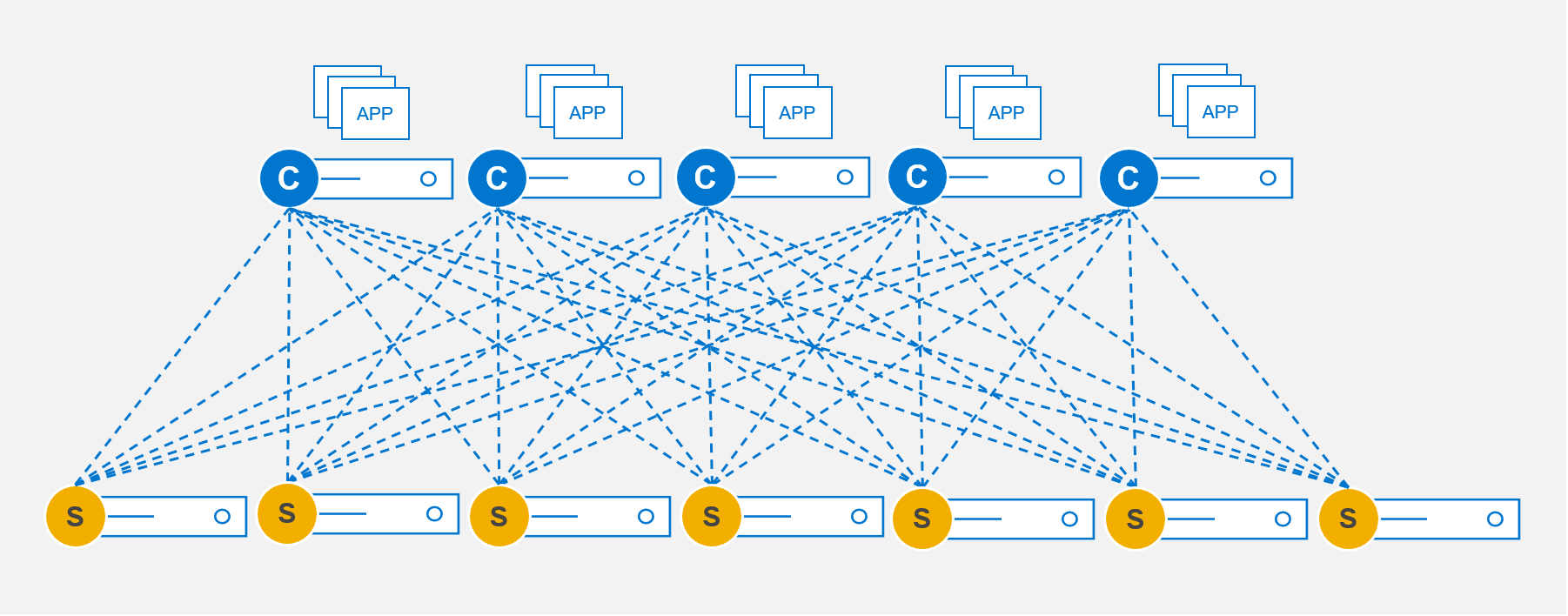

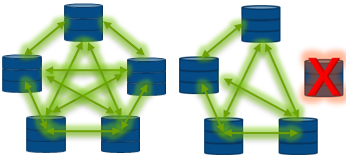

An SDC will use every SDS over a client/server mesh, illustrated in Figure 14. The SDC has automatic multipathing to each of the SDSs, ensuring IOPs are balanced over the front end. This massively parallel architecture ensures maximum throughput while minimizing latency.

Figure 14. Client-Server mesh

Intelligent resiliency

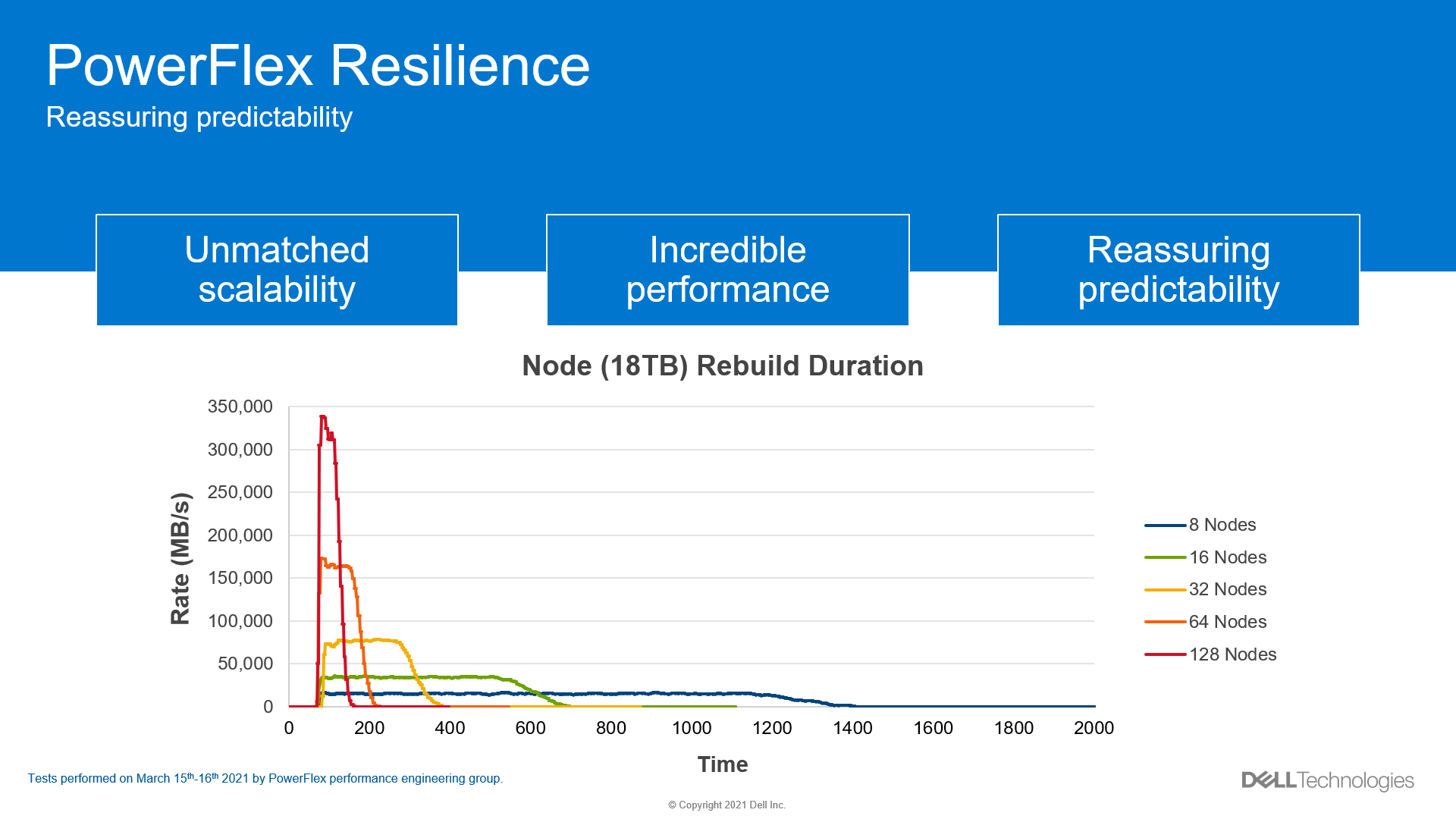

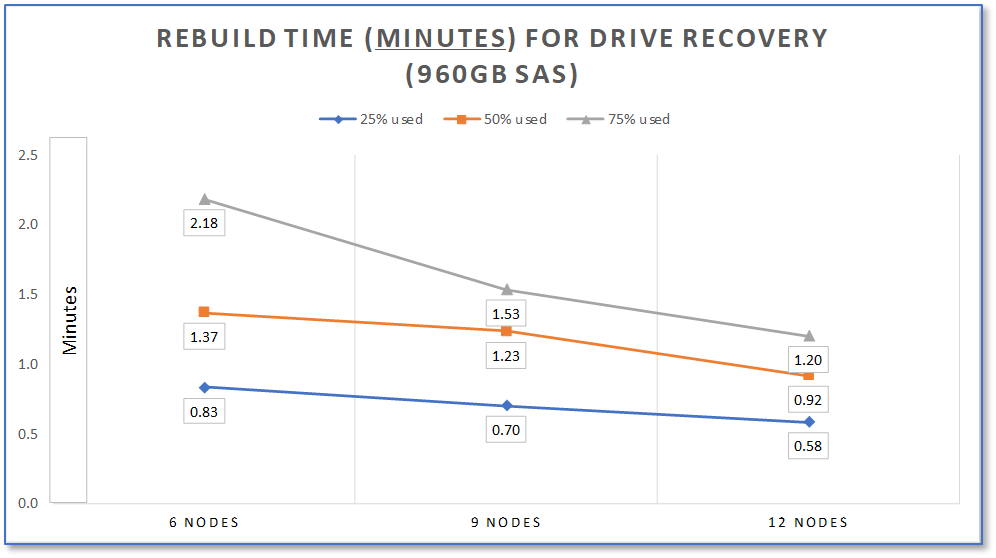

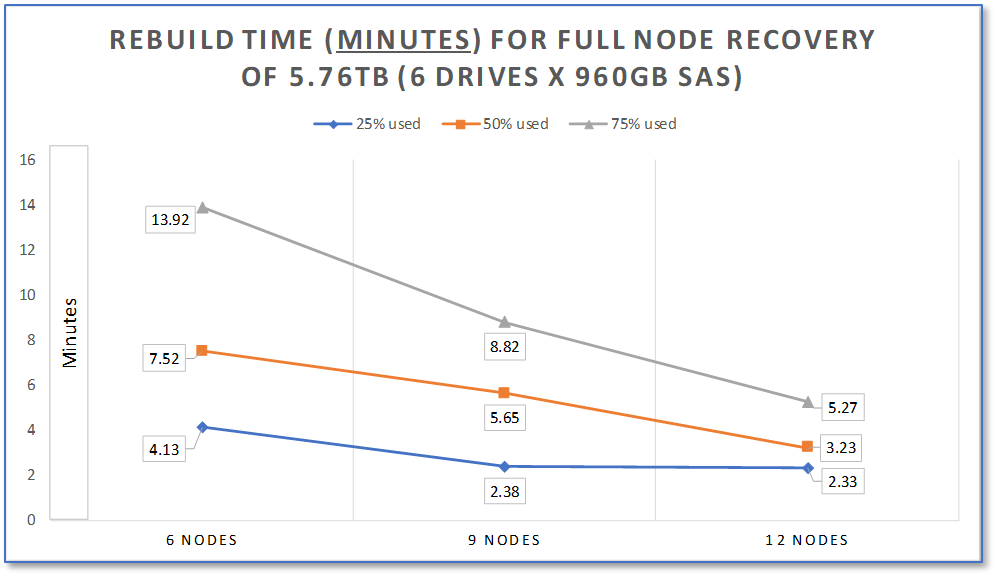

The mesh technology in PowerFlex that gives it incredible performance is also the foundation of its outstanding resiliency. If a drive or node fails, the SDSs will use the same mechanism described in the previous section to rebalance and rebuild data, ensuring 6 9’s of availability[1]. PowerFlex can reprotect the data in seconds after a drive failure and in minutes after a node failure. The following figure elucidates how the rebuild duration improves with scale.

Figure 15. Rebuild duration

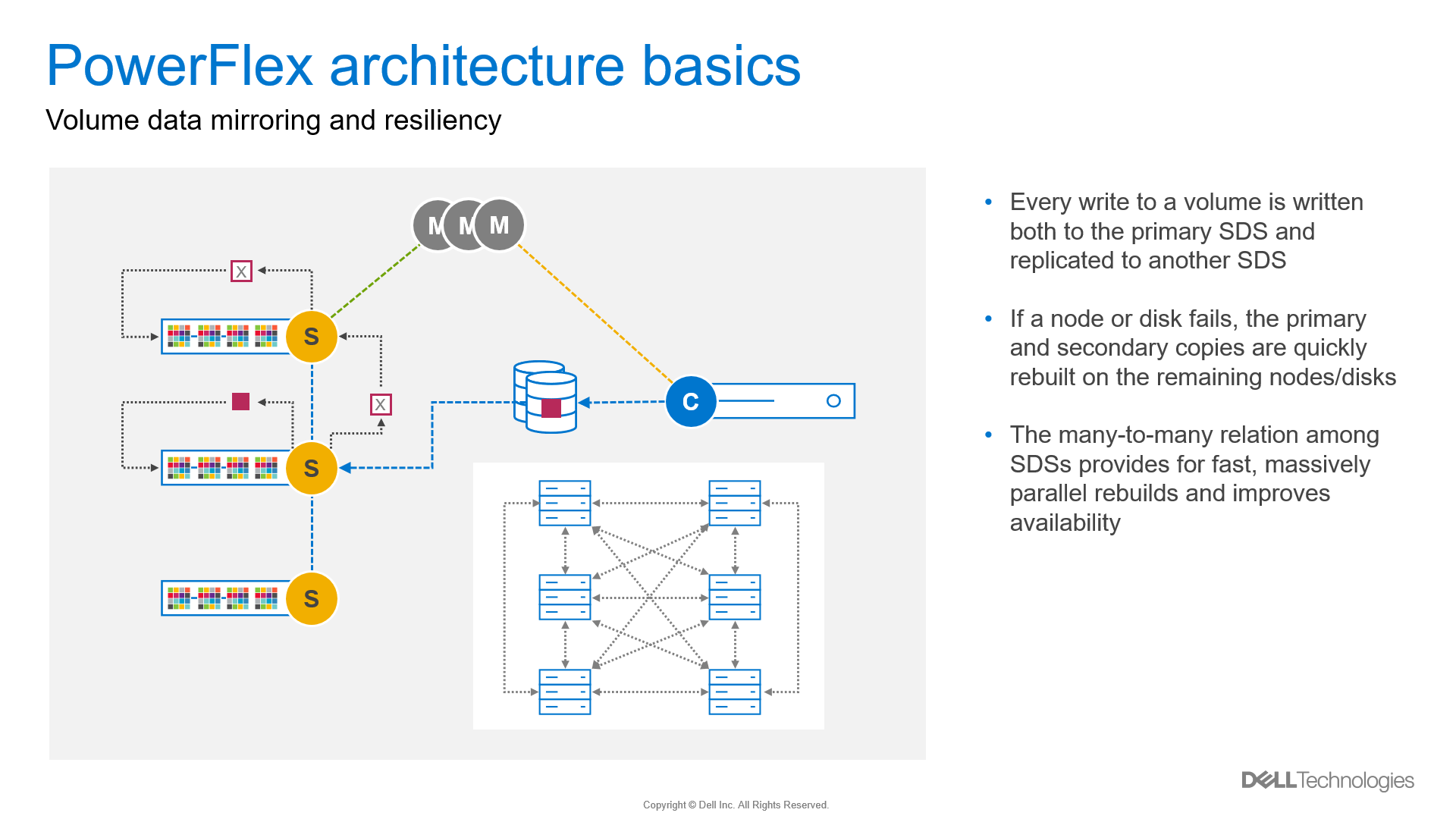

PowerFlex does not use any type of hardware disk-level RAID protection. Instead, on write operations, the SDC sends a chunk of data to an SDS (primary). The SDS then writes the data to a hard drive on the local node. The SDS also ships the chunk to a second SDS (secondary) node which then writes the data to a disk on that node.

Figure 16. Data mirroring

What about planned outages for maintenance? PowerFlex gives administrators three maintenance mode options, each of which is nondisruptive.

The first is Node removal, which is a graceful removal of a single node. PowerFlex does a many-to-many rebalance of data among the remaining nodes during a node removal operation. Data is fully protected, and the PowerFlex system remains operational. The system capacity and performance potential will be reduced when the operation is initiated. This mode is typically used to permanently remove a node from the cluster.

Instant Maintenance Mode (IMM) is designed for quick entry and exit from the maintenance operation. IMM is ideal for rolling upgrades where the maintenance window is short. Data on the node is unavailable and not rebalanced to other nodes in the cluster when a node is placed in IMM. Applications accessing data during IMM are directed to other nodes containing the copy of data. Writes are tracked, and when the node exits IMM, the changes are written to the node in question.

The last mode is called Protected Maintenance Mode (PMM), which provides similar data availability to the other maintenance modes without the single copy exposure risk of IMM. As with the node removal operation, when a node is placed into PMM, PowerFlex will perform a many-to-many copy rebalance to the other nodes. Data on the node placed in PMM is unavailable upon entering and during PMM. Work cannot begin on the node until the copy is complete. Entering PMM takes longer to ensure the data on the node is copied to other storage nodes.

Like IMM writes affecting the node are tracked, and once the node exits PMM, the updates are written to the node. For more detailed information about PowerFlex maintenance modes, check out this white paper.

Management and orchestration

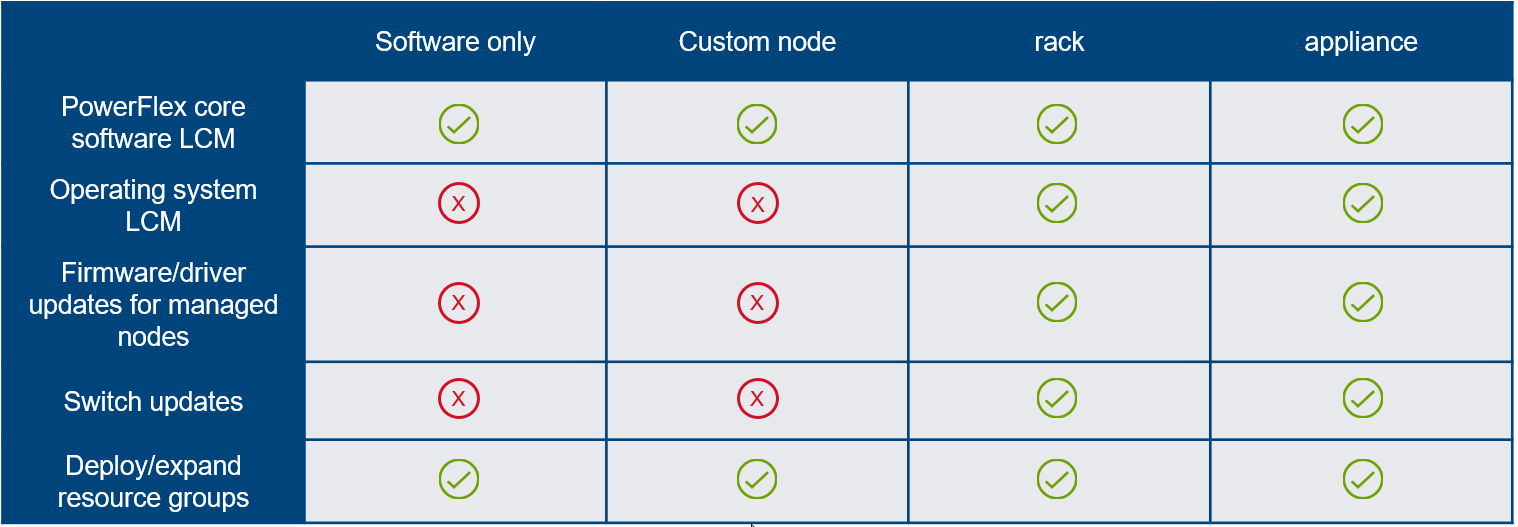

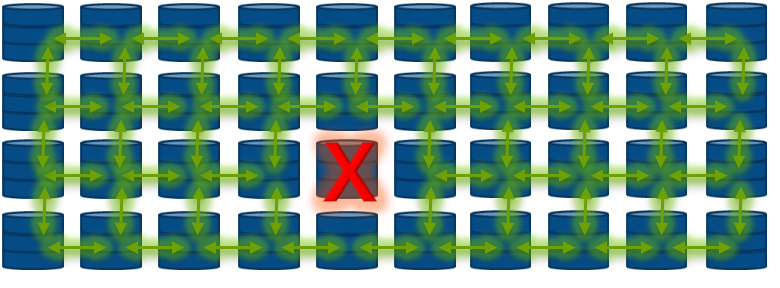

PowerFlex offers an extensive management and orchestration (M&O) ecosystem, starting with PowerFlex Manager. PowerFlex Manager is the unified management application for all PowerFlex consumption models, providing life cycle management, automation, and compliance of software and firmware for PowerFlex rack and appliance. PowerFlex Manager also automates life cycle management of core PowerFlex software for all consumption models.

Figure 17. PowerFlex Manager LCM

In addition to life cycle management, PowerFlex Manager reports on the health, capacity, and performance of PowerFlex hardware components and software and is the ingress point for the full-featured PowerFlex REST API.

Dell Technologies offers automation tools for PowerFlex such as a Python SDK, Ansible modules, and a Terraform provider. Looking to place containerized workloads on PowerFlex? Dell Technologies provides a Container Storage Interface (CSI) driver and Container Storage Modules (CSM) for managing a Kubernetes infrastructure on PowerFlex. Want more information about this topic? Head over to GitHub.

Conclusion

I could continue about the PowerFlex DNA, but I think we can wrap up for now. Stay tuned for more to follow in a future blog. If you are looking for more information in the meantime, head over to Dell Technologies Info Hub where you will find great technical resources such as white papers, reference architectures, solution briefs, and videos.

Resources

Dell PowerFlex YouTube Channel

From Chaos to Order Unifying Silos infographic

Dell Technologies GitHub Repository

Dell PowerFlex: Maintenance Modes

Author: Roy Laverty, Principal Technical Marketing Engineer

LinkedIn: https://linkedin.com/in/roy-laverty

Twitter: @RoyLaverty

[1] Availability claims based on internal Dell testing. (Source: Dell PowerFlex - Unbounded software-defined infrastructure platform.)

VMware Explore, PowerFlex, and Silos of Glitter: this blog has it all!

Fri, 18 Aug 2023 19:30:20 -0000

|Read Time: 0 minutes

Those who know me are aware that I’ve been a big proponent of one platform that must be able to support multiple workloads—and Dell PowerFlex can. If you are at VMware Explore you can see a live demo of both traditional database workloads and AI workloads running on the same four PowerFlex nodes.

When virtualization took the enterprise by storm, a war was started against silos. First was servers, and the idea that we can consolidate them on a few large hosts with virtualization. This then rapidly moved to storage and continued to blast through every part of the data center. Yet today we still have silos. Mainly in the form of workloads, these hide in plain sight - disguised with other names like “departmental,” “project,” or “application group.”

Some of these workload silos are becoming even more stealthy and operate under the guise of needing “different” hardware or performance, so IT administrators allow them to operate in a separate silo.

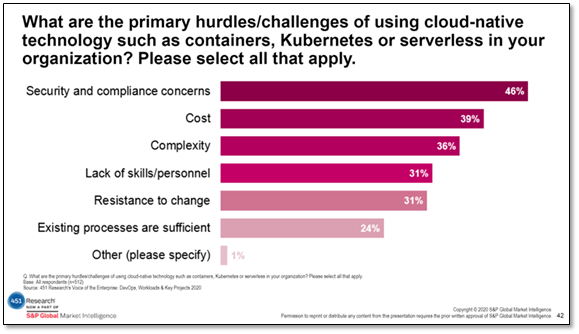

That is wasteful! It wastes company resources, it wastes the opportunity to do more, and it wastes your time managing multiple environments. It has become even more of an issue with the rise of Machine Learning (ML) and AI workloads.

If you are at VMware Explore this year you can see how to break down these silos with Dell PowerFlex at the Kioxia booth (Booth 309). Experience the power of running ResNet 50 image classification and OLTP (Online Transactional Processing) workloads simultaneously, live from the show floor. And if that’s not enough, there are experts, and lots of them! You might even get the chance to visit with the WonderNerd.

This might not seem like a big deal, right? You just need a few specialty systems, some storage, and a bit of IT glitter… some of the systems run the databases, some run the ML workloads. Sprinkle some of that IT glitter and poof you’ve got your workloads running together. Well sort of. They’re in the same rack at least.

Remember: silos are bad. Instead, let’s put some PowerFlex in there! And put that glitter back in your pocket, this is a data center, not a five-year old’s birthday party.

PowerFlex supports NVIDIA GPUs with MIG technology which is part of NVIDIA AI Enterprise, so we can customize our GPU resources for the workloads that need them. (Yes, there is nothing that says you can’t run different workloads on the same hosts.) Plus, PowerFlex uses Kioxia PM7 series SSDs, so there is plenty of IOPS to go around while ensuring sub-millisecond latency for both workloads. This allows the data to be closer to the processing, maybe even on the same host.

In our lab tests, we could push one million transactions per minute (TPM) with OLTP workloads while also processing 6620 images per second using a RESNET50 model built on NVIDIA NGC containers. These are important if you want to keep customers happy, especially as more and more organizations add AI/ML capabilities to their online apps, and more and more data is generated from all those new apps.

Here are the TPM results from the demo environment that is running our four SQL VMs. The TPMs in this test are maxing out around 320k and the latency is always sub-millisecond. This is the stuff you want to show off, not that pocket full of glitter.

Yeah, you can silo your environments and hide them with terms like “project” and “application group,” but everyone will still know they are silos.

We all started battling silos at the dawn of virtualization. PowerFlex with Kioxia drives and NVIDIA GPUs gives administrators a fighting chance to win the silo war.

You can visit the NVIDIA team at Lounge L3 on the show floor during VMware Explore. And of course, you have to stop by the Kioxia booth (309) to see what PowerFlex can do for your IT battles. We’ll see you there!

Author: Tony Foster

Twitter: | |

LinkedIn: | |

Personal Blog: | |

Location: | The Land of Oz [-6 GMT] |

Contributors: Kailas Goliwadekar, Anup Bharti

Managing Dell PowerFlex Licensing and Being Way Less Sad

Mon, 24 Jul 2023 21:20:14 -0000

|Read Time: 0 minutes

Imagine there was an easy way to view and manage your Dell PowerFlex licenses. Wouldn’t that be nice? I know I’d be way less sad. Well guess what, I’m way less sad, and there’s a way to easily manage your PowerFlex licenses.

I was on a call today with one of our product managers. He was showing something really cool, and I just had to share it with everyone. You can go into CloudIQ and view all your PowerFlex licenses.

You might think, “big deal, licenses.” You’re right! It is a big deal. Okay, a moderate sized deal, it makes me less sad. And here’s why. Have you ever had to track licenses for your environment in a spreadsheet? How about sharing that spreadsheet with everyone else on your team and hoping that no one accidently removes too many rows or types in the wrong cell. Or maybe you have to correlate a license to how much capacity you’re using. I’m sure 90% of users love this method. What’s that I hear you yelling at your monitor, I’m wrong???

You’re correct, hardly anyone wants to track licenses that way. Why? Because its error prone and difficult to manage, plus it’s not automated. Oh, and it’s licensing. Well, CloudIQ can help you address a lot of this, at least for your PowerFlex environment.

That’s right. You log in, click on the Entitlements and System Licenses option in the menu, and you can see all your entitlements for PowerFlex. With that you can see how many terabytes of capacity each license has as well as the start and end dates. It’s all there, no spreadsheets, no manual entry, it’s easy to manage. Maybe 90% of users would prefer this method over a spreadsheet. You can see this functionality in the screenshot below.

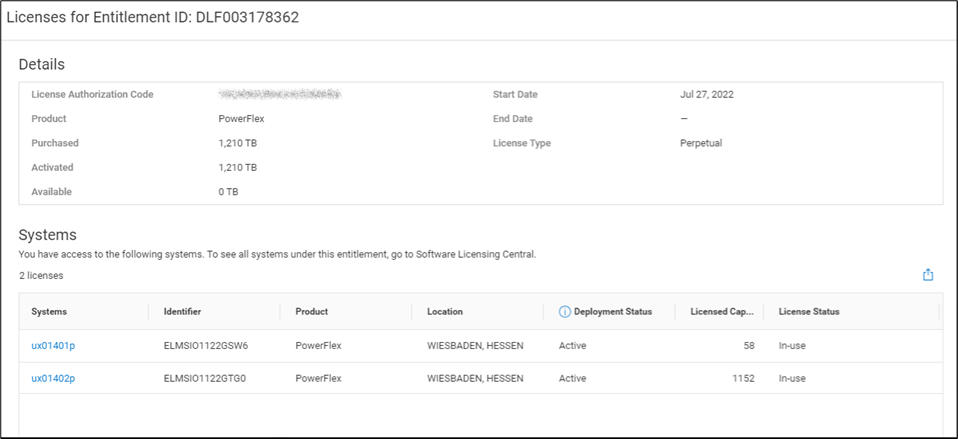

It gets better though…. Maybe you want to dig into the details of your environment and see how different licenses are being used. Maybe you are licensed for a petabyte of storage but you’re missing 50ish terabytes and want to see where they went. If you click on the details of an entitlement, you can see which systems are consuming capacity from the license. This makes it a lot easier than a spreadsheet to track down. You can see this in the following screenshot.

I’m sure it’s hard to get excited over licensing, but hopefully this makes you way less sad knowing you don’t have to try and track all this in a spreadsheet. Instead, you just log in to CloudIQ, then click on Entitlements and System Licenses. Poof, there it all is, in an easy-to-consume format. And for those who still want to manage their licenses in a spreadsheet, there’s an export option at the top of the table just for you. You can create pivot tables to your heart’s content. For everyone else, you’ve just unlocked a PowerFlex secret. Hopefully, like me, this makes you way less sad about licensing.

If you’re interested in finding out more about what you can do with licensing in CloudIQ, reach out to your Dell representative, who can guide you on all CloudIQ has to offer.

Author: Tony Foster

Sr. Principal Technical Marketing Engineer

Twitter: | |

LinkedIn: | |

Personal Blog: | |

Location: | The Land of Oz [-6 GMT] |

Can I do that AI thing on Dell PowerFlex?

Thu, 20 Jul 2023 21:08:09 -0000

|Read Time: 0 minutes

The simple answer is Yes, you can do that AI thing with Dell PowerFlex. For those who might have been busy with other things, AI stands for Artificial Intelligence and is based on trained models that allow a computer to “think” in ways machines haven’t been able to do in the past. These trained models (neural networks) are essentially a long set of IF statements (layers) stacked on one another, and each IF has a ‘weight’. Once something has worked through a neural network, the weights provide a probability about the object. So, the AI system can be 95% sure that it’s looking at a bowl of soup or a major sporting event. That, at least, is my overly simplified description of how AI works. The term carries a lot of baggage as it’s been around for more than 70 years, and the definition has changed from time to time. (See The History of Artificial Intelligence.)

Most recently, AI has been made famous by large language models (LLMs) for conversational AI applications like ChatGPT. Though these applications have stoked fears that AI will take over the world and destroy humanity, that has yet to be seen. Computers still can do only what we humans tell them to do, even LLMs, and that means if something goes wrong, we their creators are ultimately to blame. (See ‘Godfather of AI’ leaves Google, warns of tech’s dangers.)

The reality is that most organizations aren’t building world destroying LLMs, they are building systems to ensure that every pizza made in their factory has exactly 12 slices of pepperoni evenly distributed on top of the pizza. Or maybe they are looking at loss prevention, or better traffic light timing, or they just want a better technical support phone menu. All of these are uses for AI and each one is constructed differently (they use different types of neural networks).

We won’t delve into these use cases in this blog because we need to start with the underlying infrastructure that makes all those ideas “AI possibilities.” We are going to start with the infrastructure and what many now consider a basic (by today’s standards) image classifier known as ResNet-50 v1.5. (See ResNet-50: The Basics and a Quick Tutorial.)

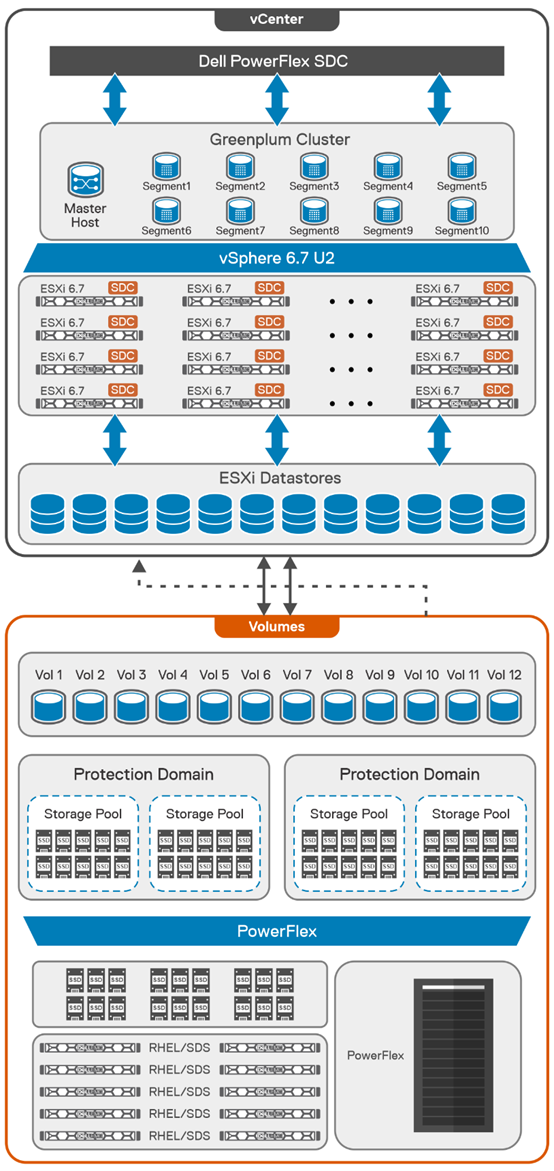

That’s also what the PowerFlex Solution Engineering team did in the validated design they recently published. This design details the use of ResNet-50 v1.5 in a VMware vSphere environment using NVIDIA AI Enterprise as part of PowerFlex environment. They started out with the basics of how a virtualized NVIDIA GPU works well in a PowerFlex environment. That’s what we’ll explore in this blog – getting started with AI workloads, and not how you build the next AI supercomputer (though you could do that with PowerFlex as well).

In this validated design, they use the NVIDIA A100 (PCIe) GPU and virtualized it in VMware vSphere as a virtual GPU or vGPU. With the infrastructure in place, they built Linux VMs that will contain the ResNet-50 v1.5 workload and vGPUs. Beyond just working with traditional vGPUs that many may be familiar with, they also worked with NVIDIA’s Multi-Instance GPU (MIG) technology.

NVIDIA’s MIG technology allows administrators to partition a GPU into a maximum of seven GPU instances. Being able to do this provides greater control of GPU resources, ensuring that large and small workloads get the appropriate amount of GPU resources they need without wasting any.

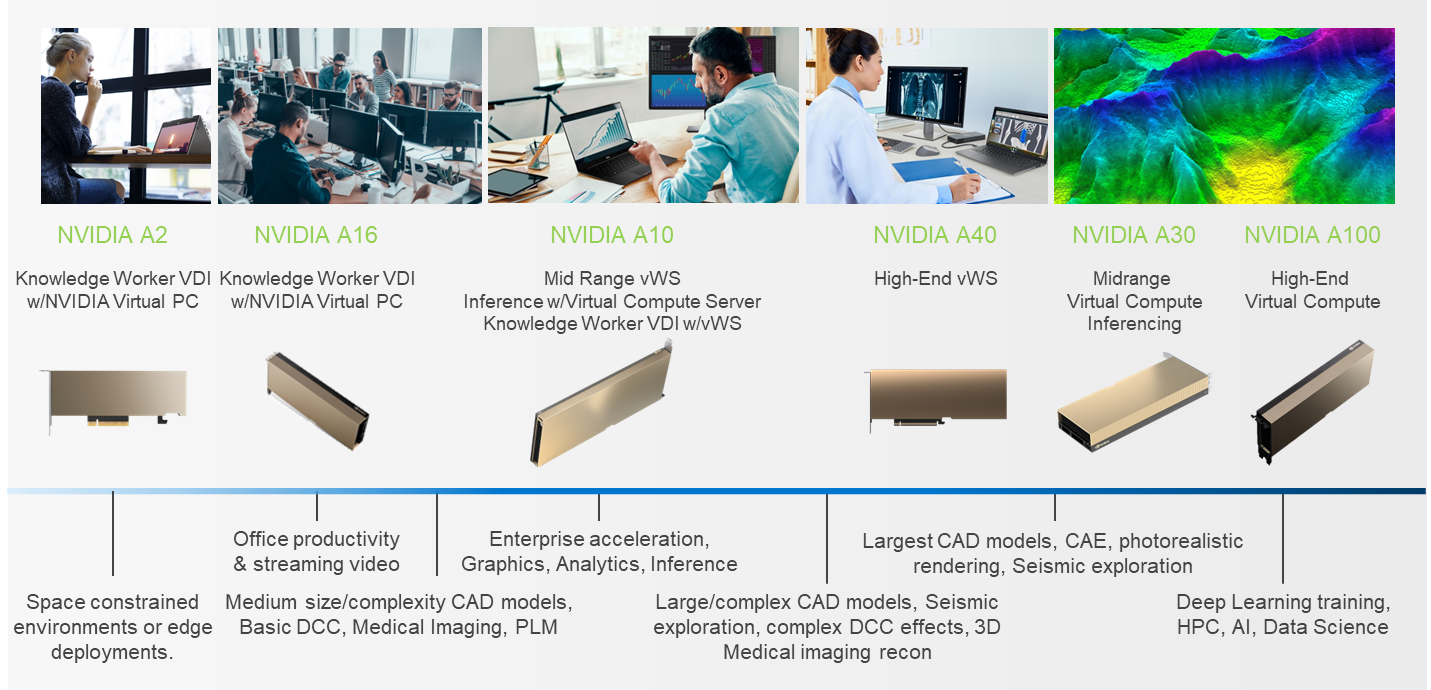

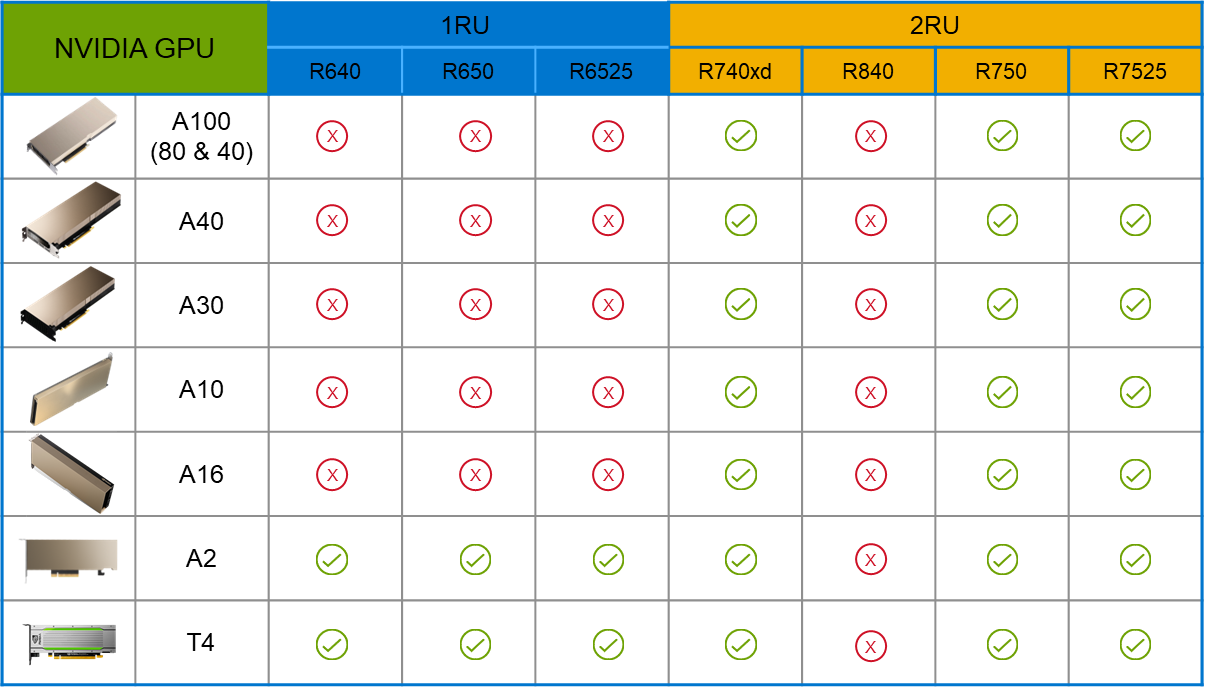

PowerFlex supports a large range of NVIDIA GPUs for workloads, from VDI (Virtual Desktops) to high end virtual compute workloads like AI. You can see this in the following diagram where there are solutions for “space constrained” and “edge” environments, all the way to GPUs used for large inferencing models. In the table below the diagram, you can see which GPUs are supported in each type of PowerFlex node. This provides a tremendous amount of flexibility depending on your workloads.

The validated design describes the steps to configure the architecture and provides detailed links to the NVIDIAand VMware documentation for configuring the vGPUs, and the licensing process for NVIDIA AI Enterprise.

These are key steps when building an AI environment. I know from my experience working with various organizations, and from teaching, that many are not used to working with vGPUs in Linux. This is slowly changing in the industry. If you haven’t spent a lot of time working with vGPUs in Linux, be sure to pay attention to the details provided in the guide. It is important and can make a big difference in your performance.

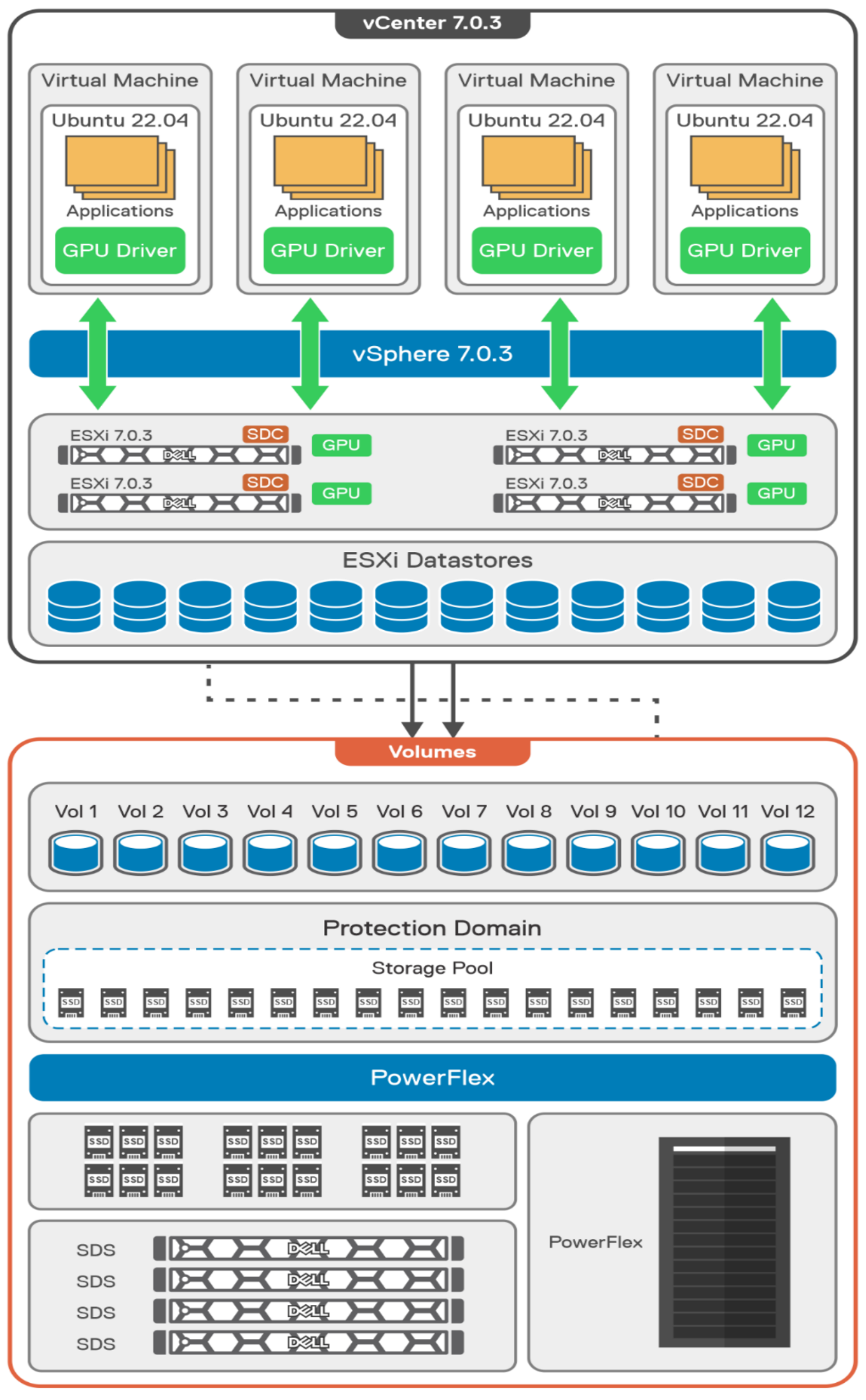

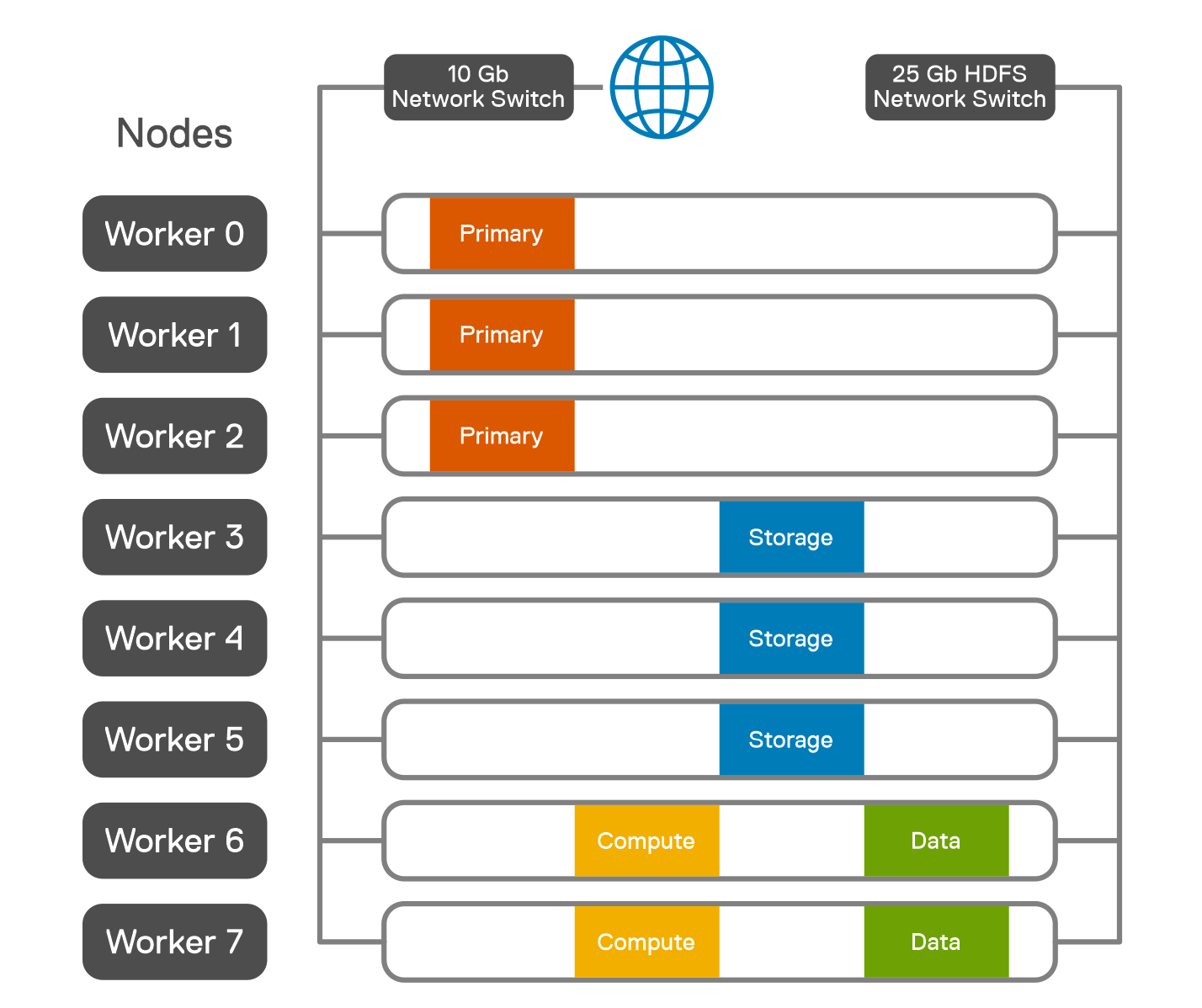

The following diagram shows the validated design’s logical architecture. At the top of the diagram, you can see four Ubuntu 22.04 Linux VMs with the NVIDIA vGPU driver loaded in them. They are running on PowerFlex hosts with VMware ESXi deployed. Each VM contains one NVIDIA A100 GPU configured for MIG operations. This configuration leverages a two-tier architecture where storage is provided by separate PowerFlex software defined storage (SDS) nodes.

A design like this allows for independent scalability for your workloads. What I mean by this is during the training phase of a model, significant storage may be required for the training data, but once the model clears validation and goes into production, storage requirements may be drastically different. With PowerFlex you have the flexibility to deliver the storage capacity and performance you need at each stage.

This brings us to testing the environment. Again, for this paper, the engineering team validated it using ResNet-50 v1.5 using the ImageNet 1K data set. For this validation they enabled several ResNet-50 v1.5 TensorFlow features. These include Multi-GPU training with Horovod, NVIDIA DALI, and Automatic Mixed Precision (AMP). These help to enable various capabilities in the ResNet-50 v1.5 model that are present in the environment. The paper then describes how to set up and configure ResNet-50 v1.5, the features mentioned above, and details about downloading the ImageNet data.

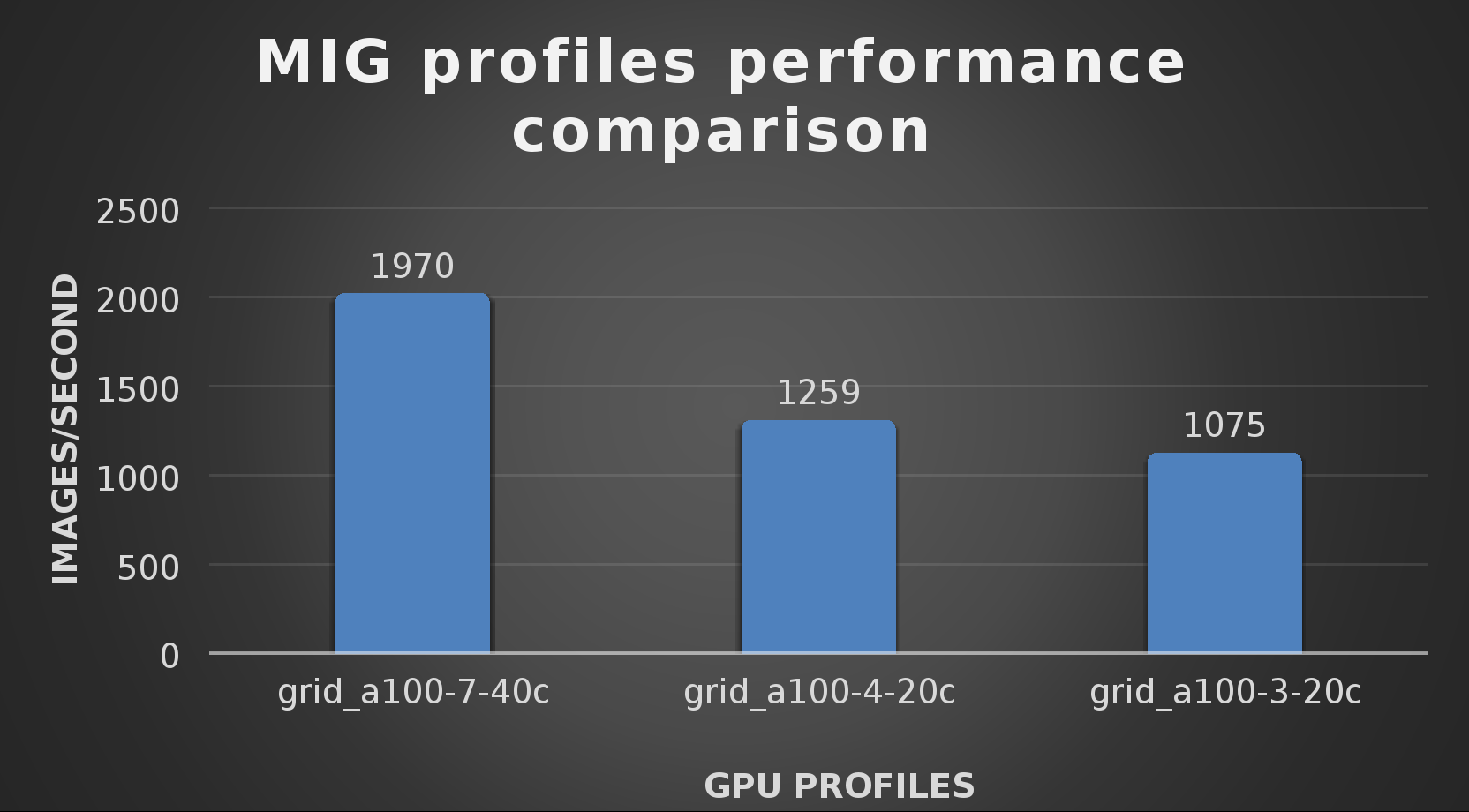

At this stage they were able to train the ResNet-50 v1.5 deployment. The first iteration of training used the NVIDIA A100-7-40C vGPU profile. They then repeated testing with the A100-4-20C vGPU profile and the A100-3-20C vGPU profile. You might be wondering about the A100-2-10C vGPU profile and the A100-1-5C profile. Although those vGPU profiles are available, they are more suited for inferencing, so they were not tested.

The results from validating the training workloads for each vGPU profile is shown in the following graph. The vGPUs were running near 98% capacity according to nvitop during each test. The CPU utilization was 14% and there was no bottle neck with the storage during the tests.

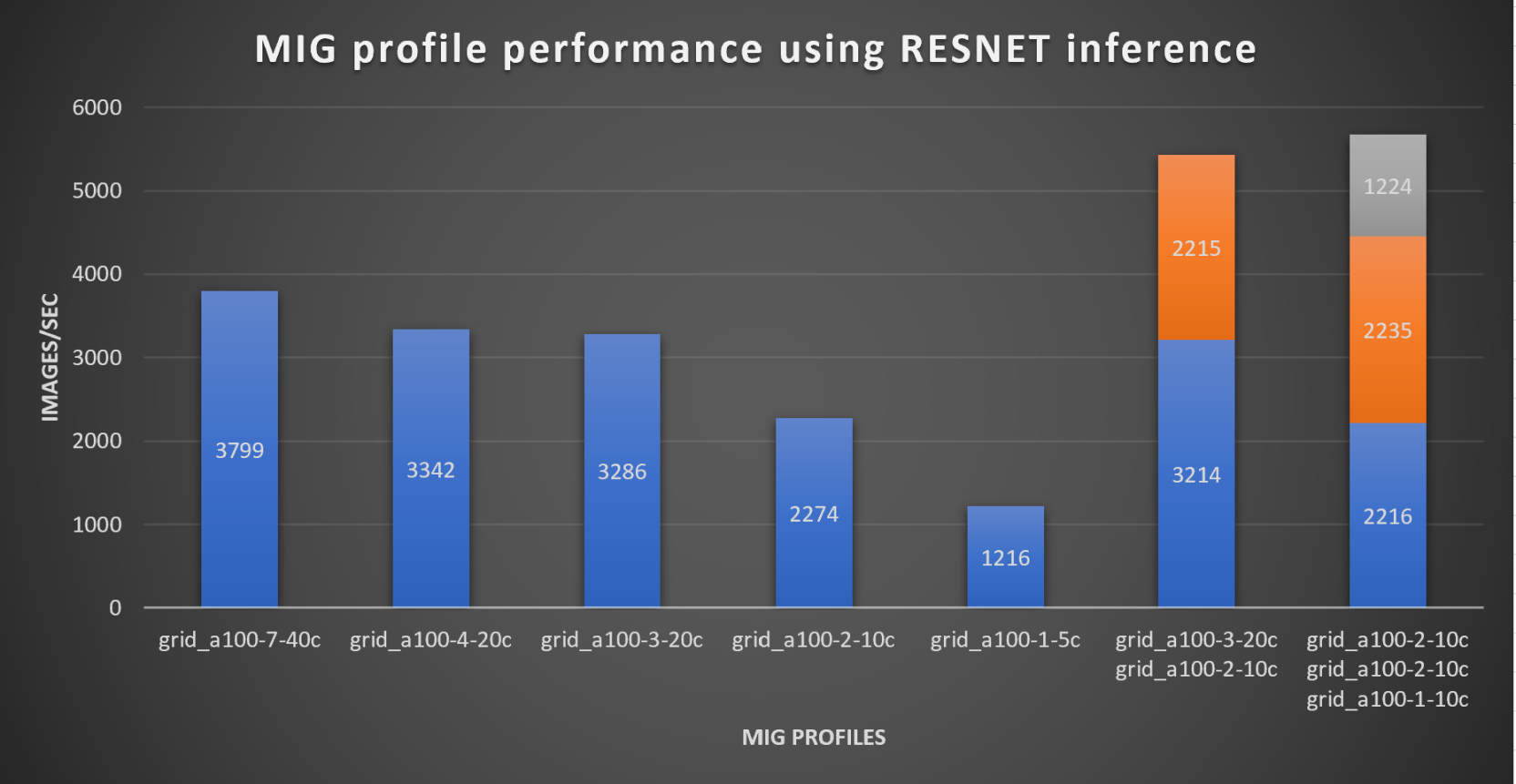

With the models trained, the guide then looks at how well inference runs on the MIG profiles. The following graph shows inferencing images per second of the various MIG profiles with ResNet-50 v1.5.

It’s worth noting that the last two columns show the inferencing running across multiple VMs, on the same ESXi host, that are leveraging MIG profiles. This also shows that GPU resources are partitioned with MIG and that resources can be precisely controlled, allowing multiple types of jobs to run on the same GPU without impacting other running jobs.

This opens the opportunity for organizations to align consumption of vGPU resources in virtual environments. Said a different way, it allows IT to provide “show back” of infrastructure usage in the organization. So if a department only needs an inferencing vGPU profile, that’s what they get, no more, no less.

It’s also worth noting that the results from the vGPU utilization were at 88% and CPU utilization was 11% during the inference testing.

These validations show that a Dell PowerFlex environment can support the foundational components of modern-day AI. It also shows the value of NVIDIA’s MIG technology to organizations of all sizes: allowing them to gain operational efficiencies in the data center and enable access to AI.

Which again answers the question of this blog, can I do that AI thing on Dell PowerFlex… Yes you can run that AI thing! If you would like to find out more about how to run your AI thing on PowerFlex, be sure to reach out to your Dell representative.

Resources

- The History of Artificial Intelligence

- ‘Godfather of AI’ leaves Google, warns of tech’s dangers

- ResNet-50: The Basics and a Quick Tutorial

- Dell Validated Design for Virtual GPU with VMware and NVIDIA on PowerFlex

- NVIDIA NGC Catalog ResNet v1.5 for PyTorch

- NVIDIA AI Enterprise

- NVIDIA A100 (PCIe) GPU

- NVIDIA Virtual GPU Software Documentation

- NVIDIA A100-7-40C vGPU profile

- NVIDIA Multi-Instance GPU (MIG)

- NVIDIA Multi-Instance GPU User Guide

- Horovod

- ImageNet

- DALI

- Automatic Mixed Precision (AMP)

- nvitop

Author: Tony Foster

Sr. Principal Technical Marketing Engineer

Twitter: | |

LinkedIn: | |

Personal Blog: | |

Location: | The Land of Oz [-6 GMT] |

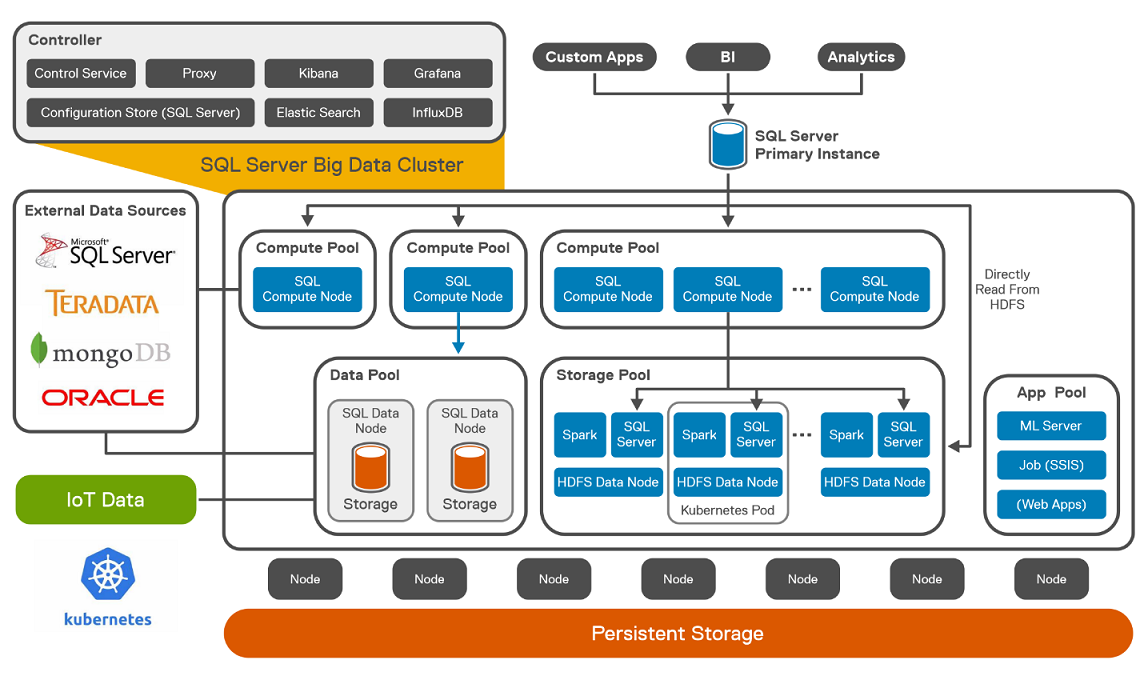

What to do with all that data? Answer: SingleStore on PowerFlex

Wed, 10 May 2023 22:55:28 -0000

|Read Time: 0 minutes

Every organization has data, every organization has databases, every organization must figure out what to do with all that data from those databases. According to research by the University of Tennessee, Knoxville’s Haslam College of Business there were 44 zettabytes of data in 2020, and by 2025 it is estimated that 463 exabytes of data will be created daily. That’s a lot of data, and even if your organization only contributes a fraction of a precent to those 463 exabytes of data a day, that’s still a lot of data to manage. A great approach to this modern ocean of data is using SingleStore on Dell PowerFlex.

Recently Dell and SingleStore released a joint validation white paper on a virtualized SingleStore environment running on PowerFlex. The paper provides an overview of the technologies used and then looks at an architecture that can be used to run SingleStore on PowerFlex. After that, the paper looks at how the environment was validated.

SingleStore

Before I get into the details of the paper, I suspect there might be a few readers who have yet to hear about SingleStore or know about some of its great features, so let’s start there. Built for developers and architects, SingleStoreDB is based on a distributed SQL architecture, delivering 10–100 millisecond performance on complex queries—all while ensuring that your organization can effortlessly scale. Now let’s go a bit deeper….

The SingleStoreDB :

- Scales horizontally providing high throughput across a wide range of platforms.

- Maintains a broad compatibility with common technologies in the modern data processing ecosystem (for example, orchestration platforms, developer IDEs, and BI tools), so you can easily integrate it in your existing environment.

- Features an in-memory rowstore and an on-disk columnstore to handle both highly concurrent operational and analytical workloads.

- Features the SingleStore Pipelines data ingestion technology that streams large amounts of data at high throughput into the database with exactly once semantics.

This means that you can continue to run your traditional SQL queries against your every growing data, which all resides on a distributed system, and you can do it fast. This is a big win for organizations who have active data growth in their environment.

What makes this even better is the ability of PowerFlex to scale from a few nodes to thousands. This provides a few different options to match your growing needs. You can start with just your SingleStore system deployed on PowerFlex and migrate other workloads on to the PowerFlex environment as time permits. This allows you to focus on just your database environment to start and then, as infrastructure comes up for renewal, you migrate those workloads and scale up your environment with more compute and storage capacity.

Or maybe you are making a bigger contribution to that 463 exabytes of data per day I mentioned earlier, and you need to scale out your environment to handle your data’s growth. You can do that too!

That’s the great thing about PowerFlex, you can consume resources independently of each other. You can add more storage or compute as you need them.

Additionally, with PowerFlex, you can deliver bare-metal and virtualized environments without having to choose only one. That’s right—you can run bare-metal servers right next to virtualized workloads.

Architecture

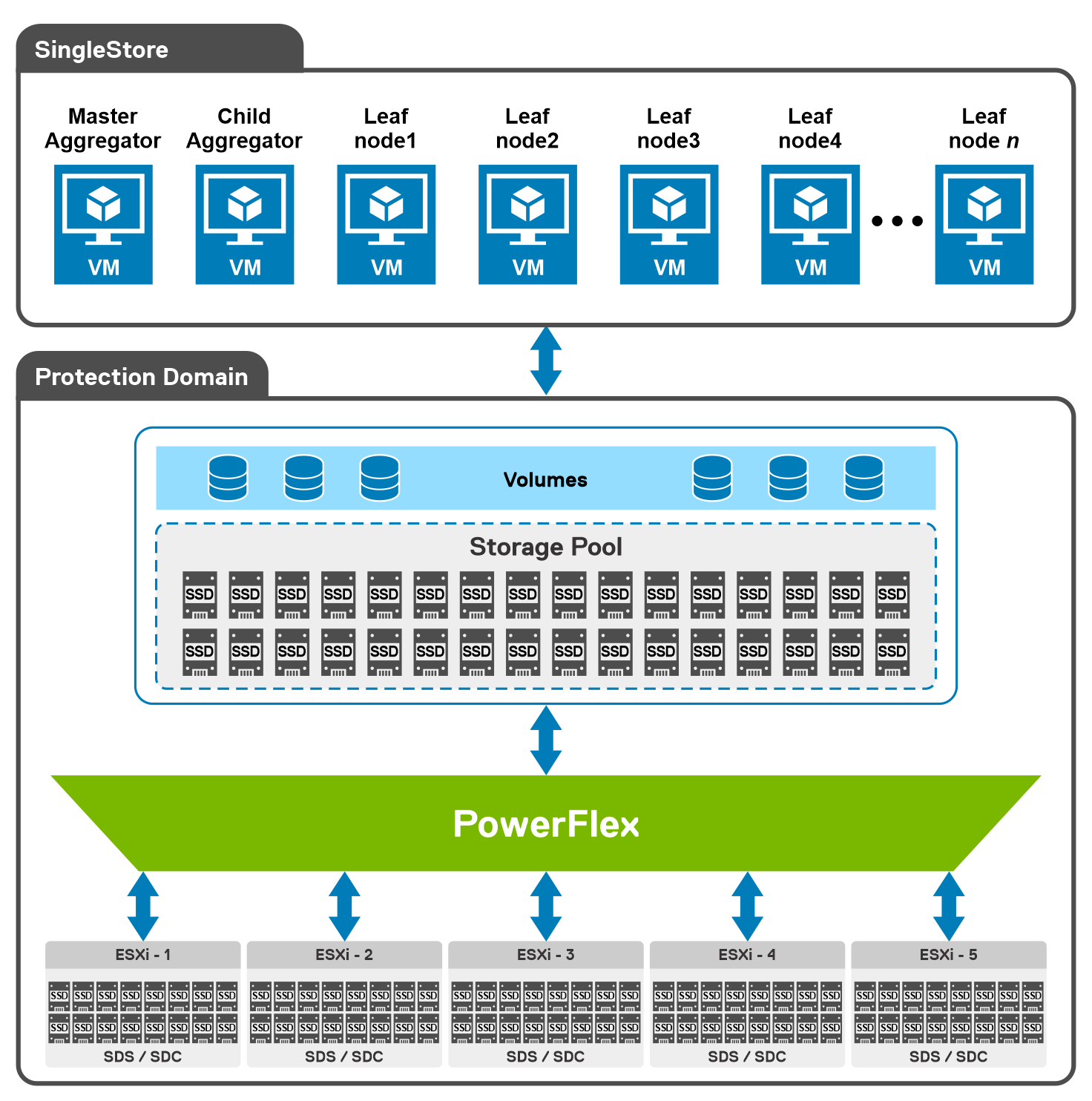

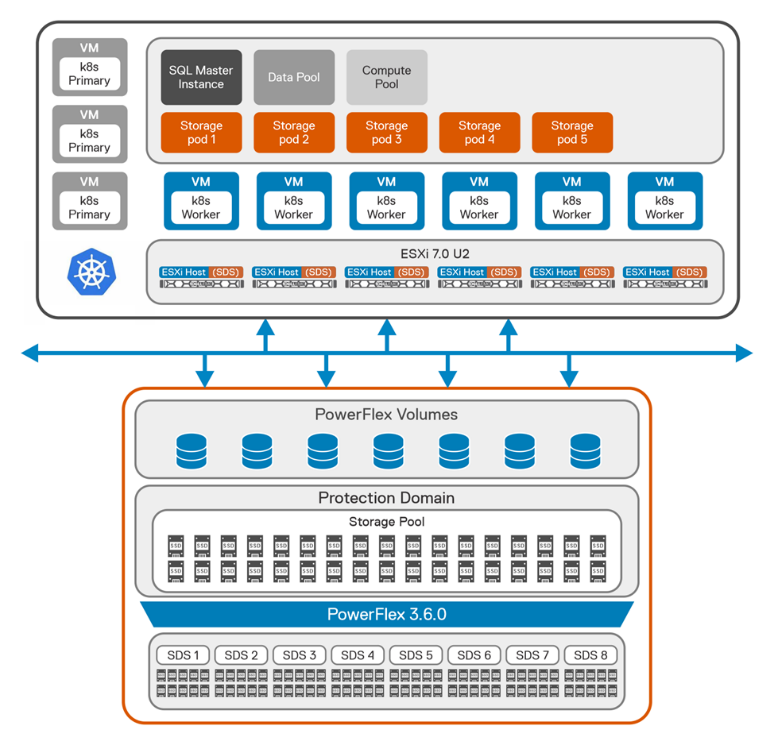

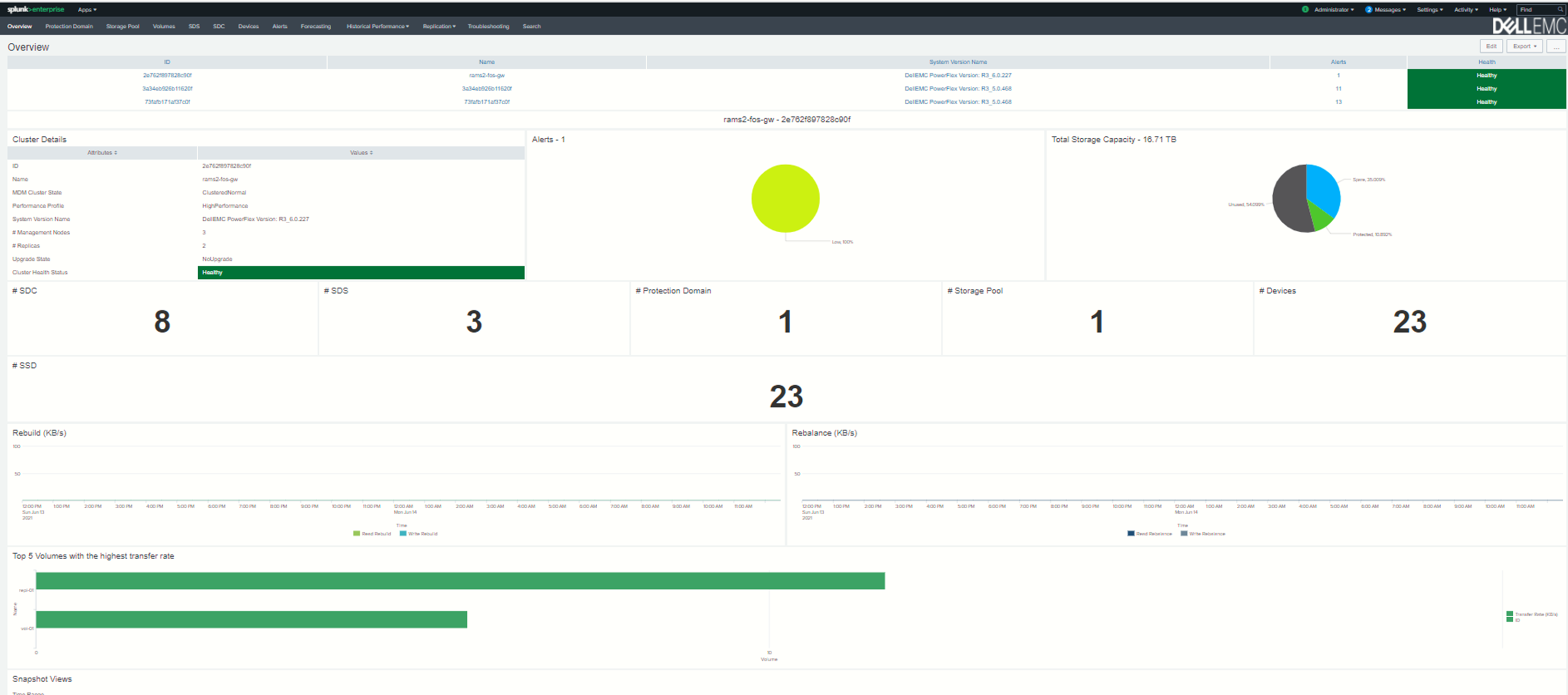

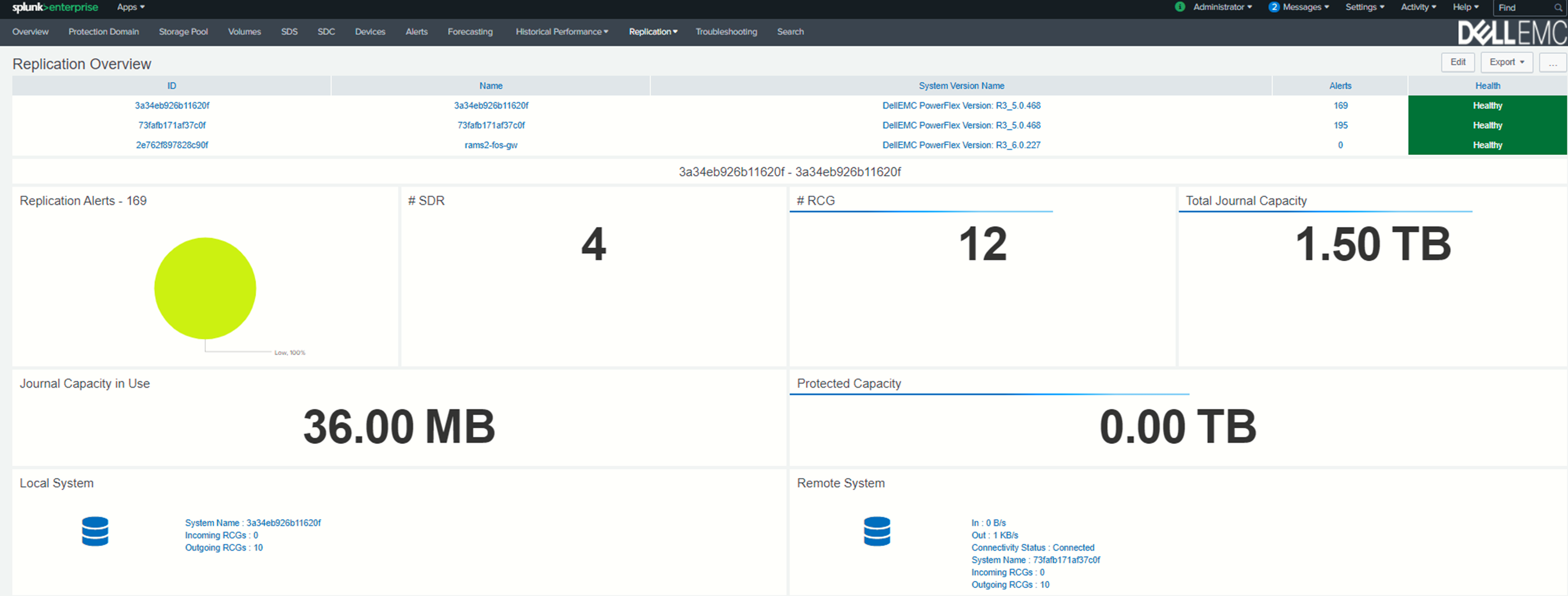

The way the engineers built this environment was using PowerFlex deployed in a hyper-converged infrastructure (HCI) configuration where the compute nodes are also storage nodes. (PowerFlex supports both two-tier architectures and HCI.)

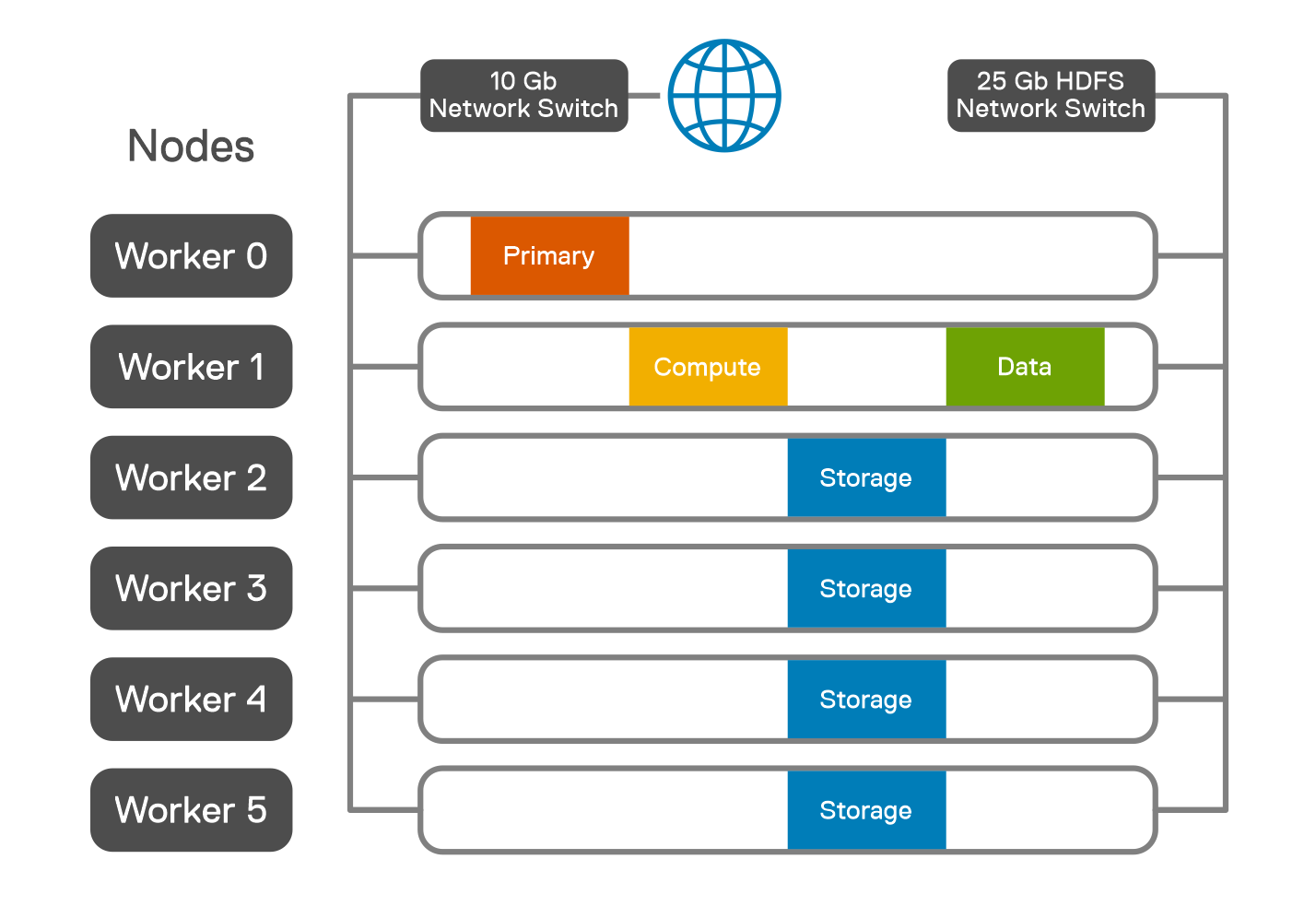

As shown in the following diagram, our engineering team used five Dell PowerEdge R640 servers with dual CPUs, 384 GB of RAM, and eight SSDs per node. These five nodes were configured as HCI nodes and connected with a 25 Gbps network. The storage from across the nodes is aggregated to create a large software-defined pool of storage as a single protection domain that provides volumes to the SingleStore VMs. This is ideal for even the most demanding databases due to its high I/O capability.

For this validation, the SingleStore Cluster VMs consist of two aggregator VMs and multiple leaf VMs. The white paper details the configuration of these VMs.

Additionally, the white paper provides an overview of the steps used to deploy SingleStore on VMware vSphere in a PowerFlex environment. For this validation, they followed the online user interface method to deploy SingleStore.

Testing

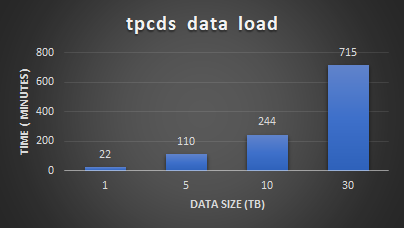

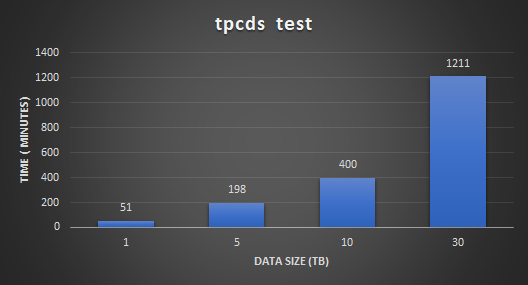

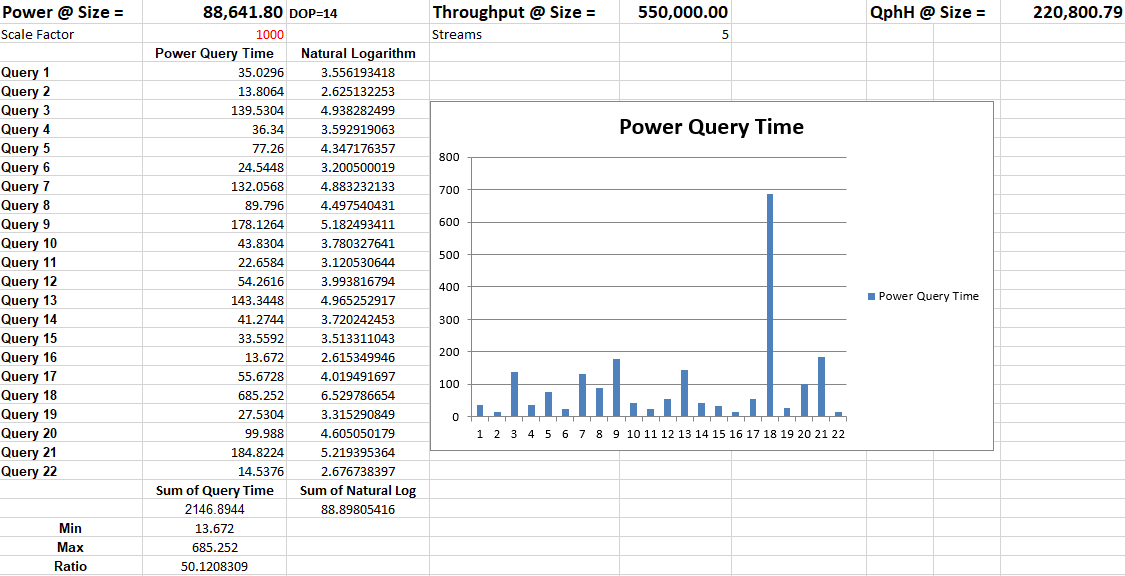

With the environment configured, the white paper then discusses how to validate the environment using TPC-DS. This tool provides 99 different queries that can be used to test a database. For this validation, only 95 of the 99 were used. The paper then describes both how the sample data set was created and how the tests were run.

The validation tests were run on 4, 6, and 8 leaf node configurations. This was done to understand the variation in performance as the environment scales. The testing showed that having more SingleStore leaf nodes results in better performance outcomes.

The testing also showed that there were no storage bottlenecks for the TPC-DS like workload and that using more powerful CPUs could further enhance the environment.

The white paper shows how SingleStore and PowerFlex can be used to create a dynamic and robust environment for your growing data needs as you do your part to contribute to the 463 exabytes of data that is expected to be created daily by 2025. To find out more about this design, contact your Dell representative.

Resources

Author: Tony Foster

Twitter: @wonder_nerd

LinkedIn

What’s New in PowerFlex 4.0 REST API?

Mon, 08 May 2023 18:14:08 -0000

|Read Time: 0 minutes

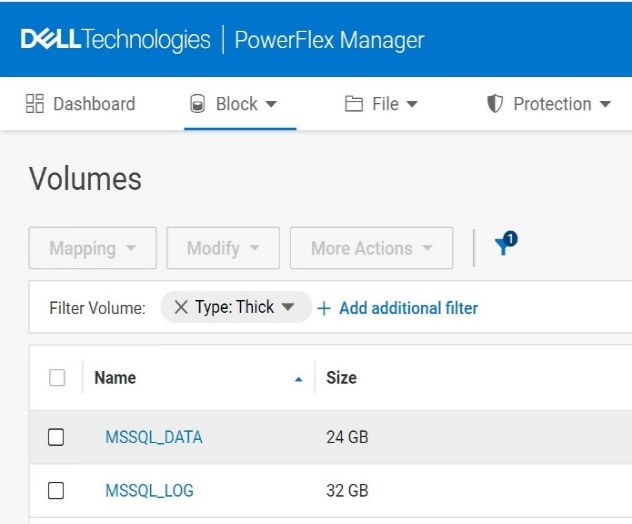

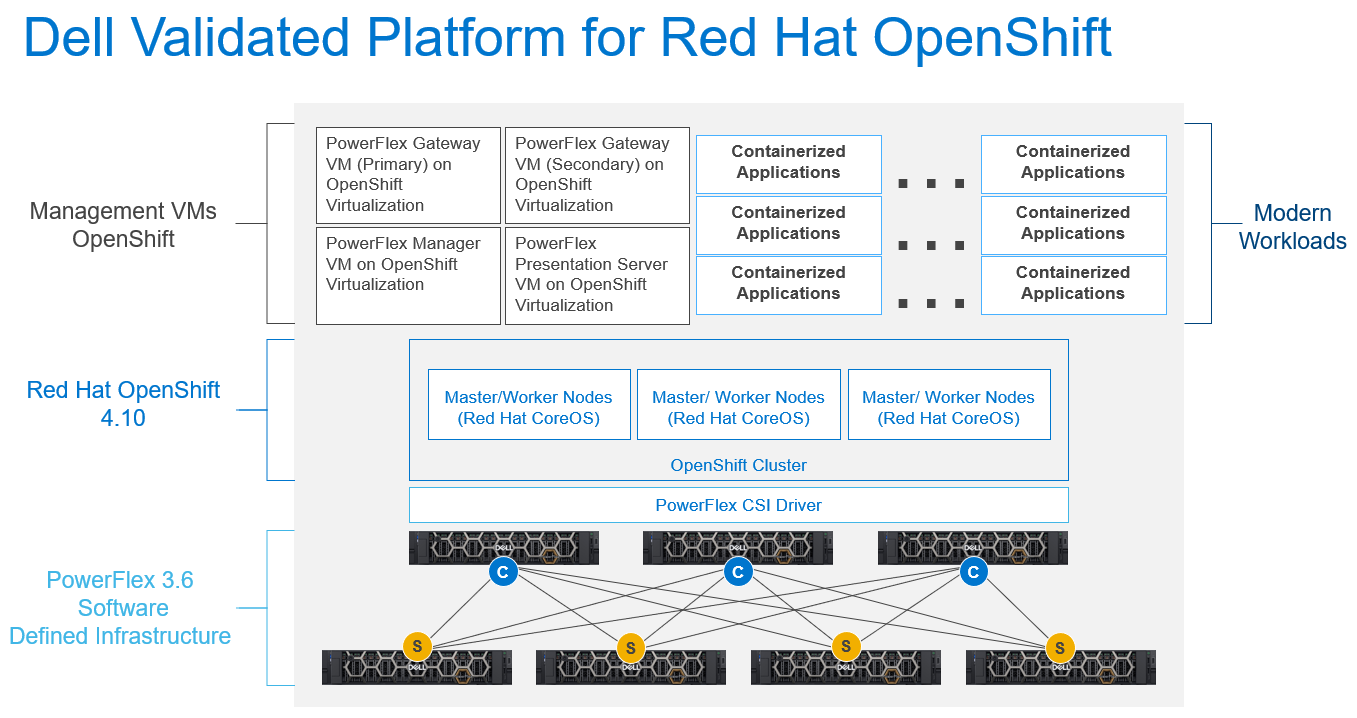

Wow, it’s been a busy year for PowerFlex! It started with the major announcement of PowerFlex on AWS at Dell Technologies World 2022. Then, in late summer of 2022 we announced a major update in PowerFlex v4.0. PowerFlex v4.0 added NVMe/TCP support, File Storage services, and a new management & operations (M&O) stack called unified PowerFlex Manager. In September of 2022 the PowerFlex Solutions team released the Dell Validated Platform for Red Had OpenShift on PowerFlex.

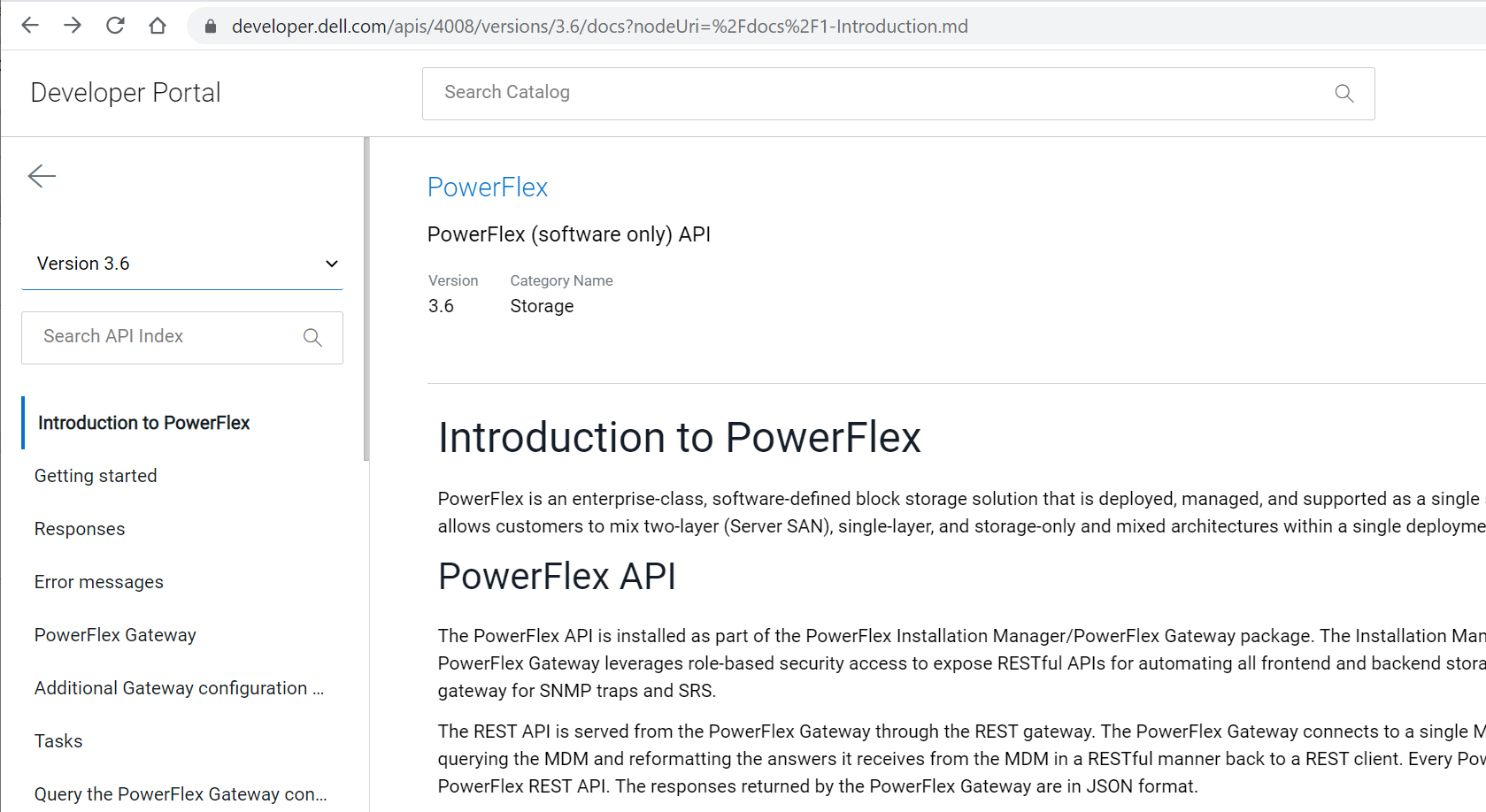

Many of the enhancements solidify PowerFlex’s position as the ultimate infrastructure platform. One such improvement is with the REST API which is the topic of this blog. If you are new to REST API and are looking for a quick introduction, I suggest you start by reading the blog Getting Started with REST API by Florian and Parasar. With that base of understanding, let’s take a closer look at the improvements made to REST API in PowerFlex 4.0.

Single endpoint

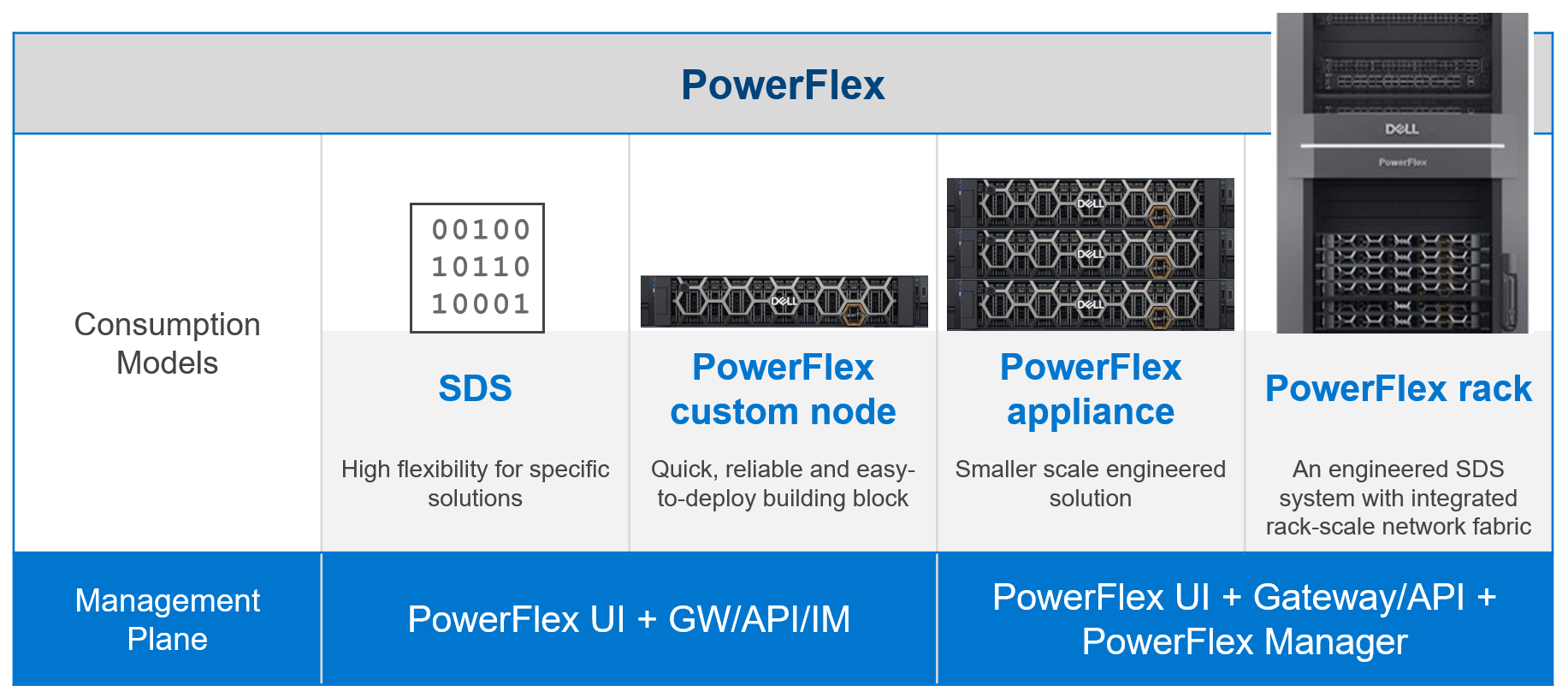

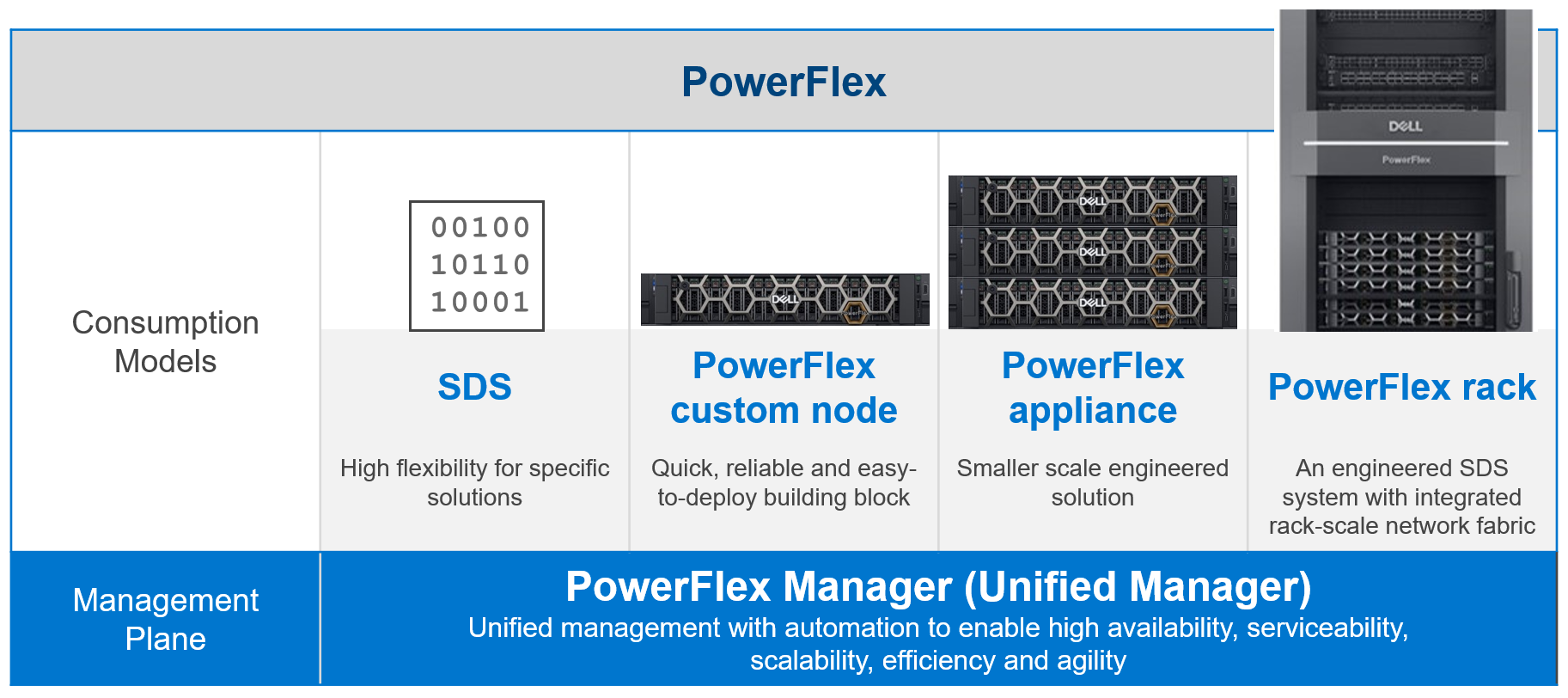

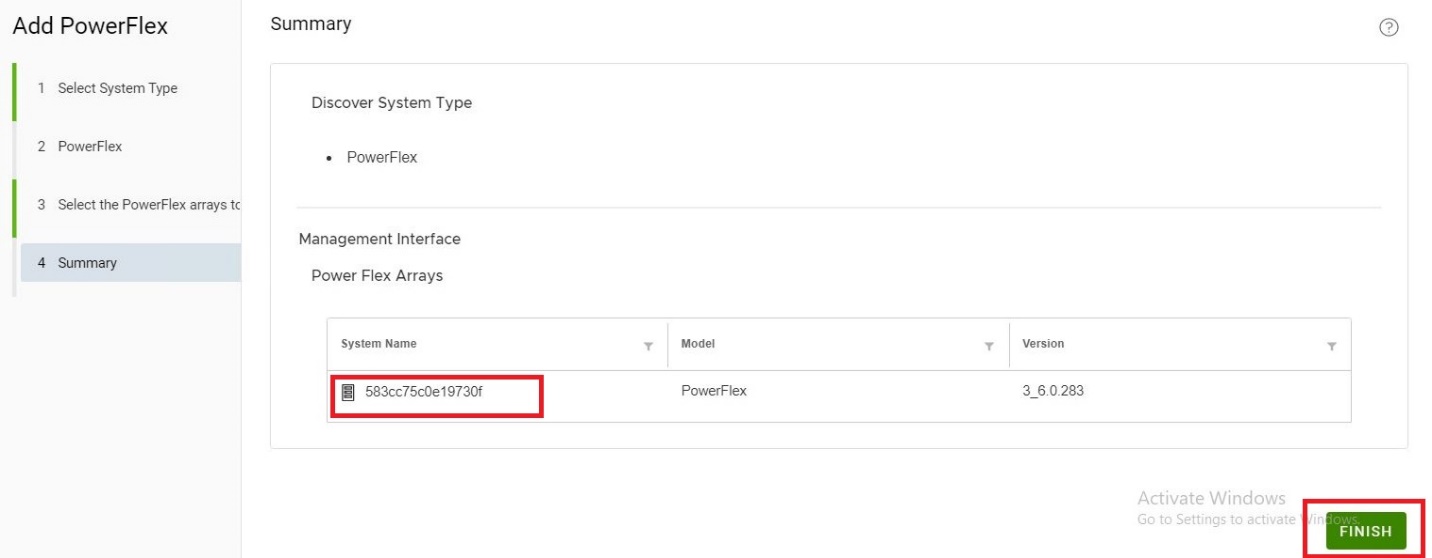

The improvements made to the REST API that I cover here are part of the new unified PowerFlex Manager application. The following figure shows the PowerFlex management plane prior to PowerFlex 4.0. As you can see, the management plane differs by the consumption model. Here there are two REST API endpoints: the PowerFlex Gateway endpoint and the PowerFlex Manager endpoint.

Figure 1. The PowerFlex Management Plane before 4.0