Resiliency Explained — Understanding the PowerFlex Self-Healing, Self-Balancing Architecture

Mon, 17 Aug 2020 21:36:11 -0000

|Read Time: 0 minutes

My phone rang. When I picked up it was Rob*, one of my favourite PowerFlex customers who runs his company’s Storage Infrastructure. Last year, his CTO made the decision to embrace digital transformation across the entire company, which included a software-defined approach. During that process, they selected the Dell EMC PowerFlex family as their Software-Defined Storage (SDS) infrastructure because they had a mixture of virtualised and bare-metal workloads, needed a solution that could handle their unpredictable storage growth, and also one powerful enough to support their key business applications.

During testing of the PowerFlex system, I educated Rob on how we give our customers an almost endless list of significant benefits – blazingly fast block storage performance that scales linearly as new nodes are added to the system; a self-healing & self-balancing storage platform that automatically ensures that it always gives the best possible performance; super-fast rebuilds in the event of disk or node failures, plus the ability to engineer a system that will meet or exceed his business commitments to uptime & SLAs.

PowerFlex provides all this (and more) thanks to its “Secret Sauce” – its Distributed Mesh-Mirror Architecture. It ensures there are always two copies of your application data – thus ensuring availability in case of any hardware failure. Data is intelligently distributed across all the disk devices in each of the nodes within a storage pool. As more nodes are added, the overall performance increases nearly linearly, without affecting application latencies. Yet at the same time, adding more disks or nodes also makes rebuild times during those (admittedly rare) failure situations decrease – which means that PowerFlex heals itself more quickly as the system grows!

PowerFlex automatically ensures that the two copies of each block of data that gets written to the Storage Pool reside on different SDS (storage) nodes, because we need to be able to get a hold of the second copy of data if a disk or a storage node that holds the first block fails at any time. And because the data is written across all the disks in all the nodes within a Storage Pool, this allows for super-quick IO response times, because we access all data in parallel.

Data also gets written to disk using very small chunk sizes – either 1MB or 4KB, depending on the Storage Pool type. Why is this? Doing this ensures that we always spread the data evenly across all the disk devices, automatically preventing performance hot-spots from ever being an issue in the first place. So, when a volume is assigned to a host or a VM, that data is already spread efficiently across all the disks in all Storage Nodes. For example, a 4-Node PowerFlex system, with 3 volumes provisioned from it, will look something like the following:

Figure 1: A Simplified View of a 4-Node PowerFlex System Presenting 3 Storage Volumes

Now, here is where the magic begins. In the event of a drive failure, the PowerFlex rebuild process utilizes an efficient many-to-many scheme for very fast rebuilds. It uses ALL the devices in the storage pool for rebuild operations and will always rebalance the data in the pool automatically whenever new disks or nodes are added to the Storage Pool. This means that, as the system grows, performance increases linearly – which is great for future-proofing your infrastructure if you are not sure how your system will grow. But this also gives another benefit – as your system grows in size, rebuilds get faster!

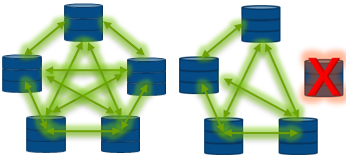

Customers like Rob typically raise their eyes at that last statement – until we provide a simple example to get the point across – and then they have a lightbulb moment. Think about what happens if we used a 4-node PowerFlex system, but only had one disk drive in each storage node. All data would be spread evenly across the 4 Nodes, but we also have some spare capacity reserved, which is also spread evenly across each drive. This spare capacity is needed to rebuild data into, in the event of a disk or a node failure and it usually equates to either the capacity of an entire node or 10% of the entire system, whichever is largest. At a superficial level, a 4-Node system would look something like this:

Figure 2: A Simplified View of a 4-Node PowerFlex System & Available Dataflows

If one of those drives (or nodes) failed, then obviously we would end up rebuilding between the three remaining disks, one disk per node:

Figure 3: Our Simplified 4-Node PowerFlex System & Available Dataflows with One Failed Disk

Now of course, in this scenario, that rebuild is going to take some time to complete. We will be performing lots of 1MB or 4KB copies between the three remaining nodes, in both directions, as we rebuild into the spare capacity available on the remaining nodes & get back to having two copies of data in order to be fully protected again. It is worth pointing out here that a node typically contains 10 or 24 drives, not just one, so PowerFlex isn’t just protecting you from “a” drive failure, we’re able to protect you from a whole pile of drives. This is not your typical RAID card schema.

Now – let the magic of PowerFlex begin! What happens if we were to add a fifth storage node into the mix? And what happens when a disk or node fails in this scenario??

Figure 4: Dataflows in a Normally Running 5-Node PowerFlex System … & Available Dataflows with One Failed Disk or Node

It should be clear for all to see that we now have more disks - and nodes - to participate in the rebuild process, making the rebuild complete substantially faster than in our previous 4 node scenario. But PowerFlex nodes do not have just a single disk inside them - They typically have 10 or 24 drive slots, hence even for a small deployment with 4 nodes, each having 10 disks, we will have data placed intelligently and evenly across all 40 drives, configured as one Storage Pool. Now, with today’s flash media, that is a heck of a lot of performance capability available at your fingertips, that can be delivered with consistent sub-millisecond latencies.

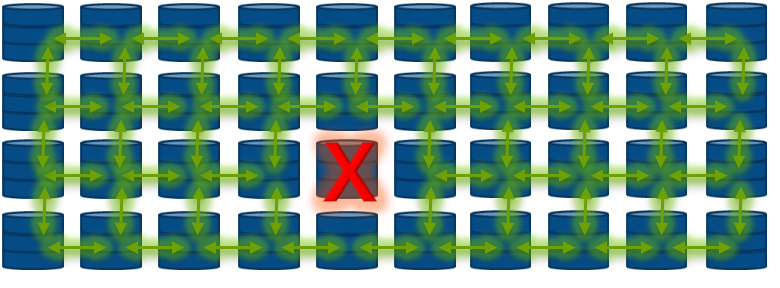

Let me also highlight the “many-to-many” rebuild scheme used by each Storage Pool. This means that any data within a Storage Pool can be rebuilt to all the other disks in the same Pool. If we have 40 drives in our pool, it means that when one drive fails, the other 39 drives will be utilised to rebuild the data of the failed drive. This results in extremely quick rebuilds that occur in parallel, with minimum impact to application performance if we lose a disk:

Figure 5: A 40-disk Storage Pool, with a Disk Failure… Showing The Magic of Parallel Rebuilds

Note that we had to over-simplify the dataflows between the disks in the figure above, because if we tried to show all the interconnects at play, we would simply have a tangle of green arrows!

Here’s another example to explain the difference between PowerFlex and conventional RAID-type drive protection. The initial rebuild test on an empty system usually takes little more than a minute for the rebuild to complete. This is because PowerFlex will only ever rebuild chunks of application data, unlike a traditional RAID controller, which will rebuild disk blocks whether they contain data or not. Why waste resources rebuilding empty zeroes of data when you need to repair from a failed disk or node as quickly as possible?

The PowerFlex Distributed Mesh-Mirror architecture is truly unique and gives our customers the fastest, most scalable and most resilient block storage platform available on the market today! Please visit www.DellTechnologies.com/PowerFlex for more information.

* Name changed to protect the innocent!