Phenomenal Power: Automating Dell EMC vSAN Ready Nodes

Tue, 16 Nov 2021 21:07:04 -0000

|Read Time: 0 minutes

Dell EMC vSAN Ready Nodes have Identity Modules that act at the lowest level of a node and imbues a host with special features and characteristics. In this blog, we explore how to use the attributes of the Identity Module to automate tasks in a vSphere environment.

Let’s start out by identifying all the Dell EMC vSAN Ready Nodes in our environment and displaying some information about them, such as the BIOS version of each host and vSphere version. After we learn about the hosts in our environment, we will discover what VMs are running on those hosts. We’ll do all of this through VMware’s PowerCLI, which is a plug-in for Microsoft PowerShell.

Note: We could also easily do this using other tools for vSphere such as Python (with pyVmomi), Ansible, and many others.

The environment we are using is a small environment using three Dell EMC vSAN Ready Nodes R740 with identity modules. All three nodes are running ESXi 7.0 U2 (vSAN 7.0 Update 2). VMware vSAN is using an all flash configuration. The code we are discussing in this post should work across current Dell EMC vSAN Ready Nodes and current vSphere releases.

The code displayed below may seem trivial, but you can use it as a base to create powerful scripts! This unlocks many automation capabilities for organizations. It also moves them further along in their autonomous operations journey. If you’re not familiar with autonomous operations, read this white paper to see where your organization is with automation. After reading it, also consider where you want your organization to go.

We’re not going to cover many of the things necessary to build and run these scripts, like connecting to a vSphere environment. There are many great blogs that cover these details, and we want to focus on the code for Dell EMC vSAN Ready Nodes.

In this first code block, we start by finding all the Dell EMC vSAN Ready Nodes in our environment. We use a ForEach-Object loop to do this.

Get-VMhost -State "connected" | ForEach-Object {

if ($_.ExtensionData.hardware.systemInfo.model.contains("vSAN Ready Node")){

echo "================================================="

echo "System Details"

echo "Model: " $_.ExtensionData.hardware.systemInfo.model

echo "Service Tag: " $_.ExtensionData.hardware.systemInfo.serialNumber

echo "BIOS version: " $_.ExtensionData.hardware.biosInfo.biosVersion

echo "ESXi build: " $_.ExtensionData.config.product.build

}

}This code snippet assumes we have connected to a vSphere environment with the Connect-VIServer command. It then creates a view of all the hosts in the environment using the Get-VMhost command, the results of which are passed to the ForEach-Object loop using the | (pipe) symbol. We then loop through this view of hosts using a ForEach-Object command and look at the hardware.systemInfo.model property of each host. The object of focus, one of the discovered hosts, is represented by the $_ variable, and to access the properties of the host object, we use the ExtensionData property. We check each host with a conditional method, .contains(), added on to the end of the property we want to check. Using the .contains method, we check if the hardware.systemInfo.model contains “vSAN Ready Node”. This string is a property that is unique to Dell EMC vSAN Ready Nodes and the Identity Module. It’s set at the factory when the Identity Module is installed.

If the system is a Dell EMC vSAN Ready Node with an Identity Module, we then display information from the hardware.systemInfo and the hardware.biosInfo, specifically the system’s BIOS version. We also collect the vSphere build of the host using the config.product property of the host.

As we loop through each host, we only display these details for the Dell EMC vSAN Ready Nodes in the environment that have Identity Modules. This results in output similar to the following:

The remainder of the nodes are excluded from the output shown here:

PS C:> .\IDM_Script.ps1 ================================================= System Details Model: PowerEdge R740 vSAN Ready Node Service Tag: [redacted] BIOS version: 2.1.12 ESXi build: 18538813

This provides relevant information that we can use to create automated reports about our environment. You can also use the script as the basis for larger automation projects. For example, when a new Dell EMC vSAN Ready node is added to an environment, a script could detect that addition, perform a set of tasks for new Dell EMC vSAN Ready Nodes, and notify the IT team when they are complete. These sample scripts can be used as a spark for your own ideas.

This next script uses the same for loop from before to find the hosts that are Dell EMC vSAN Ready Nodes and now looks to see what VMs are running on the host. From this example, we can see how the Identity Module is integral in automating the virtual environment from the hosts to the virtual machines.

Get-VMhost -state "connected" | ForEach-Object {

if ($_.ExtensionData.hardware.systemInfo.model.contains("vSAN Ready Node")){

echo "================================================="

echo "System Details"

echo "Model: " $_.ExtensionData.hardware.systemInfo.model

echo "Service Tag: " $_.ExtensionData.hardware.systemInfo.serialNumber

echo "BIOS version: " $_.ExtensionData.hardware.biosInfo.biosVersion

echo "ESXi build: " $_.ExtensionData.config.product.build

echo "+++++++++++++++++++++++++++++++++++++++++++++++++"

echo "$_ list of VMs:"

Get-VM -Location $_ | ForEach-Object{

echo $_.ExtensionData.name

}

}

} This new code snippet, shown in bold, builds on the previous example by looping through our hosts looking for the “vSAN Ready Node” as before. When it finds a matching host, it creates a new view using the Get-VM command, consisting of the virtual machines for that host. The host is specified using the -Location parameter, to which is passed the current host represented by the $_. We then use another ForEach-Object loop to display a list of VMs on the host.

This gives our code context. If an action is carried out, we can now define the scope of that action, not just on the host but on the workloads it’s running. We can start to build code with intelligence — extracting a greater value from the system, which in turn provides the opportunity to drive greater value for the organization.

As I said earlier, this is just the starting point of what is possible when building PowerCLI scripts for Dell EMC vSAN Ready Nodes with Identity Modules! Other automation platforms, like Ansible, can also take advantage of the identity module features. We only covered the basics, but there are enormous possibilities beyond discovery operations. The nuggets of knowledge in this blog unlock numerous opportunities for you to build automations that empower your data center.

For more information see Dell EMC vSAN Ready Nodes overview and the Dell EMC VSAN Ready Nodes blog site.

Author Information

Tony Foster

Related Blog Posts

Dell EMC vSAN Ready Nodes: Taking VDI and AI Beyond “Good Enough”

Mon, 18 Oct 2021 13:06:37 -0000

|Read Time: 0 minutes

Some people have speculated that 2020 was “the year of VDI” while others say that it will never be the “year of VDI.” However, there is one certainty. In 2020 and part of 2021, organizations worldwide consumed a large amount of virtual desktop infrastructure (VDI). Some of these deployments went extremely well while other deployments were just “good enough.”

If you are a VDI enthusiast like me, there was much to learn from all that happened over the last 24 months. An interesting observation is that test VDI environments turned into production environments overnight. Also, people discovered that the capacity of clouds is not limitless. My favorite observation is the discovery by many IT professionals that GPUs can change the VDI experience from “good enough” to enjoyable, especially when coupled with an outstanding environment powered by Dell Technologies with VMware vSphere and VMware Horizon.

In this blog, I will tell you about how exceptional VDI (and AI/ML) is when paired with powerful technology.

This blog does not address cloud workloads as it is a substantial topic. It would be difficult for me to provide the proper level of attention in this blog, so I will address only on premises deployments.

Many end users adopt hyperconverged infrastructure (HCI) in their data centers because it is easy to consume. One of the most popular HCIs is Dell EMC VxRail Hyperconverged Infrastructure. You can purchase nodes to match your needs. These needs range from the traditional data center workloads, to Tanzu clusters, to VDI with GPUs, and to AI. VxRail enables you to deliver whatever your end users need. Your end users might be developers working from home on a containers-based AI project and they need a development environment, VxRail can provide it with relative ease.

Some IT teams might want an HCI experience that is more customer managed but they still want a system that is straightforward to deploy, validate, and is easy to maintain. This scenario is where Dell EMC vSAN Ready Nodes come into play.

Dell EMC vSAN Ready Nodes provide comprehensive, flexible, and efficient solutions optimized for your workforce’s business goals with a large choice of options (more than 250 as of the September 29, 2021 vSAN Compatibility Guide) from tower to rack mount to blades. A surprising option is that you can purchase Dell EMC vSAN Ready Nodes with GPUs, making them a great platform for VDI and virtualized AI/ML workloads.

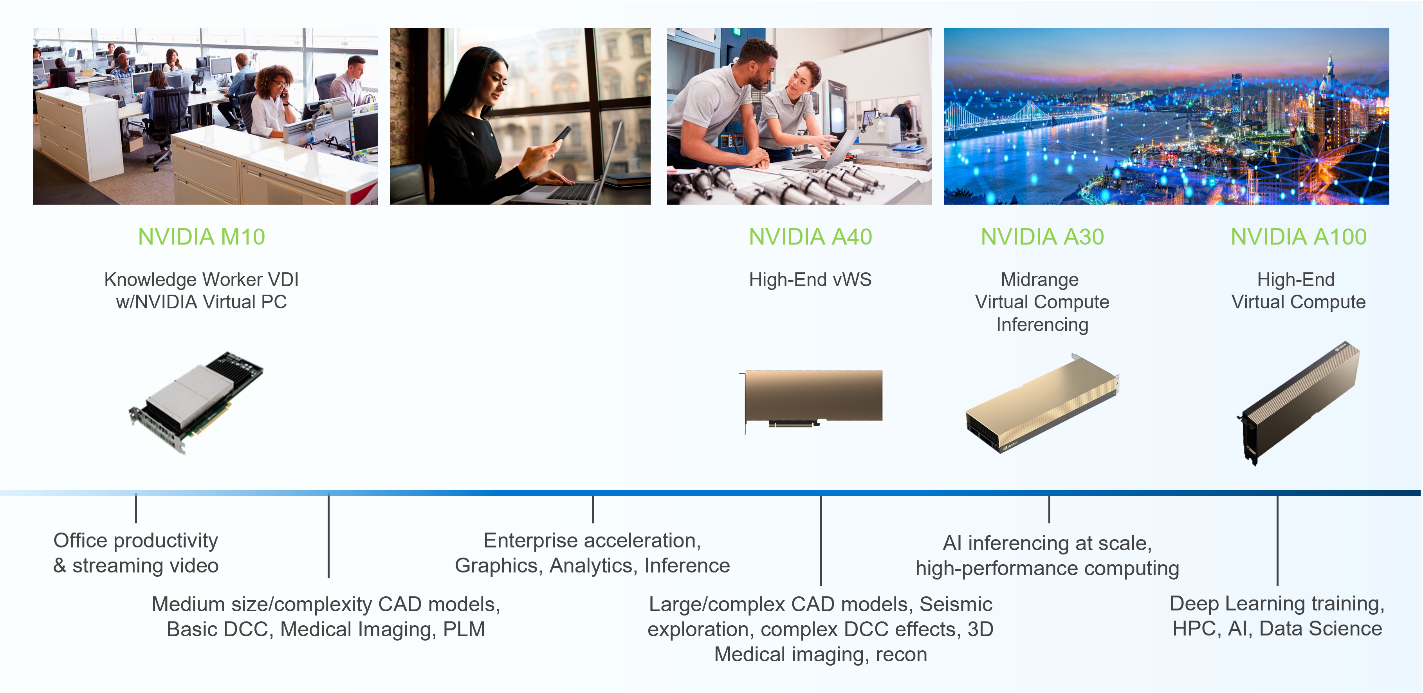

Dell EMC vSAN Ready Nodes supports many NVIDIA GPUs used for VDI and AI workloads, notably the NVIDIA M10 and A40 GPUs for VDI workloads and the NVIDIA A30 and A100 GPUs for AI workloads. There are other available GPUs depending on workload requirements, however, this blog focuses on the more common use cases.

For some time, the NVIDIA M10 GPU has been the GPU of choice for VDI-based knowledge workers who typically use applications such as Microsoft PowerPoint and YouTube. The M10 GPU provides a high density of users per card and can support multiple virtual GPU (vGPU) profiles per card. The multiple profiles result from having four GPU chips per PCI board. Each chip can run a unique vGPU profile, which means that you can have four vGPU profiles. That is, there are twice as many profiles than are provided by other NVIDIA GPUs. This scenario is well suited for organizations with a larger set of desktop profiles.

Combining this profile capacity with Dell EMC vSAN Ready Nodes, organizations can deliver various desktop options yet be based on a standardized platform. Organizations can let end users choose the system that suites them best and can optimize IT resources by aligning them to an end user’s needs.

Typically, power users need or want more graphics capabilities than knowledge workers. For example, power users working in CAD applications need larger vGPU profiles and other capabilities like NVIDIA’s Ray Tracing technology to render drawings. These power users’ VDI instances tend to be more suited to the NVIDIA A40 GPU and associated vGPU profiles. It allows power users who do more than create Microsoft PowerPoint presentations and watch YouTube videos to have the desktop experience they need to work effectively.

The ideal Dell EMC vSAN Ready Nodes platform for the A40 GPU is based on the Dell EMC PowerEdge R750 server. The PowerEdge R750 server provides the power and capacity for demanding workloads like healthcare imaging and natural resource exploration. These workloads also tend to take full advantage of other features built into NVIDIA GPUs like CUDA. CUDA is a parallel computing platform and programming model that uses GPUs. It is used in many high-end applications. Typically, CUDA is not used with traditional graphics workloads.

In this scenario, we start to see the blend between graphics and AI/ML workloads. Some VDI users not only render complex graphics sets, but also use the GPU for other computational outcomes, much like AI and ML do.

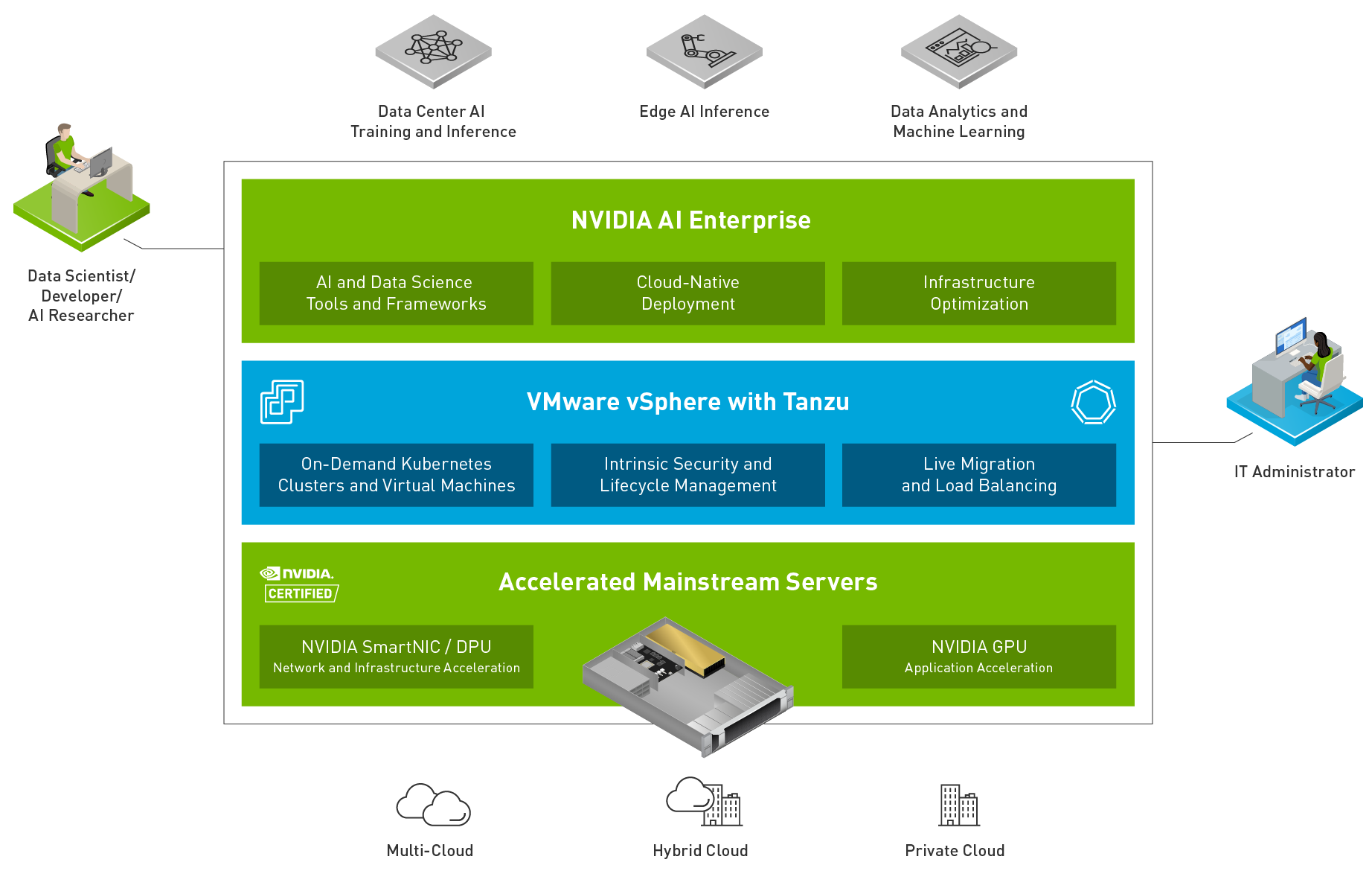

I really like that I can run AI/ML workloads in a virtual environment. It does not matter if you are an IT administrator or an AI/ML administrator. You can run AI and ML workloads in a virtual environment.

Many organizations have realized that the same benefits virtualization has brought to IT can also be realized in the AI/ML space. There are additional advantages, but those are best kept for another time.

For some organizations, IT is now responsible for AI/ML environments, whether delivering test/dev environments for programmers or delivering a complete AI training environment. For other IT groups, this responsibility falls to highly paid data scientists. And for some IT groups, the responsibility is a mix.

In this scenario, virtualization shines. IT administrators can do what they do best: deliver a powerful Dell EMC vSAN Ready Node infrastructure. Then, data scientists can spend their time building systems in a virtual environment consuming IT resources instead of racking and cabling a server.

Dell EMC vSAN Ready nodes are great for many AI/ML applications. They are easy to consume as a single unit of infrastructure. Both the NVIDIA A30 GPU and the A100 GPU are available so that organizations can quickly and easily assemble the ideal architecture for AI/ML workloads.

This ease of consumption is important for both IT and data scientists. It is unacceptable when IT consumers like data scientists must wait for the infrastructure they need to do their job. Time is money. Data scientists need environments quickly, which Dell EMC vSAN Ready Nodes can help provide. Dell EMC vSAN Ready Nodes deploy 130 percent faster with Dell EMC OpenManage Integration for VMware vCenter (OMIVV) (Based on Dell EMC internal competitive testing of PowerEdge and OMIVV compared to Cisco UCS manual operating system deployment.)

This speed extends beyond day 0 (deployment) to day 1+ operations. When using the vLCM and OMIVV, complete hypervisor and firmware updates to an eight-node PowerEdge cluster took under four minutes compared to a manual process, which took3.5 hours.(Principle Technologies report commissioned by Dell Technologies, New VMware vSphere 7.0 features reduced the time and complexity of routine update and hardware compliance tasks, July 2020.)

Dell EMC vSAN Ready Nodes ensures that you do not have to be an expert in hardware compatibility. With over 250 Dell EMC vSAN Ready Nodes available (as of the September 29, 2021 vSAN Compatibility Guide), you do not need to guess which drives will work or if a network adapter is compatible. You can then focus more on data and the results and less on building infrastructure.

These time-to-value considerations, especially for AI/ML workloads, are important. Being able to deliver workloads such as AI/ML or VDI quickly can have a significant impact on organizations, as has been evident in many organizations over the last two years. It has been amazing to see how fast organizations have adopted or expanded their VDI environments to accommodate everyone from knowledge workers to high-end power users wherever they need to consume IT resources.

Beyond “just expanding VDI” to more users, organizations have discovered that GPUs can improve the end-user experience and, in some cases, not only help but were required. For many, the NVIDIA M10 GPU helped users gain the wanted remote experience and move beyond “good enough.” For others who needed a more graphics-rich experience, the NVIDIA A40 GPU continues to be an ideal choice.

When GPUs are brought together as part of a Dell EMC vSAN Ready Node, organizations have the opportunity to deliver an expanded VDI and AI/ML experience to their users. To find out more about Dell EMC vSAN Ready Nodes, see Dell EMC vSAN Ready Nodes.

Author: Tony Foster Twitter: @wonder_nerd LinkedIn: https://linkedin.com/in/wondernerd

Learn About the Latest Major VxRail Software Release: VxRail 8.0.210

Fri, 15 Mar 2024 20:13:39 -0000

|Read Time: 0 minutes

It’s springtime, VxRail customers! VxRail 8.0.210 is our latest software release to bloom. Come see for yourself what makes this software release shine.

VxRail 8.0.210 provides support for VMware vSphere 8.0 Update 2b. All existing platforms that support VxRail 8.0 can upgrade to VxRail 8.0.210. This is also the first VxRail 8.0 software to support the hybrid and all-flash models of the VE-660 and VP-760 nodes based on Dell PowerEdge 16th Generation platforms that were released last summer and the edge-optimized VD-4000 platform that was released early last year.

Read on for a deep dive into the release content. For a more comprehensive rundown of the feature and enhancements in VxRail 8.0.210, see the release notes.

Support for VD-4000

The support for VD-4000 includes vSAN Original Storage Architecture (OSA) and vSAN Express Storage Architecture (ESA). VD-4000 was launched last year with VxRail 7.0 support with vSAN OSA. Support in VxRail 8.0.210 carries over all previously supported configurations for VD-4000 with vSAN OSA. What may intrigue you even more is that VxRail 8.0.210 is introducing first-time support for VD-4000 with vSAN ESA.

In the second half of last year, VMware reduced the hardware requirements to run vSAN ESA to extend its adoption of vSAN ESA into edge environments. This change enabled customers to consider running the latest vSAN technology in areas where constraints from price points and infrastructure resources were barriers to adoption. VxRail added support for the reduced hardware requirements shortly after for existing platforms that already supported vSAN ESA, including E660N, P670N, VE-660 (all-NVMe), and VP-760 (all-NVMe). With VD-4000, the VxRail portfolio now has an edge-optimized platform that can run vSAN ESA for environments that also may have space, energy consumption, and environmental constraints. To top that off, it’s the first VxRail platform to support a single-processor node to run vSAN ESA, further reducing the price point.

It is important to set performance expectations when running workload applications on the VD-4000 platform. While our performance testing on vSAN ESA showed stellar gains to the point where we made the argument to invest in 100GbE to maximize performance (check it out here), it is essential to understand that the VD-4000 platform is running with an Intel Xeon-D processor with reduced memory and bandwidth resources. In short, while a VD-4000 running vSAN ESA won’t be setting any performance records, it can be a great solution for your edge sites if you are looking to standardize on the latest vSAN technology and take advantage of vSAN ESA’s data services and erasure coding efficiencies.

Lifecycle management enhancements

VxRail 8.0.210 offers support for a few vLCM feature enhancements that came with vSphere 8.0 Update 2. In addition, the VxRail implementation of these enhancements further simplifies the user experience.

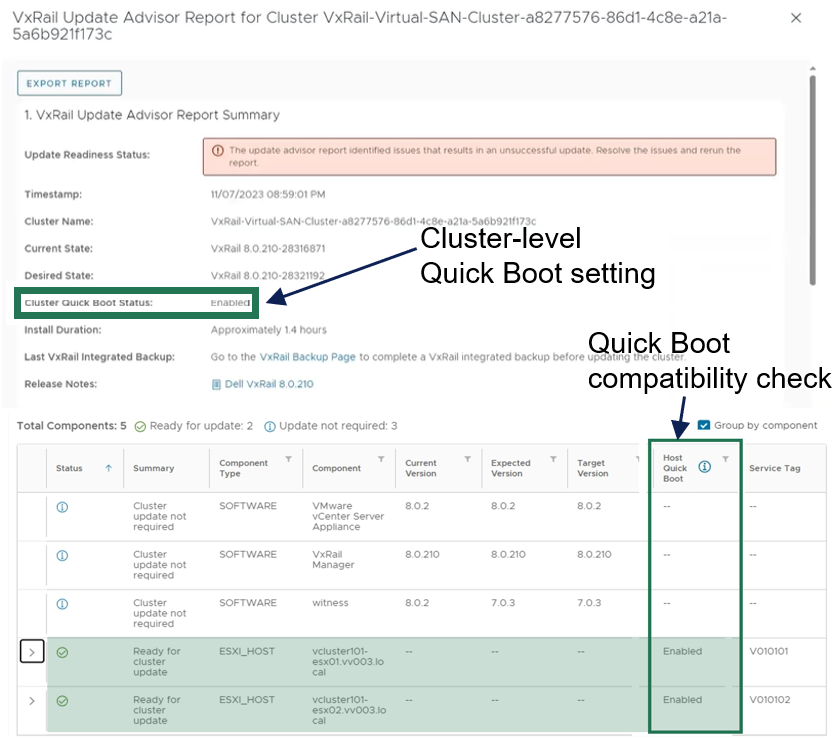

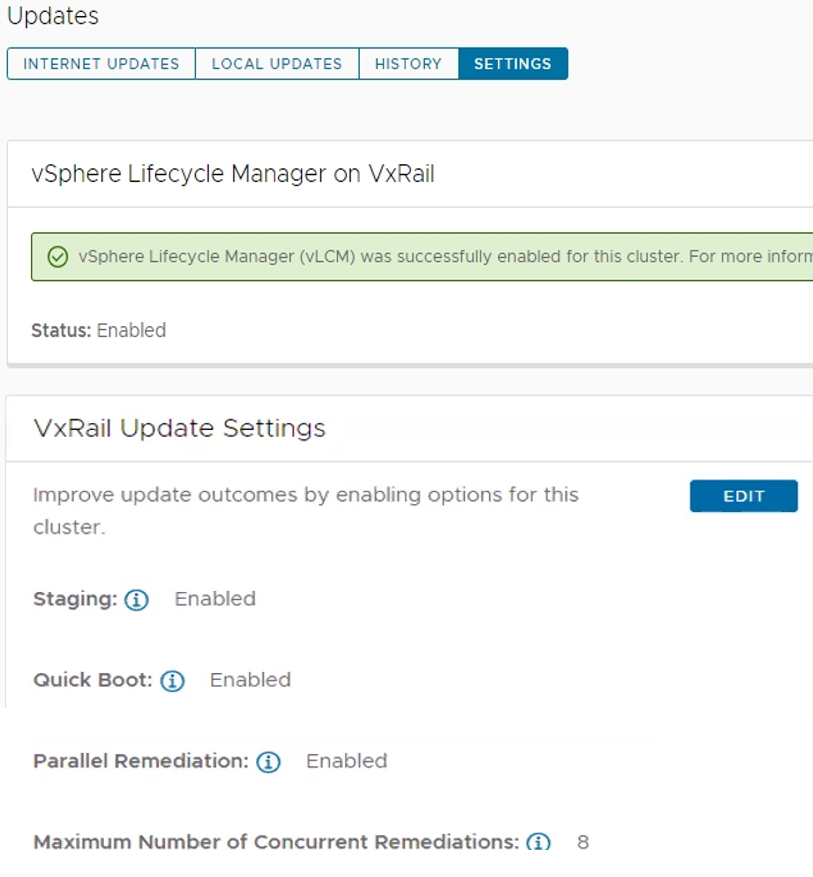

For vLCM-enabled VxRail clusters, we’ve made it easier to benefit from VMware ESXi Quick Boot. The VxRail Manager UI has been enhanced so that users can enable Quick Boot one time, and VxRail will maintain the setting whenever there is a Quick Boot-compatible cluster update. As a refresher for some folks not familiar with Quick Boot, it is an operating system-level reboot of the node that skips the hardware initialization. It can reduce the node reboot time by up to three minutes, providing significant time savings when updating large clusters. That said, any cluster update that involves firmware updates is not Quick Boot-compatible.

Using Quick Boot had been cumbersome in the past because it required several manual steps. To use Quick Boot for a cluster update, you would need to go to the vSphere Update Manager to enable the Quick Boot setting. Because the setting resets to Disabled after the reboot, this step had to be repeated for any Quick Boot-compatible cluster update. Now, the setting can be persisted to avoid manual intervention.

As shown in the following figure, the update advisor report now informs you whether a cluster update is Quick Boot-compatible so that the information is part of your update planning procedure. VxRail leverages the ESXi Quick Boot compatibility utility for this status check.

Figure 1. VxRail update advisor report highlighting Quick Boot information

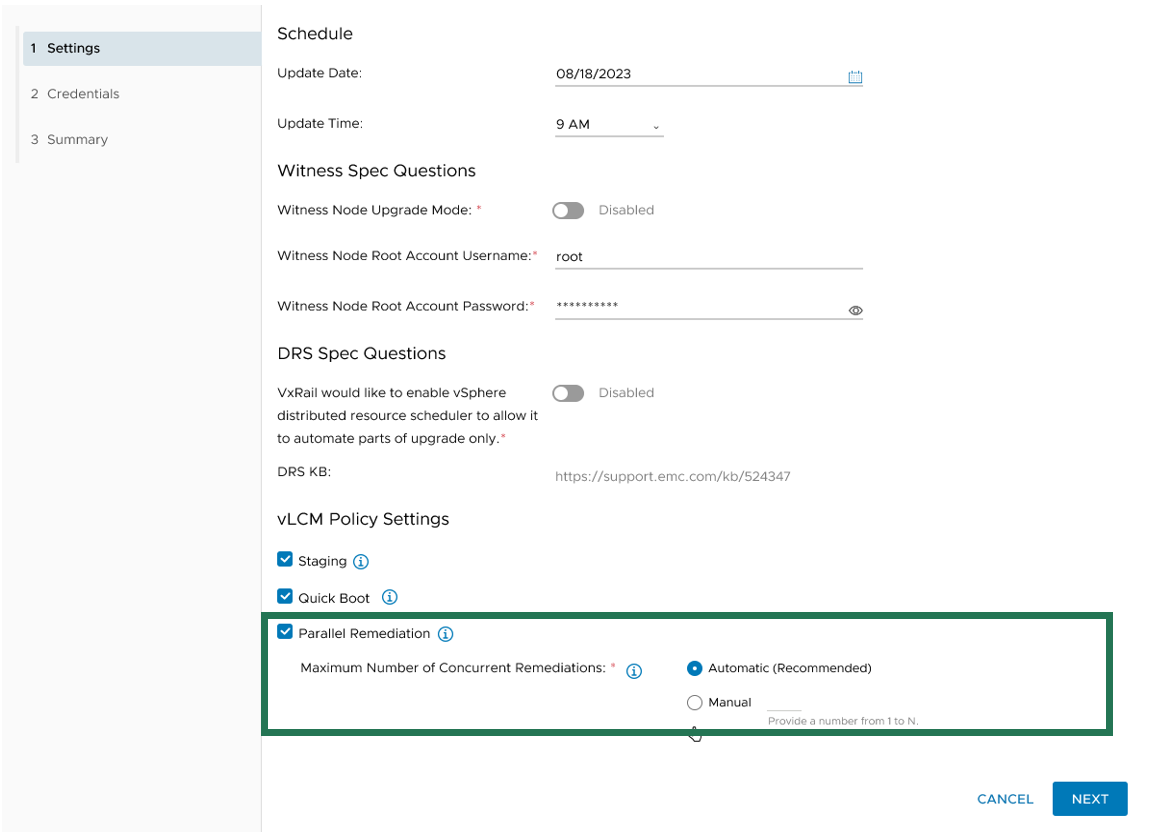

Another new vLCM feature enhancement that VxRail supports is parallel remediation. This enhancement allows you to update multiple nodes at the same time, which can significantly cut down on the overall cluster update time. However, this feature enhancement only applies to VxRail dynamic nodes because vSAN clusters still need to be updated one at a time to adhere to storage policy settings.

This feature offers substantial benefits in reducing the maintenance window, and VxRail’s implementation of the feature offers additional protections over how it can be used on vSAN Ready Nodes. For example, enabling parallel remediation with vSAN Ready Nodes means that you would be responsible for managing when nodes go into and out of maintenance mode as well as ensuring application availability because vCenter will not check whether the nodes that you select will disrupt application uptime. The VxRail implementation adds safety checks that help mitigate potential pitfalls, ensuring a smoother parallel remediation process.

VxRail Manager manages when nodes enter and exit maintenance modes and provides the same level of error checking that it already performs on cluster updates. You have the option of letting VxRail Manager automatically set the maximum number of nodes that it will update concurrently, or you can input your own number. The number for the manual setting is capped at the total node count minus two to ensure that the VxRail Manager VM and vCenter Server VM can continue to run on separate nodes during the cluster update.

Figure 2. Options for setting the maximum number of concurrent node remediations

During the cluster update, VxRail Manager intelligently reduces the node count of concurrent updates if a node cannot enter maintenance mode or if the application workload cannot be migrated to another node to ensure availability. VxRail Manager will automatically defer that node to the next batch of node updates in the cluster update operation.

The last vLCM feature enhancement in VxRail 8.0.210 that I want to discuss is installation file pre-staging. The idea is to upload as many installation files for the node update as possible onto the node before it actually begins the update operation. Transfer times can be lengthy, so any reduction in the maintenance window would have a positive impact to the production environment.

To reap the maximum benefits of this feature, consider using the scheduling feature when setting up your cluster update. Initiating a cluster update with a future start time allows VxRail Manager the time to pre-stage the files onto the nodes before the update begins.

As you can see, the three vLCM feature enhancements can have varying levels of impact on your VxRail clusters. Automated Quick Boot enablement only benefits cluster updates that are Quick Boot-compatible, meaning there is not a firmware update included in the package. Parallel remediation only applies to VxRail dynamic node clusters. To maximize installation files pre-staging, you need to schedule cluster updates in advance.

That said, two commonalities across all three vLCM feature enhancements is that you must have your VxRail clusters running vLCM mode and that the VxRail implementation for these three feature enhancements makes them more secure and easy to use. As shown in the following figure, the Updates page on the VxRail Manager UI has been enhanced so that you can easily manage these vLCM features at the cluster level.

Figure 3. VxRail Update Settings for vLCM features

VxRail dynamic nodes

VxRail 8.0.210 also introduces an enhancement for dynamic node clusters with a VxRail-managed vCenter Server. In a recent VxRail software release, VxRail added an option for you to deploy a VxRail-managed vCenter Server with your dynamic node cluster as a Day 1 operation. The initial support was for Fiber-Channel attached storage. The parallel enhancement in this release adds support for dynamic node clusters using IP-attached storage for its primary datastore. That means iSCSI, NFS, and NVMe over TCP attached storage from PowerMax, VMAX, PowerStore, UnityXT, PowerFlex, and VMware vSAN cross-cluster capacity sharing is now supported. Just like before, you are still responsible for acquiring and applying your own vCenter Server license before the 60-day evaluation period expires.

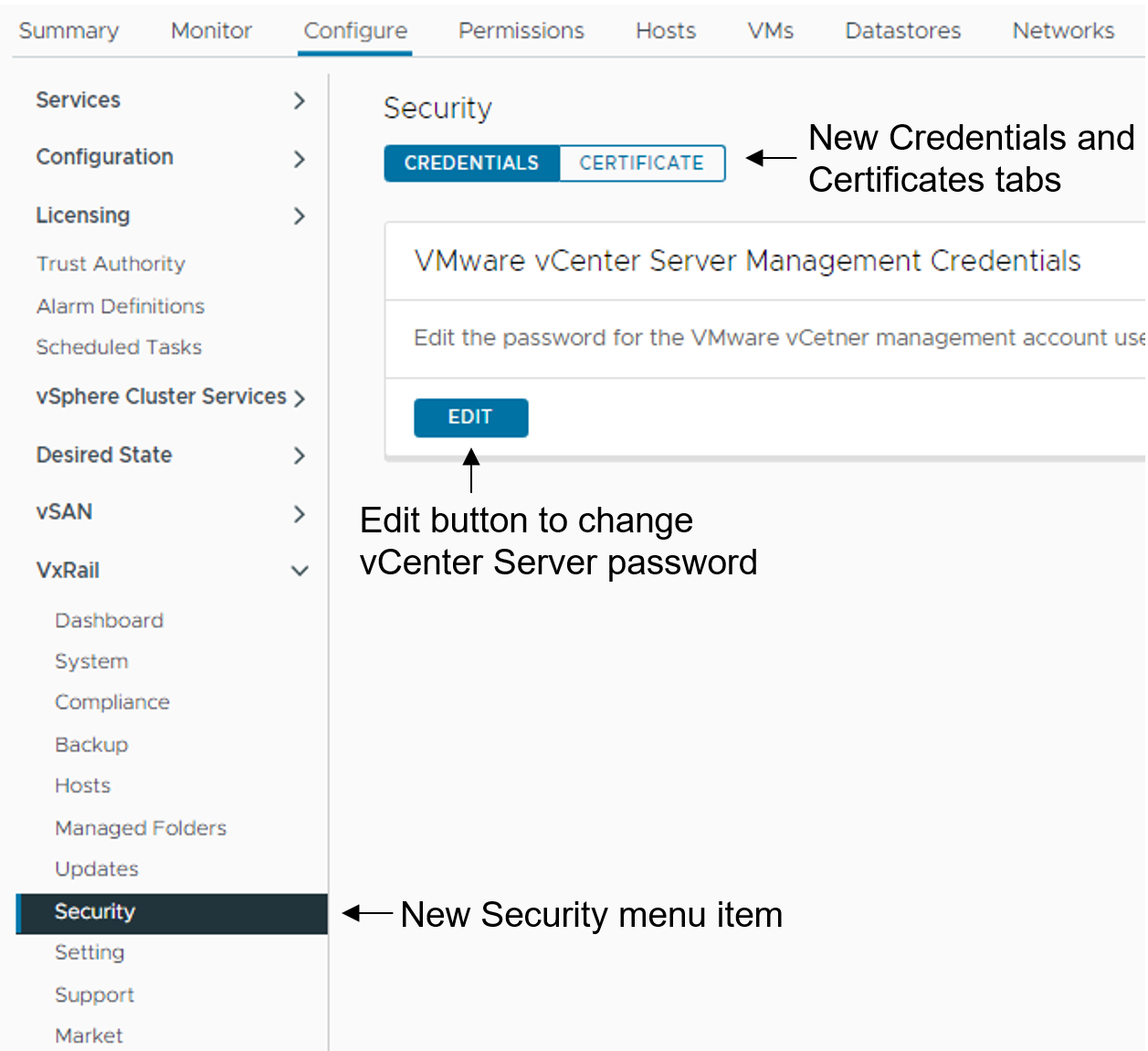

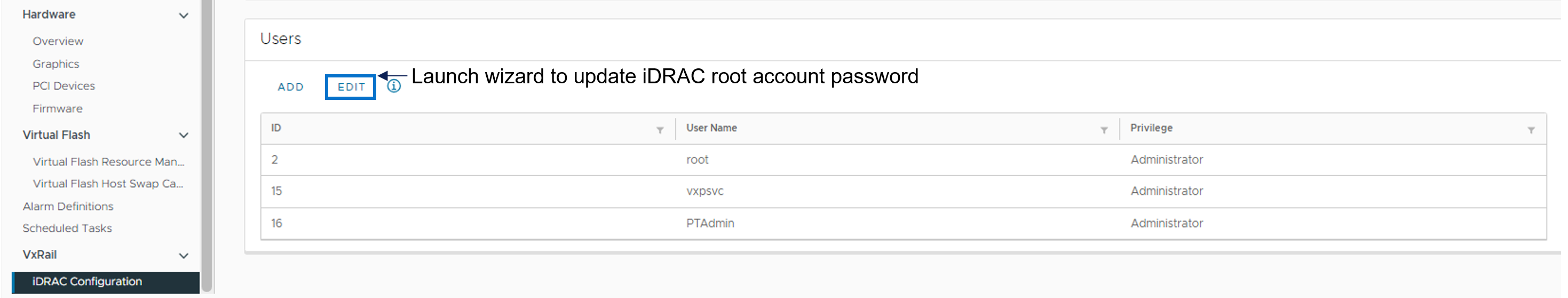

Password management

Password management is one of the key areas of focus in this software release. To reduce the manual steps to modify the vCenter Server management and iDRAC root account passwords, the VxRail Manager UI has been enhanced to allow you to make the changes via a wizard-driven workflow instead of having to change the password on the vCenter Server or iDRAC themselves and then go onto VxRail Manager UI to provide the updated password. The enhancement simplifies the experience and reduces potential user errors.

To update the vCenter Server management credentials, there is a new Security page that replaces the Certificates page. As illustrated in the following figure, a Certificate tab for the certificates management and a Credentials tab to change the vCenter Server management password are now present.

Figure 4. How to update the vCenter Server management credentials

To update the iDRAC root account password, there is a new iDRAC Configuration page where you can click the Edit button to launch a wizard to the change password.

Figure 5. How to update the iDRAC root password

Deployment Flexibility

Lastly, I want to touch on two features in deployment flexibility.

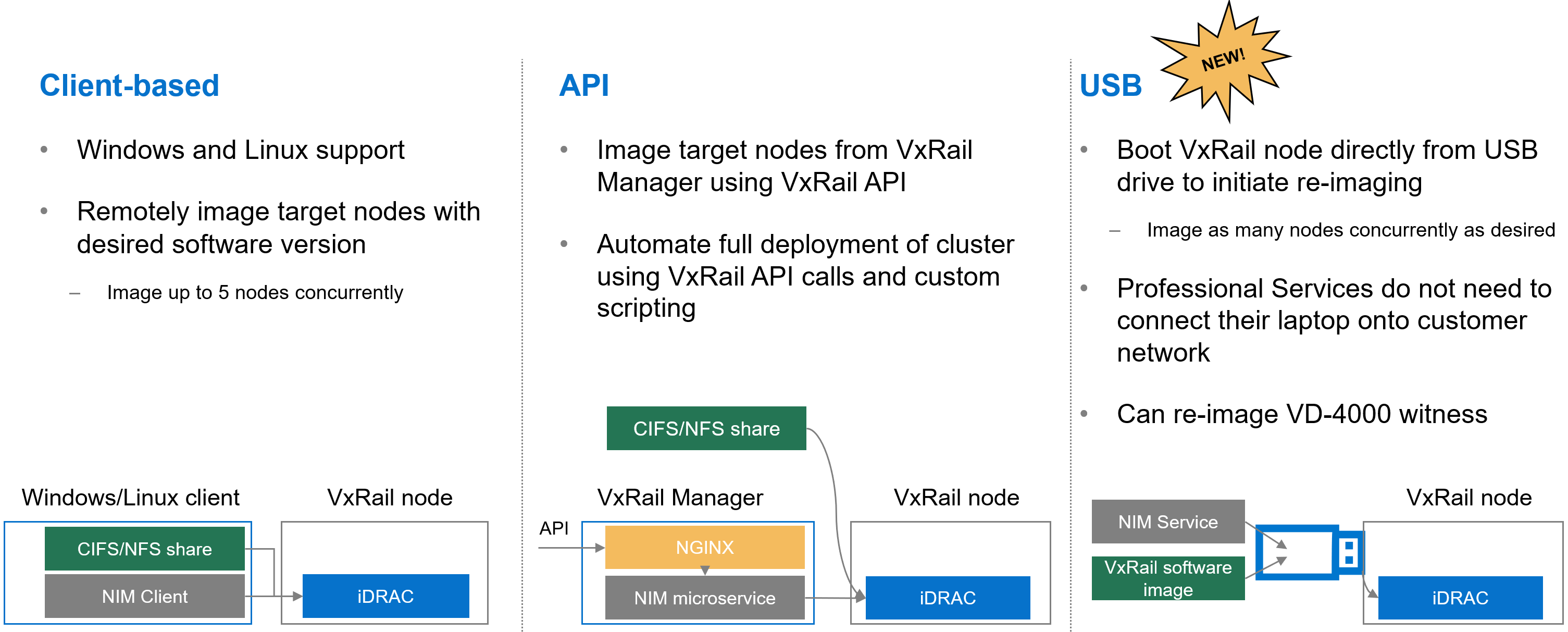

Over the past few years, the VxRail team has invested heavily in empowering you with the tools to recompose and rebuild the clusters on your own. One example is making our VxRail nodes customer-deployable with the VxRail Configuration Portal. Another is the node imaging tool.

Figure 6. Different options to use the node image management tool

Initially, the node imaging tool was Windows client-based where the workstation has the VxRail software ISO image stored locally or on a share. By connecting the workstation onto the local network where the target nodes reside, the imaging tool can be used to connect to the iDRAC of the target node. Users can reimage up to 5 nodes on the local network concurrently. In a more recent VxRail release, we added Linux client support for the tool.

We’ve also refactored the tool into a microservice within the VxRail HCI System Software so that it can be used via VxRail API. This method added more flexibility so that you can automate the full deployment of your cluster by using VxRail API calls and custom scripting.

In VxRail 8.0.210, we are introducing the USB version of the tool. Here, the tool can be self-contained on a USB drive so that users can plug the USB drive into a node, boot from it, and initiate reimaging. This provides benefits in scenarios where the 5-node maximum for concurrent reimage jobs is an issue. With this option, users can scale reimage jobs by setting up more USB drives. The USB version of the tool now allows an option to reimage the embedded witness on the VD-4000.

The final feature for deployment flexibility is support for IPv6. Whether your environment is exhausting the IPv4 address pool or there are requirements in your organization to future-proof your networking with IPv6, you will be pleasantly surprised by the level of support that VxRail offers.

You can deploy IPv6 in a dual or single network stack. In a dual network stack, you can have IPv4 and IPv6 addresses for your management network. In a single network stack, the management network is only on the IPv6 network. Initial support is for VxRail clusters running vSAN OSA with 3 or more nodes. Other than that, the feature set is on par with what you see with IPv4. Select the network stack at cluster deployment.

Conclusion

VxRail 8.0.210 offers a plethora of new features and platform support such that there is something for everyone. As you digest the information about this release, know that updating your cluster to the latest VxRail software provides you with the best return on your investment from a security and capability standpoint. Backed by VxRail Continuously Validated States, you can update your cluster to the latest software with confidence. For more information about VxRail 8.0.210, please refer to the release notes. For more information about VxRail in general, visit the Dell Technologies website.

Author: Daniel Chiu, VxRail Technical Marketing

https://www.linkedin.com/in/daniel-chiu-8422287/