Assets

Deploying the Llama 3.1 405b Model Using NVIDIA NIM

Fri, 11 Oct 2024 12:35:47 -0000

|Read Time: 0 minutes

This blog is one in a series of three that shows how Dell Technologies and our partner AI ecosystem can help you to provision the most powerful open-source model available easily. In this series of blogs, we share information about the ease of deploying the Llama 3.1 405b model in the Dell PowerEdge XE9680 server using NVIDIA NIM microservices, Dell Enterprise Hub using Text Generation Inference (TGI), or vLLM for LLMs. We hope this series equips you with the knowledge and tools needed for a successful deployment.

This blog describes the NVIDIA NIM for LLMs option.

Overview

On July 23, 2024, Meta announced the most capable open-source LLM model, Llama 3.1 405b. This model is so powerful that it competes with the best-in-class commercial models, exceeding or matching the performance in several benchmarks. However, with great power comes considerable requirements.

Dell Technologies has been working with many partners to create an ecosystem to help customers succeed on their AI journey. For the Llama 3.1 405b model, we worked directly with Meta and published the Running Llama 3.1 405B models on Dell PowerEdge XE9680 blog. The blog explains how to run model inference on a Dell PowerEdge XE9680 server with torchrun from PyTorch, which is the base process provided by Meta with the Llama 3.1 405b model.

Deployment with NVIDIA NIM

We recently published a guide about how to run NVIDIA NIM using Docker in the Introduction to NVIDIA Inference Microservices, aka NIM blog. The following steps guide the deployment of the Llama 3.1 405b model.

NVIDIA provides documentation about how to deploy NVIDIA NIM for LLM at https://docs.nvidia.com/nim/large-language-models/latest/deploy-helm.html. However, before starting the deployment, note that NVIDIA prepared four different versions of the model that are called NIM Profiles. The NIM Profiles enable you to select the version of the model to deploy, based on your hardware availability and business requirements.

Inside the NIM container that is available for download from the NVIDIA NGC at nvcr.io/nim/meta/llama-3.1-405b-instruct:1.2.0, run the following command to list the profiles:

nim@my-nim-0:/$ list-model-profiles SYSTEM INFO - Free GPUs: - [2330:10de] (0) NVIDIA H100 80GB HBM3 (H100 80GB) [current utilization: 0%] - [2330:10de] (1) NVIDIA H100 80GB HBM3 (H100 80GB) [current utilization: 0%] - [2330:10de] (2) NVIDIA H100 80GB HBM3 (H100 80GB) [current utilization: 0%] - [2330:10de] (3) NVIDIA H100 80GB HBM3 (H100 80GB) [current utilization: 0%] - [2330:10de] (4) NVIDIA H100 80GB HBM3 (H100 80GB) [current utilization: 0%] - [2330:10de] (5) NVIDIA H100 80GB HBM3 (H100 80GB) [current utilization: 0%] - [2330:10de] (6) NVIDIA H100 80GB HBM3 (H100 80GB) [current utilization: 0%] - [2330:10de] (7) NVIDIA H100 80GB HBM3 (H100 80GB) [current utilization: 0%] MODEL PROFILES - Compatible with system and runnable: - 8860fe7519bece6fdcb642b907e07954a0b896dbb1b77e1248a873d8a1287971 (tensorrt_llm-h100-fp8-tp8-throughput) - With LoRA support: - Incompatible with system: - b80e254301eff63d87b9aa13953485090e3154ca03d75ec8eff19b224918c2b5 (tensorrt_llm-h100-fp8-tp8-latency) - f8bf5df73b131c5a64c65a0671dab6cf987836eb58eb69f2a877c4a459fd2e34 (tensorrt_llm-a100-fp16-tp8-latency) - b02b0fe7ec18cb1af9a80b46650cf6e3195b2efa4c07a521e9a90053c4292407 (tensorrt_llm-h100-fp16-tp8-latency)

Looking closer at this profile information, we see:

- 8860fe7519bece6fdcb642b907e07954a0b896dbb1b77e1248a873d8a1287971—Sequence of characters that is used to select the version of the model that will be loaded into the GPU memory.

- tensorrt_llm—Backend used by Triton to load the model. For the Llama 3.1 405b model, we have only TRT-LLM, but for other models, vLLM is also available.

- h100—Model of the supported GPU.

- fp8—Float point of the model, which can be FP8 or FP16 for the Llama 3.1 405b model.

- tp8—Number of Tensor Parallelism used to deploy the model. This value represents the number of GPUs that must be available on your system to deploy it, in this case, eight GPUs per node.

- throughput—If the model is optimized for throughput or latency, this information indicates the number of nodes or Pipeline Parallelism (PP). For the throughput model, you can use a single PowerEdge XE9680 server with eight NVIDIA H100 GPUs. For the latency model, you use two PowerEdge XE9680 servers with PP2.

With this profile information in mind, you can select the model to use depending on the necessity and infrastructure available.

NVIDIA NIM prerequisites

To run the following procedure, the infrastructure must be deployed with Kubernetes, NVIDIA GPU Operator, one or two PowerEdge XE9680 servers with eight NVIDIA H100 or A100 GPUs, and follows the Support Matrix.

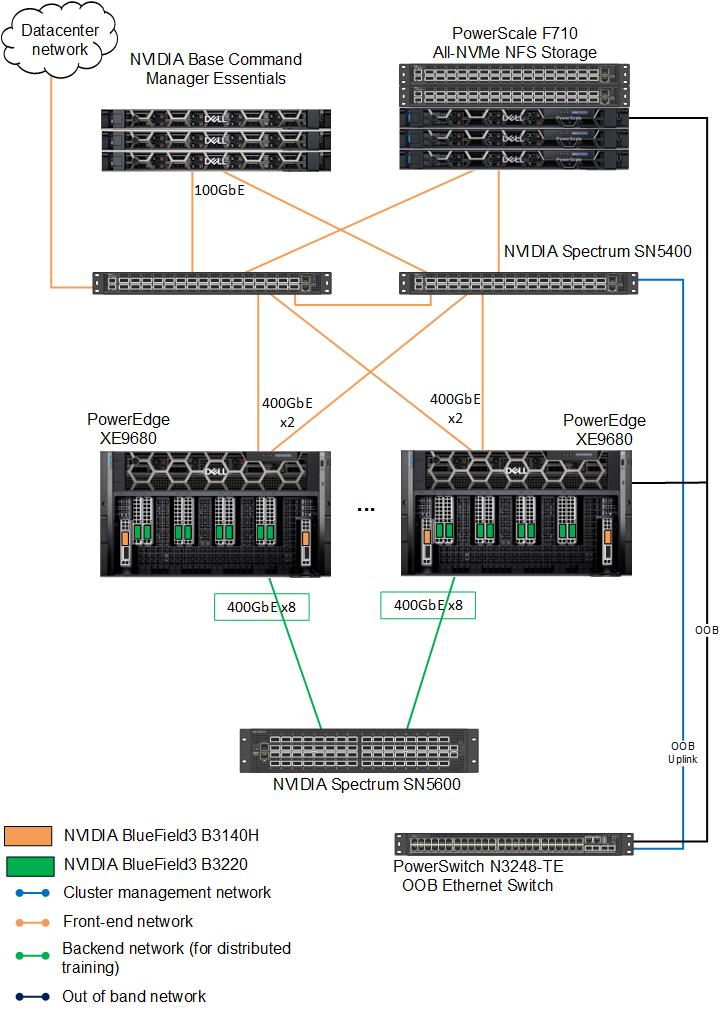

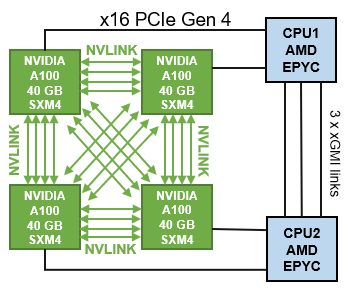

The Dell AI Factory easily deploys this model. The following figure shows the infrastructure used for the deployment:

Figure 1: Dell AI Factory connection diagram

Downloading the Helm chart for NIM

To download the Helm chart for NIM:

- Set up the NGC API Key. See the Introduction to NVIDIA Inference Microservices, aka NIM blog for details.

- Download the Helm chart, which will enable installation on a Kubernetes cluster:

fbronzati@login01:/mnt/f710/NIM$ helm fetch https://helm.ngc.nvidia.com/nim/charts/nim-llm-1.1.2.tgz --username='$oauthtoken' --password=<replace_by_your_NGC_API_KEY> fbronzati@login01:/mnt/f710/NIM$ ll total 184 drwxrwxr-x 2 fbronzati fbronzati 35 Aug 29 11:30 ./ drwxrwxrwx 33 nobody nogroup 1018 Aug 29 11:25 ../ -rw-r--r-- 1 fbronzati fbronzati 27959 Aug 29 11:30 nim-llm-1.1.2.tgz

Creating the Kubernetes namespace and secret

To avoid any conflict, deploy the pod on a new namespace:

- Create the namespace:

fbronzati@login01:/mnt/f710/NIM$ kubectl create namespace nim namespace/nim created

- Create the secret for downloading the container and models from NVIDIA NGC:

fbronzati@login01:/mnt/f710/NIM$ kubectl create secret docker-registry ngc-secret --docker-server="nvcr.io" --docker-username='$oauthtoken' --docker-password=<replace_by_your_NGC_API_KEY> -n nim secret/ngc-secret created fbronzati@login01:/mnt/f710/NIM$ kubectl create secret generic ngc-api --from-literal=NGC_API_KEY=<replace_by_your_NGC_API_KEY> -n nim secret/ngc-api created

- Run the following command to verify that the secrets were properly created inside the NIM namespace:

fbronzati@login01:/mnt/f710/NIM$ kubectl get secrets -n nim NAME TYPE DATA AGE ngc-api Opaque 1 18s ngc-secret kubernetes.io/dockerconfigjson 1 50s

Installing LWS

We recommend LeaderWorkerSet (LWS) deployments as the method for deploying multimode models with NVIDIA NIM. To enable LWS deployments, see the installation instructions in the LWS documentation. The Helm chart defaults to LWS for multinode deployments.

With LWS deployments, Leader and Worker pods coordinate to run your multinode models.

LWS deployments support manual scaling and auto scaling, in which the entire set of pods are treated as a single replica. However, there are some limitations to scaling when using LWS deployments. If scaling manually (autoscaling is not enabled), you cannot scale above the initial number of replicas set in the Helm chart.

The following example command deploys and verifies that the pod was properly deployed and is running:

fbronzati@login01:/mnt/f710/NIM$ kubectl apply --server-side -f https://github.com/kubernetes-sigs/lws/releases/download/v0.3.0/manifests.yaml namespace/lws-system serverside-applied customresourcedefinition.apiextensions.k8s.io/leaderworkersets.leaderworkerset.x-k8s.io serverside-applied serviceaccount/lws-controller-manager serverside-applied role.rbac.authorization.k8s.io/lws-leader-election-role serverside-applied clusterrole.rbac.authorization.k8s.io/lws-manager-role serverside-applied clusterrole.rbac.authorization.k8s.io/lws-metrics-reader serverside-applied clusterrole.rbac.authorization.k8s.io/lws-proxy-role serverside-applied rolebinding.rbac.authorization.k8s.io/lws-leader-election-rolebinding serverside-applied clusterrolebinding.rbac.authorization.k8s.io/lws-manager-rolebinding serverside-applied clusterrolebinding.rbac.authorization.k8s.io/lws-proxy-rolebinding serverside-applied secret/lws-webhook-server-cert serverside-applied service/lws-controller-manager-metrics-service serverside-applied service/lws-webhook-service serverside-applied deployment.apps/lws-controller-manager serverside-applied mutatingwebhookconfiguration.admissionregistration.k8s.io/lws-mutating-webhook-configuration serverside-applied validatingwebhookconfiguration.admissionregistration.k8s.io/lws-validating-webhook-configuration serverside-applied

The following command shows if LWS was properly installed and is running on the cluster:

fbronzati@login01:/mnt/f710/NIM$ kubectl get pods --all-namespaces | grep lws lws-system lws-controller-manager-5c4ff67cbd-n4glc 2/2 Running 0 48s

Customizing the deployment file

Create a custom-values.yaml file and add the following content to the file, customizing as needed for your deployment:

image: # Adjust to the actual location of the image and version you want repository: nvcr.io/nim/meta/llama-3.1-405b-instruct tag: 1.2.0 imagePullSecrets: - name: ngc-secret env: - name: NIM_MODEL_PROFILE #value: "8860fe7519bece6fdcb642b907e07954a0b896dbb1b77e1248a873d8a1287971" #Llama3.1 405b(tensorrt_llm-h100-fp8-tp8-throughput) #value: "b80e254301eff63d87b9aa13953485090e3154ca03d75ec8eff19b224918c2b5" #Llama 3.1 405b(tensorrt_llm-h100-fp8-tp8-latency) pp2 value: "b02b0fe7ec18cb1af9a80b46650cf6e3195b2efa4c07a521e9a90053c4292407" #Llama3.1 405b(tensorrt_llm-h100-fp16-tp8-latency) pp2 nodeSelector: # likely best to set this to `nvidia.com/gpu.present: "true"` depending on cluster setup nvidia.com/gpu.product: NVIDIA-H100-80GB-HBM3 model: name: meta/llama-3_1-405b-instruct ngcAPISecret: ngc-api # NVIDIA recommends using an NFS-style read-write-many storage class. # All nodes will need to mount the storage. In this example, we assume a storage class exists name "nfs". nfs: enabled: true server: f710.f710 path: /ifs/data/Projects/NIM/models readOnly: false # This should match `multiNode.gpusPerNode` resources: limits: nvidia.com/gpu: 8 multiNode: enabled: true workers: 2 gpusPerNode: 8 # Downloading the model will take quite a long time. Give it as much time as ends up being needed. startupProbe: failureThreshold: 1500

We already added the three NVIDIA H100 profiles on the configuration file. You can comment or uncomment the wanted model in the NIM_MODEL_PROFILE section.

Installing the Helm chart

To deploy the model, run the following command to download and start the Kubernetes pod inside the NIM namespace:

fbronzati@login01:/mnt/f710/NIM$ helm install my-nim nim-llm-1.1.2.tgz -f custom-values.yaml -n nim NAME: my-nim LAST DEPLOYED: Wed Sep 11 08:59:26 2024 NAMESPACE: nim STATUS: deployed REVISION: 1 NOTES: Thank you for installing nim-llm. ************************************************** | It may take some time for pods to become ready | | while model files download | ************************************************** Your NIM version is: 1.2.0

The pod will have one or two containers depending on the profile and number of workers. The following example shows the pod running the model over two containers:

fbronzati@login01:/mnt/f710/NIM$ kubectl get pods -n nim -o widefNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESfmy-nim-0 1/1 Running 0 14m *.*.*.* helios26 <none> <none> my-nim-0-1 1/1 Running 0 14m *.*.*.* helios24 <none> <none>

Sending requests

The Helm chart also deploys a Kubernetes service to interact with the model sending a request. The following command provides the IP address and port that is created to access the model:

fbronzati@login01:/mnt/f710/NIM$ kubectl get services -n nim NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE my-nim ClusterIP None <none> <none> 110s my-nim-nim-llm ClusterIP *.*.*.* <none> 8000/TCP 110s

NVIDIA NIM uses OpenAI API, therefore, there are many ways to interact with the model. See https://docs.api.nvidia.com/nim/reference/meta-llama-3_1-405b-infer for information to understand the best ways to send requests with Python, Node, and Shell. The following example is a simple shell script to test if the model was deployed correctly:

fbronzati@login01:/mnt/f710/NIM$ curl -X 'POST' \

'http://*.*.*.*:8000/v1/completions' \

-H 'accept: application/json' \

-H 'Content-Type: application/json' \

-d '{

"model": "meta/llama-3.1-405b-instruct",

"prompt": "Once upon a time",

"max_tokens": 64

}' The response is similar to the following example:

{"id":"cmpl-5df0e556fcbf48738159707b65055d83","object":"text_completion","created":1726096116,"model":"meta/llama-3.1-405b-instruct","choices":[{"index":0,"text":", in a small village nestled in the rolling hills of Tuscany, there was a young apprentice named Dario . Dario was an aspiring sommelier, eager to learn the art of pairing wines with the region’s famous dishes. His mentor, the wise and kind-hearted Giovanni, noticed Dario’s passion and","logprobs":null,"finish_reason":"length","stop_reason":null}],"usage":{"prompt_tokens":5,"total_tokens":69,"completion_tokens":64}}Conclusion

Deploying the Llama 3.1 405b model can be challenging due to its size and hardware requirements. However, with NVIDIA NIM on Dell PowerEdge servers, most of the complexity is removed and the task becomes achievable, providing flexibility and scalability for a wide range of applications. The collaboration between Dell Technologies and NVIDIA showcases a high degree of synergy, offering a pathway to use cutting-edge AI capabilities in a production environment. This blog serves as a valuable resource for understanding the deployment intricacies and selecting the optimal approach based on hardware and performance requirements.

Dell AI Factory further simplifies this process by providing preconfigured environments and deployment templates, making it easier for enterprises to adopt the Llama 3.1 405b model without compromising performance or resource efficiency.

Deploying the Llama 3.1 405b Model Using Dell Enterprise Hub

Thu, 10 Oct 2024 19:42:19 -0000

|Read Time: 0 minutes

This blog is one in a series of three that shows how Dell Technologies and our partner AI ecosystem can help you to provision the most powerful open-source model available easily. In this series of blogs, we share information about the ease of deploying the Llama 3.1 405b model on the Dell PowerEdge XE9680 server by using NVIDIA NIM, Dell Enterprise Hub using Text Generation Inference (TGI), or vLLM for Large Language Models (LLMs). We hope this series equips you with the knowledge and tools needed for a successful deployment.

This blog describes the Dell Enterprise Hub for LLMs option.

Overview

In this blog, we describe how to deploy the Llama 3.1 405b model on a Dell PowerEdge XE9680 server using the Dell Enterprise Hub portal developed in partnership with Hugging Face.

We also published the steps to run models using Kubernetes and the Dell Enterprise Hub with Hugging Face in the Scale your Model Deployments with the Dell Enterprise Hub blog, in which we used the Llama 3.1 70b model. We recommend reading this blog for more details about the Text Generation Inference (TGI) implementation and the infrastructure used. TGI is a toolkit for deploying and serving LLMs that enables high-performance text generation for the most popular open-source LLMs.

Dell Enterprise Hub

The Dell Enterprise Hub (https://dell.huggingface.co/) revolutionizes access to and use of optimized models on cutting-edge Dell hardware. It offers a curated collection of models that have been thoroughly tested and validated on Dell systems.

The Dell Enterprise Hub has curated examples for deploying the models with Docker and Kubernetes. In this blog, we show how easy it is to start inferencing with the Llama 3.1 405b model.

The Scale your Model Deployments with the Dell Enterprise Hub blog describes how to access the portal and retrieve the examples, therefore we do not include this information. For more information, see the blog.

Deploying with Docker

To deploy the model with Docker:

- Go to https://dell.huggingface.co/authenticated/models/meta-llama/Meta-Llama-3.1-405B-Instruct-FP8/deploy/docker to access the portal.

- Run the following command to deploy the Llama 3.1 405b model:

docker run \ -it \ --gpus 8 \ --shm-size 1g \ -p 80:80 \ -e NUM_SHARD=8 \ -e MAX_BATCH_PREFILL_TOKENS=16182 \ -e MAX_INPUT_TOKENS=8000 \ -e MAX_TOTAL_TOKENS=8192 \ registry.dell.huggingface.co/enterprise-dell-inference-meta-llama-meta-llama-3.1-405b-instruct-fp8

The following example shows a deployment and the output of the command:

fbronzati@node005:~$ docker run \

-it \

--gpus 8 \

--shm-size 1g \

-p 80:80 \

-e NUM_SHARD=8 \

-e MAX_BATCH_PREFILL_TOKENS=16182 \

-e MAX_INPUT_TOKENS=8000 \

-e MAX_TOTAL_TOKENS=8192 \

registry.dell.huggingface.co/enterprise-dell-inference-meta-llama-meta-llama-3.1-405b-instruct-fp8

Unable to find image 'registry.dell.huggingface.co/enterprise-dell-inference-meta-llama-meta-llama-3.1-405b-instruct-fp8:latest' locally

latest: Pulling from enterprise-dell-inference-meta-llama-meta-llama-3.1-405b-instruct-fp8

43f89b94cd7d: Pull complete

45f7ea5367fe: Pull complete

.

.

.

.

e4fdac914fb9: Pull complete

Digest: sha256:c6819ff57444f51abb2a2a5aabb12b103b346bfb056738a613f3fcc0eecbd322

Status: Downloaded newer image for registry.dell.huggingface.co/enterprise-dell-inference-meta-llama-meta-llama-3.1-405b-instruct-fp8:latest

2024-09-14T01:06:21.279409Z INFO text_generation_launcher: Args {

model_id: "/model",

revision: None,

validation_workers: 2,

sharded: None,

num_shard: Some(

8,

),

quantize: None,

speculate: None,

dtype: None,

trust_remote_code: false,

max_concurrent_requests: 128,

max_best_of: 2,

max_stop_sequences: 4,

max_top_n_tokens: 5,

max_input_tokens: Some(

8000,

),

max_input_length: None,

max_total_tokens: Some(

8192,

),

waiting_served_ratio: 0.3,

max_batch_prefill_tokens: Some(

16182,

),

max_batch_total_tokens: None,

max_waiting_tokens: 20,

max_batch_size: None,

cuda_graphs: None,

hostname: "1adda5a941d3",

port: 80,

shard_uds_path: "/tmp/text-generation-server",

master_addr: "localhost",

master_port: 29500,

huggingface_hub_cache: Some(

"/data",

),

weights_cache_override: None,

disable_custom_kernels: false,

cuda_memory_fraction: 1.0,

rope_scaling: None,

rope_factor: None,

json_output: false,

otlp_endpoint: None,

otlp_service_name: "text-generation-inference.router",

cors_allow_origin: [],

watermark_gamma: None,

watermark_delta: None,

ngrok: false,

ngrok_authtoken: None,

ngrok_edge: None,

tokenizer_config_path: None,

disable_grammar_support: false,

env: false,

max_client_batch_size: 4,

lora_adapters: None,

disable_usage_stats: false,

disable_crash_reports: false,

}

2024-09-14T01:06:21.280278Z INFO text_generation_launcher: Using default cuda graphs [1, 2, 4, 8, 16, 32]

2024-09-14T01:06:21.280285Z INFO text_generation_launcher: Sharding model on 8 processes

2024-09-14T01:06:21.280417Z INFO download: text_generation_launcher: Starting check and download process for /model

2024-09-14T01:06:25.598785Z INFO text_generation_launcher: Files are already present on the host. Skipping download.

2024-09-14T01:06:26.485957Z INFO download: text_generation_launcher: Successfully downloaded weights for /model

2024-09-14T01:06:26.486723Z INFO shard-manager: text_generation_launcher: Starting shard rank=1

2024-09-14T01:06:26.486744Z INFO shard-manager: text_generation_launcher: Starting shard rank=2

.

.

.

.

2024-09-14T01:09:41.599375Z WARN tokenizers::tokenizer::serialization: /usr/local/cargo/registry/src/index.crates.io-6f17d22bba15001f/tokenizers-0.19.1/src/tokenizer/serialization.rs:159: Warning: Token '<|reserved_special_token_247|>' was expected to have ID '128255' but was given ID 'None'

2024-09-14T01:09:41.601329Z INFO text_generation_router: router/src/main.rs:357: Using config Some(Llama)

2024-09-14T01:09:41.601337Z WARN text_generation_router: router/src/main.rs:366: no pipeline tag found for model /model

2024-09-14T01:09:41.601339Z WARN text_generation_router: router/src/main.rs:384: Invalid hostname, defaulting to 0.0.0.0

2024-09-14T01:09:42.024203Z INFO text_generation_router::server: router/src/server.rs:1572: Warming up model

2024-09-14T01:09:48.458410Z INFO text_generation_launcher: Cuda Graphs are enabled for sizes [32, 16, 8, 4, 2, 1]

2024-09-14T01:09:51.114877Z INFO text_generation_router::server: router/src/server.rs:1599: Using scheduler V3

2024-09-14T01:09:51.114907Z INFO text_generation_router::server: router/src/server.rs:1651: Setting max batch total tokens to 55824

2024-09-14T01:09:51.221691Z INFO text_generation_router::server: router/src/server.rs:1889: ConnectedConfirming the deployment

To confirm that the model is working, send a curl command. Ensure that you use the localhost if you submit the request from the node or the IP address of the host if you are testing from other system.

fbronzati@login01:/mnt/f710/vllm$ curl -X 'POST' 'http://localhost:80/v1/completions' -H 'accept: application/json' -H 'Content-Type: application/json' -d '{

"model": "meta-llama/Meta-Llama-3.1-405B-Instruct-FP8",

"prompt": "Once upon a time",

"max_tokens": 64

}' The following example is a response from the model:

{"object":"text_completion","id":"","created":1726319779,"model":"/model","system_fingerprint":"2.2.0-sha-db7e043","choices":[{"index":0,"text":" in the not so distant past, “EarthFirst” – a search word in the Datastream terminal in the university’s Reference Library brought up a thumbnail entry for Earth First!\nAccording to the International Association of Scholarly Publishers Second Nature Project, published in The Open Library the Earth First! movement held an impressive set of un","logprobs":null,"finish_reason":"length"}],"usage":{"prompt_tokens":5,"completion_tokens":64,"total_tokens":69}}Because the container being used supports the OpenAI API, you can use Python or other languages to interact with the model.

Deploying with Kubernetes

For deploying the model with Kubernetes, go to https://dell.huggingface.co/authenticated/models/meta-llama/Meta-Llama-3.1-405B-Instruct-FP8/deploy/kubernetes?gpus=8&replicas=1&sku=xe9680-nvidia-h100 to access the Dell Enterprise Hub.

Creating the deployment file

To create the deployment file, copy the example available at https://dell.huggingface.co/authenticated/models/meta-llama/Meta-Llama-3.1-405B-Instruct-FP8/deploy/kubernetes?gpus=8&replicas=1&sku=xe9680-nvidia-h100 and save it to a YAML file. For our example, we saved the file as deploy-hf-llama3.1-405B-8xH100-9680.yaml.

The following example is a copy of the content from the Dell Enterprise Hub. We recommend that you always consult the Dell Enterprise Hub for the latest updates.

apiVersion: apps/v1 kind: Deployment metadata: name: tgi-deployment spec: replicas: 1 selector: matchLabels: app: tgi-server template: metadata: labels: app: tgi-server hf.co/model: meta-llama--Meta-Llama-3.1-405B-Instruct-FP8 hf.co/task: text-generation spec: containers: - name: tgi-container image: registry.dell.huggingface.co/enterprise-dell-inference-meta-llama-meta-llama-3.1-405b-instruct-fp8 resources: limits: nvidia.com/gpu: 8 env: - name: NUM_SHARD value: "8" - name: MAX_BATCH_PREFILL_TOKENS value: "16182" - name: MAX_INPUT_TOKENS value: "8000" - name: MAX_TOTAL_TOKENS value: "8192" volumeMounts: - mountPath: /dev/shm name: dshm volumes: - name: dshm emptyDir: medium: Memory sizeLimit: 1Gi nodeSelector: nvidia.com/gpu.product: NVIDIA-H100-80GB-HBM3 --- apiVersion: v1 kind: Service metadata: name: tgi-service spec: type: LoadBalancer ports: - protocol: TCP port: 80 targetPort: 80 selector: app: tgi-server --- apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: tgi-ingress annotations: nginx.ingress.kubernetes.io/rewrite-target: / spec: ingressClassName: nginx-ingress rules: - http: paths: - path: / pathType: Prefix backend: service: name: tgi-service port: number: 80

Creating the Kubernetes namespace and secrets

After creating the deployment file, create a namespace. For our example, we used deh for simple identification on the pods:

fbronzati@login01:/mnt/f710/DEH$ kubectl create namespace deh namespace/deh created

Deploying the deh pod

To deploy the pod and the services that are required to access the model, run the following command:fbronzati@login01:/mnt/f710/DEH$ kubectl apply -f deploy-hf-llama3.1-405B-8xH100-9680.yaml -n dehdeployment.apps/tgi-deployment created service/tgi-service created ingress.networking.k8s.io/tgi-ingress created

For a first-time deployment, the process of downloading the image takes some time because the container is approximately 507 GB and the model is built in to the container image.- To monitor the deployment of the pod and services, run the following commands:

fbronzati@helios25:~$ kubectl get pods -n deh -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES tgi-deployment-69fd54c8dd-9fzgn 0/1 ContainerCreating 0 4h49m <none> helios25 <none> <none> fbronzati@helios25:~$ kubectl get services -n deh -o wide NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR tgi-service LoadBalancer *.*.*.* <pending> 80:30523/TCP 4h49m app=tgi-server

- To verify any errors or if the container image is being downloaded, run the kubectl describe command:

fbronzati@login01:/mnt/f710/DEH$ kubectl describe pod tgi-deployment-69fd54c8dd-9fzgn -n deh

Name: tgi-deployment-69fd54c8dd-9fzgn

Namespace: deh

Priority: 0

Service Account: default

Node: helios25/*.*.*.*

Start Time: Fri, 13 Sep 2024 07:19:38 -0500

Labels: app=tgi-server

hf.co/model=meta-llama--Meta-Llama-3.1-8B-Instruct

hf.co/task=text-generation

pod-template-hash=69fd54c8dd

Annotations: cni.projectcalico.org/containerID: c68dc175a1e11bb85435c6b8aaf193c106959087af26f496d7d71cba1b43b779

cni.projectcalico.org/podIP: *.*.*.*/32

cni.projectcalico.org/podIPs: *.*.*.*/32

k8s.v1.cni.cncf.io/network-status:

[{

"name": "k8s-pod-network",

"ips": [

"*.*.*.*"

],

"default": true,

"dns": {}

}]

k8s.v1.cni.cncf.io/networks-status:

[{

"name": "k8s-pod-network",

"ips": [

"*.*.*.*"

],

"default": true,

"dns": {}

}]

Status: Running

IP: *.*.*.*

IPs:

IP: *.*.*.*

Controlled By: ReplicaSet/tgi-deployment-69fd54c8dd

Containers:

tgi-container:

Container ID: containerd://813dc0f197e53d51cfd1a2e9df531cacdfeec0ad205ca517f6c536de509f6182

Image: registry.dell.huggingface.co/enterprise-dell-inference-meta-llama-meta-llama-3.1-405b-instruct-fp8

Image ID: registry.dell.huggingface.co/enterprise-dell-inference-meta-llama-meta-llama-3.1-405b-instruct-fp8@sha256:c6819ff57444f51abb2a2a5aabb12b103b346bfb056738a613f3fcc0eecbd322

Port: <none>

Host Port: <none>

State: Running

Started: Fri, 13 Sep 2024 17:30:38 -0500

Ready: True

Restart Count: 0

Limits:

nvidia.com/gpu: 8

Requests:

nvidia.com/gpu: 8

Environment:

NUM_SHARD: 8

PORT: 80

MAX_BATCH_PREFILL_TOKENS: 16182

MAX_INPUT_TOKENS: 8000

MAX_TOTAL_TOKENS: 8192

Mounts:

/dev/shm from dshm (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-zxwnf (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

dshm:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium: Memory

SizeLimit: <unset>

kube-api-access-zxwnf:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: nvidia.com/gpu.product=NVIDIA-H100-80GB-HBM3

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Pulled 52m kubelet Successfully pulled image "registry.dell.huggingface.co/enterprise-dell-inference-meta-llama-meta-llama-3.1-405b-instruct-fp8" in 10h10m58.973s (10h10m58.973s including waiting)

Normal Created 52m kubelet Created container tgi-container

Normal Started 52m kubelet Started container tgi-containerModel Initialization

The model is integrated in the container in the Dell Enterprise Hub. When the container image is downloaded and the container is running, the model is loaded on the GPUs and is ready for use in a few minutes.

To monitor the logs of the pod to verify if the loading process worked or if verification is required, run the following command:

fbronzati@helios25:~$ kubectl logs tgi-deployment-69fd54c8dd-9fzgn -n deh

2024-09-13T22:30:38.886576Z INFO text_generation_launcher: Args {

model_id: "/model",

revision: None,

validation_workers: 2,

sharded: None,

num_shard: Some(

8,

),

quantize: None,

speculate: None,

dtype: None,

trust_remote_code: false,

max_concurrent_requests: 128,

max_best_of: 2,

max_stop_sequences: 4,

max_top_n_tokens: 5,

max_input_tokens: Some(

8000,

),

max_input_length: None,

max_total_tokens: Some(

8192,

),

waiting_served_ratio: 0.3,

max_batch_prefill_tokens: Some(

16182,

),

max_batch_total_tokens: None,

max_waiting_tokens: 20,

max_batch_size: None,

cuda_graphs: None,

hostname: "tgi-deployment-69fd54c8dd-9fzgn",

port: 80,

shard_uds_path: "/tmp/text-generation-server",

master_addr: "localhost",

master_port: 29500,

huggingface_hub_cache: Some(

"/data",

),

weights_cache_override: None,

disable_custom_kernels: false,

cuda_memory_fraction: 1.0,

rope_scaling: None,

rope_factor: None,

json_output: false,

otlp_endpoint: None,

otlp_service_name: "text-generation-inference.router",

cors_allow_origin: [],

watermark_gamma: None,

watermark_delta: None,

ngrok: false,

ngrok_authtoken: None,

ngrok_edge: None,

tokenizer_config_path: None,

disable_grammar_support: false,

env: false,

max_client_batch_size: 4,

lora_adapters: None,

disable_usage_stats: false,

disable_crash_reports: false,

}

2024-09-13T22:30:38.886713Z INFO text_generation_launcher: Using default cuda graphs [1, 2, 4, 8, 16, 32]

2024-09-13T22:30:38.886717Z INFO text_generation_launcher: Sharding model on 8 processes

2024-09-13T22:30:38.886793Z INFO download: text_generation_launcher: Starting check and download process for /model

2024-09-13T22:30:45.130039Z INFO text_generation_launcher: Files are already present on the host. Skipping download.

2024-09-13T22:30:45.991211Z INFO download: text_generation_launcher: Successfully downloaded weights for /model

2024-09-13T22:30:45.991407Z INFO shard-manager: text_generation_launcher: Starting shard rank=0

2024-09-13T22:30:45.991421Z INFO shard-manager: text_generation_launcher: Starting shard rank=1

.

.

.

.

2024-09-13T22:33:22.394579Z INFO shard-manager: text_generation_launcher: Shard ready in 156.399351673s rank=7

2024-09-13T22:33:22.394877Z INFO shard-manager: text_generation_launcher: Shard ready in 156.4004131s rank=5

2024-09-13T22:33:22.490441Z INFO text_generation_launcher: Starting Webserver

2024-09-13T22:33:22.875150Z WARN tokenizers::tokenizer::serialization: /usr/local/cargo/registry/src/index.crates.io-6f17d22bba15001f/tokenizers-0.19.1/src/tokenizer/serialization.rs:159: Warning: Token '<|begin_of_text|>' was expected to have ID '128000' but was given ID 'None'

.

.

.

.

2024-09-13T22:33:22.875571Z WARN tokenizers::tokenizer::serialization: /usr/local/cargo/registry/src/index.crates.io-6f17d22bba15001f/tokenizers-0.19.1/src/tokenizer/serialization.rs:159: Warning: Token '<|reserved_special_token_247|>' was expected to have ID '128255' but was given ID 'None'

2024-09-13T22:33:22.877378Z INFO text_generation_router: router/src/main.rs:357: Using config Some(Llama)

2024-09-13T22:33:22.877385Z WARN text_generation_router: router/src/main.rs:366: no pipeline tag found for model /model

2024-09-13T22:33:22.877388Z WARN text_generation_router: router/src/main.rs:384: Invalid hostname, defaulting to 0.0.0.0

2024-09-13T22:33:23.514440Z INFO text_generation_router::server: router/src/server.rs:1572: Warming up model

2024-09-13T22:33:28.860027Z INFO text_generation_launcher: Cuda Graphs are enabled for sizes [32, 16, 8, 4, 2, 1]

2024-09-13T22:33:30.914329Z INFO text_generation_router::server: router/src/server.rs:1599: Using scheduler V3

2024-09-13T22:33:30.914341Z INFO text_generation_router::server: router/src/server.rs:1651: Setting max batch total tokens to 54016

2024-09-13T22:33:30.976105Z INFO text_generation_router::server: router/src/server.rs:1889: ConnectedVerifying the model’s use of GPU memory

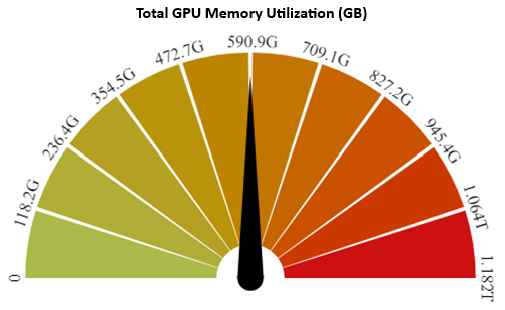

You can verify GPU use by using the nvidia-smi utility. However, with the Dell AI Factory, you can also monitor GPU use with Base Command Manager. The following figure shows that 590 GB of the memory is being used for the PowerEdge XE9680 server with eight NVIDIA H100 GPUs with 80 GB, for a total of 640 GB:

Figure 1: Total GPU memory use

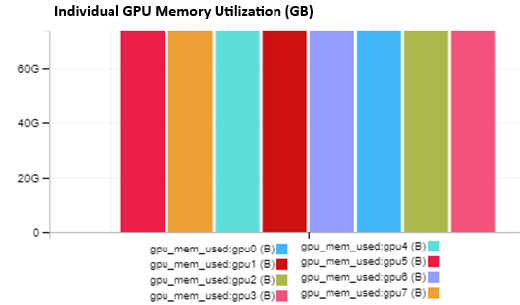

There is an option to identify the memory use of each GPU. The following figure shows that all GPUs are being evenly used with approximately 73 GB of memory:

Figure 2: Individual GPU memory use

Confirming the model

When the model is loaded on the GPUs, send a curl command to confirm that is working as planned:

fbronzati@login01:/mnt/f710/DEH$ curl -X 'POST' 'http://*.*.*.*:80/v1/completions' -H 'accept: application/json' -H 'Content-Type: application/json' -d '{

"model": "meta-llama/Meta-Llama-3.1-405B-Instruct-FP8",

"prompt": "Once upon a time",

"max_tokens": 64

}'The following example is a response from the model:

{"object":"text_completion","id":"","created":1726269695,"model":"/model","system_fingerprint":"2.2.0-sha-db7e043","choices":[{"index":0,"text":", in a small village nestled in the rolling hills of the countryside, there lived a young girl named Emily. Emily was a curious and adventurous child, with a mop of curly brown hair and a smile that could light up the darkest of rooms. She loved to explore the world around her, and spent most of her days","logprobs":null,"finish_reason":"length"}],"usage":{"prompt_tokens":5,"completion_tokens":64,"total_tokens":69}}Because the container that is being used deploys the OpenAI API, you can use Python or other languages to interact with the model.

Conclusion

Using the Dell Enterprise Hub using TGI platform is an exceptional and easy option to deploy the Llama 3.1 405b model. The Dell partnership with Hugging Face prepares, optimizes, and tests the containers so that you can focus on the development of the application. It will solve your business problems and alleviates worry about the complexities of optimizing and building your deployment environment.

The Llama 3.1 405b model is a powerful open-source tool that offers various deployment options that can often lead to dilemmas in the deployment process. This blog series aims to clarify what to expect by providing practical examples, including code snippets and outputs, to enhance your understanding. For additional deployment assistance, we developed the Dell AI Factory, which embodies Dell’s strategy for embracing and implementing AI. It ensures successful and consistent deployments at any scale and in any location.

Ultimately, the Llama 3.1 405b model deploys seamlessly on a single Dell PowerEdge XE9680 server, making it an excellent choice for organizations looking to leverage AI technology effectively.

Deploying the Llama 3.1 405b Model Using vLLM

Thu, 10 Oct 2024 19:42:18 -0000

|Read Time: 0 minutes

This blog is one in a series of three that shows how Dell Technologies and our partner AI ecosystem can help you to provision the most powerful open-source model available easily. In this series of blogs, we share information about the ease of deploying the Llama 3.1 405b model in the Dell PowerEdge XE9680 server by using NVIDIA NIM, Dell Enterprise Hub using Text Generation Inference (TGI), or vLLM for LLMs. We hope this series equips you with the knowledge and tools needed for a successful deployment.

This blog describes the vLLM for LLMs option.

Overview

In another blog in the series, we show how to deploy the Llama 3.1 405b model using NVIDIA NIM in a single and multimode deployment, also known as distributed inference.

By following the process described in the NVIDIA NIM blog, this blog demonstrates how easily you can deploy Llama 3.1 405b in a Dell PowerEdge XE9680 server using vLLM with Docker or with Kubernetes.

Deployment with vLLM

The vLLM library is designed for high-throughput and memory-efficient inference and serving of large language models (LLMs). The vLLM community is a vibrant and active membership that is centered around the development and use of the vLLM library.

In the following sections, we show two simple ways to deploy vLLM with the Llama 3.1 405b model.

Docker deployment

The easiest deployment is to run Llama 3.1 405b with vLLM. The basic requirements include a Dell PowerEdge XE9680 server running Linux, Docker, NVIDIA GPU Driver, and the NVIDIA Container Toolkit. This blog does not include installation information; see https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/latest/install-guide.html for more information.

To deploy the container and download the model, run the following command. The following example shows the output when the command is completed and the model is deployed:

fbronzati@node005:~$ docker run --runtime nvidia --gpus all -v /aipsf710-21/vllm:/root/.cache/huggingface --env "HUGGING_FACE_HUB_TOKEN=<replace_with_your_HuggingFcae_key" -p 8000:8000 --ipc=host vllm/vllm-openai:latest --model meta-llama/Meta-Llama-3.1-405B-Instruct-FP8 --tensor-parallel-size 8 --max_model_len 10000 INFO 09-11 13:44:28 api_server.py:459] vLLM API server version 0.6.0 INFO 09-11 13:44:28 api_server.py:460] args: Namespace(host=None, port=8000, uvicorn_log_level='info', allow_credentials=False, allowed_origins=['*'], allowed_methods=['*'], allowed_headers=['*'], api_key=None, lora_modules=None, prompt_adapters=None, chat_template=None, response_role='assistant', ssl_keyfile=None, ssl_certfile=None, ssl_ca_certs=None, ssl_cert_reqs=0, root_path=None, middleware=[], return_tokens_as_token_ids=False, disable_frontend_multiprocessing=False, enable_auto_tool_choice=False, tool_call_parser=None, model='meta-llama/Meta-Llama-3.1-405B-Instruct-FP8', tokenizer=None, skip_tokenizer_init=False, revision=None, code_revision=None, tokenizer_revision=None, tokenizer_mode='auto', trust_remote_code=False, download_dir=None, load_format='auto', dtype='auto', kv_cache_dtype='auto', quantization_param_path=None, max_model_len=10000, guided_decoding_backend='outlines', distributed_executor_backend=None, worker_use_ray=False, pipeline_parallel_size=1, tensor_parallel_size=8, max_parallel_loading_workers=None, ray_workers_use_nsight=False, block_size=16, enable_prefix_caching=False, disable_sliding_window=False, use_v2_block_manager=False, num_lookahead_slots=0, seed=0, swap_space=4, cpu_offload_gb=0, gpu_memory_utilization=0.9, num_gpu_blocks_override=None, max_num_batched_tokens=None, max_num_seqs=256, max_logprobs=20, disable_log_stats=False, quantization=None, rope_scaling=None, rope_theta=None, enforce_eager=False, max_context_len_to_capture=None, max_seq_len_to_capture=8192, disable_custom_all_reduce=False, tokenizer_pool_size=0, tokenizer_pool_type='ray', tokenizer_pool_extra_config=None, limit_mm_per_prompt=None, enable_lora=False, max_loras=1, max_lora_rank=16, lora_extra_vocab_size=256, lora_dtype='auto', long_lora_scaling_factors=None, max_cpu_loras=None, fully_sharded_loras=False, enable_prompt_adapter=False, max_prompt_adapters=1, max_prompt_adapter_token=0, device='auto', num_scheduler_steps=1, scheduler_delay_factor=0.0, enable_chunked_prefill=None, speculative_model=None, speculative_model_quantization=None, num_speculative_tokens=None, speculative_draft_tensor_parallel_size=None, speculative_max_model_len=None, speculative_disable_by_batch_size=None, ngram_prompt_lookup_max=None, ngram_prompt_lookup_min=None, spec_decoding_acceptance_method='rejection_sampler', typical_acceptance_sampler_posterior_threshold=None, typical_acceptance_sampler_posterior_alpha=None, disable_logprobs_during_spec_decoding=None, model_loader_extra_config=None, ignore_patterns=[], preemption_mode=None, served_model_name=None, qlora_adapter_name_or_path=None, otlp_traces_endpoint=None, collect_detailed_traces=None, disable_async_output_proc=False, override_neuron_config=None, engine_use_ray=False, disable_log_requests=False, max_log_len=None) INFO 09-11 13:44:31 api_server.py:160] Multiprocessing frontend to use ipc:///tmp/4761d363-8d98-4215-a0ae-1d63b684f5c1 for RPC Path. INFO 09-11 13:44:31 api_server.py:176] Started engine process with PID 78 INFO 09-11 13:44:36 config.py:890] Defaulting to use mp for distributed inference INFO 09-11 13:44:36 llm_engine.py:213] Initializing an LLM engine (v0.6.0) with config: model='meta-llama/Meta-Llama-3.1-405B-Instruct-FP8', speculative_config=None, tokenizer='meta-llama/Meta-Llama-3.1-405B-Instruct-FP8', skip_tokenizer_init=False, tokenizer_mode=auto, revision=None, override_neuron_config=None, rope_scaling=None, rope_theta=None, tokenizer_revision=None, trust_remote_code=False, dtype=torch.bfloat16, max_seq_len=10000, download_dir=None, load_format=LoadFormat.AUTO, tensor_parallel_size=8, pipeline_parallel_size=1, disable_custom_all_reduce=False, quantization=fbgemm_fp8, enforce_eager=False, kv_cache_dtype=auto, quantization_param_path=None, device_config=cuda, decoding_config=DecodingConfig(guided_decoding_backend='outlines'), observability_config=ObservabilityConfig(otlp_traces_endpoint=None, collect_model_forward_time=False, collect_model_execute_time=False), seed=0, served_model_name=meta-llama/Meta-Llama-3.1-405B-Instruct-FP8, use_v2_block_manager=False, num_scheduler_steps=1, enable_prefix_caching=False, use_async_output_proc=True) WARNING 09-11 13:44:37 multiproc_gpu_executor.py:56] Reducing Torch parallelism from 112 threads to 1 to avoid unnecessary CPU contention. Set OMP_NUM_THREADS in the external environment to tune this value as needed. INFO 09-11 13:44:37 custom_cache_manager.py:17] Setting Triton cache manager to: vllm.triton_utils.custom_cache_manager:CustomCacheManager (VllmWorkerProcess pid=209) INFO 09-11 13:44:38 multiproc_worker_utils.py:215] Worker ready; awaiting tasks (VllmWorkerProcess pid=215) INFO 09-11 13:44:38 multiproc_worker_utils.py:215] Worker ready; awaiting tasks (VllmWorkerProcess pid=211) INFO 09-11 13:44:38 multiproc_worker_utils.py:215] Worker ready; awaiting tasks (VllmWorkerProcess pid=213) INFO 09-11 13:44:38 multiproc_worker_utils.py:215] Worker ready; awaiting tasks (VllmWorkerProcess pid=214) INFO 09-11 13:44:38 multiproc_worker_utils.py:215] Worker ready; awaiting tasks (VllmWorkerProcess pid=210) INFO 09-11 13:44:38 multiproc_worker_utils.py:215] Worker ready; awaiting tasks (VllmWorkerProcess pid=212) INFO 09-11 13:44:38 multiproc_worker_utils.py:215] Worker ready; awaiting tasks (VllmWorkerProcess pid=210) INFO 09-11 13:44:40 utils.py:977] Found nccl from library libnccl.so.2 (VllmWorkerProcess pid=210) INFO 09-11 13:44:40 pynccl.py:63] vLLM is using nccl==2.20.5 INFO 09-11 13:44:40 utils.py:977] Found nccl from library libnccl.so.2 (VllmWorkerProcess pid=212) INFO 09-11 13:44:40 utils.py:977] Found nccl from library libnccl.so.2 (VllmWorkerProcess pid=209) INFO 09-11 13:44:40 utils.py:977] Found nccl from library libnccl.so.2 (VllmWorkerProcess pid=212) INFO 09-11 13:44:40 pynccl.py:63] vLLM is using nccl==2.20.5 INFO 09-11 13:44:40 pynccl.py:63] vLLM is using nccl==2.20.5 (VllmWorkerProcess pid=214) INFO 09-11 13:44:40 utils.py:977] Found nccl from library libnccl.so.2 (VllmWorkerProcess pid=215) INFO 09-11 13:44:40 utils.py:977] Found nccl from library libnccl.so.2 (VllmWorkerProcess pid=209) INFO 09-11 13:44:40 pynccl.py:63] vLLM is using nccl==2.20.5 (VllmWorkerProcess pid=215) INFO 09-11 13:44:40 pynccl.py:63] vLLM is using nccl==2.20.5 (VllmWorkerProcess pid=214) INFO 09-11 13:44:40 pynccl.py:63] vLLM is using nccl==2.20.5 (VllmWorkerProcess pid=213) INFO 09-11 13:44:40 utils.py:977] Found nccl from library libnccl.so.2 (VllmWorkerProcess pid=213) INFO 09-11 13:44:40 pynccl.py:63] vLLM is using nccl==2.20.5 (VllmWorkerProcess pid=211) INFO 09-11 13:44:40 utils.py:977] Found nccl from library libnccl.so.2 (VllmWorkerProcess pid=211) INFO 09-11 13:44:40 pynccl.py:63] vLLM is using nccl==2.20.5 INFO 09-11 13:44:48 custom_all_reduce_utils.py:204] generating GPU P2P access cache in /root/.cache/vllm/gpu_p2p_access_cache_for_0,1,2,3,4,5,6,7.json INFO 09-11 13:45:08 custom_all_reduce_utils.py:242] reading GPU P2P access cache from /root/.cache/vllm/gpu_p2p_access_cache_for_0,1,2,3,4,5,6,7.json (VllmWorkerProcess pid=214) INFO 09-11 13:45:08 custom_all_reduce_utils.py:242] reading GPU P2P access cache from /root/.cache/vllm/gpu_p2p_access_cache_for_0,1,2,3,4,5,6,7.json (VllmWorkerProcess pid=212) INFO 09-11 13:45:08 custom_all_reduce_utils.py:242] reading GPU P2P access cache from /root/.cache/vllm/gpu_p2p_access_cache_for_0,1,2,3,4,5,6,7.json (VllmWorkerProcess pid=211) INFO 09-11 13:45:08 custom_all_reduce_utils.py:242] reading GPU P2P access cache from /root/.cache/vllm/gpu_p2p_access_cache_for_0,1,2,3,4,5,6,7.json (VllmWorkerProcess pid=210) INFO 09-11 13:45:08 custom_all_reduce_utils.py:242] reading GPU P2P access cache from /root/.cache/vllm/gpu_p2p_access_cache_for_0,1,2,3,4,5,6,7.json (VllmWorkerProcess pid=213) INFO 09-11 13:45:08 custom_all_reduce_utils.py:242] reading GPU P2P access cache from /root/.cache/vllm/gpu_p2p_access_cache_for_0,1,2,3,4,5,6,7.json (VllmWorkerProcess pid=209) INFO 09-11 13:45:08 custom_all_reduce_utils.py:242] reading GPU P2P access cache from /root/.cache/vllm/gpu_p2p_access_cache_for_0,1,2,3,4,5,6,7.json (VllmWorkerProcess pid=215) INFO 09-11 13:45:08 custom_all_reduce_utils.py:242] reading GPU P2P access cache from /root/.cache/vllm/gpu_p2p_access_cache_for_0,1,2,3,4,5,6,7.json INFO 09-11 13:45:08 shm_broadcast.py:235] vLLM message queue communication handle: Handle(connect_ip='127.0.0.1', local_reader_ranks=[1, 2, 3, 4, 5, 6, 7], buffer=<vllm.distributed.device_communicators.shm_broadcast.ShmRingBuffer object at 0x7fff44678250>, local_subscribe_port=45245, remote_subscribe_port=None) INFO 09-11 13:45:08 model_runner.py:915] Starting to load model meta-llama/Meta-Llama-3.1-405B-Instruct-FP8... (VllmWorkerProcess pid=210) INFO 09-11 13:45:08 model_runner.py:915] Starting to load model meta-llama/Meta-Llama-3.1-405B-Instruct-FP8... (VllmWorkerProcess pid=212) INFO 09-11 13:45:08 model_runner.py:915] Starting to load model meta-llama/Meta-Llama-3.1-405B-Instruct-FP8... . . . . (VllmWorkerProcess pid=212) INFO 09-11 13:45:11 weight_utils.py:236] Using model weights format ['*.safetensors'] (VllmWorkerProcess pid=210) INFO 09-11 13:45:11 weight_utils.py:236] Using model weights format ['*.safetensors'] Loading safetensors checkpoint shards: 0% Completed | 0/109 [00:00<?, ?it/s] Loading safetensors checkpoint shards: 1% Completed | 1/109 [00:02<04:05, 2.28s/it] Loading safetensors checkpoint shards: 2% Completed | 2/109 [00:05<05:20, 3.00s/it] Loading safetensors checkpoint shards: 3% Completed | 3/109 [00:09<05:47, 3.28s/it] Loading safetensors checkpoint shards: 4% Completed | 4/109 [00:12<05:49, 3.32s/it] Loading safetensors checkpoint shards: 5% Completed | 5/109 [00:17<06:33, 3.78s/it] . . . Loading safetensors checkpoint shards: 97% Completed | 106/109 [06:28<00:11, 3.67s/it] Loading safetensors checkpoint shards: 98% Completed | 107/109 [06:32<00:07, 3.59s/it] Loading safetensors checkpoint shards: 99% Completed | 108/109 [06:36<00:03, 3.86s/it] Loading safetensors checkpoint shards: 100% Completed | 109/109 [06:39<00:00, 3.69s/it] Loading safetensors checkpoint shards: 100% Completed | 109/109 [06:39<00:00, 3.67s/it] INFO 09-11 13:51:55 model_runner.py:926] Loading model weights took 56.7677 GB (VllmWorkerProcess pid=213) INFO 09-11 13:51:55 model_runner.py:926] Loading model weights took 56.7677 GB (VllmWorkerProcess pid=212) INFO 09-11 13:51:55 model_runner.py:926] Loading model weights took 56.7677 GB (VllmWorkerProcess pid=214) INFO 09-11 13:51:55 model_runner.py:926] Loading model weights took 56.7677 GB (VllmWorkerProcess pid=210) INFO 09-11 13:51:55 model_runner.py:926] Loading model weights took 56.7677 GB (VllmWorkerProcess pid=215) INFO 09-11 13:51:55 model_runner.py:926] Loading model weights took 56.7677 GB (VllmWorkerProcess pid=211) INFO 09-11 13:51:55 model_runner.py:926] Loading model weights took 56.7677 GB (VllmWorkerProcess pid=209) INFO 09-11 13:51:55 model_runner.py:926] Loading model weights took 56.7677 GB INFO 09-11 13:52:04 distributed_gpu_executor.py:57] # GPU blocks: 3152, # CPU blocks: 4161 (VllmWorkerProcess pid=213) INFO 09-11 13:52:08 model_runner.py:1217] Capturing the model for CUDA graphs. This may lead to unexpected consequences if the model is not static. To run the model in eager mode, set 'enforce_eager=True' or use '--enforce-eager' in the CLI. (VllmWorkerProcess pid=213) INFO 09-11 13:52:08 model_runner.py:1221] CUDA graphs can take additional 1~3 GiB memory per GPU. If you are running out of memory, consider decreasing `gpu_memory_utilization` or enforcing eager mode. You can also reduce the `max_num_seqs` as needed to decrease memory usage. . . . . INFO 09-11 13:52:09 model_runner.py:1217] Capturing the model for CUDA graphs. This may lead to unexpected consequences if the model is not static. To run the model in eager mode, set 'enforce_eager=True' or use '--enforce-eager' in the CLI. INFO 09-11 13:52:09 model_runner.py:1221] CUDA graphs can take additional 1~3 GiB memory per GPU. If you are running out of memory, consider decreasing `gpu_memory_utilization` or enforcing eager mode. You can also reduce the `max_num_seqs` as needed to decrease memory usage. (VllmWorkerProcess pid=214) INFO 09-11 13:52:09 model_runner.py:1217] Capturing the model for CUDA graphs. This may lead to unexpected consequences if the model is not static. To run the model in eager mode, set 'enforce_eager=True' or use '--enforce-eager' in the CLI. (VllmWorkerProcess pid=214) INFO 09-11 13:52:09 model_runner.py:1221] CUDA graphs can take additional 1~3 GiB memory per GPU. If you are running out of memory, consider decreasing `gpu_memory_utilization` or enforcing eager mode. You can also reduce the `max_num_seqs` as needed to decrease memory usage. (VllmWorkerProcess pid=215) INFO 09-11 13:52:25 custom_all_reduce.py:223] Registering 8855 cuda graph addresses . . . . .. (VllmWorkerProcess pid=209) INFO 09-11 13:52:26 model_runner.py:1335] Graph capturing finished in 17 secs. (VllmWorkerProcess pid=214) INFO 09-11 13:52:26 model_runner.py:1335] Graph capturing finished in 17 secs. INFO 09-11 13:52:26 model_runner.py:1335] Graph capturing finished in 17 secs. INFO 09-11 13:52:27 api_server.py:224] vLLM to use /tmp/tmpje3c_sb0 as PROMETHEUS_MULTIPROC_DIR WARNING 09-11 13:52:27 serving_embedding.py:190] embedding_mode is False. Embedding API will not work. INFO 09-11 13:52:27 launcher.py:20] Available routes are: INFO 09-11 13:52:27 launcher.py:28] Route: /openapi.json, Methods: HEAD, GET INFO 09-11 13:52:27 launcher.py:28] Route: /docs, Methods: HEAD, GET INFO 09-11 13:52:27 launcher.py:28] Route: /docs/oauth2-redirect, Methods: HEAD, GET INFO 09-11 13:52:27 launcher.py:28] Route: /redoc, Methods: HEAD, GET INFO 09-11 13:52:27 launcher.py:28] Route: /health, Methods: GET INFO 09-11 13:52:27 launcher.py:28] Route: /tokenize, Methods: POST INFO 09-11 13:52:27 launcher.py:28] Route: /detokenize, Methods: POST INFO 09-11 13:52:27 launcher.py:28] Route: /v1/models, Methods: GET INFO 09-11 13:52:27 launcher.py:28] Route: /version, Methods: GET INFO 09-11 13:52:27 launcher.py:28] Route: /v1/chat/completions, Methods: POST INFO 09-11 13:52:27 launcher.py:28] Route: /v1/completions, Methods: POST INFO 09-11 13:52:27 launcher.py:28] Route: /v1/embeddings, Methods: POST INFO 09-11 13:52:27 launcher.py:33] Launching Uvicorn with --limit_concurrency 32765. To avoid this limit at the expense of performance run with --disable-frontend-multiprocessing INFO: Started server process [1] INFO: Waiting for application startup. INFO: Application startup complete. INFO: Uvicorn running on http://0.0.0.0:8000 (Press CTRL+C to quit) INFO 09-11 13:52:37 metrics.py:351] Avg prompt throughput: 0.0 tokens/s, Avg generation throughput: 0.0 tokens/s, Running: 0 reqs, Swapped: 0 reqs, Pending: 0 reqs, GPU KV cache usage: 0.0%, CPU KV cache usage: 0.0%. INFO 09-11 13:52:47 metrics.py:351] Avg prompt throughput: 0.0 tokens/s, Avg generation throughput: 0.0 tokens/s, Running: 0 reqs, Swapped: 0 reqs, Pending: 0 reqs, GPU KV cache usage: 0.0%, CPU KV cache usage: 0.0%.

Confirming the model

To confirm that the model is working, send a curl command. Ensure that you use the localhost if you submit the request from the node or the IP address of the host if you are testing from another system.

fbronzati@node005:~$ curl -X 'POST' 'http://localhost:8000/v1/completions' -H 'accept: application/json' -H 'Content-Type: application/json' -d '{

"model": "meta-llama/Meta-Llama-3.1-405B-Instruct-FP8",

"prompt": "Once upon a time",

"max_tokens": 64

}'The following example is a response from the model:

{"id":"cmpl-9f94c78172db488db84eac5f1fb5165e","object":"text_completion","created":1726232453,"model":"meta-llama/Meta-Llama-3.1-405B-Instruct-FP8","choices":[{"index":0,"text":" there was a man who devoted his entire life to mastering the art of dancing. He trained tirelessly and meticulously in every style he could find, from waltzing to hip-hop. He quickly became a master of each craft, impressing everyone around him with his incredible raw talent and dedication. People everywhere sought after this Renaissance","logprobs":null,"finish_reason":"length","stop_reason":null,"prompt_logprobs":null}],"usage":{"prompt_tokens":5,"total_tokens":69,"completion_tokens":64}}Because the container that is being used deploys the OpenAI API, you can use Python or other languages to interact with the model.

Kubernetes deployment

Similarly to the NVIDIA NIM deployment, this blog does not describe the installation of the Kubernetes cluster and additional software such as NVIDIA GPU operator. These components are essential. There are many resources on the Internet that describe how to deploy the components. Also, Dell Technologies can provide you with a working environment with the Dell AI Factory solution, which will facilitate your vLLM deployment.

Creating the deployment file

The following example shows how to create a deployment YAML file, which can be expanded with liveness and readiness probes. This blog does not describe these advanced configurations.

- Use your preferred text editor to create a deploy-vllm-llama3.1-405B-8xH100-9680.yaml file.

fbronzati@login01:/mnt/f710/vllm$ vi deploy-vllm-llama3.1-405B-8xH100-9680.yaml

We used vi, however, vim and GNU nano are also good options. - Paste and edit the following content as required for your environment:

apiVersion: apps/v1 kind: Deployment metadata: name: vllm-deployment spec: replicas: 1 selector: matchLabels: app: vllm-server template: metadata: labels: app: vllm-server spec: containers: - name: vllm-container image: "vllm/vllm-openai:v0.6.0" imagePullPolicy: IfNotPresent resources: limits: nvidia.com/gpu: 8 args: - "--model=meta-llama/Meta-Llama-3.1-405B-Instruct-FP8" - "--tensor-parallel-size=8" - "--max_model_len=10000" env: - name: HUGGING_FACE_HUB_TOKEN value: "replace_with_your_HuggingFace_key" volumeMounts: - mountPath: /dev/shm name: dshm - mountPath: /root/.cache/huggingface name: model-cache imagePullSecrets: - name: regcred volumes: - name: dshm emptyDir: medium: Memory sizeLimit: 32Gi - name: model-cache nfs: server: f710.f710 path: /ifs/data/Projects/vllm nodeSelector: nvidia.com/gpu.product: NVIDIA-H100-80GB-HBM3 --- apiVersion: v1 kind: Service metadata: name: vllm-service spec: type: LoadBalancer ports: - protocol: TCP port: 8000 targetPort: 8000 selector: app: vllm-server --- apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: vllm-ingress annotations: nginx.ingress.kubernetes.io/rewrite-target: / spec: ingressClassName: nginx-ingress rules: - http: paths: - path: / pathType: Prefix backend: service: name: vllm-service port: number: 8000

Some important considerations include:

- The Llama 3.1 405b model is approximately 700 GB. It is impractical to download the model every time that you switch to a different host. Therefore, we recommend having an external NFS such as the PowerScale F710. This configuration is shown on the volumes section of the file.

- You must accept the Hugging Face and Meta contract and use your HF key to be able to download the model. Otherwise, an error message is displayed when deploying the pod.

- We recommend that you create a new namespace for deploying the pod.

- Because the vLLM image is hosted in the Docker registry, you might need to create a secret. Otherwise, the download might be limited.

Creating the Kubernetes namespace and secrets

After creating the deployment file, create a namespace. For our example, we used vllm for simple identification on the pods.

fbronzati@login01:/mnt/f710/vllm$ kubectl create namespace vllm namespace/vllm created

Create the Docker secret to avoid limiting the number of downloads on the Docker repository:

fbronzati@login01:/mnt/f710/vllm$ kubectl create secret docker-registry regcred --docker-username=<replace_with_your_docker_user> --docker-password=<replace _with_your_docker_key> -n vllm

Deploying the vllm pod

To deploy the pod and the services that are required to access the model:

- Run the following command:

fbronzati@login01:/mnt/f710/vllm$ kubectl apply -f deploy-vllm-llama3.1-405B-8xH100-9680.yaml -n vllmdeployment.apps/vllm-deployment created service/vllm-service created ingress.networking.k8s.io/vllm-ingress created

For a first-time deployment, the process of downloading the image and the model takes some time because the model is approximately 683 GB.

fbronzati@login01:/mnt/f710/vllm$ du -sh hub/* 683G hub/models--meta-llama--Meta-Llama-3.1-405B-Instruct-FP8

- To monitor the deployment of the pod and services, run the following commands

fbronzati@login01:/mnt/f710/vllm$ kubectl get pods -n vllm -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES vllm-deployment-cd6c8564c-kh6tb 1/1 Running 0 58s 10.194.214.55 helios25 <none> <none> fbronzati@login01:/mnt/f710/vllm$ kubectl get services -n vllm -o wide NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR vllm-service LoadBalancer *.*.*.* <pending> 8000:30757/TCP 83s app=vllm-server

- To verify any errors or if the container image is being downloaded, run the kubectl describe command:

fbronzati@login01:/mnt/f710/vllm$ kubectl describe pod vllm-deployment-cd6c8564c-kh6tb -n vllm

Name: vllm-deployment-cd6c8564c-kh6tb

Namespace: vllm

Priority: 0

Service Account: default

Node: helios25/*.*.*.*

Start Time: Thu, 12 Sep 2024 09:05:22 -0500

Labels: app=vllm-server

pod-template-hash=cd6c8564c

Annotations: cni.projectcalico.org/containerID: 56bbadf0bf9193c47e481263e2a52770595c5c16f6bbee3e63177953a755c52c

cni.projectcalico.org/podIP: 10.194.214.55/32

cni.projectcalico.org/podIPs: 10.194.214.55/32

k8s.v1.cni.cncf.io/network-status:

[{

"name": "k8s-pod-network",

"ips": [

"10.194.214.55"

],

"default": true,

"dns": {}

}]

k8s.v1.cni.cncf.io/networks-status:

[{

"name": "k8s-pod-network",

"ips": [

"10.194.214.55"

],

"default": true,

"dns": {}

}]

Status: Running

IP: *.*.*.*

IPs:

IP: *.*.*.*

Controlled By: ReplicaSet/vllm-deployment-cd6c8564c

Containers:

vllm-container:

Container ID: containerd://92d98154a4faebfb5fe67ffc5aaa0404f1a6e3c37698a8eb94173543fd2b182c

Image: vllm/vllm-openai:v0.6.0

Image ID: docker.io/vllm/vllm-openai@sha256:072427aa6f95c74782a9bc3fe1d1fcd1e1aa3fe47b317584ea2181c549ad2de8

Port: <none>

Host Port: <none>

Args:

--model=meta-llama/Meta-Llama-3.1-405B-Instruct-FP8

--tensor-parallel-size=8

--max_model_len=10000

State: Running

Started: Thu, 12 Sep 2024 09:05:24 -0500

Ready: True

Restart Count: 0

Limits:

nvidia.com/gpu: 8

Requests:

nvidia.com/gpu: 8

Environment:

HUGGING_FACE_HUB_TOKEN: █████████████████████████████

Mounts:

/dev/shm from dshm (rw)

/root/.cache/huggingface from model-cache (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-kd9zq (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

dshm:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium: Memory

SizeLimit: 32Gi

model-cache:

Type: NFS (an NFS mount that lasts the lifetime of a pod)

Server: f710.f710

Path: /ifs/data/Projects/vllm

ReadOnly: false

kube-api-access-kd9zq:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: nvidia.com/gpu.product=NVIDIA-H100-80GB-HBM3

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events: <none> Model Initialization

After downloading the container image and creating the pod/service, the model is downloaded and loaded to the GPUs. This process might take a long time. We recommend that you monitor the logs of the pod to follow the process. The following example shows sample output that enables you to verify if the behavior is the same in your environment:

fbronzati@login01:/mnt/f710/vllm$ kubectl logs vllm-deployment-cd6c8564c-kh6tb -n vllm -f INFO 09-12 07:05:28 api_server.py:459] vLLM API server version 0.6.0 INFO 09-12 07:05:28 api_server.py:460] args: Namespace(host=None, port=8000, uvicorn_log_level='info', allow_credentials=False, allowed_origins=['*'], allowed_methods=['*'], allowed_headers=['*'], api_key=None, lora_modules=None, prompt_adapters=None, chat_template=None, response_role='assistant', ssl_keyfile=None, ssl_certfile=None, ssl_ca_certs=None, ssl_cert_reqs=0, root_path=None, middleware=[], return_tokens_as_token_ids=False, disable_frontend_multiprocessing=False, enable_auto_tool_choice=False, tool_call_parser=None, model='meta-llama/Meta-Llama-3.1-405B-Instruct-FP8', tokenizer=None, skip_tokenizer_init=False, revision=None, code_revision=None, tokenizer_revision=None, tokenizer_mode='auto', trust_remote_code=False, download_dir=None, load_format='auto', dtype='auto', kv_cache_dtype='auto', quantization_param_path=None, max_model_len=10000, guided_decoding_backend='outlines', distributed_executor_backend=None, worker_use_ray=False, pipeline_parallel_size=1, tensor_parallel_size=8, max_parallel_loading_workers=None, ray_workers_use_nsight=False, block_size=16, enable_prefix_caching=False, disable_sliding_window=False, use_v2_block_manager=False, num_lookahead_slots=0, seed=0, swap_space=4, cpu_offload_gb=0, gpu_memory_utilization=0.9, num_gpu_blocks_override=None, max_num_batched_tokens=None, max_num_seqs=256, max_logprobs=20, disable_log_stats=False, quantization=None, rope_scaling=None, rope_theta=None, enforce_eager=False, max_context_len_to_capture=None, max_seq_len_to_capture=8192, disable_custom_all_reduce=False, tokenizer_pool_size=0, tokenizer_pool_type='ray', tokenizer_pool_extra_config=None, limit_mm_per_prompt=None, enable_lora=False, max_loras=1, max_lora_rank=16, lora_extra_vocab_size=256, lora_dtype='auto', long_lora_scaling_factors=None, max_cpu_loras=None, fully_sharded_loras=False, enable_prompt_adapter=False, max_prompt_adapters=1, max_prompt_adapter_token=0, device='auto', num_scheduler_steps=1, scheduler_delay_factor=0.0, enable_chunked_prefill=None, speculative_model=None, speculative_model_quantization=None, num_speculative_tokens=None, speculative_draft_tensor_parallel_size=None, speculative_max_model_len=None, speculative_disable_by_batch_size=None, ngram_prompt_lookup_max=None, ngram_prompt_lookup_min=None, spec_decoding_acceptance_method='rejection_sampler', typical_acceptance_sampler_posterior_threshold=None, typical_acceptance_sampler_posterior_alpha=None, disable_logprobs_during_spec_decoding=None, model_loader_extra_config=None, ignore_patterns=[], preemption_mode=None, served_model_name=None, qlora_adapter_name_or_path=None, otlp_traces_endpoint=None, collect_detailed_traces=None, disable_async_output_proc=False, override_neuron_config=None, engine_use_ray=False, disable_log_requests=False, max_log_len=None) INFO 09-12 07:05:33 api_server.py:160] Multiprocessing frontend to use ipc:///tmp/29c1a11c-a17a-4fa1-8768-a001a3385714 for RPC Path. INFO 09-12 07:05:33 api_server.py:176] Started engine process with PID 78 INFO 09-12 07:05:37 config.py:890] Defaulting to use mp for distributed inference INFO 09-12 07:05:37 llm_engine.py:213] Initializing an LLM engine (v0.6.0) with config: model='meta-llama/Meta-Llama-3.1-405B-Instruct-FP8', speculative_config=None, tokenizer='meta-llama/Meta-Llama-3.1-405B-Instruct-FP8', skip_tokenizer_init=False, tokenizer_mode=auto, revision=None, override_neuron_config=None, rope_scaling=None, rope_theta=None, tokenizer_revision=None, trust_remote_code=False, dtype=torch.bfloat16, max_seq_len=10000, download_dir=None, load_format=LoadFormat.AUTO, tensor_parallel_size=8, pipeline_parallel_size=1, disable_custom_all_reduce=False, quantization=fbgemm_fp8, enforce_eager=False, kv_cache_dtype=auto, quantization_param_path=None, device_config=cuda, decoding_config=DecodingConfig(guided_decoding_backend='outlines'), observability_config=ObservabilityConfig(otlp_traces_endpoint=None, collect_model_forward_time=False, collect_model_execute_time=False), seed=0, served_model_name=meta-llama/Meta-Llama-3.1-405B-Instruct-FP8, use_v2_block_manager=False, num_scheduler_steps=1, enable_prefix_caching=False, use_async_output_proc=True) WARNING 09-12 07:05:37 multiproc_gpu_executor.py:56] Reducing Torch parallelism from 112 threads to 1 to avoid unnecessary CPU contention. Set OMP_NUM_THREADS in the external environment to tune this value as needed. INFO 09-12 07:05:37 custom_cache_manager.py:17] Setting Triton cache manager to: vllm.triton_utils.custom_cache_manager:CustomCacheManager (VllmWorkerProcess pid=210) INFO 09-12 07:05:38 multiproc_worker_utils.py:215] Worker ready; awaiting tasks (VllmWorkerProcess pid=213) INFO 09-12 07:05:38 multiproc_worker_utils.py:215] Worker ready; awaiting tasks (VllmWorkerProcess pid=212) INFO 09-12 07:05:38 multiproc_worker_utils.py:215] Worker ready; awaiting tasks (VllmWorkerProcess pid=211) INFO 09-12 07:05:38 multiproc_worker_utils.py:215] Worker ready; awaiting tasks (VllmWorkerProcess pid=209) INFO 09-12 07:05:38 multiproc_worker_utils.py:215] Worker ready; awaiting tasks (VllmWorkerProcess pid=214) INFO 09-12 07:05:38 multiproc_worker_utils.py:215] Worker ready; awaiting tasks (VllmWorkerProcess pid=215) INFO 09-12 07:05:38 multiproc_worker_utils.py:215] Worker ready; awaiting tasks (VllmWorkerProcess pid=211) INFO 09-12 07:05:48 utils.py:977] Found nccl from library libnccl.so.2 INFO 09-12 07:05:48 utils.py:977] Found nccl from library libnccl.so.2 (VllmWorkerProcess pid=209) INFO 09-12 07:05:48 utils.py:977] Found nccl from library libnccl.so.2 (VllmWorkerProcess pid=215) INFO 09-12 07:05:48 utils.py:977] Found nccl from library libnccl.so.2 INFO 09-12 07:05:48 pynccl.py:63] vLLM is using nccl==2.20.5 (VllmWorkerProcess pid=211) INFO 09-12 07:05:48 pynccl.py:63] vLLM is using nccl==2.20.5 (VllmWorkerProcess pid=209) INFO 09-12 07:05:48 pynccl.py:63] vLLM is using nccl==2.20.5 (VllmWorkerProcess pid=210) INFO 09-12 07:05:48 utils.py:977] Found nccl from library libnccl.so.2 (VllmWorkerProcess pid=215) INFO 09-12 07:05:48 pynccl.py:63] vLLM is using nccl==2.20.5 (VllmWorkerProcess pid=213) INFO 09-12 07:05:48 utils.py:977] Found nccl from library libnccl.so.2 (VllmWorkerProcess pid=214) INFO 09-12 07:05:48 utils.py:977] Found nccl from library libnccl.so.2 (VllmWorkerProcess pid=210) INFO 09-12 07:05:48 pynccl.py:63] vLLM is using nccl==2.20.5 (VllmWorkerProcess pid=212) INFO 09-12 07:05:48 utils.py:977] Found nccl from library libnccl.so.2 (VllmWorkerProcess pid=213) INFO 09-12 07:05:48 pynccl.py:63] vLLM is using nccl==2.20.5 (VllmWorkerProcess pid=214) INFO 09-12 07:05:48 pynccl.py:63] vLLM is using nccl==2.20.5 (VllmWorkerProcess pid=212) INFO 09-12 07:05:48 pynccl.py:63] vLLM is using nccl==2.20.5 INFO 09-12 07:05:55 custom_all_reduce_utils.py:204] generating GPU P2P access cache in /root/.cache/vllm/gpu_p2p_access_cache_for_0,1,2,3,4,5,6,7.json INFO 09-12 07:06:38 custom_all_reduce_utils.py:242] reading GPU P2P access cache from /root/.cache/vllm/gpu_p2p_access_cache_for_0,1,2,3,4,5,6,7.json (VllmWorkerProcess pid=213) INFO 09-12 07:06:38 custom_all_reduce_utils.py:242] reading GPU P2P access cache from /root/.cache/vllm/gpu_p2p_access_cache_for_0,1,2,3,4,5,6,7.json (VllmWorkerProcess pid=214) INFO 09-12 07:06:38 custom_all_reduce_utils.py:242] reading GPU P2P access cache from /root/.cache/vllm/gpu_p2p_access_cache_for_0,1,2,3,4,5,6,7.json . . . . (VllmWorkerProcess pid=209) INFO 09-12 07:06:38 custom_all_reduce_utils.py:242] reading GPU P2P access cache from /root/.cache/vllm/gpu_p2p_access_cache_for_0,1,2,3,4,5,6,7.json INFO 09-12 07:06:38 shm_broadcast.py:235] vLLM message queue communication handle: Handle(connect_ip='127.0.0.1', local_reader_ranks=[1, 2, 3, 4, 5, 6, 7], buffer=<vllm.distributed.device_communicators.shm_broadcast.ShmRingBuffer object at 0x7fff438fd6f0>, local_subscribe_port=33971, remote_subscribe_port=None) INFO 09-12 07:06:38 model_runner.py:915] Starting to load model meta-llama/Meta-Llama-3.1-405B-Instruct-FP8... (VllmWorkerProcess pid=210) INFO 09-12 07:06:38 model_runner.py:915] Starting to load model meta-llama/Meta-Llama-3.1-405B-Instruct-FP8... (VllmWorkerProcess pid=212) INFO 09-12 07:06:38 model_runner.py:915] Starting to load model meta-llama/Meta-Llama-3.1-405B-Instruct-FP8... . . . . (VllmWorkerProcess pid=209) INFO 09-12 07:06:40 weight_utils.py:236] Using model weights format ['*.safetensors'] (VllmWorkerProcess pid=210) INFO 09-12 07:06:40 weight_utils.py:236] Using model weights format ['*.safetensors'] Loading safetensors checkpoint shards: 0% Completed | 0/109 [00:00<?, ?it/s] Loading safetensors checkpoint shards: 1% Completed | 1/109 [00:03<07:09, 3.97s/it] Loading safetensors checkpoint shards: 2% Completed | 2/109 [00:07<06:20, 3.56s/it] Loading safetensors checkpoint shards: 3% Completed | 3/109 [00:10<06:03, 3.42s/it] . . . . . Loading safetensors checkpoint shards: 96% Completed | 105/109 [06:23<00:12, 3.22s/it] Loading safetensors checkpoint shards: 97% Completed | 106/109 [06:27<00:10, 3.51s/it] Loading safetensors checkpoint shards: 98% Completed | 107/109 [06:31<00:06, 3.44s/it] Loading safetensors checkpoint shards: 99% Completed | 108/109 [06:35<00:03, 3.75s/it] Loading safetensors checkpoint shards: 100% Completed | 109/109 [06:38<00:00, 3.59s/it] Loading safetensors checkpoint shards: 100% Completed | 109/109 [06:38<00:00, 3.66s/it] INFO 09-12 07:13:19 model_runner.py:926] Loading model weights took 56.7677 GB (VllmWorkerProcess pid=209) INFO 09-12 07:13:19 model_runner.py:926] Loading model weights took 56.7677 GB (VllmWorkerProcess pid=211) INFO 09-12 07:13:19 model_runner.py:926] Loading model weights took 56.7677 GB (VllmWorkerProcess pid=212) INFO 09-12 07:13:19 model_runner.py:926] Loading model weights took 56.7677 GB (VllmWorkerProcess pid=215) INFO 09-12 07:13:19 model_runner.py:926] Loading model weights took 56.7677 GB (VllmWorkerProcess pid=214) INFO 09-12 07:13:19 model_runner.py:926] Loading model weights took 56.7677 GB (VllmWorkerProcess pid=210) INFO 09-12 07:13:19 model_runner.py:926] Loading model weights took 56.7677 GB (VllmWorkerProcess pid=213) INFO 09-12 07:13:19 model_runner.py:926] Loading model weights took 56.7677 GB INFO 09-12 07:13:26 distributed_gpu_executor.py:57] # GPU blocks: 2937, # CPU blocks: 4161 (VllmWorkerProcess pid=209) INFO 09-12 07:13:30 model_runner.py:1217] Capturing the model for CUDA graphs. This may lead to unexpected consequences if the model is not static. To run the model in eager mode, set 'enforce_eager=True' or use '--enforce-eager' in the CLI. (VllmWorkerProcess pid=209) INFO 09-12 07:13:30 model_runner.py:1221] CUDA graphs can take additional 1~3 GiB memory per GPU. If you are running out of memory, consider decreasing `gpu_memory_utilization` or enforcing eager mode. You can also reduce the `max_num_seqs` as needed to decrease memory usage. . . . . . INFO 09-12 07:13:30 model_runner.py:1217] Capturing the model for CUDA graphs. This may lead to unexpected consequences if the model is not static. To run the model in eager mode, set 'enforce_eager=True' or use '--enforce-eager' in the CLI. INFO 09-12 07:13:30 model_runner.py:1221] CUDA graphs can take additional 1~3 GiB memory per GPU. If you are running out of memory, consider decreasing `gpu_memory_utilization` or enforcing eager mode. You can also reduce the `max_num_seqs` as needed to decrease memory usage. (VllmWorkerProcess pid=212) INFO 09-12 07:13:30 model_runner.py:1217] Capturing the model for CUDA graphs. This may lead to unexpected consequences if the model is not static. To run the model in eager mode, set 'enforce_eager=True' or use '--enforce-eager' in the CLI. (VllmWorkerProcess pid=212) INFO 09-12 07:13:30 model_runner.py:1221] CUDA graphs can take additional 1~3 GiB memory per GPU. If you are running out of memory, consider decreasing `gpu_memory_utilization` or enforcing eager mode. You can also reduce the `max_num_seqs` as needed to decrease memory usage. (VllmWorkerProcess pid=215) INFO 09-12 07:13:46 custom_all_reduce.py:223] Registering 8855 cuda graph addresses (VllmWorkerProcess pid=211) INFO 09-12 07:13:46 custom_all_reduce.py:223] Registering 8855 cuda graph addresses (VllmWorkerProcess pid=213) INFO 09-12 07:13:46 custom_all_reduce.py:223] Registering 8855 cuda graph addresses . . . . . (VllmWorkerProcess pid=211) INFO 09-12 07:13:46 model_runner.py:1335] Graph capturing finished in 16 secs. (VllmWorkerProcess pid=209) INFO 09-12 07:13:46 model_runner.py:1335] Graph capturing finished in 17 secs. INFO 09-12 07:13:46 model_runner.py:1335] Graph capturing finished in 16 secs. INFO 09-12 07:13:47 api_server.py:224] vLLM to use /tmp/tmpzt8ci2e8 as PROMETHEUS_MULTIPROC_DIR WARNING 09-12 07:13:47 serving_embedding.py:190] embedding_mode is False. Embedding API will not work. INFO 09-12 07:13:47 launcher.py:20] Available routes are: INFO 09-12 07:13:47 launcher.py:28] Route: /openapi.json, Methods: GET, HEAD INFO 09-12 07:13:47 launcher.py:28] Route: /docs, Methods: GET, HEAD INFO 09-12 07:13:47 launcher.py:28] Route: /docs/oauth2-redirect, Methods: GET, HEAD INFO 09-12 07:13:47 launcher.py:28] Route: /redoc, Methods: GET, HEAD INFO 09-12 07:13:47 launcher.py:28] Route: /health, Methods: GET INFO 09-12 07:13:47 launcher.py:28] Route: /tokenize, Methods: POST INFO 09-12 07:13:47 launcher.py:28] Route: /detokenize, Methods: POST INFO 09-12 07:13:47 launcher.py:28] Route: /v1/models, Methods: GET INFO 09-12 07:13:47 launcher.py:28] Route: /version, Methods: GET INFO 09-12 07:13:47 launcher.py:28] Route: /v1/chat/completions, Methods: POST INFO 09-12 07:13:47 launcher.py:28] Route: /v1/completions, Methods: POST INFO 09-12 07:13:47 launcher.py:28] Route: /v1/embeddings, Methods: POST INFO 09-12 07:13:47 launcher.py:33] Launching Uvicorn with --limit_concurrency 32765. To avoid this limit at the expense of performance run with --disable-frontend-multiprocessing INFO: Started server process [1] INFO: Waiting for application startup. INFO: Application startup complete. INFO: Uvicorn running on http://0.0.0.0:8000 (Press CTRL+C to quit) INFO 09-12 07:13:57 metrics.py:351] Avg prompt throughput: 0.0 tokens/s, Avg generation throughput: 0.0 tokens/s, Running: 0 reqs, Swapped: 0 reqs, Pending: 0 reqs, GPU KV cache usage: 0.0%, CPU KV cache usage: 0.0%. INFO 09-12 07:14:07 metrics.py:351] Avg prompt throughput: 0.0 tokens/s, Avg generation throughput: 0.0 tokens/s, Running: 0 reqs, Swapped: 0 reqs, Pending: 0 reqs, GPU KV cache usage: 0.0%, CPU KV cache usage: 0.0%.

Verifying the model’s use of GPU memory

With access to the host or the container, you can verify GPU use by using the nvidia-smi utility. The following example shows how the GPUs are displayed on the container after the model is loaded: