Blogs

Short articles related to Dell Technologies solutions for Microsoft SQL Server

SQL Server 2022 is here! Let’s Discuss

Wed, 16 Nov 2022 17:14:16 -0000

|Read Time: 0 minutes

Today at the PASS Community Data Summit, Microsoft announced the general availability of SQL Server 2022. Over the past year Dell Technologies has been working closely with Microsoft to make sure that when this day arrived, our joint customers would be ready to rapidly adopt the latest release and be able to deploy and manage with confidence based on documented testing and best practices.

Schedule

Dell Technologies is a gold sponsor at PASS 2022 and will be engaging with both in-person and virtual conference attendees in the following ways:

In-person sessions

Wednesday 9:30am – 10:45: SQL Server 2022 – A Year in (P)review in room 604

Thursday 6:45AM – 7:45: Deploying and Running SQL Server 2022 on Azure Stack HCI in rooms 602-604

On demand session

Rethink your Backup and Recovery Strategy with SQL Server 2022 available in catalog

Birds of a Feather

Wednesday 12:30 - 2:30 Hybrid Cloud Data Services in Dining Hall 4EF

Thursday 12:30 – 2:30 SQL Server 2022: A Dell Perspective in Dining Hall 4EF

In addition to these sessions, we are excited to announce a wide range of available assets today to assist you with getting the most value out of your Microsoft data platform.

New Feature: T-SQL Snapshot Backups

Taking snapshots of databases isn’t new technology, however, having the ability to leverage them in a supported way on Microsoft SQL Server while leveraging T-SQL is very exciting. Storage-based snapshots are not meant to replace all traditional database backups. Off-appliance and/or offsite backups are still a best practice for full data protection. However, most backup and restore activities do not require off-appliance or offsite backups, and this is where time and space efficiencies come in. Storage-based snapshots accelerate the majority of backup and recovery scenarios without affecting traditional database backups.

In the links below, we show how to leverage this feature our PowerStore, PowerMax, and PowerFlex storage platforms. This is a very effective tool moving forward for Windows, Linux and even containerized environments.

SQL Server 2022 – Time to Rethink your Backup and Recovery Strategy | Dell Technologies Info Hub

SQL Server 2022 Backup Using T-SQL and Dell PowerFlex Storage Snapshots | Dell Technologies Info Hub

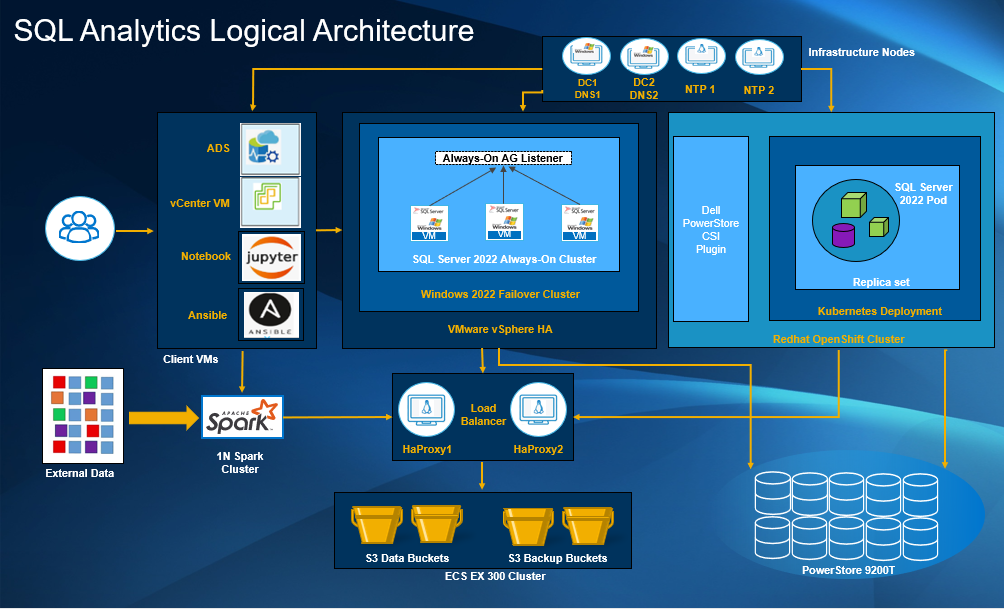

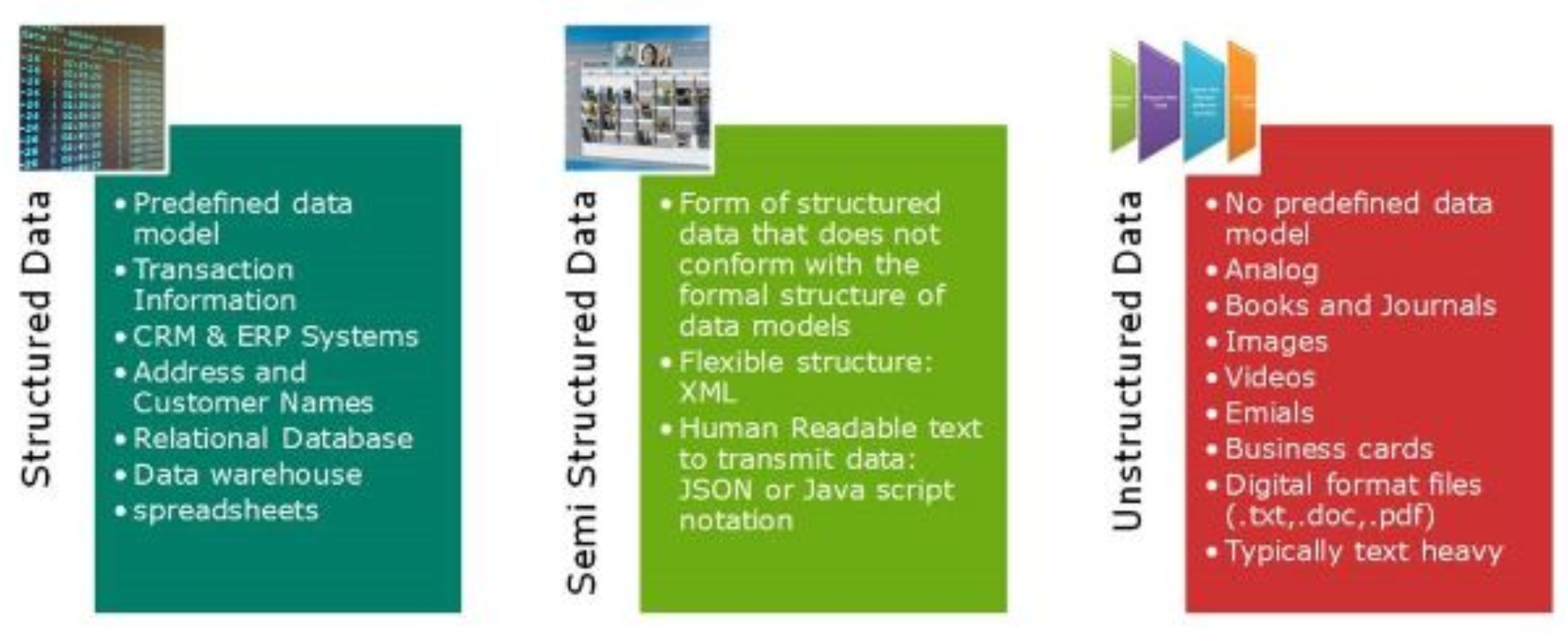

SQL Server 2022 Data Analytics with Dell PowerEdge and Dell ECS

Data virtualization has become popular among large enterprises because unstructured and semi-structured data is everywhere and leveraging this data is challenging. SQL Server 2022 PolyBase makes data virtualization possible for data scientists to use T-SQL for analytic workloads by querying data directly from S3-compatible object storage without separately installing client connection software.

Dell ECS is a modern object storage platform designed for both traditional and next-generation workloads and it provides organizations with an on-premises alternative to public cloud solutions. Dell Technologies has been named a Leader in the 2022 Gartner® Magic Quadrant™ for Distributed File Systems and Object Storage1 for the seventh year in a row – a Leader every year since the commencement of this report. According to the Gartner report, Dell Technologies has also once again received the highest overall position for its Ability to Execute in the Leaders quadrant of the report.1

As organizations, both large and small, seek to gain an edge with their intelligent data estate, access to all types of datasets must be made available. SQL Server 2022 and Dell ECS is the preferred technology for querying the Data Lake using a T-SQL surface area. This combination of products and tools yields modern opportunities to store and manage different types of data on-premises and at public cloud scale. This white paper provides an insight into the benefits of a powerful, agile, and flexible infrastructure for SQL Server 2022 data analytics.

New Feature: Backing up SQL Server to Dell ECS

In the same paper above, we leveraged the same environment to showcase the new SQL Server 2022 capabilities to run backup and restore of databases leveraging object storage with Dell ECS. One of the benefits of being able to leverage object storage is the ability to move larger read-only tables outside of the SQL database. This reduced the footprint of the database and decreases the time it takes to backup and restore.

In this document we provide the configuration steps for creating the required credential inside the SQL Server Instance for connection to ECS as well as show the correct syntax for backing up and restoring a database.

SQL Server 2022 on Dell Integrated System (DIS) for Azure Stack HCI

Dell Azure Stack HCI provides a flexible, highly available, and cost-effective platform to host Online Transaction Processing (OLTP) workloads such as SQL Server 2022. In this reference architecture we evaluated some performance results and design best practices for a cluster of AMD EPYC™ 7003 Series Processors with 3D V-Cache based DIS for Azure Stack HCI. This cost-effective solution offers strong performance results, a low data center footprint, and a highly flexible configuration in terms of compute and storage.

For those of you joining us in person at PASS, we will be jointly hosting a breakfast session with AMD and to present the reference architecture, design principles and configuration best practices for a SQL Server 2022 solution for Windows Server 2022 on Azure Stack HCI – AX7525.

Join Dell Technologies and AMD for breakfast here.

azure-stack-hci-and-the-microsoft-data-platform.pdf (delltechnologies.com)

Take the next steps

Dell Technologies Solutions for Microsoft Data Platform | Dell USA

Dell Technologies Solutions for Microsoft Azure Arc | Dell USA

PASS Data Community Summit November 15-18 2022

1Gartner, Inc. “Magic Quadrant™ for Distributed File Systems and Object Storage” by Julia Palmer, Jerry Rozeman, Chandra Mukhyala, Jeff Vogel, October 19, 2022. Dell Technologies was previously recognized as Dell EMC in the Magic Quadrant (2016-2019).

Joint engineering with AMD for SQL Server 2022

Mon, 14 Nov 2022 13:49:15 -0000

|Read Time: 0 minutes

In preparation for the PASS Data Community Summit, Dell Technologies has been heads down in our engineering labs testing some of the new features of SQL Server 2022. In this blog we will highlight a number of use cases, leveraging AMD EPYC™ 7003 Series Processors with 3D V-Cache. 3D V-Cache processors utilize AMD’s ground-breaking 3D Chiplet architecture with up to 768MBs of L3 cache per socket while providing socket compatibility with existing AMD EPYC™ 7003 platforms. AMD EPYC is optimized for performance with up to 64 cores and 4TB of memory per CPU.

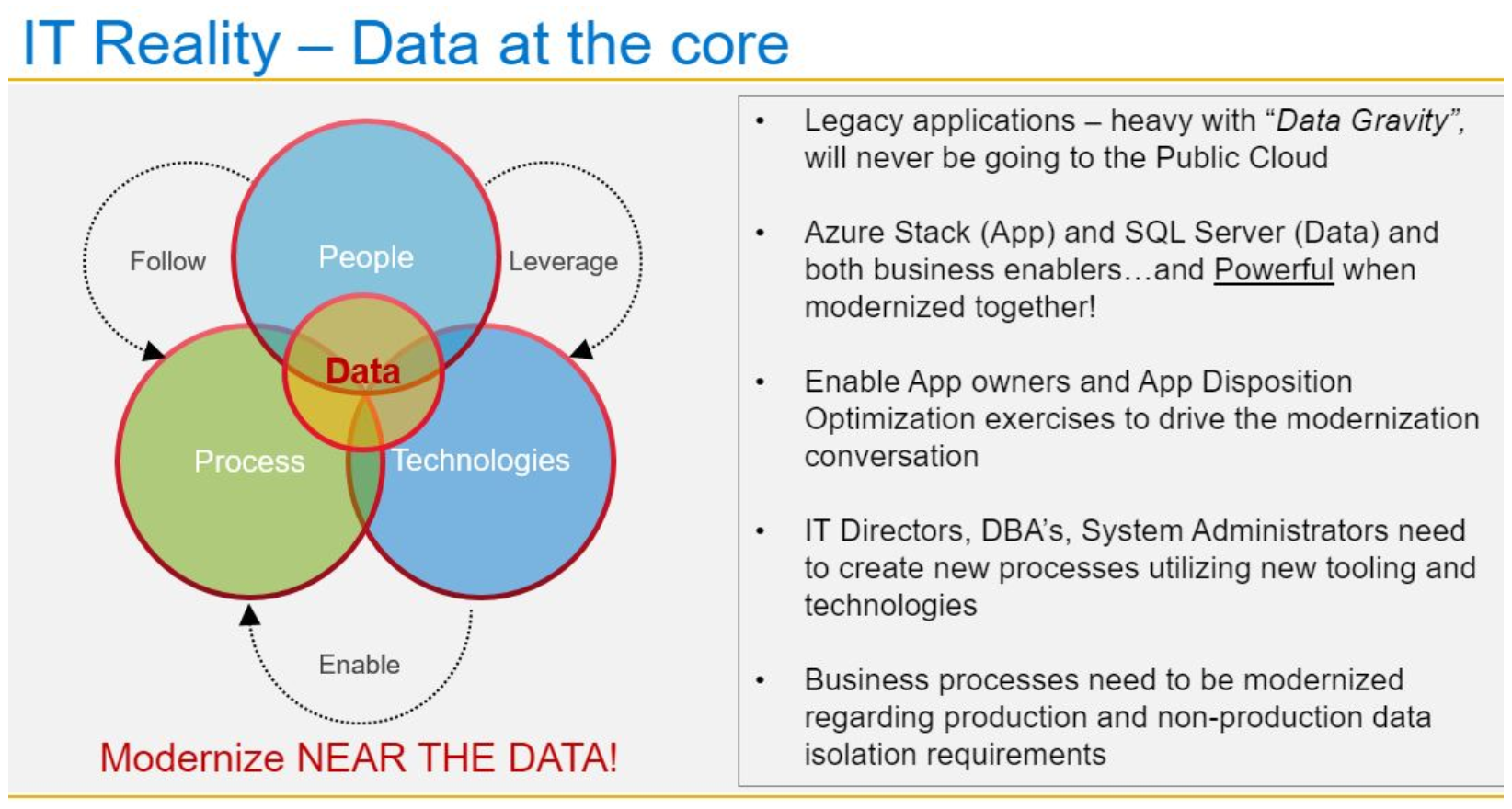

SQL Server 2022 is the most Azure-enabled release of SQL Server, with continued innovation across performance, security, and availability. SQL Server 2022 is part of the Microsoft Intelligent Data Platform, which unifies operational databases, analytics, and data governance. The reference architecture below shows how an Azure Arc-enabled Azure Stack HCI platform helps to consolidate virtualized SQL Server workloads and get up and running quickly on SQL Server 2022.

SQL Server 2022 on Azure Stack HCI

Dell Azure Stack HCI provides a flexible, highly available, and cost-effective platform to host Online Transaction Processing (OLTP) workloads such as SQL Server 2022. This document provides customers with best practice recommendations on how to deploy SQL Server 2022 on a Dell Integrated System (DIS) for Azure Stack HCI. These best practices consider both performance and high availability.

Dell Azure Stack HCI provides a flexible, highly available, and cost-effective platform to host Online Transaction Processing (OLTP) workloads such as SQL Server 2022. This document provides customers with best practice recommendations on how to deploy SQL Server 2022 on a Dell Integrated System (DIS) for Azure Stack HCI. These best practices consider both performance and high availability.

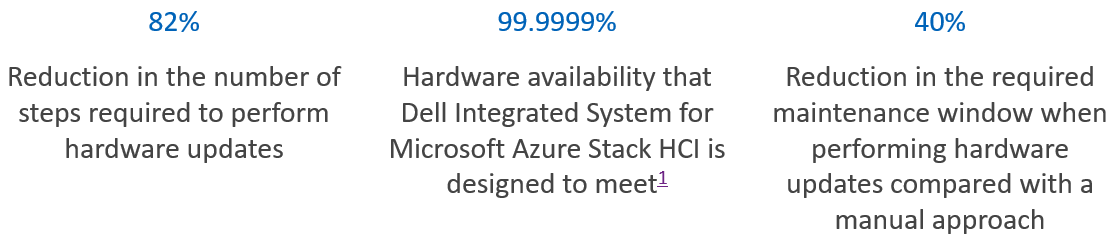

We also evaluated some performance results and design best practices for a cluster of AMD EPYC™ 7003 Series Processors with 3D V-Cache based DIS for Azure Stack HCI. This cost-effective solution offers strong performance results, a low data center footprint, and a highly flexible configuration in terms of compute and storage.

Only Azure Stack HCI from Dell Technologies leverages Dell OpenManage Integration for Windows Admin Center to orchestrate full stack lifecycle management, enabling complex tasks to be completed in a fraction of the time.

Read the full paper here.

If you are joining Dell Technologies and AMD at the PASS Data Community Summit (November 15th-18th, please join us for breakfast session and get all the details on this solution and ask the experts all of the questions you may have.

Attending the PASS Community Data Summit? Register for the breakfast here.

SQL Server 2022 Data Analytics with Dell PowerEdge and Dell ECS

Data virtualization has become popular among large enterprises because unstructured and semi-structured data is everywhere and using this data is challenging. SQL Server 2022 PolyBase makes data virtualization possible for data scientists to use T-SQL for analytic workloads by querying data directly from S3-compatible object storage without separately installing client connection software.

Data analytic workloads can be CPU intensive and selecting the optimal CPUs for the data analytic servers can be challenging, time consuming, and expensive. Because running T-SQL queries for data analytics require quick response time, as in the previous document, here we leveraged the new Milan-X AMD EPYC processor with 3D V-Cache technology. The AMD 3D V-Cache technology is the first implementation of the AMD 3D Chiplet Architecture. 3D V-Cache product offers three times the L3 cache compared to standard 3rd Gen EPYC processors and keeps memory intensive compute closer to the core speeding up performance for database and analytics workloads. AMD 3rd Gen EPYC™ Processors with 3D V-Cache helps customers optimize core usage, license costs and total cost-of-ownership.

ECS, a modern object storage platform designed for both traditional and next-generation workloads, provides organizations with an on-premises alternative to public cloud solutions. Dell Technologies has been named a Leader in the 2022 Gartner® Magic Quadrant™ for Distributed File Systems and Object Storage for the seventh year in a row – a Leader every year since the commencement of this report. According to the Gartner report, Dell Technologies has also once again received the highest overall position for its Ability to Execute in the Leaders quadrant of the report (based on Bellcore component reliability modeling across all AX-nodes).

As organizations, both large and small, seek to gain an edge with their intelligent data estate, access to all types of datasets must be made available. SQL Server 2022 and Dell ECS is the preferred technology for querying the Data Lake using a T-SQL surface area. This combination of products and tools yields modern opportunities to store and manage different types of data on-premises and at public cloud scale.

Read the full paper here.

SQL Server 2022 Backup & Restore with Dell ECS S3 Object Storage

In the same paper above, we leveraged the same environment to showcase the new SQL Server 2022 capabilities to run backup and restore of databases leveraging object storage with Dell ECS. One of the benefits of being able to leverage object storage is the ability to move larger read-only tables outside of the SQL database. This reduced the footprint of the database and decreases the time it takes to backup and restore.

In this document we provide the configuration steps for creating the required credential inside the SQL Server Instance for connection to ECS as well as show the correct syntax for backing up and restoring a database.

Take the next step…

Microsoft Azure Stack HCI | Dell USA

Dell Technologies Solutions for Microsoft Data Platform | Dell USA

Dell Technologies Solutions for Microsoft Azure Arc | Dell USA

SQL Server deployments–Have you tried this?

Tue, 27 Sep 2022 19:11:24 -0000

|Read Time: 0 minutes

SQL Server databases are critical components of most business operations and initiatives. As these systems become more intelligent and complex, maintaining optimal SQL Server database performance and uptime can pose significant challenges to IT—and often have severe implications for the business.

What is SQL Server best practice?

Best practices for SQL Server database solution provide a comprehensive set of recommendations for both the physical infrastructure and the software stack. This set of recommendations is derived from many testing hours and expertise from the Dell Server team, Dell Storage team, and the Dell Solutions and Engineering SQL Server specialists.

Why use SQL Server best practice?

Business-critical applications require an optimized infrastructure to run smoothly and efficiently. An optimized infrastructure allows applications to run smoothly and prevents performance risks, such as system sluggishness that could affect system resources and application response time. Such unexpected outcomes can often result in revenue loss, customer dissatisfaction, and damage to brand reputation.

The mission around best practices

Dell’s mission is to ensure that its customers have a robust and high-performance database infrastructure solution by providing best practices for SQL Server 2019 running on PowerEdge R750xs servers and PowerStore T model storage including the new PowerStore 3.0. These best practices aim to offer time savings for our customers by reducing the complex work required to optimize their databases. To enhance the value of best practices, we identify which configuration changes produce the greatest results and categorize them as follows:

Day 1 through Day 3: Most enterprises implement changes based on the delivery cycle:

- Day 1: Indicates configuration changes that are part of provisioning a database. The business has defined these best practices as an essential part of delivering a database.

- Day 2: Indicates configuration changes that are applied after the database has been delivered to the customer. These best practices address optimization steps to further improve system performance.

- Day 3: Indicates configuration changes that provide small incremental improvements in the database performance.

Highly, moderately, and fine-tuning recommendations: Customers want to understand the impact of the best practices and these terms are used to indicate the value of each best practice.

- Highly recommended: Indicates best practices that provided the greatest performance in our tests.

- Moderately recommended: Indicates best practices that provide modest performance improvements, but which are not as substantial as the highly recommended best practices.

- Fine-tuning: Indicates best practices that provide small incremental improvements in database performance.

Best practices test methodology for Intel-based PowerEdge and PowerStore deployments

Within each layer of the infrastructure, the team sequentially tested each component and documented the results. For example, within the storage layer, the goal was to show how optimizing the number of volumes for SQL User DB Data area volumes improve performance of a SQL Server database.

The expectation was that performance would sequentially improve. Using this methodology, an overall optimal SQL Server database solution would be achieved during the last test.

The physical architecture consists of:

- 2 x PowerEdge R750xs servers

- 1 x PowerStore T model array

Table 1 and Table 2 show the server configuration and the PowerStore T model configuration.

Table 1. Server configuration

Processors | 2 x Intel® Xeon® Gold 6338 32 core CPU @2.00GHz |

Memory | 16 x 64 GB 3200MT/s memory, total of 1 TB |

Network Adapters | Embedded NIC: 1 x Broadcom BCM5720 1 GbE DP Ethernet Integrated NIC1: 1 x Broadcom Adv. Dual port 25 Gb Ethernet NIC slot 5: 1 x Mellanox ConnectX-5-EN 25 GbE Dual port |

HBA | 2 x Emulex LP35002 32 Gb Dual Port Fibre Channel |

Table 2. PowerStore 5000T configuration details

Processors | 2 x Intel® Xeon® Gold 6130 CPU @ 2.10 GHz per Node

|

Cache size | 4 x 8.5 GB NVMe NVRAM |

Drives | 21 x 1.92 TB NVMe SSD |

Total usable capacity | 28.3 TB |

Front-end I/O modules | 2 x Four-Port 32 Gb FC |

The software layer consists of:

- VMware ESXi 7.0.3

- Red Hat Enterprise Linux 8.5

- SQL Server 2019 CU 16-15.0.4223.1

There are several combinations possible for the software architecture. For this testing, SQL Server 2019, Red Hat Enterprise Linux 8.5, and VMware vSphere 7.0.3 were selected to have a design that applies to many database customers use today.

Benchmark tool

HammerDB is a leading benchmarking tool that is used with databases like Oracle, MySQL, Microsoft SQL Server, and others. Dell’s engineering team used HammerDB to generate an Online Transaction Processing (OLTP) workload to simulate enterprise applications. To compare the benchmark results between the baseline configuration and the best practice configuration, there must be a significant load on the SQL Server Database infrastructure to ensure that the system was sufficiently taxed. This method of testing guarantees that the infrastructure resources are optimized after applying best practices. Table 3 shows the HammerDB workload configuration.

Table 3. HammerDB workload configuration

Setting name | Value |

Total transactions per user | 1,000,000 |

Number of warehouses | 5,000 |

Number of virtual users | 80 |

Minutes of ramp up time | 10 |

Minutes of test duration | 50 |

Use all warehouses | Yes |

User delay (ms) | 500 |

Repeat delay (ms) | 500 |

Iterations | 1 |

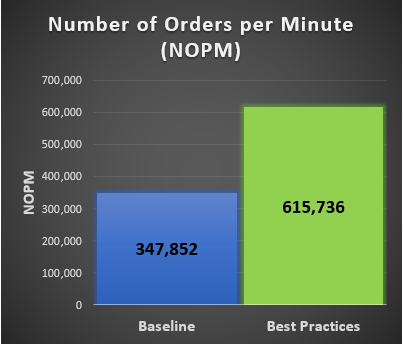

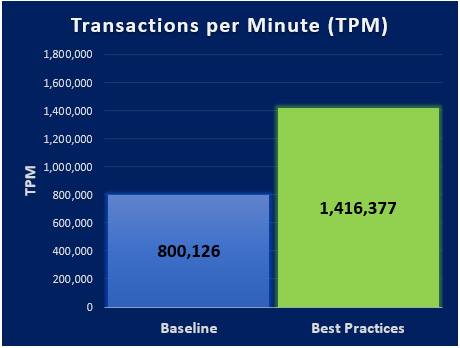

New Order per Minute (NOPM) and Transaction per Minute (TPM) provide metrics to interpret the HammerDB results. These metrics are from the TPC-C benchmark and indicate the result of a test. During our best practice validation, we compared those metrics against the baseline configuration to ensure that there was an increase in performance.

Findings

After performing various test cases between the baseline configuration and the best practice configuration, our results showed an improvement over the baseline configuration. The following graphs are derived from the database virtual machines configuration in the following table.

Note: Every database workload and system is different, which means actual results of these best practices may vary from system to system.

Table 4. vCPU and memory allocation

Resource Reservation | Baseline configuration per virtual machine | Number of SQL Server database virtual machines | Total |

vCPU | 10 cores | 6 | 60 cores |

Memory | 112 GB | 6 | 672 GB |

The Dell PowerEdge R7525: a Leader in Price and performance for SQL Server 2019

Mon, 07 Feb 2022 21:44:20 -0000

|Read Time: 0 minutes

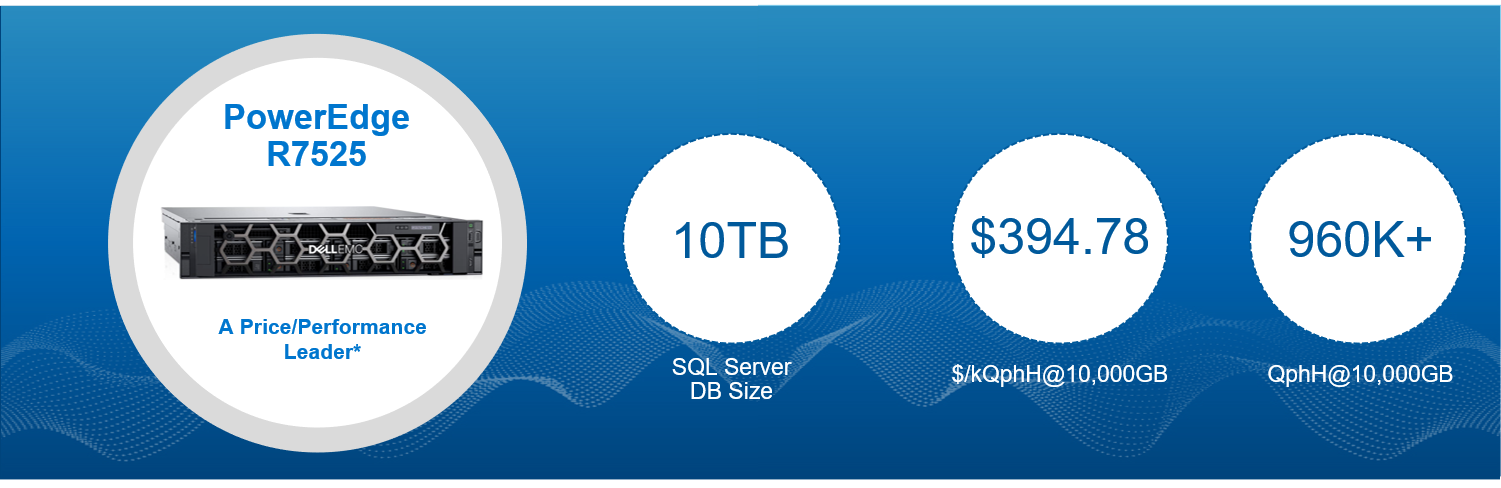

The Microsoft Server team at Dell Technologies is excited to announce the recently published decision support workload benchmark (TPC-H) that has the Dell PowerEdge R7525 as the price performance leader according to the 10,000 GB results published on December 15th, 2021. The Transaction Processing Council (TPC) provides the most trusted sources of independently audited database performance benchmarks. These TPC benchmarks provide a way for customers to compare the performance of servers using different sized workloads.

The Dell PowerEdge R7525 is a two-socket server that uses AMD EPYC processors and supports up to 4TB of memory, making it a strong choice for database workloads. The AMD EPYC 73F3 processor has 16 CPU cores and a base clock speed of 3.5 GHz that can boost up to 4.0 GHz. The base clock speed of 3.5 GHz for the CPU cores enables quick data processes, which enhances database value for customers.

We configured the Dell PowerEdge R7525 with two AMD EPYC 73F3 processors for a total of 32 physical cores and with hyperthreading 64 threads. The server was configured with the maximum amount of memory (4 TB) with DDR4-3200 DRAM in a 32 by 128 GiB memory configuration. The server storage configuration included ten 1.92 TB SSD drives and eight 6.4 enterprise NVMe drives. For the complete PowerEdge R7525 configuration, see the TPC Benchmark H Full Disclosure Report.

We used Microsoft SQL Server 2019 Enterprise Edition and Red Hat Enterprise Linux 8.3 for the TPC-H workload. The decision support workload (TPC-H) is designed to examine volumes of data, execute queries with a high degree of complexity, and provide answers to critical business questions. The key performance metric is the Composite Query-per-Hour (QphH@Size) for decision support benchmarks. The PowerEdge R7525 achieved a 960,382 QphH@Size rating with a 10,000 GB database size. To determine the price performance metric the total system cost of $379,133 USD was divided by the queries per hour. The price performance metric of $394.78 $/kQphH@10000GB placed the PowerEdge R7525 a leader in this category.*

The Microsoft team at Dell Technologies has recently published best practices for SQL Server, many of which were used in this independently audited TPC-H benchmark. To review these best practices that provide insights into how organizations can optimize their SQL Server environments, see this link: AMD-based SQL Server Best Practices.

The SQL Server Best Practices program includes the following:

- VMware ESXi: Round Robin Path Policy

- PowerMax: Adding Storage Groups

- PowerMax: Storage Directors Ports and Interface Emulations

- SQL Server 2019: CPU Affinity

The solutions page for Microsoft SQL Server provides an overview of how Dell Technologies and Microsoft SQL Server can enable your company with modern infrastructure and agile operations. To learn more, see Dell Technologies Solutions for Microsoft Data Platform.

For those interested in harnessing the performance of the AMD-based PowerEdge R7525 server see, PowerEdge R7525 rack server web page. This provides an overview and technical specifications of the PowerEdge R7525 server.

Dell Technologies offers a portfolio of other rack servers, tower and modular servers that can be configured to accelerate most any business workload. To learn more about these options, see Dell Technologies PowerEdge Server Solutions.

* Based on TPC Benchmark H (TPC-H), December 15th, 2021, the Dell EMC PowerEdge R7525 rack server has a TPC-H Composite Query-per-Hour Performance Metric of 960,382 and a price/kQphH metrick of 394.79 USD when run against a 10,000 GB Microsoft SQL Server 2019 database and Red Hat Enterprise Linux 8.3 in a non-clustered environment. Actual results may vary based on operating environment. Full results are available at tpc.org.

Dell PowerEdge R7525 Server – AMD Performance for Microsoft SQL Server workloads running on RHEL 8.3

Wed, 19 Jan 2022 21:30:10 -0000

|Read Time: 0 minutes

The Dell EMC PowerEdge R7525 is a highly scalable two-socket 2U rack server that delivers powerful performance and flexible configuration, ideal for data analytics workloads. It features a 2nd Gen AMD EPYC 7002 series processors with up to 24 NVMe drives that provide a unique combination non-oversubscribed NVMe storage and plenty of peripheral options to support applications that require maximum performance.

For this benchmark, the PowerEdge R7525 was subjected to an intense workload over 8 contiguous hours which included necessary operations such as backups. The R7525 emerged with an impressive 1,542,560 QphH@3,000GB TPC Benchmark H (TPC-H) performance rating.

Based upon the results on July 03, 2021, tcp.org, a Decision Support Benchmark organization, the Dell PowerEdge R7525 Server demonstrated a TPC-H Composite Query-per-Hour metric of 1,542,560 when run against a 3,000GB database yielding a TPC-H Price/Performance of rating of $327.38 per query-per-hour.

This performance was realized based on the installation of Microsoft SQL Server 2019 Enterprise Edition 64 bit on a Red Hat Enterprise Linux Server Release 8.3

Based on TCP-H V3 benchmarks in the 3,000 Scale Factor Range, this system has demonstrated an outstanding price to performance ratio. You can view the tcp.org results here.

Performance Measurement |

|

Performance | 1,542,560 QphH@3,000GB |

Price Performance | $327.38 per 1,542,560 QphH@3,000GB |

System Information |

|

Processors | 2x AMD EPYC MILAN 75F3, 2.95GHz (2 Proc / 64 Cores / 128 Threads) |

Memory (6TB) | 2048 GB (16x 128GB LRDIMM,3200MT/s, Quad Rank) |

Storage | BOSS controller card + with 2 M.2 Sticks 480GB (RAID 1), LP |

| 4 * 800GB SSD SAS Mix Use 12Gbps512e 2.5in AG Drive,3 DWPD |

| 3 *960GB SSD SAS Read Intensive12Gbps 512 2.5in AG Drive, 1 DWPD |

| 8 * Dell 1.6TB, NVMe, Mixed Use Express Flash, 2.5 SFF Drive, U.2, P4610 |

Why TPC Benchmarks matter

TPC-H is a decision support benchmark recorded at tpc.org. This benchmark consists of a suite of business-oriented ad-hoc queries and concurrent data modifications. The queries and data populating the database simulate a broad industry-wide relevance while maintaining easy implementation. This benchmark illustrates decision support systems that:

- Examine large volumes of data

- Execute queries with a high degree of complexity

- Give answers to critical business questions

Microsoft’s SQL Server is an enterprise-class database platform that more and more companies are using to store critical and sensitive data. SQL Server is an important cog in Microsoft’s.NET enterprise server architecture. It’s easy to use and takes fewer resources to maintain and performance tune.

The PowerEdge R7525 features 3rd Gen AMD EPYC series processors with Dell NVMe drives that provide a unique combination non-oversubscribed NVMe storage along with plenty of peripheral options to support applications that require maximum performance

This measurement is beneficial to a wide range of businesses that use Microsoft’s SQL Server as their enterprise-class database platform. More and more companies are using Microsoft’s SQL Server to store critical and sensitive data. SQL Server remains an important cog in Microsoft .NET enterprise server architecture. It’s easy to use and requires fewer resources to maintain and performance tune.

To find resources for system that is the right fit for your SQL Server see the Dell Technologies SQL Server support page.

Additional Dell Technologies Validated Designs to fit your current and future SQL requirements:

- Consolidated Mixed Workloads

- Re-platform Microsoft SQL to Linux

- Run Microsoft SQL on Containers

- Deploy Microsoft SQL 2019 Big Data Clusters

To explore these options, visit the Dell Technologies Solutions for Microsoft Data Platform.

SQL Server Modernization: 7X Performance Gains, 5.25X Faster Rebuilds

Tue, 26 Oct 2021 09:51:41 -0000

|Read Time: 0 minutes

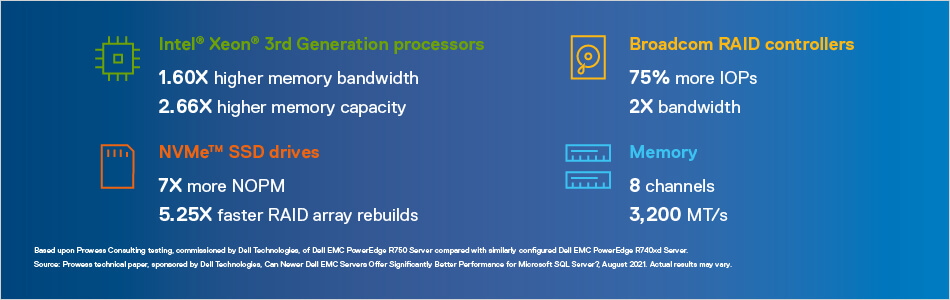

Upgrading to the latest server generation significantly improves performance and data protection

When it comes time to modernize with Microsoft® SQL Server 2019® to take advantage of its significant advancements for extracting real-time insights from data-intensive workloads, it makes sense to consider an upgrade to the underlying hardware as well.

The latest generation of Dell EMC PowerEdge servers can help SQL Server transform raw data into actionable insights with better performance and data protection. These servers are highly adaptable for seamless scaling and equipped to meet the demands of real-time analytics, artificial intelligence (AI) and machine learning (ML).

To help you put some numbers around your infrastructure decision, Dell Technologies recently asked a third-party consulting firm, Prowess Consulting, to test SQL Server performance on the latest generation of Dell EMC PowerEdge servers, compared to the previous generation.

The testing demonstrates that the latest server generation with RAID storage based on the latest NVMe™ drives can significantly improve SQL Server 2019 performance, capable of processing more than 7X more new orders per minute (NOPM).[1] In addition, RAID array rebuild times were up to 5.25X faster on the newer platform, enabling significantly faster recovery and less downtime.1

These remarkable improvements in performance and data protection are driven by a variety of new technologies incorporated in PowerEdge servers:

- Latest processors with higher core counts: Compared to the previous generation, 3rd Generation Intel® Xeon® Scalable processors are built on a more efficient architecture that increases core performance, memory, and I/O bandwidth and provides additional memory channels to accelerate workloads. In addition, it supports more cores and sockets to further enhance performance and throughput.

- More and faster memory: The 3rd Generation Intel Xeon Scalable processors offer more cores and supports more memory modules (DIMMs) at the same price, for up to 1.60X higher memory bandwidth1 and up to 2.66X higher memory capacity.1

- NVMe™ drives with PCIe® Gen4: Compared to the SATA RAID drives with PCIe Gen3 used previously, the latest generation of NVMe solid-state drives paired with PCIe Gen4 interfaces doubles server throughput.1

- Revolutionary RAID controller technology: The new Dell PERC H755N front NVMe adapter is based on the Broadcom® SAS3916 PCIe to SAS/SATA/PCIe RAID on Chip (RoC) controller. These are the first RAID controllers from Dell Technologies to offer both PCIe Gen4 host and PCIe Gen4 storage interfaces, which deliver double the bandwidth and 75% more IOPS compared to previous generations.1

- Ethernet controllers: The Broadcom NetXtreme® E-Series P425G 4x 25G PCIe NIC combines a high-bandwidth Ethernet controller with a unique set of highly optimized hardware-acceleration engines to enhance network performance and improve server efficiency for enterprise and cloud-scale networking and storage applications, including AI, ML, and data analytics.

Taken together, these modern features deliver a significant SQL Server performance boost with higher-capacity storage and faster database rebuild times. They also greatly increase IT efficiency, a topic we’ll look at in more detail in the next blog.

Learn more

Read the report: Can Newer Dell EMC Servers Offer Significantly Better Performance for Microsoft SQL Server?

Visit: DellTechnologies.com/Microsoft-Data-Platform

Visit: DellTechnologies.com/PowerEdge

[1] Dell EMC R750 server compared with similarly-configured Dell EMC PowerEdge R740xd server. Source: Prowess white paper, sponsored by Dell Technologies, “Can Newer Dell EMC Servers Offer Significantly Better Performance for Microsoft SQL Server?” August 2021. Actual results may vary.

Microsoft SQL Server 2019 TPC-H Performance on Dell EMC PowerEdge R7515

Mon, 14 Jun 2021 14:44:40 -0000

|Read Time: 0 minutes

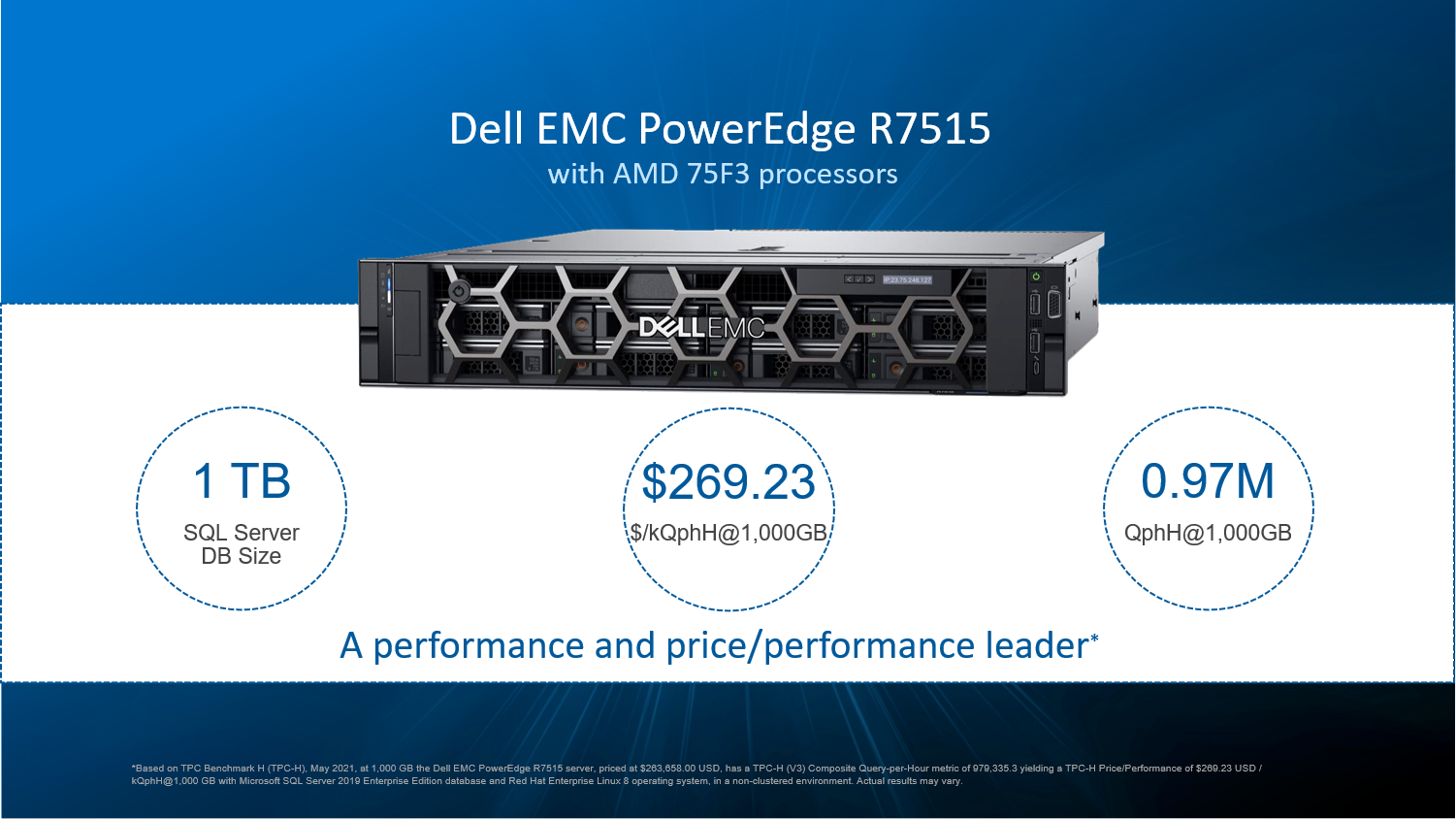

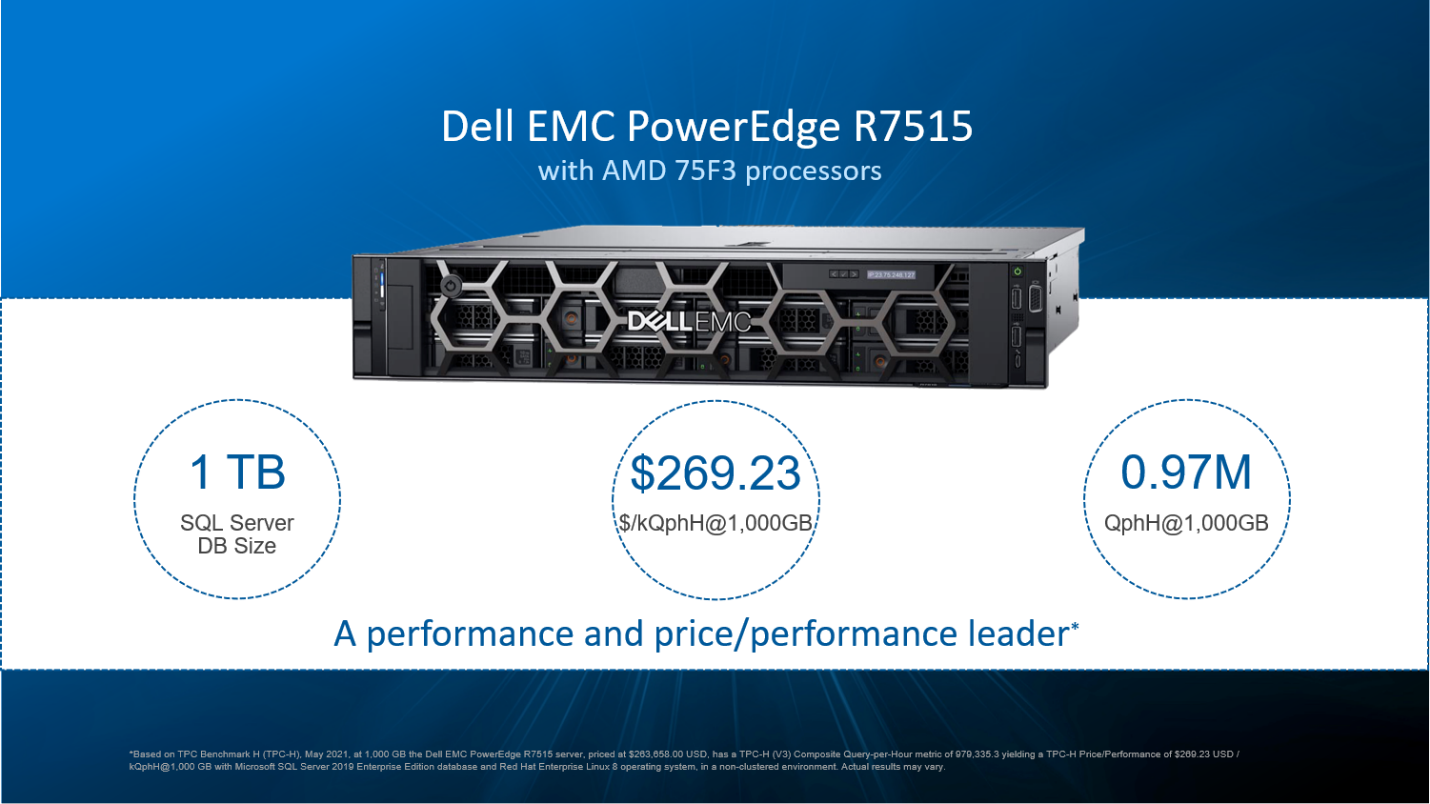

Dell EMC PowerEdge R7515 servers with AMD 75F3 processors deliver impressive performance and price/performance for SQL Server 2019.

While modernizing your data center there are two very important considerations every organization must make. The first is the price of new hardware and the other is how will it perform.

The latest powerful AMD 75F3 processors change the economics of the datacenter for the better. The Dell EMC PowerEdge R7515(2U) server, with the AMD processors, delivers the balanced I/O, memory, and computing capacity needed for large-scale analytical and business intelligence applications.

The latest generation PowerEdge R7515 servers mean organizations will not have to compromise neither on performance nor cost and instead focus on realizing their IT and digital business potentials. Independent tpc.org auditing has demonstrated that these servers are top rated in performance and price/performance for @ 1,000GB scale factor Microsoft SQL Server 2019 Enterprise database with Red Hat Enterprise Linux 8 operating system in a non-clustered environment.[1] Powered by 3rd generation AMD® EPYC™ processors, these servers are capable of handling demanding workloads and applications, such as data warehouses, ecommerce, databases. For more details about Dell servers please visit the Dell website.

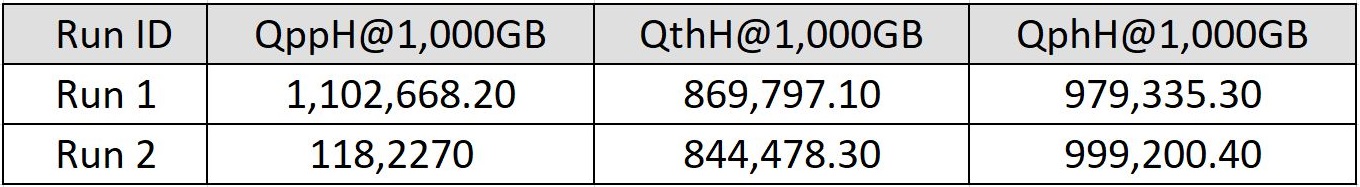

Results (Source: Tpc.org as of May 03,2021)

The Dell PowerEdge R7515 server achieved a result of 9,79,335.3 QphH@1000GB and $269.23 $/kQphH@1000GB and with system availability as of Apr 29, 2021. Results were officially published on the TPC org website on May 3,2021. *[2]

Check out the TPC-H V3 Result Highlights for additional information on the benchmark configuration. The detailed official benchmark disclosure report at TPC Results Page.

AMD EPYC 75F3 Processor:

The AMD EPYC™ 7003 Series Processors are built with leading-edge Zen 3 core, and AMD Infinity Architecture. The AMD EPYC™ SoC offers a consistent set of features across 8 to 64 cores. Each 3rd Gen AMD EPYC processor consists of up to eight Core Complex Die CCD) and an I/O Die (IOD).

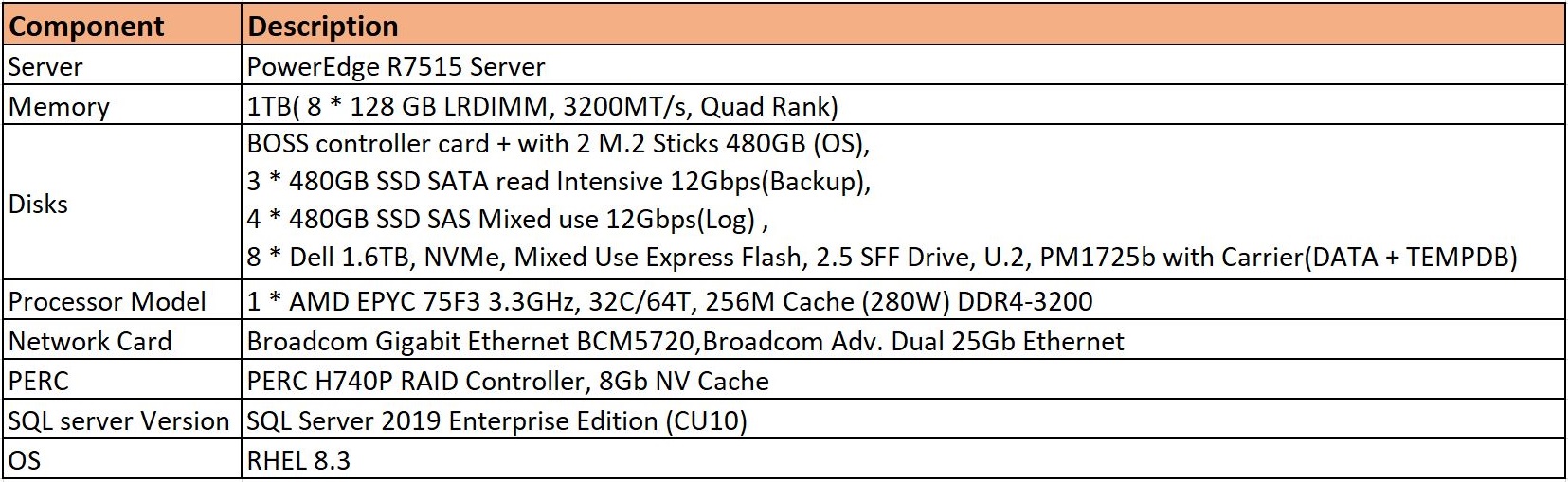

Benchmarking SQL Server 2019 with Dell EMC PowerEdge R7515 Server:

Microsoft SQL Server was configured on PowerEdge R7515 server with the following configuration. The PowerEdge R7515 server was equipped with single EPYC 75F3 3.3GHz, 32C/64T, 256M Cache (280W) DDR4-3200 and 1 TB of memory (up to 2 TB supported). Storage for this system was eight 1.6TB NVME Gen3 Mixed use Express Flash Drives plus four 480GB SSD SAS mixed use drives and three 480GB SATA read intensive SSD drives. The system ran Microsoft SQL Server 2019 Enterprise Edition and Red Hat Enterprise Linux 8. For technical information, see the R7515 rack server page for specifications, customizations, and details.

You can visit the FDR report for additional information on the benchmark configuration.

Performance:

The performance test consists of two runs:

A run consists of one execution of the Power test followed by one execution of the Throughput test.

RUN 1 is the first run following the load test. Run 2 is the run following Run 1. Below are the RUN1 and RUN2 results. For more detailed information please go through the FDR report.

Dell Technologies solutions for Microsoft SQL Server:

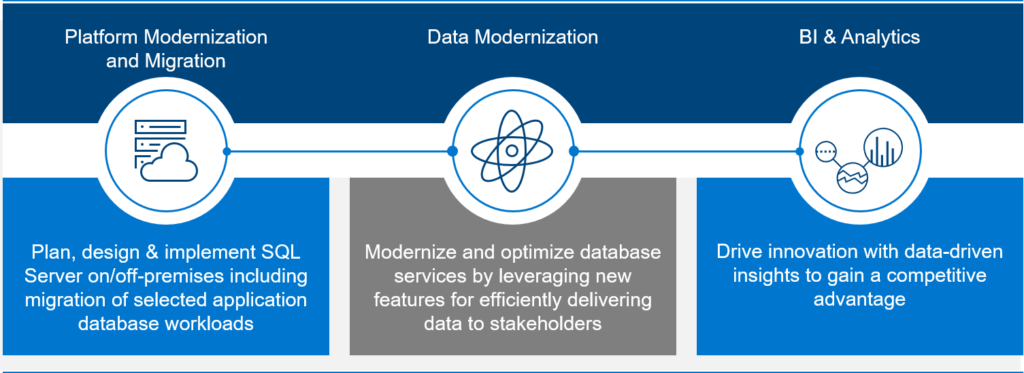

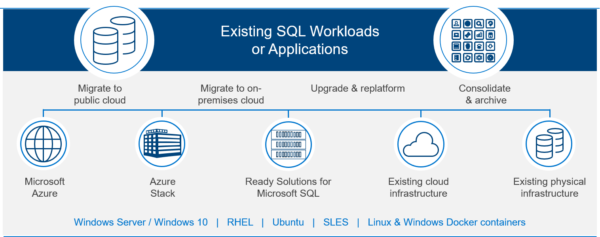

Dell Technologies solutions simplify the deployment, integration and management of Microsoft data platform environments and accelerate time-to-value for better service delivery and business innovation. With a broad infrastructure portfolio and a long-standing partnership with Microsoft, we provide the innovative solutions that reduce complexity and enable you to solve today’s challenges, no matter where you are in your transformation journey.

We have already released 10 TB TPC-H benchmarks on 4-S R940xa server. For More information about this the testing config and results please visit Dell EMC PowerEdge R940xa Full Disclosure Report.

In addition, Dell Technologies offers a portfolio of other rack servers, tower servers, and modular infrastructure that can be configured to accelerate most any business workload. For more details about Dell Technologies Solutions for Microsoft SQL please visit https://www.delltechnologies.com/sql

[1] *Based on TPC Benchmark H (TPC-H), May 2021, at 1,000 GB the Dell EMC PowerEdge R7515 server, priced at $263,658.00 USD, has a TPC-H (V3) Composite Query-per-Hour metric of 979,335.3 yielding a TPC-H Price/Performance of $269.23 USD / kQphH@1,000 GB with Microsoft SQL Server 2019 Enterprise Edition database and Red Hat Enterprise Linux 8 operating system, in a non-clustered environment. Actual results may vary. Full results on tpc.org.

[2] Based on TPC Benchmark H (TPC-H), May 2021, at 1,000 GB the Dell EMC PowerEdge R7515 server, priced at $263,658.00 USD, has a TPC-H (V3) Composite Query-per-Hour metric of 979,335.3 yielding a TPC-H Price/Performance of $269.23 USD / kQphH@1,000 GB with Microsoft SQL Server 2019 Enterprise Edition database and Red Hat Enterprise Linux 8 operating system, in a non-clustered environment. Actual results may vary. Full results on tpc.org.

AMD EPYC Zen3 Delivers 20% More SQL Server Performance

Mon, 03 May 2021 14:04:47 -0000

|Read Time: 0 minutes

It’s a common question: “How much database performance will we gain when upgrading to the newest server technology?” The person asking the question wants to justify the investment based on measured benefit. An engineering team here at Dell Technologies ran a load test comparing the prior generation of AMD EPYC processors to the new EPYC Zen3 processors. These test findings show a double-digit gain in performance for a typical write-heavy transactional workload using the new AMD EPYC Zen3 processors.

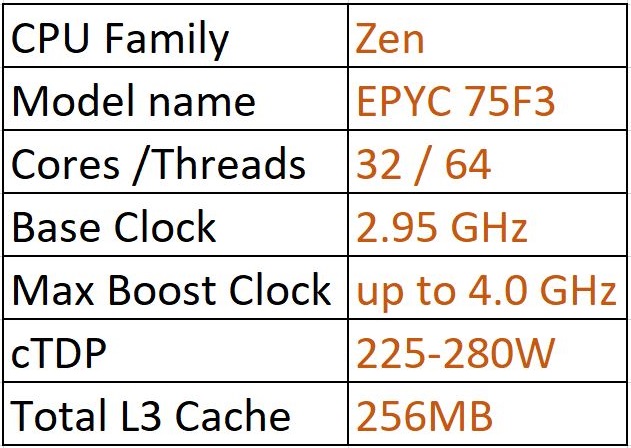

We used two Dell EMC PowerEdge R7525 servers with different generations of AMD EPYC processors. One server was configured with two 32-core AMD EPYC 75F3 processors running with a base clock speed of 2.95 GHz that can boost up to 4 GHz.

The table below compares the AMD EPYC Zen3 processors to the Zen2 processors used in the other server. We see that the new Zen3 processors have a higher boost clock speed and double the L3 cache. These new processors should accelerate database workloads by providing greater performance.

Component | PowerEdge R7525 with AMD EPYC Zen3 | PowerEdge R7525 with AMD EPYC Zen2 |

AMD EPYC CPU | 75F3 | 7542 |

Base clock speed | 2.95 GHz | 2.9 GHz |

Boost clock speed | 4.0 GHz | 3.4 GHz |

L1 cache | 96K per core | 96K per core |

L2 cache | 512K per core | 512K per core |

L3 cache | 256 MB shared | 128 MB shared |

Both generations of processors support 8 memory channels, with each memory channel supporting up to 2 DIMMS. We believe the faster boost clock speed combined with doubling the L3 cache for the new generation AMD EPYC Zen3 processors will drive greater database performance; however, there are many more features that we haven’t covered. This AMD webpage covers the EPYC 75F3 processors. For a deep technical dive into performance tuning database workloads we recommend this RDBMS tuning guide.

The table below shows a comparison summary of two PowerEdge servers used in the testing.

Component | PowerEdge Milan (Zen3) | PowerEdge ROME (Zen2) |

Processor | 2 x AMD EPYC 75F3 32 core processor | 2 x AMD EPYC 7542 32 core processor |

Memory | 2,048 GB 3.2 GHz | 2,048 GB 3.2 GHz |

Disk Storage | 8 x Dell Express Flash NVMe P4610 1.6 TB | 8 x Dell Express Flash NVMe P4610 1.6 TB |

Embedded NIC | 1 x Broadcom Gigabit Ethernet BCM5720 | 1 x Broadcom Gigabit Ethernet BCM5720 |

Integrated NIC | 1 x Broadcom Adv. Dual port 25 GB Ethernet | 1 x Broadcom Adv. Dual port 25 GB Ethernet |

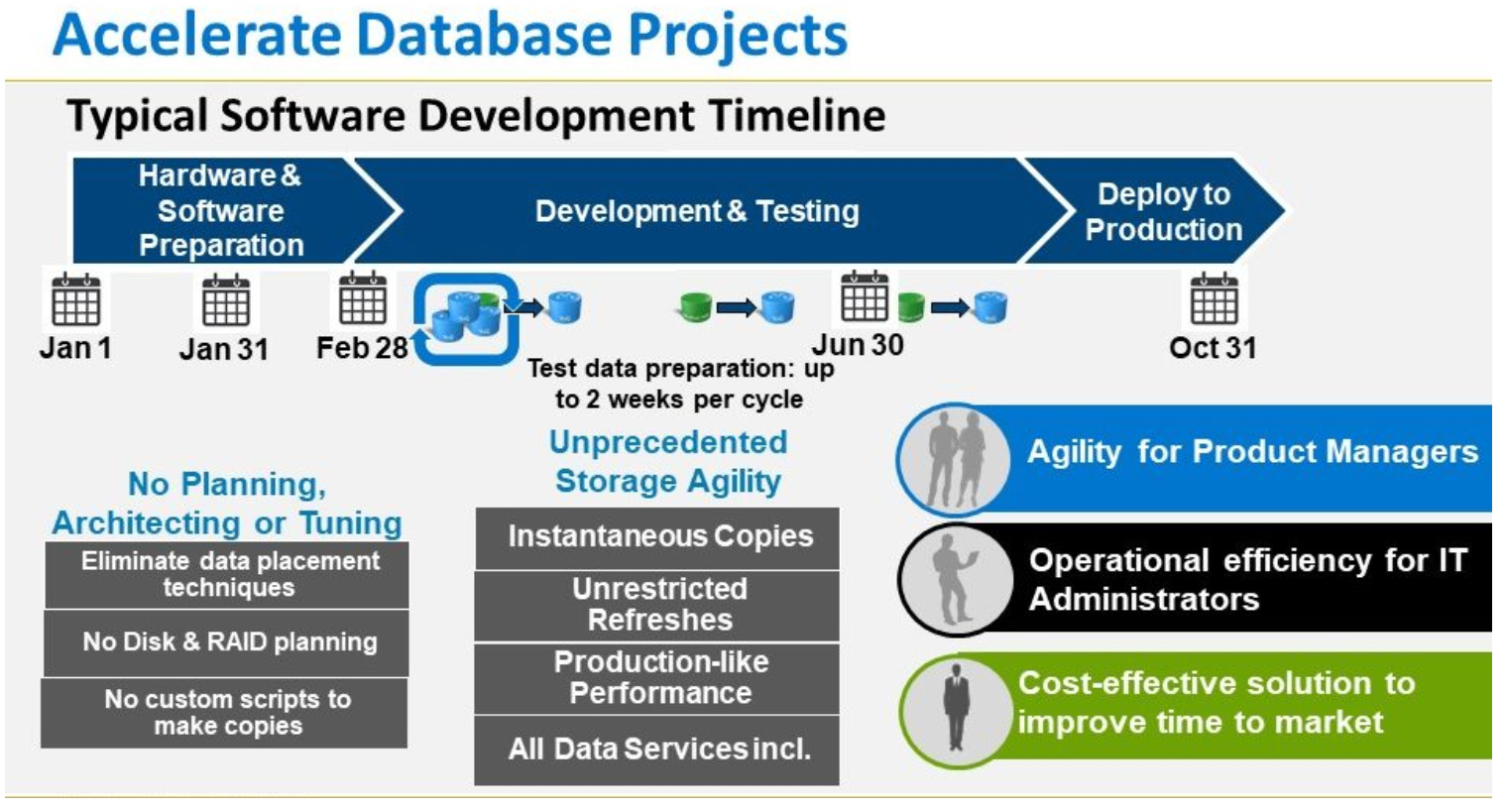

Microsoft SQL Server Enterprise Edition 2019 was virtualized to reflect the most common configuration we see at customer sites. The performance differences between bare-metal and VMware virtualization are rarely a consideration for customers since AMD and VMware vSphere 7.0 CU2 are continuously optimizing performance. The paper, “Performance Optimizations in VMware vSphere 7.0 U2 CPU Scheduler for AMD EPYC Processors” shows that the VMware CPU scheduler archives up to 50% better performance than 7.0 U1. Virtualized data management systems have also enabled Database-as-a-Service offerings on-premise for many enterprises. The capability to quickly provision database copies can significantly benefit many IT priorities and programs.

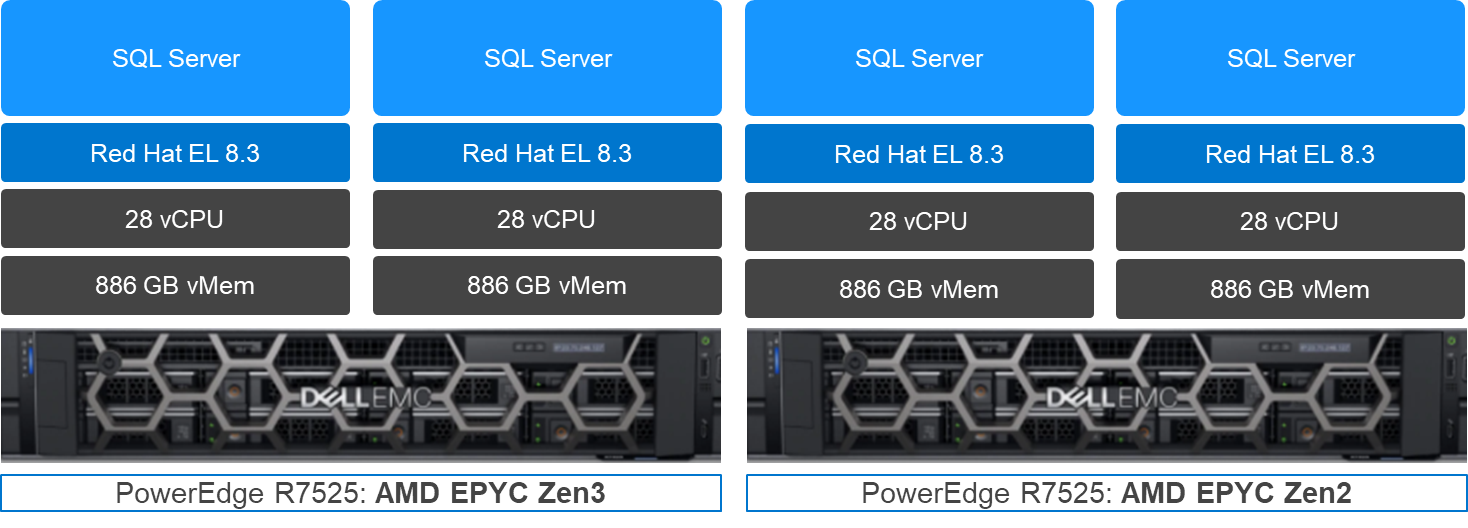

The Dell Engineering team used VMware virtualization to create a SQL Server virtual machine template for this testing. That template allowed the team to quickly provision four copies of the exact same virtualized database across the two PowerEdge servers. We hosted two virtualized SQL Server databases on each PowerEdge server. The figure below shows the infrastructure configuration for this performance test.

The virtual machine configuration for the four SQL Servers databases and the memory allocations for SQL Server are detailed below.

Component | Virtual Machine Configuration and Memory of SQL Server |

vCPU | 28 |

Memory | 886 GB |

Disk Storage | 2.7 TB |

Memory for SQL Server | 758 GB |

Both databases used VMware vSphere’s Virtual Machine File System (VMFS) on Direct Attached Storage (DAS). In the table below shows the sizes of each storage volume used for the virtual machine. There are two data volumes (data 1 and data 2) to increase I/O bandwidth to the disk storage. By having two volumes, reads and writes are split between the volumes increasing storage performance.

Storage Group | Size (GB) |

Operating System | 100 |

Data 1 | 600 |

Data 2 | 600 |

TempDB and TempDB Log | 200 |

Lob | 200 |

Backup | 1,000 |

In summary, the entire SQL Server software stack consisted of:

- Microsoft SQL Server Enterprise Edition 2019 CU9

- Red Hat Enterprise Linux 8.3

- VMware vSphere ESXi 7.0 Update 2

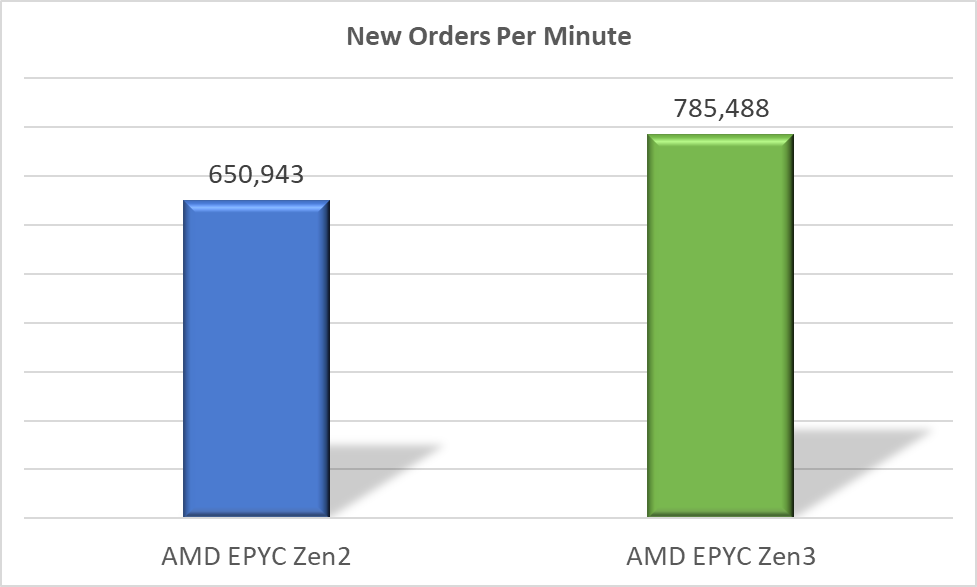

To create an Online Transaction Processing (OLTP) workload on the two SQL Server databases, the team used HammerDB. HammerDB is a leading benchmark tool used with databases like Microsoft SQL Server, Oracle, and others. We used HammerDB to generate a TPC-C workload that simulates terminal operators executing transactions, which is characteristic of an OLTP workload. A typical OLTP workload sends thousands of small read and write requests per minute to that database that must get committed to storage. New Orders Per Minute (NOPM) indicates the number of orders that were fully processed in one minute and is a metric we can use to compare two database systems. Below are the HammerDB settings used for the TPC-C workload test.

Setting | Value |

Time Driver Script | Yes |

Total Transactions per user | 1,000,000 |

Minutes of Ramp Up Time | 10 |

Minutes of Test Duration | 20 |

Use All Warehouses | Yes |

Number of Virtual Users | 100 |

The new AMD EPYC Zen3 processors delivered a 20% increase in New Orders Per Minute performance over the prior generation processor! That is a substantial improvement across the two virtualized SQL Server databases running on the PowerEdge R7525 server. See the comparison chart below.

It’s very likely that the boost memory speed of 4.0 GHz and the larger L3 cache in the AMD EPYC Zen3 processors contributed significantly to the 20% performance gain. Every database is different, and results will vary. However, this performance test provides value in terms of understanding the potential gains in moving to AMDs new Zen3 processors. For enterprises considering migrating their databases to a new server platform, serious consideration should be given to using the PowerEdge R7525 servers with the new generation of AMD processors.

Why Canonicalization Should Be a Core Component of Your SQL Server Modernization (Part 2)

Wed, 12 Apr 2023 16:01:55 -0000

|Read Time: 0 minutes

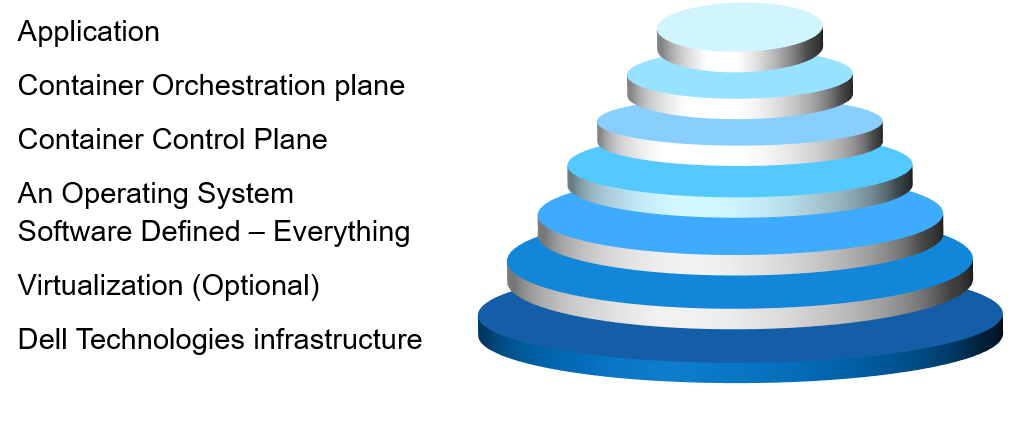

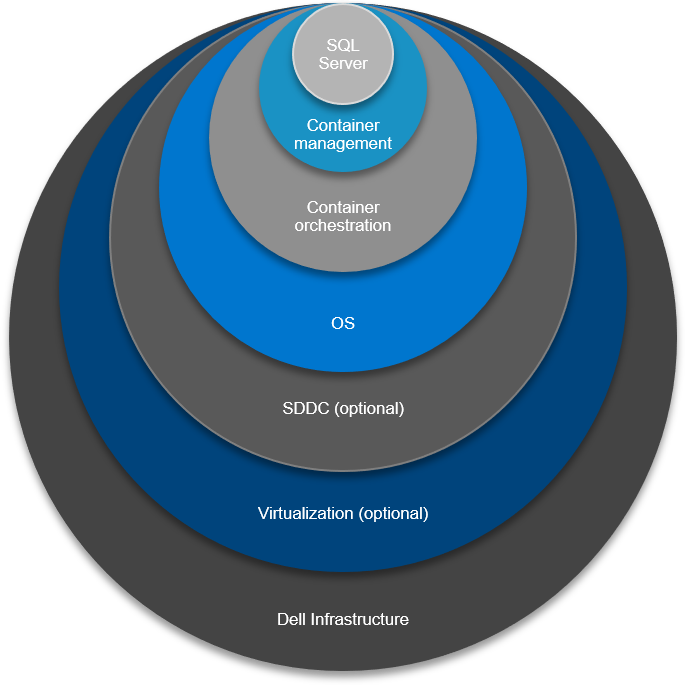

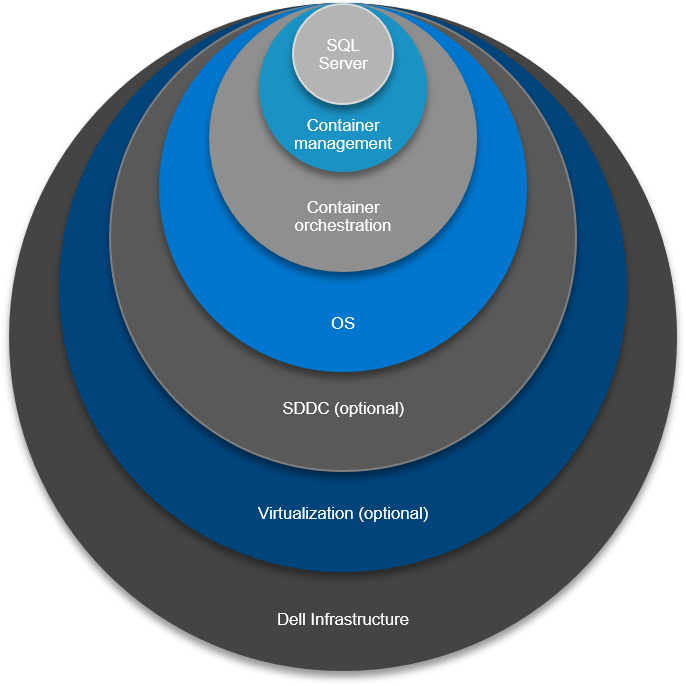

In Part 1 of this blog series, I introduced the Canonical Model, a fairly recent addition to the Services catalog. Canonicalization will become the north star where all newly created work is deployed to and managed, and it’s simplified approach also allows for vertical integration and solutioning an ecosystem when it comes to the design work of a SQL Server modernization effort. The stack is where the “services” run—starting with bare-metal, all the way to the application, with seven layers up the stack.

In this blog, I’ll dive further into the detail and operational considerations for the 7 layers of the fully supported stack and use by way of example the product that makes my socks roll up and down: a SQL Server Big Data Cluster. The SQL BDC is absolutely not the only “application” your IT team would address. This conversation is used for any “top of stack application” solutions. One example is Persistent Storage – for databases running in a container. We need to solution for the very top (SQL Server) and the very bottom (Dell Technologies Infrastructure). And, many optional permutation layers.

First, a Word About Kubernetes

One of my good friends at Microsoft, Buck Woody, never fails to mention a particular truth in his deep-dive training sessions for Kubernetes. He says, “If your storage is not built on a strong foundation, Kubernetes will fall apart.” He’s absolutely correct.

Kubernetes or “K8s” is an open-source container-orchestration system for automating deployment, scaling, and management of containerized applications and is the catalyst in the creation of many new business ventures, startups, and open-source projects. A Kubernetes cluster consists of the components that represent the control plane and includes a set of machines called nodes.

To get a good handle on Kubernetes, give Global Discipline Lead Daniel Murray’s blog a read, “Preparing to Conduct Your Kubernetes Orchestra in Tune with Your Goals.”

The 7 Layers of Integration Up the Stack

Let’s look at the vertical integration one layer at a time. This process and solution conversation is very fluid at the start. Facts, IT desires, best practice considerations, IT maturity, is currently all on the table. For me, at this stage, there is zero product conversation. For my data professionals, this is where we get on a white board (or virtual white board) and answer these questions:

Let’s look at the vertical integration one layer at a time. This process and solution conversation is very fluid at the start. Facts, IT desires, best practice considerations, IT maturity, is currently all on the table. For me, at this stage, there is zero product conversation. For my data professionals, this is where we get on a white board (or virtual white board) and answer these questions:

- Any data?

- Anywhere?

- Any way?

Answers here will help drive our layer conversations.

From tin to application, we have:

Layer 1

The foundation of any solid design of the stack starts with Dell Technologies Solutions for SQL Server. Dell Technologies infrastructure is best positioned to drive consistency up and down the stack and its supplemented by the company’s subject matter experts who work with you to make optimal decisions concerning compute, storage, and back up.

The requisites and hardware components of Layer 1 are:

- Memory, storage class memory (PMEM), and a consideration for later—maybe a bunch of all-flash storage. Suggested equipment: PowerEdge.

- Storage and CI component. Considerations here included use cases that will drive decisions to be made later within the layers. Encryption and compression in the mix? Repurposing? HA/DR conversations are also potentially spawned here. Suggested hardware: PowerOne, PowerStore, PowerFlex. Other considerations – structured or unstructured? Block? File? Object? Yes to all! Suggested hardware: PowerScale, ECS

- Hard to argue the huge importance of a solid backup and recovery plan. Suggested hardware: PowerProtect Data Management portfolio.

- Dell Networking. How are we going to “wire up”—Converged or Hyper-converged, or up the stack of virtualization, containerization and orchestration? How are all those aaS’es going to communicate? These questions concern the stack relationship integration and a key component to getting right.

Note: All of Layer 1 should consist of Dell Technologies products with deployment and support services. Full stop.

Layer 2

Now that we’ve laid our foundation from Dell Technologies, we can pivot to other Dell ecosystem solution sets as our journey continues, up the stack. Let’s keep going.

Considerations for Layer 2 are:

- Are we sticking with physical tin (bare-metal)?

- Should we apply a virtualization consolidationfactor here? ESXi, Hyper-V, KVM? Virtualization is “optional” at this point. Again, the answers are fluid right now and it’s okay to say, “it depends.” We’ll get there!

- Do we want to move to open-source in terms of a fully supported stack? Do we want the comfort of a supported model? IMO, I like a fully supported model although it comes at a cost. Implementing consolidation economics, however, like I mentioned above with virtualization and containerization, equals doing more with less.

Note: Layer 2 is optional (dependent upon future layers) and would be fully supported by either Dell Technologies, VMware or Microsoft and services provided by Dell Technologies Services or VMware Professional Services.

Layer 3

Choices in Layer 3 help drive decision or maturity curve comfort level all the way back to Layer 1. Additionally, at this juncture, we’ll also start talking about subsequent layers and thinking about the orchestration of Containers with Kubernetes.

Considerations and some of the purpose-built solutions for Layer 3 include:

- Software-defined everything such as Dell Technologies PowerFlex (formally VxFlex).

- Network and storage such as The Dell Technologies VMware Family – vSAN and the Microsoft Azure Family on-premises servers – Edge, Azure Stack Hub, Azure Stack HCI.

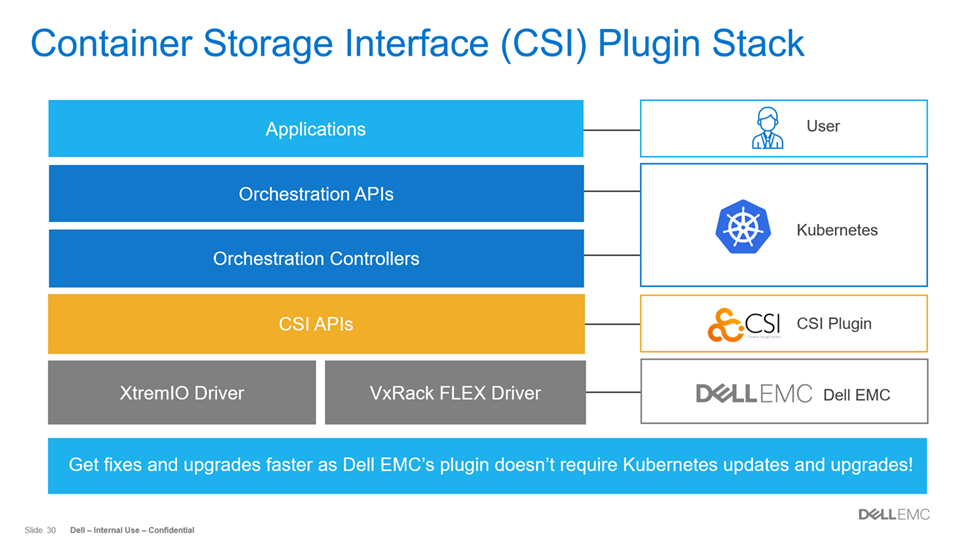

As we are walking through the journey to a containerized database world, at this level, is where we also need to start thinking about the CSI (Container Storage Interface) driver and where it will be supported.

Note: Layer 3 is optional (dependent upon future layers) and would be fully supported by either Dell Technologies, VMware or Microsoft and services provided by Dell Technologies Services or VMware Professional Services.

Layer 4

Ah, we’ve climbed up four rungs on the ladder and arrived at the Operating System where things get really interesting! (Remember the days when OS was tin and an OS?)

Considerations for Layer 4 are:

- Windows Server. Available in a few different forms—Desktop experience, Core, Nano.

- Linux OS. Many choices including RedHat, Ubuntu, SUSE, just to name a few.

Note: Do you want to continue the supported stack path? If so, Microsoft and RedHat are the answers here in terms of where you’ll reach for “phone-a-friend” support.

Option: We could absolutely stop at this point and deploy our application stack. Perfectly fine to do this. It is a proven methodology.

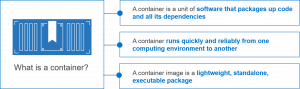

Layer 5

Container technology – the ability to isolate one process from another – dates back to 1979. How is it that I didn’t pick this technology when I was 9 years old?  Now, the age of containers is finally upon us. It cannot be ignored. It should not be ignored. If you have read my previous blogs, especially “The New DBA Role – Time to Get your aaS in Order,” you are already embracing SQL Server on containers. Yes!

Now, the age of containers is finally upon us. It cannot be ignored. It should not be ignored. If you have read my previous blogs, especially “The New DBA Role – Time to Get your aaS in Order,” you are already embracing SQL Server on containers. Yes!

Considerations and options for Layer 5, the “Container Control plane” are:

- VMware VCF 4.

- RedHat OpenShift (with our target of a SQL 2019 BDC, we need 4.3+).

- AKS (Azure Kubernetes Service) – on-premises with Azure Stack Hub.

- Vanilla Kubernetes (The original Trunk/Master).

Note: Containers are absolutely optional here. However, certain options, in these layers, that will provide the runway for containers in the future. Virtualization of data and containerization of data can live on the same platform! Even if you are not ready currently. It would be good to setup for success now. Ready to start with containers, within hours, if needed.

Layer 6

The Container Orchestration plane. We all know about Virtualization sprawl. Now, we have container sprawl! Where are all these containers running? What cloud are they running? Which Hypervisor? It’s best to now manage through a single pane of glass—understanding and managing “all the things.”

Considerations for Layer 6 are:

Considerations for Layer 6 are:

Note: As of this blog publish date Azure Arc is not yet GA, it’s still in preview. No time like the present to start learning Arc’s in’s and out’s! Sign up for the public preview.

Layer 7

Finally, we have reached the application layer in our SQL Server Modernization. We can now install SQL Server, or any ancillary service offering in the SQL Server ecosystem. But hold on! There are a couple options to consider: Would you like your SQL services to be managed and “Always Current?” For me, the answer would be yes. And remember, we are talking about on-premises data here.

Considerations for Layer 7:

- The application for this conversation is SQL Server 2019.

- The appropriate decisions in building you stack will lead you to Azure Arc Data Services (currently in Preview), SQL Server and Kubernetes is a requirement here.

Note: With Dell Technologies solutions, you can deploy at your rate, as long as your infrastructure is solid. Dell Technologies Services has services to move/consolidate and/or upgrade old versions of SQL Server to SQL Server 2019.

The Fully Supported Stack

In terms of considering all the choices and dependencies made at each layer of building and integrating the 7 layers up the stack, there is a fully supported stack available that includes services and products from:

- Dell Technologies

- VMware

- RedHat

- Microsoft

Also, there are absolutely many open-source choices that your teams can make along the way. Perfectly acceptable to do. In the end, it comes down to who wants to support what, and when.

Dell Technologies Is Here to Help You Succeed

There are deep integration points for the fully supported stack. I can speak for all permutations representing the four companies listed above. In my role at Dell Technologies, I engage with senior leadership, product owners, engineers, evangelists, professional services teams, data scientists—you name it. We all collaborate and discuss what is best for you, the client. When you engage with Dell Technologies for the complete solution experience, we have a fierce drive to make sure you are satisfied, both in the near and long term. Find out more about our products and services for Microsoft SQL Server.

I invite you to take a moment to connect with a Dell Technologies Service Expert today and begin moving forward to your fully-support stack / SQL Server Modernization.

Why Canonicalization Should Be a Core Component of Your SQL Server Modernization (Part 1)

Tue, 23 Mar 2021 13:00:57 -0000

|Read Time: 0 minutes

The Canonical Model, Defined

A canonical model is a design pattern used to communicate between different data formats; a data model which is a superset of all the others (“canonical”) and creates a translator module or layer to/from which all existing modules exchange data with other modules [1]. It’s a form of enterprise application integration that reduces the number of data translations, streamlines maintenance and cost, standardizes on agreed data definitions associated with integrating business systems, and drives consistency in providing common data naming, definition and values with a generalized data framework.

SQL Server Modernization

I’ve always been a data fanatic and forever hold a special fondness for SQL Server. As of late, my many clients have asked me: “How do we embark on era of data management for the SQL Server stack?”

Canonicalization, in fact, is very much applicable to the design work of a SQL Server modernization effort. It’s simplified approach allows for vertical integration and solutioning an entire SQL Server ecosystem. The stack is where the “Services” run—starting with bare-metal, all the way to the application, with seven integrated layers up the stack.

The 7 Layers of Integration Up the Stack

The foundation of any solid design of the stack starts with . Dell Technologies is best positioned to drive consistency up and down the stack and its supplemented by the company’s subject matter infrastructure and services experts who work with you to make the best decisions concerning compute, storage, and back up.

Let’s take a look at the vertical integration one layer at a time. From tin to application, we have:

- Infrastructure from Dell Technologies

- Virtualization (optional)

- Software defined – everything

- An operating system

- Container control plane

- Container orchestration plane

- Application

There are so many dimensions to choose from as we work up this layer cake of both hardware and software-defined and, of course, applications. Think: Dell, VMware, RedHat, Microsoft. With the progress of software, evolving at an ever-increasing rate and eating up the world, there is additional complexity. It’s critical you understand how all the pieces of the puzzle work and which pieces work well together, giving consideration of the integration points you may already have in your ecosystem.

Determining the Most Reliable and Fully Supported Solution

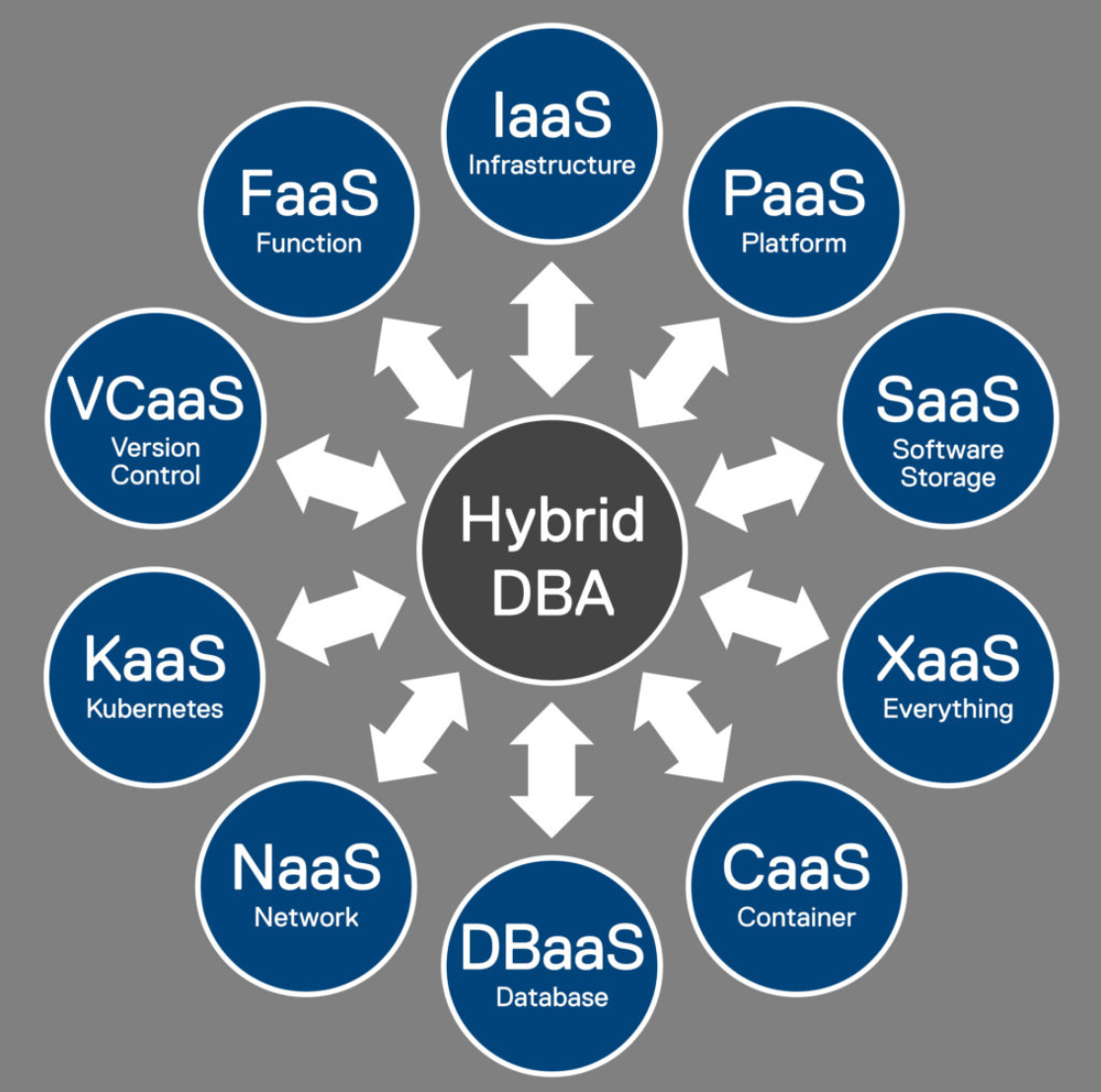

With all this complexity, which architecture do you choose to become properly solutioned? How many XaaS would you like to automate? I hope you answer is – All of them! At what point would you like the control plane, or control planes? Think of a control plane as the where your team’s manage from, deploy to, hook your DevOps tooling to. To put it a different way, would you like your teams innovating or maintaining?

As your control plane insertion point moves up towards the application, the automation below increases, as does the complexity. One example here is the Azure Resource Manager, or ARM. There are ways to connect any infrastructure in your on-premises data centers, driving consistent management. We also want all the role-based access control (RBAC) in place – especially for our data stores we are managing. One example, which we will talk about in Part 2, is Azure Arc.

This is the main reason for this blog, understanding the choices and tradeoff of cost versus complexity, or automated complexity. Many products deliver this automation, out of the box. “Pay no attention to the man behind the curtain!”

One of my good friends at Dell Technologies, Stephen McMaster an Engineering Technologist at Dell, describes these considerations as the Plinko Ball, a choose your own adventure type of scenario. This analogy is spot on!

With all the choices of dimensions, we must distill down to the most efficient approach. I like to understand both the current IT tool set and the maturity journey of the organization, before I tackle making the proper recommendation for a solid solution set and fully supported stack.

Dell Technologies Is Here to Help You Succeed

Is “keeping the lights on” preventing your team from innovating?

Dell Technologies Services can complement your team! As your company’s trusted advisor, my team members share deep expertise for Microsoft products and services and we’re best positioned to help you build your stack from tin to application. Why wait? Contact a Dell Technologies Services Expert today to get started.

Stay tuned for Part 2 of this blog series where we’ll dive further into the detail and operational considerations of the 7 layers of the fully supported stack.

New Decision Support Benchmark Has Dell EMC MX740c as Top Performance Leader

Thu, 18 Mar 2021 15:59:40 -0000

|Read Time: 0 minutes

The Microsoft SQL Server team at Dell Technologies is excited to announce the recently published decision support workload benchmark (TPC-H) that at the time of this blog has the Dell EMC PowerEdge MX740c modular server at the top position of the leaderboard for performance. For those of you that don’t follow database benchmarking news, it’s the Transaction Processing Council (TPC) that provides the most trusted source of independently audited database performance benchmarks. The TPC has two organizational goals of creating good benchmarks and a process for auditing those benchmarks.

Dell Technologies recently achieved the top position on the TPC (tpc.org) leaderboard for the decision support workload benchmark (TPC-H) Performance results for a 1,000 GB database.* This benchmark tests decision support systems that are designed to examine large volumes of data, execute queries with a high degree of complexity, and give answers to critical business questions. Our top ranked system used was the modular PowerEdge MX740c server running Microsoft SQL Server 2019 Enterprise Edition with Red Hat Enterprise 8.0 Linux operating system.*

The performance metric reported for the TPC-H benchmark is the Composite Query-per-Hour Performance Metric (QphH@Size). Most database benchmarks for high transaction workloads are stated in transactions per second (TPS). The queries per hour calculated for the decision support are the result of the queries being far more complex than most OLTP transactions by including a rich combination of transformation operators and selectivity constraints that generates intensive activity on the database server being tested.

When it comes to consolidating data management systems on a performance platform the Dell EMC PowerEdge M7000 modular chassis with the PowerEdge MX740c server compute sleds can accelerate many data workload. For the decision support benchmark, the PowerEdge MX740c modular server was configured with:

• PowerEdge MX740c server

- 2 x Intel(R) Xeon(R) Platinum 8268 2.90G, 24/48T.

- 12 x 64GB memory.

• Disk Drives (HDDs)

- 3 x Dell 1.6TB, NVMe, Mixed Use Express Flash, 2.5 SFF Drive, U.2, PM1725b with Carrier

- 2 x 480GB SSD SAS Mixed Use 12Gbps 512e 2.5in Hot-Plug Drive, PM5-V

- 1 x 2.4TB 10K RPM SAS 12Gbps 512e 2.5in Hot-plug Hard Drive

• Controllers

- PERC H730P RAID Controller

- BOSS controller card + with2 M.2 Sticks 480G (RAID 1), Blade

The PowerEdge MX740c ranked #1 for performance for a 1,000 GB SQL Server 2019 Enterprise Edition database in a non-clustered configuration with a TPC-H composite query-per-hour 824,693.5.* IT professionals’ benefit from benchmarks that rank database systems using real workloads because they provide a means for comparing performance. Published benchmarks have a great deal of integrity as an independent auditor inspects each benchmark result and performs a comprehensive review. The TPC Full Disclosure Report and be found here and provides insights into how the system was configured to achieve the query-per-hour result.

Microsoft SQL Server is very efficient at using server memory and solid-state drive technology. In general, the more dedicated memory, and the faster the solid-state storage, the faster databases will perform. The PowerEdge MX740c modular server can have up to 3 TB of LRDIMM memory and up to two-28-core 2nd Generation Intel Xeon Scalable processors. This provides the enterprise with the ability to configure the compute sled for most database workloads like decision support, online transaction processing, data warehouses, and more.

Dell Technologies has a broad portfolio of solutions for Microsoft SQL Server in the InfoHub: https://InfoHub.DellTechnologies.com. For example, there are many SQL Server solutions like running the database in containers, Big Data Clusters and with VMware vSphere virtualization. It is a technical library for researching and learning about SQL Server solutions.

More interested in an overview of the many solutions for SQL Server? The solutions page for Microsoft SQL Server provides the business overview of how Dell Technologies and Microsoft SQL Server can enable your company with modern infrastructure and agile operations. To learn more visit: https://DellTechnologies.com/sql.

Technical information about the PowerEdge MX740 modular server can be found at: PowerEdge MX740c Compute Sled. On this page are all the technical specifications and customizations and details . In addition, Dell Technologies offers a portfolio of other rack servers, tower and modular servers that can be configured to accelerate most any business workload: https://www.delltechnologies.com/en-us/servers/index.htm.

* Based on TPC Benchmark H (TPC-H), March 2021, the Dell EMC PowerEdge MX740c modular server has a TPC-H Composite Query-per-Hour Performance Metric of 824,693.5 when run against a 1,000 GB Microsoft SQL Server 2019 database and Red Hat Enterprise Linux 8.0 in a non-clustered environment. Actual results may vary based on operating environment. Full results on tpc.org.

Dell Technologies partners with Microsoft and Red Hat running SQL Server Big Data Clusters on OpenShift

Wed, 19 Aug 2020 22:32:11 -0000

|Read Time: 0 minutes

Introduced with Microsoft SQL Server 2019, SQL Server Big Data Clusters allow customers to deploy scalable clusters of SQL Server, Spark, and HDFS containers running on Kubernetes. Complete info on Big Data Clusters can be found in the Microsoft documentation. Many Dell Technologies customers are using Red Hat OpenShift Container Platform as their Kubernetes platform of choice and with this and many other solutions we are leading the way on OpenShift applications.

In Cumulative Update 5 (CU5) of Microsoft SQL Server 2019 Big Data Clusters (BDC), OpenShift 4.3+ is supported as a platform for Big Data Clusters. This has been a highly anticipated launch as customers not only realize the power of BDC and OpenShift but also look for the support of Dell Technologies, Microsoft, and Red Hat to run mission-critical workloads. Dell Technologies has been working with Microsoft and Red Hat to develop architecture guidance and best practices for deploying and running BDC on OpenShift.

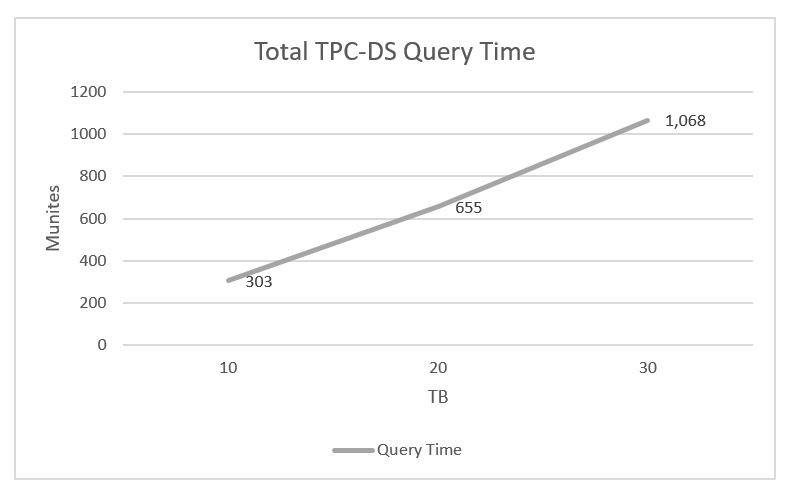

For this effort we utilized the Databricks’ TPC-DS Spark SQL kit to populate a dataset and run a workload on the OpenShift 4.3 BDC cluster to test the various architecture components of the solution. The TPC-DS benchmark is a popular database benchmark used to evaluate performance in decision support and Big Data environments.

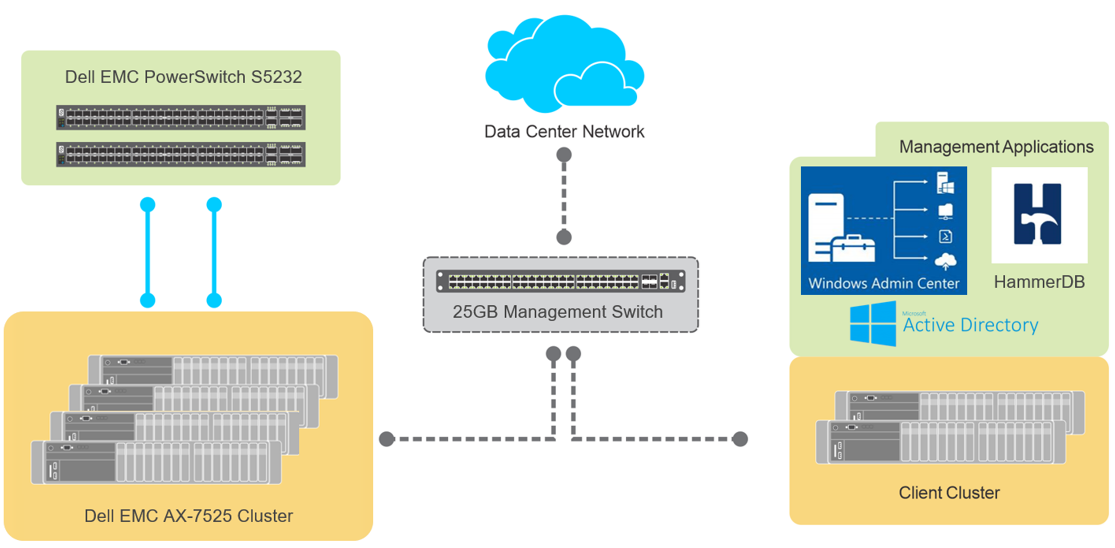

Based on our testing we were able to achieve linear scale of our workload while fully exercising our OpenShift cluster consisting of 12 Dell EMC R640 PowerEdge Servers and a single Dell EMC Unity 880F storage array.

Total time of all queries run for 10,20,and 30TB datasets

As a result of this testing, a fully detailed OpenShift reference architecture and a best practices paper for running Big Data Clusters on Dell EMC Unity storage are under way and will be published soon. More information on Dell Technologies solutions for OpenShift can be found on our OpenShift Info Hub. Additional information on Dell Technologies for SQL Server can be found on our Microsoft SQL webpage.

Database security methodologies of SQL Server

Mon, 03 Aug 2020 16:06:37 -0000

|Read Time: 0 minutes

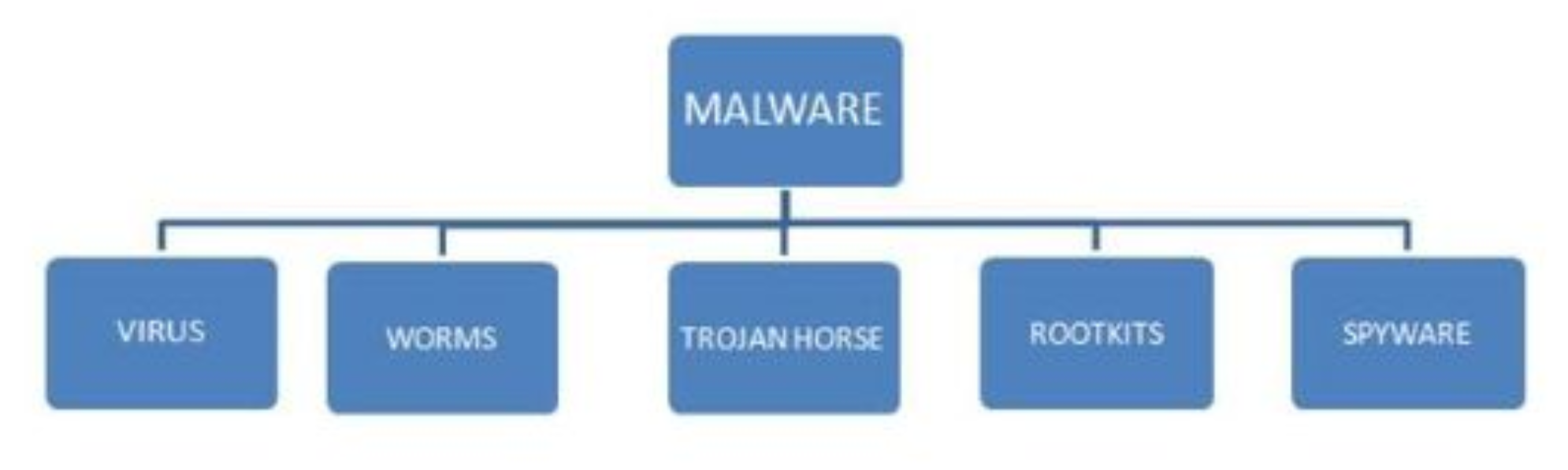

In general, security touches every aspect and activity of an information system. The subject of security is vast, and we need to understand that security can never be perfect. Every organization has unique way of dealing with security based on their requirements. In this blog, I describe database security models and briefly review SQL Server security principles.

A few definitions:

- Database: A collection of information stored in computer

- Security: Freedom from danger

- Database security: The mechanism that protects the database against intentional or accidental threats or that protects it against malicious attempts to steal (view) or modify data

Database security models

Today’s organizations rely on database systems as the key data management technology for a large variety of tasks ranging from regular business operations to critical decision making. The information in the databases is used, shared, and accessed by various users. It needs to be protected and managed because any changes to the database can affect it or other databases.

The main role of a security system is to preserve integrity of an operational system by enforcing a security policy that is defined by a security model. These security models are the basic theoretical tools to start with when developing a security system.

Database security models include the following elements:

- Subject: Individual who performs some activity on the database

- Object: Database unit that requires authorization in order to manipulate

- Access mode/action: Any activity that might be performed on an object by a subject

- Authorization: Specification of access modes for each subject on each object

- Administrative rights: Who has rights in system administration and what responsibilities administrators have

- Policies: Enterprise-wide accepted security rules

- Constraint: A more specific rule regarding an aspect of an object and action

Database security approaches

A typical DBMS supports basic approaches of data security—discretionary control, mandatory control, and role-based access control.

Discretionary control: A given user typically has different access rights, also known as privileges, for different objects. For discretionary access control, we need a language to support the definition of rights—for example, SQL.

Mandatory control: Each data object is labeled with a certain classification level, and a given object can be accessed only by a user with a sufficient clearance level. Mandatory access control is applicable to the databases in which data has a rather static or rigid classification structure—for example, military or government environments.

In both discretionary and mandatory control cases, the unit of data and the data object to be protected can range from the entire database to a single, specific tuple.

Role-based access control (RBAC): Permissions are associated with roles, and users are made members of appropriate roles. However, a role brings together a set of users on one side and a set of permissions on the other, whereas user groups are typically defined as a set of users only.

Role-based security provides the flexibility to define permissions at a high level of granularity in Microsoft SQL, thus greatly reducing the attack surface area of the database system.

RBAC mechanisms are a flexible alternative to mandatory access control (MAC) and discretionary access control (DAC).

RBAC terminology:

- Objects: Any system, resource file, printer, terminal, database record, etc.

- Operations: An executable image of a program, which upon invocation performs some function for the user.

- Permissions: An approval to perform an operation on one or more RBAC-protected objects

- Role: A job function within the context of an organization with some associated semantics regarding the authority and responsibility conferred on the user assigned to the role.

For more information, see Database Security Models — A Case Study.

Note: Access control mechanisms regulate who can access which data. The need for such mechanisms can be concluded from the variety of actors that work with a database system—for example, DBA, application admin and programmer, and users. Based on actor characteristics, access control mechanisms can be divided into three categories – DAC, RBAC, and MAC.

Principles of SQL Server security

A SQL Server instance contains a hierarchical collection of entities, starting with the server. Each server contains multiple databases, and each database contains a collection of securable objects. Every SQL Server securable has associated permissions that can be granted to a principal, which is an individual, group, or process granted access to SQL Server.

For each security principal, you can grant rights that allow that principal to access or modify a set of the securables, which are the objects that make up the database and server environment. They can include anything from functions to database users to endpoints. SQL Server scopes the objects hierarchically at the server, database, and schema levels:

- Server-level securables include databases as well as objects such as logins, server roles, and availability groups.

- Database-level securables include schemas as well as objects such as database users, database roles, and full-text catalogs.

- Schema-level securables include objects such as tables, views, functions, and stored procedures.

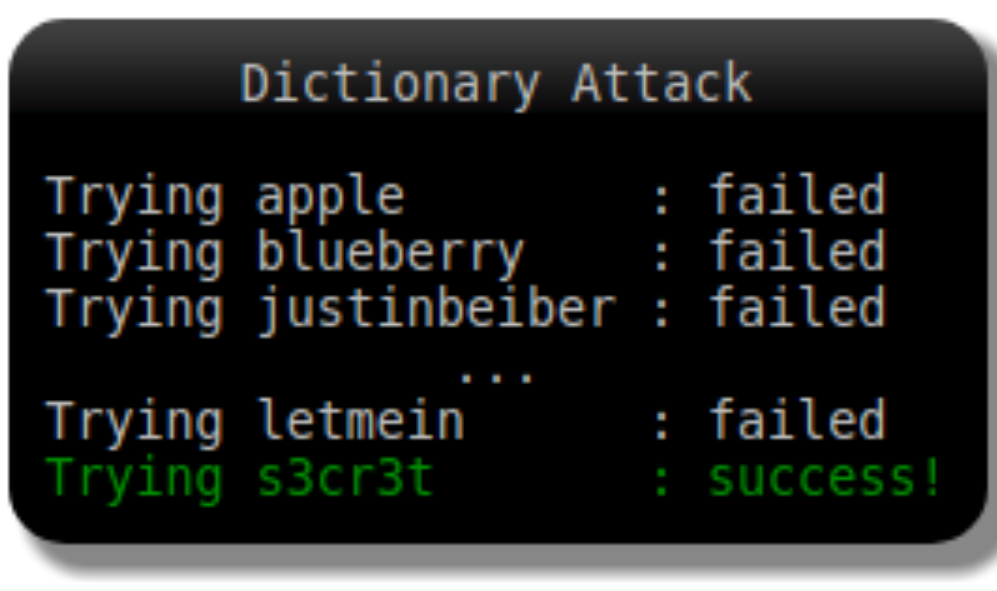

SQL Server authentication approaches include:

- Authentication: Authentication is the SQL Server login process by which a principal requests access by submitting credentials that the server evaluates. Authentication establishes the identity of the user or process being authenticated. SQL Server authentication helps ensure that only authorized users with valid credentials can access the database server. SQL Server supports two authentication modes, Windows authentication mode and mixed mode.

- Windows authentication is often referred to as integrated security because this SQL Server security model is tightly integrated with Windows.

- Mixed mode supports authentication both by Windows and by SQL Server, using usernames and passwords.

- Authorization: Authorization is the process of determining which securable resources a principal can access and which operations are allowed for those resources. Microsoft SQL -based technologies support this principle by providing mechanisms to define granular object-level permissions and simplify the process by implementing role-based security. Granting permissions to roles rather than users simplifies security administration.

- It is a best practice to use server-level roles for managing server-level access and security, and database roles for managing database-level access.

- Role-based security provides the flexibility to define permissions at a high level of granularity in Microsoft SQL, thus greatly reducing the attack surface area of the database system.

Here are a few additional recommended best practices for SQL Server authentication:

- Use Windows authentication.

- Enables centralized management of SQL Server principals via Active Directory

- Uses Kerberos security protocol to authenticate users

- Supports integrated password policy enforcement including complexity validation for strong passwords, password expiration, and account lockout

- Use separate accounts to authenticate users and applications.

- Enables limiting the permissions granted to users and applications

- Reduces the risks of malicious activity such as SQL injection attacks

- Use contained database users.

- Isolates the user or application account to a single database

- Improves performance, as contained database users authenticate directly to the database without an extra network hop to the master database

- Supports both SQL Server and Azure SQL Database, as well as Azure SQL Data Warehouse

Conclusion

Database security is an important goal of any data management system. Each organization should have a data security policy, which is set of high-level guidelines determined by:

- User requirements

- Environmental aspects

- Internal regulations

- Government laws

Database security is based on three important constructs—confidentiality, integrity, and availability. The goal of database security is to protect your critical and confidential data from unauthorized access.

References

https://docs.microsoft.com/en-us/dotnet/framework/data/adonet/sql/overview-of-sql-server-security

https://sqlsunday.com/2014/07/20/the-sql-server-security-model-part-1/

Manage and analyze humongous amounts of data with SQL Server 2019 Big Data Cluster

Wed, 19 Aug 2020 22:07:59 -0000

|Read Time: 0 minutes

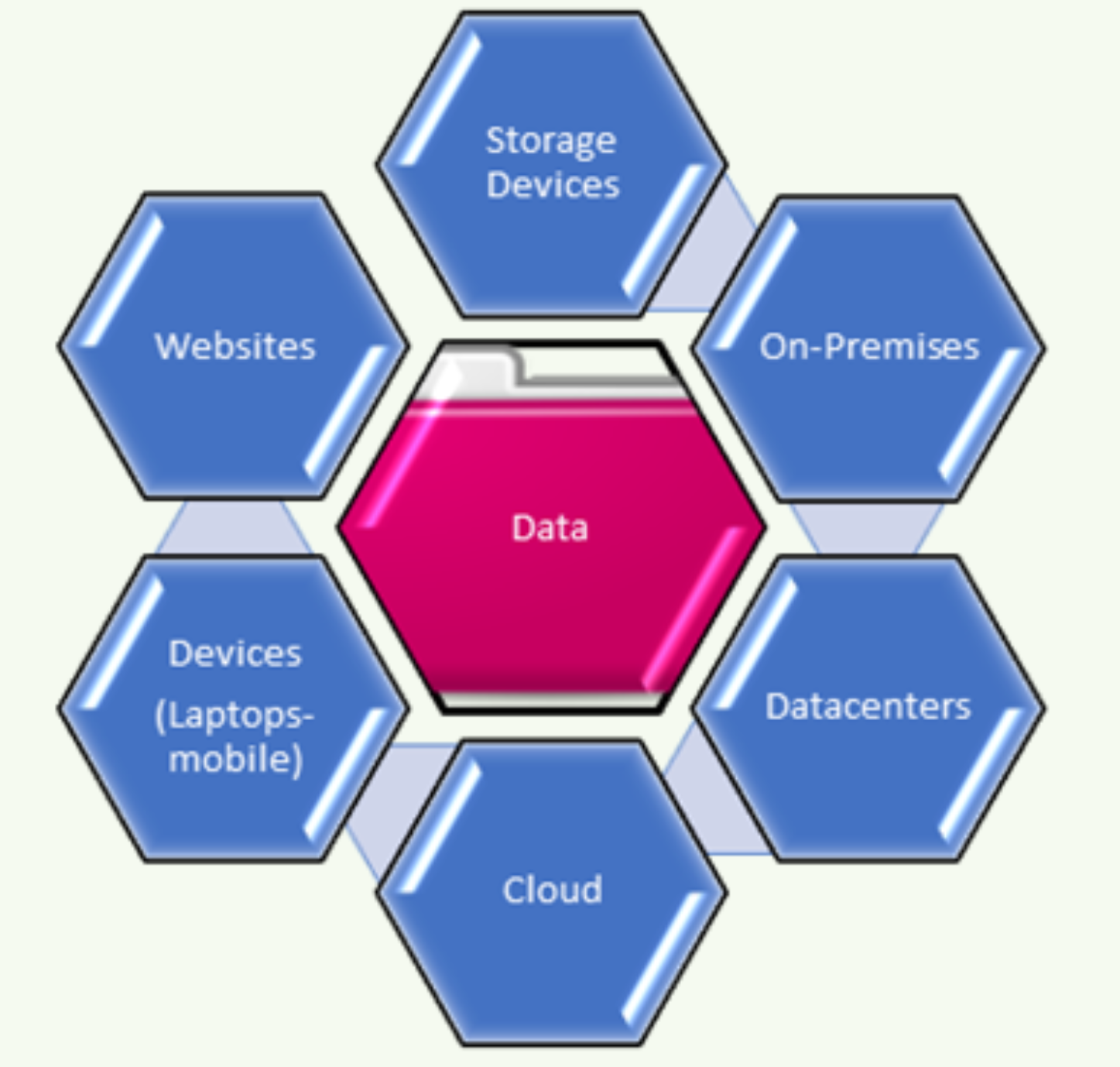

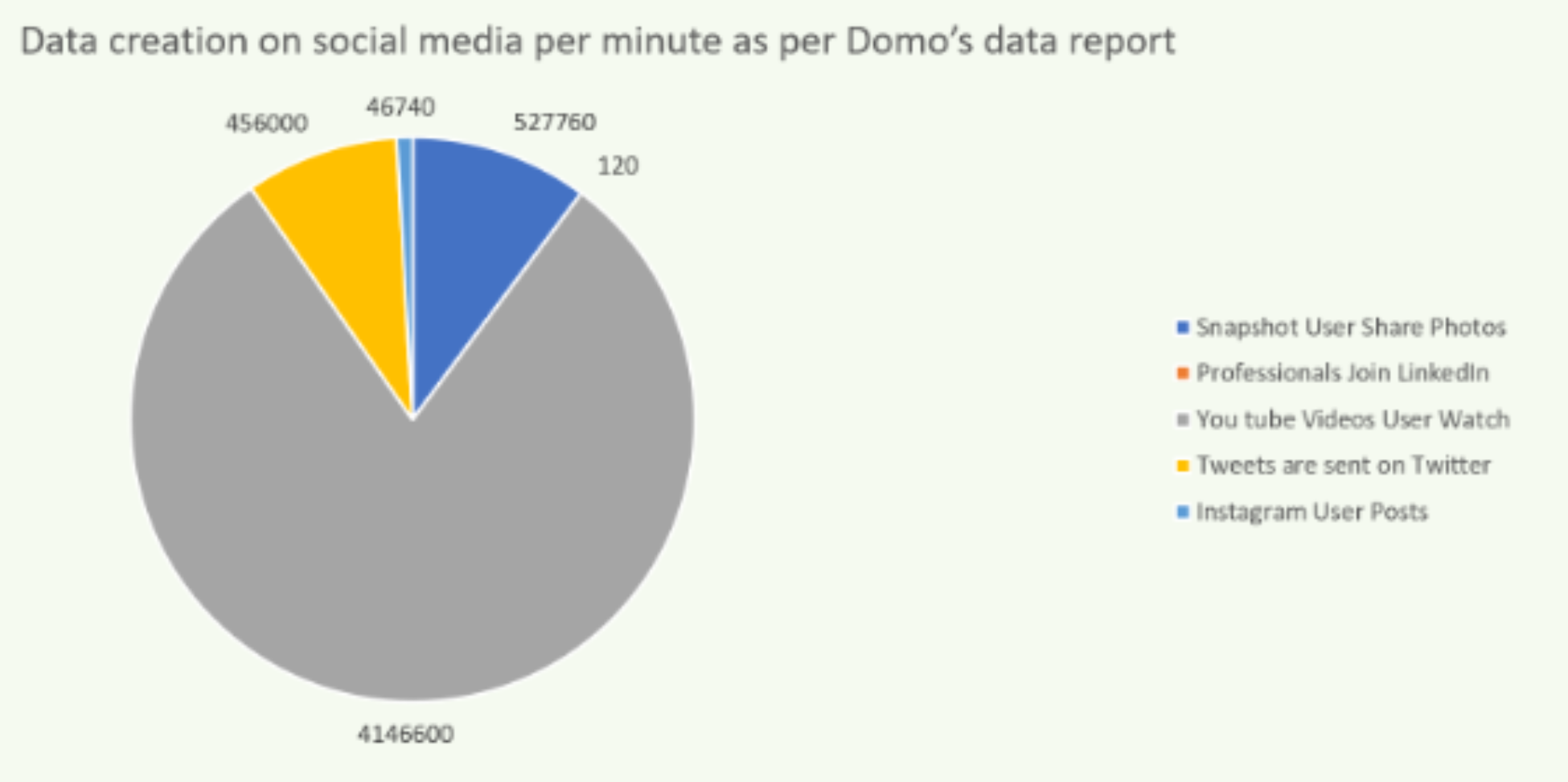

A collection of facts and statistics for reference or analysis is called data, and, in a way, the term “big data” is a large sum of data. The big data concept has been around for many years, and the volume of data is growing like never, which is why data is a hugely valued asset in this connected world. Effective big data management enables an organization to locate valuable information with ease, regardless of how large or unstructured the data is. The data is collected from various sources including system logs, social media sites, and call detail records.

The four V's associated with big data are Volume, Variety, Velocity, and Veracity:

- Volume is about the size—how much data you have.

- Variety means that the data is very different—that you have very different types of data structures.

- Velocity is about the speed of how fast the data is getting to you.

- Veracity, the final V, is a difficult one. The issue with big data is that it is very unreliable.

SQL Server Big Data Clusters make it easy to manage this complex assortment of data.

You can use SQL Server 2019 to create a secure, hybrid, machine learning architecture starting with preparing data, training a machine learning model, operationalizing your model, and using it for scoring. SQL Server Big Data Clusters make it easy to unite high-value relational data with high-volume big data.

Big Data Clusters bring together multiple instances of SQL Server with Spark and HDFS, making it much easier to unite relational and big data and use them in reports, predictive models, applications, and AI.

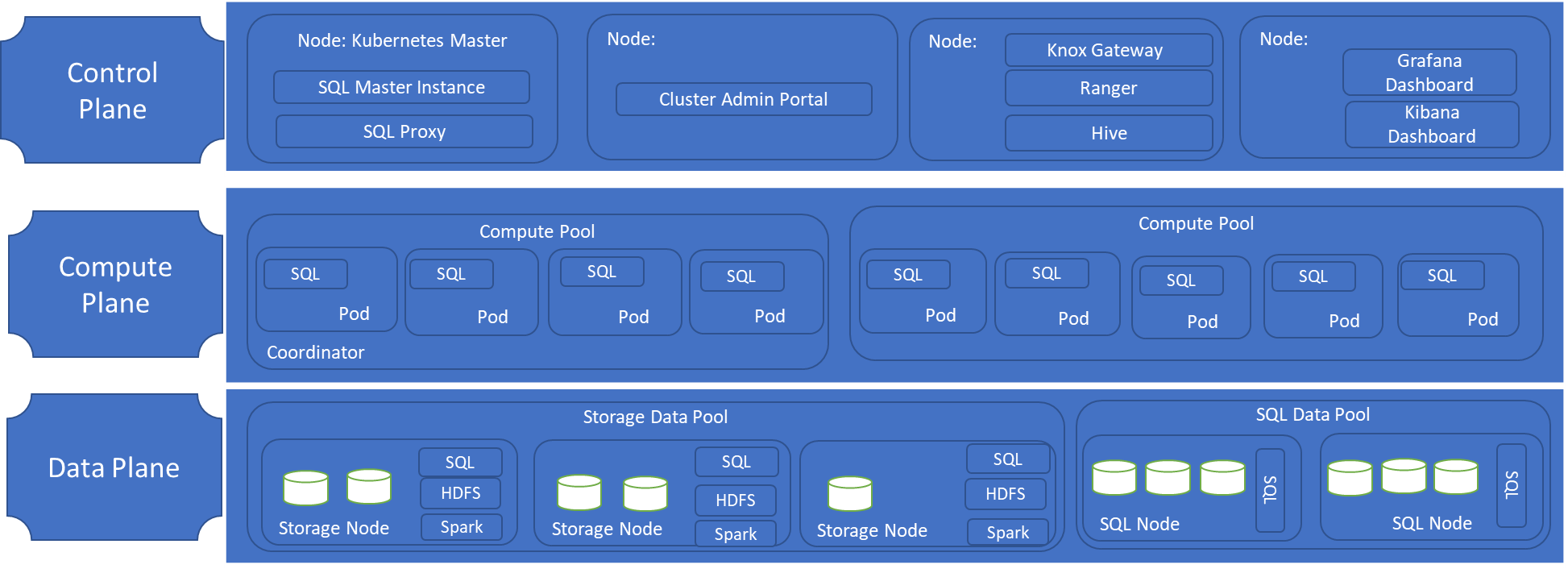

In addition, using PolyBase, you can connect to many different external data sources such as MongoDB, Oracle, Teradata, SAP HANA, and more. Hence, SQL Server 2019 Big Data Cluster is a scalable, performant, and maintainable SQL platform, data warehouse, data lake, and data science platform that doesn’t require compromising between cloud and on-premises. Components include:

Controller | The controller provides management and security for the cluster. It contains the control service, the configuration store, and other cluster-level services such as Kibana, Grafana, and Elastic Search. |

Compute pool | The compute pool provides computational resources to the cluster. It contains nodes running SQL Server on Linux pods. The pods in the compute pool are divided into SQL compute instances for specific processing tasks. |