Blogs

Dell Technologies industry experts post their thoughts about our Communication Service Providers Solutions.

Distribution of 5G Core to Network Edge

Thu, 25 Apr 2024 16:15:38 -0000

|Read Time: 0 minutes

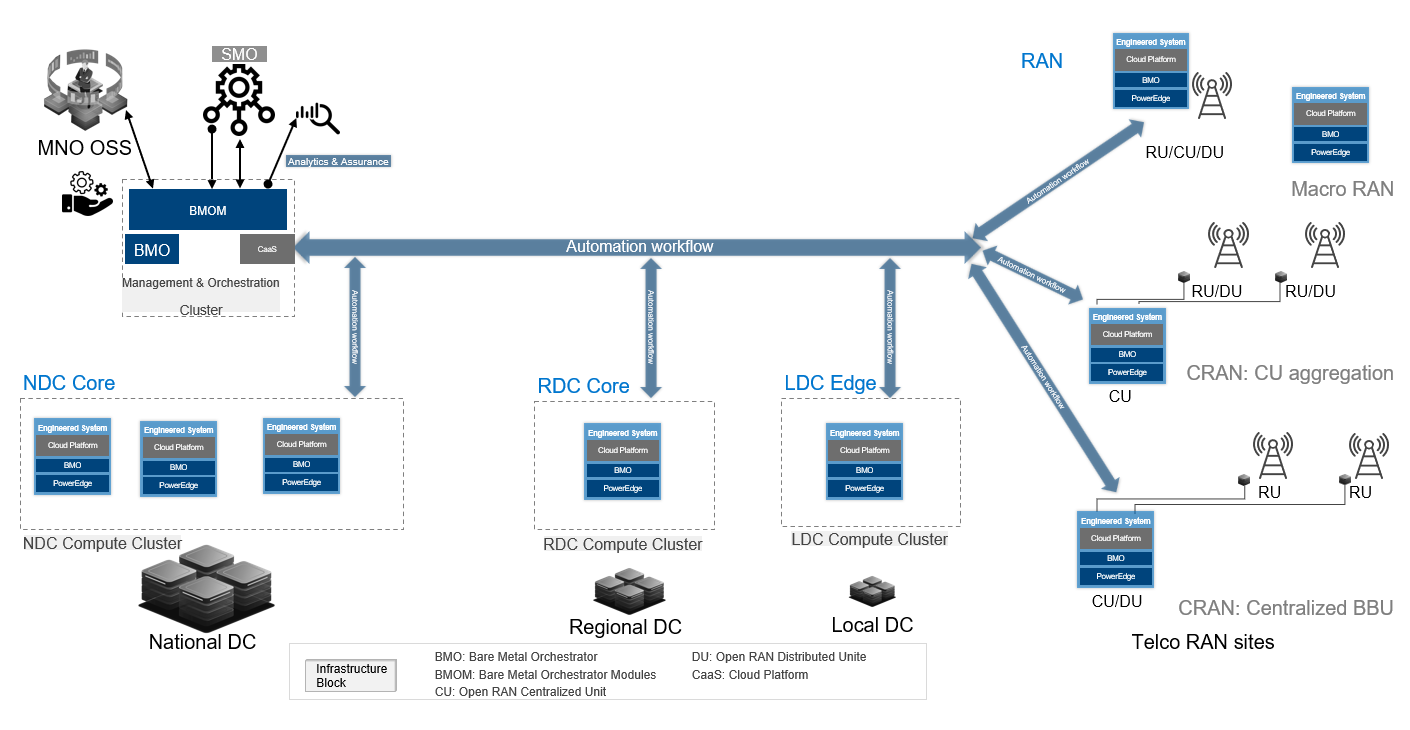

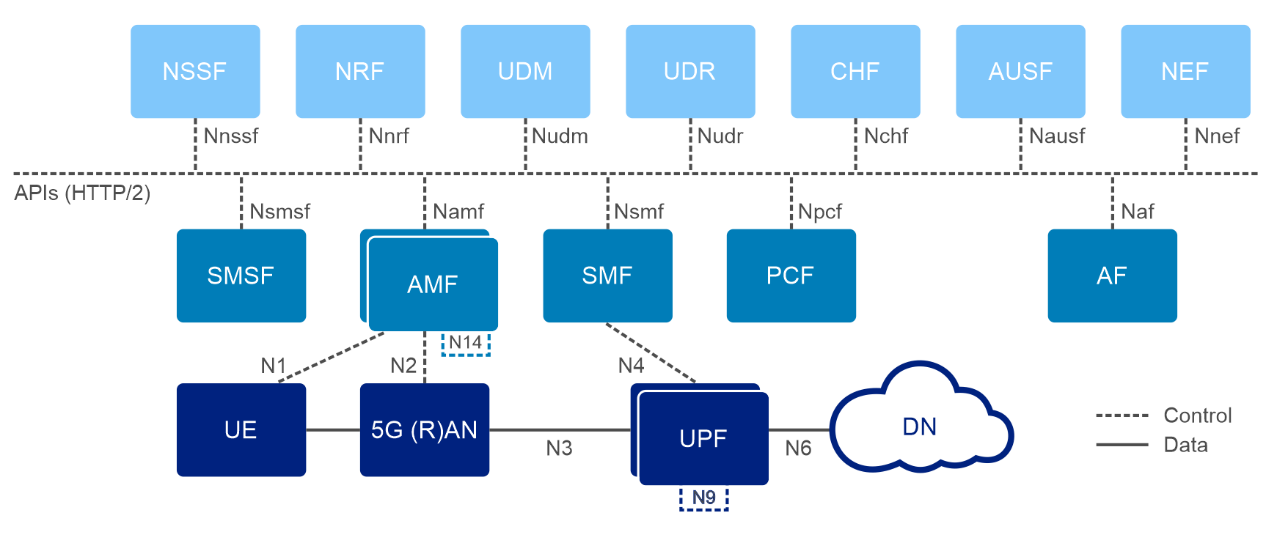

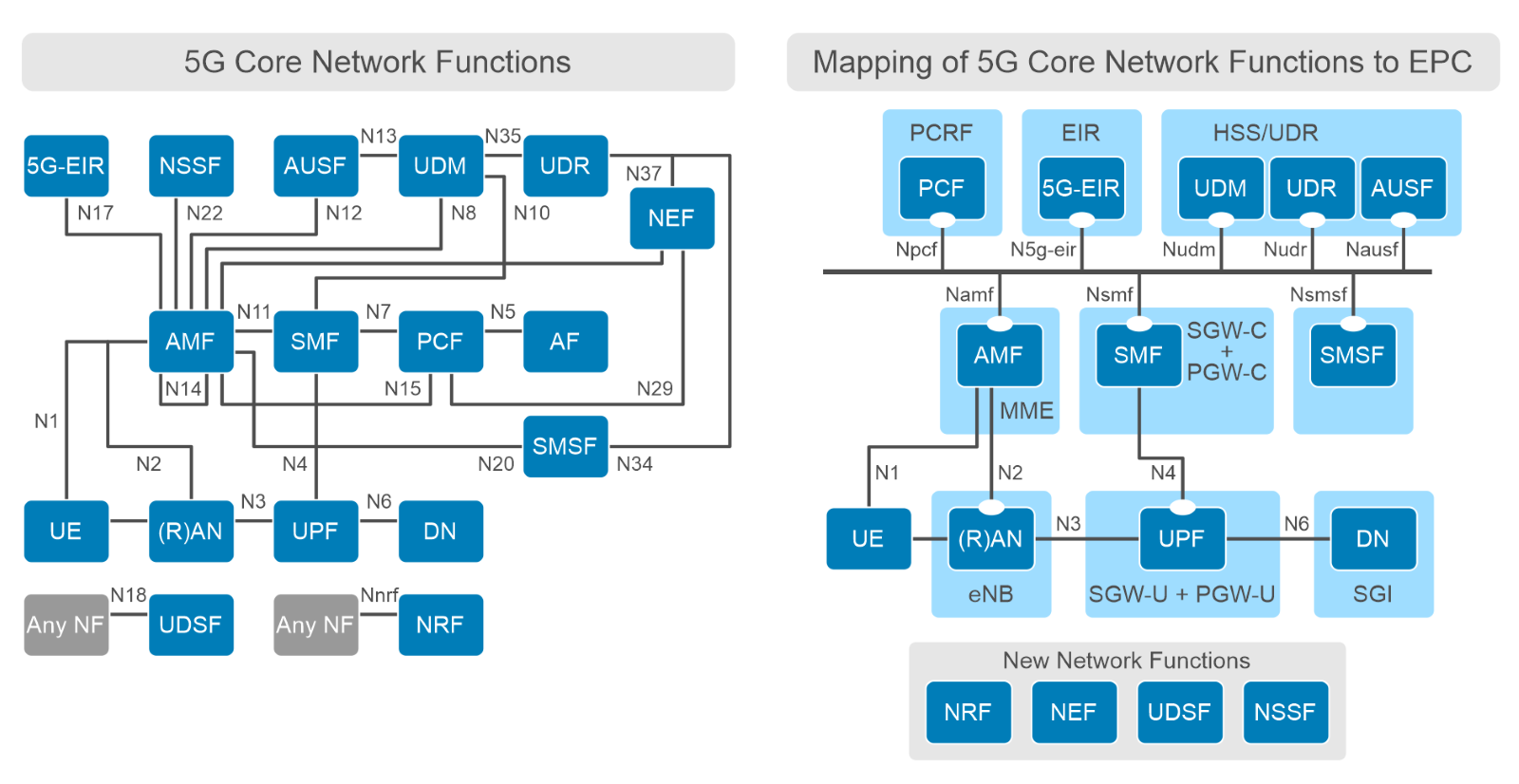

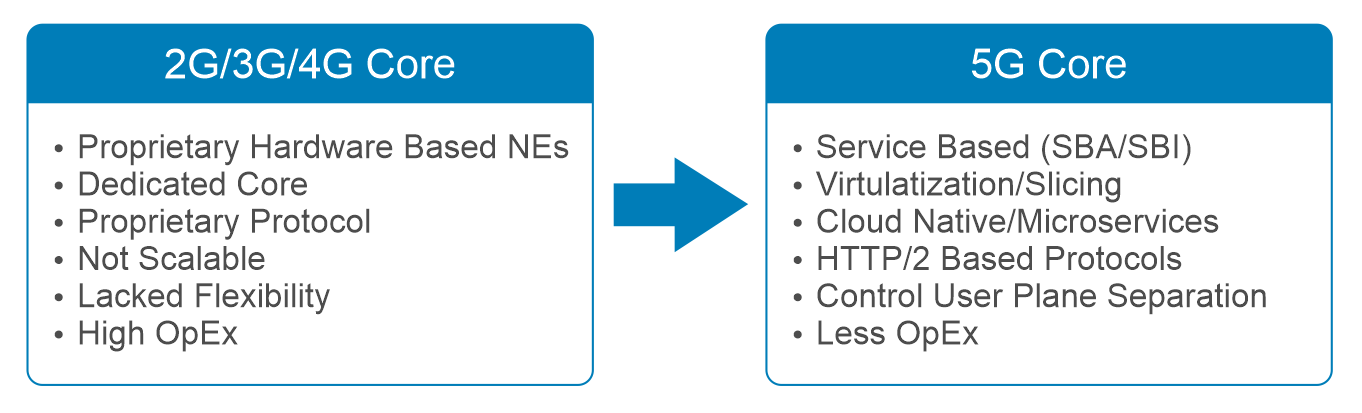

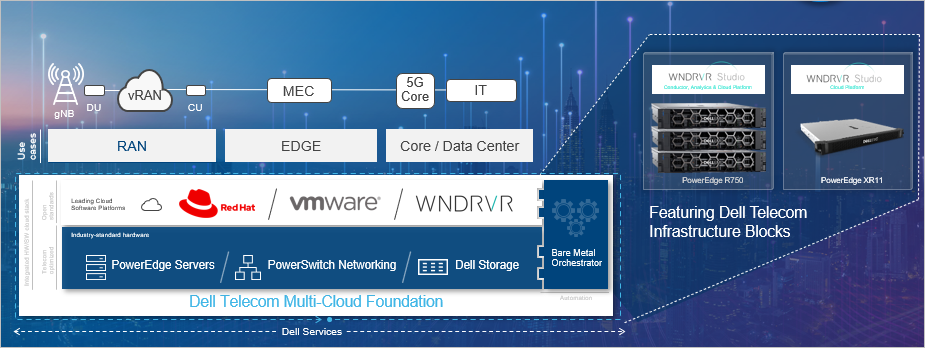

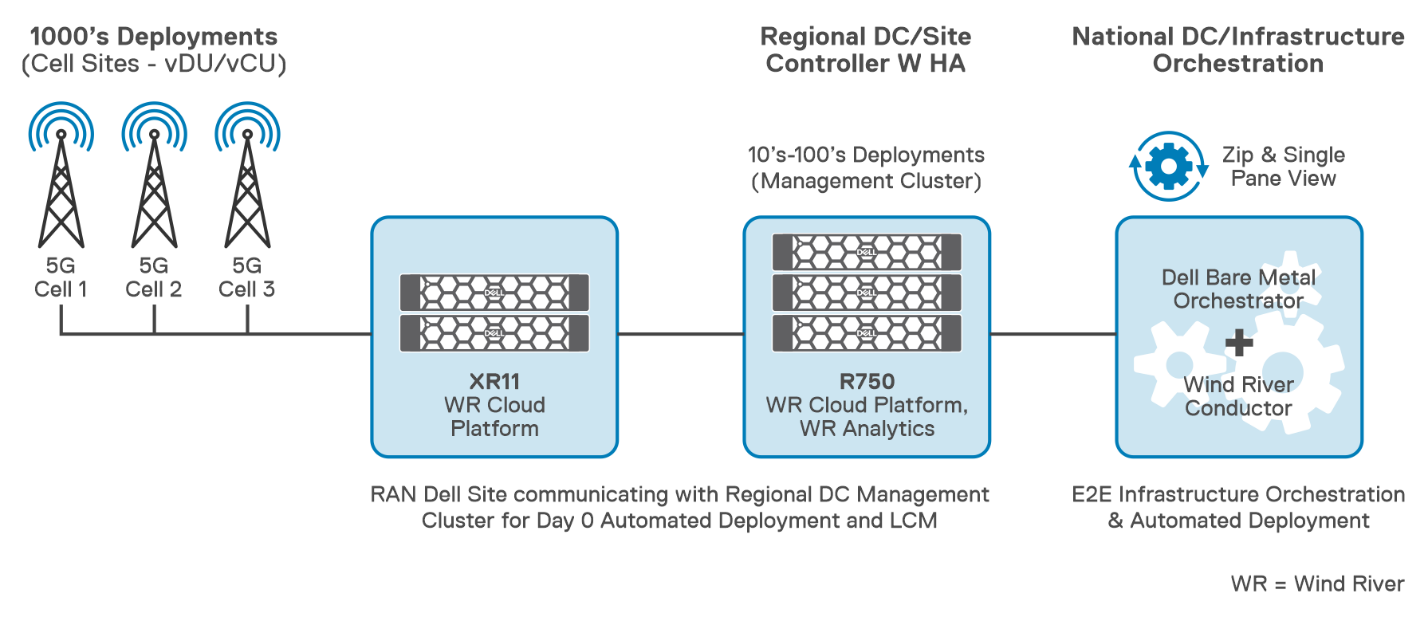

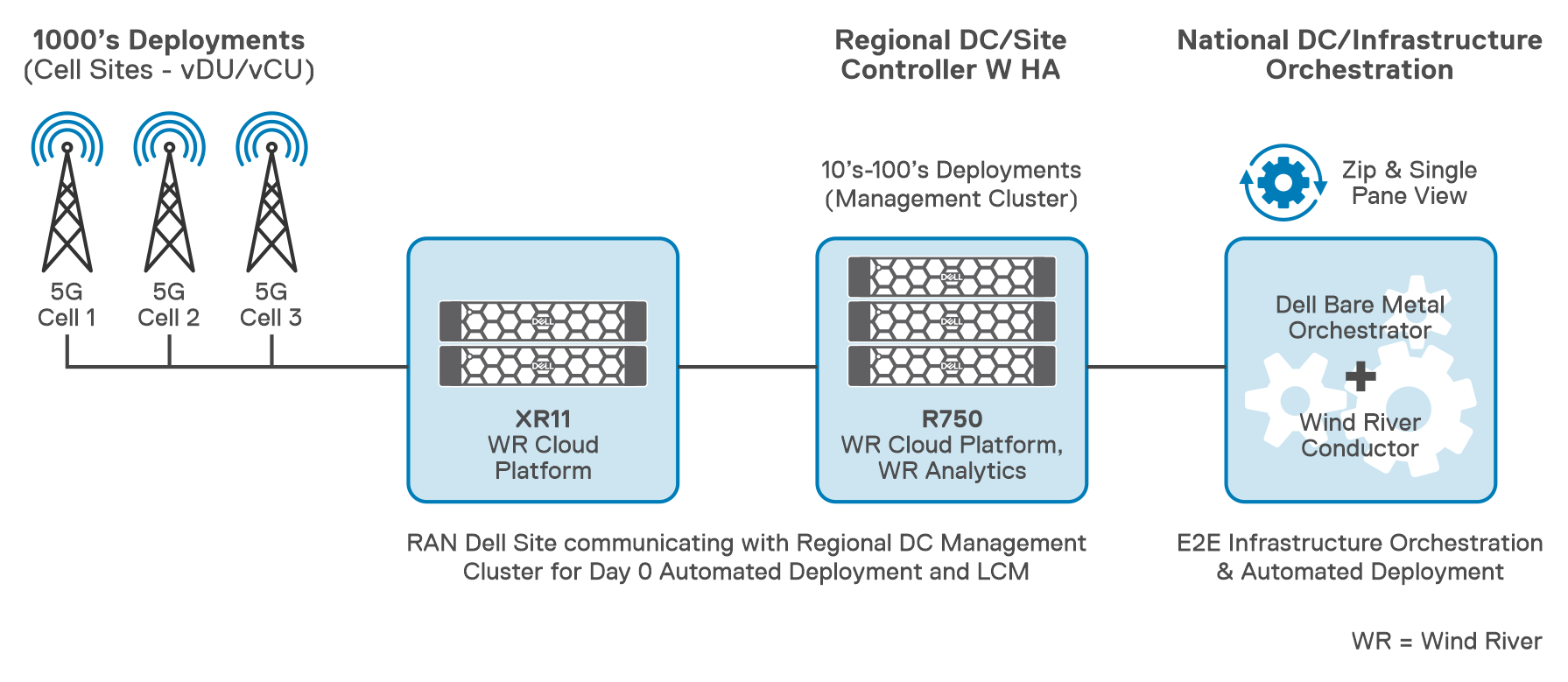

Thus far in our blog series, we have discussed migrating to an open cloud-native ecosystem, the 5G Core and its architecture, and how Dell Telecom Infrastructure Block for Red Hat can help simplify 5G network deployment for Red Hat® OpenShift®. Now, we would like to introduce a key use case for distributing 5G core User Plane functions from the centralized data center to the network edge.

Distributing Core Functions in 5G networks

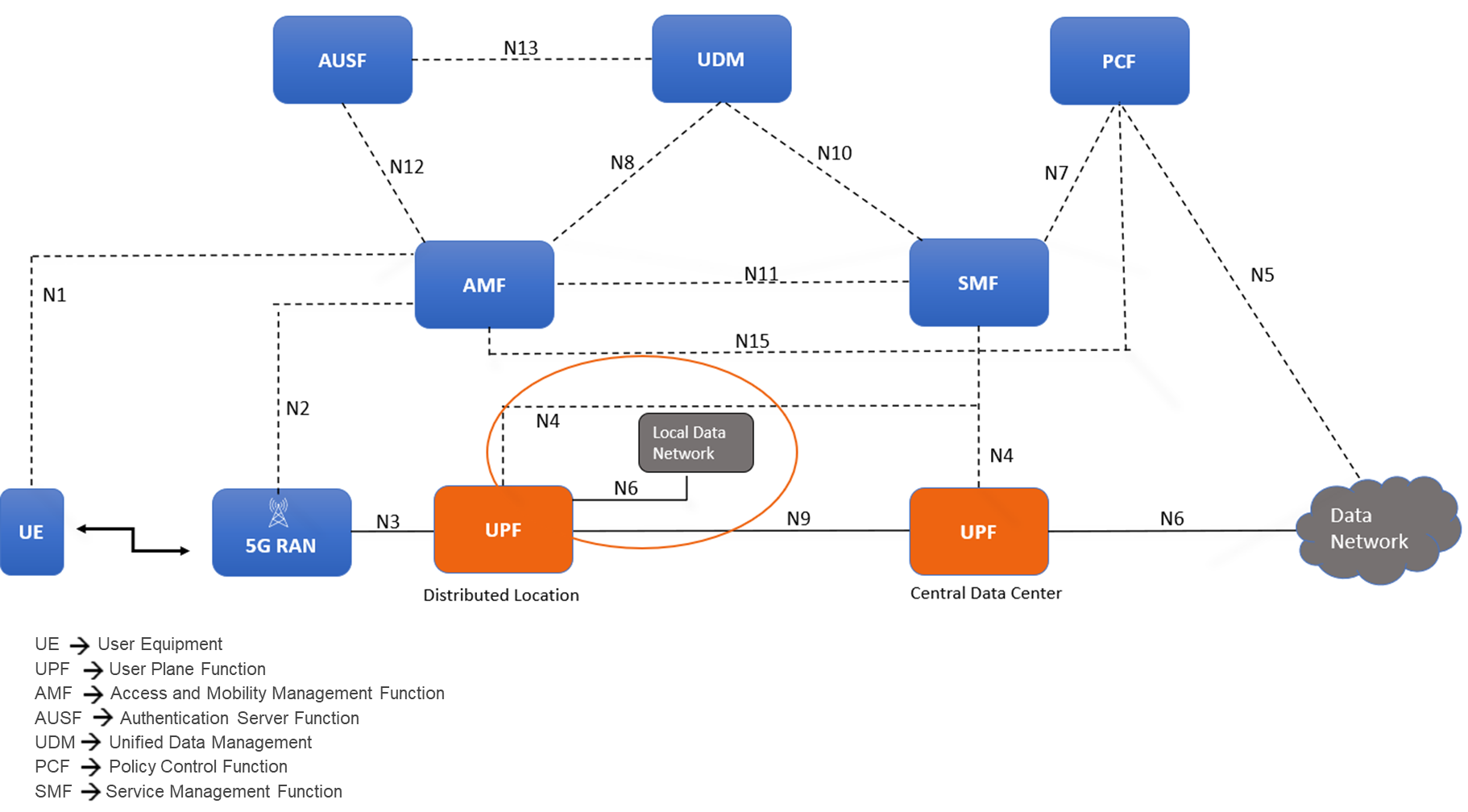

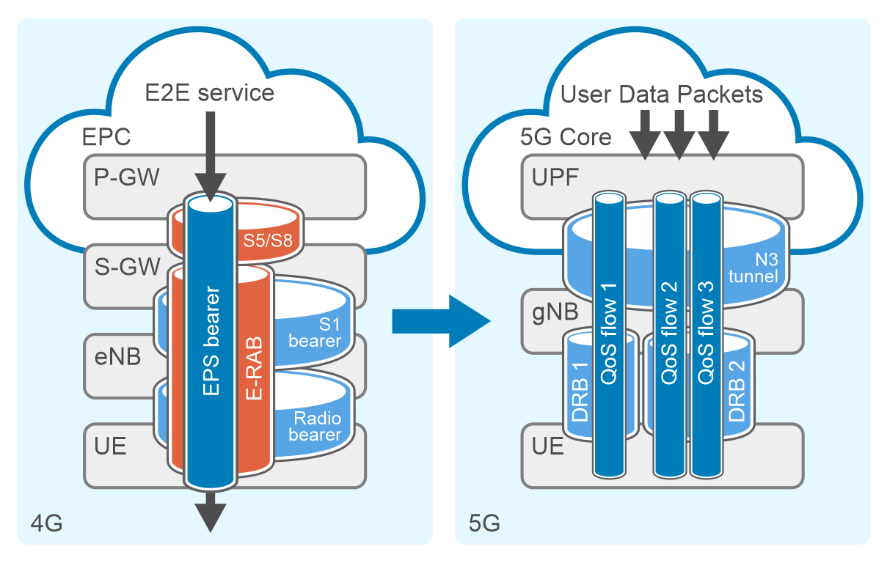

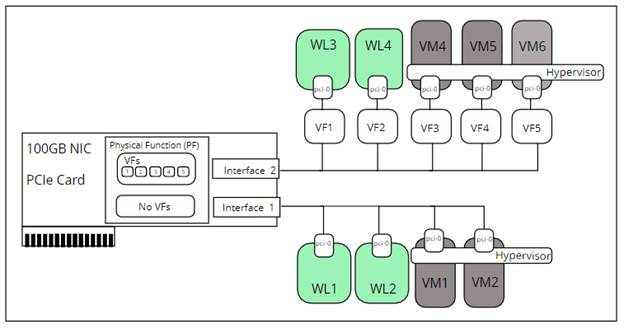

The evolution of communication technology has brought us to the era of 5G networks, promising faster speeds, lower latency, and the ability to connect billions of devices simultaneously. However, to achieve these ambitious goals, the architecture of 5G networks needs to be more flexible, scalable, and efficient than ever before. With the advent of CUPS, or Control and User Plane Separation, in later LTE releases, the telecommunications industry had high expectations for a prototypical distributed control-user plane architecture. This development was seen as a steppingstone towards the more advanced 5G networks that were on the horizon. CUPS aimed to separate the control plane and user plane functionalities where the Control Plane (specifically the Session Management Function or SMF) is typically centralized while the User Plane Function (UPF) can be located alongside the Control Plane or distributed to other locations in the network as demanded by specific use cases.

Understanding the need for Distributed UPF

The UPF is a key component in 5G networks, responsible for handling user data traffic. Distributed User Plane Function (D-UPF) is an advanced network architecture that distributes the UPF functionality across multiple nodes closer to the user and enables local breakout (LBO) to manage use cases that requires lower latency or more privacy, enabling a more scalable and flexible networking environment. With D-UPF, operators can handle increasing data volumes, reduce latency, and enhance overall network performance. By distributing the UPF, operators can effectively manage the increasing data demands across different consumer and enterprise use cases in a cost-effective manner.

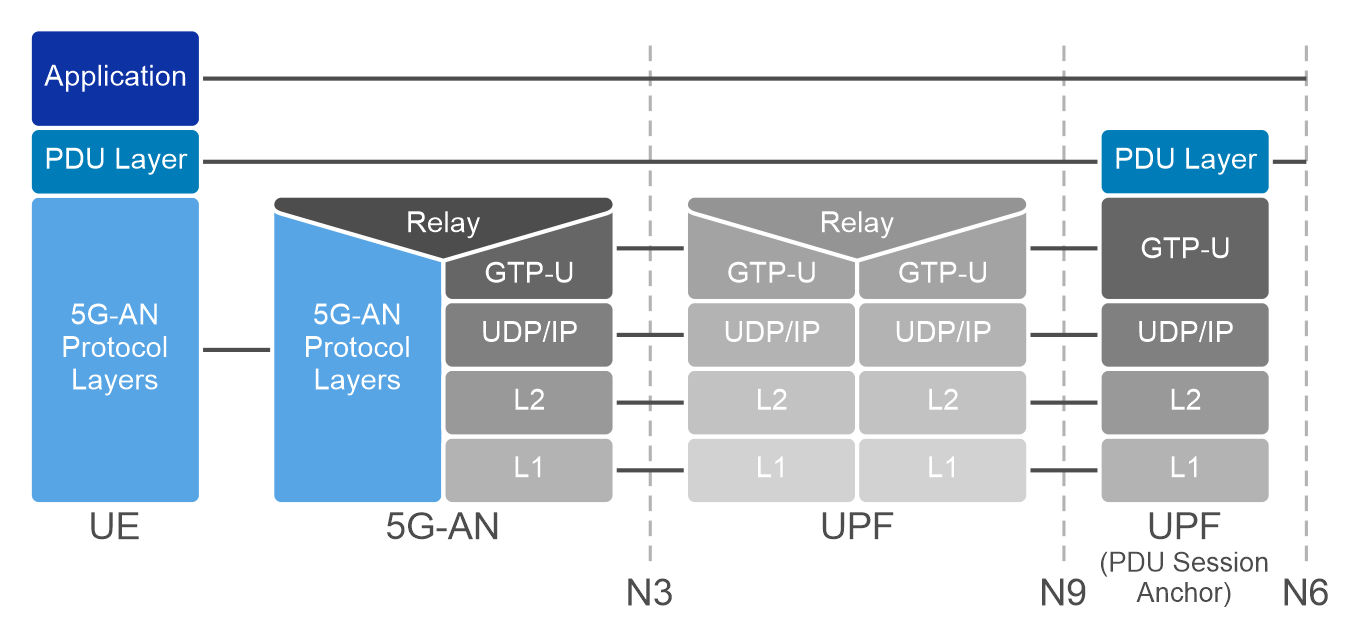

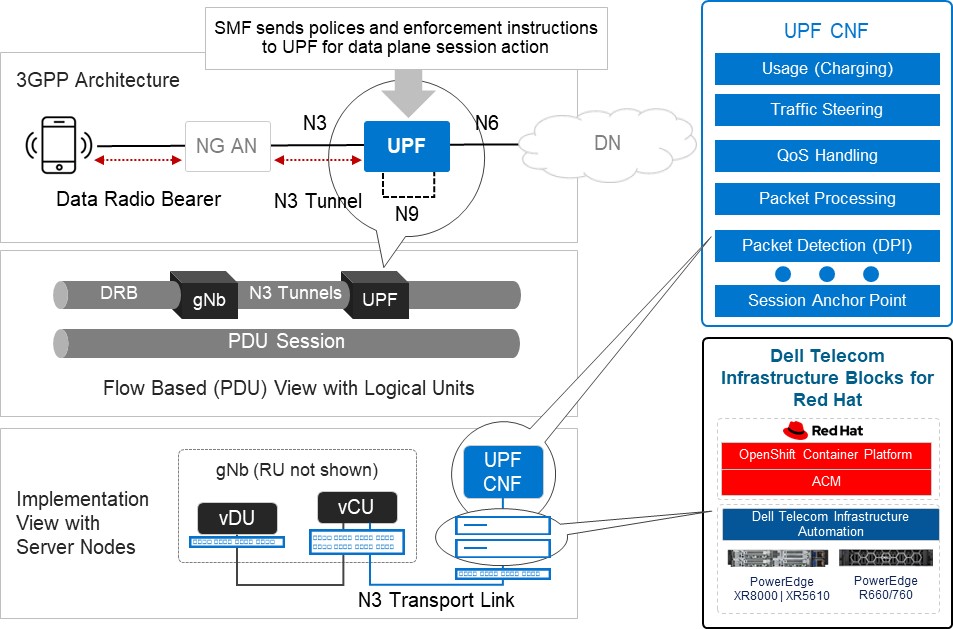

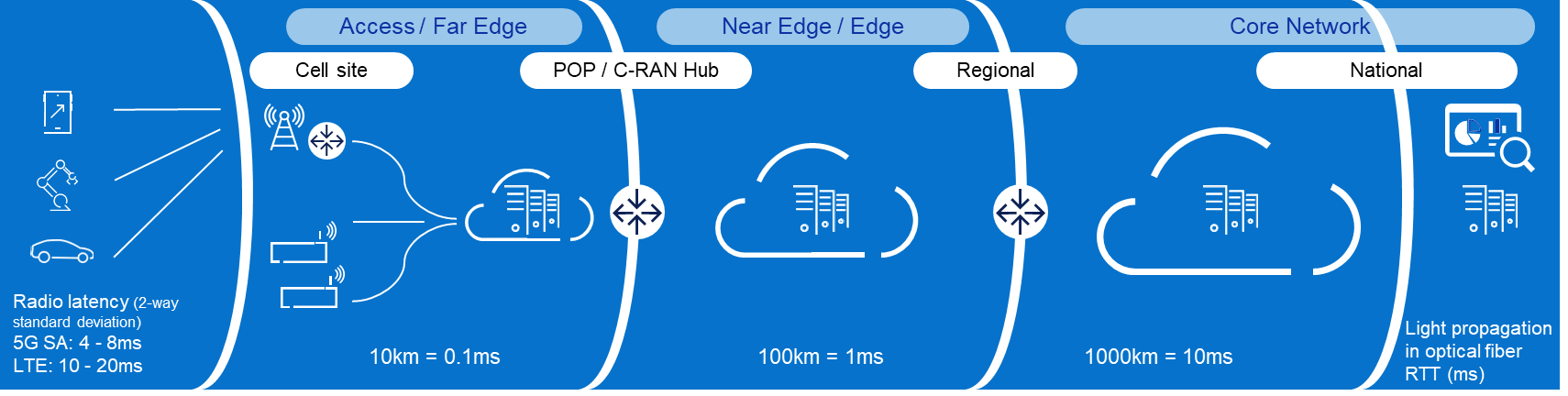

Figure 1: Distributed User Plane function in 5G Core Architecture

D-UPF also plays a crucial role in enabling edge computing in 5G networks. By distributing the user plane traffic closer to the network edge, D-UPF reduces the latency associated with data transmission to and from centralized data centers. This opens opportunities for real-time applications, such as autonomous vehicles, augmented reality, and industrial automation, where low latency is critical for their proper functioning.

Distributed UPF deployment options

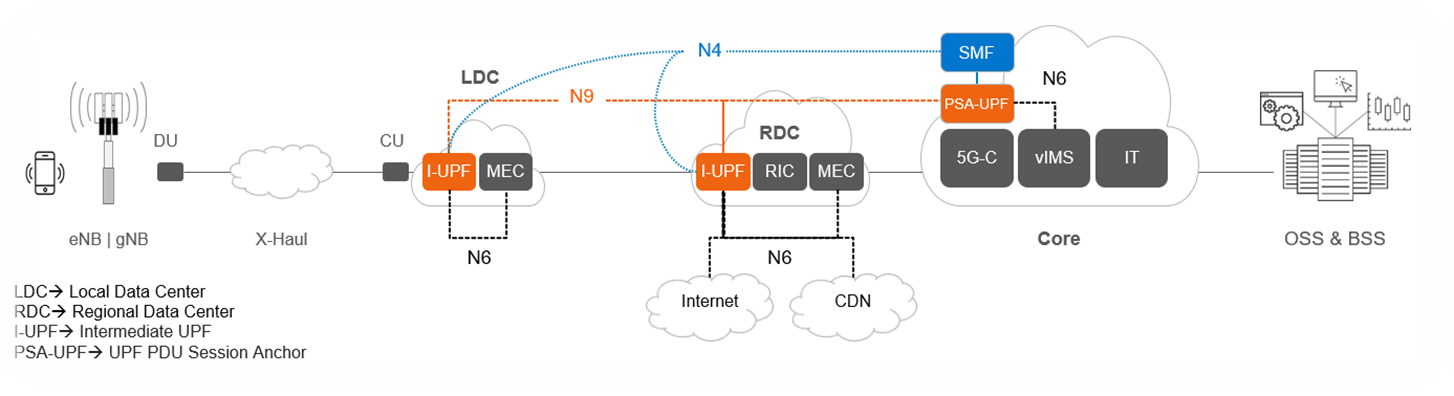

Figure 2: D-UPF deployment and functionality

The above diagram provides an overview of the different roles D-UPF may play in a 5G architecture. For example:

- Centralized UPF/PSA-UPF: In the simplest scenario, the UPF is centralized and session anchor occurs within the data center and takes care of the long-term stable IP address assignment. One such example includes VoLTE / NR call where PDU Session Anchor (PSA)-UPF traffic steers to IMS.

- Intermediate UPF (I-UPF):An intermediate UPF (I-UPF) can be inserted on the User Plane path between the RAN and a PSA. Here are two possible reasons to do that:

- If due to mobility, the UE moves to a new RAN node and the new RAN node cannot support N3 tunnel to the old PSA, then an I-UPF is inserted and this I-UPF will have the N3 interface towards RAN and an N9 interface towards the PSA UPF. This process of linking multiple UPFs is called UPF chaining, which involves directing user data flows through a series of UPFs, each of which is performing specific functions.

- You might want to deploy UPF within the Local Data Center/Edge for a low latency use case to steer data traffic to a co-deployed MEC for edge services or to break-out traffic to the local data network.

Challenges and considerations for D-UPF deployments

Now that we have reviewed the need for D-UPF and the different deployment scenarios, let’s consider some of the obstacles you will encounter along the way. As we all know, these network functions have their own needs, especially when it comes to the amount of data being inspected, routed, and forwarded across from the core to the edge, and back again. Below are four areas for consideration:

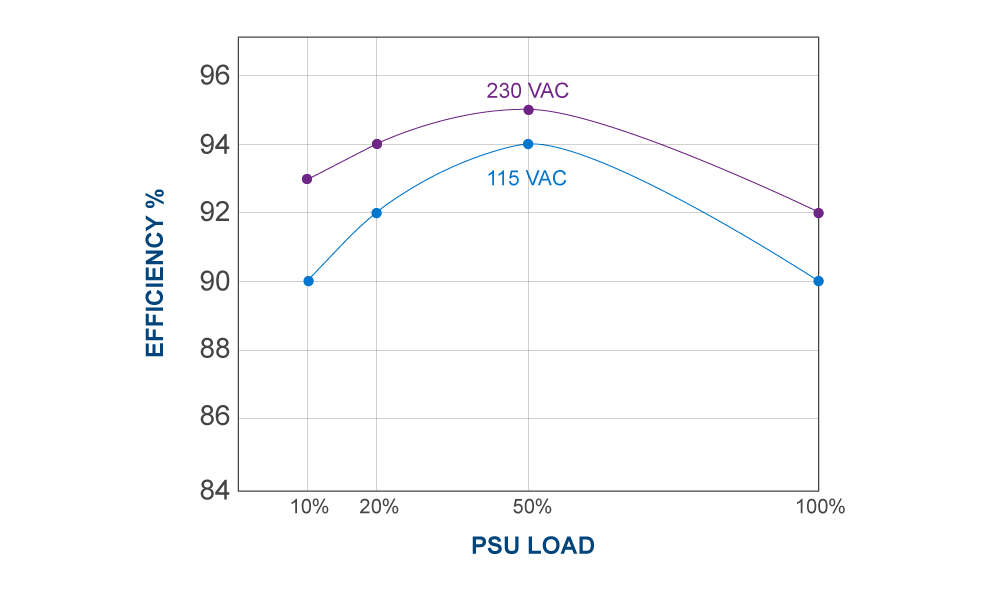

- Resource Constraints: Edge or remote locations often have limited physical space available for deploying network equipment. The challenge lies in accommodating the necessary hardware, cooling systems, and other infrastructure within these space-constrained environments. Remote locations may also have limited or unreliable power supply infrastructure. Opting for infrastructure with optimal power efficiency, high density, serviceability, ruggedized exterior, and optimized for edge form factors becomes important as UPFs are extended to the edge.

- Performance Requirements: The need for low latency Infrastructure is critical to ensure real-time responsiveness and a seamless user experience when deploying core functions to the edge. Also, by processing data at the network edge with minimal latency, the need for large bandwidth networks to transmit data to centralized core is reduced. This helps in optimizing network bandwidth and lowering the operating costs. This ultimately reduces the CSP’s reliance on centralized core infrastructure for time-sensitive operations.

- Orchestration and Automation: Deploying and managing UPFs distributed across edge locations is a complex challenge. This includes tasks such as workload placement, resource allocation, and automated management of edge infrastructure. Choosing a horizontal telco cloud platform that supports automated distributed core deployment and provides the capability to expand and scale the compute and storage resources to accommodate the varying demands at the edge is a must.

- Lifecycle Management and Operating Cost: Another significant factor is the increased costs associated with first deploying and then operating remote deployments. The large number of these locations coupled with their limited accessibility makes them more expensive to construct and maintain. To address this, zero-touch provisioning at network setup k and sustainable lifecycle management are necessary to optimize the economics of the edge.

The Horizontal Cloud Platform: Dell Telecom Infrastructure Blocks

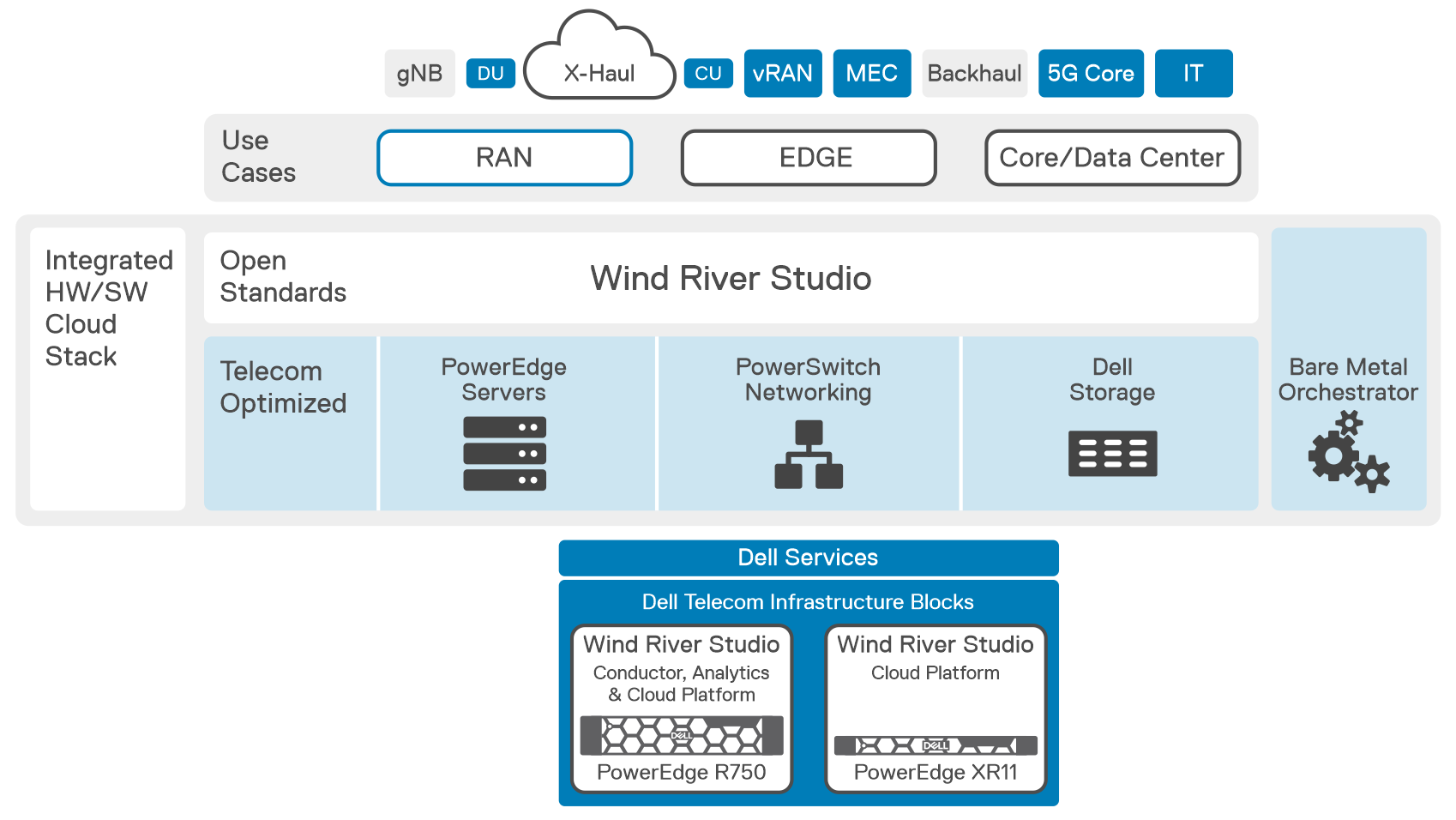

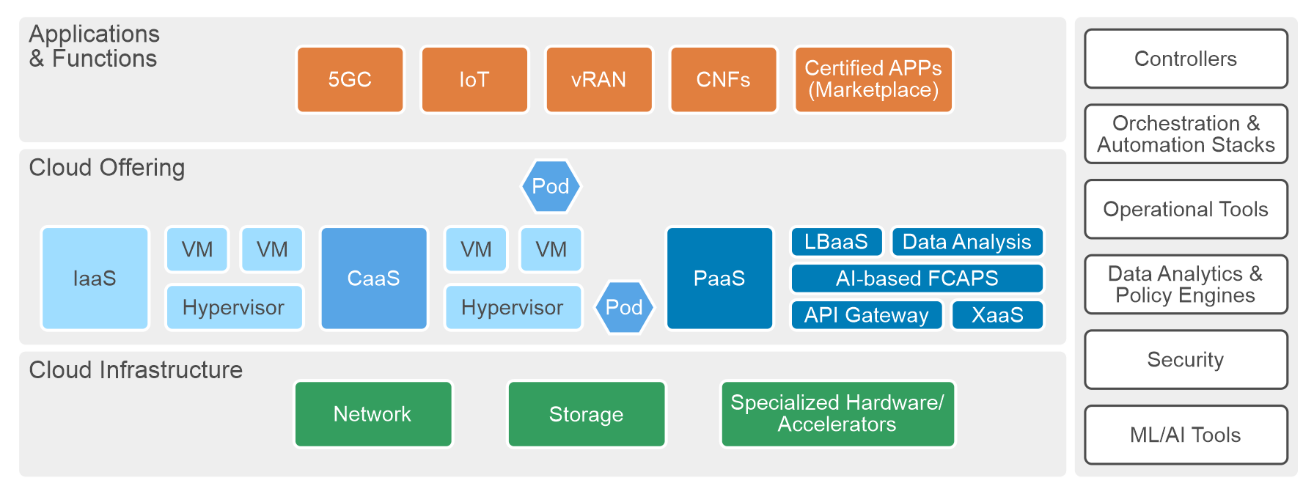

Figure3: An Implementation View of Dell Telecom Infrastructure Blocks for Red Hat running the UPF

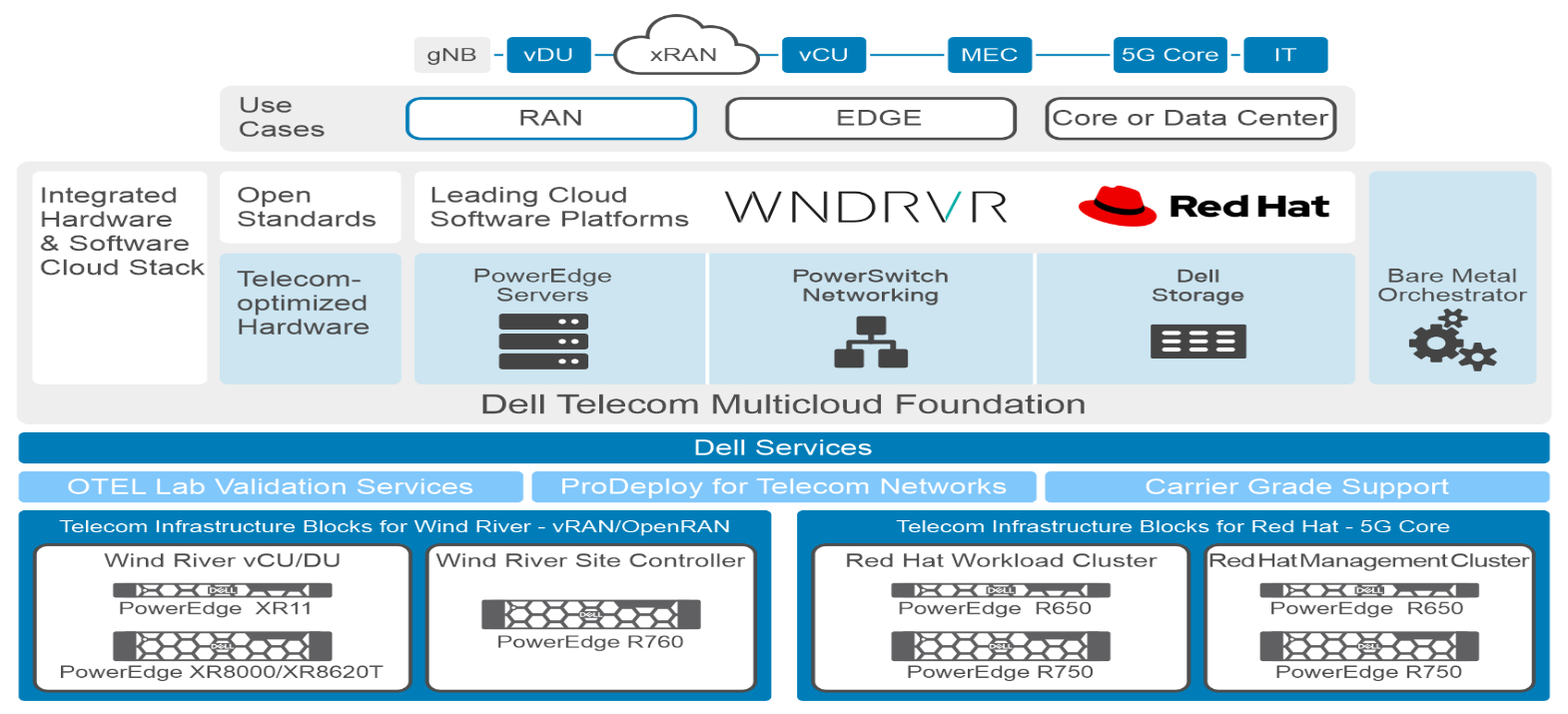

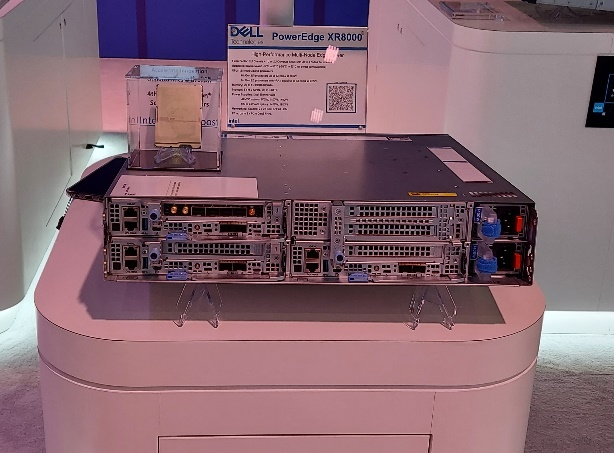

Dell Technologies is at the forefront of providing cutting-edge cloud-native solutions for the 5G telecom industry. As discussed in our previous blog, Telecom Infrastructure Blocks for Red Hat is one of those solutions, helping operators break down technology silos and empowering them to deploy a common cloud platform from Core to Edge to RAN. These are engineered systems, based on high-performance telecom edge-optimized Dell PowerEdge servers, that have been pre-validated and integrated with Red Hat OpenShift ecosystem software. This makes them a perfect solution for tackling the D-UPF challenges outlined in this blog.

- Resource Constraints:

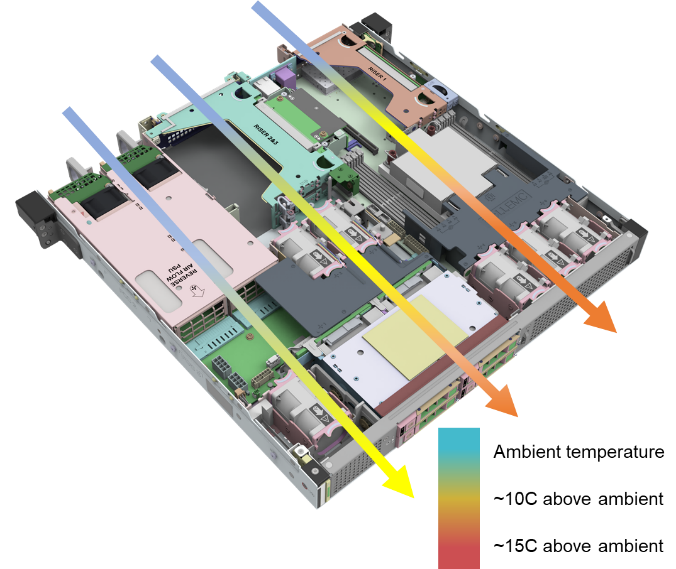

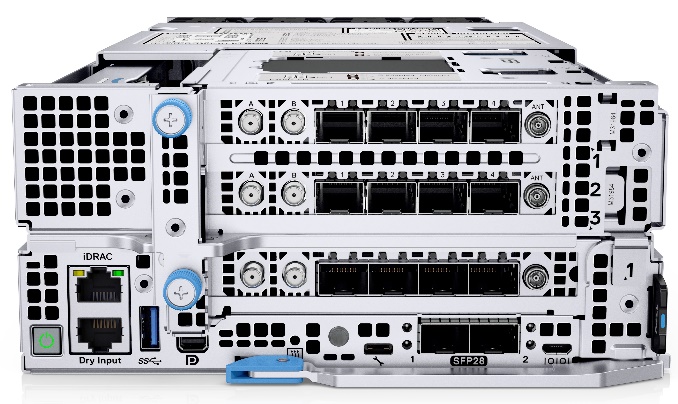

- Space-efficient modular designed server options for telecom environments, such as the Dell PowerEdge XR8000 series servers, allow providers to mix and match components based on workload needs. They can run multiple workloads, such as CU/DU and UPF in the same chassis.

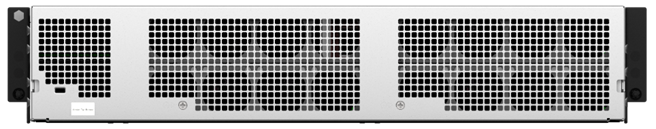

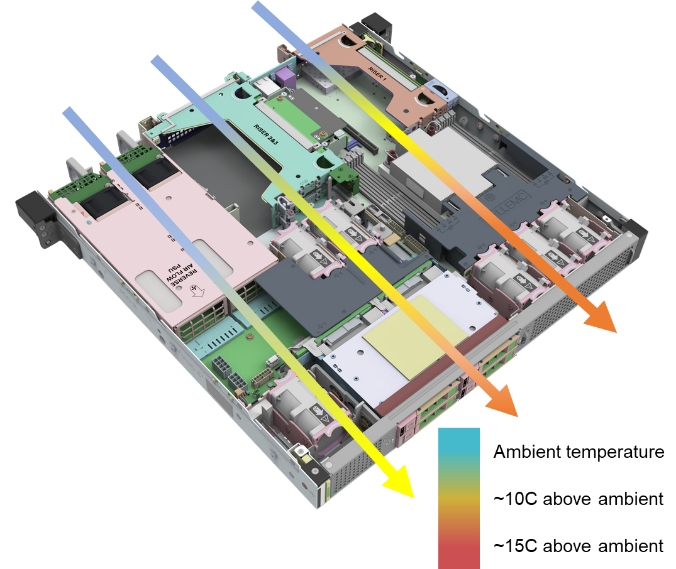

- Smart cooling designs support harsh edge environments and keep systems at optimal temperatures without using more energy than is needed.[1]

- Rugged and flexible server options that are less than half the length of traditional servers and offer front or rear connectivity make installation in small enclosures at the base of cell towers easier.[2]

- Performance Requirements:

- Provides low-latency processing for edge computing nodes at the network edge.[3]

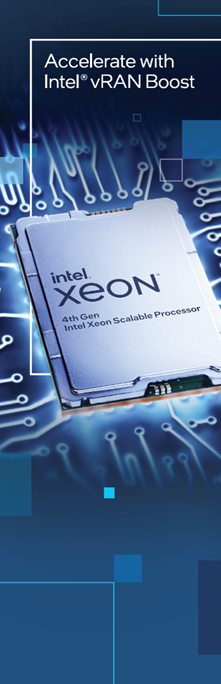

- 4th Gen Intel Xeon Scalable processors with Intel vRAN Boost increase DU capacity (up to 2X in specific scenarios), and increases the packet core UPF and RAN CU performance by 42%.[2]

- Orchestration and Automation:

- Horizontal cloud stack engineered platform based on Red Hat OpenShift allows operators to pool resources to meet changing workload requirements. This is achieved by automating server discovery, creating and maintaining a server inventory, and adding the ability to configure and reconfigure the full hardware and software stack to meet evolving workload requirements.

- Servers leverage dynamic resource allocation to ensure that computing resources are allocated precisely where and when they are needed. This real-time optimization minimizes resource waste and maximizes network efficiency.[3]

- Lifecycle Management and Operating Cost:

- Include Dell Telecom Infrastructure Automation software to automate the deployment and life-cycle management as its fundamental components.

- Backed by a unified support model from Dell with options that meet carrier grade SLAs, CSPs do not have to worry about multi-vendor management for the cloud infrastructure support (for both the hardware and cloud platform software), as Dell becomes the single point of contact in the support of telco cloud platform and works with its partners to resolve issues.

Summary

In summary, the need for D-UPF in 5G networks arises from the requirements of handling massive data volumes, improving network efficiency, reducing latency, enabling edge computing, and supporting advanced 5G services. Selecting among the different deployment scenarios possible will require ensuring you have an infrastructure capable of meeting your changing objectives for today and the flexibility and scalability to see you through your long-term goals. For example, you can host and support the deployment and management of content delivery network (CDN) at the network edge, where Dell Telecom Infrastructure Blocks for Red Hat can also serve as a telco cloud building block. By implementing this engineered telco cloud platform solution from Dell, we believe CSPs will be able to streamline the process and reduce costs associated with the deployment and maintenance of UPF across edge locations.

To learn more about Telecom Infrastructure Blocks for Red Hat, visit our website Dell Telecom Multicloud Foundation solutions.

[1] Source: ACG Report, “The TCO Benefits of Dell’s Next-Generation Telco Servers“, February 2023

[2] Source: Dell Technologies, “Introducing New Dell OEM PowerEdge XR Servers“,

[3] Source: Dell Technologies, “Competing in the new world of Open RAN in Telecom”,

Empowering Telecom: Samsung, Dell, and Intel Lead the Open Disaggregated Revolution

Fri, 29 Mar 2024 16:00:28 -0000

|Read Time: 0 minutes

The telecom industry and Communication Service Providers (CSPs) are transitioning to a new phase with disaggregated RAN. This includes embracing open, cloud-based technologies as the foundational model to support their current and upcoming services.

Samsung, Dell, and Intel are leading this transformation by crafting solutions that integrate advanced open technologies such as virtual Radio Access Network (vRAN) and Open Radio Access Network (Open RAN). Their end-to-end delivery model includes open commercial hardware designed to facilitate a horizontally automated, multi-vendor network built on modern open and disaggregated architectures.

Understanding the importance of a seamless technology transition, Dell Technologies and Intel initiated an Early Customer Evaluation Program. This program empowers customers to leverage the latest infrastructure technologies even before they hit the market. This initiative also provides Samsung, and other strategic partners of Dell and Intel, access to cutting-edge prototype offerings to conduct early solution validation.

In the initial phase of the evaluation program, the Dell PowerEdge XR 11 featuring Intel® vRAN Accelerator ACC100 Adapter accelerator cards were selected. In the second phase, the team selected Dell PowerEdge XR8000 servers, powered by 4th Gen Intel Xeon Scalable processors with Intel vRAN Boost integrated acceleration, as the foundational hardware for the open infrastructure platform. These ruggedized servers, with features such as short-depth design, NEBS certification, and optimized total cost of ownership (TCO), are ideal for vRAN and ORAN deployments.

The Early Customer Evaluation Program facilitates the integration and verification phase of Samsung’s virtual Central Unit (vCU) and virtualized Distributed Unit (vDU), well before the commercial release dates of Dell's infrastructure platforms. This emphasizes the telecom industry’s mantra, "Time is Money."

Below are some of Samsung’s recent accomplishments, made possible with the support of Dell and Intel:

- Conducting trials with several major Operators across Asia Pacific, North America, and Europe.

- Successfully implementing the Samsung vRAN solution on Dell Servers powered by Intel Xeon processors for Vodafone UK’s commercial network, demonstrating superior performance compared to legacy hardware-based RAN in urban areas. The test results surpassed targeted Key Performance Indicators (KPIs), including 4G and 5G downlink and uplink throughput.

Henrik Jansson, Vice President and Head of SI Business Group, Networks Business at Samsung Electronics Samsung's Vice President states, "With the backing of Dell and Intel’s Early Customer Evaluation Program, we've expedited the integration of our solutions on the latest server and processor versions, delivering greater value to operators."

Gautam Bhagra, Dell Technologies Vice President of Strategic Partnerships adds, "We are happy to support Samsung’s open Telecom initiatives, along with our partner Intel. Our Early Customer Evaluation Program has been a proven testament to the power of collaboration, enabling our partners to anticipate the integration and validation efforts, with significant improvement on time to value.”

Additionally, Cristina Rodriguez, Vice President and General Manager of Wireless Access Networking Division at Intel states, “Intel is pleased to collaborate with Dell in the Early Customer Evaluation Program for our valued strategic ecosystem allies and customers. This impactful initiative enables the ecosystem to seamlessly integrate and validate their solutions on cutting-edge computing platforms, even before these platforms hit the commercial market."

Advancing O-RAN-Based 5G Adoption Through Collaborative Excellence

Thu, 22 Feb 2024 19:15:40 -0000

|Read Time: 0 minutes

Within the telecom industry, a robust endorsement for the O-RAN ecosystem has emerged—a testament to the sector's commitment to accelerating open RAN adoption. This commitment is encapsulated in the Open RAN Ecosystem Experience (OREX), an O-RAN service brand by DOCOMO, collaboratively developed with multiplex global partners.

DOCOMO’s new open RAN service makes mobile networks a whole new experience, allowing operators to build open RAN networks with Service Customization in mind. By selecting OREX to deploy O-RAN networks, Communication Service Providers (CSPs) will benefit from reduced Total Cost of Ownership (TCO), power consumption, and improved operations. In a strategic collaboration, DOCOMO, Fujitsu, Wind River, and Dell, have worked together to integrate and life cycle manage a specific OREX network blueprint. CSPs can benefit by leveraging the open and disaggregated advantages of the O-RAN ecosystem efficiently without incurring expensive network design and system integration tasks.

A multi-vendor stack for open RAN

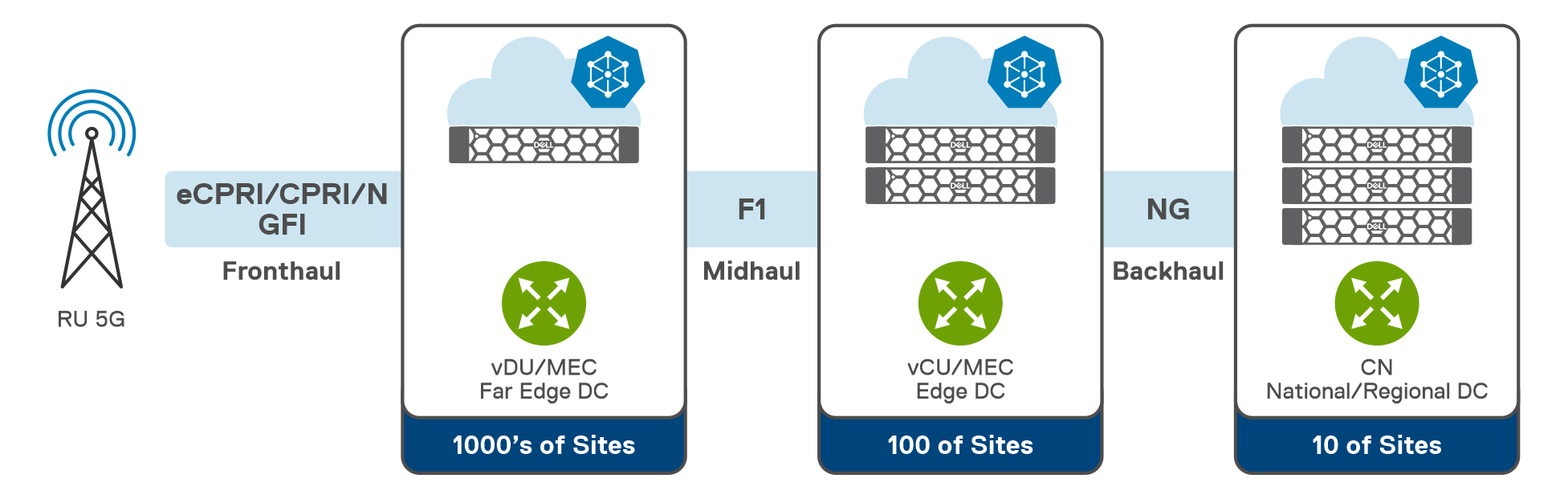

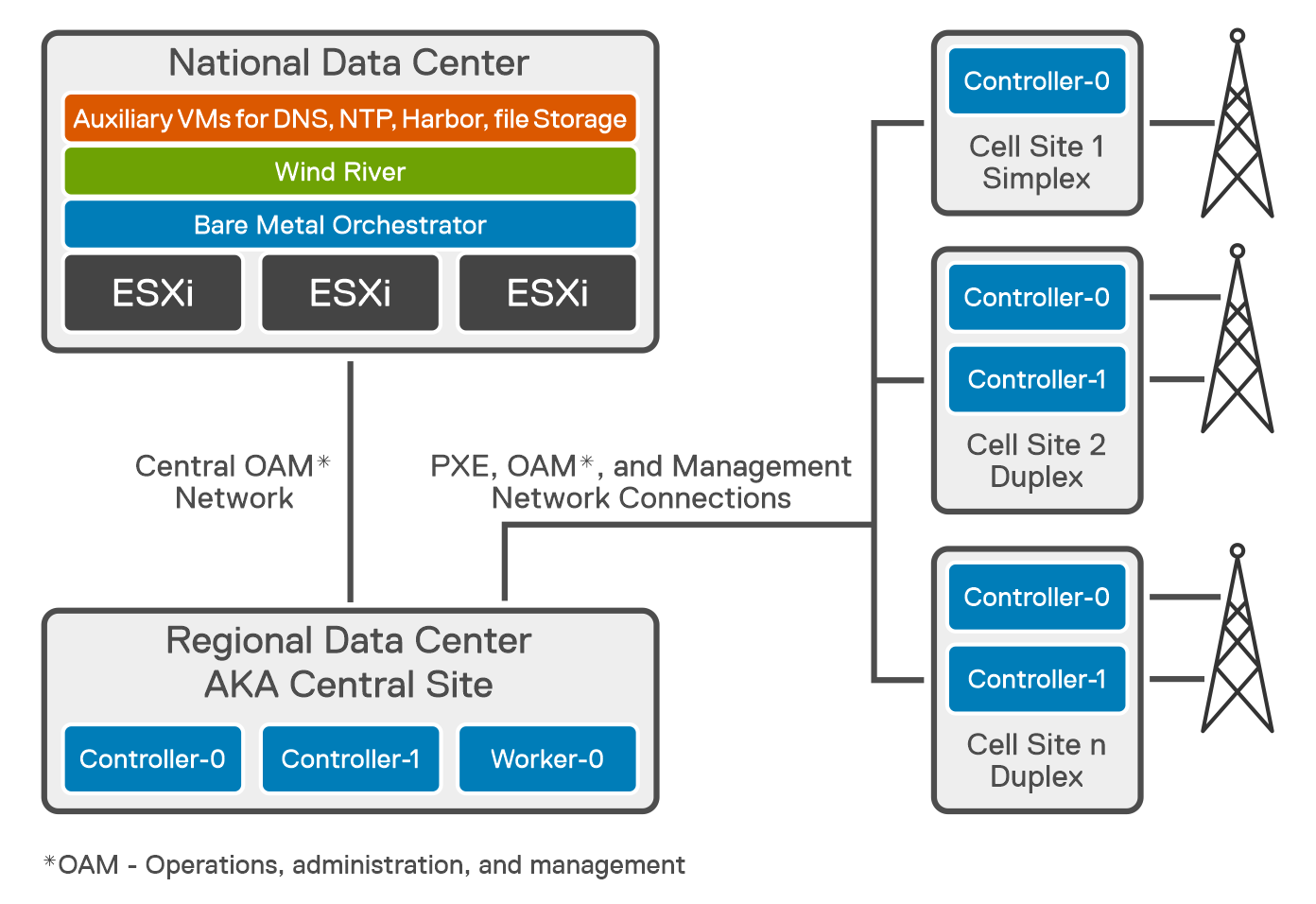

To support Cloud RAN workloads and to provide the infrastructure layer for the full stack, Fujitsu chose Dell PowerEdge XR8000 and XR5610 servers. These ruggedized servers, which are ideally suited for O-RAN and vRAN deployments, are TCO-optimized, have short-depth, and NEBS certification. Dell engineers these servers for demanding deployment environments, and they integrate seamlessly with Wind River Studio.

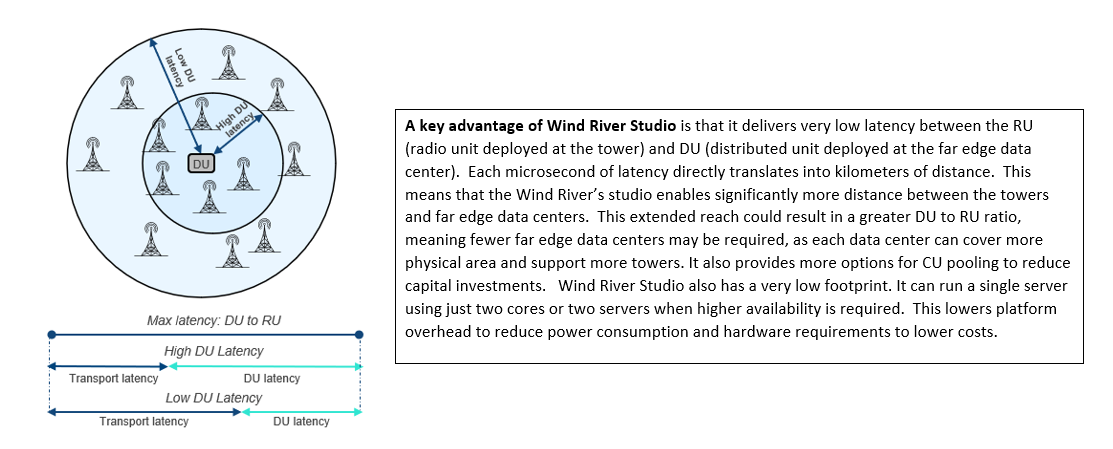

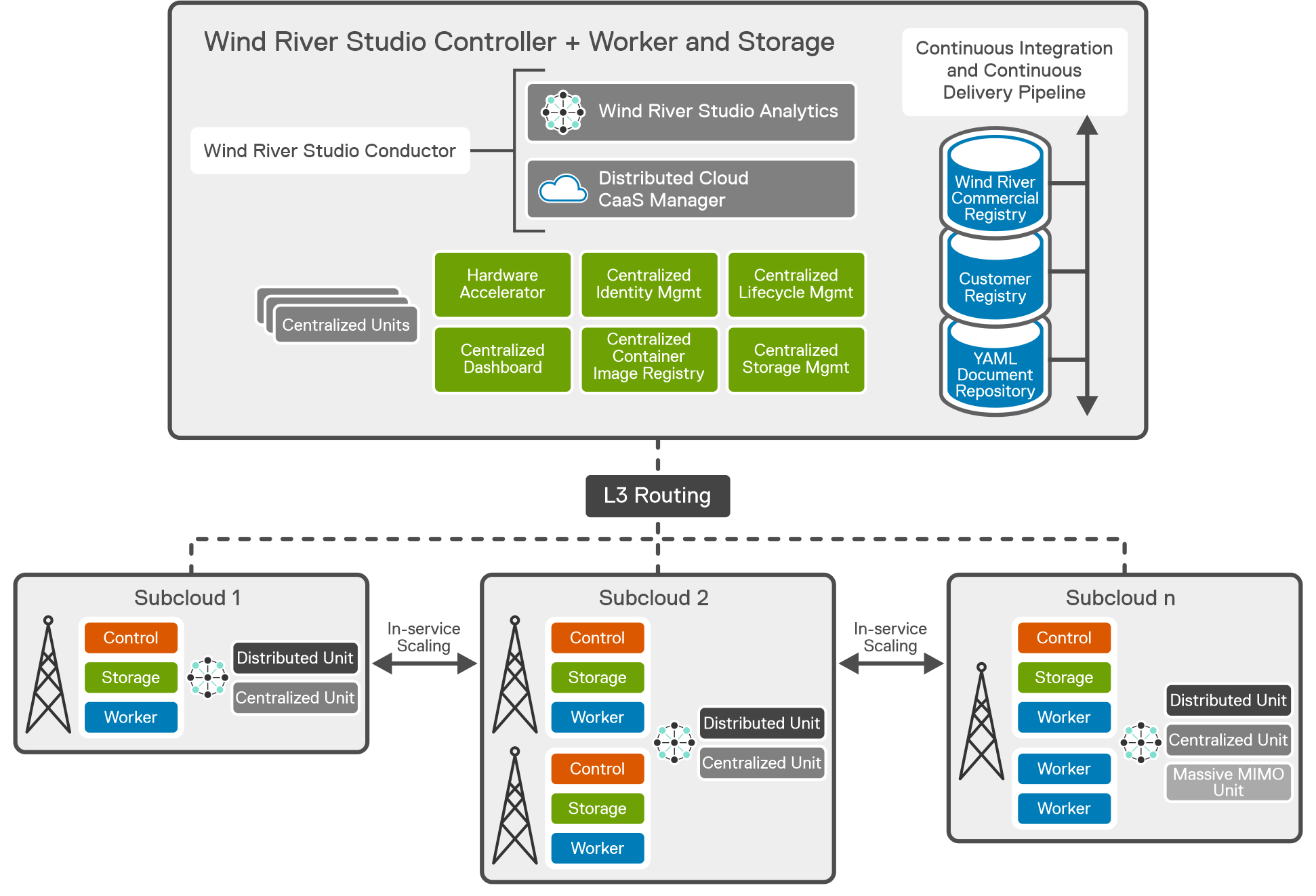

Wind River’s Studio Cloud container as a service (CaaS) platform, a cloud-native toolset for deploying and operating distributed clouds at scale, is the cloud software that provides the abstraction layer between the 5G workloads and the infrastructure hardware.

The collaboration with Dell enables Fujitsu’s vRAN software deployed on Dell's open RAN Accelerator. This inline accelerator, powered by Marvell, handles Layer 1 computations, offloading server CPU cores and eliminating the need for separate fronthaul and midhaul network interface cards (NIC).

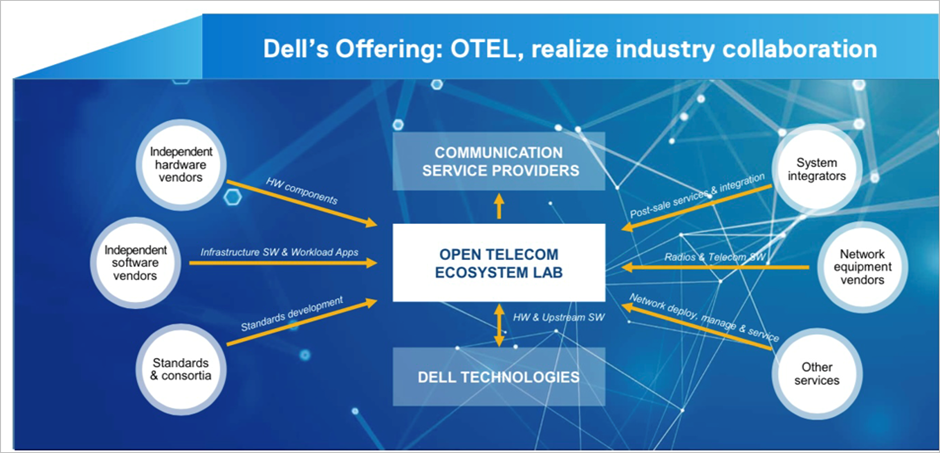

Dell Open Telecom Ecosystems Lab (OTEL) has been selected for the full stack validation process. OTEL removes the risk from the open transformation journey that CSPs and telecom software vendors are undertaking by providing access to an advanced testing environment that can be accessed remotely using secure connectivity. OTEL established a collaborative testing environment to bring together engineering teams from DOCOMO, Fujitsu, Wind River, and Dell, to test the deployment readiness of this multivendor O-RAN blueprint. The engineering teams have cooperated to mutually agree on test scenarios that will be rigorously and repeatably tested to ensure the stability of the full stack.

- “By embracing this validated design, CSPs now possess an end-solution that not only simplifies deployment but also expedites time to value—a testament to the collaborative efficiency with our partners DOCOMO, Fujitsu, and Wind River." -- Gautam Bhagra, Dell Technologies, Vice President (Telecom System Business – Strategic Alliances)

- “The combined solution, powered by Fujitsu, Dell, and Wind River results in a remarkable 30 percent reduction in power consumption, contributing to environmental sustainability while also substantially reducing TCO.” -- Toru Sekino, Fujitsu, Executive Director (vRAN)

- “By working together with NTT DOCOMO, Fujitsu, and Dell on the OREX RAN platform, we can deliver new efficiencies and greater flexibility to CSPs . . . . A proven solution with global service providers, Wind River Studio delivers a fully cloud-native, Kubernetes and container-based architecture for the development, deployment, operations, and servicing of distributed edge networks at scale. -- Scott Walker, Wind River Global Vice President (Telco Ecosystem and CSP Sales)

- “The collaborative work among Dell, Fujitsu, Wind River, and DOCOMO helps all the OREX partners to expand their open RAN business opportunities by focusing on the development of their products and realizing timely end-to-end verification of integrated OREX products. Through this effort, we will provide true open RAN to CSPs across the globe.” -- Masafumi Masuda, General Manager of Radio Access Network Technology Promotion Office, Radio Access Network Design Department (NTT DOCOMO, INC.)

Defining the future of O-RAN Management with Vodafone, Amdocs, and Dell Technologies

Thu, 22 Feb 2024 13:08:00 -0000

|Read Time: 0 minutes

Seizing the initiative to define the future of Open RAN management

The transformative journey of communication service provider (CSP) networks has reached a new, exciting stage. As operators increasingly adopt cloud technologies and embrace disaggregated architecture, the O-RAN Alliance is leading an expansion into the radio access network (RAN) realm. By disrupting the traditional RAN landscape, O-RAN is driving the industry towards a software-driven approach that leverages diverse software and hardware from multiple vendors to achieve the best possible outcomes. The goal is to create integrated, tested and certified solutions that deliver lower total cost of ownership (TCO) and amplified innovation.

With over 40 years’ industry expertise, Amdocs is a leading provider of software and services to communications and media companies. The company offers market-leading capabilities for service providers’ operations support systems (OSS) and radio access networks (RANs), and has delivered proven solutions in network management, planning, and optimization. To meet emerging challenges, Amdocs also strongly collaborates with leading industry organizations like the Telecom Infra Project and the O-RAN Alliance.

Dell Technologies is a global leader in digital transformation and infrastructure. Its products are widely utilized by global telecom operators in network and IT infrastructure, ranging from purpose-built telecom servers to cloud-native orchestration and infrastructure automation solutions. The company also offers bundled solutions developed in close collaboration with a diverse ecosystem of partners in O-Cloud and workload layers, and has extensive representation in key industry forums, including the O-RAN Alliance, Telecom Infra Project, and 3GPP.

To advance a shared vision for O-RAN management, our two companies have partnered to enable cloud transformations throughout the industry. For example, consider Amdocs Service Management and Orchestration (SMO) for O-RAN, whose capabilities include orchestration, inventory and assurance for any managed element, including x/rAPPs.

While Amdocs offering supports any O-Cloud, across bare metal and CaaS, when integrated with Dell Telecom Infrastructure Automation Suite, it supports deployments on Dell Technology’s industry-leading telecom servers, as well as O-Cloud layer software, provided by partner organizations. This integration enables CSPs to rapidly provision, manage, and monitor their O-Cloud infrastructure, and simplify the lifecycle management of infrastructure nodes in a dynamic, disaggregated network. A proof of concept (PoC) showcasing this solution's capabilities is currently underway at Vodafone Group, encompassing both immediate use cases and a roadmap of forward-looking scenarios.

Bringing efficiencies to O-RAN with Service Management and Orchestration (SMO)

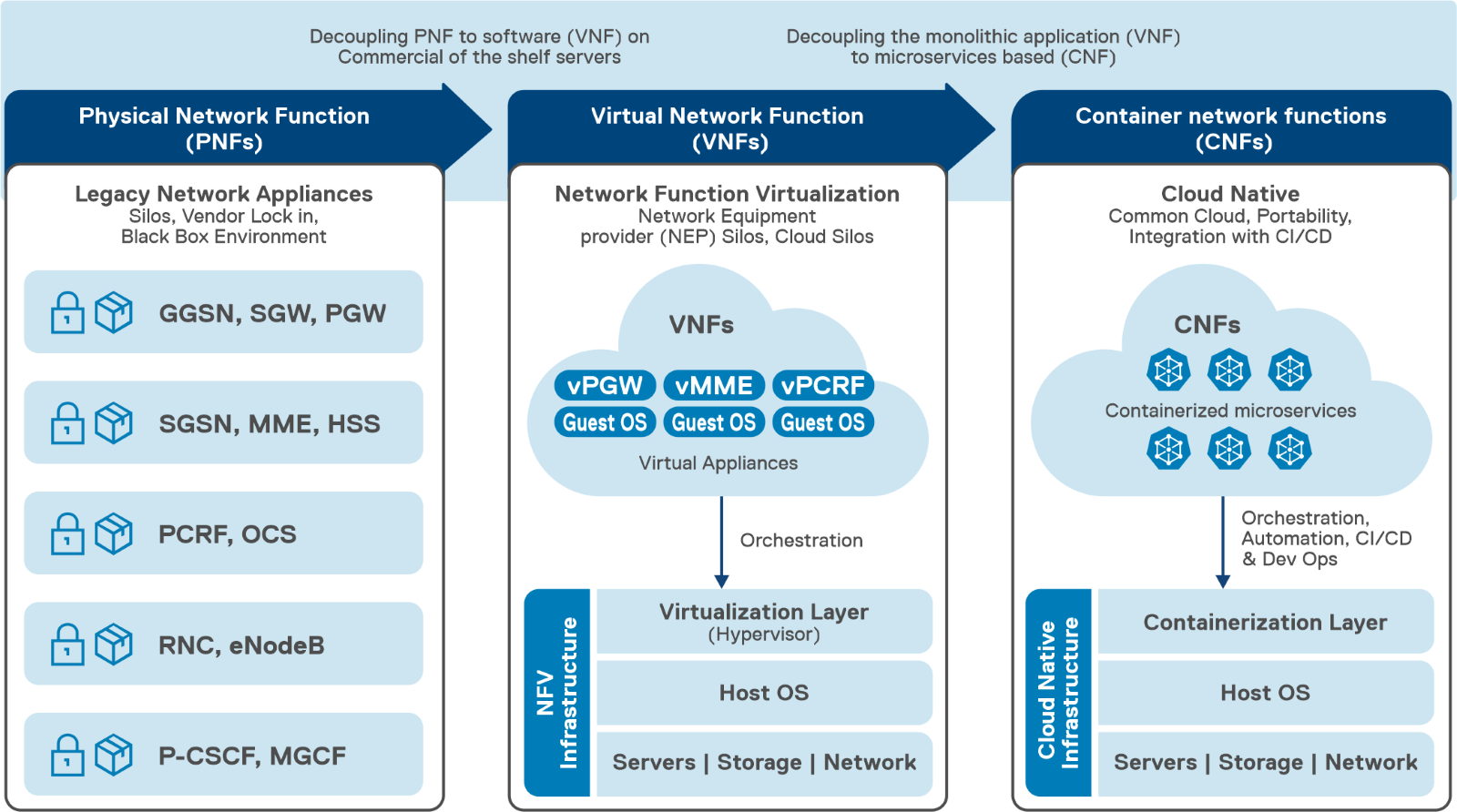

Service Management and Orchestration (SMO) is a key pillar in service and network orchestration, addressing specific CSP needs. By operating across multiple hierarchies, SMO efficiently manages multi-vendor, multi-technology entities with varying lifecycles. Furthermore, by focusing on cloud infrastructure, virtualized and containerized cloud-native functions (CNFs), it’s fully aligned with the industry’s developing architecture, seamlessly integrating with, and actively contributing to O-RAN standards and interfaces.

Amdocs SMO provides all the capabilities required to manage O-RAN. It supports the end-to-end lifecycle of the network, including design and onboarding, orchestration and management, inventory, and assurance processes. This approach also extends to embracing the openness and disaggregated approach of O-RAN, with support for heterogeneous multi-technology, multi-vendor networks – bringing CSPs cost efficiencies and empowering innovation.

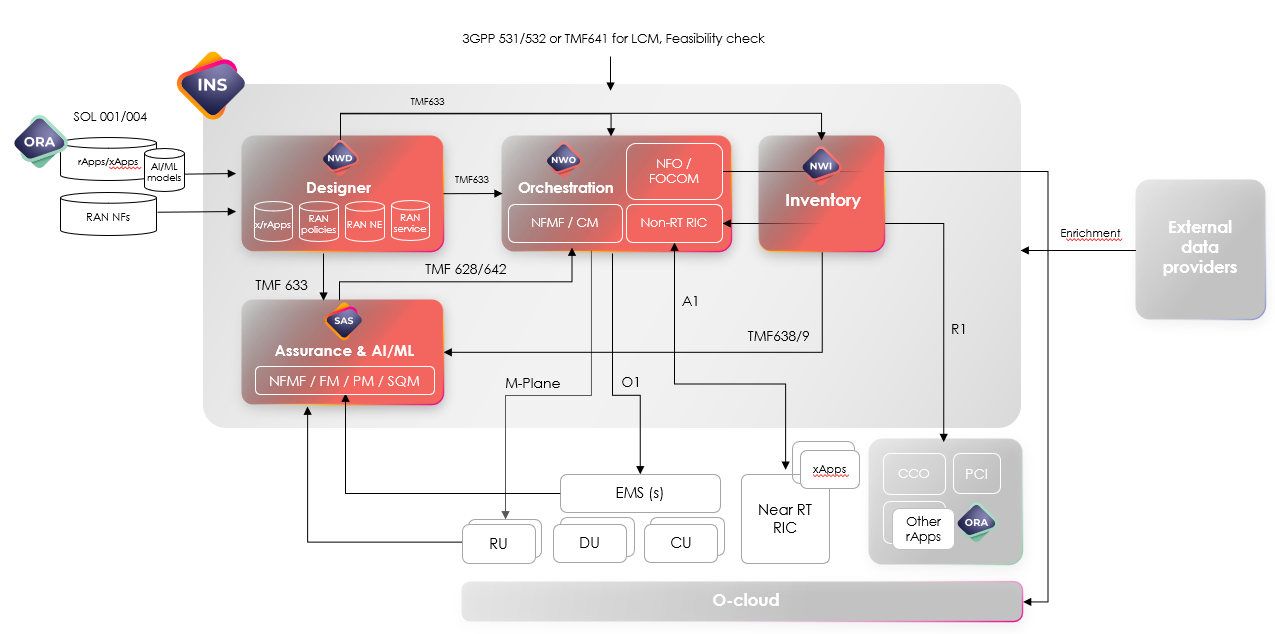

Figure 1 Amdocs Service Management and Orchestration Solution Overview

Amdocs’ SMO supports a diverse set of use cases, from O-RAN network rollout, network slicing and O-RAN energy efficiency savings, to assurance and closed-loop operations. Furthermore, it’s instrumental in simplifying the rollout process, addressing challenges presented by the disaggregated, multi-vendor nature of O-RAN.

Post-rollout too, SMO plays a pivotal role managing each individual network slice, ensuring RAN performance, maintaining service-level objectives and undertaking corrective actions. This is achieved by leveraging standard FM, PM, SQM capabilities, as well as O-RAN apps, which are deployed within both the Non-RT RIC (rApps) and

Near-RT RIC (xApps) to support different optimization use cases. Throughout, the solution fully adheres to O-RAN specifications and standards.

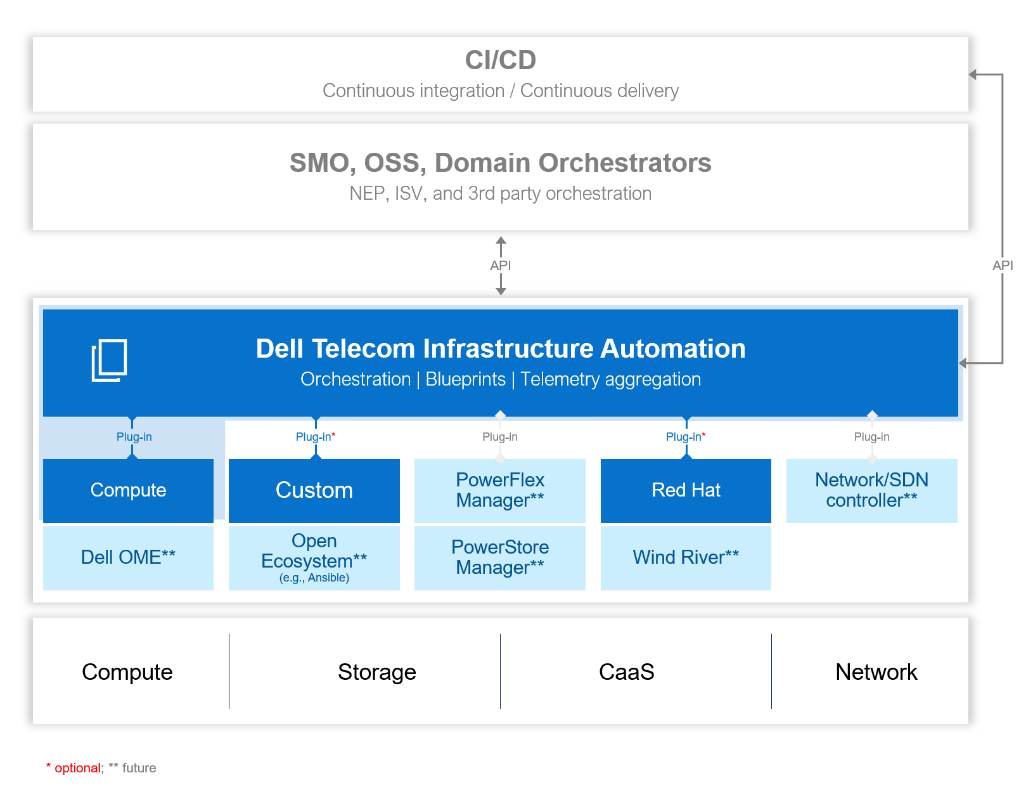

Streamlining with Infrastructure and O-Cloud automation

Dell Technologies Infrastructure Automation Suite helps to simplify and automate infrastructure management in disaggregated networks, allowing CSPs to seamlessly provision, manage and monitor their infrastructure. In addition to operating based on the O-RAN O2-IMS and O2-DMS APIs, the Suite provides an open, model-driven framework for a ubiquitous single point of control. This suite then serves as the unified entry and exit point for automated deployment and orchestration of multi-site and multi-vendor infrastructure, as well as streamlined day 2 lifecycle management, including updates and upgrades.

Figure 2 Dell Telecom Infrastructure Automation Suite

Dell Telecom Infrastructure Automation Suite’s open and extensible architecture serves as the driving force behind O-RAN infrastructure automation. It includes a comprehensive set of components, including full orchestration, data-driven telemetry of cloud infrastructure, resource controllers, API adaptors, a user interface and a single pane of glass for complete cloud infrastructure.

Importantly, the suite, with its open declarative automation framework, also delivers support for cloud infrastructure operations, lower infrastructure total cost of ownership (TCO), accelerated time to market (TTM)/time to repair (TTR), and a modular, extensible architecture to avoid vendor lock-in.

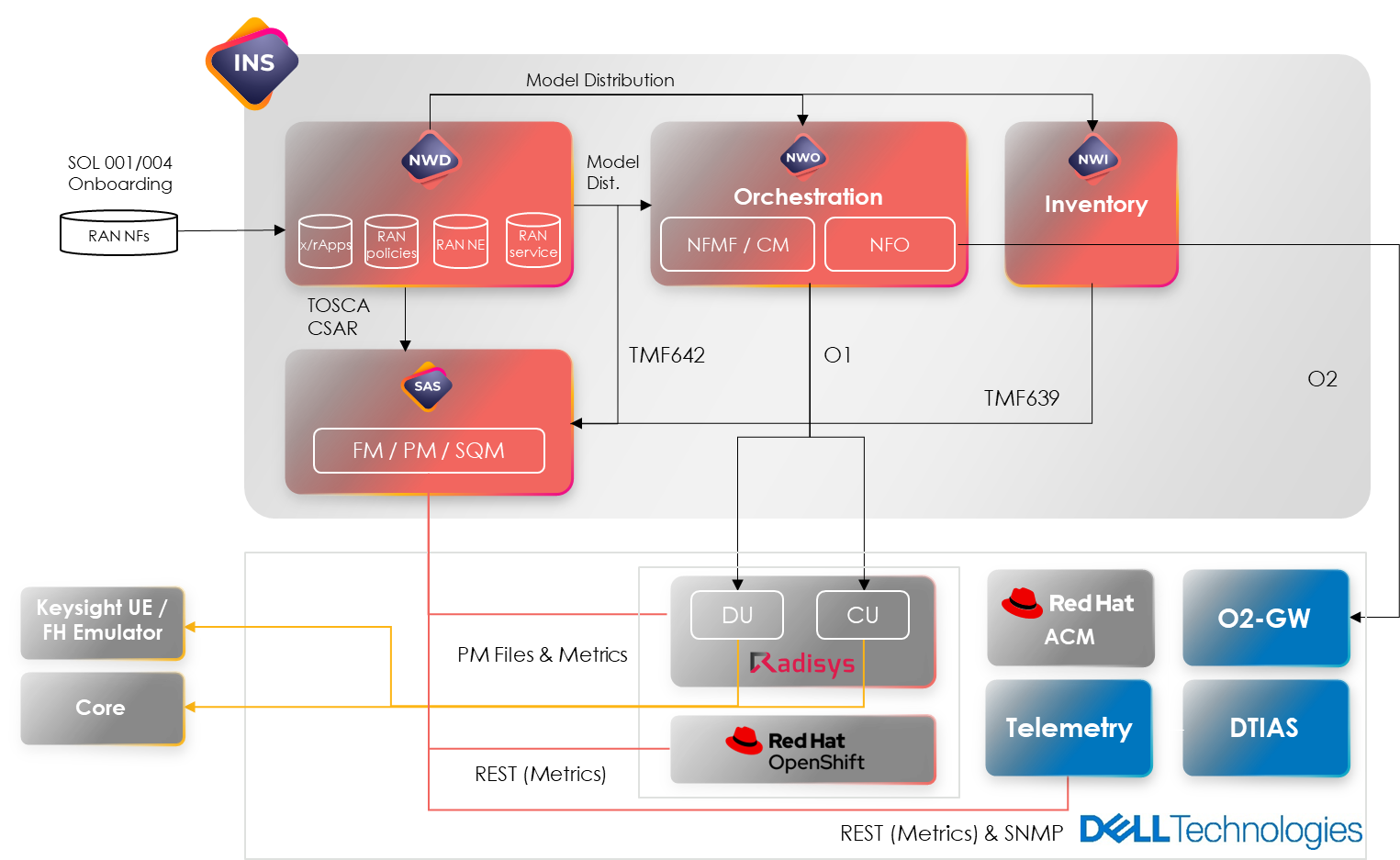

A ground-breaking proof of concept with Vodafone

A main takeaway from our collaboration with Vodafone was that the ability to replace manual processes with zero-touch operations would represent a real game changer. To showcase this vision, Amdocs and Dell Technologies set the goal of building a proof-of-concept (PoC) that would achieve this objective. Taking an end-to-end distributed zero-touch deployment approach, we set out to build a model that significantly reduces the time to bring new sites and services online. Ultimately, Vodafone also seeks to automate the radio network rollout and validate the joint solution’s ability to manage a hybrid, multi-vendor, and disaggregated O-RAN network.

For this PoC, a joint blueprint was created, whereby Amdocs would manage SMO and system integration, with Dell overseeing O-Cloud and infrastructure (including bare metal) layers, and Radisys providing O-RAN CNFs. Additional software will include Red Hat® OpenShift®, a hybrid cloud application platform powered by Kubernetes, as a CaaS platform and Open Telemetry for performance metrics in CaaS.

Figure 3 Vodafone O-RAN PoC blueprint

Vodafone Proof of Concept use cases

The PoC aims to showcase the seamless integration of Amdocs SMO with Dell Technologies Infrastructure Automation Suite, enabling zero-touch deployment of a RAN site. The deployment involves transitioning infrastructure from bare-metal to the cloud using a declarative approach. Once the site is deployed, Amdocs and Dell will demonstrate end-to-end implementation through a data call. Both Amdocs SMO assurance capabilities and Dell Technologies Infrastructure Automation Suite will gather and transmit various telemetry data from the infrastructure, CaaS and the RAN network functions to Amdocs SMO, facilitating real-time monitoring of alarms and events. The setup is both versatile and supports service assurance and closed-loop automation.

Roadmap to innovation

Looking ahead, Amdocs and Dell Technologies remain committed to evolving SMO and O-Cloud management in alignment with O-RAN standards, and empowering CSPs with the flexibility and agility they need for O-RAN deployment activities.

Amdocs SMO remains central to this goal, supporting a rich set of capabilities, including model-driven dynamic orchestration, service decomposition, network slicing, dynamic inventory and closed-loop SLA assurance. Importantly, we’re also investing in specific O-RAN capabilities such as O1, O2, R1, and A1 interfaces, as well as management of x/rApps and respective ML-models.

Meanwhile, Dell Telecom Infrastructure Automation Suite effectively manages the complete lifecycle of the O-Cloud, using the O2 API and RESTful APIs. Employing an open software framework with vendor-agnostic resource controllers, the Suite empowers CSPs to fully capitalize on the advantages of disaggregated infrastructure and cloud layers. It can also seamlessly configure the O-Cloud by orchestrating intricate dependencies, coordinating tasks across various infrastructure elements and cloud stacks.

Even as Amdocs and Dell Technologies solidify our positions as key players in O-RAN development, we remain equally excited to find new ways to collaborate and innovate in the ever-evolving O-RAN management landscape.

Telecom Cloud Core Optimized with the AMD-based PowerEdge R7615

Tue, 13 Feb 2024 19:09:50 -0000

|Read Time: 0 minutes

The transition of telecom core functions from purpose-built hardware to commercially available servers has introduced a number of challenges, including compute density, power efficiency, and performance optimization in a cloud environment. Since this large-scale migration kicked off about ten years ago, we’ve seen a massive computing densification, moving from the area of 12 cores per CPU to upwards of 128 core per CPU today. Basically, now a few servers can provide the same compute capacity that previously required an entire rack of equipment.

Figure 1. AMD EPYC CPUs Powered by Zen4 and Zen4c

Figure 1. AMD EPYC CPUs Powered by Zen4 and Zen4c

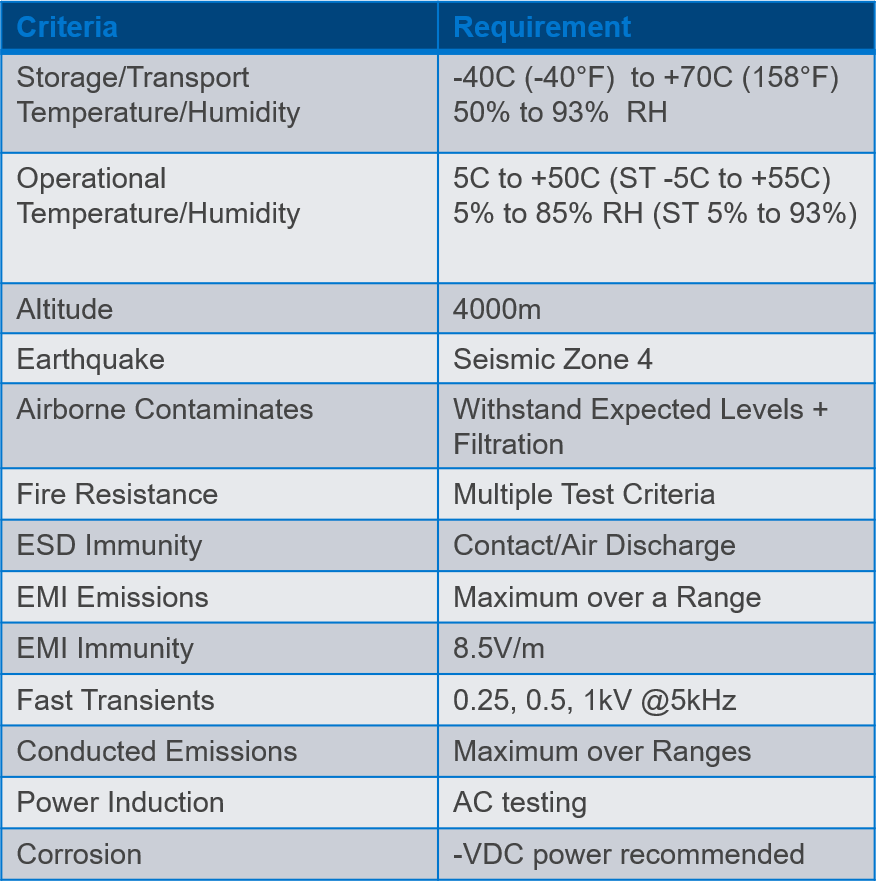

Dell Technologies is enabling such dense telecom cloud core solutions with the introduction of support for the AMD EPYC™ 9654P and EPYC™ 9754 in the PowerEdge R7615, which will feature NEBS Level 3 Certification for deployments into Telecom Environments. The R7615 is a 2U, single socket 19” rackmount server and with its upcoming NEBS certification (2Q24) will provide the densest, most power-efficient telecom cloud core solution available. Paired with the increased compute density are the inclusion of twelve fast DDR5 (4800MT/s) memory channels, support for PCIe Gen5 and NVMe-based storage will deliver a dense, power-efficient cloud computing platform that has no equal in the telecom space.

NEBS Level 3 Certification is often required in the telecom space. The key feature of this certification is the resilience to provide non-throttled performance from -5C to +55C and the ability to survive a catastrophic seismic event while continuing to provide critical Cloud Core Services, providing a highly available and fault tolerant environment.

Figure 2. - Dell PowerEdge R7615 with EDSFF StorageThe PowerEdge R7615 provides the densest telecom cloud core solution available on the market today and achieves this with a single CPU (up to 128 Cores/256 Threads) per server. This allows for deployments based on a single non-uniform memory access (NUMA) node, simplifying the challenges of deploying core services to a telecom cloud. For those that have had to deal with the planning involved in deploying to two (or more) NUMA nodes, the benefit of the R7615 is crystal clear.

Figure 2. - Dell PowerEdge R7615 with EDSFF StorageThe PowerEdge R7615 provides the densest telecom cloud core solution available on the market today and achieves this with a single CPU (up to 128 Cores/256 Threads) per server. This allows for deployments based on a single non-uniform memory access (NUMA) node, simplifying the challenges of deploying core services to a telecom cloud. For those that have had to deal with the planning involved in deploying to two (or more) NUMA nodes, the benefit of the R7615 is crystal clear.

The NEBS certified R7615, planned certification coming in 2Q2024, with 1 x 128c AMD EPYC 9654P will provide the same core/thread compute density as 2 NEBS certified servers of the next closest dual socket solution available today. This provides a reduction in maintenance activities, b an estimated 56 percent reduction in power consumption, and a 40 percent performance/watt improvement. This can result in an estimated savings of 1049€ ($1128) per year, given an average kWh cost of 0.26€ ($0.28), which was the average European cost of electricity at the time of publication. Of course, results will vary depending on many factors, including server configuration, workload, server utilization and the price of electricity.

If you’re planning to attend MWC24 and are interested in finding out more about this significant advancement in Telecom Cloud Core compute densification, stop by the AMD (2M61) and Dell Technologies (3M30) booths to see the HW and engage in further discussions.

Accelerate intelligent operations using AIOps for cloud native networks

Tue, 30 Jan 2024 17:07:35 -0000

|Read Time: 0 minutes

Dell Technologies infrastructure blocks enable telco customers to adopt telco-centric AIOps to improve operations.

Communication service providers (CSPs) are racing towards fully autonomous networks and consider automation and artificial intelligence (AI) adoption in telecom networks to be of great value. According to the latest industry insights report published by TM Forum® (New-generation intelligent operations: the service-centric transformation path), most CSPs aim to achieve Level-3 automation (conditional autonomous networks) and Level-4 automation (highly autonomous networks) by 2025. There is increased interest in accelerating Level-5 automation (AI-driven automation) using AIOps solutions.

However, telco adoption of AI-centric automation is not easy primarily because most CSPs operate a geographically distributed brownfield network and manage a multi-generation fleet of infrastructure and resources. CSPs also operate at different scales which means there is no simple, “cookie-cutter” approach towards AI-driven operations (AIOps).

In addition, CSPs adopt solutions based on clearly defined, standard telecom architectures like ETSI® (European Telecommunications Standards Institute), TM Forum® (Telecom Management Forum), 3GPP® (3rd Generation Partnership Project), and O-RAN (Open RAN alliance). CSPs also source solutions that can interwork and interoperate at a global scale. Finally, CSPs expect these solutions to fully integrate into their brownfield environments.

Hence, there is a requirement to build an outcome-based solution that supports existing operations. At the same time, the solution must enable them to accelerate the adoption of the next era of operations (based on data-driven insights and artificial intelligence).

How to adopt AIOps-based, intelligent operations for networks

CSPs are working alongside many standards bodies (especially the TM Forum®) to accelerate automation towards Level-4 (full-service orchestration and automation) and Level-5(AI-driven automation). However, there still lacks a clear path for applying these principles to large-scale networks. Building the right architecture starts from clear requirements quantification.

The right AIOps solution that is designed for CSPs must align with unique telco-specific requirements on:

- Distributed topology maps. Telco networks are being purpose-built and deployed to deliver critical and differentiated services for many decades. These networks are not like data centers but instead a fleet of resources—such as home networking, fiber, transport, radio, core, cloud, and WAN services. Topology alignment (like A/B plane, Ring) and service resilience are key requirements.

- Multi-vendor and multi-generation. Typically, CSPs operate a brownfield network over multiple generations. Most of these systems have an extended lifetime of 10 to 15 years. So, the solution should not only be future-proof but also cater to the requirements of existing deployed solutions.

- Data models. CSP networks, by nature, are highly protected—with network data existing in many silos. Network operations also follow a hierarchical, process-based delivery that is defined by Network Operations Centers (NOCs). In addition, data knowledge is based on tools and systems that vendors provide.

Given that AIOps systems are already proven and prevalent in the Cloud and IT industries, these systems and solutions must be adapted to meet CSP requirements.

AIOps in networks should strictly align with both telecom standards and network-specific needs—delivering the following capabilities:

- Process alignment. The current operational model of a telco cloud heavily relies on an operational team knowledge base and expertise. So, it is not just about data but also the unique experience of CSP operations—which are important.

- Data access. CSPs follow strict security and privacy requirements where customer data and information cannot be exposed. So, in order to adopt AIOps, data access models must be standardized to ensure AIOps use cases can retrieve data as per approved policies.

- Tuning. Because CSP-deployed networks must operate for extended periods of time, current solutions—which follow strict AI rules—cannot meet their future requirements. Therefore, AIOps systems must be adaptive.

- Scalability. CSPs operate at different scales starting from Tier-1 (many geographies) to Tier-2 (small scale). Therefore, telco-specific AIOps systems should offer a T-shirt sizing approach.

Accelerate the network AIOps journey

Today, many CSPs have already deployed small-scale AIOps solutions. However, most of these solutions are not highly aligned with telco-specific requirements—resulting in many silos that are hard to manage and scale. Further, CSPs must invest heavily in terms of time and cost to do Life Cycle Management (LCM) of these solutions. It all translates to barriers towards cloud native transformation.

Just as telco cloud CSPs have adopted standards like ETSI®, LFN® (Linux Foundation Networking) and ORAN® (Open RAN), there is a requirement to adopt a standard architecture for the Telco Multicloud AI foundation that can smoothly integrate with brownfield networks. Below are the key capabilities of an AI-centric telco platform that can enable AIOps use cases:

- Horizontal AI platform. The telco-centric AI platform should enable a composable platform that consists of:

- AIOps application layer: hosting various AIOps tasks

- Machine Learning (ML) layer: adopting specific ML models suitable for AIOps

- Knowledge layer: integrating the NOC processes and knowledge of CSPs

- Data layer: resolving any data silos in networks

- Physical layer: managing telecom networks using fully decoupled infrastructure automation

- Distributed data ingestion. The telco-centric AI platform should ingest data from fully distributed networks—delivering both reactive (respond after event occurs) and proactive (predictive) use cases on:

- MOP integration: Existing MOP and workflows must be integrated.

- Operational processes integration: Existing NOC processes must be integrated in data pipelines.

- Cloud native MLOps and AIOps capabilities. Telcos must supplement operational in-house knowledge with ML models and find a way to tune and extend it. Different models must be integrated. A systematic integration of knowledge systems with ML models (in a use case-driven approach) is required for success in network operations.

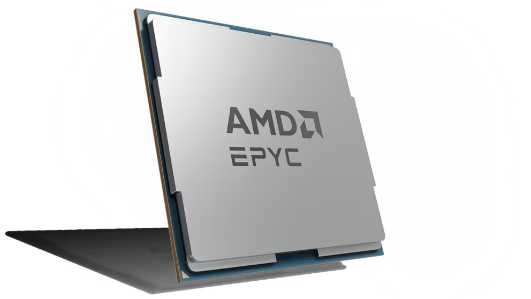

Figure: Reference architecture for AIOps-driven telecom network

How to adopt AIOps operations using telecom infrastructure blocks

Dell Technologies has worked closely with leading cloud partners, including Wind River® and Red Hat®, to bring forward an operationally ready telco cloud platform. This platform is thoroughly tested, validated, and automated to deliver telco AIOps use cases. This platform also accelerates a CSP’s adoption of zero-touch operations while consistently aligning to telecom standards and frameworks.

The Dell Technologies Telecom Multicloud Foundation flexibly transforms network operations towards programmable infrastructure using a consistent tooling and AIOps capabilities approach.

Because the platform supports multiple versions and offers with various partners, CSPs can operate all such foundational infrastructure blocks as one. Through the following key capabilities, our solution can quickly transform operational models and processes and enables agile MLOps (required in a telco environment).

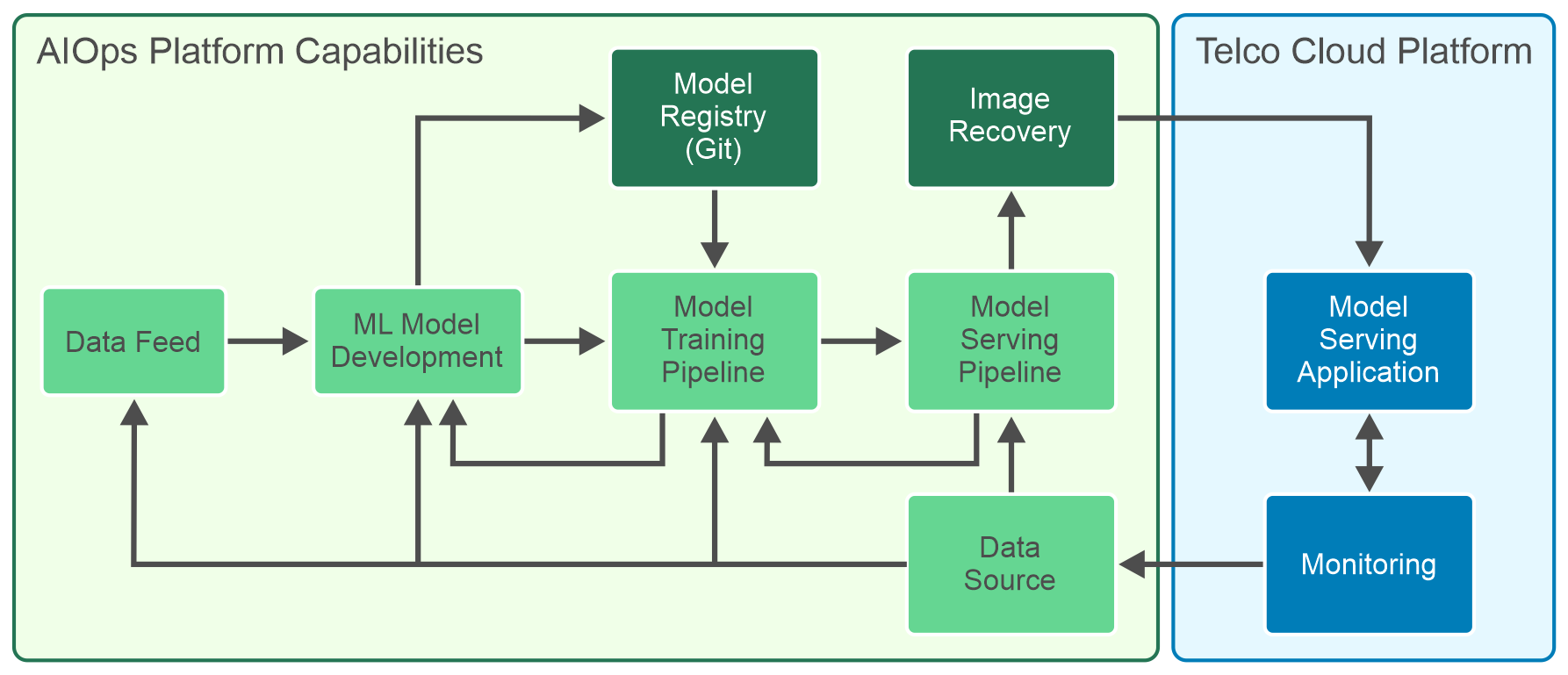

Figure: Solution architecture for AIOps Foundation

To support the unique CSP requirements to adopt AIOps and a cloud operating model, our Telecom Infrastructure Blocks provide the following key capabilities:

- Consistent platform. The first challenge is to deliver a standard and consistent platform that can integrate all layers above—abstracting the complexities of multiple technologies and components from different vendors.

- Cloud native MLOps. AIOps use cases require cloud-type agility towards data and ML. Our current version of infrastructure blocks delivers a ready platform. In future releases, we plan to enable AI enhancements (like Openshift® AI) on top of this platform. This means CSPs can build, program, and manage all their ML models and capabilities in the same way they manage cloud resources.

- Autonomous operations. Adopting data-centric architectures and ML approaches provides CSPs a smooth evolution path from their current automation approaches to an AI-centric automation that is aligned with the telco future mode of operations (FMO).

- Data-driven architecture: The automation architecture is data-driven and distributed, so data can be tapped from edge and regional sites—enabling real-time use cases and data-driven operations.

- Automated fault management: The FMO follows zero-touch and intent-driven networks. Our solution is fully aligned with this vision that enables all cloud platforms to use declarative workflows. The solution also enables all northbound integration towards orchestration and assurance systems.

- Single pane for DevOps and MLOps operations: As CSPs adopt ML/AI frameworks to deliver AIOps use cases, there is an increasing requirement to integrate and operate both DevOps and MLOPs as one. In addition, AIOps platform capabilities must be enabled in telco cloud platforms. Doing so provides a single management and observation platform.

Figure: DevOps and MLOps workflow using AIOps platform capabilities

Dell Technologies developed Telecom Multicloud Foundation and Telecom Infrastructure Blocks to accelerate telco cloud transformation. Our engineered and factory-integrated system delivers a consistent platform. This platform is ready to deliver telco-specific AIOps use cases that are fully aligned with telecom architectures—enabling our customers to accelerate AIOps solutions in networks.

Visit the Dell Telecom Multicloud Foundation site to learn more about our solution.

Simplifying 5G Network Deployment with Dell Telecom Infrastructure Blocks for Red Hat

Fri, 19 Jan 2024 15:08:05 -0000

|Read Time: 0 minutes

Welcome back to our 5G Core blog series. In the second blog post of the series, we discussed the 5G Core, its architecture, and how it stands apart from its predecessors, the role of cloud-native architectures, the concept of network slicing, and how these elements come together to define the 5G Network Architecture.

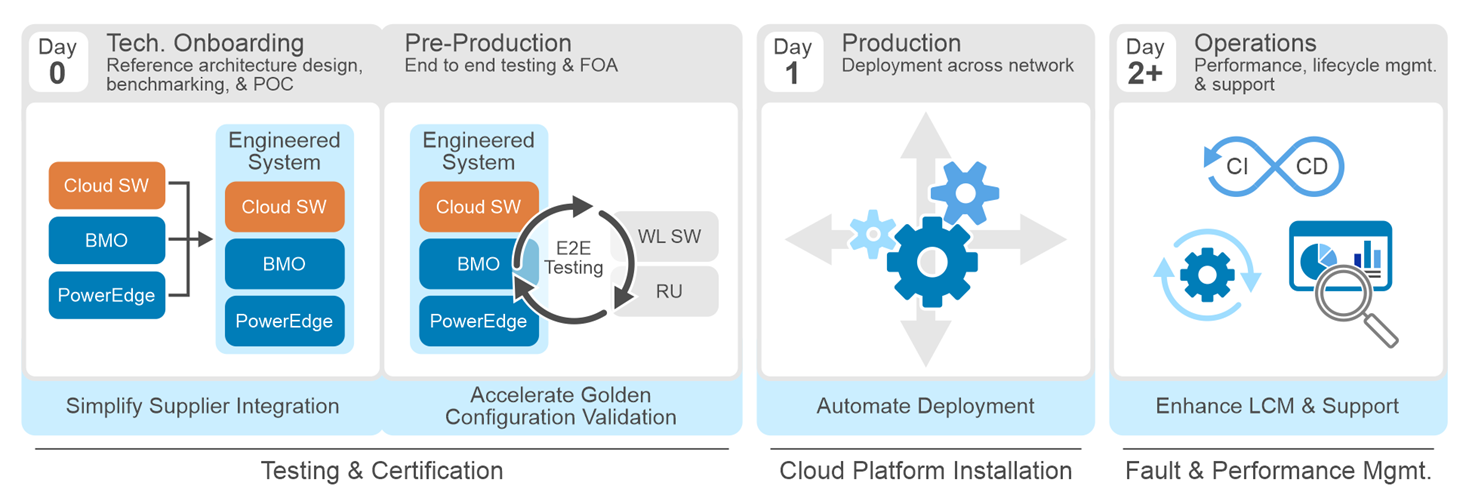

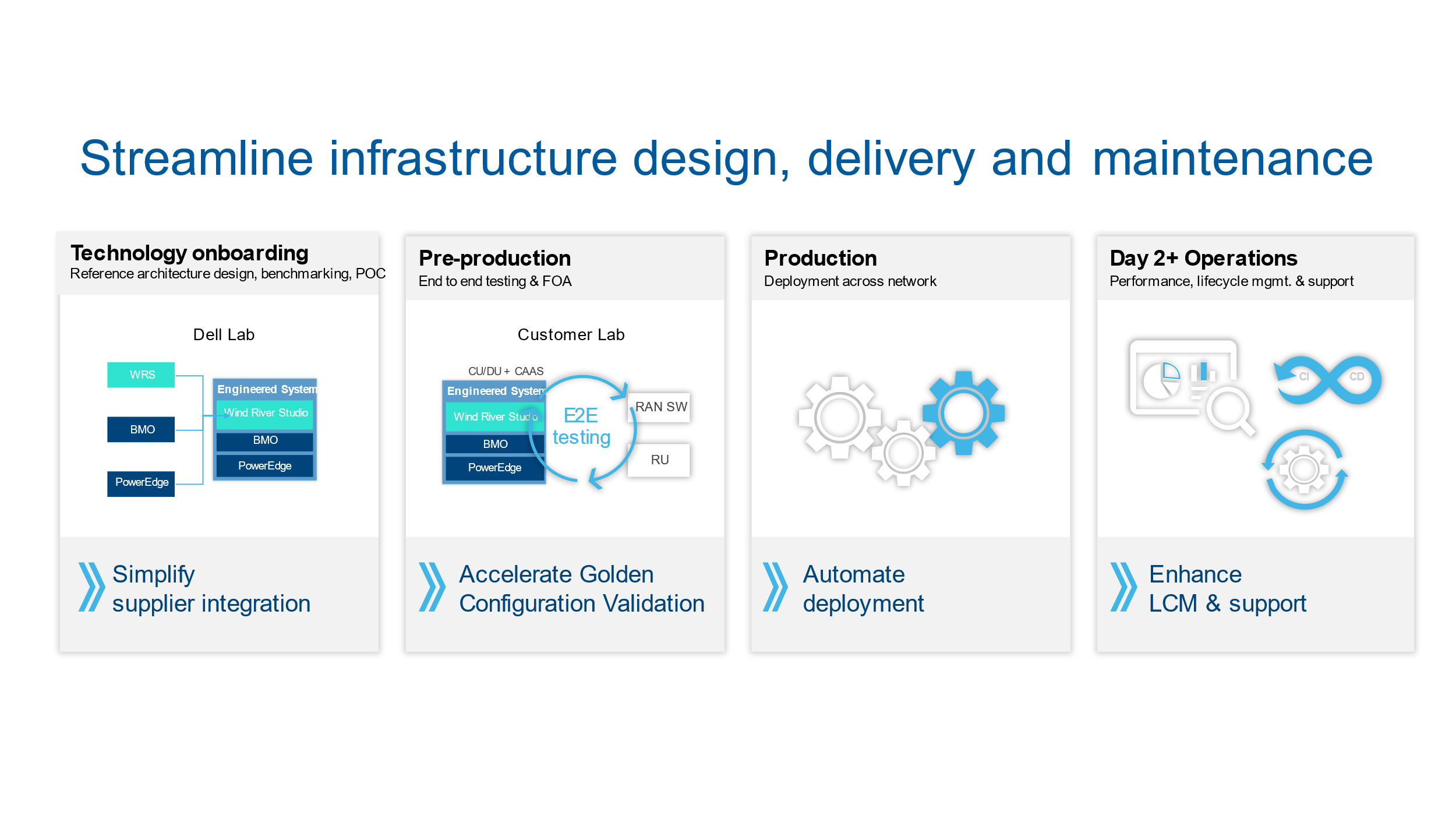

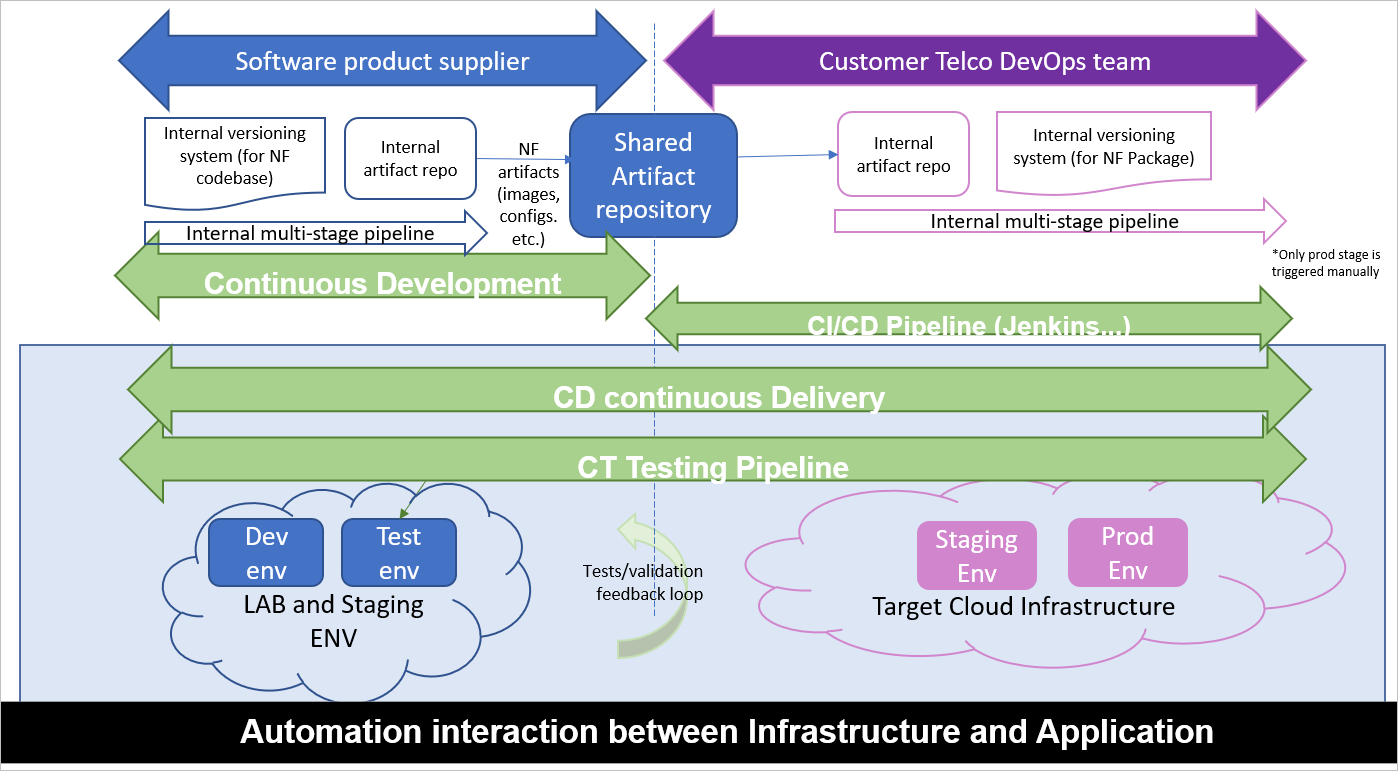

In this third blog, we look at Dell Technologies’ and Red Hat's collaboration with their latest offering of Dell Telecom Infrastructure Blocks for Red Hat. We explore how Infrastructure Blocks streamline Communications Service Providers’ (CSPs) processes for a Telco cloud used with 5G core from initial technology onboarding at day 0/1 to day 2 life cycle management.

Helping CSPs transition to a cloud-native 5G core

Building a cloud-native 5G core network is not easy. It requires careful planning, implementation, and expertise in cloud-native architectures. The network needs to be designed and deployed in a way that ensures high availability, resiliency, low latency, efficient resource utilization, and flawless component interoperability. CSPs may feel overwhelmed when considering the transition from legacy architectures to an open, best-of-breed cloud-native architecture. This can lead to delays in design, deployment, and life cycle management processes that stall projects and reduce a CSP’s ability to effectively deploy and manage their disaggregated cloud-native network.

Automation plays a critical role in managing deployment and life cycle management processes. Many projects stall or fail due to poorly defined automation strategies that make it difficult to ensure compatibility between hardware and software configurations across a large, distributed network. This is especially true when trying to deploy and manage a cloud platform running on bare metal.

Dell Telecom Infrastructure Blocks for Red Hat are foundational building blocks for creating a Telco cloud that is based on Red Hat OpenShift. They aim to reduce the time, cost, and risk of designing, deploying, and maintaining 5G networks using open software and industry standard infrastructure. The current release of Telecom Infrastructure Blocks for Red Hat supports the creation of management and workload clusters for 5G core network functions running Red Hat OpenShift on bare metal servers.

There are a number of challenges to build and maintain Kubernetes clusters on bare metal to run 5G network functions:

- Ensuring interoperability and fault tolerance in a disaggregated network is not an easy task. Deploying and managing Kubernetes clusters on bare metal requires extensive design, planning, and interoperability testing to ensure a reliable, fault tolerant, and performant system.

- Automating the deployment and life cycle management of hardware resources and cloud software in a bare metal environment can be complex. It involves deploying and updating a fleet of bare metal servers at scale.

- There is a lack of pre-built software integrations specifically designed for deploying Kubernetes clusters on bare metal servers and bringing those cluster configurations to a state where they are ready to run workloads. This means that configuring and deploying Kubernetes on bare metal frequently requires more manual effort to build and maintain the automation needed to manage deployments and upgrades at scale. This manual effort can be time-consuming and add complexity that introduces risk to the process.

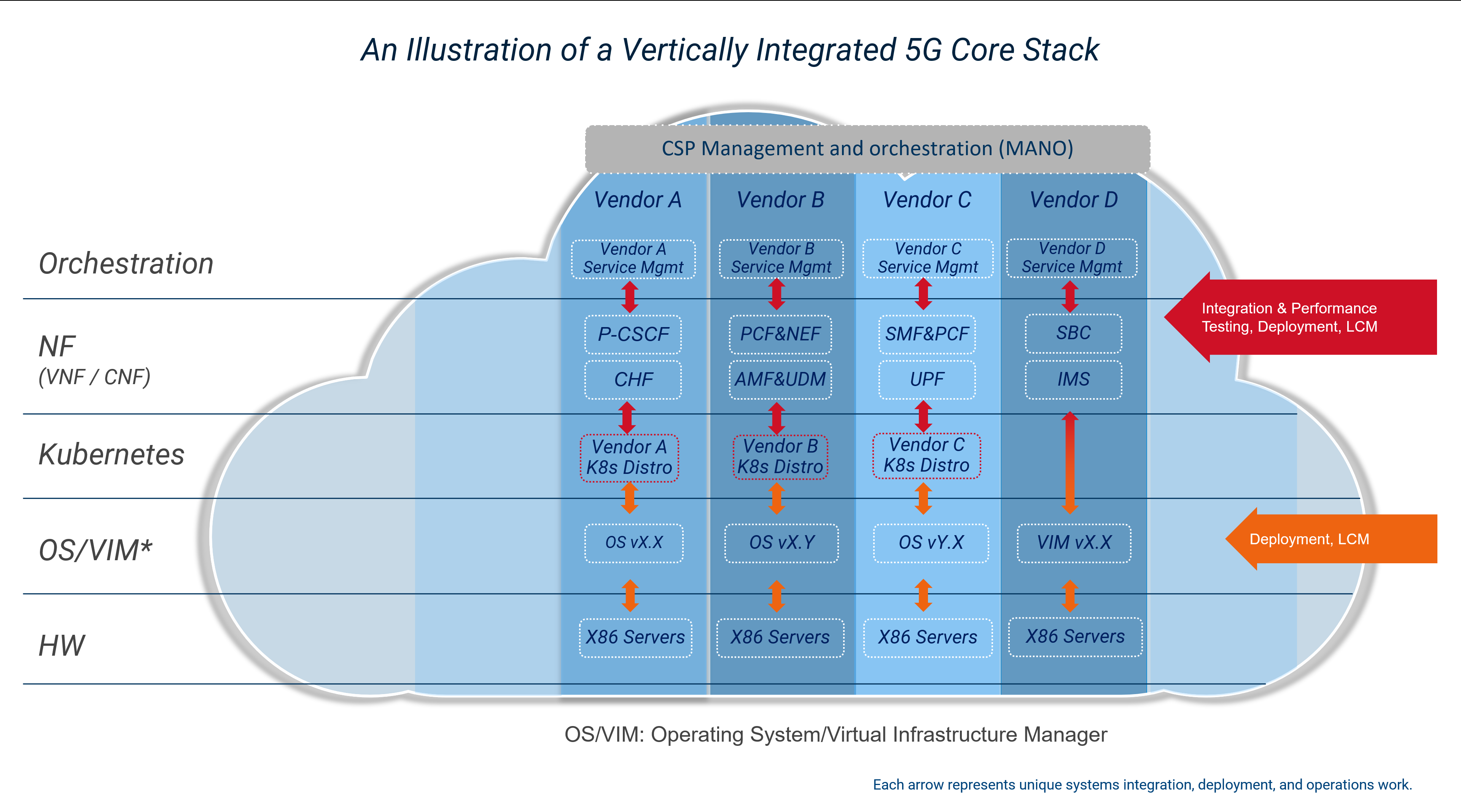

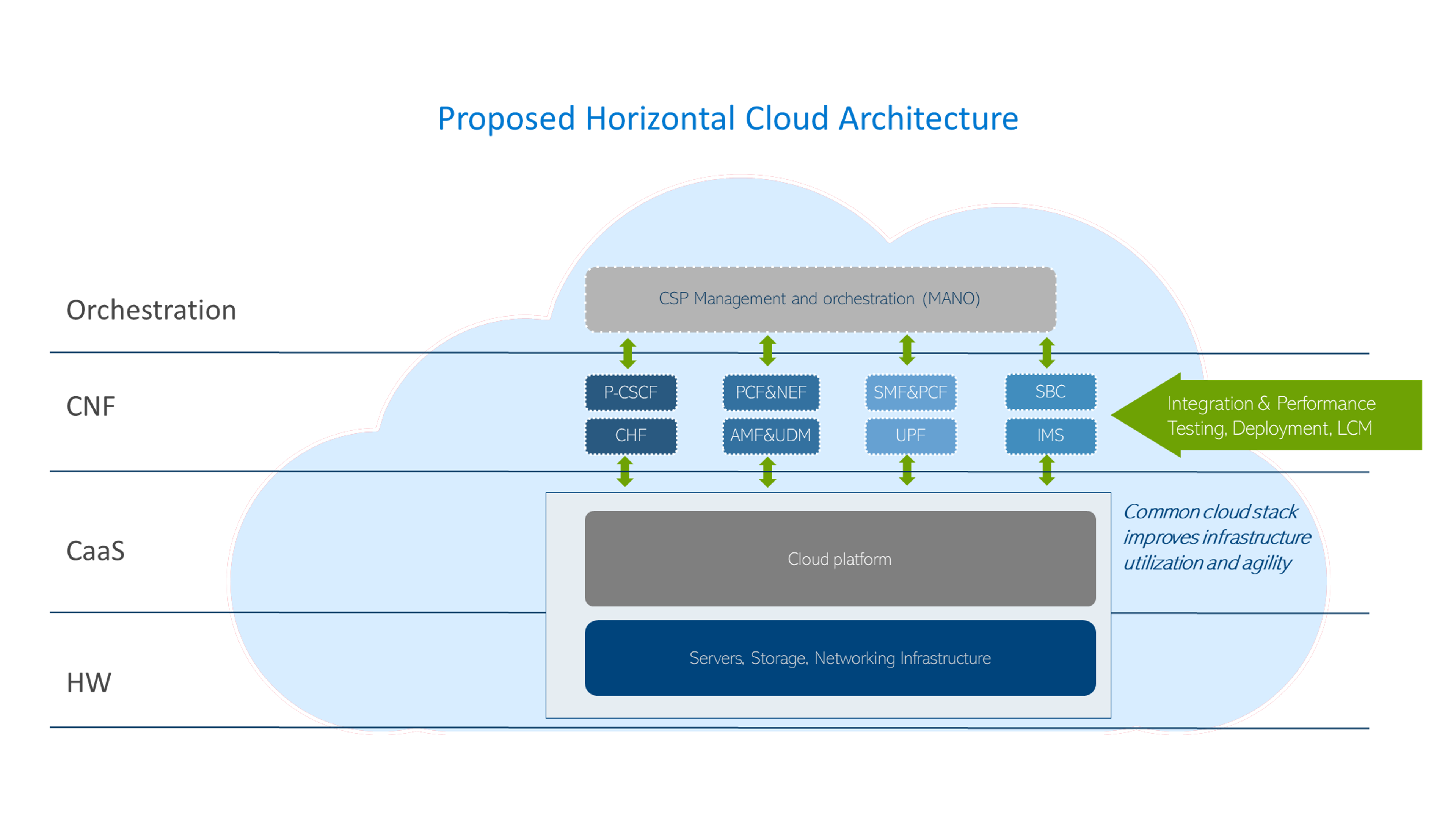

- This lack of consistent, easy-to-manage automation to deploy and update the cloud stack to meet workload requirements also make it harder to implement a unified cloud platform across all workloads. This leads to infrastructure silos that limit the ability to pool resources to improve infrastructure utilization rates, which in turn reduces network TCO efficiency.

These challenges are amplified when running 5G network functions, which require low latency and high reliability to meet carrier-grade service level agreement (SLAs). This collaboration between Dell and Red Hat aims to offer a comprehensive solution for CSPs that addresses the challenges associated with building and maintaining carrier-grade cloud infrastructure for 5G core network functions.

Key objectives of Dell Telecom Infrastructure Blocks for Red Hat

Implement a “shift left” approach

In software development, the term “shift left” refers to the ability to move tasks to an earlier stage in the development or production process to reduce time to value for those processes. The shift left approach being offered with Infrastructure Blocks moves much of the testing and integration work performed by the CSP into the supply chain prior to onboarding the new technology. This method provides CSPs with a speedy path to value by shortening the preparation and validation phase for a new network deployment. It also simplifies the procurement process by reducing the number of suppliers the CSP needs to work with and simplifies support by providing one call support for the full cloud stack. Proactive problem-solving, reduction of field touch points, risk minimization, and operational simplification are byproducts of the Infrastructure Block approach that hasten the introduction of new technology into a CSP’s network. By adopting this approach, CSPs can obtain faster rollout times and reduced operational costs. Dell does three things to help CSPs shift the technology onboarding processes left:

Engineering

Telecom Infrastructure Blocks are foundational building blocks that are co-designed with Red Hat to help CSPs build and scale out their network. These building blocks are purpose-built to meet specific workload requirements. Dell collaborates with Red Hat to maintain a roadmap of feature enhancements and perform continuous design and integration testing to accelerate the adoption of new technologies and software upgrades. The design planning and extensive interoperability testing performed by Dell simplifies the processes of building and maintaining a fault tolerant and performant cloud platform to run 5G core workloads.

Automation

Many CSPs today rely on procedural automation that they build and maintain on their own to automate the deployment and life cycle management of their cloud platform at scale. Procedural automation requires an understanding of the current state of their cloud stack and the maintenance of scripts or playbooks to define the steps needed to update the configuration to the desired state. When deploying Kubernetes on bare metal to support 5G core workloads, there are a number of items with dependencies that must be configured appropriately, including the following properties:

- Cloud platform software version

- BIOS version and settings

- Firmware versions for network interface cards (NICs) and other Peripheral Component Interconnect Express (PCIe) cards

- Single root I/O virtualization (SR-IOV) / Data Plane Development Kit (DPDK) configurations

- RAID configurations

- Site-specific data

Building and maintaining these scripts and automation playbooks is no easy task. It requires an up-to-date view of the current configuration of the infrastructure, an understanding of the dependencies between hardware and software that must be met to perform an update, and people with specialized skills that include knowledge of server hardware and the tools to manage them, the cloud software, and how to write or update playbooks to execute deployments and upgrades.

Managing this across a large, distributed network with a range of workloads that frequently require unique configurations of the cloud stack is a difficult and time-consuming process. Also consider that, in an open ecosystem environment, there is always a new version of software, BIOS, or firmware coming and the people with the needed skill sets are in short supply, resulting in a herculean effort with mixed results.

Telecom Infrastructure Blocks include purpose-built automation software that is easy to use and maintain. The blocks integrate with Red Hat Advanced Cluster Management and Red Hat OpenShift to automate deployment and life cycle management of the hardware and software stack used in a Telco cloud. This software uses declarative automation to simplify the deployment and upgrade of the cloud platform hardware and software to align with approved configuration profiles. With declarative automation, the CSP simply defines the desired state of the cloud stack, and the automation software determines the steps required to achieve the desired state and executes those steps to align the system with the approved configuration.

This infrastructure automation software uses a declarative data model that defines the desired state of the system, the resources properties, and keeps a list of the current state of the cloud configuration and inventory, which significantly simplifies the deployment and life cycle management of the cloud stack. Infrastructure Blocks come with Topology and Orchestration Specification for Cloud Applications (TOSCA) workflows that define the configurations needed to update the system based on the extensive design and validation testing performed by Dell and Red Hat. Dell provides regular updates that simplify the process of upgrading to the latest release. CSPs simply update a Customer Input Questionnaire and the automation software updates the hardware and the cloud platform software, and brings them to a workload-ready state with a single click of a button.

Integration

Dell ships fully integrated systems that are designed, optimized, and tested to meet the requirements of a range of telecom use cases and workloads. They include all the hardware, software, and licenses needed to build and scale out a Telco cloud. Delivering fully integrated building blocks from Dell’s factory significantly reduces the time spent configuring infrastructure onsite or in a network configuration center. They are also backed by Dell Technologies one-call support for the full cloud stack that meets telecom SLAs. Dell has established escalation paths with Red Hat to ensure the highest levels of support for customers.

Streamlining Day 0 through Day 2 tasks with Telecom Infrastructure Blocks

In a typical CSP network operating model, there are four stages an CSPs goes through from initial technology onboarding through managing ongoing operations. These stages are:

- Stage 1: Technology onboarding (Day 0)

- Stage 2: Pre-production (Day 0)

- Stage 3: Production (Day 1)

- Stage 4: Operations and lifecycle management (Day 2+)

Dell Telecom Infrastructure Blocks were built to streamline each stage of the processes to reduce the time and risk of building and maintaining a Telco cloud. We do this by proactively working with Red Hat to create an engineered system that meets telecom SLAs, includes automation that delivers zero-touch provisioning, and simplifies life cycle management through continuous design and integration testing.

Let's look at how Dell Telecom Infrastructure blocks affects CSP processes from Day 0 to Day 2+.

Stage 1: Day 0 technology onboarding

Dell and Red Hat collaborate to design an engineered system that is validated through an extensive array of test cases to ensure its reliability and performance. These test cases cover aspects such as functionality, interoperability, security, reliability, scalability, and infrastructure-specific requirements for 5G Core workloads. This testing is aimed at ensuring optimal performance across a diverse array of performance metrics and scale points, thereby guaranteeing performant system operation.

Some of our design and validation test cases include:

Cloud Infrastructure Cluster testing: Cloud Infrastructure Cluster testing refers to the process of testing the infrastructure components of a cloud cluster to ensure their proper functioning, performance, and scalability. It involves validating the networking, storage, compute resources, and other infrastructure elements within a cluster. These test cases include:

- Installation and validation of Infrastructure Block plugins and automation.

- Cluster storage (Red Hat OpenShift AI) validation and testing.

- Validation of cluster network configurations

- Verification of Container Network Interface (CNI) plugin configurations in the cluster.

- Validation of high availability configurations

- Scalability and performance testing

Performance Benchmarking testing: Performance Benchmarking testing usually includes several steps. First, test scenarios are created to simulate real-world usage. Second, performance tests are conducted and the resulting performance data is collected and analyzed. Finally, the results are compared to established benchmarks. Some of the testing performed with Infrastructure Blocks includes:

- CPU benchmarking

- Memory benchmarking

- Storage benchmarking

- Network benchmarking

- Container as a Service (CaaS) benchmarking

- Interoperability tests between the Infrastructure and CaaS layers

At this step, we define the automation workflows and configuration templates that will be used by the automation software during the deployment. Dell works proactively with its customers and partners to understand their best practices and incorporate those into the blueprints included with every Telecom Infrastructure Block.

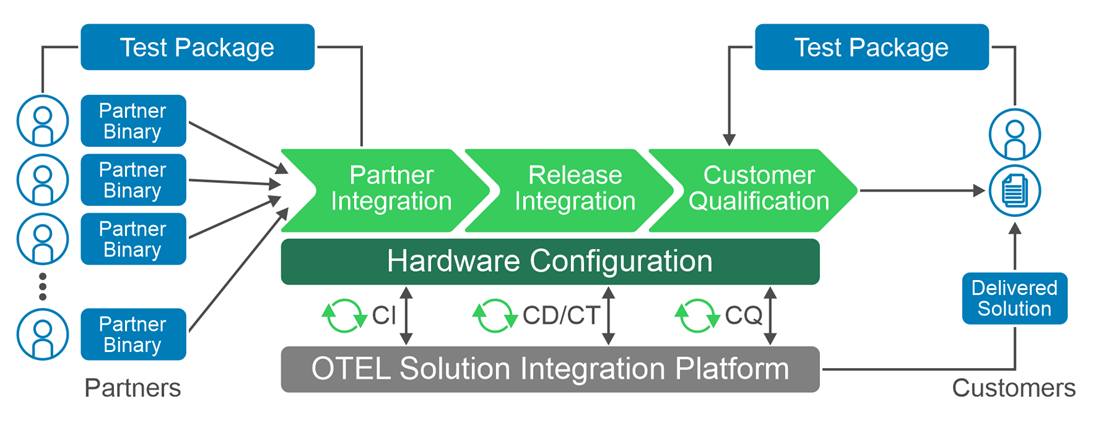

This process produces an engineered system that streamlines the CSP’s reference architecture design, benchmarking, and proof of concept (POC) processes to reduce engineering costs and accelerate the onboarding of new technology. To further streamline the design, validation, and certification process, we provide Dell Open Telecom Ecosystem Lab (OTEL), which can act as an extension of a CSP’s lab to validate workloads on Infrastructure Blocks that meet the CSP’s defined requirements.

Stage 2: Pre-production

The main objective in stage 2 is to onboard network functions onto the cloud infrastructure, define the network golden configuration, and to prepare it for production deployment. Infrastructure Blocks eliminates some of the touch steps in the onboarding by:

- Delivering an integrated and validated building block direct from Dell’s factory

- Delivering a deployment guide that simplifies onboarding

- Providing Customer Input Questionnaires that are configuration input templates used by the automation software to streamline deployment

CSPs can also leverage the Red Hat test line in Dell’s Open Telecom Ecosystem Lab (OTEL) to validate and certify the CSP’s workloads on Infrastructure Blocks. OTEL can play a significant role in enabling CSPs and partners by developing custom blueprints or performing custom tests required by the CSP using Dell’s Solution Integration Platform (SIP) which is part of OTEL. SIP is an advanced automation and service integration platform developed by Dell. It supports multi-vendor system integration and life cycle management testing at scale. It uses industry standard components, toolkits, and solutions such as GitOps.

Dell Services also offers tailor-made configurations to cater to specific operator needs. These are carried out at a Dell second-touch configuration facility. Here, configurations customized to the customer's specifications are pre-installed and then dispatched directly to the customer's location or configuration facility.

Dell Services also offers tailor-made configurations to cater to specific operator needs. These are carried out at a Dell second-touch configuration facility. Here, configurations customized to the customer's specifications are pre-installed and then dispatched directly to the customer's location or configuration facility.

Stage 3: Production

At the production stage, Dell integrates and configures all hardware and settings to support the discovery and installation of the validated version of the cloud platform software, which eliminates the need to configure hardware on site or in a configuration center. Dell’s infrastructure automation software then deploys the validated versions of Red Hat Advanced Cluster Manager and Red Hat Openshift on the servers used in the management and workload clusters and brings those clusters to a workload ready state. This process ensures a consistent and reliable installation process that reduces the risk of configuration errors or compatibility issues. Dell's automation enables zero-touch provisioning that configures hundreds of servers at the same time with full visibility into the health and status of the server infrastructure before and after deployment. Should the CSP need assistance with deployment, Dell's professional services team is standing by to assist. Dell ProDeploy for Telecom provides on-site support to rack, stack, and integrate servers into their network or remote support for deployment.

Stage 4: Operations and lifecycle management

In Day 2+ operations, CSPs must sustain network performance while adapting to changes to the network over time. This includes ensuring software and infrastructure compatibility as updates are made to the network, performing rolling updates, fault and performance management, scaling resources to meet network demands, ensuring efficient use of network resources, and adapting to technology evolution.

Infrastructure Blocks simplify Day 2+ operations in several ways:

- Dell works with Red Hat, its customers, and other partners to capture updates and new requirements necessary to evolve Infrastructure Blocks to support new technologies and software enhancements over time. This requires pro-active collaboration to ensure continuous roadmap alignment across parties. Dell then performs extensive design and validation testing on these enhancements before integrating them into Infrastructure Blocks to deliver a resilient and performant design. This helps CSPs stay on the leading edge of the technology curve while minimizing the risk of encountering faults and performance issues in production.

- Today, Telecom Infrastructure Blocks offers support for three releases per year. In every release, we prioritize the introduction of new capabilities, features, components, and solution enhancements. In addition, there are six patch releases per year that prioritize sub features to ensure compatibility across different releases. Long Term Support releases are provided at the end of the twelve-month release cycle, with a focus on fixing any solution defects that may arise.

- The out-of-the box automation provided with Infrastructure Blocks ensures a consistent, carrier-grade deployment or upgrade of the hardware and cloud platform software each time. This eliminates configuration errors to further reduce issues found in production.

- When bringing together various hardware and software components, CSPs frequently manage different release cycles to support a range of workload requirements. To address any difficulties with software compatibility and life cycle management, Dell Technologies has created a system release cadence process. It includes testing, validating, and locking the release compatibility matrixes for all Infrastructure Block components. This helps to resolve deployment problems affecting software compatibility and Day 2+ life cycle management procedures.

- Dell Professional Services can also provide custom integrations into a CSP’s CI/CD pipeline, providing the CSP with validated updates to the cloud infrastructure that pass directly into the CSP’s CI/CD tool chains to enhance DevOps processes.

- In addition, Dell offers single-call, carrier grade support that meets telecom grade SLAs with guaranteed response and service restoration times for the entire cloud stack (hardware & software).

- The declarative automation provided with Infrastructure Blocks eliminates the time spent updating scripts and playbooks to push out system updates and minimizes the risk of configuration errors that lead to fault or performance issues.

Summary

Dell Telecom Infrastructure Blocks for Red Hat offers a streamlined and efficient way to build and manage Telco cloud infrastructure. From initial technology onboarding to Day 2+ operations, they simplify every step of the process. This makes it easier for CSPs to transition from their vertically integrated, legacy architectures of today to an open cloud-native software platform running on industry standard hardware that delivers reliable and high-quality services to their customers.

This blog post is a collaborative effort from the staff of Dell Technologies and Red Hat.

Monetizing Network Exposure Through Open APIs

Fri, 08 Dec 2023 19:40:56 -0000

|Read Time: 0 minutes

Market Background

5G, especially 5G standalone, has not yet developed to fulfill expectations. End users are not yet seeing significant differences in comparison to 4G, and CSPs are not yet seeing new revenue streams. To address these challenges, we have previously presented two blogs (Network Slicing and Network Edge). In this third blog, we continue to describe a realistic view of how CSPs could maximize the 5G standalone experience and go beyond being merely connectivity providers. This blog focuses on exposing the network capabilities (services and user/network information) that service providers and enterprises can use to enable innovative and monetizable services.

Figure 1: Monetizing Network Exposure

Figure 1: Monetizing Network Exposure

The success of numerous innovative mobile applications can be traced to the availability of mobile Software Development Kits (SDKs). SDKs are available for both iOS and Android mobile platforms. These SDKs provide open tools, libraries, and documentation that allow application developers to easily create mobile applications that rely upon the capabilities of existing mobile platforms (such as notifications and analytics) and device hardware (like GPS and camera.). Most importantly, these two mobile platforms alone currently support over four billion users. The next step is to use the same principles on the network side by using Open APIs that allow unified access to network capabilities for increased network exposure.

The concept of network exposure is not new. There have been a few less-than-successful attempts in the past, such as Service Capabilities Exposure Function (SCEF) and APIs for the IMS/Voice. These solutions were not able to scale sufficiently to attract a significant number of application developers. The specifications have been too complicated for anybody outside of the telecom world to understand or implement. The integration of network exposure into the 5G design is groundbreaking. API exposure is now fundamental to 5G and is natively built into the architecture, enabling applications to seamlessly interact with the network.

Monetizing mobile networks using Open APIs relies on the implementation of communication APIs for voice, video, and messaging, as well as network APIs for location, authentication, and quality of service. By exposing these capabilities through Open APIs, CSPs can establish partnerships by facilitating the creation of tailored, high-value services for businesses, thereby enabling them to monetize 5G beyond traditional connectivity and bundled offerings. These new revenue streams are paramount as the traditional revenue streams from mobile broadband services are flat while costs continue to rise. Moreover, the deployment of a cloud-native 5G standalone network requires substantial investments, making it crucial to identify new revenue streams that can justify the business case.

Technical Background and Standardization

5G standalone was specified in 3GPP release 15 and its architecture standardized the Network Exposure Function (NEF). One of the 5G core network functions, NEF allows applications to subscribe to network changes, or instruct them to extract network information and capabilities. NEF enables an extensive set of network exposure capabilities, but it lacks the scale, agility, and simplicity that application developers require. GSMA’s Open Gateway Initiative, the CAMARA project, and TM Forum’s Open APIs all aim to address this gap.

- GSMA’s Open Gateway Initiative achieves scale by committing CSPs to implement the common system framework in a unified manner.

- Actual Service APIs are defined under the CAMARA project where the work is done as an open-source project at the Linux foundation.

- TM Forum’s Open APIs are used in this framework for Operation, Administration, and Management (OAM).

The use case is well described in the GSMA’s Open Gateway white paper.

Network APIs

Open APIs and network capabilities in this new concept have much to offer. The CAMARA project has already defined 18 Service APIs such as Quality on Demand, Device Location, Device Status, Number Verification, Simple Edge Discovery, One Time Password SMS, Carrier Billing, and SIM swap. Three of the most popular elements are described in more detail below:

Quality on Demand: It is easy to imagine that multiple applications can benefit from better quality (bandwidth and latency). The challenge is to address how the network can fulfill this request instantaneously and cost-effectively. Some Proof of Concepts (PoCs) demonstrate that implementing Quality on Demand improvements can trigger either a new Network Slice or a different Quality of Service Class Identifier (QCI). For more information, see our Network Slicing blog.

Device Location: This API verifies that the device is in a specific geographical area. The main benefits of the network-based request are that it can be used when a GPS signal is not available, and it is considered more trustworthy (location info cannot be spoofed).

Device Status: This API provides a very simple and straightforward request to determine whether the subscriber is roaming.

None of these Service APIs offer anything unique that the market has not seen before. Their intrinsic value comes from being part of a unified platform that enables a consistent way of accessing network capabilities and information, similar to how mobile SDKs became a catalyst to the thriving mobile device ecosystem we know today. Only time will tell how much value-add application developers will see from these Open APIs.

Use Cases and Commercial Models

The value of new features and applications is considered whenever 5G monetization is discussed. We are still in the early phase of Open APIs, but the TM Forum’s Catalyst Program and CAMARA Open API showcases can give good insights into what the coming commercial deployments could look like. These programs have triggered several PoCs where the related use cases have required optimized performance (Quality of Demand), user location/roaming information, and feedback on consumer experience. In these PoCs, the service providers have been able to consume the Open APIs directly or through a Hyperscale marketplace. As an example, in one PoC, guaranteed Quality of Delivery was needed for a 360-degree 8K live streaming service with content monetization through APIs (with CSPs curating markets at the edge). Another PoC included an end-to-end implementation of a marketplace from which one could consume network services from multiple CSP networks (Simple hyperscaler integrated network experience).

We can expect several commercial models for these Open APIs, because these APIs can be utilized in various ways such as providing network/subscriber information, optimizing functionalities/features, and allocating network capacity/resources. it is yet to be determined how these Open APIs can be consumed easily. Service providers are unlikely to integrate and set up individual contracts with every other service provider in the world. Therefore, there must be a place for aggregation in order to hide the complexity behind a portal. This role can be assumed by a group of service providers or hyperscalers who can onboard these services onto their marketplaces.

Challenges and The Road Ahead

One of the main Key Performance Indicators (KPI’s that define success for service providers is the ability to scale and have a global reach. It is critical that there be no fragmentation and that the community work towards a unified approach. Jointly agreed upon solutions and specifications require more time to develop; therefore, another year may pass before we start to see commercial use case launches (as forecast by Borje Ekholm, Ericsson CEO during the Q3-2023 earnings call).

The journey to unified 5G is not easy, and it presents various challenges:

- Technology migration poses a challenge as mobile operators need to transition from existing systems to effectively utilize the potential of the 5G NEF and Open APIs.

- Another significant obstacle is bridging the gap between software developers and mobile operators. Developers require clear, simple, unified, and well-documented APIs to leverage the network capabilities effectively.

- At the same time, mobile operators must ensure that exposing their network does not compromise the security of the network while ensuring that end users have full control of where and how their information is stored and used.

Some service providers have already launched platforms with a few Service APIs. Early deployments can introduce a risk of fragmentation. However, the risk is outweighed by the positive impact testing the concept in the real world and constructing more concrete requirements from actual user experiences with these services.

Regardless of how much commercial success these new Network and Service APIs realize in the coming years, they will have made an important step towards more Open, Agile, and Programmable networks. Similarly, Dell has been embracing this vision in our Telecom strategy as reflected on our Multi-Cloud Foundation Concept, Bare Metal Orchestration, and Open RAN development projects. In our vision, Open APIs are needed in all layers (Infrastructure, Network, Operations, and Services). Stay tuned for more to come from Dell about the open infrastructure ecosystem and automation (#MWC24).

Improving Network Operations and Observability for Cloud-Native Networks

Tue, 24 Oct 2023 14:35:04 -0000

|Read Time: 0 minutes

Communication service providers (CSPs) are rapidly modernizing their networks towards cloud-native and open architectures. However, as the scale of these deployments increases, so does the ever-growing concern about operational management and complexity.

According to the latest report by TM Forum on Autonomous Networks, most CSPs still manage and operate their networks at level-2 automation. This level is where most tasks are completed using statically configured rules, limiting a CSPs ability to monetize Network transformation benefits. As a result, major customers are investing in automizing their operations in order to move to level-3 automation at scale (from 13 percent today to 36 percent by 2026) to achieve zero touch closed-loop operations through dynamic and programmable policies. Level-3 automation will also enable pathways that accelerate adoption towards level-4 automation (from 4 percent today to 23 percent by 2026), which is ML and AI-centric.

Intelligent operations offer several business benefits, including improving return through better TCO, or enhancing Time to Value (TTV) for offerings and further improving resource efficiency. However, there is no cookie-cutter approach to improving network operations. The primary challenge CSPs face is that a significant lot operates a brownfield network and manages a fleet of networks and resources. As a result, building a reference architecture that aligns with their existing operations—and simultaneously accelerates the adoption of the next era of operations—is a complex challenge.

Simplifying Network Operations and Observability

Today, many CSPs are working to address the right solution and platform to optimize operational models. However, these efforts usually result in be-spoke solutions that are hard to manage and scale. CSPs also invest heavily in both time and cost to perform Life Cycle Management (LCM) of these solutions. These challenges create barriers to reaping Cloud and Network transformation benefits.

Dell Technologies has worked closely with leading cloud partners, including Wind River and Red Hat, to offer an operationally ready Telco Cloud platform as part of the Dell Telecom Multi-Cloud Foundation offer. This solution includes co-engineered building blocks referred to as Telecom Infrastructure Blocks, which support zero-touch operations and closed-loop automation. By automating the deployment and life-cycle management of the cloud platforms used in a telecom network, Dell’s Telco Cloud reduces operational costs while consistently meeting telco-grade SLAs.

Additionally, customers can optimize their infrastructures with a cloud platform of choice that is aligned end-to-end to workload vendor specifications and use cases, effectively transforming their operational models and processes. This solution not only streamlines telecom cloud design, deployment, and management with integrated hardware, software, and support, but also fully aligns with a telco-centric operational model.

Telecom Infrastructure Blocks releases will be agile delivered with multiple yearly releases to simplify life cycle management. By the end of 2023, Dell Telecom Infrastructure Blocks will support workloads for Radio Access Network and Core Network functions with:

- Dell Telecom Infrastructure Blocks for Wind River, which will support vRAN and Open RAN workloads.

- Dell Telecom Infrastructure Blocks for Red Hat that initially target Core Network workloads.

To support CSPs’ operational transformation that addresses optimal cost structure, telecom SLAs, and their ability to automate and orchestrate at scale, Dell Telecom Infrastructure Blocks provide the following key capabilities:

- Interoperability – Operating all telco cloud platforms as one abstracts all the complexities from multiple technologies and multiple components from different vendors. This allows CSPs to run and manage the entire platform together.

- Lifecycle management – Typically, a telco network requires long life-cycle time commitments and the ability to coordinate multiple systems with different versions. Dell Telecom Infrastructure Blocks address these issues by providing configuration changes and version alignment for firmware, BIOS, CaaS software and more.

- Closed-loop operations – Operational transformation is evolving towards zero-touch. This requires a new strategic platform that can de-couple infrastructure from application and enable smooth integration with application orchestration and assurance systems following a telco future mode of operations (FMO).

Transforming Operations Using Telecom Infrastructure Blocks

Dell Telecom Multi-Cloud Foundation provides CSPs a platform-centric solution that promises full support and alignment toward CSP level-4 automation. CSPs can flexibly transform their operations to programmable infrastructure using a consistent tooling and capabilities approach.

Through multiple versions and offers with various partners, CSPs can operate all such foundational infrastructure blocks as one through the following key capabilities:

- Remote upgrades – This solution follows a consistent tooling and operational model, which allows operational teams to operate full telecom cloud platforms at scale and enables seamless use from central orchestration tools.