Assets

Secure Cloud: Check! Flexible Cloud Networking: Check! Powerful Cloud Hardware: Check!

Fri, 26 Apr 2024 12:20:39 -0000

|Read Time: 0 minutes

Dell Technologies and VMware are happy to announce the availability of VMware Cloud Foundation 4.3.0 on VxRail 7.0.202. This new release provides several security-related enhancements, including FIPS 140-2 support, password auto-rotation support, SDDC Manager secure API authentication, data protection enhancements, and more. VxRail-specific enhancements include support for the more powerful, 3rd Gen AMD EYPC™ CPUs and NVIDIA A100 GPUs (check this blog for more information about the corresponding VxRail release), and more flexible network configuration options with the support for multiple System Virtual Distributed Switches (vDS).

Let’s quickly discuss the comprehensive list of the new enhancements and features:

VCF and VxRail Software BOM Updates

These include the updated version of vSphere, vSAN, NSX-T, and VxRail Manager. Please refer to the VCF on VxRail Release Notes for comprehensive, up-to-date information about the release and supported software versions.

VCF on VxRail Networking Enhancements

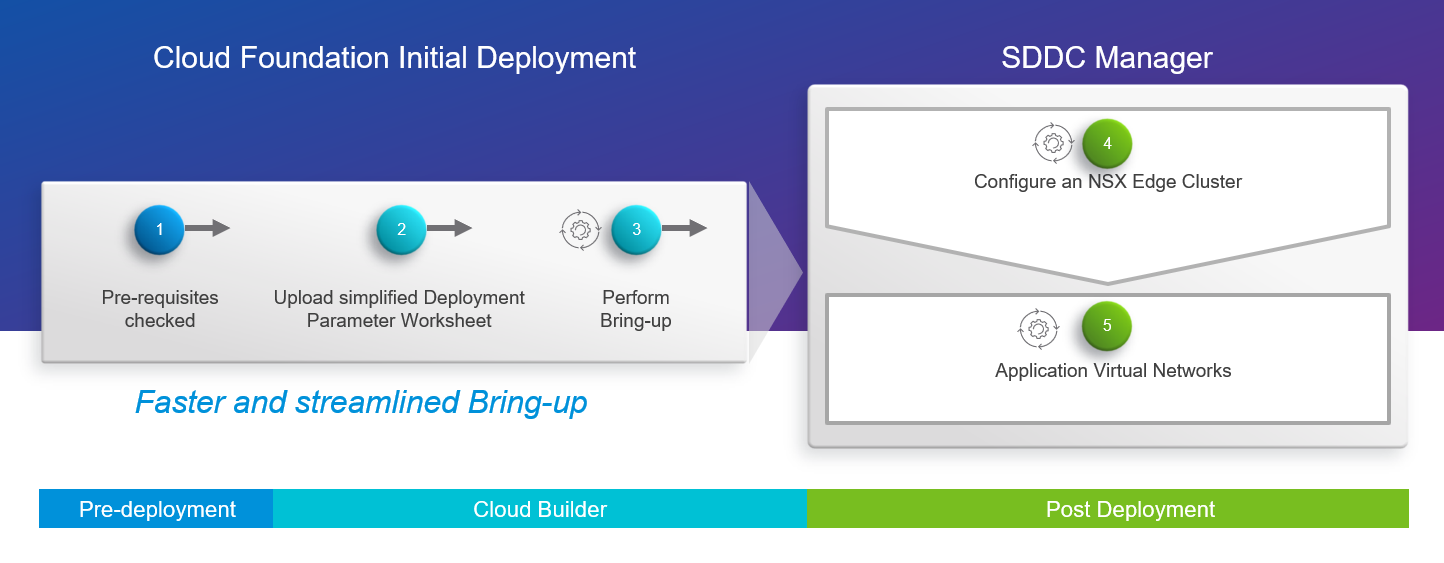

Day 2 AVN deployment using SDDC Manager workflows

The configuration of an NSX-T Edge cluster and AVN networks are now a post-deployment process that is automated through SDDC Manager. This approach simplifies and accelerates the VCF on VxRail Bring-up and provides more flexibility for the network configuration after the initial deployment of the platform.

Figure 1: Cloud Foundation Initial Deployment – Day 2 NSX-T Edge and AVN

Shrink and expand operations of NSX-T Edge Clusters using SDDC Manager workflows

NSX-T Edge Clusters can now be expanded and shrunk using in-built-in automation from within SDDC Manager. This allows VCF operators to scale the right level of resources on-demand without having to size for demand up-front, which results in more flexibility and better use of infrastructure resources in the platform.

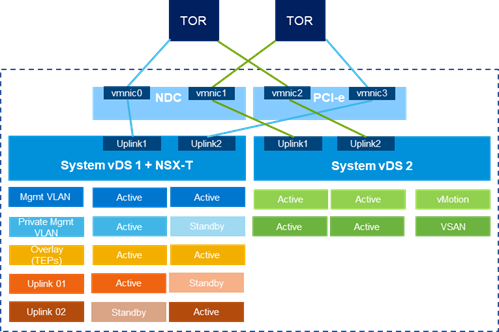

VxRail Multiple System VDS support

Two System Virtual Distributed Switch (vDS) configuration support was introduced in VxRail 7.0.13x. VCF 4.3 on VxRail 7.0.202 now supports a VxRail deployed with two system vDS, offering more flexibility and choice for the network configuration of the platform. This is relevant for customers with strict requirements for separating the network traffic (for instance, some customers might be willing to use a dedicated network fabric and vDS for vSAN). See the Figure 2 below for a sample diagram of the new network topology supported:

Figure 2: Multiple System VDS Configuration Example

VCF on VxRail Data Protection Enhancements

Expanded SDDC Manager backup and restore capabilities for improved VCF platform recovery

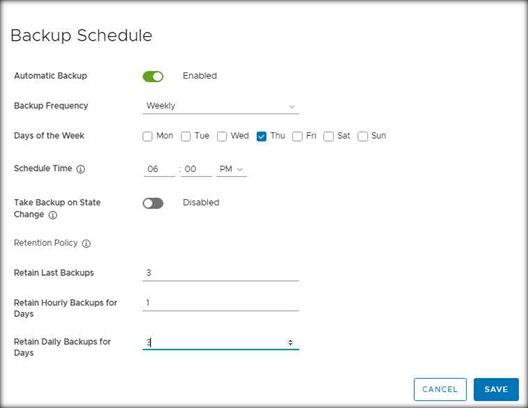

This new release introduces new abilities to define a periodic backup schedule, retention policies of backups, and disable or enable these schedules in the SDDC Manager UI, resulting in simplified backup and recovery of the platform (see the screenshot below in Figure 3).

Figure 3: Backup Schedule

VCF on VxRail Security Enhancements

SDDC Manager certificate management operations – expanded support for using SAN attributes

The built-in automated workflow for generating certificate signing requests (CSRs) within SDDC Manager has been further enhanced to include the option to input a Subject Alternate Name (SAN) when generating a certificate signing request. This improves security and prevents vulnerability scanners from flagging invalid certificates.

SDDC Manager Password Management auto-rotation support

Many customers need to rotate and update passwords regularly across their infrastructure, and this can be a tedious task if not automated. VCF 4.3 provides automation to update individual supported platform component passwords or rotate all supported platform component passwords (including integrated VxRail Manager passwords) in a single workflow. This feature enhances the security and improves the productivity of the platform admins.

FIPS 140-2 Support for SDDC Manager, vCenter, and Cloud Builder

This new support increases the number of VCF on VxRail components that are FIPS 140-2 compliant in addition to VxRail Manager, which is already compliant with this security standard. It improves platform security and regulatory compliance with FIPS 140-2.

Improved VCF API security

Token based Auth API access is now enabled within VCF 4.3 for secure authentication to SDDC Manager by default. Access to private APIs that use Basic Auth has been restricted. This change improves platform security when interacting with the VCF API.

VxRail Hardware Platform Enhancements

VCF 4.3 on VxRail 7.0.202 brings new hardware features including support for AMD 3rd Generation EPYC CPU Platform Support and Nvidia A100 GPUs.

These new hardware options provide better performance and more configuration choices. Check this blog for more information about the corresponding VxRail release.

VCF on VxRail Multi-Site Architecture Enhancements

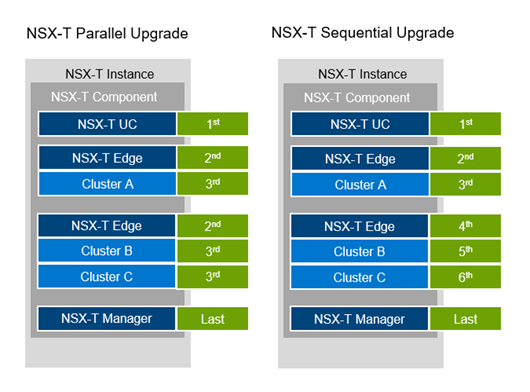

NSX-T Federation guidance - upgrade and password management Day 2 operations

New manual guidance for password and certificate management and backup & restore of Global Managers.

As you can see, most of the new enhancements in this release are focused on improving platform security and providing more flexibility of the network configurations. Dell Technologies and VMware continue to deliver the optimized, turnkey platform experience for customers adopting the hybrid cloud operating model. If you’d like to learn more, please check the additional resources linked below.

Additional Resources

VMware Cloud Foundation on Dell EMC VxRail Release Notes

VxRail page on DellTechnologies.com

VCF on VxRail Interactive Demos

Author Information

Author: Karol Boguniewicz, Senior Principal Engineering Technologist, VxRail Technical Marketing

Twitter: @cl0udguide

SmartFabric Services for VxRail

Fri, 26 Apr 2024 11:37:47 -0000

|Read Time: 0 minutes

HCI networking made easy (again!). Now even more powerful with multi-rack support.

The Challenge

Network infrastructure is a critical component of HCI. In contrast to legacy 3-tier architectures, which typically have a dedicated storage and storage network, HCI architecture is more integrated and simplified. Its design allows you to share the same network infrastructure used for workload-related traffic and inter-cluster communication with the software-defined storage. Reliability and the proper setup of this network infrastructure not only determines the accessibility of the running workloads (from the external network), it also determines the performance and availability of the storage, and as a result, the whole HCI system.

Unfortunately, in most cases, setting up this critical component properly is complex and error-prone. Why? Because of the disconnect between the responsible teams. Typically configuring a physical network requires expert network knowledge which is quite rare among HCI admins. The reverse is also true: network admins typically have a limited knowledge of HCI systems, because this is not their area of expertise and responsibility.

The situation gets even more challenging when you think about increasingly complex deployments, when you go beyond just a pair of ToR switches and beyond a single-rack system. This scenario is becoming more common, as HCI is becoming a mainstream architecture within the data center, thanks to its maturity, simplicity, and being recognized as a perfect infrastructure foundation for the digital transformation and VDI/End User Computing (EUC) initiatives. You need much more computing power and storage capacity to handle increased workload requirements.

At the same time, with the broader adoption of HCI, customers are looking for ways to connect their existing infrastructure to the same fabric, in order to simplify the migration process to the new architecture or to leverage dedicated external NAS systems, such as Isilon, to store files and application or user data.

A brief history of SmartFabric Services for VxRail

Here at Dell Technologies we recognize these challenges. That’s why we introduced SmartFabric Services (SFS) for VxRail. SFS for VxRail is built into Dell EMC Networking SmartFabric OS10 Enterprise Edition software that is built into the Dell EMC PowerSwitch networking switches portfolio. We announced the first version of SFS for VxRail at VMworld 2018. With this functionality, customers can quickly and easily deploy and automate data center fabrics for VxRail, while at the same time reduce risk of misconfiguration.

Since that time, Dell has expanded the capabilities of SFS for VxRail. The initial release of SFS for VxRail allowed VxRail to fully configure the switch fabric to support the VxRail cluster (as part of the VxRail 4.7.0 release back in Dec 2018). The following release included automated discovery of nodes added to a VxRail cluster (as part of VxRail 4.7.100 in Jan 2019).

The new solution

This week we are excited to introduce a major new release of SFS for VxRail as a part of Dell EMC SmartFabric OS 10.5.0.5 and VxRail 4.7.410.

So, what are the main enhancements?

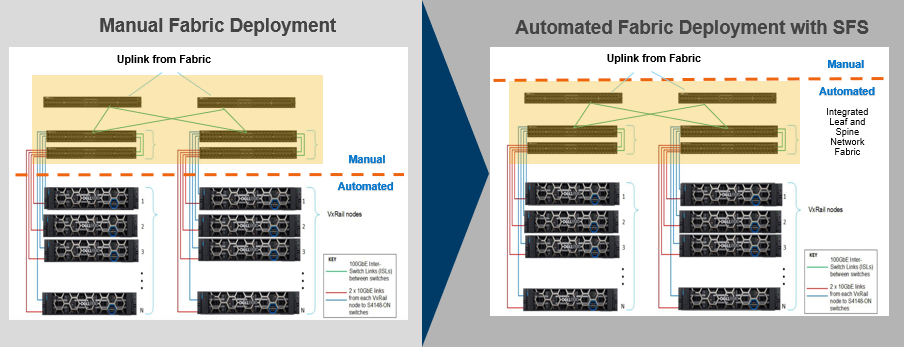

- Automation at scale

Customers can easily scale their VxRail deployments, starting with a single rack with two ToR leaf switches, and expand to multi-rack, multi-cluster VxRail deployments with up to 20 switches in a leaf-spine network architecture at a single site. SFS now automates over 99% (!) of the network configuration steps* for leaf and spine fabrics across multiple racks, significantly simplifying complex multi-rack deployments. - Improved usability

An updated version of the OpenManage Network Integration (OMNI) plugin provides a single pane for “day 2” fabric management and operations through vCenter (the main management interface used by VxRail and vSphere admins), and a new embedded SFS UI simplifying “day 1” setup of the fabric. - Greater expandability

Customers can now connect non-VxRail devices, such as additional PowerEdge servers or NAS systems, to the same fabric. The onboarding can be performed as a “day 2” operation from the OMNI plugin. In this way, customers can reduce the cost of additional switching infrastructure when building more sophisticated solutions with VxRail.

Figure 1. Comparison of a multi-rack VxRail deployment, without and with SFS

Solution components

In order to take advantage of this solution, you need the following components:

- At a minimum a pair of supported Dell EMC PowerSwitch Data Center Switches. For an up-to-date list of supported hardware and software components, please consult the latest VxRail Support Matrix. At the time of writing this post, the following models are supported: S4100 (10GbE) and S5200 (25GbE) series for the leaf and Z9200 series or S5232 for the spine layer. To learn more about the Dell EMC PowerSwitch product portfolio, please visit the PowerSwitch website.

- Dell EMC Networking SmartFabric OS10 Enterprise Edition (version 10.5.0.5 or later). This operating system is available for the Dell EMC PowerSwitch Data Center Switches, and implements SFS functionality. To learn more, please visit the OS10 website.

- A VxRail cluster consisting of 10GbE or 25GbE nodes, with software version 4.7.410 or later.

- OpenManage Network Integration (OMNI) for VMware vCenter version 1.2.30 or later.

How does the multi-rack feature work?

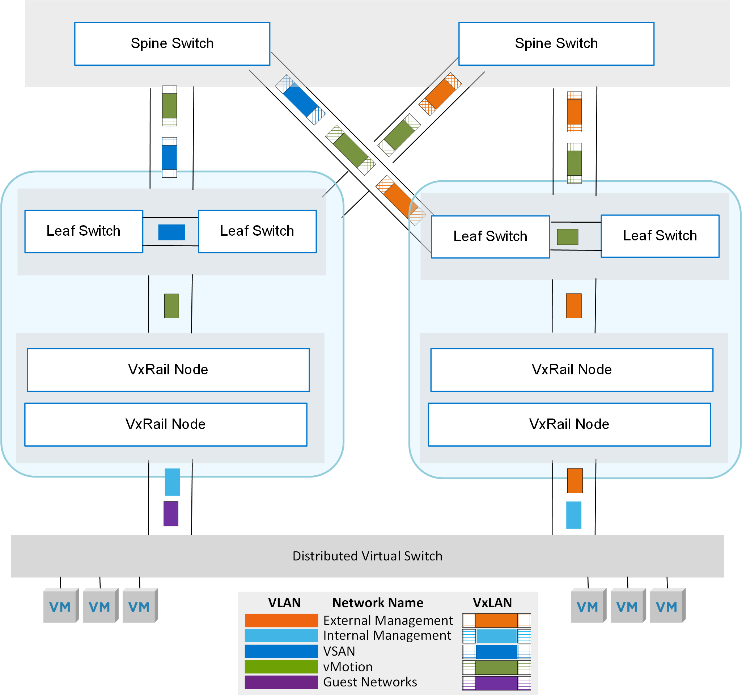

The multi-rack feature is done through the use of the Hardware VTEP functionality in Dell EMC PowerSwitches and the automated creation of a VxLAN tunnel network across the switch fabric in multiple racks.

VxLAN (Virtual Extensible Local Area Network) is an overlay technology that allows you to extend a Layer 2 “overlay” network over a Layer 3 (L3) “underlay” network by adding a VxLAN header to the original Ethernet frame and encapsulating it. This encapsulation occurs by adding a VxLAN header to the original Layer 2 (L2) Ethernet frame, and placing it into an IP/UDP packet to be transported across the L3 underlay network.

By default, all VxRail networks are configured as L2. With the configuration of this VxLAN tunnel, the L2 network is “stretched” across multiple racks with VxRail nodes. This allows for the scalability of L3 networks with the VM mobility benefits of an L2 network. For example, the nodes in a VxRail cluster can reside on any rack within the SmartFabric network, and VMs can be migrated within the same VxRail cluster to any other node without manual network configuration.

Figure 2. Overview of the VLAN and VxLAN VxRail traffic with SFS for multi-rack VxRail

This new functionality is enabled by the new L3 Fabric personality, available as of OS 10.5.0.5, that automates configuration of a leaf-spine fabric in a single-rack or multi-rack fabric and supports both L2 and L3 upstream connectivity. What is this fabric personality? SFS personality is a setting that enables the functionality and supported configuration of the switch fabric.

To see how simple it is to configure the fabric and to deploy a VxRail multi-rack cluster with SFS, please see the following demo: Dell EMC Networking SFS Deployment with VxRail - L3 Uplinks.

Single pane for management and “day 2” operations

SFS not only automates the initial deployment (“day 1” fabric setup), but greatly simplifies the ongoing management and operations on the fabric. This is done in a familiar interface for VxRail / vSphere admins – vCenter, through the OMNI plugin, distributed as a virtual appliance.

It’s powerful! From this “VMware admin-friendly” interface you can:

- Add a SmartFabric instance to be managed (OMNI supports multiple fabrics to be managed from the same vCenter / OMNI plugin).

- Get visibility into the configured fabric – domain, fabric nodes, rack, switches, and so on.

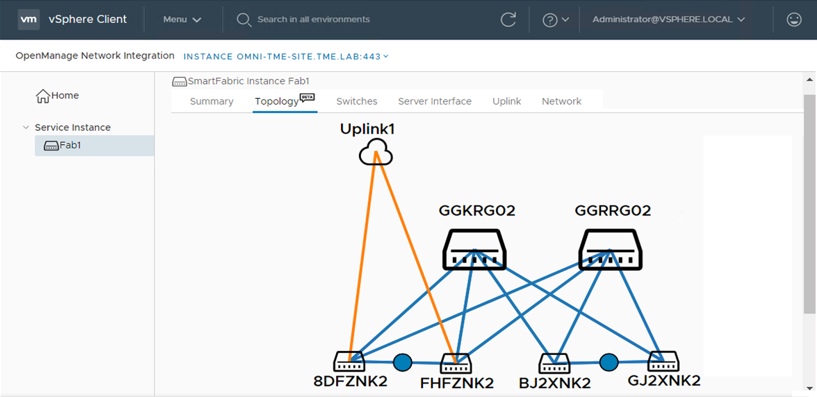

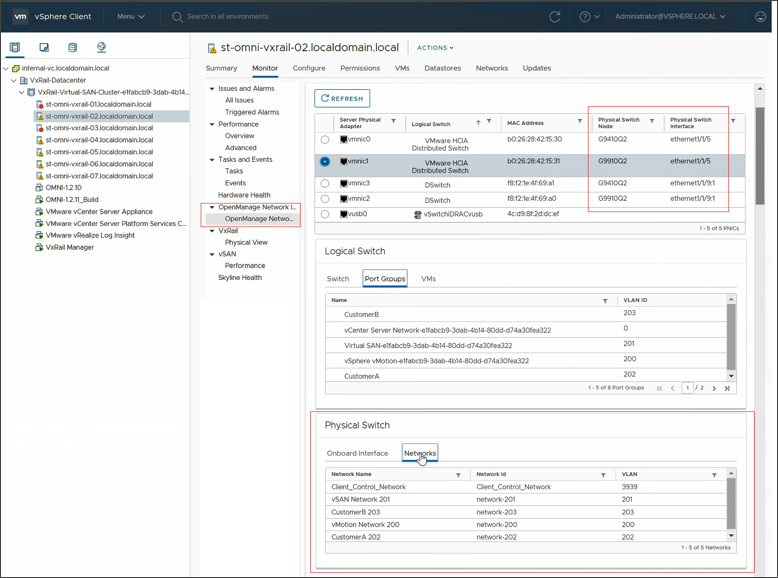

- Visualize the fabric and the configured connections between the fabric elements with a “live” diagram that allows “drill-down” to get more specific information (Figure 3).

- Manage breakout ports and jump ports, as well as on-board additional servers or non-VxRail devices.

- Configure L2 or L3 fabric uplinks, allowing more flexibility and support of multiple fabric topologies.

- Create, edit, and delete VxLAN and VLAN-based networks, to customize the network setup for specific business needs.

- Create a host-centric network inventory that provides a clear mapping between configured virtual and physical components (interfaces, switches, networks, and VMs). For instance, you can inspect virtual and physical network configuration from the same host monitoring view in vCenter (Figure 4). This is extremely useful for troubleshooting potential network connectivity issues.

- Upgrade SmartFabric OS on the physical switches in the fabric and replace a switch that simplifies the lifecycle management of the fabric.

Figure 3. Sample view from the OMNI vCenter plugin showing a fabric topology

To see how simple it is to deploy the OMNI plugin and to get familiar with some of the options available from its interface, please see the following demo: Dell EMC OpenManage Network Integration for VMware vCenter.

OMNI also monitors the VMware virtual networks for changes (such as to portgroups in vSS and vDS VMware virtual switches) and as necessary, reconfigures the underlying physical fabric.

Figure 4. OMNI – monitor virtual and physical network configuration from a single view

Thanks to OMNI, managing the physical network for VxRail becomes much simpler, less error-prone, and can be done by the VxRail admin directly from a familiar management interface, without having to log into the console of the physical switches that are part of the fabric.

Supported topologies

This new SFS release is very flexible and supports multiple fabric topologies. Due to the limited size of this post, I will only list them by name:

- Single-Rack – just a pair of leaf switches in a single rack, supports both L2 and L3 upstream connectivity / uplinks – the equivalent of the previous SFS functionality

- (New) Single-Rack to Multi-Rack – starts with a pair of switches, expands to multi-rack by adding spine switches and additional racks with leaf switches

- (New) Multi-Rack with Leaf Border – adds upstream connectivity via the pair of leaf switches; this supports both L2 or L3 uplinks

- (New) Multi-Rack with Spine Border - adds upstream connectivity via the pair of leaf spine; this supports L3 uplinks

- (New) Multi-Rack with Dedicated Leaf Border - adds upstream connectivity via the dedicated pair of border switches above the spine layer; this supports L3 uplinks

For detailed information on these topologies, please consult Dell EMC VxRail with SmartFabric Network Services Planning and Preparation Guide.

Note, that SFS for VxRail does not currently support NSX-T and VCF on VxRail.

Final thoughts

This latest version of SmartFabric Services for VxRail takes HCI network automation to the next level and solves now much bigger network complexity problem in a multi-rack environment, compared to much simpler, single-rack, dual switch configuration. With SFS, customers can:

- Reduce the CAPEX and OPEX related to HCI network infrastructure, thanks to automation (reducing over 99% of required configuration steps* when setting up a multi-rack fabric), and a reduced infrastructure footprint

- Accelerate the deployment of essential IT infrastructure for their business initiatives

- Reduce the risk related to the error-prone configuration of complex multi-rack, multi-cluster HCI deployments

- Increase the availability and performance of hosted applications

- Use a familiar management console (vSphere Client / vCenter) to drive additional automation of day 2 operations

- Rapidly perform any necessary changes to the physical network, in an automated way, without requiring highly-skilled network personnel

Additional resources:

Dell EMC VxRail with SmartFabric Network Services Planning and Preparation Guide

Dell EMC Networking SmartFabric Services Deployment with VxRail

SmartFabric Services for OpenManage Network Integration User Guide Release 1.2

Demo: Dell EMC OpenManage Network Integration for VMware vCenter

Demo: Expand SmartFabric and VxRail to Multi-Rack

Demo: Dell EMC Networking SFS Deployment with VxRail - L2 Uplinks

Demo: Dell EMC Networking SFS Deployment with VxRail - L3 Uplinks

Author: Karol Boguniewicz, Senior Principal Engineer, VxRail Technical Marketing

Twitter: @cl0udguide

*Disclaimer: Based on internal analysis comparing SmartFabric to manual network configuration, Oct 2019. Actual results will vary.

Protecting VxRail from Power Disturbances

Wed, 24 Apr 2024 13:49:39 -0000

|Read Time: 0 minutes

Preserving data integrity in case of unplanned power events

The challenge

Over the last few years, VxRail has evolved significantly -- becoming an ideal platform for most use cases and applications, spanning the core data center, edge locations, and the cloud. With its simplicity, scalability, and flexibility, it’s a great foundation for customers’ digital transformation initiatives, as well as high value and more demanding workloads, such as SAP HANA.

Running more business-critical workloads requires following best practices regarding data protection and availability. Dell Technologies specializes in data protection solutions and offers a portfolio of products that can fulfill even the most demanding RPO/RTO requirements from our customers. However, we are probably not giving enough attention to the other area related to this topic: protection against power disturbances and outages. Uninterruptible Power Supply (UPS) systems are at the heart of a data center’s electrical systems, and because VxRail is running critical workloads, it is a best practice to leverage a UPS to protect them and to ensure data integrity in case of unplanned power events. I want to highlight a solution from one of our partners – Eaton.

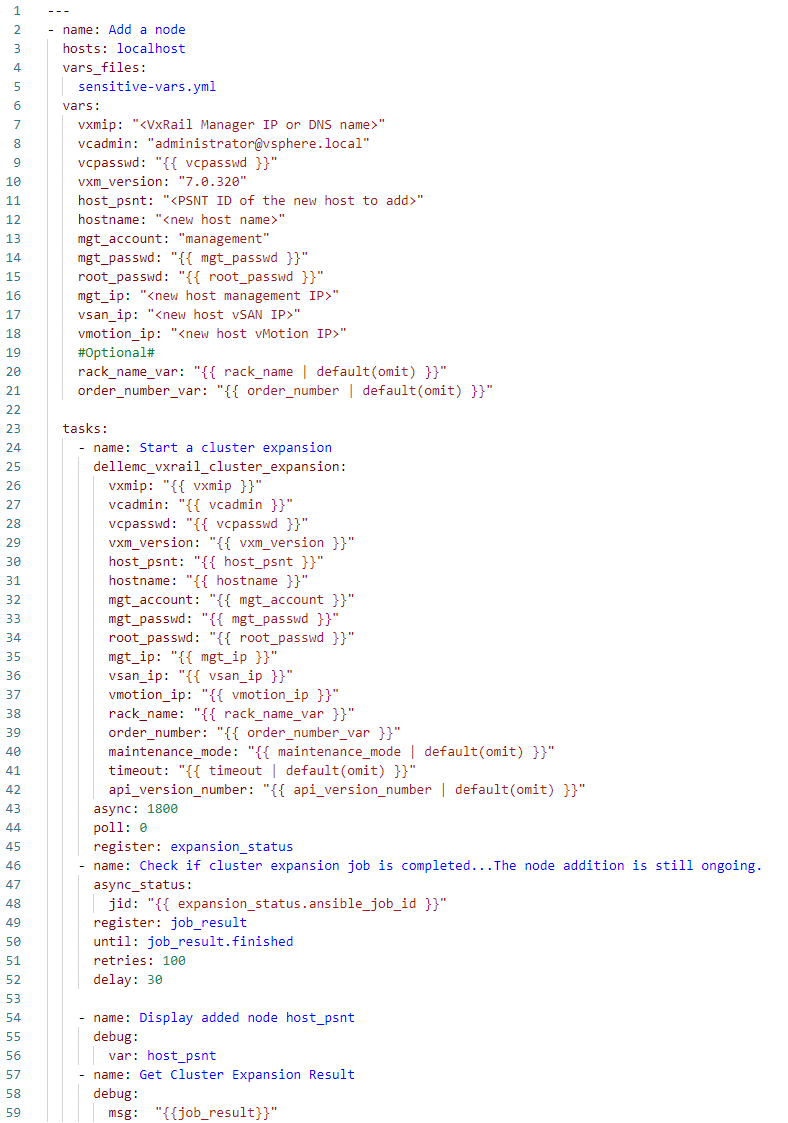

The solution

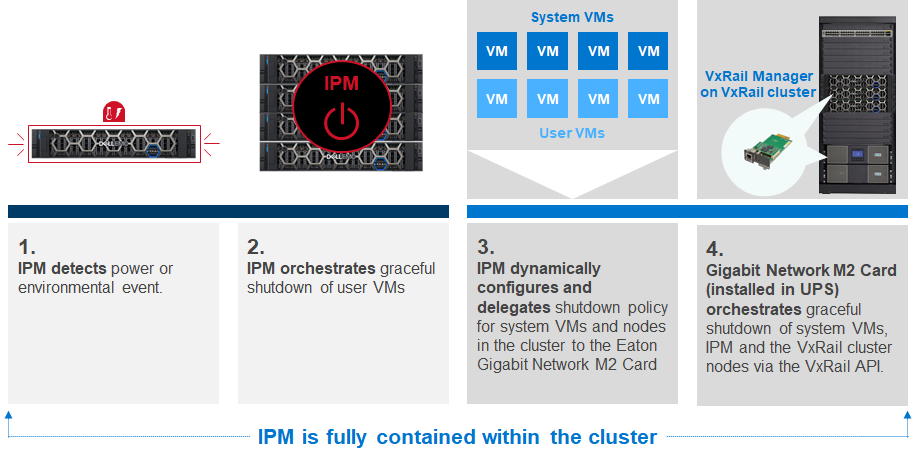

Eaton is an Advantage member of the Dell EMC Technology Connect Partner Program and the first UPS vendor who integrated their solution with VxRail. Eaton’s solution is a great example of how Dell Technologies partners can leverage VxRail APIs to provide additional value for our joint customers. Having integrated Eaton’s Intelligent Power Manager (IPM) software with VxRail APIs, and leveraged Eaton’s Gigabit Network Card, the solution can run on the same protected VxRail cluster. This removes the need for additional external compute infrastructure to host the power management software - just a compatible Eaton UPS is required.

The solution consists of:

- VxRail version min. 4.5.300, 4.7.x, 7.0.x and above

- Eaton IPM SW v 1.67.243 or above

- Eaton UPS – 5P, 5PX, 9PX, 9SX, 93PM, 93E, 9PXM

- Eaton M2 Network Card FW v 1.7.5

- IPM Gold License Perpetual

The main benefits are:

- Preserving data integrity and business continuity by enabling automated and graceful shutdown of VxRail clusters that are experiencing unplanned extended power events

- Reducing the need for onsite IT staff with simple set-up and remote management of power infrastructure using familiar VMware tools

- Safeguarding the VxRail system from power anomalies and environmental threats

How does it work?

It’s quite simple (see the figure below). What’s interesting and unique is that the IPM software, which is running on the cluster, delegates the final shutdown of the system VMs and cluster to the card in the UPS device, and the card uses VxRail APIs to execute the cluster shutdown.

Figure 1. Eaton UPS and VxRail integration explained

Summary

Protection against unplanned power events should be a part of a business continuity strategy for all customers who run their critical workloads on VxRail. This ensures data integrity by enabling automated and graceful shutdown of VxRail cluster(s). Eaton’s solution is a great example of providing such protection and how Dell Technologies partners can leverage VxRail APIs to provide additional value for our joint customers.

Additional resources:

Eaton website: Eaton ensures connectivity and protects Dell EMC VxRail from power disturbances

Brochure: Eaton delivers advanced power management for Dell EMC VxRail systems

Blog post: Take VxRail automation to the next level by leveraging APIs

Author: Karol Boguniewicz, Senior Principal Engineer, VxRail Technical Marketing

Twitter: @cl0udguide

Take VxRail automation to the next level by leveraging APIs

Wed, 24 Apr 2024 13:49:15 -0000

|Read Time: 0 minutes

VxRail REST APIs

The Challenge

VxRail Manager, available as a part of HCI System Software, drastically simplifies the lifecycle management and operations of a single VxRail cluster. With a “single click” user experience available directly in vCenter interface, you can perform a full upgrade off all software components of the cluster, including not only vSphere and vSAN, but also complete server hardware firmware and drivers, such as NICs, disk controller(s), drives, etc. That’s a simplified experience that you won’t find in any other VMware-based HCI solution.

But what if you need to manage not a single cluster, but a farm consisting of dozens or hundreds of VxRail clusters? Or maybe you’re using some orchestration tool to holistically automate the IT infrastructure and processes? Would you still need to login manually as an operator to each of these clusters separately and click a button to maybe shutdown a cluster, collect log information or health data or perform LCM operations?

This is where VxRail REST APIs come in handy.

The VxRail API Solution

REST APIs are very important for customers who would like to programmatically automate operations of their VxRail-based IT environment and integrate with external configuration management or cloud management tools.

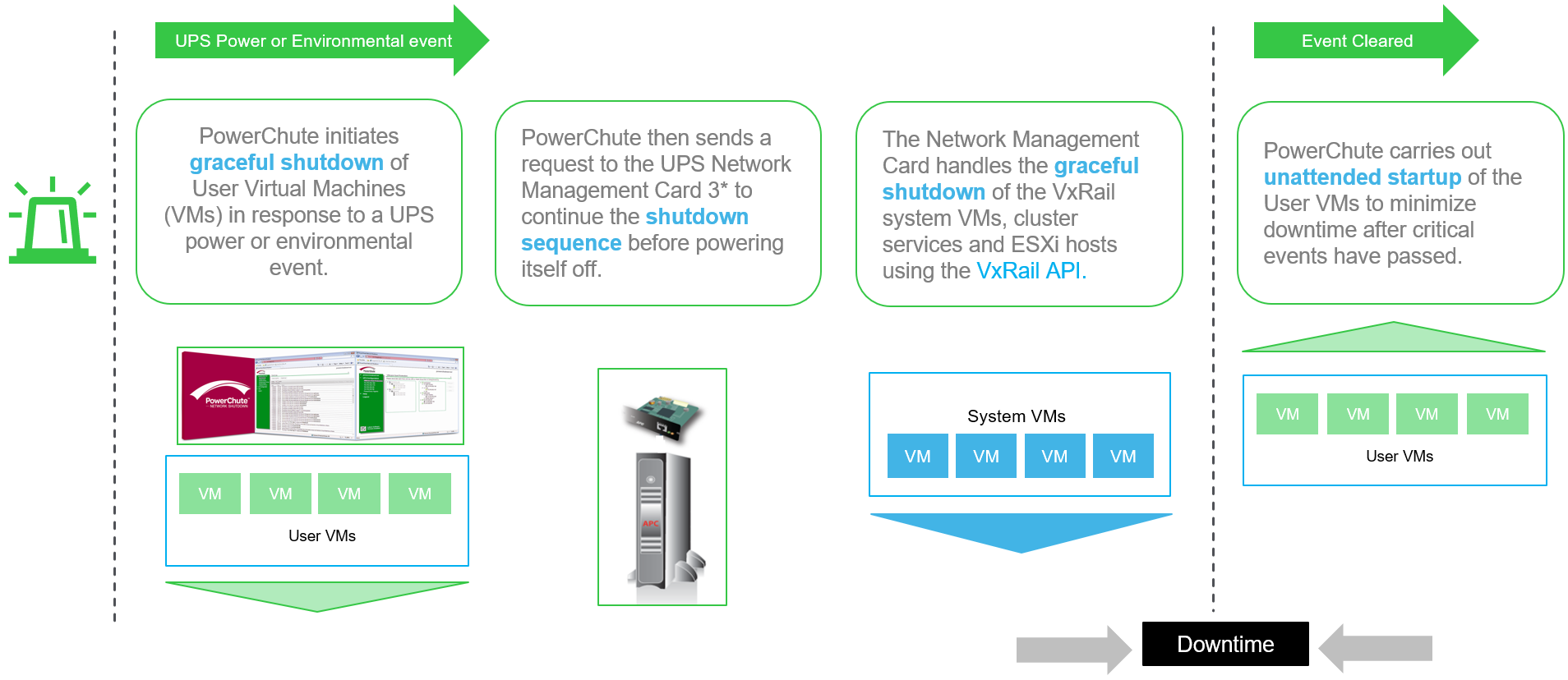

In VxRail HCI System Software 4.7.300 we’ve introduced very significant improvements in this space:

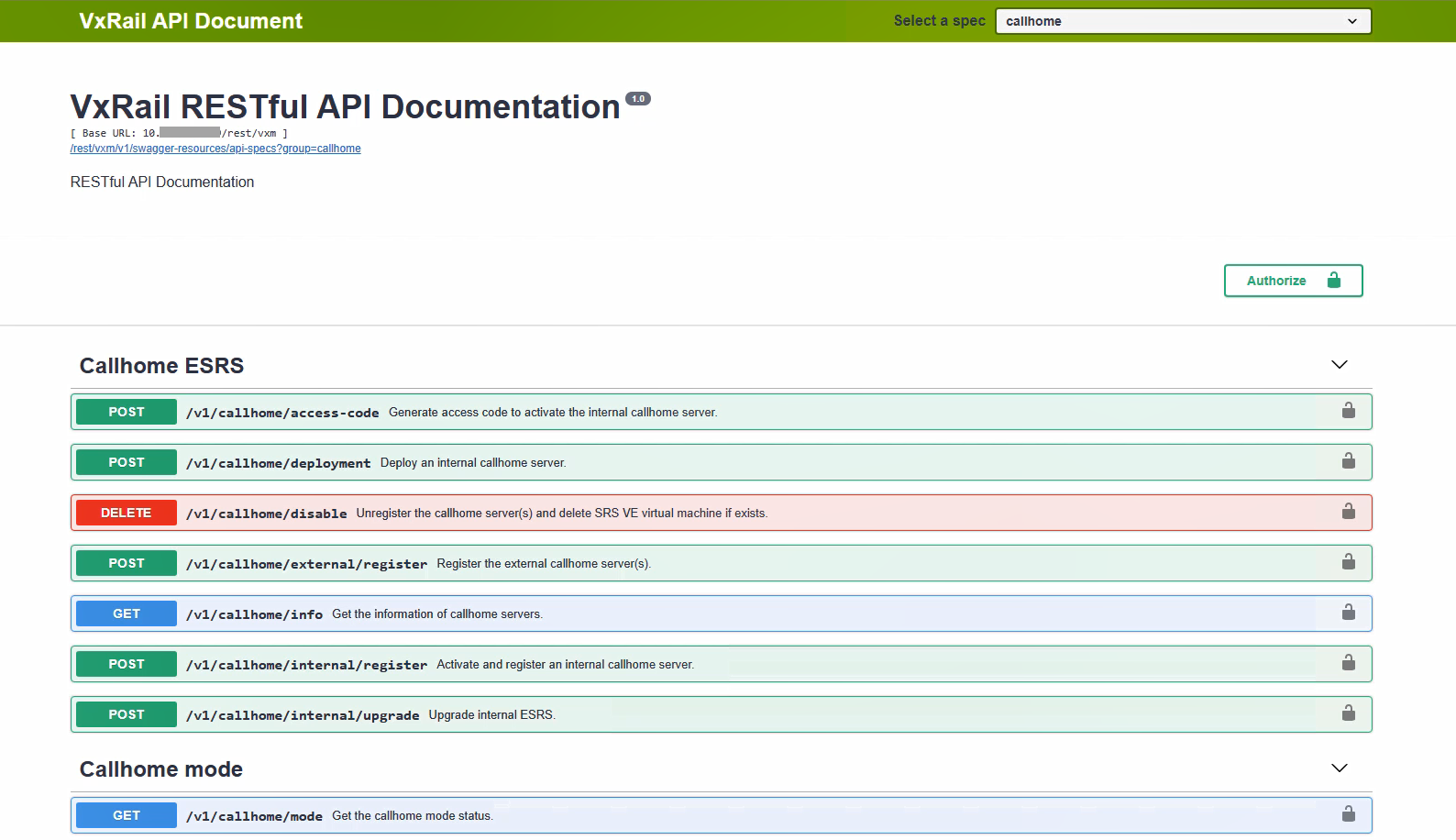

- Swagger integration - which allows for simplified consumption of the APIs and their documentation;

- Comprehensiveness – we’ve almost doubled the number of public APIs available;

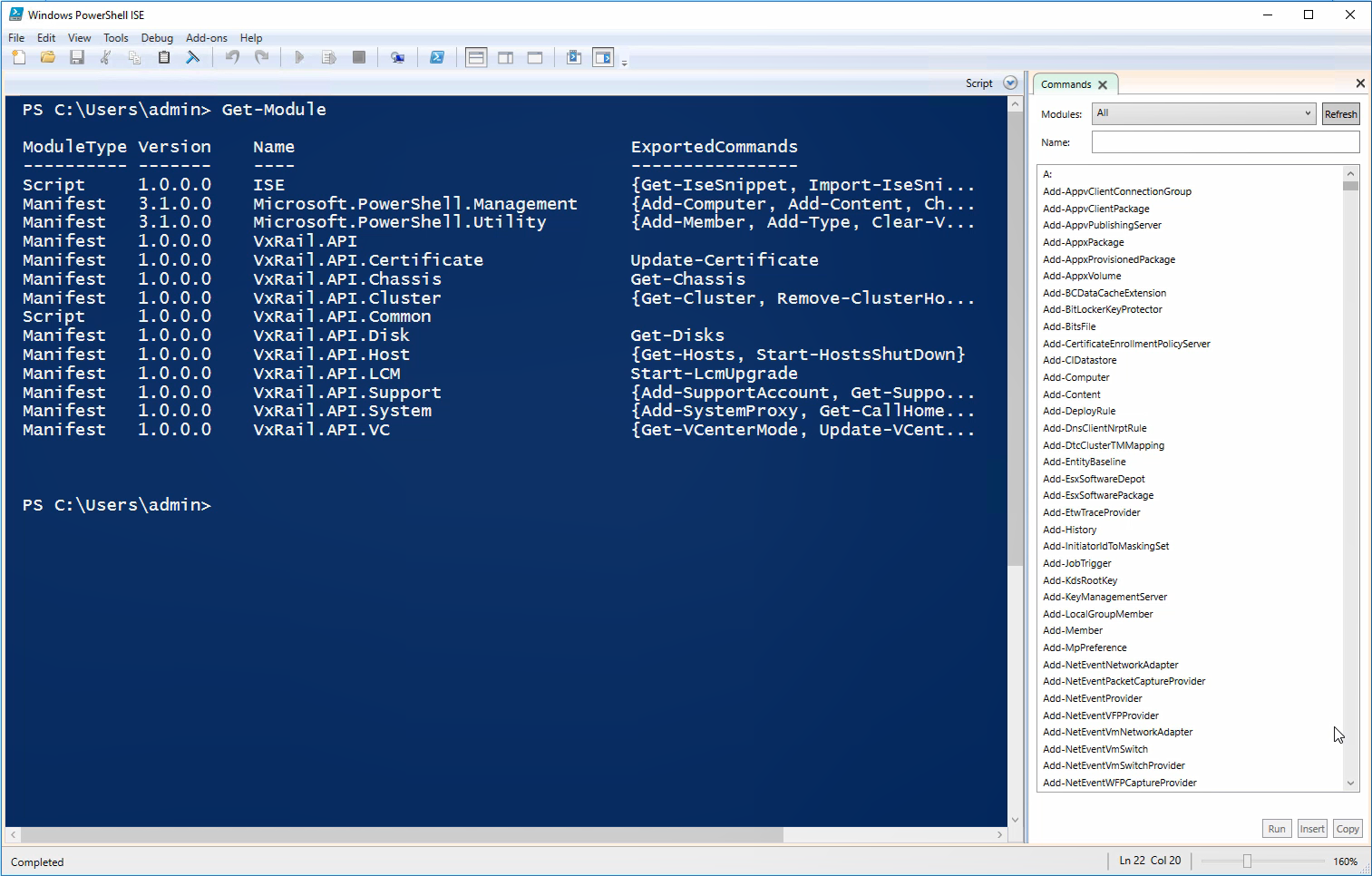

- PowerShell integration – that allows consumption of the APIs from the Microsoft PowerShell or VMware PowerCLI.

The easiest way to start using and access these APIs is through the web browser, thanks to the Swagger integration. Swagger is an Open Source toolkit that simplifies Open API development and can be launched from within the VxRail Manager virtual appliance. To access the documentation, simply open the following URL in the web browser: https://<VxM_IP>/rest/vxm/api-doc.html (where <VxM IP> stands for the IP address of the VxRail Manager) and you should see a page similar to the one shown below:

Figure 1. Sample view into VxRail REST APIs via Swagger

This interface is dedicated for customers, who are leveraging orchestration or configuration management tools – they can use it to accelerate integration of VxRail clusters into their automation workflows. VxRail API is complementary to the APIs offered by VMware.

Would you like to see this in action? Watch the first part of the recorded demo available in the additional resources section.

PowerShell integration for Windows environments

Customers, who prefer scripting in Windows environment, using Microsoft PowerShell or VMware PowerCLI, will benefit from VxRail.API PowerShell Modules Package. It simplifies the consumption of the VxRail REST APIs from PowerShell and focuses more on the physical infrastructure layer, while management of VMware vSphere and solutions layered on the top (such as Software-Defined Data Center, Horizon, etc.), can be scripted using similar interface available in VMware PowerCLI.

Figure 2. VxRail.API PowerShell Modules Package

To see that in action, check the second part of the recorded demo available in the additional resources section.

Bringing it all together

VxRail REST APIs further simplify IT Operations, fostering operational freedom and a reduction in OPEX for large enterprises, service providers and midsize enterprises. Integrations with Swagger and PowerShell make them much more convenient to use. This is an area of VxRail HCI System Software that rapidly gains new capabilities, so please make sure to check the latest advancements with every new VxRail release.

Additional resources:

Demo: VxRail API - Overview

Demo: VxRail API - PowerShell Package

Dell EMC VxRail Appliance – API User Guide

Author: Karol Boguniewicz, Sr Principal Engineer, VxRail Tech Marketing

Twitter: @cl0udguide

Learn About the Latest VMware Cloud Foundation 5.1.1 on Dell VxRail 8.0.210 Release

Tue, 26 Mar 2024 18:47:52 -0000

|Read Time: 0 minutes

The latest VCF on VxRail release delivers GenAI-ready infrastructure, runs more demanding workloads, and is an excellent choice for supporting hardware tech refreshes and achieving higher consolidation ratios.

VMware Cloud Foundation 5.1.1 on VxRail 8.0.210 is a minor release from the perspective of versioning and new functionality but is significant in terms of support for the latest VxRail hardware platforms. This new release is based on the latest software bill of materials (BOM) featuring vSphere 8.0 U2b, vSAN 8.0 U2b, and NSX 4.1.2.3. Read on for more details…

VxRail hardware platform updates

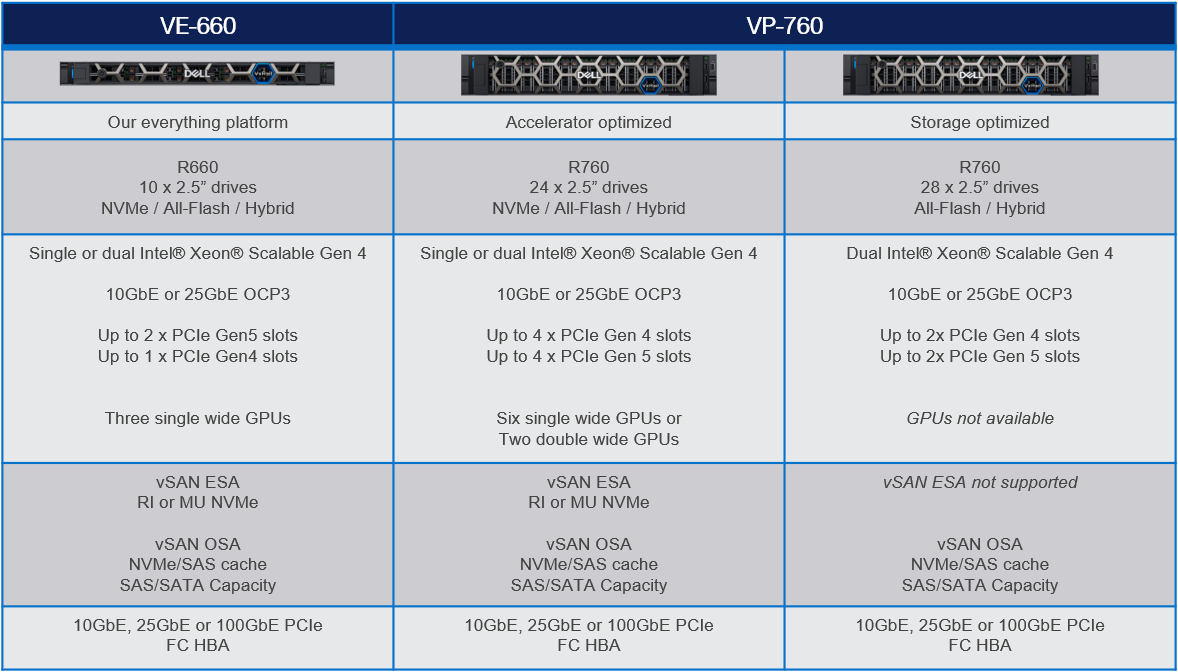

16th generation VxRail VE-660 and VP-760 hardware platform support

Cloud Foundation on VxRail customers can now benefit from the latest, more scalable, and robust 16th generation hardware platforms. This includes a full spectrum of hybrid, all-flash, and all NVMe options that have been qualified to run VxRail 8.0.210 software. This is fantastic news as these new hardware options bring many technical innovations, which my colleagues discussed in detail in previous blogs.

These new hardware platforms are based on Intel® 4th Generation Xeon® Scalable processors, which increase VxRail core density per socket to 56 (112 max per node). They also come with built-in Intel® AMX accelerators (Advanced Matrix Extensions) that support AI and HPC workloads without the need for additional drivers or hardware.

VxRail on the 16th generation hardware supports deployments with either vSAN Original Storage Architecture (OSA) or vSAN Express Storage Architecture (ESA). The VP-760 and VE-660 can take advantage of vSAN ESA’s single-tier storage architecture, which enables RAID-5 resiliency and capacity with RAID-1 performance.

This table summarizes the configurations of the newly added platforms:

To learn more about the VE-660 and VP-760 platforms, please check Mike Athanasiou’s VxRail’s Latest Hardware Evolution blog. To learn more about Intel® AMX capability set, make sure to check out the VxRail and Intel® AMX, Bringing AI Everywhere blog, authored by Una O’Herlihy.

VCF on VxRail LCM updates

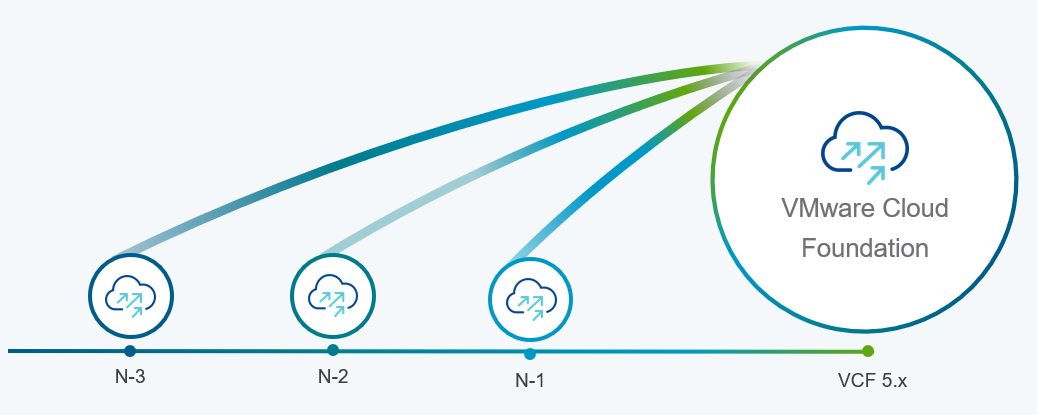

Support upgrades to VCF 5.1.1 from existing VCF 4.4.x and higher environments (N-3 upgrade support)

Customers who already upgraded to VCF 5.x are already familiar with the concept of the skip-level upgrade, which allows them to upgrade directly to the latest 5.x release without the need to perform upgrades to the interim versions. It significantly reduces the time required to perform the upgrade and enhances the overall upgrade experience. VCF 5.1.1 introduces so-called “N-3” upgrade support (as illustrated on the following diagram), which supports the skip-level upgrade for VCF 4.4.x. This means they can now perform a direct LCM upgrade operation from VCF 4.4.x, 4.5.x, 5.0.x, and 5.1.0 to VCF 5.1.1.

VCF licensing changes

Simplified licensing using a single solution license key

Starting with VCF 5.1.1, vCenter Server, ESXi, and TKG component licenses are now entered using a single “VCF Solution License” key. This helps to simplify the licensing by minimizing the number of individual component keys that require separate management. VMware NSX Networking, HCX, and VMware Aria Suite components are automatically entitled from the vCenter Server post-deployment. The single licensing key and existing keyed licenses will continue to work in parallel.

Removal of VCF+ cloud-connected subscriptions as a supported VCF licensing type

The other significant licensing change is the deprecation of VCF+ licensing, which the new subscription model has replaced.

Support for deploying or expanding VCF instances using Evaluation Mode

VMware Cloud Foundation 5.1.1 allows deploying a new VCF instance in evaluation mode without needing to enter license keys. An administrator has 60 days to enter licensing for the deployment, and SDDC Manager is fully functional at this time. The workflows for expanding a cluster, adding a new cluster, or creating a VI workload domain also provide an option to license later within a 60 day timeframe.

For more comprehensive information about changes in VCF licensing, please consult the VMware website.

Core VxRail enhancements

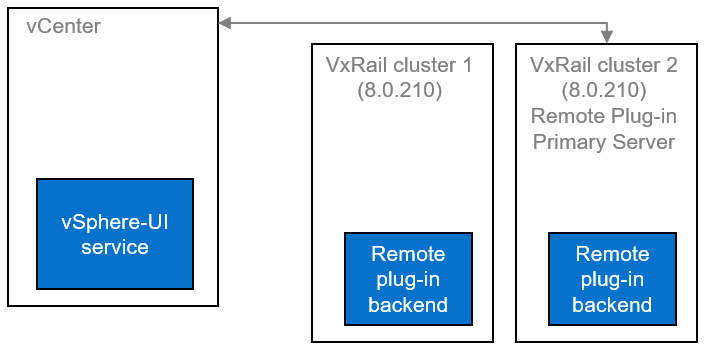

Support for remote vCenter plug-in

One of the notable enhancements in VxRail 8.0.210 is adopting the vSphere Client remote plugin architecture. It showcases adopting the latest vSphere architecture guidelines, as the local plug-ins are deprecated in vSphere 8.0 and won’t be supported in vSphere 9.0. The vSphere Client remote plug-in architecture allows plug-in functionality integration without running inside a vCenter Server. It’s a more robust architecture that separates vCenter Server from plug-ins and provides more security, flexibility, and scalability when choosing the programming frameworks and introducing new features. Starting with 8.0.210, a new VxRail Manager remote plug-in is deployed in the VxRail Manager Appliance.

LCM enhancements, including improved VxRail pre-checks and self-remediation of iDRAC issues.

VxRail 8.0.210 also comes with several small features based on Customer feedback that combine to improve the LCM experience's reliability. These include:

- VxRail Manager root disk space precheck prevents the upgrade errors related to lack of disk space (for rpm-based upgrades).

- Self-remediation of iDRAC issues during LCM upgrades provides a more reliable firmware upgrade experience. By clearing the iDRAC job queue and resetting the iDRAC, the process may recover from a firmware update failure.

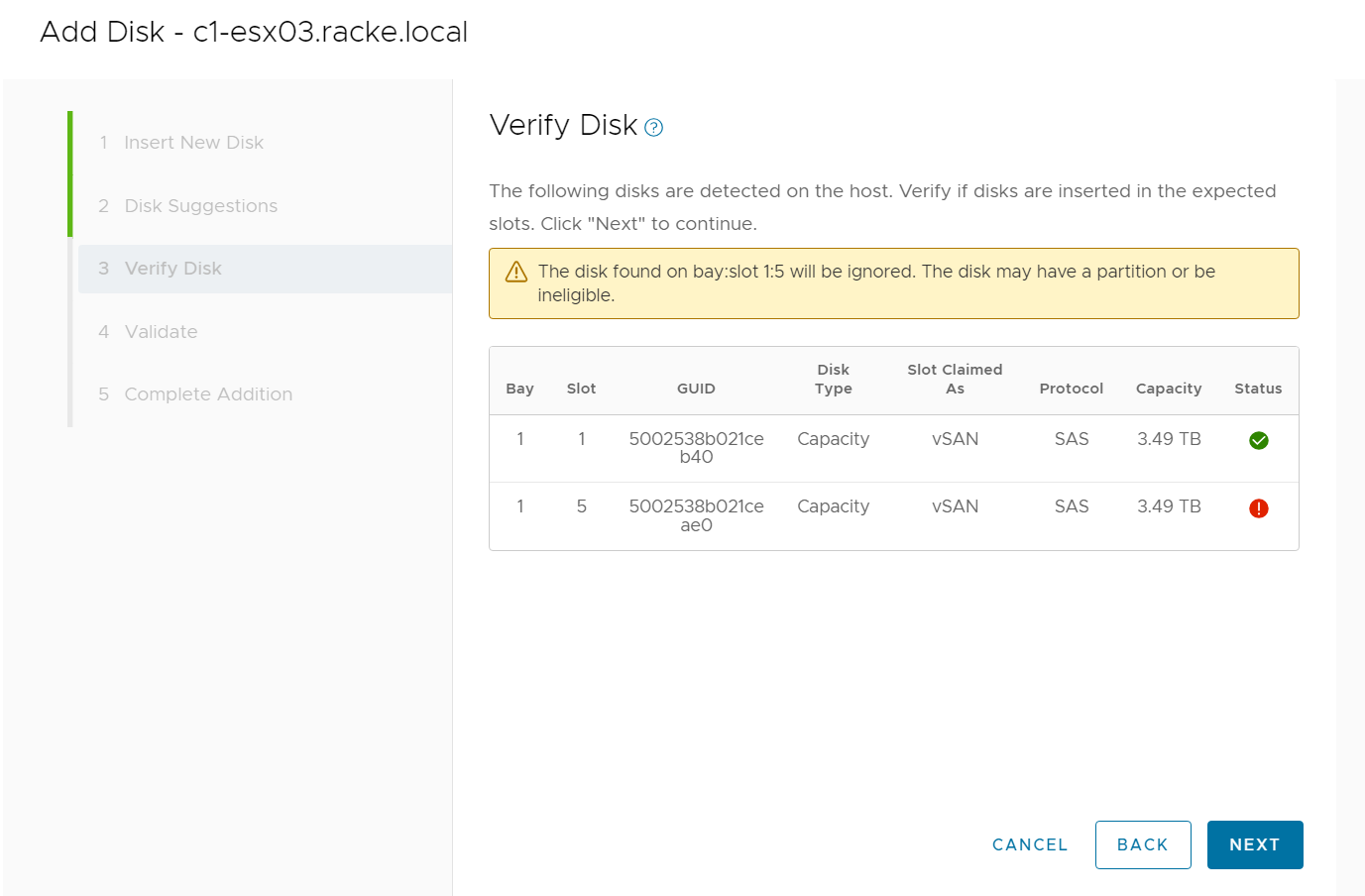

Serviceability enhancements, including improved expansion pre-checks, external storage reporting, and improved troubleshooting capabilities.

Another group of features contributes to overall improved serviceability and visibility into the system:

- The UI now implements new errors and warnings for incompatible disks when the user tries to add an incompatible disk during the disk addition process (see the following figure)

- The improved hardware views report on storage capacity and utilization for dynamic nodes, improving the overall visibility for the external storage attached to dynamic nodes directly from the vSphere Client.

- VxRail cluster troubleshooting efficiency has improved thanks to better standardization of log format and event grooming for disk exhaustion.

- The improved node-add health checks reduce the risk of successfully adding a faulty or mismatched node to a VxRail cluster.

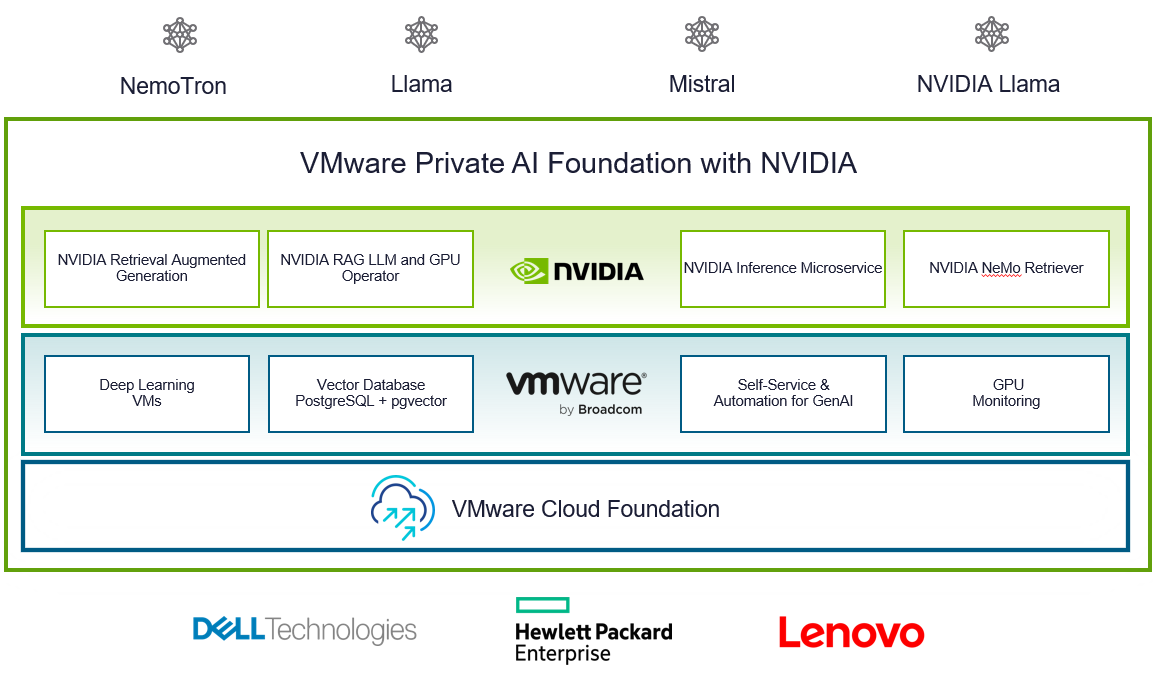

VMware Private AI Foundation with NVIDIA

With VCF 5.1.1, VMware introduces VMware Private AI Foundation with NVIDIA as Initial Access. Dell Technologies Engineering intends to validate this feature when it is generally available.

This solution aims to enable enterprise customers to adopt Generative AI capabilities more easily and securely by providing enterprises with a cost-effective, high-performance, and secure environment for delivering business value from Large Language Models (LLMs) using their private data.

Summary

The new VCF 5.1.1 on VxRail 8.0.210 release is an excellent option for customers looking for a hardware refresh, Gen AI-ready infrastructure to run more demanding workloads, or to achieve higher consolidation ratios. Additional enhancements introduced in the core VxRail functionality improve the overall LCM experience, serviceability, and visibility into the system.

Thank you for your time, and please check the additional resources if you like to learn more.

Resources

- VxRail’s Latest Hardware Evolution blog

- VxRail and Intel® AMX, Bringing AI Everywhere

- VxRail product page

- VxRail Infohub page

- VxRail Videos

- VMware Cloud Foundation on Dell VxRail Release Notes

- VCF on VxRail Interactive Demo

- VMware Product Lifecycle Matrix

Author: Karol Boguniewicz

Twitter: @cl0udguide

VxRail API—Updated List of Useful Public Resources

Fri, 09 Feb 2024 16:07:26 -0000

|Read Time: 0 minutes

Well-managed companies are always looking for new ways to increase efficiency and reduce costs while maintaining excellence in the quality of their products and services. Hence, IT departments and service providers look at the cloud and Application Programming Interfaces (APIs) as the enablers for automation, driving efficiency, consistency, and cost-savings.

This blog helps you get started with VxRail API by grouping the most useful VxRail API resources available from various public sources in one place. This list of resources is updated every few months. Consider bookmarking this blog as it is a useful reference.

Before jumping into the list, it is essential to answer some of the most obvious questions:

What is VxRail API?

VxRail API is a feature of the VxRail HCI System Software that exposes management functions with a RESTful application programming interface. It is designed for ease of use by VxRail customers and ecosystem partners who want to better integrate third-party products with VxRail systems. VxRail API is:

- Simple to use— Thanks to embedded, interactive, web-based documentation, and PowerShell and Ansible modules, you can easily consume the API using a supported web browser, using a familiar command line interface for Windows and VMware vSphere admins, or using Ansible playbooks.

- Powerful—VxRail offers dozens of API calls for essential operations such as automated life cycle management (LCM), and its capabilities are growing with every new release.

- Extensible—This API is designed to complement REST APIs from VMware (such as vSphere Automation API, PowerCLI, and VMware Cloud Foundation on Dell EMC VxRail API), offering a familiar look and feel and vast capabilities.

Why is VxRail API relevant?

VxRail API enables you to use the full power of automation and orchestration services across your data center. This extensibility enables you to build and operate infrastructure with cloud-like scale and agility. It also streamlines the integration of the infrastructure into your IT environment and processes. Instead of manually managing your environment through the user interface, the software can programmatically trigger and run repeatable operations.

More customers are embracing DevOps and Infrastructure as Code (IaC) models because they need reliable and repeatable processes to configure the underlying infrastructure resources that are required for applications. IaC uses APIs to store configurations in code, making operations repeatable and greatly reducing errors.

How can I start? Where can I find more information?

To help you navigate through all available resources, I grouped them by level of technical difficulty, starting with 101 (the simplest, explaining the basics, use cases, and value proposition), through 201, up to 301 (the most in-depth technical level).

101 Level

- Solution Brief—Dell VxRail API – Solution Brief is a concise brochure that describes the VxRail API at a high-level, typical use cases, and where you can find additional resources for a quick start. I highly recommend starting your exploration from this resource.

- Learning Tool—VxRail Interactive Journey is the "go-to resource" to learn about VxRail and HCI System Software. It includes a dedicated module for the VxRail API, with essential resources to maximize your learning experience.

- On-demand Session—Automation with VxRail API is a one-hour interactive learning session delivered as part of the Tech Exchange Live VxRail Series, available on-demand. This session is an excellent introduction for anyone new to VxRail API, discussing the value, typical use cases, and how to get started.

- On-demand Session—Infrastructure as Code (IaC) with VxRail is another one-hour interactive learning session delivered as part of the Tech Exchange Live VxRail Series, available on-demand. This one is an introduction to adopting Infrastructure as Code on VxRail, with automation tools like Ansible and Terraform.

- Instructor Session—Automation with VxRail is a live, interactive training session offered by Dell Technologies Education Services. Hear directly from the VxRail team about new capabilities and what’s on the roadmap for VxRail new releases and the latest advancements.

During the session you will:

• Learn about the VxRail ecosystem and leverage its automation capabilities

• Elevate performance of automated VxRail operations using the latest tools

• Experience live demonstrations of customer use cases and apply these examples to your environment

• Increase your knowledge of VxRail API tools such as PowerShell and Ansible modules

• Receive bonus material to support you in your automation journey - Infographic—Dell VxRail HCI System Software RESTful API is an infographic that provides quick facts about VxRail HCI System Software differentiation. This infographic explains the value of VxRail API.

- Whiteboard Video—Level up your HCI automation with VxRail API – This technical whiteboard video introduces you to automation with VxRail API. We discuss different ways you can access the API, and provide example use cases.

- Blog Post—Take VxRail automation to the next level by leveraging APIs is my first blog that focuses on VxRail API. It addresses some of the challenges related to managing a farm of VxRail clusters and how VxRail API can be a solution. It also covers the enhancements introduced in VxRail HCI System Software 4.7.300, such as Swagger and PowerShell integration.

- Blog Post—VxRail – API PowerShell Module Examples is a blog from my colleague David, explaining how to install and get started with the VxRail API PowerShell Modules Package.

- Blog Post—Infrastructure as Code with VxRail Made Easier with Ansible Modules for Dell VxRail – my blog with an introduction to VxRail Ansible Modules, including a demo.

- (New!) Blog Post—VxRail Edge Automation Unleashed - Simplifying Satellite Node Management with Ansible – my blog explaining the use of Ansible Modules for Dell VxRail for satellite node management, including a demo.

- Blog Post—Protecting VxRail from Power Disturbances is my second API-related blog, in which I explain an exciting use case by Eaton, our ecosystem partner, and the first UPS vendor who integrated their power management solution with VxRail using the VxRail API.

- Blog Post—Protecting VxRail From Unplanned Power Outages: More Choices Available describes another UPS solution integrated with the VxRail API, from our ecosystem partner APC (Schneider Electric).

- Demo—VxRail API – Overview is our first VxRail API demo published on the official Dell YouTube channel. It was recorded using VxRail HCI System Software 4.7.300, which explains VxRail API basics, API enhancements introduced in this version, and how you can explore the API using the Swagger UI.

- Demo—VxRail API – PowerShell Package is a continuation of the API overview demo referenced above, focusing on PowerShell integration. It was recorded using VxRail HCI System Software 4.7.300.

- Demo—Ansible Modules for Dell VxRail provides a quick overview of VxRail Ansible Modules. It was recorded using VxRail HCI System Software 7.0.x.

- (New!) Demo—Ansible Modules for Dell VxRail – Automating Satellite Node Management continues the subject of VxRail Ansible Modules, showcasing the satellite node management use case for the Edge. It was recorded using VxRail HCI System Software 8.0.x.

201 Level

- (Updated!) HoL—Hands On Lab: HOL-0310-01 - Scalable Virtualization, Compute, and Storage with the VxRail REST API- allows you to experience the VxRail API in a virtualized demo environment using various tools. This has been premiered at Dell Technologies World 2022 and is a very valuable self-learning tool for VxRail API. It includes four modules:

- Module 1: Getting Started (~10 min / Basic) - The aim of this module is to get the lab up and running and dip your toe in the VxRail API waters using our web-based interactive documentation.

• Access interactive API documentation

• Explore available VxRail API functions

• Test a VxRail API function

• Explore Dell Technologies' Developer Portal - Module 2: Monitoring and Maintenance (~15 min / Intermediate) - In this module you will navigate our VxRail PowerShell Modules and the VxRail Manager, to become more familiar with the options available to monitor the health indicators of a VxRail cluster. There are also some maintenance tasks that show how these functions can simplify the management of your environment.

Monitoring the health of a VxRail cluster:

• Check the cluster's overall health

• Check the health of the nodes

• Check the individual components of a node

Maintenance of a VxRail cluster:

• View iDRAC IP configuration

• Collect a log bundle of the VxRail cluster

• Cluster shutdown (Dry run) - Module 3: Add & Update VxRail Satellite Nodes (~30 min / Intermediate) - In this module, you will experiment with adding and updating VxRail satellite nodes using VxRail API and VxRail API PowerShell Modules.

• Add a VxRail satellite node

• Update a VxRail satellite node - Module 4: Cluster Expansion or Scaling Out (~25 min / Advanced) - In this module, you will experience our official VxRail Ansible Modules and how easy it is to expand the cluster with an additional node.

• Connect to Ansible server

• View VxRail Ansible Modules documentation

• Add a node to the existing VxRail cluster

• Verify cluster state after expansion - Module 5: Lifecycle Management or LCM (~25 min / Advanced) - In this module, you will experience our VxRail APIs using POSTMAN. You will see how easy LCM operations are using our VxRail API and software.

• Explore POSTMAN

• Generate a compliance report

• Explore LCM pre-check and LCM upgrade API functions available to bring it to the next VxRail version.

- Module 1: Getting Started (~10 min / Basic) - The aim of this module is to get the lab up and running and dip your toe in the VxRail API waters using our web-based interactive documentation.

Note: If you’re a customer, you will need to ask your Dell or partner account team to create a session for you and a hyperlink to get the access to this lab.

- vBrownBag session—vSphere and VxRail REST API: Get Started in an Easy Way is a vBrownBag community session that took place at the VMworld 2020 TechTalks Live event. There are no slides and no “marketing fluff,” but an extensive demo showing:

- How you can begin your API journey by using interactive, web-based API documentation

- How you can use these APIs from different frameworks (such as scripting with PowerShell in Windows environments) and configuration management tools (such as Ansible on Linux)

- How you can consume these APIs virtually from any application in any programming language.

- vBrownBag session—Automating large scale HCI deployments programmatically using REST APIs is a vBrownBag community session that took place at the VMworld 2021 TechTalks Live event. This approx. 10 minute session discusses the sample use cases and tools at your disposal, allowing you to jumpstart your API journey in various frameworks quickly. It includes a demo of VxRail cluster expansion using PowerShell.

301 Level

- (Updated!) Manual—VxRail API User Guide at Dell Technologies Developer Portal is an official web-based version of the reference manual for VxRail API. It provides a detailed description of each available API function.

Make sure to check the “Tutorials” section of this web-based manual, which contains code examples for various use cases and will replace the API Cookbook over time. - Manual—VxRail API User Guide is an official reference manual for VxRail API in PDF format. It provides a detailed description of each available API function, support information for specific VxRail HCI System Software versions, request parameters and possible response codes, successful call response data models, and example values returned. Dell Technologies Support portal access is required.

- (Updated!) Ansible Modules—The Ansible Modules for Dell VxRail available on GitHub and Ansible Galaxy allow data center and IT administrators to use Red Hat Ansible to automate and orchestrate the configuration and management of Dell VxRail.

The Ansible Modules for Dell VxRail are used for gathering system information and performing cluster level operations. These tasks can be executed by running simple playbooks written in yaml syntax. The modules are written so that all the operations are idempotent, therefore making multiple identical requests has the same effect as making a single request. - PowerShell Package—VxRail API PowerShell Modules is a package with VxRail.API PowerShell Modules that allows simplified access to the VxRail API, using dedicated PowerShell commands and integrated help. This version supports VxRail HCI System Software 7.0.010 or later.

Note: You must sign into the Dell Technologies Support portal to access this link successfully. - API Reference—vSphere Automation API is an official vSphere REST API reference that provides API documentation, request/response samples, and usage descriptions of the vSphere services.

- API Reference—VMware Cloud Foundation on Dell VxRail API Reference Guide is an official VMware Cloud Foundation (VCF) on VxRail REST API reference that provides API documentation, request/response samples, and usage descriptions of the VCF on VxRail services.

- Blog Post—Deployment of Workload Domains on VMware Cloud Foundation 4.0 on Dell VxRail using Public API is a VMware blog explaining how you can deploy a workload domain on VCF on VxRail using the API with the CURL shell command.

I hope you find this list useful. If so, make sure that you bookmark this blog for your reference. I will update it over time to include the latest collateral.

Enjoy your Infrastructure as Code journey with the VxRail API!

Author: Karol Boguniewicz, Senior Principal Engineering Technologist, VxRail Technical Marketing

Twitter: @cl0udguide

VxRail Edge Automation Unleashed - Simplifying Satellite Node Management with Ansible

Thu, 30 Nov 2023 17:43:03 -0000

|Read Time: 0 minutes

VxRail Edge Automation Unleashed

Simplifying Satellite Node Management with Ansible

In the previous blog, Infrastructure as Code with VxRail made easier with Ansible Modules for Dell VxRail, I introduced the modules which enable the automation of VxRail operations through code-driven processes using Ansible and VxRail API. This approach not only streamlines IT infrastructure management but also aligns with Infrastructure as Code (IaC) principles, benefiting both technical experts and business leaders.

The corresponding demo is available on YouTube:

The previous blog laid the foundation for the continued journey where we explore more advanced Ansible automation techniques, with a focus on satellite node management in the VxRail ecosystem. I highly recommend checking out that blog before diving deeper into the topics discussed here - as the concepts discussed in this demo will be much easier to absorb

What are the VxRail satellite nodes?

VxRail satellite nodes are individual nodes designed specifically for deployment in edge environments and are managed through a centralized primary VxRail cluster. Satellite nodes do not leverage vSAN to provide storage resources and are an ideal solution for those workloads where the SLA and compute demands do not justify even the smallest of VxRail 2-node vSAN clusters.

Satellite nodes enable customers to achieve uniform and centralized operations within the data center and at the edge, ensuring VxRail management throughout. This includes comprehensive, automated lifecycle management for VxRail satellite nodes, while encompassing hardware and software and significantly reducing the need for manual intervention.

To learn more about satellite nodes, please check the following blogs from my colleagues:

- David’s introduction: Satellite nodes: Because sometimes even a 2-node cluster is too much

- Stephen’s update on enhancements: Enhancing Satellite Node Management at Scale

Automating VxRail satellite node operations using Ansible

You can leverage the Ansible Modules for Dell VxRail to automate various VxRail operations, including more advanced use cases, like satellite node management. It’s possible today by using the provided samples available in the official repository on GitHub.

Have a look at the following demo, which leverages the latest available version of these modules at the time of recording – 2.2.0. In the demo, I discuss and demonstrate how you can perform the following operations from Ansible:

- Collecting information about the number of satellite nodes added to the primary VxRail cluster

- Adding a new satellite node to the primary VxRail cluster

- Performing lifecycle management operations – staging the upgrade bundle and executing the upgrade on managed satellite nodes

- Removing a satellite node from the primary cluster

The examples used in the demo are slightly modified versions of the following samples from the modules' documentation on GitHub. If you’d like to replicate these in your environment, here are the links to the corresponding samples for your reference, which need slight modification:

- Retrieving system information: systeminfo.yml

- Adding a new satellite node: add_satellite_node.yml

- Performing LCM operations: upgrade_host_folder.yml (both staging and upgrading as explained in the demo)

- Removing a satellite node: remove_satellite_node.yml.

In the demo, you can also observe one of the interesting features of the Ansible Modules for Dell VxRail that is shown in action but not explained explicitly. You might be aware that some of the VxRail API functions are available in multiple versions – typically, a new version is made available when some new features are available in the VxRail HCI System Software, while the previous versions are stored to provide backward compatibility. The example is “GET /vX/system”, which is used to retrieve the number of the satellite nodes – this property was introduced in version 4. If you avoid specifying the version, the modules will automatically select the latest supported version, simplifying the end-user experience.

How can you get more hands-on experience with automating VxRail operations programmatically?

The above demo, discussing the satellite nodes management using Ansible, was configured in the VxRail API hands-on lab which is available in the Dell Technologies Demo Center. With the help of the Demo Center team, we built this lab as the self-education tool for learning VxRail API and how it can be used for automating VxRail operations using various methods – through exploring the built-in, interactive, web-based documentation, VxRail API PowerShell Modules, Ansible Modules for Dell VxRail and Postman.

The hands-on lab provides a safe VxRail API sandbox, where you can easily start experimenting by following the exercises from the lab guide or trying some other use cases on your own without any concerns about making configuration changes to the VxRail system.

The lab was refreshed for the Dell Technologies World 2023 conference to leverage VxRail HCI System Software 8.0.x and the latest version of the Ansible Modules. If you’re a Dell partner, you should have access directly, and if you’re a customer who’d like to get access – please contact your Account SE from Dell or Dell Partner. The lab is available in the catalog as: “HOL-0310-01 - Scalable Virtualization, Compute, and Storage with the VxRail REST API”.

Conclusion

In the fast-evolving landscape of IT infrastructure, the ability to automate operations efficiently is not just a convenience but a necessity. With the power of Ansible Modules for Dell VxRail, we've explored how this necessity can be met, looking at the examples of satellite nodes use case. We encourage you to embrace the full potential of VxRail automation using VxRail API and Ansible or other tools. If it is something new, you can get the experience by experimenting with the hands-on lab available in the Demo Center catalog.

Resources

- Previous blog: Infrastructure as Code with VxRail Made Easier with Ansible Modules for Dell VxRail

- The “master” blog containing a curated list of publicly-available educational resources about the VxRail API: VxRail API - Updated List of Useful Public Resources

- Ansible Modules for Dell VxRail on GitHub, which is the central code repository for the modules. It also contains complete product documentation and examples.

- Dell Technologies Demo Center, which includes VxRail API hands-on lab.

Author: Karol Boguniewicz, Senior Principal Engineering Technologist, VxRail Technical Marketing

Twitter/X: @cl0udguide

LinkedIn: https://www.linkedin.com/in/boguniewicz/

Announcing VMware Cloud Foundation 5.0 on Dell VxRail 8.0.100

Thu, 22 Jun 2023 13:00:59 -0000

|Read Time: 0 minutes

A more flexible and scalable hybrid cloud platform with simpler upgrades from previous releases

The latest release of the co-engineered turnkey hybrid cloud platform is now available, and I wanted to take this great opportunity to discuss its enhancements.

Many new features are included in this major release, including support for the latest VCF and VxRail software component versions based on the latest vSphere 8.0 U1 virtualization platform generation, and more. Read on for the details!

In-place upgrade lifecycle management enhancements

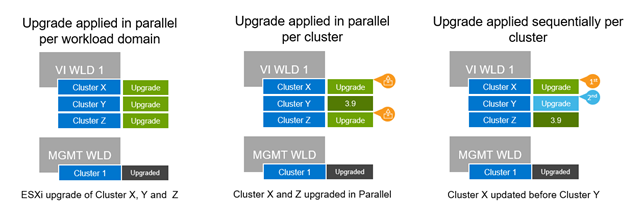

Support for automated in-place upgrades from VCF 4.3.x and higher to VCF 5.0

This is the most significant feature our customers have been waiting for. In the past, due to significant architectural changes between major VCF releases and their SDDC components (such as NSX), a migration was required to move from one major version to the next. (Moving from VCF 4.x to VCF 5.x is considered a major version upgrade.) In this release, this type of upgrade is now drastically improved.

After the SDDC Manager has been upgraded to version 5.0 (by downloading the latest SDDC Manager update bundle and performing an in-place automated SDDC Manager orchestrated LCM update operation), an administrator can follow the new built-in SDDC Manager in-place upgrade workflow. The workflow is designed to assist in upgrading the environment without needing any migrations. Domains and clusters can be upgraded sequentially or in parallel. This reduces the number and duration of maintenance windows, allowing administrators to complete an upgrade in less time. Also, VI domain skip-level upgrade support allows customers to run VCF 4.3.x or VCF 4.4.x BOMs in their domains to streamline their upgrade path to VCF 5.0, by skipping intermediary VCF 4.4.x and 4.5.x versions respectively. All this is performed automatically as part of the native SDDC Manager LCM workflows.

What does this look like from the VCF on VxRail administrator’s perspective? The administrator is first notified that a new SDDC Manager 5.0 upgrade is available. Administrators will be guided first to update their SDDC Manager instance to version 5.0. With SDDC Manager 5.0 in place, administrators can take advantage of the new enhancements which streamline the in-place upgrade process that can be used for the remaining components in the environment. These enhancements follow VMware best practices, reduce risk, and allow administrators to upgrade the full stack of the platform in a staged manner. These enhancements include:

- Context aware prechecks

- vRealize Suite prechecks

- Config drift awareness

- vCenter Server migration workflow

- Licensing update workflow

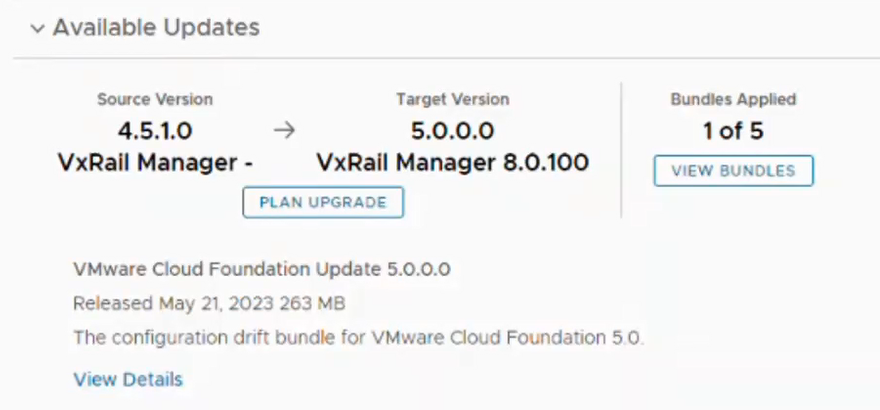

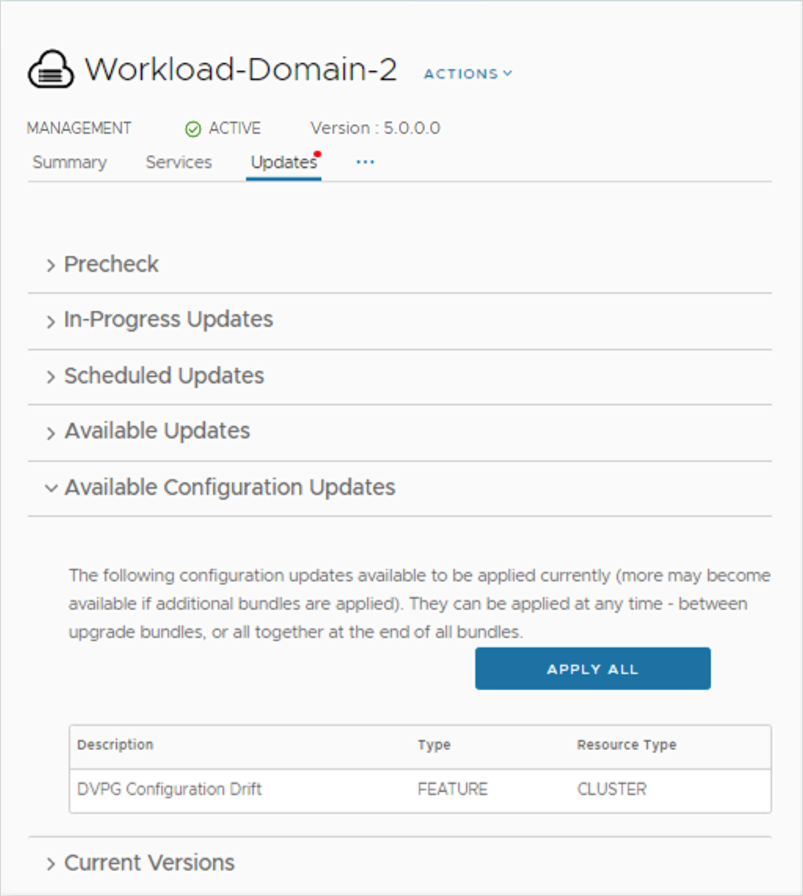

The following image highlights part of the new upgrade experience from the SDDC Manager UI. First, on the updates tab for a given domain, we can see the availability of the upgrade from VCF 4.5.1 to VCF 5.0.0 on VxRail 8.0.100 (Note: In this example, the first upgrade bundle for the SDDC Manager 5.0 was already applied.)

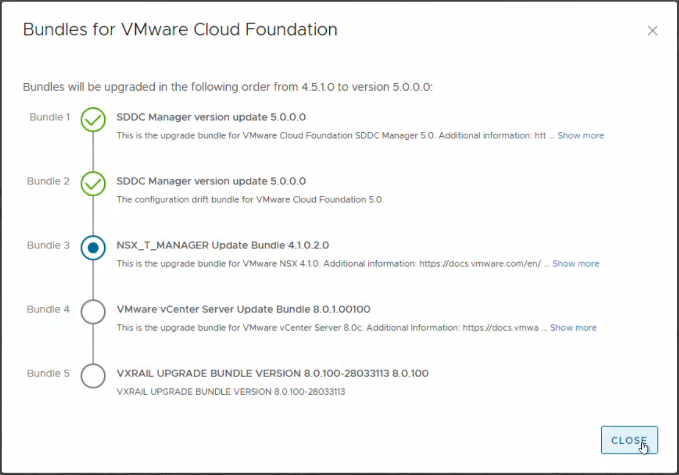

When the administrator clicks View Bundles, SDDC Manager displays a high-level workflow that highlights the upgrade bundles that would be applied, and in which order.

To see the in-place upgrade in action, check out the following demo:

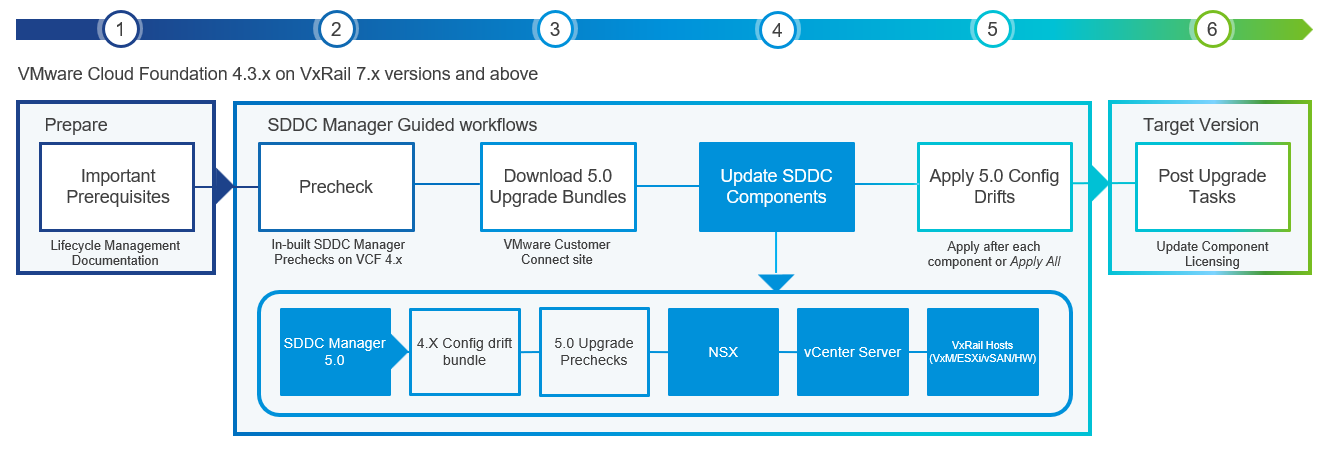

Now let’s dive a little deeper into the upgrade workflow steps. The following diagram depicts the end-to-end workflow for performing an in-place LCM upgrade from VCF 4.3.x/4.4.x/4.5.x to VCF 5.0 for the management domain.

The in-place upgrade workflow for the management domain consists of the following six steps:

- Plan and prepare by ensuring all important prerequisites are met (for example, the minimum supported VCF on VxRail version for an in-place upgrade is validated, in-place upgrade supported topologies are being used, and so on).

- Run an update precheck and resolve any issues before starting the upgrade process.

- Download the VMware Cloud Foundation and VxRail Upgrade Bundles from the Online Depot within SDDC Manager using a MyVMware account and a Dell support online depot account respectively.

- Upgrade components using the automated guided workflow, including SDDC Manager, NSX-T Data Center, vCenter Server for VCF, and VxRail hosts.

- Apply configuration drifts, which capture required configuration changes between release versions.

- When the upgrade is completed, update component licensing using the built-in SDDC Manager workflow (only applicable for VCF instances deployed using perpetual licensing).

Upgrading workload domains follows a similar workflow.

If performed manually, the in-place upgrade process to VCF 5.0 on VxRail 8.0.100 from previous releases would be potentially error-prone and time-consuming. The guided, simplified, and automated experience now provided in SDDC Manager 5.0 greatly reduces the effort and risk for customers, by helping them perform this operation in a fully controlled, guided, and automated manner on their own, providing a much better user experience and better value.

SDDC Manager context aware prechecks

Keeping a large-scale cloud environment in a healthy, well-managed state is very important to achieve the desired service levels and increase the success rate of LCM operations. In SDDC Manager 5.0, prechecks have been further enhanced and are now context aware. But what does this mean?

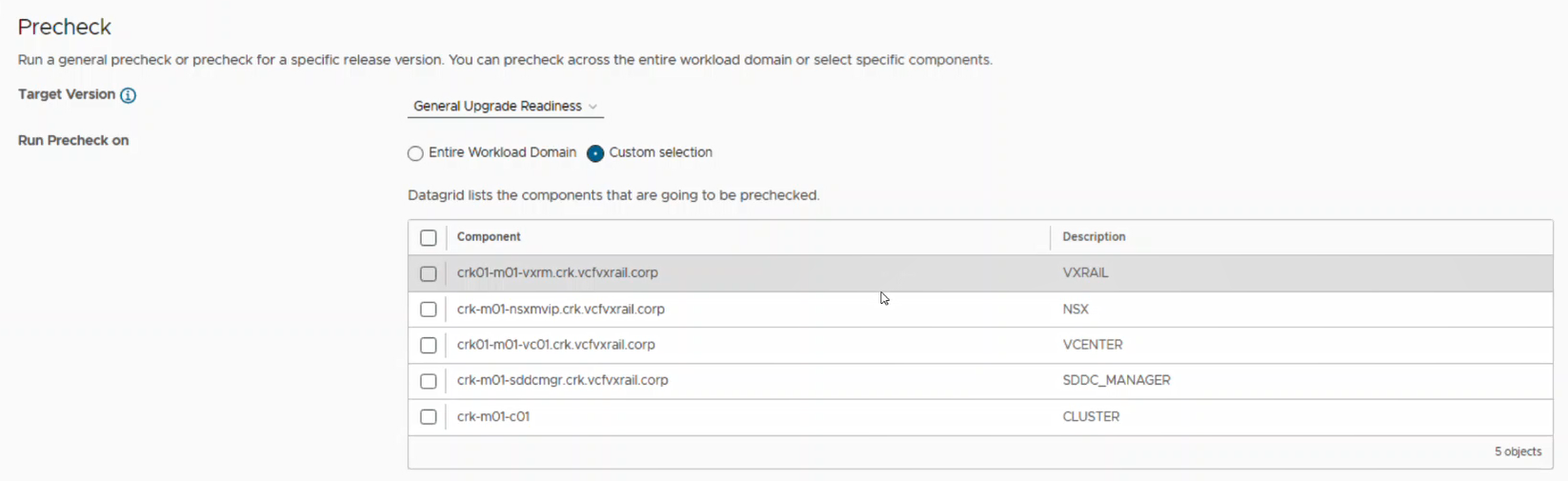

Before performing the upgrade, administrators can choose to run a precheck against a specific VCF release (“Target Version”) or run a “General Update Readiness” precheck. Each type of precheck allows the administrator to select the specific VCF on VxRail objects to run the precheck on. For example, an administrator can run it against an entire domain, a VxRail cluster, or even an individual SDDC software component such as NSX and vRealize/Aria Suite components. For example, a precheck can be run at a per-VxRail cluster level, which might be useful for large workload domains configured with multiple clusters. It could reduce planned maintenance windows by updating components of the domain separately.

But what is the difference between the “Target Version” and “General Upgrade Readiness” precheck types? Let me explain:

- Target Version precheck - Prechecks for a specific “Target Version” will run prechecks related to the components between the source and target VCF on VxRail release. (Note that the drop-down in the SDDC Manager UI will only show target versions from VCF 5.x on VxRail 8.x after the SDDC Manager has been updated to 5.0). This feature reduces the risk of issues during the upgrade to the target VCF release.

- General Upgrade Readiness precheck - The “General Upgrade Readiness” precheck can be run any time to plan and assess upgrade readiness without triggering the upgrade. The “General Upgrade Readiness” precheck can periodically run as a health check on a given SDDC component.

The following screenshot shows what this feature looks like from the system administrator perspective in the SDDC Manager UI:

Platform security and scalability enhancements

Isolated domains with individual SSO

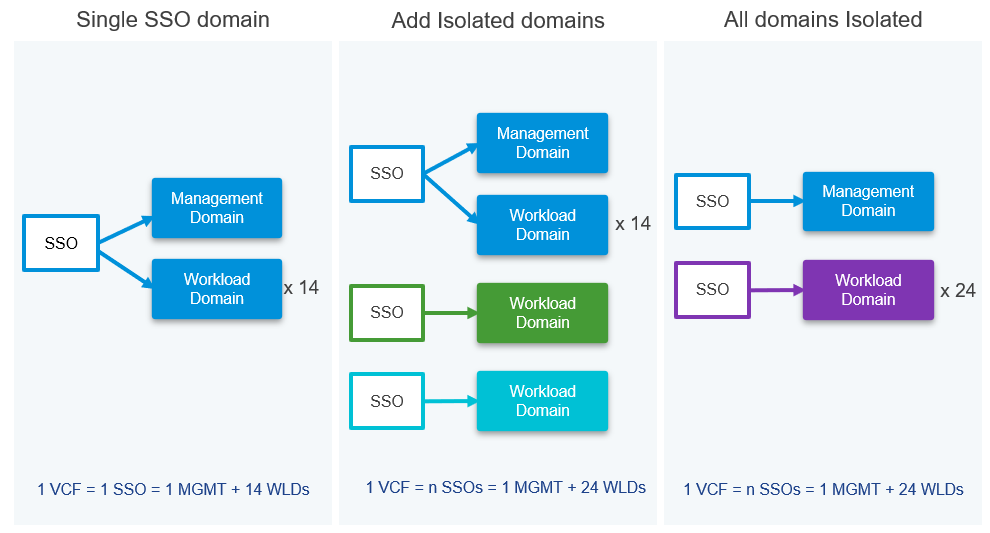

Another significant new feature I’d like to highlight is the introduction of Isolated workload domains. This has a significant impact on both the security and scalability of the platform.

In the past, VMware Cloud Foundation 4.x deployments by design have been configured to use a single SSO instance shared between the management domain and all VI workload domains (WLDs). All WLDs’ vCenter Servers are connected to each other using Enhanced Linked Mode (ELM). After a user is logged into SDDC Manager, ELM provides seamless access to all the products in the stack without being challenged to authenticate again.

VCF 5.0 on VxRail 8.0.100 deployments allow administrators to configure new workload domains using separate SSO instances. These are known as Isolated domains. This capability can be very useful, especially for Managed Service Providers (MSPs) who can allocate Isolated workload domains to different tenants with their own SSO domains for better security and separation between the tenant environments. Each Isolated SSO domain within VCF 5.0 on VxRail 8.0.100 is also configured with its own NSX instance.

As a positive side effect of this new design, the maximum number of supported domains per VCF on VxRail instance has now been increased to 25 (this includes the management domain and assumes all workload domains are deployed as isolated domains). This scalability enhancement results from not hitting the max number of vCenters configured in an ELM instance (which is 15) because Isolated domains are not configured with ELM with the management SSO domain. So, increasing the security and separation between the workload domains can also increase the overall scalability of the VCF on VxRail cloud platform.

The following diagram illustrates how customers can increase the scalability of the VCF on VxRail platform by adding isolated domains with their dedicated SSO:

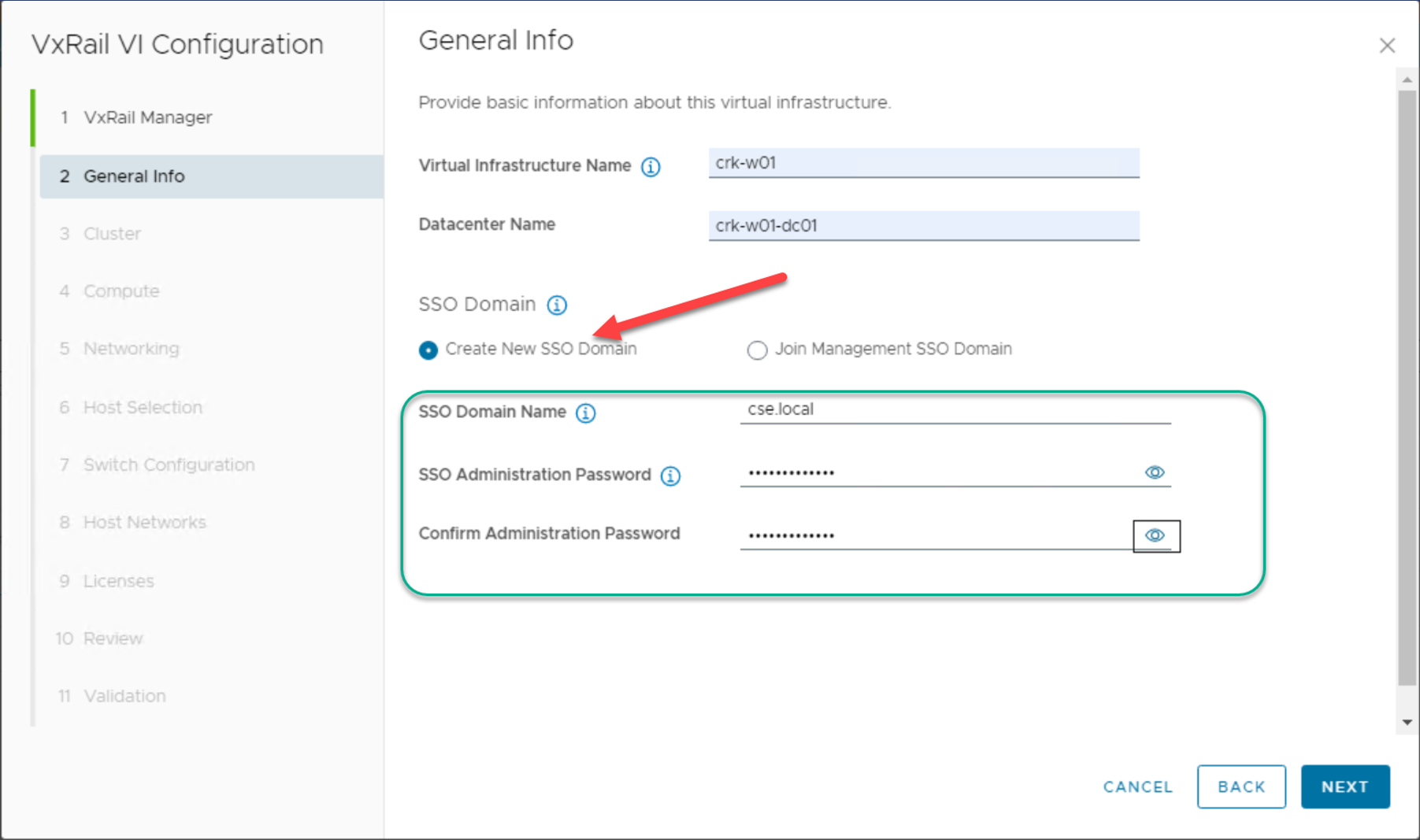

What does this new feature look like from the VCF on VxRail administrator’s perspective?

When creating a new workload domain, there’s a new option in the UI wizard allowing either to join the new WLD into the management SSO domain or create a new SSO domain:

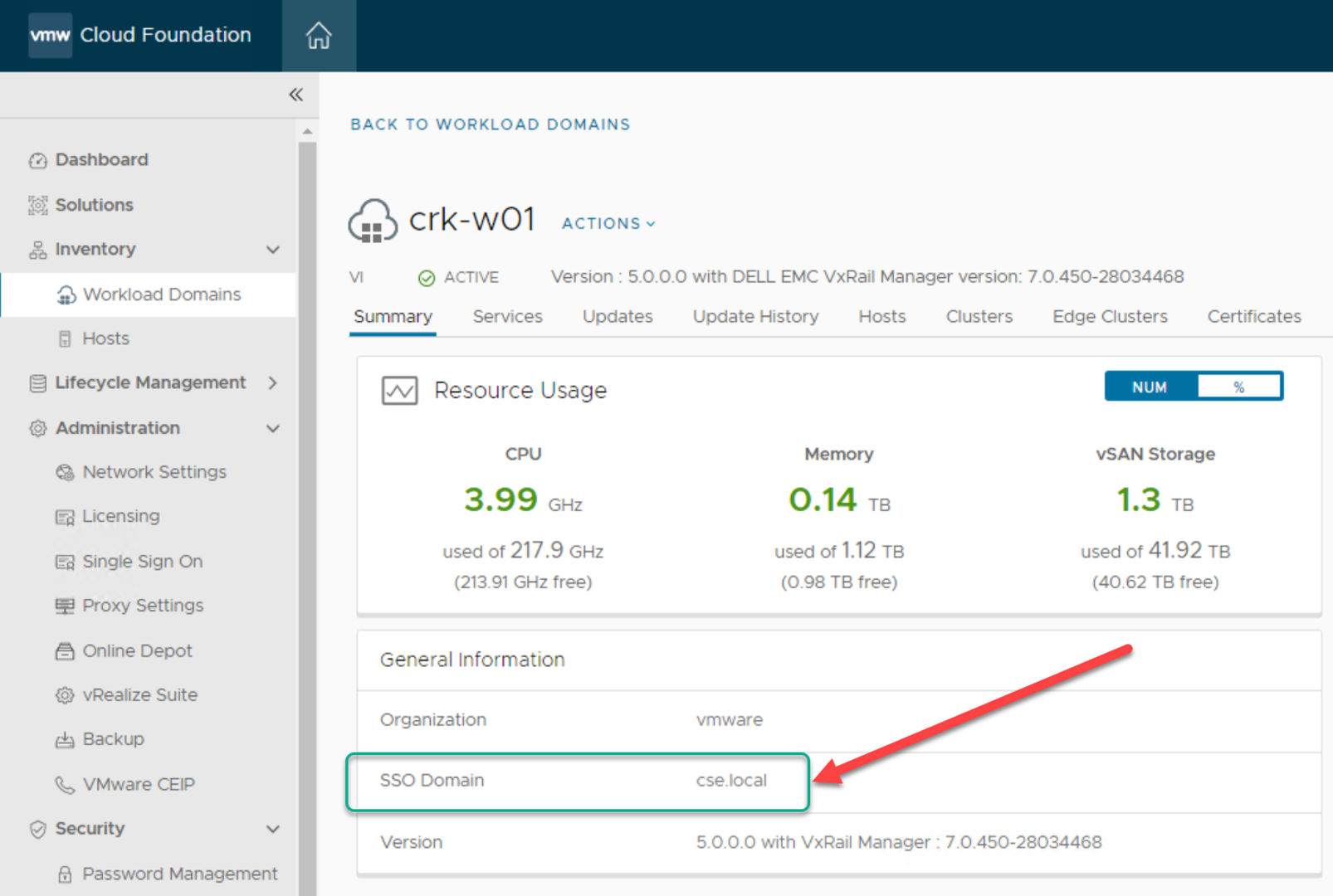

After the SSO domain is created, its information is shown in the workload domain summary screen:

General LCM updates

VxRail accurate versioning support and SDDC Manager ‘Release Versions’ UI and API enhancements

These two features should be discussed together. Beginning in VCF 5.0 on VxRail 8.0.100 and higher, enhancements were made to the SDDC Manager LCM service that enables more granular compatibility and tracking of current and previous VxRail versions that are compatible with current and previous VCF versions. This opens VCF on VxRail to be more flexible by supporting different VxRail versions within a given VCF release. It allows admins to support applying and tracking asynchronous VxRail release patches outside of the 1:1 mapped, fully compatible VCF on VxRail release that could require waiting for it to be available. This information about available and supported release versions for VCF and VxRail is integrated into the SDDC Manager UI and API.

Flexible WLD target versions

VCF 5.0 on VxRail 8.0.100 introduces the ability for each workload domain to have different versions as far back as N-2 releases, where N is the current version on the management domain. With this new flexibility, VCF on VxRail administrators are not forced to upgrade workload domain versions to match the management domain immediately. In the context of VCF 5.0 on VxRail 8.0.100, this can help admins plan upgrades over a long period of time when maintenance windows are tight.

SDDC Manager config drift awareness

Each VMware Cloud Foundation release introduces several new features and configuration changes to its underlying components. Update bundles contain these configuration changes to ensure an upgraded VCF on VxRail instance will function like a greenfield deployment of the same version. Configuration drift awareness allows administrators to view parameters and configuration changes as part of the upgrade. An example of configuration drift is adding a new service account or ESXi lockdown enhancement. This added visibility helps customers better understand new features and capabilities and their impact on their deployments.

The following screenshot shows how this new feature appears to the administrator of the platform:

SDDC Manager prechecks for vRealize/Aria Suite component versions

SDDC Manager 5.0 allows administrators to run a precheck for vRealize/VMware Aria Suite component compatibility. The vRealize/Aria Suite component precheck is run before upgrading core SDDC components (NSX, vCenter Server, and ESXi) to a newer VCF target release, and can be run from VCF 4.3.x on VxRail 7.x and above. The precheck will verify if all existing vRealize/Aria Suite components will be compatible with core SDDC components of a newer VCF target release by checking them against the VMware Product Interoperability Matrix.

General security updates

Enhanced certificate management

VCF 5.0 on VxRail 8.0.100 contains improved workflows that orchestrate the process of configuring Certificate Authorities and Certificate Signing Requests. Administrators can better manage certificates in VMware Cloud Foundation, with improved certificate upload and installation, and new workflows to ensure certificate validity, trust, and proper installation. These new workflows help to reduce configuration time and minimize configuration errors.

Storage updates

Support for NVMe over TCP connected supplemental storage

Supplemental storage can be used to add storage capacity to any domain or cluster within VCF, including the management domain. It is configured as a Day 2 operation. What’s new in VCF 5.0 on VxRail 8.0.100 is the support for the supplemental storage to be connected with the NVMe over TCP protocol.

Administrators can benefit from using NVMe over TCP storage in a standard Ethernet-based networking environment. NVMe over TCP can be more cost-efficient than NVMe over FC and eliminates the need to deploy and manage a fiber channel fabric if that is what an organization requires.

Operations and serviceability updates

VCF+ enhancements

VMware Cloud Foundation+ has been enhanced for the VCF 5.0 release, allowing greater scale and integrated lifecycle management. First, the scalability increased – it allows administrators to connect up to eight domains per VCF instance (including the management domain) to the VMware Cloud portal. Second, updates to the Lifecycle Notification Service within the VMware Cloud portal provide visibility of pending updates to any component within the VCF+ Inventory. Administrators can initiate updates through the VCF+ Lifecycle Management Notification Service, which connects back to the specific SDDC Manager instance to be updated. From here, administrators can use familiar SDDC Manager prechecks and workflows to update their environment.

VxRail hardware platform updates

Support for single socket 15G VxRail P670F

A new VxRail hardware platform is now supported, providing customers more flexibility and choice. The single-socket VxRail P670F, a performance-focused platform, is now supported in VCF on VxRail deployments and can offer customers savings on software licensing in specific scenarios.

Other asynchronous release related updates

VCF Async Patch Tool 1.1.0.1 release

While not directly tied to VCF 5.0 on VxRail 8.0.100 release, VMware has also released the latest version of the VCF Async Patch Tool. This latest version now supports applying patches to VCF 5.0 on VxRail 8.0.100 environments.

Summary

VMware Cloud Foundation 5.0 on Dell VxRail 8.0.100 is a new major platform release based on the latest generation of VMware’s vSphere 8 hypervisor. It provides several exciting new capabilities, especially around automated upgrades and lifecycle management. This is the first major release that provides guided, simplified upgrades between the major releases directly in the SDDC Manager UI, offering a much better experience and more value for customers.

All of this makes the new VCF on VxRail release a more flexible and scalable hybrid cloud platform, with simpler upgrades from previous releases, and lays the foundation for even more beneficial features to come.

Author: Karol Boguniewicz, Senior Principal Engineering Technologist, VxRail Technical Marketing

Twitter: @cl0udguide

Additional Resources:

VMware Cloud Foundation on Dell VxRail Release Notes

VxRail page on DellTechnologies.com

VCF on VxRail Interactive Demo

Infrastructure as Code with VxRail Made Easier with Ansible Modules for Dell VxRail

Thu, 26 Jan 2023 17:32:42 -0000

|Read Time: 0 minutes

Many customers are looking at Infrastructure as Code (IaC) as a better way to automate their IT environment, which is especially relevant for those adopting DevOps. However, not many customers are aware of the capability of accelerating IaC implementation with VxRail, which we have offered for some time already—Ansible Modules for Dell VxRail.

What is it? It's the Ansible collection of modules, developed and maintained by Dell, that uses the VxRail API to automate VxRail operations from Ansible.

By the way, if you're new to the VxRail API, first watch the introductory whiteboard video available on YouTube.

Ansible Modules for Dell VxRail are well-suited for IaC use cases. They are written in such a way that all requests are idempotent and hence fault-tolerant. This means that the result of a successfully performed request is independent of the number of times it is run.

Besides that, instead of just providing a wrapper for individual API functions, we automated holistic workflows (for instance, cluster deployment, cluster expansion, LCM upgrade, and so on), so customers don't have to figure out how to monitor the operation of the asynchronous VxRail API functions. These modules provide rich functionality and are maintained by Dell; this means we're introducing new functionality over time. They are already mature—we recently released version 1.4.

Finally, we are also reducing the risk for customers willing to adopt the Ansible modules in their environment, thanks to the community support model, which allows you to interact with the global community of experts. From the implementation point of view, the architecture and end-user experience are similar to the modules we provide for Dell storage systems.

Getting Started

Ansible Modules for Dell VxRail are available publicly from the standard code repositories: Ansible Galaxy and GitHub. You don't need a Dell Support account to download and start using them.

Requirements

The requirements for the specific version are documented in the "Prerequisites" section of the description/README file.

In general, you need a Linux-based server with the supported Ansible and Python versions. Before installing the modules, you have to install a corresponding, lightweight Python SDK library named "VxRail Ansible Utility," which is responsible for the low-level communication with the VxRail API. You must also meet the minimum version requirements for the VxRail HCI System Software on the VxRail cluster.

This is a summary of requirements for the latest available version (1.4.0) at the time of writing this blog:

Ansible Modules for Dell VxRail | VxRail HCI System Software version | Python version | Python library (VxRail Ansible Utility) version | Ansible version |

1.4.0 | 7.0.400 | 3.7.x | 1.4.0 | 2.9 and 2.10 |

Installation

You can install the SDK library by using git and pip commands. For example:

git clone https://github.com/dell/ansible-vxrail-utility.git cd ansible-vxrail-utility/ pip install .

Then you can install the collection of modules with this command:

ansible-galaxy collection install dellemc.vxrail:1.4.0

Testing

After the successful installation, we're ready to test the modules and communication between the Ansible automation server and VxRail API.

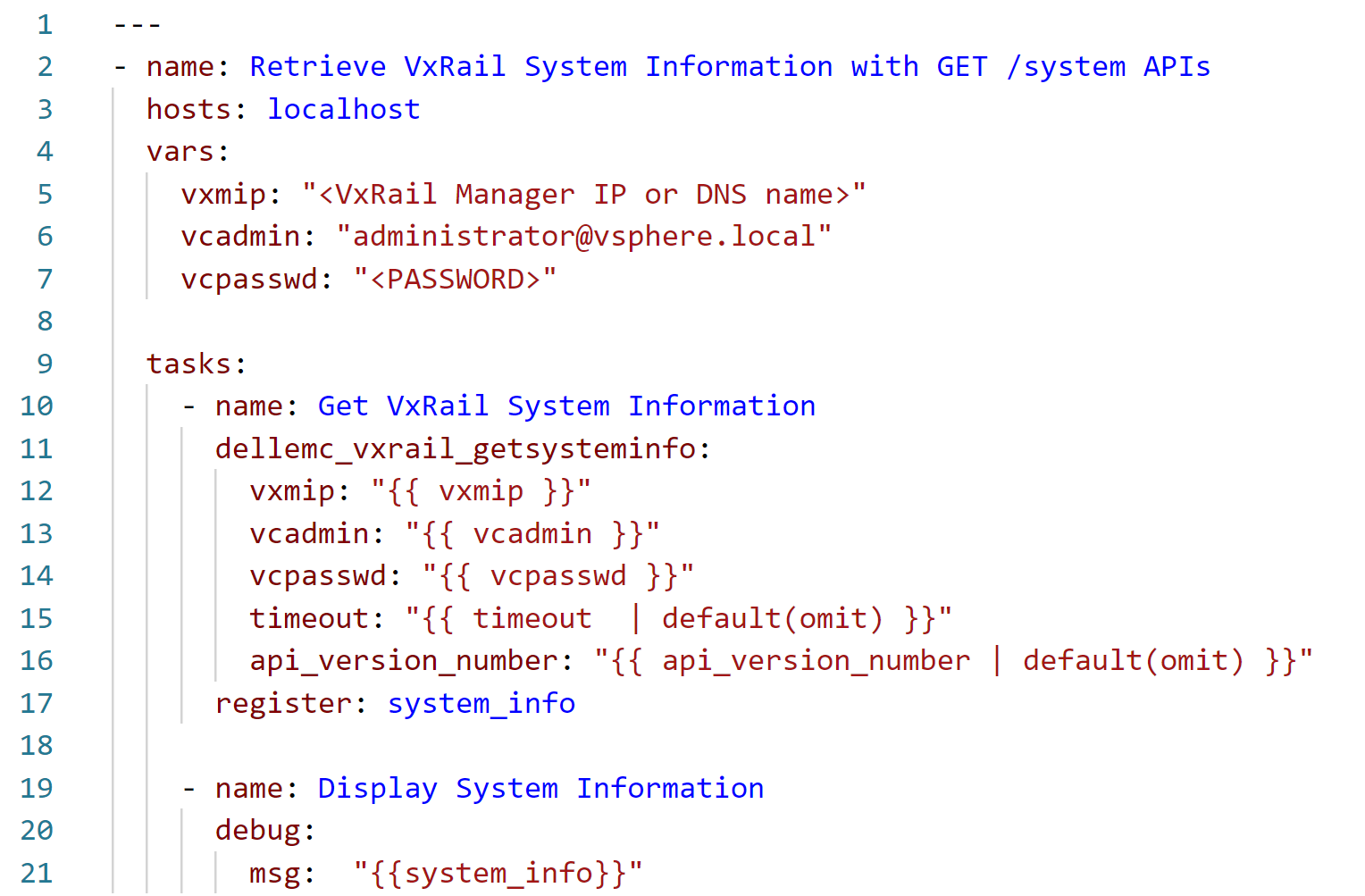

I recommend performing that check with a simple module (and corresponding API function) such as dellemc_vxrail_getsysteminfo, using GET /system to retrieve VxRail System Information.

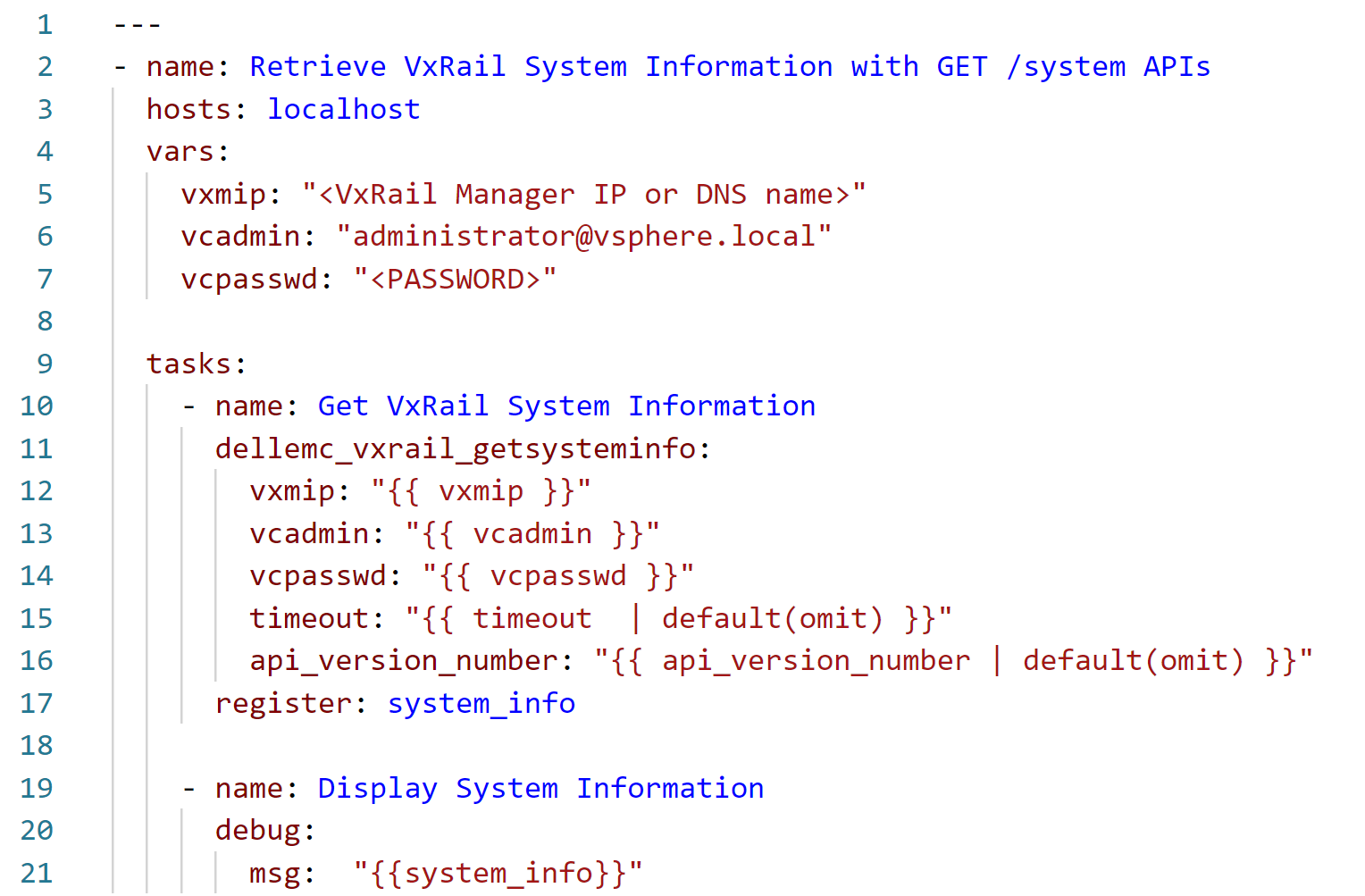

Let's have a look at this example (you can find the source code on GitHub):

Note that this playbook is run on a local Ansible server (localhost), which communicates with the VxRail API running on the VxRail Manager appliance using the SDK library. In the vars section, , we need to provide, at a minimum, the authentication to VxRail Manager for calling the corresponding API function. We could move these variable definitions to a separate file and include the file in the playbook with vars_files. We could also store sensitive information, such as passwords, in an encrypted file using the Ansible vault feature. However, for the simplicity of this example, we are not using this option.

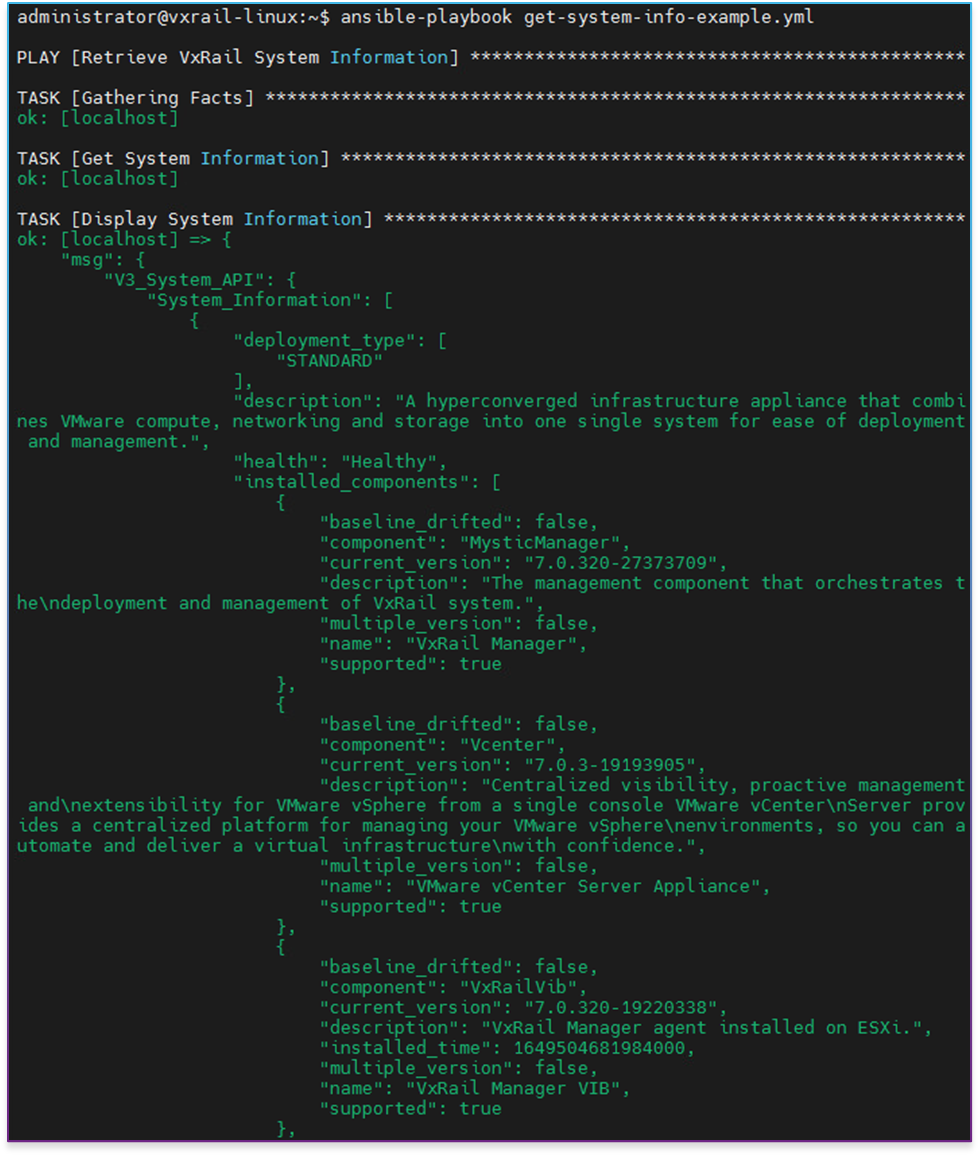

After running this playbook, we should see output similar to the following example (in this case, this is the output from the older version of the module):

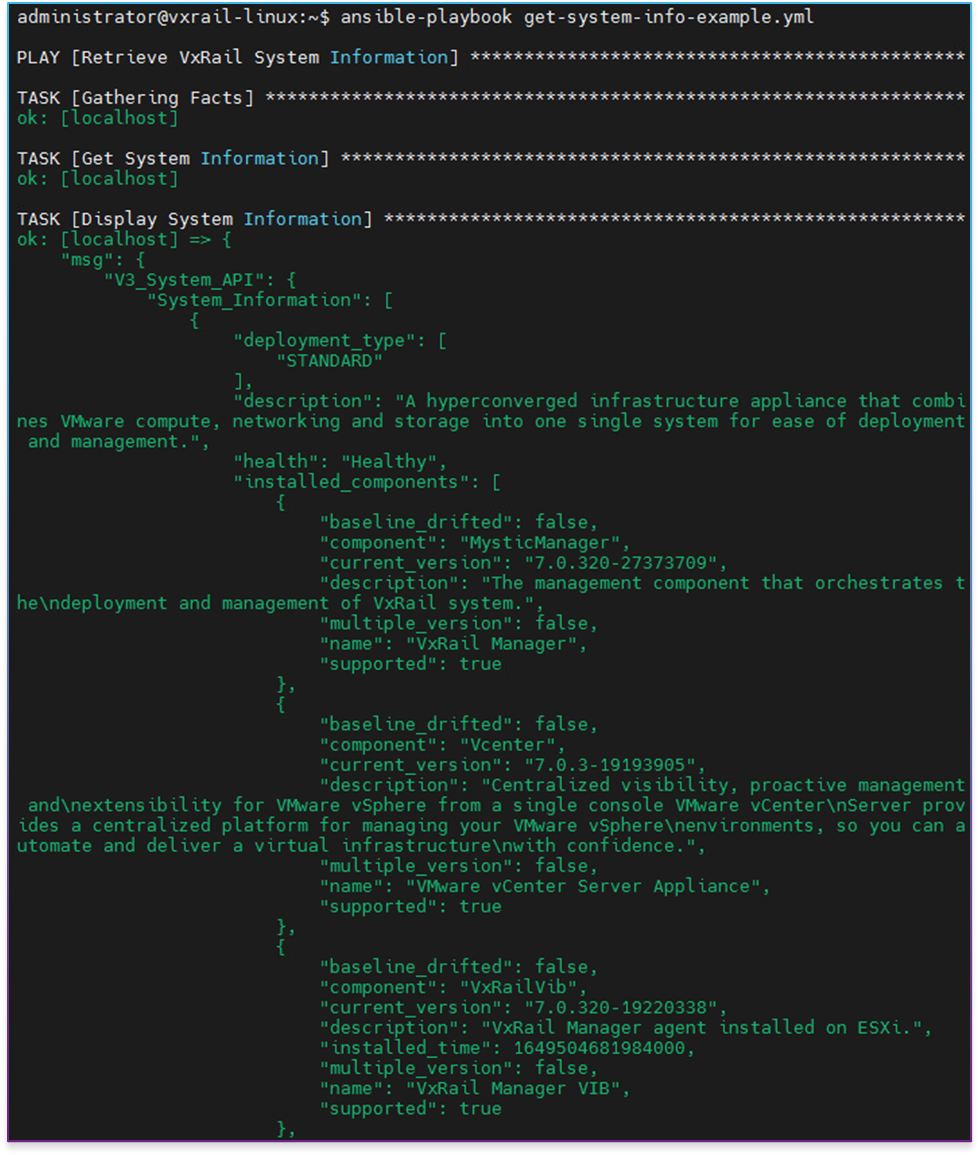

Cluster expansion example

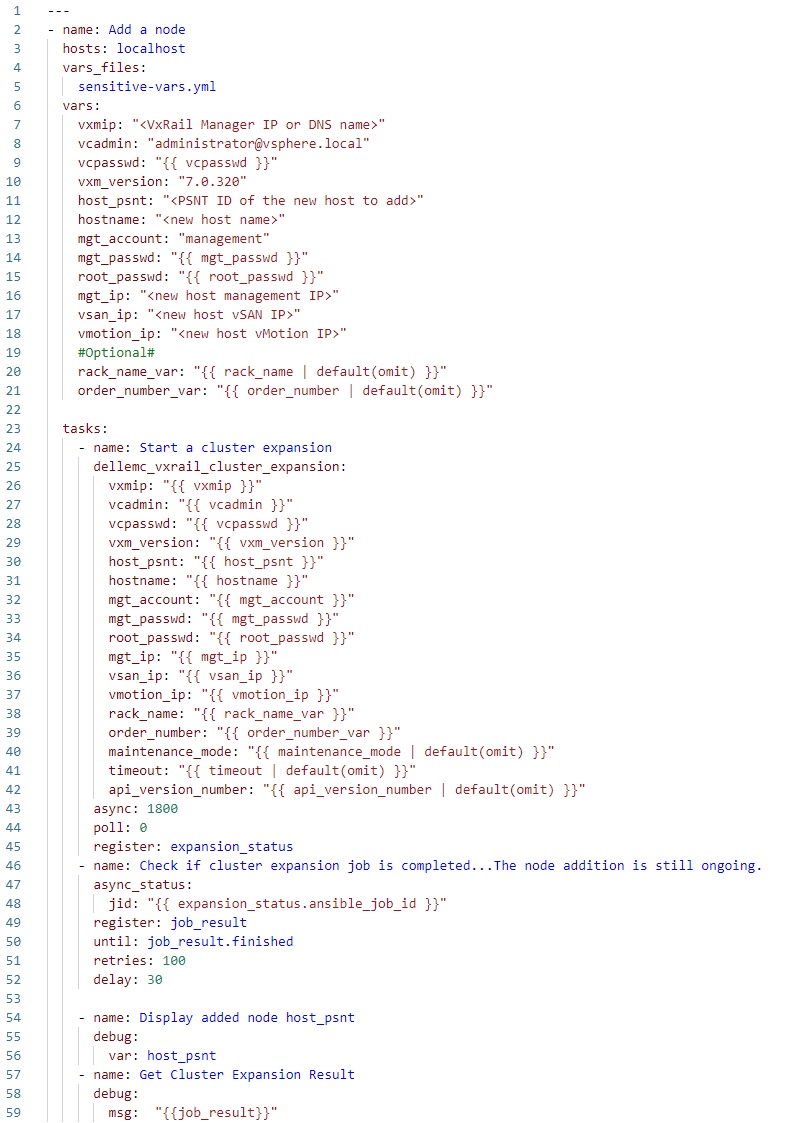

Now let's have a look at a bit more sophisticated, yet still easy-to-understand, example. A typical operation that many VxRail customers face at some point is cluster expansion. Let's see how to perform this operation with Ansible (the source code is available on GitHub):

In this case, I've exported the definitions of the sensitive variables, such as vcpasswd, mgt_passwd, and root_passwd, into a separate, encrypted Ansible vault file, sensitive-vars.yml, to follow the best practice of not storing them in the clear text directly in playbooks.

As you can expect, besides the authentication, we need now to provide more parameters—configuration of the newly added host—defined in the vars section. We select the new host from the pool of available hosts, using the PSNT identifier (host_psnt variable).

This is an example of an operation performed by an asynchronous API function. Cluster expansion is not something that is completed immediately but takes minutes. Therefore, the progress of the expansion is monitored in a loop until it finishes or the number of retries is passed. If you communicated with the VxRail API directly by using the URI module from your playbook, you would have to take care of such monitoring logic on your own; here, you can use the example we provide.

You can watch the operation of the cluster expansion Ansible playbook with my commentary in this demo:

Getting help

The primary source of information about the Ansible Modules for Dell VxRail is the documentation available on GitHub. There you'll find all the necessary details on all currently available modules, a quick description, supported endpoints (VxRail API functions used), required and optional parameters, return values, and location of the log file (modules have built-in logging feature to simplify troubleshooting— logs are written in the /tmp directory on the Ansible automation server). The GitHub documentation also contains multiple samples showing how to use the modules, which you can easily clone and adjust as needed to the specifics of your VxRail environment.