Direct from Development: Tech Notes

Implementing AI: Dell PowerEdge XE9640 and Intel® Data Center GPU Max 1550

Fri, 12 Apr 2024 15:36:58 -0000

|Read Time: 0 minutes

Implementing AI: Dell PowerEdge XE9640 and Intel® Data Center GPU Max 1550

An AI inference and training POC powered by Dell Technologies

Author: Esther Baldwin – Intel, Yashesh Shroff – Intel, Justin King - Dell

Summary

In the current economic climate, the CIO’s access to infrastructure for Artificial Intelligence (AI) development and delivery is challenging. In addition to the increasing demand for FLOPs to generate new and faster insights for their business, CIO’s face challenges on several fronts. These include the supply chain and lead time for traditional resources and the need to continue to maintain modernized environments which drive growth while reducing the cost of programs and bring forward tangible value.

In addition to modernization challenges for new data management approaches, such as data lakes, CIOs are being asked to support artificial intelligence technology for various uses as it permeates all aspects of the business environment. For many, this is an emerging technology, and they are often under a barrage of marketing and sales information. Dell Technologies, as a trusted advisor, is there to alleviate these pressures and help its customers navigate the complex decisions that turn vision and planning into reality.

With Dell Technologies’ this solution is designed and optimized to give CIOs options. With the Intel® Data Center GPU Max Series, developers working on multiple models for inference and training will find extensive resources to combat today’s competitive landscape. This comprehensive brief will provide an overview of how AI best meets the AI developer's needs.

Dell and Intel have partnered to deliver a server solution powered by Dell infrastructure with the Intel Max Series GPU. The PowerEdge XE9640 offers:

|

Business Challenges and Benefits

The Intel Max Series 1550 GPU meets industry challenges with flexible options that empower you to deliver everything you would expect from a modern high-performance graphics processing unit.

• Program once - No code changes between Intel ® Xeon® CPU and Max Series GPU

• Intel oneAPI to allow hardware vendor independence - No vendor lock-in

• AI-boosting Intel® Xe Matrix Extensions (XMX) with deep systolic arrays enabling vector and matrix capabilities in a single device

• Solving large problems - Largest L2 Cache for a GPU, 408MB (10x of A100 and 25x of MI250)

• Built-in hardware accelerated Ray Tracing cores, an advantage for visualization

• Xe Link – high-speed coherent, unified fabric offers flexibility to any form factor, enabling scale-up - 16Xe Links for GPU – to-GPU comms

• Advanced manufacturing processes - Modular & Flexible architecture that allows the SoC to be constructed from 47 individual silicon tiles

• High Bandwidth Memory - integrated on the package

• Versatile: Supports both HPC and AI workloads. AI support for popular models such as Resnet, Bert, Cosmic Tagger, Llama 2-(7B, 13B, 70B), GPT-J 6B, BLOOM-176B, and more.

• Available today from Dell

|

What is Intel® Data Center GPU Max 1550?

The Intel Max Series GPU provides support for over 60 AI models. It also offers application readiness for business applications in high-performance computing, including energy, life science, and physics as well as top applications in the financial services industry and manufacturing and more. It is available with the Dell PowerEdge XE964.

What do AI developers care about, and where and why will the XE9640 work for them?

AI developers look for several key features in a compute platform, such as performance, versatility, scalability, ease of use, and support for AI frameworks. The Dell XE9640 platform is designed with these needs in mind.

Performance: With the Max 1550 GPUs, the Dell XE9640 platform offers 2.7X peak throughput across various datatypes (FP64, FP32, TF32, BF16/FP16, and INT8).

Versatility: This performance translates into inference and training advantages for the top AI models used for image classification, image segmentation, object detection, natural language processing, speech recognition, speech synthesis, and recommendation. Details of these workloads are provided in the following section.

Scalability: With XeLink, developers can access high-speed connections via GPU-to-GPU fabric on Max 1550, thereby scaling up workloads to four cards on the Dell XE9640 platform. Moreover, ethernet or Infiniband fabric can connect GPUs across nodes in a scale-out configuration.

Ease of use: With numerous models now available for easy onboarding via GitHub (https://github.com/IntelAI/models) and a well-documented oneAPI software stack, developers can start building applications that leverage Intel’s advanced Data Center CPU capabilities, such as AVX512, and Max GPU

AI Frameworks: The oneAPI software stack supports the latest releases of PyTorch and Tensorflow through plugins, IPEX and ITEX, respectively. This makes writing code that runs efficiently on the Max 1550 GPU cards with as little as two lines of change. Find more details on this at https://software.intel.com/.

AI Workloads

Published AI and HPC workloads can be found here. Find detailed guides on running the workloads with supported frameworks and essential open-source libraries which provide developers with the tools and experiences they need to deliver value. The workloads are provided as deployable PyTorch and Tensorflow containers and include sample scripts that minimize deployment time.

Below are sample use cases and associated models:

- Enterprise: Llama 2, GPT-J-6B, BLOOM-176, ResNet-50, BERT-Large, and many more

- Financial Services: STAC-A2 and FSI Kernels

- Life & Material Sciences: LAMMPS Multi-GPU scaling–Tungsten workload, NWChemEx PWDFT, AutoDock, NAMD, RELION

- Astrophysics: DPEcho

- Physics: 3D GAN for Particle Shower Simulation, DeepGalaxy, QMCPack,

- Earth Systems Modelling: SpecFEM3D_Globe Multi-GPU Scaling – Global_s362ani_shakemovie,

ECMWF Cloudsc - Energy: Seismic Kernel Multi-GPU scaling

- Manufacturing: CoMLSim, JacobiSolver

Generative AI is of high interest in delivering business impact. The Dell PowerEdge XE9640 has the software and hardware capabilities to drive GenAI use cases in an Enterprise setting. Along with traditional deep learning (CV, RecSys, NLP) models, there is growing support for GenAI workloads such as Llama-2, Mistral for use with inference, fine-tuning, and developing Retrieval Augmented Generation (RAG) pipelines. To see the performance results for a workload that interests you, contact your Dell representative.

oneAPI Software and AI Tools from Intel

Making developers’ life easier is the oneAPI open and standards-based specification which supports multiple architecture types including but not limited to GPU, CPU, and FPGA. The specification defines a set of library interfaces that are commonly used in a variety of workflows.

AI Tools from Intel is a toolkit that provides familiar Python tools and frameworks to data scientists, AI developers, and researchers to accelerate end-to-end data science and analytics pipelines on Intel® architecture, a vital component of the Dell PowerEdge XE9640. The components are built using oneAPI libraries for low-level compute optimizations.

The AI Tools maximize performance from preprocessing through machine learning and provide interoperability for efficient model development. Train on Intel® CPUs and GPUs and integrate fast inference into your AI development workflow with Intel®-optimized deep learning frameworks for TensorFlow and PyTorch, pre-trained models, and model optimization tools. Don’t forget to look at the Intel Distribution for Python with highly optimized scikit-learn which is part of the AI Tools from Intel.

With compute-intensive Python packages, Modin*, scikit-learn*, and XGBoost, you can achieve drop-in acceleration for data preprocessing and machine learning workflows.

For more details, refer to the oneAPI specification page here and the Resources section at the end of the guide, where you can download the oneAPI base toolkit and AI Tools from Intel.

Dell PowerEdge XE9640 Overview

Density-optimized AI acceleration with the Dell PowerEdge delivers real-time insights. Dell’s first liquid-cooled 4-way GPU platform is in the XE9640 2U server. It is designed to drive the latest cutting-edge AI, Machine Learning, and Deep Learning Neural Network applications.

- Combines a high core count of up to 56 cores in the 4th Gen Intel® Xeon® processors and the most GPU. memory and bandwidth available today to break through the bounds of today’s and tomorrow’s AI computing.

- The Intel Data Center Max GPU series 1550 600W OAM GPUs is fully interconnected with XeLink.

- Ideal 2U form factor building block for dense Supercomputer and HPC acceleration workloads and applications.

- Supports Rack Direct Liquid Cooling Infrastructure: Cool IT with 42U XE9640 rack manifold and 48U XE9640 rack manifold.

Security

Security is integrated into every phase of the PowerEdge lifecycle, including a protected supply chain and factory-to-site integrity assurance. Silicon-based root of trust anchors end-to-end boot resilience while Multi-Factor Authentication (MFA) and role-based access controls ensure trusted operations.

- Cryptographically signed firmware

- Data at Rest Encryption (SEDs with local or external key mgmt)

- Secure Boot

- Secured Component Verification (Hardware integrity check)

- Secure Erase

- Silicon Root of Trust

- System Lockdown (requires iDRAC9 Enterprise or Datacenter)

- TPM 2.0 FIPS, CC-TCG certified, TPM 2.0 China NationZ

Accelerated I/O throughput

- Direct liquid-cooled Processors and GPUs enable efficient cooling for the highest performance, efficient power utilization, and lower TCO

- Dell Multi-vector cooling manages components to operate optimally

- Is the ideal dual-socket 1U rack server for dense scale-out data center computing applications. Benefiting from the flexibility of 2.5” or 3.5” drives, the performance of NVMe, and embedded intelligence, it ensures optimized application performance in a secure platform.

Dell Infrastructure Components

The following Dell components provide the foundation for AI solutions that lend themselves to development and delivery.

Dell PowerScale is an AI-ready data platform designed to easily store, manage, and protect data. Accelerate your AI workloads wherever your unstructured data lives—on-premises, at the edge, and in any cloud.

Dell Unity XT Storage provides flexible hybrid flash storage for cost-sensitive enterprises that want to leverage a combination of flash and disk for lower cost than all flash/NVMe architectures. It supports unified block and file workloads, online upgrades without migrations, guaranteed 3:1 dedupe, and sync replication.

Dell PowerVault Storage is optimized for DAS and SAN applications and supports PowerEdge server capacity expansion via PowerEdge-ready JBODs. It provides management simplicity and low-cost block storage and is ideal for edge and high-capacity data warehouse deployments.

Dell ECS Storage is an enterprise-grade, cloud-scale object storage platform providing comprehensive protocol support for unstructured object and file workloads on a single modern platform. Depending on capacity requirements, either the ECS EX500 or EX5000 may be used.

Dell PowerSwitch Networking switches are based on open standards to free the data center from outdated, proprietary approaches: They support future-ready networking technology that helps you improve network performance, lower network management cost, and complexity, and adopt innovations in networking.

Why Dell Technologies

The technology required for data management and enterprise analytics is evolving quickly, and companies may not have experts on staff or who have the time to design, deploy, and manage solution stacks at the pace required. Dell Technologies has been a leader in AI, Big Data, and advanced analytics for over a decade with proven products, solutions, and expertise. Dell Technologies has teams of application and infrastructure experts dedicated to staying on the cutting edge, testing new technologies, and tuning solutions for your applications to help you keep pace with this constantly evolving landscape.

Dell Technologies is building a broad ecosystem of partners in the data space to bring our customers the necessary experts, resources, and capabilities and accelerate their data strategy. We believe customers should be able to deliver AI innovation using data irrespective of where it resides, across on-prem, public cloud, and edge. By partnering with industry leaders in enterprise data management and analytics, we create optimized solutions for our customers.

Dell Technologies uniquely provides an extensive portfolio of technologies to deliver the advanced infrastructure that underpins successful data implementations. With years of experience and an ecosystem of curated technology and service partners, Dell Technologies provides innovative solutions, servers, networking, storage, workstations, and services that reduce complexity and enable you to capitalize on a data universe.

Proof Points

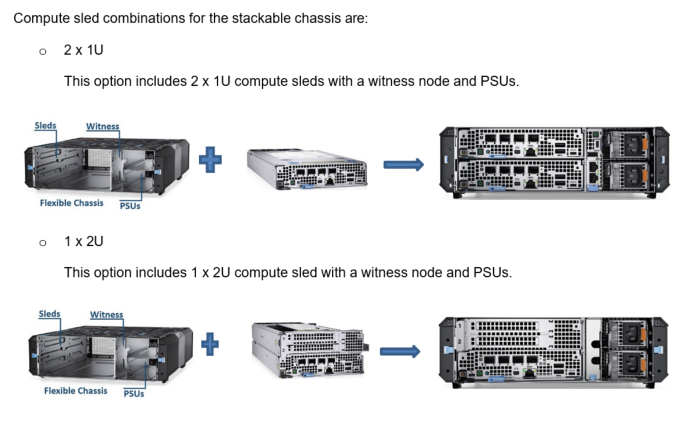

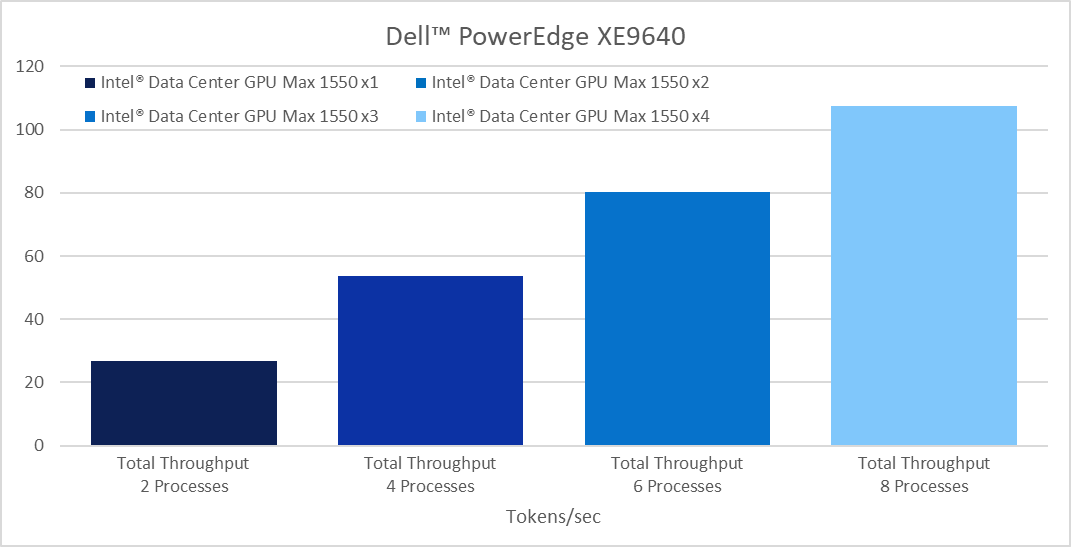

One of the more recent and fast-growing use cases is Generative AI (GenAI). The following chart shows a sample benchmark of a GenAI workload run on a Dell PowerEdge XE9640 x4 platform:

This figure demonstrates efficient linear scale-up from a single card workload up to four cards for LlaMA-2 7B inference. Details on the workload configuration and environment setup can be found at the Dell Infohub blog: https://infohub.delltechnologies.com/p/expanding-gpu-choice-with-intel-data-center-gpu-max-series/. Scalers AI, A Dell partner, developed and performed the benchmark.

Conclusion

Whether you want to expand your existing capabilities or start your first project, talk to us about your AI vision and what you need.

Your company needs all tools and technologies working in concert to achieve success. Fast, effective systems that complement time management practices are crucial to maximizing every employee hour. High-level data collection and processing that provides rich, detailed analytics can ensure your marketing campaigns strategically target your ideal customers and encourage conversion. To top it off, you need affordable products in a timely fashion that meet your criteria and then some. The XE9640 with the Intel Max 1550 GPU will meet the needs of AI developers.

Understanding AI Language

AI Models

A model is a program that analyzes data. There are many different models in use in AI, and they are specialized for the type of data they analyze. The model being used for this brief is LLaMa V2, a collection of generative AI models that are pretrained and fine-tuned to generate text (can scale from 7 to 70 billion parameters). LLaMa V2 is part of a new trend of having “nimble” models. These models are more customized to specific business needs, smaller, and lower cost to train and deploy.

“Dell uses Llama 2 internally for both experimental work and production deployment. One use case provides a chatbot-style interface to support Retrieval Augmented Generation (RAG) to get information from Dell’s knowledge base of articles. Llama 2 itself is a freely available open-source technology.” [i]

What is “GenAI”

Generative AI, or GenAI, is a subset of artificial intelligence with the potential to transform the business world due to its ability to create new content from existing data. It is a powerful tool that can generate text, images, videos, and even code, revolutionizing businesses' operations.

- Healthcare: generate synthetic data for research without violating privacy regulations.

- Research: create new models for chemical compound molecules for pharmaceutical drug discovery. Manufacturing product and part design.

- Creativity: create music, fashion design, and product design; edit images; create unique art; provide realistic images and immersive worlds for virtual and augmented reality; augment game development for in-game content creation and game play adaptation.

- Natural language understanding and processing: human-like chatbot interaction, virtual assistants.

- Software: write code, significantly reducing development time and costs.

- Data generation: create synthetic data for training machine learning models and testing edge-based communication systems.

Parameters

A variable that indicates the size of the model. For instance, Llama-2 70B is around 70 billion parameters.

Tokens

A unit that a model uses to compute the length of a text can be pieces of words, punctuation, or emojis. It is used to learn context and semantics. Text is split up into smaller units to be processed, and then new text is generated. One way to measure its capacity is the number of tokens your hardware can process.

References

- Dell PowerScale

- Dell PowerEdge XE9640

- PowerEdge XE9640 Rack Server

- Dell PowerVault Storage

- Dell Unity XT Storage

- Dell ECS Object Storage

- Dell PowerSwitch Networking

- https://arxiv.org/abs/2210.05837 for CoMLSim background

- Intel® oneAPI Base Toolkit

- AI Tools from Intel

PowerEdge XE9680 Rack Integration

Tue, 12 Sep 2023 13:22:37 -0000

|Read Time: 0 minutes

Introduction

Proper server rack integration is crucial for a data center's efficient and reliable operation. Optimizing space, power, and cooling can reduce downtime, simplify fleet management, improve serviceability, and lower overall costs. However, successful server rack integration requires careful planning, attention to detail, and expertise in server hardware, networking, and system administration.

This paper focuses on the critical aspects of deploying the PowerEdge XE9680 server in your data center. It describes key factors such as selecting the appropriate rack type, sizing the rack to meet current and future needs, installing and configuring the server hardware and related components, and ensuring proper power and cooling.

At Dell Technologies, we understand the importance of meeting our customers where they are. Whether you require full-service rack integration and deployment services or expert advice, we are committed to providing the support you need to achieve your goals. By leveraging our expertise and resources, you can be confident in your ability to implement the server rack integration that meets your unique needs and requirements.

The PowerEdge XE9680

The Dell PowerEdge XE9680 is a high-performance server designed to deliver exceptional performance for machine learning workloads, AI inferencing, and high-performance computing. Table 1 lists key specifications to consider when installing it in a rack.

Table 1. Server specifications

Feature | Technical Specifications |

Form Factor | 6U Rack Server |

Dimensions and Weight | Height — 263.2 mm / 10.36 inches Width — 482.0 mm / 18.98 inches Depth — 1008.77 mm / 39.72 inches with bezel — 995 mm / 39.17 inches without bezel —1075 mm /42.32 inches with Cable Management Arm (CMA) Weight —107 kg / 236 lbs. |

Cooling Options | Air Cooling |

XE9680 rack integration – critical factors

Server operating environment

The American Society of Heating, Refrigerating, and Air-Conditioning Engineers (ASHRAE) data center specifications focus on temperature and humidity control, optimized air distribution, airflow management, air quality, and energy efficiency. Key recommendations include maintaining appropriate temperature and humidity ranges, implementing hot aisle/cold aisle configurations and containment systems, managing airflow effectively, ensuring high indoor air quality, and adopting energy-efficient technologies.

The Dell PowerEdge XE9680 complies with the A2 Class ASHRAE specifications in Table 2.

Table 2. Operating environment specifications

Product Operation | Product Power Off | |||||

Dry-Bulb Temp, °C | Humidity Range, Noncondensing | Max Dew Point, °C | Max Elevation, meters | Max. Rate of Change, °C/hour | Dry-Bulb Temp, °C | Relative Humidity, % |

10-35

| –12°C DP and 8% rh to 21°C DP and 80% rh | 21 | 3050 | 20 | 5 to 45 | 8 to 80 |

Note: The maximum operating temperature is derated by 1°C per 300m above 900m in altitude.

For optimal performance and reliability, it is recommended to operate within the defined specification ranges. While it is possible to operate at the edge of these ranges, Dell does not recommend continuous operation under such conditions due to potential impacts on performance and reliability.

Cabinet recommendations

When choosing a cabinet, it is important to consider factors such as size, ventilation, cable management, and security. The right cabinet should provide ample space for equipment, efficient airflow to prevent overheating, organized cable routing, and robust physical protection for valuable server hardware. Careful consideration of these factors ensures optimal performance, reliability, and ease of maintenance for your server infrastructure. We recommend the following cabinet specifications for optimal XE9680 installation:

- Minimum width of 600mm / 23.62 inches

- Minimum depth of 1200mm / 47.24 inches

- 42 or 48RU height

- Rear cable management support

- Support for rear facing horizontal or vertical PDUs

- To accommodate the depth of server and IO cables, it may be necessary to utilize cabinet extensions depending on cabinet vendor

- Side panels for single cabinets

Rack and stack

Installing servers in a rack is a crucial aspect of server management. Proper placement within the rack ensures efficient use of space, ease of access, and optimal airflow. Each server should be securely mounted in the rack, taking into account factors such as weight distribution and cable management. Strategic placement allows for better cooling, reducing the risk of overheating, and prolonging the lifespan of the equipment. Additionally, thoughtful placement enables easy maintenance, troubleshooting, and scalability as the server environment evolves. By giving careful consideration to the placement of servers in a rack, you can create a well-organized and functional setup that maximizes performance and minimizes downtime. We recommend the following:

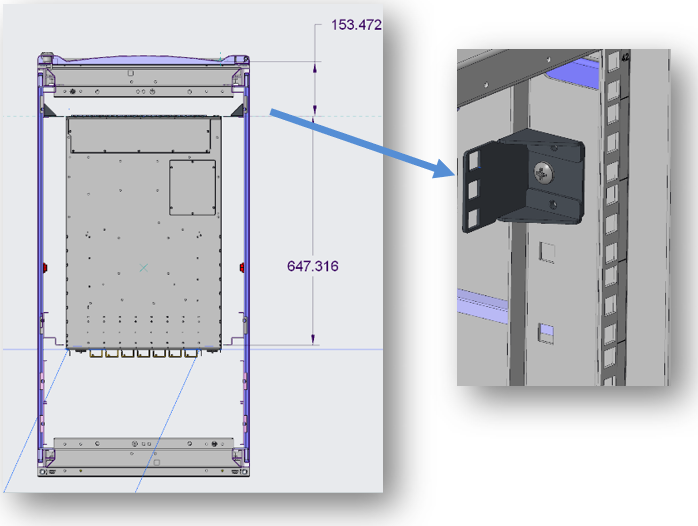

- The PowerEdge XE9680 has a maximum chassis weight of 107kg/236 lbs. It is recommended to install the first XE9680 server in the 1RU location, and to install any additional servers directly above it. This configuration helps maintain a low center of gravity, reducing the risk of cabinet tipping.

- For ease of assembly and seismic bracket installation, we recommend starting at the 3RU position when using seismic hardware. It is important for customers to adhere to local building codes, and to ensure that all necessary facility accommodations are in place and that the seismic brackets are correctly installed.

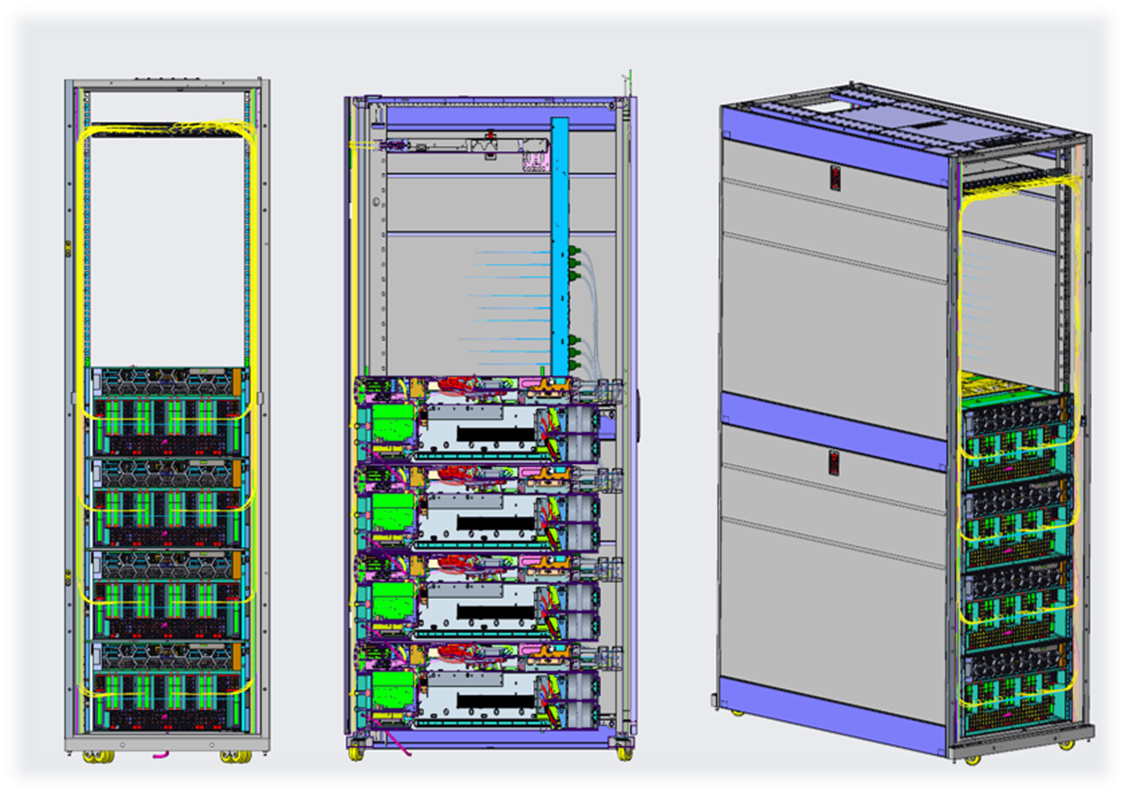

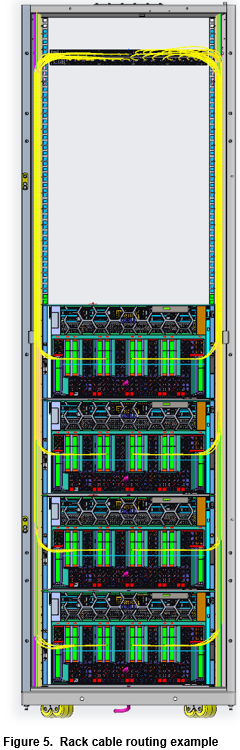

Figure 1. 4x PowerEdge XE9680 servers in a rack

Power distribution recommendations

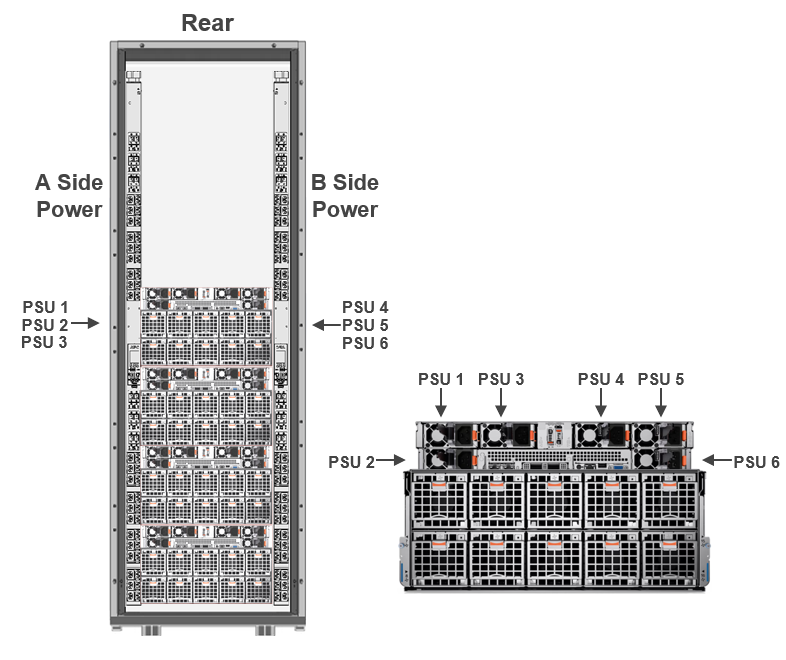

The PowerEdge XE9680, equipped with H100 GPUs, has an approximate maximum power draw of 11.5kW. It comes with six 2800W Mixed Mode power supply units (PSUs) that feature a C22 input socket.

The XE9680 currently supports 5+1 fault-tolerant redundancy (FTR). (An additional 3+3 FTR configuration will be introduced in the Fall of 2023.) It is important to note that in 3+3 mode, system performance may throttle upon power supply failure to prevent overloading the remaining power supplies.

Figure 2. PowerEdge XE9680 with PDU

Figure 2. PowerEdge XE9680 with PDU

For the XE9680, we recommend the following PDU specifications:

- Vertical or horizontal PDUs

- One circuit breaker per power supply

- C19 receptacles

Table 3. PDU specifications

PDU Input Voltage | XE9680s Per Cabinet | PDUs Per Cabinet | Circuit Breakers Per PDU (Min) | Single PDU Requirement (Min) |

208V | 2 | 2 | 6 | 60A (48A Rated) 17.3kW |

208V | 2 | 4 | 3 | 30A (24A Rated) 8.6kW |

208V | 4 | 2 | 12 | 100A (80A Rated) 28.8kW |

208V | 4 | 4 | 6 | 60A (48A Rated) 17.3kW |

400/415V | 2 | 2 | 6 | |

400/415V | 2 | 4 | 3 | 20A (16A Rated) 11.1kW@400 / 11.5kW@415V |

400/415V | 4 | 2 | 12 | |

400/415V | 4 | 4 | 6 |

Note: Single PDU Power Requirement = Input Voltage * Current Rating * 1.73.

The factor of 1.73 (the square root of 3) is used to account for three-phase power systems commonly used in data centers and industrial settings. By multiplying the input voltage, current rating, and 1.73, you can determine the power capacity needed for a single PDU to adequately support the connected equipment. This calculation helps ensure that the PDU can handle the power load and prevent overloading or electrical issues.

Optimal thermal management for performance and reliability

Thermal management is important in data centers to ensure equipment reliability, optimize performance, improve energy efficiency, prolong equipment lifespan, and reduce environmental impact. By maintaining appropriate temperature levels, data centers can achieve a balance between operational reliability, energy efficiency, and cost-effectiveness.

Dell Technologies recommends the following best practices for thermal management:

- Ensure a cold aisle inlet airflow of 1200 CFM.

- If additional equipment is rear-facing, consider using 1 or 2 RU ducts.

- Use filler panels for all open front U spaces.

- For stand-alone racks, install cabinet side panels to optimize airflow.

The XE9680 is engineered to operate efficiently within ambient temperature conditions of up to 35°C. Although it is technically capable of functioning in such environments, maintaining lower temperatures is highly recommended to ensure the device's optimal performance and reliability. By operating the XE9680 in a cooler environment, the risk of overheating and potential performance degradation can be mitigated, resulting in a more stable and reliable operation overall.

Cabinet cable management

Proper cable management in a server rack improves organization, airflow, accessibility, safety, and scalability. It enhances the reliability, performance, and maintainability of the entire IT infrastructure.

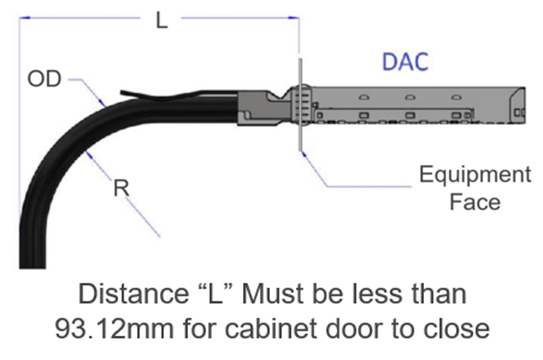

The PowerEdge XE9680 supports Ethernet and InfiniBand network adaptors, which are installed at the front of the server for easy access in cold aisles. To ensure proper cable management, the chosen cabinet solution should provide a minimum clearance of 93.12mm from the face of the network adaptor to the cabinet door. This clearance is necessary to accommodate the bend radius of a typical DAC (Direct Attach Cable) cable (see Figure 3).

Figure 3. DAC clearance recommendations

The maximum cable length in the figure 6 is 2.07 meters or 81.49 inches.

The maximum cable length in the figure 6 is 2.07 meters or 81.49 inches.

With adjacent racks, it is possible to improve cable management by removing the inner side panels. This alteration provides an open space along the sides of the racks, allowing cables to be conveniently routed between adjacent racks. By eliminating the inner side panels, technicians or IT professionals gain unobstructed access to the interconnecting cables, making it simpler to establish and maintain organized cabling infrastructure.

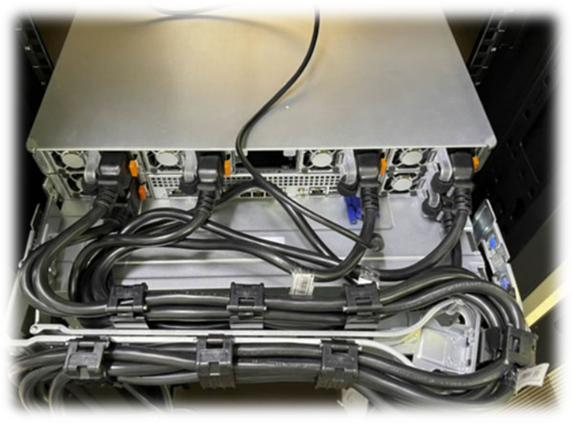

The following two figures show power cables routed through the optional cable management arm (CMA). The CMA can be mounted to either side of the sliding server rails.

Figure 4. Power cables in cable arm

Network switch

AI server network switches play a crucial role in supporting high-performance and data-intensive artificial intelligence workloads. These switches handle the demanding requirements of AI applications, providing high bandwidth, low latency, and efficient data transfer. They facilitate seamless communication and data exchange between AI servers, to ensure optimal performance and to minimize bottlenecks.

Installing a switch in a rack for servers is vital for establishing a robust and efficient network infrastructure, enabling seamless communication, centralized management, scalability, and optimal performance for the server environment.

The network switch may require offsetting within the rack to accommodate the bend radius of specific networking cables. To achieve this, a bracket can be utilized to push the network switch towards the rear of the rack, creating space for the necessary cable bend radius while ensuring proper installation of the front door. The accompanying images demonstrate the process of using the bracket to adjust the network switch position within the rack. This allows for optimal cable management and ensures the smooth operation of the network infrastructure.

Figure 6. Switch offset brackets

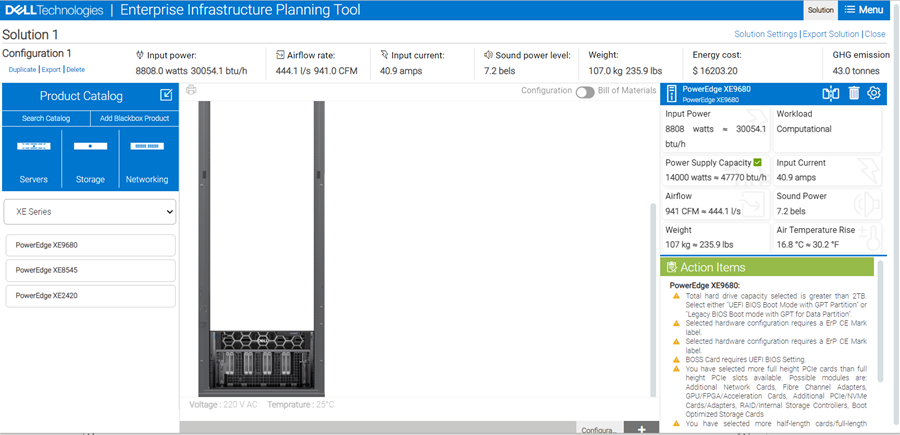

Enterprise Infrastructure Planning Tool (EIPT)

The Dell Enterprise Infrastructure Planning Tool (EIPT) helps IT professionals, plan and tune their computer and infrastructure equipment for maximum efficiency. Offering a wide range of configuration flexibility and environmental inputs, this can help right size your IT environment. EIPT is a model driven tool supporting many products and configurations for infrastructure sizing purposes. EIPT models are based on hardware measurements with operating conditions representative of typical use cases. Workloads can impact the power consumption greatly. For example, the same percent CPU utilization and different workloads can lead to widely different power consumption. It is not possible to cover all the workload, environmental, and customer data center factors in a model and provide a percent accuracy figure with any degree of confidence. With that said, Dell Technologies would anticipate (NOT guarantee or claim) a potential for some variation. Customers are always advised to confirm EIPT estimates with actual measurements under their own actual workloads.

Figure 7. Dell EIPT tool

Dell Deployment Services

Leading edge technologies bring implementation challenges that can be reduced or eliminated with Dell Rack Integration Services. We have the experience and expertise to engineer, integrate, and install your Dell storage, server, or networking solution. Our proven integration methodology will take you step by step from a plan to a ready-to-use solution:

- Project management

- Solutioning and rack layout engineering

- Physical integration and validation

- Logistics and installation

Contact your account manager and go to Custom deployment services to learn more.

References

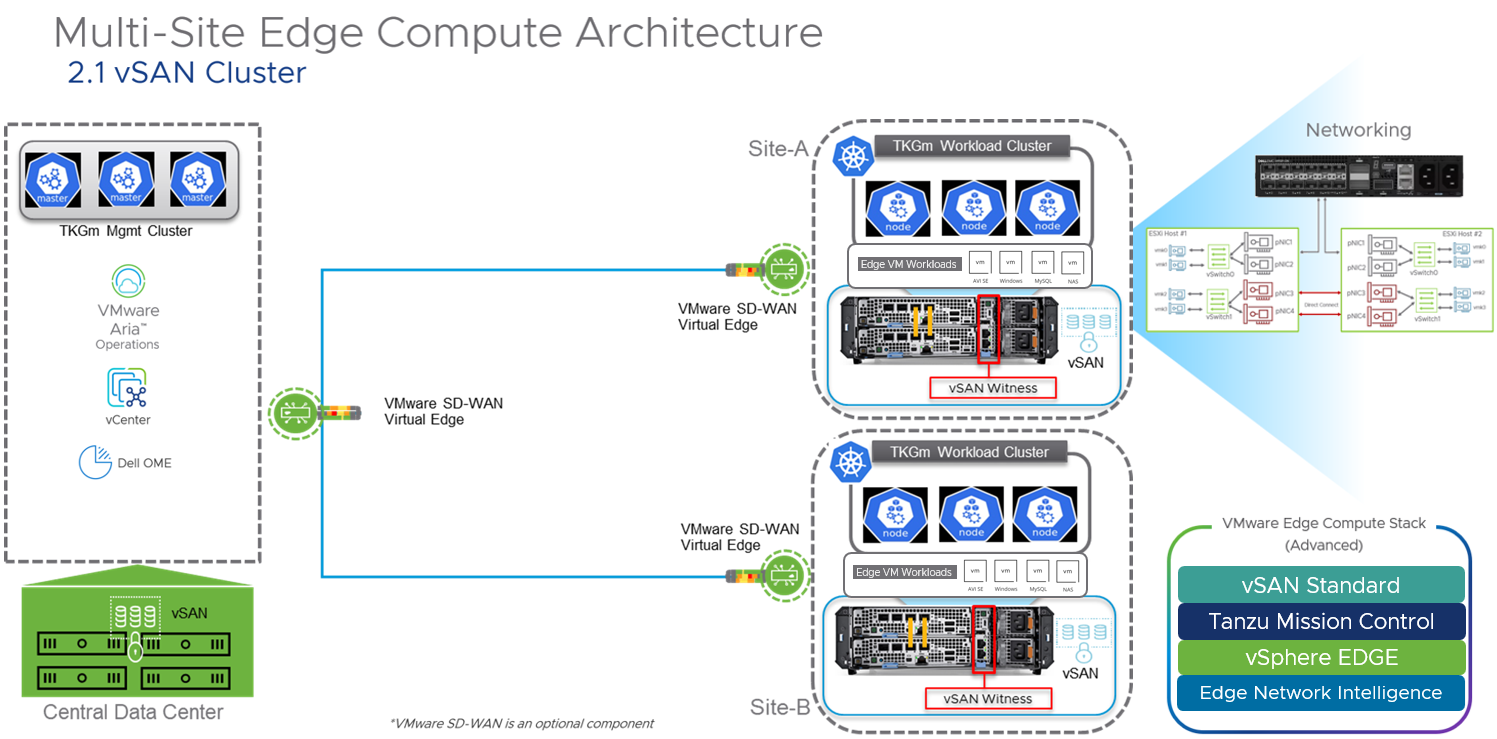

Dell PowerEdge XR4000 with VMware Edge Compute Stack for Edge Computing

Thu, 31 Aug 2023 17:42:58 -0000

|Read Time: 0 minutes

Overview

Enterprises want to build and operate applications that have low latency requirements to process and analyze real-time data, and they want to provide intelligence for smarter decision-making at the edge. However, they face many challenges: aging infrastructure, limited edge-computing resources, environmental factors, and lack of IT staff to deploy and support applications across many edge sites.

This document provides an overview of a combined edge platform built on Dell PowerEdge XR servers and VMware Edge Compute Stack to solve these challenges. It describes key use cases in retail, manufacturing, and other industries.

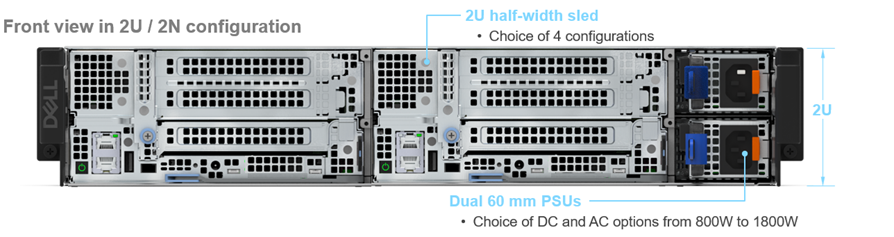

The PowerEdge XR server series is built to capture and process more data at the edge, with enterprise-grade compute abilities providing high performance with low latency for the edge. The XR servers can withstand unpredictable and challenging deployment environments. XR4000 is the new high-performance multi-node XR server, purpose-built for ultra-short depth and low power, and with flexible configurations. These configurations are also available on our Dell vSAN Ready Nodes.

- 1S Intel® Ice Lake Xeon-D® with integrated security and cyber-resilient architecture

- 355-mm-deep chassis with wall-mount option

- Rugged operating range from –5°C to 55°C (32°F to 131°F)

- Flexible 1U and 2U compute sled; self-contained 2-node for VMware vSAN cluster

Edge Compute Stack (ECS) is a fully integrated edge platform for customers with many edge sites. ECS empowers IT and OT to deliver intelligent real-time solutions, offering flexibility, consistency, security, and extensibility:

- Flexibility to run virtual and container applications, standard and real-time operating systems

- Consistent interoperability across edges, data centers, and clouds

- Security to protect applications, users, devices, and data against threats

- Open platform that offers component choices and extensibility

This document includes a combined XR4000 and ECS reference architecture validated and supported by Dell Technologies and VMware. It also provides sample configurations for customers and partners to use as a starting point to design and implement the combined edge platform.

Customer use cases

Key use cases for the solution are in the retail, manufacturing, and government sectors.

Retail

Retailers adapted to the pandemic with increased use of self-service checkout and new delivery mechanisms. They are deploying edge applications to improve customer experience and profitability:

- Self-checkout—Camera and computer vision solutions help prevent loss from missed scans and switched products or price stickers by instantaneously matching products with prices.

- Optimal shelf provisioning—Inventory tracking and data analysis solutions can optimize shelf-provisioning to increase sales.

- Immersive experience—Interactive mirrors in apparel stores give customers an immersive experience when they are trying out an item by providing additional colors or variations.

- POS—Virtualize and extend the point-of-sale life cycle and realize impactful ROI through faster innovation, a transformative customer experience, and proactive management of retail infrastructure.

The XR4000 and ECS platform provides high flexibility and performance to deploy and run these retail solutions while optimizing expensive retail space and meeting store environmental requirements.

Manufacturing

The Industry 4.0 movement is digitizing manufacturing for greater efficiency and flexibility. Manufacturers are deploying edge applications for the following use cases:

- IT/OT convergence—Virtualization of industrialized PCs and programmable logic controllers (PLCs) enabled skilled operators to work from anywhere with low latency while allowing OT and IT applications to run on the same hardware for greater efficiency.

- Predictive maintenance—Solutions that use smart sensor data can reduce machine downtime by 50 percent.

- Simulated manufacturing—Digital twin software creates a simulation running in parallel to physical machines to optimize operational efficiency.

- Quality control—Computer vision can spot defects to increase quality and yield.

The XR4000 and ECS platform provides a foundation for these solutions for machine aggregation and virtualization, OT/IT translation, industrial automation, and AI inferencing.

Government

Defense, law enforcement, and emergency response organizations have specific requirements for tactical and mobile edge deployments:

- Tactical edge—Military and civil defense organizations are implementing real-time analytics solutions using ruggedized form factors at the tactical edge.

- Mobile edge—Law enforcement and emergency response organizations are adopting vehicle-based mobile edge solutions.

XR4000 is highly portable and hardened for dusty, hot/cold operations. It is tested with NEBS Level 3 and MIL certifications. With ruggedized ATA-compliant compact and mobile systems from Dell OEM partners, the XR4000 and ECS platform is ideal for tactical and mobile edge workloads.

Features

Figure 1 illustrates the combined XR4000 and ECS reference architecture. It consolidates VMs and the Kubernetes management cluster in the central data center. It also includes self-contained 2-node vSAN and TKG Multi-Cloud (TKGm) clusters at every edge site. A purpose-built vSAN witness node XR4000w (Nano Processing Unit, shown in Figure 2) is integrated within several XR4000 chassis options, enabling a highly efficient and reliable edge stack. An optional SD-WAN virtual edge can provide optimal connectivity and additional security. The centralized VMware vCenter and TKG management cluster simplify vSAN and TKGm deployment at the edge sites.

Figure 1. XR4000 and ECS reference architecture

Figure 2. Nano Processing Unit

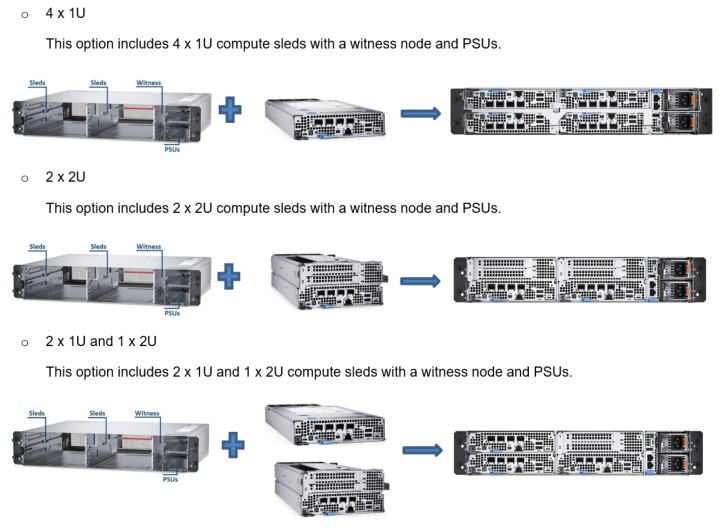

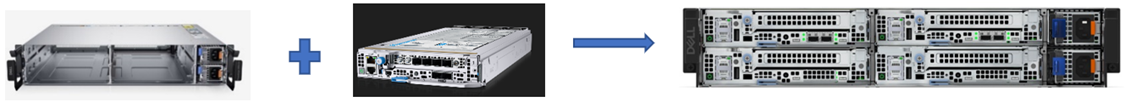

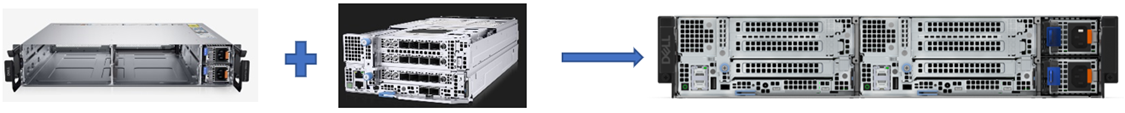

PowerEdge XR4000 and Edge Compute Stack configurations

Dell PowerEdge XR4000 is a rugged multi-node edge server available in two unique and flexible form factors. The “rackable” chassis supports up to four 1U sleds; the “stackable” chassis supports up to two 2U sleds. The 1U sled is provided for dense compute requirements. The 2U chassis shares the same “1st U” and common motherboard with the 1U sled but includes an additional riser to provide two more PCIe Gen4 FHFL I/O slots. Customers who need additional storage or PCIe expansion can choose a 2U sled option. All XR4000 chassis support both front-to-back and back-to-front airflow.

Sample configurations

The following table provides details for two sample configurations—one rackable and the other stackable.

Table 1. Sample configurations

| Rackable configuration 2 x 2U | Stackable configuration 2 x 1U |

|

|

|

Edge Compute Stack (ECS) | VMware ECS Advanced (vSphere Edge, vSAN Standard for Edge, Tanzu Mission Control Advanced), 1/3/5-year term license, up to 128 cores per edge instance | |

Chassis | Dell PowerEdge XR4000r 2U, 14 inches deep,19 inches wide | Dell PowerEdge XR4000z 2U, 14 inches deep, 10.5 inches wide |

Mounting options | Mounting ears to support a standard 19-inch-wide rack | Deployed in desktop, VESA plates, DIN rails, or stacked environments |

Power supply | Front port access, dual, hot-plug (1+1), 1400 W, RAF | |

Operating range | –5°C to 55°C (32°F to 131°F) | |

Witness node | 1 x Dell PowerEdge XR4000w, VMware Certified | |

Server

| 2 x Dell PowerEdge XR4520c sleds, VMware Certified | 2 x Dell PowerEdge XR4510c sleds, VMware Certified |

Total capacity of 2 x 2U sleds | Total capacity of 2 x 1U sleds | |

Security | Trusted Platform Module 2.0 V3 | |

CPU cores* | 32 cores (2 x 1S Intel Ice Lake Xeon-D 16 cores CPU) | |

Memory* | 256 GB (8 x 32 GB RDIMM) | 128 GB (8 x 16 GB RDIMM) |

Boot drive | 2 x BOSS-N1 controller card + with 2 M.2 960 GB - RAID 1 | 2 x BOSS-N1 controller card + with 2 M.2 480 GB - RAID 1 |

Storage* | 15.2 TB (8 x 1.9 TB, SSDR, 2E, M.2) | |

Network | 4 x 10 GbE Base-T or SFP for 4/8 core CPU; | |

GPU (optional) | 2 x NVIDIA Ampere A2, PCIe, 60 W, 16 GB Passive, Full Height GPU, VMware Certified | Not Applicable |

System management | iDRAC9, Dell OpenManage Enterprise Advanced Plus, integration for VMware vCenter | |

*In a High Availability (HA) 2-node vSAN cluster, for failover to work properly, total consumable CPU, Memory, and Storage for application workloads should not exceed the available resources of a single node.

Engage Dell and VMware

The edge platform built on Dell PowerEdge XR4000 server and VMware Edge Compute Stack aims to help retail, manufacturing, and government customer organizations build and operate applications that provide intelligence for smarter decision-making and deliver immersive digital experiences at the edge. The combined reference architecture and configuration examples described in this document are designed to help our joint customers in designing and implementing a consistent, flexible, secure, and extensible edge solution.

To learn more about the flexible configurations of the Dell XR4000 chassis and compute sleds, see PowerEdge XR Rugged Servers.

For more information about VMware Edge Compute Stack, see VMware Edge Compute Stack and contact the VMware team at edgecomputestack@vmware.com.

References

- Dell PowerEdge XR4000 Specification Sheet

- Dell PowerEdge XR4000r Chassis

- Dell PowerEdge XR4000: Multi-Node Design

- VMware SASE and Edge

- How is VMware Edge Compute Stack Accelerating Digital Transformation Across Industries?

Understanding Thermal Design and Capabilities for the PowerEdge XR8000 Server

Thu, 27 Jul 2023 20:40:00 -0000

|Read Time: 0 minutes

Summary

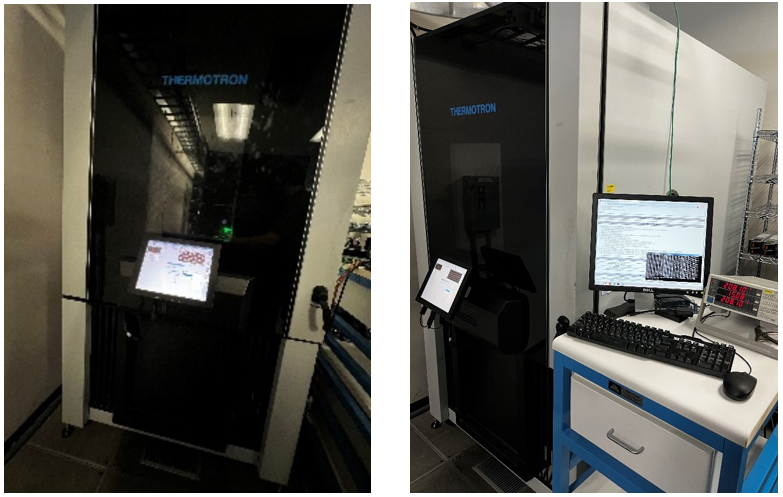

This study is intended to help customers understand the behavior of the XR8000 PowerEdge server in harsh environmental conditions at the edge, and its resulting performance.

The need to improve power efficiency and provide sustainable solutions has been imminent for some time. According to a Bloomberg report, in some countries, data centers will account for an estimated 5-10% of energy consumption by 2030. This will include the demand for edge and cloud computing requirements[1]. Dell Technologies continues to innovate in this aspect and has launched its latest portfolio of XR servers for the edge and telecom this year.

The latest offering from the Dell XR portfolio is a series of rugged servers purpose-built for the edge and telecom, especially targeting workloads for retail, manufacturing, and defense. This document highlights the testing results for power consumption and fan speed across the varying temperature range of -5 to 55°C (23F to 122F) by running iPerf3 on the XR8000 server.

About PowerEdge XR8000 – a Flexible, innovative, sled-based, RAN-optimized server

The short-depth XR8000 server, which comes in a sledded server architecture (with 1U and 2U single-socket form factors), is optimized for total cost of ownership (TCO) and performance in O-RAN applications. It is RAN optimized with integrated networking and 1/0 PTP/SyncE support. Its front-accessible design radically simplifies sled serviceability in the field.

The PowerEdge XR8000 server is built rugged to operate in temperatures from -5°C to 55°C for select configurations. (For additional details, see the PowerEdge XR8000 Specification Sheet.)

Figure 1. Dell PowerEdge XR8000

Thermal chamber testing

For the purpose of conducting this test, we placed a 2U XR8000 inside the thermal chamber in our test lab. While in the thermal chamber, we ran the iPerf3 workload on the system for more than eight hours, stressing the system from 5-20%. We measured power consumption and fan speed using iDRAC at 10-degree intervals of Celsius temperature from 0C to 55C.

The iPerf3 throughput measured for 1GB, 10GB, and 25GB seemed consistent across the entire temperature range, with no impact on performance as temperature increased. The fan speed and power consumption increased with temperature, which is the expected behavior.

Figure 2. Thermal chamber in the Dell performance testing lab

System configuration

Table 1. System configuration

Node hardware configuration | Chassis configuration | SW configuration | |

1 x 6421N (4th Generation Intel® Xeon® Scalable Processors) | 2 x 8610t | BIOS | 1.1.0 |

8 x 64GB PC5 4800MT | 2 x 1400w PSU | CPLD | 1.1.1 |

1 x Dell NVMe 7400 M.2 960GB |

| iDRAC | 6.10.89.00 Build X15 |

1 x DP 25GB BCM 57414 |

| CM | 1.10 |

|

| PCIe SSD | 1.0.0 |

|

| BCM 57414 | 21.80.16.92 |

iPerf3

iPerf3 is an open-source tool for actively measuring the maximum achievable bandwidth on IP networks. It supports the tuning of various parameters related to timing, buffers, and protocols (TCP, UDP, SCTP with IPv4, and SCTP with IPv6). For each test it reports bandwidth, loss, and other parameters. An added advantage of using iPerf3 for testing network performance is that it is very reliable if you have two servers, in geographically different locations, and you want to measure network performance between them. (For additional details about iPerf3, see iPerf - The ultimate speed test tool for TCP, UDP and SCTP.)

Results

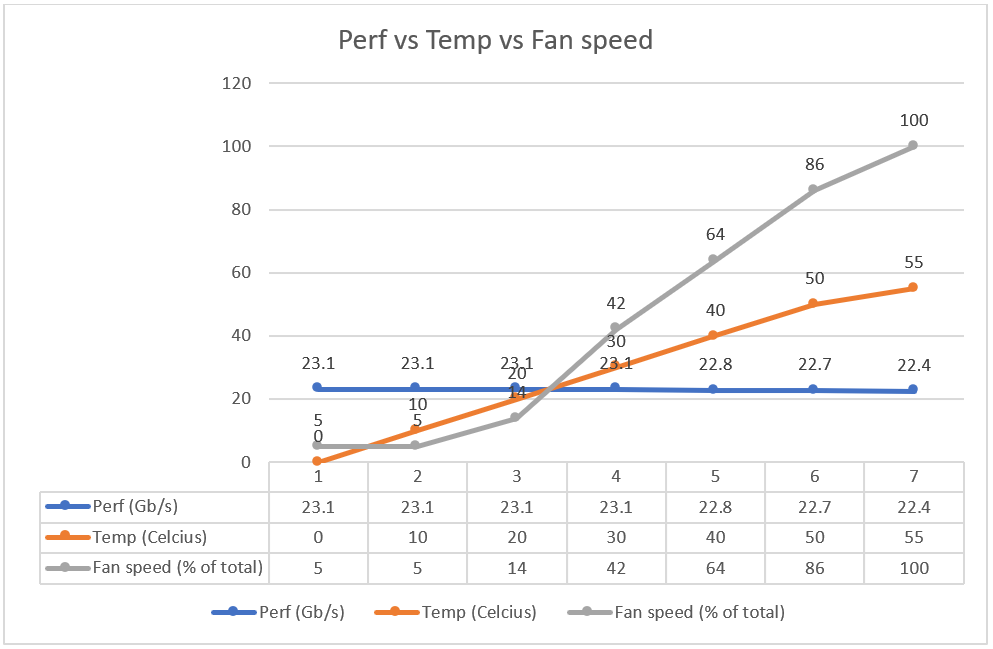

Figure 3. Constant networking performance with varying temperature and fan speed

Figure 3 shows that as the temperature and fan speed increases, the iPerf3 throughput stays the same. Fan speed is only 14% for temperatures near 20°C.

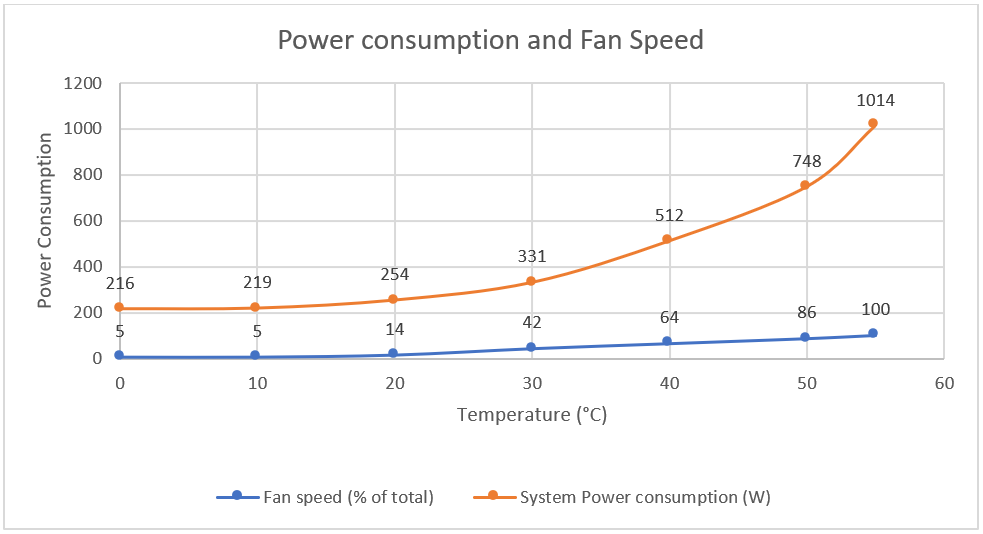

Figure 4. Power consumption and fan speed

Figure 4 shows that as temperature increases, Chassis power consumption for the system increases. It is 254W at 20°C.

A deep dive into the results

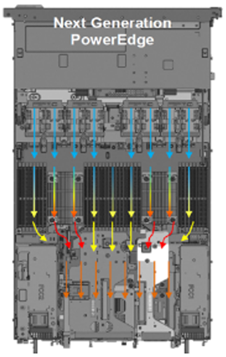

The consistent performance with increasing temperature and power can be attributed to several design considerations when designing and building these edge/telecom servers:

- RAF: The Reverse Airflow option offered in these servers is carried from Dell’s innovation in Multi-Vector Cooling technology. While most of the innovations for MVC center around optimizing thermal controls and management, the physical cooling hardware and its architecture layout help. XR servers are shallower, which can mean less airflow impedance, resulting in more airflow.

- Fans: XR servers are designed with high-performance fans, which have significantly increased airflow performance over previous fan generations.

- Layout: The T-shape system motherboard layout, along with PSUs that are located at each corner of the chassis, allows improved airflow balancing and system cooling, and consequently, improved system cooling efficiency. This layout also improves PSU cooling due to reduced risk from high pre-heat coming from CPU heat sinks. The streamlined airflow helps with PCIe cooling and enables support for PCIe Gen5 adapters.

- Smaller PSU Form Factor: In the 1U systems, a new, narrower, 60mm form factor PSU is implemented to increase the exhaust path space.

- XR servers usually support CPUs with higher TCase requirements. TCase stands for Case Temperature and is the maximum temperature allowed at the processor Integrated Heat Spreader (IHS)[2].

For more details about the design considerations used for edge servers, see the blog Computing on the Edge–Other Design Considerations for the Edge.

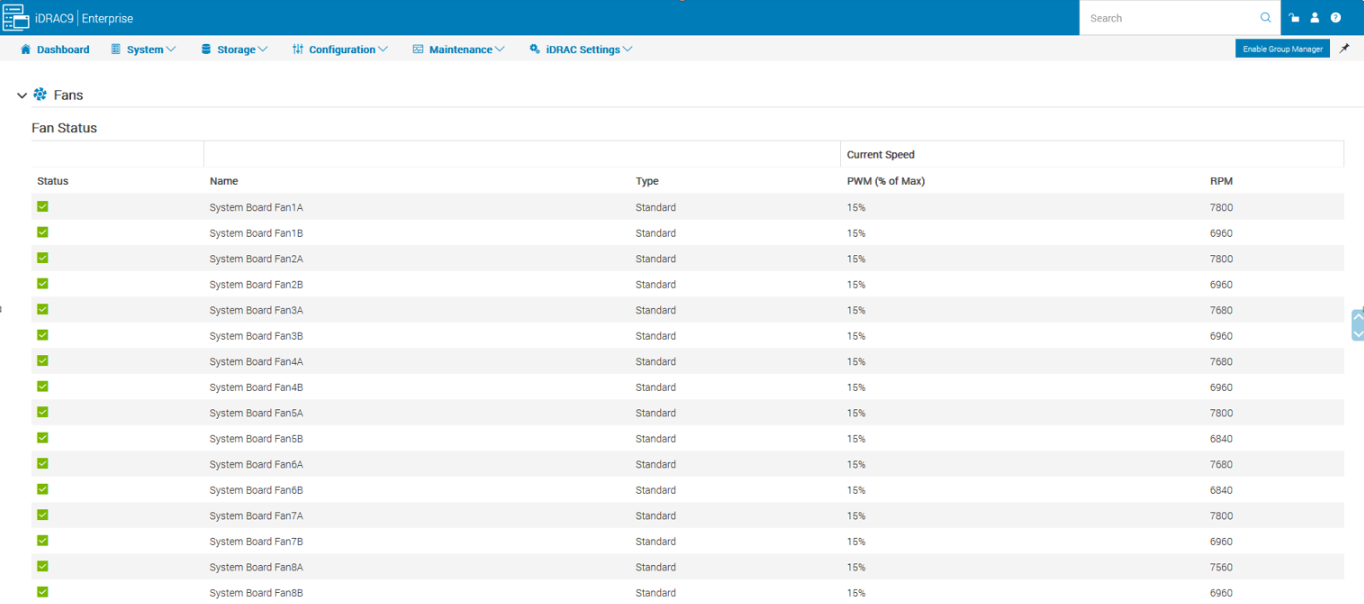

iDRAC

To best supplement the improved cooling hardware, the PowerEdge engineering team carried on the key features from the previous generation of PowerEdge servers to deliver autonomous thermal solutions capable of cooling next-generation PowerEdge servers.

An iDRAC feature in XR8000 detects Dell PCIe cards and automatically delivers the correct airflow to the slot to cool that card. When non-Dell PCIe cards are detected, the customer is given the option to enter the airflow requirement (LFM – Linear Feet per Minute) as specified by the card manufacturer. iDRAC and the fan algorithm ‘learn’ this information and the card is automatically cooled with the proper airflow. This feature saves power by not having to run the fans to cool the worst-case card in the system. Noise is also reduced.

More information about thermal management, see “Thermal Manage” Features and Benefits.

Figure 5. iDRAC settings to view fan status during our XR8000 testing in the thermal chamber

Conclusion

Dell Technologies is continuing its efforts to test other XR devices and to determine power consumption for various workloads and its variation with changes in temperature. This study is intended to help customers understand the behavior of XR servers in harsh environmental conditions at the edge and their resulting performance.

References

- PowerEdge XR8000 Specification Sheet

- iPerf - The ultimate speed test tool for TCP, UDP and SCTP

- Computing on the Edge–Other Design Considerations for the Edge

- “Thermal Manage” Features and Benefits

[1] https://stlpartners.com/articles/sustainability/edge-computing-sustainability

[2] https://www.intel.com/content/www/us/en/support/articles/000038309/processors/intel-xeon-processors.html

Accelerating AI Performance: MLPerf 3.0 Training Results with Dell PowerEdge

Wed, 28 Jun 2023 00:02:48 -0000

|Read Time: 0 minutes

Executive Summary

The PowerEdge XE9680 is a high-performance server designed and optimized to enable uncompromising performance for artificial intelligence, machine learning, and high-performance computing workloads. Dell PowerEdge has launched our innovative 8-way GPU platform with advanced features and capabilities.

- 8x NVIDIA H100 80GB 700W SXM GPUs or 8x NVIDIA A100 80GB 500W SXM GPUs

- 2x Fourth Generation Intel® Xeon® Scalable Processors

- 32x DDR5 DIMMs at 4800MT/s

- 10x PCIe Gen 5 x16 FH Slots

- 8x SAS/NVMe SSD Slots (U.2 or E.3) and BOSS-N1 with NVMe RAID

We are thrilled to share this insightful report that provides performance insights into the exceptional capabilities of the PowerEdge XE9680. Through rigorous testing and evaluation using MLPerf 3.0 benchmarks from MLCommons, this document offers a detailed analysis of the PowerEdge XE9680's outstanding performance in AI model training.

MLPerf is a suite of benchmarks that assess the performance of machine learning (ML) workloads, focusing on two crucial aspects of the ML life cycle: training and inference. This tech note delves explicitly into the training aspect of MLPerf 3.0.

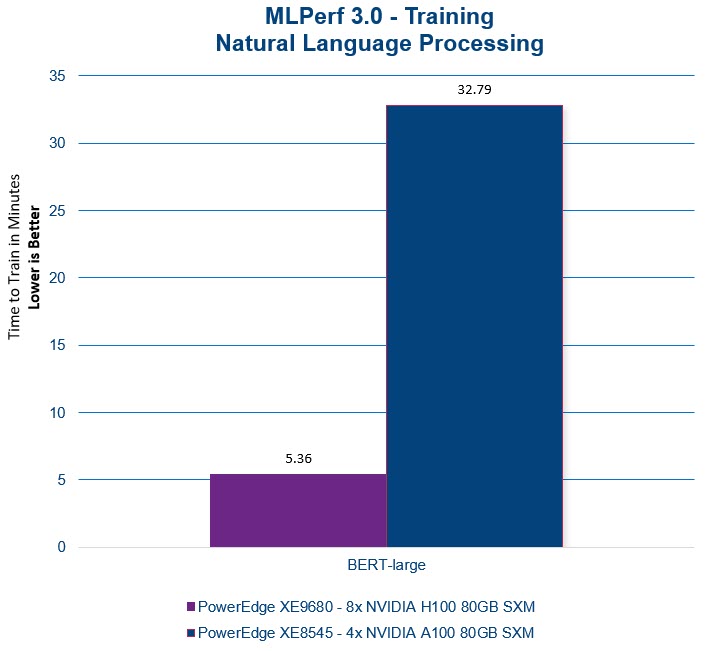

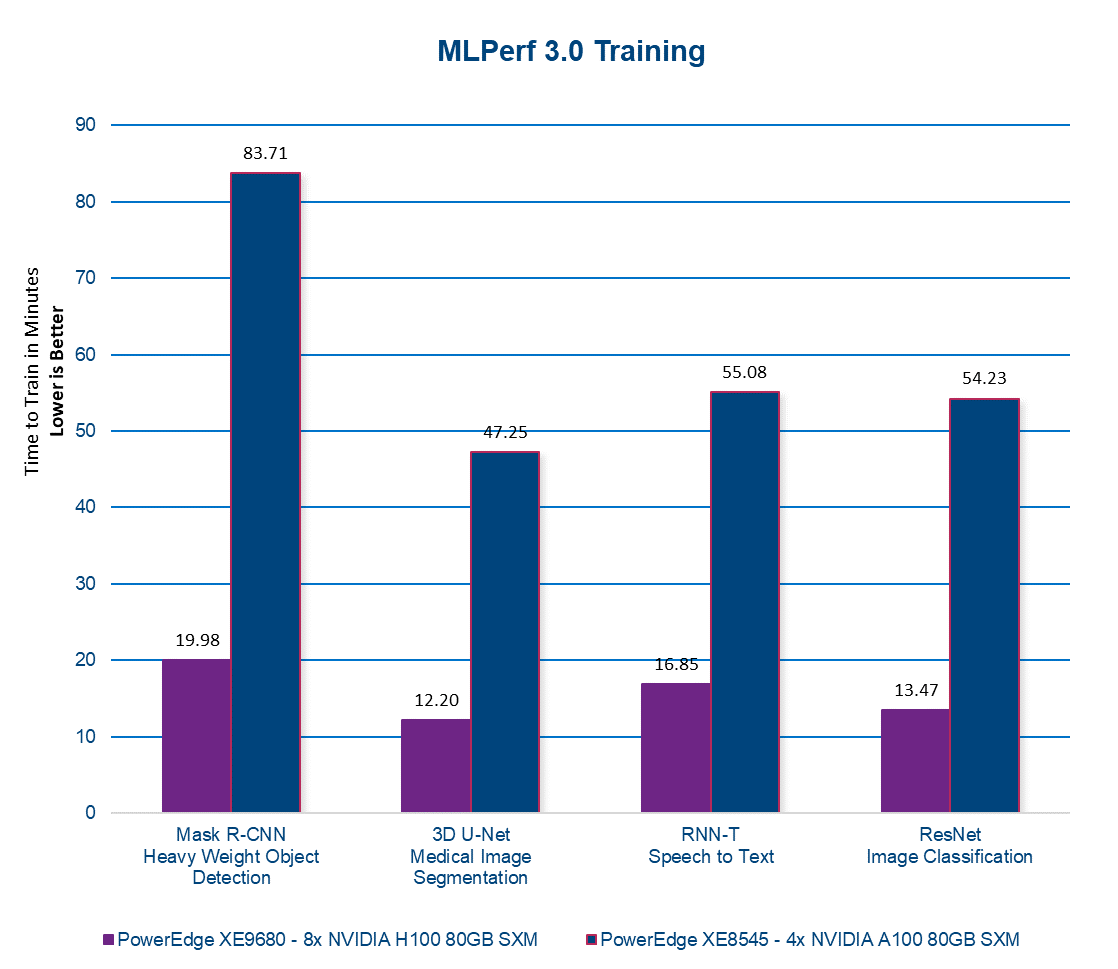

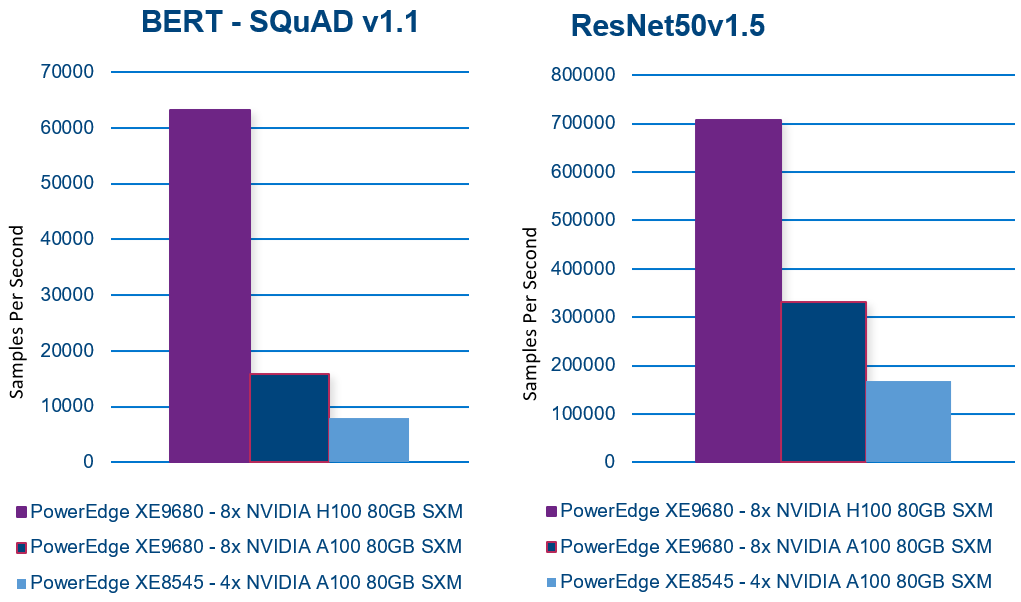

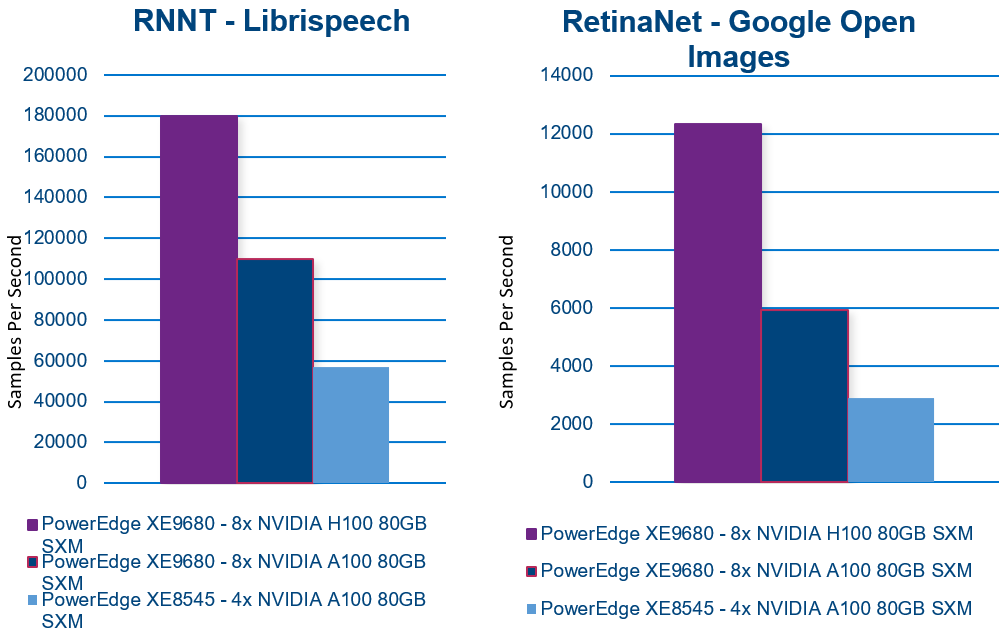

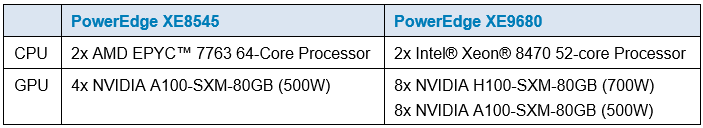

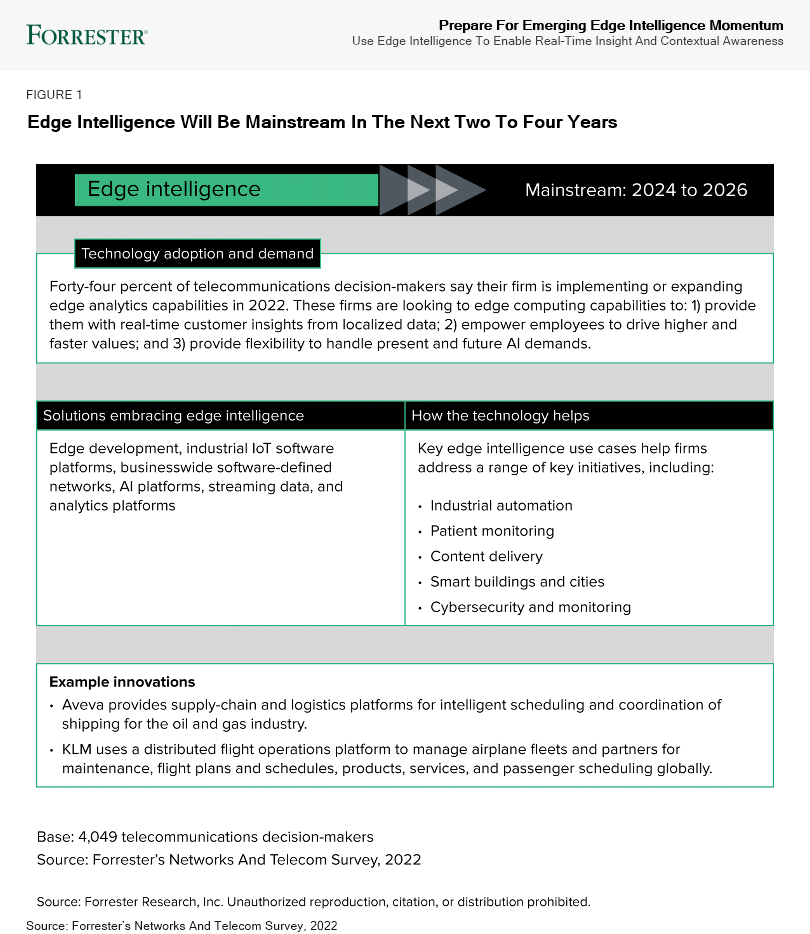

Performance

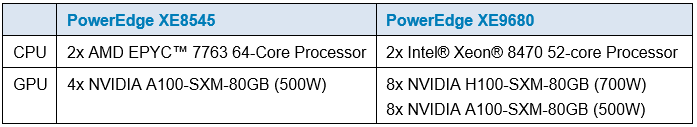

The Dell performance labs conducted MLPerf 3.0 Training benchmarks using the latest PowerEdge XE9680 with 8x NVIDIA H100 80GB SXM GPUs. For comparison, we also ran these tests on the previous generation PowerEdge XE8545, equipped with 4x NVIDIA A100 80GB SXM GPUs.

BERT (Bidirectional Encoder Representations from Transformers) is a transformer-based neural network model introduced by Google in 2018. It is designed to understand and generate human-like text by capturing the context and meaning of words in each sequence. We are thrilled that the PowerEdge XE9680 with H100 GPUs delivered a 6x time-to-train performance improvement in the MLPerf NLP benchmark results using the BERT-large model with the Wikipedia dataset. This translates to accelerated time-to-value as we help our customers unlock the potential of remarkably faster model training.

Please note that throughout this report, a lower time-to-train value indicates improved efficiency and faster model convergence. As you analyze the graphs and performance metrics, remember that achieving lower time-to-train values demonstrates the PowerEdge XE9680's ability to expedite AI model training, delivering enhanced speed and efficiency results.

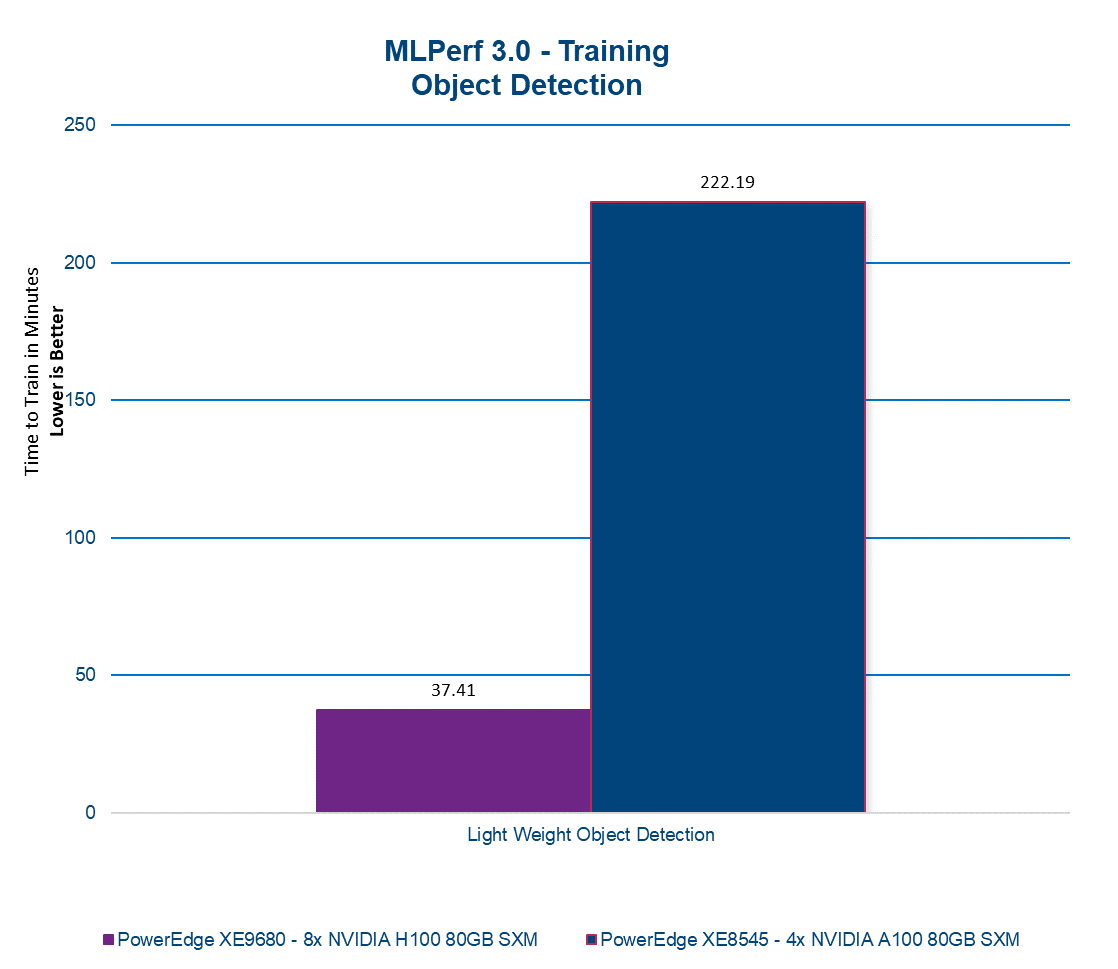

In MLPerf 3.0, the RetinaNet model leverages the Open Images dataset of millions of diverse images. In this benchmark, we observed an impressive, nearly 6x enhancement in training time for the model.

By utilizing the RetinaNet model with the Open Images dataset, MLPerf enables comprehensive evaluations and comparisons of system capabilities. The scale and diversity of the dataset ensure a robust assessment of object detection performance across various domains and object categories.

The PowerEdge XE9680 consistently delivers remarkable results across the entire MLPerf 3.0 Training benchmark suite, as depicted in the following figure. This robust performance underscores the server's exceptional capabilities and reliability in tackling a wide range of demanding machine learning tasks.

Conclusion

The PowerEdge XE9680 server surpasses our previous generation offering by delivering up to a 6x performance boost. This remarkable advancement translates into significantly accelerated AI model training, enabling your team to complete training tasks faster. To learn more about this server, we encourage you to contact your dedicated account executive or visit www.dell.com.

Table 1. Server configuration

PowerEdge XE8545 | PowerEdge XE9680 | |

CPU

| 2x AMD EPYC 7763 64-Core Processor | 2x Intel® Xeon® 8470 52-core Processor |

GPU | 4x NVIDIA A100-SXM-80GB (500W) | 8x NVIDIA H100-SXM-80GB (700W) |

- Testing conducted by Dell in June of 2023. Performed on PowerEdge XE9680 with 8x NVIDIA H100 SXM4-80GB and PowerEdge XE8545 with 4x NVIDIA A100-SXM-80GB. MLPerf v3.0 Training results in models BERT Large, Mask R-CNN, ResNet, RetinaNet, RNN-T, and 3D U-Net. The MLPerf name and logo are trademarks of MLCommons Association in the United States and other countries. All rights reserved. Unauthorized use is strictly prohibited. See www.mlcommons.org for more information. Individual results will vary.

References

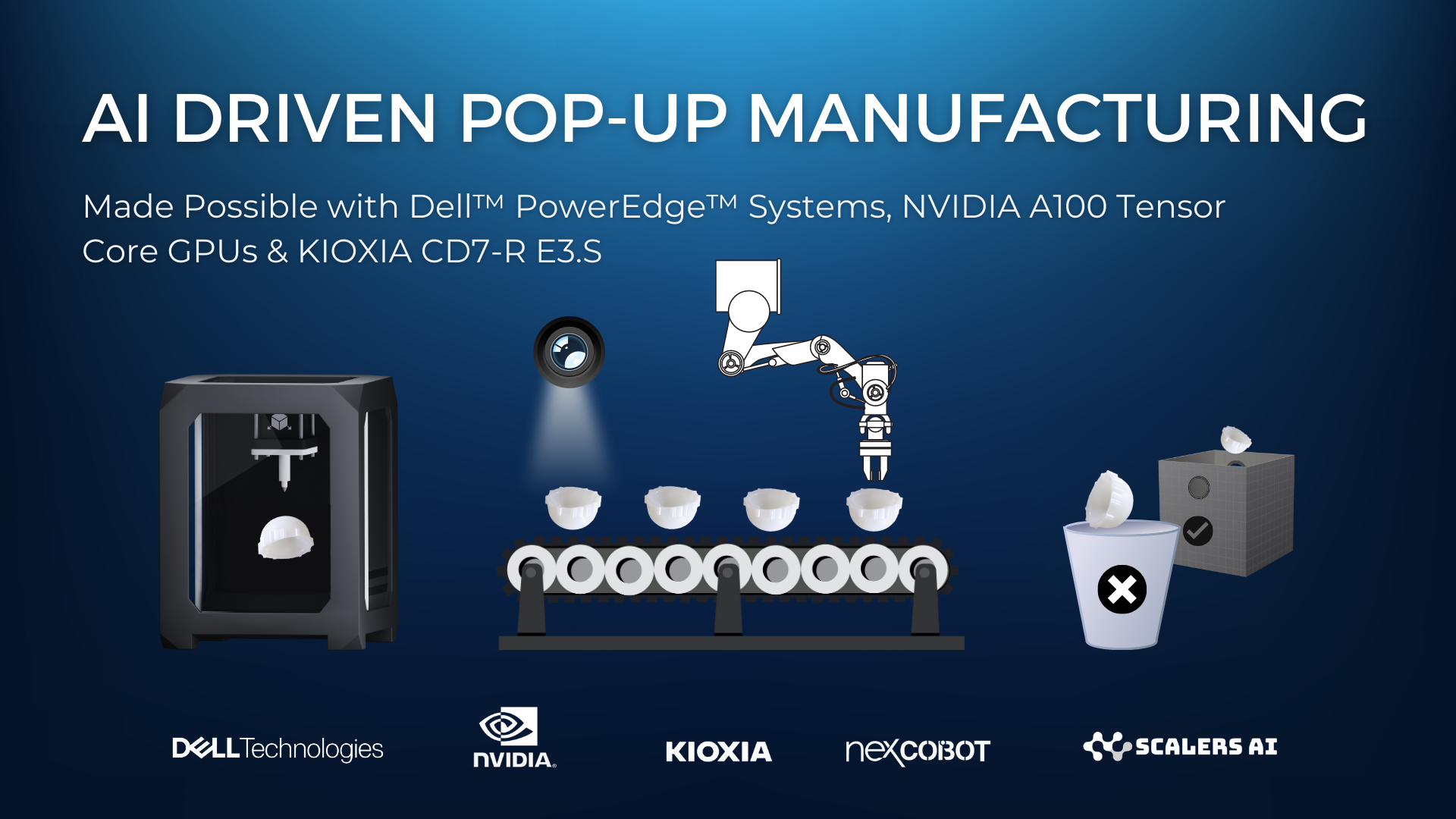

Partnership Drives AI Innovation

Fri, 19 May 2023 19:49:42 -0000

|Read Time: 0 minutes

| Click here to get the github code! |

Up to 29% Higher Inference Performance: PowerEdge R750xa and NVIDIA H100 PCIe GPU

Tue, 11 Apr 2023 22:40:39 -0000

|Read Time: 0 minutes

Executive Summary - PowerEdge R750xa

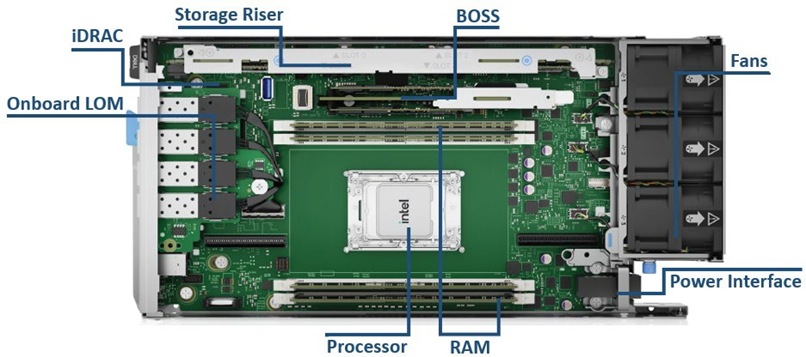

The Dell PowerEdge R750xa, powered by the 3rd Generation Intel® Xeon® Scalable processors, is a dual-socket/2U rack server that delivers outstanding performance for the most demanding emerging and intensive GPU workloads. It supports eight channels/CPU, and up to 32 DDR4 DIMMs @ 3200 MT/s DIMM speed. In addition, the PowerEdge R750xa supports PCIe Gen 4, and up to eight SAS/SATA SSD or NVMe drives.

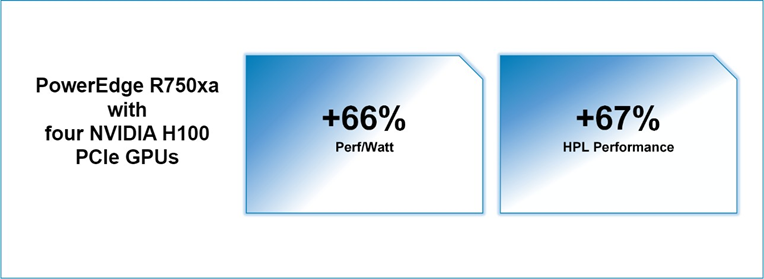

Up to 29% higher inference performance PowerEdge R750xa and NVIDIA H100 PCIe GPU(1)

One platform that supports all of the PCIe GPUs in the PowerEdge portfolio makes the PowerEdge R750xa the ideal server for workloads including AI-ML/DL Training and Inferencing, High-Performance Computing, and virtualization environments. The PowerEdge R750xa includes all of the benefits of core PowerEdge: serviceability, consistent systems management with IDRAC, and the latest in extreme acceleration.

One platform that supports all of the PCIe GPUs in the PowerEdge portfolio makes the PowerEdge R750xa the ideal server for workloads including AI-ML/DL Training and Inferencing, High-Performance Computing, and virtualization environments. The PowerEdge R750xa includes all of the benefits of core PowerEdge: serviceability, consistent systems management with IDRAC, and the latest in extreme acceleration.

NVIDIA H100 PCIe GPU

The new NVIDIA® H100 PCIe GPU is optimal for delivering the fastest business outcomes with the latest accelerated servers in the Dell PowerEdge portfolio, starting with the R750xa. The PowerEdge R750xa boosts workloads to new performance heights with GPU and accelerator support for demanding workloads, including enterprise AI. With its enhanced, air-cooled design and support for up to four NVIDIA double-width GPUs, the PowerEdge R750xa server is purpose-built for optimal performance for the entire spectrum of HPC, AI-ML/DL training, and inferencing workloads. Learn more here.

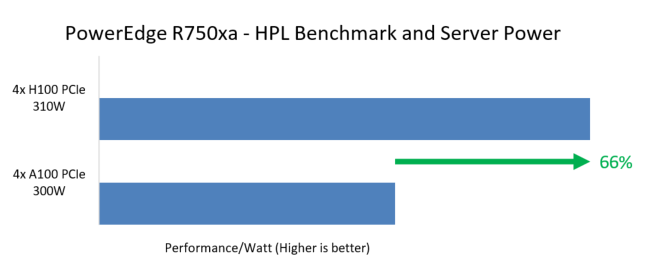

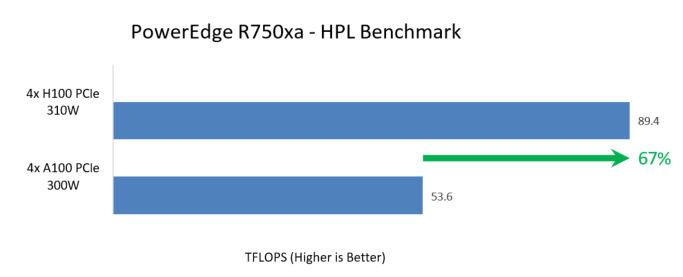

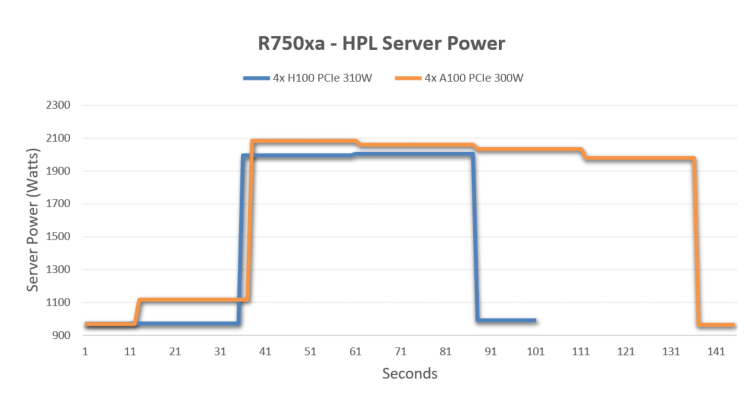

Next-Generation GPU Performance Analysis

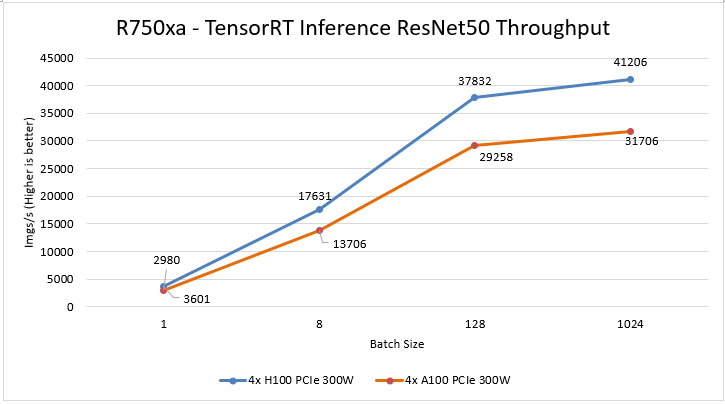

The Dell HPC & AI Innovation Lab compared the performance of the new NVIDIA® H100 PCIe 310W GPU to the last Gen A00 PCIe GPU in the Dell PowerEdge R750xa. They ran the popular TensorRT Inference benchmark across various batch sizes to evaluate inferencing performance.

The results are in Figure 1.

Figure 1. TensorRT

According to the industry standard TensorRT Inference Resnet50-v1.5 benchmark, the PowerEdge R750xa with NVIDIA's H100 PCIe 310W GPU processes approximately 29% more images per second than the NVIDIA A100 PCIe 300W GPU on the same server across various batch sizes. This significant improvement in image processing speed translates to higher overall throughput for inferencing workloads, making the PowerEdge R750xa with the H100 GPU an excellent choice for demanding applications.

Test Configuration

| R750xa with 4 NVIDIA H100 | R750xa with 4 NVIDIA A100 |

Server | PowerEdge R750xa | |

CPU | 2x Intel(R) Xeon(R) Gold 6338 CPU | |

Memory | 512G system memory | |

Storage | 1x 3.5T SSD | |

BIOS/iDRAC | 1.9.0/6.0.0.0 | |

Benchmark version | TensorRT Inference Resnet50-v1.5 | |

Operating System | Ubuntu 20.04 LTS | |

GPU | NVIDIA H100-PCIe-80GB (310W) | NVIDIA A100-PCIe-80GB (300W) |

Driver | CUDA 11.8 | CUDA 11.8 |

Conclusion

The PowerEdge R750xa supports up to four NVIDIA H100 PCIe adaptor GPUs and is available with new orders or as a customer upgrade kit for existing deployments.

Legal Disclosure

- Based on October 2022 Dell labs testing subjecting the PowerEdge R750xa 4x NVIDIA H100 PCIe Adaptor GPU configuration and the PowerEdge R750xa 4x NVIDIA A100 PCIe adaptor GPU configuration to TensorRT Inference Resnet50-v1.5 testing. Actual results will vary.

Unlocking Machine Learning with Dell PowerEdge XE9680: Insights into MLPerf 2.1 Training Performance

Tue, 28 Mar 2023 23:05:15 -0000

|Read Time: 0 minutes

Executive Summary

The Dell PowerEdge XE9680 is a high-performance server designed and optimized to enable uncompromising performance for artificial intelligence, machine learning, and high-performance computing workloads. Dell PowerEdge is launching our innovative 8-way GPU platform with advanced features and capabilities.

- 8x NVIDIA H100 80GB 700W SXM GPUs or 8x NVIDIA A100 80GB 500W SXM GPUs

- 2x Fourth Generation Intel® Xeon® Scalable Processors

- 32x DDR5 DIMMs at 4800MT/s

- 10x PCIe Gen 5 x16 FH Slots

- 8x SAS/NVMe SSD Slots (U.2) and BOSS-N1 with NVMe RAID

This tech note, Direct from Development (DfD), offers valuable insights into the performance of the PowerEdge XE9680 using MLPerf 2.1 benchmarks from MLCommons.

Testing

MLPerf is a suite of benchmarks that assess the performance of machine learning (ML) workloads, with a focus on two crucial aspects of the ML life cycle: training and inference. This tech note specifically delves into the training aspect of MLPerf.

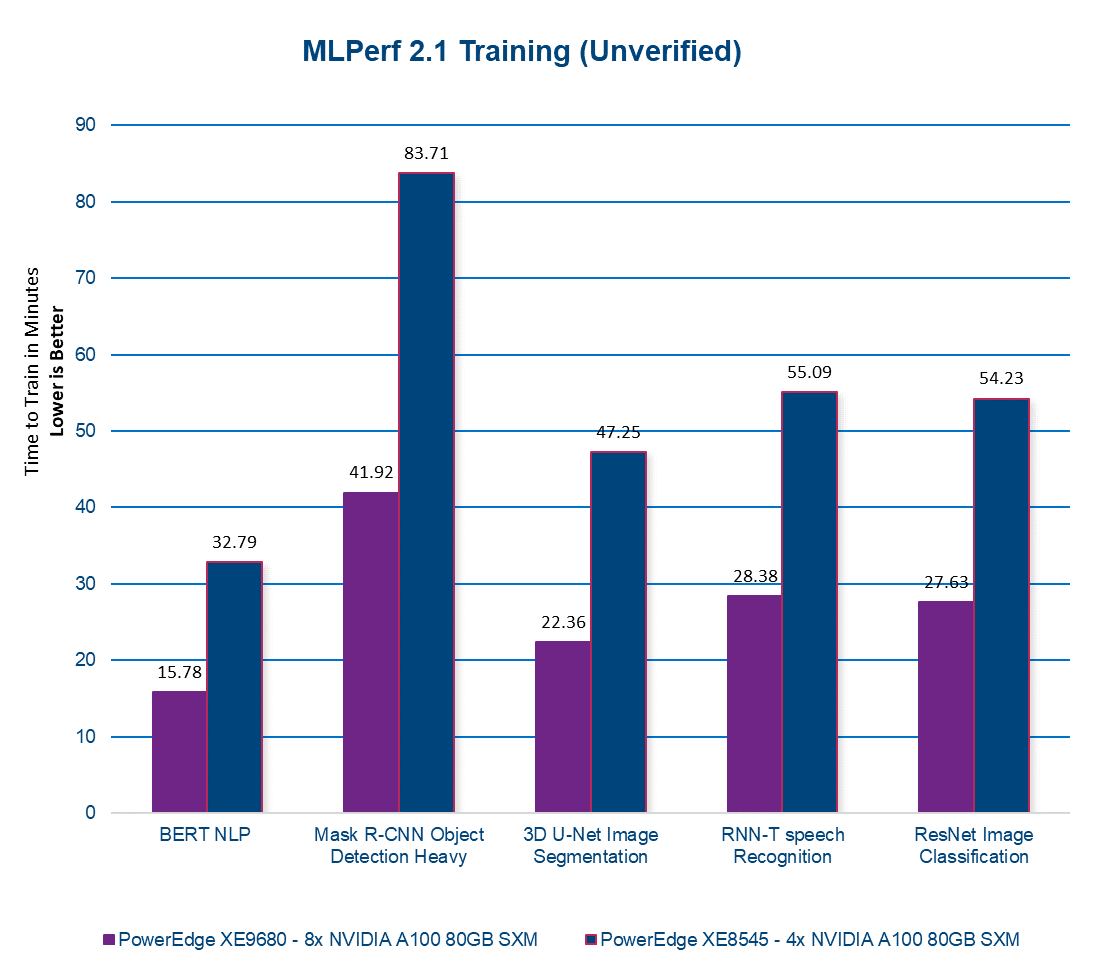

The Dell CET AI Performance and the Dell HPC & AI Innovation Lab conducted MLPerf 2.1 Training benchmarks using the latest PowerEdge XE9680 equipped with 8x NVIDIA A100 80GB SXM GPUs. For comparison, we also ran these tests on the previous generation PowerEdge XE8545, equipped with 4x NVIDIA A100 80GB SXM GPUs. The following section presents the results of our tests. Please note that in the figure below, a lower number indicates better performance and the results have not been verified by MLCommons.

Performance

Figure 1. MLPERF 2.1 Training

Our latest server, the PowerEdge XE9680 with 8x NVIDIA A100 80GB SXM GPUs, delivers on average twice the performance of our previous-generation server. This translates to faster AI model training, enabling models to be trained in half the time! With the PowerEdge XE9680, you can accelerate your AI workloads and achieve better results, faster than ever before. Contact your account executive or visit www.dell.com to learn more.

Table 1. Server configuration

(1) Testing conducted by Dell in March of 2023. Performed on PowerEdge XE9680 with 8x NVIDIA A100 SXM4-80GB and PowerEdge XE8545 with 4x NVIDIA A100-SXM-80GB. Unverified MLPerf v2.1 BERT NLP v2.1, Mask R-CNN object detection, heavy-weight v2.1 COCO 2017, 3D U-Net image segmentation v2.1 KiTS19, RNN-T speech recognition v2.1 rnnt Training. Result not verified by MLCommons Association. The MLPerf name and logo are trademarks of MLCommons Association in the United States and other countries. All rights reserved. Unauthorized use is strictly prohibited. See www.mlcommons.org for more information.” Actual results will vary.

Accelerating AI Inferencing with Dell PowerEdge XE9680: A Performance Analysis

Tue, 28 Mar 2023 23:05:16 -0000

|Read Time: 0 minutes

Executive Summary

The Dell PowerEdge XE9680 is a high-performance server designed and optimized to enable uncompromising performance for artificial intelligence, machine learning, and high-performance computing workloads. Dell PowerEdge is launching our innovative 8-way GPU platform with advanced features and capabilities.

- 8x NVIDIA H100 80GB 700W SXM GPUs or 8x NVIDIA A100 80GB 500W SXM GPUs

- 2x Fourth Generation Intel® Xeon® Scalable Processors

- 32x DDR5 DIMMs at 4800MT/s

- 10x PCIe Gen 5 x16 FH Slots

- 8x SAS/NVMe SSD Slots (U.2) and BOSS-N1 with NVMe RAID

This Direct from Development (DfD) tech note provides valuable insights on AI inferencing performance for the recently launched PowerEdge XE9680 server by Dell Technologies.

Testing

To evaluate the inferencing performance of each GPU option available on the new PowerEdge XE9680, the Dell CET AI Performance Lab, and the Dell HPC & AI Innovation Lab selected several popular AI models for benchmarking. Additionally, to provide a basis for comparison, they also ran benchmarks on our last-generation PowerEdge XE8545. The following workloads were chosen for the evaluation:

- BERT-large (Bidirectional Encoder Representations from Transformers) – Natural language processing like text classification, sentiment analysis, question answering, and language translation

- XE8545 Batch Size 512

- XE9680-A100 Batch Size 512

- XE9680-H100 Batch Size 1024

- ResNet (Residual Network) – Image recognition. Classify, object detection, and segmentation

- XE8545 Batch Size 2048

- XE9680-A100 Batch Size 2048

- XE9680-H100 Batch Size 2048

- RNNT (Recurrent Neural Network Transducer) – Speech recognition. Converts audio signal to words

- XE8545 Batch Size 2048

- XE9680-A100 Batch Size 2048

- XE9680-H100 Batch Size 2048

- RetinaNET – Object detection in images

- XE8545 Batch Size 16

- XE9680-A100 Batch Size 32

- XE9680-H100 Batch Size 16

Performance

The results are remarkable! The PowerEdge XE9680 demonstrates exceptional inferencing performance!

+300%: PowerEdge XE9680 NVIDIA A100 to H100 performance(1)

+700%: When compared to PowerEdge XE8545(2)

Comparing the NVIDIA A100 SXM configuration with the NVIDIA H100 SXM configuration on the same PowerEdge XE9680 reveals up to a 300% improvement in inferencing performance! (1)

Even more impressive is the comparison between the PowerEdge XE9680 NVIDIA H100 SXM server and the XE8545 NVIDIA A100 SXM server, which shows up to a 700% improvement in inferencing performance! (2)

Here are the results of each benchmark. In all cases, higher is better.

With exceptional AI inferencing performance, the PowerEdge XE9680 sets a high benchmark for today’s and tomorrow's AI demands. Its advanced features and capabilities provide a solid foundation for businesses and organizations to take advantage of AI and unlock new opportunities.

Contact your account executive or visit www.dell.com to learn more.

Table 1. Server configuration

(1) Testing conducted by Dell in March of 2023. Performed on PowerEdge XE9680 with 8x NVIDIA H100 SXM5-80GB and PowerEdge XE9680 with 8x NVIDIA A100 SXM4-80G. Actual results will vary.

(2) Testing conducted by Dell in March of 2023. Performed on PowerEdge XE9680 with 8x NVIDIA H100 SXM5-80GB and PowerEdge XE8545 with 4x NVIDIA A100-SXM-80GB. Actual results will vary.

Accelerating High-Performance Computing with Dell PowerEdge XE9680: A Look at HPL Performance

Tue, 28 Mar 2023 23:05:16 -0000

|Read Time: 0 minutes

Executive Summary

The Dell PowerEdge XE9680 is a high-performance server designed and optimized to enable uncompromising performance for artificial intelligence, machine learning, and high-performance computing workloads. Dell PowerEdge is launching our innovative 8-way GPU platform with advanced features and capabilities.

- 8x NVIDIA H100 80GB 700W SXM GPUs or 8x NVIDIA A100 80GB 500W SXM GPUs

- 2x Fourth Generation Intel® Xeon® Scalable Processors

- 32x DDR5 DIMMs at 4800MT/s

- 10x PCIe Gen 5 x16 FH Slots

- 8x SAS/NVMe SSD Slots (U.2) and BOSS-N1 with NVMe RAID

This Direct from Development (DfD) tech note offers valuable performance insights for High-Performance Linpack (HPL), a widely accepted benchmark for measuring HPC system performance.

Testing

The TOP500 list frequently relies on HPL to assess and rank supercomputer performance. Utilizing the Linpack library, HPL measures FLOPS (floating-point operations per second) by creating and solving linear equations, making it a reliable benchmark for evaluating HPC system efficiency.

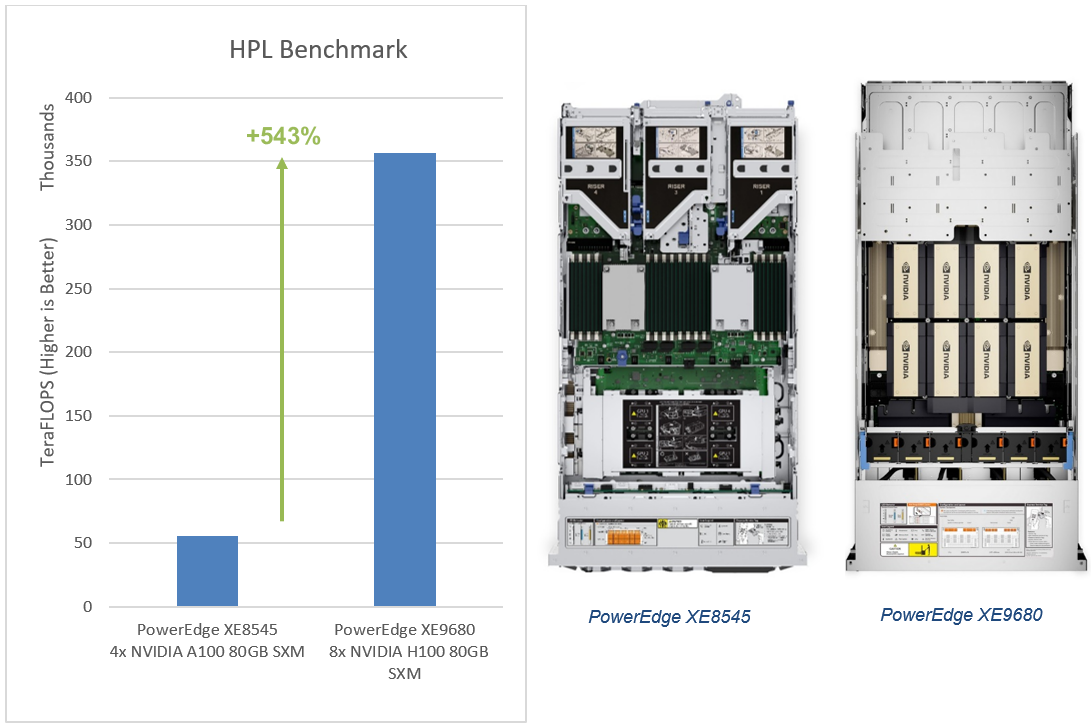

The Dell HPC & AI Innovation Lab used HPL to compare the performance of the PowerEdge XE9680 to our last generation PowerEdge XE8545. There are two key differentiators between the servers that affect HPL performance here: the quantity and model of GPUs supported by each platform.

Regarding GPU configuration, the PowerEdge XE9680 was equipped with 8x H100 80GB SXM GPUs, while the PowerEdge XE9680 was outfitted with 4x A100 80GB SXM GPUs.

Performance

In the HPL benchmark, the PowerEdge XE9680 equipped with NVIDIA's latest H100 80GB SXM GPU outperforms the PowerEdge XE8545 by an impressive 543% more TeraFLOPS!1

The PowerEdge XE9680, with the latest NVIDIA H100 SXM GPU, advances HPC performance. With exceptional HPL performance, the PowerEdge XE9680 sets a high benchmark for today’s and tomorrow's HPC demands. Contact your account executive or visit www.dell.com to learn more.

Table 1. Server configuration

- Testing conducted by Dell in March of 2023. Performed on PowerEdge XE9680 with 8x NVIDIA H100 SXM5-80GB and PowerEdge XE8545 with 4x NVIDIA A100-SXM-80GB. Actual results will vary.

Next-Generation Dell PowerEdge XR5610 Machine Learning Performance

Fri, 03 Mar 2023 20:01:50 -0000

|Read Time: 0 minutes

Summary

Dell Technologies has recently announced the launch of next-generation Dell PowerEdge servers that deliver advanced performance and energy-efficient design.

This Direct from Development Tech Note describes the new capabilities you can expect from the next generation of PowerEdge servers. It discusses the test and results for machine learning (ML) performance of the PowerEdge XR5610 using the industry-standard MLPerf Inference v2.1 benchmarking suite. The XR5610 has target workloads in networking and communication, enterprise edge, military, and defense—all key workloads requiring AI/ML inferencing capabilities at the edge.

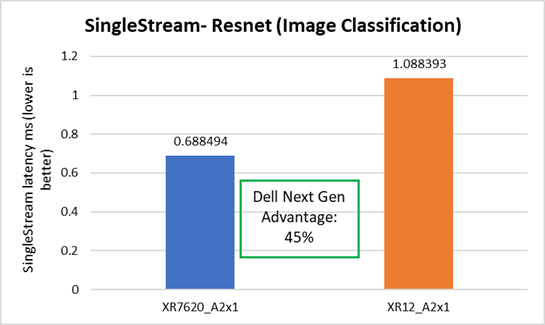

The 1U single-socket XR5610 is an edge-optimized short-depth rugged 1U server powered by 4th Generation Intel® Xeon® Scalable processors with the MCC SKU stack. It includes the latest generation of technologies, with slots up to 8x DDR5 and two PCIe Gen5x16 card slots, and is capable of 46 percent faster image classification (reduced latency) workload as compared to the previous-generation PowerEdge XR12.

PowerEdge XR5610—Designed for the edge

Edge computing, in essence, brings compute power close to the source of the data. As Internet of Things (IoT) endpoints and other devices generate more and more time-sensitive data, edge computing becomes increasingly important. Machine learning (ML) and artificial intelligence (AI) applications are particularly suitable for edge computing deployments. The environmental conditions for edge computing are typically vastly different than those at centralized data centers. Edge computing sites, at best, might consist of little more than a telecommunications closet with minimal or no HVAC.

Dell PowerEdge XR5610 is a rugged, short-depth (400 mm class) 1U server for the edge, designed for deployment in locations constrained by space or environmental challenges. It is well suited to operate at high temperatures ranging from –5°C to 55°C (23°F to 131°F) and designed to excel with telecom vRAN workloads, military and defense deployments, and retail AI including video monitoring, IoT device aggregation, and PoS analytics.

Figure 1. Dell PowerEdge XR5610 – 1U

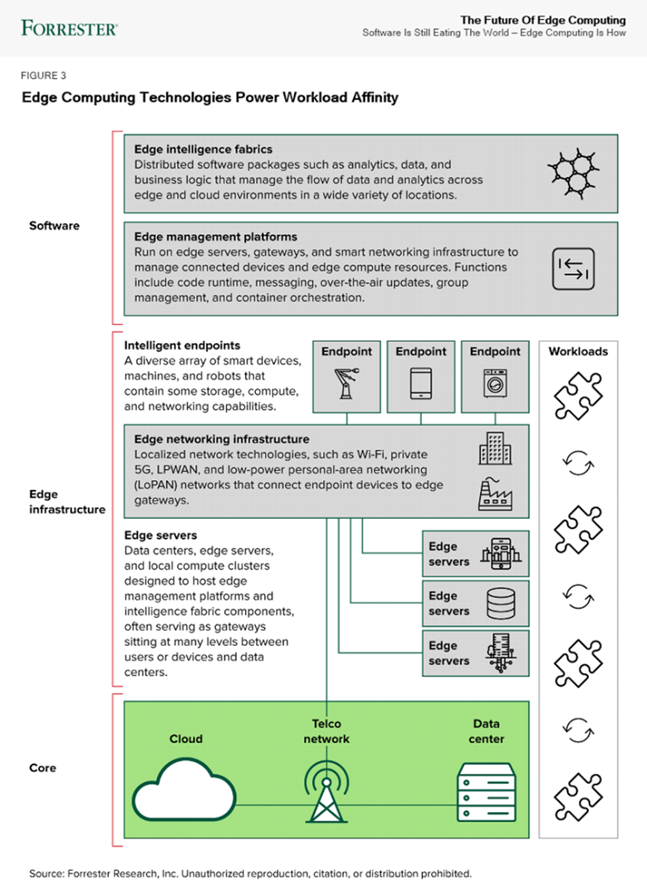

The emerging technology of edge intelligence

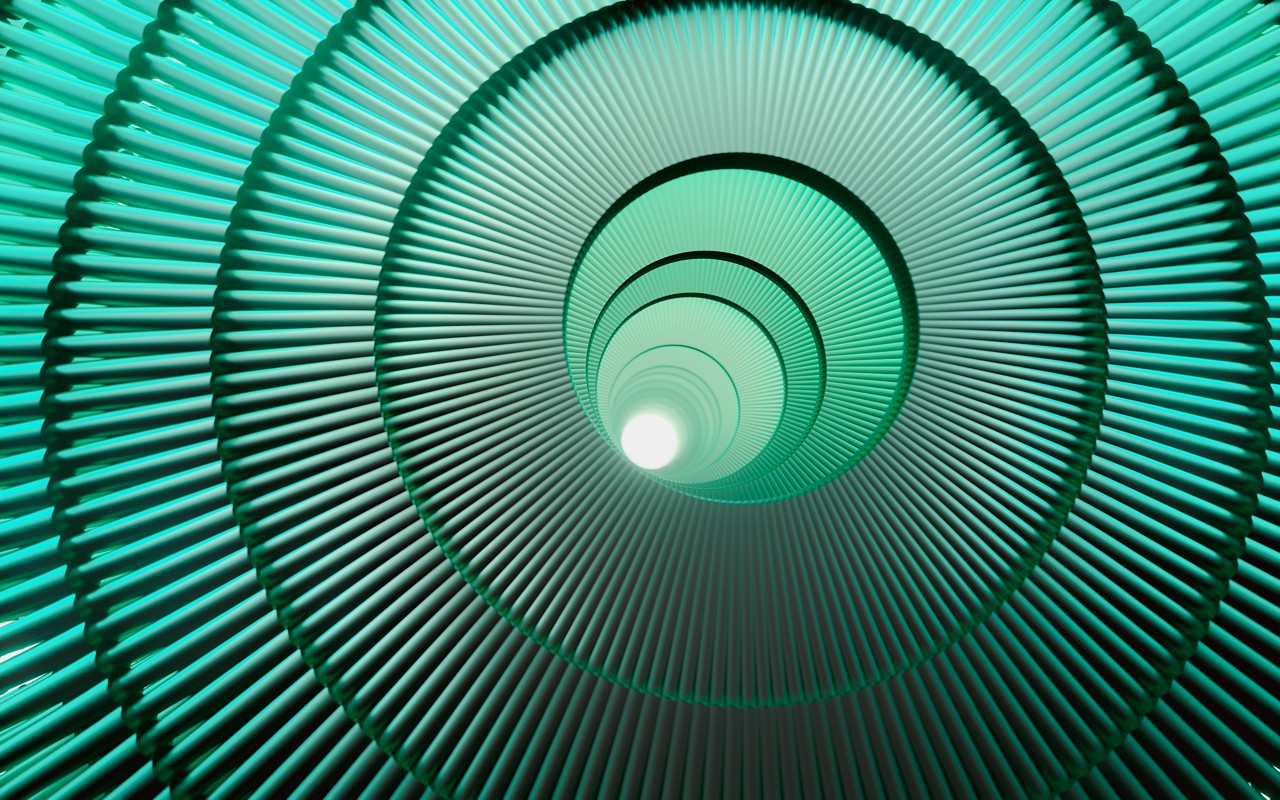

According to a recent Forrester report, “Edge intelligence, a top 10 emerging technology in 2022, helps capture data, embed inferencing, and connect insight in a real-time network of application, device, and communication ecosystems.”

Figure 2. Forrester report excerpt, reprinted with permission

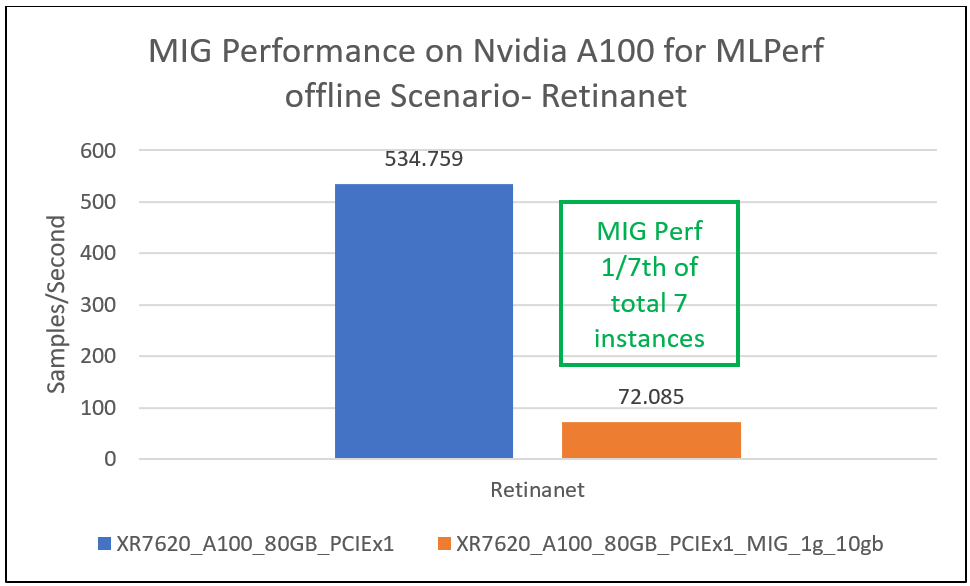

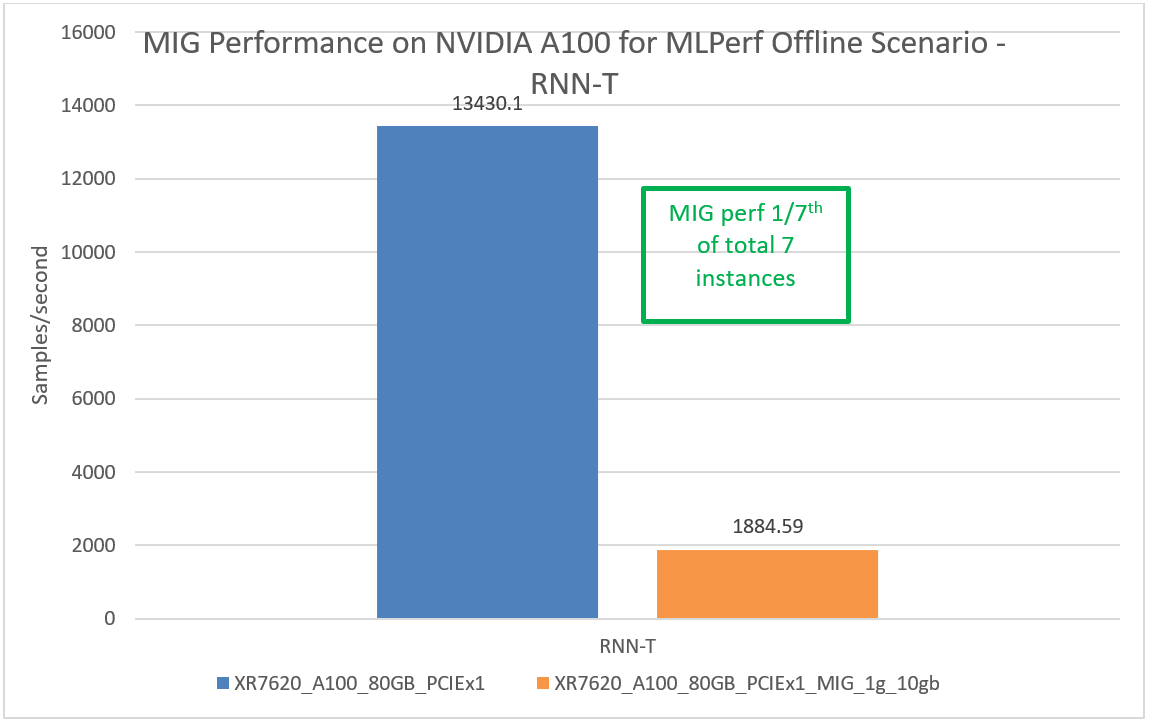

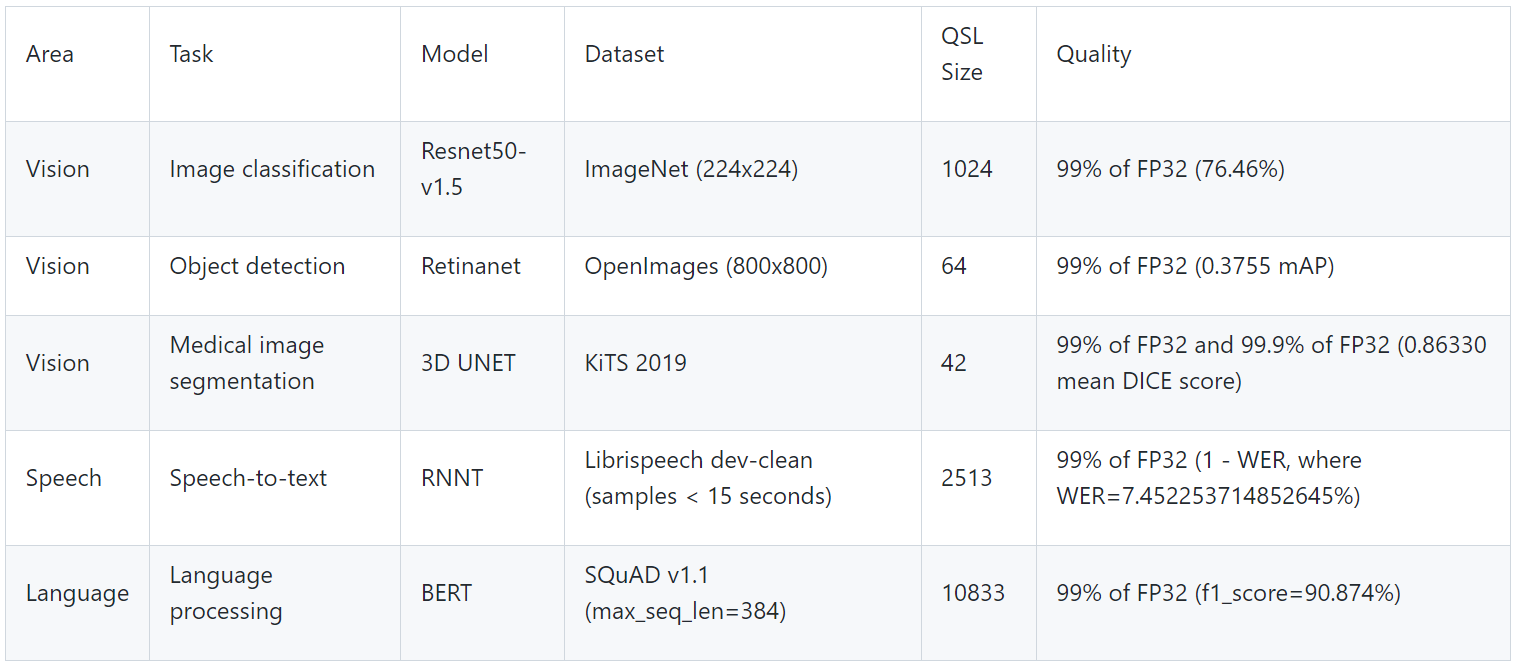

MLPerf Inference workload summary

MLPerf Inference is a multifaceted benchmark framework, measuring four different workload types and three processing scenarios. The workloads are image classification, object detection, medical imaging, speech-to-text, and natural language processing (BERT). The processing scenarios, as outlined in the following table, are single stream, multistream, and offline.

Table 1. MLPerf Inference benchmark scenarios

Scenario | Performance metric | Use case |

Single stream | 90th latency percentile | Search results. Waits until the query is made and returns the search results. Example: Google voice search |

Multistream | 99th latency percentile | Multicamera monitoring and quick decisions. Acts more like a CCTV backend system that processes multiple real-time streams and identifies suspicious behaviors. Example: Self-driving car that merges all multiple camera inputs and makes drive decisions in real time |

Offline | Measured throughput | Batch processing, also known as offline processing. Example: Google Photos service that identifies pictures, tags people, and generates an album with specific people and locations or events offline |

The MLPerf suite for inferencing includes the following benchmarks:

Table 2. MLPerf suite for inferencing benchmarks

Area | Task | Model | Dataset | QSL size | Quality |

Vision | Image classification | Resnet50-v1.5 | ImageNet (224x224) | 1024 | 99% of FP32 (76.46%) |

Vision | Object detection | Retinanet | OpenImages (800x800) | 64 | 99% of FP32 (0.3755 mAP) |

Vision | Medical image segmentation | 3D UNET | KiTS 2019 | 42 | 99% of FP32 and 99.9% of FP32 (0.86330 mean DICE score) |

Speech | Speech-to-text | RNNT | Librispeech dev-clean (samples < 15 seconds) | 2513 | 99% of FP32 (1 – WER, where WER=7.452253714852645%) |

Language | Language processing | BERT | SQuAD v1.1 (max_seq_len=384) | 10833 | 99% of FP32 (f1_score=90.874%) |

MLPerf Inference performance

The following table outlines the key specifications of the PowerEdge XR5610 that was used for the MLPerf Inference test suite.

Table 3. Dell PowerEdge XR5610 key specifications for MLPerf Inference test suite

Component | Specifications |

CPU | 4th Gen Intel Xeon Scalable processors MCC SKU |

Operating system | CentOS 8.2.2004 |

Memory | 256 GB |

GPU | NVIDIA A2 |

GPU count | 1 |

Networking | 1x ConnectX-5 IB EDR 100 Gbps |

Software stack |

|

Storage | NVMe SSD 1.8 TB |

Table 4 shows the specifications of the NVIDIA GPUs that were used in the benchmark tests.

Table 4. NVIDIA GPUs tested

GPU model | GPU memory | Maximum power consumption | Form factor | 2-way bridge | Recommended workloads |

PCIe adapter form factor | |||||

A2 | 16 GB GDDR6 | 60 W | SW, HHHL, or FHHL | Not applicable | AI inferencing, edge, VDI |

The edge server offloads the image processing to the GPU, and, just as servers have different price/performance levels to suit different requirements, so do GPUs. XR5610 supports up to 2x SW GPUs, as did the previous-generation XR11.

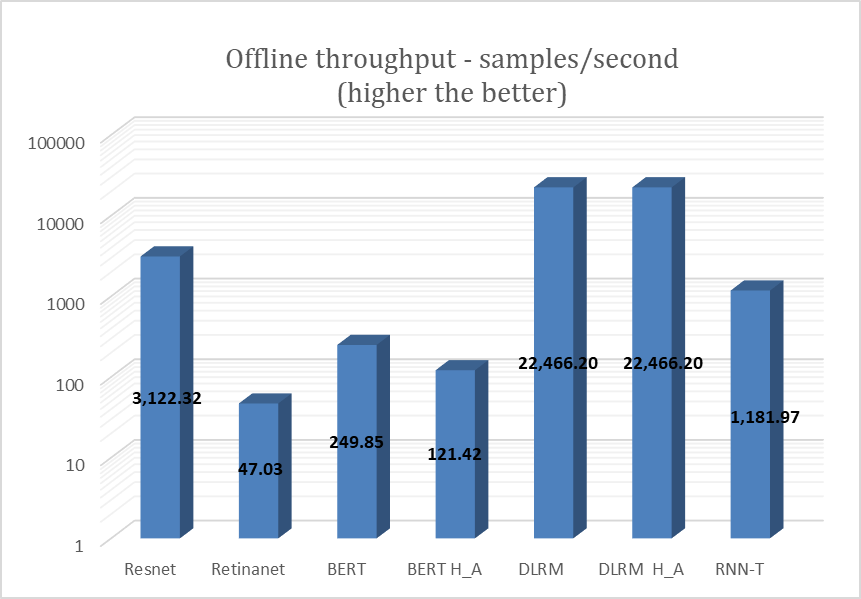

XR5610 was tested with the NVIDIA A2 GPU for the entire range of MLPerf workloads on the offline scenario. The following figure shows the results of the testing.

Figure 3. NVIDIA A2 GPU test results for MLPerf offline scenario

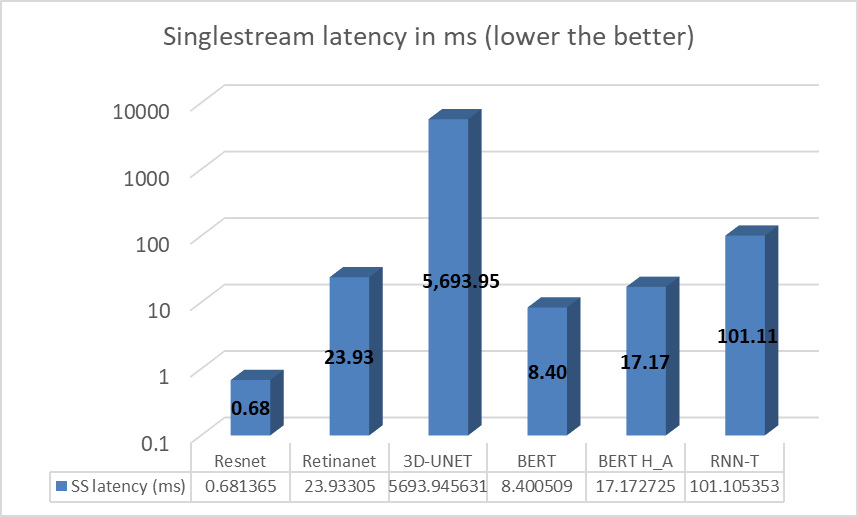

XR5610 also was tested with the NVIDIA A2 GPU for the entire range of MLPerf workloads on the single stream scenario. The following figure shows the results of that testing.

Figure 4. NVIDIA A2 GPU test results for MLPerf single stream scenario

In some tasks/workloads, the XR5610 showed improvement over previous generations, resulting from the integration of new technologies such as PCIe Gen 5.

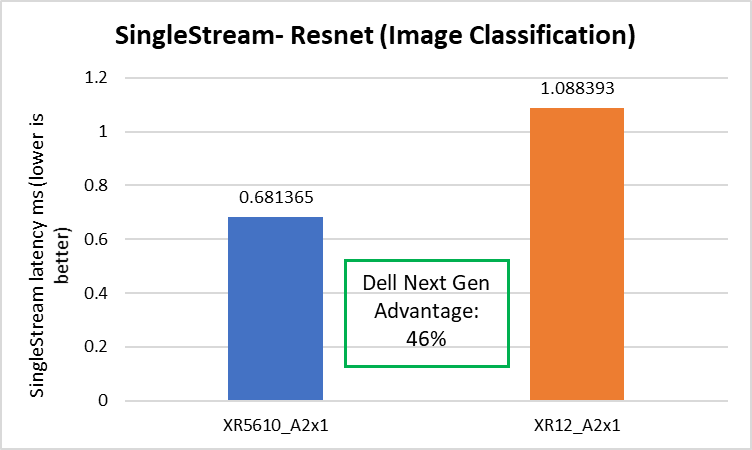

Image classification

The PowerEdge XR5610 delivered 46 percent better image classification latency compared to the prior-generation PowerEdge server, as shown in the following figure.

Figure 5. Image classification latencies: XR5610 and prior-generation PowerEdge server

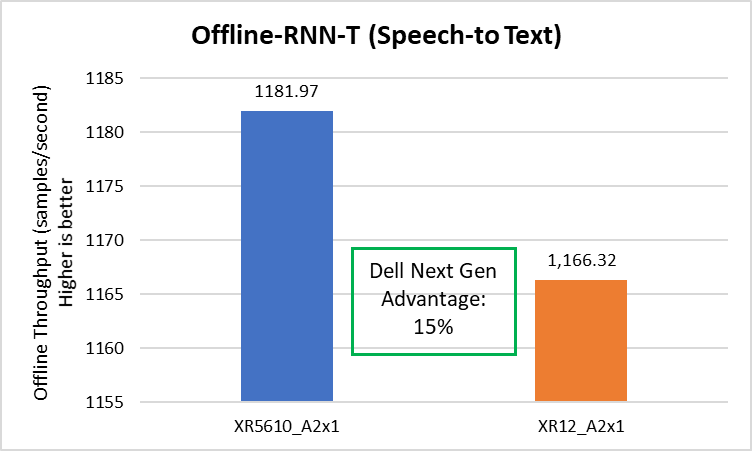

Speech to text

The Dell XR5610 delivered 15 percent better speech-to-text throughput compared to the prior-generation PowerEdge server, as shown in the following figure.

Figure 6. Speech to text latencies: XR5610 and prior-generation PowerEdge server

Conclusion

The PowerEdge XR portfolio continues to provide a streamlined approach for various edge and telecom deployment options based on different use cases. It provides a solution to the challenge of a small form factor at the edge with industry-standard rugged certifications (NEBS), providing a compact solution for scalability and for flexibility in a temperature range of –5°C to +55°C.

References

Notes:

- Based on testing conducted in Dell Cloud and Emerging Technology lab in January 2023. Results to be submitted to MLPerf in Q2, FY24.

- Unverified MLPerf v2.1 Inference. Result not verified by MLCommons Association. MLPerf name and logo are trademarks of MLCommons Association in the United States and other countries. All rights reserved. Unauthorized use strictly prohibited. See www.mlcommons.org for more information.

Next-Generation Dell PowerEdge XR Server CPU Improvements

Fri, 03 Mar 2023 19:57:24 -0000

|Read Time: 0 minutes

Summary

Dell Technologies has recently introduced the next generation of Dell PowerEdge XR servers. Powered by 4th Gen Intel® Xeon® Scalable processors with the MCC SKU stack, these servers deliver advanced performance in an energy-efficient design. Dell continues to provide scalability and flexibility with its latest portfolio of short-depth XR servers. These servers integrate technologies such as 4th Gen Intel CPUs, PCIe Gen5, DDR5, NVMe drives, and GPU slots, and they are compliance-tested for NEBS and MIL-STD.

This tech note discusses our CPU performance benchmark testing of the next-generation PowerEdge XR server portfolio and the test results that show improvements over previous PowerEdge XR servers powered by 3rd Gen Intel Xeon Scalable processors and Xeon D processors.

Benchmarks

4th Gen Intel Xeon Scalable processors with the MCC SKU stack were tested using the STREAM and HPL benchmarks and compared with the CPU of the previous generation of XR servers.

STREAM

The STREAM benchmark is a simple, synthetic benchmark designed to measure sustainable memory bandwidth (in MB/s) and a corresponding computation rate for four simple vector kernels: Copy, Scale, Add, and Triad. The STREAM benchmark is designed to work with datasets much larger than the available cache on any system so that the results are (presumably) more indicative of the performance of very large, vector-style applications. Ultimately, we get a reference for compute performance.

HPL

HPL is a high-performance LINPACK benchmark implementation. The code solves a uniformly random system of linear equations and reports time and floating-point operations per second using a standard formula for operation count. It also helps to provide a reference for a system’s compute speed performance.

Performance results

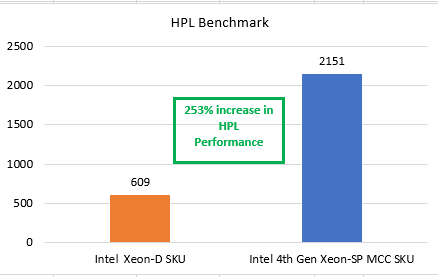

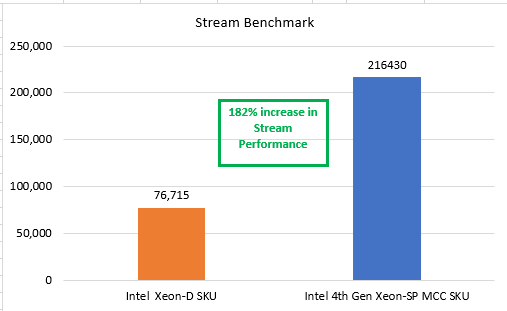

Benchmark testing showed significant performance increases with the 4th Gen Intel Xeon Scalable MCC SKU stack when it was compared with both the Intel Xeon D SKU and the 3rd Gen Intel Xeon Scalable MCC SKU.

Comparison of 4th Gen Intel Xeon Scalable MCC SKU with Intel Xeon D SKU

In our tests, the single-socket PowerEdge XR servers with the 4th Gen Intel Xeon Scalable CPU (32 core) MCC SKU stack delivered a 253 percent increase in HPL performance and a 182 percent increase in STREAM performance. Thus, these servers are faster at the network edge or enterprise edge than the previous-generation PowerEdge XR servers powered by the Intel Xeon D (16 core) SKU.

Figure 1 and Figure 2 show the results of the benchmark tests that compared the performance of the 4th Gen Intel Xeon Scalable processor MCC SKU stack with the Intel Xeon D SKU.

Figure 1. HPL performance comparison: Intel Xeon D SKU and 4th Gen Intel Xeon Scalable MCC SKU

Figure 2. STREAM performance comparison: Intel Xeon D SKU and 4th Gen Intel Xeon Scalable MCC SKU

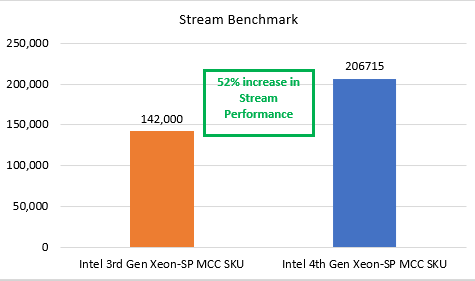

Comparison of 4th Gen and 3rd Gen Intel Xeon Scalable MCC SKU

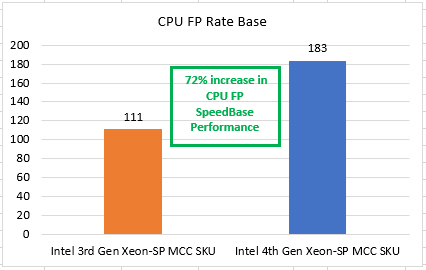

In our tests, the single-socket PowerEdge XR servers with the 4th Gen Intel Scalable CPU (32 core) MCC stack delivered a 52 percent increase in STREAM performance and a 72 percent increase in CPU FP rate base performance (floating point performance for the CPU). Thus, these servers are faster for compute at the network edge or enterprise edge than the previous generation of PowerEdge XR servers powered by the 3rd Gen Intel Xeon Scalable MCC SKU.

Figure 3 and Figure 4 show the results of the benchmark tests that compared the performance of the 4th Gen and 3rd Gen Intel Xeon Scalable processor MCC SKU stacks.

Figure 3. STREAM performance for 4th and 3rd Gen Intel Xeon Scalable processors

Figure 4. CPU FP rate base performance for 4th and 3rd Gen Intel Xeon Scalable processors

Conclusion

The Dell PowerEdge XR portfolio continues to provide CPU-based improvements and a streamlined approach for various edge and telecom deployment options. The XR portfolio provides a solution to the challenge of needing a small form factor at the edge with industry-standard rugged certifications (NEBS). It provides a compact solution for scalability along with flexibility for operating in temperatures ranging from –5°C to +55°C.

References

PTP and SyncE on Dell Next-Generation Servers

Fri, 03 Mar 2023 19:57:24 -0000

|Read Time: 0 minutes

Summary

The telecom industry is on a journey of transformation, making pitstops to disaggregate hardware and software, virtualize networks, and introduce cloudification across RAN and core domains. The introduction of 5G and ORAN has accelerated the transformation, and we now see telecom becoming a universal phenomenon and touching all aspects of life.