Dell PowerEdge Servers Offer Comprehensive GPU Acceleration Options

Download PDFFri, 03 Mar 2023 19:57:27 -0000

|Read Time: 0 minutes

Summary

The next generation of PowerEdge servers is engineered to accelerate insights by enabling the latest technologies. These technologies include next-gen CPUs bringing support for DDR5 and PCIe Gen 5 and PowerEdge servers that support a wide range of enterprise-class GPUs. Over 75% of next generation Dell PowerEdge servers offer support for GPU acceleration.

Accelerate insights

For the digital enterprise, success hinges on leveraging big, fast data. But as data sets grow, traditional data centers are starting to hit performance and scale limitations — especially when ingesting and querying real-time data sources. While some have long taken advantage of accelerators for speeding visualization, modeling, and simulation, today, more mainstream applications than ever before can leverage accelerators to boost insight and innovation. Accelerators such as graphics processing units (GPUs) complement and accelerate CPUs, using parallel processing to crunch large volumes of data faster. Accelerated data centers can also deliver better economics, providing breakthrough performance with fewer servers, resulting in faster insights and lower costs. Organizations in multiple industries are adopting server accelerators to outpace the competition — honing product and service offerings with data-gleaned insights, enhancing productivity with better application performance, optimizing operations with fast and powerful analytics, and shortening time to market by doing it all faster than ever before. Dell Technologies offers a choice of server accelerators in Dell PowerEdge servers so you can turbo-charge your applications.

For the digital enterprise, success hinges on leveraging big, fast data. But as data sets grow, traditional data centers are starting to hit performance and scale limitations — especially when ingesting and querying real-time data sources. While some have long taken advantage of accelerators for speeding visualization, modeling, and simulation, today, more mainstream applications than ever before can leverage accelerators to boost insight and innovation. Accelerators such as graphics processing units (GPUs) complement and accelerate CPUs, using parallel processing to crunch large volumes of data faster. Accelerated data centers can also deliver better economics, providing breakthrough performance with fewer servers, resulting in faster insights and lower costs. Organizations in multiple industries are adopting server accelerators to outpace the competition — honing product and service offerings with data-gleaned insights, enhancing productivity with better application performance, optimizing operations with fast and powerful analytics, and shortening time to market by doing it all faster than ever before. Dell Technologies offers a choice of server accelerators in Dell PowerEdge servers so you can turbo-charge your applications.

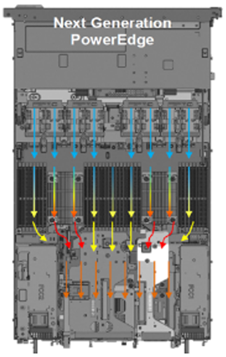

Accelerated server architecture

Our world-class engineering team designs PowerEdge servers with the latest technologies for ultimate performance. Here’s how.

Industry enabled technologies

- Next Generation Intel and AMD Processors

- DDR5 Memory

- PCIe Gen5

- GPU Form Factor Options

Next generation air and Direct Liquid Cooling (DLC) technology

PowerEdge ensures no-compromise system performance through innovative cooling solutions while offering customers options that fit their facility or usage model.

PowerEdge ensures no-compromise system performance through innovative cooling solutions while offering customers options that fit their facility or usage model.

- Innovations that extend the range of air-cooled configurations

- Advanced designs - airflow pathways are streamlined within the server, directing the right amount of air to where it is needed

- Latest generation fan and heat sinks – to manage the latest high-TDP CPUs and other key components

- Intelligent thermal controls – automatically adjust airflow during workload or environmental changes, seamless support for channel add-in cards, plus enhanced customer control options for temp/power/acoustics

- For high-performance CPU and GPU options in dense configurations, Dell DLC effectively manages heat while improving overall system efficiency

Our GPU partners

AMD

Dell Technologies and AMD have established a solid partnership to help organizations accelerate their AI initiatives. Together our technologies provide the foundation for successful AI solutions that drive the development of advanced DL software frameworks. These technologies also deliver massively parallel computing in the form of AMD Graphic Processing Units (GPUs) for parallel model training and scale-out file systems to support the concurrency, performance and capacity requirements of unstructured image and video data sets. With AMD ROCm open software platform built for flexibility and performance, the HPC and AI communities can gain access to open compute languages, compilers, libraries, and tools designed to accelerate code development and solve the toughest challenges in the world today.

Intel

Dell Technologies and Intel are giving customers new choices in enterprise-class GPUs. The Intel Data Center GPUs are available with our next generation of PowerEdge servers. These GPUs are designed to accelerate AI inferencing, VDI, and model training workloads. And with toolsets like Intel® oneAPI and OpenVINOTM, developers have the tools they need to develop new AI applications and migrate existing applications to run optimally on Intel GPUs.

NVIDIA

Dell Technologies solutions designed with NVIDIA hardware and software enable customers to deploy high-performance deep learning and AI-capable enterprise-class servers from the edge to the data center. This relationship allows Dell to offer Ready Solutions for AI and built-to-order PowerEdge servers with your choice of NVIDIA GPUs. With Dell Ready Solutions for AI, organizations can rely on a Dell-designed and validated set of best-of-breed technologies for software – including AI frameworks and libraries – with compute, networking, and storage. With NVIDIA CUDA, developers can accelerate computing applications by harnessing the power of the GPUs. Applications and operations (such as matrix multiplication) that are typically run serially in CPUs can run on thousands of GPU cores in parallel.

GPU options for next-generation PowerEdge servers

Turbo-charge your applications with performance accelerators available in select Dell PowerEdge tower and rack servers. The number and type of accelerators that fit in PowerEdge servers are based on the physical dimensions of the PCIe adapter cards and the GPU form factor.

Brand | GPU Model | GPU Memory | Max Power Consumption | Form Factor | 2-way Bridge | Recommended Workloads | |

PCIe Adapter Form Factor | |||||||

NVIDIA | A2 | 16 GB GDDR6 | 60W | SW, HHHL or FHHL | n/a | AI Inferencing, Edge, VDI | |

NVIDIA | A16 | 64 GB GDDR6 | 250W | DW, FHFL | n/a | VDI | |

NVIDIA | A40, L40 | 48 GB GDDR6 | 300W | DW, FHFL | Y, N | Performance graphics, Multi-workload | |

NVIDIA | A30 | 24 GB HBM2 | 165W | DW, FHFL | Y | AI Inferencing, AI Training | |

NVIDIA | A100 | 80 GB HBM2e | 300W | DW, FHFL | Y, Y | AI Training, HPC, AI Inferencing | |

NVIDIA | H100 | 80GB HBM2e | 300 - 350W | DW, FHFL | Y | AI Training, HPC, AI Inferencing | |

AMD | MI210 | 64 GB HBM2e | 300W | DW, FHFL | Y | HPC, AI Training | |

Intel | Max 1100* | 48GB HBM2e | 300W | DW, FHFL | Y | HPC, AI Training | |

Intel | Flex 140* | 12GB GDDR6 | 75W | SW, HHHL or FHHL | n/a | AI Inferencing | |

SXM / OAM Form Factor | |||||||

NVIDIA | HGX A100* | 80GB HBM2 | 500W | SXM w/ NVLink | n/a | AI Training, HPC | |

NVIDIA | HGX H100* | 80GB HBM3 | 700W | SXM w/ NVLink | n/a | AI Training, HPC | |

Intel | Max 1550 * | 128GB HBM2e | 600W | OAM w/ XeLink | n/a | AI Training, HPC | |

* Development or under evaluation | |||||||