Multi-Instance GPU on the Edge

Download PDFFri, 03 Mar 2023 19:57:25 -0000

|Read Time: 0 minutes

Summary

Dell has recently announced the launch of Next-generation Dell PowerEdge servers that deliver advanced performance and energy efficient design.

This Direct from Development (DfD) tech note describes the new capabilities you can expect from the next-generation Dell PowerEdge servers powered by Intel 4th Gen Intel® Xeon® Scalable processors MCC SKU stack. This document covers the test and results for ML performance benchmarking for the offline scenario on Dell’s next generation PowerEdge XR 7620 using Multi-Instance GPU technology. XR7620 has target workloads in manufacturing, retail, defense, and telecom - all key workloads requiring AI/ML inferencing capabilities at the edge. Dell continues to provide scalability and flexibility with its latest short-depth XR servers portfolio, integrated with the latest technologies such as 4th Gen Intel CPU, PCIe Gen5, DDR5, NVMe drives, and GPU slots, along with compliance testing for NEBS and MIL-STD.

MIG overview

Multi-Instance GPU (MIG) expands the performance and value of NVIDIA H100, A100, and A30 Tensor Core GPUs. MIG can partition the GPU into as many as seven instances, each fully isolated with its own high-bandwidth memory, cache, and compute cores. This gives administrators the ability to support every workload, from the smallest to the largest, with guaranteed quality of service (QoS) and extending the reach of accelerated computing resources to every user.

Without MIG, different jobs running on the same GPU, such as different AI inference requests, compete for the same resources. A job-consuming larger memory bandwidth starves others, resulting in several jobs missing their latency targets. With MIG, jobs run simultaneously on different instances, each with dedicated resources for compute, memory, and memory bandwidth, resulting in a predictable performance with QoS and maximum GPU utilization.

Figure 1. Seven different instances with MIG

MIG at the edge

Dell defines edge computing as technology that brings compute, storage, and networking closer to the source where data is created. This enables faster processing of data, and consequently, quicker decision making and faster insights. For edge use cases such as running an edge server on a factory floor or in a retail store, requires multiple applications to run simultaneously. One solution to solve this problem can be to add a piece of hardware for each application, but this solution is not scalable or sustainable in the long run. Thus, deploying multiple applications on the same piece of hardware is an option but it can cause much higher latency for different applications.

With multiple applications running on the same device, the device time-slices the applications in a queue so that applications are run sequentially as opposed to concurrently. There is always a delay in results while the device switches from processing data for one application to another.

MIG is an innovative technology to use in such use cases for the edge, where power, cost, and space are important constraints. AI inferencing applications such as computer vision and image detection need to run instantaneously and continuously to avoid any serious consequences due to lack of safety.

Jobs running simultaneously with different resources result in predictable performance with quality of service and maximum GPU utilization. This makes MIG an essential addition to every edge deployment.

MIG can be used in a multitenant environment. It is different from virtual GPU technology because MIG is hardware based, which makes edge computing even more secure.

Provision and configure instances as needed

A GPU can be partitioned into different-sized MIG instances. For example, in an NVIDIA A100 40GB, an administrator could create two instances with 20 gigabytes (GB) of memory each, three instances with 10GB each, or seven instances with 5GB each, or a combination of these.

MIG instances can also be dynamically reconfigured, enabling administrators to shift GPU resources in response to changing user and business demands. For example, seven MIG instances can be used during the day for low-throughput inference and reconfigured to one large MIG instance at night for deep learning training.

System Config for Next Generation Dell PowerEdge XR Server MIG Testing

Table 1. System architecture

MLPerf system | Edge |

Operating System | CentOS 8.2.2004 |

CPU | 4th Gen Intel Xeon Scalable processors MCC SKU |

Memory | 512GB |

GPU | NVIDIA A100 |

GPU Count | 1 |

Networking | 1x ConnectX-5 IB EDR 100Gb/Sec |

Software Stack | TensorRT 8.4.2 CUDA 11.6 cuDNN 8.4.1 Driver 510.73.08 DALI 0.31.0 |

Table 2. MLPerf scenario used in this test and MIG specs

Scenario | Performance metric | Example use cases | |

Offline | Measured throughput | Batch processing aka Offline processing. Google photos identifies pictures, tags, and people and generates an album with specific people and locations/events offline. | |

MIG Specifications | A100 | ||

Instance types | 7x 10GB 3x 20GB 2x 40GB 1x 80GB | ||

GPU profiling and monitoring | Only one instance at a time | ||

Secure Tenants | 1x | ||

Media decoders | Limited options | ||

Table 3. High accuracy benchmarks and their degree of precision

| BERT | BERT H_A | DLRM | DLRM H_A | 3D-Unet | 3D-Unet H_A |

Precision | int8 | fp16 | int8 | int8 | int8 | int8 |

DLRM H_A and 3D-Unet H_A is the same as DLRM and 3D-unet respectively. They were able to reach the target accuracy with int8 precision.

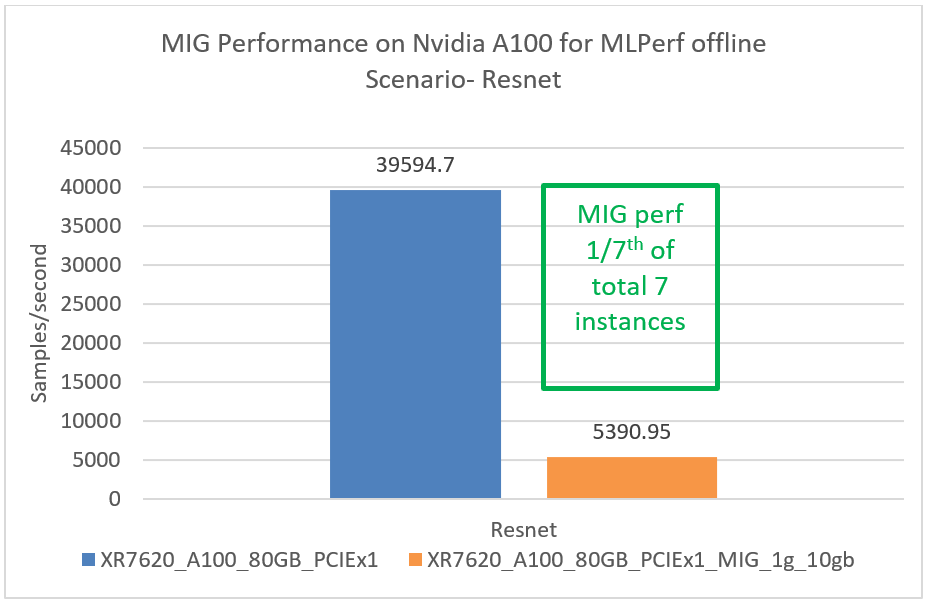

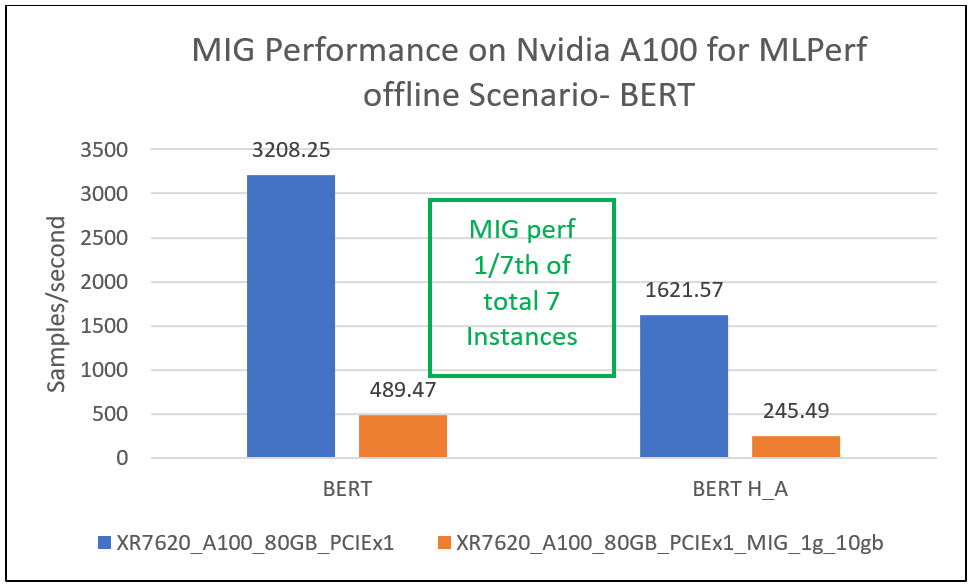

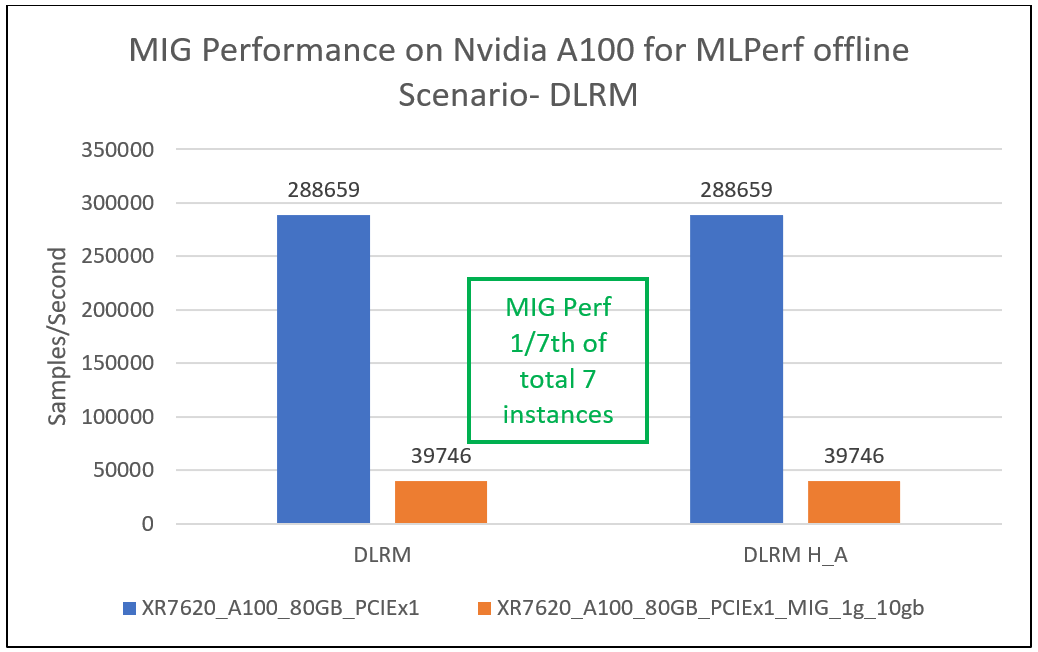

Performance results

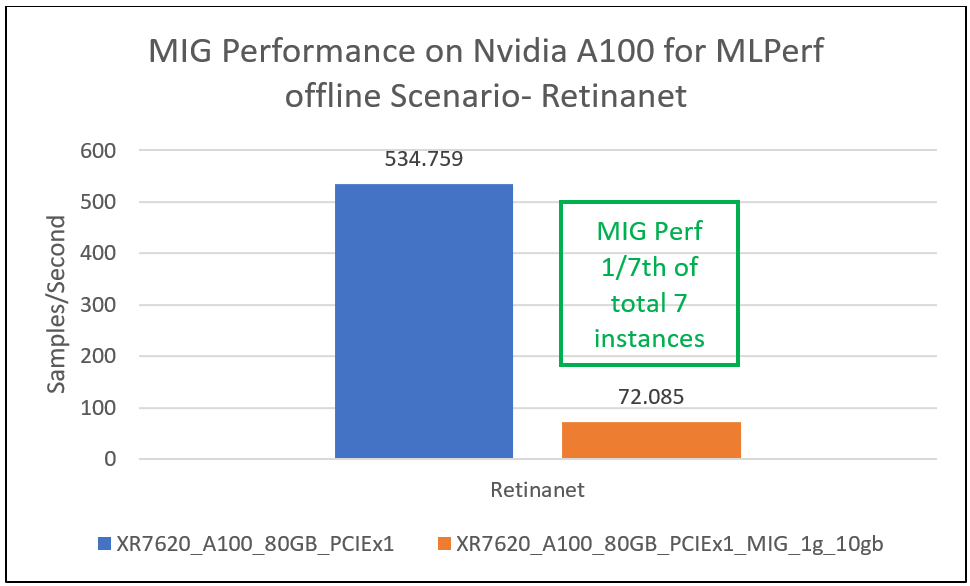

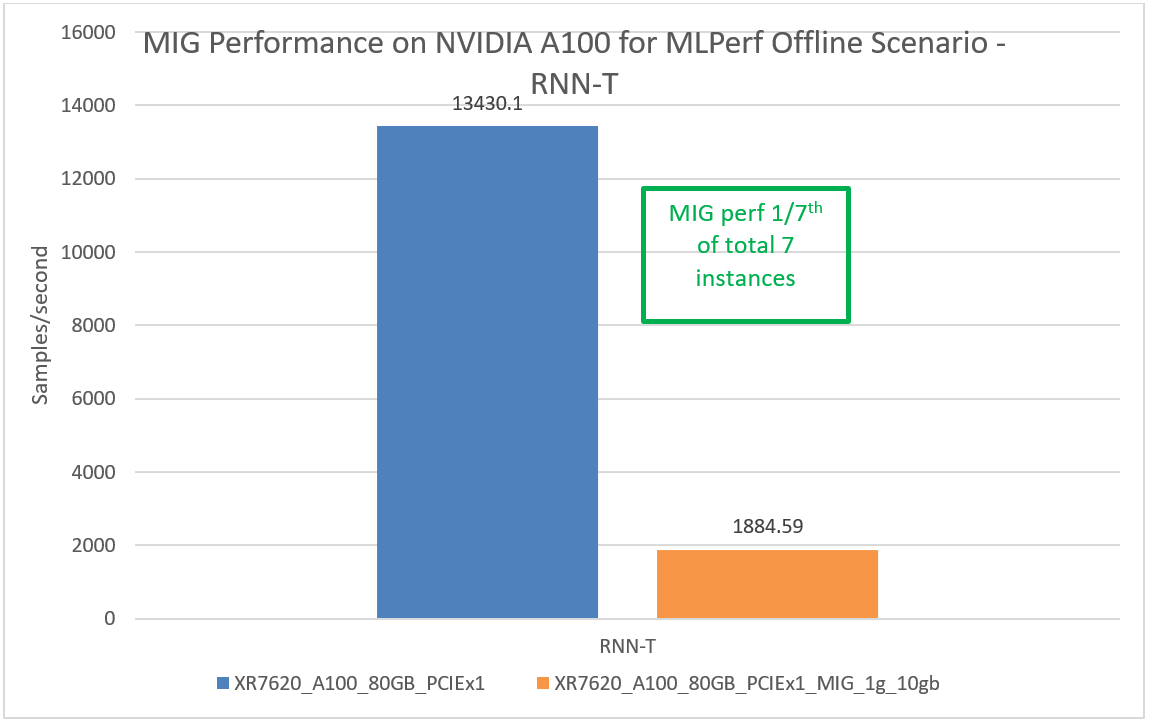

This section provides MIG performance results for various scenarios, showing that when divided into seven instances, each instance can provide equal performance without any loss in throughput.

Conclusion

Dell XR portfolio continues to provide a streamlined approach for various edge and telecom deployment options based on different use cases. It provides a solution to the challenge of small form factors at the edge with industry-standard rugged certifications (NEBS) that provide a compact solution for scalability, and flexibility in a temperature range of -5 to +55°C. The MIG capability for MLPerf workloads provides real-life scenarios for showcasing AI/Ml inferencing on multiple instances for edge use cases. Based on the results in this document, Dell servers continue to provide a complete solution.

References

- https://www.nvidia.com/en-us/technologies/multi-instance-gpu

- Running Multiple Applications on the Same Edge Devices | NVIDIA Technical Blog

- MLPerf Inference Benchmark

Notes:

- Based on testing conducted in Dell Cloud and Emerging Technology lab in January 2023. Results to be submitted to MLPerf in Q2, FY24.

- Unverified MLPerf v2.1 Inference. Results not verified by MLCommons Association. MLPerf name and logo are trademarks of MLCommons Association in the United States and other countries. All rights reserved. Unauthorized use strictly prohibited. See www.mlcommons.org for more information.