Blogs

Short articles related to Microsoft HCI Solutions from Dell Technologies

Preview of Intelligent Automation in Dell APEX Cloud Platform for Microsoft Azure

Wed, 24 Apr 2024 15:35:21 -0000

|Read Time: 0 minutes

UPDATE 11/7/2023: This blog and the embedded YouTube videos were published after Dell APEX Cloud Platform for Microsoft Azure was first announced at Dell Technologies World 2023. It contains early preview content. Please proceed to the following links to see the most up-to-date collateral and YouTube demo videos created after the platform was generally available Sept. 2023. https://www.youtube.com/playlist?list=PL2nlzNk2-VMEkNM7E8m0ia_lLHWlOuT5h |

It was another exhilarating Dell Technologies World (DTW) back in May. It’s always fun connecting with colleagues, customers, and partners in Las Vegas. As always, Vegas managed to surprise me with something I’d never seen before. I finally witnessed the incredible iLuminate team up close and personal at the APEX After Dark party. I tried to describe the phenomenon to a friend who hasn’t experienced one of their performances, but words cannot adequately convey this mesmerizing spectacle of sight and sound! In the end, only one of my photos from the event and a link to one of their recorded shows could make it real for them.

Similarly, words alone can’t do justice to the game changing potential of the new APEX Cloud Platform announced at DTW. That’s why I created a demo video giving customers an early preview[1] of the new management and orchestration capabilities coming to our APEX Cloud Platform Foundation Software. This software integrates intelligent automation into the familiar management tools of each supported cloud ecosystem – Microsoft Azure, Red Hat OpenShift, and VMware vSphere.

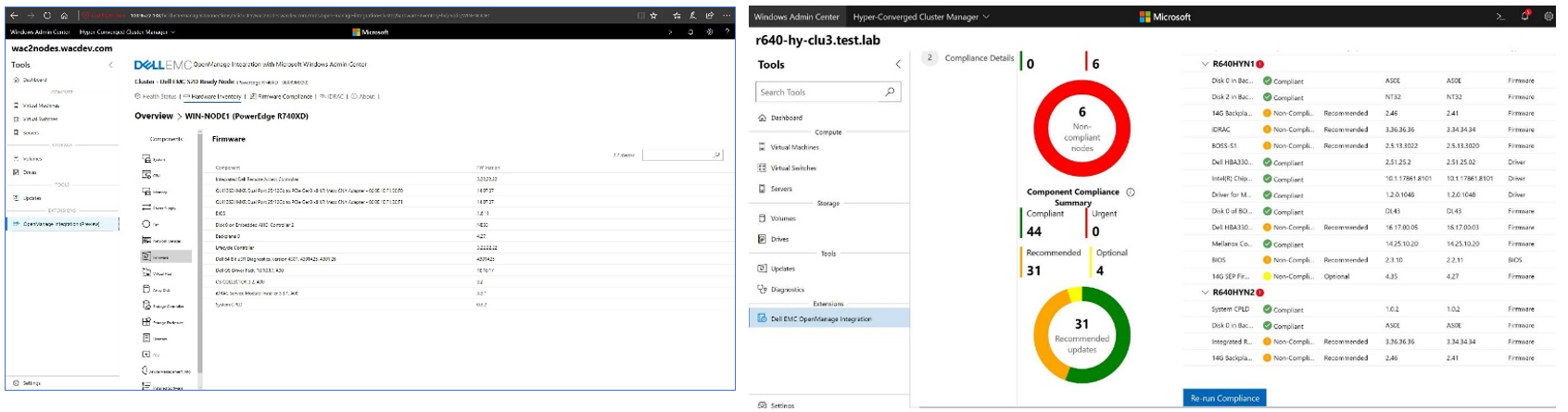

In this blog, I want to showcase APEX Cloud Platform for Microsoft Azure and the features and functionality we integrate into Microsoft Windows Admin Center. My colleague and friend, Kenny Lowe, wrote a brilliant analysis of our new solution in his recent blog post, Delving Into the APEX Cloud Platform for Microsoft Azure. He included some screen shots from my demo video, which hasn’t been shared publicly until now. I highly recommend reading his enlightening article, which provides invaluable context before viewing the demos.

Please be aware that the clips below are sections of a lengthier video that shares the story of a fictional retail company named WhyGoBuy. They used APEX Cloud Platform Foundation Software to accelerate their time to value and improve operational efficiency. Because this video was over 15 minutes long, I divided it into bite-sized chunks and included a brief introduction to each administrative task. You can view the full video HERE.

Seeing is believing

Without further ado, let’s dive into the technology!

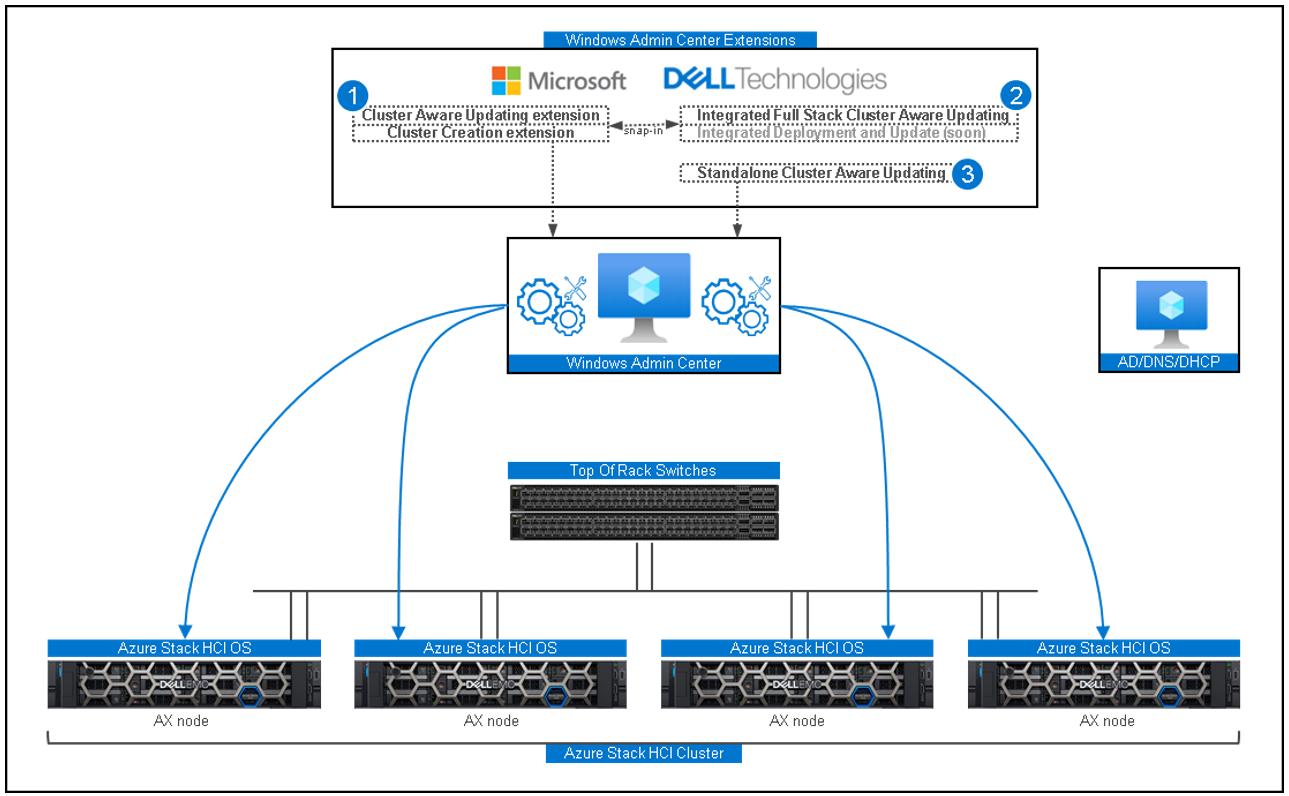

At initial release of APEX Cloud Platform for Microsoft Azure, Dell Technologies is offering a white-glove deployment experience through Dell ProDeploy Services. Our expert technicians will walk you through your first deployments to help you get comfortable with the process. Soon after announcing general availability, we will empower you to install the platform yourself using the APEX Cloud Platform Foundation Software deployment automation. In this first video, our administrators at WhyGoBuy followed the step-by-step user input configuration method and provided the settings in each step of the deployment wizard.

The next video presents a common Day 2 operations scenario. Some of WhyGoBuy’s Storage Spaces Direct volumes were approaching maximum capacity, and one volume required immediate attention. Luckily, APEX Cloud Platform for Microsoft Azure offered a consistent hybrid management experience. Administrators were promptly made aware of the issue through Azure Monitor, which provided observability for their entire fleet of platforms across data center and edge locations. Then, they navigated to the Windows Admin Center extension for further investigation and remediation of the issue.

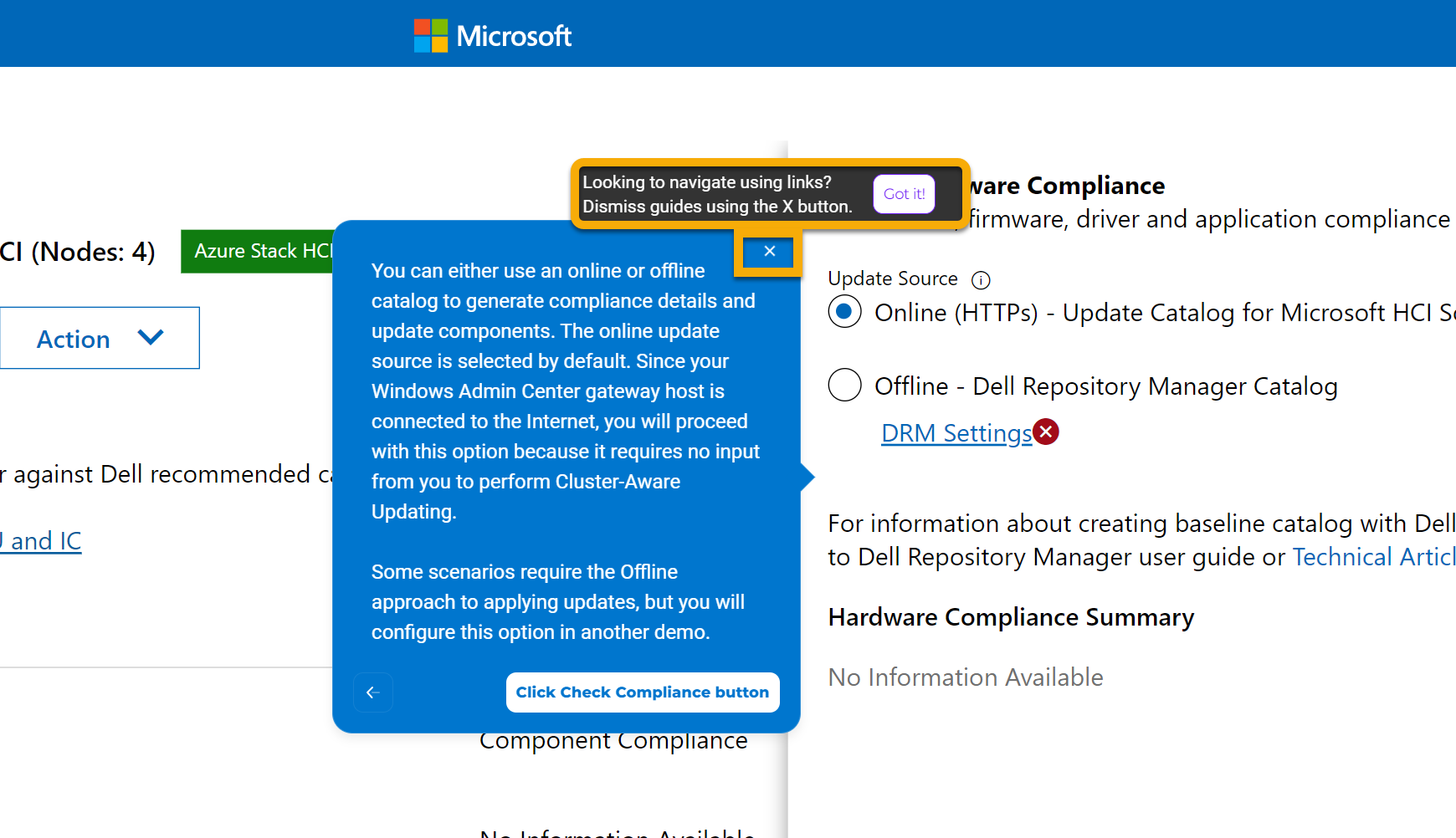

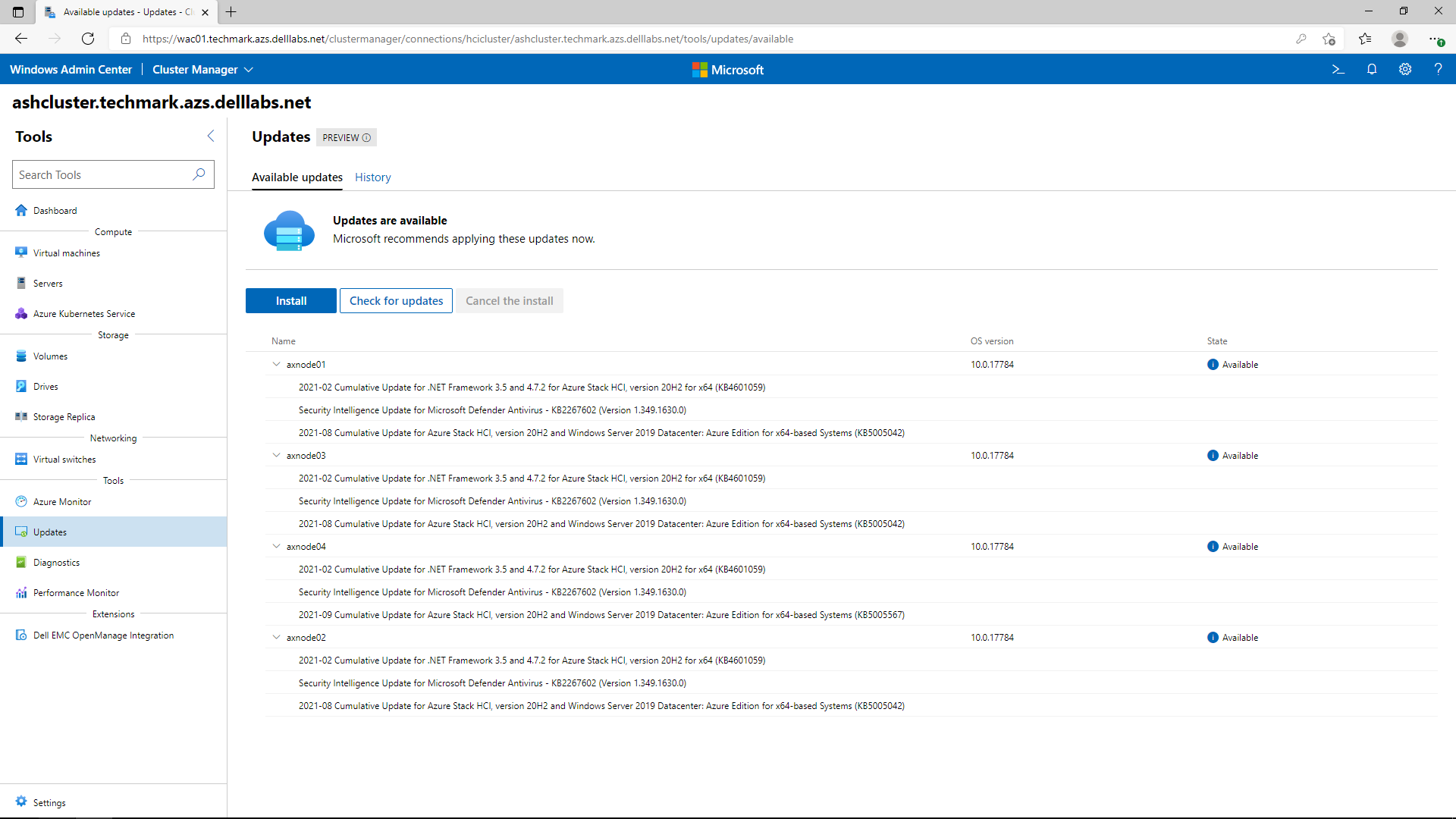

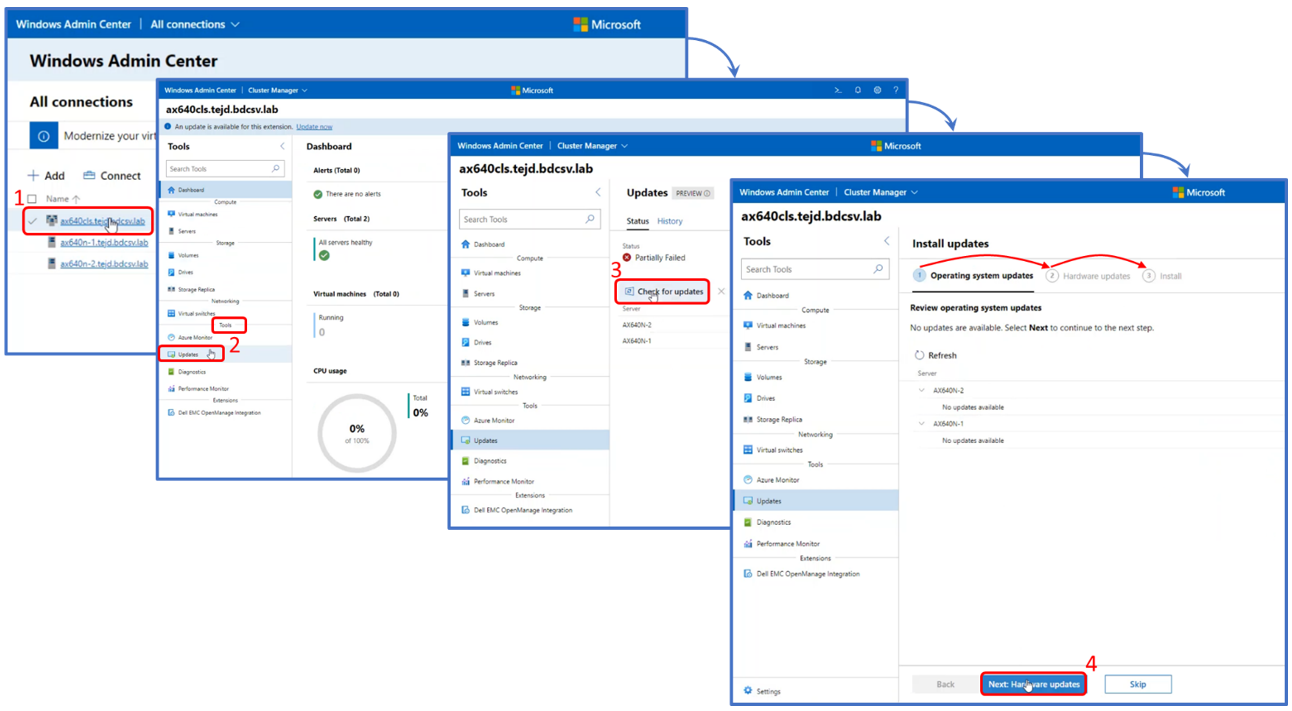

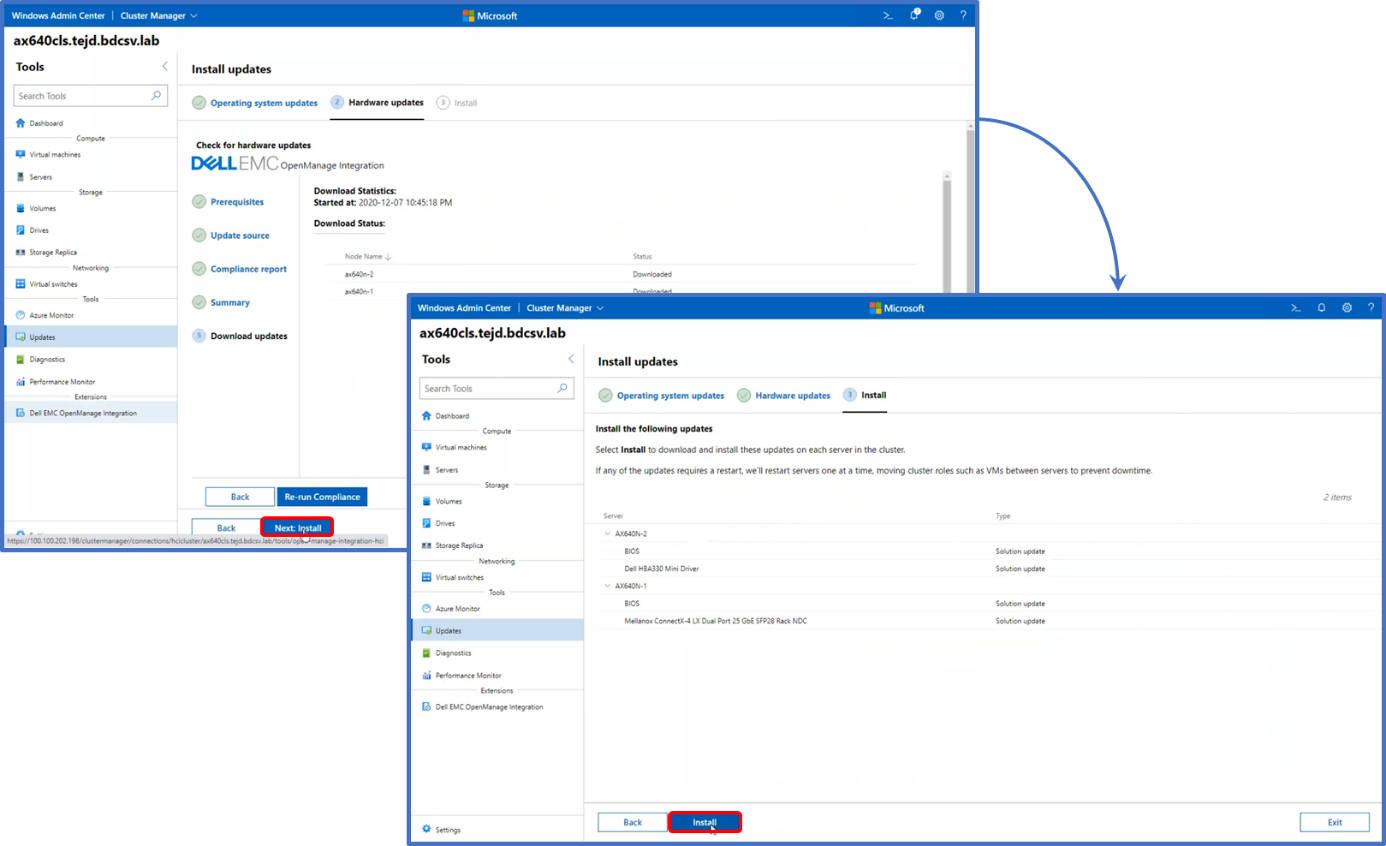

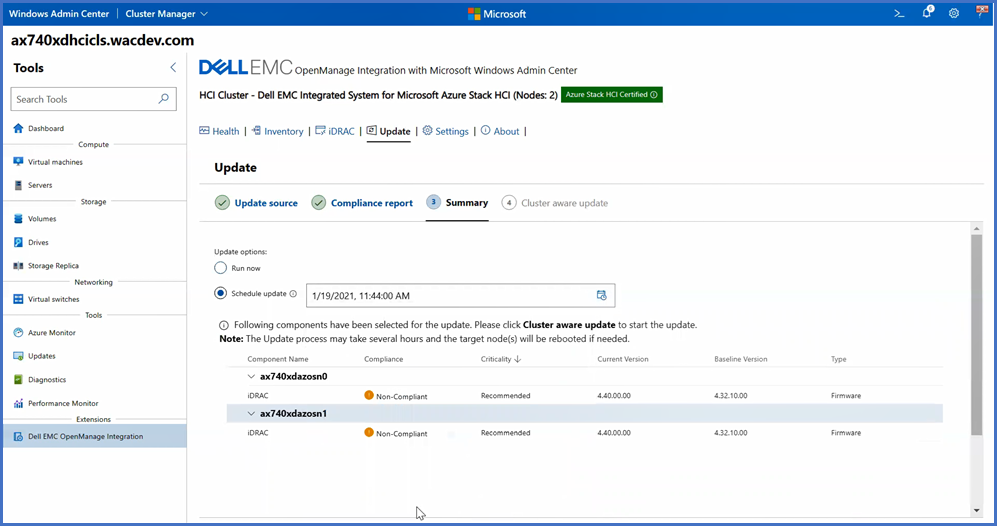

Lifecycle management is critical to ensuring the optimal security, performance, and reliability of any infrastructure. With APEX Cloud Platform Foundation Software, Dell helps our customers remain in a continuously validated state – updating the platform from one known good state to the next, inclusive of hardware, operating system, and systems management software. A few months passed since WhyGoBuy deployed their first platform, and the time came to apply a quarterly baseline bundle using the Windows Admin Center extension. The following video captures their experience.

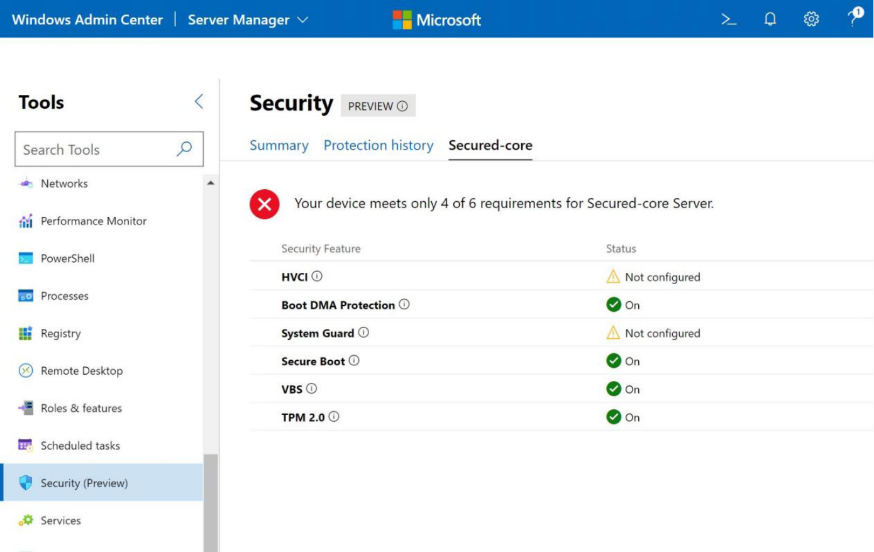

WhyGoBuy was committed to maintaining a robust security posture. They used APEX Cloud Platform Foundation Software intrinsic infrastructure security management features to help them accomplish this. The next video showcases two of these features:

- Infrastructure Lock – Protects against unauthorized or malicious changes to configuration settings by enabling the System Lockdown feature in Dell iDRAC. This also prevents updates to BIOS, firmware, and drivers to guard against cybersecurity attacks.

- Secured-core server – Proactively defends against many of the paths attackers might use to exploit a system by establishing a hardware root-of-trust, protecting firmware, and introducing virtualization-based security.

In this final video, WhyGoBuy set up connectivity to Dell ProSupport to benefit from log collection, phone home, automated case creation, and remote support. They also wanted to send telemetry data to Dell CloudIQ cloud-based software for multi-cluster monitoring. CloudIQ provided proactive monitoring, machine learning, and predictive analytics so they could take quick action and simplify operations of all their on-premises APEX Cloud Platforms.

The future’s so bright

We are excited to bring Dell APEX Cloud Platform for Microsoft Azure to market later this year. I’ve compiled the following list of available resources for further learning.

- Dell APEX Cloud Platform for Microsoft Azure Playlist

- Solution Brief – Deploy mission-critical database and virtual desktop workloads in a Microsoft hybrid cloud environment

- Thomas Maurer Speaks with Kenny Lowe on APEX Cloud Platform for Microsoft Azure

- Building the Future of Azure Stack HCI

- Delving Into the APEX Cloud Platform for Microsoft Azure

After we launch this solution, you’ll be able to find white papers, videos, blogs, and more at the APEX tile at our Info Hub site.

And as always, please reach out to your Dell account team if you would like to have more in-depth discussions about the APEX portfolio. If you don’t currently have a Dell contact, we’re here to help on our corporate website.

Author: Michael Lamia, Engineering Technologist at Dell Technologies

Follow me on Twitter: @Evolving_Techie

LinkedIn: https://www.linkedin.com/in/michaellamia/

Email: michael.lamia@dell.com

[1] Dell APEX Cloud Platform for Microsoft Azure will be generally available later in 2023. Some of the features and functionality depicted in these videos may behave differently at initial release or may not be available until later releases. Dell makes no representation and undertakes no obligations with regard to product planning information, anticipated product characteristics, performance specifications, or anticipated release dates (collectively, “Roadmap Information”). Roadmap Information is provided by Dell as an accommodation to the recipient solely for the purposes of discussion and without intending to be bound thereby.

Accelerate your SQL Server Workloads with Dell Integrated System for Azure Stack HCI

Fri, 28 Jul 2023 16:05:27 -0000

|Read Time: 0 minutes

Microsoft presented SQL Server 2022 last November, during the Microsoft Ignite 2022 event. This was a highly expected release, introducing several key improvements for database operations, availability, security, and performance.

SQL Server 2022 constitutes the most cloud-connected database Microsoft has released to date. Building an Azure Arc-enabled database platform with Azure Arc-enabled SQL Server facilitates extending your data management operations from your own data center to any edge location, public cloud, or hosting facility.

With the simple installation of a new agent into the SQL Server instance, a full set of management, security, and performance options are enabled.

See more details on these new features at this Microsoft learn page.

As of today, one of the most powerful deployment scenarios for SQL Server is a hybrid environment. With Arc-enabled service, we can deploy, manage, and operate from a single point and have the flexibility to place every SQL Server instance where it should be to benefit from the best resource allocation and manageability, and thus provide the best IT experience to meet the business demands.

Thinking about an HCI platform to host the on-premises side of our hybrid approach seems reasonable, as HCI solutions have become predominant in their IT segment, as analysts report.

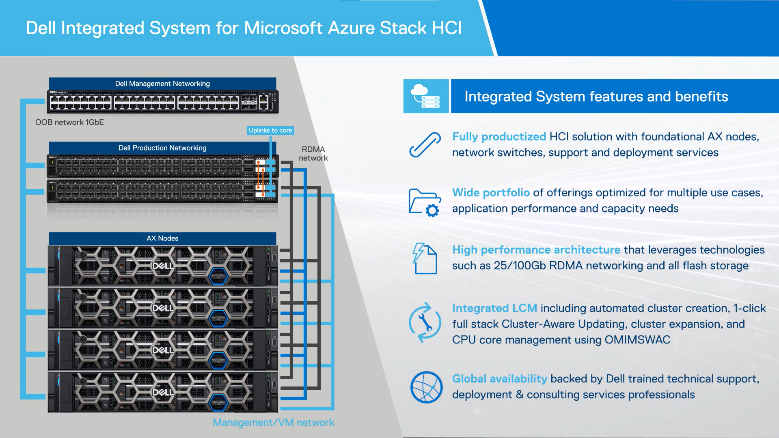

Dell Integrated System for Azure Stack HCI represents a perfect choice to meet the SQL Server 2022 requirements, providing a fully productized platform that offers, out of the box, intelligently designed configurations to minimize hardware and software customizations often required for this type of environment.

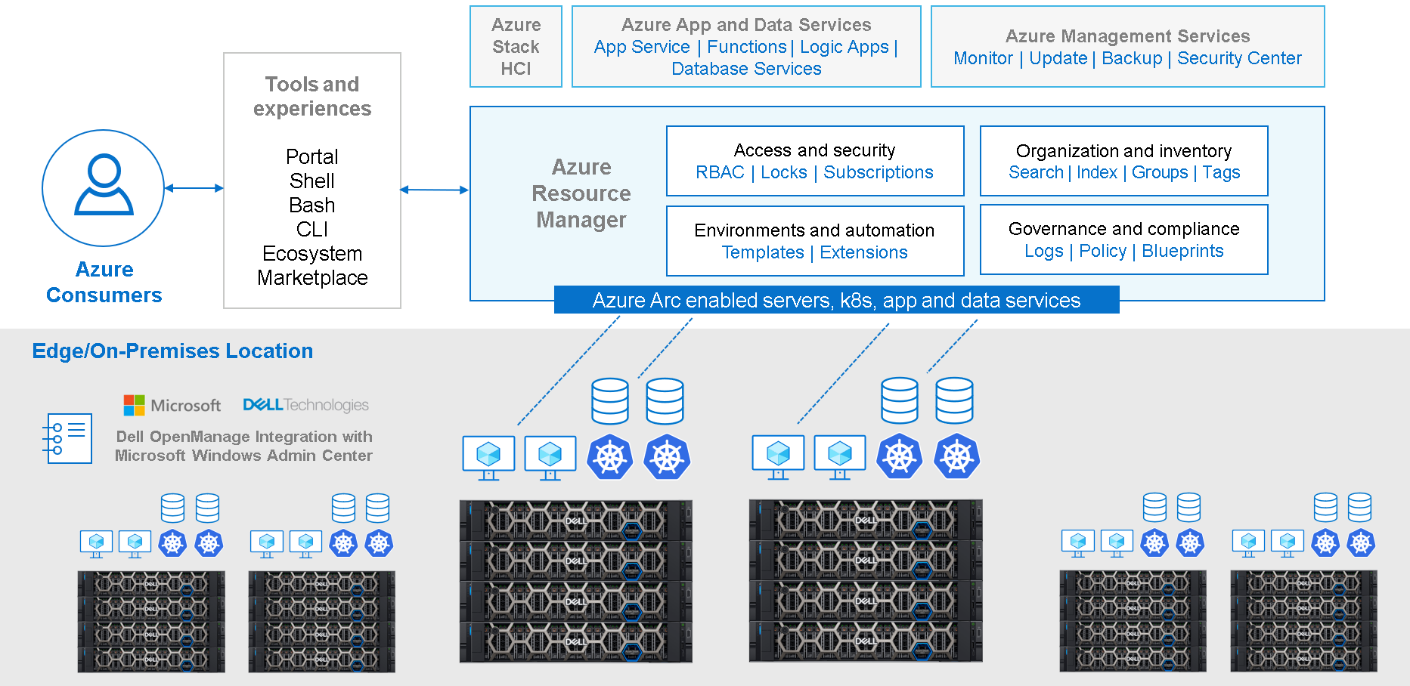

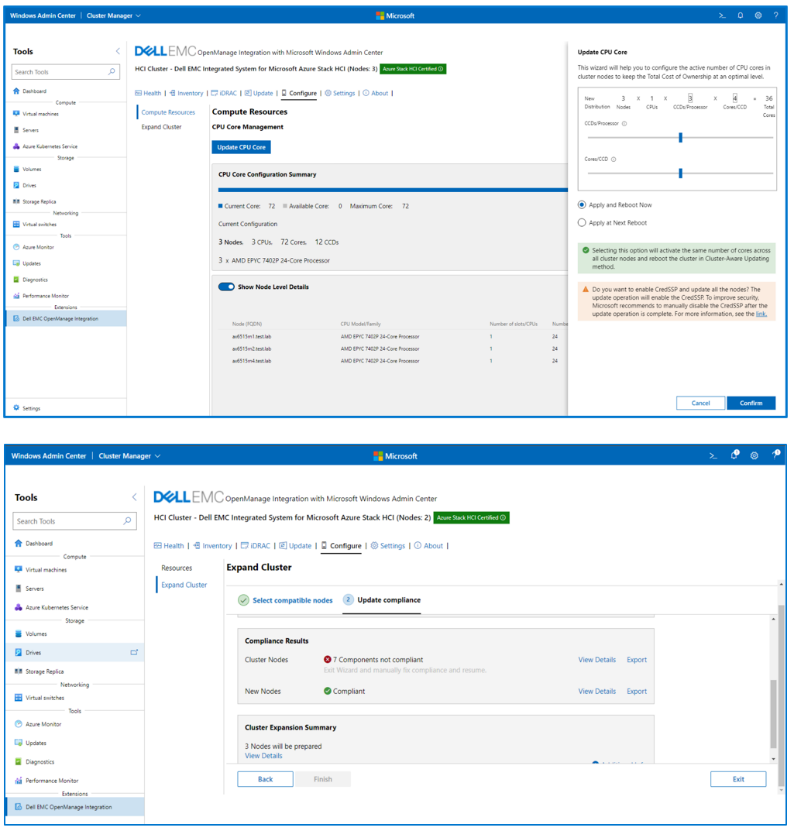

If we want to populate our hybrid solution with a set of tools to ensure repeatable and predictable infrastructure operations, Dell OpenManage Integration with Microsoft Windows Admin Center provides in-depth, cluster-level automation capabilities that enable an efficient and flexible operation of the Azure Stack HCI platform.

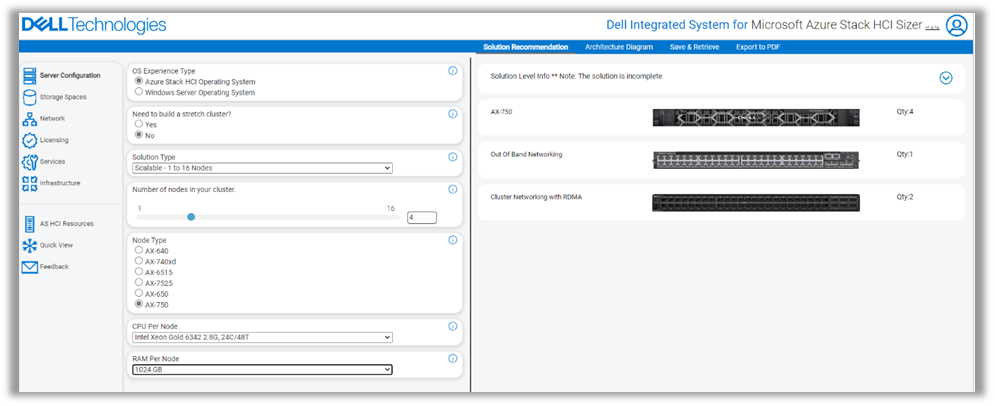

For optimal platform sizing, to properly address SQL Server workload demands, we can use a free, online tool such Dell Live Optics. With the information gathered by Live Optics software collectors, we can better understand application performance and capacity requirements. That information can be used by the Dell sales team to influence the selection available to configure the Azure Stack HCI platform in Dell’s Azure Stack HCI Sizer tool. You can find more details on Live Optics here. For specifics on Live Optics and database workloads, check this site.

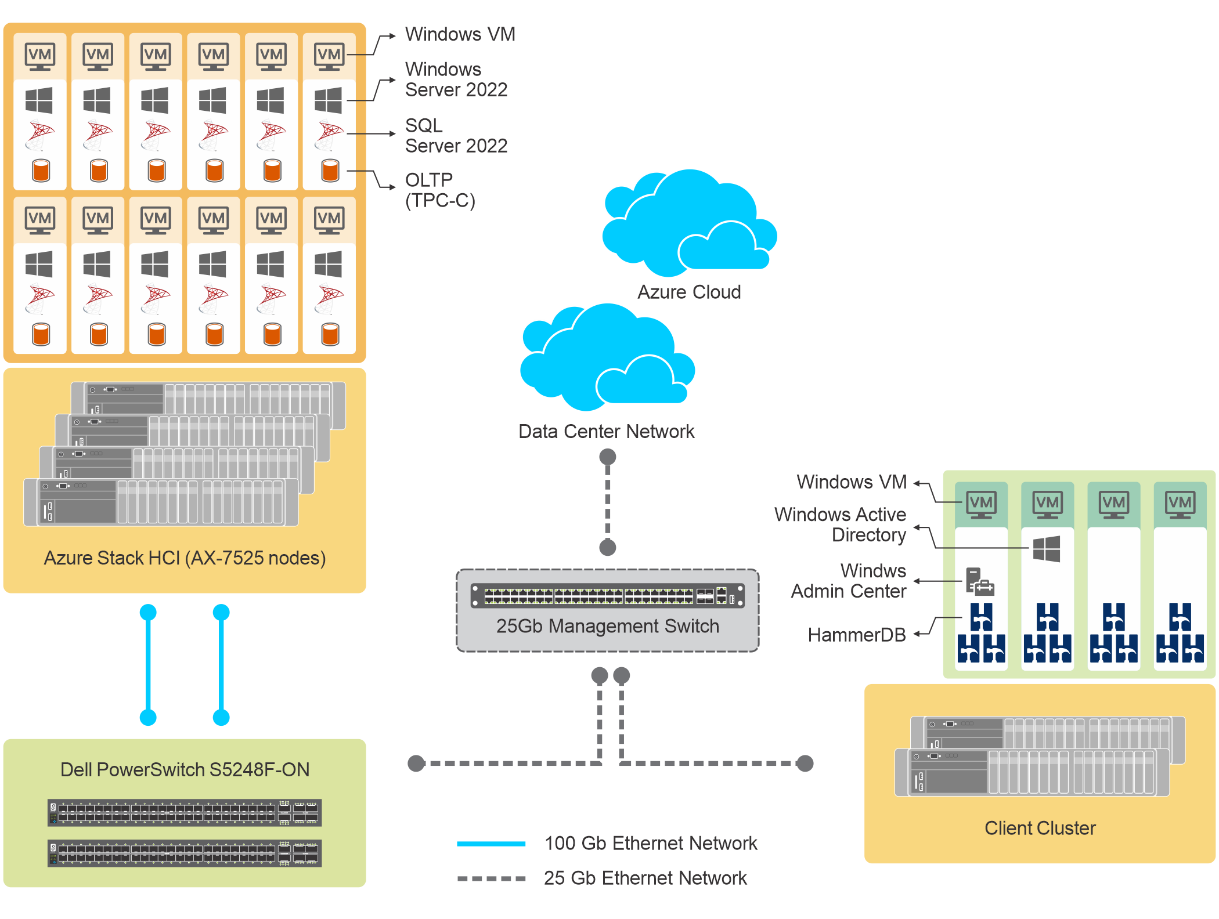

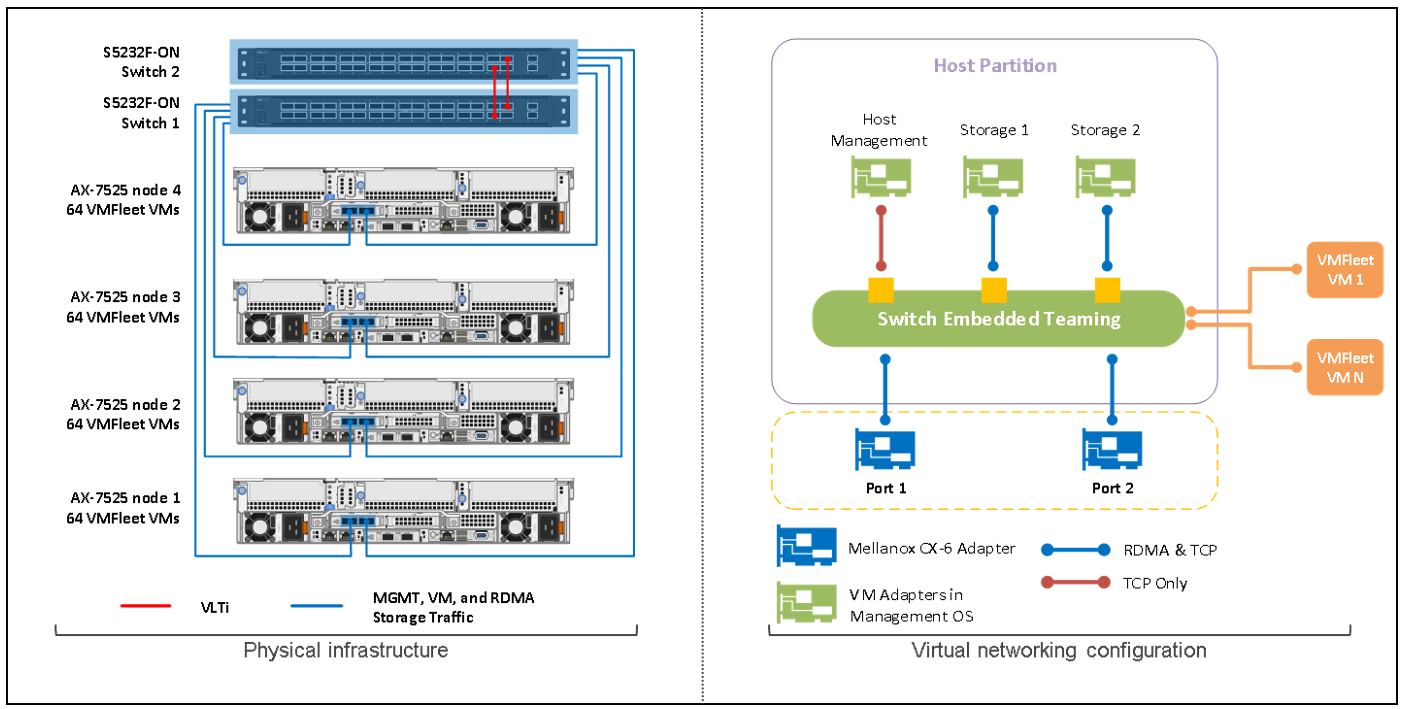

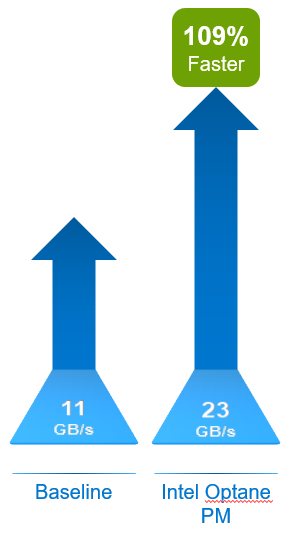

To evaluate SQL Server performance in this hybrid scenario, we have configured a four-node Dell Integrated System for Microsoft Azure Stack HCI. The underlying infrastructure is based on Dell AX-7525 nodes, each powered by two AMD EPYC processors, 2 TB of RAM and 12 NVMe drives.

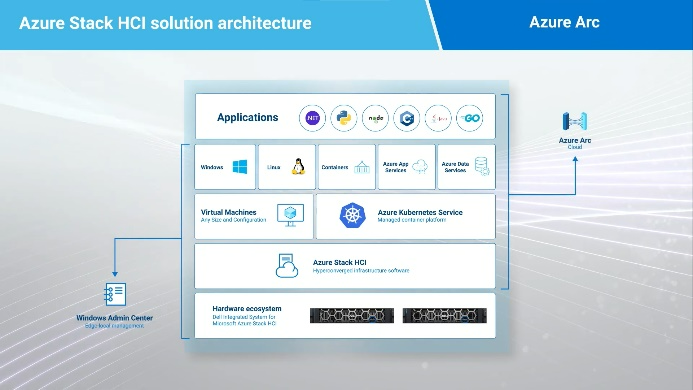

The solution architecture looks like this:

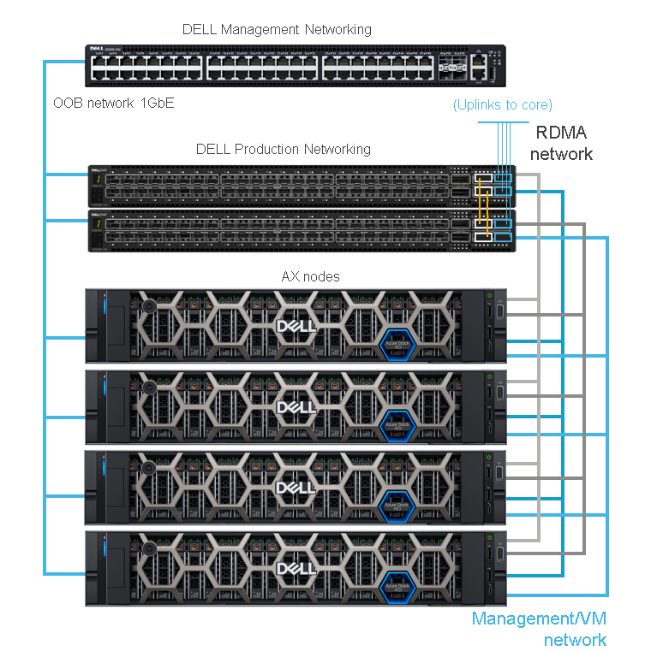

Figure 1. Dell Integrated System for Azure Stack HCI architecture overview

On the storage side of the solution, Microsoft Storage Spaces Direct manages the NVMe drives made available by the four AX-7525 nodes, creating a single pool, accessed through Cluster Shared Volumes (CSVs) in which Virtual Hard Disks (.vhds) were placed.

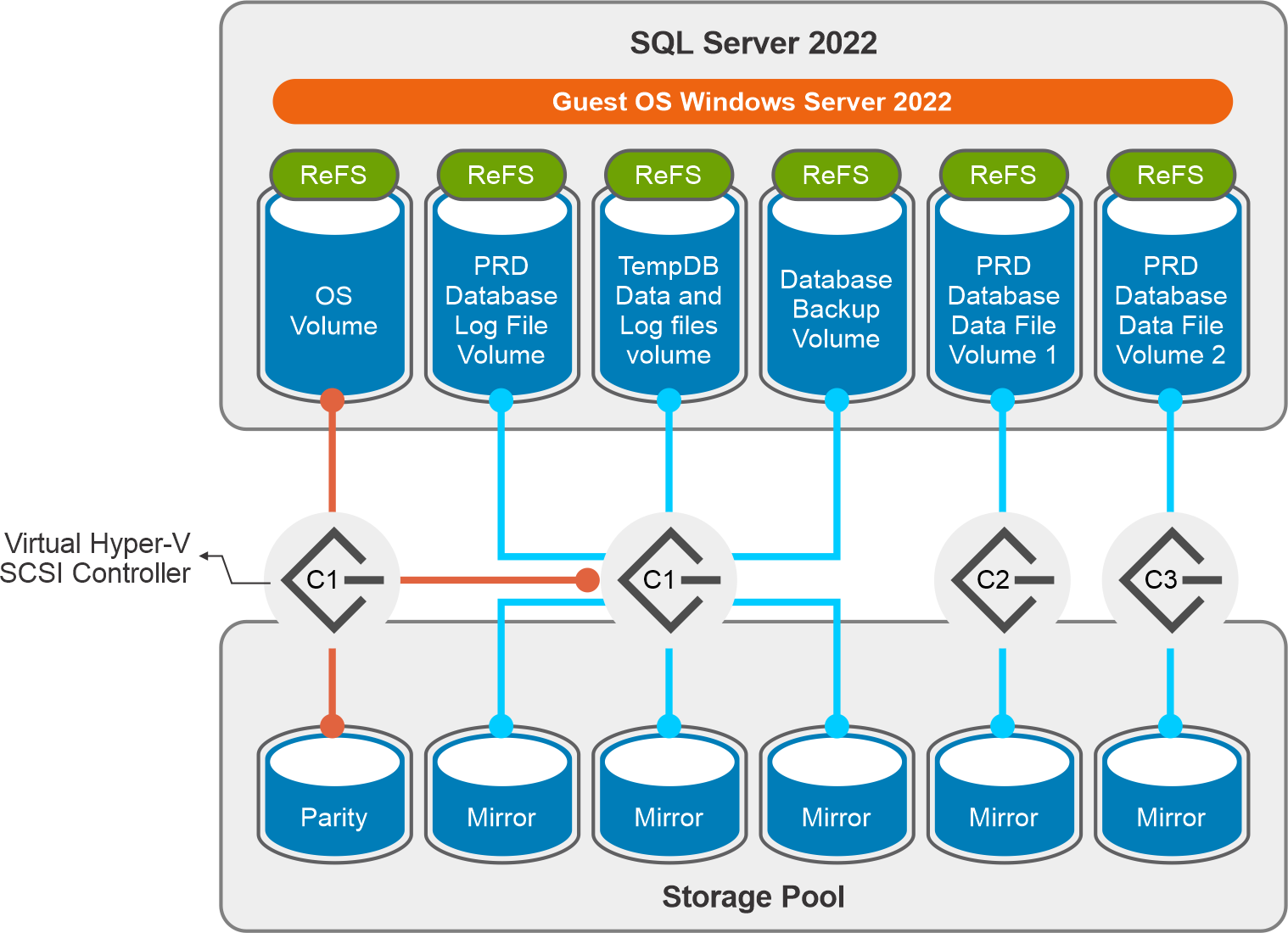

The following figure shows the volume and controller layout.

Figure 2. Storage layout

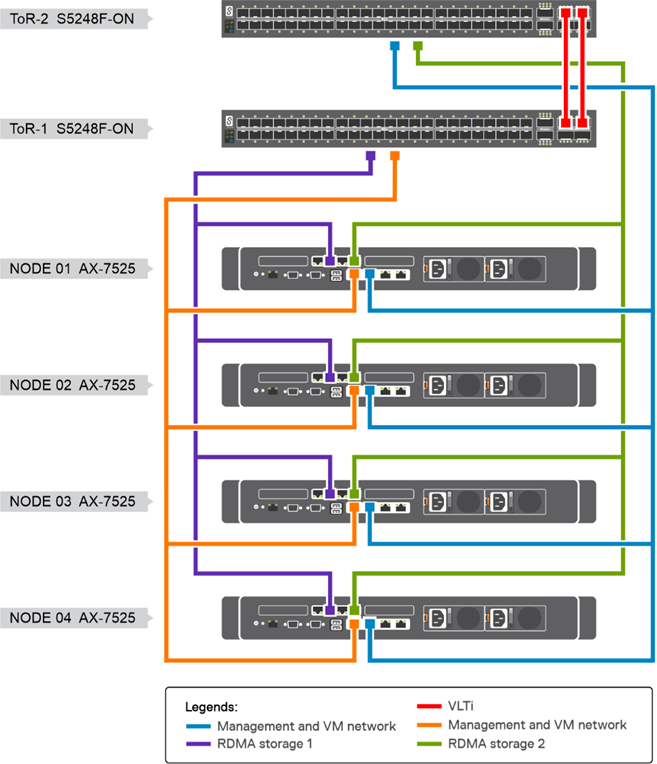

We also need to design and configure the networking component of the test environment. For this SQL Server case, we have chosen to provide top-of-rack connectivity through two Dell S5248F-ON switches, with L2 multipath support using Virtual Link Trunking (VLT) for a highly available configuration. With the addition of NVIDIA Mellanox ConnectX-6 Dx Dual Port 100 GbE adapters, we can provide Remote Direct Memory Access (RDMA) with RDMA over Ethernet capabilities (RoCE) to our storage network. The overarching network architecture looks as follows:

Figure 3. Network architecture

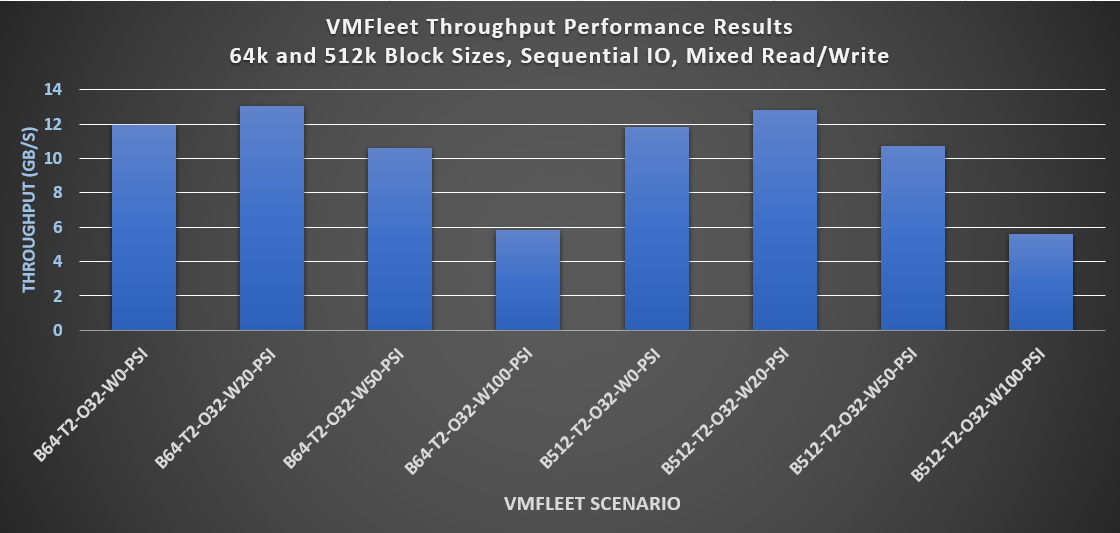

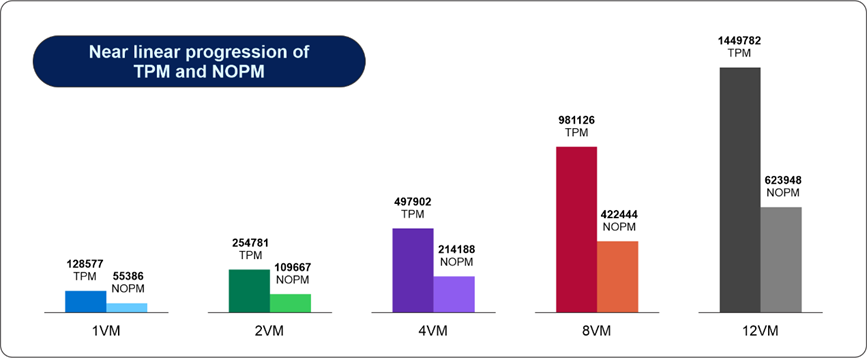

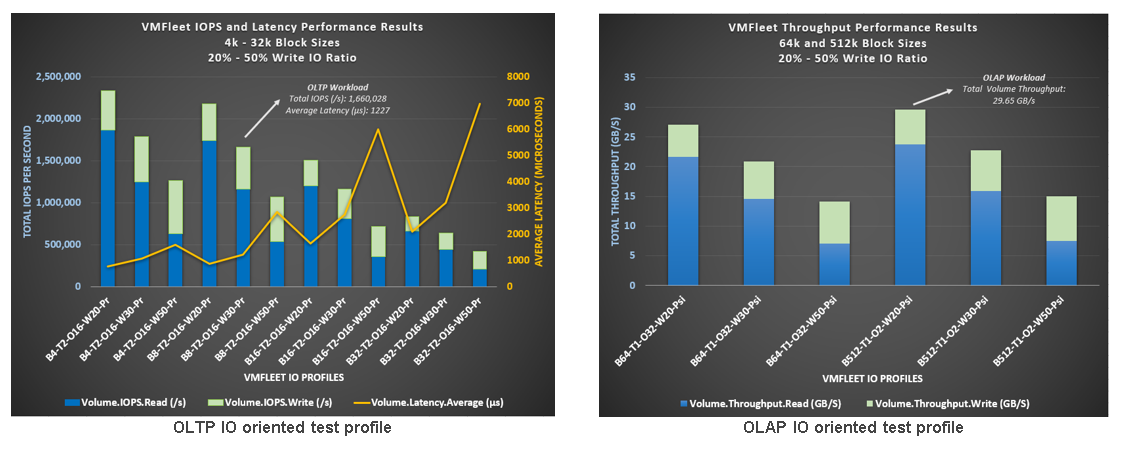

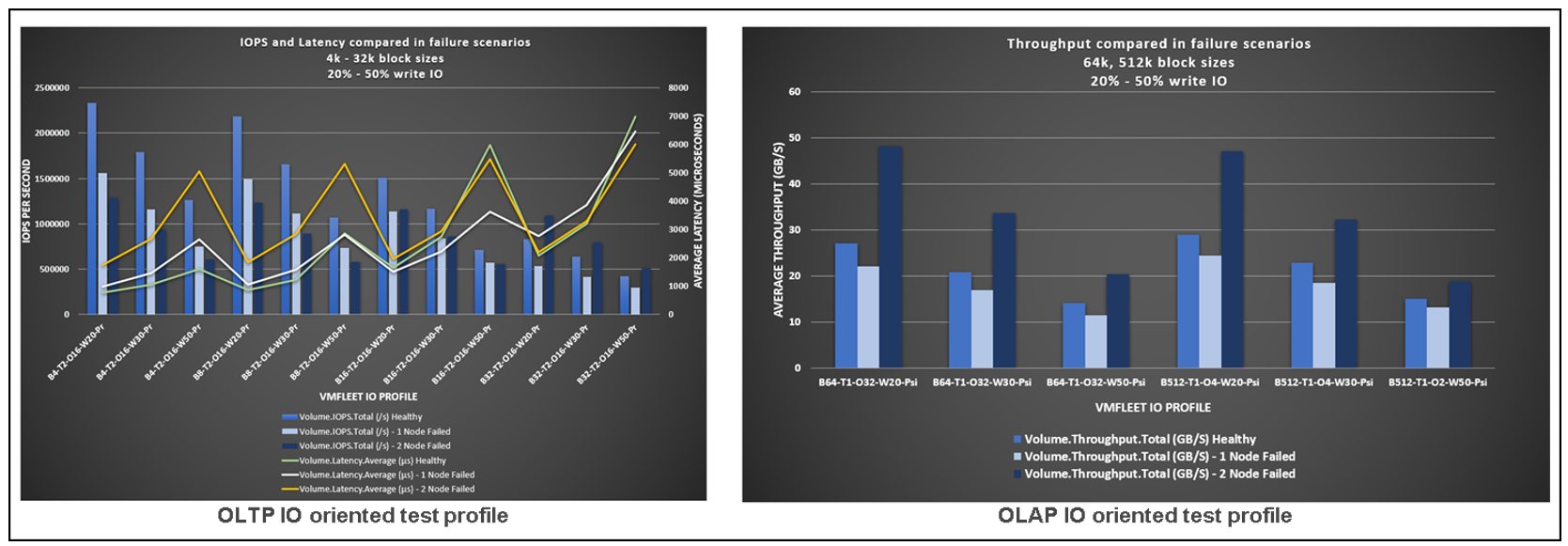

With this infrastructure scenario, we chose a test methodology that started with one SQL Server VM and scaled up to 12 VMs. In each SQL Server VM, we installed and configured HammerDB instances on a cluster of clients running Windows Server 2022. For benchmarking, we chose the TPROC-C, an online transaction processing (OLTP) benchmarking standard derived from TPC-C.

With a dataset of a 4,000 scale factor and a size of 400 GB, we started running the test on one SQL Server VM, then scaling to two, four, eight, and finally, 12 VMs.

We focused the test on two key performance indicators, transactions per minute (TPM) and new orders per minute (NOPM). The main goal was to obtain performance scaling as linear as possible when going from one to twelve VMs, keeping CPU utilization in a safe range, to leave ample performance space to run other workloads. Each of these benchmarking tests was conducted while the TPROC-C transaction load from HammerDB was running concurrently on the respective number of VMs running SQL Server.

The following figure shows a summary of the results obtained:

Figure 4. SQL performance summary

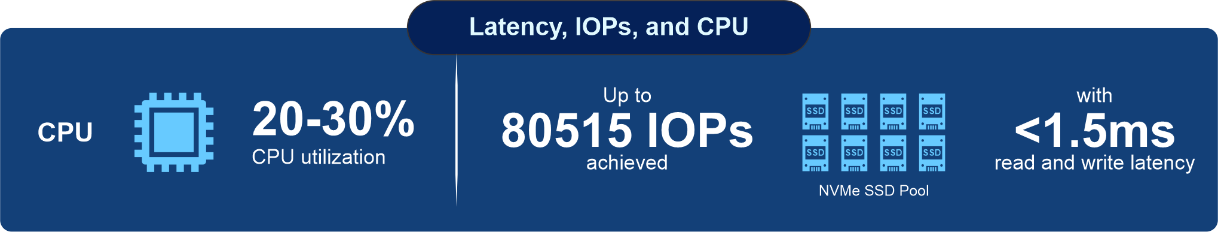

As usual, keeping a low latency, increasing IOPS score, was an ad hoc goal to maintaining consistent CPU utilization all along the tests. A summary of the results is shown in the following figure:

Figure 5. Latency, IOPS, and CPU utilization results

In summary, running our SQL Server 2022 workloads on Dell’s Azure Stack HCI, connected to Microsoft Azure through Azure Arc Resource Manager, provides excellent performance with rich management features for on-premises operations through Dell OpenManage Integration for Microsoft Windows Admin Center.

For more technical content on Dell Integrated System for Azure Stack HCI, visit our Info Hub.

Resources

- Dell Integrated Systems for Azure Stack HCI Info Hub

- Microsoft Storage Spaces Direct

- Live Optics main page

- Live Optics Knowledge Base

Author:

Inigo Olcoz, Senior Principal Engineer Technologist at Dell

Twitter: @VirtualOlcoz

2023 Updates for Azure Stack HCI and Hub (Part I)

Wed, 30 Aug 2023 22:05:17 -0000

|Read Time: 0 minutes

The first half of 2023 has been quite prolific for the Dell Azure Stack HCI ecosystem, providing many important incremental updates in the platform. This article summarizes the most relevant changes inside the program.

Azure Stack HCI

Azure Stack HCI

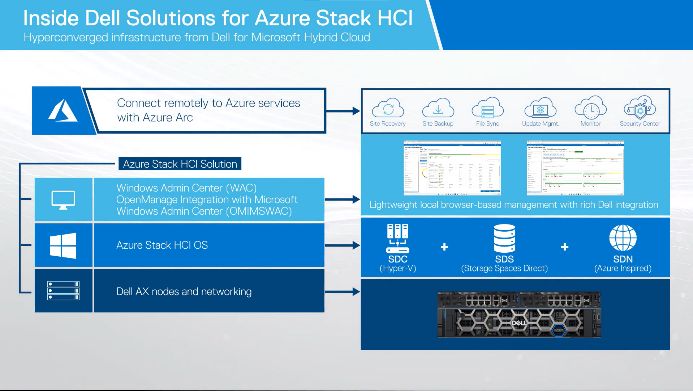

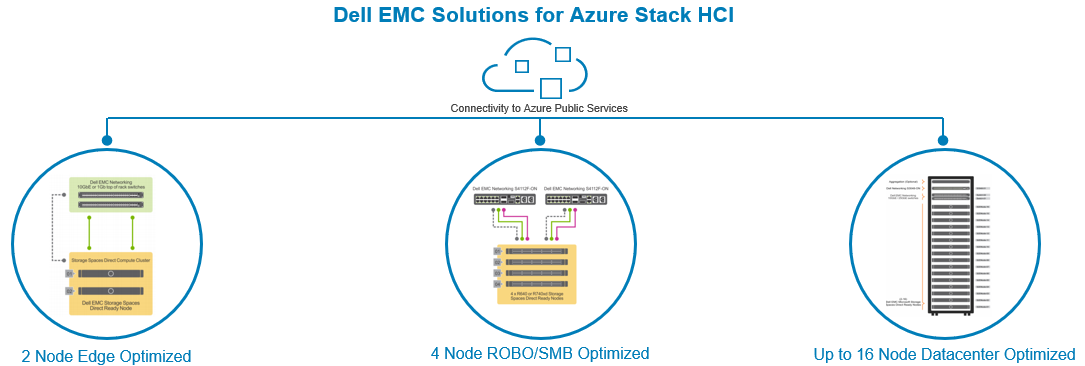

Dell Integrated System for Microsoft Azure Stack HCI delivers a fully productized, validated, and supported hyperconverged infrastructure solution that enables organizations to modernize their infrastructure for improved application uptime and performance, simplified management and operations, and lower total cost of ownership. The solution integrates the software-defined compute, storage, and networking features of Microsoft Azure Stack HCI with AX nodes from Dell to offer the high-performance, scalable, and secure foundation needed for a software-defined infrastructure.

With Azure Arc, we can now unlock new hybrid scenarios for customers by extending Azure services and management to our HCI infrastructure. This allows customers to build, operate, and manage all their resources for traditional, cloud-native, and distributed edge applications in a consistent way across the entire IT estate.

What’s new with Azure Stack HCI?

A lot. There have been so many updates in the Azure Stack HCI front that it is difficult to detail all of them in just a single blog. So, let’s focus on the most important ones.

Azure Stack HCI software and hardware updates

From a software and hardware perspective, the biggest change during the first half of 2023 was the introduction of Azure Stack HCI, version 22H2 (factory install and field support). The most important features in this release are Network ATC, GPU partitioning (GPU-P), and security improvements.

- Network ATC simplifies the virtual network configuration by leveraging intent-based network deployment and incorporating Microsoft best practices by default. It provides the following advantages over manual deployment:

- Reduces network configuration deployment time, complexity, and incorrect input errors

- Uses the latest Microsoft validated network configuration best practices

- Ensures configuration consistency across all nodes in the cluster

- Eliminates configuration drift, with periodic consistency checks every 15 minutes

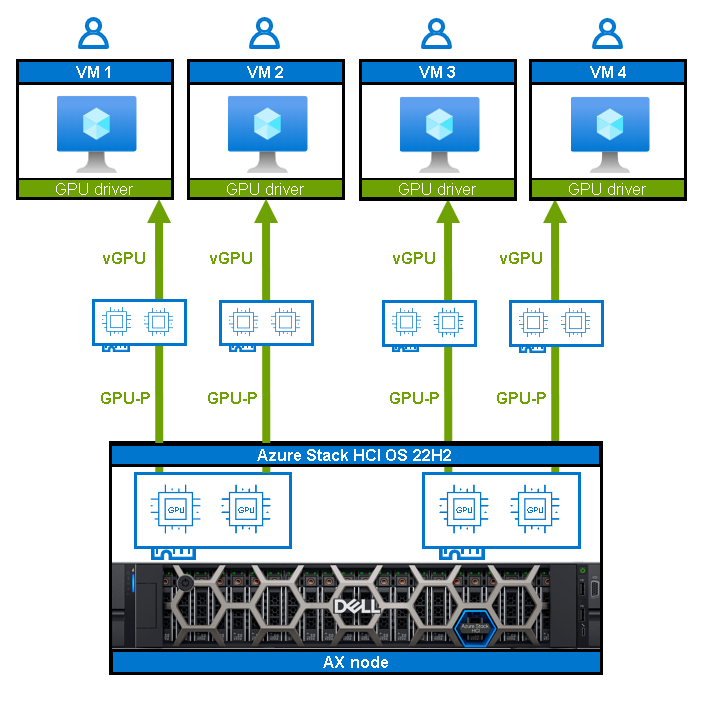

- GPU-P allows sharing a physical GPU device among several VMs. By leveraging single root I/O virtualization (SR-IOV), GPU-P provides VMs with a dedicated and isolated fractional part of the physical GPU. The obvious advantage of GPU-P is that it enables enterprise-wide utilization of highly valuable and limited GPU resources.

- Azure Stack HCI OS 22H2 security has been improved with more than 200 security settings enabled by default within the OS (“Secured-by-default”), enabling customers to closely meet Center for Internet Security (CIS) benchmark and Defense Information System Agency (DISA) Security Technical Implementation Guide (STIG) requirements for the OS. All these security changes improve the security posture by also disabling legacy protocols and ciphers.

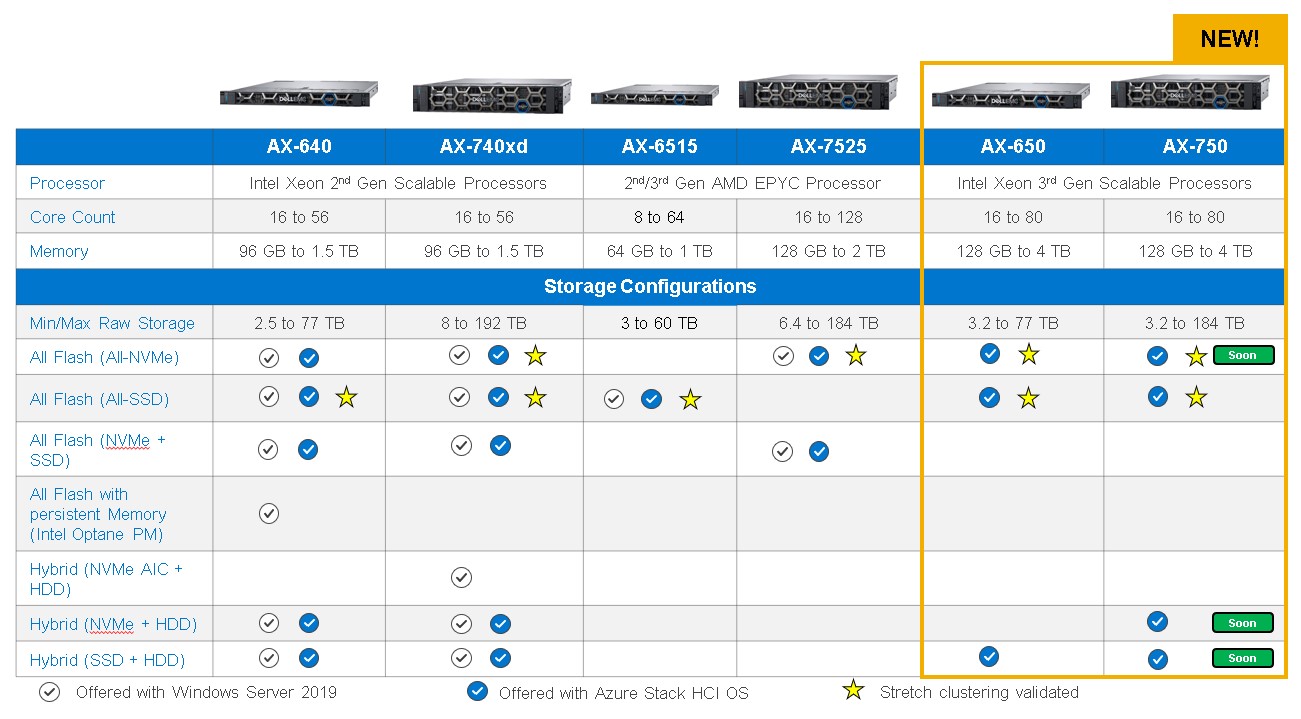

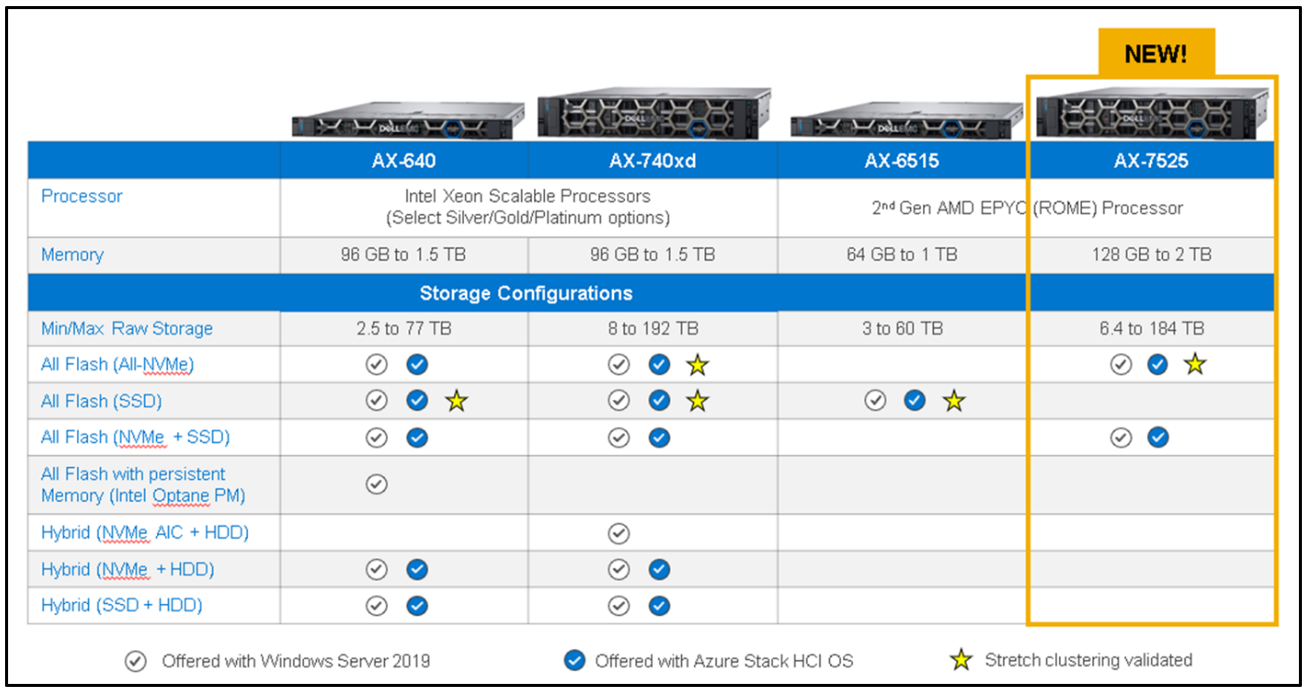

From a hardware perspective, these are the most relevant additions to the AX node family:

- More NIC options:

- Mellanox ConnectX-6 25 GbE

- Intel E810 100 GbE; also adds RoCEv2 support (now iWARP or RoCE)

- More GPU options for GPU-P and Discrete Device Assignment (DDA):

- GPU-P validation for: NVIDIA A40, A16, A2

- DDA options: NVIDIA A30, T4

To better understand GPU-P and DDA, check this blog.

Azure Stack HCI End of Life (EOL) for several components

As the platforms mature, it is inevitable that some of the aging components are discontinued or replaced with newer versions. The most important changes on this front have been:

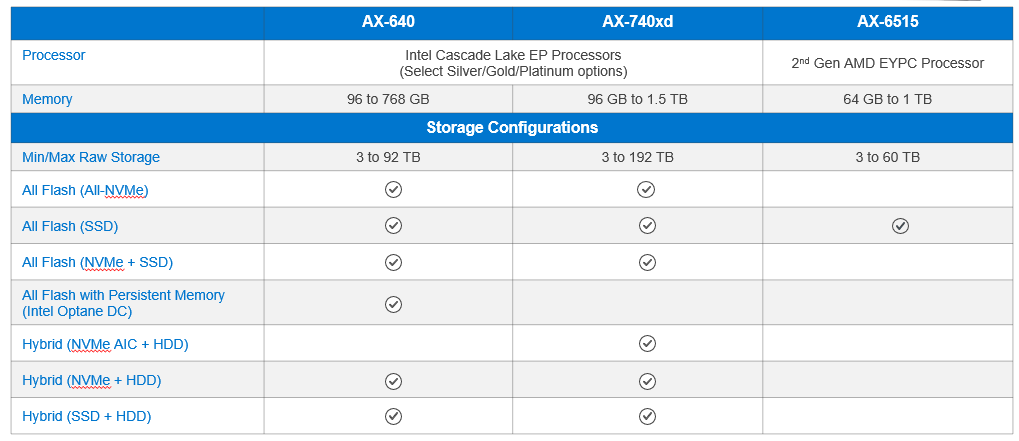

- EOL for AX-640/AX-740xd nodes: Azure Stack HCI 14G servers, AX-640, and AX-740xd reached their EOL on March 31, 2023, and are therefore no longer available for quoting, or new orders. These servers will be supported for up to seven years, until their End of Service Life (EOSL) date. Azure Stack HCI 15G AX-650/AX-750/AX-6515/AX-7525 platforms will continue to be offered to address customer demands.

- EOL for Windows Server 2019: While Windows Server 2019 will be reaching end of sales/distribution from Dell on June 30, 2023, the product will continue to be in Microsoft Mainstream Support life cycle until January 9, 2024. That means that our customers will be eligible for security and quality updates from Microsoft free of charge until that date. After January 9, 2024, Windows Server will enter a 5-year Extended Support life cycle that will provide our customers with security updates only. Any quality and product fixes will be available from Microsoft for a fee. It is highly recommended that customers migrate their current Windows Server 2019 workloads to Windows Server 2022 to maintain up-to-date support.

Finally, we have introduced a set of short and easily digestible training videos (seven minutes each, on average) to learn everything you need to know about Azure Stack HCI, from the AX platform and Microsoft’s Azure Stack HCI operating system, to the management tools and deploy/support services.

Conclusion

It’s certainly a challenge to synthesize the last six months of incredible innovation into a brief article, but we have highlighted the most important updates: focus on learning all the new Azure Stack HCI 22H2 features and updates, keep current with the hardware updates, and… stay tuned for important announcements by the last quarter of the year: big things are coming in Part 2!!!

Thank you for reading.

Author: Ignacio Borrero, Senior Principal Engineer, Technical Marketing

Live Optics and Azure Stack HCI

Thu, 15 Jun 2023 00:58:28 -0000

|Read Time: 0 minutes

The IT industry has coped with many challenges during the last decades. One of the most impactful ones, probably due to its financial implications, has been the “IT budget reduction”—the need for IT departments to increase efficiency, reduce the cost of their processes and operations, and optimize asset utilization.

This do-more-with-less mantra has a wide range of implications, from cost of acquisition to operational expenses and infrastructure payback period.

Cost of acquisition is not only related to our ability to get the best IT infrastructure price from technology vendors but also to the less obvious fact of optimal workload characterization that leads to the minimum infrastructure assets to service business demand.

Without the proper tools, assessing the specific needs that each infrastructure acquisition process requires is not simple. Obtaining precise workload requirements often involves input from several functional groups, requiring their time and dedication. This is often not possible, so the only choice is to do a high-level estimation of requirements and select a hardware offering that can cover by ample margin the performance requirements.

Those ample margins do not align very well with concepts such as optimal asset utilization and, thus, do not lead to the best choice under a budget reduction paradigm.

But there is free online software, Live Optics, that can be used to collect, visualize, and share data about your IT infrastructure and the workloads they host. Live Optics helps you understand your workloads’ performance by providing in-depth data analysis. It makes the project requirements much clearer, so the sizing decision—based on real data—is more accurate and less estimated.

Azure Stack HCI, as a hyperconverged system, greatly benefits from such sizing considerations. It is often used to host a mix of workloads with different performance profiles. Being able to characterize the CPU, memory, storage, network, or protection requirements is key when infrastructure needs to be defined. This way, the final node configuration for the Azure Stack HCI platform will be able to cope with the workload requirements without oversizing the hardware and software offerings, and we are able to select the type of Azure Stack HCI node that best fits the workload requirements.

Microsoft recommends using an official sizing tool such as Dell’s tool, and Live Optics incorporates all Azure Stack HCI design constraints and best practices, so the tool outcome is optimized to the workload requirements.

Imagine that we had to host in the Azure Stack HCI infrastructure a number of business applications with sets of users. With the performance data gathered by Live Optics, and using the Azure Stack HCI sizing tool, we can select the type of node we need, the amount of memory each node will have, what CPU we will equip, how many drives are needed to cover the I/O demand, and the network architecture.

We can see a sample of the sizing tool input in the following figure:

Figure 1. Example from Dell's sizing tool for Azure Stack HCI

In this case, we have chosen to base our Azure Stack HCI infrastructure on four AX-750 nodes, with Intel Gold 6342 CPUs and 1 GB of RAM per node.

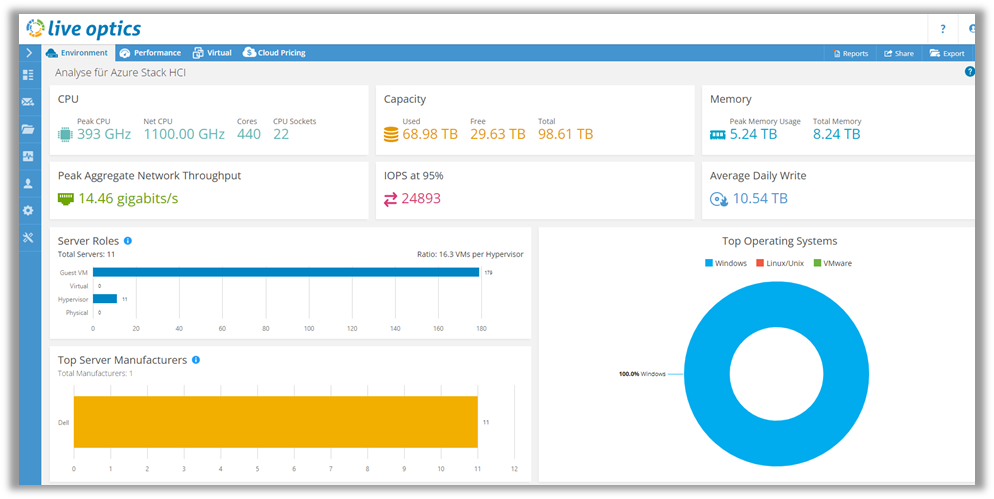

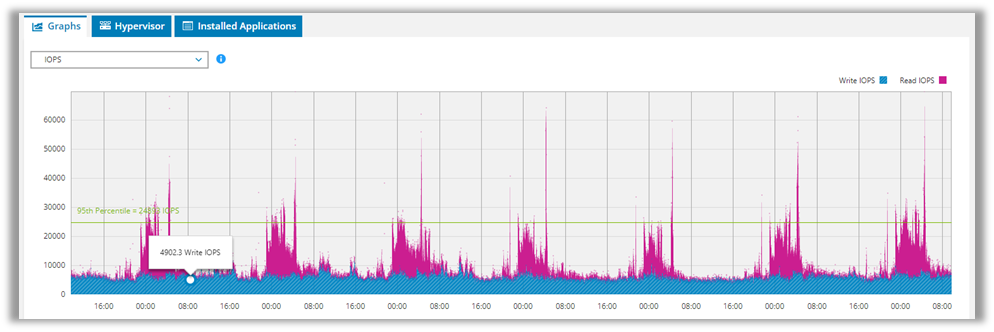

Because we have used Live Optics to gather and analyze performance data, we have sized our hardware assets based on real customer usage data such as that shown in the next figure:

Figure 2. Live Optics performance dashboard

This Live Optics dashboard shows the general requirements of the analyzed environment. Data of aggregated network throughput, IOPS, and memory usage or CPU utilization are displayed and, thus, can be used to size the required hardware.

There are more specific dashboards that show more details on each performance core statistic. For precise storage sizing, we can display read and write I/O behavior in great detail, as we can see in the following figure:

Figure 3. IOPS graphics through a Live Optics read/write I/O dashboard

With a tool such as Live Optics, we can size our Azure Stack HCI infrastructure based on real workload requirements, not assumptions made because information is lacking. This leads to an accurate configuration, usually resulting in a lower price, and warranties that the proposed infrastructure can handle even the peak business workload requirements.

Check the resources shown below to find links to the Live Optics site and collector application, as well as some Dell technical information and sizing tools for Azure Stack HCI.

Resources

- Live Optics app

- Live Optics site

- Info Hub: Microsoft HCI Solutions from Dell Technologies

- Microsoft Azure Stack HCI sizer from Dell Technologies

Author: Inigo Olcoz

Twitter: VirtualOlcoz

Dell and Azure Stack HCI Made Easy: the Video Series

Tue, 25 Apr 2023 17:05:23 -0000

|Read Time: 0 minutes

It is incredible how time flies and it still feels like yesterday since December 10, 2020, when Microsoft initially released Azure Stack HCI.

Today, Azure Stack HCI is a huge success and, in combination with Azure Arc, the foundation for any real Microsoft hybrid strategy.

But believe it or not, 850+ days later, Azure Stack HCI is still a big unknown for part of the Microsoft community. In our daily customer engagements, we keep on observing that there are knowledge gaps around the Azure Stack HCI program itself and the partner ecosystem that surrounds it.

In these circumstances, we have decided to take action and create a very short and easy-to-follow video series explaining everything you need to know about Azure Stack HCI from a technical perspective.

What you will find

This initial video training library consist of five videos, each averaging seven minutes in length. Here’s a summary of what you will discover in each of the videos:

Video: What Is Inside Azure Stack HCI

Learn the basics and fundamental components of Azure Stack HCI and get to know the Dell Integrated System for Microsoft Azure Stack HCI platform.

Meet the AX node platform and take the Dell Integrated System for Microsoft Azure Stack HCI route to deliver consistent Azure Stack HCI deployments.

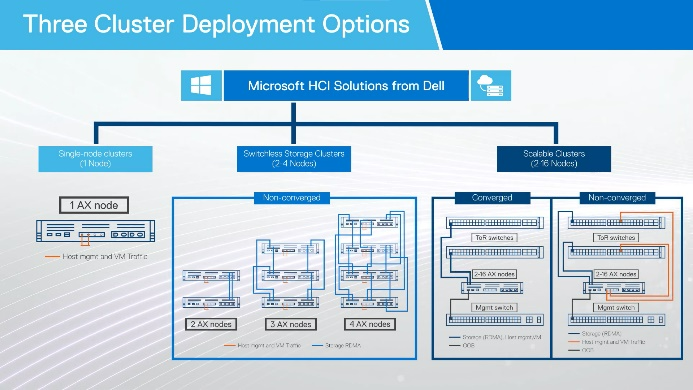

Video: Topology and networking

Explore topology and network deployment options for Dell Integrated System for Microsoft Azure Stack HCI. Make good Azure Stack HCI environments even better with the Dell PowerSwitch family.

Learn about Azure Stack HCI local management with Windows Admin Center and OpenManage. This is the perfect combination for quick and easy controlled local deployments…and a solid foundation for true hybrid management.

Describes end to end deployment and support for the Dell Integrated System for Microsoft Azure Stack HCI platform with ProDeploy and ProSupport services.

Will there be more?

Absolutely.

We are already working on the next series where we’ll be covering other important topics that are beyond the scope for this initial launch (such as best practices and stretched clusters).

Conclusion

There is no doubt that Azure Stack HCI is a very hot topic. In fact, it is the key foundational element that enables a true Microsoft hybrid strategy by delivering on-premises infrastructure fully integrated with Azure. This video series explains the different elements that make this possible.

All videos in the series are important, none should be skipped… but if there is one not to be missed, please, go for Dell Azure Stack HCI: Local Management. This topic is actually the hook for the next release (Hint -> Hybrid management is the next big thing!).

Thanks for reading and… stay tuned for additional videos on the Info Hub!

Author: Ignacio Borrero, Senior Principal Engineer, Technical Marketing Dell CI & HCI

Test Drive Azure Stack HCI in the Dell Demo Center!

Wed, 24 Apr 2024 15:29:51 -0000

|Read Time: 0 minutes

A picture is worth a thousand words, but the value of a good hands-on lab is immeasurable!

Our newly minted interactive demo and hands-on lab are published in the Dell Technologies Demo Center:

- The interactive demo (ITD-0910) offers an immersive look at all Azure Stack HCI management and governance features in Dell OpenManage Integration with Microsoft Windows Admin Center.

- If you're seeking a deep-dive into the Dell Integrated System for Microsoft Azure Stack HCI initial deployment experience, you may prefer the PowerShell-heavy hands-on lab (HOL-0313-01).

In this blog, we'll begin with a brief introduction to these test drives. Then, we'll share our list of other virtual labs that will prove invaluable on your journey to becoming an Azure Stack HCI champion. Fasten your seatbelt and get ready to take your skills to the next level!

The interactive demo can be accessed directly by all customers and partners. When first navigating to the Demo Center site, remember to click the Sign In drop-down menu in the upper right corner of the page.

At the present time, you will not see the hands-on lab appear in the Demo Center catalog. You will need to contact your Dell Technologies account team to gain access to HOL-0313-01.

Interactive Demo ITD-0910

Taking this demo is like competing in a Formula1 or NASCAR race. It is fast-paced and remains within the secure confines of the track's guardrails. Each module in the demo guides you down a well-defined path that leads to a desired business outcome. Here is a summary of the benefits our OpenManage Integration extension delivers:

- Uses automation to streamline operational efficiency and flexibility

- Provides a consistent hybrid management experience by using a single Dell HCI Configuration Profile policy definition

- Reduces operational expense by intelligently right-sizing infrastructure to match your workload profile

- Ensures stability and security of infrastructure with real-time monitoring and lifecycle management

- Protects your IT estate from costly changes to configuration settings made inadvertently or maliciously

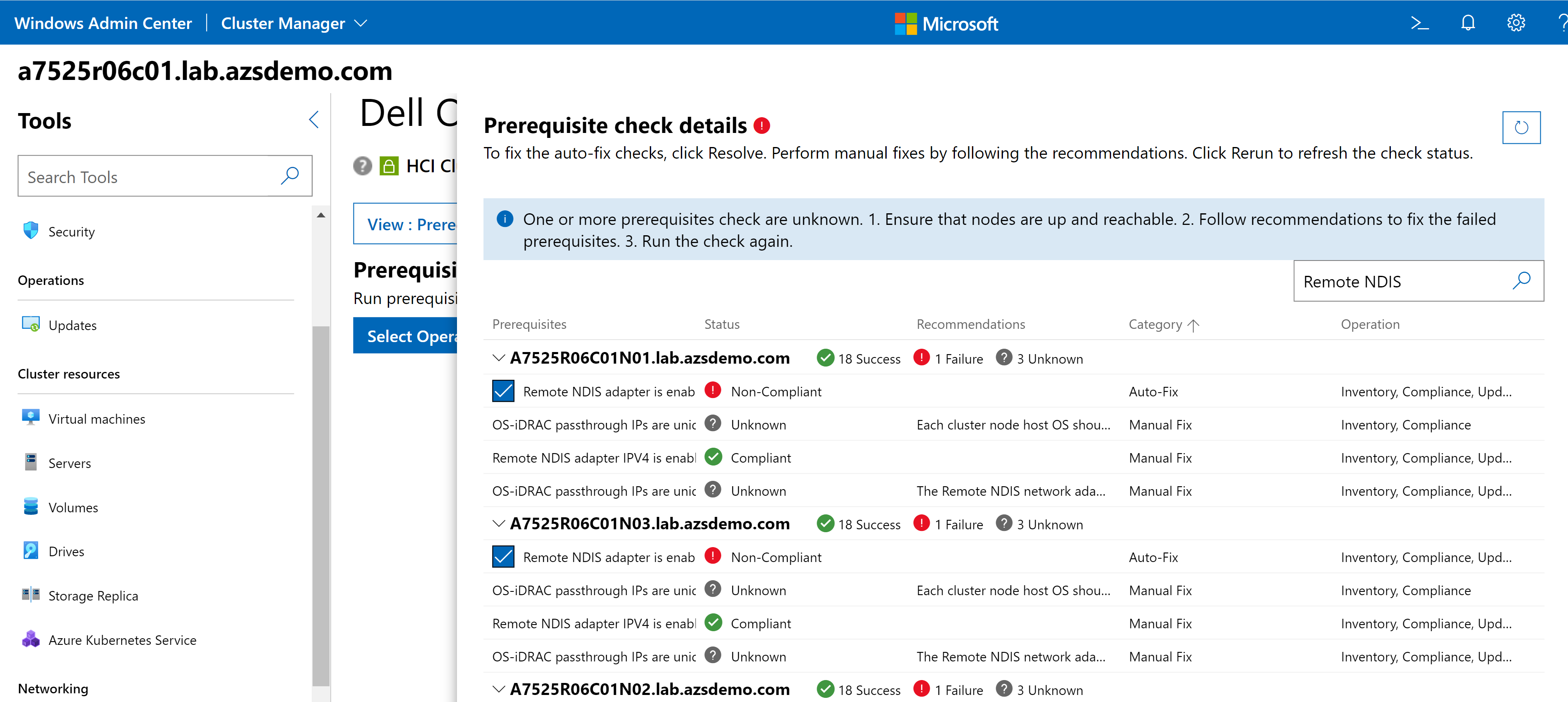

Whenever new features are released for our extension, you'll be able to familiarize yourself with them here first. In the latest release (v3.0), we completely revamped the user interface for improved usability and navigation. We also added server and cluster-level checks to ensure that all prerequisites are met for seamless enablement of management and monitoring operations. The following figure illustrates the results of a prerequisite check. In the interactive demo, you learn more about these failures and how to use the OpenManage Integration extension to fix them.

When we first start driving, we need our parents and teachers to provide turn-by-turn directions. If you're exploring the extension for the first time, you'll want to keep the guides enabled to aid your understanding.

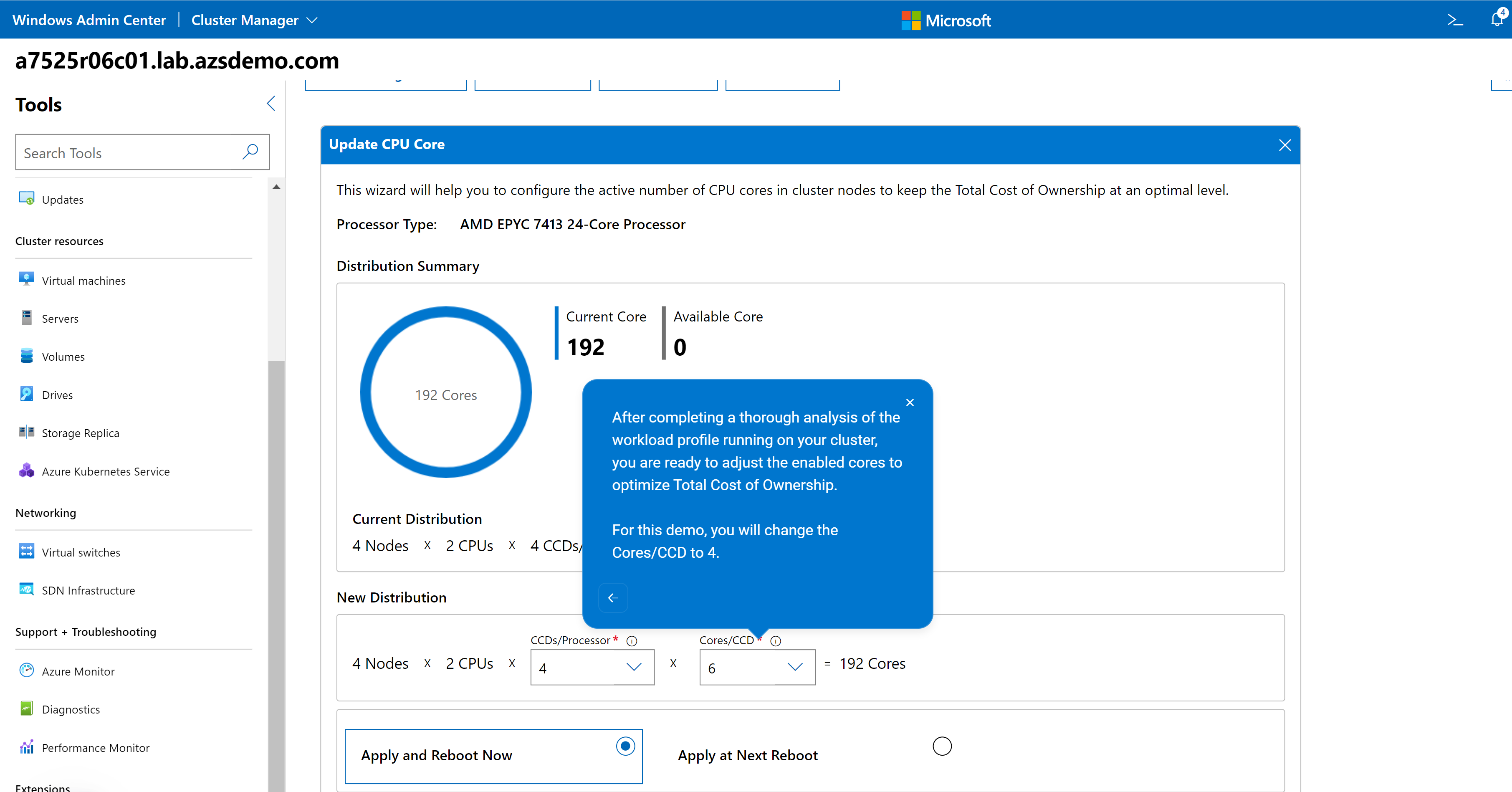

For example, consider the CPU Core Management feature. This feature allows you to right size your Azure Stack HCI cluster by enabling/disabling CPU cores to meet the requirements of your workload profile. It can also help save in subscription costs because Azure Stack HCI hosts are billed by CPU core per month. The guide in the following figure reminds you that a thorough analysis of your workload characteristics is essential prior to reducing the enabled CPU cores on this cluster.

After you've familiarized yourself with the talk track, you can leave your parents and teachers at home and drive through the demos without the detailed explanations. You can navigate using links alone by clicking the X in the upper right-hand corner of any guide. You might choose to proceed down this road to test your knowledge. As a Dell Technologies partner, you might want to create the illusion of performing a demo from a live environment to impress prospective clients.

Hands-on Lab HOL-0313-01

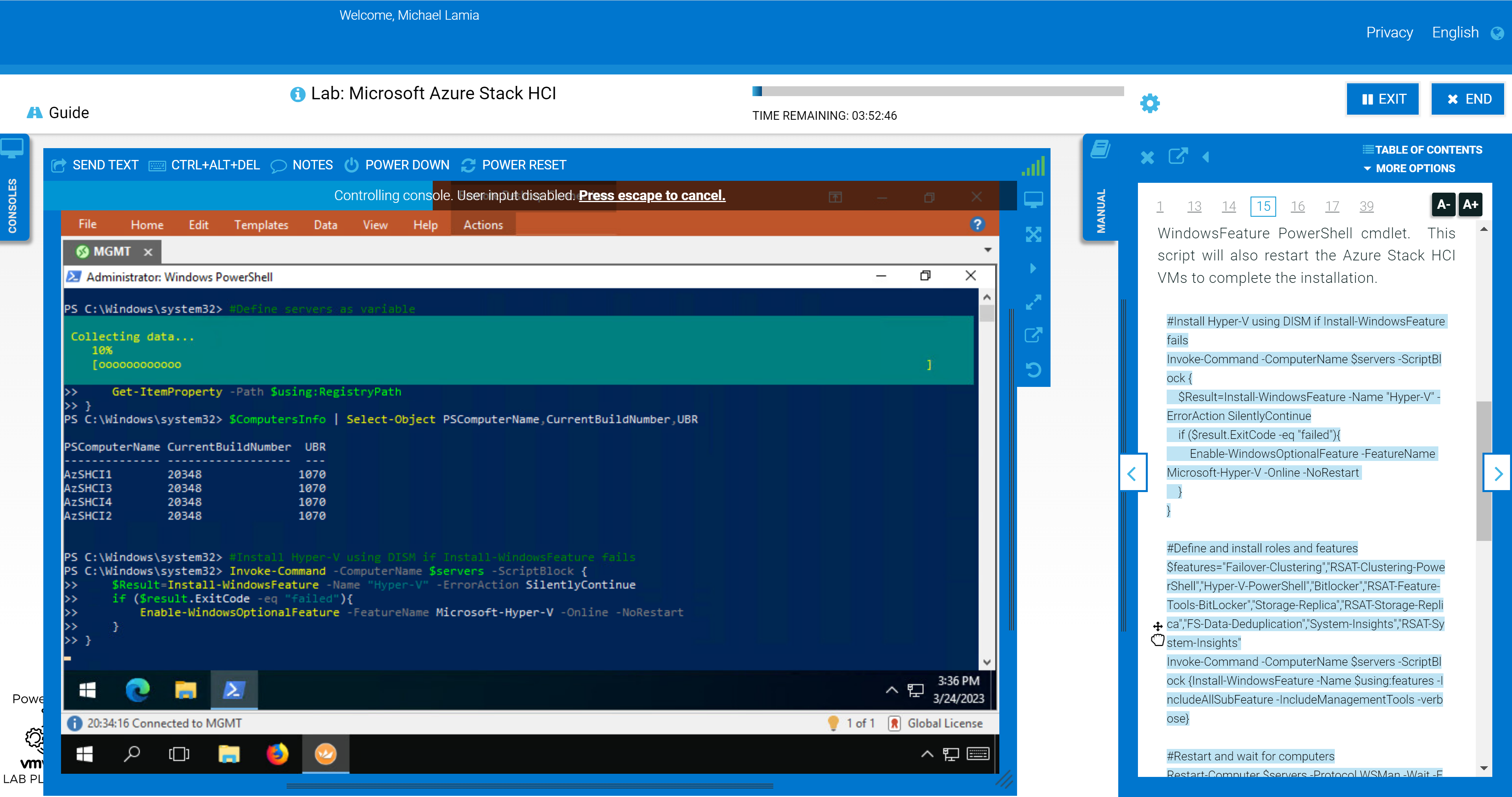

The Microsoft Azure Stack HCI Deployment hands-on lab in the Demo Center will appeal to the more mechanically inclined. It pops open the hood so you can get your hands dirty with all the PowerShell automation in our End-to-End Deployment Guide for Day 1 deployments. It is accompanied by an in-depth student manual to point you in the right direction, but there is a bit more freedom to go off-road compared with the interactive demo. Keep in mind that this is a virtual environment, so certain tasks that require the physical hardware may be limited.

This figure illustrates how you can drag and drop the PowerShell code into the console, so you aren't wasting time typing everything yourself:

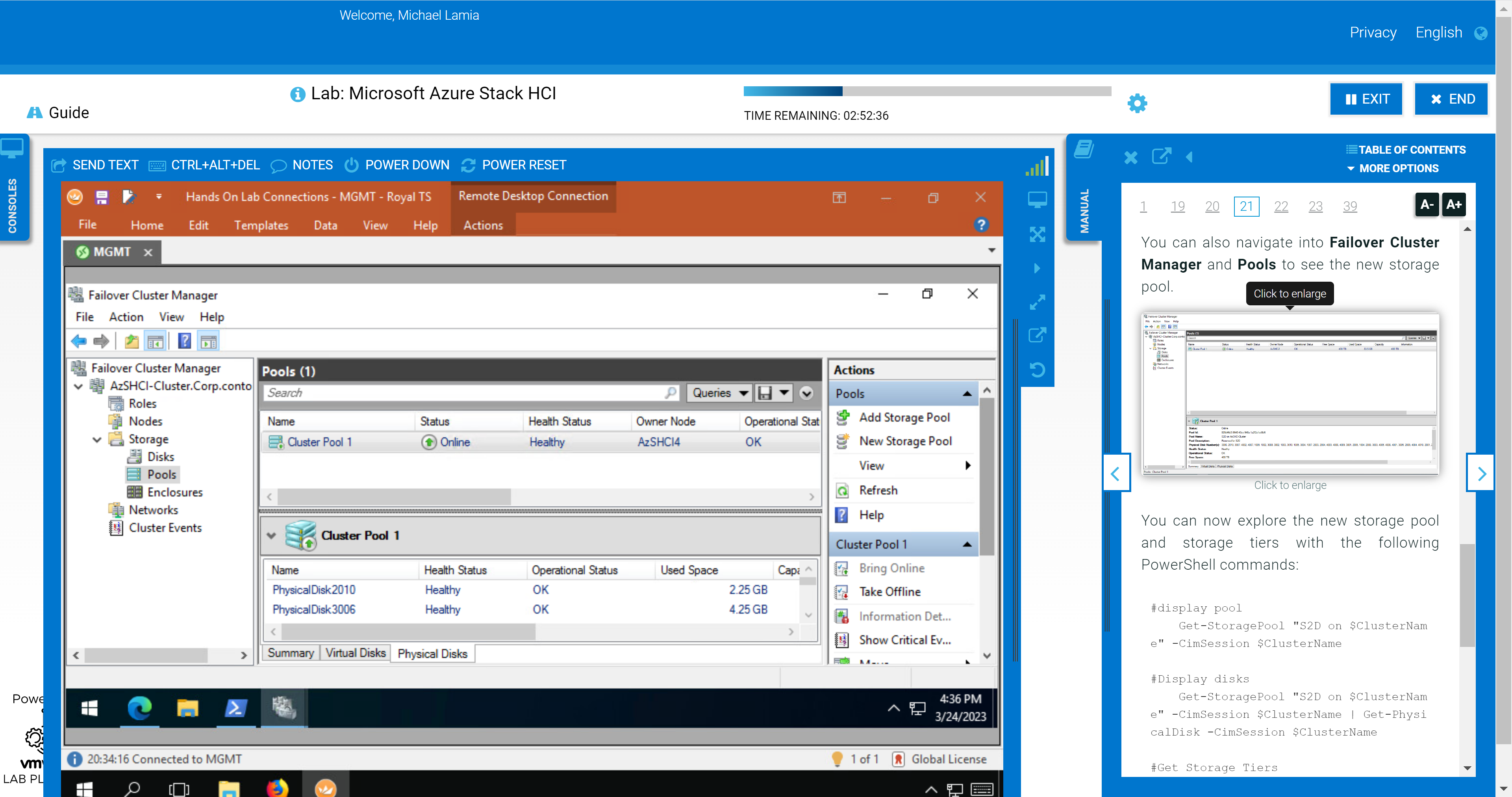

We still show the GUI some love in the later portions of the lab. Failover Cluster Manager and Windows Admin Center make an appearance after you've used PowerShell to configure the hosts, create the cluster, configure a cluster witness, and enable Storage Spaces Direct (S2D). You'll be able to confirm the expected outcome at the command line using the graphical tools.

The following figure shows the step where you use Failover Cluster Manager to inspect the newly created storage pool after its created with PowerShell.

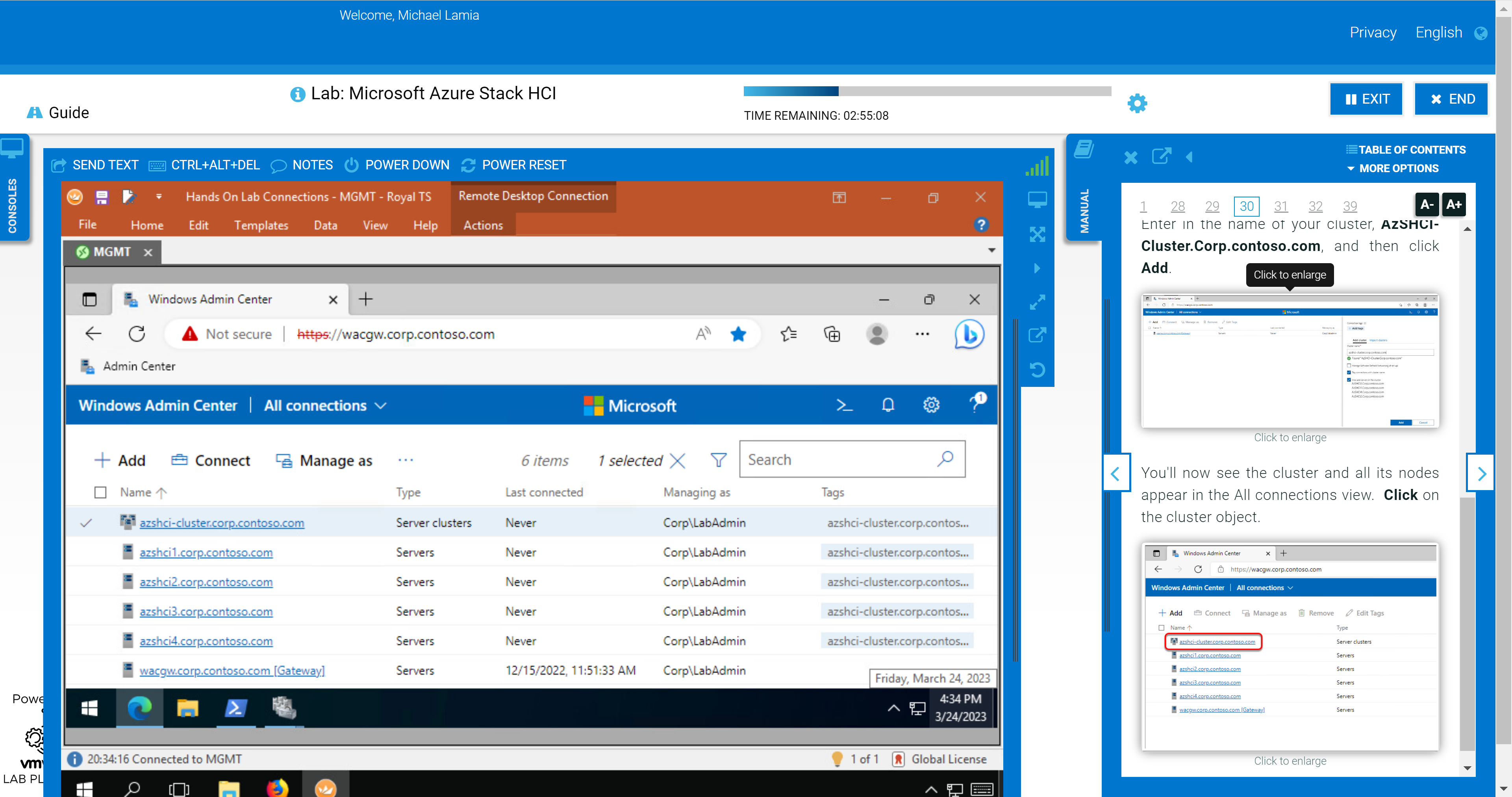

You'll also explore some of the management and monitoring capabilities in Windows Admin Center after adding your new cluster as a connection. This section of the HOL stops short of exploring the OpenManage Integration extension, though. We provide a link in the student manual to the interactive demo. If you’re not a fan of the layout of the lab shown in the following figure, you can rearrange the panes to fit your preferences. For example, you can open the manual in a separate window and allow your virtual desktop to consume all your screen real estate.

Other Opportunities to Get Hands-On

Maybe the interactive demo and hands-on lab don't meet your needs. Maybe you're looking to kick the tires on Azure Stack HCI without any training wheels. In that case, there are other options available to you. We have compiled a great list of resources that address a variety of use cases:

- MSLab – Using this GitHub project, you can run entire virtual Azure Stack HCI environments directly on your laptop if it meets moderate hardware requirements. There are endless Azure hybrid scenarios available to try (Azure Kubernetes Service hybrid, Azure Virtual Desktop, Azure Arc portfolio, and so on), and new ones are added almost immediately after new features are released.

- Dell GEOS Azure Stack HCI Hands-on Lab Guides – The Dell Global Engineering Outreach Specialists have crafted extensive guides to accompany the MSLab scenarios.

- Dell Technologies & Microsoft | Hybrid Jumpstart – The goal of this jumpstart is to help you grow your knowledge, skills, and experience around several core hybrid cloud solutions from the Dell Technologies and Microsoft hybrid portfolio. This has many highly prescriptive hands-on modules and resembles more of a Pluralsight or Microsoft Learn course.

- Azure Arc Jumpstart – If you want to skip the infrastructure deployment steps and get right into the key features of the Azure Arc portfolio, then this project is for you. All you need is an Azure subscription and a single resource group to get started immediately.

- Dell Technologies Customer Solution Center – Speak with your Dell Technologies account team to schedule a personalized engagement with a Customer Solution Center. Our dedicated subject matter experts can help you with extensive Proofs of Concept with your target workloads.

If you're looking for educational materials on Azure Stack HCI, like white papers, blogs, and videos, visit our Info Hub and main product page.

Be sure to follow me on Twitter @Evolving_Techie and LinkedIn.

Author: Mike Lamia

GPU Acceleration for Dell Azure Stack HCI: Consistent and Performant AI/ML Workloads

Wed, 01 Feb 2023 15:50:35 -0000

|Read Time: 0 minutes

The end of 2022 brought us excellent news: Dell Integrated System for Azure Stack HCI introduced full support for GPU factory install.

As a reminder, Dell Integrated System for Microsoft Azure Stack HCI is a fully integrated HCI system for hybrid cloud environments that delivers a modern, cloud-like operational experience on-premises. It is intelligently and deliberately configured with a wide range of hardware and software component options (AX nodes) to meet the requirements of nearly any use case, from the smallest remote or branch office to the most demanding business workloads.

With the introduction of GPU-capable AX nodes, now we can also support more complex and demanding AI/ML workloads.

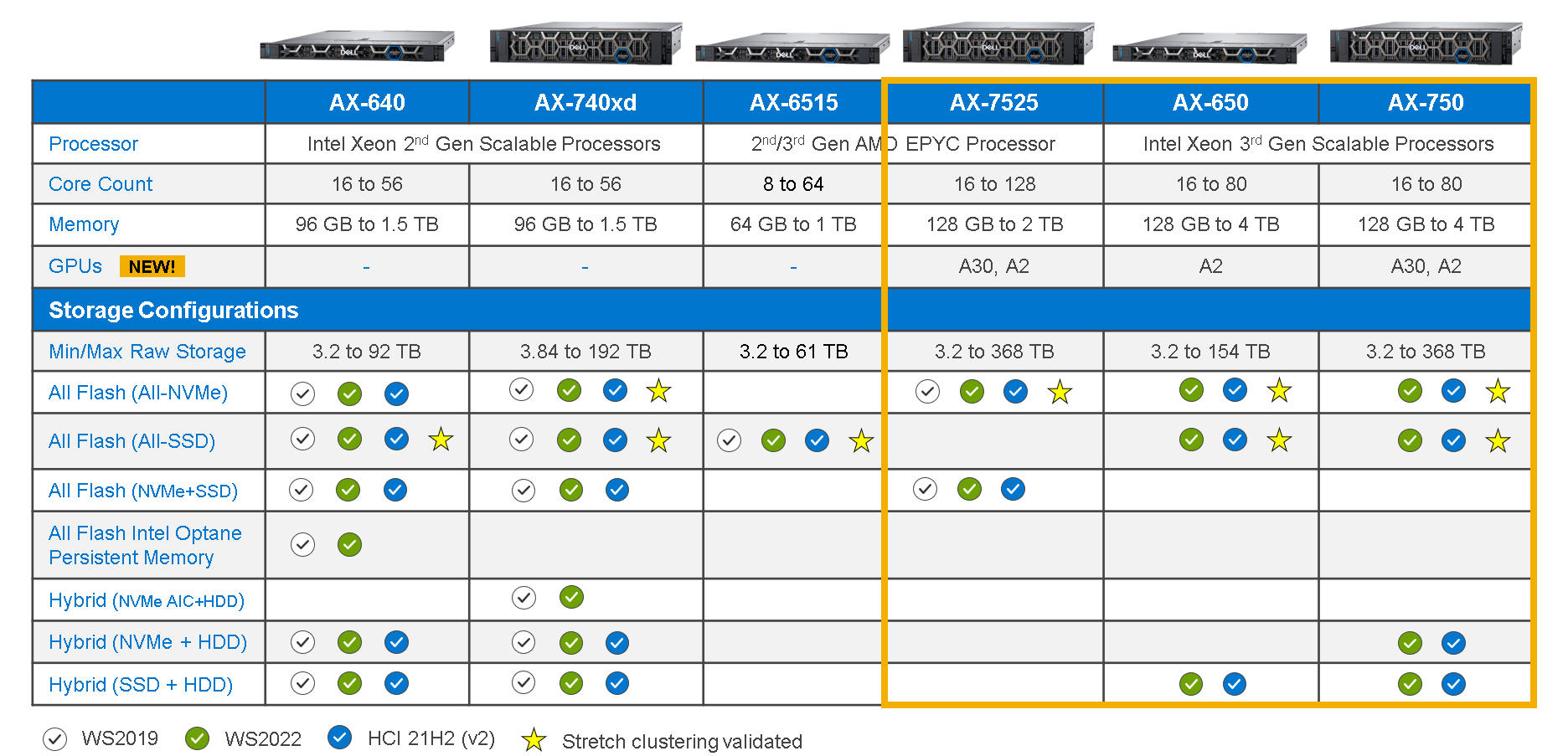

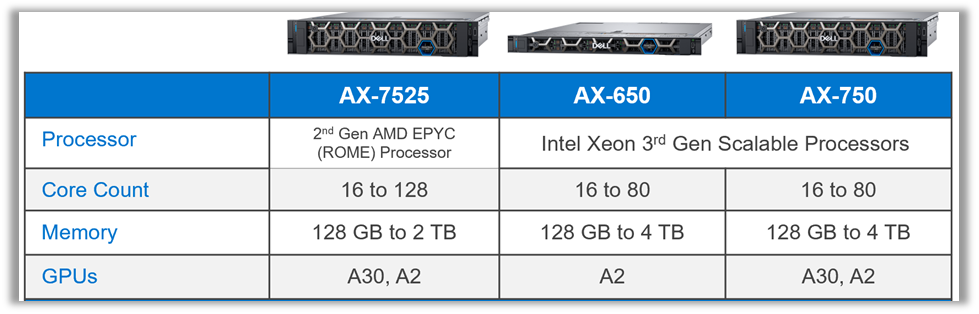

New GPU hardware options

Not all AX nodes support GPUs. As you can see in the table below, AX-750, AX-650, and AX-7525 nodes running AS HCI 21H2 or later are the only AX node platforms to support GPU adapters.

Table 1: Intelligently designed AX node portfolio

Note: AX-640, AX-740xd, and AX-6515 platforms do not support GPUs.

The next obvious question is what GPU type and number of adapters are supported by each platform.

We have selected the following two NVIDIA adapters to start with:

- NVIDIA Ampere A2, PCIe, 60W, 16GB GDDR6, Passive, Single Wide

- NVIDIA Ampere A30, PCIe, 165W, 24GB HBM2, Passive, Double Wide

The following table details how many GPU adapter cards of each type are allowed in each AX node:

Table 2: AX node support for GPU adapter cards

| AX-750 | AX-650 | AX-7525 | |

|---|---|---|---|

| NVIDIA A2 | Up to 2 | Up to 2 | Up to 3 |

| NVIDIA A30 | Up to 2 | -- | Up to 3 |

| Maximum GPU number (must be same model) | 2 | 2 | 3 |

Use cases

The NVIDIA A2 is the entry-level option for any server to get basic AI capabilities. It delivers versatile inferencing acceleration for deep learning, graphics, and video processing in a low-profile, low-consumption PCIe Gen 4 card.

The A2 is the perfect candidate for light AI capability demanding workloads in the data center. It especially shines in edge environments, due to the excellent balance among form factor, performance, and power consumption, which results in lower costs.

The NVIDIA A30 is a more powerful mainstream option for the data center, typically covering scenarios that require more demanding accelerated AI performance and a broad variety of workloads:

- AI inference at scale

- Deep learning training

- High-performance computing (HPC) applications

- High-performance data analytics

Options for GPU virtualization

There are two GPU virtualization technologies in Azure Stack HCI: Discrete Device Assignment (also known as GPU pass-through) and GPU partitioning.

Discrete Device Assignment (DDA)

DDA support for Dell Integrated System for Azure Stack HCI was introduced with Azure Stack HCI OS 21H2. When leveraging DDA, GPUs are basically dedicated (no sharing), and DDA passes an entire PCIe device into a VM to provide high-performance access to the device while being able to utilize the device native drivers. The following figure shows how DDA directly reassigns the whole GPU from the host to the VM:

Figure 1: Discrete Device Assignment in action

To learn more about how to use and configure GPUs with clustered VMs with Azure Stack HCI OS 21H2, you can check Microsoft Learn and the Dell Info Hub.

GPU partitioning (GPU-P)

GPU partitioning allows you to share a physical GPU device among several VMs. By leveraging single root I/O virtualization (SR-IOV), GPU-P provides VMs with a dedicated and isolated fractional part of the physical GPU. The following figure explains this more visually:

Figure 2: GPU partitioning virtualizing 2 physical GPUs into 4 virtual vGPUs

The obvious advantage of GPU-P is that it enables enterprise-wide utilization of highly valuable and limited GPU resources.

Note these important considerations for using GPU-P:

- Azure Stack HCI OS 22H2 or later is required.

- Host and guest VM drivers for GPU are needed (requires a separate license from NVIDIA).

- Not all GPUs support GPU-P; currently Dell only supports A2 (A16 coming soon).

- We strongly recommend using Windows Admin Center for GPU-P to avoid mistakes.

You’re probably wondering about Azure Virtual Desktop on Azure Stack HCI (still in preview) and GPU-P. We have a Dell Validated Design today and will be refreshing it to include GPU-P during this calendar year.

To learn more about how to use and configure GPU-P with clustered VMs with Azure Stack HCI OS 22H2, you can check Microsoft Learn and the Dell Info Hub (Dell documentation coming soon).

Timeline

As of today, Dell Integrated System for Microsoft Azure Stack HCI only provides support for Azure Stack HCI OS 21H2 and DDA.

Full support for Azure Stack HCI OS 22H2 and GPU-P is around the corner, by the end of the first quarter, 2023.

Conclusion

The wait is finally over, we can now leverage in our Azure Stack HCI environments the required GPU power for AI/ML highly demanding workloads.

Today, DDA provides fully dedicated GPU pass-through utilization, whereas with GPU-P we will very soon have the choice of providing a more granular GPU consumption model.

Thanks for reading, and stay tuned for the ever-expanding list of validated GPUs that will unlock and enhance even more use cases and workloads!

Author: Ignacio Borrero, Senior Principal Engineer, Technical Marketing Dell CI & HCI

@virtualpeli

Single-Node Azure Stack HCI is now available!

Wed, 19 Oct 2022 15:25:20 -0000

|Read Time: 0 minutes

Single-Node Azure Stack HCI is now available!

Earlier this year, Microsoft announced the release of a new flavor for Azure Stack HCI: Azure Stack HCI single node. This is another milestone in Microsoft’s long history of evolution for the Azure Stack family of products.

Back in 2017, Microsoft announced Azure Stack, the platform to extend the cloud to the customers’ data centers. One of the key design principles for this release was to make it easy to create hybrid cloud environments.

In March 2019, a new member of the Azure Stack family was announced: Azure Stack HCI. This incumbent is a main driver for IT modernization, infrastructure consolidation, and true hybridity for Microsoft environments. Azure Stack HCI enables customers to run virtual machines (VMs), cloud native applications, and Azure Services on-premises on top of hyperconverged infrastructure (HCI) integrated systems as an optimal solution in performance and cost. Dell Integrated Systems for Azure Stack HCI delivers a seamless Azure experience, simplifies Azure on-premises, and accelerates innovation.

Figure 1 Dell Technologies vision of Microsoft Azure Stack HCI

While Azure Stack HCI was born as a scalable solution to adapt to most customer IT needs, certain scenarios require other intrinsic characteristics. We think of the “edge” as the IT place where data is acted on near its creation point to generate immediate and essential value. In many cases, these edge locations have severe space and cooling restrictions, with more emphasis on data proximity and operational efficiency than scalability or resiliency. For these scenarios, having a low-cost, highly performing, and easy-to-manage platform is more important than prioritizing scalability and cluster-level high availability.

The edge is becoming the next technology turning point, where organizations are planning to increase their IT spending significantly (IDC EdgeView Survey). Microsoft designed an Azure Stack HCI platform for this scenario. Any IT deployment in which we benefit from the data being collected and processed where it’s produced, away from a core data center, will become eligible for an Azure Stack HCI single-node deployment. Edge can be manufacturing, retail, energy, telco, healthcare, smart connected cities—you name it. If we think about Machine Learning (ML), Artificial Intelligence (AI), or Internet of Things (IoT) scenarios, single-node Azure Stack HCI clusters fit perfectly into these typical edge needs. A single-node cluster provides a cost-sensitive solution that supports the same workloads a multi-node cluster does and behaves in a similar way.

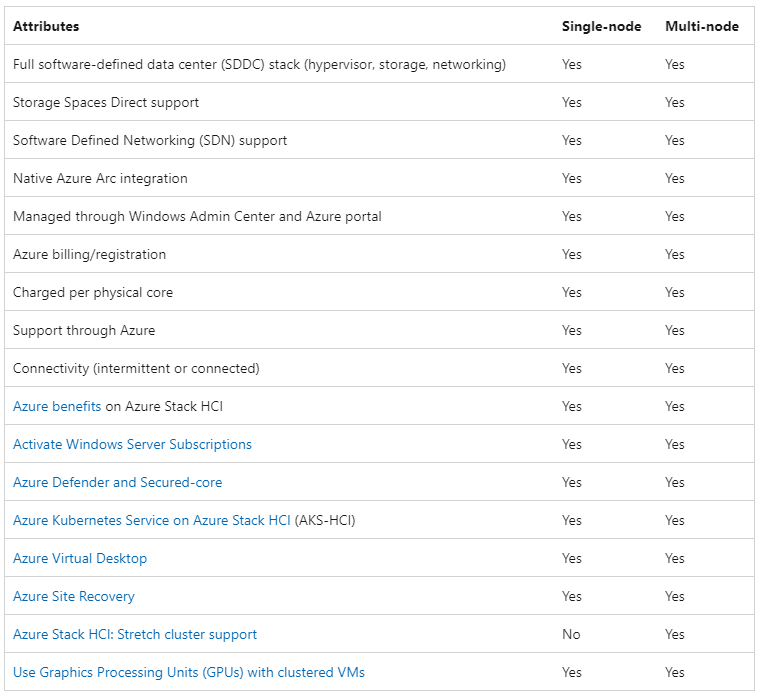

Dell Technologies portfolio for Azure Stack HCI single node is based on the same 15G models also available for multi-node deployments, as shown here:

Figure 2 Dell Technologies Integrated System for Microsoft Azure Stack HCI portfolio

In terms of features, as mentioned before, single-node and multi-node systems behave similarly. The following table shows the main attributes of both. Note that they are nearly identical except for a few distinctions, the most relevant being the lack of stretched-cluster support:

Figure 3 Azure Stack HCI single and multi-node attributes comparison (Source: Microsoft)

There are a few differences worth highlighting:

- Windows Admin Center (WAC) does not support the creation of single-node clusters. Deployment is done through PowerShell and Storage Spaces Direct enablement.

- Stretched clusters are not supported with single-node deployments. Stretched clusters require a minimum of two nodes at each site.

- Storage Bus Cache (SBL), which is commonly used to improve read/write performance on Windows Server/Azure Stack HCI OS, is not supported in single-node clusters.

- There are limitations on WAC cluster management functionality. PowerShell can be used to cover those limitations.

- Single-node clusters support only a single drive type: either NVMe or SSD drives, but not a mix of both.

- For cluster lifecycle management, Open Manage Integration with Microsoft Admin Center (OMIMSWAC) Cluster Aware Updating (CAU) cannot be used. Vendor-provided solutions (drives and firmware) or a PowerShell and Server Configuration tool (SConfig) are valid alternatives.

If your Azure based edge workloads are moving further from the data center, and you understand the design differences listed above for Dell Azure Stack HCI single node, this could be a great fit for your business.

We expect Azure Stack HCI single-node clusters to evolve over time, so check our Info Hub site for the latest updates!

Author: Iñigo Olcoz

Twitter: VirtualOlcoz

References

- IDC EdgeView Survey 2022

- Microsoft: Azure Stack HCI single-node clusters

- Info Hub: Microsoft HCI Solutions

- Info Hub: Dell OpenManage Integration with Microsoft Windows Admin Center v2.0 Technical Walkthrough

- Info Hub: Technology leap ahead: 15G Intel based Dell Integrated System for Microsoft Azure Stack HCI

Dell Hybrid Management: Azure Policies for HCI Compliance and Remediation

Mon, 30 May 2022 17:05:47 -0000

|Read Time: 0 minutes

Dell Hybrid Management: Azure Policies for HCI Compliance and Remediation

Companies that take an “Azure hybrid first” strategy are making a wise and future-proof decision by consolidating the advantages of both worlds—public and private—into a single entity.

Sounds like the perfect plan, but a key consideration for these environments to work together seamlessly is true hybrid configuration consistency.

A major challenge in the past was having the same level of configuration rules concurrently in Azure and on-premises. This required different tools and a lot of costly manual interventions (subject to human error) that resulted, usually, in potential risks caused by configuration drift.

But those days are over.

We are happy to introduce Dell HCI Configuration Profile (HCP) Policies for Azure, a revolutionary and crucial differentiator for Azure hybrid configuration compliance.

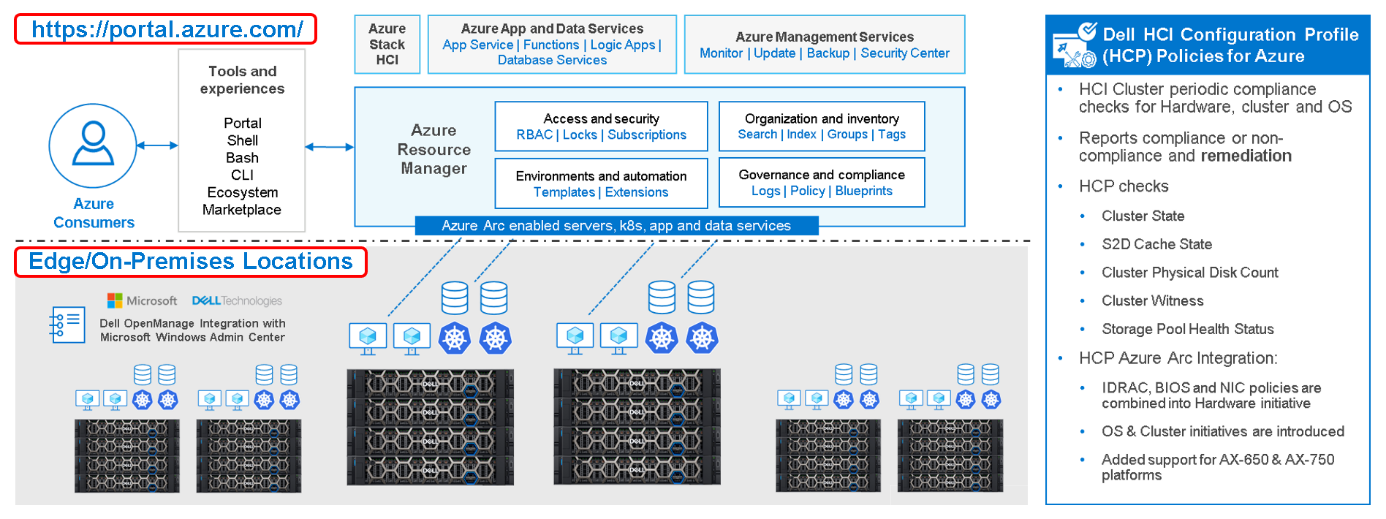

Figure 1: Dell Hybrid Management with Windows Admin Center (local) and Azure/Azure Arc (public)

So, what is it? How does it work? What value does it provide?

Dell HCP Policies for Azure is our latest development for Dell OpenManage Integration with Windows Admin Center (OMIMSWAC). With it, we can now integrate Dell HCP policy definitions into Azure Policy. Dell HCP is the specification that captures the best practices and recommended configurations for Azure Stack HCI and Windows-based HCI solutions from Dell to achieve better resiliency and performance with Dell HCI solutions.

The HCP Policies feature functions at the cluster level and is supported for clusters that are running Azure Stack HCI OS (21H2) and pre-enabled for Windows Server 2022 clusters.

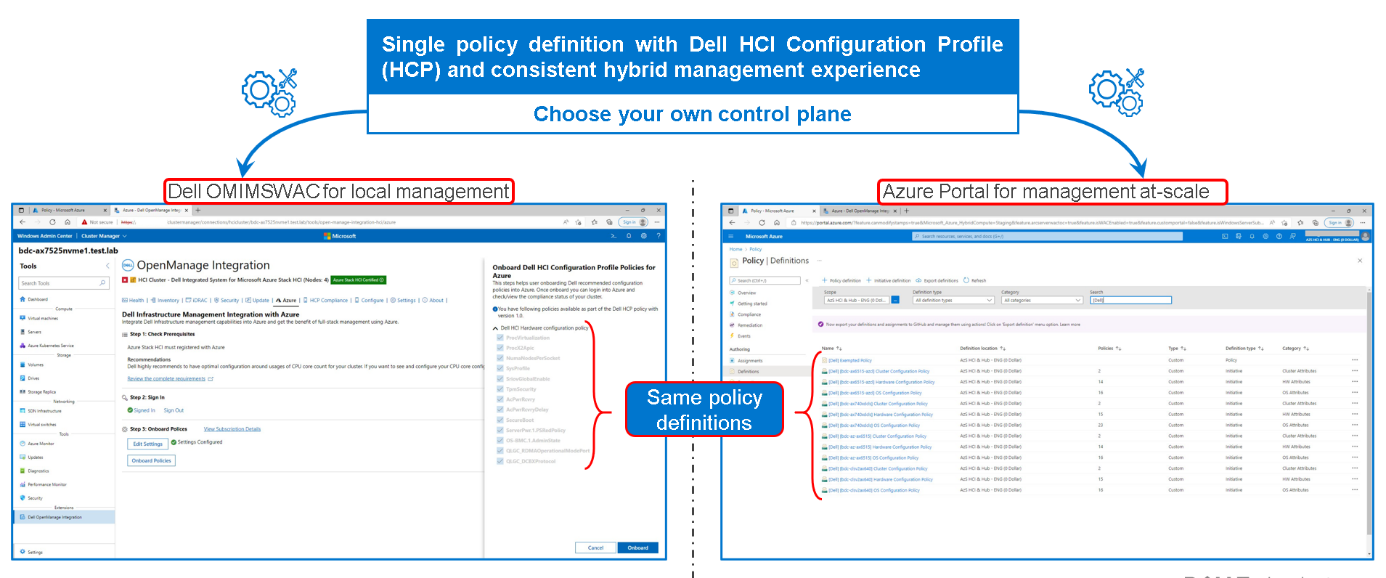

IT admins can manage Azure Stack HCI environments through two different approaches:

- At-scale through the Azure portal using the Azure Arc portfolio of technologies

- Locally on-premises using Windows Admin Center

Figure 2: Dell HCP Policies for Azure - onboarding Dell HCI Configuration Profile

By using a single Dell HCP policy definition, both options provide a seamless and consistent management experience.

Running Check Compliance automatically compares the recommended rules packaged together in the Dell HCP policy definitions with the settings on the running integrated system. These rules include configurations that address the hardware, cluster symmetry, cluster operations, and security.

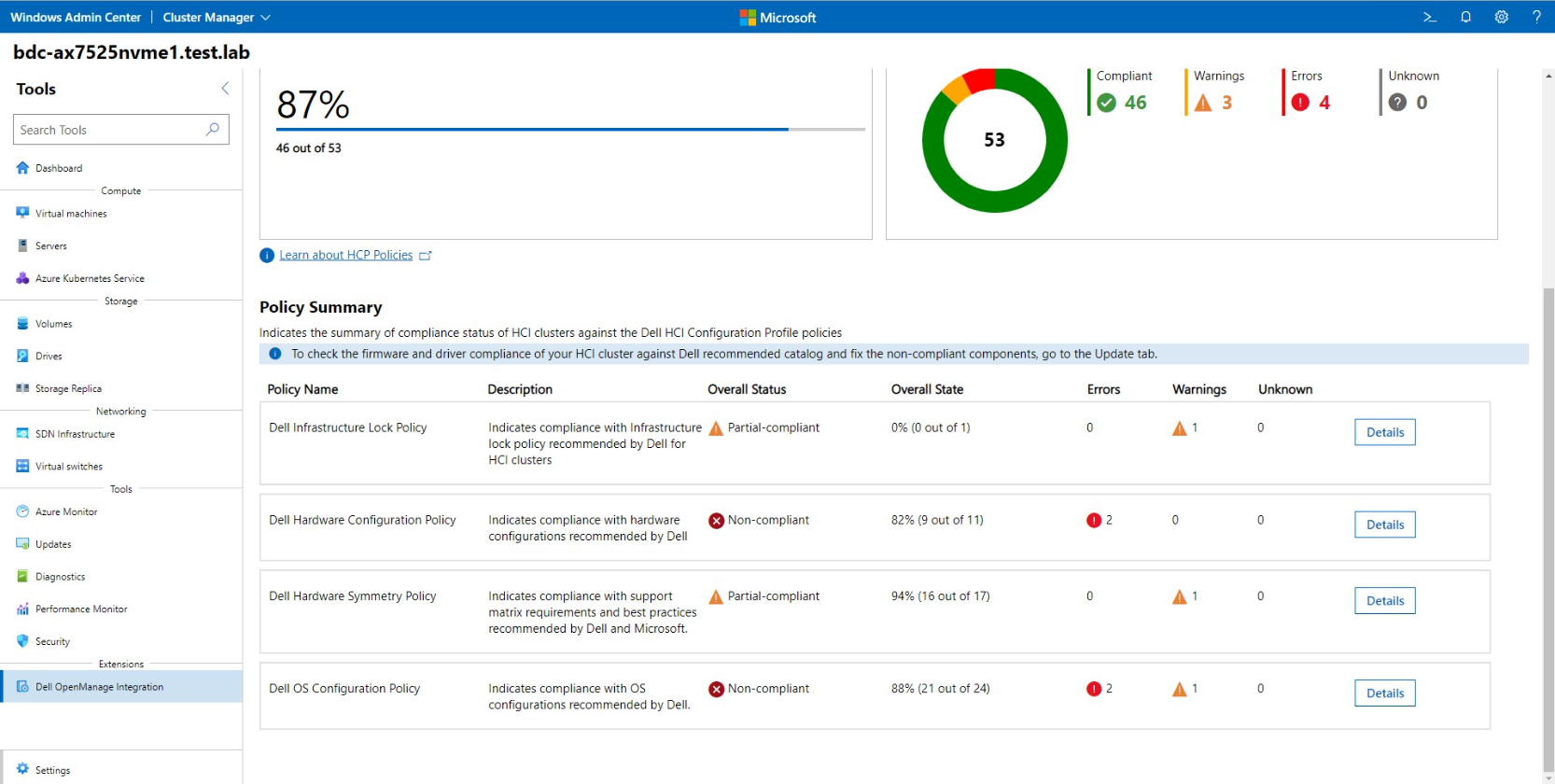

Figure 3: Dell HCP Policies for Azure - HCP policy compliance

Dell HCP Policy Summary provides the compliance status of four policy categories:

- Dell Infrastructure Lock Policy - Indicates enhanced security compliance to protect against unintentional changes to infrastructure

- Dell Hardware Configuration Policy - Indicates compliance with Dell recommended BIOS, iDRAC, firmware, and driver settings that improve cluster resiliency and performance

- Dell Hardware Symmetry Policy - Indicates compliance with integrated-system validated components on the support matrix and best practices recommended by Dell and Microsoft

- Dell OS Configuration Policy - Indicates compliance with Dell recommended operating system and cluster configurations

Figure 4: Dell HCP Policies for Azure - HCP Policy Summary

To re-align non-compliant policies with the best practices validated by Dell Engineering, our Dell HCP policy remediation integration with WAC (unique at the moment) helps to fix any non-compliant errors. Simply click “Fix Compliance.”

Figure 5: Dell HCP Policies for Azure - HCP policy remediation

Some fixes may require manual intervention; others can be corrected in a fully automated manner using the Cluster-Aware Updating framework.

Conclusion

The “Azure hybrid first” strategy is real today. You can use Dell HCP Policies for Azure, which provides a single-policy definition with Dell HCI Configuration Profile and a consistent hybrid management experience, whether you use Dell OMIMSWAC for local management or Azure Portal for management at-scale.

With Dell HCP Policies for Azure, policy compliance and remediation are fully covered for Azure and Azure Stack HCI hybrid environments.

You can see Dell HCP Policies for Azure in action at the interactive Dell Demo Center.

Thanks for reading!

Author: Ignacio Borrero, Dell Senior Principal Engineer CI & HCI, Technical Marketing

Twitter: @virtualpeli

Exclusive Preview of Dell Azure Stack HCI Arc Integrated Configuration Compliance

Tue, 01 Mar 2022 20:39:03 -0000

|Read Time: 0 minutes

Who doesn’t enjoy VIP treatment? Exciting opportunities to feel like royalty include winning box seats at a sporting event or getting invited to attend opening night at a new restaurant. I received an unexpected upgrade to business class on a flight a couple years ago and remember texting every celebratory meme I could find to friends and family! These are the moments in life to really savor.

In my line of work as a technical marketing engineer, I relish any situation where VIP stands for Very Important Person rather than Virtual IP address. Private previews of the latest technology often provide both flavors of VIP.

I consider myself fortunate to be among the first to experience cutting-edge solutions with the potential to solve today’s most vexing business challenges. I also get direct access to the best minds in the software and hardware industry. They welcome my feedback, and there’s no better feeling than knowing that I’ve made a meaningful contribution to a product that will benefit the broader community! Now it’s your turn to feel the thrill of gaining early access to long-awaited new software capabilities for Azure Stack HCI.

Your official preview invitation has arrived.

You are cordially invited to participate in an exclusive VIP preview of Azure Stack HCI Configuration and Policy Compliance Visibility from Dell Technologies, integrated with Azure Arc.

The Azure Arc portfolio demonstrates the unique Microsoft approach to delivering hybrid cloud by extending Azure platform services and management capabilities to data center, edge, and multi-cloud environments. Dell Technologies uses the Azure Policy guest configuration feature and Azure Arc-enabled servers to audit software and hardware settings in Dell Integrated System for Microsoft Azure Stack HCI.

Our engineering-validated integrated system is Azure hybrid by design and delivers efficient operations using our Dell OpenManage Integration with Microsoft Windows Admin Center extension and snap-ins.

When we first developed our extension, we delivered deep hardware monitoring, inventory, and troubleshooting capabilities. Over the last few years, we have collected valuable feedback from preview programs to drive further investment and innovation into our extension. Customer experience has helped us shape new features including:

- One-click full stack lifecycle management using Cluster-Aware Updating

- Automated cluster creation and expansion

- Dynamic CPU core management

- Intrinsic infrastructure security management

The Azure Arc integration from Dell Technologies complements Windows Admin Center and our OpenManage extension by applying robust governance services to the integrated system. Our Azure Arc integration creates software and hardware compliance policies for near real-time detection of infrastructure configuration drift at-scale. It protects clusters in the data center or geographically dispersed to ROBO and edge locations from malicious threats and inadvertent changes to operating system, BIOS, iDRAC, and network adapter settings on AX nodes from Dell Technologies. Without this visibility, you leave yourself vulnerable to security breaches, costly downtime, and degraded application performance.

All we need now is your experience and valuable feedback to help us fine-tune this critical capability!

Consider Azure Portal your observation deck.

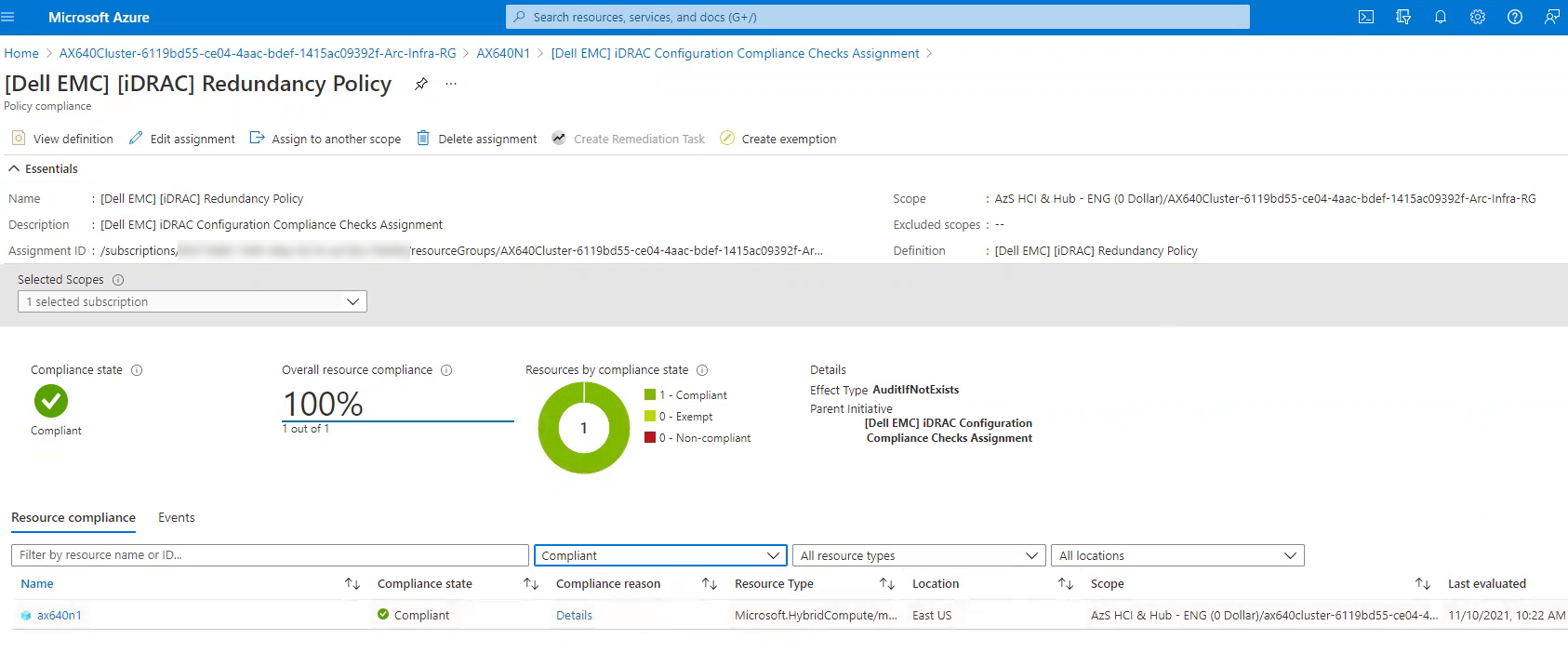

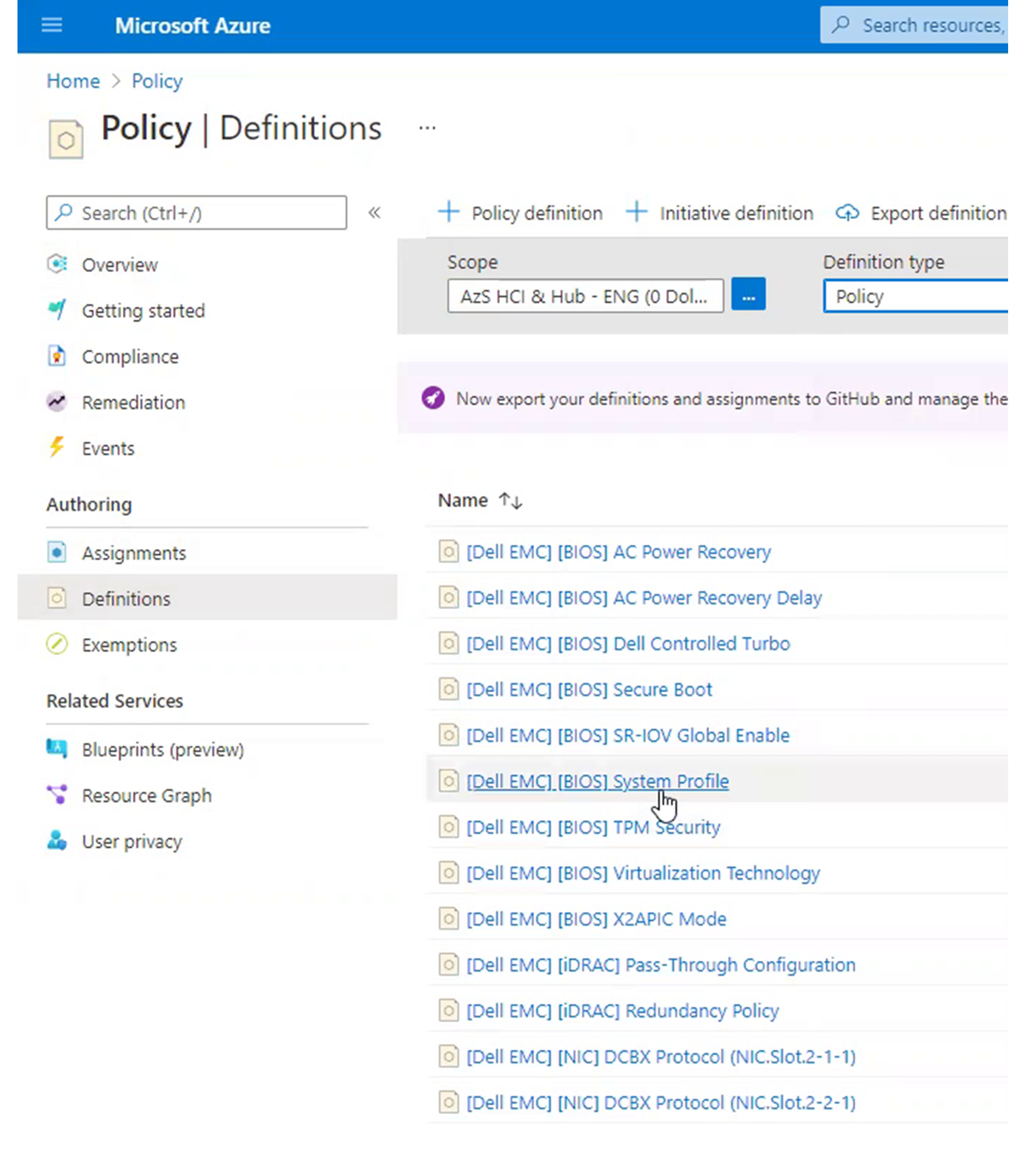

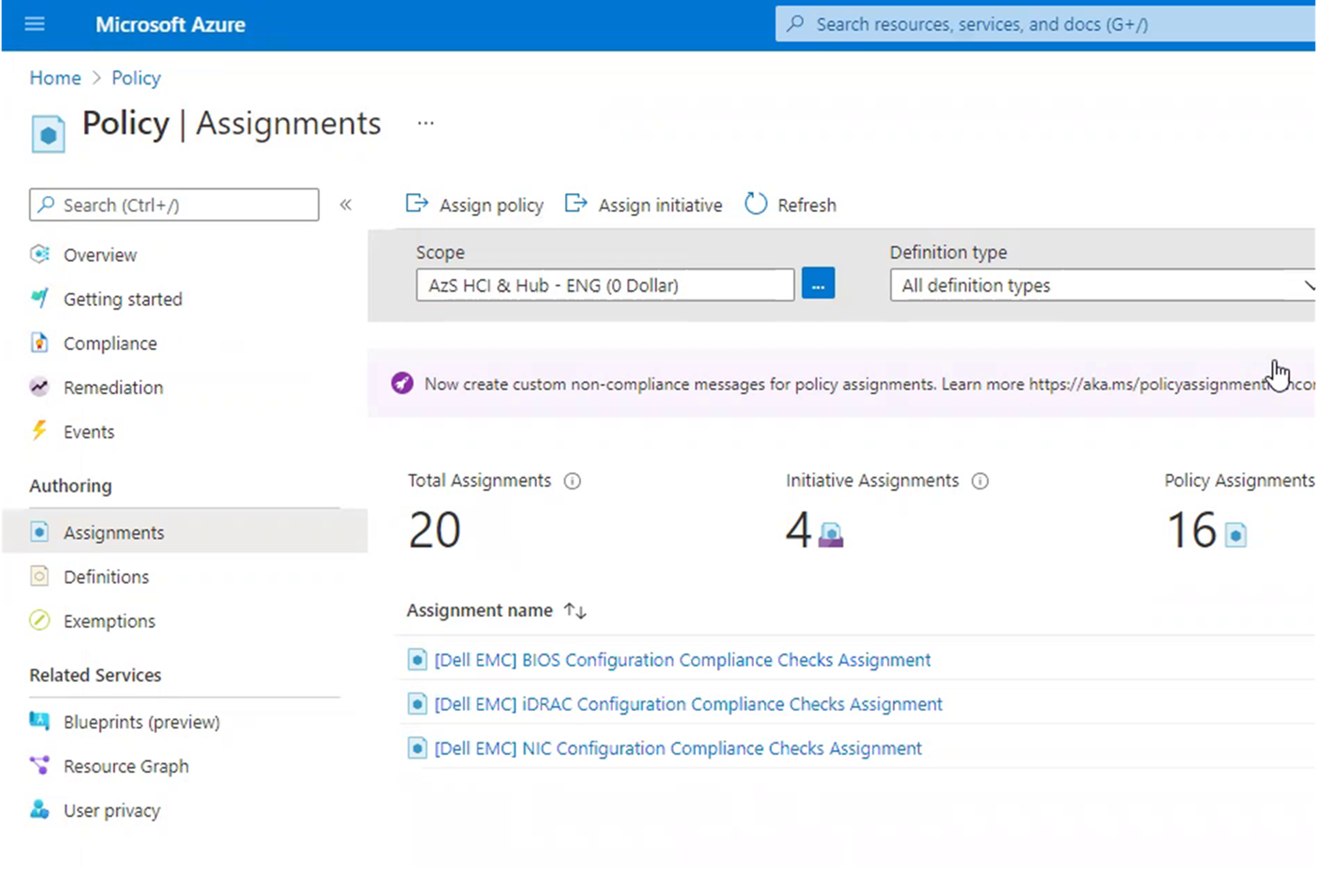

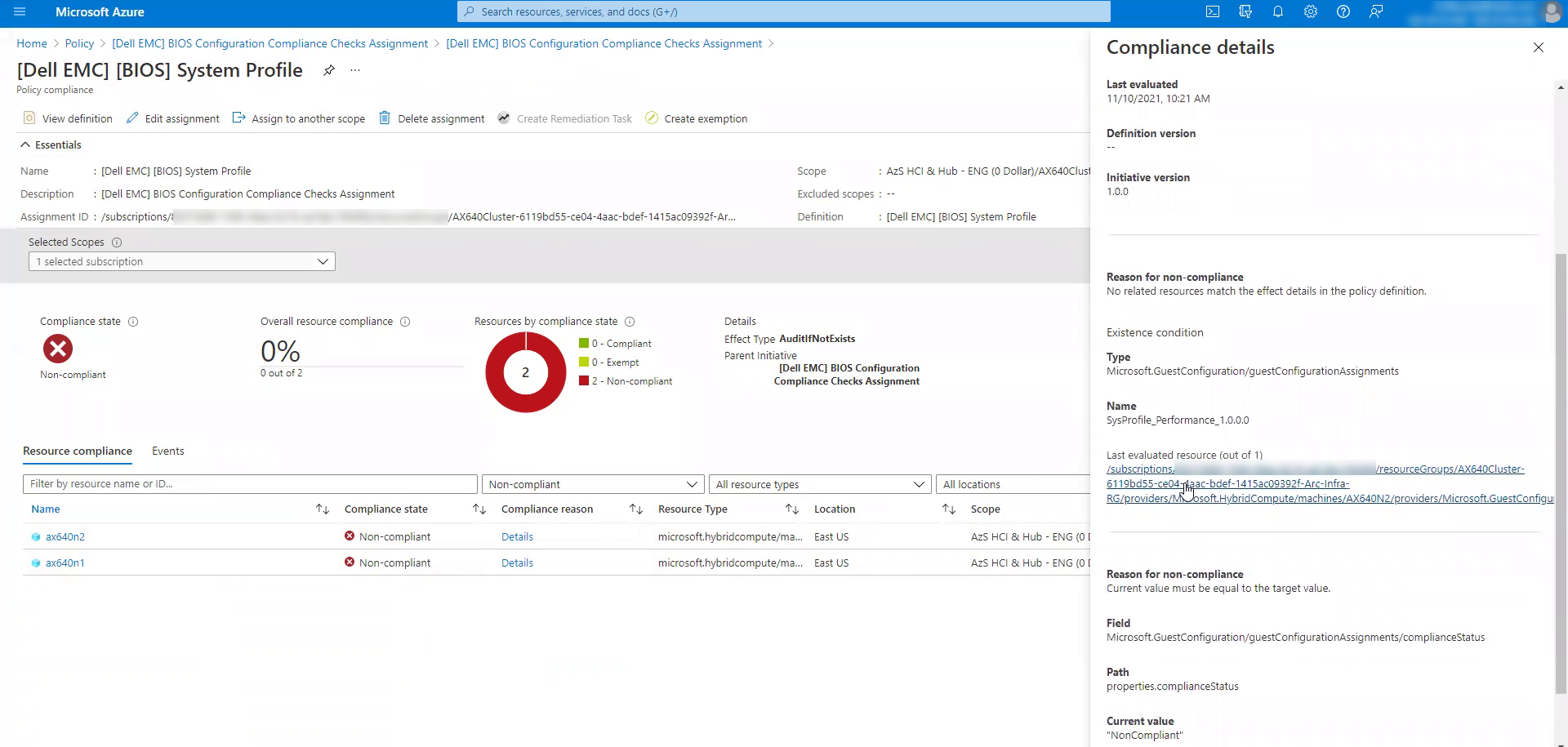

Intentionally selected AX node attributes and values targeted by our Azure Arc integration are routinely checked for compliance against pre-defined business rules. Then, compliance results are visualized in the Policy blade of the Azure portal as shown in the following screen shots.

Help prevent costly business setbacks.

This guided preview is checking select OS-level, cluster-level, BIOS, iDRAC, and network adapter attributes that optimize Azure Stack HCI. If an unapproved change to these attribute values goes undetected, the integrated system may experience degradation to performance, availability, and security. The abnormal behavior of the system may not be readily traced back to the modified OS and hardware setting – delaying Mean Time to Repair (MTTR). The longer the incident takes to resolve, the greater the consequences to your business in the form of decreased productivity, lost revenue, or tarnished reputation.

Ready for your sneak peek?

Here are just some of the preview benefits in store:

Playing with the newest toys in your own sandbox and directly with the engineers creating the solution

Helping to make a cutting-edge technology even better with a vendor who is listening and responding to your feedback

Achieving superhero status at your business by automating routine administrative tasks that strengthen infrastructure integrity and improve operational efficiency

Availability is limited for this guided preview. To claim your spot, please contact your account manager right away. They will coordinate with the internal teams at Dell Technologies and schedule further conversations with you. A professional services engagement is required to install the Azure Arc integration during the preview phase. We will work together to prepare the Azure artifacts and run the required scripts. Over time, Dell Technologies intends to expand this compliance visibility to a much larger set of attributes in an extensible, user-friendly framework.

I hope you’re as excited as I am to deliver this configuration and policy compliance visibility using Azure Arc to Dell Integrated System for Microsoft Azure Stack HCI. The technical previews that I’ve been a part of have been some of the most memorable and rewarding experiences of my career. An unexpected upgrade to business class is nice but contributing to the success of a technology that will help my industry peers for years to come? Priceless.

Author: Michael Lamia

Twitter: @Evolving_Techie

LinkedIn: https://www.linkedin.com/in/michaellamia/

Azure Stack HCI automated and consistent protection through Secured-core and Infrastructure lock

Mon, 21 Feb 2022 17:45:58 -0000

|Read Time: 0 minutes

Global damages related to cybercrime were predicted to reach USD 6 trillion in 2021! This staggering number highlights the very real security threat faced not only by big companies, but also for small and medium businesses across all industries.

Cyber attacks are becoming more sophisticated every day and the attack surface is constantly increasing, now even including the firmware and BIOS on servers.

Figure 1: Cybercrime figures for 2021

However, this isn’t all bad news, as there are now two new technologies (and some secret sauce) that we can leverage to proactively defend against unauthorized access and attacks to our Azure Stack HCI environments, namely:

- Secured-core Server

- Infrastructure lock

Let’s briefly discuss each of them.

Secured-core is a set of Microsoft security features that leverage the latest security advances in Intel and AMD hardware. It is based on the following three pillars:

- Hardware root-of-trust: requires TPM 2.0 v3, verifies for validly signed firmware at boot times to prevent tamper attacks

- Firmware protection: uses Dynamic Root of Trust of Measurement (DRTM) technology to isolate the firmware and limit the impact of vulnerabilities

- Virtualization-based security (VBS): in conjunction with hypervisor-based code integrity (HVCI), VBS provides granular isolation of privileged parts of the OS (like the kernel) to prevent attacks and exfiltration of data

Infrastructure lock provides robust protection against unauthorized access to resources and data by preventing unintended changes to both hardware configuration and firmware updates.

When the infrastructure is locked, any attempt to change the system configuration is blocked and an error message is displayed.

Now that we understand what these technologies provide, one might have a few more questions, such as:

- How do I install these technologies?

- Is it easy to deploy and configure?

- Does it require a lot of human manual (and perhaps error prone) interaction?

In short, deploying these technologies is not an easy task unless you have the right set of tools in place.

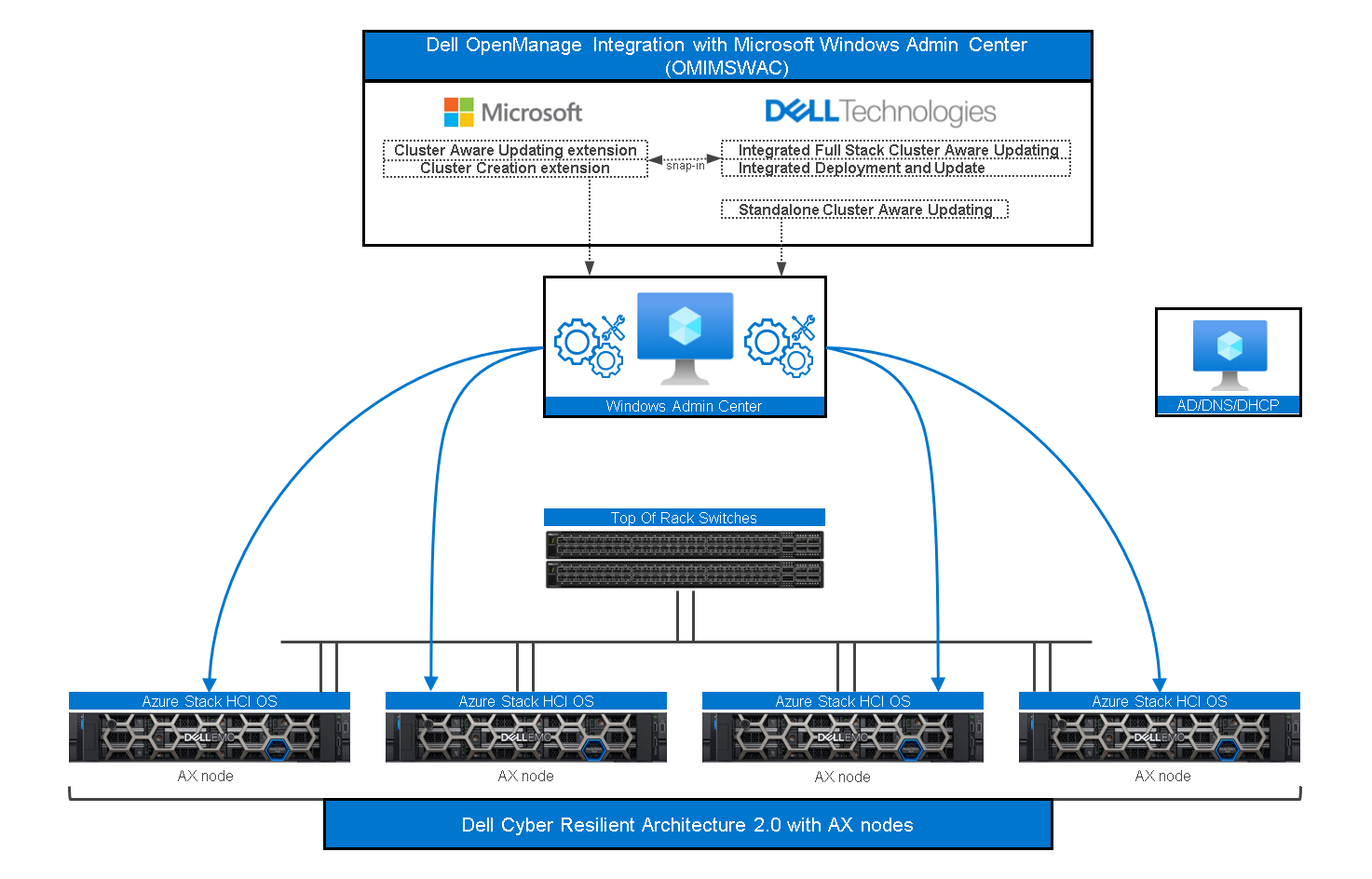

This is when you’ll need the “secret sauce”— which is the Dell OpenManage Integration with Microsoft Windows Admin Center (OMIMSWAC) on top of our certified Dell Cyber-resilient Architecture, as illustrated in the following figure:

Figure 2: OMIMSWAC and Dell Cyber-resilient Architecture with AX Nodes

As a quick reminder, Windows Admin Center (WAC) is Microsoft’s single pane of glass for all Windows management related tasks.

Dell OMIMSWAC extensions make WAC even better by providing additional controls and management possibilities for certain features, such as Secured-core and Infrastructure lock.

Dell Cyber Resilient Architecture 2.0 safeguards customer’s data and intellectual property with a robust, layered approach.

Since a picture is worth a thousand words, the next section will show you what WAC extensions look like and how easy and intuitive they are to play with.

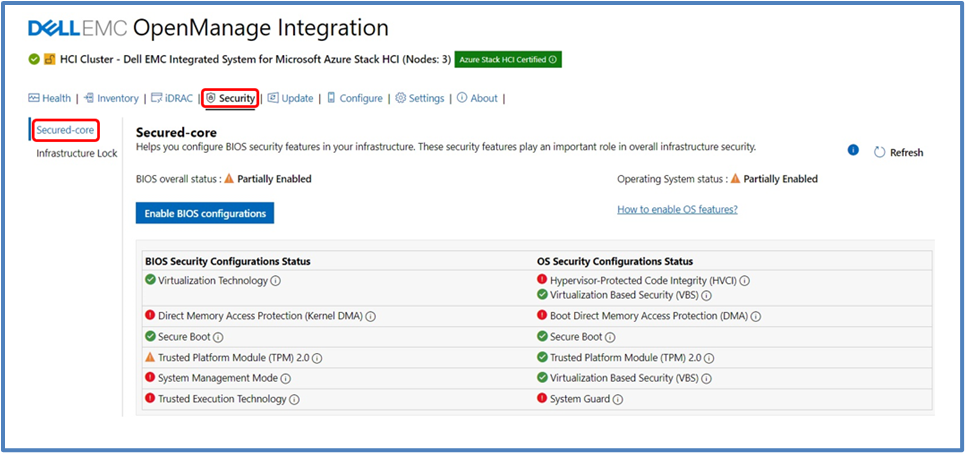

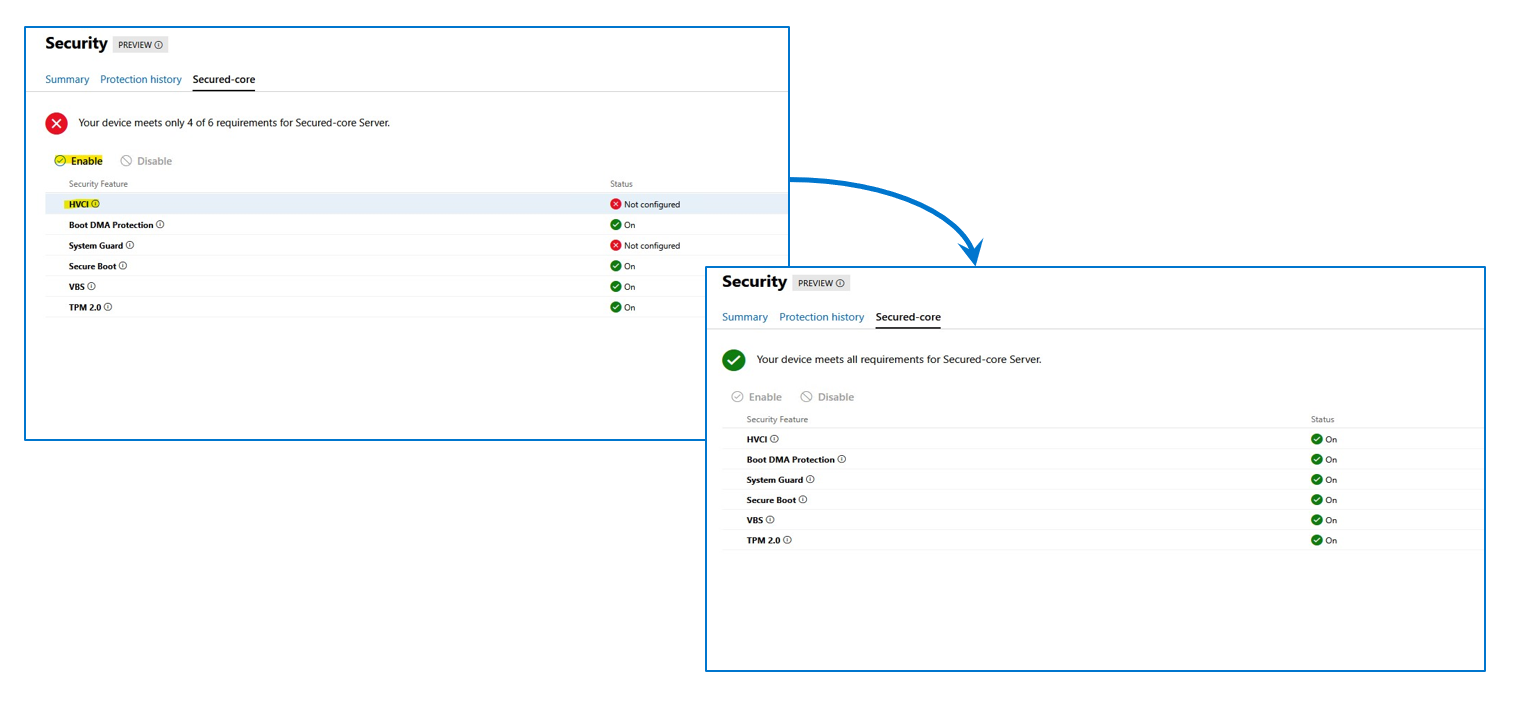

Dell OMIMSWAC Secured-core

The following figure shows our Secured-core snap-in integration inside the WAC security blade and workflow.

Figure 3: OMIMSWAC Secured-core view

The OS Security Configuration Status and the BIOS Security Configuration Status are displayed. The BIOS Security Configuration Status is where we can set the Secured-core required BIOS settings for the entire cluster.

OS Secured-core settings are visible but cannot be altered using OMIMSWAC (you would directly use WAC for it). You can also view and manage BIOS settings for each node individually.

Figure 4: OMIMSWAC Secured-core, node view

Prior to enabling Secured-core, the cluster nodes must be updated to Azure Stack HCI, version 21H2 (or newer). For AMD Servers, the DRTM boot driver (part of the AMD Chipset driver package) must be installed.

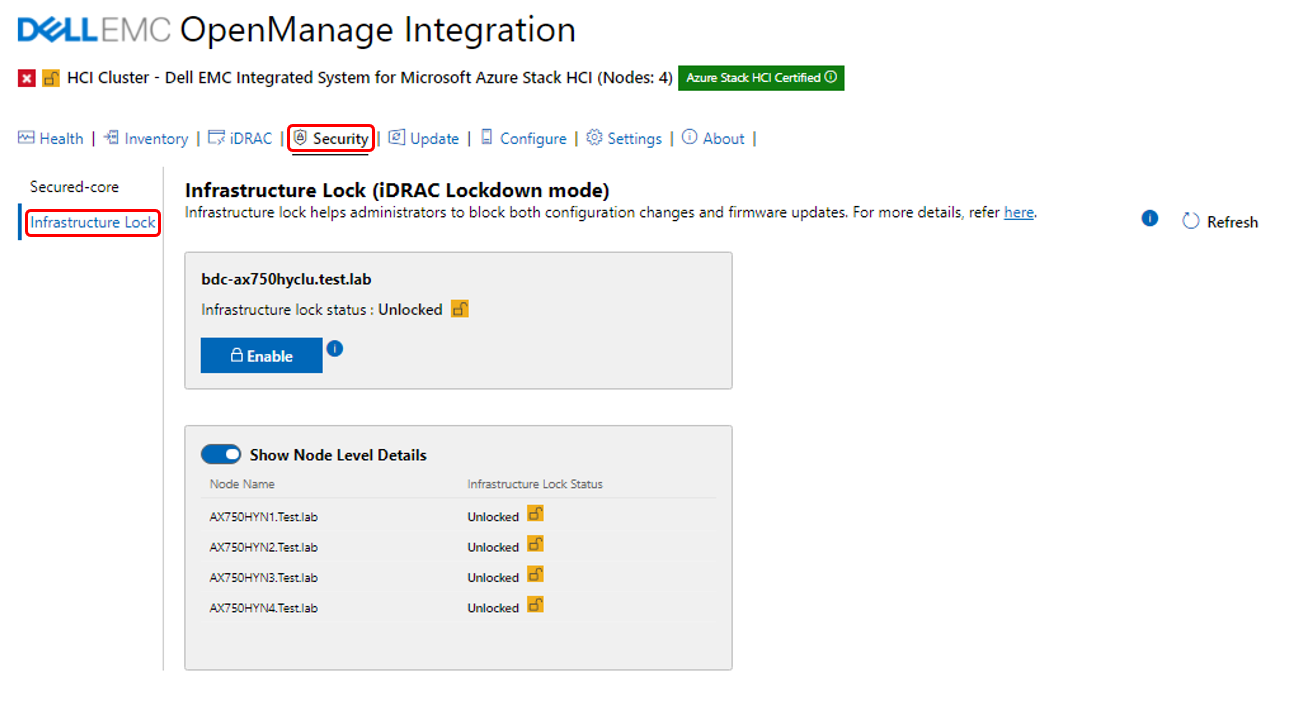

Dell OMIMSWAC Infrastructure lock

The following figure illustrates the Infrastructure lock snap-in integration inside the WAC security blade and workflow. Here we can enable or disable Infrastructure lock to prevent unintended changes to both hardware configuration and firmware updates.

Figure 5: OMIMSWAC Infrastructure lock

Enabling Infrastructure lock also blocks the server or cluster firmware update process using OpenManage Integration extension tool. This means a compliance report will be generated if you are running a Cluster Aware Update (CAU) operation with Infrastructure lock enabled, which will block the cluster updates. If this occurs, you will have the option to temporarily disable Infrastructure lock and have it automatically re-enabled when the CAU is complete.

Conclusion

Dell understands the importance of the new security features introduced by Microsoft and has developed a programmatic approach, through OMIMSWAC and Dell’s Cyber-resilient Architecture, to consistently deliver and control these new features in each node and cluster. These features allow customers to always be secure and compliant on Azure Stack HCI environments.

Stay tuned for more updates (soon) on the compliance front, thank you for reading this far!

Author Information

Ignacio Borrero, Senior Principal Engineer, Technical Marketing

Twitter: @virtualpeli

References

2020 Verizon Data Breach Investigations Report

2019 Accenture Cost of Cybercrime Study

Global Ransomware Damage Costs Predicted To Reach $20 Billion (USD) By 2021

Cybercrime To Cost The World $10.5 Trillion Annually By 2025

The global cost of cybercrime per minute to reach $11.4 million by 2021

Experts Recommend Automation for a Healthier Lifestyle

Wed, 20 Oct 2021 19:59:25 -0000

|Read Time: 0 minutes

Like any good techie, I can get a little obsessed with gadgets that improve my quality of life. Take, for example, my recent discovery of wearable technology that eases the symptoms of motion sickness. For most of my life, I’ve had to take over-the-counter or prescription medicine when boating, flying, and going on road trips. Then, I stumbled across a device that I could wear around my wrist that promised to solve the problem without the side effects. Hesitantly, I bought the device and asked a friend to drive like a maniac around town while I sat in the back seat. It actually worked – no headache, no nausea, and no grogginess from meds! Needless to say, I never leave home without my trusty gizmo to keep motion sickness at bay.

Throughout my career in managing IT infrastructure, stress has affected my quality of life almost as much as motion sickness. There is one responsibility that has always caused more angst than anything else: lifecycle management (LCM). To narrow that down a bit, I’m specifically talking about patching and updating IT systems under my control. I have sometimes been derelict in my duties because of annoying manual steps that distract me from working on the fun, highly visible projects. It’s these manual steps that can cause the dreaded DU/DL (data unavailable or data loss) to rear its ugly head. Can you say insomnia?

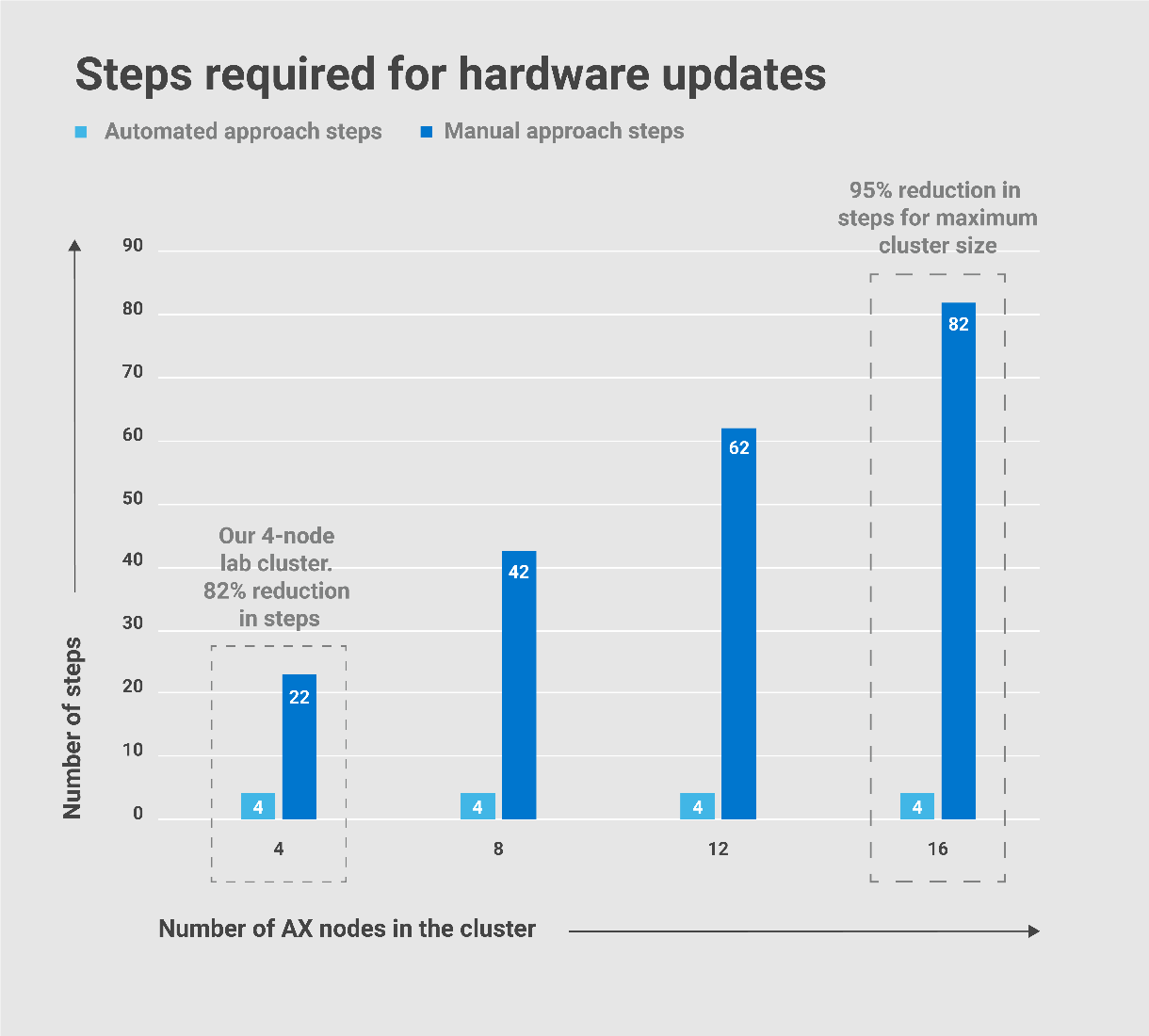

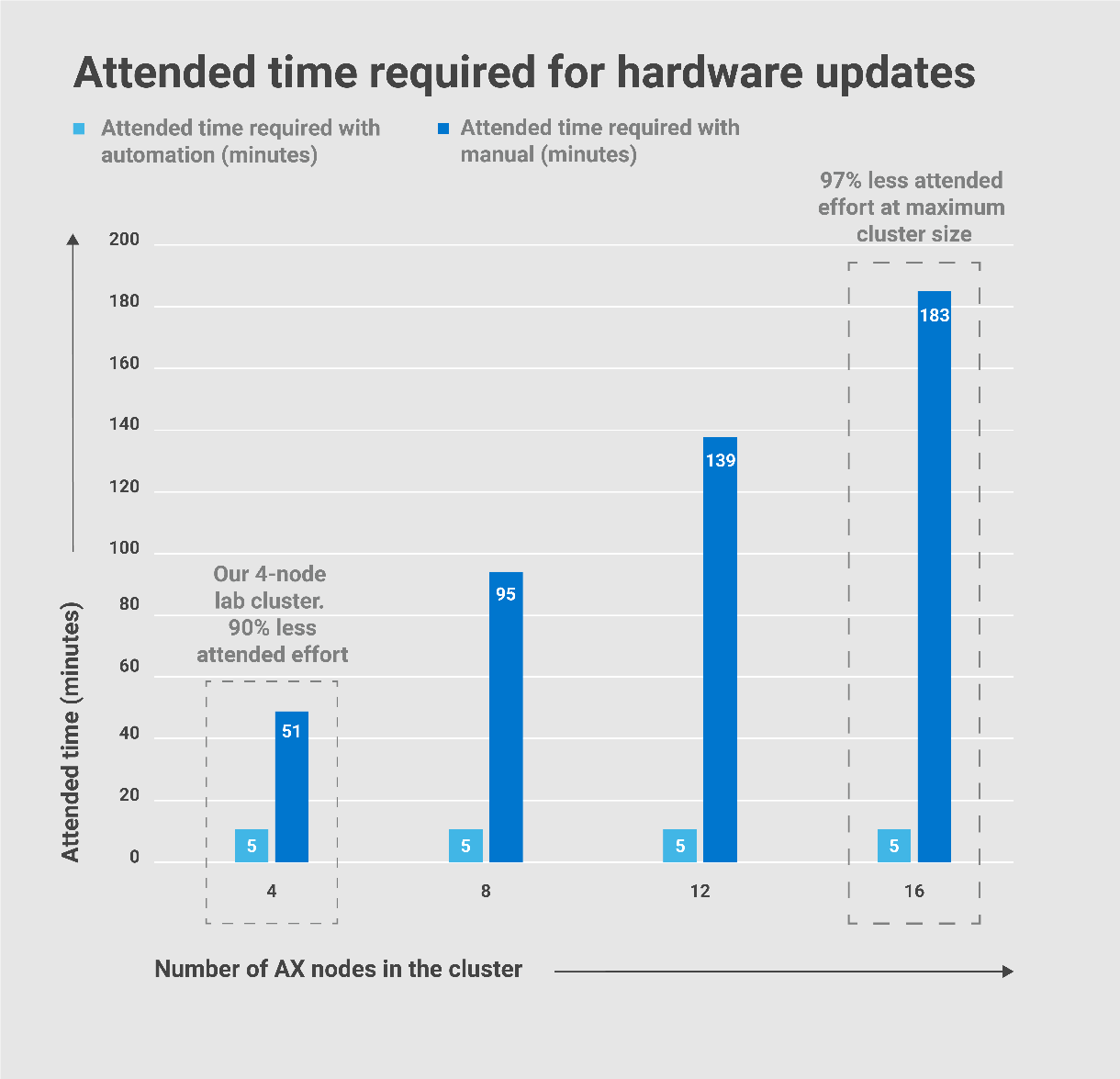

Innovative technology to the rescue once again! While creating a demo video last year for our Dell EMC OpenManage Integration with Microsoft Windows Admin Center (OMIMSWAC), I was blown away by how easy we made the BIOS, firmware, and driver updates on clusters. The video did a pretty good job of showing the power of the Cluster-Aware Updating (CAU) feature, but it didn’t go far enough. I needed to quantify its full potential to change an IT profressional’s life by pitting an OMIMSWAC’s automated, CAU approach against a manual, node-based approach. I captured the results of the bake off in Dell EMC HCI Solutions for Microsoft Windows Server: Lifecycle Management Approach Comparison.

Automation Triumphs!

For this white paper to really stand the test of time, I knew I needed to be very clever to compare apples-to-apples. First, I referred to HCI Operations Guide—Managing and Monitoring the Solution Infrastructure Life Cycle, which detailed the hardware updating procedures for both the CAU and node-based approaches. Then, I built a 4-node Dell EMC HCI Solutions for Windows Server 2019 cluster, performed both update scenarios, and recorded the task durations. We all know that automation is king, but I didn’t expect the final tally to be quite this good:

- The automated approach reduced the number of steps in the process by 82%.

- The automated approach required 90% less of my focused attention. In other words, I was able to attend to other duties while the updates were installing.

- If I was in a production environment, the maintenance window approved by the change control board would have been cut in half.

- The automated process left almost no opportunity for human error.

As you can see from the following charts taken from the paper, these numbers only improved as I extrapolated them out to the maximum Windows Server HCI cluster size of 16 nodes.

I thought these results were too good to be true, so I checked my steps about 10 times. In fact, I even debated with my Marketing and Product Management counterparts about sharing these claims with the public! I could hear our customers saying, “Oh, yeah, right! These are just marketecture hero numbers.” But in this case, I collected the hard data myself. I am still confident that these results will stand up to any scrutiny. This is reality – not dreamland!

Just when I thought it couldn’t get any better

So why am I blogging about a project I did last year? Just when I thought the testing results in the white paper couldn’t possibly get any better, Dell EMC Integrated System for Microsoft Azure Stack HCI came along. Azure Stack HCI is Microsoft’s purpose-built operating system delivered as an Azure service. The current release when writing this blog was Azure Stack HCI, version 20H2. Our Solution Brief provides a great overview of our all-in-one validated HCI system, which delivers efficient operations, flexible consumption models, and end-to-end enterprise support and services. But what I’m most excited about are two lifecycle management enhancements – 1-click full stack LCM and Kernel Soft Reboot – that will put an end to the old adage, “If it looks too good to be true, it probably is.”

Let’s invite OS updates to the party

OMIMSWAC was at version 1.1 when I did my testing last year. In that version, the CAU feature focused on the hardware – BIOS, firmware, and drivers. In OMIMSWAC v2.0, we developed an exclusive snap-in to Microsoft’s Failover Cluster Tool Extension to create 1-click full stack LCM. Only available for clusters running Azure Stack HCI, a simple workflow in Windows Admin Center automates not only the hardware updates – but also the operating system updates. How do I see this feature lowering my blood pressure?

- Applying the OS and hardware updates can typically require multiple server reboots. With 1-click full stack LCM, reboots are delayed until all updates are installed. A single reboot per node in the cluster results in greater time savings and shorter maintenance windows.

- I won’t have to use multiple tools to patch different aspects of my infrastructure. The more I can consolidate the number of management tools in my environment, the better.

- A simple, guided workflow that tightly integrates the Microsoft extension and OMIMSWAC snap-in ensures that I won’t miss any steps and provides one view to monitor update progress.

- The OMIMSWAC snap-in provides necessary node validation at the beginning of the hardware updates phase of the workflow. These checks verify that my cluster is running validated AX nodes from Dell Technologies and that all the nodes are homogeneous. This gives me peace of mind knowing that my updates will be applied successfully. I can also rest assured that there will be no interruption to the workloads running in my VMs and containers since this feature leverages CAU.

- The hardware updates leverage the Microsoft HCI solution catalog from Dell Technologies. Each BIOS, firmware, and driver in this catalog is validated by our engineering team to optimize the Azure Stack HCI experience.

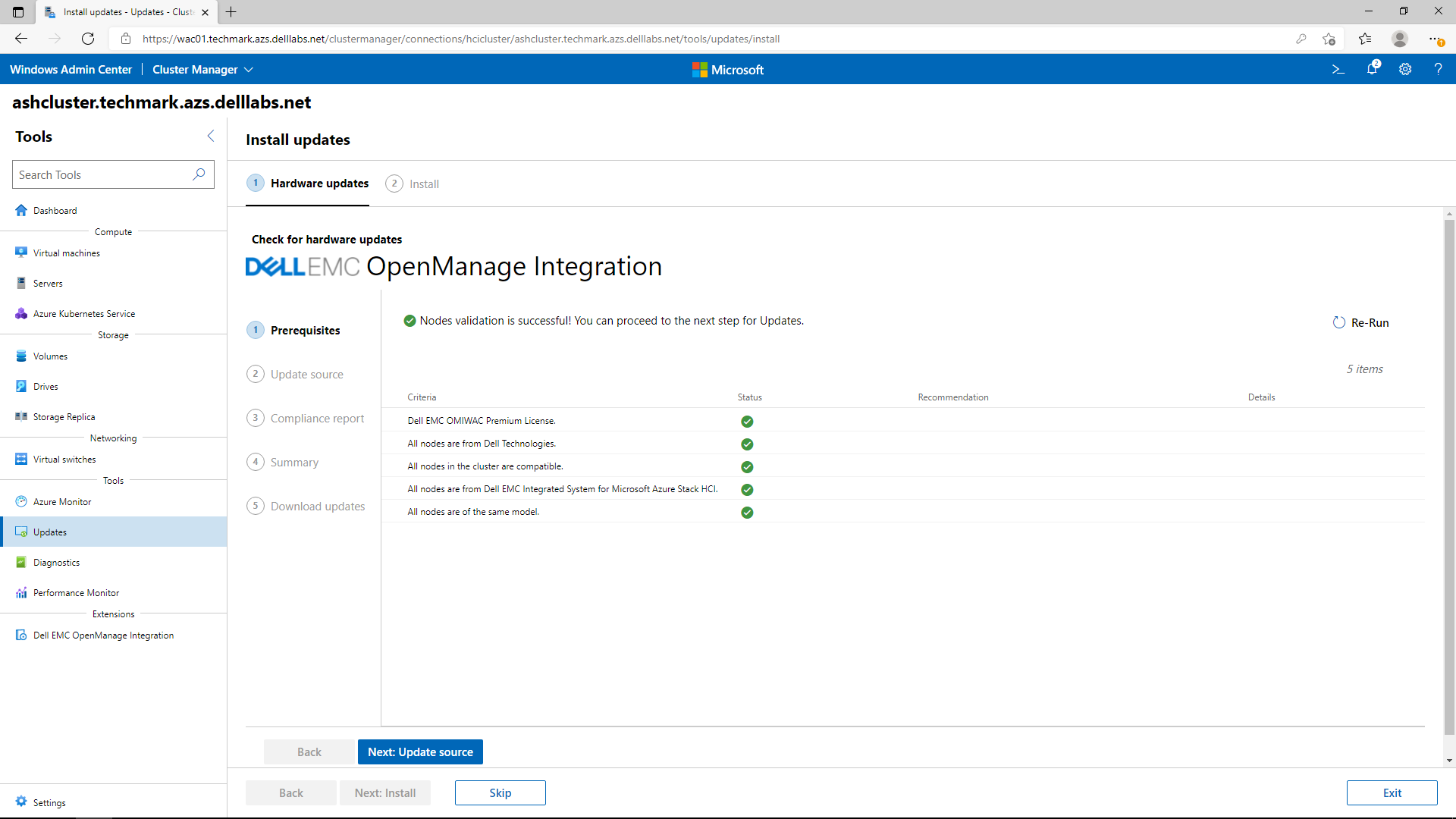

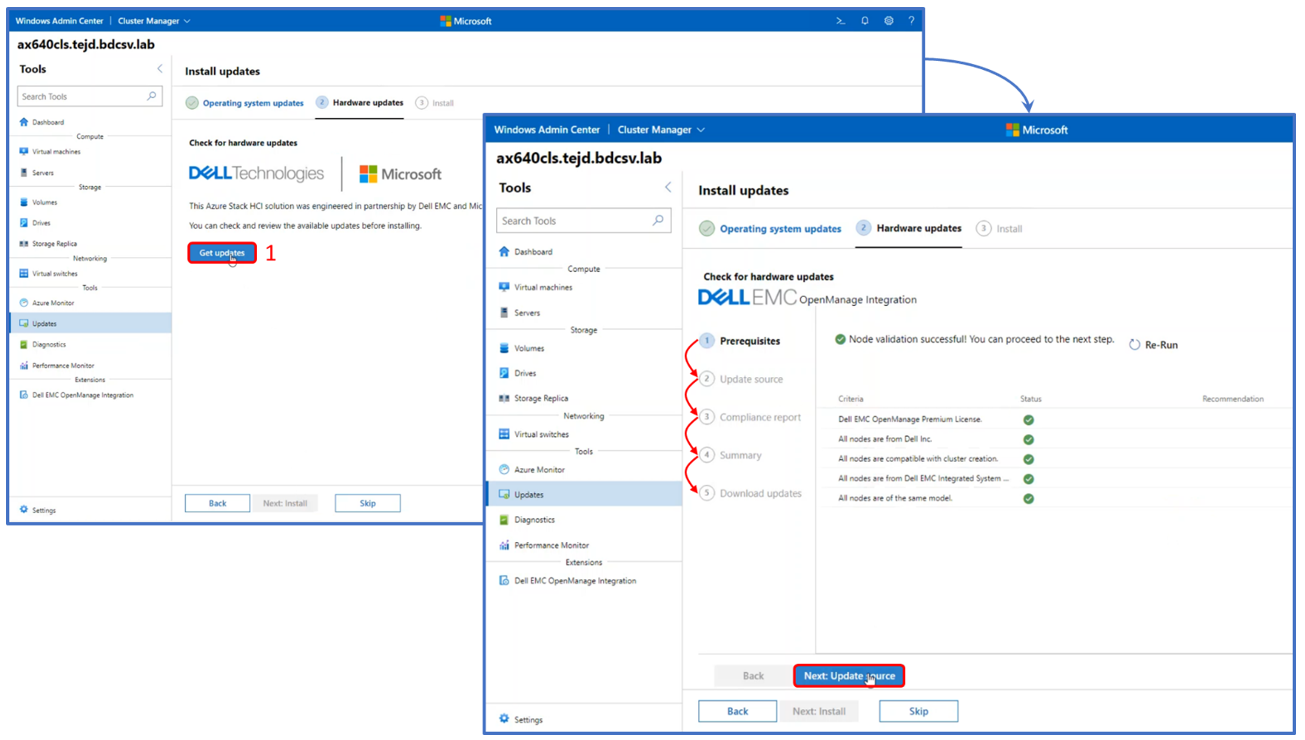

The following screen shots were taken from the full stack CAU workflow. The first step indicates which OS updates are available for the cluster nodes.

Node validation is performed first before moving forward with hardware updates.

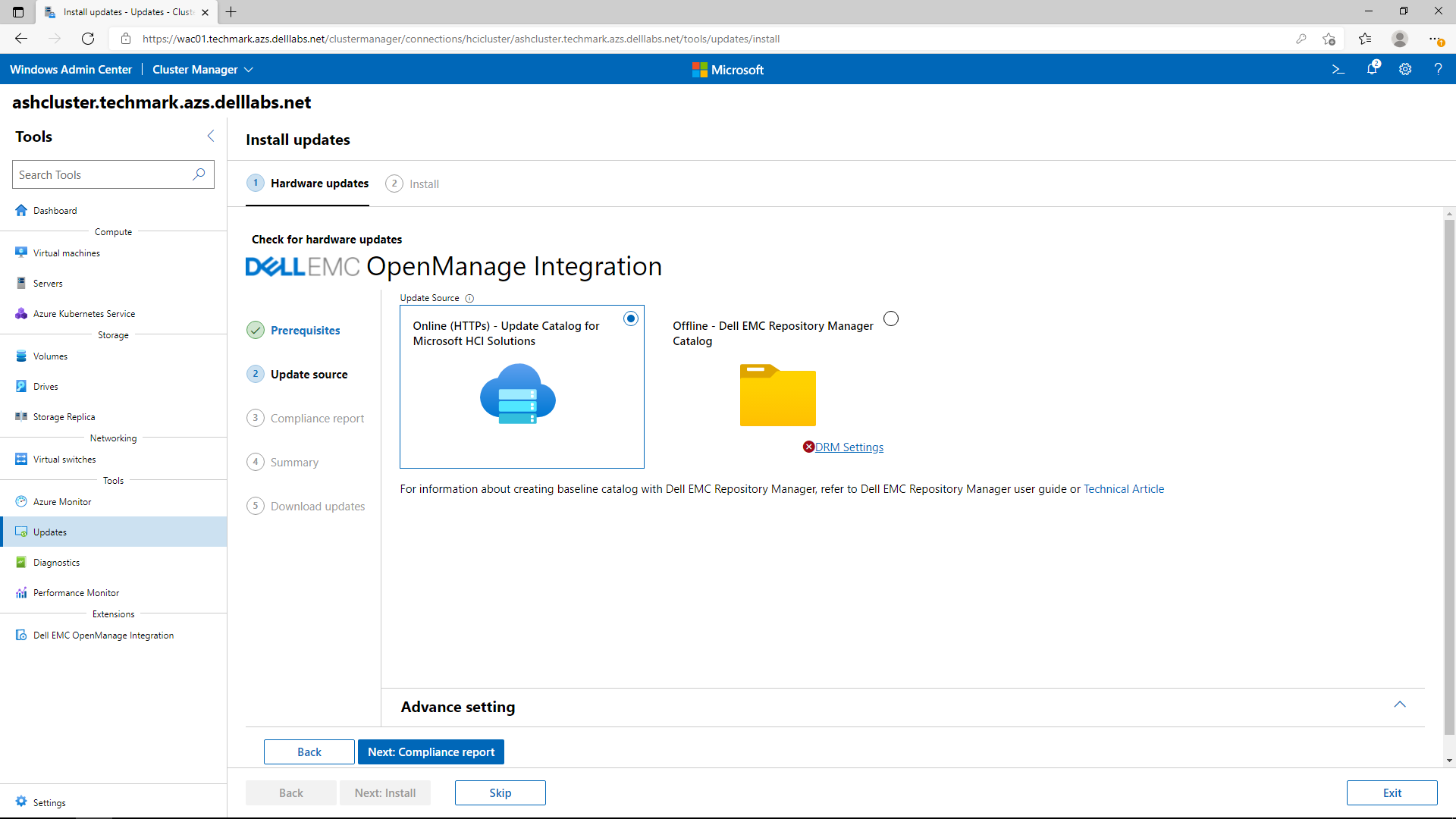

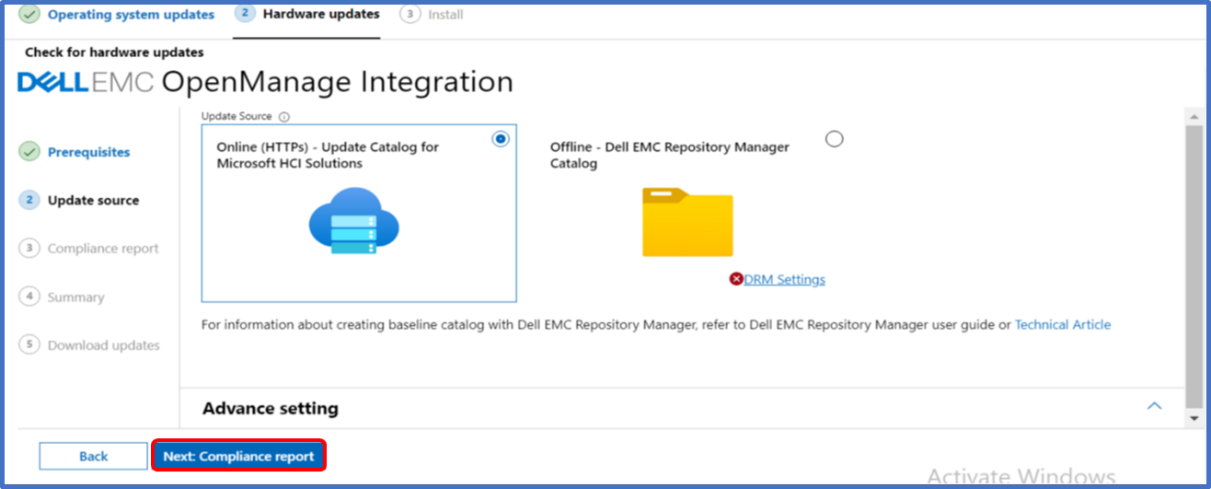

If the Windows Admin Center host is connected to the Internet, the online update source approach obtains all the systems management utilities and the engineering validated solution catalog automatically. If operating in an edge or disconnected environment, the solution catalog can be created with Dell EMC Repository Manager and placed on a file server share accessible from the cluster nodes.

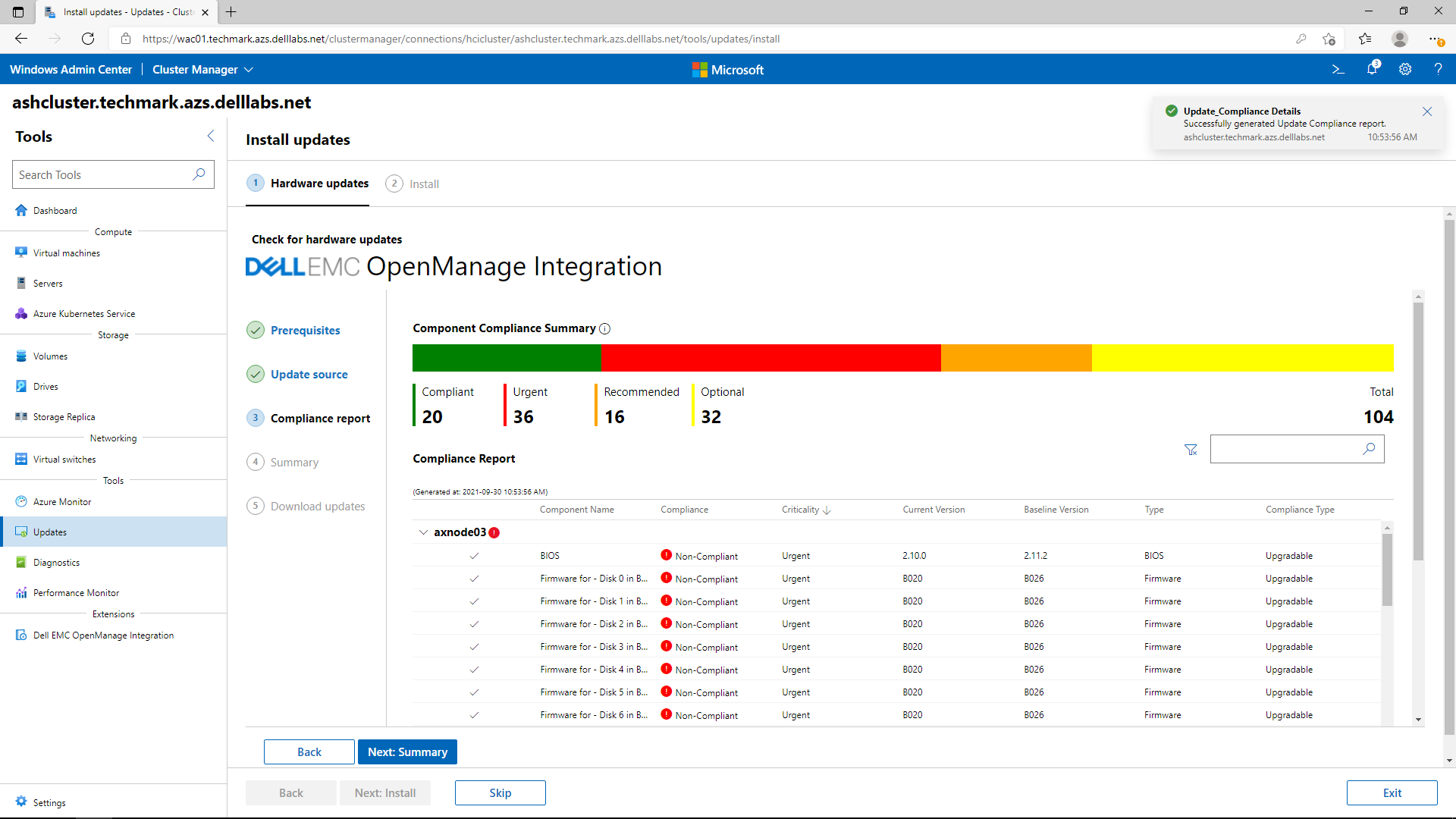

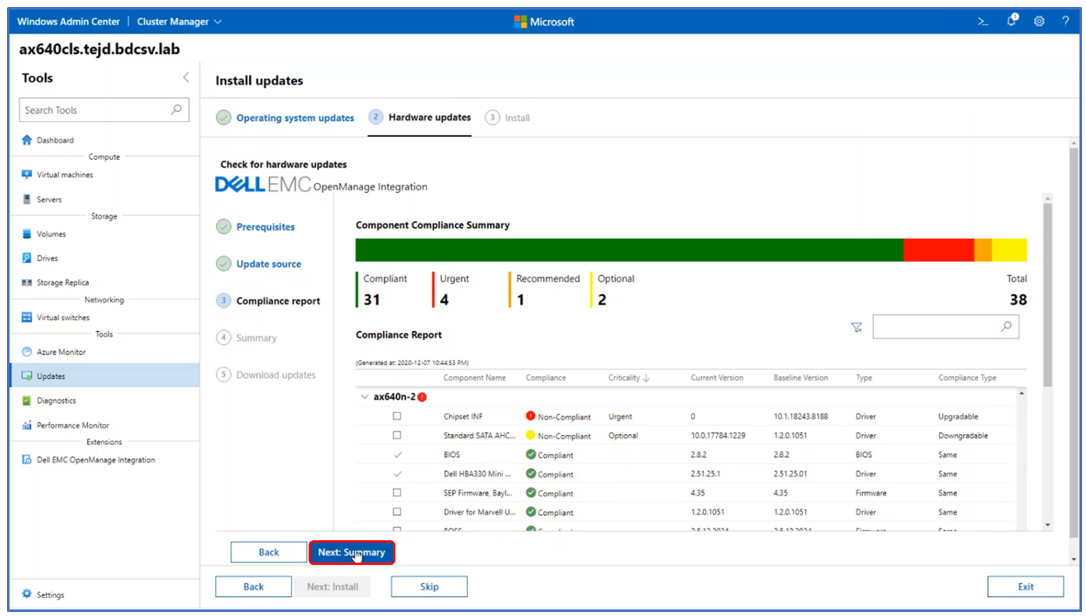

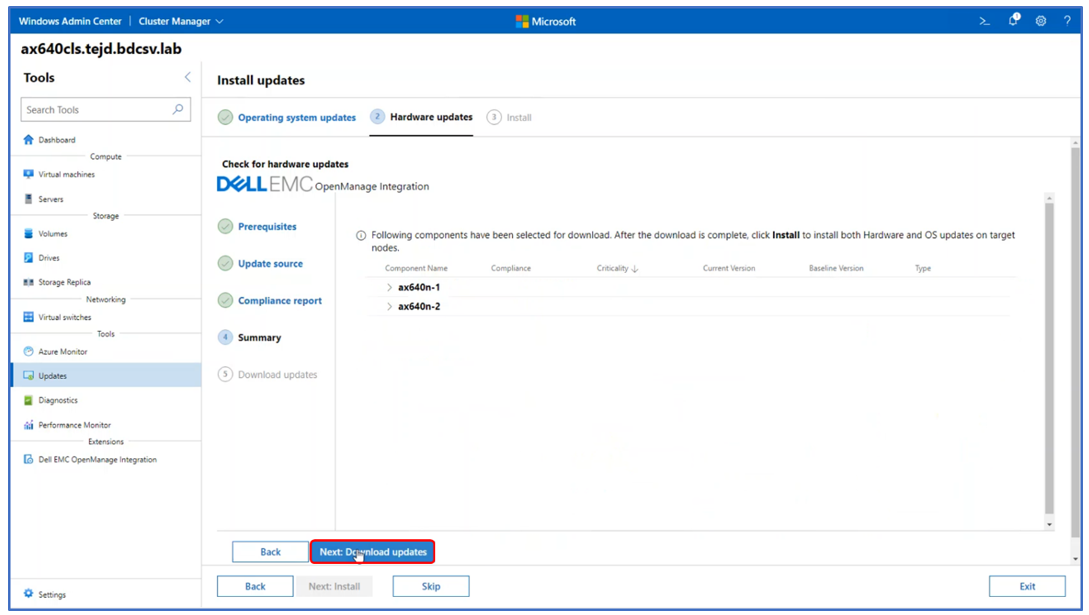

The following image shows a generated compliance report. All non-compliant components are selected by default for updating. After this point, all the OS and non-compliant hardware components will be updated together with only a single reboot per node in the cluster and with no impact to running workloads.

Life is too short to wait for server reboots

Speaking of reboots, Kernel Soft Reboot (KSR) is a new feature coming in Azure Stack HCI, version 21H2 that also has the potential to make my white paper claims even more jaw dropping. KSR will give me the ability to perform a “software-only restart” on my servers – sparing me from watching the paint dry during those long physical server reboots. Initially, the types of updates in scope will be OS quality and security hotfixes since these don’t require BIOS/firmware initialization. Dell Technologies is also working on leveraging KSR for the infrastructure updates in a future release of OMIMSWAC.

KSR will be especially beneficial when using Microsoft’s CAU extension in Windows Admin Center. The overall time savings using KSR multiplies for clusters because faster restarts means less resyncing of data after CAU resumes each cluster node. Each node should reboot with Mach Speed if there are only Azure Stack HCI OS hotfixes and Dell EMC Integrated System infrastructure updates that do not require the full reboot. I will definitely be hounding my Product Managers and Engineering team to deliver KSR for infrastructure updates in our OMIMSWAC extension ASAP.

Bake off rematch

I decided to hold off on doing a new bakeoff until Azure Stack HCI, version 21H2 is released with KSR. I also want to wait until we bring the benefits of KSR to OMIMSWAC for infrastructure updates. The combination of OMIMSWAC 1-click full stack CAU and KSR will continue to make OMIMSWAC unbeatable for seamless lifecycle management. This means better outcomes for our organizations, improved blood pressure and quality of life for IT pros, and more motion-sickness-free adventure vacations. I’m also looking forward to spending more time learning exciting new technologies and less time with routine administrative tasks.

If you’d like to get hands-on with all the different features in OMIMSWAC, check out the Interactive Demo in Dell Technologies Demo Center. Also, check out my other white papers, blogs, and videos in the Dell Technologies Info Hub.

It’s Time to Expect Flexible Disaster Recovery

Thu, 14 Oct 2021 14:52:42 -0000

|Read Time: 0 minutes

Rigid and complex disaster recovery (DR) can be a thing of the past with Dell EMC Integrated System for Microsoft Azure Stack HCI.

When data Is currency, DR is non-negotiable

If your organization is like many others—of any size—it relies increasingly on data to thrive. This is particularly true for businesses that are on track to modernize their infrastructure and application architectures. For those organizations, data and the workloads that process it are truly the lifeblood of the business.

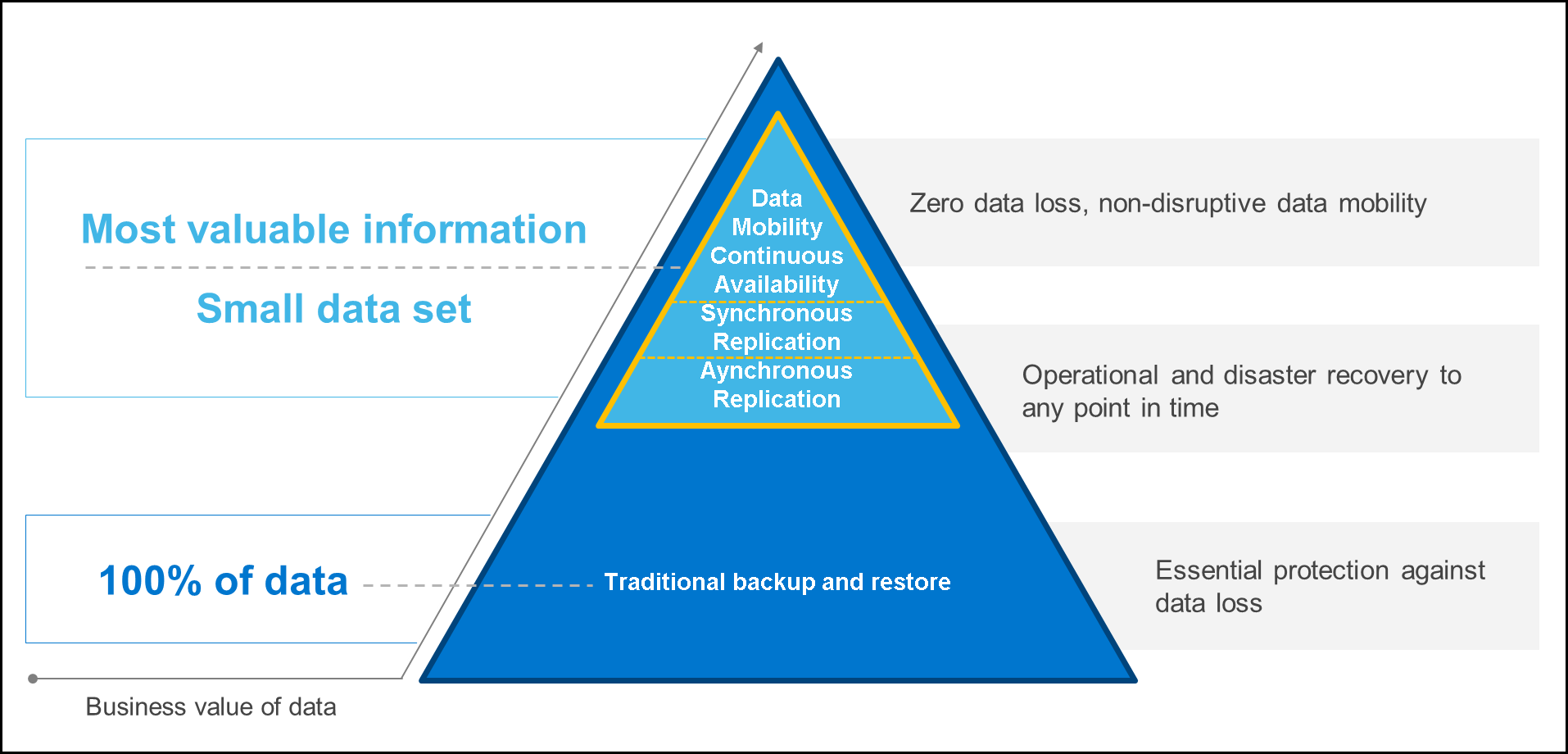

When business relies on data to function, recovery-point objectives (RPOs) and recovery-time objectives (RTOs) must be as low as possible. However, legacy disaster recovery (DR) solutions are complex to design and maintain, and they might require manual intervention during a DR scenario. These solutions can also be costly, especially if you must maintain a dedicated DR site. That’s why a flexible and performant DR solution is a crucial part of infrastructure modernization.

Stretch clustering could be the answer

Today, enterprise organizations are consolidating, refreshing, and modernizing their aging virtualization platforms with hyperconverged infrastructure (HCI). HCI architectures help customers achieve a highly automated and orchestrated cloud-operations experience. The architectures are designed to deliver high levels of performance and scalability with software-defined compute, storage and networking. HCI solutions are also designed to simplify the implementation of high availability and DR for workloads running in virtual machines (VMs) and containers.

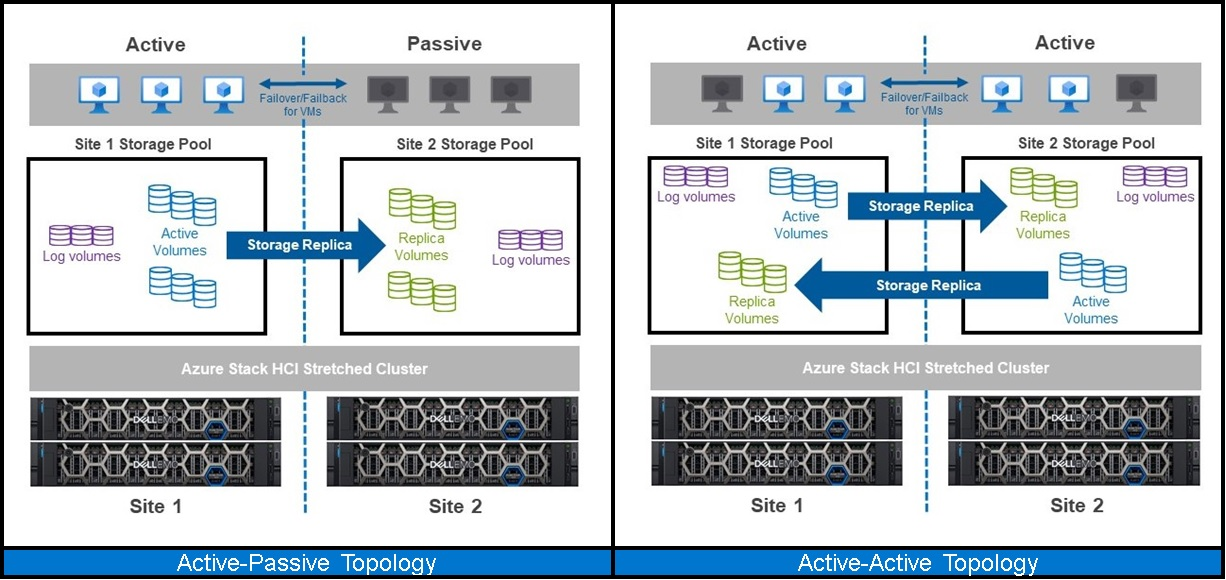

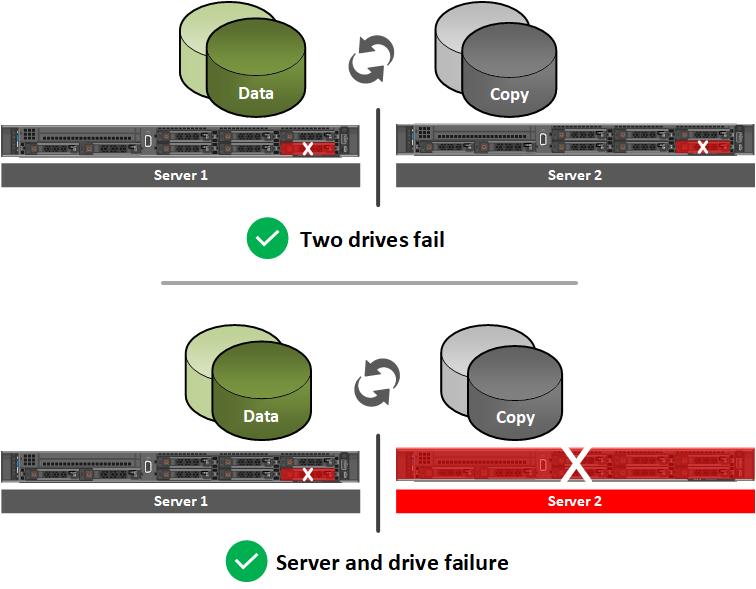

What if you could stretch a single HCI cluster across two locations as a DR solution? That would simplify and accelerate DR. Such a solution is now within reach using Microsoft Azure Stack HCI, version 20H2 or later. Azure Stack HCI includes built-in stretch clustering capabilities, which use Storage Replica for volume replication. Stretch clustering allows organizations to split a single HCI cluster across two locations, whether they be rooms, buildings, cities or regions. It provides automatic failover of Microsoft Hyper-V VMs if a site failure occurs.

In general, stretch clustering on Azure Stack HCI is an ideal DR solution for scenarios like these:

- Introducing automatic failover with orchestration for recovery of a web-based application’s front-end server tier after a disaster at a hosting location

- Distributing primary and secondary instances of an infrastructure’s core services, such as Microsoft Active Directory, across two physical locations

- Hosting applications with lower write input/output (I/O) performance characteristics

- Running file-system-based services and other business services that can tolerate being hosted on crash-consistent volumes

- Running database workloads such as Microsoft SQL Server, which often cannot sustain the loss of even a single transaction, where using application-layer recoverability solutions such as SQL Always On availability groups might be more appropriate

Putting the solution to the test

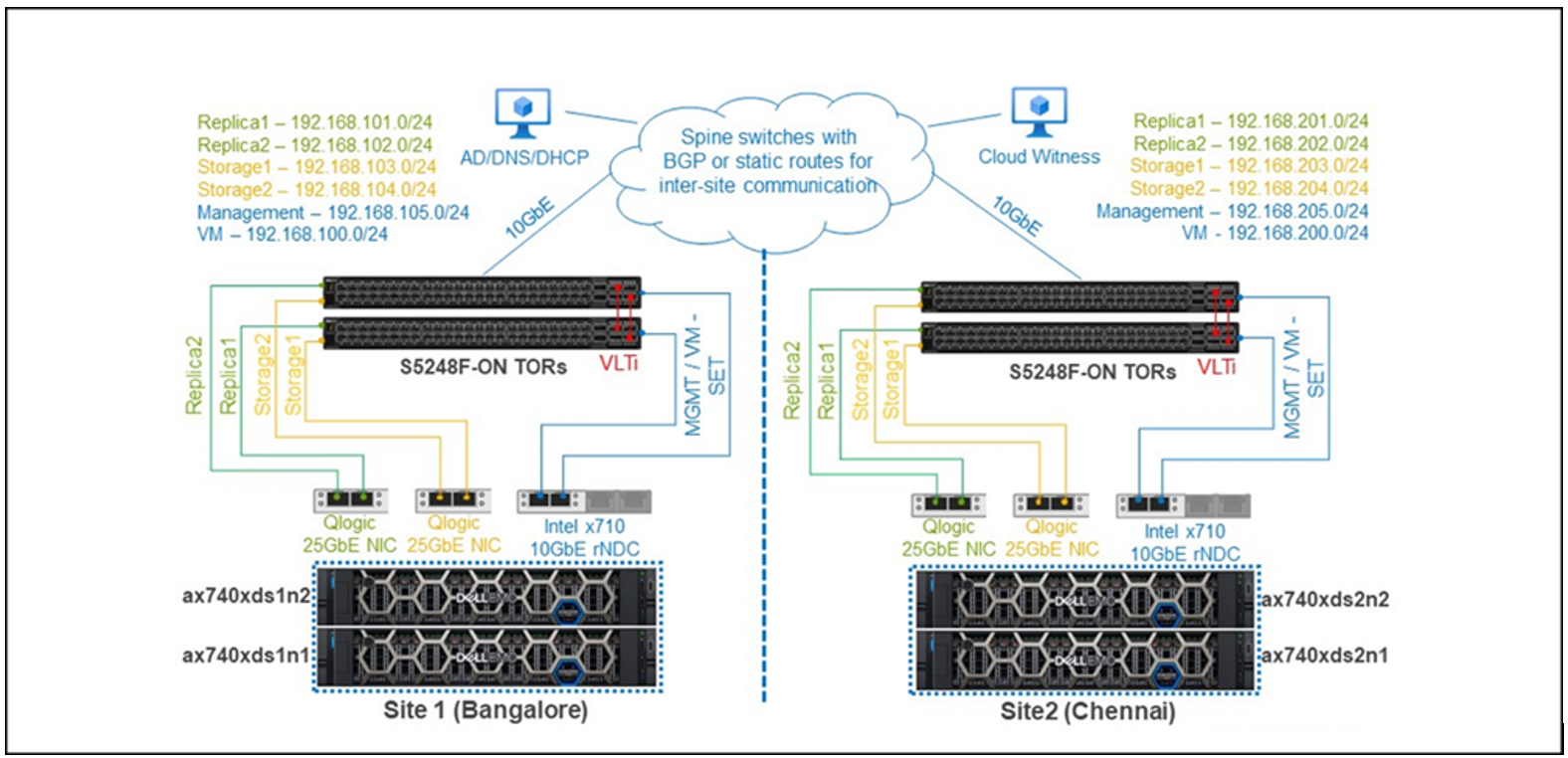

Dell Technologies engineers conducted proof-of-concept (PoC) tests to show how Dell EMC Integrated System for Azure Stack HCI with stretch clustering can handle VM and volume placement. We also wanted to observe the impact of a real running application (Dell EMC OpenManage Enterprise) during failover scenarios. Each of the four nodes (two per site) in our testing environment included two Intel® Xeon® Gold 6230R processors and 384 GB of memory, running Azure Stack HCI, version 20H2.

We tested the following scenarios and observed the outcomes listed. For full details, read the white paper, Adding Flexibility to DR Plans with Stretch Clustering for Azure Stack HCI.

- Unplanned cluster-node failure: All VMs fully restarted on the second node at the same site in about 5 minutes.

- Unplanned site failure: Affected VMs moved and came fully back online in 15–20 minutes.

- Planned site failover: The OpenManage Enterprise application was reachable from the client device within 3 minutes of the live migration to site 2.

- Lifecycle management: Applying the BIOS, firmware and driver updates to the stretched cluster took approximately three hours, and the process had no impact on the Dell EMC OpenManage Enterprise (OME) application.

An accelerated path to simple DR

Dell Technologies offers a broad portfolio of solution configurations designed to meet the requirements of any workload. The solution for DR built on Dell EMC Integrated System for Azure Stack HCI features intelligently designed AX nodes from Dell Technologies configurations. Dell engineers validate every component of these configurations, including firmware and driver versions. Additionally, Dell ProSupport technicians know the entire solution, from hardware to operating system to Microsoft Storage Spaces Direct to networking. They can help keep the cluster operating at peak performance and availability.

To see the full details of our tests and to learn more about the stretch clustering capability in Azure Stack HCI, read the white paper, Adding Flexibility to DR Plans with Stretch Clustering for Azure Stack HCI.

Virtualize Demanding Applications with a Dell EMC Integrated System for Microsoft Azure Stack HCI

Thu, 14 Oct 2021 14:52:42 -0000

|Read Time: 0 minutes

Break through performance barriers

If your organization is on the road to infrastructure modernization, chances are good that your underlying legacy virtualization clusters are being stretched to their limits. This could mean suboptimal performance and resiliency, which can make it difficult to scale clusters and meet service-level agreements (SLAs).

In addition, with overtaxed and aging clusters, you can’t virtualize applications that you would like to because of performance requirements, which can mean a larger data center footprint and higher corresponding power and cooling costs.

If you’re thinking about refreshing and modernizing your legacy virtualization environments, you might want to consider a Dell EMC Integrated System for Microsoft Azure Stack HCI.

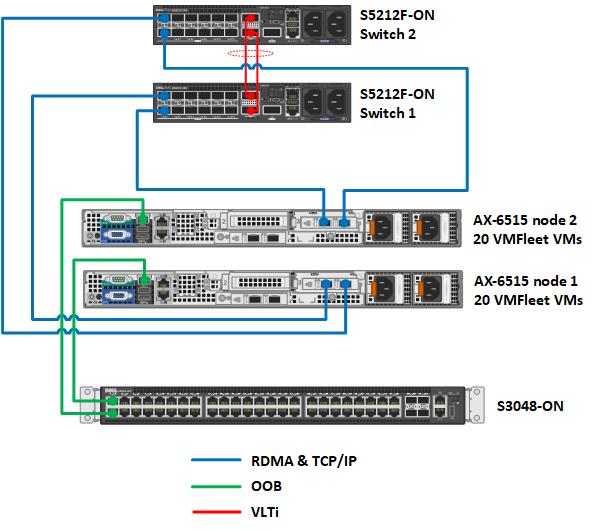

Solution overview