Blogs

Dell Technologies blog posts about the MX-series modular switches (PowerEdge MX)

MX8116n Fabric Expander Modular (FEM) port mapping with external Fabric Switching Engine (FSE)

Fri, 14 Jul 2023 13:16:33 -0000

|Read Time: 0 minutes

The Dell Networking MX8116n FEM acts as an Ethernet repeater, taking signals from an attached compute sled and repeating those signals to the associated lane on the external QSFP56-DD connector. The MX8116n FEM includes two QSFP56-DD interfaces, with each interface providing up to four 100 Gbps connections to the chassis and eight internal 100 GbE server facing ports.

The Dell PowerSwitch Z9432F-ON fixed switch serves as the designated FSE of the MX platform and can support MX chassis deployed with 100 GbE or 25 GbE-based compute sleds. The switch comes equipped with 32 QSFP56-DD ports that provide uplinks, Virtual Link Trunking interconnect (VLTi), and fabric expansion connections.

The goal of this blog is to help you understand port-mapping information about MX8116n FEM, where the module is connected to NIC cards in compute sleds internally on one side and Fabric Switching Engine on the other side on external ports.

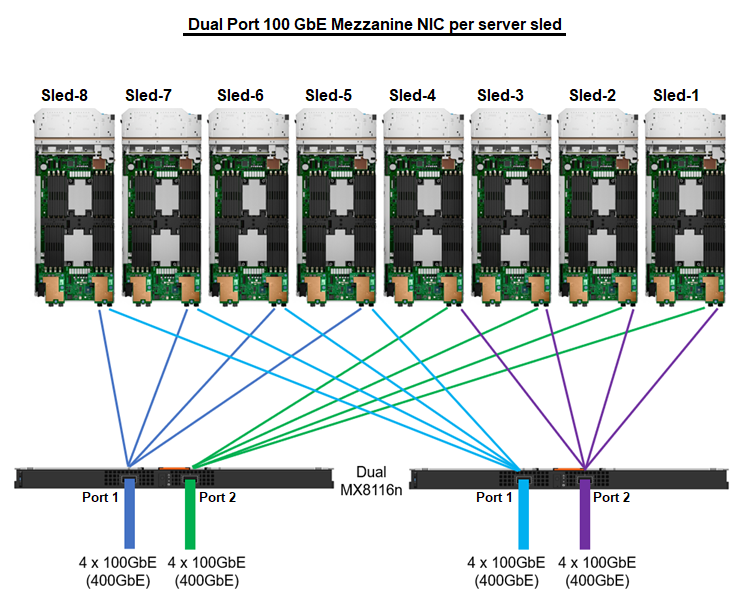

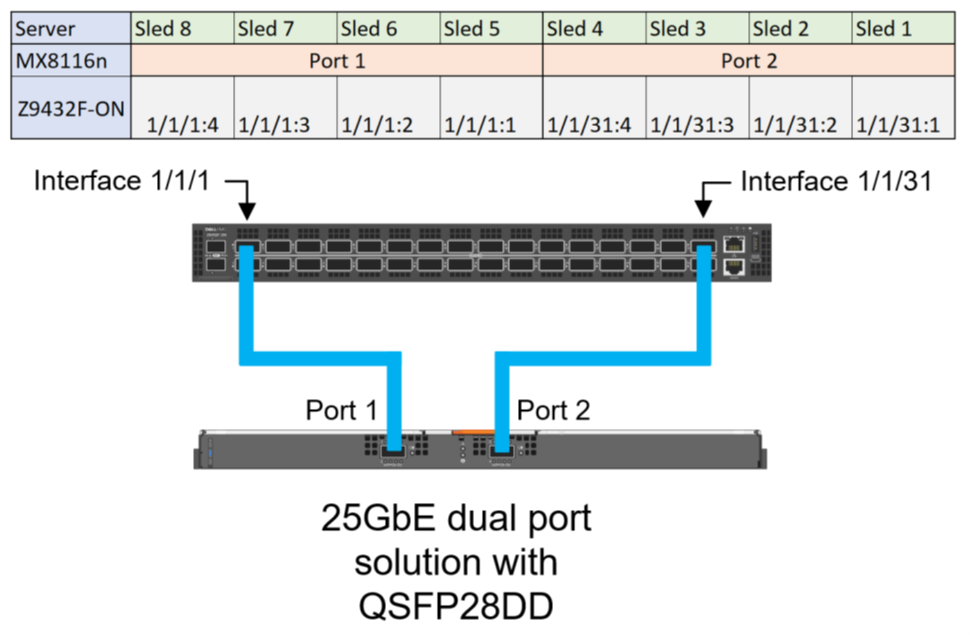

Figure 1. Port mapping of dual MX8116n FEM ports to NIC ports

Sled 1 through Sled 4 use Port 2 on the MX8116n, while Sled 5 through Sled 8 use Port 1.

Figure 2. MX8116n internal port mapping

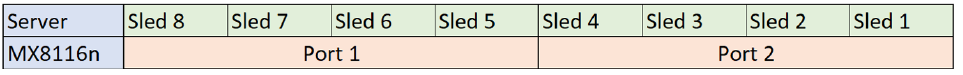

The MX7000 chassis supports up to four MX8116n FEMs in Fabric A and Fabric B. Figure 3 shows one MX8116n FEM module that has two QSFP56-DD 400 GbE ports that can be split into 4x100 GbE to FSE facing ports and 8x100 GbE to facing internal sled NIC ports.

Figure 3. MX7000 chassis front and back physical view with IOMs and sleds port mapping

The MX8116n FEM can operate at 25 GbE and 100 GbE. The 25 GbE solution can support on both dual and quad port NICs, while the 100 GbE solution is supported on dual port NIC only. For the following examples in this blog, the PowerSwitch Z9432F-ON port mapping on 100 GbE dual port NIC using QSFP56-DD cables and 25 GbE dual port and quad port NICs using QSFP56-DD and QSFP28-DD.

The interfaces used on the Z9432F-ON are arbitrary. QSFP56-DD interfaces on the Z9432F-ON can be connected in any order.

Each port group in PowerSwitch Z9432F-ON contains two physical interfaces. The following examples shows the ports of the first port group 1/1/1 that contain interfaces 1/1/1-1/1/2 and the ports of the last port group 1/1/16 that contain interfaces 1/1/31-1/1/32. The port mode for each port interface can be configured in the port group configuration.

Compute sleds with 100 GbE dual port mezzanine cards

The following port group settings are required for 100 GbE dual port mezzanine cards for the Z9432F-ON:

port-group 1/1/1

profile unrestricted

port 1/1/1 mode Eth 100g-4x

port 1/1/2 mode Eth 100g-4x

port-group 1/1/16

profile unrestricted

port 1/1/31 mode Eth 100g-4x

port 1/1/32 mode Eth 100g-4x

Once the port modes are configured and the connections are made, the MX8116n ports auto negotiate to match the port operating mode of the Z9432F-ON interfaces. The internal servers facing ports of the MX8116n auto-negotiate with the mezzanine card port speed of 100 GbE.

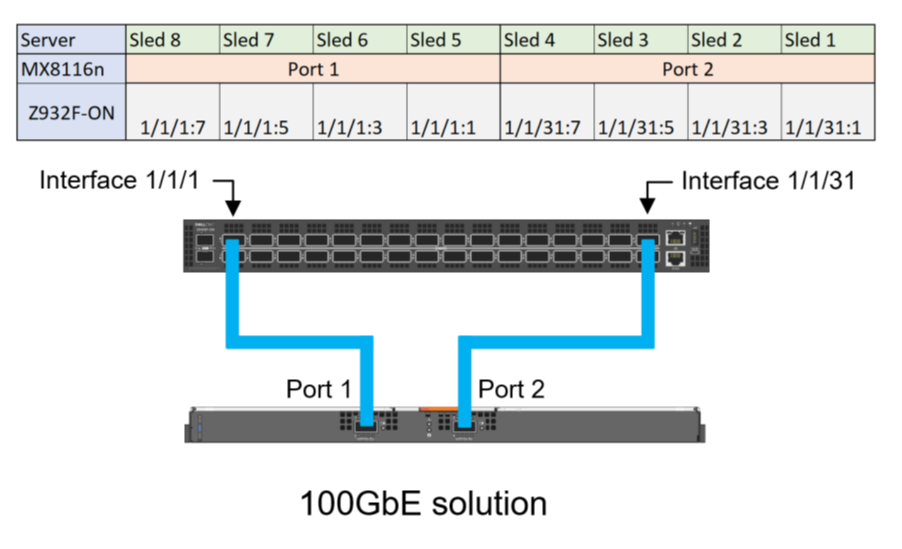

Figure 4 shows the interface numbering for each sled and corresponding MX8116n port when using a QSFP56DD based optic or cable:

Figure 4. Z9432F-ON port mapping for 100 GbE solution

Compute sleds with 25 GbE quad port NIC using QSFP56-DD

The following port group settings are required for 25 GbE quad port NIC using QSFP56-DD on the Z9432F-ON:

- For the required 25g-8x port mode operation, the profile must first be set to restricted. This restriction means that the second port interface in the port group can only operate in a restricted mode.

- The restriction on the second port means that it must operate in a 1x mode, making the even ports unsuitable for connections to the MX8116n. Therefore, only the odd ports can be used for connections to the MX8116n.

port-group 1/1/1

profile restricted

port 1/1/1 mode Eth 25g-8x

port 1/1/2 mode Eth 400g-1x

port-group 1/1/16

profile restricted

port 1/1/31 mode Eth 25g-8x

port 1/1/32 mode Eth 400g-1x

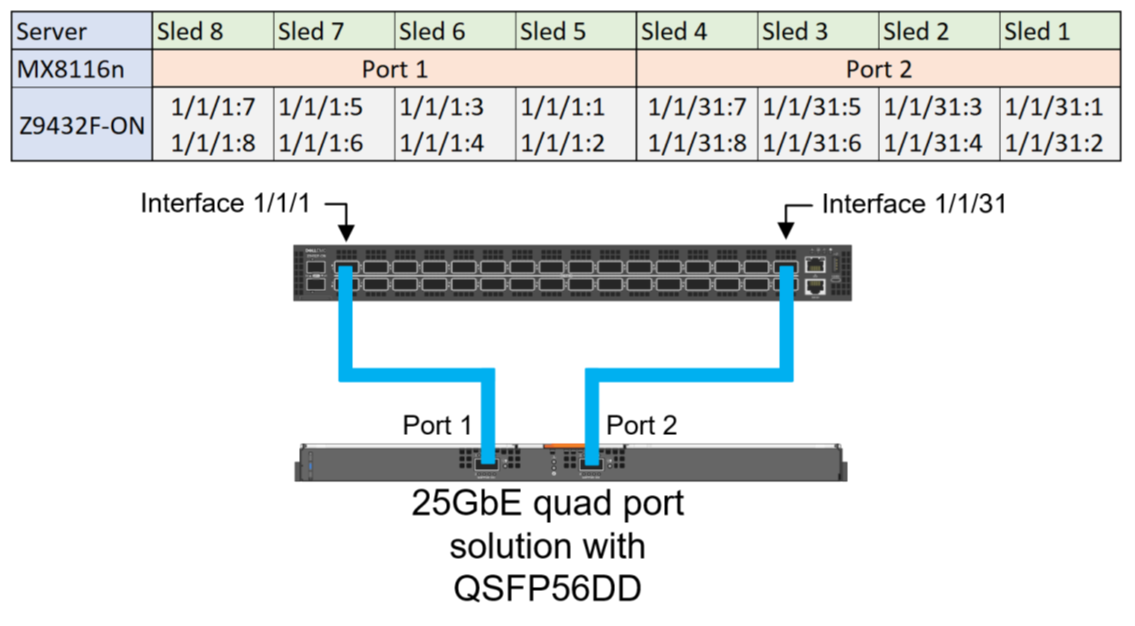

Figure 5 shows the interface numbering for each sled and corresponding MX8116n port when using a QSFP56DD based optic or cable:

Figure 5. Z9432F-ON Port mapping for 25 GbE quad port solution for QSFP56DD based optics and cables

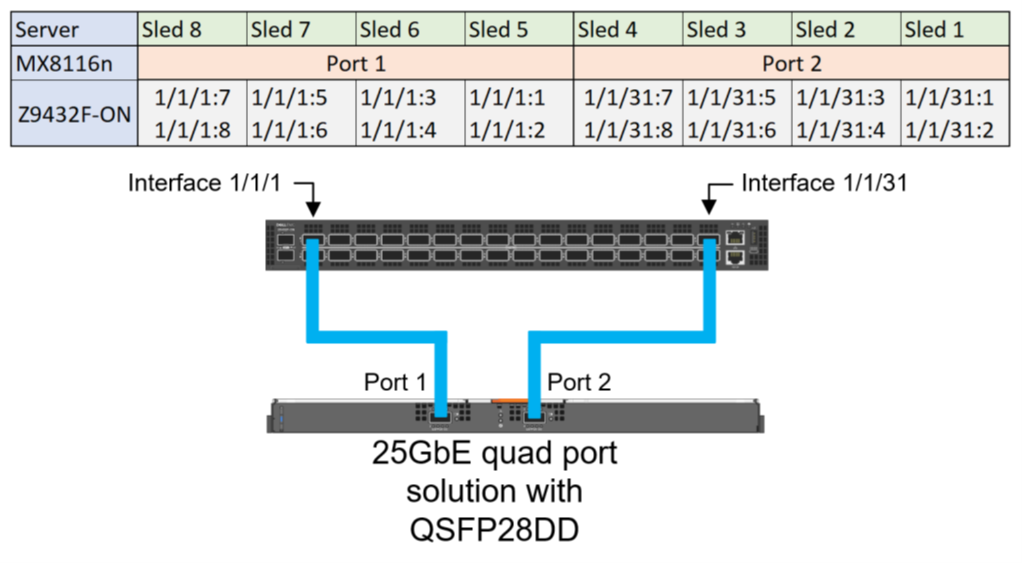

Compute sleds with 25 GbE quad port NIC using QSFP28-DD

The 25 GbE quad port NIC solution can use QSF28-DD based optics and cables. The following configuration shows the final state required for 25 GbE quad port NICs:

port-group 1/1/1

profile restricted

port 1/1/1 mode Eth 25g-8x

port 1/1/2 mode Eth 400g-1x

port-group 1/1/16

profile restricted

port 1/1/31 mode Eth 25g-8x

port 1/1/32 mode Eth 400g-1x

Figure 6. Z9432F-ON Port mapping for 25 GbE quad port solution with QSFP28-DD based optics and cables

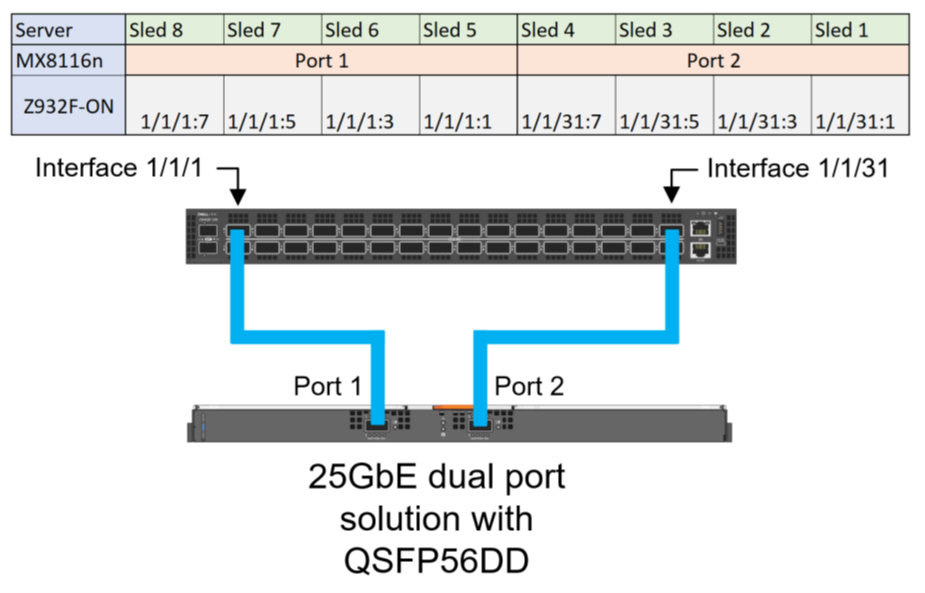

Compute sleds with 25 GbE dual port NIC using QSFP56-DD

For the required 25g-4x port mode operation, the profile should stay in the default unrestricted setting. Unlike quad port deployments, dual port deployments can use both even and odd ports on the Z9432F-ON.

port-group 1/1/1

profile unrestricted

port 1/1/1 mode Eth 25g-8x

port 1/1/2 mode Eth 400g-1x

port-group 1/1/16

profile unrestricted

port 1/1/31 mode Eth 25g-8x

port 1/1/32 mode Eth 400g-1x

Figure 7 shows the interface numbering for each sled and corresponding MX8116n port when using a QSFP56DD based optic or cable:

Figure 7. Z9432F-ON Port mapping for 25 GbE dual port solution for QSFP56DD based optic or cable

Compute sleds with 25 GbE dual port NIC using QSFP28-DD

The following configuration shows the final state required for 25 GbE dual port mezzanine cards, the profile should stay in the default unrestricted setting:

port-group 1/1/1

profile unrestricted

port 1/1/1 mode Eth 25g-4x

port 1/1/2 mode Eth 400g-1x

port-group 1/1/16

profile unrestricted

port 1/1/31 mode Eth 25g-4x

port 1/1/32 mode Eth 400g-1x

Figure 8. Z9432F-ON Port mapping for 25 GbE dual port solution with QSFP28-DD based optics and cables

References

Dell PowerEdge MX Networking Deployment Guide

Dell Technologies PowerEdge MX 100 GbE Solution with external FSE blog

Dell PowerEdge MX7000 Chassis User Guide

Dell Technologies PowerEdge MX 100 GbE solution with external Fabric Switching Engine

Mon, 26 Jun 2023 20:31:38 -0000

|Read Time: 0 minutes

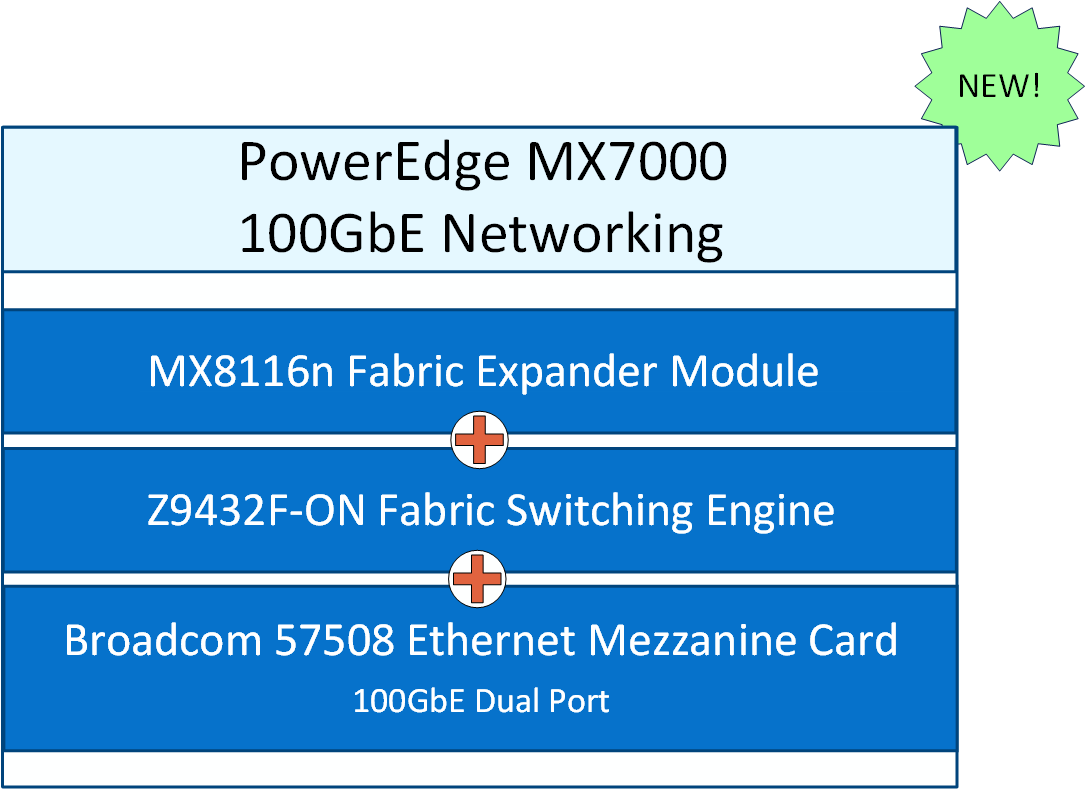

The Dell PowerEdge MX platform is advancing its position as the leading high-performance data center infrastructure by introducing a 100 GbE networking solution. This evolved networking architecture not only provides the benefit of 100 GbE speed but also increases the number of MX7000 chassis within a Scalable Fabric. The 100 GbE networking solution brings a new type of architecture, starting with an external Fabric Switching Engine (FSE).

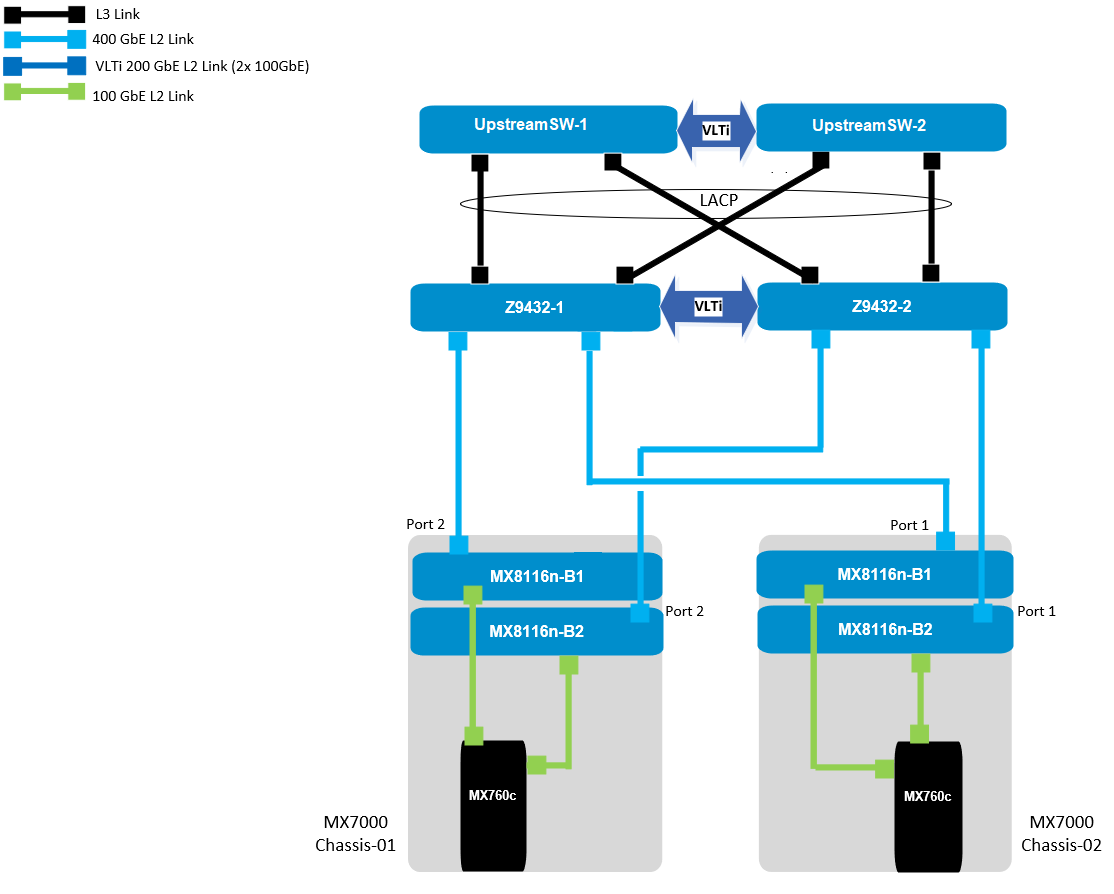

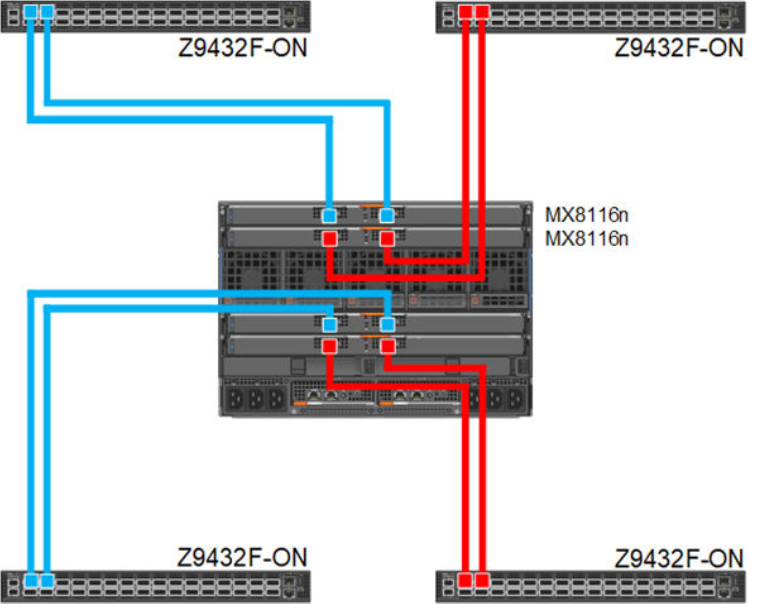

PowerEdge MX 100 GbE solution design example

The diagram shows only one connection on each MX8116n for simplicity. See the port-mapping section in the networking deployment guide here.

Figure 1. 100 GbE solution example topology

Components for 100 GbE networking solution

The key hardware components for 100 GbE operation within the MX Platform are described below with a minimal description.

Dell Networking MX8116n Fabric Expander Module

The MX8116n FEM includes two QSFP56-DD interfaces, with each interface providing up to 4x 100Gbps connections to the chassis, 8x 100 GbE internal server-facing ports for 100 GbE NICs, and 16x 25 GbE for 25 GbE NICs.

The MX7000 chassis supports up to four MX8116n FEMs in Fabric A and Fabric B.

Figure 2. MX8116n FEM

The following MX8116n FEM components are labeled in the preceding figure:

- Express service tag

- Power and indicator LEDs

- Module insertion and removal latch

- Two QSFP56-DD fabric expander ports

Dell PowerEdge MX760c compute sled

- The MX760c is ideal for dense virtualization environments and can serve as a foundation for collaborative workloads.

- Businesses can install up to eight MX760c sleds in a single MX7000 chassis and combine them with compute sleds from different generations.

- Single or dual CPU (up to 56 cores per processor/socket with four x UPI @ 24 GT/s) and 32x DIMM slots DDR5 with eight memory channels.

- 8x E3.S NVMe (Gen5 x4) or 6 x 2.5" SAS/SATA SSDs or 6 x NVMe (Gen4) SSDs and iDRAC9 with lifecycle controller.

Note: The 100 GbE Dual Port Mezzanine card is also available on the MX750c.

Figure 3. Dell PowerEdge MX760c sled with eight E3.s SSD drives

Dell PowerSwitch Z9432F-ON external Fabric Switching Engine

The Z9432F-ON provides state-of-the-art, high-density 100/400 GbE ports, and a broad range of functionality to meet the growing demands of modern data center environments. Compact and offers an industry-leading density of 32 ports of 400 GbE in QSFP56-DD, 128 ports of 100, or up to 144 ports of 10/25/50 (through breakout) in a 1RU design. Up to 25.6 Tbps non-blocking (full duplex), switching fabric delivers line-rate performance under full load.L2 multipath support using Virtual Link Trunking (VLT) and Routed VLT support. Scalable L2 and L3 Ethernet switching with QoS and a full complement of standards-based IPv4 and IPv6 features, including OSPF and BGP routing support.

Figure 4. Dell PowerSwitch Z9432F-ON

Note: Mixed dual port 100 GbE and quad port 25 GbE mezzanine cards connecting to the same MX8116n are not a supported configuration.

100 GbE deployment options

There are four deployment options for the 100 GbE solution, and every option requires servers with a dual port 100 GbE mezzanine card. You can install the mezzanine card in either mezzanine slot A, B, or both. When you use the Broadcom 575 KR dual port 100 GbE mezzanine card, you should set the Z9432F-ON port-group to unrestricted mode and configure the port mode for 100g-4x.

PowerSwitch CLI example:

port-group 1/1/1

profile unrestricted

port 1/1/1 mode Eth 100g-4x

port 1/1/2 mode Eth 100g-4x

Note: The 100 GbE solution deployment, 14 maximum numbers of chassis are supported in single fabric, and 7 maximum numbers of chassis are supported in dual fabric using the same pair of FSE solution.

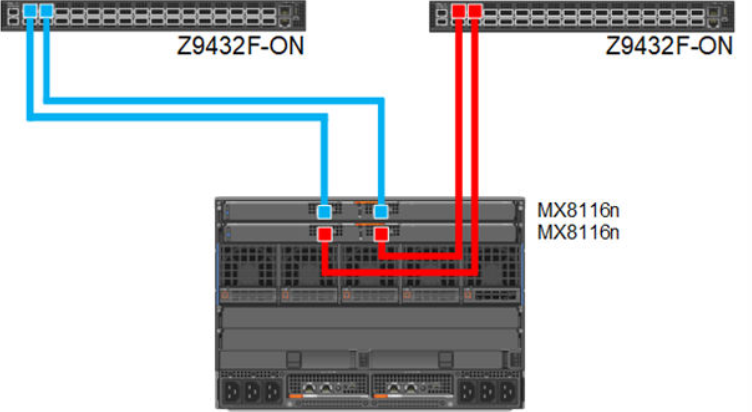

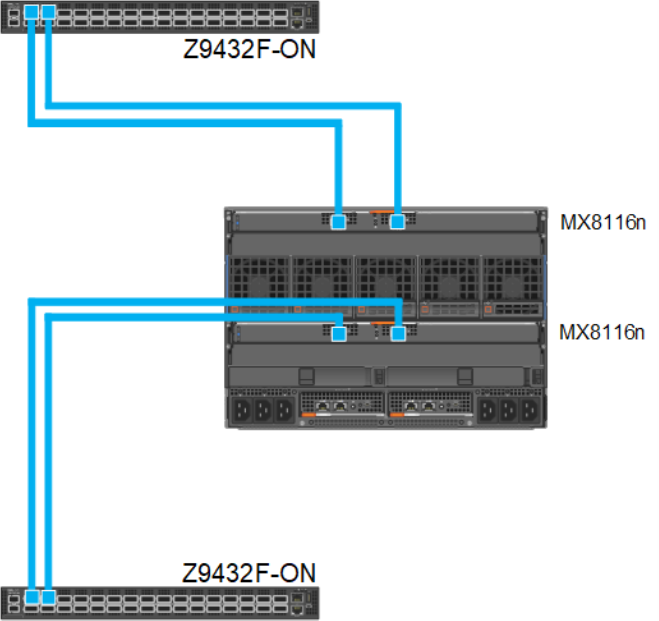

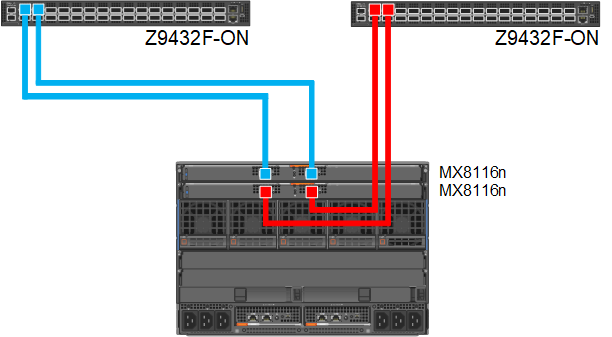

Single fabric

In a single fabric deployment, two MX8116n can be installed either in Fabric A or Fabric B, and the corresponding slot of the sled in slot-A or slot-B can have the 100 GbE mezzanine card installed.

Figure 5. 100 GbE Single Fabric

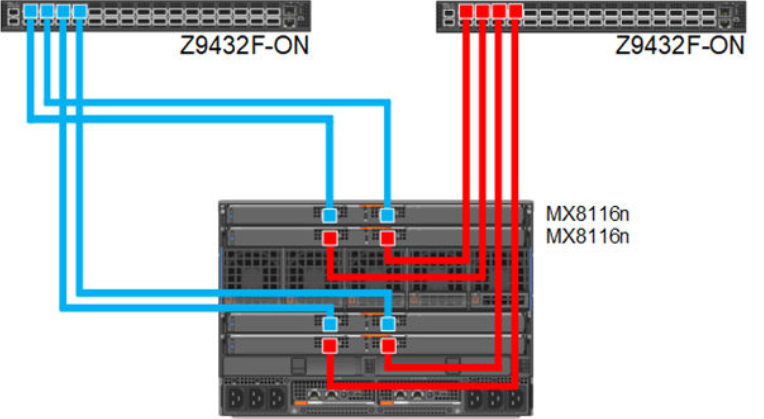

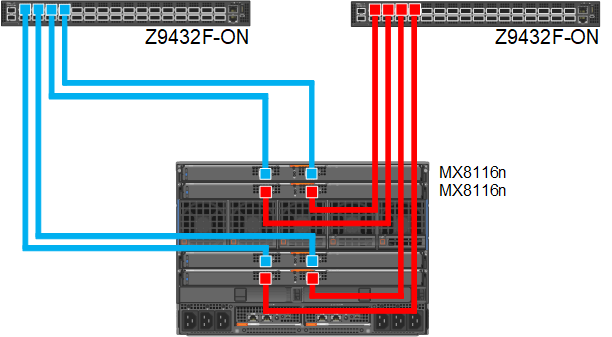

Dual fabric combined fabrics

In this option, four MX8116n (2x in Fabric A and 2x in Fabric B) can be installed and combined to connect Z9432F-ON external FSE.

Figure 6. 100 GbE Dual Fabric combined Fabrics

Dual fabric separate fabrics

In this option four, MX8116n (2x in Fabric A and 2x in Fabric B) can be installed and connected to two different networks. In this case, the MX760c server module has two mezzanine cards, with each card connected to a separate network.

Figure 7. 100 GbE Dual Fabric separate Fabrics

Dual fabric, single MX8116n in each fabric, separate fabrics

In this option two, MX8116n (1x in Fabric A and 1x in Fabric B) can be installed and connected to two different networks. In this case, the MX760c server module has two mezzanine cards, each connected to a separate network.

Figure 8. 100 GbE Dual Fabric single FEM in separate Fabrics

References

Dell PowerEdge Networking Deployment Guide

A chapter about 100 GbE solution with external Fabric Switching Engine

Dell Technologies PowerEdge MX Platform: Advanced NPAR

Wed, 03 May 2023 15:47:10 -0000

|Read Time: 0 minutes

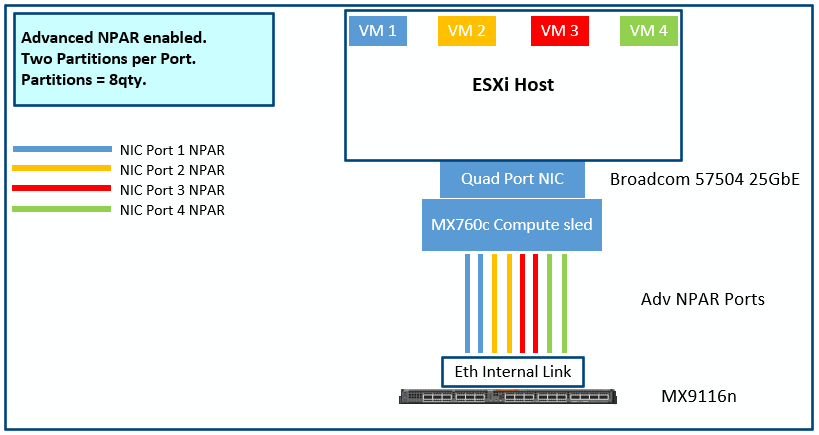

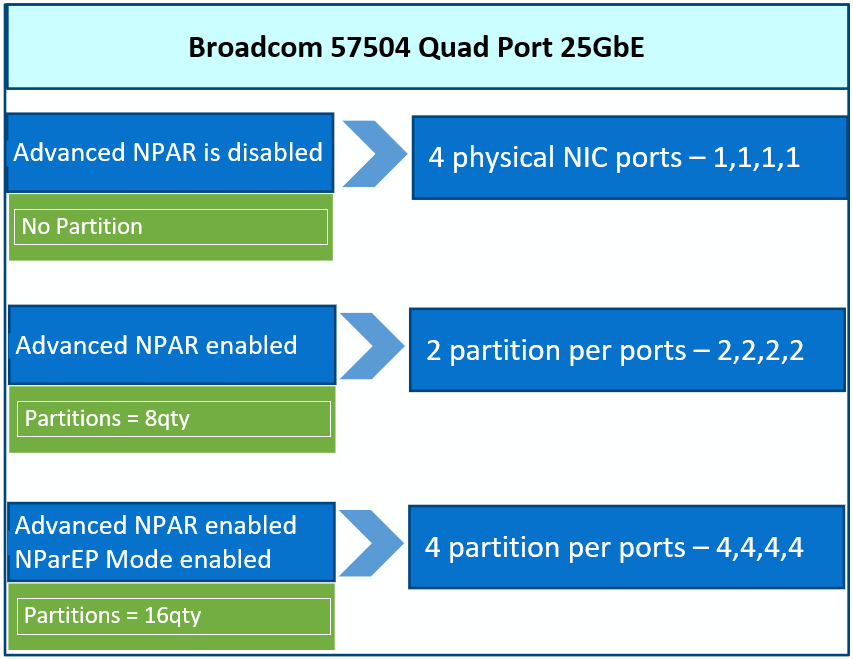

Advanced Network Partitioning (NPAR) is now available in both SmartFabric and Full switch modes with the release of OME-M 2.10.00.

- The main advantage that separates Adv NPAR from standard NPAR is that it provides users with the ability to assign VLANs to each partition.

- The Adv NPAR feature is only supported on the 25 GbE-based IOMs in the MX chassis.

- The Adv NPAR feature is only supported on Broadcom 57504 NIC and VMware ESXi 7.0.U3 and above as the server host operating system.

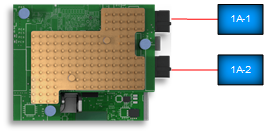

The following diagram shows how the MX9116n ethernet internal port to the Broadcom 57504 25GbE quad port NIC is split into eight partitions:

Figure 1. Advanced NPAR NIC Port Partitions

The BCOM 57504 quad port mezzanine card is used in this example and can only support Adv NPAR. The following combinations are supported:

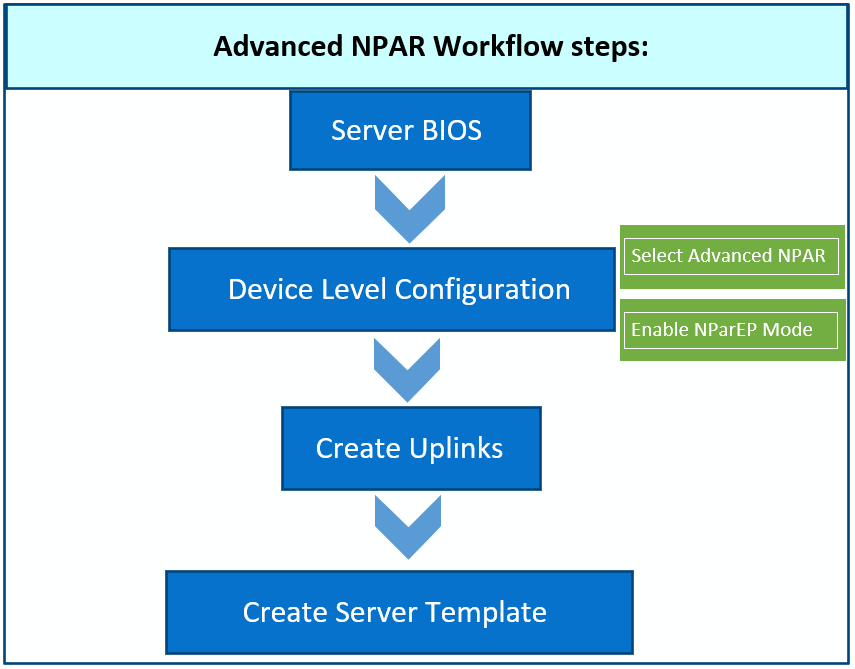

The Advanced NPAR setting and the configuration workflow are shown in the following image:

For more information, see the latest Dell Technologies PowerEdge MX Networking Deployment Guide. It has information on the following:

- Topology and port information

- Hardware and software requirements

- Restrictions and limitations

- Adv NPAR deployment in SmartFabric and in Full Switch modes.

- Configuration validation CLI outputs

See also this blog post:

Dell Technologies PowerEdge MX Platform: Network Interface Card Partitioning

PowerEdge MX7000 100 GbE Networking

Tue, 09 May 2023 14:01:03 -0000

|Read Time: 0 minutes

100 Gigabit Ethernet networking has arrived on the Dell PowerEdge Platform! The Dell Networking MX8116n Fabric Expander Module is now available to deploy with the PowerSwitch Z9432F-ON as a Fabric Switching Engine.

The MX8116n FEM can be installed in fabric A and B. Each 400GbE port connects to the Z9432F-ON FSE, supporting a single 100GbE connection for four compute sleds.

The MX Scalable Fabric Architecture allows the Z9432F-ON FSE to support up to a maximum of 14 MX7000 chassis and 112 MX compute sleds in a single fabric deployment. The Z9432F-ON FSE operates on Dell Networking SmartFabric OS10 in Full Switch mode only.

Here are some common deployment options for the 100GbE solution.

Figure 1. 100GbE single fabric deployment

Figure 2. 100GbE dual fabric deployment

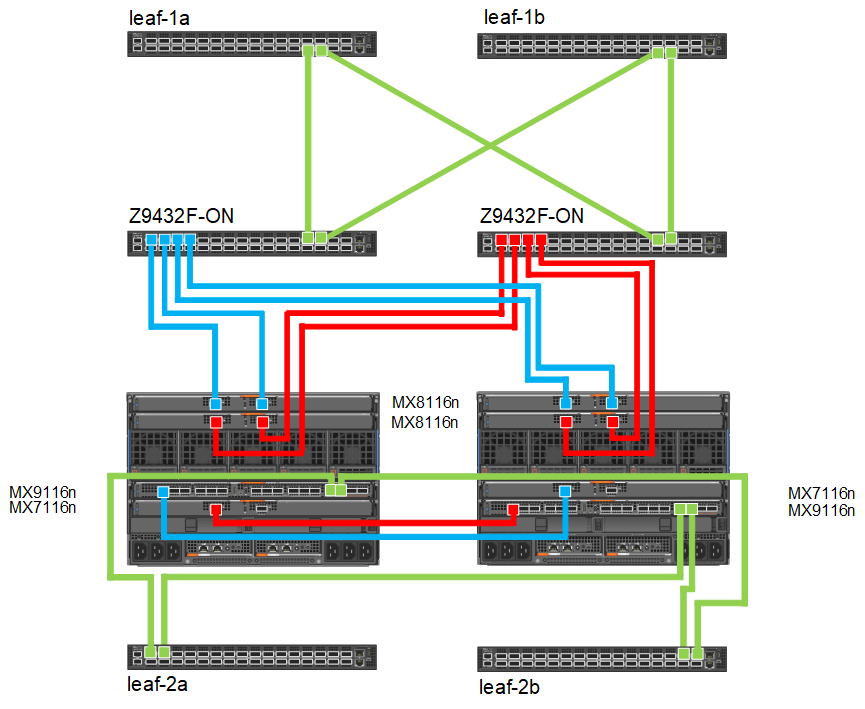

Figure 3. Combination MX8116n/Z9432F-ON and MX9116n/MX7116n deployment

For additional deployment options and detailed technical documentation on the new 100GbE networking solution see the updated Dell Technologies PowerEdge MX Networking Deployment Guide.

Another great source for hardware specifications, deployment diagrams, and cables/optics is the PowerEdge MX I/O Guide.

Dell Technologies PowerEdge MX with Cisco ACI Integration

Thu, 09 Feb 2023 20:05:01 -0000

|Read Time: 0 minutes

Introduction

This paper provides an example of integrating the Dell PowerEdge MX platform running Dell SmartFabric Services (SFS) with Cisco Application Centric Infrastructure (ACI).

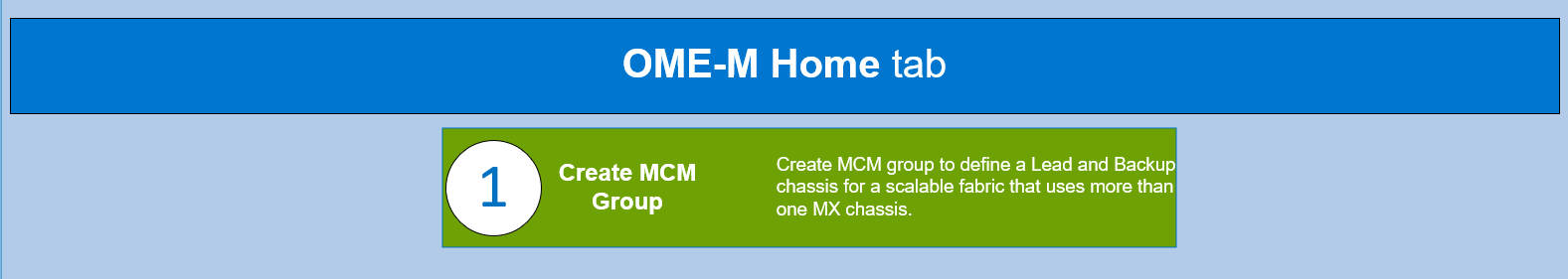

The example in this blog assumes that the PowerEdge MX7000 chassis are configured in a Multi Chassis Management Group (MCM) and that you have a basic understanding of the PowerEdge MX platform.

As part of the PowerEdge MX platform, the SmartFabric OS10 network operating system includes SmartFabric Services, a network automation and orchestration solution that is fully integrated with the MX platform.

Configuration Requirements

Configuration of SmartFabric on PowerEdge MX with Cisco ACI makes the following assumptions:

- All MX7000 chassis and management modules are cabled correctly and in an MCM group.

- VLTi cables between MX Fabric Switching Engines (FSE) and Fabric Expander Modules (FEM) are connected correctly.

- PowerEdge and Cisco ACI platforms are in healthy status and are running updated software.

The example setup is validated using the following software versions:

- MX chassis: 2.00.00

- MX IOMs (MX9116n): 10.5.4.1.29

- Cisco APIC: 5.2(6e).

- Cisco leaf switches: 4.2(7u)

Refer to the Dell Networking Support and Interoperability Matrix for the latest validated versions.

Hardware and Logical Topology

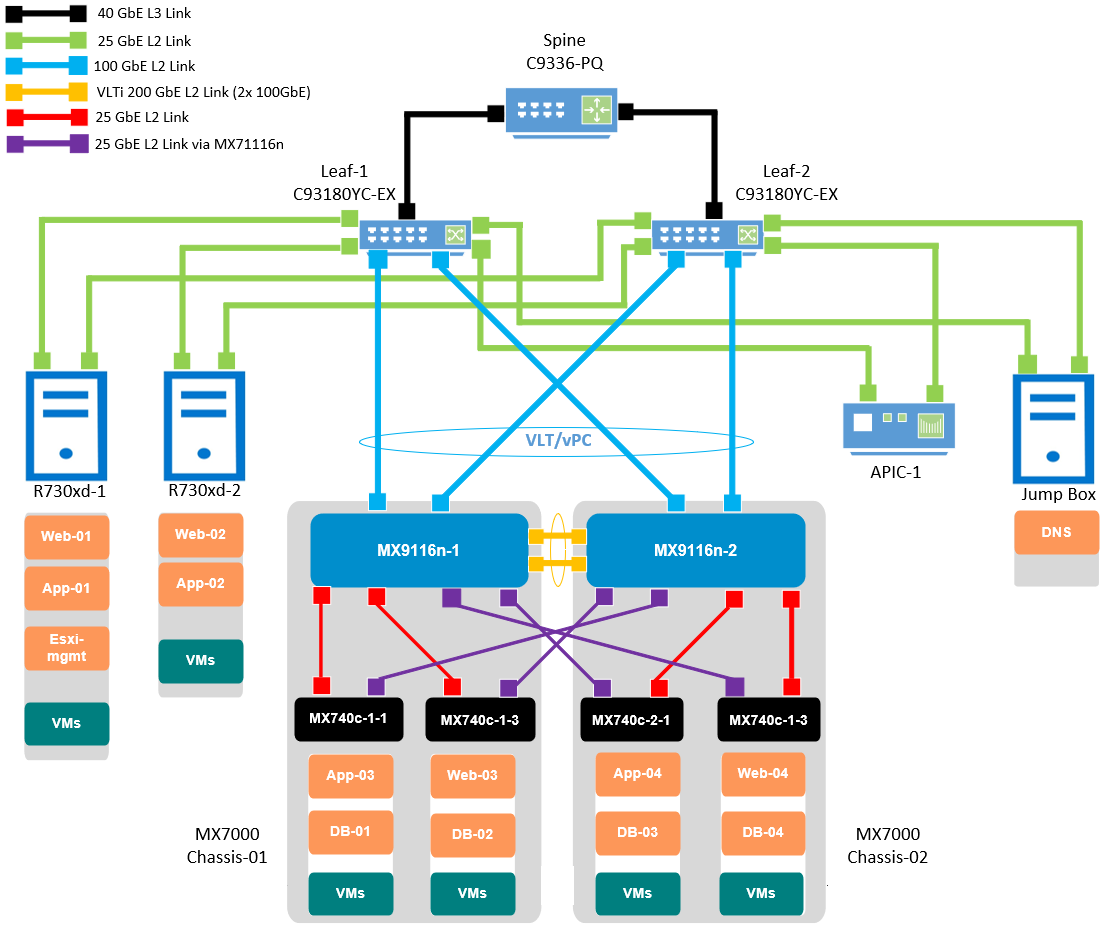

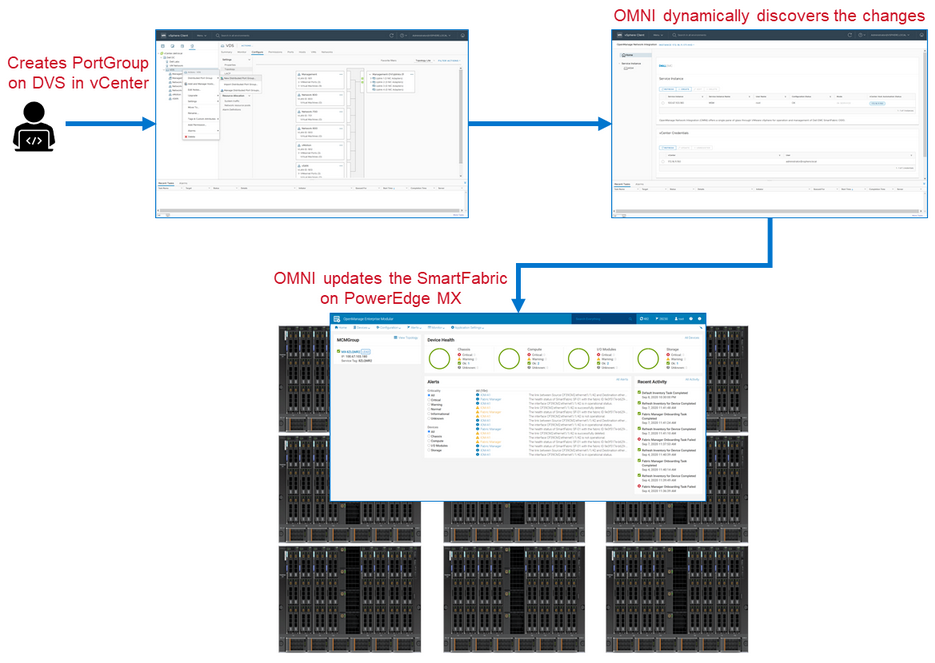

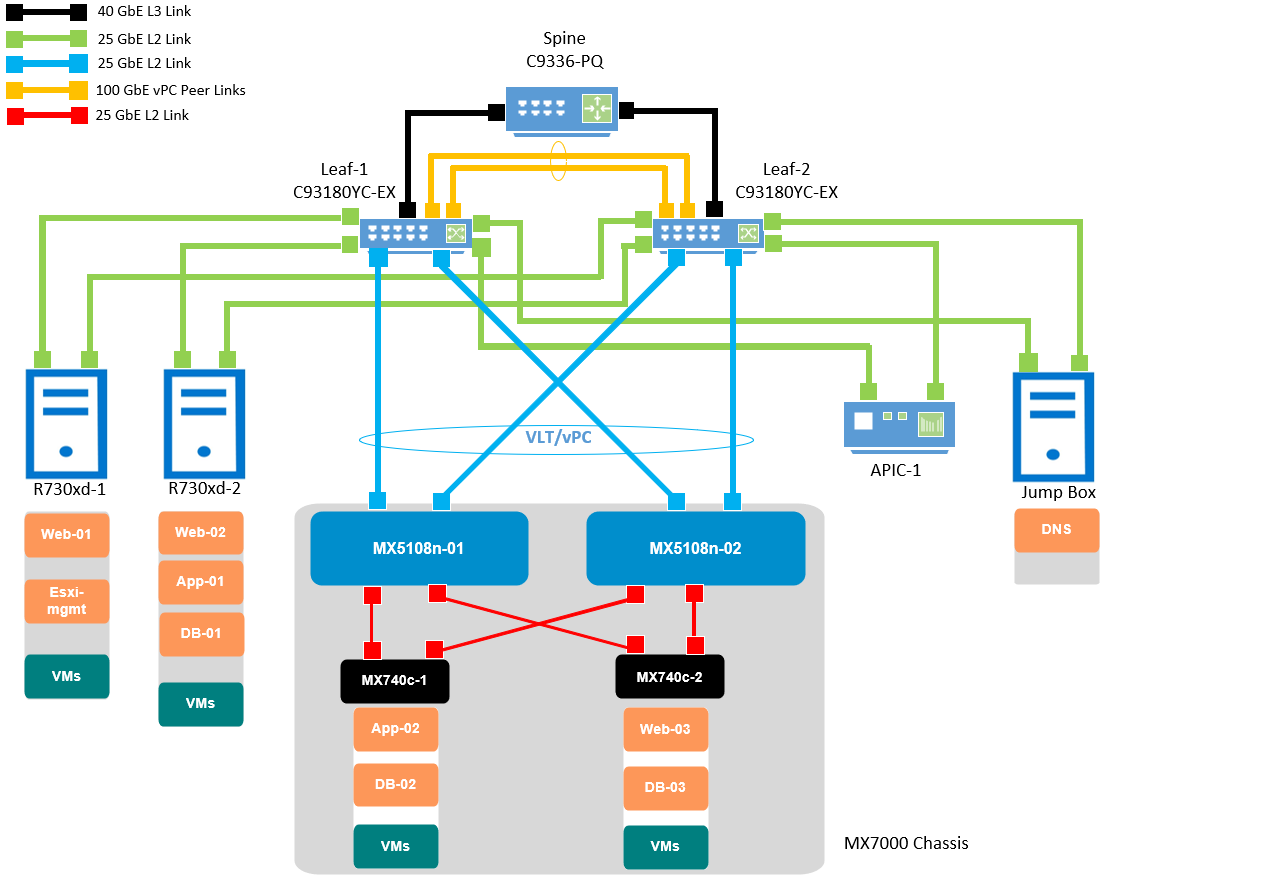

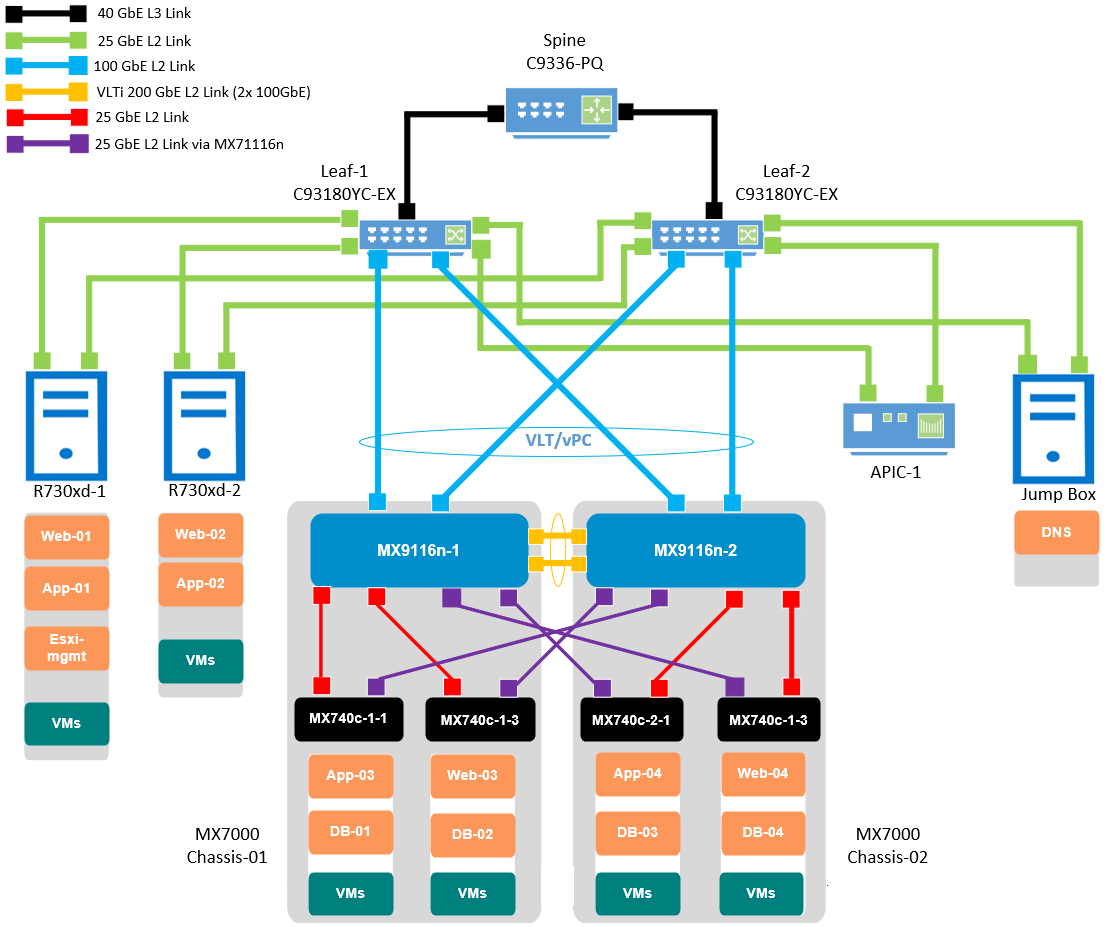

The validated Cisco ACI environment includes a pair of Nexus C93180YC-EX switches as leafs. These switches are connected to a single Nexus C9336-PQ switch as the spine using 40GbE connections. MX9116n FSE switches are connected to the C93180YC-EX leafs using 100GbE cables.

The following section provides an overview of the topology and configuration steps. For detailed configuration instructions, refer to the Dell EMC PowerEdge MX SmartFabric and Cisco ACI Integration Guide.

Caution: The connection of an MX switch directly to the ACI spine is not supported.

Figure 1 Validated SmartFabric and ACI environment logical topology

This blog is categorized into four major parts:

- Cisco Application Policy Infrastructure Controller (APIC)

- Dell PowerEdge MX OpenManage Enterprise-Modular (OME-M)

- VMware vCenter Server Appliance (VCSA)

- Dell OpenManage Network Integration (OMNI)

Cisco APIC

Cisco APIC provides a single point of automation and fabric element management in both virtual and physical environments. It helps the operators build fully automated and scalable multi-tenant networks.

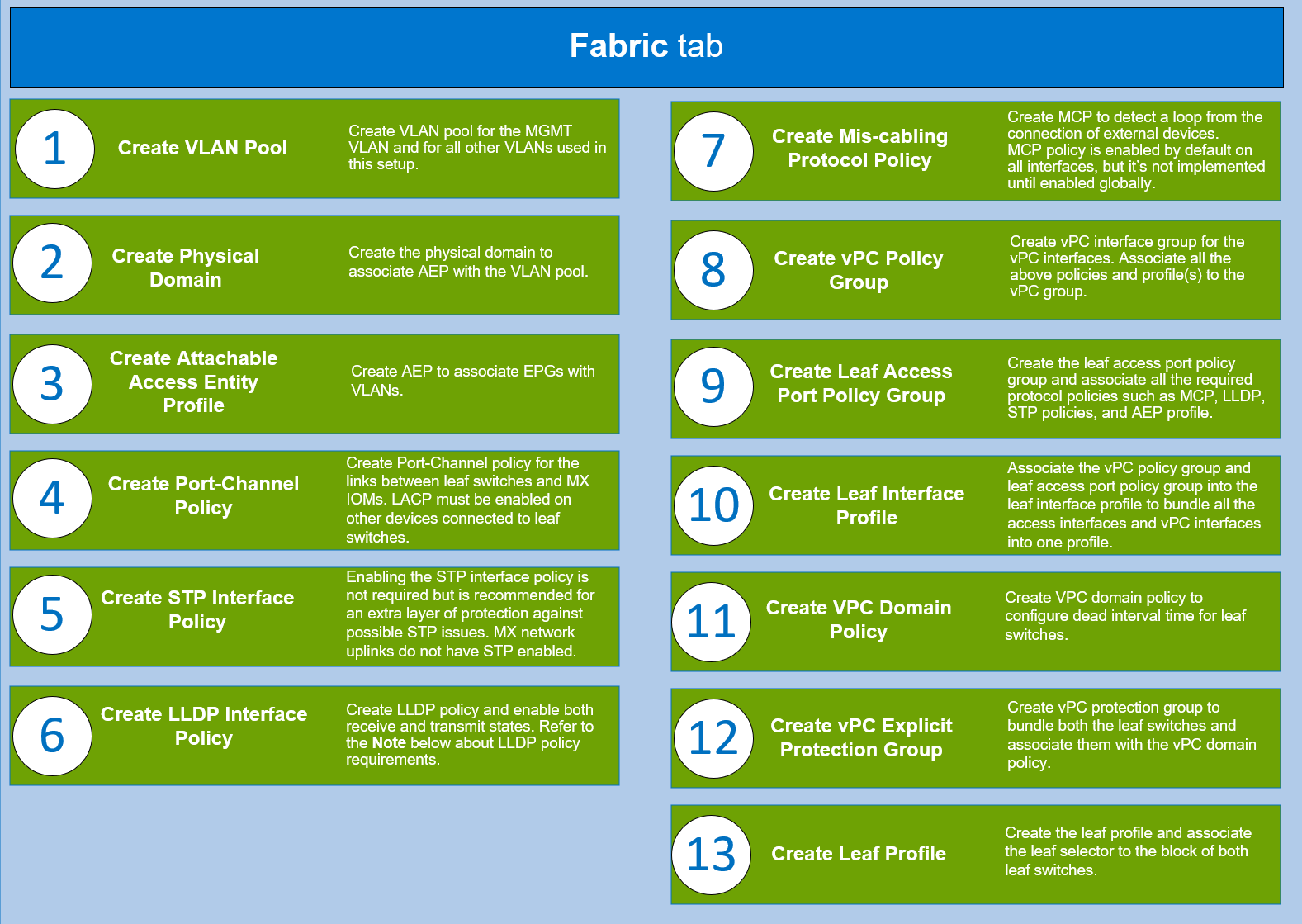

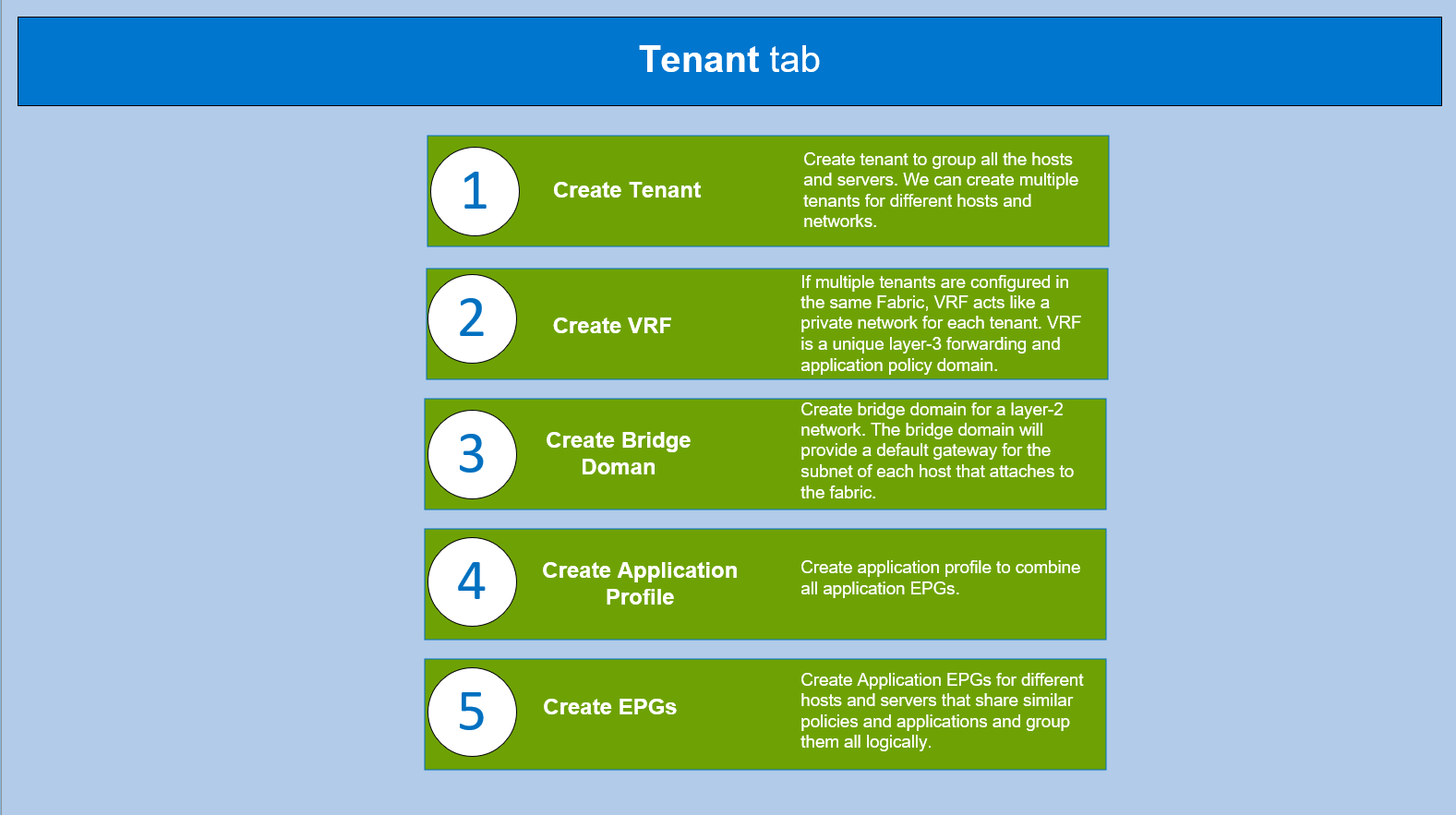

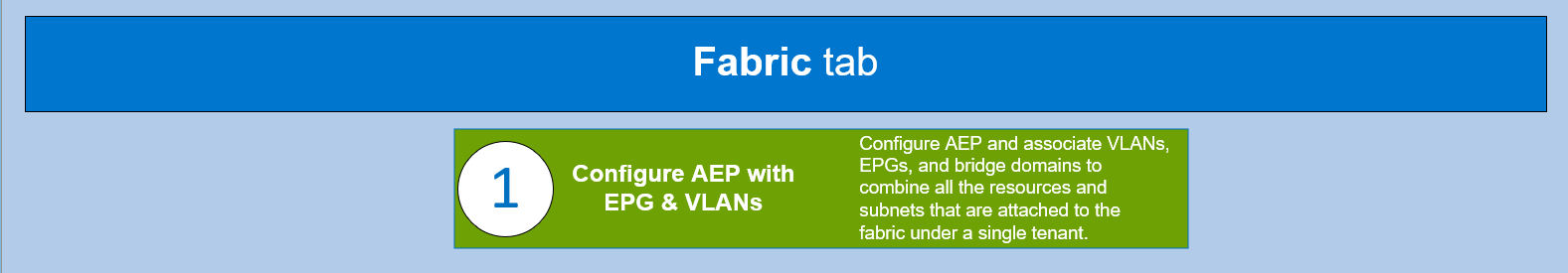

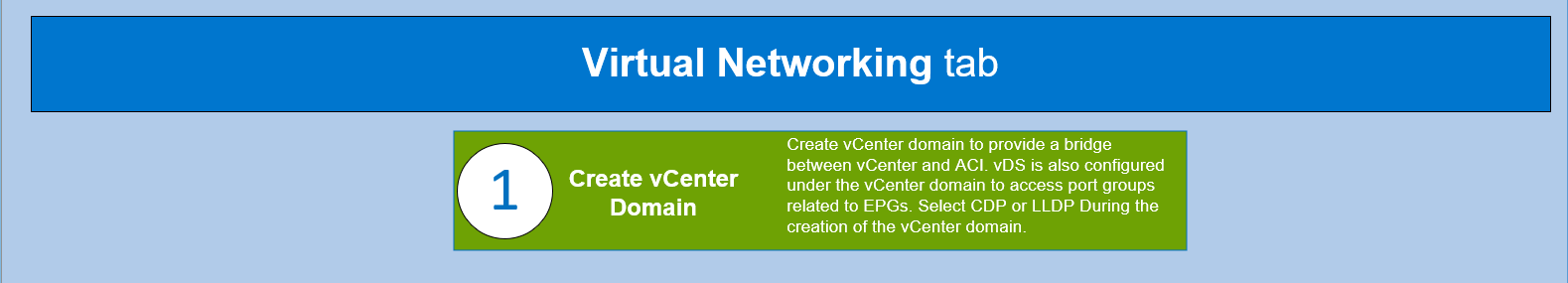

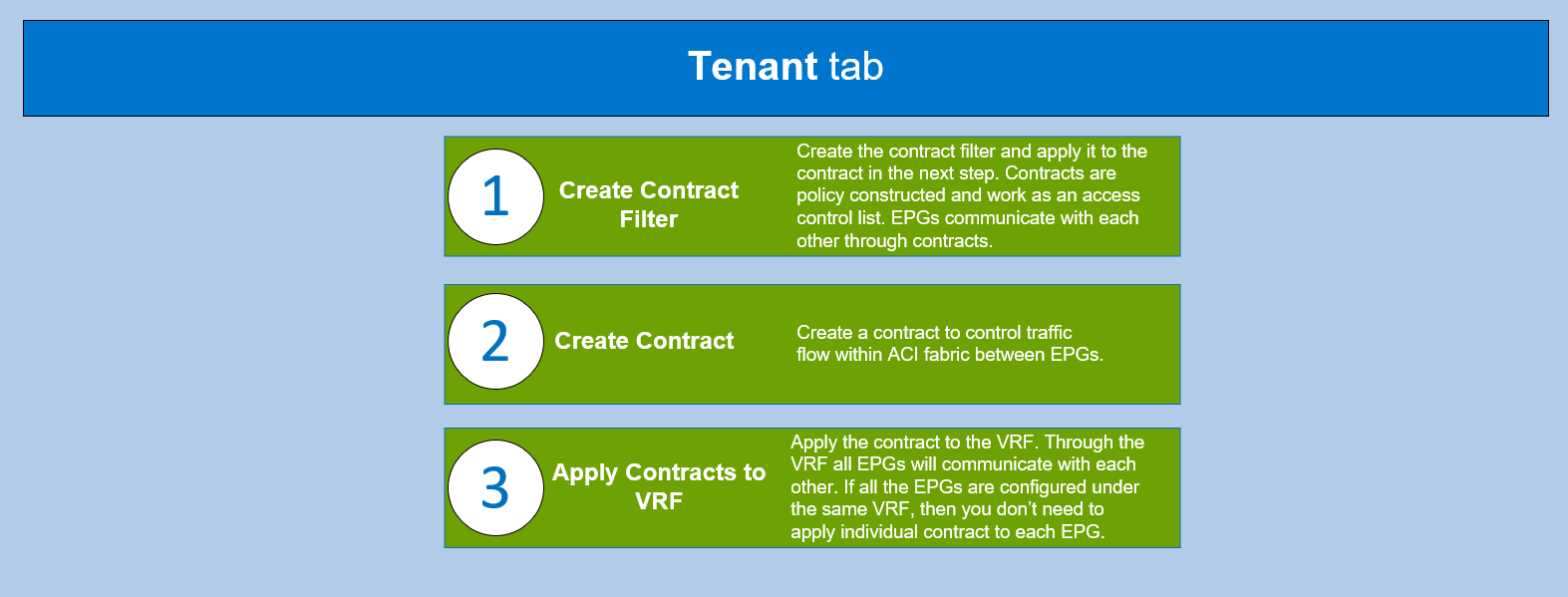

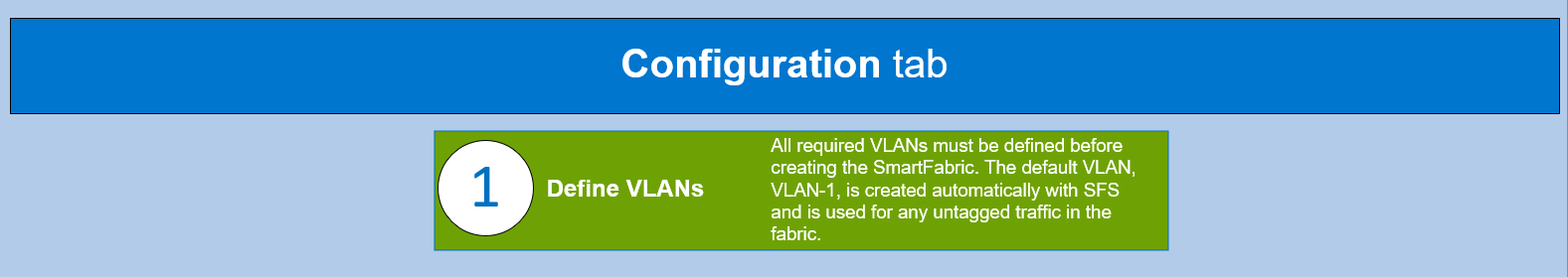

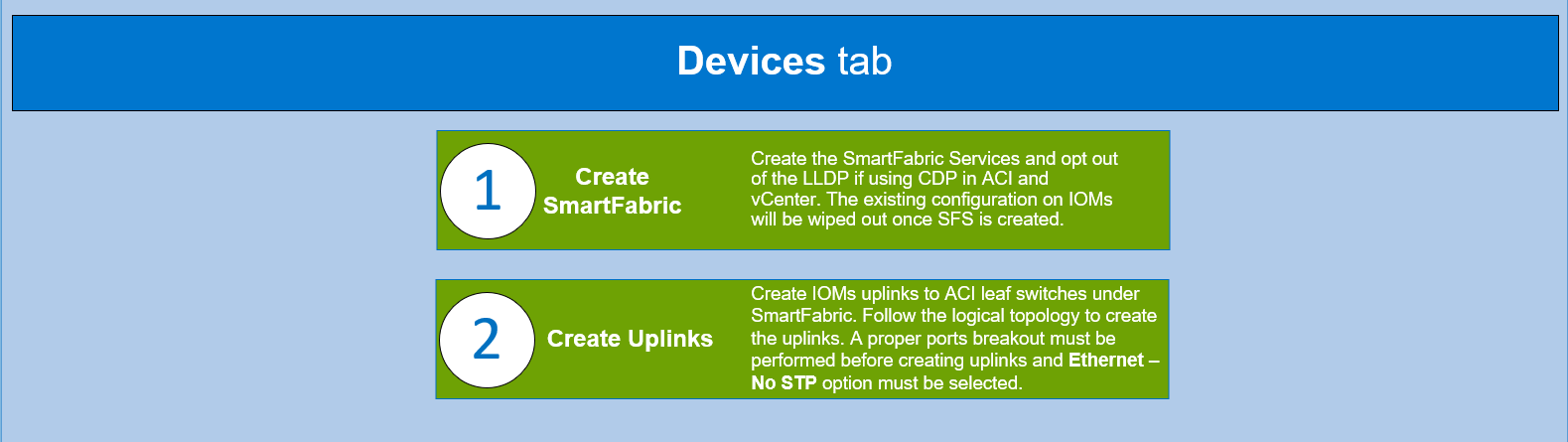

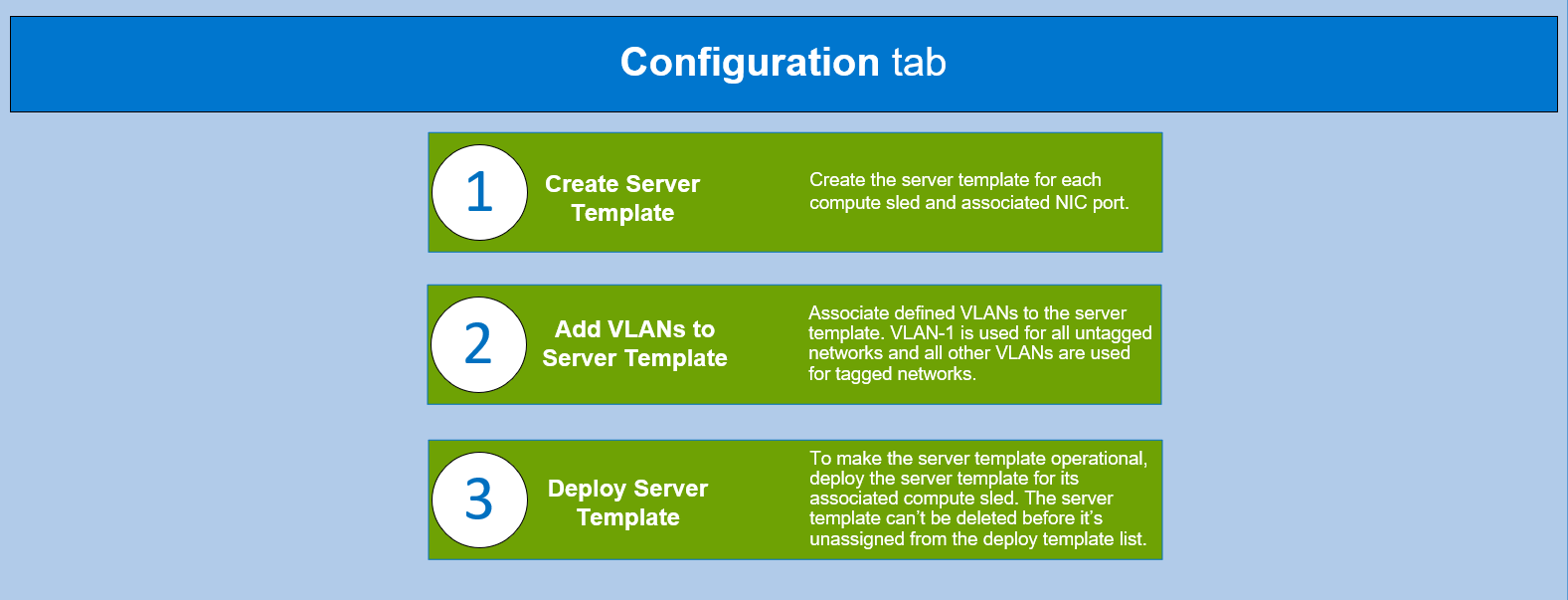

To understand the required protocols, policies, and features that you must configure to set up the Cisco ACI, log in to the Cisco APIC controller and complete the steps shown in the following flowcharts.

CAUTION: Ensure all the required hardware is in place and all the connections are made as shown in the above logical topology.

Note: If a storage area network protocol (such as FCoE) is configured, Dell Technologies suggest that you use CDP as a discovery protocol on ACI and vCenter, while LLDP remains disabled on the MX SmartFabric.

PowerEdge MX OME-M

The PowerEdge MX platform is a unified, high-performance data center infrastructure. It provides the agility, resiliency, and efficiency to optimize a wide variety of traditional and new, emerging data center workloads and applications. With its kinetic architecture and agile management, PowerEdge MX dynamically configures compute, storage, and fabric; increases team effectiveness; and accelerates operations. The responsive design delivers the innovation and longevity that customers need for their IT and digital business transformations.

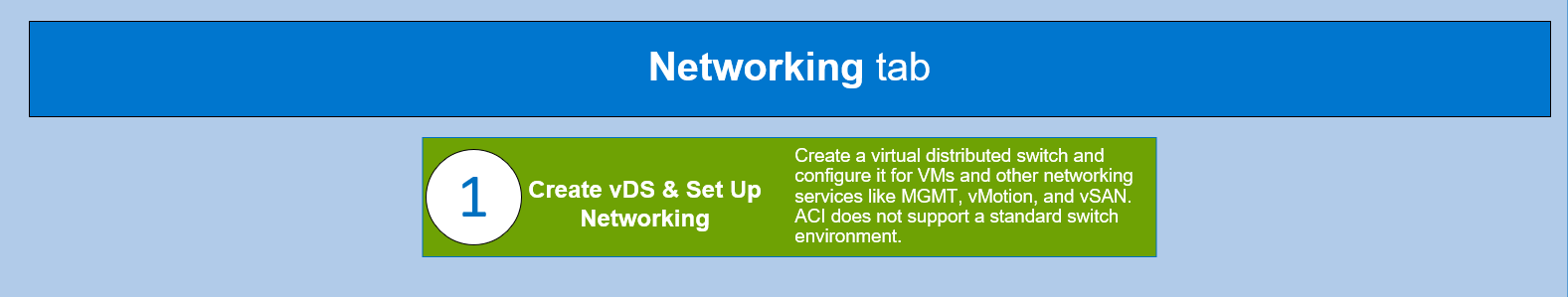

VMware vCenter

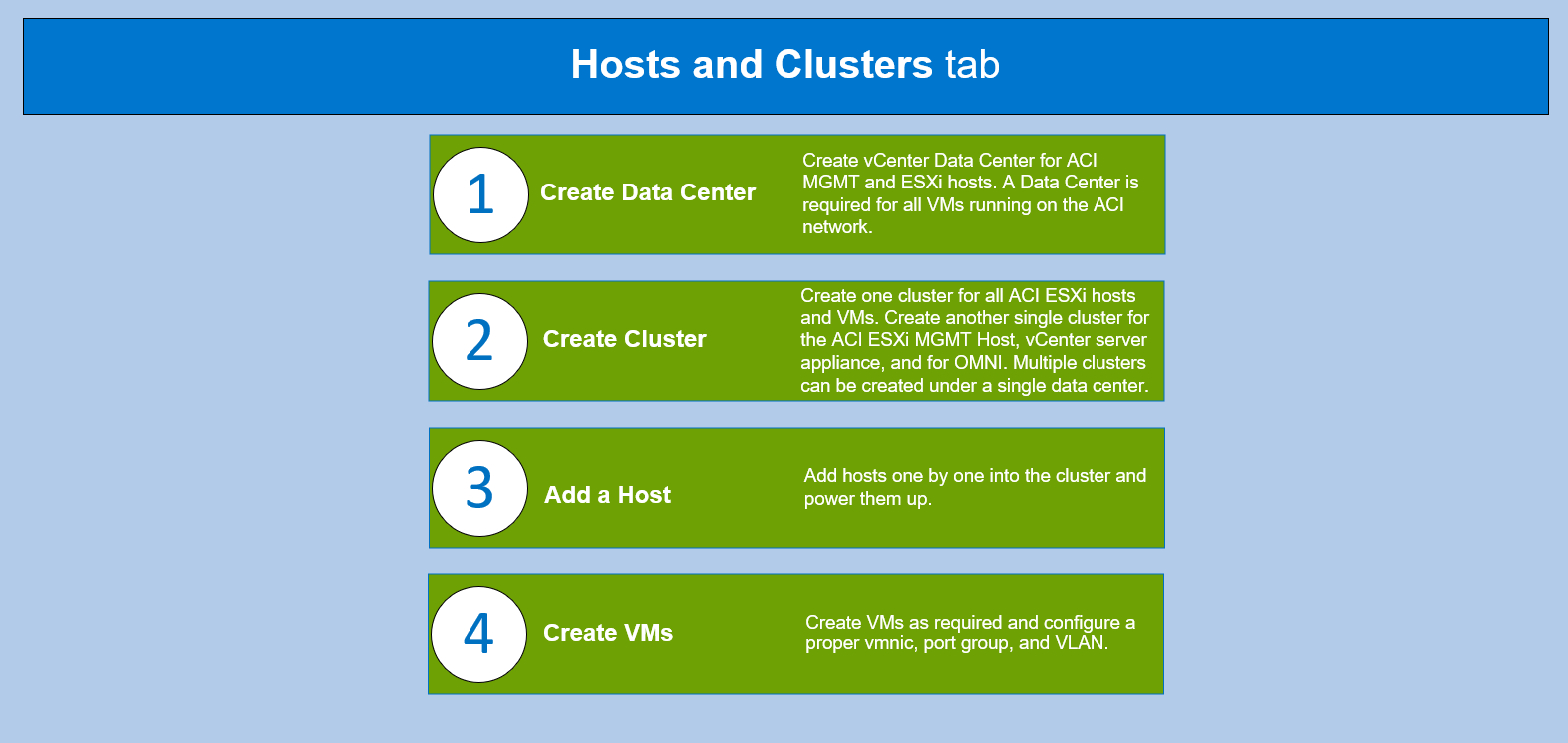

VMware vCenter is an advanced management centralized platform application. The flowchart below assumes that you have completed the following prerequisites:

- Install the vCenter server appliance on the ESXi MGMT server.

- Install the ESXi VMvisor on the ESXi host servers for the MX SmartFabric and Cisco ACI integration environment.

OMNI

OMNI is an external plug-in for VMware vCenter that is designed to complement SFS by integrating with VMware vCenter to perform fabric automation. This integration automates VLAN changes that occur in VMware vCenter and propagates those changes into the related SFS instances running on the MX platform, as shown in the following flowchart figure.

The combination of OMNI and Cisco ACI vCenter integration creates a fully automated solution. OMNI and the Cisco APIC recognize and allow a VLAN change to be made in vCenter, and this change will flow through the entire solution without any manual intervention.

For more information about OMNI, see the SmartFabric Services for OpenManage Network Integration User Guide on the Dell EMC OpenManage Network Integration for VMware vCenter documentation page.

Figure 2 OMNI integration workflow

MX Single Chassis Deployment for ACI Integration

A single MX7000 chassis may also join an existing Cisco ACI environment by using the MX5108n ethernet switch. The MX chassis in this example has two MX5108n ethernet switches and two MX compute sleds.

The connections between the ACI environment and the MX chassis are made using a double-sided multi-chassis link aggregation group (MLAG). The MLAG is called a vPC on the Cisco ACI side and a VLT on the PowerEdge MX side. The following figure shows the environment.

Figure 3 SmartFabric and ACI environment using MX5108n Ethernet switches logical topology

Reference

List of Acronyms

ACI: Cisco Application Centric Infrastructure (ACI) AEP: Attachable Access Entity Profile APIC: Cisco Application Policy Infrastructure Controller CDP: Cisco Discovery Protocol EPG: End Point Groups LLDP: Link Local Discovery Protocol MCP: Mis-Cabling Protocol MCM: Multi Chassis Management Group MLAG: Multi-chassis link aggregation group MX FSE: Dell MX Fabric Switching Engines MX FEM: Dell MX Fabric Expander Modules MX IOMs: Dell MX I/O Modules | MX MCM: Dell MX Multichassis Management Group OME-M: Dell OpenManage Enterprise-Modular OMNI: Dell OpenManage Network Integration PC: Port Channel STP: Spanning Tree Protocol VCSA: VMware vCenter Server Appliance vDS: Virtual Distributed Switch VLAN: Virtual Local Area Network VM: virtual machine VMM: VMware Virtual Machine Manager vPC: Virtual Port Channel VRF: Virtual Routing Forwarding |

Documentation and Support

Dell EMC PowerEdge MX Networking Deployment Guide

Dell EMC PowerEdge MX SmartFabric and Cisco ACI Integration Guide.

Networking Support & Interoperability Matrix

Dell EMC PowerEdge MX VMware ESXi with SmartFabric Services Deployment Guide

Integrate Dell PowerEdge MX with Cisco ACI

Thu, 09 Feb 2023 20:05:01 -0000

|Read Time: 0 minutes

You can integrate the Dell PowerEdge MX platform running Dell SmartFabric Services (SFS) with Cisco Application Centric Infrastructure (ACI). This blog shows an example setup using PowerEdge MX7000 chassis that are configured in a Multi Chassis Management Group (MCM).

We validated the example setup using the following software versions:

- MX chassis: 2.00.00

- MX IOMs (MX9116n): 10.5.4.1.29

- Cisco APIC: 5.2(6e).

- Cisco leaf switches: 4.2(7u)

The validated Cisco ACI environment includes a pair of Nexus C93180YC-EX switches as leafs. We connected these switches to a single Nexus C9336-PQ switch as the spine using 40GbE connections. We connected MX9116n FSE switches to the C93180YC-EX leafs using 100GbE cables.

Configuration

The Dell Technologies PowerEdge MX with Cisco ACI Integration blog provides an overview of the configuration steps for each of the components:

- Cisco Application Policy Infrastructure Controller (APIC)

- Dell PowerEdge MX OpenManage Enterprise-Modular (OME-M)

- VMware vCenter Server Appliance (VCSA)

- Dell OpenManage Network Integration (OMNI)

For more detailed configuration instructions, refer to the Dell EMC PowerEdge MX SmartFabric and Cisco ACI Integration Guide.

Resources

Dell EMC PowerEdge MX Networking Deployment Guide

Dell EMC PowerEdge MX SmartFabric and Cisco ACI Integration Guide.

Networking Support & Interoperability Matrix

Dell EMC PowerEdge MX VMware ESXi with SmartFabric Services Deployment Guide

Dell Technologies PowerEdge MX Platform: MX IOM Firmware Update Process

Thu, 08 Dec 2022 23:40:12 -0000

|Read Time: 0 minutes

If you are reading this blog, most likely your interest is in how to update the firmware on the Dell Networking MX I/O modules (IOMs). This blog will go through all the necessary steps to update both the Dell OME-Modular application and the networking IOMs for all types of deployments.

Starting with OME-M 2.00.00, a new recommended order for updating the MX chassis and all its components has been documented in Appendix B of the Dell OpenManage Enterprise – Modular Edition Version 2.00.00 for PowerEdge MX7000 Chassis User’s Guide. The new process, called phased update order, is supported for upgrading from OME-M 1.40.xx to OME-M 2.00.00 and later.

Note: If updating to a version prior to OME-M 2.00.00 or updating from a version prior to OME-M 1.40.00, use the update process documented in the section titled Updating firmware for PowerEdge MX solution in the appropriate Dell OpenManage Enterprise – Modular Edition PowerEdge MX7000 Chassis User’s Guide.

This blog will follow the phased update order process. After updating the Networking IOMs, please refer to the MX7000 Chassis User Guide linked above for server update instructions.

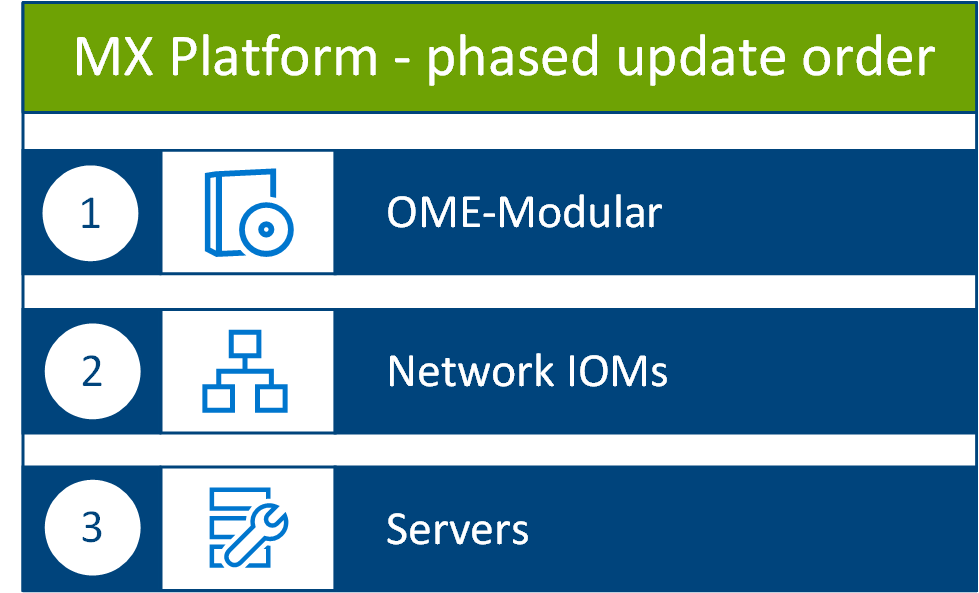

The following image shows the update order for all deployment types. In the sections below, the instructions will provide additional information when updating multichassis or using different IOM operating modes.

Note: The update order for versions prior to OME-M 2.00.00 recommended an order of servers, followed by OME-M, and then network IOMs. Although the order is different, the steps to update OME-M and network IOMs remain the same.

MX Platform phased update order

MX Platform phased update order

MX7000 Solution Baseline

All components and devices within the MX chassis are tested as a validated solution stack or firmware baseline. Each release of the OME-Modular software includes an update to the firmware baseline. The MX7000 Chassis User Guide will contain the current baseline for that release and baselines for past OME-M. The details are listed in a table within the Updating firmware for PowerEdge MX section.

The firmware baseline information can also be seen at the Networking Support & Interoperability Matrix.

Firmware location and catalogs

Firmware location

Dell update packages (DUPs): https://www.dell.com/support

OME-M DUPs: https://www.dell.com/support/home/en-us/product-support/product/poweredge-mx7000/drivers

IOM DUPs:

- https://www.dell.com/support/home/en-us/product-support/product/networking-mx9116n/drivers

- https://www.dell.com/support/home/en-us/product-support/product/networking-mx5108n/drivers

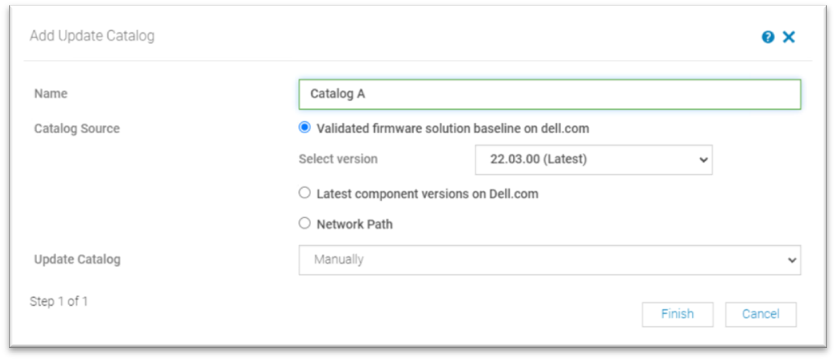

Catalogs

Catalogs are a list of firmware available for the components and devices installed on the chassis. There are three main types of catalogs:

- Validated firmware solution baseline on dell.com

- Latest component versions on Dell.com

- Network Path (Custom catalog from Dell Repository Manger)

The most common catalog method is the Validated firmware solution baseline on dell.com and will ensure you follow the baseline. Catalogs based on baselines are available approximately 30 days after the release of a new OME-M version. This blog focuses on the validated firmware solution baseline.

Catalogs can be added to OME-M through the Configuration > Firmware Compliance section of the OME-M GUI.

Once a catalog is established, it can be used to establish a Firmware Compliance baseline to check compliance to that catalog. Once a compliance check is completed, the compliance report can be used to show components that do not match the catalog. From the compliance report, you can select components to update via the Make Compliant button. This is especially helpful for server components.

For chassis updates with OME-Modular and IOMs, the process described in this blog will start from the Devices view within OME-M.

OME-Modular update process

OME-M resides in the management module. Updating the chassis to the latest OME-M will update all components within the management module.

When updating a single chassis deployment, access the OME-M GUI using the chassis management IP address.

When updating a multi-chassis deployment, access the OME-M GUI of the lead chassis. If you select all the management modules in an MCM group, OME-M updates them in the required order.

Single chassis OME-M update

Follow the below steps to update the desired OME-M release for a single chassis deployment.

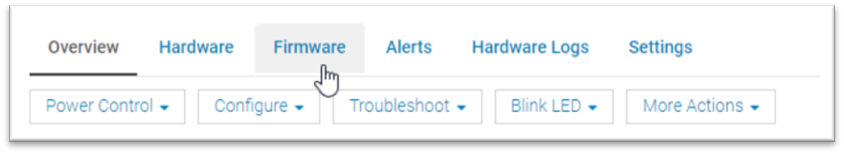

- Navigate to the chassis IP address.

- Click the Firmware tab.

Click the Firmware tab

Click the Firmware tab

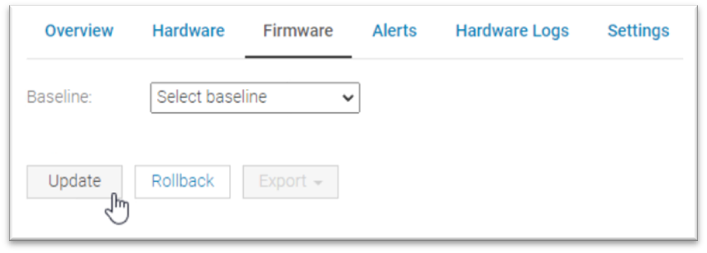

- Click Update.

Click Update

Click Update

- Select the update source. If you have downloaded the DUP package from the Dell support site, choose Individual package and choose the file from your local directory. You can also download the update file directly from the OME-M GUI using the Baseline selection.

- Select both instances of OpenManage Enterprise Modular and click Next.

- Click either Update Now or Schedule Later, depending on your preference.

- Click Finish.

OME-Modular starts a job for the update process. You can monitor the job in the Monitor > Jobs area of the OME-M GUI. - Wait for the job to fully complete. The OME-M GUI will not be reachable for all parts of the upgrade process. Check the job for full completion before starting IOM updates.

Multi-chassis OME-M update

The process for updating multiple chassis is the same as the single chassis. The main difference is to select all of the chassis in the group.

- Navigate to the lead chassis IP address.

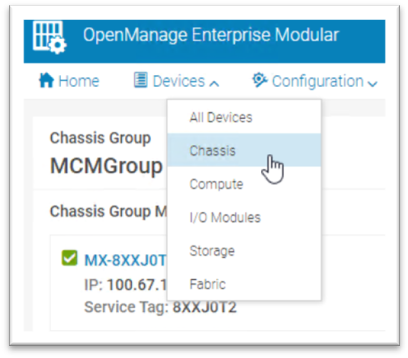

- Click Devices > Chassis.

Click Devices > Chassis

Click Devices > Chassis - Select all of the chassis in the group desired for update.

- Click Update.

- Select the update source. If you have downloaded the DUP package from the Dell support site, choose Individual package and choose the file from your local directory. You can also download the update file directly from the OME-M GUI using the Baseline selection.

- Select all instances of OpenManage Enterprise Modular and click Next.

- Click either Update Now or Schedule Later, depending on your preference.

- Click Finish.

OME-Modular starts a job for the update process. You can monitor the job in the Monitor > Jobs area of the OME-M GUI. - Wait for the job to fully complete. The OME-M GUI will not be reachable for all parts of the upgrade process. Check the job for full completion before starting IOM updates.

Networking IOM update process

Once the chassis update process is completed, the IOMs are ready for the update process. The following sections will show the update process using the OME-M GUI and the DUP firmware package.

SmartFabric mode updates

SmartFabric and OME-Modular controls the update process of the IOM pair in SmartFabric mode in the MX deployment. Updating an IOM on a fabric will start the update process for all IOMs in the fabric. OME-M will coordinate the update process to ensure continuity of the fabric, updating each IOM one at a time.

- Navigate to the lead chassis IP address.

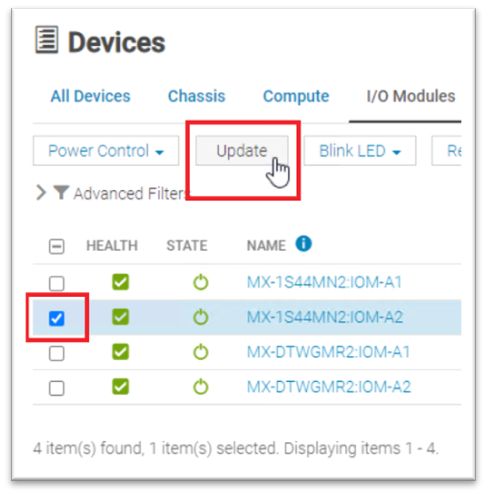

- Click Devices > I/O Modules.

- From the list of available IOMs, choose a single IOM in a desired fabric by using the selection box on the left side of the list. Ensure the selection is a MX9116n or MX5108n model. Use the selection box on the left side of the list.

- Click Update directly above the list.

Select a single IOM and click Update

Select a single IOM and click Update - Select the update source. If you downloaded the DUP package from the Dell support site, choose Individual package and choose the file from your local directory. You can also download the update file directly from the OME-M GUI using the Baseline selection.

- Select the IOM device again and click Next or Finish.

Note: No servers were included in this update. Disregard the warnings for server options.

From this point, the SmartFabric and OME-M coordinates the upgrade of all IOMs in the SmartFabric. All IOMs will be shown in a single job. To monitor the IOM upgrades, navigate to Monitor > Jobs, select the desired job, and click View Details.

The job is not fully complete until the job messages show completed and the green status checkmark is displayed. This update process can take 2 hours or more to complete. It is common to see the first IOM reboot and then have a long waiting period between status messages. The SmartFabric is performing checks to ensure the databases and configuration are properly established before reloading the second IOM.

If there is another fabric with a second set of IOMs in the chassis or scalable fabric, repeat the above steps for the other fabric.

Full Switch mode updates

IOMs operating in Full Switch mode operate and are configured much like traditional rack switches. However, the MX platform has the OME-Modular GUI to manage the IOMs. Dell Technologies recommends that you use the IOM-Modular GUI to download and initiate the update process, as described in the SmartFabric operation steps above.

In Full Switch mode, you manage the update process and ensure connectivity is maintained throughout the update process. Update only one switch at a time to ensure connectivity is maintained.

For deployments with storage uplinks such as FCoE or FC, Dell Technologies recommends that you verify the active path and maintain storage connectivity throughout the process. If any issues occur in migrating the active storage uplink during the update process, contact Dell Support for assistance.

- Navigate to the lead chassis IP address.

- Click Devices > I/O Modules.

- From the list of available IOMs, choose a single IOM from the desired fabric. Ensure the selection is a MX9116n or MX5108n model. Use the selection box on the left side of the list.

- Click Update directly above the list.

- Select the update source. If you downloaded the DUP package from the Dell support site, choose Individual package and choose the file from your local directory. You can also download the update file directly from the OME-M GUI using the Baseline selection.

- Select the IOM device again and click Next or Finish.

- Wait for the update job to fully complete. To monitor the IOM upgrades, navigate to Monitor > Jobs, select the desired job, and click View Details. The job is not fully complete until the job messages show completed and the green status checkmark is displayed.

- Repeat the update process for the second IOM in steps 2 through 7.

Video

Dell PowerEdge MX7000 Platform firmware update process example:

Dell Technologies PowerEdge MX platform: I/O Module and Fibre Channel Switch Module Replacement Process

Sat, 10 Dec 2022 01:45:06 -0000

|Read Time: 0 minutes

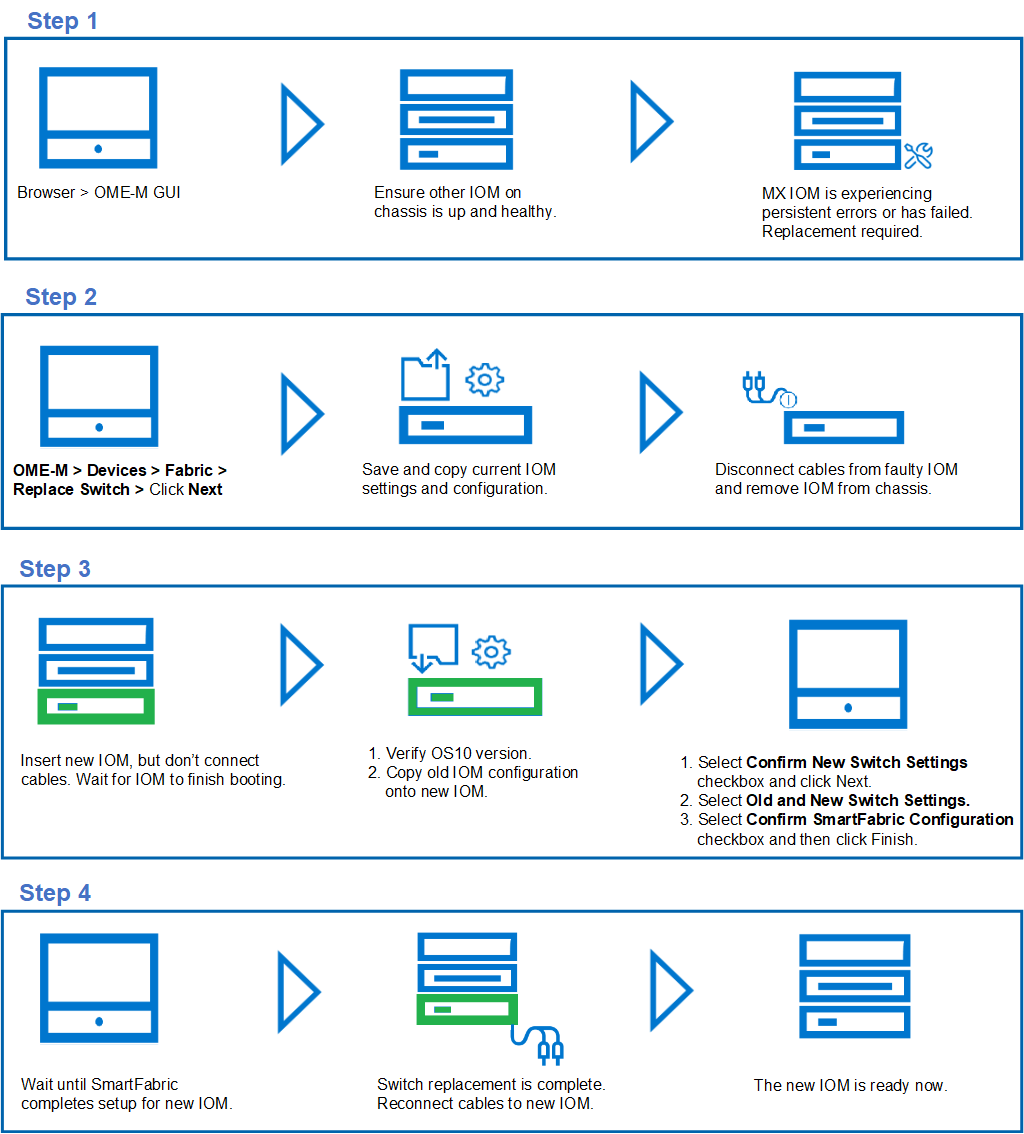

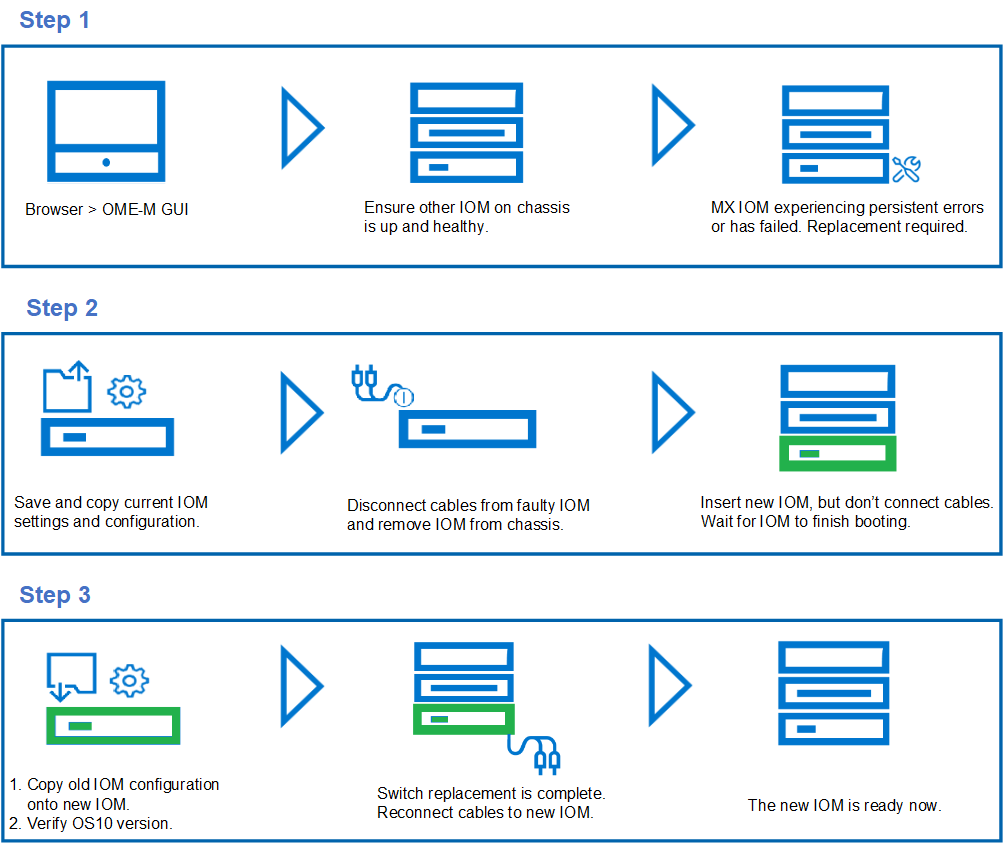

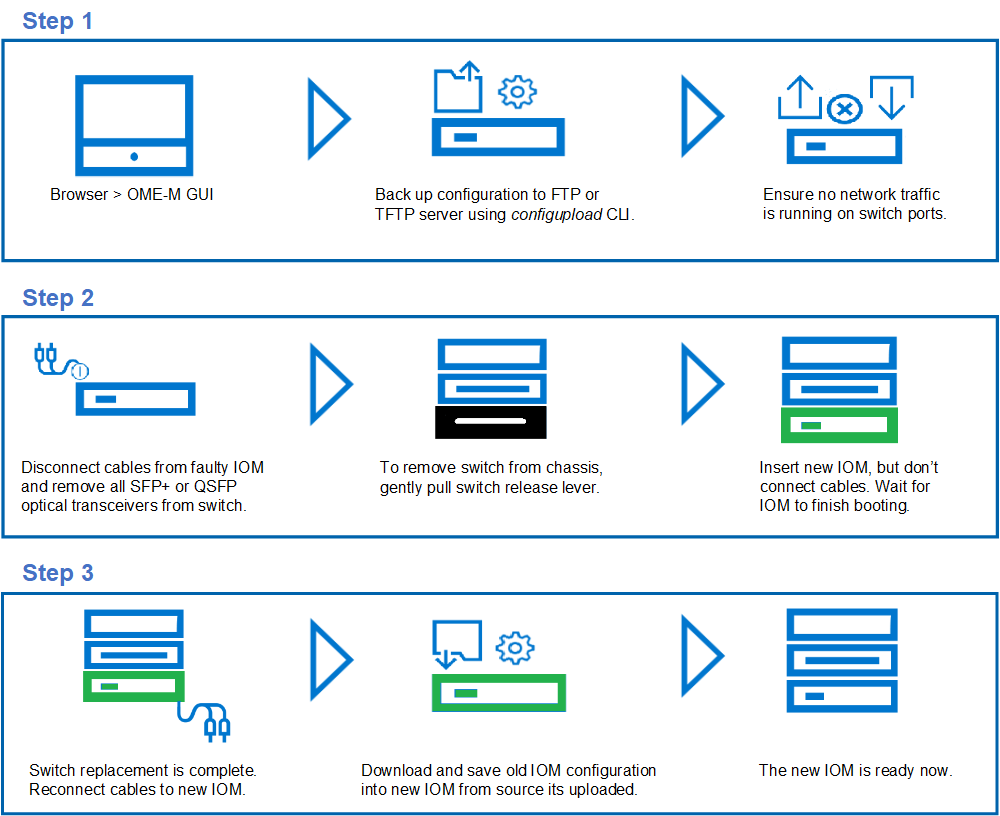

The Dell PowerEdge MX platform with OME-M 1.30.00 and later enables you to replace the MX I/O Module (IOM) and MX Fibre Channel (FC) switch module. This capability lets you recover from persistent errors, hardware failure, or other valid reasons. You can replace the IOM through an OME-M Graphical User Interface (GUI) in SmartFabric mode, or you can replace it manually through the Command Line Interface (CLI) in Full Switch mode.

This blog describes how to:

- Replace the MX IOM in SmartFabric mode.

- Replace the MX IOM in Full Switch mode.

- Replace the MXG610S FC switch module.

Note: Before starting the MX module switch replacement process, contact Dell Technical Support. For technical support, go to https://www.dell.com/support or call (USA) 1-800-945-3355.

Note: The new IOM includes OS10 factory default version and settings, and all ports are in no shutdown mode by default.

MX I/O Module replacement process in SmartFabric Mode

- Once the SmartFabric is created, you can only perform the IOM replacement process through the OME-M GUI.

- The IOM must be replaced with another IOM of the same type.

- Ensure that the OS10 version on the new IOM is identical to the OS10 version of the IOM being replaced.

- If the new OS10 version does not match another existing IOM in the chassis, then cancel the OME-M replacement wizard and upgrade or downgrade the new IOM OS10 firmware to match.

- Once the OME-M replacement wizard completes setup for the new IOM, connect all the cables to the new IOM.

Note: As a best practice, manually back up the IOM startup configuration on a regular basis. If a configuration is not available for the faulty IOM, you must configure the IOM through the standard initial setup process.

MX I/O Module replacement process in Full Switch Mode

- Before replacing the faulty IOM, ensure that the other IOM on the chassis is up, healthy, and in Full Switch mode. A complete network traffic outage might occur if these conditions are not met.

- The IOM must be replaced with another IOM of the same type.

- The OS10 firmware is factory-installed in the MX9116n Fabric Switching Engine (FSE) and in the MX5108 Ethernet Switch.

- Ensure that the OS10 version on the new IOM is identical to the OS10 version of the IOM being replaced.

- If the new OS10 version does not match another existing IOM in the chassis, then cancel the OME-M replacement wizard and upgrade or downgrade the new IOM OS10 firmware to match.

- Once the OME-M completes setup for the new IOM, connect all the cables to the new IOM.

MXG610S Fibre Channel Switch module replacement process

The PowerEdge MX platform allows replacement of an MXG610s FC switch module. The MXG610 has a flexible architecture which enables dynamic scale connectivity and bandwidth with the latest generation of Fibre Channel for the PowerEdge MX7000 platform. The MXG610 features up to 32 Fibre Channel ports, which auto-negotiate to 32, 16, or 8 Gbps speed.

Note: Never leave the slot on the blade server chassis open for an extended period. To maintain proper airflow, fill the slot with either a replacement switch module or filler blade.

Note: As a best practice, manually backup the IOM startup configuration on a regular basis. If a configuration is not available for the faulty IOM, you must configure the IOM through the standard initial setup process.

References:

MX SmartFabric mode IOM replacement process

MX Full Switch mode IO module replacement process

MXG610 Fibre Channel switch module replacement process

Upgrading Dell EMC SmartFabric OS10

Manual backup of IOM configuration through the CLI

Interactive Demo: OpenManage Enterprise Modular for MX solution management

Dell Technologies PowerEdge MX Platform: NPAR

Mon, 22 May 2023 18:49:51 -0000

|Read Time: 0 minutes

Network interface card partitioning (NPAR) allows users to minimize the implementation of physical Network interface cards (NICs) and separates Local Area Network (LAN) and Storage Area Network (SAN) connections. NPAR improves bandwidth allocation, network traffic management, and utilization in virtualized and non-virtualized network environments. The number of physical servers may be fewer, but the demand for the NIC ports is more.

The NPAR feature allows you to use a single physical network adapter for multiple logical networking connections.

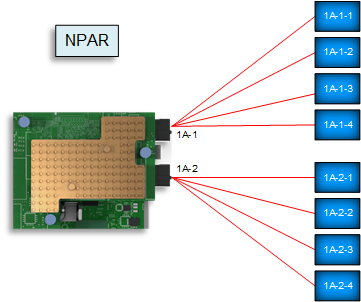

NIC with NPAR enabled

NIC with NPAR enabled Creating multiple virtual NICs for different applications uses Operating System (OS) resources. Deploying NPAR on the NIC will reduce the OS resource consumption and put most of the load on the NIC itself.

Note: Not every implementation requires NPAR. NPAR benefits depend on the server NIC and the network traffic that should run on that NIC.

NIC with NPAR enabled

NIC with NPAR enabledThis blog describes how to validate, enable, and configure NPAR on a Dell PowerEdge MX Platform through the server System Setup and the MX compute sled Server Templates within Dell Open Manage Enterprise – Modular (OME-M).

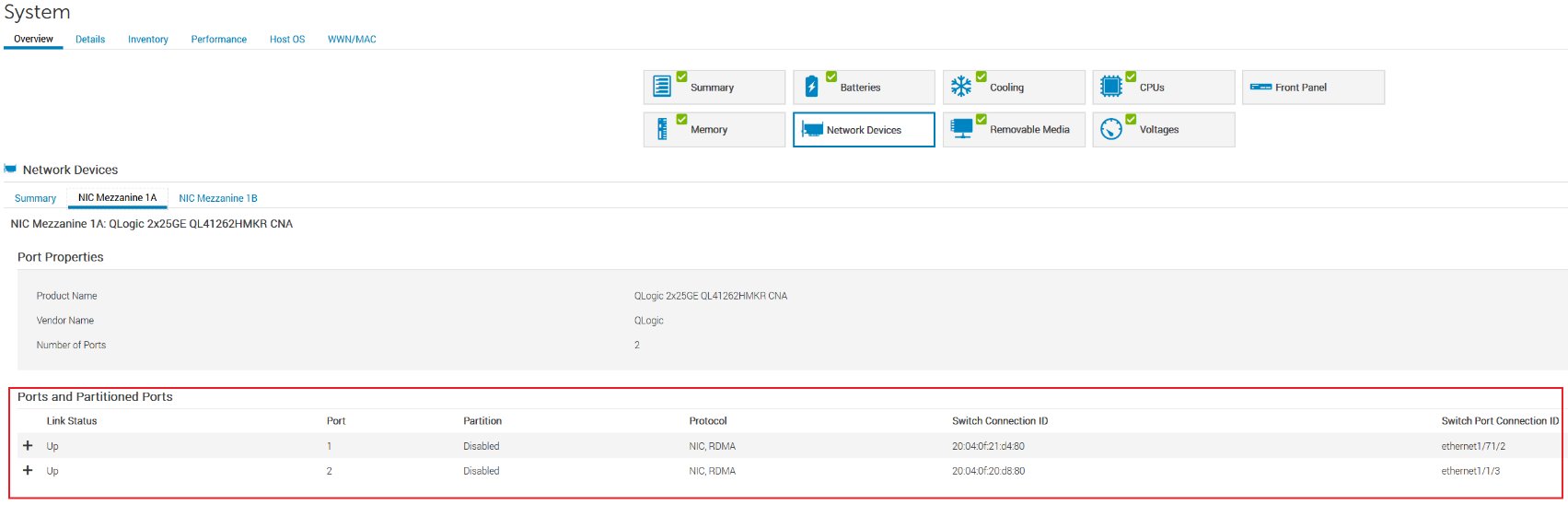

The MX750c compute sled and QLogic-41262 Converged Network Adapter (CNA) have been used in the deployment example described throughout this blog.

Validating the NIC port without NPAR enabled

NPAR is not enabled by default on the MX compute sled NIC. This section demonstrates how to verify the current settings through the following methods:

- Server Integrated Dell Remote Access Controller (iDRAC)

- MX OME-M

- Windows operating system

- VMware vSphere ESXi

Note: The following figures show NIC status without NPAR enabled for all the techniques.

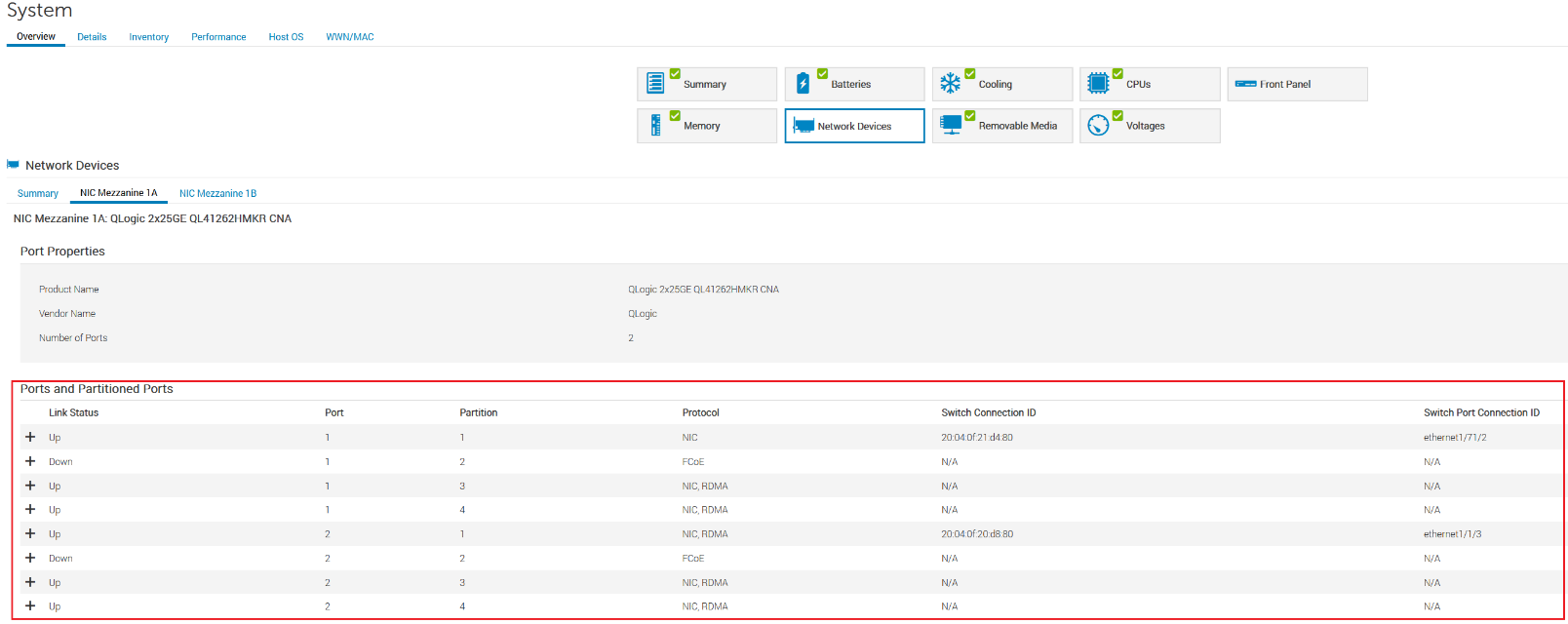

Server iDRAC

- Open the server Integrated Dell Remote Access Controller (iDRAC).

- Click System > Overview > Network Devices.

The partition is Disabled for port-1 and port-2 of NIC Mezzanine 1A, as shown in the figure below.

MX 750c Sled server iDRAC settings

MX OME-M

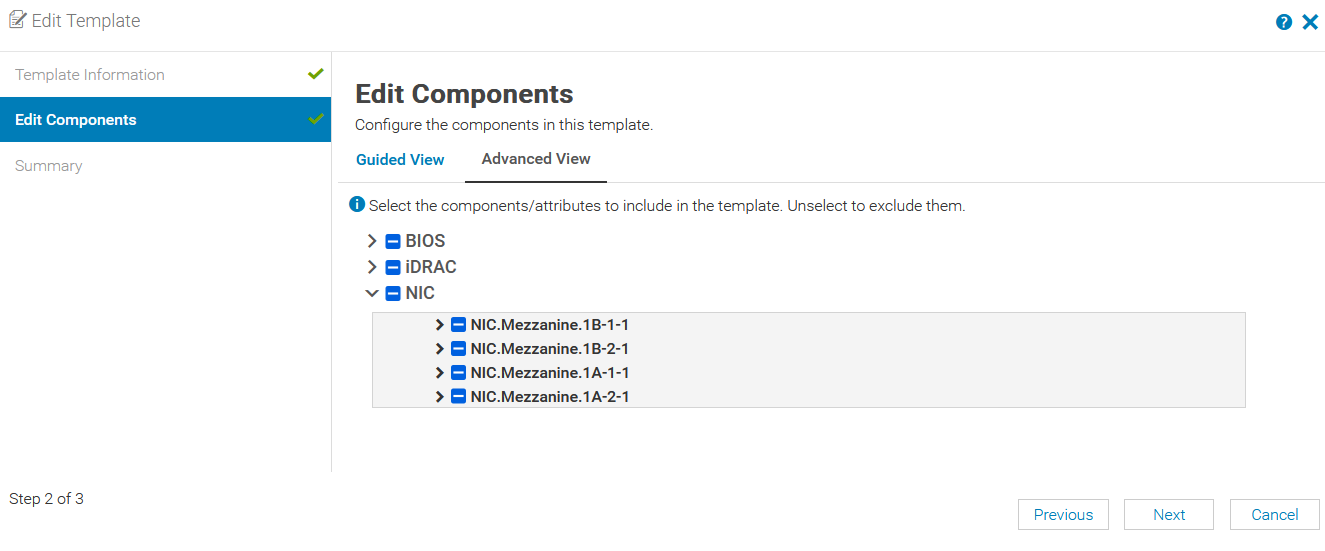

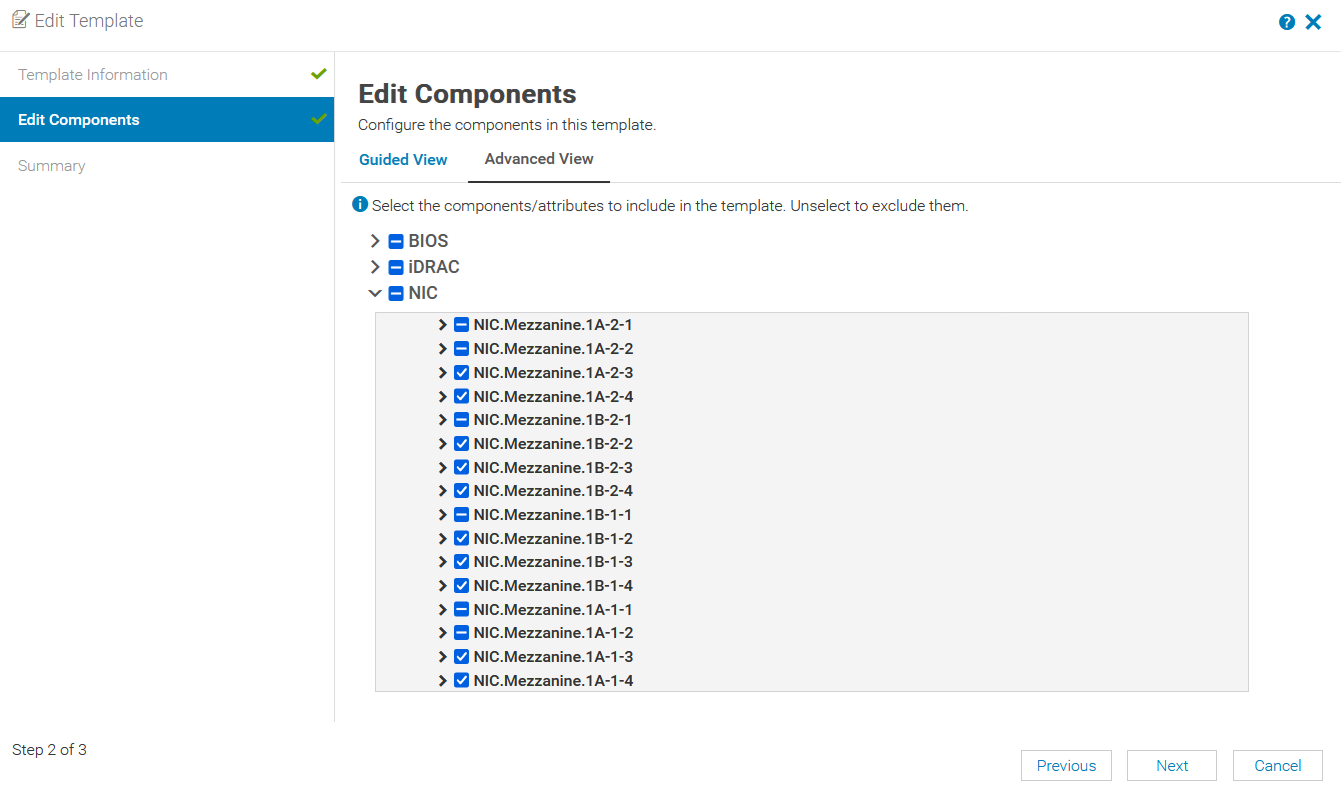

- In OME-M, select Configuration > Template.

- In the Edit Template window, select the server template and then click Edit > Next.

- On the Edit Components page, select the Advanced View tab.

No partitions are enabled for port-1 and port-2 of NIC Mezzanine 1A, as shown in the figure below.

OME-M server Edit Template settings

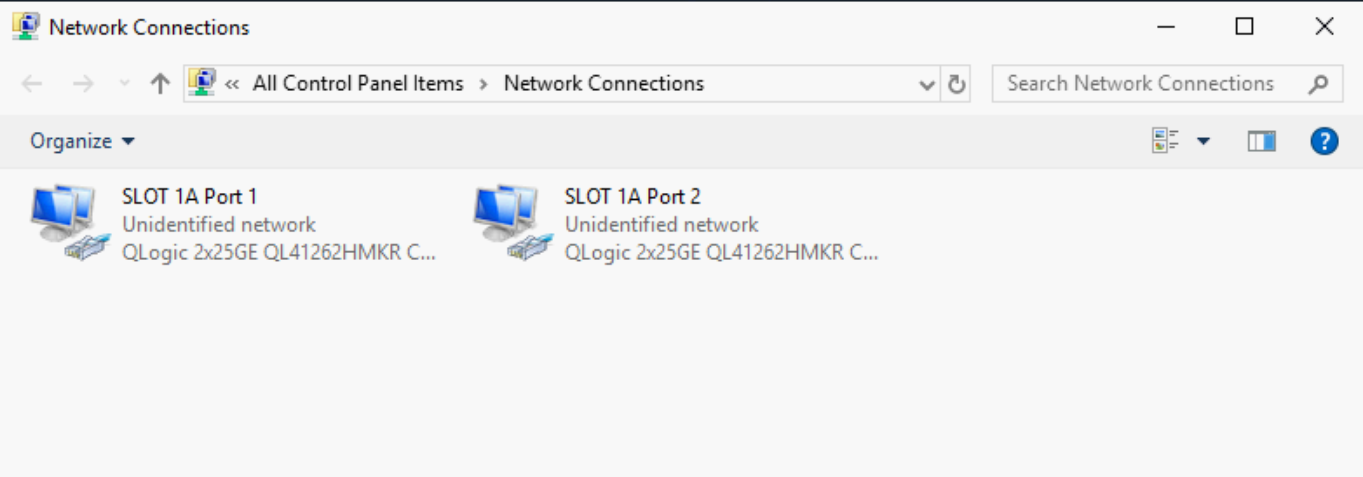

Windows operating system

- Log into Windows Server.

- Open the Windows Control Panel.

- Click Network and Sharing Center > Change adapter settings.

No partitions are enabled for NIC SLOT 1A, port-1 and port-2, as shown in the figure below.

Windows Network adapter settings

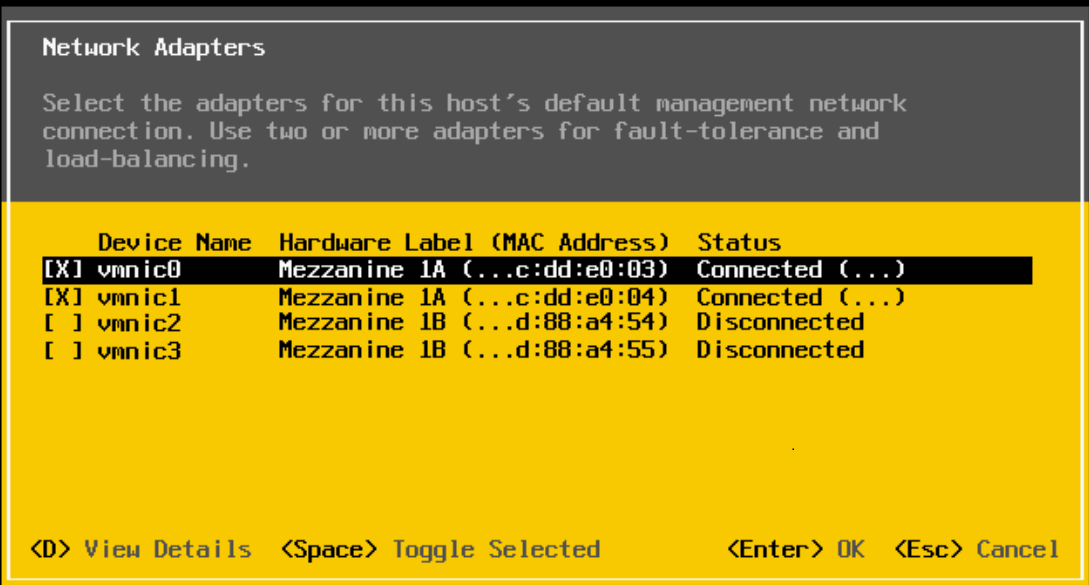

VMware vSphere ESXi

- Log into the VMware host server.

- Click Configure Management Network > Network Adapters.

No partitions are enabled for port-1 and port-2 of NIC Mezzanine 1A, as shown in the figure below.

VMware Network Adapters settings

Configure NPAR Device Settings and NIC Partitioning

You can configure NPAR device settings and NIC Partitioning on the MX compute sled through the server System Setup wizard.

The QLogic-41262 CNA shown in this example supports eight partitions per CNA port. In the following deployment example, we create four NIC partitions. However, only two partitions are used: one for Ethernet traffic and one for FCoE traffic.

System Setup wizard

To enable and configure NPAR on a server NIC through the System Setup wizard:

- In OME-M, select Compute.

- Select the required server.

- In the URL field, enter the IP address for the server.

- Open the Virtual Console.

- From the menu at the top of the window, click Next Boot.

- Select BIOS Setup and click OK.

- To reboot the server:

- From the menu at the top of the window, click Power.

- Select Reset System (warm boot) and then click Yes.

Device Settings

To configure the device settings:

- From the System Setup main menu, select Device Settings.

- Select Port-1 from mezzanine 1A of the CNA.

The Main Configuration page displays. - To enable Virtualization Mode:

- Click Device Level Configuration.

- From the Virtualization Mode list, select NPar.

- Click Back.

Note: Do not enable NParEP-Mode. Enabling NParEP-Mode will create eight partitions per CNA port.

NIC Partitioning Configuration

To configure NIC partitioning for Partition 1:

- Click NIC Partitioning Configuration.

- Select Partition 1 Configuration.

- Validate NIC is set to Enabled.

- Set NIC + RDMA Mode to Disabled.

- Click Back.

To configure NIC partitioning for Partition 2:

- Select Partition 2 Configuration.

- Set FCoE Mode to Enabled.

- Click Back to return to the Main Configuration Page.

- To set the boot protocol:

- Select NIC Configuration.

- Set Boot Protocol to UEFI FCoE.

- To enable FCoE Configuration:

- Select FCoE Configuration.

- Set Connect 1 to Enabled.

- Click Back.

In this example partition-3 and partition-4 are not used. To disable NPar for Partition 3 and 4:

- Click NIC Partitioning Configuration.

- Set NIC partition-3 Mode to Disabled and click Back.

- Set NIC partition-4 Mode to Disabled and click Back.

- Click Back and then click Finish.

- To save the changes, click Yes.

- On the Success window, click OK.

The Device Settings Page displays. - To return to the System Setup Main Menu, click Finish.

Configure second CNA port

To configure the second CNA port:

- From the System Setup main menu, select Device Settings.

- Select Port-2 from mezzanine 1A of the CNA.

The Main Configuration page displays. - Repeat the steps in the previous sections, Device Settings and NIC Partitioning Configuration.

- To reboot the server:

- Click Finish.

- On the Confirm Exit window, click Yes.

Confirm NPAR status

The MX compute sled NIC is now configured for NPAR. The following sections describe how to confirm the NIC status with NPAR enabled.

Server iDRAC

To confirm the NPAR status on the server iDRAC:

- Open the server iDRAC.

- Click System > Overview > NetworkDevices.

Port-1 and port-2 of NIC Mezzanine 1A have four partitions for each NIC port, as shown in the following figure.

MX 740c Sled server iDRAC settings

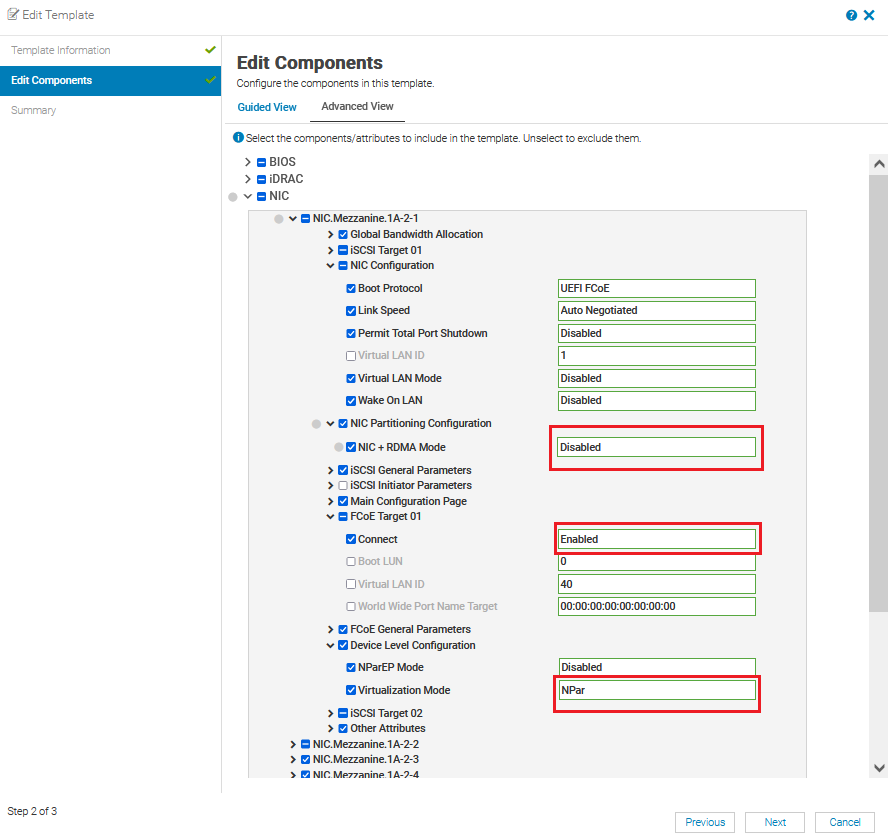

MX OME-M

To confirm the NPAR status in OME-M:

- In OME-M, select Configuration > Template.

- In the Edit Template window, select the server template and then click Edit > Next.

- On the Edit Components page, select the Advanced View tab.

Partitions for NIC port-1 and port-2 of NIC Mezzanine 1A have been enabled, as shown in the figure below.

MX OME-M Compute Sled NIC-NPAR enabled

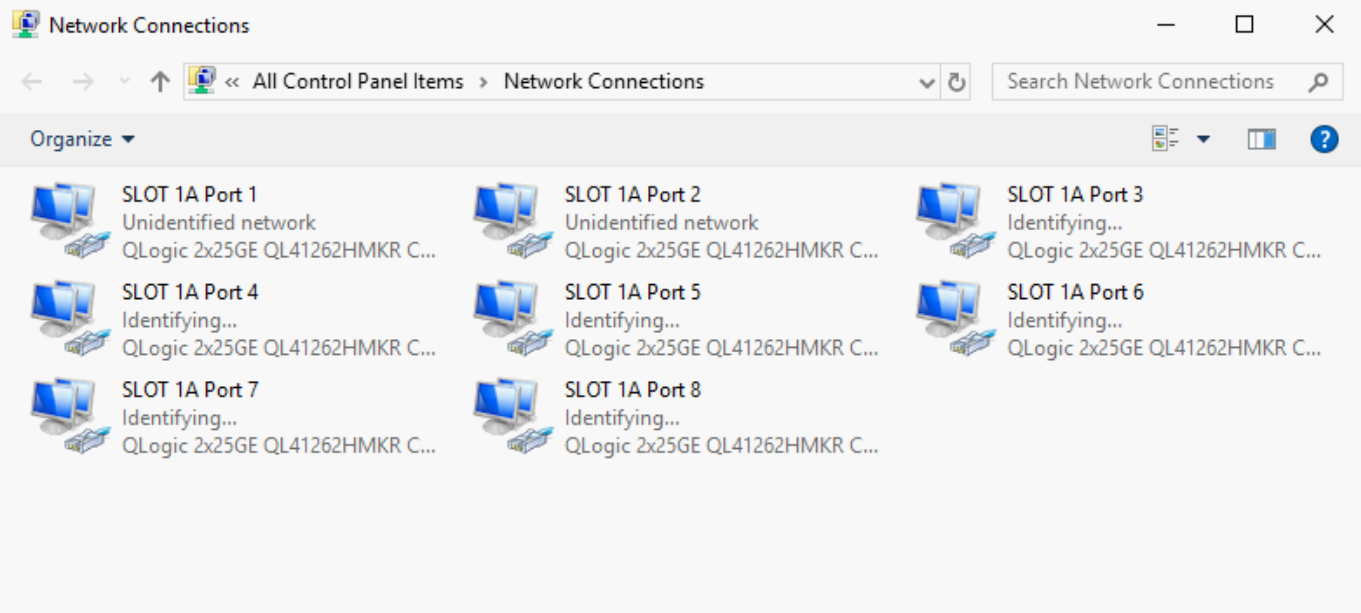

Windows operating system

To confirm the NPAR status in the Windows Control Panel:

- Log into Windows Server.

- Open the Windows Control Panel.

- Click Network and Sharing Center > Change adapter settings.

NIC Mezzanine 1A has four partitions for each NIC port, as shown in the figure below.

Windows Network adapter settings

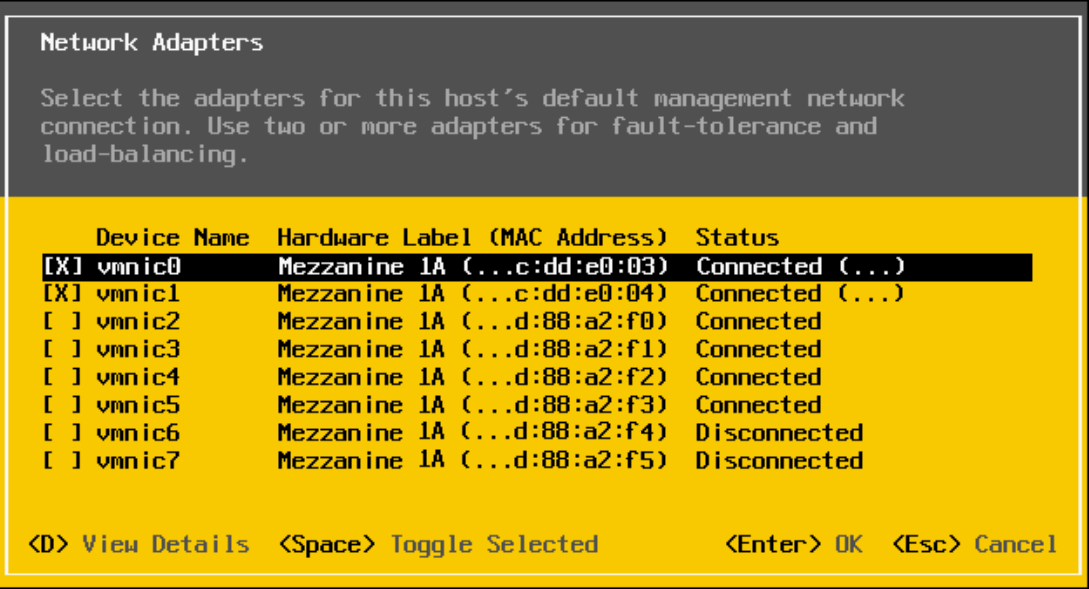

VMware vSphere ESXi

To confirm the NPAR status in VMware vSphere ESXi:

- Log into the VMware host server.

- Click Configure Management Network > Network Adapters.

The partitions for port-1 and port-2 of NIC Mezzanine 1A, as shown in the figure below.

VMware Network adapters settings

Configure NIC Partitioning with a template in OME-M

OME-M 1.40.00 introduces NPAR NIC configurations in the MX compute sled server template GUI. To configure NPAR settings on the PowerEdge MX platform, you must have administrator access.

Note: Ensure NPAR is enabled through System Setup before using the MX server template GUI for NPAR settings. The MX server template GUI allows users to modify the NIC attributes. To configure NPAR attributes on the MX compute sled using the server template GUI:

- Open the OME-M console.

- If NPAR is configured on an existing server template, proceed to step 10.

- If NPAR is not configured on an existing server template, from the navigation menu, select Configuration, then click Templates.

Note: With OME-M 1.20.10 and earlier, the Templates option is called Deploy. - From the center panel, click Create Template, then click From Reference Device to open the Create Template window.

- In the Template Name box, enter a name.

- Optionally, enter a description in the Description box, then click Next.

- In the Device Selection section, click Select Device.

- Select the compute sled from the desired chassis and click Finish.

- To complete the compute selection for server template, click Finish.

The server template status shows Running and then displays Completed after the process completes. - Select the server template.

- Click Edit and then click Next.

- On the Edit Components page, select the Advanced View tab.

- Expand the NIC and the NIC port as shown in the figure below.

- Expand the desired NIC Port and expand Device Level Configuration. Verify the Virtualization Mode is set to NPAR.

- Expand the NIC Configuration and set Boot Protocol to UEFI FCoE.

- Expand the NIC Partitioning Configuration and set NIC + RDMA Mode to Disabled.

- Expand the FCoE menu and set Connect to Enabled.

- Click Next.

- Click Finish.

OME-M Server Template Edit settings

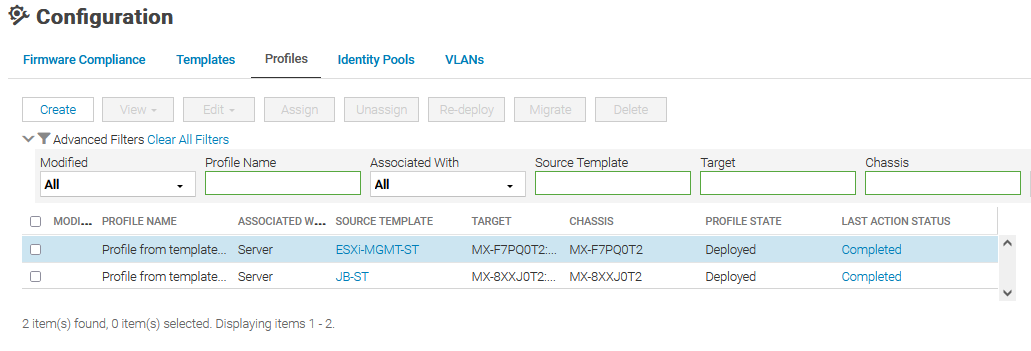

Server Template Profile

The PowerEdge MX platform supports profiles with OME-M 1.30.00 and later. OME-M creates and automatically assigns a profile once the server template is deployed successfully. Profiles can be used to deploy with modified attributes on server templates, including NPAR settings. A single profile can be applied to multiple server templates with only modified attributes, or with all attributes.

The following figure shows two profiles from template deployments that have been created and deployed.

Note: The server template cannot be deleted until it is unassigned from a profile.

MX Server Template Profiles

Choosing a NIC

The following sections provide information to assist in choosing the appropriate NIC for your environment.

PowerEdge MX Ethernet Mezzanine Cards

The following table shows NPAR support details for MX-qualified NICs:

Vendor | Model | Max Speed | Ports | NIC Type | NPAR | Number of Partitions |

Marvell/QLogic | QL41262 | 10/25GbE | 2 | CNA | Yes | 8/port – Total 16 |

Marvell/QLogic | QL41232 | 10/25GbE | 2 | NIC | Yes | 8/port – Total 16 |

Broadcom | 57504 | 10/25GbE | 4 | NIC | Yes | 4/port – Total 16 |

Intel | XXV710 | 10/25GbE | 2 | NIC | No | N/A |

Mellanox | ConnectX-4 LX | 10/25GbE | 2 | NIC | No | N/A |

Comparison of NIC NPAR and Cisco Virtual Interface Card (VIC)

NIC NPAR and Cisco VIC both provide multiple network connections using limited physical ports of the NIC. In the comparison table below, some key differences between NIC NPAR and Cisco VIC are highlighted.

NIC NPAR | Cisco VIC

|

Industry standard. Works with any supported network switch. | Cisco proprietary LAN and SAN interface card for UCS and modular servers. |

Up to four or up to eight physical function vNIC per adapter port. | Up to 256 PCI-e devices can be configured. Physical limitation on performance of the traffic based on the available bandwidth from the network link. |

Configured in BIOS or iDRAC found in the server. | Requires UCS Fabric Interconnect to associate vNIC ports. |

MAC and Bandwidth allotment assigned and configured in BIOS. | MAC and Bandwidth allotment are determined by a service profile. |

NIC port enumeration is predictable and provides uniform device name assignments across a population of identical and freshly deployed in ESXi host. | Cisco UCS can manage the order that NICs are enumerated in ESXi. |

NIC teaming options on the MX Platform

Below are the NIC teaming options available on MX compute sleds.

Teaming option | Description |

No teaming | No NIC bonding, teaming, or switch-independent teaming |

LACP teaming | LACP (Also called 802.3ad or dynamic link aggregation.) NOTE: LACP Fast timer is not currently supported. |

Other | Other NOTE: If using the Broadcom 57504 Quad-Port NIC and two separate LACP groups are needed, select this option, and configure the LACP groups in the Operating System. Otherwise, this setting is not recommended as it can have a performance impact on link management. |

Restrictions

- The following restrictions apply for both Full Switch and SmartFabric modes:

- If NPAR is not in use, both switch-dependent (LACP and static LAG) and switch-independent teaming methods are supported.

- If NPAR is in use, only switch-independent teaming methods are supported. Switch-dependent teaming (LACP and static LAG) is not supported.

- If switch dependent (LACP) teaming is used, the following restrictions apply:

- The iDRAC shared LAN on motherboard (LOM) feature can only be used if the Failover option on the iDRAC is enabled.

- If the host operating system is Microsoft Windows, the LACP timer MUST be set to Slow, also referred to as Normal.

- In SmartFabric mode when an imported template is deployed where NPAR is enabled, it does not configure the bandwidth settings for IOMs.

References

Profiles Deployment: Profiles with server template deployment

VMware knowledge base: How VMware ESXi determines the order in which names are assigned to devices (2091560)

Dell EMC OpenManage Enterprise-Modular Edition for PowerEdge MX7000 Chassis User's Guide

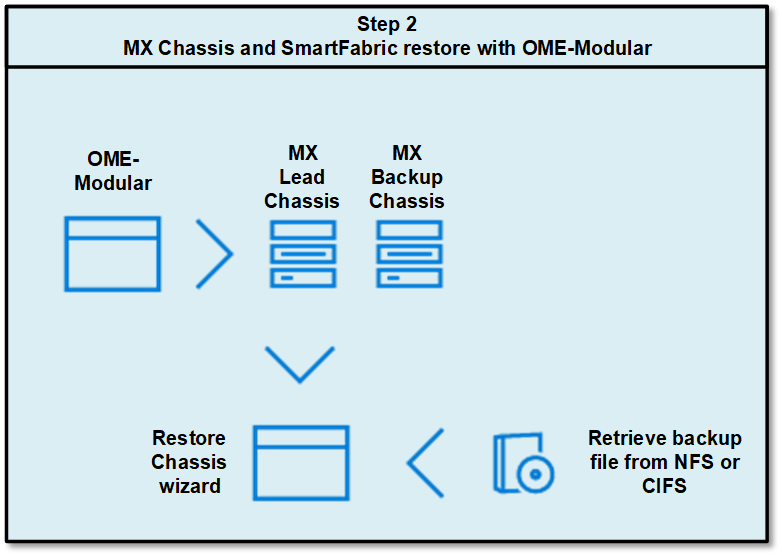

Dell Technologies PowerEdge MX Platform: Backing Up and Restoring the MX Platform

Fri, 09 Dec 2022 20:24:42 -0000

|Read Time: 0 minutes

An essential part of any data center disaster recovery plan is the ability to back up and restore the infrastructure. As a best practice, logs, server settings, routing information, and switch configurations should be backed up, with several copies secured in multiple locations.

The Dell Technologies PowerEdge MX Platform OME-M 1.40.00 release includes a significant new addition to the backup and restore feature: SmartFabric settings are now included.

This blog describes the full network backup and restore process, with emphasis on the SmartFabric settings and complete IOM startup.xml configuration.

Backing up the MX7000 chassis

In the following scenarios, you might need to restore the MX7000 chassis:

- The chassis was misconfigured

- The MX platform is unresponsive or malfunctioning

- Configurations were deleted due to disaster or human error

Note: If the MX chassis is in a MultiChassis Management (MCM) group, the backup will only be performed on the lead chassis. Member chassis do not need to be backed up because they inherit the configuration from the lead chassis.

MX Chassis, OME-M, and SmartFabric settings and configurations

MX platform backups include the following configurations and settings:

- Application settings

- Setup configuration

- Power configuration

- Chassis network configuration

- Local access configuration

- Location configuration

- Slot configuration

- OME Modular network settings

- User's settings

- Security settings

- Alert settings

OME-M 1.40.00 introduces the following:

- System configuration

- Templates

- Profiles

- Identity pools and VLANs

- Catalogs and baselines

- Alert policies

- SmartFabric

- MCM configuration

Back up the MX platform

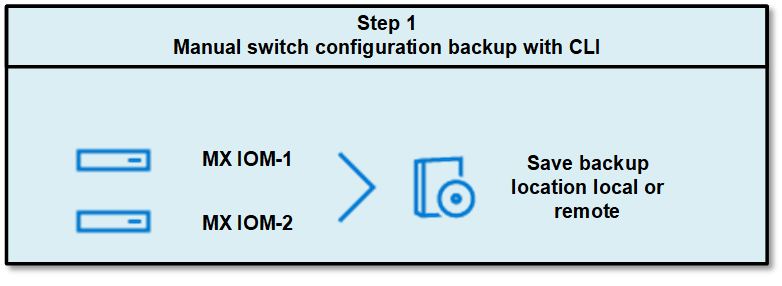

The OME-M Chassis Backup wizard includes chassis settings and configurations, but it does not include the I/O Modules (IOMs) configuration. Let’s get started by backing up the IOM configurations manually through the CLI.

Manual backup of IOM configuration provides a backup of the running configuration. The running configuration contains the current OS10 system configuration and consists of a series of OS10 commands in a text file that you can view and edit with a text editor. Copy the configuration file to a remote server or local directory as a backup or for viewing and editing.

- In the CLI, run the following command to manually save each IOM switch configuration:

OS10# copy running-configuration startup-configuration - Back up the startup file to the local directory or on external resources such as an TFTP server, an FTP server, or a USB drive.

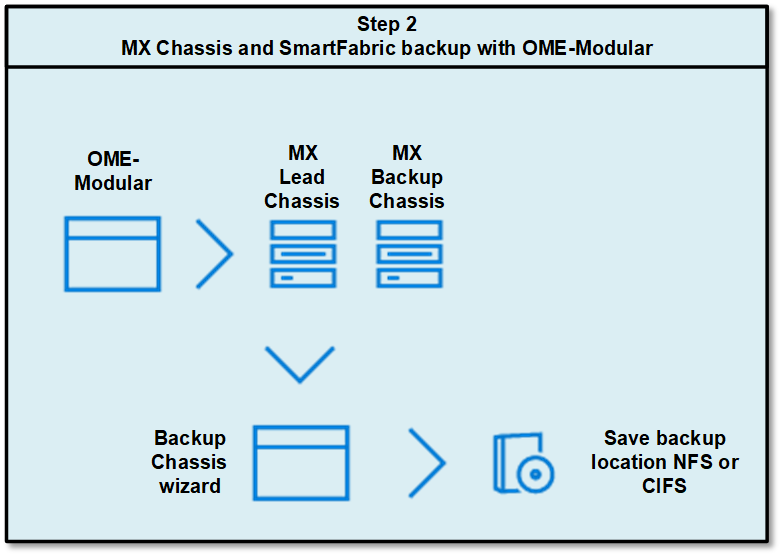

In the example below, the configuration is saved on a local directory of the switch by running the following CLI:OS10# copy config://startup.xml config://backup-3-22.xml - Access the MX lead chassis Overview page and click More Actions > Backup.

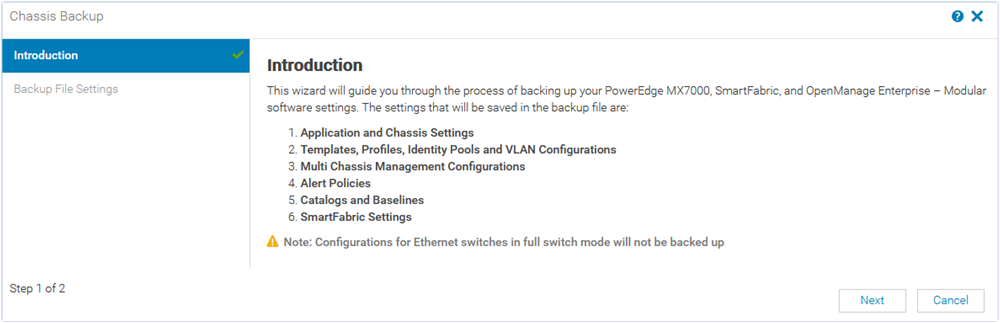

The Chassis Backup wizard is displayed.

- On the Introduction page, review the settings that are included in the backup file and click Next.

The Backup File Settings page is displayed.

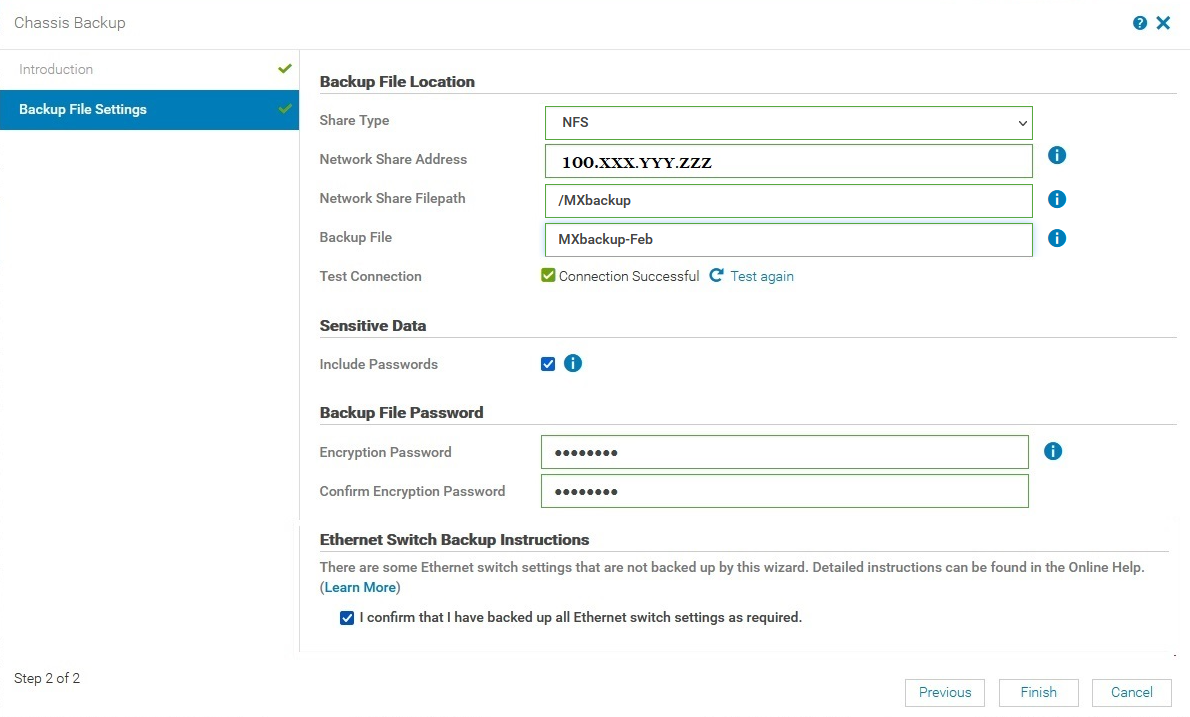

- In Backup File Location, select the Share Type where you want to store the chassis backup file.

The available options are CIFS and NFS.

In this example, NFS is selected. Therefore, the NFS server should be preconfigured and the network connection should be tested before starting the backup process. - Enter the Network Share Address and the Network Share FilePath name of the backup file after a forward slash. For example /MXbackup

The Network Share Address is the NFS server NIC IP. The Network Share FilePath must be predefined on the NFS server, the file path, or the folder where the backup file will be saved. - Enter a name for the Backup File.

In this example, MXbackup-Feb is entered. - (Optional) Select Test connection. If the MX chassis and NFS server are in the same network, you can skip this step.

- (Optional) To allow users to include passwords while backing up the chassis, select Sensitive Data.

For more information about sensitive data, see Chassis Backup and Restore. - In Backup File Password, enter a password in the Encryption Password and Confirm Encryption Password textboxes.

The backup file is encrypted and cannot be edited. Only authorized users can retrieve and restore the file on the chassis. Provide the password and secure it in a safe place.

Note: The password must be 8 to 32 characters long and must be a combination of an uppercase, a lowercase, a special character (+, &, ?, >, -, }, |, ., !, (, ', ,, _, [, ", @, #, ), *, ;, $, ], /, §, %, =, <, :, {, I) , and a number.

- In the Ethernet Switch Backup Instructions, select the check box to confirm that you have manually saved the Ethernet switch backup settings.

For more detailed information about all Ethernet switch CLI settings, see CLI commands not part of chassis backup.- When the MX7000 chassis is in SmartFabric mode, the backup process does not include some switch settings.

- In Full Switch mode, the chassis backup process does not include any switch settings.

- To start the backup, click Finish.

- (Optional) To view the progress of the backup operation:

- Click Monitor > Jobs.

- Select the appropriate job and click View Details.

Available information includes current task status, percentage complete, elapsed time, and much more.

Restore the MX platform

This section describes the steps to restore an MX chassis and the IOM configuration.

You can use the OME-M GUI to restore the MX chassis working configuration with the backup file we created in the previous section.

The GUI doesn’t restore the IOM configuration, so you can manually restore the IOM configuration through the CLI.

Before you start the restore operation, make sure you have network access to the location where the backup file has been saved.

Restore the system

As a best practice, verify network connectivity to the location where the backup file has been saved.

You can restore the chassis through the OME-M GUI. The GUI doesn’t restore the IOM configuration, so the IOM configuration must be restored manually through the CLI.

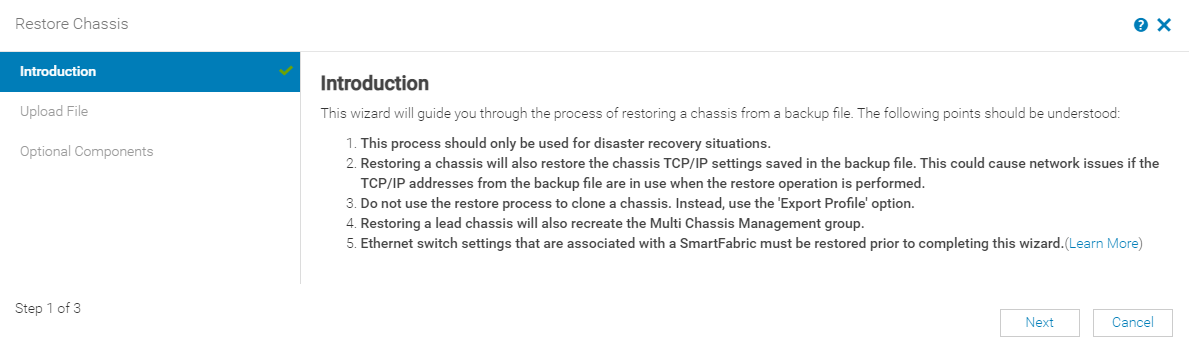

- From the lead chassis, open the OME-M GUI and browse to Overview > More Actions > Restore.

The Introduction page displays.

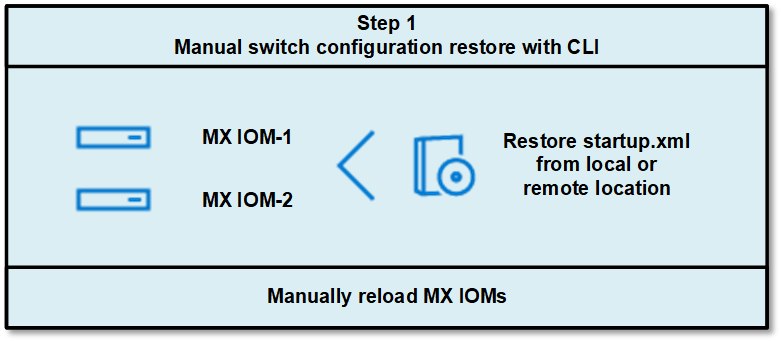

- Read the process and click the Learn More link in point 5 (shown in the figure above) to see more information about the Ethernet switch restore.

Note: Do not click Next. The Ethernet switch configuration must be manually restored before proceeding to the next step in the restore wizard. - Access the MX IOM CLI of each switch and restore the Ethernet Switch configuration.

The IOMs can be restored manually from the local directory on the switch or from external resources such as an TFTP server, an FTP server, or a USB drive. In this example, the IOMs manually restore through the CLI from the local directory on the switch.

In the CLI, run the following command:OS10# copy config://backup-3-22.xml config://startup.xmlOS10# reloadProceed to reboot the system? [confirm yes/no]:yesSystem configuration has been modified. Save? [yes/no]:noCaution: Reload the IOMs immediately after restoring the startup configuration, because the running configuration is automatically written to the startup.xml every 30 minutes. Reloading the IOM immediately after each startup configuration restore avoids the startup.xml being overwritten.

- After the IOMs are restored and reloaded successfully, resume the restore process in the OME-M GUI. Click Next on the Introduction page.

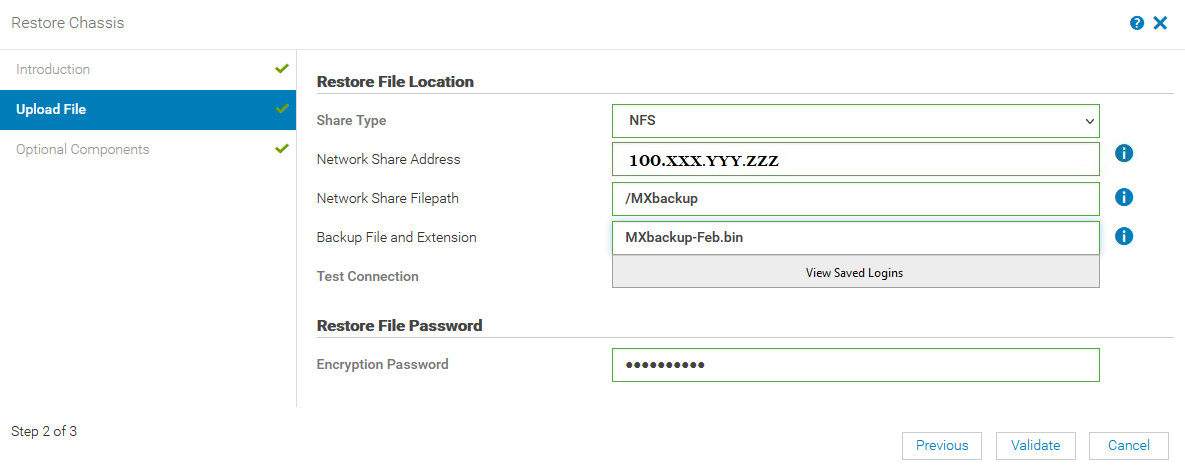

The Upload File page displays.

- Enter the restore file location details and enter the encryption password.

- In the Restore File section, enter the appropriate information.

Field

Input

Share Type

Select the share type where the configuration backup file is located.

In our example, since we selected the NFS server option for our backup, select NFS.

Network Share Address

Provide the NFS server NIC IP.

Network Share Filepath

Enter the same Network Share Filepath used for the backup file, including a forward slash: /MXbackup

Backup Filename

Type the Backup Filename with extension as shown in the figure above: MXbackup-Feb.bin.

- In the Restore File Password section, provide the same Encryption Password used during the backup process. The encryption password prevents unauthorized access.

- In the Restore File section, enter the appropriate information.

- To validate the chassis connectivity with the NFS server or location where backup file is saved, click Validate.

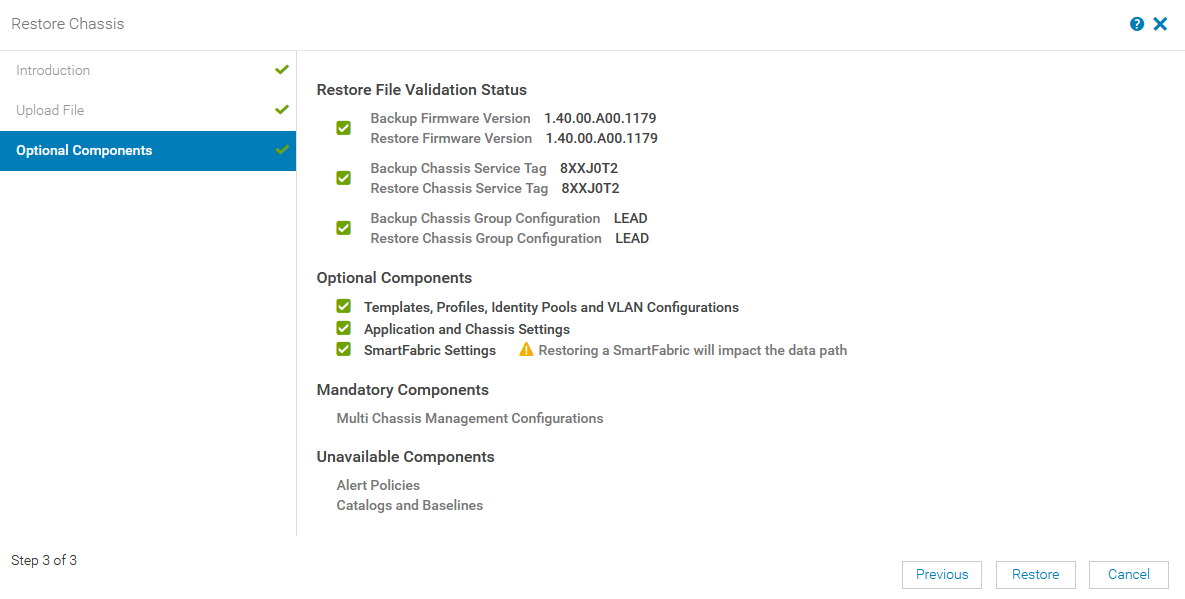

After the validation completes successfully, the Optional Components section is displayed.

- (Optional) On the Optional components page, you can choose to restore files on the selected components.

The following table provides details about the available options:Component

Description

Restore File Validation Status

Displays the validation status of the restore files

- Backup and restore chassis firmware version

Note: The restore chassis firmware version must match the firmware version that was installed when the backup file was created. - Backup and restore chassis service tag information

- The role of the backup chassis and the restore chassis

Optional Components

Displays the components that you can select for the restore operation.

Mandatory Components

Displays mandatory components, if applicable.

A restoring chassis that is in the MCM group is a Mandatory Component. Mandatory components restore automatically in the restore process.

Unavailable Components

Displays all other components that were not backed up during the backup process and are therefore unavailable for the restore operation.

- Backup and restore chassis firmware version

- Click Restore to start the chassis restore process.

- (Optional) To view the progress of the restore operation:

- Click Monitor > Jobs.

- Select the appropriate job and click View Details.

Available information includes current task status, percentage complete, elapsed time, and much more.

Notes:

- The restore process may take several hours, depending on the network settings and configuration.

- Once the MCM lead chassis has been restored, set the backup lead manually by assigning a backup lead chassis.

Resources

Dell Technologies PowerEdge MX7000 Networking Deployment Guide

Dell Technologies OME-M for PowerEdge MX7000 Chassis User’s Guide

Dell Technologies PowerEdge MX7000 Networking Interactive Demos