Home > AI Solutions > Artificial Intelligence > Guides > Implementation Guide—Virtualizing GPUs for AI with VMware and NVIDIA Based on Dell Infrastructure > Deploy VMware vSphere

Deploy VMware vSphere

-

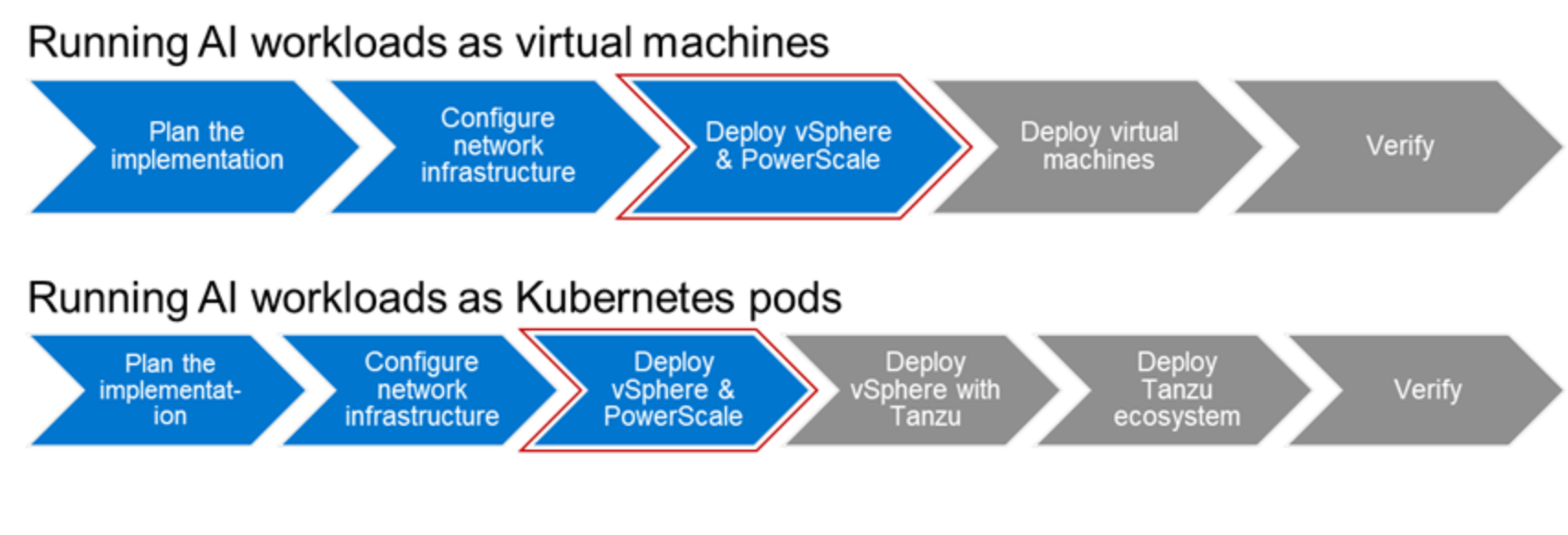

The next step is to deploy VMware vSphere and configure PowerScale storage for AI workload datasets.

vSphere is a sophisticated product with multiple components that require installation and configuration. To ensure a successful vSphere deployment, understand the sequence of required tasks. Installing vSphere includes the following tasks:

- Install ESXi on at least one PowerEdge server.

- Configure ESXi.

- Deploy vCenter Server Appliance.

- Log in to the vSphere Client to create and organize your vCenter Server inventory.

ESXi on PowerEdge servers

PowerEdge servers do not support factory installations of ESXi on servers with GPUs. We recommend installing ESXi on the BOSS controller card on the PowerEdge server. It is required to install Dell customized images of ESXi on PowerEdge servers as it includes the latest drivers for the servers.

For more information about deploying ESXi, see the installation guide.

VMware vCenter for management

VMware vCenter Server can be deployed as a VM in your data center. vCenter is critical to the deployment, operation, and maintenance of a vSAN environment. For this reason, Dell Technologies recommends that you deploy vCenter on a highly available management cluster, which exists outside of the compute cluster. For more information about deploying vCenter, see vCenter Server installation guide.

Configure VMware vSAN - Storage for VMs

The Dell Validated Design for AI is flexible and provides various options for storage deployment.

We recommend using vSAN for VM storage. We use Dell vSAN Ready Nodes in our solution validation to simplify the requirements for a vSAN cluster deployment. We use the VMware vCenter Server Appliance utility to streamline the vSAN deployment steps. For details about vSAN deployment, see the VMware vSAN Design Guide. We recommend that you use the vCenter QuickStart wizard to complete your vSAN cluster after you deploy your initial vCenter instance. See Using Quickstart to Configure and Expand a vSAN Cluster to help streamline your vSAN cluster provisioning.

PowerStore and PowerScale can also be used for shared storage for VMs. See the PowerStore and PowerScale product documentation for more information about deployment.

Configure PowerScale storage - Storage for AI workloads (datasets)

PowerScale can also be used as a storage repository for AI datasets. PowerScale provides various options in node types and software services that enable you to correctly size the storage, and incrementally scale infrastructure and data services to match diverse workload requirements. For example, with PowerScale, you can start with an initial deployment as small as three nodes and 12 drives, and incrementally expand up to 252 nodes, up to 93 PB, and up to 945 GB/s throughput.

The following steps create NFS volumes in PowerScale storage that are used for AI workloads:

- Log in to the PowerScale OneFS web console.

- Click Protocols > UNIX Sharing (NFS) > NFS Exports.

- Click Create Export.

- For the Directory Paths setting, enter or browse to the directory that you want to export.

- To add multiple directory paths, click Add another directory path for each additional path.

- In the Description field, type a comment that describes the export.

- Specify the NFS clients that can access the export.

You can specify NFS clients in any or all the client fields. A hostname, IPv4 or IPv6 address, subnet, or netgroup can identify a client. IPv4 addresses mapped into the IPv6 address space are translated and stored as IPv4 addresses to remove any possible ambiguities.

You can specify multiple clients in each field by typing one entry per line.

In the HPC & Innovation Lab, we have validated the PowerScale F810 as part of this validated design. For more information about deploying PowerScale, see the PowerScale documentation.