Home > AI Solutions > Artificial Intelligence > Guides > Implementation Guide—Virtualizing GPUs for AI with VMware and NVIDIA Based on Dell Infrastructure > Deploy the solution for running AI Workloads as VMs

Deploy the solution for running AI Workloads as VMs

-

This section provides guidelines for deploying a GPU cluster with vSphere 8.0 with A100 or A30 GPUs on PowerEdge servers. We focus on enabling MIG and configuring the VMs with access to a virtual GPU (vGPU).

Installing and configuring the NVIDIA AI Enterprise Host software

See the NVIDIA AI Enterprise platform deployment guide for detailed instructions about installing and configuring the NVIDIA Virtual GPU Manager for vSphere, which includes these high-level steps:

- Use the vSphere Installation bundle (VIB) to install the NVIDIA Virtual GPU Manager for vSphere.

- Configure vSphere vMotion with vGPU for vSphere by enabling an advanced vCenter Server setting.

- Change the Default Graphics Type in vSphere.

- Configure a GPU for MIG-backed vGPUs. By default, MIG mode is not enabled on the A100 or A30 GPU.

Configuring a VM to use MIG

Follow these steps to configure a VM to use MIG:

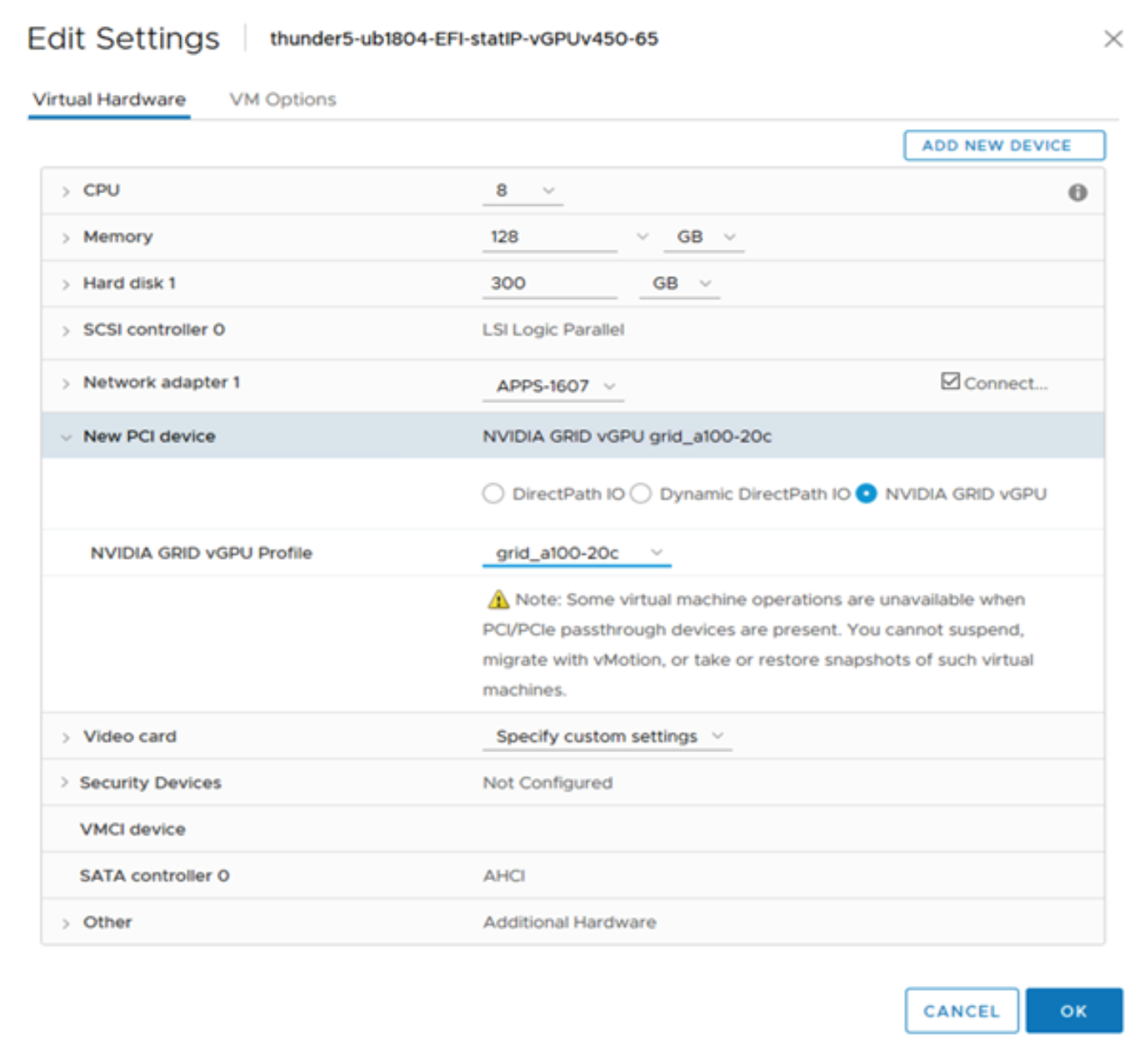

- Create a VM, provide virtual processor and memory configurations, and then assign a MIG partition, as shown in the following figure:

Figure 6. Creating a VM and assigning a MIG profile

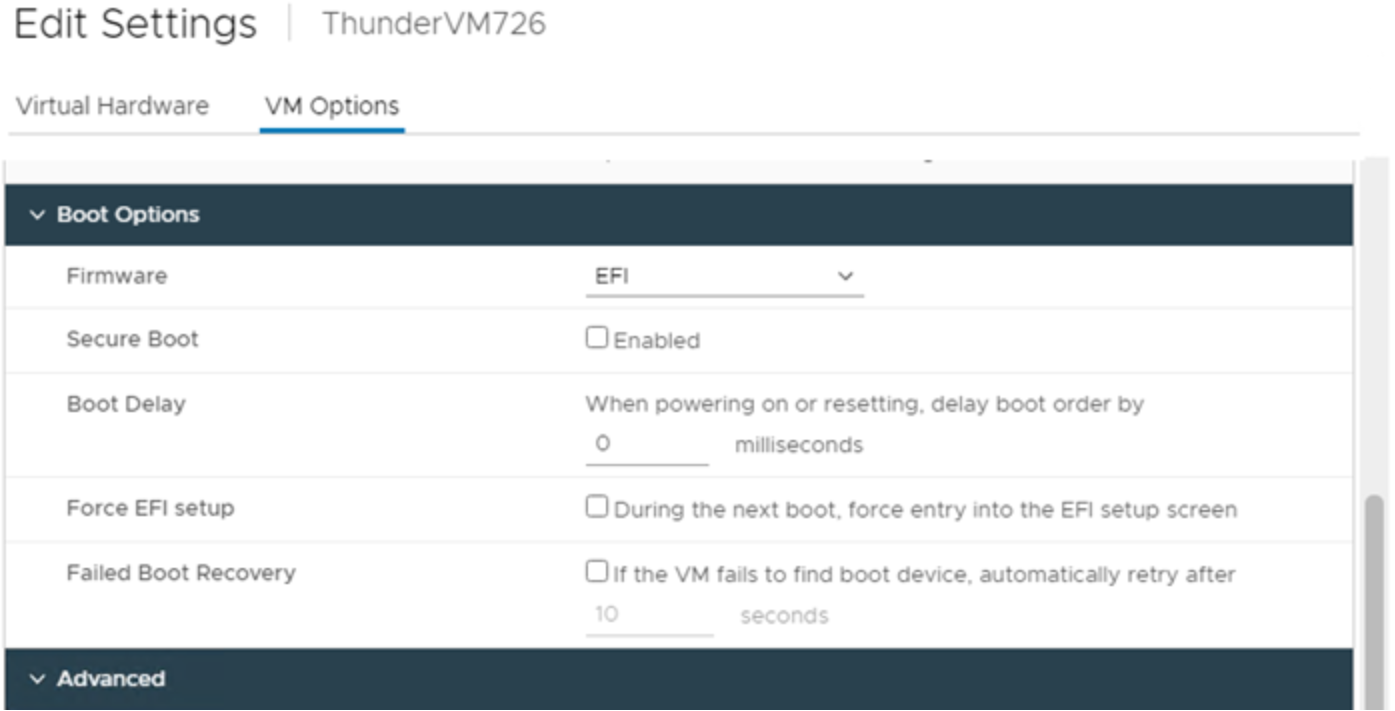

- Boot your guest operating system in the VM in EFI mode or UEFI mode for correct GPU use:

- In the vSphere Client, select your VM.

- Go to Edit Settings >VM Options >Boot Options. In the Firmware field, ensure that UEFI or EFI is enabled, as shown in the following figure:

Figure 7. VM boot options

- Click Advanced >Configuration Parameters >Edit Configuration and edit the following parameters:

pciPassthru.use64bitMMIO: TRUE

pciPassthru.allowP2P: TRUE

pciPassthru.64bitMMIOSizeGB: 64

- Install the Linux operating system on the VM.

- Install the NVIDIA vGPU Software Graphics Driver on the VM.

- License GRID vGPU on the VM.

For detailed instructions about installing the NVIDIA driver and licensing the GPU, see the Virtual GPU Software User Guide.

Mount datasets from PowerScale to the VM

Use the following steps to mount AI datasets from PowerScale to the VMs:

- Use SSH to connect to the Linux VM.

- Create a directory to serve as the mount point for the remote NFS share:

sudo mkdir /mnt/dataset

- Mount the NFS share by running the following command as root or user with sudo privileges:

sudo mount -t nfs <NFS IP addr>:<path> /mnt/dataset

- Verify that the remote NFS volume is successfully mounted:

df -h

Installing NVIDIA AI Enterprise Suite

The AI and data science applications and frameworks are distributed as NGC container images through the NVIDIA NGC Enterprise Catalog. Each container image contains the entire user-space software stack that is required to run the application or framework such as the CUDA libraries, cuDNN, any required Magnum IO components, TensorRT, and the framework. See the Installing AI and data science applications and Frameworks chapter in the NVIDIA AI Enterprise documentation.