Deploy the solution for running AI Workloads as Kubernetes pods

Home > AI Solutions > Artificial Intelligence > Guides > Implementation Guide—Virtualizing GPUs for AI with VMware and NVIDIA Based on Dell Infrastructure > Deploy the solution for running AI Workloads as Kubernetes pods

Deploy the solution for running AI Workloads as Kubernetes pods

-

This section provides guidelines for deploying a Tanzu Kubernetes cluster for running AI workloads as Kubernetes pods.

Overview

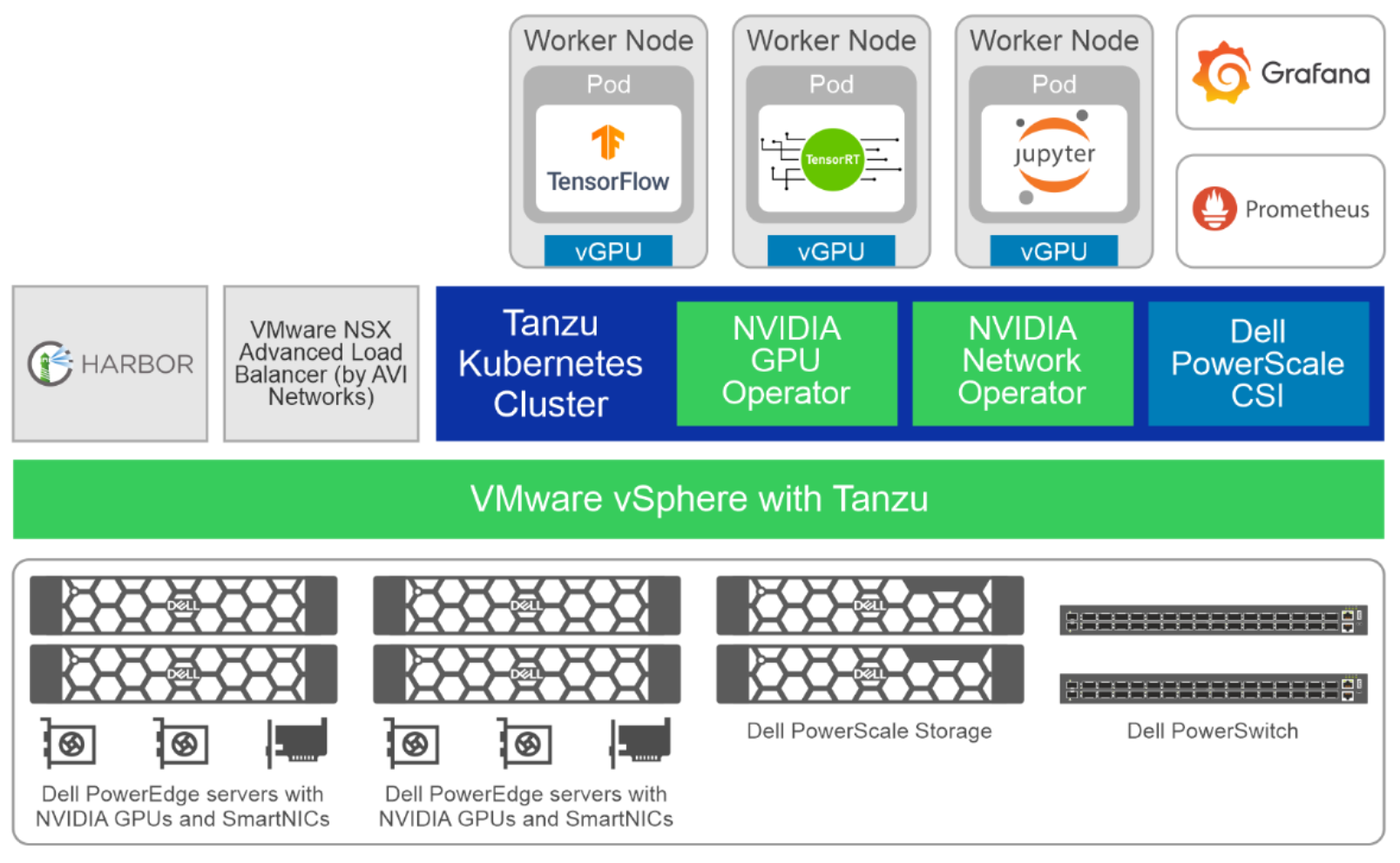

The following figure shows the software components of VMware vSphere with Tanzu:

Figure 8. VMware vSphere with Tanzu – software components

Deploying NSX Advanced Load Balancer

See the Install and Configure the NSX Advanced Load Balancer for installation instructions for the NSX Advanced Load Balancer. The high-level steps include:

- Create a vSphere Distributed Switch for Supervisor Cluster.

- Import the NSX Advanced Load Balancer to a Local Content Library.

- Deploy a Controller Cluster.

- Power on and configure the Controller.

- Add a license.

- Assign a certificate to the Controller.

- Configure Service Engine Group.

- Configure Virtual IP Network.

- Configure Default Gateway.

- Test the NSX Advanced Load Balancer.

Configure Supervisor Cluster, create workload management and a namespace

Enable a vSphere cluster for Workload Management by creating a Supervisor Cluster. See Enable Workload Management with vSphere Networking and the Tanzu product documentation for more information.

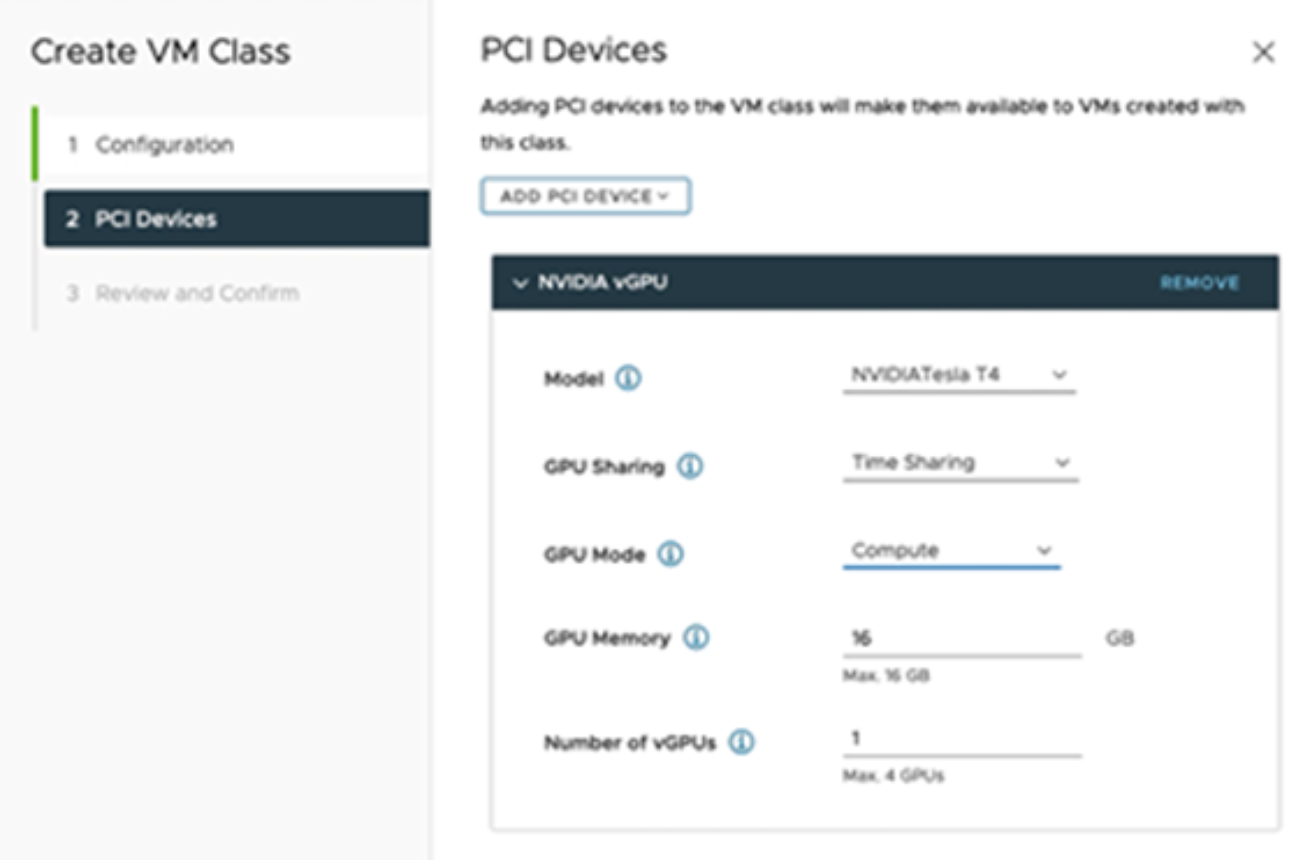

Create a VM class and associate it with the namespace

A VM class is a template that defines CPU, memory, and reservations for VMs. GPU allocations are added to the VM class. The VM class helps to set guardrails for the policy and governance of VMs by anticipating development needs and accounting for resource availability and constraints. We recommend creating one VM class (or have the same GPU resource allocated to all the VM classes) per Tanzu Kubernetes cluster.

The steps to create a VM class include:

- Log in to vCenter with administrator access and go to Workload Management.

- Select Services and Manage under the VM Service tile.

- Select VM Classes and the Create VM Class tile.

- Enter a name for the VM class.

- Select the ADD PCI DEVICE drop-down list and select NVIDIA vGPU.

- Select a GPU model from the drop-down list.

- Configure GPU Sharing by selecting one of the two options:

- Time Sharing

- Multi-Instance GPU Sharing

Time Sharing refers to temporal partitioning, while Multi-Instance GPU sharing refers to NVIDIA MIG capability, as shown in the following figure:

Figure 9. GPU sharing field

- Select the number of GPU Partitioned slices that you want to allocate to the VM by selecting the correct amount of GPU memory.

- Review the information on the Review and Confirm page and click Finish.

- When a VM class is created, associate the VM Class to the namespace.

VM classes can also be used with NVIDIA networking. NVIDIA networking cards can be added to VM classes as PCI devices. You can use VM classes with NVIDIA networking without GPUs. For detailed instructions, see the NVIDIA AI Enterprise documentation.

Provision and configure a Tanzu Kubernetes cluster

When VM classes and namespaces are created, use a YAML file to create a Tanzu Kubernetes cluster. The following example shows a YAML file.

Note: Enter the details for your cluster in the fields with angle brackets (< >).

apiVersion: run.tanzu.vmware.com/v1alpha3

kind: TanzuKubernetesCluster

metadata:

name: tkg-a30-cx6

namespace: tkg-ns

annotations:

run.tanzu.vmware.com/resolve-os-image.nodepool-a30-cx6: os-name=ubuntu

spec:

distribution:

fullVersion: v1.23.8---vmware.2-tkg.2-zshippable

settings:

storage:

defaultClass: vsan-default-storage-policy

network:

cni:

name: antrea

services:

cidrBlocks: [<IP range>]

pods:

cidrBlocks: [<IP range>]

serviceDomain: local

topology:

controlPlane:

replicas: 3

storageClass: <Kubernetes Storage Class>

tkr:

reference:

vmClass: vm-class-control-plane

nodePools:

- name: nodepool-a30-cx6

labels:

node-label: gpu-infer-large

replicas: 2

storageClass: <Kubernetes Storage Class>

tkr:

reference:

vmClass: vm-class-cpu-32c-512g

volumes:

- name: containerd

mountPath: /var/lib/containerd

capacity:

storage: 100Gi

- name: kubelet

mountPath: /var/lib/kubelet

capacity:

storage: 100Gi

Run the following command to apply the configuration in the YAML file to create the TKG. Ensure that the kubectl is set to the correct context, which is the Supervisor Cluster.

kubectl apply -f tanzucluster.yaml

Run the following command to see the status of the cluster and ensure that the cluster is deployed and ready.

kubectl get tkc

Deploying NVIDIA operators

NVIDIA GPU Operator uses the operator framework in Kubernetes to automate the management of all NVIDIA software components that are needed to provision the GPU. These components include the NVIDIA drivers (to enable CUDA), the Kubernetes device plug-in for GPUs, the NVIDIA Container Runtime, automatic node labeling, DCGM-based monitoring, and others.

NVIDIA Network Operator uses the operator framework in Kubernetes to manage networking related components, to enable fast networking, RDMA, and GPUDirect for workloads in a Kubernetes cluster. The Network Operator works with the GPU Operator to enable GPU-Direct RDMA on compatible systems.

See the NVIDIA documentation for information about deploying the operators.

Deploy the Tanzu Ecosystem

This section provides an overview of the software components that are deployed as part of the Tanzu ecosystem.

Tanzu Mission Control

Tanzu Mission Control is available as SaaS in the VMware Cloud Services portfolio. Follow the steps in the invitation link or service sign-up email to create a Cloud Services account. When you have created an account, you can access Tanzu Mission Control as one of the services available to your account.

Deploying TKG Extensions: Prometheus, Grafana, and Harbor

Deploy the following TKG extensions:

- Deploy the TKG Extension for Prometheus to collect and view metrics for Tanzu Kubernetes clusters. Prometheus is a system and service monitoring system. It collects metrics from configured targets at specific intervals, evaluates rule expressions, displays the results, and can trigger alerts if a condition is observed to be true. Alert manager handles alerts generated by Prometheus and routes them to their receiving endpoints. For more information, see Deploy and Manage the TKG Extension for Prometheus Monitoring.

- Deploy the TKG Extension for Grafana to generate and view metrics for Tanzu Kubernetes clusters. Grafana enables you to query, visualize, alert on, and explore metrics no matter where they are stored. Grafana provides tools to form graphs and visualizations from application data. For more information, see Deploy and Manage the TKG Extension for Grafana Monitoring.

- Deploy the TKG Extension for Harbor Registry as a private registry store for the container images that you want to deploy to Tanzu Kubernetes clusters. Harbor is an open-source container registry. For more information, see Deploy and Manage the TKG Extension for Harbor Registry.