Assets

Dell Shifts vRAN into High Gear on PowerEdge with Intel vRAN Boost

Thu, 17 Aug 2023 18:33:05 -0000

|Read Time: 0 minutes

What has past

Mobile World Congress 2023 was an important event for both Dell Technologies and Intel that marked a true foundational turning point for vRAN viability. At this event, Intel launched its 4th Gen Intel Xeon Scalable processor, with Intel vRAN Boost, and Dell announced two new ruggedized server platforms, the PowerEdge XR5610 and XR8000, with support for vRAN Boost CPU SKUs.

The features and capabilities of the PowerEdge XR5610 and XR8000 have been highlighted in previous blogs and both have been available to order since May 2023. These new ruggedized servers have been evaluated and adopted as Cloud RAN reference platforms by NEPs such as Samsung and Ericsson. Short-depth, NEBS certified and TCO-optimized, these servers are purpose-built for the demanding deployment environments of Mobile Operators and are now married to the Intel vRAN Boost processor to provide a powerful and efficient alternative to classical appliance options.

What is now

Starting August 16, 2023, the 4th Gen Intel Xeon Scalable processor with Intel vRAN Boost is available to order with the PowerEdge XR5610 and XR8000. These two critical pieces of the vRAN puzzle have been brought together and are now available to order from our PowerEdge XR Rugged Servers page with the following CPU SKUs.

| CPU SKU | Cores | Base Freq. | TDP |

|---|---|---|---|

6433 N | 32 | 2.0 | 205 W |

5423 N | 20 | 2.1 | 145 W |

6423 N | 28 | 2.0 | 195 W |

6403 N | 24 | 1.9 | 185 W |

Table 1. Intel vRAN Boost SKUs available today from Dell

Additional details on these new CPU SKUs and all 4th Gen Intel® Xeon® Scalable processors can be found on the Intel Ark Site.

These processors, with Intel vRAN Boost, integrate key acceleration blocks for 4G and 5G Radio Layer 1 processing into the CPU. These include:

- 5G Low Density Parity Check (LDPC) encoder/decoder

- 4G Turbo encoder/decoder

- Rate match/dematch

- Hybrid Automatic Repeat

- Request (HARQ) with access to DDR memory for buffer management

- Fast Fourier Transform (FFT) block providing DFT/iDFT for the 5G Sounding Reference Signal (SRS)

- Queue Manager (QMGR)

- DMA subsystem

One of the most interesting features of the vRAN Boost CPU is how this acceleration block is accessed by software. Although it is integrated on-chip with the CPU, the vRAN Boost block still presents itself to the Cores/OS as a PCIe device. The genius of this approach is in software compatibility. Virtual Distributed Unit (vDU) applications written for the previous generation HW will access the new vRAN Boost block using the same standardized, open APIs that were developed for the previous generation product. This creates a platform that can support past, present (and possibly future) generations of Intel’s vRAN optimized HW with the same software image.

What is to come

Prior to vRAN Boost, the reference architecture for vDU was a 3rd Gen Intel Xeon Scalable processor along with a FEC/LDPC accelerator, such as the Intel vRAN Accelerator ACC100 Adapter, and most today’s vRAN deployments can be found with this configuration. While the ACC100 does meet the L1 acceleration needs of vRAN it does this at a price, in terms of the space of an HHHL PCIe card and at the cost of an additional 54 W of power consumed (and cooled). In addition, using a PCIe interface will further reduce additional I/O expansion options and impact the ability to scale in-chassis due to slot count – both of which are alleviated with vRAN Boost.

With the new Intel vRAN Boost processors’ fully integrated acceleration, Intel has taken a huge step in closing the performance gap with purpose-built hardware, while remaining true to the “Open” in O-RAN.

Intel says that, compared to the previous generation, the new Intel vRAN Boost processor delivers up to 2x capacity and ~ 20% compute power savings compared to its previous generation processor with ACC100 external acceleration. At the Cell Site, where every watt is counted, operators are constantly exploring opportunities to reduce both power consumption and the associated “cooling tax” of keeping the HW in its operational range, typically within a sealed environment.

Dell and Intel have worked together to provide early access Intel vRAN Boost provisioned XR5610s and XR8000s to multiple partners and customers for integration, evaluation, and proof-of-concepts. One early evaluator, Deutsche Telekom, states:

“Deutsche Telekom recently conducted a performance evaluation of Dell’s PowerEdge XR5610 server, based on Intel’s 4th Gen Intel Xeon Scalable processor with Intel vRAN Boost. Testing under selected scenarios concluded a 2x capacity gain, using approximately 20% less power versus the previous generation. We aim to leverage these significant performance gains on our journey to vRAN.”

-- Petr Ledl , Vice President of Network Trials and Integration Lab and Access Disaggregation Chief Architect, Deutsche Telekom AG

With such a solid industry foundation of the telecom-optimized PowerEdge XR5610s/XR8000s, and 4th Gen Intel Xeon Scalable processors with Intel vRAN Boost, expect to see accelerated deployments of open, vRAN-based infrastructure solutions.

Dell’s PowerEdge XR7620 for Telecom/Edge Compute

Fri, 07 Jul 2023 15:03:04 -0000

|Read Time: 0 minutes

The XR7620 is an Edge-optimized short-depth, dual-socket server, purpose-built and compact, offering acceleration-focused solutions for the Edge. Similar to the other new PowerEdge XR servers reviewed in this blog series (the XR4000, XR8000, and XR5610)the XR7620 is a ruggedized design built to tolerate dusty environments, extreme temperatures, and humidity and is both NEBS Level 3, GR-3108 Class 1 and MIL-STD-810G certified.  Figure 1. PowerEdge XR7620 Server

Figure 1. PowerEdge XR7620 Server

XR7620 is intended to be a generational improvement over the previous PowerEdge XR2 and XE2420 servers, with similar base features and the newest components, including:

- A CPU upgrade to the recently announced Intel 4th Generation Xeon Scalable processor, up to 32 cores.

- 2x the memory bandwidth with the upgrade from DDR4 to DDR5

- Higher performance I/O capabilities with the upgrade from PCIe Gen 4 to PCIe Gen 5, with 5 x PCIe slots.

- Enhanced storage capabilities with up to 8 x NVMe drives, BOSS support, and HW-based NVMe RAID.

- Dense acceleration capabilities at the edge where there the XR7620 excels, with support for up to 2 x double-width (DW) accelerators at up to 300W each, or 4 single-width (SW) accelerators at up to 150W each. Filtered bezel for work in dusty environments

Targeted workloads include Digital Manufacturing workloads for machine aggregation, VDI, AI inferencing, OT/IT translation, industrial automation, ROBO, and military applications where a rugged design is required. In the Retail vertical, the XR7620 is designed for such applications as warehouse operations, POS aggregation, inventory management, robotics and AI inferencing.

For additional details on the XR7620’s performance, see the tech notes on the servers machine learning (ML) capabilities.

The XR7620 shares the ruggedized design features of the previously reviewed XR servers, and its strength lies in its ability to bring dense acceleration capabilities to the Edge, but instead of repeating the same feature and capabilities highlighted in previous blogs, I would like to discuss a few other PowerEdge features that have special significance at the Edge. These are in the areas of:

- Security

- Cooling

- Management

Security

Security is a core tenant and the common foundation of the entire PowerEdge Portfolio. Dell designs PowerEdge with security in mind at every phase in the server lifecycle, starting before the server build, with a Secure Supply Chain, extending to the delivered servers, with Secure Lifecycle Management and Silicon Root of Trust then secures what’s created/stored by the server in Data Protection.

Figure 2. Dell's Cyber Resilient Architecture

Figure 2. Dell's Cyber Resilient Architecture

This is a Zero Trust security approach that assumes at least privilege access permissions and requires validation at every access/implementation point, with features such as Identify Access Management (IAM) and Multi-Factor Authentication (MFA).

Especially at the Edge, where servers are not typically deployed in a “lights out” environment the ability to detect and respond to any tampering or intrusion is critical. Dell’s silicon-based platform Root of Trust created a secured booth environment to ensure that firmware comes from a trusted, untampered source. PowerEdge can also lock down a system configuration, detect any changes in firmware versions or configuration and on detection, can initiate a rollback to the last known good environment.

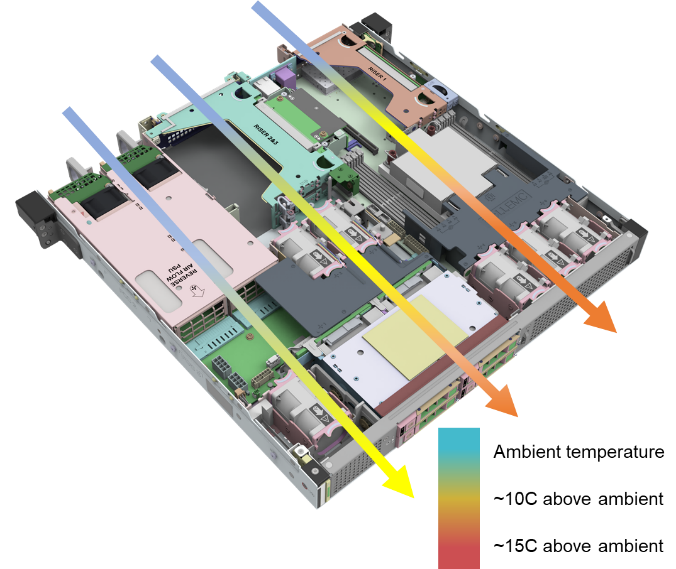

Cooling

Figure 3. Intelligent Cooling DesignsAs covered in a previous blog, optimized thermal performance is critical in the design of resilient, ruggedized Edge Server designs. The PowerEdge XR servers are designed with balanced, cooling-efficient airflow and comprehensive thermal management that provide optimized airflow while minimizing fan speeds and reducing server power consumption. XR Servers have a cooling design that allows them to operate between -5oC to 55oC. Dell engineers are currentlyworking on solutions to extend that operational range even further.

Figure 3. Intelligent Cooling DesignsAs covered in a previous blog, optimized thermal performance is critical in the design of resilient, ruggedized Edge Server designs. The PowerEdge XR servers are designed with balanced, cooling-efficient airflow and comprehensive thermal management that provide optimized airflow while minimizing fan speeds and reducing server power consumption. XR Servers have a cooling design that allows them to operate between -5oC to 55oC. Dell engineers are currentlyworking on solutions to extend that operational range even further.

All PowerEdge XR servers are designed with multiple, dual counter-rotating fans (basically 2 fans in 1 housing) and support for N+1 fan redundancy. While for NEBS certification, fan failure is only “evaluated”, to certify as a GR-3108 Class 1 device, the server must continue to operate with a single fan failure, at a maximum of 40oC for a minimum of four hours.

Management

All Dell PowerEdge servers have a common, three tier approach to system management, in the forms of the Integrated Dell Remote Access Controller (iDRAC), Open Manage Enterprise (OME) and CloudIQ. These three tiers build upon Dell’s approach to system management, of a unified, simple, automated, and secure solution. This approach scales from the management of a single server, at the iDRAC Baseboard Management Controller (BMC) console, to managing 1000s of servers simultaneously with OME, to leveraging intelligent infrastructure insights and predictive analytics to maximize server productivity with CloudIQ.

Conclusion

The XR7620 is a valuable addition to the PowerEdge XR portfolio, providing dense compute, storage, and I/O capabilities in a short-depth and ruggedized form factor, for environmentally challenging deployments. But far and away, the XR7620’s best capability is a design that brings a dense GPU acceleration environment to the edge, while continuing the meeting the performance requirements of NEBS Level 3, an ability that has previously not been an option.

Dell’s focus on security, cooling, and management creates a solution that can be efficiently and confidently deployed and maintained in the challenging environment that is today’s Edge.

Author information

In closing out this blog series, I would like to thank you for taking your valuable time to review my thoughts on Design for the Edge. To continue these discussions, connect with me here:

Mike Moore, Telecom Solutions Marketing Consultant at Dell Technologies

Dell’s PowerEdge XR5610 for Telecom/Edge Compute

Tue, 25 Apr 2023 16:58:19 -0000

|Read Time: 0 minutes

In June 2021, Dell announced the PowerEdge XR11 Server. This was Dell’s first design created for the requirements of Telecom Edge Environments. A 1U, short-depth, ruggedized, NEBS Level 3 compliant server, the XR11 has been successfully deployed in multiple O-RAN compliant commercial networks, including DISH Networks and Vodafone.

Dell has followed on the success of the XR11, with a generational improvement in the introduction of the PowerEdge XR5610.

Like its predecessor, the XR5610 is a short-depth ruggedized, single socket, 1U monolithic server, purpose-built for the Edge and Telecom workloads. Its rugged design also accommodates military and defense deployments, retail AI including video monitoring, IoT device aggregation, and PoS analytics.

Figure 1. PowerEdge XR5610 1U Server

Figure 1. PowerEdge XR5610 1U Server

Improvements to the XR5610 include:

- A CPU upgrade to the recently announced 4th Generation Xeon Scalable processor, up to 32c,

- Support for the new Intel vRAN Boost variant, which will embed a vRAN accelerator in the CPU.

- A doubling of the memory bandwidth with the upgrade from DDR4 to DDR5.

- Higher performance I/O capabilities with the upgrade from PCIe Gen 4 to Gen 5.

- Dry inputs, common in remote environments to gain some insights into edge enclosure conditions, such as door open alarms, moisture detection, and more.

- Support for multiple accelerators, such as GPUs, O-RAN L1 Accelerators, and storage options including SAS, SATA or NVMe,

Topics where the XR5610 delivers at the Edge are:

- Form factor and deployability

- Environment and rugged design

- Efficient power options

Form factor and deployability

The monolithic chassis design of the XR5610 is a traditional, short depth form factor and fills certain deployment cases more efficiently than the XR8000. This form factor will often be preferred where limited or single server edge deployments are required, or if this is a planned long-term installation with limited or planned upgrades.

Figure 2. Site support cabinetThe XR5610 is compatible with much of today’s Edge infrastructure. These servers are designed with a short depth, “400mm Class” form factor, compatible with most existing Telecom Site Support Cabinets with flexible Power Supply options and dynamic power management to efficiently use limited resources at the edge.

Figure 2. Site support cabinetThe XR5610 is compatible with much of today’s Edge infrastructure. These servers are designed with a short depth, “400mm Class” form factor, compatible with most existing Telecom Site Support Cabinets with flexible Power Supply options and dynamic power management to efficiently use limited resources at the edge.

This 400mm Class server fits well within the commonly deployed edge enclosure depths of 600mm. With front maintenance capabilities, the XR5610 can be installed in Edge Cloud racks, and provide sufficient front clearance for power and network cabling, without creating a difficult-to-maintain cabling design or potentially one that obstructs airflow.

Environment and rugged design

While the XR5610 is designed to meet the environmental requirements of NEBS Level 3 and GR-3108 Class 1 for deploying into the Telecom Edge, Dell also wanted to create a platform that had uses and applications outside the Telecom Sector, beyond mee The PowerEdge XR5610 is also designed as a ruggedized compute platform for both military and maritime environments. The XR5610 is tested to MIL-STD and Maritime specifications, including shock, vibration, altitude, sand, and dust. This wider vision for the deployment potential of the XR5610 creates a computing platform that can exist comfortably in an O-RAN Edge Cloud environment, without being restricted to Telecom-only.

A smart filtered bezel option is also available so the XR5610 can work in dusty environments and send an alert when a filter should be replaced. This saves maintenance costs because technicians can be called out on an as-needed basis, and customers don’t have to be concerned with over-temperature alarms caused by prematurely clogged filters.

Efficient power options

The XR5610 supports 2 PSU slots that can accommodate multiple power capacities, in both 120/240 VAC and -48 VDC input powers.

Dell has worked with our power supply vendors to create an efficient range of Power Supply Units (PSUs), from 800W to 1800W. This allows the customer to select a PSU that most closely matches the current version available at the facility and power draw of the server, reducing wasted power loss in the voltage conversion process.

Conclusion

The Dell PowerEdge XR servers, in particular the XR5610 and XR8000, are providing a new Infrastructure Hardware Foundation that allows Wireless Operators to transition away from traditional, purpose-built, classical BBU appliances, decoupling HW and SW to an open, virtualized RAN that gives operators the choice to create innovative, best-in-class solutions from a multi-vendor ecosystem.

Dell’s PowerEdge XR8000 for Telecom/Edge Compute

Fri, 31 Mar 2023 17:38:53 -0000

|Read Time: 0 minutes

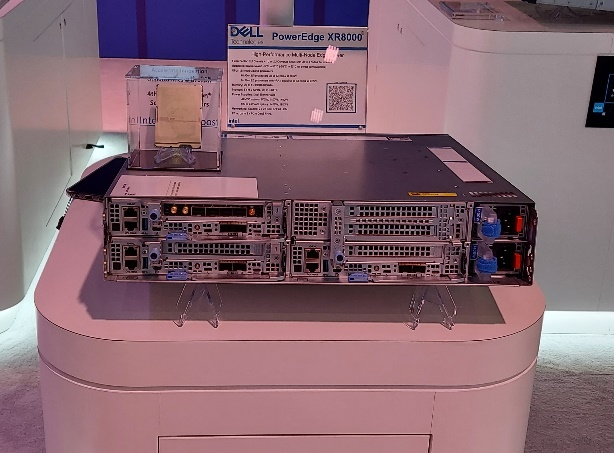

The design goals of a Telecom-inspired Edge Server are to not only to complement existing installations such as traditional Baseband Units (BBUs) all the way out to the cell site, but to eventually replace the purpose-built proprietary platforms with a cloud-based and open solution. The new Dell Technologies PowerEdge XR8000 achieves this goal, in terms of form factor, operations, and environmental specifications.

Figure 1. XR8000 2U Chassis

Figure 1. XR8000 2U Chassis

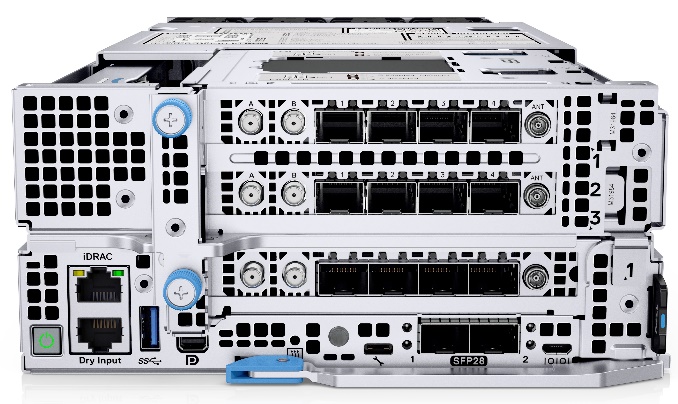

The XR8000 is composed of a 2U, short depth, 400mm class Chassis with options to choose from 1U or 2U half-width hot-swappable Compute Sleds with up to 4 nodes per chassis. The XR8000 supports 3 sled configurations designed for flexible deployments. These can be 4 x 1U sleds, 2 x 1U and 1 x 2U sleds or 2 x 2U sleds.

The Chassis also supports 2 PSU slots that can accommodate up to 5 power capacities, with both 120/240 AC and -48 VDC input powers supported.

The 1U and 2U Compute Sleds are based on Intel’s 4th Generation Xeon Scalable Processors, up to 32 cores, with support for both Sapphire Rapids SP and Edge Enhanced (EE) Intel® vRAN Boost processors. Both sled types have 8 x RDIMM slots and support for 2 x M.2 NVMe boot devices with optional RAID1 support, 2 optional 25GbE LAN-on-Motherboard (LoM) ports and 8 Dry Contact Sensors though an RJ-45 connector.

The 1U Compute Sleds adds support for one x16 FHHL (Full Height Half Length) Slot (PCIe Figure 2. XR8610t 1U Compute Sled Gen4 or Gen5).

Figure 2. XR8610t 1U Compute Sled Gen4 or Gen5).

The 2U Compute Sled builds upon the foundation of the 1U Sled and adds support for an additional two x16 FHHL slots.

These 2 Sled configurations can create both dense compute and dense I/O configurations. The 2U Sled also provides the ability to accommodate GPU-optimized workloads.

This sledded architecture is designed for deployment into traditional Edge and Cell Site Environments, complementing or replacing current hardware and allowing for the reuse of existing infrastructure. Design features that make this platform ideal for Edge deployments include: Figure 3. XR8620t 2U Compute Sled

Figure 3. XR8620t 2U Compute Sled

- Improved Thermal Performance

- Efficient maintenance operations

- Reduced power cabling

- Simplified generational upgrades

Let’s take a look at each one of these.

Improved thermal performance

The XR8000 is designed for NEBS Level 3 compliance, which specifies an operational temperature range of -5oC to +55oC. However, creating a server that operates efficiently through this whole temperature range can require some “padding” on either side. Dell has designed the XR8000 to operate at both below -5oC and above +55oC. This creates a server that operates comfortably and efficiently in the NEBS Level 3 range.

On the low side of the temperature scale, as discussed in the sixth blog in this series, commercial-grade components are typically not specified to operate below 0°C. New to Dell PowerEdge design, is the XR8000 sled pre-heater controller, which on cold start where the temperature is below -5°C, will internally warm the server up to the specified starting temperature before applying power to the rest of the server.

On the high side of the temperature scale, Dell is introducing new, advanced heat sink technologies to allow for extended operations above +55°C. Another advantage of this new class of heat sinks will be in power savings, as at more nominal operating temperatures the sled’s cooling fans will not have to spin at as high a rate to dissipate the equivalent amount of heat, consuming fewer watts per fan.

Efficient maintenance operations

Figure 4. XR8000 Front View. All Front Maintenance

Figure 4. XR8000 Front View. All Front Maintenance Figure 5. XR8000 Rear View. Nothing to see here.

Figure 5. XR8000 Rear View. Nothing to see here.

In many Cell Site deployments, access to the back of the server is not possible without pulling the entire server. This is typical, for example, in dedicated Site Support Cabinets, with no rear access, or in Concrete Huts where racks of equipment are located close to the wall, allowing no rear aisle for maintenance.

Maintenance procedures at a Cell Site are intended to be fast and simple. The area where a Cell Site Enclosure sits is not a controlled environment. Sometimes, there will be a roof over the enclosure to reduce solar load, but more times than not it’s exposed to everything Mother Nature has to offer. So the FRU (Field Replaceable Unit) maintenance needs to be simple, fast, and quickly bring the system back into full service. For the XR8000, the 2 basic FRUs are the Compute Sled and the PSUs. Simple and fast not only restore service more quickly, but the shorter maintenance cycle allows more sites to be serviced by the same technicians, saving both time and money.

Reduced power cabling

Up to four compute sleds are supported in the XR8000 Chassis, supplied by two 60mm PSUs. If you looked at a traditional, rackmount server equivalent there would be either 4U of single socket or 2U-4U of dual-socket servers. Assuming redundant PSUs for each server, there would be between four to eight PSUs for equivalent compute capacity and between four to eight more power cables. This consolidation of PSUs and cables not only reduces the cost of the installation, due to fewer PSUs but also reduces the cabling, clutter, and Power Distribution Unit (PDU) ports used in the installation.

Simplified generational upgrades

With the release of Intel’s new 4th Generation Xeon Scalable Processor, a server in 2023 can execute the equivalent of multiple servers from only 10 years ago. It can be expected that not only will processors efficiently continue to improve, but greater capabilities and performance in peripherals, including GPUs, DPU/IPUs, and Application Specific Accelerators will continue this processing densification trend. The XR8000 Chassis is designed to accommodate multiple generations of future Compute Sleds, enabling fast and efficient upgrades while keeping any service disruptions to a minimum.

Conclusion

It is said that imitation is the sincerest form of flattery. In this respect, our customers have requested, and Dell has delivered the XR8000, which is designed in a compact and efficient form factor with similar maintenance procedures as found in the existing, deployed RAN infrastructure.

Building upon a Classical BBU architecture, the XR8000 adopts an all-front maintenance approach with 1U and 2U Sledded design that makes server/PSU installation and upgrades quick and efficient.

Dell’s PowerEdge XR4000 for Telecom/Edge Compute

Wed, 08 Feb 2023 18:04:19 -0000

|Read Time: 0 minutes

Compute capabilities are increasingly migrating away from the centralized data center and deployed closer to the end user—where data is created, processed, and analyzed in order to generate rapids insights and new value.

Dell Technologies is committed to building infrastructure that can withstand unpredictable and challenging deployment environments. In October 2022, Dell announced the PowerEdge XR4000, a high-performance server, based on the Intel® Xeon® D Processor that is designed and optimized for edge use cases.

Figure 1. Dell PowerEdge XR4000 “rackable” (left) and “stackable” (right) Edge Optimized Servers

The PowerEdge XR4000 is designed from the ground up with the specifications to withstand rugged and harsh deployment environments for multiple industry verticals. This includes a server/chassis designed with the foundation requirements of GR-63-CORE (including -5C to +55C operations) and GR1089-CORE for NEBS Level 3 and GR-3108 Class 1 certification. Designed beyond the NEBS requirements of Telecom, the XR4000 also meets MIL-STD specifications for defense applications, marine specifications for shipboard deployments, and environmental requirements for installations in the power industry.

The XR4000 marks a continuation of Dell Technologies’ commitment to creating platforms that can withstand the unpredictable and often challenging deployment environments encountered at the edge, as focused compute capabilities are increasingly migrating away from the Centralized Data Center and deployed closer to the End User, at the Network Edge or OnPrem.

Attention to a wide range of deployment environments creates a platform that can be reliably deployed from the Data Center to the Cell Site, to the Desktop, and anywhere in between. Its rugged design makes the XR4000 an attractive option to deploy at the Industrial Edge, on the Manufacturing Floor, with the power and expandability to support a wide range of computing requirements, including AI/Analytics with bleeding-edge GPU-based acceleration.

The XR4000 is also an extremely short depth platform, measuring only 342.5mm (13.48 inches) in depth which makes it extremely deployable into a variety of locations. And with a focus on deployments, the XR4000 supports not only EIA-310 compatible 19” rack mounting rails, but also the “stackable” version supports common, industry-standard VESA/DIN rail mounts, with built-in latches to allow the chassis to be mounted on top of each other, leveraging a single VESA/DIN mount.

Additionally, both Chassis types have the option to include a lockable intelligent filtered bezel, to prevent unwanted access to the Sleds and PSUs, with filter monitoring which will create a system alert when the filter needs to be changed. Blocking airborne contaminants, as discussed in a previous blog, is key to extending the life of a server by reducing contaminant build-up that can lead to reduced cooling performance, greater energy costs, corrosion and outage-inducing shorts.

The modular design of the XR4000, along with the short-depth Compute Sled design creates an easily scalable solution. Maintenance procedures are simplified with an all-front-facing, sled-based design.

Conclusion

Specifying and deploying Edge Compute can very often involve selecting a Server Solution outside of the more traditional data center choices. The XR4000 addresses the challenges of moving to compute to the Edge with a compact, NEBS-compliant, and ruggedized approach, with Sled-based servers and all front access, reversible airflow and flexible mounting options, to provide ease of maintenance and upgrades, reducing server downtime and improving TCO.

Computing on the Edge–Other Design Considerations for the Edge: Part 2

Thu, 02 Feb 2023 15:30:52 -0000

|Read Time: 0 minutes

The previous blog discussed the physical aspects of a ruggedized chassis, including short depth and serviceability. The overarching theme being that of creating a durable server, in a form factor that can be deployed in a wide range of Telecom and Edge compute environments.

This blog will focus on the inside of the server, specifically design aspects that cover efficient and long-term, reliable server operations. This blog covers the following topics:

- Optimal Thermal Performance

- Power Efficiency

- Contaminant Protections and Smart Bezel Design

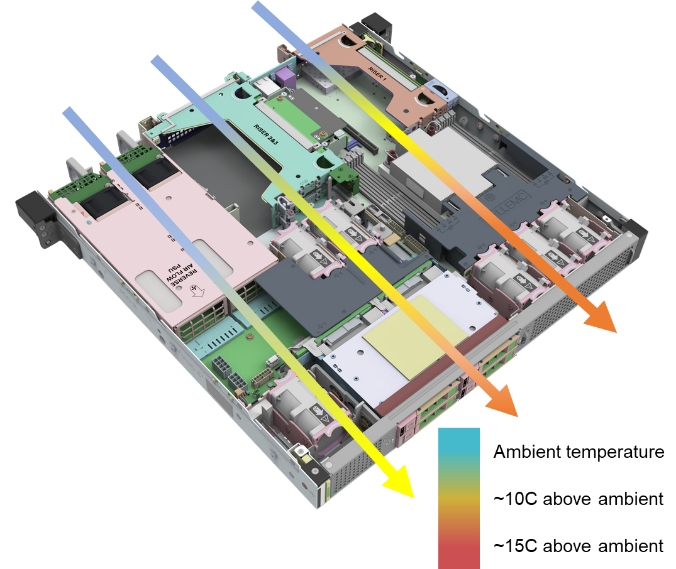

Optimal thermal performance Figure 1. Serial Heat Rise Example from Front to Back

Figure 1. Serial Heat Rise Example from Front to Back

Certainly, one of the greatest challenges of Edge Server Design is architecture and layout. It is extremely challenging to optimize airflow such that heat is efficiently dissipated over the entire operational temperature and humidity range.

In an Edge Server, there are still the same compute, storage, memory, and networking demands required for a traditional data center server. However, the designers are dealing with 30 percent less real estate to work with—and even less space when dealing with some of the sledded server architectures, such as with Dell’s new PowerEdge XR4000 Server.

These design restrictions typically result in components being placed much closer together on the motherboard and a concentration of heat creation in a smaller area. Smart component placement, which mitigates pre-heated air from passing over other sensitive components and advanced heat sinks specifying high-performance fans and the use of air channels to internally direct air through the server, is critical to creating server designs that can tolerate temperature extremes without creating excessive hotspots in the server.

These designs are repeatedly simulated and optimized using a Computational Fluid Dynamics (CFD) application. Hot spots are identified and mitigated until a design is created that maintains all active components within their specified operating temperatures, over the entire operational range of the overall server. For example, for NEBS Level 3 this would range from -5C to +55C, as discussed in the third blog of this series.

Bringing together these server performance requirements, thermal dissipation challenges, component selection, and effective airflow simulations, while involving considerable engineering and applied science is very much an art form. A well-designed server is remarkable not only in its performance but the efficiency and elegance of its layout. Perhaps that’s a little overboard, but I can’t help but admire an efficient server layout and consider all the design iterations, time, engineering efforts and simulations that went into its creation.

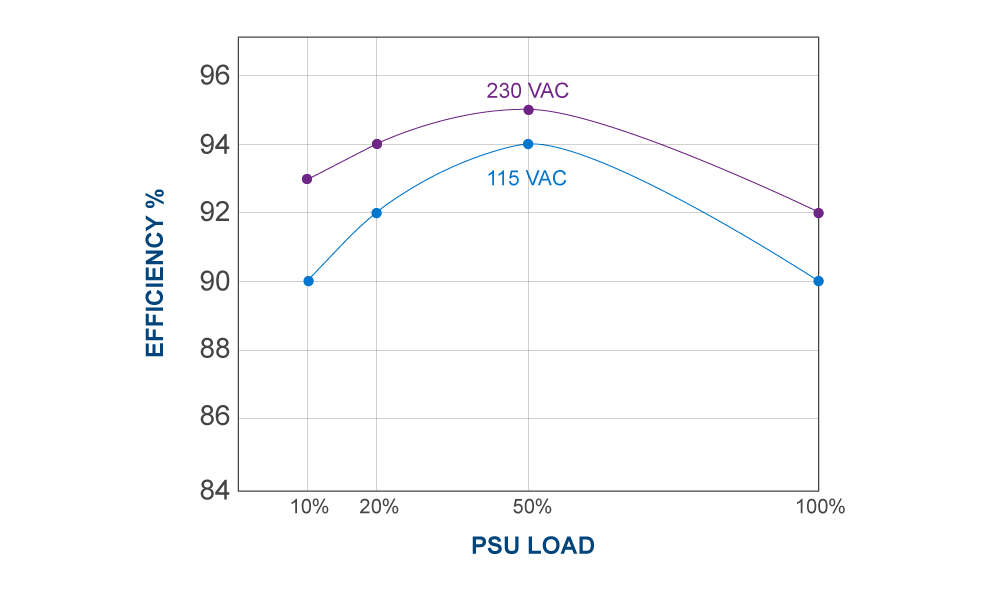

Figure 2. Example 80PLUS Platinum Efficiency CorePower efficiency

Figure 2. Example 80PLUS Platinum Efficiency CorePower efficiency

Having high-efficiency Power Supply Units (PSUs) options, that support multiple voltages (both AC and DC) and multiple PSU capacities will allow for the optimal conversion of input power (110VAC, 220VAC, -48VDC) server consumable voltages (12VDC, 5VDC).

Power Supplies operate most efficiently in a utilization range. PSUs are generally rated with a voluntary certification, called 80PLUS, which is a rating of power conversion efficiency. The minimum efficiency is 80 percent for power conversion rating. The flip side of an 80 percent efficiency rating, is that 20 percent of the input power is wasted as heat. Maximum PSU efficiency rating is currently around 96 percent. Of course, the higher the efficiency the higher the price of the PSU. The increasing costs of electricity globally is dimensioning the PSU, resulting in significant TCO savings.

Ensuring that a server vendor has multiple PSU options that provide optimal PSU efficiencies, over the performance range of the server can save hundreds to thousands of dollars in inefficient power conversion over the lifetime of the server. If you also consider that the power conversion loss represents generated heat, the potential savings in cooling costs are even greater.

Contaminant protection and Smart Bezel design

GR-63-Core specifies three types of airborne contaminants that need to be addressed: particulate, organic vapors, and reactive gases. Organic vapors and reactive gases can lead to rapid corrosion, especially where copper or silver components are exposed in the server. With the density of server components on a motherboard increasing from generation to generation and the size of the components decreasing, corrosion becomes an increasingly complex issue to resolve.

Particulate contaminants, which include particulates—such as salt crystals on the fine side and common dust and metallic particles like zinc whiskers on the coarse side—can cause corrosion but can also result in leakage, eventual electrical arcing, and sudden failures. Common dust build-up within a server can reduce the efficiency of heat dissipation and dust can absorb humidity that can cause shorts and resulting failures.

Hybrid outdoor cabinet solutions may become more common as operators look toward reducing energy costs. These would involve combination of Air Ventilation (AV), Active Cooling (AC), and Heat Exchangers (HEX). Depending on the region AV+AC (warmer) or AV+HEX (cooler) can be used to efficiently evacuate heat from an enclosure, only falling back on AC and HEX when AV cannot sufficiently cool the cabinet. However, exposure to outside air brings in a whole new set of design challenges, which increases the risk of corrosion.

One method of protection employed is a Conformal Covering is a protection method that combats corrosive contaminates in hostile environments. This is a thin layer of a non-conductive material that is applied to the electronics in a server and acts as a barrier to corrosive elements. This layer and the material used (typically some acrylic) is thin enough that its application does not impede heat conduction. Conformal Coverings can also assist against dust build-up. This is not a common practice in servers due to the complexity of applying the coating to the multiple modules (motherboard, DIMMs, PCIe Cards, and more) that compose a modern server and is not without cost. However, the tradeoff of coating a server compared to the savings of using AV may make this practice more common in the future.

Using a filtered bezel is a common option for dust. These filters block dust from entering the server but not keep dust out of the filter. Eventually, the dust accumulated in the filter reduces airflow through the server which can cause components to run hotter or cause the fans to spin at a higher rate consuming more electricity.

Periodically replacing filters is critical—but how often and when? The use of Smart Filter Bezels can be an effective solution to this question. These bezels notify operations when a filter needs to be swapped and may save time with unnecessary periodic checks or rapidly reacting when over-temperature alarms are suddenly received from the server.

Conclusion

The last two blogs in this series covered a few of the design aspects that should be considered when designing a compute solution for the edge that is powerful, compact, ruggedized, environmentally tolerant and power efficient. These designs need to be flexible, deployable into existing environments, often short-depth, and operate reliably with a minimum of physical maintenance for multiple years.

Dell PowerEdge Servers for OpenRAN Edge Deployments

Mon, 16 Jan 2023 19:50:53 -0000

|Read Time: 0 minutes

SOLUTION BRIEF1•1Dell PowerEdge Servers forSummary

Dell Technologies is helping to shape the future of Open RAN solutions with our partnerships and our high performance, purpose-built XR11 and XR12 PowerEdge servers designed for Open RAN and edge deployments.

Introduction

The future of telecommunications includes an open, cloud-native architecture within an open ecosystem of vendors working together to build this new architecture. One of the more exciting aspects of this open future is Open Radio Access Networks (Open RAN). Open RAN is an industry-wide movement that promotes the adoption of open and interoperable solutions at the RAN.

Dell Technologies is helping to shape the future of Open RAN solutions with our partnerships and our high-performance, purpose-built XR11 and XR12 PowerEdge Servers designed for Open RAN and edge deployments.

PowerEdge XR11/XR12: Designed for O-RAN

Open RAN provides opportunities to replace the proprietary, purpose-built RAN equipment of the past with standardized, virtualized hardware that can be deployed anywhere—at the far edge, regional edge, or centralized data centers. Also, intelligent controllers can provide optimized performance and enhanced automation capabilities to improve operational efficiency.

In the O-RAN frameworks, you can separate the baseband unit (BBU) of the traditional RAN into virtualized distributed unit (vDU) and virtualized centralized unit (vCU) components. You can also scale these components independently as control- and user-plane traffic requirements dictate. When building an open-hardware platform for a vRAN architecture, you must consider six critical factors:

| Form factor | Environment | Components |

| Security | Automation and management | Supply chain |

With the growing number of edge deployments required to support 5G O-RAN services, edge-optimized cloud infrastructure is essential. These six factors ensure that telco providers build their 5G RAN on a scalable, highly available, and long-term sustainable foundation. Dell Technologies considered each of these factors when designing their PowerEdge XR11 and XR12 servers. These servers are built specifically for O-RAN and edge environments, including multi-access edge computing (MEC) and content delivery network (CDN) applications. The following sections examine how the XR11 and XR12 servers meet, and in many cases exceed, the criteria for O-RAN and edge deployments across these six critical factors.

Best-of-breed components built for harsh environments

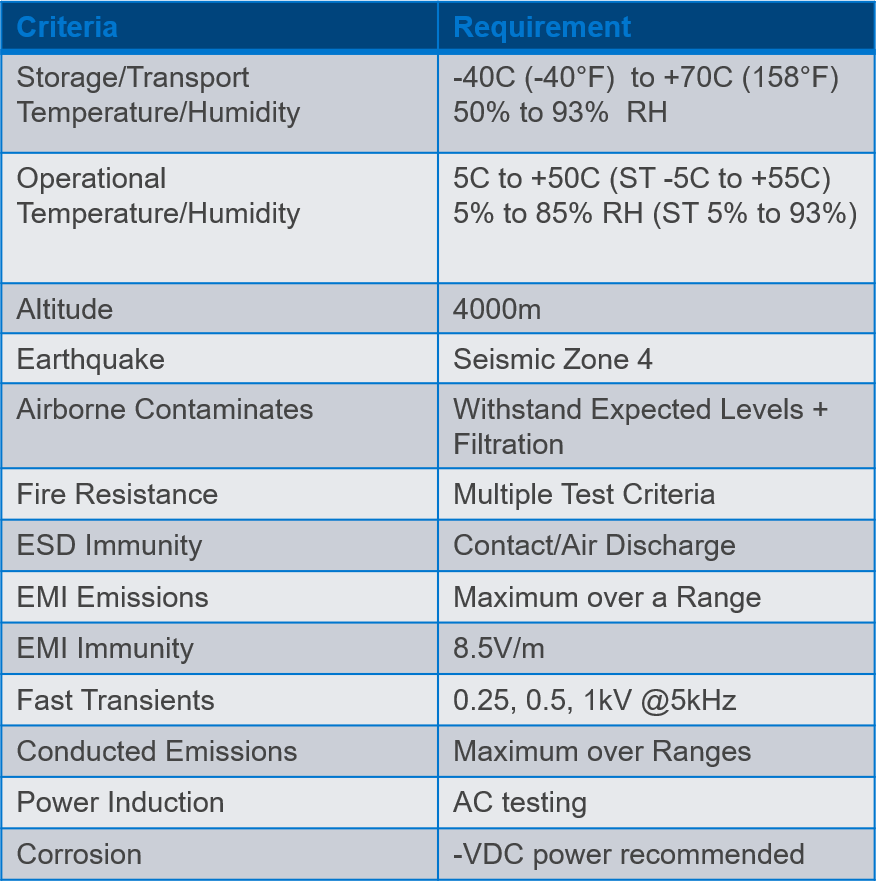

Unlike data centers, which are carefully controlled environments, RAN components are often subject to extreme temperature changes and less-than-ideal conditions such as humidity, dust, and vibration. For years, the telecommunications industry has used the Network Equipment-Building System (NEBS) as a standard for telco-grade equipment design. The PowerEdge XR11 and XR12 are designed to exceed NEBS Level 3 compliance (meets or exceeds the GR-63-CORE and GR-1089-CORE standards). They also meet military and marine standards for shock, vibration, sand, dust, and other environmental challenges.

Fully operational within extreme temperature ranges from -5° C (23°F) to 55° C (131° F), you can deploy XR11/12 servers in almost any environment, even where exposure to heat, dust, and humidity are factors. The XR11/12 series is designed to withstand earthquakes and is fully tested to the NEBS Seismic Zone 4 levels. As a result, you can trust Dell PowerEdge servers to keep working no matter where they are deployed.

The PowerEdge XR11 and XR12 provide significant flexibility over purpose-built, all-in-one appliances by using the industry’s most-advanced, best-of-breed components. Also, by providing multiple CPU, storage, peripheral, and acceleration options, PowerEdge XR11/12 servers enable telecommunications providers to deploy their vRAN systems in many different environments.

Both models feature the following components:

- 3rd Generation Intel® Xeon® Scalable processors

- Up to 8 DIMMs

- PCI Express 4.0 enabled expansion slots

- Choice of network interface technologies

- Up to 90 TB storage

One example test shows the performance possibilities that the PowerEdge XR12 enabled by 3rd Gen Intel® Xeon® Scalable processors offers: The solution delivered 2x the massive MIMO throughput for a 5G vRAN deployment compared to the previous generation.1

Dell security, management systems, and supply-chain advantage

PowerEdge XR11/12 servers are designed with a security-first approach to deliver proactive safeguards through integrated hardware and software protection. This security extends from a hardware-based silicon root of trust to asset retirement across the entire supply chain. From the moment a PowerEdge server leaves our factory, we can detect and verify whether a server has been tampered with, providing a foundation of trust that continues for the life of the server. The Dell Integrated Dell Remote Access Controller (iDRAC) is the source of this day-zero trust. iDRAC checks the firmware against the factory configuration down to the smallest detail after the XR11/12 server is plugged in. If you change the memory, iDRAC detects it. If you change the firmware, iDRAC detects it. Also, we build every PowerEdge server a cyber-resilient architecture2 that includes firmware signatures, drift detection, and BIOS recovery.

Besides providing proactive and comprehensive security, PowerEdge XR11/12 servers combine ease-of-management with automation to reduce operational complexity and cost while accelerating time-to-market for new services. Dell OpenManage provides a single systems-management platform across all Dell components. This platform makes it easier for telecommunications providers to manage their hardware components remotely, from configuration to security patches. Also, Dell delivers powerful analytics capabilities to help manage server data and cloud storage. The iDRAC agent-less server monitoring also allows telecommunications providers to proactively detect and mitigate potential server issues before they impact production traffic. By analyzing telemetry data, iDRAC can detect the root cause for poor server performance and identify cluster events that can predict hardware failure in the future.

In the last year, the importance of a secure and stable supply chain has become apparent while many manufacturers struggle to adapt to widespread supply-chain disruption. As telecommunications providers look to ramp up 5G services, they require partners they can depend on to deliver, innovate, scale, and support their plans for the future. Because we are the world’s largest supplier of data-center servers, telecommunications providers can depend on Dell Technologies. We operate in 180 countries worldwide, including 25 unique manufacturing locations, 50 distribution and configuration centers, and over 900 parts-distribution centers. Our global, secure supply chain means that telecommunications providers can grow their business with confidence.

Dell Open RAN reference architecture

Dell Technologies does not stop at the server. We work closely with our open partner ecosystem to integrate and validate our technology in multivendor solutions that provide a best-of-breed, end-to-end vRAN system. You will find this partnership at work in our latest technology preview of the Dell Open RAN reference architecture featuring VMware Telco Cloud Platform (TCP) 1.0, Intel FlexRAN technology, and vRAN software from Mavenir. Our O-RAN solution architecture delivers the disaggregated components that compose the RAN network—vRU, vCU and vDU. Also you can deploy it in hybrid (private and public) clouds plus as bare-metal server environments. Having a pre-built, integrated solution allows telecommunications providers to deploy O-RAN solutions quickly and confidently, knowing that they have the power of our global supply chain and expert services behind them.

Conclusion

With many initial 5G core network transformations complete, telecommunications providers are now turning their attention to the RAN. For them, there are several paths to choose. They can continue to work with legacy vendors by growing out their proprietary RAN systems, missing out on the opportunity to build a best-of-breed RAN solution from multiple partners. Or, they can follow the path of Open RAN with Dell Technologies as a trusted partner to assemble and manage the right pieces from the industry’s O-RAN leaders.

Dell PowerEdge XR11/12 servers are the latest examples of our commitment to open 5G solutions. These servers are built by telco experts specifically for telco edge applications, using a security-first approach and featuring high- performance compute, storage, and analytics components. Also, they have been bundled with our broader Open RAN reference architecture to form the foundation of a seamless, complete vRAN solution that includes hardware, software, and services.

O-RAN is more than the edge of the future. It is a competitive edge for telecommunications providers that must quickly deliver and monetize 5G services, from private mobile networks to high-performance computing applications. Make Dell Technologies your competitive edge, and ask your Dell representative about our portfolio of telco-grade edge solutions.

1 PowerEdge Cyber Resilient Architecture Infographic

2 Bringing high performance and reliability to the edge with rugged Dell EMC PowerEdge XR servers

OLUTION BRIEF1•1Dell PowerEdge Servers for OpenRANEdge Deployments

Automation

Computing on the Edge: Other Design Considerations for the Edge – Part 1

Fri, 13 Jan 2023 19:46:50 -0000

|Read Time: 0 minutes

In past blogs, the requirements for NEBS Level 3 certifications were addressed, with even higher demands depending on the Outside Plant (OSP) installation requirements. Now, additional design considerations need to be considered, to create a hardware solution that is not only going to survive the environment at the edge, but provides a platform that can be effectively deployed to the edge.

Ruggedized Chassis Design

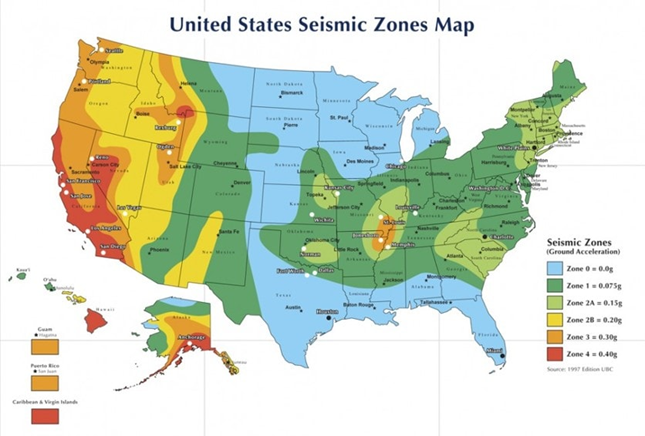

The first design consideration that we’ll cover for an Edge Server is the Ruggedized Chassis. This is certainly a chassis that can stand up to the demands of Seismic Zone 4 testing and can also withstand impacts, drops, and vibration, right?

Not necessarily.

While earthquakes are violent, demanding, but relatively short-duration events, the shock and vibration profile can differ significantly when the server is taken out from under the Cell Tower. We are talking beyond the base of the tower, and to edge environments that might be encountered in Private Wireless or Multi-Access Edge Compute (MEC) deployments. Some vibration and shock impacts are tested in GR-63-Core, under test criteria for Transportation and Packaging, but ruggedized designs Figure 1. Portable Edge Compute Platforms need to go beyond this level of testing.

Figure 1. Portable Edge Compute Platforms need to go beyond this level of testing.

For example, the need for ruggedized servers in mining or military environments, where setting up compute can be more temporary in nature and often includes the use of portable cases, such as Pelican Cases. These cases are subject to environmental stresses and can require ruggedized rails and upgraded mounting brackets on the chassis for those rails. For longer-lasting deployments, enclosures can be less than ideal and require all the requirements of a GR-3108 Class 2 device and perhaps some additional considerations.

Dell Technologies also tests our Ruggedized (XR-series) Servers to MIL-STD-810 and Marine testing specifications. In general, MIL-STD-810 temperature requirements are aligned with GR-63-CORE on the high side but test operationally down to -57C (-70F) on the low side. This reflects some extreme parts of the world where the military is expected to operate. But MIL-STD-810 also covers land, sea, and air deployments. This means that non-operational (shipping) criteria is much more in-depth, as are acceleration, shock, and vibration. Criteria includes scenarios, such as crash survivability, where the server can be exposed to up to 40Gs of acceleration. Of course, this tests not only the server, but the enclosure and mounting rails used in testing.

So why have I detoured onto MIL-STD and Marine testing? For one, it’s interesting in the extreme “dynamic” testing requirements that are not seen in NEBS. Secondly, creating a server that is survivable in MIL-STD and Marine environments is only complementary to NEBS and creates an even more durable product that has applications beyond the Cellular Network.

Server Form Factor

Figure 2. Typical Short Depth Cell Site EnclosureAnother key factor in chassis design for the edge is the form factor. This involves understanding the physical deployment scenarios and legacy environments, leading to a server form factor that can be installed in existing enclosures without the need for major infrastructure improvements. For servers, 19 inch rackmount or 2 post mounting is common, with 1U or 2U heights. But the key driver in the chassis design for compatibility with legacy telecom environments is short depth.

Figure 2. Typical Short Depth Cell Site EnclosureAnother key factor in chassis design for the edge is the form factor. This involves understanding the physical deployment scenarios and legacy environments, leading to a server form factor that can be installed in existing enclosures without the need for major infrastructure improvements. For servers, 19 inch rackmount or 2 post mounting is common, with 1U or 2U heights. But the key driver in the chassis design for compatibility with legacy telecom environments is short depth.

Server depth is not something covered by NEBS, but supplemental documentation created by the Telecoms, and typically reflected in RFPs, define the depth required for installation into Legacy Environments. For instance, AT&T’s Network Equipment Power, Grounding, Environmental, and Physical Design Requirements document states that “newer technology” deployed to a 2 post rack, which certainly applies to deployments like vRAN and MEC, “shall not” exceed 24 inches (609mm) in depth. This disqualifies most traditional rackmount servers.

The key is deployment flexibility. Edge Compute should be able to be mounted anywhere and adapt to the constraints of the deployment environment. For instance, in a space-constrained location, front maintenance is a needed design requirement. Often these servers will be installed close to a wall or mounted in a cabinet with no rear access. In addition, supporting reversible airflow can allow the server to adapt to the cooling infrastructure (if any) already installed.

Conclusion

While NEBS requirements focus on Environmental and Electrical Testing, ultimately the design needs to consider the target deployment environment and meet the installation requirements of the targeted edge locations.

Computing on the Edge: Outdoor Deployment Classes

Fri, 02 Dec 2022 20:21:29 -0000

|Read Time: 0 minutes

Ultimately, all the testing involved with GR-63-CORE and GR-1089-CORE is intended to qualify hardware designs that have the environmental, electrical, and safety qualities that allow for installations from the Central Office, all the way out to the Cell Site. For deployments at the Cell Site, it turns out that NEBS Level 3 is really only the start and the minimum environmental threshold for Cell Site Controlled Environment.

This is where GR-3108-CORE comes into scope. GR-3108-CORE, Generic Requirements for Network Equipment in Outside Plant (OSP), defines the environmental tolerances for equipment deployed throughout a Telecom Network, from the Central Office, to up the tower of the Cell Site, and to the customer premises.

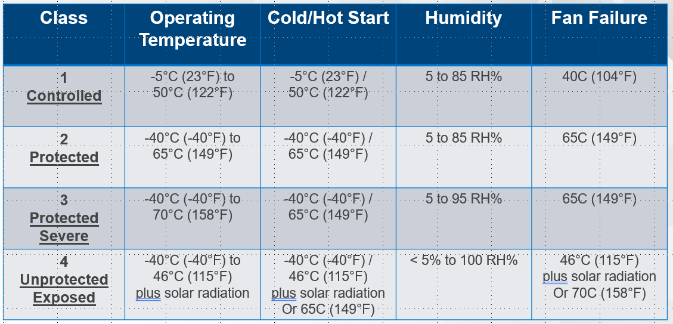

Figure 1. GR-3108-CORE Equipment ClassesThe four Classes of equipment defined in GR-3108-CORE are:

Figure 1. GR-3108-CORE Equipment ClassesThe four Classes of equipment defined in GR-3108-CORE are:

Class 1: Equipment in Controlled or Protected Environments

Class 2: Protected Equipment in Outside Environments

Class 3: Protected Equipment in Severe Outside Environments

Class 4: Products in Unprotected Environment directly exposed to the weather

The primary drivers of these classes include:

- Thermal, including Cold Start and Hot Start

- Temperature and Humidity Cycling

- Salt Fog Exposure

- Closure and Housing Requirements

Class 1: Equipment in Controlled or Protected Environments

The OSP for Class 1 enclosures includes Controlled Environmental Vaults, Huts and Cabinets with active heating and cooling, Telecom Closets in-Building/on- Figure 2. Typical OSP Enclosure and Concrete HutBuilding or Residential Locations. The requirements for Class 1 installations are very much in line with NEBS Level 3 specifications with the recurring theme on these enclosures being that there is some active means of environmental control. The methods of maintaining a controlled environment are not specified, but the method used must maintain the defined operating temperatures between -5°C (23°F) to 50°C (122°F) and humidity levels between 5-85 percent.

Figure 2. Typical OSP Enclosure and Concrete HutBuilding or Residential Locations. The requirements for Class 1 installations are very much in line with NEBS Level 3 specifications with the recurring theme on these enclosures being that there is some active means of environmental control. The methods of maintaining a controlled environment are not specified, but the method used must maintain the defined operating temperatures between -5°C (23°F) to 50°C (122°F) and humidity levels between 5-85 percent.

Other expectations for Class 1 enclosures include performing initial, cold, or hot startup throughout the entire temperature range and continued operation if device single fan failures occurs, but at a lower upper-temperature threshold.

Class 2: Protected Equipment in Outside Environments

Figure 3. Example Class 2 Protected EnclosuresThe internal enclosures or spaces of a Class 2 OSP have an extended temperature range of -40°C (-40°F) to 65°C (149°F), with humidity levels the same as for Class 1. Typically, while these OSPs continue to protect the hardware from the outside elements, environmental controls are less capable and often involve the use of cooling fans, heat exchangers and raised fins to dissipate heat. Besides outdoor enclosures, Class 2 environments can also include customer premise locations such as garages, attics or uncontrolled warehouses.

Figure 3. Example Class 2 Protected EnclosuresThe internal enclosures or spaces of a Class 2 OSP have an extended temperature range of -40°C (-40°F) to 65°C (149°F), with humidity levels the same as for Class 1. Typically, while these OSPs continue to protect the hardware from the outside elements, environmental controls are less capable and often involve the use of cooling fans, heat exchangers and raised fins to dissipate heat. Besides outdoor enclosures, Class 2 environments can also include customer premise locations such as garages, attics or uncontrolled warehouses.

For hardware designers, creating Carrier Grade Servers, this is where it’s particularly important to pay attention to the components being used when you’re looking at your target Class 2 deployment environment. Many manufacturers will provide specifications on the maximum temperature where the IC component will operate. Typically, the maximum temperature is a die temperature, and the method of heat evacuation is left to the HW designers, in the form of heat sinks, fans for airflow, and others.

However, for those attempting compliance with Class 2, the lower temperature range also becomes important because many ICs are not tested for operation below 0°C. The IC temperature grades generally come in commercial (0°C/32°F to 70°C/158°F), Industrial (-40°C/-40°F to 85°C/185°F), Military (-55°C/-67°F to 125°C/257°F), and Automotive grades.

So the specs for Commercial grade ICs may not even accommodate the requirements of even a Class 1 OSP. So, what do designers do? Sometimes, you’ll see an “asterisk” on the server spec sheet, indicating that the device can run at the lower temperature range, but not start at the lower range. This is where the design can start at 0C and provide sufficient heat to keep the IC warm down through -5°C (23°F).

Designers may also consider including some pre-heater or enclosure heater to bring the device up to 0°C before a startup is allowed or incur the added expense of extended temperature parts.

Class 3: Protected Equipment in Severe Outside Environments

Severe is certainly the theme for Class 3 OSPs. In these environments, while inside an enclosure to protect the device from direct sunlight and rain, the enclosure may not be sealed from other outside stresses like hot, cold and humidity extremes, dust and other airborne contaminants, salt fog, etc. Temperature ranges from -40°C (-40°F) to 70°C (158°F) and humidity levels from 5% to 95%, with single fan failure requirements of 65°C. Certainly, indoor hostile environments, such as boiler rooms, furnace spaces and attics also exist that would require Class 3 designed solutions. Figure 4.Protected Sever Cabinet

Figure 4.Protected Sever Cabinet

Class 4: Products in Unprotected Environment directly exposed to the weather

Figure 5. Class 4 Radio UnitsThis class of equipment is intended for outdoor deployments, with full exposure to sun, rain, wind and all the environmental challenges found, for example, at the top of a Cell Tower. For Telecom, Class 4 certification would typically be the domain of Antennas and Remote Radio Heads. These units, mounted on towers, buildings, street lamps, and other places are fully exposed to the entire spectrum of environmental challenges. Class 4 devices get a bit of a break on temperature, -40°C (-40°F) to 46°C (115°F), due to direct exposure to sunlight, but 100% humidity due to its exposure to rain.

Figure 5. Class 4 Radio UnitsThis class of equipment is intended for outdoor deployments, with full exposure to sun, rain, wind and all the environmental challenges found, for example, at the top of a Cell Tower. For Telecom, Class 4 certification would typically be the domain of Antennas and Remote Radio Heads. These units, mounted on towers, buildings, street lamps, and other places are fully exposed to the entire spectrum of environmental challenges. Class 4 devices get a bit of a break on temperature, -40°C (-40°F) to 46°C (115°F), due to direct exposure to sunlight, but 100% humidity due to its exposure to rain.

Conclusion

For Carrier Grade Servers, Class 1 (NEBS Level 3 equivalent) is the most common target of designers creating compute, storage, and networking platforms for Telecom consumption. Class 2 servers are also achievable, and their demand may increase as Edge Computing and O-RAN/Cloud RAN deployments become more common, moving beyond Class 2 will require specialty, more purpose-defined designs.

Computing on the Edge: NEBS Criteria Levels

Tue, 15 Nov 2022 14:43:44 -0000

|Read Time: 0 minutes

In our previous blogs, we’ve explored the type of tests involved to successfully pass the criteria of GR-63-CORE, Physical Protections, GR-1089-CORE, Electromagnetic Compatibility, and Electrical Safety. The goal of successfully completing these tests is to create Carrier Grade, NEBS compliant equipment. However, outside of highlighting the set of documents that compose NEBS, nothing is mentioned of the NEBS levels and the requirements to achieve each level. NEBS levels are defined in Special Report, SR-3580.

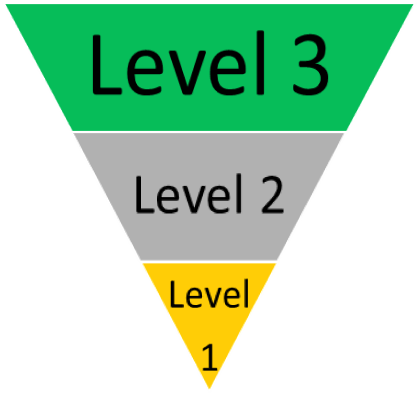

Figure 1. NEBS Certification LevelsNEBS Level 3 compliance is expected from most Telecom environments, outside of a traditional data center. So, what NEBS level do equipment manufacturers aim to achieve?

Figure 1. NEBS Certification LevelsNEBS Level 3 compliance is expected from most Telecom environments, outside of a traditional data center. So, what NEBS level do equipment manufacturers aim to achieve?

At first, I created Figure 1 as a pyramid, not inverted, with Level 1 as the base and Level 3 as the peak. However, I reorganized the graphic because Level 1 isn’t really a foundation, it is a minimum acceptable level. Let’s dive into what is required to achieve each NEBS certification level.

NEBS Level 1

NEBS Level 1 is the lowest level of NEBS certification. It provides the minimum level of environmental hardening and stresses safety criteria to minimize hazards for installation and maintenance administrators.

This level is the minimum acceptable level of NEBS environmental compatibility required to preclude hazards and degradation of the network facility and hazards to personnel.

This level includes the following tests:

- Fire resistance

- Radiated radiofrequency (RF)

- Electrical safety

- Bonding or grounding

Level 1 criteria does not assess Temperature/Humidity, Seismic, ESD or Corrosion.

Operability, enhanced resilience, and environmental tolerances are assessed in Levels 2 and 3.

NEBS Level 2

Figure 2. Map of Seismic Potential in the US

NEBS Level 2 assesses some environmental criteria, but the target deployment is in a “normal” environment, such as data center installations where temperatures and humidity are well controlled. These environments typically experience limited impacts of EMI, ESD, and EFTs, and have some protection from lightning, Surges and Power Faults. There is also some Seismic Testing performed on the EUT, but only to Zone 2. While there is no direct correlation between seismic zones and earthquake intensity, in the United States, zone 2 generally covers the Rocky Mountains, much of the West and parts Southeast and Northeast Regions.

NEBS Level 2 certification may be sufficient for some Central Office (CO) installations but is not sufficient for deployment to Far Edge or Cell Site Enclosures which can be exposed to environmental and electromagnetic extremes, or in regions covered by seismic zones 3 or 4.

NEBS Level 3

Figure 3. Level 3 criteria

Figure 3. Level 3 criteria

NEBS Level 3 certification is the highest level of NEBS Certification and is the level that is expected by most North American telecom and network providers when specificizing equipment requirements for installation into controlled environments.

Level 3 is required to provide maximum assurance of equipment operability within the network facility environment.

Level 3 criteria are also suited for equipment applications that demand minimal service interruptions over the equipment’s life.

Full NEBS Level 3 certification can take from three to six months to complete. This includes prepping and delivering the hardware to the lab, test scheduling, performance, analysis of test results, and the production of the final report. If a failure occurs, systems can be redesigned for retesting.

Conclusion

While environmental, electrical, electromagnetic, and safety specifications described in NEBS Level 3 certification, it is the minimum required for deployment into a controlled telecom network environment; these specifications are only the beginning for outdoor deployments. The next blog in this series will explore more of these specifications such as GR-3108-CORE and general requirements for Network Equipment in Outside Plant (OSP). Stay tuned.