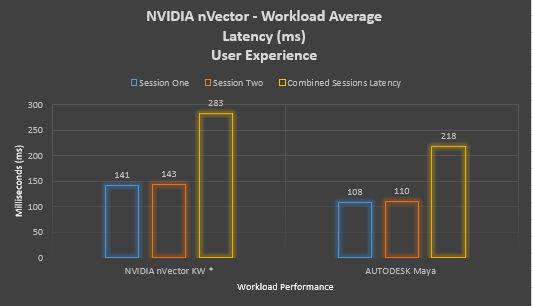

End-user latency

The end-user latency metric defines how responsive a remote desktop or application is. It is a measurement of the lag an end-user experiences at an endpoint device when interacting with a remote desktop or application. The following figure shows that the Maya collaborative workload performs well for end-user latency when compared to the Knowledge Worker baseline workload, with slightly lower end-user latency experienced.

In summary, we found that end-user latency results for the baseline nVector Knowledge Worker and Maya collaborative sessions were similar. The Maya workload latency distribution appears similar, regardless of which session takes the collaborative lead. Overall, the Maya-Omniverse collaborative sessions performed better than the baseline Knowledge Worker workload.

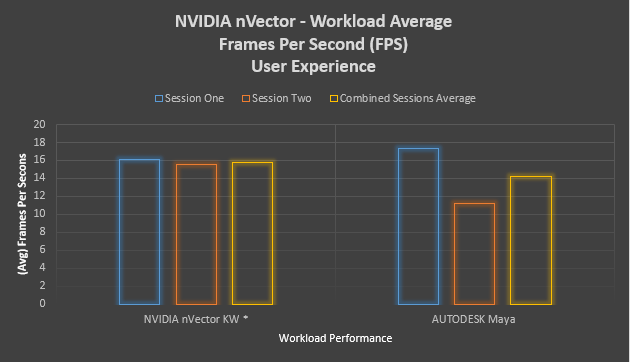

Frame rate (FPS)

The frame rate metric defines how smooth the EUE is. It measures the rate at which frames are delivered on the screen of the endpoint device. Frame rate is sampled at 5-second intervals for the duration of the workload. The metric is a measurement of “smoothness” for user experiences at an endpoint device when interacting with a remote desktop or application.

The following figure shows similar frame rate performance for both the Knowledge Worker workload and the Maya collaborative workload. The Knowledge Worker workload achieved more consistent FPS averages per session compared to the Maya collaborative workload.

For the Maya workload, information in relation to Session 1 is of most relevance. This is because Session 2 users will typically be more concerned about the speed at which data updated by Session 1 is visible to them than the absolute value of the frame rate that they see for Session 1 activities, as Session 2 users would typically initiate activities (object rotation and so on) of interest to them.

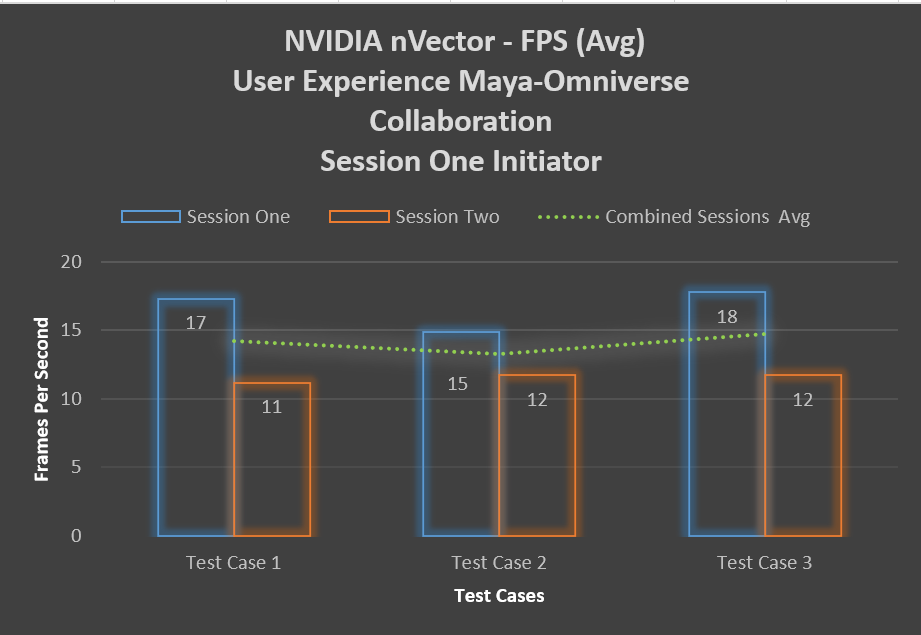

The following figure shows the average frame rates for both Maya Sessions where Session One starts the collaboration:

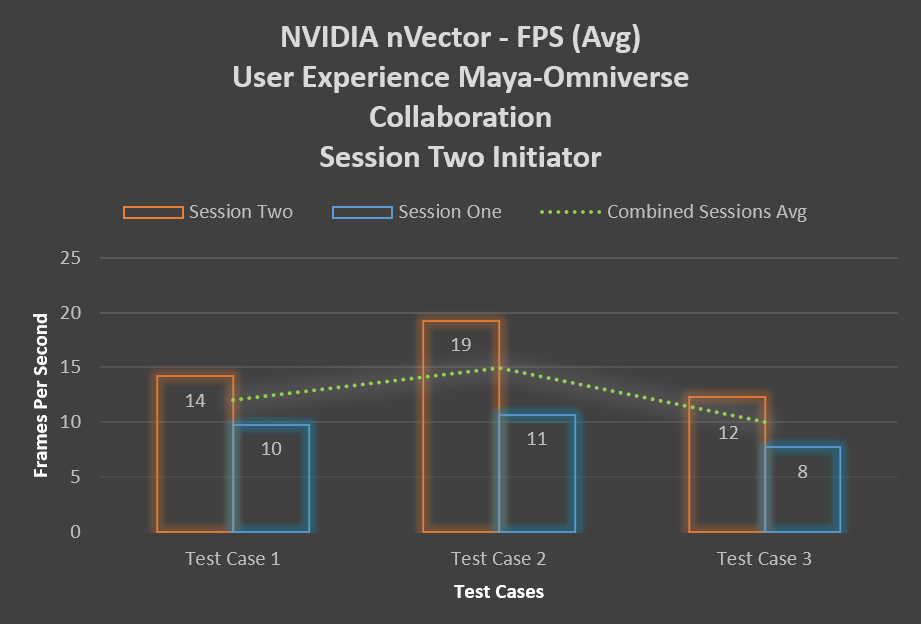

The following figure shows the average frame rates for both Maya sessions where Session Two leads the collaboration:

In summary, we found that frame rate results for the baseline nVector Knowledge Worker and Maya collaborative sessions were similar. The Maya workload FPS performance average appears to be influenced by which session takes the collaborative lead and it is felt that this is acceptable as outlined above.

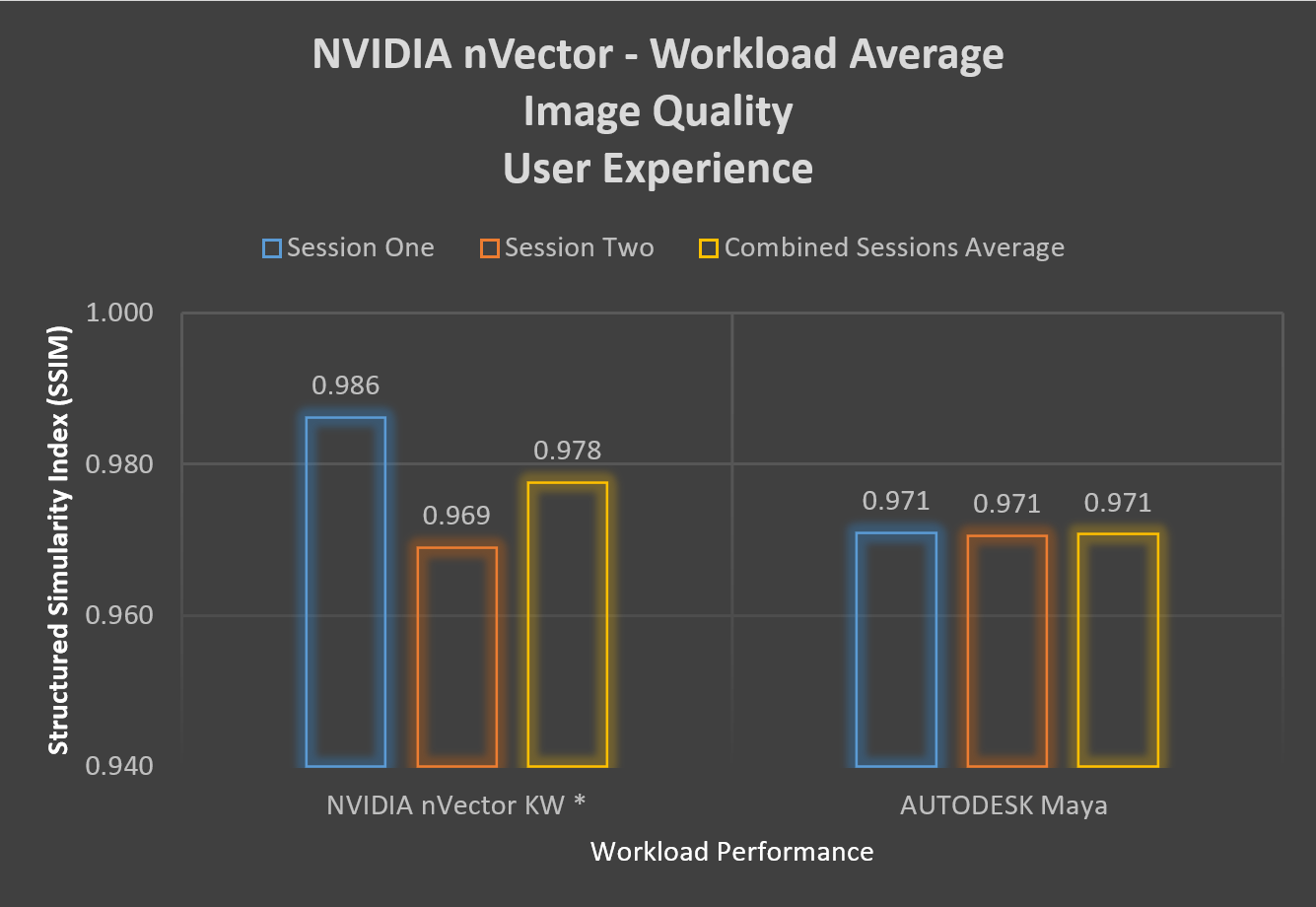

Image quality (SSIM)

This index metric defines image quality. It uses the SSIM to compare an image that is rendered on the target desktop or workstation VM with the image that is displayed on the endpoint device. The average SSIM index of all pairs of images is computed for a single point in time for the VDI session. The index score is calculated once during the workload, so a single value score is given for each workload.

The following figure shows that the Maya collaborative workload achieved a more consistent session image quality performance compared to the baseline Knowledge Worker workload, although the latter achieved a marginally higher score for combined session average.

In summary, the SSIM image quality results for the baseline nVector Knowledge Worker and Maya collaborative sessions were similar. The Maya workload image quality SSIM index scores were very similar, regardless of which session took the collaborative lead. Overall, the Maya–Omniverse collaborative sessions performed well compared to the baseline workload.