Software defined storage (SDS) is a significant building block of the Azure Stack HCI in which the software manages the storage hardware. SDS works on the concept of separating the management and presentation layer of the storage from the underlying physical hardware. It also eliminates the complex and error prone process of configuring Logical Unit Numbers (LUNs) over the Storage Area Networks (SANs) to deploy the virtualized workloads. Instead of using the proprietary and expensive technologies of SANs, customers can take advantage of this flexible and economical server-based solution by using local disks over remote file sharing protocols with high bandwidth and low latency networking.

SDS running inside the Azure Stack HCI enables customers to build their data centers with the following characteristics:

- Start as small as 2 servers with a switchless configuration. If scale up is in the plan, then start from 3-16 servers

- Scales up to 384 drives and configures up to 3 PB of raw storage for each cluster

- Adds drives and memory to scale up

- Adds servers to scale out

- The storage pool automatically absorbs new drives making it hassle free for drive upgrade/replacement

- Offers better storage efficiency and performance at larger and distributed scale

- Simplifies procurement due to its converged design supports Ethernet, with no special hardware or cables required

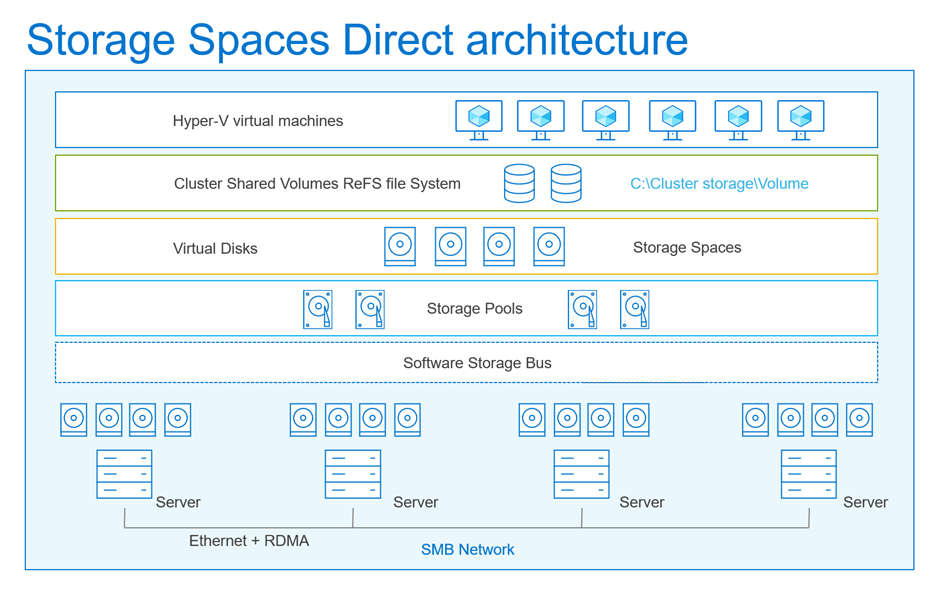

Storage Spaces Direct (S2D) evolved from Storage Spaces, first introduced with Windows Server 2012. While Storage Spaces was confined to pool resources from a single server and its direct attached storage (JBODs), iSCSI, and Fiber channel enclosures, the S2D was extended to pool storage across multiple servers. S2D is powered by features such as Failover Clustering, Cluster Shared Volume file system, Server Message block (SMB3), and Storage Spaces. The following figure shows the overall resource stack of S2D.

Storage pool is a single logical disk entity that is formed by aggregating a pool of hard drives of any type and size. Storage spaces are virtual disks that are created from the available disk space provided by the storage pools. They are equivalent to LUNs in a SAN environment.

Software Storage Bus is an S2D component that establishes a software defined storage fabric that spans clusters. It enables all the servers to see each other’s’ local drives, providing a huge pool of storage space. It also enables a caching mechanism that provides server-side read/write caching that accelerates I/O and boosts throughput. The caching behavior is determined automatically by the type(s) of drives present in the server. The following table summarizes the different cache configurations and their behavior:

| Configuration | Cache drives | Capacity drives | Cache behavior (default) |

| All NVMe | None (Optional: configure manually) | NVMe | Write-only (if configured) |

| All SSDs | None (Optional: configure manually) | SSD | Write-only (if configured) |

| NVMe + SSD | NVMe | SSD | Write-only |

| NVMe + HDD | NVMe | HDD | Read + Write |

| SSD + HDD | SSD | HDD | Read + Write |

| NVMe + SSD + HDD | NVMe | SSD + HDD | Read + Write for HDD, Write-only for SSD |

Clusters through Storage Spaces Direct use SMB3, including SMB Direct and SMB Multichannel, over Ethernet to communicate between servers.

- Server Message Block 3.x (SMB) is a network file sharing protocol that provides access to files over a traditional Ethernet network by means of the TCP/IP transport protocol.

- SMB Multichannel is part of the implementation of the SMB 3.x protocol, which significantly improves network performance and availability for devices by automatic configuration, increasing throughput, and fault tolerance.

- SMB Direct optimizes the use of remote direct memory access (RDMA) network adapters for SMB traffic, allowing them to function at full speed with low latency and low CPU utilization.

Storage Spaces (Virtual Disks) support the system by providing high resiliency and fault tolerance to disks using mirroring and parity. The virtual disks resiliency resembles the technology used in Redundant Array of Independent Disks (RAID).

Cluster Shared Volumes (CSV) is a clustered file system that enables multiple nodes of a failover cluster to concurrently read-from and write-to the same set of storage volumes. This is achieved by mapping CSV volumes to subdirectories within the C:\ClusterStorage\directory.Each cluster node then allows cluster nodes to access the same content through the same file system path. While each node can independently read from and write to individual files on a given volume, a single cluster node serves a special role of the CSV owner (that is, the coordinator) of that volume. A failover cluster automatically distributes CSV ownership between cluster nodes, however there is also a provision to assign a given volume to a specific owner.

Resilient File System (ReFS) is a filesystem purpose-built for virtualization that accelerates file operations such as creation, expansion, checkpoint merging, and integrated checksum to detect and correct bit errors. It also introduces tiers that rotate data between so-called "hot" and "cold" storage tiers in real-time based on usage.