Azure Kubernetes Service on Azure Stack HCI (AKS-HCI) provides an effective platform consistency. Modern applications are increasingly getting built on the containerized approach, where microservices are packaged with their dependencies and configurations. Kubernetes, the core component of AKS-HCI, is open-source software for deploying and managing these containers at scale. As compute utilization increases, applications grow to span multiple containers that are deployed across multiple servers. Operating these applications at scale becomes more complex. To manage this complexity, Kubernetes provides an open-source API that determines how and where these containers will run.

Kubernetes 1 orchestrates with a cluster of VMs and schedules the containers to run on those VMs based on their available compute resources and the containers’ resource requirements. Containers are then grouped into pods, the basic operational unit of Kubernetes. These pods scale based on the needs of the applications. Kubernetes also manages service delivery, load balancing, resource allocation, and scales based on utilization. It also keeps a check on the health of each individual resource and enables applications to self-heal automatically by restarting or replicating the containers.

Setting up and maintaining Kubernetes can be complex. AKS-HCI helps simplify setting up Kubernetes on-premises, making it faster to get started hosting Linux and Windows containers.

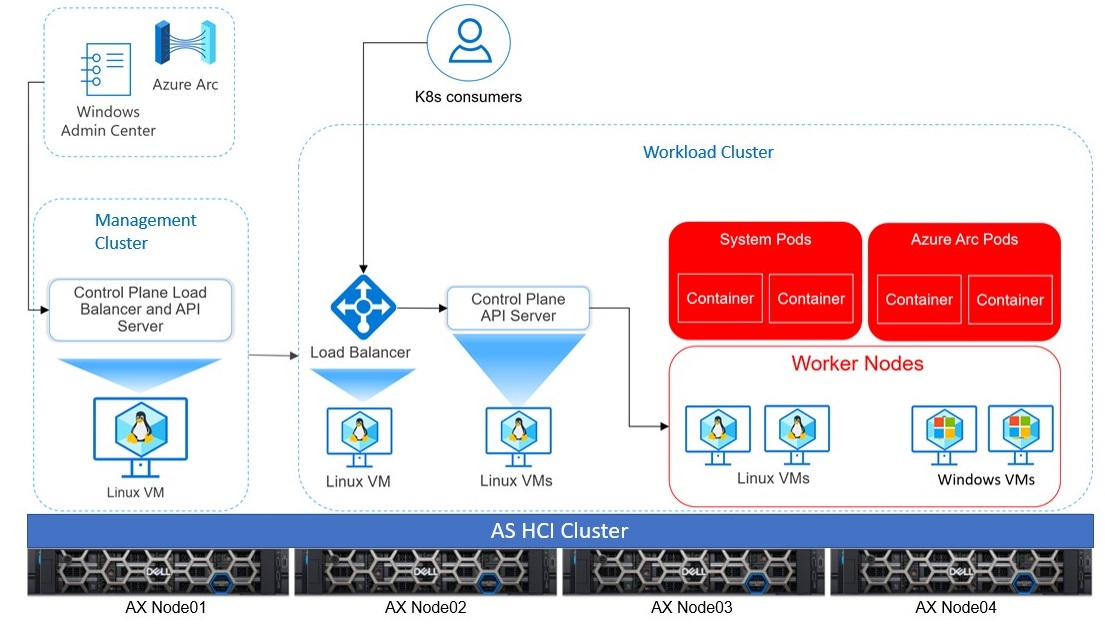

Windows Admin Center and PowerShell are two options for managing the life cycle of Azure Kubernetes Service clusters on Azure Stack HCI. This figure shows the core infrastructure components of Kubernetes, which is divided into two main units based on their operating function. They are:

- Management Cluster: (also known as AKS host) provides the core orchestration mechanism and an interface for deploying and managing one or more clusters.

- Workload Cluster: comprises the target clusters where containerized applications are deployed.

Management Cluster is automatically created when the Azure Kubernetes Service cluster is created on Azure Stack HCI. It is mainly responsible for provisioning and managing workload clusters where workloads are designed to run.

Through the API server, the underlying Kubernetes APIs that are exposed provide the interaction with management tools, such as Windows Admin Center, PowerShell modules, or kubectl.

kubectl is the command line interface for Kubernetes clusters.

The load balancer is a single dedicated Linux VM with a load balancing rule for the API server of the management cluster.

The Workload Cluster is a highly available deployment of Kubernetes using Linux VMs, meant for running Kubernetes control plane components and running Linux and Windows Server-based containers. Multiple workload cluster(s) can be managed by one management cluster.

The API server allows interaction with the Kubernetes API and provides the interface for interaction of management tools, such as Windows Admin Center, PowerShell modules, or kubectl.

The etcd is a distributed key-value store that stores data required for life cycle management of the cluster. It stores the control plane state.

The Load Balancer is a Linux VM running HAProxy + KeepAlive to provide highly available load balanced services for the workload clusters deployed by the management cluster. In each workload cluster there is at least one load balancer VM defined with a load balancing rule.

A Worker Node is a VM that runs the Kubernetes node components and hosts the pods and services that make up the application workload.

A pod represents a single instance of the application running and typically has a 1:1 mapping associated with a container. In advanced scenarios, pods can contain multiple containers with shared resources.

A deployment defines the number of replicas (pods) to be created. The Kubernetes Scheduler ensures that if pods or nodes encounter problems, additional pods are scheduled to start on healthy nodes.