Home > Storage > PowerScale (Isilon) > Product Documentation > Data Protection > Dell PowerScale SyncIQ: Architecture, Configuration, and Considerations > Architecture and processes overview

Architecture and processes overview

-

SyncIQ leverages the full complement of resources in a PowerScale cluster and the scalability and parallel architecture of the Dell PowerScale OneFS file system. SyncIQ uses a policy-driven engine to run replication jobs across all nodes in the cluster.

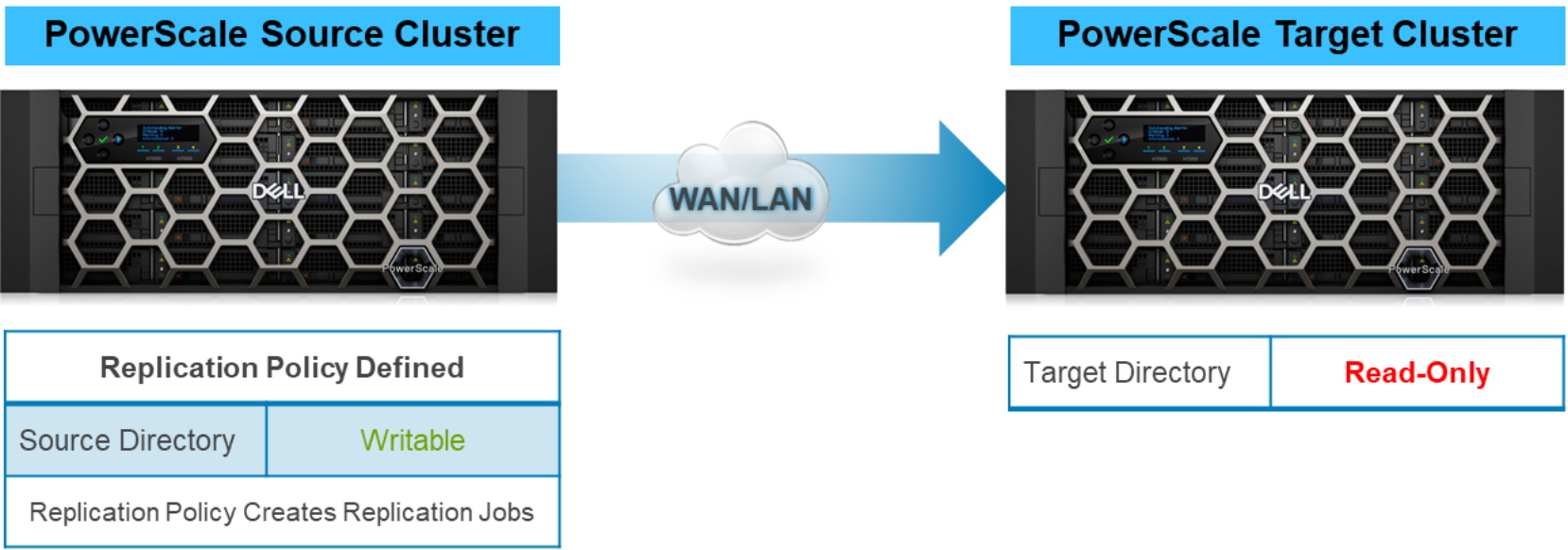

Multiple policies can be defined to allow for high flexibility and resource management. The replication policy is created on the source cluster, and data is replicated to the target cluster. As the source and target clusters are defined, source and target directories are also selected, provisioning the data to replicate from the source cluster and where it is replicated on the target cluster. The policies can either be performed on a user-defined schedule or started manually. This flexibility allows administrators to replicate datasets based on predicted cluster usage, network capabilities, and requirements for data availability.

After the replication policy starts, a replication job is created on the source cluster. Within a cluster, many replication policies can be configured.

During the initial run of a replication job, the target directory is set to read-only and is solely updated by jobs associated with the replication policy configured. When access is required to the target directory, the replication policy between the source and target must be broken. When access is no longer required on the target directory, the next jobs require an initial or differential replication to establish the sync between the source and target clusters.

Note: Practice extreme caution before breaking a policy between a source and target cluster or allowing writes on a target cluster. First ensure that you understand the repercussions. For more information, see Impacts of modifying SyncIQ policies and Allow-writes compared to break association.

Figure 9. PowerScale SyncIQ replication policies and jobs

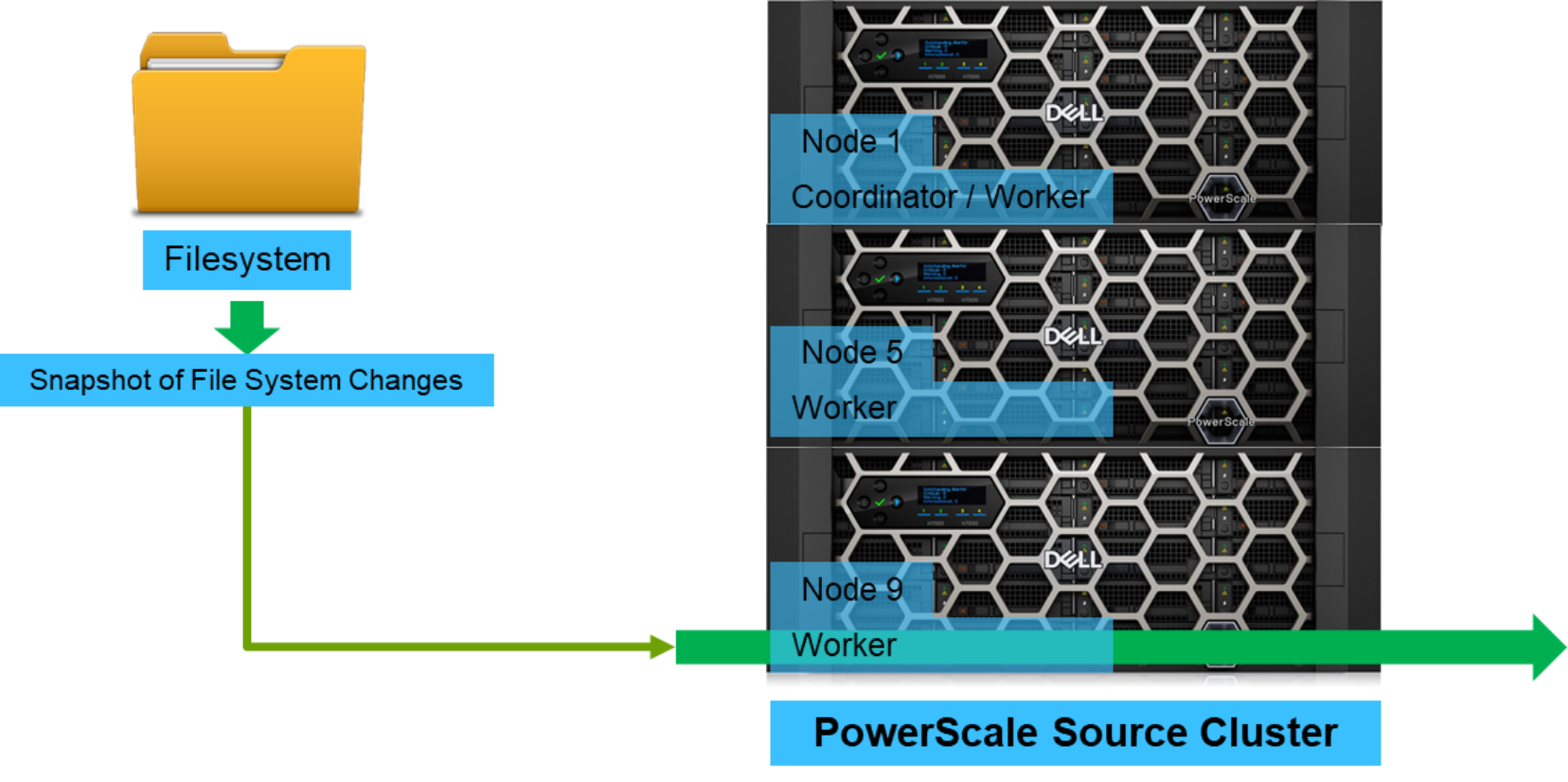

When a SyncIQ job is initiated, from either a scheduled or manually applied policy, the system first takes a snapshot of the data to be replicated. SyncIQ compares this snapshot to the snapshot from the previous replication job to quickly identify the changes to be propagated. Those changes can be new files, changed files, metadata changes, or file deletions. SyncIQ pools the aggregate resources from the cluster, splitting the replication job into smaller work items and distributing the items among multiple workers across all nodes in the cluster. Each worker scans a part of the snapshot differential for changes and transfers those changes to the target cluster. While the cluster resources are managed to maximize replication performance, administrators can decrease the impact on other workflows using configurable SyncIQ resource limits in the policy.

Replication workers on the source cluster are paired with workers on the target cluster to accrue the benefits of parallel and distributed data transfer. As more jobs run concurrently, SyncIQ employs more workers to use more cluster resources. As more nodes are added to the cluster, file system processing on the source cluster and file transfer to the remote cluster are accelerated, a benefit of the PowerScale scale-out NAS architecture.

Figure 10. SyncIQ snapshots and work distribution

SyncIQ is configured through the OneFS WebUI, providing a simple, intuitive method to create policies, manage jobs, and view reports. In addition to the web-based interface, all SyncIQ functionality is integrated into the OneFS command-line interface. For a full list of all commands, run isi sync –-help.