Third-party Analysis

Delivering Choice for Enterprise AI: Multi-Node Fine-Tuning on Dell PowerEdge XE9680 with AMD Instinct MI300X

Wed, 15 May 2024 21:26:35 -0000

|Read Time: 0 minutes

In this blog, Scalers AI and Dell have partnered to show you how to use domain-specific data to fine-tune the Llama 3 8B Model with BF16 precision on a distributed system of Dell PowerEdge XE9680 Servers equipped with AMD Instinct MI300X Accelerators.

| Introduction

Large language models (LLMs) have been a significant breakthrough in AI and demonstrated remarkable capabilities in understanding and generating human-like text across a wide range of domains. The first step in approaching an LLM-assisted AI solution is generally pre-training, during which an untrained model learns to anticipate the next token in a given sequence using information acquired from various massive datasets, followed by fine-tuning, which involves adapting the pre-trained model for a domain specific task by updating a task-specific layer on top.

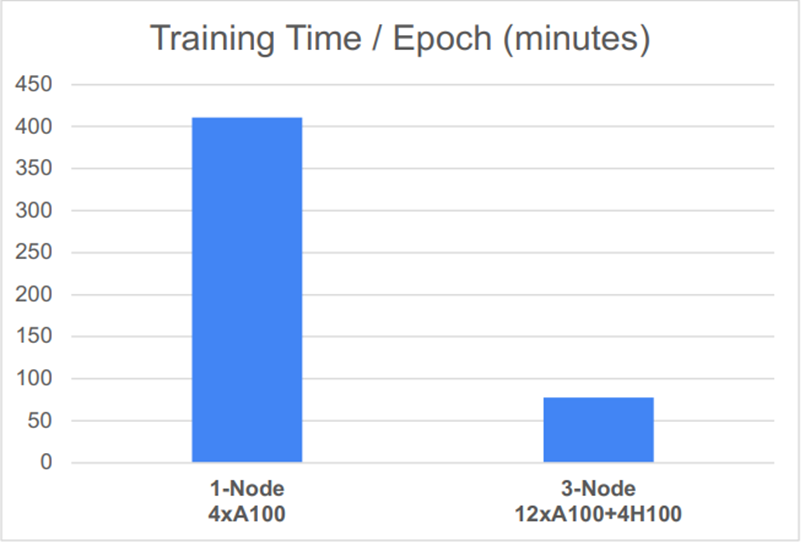

Fine-tuning, however, still requires a lot of time, computation, and RAM. One approach to reducing computation time is distributed fine-tuning, which allows computational resources to be more efficiently utilized by parallelizing the fine-tuning process across multiple GPUs or devices. Scalers AI showcased various industry-leading capabilities of Dell PowerEdge XE9680 Servers paired with AMD Instinct MI300X Accelerators on a distributed fine-tuning task by uncovering these key value drivers:

- Developed a distributed finetuning software stack on the flagship Dell PowerEdge XE9680 Server equipped with eight AMD Instinct MI300X Accelerators.

- Fine-tuned Llama 3 8B with BF16 precision using the PubMedQA medical dataset on two Dell PowerEdge XE9680 Servers each equipped with AMD Instinct MI300X Accelerators.

- Deployed fine-tuned model in an enterprise chatbot scenario & conducted side by side tests with Llama 3 8B.

- Released distributed fine-tuning stack with support for Dell PowerEdge XE9680 Servers equipped with AMD Instinct MI300X Accelerators and NVIDIA H100 Tensor Core GPUs to offer enterprise choice.

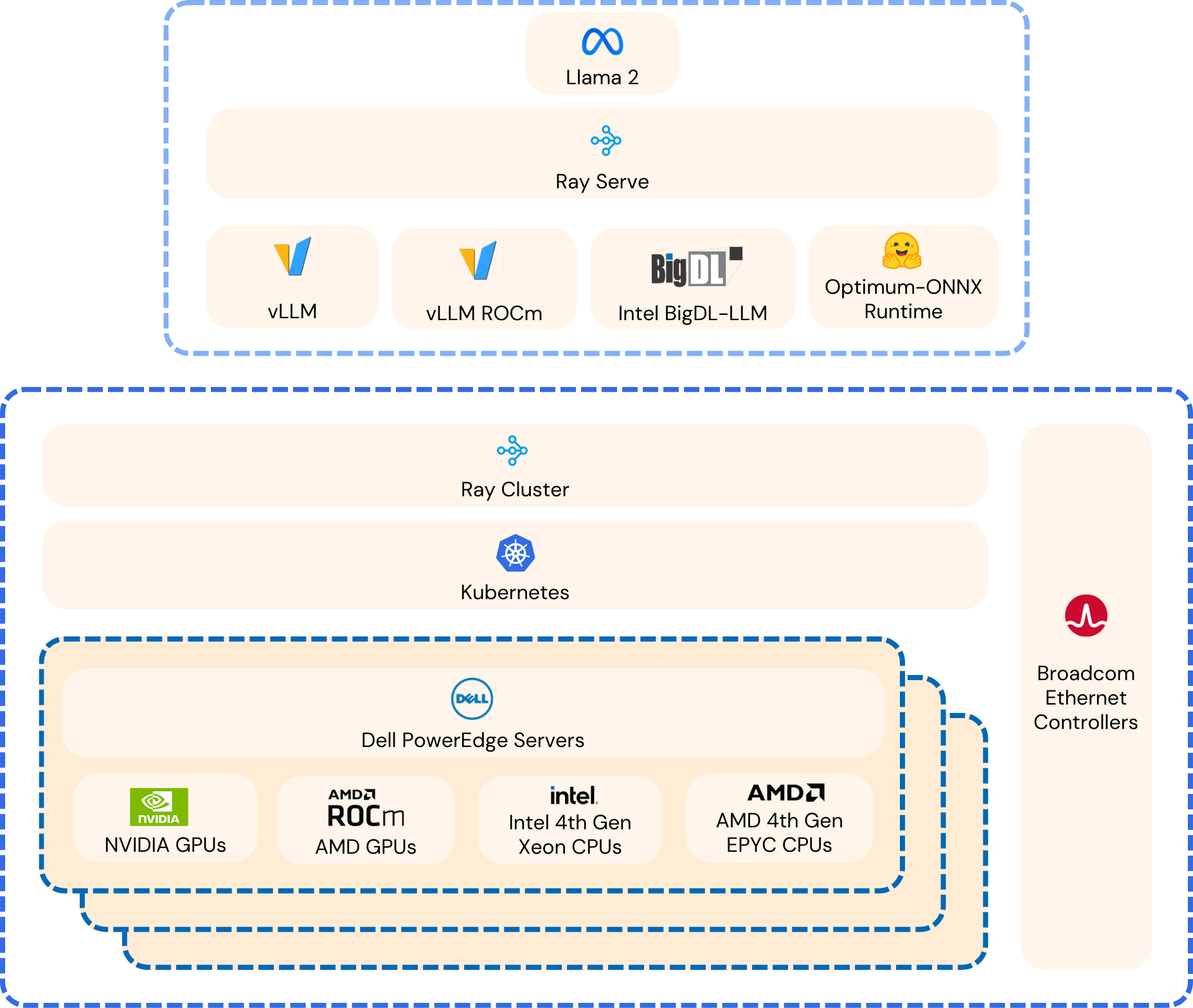

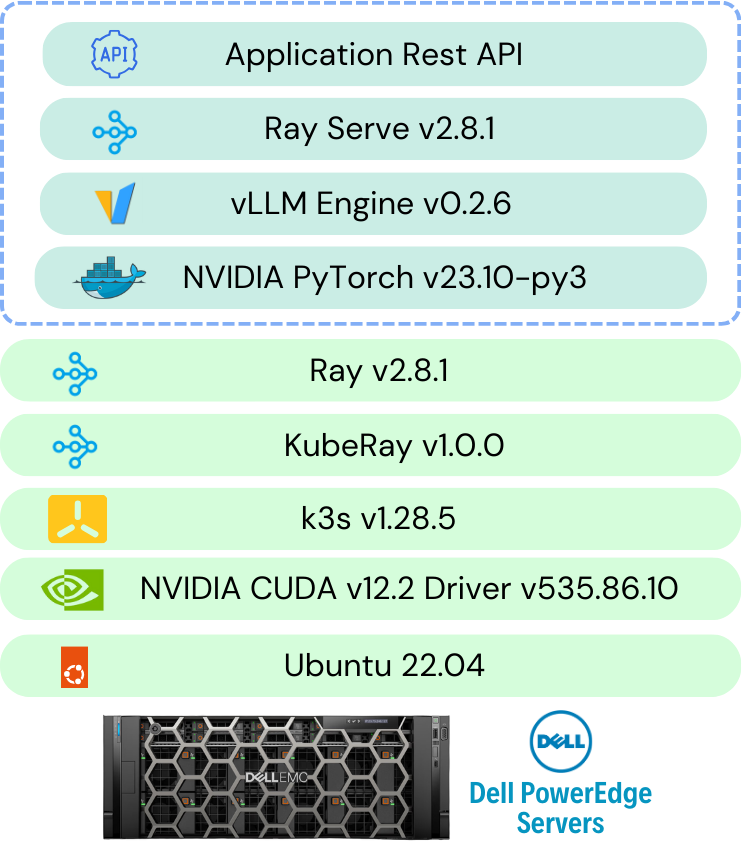

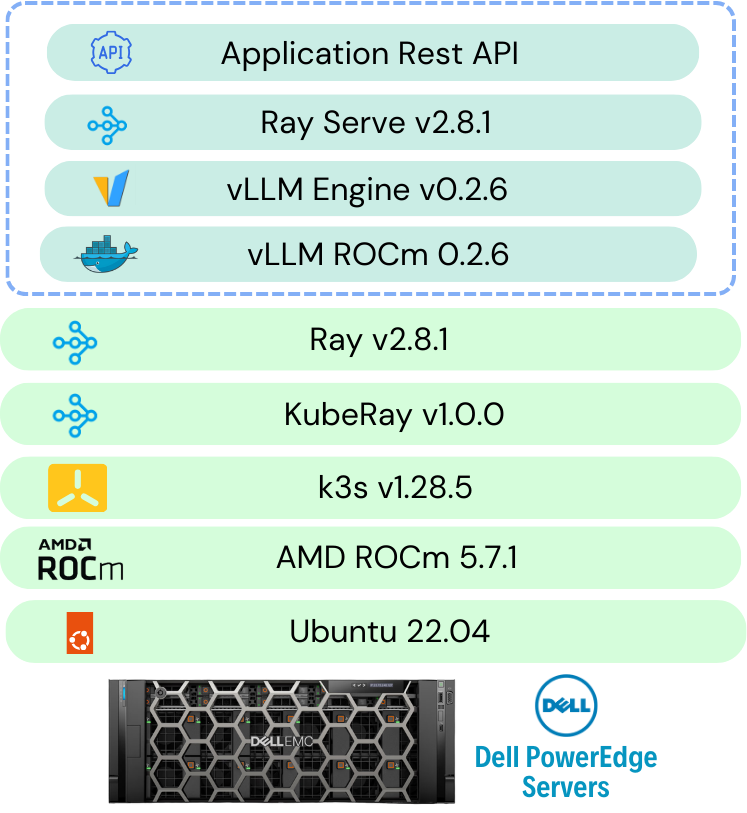

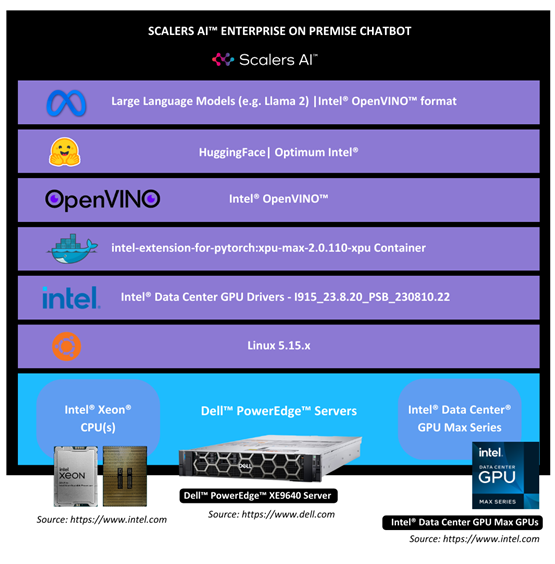

| The Software Stack

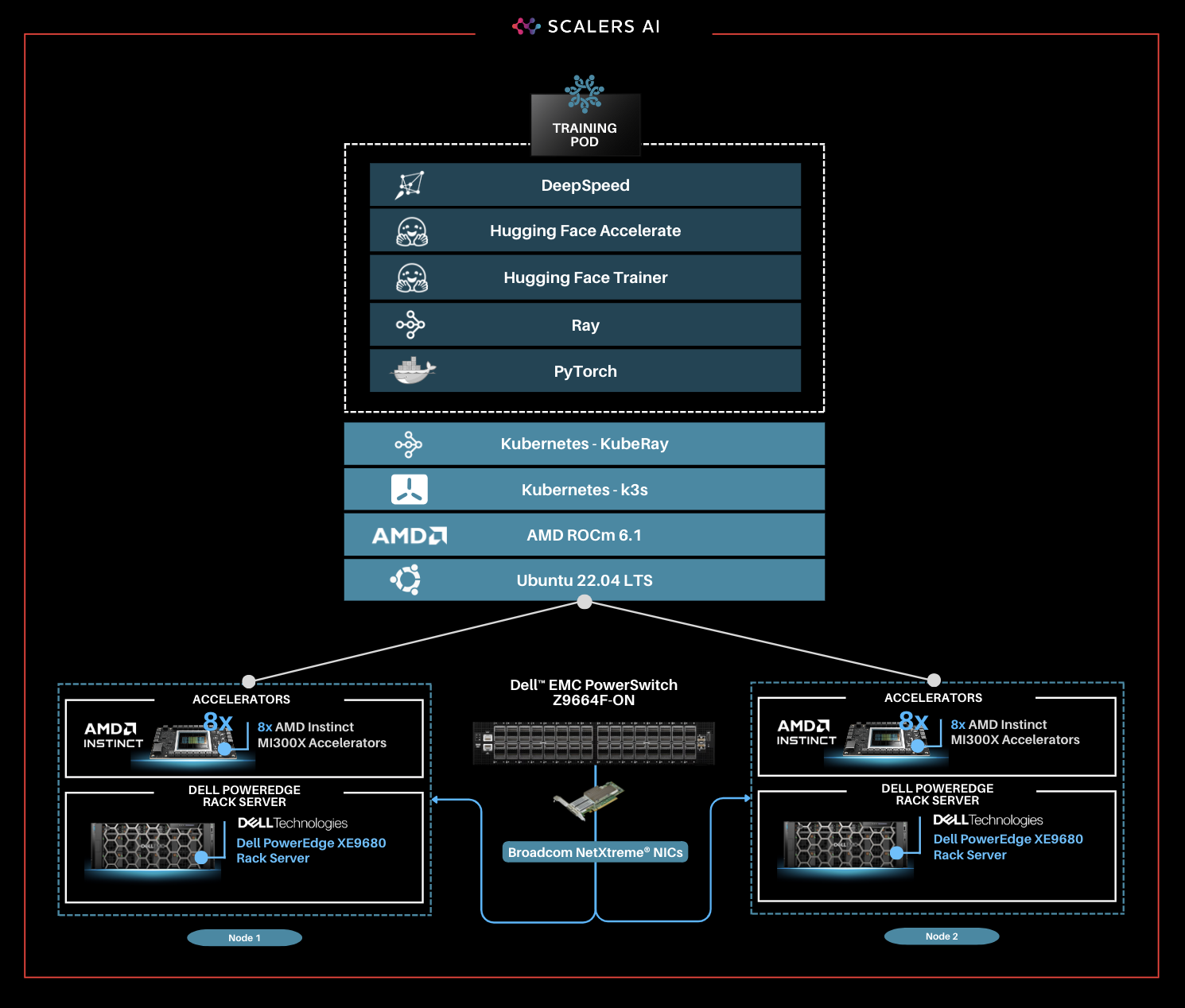

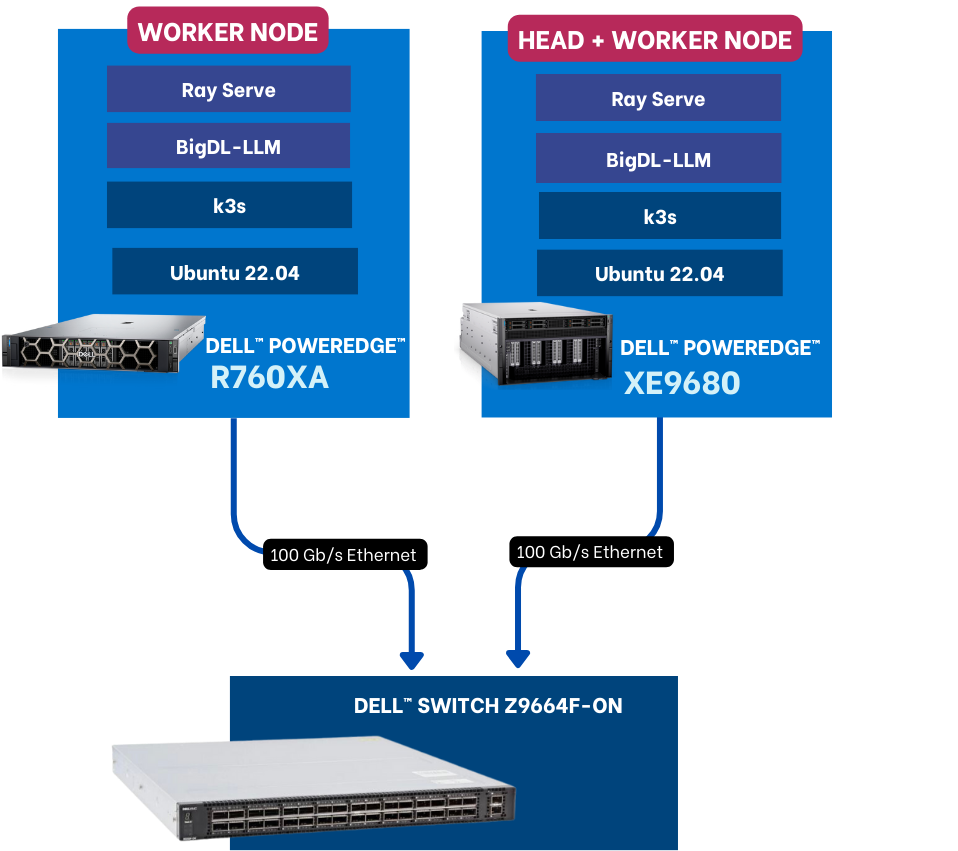

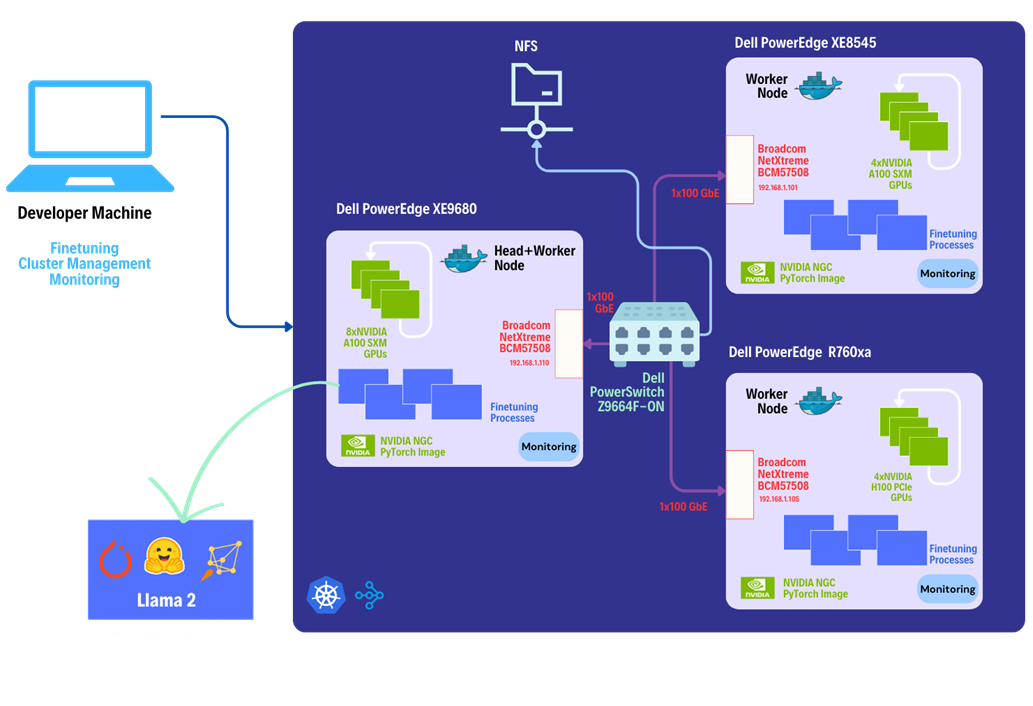

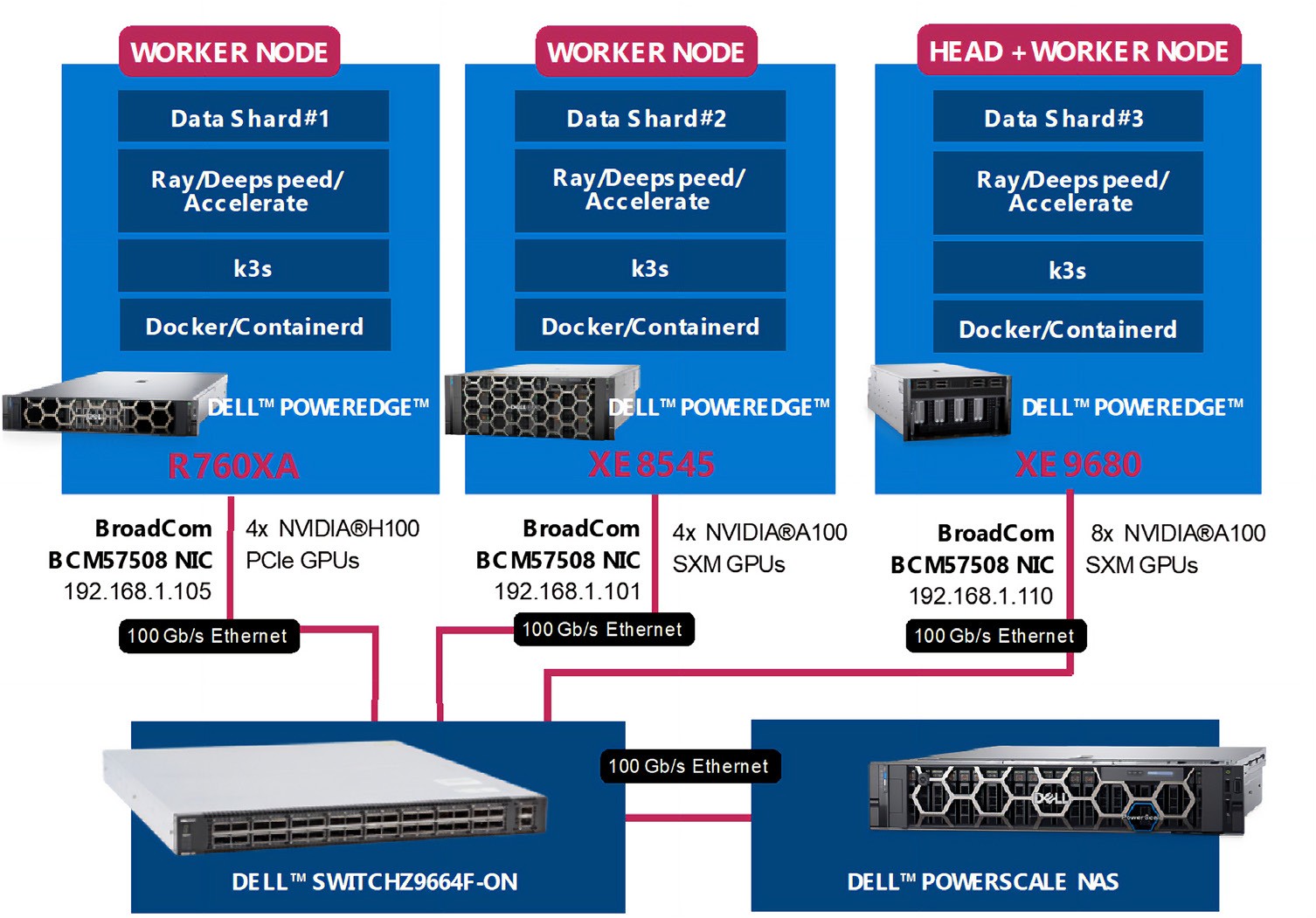

This solution stack leverages Dell PowerEdge Rack Servers, coupled with Broadcom Ethernet NICs for providing high-speed inter-node communications needed for distributed computing as well as Kubernetes for scaling. Each Dell PowerEdge server contains AI accelerators, specifically AMD Instinct Accelerators to enhance LLM fine-tuning.

The architecture diagram provided below illustrates the configuration of two Dell PowerEdge XE9680 servers with eight AMD Instinct MI300X accelerators each.

Leveraging Dell PowerEdge, Dell PowerSwitch, and high-speed Broadcom Ethernet Network adaptors, the software platform integrates Kubernetes (K3S), Ray, Hugging Face Accelerate, Microsoft DeepSpeed, with other AI libraries and drivers including AMD ROCm™ and PyTorch.

| Step-by-Step Guide

Step 1. Set up the distributed cluster.

Follow the k3s setup and introduce additional parameters for the k3s installation script. This involves configuring flannel, the networking fabric for kubernetes, with a user-selected specified network interface and utilizing the "host-gw" backend for networking. Then, Helm, the package manager for Kubernetes, will be used, and AMD plugins will be incorporated to grant access to AMD Instinct MI300X GPUs for the cluster pods.

Step 2. Install KubeRay and configure Ray Cluster.

The next steps include installing Kuberay, a Kubernetes operator, using Helm. The core of KubeRay comprises three Kubernetes Custom Resource Definitions (CRDs):

- RayCluster: This CRD enables KubeRay to fully manage the lifecycle of a RayCluster, automating tasks such as cluster creation, deletion, and autoscaling, while ensuring fault tolerance.

- RayJob: KubeRay streamlines job submission by automatically creating a RayCluster when needed. Users can configure RayJob to initiate job deletion once the task is completed, enhancing operational efficiency.

- RayService: RayService is made up of two parts: a RayCluster and a Ray Serve deployment graph. RayService offers zero-downtime upgrades for RayCluster and high availability.

helm repo add kuberay https://ray-project.github.io/kuberay-helm/ helm install kuberay-operator kuberay/kuberay-operator --version 1.0.0 |

This RayCluster consists of a head node followed by 1 worker node. In a YAML file, the head node is configured to run Ray with specified parameters, including the dashboard host and the number of GPUs, as shown in the excerpt below. Here, the worker node is under the name "gpu-group”.

... headGroupSpec: rayStartParams: dashboard-host: "0.0.0.0" # setting num-gpus on the rayStartParams enables # head node to be used as a worker node num-gpus: "8" ... |

The Kubernetes service is also defined to expose the Ray dashboard port for the head node. The deployment of the Ray cluster, as defined in a YAML file, will be executed using kubectl.

kubectl apply -f cluster.yml |

Step 3. Fine-tune Llama 3 8B Model with BF16 Precision.

You can either create your own dataset or select one from HuggingFace. The dataset must be available as a single json file with the specified format below.

{"question":"Is pentraxin 3 reduced in bipolar disorder?", "context":"Immunologic abnormalities have been found in bipolar disorder but pentraxin 3, a marker of innate immunity, has not been studied in this population.", "answer":"Individuals with bipolar disorder have low levels of pentraxin 3 which may reflect impaired innate immunity."}

Jobs will be submitted to the Ray Cluster through the Ray Python SDK utilizing the Python script, job.py, provided below.

# job.py

from ray.job_submission import JobSubmissionClient

# Update the <Head Node IP> to your head node IP/Hostname client = JobSubmissionClient("http://<Head Node IP>:30265")

fine_tuning = ( "python3 create_dataset.py \ --dataset_path /train/dataset.json \ --prompt_type 5 \ --test_split 0.2 ;" "python3 train.py \ --num-devices 16 \ # Number of GPUs available --batch-size-per-device 12 \ --model-name meta-llama/Meta-Llama-3-8B-Instruct \ # model name --output-dir /train/ \ --hf-token <HuggingFace Token> " ) submission_id = client.submit_job(entrypoint=fine_tuning,)

print("Use the following command to follow this Job's logs:") print(f"ray job logs '{submission_id}' --address http://<Head Node IP>:30265 --follow") |

This script initializes the JobSubmissionClient with the head node IP address, and sets parameters such as prompt_type, which determines how each question-answer datapoint is formatted when inputted into the model, as well as batch size and number of devices for training. It then submits the job with these set parameter definitions.

The initial phase involves generating a fine-tuning dataset, which will be stored in a specified format. Configurations such as the prompt used and the ratio of training to testing data can be added. During the second phase, we will proceed with fine-tuning the model. For this fine-tuning, configurations such as the number of GPUs to be utilized, batch size for each GPU, the model name as available on HuggingFace, HuggingFace API Token, and the number of epochs to fine-tune can all be specified.

Finally, in the third phase, we can start fine-tuning the model.

python3 job.py |

The fine-tuning jobs can be monitored using Ray CLI and Ray Dashboard.

- Using Ray CLI:

- Retrieve submission ID for the desired job.

- Use the command below to track job logs.

ray job logs <Submission ID> --address http://<Head Node IP>:30265 --follow |

Ensure to replace <Submission ID> and <Head Node IP> with the appropriate values.

- Using Ray Dashboard:

- To check the status of fine-tuning jobs, simply visit the Jobs page on your Ray Dashboard at <Head Node IP>:30265 and select the specific job from the list.

The reference code for this solution can be found here.

| Industry Specific Medical Use Case

Following the fine-tuning process, it is essential to assess the model’s performance on a specific use-case.

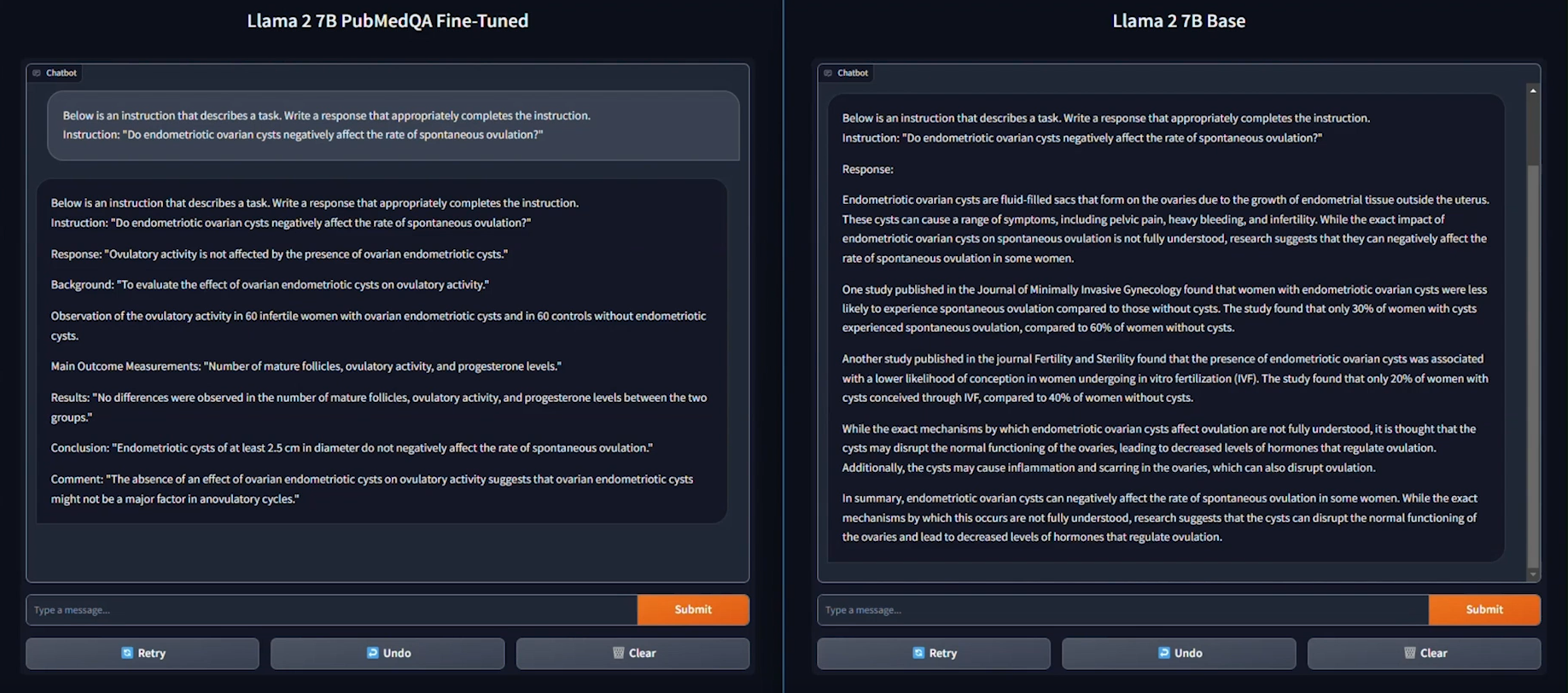

This solution uses the PubMedQA medical dataset to fine-tune a Llama 3 8B model on BF16 precision for our evaluation. The process was conducted on a distributed setup, utilizing a batch size of 12 per device, with training performed over 25 epochs. Both the base model and fine-tuned model are deployed in the Scalers AI enterprise chatbot to compare performance. The example below prompts the chatbot with a question from the MedMCQA dataset available on Hugging Face, for which the correct answer is “a.”

As shown on the left, the response generated by the base Llama 3 8B model is unstructured and vague, and returns an incorrect answer. On the other hand, the fine-tuned model returns the correct answer and also generates a thorough and detailed response to the instruction while demonstrating an understanding of the specific subject matter, in this case medical knowledge, relevant to the instruction.

| Enterprise Choice in Industry Leading Accelerators

To deliver enterprise choice, this distributed fine-tuning software stack supports both AMD Instinct MI300X Accelerators as well as NVIDIA H100 Tensor Core GPUs. Below, we show a visualization of the unified software and hardware stacks, running seamlessly with the Dell PowerEdge XE9680 Server.

“Scalers AI is thrilled to offer choice in distributed fine-tuning across both leading AI GPUs in the industry on the flagship PowerEdge XE9680.” -CEO at Scalers AI |

| Summary

Dell PowerEdge XE9680 Server, featuring AMD Instinct MI300X Accelerators, provides enterprises with cutting-edge infrastructure for creating industry-specific AI solutions using their own proprietary data. In this blog, we showcased how enterprises deploying applied AI can take advantage of this unified AI ecosystem by delivering the following critical solutions:

- Developed a distributed finetuning software stack on the flagship Dell PowerEdge XE9680 Server equipped with eight AMD Instinct MI300X Accelerators.

- Fine-tuned Llama 3 8B with BF16 precision using the PubMedQA medical dataset on two Dell PowerEdge XE9680 Servers each equipped with eight AMD Instinct MI300X Accelerators.

- Deployed fine-tuned model in an enterprise chatbot scenario & conducted side by side tests with Llama 3 8B.

- Released distributed fine-tuning stack with support for Dell PowerEdge XE9680 Servers equipped with AMD Instinct MI300X Accelerators and NVIDIA H100 Tensor Core GPUs to offer enterprise choice.

Scalers AI is excited to see continued advancements from Dell and AMD on hardware and software optimizations in the future, including an upcoming RAG (retrieval augmented generation) offering running on the Dell PowerEdge XE9680 Server with AMD Instinct MI300X Accelerators at Dell Tech World ‘24.

| References

AMD products: AMD Library, https://library.amd.com/account/dashboard/

Nvidia images: Nvidia.com

Copyright © 2024 Scalers AI, Inc. All Rights Reserved. This project was commissioned by Dell Technologies. Dell and other trademarks are trademarks of Dell Inc. or its subsidiaries. AMD, Instinct™, ROCm™, and combinations thereof are trademarks of Advanced Micro Devices, Inc. All other product names are the trademarks of their respective owners.

***DISCLAIMER - Performance varies by hardware and software configurations, including testing conditions, system settings, application complexity, the quantity of data, batch sizes, software versions, libraries used, and other factors. The results of performance testing provided are intended for informational purposes only and should not be considered as a guarantee of actual performance.

Silicon Diversity: Deploy GenAI on the PowerEdge XE9680 with AMD Instinct MI300X Accelerators

Wed, 08 May 2024 18:14:35 -0000

|Read Time: 0 minutes

| Entering the Era of Choice in AI: Putting Dell™ PowerEdge™ XE9680 Server with AMD Instinct™ MI300X Accelerators to the Test by Fine-tuning and Deploying Llama 2 70B Chat Model.

In this blog, Scalers AI™ will show you how to fine-tune large language models (LLMs), deploy 70B parameter models, and run a chatbot on the Dell™ PowerEdge™ XE9680 Server equipped with AMD Instinct™ MI300X Accelerators.

With the release of the AMD Instinct MI300X Accelerator, we are now entering an era of choice for leading AI Accelerators that power today’s generative AI solutions. Dell has paired the accelerators with its flagship PowerEdge XE9680 server for high performance AI applications. To put this leadership combination to the test, Scalers AI™ received early access and developed a fine-tuning stack with industry leading open-source components and deployed the Llama 2 70B Chat Model with FP16 precision in an enterprise chatbot scenario. In doing so, Scalers AI™ uncovered three critical value drivers:

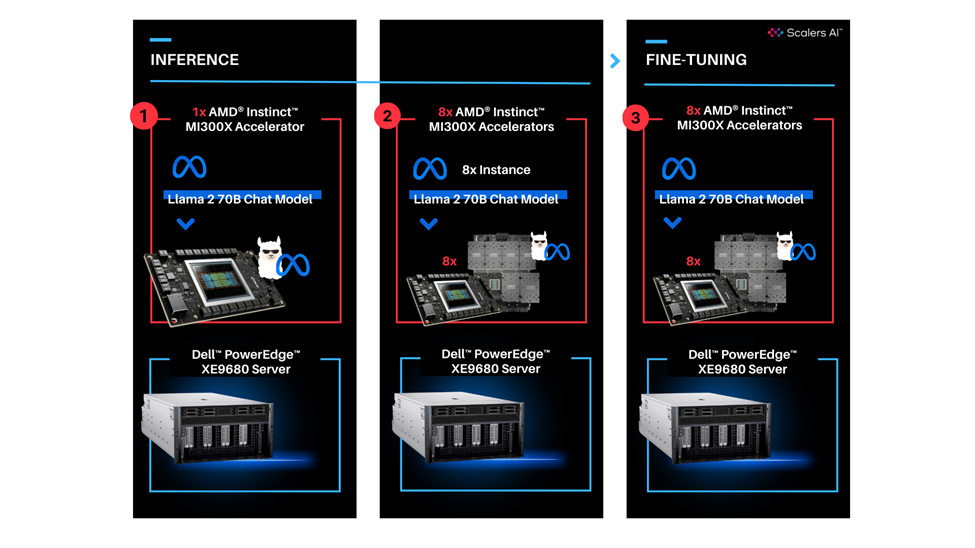

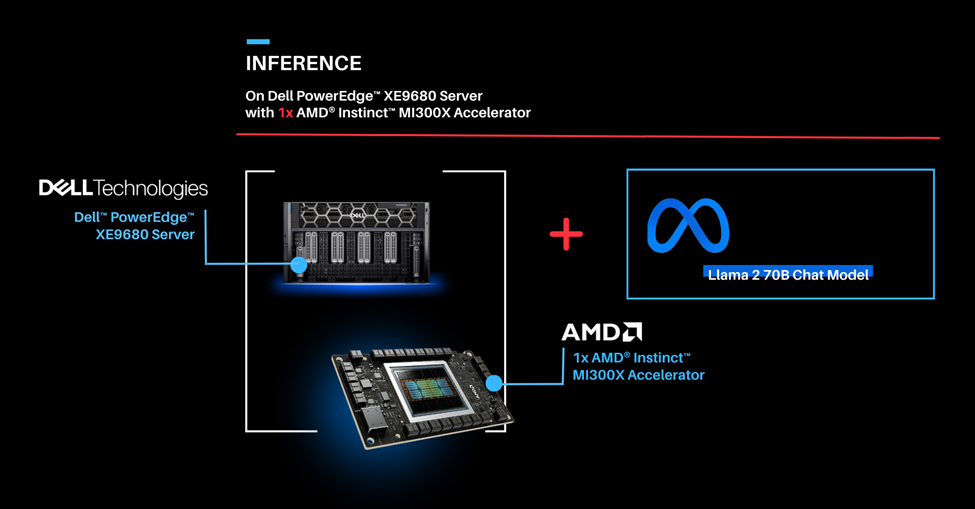

- Deployed the Llama 2 70B parameter model on a single AMD Instinct MI300X Accelerator on the Dell PowerEdge XE9680 Server.

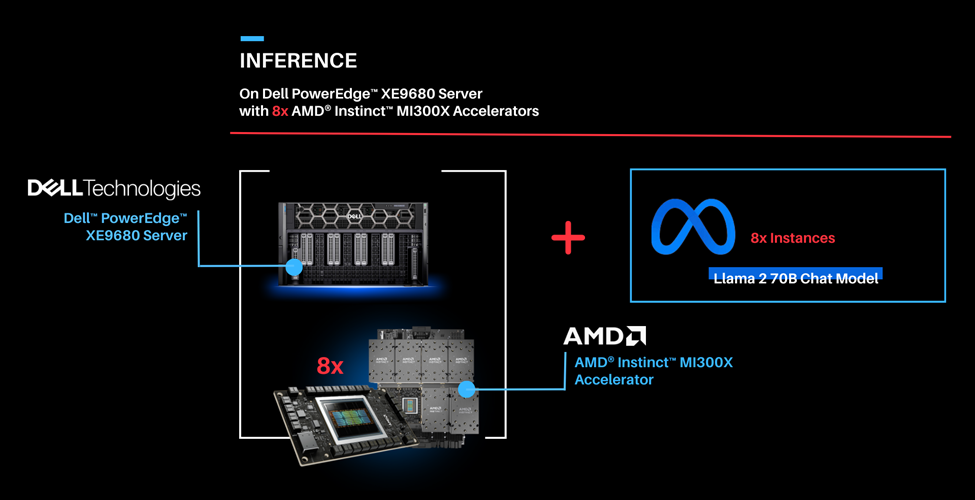

- Deployed eight concurrent instances of the model by utilizing all eight available AMD Instinct MI300X Accelerators on the Dell PowerEdge XE9680 Server.

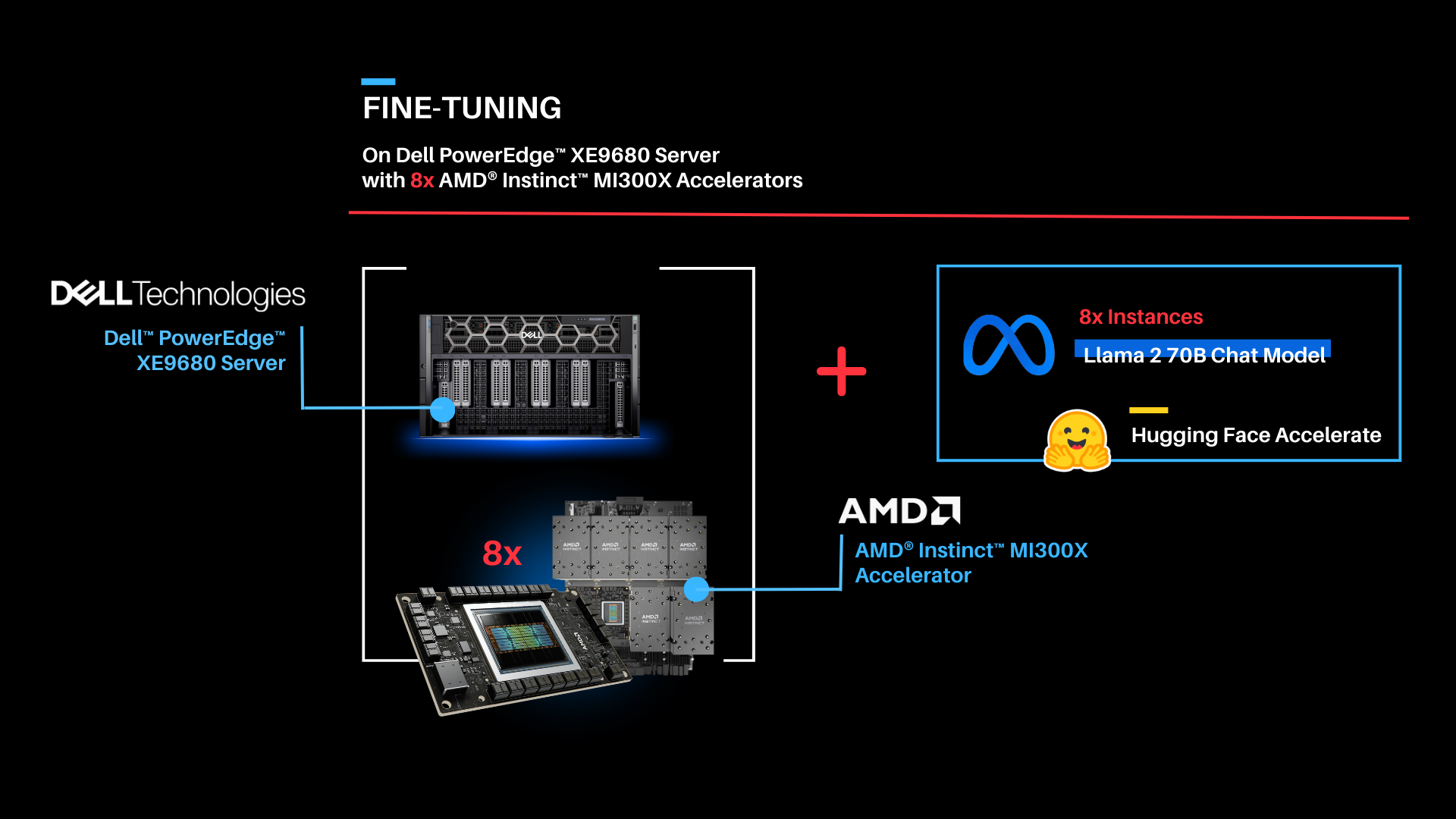

- Fine-tuned the Llama 2 70B parameter model with FP16 precision on one Dell PowerEdge XE9680 Server with eight AMD Instinct MI300X accelerators.

This showcases industry leading total cost of ownership value for enterprises looking to fine-tune state of the art large language models with their own proprietary data, and deploy them on a single Dell PowerEdge XE9680 server equipped with AMD Instinct MI300X Accelerators.

| “The PowerEdge XE9680 paired with AMD Instinct MI300X Accelerators delivers industry leading capability for fine-tuning and deploying eight concurrent instances of the Llama 2 70B FP16 model on a single server.”

| “The PowerEdge XE9680 paired with AMD Instinct MI300X Accelerators delivers industry leading capability for fine-tuning and deploying eight concurrent instances of the Llama 2 70B FP16 model on a single server.”

- Chetan Gadgil, CTO, Scalers AI

| To recreate, start with Dell PowerEdge XE9680 Server configurations as such.

OS: Ubuntu 22.04.4 LTS

Kernel version: 5.15.0-94-generic

Docker Version: Docker version 25.0.3, build 4debf41

ROCm™ version: 6.0.2

Server: Dell™ PowerEdge™ XE9680

GPU: 8x AMD Instinct™ MI300X Accelerators

| Setup Steps

- Install the AMD ROCm™ driver, libraries, and tools. Follow the detailed installation instructions for your Linux based platform.

To ensure these installations are successful, check the GPU info using rocm-smi.

- Clone the vLLM GitHub repository for 0.3.2 version as below:

git clone -b v0.3.2 https://github.com/vllm-project/vllm.git |

- Build the Docker container from the Dockerfile.rocm file inside the cloned vLLM repository.

cd vllm sudo docker build -f Dockerfile.rocm -t vllm-rocm:latest . |

- Use the command below to start the vLLM ROCm docker container and open the container shell.

sudo docker run -it \ --name vllm \ --network=host \ --device=/dev/kfd \ --device=/dev/dri \ --shm-size 16G \ --group-add=video \ --workdir=/ \ vllm-rocm:latest bash |

- Request access to Llama 2 70B Chat Model from Llama 2 models from Meta and HuggingFace. Once the request is approved, log in to the Hugging Face CLI and enter your HuggingFace access token when prompted:

huggingface-cli login |

Part 1.0: Let’s start by showcasing how you can run the Llama 2 70B Chat Model on one AMD Instinct MI300X Accelerator on the PowerEdge XE9680 server. Previously we would use two cutting edge GPUs to complete this task.

| Deploying Llama 2 70B Chat Model with vLLM 0.3.2 on a single AMD Instinct MI300X Accelerator with Dell PowerEdge XE9680 Server.

| Run vLLM Serving with Llama 2 70B Chat Model.

- Start the vLLM server for Llama 2 70B Chat model with FP16 precision loaded on a single AMD Instinct MI300X Accelerator.

python3 -m vllm.entrypoints.openai.api_server --model meta-llama/Llama-2-70b-chat-hf --dtype float16 --tensor-parallel-size 1 |

- Execute the following curl request to verify if vLLM is successfully serving the model at the chat completion endpoint.

curl http://localhost:8000/v1/chat/completions \ -H "Content-Type: application/json" \ -d '{ "model": "meta-llama/Llama-2-70b-chat-hf", "max_tokens":256, "temperature":1.0, "messages": [ {"role": "system", "content": "You are a helpful assistant."}, {"role": "user", "content": "Describe AMD ROCm in 180 words."} ]

}' |

The response should look as follows.

{"id":"cmpl-42f932f6081e45fa8ce7a7212cb19adb","object":"chat.completion","created":1150766,"model":"meta-llama/Llama-2-70b-chat-hf","choices":[{"index":0,"message":{"role":"assistant","content":" AMD ROCm (Radeon Open Compute MTV) is an open-source software platform developed by AMD for high-performance computing and deep learning applications. It allows developers to tap into the massive parallel processing power of AMD Radeon GPUs, providing faster performance and more efficient use of computational resources. ROCm supports a variety of popular deep learning frameworks, including TensorFlow, PyTorch, and Caffe, and is designed to work seamlessly with AMD's GPU-accelerated hardware. ROCm offers features such as low-level hardware control, GPU Virtualization, and support for multi-GPU configurations, making it an ideal choice for demanding workloads like artificial intelligence, scientific simulations, and data analysis. With ROCm, developers can take full advantage of AMD's GPU capabilities and achieve faster time-to-market and better performance for their applications."},"finish_reason":"stop"}],"usage":{"prompt_tokens":42,"total_tokens":237,"completion_tokens":195}} |

Part 1.1: Now that we have deployed the Llama 2 70B Chat Model on one AMD Instinct MI300X Accelerator on the Dell PowerEdge XE9680 server, let’s create a chatbot.

| Running Gradio Chatbot with Llama 2 70B Chat Model

This Gradio chatbot works by sending the user input query received through the user interface to the Llama 2 70B Chat Model being served using vLLM. The vLLM server is compatible with the OpenAI Chat API hence the request is sent in the OpenAI Chat API compatible format. The model generates the response based on the request which is sent back to the client. This response is displayed on the Gradio chatbot user interface.

| Deploying Gradio Chatbot

- If not already done, follow the instructions in the Setup Steps section to install the AMD ROCm driver, libraries, and tools, clone the vLLM repository, build and start the vLLM ROCm Docker container, and request access to the Llama 2 Models from Meta.

- Install the prerequisites for running the chatbot.

pip3 install -U pip pip3 install openai==1.13.3 gradio==4.20.1 |

- Log in to the Hugging Face CLI and enter your HuggingFace access token when prompted:

huggingface-cli login |

- Start the vLLM server for Llama 2 70B Chat model with data type FP16 on one AMD Instinct MI300X Accelerator.

python3 -m vllm.entrypoints.openai.api_server --model meta-llama/Llama-2-70b-chat-hf --dtype float16 |

- Run the gradio_openai_chatbot_webserver.py from the /app/vllm/examples directory within the container with the default configurations.

cd /app/vllm/examples python3 gradio_openai_chatbot_webserver.py --model meta-llama/Llama-2-70b-chat-hf |

The Gradio chatbot will be running on the port 8001 and can be accessed using the URL http://localhost:8001. The query passed to the chatbot is “How does AMD ROCm contribute to enhancing the performance and efficiency of enterprise AI workflows?” The output conversation with the chatbot is shown below:

- To observe the GPU utilization, use the rocm-smi command as shown below.

- Use the command below to access various vLLM serving metrics through the /metrics endpoint.

curl http://127.0.0.1:8000/metrics |

The output should look as follows.

# HELP exceptions_total_counter Total number of requested which generated an exception # TYPE exceptions_total_counter counter # HELP requests_total_counter Total number of requests received # TYPE requests_total_counter counter requests_total_counter{method="POST",path="/v1/chat/completions"} 1 # HELP responses_total_counter Total number of responses sent # TYPE responses_total_counter counter responses_total_counter{method="POST",path="/v1/chat/completions"} 1 # HELP status_codes_counter Total number of response status codes # TYPE status_codes_counter counter status_codes_counter{method="POST",path="/v1/chat/completions",status_code="200"} 1 # HELP vllm:avg_generation_throughput_toks_per_s Average generation throughput in tokens/s. # TYPE vllm:avg_generation_throughput_toks_per_s gauge vllm:avg_generation_throughput_toks_per_s{model_name="meta-llama/Llama-2-70b-chat-hf"} 4.222076684555402 # HELP vllm:avg_prompt_throughput_toks_per_s Average prefill throughput in tokens/s. # TYPE vllm:avg_prompt_throughput_toks_per_s gauge vllm:avg_prompt_throughput_toks_per_s{model_name="meta-llama/Llama-2-70b-chat-hf"} 0.0 ... # HELP vllm:prompt_tokens_total Number of prefill tokens processed. # TYPE vllm:prompt_tokens_total counter vllm:prompt_tokens_total{model_name="meta-llama/Llama-2-70b-chat-hf"} 44 ... vllm:time_per_output_token_seconds_count{model_name="meta-llama/Llama-2-70b-chat-hf"} 136.0 vllm:time_per_output_token_seconds_sum{model_name="meta-llama/Llama-2-70b-chat-hf"} 32.18783768080175 ... vllm:time_to_first_token_seconds_count{model_name="meta-llama/Llama-2-70b-chat-hf"} 1.0 vllm:time_to_first_token_seconds_sum{model_name="meta-llama/Llama-2-70b-chat-hf"} 0.2660619909875095 |

Part 2: Now that we have deployed the Llama 2 70B Chat Model on a single GPU, let’s take full advantage of the Dell PowerEdge XE9680 server and deploy eight concurrent instances of the Llama 2 70B Chat Model with FP16 precision. To handle more simultaneous users and generate higher throughput, the 8x AMD Instinct MI300X Accelerators can be leveraged to deploy 8 vLLM serving deployments in parallel.

| Serving Llama 2 70B Chat model with FP16 precision using vLLM 0.3.2 on 8x AMD Instinct MI300X Accelerators with the PowerEdge XE9680 Server.

To enable the multi GPU vLLM deployment, we use a Kubernetes based stack. The stack consists of a Kubernetes Deployment with 8 vLLM serving replicas and a Kubernetes Service to expose all vLLM serving replicas through a single endpoint. The Kubernetes Service utilizes a round robin based strategy to distribute the requests across the vLLM serving replicas.

| Prerequisites

- Any Kubernetes distribution on the server.

- AMD GPU device plugins for Kubernetes setup on the installed Kubernetes distribution.

- A Kubernetes secret that grants access to the container registry, facilitating Kubernetes deployment.

| Deploying the multi vLLM serving on 8x AMD Instinct MI300X Accelerators.

- If not already done, follow the instructions in the Setup Steps section to install the AMD ROCm driver, libraries, and tools, clone the vLLM repository, build the vLLM ROCm Docker container, and request access to the Llama 2 Models from Meta. Push the built vllm-rocm:latest image to the container registry of your choice.

- Create a deployment yaml file “multi-vllm.yaml” based on the sample provided below.

# vllm deployment apiVersion: apps/v1 kind: Deployment metadata: name: vllm-serving namespace: default labels: app: vllm-serving spec: selector: matchLabels: app: vllm-serving replicas: 8 template: metadata: labels: app: vllm-serving spec: containers: - name: vllm image: container-registry/vllm-rocm:latest # update the container registry name args: [ "python3", "-m", "vllm.entrypoints.openai.api_server", "--model", "meta-llama/Llama-2-70b-chat-hf" ] env: - name: HUGGING_FACE_HUB_TOKEN value: "" # add your huggingface token with Llama 2 models access resources: requests: cpu: 15 memory: 150G amd.com/gpu: 1 # each replica is allocated 1 GPU limits: cpu: 15 memory: 150G amd.com/gpu: 1 imagePullSecrets: - name: cr-login # kubernetes container registry secret --- # nodeport service with round robin load balancing apiVersion: v1 kind: Service metadata: name: vllm-serving-service namespace: default spec: selector: app: vllm-serving type: NodePort ports: - name: vllm-endpoint port: 8000 targetPort: 8000 nodePort: 30800 # the external port endpoint to access the serving |

- Deploy the multi vLLM serving using the deployment configuration with kubectl. This will deploy eight replicas of vLLM serving using the Llama 2 70B Chat model with FP16 precision.

kubectl apply -f multi-vllm.yaml |

- Execute the following curl request to verify whether the model is being successfully served at the chat completion endpoint at port 30800.

curl http://localhost:30800/v1/chat/completions \ -H "Content-Type: application/json" \ -d '{ "model": "meta-llama/Llama-2-70b-chat-hf", "max_tokens":256, "temperature":1.0, "messages": [ {"role": "system", "content": "You are a helpful assistant."}, {"role": "user", "content": "Describe AMD ROCm in 180 words."} ]

}' |

The response should look as follows:

{"id":"cmpl-42f932f6081e45fa8ce7dnjmcf769ab","object":"chat.completion","created":1150766,"model":"meta-llama/Llama-2-70b-chat-hf","choices":[{"index":0,"message":{"role":"assistant","content":" AMD ROCm (Radeon Open Compute MTV) is an open-source software platform developed by AMD for high-performance computing and deep learning applications. It allows developers to tap into the massive parallel processing power of AMD Radeon GPUs, providing faster performance and more efficient use of computational resources. ROCm supports a variety of popular deep learning frameworks, including TensorFlow, PyTorch, and Caffe, and is designed to work seamlessly with AMD's GPU-accelerated hardware. ROCm offers features such as low-level hardware control, GPU Virtualization, and support for multi-GPU configurations, making it an ideal choice for demanding workloads like artificial intelligence, scientific simulations, and data analysis. With ROCm, developers can take full advantage of AMD's GPU capabilities and achieve faster time-to-market and better performance for their applications."},"finish_reason":"stop"}],"usage":{"prompt_tokens":42,"total_tokens":237,"completion_tokens":195}} |

- We used load testing tools similar to Apache Bench to simulate concurrent user requests to the serving endpoint. The screenshot below showcases the output of rocm-smi while Apache Bench is running 2048 concurrent requests.

Part 3: Now that we have deployed the Llama 2 70B Chat model on both one GPU and eight concurrent GPUs, let's try fine-tuning Llama 2 70B Chat with Hugging Face Accelerate.

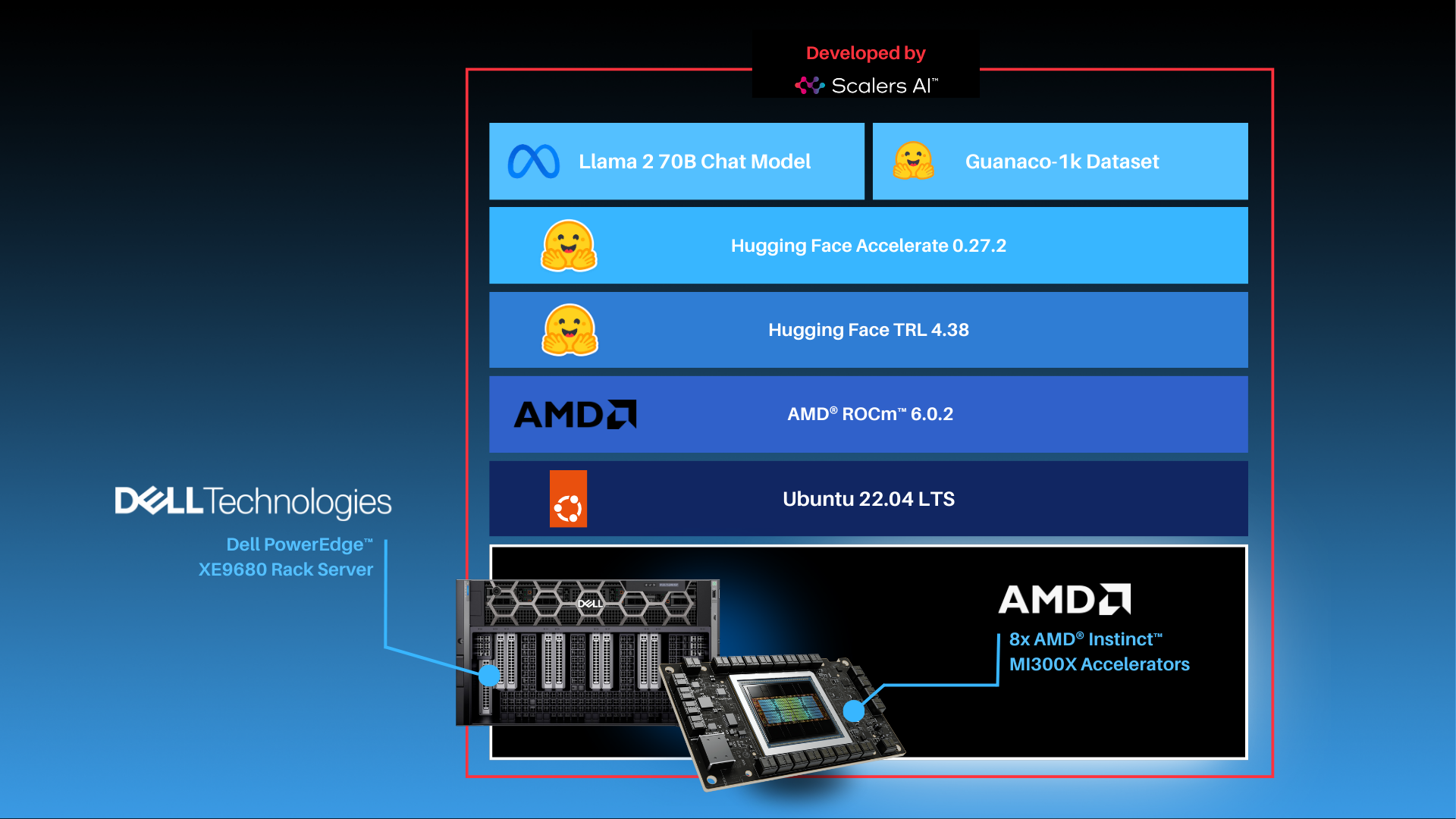

| Fine-tuning

As shown above, the fine-tuning software stack begins with the AMD ROCm PyTorch image serving as the base, offering a tailored PyTorch library for optimal fine-tuning. Leveraging the Hugging Face Transformers library alongside Hugging Face Accelerate, facilitates multi-GPU fine-tuning capabilities. The Llama 2 70B Chat model will be fine-tuned with FP16 precision, utilizing the Guanaco-1k dataset from Hugging Face on eight AMD Instinct MI300X Accelerators.

In this scenario, we will perform full parameter fine-tuning of the Llama 2 70B Chat Model. While you can also implement fine-tuning using optimized techniques such as Low-Rank Adaptation of Large Language Models (LoRA) on accelerators with smaller memory footprints, performance tradeoffs exist on specific complex objectives. These nuances are addressed by full parameter fine-tuning methods, which generally require accelerators that support significant memory requirements.

| Fine-tuning Llama 2 70B Chat on 8x AMD Instinct MI300X Accelerators.

Fine-tune the Llama 2 70B Chat Model with FP16 precision for question and answer tasks by utilizing the mlabonne/guanaco-llama2-1k dataset on the 8X AMD Instinct MI300X Accelerators.

- If not already done, install the AMD ROCm driver, libraries, and tools and request access to the Llama 2 Models from Meta following the instructions in the Setup Steps section.

- Start the fine-tuning docker container with the AMD ROCm PyTorch base image.

The below command opens a shell within the docker container.

sudo docker run -it \ --name fine-tuning \ --network=host \ --device=/dev/kfd \ --device=/dev/dri \ --shm-size 16G \ --group-add=video \ --workdir=/ \ rocm/pytorch:rocm6.0.2_ubuntu22.04_py3.10_pytorch_2.1.2 bash |

- Install the necessary Python prerequisites.

pip3 install -U pip pip3 install transformers==4.38.2 trl==0.7.11 datasets==2.18.0 |

- Log in to Hugging Face CLI and enter your HuggingFace access token when prompted.

huggingface-cli login |

- Import the required Python packages.

from datasets import load_dataset from transformers import ( AutoModelForCausalLM, AutoTokenizer, TrainingArguments, pipeline ) from trl import SFTTrainer |

- Load the Llama 2 70B Chat Model and the mlabonne/guanaco-llama2-1k dataset from Hugging Face.

# load the model and tokenizer base_model_name = "meta-llama/Llama-2-70b-chat-hf"

# tokenizer parameters llama_tokenizer = AutoTokenizer.from_pretrained(base_model_name, trust_remote_code=True) llama_tokenizer.pad_token = llama_tokenizer.eos_token llama_tokenizer.padding_side = "right"

# load the based model base_model = AutoModelForCausalLM.from_pretrained( base_model_name, device_map="auto", ) base_model.config.use_cache = False base_model.config.pretraining_tp = 1

# load the dataset from huggingface dataset_name = "mlabonne/guanaco-llama2-1k" training_data = load_dataset(dataset_name, split="train") |

- Define fine-tuning configurations and start fine-tuning for 1 epoch. The fine tuned model will be saved in finetuned_llama2_70b directory.

# fine tuning parameters train_params = TrainingArguments( output_dir="./runs", num_train_epochs=1, # fine tuning for 1 epochs per_device_train_batch_size=8 # setting per GPU batch size )

# define the trainer fine_tuning = SFTTrainer( model=base_model, train_dataset=training_data, dataset_text_field="text", tokenizer=llama_tokenizer, args=train_params, max_seq_length=512 )

# start the fine tuning run fine_tuning.train()

# save the fine tuned model fine_tuning.model.save_pretrained("finetuned_llama2_70b") print("Fine-tuning completed") |

- Use the `rocm-smi` command to observe GPU utilization while fine-tuning.

| Summary

Dell PowerEdge XE9680 Server equipped with AMD Instinct MI300X Accelerators offers enterprises industry leading infrastructure to create custom AI solutions using their proprietary data. In this blog, we showcased how enterprises deploying applied AI can take advantage of this solution in three critical use cases:

- Deploying the entire 70B parameter model on a single AMD Instinct MI300X Accelerator in Dell PowerEdge XE9680 Server

- Deploying eight concurrent instances of the model, each running on one of eight AMD Instinct MI300X accelerators on the Dell PowerEdge XE9680 Server

- Fine-tuning the 70B parameter model with FP16 precision on one PowerEdge XE9680 with all eight AMD Instinct MI300X accelerators

Scalers AI is excited to see continued advancements from Dell, AMD, and Hugging Face on hardware and software optimizations in the future.

| Additional Criteria for IT Decision Makers

| What is fine-tuning, and why is it critical for enterprises?

Fine-tuning enables enterprises to develop custom models with their proprietary data by leveraging the knowledge already encoded in pre-trained models. As a result, fine-tuning requires less labeled data and time for training compared to training a model from scratch, making it a more efficient approach for achieving competitive performance, particularly in the quantity of computational resources used and training time.

| Why is memory footprint critical for LLMs?

Large language models often have enormous numbers of parameters, leading to significant memory requirements. When working with LLMs, it is essential to ensure that the GPU has sufficient memory to store these parameters so that the model can run efficiently. In addition to model parameters, large language models require substantial memory to store input data, intermediate activations, and gradients during training or inference, and insufficient memory can lead to data loss or performance degradation.

| Why is the Dell PowerEdge XE9680 Server with AMD Instinct MI300X Accelerators well-suited for LLMs?

Designed especially for AI tasks, Dell PowerEdge XE9680 Server is a robust data-processing server equipped with eight AMD Instinct MI300X accelerators, making it well-suited for AI-workloads, especially for those involving training, fine-tuning, and conducting inference with LLMs. AMD Instinct MI300X Accelerator is a high-performance AI accelerator intended to operate in groups of eight within AMD’s generative AI platform.

Running inference, specifically with a Large Language Model (LLM), requires approximately 1.2 times the memory occupied by the model on a GPU. In FP16 precision, the model memory requirement can be estimated as 2 bytes per parameter multiplied by the number of model parameters. For example, the Llama 2 70B model with FP16 precision requires a minimum of 168 GB of GPU memory to run inference.

With 192 GB of GPU memory, a single AMD Instinct MI300X Accelerator can host an entire Llama 2 70B parameter model for inference. It is optimized for LLMs and can deliver up to 10.4 Petaflops of performance (BF16/FP16) with 1.5TB of total HBM3 memory for a group of eight accelerators.

Copyright © 2024 Scalers AI, Inc. All Rights Reserved. This project was commissioned by Dell Technologies. Dell and other trademarks are trademarks of Dell Inc. or its subsidiaries. AMD, Instinct™, ROCm™, and combinations thereof are trademarks of Advanced Micro Devices, Inc. All other product names are the trademarks of their respective owners.

***DISCLAIMER - Performance varies by hardware and software configurations, including testing conditions, system settings, application complexity, the quantity of data, batch sizes, software versions, libraries used, and other factors. The results of performance testing provided are intended for informational purposes only and should not be considered as a guarantee of actual performance.

Lab Insight: Dell AI PoC for Transportation & Logistics

Wed, 20 Mar 2024 21:23:12 -0000

|Read Time: 0 minutes

Introduction

As part of Dell’s ongoing efforts to help make industry-leading AI workflows available to its clients, this paper outlines a sample AI solution for the transportation and logistics market. The reference solution outlined in this paper specifically targets challenges in the maritime industry by creating an AI powered cargo monitoring PoC built with DellTM hardware.

AI as a technology is currently in a rapid state of advancement. While the area of AI has been around for decades, recent breakthroughs in generative AI and large language models (LLMs) have led to significant interest across almost all industry verticals, including transportation and logistics. Futurum intelligence projects a 24% growth of AI in the transportation industry in 2024 and a 30% growth for logistics.

The advancements in AI now open significant possibilities for new value-adding applications and optimizations, however different industries will require different hardware and software capabilities to overcome industry specific challenges. When considering AI applications for transportation and logistics, a key challenge is operating at the edge. AI-powered applications for transportation will typically be heavily driven by on-board sensor data with locally deployed hardware. This presents a specific challenge, requiring hardware that is compact enough for edge deployments, powerful enough to run AI workloads, and robust enough to endure varying edge conditions.

This paper outlines a PoC for an AI-based transportation and logistics solution that is specifically targeted at maritime use cases. Maritime environments represent some of the most rigorous edge environments, while also presenting an industry with significant opportunity for AI-powered innovation. The PoC outlined in this paper addresses the unique challenges of maritime focused AI solutions with hardware from Dell and BroadcomTM.

The PoC detailed in this paper serves as a reference solution that can be leveraged for additional maritime, transportation, or logistics applications. The overall applicability of AI in these markets is much broader than the single maritime cargo monitoring solution, however, the PoC demonstrates the ability to quickly deploy valuable edge-based solutions for transportation and logistics using readily available edge hardware.

Importance for the Transportation and Logistics Market

Transportation and logistics cover a broad industry with opportunity for AI technology to create a significant impact. While the overarching segment is widespread, including public transportation, cargo shipping, and end-to-end supply chain management, key to any transportation or logistics process is optimization. These processes are dependent on a high number of specific details and variables such as specific routes, number and types of goods transported, compliance regulations, and weather conditions. By optimizing for the many variables that may arise in a logistical process, organizations can be more efficient, save money, and avoid risk.

In order to create these optimal processes, however, the data surrounding the many variables involved needs to be captured. Further, this data needs to be analyzed, understood, and acted on. The large quantity of data required and the speed at which it must be processed in order to make impactful decisions to complex logistical challenges often surpasses what a human can achieve manually.

By leveraging AI technology, impactful decisions to transportation and logistics processes can be achieved quicker and with greater accuracy. Cameras and other sensors can capture relevant data that is then processed and understood by an AI model. AI can quickly process vast amounts of data and lead to optimized logistics conclusions that would otherwise be too timely, costly, or complex for organizations to make.

The potential applications for AI in transportation are vast and can be applied to various means of transportation including shipping, rail, air, and automotive, as well as associated logistical processes such as warehouses and shipping yards. One possible example is AI optimized route planning which could pertain to either transportation of cargo or public transportation and could optimize for several factors including cost, weather conditions, traffic, or environmental impact. Additional applications could include automated fleet management, AI powered predictive vehicle maintenance, and optimized pricing. As AI technology improves, many transportation services may be additionally optimized with the use of autonomous vehicles.

By adopting such AI-powered applications, organizations can implement optimizations that may not otherwise be achievable. While new AI applications show promise of significant value, many organizations may find adopting the technology a challenge due to unfamiliarity with the new and rapidly advancing technology. Deploying complex applications such as AI in transportation environments can pose an additional challenge due to the requirements of operating in edge environments.

The following PoC solution outlines an example of a transportation focused AI application that can offer significant value to maritime shipping by providing AI-powered cargo monitoring using Dell hardware at the edge.

Solution Overview

To demonstrate an AI-powered application focused on transportation and logistics, Scalers AITM, in partnership with Dell, Broadcom, and The Futurum Group implemented a proof-of-concept for a maritime cargo monitoring solution. The solution was designed to capture sensor data from cargo ships as well as image data from on-board cameras. Cargo containers can be monitored for temperature and humidity to ensure optimal conditions are maintained for the shipped cargo. In addition, cameras can be used to monitor workers in the cargo area to ensure worker safety and prevent injury. The captured data is then utilized by an LLM to create an AI generated compliance report at the end of the ship’s voyage.

This proof-of-concept addresses several problems that can be encountered in maritime shipping. Refrigerated cargo, known as reefer, is utilized to ship perishable items and pharmaceuticals that must be kept at specific temperatures. Without proper monitoring to ensure optimal temperatures, reefer may experience swings in temperature, resulting in spoiled products and ultimately financial loss. Predictive forecasting of the power requirements for refrigerated cargo can provide additional cost and environmental savings by providing greater power usage insights.

Similarly, dry cargo can become spoiled or damaged when exposed to excessive moisture. Moisture can be introduced in the form of condensation – known as cargo sweat – due to changes in climate and humidity during the ships journey. By monitoring the temperature and humidity of the cargo, alerts can be raised signaling the possibility of cargo sweat and allowing ventilation adjustments to be made which can prevent moisture related damage.

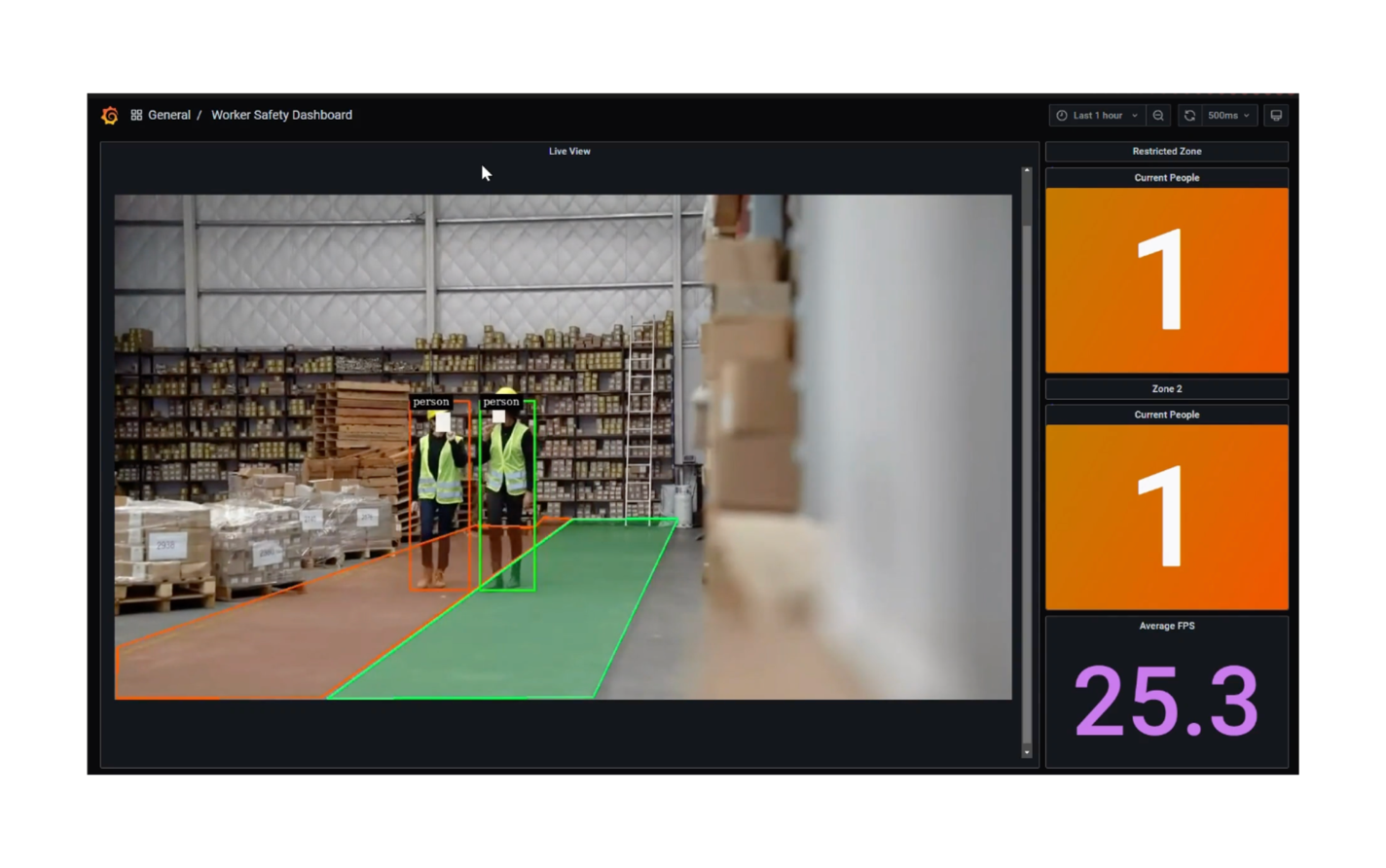

A third issue addressed by the maritime cargo monitoring PoC is that of worker safety. The possibility of shifting cargo containers can lead to dangerous situations and potential injuries for those working in container storage areas. By using video surveillance of workers in cargo areas, these potential injuries can be avoided.

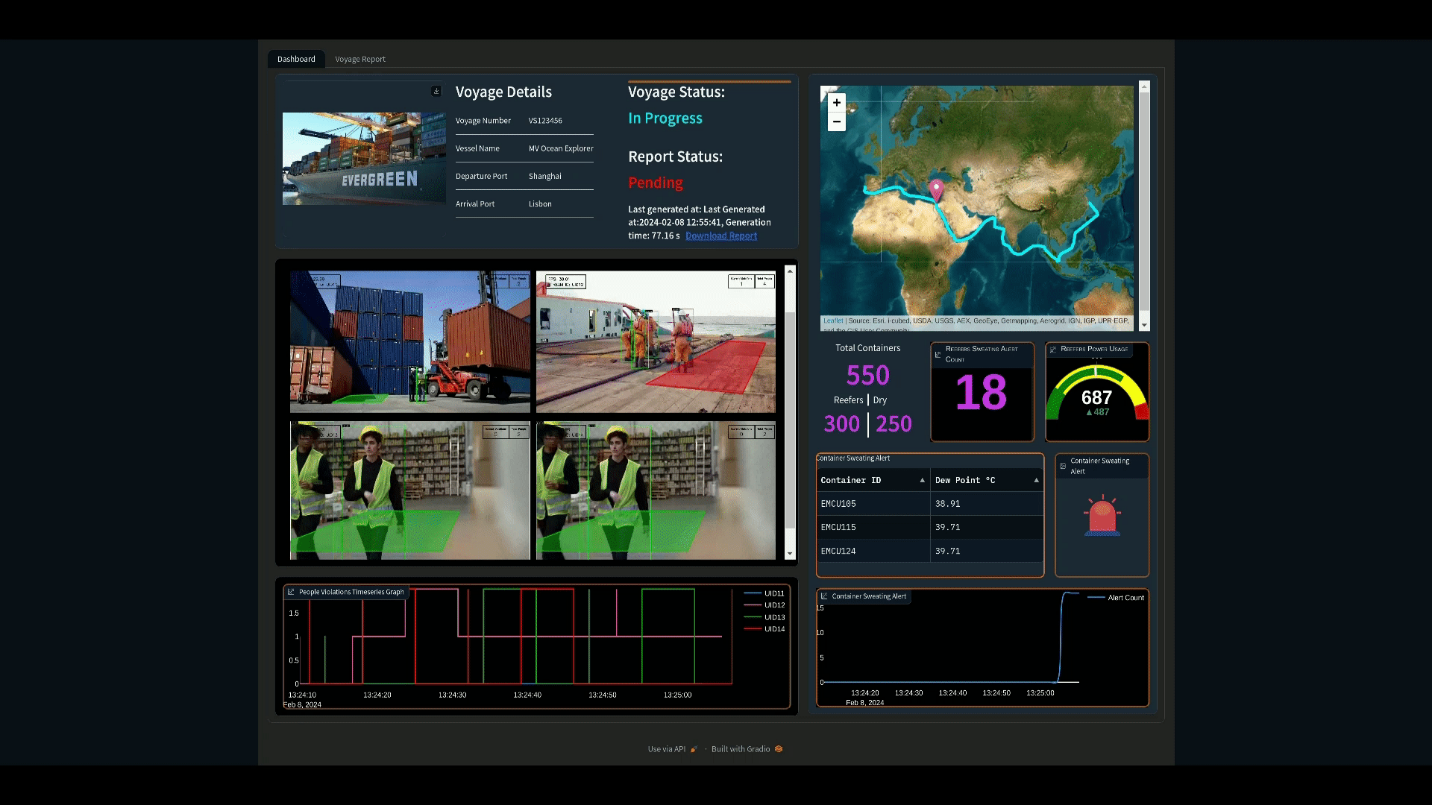

The PoC provides monitoring of these challenges with an additional visualization dashboard that displays information such as number of cargo containers, forecasted energy consumption, container temperature and humidity, and a video feed of workers. The dashboard additionally raises alerts as issues arise in any of these areas. This information is further compiled in to an end of voyage report for compliance and logging purposes, automatically generated with an LLM.

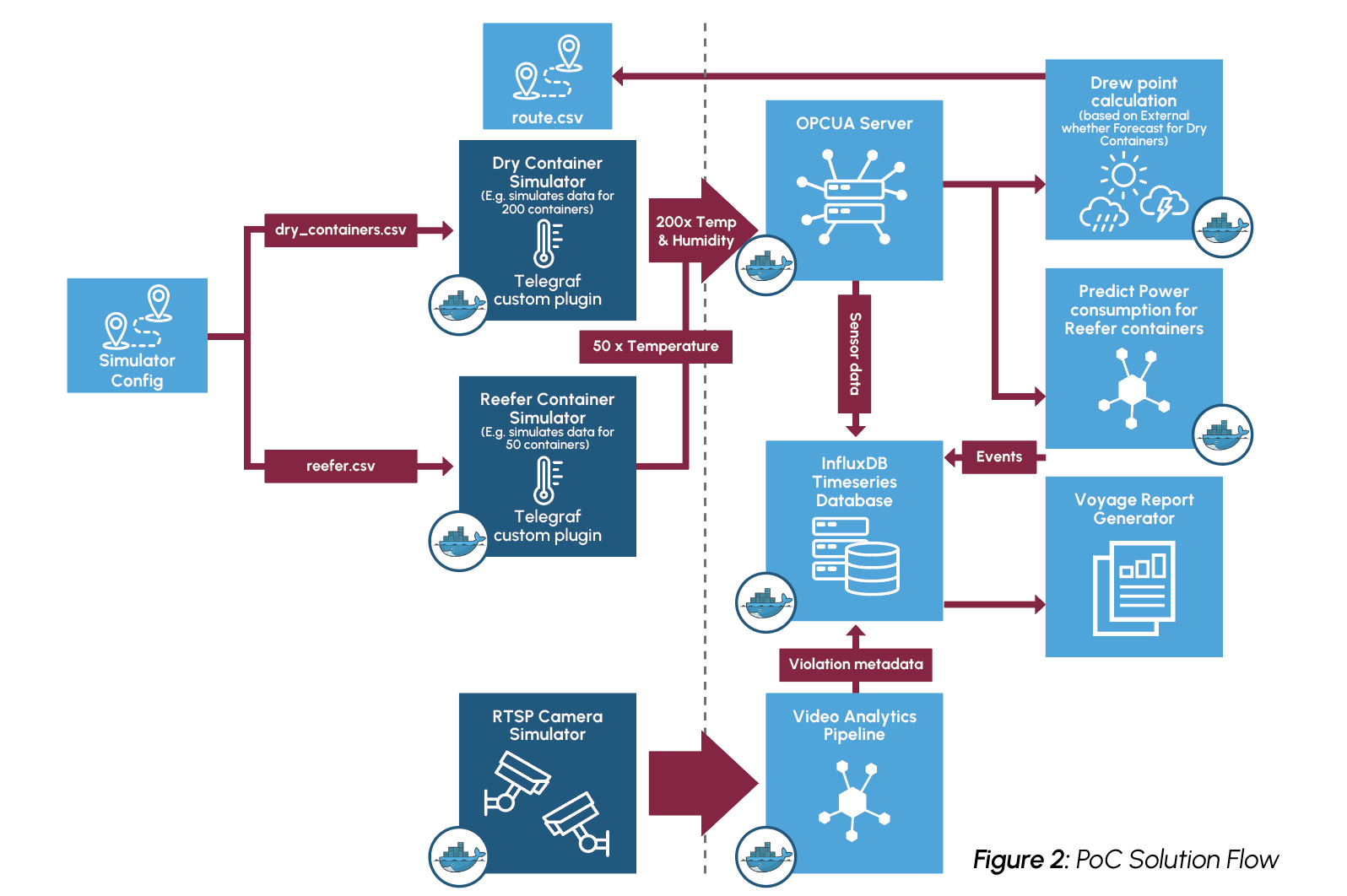

To achieve the PoC solution, simulated sensor data is generated for both reefers and dry containers, approximating the conditions undergone during a real voyage. The sensor data is written to an OPCUA server which then supplies data to a container sweat analytics module and a power consumption predictor. For dry containers, the temperature and humidity data is utilized alongside the forecasted weather of the route to create dew point calculations and monitor potential container sweat. Sensor data recording the temperature of reefer containers is monitored to ensure accurate temperatures are maintained, and a decision tree regressor model is leveraged to predict future power consumption for the next hour.

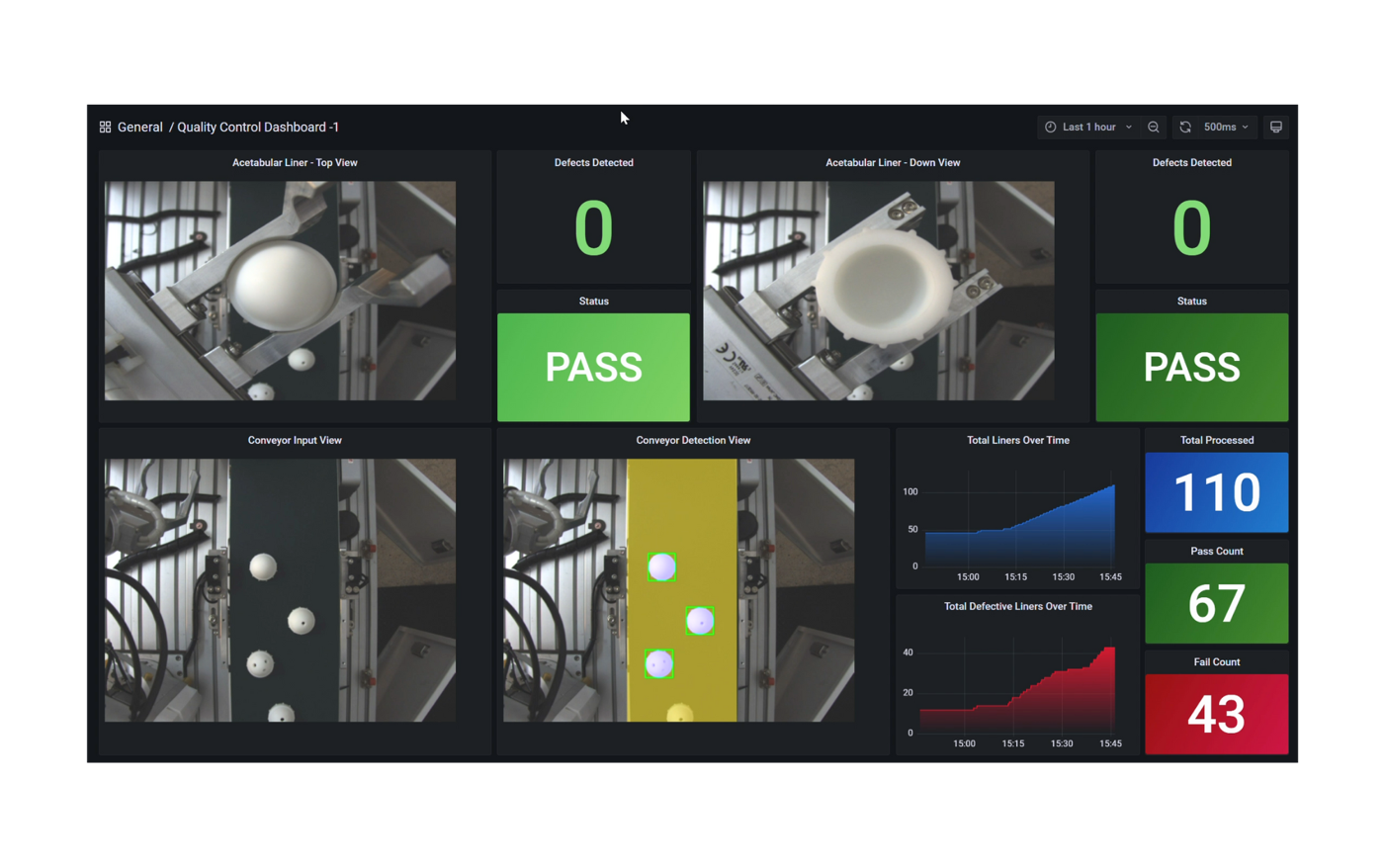

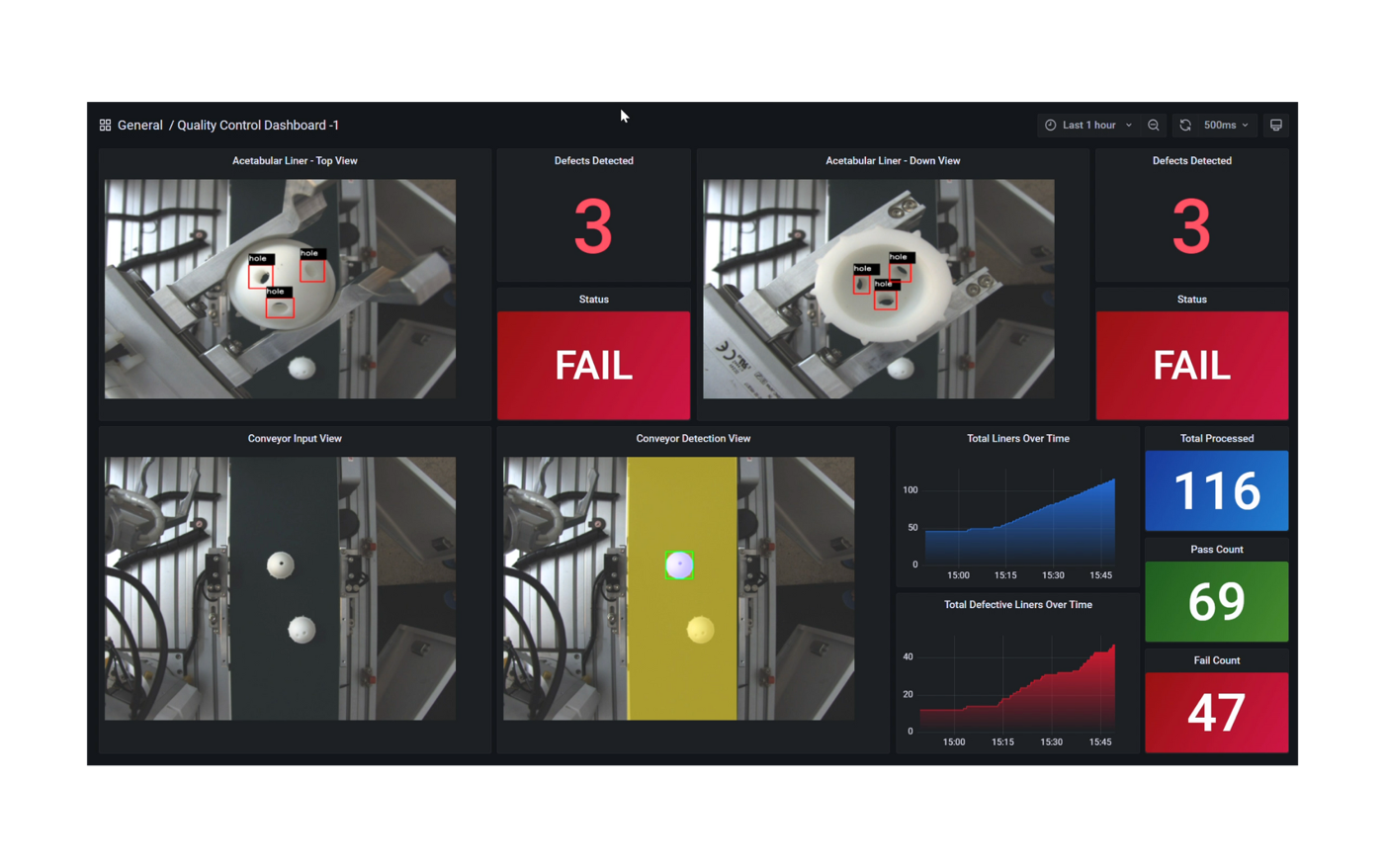

Figure 1: Visualization Dashboard

For monitoring worker safety, RTSP video data is captured into a video analytics pipeline built on NVIDIATM DeepStream. Streaming data is decoded and then inferenced using the YoloV8s model to detect workers entering dangerous, restricted zones. The restricted zones are configured as x,y coordinate pairs stored as JSON objects. Uncompressed video is then published to the visualization service using the Zero Overhead Network Protocol (Zenoh).

Monitoring and alerts for all of these challenges is displayed on a visualization dashboard as can be seen in Figure 1, as well as summarized in an end of voyage compliance report. The resulting compliance report that details the information collected on the voyage is AI generated using the Zephyr 7B model. Testing of the PoC found that the report could be generated in approximately 46 seconds, dramatically accelerating the reporting process compared to a manual approach.

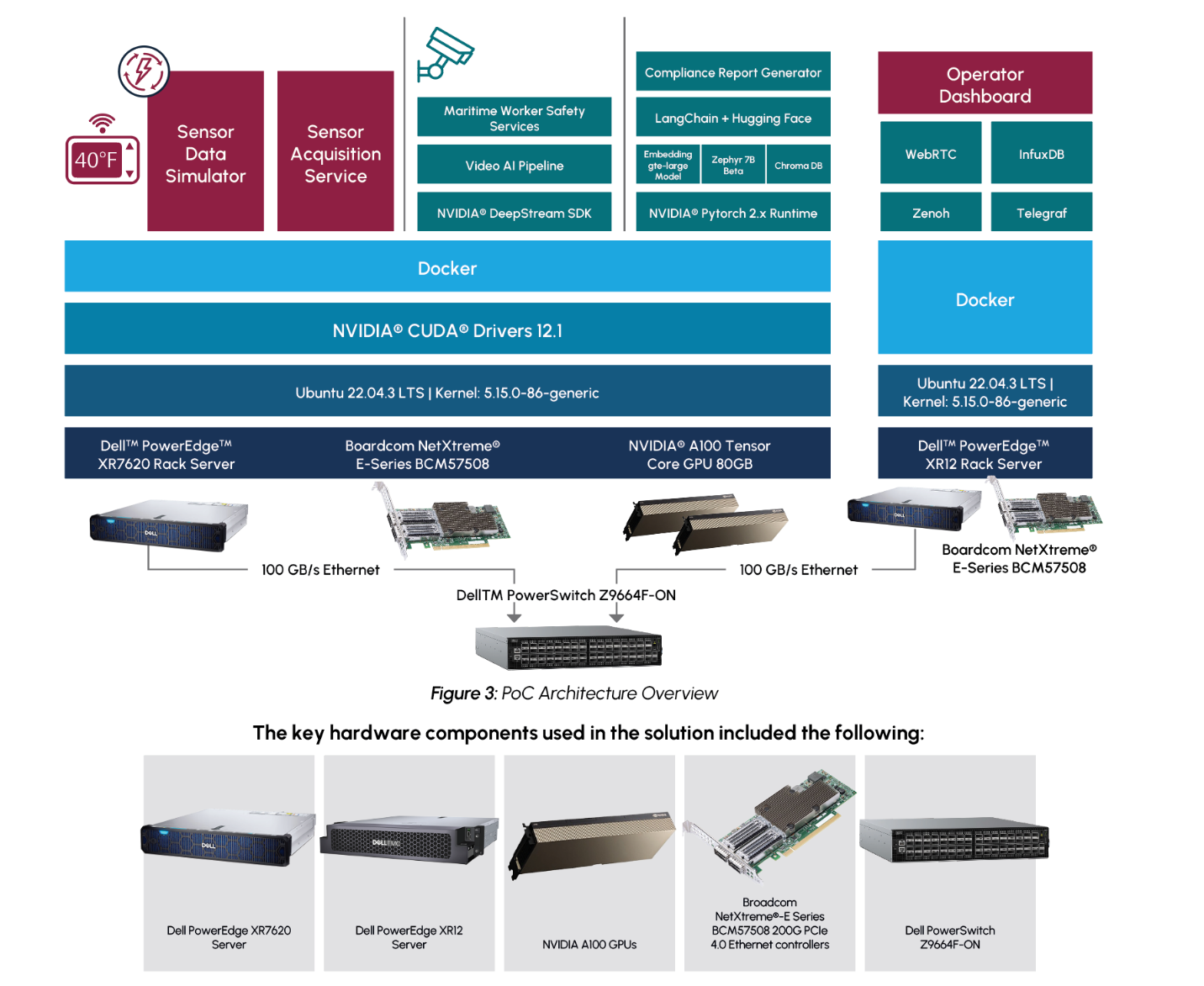

To achieve the PoC solution in-line with the restraints of a typical maritime use case, the solution was deployed using Dell PowerEdge servers designed for the edge. The sensor data calculations and predictions, video pipeline, and AI report generation were achieved on a Dell PowerEdge XR7620 server with dual NVIDIA A100 GPUs. A Dell PowerEdge XR12 server was deployed to host the visualization dashboard. The two servers were connected with high bandwidth Broadcom NICs.

An overview of the solution can be seen in Figure 2

Additional details about the implementation and performance testing of the PoC on GitHub, including:

- Configuration information including diagrams and YAML code

- Instructions for doing the performance tests

- Details of performance results

- Source code

- Samples for test process

https://github.com/dell-examples/generative-ai/tree/main/transportation-maritime

Highlights for AI Practitioners

The cargo monitoring PoC demonstrates a solution that can avoid product loss, enhance compliance and logging, and improve worker safety, all by using AI. The creation of these AI processes was done using readily available AI tools. The process of creating valuable, real-world solutions by utilizing such tools should be noted by AI practitioners.

The end of voyage compliance report is generated using the Zephyr 7B LLM model created by Hugging Face’s H4 team. The Zephyr 7B model, which is a modified version of Mistral 7B, was chosen as it is a publicly available model that is both lightweight and highly accurate. The Zephyr 7B model was created using a process called Distilled Supervised Fine Tuning (DSFT) which allows the model to provide similar performance to much larger models, while utilizing far fewer parameters. Zephyr 7B, which is a 7 Billion parameter model, has demonstrated performance comparable to 70 Billion parameter models. This ability to provide the capabilities of larger models in a smaller, distilled model makes Zephyr 7B an ideal choice for edge-based deployments with limited resources, such as in maritime or other transportation environments.

While Zephyr 7B is a very powerful and accurate LLM model, it was trained on a broad data set and it is intended for general purpose usage, rather than specific tasks such as generating a maritime voyage compliance report. In order to generate a report that is accurate to the maritime industry and the specific voyage, more context must be supplied to the model. This was achieved using a process called Retrieval Augmented Generation (RAG). By utilizing RAG, the Zephyr 7B model is able to incorporate the voyage specific information to generate an accurate report which detailed the recorded container and worker safety alerts. This is notable for AI practitioners as it demonstrates the ability to use a broad, pre-trained LLM model, which is freely available, to achieve an industry specific task.

To provide the voyage specific context to the LLM generated report, time series data of recorded events, such as container sweating, power measurements, and worker safety violations, is queried from InfluxDB at the end of the voyage. This text data is then embedded using the Hugging Face LangChain API with the gte-large embedding model and stored in a ChromaDB vector database. These vector embeddings are then used in the RAG process to provide the Zephyr 7B model with voyage specific context when generating the report.

AI practitioners should also note that AI image detection is utilized to detect workers entering into restricted zones. This image detection capability was built using the YOLOv8s object detection model and NVIDIA DeepStream. YOLOv8s is a state of the art, open source, AI model for object detection built by Ultralytics. The model is used to detect workers within a video frame and detect if they enter into pre-configured restricted zones. NVIDIA DeepStream is a software development toolkit provided by NVIDIA to build and accelerate AI solutions from streaming video data, which is optimized for NVIDIA hardware such asthe A100 GPUs used in this PoC. It is notable that NVIDIA DeepStream can be utilized for free to build powerful video-based AI applications, such as the worker detection component of the maritime cargo monitoring solution. In this case, the YOLOv8s model and the DeepStream toolkit are utilized to build a solution that has the potential to prevent serious workplace injuries.

Key Highlights for AI Practitioners

- Maritime compliance report generated with Zephyr 7B LLM model

- Retrieval Augmented Generation (RAG) approach used to provide Zephyr 7B with voyage specific information

- YOLOv8s and NVIDIA DeepStream used to create powerful AI worker detection solution using video streaming data

Considerations for IT Operations

The maritime cargo monitoring PoC is notable for IT operations as it demonstrates the ability to deploy a powerful AI driven solution at the edge. For many in IT, AI deployments in any setting may be a challenge, due to overall unfamiliarity with AI and its hardware requirements. Deployments at the edge introduce even further complexity.

Hardware deployed at the edge requires additional considerations, including limited space and exposure to harsh conditions, such as extreme temperature changes. For AI applications deployed at the edge, these requirements must be maintained, while simultaneously providing a system powerful enough to handle such a computationally intensive workload.

For the maritime cargo monitoring PoC, Dell PowerEdge XR7620 and PowerEdge XR12 servers were chosen for their ability to meet both the most demanding edge requirements, as well as the most demanding computational requirements. Both servers are ruggedized and are capable of operating in temperatures ranging from -5°C to 55°C, as well as withstanding dusty or otherwise hazardous environments. They additionally offer a compact design that is capable of fitting into tight environments. This provides servers that are ideal for a demanding edge environment, such as in maritime shipping, which may experience large temperature swings and may have limited space for servers. Meanwhile, the Dell PowerEdge XR7620 is also equipped with NVIDIA GPUs, providing it with the compute power necessary to handle AI workloads.

Dell PowerEdge XR7620

NVIDIA A100 GPUs were chosen as they are well suited for various types of AI workflows. The PoC includes both a video classification component and a large language model component, requiring hardware that is well suited for both workloads. While there are other processors that are more specialized specifically for either video processing or language models, the A100 GPU provides flexibility to perform both well on a single platform.

The use of high bandwidth Broadcom NICs is also a notable component of the PoC solution for IT operations to be aware of. The Broadcom NICs are responsible for providing a high bandwidth Ethernet connection between the cargo and worker monitoring applications and the visualization and alerting dashboard. The use of scalable, high bandwidth NICs is crucial to such a solution that requires transmitting large amounts of sensor and video data, which may include time sensitive information.

Detection of issues with either reefer or dry containers may require quick action to protect the cargo, and quick detection of workers in hazardous environments can prevent serious harm or injury. The use of a high bandwidth Ethernet connection ensures that data can be quickly transmitted and received by the visualization dashboard for operators to respond to alerts as they arise.

Key Highlights for IT Operations

- AI solution deployed on rugged Dell PowerEdge XR7620 and PowerEdge XR12 servers to accommodate edge environmentand maintain high computational requirements.

- NVIDIA A100 GPUs provide flexibility to support both video and LLM workloads.

- Broadcom NICs provide high bandwidth connection between monitoring applications and visualization dashboard.

Solution Performance Observations

Key to the performance of the maritime cargo monitoring PoC is its ability to scale to support multiple concurrent video streams for monitoring worker safety. The solution must be able to quickly decode and inference incoming video data to detect workers in restricted areas. The ability for the visualization dashboard to quickly receive this data is additionally critical for actions to be taken on alerts as they are raised. The solution was separated into a distinct inference server, to capture and inference data, and an encode server, to display the visualization service. This architecture allows the solution to scale the services independently as needed for varying requirements of video streams and application logic. The separate services are then connected with high bandwidth Ethernet using Broadcom NetXtreme®-E Series Ethernet controllers. The following performance data demonstrates the ability to scale the solution with an increasing number of data streams. Each test was run for a total of 10 minutes and video streams were scaled evenly across the two NVIDIA A100 GPUs. Additional performance results are available in the appendix.

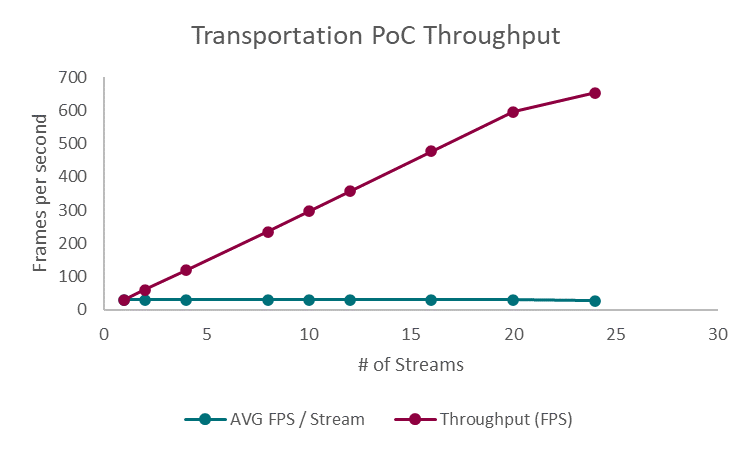

Figure 4: Transportation PoC Throughput

Figure 4 displays the total throughput of frames per second as well as the average throughput as the number of streams increased. The frames per second metric includes video decoding, inference, post-processing, and publishing of an uncompressed stream. The PoC displayed increasing throughput with a maximum of 653.7 frames per second when tested with 24 concurrent streams. Notably, the average frames per second remained steady at approximately 30 frames per second for up to 20 streams, which is considered an industry standard for video processing workloads. When tested with 24 streams, the solution did experience a slight drop, with an average of 27.24 frames per second. Overall, the throughput performance demonstrates the ability of the Dell PowerEdge Server and the NVIDIA A100 GPUs to successfully handle a demanding video-based AI workload with a significant number of concurrent streams.

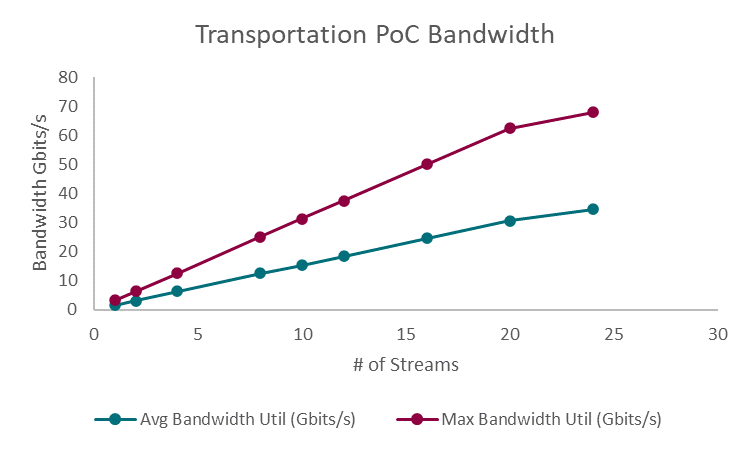

Figure 5: Transportation PoC Bandwidth Utilization

Figure 5 displays the solution’s bandwidth utilization as the number of streams increased from 1 to 24. The results demonstrate the increase in required bandwidth, both at a maximum and on average, as the number of streams increased. The average bandwidth utilization scaled from 1.56 Gb/s with a single video stream, to 34.6 Gb/s when supporting 24 concurrent streams The maximum bandwidth utilization was observed to be 3.13 Gb/s with a single stream, up to 67.9 Gb/s with 24 streams. By utilizing scalable, high bandwidth 100Gb/s Broadcom Ethernet, the solution is able to achieve the increasing bandwidth utilization required when adding additional video streams.

The performance results showcase the PoC as a flexible solution that can be scaled to accommodate varying levels of video requirements while maintaining performance and scaling bandwidth as needed. The solution also provides the foundation for additional AI-powered transportation and logistics applications that may require similar transmission of sensor and video data.

Final Thoughts

The maritime cargo monitoring PoC provides a concrete example of how AI can improve transportation and logistics processes by monitoring container conditions, detecting dangerous working environments, and generating automated compliance reports. While the PoC presented in this paper is limited in scope and executed using simulated sensor datasets, the solution serves as a starting point for expanding such a solution and a reference for developing related AI applications.

The solution additionally demonstrates several notable results. The solution utilizes readily available AI tools including Zephyr 7B, YOLOv8s, and NVIDIA DeepStream to create valuable AI applications that can be deployed to provide tangible value in industry specific environments. The use of RAG in the Zephyr 7B implementation is especially notable, as it provides customization to a general-purpose language model, enabling it to function for a maritime specific use case. The PoC also showcased the ability to deploy an AI solution in demanding edge environments with the use of Dell PowerEdge XR7620 and XR12 servers and to provide high bandwidth when transmitting critical data by using Broadcom NICs.

When tested, the PoC solution demonstrated the ability to scale up to 24 concurrent streams while experience little loss of throughput and successfully supporting increased bandwidth requirements. Testing of the LLM report generation showed that an AI augmented maritime compliance report could be generated in as little as 46 seconds. The testing of the PoC demonstrate both its real-world applicability in solving maritime challenges, as well as its flexibility to scale to individual deployment requirements.

Transportation and logistics are areas that rely heavily upon optimization. With the advancements in AI technology, these markets are well positioned to benefit from AI-driven innovation. AI is capable of processing data and deriving solutions to optimize transportation and logistics processes at a scale and speed that humans are not capable of achieving manually. The opportunity for AI to create innovative solutions in this market is broad and extends well beyond the maritime PoC detailed in this paper. By understanding the approach to creating an AI application and the hardware components used, however, organizations in the transportation and logistics market can apply similar solutions to innovate and optimize their business.

Appendix

Figure 6 shows full performance testing results for the cargo monitoring PoC.

CONTRIBUTORS

Mitch Lewis

Research Analyst | The Futurum Group

PUBLISHER

Daniel Newman

CEO | The Futurum Group

INQUIRIES

Contact us if you would like to discuss this report and The Futurum Group will respond promptly.

CITATIONS

This paper can be cited by accredited press and analysts, but must be cited in-context, displaying author’s name, author’s title, and “The Futurum Group.” Non-press and non-analysts must receive prior written permission by The Futurum Group for any citations.

LICENSING

This document, including any supporting materials, is owned by The Futurum Group. This publication may not be

reproduced, distributed, or shared in any form without the prior written permission of The Futurum Group.

DISCLOSURES

The Futurum Group provides research, analysis, advising, and consulting to many high-tech companies, including those mentioned in this paper. No employees at the firm hold any equity positions with any companies cited in this document.

ABOUT THE FUTURUM GROUP

The Futurum Group is an independent research, analysis, and advisory firm, focused on digital innovation and market-disrupting technologies and trends. Every day our analysts, researchers, and advisors help business leaders from around the world anticipate tectonic shifts in their industries and leverage disruptive innovation to either gain or maintain a competitive advantage in their markets.

© 2024 The Futurum Group. All rights reserved.

Dell POC for Scalable and Heterogeneous Gen-AI Platform

Fri, 08 Mar 2024 18:35:58 -0000

|Read Time: 0 minutes

Introduction

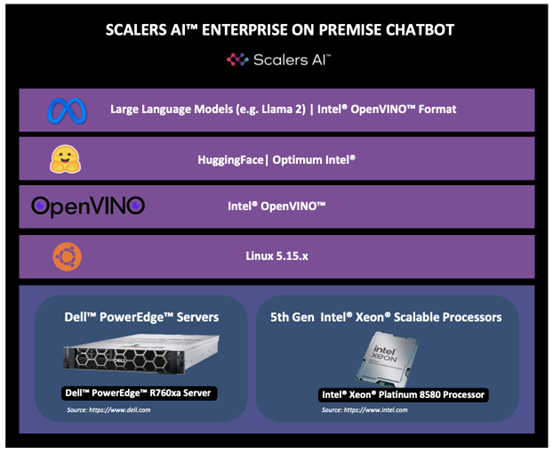

As part of Dell’s ongoing efforts to help make industry leading AI workflows available to their clients, this paper outlines a scalable AI concept that can utilize heterogeneous hardware components. The featured Proof of Concept (PoC) showcases a Generative AI Large Language Model (LLM) in active production, capable of functioning across diverse hardware systems.

Currently, most AI offerings are highly customized and designed to operate with specific hardware, either a particular vendor's CPUs or a specialized hardware accelerator such as a GPU. Although the operational stacks in use vary across different operational environments, they maintain a core similarity and adapt to each specific hardware requirement.

Today, the conversation around Generative-AI LLMs often revolves around their training and the methods for enhancing their capabilities. However, the true value of AI comes to light when we deploy it in production. This PoC focuses on the application of generative AI models to generate useful results. Here, the term 'inferencing' is used to describe the process of extracting results from an AI application.

As companies transition AI projects from research to production, data privacy and security emerge as crucial considerations. Utilizing corporate IT-managed equipment and AI stacks, firms ensure the necessary safeguards are in place to protect sensitive corporate data. They effectively manage and control their AI applications, including security and data privacy, by deploying AI applications on industry-standard Dell servers within privately managed facilities.

Multiple PoC examples on Dell PowerEdge hardware, offering support for both Intel and AMD CPUs, as well as Nvidia and AMD GPU accelerators. These configurations showcase a broad range of performance options for production inferencing deployments. Following our previous Dell AI Proof of Concept,[1] which examined the use of distributed fine-tuning to personalize an AI application, this PoC can serve as the subsequent step, transforming a trained model into one that is ready for production use.

Designed to be industry-agnostic, this PoC provides an example of how we can create a general-purpose generative AI solution that can utilize a variety of hardware options to meet specific Gen-AI application requirements.

In this Proof of Concept, we investigate the ability to perform scale-out inferencing for production and to utilize a similar inferencing software stack across heterogeneous CPU and GPU systems to accommodate different production requirements. The PoC highlights the following:

- A single CPU based system can support multiple, simultaneous, real-time sessions

- GPU augmented clusters can support hundreds of simultaneous, real-time sessions

- A common AI inferencing software architecture is used across heterogenous hardware

| Futurum Group Comment: The novel aspect of this proof of concept is the ability to operate across different hardware types, including Intel and AMD CPUs along with support for both Nvidia and AMD GPUs. By utilizing a common inferencing framework, organizations are able to choose the most appropriate hardware deployment for each application’s requirements. This unique approach helps reduce the extensive customization required by AI practitioners, while also helping IT operations to standardize on common Dell servers, storage and networking components for their production AI deployments. |

Distributed Inferencing PoC Highlights

The inferencing examples include both single node CPU only systems, multi-node CPU clusters, along with single node and clusters of GPU augmented systems. Across this range of hardware options, the resulting generative AI application provides a broad range of performance, ranging from the ability to support several interactive query and response streams on a CPU, up to the highest performing example supporting thousands of queries utilizing a 3-node cluster with GPU cards to accelerate performance.

The objective of this PoC was to evaluate the scalability of production deployments of Generative-AI LLMs on various hardware configurations. Evaluations included deployment on CPU only, as well as GPU assisted configurations. Additionally, the ability to scale inferencing by distributing the workload across multiple nodes of a cluster were investigated. Various metrics were captured in order to characterize the performance and scaling, including the total throughput rate of various solutions, the latency or delay in obtaining results, along with the utilization rates of key hardware elements.

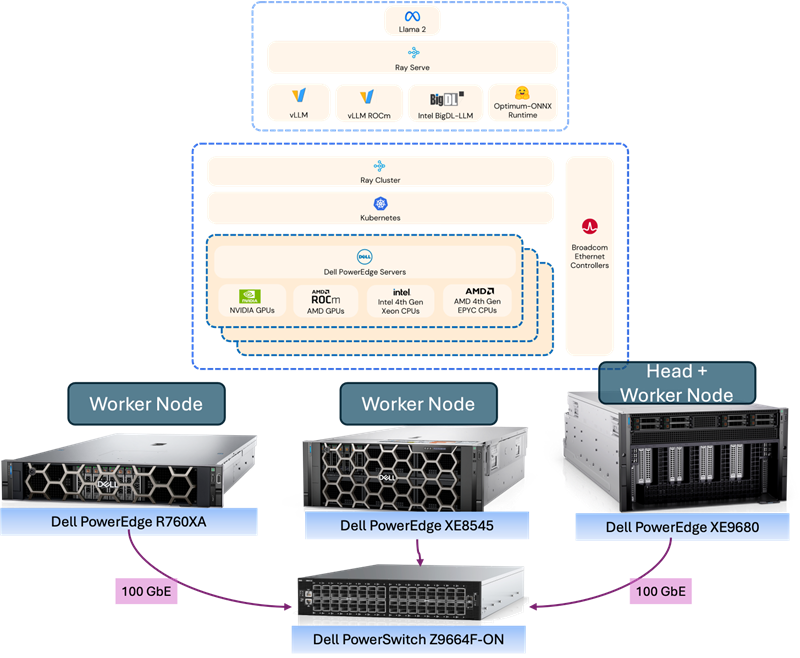

The examples included between one to three Dell PowerEdge servers with Broadcom NICs, with additional GPU acceleration provided in some cases by either AMD or Nvidia GPUs. Each cluster configuration was connected using Broadcom NICs to a Dell Ethernet switch for distributed inferencing. Each PoC uses one or more PowerEdge servers, with some examples also using GPUs. Dell PowerEdge Servers used included a Dell XE8545, a Dell XE9680 and a Dell R760XA. Each Dell PowerEdge system also included a Broadcom network interface (NIC) for all internode communications connected via a Dell PowerSwitch.

Shown in Figure 1 below is a 3-node example that includes the hardware and general software stack.

Figure 1: General, Scale-Out AI Inferencing Stack (Source: Scalers.AI)

There are several important aspects of the architecture utilized, enabling organizations to customize and deploy generative AI applications in their choice of colocation or on-premises data center. These include:

- Dell PowerEdge Sixteenth Gen Servers, with 4th generation CPUs and PCIe Gen 5 connectivity

- Broadcom NetXtreme BCM57508 NICs with up to 200 Gb/s per ethernet port

- Dell PowerSwitch Ethernet switches Z line support up to 400 Gb/s connectivity

This PoC demonstrating both heterogeneous and distributed inferencing of LLMs provides multiple advantages compared to typical inferencing solutions:

- Enhanced Scalability: Distributed inferencing enables the use of multiple nodes to scale the solution to the desired performance levels.

- Increased Throughput: By distributing the inferencing processes across multiple nodes, the overall throughput increases.

- Increased Performance: The speed of generated results may be an important consideration, by supporting both CPU and GPU inferencing, the appropriate hardware can be selected.

- Increased Efficiency: Providing a choice of using CPU or GPUs and the number of nodes enables organizations to align the solutions capabilities with their application requirements.

- Increased Reliability: With distributed inferencing, even if one node fails, the others can continue to function, ensuring that the LLM remains operational. This redundancy enhances the reliability of the system

Although each of the capabilities outlined above are related, certain considerations may be more important than others for specific deployments. Perhaps more importantly, this PoC demonstrates the ability to stand up multiple production deployments, using a consistent set of software that can support multiple deployment scenarios, ranging from a single user up to thousands of simultaneous requests. In this PoC, there are multiple hardware solution deployment examples, summarized as follows:

- CPU based inferencing using both AMD and Intel CPUs, scaled from 1 to 2 nodes

- GPU based inferencing using Nvidia and AMD GPUs, scaled from 1 to 3 nodes

For the GPU based configurations, a three node 12-GPU configuration achieved nearly 3,000 words per second in total output generation. For the scale-out configurations, inter-node communications were an important aspect of the solution. Each configuration utilized a Broadcom BCM-57508 Ethernet card enabling high-speed and low latency communications. Broadcom’s 57508 NICs allow data to be loaded directly into accelerators from storage and peers, without incurring extra CPU or memory overhead.

Futurum Group Comment: By using a scale out inferencing solution leveraging industry standard Dell servers, networking and optional GPU accelerators provides a highly adaptable reference that can be deployed as an edge solution where few inferencing sessions are required, up to enterprise deployments supporting hundreds of simultaneous inferencing outputs. |

Evaluating Solution Performance

In order to compare the performance of the different examples, it is important to understand some of the most important aspects of commonly used to measure LLM inferencing. These include the concept of a token, which typically consists of a group of characters, which are groupings of letters, with larger words comprised of multiple tokens. Currently, there is no standard token size utilized across LLM models, although each LLM typically utilizes common token sizes. Each of the PoCs utilize the same LLM and tokenizer, resulting in a common ratio of tokens to words across the examples. Another common metric is that of a request, which is essentially the input provided to the LLM and may also be called a query.

A common method of improving the overall efficiency of the system is to batch requests, or submit multiple requests simultaneously, which improves the total throughput. While batching requests increases total throughput, it comes at the cost of increasing the latency of individual requests. In practice, batch sizes and individual query response delays must be balanced to provide the response throughput and latencies that best meet a particular application’s needs.

Other factors to consider include the size of the base model utilized, typically expressed in billions of parameters, such as Mistral-7B (denoting 7 billion parameters), or in this instance, Llama2-70B, indicating that the base model utilized 70 billion parameters. Model parameter sizes are directly correlated to the necessary hardware requirements to run them.

Performance testing was performed to capture important aspects of each configuration, with the following metrics collected:

- Requests per Second (RPS): A measure of total throughput, or total requests processed per second

- Token Throughput: Designed to gauge the LLMs performance using token processing rate

- Request Latency: Reports the amount of delay (latency) for the complete response, measured in seconds, and for individual tokens, measured in milli-seconds.

- Hardware Metrics: These include CPU, GPU, Network and Memory utilization rates, which can help determine when resources are becoming overloaded, and further splitting or “sharding” of a model across additional resources is necessary.

Note: The full testing details are provided in the Appendix.

Testing evaluated the following aspects and use cases:

- Effects of scaling for interactive use cases, and batch use cases

- Scaling from 1 to 3 nodes, for GPU configurations, using 4, 8, 12 and 16 total GPUs

- Scaling from 1 to 3 nodes for CPU only configurations (using 112, 224 and 448 total CPU cores)

- For GPU configuration, the effect of moderate batch sizes (32) vs. large batching (256)

- Note: for CPU configurations, the batch size was always 1, meaning a single request per instance

We have broadly stated that two different use cases were tested, interactive and batch. An interactive use case may be considered an interactive chat agent, where a user is interacting with the inferencing results and expects to experience good performance. We subsequently define what constitutes “good performance” for an interactive user. An additional use case could be batch processing of large numbers of documents, or other scenarios where a user is not directly interacting with the inferencing application, and hence there is no requirement for “good interactive performance”.

As noted, for GPU configurations, two different batch sizes per instance were used, either 32 or 256. Interactive use of an LLM application may be uses such as chatbots, where small delays (i.e. low latency) is the primary consideration, and total throughput is a secondary consideration. Another use case is that of processing documents for analysis or summarization. In this instance, total throughput is the most important objective and the latency of any one process is inconsequential. For this case, the batch operation would be more appropriate, in order to maximize hardware utilization and total processing throughput.

Interactive Performance

For interactive performance, the rate of text generation should ideally match, or exceed the users reading or comprehension rate. Also, each additional word output should be created with relatively small delays. According to The Futurum Group’s analysis of reading rates, 200 words per minute can be considered a relatively fast rate for comprehending unseen, non-fiction text. Using this as a guideline results in a rate of 3.33 words per second.

- 200 wpm / 60 sec / minute = 3.33 words per second

Moreover, we will utilize a rate of 3.33 wps as the desired minimum generation rate for assessing the ability to meet the needs of a single interactive user. In terms of latency, 1 over 3.33, or 300 milliseconds would be considered an appropriate maximum delay threshold.

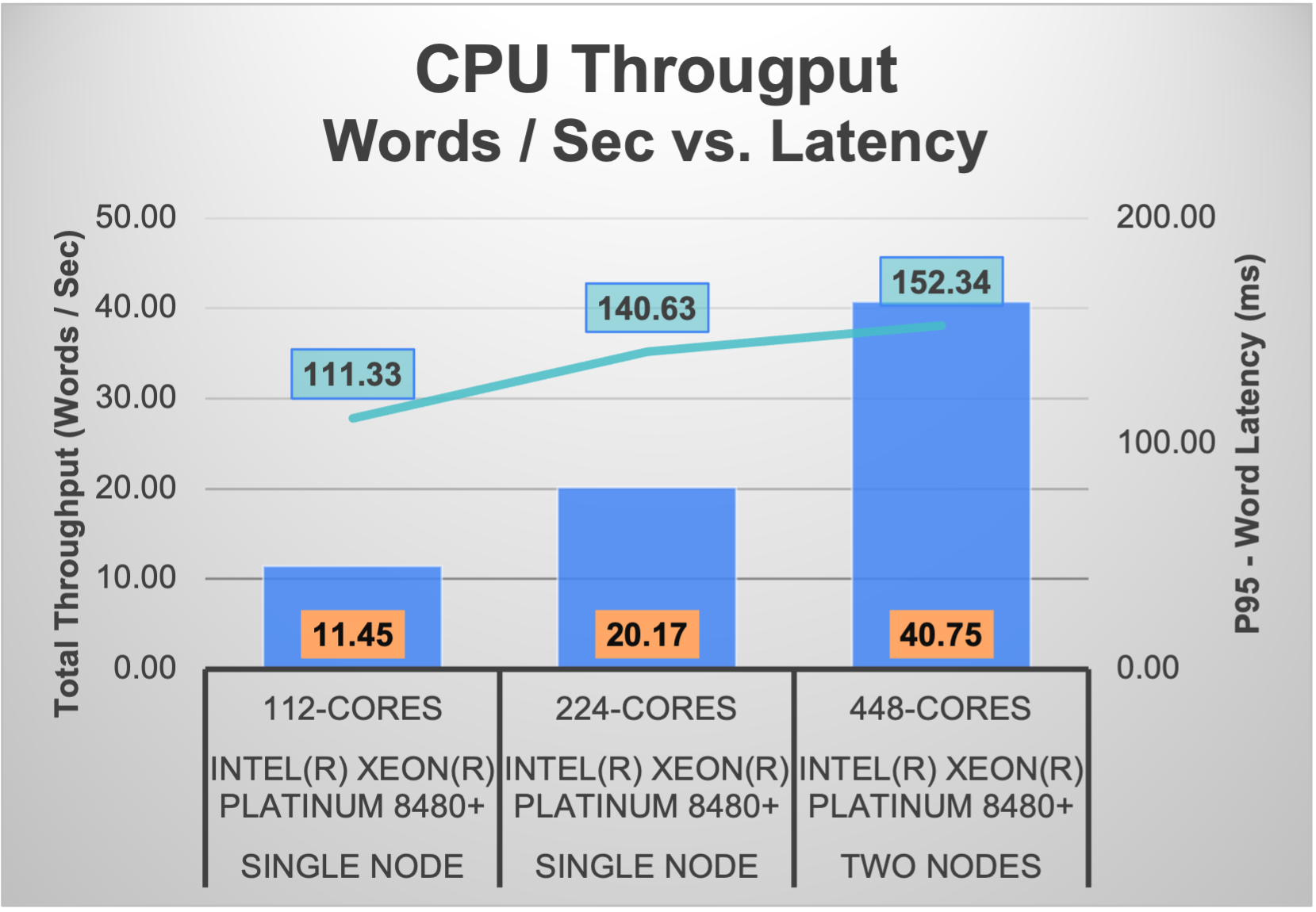

Note: For Figures 2 – 5, each utilizes two axes, the primary (left) vertical axis represents the throughput for the bars in words per second. The second (right) vertical axis represents the 95th percentile of latency results for each word generated.

In Figure 2 below, we show the total throughput of 3 different CPU configurations, along with the associated per word latency. As seen, a CPU only example can support over 40 words per second, significantly greater than the 3.33 word per second rate required for good interactive performance, while maintaining a latency of 152 ms., well under 300 ms.

Figure 2: Interactive Inferencing Performance for CPUs (Source: Futurum Group)

Using a rate of 3.33 words / sec., we can see that two system, each with 224 CPU cores can support inferencing of up to 12 simultaneous sessions.

- Calculated as: 40 wps / 3.33 wps / session = 12 simultaneous sessions.

Futurum Group Comment: It is often expected that all generative AI applications require the use of GPUs in order to support real-time deployments. As evidenced by the testing performed, it can be seen that a single system can support multiple, simultaneous sessions, and by adding a second system, performance scales linearly, doubling from 20 words per second up to more than 40 words per second. Moreover, for smaller deployments, a single CPU based system supporting inferencing may be sufficient. |

In Figure 3 below, we show the total throughput of 4 different configurations, along with the associated per word latency. As seen, even at the rate of 1,246 words per second, latency remains at 100 ms., well below our 300 ms. threshold.

Figure 3: Interactive Inferencing Performance for GPUs (Source: Futurum Group)

Again, using 3.33 words / sec., each example can support a large number of interactive sessions:

- 1 node + 4 GPUs: 414 wps / 3.33 wps / session = 124 simultaneous sessions

- 2 nodes + 8 GPUs: 782 wps / 3.33 wps / session = 235 simultaneous sessions

- 2 nodes + 12 GPUs: 1,035 wps / 3.33 wps / session = 311 simultaneous sessions

- 3 nodes + 16 GPUs: 1,246 wps / 3.33 wps / session = 374 simultaneous sessions

Futurum Group Comment: Clearly, the GPU based results significantly exceed those of the CPU based deployment examples. In these examples, we can see that once again, performance scales well, although not quite linearly. Perhaps more importantly, as additional nodes are added, the latency does not increase above 100 ms., which is well below our established desired threshold. Additionally, the inferencing software stack was very similar to the CPU only stack, with the addition of Nvidia libraries in place of Intel CPU libraries. |

Batch Processing Performance

Inferencing of LLMs becomes memory bound as the model size increases. For larger models such as Llama2-70B, memory bandwidth, between either the CPU and main memory, or GPU and GPU memory is the primary bottleneck. By batching requests, multiple processes may be processed by the GPU or CPU without loading new data into memory, thereby improving the overall efficiency significantly.

Having an inference serving system that can operate at large batch sizes is critical for cost efficiency, and for large models like Llama2-70B the best cost/performance occurs at large batch sizes.

In Figure 4 below we show the throughput capabilities of the same hardware configuration used in Figure 3, but this time with a larger batch size of 256.

Figure 4: Batch Inferencing Performance for GPUs (Source: The Futurum Group)

For this example, we would not claim the ability to support interactive sessions. Rather the primary consideration is the total throughput rate, shown in words per second. By increasing the batch size by a factor of 4X (from 32 to 256), the total throughput more than doubles, along with a significant increase in the per word latency, making this deployment appropriate for offline, or non-interactive scenarios.

Futurum Group Comment: Utilizing the exact same inferencing software stack, and hardware deployment, we can show that for batch processing of AI, the PoC example is able to achieve rates up to nearly 3,000 words per second. |

Comparison of Batch vs. Interactive

As described previously, we utilized a total throughput rate of 200 words per minute, or 3.33 words per second, which yields a maximum delay of 300 ms per word as a level that would produce acceptable interactive performance. In Figure 4 below, we compare the throughput and associated latency of the “interactive” configuration to the “batch” configuration.

Figure 5: Comparison of Interactive vs. Batch Inferencing on GPUs (Source: The Futurum Group)

As seen above, while the total throughput, measured in words per second increases by 2.4X, the latency of individual word output slows substantially, by a factor of 6X. It should be noted that in both cases batching was utilized. The batch size of the “interactive” results was set to 32, while the batch size of the “batch” results utilized a setting of 256. The “interactive” label was applied to the lower results, due to the fact that the latency delay of 100 ms. was significantly below the threshold of 300 ms. for typical interactive use. In summary:

- Throughput increase of 2.4X (1,246 to 2,962) for total throughput, measured in words per second

- Per word delays increased 6X (100 ms. to 604 ms.) measured as latency in milli-seconds

These results highlight that total throughput can be improved, albeit at the expense of interactive performance, with individual words requiring over 600 ms (sixth tenths of a second) when the larger batch size of 256 was used. With this setting, the latency significantly exceeded the threshold of what is considered acceptable for interactive use, where a latency of 300 ms would be acceptable.

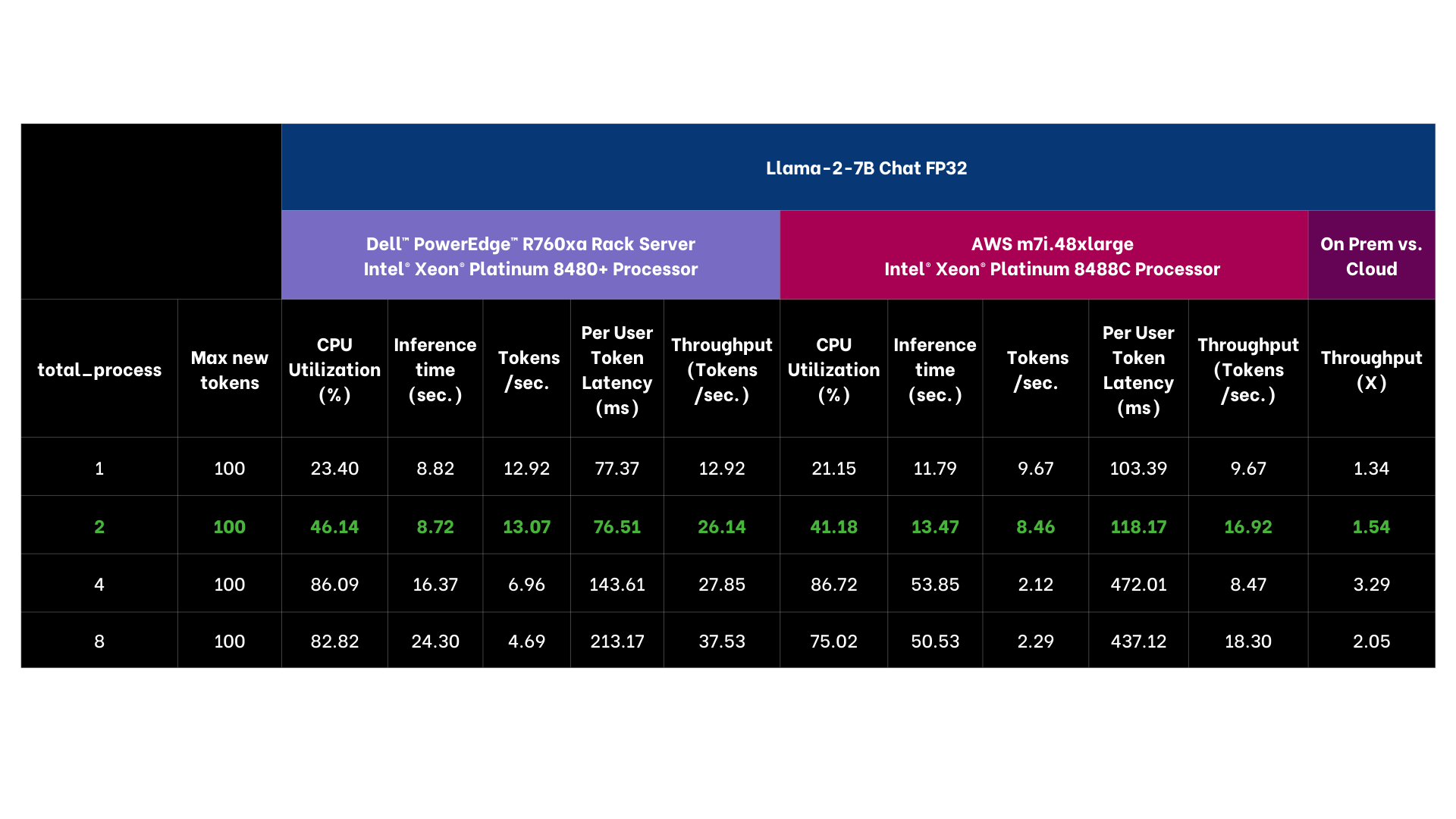

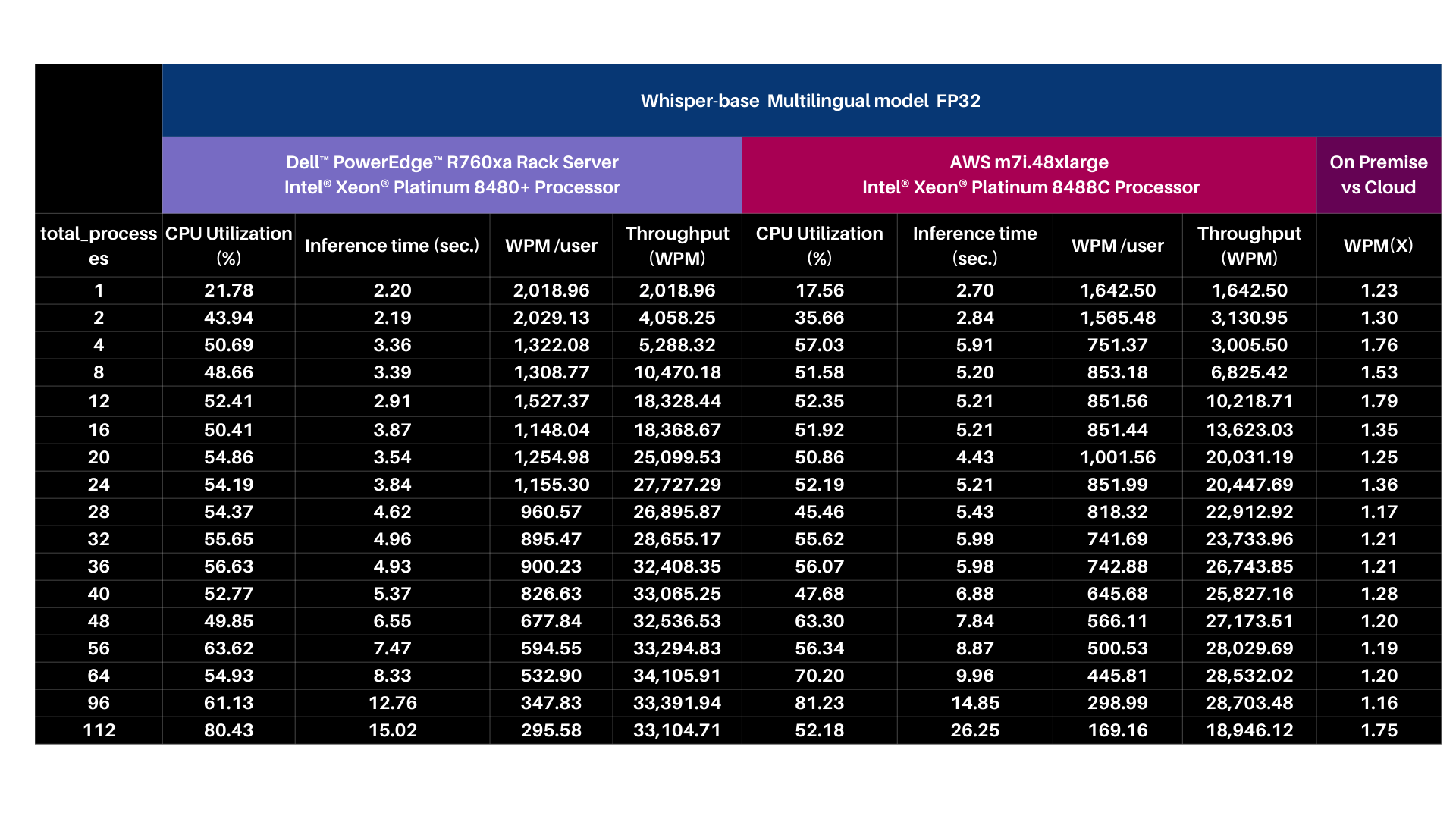

Highlights for IT Operations