Home > Storage > ObjectScale and ECS > Industry Solutions and Verticals > Dell ECS: Data Lake with Apache Iceberg > Real-time data import and query

Real-time data import and query

-

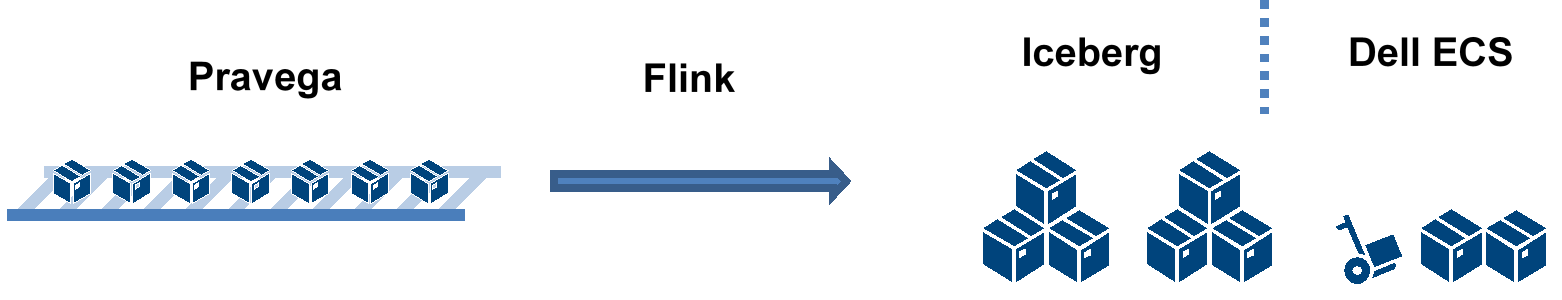

With the increasing demand for data timeliness and the popularization and development of the message queue, more data is being sent to the data lake in real time. Using Flink SQL, the data in message queue can be easily identified, filtered, split, and sent to the Iceberg table. The data in the Iceberg table can be queried in near real-time. You can query the Iceberg table in real-time through Flink to follow the update of the table data in ECS.

Figure 15. Data import and query

SET execution.type = streaming;

CREATE TABLE `external`.`sample` (

id BIGINT,

data STRING

) WITH (

'connector' = 'pravega'

'controller-uri' = 'tcp://localhost:9090',

'scope' = 'scope',

'scan.reader-group.name' = 'group1',

'scan.streams' = 'stream',

'format' = 'json'

);

CREATE TABLE `ecs`.`default`.`sample` (

id BIGINT,

partition_id INT,

data STRING

) PARTITIONED BY (partition_id);

INSERT INTO `ecs`.`default`.`sample`

SELECT

id,

id % 10,

data

FROM `external`.`sample`

With these SQL queries, you can import data from Pravega into a sample table, and the data is submitted at specified intervals, depending on the Flink checkpoint configuration. After the data is injected, you can use simple SQL to get the data in real time. Use the following SQL to determine in real time how many rows of data are in the current table, and the returned results are updated in real time as the new snapshot is submitted.

SET execution.type = streaming;

SELECT COUNT(*) FROM `ecs`.`default`.`sample`;