Home > Integrated Products > VxBlock 1000 and 3-Tier Platform Reference Architectures > Guides > 3-Tier Platform Design Guide > Management components

Management components

-

Single-system and multisystem management solutions use installed software components based on the LCM release for the deployed solution version.

The following table shows the hardware and software components of the management solution:

Table 99. Management solution hardware and software components

Resource

Components

Compute

Cisco UCS C220 M6 SFF Rack Server

Cisco UCS C220 M7 SFF Rack Server

/M7/M7

Storage

Dell Unity XT 480 Hybrid

Dell Unity XT 480 Hybrid with optical connectivity

Network

Cisco Nexus 93108TC-FX3P OOB management switch

Cisco Nexus 92348GC-X OOB management switch

Cisco Nexus 9336C-FX2 OOB management aggregation and ToR switch

VMware vSphere Distributed Switch (VDS)

Management Tools

Dell Secure Connect Gateway

Dell PowerPath/VE Management Appliance

Microsoft Windows Server Embedded Standard

Cisco Intersight Appliance

Dell Technologies optional, and ecosystem applications

Virtualization

VMware vSphere with the following features:

- VMware vSphere ESXi

- VMware vCenter Server Appliance (vCSA)

- VMware vSphere Client (HTML5)

- VMware Host Client (HTML5)

- VMware vSphere Lifecycle Manager (vLCM)

- VMware Single Sign-On (SSO) Service

- VMware vSphere HA

- VMware Distributed Resource Scheduler (DRS)

- VMware vSphere Distributed Switch (VDS)

- VMware vSphere vMotion

- VMware Storage vMotion

- VMware Enhanced vMotion Compatibility (EVC)

- VMware Distributed Resource Scheduler (DRS) (capacity only)

- VMware Storage-driven profiles (user-defined only)

- VMware vSphere Inventory Service

- VMware Syslog Service

- VMware Core Dump Collector

Compute specifications

The following table shows the compute specifications for the management solution:

Table 100. Management solution compute specifications

Cisco UCS C220 M6 Specifications

Cisco UCS C220 M7 Specifications

2 x Intel 4310 2.1 GHz, 120 W, 12C, 18.00 MB DDR4 2667 MHz

2 x Intel 4410Y 2.0 GHz, 150W, 12C, 30.00 MB DDR5 4800 MHz

Single: 256 GB using 16 x 16 GB DDR4-3200-MHz RDIMM, 1Rx4, 1.2v

Multi: 512 GB using 16 x 32 GB DDR4-3200-MHz RDIMM, 2Rx4, 1.2v

Single: 256 GB using 16 x 16 GB DDR5-4800-MHz RDIMM, 1Rx8, 1.2v

Multi: 512 GB using 16 x 32 GB DDR5-4800-MHz RDIMM, 1Rx4, 1.2v

1 x Cisco UCS VIC 1467 Quad Port 10/25G SFP28 mLOM

1 x Intel X550 Dual Port 10GBase-T LOM

1 x Cisco UCS VIC 15427 Quad Port 10/25/50G mLOM (SFP+/SFP28/SFP56)

1 x Intel X710T2LG Dual Port 10Gbase-T PCIe NIC

2 x 240 GB M.2 (RAID 1)

2 x 240 GB M.2 (RAID 1)

TPM 2.0 (non-TPM server is available)

TPM 2.0 (non-TPM server is available)

2 x Cisco UCS 1050 W AC PSU

2 x Cisco UCS 1200 W AC PSU

Storage

Storage offerings allow for entry-level capacity, expansion, and large-capacity solution configurations. The amount of available storage in a management solution depends on requirements that are associated with the deployed core and optional and ecosystem applications.

Unity XT hybrid configurations

The management solution uses iSCSI for the supported Unity XT Hybrid storage arrays to provide block storage to the Cisco UCS servers.

The solution uses one of the following Unity storage arrays:

- Unity XT 480 hybrid storage array

- Unity XT 480 hybrid storage array with optical connectivity. Uses 25 GbE mezzanine card ports.

Unity XT hybrid storage arrays are available in multiple configurations to support growth and expansion. The default storage array configuration consists of eight 2.0 TB data LUNs, while the extra-small system consists of four 2.0 TB LUNs. If capacity exists on the deployed storage array, you can expand the default LUNs or create new LUNs.

The following table shows the available configurations:

Table 101. Dell Unity XT hybrid configurations

Storage array

Raw capacity (TB)

Usable capacity (TB)

Performance (IOPS)

Extra small (single system management only)

16

10

14,156

Small

27

21

23,750

Medium

37

30

33,250

Large

54

45

45,499

Extra large

72

60

64,218

The Unity XT Hybrid configurations include FAST Cache, D@RE, and FAST VP in the base license.

The default pool specifications are based on a two-tiered storage configuration of performance (SAS) and extreme performance (SSD) and drives with an appropriate device count of each to meet the specified capacity options.

Dell Unity OE 5.2 (Kite) and later versions changed the default pool type for hybrid arrays from traditional to dynamic. To understand the concepts and operational changes and for additional information, see the Dell Unity: Dynamic Pools White Paper.

Bill of Materials

The following tables show details of the components that are common to all Dell Unity storage configurations.

Note: The tables do not include software, support, mounting hardware, or consumables (transceivers, cables, and so on).

Table 102. Unity array components

Module name

Option name

Quantity

Starter pack drives

Unity SYSPACK 4X600GB 10K SAS 25X2.5

1

Fast cache drives

Unity 400 GB FAST CACHE 25X2.5 SSD

3

4 port cards

Copper: Unity 2X4 Port Card 10GBaseT

1

Optical: Unity 2X4 Port Card 25 GbE OPT

1

Table 103. Storage array components by size

Module name

Option name

10 TB

20 TB

30 TB

45 TB

60 TB

Additional drives (Extreme Performance)

D4 400GB SAS FLASH 25X2.5 SSD

6

10

14

19

27

Additional drives (Performance)

Unity 1.2 TB 10K SAS 25 X 2.5 drive

11

20

28

42

55

SPIO

Copper: Unity 2 x 4 port I/O 10 GB a set

1

2

2

2

2

Optical: N/A

-

-

-

-

-

Unity XT HFA 25 x2.5 DAE

Unity 2U 25 x 2.5 DAE customer-supplied rack (pair of SAS cables included)

0

1

1

2

3

Network

The management solution uses Cisco Nexus management and ToR network switches for network connectivity.

The following server hardware is used for connectivity to management and ToR network switches:

- Cisco UCS VIC mLOM uses the first two ports to connect to the ToR switches.

If the Cisco Nexus 92348GC-X is the management switch, the last two mLOM ports connect through 10G optical to the QSFP 10G breakout ports on the management switch.

- Cisco UCS 10Gbase-T LOM (M6) or PCIe NIC (M7),, and Cisco UCS IMC 1 GbE connectivity to the management switches.

The server VIC connects to the ToR switch ports using 100 GbE QSFP 4 x 25 GbE breakout transceivers to support multiple connections per port. The default is two transceivers per switch to connect a minimum of four servers. There are two servers per switch port, using two of four breakout cables per transceiver.

When the Cisco Nexus 92348GC-X is used as the management switch, the switch QSFP ports are provisioned with QSFP-40G- SR4 in a 10G breakout configuration. The default is two transceivers per switch, which provides connectivity to a maximum of six servers. This configuration consumes three of the four breakout ports on the QSFP. The fourth breakout port on each QSFP is for the customer uplinks. Each server uses mLOM ports 2 and 3, which are provisioned with an SFP-10G-SR to connect to the QSFP 10G breakout ports.

The following table shows the network components that the Unity XT storage arrays use:

Table 104. Dell Unity XT network components

Storage arrays in management and data networks

Components

Dell Unity XT hybrid management networks

2 x 1 G Base-T management ports (one per storage processor)

Dell Unity XT 480 hybrid data networks

4 x 10 G Base-T ports from the mezzanine card (two per storage processor)

16 x 10 G Base-T ports from the input and output modules, iom_0 and iom_1 (eight per storage processor)

Dell Unity XT 480 hybrid w/ optical data networks

4 X 10/25 Gbps ports from the optical mezzanine card (two per storage processor)

IOM slots are not populated.

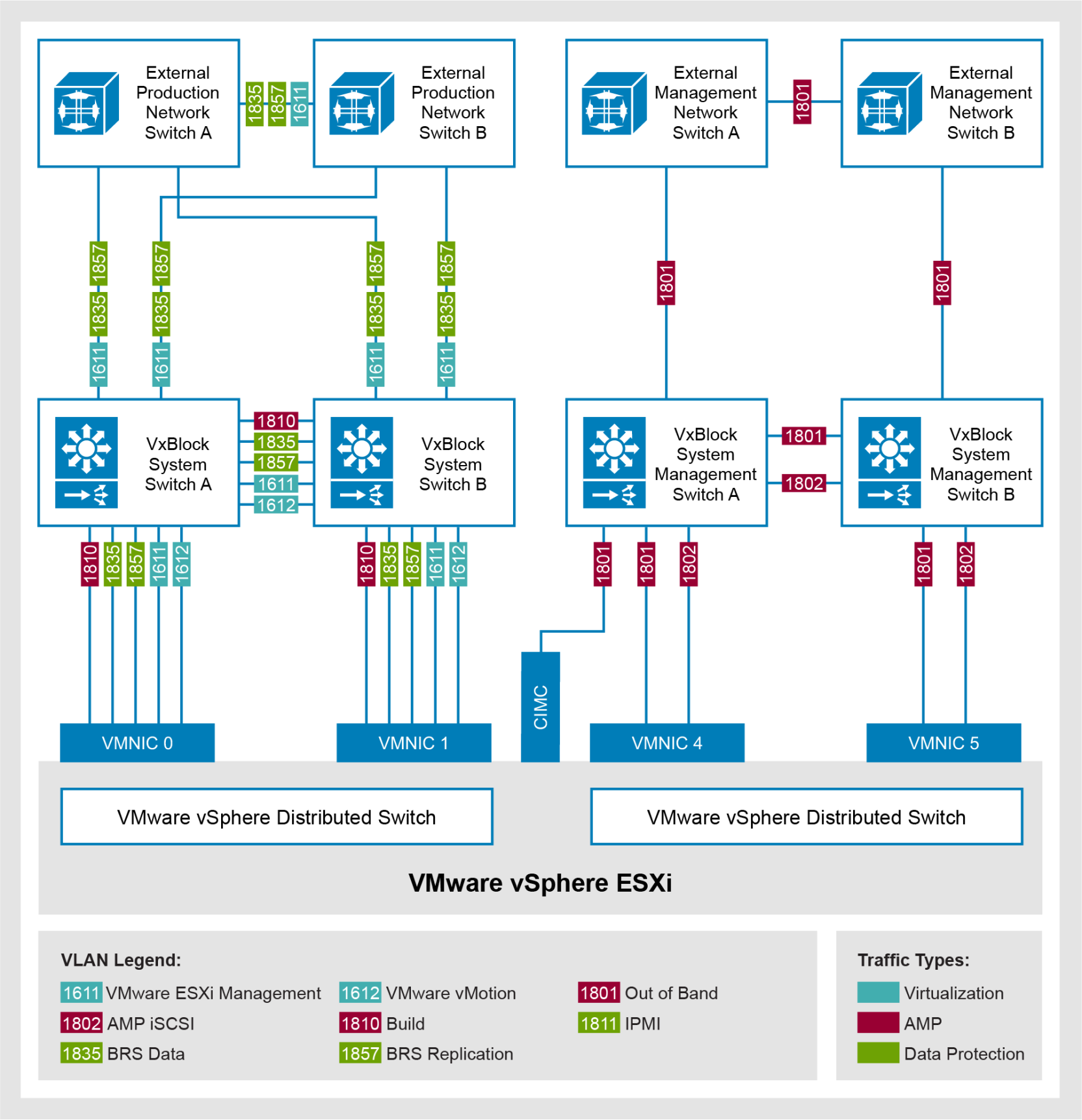

Logical network topologies

Unique naming is essential for autonomous computing, containers, APIs, and custom scripting. Starting from VMware vSphere 7.0, both the management solution and 3-Tier Platforms use a unique set of default VLAN names, values, and examples.

- VMNIC0 and VMNIC1 are configured on the Cisco UCS VIC mLOM (the first two ports) for connectivity to the ToR.

- VMNIC4 and VMNIC5 are configured on the LOM or PCIe NIC to the Cisco Nexus 93108TC-FX3P management switch. VMNIC4 and VMNIC5 are configured on the LOM (M6) or PCIe NIC (M7) to the Cisco Nexus 93108TC-FX3P management switch.

- If the Cisco Nexus 92348GC-X is deployed as the management switch, use the Cisco UCS VIC VMNIC2 and VMNIC3 (10G optical) to connect to the management switch uplink (QSFP) ports.

The following figure shows Unity XT with VMware vSphere:

Figure 20. Dell Unity XT with VMware vSphere

- DVSwitch-Mgmt uses VMNIC 4 and 5 when connecting to the Cisco Nexus 93108TC-FX3P.

- DVSwitch-Mgmt uses VMNIC 2 and 3 when connecting to the Cisco Nexus 92348GC-X.