Assets

Running Meta Llama 3 Models on Dell PowerEdge XE9680

Thu, 02 May 2024 17:54:12 -0000

|Read Time: 0 minutes

Introduction

Recently, Meta has open-sourced its Meta Llama 3 text-to-text models with 8B and 70B sizes, which are the highest scoring LLMs that have been open-sourced so far in their size ranges, in terms of quality of responses[1]. In this blog, we will run those models on the Dell PowerEdge XE9680 server to show their performance and improvement by comparing them to the Llama 2 models.

Open-sourcing the Large Language Models (LLMs) enables easy access to this state-of-the-art technology and has accelerated innovations in the field and adoption for different applications. As shown in Table 1, this round of Llama 3 release includes the following five models in total, including two pre-trained models with sizes of 8B and 70B and their instruction-tuned versions, plus a safeguard version for the 8B model[2].

Table 1: Released Llama 3 Models

Model size (Parameters) | Model names | Context | Training tokens | Vocabulary length |

8B |

| 8K |

15T |

128K |

70B |

|

Llama 3 is trained on 15T tokens which is 7.5X the number of tokens on which Llama 2 was trained. Training with large, high-quality datasets and refined post-training processes improved Llama 3 model’s capabilities, such as reasoning, code generation, and instruction following. Evaluated across main accuracy benchmarks, the Llama 3 model not only exceeds its precedent, but also leads over other main open-source models by significant margins. The Llama 3 70B instruct model shows close or even better results compared to the commercial closed-source models such as Gemini Pro[1].

The model architecture of Llama 3 8B is similar to that of Llama 2 7B with one significant difference. Besides a larger parameter size, the Llama 3 8B model uses the group query attention (GQA) mechanism instead of the multi-head attention (MHA) mechanism used in the Llama 2 7B model. Unlike MHA which has the same number of Q (query), K (key), and V (value) matrixes, GQA reduces the number of K and V matrixes required by sharing the same KV matrixes across grouped Q matrixes. This reduces the memory required and improves computing efficiency during the inferencing process[3]. In addition, the Llama 3 models improved the max context window length to 8192 compared to 4096 for the Llama 2 models. Llama 3 uses a new tokenizer called tik token that expands the vocabulary size to 128K when compared to 32K used in Llama 2. The new tokenization scheme offers improved token efficiency, yielding up to 15% fewer tokens compared to Llama 2 based on Meta’s benchmark[1].

This blog focuses on the inference performance tests of the Llama 3 models running on the Dell PowerEdge XE9680 server, especially, the comparison with Llama 2 models, to show the improvements in the new generation of models.

Test setup

The server we used to benchmark the performance is the PowerEdge XE9680 with 8x H100 GPUs[4]. The detailed server configurations are shown in Table 2.

Table 2: XE9680 server configuration

System Name | PowerEdge XE9680 |

Status | Available |

System Type | Data Center |

Number of Nodes | 1 |

Host Processor Model | 4th Generation Intel® Xeon® Scalable Processors |

Host Process Name | Intel® Xeon® Platinum 8470 |

Host Processors per Node | 2 |

Host Processor Core Count | 52 |

Host Processor Frequency | 2.0 GHz, 3.8 GHz Turbo Boost |

Host Memory Capacity and Type | 2TB, 32x 64 GB DIMM, 4800 MT/s DDR5 |

Host Storage Capacity | 1.8 TB, NVME |

GPU Number and Name | 8x H100 |

GPU Memory Capacity and Type | 80GB, HBM3 |

GPU High-speed Interface | PCIe Gen5 / NVLink Gen4 |

The XE9680 is the ideal server, optimized for AI workloads with its 8x NVSwitch interconnected H100 GPUs. The high-speed NVLink interconnect allows deployment of large models like Llama 3 70B that need to span multiple GPUs for best performance and memory capacity requirements. With its 10 PCIe slots, the XE9680 also provides a flexible PCIe architecture that enables a variety of AI fabric options.

For these tests, we deployed the Llama 3 models Meta-Llama-3-8B and Meta-Llama-3-70B, and the Llama 2 models Llama-2-7b-hf and Llama-2-70b-hf. These models are available for download from Hugging Face after permission approved by Meta. For a fair comparison between Llama 2 and Llama 3 models, we ran the models with native precision (float16 for Llama 2 models and bfloat16 for Llama 3 models) instead of any quantized precision.

Given that it has the same basic model architecture as Llama 2, Llama 3 can easily be integrated into any available software eco-system that currently supports the Llama 2 model. For the experiments in this blog, we chose NVIDIA TensorRT-LLM latest release (version 0.9.0) as the inference framework. NVIDIA CUDA version was 12.4; the driver version was 550.54.15. The operating system for the experiments was Rocky Linux 9.1.

Knowing that the Llama 3 improved accuracy significantly, we first concentrated on the inferencing speed tests. More specifically, we tested the Time-to-First-Token (TTFT) and throughput over different batch sizes for both Llama 2 and Llama 3 models, as shown in the Results section. To make the comparison between two generations of models easy, and to mimic a summarization task, we kept the input token length and output token length at 2048 and 128 respectively for most of the experiments. We also measured throughput of the Llama 3 with the long input token length (8192), as one of the most significant improvements. Because H100 GPUs support the fp8 data format for the models with negligible accuracy loss, we measured the throughput under long input token length for the Llama 3 model at fp8 precision.

Results

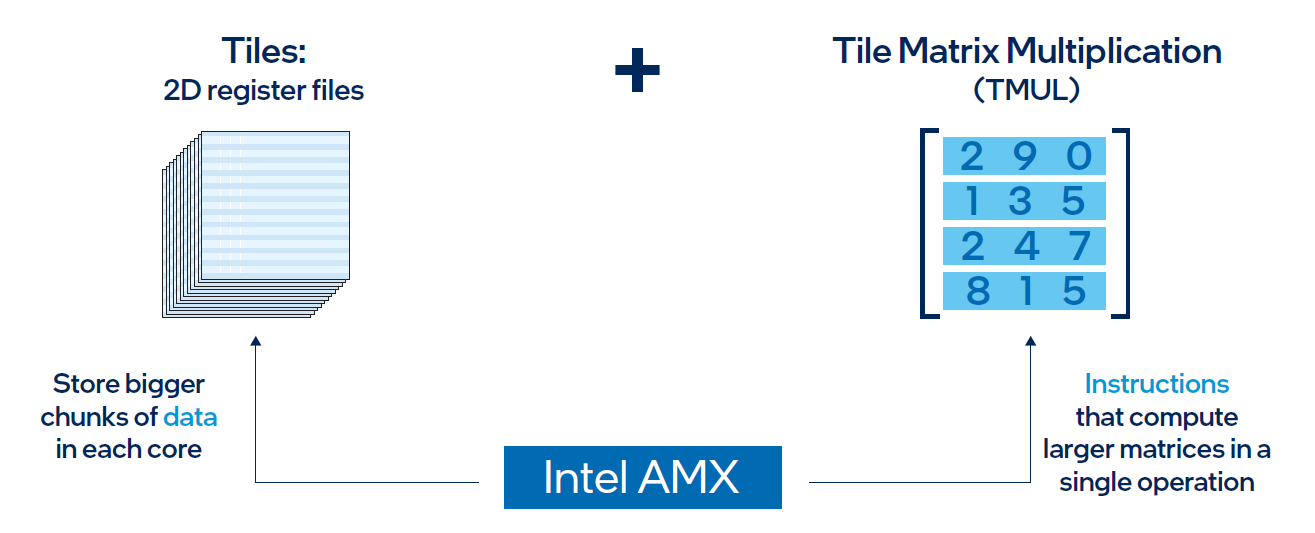

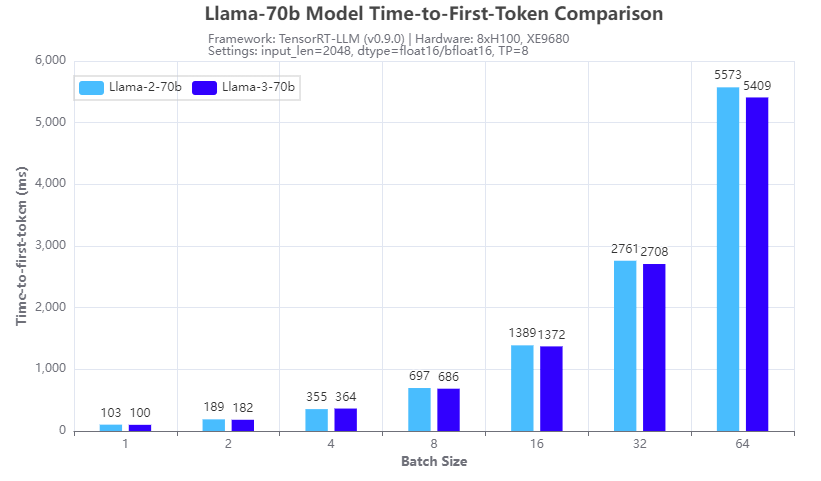

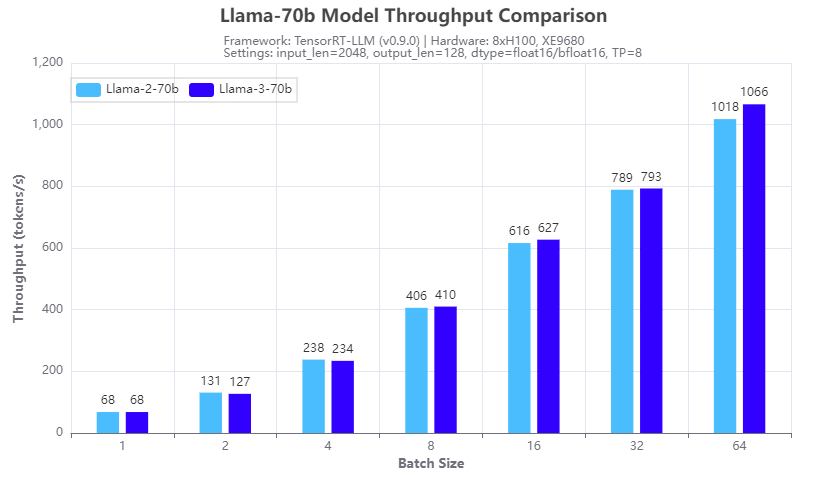

Figure 1. Inference speed comparison: Llama-3-70b vs Llama-2-70b: Time-to-First-Token

Figure 2: Inference speed comparison: Llama-3-70b vs Llama-2-70b: Throughput

Figures 1 and 2 show the inference speed comparison with the 70b Llama 2 (Llama-2-70b) and Llama 3 (Llama-3-70b) models running across eight H100 GPUs in a tensor parallel (TP=8) fashion on an XE9680 server. From the test results, we can see that for both TTFT (Figure 1) and throughput (Figure 2), the Llama 3 70B model has a similar inference speed to the Llama 2 70b model. This is expected given the same size and architecture of the two models. So, by deploying Llama 3 instead of Llama 2 on an XE9680, organizations can immediately see a big boost in accuracy and quality of responses, using the same software infrastructure, without any impact to latency or throughput of the responses.

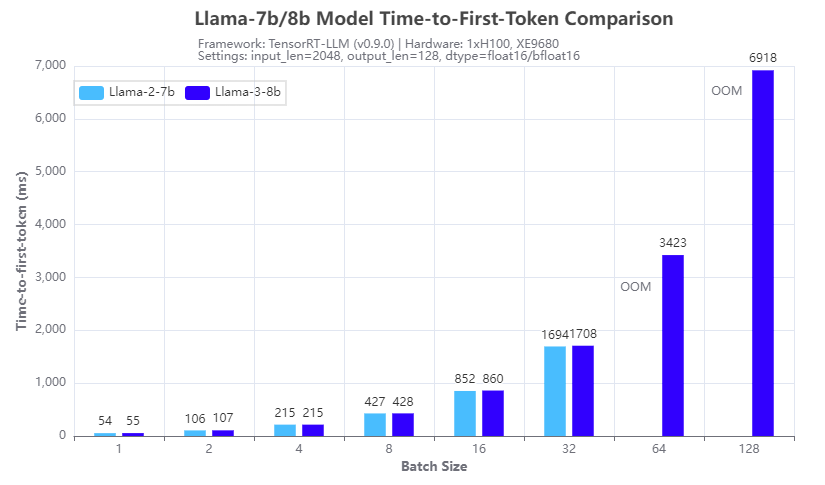

Figure 3. Inference speed comparison: Llama-3-8b vs Llama-2-7b: Time-to-First-Token

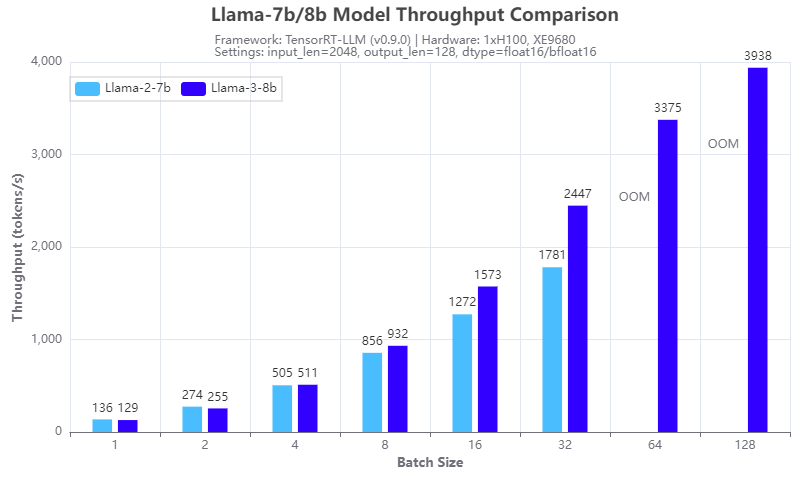

Figure 4: Inference speed comparison: Llama-3-8b vs Llama-2-7b: Throughput

Figures 3 and 4 show the inference speed comparison with the 7b Llama 2 (Llama-2-7b) and 8b Llama 3 (Llama-3-8b) models running on a single H100 GPU on an XE9680 server. From the results, we can see the benefits of using the group query attention (GQA) in the Llama 3 8B architecture, in terms of reducing the GPU memory footprint in the prefill stage and speeding up the calculation in the decoding stage of the LLM inferencing. Figure 3 shows that Llama 3 8B has a similar response time in generating the first token even though it is a 15% larger model compared to Llama-2-7b. Figure 4 shows that Llama-3-8b has higher throughput than Llama-2-7b when the batch size is 4 or larger. The benefits of GQA grow as the batch size increases. We can see from the experiments that:

- the Llama-2-7b cannot run at a batch size of 64 or larger with the 16-bit precision and given input/output token length, because of the OOM (out of memory) error

- the Llama-3-8b can run at a batch size of 128, which gives more than 2x throughput with the same hardware configuration

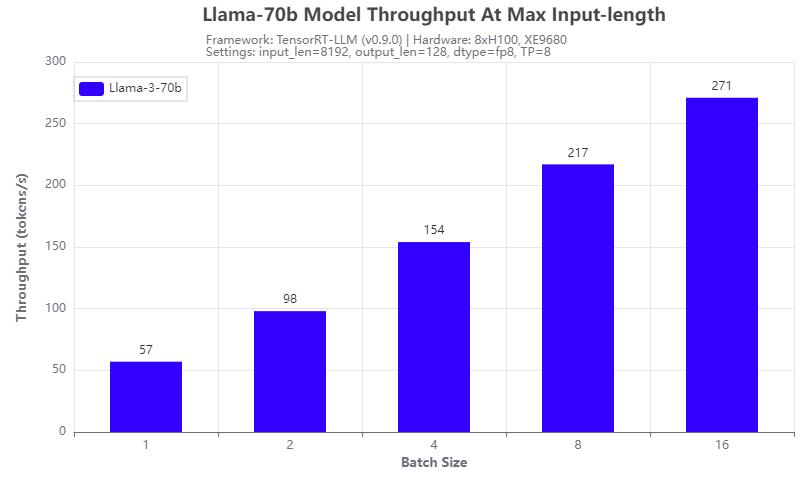

Figure 5: Llama-3-70b throughput under 8192 input token length

Another improvement of the Llama 3 model: it supports a max input token length of 8192, which is 2x of that of a Llama 2 model. We tested it with the Llama-3-70b model running on 8 H100 GPUs of the XE9680 server. The results are shown in Figure 5. The throughput is linearly proportional to the batch size tested and can achieve 271 tokens/s at a batch size of 16, indicating that more aggressive quantization techniques can further improve the throughput.

Conclusion

In this blog, we investigated the Llama 3 models that were released recently, by comparing their inferencing speed with that of the Llama 2 models by running on a Dell PowerEdge XE9680 server. With the numbers collected through experiments, we showed that not only is the Llama 3 model series a big leap in terms of the quality of responses, it also has great inferencing advantages in terms of high throughput with a large achievable batch size, and long input token length. This makes Llama 3 models great candidates for those long context and offline processing applications.

Authors: Tao Zhang, Khushboo Rathi, and Onur Celebioglu

[1] Meta AI, “Introducing Meta Llama 3: The most capable openly available LLM to date”, https://ai.meta.com/blog/meta-llama-3/.

[3] J. Ainslie et. al, “GQA: Training Generalized Multi-Query Transformer Models from Multi-Head Checkpoints”, https://arxiv.org/abs/2305.13245

MLPerf™ Inference 4.0 on Dell PowerEdge Server with Intel® 5th Generation Xeon® CPU

Mon, 22 Apr 2024 05:40:38 -0000

|Read Time: 0 minutes

Introduction

In this blog, we present the MLPerf™ v4.0 Data Center Inference results obtained on a Dell PowerEdge R760 with the latest 5th Generation Intel® Xeon® Scalable Processors (a CPU only system).

These new Intel® Xeon® processors use an Intel® AMX matrix multiplication engine in each core to boost overall inferencing performance. With a focus on ease of use, Dell Technologies delivers exceptional CPU performance results out of the box with an optimized BIOS profile that fully unleashes the power of Intel’s OneDNN software – a software which is fully integrated with both PyTorch and TensorFlow frameworks. The server configurations and the CPU specifications in the benchmark experiments are shown in Tables 1 and 2, respectively.

Table 1. Dell PowerEdge R760 Server Configuration

System Name | PowerEdge R760 |

Status | Available |

System Type | Data Center |

Number of Nodes | 1 |

Host Processor Model | 5th Generation Intel® Xeon® Scalable Processors |

Host Processors per Node | 2 |

Host Processor Core Count | 64 |

Host Processor Frequency | 1.9 GHz, 3.9 GHz Turbo Boost |

Host Memory Capacity | 2 TB, 16 x 128 GB 5600 MT/s |

Host Storage Capacity | 7.68TB, NVME |

Table 2. 5th Generation Intel® Xeon® Scalable Processor Technical Specifications

Product Collection | 5th Generation Intel® Xeon® Scalable Processors |

Processor Name | Platinum 8592+ |

Status | Launched |

# of CPU Cores | 64 |

# of Threads | 128 |

Base Frequency | 1.9 GHz |

Max Turbo Speed | 3.9 GHz |

Cache L3 | 320 MB |

Memory Type | DDR5 5600 MT/s |

ECC Memory Supported | Yes |

MLPerf™ Inference v4.0 - Datacenter

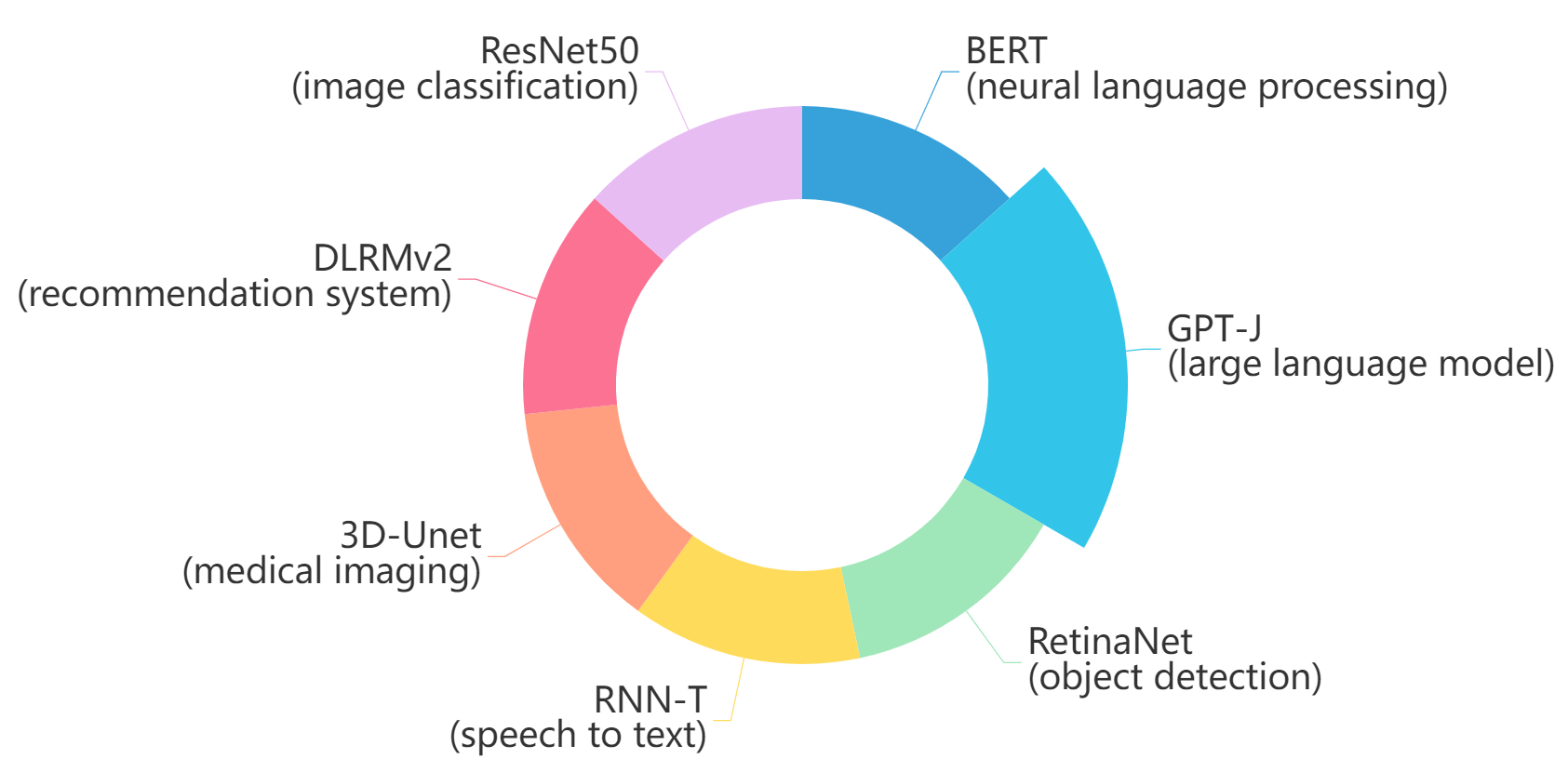

The MLPerf™ inference benchmark measures how fast a system can perform ML inference using a trained model with new data in a variety of deployment scenarios. There are two benchmark suites, one for Datacenter systems and one for Edge. Figure 1 shows the 7 models with each targeting at different task in the official release v4.0 for Datacenter systems category that were run on this PowerEdge R760 and submitted in the closed category. The dataset and quality target are defined for each model for benchmarking, as listed in Table 3.

Figure 1. Benchmarked models for MLPerf™ datacenter inference v4.0

Table 3. Datacenter Suite Benchmarks. Source: MLCommons™

Area | Task | Model | Dataset | QSL Size | Quality | Server latency constraint |

Vision | Image classification | ResNet50-v1.5 | ImageNet (224x224) | 1024 | 99% of FP32 (76.46%) | 15 ms |

Vision | Object detection | RetinaNet | OpenImages (800x800) | 64 | 99% of FP32 (0.20 mAP) | 100 ms |

Vision | Medical imaging | 3D-Unet | KITS 2019 (602x512x512) | 16 | 99.9% of FP32 (0.86330 mean DICE score) | N/A |

Speech | Speech-to-text | RNN-T | Librispeech dev-clean (samples < 15 seconds) | 2513 | 99% of FP32 (1 - WER, where WER=7.452253714852645%) | 1000 ms |

Language | Language processing | BERT-large | SQuAD v1.1 (max_seq_len=384) | 10833 | 99% of FP32 and 99.9% of FP32 (f1_score=90.874%) | 130 ms |

Language | Summarization | GPT-J | CNN Dailymail (v3.0.0, max_seq_len=2048) | 13368 | 99% of FP32 (f1_score=80.25% rouge1=42.9865, rouge2=20.1235, rougeL=29.9881). | 20 s |

Commerce | Recommendation | DLRMv2 | Criteo 4TB multi-hot | 204800 | 99% of FP32 (AUC=80.25%) | 60 ms |

Scenarios

The models are deployed in a variety of critical inference applications or use cases known as “scenarios” where each scenario requires different metrics, demonstrating production environment performance in practice. Following is the description of each scenario. Table 4 shows the scenarios required for each Datacenter benchmark included in this submission v4.0.

Offline scenario: represents applications that process the input in batches of data available immediately and do not have latency constraints for the metric performance measured in samples per second.

Server scenario: represents deployment of online applications with random input queries. The metric performance is measured in queries per second (QPS) subject to latency bound. The server scenario is more complicated in terms of latency constraints and input queries generation. This complexity is reflected in the throughput-degradation results compared to the offline scenario.

Each Datacenter benchmark requires the following scenarios:

Table 4. Datacenter Suite Benchmark Scenarios. Source: MLCommons™

Area | Task | Required Scenarios |

Vision | Image classification | Server, Offline |

Vision | Object detection | Server, Offline |

Vision | Medical imaging | Offline |

Speech | Speech-to-text | Server, Offline |

Language | Language processing | Server, Offline |

Language | Summarization | Server, Offline |

Commerce | Recommendation | Server, Offline |

Software stack and system configuration

The software stack and system configuration used for this submission is summarized in Table 5.

Table 5. System Configuration

OS | CentOS Stream 8 (GNU/Linux x86_64) |

Kernel | 6.7.4-1.el8.elrepo.x86_64 |

Intel® Optimized Inference SW for MLPerf™ | MLPerf™ Intel® OneDNN integrated with Intel® Extension for PyTorch (IPEX) |

ECC memory mode | ON |

Host memory configuration | 2TB, 16 x 128 GB, 1 DIMM per channel, well balanced |

Turbo mode | ON |

CPU frequency governor | Performance |

What is Intel® AMX (Advanced Matrix Extensions)?

Intel® AMX is a built-in accelerator that enables 5th Gen Intel® Xeon® Scalable processors to optimize deep learning (DL) training and inferencing workloads. With the high-speed matrix multiplications enabled by Intel® AMX, 5th Gen Intel® Xeon® Scalable processors can quickly pivot between optimizing general computing and AI workloads.

Imagine an automobile that could excel at city driving and then quickly shift to deliver Formula 1 racing performance. 5th Gen Intel® Xeon® Scalable processors deliver this level of flexibility. Developers can code AI functionality to take advantage of the Intel® AMX instruction set as well as code non-AI functionality to use the processor instruction set architecture (ISA). Intel® has integrated the oneAPI Deep Neural Network Library (oneDNN) – its oneAPI DL engine – into popular open-source tools for AI applications, including TensorFlow, PyTorch, PaddlePaddle, and ONNX.

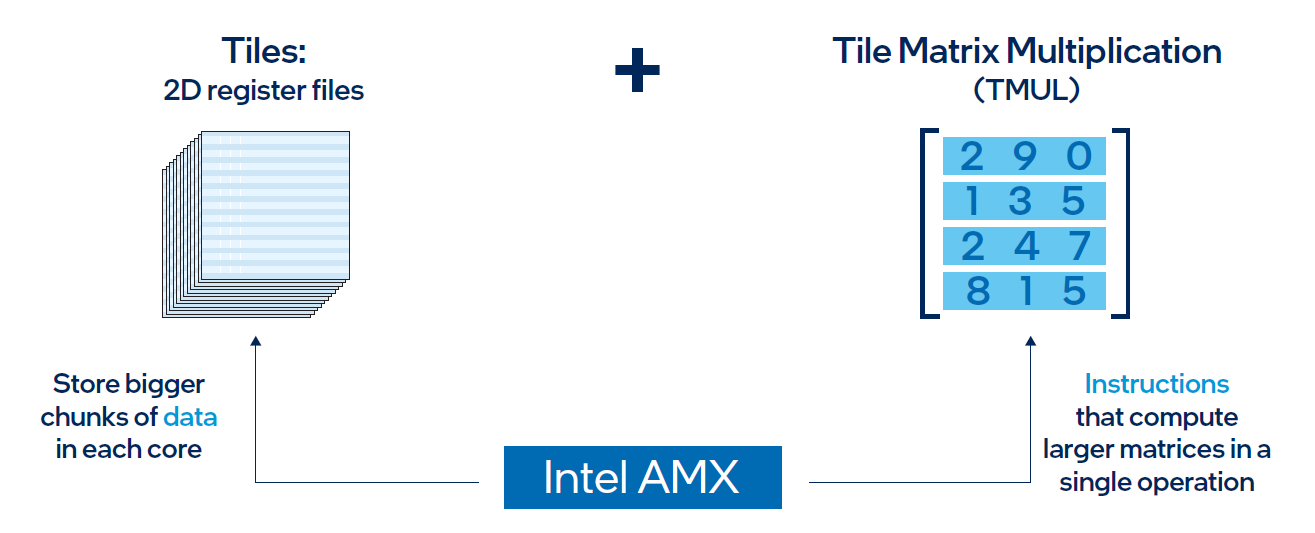

AMX architecture

Intel® AMX architecture consists of two components, as shown in Figure 1:

- Tiles consist of eight two-dimensional registers, each 1 kilobyte in size. They store large chunks of data.

- Tile Matrix Multiplication (TMUL) is an accelerator engine attached to the tiles that performs matrix-multiply computations for AI.

Figure 2. Intel® AMX architecture consists of 2D register files (tiles) and TMUL

Results

Both MLPerf™ v3.1 and MLPerf™ v4.0 benchmark results are based on the Dell R760 server but with different generations of Xeon® CPUs (4th Generation Intel® Xeon® CPUs for MLPerf™ v3.1 versus 5th Generation Intel® Xeon® CPUs for MLPerf™ v4.0) and optimized software stacks. In this section, we show the performance in the comparing mode so the improvement from the last submission can be easily observed.

Comparing Performance from MLPerfTM v4.0 to MLPerfTM v3.1

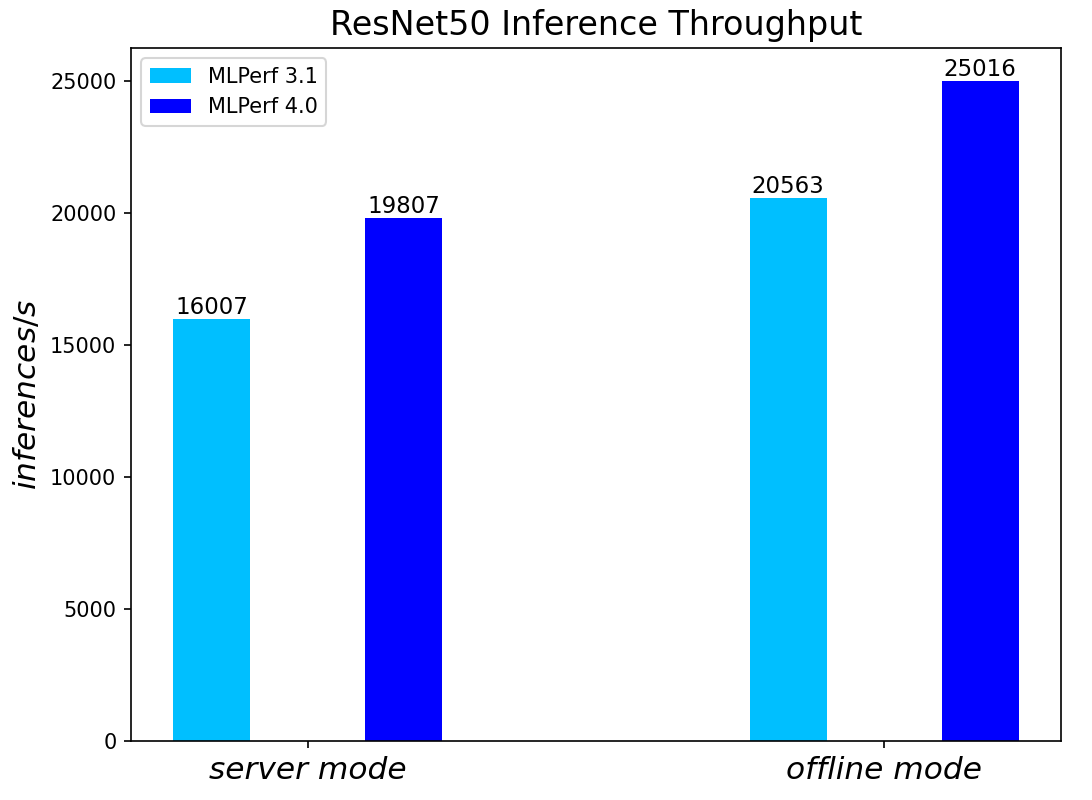

ResNet50 server & offline scenarios:

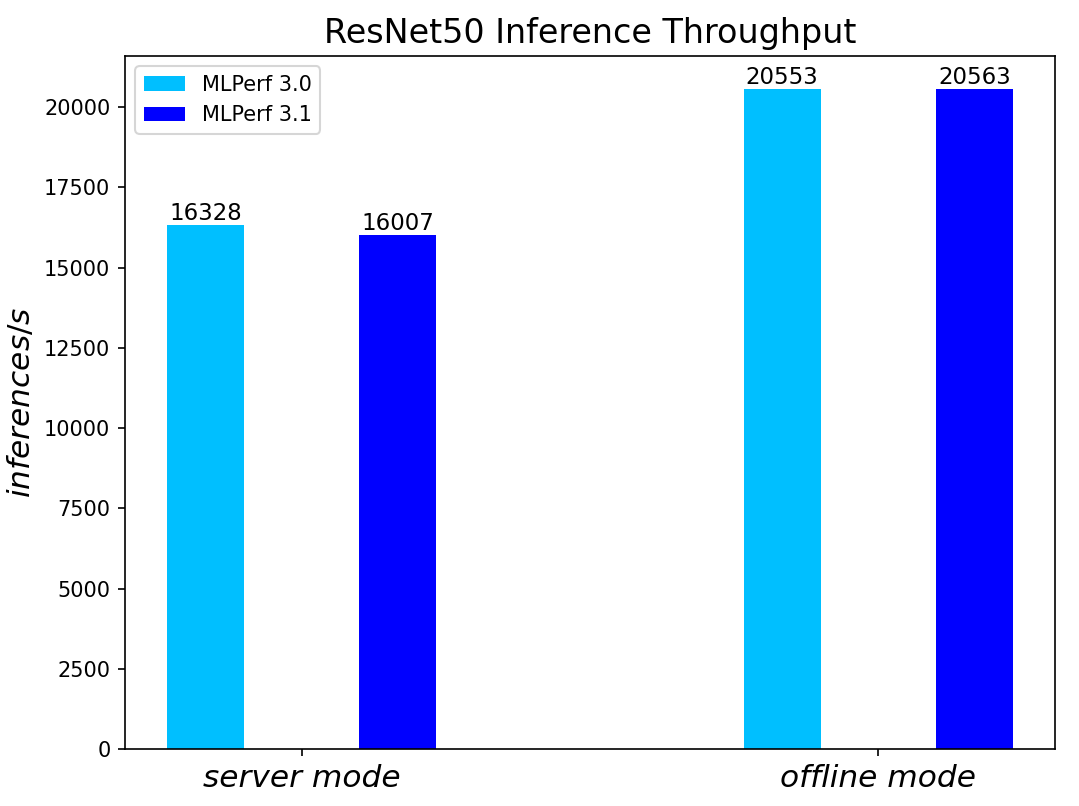

Figure 3. ResNet50 inference throughput in server and offline scenarios

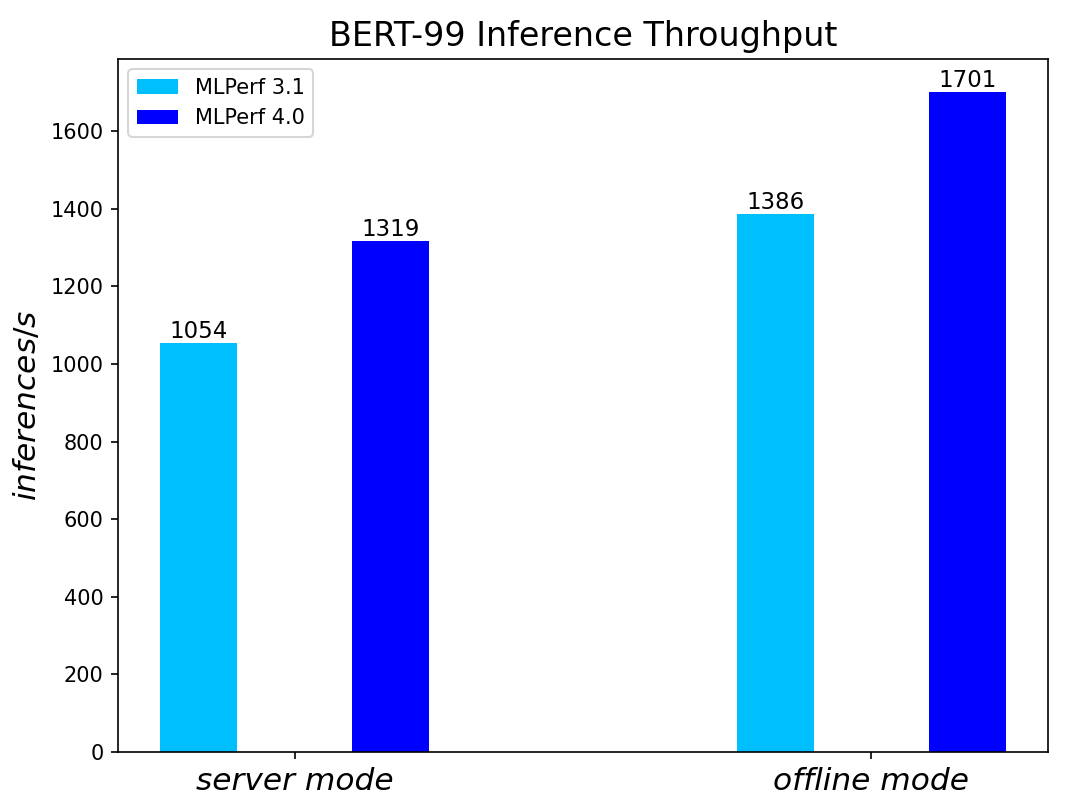

BERT Large Language Model server & offline scenarios:

Figure 4. BERT Inference results for server and offline scenarios

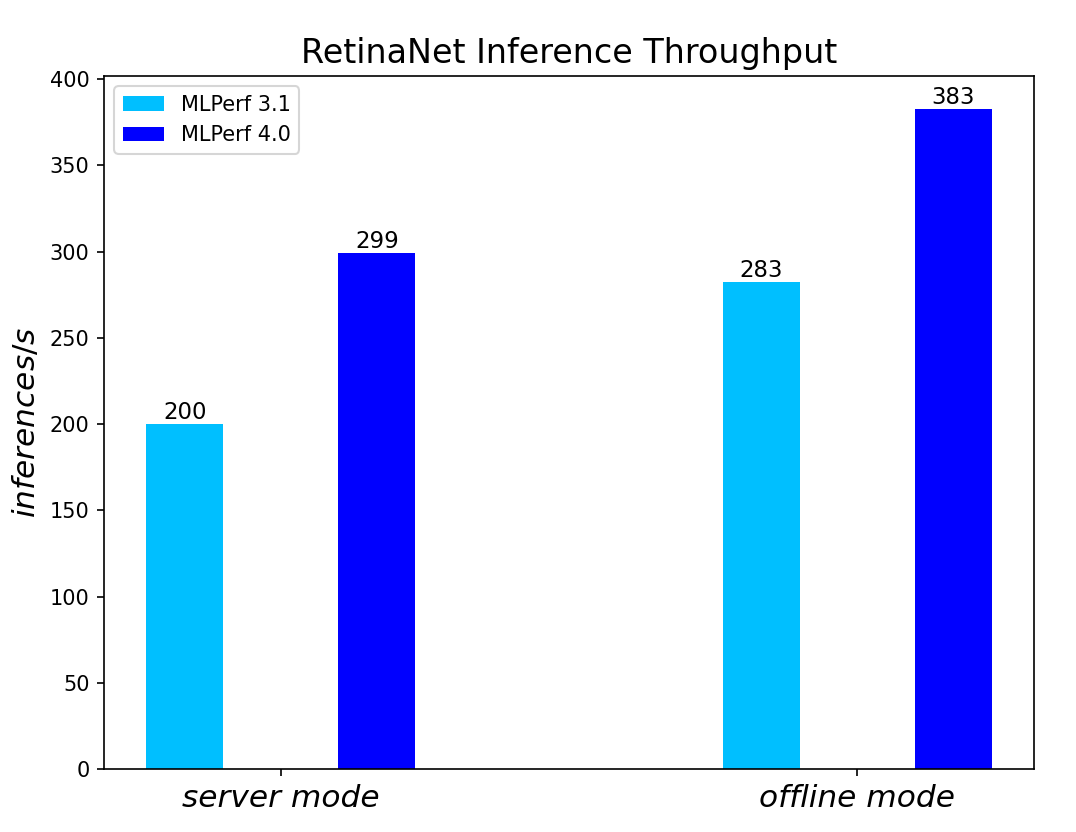

RetinaNet Object Detection Model server & offline scenarios:

Figure 5. RetinaNet Object Detection Model Inference results for server and offline scenarios

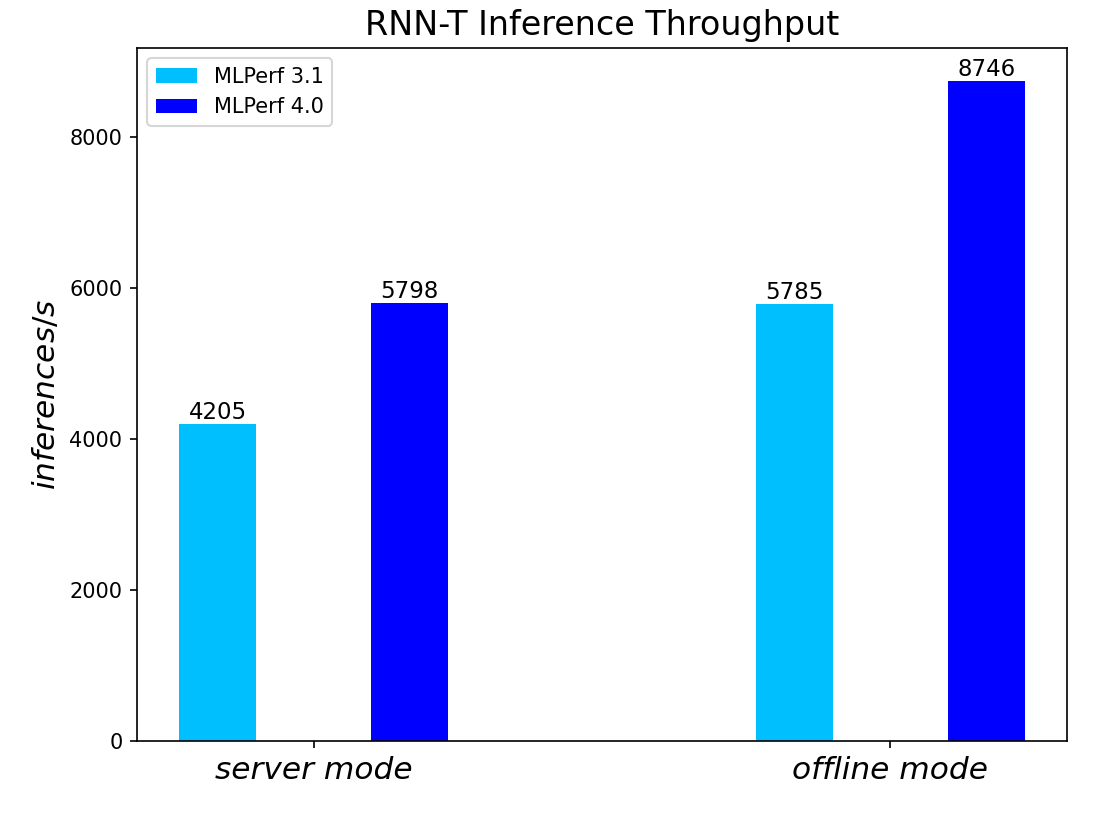

RNN-T Text to Speech Model server & offline scenarios:

Figure 6. RNN-T Text to Speech Model Inference results for server and offline scenarios

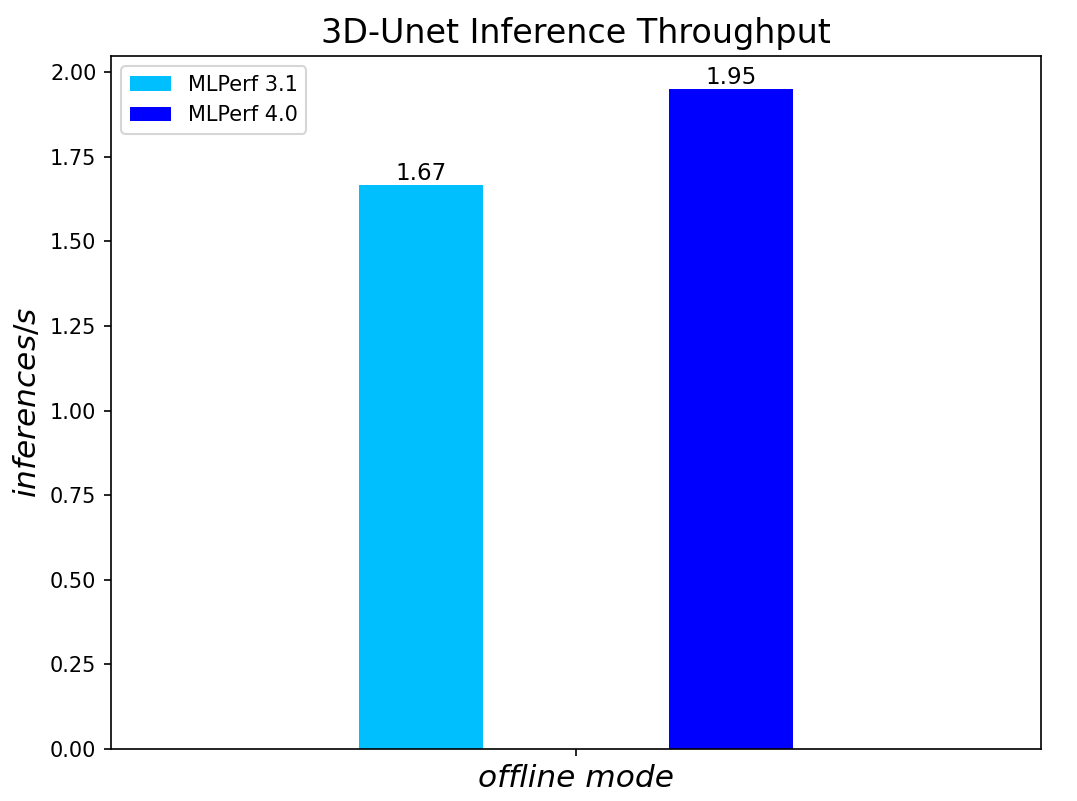

3D-Unet Medical Imaging Model offline scenarios:

Figure 7. 3D-Unet Medical Imaging Model Inferencing results for server and offline scenarios

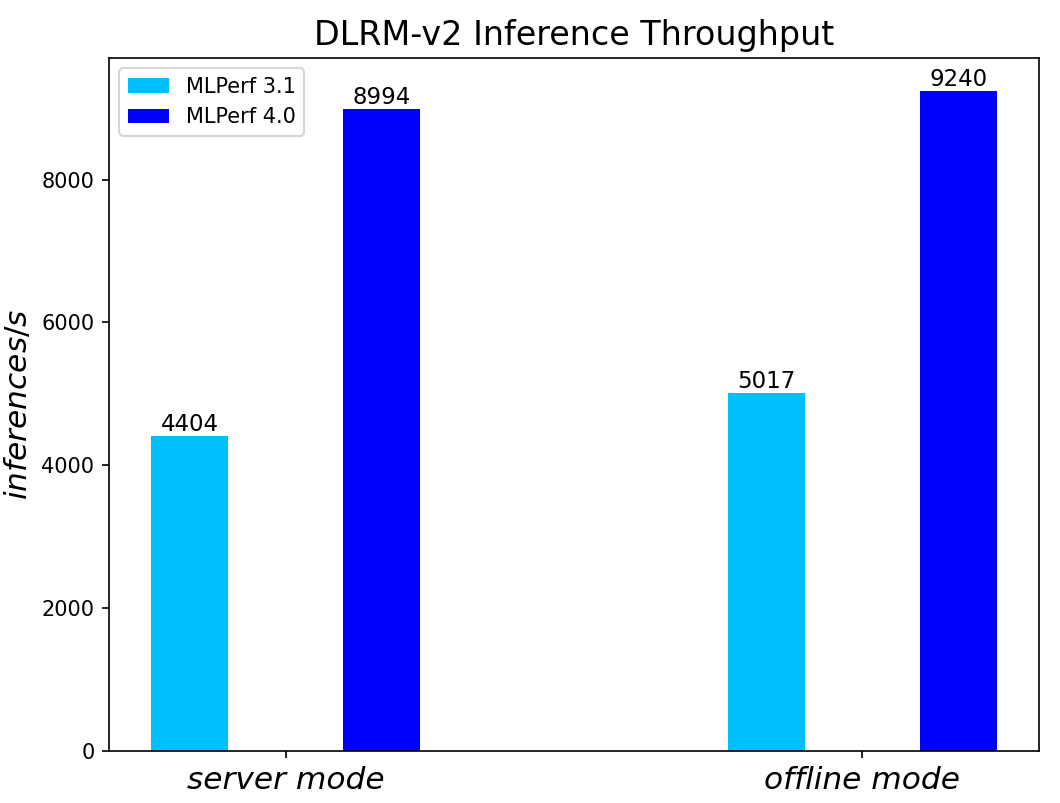

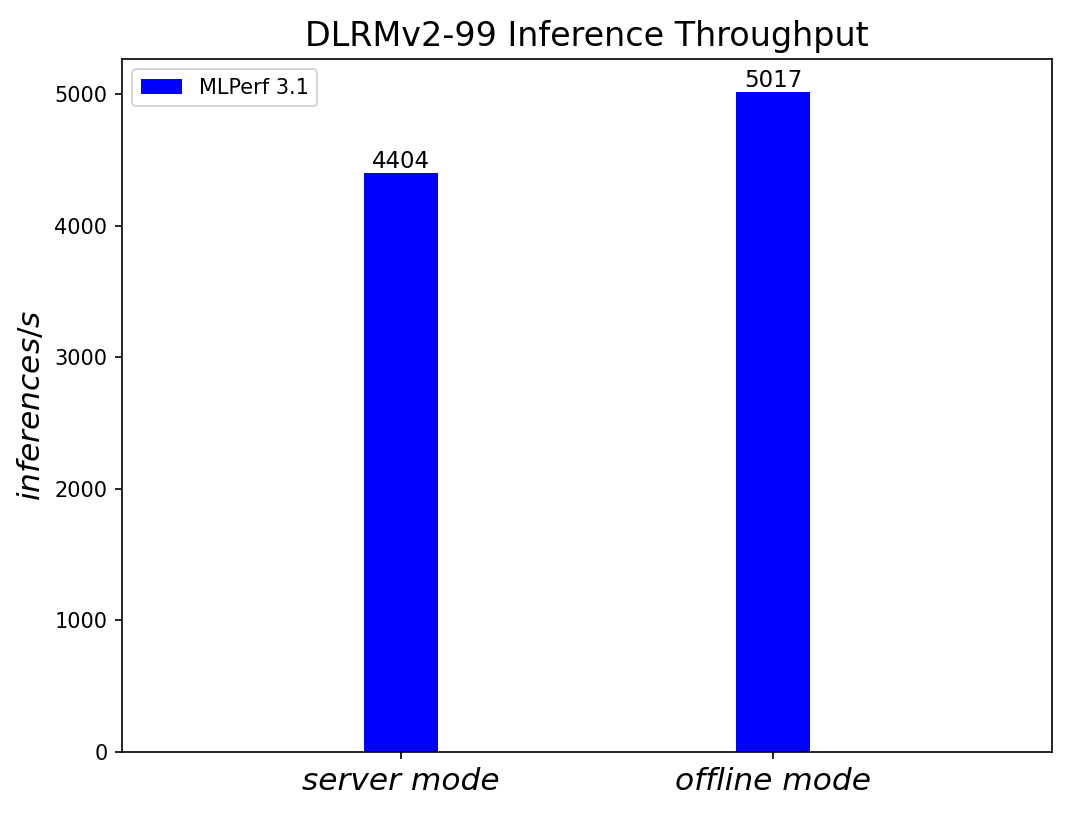

DLRMv2-99 Recommendation Model server & offline scenarios:

Figure 8. DLRMv2-99 Recommendation Model Inference results for server and offline scenarios

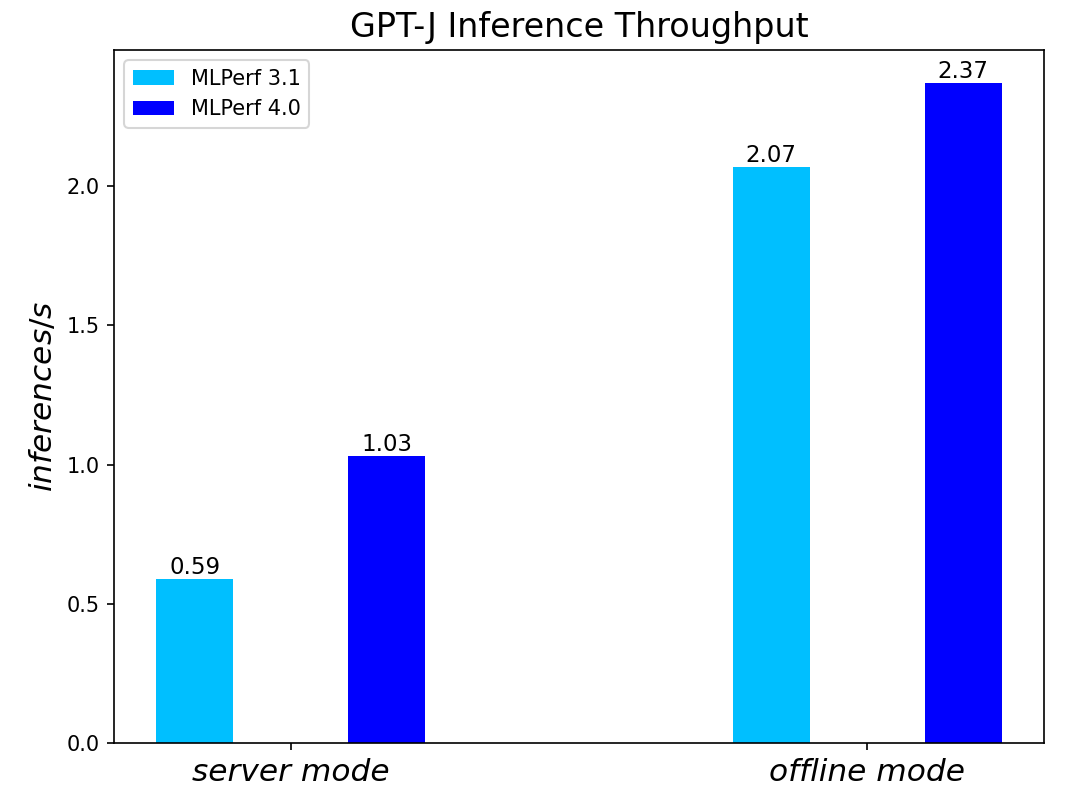

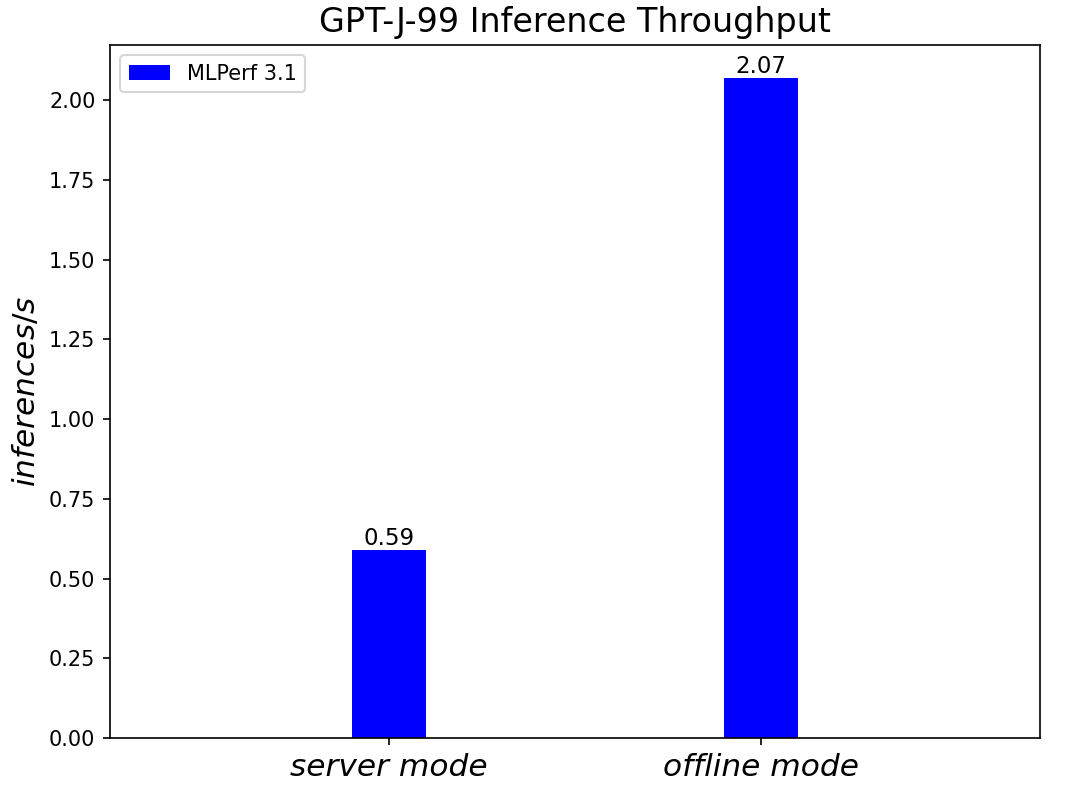

GPT-J-99 Summarization Model server & offline scenarios:

Figure 9. GPT-J-99 Summarization Model Inference results for server and offline scenarios

Conclusion

- The PowerEdge R760 server with 5th Generation Intel® Xeon® Scalable Processors produces strong data center inference performance, confirmed by the official version 4.0 MLPerfTM benchmarking results from MLCommonsTM.

- The high performance and versatility are demonstrated across natural language processing, image classification, object detection, medical imaging, speech-to-text inference, recommendation, and summarization systems.

- Compared to its prior version 3.0 and 3.1 submissions enabled by 4th Generation Intel® Xeon® Scalable Processors, the R760 with 5th Generation Intel® Xeon® Scalable Processors show significant performance improvement across different models, including the generative AI models like GPT-J.

- The R760 supports different deep learning inference scenarios in the MLPerfTM benchmark scenarios as well as other complex workloads such as database and advanced analytics. It is an ideal solution for data center modernization to drive operational efficiency, lead to higher productivity, and minimize total cost of ownership (TCO).

References

MLCommonsTM MLPerfTM v4.0 Inference Benchmark Submission IDs

ID | Submitter | System |

4.0-0026 | Dell | Dell PowerEdge Server R760 (2x Intel® Xeon® Platinum 8592+) |

Investigating the Memory Access Bottlenecks of Running LLMs

Thu, 18 Jan 2024 20:20:03 -0000

|Read Time: 0 minutes

Introduction

Memory access and computing are the two main functions in any computer system. In past decades, the computing capability of a processor has greatly benefited from Moore’s Law which brings smaller and faster transistors into the silicon die almost every year. On the other hand, system memory is regressing. The trend of shrinking fabrication technology for a system is making memory access much slower. This imbalance causes the computer system performance to be bottle-necked by the memory access; this is referred to as the “memory wall” issue. The issue gets worse for large language model (LLM) applications, because they require more memory and computing. Therefore, more memory access is required to be able to execute those larger models.

In this blog, we will investigate the impacts of memory access bottlenecks to the LLM inference results. For the experiments, we chose the Llama2 chat models running on a Dell PowerEdge HS5610 server with the 4th Generation Intel® Xeon® Scalable Processors. For quantitative analysis, we will be using the Intel profile tool – Intel® VTune™ Profiler to capture the memory access information while running the workload. After identifying the location of the memory access bottlenecks, we propose the possible techniques and configurations to mitigate the issues in the conclusion session.

Background

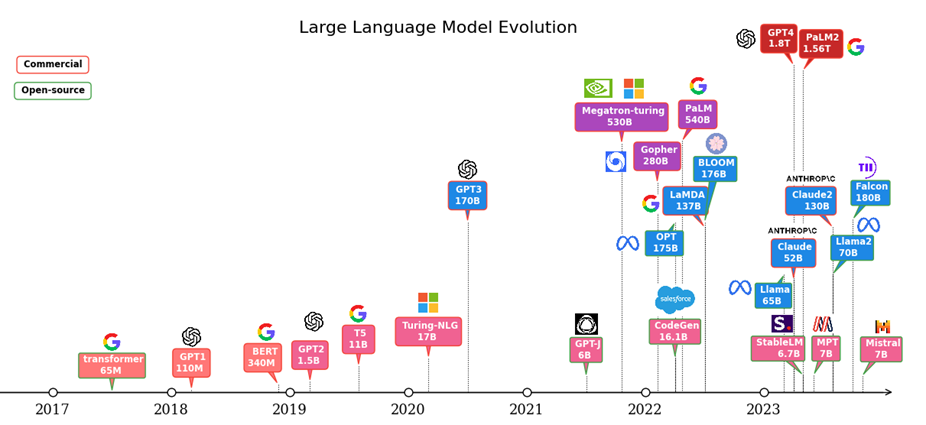

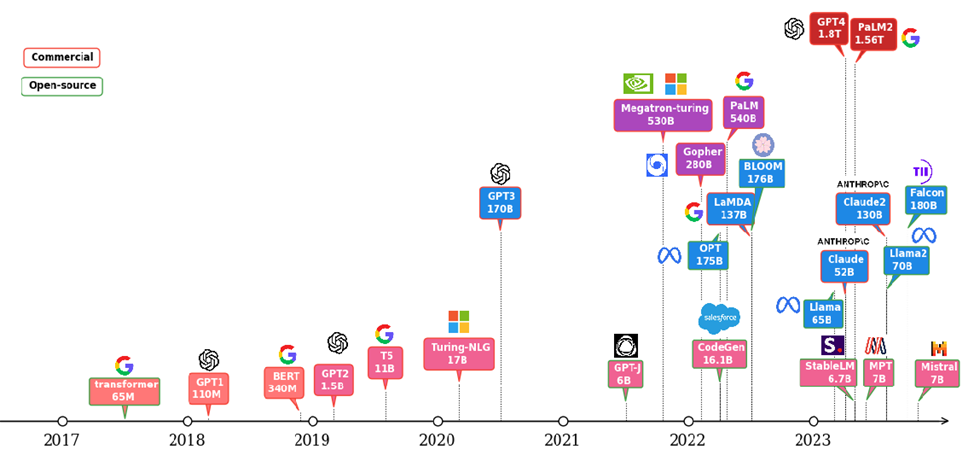

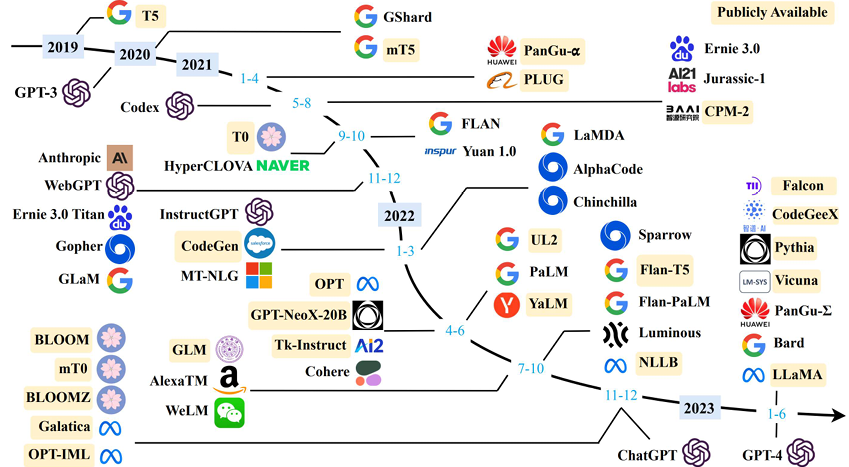

The Natural Language Processing (NLP) has greatly benefited from the transformer architecture since it was introduced in 2017 [1]. The trajectory of the NLP models has been moved to transformer-based architectures given its parallelization and scalability features over the traditional Recurrent Neural Networks (RNN) architectures. Research shows a scaling law of the transformer-based language models, in which the accuracy is strongly related to the model size, dataset size and the amount of compute [2]. This inspired the interest in using Large Language Models (LLMs) for high accuracy and complicated tasks. Figure 1 shows the evolution of the LLMs since the transformer architecture was invented. We can see the parameters of the LLMs have increased dramatically in the last 5 years. This trend is continuing. As shown in the figure, most of the LLMs today come with more than 7 billion parameters. Some models like GPT4 and PaLM2 have trillion-level parameters to support multi-mode features.

Figure 1: LLM evolution

What comes with the large models are the challenges on the hardware systems for training and inferencing those models. On the one hand, the computation required is tremendous as it is proportional to the model size. On the other hand, memory access is expensive. This mainly comes from the off-chip communication and complicated cache architectures required to support the large model parameters and computation.

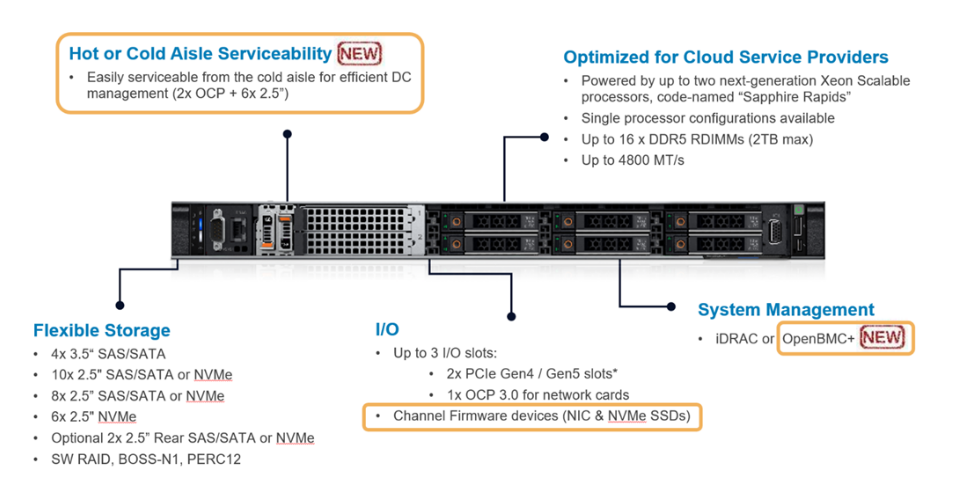

Test Setup

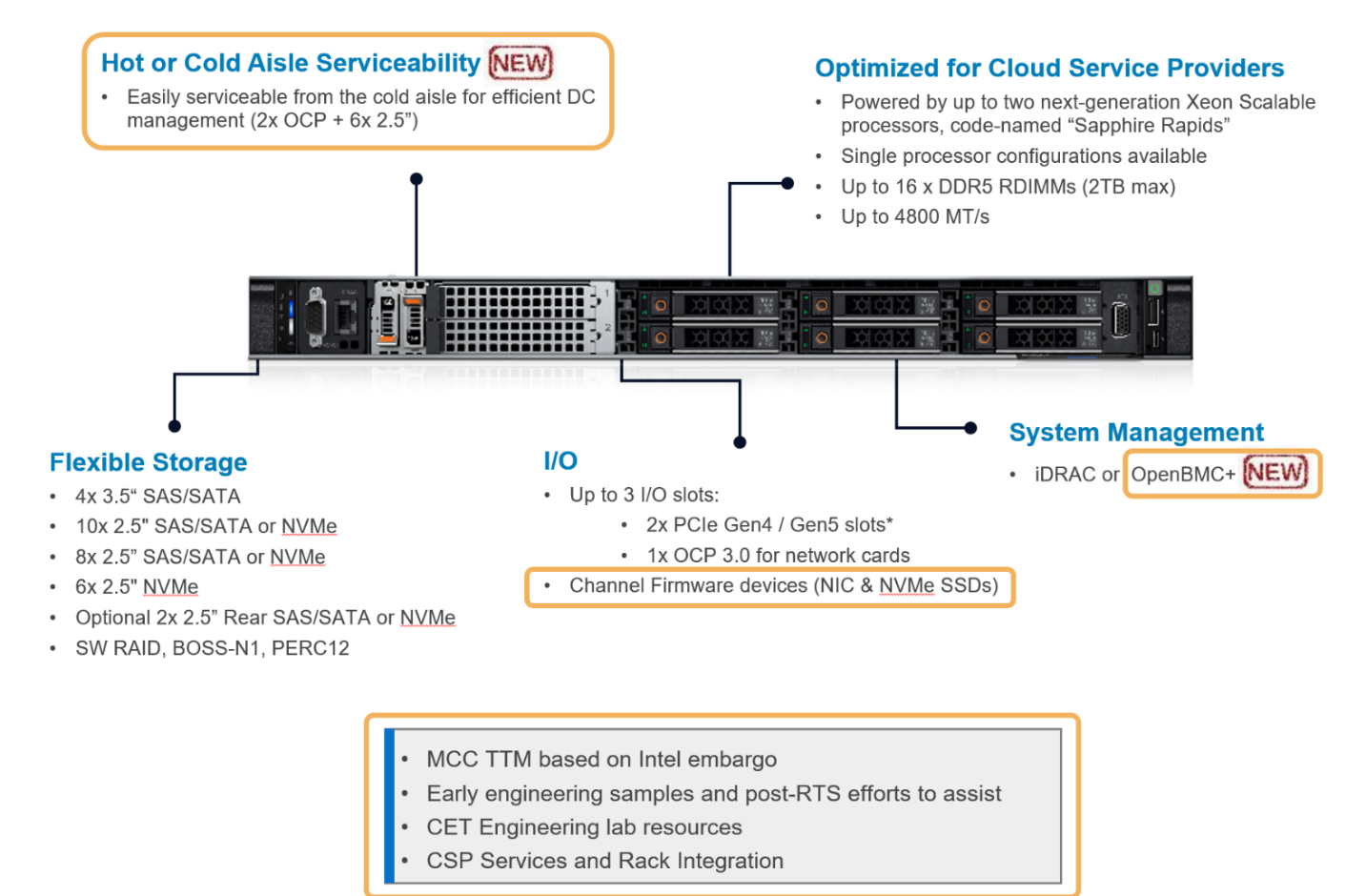

The hardware platform we used for this study is HS5610 which is the latest 16G cloud-optimized server from Dell product portfolio. Figure 2 gives an overview of HS5610. It has been designed with CSP features that allow the same benefits with full PowerEdge features & management like mainstream Dell servers, as well as open management (OpenBMC), cold aisle service, channel firmware, and services. The server has two sockets with an Intel 4th generation 32-core Intel® Xeon® CPU on each socket. The TDP power for each CPU is 250W. Table 1 and Table 2 show the details of the server configurations and CPU specifications.

Figure 2: PowerEdge HS5610 [3]

Product Collection | 4th Generation Intel® Xeon® Scalable Processors |

Processor Name | Platinum 8480+ |

Status | Launched |

# of CPU Cores | 32 |

# of Threads | 64 |

Base Frequency | 2.0 GHz |

Max Turbo Speed | 3.8 GHz |

Cache L3 | 64 MB |

Memory Type | DDR5 4800 MT/s |

ECC Memory Supported | Yes |

Table 1: HS5610 Server Configurations

System Name | PowerEdge HS5610 |

Status | Available |

System Type | Data Center |

Number of Nodes | 1 |

Host Processor Model | 4th Generation Intel® Xeon® Scalable Processors |

Host Processors per Node | 2 |

Host Processor Core Count | 32 |

Host Processor Frequency | 2.0 GHz, 3.8 GHz Turbo Boost |

Host Memory Capacity | 1TB, 16 x 64GB DIMM 4800 MHz |

Host Storage Capacity | 4.8 TB, NVME |

Table 2: 4th Generation 32-core Intel® Xeon® Scalable Processor Technical Specifications

Software Stack and System Configuration

The software stack and system configuration used for this submission is summarized in Table 3. Optimizations have been done for the PyTorch framework and Transformers library to unleash the Xeon CPU AI instruction capabilities. Also, a low-level tool - Intel® Neural Compressor has been used for high-accuracy quantization.

OS | CentOS Stream 8 (GNU/Linux x86_64) |

Intel® Optimized Inference SW | OneDNN™ Deep Learning, ONNX, Intel® Extension for PyTorch (IPEX), Intel® Extension for Transformers (ITREX), Intel® Neural Compressor |

ECC memory mode | ON |

Host memory configuration | 1TiB |

Turbo mode | ON |

CPU frequency governor | Performance |

Table 3: Software stack and system configuration

The model under tests is Llama2-chat-hf models with 13 billion parameters (Llama2-13b-chat-hf). The model is based on the pre-trained 13 billion Llama2 model and fine-tuned with human feedback for chatbot applications. The Llama2 model has light (7b), medium (13b) and heavy (70b) size versions.

The profile tool used in the experiments is Intel® VTune™. It is a powerful low-level performance analysis tool for x86 CPUs that supports algorithms, micro-architecture, parallelism, and IO related analysis etc. For the experiments, we use the memory access analysis under micro-architecture category. Note Intel® VTune™ consumes significant hardware resources which impacts the performance results if we run the tool along with the workload. So, we use it as a profile/debug tool to investigate the bottleneck. The performance numbers we demonstrate here are running without Intel® VTune™ on.

The experiments are targeted to cover the following:

- Single-socket performance vs dual-socket performance to demonstrate the NUMA memory access impact.

- Performance under different CPU-core numbers within a single socket to demonstrate the local memory access impact.

- Performance with different quantization to demonstrate the quantization impact.

- Intel® VTune™ memory access results.

Because Intel® VTune™ has minimum capture durations and max capture size requirements, we focus on capturing the results for the medium-size model (Llama2-13b-chat-hf). This prevents short/long inference time therefore avoiding an underload or overload issue. All the experiments are based on the batch size equals to 1. Performance is characterized by latency or throughput. To reduce the measurement errors, the inference is executed 10 times to get the averaged value. A warm-up process by loading the parameter and running a sample test is executed before running the defined inference.

Results

For this section, we showcase the performance results in terms of throughput for single-socket and dual socket scenarios under different quantization types followed by the Intel® VTune™ capturing results.

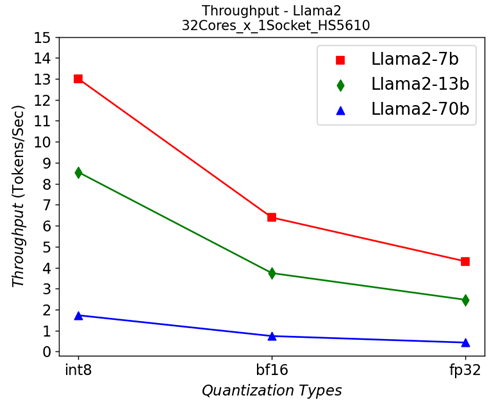

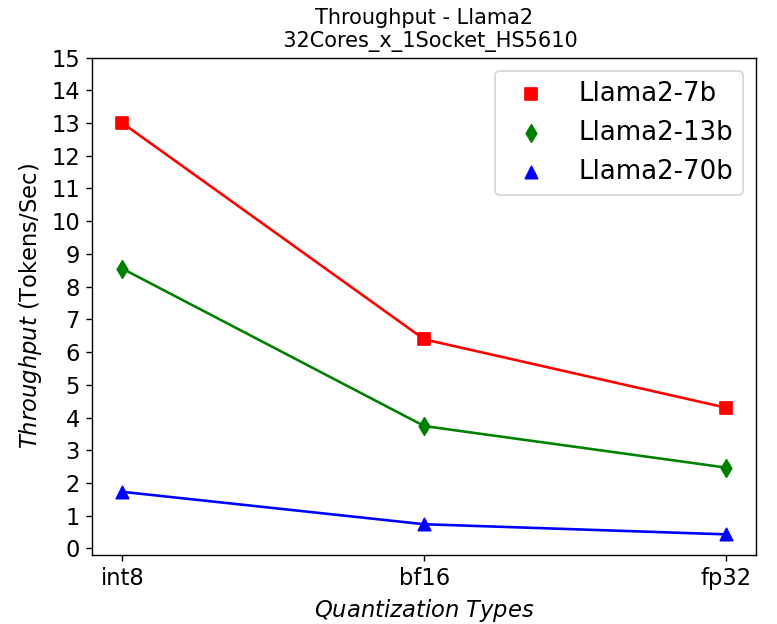

Single-socket Results Under Different Quantization Types:

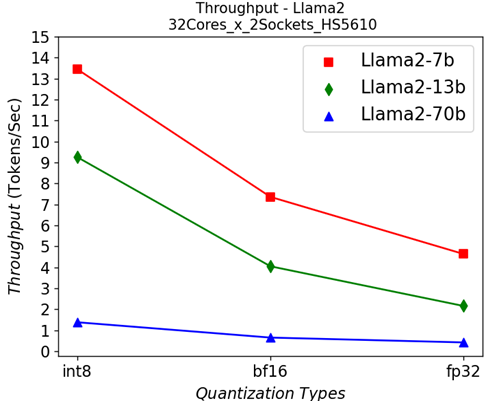

Figure 3: Single-socket throughput in HS5610 server running Llama2 models under different quantization types

Figure 3 shows the throughputs of running different Llama2 chat models with different quantization types on a single socket. The “numactl” command is used to confine the workload within one single 32-core CPU. From the results, we can see that quantization greatly helps to improve the performance across different models.

|  |

(a) | (b) |

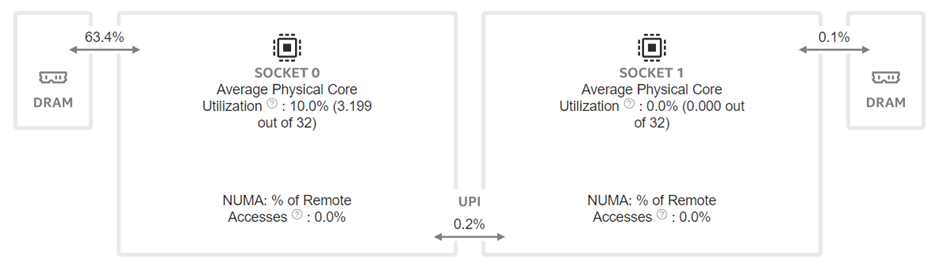

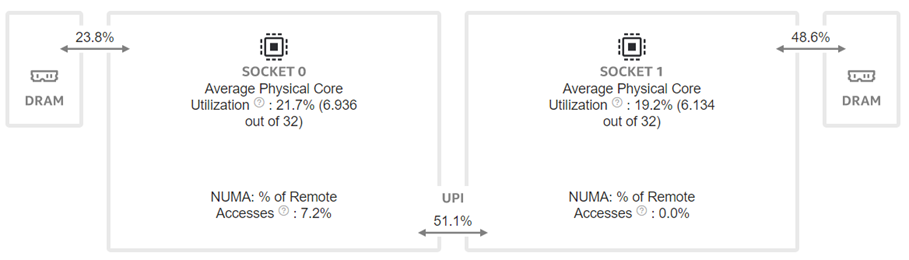

Figure 4:Intel® VTune™ memory analysis results for single-socket fp32 results:

(a). bandwidth and utilization diagram (b). elapsed time analysis

|  |

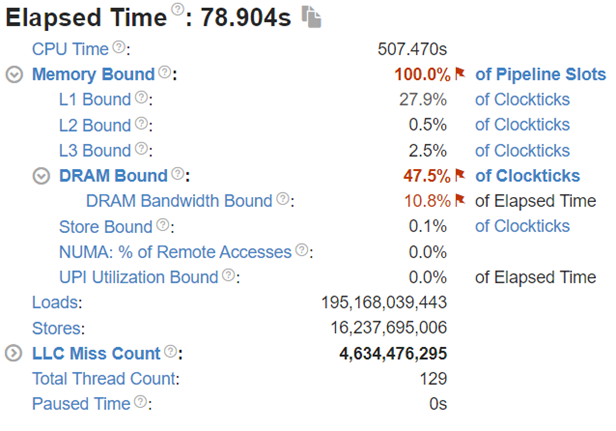

(a) | (b) |

Figure 5: Intel® VTune™ memory analysis results for single-socket bf16 results:

(a). bandwidth and utilization diagram (b). elapsed time analysis

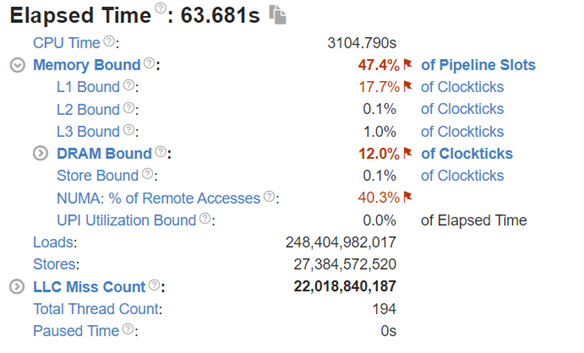

To better understand what would happen at the lower level, we will take the Llama2 13 billion model as an example. We will use Intel® VTune™ to capture the bandwidth and utilization diagram and the elapsed time analysis for the fp32 data type (shown in Figure 4) and use bf16 data type (shown in Figure 5). We can see that by reducing the representing bits, the bandwidth required for the CPU and DRAM communication is reduced. In this scenario, the DRAM utilization drops from 63.4% for fp32 (shown in Figure 4 (a)) to 28.7% (shown in Figure 4 (b)). The also indicates that the weight data can arrive quicker to the CPU chip. Now we can benefit from the quicker memory communication. The CPU utilization also increases from 10% for fp32 (shown in Figure 4 (a)) to 15.6% for bf16 (shown in Figure 4 (b)). Both faster memory access and better CPU utilization translate to better performance with a more than 50% (from 2.47 tokens/s for fp32 to 3.74 tokens/s) throughput boost as shown in Figure 3. Diving deeper with the elapsed time analysis shown in Figure 4 (b), and Figure 5 (b), L1 cache is one of the performance bottleneck locations on the chip. Quantization reduces the possibility that the task gets stalled.

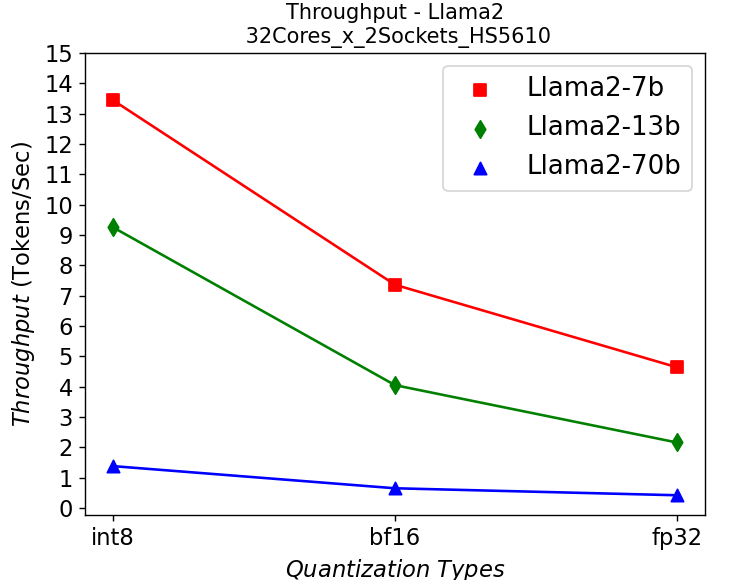

Dual-socket Results Under Different Quantization Types:

Figure 6: Dual-socket throughput in HS5610 server running Llama2 models under different quantization types

|  |

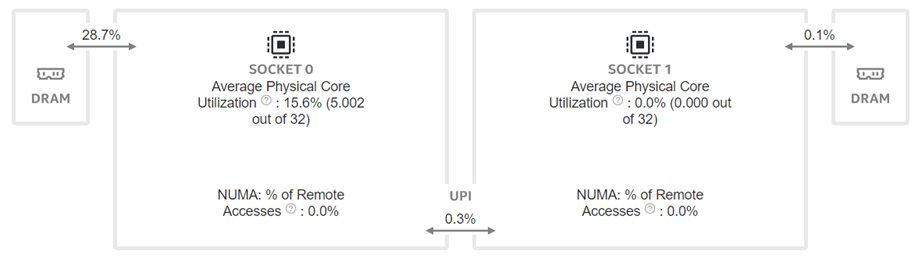

(a) | (b) |

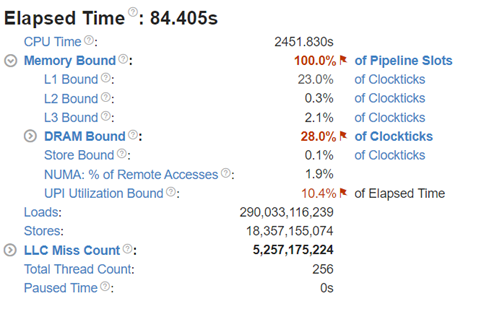

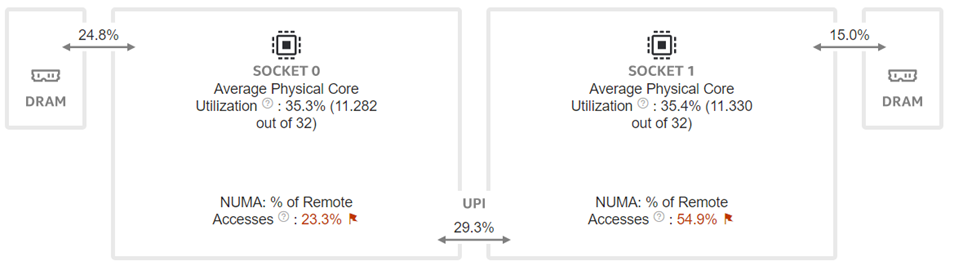

Figure 7: Intel® VTune™ memory analysis results for dual-socket fp32 results:

(a). bandwidth and utilization diagram (b). elapsed time analysis

|  |

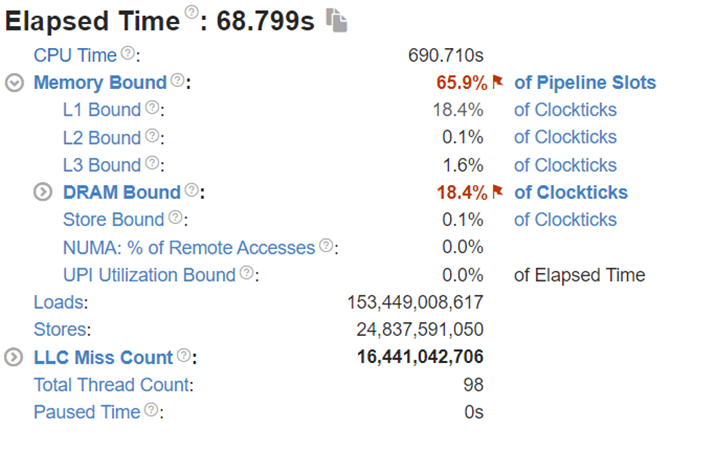

(a) | (b) |

Figure 8: Intel® VTune™ memory analysis results for dual-socket bf16 results:

(a). bandwidth and utilization diagram (b). elapsed time analysis

Now moving to the dual-socket scenarios shown in Figure 6-8, we have similar observations regarding the impacts of the quantization: Quantization increases CPU utilization and reduces the L1 cache bottleneck, therefore boosting the throughputs across different Llama2 models.

Comparing the performance between the single-socket (shown in Figure 3) and dual-socket (shown in Figure 6) scenarios indicates negligible performance improvement. As seen in Figure 7 and 8, even though we get better CPU utilizations, the communication between two sockets (the UPI or the NUMA memory access), becomes the main bottleneck that offsets the benefits of having more computing cores.

Conclusion

Based on the experiment results for different Llama2 models under various configurations, we have the conclusions as the following:

- Quantization improves the performance across the models with different weights by reducing the L1 cache bottleneck and increasing the CPU utilization. It also indicates that we can optimize the TCO by reducing the memory requirements (in terms of the capacity and speed) if we were able to quantize the model properly.

- Crossing-socket communication from either UPI or NUMA memory access is a significant bottleneck that may affect performance. Optimizations include the reducing of the inter-socket communication. For example better partitioning of the model is critical. Alternatively, this also indicates that executing one workload on a single dedicated CPU with enough cores is desirable for cost and performance considerations.

References

[1]. A. Vaswani et. al, “Attention Is All You Need”, https://arxiv.org/abs/1706.03762

[2]. J. Kaplan et. al, “Scaling Laws for Neural Language Models”, https://arxiv.org/abs/2001.08361

[3]. https://www.dell.com/en-us/shop/ipovw/poweredge-hs5610

Deploying Llama 7B Model with Advanced Quantization Techniques on Dell Server

Tue, 16 Jan 2024 20:05:01 -0000

|Read Time: 0 minutes

Introduction

Large-language Models (LLMs) have gained great industrial and academic interest in recent years. Different LLMs have been adopted in various applications, such as: content generation, text summarization, sentiment analysis, and healthcare. The LLM evolution diagram in Figure 1 shows the popular pre-trained models since 2017 when the transformer architecture was first introduced [1]. It is not hard to find the trend of larger and more open-source models following the timeline. Open-source models boosted the popularity of LLMs by eliminating the huge training cost associated with the large scale of the infrastructure and long training time required. Another portion of the cost of LLM applications comes from the deployment where an efficient inference platform is required.

This blog focuses on how to deploy LLMs efficiently on Dell platform with different quantization techniques. We first benchmarked the model accuracy under different quantization techniques. Then we demonstrated their performance and memory requirements of running LLMs under different quantization techniques through experiments. Specifically, we chose the open-source model Llama-2-7b-chat-hf for its popularity [2]. The server is chosen to be Dell main-stream server R760xa with NVIDIA L40 GPUs [3] [4]. The deployment framework in the experiments is TensorRT-LLM, which enables different quantization techniques including advanced 4bit quantization as demonstrated in the blog [5].

Figure 1 :LLM evolution

Background

LLM inferencing processes tend to be slow and power hungry, because of the characteristics of LLMs being large in weight size and having auto-regression. How to make the inferencing process more efficient under limited hardware resources is among the most critical problems for LLM deployment. Quantization is an important technique widely used to push for more efficient LLM deployment. It can relieve the large hardware resource requirements by reducing the memory footprint and computation energy, as well as improve the performance with faster memory access time compared to the deployment with the original un-quantized model. For example, in [6], the performance in terms of throughput by tokens per second (tokens/s) for Llama-2-7b model is improved by more than 2x by quantizing from floating point 16-bit format to integer 8-bit. Recent research made more aggressive quantization techniques like 4-bit possible and available in some deployment frameworks like TensorRT-LLM. However, quantization is not free, and it normally comes with accuracy loss. Besides the cost, reliable performance with acceptable accuracy for specific applications is what users would care about. Two key topics covered in this blog are accuracy and performance. We first benchmark the accuracy of the original model and quantized models over different tasks. Then we deployed those models into Dell server and measured their performance. We further measured the GPU memory usage for each scenario.

Test Setup

The model under investigation is Llama-2-7b-chat-hf [2]. This is a finetuned LLMs with human-feedback and optimized for dialogue use cases based on the 7-billion parameter Llama-2 pre-trained model. We load the fp16 model as the baseline from the huggingface by setting torch_dtype to float16.

We investigated two advanced 4-bit quantization techniques to compare with the baseline fp16 model. One is activation-aware weight quantization (AWQ) and the other is GPTQ [7] [8]. TensorRT-LLM integrates the toolkit that allows quantization and deployment for these advanced 4-bit quantized models.

For accuracy evaluation across models with different quantization techniques, we choose the Massive Multitask Language Understanding (MMLU) datasets. The benchmark covers 57 different subjects and ranges across different difficulty levels for both world knowledge and problem-solving ability tests [9]. The granularity and breadth of the subjects in MMLU dataset allow us to evaluate the model accuracy across different applications. To summarize the results more easily, the 57 subjects in the MMLU dataset can be further grouped into 21 categories or even 4 main categories as STEM, humanities, social sciences, and others (business, health, misc.) [10].

Performance is evaluated in terms of tokens/s across different batch sizes on Dell R760xa server with one L40 plugged in the PCIe slots. The R760xa server configuration and high-level specification of L40 are shown in Table 1 and 2 [3] [4]. To make the comparison easier, we fix the input sequence length and output sequence length to be 512 and 200 respectively.

System Name | PowerEdge R760xa |

Status | Available |

System Type | Data Center |

Number of Nodes | 1 |

Host Processor Model | 4th Generation Intel® Xeon® Scalable Processors |

Host Process Name | Intel® Xeon® Gold 6430 |

Host Processors per Node | 2 |

Host Processor Core Count | 32 |

Host Processor Frequency | 2.0 GHz, 3.8 GHz Turbo Boost |

Host Memory Capacity and Type | 512GB, 16 x 32GB DIMM, 4800 MT/s DDR5 |

Host Storage Capacity | 1.8 TB, NVME |

Table 1: R760xa server configuration

GPU Architecture | L40 NVIDIA Ada Lovelace Architecture |

GPU Memory Bandwidth | 48 GB GDDR6 with ECC |

Max Power Consumption | 300W |

Form Factor | 4.4" (H) x 10.5" (L) Dual Slot |

Thermal | Passive |

Table 2: L40 High-level specification

The inference framework that includes different quantization tools is NVIDIA TensorRT-LLM initial release version 0.5. The operating system for the experiments is Ubuntu 22.04 LTS.

Results

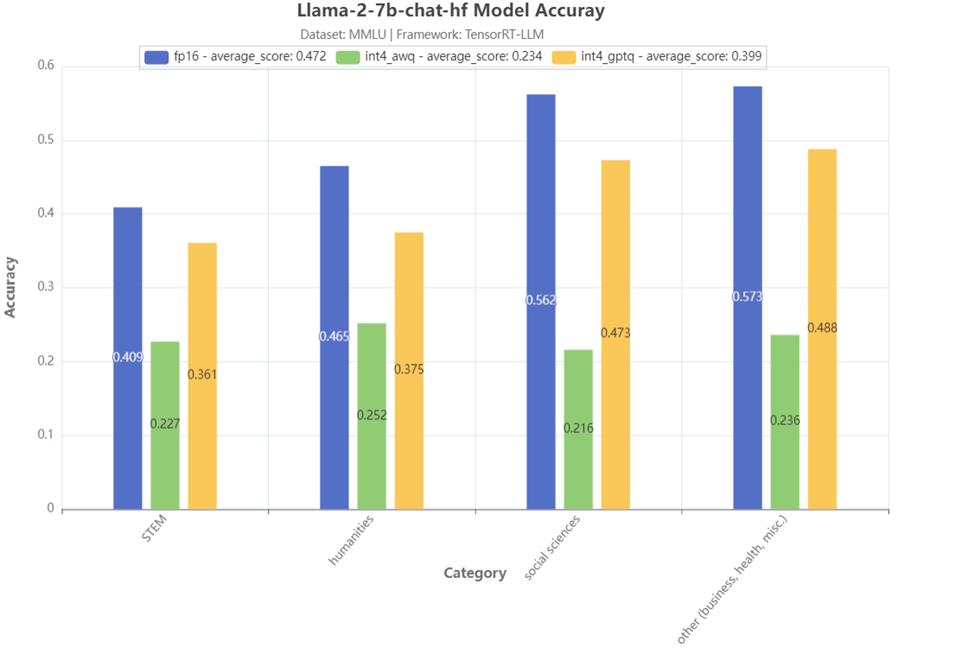

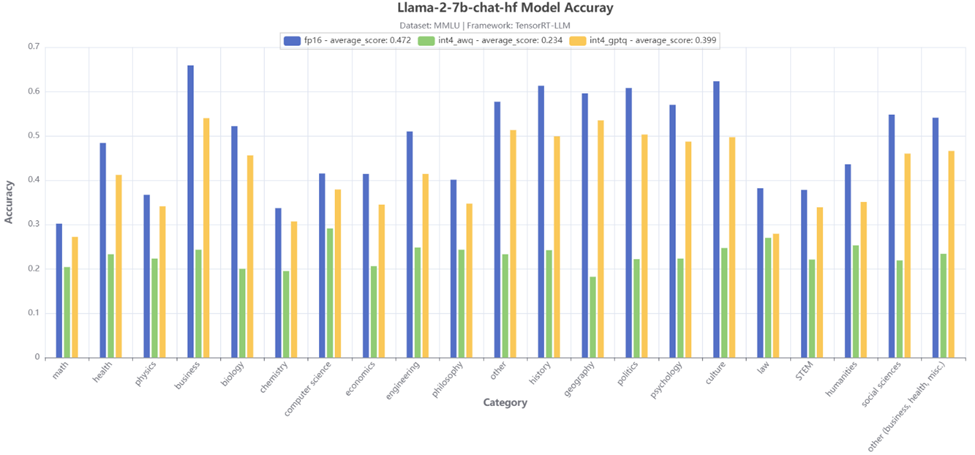

We first show the model accuracy results based on the MMLU dataset tests in Figure 2 and Figure 3, and throughput performance results when running those models on PowerEdge R760xa in Figure 4. Lastly, we show the actual peak memory usage for different scenarios. Brief discussions are given for each result. The conclusions are summarized in the next section.

Accuracy

Figure 2:MMLU 4-category accuracy test result

Figure 2 shows the accuracy test results of 4 main MMLU categories for the Llama-2-7b-chat-hf model. Compared to the baseline fp16 model, we can see that the model with 4-bit AWQ has a significant accuracy drop. On the other hand, the model with 4-bit GPTQ has a much smaller accuracy drop, especially for the STEM category, the accuracy drop is smaller than 5%.

Figure 3:MMLU 21-category accuracy test result

Figure 3 further shows the accuracy test results of 21 MMLU sub-categories for the Llama-2-7b-chat-hf model. Similar conclusions can be made that the 4-bit GPTQ quantization gives much better accuracy, except for the law category, the two quantization techniques achieve a close accuracy.

Performance

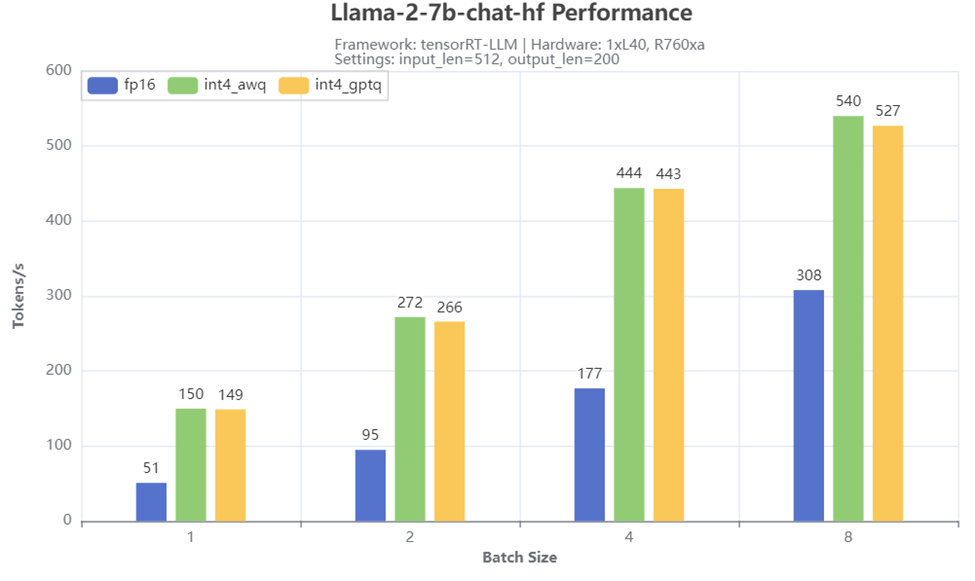

Figure 4: Throughput test result

Figure 4 shows the throughput numbers when running Llama-2-7b-chat-hf with different batch size and quantization methods on R760xa server. We observe significant throughput boost with the 4-bit quantization, especially when the batch size is small. For example, a 3x tokens/s is achieved when the batch size is 1 when comparing the scenarios with 4-bit AWQ or GPTQ quantization to the 16-bit baseline scenario. Both AWQ and GPTQ quantization give similar performance across different batch sizes.

GPU Memory Usage

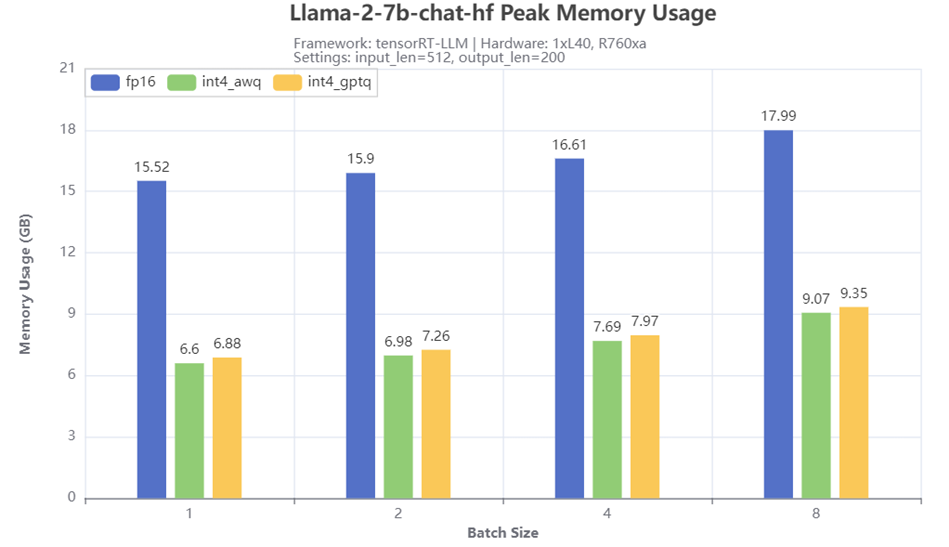

Figure 5: Peak GPU memory usage

Figure 5 shows the peak GPU memory usage when running Llama-2-7b-chat-hf with different batch size and quantization methods on R760xa server. From the results, 4-bit quantization techniques greatly reduced the memory required for running the model. Compared to the memory size required for the baseline fp16 model, the quantized models with AWQ or GPTQ only requires half or even less of the memory, depending on the batch size. A slightly larger peak memory usage is also observed for GPTQ quantized model compared to the AWQ quantized model.

Conclusion

- We have shown the impacts for accuracy, performance, and GPU memory usage by applying advanced 4-bit quantization techniques on Dell PowerEdge server when running Llama 7B model.

- We have demonstrated the great benefits of these 4-bit quantization techniques in terms of improving throughput and saving GPU memory.

- We have quantitively compared the quantized models with the baseline model in terms of accuracy among various subjects based on the MMLU dataset.

- Tests showed that with an acceptable accuracy loss, 4-bit GPTQ is an attractive quantization method for the LLM deployment where the hardware resource is limited. On the other hand, large accuracy drops across many MMLU subjects have been observed for the 4-bit AWQ. This indicates the model should be limited to the applications tied to some specific subjects. Otherwise, other techniques like re-training or fine-turning techniques may be required to improve accuracy.

References

[1]. A. Vaswani et. al, “Attention Is All You Need”, https://arxiv.org/abs/1706.03762

[2]. https://huggingface.co/meta-llama/Llama-2-7b-chat-hf

[4]. https://www.nvidia.com/en-us/data-center/l40/

[5]. https://github.com/NVIDIA/TensorRT-LLM

[7]. J. Lin et. al, “AWQ: Activation-aware Weight Quantization for LLM Compression and Acceleration”, https://arxiv.org/abs/2306.00978

[8]. E. Frantar et. al, “GPTQ: Accurate Post-Training Quantization for Generative Pre-trained Transformers”, https://arxiv.org/abs/2210.17323

[9]. D. Hendrycks et. all, “Measuring Massive Multitask Language Understanding”, https://arxiv.org/abs/2009.03300

[10]. https://github.com/hendrycks/test/blob/master/categories.py

MLPerf™ Inference 3.1 on Dell PowerEdge Server with Intel® 4th Generation Xeon® CPU

Thu, 11 Jan 2024 19:43:07 -0000

|Read Time: 0 minutes

Introduction

MLCommons™ has released the v3.1 results for its machine learning inference benchmark suite, MLPerf™. This blog focuses on the impressive datacenter inference results obtained across different use cases by using the new 4th Generation Intel Xeon Scalable Processors on a Dell PowerEdge R760 server. This submission covers the benchmark results for all 7 use cases defined in MLPerf™, which are Natural Language Processing (BERT), Image Classification (ResNet50), Object Detection (RetinaNet), Speech-to-Text (RNN-T), Medical Imaging (3D-Unet), Recommendation Systems (DLRMv2), and Summarization (GPT-J).

These new Intel® Xeon® processors use an Intel AMX® matrix multiplication engine in each core to boost overall inferencing performance. With a focus on ease of use, Dell Technologies delivers exceptional CPU performance results out of the box with an optimized BIOS profile that fully unleashes the power of Intel’s OneDNN software – software which is fully integrated with both PyTorch and TensorFlow frameworks. The server configurations and the CPU specifications in the benchmark experiments are shown in Tables 1 and 2 respectively.

System Name | PowerEdge R760 |

Status | Available |

System Type | Data Center |

Number of Nodes | 1 |

Host Processor Model | 4th Generation Intel® Xeon® Scalable Processors |

Host Processors per Node | 2 |

Host Processor Core Count | 56 |

Host Processor Frequency | 2.0 GHz, 3.8 GHz Turbo Boost |

Host Memory Capacity | 1TB, 16 x 64GB DIMM 4800 MHz |

Host Storage Capacity | 4.8 TB, NVME |

Table 1. Dell PowerEdge R760 Server Configuration

Product Collection | 4th Generation Intel® Xeon® Scalable Processors |

Processor Name | Platinum 8480+ |

Status | Launched |

# of CPU Cores | 56 |

# of Threads | 112 |

Base Frequency | 2.0 GHz |

Max Turbo Speed | 3.8 GHz |

Cache L3 | 105 MB |

Memory Type | DDR5 4800 MT/s |

ECC Memory Supported | Yes |

Table 2. 4th Generation Intel® Xeon® Scalable Processor Technical Specifications

MLPerf™ Inference v3.1 - Datacenter

The MLPerf™ inference benchmark measures how fast a system can perform ML inference using a trained model with new data in a variety of deployment scenarios. There are two benchmark suites – one for Datacenter systems and one for Edge. Table 3 lists the 7 mature models with each targeting a different task in the official release v3.1 for Datacenter systems category that were run on this PowerEdge R760. Compared to the v3.0 release, v3.1 added the updated version of the recommendation model – DLRMv2 – and introduced the first Large-Language Model (LLM) – GPT-J.

Area | Task | Model | Dataset | QSL Size | Quality | Server latency constraint |

Vision | Image classification | ResNet50-v1.5 | ImageNet (224x224) | 1024 | 99% of FP32 (76.46%) | 15 ms |

Vision | Object detection | RetinaNet | OpenImages (800x800) | 64 | 99% of FP32 (0.20 mAP) | 100 ms |

Vision | Medical imaging | 3D-Unet | KITS 2019 (602x512x512) | 16 | 99.9% of FP32 (0.86330 mean DICE score) | N/A |

Speech | Speech-to-text | RNN-T | Librispeech dev-clean (samples < 15 seconds) | 2513 | 99% of FP32 (1 - WER, where WER=7.452253714852645%) | 1000 ms |

Language | Language processing | BERT-large | SQuAD v1.1 (max_seq_len=384) | 10833 | 99% of FP32 and 99.9% of FP32 (f1_score=90.874%) | 130 ms |

Language | Summarization | GPT-J-99 | CNN Dailymail (v3.0.0, max_seq_len=2048) | 13368 | 99% of FP32 (f1_score=80.25% rouge1=42.9865, rouge2=20.1235, rougeL=29.9881). | 20 s |

Commerce | Recommendation | DLRMv2 | Criteo 4TB Multi-hot | 204800 | 99% of FP32 (AUC=80.25%) | 60 ms |

Table 3. Datacenter Suite Benchmarks. Source: MLCommons™

Scenarios

The models are deployed in a variety of critical inference applications or use cases known as “scenarios” where each scenario requires different metrics, demonstrating production environment performance in practice. Following is the description of each scenario. Table 4 shows the scenarios required for each Datacenter benchmark included in this submission v3.1.

Offline scenario: represents applications that process the input in batches of data available immediately and do not have latency constraints for the metric performance measured in samples per second.

Server scenario: represents deployment of online applications with random input queries. The metric performance is measured in queries per second (QPS) subject to latency bound. The server scenario is more complicated in terms of latency constraints and input queries generation. This complexity is reflected in the throughput-degradation results compared to the offline scenario.

Each Datacenter benchmark requires the following scenarios:

Area | Task | Required Scenarios |

Vision | Image classification | Server, Offline |

Vision | Object detection | Server, Offline |

Vision | Medical imaging | Offline |

Speech | Speech-to-text | Server, Offline |

Language | Language processing | Server, Offline |

Language | Summarization | Server, Offline |

Commerce | Recommendation | Server, Offline |

Table 4. Datacenter Suite Benchmark Scenarios. Source: MLCommons™

Software stack and system configuration

The software stack and system configuration used for this submission is summarized in Table 5.

OS | CentOS Stream 8 (GNU/Linux x86_64) |

Intel® Optimized Inference SW for MLPerf™ | MLPerf™ Intel OneDNN integrated with PyTorch |

ECC memory mode | ON |

Host memory configuration | 1TiB |

Turbo mode | ON |

CPU frequency governor | Performance |

Table 5. System Configuration

What is Intel AMX (Advanced Matrix Extensions)?

Intel AMX is a built-in accelerator that enables 4th Gen Intel Xeon Scalable processors to optimize deep learning (DL) training and inferencing workloads. With the high-speed matrix multiplications enabled by Intel AMX, 4th Gen Intel Xeon Scalable processors can quickly pivot between optimizing general computing and AI workloads.

Imagine an automobile that could excel at city driving and then quickly shift to deliver Formula 1 racing performance. 4th Gen Intel Xeon Scalable processors deliver this level of flexibility. Developers can code AI functionality to take advantage of the Intel AMX instruction set as well as code non-AI functionality to use the processor instruction set architecture (ISA).

Intel has integrated the Intel® oneAPI Deep Neural Network Library (oneDNN) – its oneAPI DL engine – into popular open-source tools for AI applications, including TensorFlow, PyTorch, PaddlePaddle, and ONNX.

AMX architecture

Intel AMX architecture consists of two components, as shown in Figure 1:

- Tiles consist of eight two-dimensional registers, each 1 kilobyte in size. They store large chunks of data.

- Tile Matrix Multiplication (TMUL) is an accelerator engine attached to the tiles that performs matrix-multiply computations for AI.

Figure 1. Intel AMX architecture consists of 2D register files (tiles) and TMUL

Results

Both MLPerf™ v3.0 and MLPerf™ v3.1 benchmark results are based on the latest Dell R760 server utilizing 4th Generation Intel® Xeon® Scalable Processors.

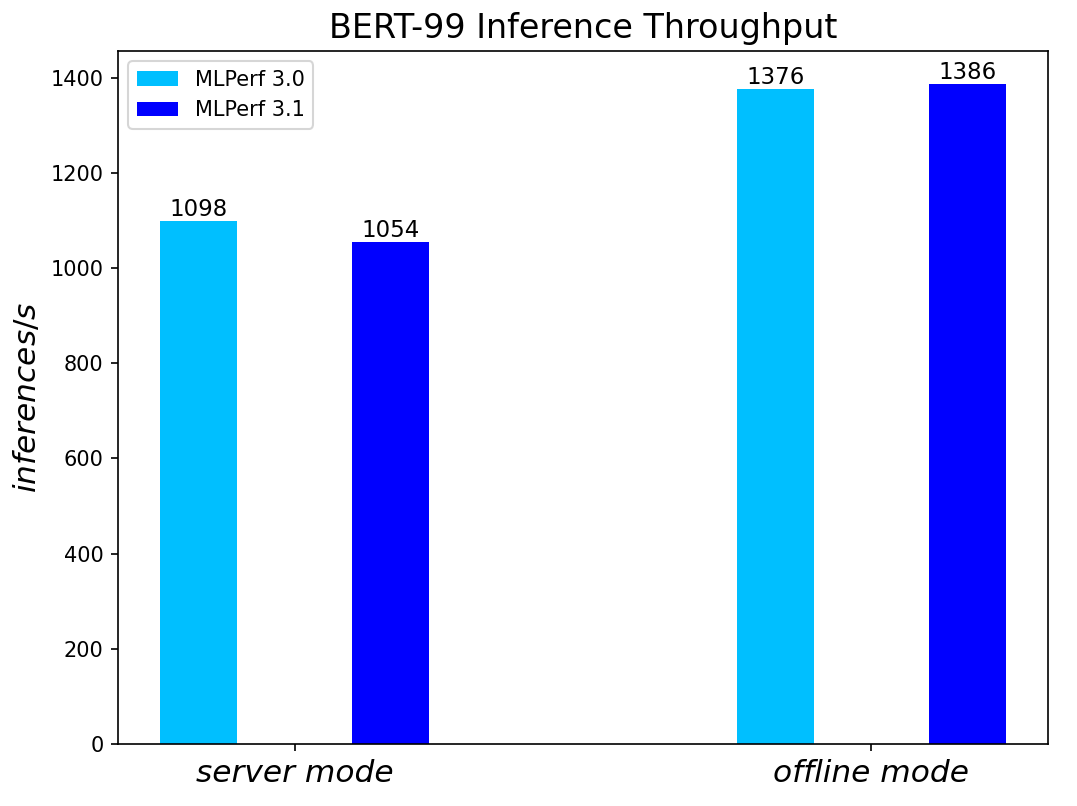

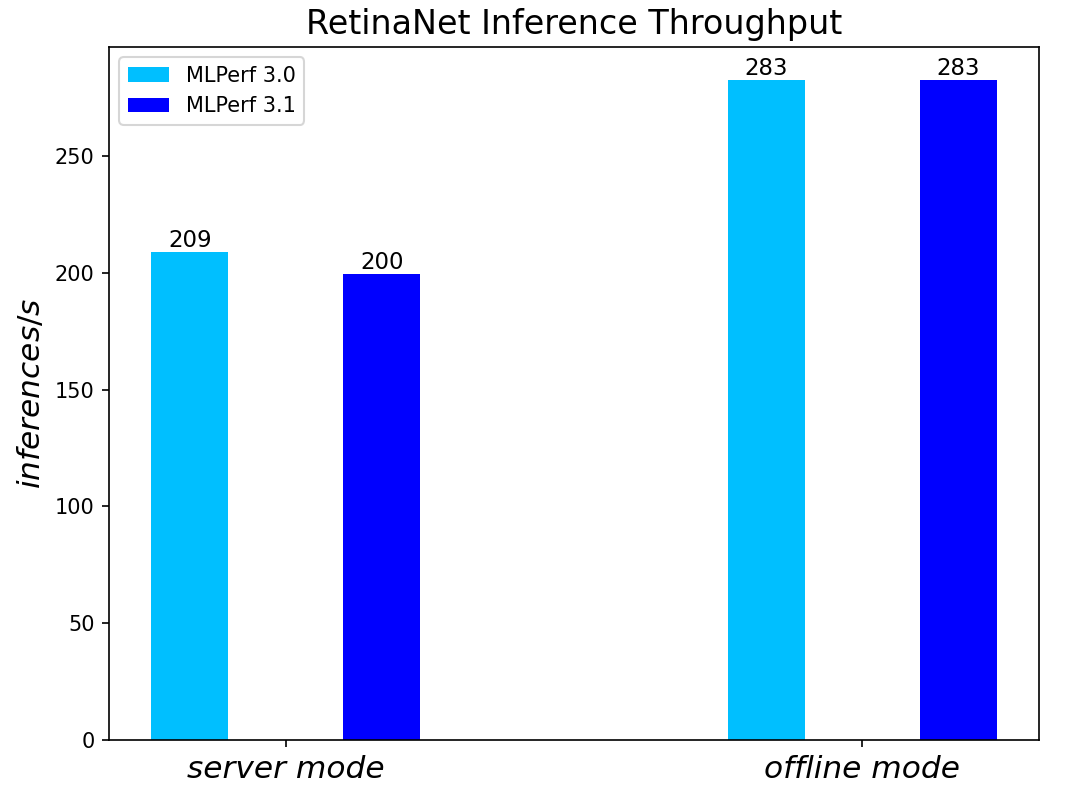

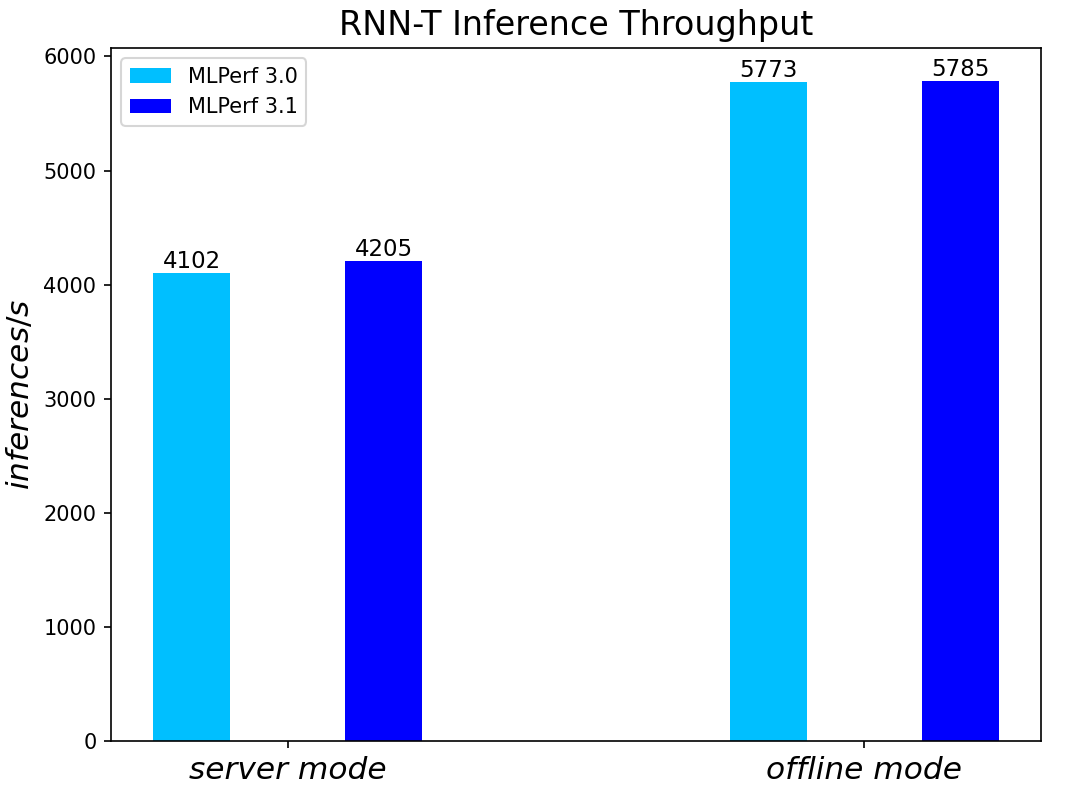

For the ResNet50 Image Classification, RetinaNet Object Detection, BERT Large Language, and RNN-T Speech Models – which are identical models with same datasets for both MLPerf™ v3.0 and MLPerf™ v3.1 – we re-run those for the latest submission. The results show negligible differences between two submissions.

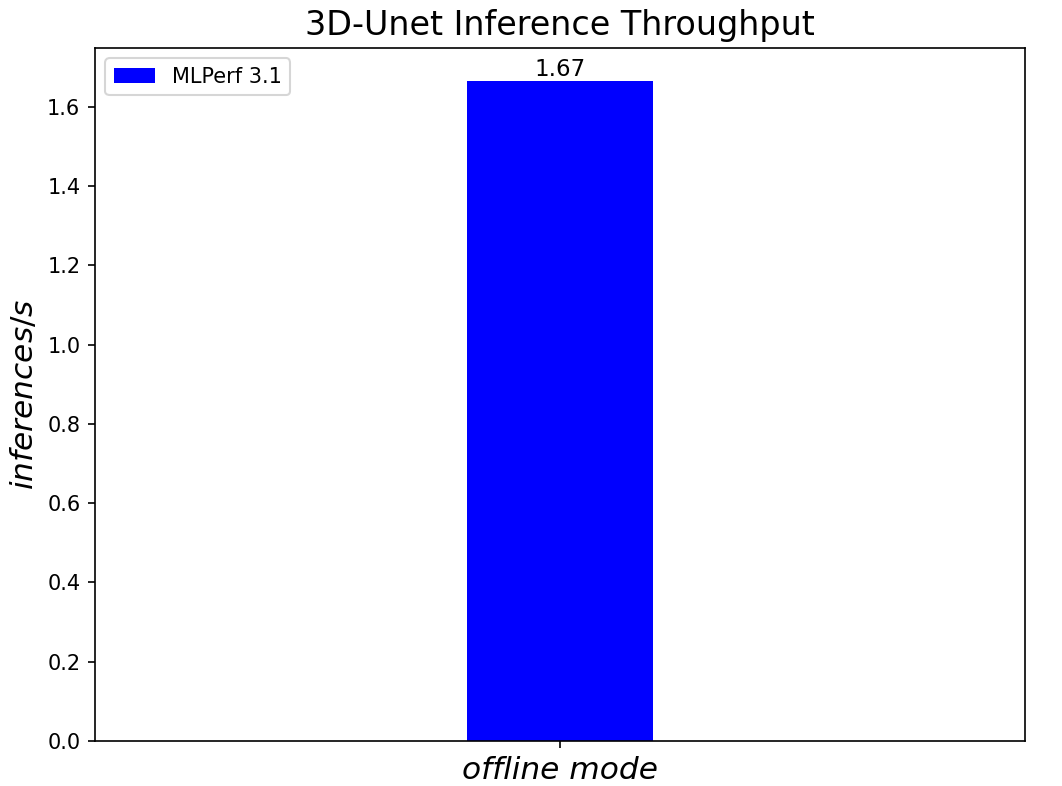

We added three new benchmark results for MLPerf™ v3.1 submission compared to MLPerf™ v3.0 submission. Those are 3D-Unet Medical Imaging, DLRMv2 Recommendation, and GPT-J Summarization models. Given that there is no previous result for comparison, we simply show the current result on the R760.

Comparing Performance from MLPerfTM v3.1 to MLPerfTM v3.0

ResNet50 server & offline scenarios:

Figure 2. ResNet50 inference throughput in server and offline scenarios

BERT Large Language Model server & offline scenarios:

Figure 3. BERT Inference results for server and offline scenarios

RetinaNet Object Detection Model server & offline scenarios:

Figure 4. RetinaNet Object Detection Model Inference results for server and offline scenarios

RNN-T Text to Speech Model server & offline scenarios:

Figure 5. RNN-T Text to Speech Model Inference results for server and offline scenarios

3D-Unet Medical Imaging Model offline scenarios:

Figure 6. 3D-Unet Medical Imaging Model Inferencing results for server and offline scenarios

DLRMv2-99 Recommendation Model server & offline scenarios:

Figure 7. DLRMv2-99 Recommendation Model Inference results for server and offline scenarios (submitted in the open category)

GPT-J-99 Summarization Model server & offline scenarios:

Figure 8. GPT-J-99 Summarization Model Inference results for server and offline scenarios

Conclusion

- The PowerEdge R760 server with 4th Generation Intel® Xeon® Scalable Processors produces strong data center inference performance, confirmed by the official version 3.1 MLPerfTM benchmarking results from MLCommonsTM.

- The high performance and versatility are demonstrated across natural language processing, image classification, object detection, medical imaging, speech-to-text inference, recommendation, and summarization systems.

- The R760 with 4th Generation Intel® Xeon® Scalable Processors show good performance in supporting generative AI models like GPT-J.

- The R760 supports different deep learning inference scenarios in the MLPerfTM benchmark scenarios as well as other complex workloads such as database and advanced analytics. It is an ideal solution for data center modernization to drive operational efficiency, lead higher productivity, and minimize total cost of ownership (TCO).

References

MLCommonsTM MLPerfTM v3.1 Inference Benchmark Submission IDs

ID | Submitter | System |

3.1-0059 | Dell | Dell PowerEdge Server R760 (1x Intel Xeon Platinum 8480+) |

3.1-0060 | Dell | Dell PowerEdge Server R760 (1x Intel Xeon Platinum 8480+) |

3.1-4184 | Dell | Dell PowerEdge Server R760 (1x Intel Xeon Platinum 8480+) |

Authors: Tao Zhang (tao.zhang9@dell.com); Brandt Springman (brandt.springman@dell.com); Bhavesh Patel (bhavesh_a_patel@dell.com); Louie Tsai (louie.tsai@intel.com); Yuning Qiu (yuning.qiu@intel.com); Ramesh Chukka (ramesh.n.chukka@intel.com)

Running LLMs on Dell PowerEdge Servers with Intel® 4th Generation Xeon® CPUs

Thu, 11 Jan 2024 19:38:53 -0000

|Read Time: 0 minutes

Introduction

Large-language Models (LLMs) have gained great industrial and academic interests in recent years. Different LLMs have been adopted in various applications, such as content generation, text summarization, sentiment analysis, and healthcare. The list goes on.

When we think about LLMs and what methodologies we can use for inferencing and fine-tuning, the question always comes up as to which compute device we should use. For inferencing, we wanted to explore what the performance metrics are when running on an Intel 4th Generation CPU, and what are some of the variables we should explore?

This blog focuses on LLM inference results on Dell PowerEdge Servers with the 4th Generation Intel® Xeon® Scalable Processors. Specifically, we demonstrated their performance and power while running the stable diffusion and Llama2 chat models on R760 and HS5610 servers. We also explored the performance and power impacts with different quantization bits and CPU/socket numbers through experiments and will present the inference results of stable diffusion and Llama2 models obtained on a Dell PowerEdge R760 and HS5610 with the 4th Generation Intel® Xeon® Scalable Processors.

We selected the aforementioned Dell platforms because we wanted to explore how our CSP-focused platforms like HS5610 perform when it comes to inferencing and whether they can meet the requirements for LLM models. These new Intel® Xeon® processors use an Intel AMX® matrix multiplication engine in each core to boost overall inferencing performance. By combining with the quantization techniques, we further improved the inference performance with the CPU-only system. Moreover, we also show how the CPU core and socket numbers affect the performance results.

Background

Transformer is regarded as the 4th fundamental model after Multilayer Perceptron (MLP), Recurrent Neural Networks (RNN), and Convolutional Neural Networks (CNN). Known for its parallelization and scalability, transformer has greatly boosted the performance and capability of LLMs since it was introduced in 2017 [1].

Today, LLMs have been rapidly adopted in various applications like content generation, text summarization, sentiment analysis, code generation, healthcare, and so on, as shown in Figure 1 [2]. This trend is continuing. More open-source LLMs are popping up almost on a monthly basis. Moreover, the transformer-based techniques are being used alongside with other methods, greatly improving the accuracy and performance of the original tasks. For example, the stable diffusion model uses the LLM at the input as the neural language understanding engine. Combined with the diffusion model, it has greatly improved the quality and throughput of the text-to-image generation task [3]. Note that for simplicity in this blog, we use the term “LLMs” to represent both those transformer-based models shown in Figure 1 and the derivative models like stable diffusion models.

Figure 1. LLM Timeline [2] Image credit: Wayne Xin Zhao, et.al, “A Survey of Large Language Models”]

While training and fine-tuning those LLMs is normally time- and cost-consuming, deploying the LLMs at the edge has its own challenges. Considering both performance and power, deploying the LLMs can be, in a sense, more cost-sensitive given the volumes of the systems required to cover various applications. GPUs are widely used to deploy LLMs. In this blog, we demonstrate the feasibility of deploying those LLMs with Intel 4th generation Intel® Xeon® CPUs with Dell PowerEdge servers and illustrate that good performance can be achieved with a proper hardware configuration – like CPU core numbers and quantization method for popular LLMs.

Test Setup

The hardware platforms we used for the experiments are PowerEdge R760 and HS5610, which are the latest mainstream and cloud-optimized servers respectively from Dell product portfolio. Figure 2 shows the rack-level interface for the HS5610 server. As a cloud-optimized solution, the HS5610 server has been designed with CSP features that allow the same benefits with full PowerEdge features and management like the mainstream server R760, as well as open management (OpenBMC), cold aisle service, channel firmware, and services. Both servers have two sockets with an Intel 4th generation Xeon CPU on each socket. R760 features a 56-core CPU – Intel® Xeon® Platinum 8480+ (TDP: 350W) in each socket, and HS5610 has a 32-core CPU – Intel® Xeon® Gold 6430 (TDP: 250W) in each socket. Tables 1-4 show the details of the server configurations and CPU specifications. During tests, we use the numactl command to set the numbers of the sockets or CPU cores to execute the LLM inference tasks.

Figure 2. PowerEdge HS5610 [4]

System Name | PowerEdge R760 |

Status | Available |

System Type | Data Center |

Number of Nodes | 1 |

Host Processor Model | 4th Generation Intel® Xeon® Scalable Processors |

Host Processors per Node | 2 |

Host Processor Core Count | 56 |

Host Processor Frequency | 2.0 GHz, 3.8 GHz Turbo Boost |

Host Memory Capacity | 1TB, 16 x 64GB DIMM 4800 MHz |

Host Storage Capacity | 4.8 TB, NVME |

Table 1. R760 Server Configuration

Product Collection | 4th Generation Intel® Xeon® Scalable Processors |

Processor Name | Platinum 8480+ |

Status | Launched |

# of CPU Cores | 56 |

# of Threads | 112 |

Base Frequency | 2.0 GHz |

Max Turbo Speed | 3.8 GHz |

Cache L3 | 108 MB |

Memory Type | DDR5 4800 MT/s |

ECC Memory Supported | Yes |

Table 2. 4th Generation 56-core Intel® Xeon® Scalable Processor Technical Specifications

System Name | PowerEdge HS5610 |

Status | Available |

System Type | Data Center |

Number of Nodes | 1 |

Host Processor Model | 4th Generation Intel® Xeon® Scalable Processors |

Host Processors per Node | 2 |

Host Processor Core Count | 32 |

Host Processor Frequency | 2.0 GHz, 3.8 GHz Turbo Boost |

Host Memory Capacity | 1TB, 16 x 64GB DIMM 4800 MHz |

Host Storage Capacity | 4.8 TB, NVME |

Table 3. HS5610 Server Configuration

Product Collection | 4th Generation Intel® Xeon® Scalable Processors |

Processor Name | Gold 6430 |

Status | Launched |

# of CPU Cores | 32 |

# of Threads | 64 |

Base Frequency | 2.0 GHz |

Max Turbo Speed | 3.8 GHz |

Cache L3 | 64 MB |

Memory Type | DDR5 4800 MT/s |

ECC Memory Supported | Yes |

Table 4. 4th Generation 32-core Intel® Xeon® Scalable Processor Technical Specifications

Software stack and system configuration

The software stack and system configuration used for this submission is summarized in Table 5. Optimizations have been done for the PyTorch framework and Transformers library to unleash the Xeon CPU machine learning capabilities. Moreover, a low-level tool -- Intel® Neural Compressor -- has been used for high-accuracy quantization.

OS | CentOS Stream 8 (GNU/Linux x86_64) |

Intel® Optimized Inference SW | OneDNN™ Deep Learning, ONNX, Intel® Extension for PyTorch (IPEX), Intel® Extension for Transformers (ITREX), Intel® Neural Compressor |

ECC memory mode | ON |

Host memory configuration | 1TiB |

Turbo mode | ON |

CPU frequency governor | Performance |

Table 5. Software stack and system configuration

The models under testing are stable diffusion model version 1.4 (~1 billion parameters) and Llama2-chat-HF models with 7 billion, 13 billion, and 70 billion parameters. We purposely choose those models because they are open-sourced, representative, and cover a wide parameter range. Different quantization bits are tested to characterize the corresponding performance and power consumption.

All the experiments are based on batch-size equal to 1. Performance is characterized by latency or throughput. To reduce the measurement errors, the inference is executed 10 times to get the averaged value. A warm-up process is executed by loading the parameter and running a sample test before running the defined inference.

Results

We show some typical results in this section alongside brief discussions for each result. The conclusions are summarized in the next section.

HS5610 Results

Latency vs Quantization vs Cores – Stable Diffusion Model:

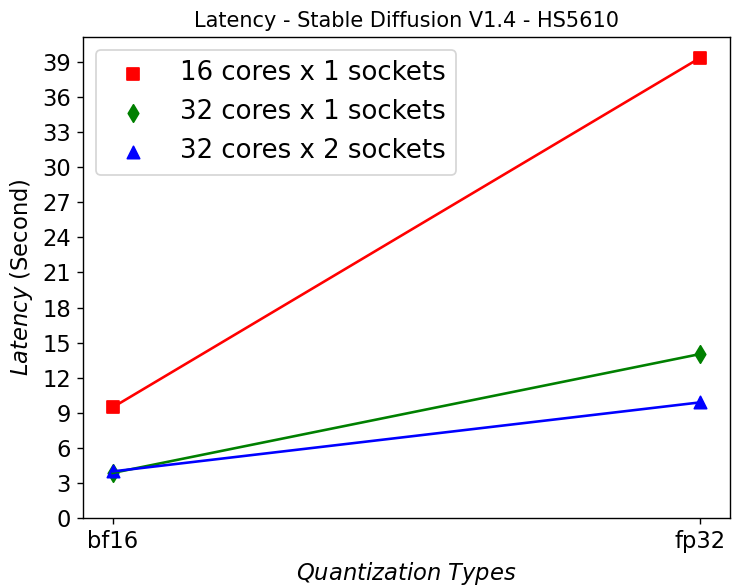

Figure 3. Latency in HS5610 server running Stable Diffusion

Figure 3 shows that HS5610 can generate a new image in approximately 3 seconds when running at bf16 Stable Diffusion V1.4 model. Quantizing to 16 bits greatly reduces the latency compared to using fp32 model. Scaling up the core numbers from 16 to 32 cores greatly reduces the latency, however scaling up across the sockets does not help. This is mainly due to the NUMA remote memory bottleneck.

Power Consumption – Stable Diffusion Model:

(a)

(a)  (b)

(b)

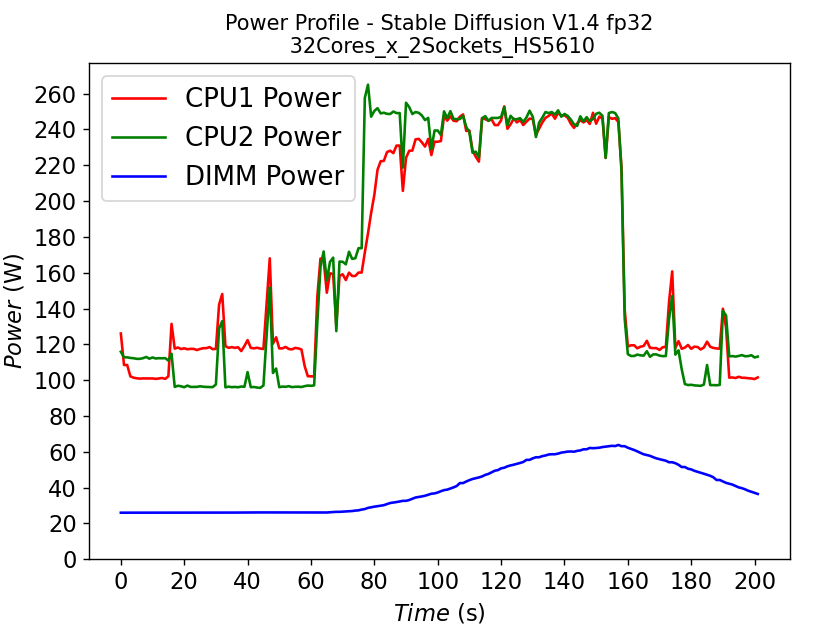

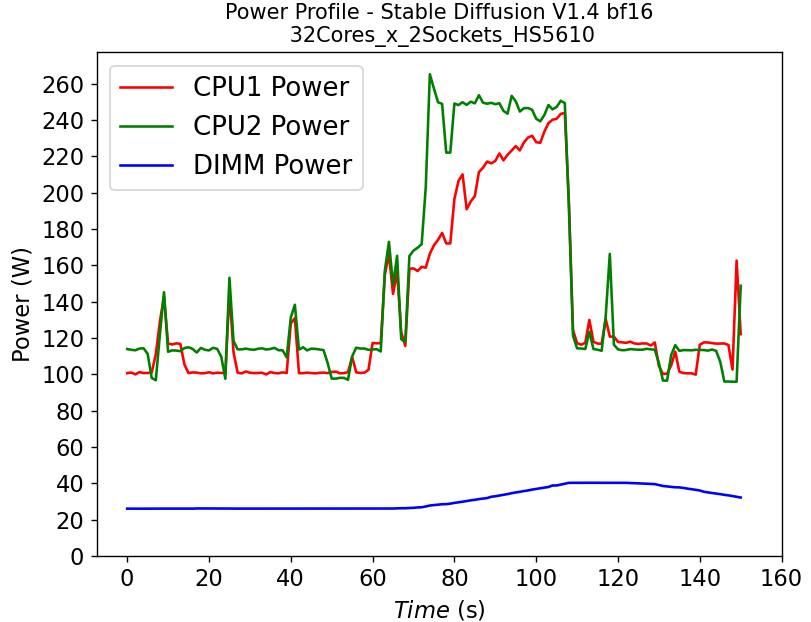

Figure 4. Power consumption of CPU and DIMM in HS5610 server running stable diffusion: (a) fp32 model (b) bf16 model

Figure 4 shows the power profile comparison of HS5610 when running the stable diffusion model with (a) fp32 weights and (b) bf16 weights. To finish the same tasks (warm up and inferencing), the bf16 model takes significantly less time (shorter power profile duration) compared to fp32 scenario. The plot also shows that much larger DIMM power is required to run fp32 compared to bf16. Executing the task pushes the CPU working close to the TDP limit, with the exception of the CPU1 in Figure 4b, indicating that further improvement is possible to further reduce the latency for the bf16 model.

Throughput vs Quantization vs Cores – Llama2 Chat Models:

(a)

(a) (b)

(b)

Figure 5. Throughput in HS5610 server running Llama2: (a) 1-socket (b) 2-socket

Figure 5 shows the throughput numbers when running Llama2 chat models with different parameter sizes and quantization bits in HS5610 server. Figure 5a shows the single socket scenario and 5b shows the dual-socket scenario. Smaller models with lower quantization bits give higher throughputs which is to be expected. Like the stable diffusion model, quantization greatly improves the throughput. However, scaling up with more CPU cores across the socket has negligible results in boosting the performance.

R760 Results

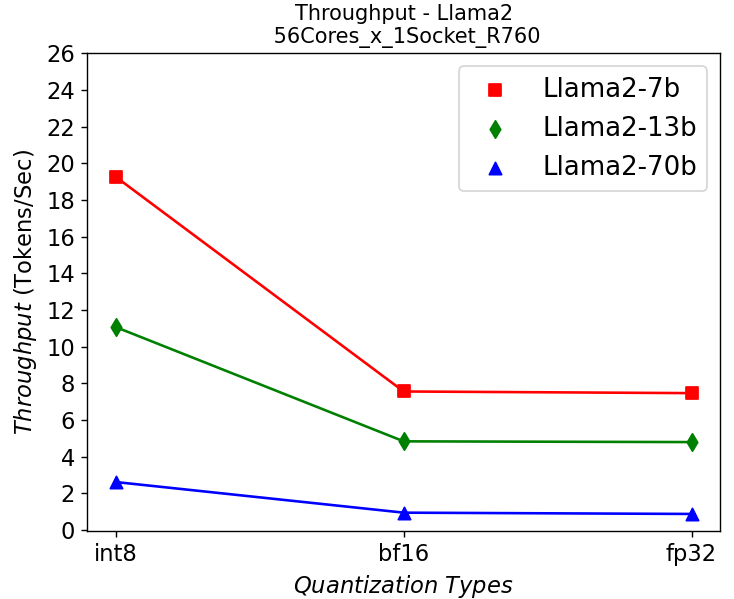

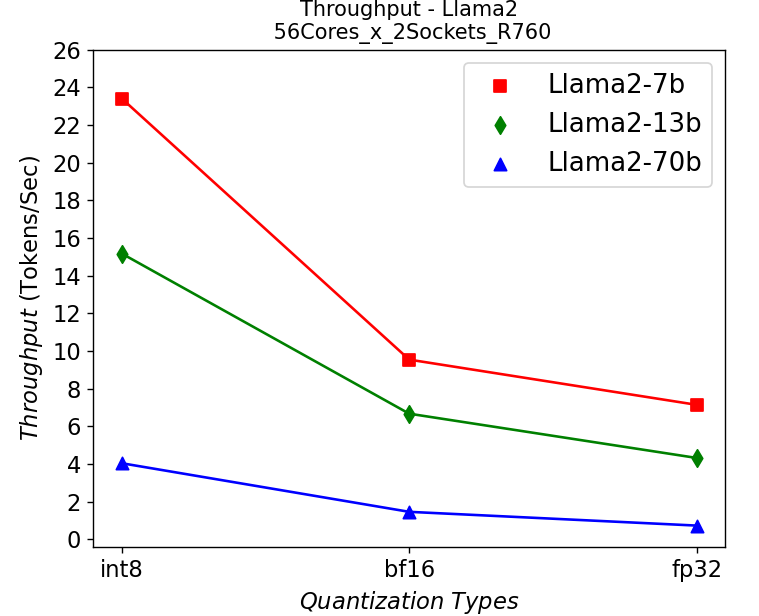

Throughput vs Quantization vs Cores – Llama2 Chat Models:

(a)

(a) (b)

(b)

Figure 6. Throughput in R760 server running Llama2: (a) 1-socket (b) 2-socket

Figure 6 shows the throughput numbers when running Llama2 chat models with different parameter sizes and quantization bits in R760 server. We get similar observations as the results shown in HS5610 server. A smaller model gives a higher throughput, and quantization greatly improves the throughput. One difference is that we get a 10-30% performance improvement depending on models when scaling up across sockets, showing a benefit from larger core numbers. The performance across the models is good enough for most real-time chatbot applications.

Performance Per Watt – Llama2 Chat Models:

(a)

(a) (b)

(b) (c)

(c)

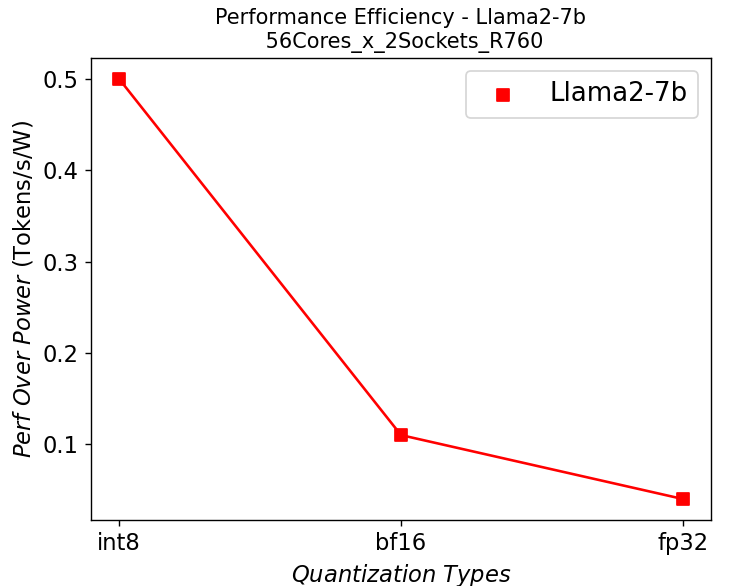

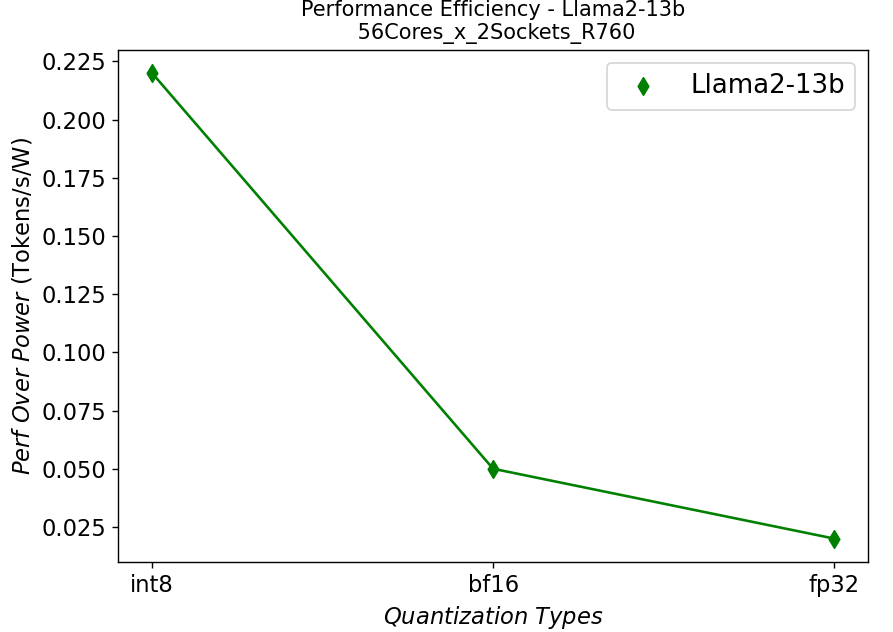

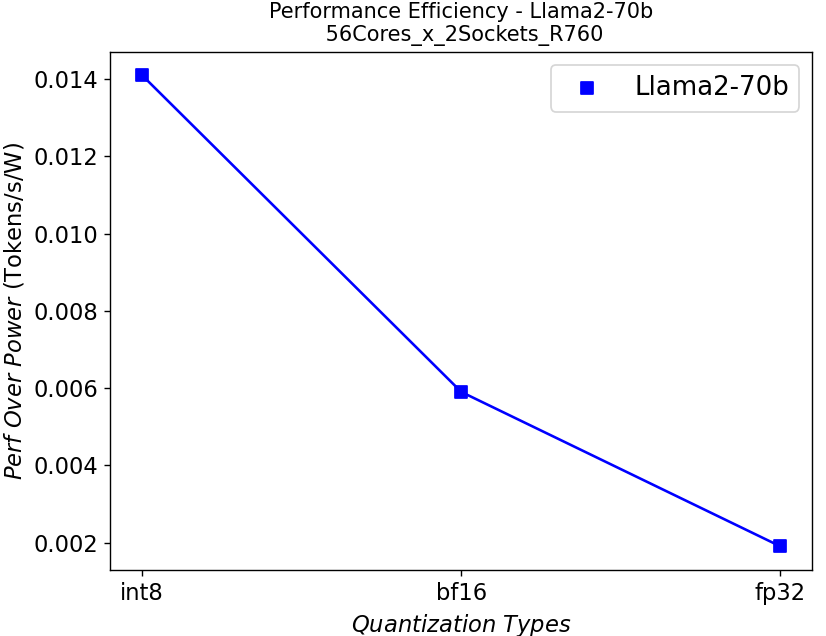

Figure 7. Performance per watt in R760 server running Llama2: (a) 7b (b)13b (c) 70b

We further plot the performance per watt curve which is strongly related to the total cost of ownership (TCO) of the system in Figure 7. From the plots, the quantization can greatly help with the performance efficiency, especially for the models with large parameters.

Conclusion

- We have shown that the Intel 4th generation Intel® Xeon® CPUs on Dell PowerEdge mainstream and HS class platforms can easily meet performance requirements when it comes to Inferencing with Llama2 models.

- We also demonstrate the benefits of quantization or using lower precision for inferencing quantitively, which can give a better TCO in terms of performance per watt and memory footprint as well as enable better user experience by improving the throughput.

- These studies also show that we need to right-size the infrastructure based on the application and model size.

References

[1]. A. Vaswani et. al, “Attention Is All You Need”, https://arxiv.org/abs/1706.03762

[2]. W. Zhao et. al, “A Survey of Large Language Models”, https://doi.org/10.48550/arXiv.2303.18223

[3]. R. Rombach et. al, “High-Resolution Image Synthesis with Latent Diffusion Models”, https://arxiv.org/abs/2112.10752

[4]. https://www.dell.com/en-us/shop/ipovw/poweredge-hs5610

Authors: Tao Zhang (tao.zhang9@dell.com); Bhavesh Patel (bhavesh_a_patel@dell.com)