Delivering VxRail simplicity with vLCM compatibility

Wed, 24 Apr 2024 12:03:05 -0000

|Read Time: 0 minutes

As the days start off with cooler mornings and later sunrises, we welcome the autumn season. Growing up each season brought forth its own traditions and activities. While venturing through corn mazes was fun, autumn first and foremost meant that it was apple-picking time. Combing through the orchard, you’re constantly looking for which apple to pick, even comparing ones from the same branch because no two are alike. Just like the newly introduced VMware vSphere Lifecycle Manager (vLCM) compatibility in VxRail 7.0.240, there are differences to the VxRail implementation as compared to that of the Dell EMC vSAN Ready Nodes, though they’re from the same vLCM “branch.”

Now that VxRail offers vLCM compatibility, it’s a good opportunity to provide an update to Cliff’s blog post last year where he provided a comprehensive review of the customer experiences with lifecycle management of vSAN Ready Nodes and VxRail clusters. While my previous blog post about the VxRail 7.0.240 release provided a summary of VxRail’s vLCM implementation and the added value, I’ll focus more on customer experience this time. Combining the practice of Continuously Validated States to ensure cluster integrity with a VxRail-driven experience truly showcases how automated the vLCM process can be.

In this blog, I’ll cover the following:

- Overview of VMware vLCM

- Compare how to establish a baseline image

- Compare how to perform a cluster update

Overview of VMware vLCM

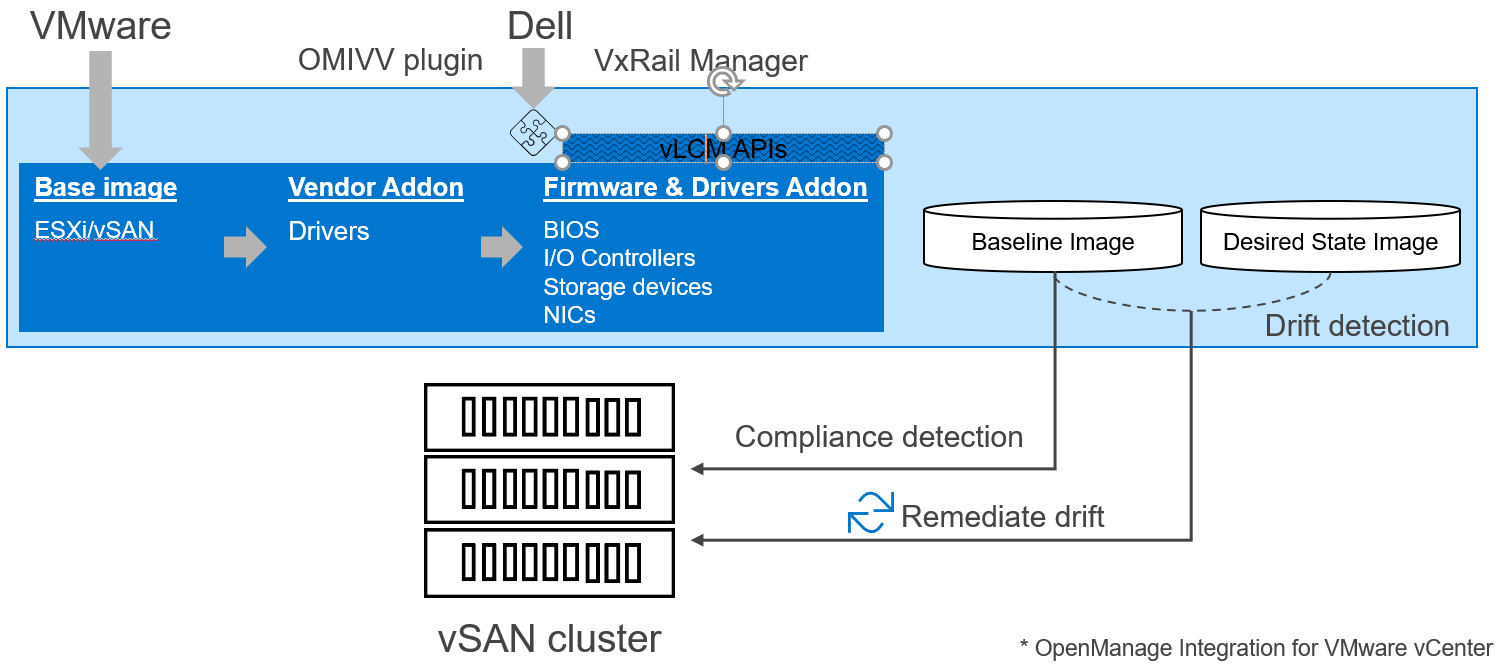

Figure 1: VMware vSphere Lifecycle Manager vLCM framework

VMware vLCM was introduced in vSphere 7.0 as a framework to allow for software and hardware to be updated together as a single system. Being able to combine the ESXi image and component firmware and drivers into a single workflow helps streamline the update experience. To do that, server vendors are tasked with developing their own plugin into this vLCM framework to perform the function of the firmware and drivers addon as depicted in the Figure 1. The server vendor implementation provides functionality to build the hardware catalog of firmware and drivers on the server and supply the bits to vCenter. For some components, the server vendors do not supply their firmware and drivers, and relies on individual vendors to provide the addon capability. Put together, the software and hardware form a cluster image. To start using vLCM, you need to build out a cluster image and assign it as the baseline image. For future updates, you have to build out a cluster image and assign it as the desired state image. Drift detection between the two determines what needs to be remediated for the cluster to arrive at the desired state.

For Dell EMC vSAN Ready Nodes, you will use the OMIVV (OpenManage Integration with VMware vCenter) plugin to vCenter to use the vLCM framework. Now VxRail has enhanced VxRail Manager to plug into vCenter in its vLCM implementation. The difference between the two implementations really drives home that vSAN Ready Nodes, whether its Dell EMC’s or other server vendors, deliver a customer-driven experience versus a VxRail-driven experience. Both implementations have their merits because they target different customer problems. The customer-driven experience makes sense for customers who have already invested the IT resources to have more operational control of what is installed on their clusters. For customers looking for operational efficiency that reduces and simplifies their day-to-day responsibility to administrate and secure infrastructure, the VxRail-driven experience provides them with the confidence to be able to so.

Enabling VMware vLCM with the baseline image

A baseline image is a cluster image that you have identified as the version set to deliver that happy state for your cluster. IT operations team is happy because the cluster is running secure and stable code that complies with their company’s security standards. End users of the applications running on the cluster are happy because they are getting the consistent service required to perform their jobs.

For Dell EMC vSAN Ready Nodes or any vSAN Ready Nodes, users first need to arrive at what the baseline image should be before deploying their clusters. That requires research and testing to validate that the set of firmware and drivers are compatible and interoperable with the ESXi image. Importing it into vLCM framework involves a series of steps.

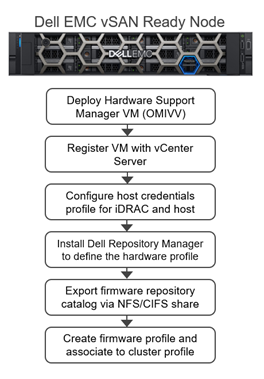

Figure 2: Customer-driven process to establish a baseline image for Dell EMC vSAN Ready Nodes

Dell EMC vSAN Ready Node uses the OMIVV plugin to interface with vCenter Server. A user needs to first deploy this OMIVV virtual machine on vCenter.

- Once deployed, the user has to register it with vCenter Server.

- From the vCenter UI, the user must configure the host credentials profile for iDRAC and the host.

- To acquire the bits for the firmware and drivers, user needs to install the Dell Repository Manager which provides the depot to all firmware and drivers. Here is where the user can build the catalog of firmware and drivers component-by-component (BIOS, NICs, storage controllers, IO controllers, and so on) for their cluster.

- With the catalog in place, the user uploads each file into an NFS/CIFS share that the vCenter Server can access.

- From the vCenter UI, user creates a repository profile that points to the share with the firmware and drivers. Next is defining the cluster profile with the ESXi image running on the cluster and the repository profile. This cluster profile becomes the baseline image for future compliance checks and drift remediation scans.

For VxRail, vLCM is not automatically enabled once your cluster is updated to VxRail 7.0.240. It’s a decision you make based on the benefits that vLCM compatibility provides (described in my previous blog post). Once enabled, it cannot be disabled. To enable vLCM, your VxRail cluster needs to be running in a Continuously Validated State. It is a good idea to run the compliance checker first.

Once you have made the decision to move forward, VxRail’s vLCM implementation is astoundingly simple! There’s no need for you to define the baseline image because you’re already running in a Continuously Validated State. The VxRail implementation obfuscates the plugin interaction and uses the vLCM APIs to automate all the previously described manual steps. As a result, enabling vLCM and establishing the baseline image have been reduced to a 3-step process.

- Enter the vCenter user credentials.

- VxRail automatically performs a compliance check to verify the cluster is running in a Continuously Validate State.

- VxRail automatically ports the Continuously Validated State into the formation of the baseline image.

And that’s it! The following video clip captures the compliance check you can run first and then the three step process to enable vLCM:

Figure 3: How to enable vLCM on VxRail

Cluster update with vLCM

For Dell EMC vSAN Ready Nodes, the customer-driven process to build the desired state image is similar to the baseline image. It requires investigation, research, and testing to define the next happy state and the use of the Dell Repository Manager to save and export the hardware catalog to vCenter. From there, users build out a cluster image that includes the ESXi image and the hardware catalog that becomes the desired state image.

Not surprisingly, performing a cluster update with vLCM doesn’t fall too far from the VxRail tree, VxRail streamlines that process down to a few steps within VxRail Manager. By using vLCM APIs, VxRail incorporates the vLCM process into the VxRail Manager experience for a complete LCM experience.

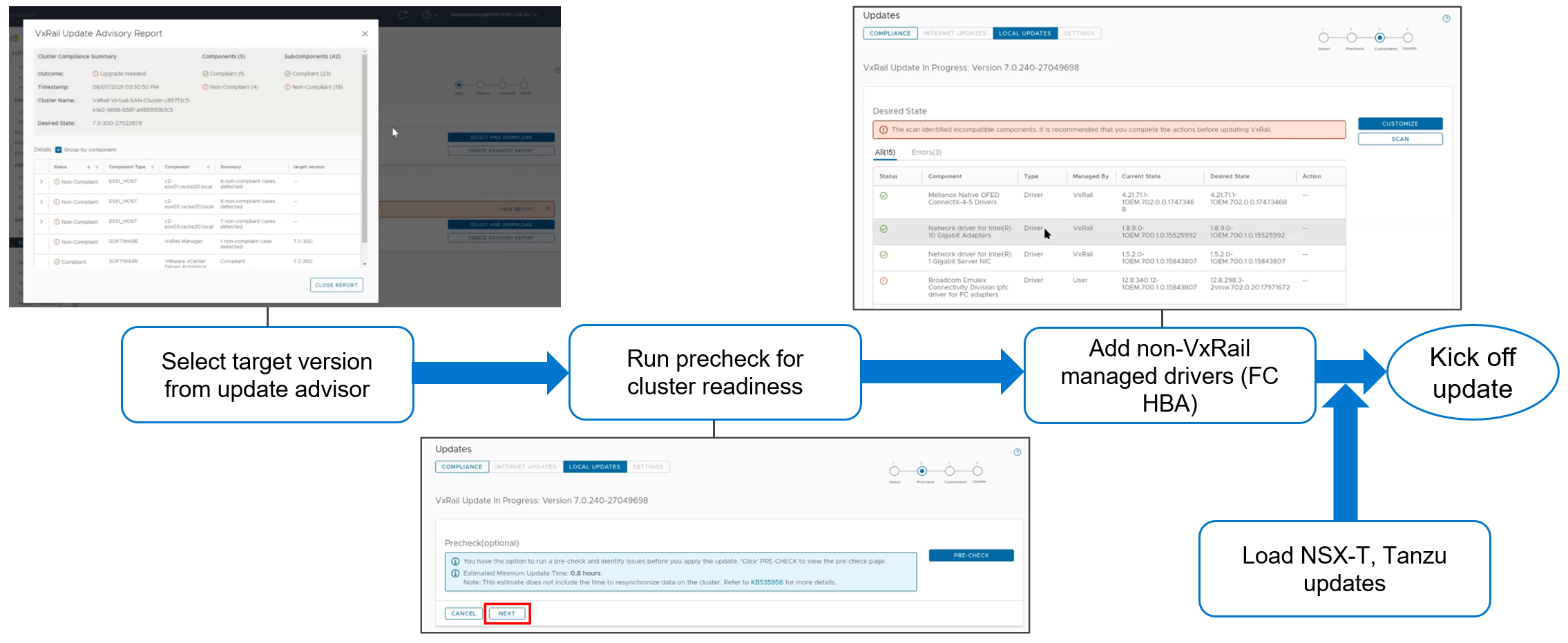

Figure 4: Process to perform cluster update with VxRail

- From the new update advisor tool, select the target VxRail version to which you want to update your cluster. The update advisor then generates a drift remediation report (called an advisory report) that provides a component-by-component analysis of what needs to be updated. This information along with estimated update time will help you plan the length of your maintenance window.

- Running a cluster readiness precheck ahead of your maintenance window is good practice. It allows you time to address any issues that may be found ahead of your scheduled window or to plan for additional time.

- Having passed the precheck, VxRail Manager will incorporate the vLCM process into its own experience. VxRail Manager includes the vendor addon capability in vLCM so that you can add separate firmware and drivers that are not part of the VxRail Continuously Validated State, such as a Fibre-channel HBA. Using the vLCM APIs, VxRail can automatically port the Continuously Validated State LCM bundle and any non-VxRail managed component firmware and drivers into the cluster image for remediation.

- If you want to customize the cluster image even more with NSX-T or Tanzu VIBs, you can add them from vCenter UI. Once included in the desired state image, you have the option of either initiating the remediation from vCenter or from the VxRail Manager UI. For those not adding these VIBs, then the entire cluster update experience stays within the simple and familiar VxRail Manager experience.

Check out the following video clip to see this end-to-end process in action:

Figure 5: How to update your VxRail cluster with VMware vLCM

Conclusion

With both Dell EMC vSAN Ready Nodes and VxRail using the same vLCM framework, it’s a much easier task to deliver an apples-to-apples comparison that clearly shows the simplicity of VxRail LCM with vLCM compatibility. This vLCM implementation is a perfect example how VxRail is built with VMware and made to enhance VMware. We’ve integrated the innovations of vLCM into the simple and streamlined VxRail-driven experience. As VMware looks to deliver more features to vLCM, VxRail is well positioned to present these capabilities in VxRail fashion.

For more information about this topic, check out the latest podcast: https://infohub.delltechnologies.com/p/vxrail-vlcm-compatibility/

Author Information

Daniel Chiu, Senior Technical Marketing Manager at Dell Technologies

LinkedIn: https://www.linkedin.com/in/daniel-chiu-8422287/

Related Blog Posts

Learn About the Latest Major VxRail Software Release: VxRail 8.0.210

Wed, 24 Apr 2024 12:09:24 -0000

|Read Time: 0 minutes

It’s springtime, VxRail customers! VxRail 8.0.210 is our latest software release to bloom. Come see for yourself what makes this software release shine.

VxRail 8.0.210 provides support for VMware vSphere 8.0 Update 2b. All existing platforms that support VxRail 8.0 can upgrade to VxRail 8.0.210. This is also the first VxRail 8.0 software to support the hybrid and all-flash models of the VE-660 and VP-760 nodes based on Dell PowerEdge 16th Generation platforms that were released last summer and the edge-optimized VD-4000 platform that was released early last year.

Read on for a deep dive into the release content. For a more comprehensive rundown of the feature and enhancements in VxRail 8.0.210, see the release notes.

Support for VD-4000

The support for VD-4000 includes vSAN Original Storage Architecture (OSA) and vSAN Express Storage Architecture (ESA). VD-4000 was launched last year with VxRail 7.0 support with vSAN OSA. Support in VxRail 8.0.210 carries over all previously supported configurations for VD-4000 with vSAN OSA. What may intrigue you even more is that VxRail 8.0.210 is introducing first-time support for VD-4000 with vSAN ESA.

In the second half of last year, VMware reduced the hardware requirements to run vSAN ESA to extend its adoption of vSAN ESA into edge environments. This change enabled customers to consider running the latest vSAN technology in areas where constraints from price points and infrastructure resources were barriers to adoption. VxRail added support for the reduced hardware requirements shortly after for existing platforms that already supported vSAN ESA, including E660N, P670N, VE-660 (all-NVMe), and VP-760 (all-NVMe). With VD-4000, the VxRail portfolio now has an edge-optimized platform that can run vSAN ESA for environments that also may have space, energy consumption, and environmental constraints. To top that off, it’s the first VxRail platform to support a single-processor node to run vSAN ESA, further reducing the price point.

It is important to set performance expectations when running workload applications on the VD-4000 platform. While our performance testing on vSAN ESA showed stellar gains to the point where we made the argument to invest in 100GbE to maximize performance (check it out here), it is essential to understand that the VD-4000 platform is running with an Intel Xeon-D processor with reduced memory and bandwidth resources. In short, while a VD-4000 running vSAN ESA won’t be setting any performance records, it can be a great solution for your edge sites if you are looking to standardize on the latest vSAN technology and take advantage of vSAN ESA’s data services and erasure coding efficiencies.

Lifecycle management enhancements

VxRail 8.0.210 offers support for a few vLCM feature enhancements that came with vSphere 8.0 Update 2. In addition, the VxRail implementation of these enhancements further simplifies the user experience.

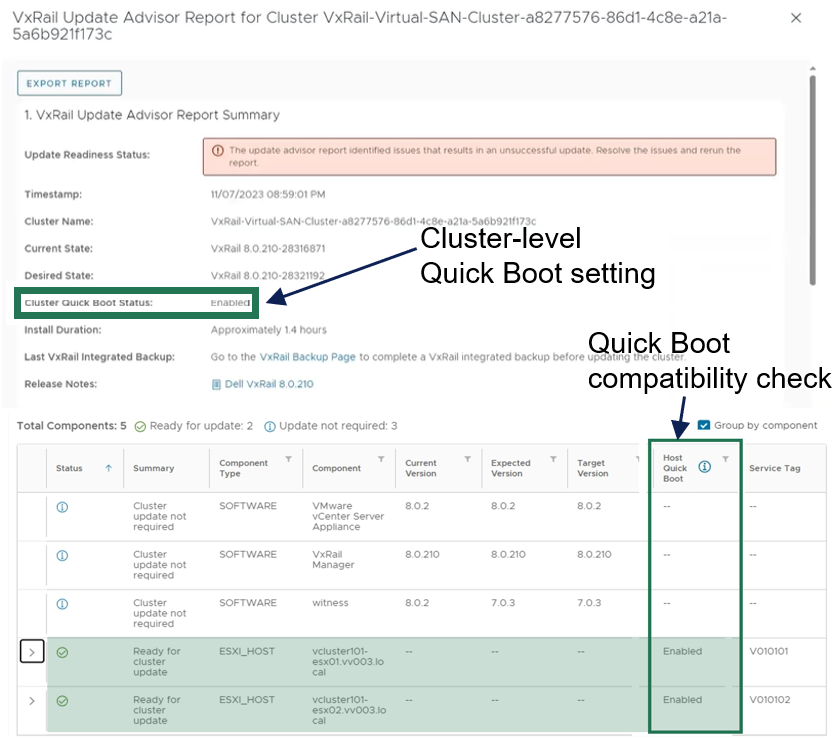

For vLCM-enabled VxRail clusters, we’ve made it easier to benefit from VMware ESXi Quick Boot. The VxRail Manager UI has been enhanced so that users can enable Quick Boot one time, and VxRail will maintain the setting whenever there is a Quick Boot-compatible cluster update. As a refresher for some folks not familiar with Quick Boot, it is an operating system-level reboot of the node that skips the hardware initialization. It can reduce the node reboot time by up to three minutes, providing significant time savings when updating large clusters. That said, any cluster update that involves firmware updates is not Quick Boot-compatible.

Using Quick Boot had been cumbersome in the past because it required several manual steps. To use Quick Boot for a cluster update, you would need to go to the vSphere Update Manager to enable the Quick Boot setting. Because the setting resets to Disabled after the reboot, this step had to be repeated for any Quick Boot-compatible cluster update. Now, the setting can be persisted to avoid manual intervention.

As shown in the following figure, the update advisor report now informs you whether a cluster update is Quick Boot-compatible so that the information is part of your update planning procedure. VxRail leverages the ESXi Quick Boot compatibility utility for this status check.

Figure 1. VxRail update advisor report highlighting Quick Boot information

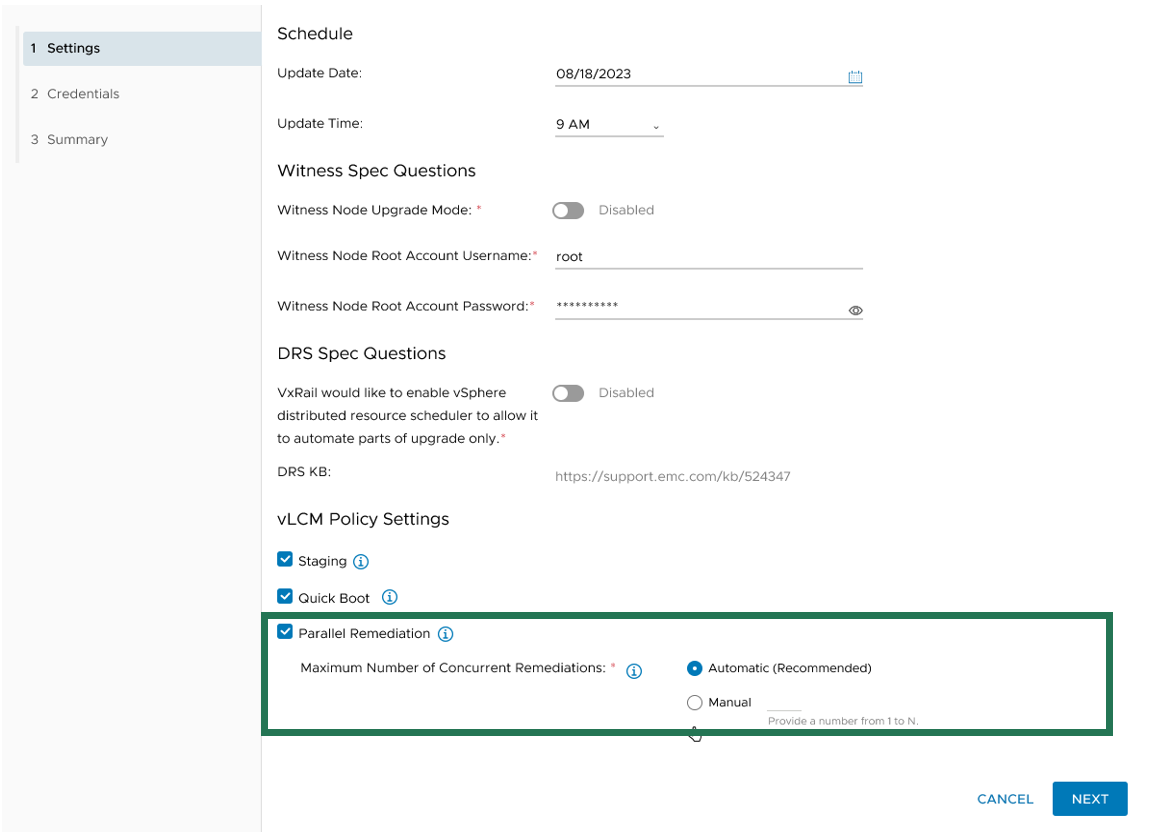

Another new vLCM feature enhancement that VxRail supports is parallel remediation. This enhancement allows you to update multiple nodes at the same time, which can significantly cut down on the overall cluster update time. However, this feature enhancement only applies to VxRail dynamic nodes because vSAN clusters still need to be updated one at a time to adhere to storage policy settings.

This feature offers substantial benefits in reducing the maintenance window, and VxRail’s implementation of the feature offers additional protections over how it can be used on vSAN Ready Nodes. For example, enabling parallel remediation with vSAN Ready Nodes means that you would be responsible for managing when nodes go into and out of maintenance mode as well as ensuring application availability because vCenter will not check whether the nodes that you select will disrupt application uptime. The VxRail implementation adds safety checks that help mitigate potential pitfalls, ensuring a smoother parallel remediation process.

VxRail Manager manages when nodes enter and exit maintenance modes and provides the same level of error checking that it already performs on cluster updates. You have the option of letting VxRail Manager automatically set the maximum number of nodes that it will update concurrently, or you can input your own number. The number for the manual setting is capped at the total node count minus two to ensure that the VxRail Manager VM and vCenter Server VM can continue to run on separate nodes during the cluster update.

Figure 2. Options for setting the maximum number of concurrent node remediations

During the cluster update, VxRail Manager intelligently reduces the node count of concurrent updates if a node cannot enter maintenance mode or if the application workload cannot be migrated to another node to ensure availability. VxRail Manager will automatically defer that node to the next batch of node updates in the cluster update operation.

The last vLCM feature enhancement in VxRail 8.0.210 that I want to discuss is installation file pre-staging. The idea is to upload as many installation files for the node update as possible onto the node before it actually begins the update operation. Transfer times can be lengthy, so any reduction in the maintenance window would have a positive impact to the production environment.

To reap the maximum benefits of this feature, consider using the scheduling feature when setting up your cluster update. Initiating a cluster update with a future start time allows VxRail Manager the time to pre-stage the files onto the nodes before the update begins.

As you can see, the three vLCM feature enhancements can have varying levels of impact on your VxRail clusters. Automated Quick Boot enablement only benefits cluster updates that are Quick Boot-compatible, meaning there is not a firmware update included in the package. Parallel remediation only applies to VxRail dynamic node clusters. To maximize installation files pre-staging, you need to schedule cluster updates in advance.

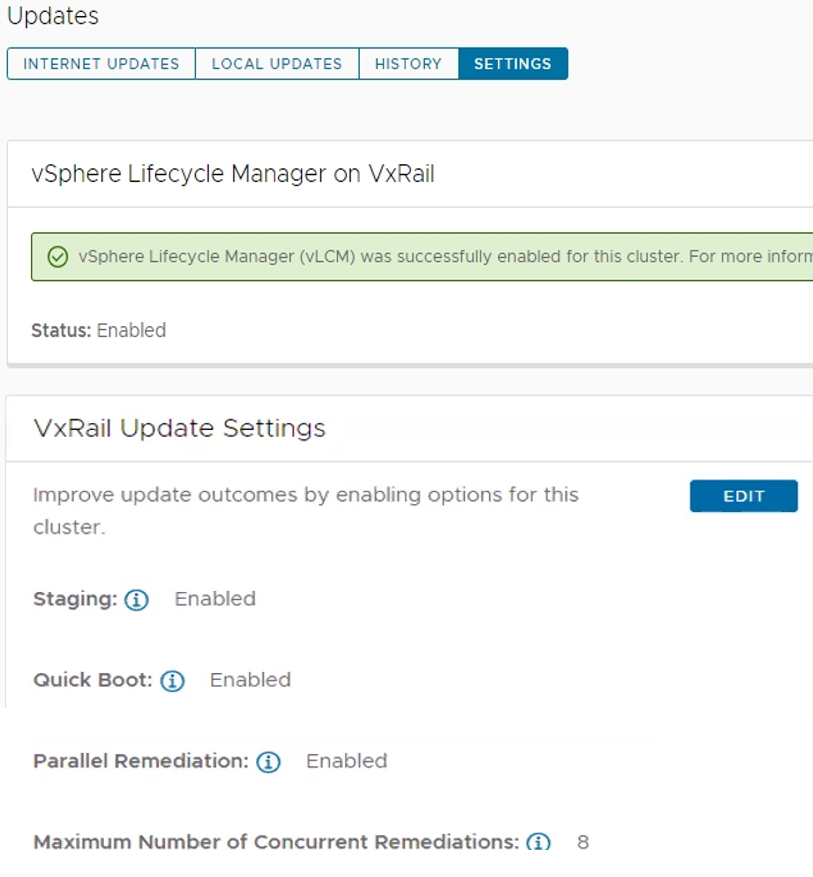

That said, two commonalities across all three vLCM feature enhancements is that you must have your VxRail clusters running vLCM mode and that the VxRail implementation for these three feature enhancements makes them more secure and easy to use. As shown in the following figure, the Updates page on the VxRail Manager UI has been enhanced so that you can easily manage these vLCM features at the cluster level.

Figure 3. VxRail Update Settings for vLCM features

VxRail dynamic nodes

VxRail 8.0.210 also introduces an enhancement for dynamic node clusters with a VxRail-managed vCenter Server. In a recent VxRail software release, VxRail added an option for you to deploy a VxRail-managed vCenter Server with your dynamic node cluster as a Day 1 operation. The initial support was for Fiber-Channel attached storage. The parallel enhancement in this release adds support for dynamic node clusters using IP-attached storage for its primary datastore. That means iSCSI, NFS, and NVMe over TCP attached storage from PowerMax, VMAX, PowerStore, UnityXT, PowerFlex, and VMware vSAN cross-cluster capacity sharing is now supported. Just like before, you are still responsible for acquiring and applying your own vCenter Server license before the 60-day evaluation period expires.

Password management

Password management is one of the key areas of focus in this software release. To reduce the manual steps to modify the vCenter Server management and iDRAC root account passwords, the VxRail Manager UI has been enhanced to allow you to make the changes via a wizard-driven workflow instead of having to change the password on the vCenter Server or iDRAC themselves and then go onto VxRail Manager UI to provide the updated password. The enhancement simplifies the experience and reduces potential user errors.

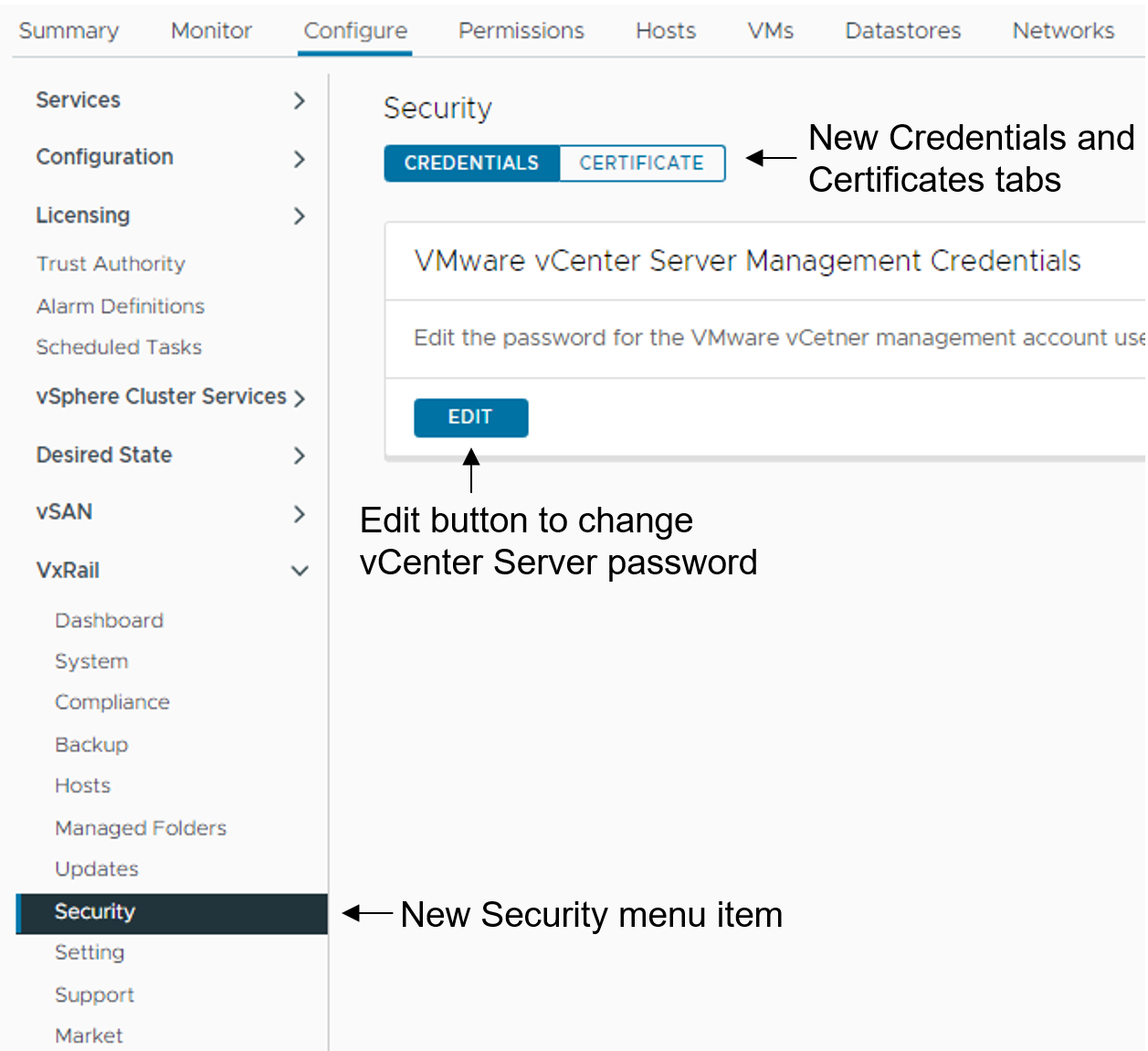

To update the vCenter Server management credentials, there is a new Security page that replaces the Certificates page. As illustrated in the following figure, a Certificate tab for the certificates management and a Credentials tab to change the vCenter Server management password are now present.

Figure 4. How to update the vCenter Server management credentials

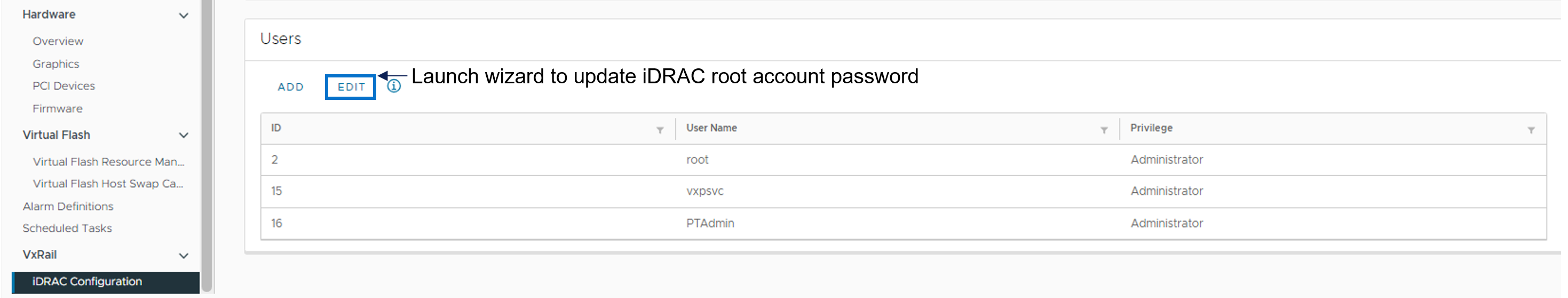

To update the iDRAC root account password, there is a new iDRAC Configuration page where you can click the Edit button to launch a wizard to the change password.

Figure 5. How to update the iDRAC root password

Deployment Flexibility

Lastly, I want to touch on two features in deployment flexibility.

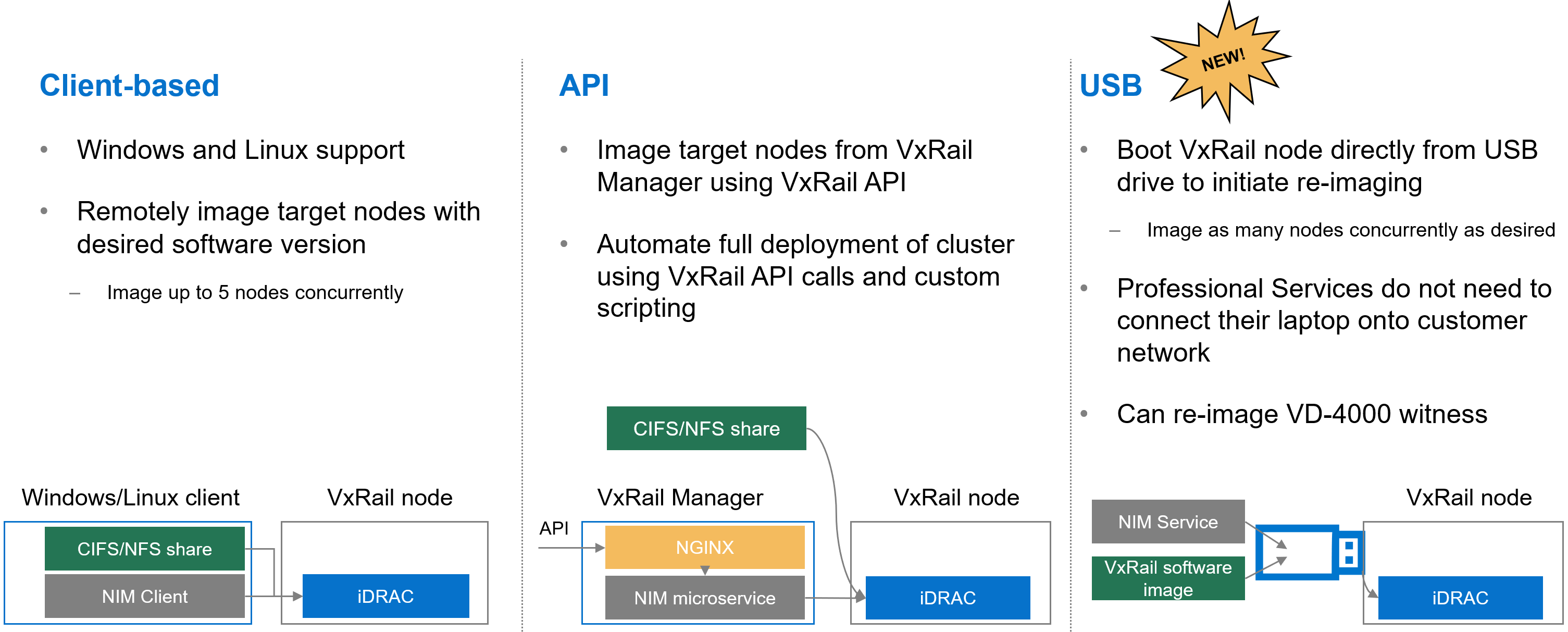

Over the past few years, the VxRail team has invested heavily in empowering you with the tools to recompose and rebuild the clusters on your own. One example is making our VxRail nodes customer-deployable with the VxRail Configuration Portal. Another is the node imaging tool.

Figure 6. Different options to use the node image management tool

Initially, the node imaging tool was Windows client-based where the workstation has the VxRail software ISO image stored locally or on a share. By connecting the workstation onto the local network where the target nodes reside, the imaging tool can be used to connect to the iDRAC of the target node. Users can reimage up to 5 nodes on the local network concurrently. In a more recent VxRail release, we added Linux client support for the tool.

We’ve also refactored the tool into a microservice within the VxRail HCI System Software so that it can be used via VxRail API. This method added more flexibility so that you can automate the full deployment of your cluster by using VxRail API calls and custom scripting.

In VxRail 8.0.210, we are introducing the USB version of the tool. Here, the tool can be self-contained on a USB drive so that users can plug the USB drive into a node, boot from it, and initiate reimaging. This provides benefits in scenarios where the 5-node maximum for concurrent reimage jobs is an issue. With this option, users can scale reimage jobs by setting up more USB drives. The USB version of the tool now allows an option to reimage the embedded witness on the VD-4000.

The final feature for deployment flexibility is support for IPv6. Whether your environment is exhausting the IPv4 address pool or there are requirements in your organization to future-proof your networking with IPv6, you will be pleasantly surprised by the level of support that VxRail offers.

You can deploy IPv6 in a dual or single network stack. In a dual network stack, you can have IPv4 and IPv6 addresses for your management network. In a single network stack, the management network is only on the IPv6 network. Initial support is for VxRail clusters running vSAN OSA with 3 or more nodes. Other than that, the feature set is on par with what you see with IPv4. Select the network stack at cluster deployment.

Conclusion

VxRail 8.0.210 offers a plethora of new features and platform support such that there is something for everyone. As you digest the information about this release, know that updating your cluster to the latest VxRail software provides you with the best return on your investment from a security and capability standpoint. Backed by VxRail Continuously Validated States, you can update your cluster to the latest software with confidence. For more information about VxRail 8.0.210, please refer to the release notes. For more information about VxRail in general, visit the Dell Technologies website.

Author: Daniel Chiu, VxRail Technical Marketing

https://www.linkedin.com/in/daniel-chiu-8422287/

Learn More About the Latest Major VxRail Software Release: VxRail 7.0.480

Tue, 24 Oct 2023 15:51:48 -0000

|Read Time: 0 minutes

Happy Autumn, VxRail customers! As the morning air gets chillier and the sun rises later, this blog on our latest software release – VxRail 7.0.480 – paired with your Pumpkin Spice Latte will give you the boost you need to kick start your day. It may not be as tasty as freshly made cider donuts, but this software release has significant additions to the VxRail lifecycle management experience that can surely excite everyone.

VxRail 7.0.480 provides support for VMware ESXi 7.0 Update U3o and VMware vCenter 7.0 Update U3o. All existing platforms that support VxRail 7.0, except ones based on Dell PowerEdge 13th Generation platforms, can upgrade to VxRail 7.0.480. This includes the VxRail systems based on PowerEdge 16th Generation platforms that were released in August.

Read on for a deep dive into the VxRail Lifecycle Management (LCM) features and enhancements in this latest VxRail release. For a more comprehensive rundown of the features and enhancements in VxRail 7.0.480, see the release notes.

Improving update planning activities for unconnected clusters or clusters with limited connectivity

VxRail 7.0.450, released earlier this year, provided significant improvements to update planning activities in a major effort to streamline administrative work and increase cluster update success rates. Enhancements to the cluster pre-update health check and the introduction of the update advisor report were designed to drive even more simplicity to your update planning activities. By having VxRail Manager automatically run the update advisor report, inclusive of the pre-update health check, every 24 hours against the latest information, you will always have an up-to-date report to determine your cluster’s readiness to upgrade to the latest VxRail software version.

If you are not familiar with the LCM capabilities added in VxRail 7.0.450, you can review this blog for more information.

VxRail 7.0.450 offered a seamless path for clusters that are connected to the Dell cloud to take advantage of these new capabilities. Internet-connected clusters can automatically download LCM pre-checks and the installer metadata files, which provide the manifest information about the latest VxRail software version, from the Dell cloud. The ability to periodically scan the Dell cloud for the latest files ensures the update advisor report is always up to date to support your decision-making.

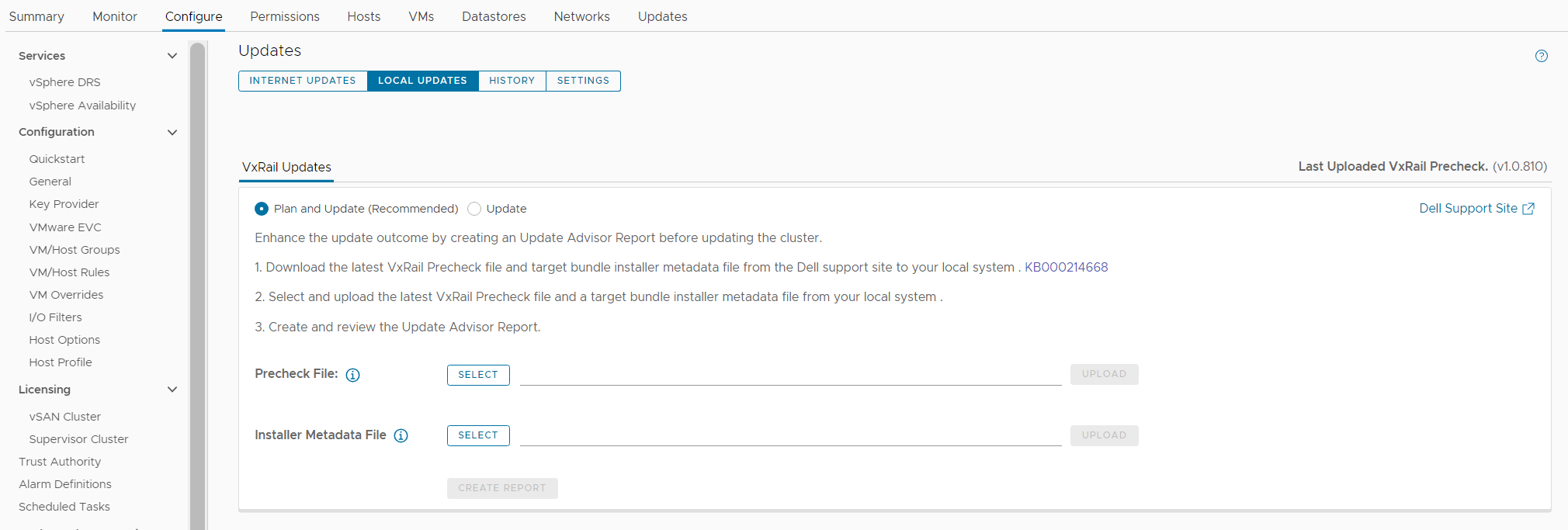

While unconnected clusters could use these features, the user experience in VxRail 7.0.450 made it more cumbersome for users to upload the latest LCM pre-checks and installer metadata files. VxRail 7.0.480 aims to improve the user experience for those who have clusters deployed in dark or remote sites that have limited network connectivity.

Starting in VxRail 7.0.480, users of unconnected clusters will have an easier experience uploading the latest LCM pre-checks file onto VxRail Manager. The VxRail Manager UI has been enhanced, so you no longer have to upload via CLI.

Knowing that some clusters are deployed in areas where network bandwidth is at a premium, the VxRail Manager UI has also been updated so that you only need to upload the installer metadata file to generate the update advisor report. In VxRail 7.0.450, users had to upload the full LCM bundle for the update advisor report. The difference in the payload size of greater than 10GB for a full LCM bundle versus a 50KB installer metadata file is a tremendous improvement for bandwidth-constrained clusters, eliminating a barrier to relying on the update advisor report as a standard cluster management practice. With VxRail 7.0.480, whether you have connected or unconnected clusters, these update planning features are easy to use and will help increase your cluster update success rates.

To accommodate these improvements, the Local Updates tab has been modified to support these new capabilities. There are now two sub-tabs underneath the Local Updates tab:

- The Update sub-tab represents the existing cluster update workflow where you would upload the full LCM bundle to generate the update advisor report and initiate the cluster update operation.

- The Plan and Update sub-tab is the recommended path which incorporates the enhancements in VxRail 7.0.480. Here you can upload the latest LCM pre-checks file and the installer metadata file that you found and downloaded from the Dell Support website. Uploading the LCM pre-checks file is optional to create a new report because there may not always be an updated file to apply. However, you do need to upload an installer metadata file to generate a new report from here. Once uploaded, VxRail Manager will generate an update advisor report against that installer metadata file every 24 hours.

Figure 1. New look to the Local Updates tab

Easier record-keeping for compliance drift and update advisor reports

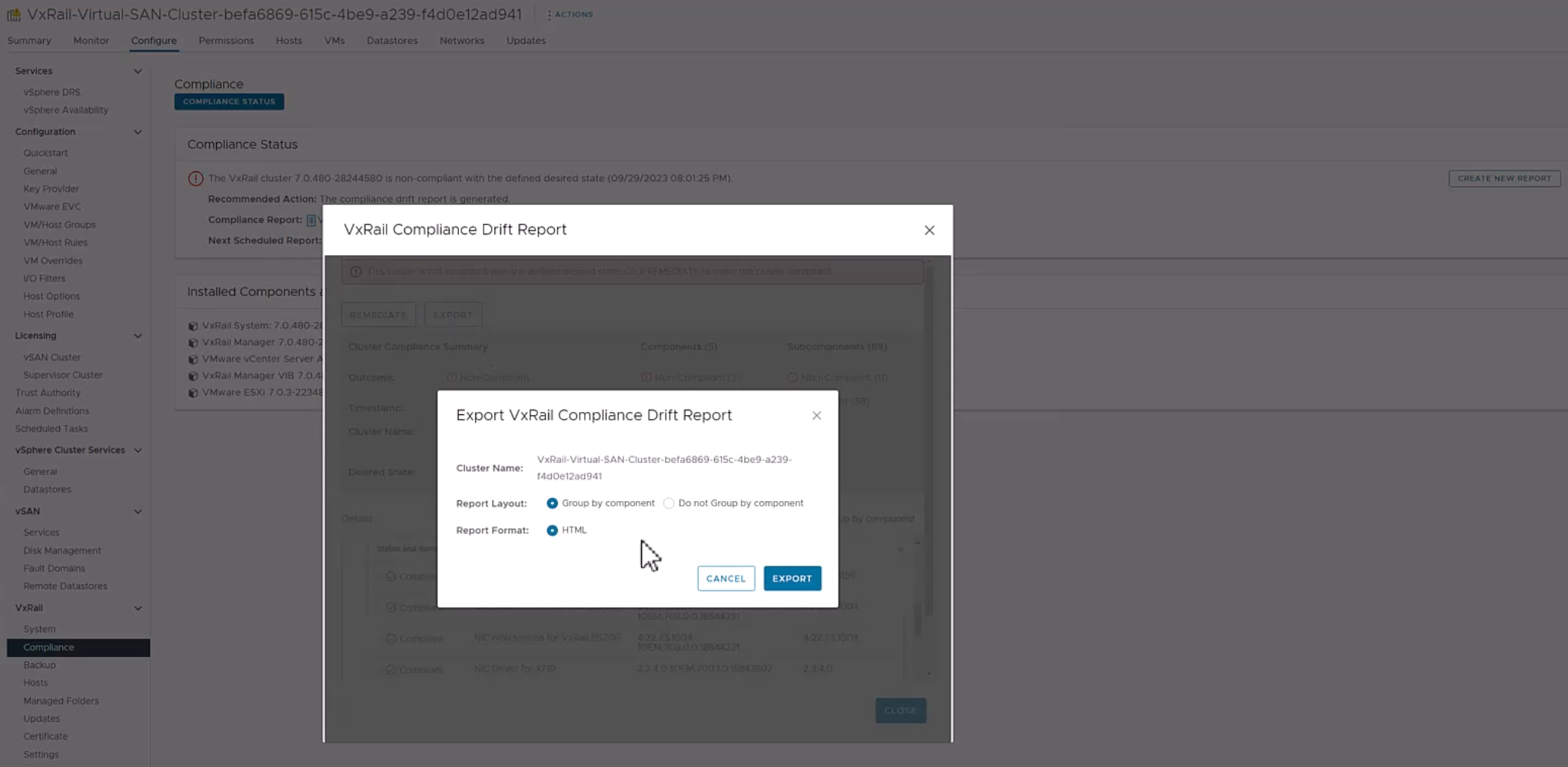

VxRail 7.0.480 adds new functionality to make the compliance drift reports exportable to outside the VxRail Manager UI while also introducing a history tab to access past update advisor reports.

Some of you use the contents of the compliance drift report to build out a larger infrastructure status report for information sharing across your organizations. Making the report exportable would simplify that report building process. When exporting the report, there is an option to group the information by host if you prefer.

Note that the compliance check functionality has moved from the Compliance tab under the Updates page to a separate page, which you can navigate to by selecting Compliance from under the VxRail section.

Figure 2. Exporting the compliance drift report

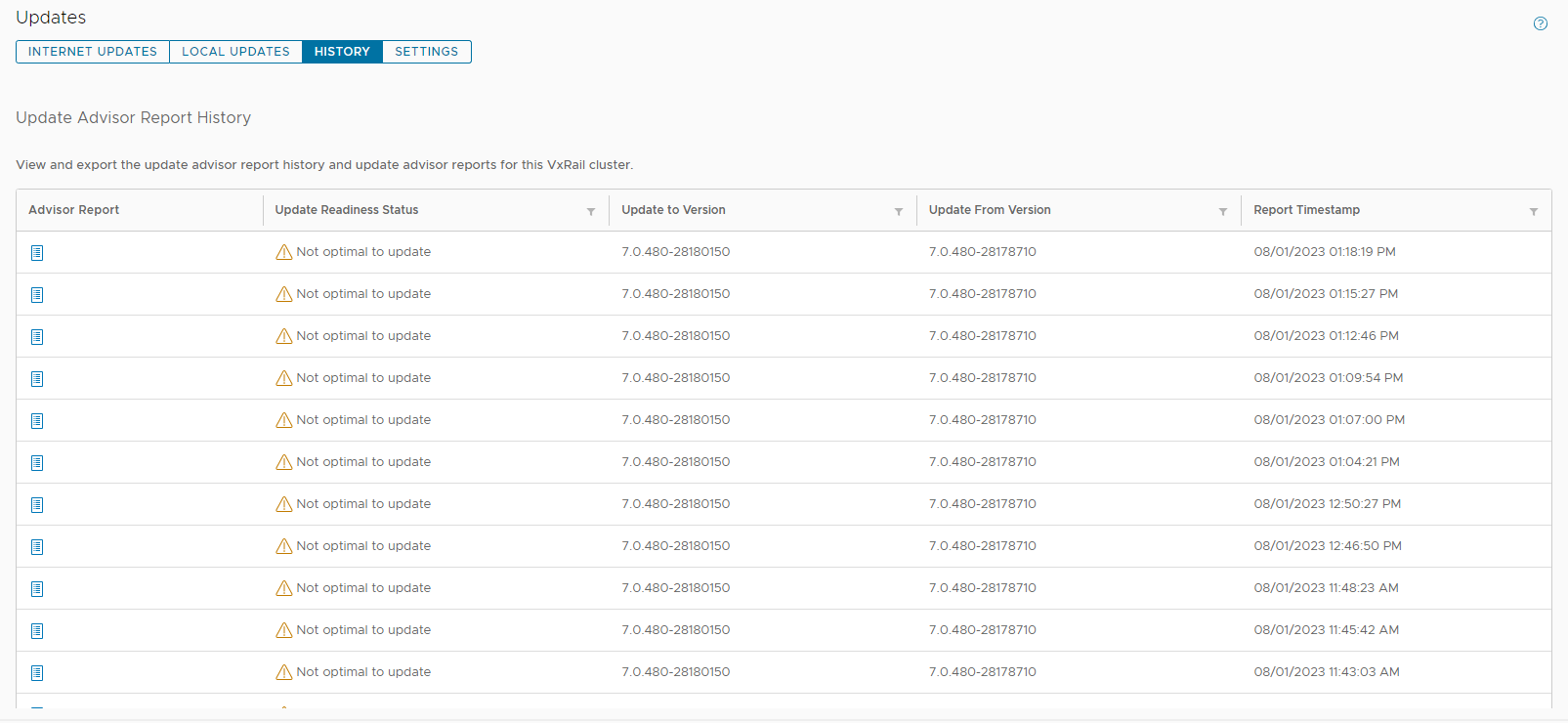

The exit of the Compliance tab comes with the introduction of the History tab on the Updates page in VxRail 7.0.480. Because VxRail Manager automatically generates a new update advisor report every 24 hours and you have the option to generate one on-demand, the update advisor report is often overwritten. To avoid the need to constantly export them as a form of record-keeping, the new History tab stores the last 30 update advisor reports. The reports are listed in a table format where you can see which target version the report was run against and when it was run. To view the full report, you can click on the icon on the left-hand column.

Figure 3. New History tab to store the last 30 update advisor reports

Addressing cluster update challenges for larger-sized clusters

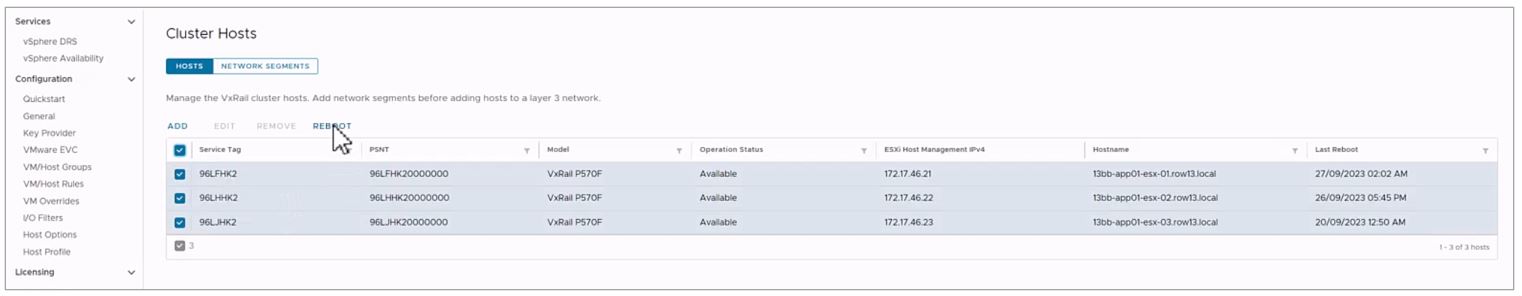

For some of you that have larger-sized clusters, cluster updates pose challenges that may prevent you from upgrading more frequently. For example, the length of the maintenance window required to complete a full cluster update may not fit within your normal business operations such that any cluster update activity will impact service availability. As a result, cluster updates are kept to a minimum and nodes inevitably are not rebooted for long periods of time. While the cluster pre-update health check is an effective tool to determine cluster readiness for an upgrade, some issues may be lurking that a node reboot can uncover. That’s why some of you script your own node reboot sequence that acts as a test run for a cluster upgrade. The script reboots each node one at a time to ensure service levels of your workloads are maintained. If any nodes fail to reboot, you can investigate those nodes.

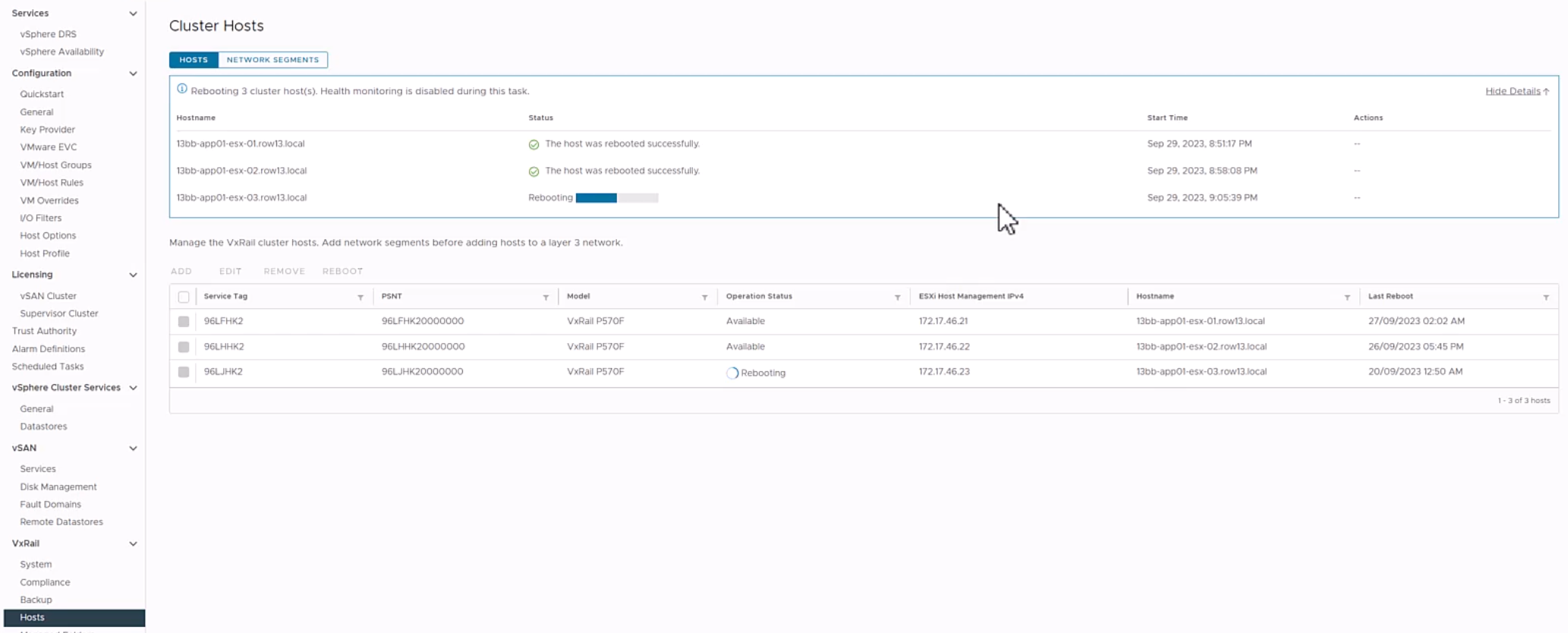

VxRail 7.0.480 introduces the node reboot sequence on VxRail Manager UI so that you do not have to manage your scripts anymore. The new feature includes cluster-level and node-level prechecks to ensure it is safe to perform this activity. If nodes fail to reboot, there is an option for you to retry the reboot or skip it. Making this activity easy may also encourage more customers to do this additional pre-check before upgrading their clusters.

Figure 5. Monitoring the node reboot sequence on the dashboard

VxRail 7.0.480 also provides the capability to split your cluster update into multiple parts. Doing so allows you to separate your cluster upgrade into smaller maintenance windows and work around your business operation needs. Though this capability could reduce the impact of a cluster upgrade to your organization, VMware does recommend that you complete the full upgrade within one week given that there are some Day 2 operations that are disabled while the cluster is partially upgraded. VxRail enables this capability only through VxRail API. When a cluster is in a partially upgraded state, features in the Updates tab are disabled and a banner appears alerting you of the cluster state. Cluster expansion and node removal operations are also unavailable in this scenario.

Conclusion

The new lifecycle management capabilities added to VxRail 7.0.480 are part of the continual evolution of the VxRail LCM experience. They also represent how we value your feedback on how to improve the product and our dedication to making your suggestions come to fruition. The LCM capabilities added to this software release will drive more effective cluster update planning, which will result in higher rates of cluster update success that will drive more efficiencies in your IT operations. Though this blog focuses on the improvements in lifecycle management, please refer to the release notes for VxRail 7.0.480 for a complete list of features and enhancements added to this release. For more information about VxRail in general, visit the Dell Technologies website.

Author: Daniel Chiu