Home > Workload Solutions > Artificial Intelligence > White Papers > MLPerf™ Inference v2.1 with NVIDIA GPU-Based Benchmarks on Dell PowerEdge Servers > GPUs

GPUs

-

NVIDIA A2 accelerator

The NVIDIA A2 Tensor Core GPU provides entry-level inference with low power, a small footprint, and high performance for NVIDIA AI at the edge. Featuring a low-profile PCIe Gen4 card and a low 40-60 W configurable thermal design power (TDP) capability, the A2 brings versatile inference acceleration to any server for deployment at scale.

The following figure shows the NVIDIA A2 PCIe GPU:

Figure 8. NVIDIA A2 PCIe GPU

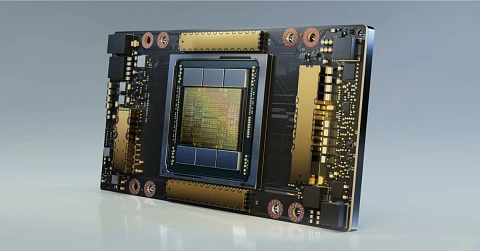

NVIDIA A100 GPUs

The NVIDIA A100 Tensor Core GPU delivers unprecedented acceleration—at every scale—to power the world’s highest performing elastic data centers for AI, data analytics, and high-performance computing (HPC) applications. As the engine of the NVIDIA data center platform, A100 provides up to 20X higher performance over the prior NVIDIA Volta™ generation. The A100 GPU can efficiently scale up or be partitioned into seven isolated GPU instances with Multi-Instance GPU (MIG), providing a unified platform that enables elastic data centers to dynamically adjust to shifting workload demands.

The following figure shows the NVIDIA A100 PCIe accelerator:

Figure 9. NVIDIA A100 PCIe accelerator

The following figure shows the NVIDIA A100 SXM accelerator:

Figure 10. NVIDIA A100 SXM accelerator