This section provides an overview of considerations regarding a VxRail deployment, and it references the Dell VxRail Network Planning Guide. The section covers best practices, recommendations, and requirements for both physical and virtual network environments.

Home > Edge > Manufacturing Edge > Guides > Dell Validated Design for Manufacturing Edge - Design Guide with Litmus > VxRail network planning considerations

VxRail network planning considerations

-

Top-of-Rack switch

A VxRail cluster depends on adjacent switches, usually a Top-of-Rack (ToR) switch, as it enables all connectivity between VxRail nodes since they have no backplane. The VxRail nodes can attach to any compatible network infrastructure at 1/10/25 GbE speeds using RJ45 or SFP+ ports. Most production environments deploy dual ToR switches to avoid having a single point of failure. Typically, all network traffic configured in a VxRail traffic is Layer 2 (data link layer), where all hosts are on the same broadcast domain and do not require routing services. A Layer 2 configuration makes it easier to administer and integrate with the upstream network. Additionally, one or more switches do not have to support Layer 3 (network layer) services that require routing beyond the local broadcast domain (which requires more configuration and management). Choose a switch that has IPv6 multicast functionality, as VxRail nodes use this for the node discovery process. In a production environment, deploying managed switches is recommended.

Consider switch performance when selecting the best ToR switch solution:

- When deploying all-flash storage on the VxRail cluster, the minimum supported switch speed is 10 GbE. Dell Technologies recommends using switches with 25 GbE speeds if possible.

- When planning to use advanced features on the switch, such as Layer 3 routing services, choose a switch with sufficient resources to accommodate for switch buffer space contention.

- Switches with higher port speeds are designed with higher Network Processor Unit (NPU) buffers. An NPU shared switch buffer of at least 16 MB is recommended for 10 GbE network connectivity, and an NPU buffer of at least 32 MB is recommended for 25 GbE network connectivity.

- For large VxRail clusters with demanding performance requirements and advanced switch services enabled, choose switches with additional resource capacity and deeper buffer capacity.

VxRail requirements

For the VxRail cluster to fully deploy and function, a set of requirements must be followed. The first requirement is that the network services required for VxRail are functional. Ensure that the cluster has access to DNS (Domain Name Server) and NTP (Network Time Protocol). These settings are entered during the setup. VxRail will not deploy if it cannot reach either service. Syslog is not required but recommended for monitoring of the system.

Another requirement is to choose the type of vCenter deployment. There is the embedded option, which comes packaged with VxRail, and this is a good option for a single-cluster deployment. The other option is to deploy an external vCenter instance somewhere within the network. This external instance is better suited for instances with multiple VxRail clusters to be managed.

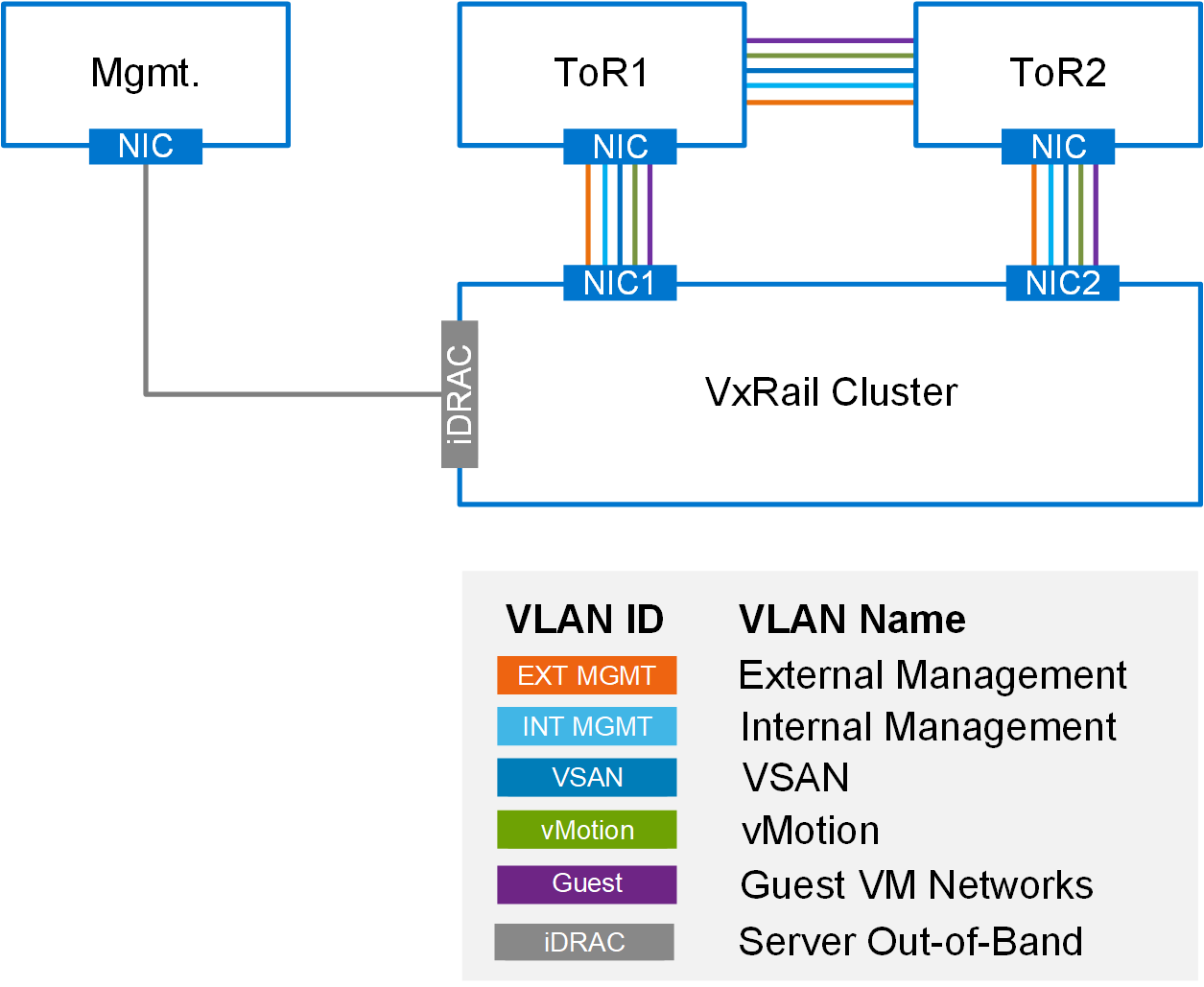

Designate Virtual Local Area Network (VLAN) IDs in your network to be assigned to the VxRail network. The minimum VxRail VLANs that must be deployed to enable full functionality are listed below. The External Management network must be able to route to DNS and NTP services.

- External Management

- Internal Management

- vSAN

- vMotion

- Guest VM Networks

- Server Out-of-Band (iDRAC)

The following figure illustrates a server cluster connected to two ToR switches for high availability and a separate out-of-band management node.

Figure 15. VxRail VLAN diagram

Assign IP addresses to the different networks. Internal management does not require addressing, while external management requires a routable IP to reach network services. vSAN and vMotion networks can use nonroutable addresses used within the cluster. If planning a multirack deployment, plan for a large subnet allocation.

Consider using reserved addressing for VxRail manager when planning for the networks previously mentioned. The reserved IP address ranges are: 172.28.0.0/16, 172.29.0.0/16, 10.0.0.0/24, and 10.0.1.0/24. During the initial build of the VxRail cluster, the External Management network must follow these rules: IP address scheme must be public (routable), it must be a fixed IP (not DHCP), the IP address cannot be in use, and the IP address range must all be in the same subnet.

Use the following table for the VxRail open ports requirements, found in Appendix D of the Dell VxRail Network Planning Guide, to identify which services are allowed to run over the network.

Table 3. VxRail open port requirements Description Source devices Destination devices Protocol Ports DNS VxRail Manager, Dell iDRAC DNS servers UDP 53 NTP client Host ESXi Management Interface, Dell iDRAC, VMware vCenter Servers, VxRail Manager NTP servers UDP 123 Syslog Host ESXi Management Interface, vRealize Log Insight Syslog server TCP 514 LDAP VMware vCenter Servers, PSC LDAP server TCP 389, 636 SMTP SRS Gateway VMs, vRealize Log Insight SMTP servers TCP 25 ESXi Management Administrators Host ESXi management interface TCP, UDP 902 VxRail Management GUI/Web interface Administrators VMware vCenter Server, VxRail Manager, Host ESXi Management, Dell iDRAC port, vRealize Log Insight, PSC TCP 80, 443 Dell server management Administrators Dell iDRAC TCP 623, 5900, 5901 SSH and SCP Administrators Host ESXi Management, vCenter Server Appliance, Dell iDRAC port, VxRail Manager Console TCP 22 vSphere Clients to vCenter server vSphere clients vCenter server TCP 5480, 8443, 9443, 10080, 10443 Managed Hosts to vCenter Host ESXi Management vCenter server TCP 443, 902, 5988,5989, 6500, 8000, 8001 Managed Hosts to vCenter Heartbeat Host ESXi Management vCenter server UDP 902

VxRail network implementation overview

The following is a high-level checklist to ensure seamless integration of the VxRail cluster into your environment prior to deployment. For more details on each step, see the Dell VxRail Network Planning Guide.

- Decide on the VxRail single point of management.

- Decide on the VxRail network traffic segmentation.

- Plan the VxRail logical network.

- Determine if jumbo frames will be used, and plan MTU sizing accordingly.

- Identify the IP address range for VxRail logical networks.

- Identify unique hostnames for VxRail management components.

- Identify external applications and settings for VxRail.

- Create DNS records for VxRail management components.

- Prepare the customer-supplied vCenter Server.

- Reserve IP addresses for VxRail, vMotion, and vSAN networks.

- Decide on the VxRail logging solution.

- Decide on passwords for VxRail management.

In addition to these items, it is helpful to calculate the estimated amount of network traffic to ensure adequate bandwidth and port density to support the new cluster or clusters. Create a diagram for smoother integration regarding location of port reservations, the best type of logical network, and support for various networking features.

Multirack VxRail cluster considerations

There are scenarios where multiple racks of VxRail clusters are deployed to support certain business applications and workloads. VxRail clusters can extend to as many as six racks. For a single rack, all IP addressing must be within the same subnet. When deploying multiple racks, there are two options. The first option is to use the same assigned subnet ranges for all VxRail nodes in the expansion racks. The second option is to assign a new subnet range and add a new gateway to the VxRail nodes.

Network redundancy

To support high availability, better performance, and failover for the network, there must be at least two ToR switches deployed with a pair of cables connecting the switches. Additionally, configure link aggregation to enable features such as load balancing and failure protection. VxRail supports NIC teaming, which is a physical pairing of ports on the node. For network-intensive workloads that require high availability, choose switches that support multichassis link aggregation, such as the Virtual Link Trunking (VLT) port channel from Dell. VxRail version 7.0.130 introduces support for Link Aggregation Control Protocol (LACP) at the cluster level. The switches that support the VxRail cluster should support LACP for better manageability and broad load-balancing options.

For more information, see the High Availability and Disaster Recovery chapter.

Network considerations after implementation

After the VxRail cluster is deployed, VxRail supports multiple features to help enhance network functionality. One feature is the NIC teaming and failover policies for VxRail networks, which provide support for a single network over multiple physical ports. To best utilize this feature, set up link aggregation in conjunction with the connected ToR switch or switches. Another postdeployment feature is the use of optional PCIe adapter cards that support network traffic. For instance, the PCIe card and Network Daughter Card (NDC) can be used together to provide fault tolerance so that if one card fails, the other can still pass the network traffic. Another feature is the ability to deploy more than one Virtual Distribution Switch (VDS). This is helpful in environments with stringent security requirements, where certain network traffic can use a separate path.