Home > Storage > ObjectScale and ECS > Industry Solutions and Verticals > Dell EMC ECS: Splunk SmartStore Configuration > Implementation workflow

Implementation workflow

-

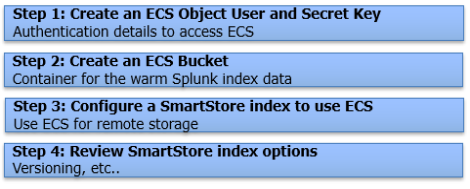

The following figure shows the minimal steps required to implement the solution.

Figure 2. Configuration steps

Create the ECS object user, secret key, and bucket

An object user, S3 secret key, and bucket will need to be created for SmartStore to access ECS to store index data. This can be done from the ECS Web Portal or Management API.

- Create a new object user and generate an S3 secret key.

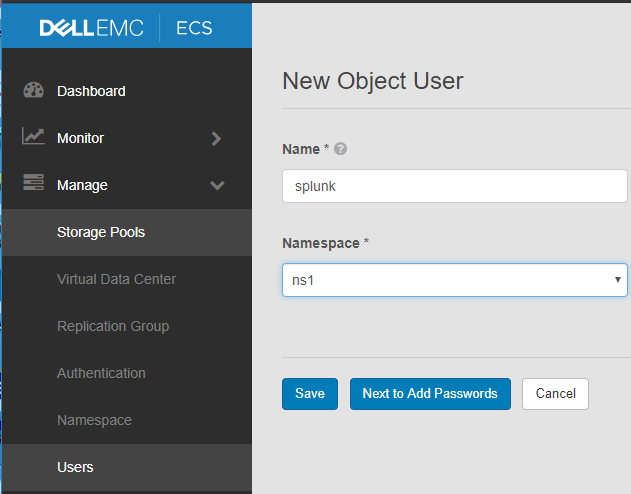

- From the ECS Web Portal, navigate to Manage > Users and click the New Object User button.

- Enter the name of the user and a namespace.

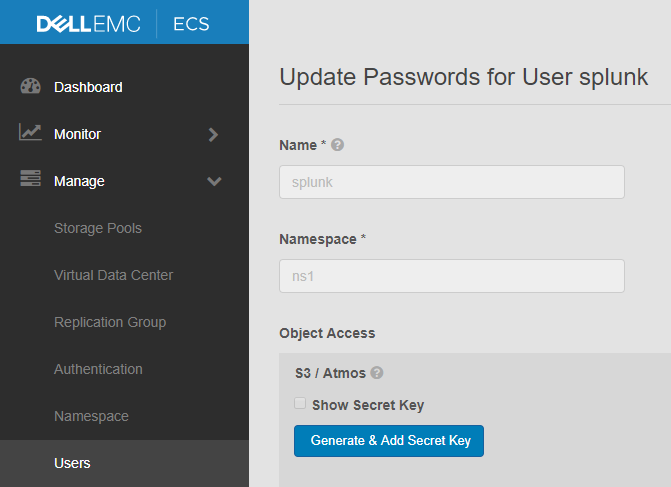

- Click the Next to Add Passwords button and click the Generate & Add Secret Key button under the Object Access section.

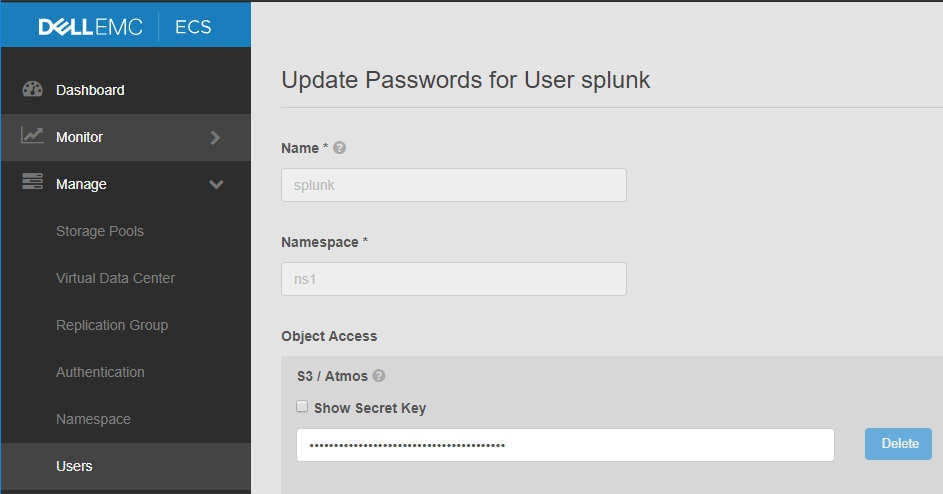

- Click the Show Secret Key box to display the secret key. Click the Close button at the bottom of the page

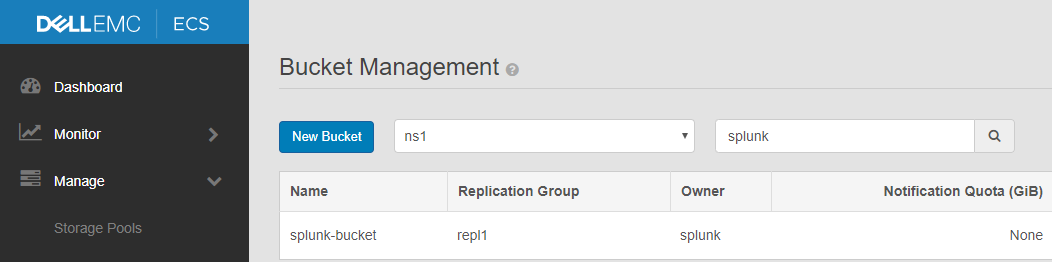

- Create an ECS bucket.

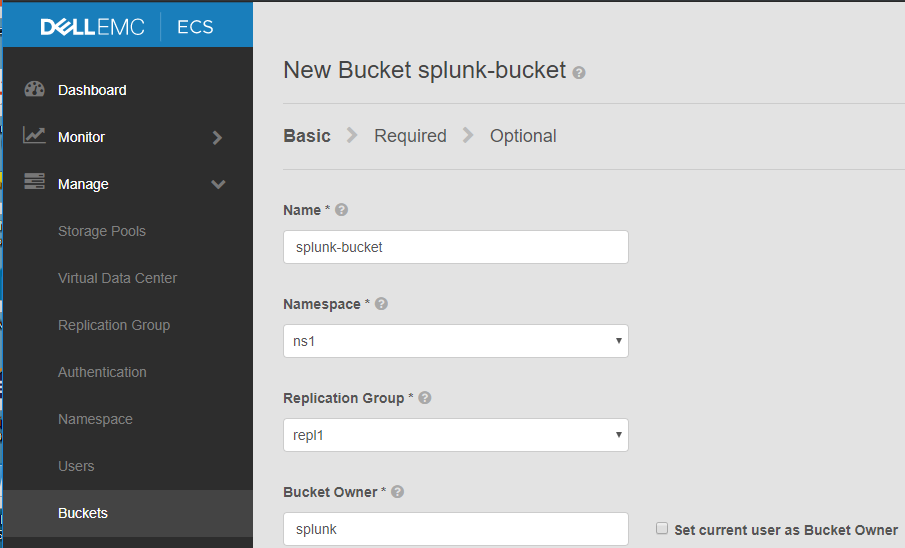

- Navigate to Manage > Buckets and select the namespace that you chose when creating the Object User (in our example that would be ns1). Click the New Bucket button.

- Enter a name and bucket owner. The bucket owner is the ECS Object user which was created in step 1.

- Click Next at the bottom of the page.

Note: Do not enable File System unless you’ve reviewed Best practices. The Required and Optional configuration settings can all be left as the defaults however the ECS documentation should be reviewed to better understand each option. Access During Outage can be enabled if the replication group chosen for the bucket spans multiple ECS sites. Reference the Temporary Site Outage section in the ECS Overview and Architecture document for details of this feature.

- Click the Save button at the bottom of the final page to create the bucket.

Configure SmartStore indexes with ECS

This section outlines the steps to configure a SmartStore index with ECS.

The SmartStore settings in indexes.conf enable and control SmartStore indexes. You can enable SmartStore for all of an indexer's indexes, or you can enable it on an index-by-index basis, allowing a mix of SmartStore and non-SmartStore indexes on the same indexer.

Note: When you configure these settings on an indexer cluster's peer nodes, you must deploy the settings through the configuration bundle method. As with all settings in indexes.conf, SmartStore settings must be the same across all peer nodes.

This example configures SmartStore for an indexer cluster. On the master node, cd to /$SPLUNK_HOME/etc/master/_cluster/local and create a file named indexes.conf. The below SmartStore index example will store the Splunk index warm buckets (_audit, _internal, etc.) on ECS.

Sample indexes.conf file:

[default]

# Configure all indexes to use the SmartStore remote volume called

# "remote_store".

# Note: If you want only some of your indexes to use SmartStore,

# place this setting under the individual stanzas for each of the

# SmartStore indexes, rather than here.

remotePath = volume:ecs_store/$_index_name

repFactor = auto

# Configure the remote volume

[volume:ecs_store]

storageType = remote

# On the next line, the path attribute points to the remote storage location

# where indexes reside. Each SmartStore index resides directly below the location

# specified by the path attribute. The <scheme> identifies a supported remote

# storage system type, such as S3. The <remote-location-specifier> is a

# string specific to the remote storage system that specifies the location

# of the indexes inside the remote system.

# This is an S3 example: "path = s3://mybucket/some/path".

path = s3://splunk-bucket/indexes

# The following S3 settings are required only if you’re using the access and secret

# keys. They are not needed if you are using AWS IAM roles.

remote.s3.access_key = splunk

remote.s3.secret_key = <ECS Object Users S3 Secret Key>

remote.s3.endpoint = <Endpoint to access ECS nodes>

# This example stanza configures a custom index, "cs_index".

[cs_index]

homePath = $SPLUNK_DB/cs_index/db

thawedPath = $SPLUNK_DB/cs_index/thaweddb

# SmartStore-enabled indexes do not use coldPath, but you must still specify it here.

coldPath = $SPLUNK_DB/cs_index/colddb

The highlighted parameters above are the required values that need to be modified to store index data in ECS.

Table 6. Indexes conf configuration attributes

Attribute

Description

path

The above example is configured to use our bucket example in Solution verification. A prefix/path named “indexes” is also being used. The bucket must be created on ECS prior to deploying the configuration bundle but any prefixes will automatically be created by SmartStore.

remote.s3.access_key

The ECS Object username that was created in Solution verification.

remote.s3.secret_key

The ECS Object Users S3 secret key generated in Solution verification.

remote.s3.endpoint

The HTTP|HTTPS endpoint to access the ECS nodes. This would typically be the IP load balancer in front of the ECS cluster.

Push the configuration bundle to the peer node(s) from the master. This action may cause the peer node(s) to restart.

- Create a new object user and generate an S3 secret key.