Home > Integrated Products > VxRail > Guides > Architecture Guide—VMware Cloud Foundation on VxRail: VCF 4.5 on VxRail 7.0 > Two vDS (system and NSX) network topologies

Two vDS (system and NSX) network topologies

-

Introduction

The second vDS provides several different network topologies. Some of these topologies are covered in this section. Note in these examples, we focus on the connectivity from the vDS and do not take the NIC card to vDS uplink into consideration. With the new feature for custom profiles, there are too many combinations to cover in this guide.

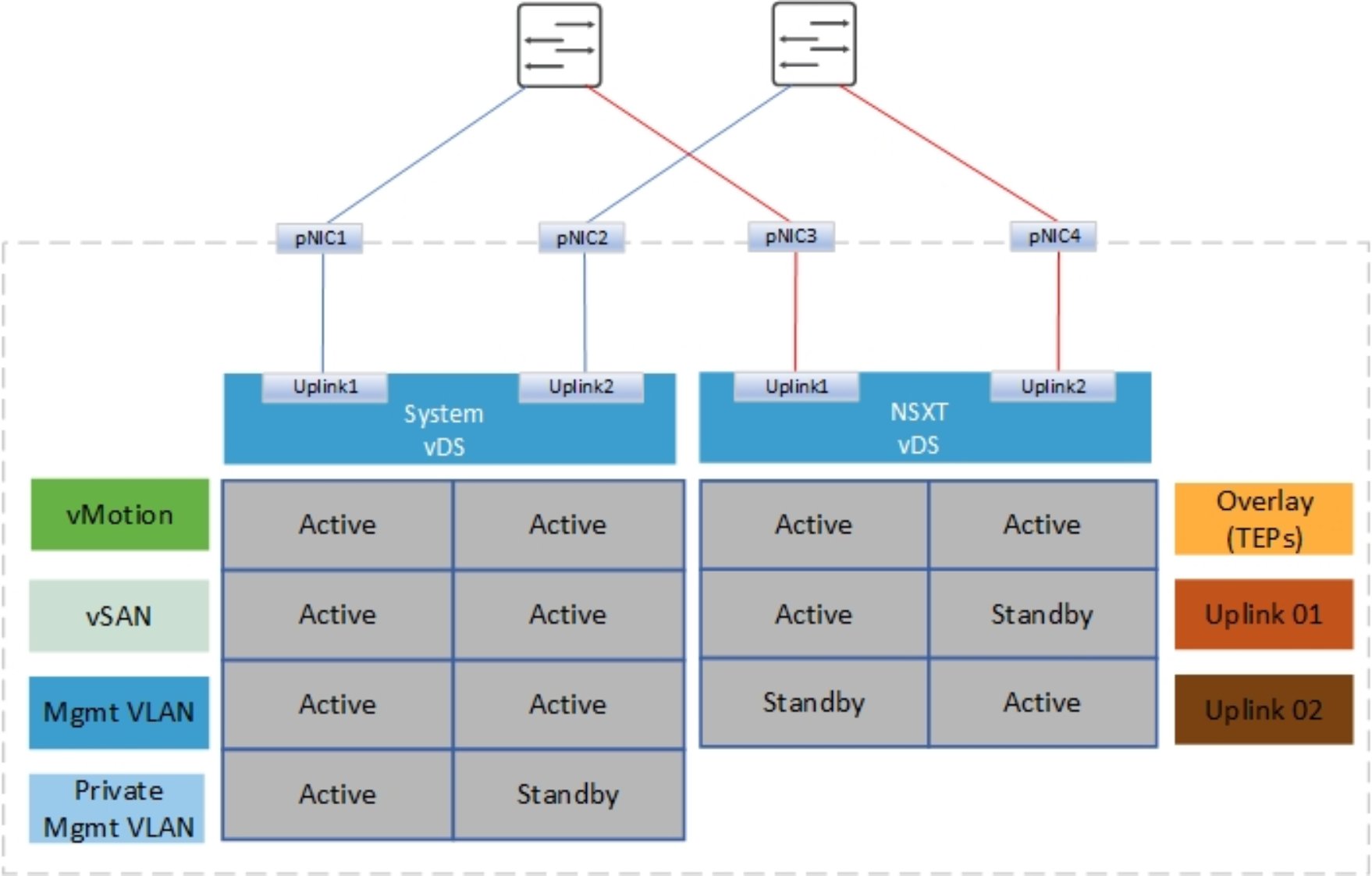

Two vDS (system and NSX) – 4‑pNIC topology

The first option uses four pNICs, two uplinks on the VxRail (system) vDS, and two uplinks on the NSX vDS.

Figure 18. Two vDS with four pNICs

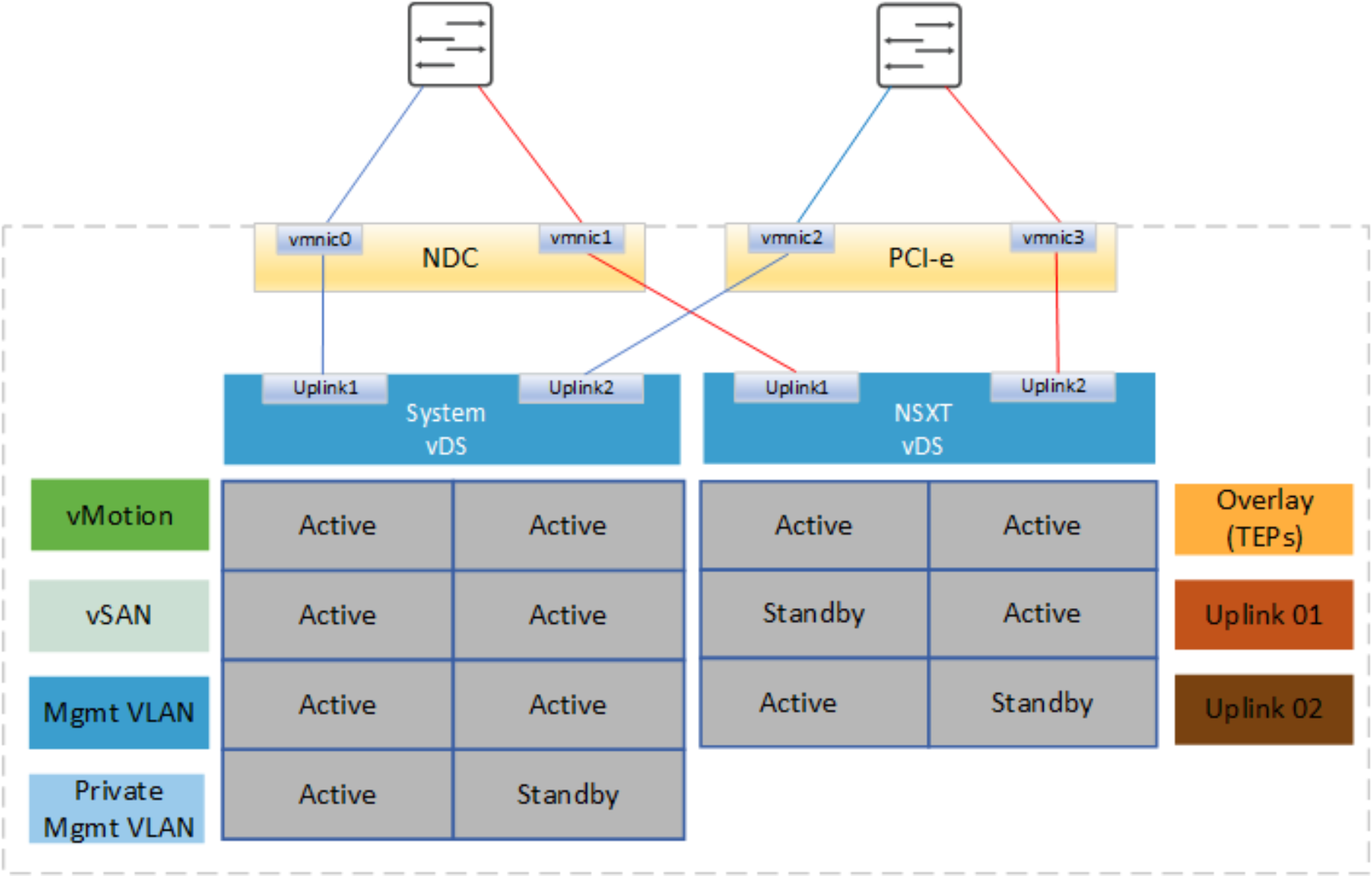

If we now consider the two-vDS design and NIC-level redundancy, the VxRail vDS must be deployed using a custom profile using uplink1/uplink2 for all traffic. Uplink1 must be mapped to a port on the NDC and the second uplink2 must be mapped to a port on the PCIe, providing NIC-level redundancy for the system traffic. When the VxRail cluster is added to VCF, the remaining two pNICs (one from NDC and one from PCIe) can be selected to provide NIC-level redundancy for the NSX traffic. The next figure illustrates this network design.

Note: Both ports from NDC must connect to switch A, and both ports from the PCIe must connect to switch B. These connections are required for VCF vmnic lexicographic ordering.

Figure 19. Two vDS with custom profile and NIC-level redundancy

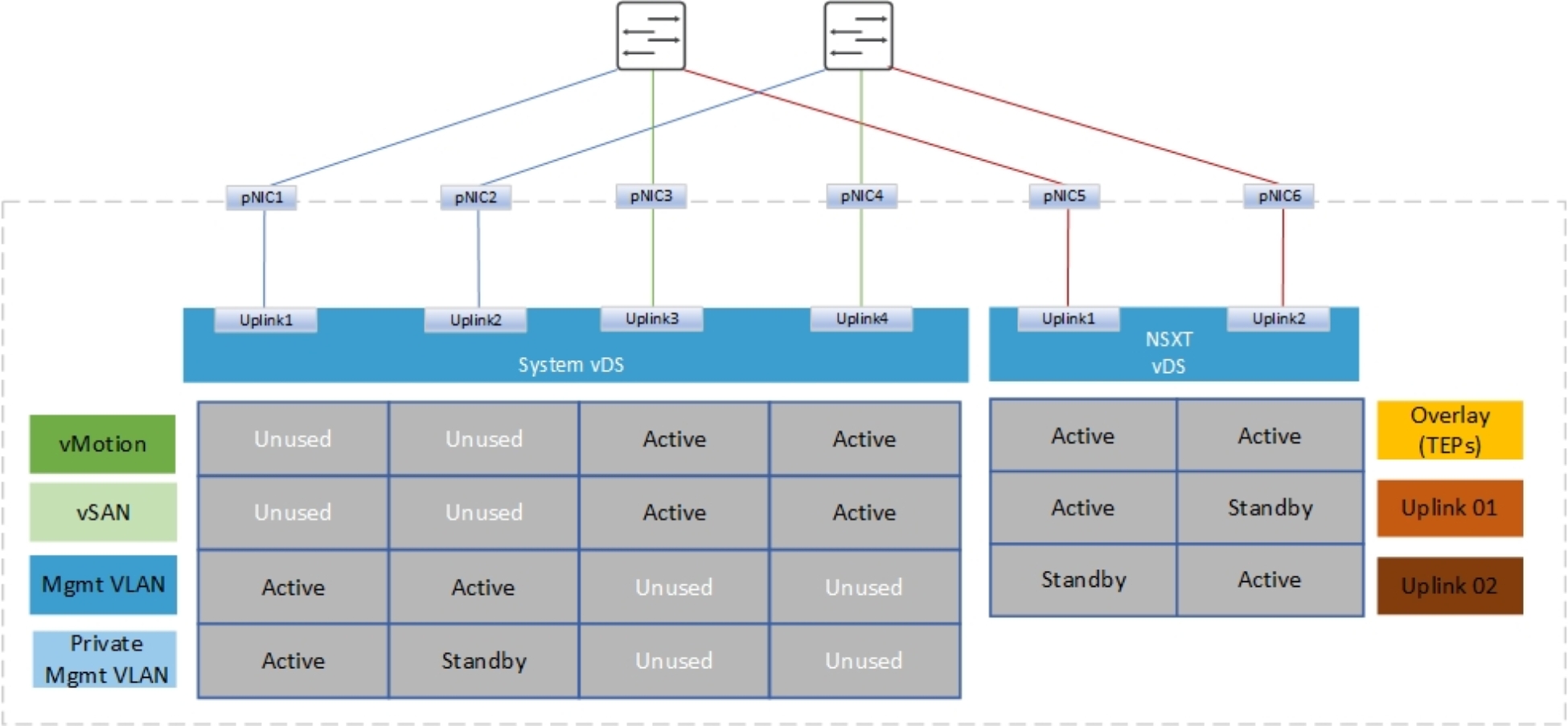

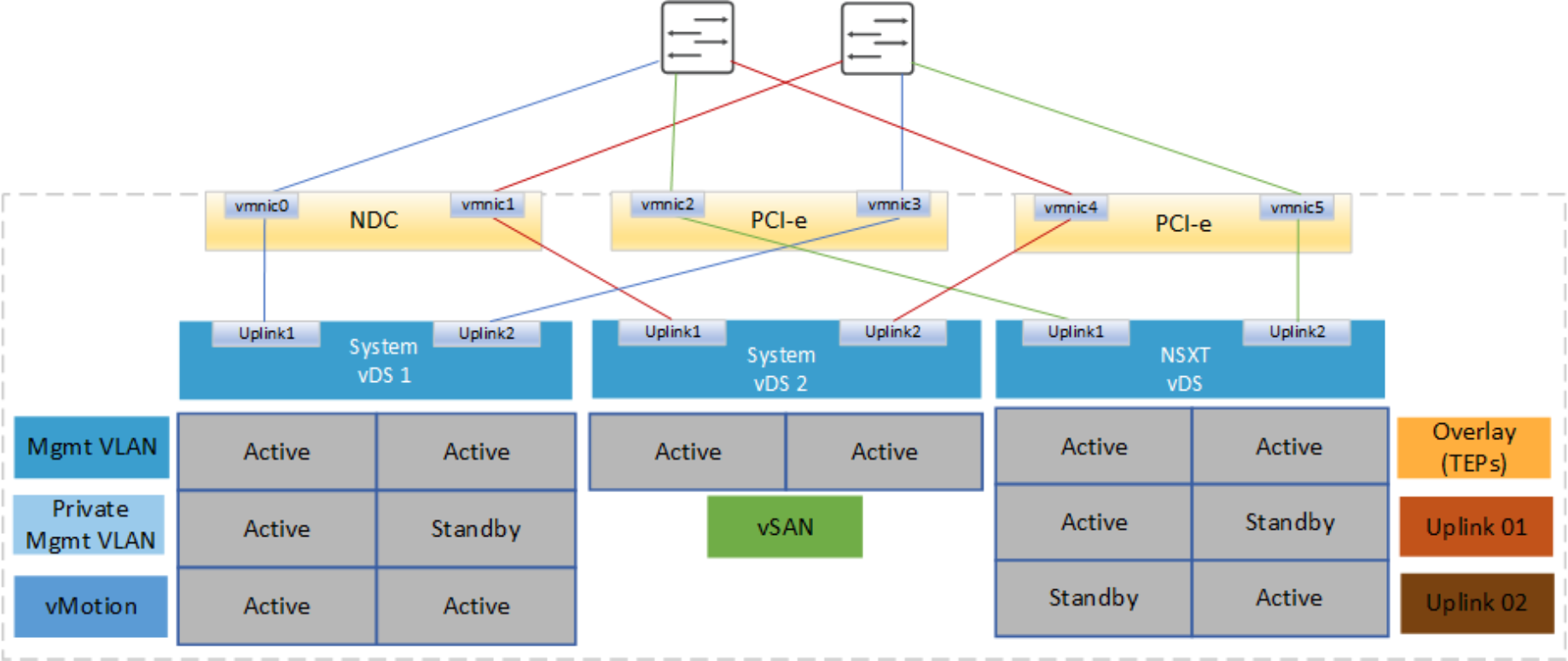

Two vDS (system and NSX) – 6‑pNIC topologies

There are two options in a 6-pNIC design with two vDS. For the first option, we have four pNICs on the VxRail vDS and use two additional pNICs dedicated for NSX traffic on the NSX vDS. This option might be required to keep Mgmt and vSAN/vMotion on different physical interfaces and also if NSX-T needs its own dedicated interfaces.

Figure 20. Two vDS with six pNICs option 1

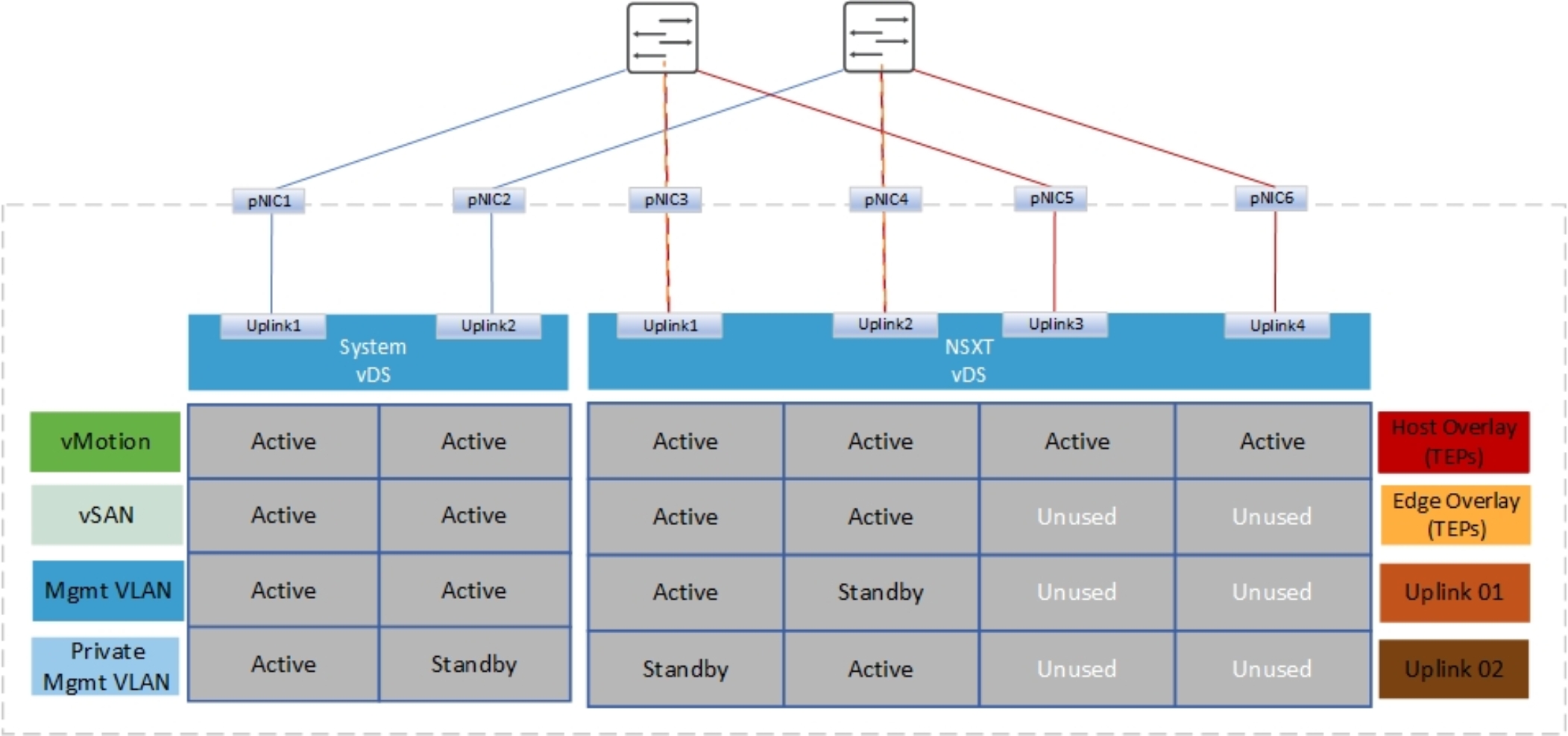

The second option with six pNICs uses a system vDS with two pNICs and the NSX vDS with four pNICs. This configuration increases the bandwidth for NSX east-west traffic between transport nodes. The use case for this design might be when the east-west bandwidth requirement scales beyond two pNICs. The host overlay traffic uses all four uplinks on the NSX vDS, load-balanced using source ID teaming. By default, the Edge VMs, including the Edge overlay and Edge uplink traffic, use uplink 1 and 2 on NSX vDS.

Note: Starting with VCF 4.3, you can select what uplinks to use on the vDS for the Edge VM traffic when a dedicated NSXT vDS has been deployed with four uplinks.

Figure 21. Two vDS with six pNICs option 2

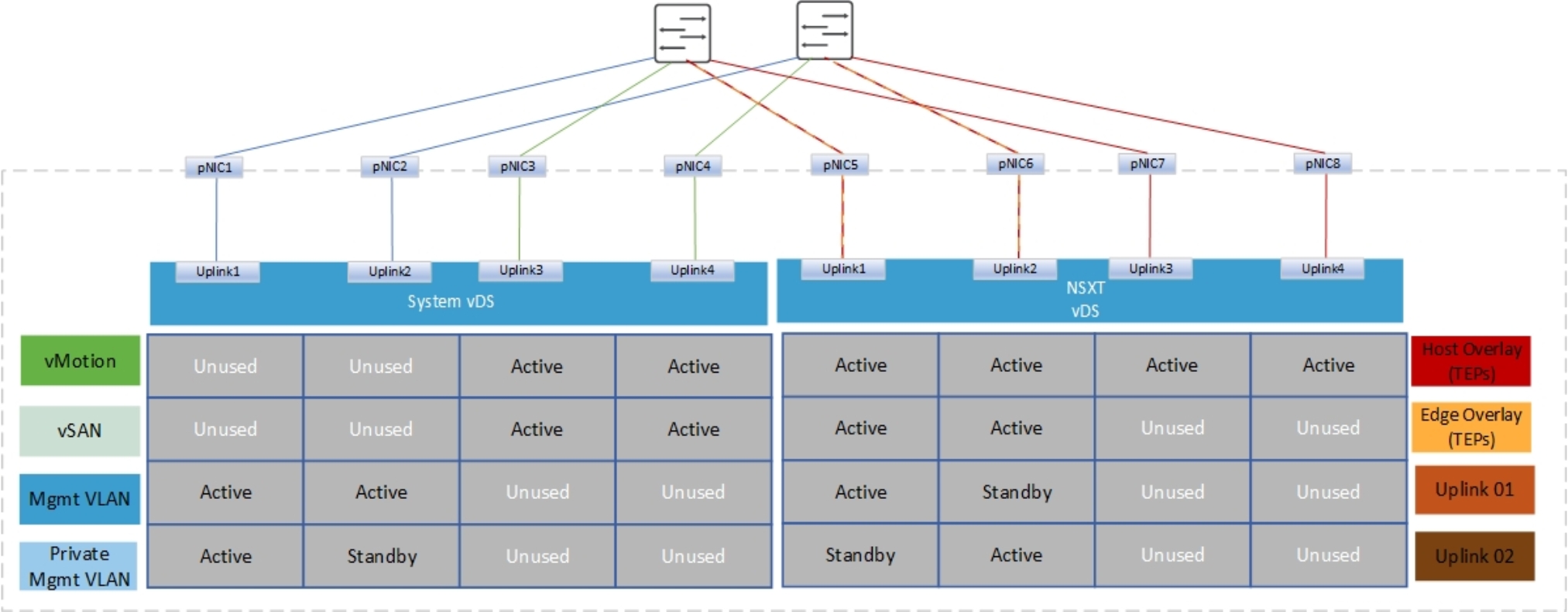

Two vDS (system and NSX) – 8‑pNIC topologies

The 8-pNIC option that is illustrated in the following figure provides a high level of network isolation and also the maximum bandwidth for NSX east-west between host transport nodes. At the cost of a large port count on the switches, each host requires four ports per switch. The VxRail vDS (system) uses four uplinks and the NSX vDS also uses four uplinks.

Figure 22. Two vDS (system and NSX) design with eight pNICs

Two system vDS

Starting with VCF 4.3.0, you can have two VxRail system vDS to separate system traffic onto two different vDS. For example, vMotion and external management traffic can be on one vDS and vSAN on another vDS. Either one of the two VxRail vDS can be used for NSXT traffic. Alternatively, you can use a dedicated NSXT vDS, which results in three vDS in the network design. Sometimes physical separation is needed for management/vMotion, vSAN, and NSX-T traffic. The three-vDS design provides this capability. This section describes sample topologies.

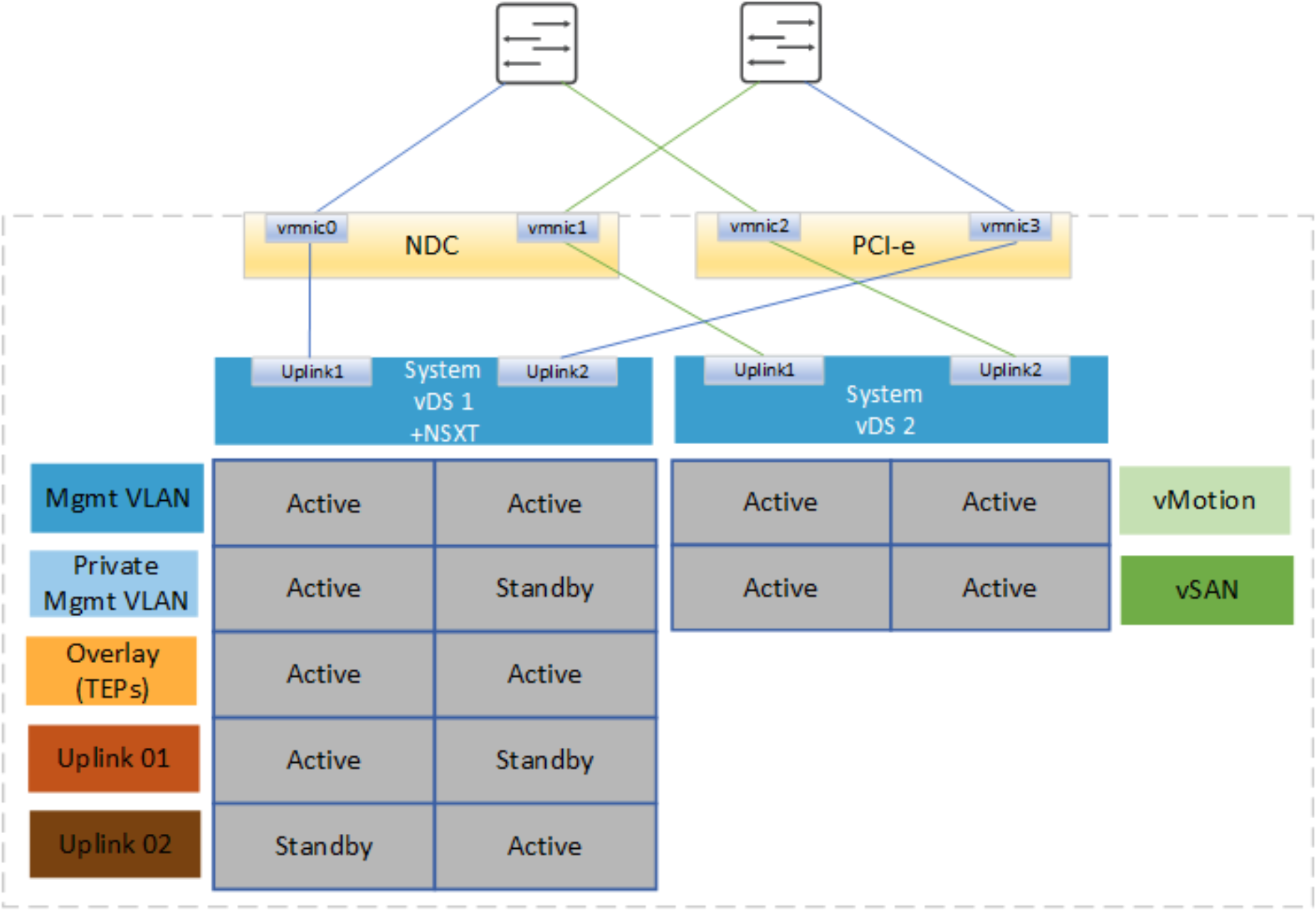

Two system vDS – four pNIC

In this first example, two system vDS are used. The first vDS is used for management and NSX-T traffic, the second system vDS is used for vMotion and vSAN traffic. This design also incorporates NIC-level redundancy.

Figure 23. Two-system vDS design with four pNIC

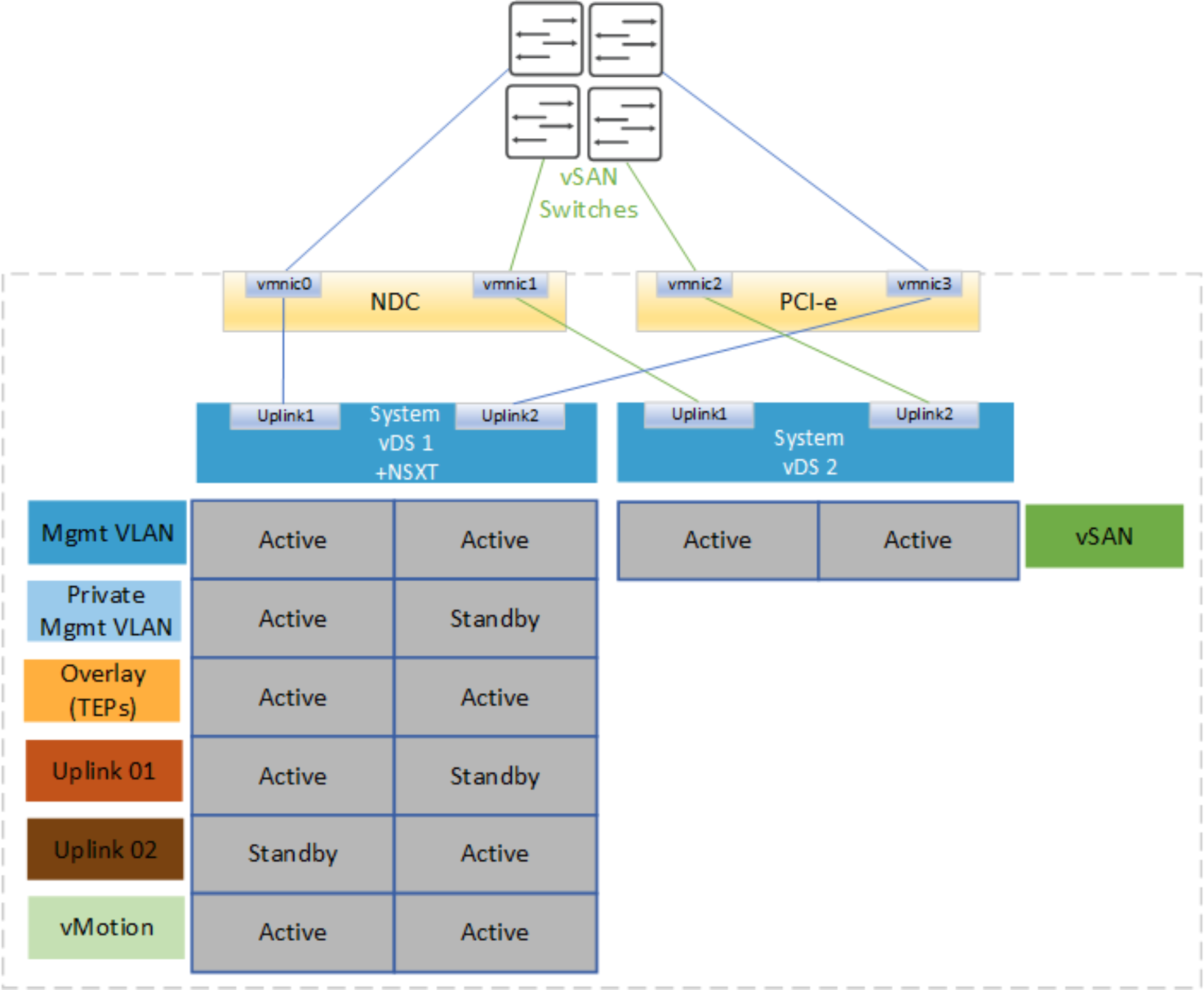

In the next example, vSAN is isolated to a dedicated network fabric. This design might be needed if there is a requirement for physical separation of storage traffic from management and workload production traffic.

Figure 24. Two-system vDS design with four pNIC and isolated vSAN

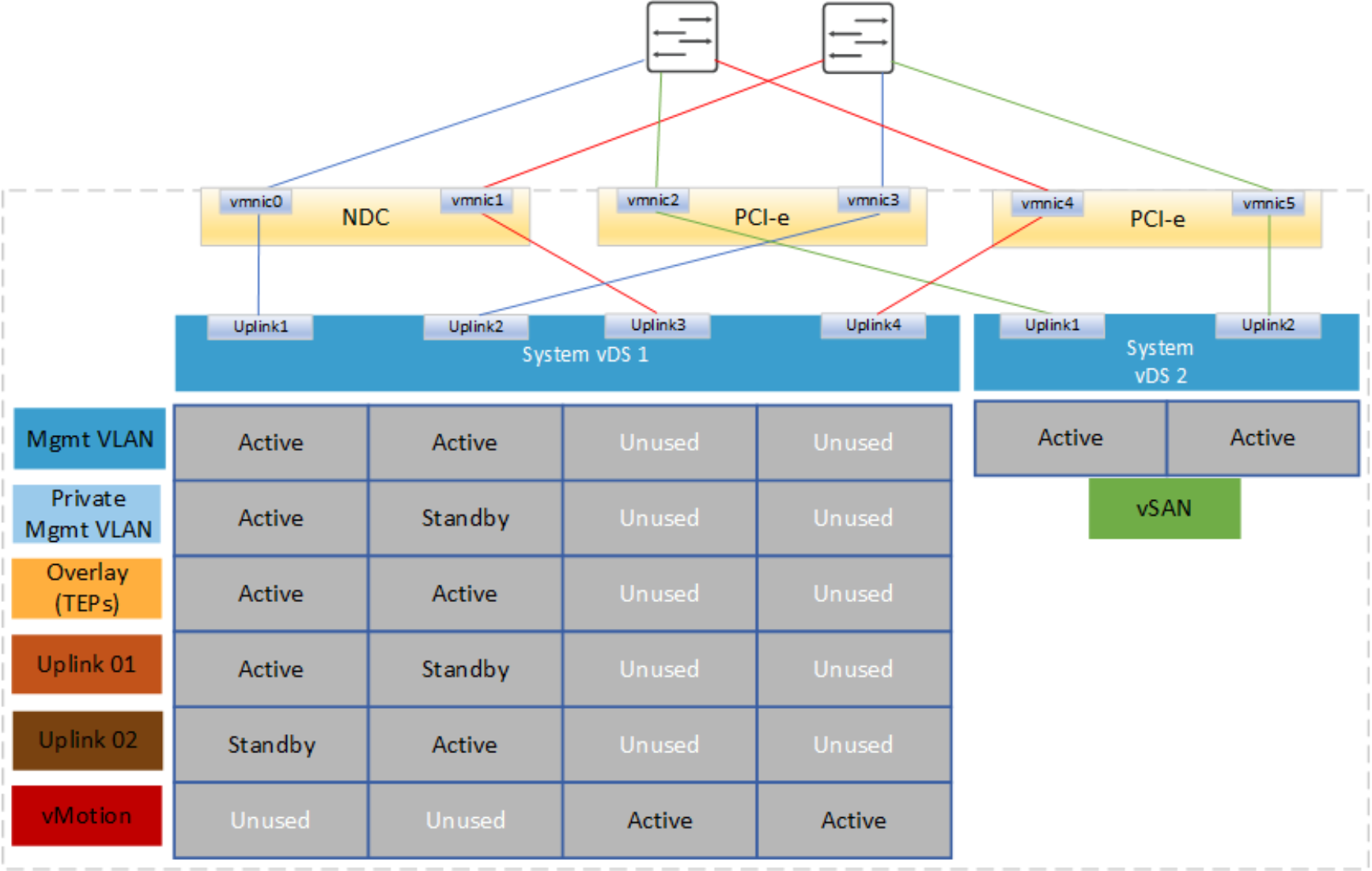

Two system vDS – six pNIC

Another option is to deploy the first system vDS with four uplinks. This option allows separation of vMotion from management and workload production traffic and allows vSAN on its own dedicated vDS. This option requires six pNICs per host. With VCF 4.3, you can pin the NSX-T traffic to the same NICs using either management, vMotion, or vSAN on either vDS. In this example, management NICs have been selected on system vDS1, which is the default behavior in the previous release.

Figure 25. Two-system vDS design with six pNIC

Note: VCF 4.3 provides the option to place NSX-T traffic onto the same two pNICs used for external management, vMotion or vSAN on either system vDS.

A third vDS is required if there is a requirement to isolate NSX-T traffic and vSAN traffic. In this case, two system vDS and an NSX-T vDS are required. The first system vDS is used for management and vMotion, the second system vDS is used for vSAN, and the third vDS is dedicated for NSX-T traffic. This design results in six pNICs per host.

Figure 26. Two system vDS and NSX-T vDS