Third-party Analysis

VMware Cloud Foundation 5.1 on next gen Dell PowerEdge servers

Thu, 16 May 2024 15:42:24 -0000

|Read Time: 0 minutes

A Principled Technologies deployment guide

The release of VMware Cloud Foundation™ 5.1 offers new scalability, security, and enhancements that can help organizations meet essential infrastructure-as-a-service (IaaS) requirements. When backed by next gen Dell™ PowerEdge™ servers (with their own advantages in performance, security, management, and more), the VMware and Dell solution could deliver a robust, scalable, and efficient on-premises cloud infrastructure that provides the underlying infrastructure for your business to achieve your strategic business goals.

This comprehensive guide explains the deployment process for VMware Cloud Foundation 5.1 on a cluster of Dell PowerEdge servers, steps that we verified by doing the work ourselves. As your organization continues to evolve in the dynamic landscape of modern IT, this guide can empower your system administrators, architects, and IT professionals with the knowledge and expertise to implement the VMware and Dell cloud solution efficiently and effectively.

In addition to verifying the straightforward deployment process of VMware Cloud Foundation 5.1 on next gen Dell PowerEdge servers, we ran an online transaction processing (OLTP) workload on 24 VMs configured with MySQL database software. The consistent new orders per minute (NOPM) metrics that we captured demonstrate the potential transactional database performance value that the VMware and Dell cloud solution can deliver.

About 16th Generation Dell PowerEdge servers

With enhanced processing power, advanced security features, and transformative management capabilities, the 16th Generation servers can offer strong standards of server performance for your data center. According to Dell, “Whether your applications and data reside in the cloud, data centers, or edge environments, Dell’s PowerEdge portfolio empowers you to harness the full ease and agility of the cloud.”[i] Learn more about the portfolio of 16th Generation Dell PowerEdge rack, tower, purpose-built, and modular infrastructure servers.

VMware Cloud Foundation 5.1 overview

VMware Cloud Foundation (VCF) 5.1 allows your organization to build and operate a robust private or hybrid cloud infrastructure, seamlessly integrating essential resources and services into a unified platform. Boasting features such as automated lifecycle management and intrinsic security, Cloud Foundation 5.1 can simplify the deployment and management of cloud environments while ensuring efficiency.

This latest release includes security updates, fixes for UI and Lifecycle Management issues, enhancements to prechecks at the bundle level for VMware vCenter®, and several key enhancements that address requirements for cloud scale infrastructure.[ii],[iii] It provides a complete set of software-defined services for compute, storage, network, and security, along with cloud management capabilities.[iv],[v]

Learn more about VCF 5.1.

VMware Cloud Foundation components

VCF 5.1 comprises the following:

• VMware vSphere®

• VMware NSX®

• VMware vSphere with Tanzu™

• VMware ESXi™ 8.0 U1

• VMware vCenter Server® 8.0 U1

• VMware vSAN™ 8.0 U1

• VMware Aria Suite™

• VMware Cloud Builder

• VMware Software-Defined Data Center (SDDC) Manager 5.0.[vi]

VCF automates deployment and configuration of the private or hybrid cloud software stack for your virtual infrastructure. The initial VCF deployment creates a management domain that you can then use to add workload domains. Workload domains consist of clusters of at least three ESXi hosts and can manage and segregate resources and workloads in your private cloud.

For deploying and overseeing the logical infrastructure within the private cloud, VCF incorporates Cloud Builder and SDDC Manager virtual appliances to enhance VMware virtualization and management elements. These components were essential in our deployment to the next gen Dell PowerEdge server cluster.

VMware Cloud Builder automates the deployment of the management domain, the first cluster in a VCF deployment that manages the health of the VCF stack and the deployment of workload domains (server clusters that you use to run workload VMs).

SDDC Manager automates the virtualization software life cycle, encompassing configuration, provisioning, upgrades, and patching, including host firmware, while simplifying day-to-day management and operations. Through the SDDC Manager interface, which Figure 1 shows, the virtual infrastructure administrator or cloud administrator can provision new private cloud resources, monitor changes to the logical infrastructure, and oversee life cycle and other operational activities.

Figure 1: The VMware SDDC Manager interface. Source: Principled Technologies.

SDDC Manager uses vSphere Lifecycle to bundle, stage, and deploy software, OS, and firmware updates on a per-workload domain basis.

Additionally, VMware lists other features that we did not use in our deployment but that could be helpful in your next gen Dell PowerEdge cluster environment:[vii]

- vSphere with Tanzu/Workload Management integration to run Kubernetes workloads natively in vSphere

- vSAN stretched cluster configuration to provision two availability zones in a single workload domain, including a management domain, which could provide native high availability and physical resiliency and could minimize management service and workload downtime

- NSX Federation to manage network configuration across multiple VCF instances and management domains

- VMware Cloud Foundation+ to connect to VMware Cloud® using a subscription model rather than key-based component licensing and to manage your VCF instance via the VMware Cloud Console

Setting up the next gen Dell PowerEdge servers

The Dell PowerEdge servers that comprised our cluster included the following:

- Four Dell PowerEdge R750xs servers for management domain

- Three Dell PowerEdge R760 servers for virtual infrastructure workload domain

We deployed the VCF management domain on the four Dell PowerEdge R750xs servers. Each PowerEdge R750xs server had two BOSS drives for VMware ESXi 8.0.2 and eight SAS SSDs for VMware vSAN storage. The VCF management domain uses its own vCenter, NSX networking, and vSAN storage. To deploy and configure those resources and SDDC Manager automatically during the Cloud Builder deployment, VCF used our configuration details from the Deployment Parameter Workbook. This is an Excel workbook where you enter credentials, IPs, VLANs, and other configuration details for the VCF deployment and then upload it to Cloud Builder, which tests the input and allows you to continue deploying VCF once everything passes validation.

After deploying the VCF management domain, we deployed a virtual infrastructure (VI) workload domain on the three Dell PowerEdge R760 servers. Each Dell PowerEdge R760 server had two BOSS drives for ESXi 8.0.2, and four NVMe® drives and 20 SAS SSDs for vSAN storage. VI workload domains are additional vSphere clusters of at least three hosts with their own storage (in our case vSAN) and their own vCenter Server instance. VI workload domains provide physical and logical units for segregating and managing customer workloads.[viii]

All the Dell PowerEdge servers in our testbed had two 25Gb Ethernet connections to a Dell S5248F switch. We also used a Dell PowerEdge R6625 server as an infrastructure server where we deployed the AD/DNS server, the Certificate Authority server, a jumpbox VM, and routers to manage the VLANs on the Dell S5248F switch.

Overview of our VCF 5.1 deployment on a Dell PowerEdge server cluster

Figure 2 shows an example of how you can deploy all management components in VMware Cloud Foundation, which was the general process we followed.

Figure 2. Example flow chart of a VMware Cloud Foundation 5.1 deployment. Source: Principled Technologies based on VMware Deployment Overview of VMware Cloud Foundation.

You can install VCF 5.1 either “as a new release or perform a sequential or skip-level upgrade to VMware Cloud Foundation 5.1.”[ix] The new installation process, which we broadly followed, has three phases:[x]

- Preparing the environment: “The Planning and Preparation Workbook provides detailed information about the software, tools, and external services that are required to implement a Software-Defined Data Center (SDDC) with VMware Cloud Foundation, using a standard architecture model.”[xi] Note: Unlike the Deployment Parameter Workbook, an Excel file that you complete and upload to Cloud Builder, the Planning and Preparation Workbook is a long list of values, IPs, VLANs, domain names, usernames, passwords, etc. Your infrastructure team completes it with all the environment details and you use that as reference throughout the deployment process, but do not upload it at any point.

- Imaging all servers with ESXi: “Image all servers with the ESXi version mentioned in the Cloud Foundation Bill of Materials (BOM) section. See the VMware Cloud Foundation Deployment Guide for information on installing ESXi.”[xii] We installed the latest Dell-customized ESXi 8 version to each host under test at the start of testing and configured the OS as the Planning and Preparation Workbook specified.

- Installing Cloud Foundation 5.1: VMware Cloud Builder handles the bulk of the VCF deployment. Using the Planning and Preparation Workbook, in conjunction with the automation of VCF, made our deployment a smooth and almost entirely automated process. (Once you have uploaded the workbook and it passes validation with no errors, you click a button to kick off the automated deployment of the management domain.)

Key takeaway

Less-experienced infrastructure administrators might find some difficulty in setting up VMware Cloud Foundation due to the complex initial environment requirements, such as DNS, networking/IP pools, and preparing hosts and disks. In our experience, however, the validation process of the Parameter Workbook helped with troubleshooting and ensuring accuracy before our deployment. Once the software successfully validated the workbook, Cloud Builder fully automated the VCF deployment, including vSAN, NSX, vCenter, and SDDC Manager. This makes deployment much easier and faster for admins at any level rather than manually completing the same processes.

Deploying VMware Cloud Foundation 5.1 on Dell PowerEdge servers

Getting started

Note that we deployed our solution by following the steps in this document but skipped some optional steps, such as including Workspace ONE.

Prior to deploying VCF via the VMware Cloud Builder, we set up infrastructure components in our environment, including the following:

- A Windows Server 2022 VM that we configured as an Active Directory domain controller for our VCF domain (in our case, we used vcfdomain.local)

- This VM also included a DNS server with forward and reverse lookups for core infrastructure components as defined by the Planning and Preparation workbook, including fully qualified domain names (FQDNs) for SDDC Manager, vCenter, and other key components of the VCF deployment

- This VM also hosted an internal NTP server for VCF hosts

- DNS with forward and reverse lookups for all relevant DNS entries

- Another Windows Server 2022 VM that we configured as a child domain controller joined to the primary VCF domain that served as the Active Directory Certificate Services role

- SDDC Manager used this domain as an internal Certificate Authority to allow trusted communication between the various VCF components

- Other necessary infrastructure components, such as pfsense VMs to handle inter-VLAN routing, DHCP, and provide NAT to the internal network where we deployed VCF

- Configuring our Dell S5248F switch as required by the Planning Workbook

We also configured static IPs for the management domain hosts and vSAN ready disks for the management cluster.

Using the Planning and Preparation Workbook

We filled out the VCF Planning and Preparation Workbook with our environment’s details. This is a Microsoft Excel workbook that serves as a configuration guide for the required VCF components and for select components after the automated deployment. Download the Planning and Preparation Workbook.

Our environment details included our supporting virtual infrastructure and IP addresses, hostnames, credentials, and other relevant details. Some of these VMware Cloud Builder automatically configured during the deployment and others we manually configured after the deployment. As we mentioned earlier, this Planning and Preparation Workbook is different from the VCF Deployment Parameter Workbook, which we used to define the environment details for the automated VCF deployment via the Cloud Builder appliance.

Imaging the servers

After we fully populated the workbook, we followed the steps from this document to prepare the individual hosts for the management domain:

- We installed ESXi on each of the hosts. We used ESXi 8.0.2 build 22380479 (Dell Customized), the most recent Dell-customized ESXi 8 version at the time.

- We configured networking on each of the hosts. We used the ESXi direct console UI to set the network adapter, hostname, static IP address, subnet mask, gateway, and DNS as specified in the planning workbook. We also enabled SSH in troubleshooting options.

- We logged into the ESXi host client for each host and started the NTP server. We also ensured that the SSH service was running.

- After setting the hostnames, we regenerated the self-signed certificates on each host so that the common name of the certificate included the hostname. We connected to each host using SSH, regenerated the self-signed certificate, and restarted the hostd and vpxa services.

Installing VMware Cloud Builder

We then finished by populating the Deployment Parameter Workbook with our environment details, networking information, and credentials. (For more information on deploying Cloud Builder, see the guide.)

After preparing all four ESXi hosts for the management domain, we deployed the VMware Cloud Builder appliance to our infrastructure host using the ESXi host client, the Cloud Builder appliance OVA file, and specified admin and root credentials and networking details for the appliance. After we deployed Cloud Builder, we connected to the Cloud builder VM via SSH and confirmed that it could successfully ping the ESXi hosts. See this VMware document for more information.

We then logged into the VMware Cloud Builder appliance web interface by navigating to its FQDN in a web browser and following the steps listed on the web interface. The steps consisted of filling out the VCF Deployment Parameter Workbook with values from the Planning and Preparation Workbook and then uploading it to the Cloud Builder VCF deployment wizard. Cloud Builder validated the entire configuration as the Deployment Parameter Workbook specified. To learn more about the Deployment Parameter Workbook, visit https://docs.vmware.com/en/VMware-Cloud-Foundation/5.1/vcf-deploy/GUID-08E5E911-7B4B-4E1C-AE9B-68C90124D1B9.html.

After populating the Deployment Parameter Workbook with the relevant networking, existing infrastructure, credentials, and licensing information and testing that everything passed validation in the VMware Cloud Builder, we clicked Deploy SDDC and the Cloud Builder automatically deployed SDDC Manager and the other components of our initial VCF management cluster, including vCenter, NSX Manager, and vSAN.

VMware documentation states that Cloud Builder lists any issues with validation as errors or warnings in the UI. Users must address any configuration or environment errors before continuing. We did not encounter any errors or warnings in our deployment.

After validating and testing the environment parameters, the Cloud Builder appliance used our information to deploy the management domain cluster, consisting of four hosts. The deployment process included deploying a VMware vCenter Server environment, configuring NSX and vSAN, deploying SDDC Manager, and transferring control of the hosts and environment to SDDC Manager.

As we previously noted, VCF is compatible with vSphere with Tanzu workloads and Workload Management for running Kubernetes-based applications and workloads natively on the ESXi hypervisor layer. You could enable Workload Management on the management domain cluster or on specific workload domain clusters. We did not do this in our testing or use vSphere with Tanzu.[xiii]

Figure 3: The VMware Cloud Builder post-deployment success screen. Source: Principled Technologies.

Post-deployment configuration

With our core VCF components and management domain cluster deployed, we needed to complete some steps in SDDC Manager before deploying the first VI workload domain or VMware Aria Suite components.

Based on recommendations in VMware documentation,[xiv] we deployed Aria Operations after the initial management domain deployment and configured the software to provide workload and performance visibility into the VCF management domain and our eventual virtual infrastructure workload domains.

We logged into our newly deployed SDDC Manager instance and configured it to authenticate with VMware Customer Connect to download install and update bundles for Aria Suite Lifecycle Manager and the VI workload domain deployment. We also configured SDDC Manager to use our internal Certificate Authority server to manage CA-signed certificates for the physical infrastructure underlying our VCF deployment. In our management domain, we deployed an NSX Manager and Edge cluster and application virtual networks. We referenced these VMware documents.

Next, we followed the steps in this document to deploy VMware Aria Suite Lifecycle in the management domain. We used SDDC Manager to generate and sign a certificate for Lifecycle Manager by following these steps. We configured Lifecycle Manager to communicate with our management domain vCenter. We did the same for Aria Suite Operations: deployed it to our management domain for visibility into our virtual and physical infrastructure and then configured it in SDDC Manager. See how to configure Lifecycle Manager and more information on deploying Aria Suite Operations. After we installed VMware Aria Operations, the entire VCF management domain deployment was complete (see Figure 3).

Preparing for workload activity

To deploy a VI workload domain cluster, we prepared three new hosts the same way we configured the management domain ESXi hosts. We created a network pool for the workload domain cluster and commissioned them to the SDDC inventory. We then deployed the VI workload domain by following these steps. We deployed an NSX Edge cluster to the workload domain for virtual networking infrastructure and generated certificates in SDDC Manager for the VI workload domain hosts. We considered our workload domain fully configured at this point and ready for our proof-of-concept database workload.

Deploying the OLTP database workload

We used the TPROC-C benchmark from the HammerDB suite to simulate a real-world online transaction processing database workload. We created a VM running MySQL database software on the workload domain cluster with 16 vCPUs, 64 GB of memory, and 2 TB of storage from the VSAN datastore. We installed Ubuntu 22.04 and MySQL 8.0 on the VM. We then scaled out to 24 VMs on each Dell PowerEdge R760 server. We ran the HammerDB 4.9 TPROC-C workload on each VM with 500 warehouses and measured the new orders per minute.

About HammerDB

HammerDB is an open-source benchmarking tool that tests the performance of many leading databases. The benchmark tool includes two built-in workloads derived from industry standards: a transactional (TPROC-C) workload and an analytics (TPROC-H) workload. We chose the TPROC-C (TPC-C-like) workload to demonstrate the online transaction processing performance capabilities of each instance, which benefit from high core counts and fast memory. TPROC-C runs a transaction processing workload that simulates an ecommerce business with five types of transactions: receiving a customer order, recording a payment, delivering an order, checking an order’s status, and checking stock in inventory.[xv] Note that our test results do not represent official TPC results and are not comparable to official TPC-audited results. To learn more about HammerDB, visit https://www.hammerdb.com/.

Get strong cloud OLTP database performance

We ran the TPROC-C workload three times and collected the total NOPM and transactions per minute (TPM) across all 24 MySQL VMs (see Table 1). The median run is in bold.

Table 1: The total new orders per minute and transactions per minute for all 24 MySQL VMs in our testbed. Source: Principled Technologies.

TPROC-C run 1 | TPROC-C run 2 | TPROC-C run 3 | |

Total NOPM | 342,850 | 344,889 | 345,961 |

Total TPM | 796,817 | 801,329 | 803,411 |

Figure 4 shows CPU utilization during the median run (run 2). CPU utilization stayed around 70 percent during the test. We wanted to hit 70 percent utilization to simulate a real-world OLTP workload.

Figure 4: CPU utilization during the median run of our testing. Source: Principled Technologies.

Figure 5 shows the average vSAN storage latencies for the Dell PowerEdge cluster during run 2. Read latency stayed between 1.5 and 2 milliseconds, and write latency stayed 2.5 and 3 milliseconds, showing that storage access stayed relatively low and constant during for the OLTP workload.

Figure 5: Average vSAN storage latencies for the Dell PowerEdge cluster with VCF 5.1. Source: Principled Technologies.

Conclusion

Deploying VMware Cloud Foundation 5.1 on next gen Dell PowerEdge servers brings together critical virtualization capabilities and high-performing hardware infrastructure. Relying on our hands-on experience, this deployment guide offers a comprehensive roadmap that can guide your organization through the seamless integration of advanced VMware cloud solutions with the performance and reliability of Dell PowerEdge servers. In addition to the deployment efficiency, the Cloud Foundation 5.1 and PowerEdge solution delivered strong performance while running a MySQL database workload. By leveraging VMware Cloud Foundation 5.1 and PowerEdge servers, you could help your organization embrace cloud computing with confidence, potentially unlocking a new level of agility, scalability, and efficiency in your data center operations.

This project was commissioned by Dell Technologies.

May 2024

Principled Technologies is a registered trademark of Principled Technologies, Inc.

All other product names are the trademarks of their respective owners.

Read the report on the PT site at https://facts.pt/Hse6826 and see the science at https://facts.pt/vXo6g7E.

[i] Dell Technologies, “Dell PowerEdge Servers,” accessed January 3, 2024,

[ii] VMware, “VMware Cloud Foundation 5.0 Release Notes,” accessed December 20, 2023,

[iii] Rick Walsworth, “Announcing VMware Cloud Foundation 5.0,” accessed December 20, 2023,

https://blogs.vmware.com/cloud-foundation/2023/06/01/announcing-vmware-cloud-foundation-5-0/.

[iv] VMware, “VMware Cloud Foundation 5.0 Release Notes.”

[v] VMware, “VMware Cloud Foundation Overview,” accessed December 20, 2023,

[vi] VMware, “Frequently Asked Questions: VMware Cloud Foundation 5.0,” accessed December 19, 2023,

[vii] VMware, “VMware Cloud Foundation Features,” accessed February 9, 2024,

[viii] VMware, “VMware Cloud Foundation Glossary,” accessed February 9, 2024,

[ix] VMware, “VMware Cloud Foundation 5.1 Release Notes,” accessed January 3, 2024,

[x] VMware, “VMware Cloud Foundation 5.1 Release Notes.”

[xi] VMware, “VMware Cloud Foundation 5.1 Release Notes.”

[xii] VMware, “VMware Cloud Foundation 5.1 Release Notes.”

[xiii] VMware, “VMware Cloud Foundation with VMware Tanzu,” accessed February 9, 2024,

[xiv] VMware, “Unified Cloud Management for VMware Cloud Foundation,” accessed February 9, 2024,

[xv] HammerDB, “Understanding the TPROC-C workload derived from TPC-C,” accessed March 13, 2024,

https://www.hammerdb.com/docs/ch03s05.html.

Author: Principled Technologies

Reimagine the Employee Experience in Hybrid Work Environments

Wed, 29 Nov 2023 04:46:00 -0000

|Read Time: 0 minutes

Prowess Consulting identified how an integrated workforce solution like VMware® Anywhere Workspace with Dell™ PowerEdge™ servers can help organizations succeed with hybrid work environments.

Executive Summary

Hybrid work is here to stay. 64% of executives are convinced that flexible work options motivate employees, and more than 70% of employees are working from home at least 2–3 days per week.[1] But hybrid work environments create a unique set of challenges for organizations. As employees use more devices, access more applications, and work from more locations, IT teams are scrambling to support them. Some organizations try to get by on legacy technologies and point solutions. However, traditional IT environments and management solutions are not designed to support a distributed workforce. Using traditional tools can lead to operational complexity, fragmented security, and poor user experiences.

Prowess Consulting evaluated VMware® Anywhere Workspace running on Dell™ PowerEdge™ servers as a potential solution for managing hybrid work environments. Anywhere Workspace integrates modern tools into a single platform to deliver IT services like onboarding employees, managing remote devices and virtual desktops, and monitoring for security threats. After reviewing components including VMware Workspace ONE®, VMware® Secure Access Server Edge (SASE), and VMware® Carbon Black, we determined that organizations that deploy the Anywhere Workspace platform can enhance user experiences and achieve consistent, secure performance across locations and devices for hybrid workers. For employers embracing hybrid work environments for their employees, this solution could be a significant win.

Hybrid Work Environments

Organizations are supporting hybrid work environments as executives conclude that flexible work options keep employees motivated.1 In a hybrid work environment, some employees might work remotely, others might work on-premises, and others might split their time between the office and other locations, such as home or coffee shops. But designing and managing hybrid work environments is not easy.

Challenges of Hybrid Work Environments

Today’s executives face a wide variety of challenges as the concept of the workplace evolves from being a physical space to a digital space. Organizations must maximize employee engagement and productivity when employees work from almost anywhere. To support digital workspaces, IT teams must support an increasing number of device types of applications across multiple clouds and different networks. Processes are often manual, and organizations might be constrained by legacy on-premises computing systems. Multiple IT management tools are often needed to run different platforms and operating systems, and each needs specific skill sets and resources. Additionally, an increased number of endpoints expands the attack surface and increases security risks. To address these challenges, organizations need an integrated workforce solution that unifies IT management into a single platform.

The Platform Advantage

Prowess Consulting observed that Anywhere Workspace can be used to replace multiple IT tools, reducing the number of IT skill sets and resources needed. In our analysis, we noted that Anywhere Workspace consists of three components, as shown in Figure 1: VMware Workspace ONE, VMware SASE, and VMware Carbon Black.

VMware Workspace ONE®

We started our evaluation by reviewing VMware Workspace ONE. Gartner has cited Workspace ONE as a leader in the unified endpoint management (UEM) category for the last five years.[2] In our analysis, we identified three key benefits of Workspace ONE UEM:

1. Automated onboarding. New employee devices can register over the air during the initial power-up. Admins can easily set up and customize an imageless configuration of work profiles such as emails, VPNs, and Wi-Fi.

Employees are 2.6x as likely to be satisfied with their employer if onboarding is exceptional.[3] | 61% of organizations report that they wrestle with onboarding.[4] |

2. Management across endpoints. Workspace ONE UEM manages and secures devices and apps by taking advantage of the native mobile device management (MDM) capabilities of iOS® and Android™ devices and mobile-cloud management efficiencies of Windows®, Apple® macOS®, and Google Chrome™ devices.

3. Integrated single-sign-on (SSO). Integrated SSO eliminates multiple logins for better security, speed, and ease of use.

Virtual Apps and Desktops

VMware Workspace ONE integrates UEM technology with virtual application delivery through VMware Horizon®. We identified the following key benefits:

- Automatic software installation. IT teams can create an automated workflow for installing software, applications, files, scripts, and commands, which can save time and reduce expenses.

- Broad support. Workspace ONE makes use of VMware Horizon, a virtualization software product, to deliver desktops and apps on Windows, macOS, Linux®, iOS, Chrome, and Android endpoints.

- Secure virtual apps. IT teams can secure sensitive and confidential information.

Measuring VM Density with VMware Horizon®

To better evaluate Workspace ONE, we measured the virtual desktop infrastructure (VDI) density created using Workspace ONE with VMware Horizon. VMware Horizon enables IT departments to run remote desktops and applications in the data center and deliver these desktops and applications to employees. VDI density refers to the number of virtual desktops that can be efficiently and effectively hosted on a single cluster of servers in a VDI environment. The concept of VDI density is important for several reasons:

- Resource utilization: Efficient VDI density maximizes the use of hardware resources and can lead to improved performance.

- Cost efficiency: Increased VDI density reduces the number of physical servers required, which can lead to cost savings.

- Simplified management: With higher VDI density, the administrative overhead associated with server management, updates, and maintenance can be reduced.

[1] EY. “Workplace of the Future Index 2.0.” November 2022.

[2] VMware. “VMware Named a Leader in the 2022 Gartner® Magic Quadrant™ for Unified Endpoint Management for Fifth Year in a Row.” August 2022.

[3] Gallup. “The Relationship Between Engagement at Work and Organizational Outcomes.” October 2020.

[4] Tolly. “VMware Work From Home Test Report by Tolly.” Commissioned by VMware. January 2021.

Improve workload resilience & streamline management of modern apps

Wed, 06 Sep 2023 00:21:03 -0000

|Read Time: 0 minutes

The combination of the latest hardware and software platforms can give DevOps and infrastructure admin teams better reliability and more provisioning options for containers—with simple and efficient management.

Introduction

Developer and operations teams that adopt DevOps philosophies and methodologies often rely on container technologies for the flexibility, efficiency, and consistency containers provide. Compared to running multiple VMs, which can be more resource-intensive, containers require less overhead and offer greater portability.[i] When teams need to spin up their workloads, containers are a convenient way for infrastructure admins to provide those resources and a speedy way for developers to test applications. These teams also need orchestration and management software to manage those containerized workloads. Kubernetes® is one such orchestration platform. It helps admins fully manage, automate, deploy, and scale containerized applications and workloads across an environment.[ii]

VMware® developed vSphere® with Tanzu to integrate Kubernetes containers with vSphere and to provide self-service environments for DevOps applications. With vSphere 8.0, the latest version of the platform, VMware has further enhanced vSphere with Tanzu.

Using an environment that included the latest 16th Generation Dell™ PowerEdge™ servers, we explored three new vSphere with Tanzu features that VMware released with vSphere 8.0:

- the ability to span clusters with Workload Availability Zones to increase resiliency and availability

- the introduction of the ClusterClass definition in the Cluster API, which helps streamlines Kubernetes cluster creation

- the ability to create custom node images using vSphere Tanzu Kubernetes Grid (TKG) Image Builder.

We found that these features could let DevOps teams save time while also increasing availability, helping pave the way for more efficient application development processes.

Understanding containers and their use in DevOps

Containers are packages of software that contain all the elements to run in a computing environment. Those elements include applications and services, libraries, and configuration files, among others. Containers do not typically contain operating system images but rather share elements of operating systems on the device—in our test cases, VMs running on PowerEdge servers. Using containers can benefit developers and DevOps applications because they are more lightweight and portable than single-function VMs.

Additionally, containers offer quick, reliable, and consistent deployments across different environments because they run as resource-isolated processes. They can run in a private data center, in the public cloud, or on a developer’s personal laptop.

An organizational advantage of containers is that they can help teams focus on their tasks: Developers can focus on application development and code issues, and operations teams can focus on the physical and virtual infrastructure. Some organizations may choose to combine developers and operations teams in a single DevOps group that manages the entire application development lifecycle, from planning to deployment and operations. In other organizations, separate development and operational teams may choose to adopt DevOps methodologies to streamline processes. For our test purposes, we assumed that a DevOps team is responsible for provisioning and managing virtual infrastructure for developers but not necessarily using that infrastructure themselves.

16th Generation Dell PowerEdge servers

The latest line of servers from Dell, PowerEdge servers come in multiple form factors for workload acceleration. Also in 16th Generation Dell PowerEdge servers is the Dell PowerEdge RAID controller 12 (PERC 12 Series), which offers expanded support and capabilities compared to previous versions, including support for SAS4, SATA, and NVMe® drive types.

Dell PowerEdge servers, including those from the 16th Generation, offer resiliency in the form of Fault Resistant Memory (FRM).[iii] FRM creates a fault-resilient area of memory that protects the hypervisor against uncorrectable memory errors, which in turn helps safeguard the system from unplanned downtime. On the virtual side, VMware vSAN™ 8.0 allows DevOps and infrastructure teams to pool storage to create smaller fault domains.

To learn more about the latest 16th Generation Dell PowerEdge servers, visit https://www.dell.com/en-us/shop/dell-poweredge-servers/sc/servers.

To see more of our recent work with the latest Dell PowerEdge servers, visit https://www.principledtechnologies.com/portfolio-marketing/Dell/2023.

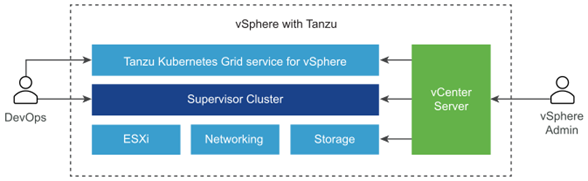

A quick look at vSphere with Tanzu

VMware created the Tanzu Kubernetes Grid (TKG) framework to simplify the creation of a Kubernetes environment. VMware includes it as a central component in many of their Tanzu offerings.[iv] vSphere with Tanzu takes TKG one step further by integrating the Tanzu Kubernetes environments with vSphere clusters. The addition of vSphere Namespaces (collections of CPU, memory, and storage quotas for running TKG clusters) allows vSphere admins to allocate resources to each Namespace to help balance developer requests with resource realities.[v]

vSphere admins can use vSphere with Tanzu to create environments in which developers can quickly and easily request and access the containers they need to develop and maintain workloads. At the same time, vSphere with Tanzu allows admins to monitor and manage resource consumption using familiar VMware tools. (To learn more about how Dell and VMware solutions can enable a self-service DevOps environment, read our report “Give DevOps teams self-service resource pools within your private infrastructure with Dell Technologies APEX cloud and storage solutions” at https://facts.pt/MO2uvKh.)

New features with VMware vSphere 8.0

As we previously noted, VMware vSphere 8.0 brings new and improved features to vSphere with Tanzu. These include the ability to deploy vSphere with Tanzu Supervisor in a zonal configuration, the inclusion of ClusterClass specification in the TKG API, and tools that let administrators customize OS images for TKG cluster worker nodes. In this section, we describe these features and how they function; later, we cover how we tested them and what our results might mean to your organization.

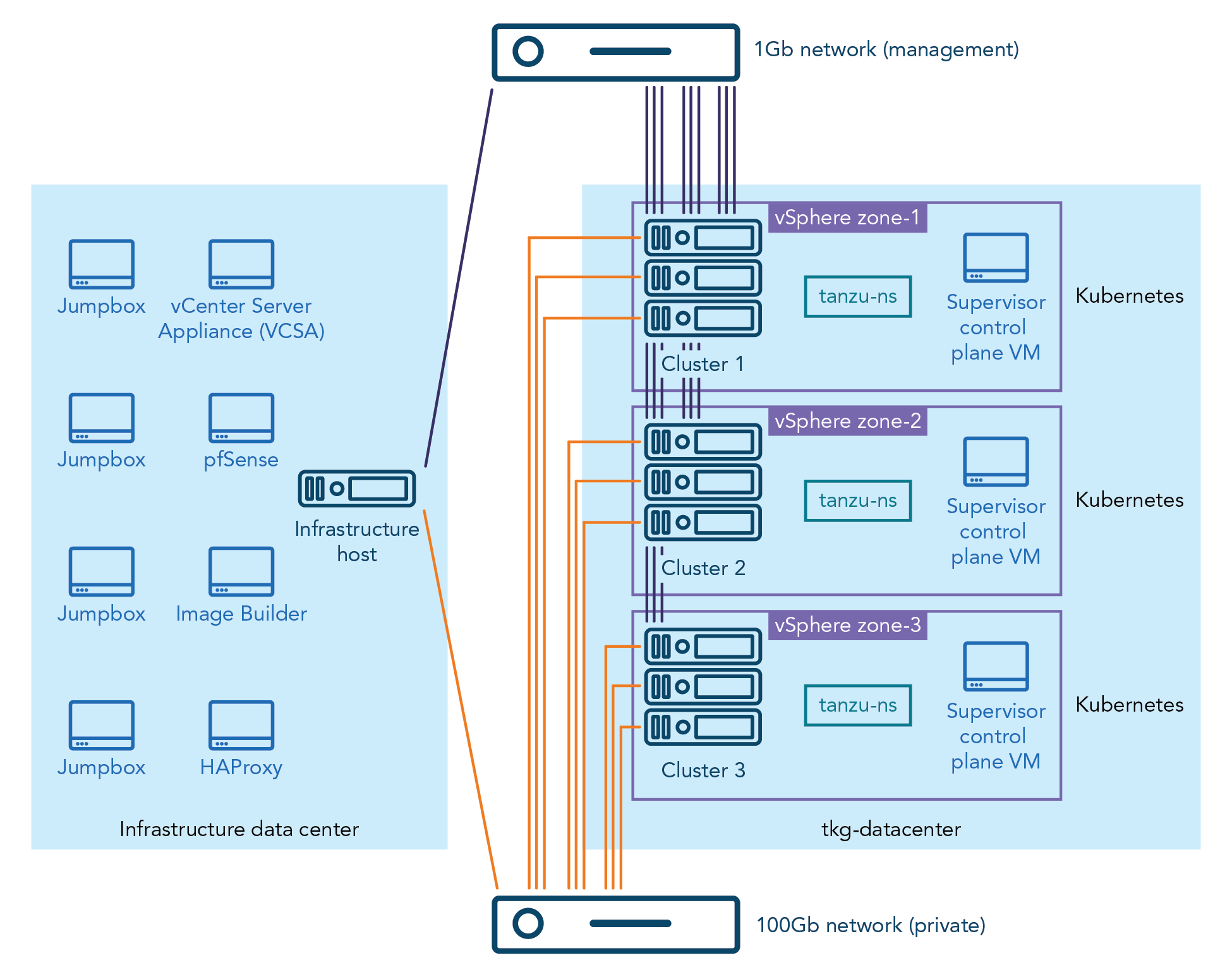

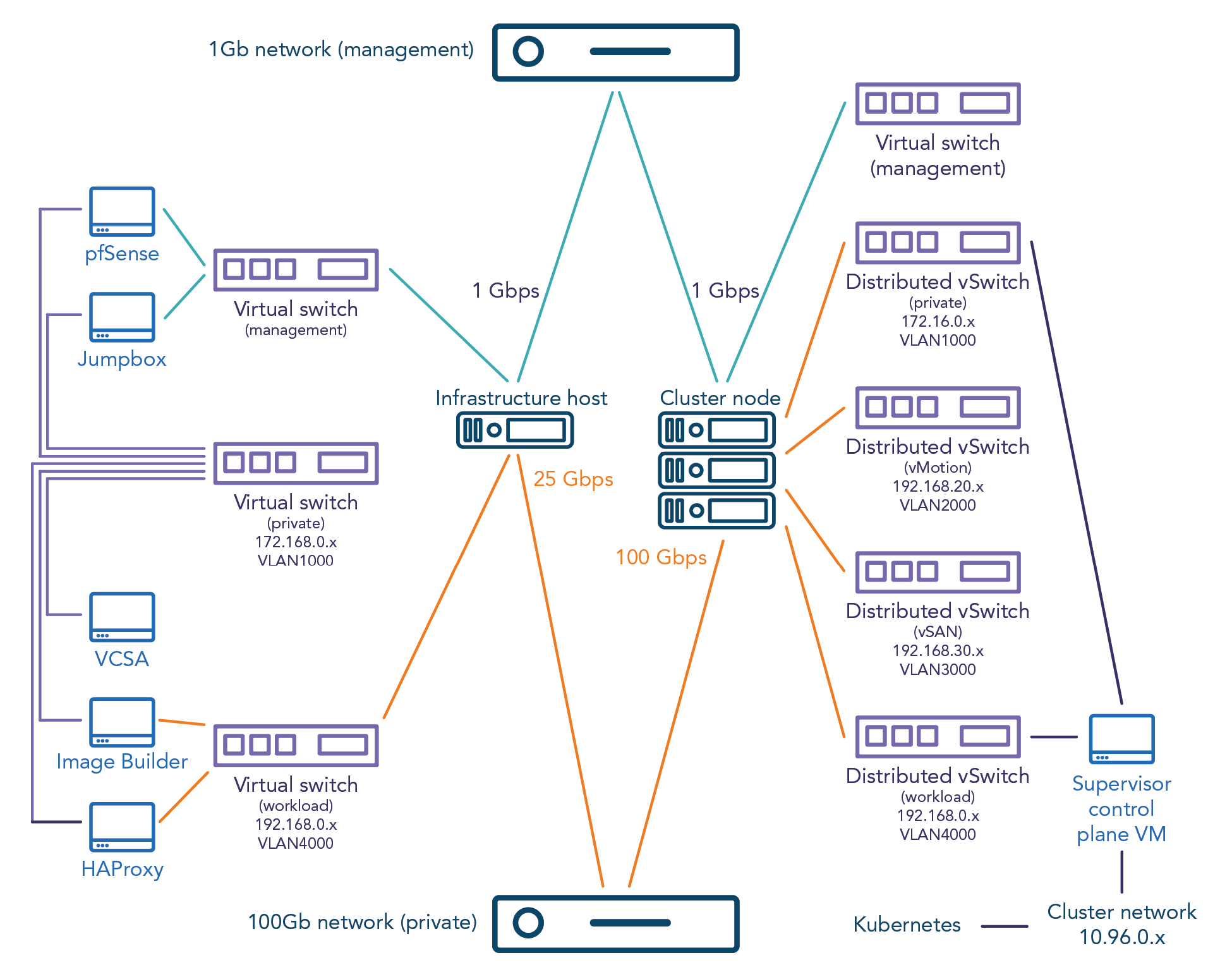

Deploying vSphere with Tanzu Supervisor in a zonal configuration provides high availability

In previous versions of vSphere, administrators could deploy vSphere with Tanzu Supervisor to only a single vSphere host cluster. vSphere 8.0 introduces the ability to deploy the Supervisor in a configuration spanning three Workload Availability Zones, which map directly to three respective clusters of physical hosts. This zonal deployment of the Supervisor provides high availability at the vSphere cluster layer and keeps Tanzu Kubernetes workloads running even if one cluster or availability zone is temporarily unavailable. Figure 1 shows how we set up our environment to test the functionality of Workload Availability Zones.

Figure 1: The zonal setup of our testbed. Source: Principled Technologies.

The vSphere with Tanzu Supervisor is a vSphere-managed Kubernetes control plane that operates on the VMware ESXi™ hypervisor layer and functionally replaces a standalone TKG management cluster. vSphere administrators can configure and deploy the Supervisor via the Workload Management tool in VMware vCenter Server®. Once an administrator has deployed the Supervisor cluster and associated it with a vSphere Namespace, the cluster acts as a control and management plane for the Namespace and all the TKG workload clusters it contains.

The Namespace provides role-based access control (RBAC) to the virtual infrastructure and defines the hardware resources—CPU, memory, and storage—available to the Supervisor for provisioning workload clusters. It also allows infrastructure administrators to specify the available VM classes, storage policies, and Tanzu Kubernetes Releases (TKrs) available to users with access to the Namespace. Users can then provision their workload clusters as necessary to host Kubernetes applications and services in a way that the hypervisor and vSphere administrators can see.

Based on our testing, having the Supervisor span three Workload Availability Zones provided increased resiliency to our Tanzu Kubernetes workload clusters. We tested by deploying a webserver application to a workload cluster spanning all three availability zones. Even after we powered off one of the physical hosts that comprised the three Workload Availability Zones physically, the application remained functional and accessible. After we powered on the hosts, the Supervisor repaired itself and brought all missing control plane and worker nodes back online automatically without administrator intervention.

Defining clusters with the ClusterClass specification in the TKG API is easier

Another significant improvement to vSphere with Tanzu in vSphere 8.0 is the introduction of the ClusterClass resource to the TKG API. vSphere with Tanzu in vSphere 8.0 uses TKG 2, which implements the ClusterClass abstraction from the Kubernetes Cluster API and provides a modular, cloud-agnostic format for configuring and provisioning clusters and cluster lifecycle management.

Previous versions of vSphere with Tanzu used the TKG 1.x API. This API used a VMware proprietary cluster definition called tanzukubernetescluster (TKC) that was specific to vSphere. With the introduction of ClusterClass to the v1beta1 version of the open-source Kubernetes Cluster API and its implementation in the TKG 2.x API, cluster provisioning and management in vSphere with Tanzu are now more directly aligned with the broader multi-cloud Kubernetes ecosystem and provide a more portable and modular way to define clusters regardless of the specific cloud environment. The ClusterClass specification also allows users to define which Workload Availability Zones any specific workload cluster will span and is less complicated than the previous TKC cluster specification.

Administrators can customize OS images for TKG cluster worker nodes by using new tools

vSphere 8.0 also provides tools for administrators to build their own OS images for TKG cluster

worker nodes. vSphere with Tanzu includes a VMware-curated set of worker node images for specific combinations of Kubernetes releases and Linux OSes, but now administrators can create custom OS images and make them available to DevOps users via a local content library for use in provisioning workload clusters. This allows administrators to create custom OS images outside of the options distributed with TKG, including the ability to create worker nodes based on Red Hat® Enterprise Linux® 8 that TKG supports but does not currently include in the TKG distribution set of default images. To suit the needs of their DevOps users, administrators can also use this feature to preinstall binaries and packages to worker node images that the OS does not include by default.

What can the combination of 16th Generation Dell PowerEdge servers and VMware vSphere 8.0 deliver for containerized environments?

A multi-node Dell PowerEdge server cluster running VMware vSphere 8.0 could improve workload resiliency and streamline management and security of modern apps. The following sections delve into our testing and explore how the new vSphere 8.0 features could benefit developers and infrastructure teams.

About VMware vSphere 8.0

vSphere is an enterprise compute virtualization program that aims to bring “the benefits of cloud to on-premises workloads” by combining “industry-leading cloud infrastructure technology with data processing unit (DPU)- and GPU-based acceleration to boost workload performance.”[vi]

This latest version introduces the vSphere Distributed Services Engine, which enables organizations to distribute infrastructure services across compute resources available to the VMware ESXi host and offload networking functions to the DPU.[vii]

In addition to the improvements for vSphere for Tanzu and the introduction of vSphere Distributed Services Engine, the latest version of the vSphere platform offers new features for vSphere Lifecycle Manager, artificial intelligence and machine learning hardware and software, and other facets of data center operations.

To learn more about vSphere 8.0, visit https://core.vmware.com/vmware-vsphere-8.

How we tested

About the Dell PowerEdge R6625 servers we used

The PowerEdge R6625 rack server is a high-density, two-socket 1U server. According to Dell, the company designed the server for “data speed” and “HPC workloads or running multiple VDI instances,”[viii] and to serve “as the backbone of your data center.”[ix] Two AMD EPYC™ 4th Generation 9004 Series processors power these servers, delivering up to 128 cores per processor. For storage, customers can get up to 153.6 TB in the front bays from 10 2.5-inch SAS, SATA, or NVMe HDD or SSD drives, and up to 30.72 TB in the rear bays from two 2.5-inch SAS or SATA HDD or SSD drives. For memory, servers have 24 DDR5 DIMM slots to support up to 6 TB of RDIMM at speeds up to 4800 MT/s. Customers can also choose from many power and cooling options. The server and processor offer the following security features:[x]

- AMD Secure Encrypted Virtualization (SEV)

- AMD Secure Memory Encryption (SME)

- Cryptographically signed firmware

- Data at Rest Encryption (SEDs with local or external key management)

- Secure Boot

- Secured Component Verification (hardware integrity check)

- Secure Erase

- Silicon Root of Trust

- System Lockdown (requires iDRAC9 Enterprise or Datacenter)

- TPM 2.0 FIPS, CC-TCG certified, TPM 2.0 China Nation

Table 1 shows the technical specifications of the six 16th Generation PowerEdge R6625 servers we tested. Three of the servers had one configuration, and the other three had a different one. We clustered the similarly configured servers together.

Table 1: Specifications of the 16th Generation Dell PowerEdge R6625 servers we tested. Source: Principled Technologies.

Cluster 1 | |

Hardware | 3x Dell PowerEdge R6625 |

Processor | 2x AMD EPYC 9554 64-core processor, 128 cores, 3.1 GHz |

Disks (vSAN) | 4x 3.2TB Dell Ent NVMe v2 AGN MU U.2 2x 600GB Seagate ST600MM0069 |

Disks (OS) | 2x 600GB Seagate ST600MM0069 |

Total memory in system (GB) | 128 |

Operating system name and version/build number | VMware ESXi, 8.0.1, 21495797 |

Cluster 2 | |

Hardware | 3x Dell PowerEdge R6625 |

Processor | 2x AMD EPYC 9554 64-core processor, 128 cores, 3.1 GHz |

Disks (vSAN) | 4x 6.4TB Dell Ent NVMe v2 AGN MU U.2 |

Disks (OS) | 2x servers: 2x 600GB Seagate ST600MM0069 1x server: 2x 600GB Toshiba AL15SEB060NY |

Total memory in system (GB) | 128 |

Operating system name and version/build number | VMware ESXi, 8.0.1, 21495797 |

In addition to the 16th Generation Dell PowerEdge R6625 servers, we included previous-generation PowerEdge R7525 servers in our testbed (see Table 2). Our use of the older servers in testing reflects actual use cases where organizations might include them for less-critical applications or as failover options.

Table 2: Specifications of the Dell PowerEdge R7525 servers we tested. Source: Principled Technologies.

Cluster 3 | |

Hardware | 3x Dell PowerEdge R7525 |

Processor | 2x AMD EPYC 7513 32-Core Processor, 64 cores, 2.6 GHz |

Disks (vSAN) | 4x 3.2TB Dell Ent NVMe v2 AGN MU U.2 |

Disks (OS) | 2x servers: 2x 240GB Micron MTFDDAV240TDU 1x server: 2x 240GB Intel SSDSCKKB240G8R |

Total memory in system (GB) | 512 |

Operating system name and version/build number | VMware ESXi, 8.0.1, 21495797 |

For more information on the servers we used in testing, see the science behind this report.

To learn more about the Dell PowerEdge R6625, visit https://www.dell.com/en-us/shop/cty/pdp/spd/ poweredge-r6625/pe_r6625_tm_vi_vp_sb.

Setting up the VMware vCenter environment

We started with six Dell PowerEdge R6625 and three Dell PowerEdge R7525 servers and installed the VMware vSphere 8.0.1 release of the Dell-customized VMware ESXi image on each server. To support our environment, we deployed the latest version of VMware vCenter on a separate server. We then created three separate vSAN-enabled vSphere clusters, as we defined in Table 1, and deployed vSphere with Tanzu with a zonal Supervisor cluster spanning the three vSphere clusters, each set as a vSphere Zone. Figure 2 shows the details of our testbed.

Figure 2: Our testbed in detail. Source: Principled Technologies.

Testing Workload Availability Zones

To demonstrate the utility of a zonal Supervisor deployment spanning three Workload Availability Zones, we deployed a simple “hello world” NGINX web server application to a workload cluster that spanned all three zones. Our deployment defined three replicas of the application, which the Supervisor distributed across the three zones automatically. Then, to simulate a system failure—such as might occur with a power outage—we powered off the physical hosts of one vSphere cluster mapped as an availability zone.

Even after this loss of power on the cluster, the workload continued to function. The missing Supervisor and worker nodes and their services automatically came back online after we powered on the hosts and they reconnected to the vCenter Server.

For clusters hosting any kind of key workload, whether user-facing or internal, availability is critical. If a cluster goes down completely—whether due to natural disaster, human error, or malicious intent—the resulting downtime can have severe consequences, from hurting productivity to losing revenue. As an example for our use case, downtime for containerized microservices that are part of an ecommerce app could lead to miscalculations in inventory or the inability to process an order, potentially causing customer dissatisfaction and lost revenue. Workload Availability Zones offer a resiliency that can ease the minds of both development and infrastructure teams by ensuring that containerized applications and services have the uptime to meet usage demands.

Testing ClusterClass

We used the v1beta1 Cluster API implementation of ClusterClass to deploy workload clusters using TKG 2.0 on a multi-zone vSphere with Tanzu Supervisor. The Cluster API, implemented in the vSphere with Tanzu environment as part of TKG, provides provisioning and operating of Kubernetes clusters across different types of infrastructure, including vSphere, Amazon Web Services, and Microsoft Azure, among others. We used YAML configuration files in our test environment to define and test two sample ClusterClass cluster definitions representing different options available to users when provisioning a workload cluster deployment in vSphere with Tanzu: One spanning all three Workload Availability Zones, and one that uses the Antrea Container Network Interface (CNI) rather than the default Calico CNI.

The implementation of the ClusterClass object in the TKG 2 API replaces the previous tanzukubernetescluster resource used in TKG 1.x and brings Tanzu cluster definitions into a more modular and cloud-agnostic format that aligns with user expectations and helps with portability across diverse cloud environments. Although we did not test the full extent of the feature, we found that it worked well in our testing.

Testing node image customization on the workload cluster

Using the VMware Tanzu Image Builder, we created a custom Ubuntu 20.04 image for use with Kubernetes v.1.24.9 and vSphere with Tanzu. We added a simple utility package (curl) that the base Ubuntu installation does not include by default. To confirm that vSphere used the custom OS image on the provisioned cluster’s nodes as we expected, we connected to one of the worker nodes via SSH. When we did so, we saw that the platform had installed curl—the feature had worked as intended.

This feature allows teams to build OS images to meet the specific needs of their applications or services. They can then add the custom-built OS images as a custom TKr in vSphere for Tanzu to provision workload clusters.

DevOps teams can create custom machine images for the management and workload cluster nodes to use as VM templates. Each custom machine image packages a base operating system, a Kubernetes version, and any additional customizations into an image that runs on vSphere. However, custom images must use the operating systems that vSphere for Tanzu supports; currently, it supports Ubuntu 20.04, Ubuntu 18.04, Red Hat Enterprise Linux 8, Photon OS 3, and Windows 2019.[xi]

This kind of customization could save time for DevOps teams and help maintain Tanzu environmental consistency. It also allows users to predefine binaries included on an OS image rather than install them afterward.

Conclusion

The combination of 16th Generation Dell PowerEdge servers and VMware vSphere 8.0 provides a resilient and efficient solution for organizations running containerized applications. Upgrading to the hardware and software combination provides helpful features that increase workload availability and streamline management. We found Workload Availability Zones, ClusterClass, and node image customization features to function well and as we expected, thus aiding both development and infrastructure teams in a DevOps context. Deploying vSphere 8.0 on Dell PowerEdge servers enables organizations to take advantage of the benefits of containerization and modernize their IT infrastructure.

This project was commissioned by Dell Technologies.

August 2023

Principled Technologies is a registered trademark of Principled Technologies, Inc.

All other product names are the trademarks of their respective owners.

[i] Rosbach, Felix, “Why we use Containers and Kubernetes - an Overview,” accessed July 10, 2023, https://insights.comforte.com/why-we-use-containers-and-kubernetes-an-overview.

[ii] Rosbach, Felix, “Why we use Containers and Kubernetes - an Overview.”

[iii] Dell Technologies, “Dell Fault Resilient Memory,” accessed June 28, 2023,

https://dl.dell.com/content/manual19947903-dell-fault-resilient-memory.pdf?language=en-us.

[iv] VMware, “VMware Tanzu Kubernetes Grid Documentation,” accessed July 7, 2023,

https://docs.vmware.com/en/VMware-Tanzu-Kubernetes-Grid/index.html.

[v] VMware, “What is a vSphere Namespace?,” accessed July 7, 2023, https://docs.vmware.com/en/VM-ware-vSphere/8.0/vsphere-with-tanzu-concepts-planning/GUID-638682AE-5EC9-47AA-A71C-0BECF9DC27A1.html.

[vi] VMware, “VMware vSphere,” accessed June 29, 2023,

https://www.vmware.com/content/dam/digitalmarketing/vmware/en/pdf/vsphere/vmw-vsphere-datasheet.pdf.

[vii] VMware, “Introducing VMware vSphere Distributed Services Engine and Networking Acceleration by Using DPUs,” accessed June 29, 2023, https://docs.vmware.com/en/VMware-vSphere/8.0/vsphere-esxi-installation/GUID-EC3CE886-63A9-4FF0-B79F-111BCB61038F.html.

[viii] Dell Technologies, “PowerEdge R6625 Rack Server,” accessed June 28, 2023, https://www.dell.com/en-us/shop/cty/pdp/spd/poweredge-r6625/pe_r6625_tm_vi_vp_sb.

[ix] Dell Technologies, “PowerEdge R6625: Breakthrough performance,” accessed June 28, 2023, https://www.dell-technologies.com/asset/en-us/products/servers/technical-support/poweredge-r6625-spec-sheet.pdf.

[x] Dell Technologies, “PowerEdge R6625: Breakthrough performance.”

[xi] VMware, “Tanzu Kubernetes Releases and Custom Node Images,” accessed July 10, 2023,

https://docs.vmware.com/en/VMware-Tanzu-Kubernetes-Grid/2/about-tkg/tkr.html.

Author: Principled Technologies

Process 84% more MySQL database activity with the latest-gen PowerEdge R760 server running VMware vSphere 8.0

Wed, 21 Jun 2023 21:54:59 -0000

|Read Time: 0 minutes

The 16th Generation server handled more database transactions than a previous-generation PowerEdge R750 server with vSphere 7

Upgrading hardware and software in your infrastructure can bring a bevy of benefits to your organization, including the potential for increased performance. For database servers, that could mean more ecommerce activity or faster inventory management.

At Principled Technologies, we used online transaction processing (OLTP) workloads targeting a MySQL 8.0 database to test a latest-generation Dell™ PowerEdge™ R760 server running a VMware® vSphere® 8.0 environment and a previous-generation PowerEdge R750 server running vSphere 7.0. Based on the increased OLTP performance of the PowerEdge R760, upgrading to the newer server with the latest version of the hypervisor software could provide a boost in MySQL production workload performance.

We also used Dell Live Optics software to monitor the latest-gen PowerEdge server and vSphere 8.0 environment. Live Optics enabled us to gather environment and workload characteristics, which could help optimize MySQL workload performance and potentially minimize overspending on data center resources.

How we tested

We used an online transaction processing workload, called TPROC-C, from the benchmarking tool HammerDB. We created VMware vSphere VMs on the two servers and set up one workload on each VM that used a MySQL 8.0 database. We then scaled up to 10 VMs on each server. We chose that number so we could divide each server into medium-sized database VMs equally while leaving some cores and memory for hypervisor overhead. In terms of average CPU load, the PowerEdge R760 utilized 79.9 percent of its CPU, and the PowerEdge R750 utilized 69.3 percent.

We performed all testing remotely. For more information on how we tested, see the science behind this report.

About the Dell PowerEdge R760

The 2U Dell PowerEdge R760 rack server features up to two 4th Generation Intel® Xeon® Scalable processors with up to 56 cores per processor to provide “performance and versatility for demanding applications.”1

Key features of the Dell PowerEdge R760 include:

- Up to two 300W (dual-width) or six 75W (single-width) GPUs

- Up to 28 storage drives, including 24 NVMe® direct-attached drives and 32 DDR5 DIMMs of memory

- Flexible I/O options, with up to eight PCIe® slots and optional two 1GbE LOM and one OCP 3.0 slots

- Dell OpenManage systems management platform for automated deployment, updates, and maintenance (features depend on license)

- Dell Smart Flow design and OpenManage Enterprise Power Manager 3.0 (with license) to improve energy efficiency

For more PowerEdge R760 details, visit https://www.dell.com/en-us/shop/cty/pdp/spd/poweredge-r760.

Why NOPM?

NOPM is a metric for OLTP workloads that shows only the number of new-order transactions completed in one minute as part of a serialized business workload. HammerDB claims that because NOPM is “independent of any particular database implementation [it] is the recommended primary metric to use.”2 NOPM comes from the database schema itself, which means IT staff and IT decision makers can use the data to compare performance of different databases that run different transaction types. For example, a database administrator could compare the NOPM of their ecommerce database workload to the NOPM of their inventory database workload because both run new-order transactions but differ in other transaction types.

About VMware vSphere 8.0

vSphere is an enterprise compute virtualization program that aims to bring “the benefits of cloud to on-premises workloads” by combining “industry-leading cloud infrastructure technology with data processing unit (DPU)- and GPU-based acceleration to boost workload performance.”3

This latest version introduces the vSphere Distributed Services Engine, which enables organizations to distribute infrastructure services across compute resources available to the VMware ESXi™ host and, for systems with DPUs, offload networking functions to the DPU.4 (We did not use DPUs in our testing.)

How the servers performed

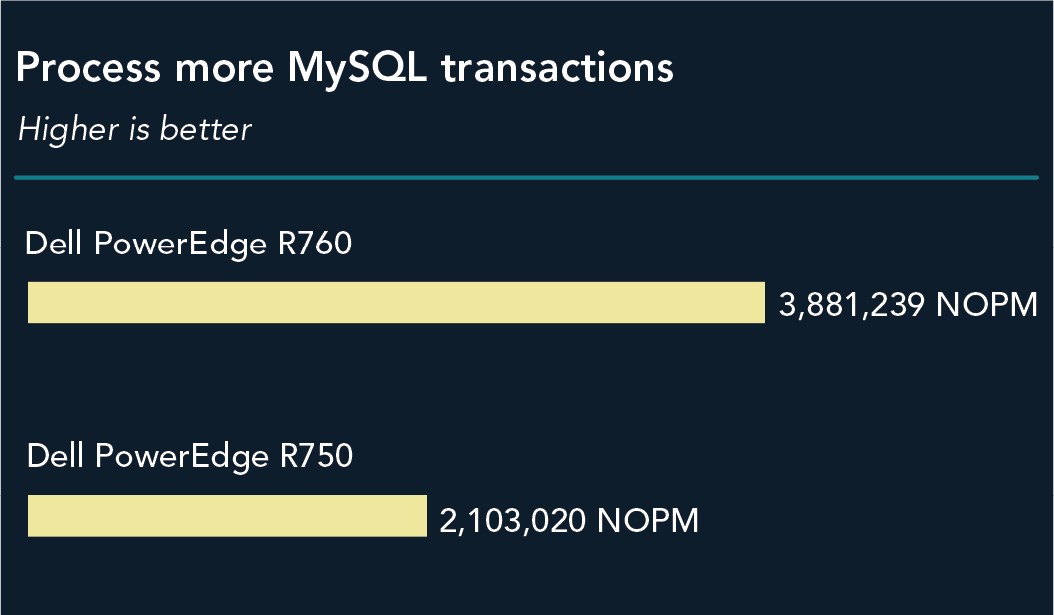

Process more MySQL transactions

When we ran the TPROC-C workload from HammerDB on both solutions, we saw an advantage of 84 percent for the latest-generation Dell PowerEdge R760 running VMware vSphere 8.0. The solution processed 3,881,239 NOPM, while the previous-generation PowerEdge R750 running vSphere 7 processed 2,103,020. Figure 1 shows the total NOPM each server processed.

This performance increase from upgrading to PowerEdge R760 servers and vSphere 8.0 could enable organizations to provide a better experience in many use cases where MySQL is the back end. Customer-facing ecommerce applications could process more sales and support a larger customer base. Internal-facing inventory management applications using MySQL could have a faster response, thus providing a potentially better experience for their users. IT monitoring applications using MySQL as a back end could potentially handle more transactions.

About HammerDB results

We tested each server with a TPROC-C OLTP workload from the HammerDB suite of benchmarks. While the HammerDB developers derived TPROC-C from the TPC-C specification, the workload is not a full implementation of the TPC-C standard. For this reason, our test results are not official TPC results and are not comparable to them in any manner.

For more information on the HammerDB benchmark suite, visit their website at www.hammerdb.com.

Collect data quickly with Dell Live Optics

Dell offers customers and service providers Live Optics, online IT infrastructure software, at no cost to help with collecting, visualizing, and sharing environment and workload data. According to Dell, customers and service providers can “gain deeper insights into performance, workload simulations, utilization, and support” and “analyze data in 24 hours or less rather than…weeks to months”5 with Live Optics. Monitoring infrastructure with Live Optics can help admins optimize systems and workloads to avoid over- and underutilizing resources.

In our testing, we used Live Optics to get a bird’s-eye view of our environment and its performance metrics. We pointed Live Optics at our VMware vCenter server, provided credentials, and selected a time period for the collection to run. After the collection period finished, we could view performance metrics and detailed inventory data for our physical and virtual environment.

Live Optics collects information on the following five components of infrastructure:

- Server and cloud: Inventory and performance monitoring of hosts and VMs regardless of platform or vendor

- Workloads: Machine and installation details, SQL Server-specific features, and database information

- File: Insight into unstructured data, such as file growth and potential for space savings

- Storage: Hardware inventory, configuration, performance history, and value assessment

- Data protection: Backup software and appliances and front-end capacity management

For more information about Live Optics, visit https://www.dell.com/en-us/dt/live-optics/index.htm.

Conclusion

New servers and software versions can be a boon for your organization. If you’re running MySQL workloads for web applications, retail, or other use cases, your organization could see a performance boost by upgrading to latest-generation Dell PowerEdge R760 servers running VMware vSphere 8.0. In our testing, a PowerEdge R760 server handled 84.5 percent more NOPM than an older Dell PowerEdge R750 server. We also found that Live Optics infrastructure monitoring software allows you to view performance data for new PowerEdge R760 and vSphere 8.0 environments so you can manage those resources to optimize your MySQL workloads.

- Dell, “Dell PowerEdge Rack Servers,” accessed May 11, 2023, https://i.dell.com/sites/csdocuments/Product_Docs/en/poweredge-rack-quick-reference-guide.pdf.

- HammerDB, “Comparing HammerDB results,” accessed May 11, 2023, https://www.hammerdb.com/docs/ch03s04.html.

- VMware, “VMware vSphere,” accessed May 11, 2023, https://www.vmware.com/content/dam/digitalmarketing/vmware/en/pdf/vsphere/vmw-vsphere-datasheet.pdf.

- VMware, “Introducing VMware vSphere Distributed Services Engine and Networking Acceleration by Using DPUs,” accessed May 11, 2023, https://docs.vmware.com/en/VMware-vSphere/8.0/vsphere-esxi-installation/GUID-EC3CE886-63A9-4FF0-B79F-111BCB61038F.html.

- Dell, “Live Optics for Service Providers,” accessed May 11, 2023, https://www.dell.com/en-us/shop/live-optics/cp/live-optics.

This project was commissioned by Dell Technologies.

June 2023

Accelerate Workload Performance with NVIDIA GPUs on VMware vSphere Tanzu and PowerEdge Servers

Wed, 21 Jun 2023 18:36:32 -0000

|Read Time: 0 minutes

VMware vSphere with Tanzu

VMware vSphere with Tanzu is used to transform vSphere to a platform for running Kubernetes workloads natively on the hypervisor layer. When enabled on a vSphere cluster, vSphere with Tanzu provides the capability to run Kubernetes workloads directly on ESXi hosts and to create upstream Kubernetes clusters within dedicated resource pools. Tanzu Kubernetes Grid is an enterprise ready Kubernetes runtime built to run consistently with any app on any cloud. It runs the same Kubernetes workloads across the data center, public cloud, and edge for a consistent, secure experience for on-demand access and to keep your workloads properly isolated and secure.

Graphics Processing Units

Graphics processing units (GPU) are dedicated parallel hardware accelerators originally designed for accelerating graphics intensive processing. Today, GPUs have become an essential part of artificial intelligence workloads, especially machine learning and deep learning. Moreover, containers help organizations modernize applications and to align closely with current business needs (see Consolidate your VMs and Modernize Aging Environments with PowerEdge MX750c Compute Sleds and VMware Tanzu). It is only logical to empower container-based applications and workloads to leverage any accelerators that the hardware infrastructure can provide.

As investments in artificial intelligence grow, and organizations explore using AI workloads for critical business workloads, VMware vSphere with Tanzu containerized environments provide the ability to rapidly deploy applications. Although generic applications do well with standard containers, computationally demanding workloads can benefit tremendously with GPU accelerators that can help process multiple computations concurrently. VMware Tanzu Kubernetes on NVIDIA GPUs enables enterprises to adopt and deploy artificial intelligence workloads, such as machine learning and deep learning.

NVIDIA Graphics Processing Units

The NVIDIA Ampere A100 is a powerful graphics processing unit (GPU). The A100 Tensor Core GPU runs diverse compute intensive applications at every scale running in modern cloud data centers. Some compute-intensive applications include AI deep learning (DL) training and inference, data analytics, scientific computing, genomics, edge video analytics and 5G services, graphics rendering, cloud gaming, and many more. Using NVIDIA GPUs to accelerate container workload performance is the key to rapid scalability and superior performance of containers with GPUs.

NVIDIA GPUs with their simultaneous processing capabilities, supported by thousands of cores, help accelerate a wide range of applications across industry segments such as:

- HPC: Deep Learning, Defense, Weather Forecasting, Bioscience research

- Consumer Applications: Transportation, Video Editing, 3D Graphics, Machine Learning

- Entertainment: Gaming, Visual Effects

- Automotive Sectors: Visual Data, Sensors, Automation

- Finance: Analytics, Security-Fraud Detection

Testing the benefits of workloads on containers with GPU acceleration

In this study, we used ResNet-50—a deep learning image classification workload—on a Dell PowerEdge R750 server with an NVIDIA A100 Tensor Core GPU running VMware vSphere with Tanzu. NVIDIA vGPU software “creates virtual GPUs that can be shared across multiple virtual machines, accessed by any device, anywhere”. Companies can use NVIDIA vGPU software for a wide range of workloads. This approach combines the management and security benefits of virtualization with the performance of GPUs, from which many modern workloads can benefit. The results show that the PowerEdge R750 with an NVIDIA A100 Tensor Core GPU in a VMware Tanzu Kubernetes environment with GPU virtualization can support flexibly apportioning GPU compute capability across multiple machine learning workloads in VMware Tanzu Kubernetes clusters.

Dell PowerEdge servers

Modern data centers require fast, flexible cloud enabled infrastructure to respond to complex compute demands. Dell PowerEdge servers provide a scalable business architecture, intelligent automation, and integrated security for workloads from traditional applications and virtualization to cloud-native workloads. The Dell PowerEdge difference is that we deliver the same user experience, and the same integrated management experience across all our servers, so you have one way to patch, manage, update, refresh, and retire servers across the entire data center. PowerEdge servers also incorporate the embedded efficiencies of OpenManage systems management that enable IT pros to focus more time on strategic business objectives and less time on routine IT tasks.

Dell PowerEdge R750

The Dell PowerEdge R750, powered by 3rd Generation Intel® Xeon® Scalable processors, is a rack server that optimizes application performance and acceleration. The R750 is a dual-socket 2U rack server that delivers outstanding performance for the most demanding workloads. It supports eight channels of memory per CPU, and up to 32 DDR4 DIMMs at 3200 MT/s speeds. In addition, to address substantial throughput improvements, the PowerEdge R750 supports PCIe Gen 4 and up to 24 NVMe drives with improved air-cooling features and optional Direct Liquid Cooling to support increasing power and thermal requirements. These features make the R750 an ideal server for data center standardization on a wide range of workloads, including database and analytics, high performance computing (HPC), traditional corporate IT, virtual desktop infrastructure, and AI/ML environments that require performance, extensive storage, with Data Processing Unit (DPU) and Graphics Processing Unit (GPU) support.

References

About the author: Thomas MM works in the technical marketing team that focuses on Dell PowerEdge with VMware software. With vast experience in the IT industry in various roles, Thomas specializes in PowerEdge servers and VMware software and works to create technical collateral that highlights the many unique benefits of running VMware software on PowerEdge servers for Dell and VMware customers and partners.

Streamline operations with new and updated VMware vSphere 8.0 features on 16th Generation PowerEdge servers

Mon, 22 May 2023 17:07:42 -0000

|Read Time: 0 minutes

Managing infrastructure at scale requires significant monitoring and maintenance from data center administrators. By updating your environment with clusters of the latest 16th Generation Dell™ PowerEdge™ servers and the latest versions of software, you can arm administrators with cutting-edge tools to help simplify routine tasks. The latest release of VMware® vSphere®, version 8.0, offers new and updated features to make monitoring and managing Dell PowerEdge servers easier.

Connecting to a cluster of two remote 16th Generation Dell PowerEdge R6625 servers from our data center, we explored how vSphere 8.0 can simplify some PowerEdge server, host, and VM configuration tasks as well as some ongoing monitoring tasks. We found that the new and upgraded vSphere 8.0 features we evaluated provide the functionality they aim to deliver. For your organization, that could translate to better hardware and software performance and less downtime. In addition, your data center admins could focus less on routine management and monitoring tasks and spend more time on remediation or other work to help the organization.

For the latest 16th Generation Dell PowerEdge servers, what’s new and improved with VMware vSphere 8.0?

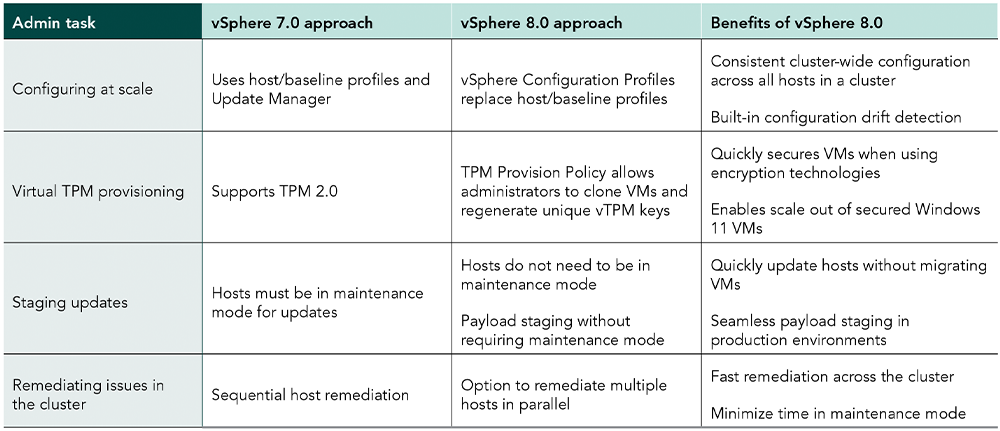

Centralized management capabilities for VMware vSphere 8.0 improved from the previous version to further ease management burdens for administrators. Figure 1 shows the improvements that we found.

Stage cluster image updates

With vSphere 8, admins no longer need to put hosts into maintenance mode when staging updates for the 16th Gen PowerEdge cluster, which can lessen the time that hosts must spend in maintenance mode and thus reduce potential downtime due to host updating. In addition, VMs in the PowerEdge cluster do not need to shut down or migrate to a host not in maintenance mode.

Remediate hosts in a PowerEdge server cluster in parallel

Admins can remediate issues on multiple hosts in parallel in vSphere 8.0. Parallel remediation of hosts could reduce the overall time to remediate a cluster of latest-gen PowerEdge servers.

Configure hosts in a PowerEdge cluster at scale

Configuration Profiles, a new feature in vSphere 8.0, allows admins to configure all hosts in a PowerEdge cluster at once with the same configuration. Ensuring hosts in a cluster have the same configuration can help minimize errors at the host level so VMs can function properly regardless of placement within the cluster.

Reduce potential issues when securing VMs

Cloning a VM with a virtual TPM (vTPM) means cloning the secret Endorsement Key of a Trusted Platform Module (TPM), which could introduce security risks. vSphere 8 offers a new feature, called TPM Provision Policy, to ensure each cloned VM in the PowerEdge cluster can have a unique vTPM.

Conclusion

By using the latest software and 16th Generation Dell PowerEdge servers in your VMware vSphere environment, you can provide your data center administrators with new or updated tools that simplify routine tasks in both initial host setup and ongoing monitoring. In our exploration of the latest features in vSphere 8.0 Lifecycle Manager, we found that vSphere 8.0 on 16th Generation Dell PowerEdge servers offers advantages compared to the previous generation, which may make an infrastructure update worth your while. By introducing vSphere Configuration Profiles and providing simpler image updates to vSphere clusters, VMware vSphere 8.0 on latest 16th Generation Dell PowerEdge servers can help streamline operations for your administrative staff.

This project was commissioned by Dell Technologies.

April 2023. Revised April 2023.