Direct from Development- Tech Notes

Dell PowerEdge C6615 Delivers World Record VMmark Power-Performance Results

Thu, 18 Apr 2024 16:24:02 -0000

|Read Time: 0 minutes

Abstract

The Dell PowerEdge C6615 has set a new VMmark 3 world record. This result is the world’s highest power-performance score achieved on VMmark 3 using vSAN storage. This configuration also achieved the highest 4-node server power-performance score and the highest server-storage power-performance score. All of these records illustrate the sheer efficiency, scalability, and performance-density of the C6615 modular platform.

This document summarizes the VMmark 3 benchmark results for the PowerEdge C6615 published on 1/23/2024. It lists the results, summarizes the major configuration details, and links to the results on the VMmark website.

Benchmark results

- Performance Score: 17.42 @ 16tiles

- Server Power-Performance: 18.2000 @ 16 tiles

- Server Storage Power-Performance: 11.0600 @ 16 tiles

What do these scores mean?

The following world records were achieved:

- highest power-performance score achieved on VMMark 3 using vSAN storage.

- highest 4-node server power-performance score

- highest server-storage power-performance score

The Dell PowerEdge C6615 is a modular server node in the C6600 chassis with the AMD 4th Gen EPYC 8004 series of processors. This configuration allows for four 1-S nodes in a single 2U chassis, enabling high performance density and power efficiency, especially when using local storage for vSAN.

The configuration also leveraged the latest 96GB DDR5 DIMMs that offer exceptional value and capacity for a variety of use cases.

These results showcase the great performance density, power efficiency, and scalability of the Dell PowerEdge C6615 servers for virtualization use cases. VMmark is a benchmark standard for today’s virtualized applications in the datacenter.

Notes

- Results referenced are current as of April 3rd. 2024.

- To view all VMmark 3 results, see VMmark 3.x Results (vmware.com).

- This benchmark was run by Dell in the Dell SPA lab and audited by VMware.

Key configuration details

We used 4x Dell PowerEdge C6615 server nodes in a single C6600 chassis.

Each node had the following configuration:

- 1x AMD EPYC 8534P 64 core processors at 2.3GHz (AMD EPYC 8534P | AMD)

- 6x 96 GB Dual Rank x4 DDR5 4800MT/s RDIMM (576GB total)

- Dell Customized Image of VMware ESXi 8.0 U2, Build 22380479

- VMware vCenter Server 8.0 U2a

- Boot Storage: Dell EC NVMe ISE 7400 RI M.2 960GB

- vSAN Storage:

- 1x Disk Group per Host

- 4x Dell Ent NVMe v2 AGN MU U.2 6.4TB

- Networking:

- 1x Mellanox CX-6 Dx Dual Port 100GbE QSFP56 Adapter

- 1x Broadcom Advanced Dual 25Gb Ethernet Adapter

About the Dell PowerEdge C6615

The Dell PowerEdge C6615 node with AMD EPYC 8004 series of processors is designed to maximize value and minimize TCO for scale-out workloads, using a scalable dense compute infrastructure focused on performance per watt, per dollar.

Some of the key features include:

- AMD 4th Gen EPYC up to 64 cores/CPU

- Single-socket only

- Enables DDR5 at 4800 MT/s memory and PCIe Gen5 with double the speed of previous PCIe Gen4 for faster access and transport of data, to optimize application output

- Fully featured systems management with iDRAC, and OpenManage Enterprise, and CloudIQ

These features allow you to maximize security with the PowerEdge Cyber Resilient Architecture.

Figure 1. The PowerEdge C6615 sled and the PowerEdge C6600 chassis

What is VMmark 3.0?

VMmark is a free tool used by hardware vendors and others to measure the performance, scalability, and power consumption of virtualization platforms.

Figure 2. Outline of the VMmark benchmark components

The VMmark benchmark combines commonly-virtualized applications into predefined bundles called "tiles". This version of the benchmark has 19 unique virtual machines per tile. The number of VMmark tiles a virtualization platform can run, as well as the cumulative performance of those tiles and of a variety of platform-level workloads, determine the VMmark 3 score.

References

- For details about VMmark, see VMmark (vmware.com).

- PowerEdge C6615 Server Node

Dell PowerEdge 16G Server BIOS Settings for Optimized Performance: R7625, R6625, R7615, R6615, C6615

Tue, 26 Mar 2024 22:46:05 -0000

|Read Time: 0 minutes

BIOS setting recommendations

The following tables provide the BIOS setting recommendations for the latest generation of PowerEdge servers:

Table 1. BIOS setting recommendations - System profile settings

| System setup screen | Setting | BIOS Defaults | SPEC cpu2017 int rate (General Purpose Performance) | SPEC cpu2017 fp rate | SPEC cpu2017 int speed | SPEC cpu2017 fp speed | Memory Throughput | HPC | Latency |

| System profile setting | System profile | Performance Per Watt | Custom | Custom | Custom | Custom | Custom | Custom | Custom |

| System profile setting[*] | CPU Power Management | OS DBPM | OS DBPM | OS DBPM | OS DBPM | OS DBPM | OS DBPM | Max Performance | Max Performance |

| System profile setting | Memory Frequency | Max Performance | Max Performance | Max Performance | Max Performance | Max Performance | Max Performance | Max Performance | Max Performance |

| System profile setting | Turbo Boost | Enabled | Enabled | Enabled | Enabled | Enabled | Enabled | Enabled | Enabled |

| System profile setting | C-States | Enabled | Enabled | Enabled | Disabled | Disabled | Disabled | Disabled | Disabled |

| System profile setting | Write Data CRC | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled | Enabled | Disabled |

| System profile setting | Memory Patrol Scrub | Standard | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled |

| System profile setting | Memory Refresh Rate | 1x | 1x | 1x | 1x | 1x | 1x | 1x | 1x |

| System profile setting | Workload Profile | not configured | not configured | not configured | not configured | not configured | not configured | HPL | not configured |

| System profile setting | PCI ASPM L1 Link Power Management | Enabled | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled |

| System profile setting | Determinism Slider | Performance Determinism | Power Determinism | Power Determinism | Power Determinism | Power Determinism | Power Determinism | Power Determinism | Power Determinism |

| System profile setting | Power Profile Select | High Performance Mode | High Performance Mode | High Performance Mode | High Performance Mode | High Performance Mode | High Performance Mode | High Performance Mode | High Performance Mode |

| System profile setting | PCIE Speed PMM Control | Auto | Auto | Auto | Auto | Auto | Auto | Auto | (GEN 5) |

| System profile setting | EQ Bypass To Highest Rate | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled |

| System profile setting | DF PState Frequency Optimizer | Enabled | Enabled | Enabled | Enabled | Enabled | Enabled | Enabled | Enabled |

| System profile setting | DF PState Latency Optimizer | Enabled | Enabled | Enabled | Enabled | Enabled | Enabled | Enabled | Enabled |

| System profile setting | DF CState | Enabled | Enabled | Enabled | Enabled | Enabled | Enabled | Enabled | Enabled |

| System profile setting | Host System Management Port (HSMP) Support | Enabled | Enabled | Enabled | Enabled | Enabled | Enabled | Enabled | Enabled |

| System profile setting | Boost FMax | 0-Auto | 0-Auto | 0-Auto | 0-Auto | 0-Auto | 0-Auto | 0-Auto | 0-Auto |

| System profile setting | Algorithm Performance Boost Disable (ApbDis) | Disabled | Disabled | Disabled | Enabled | Enabled | Disabled | Disabled | Enabled |

| System profile setting | ApbDis Fixed Socket P-State[2] | P0 | P0 | P0 | |||||

| System profile setting | Dynamic Link Width Management (DLWM) | Unforced | Unforced | Unforced | Unforced | Unforced | Unforced | Unforced | Forced x16 |

[*] For C6615, apply setting from Table 3.

[1] Depends on how system was ordered. Other System Profile defaults are driven by this choice and may be different than the examples listed. Select Performance Profile first, and then select Custom to load optimal profile defaults for further modification.

[2] Pstate field is dependent on Algorithm Performance Boost Disable (ApbDis) and is visible only when it is enabled.

Table 2. BIOS setting recommendations – Memory, processor, and iDRAC settings

| System setup screen | Setting | BIOS Defaults | SPEC cpu2017 int rate (General Purpose Performance) | SPEC cpu2017 fp rate | SPEC cpu2017 int speed | SPEC cpu2017 fp speed | Memory Throughput | HPC | Latency |

| Memory settings | System Memory Testing | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled |

| Memory settings | DRAM Refresh Delay | Minimum | Performance | Performance | Performance | Performance | Performance | Performance | Performance |

| Memory settings | Correctable memory ECC SMI | Enabled | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled |

| Memory settings | Uncorrectable Memory Error (DIMM Self healing on uncorrectable memory) | Enabled | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled |

| Memory settings | Correctable Error Logging | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled |

| Processor settings | Logical Processor | Enabled | Enabled | Disabled[1] | Disabled[1] | Disabled[1] | Disabled[1] | Disabled[1] | Disabled[1] |

| Processor settings | Virtualization Technology | Enabled | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled |

| Processor settings | IOMMU Support | Enabled | Enabled | Enabled | Enabled | Enabled | Enabled | Enabled | Enabled |

| Processor settings | Kernel DMA Protection | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled |

| Processor settings | L1 Stream HW Prefetcher | Enabled | Enabled | Disabled | Enabled | Enabled | Enabled | Enabled | Enabled |

| Processor settings | L2 Stream HW Prefetcher | Enabled | Enabled | Enabled | Enabled | Enabled | Enabled | Enabled | Enabled |

| Processor settings | L1 Stride Prefetcher | Enabled | Enabled | Enabled | Enabled | Enabled | Enabled | Enabled | Enabled |

| Processor settings | L1 Region Prefetcher | Enabled | Enabled | Enabled | Enabled | Enabled | Enabled | Enabled | Enabled |

| Processor settings | L2 Up Down Prefetcher | Enabled | Enabled | Enabled | Enabled | Enabled | Enabled | Enabled | Enabled |

| Processor settings | MADT Core Enumeration | Linear | Linear | Linear | Linear | Linear | Linear | Linear | Linear |

| Processor settings[*] | NUMA Node Per Socket | 1 | 4 | 4 | 4 | 1 | 4 | 4 | 4 |

| Processor settings | L3 cache as NUMA | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled |

| Processor settings | Secure Memory Encryption | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled |

| Processor settings | Minimum SEV no-ES ASID | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| Processor settings | SNP Memory Coverage | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled |

| Processor settings | Secure Nested Paging | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled |

| Processor settings | Transparent Secure Memory Encryption | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled | Disabled |

| Processor settings | ACPI CST C2 Latency | 800 | 18 | 18 | 18 | 18 | 800 | 18 | 800 |

| Processor settings | Configurable TDP | Maximum | Maximum | Maximum | Maximum | Maximum | Maximum | Maximum | Maximum |

| Processor settings | x2APIC Mode | Enabled | Enabled | Enabled | Enabled | Enabled | Enabled | Enabled | Enabled |

| Processor settings | Number of CCDs per Processor | All | All | All | All | All | All | All | All |

| Processor settings | Number of Cores per CCD | All | All | All | All | All | All | All | All |

| iDRAC settings | Thermal Profile | Default | Maximum Performance | Maximum Performance | Maximum Performance | Maximum Performance | Maximum Performance | Maximum Performance | Maximum Performance |

[*] For C6615, apply setting from Table 3.

[1] Logical Processor (Hyper Threading) tends to benefit throughput-oriented workloads such as SPEC CPU2017. Many HPC workloads disable this option.

Table 3. BIOS setting recommendations specific to C6615 (apply remaining settings from Table 1 and 2)

System setup screen | Setting | BIOS Defaults | SPEC cpu2017 int rate (General Purpose Performance) | SPEC cpu2017 fp rate | SPEC cpu2017 int speed | SPEC cpu2017 fp speed | Memory Throughput | HPC | Latency |

Processor settings | NUMA Node per Socket | 1 | 1 | 1 | 1 | 1 | 2 | 1 | 2 |

System profile setting | CPU Power Management | OS DBPM | OS DBPM | OS DBPM | OS DBPM | OS DBPM | OS DBPM | OS DBPM | OS DBPM |

iDRAC recommendations

Following are what we would recommend for an iDRAC environment:

- Thermally challenged environments should increase fan speed through iDRAC Thermal Section.

- All Power Capping should be removed in performance-sensitive environments.

Glossary

System profile: (Default="Performance Per Watt")

To assist the average customer in setting each individual power/performance feature for their specific environment, a menu option is provided that can help a customer optimize the system for factors such as minimum power usage/acoustic levels, maximum efficiency, Energy Star optimization, and maximum performance.

Performance Per Watt OS mode optimizes the performance/watt efficiency with a bias towards performance. It is the favored mode for Energy Star. Note that this mode is slightly different than Performance Per Watt DAPC mode. In this mode, no bus speeds are derated, leaving the OS in charge of making those changes.

Custom allows the user to individually modify any of the low-level settings that are preset and unchangeable in any of the other four preset modes.

C-States

C-states reduce CPU idle power. There are three options in this mode: Legacy, Autonomous, and Disable.

Enabled: When “Enabled” is selected, the operating system initiates the C-state transitions. Some OS SW may defeat the ACPI mapping, such as intel_idle driver.

Autonomous: When "Autonomous" is selected, HALT and C1 requests get converted to C6 requests in hardware.

Disable: When "Disable" is selected, only C0 and C1 are used by the OS. C1 gets enabled automatically when an OS autohalts.

CPU Power Management

CPU Power Management allows the selection of CPU power management methodology. Maximum Performance is typically selected for performance-centric workloads where it is acceptable to consume additional power to achieve the highest possible performance for the computing environment. This mode drives processor frequency to the maximum across all cores (although idled cores can still be frequency-reduced by C-States enforcement through BIOS or OS mechanisms if enabled). This mode also offers the lowest latency of the CPU Power Management Mode options, so it is always preferred for latency-sensitive environments. OS DBPM is another Performance Per Watt option that relies on the operating system to dynamically control individual core frequency. Both Windows and Linux can take advantage of this mode to reduce the frequency of idled or underutilized cores in order to save power. This will be Read-only unless System Profile is set to Custom.

Memory Frequency

Memory Frequency governs the BIOS memory frequency. The variables that govern maximum memory frequency include the maximum rated frequency of the DIMMs, the DIMMs per channel population, the processor choice, and this BIOS option. Additional power savings can be achieved by reducing the memory frequency at the expense of reduced performance. This will be Read-only unless System Profile is set to Custom.

Turbo Boost

Turbo Boost governs the Boost Technology. This feature allows the processor cores to be automatically clocked up in frequency beyond the advertised processor speed. The amount of increased frequency (or 'turbo upside') one can expect from an EPYC processor depends on the processor model, thermal limitations of the operating environment, and in some cases power consumption. In general terms, the fewer cores being exercised with work, the higher the potential turbo upside. The potential drawbacks for Boost are mainly centered on increased power consumption and possible frequency jitter that can affect a small minority of latency-sensitive environments. This will be Read-only unless System Profile is set to Custom.

Memory Patrol Scrub

Memory Patrol Scrubbing searches the memory for errors and repairs correctable errors to prevent the accumulation of memory errors. When set to Disabled, no patrol scrubbing will occur. When set to Standard Mode, the entire memory array will be scrubbed once in a 24-hour period. When set to Extended Mode, the entire memory array will be scrubbed more frequently to further increase system reliability. This will be Read-only unless System Profile is set to Custom.

Memory Refresh Rate

The memory controller will periodically refresh the data in memory. The frequency at which memory is normally refreshed is referred to as 1X refresh rate. When memory modules are operating at a higher-than-normal temperature or to further increase system reliability, the refresh rate can be set to 2X, however this may have a negative impact on memory subsystem performance under certain circumstances. This will be Read-only unless System Profile is set to Custom.

PCI ASPM L1 Link Power Management

When enabled, PCIe Advanced State Power Management (ASPM) can reduce overall system power while slightly reducing system performance.

Note: Some devices may not perform properly (they may hang or cause the system to hang) when ASPM is enabled; for this reason, L1 will only be enabled for validated qualified cards.

Determinism Slider

The Determinism Slider controls whether BIOS will enable determinism to control performance.

Performance Determinism: BIOS will enable 100% deterministic performance control.

Power Determinism: BIOS will not enable deterministic performance control.

Power Profile Select

High Performance Mode (default): Favors core performance. All DF P-States are available in this mode, and the default DF P-State and DLWM algorithms are active.

Efficiency Mode: Configures the system for power efficiency. Limits boost frequency available to cores and restricts DF P-States available in the system. Maximum IO.

Performance Mode: Sets up Data Fabric to maximize IO sub-system performance.

Algorithm Performance Boost Disable (ApbDis)

When enabled, a specific hard-fused Data Fabric (SoC) P-state is forced for optimizing workloads sensitive to latency or throughput. For higher performance and power savings, when disabled, P-states will be automatically managed by the Application Power Management, allowing the processor to provide maximum performance while remaining within a specified power-delivery and thermal envelope.

ApbDis Fixed Socket P-State

This value defines the forced P-state when ApbDis is enabled.

Dynamic Link Width Management (DLWM)

DLWM reduces the XGMI link width between sockets from x16 to x8 (default) when no traffic is detected on the link. As with Data Fabric and Memory P-states, this feature is optimized to trade power between core and high IO/memory bandwidth workloads.

Forced: Force link width to x16, x8, or x2.

Unforced: Link width will be managed by DLWM engine.

System Memory Testing

System Memory Testing indicates whether or not the BIOS system memory tests are conducted during POST. When set to Enabled, memory tests are performed.

Note: Enabling this feature will result in a longer boot time. The extent of the increased time depends on the size of the system memory.

Dram Refresh Delay

By enabling the CPU memory controller to delay running the REFRESH commands, you can improve the performance for some workloads. By minimizing the delay time, it is ensured that the memory controller runs the REFRESH command at regular intervals. For Intel-based servers, this setting only affects systems configured with DIMMs which use 8 Gb density DRAMs.

Correctable Memory ECC SMI

Allows the system to log ECC-corrected DRAM errors into the SEL log. Logging these rare errors can help identify marginal components, however the system will pause for a few milliseconds after an error while the log entry is created. Latency-conscious customers may want to disable the feature. Spare Mode and Mirror mode require this feature to be enabled.

DIMM Self Healing (Post Package Repair) on Uncorrectable Memory Error Enable/Disable Post Package Repair (PPR) on Uncorrectable Memory Error.

Correctable Error Logging

Enable/Disable logging of correctable memory threshold error.

Logical Processor

Each processor core supports up to two logical processors. When set to Enabled, the BIOS reports all logical processors. When set to Disabled, the BIOS only reports one logical processor per core. Generally, a higher processor count results in increased performance for most multi-threaded workloads, and the recommendation is to keep this enabled. However, there are some floating point/scientific workloads, including HPC workloads, where disabling this feature may result in higher performance.

Virtualization Technology

When set to Enabled, the BIOS will enable processor Virtualization features and provide the virtualization support to the Operating System (OS) through the DMAR table. In general, only virtualized environments such as VMware(r) ESX(tm), Microsoft Hyper-V(r), Red Hat(r) KVM, and other virtualized operating systems will take advantage of these features. Disabling this feature is not known to significantly alter the performance or power characteristics of the system, so leaving this option Enabled is advised for most cases.

IOMMU Support

Enable or Disable IOMMU support. Required to create IVRS ACPI Table.

Kernel DMA Protection

When set to Enabled, using IOMMU, BIOS & Operating System will enable direct memory access protection for DMA-capable peripheral devices. Enable IOMMU Support to use this option.

L1 Stream HW Prefetcher

When set to Enabled, the processor provides advanced performance tuning by controlling the L1 stream HW prefetcher setting. Use the recommended setting, and this option will allow for optimizing overall workloads.

L2 Stream HW Prefetcher

When set to Enabled, the processor provides advanced performance tuning by controlling the L2 stream HW prefetcher setting. Use the recommended setting, and this option will allow for optimizing overall workloads.

L1 Stride Prefetcher

When set to Enabled, the processor provides additional fetch to the data access for an individual instruction for performance tuning by controlling the L1 stride prefetcher setting. Use the recommended setting, and this option will allow for optimizing overall workloads.

L1 Region Prefetcher

When set to Enabled, the processor provides additional fetch to data along with the data access to the given instruction for performance tuning by controlling the L1 region prefetcher setting. Use the recommended setting, and this option will allow for optimizing overall workloads.

L2 Up Down Prefetcher

When set to Enabled, the processor uses memory access to determine whether to fetch next or previous for all memory accesses for advanced performance tuning by controlling the L2 up/down prefetcher setting. Use the recommended setting, and this option will allow for optimizing overall workloads.

MADT Core Enumeration

This field determines how BIOS enumerates processor cores in the ACPI MADT table. When set to Round Robin, processor cores are enumerated in a Round Robin order to evenly distribute interrupt controllers for the OS across all Sockets and Dies. When set to Linear, processor cores are enumerated across all Dies within a Socket before enumerating additional Sockets for a linear distribution of interrupt controllers for the OS.

NUMA Nodes Per Socket

This field specifies the number of NUMA nodes per socket. The Zero option is for 2 socket configurations.

L3 cache as NUMA Domain

This field specifies that each CCX within the processor will be declared as a NUMA Domain.

Secure Memory Encryption

This field enables or disables AMD secure encryption features such as Secure Memory Encryption (SME) and Secure Encrypted Virtualization (SEV). In addition to enabling this option, SME must be supported and activated by the operating system. Similarly, SEV must be supported and activated by the hypervisor. This option also determines if other secure encryption feature such as TSME and SEV-SNP features can be enabled.

Minimum SEV non-ES ASID

This field determines the number of Secure Encrypted Virtualization (SEV) Encrypted States (ES) and non-ES available Address Space IDs. The number specified is the dividing line between ES and non-ES ASIDs. The register save state area is also encrypted along with the entire guest memory area. The maximum number of ASIDs available depends on installed CPU and memory configuration which can either be 15, 253, or 509. The default value is 1, and the value entered by user means the number of non-ES ASIDs starts from the value entered and ends at the maximum number of ASIDs available. A value of 1 means there are only non-ES ASIDs available. For example, if the maximum number of ASIDs is 15, the default value 1 means there are 15 SEV non-ES ASIDs and 0 SEV ES ASIDs. Alternatively, if the maximum number of ASIDs is 15, the value 4 means there are 12 SEV non-ES ASIDs and 3 SEV ES ASIDs. Further, if the maximum number of ASIDs is 509, the value 40 means there are 470 SEV non-ES ASIDs and 39 SEV ES ASIDs.

Secure Nested Paging

This option enables or disables SEV-SNP, a set of additional security protections.

SNP Memory Coverage

This option selects the operating mode of the Secure Nested Paging (SNP) Memory and the Reverse Map Table (RMP). The RMP is used to ensure a one-to-one mapping between system physical addresses and guest physical addresses.

Transparent Secure Memory Encryption

This field enables or disables Transparent Secure Memory Encryption (TSME). TSME is always-on memory encryption that does not require operating system or hypervisor support. If the operating system supports SME, this field does not need to be enabled. If the hypervisor supports SEV, this field does not need to be enabled. Enabling TSME affects system memory performance.

ACPI CST C2 Latency

Enter in 18 - 1000 microseconds (decimal value). Larger C2 latency values will reduce the number of C2 transitions and reduce C2 residency. Fewer transitions can help when performance is sensitive to the latency of C2 entry and exit. Higher residency can improve performance by allowing higher frequency boost and reduce idle core power. With Linux kernel 6.0 or later, the C2 transition cost is significantly reduced. The best value will be dependent on kernel version, use case, and workload.

Configurable TDP

Configurable TDP allows the reconfiguration of the processor Thermal Design Power (TDP) levels based on the power and thermal delivery capabilities of the system. TDP refers to the maximum amount of power the cooling system is required to dissipate.

Note: This option is only available on certain SKUs of the processors, and the number of alternative levels varies as well.

x2APIC Mode

Enable or Disable x2APIC mode. Compared to the traditional xAPIC architecture, x2APIC extends processor addressability and enhances interrupt delivery performance.

Number of CCDs per Processor

This field enables the number of CCDs per Processor.

Number of Cores per CCD

This field enables the number of Cores per CCD.

Authors: Charan Soppadandi, Chris Cote, Donald Russell, Kavya AR

Accelerating Relational Database Workloads with 16G Dell PowerEdge R6625 Servers Equipped with PCIe 5.0 E3.S

Thu, 08 Feb 2024 02:28:42 -0000

|Read Time: 0 minutes

Summary

The latest 16G Dell PowerEdge R6625 servers support the PCIe 5.0 interface and the Enterprise and Datacenter Standard Form Factor (EDSFF) E3.S form factor. They deliver significant performance benefits and an improved system airflow that enhances heat dissipation. This can lead to less thermal throttling and increased lifespans for system components such as CPUs, memory and storage when compared with prior PCIe generations deployed with 2.5-inch1 form factor SSDs.

The purpose of this tech note is to present a generational server performance and power consumption comparison using PostgreSQL® relational database2 workloads. It compares 16G Dell PowerEdge R6625 PCIe 5.0 E3.S servers deployed with KIOXIA CM7-R Series E3.S enterprise NVMe SSDs with a previous generation system configuration.

The test results indicate that the latest 16G Dell PowerEdge R6625 servers deliver almost twice the relational database transactions using approximately the same amount of power when compared with the previous generation system.

Market positioning

Relational databases are vital to today’s data centers as they store an overwhelming amount of data captured on premises and at the edge of the network. Sales transactions and information relating to customers, vendors, products and financials represent key data.

IT teams need solutions that scale their data center storage platforms to better address large datasets and future growth. As these databases are dependent on fast underlying storage, one way to achieve high performance and scalability is by utilizing servers equipped with enterprise SSDs based on the latest PCIe 5.0 interface and the NVMe 2.0 protocol. The PCIe 5.0 revision can move data through the PCIe interface almost twice as fast when compared with the previous PCIe

4.0 generation. This enables SSDs to deliver input/output (I/O) even faster while requiring fewer servers to achieve the same level of performance.

With the recent availability of EDSFF SSDs, storage performance and total capacity per server can also increase. Servers with EDSFF E3.S slots deployed with E3.S SSDs deliver fast data throughput, fast input/output operations per second (IOPS) performance, low latency, high density and thermally optimized capabilities.

Product Features

Dell PowerEdge 6625 Rack Server (Figure 1)

Specifications: https://www.delltechnologies.com/asset/en-us/products/servers/technical-support/poweredge-r6625-spec- sheet.pdf.

Figure 1: Side angle of Dell PowerEdge 6625 Rack Server3

KIOXIA CM7 Series Enterprise NVMe SSD (Figure 2) Specifications:https://americas.kioxia.com/en-us/business/ssd/enterprise-ssd.html.

Figure 2: Front view of KIOXIA CM7 Series SSD4

PCIe 5.0 and NVMe 2.0 specification compliant; Two configurations: CM7-R Series (read intensive), 1 Drive Write Per Day5 (DWPD), up to 30,720 gigabyte6 (GB) capacities and CM7-V Series (higher endurance mixed use), 3 DWPD, up to 12,800 GB capacities.

Performance specifications: SeqRead = up to 14,000 MB/s; SeqWrite = up to 7,000 MB/s; RanRead = up to 2.7M IOPS; RanWrite = up to 600K IOPS.

Hardware/Software test configuration

The hardware and software equipment used in this virtualization comparison (Figure 3):

Server Information | ||

Server Model | Dell PowerEdge R6625 | Dell PowerEdge R6525 |

No. of Servers | 1 | 1 |

CPU Information | ||

CPU Model | AMD EPYC™ 9334 | AMD EPYC 7352 |

No. of Sockets | 2 | 2 |

No. of Cores | 32 | 24 |

Memory Information | ||

Memory Type | DDR5 | DDR4 |

Memory Speed (in megatransfers per second) | 4,800 MT/s | 3,200 MT/s |

Memory Size (in gigabytes) | 384 GB | 128 GB |

SSD Information | ||

SSD Model | KIOXIA CM7-R Series | KIOXIA CM6-R Series |

SSD Type | Read intensive | Read intensive |

Form Factor | E3.S | 2.5-inch (U.3) |

Interface | PCIe 5.0 x4 | PCIe 4.0 x4 |

Interface Speed (in gigatransfers per second) | 128 GT/s | 64 GT/s |

No. of SSDs | 4 | 4 |

SSD Capacity (in terabytes6) | 3.84 TB | 3.84 TB |

DWPD | 1 | 1 |

Active Power | up to 24 watts | up to 19 watts |

Operating System Information | ||

Operating System (OS) | Ubuntu® | Ubuntu |

OS Version | 22.04.2 | 22.04.2 |

Kernel | 5.15.0-76-generic | 5.15.0-76-generic |

RAID | RAID 57 | RAID 5 |

RAID Version | mdadm 4.2 | mdadm 4.2 |

Test Software Information | ||

Software | HammerDB8 | HammerDB |

Benchmark | TPROC-C9 | TPROC-C |

Version | 4.8 | 4.8 |

No. of Virtual Users | 128 | 128 |

Figure 3: Hardware/software configuration used in the comparison

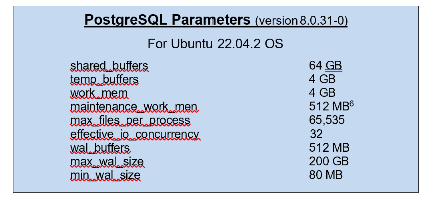

For additional information regarding PostgreSQL relational database parameters and the OS tuning parameters used in this comparison, see Appendix A.

Set-up and test procedures

Set-up #1:

A Dell PowerEdge 6625 Rack Server was set-up with the Ubuntu 22.04.2 operating system.

Additional OS level parameters were adjusted to help increase system performance (to adjust these parameters, refer to Appendix A).

The system was rebooted.

Four 3.84 TB KIOXIA CM7 Series SSDs were placed in a RAID 5 set (via mdadm) to hold the PostgreSQL database in the server.

An XFS® file system was placed on top of the RAID 5 set and was mounted with noatime10 and discard11 flags. PostgreSQL relational database was installed in the server and the service was started.

HammerDB test software was installed on the server for the KIOXIA CM7 Series SSDs, enabling the TPROC-C online transaction processing (OLTP) workloads to run against the PostgreSQL database.

Set-up #2:

A Dell PowerEdge 6525 Rack Server was set-up with the Ubuntu 22.04.2 operating system.

Additional OS level parameters were adjusted to help increase system performance (to adjust these parameters, refer to Appendix A).

The system was rebooted.

Four 3.84 TB KIOXIA CM6 Series SSDs were placed in a RAID 5 set (via mdadm) to hold the PostgreSQL database in the server.

An XFS file system was placed on top of the RAID 5 set and was mounted with noatime and discard flags. PostgreSQL relational database was installed in the server and the service was started.

HammerDB test software was installed on the server for the KIOXIA CM6 Series SSDs, enabling the TPROC-C OLTP workloads to run against the PostgreSQL database.

Test procedures:

The following metrics were recorded when the TPROC-C workload was run against each configuration: Average Database Throughput

Average Drive Read Latency

Average Drive Write Latency Average Server Power Consumption

For each individual metric, three total runs were performed and the average of the three runs were calculated and compared with each configuration.

Test results12

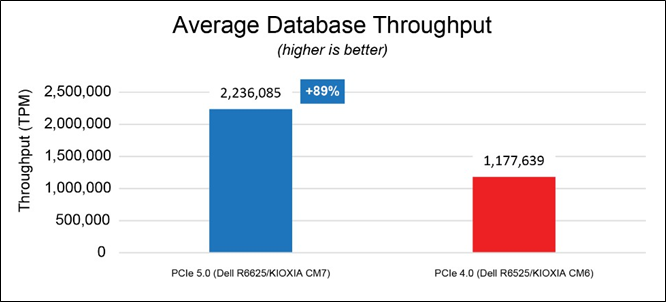

Average Database Throughput (Figure 4).

This test measured how many transactions in the TPROC-C workload were executed per minute. The HammerDB software, executing the TPROC-C transaction profile, randomly performed new order, payment, order status, delivery and stock level transactions. The benchmark simulated an OLTP environment with a large number of users conducting simple and short transactions (that require sub-second response times and return relatively few records). Figure 4 shows the average database throughput from three test runs for each set of drives. The results are in transactions per minute (TPM)

- the higher result is better.

Figure 4: Average database throughput results

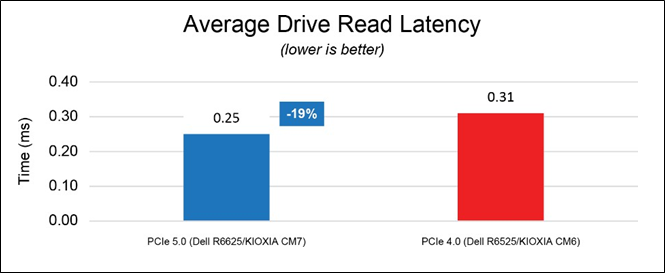

Average Read Latency (Figure 5).

This test measured drive read latency in milliseconds (ms) - the time it took to perform a drive read operation and included the time it took to complete the operation and receive a ‘successfully completed’ acknowledgement. These metrics were obtained from the drives while the database workload was running. Figure 5 shows the average read latency from three test runs for each set of drives - the lower result is better.

Figure 5: Average read latency results

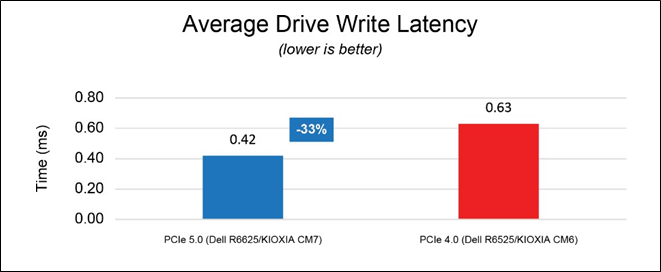

Average Write Latency (Figure 6).

This test measured drive write latency in milliseconds (ms) - the time it took to perform a drive write operation and included the time it took to complete the operation and receive a ‘successfully completed’ acknowledgement. These metrics were obtained from the drives while the database workload was running. Figure 6 shows the average write latency from three test runs for each set of drives - the lower result is better.

Figure 6: Average write latency results

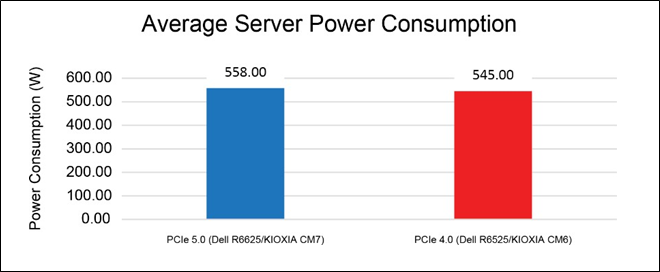

Average Server Power Consumption (Figure 7).

This test measured the average amount of power drawn by each server system in its entirety including all of the individual components that run from the server’s power supply unit (PSU). This includes the motherboard, CPU, memory, storage and other server components. The following results in Figure 7 were obtained from the Integrated Dell Remote Access Controller (iDRAC) – the results are in watts (W).

Figure 7: Average server power consumption results

Although the overall system power draw is slightly higher in the PCIe 5.0 configuration, the solution is able to maintain 89% higher database throughput, 19% lower read latency and 33% lower write latency on average.

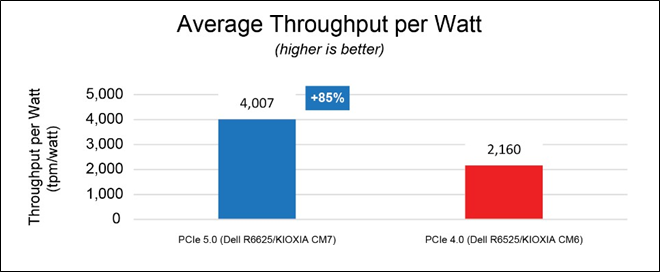

From the Figure 7 results, database throughput per watt can be easily determined by dividing the average database throughput by the average server consumption as depicted in Figure 8 – the higher result is better.

Figure 8: Average throughput per watt results

The PCIe 5.0 configuration was able to deliver 4,007 TPM per watt versus 2,160 TPM per watt delivered by the PCIe 4.0 configuration, nearly doubling database throughput per watt. At the data center level, these results enable administrators to use the same number of servers for nearly double the performance, or converse to this, scale the number of servers to help save on power consumption and total cost of ownership without sacrificing performance.

Final analysis

Next generation Dell PowerEdge 6625 Rack Servers deployed with KIOXIA CM7 Series PCIe 5.0 E3.S SSDs show nearly double the database performance when compared with a previous generation while lowering SSD latency by performing read/write operations faster. This system delivered 89% more transactions per minute enabling higher relational database workload densities while reducing the footprint of servers needed to service these workloads.

The Dell PowerEdge R6625 and KIOXIA CM7 Series SSD test configuration also demonstrated a comparable server power draw when compared with the previous generation test system. Though the active power increased from PCIe 4.0 to PCIe 5.0 by approximately 13 watts, the system was able to process almost twice as many transactions while consuming almost the same amount of power. As such, fewer servers are necessary to achieve the same level of performance without experiencing a power consumption spike.

The test results indicate that the latest 16G Dell PowerEdge 6625 Rack Servers deliver almost twice the relational database transactions using approximately the same amount of power when compared with prior PCIe generations.

Appendix A – PostgreSQL Parameters / OS Tuning Parameters

The PostgreSQL parameters used for this comparison include:

Additional tuning parameters performed on the OS to optimize system performance were made to /etc/sysctl.conf files and

/etc/security/limits.conf files. The /etc/sysctl.conf files override OS default kernel parameter values while the

/etc/security/limits.conf files allow resource limits to be set. These tuning parameters include:

/etc/sysctl.conf file changes:

Parameter | Value |

vm.swappiness | 0 |

kernel.sem | 250 32000 100 128 |

fs.file-max | 6815744 |

net.core.rmem_default | 262144 |

net.core.rmem_max | 4194304 |

net.core.wmem_default | 262144 |

net.core.wmem_max | 1048576 |

fs.aio-max-nr | 1048576 |

vm.nr_hugepages | 35000 |

/etc/security/limits.conf file changes:

User | Values | ||

* | soft | nproc | 65535 |

* | hard | nproc | 65535 |

* | soft | nofile | 65535 |

* | hard | nofile | 65535 |

root | soft | nproc | 65535 |

root | hard | nproc | 65535 |

root | soft | nofile | 65535 |

root | hard | nofile | 65535 |

postgres | soft | memlock | 100000000 |

postgres | hard | memlock | 100000000 |

References

Footnotes

- 2.5-inch indicates the form factor of the SSD and not its physical size.

- PostgreSQL is a powerful, open source object-relational database system with over 35 years of active development and a reputation for reliability, feature robustness and performance.

- The product image shown is a representation of the design model and not an accurate product depiction.

- The product image shown was provided with permission from KIOXIA America, Inc. and is a representation of the design model and not an accurate product depiction.

- Drive Write Per Day (DWPD) means the drive can be written and re-written to full capacity once a day, every day for five years, the stated product warranty period. Actual results may vary due to system configuration, usage and other factors. Read and write speed may vary depending on the host device, read and write conditions and file size.

- Definition of capacity - KIOXIA Corporation defines a megabyte (MB) as 1,000,000 bytes, a gigabyte (GB) as 1,000,000,000 bytes and a terabyte (TB) as 1,000,000,000,000 bytes. A computer operating system, however, reports storage capacity using powers of 2 for the definition of 1Gbit = 230 bits = 1,073,741,824 bits, 1GB = 230 bytes = 1,073,741,824 bytes and 1TB = 240 bytes = 1,099,511,627,776 bytes and therefore shows less storage capacity. Available storage capacity (including examples of various media files) will vary based on file size, formatting, settings, software and operating system, and/or pre-installed software applications, or media content. Actual formatted capacity may vary.

- RAID 5 is a redundant array of independent disks configuration that uses disk striping with parity - Data and parity are striped evenly across all of the disks, so no single disk is a bottleneck.

- HammerDB is benchmarking and load testing software that is used to test popular databases. It simulates the stored workloads of multiple virtual users against specific databases to identify transactional scenarios and derive meaningful information about the data environment, such as performance comparisons.

- TPROC-C is the OLTP workload implemented in HammerDB derived from the TPC-C™ specification with modification to make running HammerDB straightforward and cost-effective on any of the supported database environments. The HammerDB TPROC-C workload is an open source workload derived from the TPC-C Benchmark Standard and as such is not comparable to published TPC-C results, as the results comply with a subset rather than the full TPC-C Benchmark Standard. TPROC-C means Transaction Processing Benchmark derived from the TPC "C" specification.

- The noatime option turns off access time recording so that the file system will ignore access time updates on files. If the file system is used for database workloads, specifying noatime can reduce writes to the file system.

- The discard option allows the file system to inform the underlying block device to issue a TRIM command when blocks are longer used. KIOXIA makes no warranties regarding the test results and performance can vary due to system configuration usage and other factors.

- Read and write speed may vary depending on the host device, read and write conditions and file size.

Trademarks

AMD EPYC and combinations thereof are trademark of Advanced Micro Devices, Inc. Dell and PowerEdge are registered trademarks or trademarks of Dell Inc.

NVMe is a registered or unregistered trademark of NVM Express, Inc. in the United States and other countries. PCIe is a registered trademark of PCI-SIG.

PostgreSQL is a registered trademark of the PostgreSQL Community Association of Canada.

All other company names, product names and service names may be trademarks or registered trademarks of their respective companies.

TPC-C is a trademark of the Transaction Processing Performance Council. All other company names, product names and service names may be trademarks or registered trademarks of their respective companies.

Ubuntu is a registered trademark of Canonical Ltd.

XFS is a registered trademark of Silicon Graphics International Corp. or its subsidiaries in the United States and/or other countries.

All other company names, product names and service names may be trademarks or registered trademarks of their respective companies.

Disclaimers

© 2023 Dell, Inc. All rights reserved. Information in this tech note, including product specifications, tested content, and assessments are current and believed to be accurate as of the date that the document was published and subject to change without prior notice. Technical and application information contained here is subject to the most recent applicable product specifications.

13% Better Performance in Financial Trading with PowerEdge R7615 and AMD EPYC 9374F

Wed, 16 Aug 2023 15:41:36 -0000

|Read Time: 0 minutes

Summary

Dell PowerEdge R7615 with 4th Generation AMD EPYC 9374F provides up to a 13 percent performance gain over Dell PowerEdge R7615 with 4th Generation AMD EPYC 9354P for financial trading benchmarks.[1] This Direct to Development (DfD) document looks at CPU benchmarks for three R7615 32-core based CPU configurations and highlights key features that enable businesses enterprises to host different workloads.

Dell PowerEdge R7615

Dell PowerEdge R7615 is a 2U, single-socket rack server. It is designed to be the best investment per dollar for your data center. This server provides performance, and flexible low-latency storage options in an air or Direct Liquid Cooling (DLC) configuration by using an AMD EPYC 4th generation processor to deliver up to 50% more core count per single socket platform in an innovative air-cooled chassis. It delivers breakthrough innovation for traditional and emerging workloads, including software-defined storage, data analytics, and virtualization, using the latest performance and density.

Figure 1. Side angle of the extremely scalable R7615

4th Generation AMD EPYC processors

PowerEdge R7615 is the latest single socket AMD server supporting 4th Generation AMD EPYC 9004 Series processors, the latest generation of the AMD64 System-on-Chip (SoC) processor family. It is based on the Zen 4 microarchitecture introduced in 2022, supporting up to 128 cores (256 threads) and 12 memory channels per socket, a 50% increase over the previous generation. This series includes three different CPU(s) with 32 cores:

Processor | CPU Cores | Threads | Max. Boost Clock | All core boost speed | Base clock | L3 Cache | Default TDP |

AMD EPYC 9374F | 32 | 64 | Up to 4.3GHz | 4.1GHz | 3.85GHz | 256MB | 320W |

AMD EPYC 9354P | 32 | 64 | Up to 3.8GHz | 3.75GHz | 3.25GHz | 256MB | 280W |

AMD EPYC 9334 | 32 | 64 | Up to 3.9GHz | 3.85GHz | 2.7GHz | 128MB | 210W |

The Base Clock, also known as Base Frequency, refers to the minimum operational clock speed of an AMD processor's cores when running under normal conditions. It serves as the foundational clock speed for the processor's overall performance. During tasks that do not require intense processing power, the processor operates at or around this speed, conserving energy and minimizing heat generation.

The Max Boost Clock, often called Max Turbo Frequency or Max Turbo Boost, signifies the upper limit of a processor's clock speed. This clock speed is achieved when specific cores of the AMD processor dynamically increase their frequency to deliver peak performance. The Max Boost Clock is typically applied to a subset of cores and is triggered when the workload demands require a burst of processing power, such as for gaming, video editing, financial trading, and other intensive applications.

The All-Core Boost Speed refers to the clock speed that all cores of an AMD processor can achieve simultaneously when under load. Unlike the Max Boost Clock, which is applicable to only a select number of cores, the All-Core Boost Speed ensures that all cores are operating at an elevated clock speed for optimized multi-threaded performance. This feature is particularly advantageous for tasks that rely heavily on parallel processing, such as rendering, simulations, and content creation.

AMD EPYC 9374F is the frequency/core optimized offering which provides up to a 13 percent increase in all core boost speed over AMD EPYC 9354P, the basic 32 core 1-socket offering. The series also includes AMD EPYC 9334 which has half the L3 Cache but offers over 52 percent drop in Default TDP over AMD EPYC 9374F, making it the most energy efficient of the three CPUs.

Performance data

We captured four benchmarks:

- Sockperf is a network benchmarking tool designed to measure network latency and throughput performance using the Socket Direct Protocol (SDP) for high-performance computing clusters and data centers.

- The QuantLib benchmark is a software library used in quantitative finance and derivatives pricing for modeling and analyzing financial instruments, providing tools for pricing, risk management, and quantitative research. It is widely used by financial professionals and institutions for accurate and efficient financial calculations.

- High Performance Conjugate Gradient measures the computational efficiency of solving a sparse linear system using conjugate gradient methods, providing insights into HPC system performance and optimization. It complements the traditional HPL benchmark, reflecting real-world application characteristics.

- The dav1d benchmark is a performance testing tool used to assess the decoding speed and efficiency of the AV1 video codec, helping to evaluate its real-time playback capabilities and effectiveness in video streaming applications. It aids in optimizing AV1 codec implementations for improved video compression and playback performance.

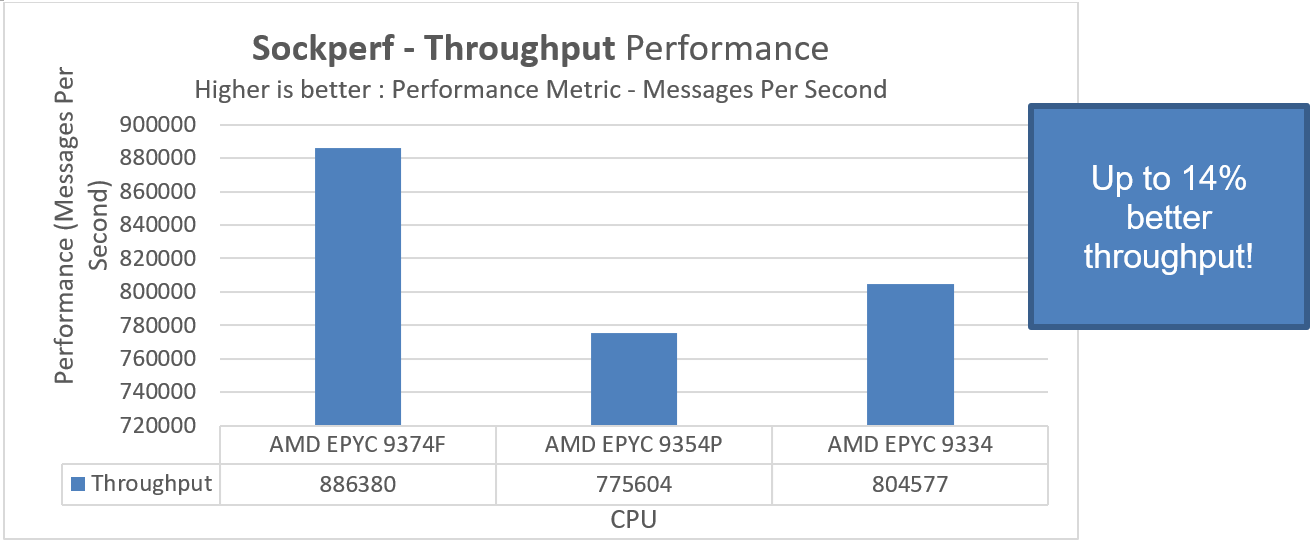

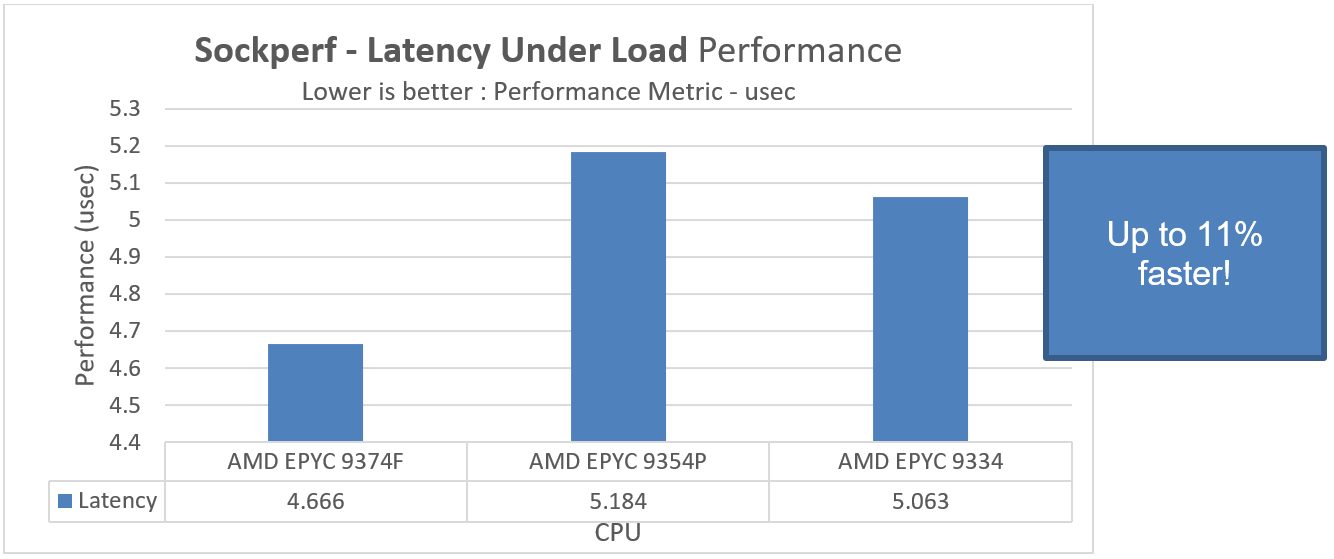

To compare performance across three R7615 4th Generation AMD EPYC processors, let us first consider the Sockperf benchmark. This benchmark reports throughput in terms of messages per second, the speed at which queries are processed and data is retrieved or stored. It also reports latency overload in usec, measuring the system's response time (latency) under different load conditions.

Figure 1. Three CPU comparison demonstrating Throughput performance using the Sockperf benchmark

Figure 2. CPU comparison showing Latency under Load performance using the Sockperf benchmark

In PowerEdge R7615 with AMD EPYC 9374F, we see up to 14 percent better throughput performance and an 11 percent drop-in time taken for the Latency Under Load subtest to complete using the Sockperf benchmark over AMD EPYC 9354P.

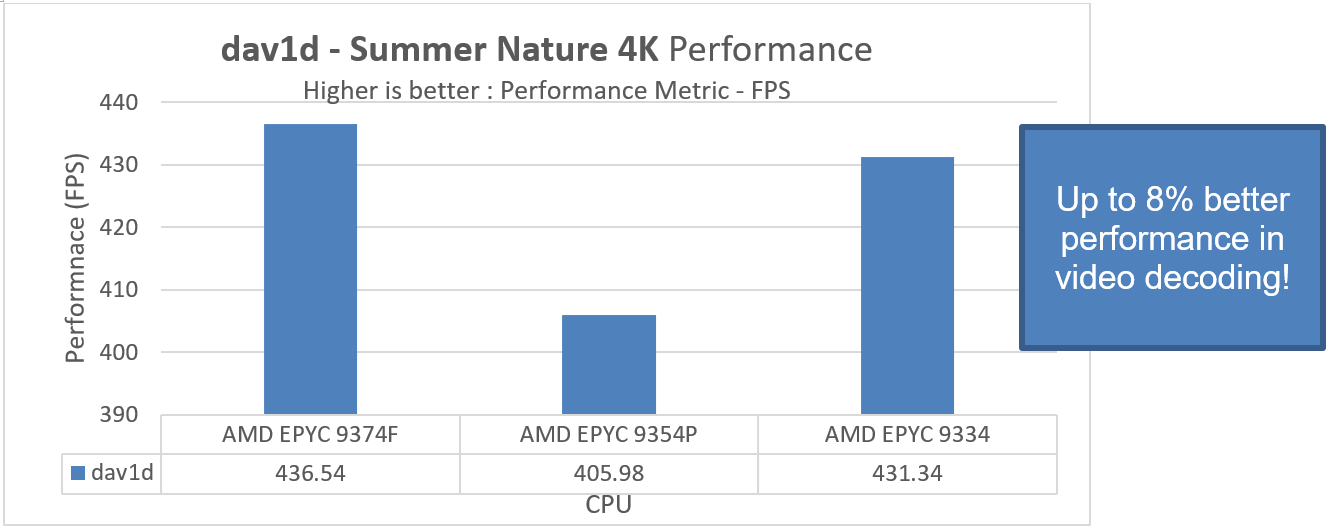

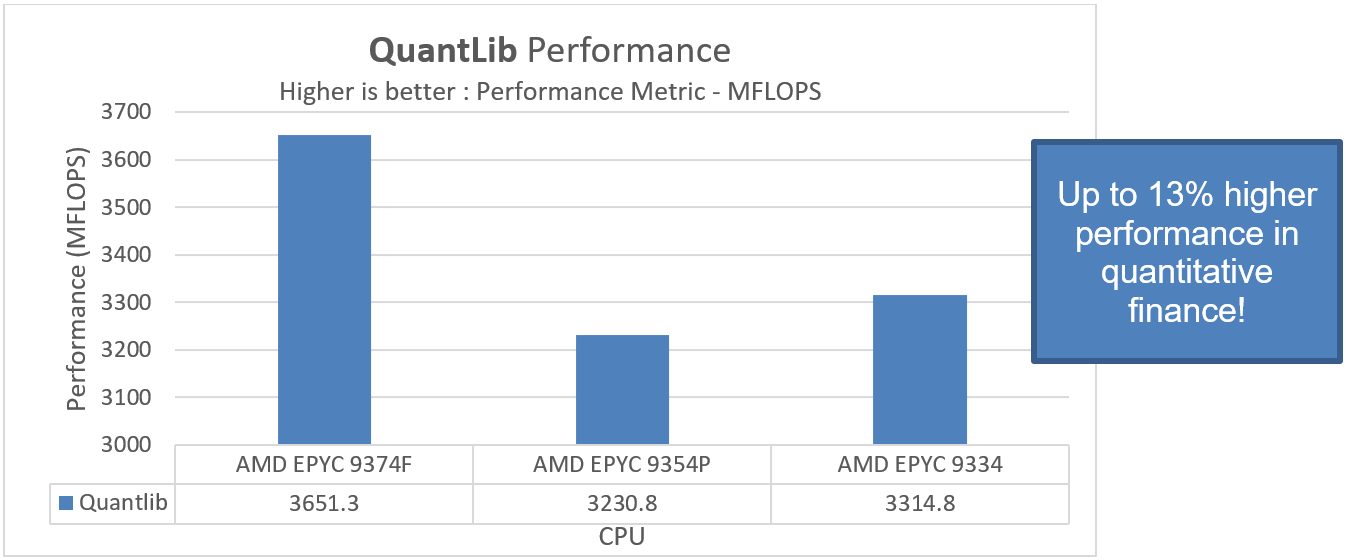

We also report dav1d results in Frames per second. This test measures the time taken to decode AV1 video content and QuantLib results in MFLOPS, a benchmark for quantitative finance for modeling, trading, and risk management scenarios.

Figure 3. A three CPU comparison demonstrating dav1d performance

Figure 4. A three CPU comparison demonstrating performance using the QuantLib benchmark

Performance in PowerEdge R7615 with AMD EPYC 9374F is better for the dav1d and QuantLib benchmarks than for the other tested configurations. We find an up to 8 percent performance uptake for video decoding and an additional 13 percent better performance for financial modelling and trading workloads in Dell Technologies PowerEdge R7615 with the frequency optimized AMD EPYC 9374F.

Conclusion

Some workloads benefit from more cores and some benefit from higher frequency. Here we have shown examples of workloads that take advantage of the higher boost frequencies.

Like most industries, the financial trading industry continues to evolve. Firms are pushing workloads harder and with larger datasets, all while expecting immediate or real-time results. These organizations must be confident that they are investing in the right platforms to support computational requirements. With PowerEdge R7615 with AMD EPYC 9374F, Dell Technologies delivers the systems to address the current and expanding needs for high-performance quantitative trading modelling and risk management scenarios.

References

- Dell PowerEdge R7615 Spec Sheet

- AMD EPYC™ 9374F Processors | AMD

- DDR5 Memory Bandwidth for Next-Generation PowerEdge Servers Featuring 4th Gen AMD EPYC Processors | Dell Technologies Info Hub

[1] Tests were performed in August 2023 at the Solutions and Performance Analysis Lab at Dell Technologies.

Save Time, Rack Space, and Money—5:1 Server Consolidation Made Possible with the Latest AMD EPYC Processors

Thu, 20 Apr 2023 17:41:37 -0000

|Read Time: 0 minutes

Summary

The latest Dell PowerEdge servers with AMD EPYC 4th Generation processors, each with up to 96 cores, deliver exceptional value to our customers. The large number of cores coupled with the high-speed DDR5 memory and very high-speed PCIe Gen5 devices makes for servers that can run almost any workload with ease. These servers are especially well suited for virtualization workloads. These unprecedented performance enhancements enabled Dell Technologies to achieve multiple virtualization world records. The cluster-level benchmarks for virtualized workloads are an excellent example of the performance and power-performance world record gains that are achievable.

Running a mixture of architectures in your data center can be cause for some concern—especially if you are looking to upgrade to the latest AMD servers and you are currently running the workloads on legacy Intel® based servers. Even with the greatest level of planning, there is always the fear that some unexpected variable might turn everything upside down during the migration process. Now, there is a new tool for your toolbox to make such migrations easier. The VMware Architecture Migration Tool1 is a PowerShell script that uses VMware PowerCLI to eliminate the guesswork and complexity involved in migrating a virtual machine from one hardware architecture to another.

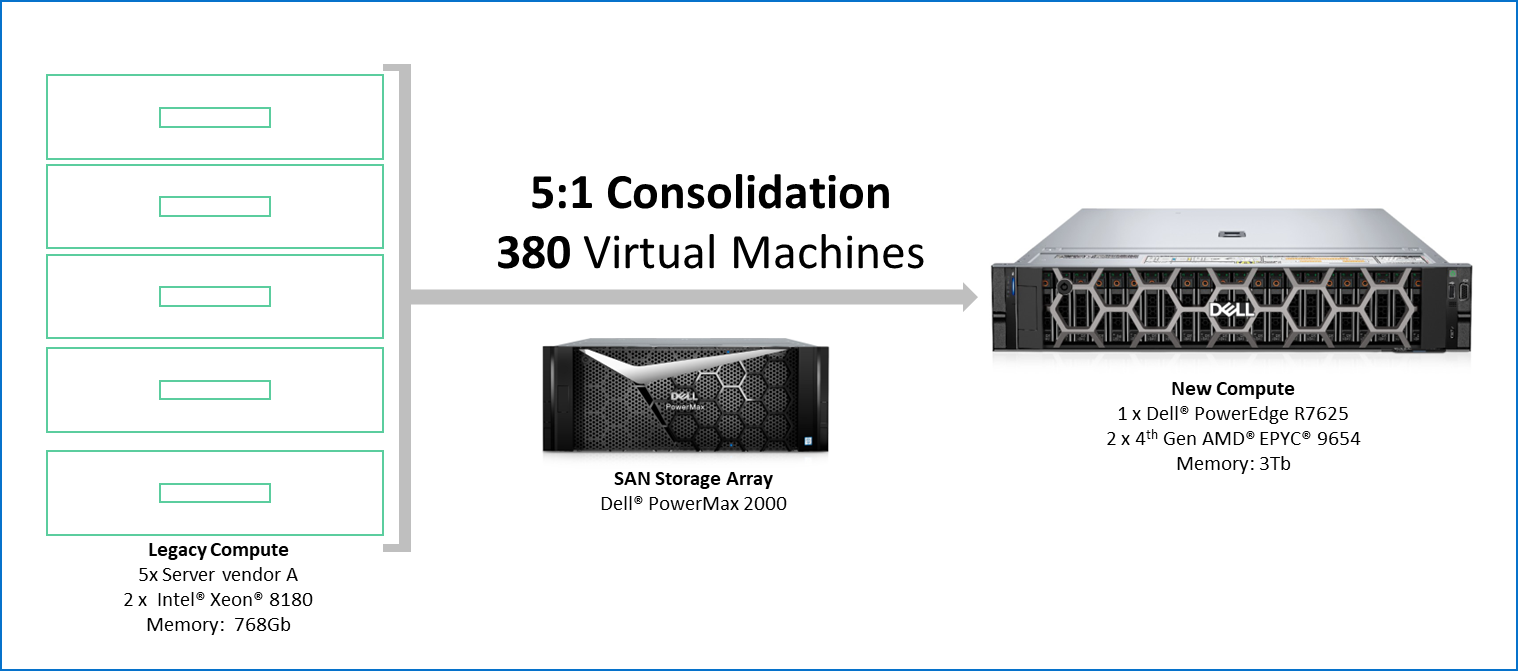

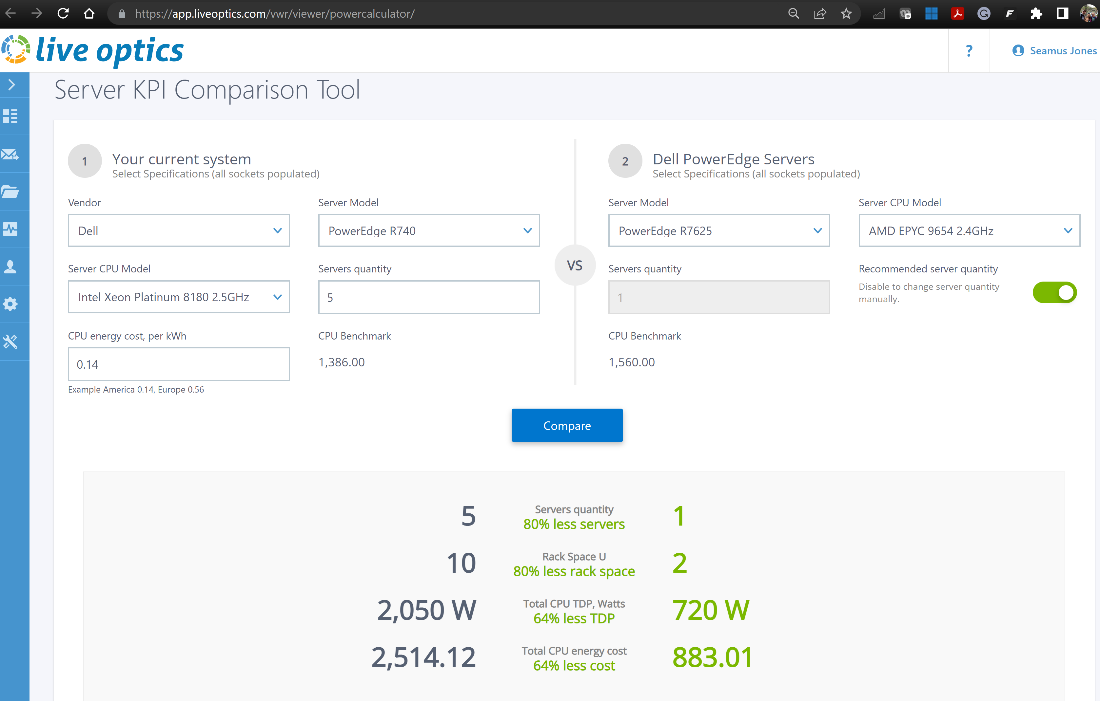

To fully test the tool, Dell ran a full migration scenario. We were able to consolidate 380 VMs running on five legacy Intel platform servers into one Dell PowerEdge R7625 with AMD EPYC 4th Gen processors. We describe our testing in more detail later in this paper.

Why migrate?

In today’s IT departments, workloads are always evolving. There is increasing pressure to support new workloads while keeping existing workloads to support existing business needs—all while also trying to reduce costs and meet corporate goals.

The latest technology tends to bring multiple advantages, driving the need to upgrade. Some of these advantages are:

- Higher performance

The latest Dell PowerEdge servers with 4th Gen AMD EPYC processors have class-leading performance with up to 121 percent higher scores than prior generations.2

- Better efficiency

The Dell PowerEdge servers with 4th Gen AMD EPYC processors are some of the first to achieve the EPEAT silver rating, indicating the highest level of environmental responsibility and efficiency. Dell has achieved 159 percent higher performance per kilowatt on the VMmark benchmark with the R7625 compared to the prior-generation model server.3

- More security

With Dell’s Cyber Resilient Architecture and AMD’s Infinity Guard, the PowerEdge servers with 4th Gen AMD EPYC processors offer top-class security to ensure that your data and infrastructure are protected.4

- Workload optimizations

The 4th Generation AMD EPYC processors have several optimizations, such as support for AVX-512, INT8, and BFLOAT16. The processors can deliver exceptional performance for workloads that can take advantage of such optimizations.

VMware Architecture Migration Tool

The VMware Architecture Migration Tool (VAMT) was developed jointly by AMD and VMware to automate the migration of legacy VMs from Intel architecture to AMD architecture, with the goal of delivering a better user experience and better business value. Freely available on GitHub, VAMT offers several key features:

- Architecture agnostic and open source

- Fully automated cold migration

- VM success validation

- Process throttling

- Change window support

- Email and syslog support

- Audit trail

- Rollback

The tool streamlines and simplifies the migration process in a trustworthy fashion.

Benchmarking

Dell leveraged the VAMT tool and the VMmark benchmark to achieve some remarkable consolidation on the PowerEdge R7625.

The VMmark benchmark allowed us to set up a workload in the form of tiles within each hardware cluster. Each tile consisted of 19 different VMs running a workload internally. The benchmark was deployed across five legacy Intel based servers and eventually migrated to a single AMD based PowerEdge server. A Dell PowerMax 2000 SAN was used for data storage. The following table shows the configuration details:

Table 1. Configuration of source and target servers

| Component/specification | Source | Target |

|---|---|---|

Number of servers | 5 | 1 |

Processor | Intel 8180 | AMD EPYC 9654 |

Cores per server | 56 | 192 |

Memory | 768 GB | 3 TB |

Tiles | 4 | 20 |

VMs per server | 76 | 380 |

Server | Server vendor A | Dell PowerEdge R7625 |

Storage | PowerMax 2000; 30 TB spread across 6 LUNs | |

Network | 32 GB FC network for storage, 25 GbE for data network on VMs through a 4-way splitter, 100 Gb switch | |

We were able to run four tiles per legacy server for a total of 380 VMs. The VAMT was then used to migrate the VMs across to the target PowerEdge server.

The tool completed a cold migration of all 380 VMs to the target server in 57 minutes!

Achieving value

The Dell PowerEdge R7625 with 4th Gen AMD EPYC processors delivers significant technology advancements that can deliver value in any virtualized deployment. Consolidating from five servers to a single server is an example of the extent of savings possible. This kind of consolidation allows for significant license cost savings and fewer hours on system management. Decommissioning the five legacy systems also reduces power draw and operational costs by as much 64 percent,5 even while also running workloads on the latest architecture with security features like Secure Memory Encryption (SME) and Secure Encrypted Virtualization (SEV). AMD SEV helps safeguard privacy and integrity by encrypting each virtual machine.

1 https://github.com/vmware-samples/vmware-architecture-migration-tool

2 Based on Dell analysis of submitted SPECFPRate score of 1410 achieved on a Dell PowerEdge R7625 with AMD EPYC 9654s compared to the previous high score of 636 on a Dell PowerEdge R7525 with AMD EPYC 7763 processors as of 11/3/2022. Actual performance might vary.

3 Based on Dell analysis of published VMmark Server Power-Performance score of 21.0179@21 tiles achieved on a Dell PowerEdge R7615 cluster with AMD EPYC 9654P processors compared to the score of 8.1263@12 tiles achieved on a Dell PowerEdge R7515 cluster with the AMD EPYC 7763 processors as of 4/13/2023. Actual performance might vary.

5 Based on Dell internal analysis comparing the total CPU TDP of 2,050 W from five dual-socket servers with the Intel Xeon 8180 processors compared to the total CPU TDP of 720 W from a single dual-socket Dell PowerEdge server with AMD EPYC 9654 processors as of 4/13/2023. Actual performance might vary.

VDI on Dell PowerEdge Infrastructure with 4th Generation AMD EPYC Processors

Fri, 14 Apr 2023 15:17:26 -0000

|Read Time: 0 minutes

Summary

Dell PowerEdge server improvements for VDI

The all-new Dell PowerEdge R7625 with AMD EPYC 4th Gen processors delivers up to 50 percent higher CPU density in terms of cores per server. This platform is based on the latest technology from AMD to provide better performance and improved scalability for a variety of workloads, including VDI.

Some of the platform enhancements that are especially relevant to VDI workloads are:

- CPU—Up to 50 percent more cores with up to 96 cores per socket, allowing VDI virtual machine (VM) per-node density increases and better VDI VM performance.

- Memory—33 percent more memory channels with 50 percent faster memory, allowing greater memory capacity and performance to support richer VDI desktop VM configurations for applications that require increased memory.

- I/O—PCIe Gen5 with twice the bandwidth, allowing for high-speed and low-latency NVMe drives, NICs, and GPU accelerators.

- Smart Cooling Technology—Advanced thermal designs and options, such as streamlined airflow pathways within the server, liquid cooling options, and so on, to keep CPUs, high-performance NICs, and GPUs cool and performing optimally.

- Boot Optimized Storage—The 3rd generation Boot Optimized Storage Solution (BOSS-N1), which has been enhanced with full hot-plug support for enterprise class M.2 NVMe SSDs. Additionally, the design is integrated into the server, eliminating the need to dedicate a PCIe slot and giving customers more flexibility with their choice of I/O slots and peripherals.

Benchmarking for VDI

Login VSI by Login Consultants is the industry-standard tool for testing VDI environments and server-based computing (RDSH environments). It installs a standard collection of desktop application software (for example, Microsoft Office, Adobe Acrobat Reader) on each VDI desktop. It then uses launcher systems to connect a specified number of users to available desktops within the environment. Once each user is connected, the workload is started through a login script, which starts the test script once the user environment is configured by the login script. Each launcher system can launch connections to several ‘target’ machines (VDI desktops).

When designing a desktop virtualization solution, understanding user workloads and profiles is key to understanding the density numbers that the solution can support. At Dell Technologies, we use several Login VSI workload/profile levels, each of which is bound by specific metrics and capabilities, with two targeted at graphics-intensive use cases.

To understand the improvements that we can expect to see with the latest generation of servers compared with the prior-generation servers, we ran the same Login VSI benchmark against both servers. We used a Knowledge Worker profile consisting of 5 to 9 applications and 360p video. The following table shows the user VM configuration:

Table 1. Login VSI Knowledge Worker profile

Workload | VM profiles | ||||

vCPUs | RAM | RAM reserved | Desktop video resolution | Operating system | |

Knowledge Worker | 2 | 4 GB | 2 GB | 1920 x 1080 | Windows 10 Enterprise 64-bit |

The following table outlines the test configuration of the hardware and software components:

Table 2. Hardware and software configuration

Component | Configuration |

Compute host hardware |

|

Management host hardware |

|

Storage | PERC with 6x mixed use SSDs (RAID 10) |

Network | Dell S5248-ON switch |

Broker | VMware Horizon 8 2209 |

Hypervisor | VMware ESXi 8.0.0 |

SQL | Microsoft SQL Server 2019 |

Desktop operating system | Microsoft Windows 10 Enterprise 64-bit, 22h2 version |

Office | Microsoft Office 365 |

Profile management | FSLogix |

Management operating system | Windows Server 2022 |

Login VSI | Version 4.1.40.1 |

Results summary—R7525 compared with R7625

Comparing the 32 core processors of the 4th Gen AMD EPYC to the 3rd Gen AMD EPYC using Login VSI showed approximately 30 percent improvement in VM density. At the same time, we observed approximately 11 percent improvement in response time.

The following table outlines the test results:

Table 3. Key results of Login VSI testing

Server | Density per host (higher is better) | User experience—VSI base (lower is better) |

PowerEdge R7525 | 265 VMs | 896 milliseconds |

PowerEdge R7625 | 345 VMs | 794 milliseconds |

Conclusion

With up to 96 cores per socket and significant increases in memory bandwidth, Dell PowerEdge servers with 4th Gen AMD EPYC processors continue to provide best-in-class features and specifications to satisfy the most demanding workloads. For VDI workloads, with the same number of cores, we observed a 30 percent increase in density with more than 11 percent reduction in response time.

DDR5 Memory Bandwidth for Next-Generation PowerEdge Servers Featuring 4th Gen AMD EPYC Processors

Wed, 03 May 2023 15:49:23 -0000

|Read Time: 0 minutes

Summary

Dell Technologies has announced some exciting new servers featuring the latest 4th Gen AMD EPYC processors. These servers come in 1- and 2-socket versions in 1U and 2U form factors. Each socket supports up to 12 DIMMs at speeds of up to 4,800 MT/s. This document compares the memory bandwidth readings observed with these new servers against previous-generation servers running 3rd Gen AMD EPYC processors.

4th Gen AMD EPYC memory architecture

The 4th Gen AMD EPYC processors are the first AMD x86 server processors to support DDR5 memory. The CPUs themselves still have a chiplet design with a central I/O chiplet surrounded by compute chiplets. The memory runs at speeds of up to 4,800 MT/s, which is 50 percent faster than the 3,200 MT/s that the previous 3rd Gen AMD EPYC processors supported.

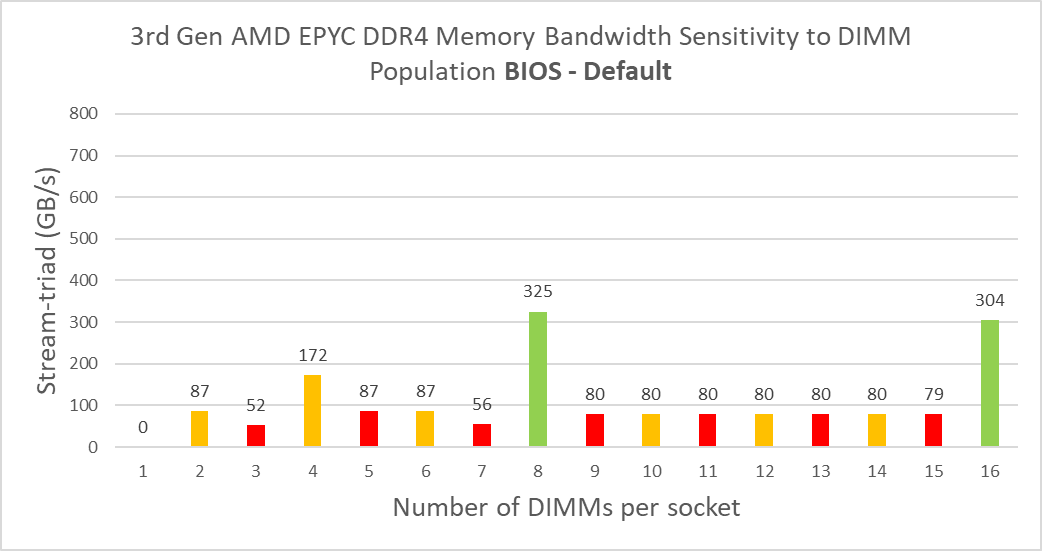

One other significant difference is in the number of populated slots. The 3rd Gen AMD EPYC processors supported up to 16 DIMMs per socket in a 2 DIMMs per channel configuration or 8 DIMMs per socket in a 1 DIMM per channel configuration. The 2 DIMMs per channel configuration supported a maximum speed of 2,933 MT/s.

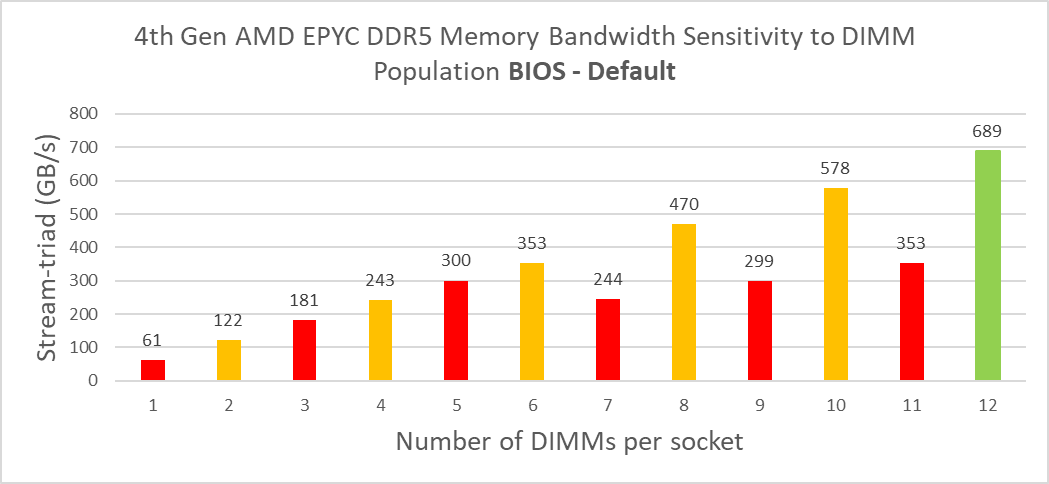

Memory bandwidth test

To quantify the impact of this increase in memory support, we performed two studies.1 The first study (see Figure 1) measured memory bandwidth determined by the number of DIMMs per CPU populated. To measure the memory bandwidth, we used the STREAM Triad benchmark. STREAM Triad is a synthetic benchmark that is designed to measure sustainable memory bandwidth (in MB/s) and a corresponding computation rate for four simple vector kernels. Of all the vector kernels, Triad is the most complex scenario. We ran the benchmark on the following systems:

- Previous-generation Dell PowerEdge R7525 powered by AMD’s 3rd Gen EPYC CPUs populated with up to 16 DDR4 3,200 MT/s DIMMs per channel

- Latest-generation Dell PowerEdge R7625 powered by AMD’s 3rd Gen EPYC CPUs populated with up to 12 DDR5 4,800 MT/s DIMMs per socket

We used default BIOS configurations for this test.

The following figures show the system aggregate memory bandwidth across two CPUs:

Figure 1. System aggregate memory bandwidth trends with DIMM population for 4th Gen AMD EPYC processor-based PowerEdge servers with default BIOS settings

Figure 2. System aggregate memory bandwidth trends with DIMM population for 3rd Gen AMD EPYC processor-based PowerEdge servers with default BIOS settings

Consider that a fully balanced configuration requires all DIMM channels to be populated—that is 8 DIMMs for the 3rd Gen and 12 DIMMs for the 4th Gen. Given these differences, it is challenging to do a direct comparison. However, if we compare the numbers for a balanced configuration with 1 DIMM per channel, we see a 112 percent increase in bandwidth. With just 8 channels populated in both cases, we see a 45 percent increase in bandwidth. Despite this not being a balanced configuration, we still see a significant performance increase at this point.

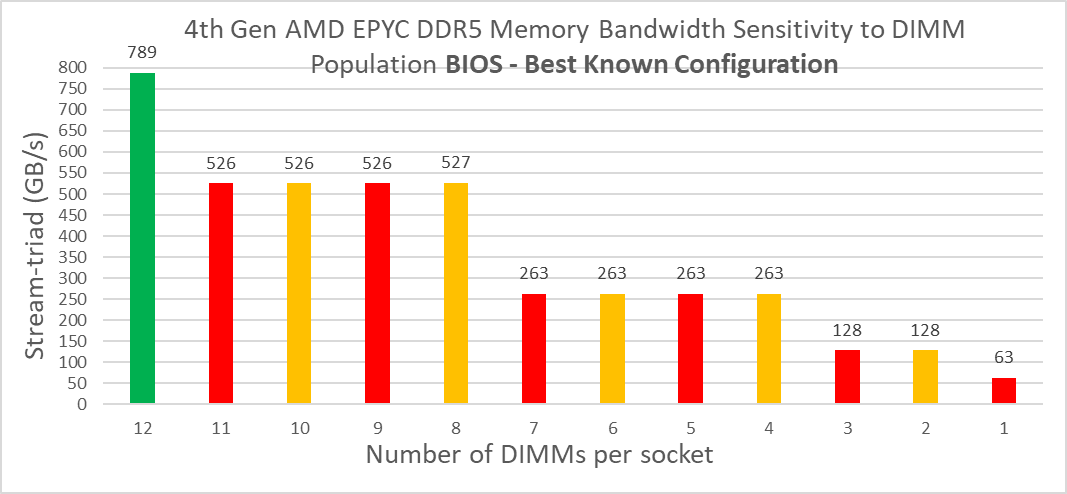

Figure 3. System aggregate memory bandwidth trends with DIMM population for 4th Gen AMD EPYC processor-based PowerEdge servers with tuned BIOS settings

We collected a second series of datapoints on the R7625 with BIOS settings adjusted for best memory performance. This included setting the NPS setting to NPS4 and disabling CCX as NUMA. With these settings, we see that the maximum bandwidth of the R7625 further increases by another 14.5 percent to a class-leading 789 GB/s.

Conclusion

With up to 96 cores per socket and significant increases in memory bandwidth, Dell PowerEdge servers with 4th Gen AMD EPYC processors continue to provide best-in-class features and specifications to satisfy the most demanding workloads.

1 Tests were performed in January 2023 at the Solutions and Performance Analysis Lab at Dell Technologies.

Understanding the Value of AMDs Socket to Socket Infinity Fabric

Tue, 17 Jan 2023 00:43:22 -0000

|Read Time: 0 minutes

Summary

AMD socket-to-socket Infinity Fabric increases CPU-to-CPU transactional speeds by allowing multiple sockets to communicate directly to one another through these dedicated lanes. This DfD will explain what the socket-to-socket Infinity Fabric interconnect is, how it functions and provides value, as well as how users can gain additional value by dedicating one of the x16 lanes to be used as a PCIe bus for NVMe or GPU use.

Introduction

Prior to socket-to-socket Infinity Fabric (IF) interconnect, CPU-to-CPU communications generally took place on the HyperTransport (HT) bus for AMD platforms. Using this pathway for multi-socket servers worked well during the lifespan of HT, but developing technologies pushed for the development of a solution that would increase data transfer speeds, as well as allow for combo links.

AMD released socket-to-socket Infinity Fabric (also known as xGMI) to resolve these bottlenecks. Having dedicated IF links for direct CPU-to- CPU communications allowed for greater data-transfer speeds, so multi-socket server users could do more work in the same amount of time as before.

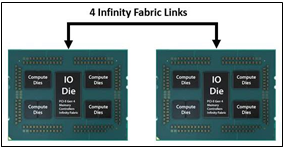

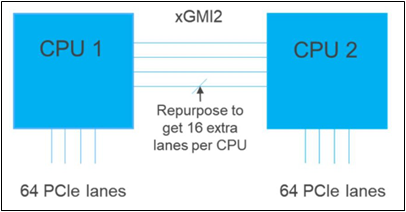

How Socket-to-Socket Infinity Fabric Works

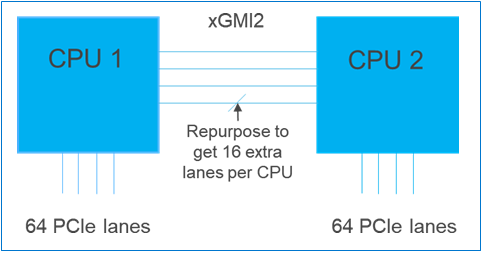

IF is the external socket-to-socket interface for 2-socket servers. The architecture used for IF links is a combo of serializer/deserializer (SERDES) that can be both PCIe and xGMI, allowing for sixteen lanes per link and a lot of platform flexibility. xGMI2 is the current generation available and it has speeds that reach up to 18Gbps; which is faster than the PCIe Gen4 speed of 16Gbps. Two CPUs can be supported by these IF links. Each IF lane connects from one CPU IO die to the next, and they are interwoven in a similar fashion, directly connecting the CPUs to one- another. Most dual-socket servers have three to four IF links dedicated for CPU connections. Figure 1 depicts a high- level illustration of how socket to socket IF links connect across CPUs.

Figure 1 – 4 socket to socket IF links connect two CPUs

The Value of Infinity Fabric Interconnect

Socket to socket IF interconnect creates several advantages for PowerEdge customers:

- Dedicated IF lanes are routed directly from one CPU to the other CPU, ensuring inter-socket communications travel the shortest distance possible

- xGMI2 speeds (18Gbps) exceed the speeds of PCIe Gen4, allowing for extremely fast inter-socket data transfer speeds

Furthermore, if customers require additional PCIe lanes for peripheral components, such as NVMe or GPU drives, one of the four IF links are a cable with a connector that can be repurposed as a PCIe lane. AMD’s highly optimized and flexible link topologies enable sixteen lanes per socket of Infinity Fabric to be repurposed. This means that 2S AMD servers, such as the PowerEdge R7525, have thirty-two additional lanes giving a total of 160 PCIe lanes for peripherals. Figure 2 below illustrates what this would look like:

Figure 2 – Diagram showing additional PCIe lanes available in a 2S configuration

Conclusion

AMDs socket-to-socket Infinity Fabric interconnect replaced the former HyperTransport interconnect in order to allow massive amounts of data to travel fast enough to avoid speed bottlenecks. Furthermore, customers needing additional PCIe lanes can repurpose one of the four IF links for peripheral support. These advantages allow AMD PowerEdge servers, such as the R7525, to meet our server customer needs.

Understanding Confidential Computing with Trusted Execution Environments and Trusted Computing Base models

Tue, 17 Jan 2023 00:35:08 -0000

|Read Time: 0 minutes

Summary

As the value of data increases, it becomes essential to protect data in- use from unauthorized access. Confidential Computing provides various levels of protection options to mitigate different kinds of threat vectors.

Introduction

Data is the new oil. As the value of data increases, it becomes increasingly important to protect data in-use to perform computations. Data in use is often stored in the clear in memory (DRAM) and accessed via unencrypted memory buses. Whether data in use is a machine learning data set or relates to keeping a secret in memory, data in-use can be vulnerable to threats vectors that can snoop on the contents of memory or the access bus. Data- in-use protection is necessary to secure computations that are increasingly operating on large data sets in memory. Additionally, code executing on the data must be trusted, tamper-free with facilities to separate trusted and non-trusted code execution environments with respect to data in-use.

Trusted Execution Environments and Trusted Computing Base models

With per country regulation requirements on data confidentiality increasing, data generators and users need secure TEEs (Trusted Executions Environments) to satisfy data privacy and protection regulations. Hosting and Infrastructure providers must enable trusted execution environment to guarantee data confidentiality of client data. This requires that entities outside the trust boundary should not be able to access the data in-use

To mitigate against increasing threat vectors combined with usage models that range from multi-tenant environments to edge deployments, trust boundaries need to shrink. Data owners and clients should prefer to keep a small TCB (Trusted Computing Base) to minimize attack coordinates and data misuse by untrusted elements. They should look closely at what TCB levels they can trust for their usage model. A TCB level informs the code footprint that can be trusted

While a reduced TCB can be achieved using software techniques, silicon-aided features can greatly aid the creation, separation and protection of TEEs with reduced TCBs. Silicon features are needed to minimize TCB to a Trusted Host Execution Environment, Trusted Virtual Machine Execution environment, and a Trusted Application Execution Environment for new and emerging deployments

Picking an appropriate TCB footprint level

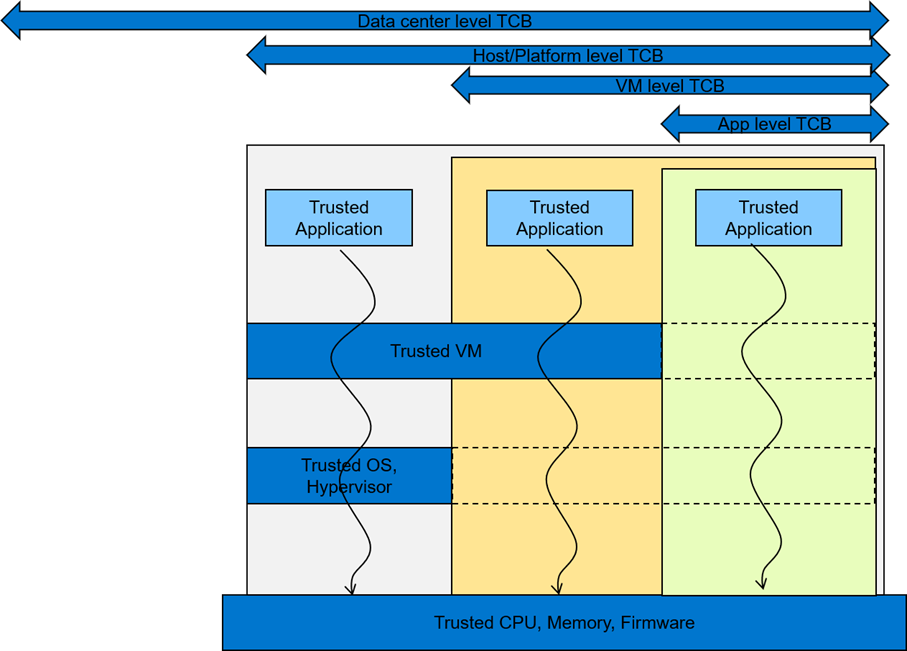

To consider an appropriate TCB footprint level, one should determine if the entity hosting the code and data execution environment can be trusted and has the facility to separate trusted and non- trusted components. For e.g., a data center level TCB can imply a data center administrator is a trusted operator for the data in use. This means the entire data center execution environment is trusted and applications users can employ a data center wide application/workload deployment policy. A Platform/Host level TCB requirement can imply a system administrator is a trusted operator for the data and the code running on the platform and can deploy a trusted Host execution environment for the workloads. A VM level TCB footprint requirement implies a trusted guest machine user for data in use running in a trusted Guest Execution Environment. An App level TCB footprint requirement can imply only the App owner is trusted with data in use access. See Figure 1 for a representation of various TCB footprint levels. If you observe carefully, as TCB footprint shrinks, the application owner has fewer layers of trusted software.

Figure 1 A view of various TCB footprint levels

These levels come with varying degree of usability to application deployments. They have unique advantages and tradeoffs when it comes performance, application mobility, trust granularity and integration with management stacks.

In general, to enable these TEEs, silicon enables memory encryption such that trusted, differentiated and secure memory access is possible for data in use. Data/app owners must be able to independently attest to the integrity of the platform and the TCB levels supported by the underlying infrastructure.

Dell believes in the power of choice when it comes to offering a trusted execution environment with a level of TCB needed to run your applications. Dell’s breadth of technologies including the enhanced cyber resilient architecture that is part of the latest generations of PowerEdge servers enables usages at the edge, core and the cloud.

Conclusion

To maximize protection of data in-use, consideration should be given to the TCB footprint that is appropriate for the use case. Dell EMC PowerEdge servers are loaded with top notch security features to provide maximum protection for your data. In addition, Dell Technologies is pleased to partner with key vendors to support features like SME, SEV-ES, and SGX, etc. with various levels of confidential computing usage models that cater to various Trusted Execution Environments

Efficient Machine Learning Inference on Dell EMC PowerEdge R7525 and R7515 Servers using NVIDIA GPUs

Tue, 17 Jan 2023 00:28:16 -0000

|Read Time: 0 minutes

Summary