Blogs

Blogs about VDI solutions

Preparing for the Metaverse with NVIDIA Omniverse and Dell Technologies

Mon, 21 Mar 2022 11:47:03 -0000

|Read Time: 0 minutes

As technology evolves, it can sometimes seem like creativity leads to complexity. In the case of 3D graphics creation, teams with a broad range of skills must come together. Each of the team members — from artists, designers, and animators to engineers and project managers — typically have a special skill set requiring their own tools, systems, and work environment.

But the technical issues do not stop there. As existing technologies mature and new ones enter the scene, the number of specialized 3D design and content creation tools rapidly increases. Many of them lack compatibility or interoperability with other tools. And across the 3D graphics ecosystem, hybrid workforces require a “physical workstation” experience wherever they may be.

It is no small task to provide compute-power access to a geographically distributed team in a way that enables collaboration without compromising security. But to remain competitive and retain top talent, companies must provide the necessary tools to the remote workforce.

Solutions for remote, collaborative, and secure 3D graphics creation

The new Dell Validated Design for VDI with NVIDIA Omniverse makes it possible for 3D graphics creation teams to work together from anywhere in real-time using multiple applications within shared virtual 3D worlds. NVIDIA® Omniverse users can access the resources and compute power they need through a virtualized desktop infrastructure (VDI), without the need for a local physical workstation.

By running NVIDIA Omniverse on Dell PowerEdge NVIDIA‑Certified Systems™, companies can enable 3D graphics teams to connect with major design tools, assets, and projects to collaborate and iterate seamlessly from early-stage ideation through to finished creation.

Designs for NVIDIA Omniverse rely on Dell PowerEdge NVIDIA‑Certified Systems and leverage a VxRail‑based virtual workstation environment with NVIDIA A40 GPUs. VMware® Horizon® can provide workload virtualization. The designs were validated with Autodesk® Maya® 3D animation and visual effects software.

The flexible solution supports the varying needs of different industries. Within media and entertainment, for example, the goal might be to create virtual worlds for films or games. Those in architecture, engineering, and construction might want to see their innovative building designs come to life within a 3D reality. Manufacturers might look to collaborate on interactive and physically accurate visualizations of potential future products or create realistic simulations of factory floors.

For all organizations, the benefits are significant:

- Empower the workforce–Enhance 3D interactive user collaboration and productivity for remote and distributed teams.

- Boost efficiency–Streamline deployment, management and support with engineering‑validated designs created in close collaboration with NVIDIA.

- Collaborate securely–Protect data against cyberthreats from the data center to the endpoint with built‑in security features.

Transforming 3D graphics production

At Dell Technologies, we are excited about NVIDIA Omniverse and its ability to enable collaboration for designers, engineers, and other innovators leveraging Dell PowerEdge servers. Dell Validated Design for VDI with NVIDIA Omniverse solutions have the potential to transform every stage of 3D production, a crucial step forward toward the metaverse. According to the Marvel® database, “The Omniverse is every version of reality and existence imaginable and unimaginable” and brings together our physical and digital lives with augmented reality, extended reality and virtual reality in our physical world like the Oasis in Ready Player One.

An open platform built for virtual collaboration and real‑time simulation, NVIDIA Omniverse streamlines and accelerates even the most complex 3D workflows by enabling remote workers to collaborate using multiple apps simultaneously. Dell Technologies is committed to offering innovative solutions to help customers manage their collaborative and performance-intensive workflows.

Learn more about Dell Validated Design for VDI with NVIDIA Omniverse.

Research and learn seamlessly from anywhere

Tue, 22 Feb 2022 15:28:11 -0000

|Read Time: 0 minutes

Traditionally, designers and engineers requiring 2D/3D visualization and analysis used high-end physical workstations. Deploying, upgrading, and maintaining these workstations is difficult and time-consuming for IT. Using VDI with virtual workstations instead provides an opportunity to overcome many of the challenges of IT management and maintenance as well as offering better collaboration, security, and flexibility.

However, a further complication to delivering a high-quality and consistent user experience in the modern higher education sector is the need to support remote learners with the same standards as are available for on-campus students, even when they are subject to a wide variety of distance and performance impacts in their LAN and WAN environments.

Recently, the Dell Technologies Validated Designs team conducted testing and best practices to optimize the remote user experience on professional graphics-accelerated applications for higher education, specifically focusing on geographic information systems (GIS) and engineering computer-aided design (CAD) applications. This paper provides details of performance test results for both baseline local area network (LAN) and remote wide area network (WAN) user experiences with virtual desktop implementations of Esri's ArcGIS Pro on Dell Technologies VDI with NVIDIA virtual GPUs, using NVIDIA’s nVector performance monitoring tool.

Why did we do this?

Measuring user experience at the VDI desktops or workstation level CAN BE informative – if the end-users' endpoints are in proximity to the hosting data center (for example, the same LAN or campus in the same building or site). But what if the user is remote or doesn’t have a strong WAN? Then measuring EUE at the desktop or workstation level is much less representative. In this case, the ability to measure end-user experience at the actual endpoint device gives a better indication of the remote user experience than relying on data captured at the VDI desktop or workstation.

Testing

We ran the NVIDIA nVector Lite tool to assess the end-user experience. The tool measured the following remote user experience attributes:

● End-user latency: This metric defines the level of response of a remote desktop or application. It measures the duration of any lag that an end-user experiences when interacting with a remote desktop or application. The metric is based on the graphics driver on the user’s endpoint device and is measured along the whole graphics pipeline from the VDI desktop to the remote screen.

● Frame rate: This metric is a common measure of user experience and defines how smooth the experience is. It measures the rate at which frames per second (fps) are delivered on the screen of the endpoint device.

● Image quality: This metric defines the impact of remoting on image quality. It uses the Structural Similarity Index (SSIM) to compare an image that is rendered on the target desktop/workstation VM with the image that is displayed on the endpoint device. The average SSIM index of all pairs of images is computed for a single point in time for the remote VDI session. The index score is calculated once during the workload so a single value score is given for each workload.

We got the following test results:

End-user latency

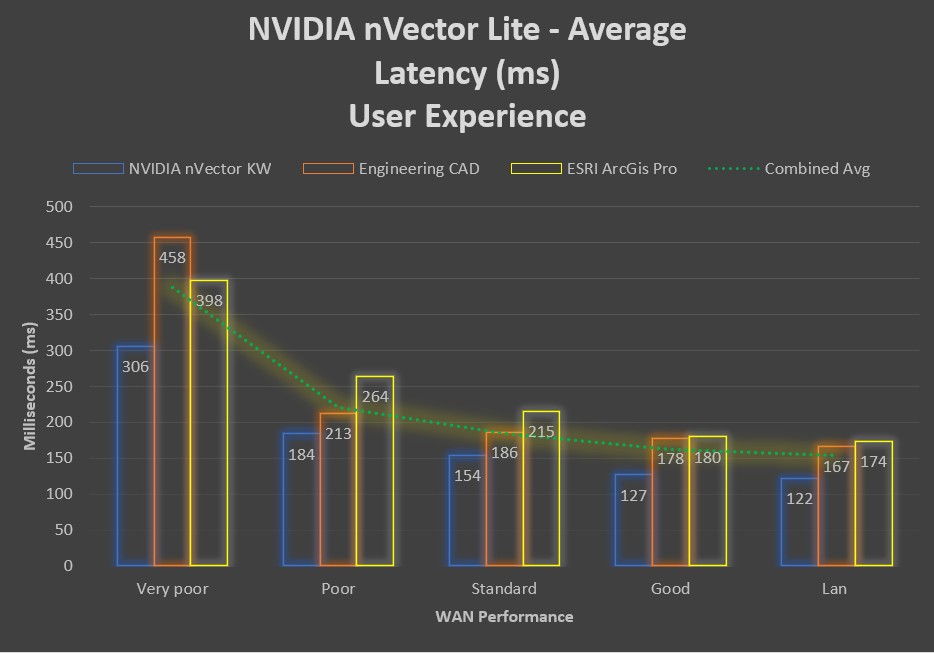

The end-user latency metric defines how responsive a remote desktop or application is. The following figure confirms the common assumption that the better the network connection, the lower the end-user latency. The chart shows that average end-user latency more than doubles for a remote user with a “Very Poor” network connection when compared to an office user with a local LAN connection.

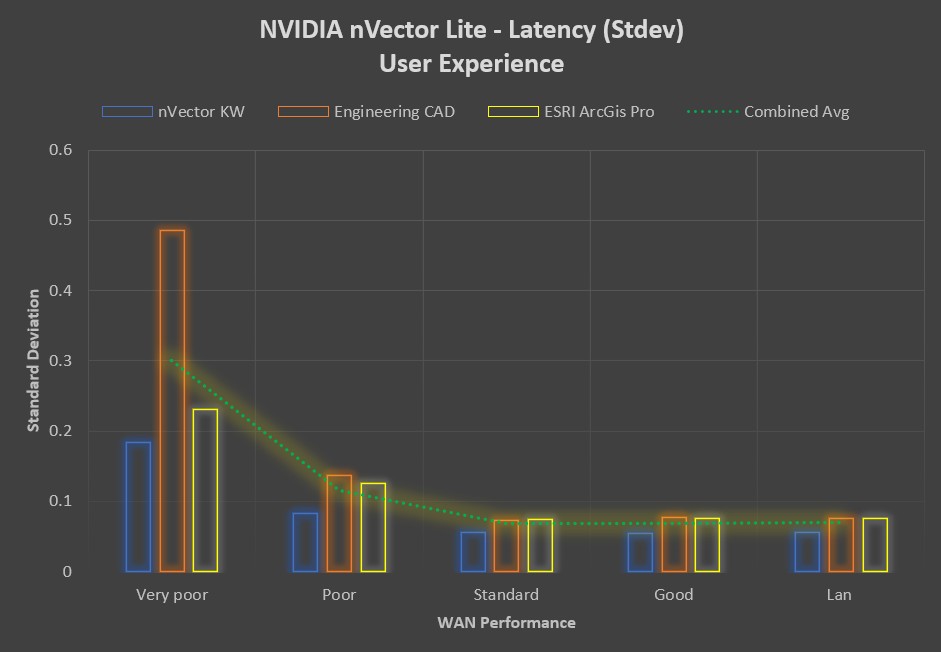

The following figure shows the end-user latency variability between the graphics-accelerated workloads.

Remote users with a “Standard” to “Good” network connection can expect to see the same consistent user experience, with end-user latency distribution well below 500 ms. Latency consistency decreases as the network performance decreases, with outlying values in excess of 2 seconds for specific applications.

Frame rate

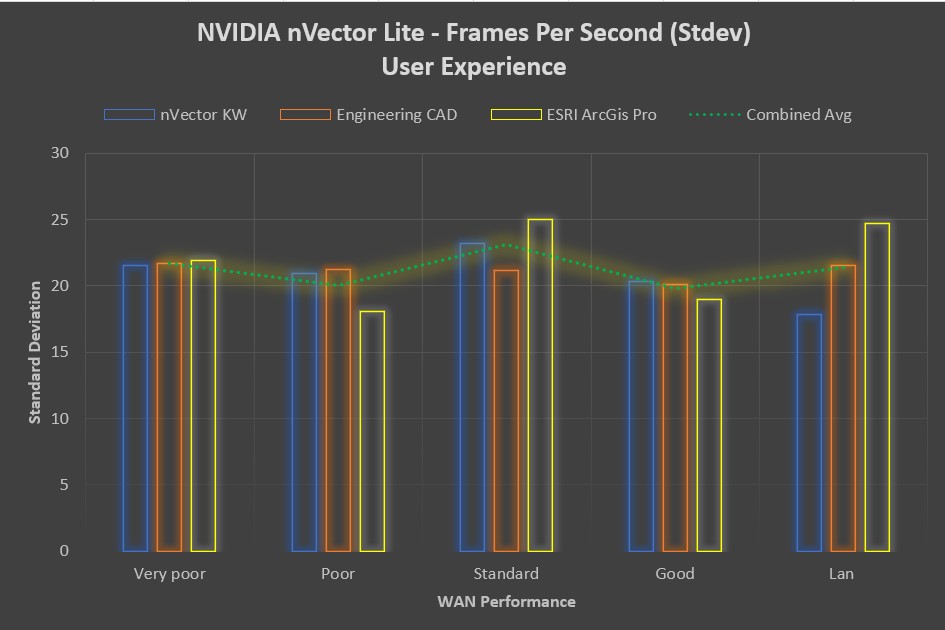

The frame rate metric defines how smooth the end-user experience is. It is a measurement of “smoothness” at an endpoint device when a user is interacting with a remote VDI desktop or application. The nVector tool samples frame rates at 5-second intervals for the duration of the workload.

The higher the number of endpoint frames per second, the smoother the end-user experience. The average fps number show a marginal increase with network performance, with minimal variability shown between the application workloads.

Image Quality

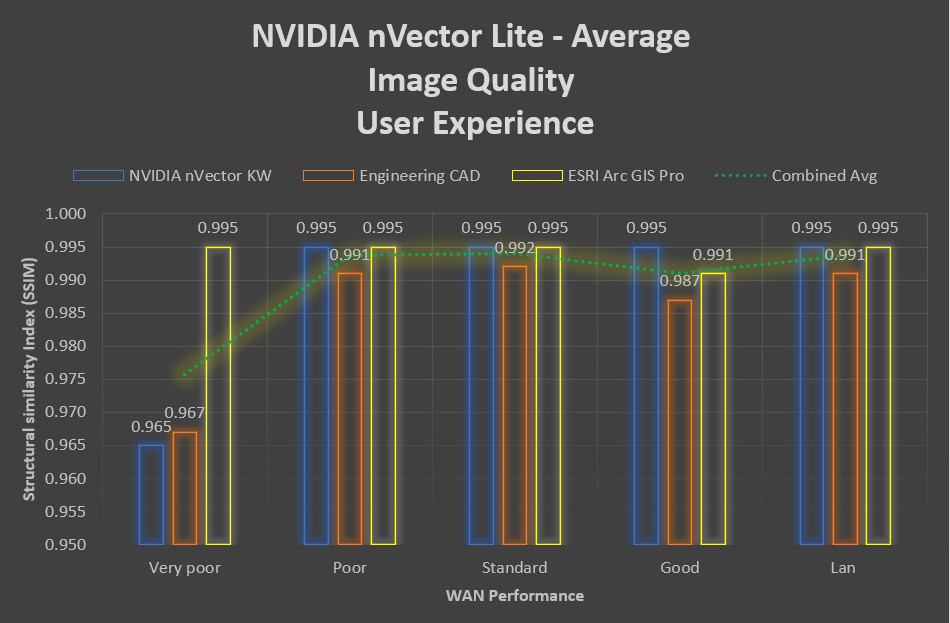

While image quality remained highly consistent across all network categories for some workloads, it showed a noticeable drop in the “Very Poor” network category for others. Remote image quality appears to be related to a combination of the workload and the network performance. The following figure shows the average image quality:

Summary & Conclusions

With the changing dynamics of modern-day education, for example, the diverse user situations such as working from campus compared to working from home, it has become challenging for IT teams to provide a consistent user experience. While the general intuition that "The better the network performance, the better the end-user experience" holds true, our test results do show that a typical remote ArcGIS Pro and Engineering CAD user with a "Standard" to "Good" broadband connection can expect the same end-user experience as an on-campus or office LAN user, as measured by NVIDIA's nVector performance monitoring tool.

To learn more, Read the technical white paper and check out the Touro College of Dental Medicine customer case study.

VDI Connectivity to the Cloud

Fri, 09 Dec 2022 13:51:47 -0000

|Read Time: 0 minutes

Introduction

As the cloud operating model becomes more pervasive, solutions such as virtual desktop infrastructure (VDI) are frequently deployed in a hybrid model, allowing them to take advantage of the benefits of both on-prem and public cloud environments for different use cases and user types.

VMware Cloud Foundation (VCF) is a great hybrid cloud platform for managing VMs. It enables a secure and consistent infrastructure for your VMware Horizon deployment. Once set up, the administrative interfaces make management quite simple; however, understanding all the underlying components are key to a successful deployment. To help understand these components, I will guide you through a few key areas that need extra attention when setting up VCF to allow it to be connected to VMware Cloud on Amazon Web Services (VMC on AWS). Understanding these points when working with your Dell /VMware professional services teams will ensure a successful deployment.

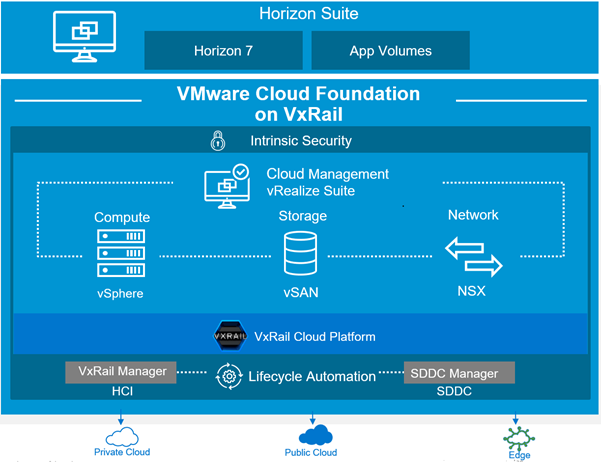

Figure 1: VCF Overview

Figure 1: VCF Overview

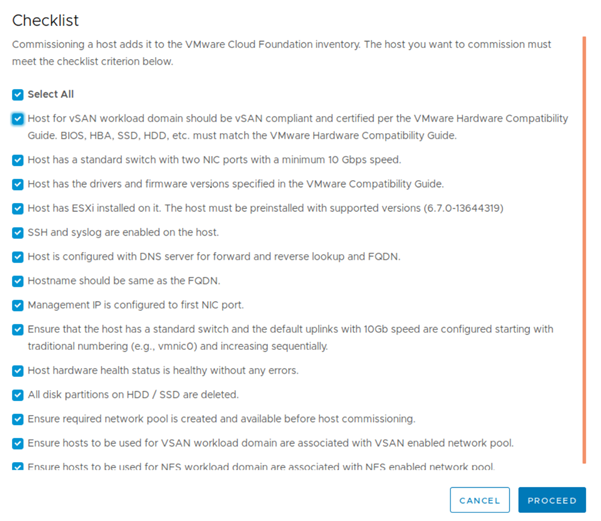

VCF Checklist

The checklist shown in Figure 2 goes through everything you need to have in place before you can deploy VCF. This checklist doesn’t do any system validation, so you should ensure these items are in place manually. If there are any misconfigurations, it will lead to the VMware Cloud Builder failing, so it is important to make sure this list is complete before you continue.

Figure 2: VCF Checklist

Once VCF has deployed, you can move on to choosing a Virtual Private Network (VPN) configuration and mapping out the network topology.

VPN

The set-up of VMware NSX will change depending on which VPN you plan on implementing, so it is best to know what direction you plan on going before you start this step. There are three options of VPN to use with VMC on AWS. See the VMware articles on the steps involved with setting up each type of VPN. The detailed configuration steps for each option are shown below, as well as links to VMware resources in relation to the configuration steps for each. I have laid out some basic pros and cons and use cases for each of the options below. However, more research and understanding may be needed to choose the best option for your type of infrastructure.

Route Based: This will use a routing protocol to tell the peer what networks it can reach, and then both the On-Prem and Cloud use that information to configure which traffic should be sent through the VPN.

Use Case: If you need to access multiple subnets or networks at the remote site and a dynamic routing protocol (BGP, OSPF, etc.) running across the VPN.

PROS | CONS |

Can configure VPN tunnel redundancy.

| Requires a routing protocol.

|

More scalable.

| Requires a routed subnet.

|

Policy-Based: Uses policies to dictate how different traffic uses the VPN. If the network changes, the policies will have to be changed as well, or the changes will be ignored.

Use case: Need to access only one subnet or one network at a remote site using VPN.

PROS | CONS |

Remote access VPN can be used. | When local network changes, this must be manually updated.

|

Easier to set-up

| No Tunnel redundancy.

|

Direct-Connect: If a workload requires higher speed and lower latency between cloud and on-prem.

Use Case: If you are prohibited from transferring sensitive data across the public internet or need to run a workload that requires a low latency connection to the cloud.

PROS | CONS |

Low latency

| Higher costs

|

Private connection between on-prem and AWS

|

|

VMware NSX

Depending on what VPN is being set up, the NSX set-up will change. While most of the core components stay the same, there will be minor configuration differences. Have a look at the following VMware documentation to show how things will be configured depending on the VPN set up.

NSX Configuration Policy Based

NSX Configuration Direct Connect

Once the VPN and NSX are set up and working on-prem, you can use these as guidelines to show how the set-up is done on the VMC on AWS side.

- VPN & Hybrid Linked Mode

- Hybrid Cloud Extension

- HCX Interconnect and Network Extension

- Live Migration

- Disaster Recovery

Hopefully, this post has helped you understand the different components needed to get connected to the cloud and how these components fit together.

References:

To get more information on Dell EMC Ready Solutions for VDI, visit our info hub page:

https://infohub.delltechnologies.com/t/solutions/vdi/

VCF with VxRail ordering Guide:

Provides guidance for setting up VDI on Dell Technologies Cloud Platform (DTCP) using VMware Horizon:

https://infohub.delltechnologies.com/section-assets/h18160-vdi-dtcp-horizon-reference-architecture

VDI on Dell Technologies Cloud Platform – Part 1: Introduction

Fri, 09 Dec 2022 13:47:56 -0000

|Read Time: 0 minutes

The way we work is changing. With more employees working from home and outside the office on flexible schedules, organizations are shifting towards digital workspaces. Digital workspaces allow employees to access their applications and data from anywhere, anytime, across any device. The flexibility offered by digital workspaces fosters collaboration and enhances the productivity of employees.

Virtual desktop infrastructure (VDI) is an enabling technology for workspace transformation initiatives. A growing number of organizations rely on VDI for providing accessibility to business applications and data while ensuring a secure and superior user experience. VDI provides the agility, security, and centralized management that are critical to successful workspace transformation initiatives.

According to a survey by market intelligence company IDC, 93 percent of customers will deploy their workloads across two or more clouds. A multi-cloud approach comes with its unique benefits, and VDI is a workload that takes full advantage of it. For example, VDI customers can utilize the flexibility and economics of the multi-cloud approach by extending their on-premises infrastructure for seasonal demand spikes and/or can also host a disaster recovery (DR) environment on the public cloud.

However, a multi-cloud approach can increase complexity by creating multiple management and operational silos. Due to the difference in the architecture and environments of the multiple clouds involved, workload migrations are often complicated. Maintaining consistent and efficient security is challenging with multiple cloud providers, and existing security best practices adopted by your organization may not be portable across a multi-cloud environment. The best solution to overcome these challenges is a hybrid cloud approach that offers consistent operations and infrastructure.

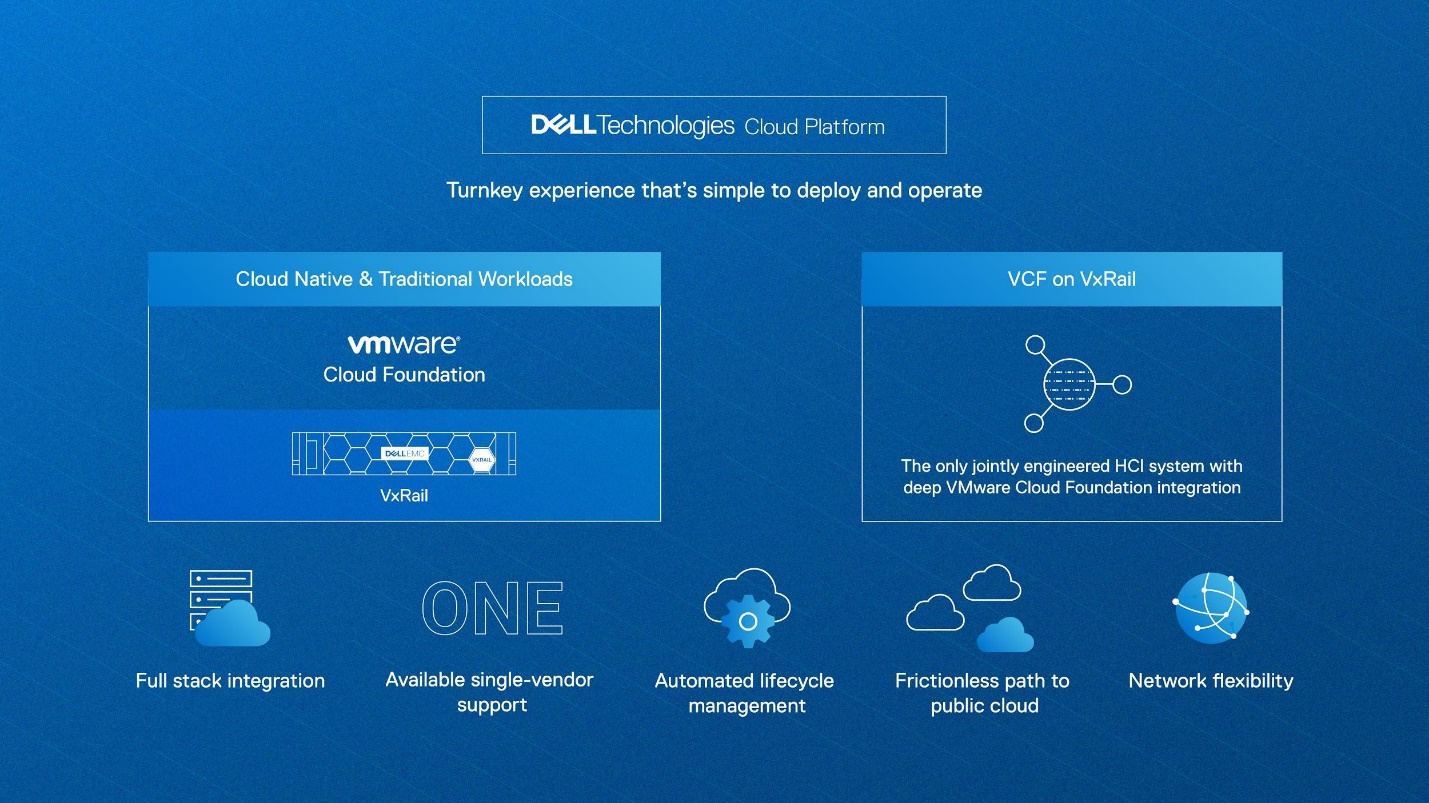

VCF on VxRail, the Dell Technologies Cloud Platform1 (DTCP), takes the complexity out of a multi-cloud environment by offering true hybrid compatibility and facilitating consistent operations across private and public cloud environments. DTCP is an on-premises infrastructure based on industry-leading Dell EMC VxRail hyper-converged infrastructure running VMWare Cloud Foundation (VCF). It offers options to extend your infrastructure to Dell Technologies’ partner public clouds, providing choice and flexibility. DTCP allows you to build standardized VMware Software-Defined Data Center (SDDC) architecture that provides a consistent infrastructure connecting your on-premises and public clouds.

Figure 1: VCF on VxRail, Dell Technologies Cloud Platform

Let’s see how you can benefit from a VDI solution based on VMware Horizon running on DTCP.

VMware Horizon on DTCP

Dell Technologies offers a tested and validated VMware Horizon solution running on DTCP for your VDI workloads. Horizon on DTCP allows you to leverage a software-defined infrastructure for compute, storage, networking, and security with the market-leading capabilities of VMware Horizon for a complete, secure, and easy-to-operate desktop and application virtualization solution. The native integration of VxRail Manager with SDDC Manager offers automation and simplifies lifecycle management for your entire VDI stack, including hardware. With VMware NSX2, you can secure east-west traffic within your data center by creating fast and simple network policies that follow virtual desktops. The Micro-segmentation feature of NSX creates a perimeter defense around the virtual desktops, eliminating unauthorized access between virtual desktops and adjacent critical workloads.

Our Horizon solution architecture aligns with the VMware Horizon Cloud Pod Architecture (CPA)3. CPA allows you to join multiple pods to form a single Horizon implementation. This pod federation spans multiple sites, simplifying the administration effort that is required to manage a large-scale Horizon deployment. See the ‘VDI on DTCP using VMware Horizon’ reference architecture guide4 available on the VDI InfoHub for more details on our validated solution.

VMware Horizon on DTCP offers a hybrid platform where you can easily enable public cloud use-cases like provisioning additional capacity and DR. With DTCP, you can have an extended Horizon deployment on one of our public partner clouds such as VMware Cloud (VMC) on AWS. VMC on AWS delivers VMware SDDCs as a service on the AWS cloud. If you already have a Horizon installation on-premises on VMware SDDC, you can leverage those skills to build a Horizon infrastructure on VMC on AWS. You get a unified architecture, operational consistency, and a similar feature set for Horizon across on-prem and VMC on AWS.

Conclusion

DTCP can offer you a true hybrid cloud experience by delivering consistent operations and infrastructure for your VDI workloads across a multi-cloud environment. By running VDI on DTCP powered by Dell EMC VxRail hyper-converged infrastructure, you can enable typical VDI use-cases like provisioning additional capacity and DR in a simple, flexible and cost-effective manner.

DTCP is also available with subscription pricing5, which gives you freedom of choice between CapEx and OpEx models. You can start small, easily scale and align with growing business needs.

I hope you enjoyed reading part 1 of this blog series. In part 2, we will discuss the public cloud interoperability use-cases of VMware Horizon on DTCP in detail. Stay tuned!

Additional Resources

- DTCP: https://www.delltechnologies.com/en-in/solutions/cloud/vmware-cloud-foundation-on-vxrail.htm

- NSX for Horizon: https://www.vmware.com/content/dam/digitalmarketing/vmware/en/pdf/products/horizon/vmware-nsx-with-horizon.pdf

- Horizon Cloud Pod Architecture: https://techzone.vmware.com/resource/workspace-one-and-horizon-reference-architecture#sec10-sub2

- VDI on DTCP using VMware Horizon: https://infohub.delltechnologies.com/section-assets/h18160-vdi-dtcp-horizon-reference-architecture

- DTCP with the subscription: https://www.dellemc.com/en-us/collaterals/unauth/offering-overview-documents/products/dell-technologies-cloud/h18181-dell-technologies-cloud-platform-with-subscription-solution-brief.pdf

A VMware Horizon solution on Dell EMC PowerEdge R7525 servers based on 2nd Gen AMD EPYC processors

Mon, 12 Dec 2022 13:38:14 -0000

|Read Time: 0 minutes

Many VDI deployments experience performance issues and poor user experience when trying to maintain a cost-effective consolidation ratio. A higher consolidation ratio of virtual machines to physical servers offers better economics and lower Total Cost of Ownership (TCO). The amount of TCO benefits might vary depending on the size of your VDI environment. It is a challenge for today’s organizations to deploy a cost-effective VDI environment while striking the right balance between performance and density.

The Dell Technologies Ready Solutions for VDI team provides a solution that resolves these challenges. It uses VMware Horizon based on Dell EMC PowerEdge R7525 servers equipped with new 2nd Gen AMD EPYC processors. The PowerEdge R7525 is a highly scalable, two-socket 2U rack server that delivers powerful performance and flexible configuration options. The servers are equipped with 2nd Gen AMD EPYC processors that can accommodate up to 64 cores per socket. A dual-socket R7525 server can have up to 128 cores, providing excellent user densities and a lower TCO for your VDI deployment. This solution offers you the flexibility to correctly size your VDI environment for performance and an exceptional end-user experience.

In this blog, we will discuss the key benefits of this solution and the results of performance testing carried out by the Dell Technologies Ready Solutions for VDI team.

Key benefits of the solution

- High performance and density: PowerEdge R7525 servers based on 2nd Gen AMD EPYC processors are designed for performance and with a high number of cores per CPU socket you can achieve higher user densities per server.

- Lower security risks with a diverse CPU architecture: The 2nd Gen AMD EPYC processors in this solution present an opportunity to diversify the CPU architecture within your data center. A data center with diverse CPU architecture poses a lower risk to your organization during security threats. Customers can move business-critical data to an appropriate and safe environment while a security event is resolved. With AMD Infinity Guard, which includes the AMD secure processor, Secure Memory Encryption (SME), and Secure Encrypted Virtualization (SEV) capabilities, you can minimize potential attack surfaces and deploy your workloads with confidence.

- Excellent graphics capability: The solution also offers excellent graphics performance with the capability of hosting up to 6 NVIDIA T4 cards (each with x16 PCIe lane access) on the PowerEdge R7525 server, providing up to 96 GB of graphics frame-buffer per server.

Solution performance testing

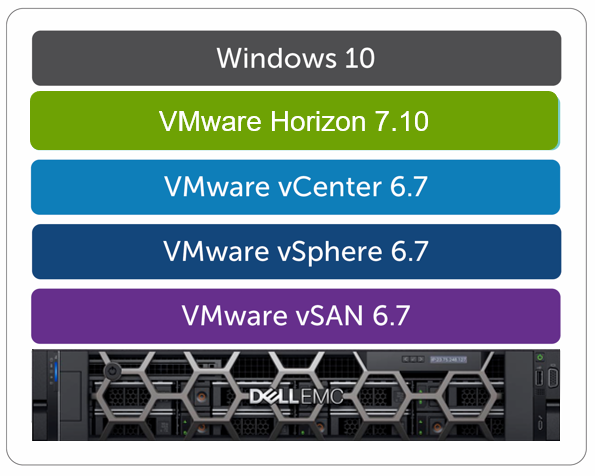

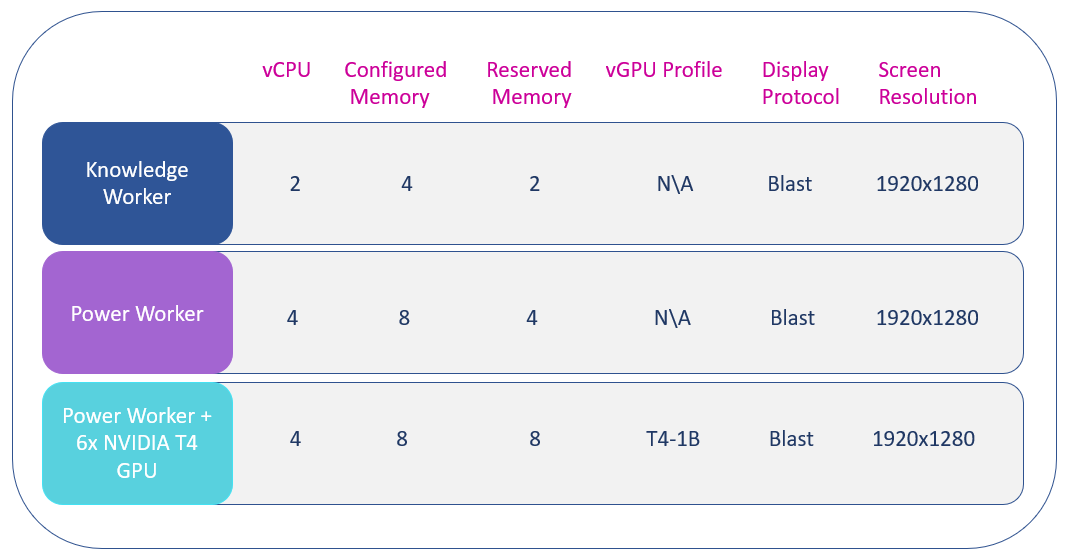

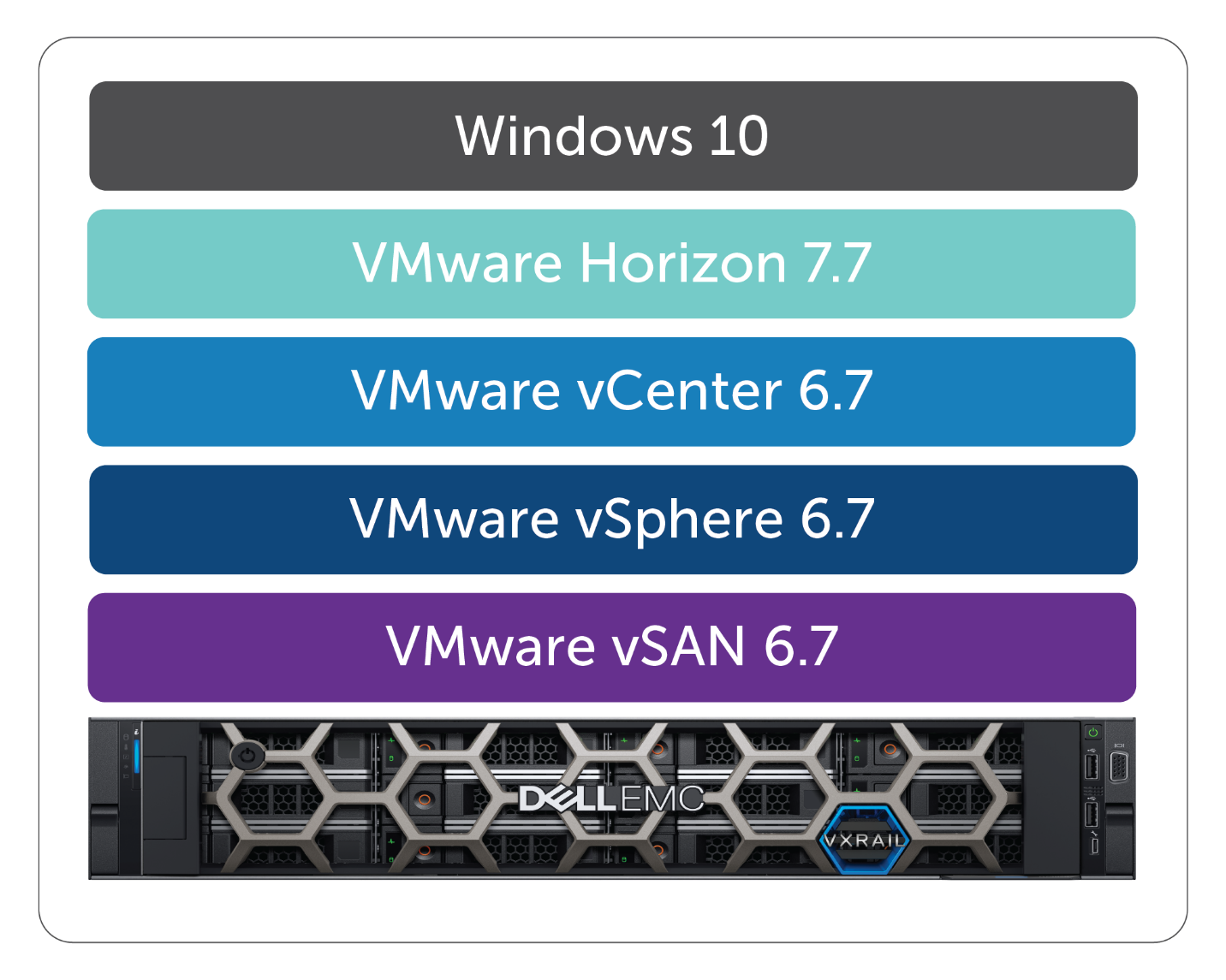

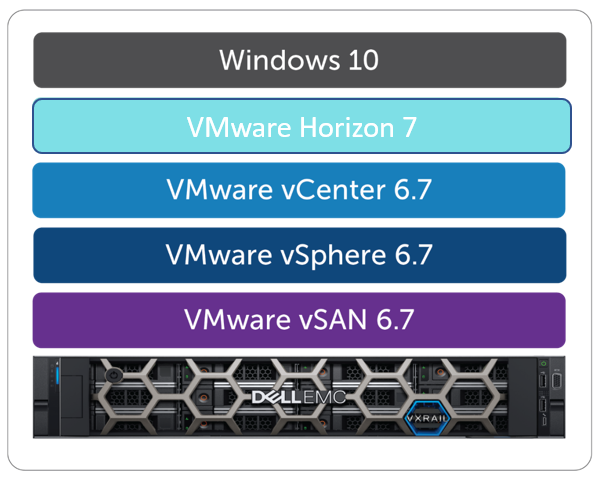

The Dell Technologies Ready Solutions for VDI team used the Login VSI benchmark tool for performance testing. We performed testing on a 3-node VMware vSAN cluster based on PowerEdge R7525 servers with a ‘Density Optimized’ configuration. VMware ESXi 6.7 update 3 was used as the hypervisor and the Horizon 7 virtual desktops were provisioned by instant clones. See Figure 1 for the solution stack.

Figure 1: VMware Horizon on PowerEdge R7525 solution stack

The environment configuration was:

- PowerEdge R7525 server (Density Optimized configuration)

- 2 x AMD EPYC 7502 (32 core @2.5 GHz)

- 1024 GB (16 x 64 GB @ 3200 MHz)

- 2 x 800 GB WI SAS SSD (cache)

- 4 x 1.92 TB MU SAS SSD (capacity)

- Mellanox Connect X- 5, 25 Gbe Dual port SFP28

- 6 x NVIDIA T4

- vSAN all-flash datastore

- VMware ESXi 6.7u3 hypervisor

- VMware Horizon 7.10 VDI software layer

See Table 1 for the VM configuration that we tested for different Login VSI workloads. For details of the test environment, configuration and testing process and an analysis of the test results, see the Reference Architecture Guide available on the Dell Technologies VDI Infohub.

Table 1 : Virtual machine configuration for different Login VSI workloads

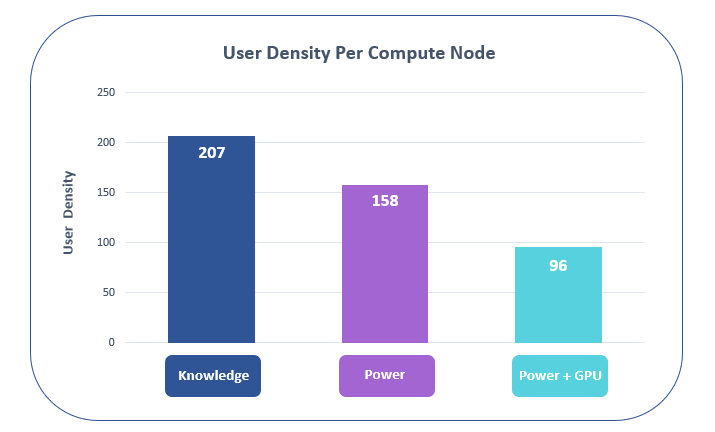

Figure 2 shows the recommended density figures per host for Login VSI workloads based on our performance testing. We recommend these density figures after monitoring and analyzing a combination of host utilization parameters (CPU, memory, network and disk utilization) and Login VSI results. We monitored the relevant host utilization parameters and applied relatively conservative thresholds for the Login VSI testing. Thresholds are carefully selected to deliver an optimal combination of excellent end-user experience and cost-per user while also providing burst capacity for seasonal or intermittent spikes in usage.

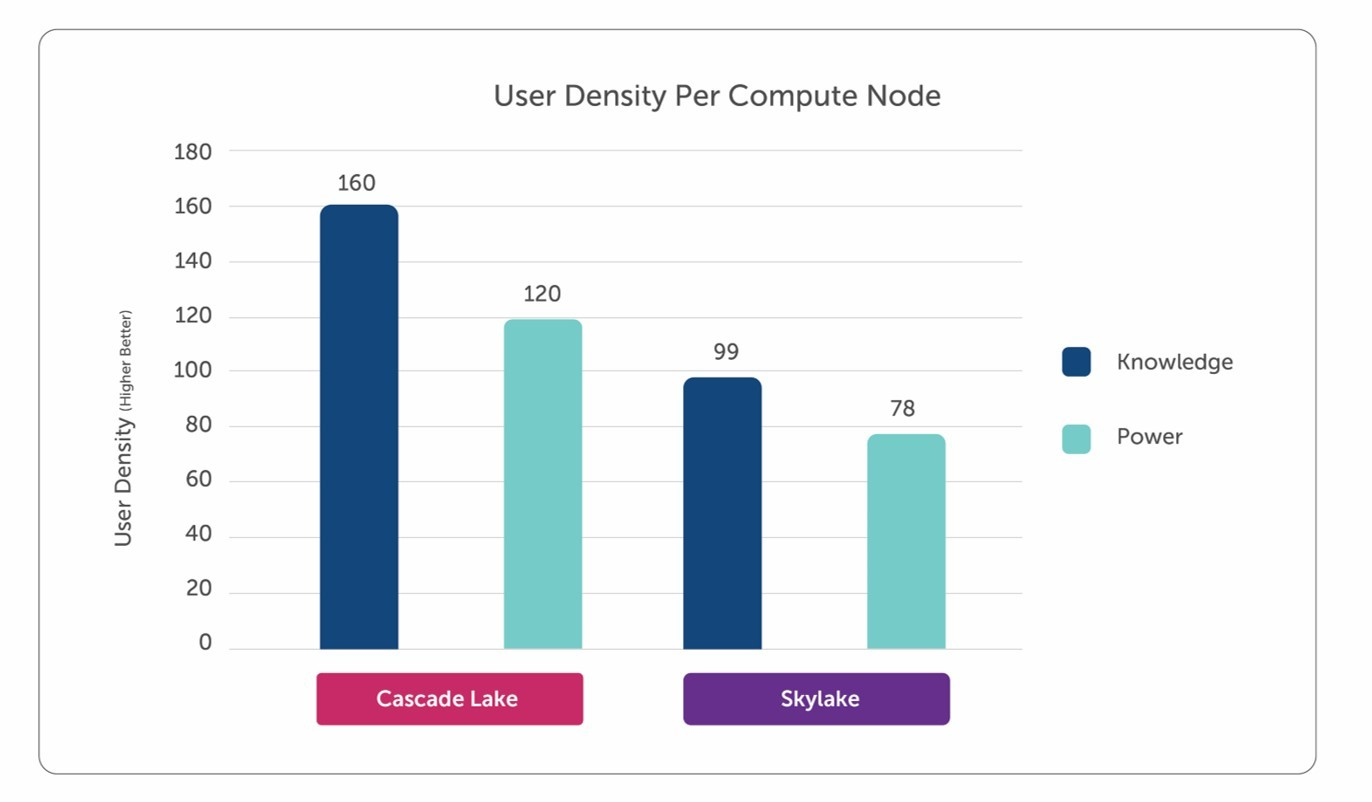

Figure 2: Horizon on PowerEdge R7525 solution user density figures

Conclusion

Our performance testing achieved excellent consolidation ratios for the solution while maintaining good performance for typical VDI workloads. PowerEdge R7525 servers based on AMD processors come with dual-socket CPUs that can host up to 128 cores per server, increasing user density within VDI environment.

If you are running a mixed workload on your hypervisor, including your VDI workload, there is a limitation using VMware licensing greater than 32 cores. See the licensing details here. However, this limitation doesn't apply to VMware vSphere Desktop edition intended only for deploying desktop virtualization and is licensed based on powered-on desktop virtual machines.

The high CPU core density per server results in exceptional user densities and high performance for VDI workloads. The 2nd Gen AMD EPYC processors with high core counts present an opportunity to design your VDI environment with CPU oversubscription ratios that result in the right balance between performance and user density.

Dell EMC VxBlock 1000 and VDI – A Real Better Together Story

Wed, 19 Aug 2020 23:24:12 -0000

|Read Time: 0 minutes

Originally published on August 21, 2019

Enterprises driving towards digital transformation need IT infrastructure designed to support those initiatives. Along with modernizing applications and the underlying infrastructure, it is equally important to focus on transforming the workspaces of end-users. End-user experience is an essential aspect of digital transformation initiatives that depend on the performance and responsiveness of digital workspaces.

Virtual desktop infrastructure (VDI) is the heart of digital workspace implementations. Enterprises planning for workspace transformation rely on VDI to provide a seamless desktop experience to their employee workspaces. VDI provides the agility, security, and centralized management that are critical to successful workspace transformation initiatives.

In this blog, we will discuss how the latest Dell EMC VxBlock System 1000 converged infrastructure and VDI together form a better strategy for your digital transformation initiatives.

VxBlock 1000 converged infrastructure is a combination of best-in-breed technologies from Dell EMC, Cisco, and VMware. You get a turnkey product that is engineered, manufactured, managed, supported, and most importantly sustained as one system.

Figure 1: VxBlock System 1000

VxBlock 1000 from Dell EMC offers you a design that ensures your IT infrastructure can support next-generation technologies and meet performance, agility, scalability, and simplicity requirements for digital transformation. With the flexibility to choose from Dell EMC’s powerful portfolio of storage and data protection products, and from Cisco’s compute and networking products, you can consolidate and run a variety of workloads, including mission-critical ones such as SAP, Microsoft SQL, Oracle, Epic, and VDI on a single platform. From a life-cycle management (LCM) perspective, the integration of VxBlock Central software with VMware’s vRealize Orchestrator and vRealize Operations software delivers cloud operations faster and more efficiently. VxBlock 1000 simplifies LCM by performing upgrades and patches 6 times faster than traditional 3-tier system management. For more information regarding the benefits of VxBlock 1000, see the Dell EMC VxBlock website.

The migration of applications to the public cloud is sometimes regarded as a step forward towards reaching the goal of digital transformation. However, most enterprises quickly find that the public cloud is not a one-size-fits-all solution for hosting their applications. Some applications are better suited to hosting on-premises for a variety of reasons, including security, operational control, and data governance. So, the ‘hybrid cloud’ is emerging as the model that best fits the needs of many enterprises embracing digital transformation. VxBlock System 1000 can be the foundation for your hybrid cloud infrastructure to speed up your efforts towards digital transformation. You can read more about VxBlock 1000 and digital transformation in this Dell EMC blog. And, for the majority of the applications running on-premises, enterprises can use VDI infrastructure running on VxBlock 1000 to present these applications on end-users’ digital workspaces, while reaping all the benefits of a VDI solution.

Enterprises with VxBlock 1000 can run VDI workloads in combination with high-value workloads like SAP, Oracle, Microsoft SQL, and Epic on a common platform. In an enterprise-scale VDI environment, VxBlock 1000 can both scale up and scale out seamlessly to support additional users. The VxBlock 1000 architecture supports the granular scalability of each component independently, whether it is the compute, networking, or storage, thus providing flexibility and agility for your VDI environment. If you are a large enterprise running a VDI workload, you are likely to get a lower Total Cost of Ownership (TCO) when using VxBlock 1000, compared to a DIY design approach or public cloud offering.

Dell EMC now offers an integrated, validated, and tested solution for a VMware Horizon 7 VDI running on VxBlock Systems 1000. In our testing, we have found that such an environment provides excellent performance and an exceptional user experience, both of which are paramount for your workspace transformation initiatives. For design options, a validated architecture, and best practices, see the Design and Validation Guides available under "Designs for VMware Horizon on VxBlock System 1000" on our VDI Info Hub for Ready Solutions website.

Come find out more about the benefits of a Dell EMC VDI solution based on VxBlock 1000 at VMworld US 2019, where we will be discussing the solution in the Dell EMC Infrastructure Solutions Group booth and in our executive suite (click to register for the event). We look forward to seeing you there.

In the next blog, we will discuss in detail why the Dell EMC VxBlock 1000 is an ideal choice for running Enterprise-Scale and Multi-Workload VDI Environments. Stay tuned, and we’d love to get your feedback in the comments section!

Published By

Anand Johnson

Principal Engineer at Dell EMC, Technical Marketing ,Ready Solutions for VDI

VDI Data Protection Part 1: Protecting Your VDI Environment - What You Need to Consider

Tue, 11 Jul 2023 18:44:31 -0000

|Read Time: 0 minutes

Virtual Desktop Infrastructure (VDI) plays a crucial role in today's business transformation initiatives. Although there is an increase in SaaS-based and cloud native applications, the majority of the applications in today's enterprises continue to be Microsoft Windows-based applications. VDI is the most efficient way to present these Windows applications to end users in their digital workspaces, and provides a consistent user experience across user devices for the modern-day mobile workforce. Organizations increasingly rely on VDI to provide the agility, security, and centralized management that are so important for their workforce.

According to the Global Data Protection Index survey by Dell EMC, organizations managed an average of 9.7PB of data in 2018 - representing an explosive growth of 569% compared to the 1.45PB managed in 2016. What’s worrying is that the number of businesses unable to recover data after an incident nearly doubled between 2016 and 2018. This information is alarming because incidents such as these can cause substantial monetary losses, reduced employee productivity, and damage to the reputation of the affected organizations. VDI environments are arguably the most critical workload in the corporate data center because they impact user desktops and user data - the primary gateways to user productivity. Any loss or reduced availability of these components will directly impact both user productivity and the business.

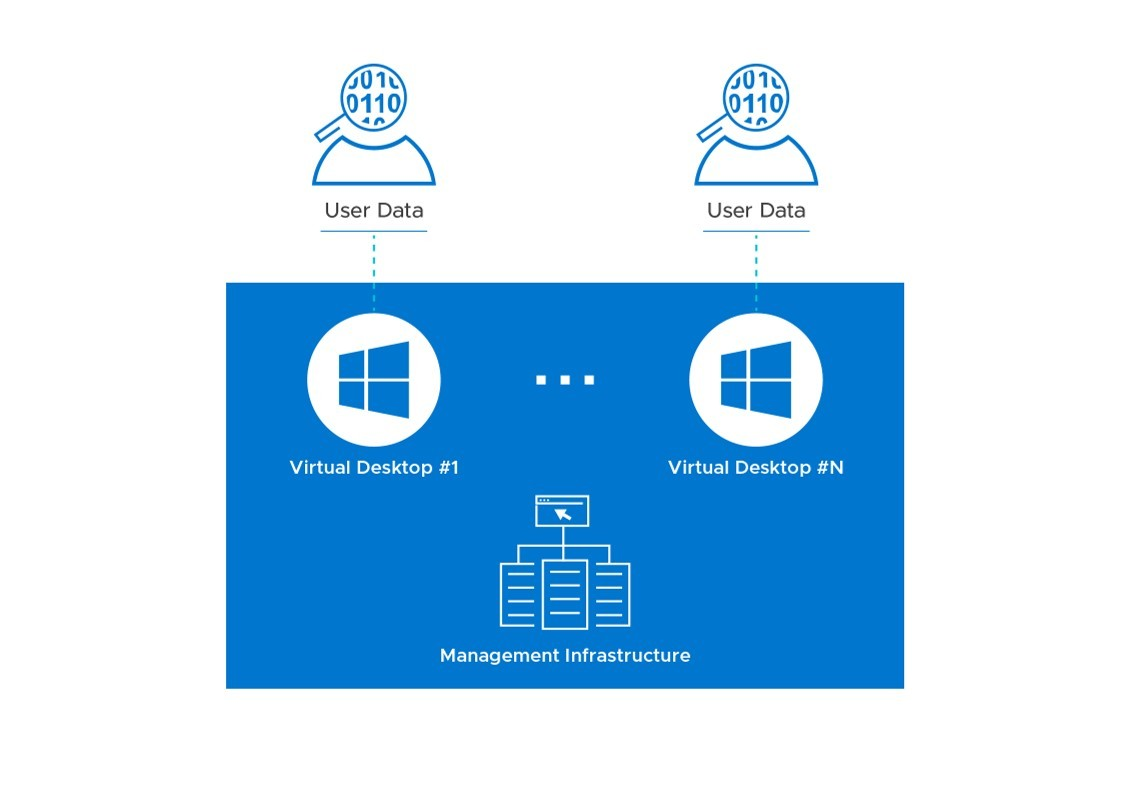

For physical desktops and laptops, data protection is often restricted to storing user data on a shared folder somewhere in the organization's network, then protecting that folder. But when it comes to VDI, virtual desktops reside in the data center, and it is the responsibility of IT to not only protect the user-specific data but also to protect the desktop and associated desktop management infrastructure.

The success of a VDI data protection plan depends on these classic data protection parameters:

- Availability -- the percentage of time that a service/application (in this case the VDI environment) is available. Five-nines or 99.999% availability means 5 minutes, 15 seconds or less of downtime in a year.

- Recovery time objective (RTO) -- the elapsed time to when virtual desktops can be available after an incident

- Recovery point objective (RPO) -- the time period (minutes, hours) of acceptable user and configuration data loss from the VDI environment prior to when the incident occurred

For example, a policy might state that we must restore service within 4 hours (RTO) with no more than 1 hour of data loss (RPO). A robust data protection plan is necessary to ensure that availability, RTO, and RPO objectives are met. Such a plan will require the protection of all essential components of the VDI environment to ensure that the plan meets its service level agreement (SLA) to avoid business impact.

As shown in Figure 1 below, a VDI environment typically consists of management infrastructure, desktop infrastructure, and user data components (often a file share or dedicated unstructured data storage platform such as Dell EMC Isilon) where user data is stored. The functions of these layers are summarized below:

- The management layer performs the provisioning, brokering, policy management, and related management functions

- The desktop layer is the user’s desktop, which is often made available to multiple users using an appropriate cloning technology

- The third layer is the user data (stored in user profile shares, home folders, etc.)

A VDI data protection plan should cover all three of these component layers.

Figure 1: Components of a VDI Environment that Require Protection

The availability and recoverability goals described above will determine the overall design for your VDI infrastructure. The level of redundancy and other factors will vary depending on whether it will be a single-site or multi-site design. The operational backup of data and the disaster recovery plan, two major aspects of data protection, will vary across organizations based on these parameters. Careful consideration needs to be given to these requirements during the design of the VDI infrastructure, to meet the Service Level Agreement (SLA).

In the next installments of this blog series on data protection for VDI, we will discuss in detail how these objectives can be met by describing some of the important considerations for multi-site disaster recovery, single-site protection, and what the future of data protection for VDI environments in a multi-cloud world might look like. The next blog will be a deep-dive on VDI multi-site disaster recovery from a Dell EMC perspective. Stay tuned and we’d love to get your feedback!

Published By

Anand Johnson

Principal Engineer at Dell EMC, Technical Marketing ,Ready Solutions for VDI

VDI Data Protection - Part 2 : A Deep Dive into VMware Horizon 7 Multi-Site Scenarios

Mon, 12 Dec 2022 21:26:47 -0000

|Read Time: 0 minutes

In the first part of this blog series – VDI Data Protection - Part 1: Protecting Your VDI Environment - What You Need to Consider, we discussed major components of virtual desktop infrastructure (VDI) data protection and the parameters involved in selecting a data protection plan that is right for your VDI environment. As discussed in that blog post, disaster recovery and operational backup are two significant aspects of data protection.

A VDI outage directly impacts user productivity in ways that are usually measurable by the organization. Even if your mission-critical application servers and databases are online, users still need access to their desktops to be productive. So, it is important to have an adequately formulated Business Continuity Plan or Disaster Recovery (DR) Plan for VDI that aligns with the organization’s business objectives.

A complete risk assessment of the likely disaster scenarios and business impact should be done to formulate an optimal DR plan for your VDI environment. Disaster recovery protection and execution comes with a cost, so you should strike the right balance between cost and the availability of VDI services required by the organization. You should:

- Divide the VDI user groups into segments based on the criticality of the applications they use.

- Establish an optimal Recovery Point Objective (RPO) and Recovery Time Objective (RTO) for each desktop group so that a disaster will have the least impact on your business while also conforming with the organization’s budget. (RTO is the elapsed time until virtual desktops are available or recovered after an incident. RPO is the acceptable time duration (in minutes or hours) of data loss from a VDI environment in the event of a disaster.)

In this blog, we will deep dive into the different multi-site approaches used in a VMware Horizon 7 VDI Disaster Recovery plan.

Multi-Site Horizon 7 VDI with Cloud Pod Architecture

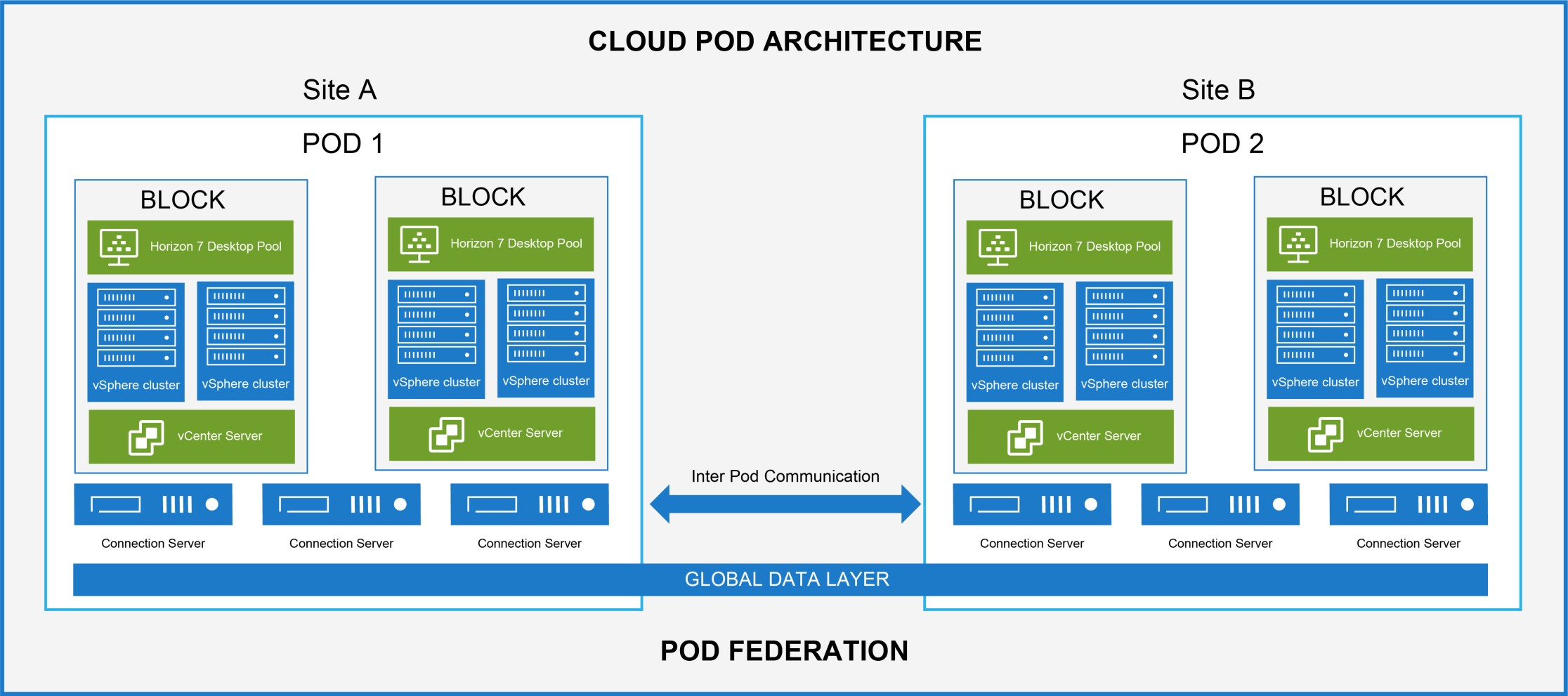

Cloud Pod Architecture (CPA) is the foundation for VMware’s Horizon 7 VDI Disaster Recovery. A pod is made up of a group of interconnected Connection Servers that broker connections to desktops or published applications. A pod consists of multiple blocks, and a block is a collection of one or more vSphere clusters hosting pools of desktops or applications. Each block has a dedicated vCenter Server.

With CPA, you can have multiple pods connected in a federation to improve reliability. For example, you can have pods in each of your CPA sites, and all of them can be connected via the CPA to form a federation. In a CPA environment, a site is a collection of well-connected pods in the same physical location, typically in a single data center. Connection Server instances in a pod federation use a data-sharing approach known as the Global Data Layer to replicate information about pod federation topology, user/group assignments, policies, and other CPA configuration settings.

With a global entitlement, you can publish desktop icons from Horizon pools from any of the pods in the federation. You can create a global entitlement to publish the VDI desktop icon, then assign users or user groups to the global entitlement, which gives users access to desktops across multiple sites. Each site has a minimum of one pod.

Figure 1 shows a basic CPA architecture involving two Horizon 7 sites or data centers. For more information regarding the architecture and configuration of Horizon 7 CPA, see the VMware documentation.

Figure 1: VMware Horizon 7 Multi-Site Deployment with Cloud Pod Architecture

When a user launches a VDI desktop icon, the following occurs:

- The request goes to a Global Server Load Balancer (for example, VMware NSX Advanced Load Balancer).

- The Global Server Load Balancer redirects the request to a Horizon Connection Server in one of the pods.

- The Horizon Connection Server brokers the virtual desktops.

Home Site and Scope policies defined in a global entitlement determine the scope of the search to identify a desktop for the user. A Home Site is a relationship between a user or group and a CPA site. Typically, a user’s data and profile reside in the site that is configured as a Home Site. With the Home Site option enabled, the preference will be to launch the desktop from the Home Site, irrespective of the user’s location. If the Home Site is not configured, unavailable, or does not have resources to satisfy the user's request, Horizon continues searching other sites according to the Scope policy set for the global entitlement. The Scope policy options available in Horizon 7 global entitlement, are ‘All Sites,’ ‘Within Site,’ or ‘Within Pod.’

Horizon CPA architecture is typically used with non-persistent desktop models based on instant clones or linked clones, where we can de-couple the user data from the rest of the desktop. A similar desktop virtual machine (VM), without user data, is also provisioned in other sites. The user data can then be replicated to the other sites to provide a consistent experience while accessing these desktops.

You can have an active/active or active/passive approach for your VDI DR plan based on Horizon CPA. When providing DR for Horizon-based services, it is important to consider the active or passive approach from the perspective of the user:

- With an active/active approach, services delivered to a particular user will be available on all sites.

- With an active/passive approach, only the primary site will be active, and in the event of a disaster, services will be available to the user on the secondary site.

Home Site and Scope policies defined in the global entitlement should align with the DR approach devised for your VDI environment. For example, in a VDI environment based on an active/active approach, the global entitlement policies are configured to search desktops from all the sites in the pod federation. Even if one of the sites is not available, the desktop can be searched and launched from the pods in the other active sites. In a VDI environment based on an active/passive DR approach, global entitlement policies should be set to launch desktops only from the pods in the active site. If there is an outage in the primary active site, services are enabled in the secondary passive site, with manual intervention, and desktops are launched from the secondary site. For more information about configuring global entitlement, see the VMware documentation.

Even though Horizon 7 CPA supports both approaches, the decision concerning the DR approach will depend on a variety of factors, including the availability requirements of business-critical applications, the distance between sites, the cost of the DR infrastructure, replication of data, and network connectivity.

In an active/active multi-site scenario, user data should be replicated synchronously across all sites in real-time to maintain application responsiveness and the user experience. It could be a challenge to replicate large data files across a Wide Area Network (WAN) without impacting the user experience. For example, consider a user who works with an application that processes large files. If the user is redirected to different active sites each time he logs in, he may either experience slowness or the application may not work properly because the associated data is not available in real-time in all sites. The speed of replication plays a more significant role under this combination of requirements - it depends on the distance between sites, the type of WAN network, available bandwidth, and so on. Real-time replication traffic can also consume a large chunk of your network bandwidth and may affect other traffic, including production. So, you must consider these implications before deciding on an active/active approach.

Alternatively, you can have a partial active/active DR approach, with 50% of users homed to one of the active sites. The other site will still be active, serving the other 50% of users. However, both data centers should have the capacity to run at 100% capacity, in the event of a disaster in one of the sites. With this approach, you can avoid the replication challenges described above. You can schedule the replication out of business hours on a daily or weekly basis without impacting critical production traffic. The user also gets a better experience as their desktops launch at the Home Site, where their data and profile reside.

An active/passive DR solution is the simplest approach in Horizon 7 CPA multi-site deployments because only one site is active at any given point of time. Users are assigned to global entitlements and are homed to the primary active site, while the secondary site remains passive. In this approach, you do not have to worry about the replication challenges because the desktop always launches on the Home Site, which is the primary or active site for users. However, data is replicated to the passive site based on the RPO requirements. In the event of a disaster, services in the primary site will be failed-over to the secondary passive site – with manual intervention, while global entitlement launches desktops on the secondary site.

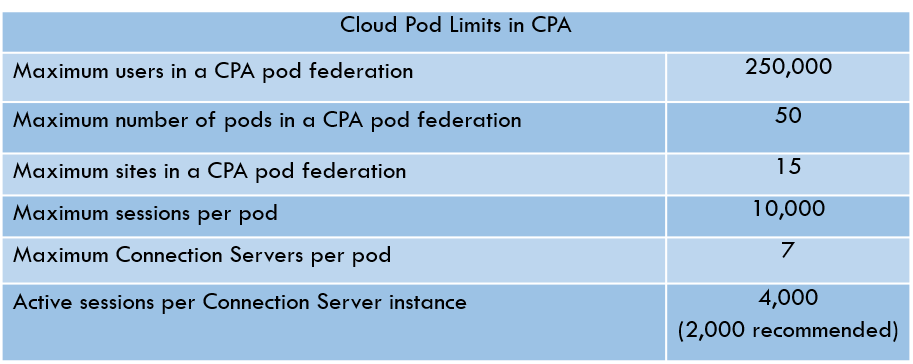

Table 1 shows the limits of various components in CPA architecture for Horizon version 7.8 or later. For more information regarding limits refer to VMware Horizon 7 sizing limits and recommendations.

Table 1: Cloud Pod Limits in Horizon 7 CPA

Multi-Site Horizon 7 VDI with vSAN stretched clusters

Architectures based on VMware’s vSAN technology support active/passive multi-site deployment for Horizon 7 VDI. This is a truly active/passive approach that leverages a stretched cluster: one that extends a vSAN cluster across two sites or data centers. The solution builds on the VMware vSAN replication technology that replicates data across the sites involved in a stretched cluster and the VMware vSphere HA feature that provides high-availability across the hosts in a cluster. Horizon 7 management servers and desktop VMs are pinned to the active site using VMware vSphere Storage DRS, VM DRS, host DRS groups, and VM-Host affinity rules. In the event of a disaster in the active site, the VMs are failed-over and restarted in the passive site using VMware HA. The pinning of Horizon management and desktop VMs to storage and compute resources on a single site mean that these VMs only reside on a single site at any given time – either the originally active site in normal operation or the originally passive site after a DR event has occurred.

A vSAN stretched architecture consists of three fault domains called preferred (active site), secondary (passive site), and witness (third site). The latency between data sites should not be more than 5 ms round-trip-time (RTT). The latency between the data sites and the witness site should not be more than 200 ms RTT. vSAN communication between the data sites can be overstretched L2 or L3 networks, and vSAN communication between data sites and the witness site can be routed over L3. For details on a vSAN stretched, cluster-based DR approach see the VMware documentation.

A typical use-case when leveraging a vSAN stretched cluster DR approach is full-clone persistent desktop pools, where user data is tightly integrated with the desktop. vSAN storage cluster technology replicates VDI VMs across the sites in the stretched cluster in real-time. The architecture only supports data centers which are near to each other and connected via good network bandwidth with low latencies. In this DR approach, RTO is higher compared to a CPA-based DR solution because the management and desktop VMs need to be restarted at the passive site in the event of a disaster.

Dell EMC VxRail hyper-converged appliances based on VMware vSAN technology can run a multi-site Horizon 7 VDI environment using the vSAN stretched cluster architecture discussed above. For more details on deploying a VMware Horizon 7 VDI on VxRail appliances, see the Design Guide under "Designs for VMware Horizon on VxRail and vSAN Ready Nodes" on our VDI Info Hub for Ready Solutions website.

Summary

Getting your users back to being productive with a minimum loss of time and data is of vital importance in the event of a DR. With technologies where we can de-couple user data from a virtual desktop, Horizon 7 VDI disaster recovery boils down to how you handle the replication of user data across your sites while providing an equivalent set of VMs in other sites. For non-persistent desktop use-cases where user data is usually decoupled from the rest of the desktop, a Horizon CPA-based DR approach is recommended. If you are planning for an active/active DR solution, you should consider the replication challenges discussed earlier in this blog. For use-cases where full-clone persistent desktops cannot be converted to a non-persistent model for various business reasons, an active/passive DR approach based on vSAN stretched cluster architecture is the best fit.

In the next blog in this series, we will discuss the operational backup aspects of VDI data protection based on testing done by the Dell EMC Ready Solutions for VDI Engineering team. So, stay tuned!

Published By

Anand Johnson

Principal Engineer at Dell EMC, Technical Marketing ,Ready Solutions for VDI

Check out this article to learn about VDI - VMware Horizon 7 multi-site approaches. #iwork4dell #dellemc #vmware #vxrail

VDI Data Protection - Part 3: An Operational Backup Approach for Horizon 7

Mon, 12 Dec 2022 21:26:48 -0000

|Read Time: 0 minutes

In Part 1 of this blog series we discussed how disaster recovery and operational backup are two significant aspects of Virtual Desktop Infrastructure (VDI) data protection. In this blog, we will discuss the operational backup aspects of VMware Horizon data protection. For details on disaster recovery, see Part 2.

Loss of VDI environment availability or data has the potential to degrade a user’s ability to perform daily operational tasks. So, it is important for organizations to have an optimal plan to back up and recover VDI data. A robust data protection plan should meet the availability, Recovery Time Objective (RTO), and Recovery Point Objective (RPO) targets defined in Service Level Agreements (SLAs).

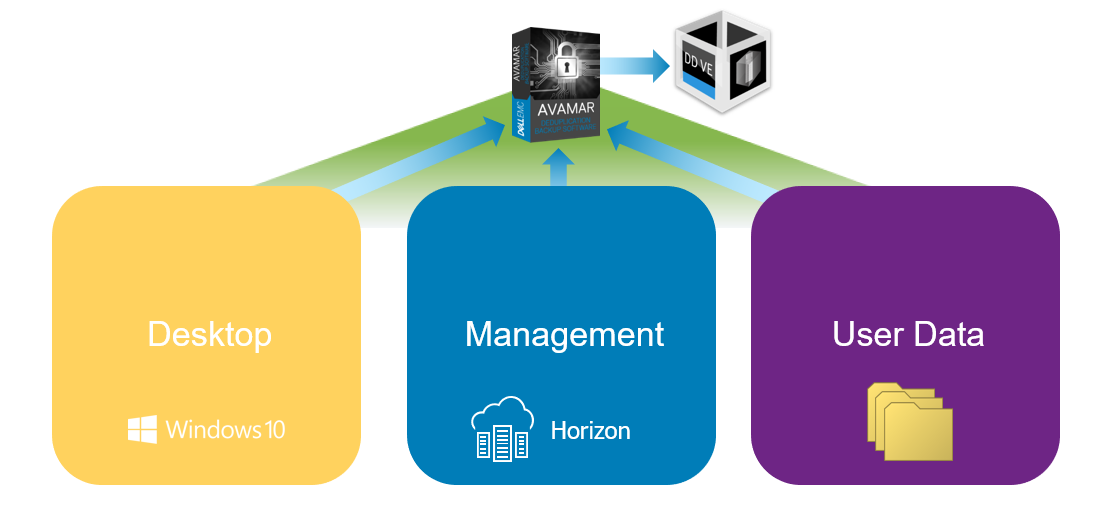

For a VMware Horizon virtual desktop environment, three key component layers require protection:

- The desktop layer, that is, the user’s desktop (which is often made available to multiple users using an appropriate provisioning technology)

- The management layer (which performs the provisioning, brokering, policy management, and related management functions)

- The user data layer (stored in user profile shares, home folders, and so on)

The backup and recovery requirements of each component layer depend on the type of the desktop pools and provisioning method used in the Horizon 7 environment. For example, a persistent (stateful) desktop pool can be created with full clones or full virtual machines, which requires a full backup of the virtual machines. A persistent pool can also be created with Horizon instant clones or linked clones with App Volumes (App Stacks and User Writable Volumes) to store the user-installed apps and user-related data. In this scenario, the gold image of the desktop and the persistent data related to App Volumes need protection.

For a non-persistent (stateless) desktop pool, only the gold image of the desktop needs to be protected. In the case of non-persistent desktops, you should consider protecting the user data that is stored in user profile shares and home folders, based on the user environment.

Figure 1: Horizon 7 Operational Backup Approach

Dell EMC offers comprehensive backup and recovery solutions that include products like Integrated Data Protection Appliances (IDPA), Avamar, Data Domain, and Data Protection Suite. For the data protection of a Horizon 7 environment, you can choose from this broad range of Dell EMC data protection products to match your user environment and existing data protection regime. For further information, visit the Dell EMC Data Protection web page.

The Dell EMC Ready Solutions for VDI team has published an operations guide that outlines how Avamar Virtual Edition (AVE) and Data Domain Virtual Edition (DD VE) can be used to facilitate backup and recovery of a Horizon 7, non-persistent desktop pool provisioned by instant clone technology. AVE and DD VE are the software-defined versions of the industry-leading Dell EMC data protection products Avamar and Data Domain. Avamar facilitates fast and efficient backup and recovery for a Horizon environment. Variable-length data deduplication, a key feature of Avamar data protection software, reduces network traffic significantly and provides better storage efficiency. Data Domain provides backup as well as archival capabilities. Data Domain’s tight integration with Avamar delivers added performance and scalability advantages for large Horizon 7 environments. Let’s see some of the key points discussed in the operation guide for backup and recovery of Horizon 7 desktop, management, and user layers.

The Horizon 7 configuration details are in the management layer stored in a View LDAP repository as part of the connection server configurations details. To schedule backups of this database, select the connection server instance from the Horizon console to generate a configuration backup file in a file share. You can then use Avamar VE to back up and restore this configuration backup file. If you are using linked clones, you also need to back up the Composer database.

As discussed earlier in this blog, the backup requirements of the desktop layer depend on the desktop pools and provisioning method. In the case of Horizon instant clones, only the gold image of the respective desktop pools need to be backed up. We recommend taking a clone of the original gold image (containing snapshots) and use that copied cloned image for the backup cycles.

The user data layer contains user-profile shares and other user-related files that are backed up by Avamar software. This layer needs to be protected using a standard data protection approach that is appropriate for user data in any environment.

For a more detailed description of the process to protect each of the layers described above, refer to the operations guide published by the Dell EMC Ready Solutions for VDI team.

The backup and recovery approach for Horizon virtual desktop environments is different from the approach followed for physical desktops and other virtual machines. For developing a successful operational backup strategy for Horizon, the key thing to be aware of is that all three component layers (desktop, management, user data) must be considered. The successful recovery of each of these interdependent components is essential to restore and deliver a fully functional user desktop. To make sure that your backup and recovery plan is effective from a user and business perspective, we recommend that you perform a backup and recovery test for all three layers simultaneously.

In the next part, we will conclude the blog series with some discussion on multi-cloud and hybrid cloud strategies for Horizon 7. So, stay tuned for more!

Thanks for Reading,

Anand Johnson - On Twitter @anandjohns

VDI Data Protection - Part 4: Summary

Mon, 12 Dec 2022 21:26:48 -0000

|Read Time: 0 minutes

In the previous blog posts in this series (part 1, part 2 and part 3) we discussed the components of data protection, disaster recovery, and operational backup approaches in a VMware Horizon environment. The components that require data protection in a Horizon environment are management infrastructure, desktop infrastructure, and user data components where user profiles, home drives and so on are stored.

Today’s organizations rely heavily on VDI to extend their business-critical applications to digital workspaces, giving users on-demand access from any device, no matter where they are. An outage to the VDI environment can cause a major disruption to business continuity and productivity as users are prevented from accessing the applications. So, a well-formulated DR and backup plan are critical to business continuity and for the success of VDI deployments. You can read more about the components of data protection, DR, and the backup aspects of Horizon data protection in the previous posts in this blog series.

We will conclude this series by exploring the public cloud disaster recovery options that are enabled by Horizon on the Dell Technologies Cloud Platform (DTCP) solution from the Dell Technologies Ready Solutions for VDI team.

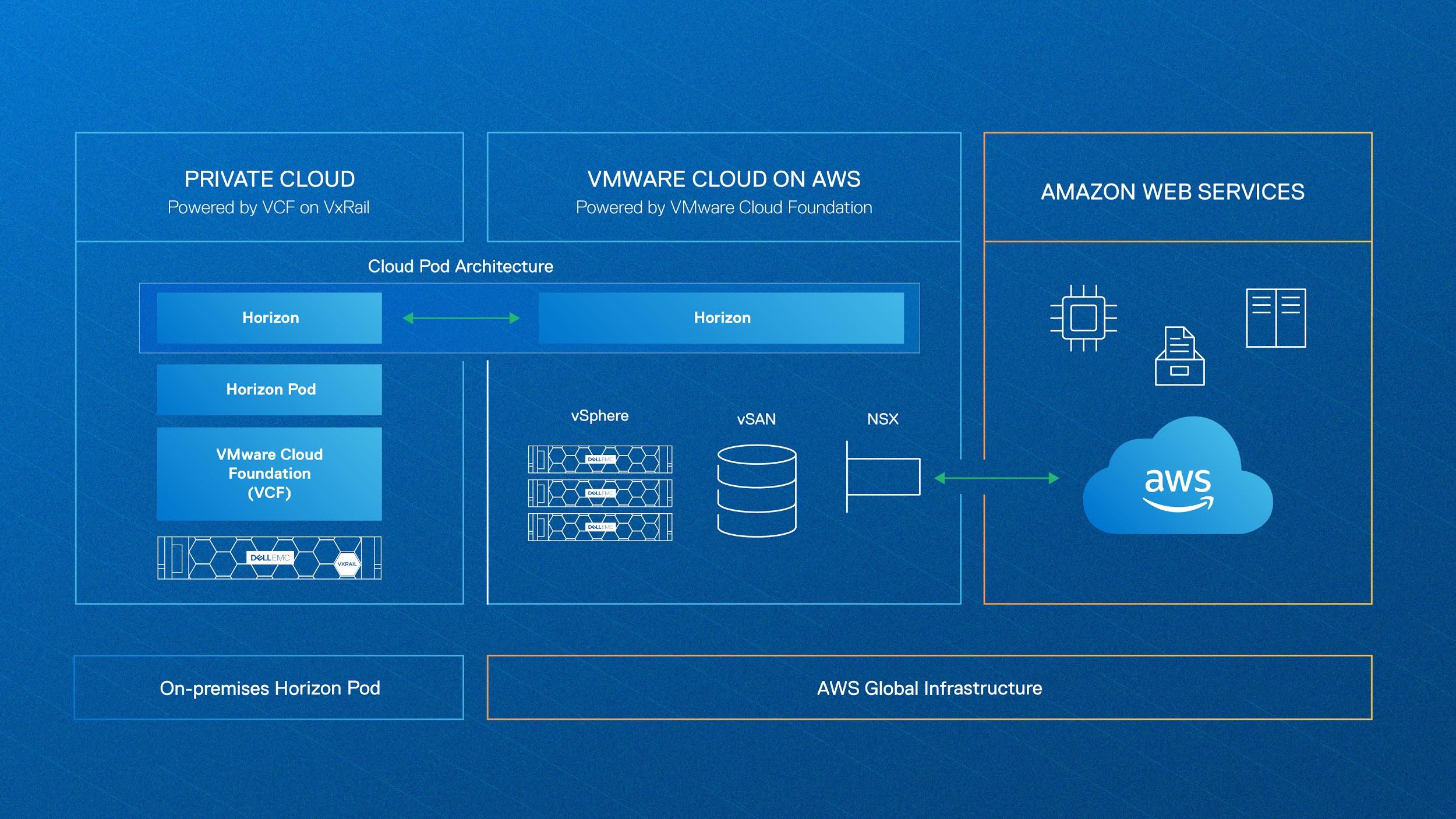

VDI Data Protection in a Public Cloud - With DTCP and VMC on AWS

VMware Horizon on DTCP is a true hybrid cloud platform for VDI workloads that easily enables disaster recovery on the public cloud. DTCP is based on Dell EMC VxRail hyper-converged infrastructure running VMWare Cloud Foundation (VCF) delivering consistent infrastructure and operations. DTCP allows you to build standardized VMware Software-Defined Data Center (SDDC) architecture that provides a consistent infrastructure connecting your on-premises and a public cloud. Watch this video to learn more about Horizon on DTCP solution.

With DTCP, you can configure DR for Horizon 7 by having an on-premises active-primary site and a passive-secondary site on VMC on AWS, one of our partner public cloud. VMC on AWS delivers VMware SDDCs as-a-service on the AWS cloud. The consistent infrastructure that is offered by DTCP allows you to leverage the same existing skills to build a Horizon 7 infrastructure on VMC on AWS. By using VMC on AWS as a passive site for DR, you can take advantage of the hourly billing option and the pay-as-you-go benefit.

Figure 1: VMware Horizon on DTCP using VMC on AWS as a DR site

VMware Cloud Pod Architecture (CPA) allows you to join multiple pods to form a single Horizon implementation. This pod federation can span multiple sites and data centers, simplifying the administration effort that is required to manage a large-scale Horizon deployment. The CPA architecture also simplifies the DR fail-over process. Read more about CPA and different Horizon DR approaches in part 2 of this data protection blog series.

For a VDI environment based on non-persistent or stateless virtual desktops, you can keep a small host footprint on VMC on AWS, where you will deploy your Horizon 7 instance, store your updated golden images, and create a small pool of VMs. You should also replicate App Volumes, Dynamic Environment Manager settings, user profiles, and other user-related data to maintain consistency across on-premises and VMC on AWS sites. If you have an environment based on persistent or stateful virtual desktops, you must periodically replicate your full-clone desktop from on-premises to VMC on AWS. However, this type of protection is expensive and involves more effort. See this reference architecture guide from the Dell Technologies Ready Solutions for VDI team to learn more about the design considerations and replication options when deploying a Horizon solution based on DTCP and VMC on AWS.

Conclusion

VDI consolidates desktop storage from many devices onto centrally managed infrastructure in the data center. The management of centralized desktops is easier and more secure than distributed physical desktops and it gives more control to administrators. However, an outage to the VDI environment could affect the user’s ability to access business-critical data.

All three component layers (desktop, management, and user data) must be considered when developing a backup strategy for your Horizon environment. The backup approach might vary depending on whether you are using a persistent or non-persistent virtual desktop environment. For multi-site disaster recovery, it is recommended that you use an approach based on Horizon CPA architecture.

The availability and recoverability goals that are defined in the service level agreement (SLA) will determine the overall data protection plan for your VDI infrastructure. The level of redundancy and other factors will vary depending on whether it is a single-site or multi-site design. For the data protection of a Horizon 7 environment, you can choose from the broad range of Dell Technologies data protection products to match your user environment and existing data protection policy. For further information, see the Dell Technologies Data Protection web page.

Creating clarity through multiple lenses

Wed, 24 Apr 2024 12:20:55 -0000

|Read Time: 0 minutes

Originally published on August 20, 2019

With just a single lens, a camera captures objects in 2D space. Using only the camera on a cellphone, users are capable of recording ultra-high definition video and playing back on their home entertainment screens with amazing fidelity. While the movie depicts a masterful 3D scene, we are only perceiving the realistic nature by remaining in our seats. Moving from side to side will not allow the viewer to see objects not captured by the camera. We remain satisfied with our viewing experience for media, as we are given the view that the recorder intended.

Adding another camera to a phone allows users to capture another dimension, depth. Ironically, the images from each lens look extremely similar and oftentimes are not noticeably different, unless viewed side-by-side. The minor differences exist between images but when viewed on a 3D supported television they provide an impressive experience emulating reality. While 3D television may not be the preference for all viewers of media, it does prove that adding a second lens can provide an additional measurement of depth, which is precisely the point.

Dell EMC is adding an additional perspective to our infrastructure considerations and guidelines for VDI (click here to access designs from our Info Hub). In the past, Dell and Dell EMC have relied solely on the LoginVSI scores under specific testing thresholds to provide our guidance for user density and sizing expectations. This benchmarking tool has been a positive and reliable industry standard benchmark and it will remain a part of our design guidance based on its effectiveness at displaying a maximum threshold for VDI. To complement that perspective, Dell EMC VDI Solutions will, where appropriate, begin using the NVIDIA nVector to provide the second lens necessary for focusing on experience.

The NVIDIA nVector toolset has the ability to monitor the VDI experience in different ways than LoginVSI. LoginVSI focuses mainly on server-side metrics and measures individual components throughout the load test. NVIDIA nVector does utilize server side metrics but adds client side measurements to monitor frame rate, latency, and protocol fidelity (or accuracy). These elements are more geared towards measuring how the end user perceives the experience, whereas the LoginVSI focuses on optimizing to the maximum limitations of the hardware. Since VDI solutions are comprised of components beyond the server, a holistic measurement tool for the entire VDI environment gives Dell EMC the ability to measure experience impacts of components, such as switches, SD-WAN, thin clients, and more.

Come find out more about the benefits of Dell EMC VDI Solutions at VMworld 2019, as we present in the NVIDIA booth, our executive suite (click to register for the event), as well as the many great VDI sessions from Dell Technologies. We hope you'll gain from our enhanced perspective.

Published By

David Pfahler

Solution Product Manager

Next-Generation Graphics Acceleration for Digital Workplaces from Dell EMC and NVIDIA

Fri, 09 Dec 2022 13:58:56 -0000

|Read Time: 0 minutes

Originally published June 2019

For most organizations undergoing a digital transformation, maintaining a good user experience on virtual desktops—an essential component of digital workplaces—is a challenge. Users naturally compare their new virtual desktop experience to their previous physical endpoint experience. As the user experience continues to gain importance in digital workplaces (see this blog for more information), it is essential that virtualized environments keep pace with growing demands for user experience improvements.

This focus on the new user experience is being addressed by developers of modern-day operating systems and applications, who strive to meet the high expectations of their consumers. For example, the Windows 10 operating system, which plays a significant role in today's digital transformation initiatives, is more graphics-intensive than its predecessors. A study by Lakeside Software's SysTrack Community showed a 32 percent increase in graphics requirements when you move from Windows 7 to Windows 10. Microsoft Office applications (PowerPoint, Outlook, Excel, and so on), Skype for Business collaboration software, and all modern-day web browsers are designed to use more graphics acceleration in their newest releases.

Dell EMC Ready Solutions for VDI with NVIDIA Tesla T4 GPU

Dell EMC Ready Solutions for VDI, coupled with NVIDIA GRID Virtual PC (GRID vPC) and Virtual Apps (GRID vApps) software, provides comprehensive graphics acceleration solutions for your desktop virtualization workloads. The core of the NVIDIA GRID software is NVIDIA vGPU technology. This technology creates virtual GPUs, which enables sharing of the underlying GPU hardware among multiple users or virtual desktops running concurrently on a single host. This video compares the quality of a “CPU-only” VDI desktop with a VDI desktop powered by NVIDIA vGPU technology.

The latest NVIDIA GPU offering that supports virtualization is the NVIDIA Tesla T4, which is a universal GPU that can cater to a variety of workloads. The Tesla T4 comes with a 16 GB DDR6 memory. It operates at 70 W, providing higher energy efficiency and lower operating costs than its predecessors, and has a single-slot PCIe form factor. You can configure up to six Tesla T4s in a single Dell EMC PowerEdge R740xd server, providing the highest density for GPU-accelerated VMs in a Dell EMC server. For more details about the NVIDIA Tesla T4 GPU, see the Tesla T4 for Virtualization Technology Brief.

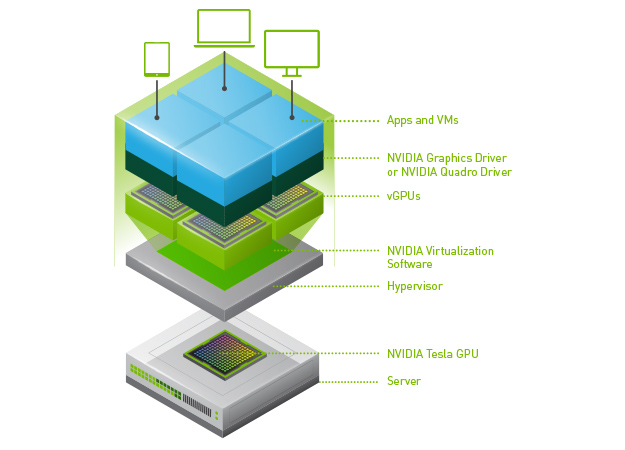

Image courtesy NVIDIA Corporation

Figure 1. NVIDIA vGPU technology stack

Tesla T4 vs. earlier Tesla GPU cards

Let's compare the NVIDIA Tesla T4 with other widely used cards—the NVIDIA Tesla P40 and the NVIDIA Tesla M10.

Tesla T4 vs. Tesla P40:

- The Tesla T4 comes with a maximum framebuffer of 16 GB. In a PowerEdge R740xd server, T4 cards can provide up to 96 GB of memory (16 GB x 6 GPUs), compared to the maximum 72 GB provided by the P40 cards (24 GB x 3 GPUs). So, for higher user densities and cost efficiency, the Tesla T4 is a better option in VDI workloads.

- You might have to sacrifice 3, 6, 12, and 24 GB profiles when using the T4, but 2 GB and 4 GB profiles, which are the most tested and configured profiles in VDI workloads, work well with the Tesla T4. However, NVIDIA Quadro vDWS use cases, which require higher memory per profile, are encouraged to use Tesla P40.

Tesla T4 vs. Tesla M10:

- In the PowerEdge R740xd server, three Tesla M10 cards can give you the same 96 GB memory as six Tesla T4 cards in a PowerEdge R740xd server. However, when it comes to power consumption, the six Tesla T4 cards consume only 420 W (70 W x 6 GPUs), while the three Tesla M10 GPUs consume 675 W (225 W x 3 GPUs), a substantial difference of 255 W per server. When compared to the Tesla M10, the Tesla T4 provides power savings, reducing your data center operating costs.

- Tesla M10 cards support a 512 MB profile, which is not supported by the Tesla T4. However, the 512 MB profile is not a viable option in today’s modern-day workplaces, where graphics-intensive Windows 10 operating systems, multi-monitors, and 4k monitors are prevalent.

The following table provides a summary of the Tesla T4, P40, and M10 cards.

Table 1. Comparison of NVIDIA Tesla T4, P40 & M10

GPU | Form factor | GPUs/board | Memory size | vGPU profiles | Power |

T4 | PCIe 3.0 single slot | 1 | 16 GB GDDR6 | 1 GB, 2 GB, 4 GB, 8 GB, 16 GB | 70 W |

P40 | PCIe 3.0 dual slot | 1 | 24 GB GDDR5 | 1 GB, 2 GB, 3 GB, 4 GB, 6 GB, 8 GB, 12 GB, 24 GB | 250 W |

M10 | PCIe 3.0 dual slot | 4 | 32 GB GDDR5 | .5 GB, 1 GB, 2 GB, 4 GB, 8 GB | 225 W |

(8 per GPU) |

GPU sizing and support for mixed workloads

With multi-monitors and 4K monitors becoming a norm in the modern workplace, streaming high-resolution videos can saturate the encoding engine on the GPUs and increase the load on the CPUs, affecting the performance and scalability of VDI systems. Thus, it is important to size the GPUs based on the number of encoding streams and required frames per second (fps). The Tesla T4 comes with an enhanced NVIDIA NVENC encoder that can provide higher compression and better image quality in H.264 and H.265 (HEVC) video codecs. The Tesla T4 can encode 22 streams at 720 progressive scan (p) resolution, with simultaneous display in high-quality mode. On average, the Tesla T4 can also handle 10 streams at 1080p and 2–3 streams at Ultra HD (2160p) resolutions. Running in a low-latency mode, it can encode 37 streams at 720p resolution, 17–18 streams at 1080p resolution, and 4–5 streams in Ultra HD.

VDI remote protocols such as VMware Blast Extreme can use NVIDIA GRID software and the Tesla T4 to encode video streams in H.265 and H.264 codecs, which can reduce the encoding latency and improve fps, providing a better user experience in digital workplaces. The new Tesla T4 NVENC encoder provides up to 25 percent bitrate savings for H.265 and up to 15 percent bitrate savings for H.264. Refer to this NVIDIA blog to learn more about the Tesla T4 NVENC encoding improvements.

The Tesla T4 is well suited for use in a data center with mixed workloads. For example, it can run VDI workloads during the day and compute workloads at night. This concept, known as VDI by Day, HPC by Night, increases the productivity and utilization of data center resources and reduces data center operating costs.

Tesla T4 testing on Dell EMC VDI Ready Solution

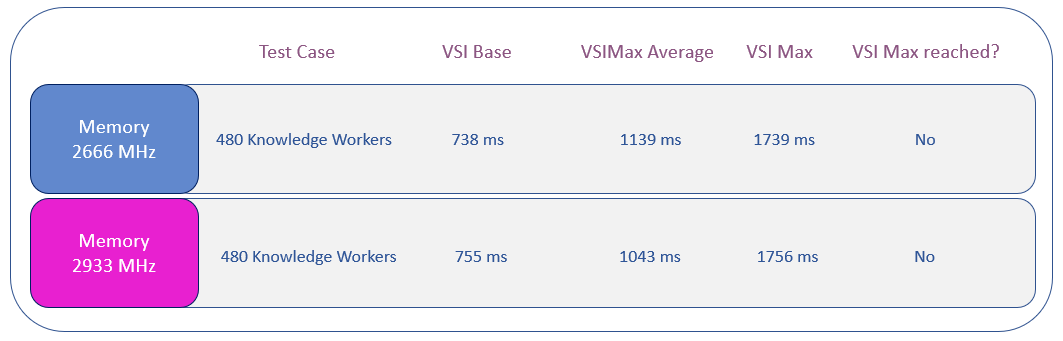

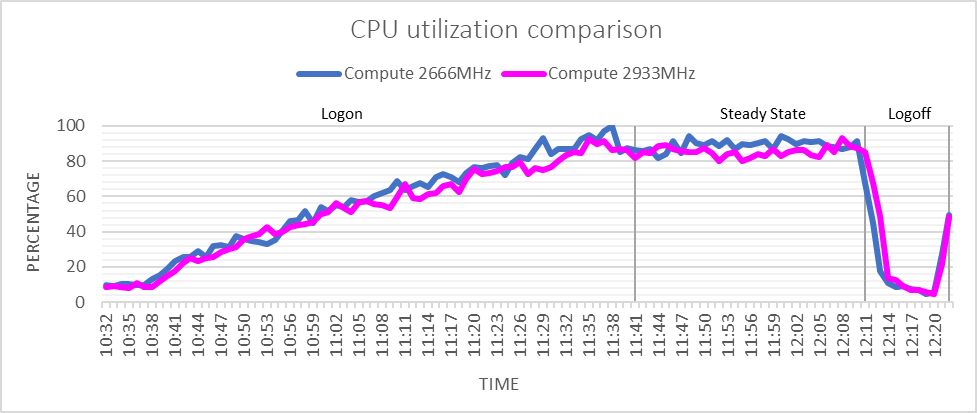

At Dell EMC, our engineering team tested the NVIDIA Tesla T4 on our Ready Solutions VDI stack based on the Dell EMC VxRail hyperconverged infrastructure. The test bed environment was a 3-node VxRail V570F appliance cluster that was optimized for VDI workloads. The cluster was configured with 2nd Generation Intel Xeon Scalable processors (Cascade Lake) and with NVIDIA Tesla T4 cards in one of the compute hosts. The environment included the following components:

- PowerEdge R740xd server

- Intel Xeon Gold 6248, 2 x 20-core, 2.5 GHz processors (Cascade Lake)

- NVIDIA Tesla T4 GPUs with 768 GB memory (12 x 64 GB @ 2,933 MHz)

- VMware vSAN hybrid datastore using an SSD caching tier

- VMware ESXi 6.7 hypervisor

- VMware Horizon 7.7 VDI software layer

Dell EMC Engineering used the Power Worker workload from Login VSI for testing. You can find background information about Login VSI analysis at Login VSI Analyzing Results.

The GPU-enabled PowerEdge compute server hosted 96 VMs with a GRID vPC vGPU profile (T4-1B) of 1 GB memory each. The host was configured with six NVIDIA Tesla T4 cards, the maximum possible configuration for the NVIDIA Tesla T4 in a Dell PowerEdge R740xd server.

With all VMs powered on, the host server recorded a steady-state average CPU utilization of approximately 95 percent and a steady-state average GPU utilization of approximately 34 percent. Login VSImax—the active number of sessions at the saturation point of the system—was not reached, which means the performance of the system was very good. Our standard threshold of 85 percent for average CPU utilization was relaxed for this testing to demonstrate the performance when graphics resources are fully utilized (96 profiles per host). You might get a better user experience with managing CPU at a threshold of 85 percent by decreasing user density or by using a higher-binned CPU. However, if your CPU is a previous generation Intel Xeon Scalable processor (Skylake), the recommendation is to use only up to four NVIDIA Tesla cards per PowerEdge R740xd server. With six T4 cards per PowerEdge R740xd server, the GPUs were connected to both x8 and x16 lanes. We found no issues using both x8 and x16 lanes and, as indicated by the Login VSI test results, system performance was very good.

Dell EMC Engineering performed similar tests with a Login VSI Multimedia Workload using 48 vGPU-enabled VMs on a GPU-enabled compute host, each having a Quadro vDWS-vGPU profile (T4-2Q) with a 2 GB frame buffer. With all VMs powered on, the average steady-state CPU utilization was approximately 48 percent, and the average steady-state GPU utilization was approximately 35 percent. The system performed well and the user experience was very good.

For more information about the test-bed environment configuration and additional resource utilization metrics, see the design and validation guides for VMware Horizon on VxRail and vSAN on our VDI Info Hub.

Summary

Just as Windows 10 and modern applications are incorporating more graphics to meet user expectations, virtualized environments must keep pace with demands for an improved user experience. Dell EMC Ready Solutions for VDI, coupled with the NVIDIA Tesla T4 vGPU, are tested and validated solutions that provide the high-quality user experience that today’s workforce demands. Dell EMC Engineering used Login VSI’s Power Worker Workload and Multimedia Workload to test Ready Solutions for VDI with the Tesla T4, and observed very good results in both system performance and user experience.

In the next blog, we will discuss the affect of memory speed on VDI user density based on testing done by Dell EMC VDI engineering team. Stay tuned and we’d love to get your feedback!

Improved performance and user densities with Dell EMC Ready Solutions for VDI, powered by 2nd Generation Intel

Mon, 03 Aug 2020 16:17:48 -0000

|Read Time: 0 minutes

Improved performance and user densities with Dell EMC Ready Solutions for VDI, powered by 2nd Generation Intel Xeon Scalable processors

Published on May 15, 2019 by Anand Johnson, Principal Engineer

Performance is one of the key factors required for a successful Virtual Desktop Infrastructure (VDI) deployment. It is often challenging for IT teams to measure performance and perform capacity planning for their environments without proper benchmarking tests. With the discovery of the Spectre, Meltdown, and L1 Terminal Fault vulnerabilities in 2018, VDI support teams wanted to know the potential negative performance impact these vulnerabilities might have on their environments. A VMware benchmark study performed in August 2018 found that VDI systems patched for L1TF\Foreshadow had a performance degradation of up to 30%.

Spectre, Meltdown, and L1 Terminal Fault are all types of side-channel vulnerabilities, meaning that they use certain design characteristics of modern-day processors, such as timing information, power consumption, electromagnetic leaks, and emitted sounds to gain information for use in the attack. Mitigations provided in the form of operating system patches, hypervisor patches, or microcodes address these vulnerabilities indirectly. However, hardware-level fixes are preferable because addressing these vulnerabilities from the kernel- or software-level is likely to have a negative impact on system performance.

The recently announced 2nd Generation Intel Xeon Scalable processors (Cascade Lake) include fixes in the silicon for Spectre (variant 2), Meltdown (variant 3), and L1 Terminal Fault side-channel methods. These fixes mean that the new processors are expected to provide a better performance than first-generation Intel Xeon Scalable Processors (Skylake) or other previous generation processors, which still require software-level fixes to protect against side-channel vulnerabilities. Cascade Lake processors also come with an improved architecture and higher thermal efficiency that boosts the performance of the systems.

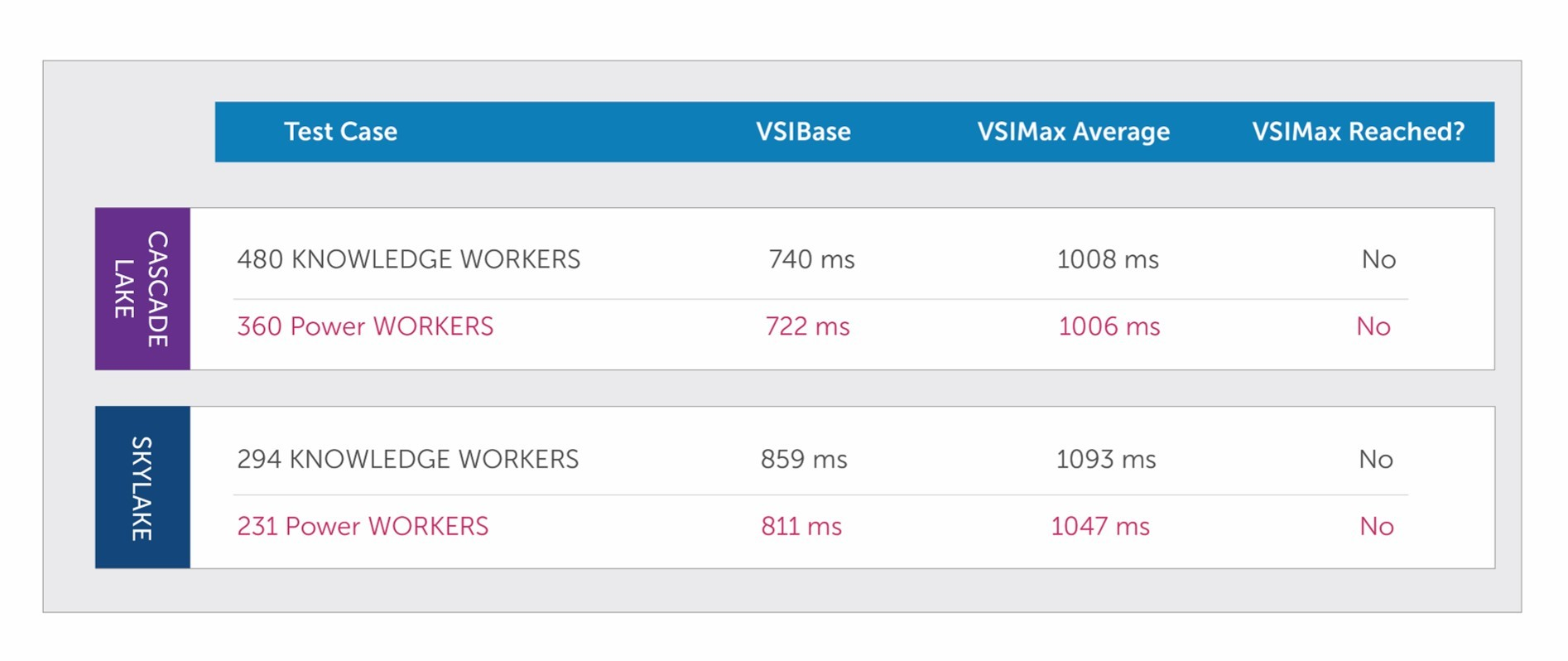

Dell EMC-Ready Solutions for VDI on both VxRail and vSAN ReadyNodes are now available with 2nd Generation Intel Xeon Scalable Processors (Cascade Lake). Cascade Lake processors have more benefits beyond better security, which you can read about here. The Dell EMC VDI engineering team performed an impressive comparison test between Intel Xeon Gold 6248 processor (Cascade Lake) and the previous generation’s Intel Xeon Gold 6138 processor (SkyLake) with the results described below. I think that’s enough of an introduction; let’s get to the meat of the testing.

Test environment

The Dell EMC VDI engineering team performed tests with Login VSI, an industry standard for benchmarking VDI workloads. The following workloads were tested:

· Knowledge Workload running on VMs configured with 2 vCPU and 4 GB RAM

· Power Workload running on VMs configured with 4 vCPU and 8 GB RAM