Live Optics and Azure Stack HCI

Thu, 15 Jun 2023 00:58:28 -0000

|Read Time: 0 minutes

The IT industry has coped with many challenges during the last decades. One of the most impactful ones, probably due to its financial implications, has been the “IT budget reduction”—the need for IT departments to increase efficiency, reduce the cost of their processes and operations, and optimize asset utilization.

This do-more-with-less mantra has a wide range of implications, from cost of acquisition to operational expenses and infrastructure payback period.

Cost of acquisition is not only related to our ability to get the best IT infrastructure price from technology vendors but also to the less obvious fact of optimal workload characterization that leads to the minimum infrastructure assets to service business demand.

Without the proper tools, assessing the specific needs that each infrastructure acquisition process requires is not simple. Obtaining precise workload requirements often involves input from several functional groups, requiring their time and dedication. This is often not possible, so the only choice is to do a high-level estimation of requirements and select a hardware offering that can cover by ample margin the performance requirements.

Those ample margins do not align very well with concepts such as optimal asset utilization and, thus, do not lead to the best choice under a budget reduction paradigm.

But there is free online software, Live Optics, that can be used to collect, visualize, and share data about your IT infrastructure and the workloads they host. Live Optics helps you understand your workloads’ performance by providing in-depth data analysis. It makes the project requirements much clearer, so the sizing decision—based on real data—is more accurate and less estimated.

Azure Stack HCI, as a hyperconverged system, greatly benefits from such sizing considerations. It is often used to host a mix of workloads with different performance profiles. Being able to characterize the CPU, memory, storage, network, or protection requirements is key when infrastructure needs to be defined. This way, the final node configuration for the Azure Stack HCI platform will be able to cope with the workload requirements without oversizing the hardware and software offerings, and we are able to select the type of Azure Stack HCI node that best fits the workload requirements.

Microsoft recommends using an official sizing tool such as Dell’s tool, and Live Optics incorporates all Azure Stack HCI design constraints and best practices, so the tool outcome is optimized to the workload requirements.

Imagine that we had to host in the Azure Stack HCI infrastructure a number of business applications with sets of users. With the performance data gathered by Live Optics, and using the Azure Stack HCI sizing tool, we can select the type of node we need, the amount of memory each node will have, what CPU we will equip, how many drives are needed to cover the I/O demand, and the network architecture.

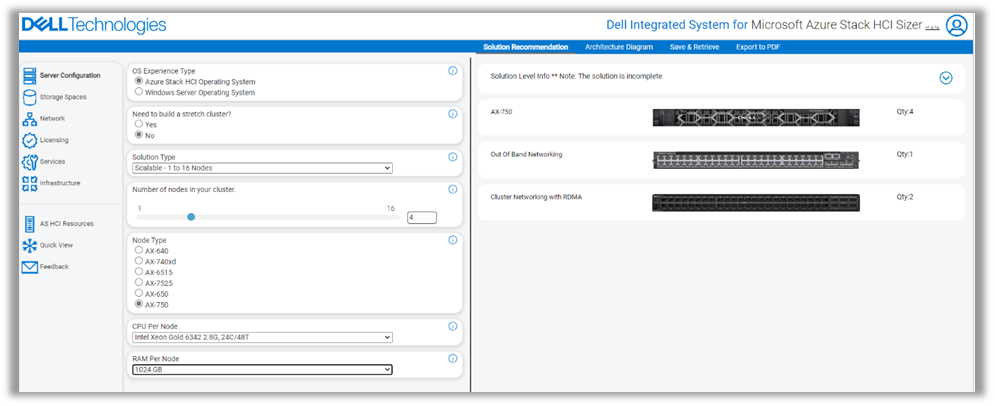

We can see a sample of the sizing tool input in the following figure:

Figure 1. Example from Dell's sizing tool for Azure Stack HCI

In this case, we have chosen to base our Azure Stack HCI infrastructure on four AX-750 nodes, with Intel Gold 6342 CPUs and 1 GB of RAM per node.

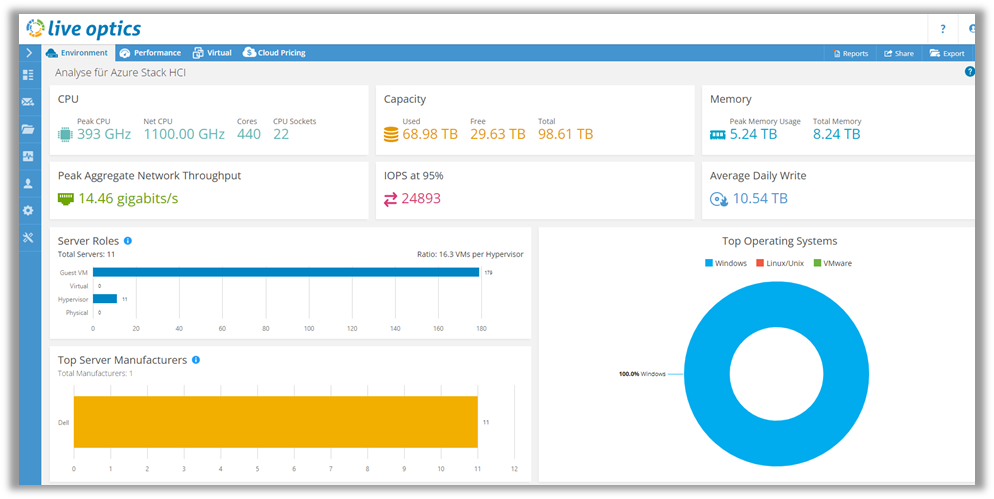

Because we have used Live Optics to gather and analyze performance data, we have sized our hardware assets based on real customer usage data such as that shown in the next figure:

Figure 2. Live Optics performance dashboard

This Live Optics dashboard shows the general requirements of the analyzed environment. Data of aggregated network throughput, IOPS, and memory usage or CPU utilization are displayed and, thus, can be used to size the required hardware.

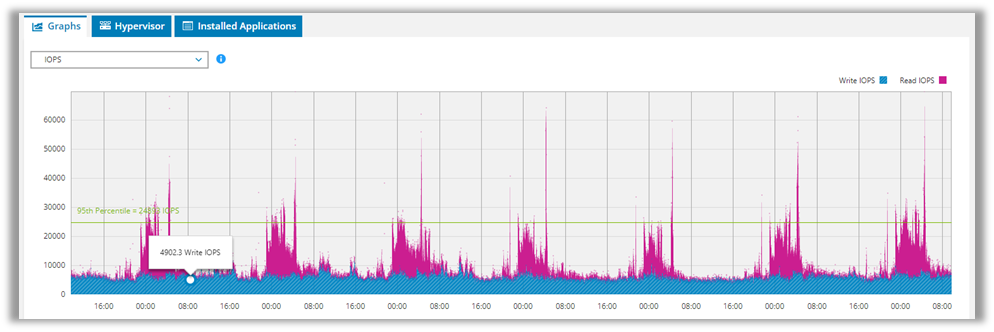

There are more specific dashboards that show more details on each performance core statistic. For precise storage sizing, we can display read and write I/O behavior in great detail, as we can see in the following figure:

Figure 3. IOPS graphics through a Live Optics read/write I/O dashboard

With a tool such as Live Optics, we can size our Azure Stack HCI infrastructure based on real workload requirements, not assumptions made because information is lacking. This leads to an accurate configuration, usually resulting in a lower price, and warranties that the proposed infrastructure can handle even the peak business workload requirements.

Check the resources shown below to find links to the Live Optics site and collector application, as well as some Dell technical information and sizing tools for Azure Stack HCI.

Resources

- Live Optics app

- Live Optics site

- Info Hub: Microsoft HCI Solutions from Dell Technologies

- Microsoft Azure Stack HCI sizer from Dell Technologies

Author: Inigo Olcoz

Twitter: VirtualOlcoz

Related Blog Posts

Accelerate your SQL Server Workloads with Dell Integrated System for Azure Stack HCI

Fri, 28 Jul 2023 16:05:27 -0000

|Read Time: 0 minutes

Microsoft presented SQL Server 2022 last November, during the Microsoft Ignite 2022 event. This was a highly expected release, introducing several key improvements for database operations, availability, security, and performance.

SQL Server 2022 constitutes the most cloud-connected database Microsoft has released to date. Building an Azure Arc-enabled database platform with Azure Arc-enabled SQL Server facilitates extending your data management operations from your own data center to any edge location, public cloud, or hosting facility.

With the simple installation of a new agent into the SQL Server instance, a full set of management, security, and performance options are enabled.

See more details on these new features at this Microsoft learn page.

As of today, one of the most powerful deployment scenarios for SQL Server is a hybrid environment. With Arc-enabled service, we can deploy, manage, and operate from a single point and have the flexibility to place every SQL Server instance where it should be to benefit from the best resource allocation and manageability, and thus provide the best IT experience to meet the business demands.

Thinking about an HCI platform to host the on-premises side of our hybrid approach seems reasonable, as HCI solutions have become predominant in their IT segment, as analysts report.

Dell Integrated System for Azure Stack HCI represents a perfect choice to meet the SQL Server 2022 requirements, providing a fully productized platform that offers, out of the box, intelligently designed configurations to minimize hardware and software customizations often required for this type of environment.

If we want to populate our hybrid solution with a set of tools to ensure repeatable and predictable infrastructure operations, Dell OpenManage Integration with Microsoft Windows Admin Center provides in-depth, cluster-level automation capabilities that enable an efficient and flexible operation of the Azure Stack HCI platform.

For optimal platform sizing, to properly address SQL Server workload demands, we can use a free, online tool such Dell Live Optics. With the information gathered by Live Optics software collectors, we can better understand application performance and capacity requirements. That information can be used by the Dell sales team to influence the selection available to configure the Azure Stack HCI platform in Dell’s Azure Stack HCI Sizer tool. You can find more details on Live Optics here. For specifics on Live Optics and database workloads, check this site.

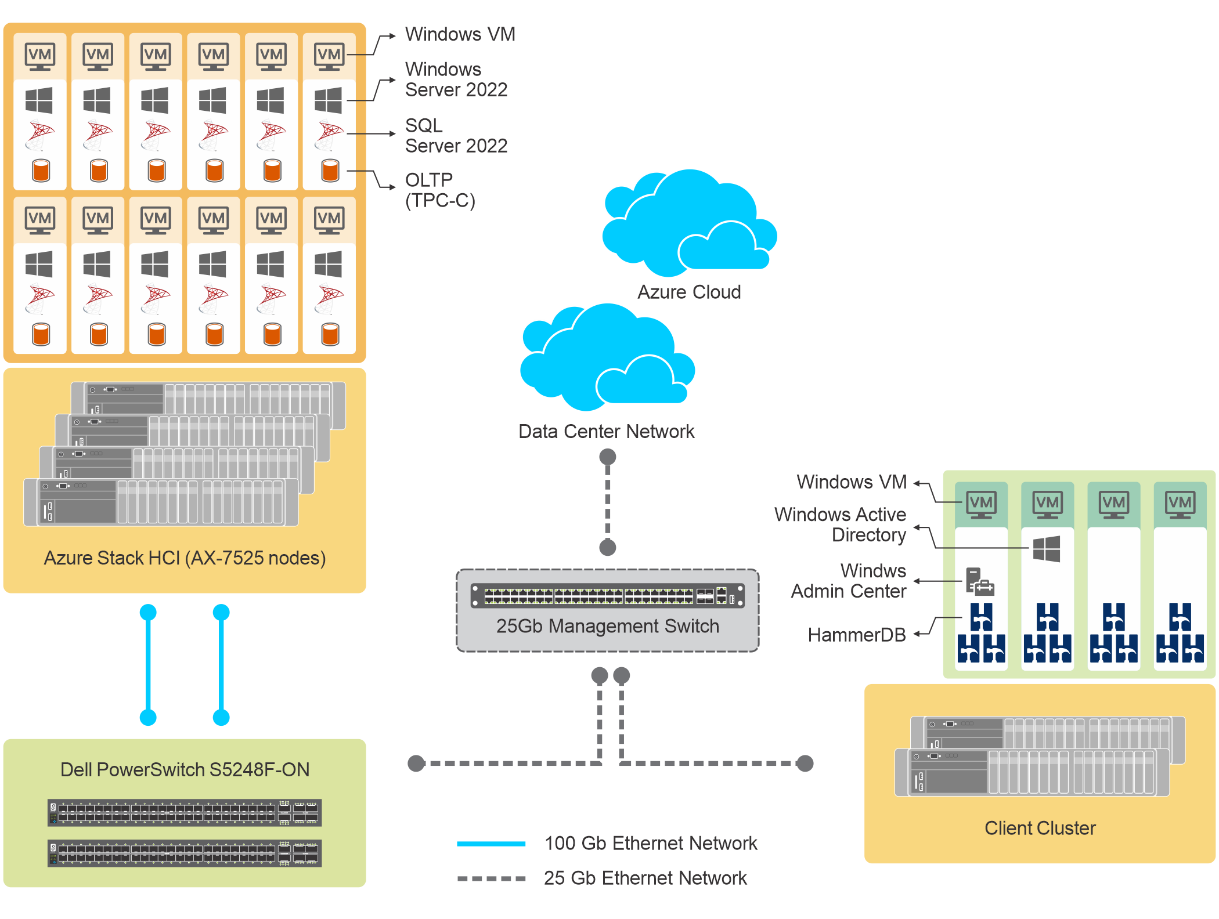

To evaluate SQL Server performance in this hybrid scenario, we have configured a four-node Dell Integrated System for Microsoft Azure Stack HCI. The underlying infrastructure is based on Dell AX-7525 nodes, each powered by two AMD EPYC processors, 2 TB of RAM and 12 NVMe drives.

The solution architecture looks like this:

Figure 1. Dell Integrated System for Azure Stack HCI architecture overview

On the storage side of the solution, Microsoft Storage Spaces Direct manages the NVMe drives made available by the four AX-7525 nodes, creating a single pool, accessed through Cluster Shared Volumes (CSVs) in which Virtual Hard Disks (.vhds) were placed.

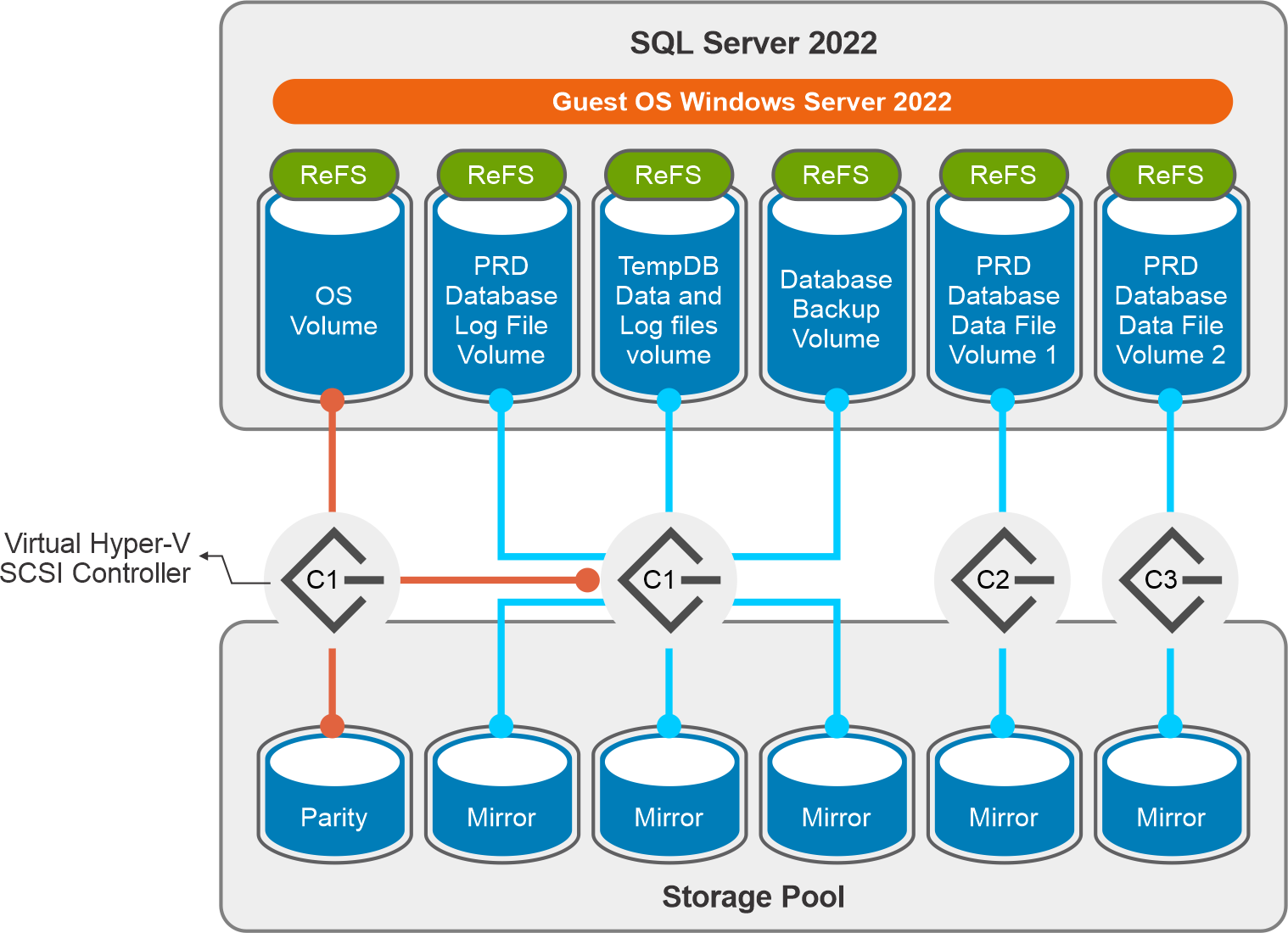

The following figure shows the volume and controller layout.

Figure 2. Storage layout

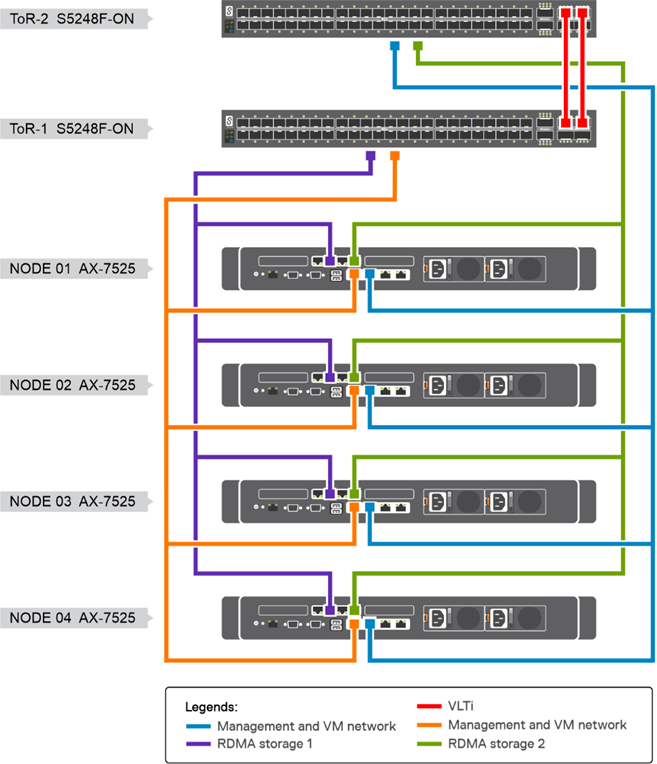

We also need to design and configure the networking component of the test environment. For this SQL Server case, we have chosen to provide top-of-rack connectivity through two Dell S5248F-ON switches, with L2 multipath support using Virtual Link Trunking (VLT) for a highly available configuration. With the addition of NVIDIA Mellanox ConnectX-6 Dx Dual Port 100 GbE adapters, we can provide Remote Direct Memory Access (RDMA) with RDMA over Ethernet capabilities (RoCE) to our storage network. The overarching network architecture looks as follows:

Figure 3. Network architecture

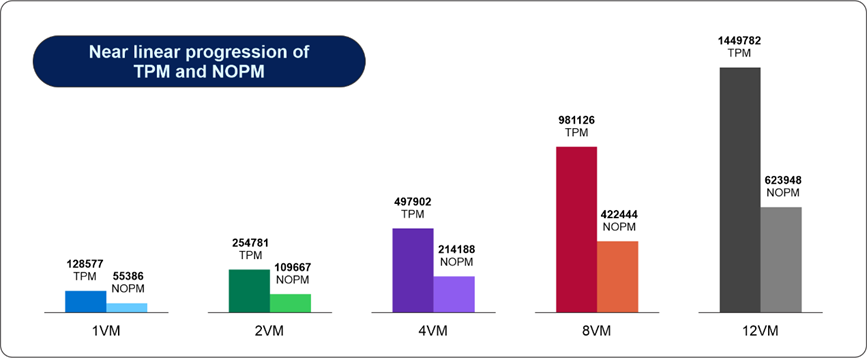

With this infrastructure scenario, we chose a test methodology that started with one SQL Server VM and scaled up to 12 VMs. In each SQL Server VM, we installed and configured HammerDB instances on a cluster of clients running Windows Server 2022. For benchmarking, we chose the TPROC-C, an online transaction processing (OLTP) benchmarking standard derived from TPC-C.

With a dataset of a 4,000 scale factor and a size of 400 GB, we started running the test on one SQL Server VM, then scaling to two, four, eight, and finally, 12 VMs.

We focused the test on two key performance indicators, transactions per minute (TPM) and new orders per minute (NOPM). The main goal was to obtain performance scaling as linear as possible when going from one to twelve VMs, keeping CPU utilization in a safe range, to leave ample performance space to run other workloads. Each of these benchmarking tests was conducted while the TPROC-C transaction load from HammerDB was running concurrently on the respective number of VMs running SQL Server.

The following figure shows a summary of the results obtained:

Figure 4. SQL performance summary

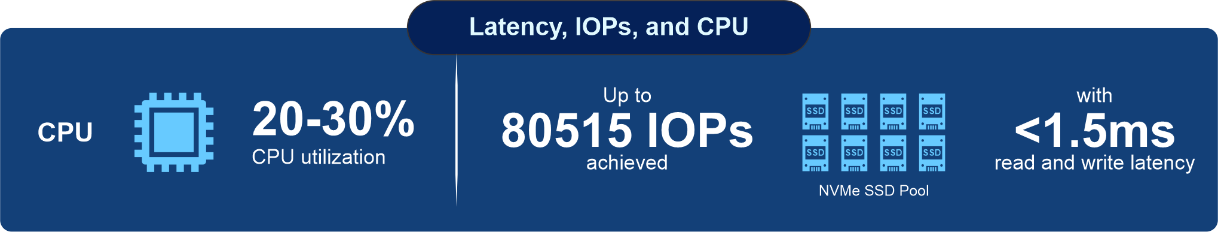

As usual, keeping a low latency, increasing IOPS score, was an ad hoc goal to maintaining consistent CPU utilization all along the tests. A summary of the results is shown in the following figure:

Figure 5. Latency, IOPS, and CPU utilization results

In summary, running our SQL Server 2022 workloads on Dell’s Azure Stack HCI, connected to Microsoft Azure through Azure Arc Resource Manager, provides excellent performance with rich management features for on-premises operations through Dell OpenManage Integration for Microsoft Windows Admin Center.

For more technical content on Dell Integrated System for Azure Stack HCI, visit our Info Hub.

Resources

- Dell Integrated Systems for Azure Stack HCI Info Hub

- Microsoft Storage Spaces Direct

- Live Optics main page

- Live Optics Knowledge Base

Author:

Inigo Olcoz, Senior Principal Engineer Technologist at Dell

Twitter: @VirtualOlcoz

Single-Node Azure Stack HCI is now available!

Wed, 19 Oct 2022 15:25:20 -0000

|Read Time: 0 minutes

Single-Node Azure Stack HCI is now available!

Earlier this year, Microsoft announced the release of a new flavor for Azure Stack HCI: Azure Stack HCI single node. This is another milestone in Microsoft’s long history of evolution for the Azure Stack family of products.

Back in 2017, Microsoft announced Azure Stack, the platform to extend the cloud to the customers’ data centers. One of the key design principles for this release was to make it easy to create hybrid cloud environments.

In March 2019, a new member of the Azure Stack family was announced: Azure Stack HCI. This incumbent is a main driver for IT modernization, infrastructure consolidation, and true hybridity for Microsoft environments. Azure Stack HCI enables customers to run virtual machines (VMs), cloud native applications, and Azure Services on-premises on top of hyperconverged infrastructure (HCI) integrated systems as an optimal solution in performance and cost. Dell Integrated Systems for Azure Stack HCI delivers a seamless Azure experience, simplifies Azure on-premises, and accelerates innovation.

Figure 1 Dell Technologies vision of Microsoft Azure Stack HCI

While Azure Stack HCI was born as a scalable solution to adapt to most customer IT needs, certain scenarios require other intrinsic characteristics. We think of the “edge” as the IT place where data is acted on near its creation point to generate immediate and essential value. In many cases, these edge locations have severe space and cooling restrictions, with more emphasis on data proximity and operational efficiency than scalability or resiliency. For these scenarios, having a low-cost, highly performing, and easy-to-manage platform is more important than prioritizing scalability and cluster-level high availability.

The edge is becoming the next technology turning point, where organizations are planning to increase their IT spending significantly (IDC EdgeView Survey). Microsoft designed an Azure Stack HCI platform for this scenario. Any IT deployment in which we benefit from the data being collected and processed where it’s produced, away from a core data center, will become eligible for an Azure Stack HCI single-node deployment. Edge can be manufacturing, retail, energy, telco, healthcare, smart connected cities—you name it. If we think about Machine Learning (ML), Artificial Intelligence (AI), or Internet of Things (IoT) scenarios, single-node Azure Stack HCI clusters fit perfectly into these typical edge needs. A single-node cluster provides a cost-sensitive solution that supports the same workloads a multi-node cluster does and behaves in a similar way.

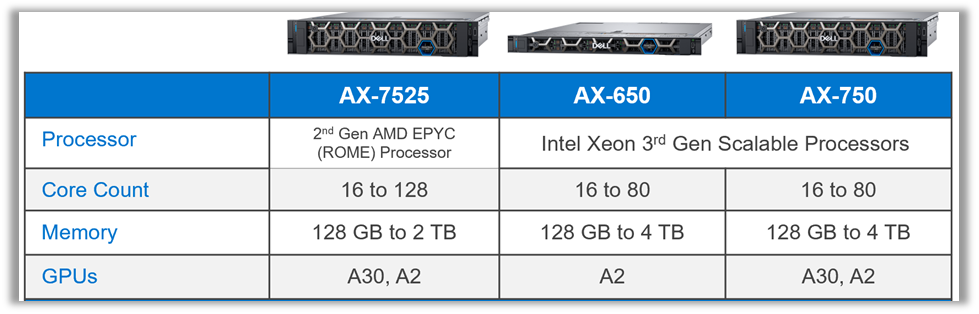

Dell Technologies portfolio for Azure Stack HCI single node is based on the same 15G models also available for multi-node deployments, as shown here:

Figure 2 Dell Technologies Integrated System for Microsoft Azure Stack HCI portfolio

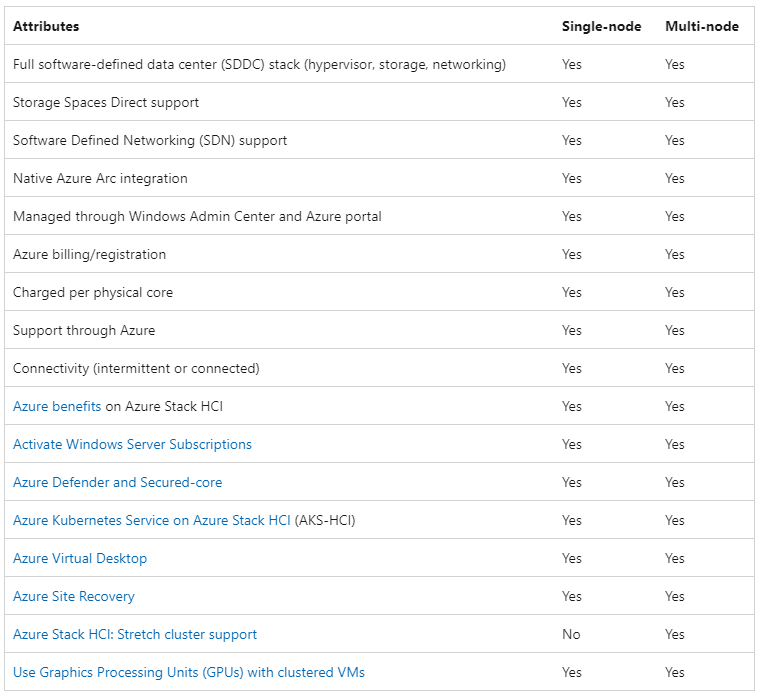

In terms of features, as mentioned before, single-node and multi-node systems behave similarly. The following table shows the main attributes of both. Note that they are nearly identical except for a few distinctions, the most relevant being the lack of stretched-cluster support:

Figure 3 Azure Stack HCI single and multi-node attributes comparison (Source: Microsoft)

There are a few differences worth highlighting:

- Windows Admin Center (WAC) does not support the creation of single-node clusters. Deployment is done through PowerShell and Storage Spaces Direct enablement.

- Stretched clusters are not supported with single-node deployments. Stretched clusters require a minimum of two nodes at each site.

- Storage Bus Cache (SBL), which is commonly used to improve read/write performance on Windows Server/Azure Stack HCI OS, is not supported in single-node clusters.

- There are limitations on WAC cluster management functionality. PowerShell can be used to cover those limitations.

- Single-node clusters support only a single drive type: either NVMe or SSD drives, but not a mix of both.

- For cluster lifecycle management, Open Manage Integration with Microsoft Admin Center (OMIMSWAC) Cluster Aware Updating (CAU) cannot be used. Vendor-provided solutions (drives and firmware) or a PowerShell and Server Configuration tool (SConfig) are valid alternatives.

If your Azure based edge workloads are moving further from the data center, and you understand the design differences listed above for Dell Azure Stack HCI single node, this could be a great fit for your business.

We expect Azure Stack HCI single-node clusters to evolve over time, so check our Info Hub site for the latest updates!

Author: Iñigo Olcoz

Twitter: VirtualOlcoz

References

- IDC EdgeView Survey 2022

- Microsoft: Azure Stack HCI single-node clusters

- Info Hub: Microsoft HCI Solutions

- Info Hub: Dell OpenManage Integration with Microsoft Windows Admin Center v2.0 Technical Walkthrough

- Info Hub: Technology leap ahead: 15G Intel based Dell Integrated System for Microsoft Azure Stack HCI