Home > Integrated Products > XC Family > Guides > Best Practices Guide—Deploying Oracle 12c RAC Database on Dell EMC XC Series All-Flash > Oracle database VM configuration

Oracle database VM configuration

-

Processor and memory

Sizing the vCPUs and memory of the virtual machines appropriately requires understanding the Oracle workload. Avoid overcommitting processor and memory resources on the physical node. Use as few vCPUs as possible because performance might be adversely impacted when using excess vCPUs due to the scheduling constraints.

Hyperthreading is a hardware technology on Intel processors that enables a physical processor core to act like two processors. In general, there is a performance advantage to enabling hyperthreading on the newer Intel processors.

Each VMware vSphere® physical node also runs a Nutanix CVM. Therefore, consider the resources required for the CVMs. Only one CVM would be running on a physical node, and it does not move to another physical node when a failure event occurs.

While it is possible to support multiple Oracle database VMs on a same physical node, for performance reasons, it is better to spread them out on multiple nodes and minimize the number of database instances running on the same node. In the case of Oracle RAC, the RAC-instance VMs should run on different physical nodes. VM-host affinity or anti-affinity rules can be set up for database VMs to define where they can run within the cluster.

XC Series storage container and VMware storage virtualization

On XC Series storage, it is typical to have a single storage container comprised of all SSDs and HDDs in the cluster so that it can maximize the storage capacity and manage the auto-tiering more efficiently. The single container is mounted on the ESX hosts through NFS as a datastore. Multiple virtual disks are created from the same datastore and presented to the guest OS as SCSI disks that can be used by Oracle ASM.

Nutanix ABS allows storage to be presented directly into a non-VM physical host or virtualized guest OS through iSCSI, bypassing the VMware storage virtualization layer. Additional consideration and extra configuration steps are required for both Nutanix and the guest OS.

For virtual machines on VMware, it is recommended to present storage as virtual disks with VMware storage virtualization because it offers a good balance between flexibility, performance, and ease of use.

Find more information on ABS at the Nutanix portal.

VM storage controller and virtual disks

Typically, an Oracle database spans across multiple LUNs to increase performance by allowing parallel I/O streams. In a virtualized environment, multiple virtual disks are used instead. Nutanix recommends to have at least four to six database virtual disks and add more disks, depending on the capacity requirements, to achieve better performance.

It is best practice to create multiple controllers and separate guest OS virtual disk from database virtual disks. The guest OS virtual disk should be on the primary controller. Additional controllers are created to separate the virtual disks for data and log files.

Table 1 shows an example configuration of controllers and virtual disks for an Oracle database VM.

Table 1. Virtual adapter and vdisk configuration for Oracle database VM

Controller

Adapter type

Usage

Virtual disk

Controller 0

LSI Logic Parallel

Guest OS

1 x 100GB

Controller 1

Paravirtual

Data files, redo logs

4 x 200GB

Controller 2

Paravirtual

Archived logs

2 x 200GB

Use the default adapter type LSI Logic Parallel for SCSI controller 0.

Choose Paravirtual SCSI (PVSCSI) adapter type for controllers where virtual disks are used for data files, redo logs, and archived logs. The PVSCSI adapter allows greater I/O throughput and lower CPU utilization. VMware recommends using this for virtual machines with demanding I/O workloads.

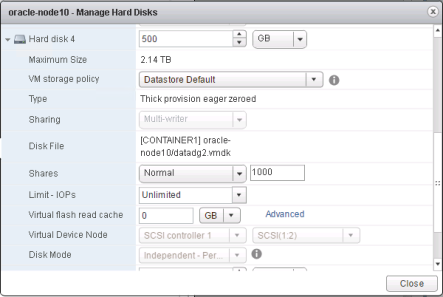

Shared access for virtual disks

By default, VMware does not allow multiple virtual machines to access the same virtual disks. In an Oracle RAC implementation where multiple database VMs need to access the same set of virtual disks, the default protection must be disabled by setting the multi-writer option. The option can be found in the virtual machine setting in the vSphere web client. It can be set when new virtual disks are created or when the virtual machine is powered down.

Queue depth and outstanding disk requests

Splitting virtual disks across multiple controllers increases the limits of outstanding I/Os which a virtual machine supports. For a demanding I/O workload environment, the default queue depth values might not be sufficient. The default PVSCSI queue depth is 64 per virtual disk and 254 per virtual controller. To increase these settings, refer to the VMware KB article 2053145.

For vSphere versions prior to 5.5, the maximum number of outstanding disk requests for virtual machines sharing a datastore/LUN is limited by the Disk.SchedNumReqOutstanding parameter. Beginning with vSphere version 5.5, this parameter is deprecated and is set per LUN. Review and increase the setting if necessary. Refer to the VMWare KB article 1268 for details.

Enabling virtual disk UUID

It is important to correctly identify the virtual disks inside the guest OS before performing any disk-related operations such as formatting or partitioning the disks. WWN is commonly used as the unique identifier to identify disks that support it. For the guest OS to properly see this information of the virtual disks, the EnableUUID parameter must be set to TRUE in the virtual machine configuration. The steps to set this parameter can be found in Appendix A: How to identify and query the disk ids/WWNs.

VM networking

A minimum of two 10GbE interfaces are recommended for each ESXi host. The actual number required depends on how many vSwitches and the total network bandwidth requirement. Each host should connect to dual redundant switches for network path redundancy as described in Network configuration. Table 2 shows number of vSwitches and their target usage.

Table 2. vSwitches and target use

vSwitch

Physical adapters

Speed

Network

Usage

vSwitchNutanix

NA

Intra CVM and ESXi host

svm-iscsi-pg

vmk-svm-iscsi-pg

Primary storage communication path

vSwitch0

vmnic0 vmnic1

10GB

Management network,

VM network

Management traffic, VM public traffic, inter-node communication

vSwitch1

vmnic2

vmnic3

10GB

VM network: Oracle

Private Oracle RAC interconnect

vSwitch2

vmnic4

vmnic5

10GB

VM network: iSCSI

Dedicated iSCSI traffic to VMs

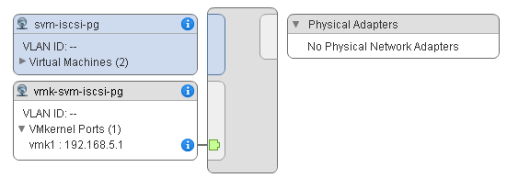

Nutanix local virtual switch

Each ESXi host which is part of the cluster has a local vSwitch automatically created as shown in the following image.

This switch is used for local communication between the CVM and ESXi host. The host has a vmkernel interface on this vSwitch and the CVM has an interface bound to a port group called svm-iscsi-pg which serves as the primary communication path to the storage. This switch is created automatically when the Nutanix operating system is installed. It is recommended not to modify this virtual switch configuration.

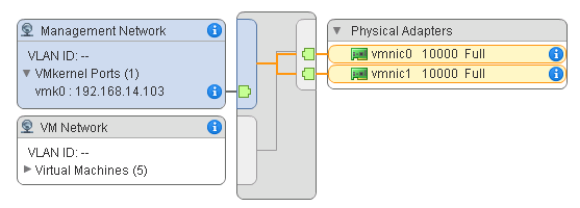

Management/inter-node network

The virtual switch was created using two 10GbE physical adapters, vmnic0 and vmnic1, as shown in the following image. The management traffic is very minimal but Acropolis DSF needs connectivity across CVMs. This connectivity is used for synchronous write replication and also Nutanix cluster management. This network is used for VMware vSphere vMotion and other cluster management activities as well.

Public VM network

All public client access to the virtual machines flow through this network. The client traffic can come in bursts and sometimes the largest amount of data might be transferred between client and VMs. It is recommended to set this up on the 10Gb network.

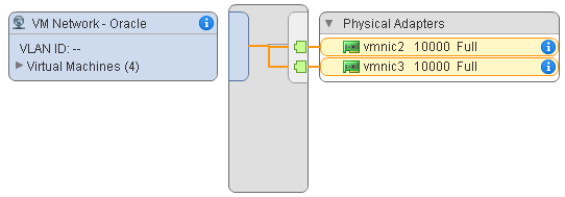

Oracle RAC interconnect

When deploying Oracle RAC, Oracle recommends a setting up a dedicated network for inter-RAC node traffic. A separate vSwitch can be set up with redundant physical adapters to provide a dedicated RAC interconnect network. Only RAC traffic should go on this network.

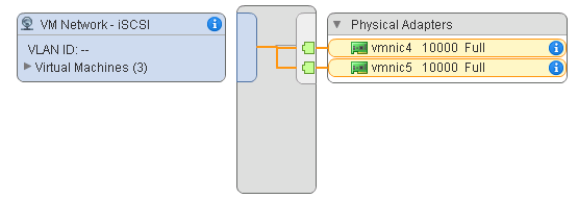

iSCSI network

Typically, iSCSI is not a normal protocol used in an XC Series Nutanix environment. The ABS feature, part of Nutanix release 4.7, enables presenting DSF storage resources directly to virtualized guest operating systems or physical hosts using the iSCSI protocol. If this feature is used, it is recommended to use at least two 10GbE interfaces dedicated for iSCSI traffic. The vSwitch configuration used for iSCSI connectivity in the solution is shown in the following image.

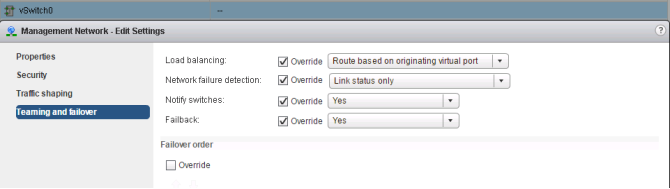

Other networking best practices

Additional networking best practices include the following:

- Use dedicated NICs on the hosts for management, iSCSI, and RAC-interconnect traffic. Also, dedicated VLANs are recommended to separate each type of traffic.

- For a standard virtual switch configuration, the default load-balancing policy is recommended: Route based on originating virtual port.

These attributes help simplify implementation for configurations such as LACP.

- The standard network packet size is 1500 MTU. Jumbo frames send network packets in a much larger size of 9000 MTU. Increasing the transfer unit size allows more data to be transferred in a single packet which results in higher throughput, and lower CPU utilization and overhead. Use Jumbo frames only when all the network devices — including the network switches, CVMs, VMs, and ESXi hosts — on the network path can support the same MTU size.